Cisco Software-Defined Access Solution Design Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

This document is organized into the following chapters:

| Chapter |

Description |

| Introduction and benefits of SD-Access |

|

| Key components of the SD-Access solution |

|

| Control plane, data plane, policy plane, and management plane technologies |

|

| Fabrics, underlay networks, overlay networks, and shared services |

|

| LAN design principles, Layer 3 routed access, role considerations, and feature considerations |

|

| Site size reference models and topologies |

|

| Migration support and strategies |

|

| Additional references and resources |

Scope

This guide serves as a foundational technical reference for designing Cisco® Software-Defined Access (SD-Access), an intent-based networking architecture that delivers automated, secure, and scalable wired and wireless campus networks. Use this guide to follow best practice recommendations when designing and deploying the solution. In addition to outlining the core architectural components, it highlights key design considerations and migration approaches for existing enterprise LAN environments.

Key focus areas include:

● Solution overview and components: Review of the SD-Access solution building blocks (fabric overlay/underlay, control plane, data plane, policy plane, management plane, and more).

● Design principles and considerations: Core design guidelines across underlay, overlay, segmentation (macro and micro), shared-services integration, wireless, external connectivity, latency, and scale metrics.

● Site reference models: Illustrative models for Fabric in a Box and for small, medium, and large fabric sites.

● Migration and operational readiness: Deployment models and strategies to migrate an existing network to SD-Access.

● Feature scope: Cisco Catalyst™ Center Release 2.3.7.10 and the SD-Access LISP Publisher/Subscriber (Pub/Sub) control plane architecture.

Intended audience

This design guide is intended for stakeholders involved in planning or designing an SD-Access solution—including network architects, implementation engineers, operations teams, and partner organizations—as it outlines the key design concepts needed to build a strong SD-Access foundation. The guide is also aimed at decision-makers who must understand the primary design dimensions and how they affect the overall solution.

Cisco Software-Defined Access is driving the evolution from traditional campus network designs to networks that directly implement the intent of an organization. Running on Cisco Catalyst™ Center hardware, SD-Access is a software application that is used to automate wired and wireless campus networks.

Fabric technology, an integral part of SD-Access, provides wired and wireless campus networks with programmable overlays and easy-to-deploy network virtualization, permitting a physical network to host one or more logical networks to meet the design intent. In addition to network virtualization, fabric technology in the campus network enhances control of communications, providing software-defined segmentation and policy enforcement based on user identity and group membership. Software-defined segmentation is seamlessly integrated using Cisco TrustSec® technology, providing micro-segmentation for groups within a virtual network using Security Group Tags (SGTs). Using Cisco Catalyst Center to automate the creation of virtual networks with integrated security and segmentation reduces operational expenses and reduces risk. Network performance, network insights, and telemetry are provided through assurance and analytics capabilities.

This design guide provides an overview of the requirements driving the evolution of campus network designs, followed by a discussion of the latest technologies and designs that are available for building an SD-Access network to address those requirements.

Benefits of Cisco SD-Access

The following are the key requirements driving the evolution of campus networks to SD-Access:

● Simplified deployment and automation: Network device configuration and management through a centralized controller using open APIs allows for very fast, lower-risk deployment of network devices and services.

● Consistent wired and wireless security capabilities: Security capabilities should be consistent, whether a user is connecting to a wired Ethernet port or connecting over the wireless LAN (WLAN).

● Wireless bandwidth at scale: Placing wireless traffic directly into the switched data plane at the network edge offers wireless endpoints the uplink bandwidth of each access switch. Whether there are 10 or 1000 access switches, this translates to wireless bandwidth that is an order of magnitude higher than the bandwidth available in traditional centrally switched traffic.

● Identity services: Identifying users and devices connecting to the network provides the contextual information required to implement security policies for access control, network segmentation by using scalable group membership, and mapping of devices into virtual networks.

● Network assurance and analytics: The deployment should proactively predict network-related and security-related risks by using telemetry to improve the performance of the network, devices, and applications, even with encrypted traffic.

This chapter is organized into the following sections:

| Chapter |

Section |

| SD-Access solution components |

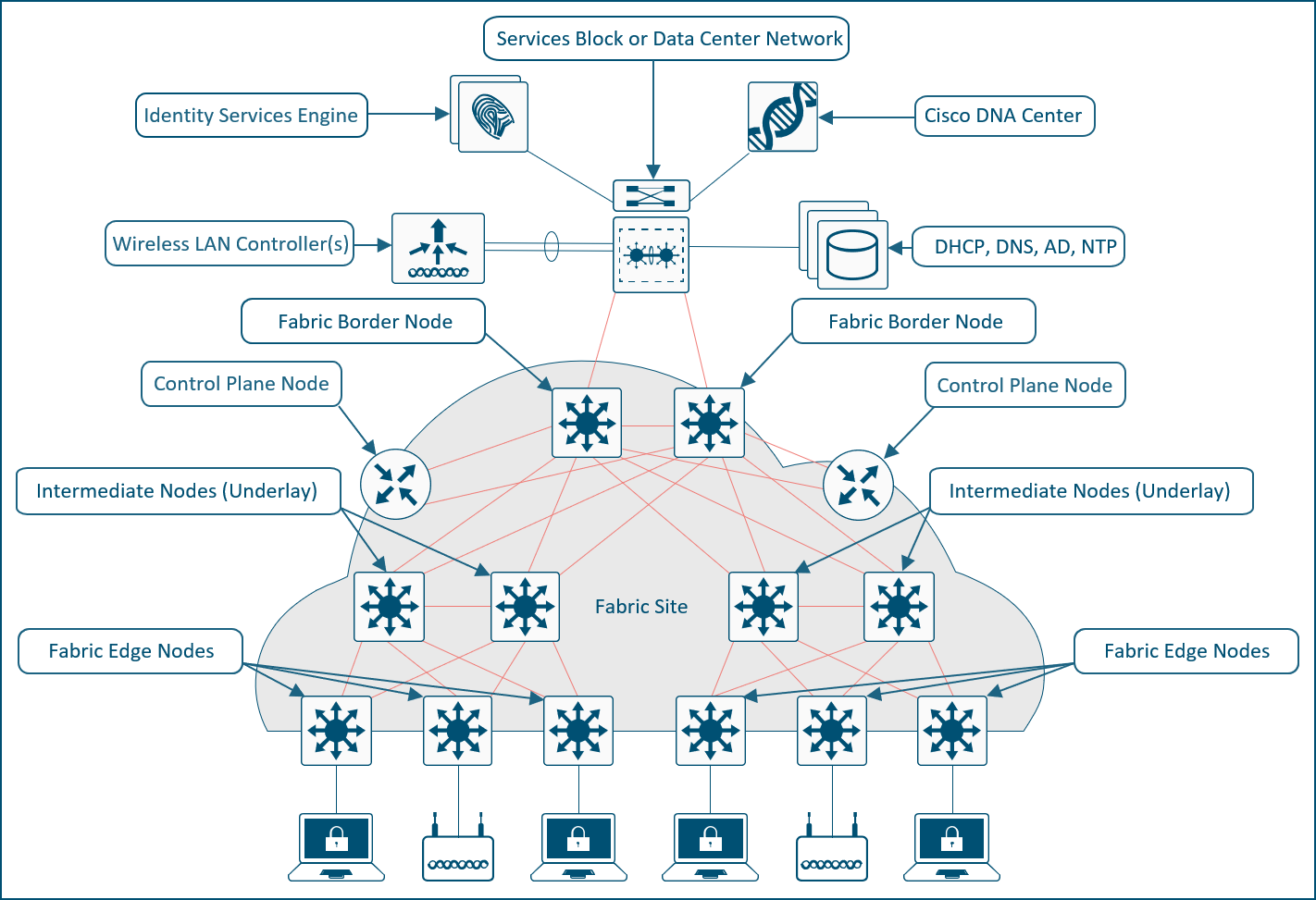

The SD-Access solution is provided through a combination of Cisco Catalyst Center, the Cisco Identity Services Engine (ISE), and wired and wireless device platforms that have fabric functionality. As described later in the Fabric Roles Design Principles section, the wired and wireless device platforms are used to create the elements of a fabric site. This chapter describes the functionality of the remaining two components of SD-Access: Cisco Catalyst Center and the Identity Services Engine.

Cisco Catalyst Center is Cisco's AI-powered network management and automation platform that simplifies operations, secures networks with zero-trust policies, and delivers seamless connectivity for users, applications, and IoT across wired and wireless campus and branch environments. Cisco Catalyst Center can be deployed in physical, virtual, and cloud form factors to match diverse network scales and environments. The physical appliances come in different generations with varying models that differ in hardware specifications, processing power, and form factor. The virtual appliance can be deployed on platforms like VMware ESXi, while cloud options are also available.

| Tech tip |

| For additional information about the Cisco Catalyst Center appliance capabilities, see the data sheet on Cisco.com. |

Cisco Catalyst Center software

Cisco Catalyst Center acts as the centralized manager for a suite of applications and services, powering automation, analytics, visibility, and management across digital-ready infrastructure including Catalyst switches, routers, access points, and Wireless LAN controllers (WLCs). One of its core software packages is SD-Access, which simplifies network design, automates provisioning, and strengthens security across campus environments. With SD-Access, organizations can achieve consistent policy enforcement, highly granular micro-segmentation, and greater operational efficiency, all managed through the intuitive Catalyst Center interface.

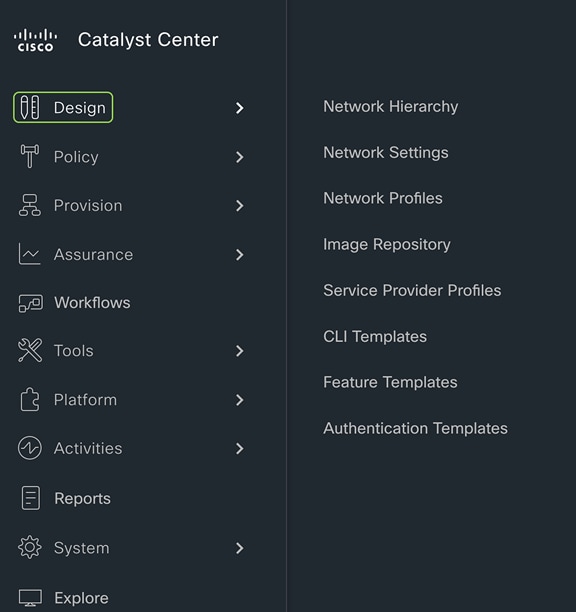

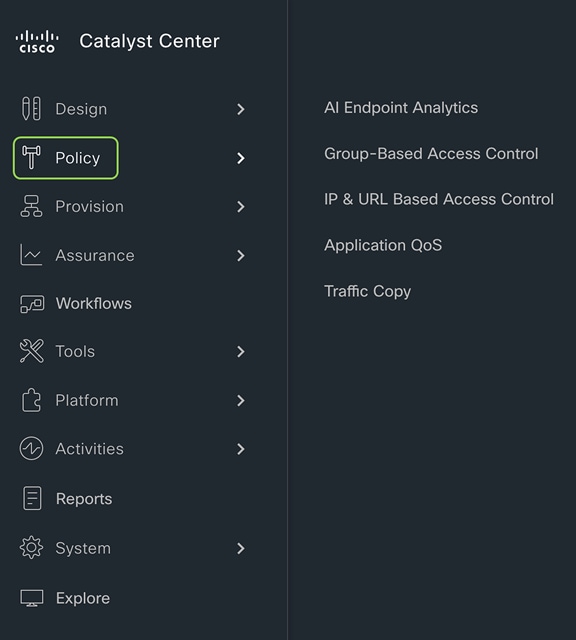

Cisco Catalyst Center centrally manages the following configuration and operations workflow areas:

● Design: Creates the structure and framework of your network, including network hierarchy, network settings, DNS, DHCP, IP addressing, site profiles, Software Image Management (SWIM), device/feature templates, and telemetry settings such as Syslog, SNMP, and NetFlow.

● Policy: Defines business intent by assigning endpoints to Cisco AI Endpoint Analytics, controlling traffic in and out of a device using group-based and IP-based access control policy, and configuring application policies such as quality of service (QoS).

● Provision: Provisions devices and adds them to the managed inventory, supports Cisco Plug and Play and LAN Automation, builds fabric sites with SD-Access components including Zero Trust, establishes virtual networks and transits, and offers a catalog of network services.

● Assurance: Provides proactive monitoring and insights to verify that the user experience matches the intended design, using network, client, and application health dashboards, issue management, sensor-based testing, Cisco AI Network Analytics, and SD-Access.

● Platform: Allows programmatic access to the network and system integration with third-party systems via APIs by using feature set bundles, configurations, a runtime dashboard, and a developer toolkit.

For details of how to implement SD-Access, please refer to SD-Access Deployment Using Cisco Catalyst Center.

Cisco Identity Services Engine (ISE) is a secure network access platform that increases visibility, control, and policy consistency for users and devices connecting to an organization’s network. ISE is a core component of SD-Access, providing network access control by dynamically mapping users and devices to networks and security groups, simplifying end-to-end policy enforcement. Cisco Catalyst Center integrates with ISE over HTTPS for trust establishment, uses REST APIs to automate policy configuration, and leverages Cisco Platform Exchange Grid (pxGrid) for sharing endpoint event and context information.

The SD‑Access solution integrates Cisco TrustSec to provide end‑to‑end, group‑based policy using Security Group Tags (SGTs). SGTs are metadata carried in the header of fabric‑encapsulated packets and are used to enforce identity‑based access controls across the network. Group and policy services are provided by Cisco ISE and orchestrated through Catalyst Center’s policy‑authoring workflows. Cisco ISE is the authoritative system for creating and administering SGTs through tightly integrated REST APIs, while Catalyst Center serves as the single pane of glass to visualize, manage, and consume these SGTs when defining network policies. This architecture simplifies end‑to‑end security policy design, deployment, and enforcement, and scales far beyond traditional approaches that depend on static IP‑based access control lists.

Cisco SD‑Access provides two primary segmentation options, macro‑segmentation with Virtual Networks (VNs) and micro‑segmentation with Security Group Tags (SGTs). Cisco ISE is optional for SD‑Access deployments that require only macro‑segmentation, but it becomes mandatory when identity‑based micro‑segmentation is required. Integrating Catalyst Center with Cisco ISE enables identity‑based policy management that dynamically maps users and devices into security groups while also unlocking a broad set of additional capabilities such as:

● Guest access workflow: Centralized workflow in Catalyst Center automates WLC, fabric nodes, and ISE policy configuration for guest SSIDs.

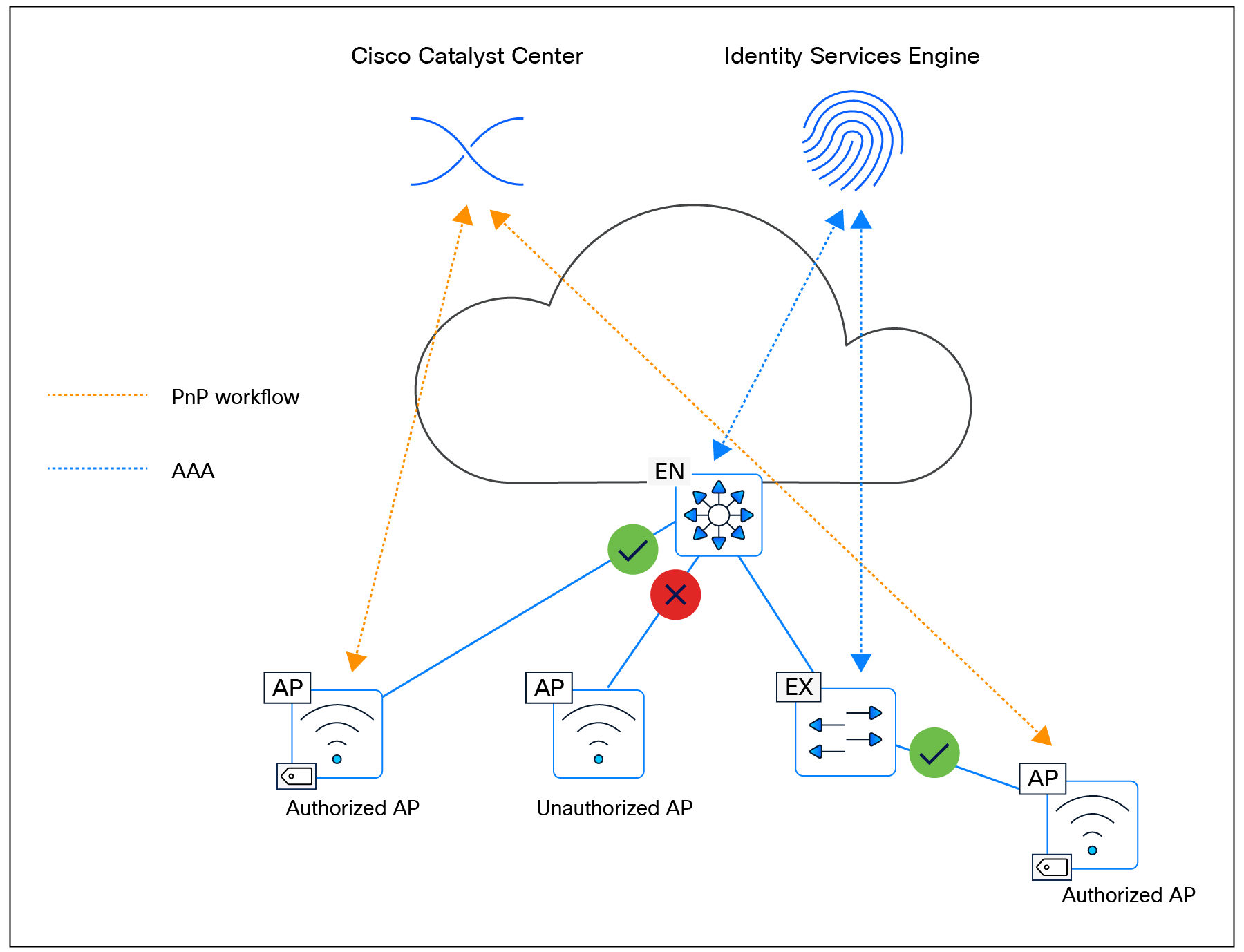

● Host onboarding: Automates the deployment of Identity Based Networking Services (IBNS) 2.0–based 802.1X/MAC Authentication Bypass (MAB) policies; authentication, authorization, and accounting (AAA); and RADIUS settings across all fabric edge nodes through centralized workflows in Catalyst Center.

● Device administration: Automatically deploys centralized AAA and TACACS configurations across all fabric devices.

● Multiple Catalyst Center clusters to ISE: Integrating multiple Catalyst Center clusters with a single Cisco ISE deployment centralizes group‑based policy, and, optionally, Virtual Network definitions, LISP Extranet policies, and shared SD‑Access transits, while still allowing each Catalyst Center cluster to operate and manage its own fabrics independently.

● Asset visibility/AI Endpoint Analytics: Increases endpoint visibility, improves security posture, and reduces the operational effort required to classify and control devices on the network. Uses deep packet inspection on Catalyst 9000 switches plus AI/ML to discover and profile IT, IoT, and OT endpoints, dramatically shrinking the “unknown device” population.

● Group-Based Policy Analytics: Provides insights for creating group-based policies by visualizing communications between security groups, ISE profiles, and Secure Network Analytics host groups to assess the impact of introducing new access controls and understand exactly which protocols are needed in the policies.

● User/device assurance: Combines rich network telemetry with user and device identity to deliver deeper visibility and faster troubleshooting for client issues.

ISE personas

A Cisco ISE node can provide various services based on the persona that it assumes. Personas are simply the services and specific feature set provided by a given ISE node. The four primary personas are:

● Policy Administration node (PAN): A Cisco ISE node with the Administration persona performs all administrative operations on Cisco ISE. It handles all system-related configurations that are related to functionality, such as AAA.

● Monitor and Troubleshooting node (MnT): A Cisco ISE node with the Monitoring persona functions as the log collector and stores log messages from all the Administration and Policy Service nodes in the network. This persona provides advanced monitoring and troubleshooting tools that are used to effectively manage the network and resources. A node with this persona aggregates and correlates the data it collects to provide meaningful information in the form of reports.

● Policy Service node (PSN): A Cisco ISE node with the Policy Service persona provides network access, posture, guest access, client provisioning, and profiling services. This persona evaluates the policies and makes all the decisions. Typically, there would be more than one PSN in a distributed deployment. All PSNs that reside in the same high-speed LAN or behind a load balancer can be grouped together to form a node group.

● Platform Exchange Grid (pxGrid): A Cisco ISE node with the pxGrid persona shares the context-sensitive information from the Cisco ISE session directory with other network systems such as ISE ecosystem partner systems and Cisco platforms. The pxGrid framework can also be used to exchange policy and configuration data between nodes, such as sharing tags and policy objects. TrustSec information such as tag definition, value, and description can be passed from Cisco ISE to other Cisco management platforms such as Catalyst Center and Cisco Secure Network Analytics (formerly Stealthwatch).

ISE supports standalone and distributed deployment models. Multiple distributed nodes can be deployed together to provide failover resiliency and scale. The range of deployment options allows support for hundreds of thousands of endpoint devices. Minimally, a basic two-node ISE deployment is recommended for SD-Access single-site deployments, with each ISE node running all services (personas) for redundancy.

| Tech tip |

| For additional details on ISE personas and services, please see the latest Cisco Identity Services Engine Administrator Guide. For additional ISE deployment and scale details, please see the ISE Performance and Scalability Guide on the Cisco.com Security Community. |

This chapter is organized into the following sections:

| Chapter |

Section |

| SD-Access operational planes |

There are four key technologies that make up the SD-Access solution, each performing distinct activities in different network planes of operation:

● Management plane: Orchestration, assurance, visibility, and management.

● Overlay control plane: Messaging and communication protocol between infrastructure devices in the fabric.

● Data plane: Encapsulation method used for the data packets.

● Policy plane: Used for security and segmentation.

In Cisco SD-Access the management plane is enabled and powered by Cisco Catalyst Center, the control plane is based on LISP (Locator/ID Separation Protocol), the data plane is based on VXLAN (Virtual Extensible LAN), and the policy plane is based on Cisco TrustSec.

Management plane – Cisco Catalyst Center

Cisco Catalyst Center is a foundational component of SD-Access, enabling automation of device deployments and configurations into the network to provide the speed, scale, and consistency required for operational efficiency. Through its automation capabilities, the control plane, data plane, and policy plane for the fabric devices are easily, seamlessly, and consistently deployed. Through the assurance feature, visibility and context are achieved for both the infrastructure devices and endpoints.

A full understanding of LISP and VXLAN is not required to deploy the fabric in SD-Access, nor is there a requirement to know the details of how to configure each individual network component and feature to create the consistent end-to-end behavior offered by SD-Access. Catalyst Center is an intuitive, centralized management system used to automate configuration and policy across the wired and wireless SD-Access network. It takes the user’s intent and programmatically applies it to network devices.

In many networks, the IP address associated with an endpoint defines both its identity and its location in the network. In these networks, the IP address is used both for network layer identification (who the device is on the network) and as a network layer locator (where the device is at in the network or to which network the devices is connected). While an endpoint’s location in the network will change, who this device is and what it can access should not have to change. LISP allows the separation of identity and location though a mapping relationship of these two namespaces: an endpoint’s identity (EID) in relationship to its routing locator (RLOC).

The LISP control plane messaging protocol is an architecture to communicate and exchange the relationship between these two namespaces. This relationship is called an EID-to-RLOC mapping. The EID and RLOC combination provides all the necessary information for traffic forwarding, even if an endpoint uses an unchanged IP address when appearing in a different network location (associated or mapped behind different RLOCs).

Simultaneously, the decoupling of the endpoint identity (EID) from its location allows addresses in the same IP subnet to be available behind multiple Layer 3 gateways in disparate network locations (such as multiple wiring closets), versus the one-to-one coupling of IP subnet with network gateway in traditional networks. The multiple Layer 3 gateways on all the access layer switches are called anycast gateways. This provides the benefit of any subnet being available anywhere without the challenges of Spanning Tree Protocol.

Instead of a typical traditional routing-based decision, the fabric devices query the control plane node to determine the RLOC associated with the destination address (EID-to-RLOC mapping) and use that RLOC information as the traffic destination. In case of a failure to resolve the destination RLOC, the traffic is sent to the default fabric border node. The response received from the control plane node is stored in the LISP map cache, which is merged with the Cisco Express Forwarding table and installed in hardware.

LISP's architecture introduces several key benefits that enhance network scalability, efficiency, and flexibility:

● Selective, pull-based control plane: This approach reduces unnecessary network updates and flooding, leading to more efficient resource use and supporting seamless network scalability.

● Support for extensible addresses: The LISP Canonical Address Format (LCAF) allows for the encoding of additional metadata beyond traditional address families, supporting advanced use cases and future growth of new features and capabilities.

● Superior mobility for wired and wireless endpoints: LISP enables fast convergence and seamless roaming for wireless clients, while also supporting wired endpoint mobility. This means any wired endpoint can connect at any location within the network and automatically receive the correct access, security policies, and services.

● Wired and wireless consistency: Unified control mechanisms help ensure that policies, configurations, and troubleshooting are consistent across both wired and wireless domains.

● Open, standards-based foundation: LISP is based on open standards and has matured over more than a decade, providing long-term stability and interoperability.

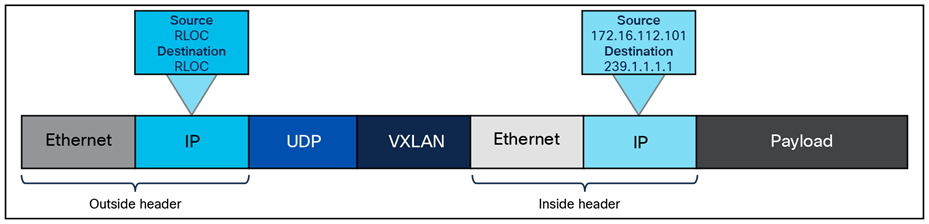

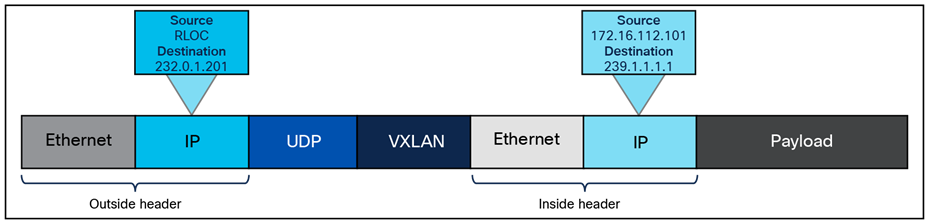

VXLAN is an encapsulation technique for data packets. When encapsulation is added to these data packets, a tunnel network is created. Tunneling encapsulates data packets from one protocol inside a different protocol and transports the original data packets, unchanged, across the network. A lower-layer or same-layer protocol (from the Open Systems Interconnection [OSI] model) can be carried through this tunnel, creating an overlay. In SD-Access, this overlay network is referred to as the fabric.

VXLAN is a MAC-in-IP encapsulation method. It provides a way to carry lower-layer data across the higher Layer 3 infrastructure. Unlike routing protocol tunneling methods, VXLAN preserves the original Ethernet header from the original frame sent from the endpoint. This allows for the creation of an overlay at Layer 2 and at Layer 3, depending on the needs of the original communication.

SD-Access also places additional information in the fabric VXLAN header, including alternative forwarding attributes that can be used to make policy decisions by identifying each overlay network by assigning a VXLAN network identifier (VNI). Layer 2 overlays are identified with a VLAN-to-VNI correlation (Layer 2 VNI), and Layer 3 overlays are identified with a Virtual Routing and Forwarding instance (VRF)-to-VNI correlation (Layer 3 VNI or instance ID in LISP).

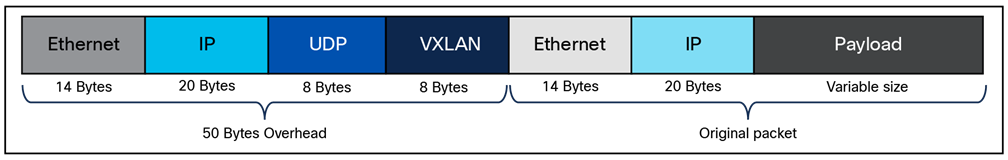

Any encapsulation method is going to create additional MTU (Maximum Transmission Unit) overhead on the original packet. As shown in Figure 1, VXLAN encapsulation uses a User Datagram Protocol (UDP) transport. Along with the VXLAN and UDP headers used to encapsulate the original packet, an outer IP and Ethernet header are necessary to forward the packet across the wire. At a minimum, these extra headers add 50 bytes of overhead to the original packet.

Fabric VXLAN (VNI) encapsulation overhead

Cisco TrustSec decouples access that is based strictly on IP addresses and VLANs by using logical groupings in a method known as Group-Based Access Control (GBAC). The goal of Cisco TrustSec technology is to assign an SGT value to the packet at its ingress to the network. An access policy is then enforced based on this tag information.

An SGT is a form of metadata. It is a 16-bit value assigned by Cisco ISE in an authorization policy when a user, device, or application connects to the network.

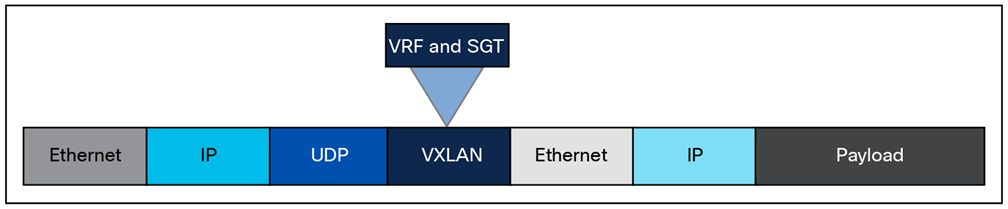

The fabric VXLAN encapsulation method is used both by the data plane and the policy plane. In the policy plane, the alternative forwarding attributes (the SGT value and VRF values) are encoded into the header and carried across the overlay.

Fabric VXLAN alternative forwarding attributes

| Tech tip |

| A bit-level diagram of the VXLAN encapsulation method used in SD-Access fabric, along with low-level details on policy constructs inserted into the header, can be found in Appendix A. |

SD-Access architecture network components

This chapter is organized into the following sections:

| Chapter |

Section |

| SD-Access architecture network components |

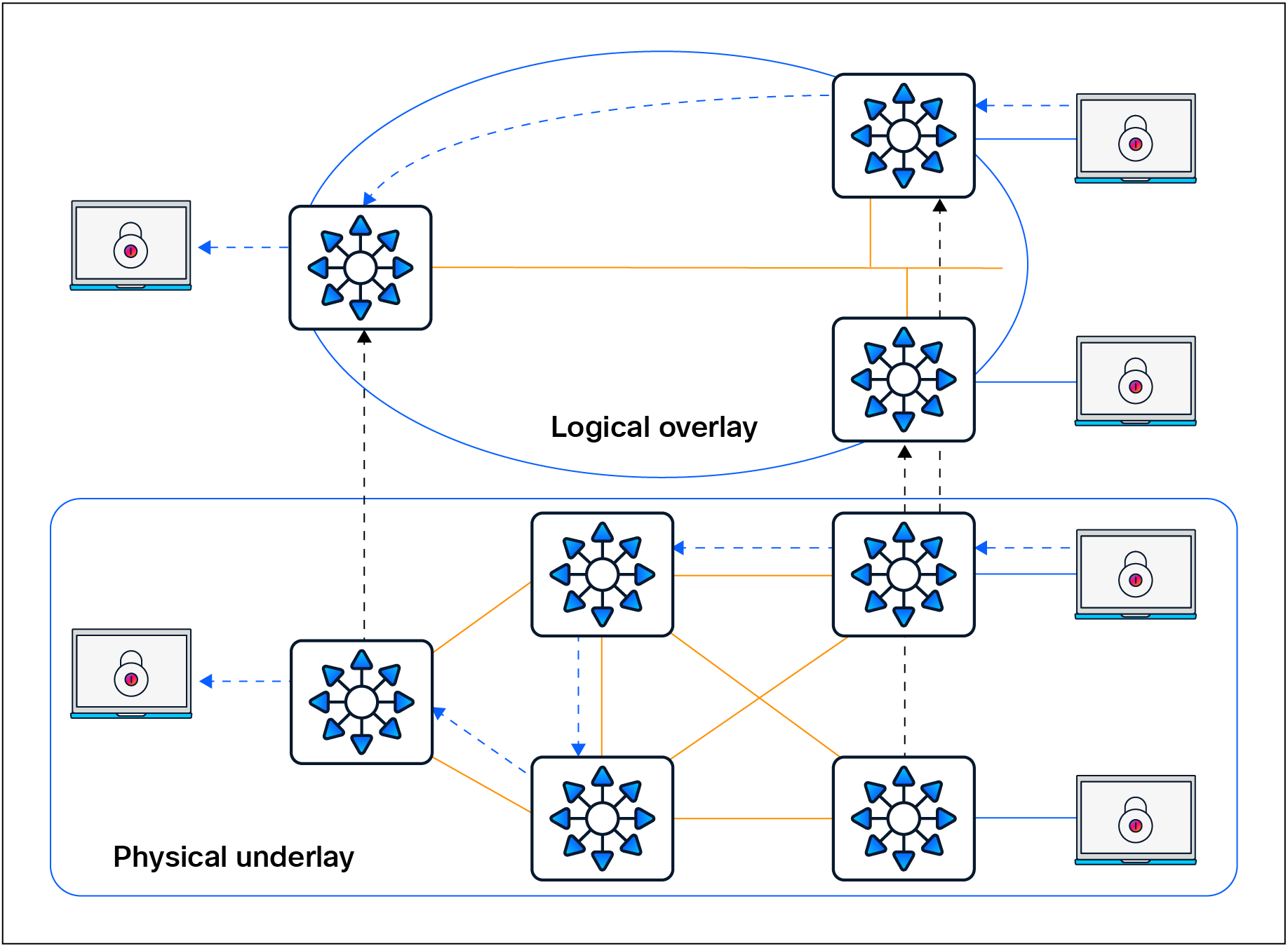

The SD-Access architecture is supported by fabric technology implemented for the campus, enabling the use of virtual networks (overlay networks) running on a physical network (underlay network) and creating alternative topologies to connect devices. This chapter describes and defines the word fabric, discusses the SD-Access fabric underlay and overlay networks, and introduces shared services, which are a shared set of resources accessed by devices in the overlay. It provides an introduction to these fabric-based network terminologies that will be used throughout the rest of this guide. Design consideration for these are covered in the next chapter.

Modern organizations rely on their networks not just for connectivity, but also for authentication, security, reliability, and user experience. They host many user types, IoT devices, applications, and security domains across both wired and wireless access. A network fabric addresses these challenges by delivering:

● A simple and repeatable network foundation (underlay) designed for maximum reliability and resiliency

● Multiple logical overlays to deliver different network services for distinct user and device groups

● Role-based segmentation with VRFs and SGTs, instead of relying solely on VLANs and subnets

● Consistent, centrally defined policy for both wired and wireless users and devices

● Seamless mobility, with devices retaining their IP addresses and policies as they move across the network

● Standardized building blocks, making it much easier to scale the network as requirements grow

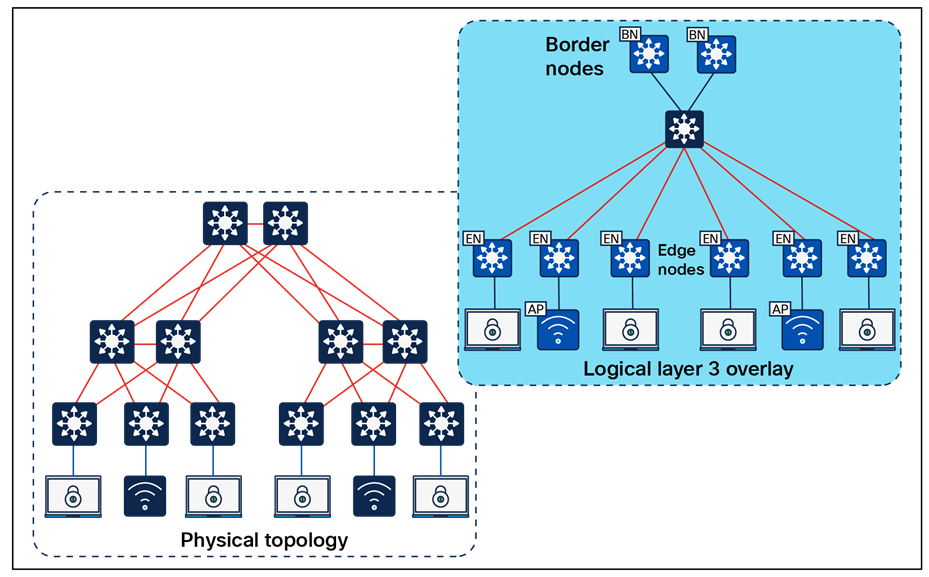

A fabric is simply an overlay network. Overlays are created through encapsulation, a process that adds additional header(s) to the original packet or frame. An overlay network creates a fully meshed logical topology of tunnels used to virtually connect devices that are built over an arbitrary physical underlay topology. In an idealized, theoretical network, every device would be connected to every other device. In this way, any connectivity or topology imagined could be created. While this theoretical network does not exist, there is still a technical desire to have all these devices connected to each other in a full mesh. In networking, an overlay (or tunnel) provides this logical full-mesh connection.

The underlay network is defined by the physical switches and routers that are used to deploy the SD-Access network. All network elements of the underlay must establish IP connectivity via the use of a routing protocol. Instead of using arbitrary network topologies and protocols, the underlay implementation for SD-Access uses a well-designed Layer 3 foundation inclusive of the campus edge switches; this is known as a Layer 3 routed access design. This foundation ensures performance, scalability, and resiliency, and enables deterministic convergence of the network. End-user subnets and endpoints are not part of the underlay network; they are part of the overlay network.

Overlay and underlay relationship

Having a well-designed underlay network helps ensure the stability, performance, and efficient utilization of the SD-Access network. Automation for deploying the underlay is available using the LAN Automation capability of Cisco Catalyst Center, which is discussed in a later section.

Whether using LAN Automation or deploying the network manually, the underlay networks for the fabric have the following general design requirements:

● Layer 3 routed access: The use of a Layer 3 routed access network for the fabric provides the highest level of availability without the need to use loop avoidance protocols such as Spanning Tree Protocol (STP), interface bundling techniques using link aggregation technologies such as EtherChannel, or Layer 2 redundancy technologies like StackWise® Virtual.

● Larger default MTU: VXLAN encapsulation adds 50 bytes of overhead and sets the IP DF (don’t fragment) bit. Enabling a campus-wide and branch-wide MTU of 9100 helps ensure that Ethernet jumbo frames can be transported without being dropped inside the fabric.

● Point-to-point links: Point-to-point routed links provide the quickest convergence times because they eliminate the need to wait for the upper-layer protocol timeouts typical of more complex topologies. Combining point-to-point routed links with the recommended physical topology design provides fast convergence in the event of a link failure.

The fast convergence is a benefit of quick link-failure detection, triggering immediate use of alternate topology entries preexisting in the routing and forwarding table. Implement the point-to-point links using optical technology, as optical (fiber) interfaces are not subject to the same electromagnetic interference as copper links. Copper interfaces can be used, though optical ones are preferred.

● Bidirectional Forwarding Detection (BFD): BFD enhances the fault detection and convergence characteristics of routing protocols. Routing protocols use the absence of hello packets to determine if an adjacent neighbor is down (commonly called a hold timer or dead timer). Thus, the ability to detect liveliness in a neighbor is based on the frequency of hello packets.

● Interior Gateway Protocol (IGP) process for the fabric: While the Intermediate System-to-Intermediate System (IS-IS) Protocol is supported when using LAN Automation, manual deployment of other classless routing protocols such as Open Shortest Path First (OSPF) and Enhanced Interior Gateway Routing Protocol (EIGRP) is also supported.

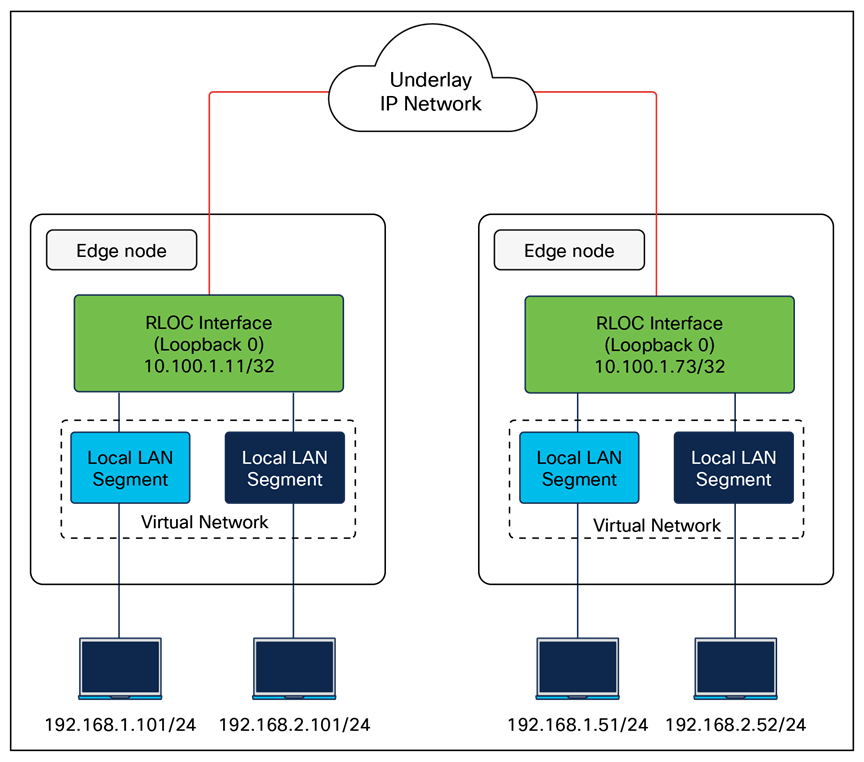

● Loopback propagation: The loopback addresses assigned to the underlay devices need to propagate outside of the fabric to establish connectivity to infrastructure services such as, DNS, DHCP, and AAA. Loopback 0 interfaces (RLOC) require a /32 subnet mask. These addresses can also be propagated throughout the fabric site. Reachability between loopback addresses (RLOCs) cannot use the default route. They must use a /32 route.

● WLC reachability: Connectivity to the WLC should be treated like reachability to the loopback addresses. The access points (APs) cannot use a default route in the underlay to reach the WLCs. A specific (nondefault) route to the WLC IP address must exist in the global routing table at each switch where the APs are physically connected. This can be a host route (/32) or summarized route.

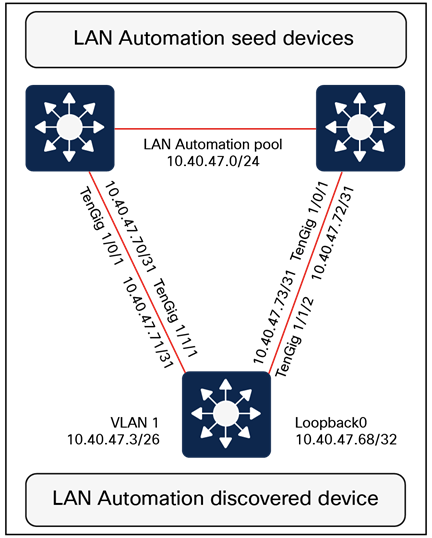

LAN Automation handles the plug-and-play zero-touch automation of the underlay network for the SD-Access solution. The simplified procedure builds a solid, error-free underlay network foundation using the principles of a Layer 3 routed access design. The LAN Automation feature uses components from the Cisco Plug and Play (PnP) solution, where configuration of the underlay can be orchestrated and devices are automatically added to the Cisco Catalyst Center inventory. LAN Automation is an alternative to manual underlay deployments to onboard multiple switches with SWIM and best-practices configuration using an IS-IS routed access design.

Although there are many alternative routing protocols, the IS-IS routing protocol offers operational advantages such as neighbor establishment without IP protocol dependencies, peering capability using loopback addresses, and agnostic treatment of IPv4, IPv6, and non-IP traffic.

Network design considerations for LAN Automation

There are specific considerations for designing a network to support LAN Automation. These include IP reachability, seed peer configuration, hierarchy, device support, IP address pool planning, and multicast. Additional design considerations exist when integrating the LAN automated network into an existing routing domain or when running multiple LAN Automation sessions. Each of these is discussed in detail below.

IP reachability

Cisco LAN Automation in Catalyst Center deploys a Layer 3 underlay using IS-IS as the primary routing protocol. Border Gateway Protocol (BGP) integration occurs only on seed devices (primary and peer), where you preconfigure it manually for reachability. LAN Automation optionally advertises a LAN Automation IP pool summary route into that BGP process to ensure that Catalyst Center can reach discovered devices without static routes.

Peer configuration

The peer device (secondary seed) can be automated and discovered through the LAN Automation process. However, we recommend configuring the device manually. The two seed devices should be configured with a Layer 3 physical interface link between them. Both devices should be configured with IS-IS, and the link between the two should be configured as a point-to-point interface that is part of the IS-IS routing domain. For consistency with the interface automation of the discovered devices, BFD should be enabled on this crosslink between the seeds, the Connectionless Network Service (CLNS) MTU should be set to 1400, Protocol Independent Multicast (PIM) sparse mode should be enabled, and the system MTU should be set to 9100.

Multicast and LAN Automation

Enable Multicast is an optional capability of LAN Automation. It is represented by a check box in the LAN Automation workflow. When this box is checked, seed devices are configured as rendezvous points (RPs) for PIM – Any Source Multicast (PIM-ASM), and PIM sparse mode will be enabled on the Layer 3 point-to-point interfaces. If redundant seeds are defined, Cisco Catalyst Center will automate the configuration of Multicast Source Discovery Protocol (MSDP) between them using Loopback 60000 as the RP interface and Loopback 0 as the unique interface. Overlay features like Layer 2 flooding and native multicast need underlay multicast configuration. If Layer 2 flooding is required and multicast wasn’t enabled during the LAN Automation process, underlay multicast routing needs to be enabled manually on the devices in the fabric site and MSDP should be configured between the RPs in the underlay.

| Tech tip |

| For additional details on multicast RPs, MSDP, and PIM-ASM, please see the Rendezvous Point Design section. |

Additional IS-IS routing considerations

The seed devices are commonly part of a larger, existing deployment that includes a dynamic routing protocol to achieve IP reachability to Cisco Catalyst Center. When a LAN Automation session is started, IS-IS routing is configured on the seed devices in order to prepare them to provide connectivity for the discovered devices. This IS-IS configuration includes routing authentication, BFD, and default route propagation. These provisioned elements should be considered when multiple LAN Automation sessions are completed in the same site, when LAN Automation is used in multiple fabric sites, and when the fabric is part of a larger IS-IS routing domain.

IS-IS domain password

As part of the LAN Automation workflow in Cisco Catalyst Center, an IS-IS domain password is recommended. The IS-IS domain password enables plain-text authentication of IS-IS Level 2 link-state packets. If the seed devices are joining an existing IS-IS routing domain, the password entered in the GUI workflow should be the same as for the existing routing domain to allow the exchange of routing information.

Bidirectional Forwarding Detection

BFD is provisioned on seed devices at the router configuration level and at the interface level connecting to the discovered devices. BFD is also provisioned on the discovered devices at the router configuration level and at the interface configuration level connecting to the upstream peers.

When configuring the seed device pair before beginning LAN Automation, you’ll need to configure a Layer 3 routed link between them and add the link to the IS-IS routing process. On some platforms, if BFD is enabled at the router configuration level only and not also at the interface level, the IS-IS adjacency will drop. Therefore, BFD should be enabled manually on this crosslink interface to ensure that the adjacency remains up once the LAN Automation session is started. This also means that when integrating the seed devices into an existing IS-IS network, BFD should be enabled on the interfaces connecting to the remainder of the network.

Default route propagation

During LAN Automation, the command default-information originate is provisioned under the IS-IS routing process to advertise the default route to all discovered devices. This command is applied to each seed during the LAN Automation process and excluded for subsequent LAN Automation sessions. If you are integrating with an existing IS-IS network and an IS-IS learned default route is present in the route table, default-information originate won’t be configured on the seed devices.

Onboarding guidelines for LAN Automation with Extended Nodes

SD-Access extended nodes are Layer 2 switches that extend SD-Access VLANs into traditional, noncarpeted areas of the business, often called the extended enterprise. An extended node is either directly connected to a fabric edge node or is part of a chain of extended nodes that ultimately connects to a fabric edge node. For more information about extended nodes, refer to the Extended Nodes section.

Factory-default switches that are to be provisioned as extended nodes should not be physically connected to any switch that is acting as a LAN Automation seed or is currently undergoing a LAN Automation discovery process. This ensures that the LAN Automation session can properly discover and provision only the switches that will participate in IS-IS routing.

| Tech tip |

| Please consult the Cisco Catalyst Center Release Notes and Cisco Catalyst Center SD-Access LAN Automation Deployment Guide for updates, additions, and a complete list of devices supported with LAN Automation. |

An overlay network is created on top of the underlay network through virtualization (virtual networks, or VNs). The data plane traffic and control plane signaling are contained within each virtual network, maintaining isolation among the networks and an independence from the underlay network. Multiple overlay networks can run across the same underlay network through virtualization. In SD-Access, the user-defined overlay networks are provisioned as VRF instances that provide separation of routing tables.

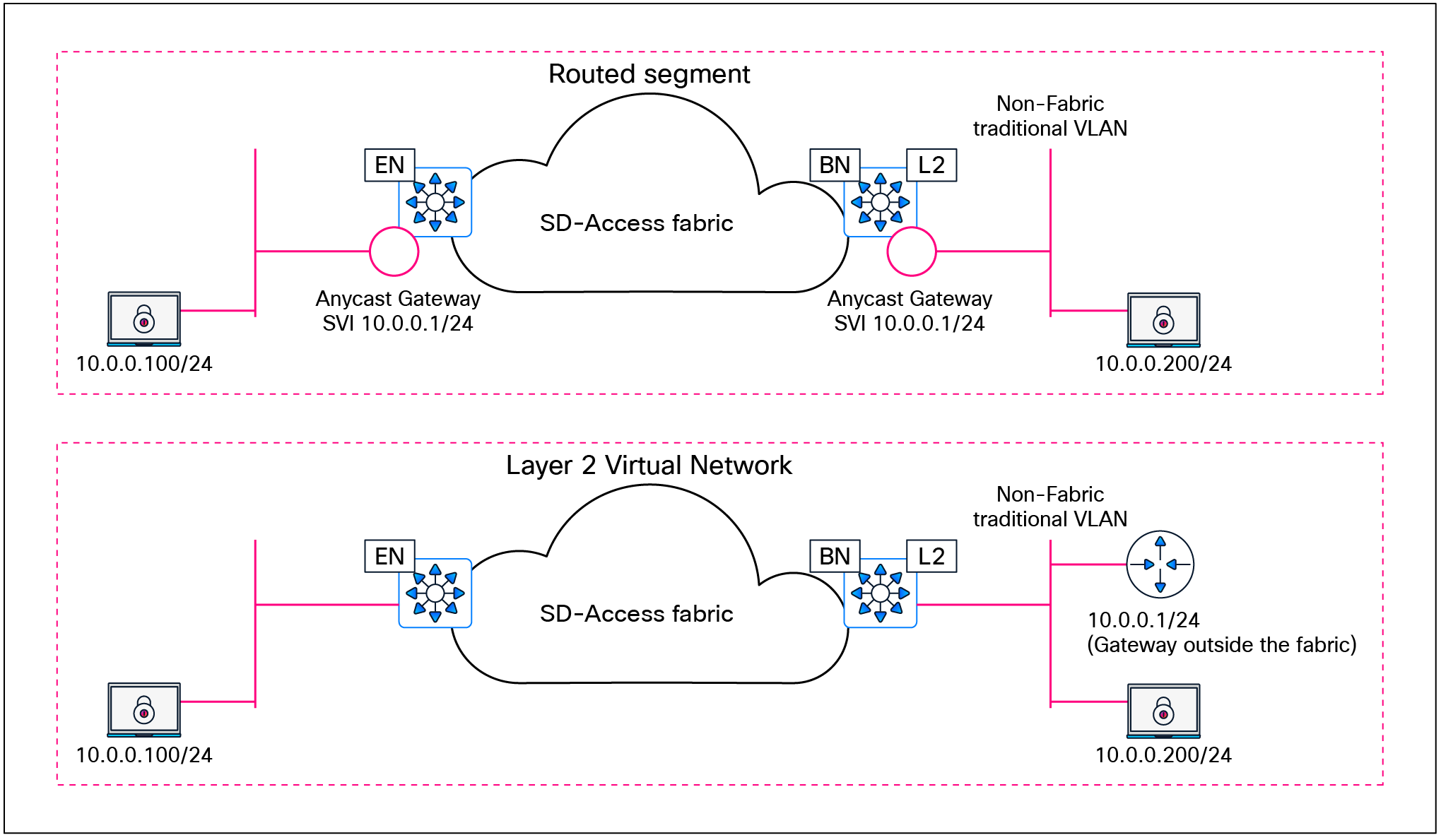

SD-Access allows for the extension of Layer 2 and Layer 3 connectivity across the overlay through the services provided by LISP and VXLAN. Layer 2 overlay services emulate a LAN segment to transport Layer 2 frames by carrying a subnet over the Layer 3 underlay, as shown in Figure 4.

Layer 2 overlay – Logically switched connectivity

Layer 3 overlays abstract the IP-based connectivity from the physical connectivity, as shown in Figure 5. This can allow multiple IP networks to be part of each virtual network. Each Layer 3 overlay, its routing tables, and its associated control planes are completely isolated from one another.

Layer 3 overlay – Logically routed connectivity

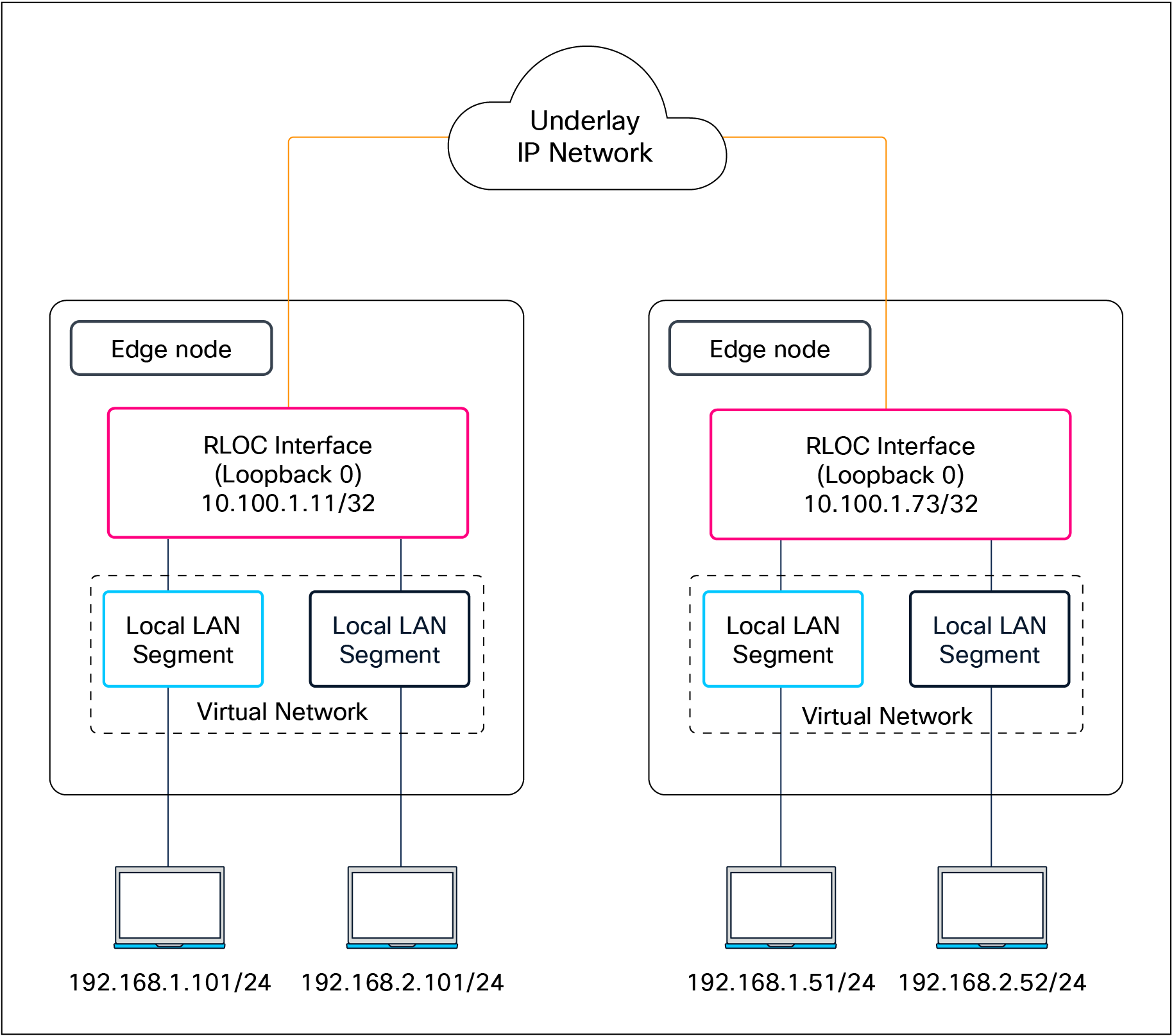

The following figure shows an example of two subnets that are part of the overlay network. The subnets stretch across physically separated Layer 3 devices — two edge nodes. The RLOC interfaces, or Loopback 0 interfaces in SD-Access, are the only underlay routable addresses that are required to establish connectivity between endpoints of the same or different subnets within the same virtual network.

Subnet stretching – Example

In the SD-Access fabric, the overlay networks are used for transporting user traffic across the fabric. The fabric encapsulation also carries security group information used for traffic segmentation inside the overlay virtual networks. Consider the following in the design when deploying virtual networks:

● Virtual networks (macro-segmentation): Use virtual networks when requirements dictate isolation at both the data plane and control plane. In general, if devices need to communicate with each other, they should be placed in the same virtual network. If communication is required between different virtual networks, use an external firewall or other device to enable inter-virtual network communication. Virtual networks provide the same behavior and isolation as VRFs.

● SGTs (micro-segmentation): Segmentation using SGTs allows for simple-to-manage group-based policies and enables granular data plane isolation between groups of endpoints within a virtualized network. Using SGTs also enables scalable deployment of policy without having to do cumbersome updates for these policies based on IP addresses.

● Fewer subnets and simplified DHCP management: In the overlay, IP subnets can be stretched across the fabric without the flooding issues that can happen on large Layer 2 networks. Use fewer subnets and DHCP scopes for simpler IP addressing and DHCP scope management. Subnets are sized according to the services that they support, versus being constrained by the location of a gateway. Enabling the optional broadcast flooding (Layer 2 flooding) feature can limit the subnet size based on the additional bandwidth and endpoint processing requirements for the traffic mix within a specific deployment.

● Overlapping IP subnets: Exactly overlapping anycast gateway addresses are supported for wired endpoints, but only within a single fabric site. Partially overlapping anycast gateways are not supported, for example, 10.0.0.1/24 and 10.0.0.1/23. If overlapping IP subnets need access to shared services, Network Address Translation (NAT) will be required outside of the SD-Access fabric. Overlapping subnets for wired endpoints within Layer 2 VNs (gateway outside the fabric) have no subnet overlap or fabric site restrictions.

| Tech tip |

| The underlay network uses the IPv4 address for the Loopback 0 (RLOC) interfaces on the devices operating in a fabric role. Connectivity in the underlay should use IPv4 routing to propagate the /32 RLOC routes as discussed in the Underlay Network Design section. Endpoints in the overlay space can use IPv4 addresses or dual-stack IPv4/IPv6 addresses. |

Most networks need some form of shared services that can be reused across multiple virtual networks rather than hosting them inside every VN. It is important that those shared services are deployed correctly to preserve the isolation between different VNs accessing those services. The use of a VRF-aware peer directly attached outside of the fabric provides a mechanism for route leaking of shared services prefixes across multiple networks, and the use of firewalls provides an additional layer of security and monitoring of traffic between VNs. Alternatively, SD-Access LISP Extranet can be used to enable access to shared services or internet resources external to the fabric, eliminating the need for traditional BGP-based route leaking between VNs. Examples of shared services include DHCP, DNS, Network Time Protocol (NTP) servers, and internet access, among others. The location of these services is an important consideration in the SD-Access solution. These should be located outside the fabric network, typically upstream of the border node.

● DHCP, DNS, IP Address Management (IPAM), and Active Directory (AD): The same set of infrastructure services can be reused if they support virtual networks. Special capabilities such as advanced DHCP scope selection criteria, multiple domains, and support for overlapping address space are some of the capabilities required to extend the services beyond a single network.

● Internet access: The same set of internet firewalls can be used for multiple virtual networks. If firewall policies need to be unique for each virtual network, the use of a multicontext firewall is recommended.

● IP voice/video collaboration services: When IP phones and other unified communications devices are connected in multiple virtual networks, the call control signaling to the communications manager and the IP traffic between those devices needs to be able to traverse multiple virtual networks in the infrastructure.

● Servers and critical systems: NTP servers, building management systems, network orchestrators, management appliances, support systems, administrative applications, databases, payroll systems, and other critical applications may be required for access by one or many virtual networks.

| Tech tip |

| Place shared‑services points of presence as close as practical to major user populations, and favor regional or local internet and data center exits for latency‑sensitive applications. |

Shared services design

Once the physical design of the services block is determined, its logical design should be considered next. Shared services are commonly deployed in the global routing table, though they are also supported in a VRF. If deployed in a VRF, this routing table should be dedicated only to these shared services.

As discussed in detail later in the External Connectivity section, the endpoint prefix space in the fabric site will be present on the border nodes for advertisement to the external world. However, these prefixes will be in a VRF table, not the global routing table. The External Connectivity section discusses options for connecting the border node to shared services, the internet, and outside the fabric.

With shared services in a dedicated VRF, route leaking (VRF-to-VRF leaking) is administratively straightforward, as it uses BGP route targets under the VRF configuration, although at the expense of creating another VRF to manage. The alternative approach, shared services in the global routing table, requires a different approach to leak routes for access to shared services. The process still requires the same handoff components to the external entity to the border node, though with slightly more touch points. These begin with an IP prefix list for each virtual network in the fabric that references each of the associated subnets. A route map is created to match on each prefix list. Finally, the VRF configuration imports and exports routes that are filtered based on these route maps.

While the second approach, shared services in the global routing table, may have more configuration elements, it also provides the highest degree of granularity. Specific routes can be selectively and systematically leaked from the global routing table to the fabric virtual networks without having to maintain a dedicated VRF for shared services. Both approaches are supported, although the underlying decision on the routing table used by shared services should be based on the entire network, not just the SD-Access fabric sites.

There’s a range of factors that determine the scale of SD-Access fabrics, including the network platforms used, the number of endpoints, and the Cisco Catalyst Center scale, depending on the deployment option and form factor.

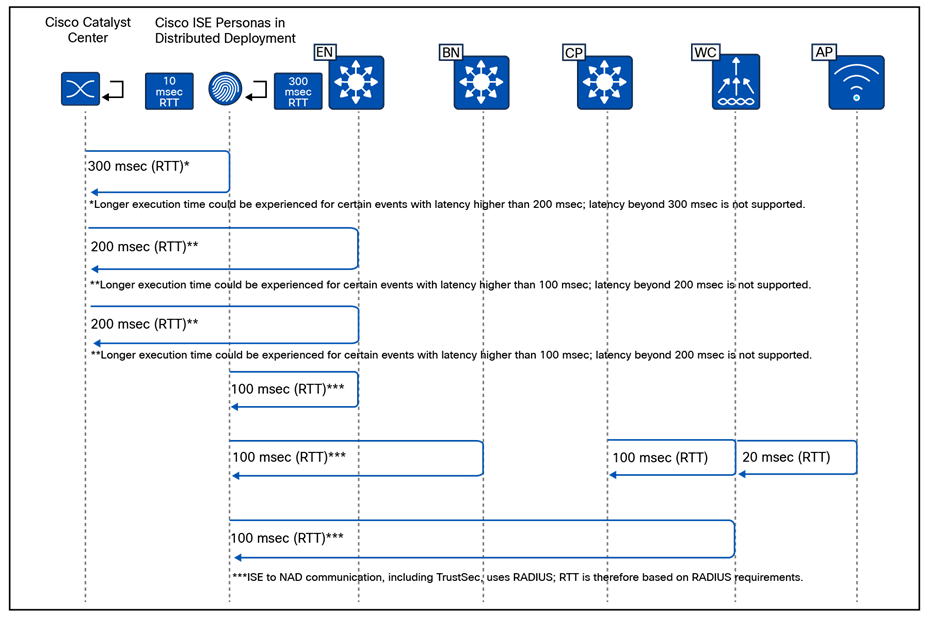

Latency in the network is also an important consideration for performance, and the Round-Trip Time (RTT) between Catalyst Center and any network devices it manages must be within tolerances.

For current latency requirements and scale metrics, please see the latest Cisco Catalyst Center data sheet.

SD-Access design considerations

This chapter is organized into the following sections:

| Chapter |

Section |

| SD-Access design considerations |

Management plane considerations |

This chapter provides design guidelines that are built upon principles that allow an SD-Access network architect to build the fabric using next-generation products and technologies. These principles allow for simplified application integration and enable the network solutions to be seamlessly built on a modular, extensible, and highly available foundation design that can provide continuous, secure, and deterministic network operations.

Management plane considerations

The management plane is a critical component of any SD-Access deployment, as it orchestrates the automation, assurance, identity integration, and policy workflows that shape and govern the fabric.

Cisco Catalyst Center is supported as a single node, in three-node clusters, and in a disaster recovery configuration. Each option has different scale and resiliency characteristics. A single node can be deployed as a standalone physical on-premises appliance or as a virtual appliance on cloud or VMware ESXi. If Catalyst Center is deployed as a single-node cluster, wiring, IP addresses, and connectivity should be planned and configured with future three-node clustering in mind.

For high availability, Catalyst Center should be deployed as a three-node cluster. An odd number of nodes is required to maintain quorum in a distributed system, and Catalyst Center supports only a three-node model (not five or seven). Although the cluster is composed of three physical nodes, it operates as a single logical entity accessed through a virtual IP address that is serviced by the resilient nodes within the cluster.

Within a three-node cluster, service distribution provides distributed processing, database replication, security replication, and file synchronization. Software upgrades are automatically replicated across the nodes in a three-node cluster. A three-node cluster will survive the loss of a single node, though it requires at least two nodes to remain operational. Some maintenance operations, such as software upgrades and file restoration from backup, are restricted until the three-node cluster is fully restored. Additionally, not all assurance data may be protected while in the degraded two-node state.

Disaster recovery (DR) adds an additional layer of resilience by protecting against complete cluster failure. Catalyst Center supports two disaster recovery setups: 1+1+1 and 3+3+1. In the event of a primary cluster outage, management operations can be transferred to a designated DR cluster, helping ensure continuity of fabric management and visibility (see the Cisco Catalyst Center Administrator Guide for detailed DR workflows).

While Catalyst Center is not part of the SD-Access data plane or control plane, its availability directly affects how the fabric is provisioned, maintained, and operated. A Catalyst Center outage does not impact SD-Access wired and wireless traffic forwarding, but it does affect:

● All management plane functions: Key areas influenced include fabric provisioning and automation (creation of fabric sites), virtual networks, IP pools, device role assignments (device onboarding), lifecycle management and Plug-and-Play, SWIM, templates, and compliance checks.

● Assurance and telemetry: Fabric, device, and client health monitoring, as well as troubleshooting and path analytics.

● Identity policy and configuration changes: Deployment and synchronization of segmentation policies, SGT assignments, and network-wide configuration updates.

For assurance communication and provisioning efficiency, a Cisco Catalyst Center should be installed in close network proximity to the greatest number of devices being managed to minimize communication delay to the devices. Additional latency information is discussed in the Scale and Latency section.

In the management plane, as of Catalyst Center Release 2.3.7, interfaces can use either IPv4 or IPv6 addressing. However, integration between Catalyst Center and Cisco ISE remains IPv4-only, so IPv4 is still required on the management path for policy, authentication, and pxGrid/External RESTful Services (ERS) interactions.

Within the SD-Access fabric, the underlay is IPv4 only, while the fabric overlay can be configured as either IPv4 only or dual stack for endpoints. Dual-stack anycast gateways on fabric edge nodes allow both IPv4 and IPv6 clients, carrying IPv6 endpoint traffic over the IPv4 fabric underlay. This model lets you introduce IPv6 services and clients in the overlay while keeping the underlay and management/control integrations anchored in IPv4.

| Tech tip |

| For details on Cisco Catalyst Center capabilities, design, and configuration, please see the Maintain and Operate Guides. |

This section is organized into the following subsections:

| Section |

Subsection |

| Policy plane considerations |

Macro- vs. micro -segmentation |

Cisco SD‑Access provides two primary segmentation options, macro‑segmentation with virtual networks and micro‑segmentation with SGTs. Cisco ISE is optional for SD‑Access deployments that use only macro‑segmentation, but it becomes mandatory when identity‑based micro‑segmentation is required.

Zero trust is a security strategy or a framework and also the end state many customers are targeting. Its core principles are to never trust any endpoint or user unless it’s proven trustworthy and then to assign only the minimum necessary access. The figure shows common SD‑Access adoption paths toward a zero‑trust architecture for both existing and new deployment customers. It highlights how you can phase in macro‑segmentation, analytics, and then micro‑segmentation depending on whether you already have Cisco ISE.

SD-Access flexible deployment options

Customers can start their SD‑Access journey with simple macro‑segmentation using virtual networks and later, after integrating Cisco ISE, enhance the design with AI Endpoint Analytics, Group-Based Policy Analytics, and Trust Analytics to gain identity and behavior visibility, ultimately enabling micro‑segmentation with SGTs and group‑based policy as the end state, progressively marching toward a zero‑trust architecture.

Customers with existing LANs that already use Cisco ISE can begin their SD‑Access journey with fabric‑agnostic capabilities such as AI Endpoint Analytics, Group-Based Policy Analytics, and Trust Analytics to understand existing users, devices, and traffic patterns. They can then introduce macro‑segmentation with Layer 2 or Layer 3 virtual networks and ultimately enable micro‑segmentation with SGTs and group‑based policies as the zero‑trust end state.

When Catalyst Center is integrated to ISE, Catalyst Center by default becomes the primary administration point for group‑based access control, and Cisco ISE functions as a read‑only policy engine for TrustSec data. In this mode, Catalyst Center owns a single policy matrix (Production) on ISE and enforces one access contract (SGACL) per TrustSec policy. All fabric sites managed by Catalyst Center operate under a global default‑permit or default‑deny decision, which you choose in the Group-Based Access Control policy settings, and then it’s applied consistently to every site.

Optionally, Catalyst Center can be configured to have TrustSec policy administered directly on Cisco ISE. ISE then becomes the native policy engine for group‑based access control. In this mode, ISE supports multiple policy matrices, allowing different sites to maintain their own TrustSec matrices with independent default-permit or default-deny behavior per site. The same mode also enables enforcement of multiple SGACLs or access contracts per TrustSec policy entry, providing more granular and flexible access control than the single‑matrix, single‑SGACL model driven from Catalyst Center.

SD‑Access supports hierarchical segmentation options built into the solution. Macro-segmentation uses virtual networks or VRFs to isolate large domains such as users (employees, contractors, etc.), guests, and IoT devices (cameras, HVAC, etc.), with each VN providing complete Layer 3 isolation and no inter-VN communication unless there is route leaking. The generally recommended best practice is to keep the number of VNs small and create only those needed for truly necessary domain separation (for example, Campus, Guest, IoT, and possibly a shared services VN), rather than proliferating VNs everywhere, because each additional VN consumes fabric-wide resources and increases complexity—especially on border and peer devices, where every VN requires VRF Lite handoff and associated BGP neighbors.

Within each VN, micro-segmentation provides a second, finer layer of control between specific roles and device types, producing a hierarchical model that aligns with zero‑trust principles. Micro‑segmentation uses SGTs and group‑based policies to control which specific roles or device groups can talk to each other inside a VN. Catalyst Center and ISE support many SGTs, but best practice design guidance is to keep the SGT set as small and meaningful as possible. Each additional SGT increases the size of the policy matrix (NxN relationships) and consumes resources in ISE and the TrustSec enabled network devices. Many deployments end up with on the order of a few tens of well-defined SGTs (for example, per role or device class) rather than hundreds.

In brownfield environments, some customers need to retain their existing third-party Network Admission Control (NAC) solution. In SD-Access, the third-party NAC can be configured to support authentication, authorization, and micro-segmentation in concert with ISE. In this scenario, ISE remains mandatory for SGTs and group-based policy.

In a first supported model, third‑party RADIUS/NAC servers sit behind ISE: 802.1X/MAB authentications are sent to ISE, which proxies to the third‑party server for posture or profiling. ISE then receives an Access‑Accept with a Cisco attribute-value (AV) pair carrying the SGT and applies SD‑Access policy accordingly. This option requires a full-scale ISE deployment.

In the second model, switches send authentication requests directly to the third‑party NAC server for authentication, profiling, and posture assessment, which returns an Access‑Accept containing VLAN name and Cisco SGT assignment. The switch applies the VLAN locally and then queries Cisco ISE only for group‑based policy, so this model does not require a full‑scale ISE deployment because authentication traffic does not terminate on ISE.

The third and final supported design model uses only third-party RADIUS servers without ISE. The third-party RADIUS server is added to Catalyst Center as an authentication server and SD-Access network access devices (NADs) send AAA purely to the third-party RADIUS server. In this design micro-segmentation is not possible because ISE is not present.

Default permit vs. default deny

SD‑Access supports both default‑permit and default‑deny modes through the TrustSec/SGT policy model, each suited to different deployment phases and risk profiles. This behavior is controlled in Cisco ISE and surfaced in the Catalyst Center UI as part of the single‑pane‑of‑glass experience. By default, a “permit any” policy is used, so any source–destination SGT pair without an explicit SGACL is allowed.

Once default deny is enabled, all traffic in the fabric underlay and overlay is blocked by default, including routing protocols, BFD, SSH/Telnet, and multicast and broadcast traffic, so the following prerequisites must be satisfied before moving to this model.

● Starting with Catalyst Center Release 2.3.7.x, TrustSec enforcement is disabled in the underlay for newly LAN-automated links and on AP/extended node VLANs, which is a best practice for default-deny fabrics. For underlays that were LAN automated prior to Release 2.3.7.x, we recommend disabling TrustSec enforcement on all Layer 3 uplinks using Catalyst Center templates.

Multiple Catalyst Center clusters to ISE

Cisco Catalyst Center and ISE integrate to address multiple SD‑Access use cases, as described earlier. SD‑Access customers with large or distributed enterprise fabrics often deploy multiple Catalyst Center clusters for management simplicity, multiregion operations, and compliance needs, while relying on a single ISE cluster to provide globally consistent group‑based access control policy.

The rules and restrictions can be found in the latest Cisco Catalyst Center Administrator Guide.

When Multiple Catalyst Center operation is enabled in Catalyst Center settings, the first cluster that integrates with ISE is designated as the Author cluster and up to four additional Catalyst Center clusters operate as Reader clusters, inheriting and distributing Group-Based Policy (GBP) data (SGTs, access contracts, GBAC policy) and SD-Access data (LISP Extranet policy and shared SD‑Access transit) from the Author to the Reader nodes. TrustSec policy can be managed either on the Author cluster or directly in Cisco ISE.

If multiple Catalyst Center clusters (up to 10 in Release 2.3.7.x) are integrated with the same ISE instance without enabling Multiple Catalyst Center operation, each cluster operates independently, and no GBP or SD‑Access data is shared or synchronized between them. This integration pattern is supported only when TrustSec policy is managed centrally on Cisco ISE.

This section is organized into the following subsections:

| Section |

Subsection |

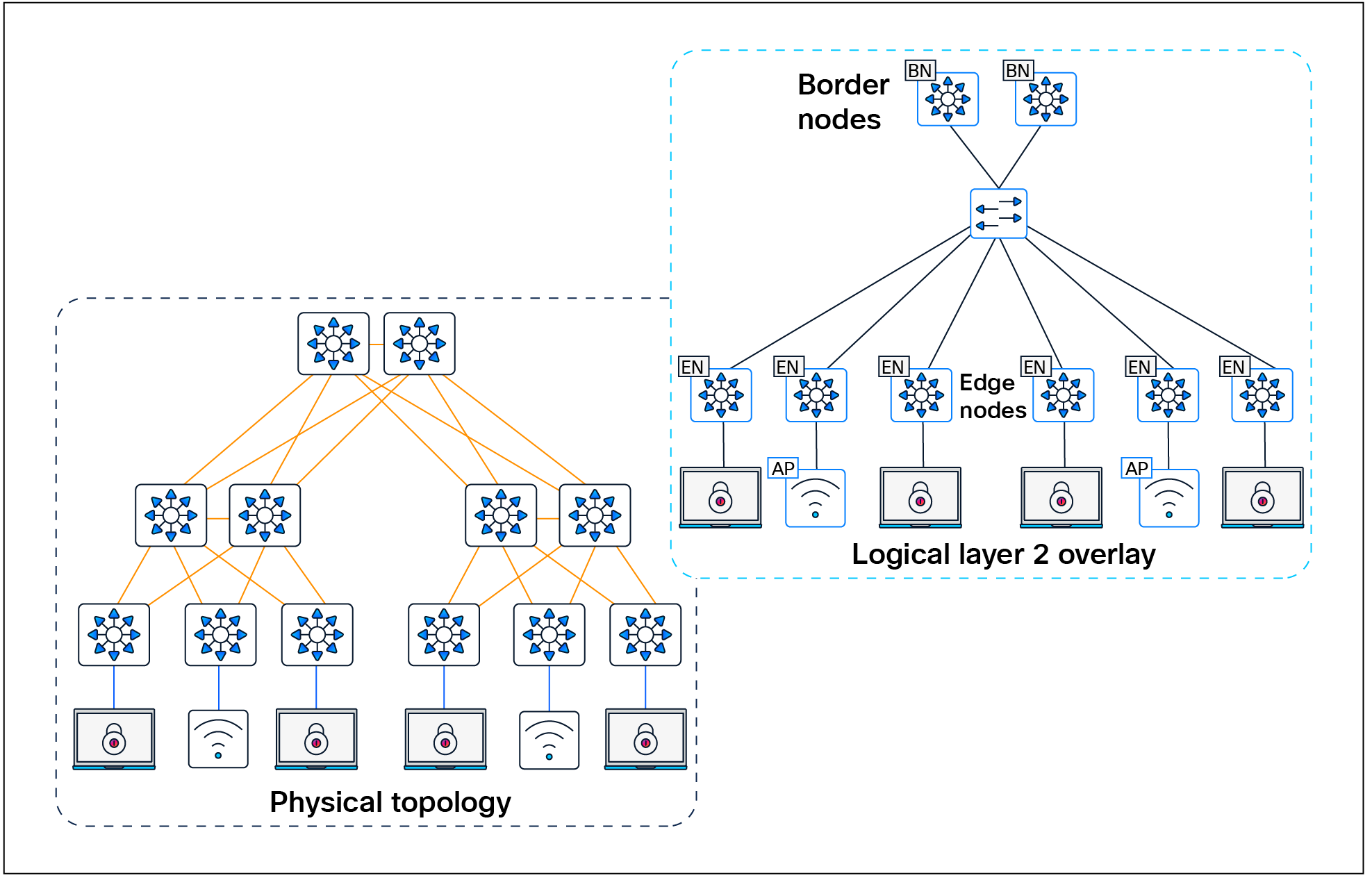

| Fabric role design principles |

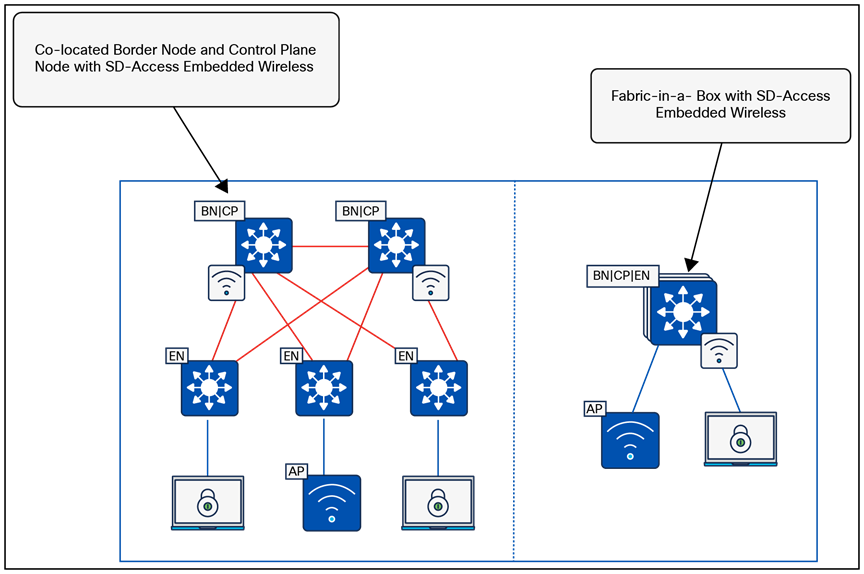

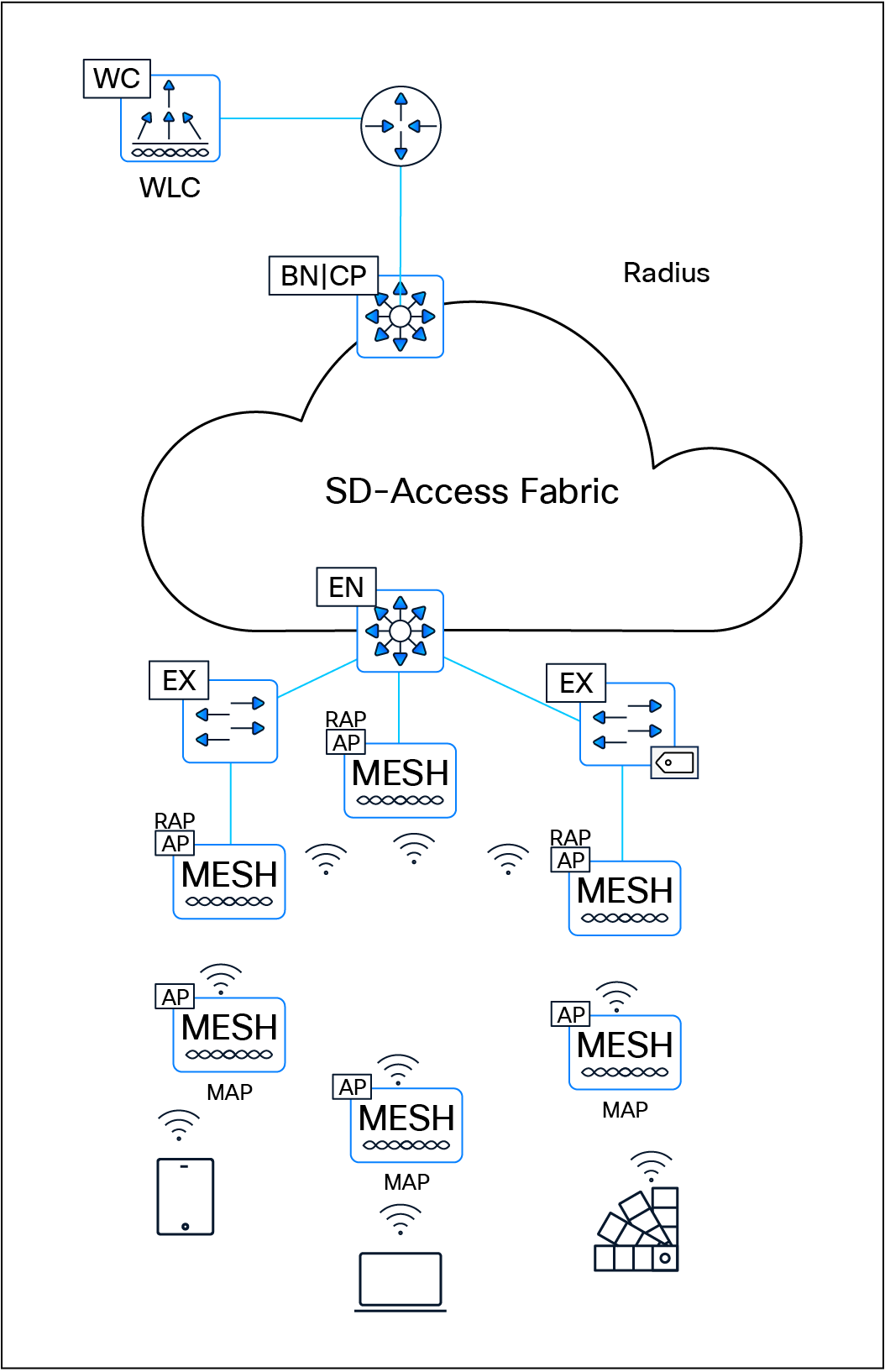

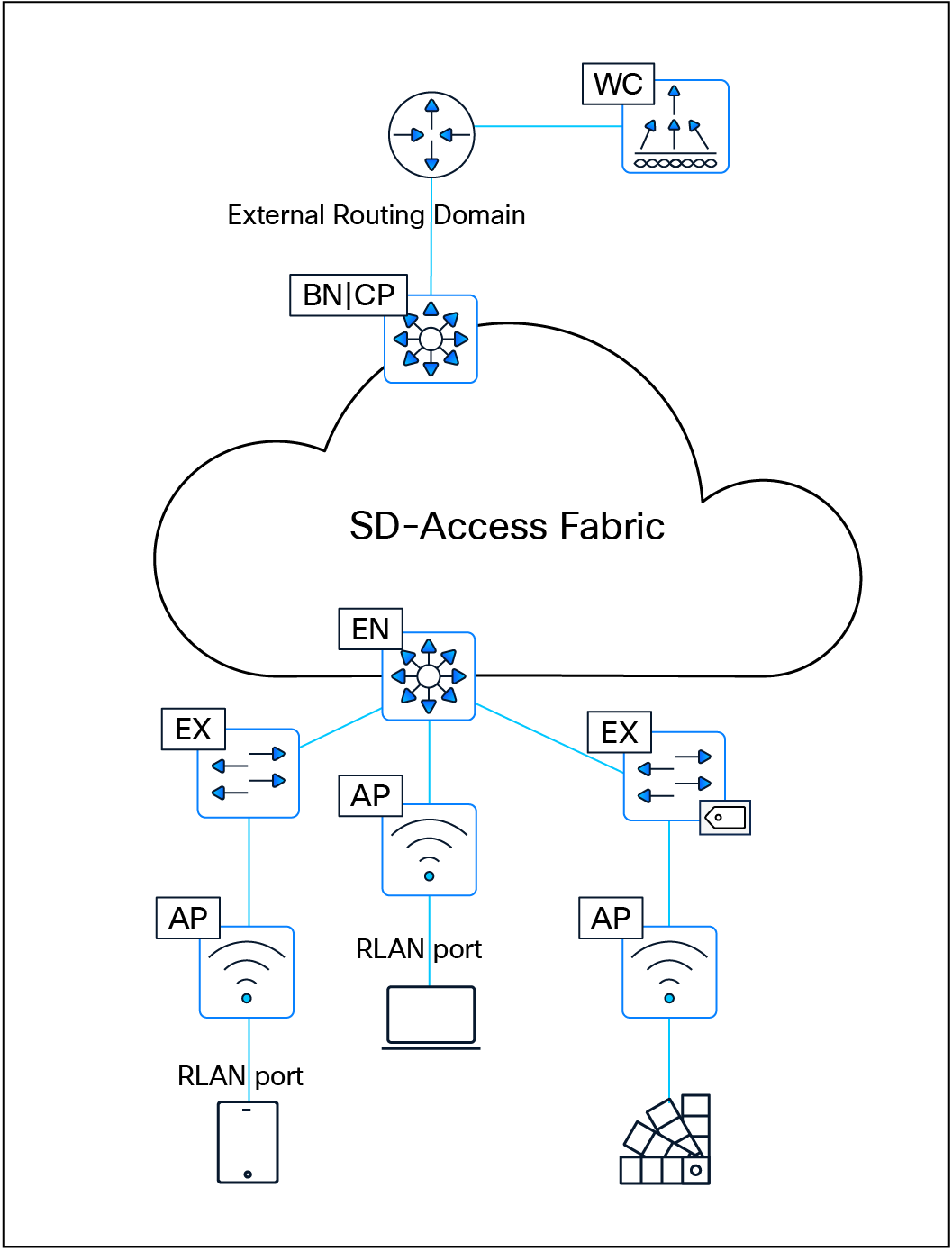

The SD-Access solution is provided through a combination of Cisco Catalyst Center, ISE, and wired and wireless device platforms that support fabric functionality. The wired and wireless device platforms are used to create the elements of a fabric site. A fabric site is a location that has its own border node, control plane node, and edge node. For wireless, a fabric‑mode WLC is dedicated to the site, and Cisco ISE provides centralized policy services.

A fabric role in SD-Access is a software-based function that operates on physical network hardware. These roles are designed for modularity and flexibility—allowing a single device to host one or multiple roles as needed. When provisioning SD-Access fabric roles, it’s important to align their deployment with the underlying network architecture and its functional distribution. Placing different roles on separate devices provides the highest levels of availability, resiliency, and scalability.

This section discusses design principles for SD-Access fabric sites, device roles, and related constructs, including edge nodes, control plane nodes, border nodes, Fabric in a Box, and extended nodes, as well as fabric wireless and transit and peer networks.

The SD-Access fabric edge nodes are the equivalent of an access layer switch in a traditional campus LAN design. The edge node functionality is based on the ingress and egress tunnel routers (xTR) in LISP. The edge nodes must be implemented using a Layer 3 routed access design. An edge node provides the following fabric functions:

● Endpoint registration: Each edge node has a LISP session to all control plane nodes. After an endpoint is detected by the edge node, it is added to a local database called the EID-table. Once the host is added to this local database, the edge node also issues a LISP map-register message to inform the control plane node of the endpoint so the central host tracking database (HTDB) is updated.

● Anycast Layer 3 gateway: A common gateway (IP and MAC addresses) is used at every edge node that shares a common EID subnet, providing optimal forwarding and mobility across different RLOCs. On edge nodes, the anycast Layer 3 gateway is instantiated as a switched virtual interface (SVI) with a hard-coded MAC address that is uniform across all edge nodes within a fabric site.

● Layer 2 bridging: A fabric edge node conducts bridging functionality when traffic originates from and is destined for an endpoint within the same VLAN. The fabric edge nodes exclusively determine whether to route or bridge the packets. They use a Layer 2 VNI (equivalent to a VLAN) within the VXLAN header to bridge the packets to the destination RLOC, where the endpoint is situated. The anycast gateway is an optional component when using the bridging functionality within the fabric. A Layer 2 border is usually deployed when the traffic must bridge out of the fabric.

● Mapping of user to virtual network: An endpoint is placed into a virtual network by assigning the endpoint to a VLAN associated to an SVI that is forwarding for a VRF. Together, these make up the Layer 2 and Layer 3 LISP VNIs, respectively, which maintain fabric segmentation even at the control plane communication level.

● AAA authenticator: The mapping of endpoints into VLANs can be done statically or dynamically using an authentication server. Operating as a Network Access Device (NAD), the edge node plays an integral part in the IEEE 802.1X port-based authentication process by collecting authentication credentials from connected devices, relaying them to the authentication server, and enforcing the authorization result.

● VXLAN encapsulation/de-encapsulation: Packets and frames received from an endpoint, either directly connected to an edge node or through it by way of an extended node or access point, are encapsulated in a fabric VXLAN and forwarded across the overlay. Traffic is sent either to another edge node or to the border node, depending on the destination.

When fabric encapsulated traffic is received for the endpoint, such as from a border node or another edge node, it is de-encapsulated and sent to that endpoint. This encapsulation and de-encapsulation of traffic enables the location of an endpoint to change, as the traffic can be encapsulated toward different edge nodes in the network without the endpoint having to change its address.

In SD-Access, fabric edge nodes represent the access layer in a two- or three-tier hierarchy. The access layer is the edge of the campus. It is the place where end devices attach to the wired portion of the campus network. The edge nodes also represent the place where devices extend the network connectivity out one more layer. These include devices such as IP phones, access points, and extended nodes.

The access layer provides the intelligent demarcation between the network infrastructure and the devices that leverage that infrastructure. As such it provides a trust boundary for QoS, security, and policy. It is the first layer of defense in the network security architecture, and the first point of negotiation between end devices and the network infrastructure. High availability for individual switches is achieved through stacking technologies like StackWise, StackWise Virtual, or chassis-based switches with redundant supervisors and power supplies.

It is recommended that each tier in the network be dual-homed to the next higher tier to ensure robust connectivity; however, if necessary, due to nonhierarchical structured cabling environments, a daisy chain configuration of edge nodes is also supported. The underlay network is routed typically with fast converging protocols such as OSPF or IS-IS, along with features like equal-cost multipath (ECMP), BFD, and NSF with SSO for rapid failover. Design guidelines recommend connecting edge node uplinks to different stack members or supervisors and providing power redundancy beyond minimum requirements to avoid single points of failure, collectively ensuring continuous network availability and fast recovery from failures at the edge node level.

In SD-Access, dual-homing an external Layer 2 switch is supported when it does not create a Layer 2 forwarding path between two edge nodes. The preferred dual-homing design is a port‑channel/Multichassis EtherChannel (MEC) from the external Layer 2 domain into a single logical edge node, implemented as a StackWise switch or StackWise Virtual pair. If StackWise or StackWise Virtual is not possible, another way to achieve dual homing is using active/standby links from the external switching domain, such as Flexlink+, where one link is active and the other is standby, helping ensure that there is never more than one Layer 2 forwarding path into the fabric. Note that correct hardware selection is crucial because not all switch models support Flexlink+.

Dual-homed Layer 2 switches with Flexlink+

The SD-Access fabric also supports dual-homing of wired endpoints to different fabric edge nodes in an active/standby configuration. The endpoint is responsible for keeping one uplink active and forwarding while the other remains in standby and does not forward traffic.

The SD-Access fabric control plane node is built on Locator/ID Separation Protocol (LISP) functionality, combining both the map-server and map-resolver roles within the same node. It maintains a database of all endpoints in the fabric site, mapping each endpoint to its corresponding fabric edge node. This architecture decouples the endpoint’s IP or MAC address from its physical location (the nearest router), enabling optimized mobility, efficient lookups, and seamless policy enforcement across the fabric.

The control plane node enables the following functions:

● Host tracking database (HTDB): The HTDB serves as the central repository of EID-to-RLOC mappings, where the RLOC corresponds to the IP address of the Loopback 0 interface on a fabric node. The HTDB is equivalent to a LISP site in traditional LISP, which includes what EIDs can be and have been registered.

● Endpoint identifier (EID): The EID is an address used for numbering or identifying an endpoint device in the network. The SD-Access solution supports MAC addresses, IPv4 addresses, and IPv6 addresses as EIDs.

● Map server: The LISP map server receives endpoint registrations indicating the associated RLOC and uses these to populate the HTDB.

● Map resolver: The LISP map resolver responds to queries from fabric devices requesting RLOC mapping information from the HTDB in the form of an EID-to-RLOC binding. This tells the requesting device the fabric node to which an endpoint is connected and thus where to direct traffic.

The fabric control plane node contains the database used to identify an endpoint’s location in the network. This is a central and critical function, and is required for the fabric to operate. If the fabric control plane is down, endpoints inside the fabric fail to establish communication to remote endpoints that are not cached in the local database.

For redundancy, we recommend deploying two control plane nodes to ensure high availability of the fabric site, as each node contains a copy of control plane information acting in an active/active state. The devices supporting the control plane should be chosen to support the HTDB (EID-to-RLOC bindings), CPU, and memory needs for an organization based on the number of endpoints. Border nodes and edge nodes register with and use all control plane nodes, so redundant nodes chosen should be of the same type for consistent performance.

The Cisco WLC can communicate with a pair of control plane nodes within a fabric site. The WLC updates the wireless client context information with this pair of control plane nodes. If a fabric site deploys more than a pair of control plane nodes, the client context information may not be updated with all control plane nodes. Therefore, we recommend that you restrict the number of control plane nodes to a pair within a fabric site when using fabric-enabled wireless.

Two LISP control plane implementations are available for use in SD-Access. The original was LISP/BGP, released in 2017, and the more recent one is LISP Pub/Sub (Publish/Subscribe). Pub/Sub offers a range of new capabilities, including:

● Faster convergence: Topology updates are proactively pushed directly to subscribers, enabling faster propagation compared to BGP-based models.

● Reduced protocol complexity: There is no need for BGP in prefix propagation, making the control plane simpler and easier to troubleshoot.

● Configuration simplicity: No mutual redistribution is required between LISP and BGP.

● Scalability and extensibility: Pub/Sub is designed for large and dynamic fabric deployments; adding support for new LISP features is relatively easier.

● Efficient operation: Unnecessary map lookups are reduced and overlay traffic flows are optimized.

LISP Pub/Sub is recommended for all new SD-Access implementations. As of this writing, an automated workflow to migrate existing LISP/BGP sites to LISP Pub/Sub is under development.

In the initial release of the Pub/Sub architecture, subscribers (border nodes) express interest in receiving updates for all registrations for a given instance ID (IID) table, and the publisher (control plane node) proactively pushes reachability information to subscribers. By pushing mappings instead of redistributing them through BGP in LISP/BGP, LISP Pub/Sub removes the dependency on BGP for information exchange within and across fabric sites (when using the optional SD-Access transit control plane). This results in a more efficient, extensible, and scalable control plane architecture.

Cisco IOS® XE Release 17.18.1 introduced support for EID Pub/Sub. It allows edge nodes and Layer 2 border nodes to subscribe to active remote EIDs in the overlay (remote EIDs to which traffic is being sent). Updates for the subscribed EIDs are published immediately to the edge node or Layer 2 border node, further optimizing network convergence speed and efficiency in the LISP Pub/Sub architecture.

Fabric site control plane nodes may be deployed as either dedicated (distributed) or nondedicated (colocated) devices in relation to the fabric border nodes. In a Fabric in a Box deployment, all fabric roles must be colocated on the same device. In most deployments, it is common to deploy a colocated control plane node solution, using the border node and control plane node on the same device.

The control plane node should have ample available memory to store all the registered prefixes. If the control plane node is in the data forwarding path, such as at the distribution layer of a three-tier hierarchy, throughput should be considered, as well as ensuring that the node is capable of CPU-intensive LISP tasks along with the other services and connectivity it is providing.

One consideration for separating control plane functionality onto dedicated devices is to support frequent roaming of endpoints across fabric edge nodes. Roaming across fabric edge nodes causes control plane events in which the WLC updates the control plane nodes on the mobility (EID-to-RLOC mapping) of these roamed endpoints. Although a colocated control plane is the simplest design, adding the control plane node function on border nodes in a high-frequency roam environment can lead to high CPU use on colocated devices. For high-frequency roam environments, a dedicated control plane node should be used.

Intermediate nodes are part of the Layer 3 network used for interconnections among the devices operating in a fabric role, such as the interconnections between border nodes and edge nodes. These interconnections are created in the global routing table on the devices and are also known collectively as the underlay network. For example, if a three-tier campus deployment provisions the core switches as the border nodes and the access switches as the edge nodes, the distribution switches are the intermediate nodes.

The number of intermediate nodes is not limited to a single layer of devices. For example, border nodes may be provisioned on enterprise edge nodes, resulting in the intermediate nodes being the core and distribution layers, as shown in Figure 10.

Intermediate nodes in SD-Access – Example

Intermediate nodes do not have a requirement for VXLAN encapsulation/de-encapsulation, LISP control plane messaging support, or SGT awareness. Their requirement is to provide IP reachability and physical connectivity, and to support the additional MTU requirement to accommodate the larger-sized IP packets encapsulated with fabric VXLAN information. Intermediate nodes simply route and transport IP traffic between the devices operating in fabric roles. Intermediate nodes do not count toward the total number of fabric nodes supported per fabric site.

| Tech tip |

| VXLAN adds 50 bytes to the original packet. The common denominator and recommended MTU value available on devices operating in a fabric role is 9100. Networks should have a minimum starting MTU of at least 1550 bytes to support the fabric overlay. MTU values between 1550 and 9100 are supported, along with MTU values larger than 9100, although there may be additional configuration and limitations based on the original packet size. MTU 9100 is provisioned as part of LAN Automation. Devices in the same routing domain and Layer 2 domain should be configured with a consistent MTU size to support routing protocol adjacencies and packet forwarding without fragmentation. |

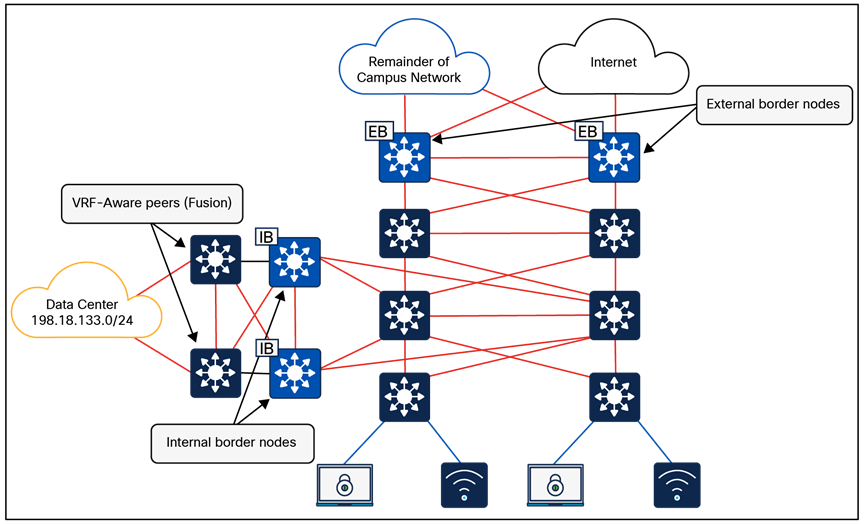

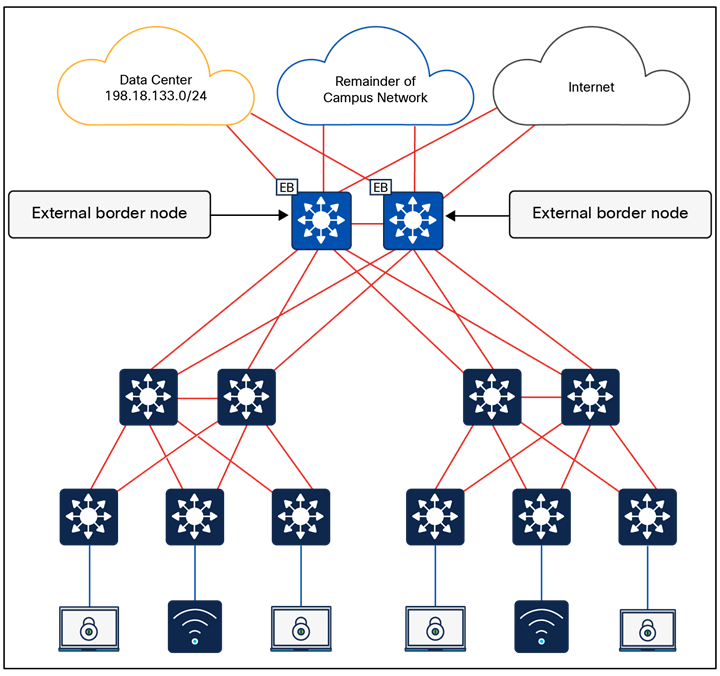

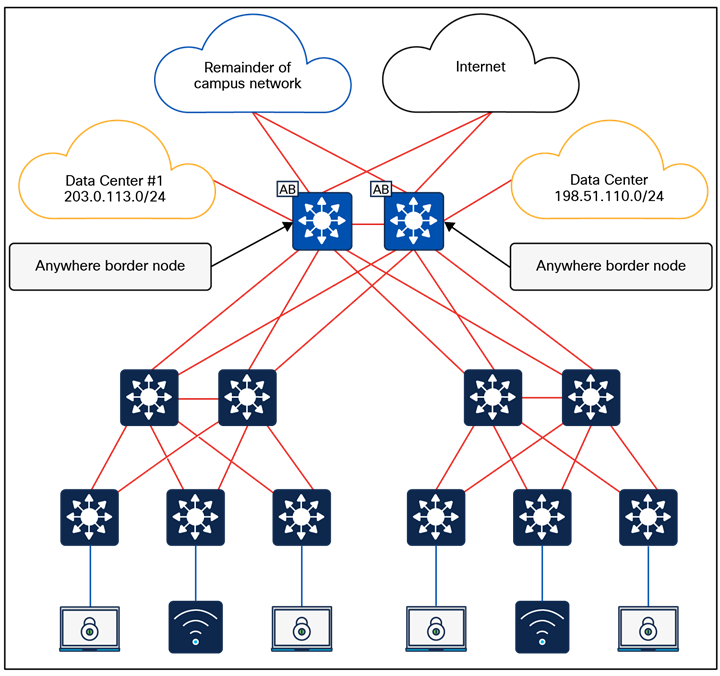

The fabric border nodes serve as the gateway between the SD-Access fabric site and the networks external to the fabric. The border node is responsible for network virtualization interworking and SGT propagation from the fabric to the rest of the network.

Border nodes implement the following functions:

● Advertisement of EID subnets: Border Gateway Protocol (BGP) is the routing protocol provisioned to advertise the coarse-aggregate endpoint prefix space outside the fabric. This is also necessary so that traffic from outside of the fabric destined for endpoints in the fabric is attracted back to the border nodes.

● Fabric site exit point: The external border node is the gateway of last resort for the fabric edge nodes. This is implemented using LISP proxy tunnel router (PxTR) functionality. Also possible is the internal border node, which registers known networks (IP subnets) with the fabric control plane node.

● Network virtualization extension to the external world: The border node can extend network virtualization from inside the fabric to outside the fabric by using VRF-lite and VRF-aware routing protocols to preserve the segmentation.

● Policy mapping: The border node maps SGT information from within the fabric to be appropriately maintained when exiting that fabric. As discussed further in the Micro-segmentation section, when the fabric packet is de-encapsulated at the border, SGT information can be propagated using SGT Exchange Protocol (SXP) or by directly mapping SGTs into the Cisco metadata field in a packet using inline tagging.

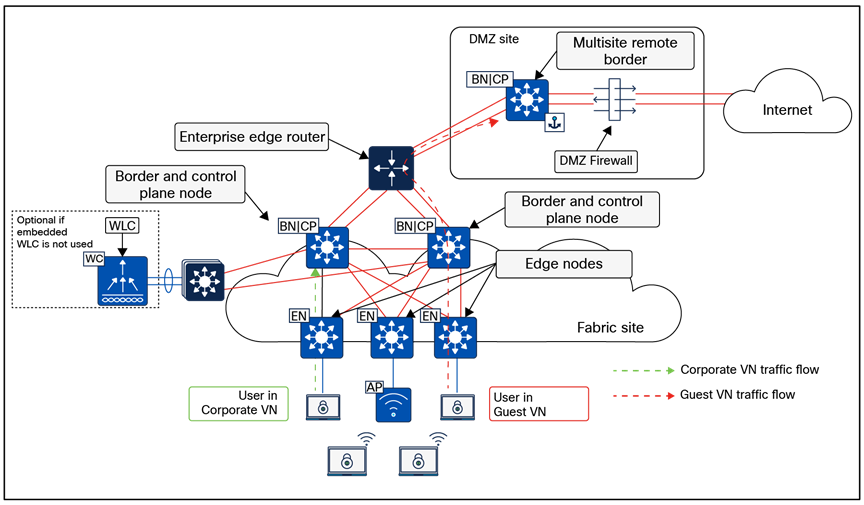

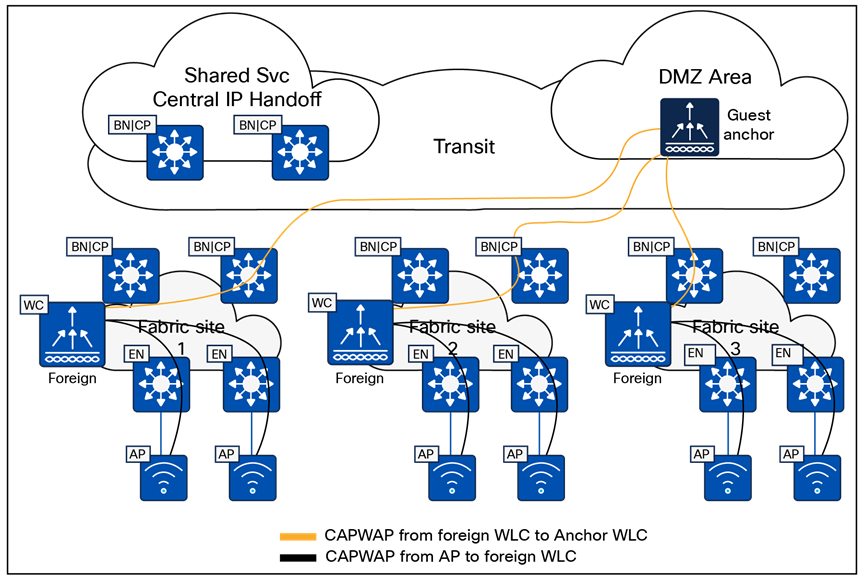

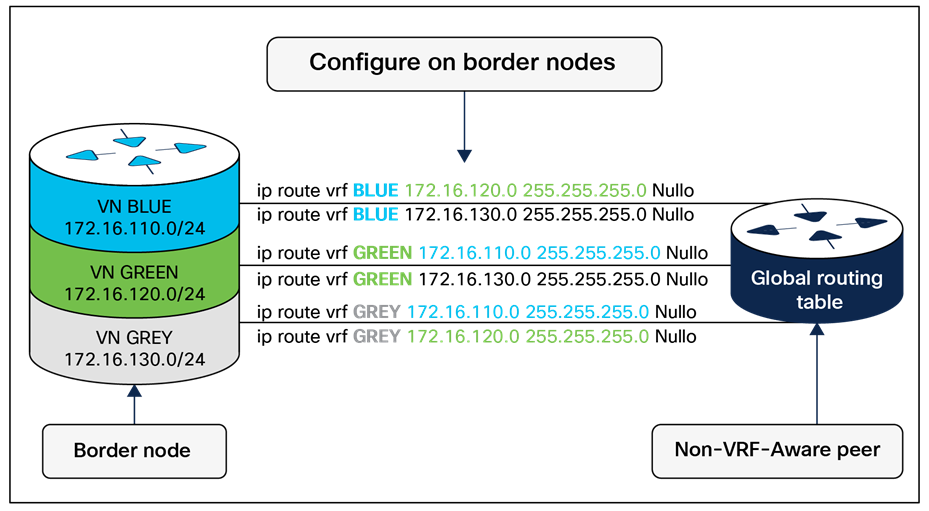

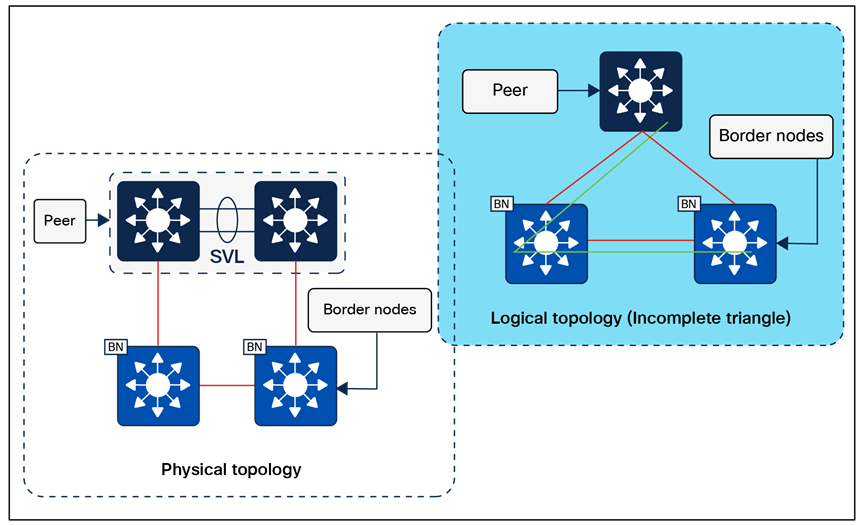

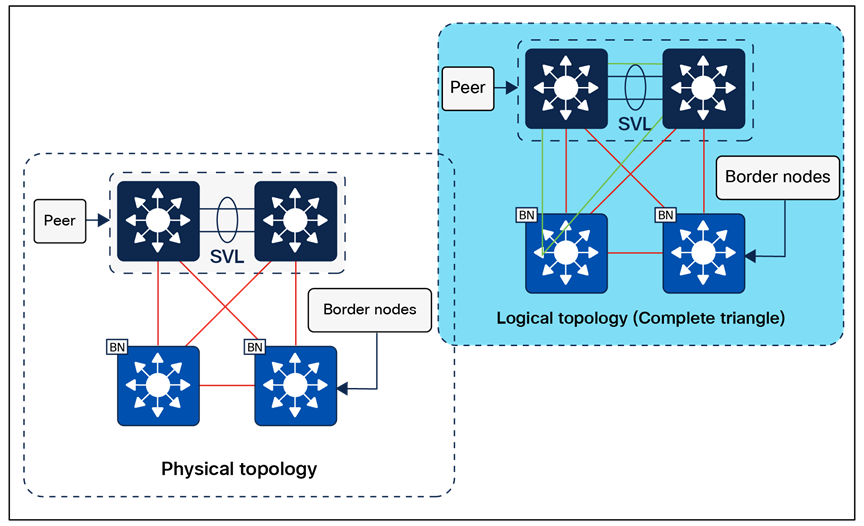

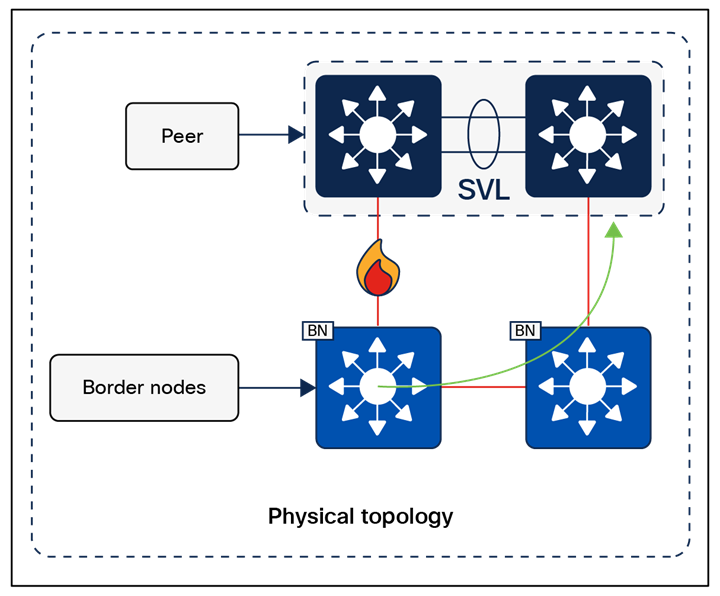

● VXLAN encapsulation/de-encapsulation: Packets and frames received from outside the fabric and destined for an endpoint inside the fabric are encapsulated in the fabric VXLAN by the border node. Packets and frames sourced from inside the fabric and destined outside of the fabric are de-encapsulated by the border node. This is similar to the behavior used by an edge node except that, rather than being connected to endpoints, the border node connects a fabric site to a nonfabric network.