Installation Requirements

The following list contains the pre-requisites of Cisco Optical Network Controller installation.

-

Before installing Cisco Optical Network Controller, you must first login in to the VMware customer center and download VMware vCenter server version 7.0, as well as vSphere server and client with version 7.0. Cisco Optical Network Controller is deployed on rack or blade servers within vSphere.

Attention

Upgrade to VMware vCenter Server 8.0 U2 if you are using VMware vCenter Server 8.0.2 or VMware vCenter Server 8.0.1.

-

Install ESXi host version of 7.0 or higher on the servers to support creating Virtual Machines.

-

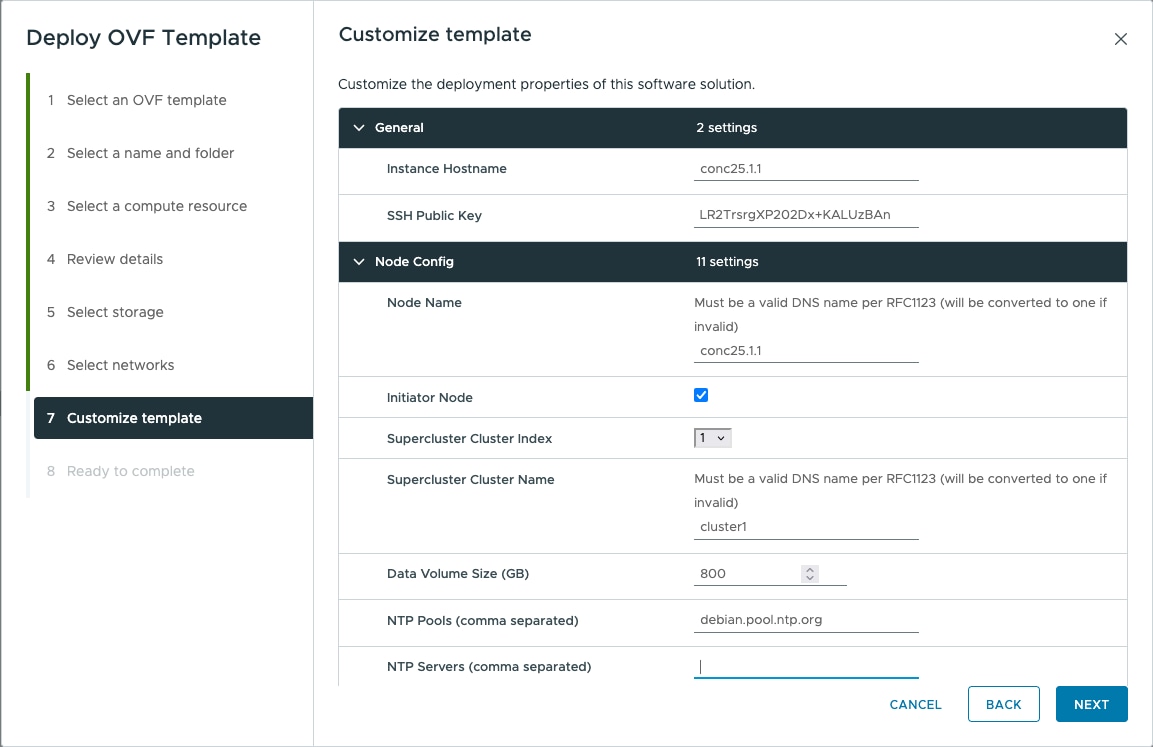

You must have a DNS server. The DNS server can be an internal DNS server if the Cisco Optical Network Controller instance is not exposed to the internet.

-

You must have an NTP server or NTP Pool for time synchronization. Configure the same NTP server or pool on Cisco Optical Network Controller and the PC or VM you use to access Cisco Optical Network Controller. Configure the ESXi host also with the same NTP configuration.

-

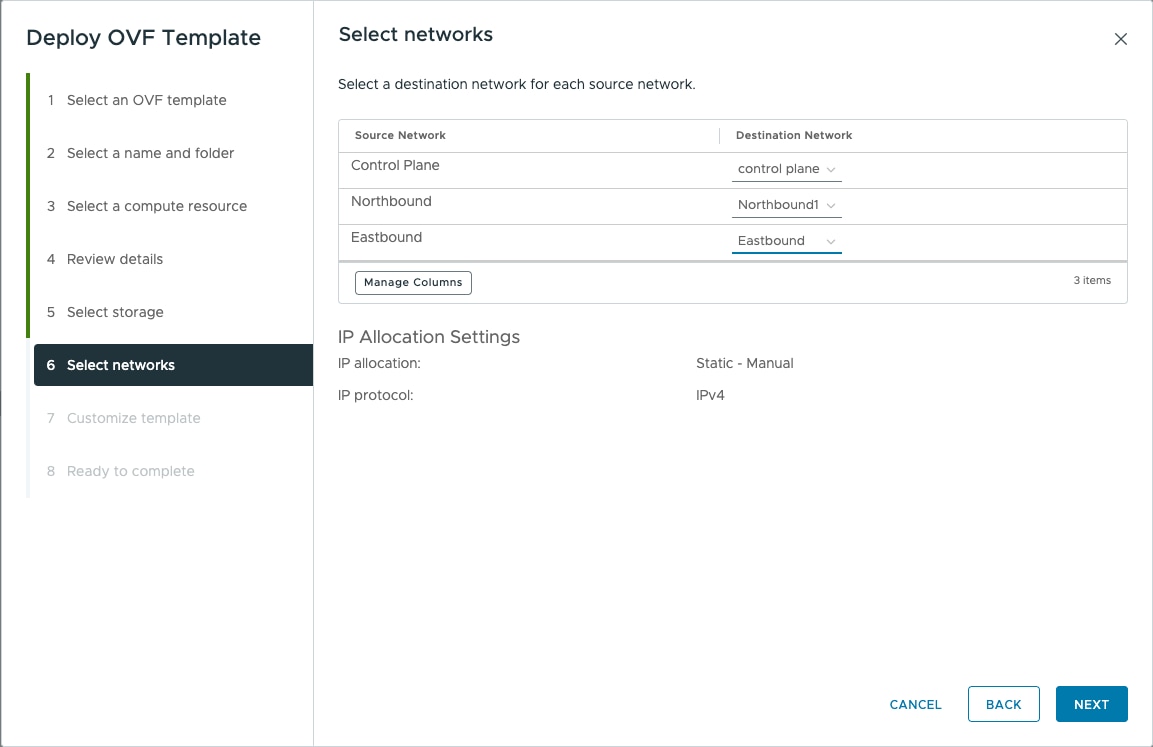

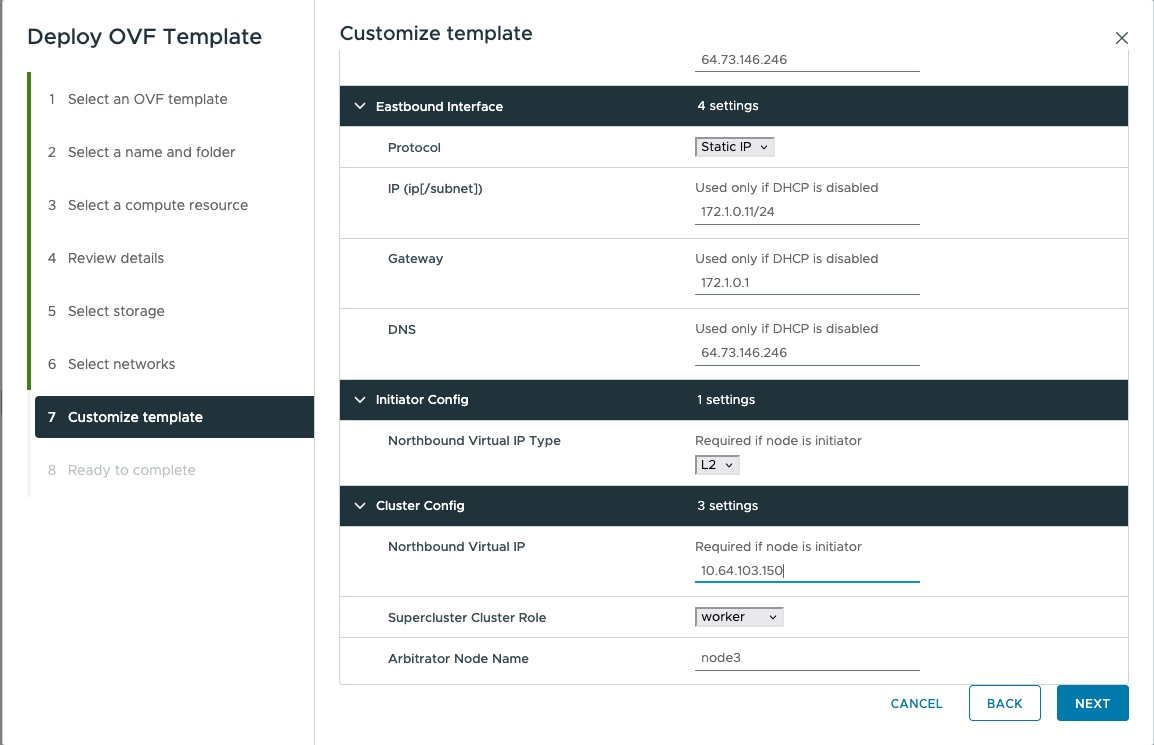

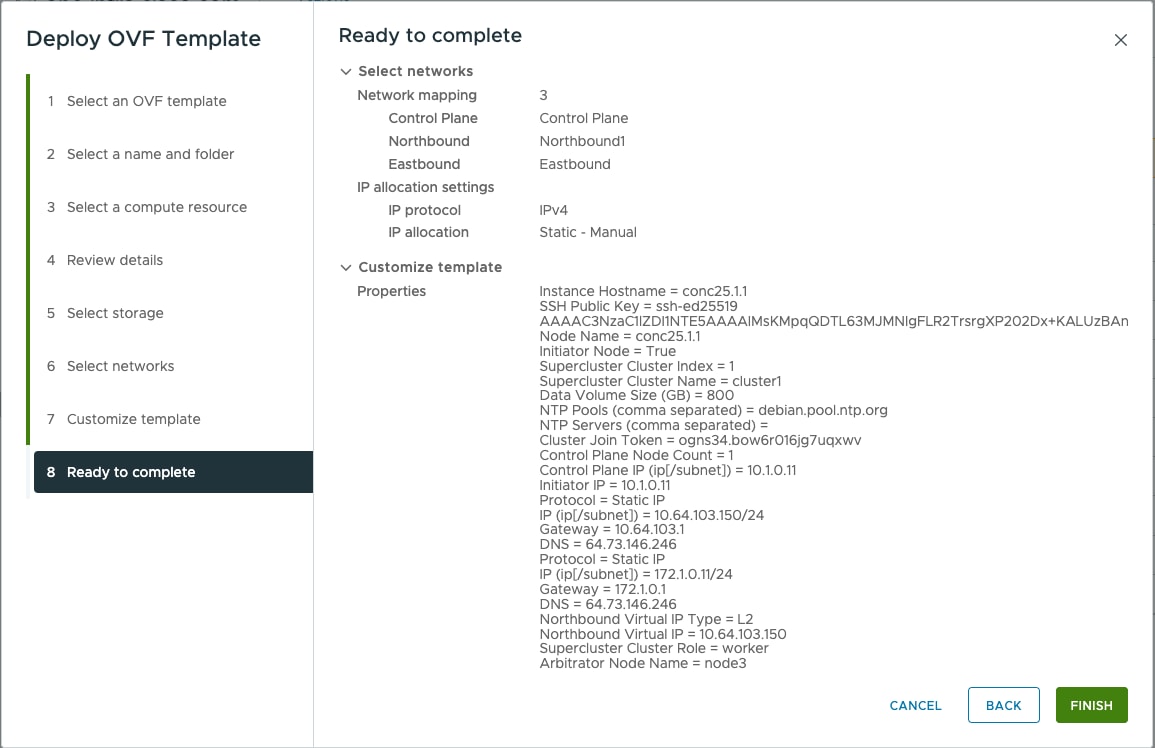

Before the Cisco Optical Network Controller installation, three networks must be created.

-

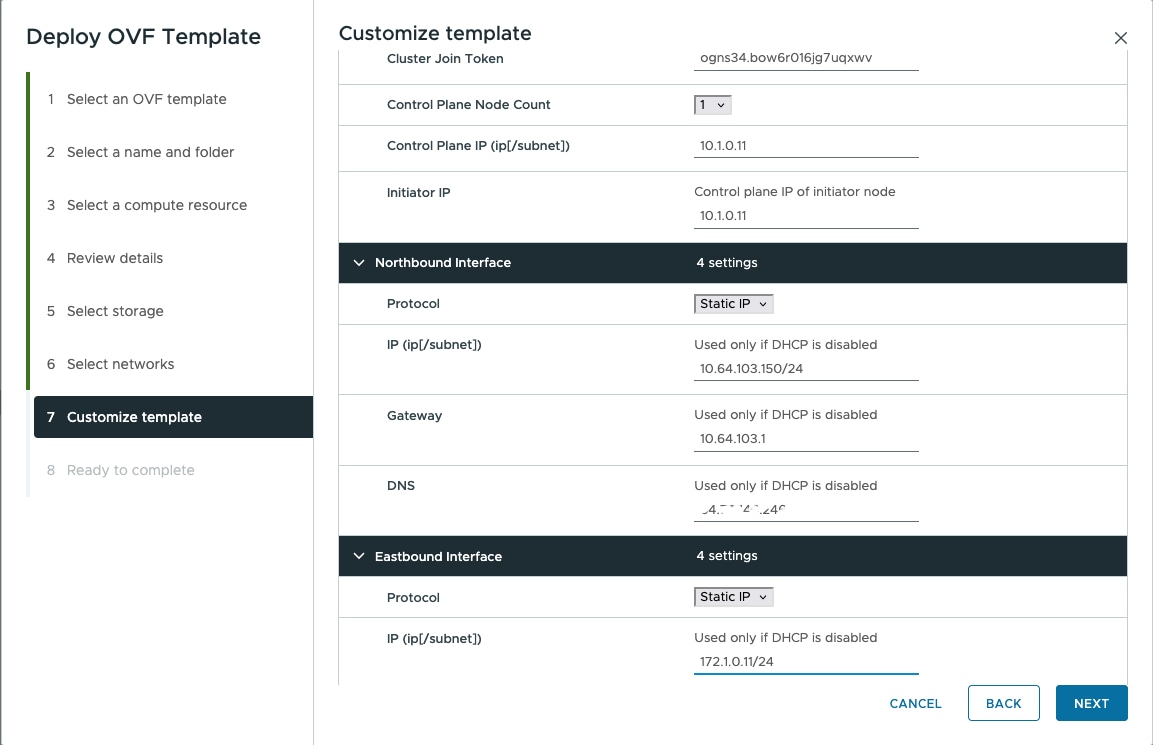

Control Plane Network:

The control plane network helps in the internal communication between the deployed VMs within a cluster. If you are setting up a standalone system, this can refer to any private network.

-

VM Network or Northbound Network:

The VM network is used for communication between the user and the cluster. It handles all the traffic to and from the VMs running on your ESXi hosts and this is your public network through which the UI is hosted.

-

Eastbound Network:

The Eastbound Network helps in the internal communication between the deployed VMs within a cluster. If you are setting up a standalone system, this can refer to any private network.

-

-

Accept the Self-Signed Certificate from the ESXi host.

-

Access the ESXi host using your web browser.

-

If you receive a security warning indicating that the connection is not private or that the certificate is not trusted, proceed by accepting the risk or bypassing the warning.

-

Note |

For more details on VMware vSphere, see VMware vSphere. |

The minimum hardware requirement for Cisco Optical Network Controller installation is given in this table.

|

Sizing |

CPU |

Memory |

Solid State Drive (SSD) |

|---|---|---|---|

|

Extra Small (XS) |

16 vCPU |

64 GB |

2 TB |

|

Small (S) |

32 vCPU |

128 GB |

4 TB |

|

Medium (M) |

48 vCPU |

256 GB |

10 TB |

Storage: SSDs to meet the disk write latency requirement of ≤ 100 ms.

Attention |

Cisco Optical Network Controller supports only SSDs for storage. |

Note |

Configure vCPU and memory according to the VM profile (XS=16vCPU+64GB, S=32vCPU+128GB) before you power on the VM in vCenter. vCPU to Physical CPU Core Ratio: We support a vCPU to Physical CPU core ratio of 2:1 if hyperthreading is enabled and the hardware supports hyperthreading. Hyperthreading is enabled by default on Cisco UCS servers that support hyperthreading. In other cases, the vCPU to Physical CPU core ratio is 1:1. |

The requirements based on type of deployment are given in the table below.

|

Deployment Type |

Requirements |

|---|---|

|

Standalone (SA) |

Control Plane Network: Can be a private network for standalone setups. Requires one IP address. Gateway: Required. DNS Server: Should be an internal DNS if the node is not exposed to the internet; otherwise, an internet DNS can be used. Northbound Network (VM Network): Should be a public network. All communication between the Cisco Optical Network Controller and devices will flow through this network. Requires one public IP address. Gateway: Required. DNS Server:Required. Should be an internal DNS if the node is not exposed to the internet; otherwise, an internet DNS can be used. Eastbound Network: Can be a private network for standalone setups. Requires one private IP address. Gateway: Required. DNS Server:Required. Should be an internal DNS if the node is not exposed to the internet; otherwise, an internet DNS can be used. |

To create the control plane and virtual management networks follow the steps listed below.

-

From the vSphere client, select the Datacenter where you want to add the ESXi host.

-

Right-click the server from the vCenter inventory and click Add Networking.

-

To create a private network for Control Plane and Eastbound Networks, follow the wizard for a Standard Switch addition for each network.

-

In Select connection type, choose Virtual Machine Port Group for a Standard Switch and click Next.

-

In Select target device , select New Standard Switch (MTU 1500) and click Next.

-

In Create a Standard Switch, click Next, and confirm There are no active physical network adapters for the switch.

-

In Connection settings choose a network label (Control Plane or Eastbound) and select VLAN ID as None(0) click Next.

-

In Ready to complete, review your configuration and click Finish.

-

After adding the ESXi host, create the Control Plane, Northbound, and Eastbound Networks before deploying.

This table lists the default port assignments.

| Traffic Type | Port | Description |

|---|---|---|

| Inbound | TCP 22 | SSH remote management |

| TCP 8443 | HTTPS for UI access | |

| Outbound | TCP 22 | NETCONF to routers |

| TCP 389 | LDAP if using Active Directory | |

| TCP 636 | LDAPS if using Active Directory | |

| Customer Specific | HTTP for access to an SDN controller | |

| User Specific | HTTPS for access to an SDN controller | |

|

TCP 3082, 3083, 2361, 6251 |

TL1 to optical devices | |

|

Eastbound |

TCP 10443 |

Supercluster join requests |

|

UDP 8472 |

VxLAN |

|

| syslog | User specific | TCP/UDP |

|

Control Plane Ports (Internal network between cluster nodes, not exposed) |

TCP 443 | Kubernetes |

| TCP 6443 | Kubernetes | |

| TCP 10250 | Kubernetes | |

| TCP 2379 | etcd | |

| TCP 2380 | etcd | |

| UDP 8472 | VXLAN | |

| ICMP | Ping between nodes (optional) |

Feedback

Feedback