Cisco VXLAN Multi-Site and Service Node Integration

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

| Description |

|

| January 29, 2024 |

First release of this document. |

Introduction

Executive Summary

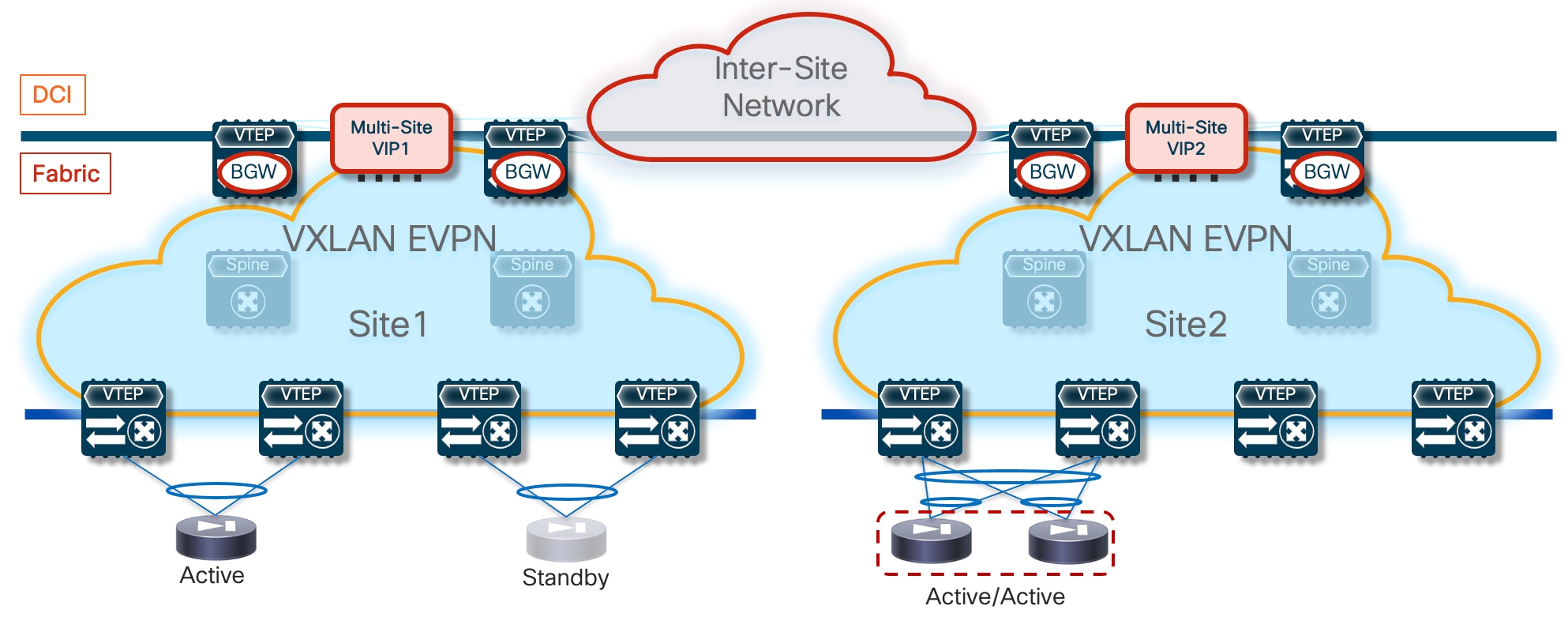

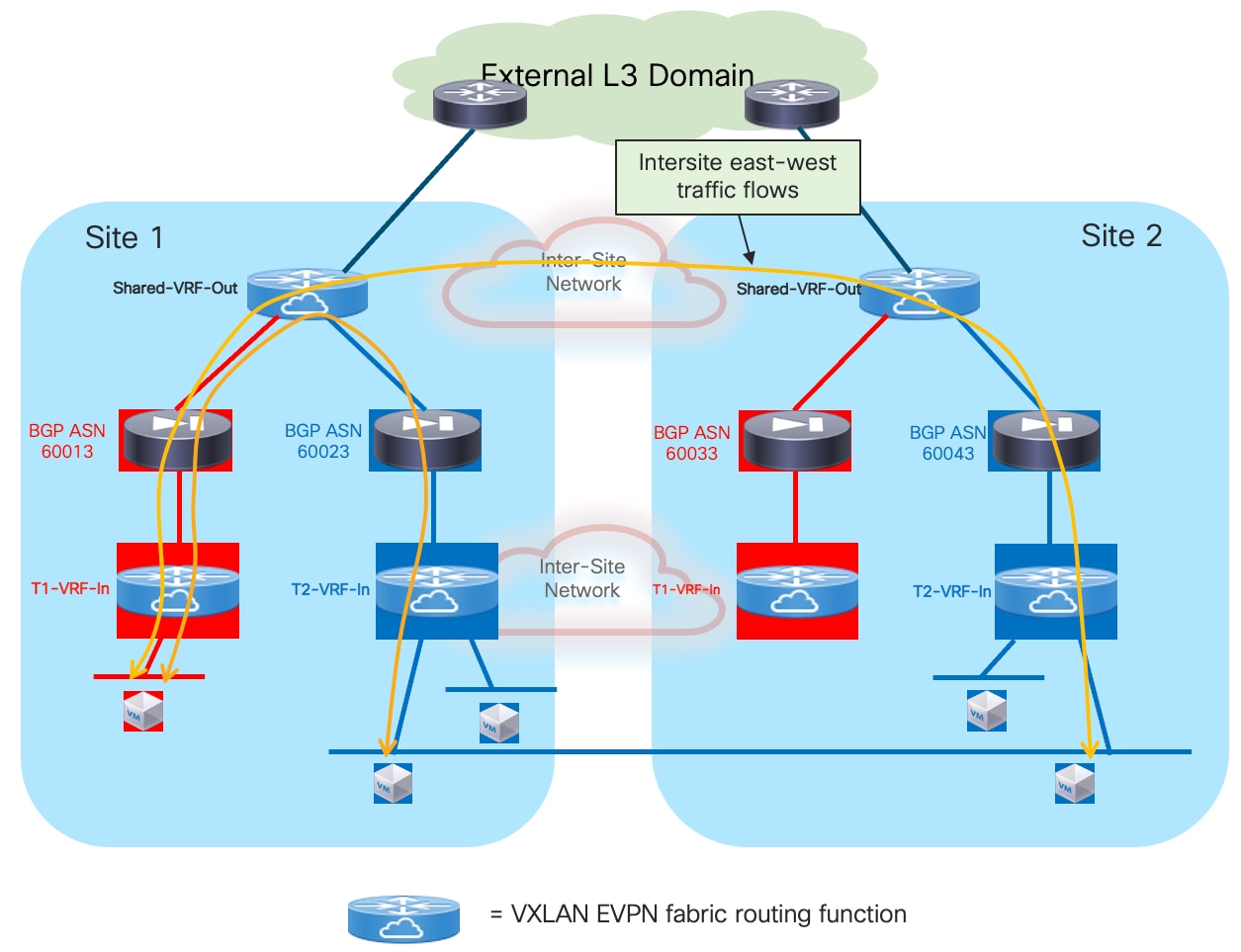

The goal of this paper is to cover the design and deployment considerations for integrating service devices (such as firewalls) in a VXLAN EVPN Multi-Site architecture interconnecting multiple VXLAN EVPN fabrics. Different design options are possible, depending on the chosen service device redundancy model (Active/Standby stretched cluster, Active/Active stretched cluster, independent service nodes in each fabric) and on how the service devices need to be integrated to enforce policy for communication between endpoints connected to the fabrics (East-West traffic flows) or between endpoints and external resources (North-South flows).

The paper is structured in a modular way to ensure all the deployment and configuration information can be found in the section covering each specific use case. Each section covers one of the following three main deployment models, each of them with two different service device redundancy models.

● Firewall deployed as default gateway –this design enforces security policies for all communications between different networks that are part of the same Tenant and with resources external to the Tenant. While this represents an easy way to deploy tight security (each subnet represents a separate security zone), the obvious drawback is that the firewall, which functions as default gateway, must inspect all routed traffic flows and may quickly become a bandwidth bottleneck if not properly dimensioned.

This use case includes two different variations depending on the firewall redundancy model:

◦ Active/Standby firewall pair stretched across two fabrics: this model covers both using static routing or dynamic routing between the Active firewall and the northbound network.

◦ Active/Active firewall cluster stretched across two or more fabrics: this case discusses static routing between the firewall nodes that are part of the cluster and the northbound network.

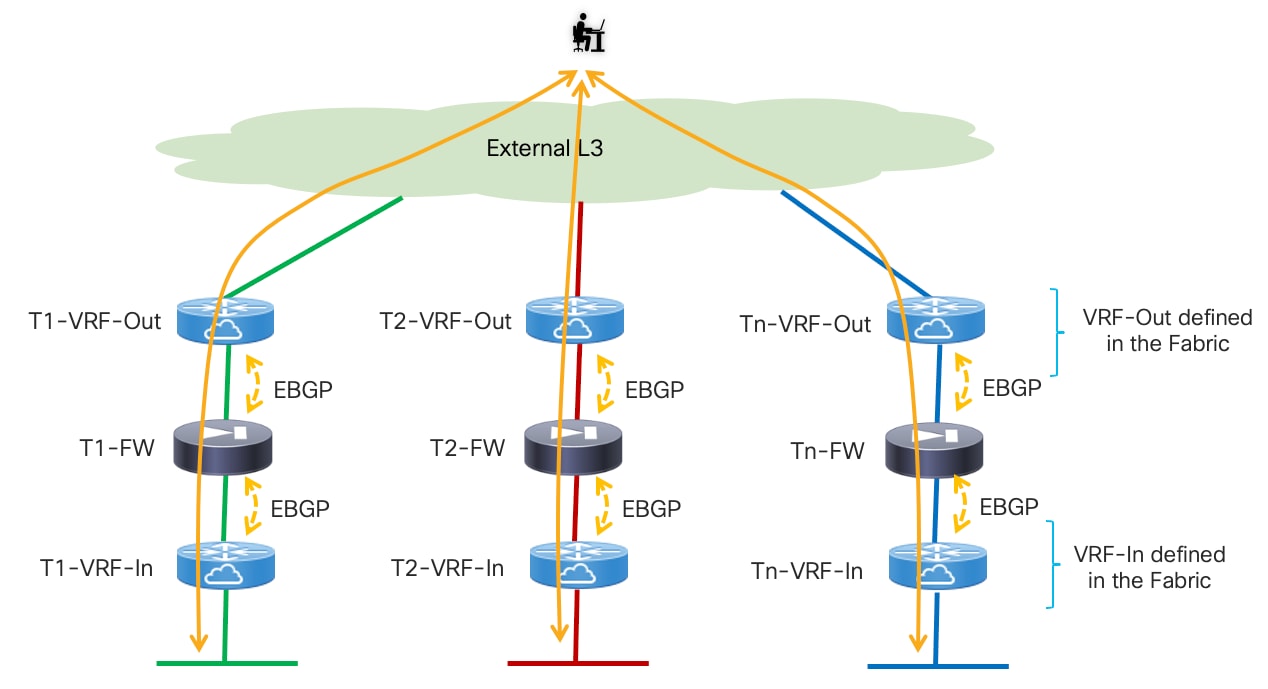

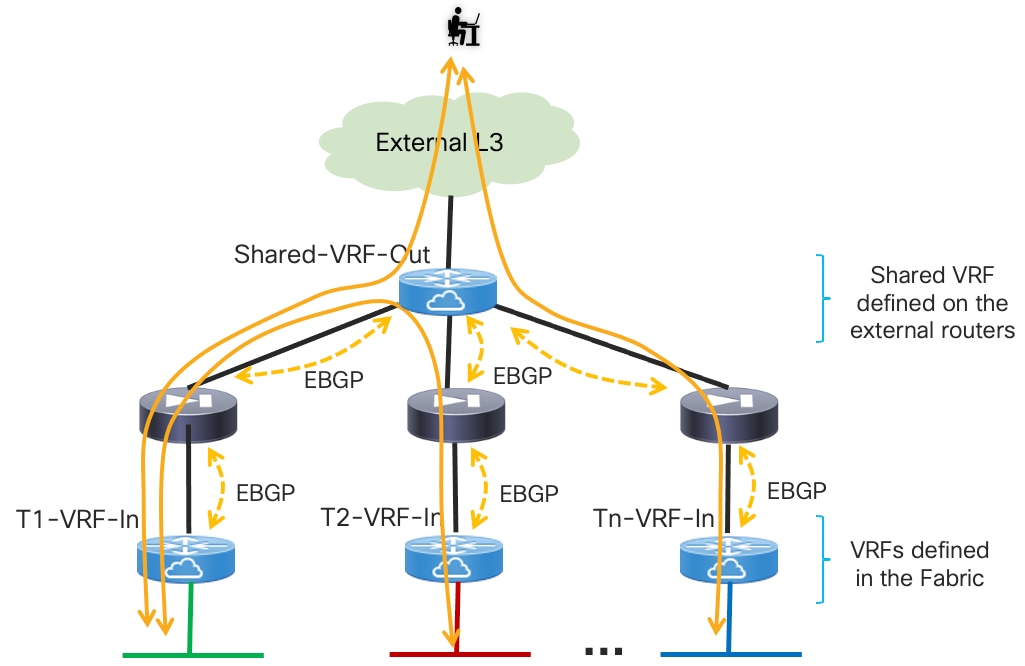

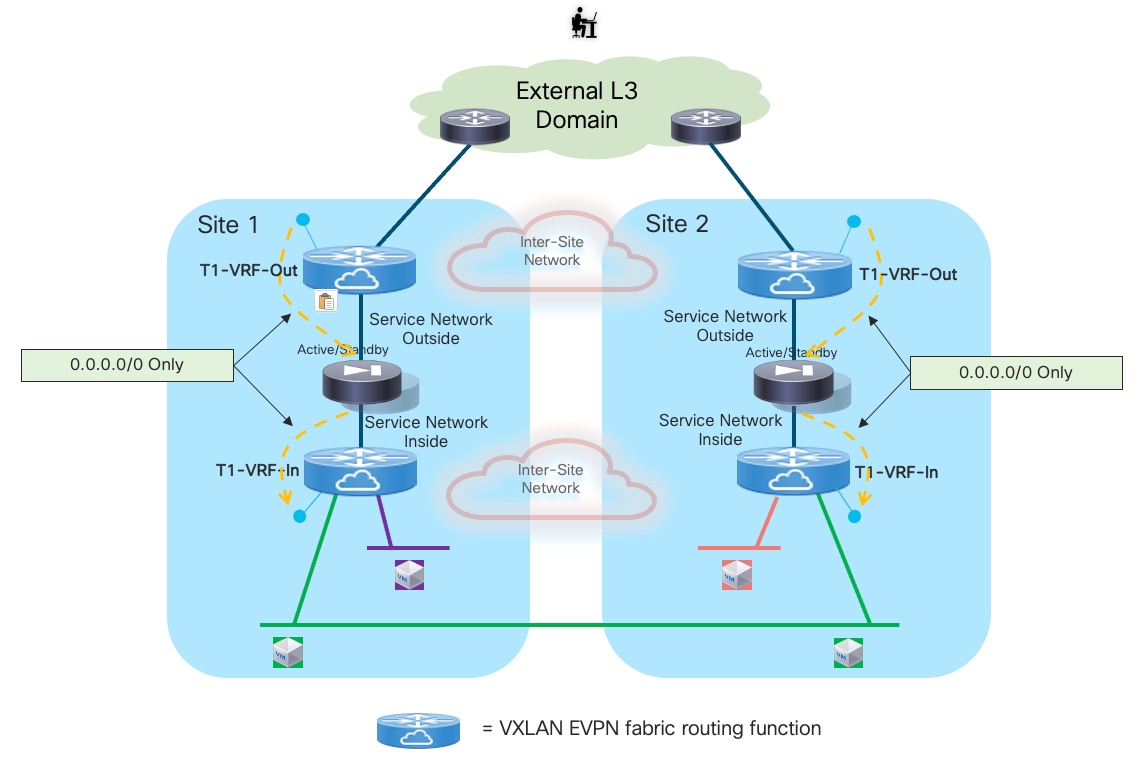

● Firewall deployed as a perimeter device – this design enforces security policies on all traffic flows leaving or entering a specific Tenant (VRF). In this scenario, the function of default gateway for the endpoints’ subnets is performed by the fabric (distributed anycast gateway), and the firewall represents the next Layer 3 hop toward the external network domain.

This use case covers the following firewall redundancy models:

◦ Active/Standby firewall pair stretched across two fabrics: EBGP is the routing protocol of choice for peering the active firewall with the fabric via both the inside and outside interfaces (“VRF sandwich” design).

◦ Independent Firewall Service deployed in each fabric: EBGP is also used to ensure that the active firewall node(s) deployed in each fabric can establish routing adjacencies with the fabric implementing again a VRF-sandwich design.

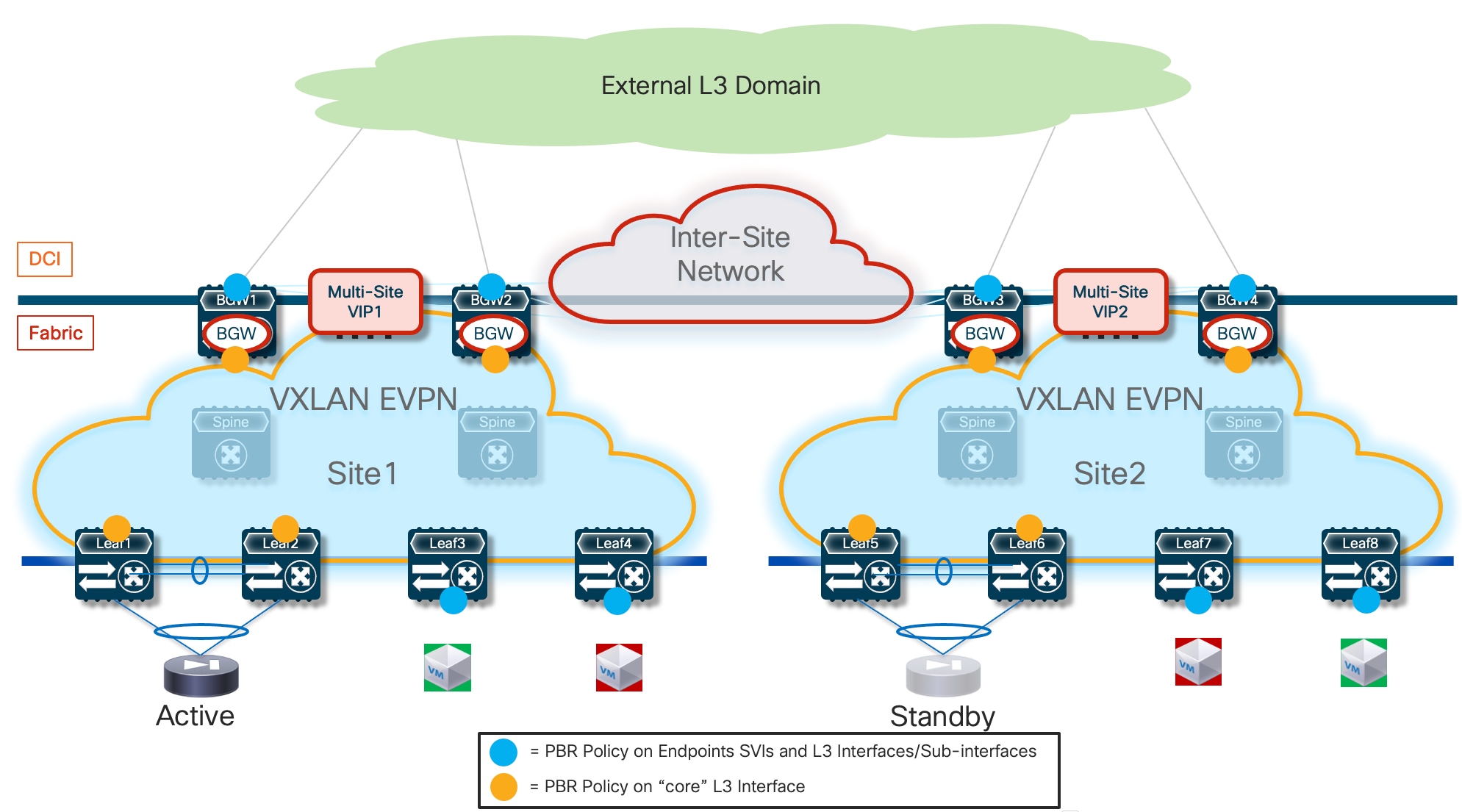

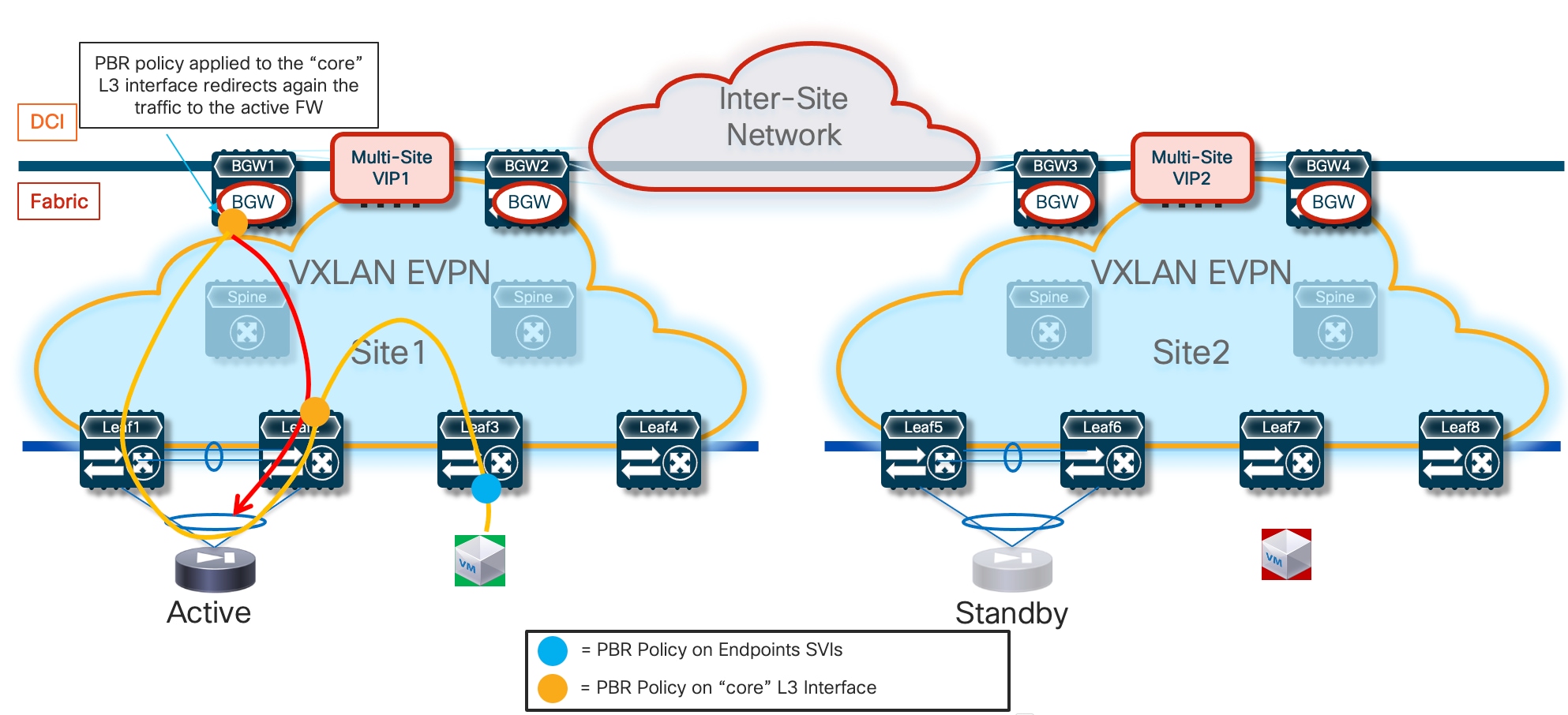

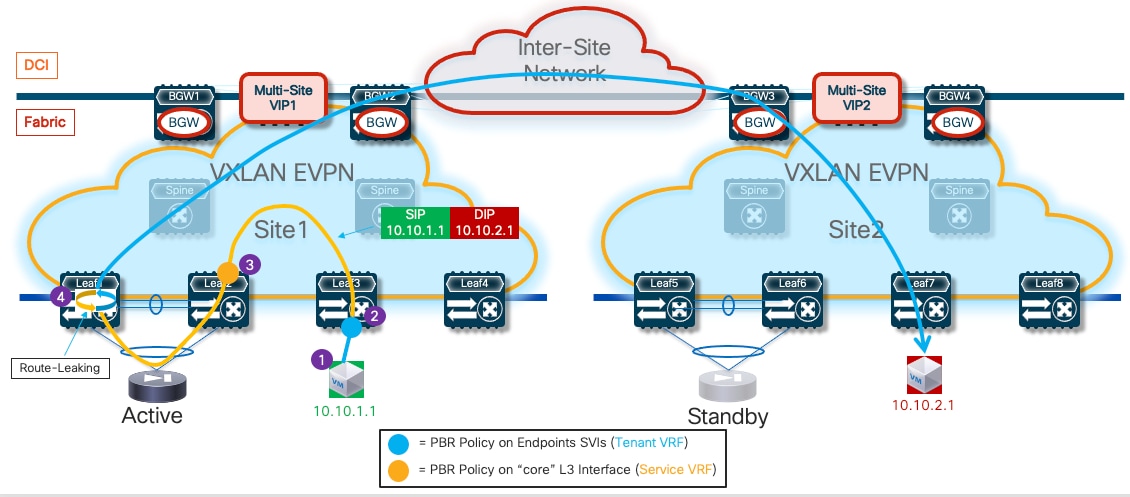

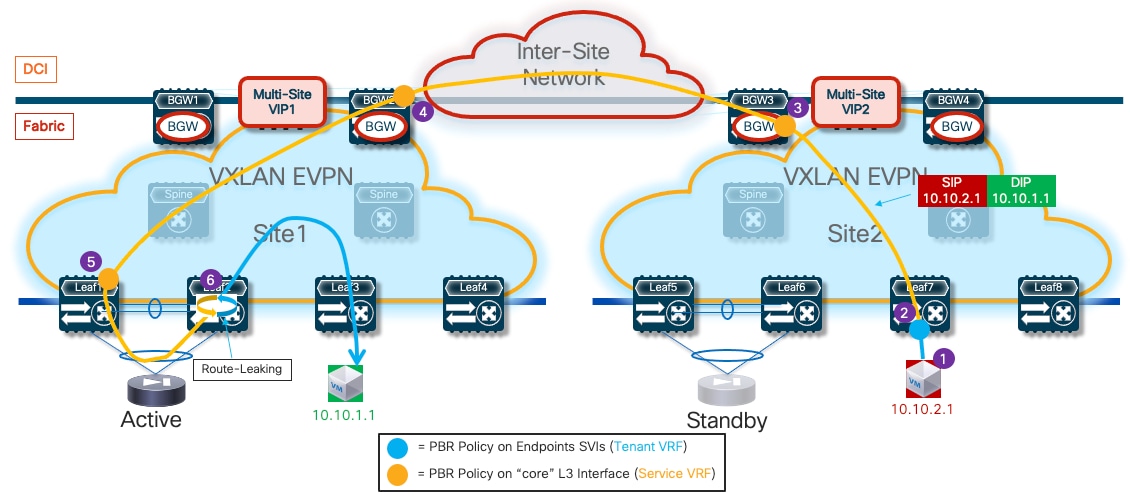

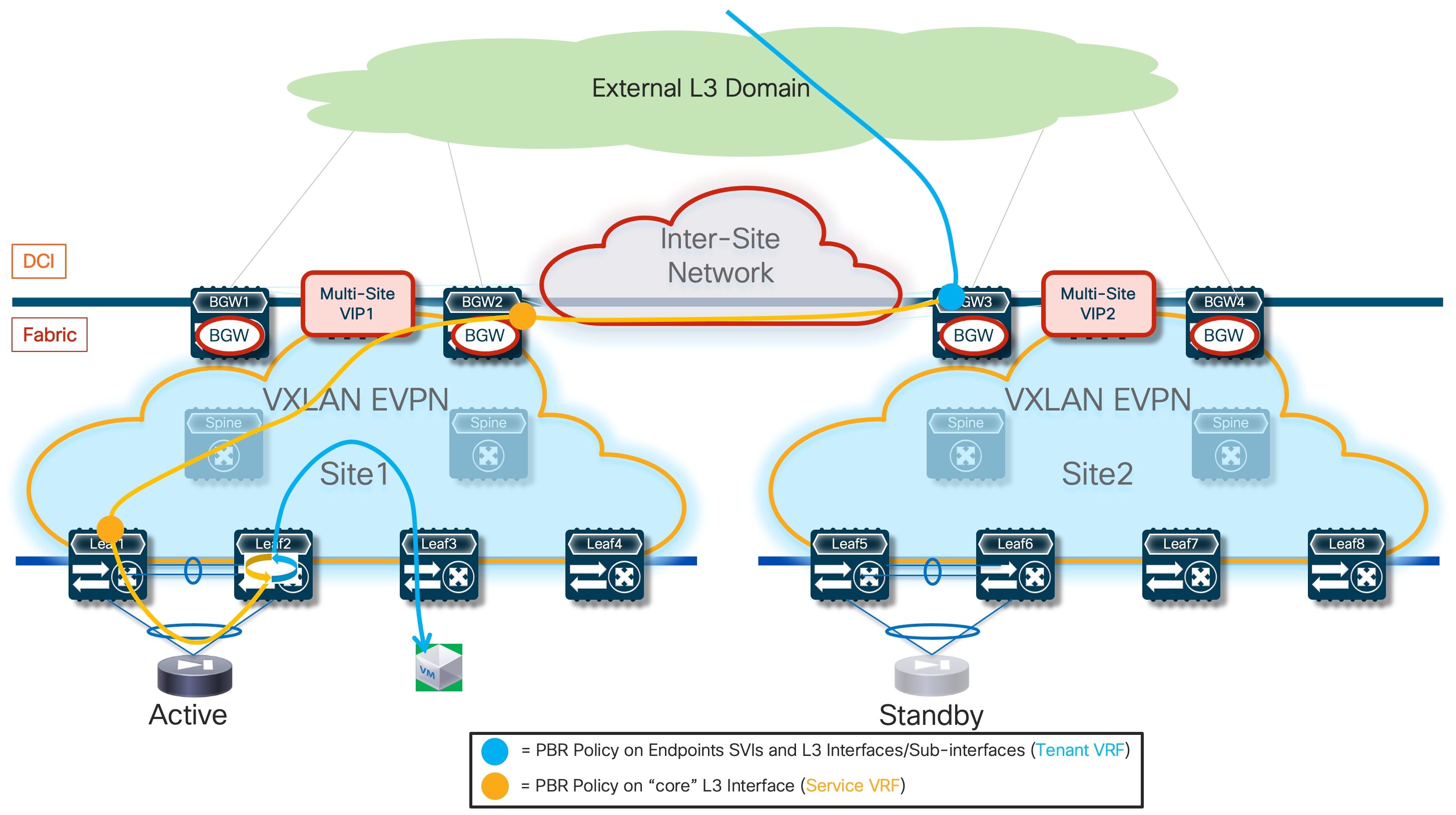

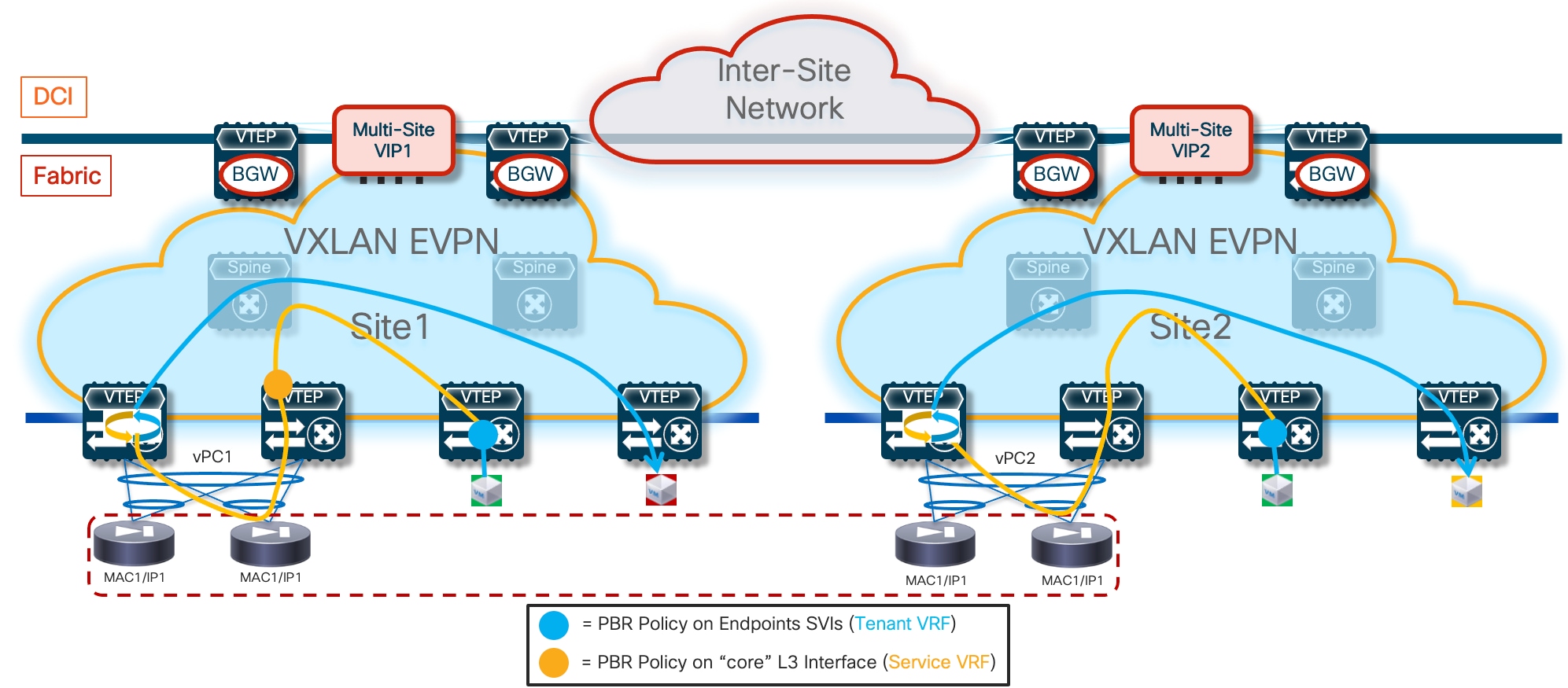

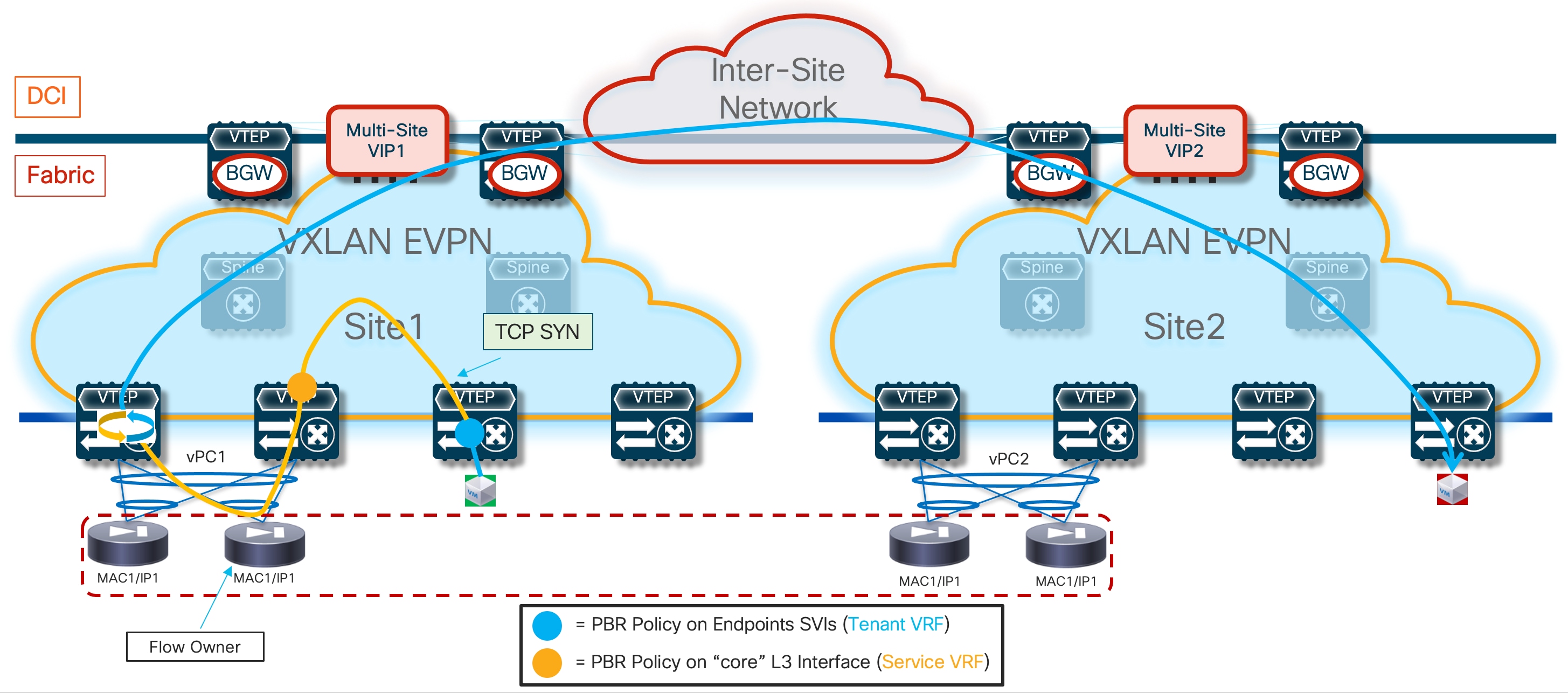

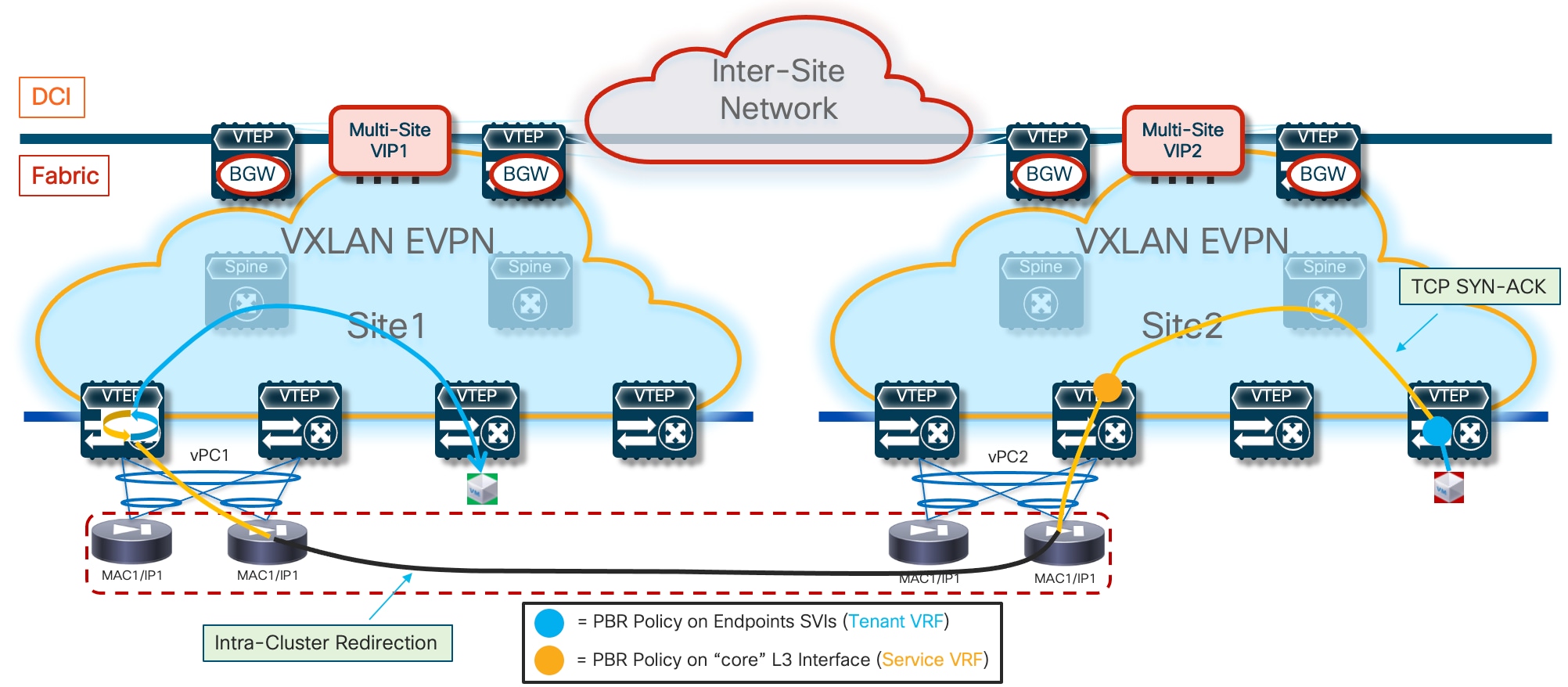

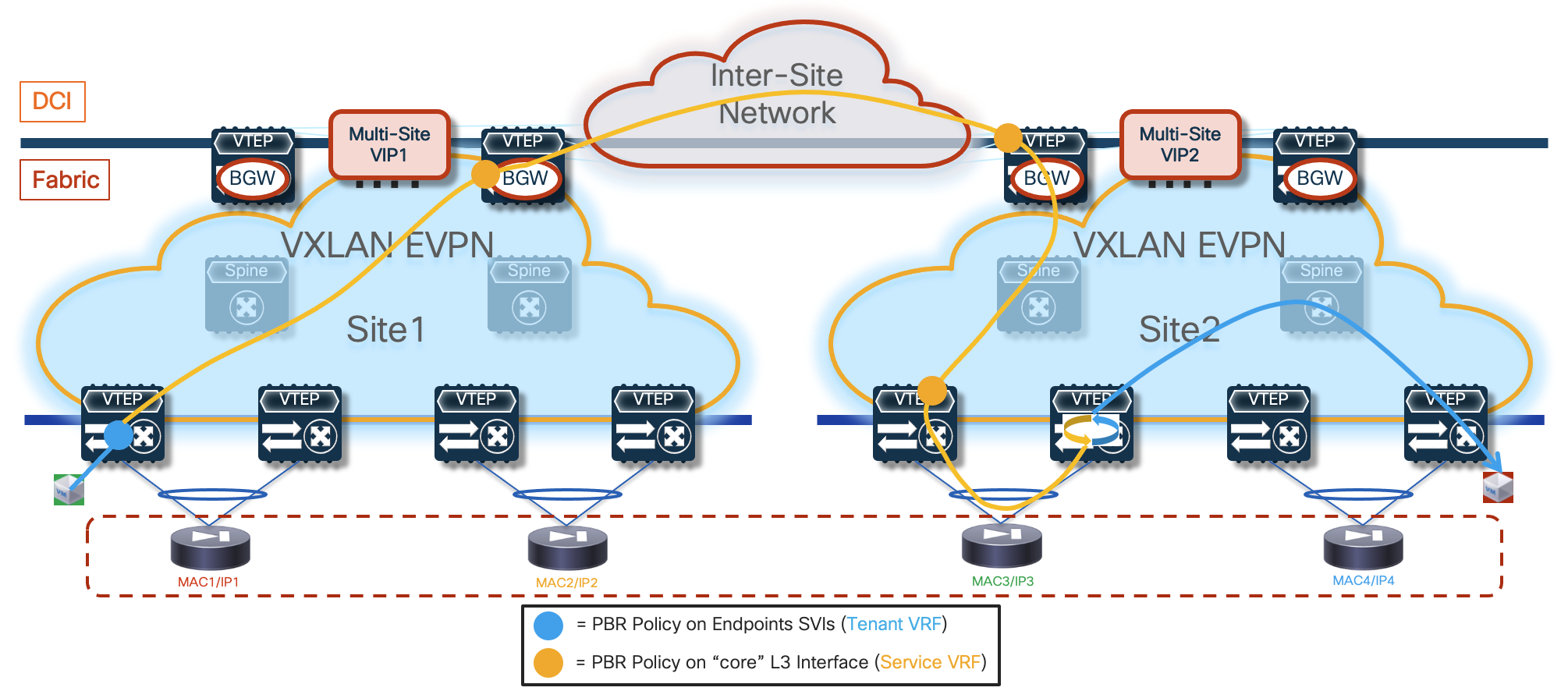

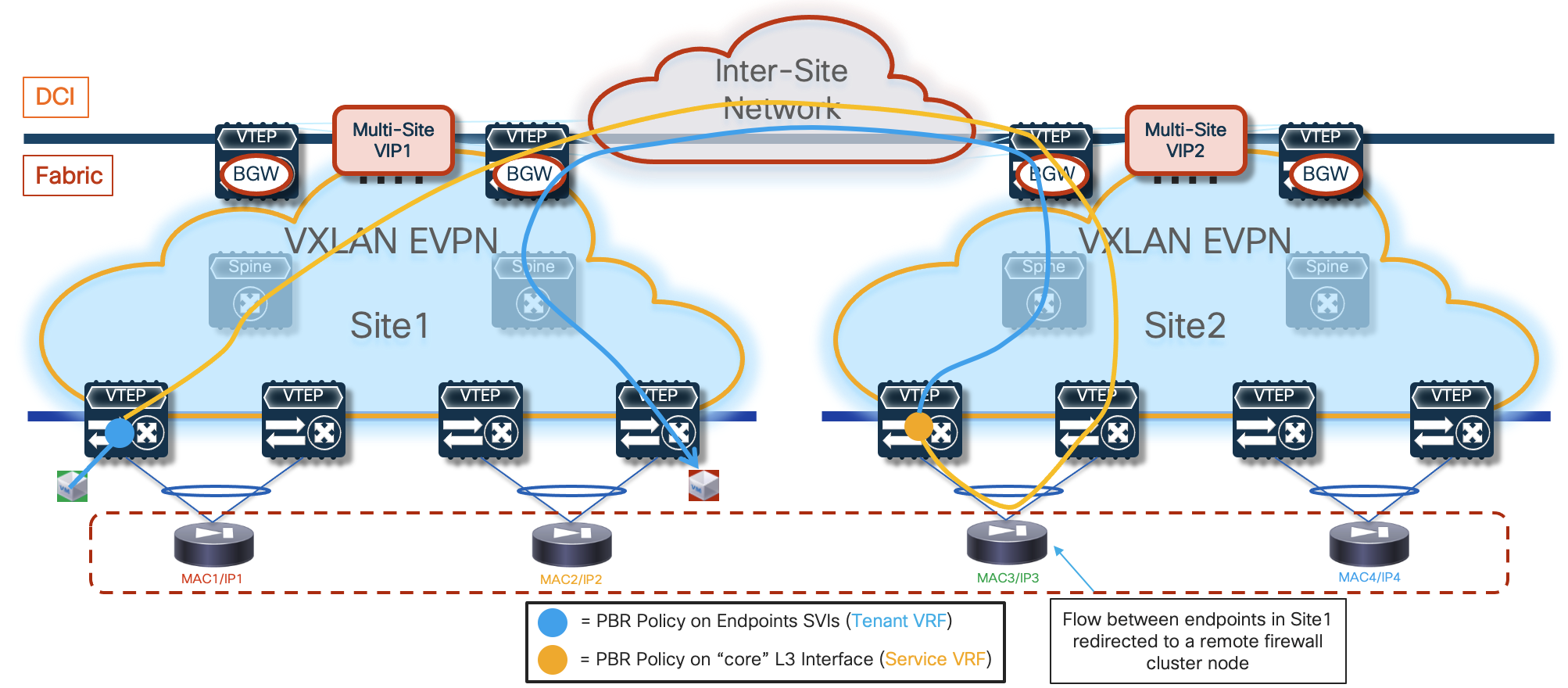

● Traffic stitching to firewall service leveraging policy-based redirection – this design uses the advanced policy based redirection capabilities of a VXLAN EVPN fabric to steer east-west and/or north-south traffic flows to firewall nodes.

This design option discusses the following firewall redundancy models:

◦ Active/Standby firewall pair stretched across two fabrics, with each firewall node connected in one-arm mode to the fabric.

◦ Active/Active firewall cluster stretched across two (or more) fabrics, with each firewall node connected in one-arm mode to the fabric.

Before delving into the details of each design option and service node redundancy model, it is useful to recall the difference between intra-tenant and inter-tenant service node deployments.

Note: The firewall configuration samples shown throughout this document are valid for Cisco Adaptive Security Appliance (ASA) platforms. However, the deployment options discussed in this paper are not limited to the ASA platforms and can be modified to fit other firewall models (from Cisco and other third-party vendors) into these designs according to their clustering capability.

Intra-Tenant Security Enforcement

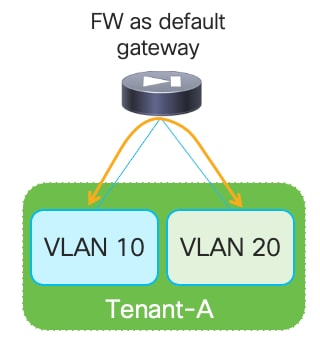

When security/policy enforcement is done within a tenant (VRF), you deploy a firewall within the same VRF instance or tenant to filter traffic between network segments,. Communication between the different network segments within the same tenant (VRF) is also known as East-West traffic. The filtering policy is applied by a firewall at the network segment edge within a VRF and is referenced as Intra-Tenant Service.

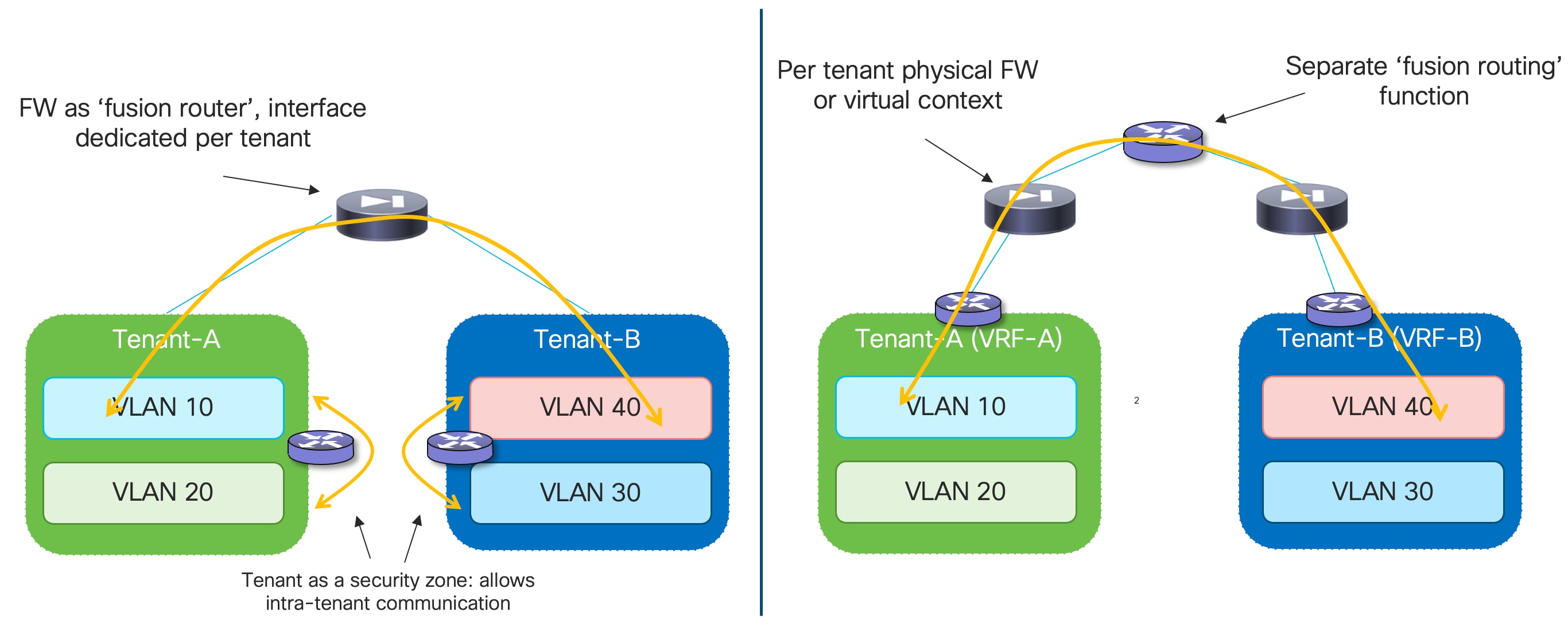

This can be achieved with three different design options:

● The firewall can be deployed as default gateway for multiple network segments that are deployed as Layer 2 only networks.

● Policy based routing (PBR) can be leveraged to redirect traffic to the firewall. In this case the default gateway function is deployed on the fabric and only selective traffic flows can be directed to the firewall for security enforcement. With PBR, it is possible to ensure that each network functions as a separate security zone, but with the flexibility of specifying the subset of traffic flows to inspect (depending on the specific policy configured to redirect the traffic to the firewall instance). Also, because each network is deployed as a Layer 3 segment, it must be associated to a VRF that becomes the tenant identifier.

● A third, less common option is the one where the firewall is deployed in transparent mode, as a bump in the wire.

Figure 1 illustrates the enforcement of security policies within a tenant through the deployment of a firewall as default gateway or by leveraging the PBR functionality.

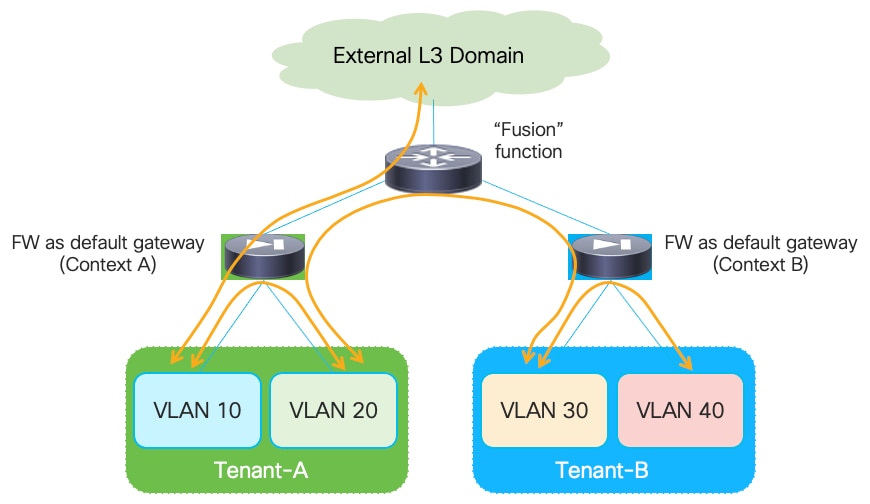

Inter-Tenant Security Enforcement

In a multi-tenant environment, each tenant is usually logically isolated from the others. In such cases, the deployment of a tenant-edge firewall allows you to apply filtering policies to data traffic moving between endpoints that belong to different tenants.

While this is also possible in the use case described in the previous section where the firewall is deployed as default gateway for the tenant’s networks, the goal is often to move the default gateway function into the fabric. In such case, the networks are deployed as Layer 3 and belong to a VRF that represents the security zone for a specific tenant.

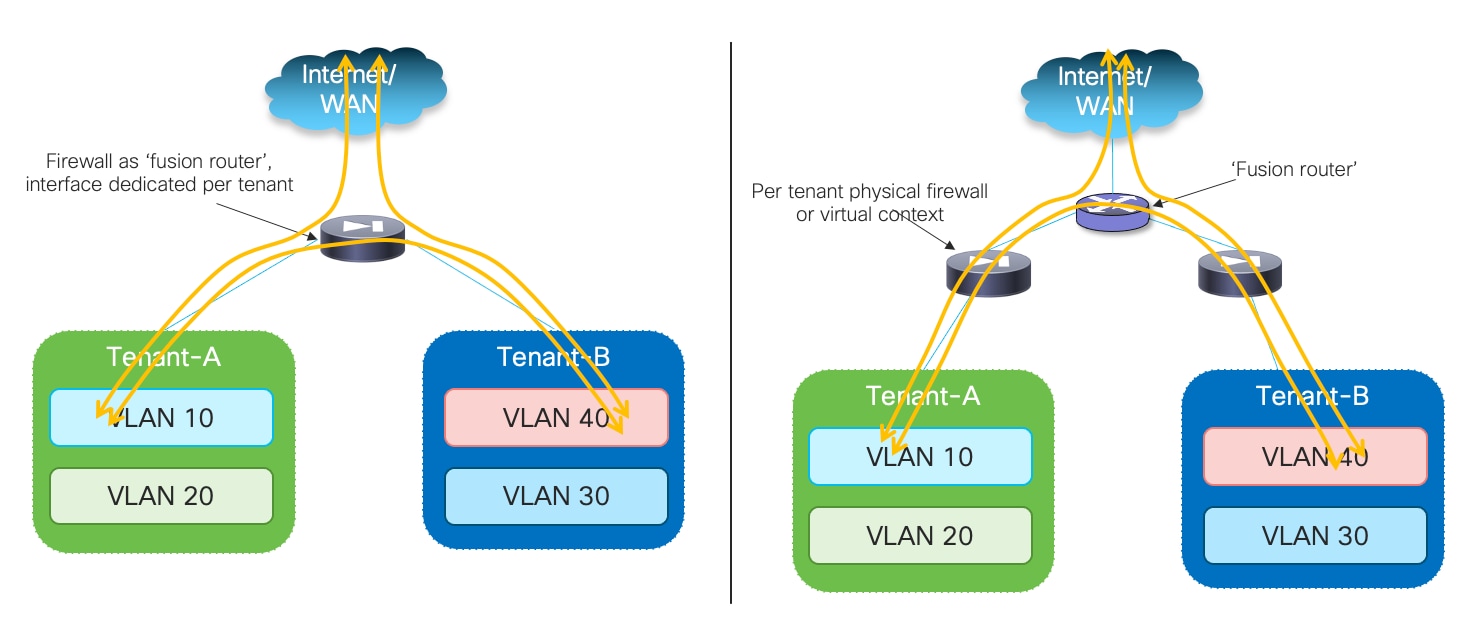

Traffic within the VRF (East-West traffic) is normally allowed, and the communication between overlay networks that are part of a given VRF is typically provided by the VXLAN EVPN routing functionality. To enable secured routing between different VRFs (tenants), a “fusion” function is usually deployed to interconnect the different firewall devices. The fusion devices can be placed at Inter-Tenant Service level or within the routing core.

Figure 2 shows the options of placing a fusion device to enable communication between tenants/VRFs and the concept of tenant/VRF level security zone.

Service Nodes Redundancy Models

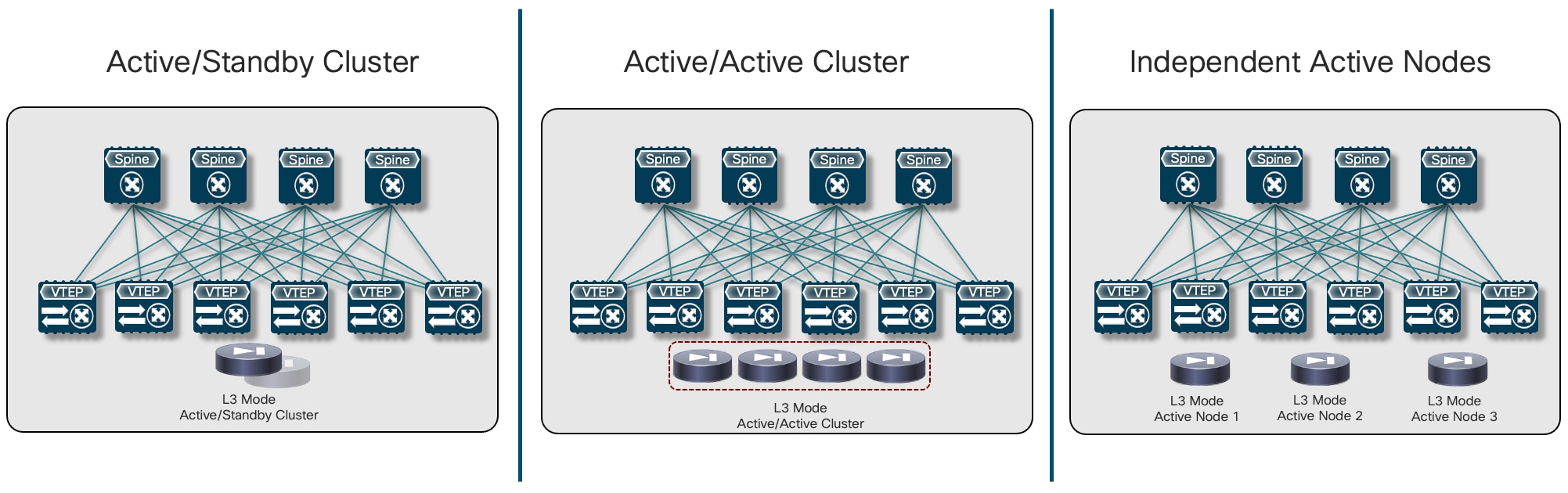

Different redundancy models are available to connect the service nodes to VXLAN EVPN fabrics part of a Multi-Site domain. The right choice depends on multiple factors, such as the desired level of service nodes’ resiliency, HW capabilities for the service node of choice, etc.

This section provides an overview of the three most common redundancy deployment options found in real-life deployments. After the overview, we will describe different deployment options that can leverage any of those redundancy deployment models. Specifically, the deployment of a firewall service as default gateway, the deployment of a firewall as a perimeter device (for North-South and inter-tenant/inter-VRF policy enforcement) and the use of traffic stitching to firewall service leveraging policy-based redirection functionalities.

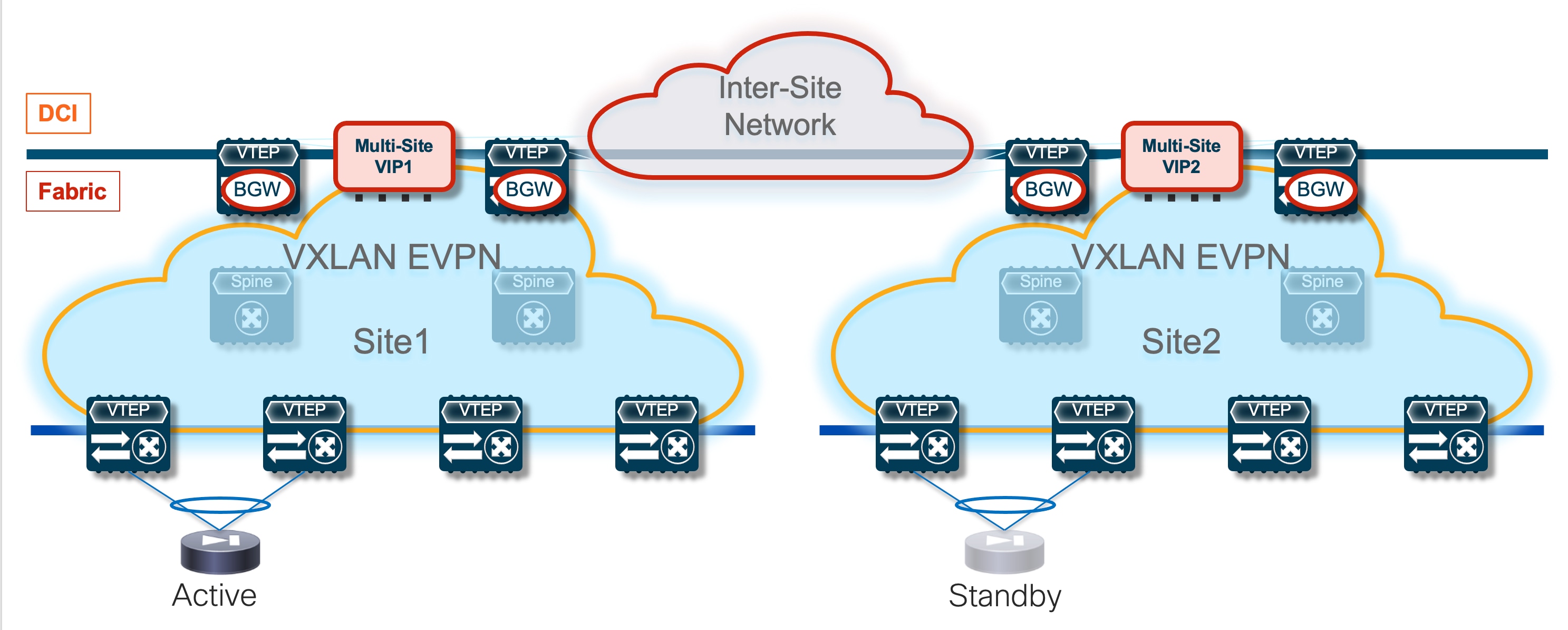

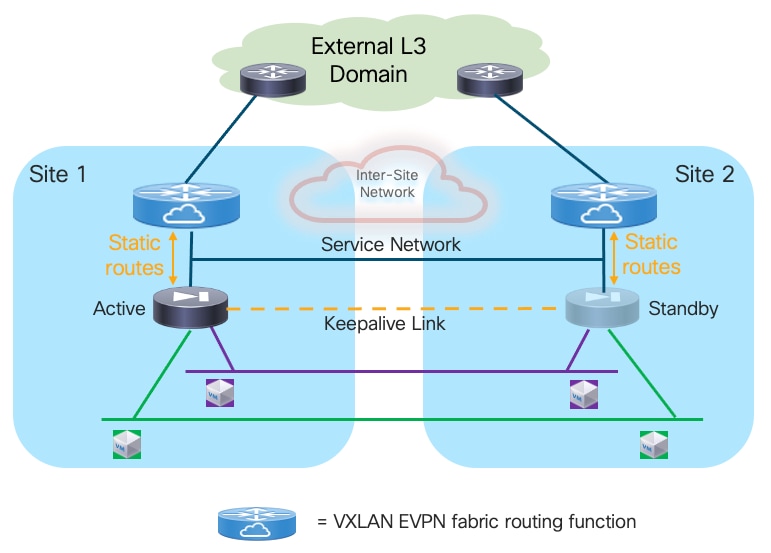

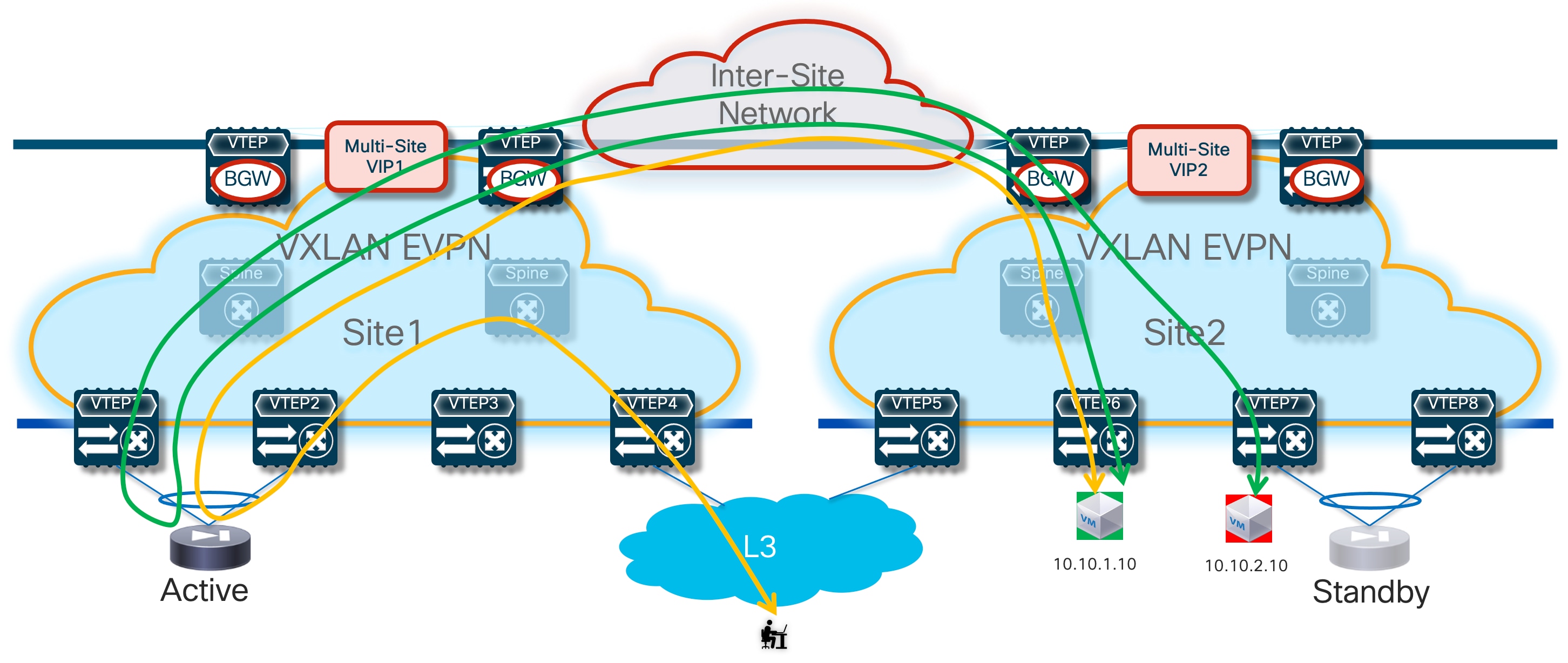

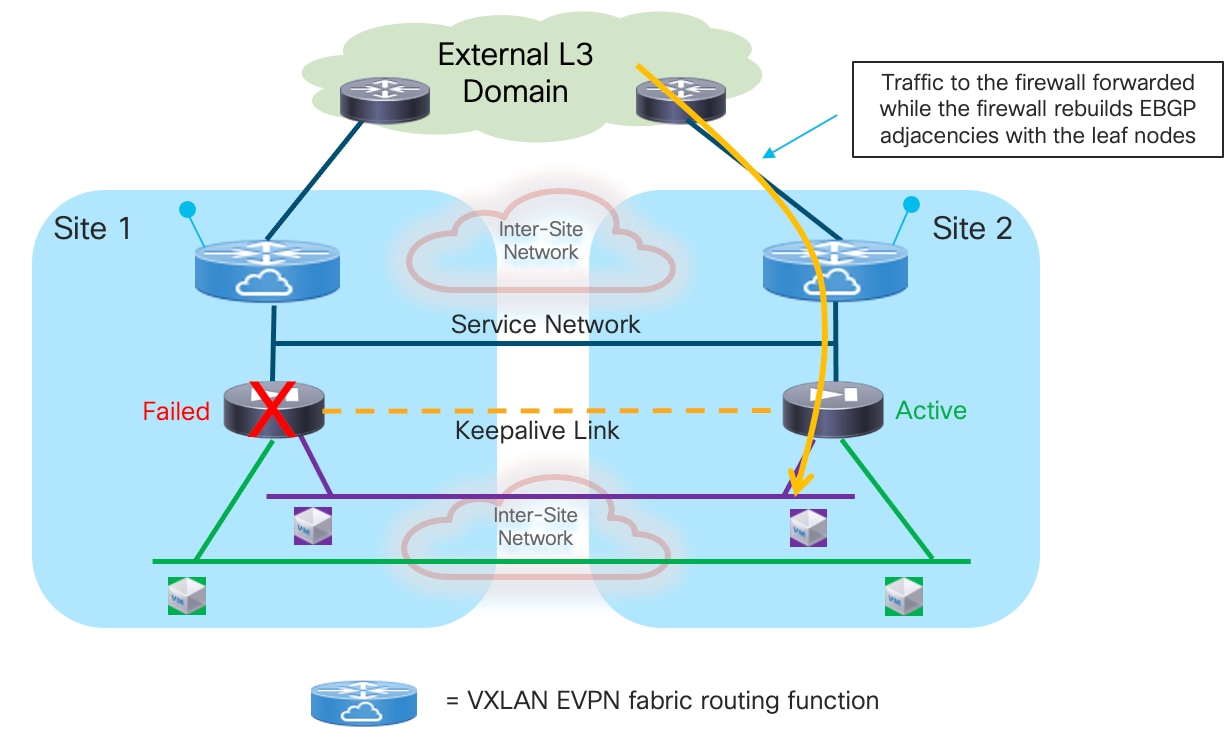

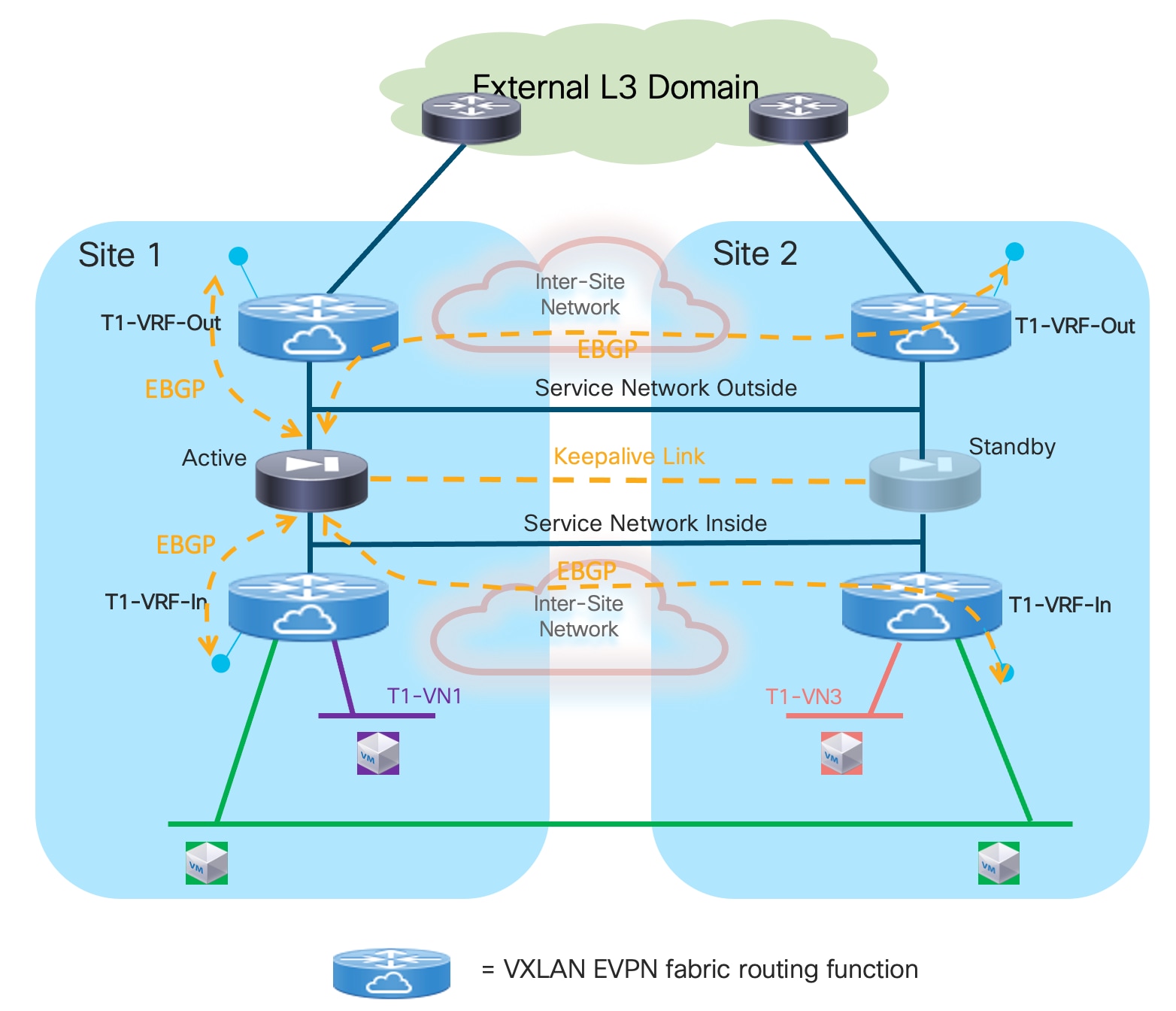

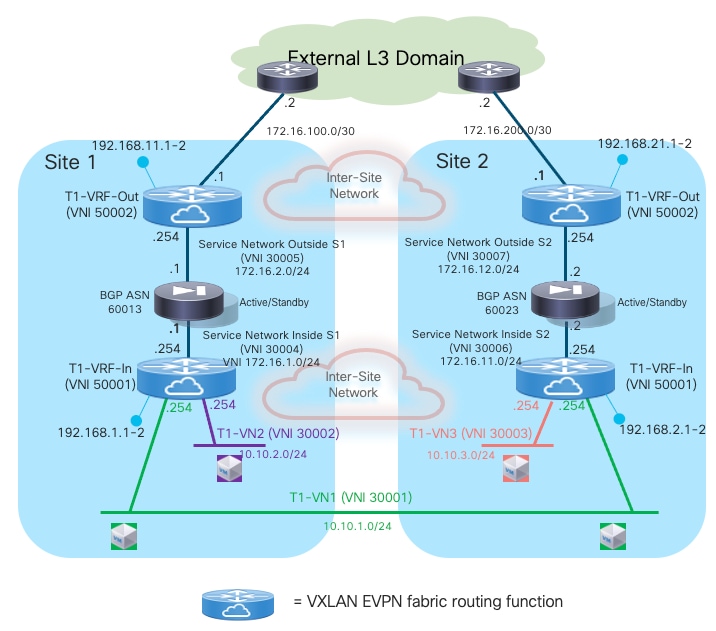

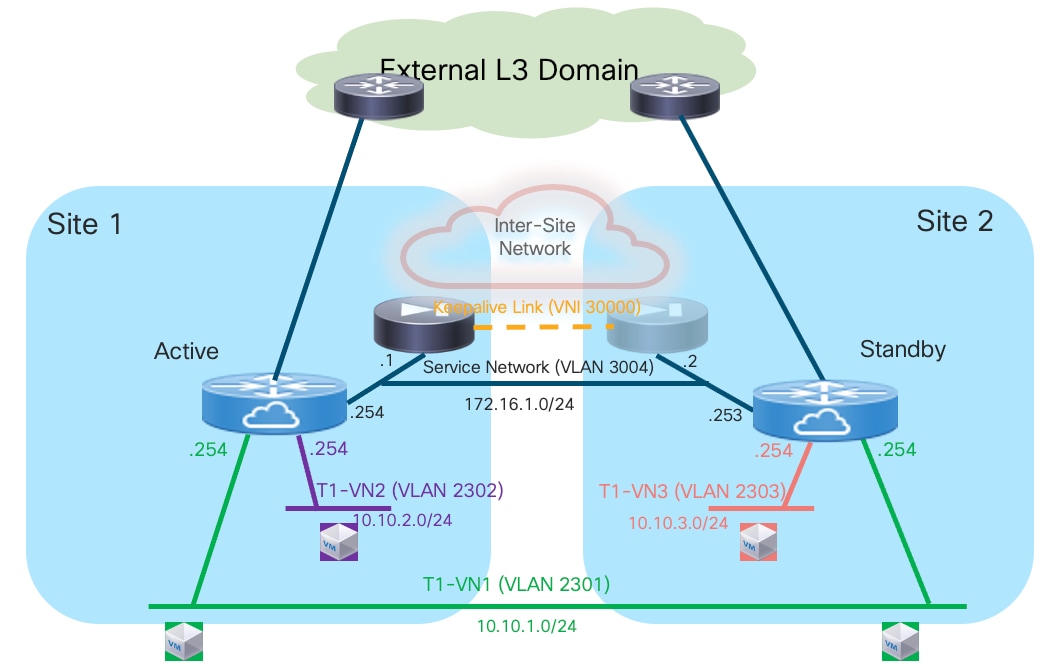

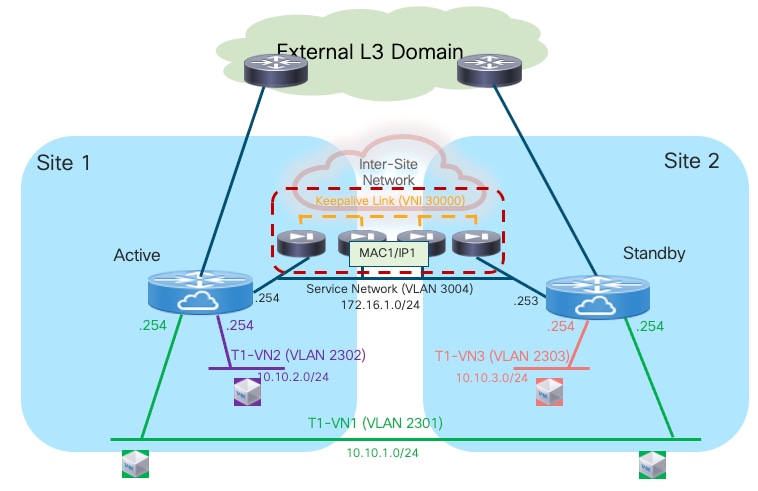

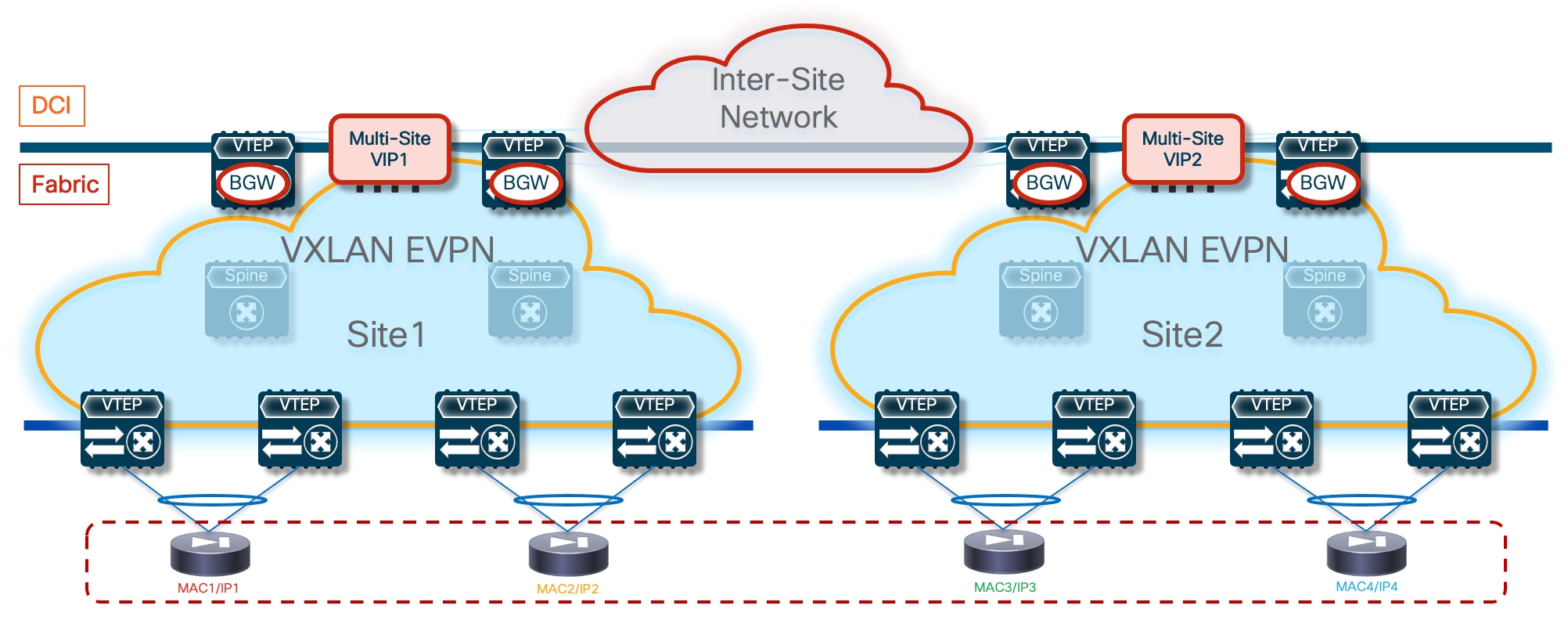

Active/Standby Firewall Cluster Stretched across Sites

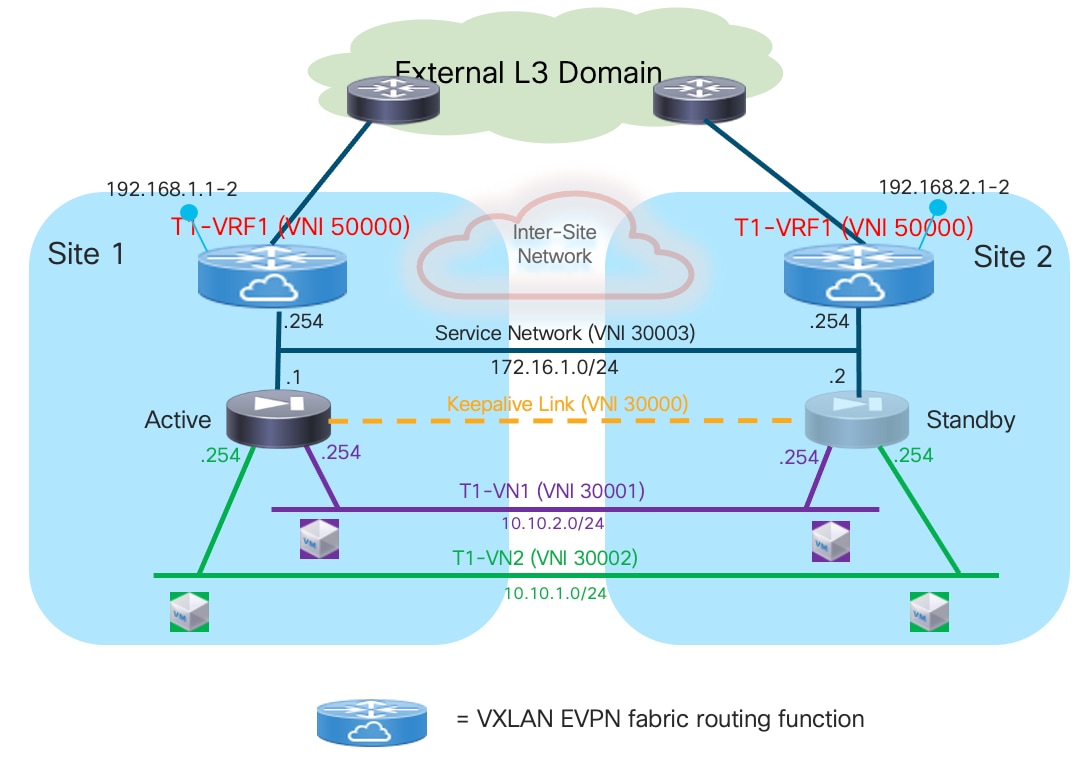

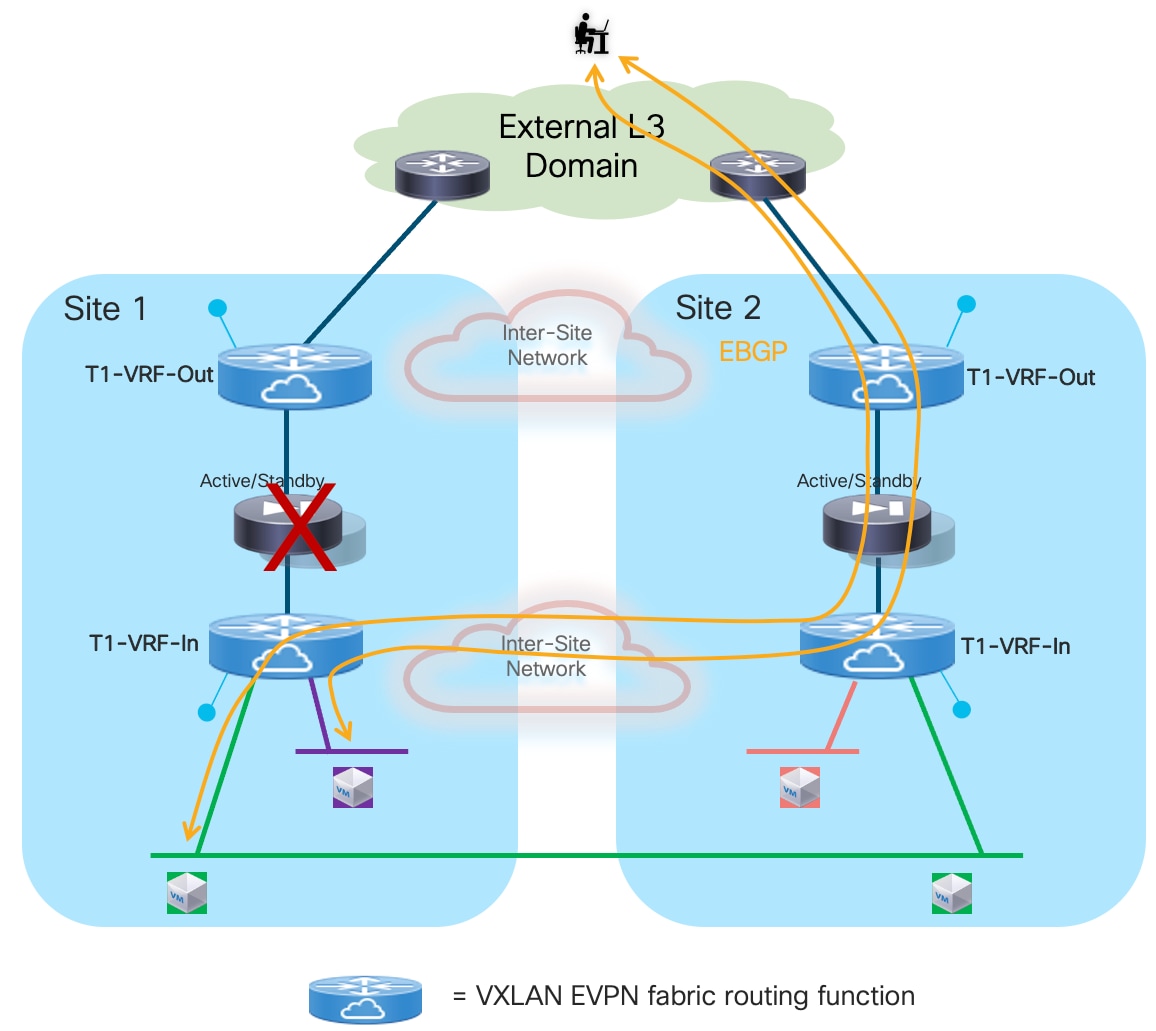

The first simple and quite common redundancy model calls for the deployment of an Active/Standby cluster of service nodes across different fabrics part of the Multi-Site domain, as shown in Figure 3 below.

This approach makes more sense when the fabrics are co-located in the same data center or deployed in locations that are in close geographical proximity (such as metro data centers), because the obvious consequence of stretching the Active/Standby cluster across sites is the hair-pinning of traffic to the fabric where the active service node is deployed.

The connectivity extension services provided by the Border Gateway nodes allow you to abstract the physical location of the service leaf nodes and make them behave as if they were deployed next to each other. This is possible through the extension of the Layer 2 segment used to exchange keepalive, configuration information, and connection state between the active and standby nodes. Additionally, because those service nodes have their data interfaces (inside, outside, DMZ, or one-arm) assigned IP addresses that are part of common subnets (to handle failover scenarios where the units exchange their active and standby roles), those data networks must also be extended across fabrics.

Note: The failover connection can be established between the firewall nodes by extending that network via VXLAN Multi-Site as mentioned above or by leveraging a physically separate infrastructure. This second option allows you to remove any dependency on the fabric itself and providesmore robust support for the failover functionality. Finally, when the firewall nodes are co-located in the same physical locations, it may also be possible to use dedicated back-to-back connections.

There are different options on how to deploy the service nodes, which could function as default gateway for the endpoints or be connected as devices peering with the leaf nodes of the VXLAN EVPN fabrics. Also, there are different approaches on how to enforce traffic flows through the service nodes, either based on traditional bridging or routing behavior or by leveraging more advanced PBR-based functionalities. The following sections of this paper discuss all these alternative options in greater detail.

The configuration sample below highlights the configuration that must be applied on both firewall nodes to ensure that an Active/Standby cluster can be built. In the example below, a dedicated interface (Port-channel1) is used to establish that communication.

Note: The configuration below is referring to a Cisco Adaptive Security Appliance (ASA) firewall device.

Primary Firewall Node

interface Port-channel1

description LAN Failover Interface

!

failover

failover lan unit primary

failover lan interface fover Port-channel1

failover polltime unit 1 holdtime 3

failover interface ip fover 192.168.1.1 255.255.255.252 standby 192.168.1.2

Secondary Firewall Node

interface Port-channel1

description LAN Failover Interface

!

failover

failover lan unit secondary

failover lan interface fover Port-channel1

failover polltime unit 1 holdtime 3

failover interface ip fover 192.168.1.1 255.255.255.252 standby 192.168.1.2

Once the Active/Standby cluster is established, the provisioning can be done only from the active firewall node. The configuration would then be automatically synchronized to the standby unit.

It is also possible to tune the keepalive timers to speed up the detection of the failure of the active firewall and the activation of the standby unit. In this case, we recommend not to tune those timers too aggressively, to take into account the intersite traffic convergence under various link/node failure cases that may result in creating a split-brain scenario for the firewall pair. In the specific example above, keepalives are exchanged every second (polltime unit 1) and a firewall is considered failed after a 3-second timer expires (holdtime 3).

Active/Active Firewall Cluster Stretched across Sites

The evolution of the Active/Standby firewall cluster depicted in Figure 3 above is represented by the deployment of an Active/Active cluster. The immediate advantages of such approach are the better use of the deployed resources (all the firewall nodes perform concurrently their security enforcement duties) and the avoidance of the traffic hair-pinning, as each fabric can have deployed a local firewall service node (part of the stretched cluster) to handle the security enforcement for traffic flows between local endpoints (or between local endpoints and the external network domain).

Note: Some traffic hair-pinning may still occur in case of live migration of workloads across sites, depending on the specific clustering functionalities offered by the firewall model of choice. For example, with Cisco ASA and FTD firewalls, an intra-cluster traffic redirection functionality is invoked to steer the traffic back to the specific firewall cluster node that owns the connection state for a specific flow.

Before stretching an Active/Active firewall cluster across data center physical locations, it is important to verify what are the maximum Round Trip Time (RTT) latency between those sites is lower than the maximum RTT latency supported by the firewall clustering implementation. Please refer to the vendor’s firewall documentation for this information.

There are two main implementations on the market for an Active/Active firewall cluster deployment; both have applicability in the multi-fabric architecture discussed in this paper and will be discussed in the following two sections below.

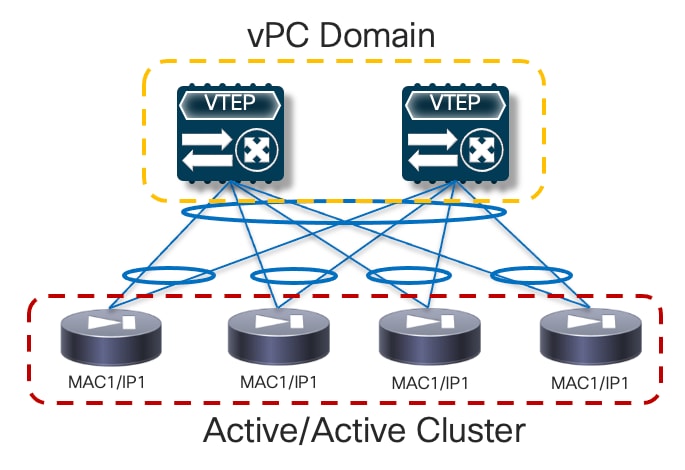

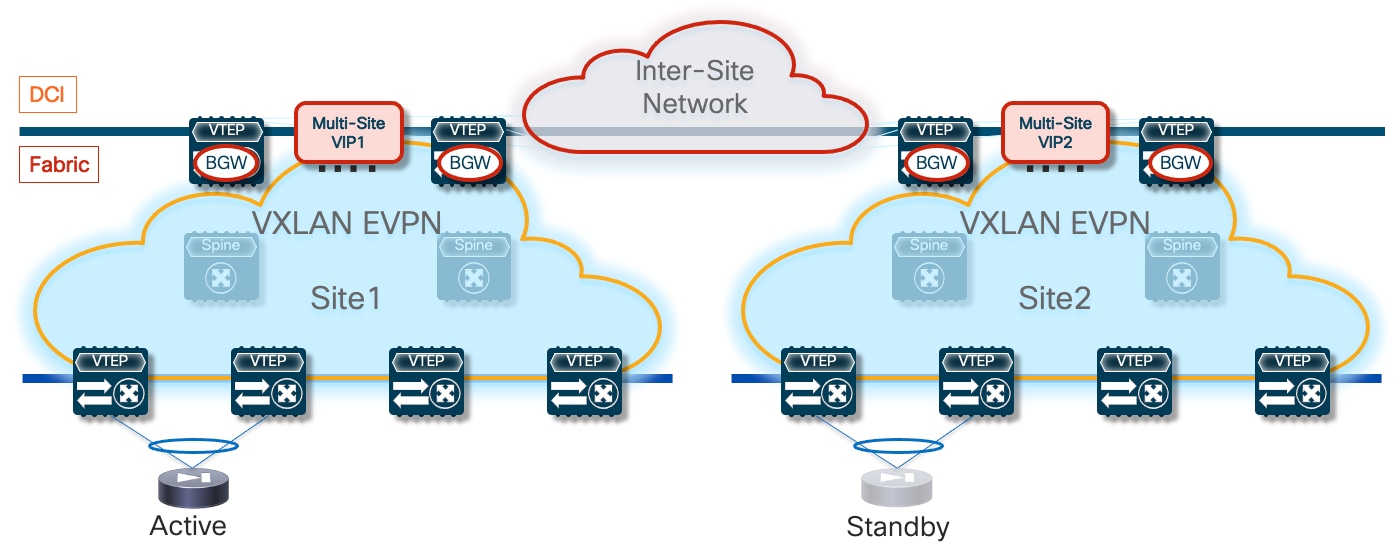

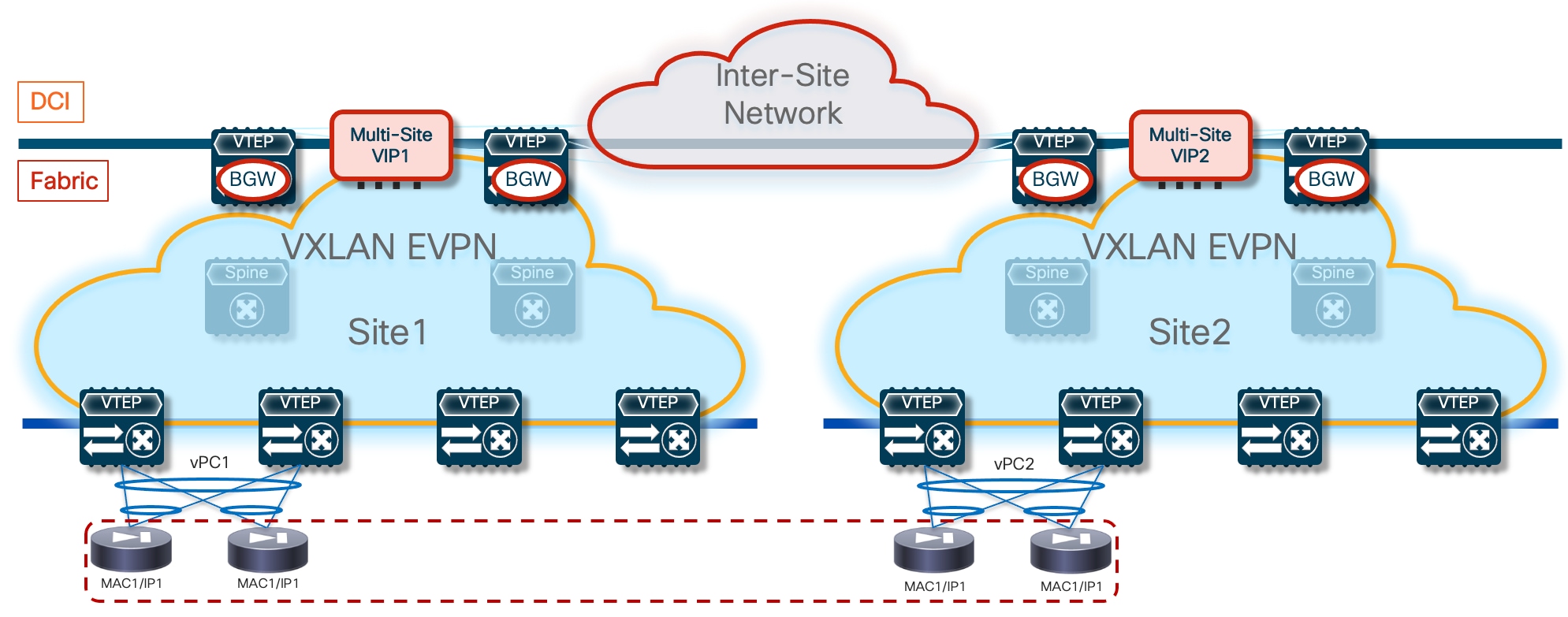

Split Spanned EtherChannels Active/Active Firewall Cluster Mode

The first option consists in “bundling” all the firewall nodes together and making them look like a single logical device to the network infrastructure. For this to happen, all the firewall nodes that are part of the same cluster must share a common virtual MAC and virtual IP addresses pair for each of their data interfaces. In the specific Cisco implementation, this firewall redundancy model is called “Spanned EtherChannels Cluster Mode” because all the firewall nodes that are part of the same cluster build local port-channels that are seen as a single logical connection on the network side (Figure 4).

In the above example , Cisco virtual Port-Channel (vPC) technology is used to implement the single logical connection between the fabric leaf nodes and the firewall nodes that are part of the cluster. Having a single vPC on the fabric side is critical to avoid continuous MAC flapping events across different interfaces, given that all the firewall nodes use the same MAC address to send traffic into the network. The obvious consequence is that all the firewall nodes part of the same active/active cluster must be connected to the same pair of vPC leaf nodes.

A communication channel is established between all the firewall nodes that are part of the same cluster leveraging a logical Cluster Control Link (CCL). The CCL is used for control plane activities (such as exchanging keepalives, synchronizing configuration information, etc.) but can also be leveraged for data-plane communication in the scenarios where the two directions of the same traffic flow are sent to different firewall nodes. In a non-cluster scenario, those asymmetric flows would normally be dropped, whereas the use of CCL allows you to redirect and stitch the two legs of the flow via the same firewall cluster node that owns the connection state for that specific flow.

Note: Discussing the details of the Active/Active clustering implementation is out of the scope of this paper. For more information please refer to the documentation available on cisco.com:

https://www.cisco.com/c/en/us/td/docs/security/asa/special/cluster-sec-fw/secure-firewall-cluster.html

From a provisioning perspective, all the configuration is always applied on one node part of the cluster, which assumes the role of the “Master” node. All the other nodes become “Slave” nodes, capable of locally forwarding traffic but receiving configuration information from the master node via the CCL.

The configuration example below shows how to build a Cisco active/active Spanned EtherChannel firewall cluster, specifying the minimum configuration required on the master and slave nodes.

Master Node

!Name the cluster

hostname FW-Cluster

!

! enable SSH access

enable password ***** pbkdf2

crypto key generate rsa general-keys modulus 2048

username admin password testpass

aaa authentication ssh console LOCAL

ssh 0.0.0.0 0.0.0.0 management

!

! Configure the cluster interface mode

cluster interface-mode spanned

!

! Define IP pool for Mgmt

ip local pool Mgmt 10.237.99.23-10.237.99.26 mask 255.255.255.224

!

! Enable and configure the interfaces used for CCL

interface GigabitEthernet0/0

channel-group 1 mode active

no nameif

no security-level

no ip address

!

interface GigabitEthernet0/1

channel-group 1 mode active

no nameif

no security-level

no ip address

!

! Assign a virtual Mgmt IP to the cluster (each node gets also a dedicated Mgmt IP)

interface Management0/0 management-only

nameif management

security-level 100

ip address 10.237.99.22 255.255.255.224 cluster-pool Mgmt

no shutdown

!

! Create a default route for management

route management 0.0.0.0 0.0.0.0 10.237.99.1

!

! Cluster configuration

cluster group cluster1

local-unit node1

cluster-interface Port-channel1 ip 192.168.1.1 255.255.255.0

priority 1

enable

!

! Enable jumbo-frame support on the CCL link

jumbo-frame reservation

mtu cluster 1654

Slave Node

! Enable and configure the interfaces used for CCL

interface GigabitEthernet0/0

channel-group 1 mode active

no nameif

no security-level

no ip address

!

interface GigabitEthernet0/1

channel-group 1 mode active

no nameif

no security-level

no ip address

!

! Cluster configuration

cluster group cluster1

local-unit node2

cluster-interface Port-channel1 ip 192.168.1.2 255.255.255.0

priority 2

enable

Once the firewall nodes discover themselves via the CCL interfaces, they are bundled as part of the same cluster:.

FW-Cluster# show cluster info

Cluster FW-Cluster: On

Interface mode: spanned

This is "1" in state MASTER

ID : 2

Version : 9.2(2)4

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.1

CCL MAC : 006b.f11e.fd5f

Last join : 15:05:59 UTC Nov 22 2023

Last leave: 14:46:02 UTC Nov 22 2023

Other members in the cluster:

Unit "node2" in state SLAVE

ID : 0

Version : 9.2(2)4

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.2

CCL MAC : 006b.f11f.2a74

Last join : 16:04:23 UTC Nov 22 2023

Last leave: 16:00:56 UTC Nov 22 2023

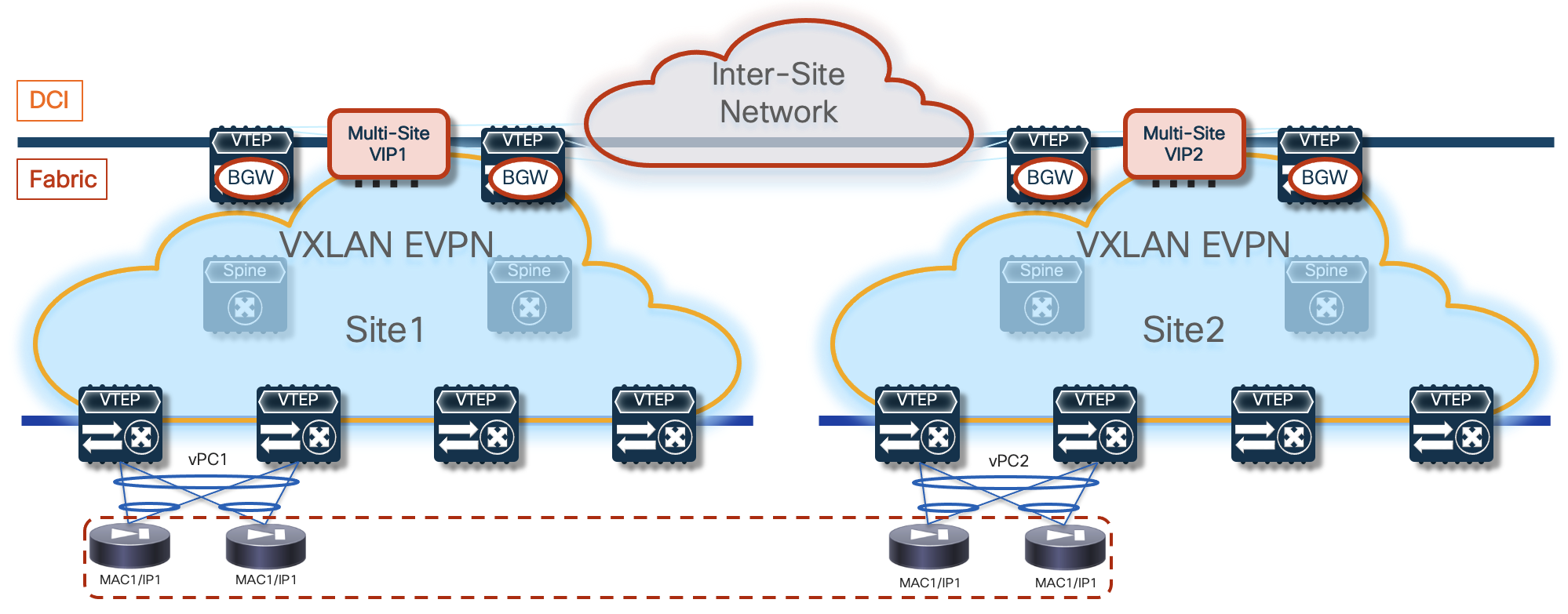

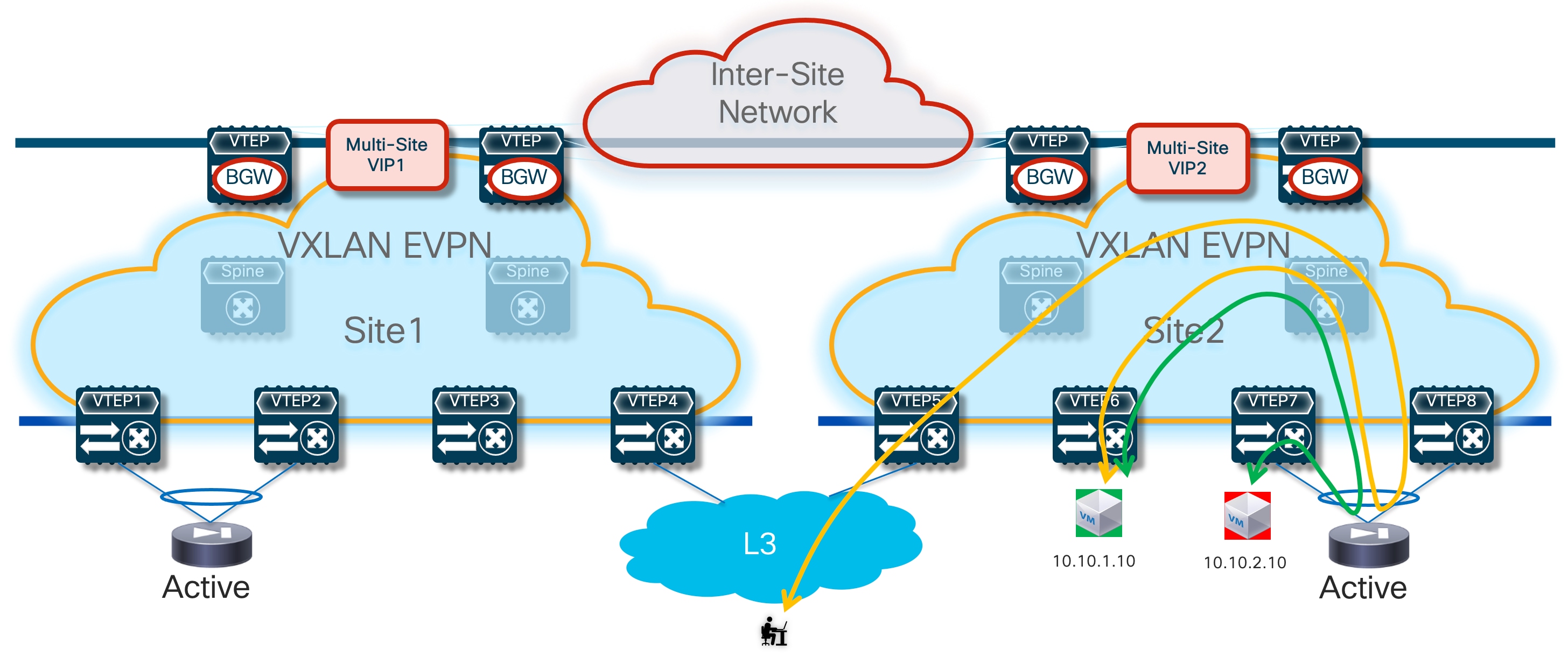

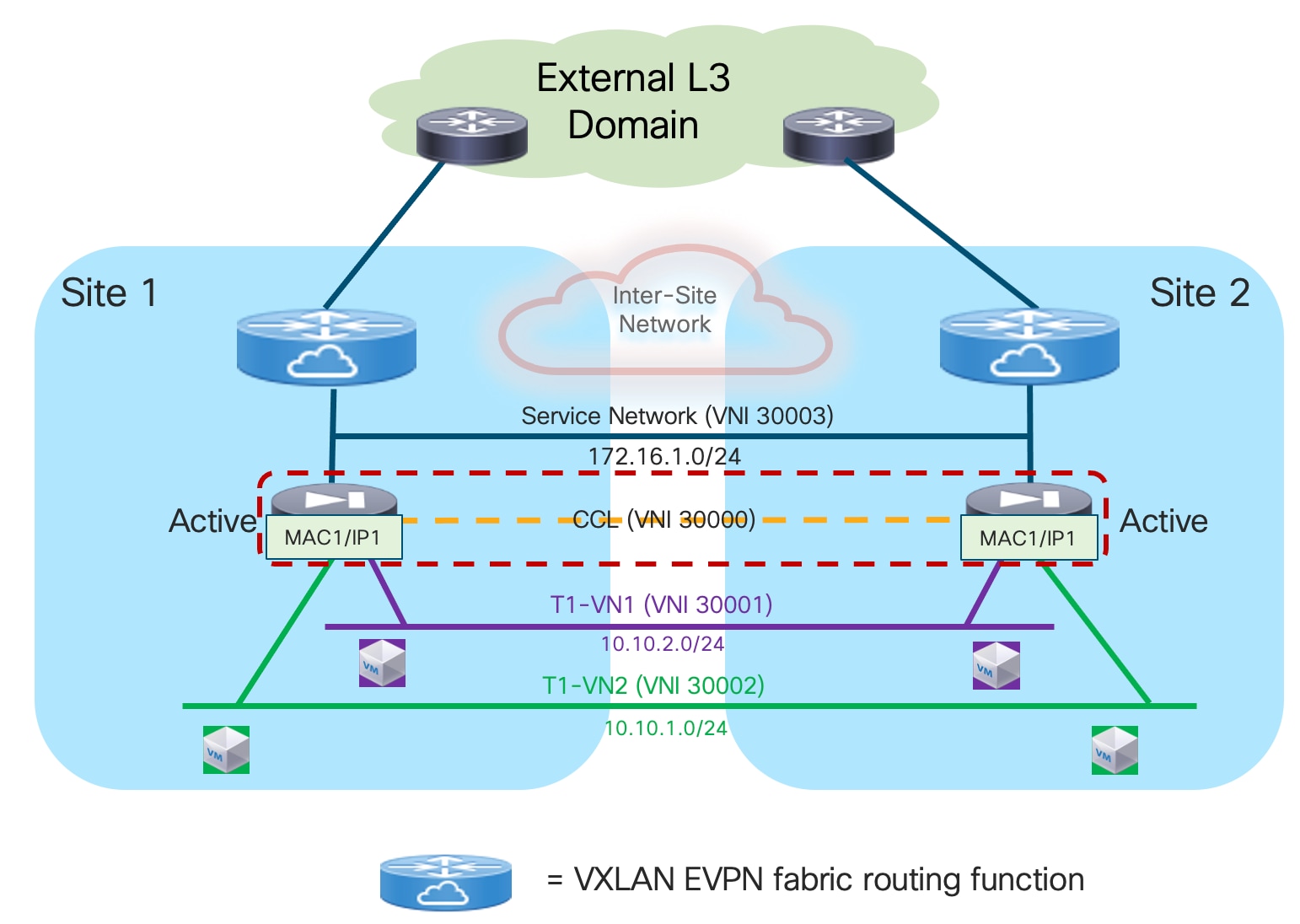

An exception to the above guideline (connecting all the firewall nodes to the same pair of leaf nodes) is required when the active/active cluster needs to be stretched across fabrics that are part of a Multi-Site domain. This design is called “Split Spanned EtherChannels”, as the same topology shown in figure above can be used inside each fabric, essentially “splitting” the Spanned EtherChannels design (Figure 5).

The same requirement of connecting the firewall nodes to a single pair of leaf devices remains valid inside each fabric, but the firewall nodes must be connected to different leaf nodes across fabrics. Connectivity between nodes via CCL is still possible by ensuring that the CCL interfaces are mapped to a specific network (associated to an L2VNI segment) stretched across sites.

Note: As previously mentioned, CCL connectivity could also be achieved by leveraging a separate physical network infrastructure.

In the following sections of this document, we are going to cover different deployment models for this active/active firewall cluster. In all of those options, the data interface(s) defined on each firewall node part of the cluster share the same virtual MAC/virtual IP address (as shown in the figure above). Because of that, a specific challenge arises when stretching the cluster across different fabrics: how to handle the concurrent learning of the same vMAC/vIP information in different fabrics and how to prevent this from being seen as a continuous ‘live endpoint migration” event.

The specific solution adopted for VXLAN Multi-Site calls for the association of the logical vPC connections defined in each fabric to connect the firewall nodes (vPC1 and vPC2 in Figure 5) to a common Ethernet Segment (ES), leveraging the ESI based multi-homing functionality. This ensures that, at the Multi-Site level, learning of concurrent vMAC/vIP information on vPC1 and vPC2 is not considered a “move” event, as both connections are considered part of the same multi-homed logical segment and all the available paths are considered as “valid” to reach the firewall service.

Note: Discussing the details of EVPN multi-homing is out of the scope of this paper. For more information, please refer to the documents below: https://www.rfc-editor.org/rfc/rfc7432.html

https://www.cisco.com/c/en/us/td/docs/dcn/nx-os/nexus9000/104x/configuration/vxlan/cisco-nexus-9000-series-nx-os-vxlan-configuration-guide-release-104x/m-interoperability-with-mvpn-multi-homing-using-esi.html

It is worth noticing how the use of EVPN multi-homing described in this document is specifically focused on the deployment of an active/active firewall stretched across VXLAN EVPN fabrics. Therefore, only a subset of the EVPN multi-homing functionalities discussed in the documents above are required. The points below discuss some functional considerations and provide the configuration required to enable this subset of functionalities.

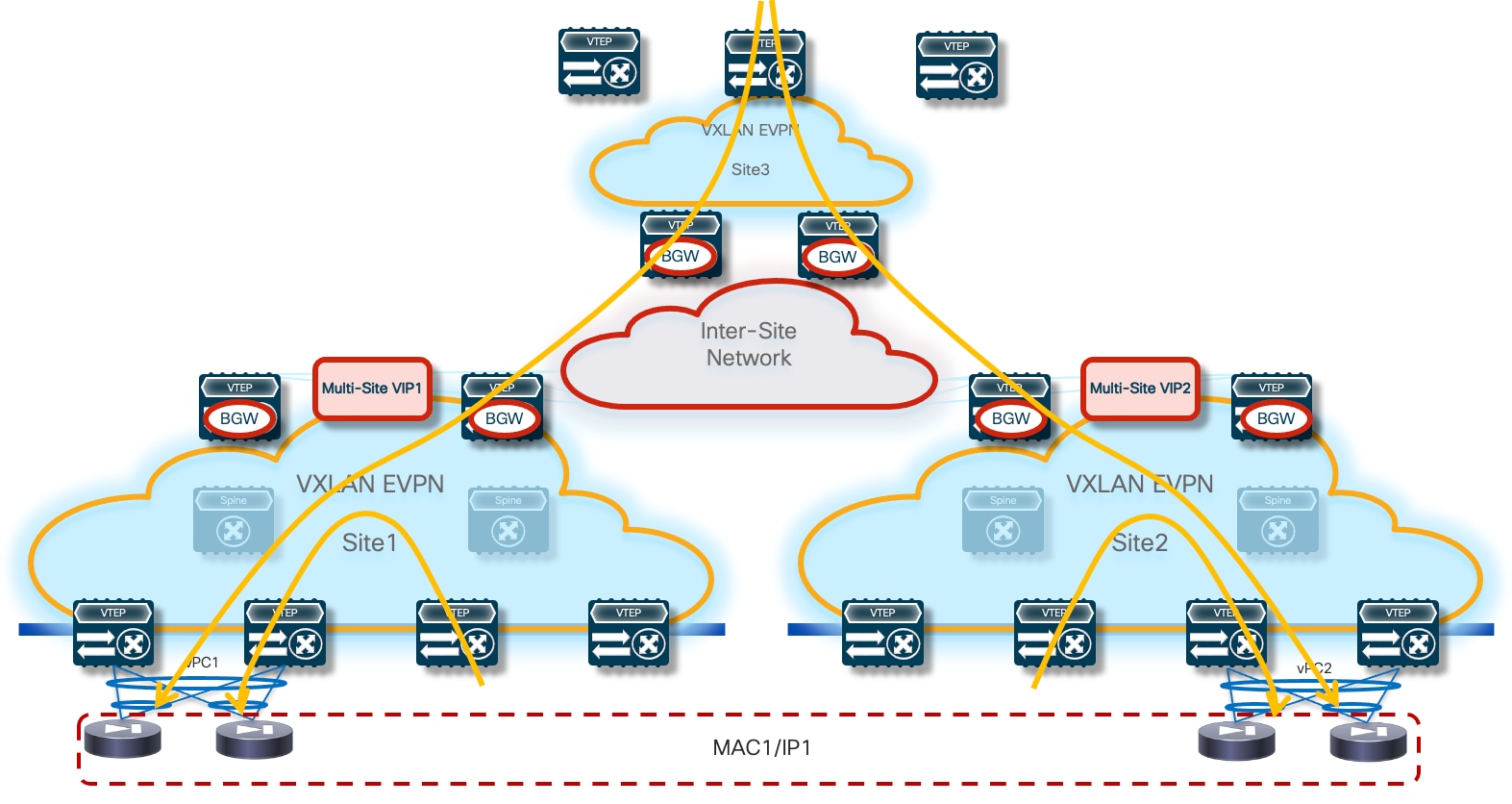

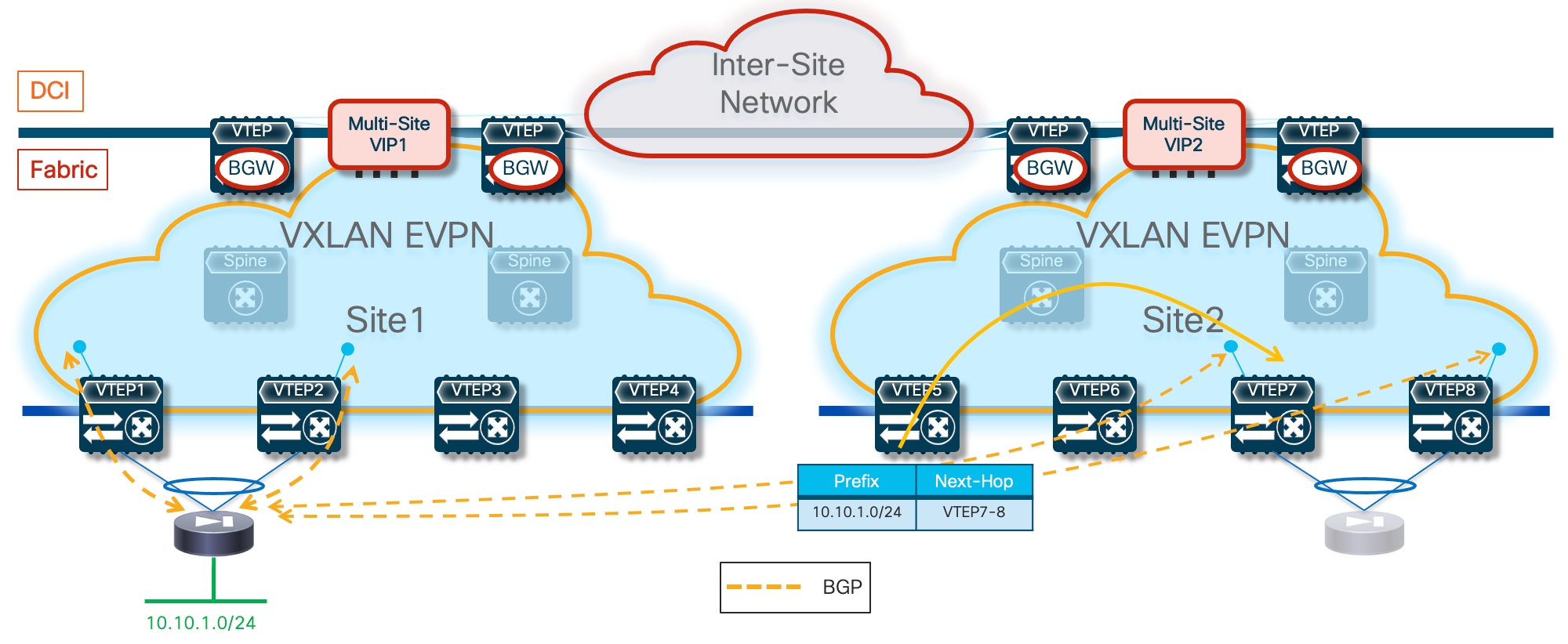

Because firewall nodes with the same vMAC/vIP addresses are connected to different sites, each VTEP device that is part of the Multi-Site domain should be able to properly handle that same vMAC/vIP information pointing to different locations (different fabrics) and determine the best path to access the firewall service. Figure 6 highlights a deployment of three VXLAN EVPN fabrics part of a Multi-Site domain, with the Active/Active firewall cluster stretched across Site 1 and 2.

Any communication originated in Site3 and requiring access to the firewall service would have two paths available, leading to the firewall nodes in Site1 or Site2. Load-balancing of traffic flows will be handled (on a per-flow basis) by the BGW nodes deployed in Site3. Communication initiated from Site1 or SIte2 should instead always prefer the connectivity to one of the firewall nodes locally deployed in that site.

Note: On any sites that do not have a firewall service locally deployed (such as Site 3 in the example above), it is necessary to configure “bestpath AS-Path multipath-relax” as part of the BGP configuration on its BGWs so that the BGWs can treat the clustering routes received from remote sites’ BGWs as ECMPs. Without this configuration, the BGWs in Site 3 will not be able to install the remote routes as ECMPs because they will have been received from differing neighboring ASNs. It will also be necessary to configure “maximum-paths” under the L2VPN/EVPN address family so that multiple paths can be selected as ECMPs and installed in the forwarding table.

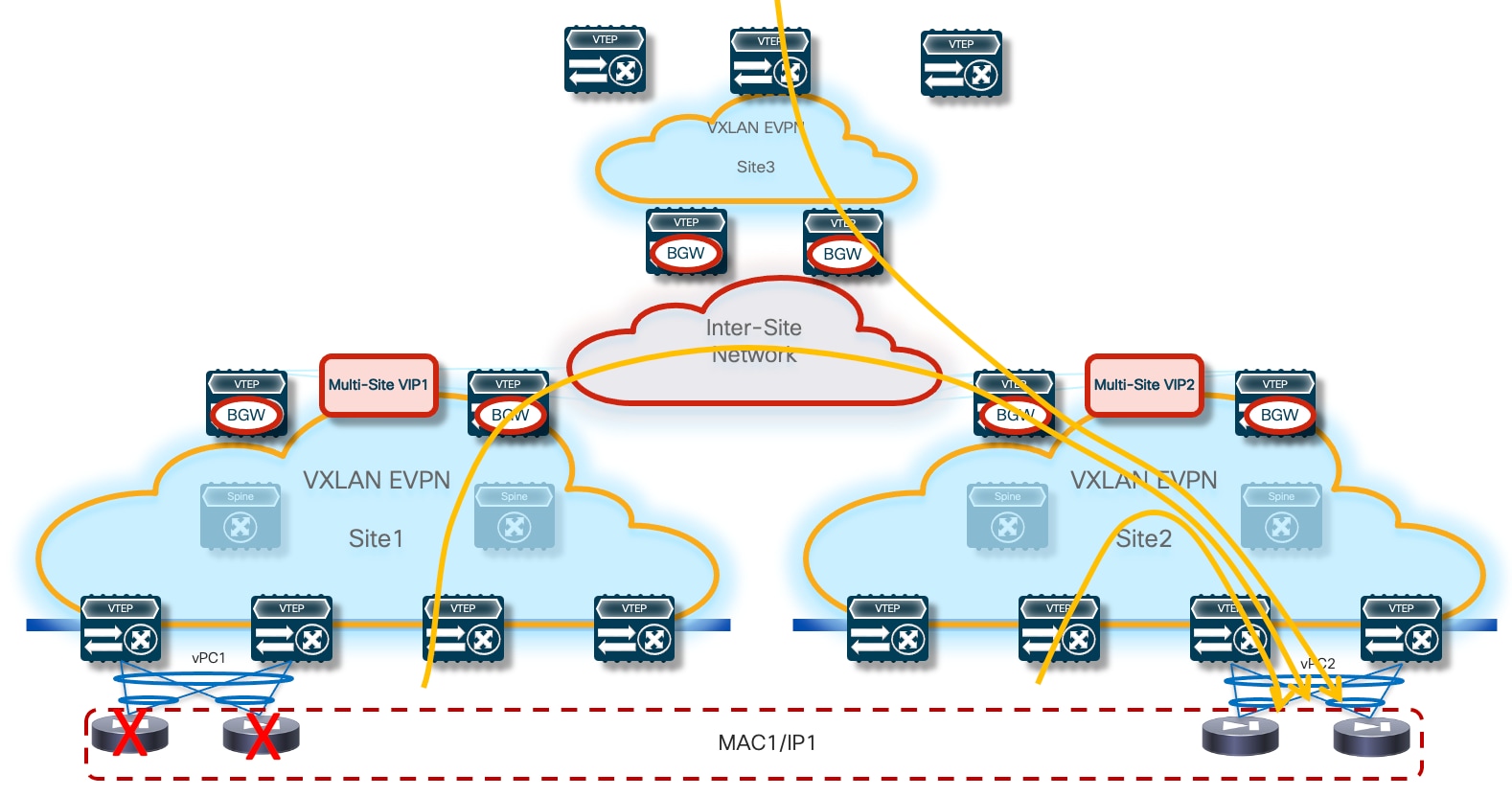

The leaf (and BGW) nodes deployed in Site1 (or Site2) also receive the vMAC/vIP information from the remote site; this ensures that in the specific scenario where all the firewall nodes deployed in a site fail (this could become more likely if a single firewall node was connected per site), traffic could be redirected to the remaining nodes still available in the other sites (Figure 7).

From an architectural perspective, the recommendation is to connect the firewall nodes to a pair of leaf nodes in each fabric and not to the Border Gateway nodes. This allows you to deploy the BGWs in Anycast mode without requiring making them part of a vPC domain (vPC BGW deployment model). If the goal is reducing the switches’ footprint, it is also possible to consolidate the spines and BGW functions on a common set of devices (instead of using a dedicated set of Anycast BGW nodes, as shown in the previous diagrams).

There are specific hardware and software considerations for the switches deployed as part of this solution, depending on the specific function they perform:

● The leaf nodes where the firewall nodes are connected and the BGW nodes must be able to propagate vMAC/vIP information to the rest of the network (across different VXLAN EVPN fabrics) leveraging EVPN multi-homing, a functionality sometimes referred to as “ESI TX”. This requires the use for all those nodes of Nexus 9000 FX2 platforms (or newer) running NX-OS 10.1(2) release (or newer).

● All the other leaf nodes must instead be able to receive and handle EVPN updates containing an ESI value different than 0, a functionality often referred to as “ESI RX”. This capability is available on all second-generation Nexus 9000 platforms (EX and newer) running as minimum the NX-OS 10.2(2)F release.

As previously mentioned, all the firewall nodes in the same site must connect to the same logical vPC connection defined on a pair of leaf nodes. The different vPCs used in different fabrics need then to be configured as part of the same ethernet-segment (ES), leveraging the simple configuration shown below.

interface port-channel 1

vpc 1

ethernet-segment vpc

esi 0012.0000.0000.1200.0102 tag 1012

The <vpc> keyword needs to be configured for the specific firewall clustering feature, as it removes the need of performing the Designated Forwarder (DF) election using EVPN Type-4 advertisement (the DF nose is responsible for the forwarding of BUM traffic for each specific L2VNI segment). This is because normal vPC DF election is implemented instead.

The firewall cluster vMAC/vIP addresses are advertised into the multi-fabric control plane as EVPN Route-Type 2 with the ESI set to the configured value on each vPC port-channel interface. Inside each fabric, the next-hop for those routes is always going to be the vPC VIP defined for the local service leaf nodes. When this information is propagated outside of the fabric by the BGW nodes, the next-hop is going to be changed to the Multi-Site VIP address identifying all the BGW nodes in that specific site. Additionally, Ethernet Auto-Discovery (EAD) / Ethernet Segment (ES) information is also injected by the service leaf nodes as EVPN Route-Type 1. Specific configuration should be applied on the service leaf nodes and on the BGW nodes to be able to identify those messages and forward them consistently across fabrics.

As shown in the previous configuration sample, the clustering ethernet-segment defined on the vPC service leaf nodes has a 4-byte tag assigned to it. This tag has only local significance and will not be propagated by BGP outside of the originating leaf VTEP, so an originate-map route-map policy needs to be configured to match the tag and attach a community to all matching EVPN Route-Type 1 and 2 advertisements so they can be identified on the BGW nodes as belonging to the firewall cluster.

! Define the route-map to attach the community to T1/T2 routes

route-map SET_FW_COMMUNITY permit 10

match tag 1012

match evpn route-type 1 2

set community 12:10012

!

! Apply the route-map to the L2VPN EVPN address-family

router bgp 65001

address-family l2vpn evpn

originate-map SET_FW_COMMUNITY

On the BGWs of each site where the cluster nodes are deployed, specific route-maps are then defined to match the community applied by the service leaf nodes and to propagate the ESI value received with the routes across the site’s boundary without modifying it. Remote sites receiving the EAD/ES and MAC/IP routes containing the ESI information can then exercise the multi-homing logic to balance traffic across multiple sites attached to the same firewall cluster. Notice how different route-maps are applied on the internal EVPN peerings established with the spines and on the external EVPN peerings established with remote BGW nodes.

! Define a community-list to match the community applied on the service leaf nodes

ip community-list standard MATCH_FW_COMMUNITY seq 5 permit 12:10012

!

! Define the route-map to be associated to EVPN peerings with spines

route-map PRESERVE_ESI_Fabric permit 10

match community MATCH_FW_COMMUNITY exact-match

match evpn route-type 2

set esi unchanged

route-map PRESERVE_ESI_Fabric permit 15

!

! Define the route-map to be associated to EVPN peerings with remote BGWs

route-map PRESERVE_ESI_DCI permit 10

match community MATCH_FW_COMMUNITY exact-match

match evpn route-type 2

set esi unchanged

route-map PRESERVE_ESI_DCI permit 15

match community MATCH_FW_COMMUNITY exact-match

match evpn route-type 1

route-map PRESERVE_ESI_DCI deny 20

match evpn route-type 1

route-map PRESERVE_ESI_DCI permit 30

!

! Apply the defined route-maps to the spine and remote BGW neighbors

router bgp 65001

neighbor 10.12.0.3

remote-as 65001

update-source loopback0

address-family l2vpn evpn

send-community

send-community extended

route-map PRESERVE_ESI_Fabric out

neighbor 10.22.0.3

remote-as 65002

update-source loopback0

ebgp-multihop 5

peer-type fabric-external

address-family l2vpn evpn

send-community

send-community extended

route-map PRESERVE_ESI_DCI out

Note: Each BGW and vPC service leaf node must also configure “send-community” under the L2VPN/EVPN address-family of their BGP peers so that the community attached to the clustering routes can be propagated along the way. If there are route-reflectors and/or route servers, they must be configured accordingly as well.

At the end of the configuration, it is possible to verify that the firewall cluster has been properly formed by using the CLI command shown below (valid for a two nodes Active/Active cluster).

FW-Cluster# show cluster info

Cluster FW-Cluster: On

Interface mode: spanned

This is "node1" in state MASTER

ID : 0

Version : 9.2(2)4

Serial No.: XXXXXXXXXXX

CCL IP : 66.66.66.1

CCL MAC : 006b.f11f.2a74

Last join : 04:49:51 UTC Nov 25 2023

Last leave: 04:45:18 UTC Nov 25 2023

Other members in the cluster:

Unit "2" in state SLAVE

ID : 2

Version : 9.2(2)4

Serial No.: XXXXXXXXXXX

CCL IP : 66.66.66.2

CCL MAC : 006b.f11e.fd5f

Last join : 05:13:10 UTC Nov 25 2023

Last leave: 05:10:06 UTC Nov 25 2023

Individual Interface Active/Active Cluster Mode

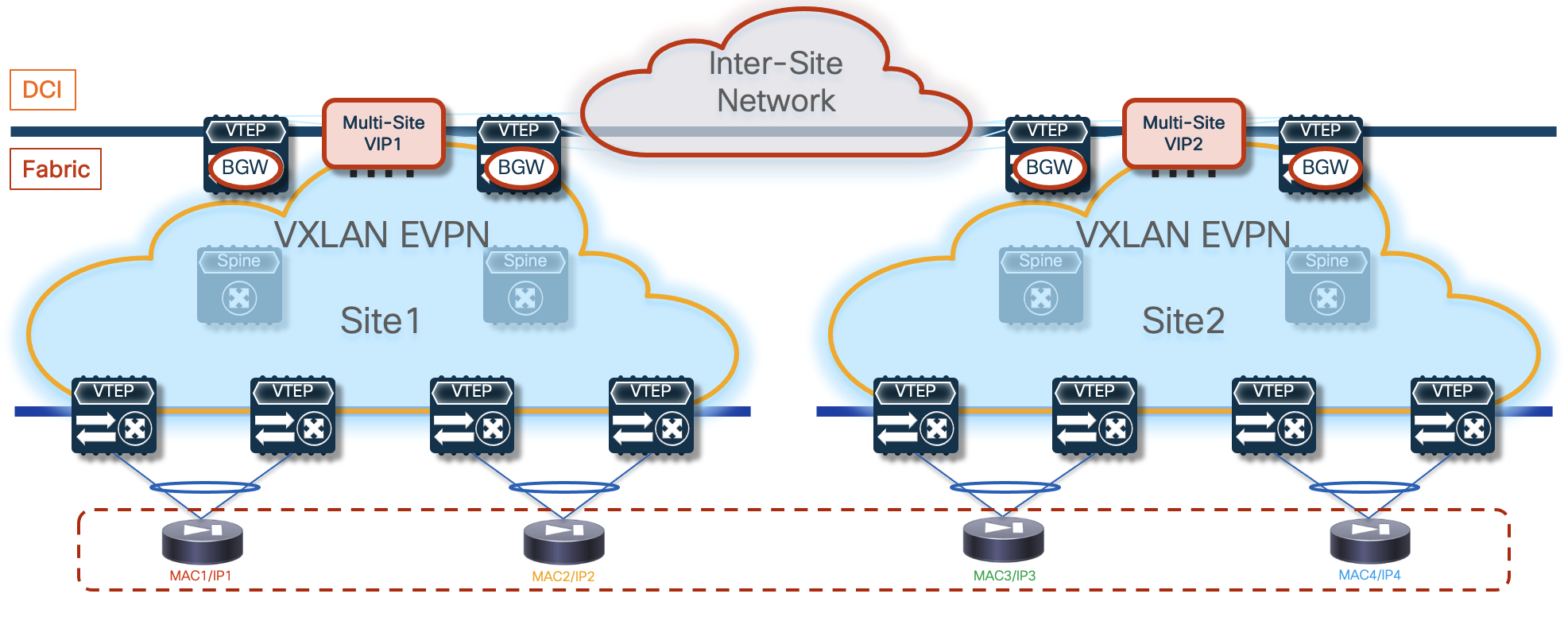

In this second option, each firewall node maintains its own identity (in terms of MAC and IP addresses) for each locally defined data interface. As displayed in Figure 8 below, this provides more flexibility on how to connect the firewall nodes to the fabric, removing the need to use the same vPC pair of devices in each fabric.

The use of the Cluster Control Link applies also with this redundancy model and allows you to achieve the same benefits previously described for the Split Spanned EtherChannel mode.

Below is the configuration that must be applied to the cluster’s control node and to the data nodes in order to ensure the cluster can be successfully formed. The initial configuration for the control node includes the bootstrap configuration followed by the interface configuration that will be replicated to the data nodes that are part of the same cluster.

Control Node

A few important considerations for the configuration required on the control node:

● The cluster interface mode must now be configured as “individual” to distinguish the Active/Active cluster deployment model from the previously described “Split Spanned EtherChannel” mode.

● IP pools must be defined to assign the IP addresses to each node that is part of the cluster. The range must ensure that one address is available for the control node and all the data nodes.

● The control node will define a unique IP address for its management and data interfaces (Mgmt and one-arm in the example below) not included in the specified ranges. Those are the IP addresses that are always reachable on the active control node and can move across nodes of the cluster when the active control node fails. Note that the use of this “virtual IP” is instead not required for the interface used as cluster control link, because its IP address is configured for each node part of the cluster as part of the specific “cluster group” configuration.

● BecauseBecause the cluster control link traffic includes data packet forwarding, the cluster control link needs to accommodate the entire size of a data packet plus cluster traffic overhead (100 bytes). It is hence recommended to increase the MTU associated to the CCL interface to accommodate this extra information. Doing so requires jumbo frame reservation (see the “jumbo-frame reservation” command).

Note: The control node must be reloaded after entering the commands required to increase MTU on the CCL link.

! Name the cluster

hostname FW-Cluster

!

! enable SSH access

enable password ***** pbkdf2

crypto key generate rsa general-keys modulus 2048

username admin password testpass

aaa authentication ssh console LOCAL

ssh 0.0.0.0 0.0.0.0 management

!

! Configure the cluster interface mode

cluster interface-mode individual

!

! Define IP pools

ip local pool CCL 192.168.1.1-192.168.1.4 mask 255.255.255.0

ip local pool one-arm 172.16.1.11-172.16.1.14 mask 255.255.255.0

ip local pool Mgmt 10.237.99.23-10.237.99.26 mask 255.255.255.224

!

! Enable and configure the interfaces

interface GigabitEthernet0/0

no shutdown

!

interface GigabitEthernet0/1

nameif one-arm

security-level 0

ip address 172.16.1.10 255.255.255.0 cluster-pool one-arm

no shutdown

!

interface Management0/0

management-only

nameif management

security-level 100

ip address 10.237.99.22 255.255.255.224 cluster-pool Mgmt

no shutdown

!

! Create a default route for management

route management 0.0.0.0 0.0.0.0 10.237.99.1

!

! Cluster configuration

cluster group cluster1

local-unit node1

cluster-interface GigabitEthernet0/0 ip 192.168.1.1 255.255.255.0

priority 1

enable

!

jumbo-frame reservation

mtu cluster 1654

Data Nodes

For each data node, the only configuration required to ensure that each data node can join the cluster with the control node (and receive additional required configuration) is the “cluster group” configuration shown below.

! Configure the cluster interface mode

cluster interface mode individual

!

! Enable CCL interface

interface GigabitEthernet0/0

no shutdown

!

! Cluster configuration

cluster group cluster1

local-unit node2

cluster-interface GigabitEthernet0/0 ip 192.168.1.2 255.255.255.0

priority 2

enable

Note: The only parameters to change on the different data nodes are the “local-unit” name, the cluster interface IP address, and the priority.

At the end of the configuration, it is possible to verify that the firewall cluster has been properly formed by using the CLI command shown below.

FW-Cluster# show cluster info

Cluster cluster1: On

Interface mode: individual

Cluster Member Limit : 16

This is "node1" in state CONTROL_NODE

ID : 0

Version : 9.19(1)

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.1

CCL MAC : 0050.56b7.5ece

Module : ASAv

Resource : 4 cores / 8192 MB RAM

Last join : 21:27:34 UTC Oct 27 2023

Last leave: 21:26:30 UTC Oct 27 2023

Other members in the cluster:

Unit "node2" in state DATA_NODE

ID : 1

Version : 9.19(1)

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.2

CCL MAC : 0050.56b7.7bca

Module : ASAv

Resource : 4 cores / 8192 MB RAM

Last join : 21:18:42 UTC Oct 27 2023

Last leave: N/A

Unit "node3" in state DATA_NODE

ID : 2

Version : 9.18(3)56

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.3

CCL MAC : 0050.56b7.8c90

Module : ASAv

Resource : 4 cores / 8192 MB RAM

Last join : 21:20:05 UTC Oct 27 2023

Last leave: N/A

Unit "node4" in state DATA_NODE

ID : 3

Version : 9.18(3)56

Serial No.: XXXXXXXXXXX

CCL IP : 192.168.1.4

CCL MAC : 0050.56b7.f68f

Module : ASAv

Resource : 4 cores / 8192 MB RAM

Last join : 21:22:00 UTC Oct 27 2023

Last leave: N/A

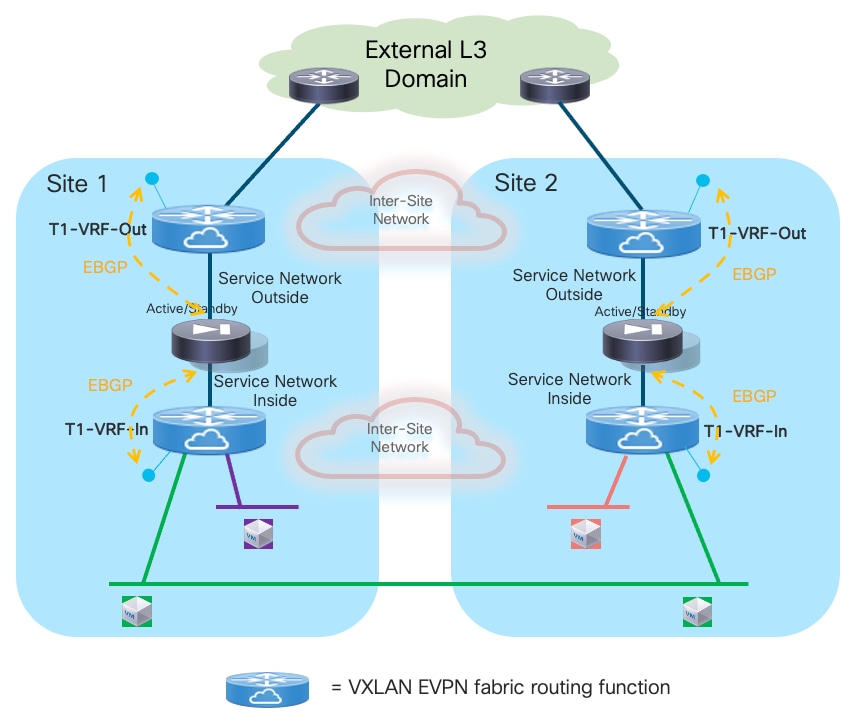

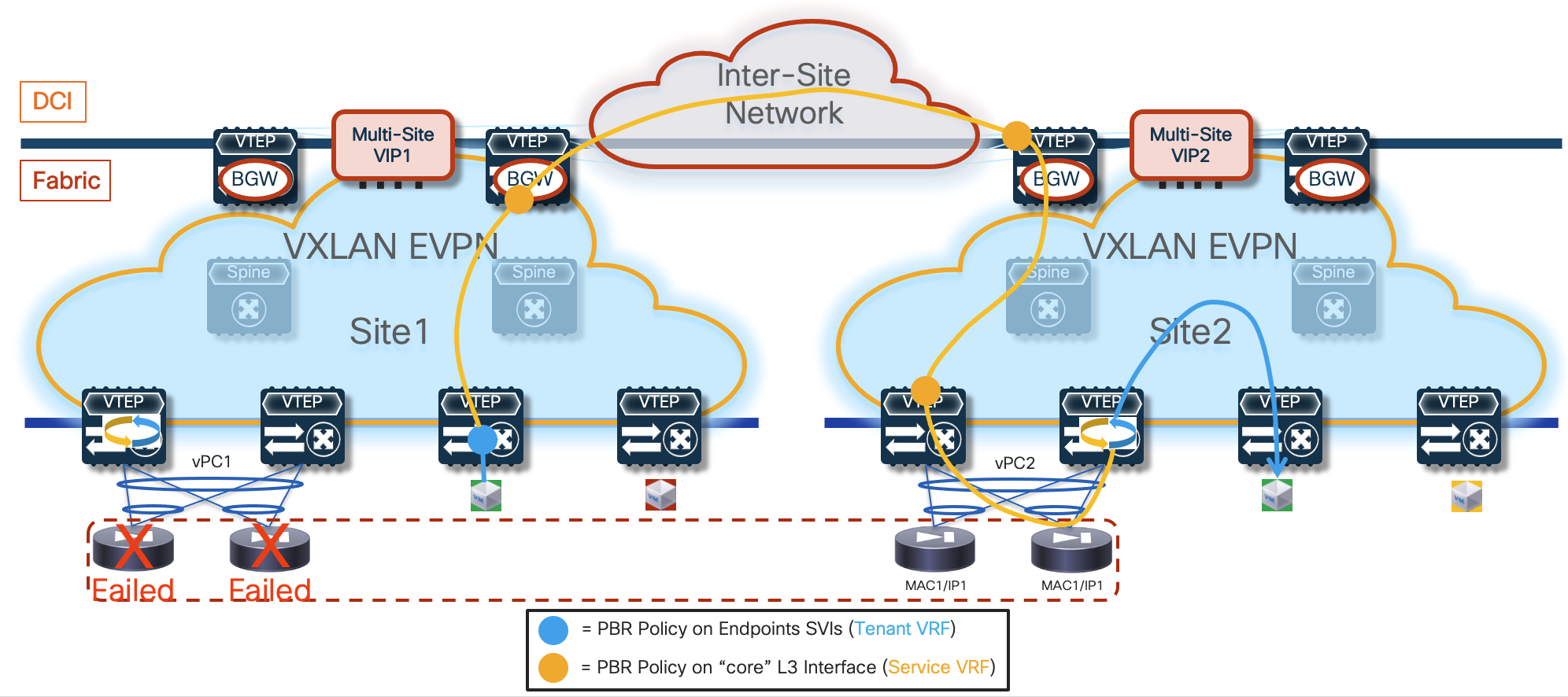

Independent Firewall Services Deployed per Site

This redundancy model is desirable when the goal is to operate the different fabrics in a more “loosely coupled” fashion, removing the need to extend networks across sites for the clustering of service nodes connected to different fabrics. This means that the redundancy of the firewall function must be handled at the single fabric level, which can be achieved by leveraging any of the two clustering options described in the previous sections or by deploying different independent firewall nodes in a given fabric.

Important Considerations for Deploying This Redundancy Model

First, it is possible to “mix and match” the redundancy models used inside each fabric. For example, Figure 10 shows a scenario where an Active/Standby cluster is deployed in fabric 1, whereas an Active/Active cluster is used in fabric 2.

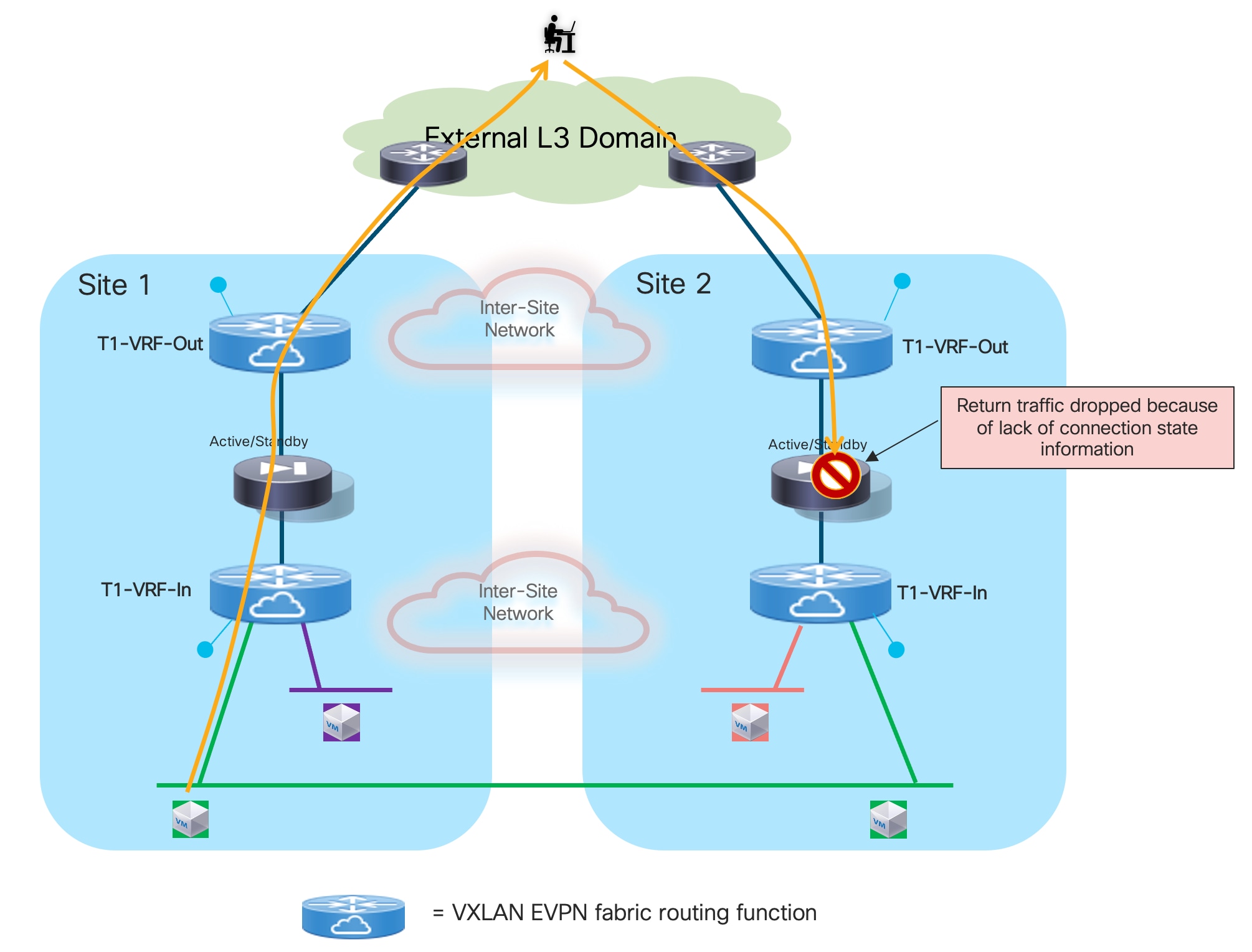

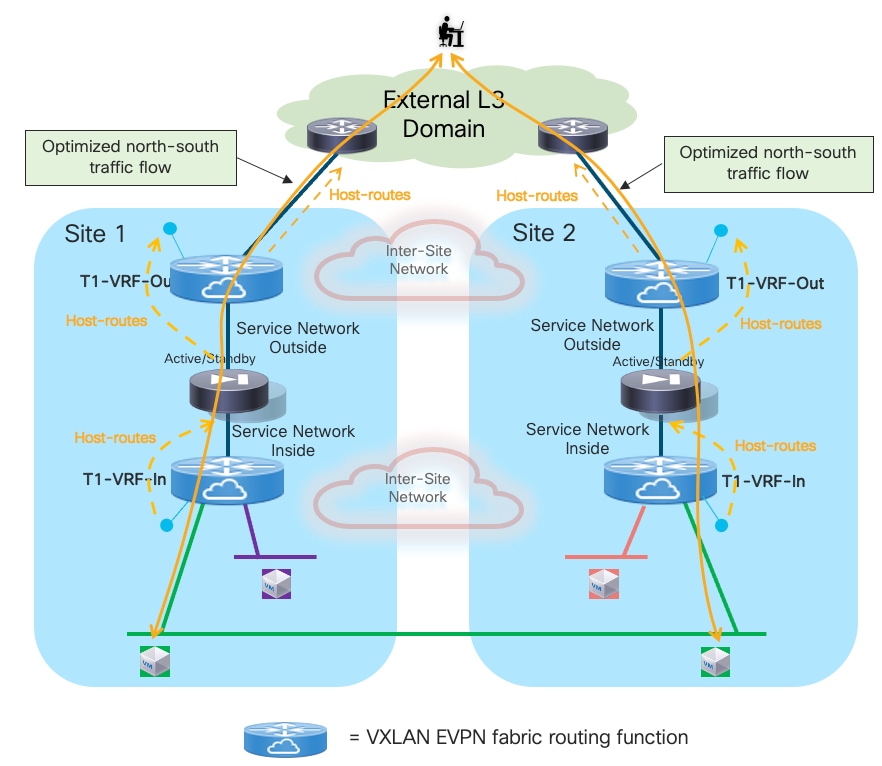

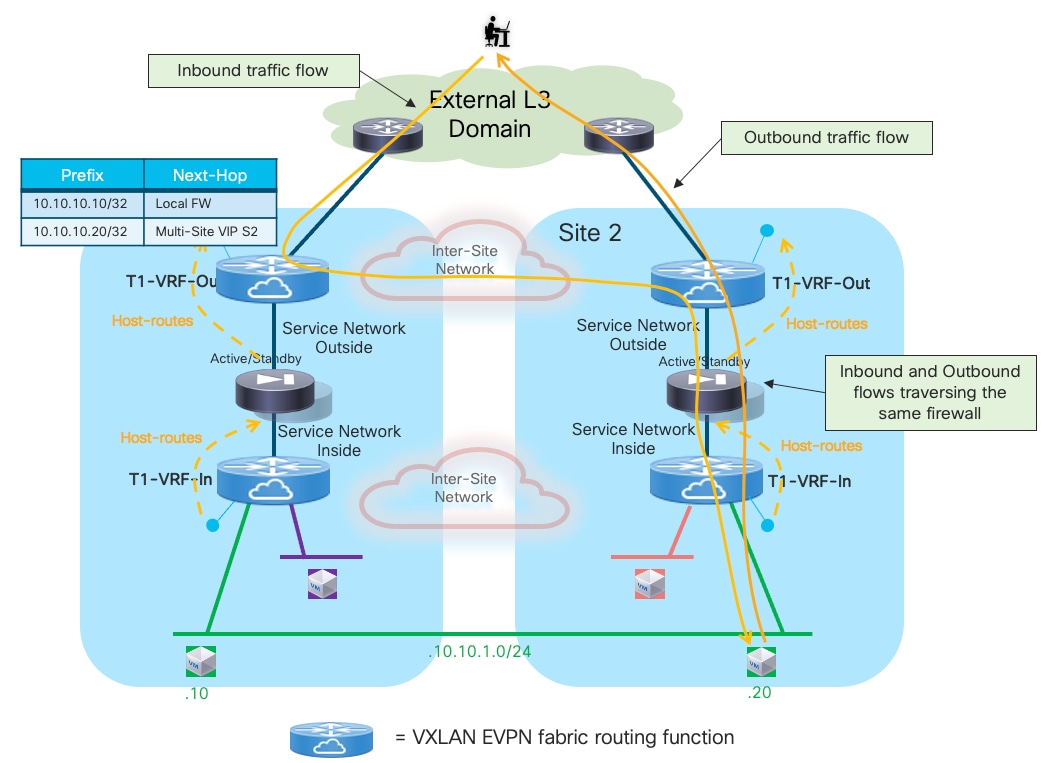

The deployment of independent active nodes inside the same fabric shown on the right of Figure 9 implies that there is no use of any clustering capability between the firewall nodes. Therefore, it becomes responsibility of the network deployment to ensure that the two legs of the same traffic flow can be stitched through the same firewall node that owns the connection state for that communication. As it will become clear in the rest of this paper, doing this via traditional routing configuration becomes quite challenging and the use of enhanced Policy-Based Redirection (ePBR) is the recommended solution to achieve this goal.

Similar considerations apply for the firewall services deployed across sites, even if a clustering redundancy model were to be used inside each fabric (as shown in Figure 10). It is mandatory to avoid creating asymmetric traffic paths via the independent firewalls services deployed in separate sites, which would result in dropping the stateful traffic flows because connection state is not synchronized between those devices. ePBR can provide the easiest answer also to this requirement. This also implies that, depending on the specific use case under consideration, live migration of endpoints across the sites could not allow to maintain the stateful session established with the firewall node(s) in the original fabric.

Finally, another consequence of the lack of clustering of services across sites is the requirement of consistently defining the configuration and the security policies on all the firewall nodes deployed across sites. One way to address this is by using a common tool managing the various firewall instances deployed in different fabrics.

Now that we’ve introduced the most common firewall redundancy models, the following sections will discuss how to use them in the following distinct deployment scenarios:

● Firewall as Default Gateway connected to the northbound network either via static routing or using a routing protocol.

● Firewall deployed as “perimeter service” to apply security policy to all the flows leaving (or entering) a specific tenant/VRF domain.

● Use of enhanced Policy-Based Redirection (ePBR) to stitch traffic flows between endpoints through a routed firewall service (both for North-South and East-West communication). In this case, the recommendation is to connect the firewall service in one-arm mode to the fabric in order to simplify the routing configuration on the firewall itself.

The deployment of the Firewall function as default gateway for the endpoints connected to the fabric is appropriate when the requirement is the creation of small security zones mapping to each specific IP subnet (on a per VRF level), as shown in the logical representation in Figure 11:

In this case, VXLAN Multi-Site only needs to provide Layer-2 connectivity between the endpoints connected to the different subnets and the default gateway function implemented on the Firewall device.

In a multi-VRF (multi-tenant) deployment, a separate firewall service (physical or logical) is usually assigned for each tenant/VRF, to separate the operational duties for the different tenants and simplify the application of inter-VRF security policies. Most of the firewall products available on the market (including Cisco ASA and FTD models) support device virtualization capabilities allowing to assign different virtual devices (usually referred to as “contexts”) to each tenant/VRF.

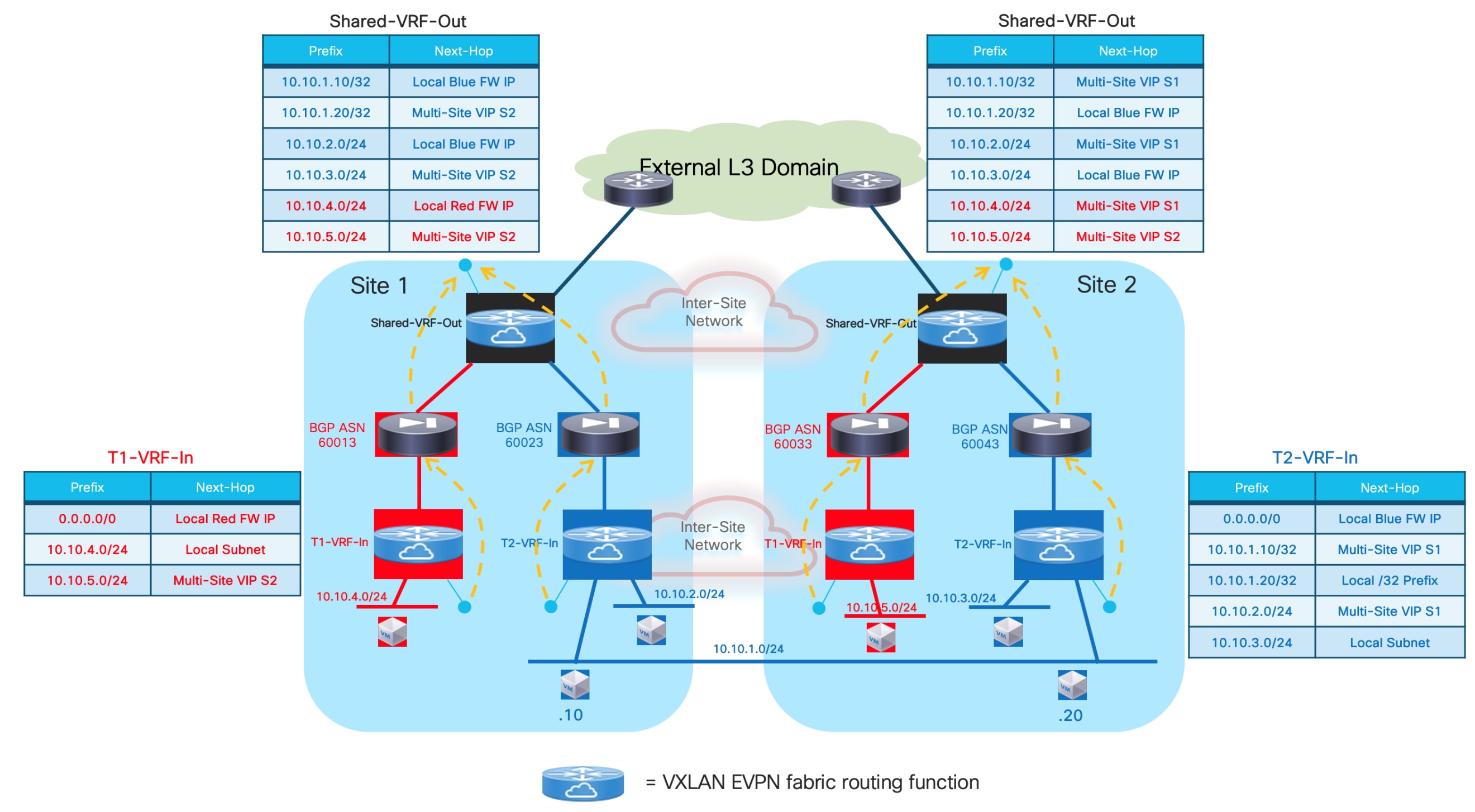

All traffic that needs to leave a specific VRF domain, will then be sent from the firewall toward a northbound device, based on static routing information configured on the firewall or through the dynamic exchange of reachability information via a routing protocol (IGP or BGP). The northbound device provides a “Fusion” function to connect IP subnets that are part of different tenants/VRFs and for allowing connectivity between each VRF routing domain and the external network domain, as highlighted in Figure 12:

The “Fusion” function could be deployed directly on the VXLAN EVPN fabrics (using a dedicated “Outside VRF”) or on physical external devices. Because firewalls are often physically connected to service leaf nodes in the fabric, the definition of an outside VRF is quite common (and recommended) as it allows you to leverage the Layer 3 forwarding capabilities of the fabric, rather than using it only as a Layer 2 service to interconnect the firewall with the external router.

The establishment of the communication patterns shown in figure above requires the exchange of reachability information between the firewall nodes and the devices performing the “Fusion” function. This can be achieved leveraging static routing or dynamic routing protocol, as clarified in the sections below.

When considering the firewall redundancy models discussed in the previous sections of this paper, only the use of clustering options (Active/Standby or Active/Active) are considered (and recommended) when the firewall is deployed as default gateway. This is because ensuring that the two legs of a given traffic flow are steered through the same firewall node becomes quite challenging if independent firewall services are deployed across different fabrics (and it is also usually not possible to define the same default gateway function on different firewall devices connected in separate sites).

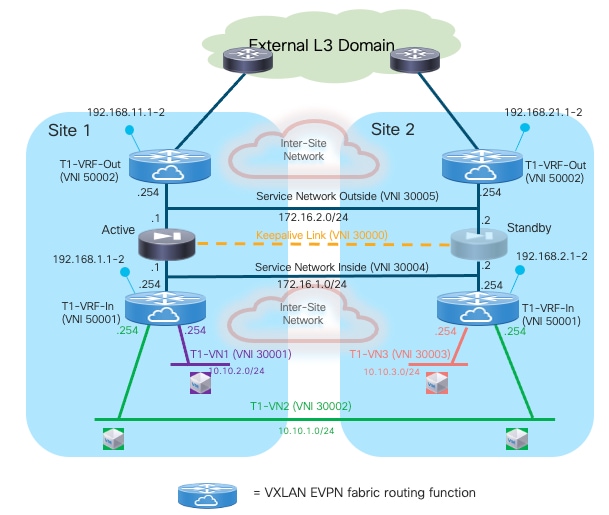

Active/Standby Firewall Cluster as Default Gateway Stretched across Sites

The first redundancy model considered for the firewall as default gateway option is the deployment of an Active/Standby firewall cluster stretched across sites. As mentioned above, this requires establishing Layer 3 connectivity between the active firewall node and a northbound Layer 3 network device.

The following two sections focus on the use case where the northbound device is represented by a dedicated stretched VRF deployed in the VXLAN Multi-Site domain. For the connectivity with the firewall, we cover both the options of static routing and the use of a dynamic routing protocol (BGP).

Note: BGP is always the recommended routing protocol to connect Layer 3 devices (firewall, load-balancer, external routers) to the fabric because of the many advanced functionalities offered by this protocol in terms of prefix filtering, routing loops avoidance, etc.

Use of Static Routing between the Firewall and the Leaf Nodes

Given that all communication between the endpoints and the rest of the network (i.e. the external network domain or a different Tenant/VRF routing domain) is always enforced via the active firewall node functioning as default gateway, the simplest approach consists in using static routing between the active firewall and the northbound network.

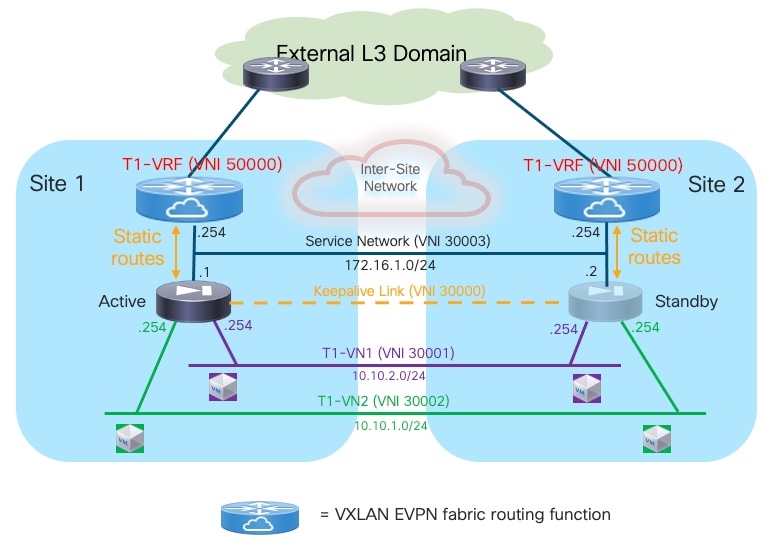

Note: The logical diagram shown in Figure 13 applies to a specific tenant. The same design would need to be replicated ‘n’ times for the multi-tenant scenario shown in previous Figure 12.

Deployment this use case requires extending several network segments across fabrics. For that purpose, it is advantageous to leverage the Layer-2 extension capabilities offered by the VXLAN Multi-Site architecture.

The specific networks that need to be extended across sites are:

● The Layer 2 segments where the endpoints are connected

This is because the default gateway for those endpoints can move across sites (based on a firewall failover event) and it is required to ensure that the endpoints always have reachability toward their active default gateway. The active and standby firewall nodes have multiple internal interfaces associated to each of those endpoints’ subnets. Those interfaces are usually logical ones, each mapped to a dedicated VLAN trunked on the physical connection (usually a local port-channel or a vPC) with the fabric nodes.

● The keepalive link used for syncing configuration and state information between the Active and Standby service nodes

Assuming the latency is not above the maximum value supported for this function by the deployed firewall (please always refer to the firewall’s specific documentation), it is a quite useful and flexible option using a Layer 2 segment extended across fabrics for this specific function (because a direct connection between the firewall nodes may not be a viable option between separate sites). The recommendation is therefore to use a separate physical interface for this function, connecting the firewall to a pair of leaf nodes (with a local port-channel or a vPC).

● The Service Network used to connect the firewall devices to the upstream Layer-3 devices

This is required to allow the Active and Standby firewalls to get assigned IP addresses on the same IP subnet and to track the health of their interfaces connected to that network. A failover event could for example be triggered if the standby node detected that the interface of the active firewall connecting it to the Service Network has failed, even if the active firewall itself hadn’t failed (this condition could be detected via the dedicated keepalive link). The outside interface of the firewalls is usually a logical one, and the connectivity with the fabric nodes is achieved on a dedicated VLAN carried on the same port-channel (or vPC) already used to trunk the VLANs associated to the internal Layer 2 segments.

Note: In the example shown in Figure 13, the northbound Layer 3 network where the service nodes are connected is represented by a specific VRF routing domain deployed in the VXLAN fabric, as it is a quite common deployment option. However, the same considerations listed above for the Service Network continue to apply even if the VXLAN EVPN fabric only performs Layer 2 duties to connect the service nodes to upstream Layer 3 devices external to the fabric.

The establishment of connectivity between endpoints part of subnets of the same Tenant (intra-Tenant East-West communication) is achieved through the active firewall performing the duties of default gateway for all those subnets. The fabric only performs Layer 2 forwarding duties to allow intra-subnet communication between endpoints and to send any routed flows to the firewall.

Traffic flows between endpoints of a specific Tenant and endpoints of a different Tenant (inter-Tenant East-West communication) or between the endpoints of a specific Tenant and the rest of the network (North-South communication) must also be steered to the active firewall performing the duties of default gateway.

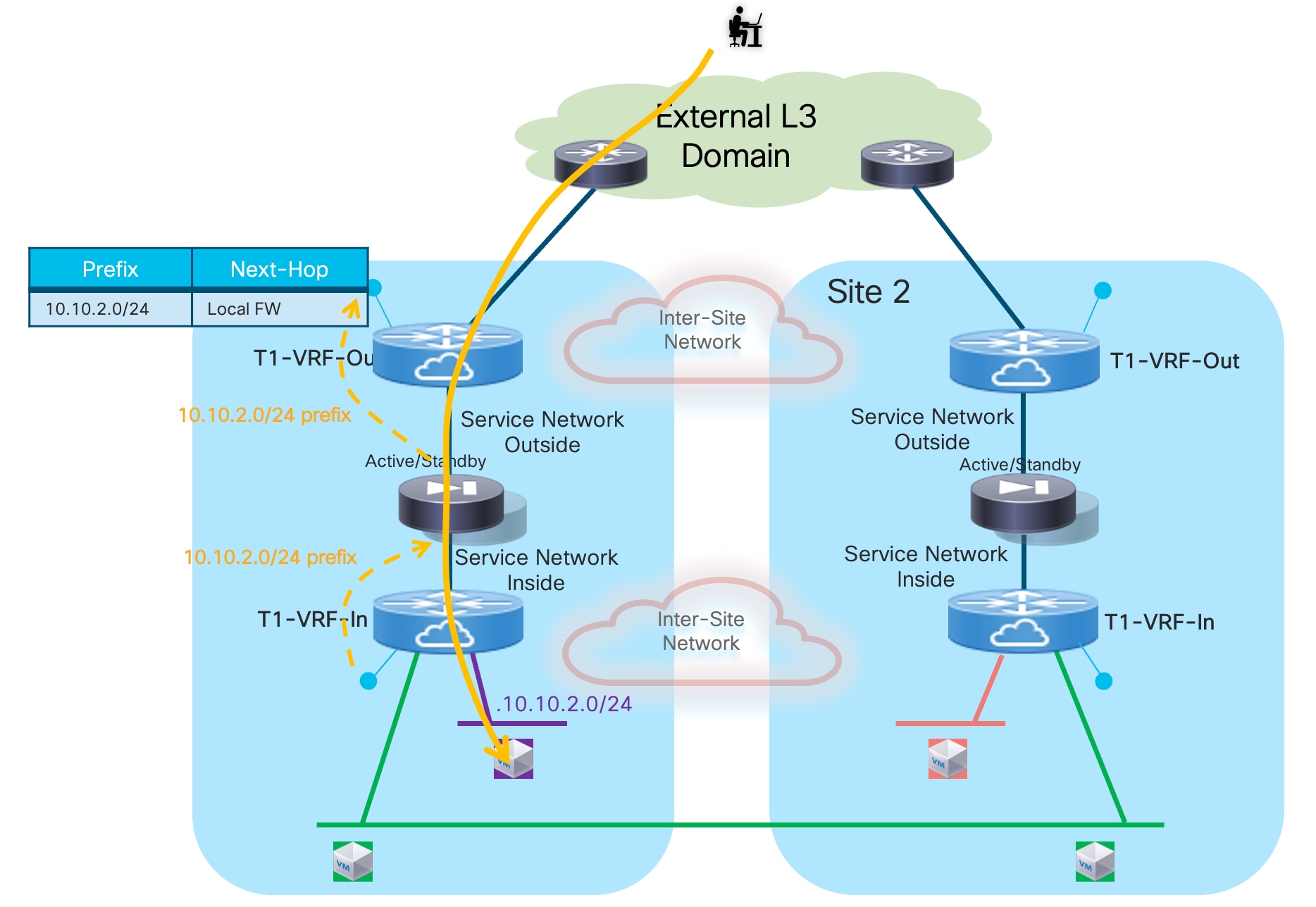

Because that default gateway function is only available in the fabric where the active firewall is connected, the immediate consequence is traffic hair-pinning when endpoints located in remote sites requires to communicate with resources outside of their local network. Figure 14 highlights this behavior both for communications between endpoints part of different subnets defined inside the data center (intra-Tenant East-West traffic) and for communication with the external network domain (North-South traffic).

Additionally, the establishment of North-South communication requires the configuration of static routes both on the firewall node and on the service leaf nodes.

A default route (0.0.0.0/0) can be configured on the firewall using as next-hop address the anycast gateway IP address defined on the fabric for the Service Network VXLAN segment. A consistent anycast gateway IP address is deployed across fabrics on all the leaf nodes where the active and standby firewall nodes are connected, so that the same next-hop address is always available wherever the firewall gets activated. BecauseBecause the anycast gateway IP address plus the interfaces of the active and standby firewalls need to be connected to a common Service Network, it is usually necessary to reserve at least a /29 subnet for that purpose (as a separate IP address is normally assigned to the interfaces of the active and standby firewalls connected to that segment).

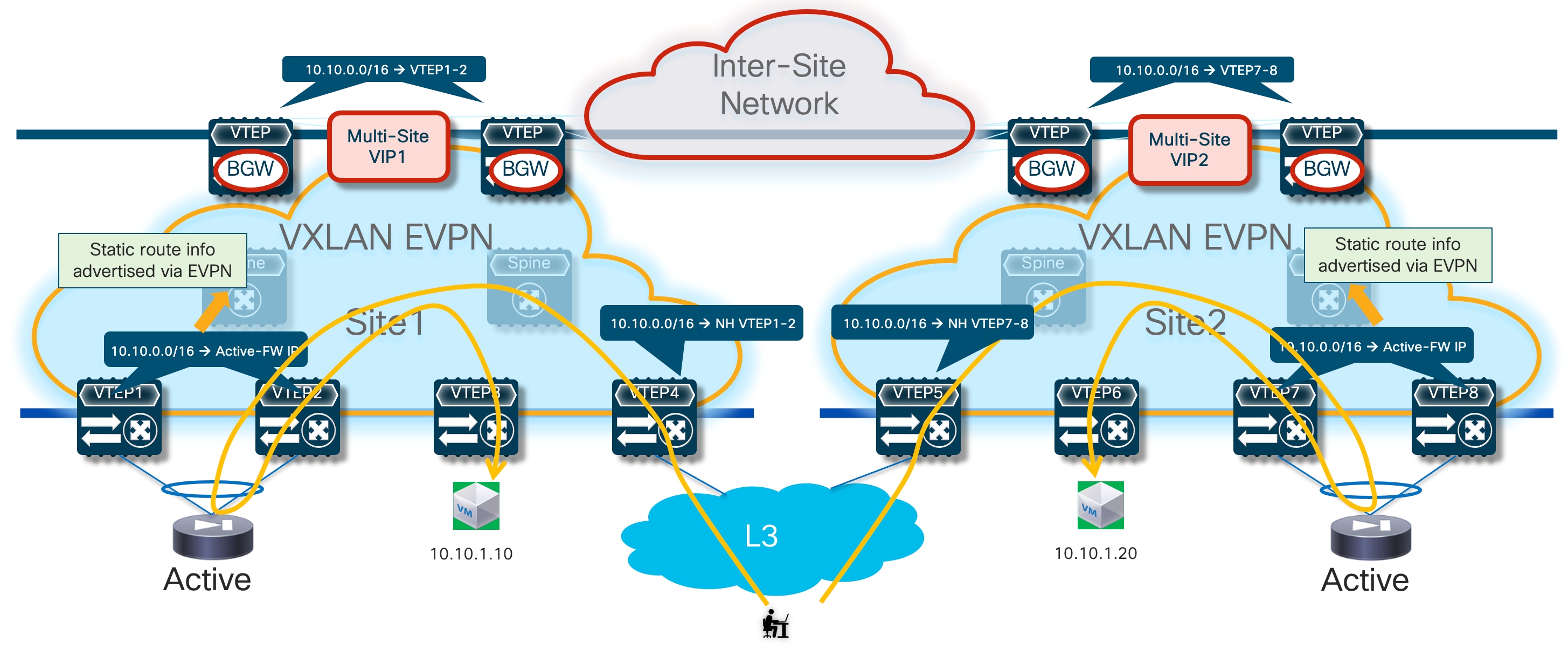

Static entries must be also configured on the service leaf nodes connected to the firewall devices to ensure that traffic, which is originated from other tenants or from the external network and destined to the subnets of a given tenant connected behind the firewall, can be routed toward the active firewall node. The complexity of the required static routing configuration is mostly dependent on the capability of summarizing the endpoints’ address space associated to a given tenant/VRF.

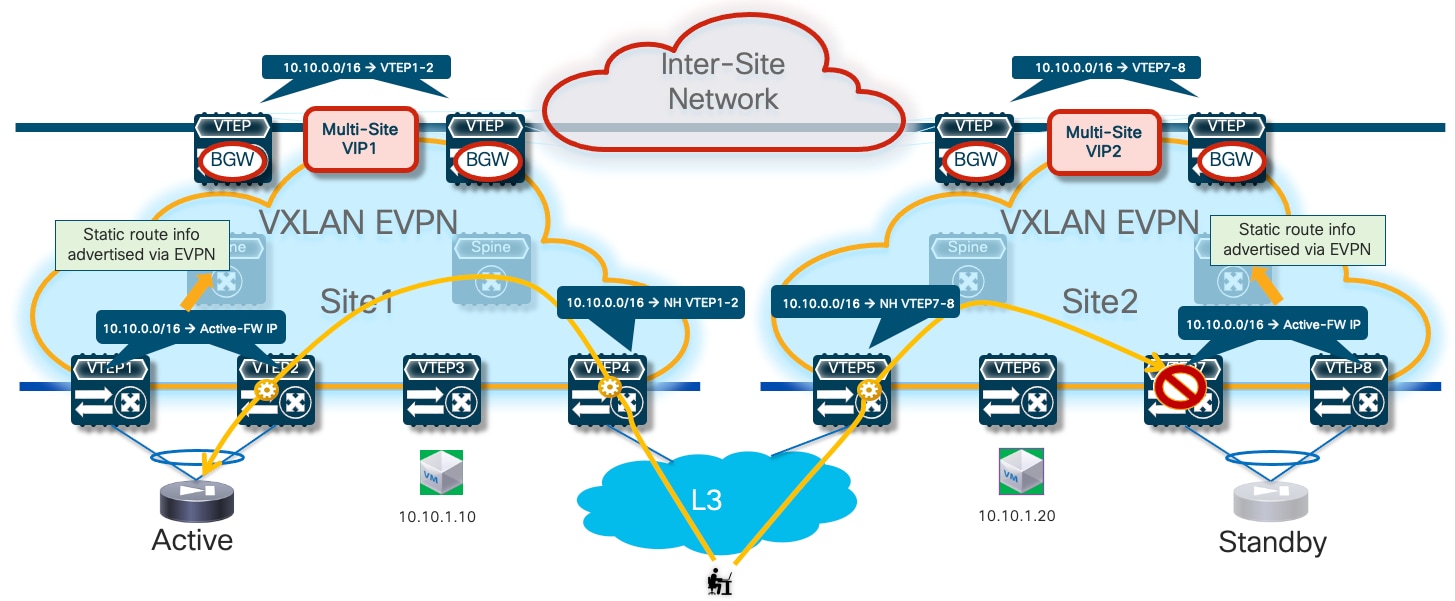

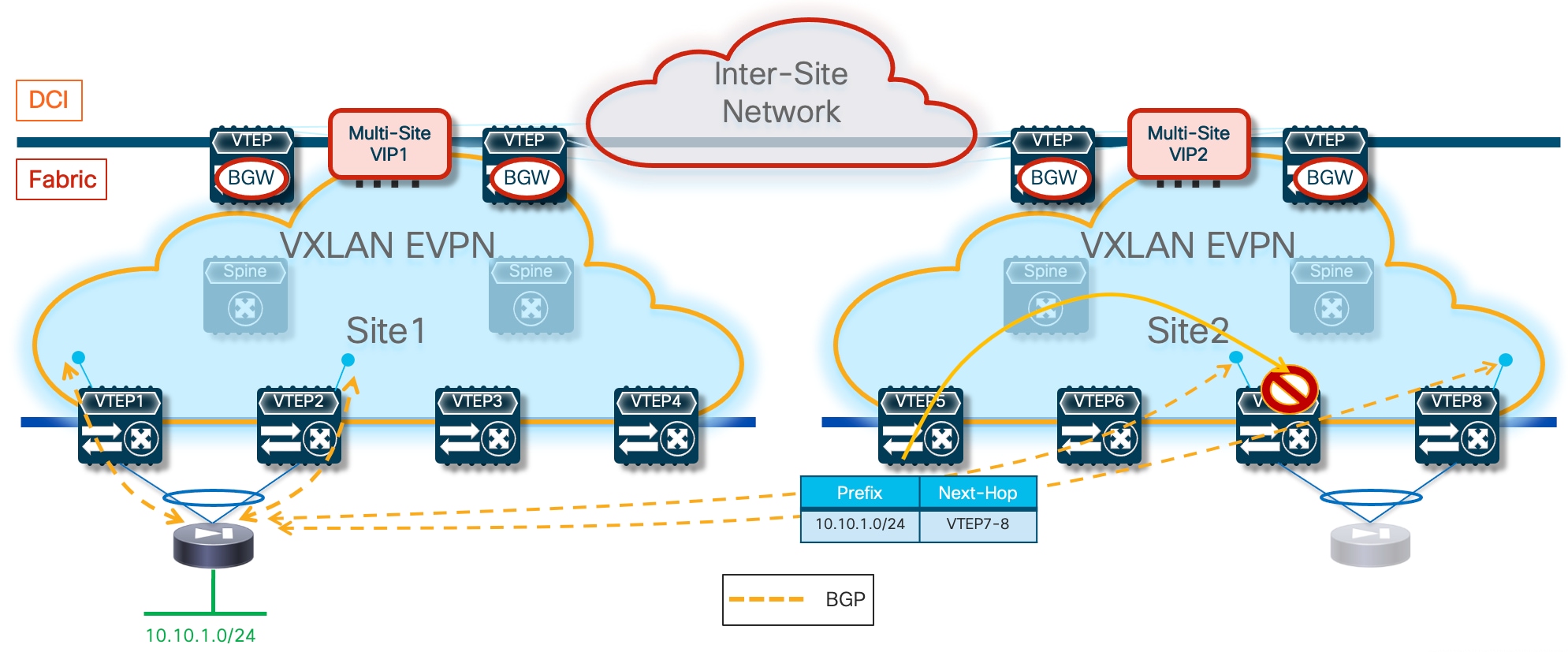

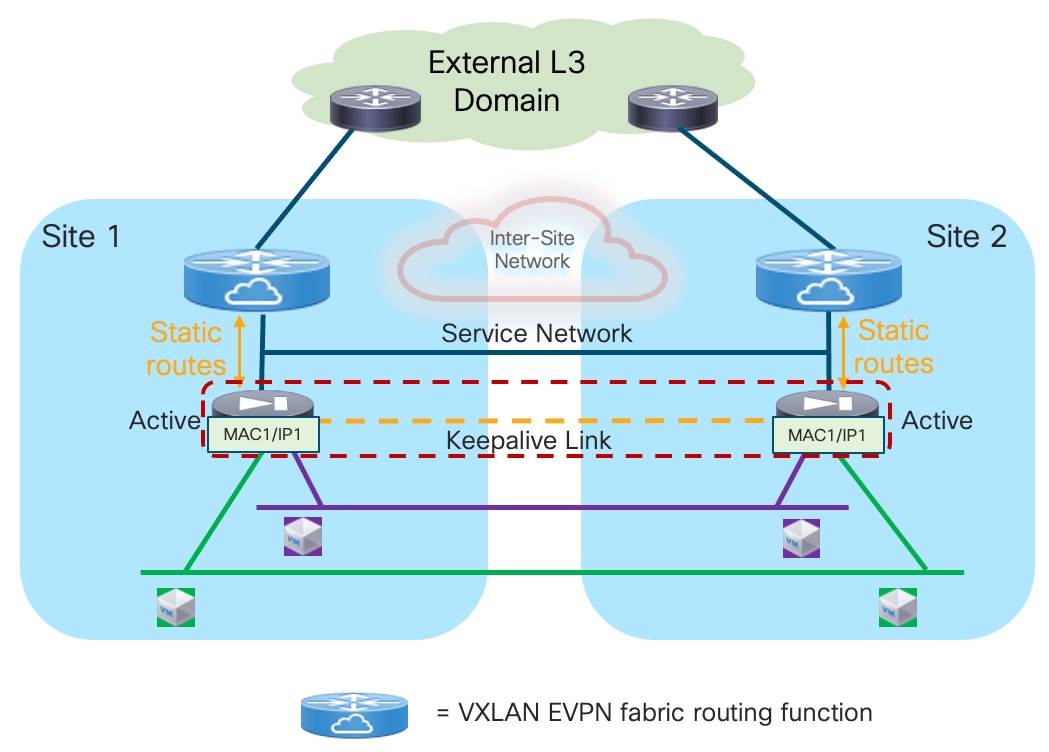

Then the default behavior of the service leaf nodes is to advertise the configured static routing information inside the local fabric’s MP-BGP EVPN control plane. As a result, all the switches (compute, border, or BGW nodes) deployed in the fabrics where the active or standby firewall nodes are located will always prefer the path via the local service leaf nodes to reach the endpoints’ subnets behind the firewall. This is the case, independently from the fact that the local firewall node is functioning in Active or Standby mode (Figure 15).

In the example shown above, routed traffic originated from an external client and destined to the green DC endpoints part of the stretched 10.10.10.0/24 IP subnet behind the firewall, could be sent toward Site1 or Site2 based on the routing information injected in the external Layer 3 network.

If the inbound traffic is received by the border leaf (BL) node in Site1, the BL would then encapsulate the flow toward the local service leaf nodes connected to the active firewall, and communication would be successfully established. In the case where inbound traffic is instead steered toward Site2, the BL node there would steer the traffic toward the local service leaf nodes connected to the standby firewall node. This behavior is not only undesirable because of the suboptimal traffic path, but it is also leading to traffic flows being dropped (the service leaf nodes in Site2 is not capable of decapsulating the traffic, performing the Layer 3 lookup and re-encapsulating it toward the service leaf nodes connected to the active firewall in Site1).

There are a few options to avoid this problem, each one is listed in order of preference and discussed in greater detail below.

Optimizing Connectivity to the Active Firewall with “export-gateway-ip”

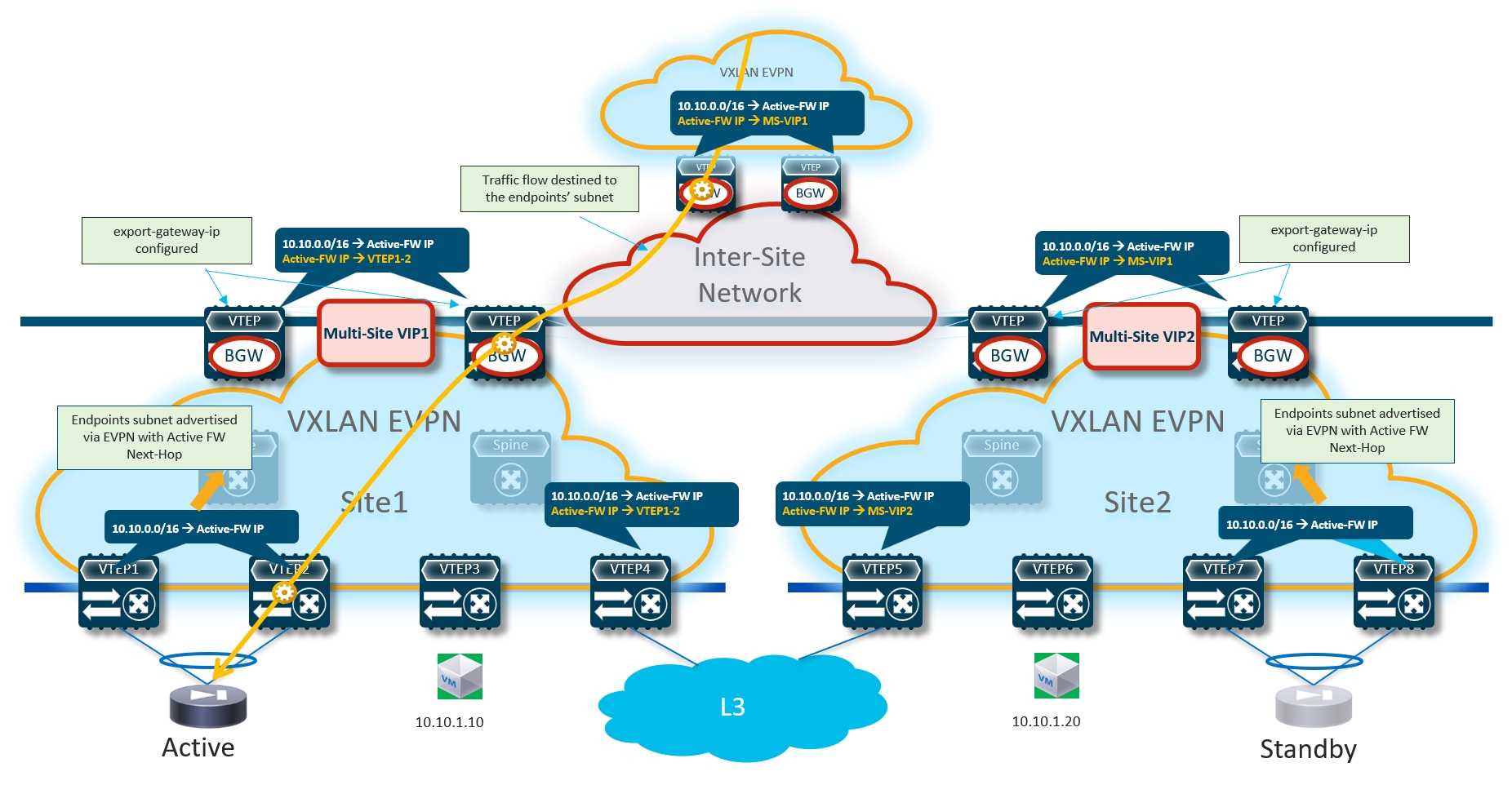

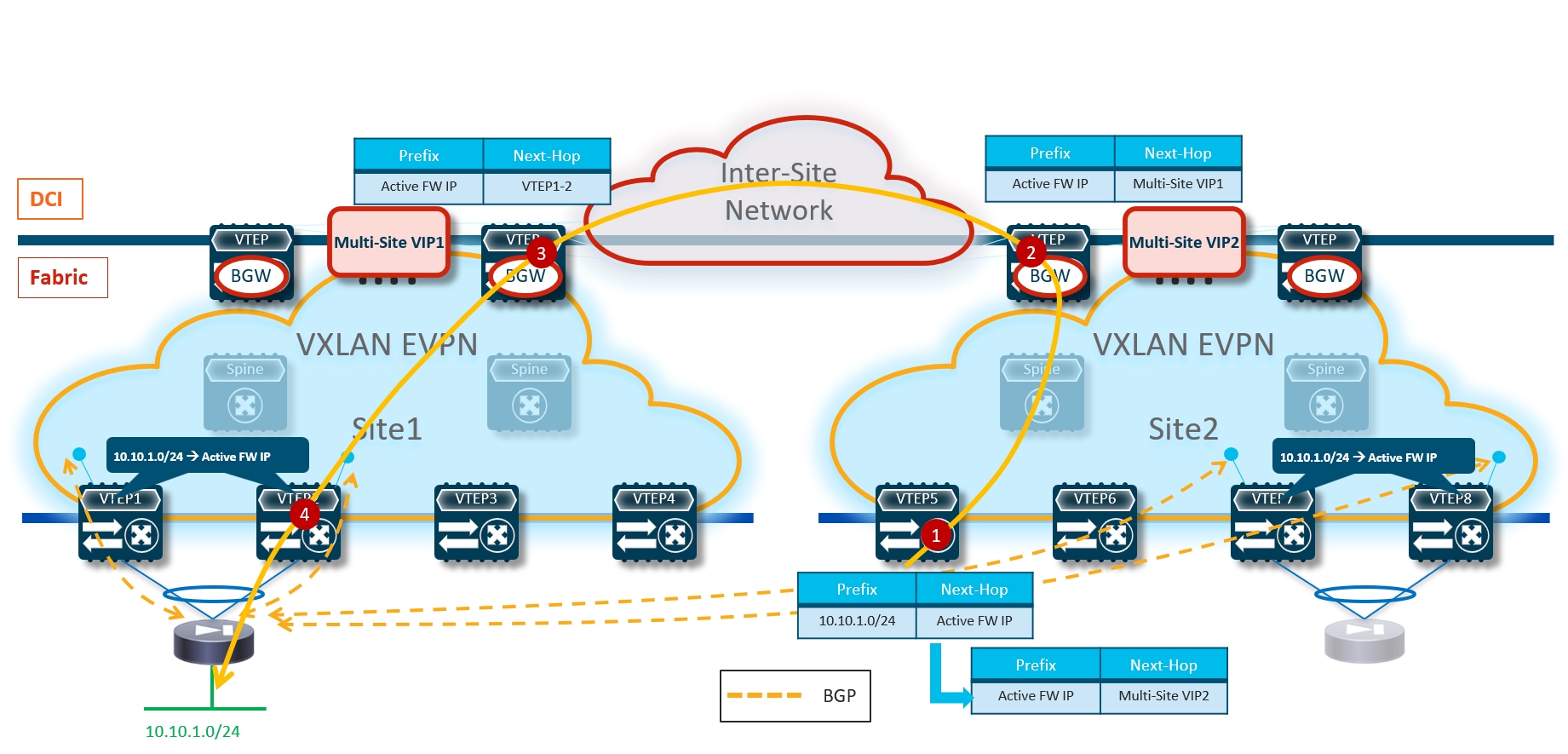

The first, and recommended option, is the enablement of the “export-gateway-ip” functionality (available since NX-OS release 9.2(1)). This allows all the leaf nodes where the VRF is instantiated (border leaf nodes in the example above, but the same applies to compute and BGW nodes) to receive via EVPN the advertisement for the static IP prefix covering all the endpoints’ subnets behind the firewall and configured on the service leaf nodes (10.10.0.0/16 in our specific example) carrying not only the next-hop of the service leaf nodes deployed in the local fabric but also the additional information of the IP address of the active firewall representing the next-hop for the static routes defined on the service leaf nodes.

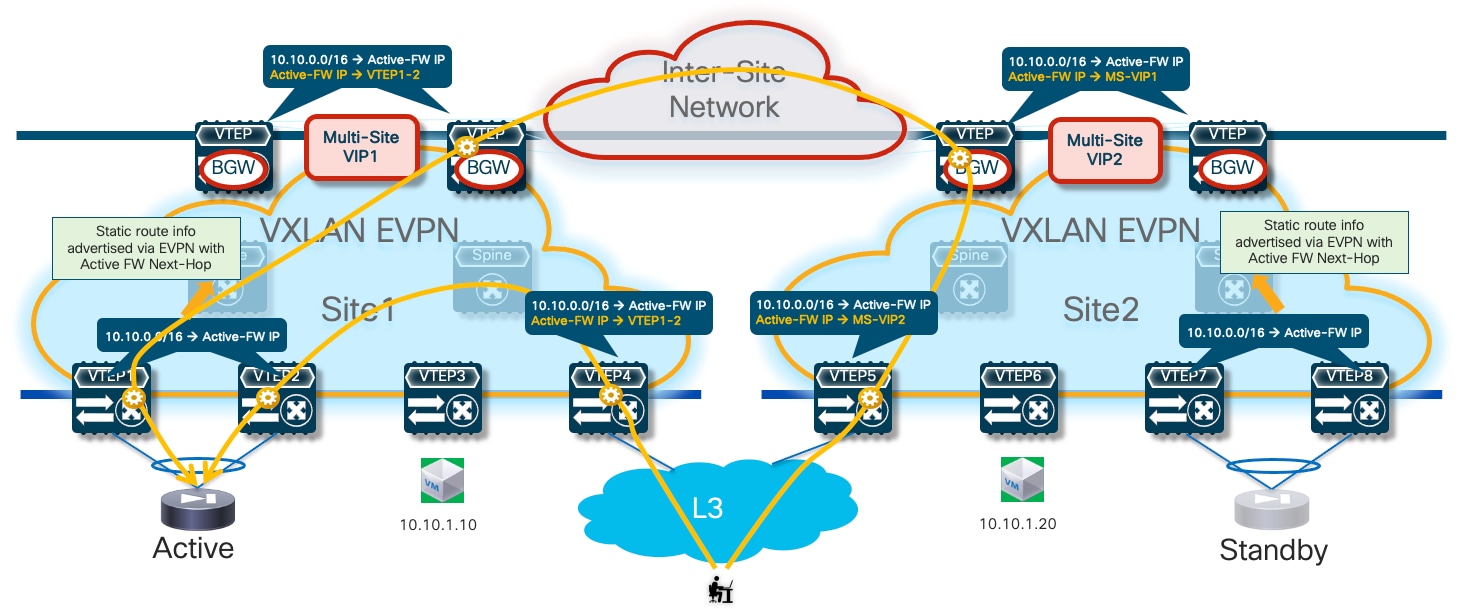

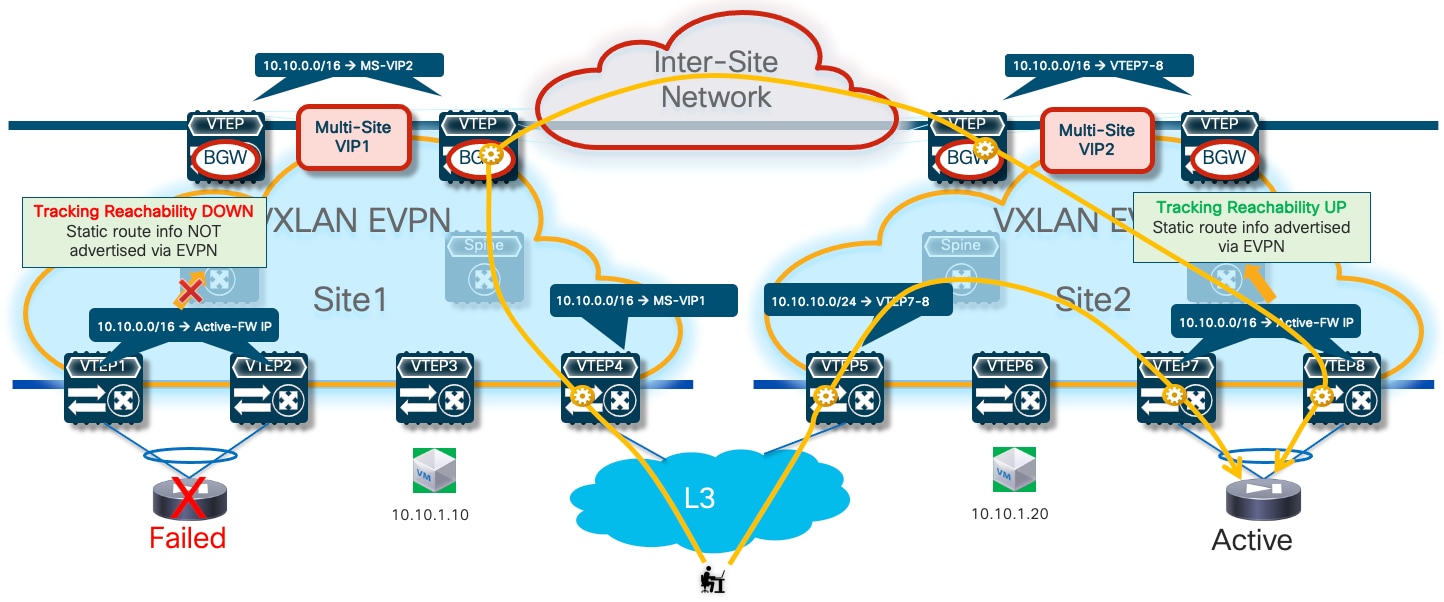

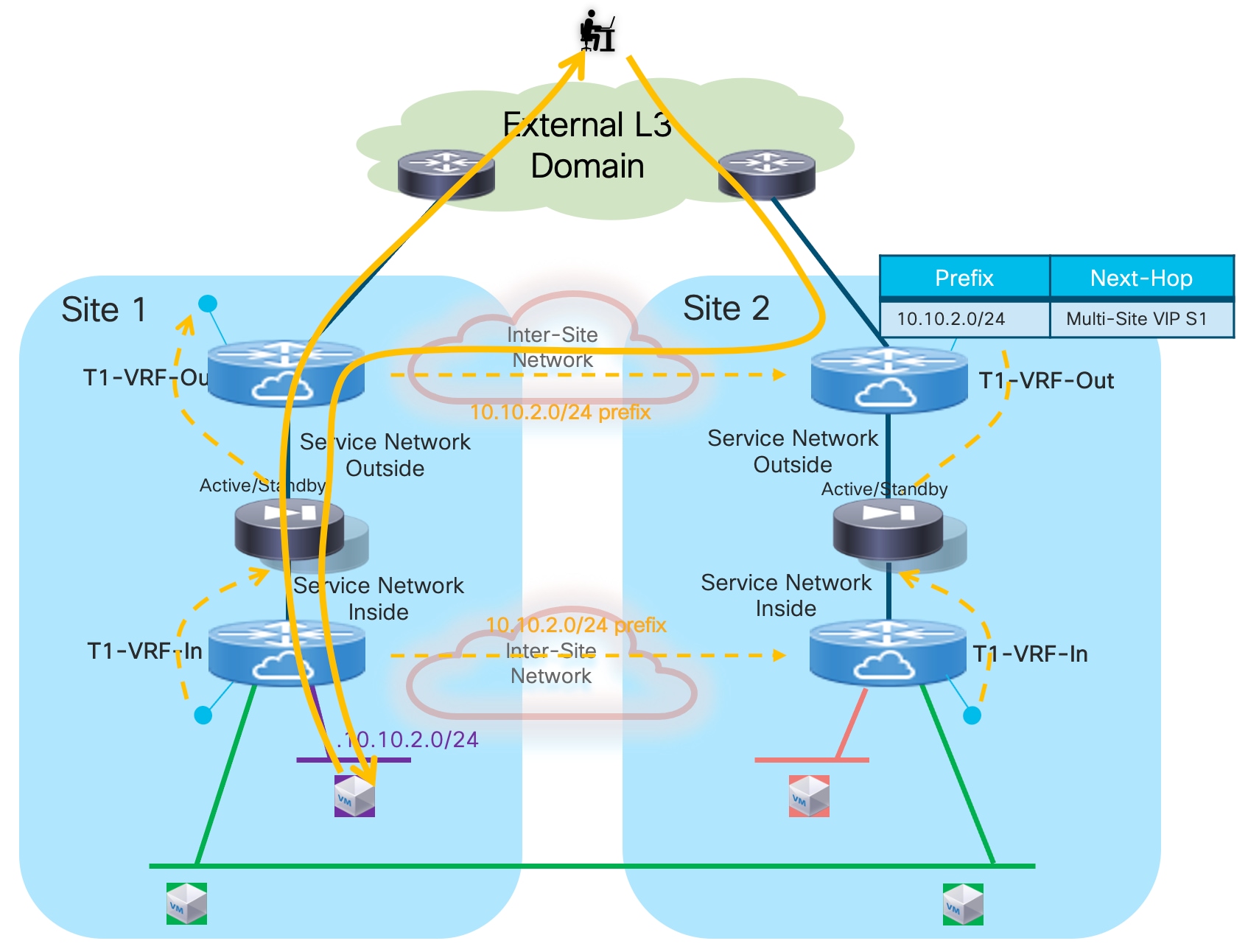

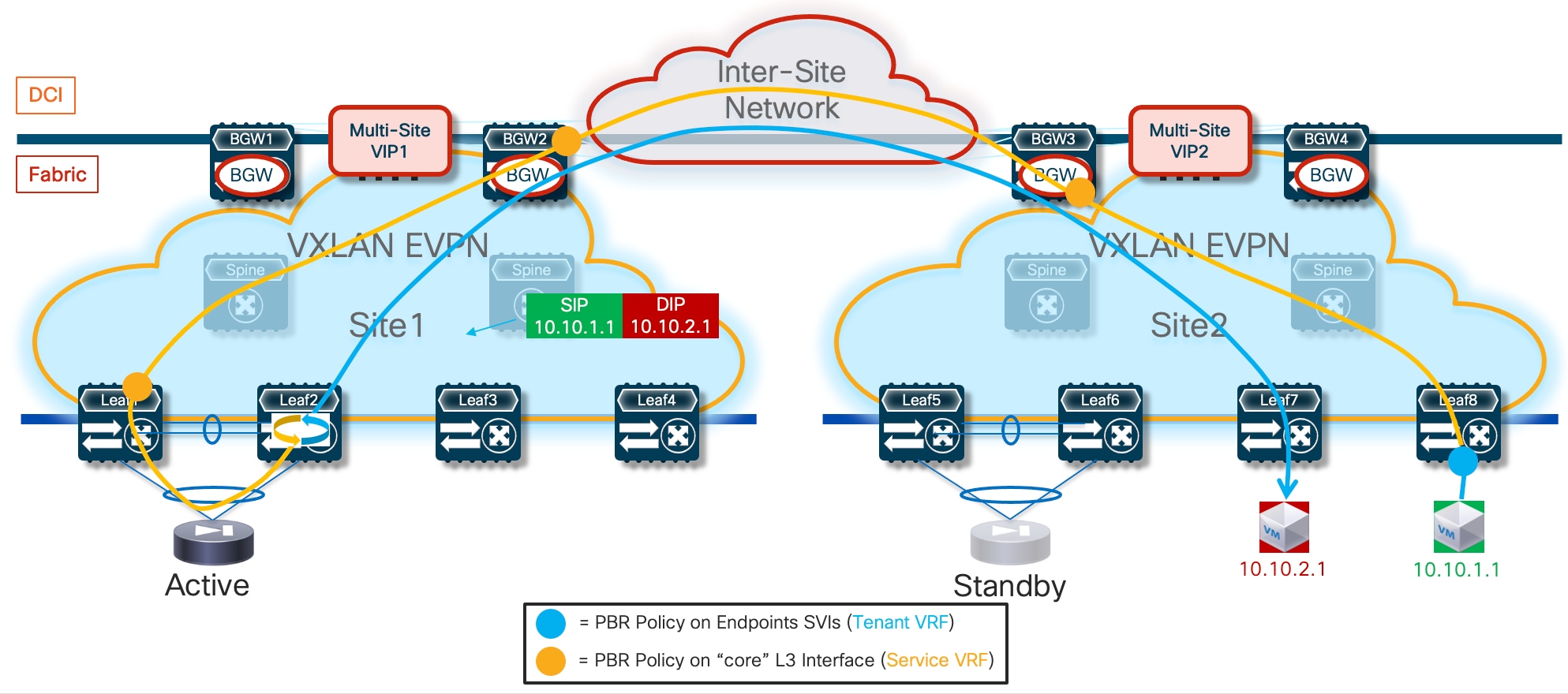

A recursive routing lookup is then performed to determine how to reach that active firewall’s IP address; only the service leaf nodes connected to the active firewall have discovered that IP address as locally connected and advertised it via EVPN inside the fabric. Therefore, all the VXLAN traffic flows are now directed to those VTEPs, independently from the specific fabric where they are located (Figure 16).

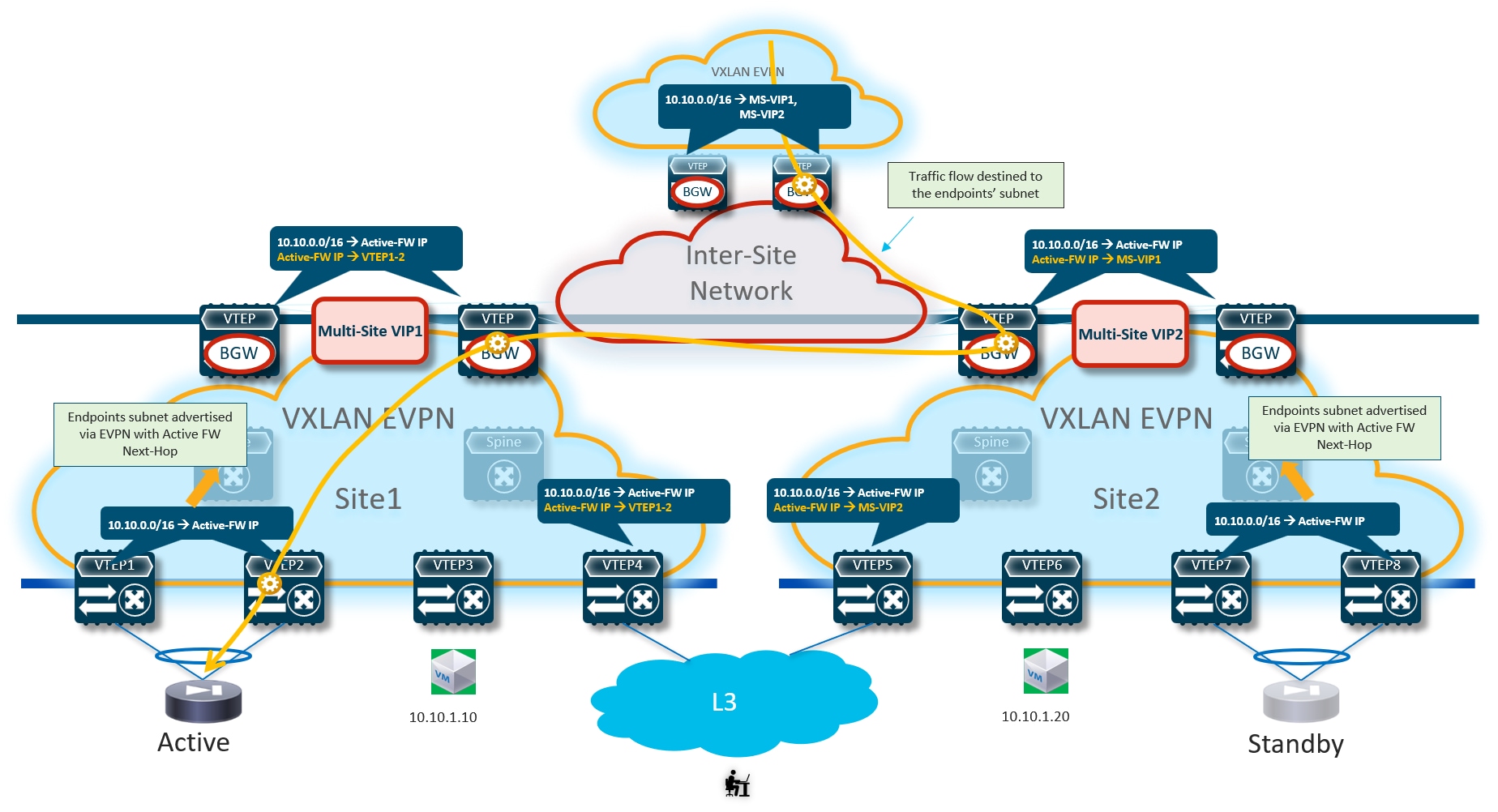

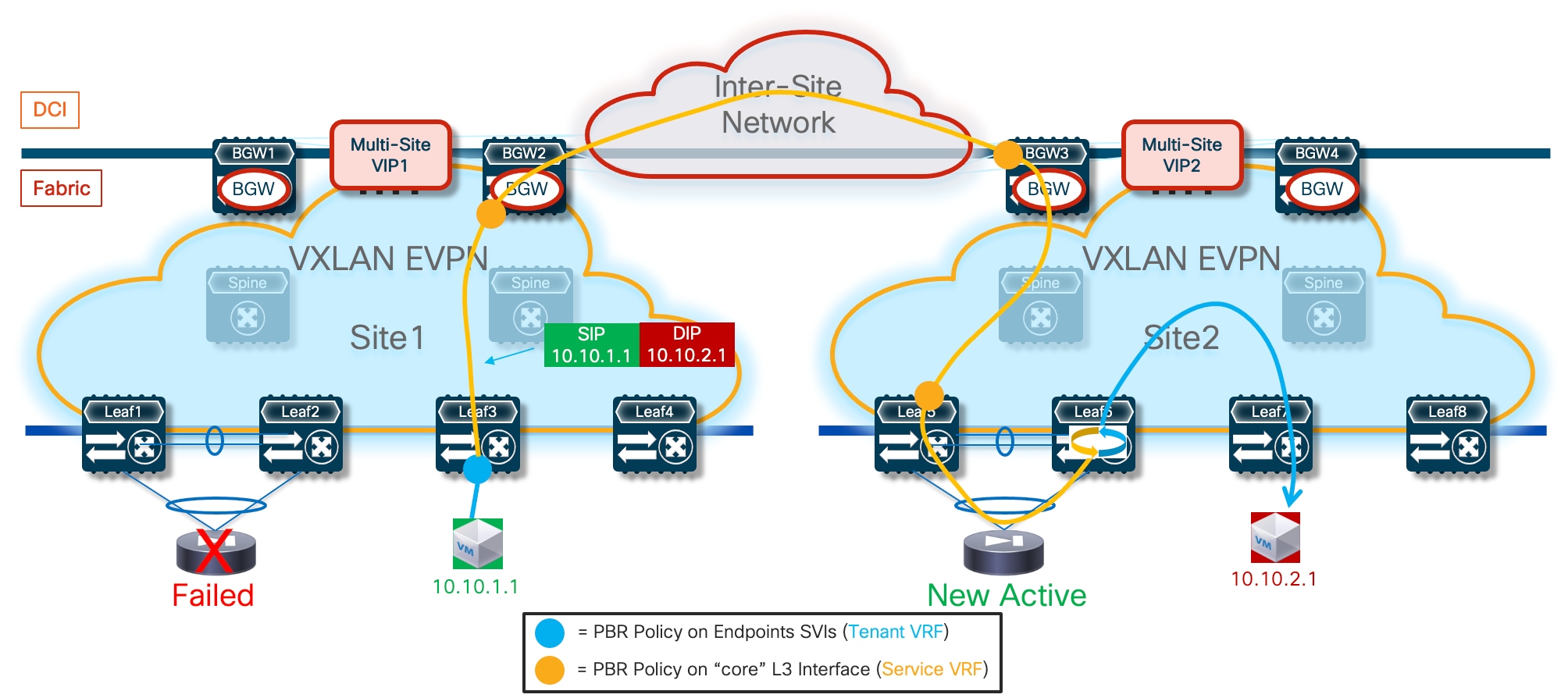

If the active firewall fails, a failover event is triggered, and the standby unit becomes the new active firewall. This causes an EVPN advertisement to be propagated inside the fabric and across the fabrics steering all the traffic flows destined to the endpoints behind the firewall (Figure 17) toward those VTEPs instead.

The convergence time for restoring the traffic flows is quite short and mostly dependent on the time required by the standby unit to detect the failure of the active one and promote itself as new active. As a result of this event, the newly activated firewall originates a GARP frame on the Service Network, allowing the directly connected VTEPs to discover the MAC/IP of the newly activated firewall and advertise that information inside the fabric.

The configuration required to enable the “export-gateway-ip” configuration is shown below:

! Configure the static route under the VRF

vrf context t1-vrf

ip route 10.10.0.0/16 172.16.1.1 tag 12345

!

! Define the route-map to redistribute the static route into BGP

route-map redist-static-routes

match tag 12345

set ip next-hop redist-unchanged

!

! Configure export-gateway-ip and redistribution under BGP

router bgp 65001

vrf t1-vrf1

address-family ipv4 unicast

redistribute static route-map redist-static-routes

export-gateway-ip

It is important to observe how the “export-gateway-ip” configuration shown above (without the static route definition and redistribution in BGP) should be applied also on the BGW nodes of the fabrics where the active and standby firewalls resides. This is to ensure that those BGWs advertise the active firewall IP address information in the EVPN updates sent toward the BGWs of other sites, so that those devices can also perform a recursive routing lookup for the active firewall’s address and send the traffic to the BGWs of the site where that active firewall is located (Figure 18).

Figure 19 shows what would happen if the “export-gateway-ip” was not configured on the BGWs nodes of the fabrics where the active and standby firewalls are connected.

In this case, the BGWs in a third site learn the static route prefix with associated next-hops the Multi-Site VIP addresses of both fabrics where the active and standby firewalls are connected. Therefore, half of the traffic flows are steered toward the site with the standby firewall (Site2 in the example above). The BGWs in that site can decapsulate the traffic, perform a Layer 3 lookup and re-encapsulate toward the BGWs of the site with the active firewall. Even if the traffic is not dropped, this represents a suboptimal behavior that can simply be avoided configuring “export-gateway-ip” on the BGW nodes.

Optimizing Connectivity to the Active Firewall with Distributed Recursive Static Routes

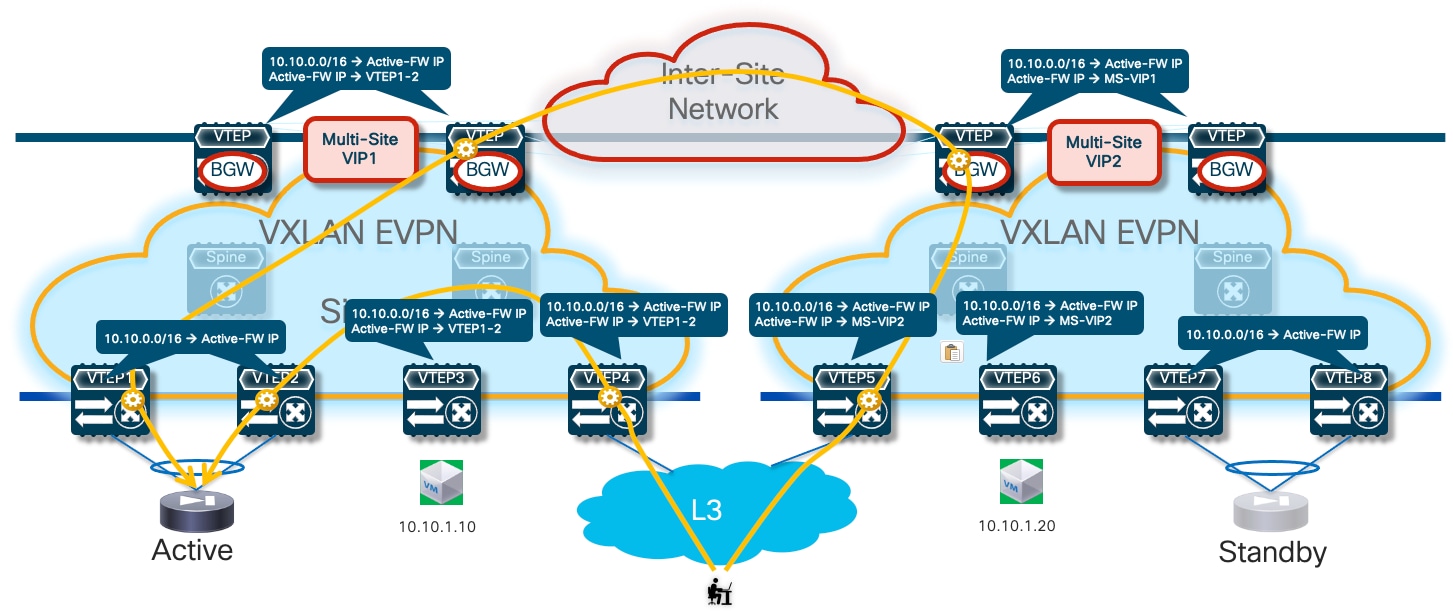

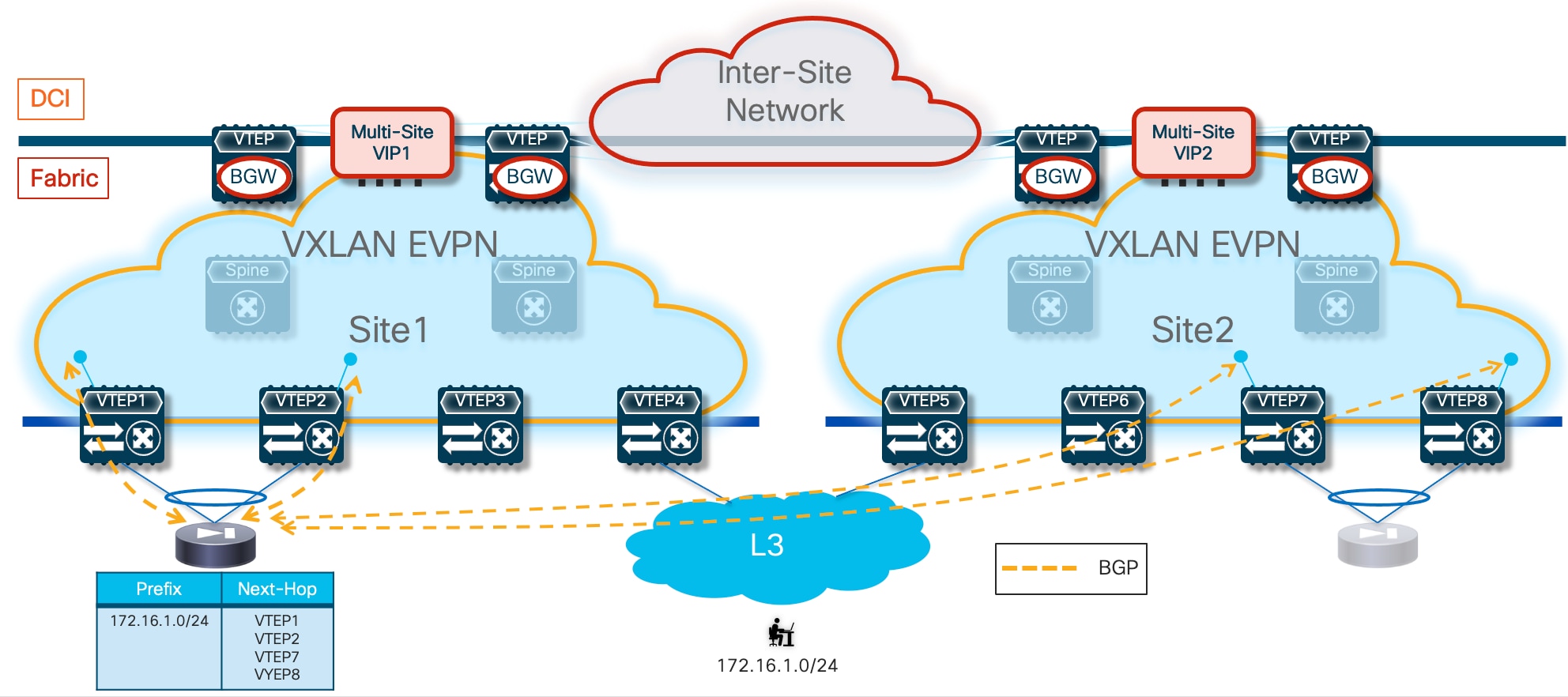

A second option to steer traffic flows only to the service leaf nodes connected to the active firewall consists in leveraging again recursive routing (similarly to the “export-gateway-ip” approach just discussed) by configuring the same static route not only on the service leaf nodes connected to the firewalls, but also on all the leaf nodes (compute leaf, border leaf and BGW nodes) where the VRF is being instantiated.

Given that the static route configuration is distributed on all the leaf nodes, in this scenario there is no need for the service leaf nodes to redistribute such information in the fabric’s EVPN control plane. Only the border or border gateway nodes must advertise such information toward the external network or toward remote BGW nodes.

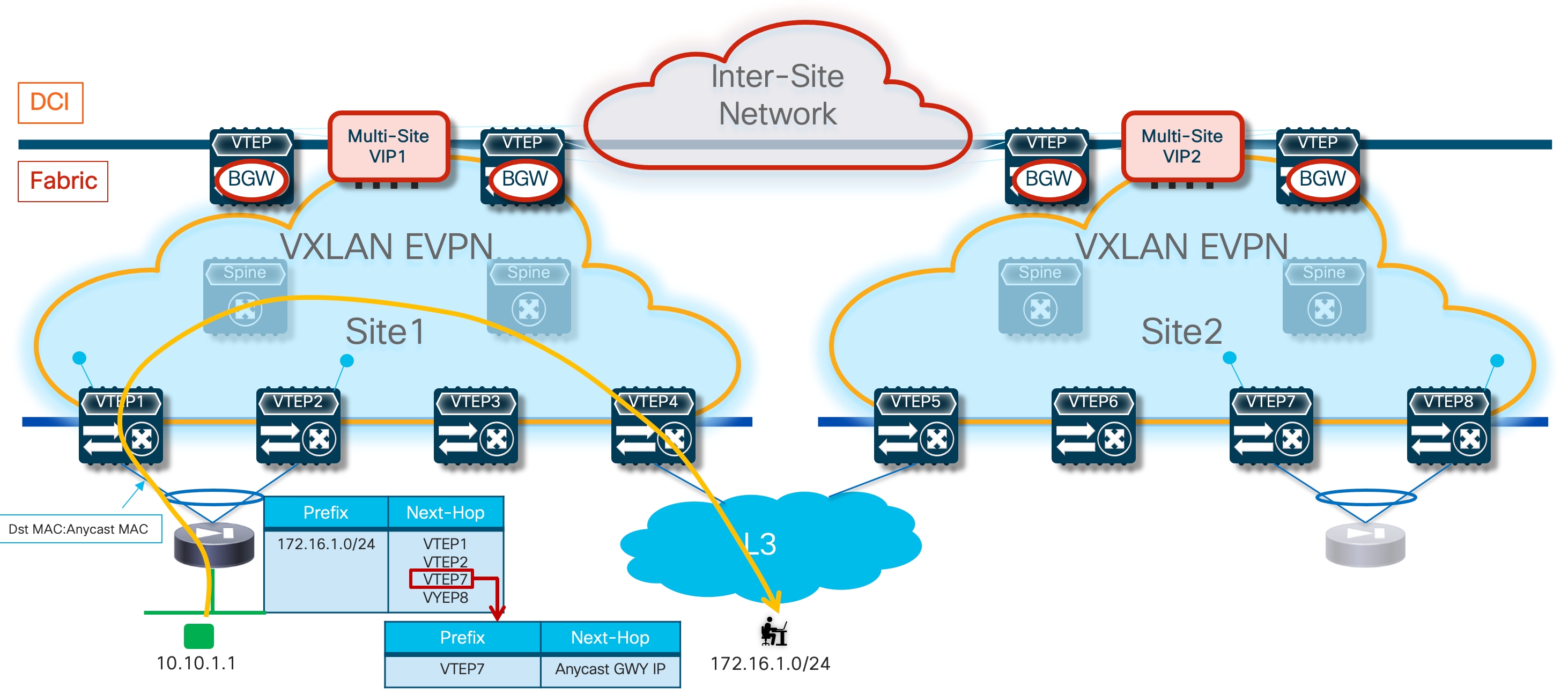

Because the next-hop for the static route is represented by the IP address of the active firewall, a recursive lookup is going to be triggered on all the VTEPs that are not directly connected to the active firewall to determine how to reach that specific IP address. The use of the recursion always ensures that traffic is steered toward the service leaf nodes that discovered the active firewall node as directly connected and injected such information inside the fabric (Figure 20).

Like in the scenario leveraging “export-gateway-ip” shown in Figure 17, the traffic convergence after a firewall failover event is mainly dependent on the activation of the standby firewall node and the discovery of its MAC/IP address on the directly connected service leaf nodes.

The main drawback of this solution is that you must configure the static routes on all the VTEPs where the VRF is instantiated. Such concern can obviously be alleviated by deploying a tool, such as Nexus Dashboard Fabric Controller, to automate the provisioning of configuration on multiple fabric nodes. For more information on this approach, please refer to the configuration guide below:

https://www.cisco.com/c/en/us/td/docs/dcn/nx-os/nexus9000/104x/configuration/vxlan/cisco-nexus-9000-series-nx-os-vxlan-configuration-guide-release-104x/m_configuring_layer_4-layer_7_network_services_integration.html?bookSearch=true#Cisco_Concept.dita_aa6ab7d6-ccf5-47b2-90d0-a7c91a94a971

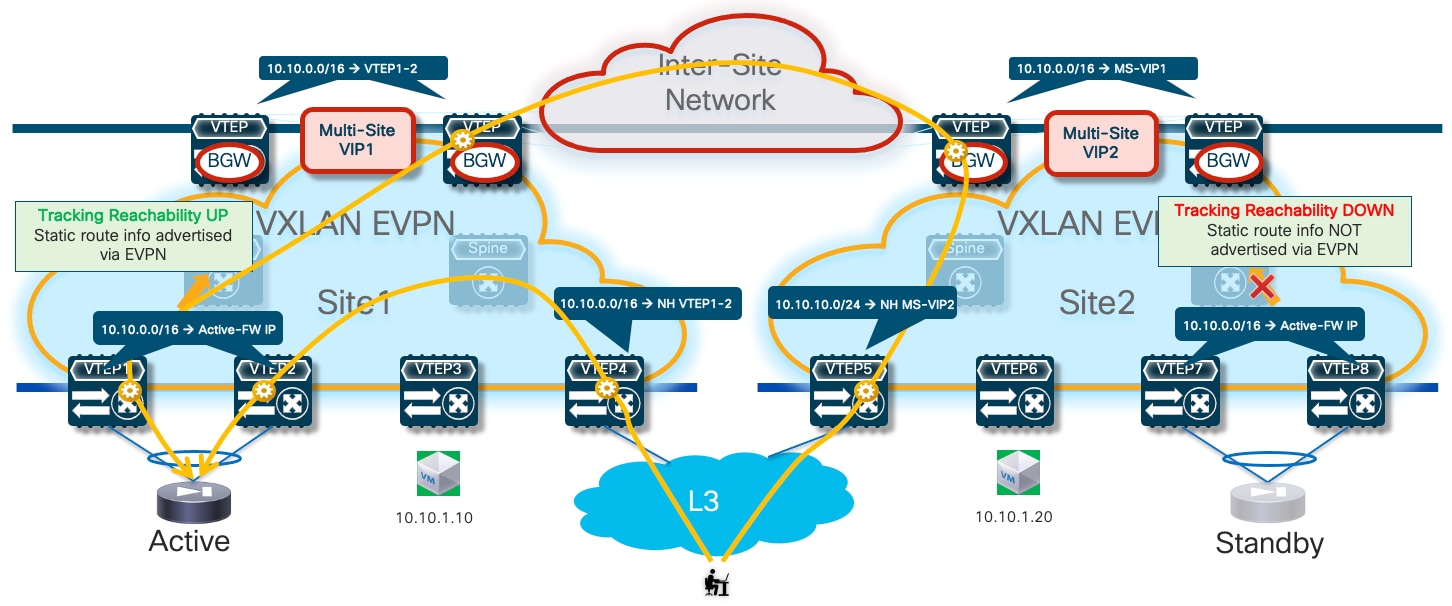

Optimizing Connectivity to the Active Firewall with Centralized Static Routing and HMM Tracking

With this last option, the static routing configuration is only provisioned on the service leaf nodes connected to the firewall devices (as it was the case with the “export-gateway-ip” scenario). A tracking mechanism (named “HMM tracking”) is introduced on the service leaf nodes to verify if the active firewall is directly connected. The HMM tracking basically checks if the firewall active IP address is locally learned on the service leaf nodes (based on the presence of the /32 prefix in the routing table). The CLI output below shows the HMM entry on the service leaf nodes relative to the firewall’s IP address.

Leaf11# show ip route vrf t1-vrf1

IP Route Table for VRF "t1-vrf1"

'*' denotes best ucast next-hop

'**' denotes best mcast next-hop

'[x/y]' denotes [preference/metric]

'%<string>' in via output denotes VRF <string>

<snip>

172.16.1.1/32, ubest/mbest: 1/0, attached

*via 172.16.1.1, Vlan400, [190/0], 1d02h, hmm

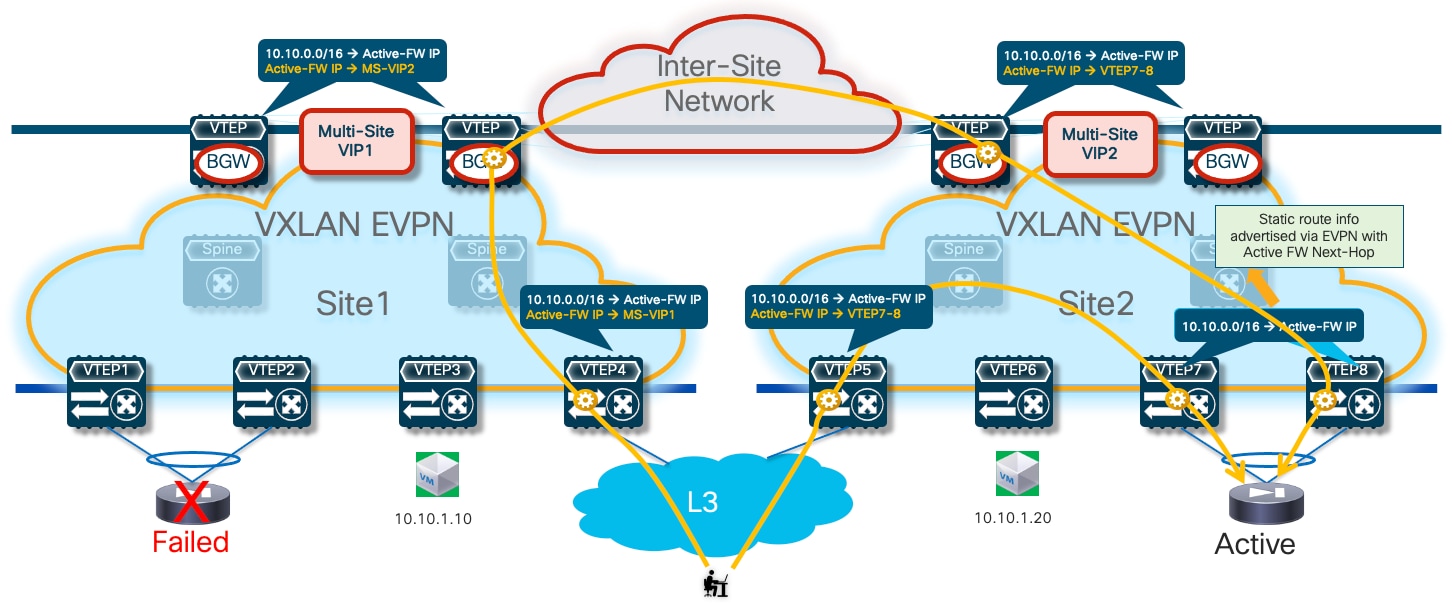

The result of the check is associated to the static route applied for the VRF, with the result that only the service leaf nodes where the active FW is connected are allowed to inject the static route information into the EVPN fabric control plane. The consequence is that, as it was the case in the scenarios previously described, all the traffic flows destined to the endpoints behind the firewall are steered toward the service leaf nodes with the connected active firewall (Figure 21).

While this approach reduces the configuration touch points when compared to the use of distributed recursive routing (making it like the “export-gateway-ip” scenario), the traffic convergence mechanism after a firewall failover event results more complex:

● First, the HMM tracking mechanism needs to detect that the active firewall has moved to a new location (for example, behind VTEP7-8 in the figure above) and unlock the advertisement of static route info into the fabric’s EVPN control plane.

● At the same time, HMM tracking on the service leaf nodes where the failed active was connected needs to detect that the active firewall is gone and therefore stop advertising static route information into the fabric.

● Finally, there will also be scalability implications on the service leaf nodes when provisioning multiple static route entries with different next-hops.

The sample below show the configuration required on the service leaf nodes to enable HMM tracking and use its result to control the advertisement of static route information into the EVPN control plane.

track 1 ip route 172.16.1.1/32 reachability hmm

vrf member t1-vrf1

!

vrf context t1-vrf1

ip route 10.10.1.0/24 172.16.1.1 track 1

For more information on the use of the NX-OS object tracking functionality, please refer to the document below:

https://www.cisco.com/c/en/us/td/docs/dcn/nx-os/nexus9000/103x/unicast-routing-configuration/cisco-nexus-9000-series-nx-os-unicast-routing-configuration-guide-release-103x/m_configuring_object_tracking.html

Configuration Samples

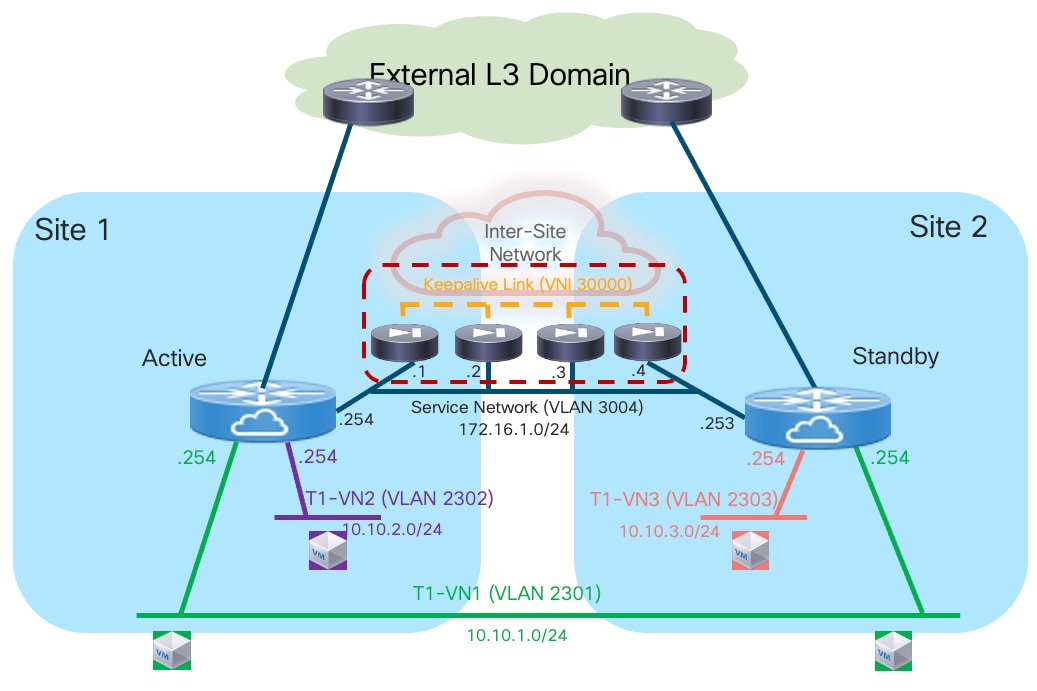

The samples below show the configuration required on the various nodes, based on the reference topology shown in Figure 23:

Compute Leaf Nodes

Define the L2VNI segments (Layer 2 only) representing the subnets where the endpoints are connected.

vlan 2301

vn-segment 30001

!

vlan 2302

vn-segment 30002

!

interface nve1

member vni 30001

mcast-group 239.1.1.1

member vni 30002

mcast-group 239.1.1.1

!

evpn

vni 30001 l2

rd auto

route-target import auto

route-target export auto

vni 30002 l2

rd auto

route-target import auto

route-target export auto

!

interface port-channel1

description vPC to the ESXi host

switchport mode trunk

switchport trunk allowed vlan 2301-2302

spanning-tree port type edge trunk

spanning-tree bpduguard enable

mtu 9216

vpc 1

Service Leaf Nodes

On the leaf nodes where the active and standby service nodes are connected, define a VRF dedicated to each tenant and all the associated configurations to implement the northbound Layer 3 network. Notice the definition of the static route under the VRF and how to redistribute it into the EVPN control plane. In this simple example, the subnets for the endpoints are all summarized with a /16 super-net (10.10.0.0/16), whereas 172.16.1.1 represents the IP address of the active firewall node interface connected to the northbound Service Network. The same configuration must be applied to the service leaf nodes in the remote fabric where the standby firewall node is connected, with the only difference being the fabric’s specific BGP ASN value.

Note: The redistribution of the static route information into the fabric BGP EVPN control plane is required as the configuration example below leverages the use of “export-gateway-ip” command to optimize the communication with the active firewall node.

vlan 2000

vn-segment 50000

!

vrf context t1-vrf

vni 50000

ip route 10.10.0.0/16 172.16.1.1 tag 12345

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

address-family ipv6 unicast

route-target both auto

route-target both auto evpn

!

interface Vlan2000

no shutdown

mtu 9216

vrf member t1-vrf

no ip redirects

ip forward

ipv6 address use-link-local-only

no ipv6 redirects

!

route-map redist-static-routes

match tag 12345

set ip next-hop redist-unchanged

!

router bgp 65001

vrf t1-vrf1

address-family ipv4 unicast

advertise l2vpn evpn

redistribute static route-map redist-static-routes

maximum-paths ibgp 2

export-gateway-ip

address-family ipv6 unicast

advertise l2vpn evpn

redistribute static route-map redist-static-routes

maximum-paths ibgp 2

export-gateway-ip

!

interface nve1

member vni 50000 associate-vrf

Define the L2VNI segment used as firewall Keepalive Link (vn-segment 30000). The corresponding VLAN must then be trunked on the vPC connection toward the Firewall node.

Note: In this example, the endpoints L2VNIs (and associated SVIs) are not defined on the service leaf nodes, but that could obviously be the case if the logical roles of compute nodes and service leaf nodes are co-located on the same set of physical devices.

vlan 2300

vn-segment 30000

!

interface nve1

member vni 30000

mcast-group 239.1.1.1

!

evpn

vni 30000 l2

rd auto

route-target import auto

route-target export auto

!

interface port-channel1

description vPC to the Firewall Node

switchport mode trunk

switchport trunk allowed vlan 2300

spanning-tree port type edge trunk

spanning-tree bpduguard enable

mtu 9216

vpc 1

Define the Service Network used to connect the firewall nodes to the service leaf nodes. For this L2VNI it is also required to define an anycast gateway address, which represents the next-hop of the static default route defined on the firewall (see config sample later below).

vlan 3000

vn-segment 30003

!

interface Vlan3000

description Service Network

no shutdown

vrf member t1-vrf

ip address 172.16.1.254/24 tag 12345

fabric forwarding mode anycast-gateway

!

interface nve1

member vni 30003

mcast-group 239.1.1.1

!

evpn

vni 30003 l2

rd auto

route-target import auto

route-target export auto

!

interface port-channel1

description vPC to the Firewall Node

switchport mode trunk

switchport trunk allowed vlan 2300,3000

BGW Nodes

Define the VRF for each tenant and all the associated configurations to extend the VRFs between fabrics. This is required to be able to extend the Service Network, part of the VRF, across sites. Note that the full BGW configuration is not shown below, so we recomment referencing the VXLAN Multi-Site documentation for more information.

vlan 2000

vn-segment 50000

!

vrf context t1-vrf

vni 50000

rd auto

address-family ipv4 unicast

route-target both auto

route-target both auto evpn

address-family ipv6 unicast

route-target both auto

route-target both auto evpn

!

route-map fabric-rmap-redist-subnet permit 10

match tag 12345

!

router bgp 65001

vrf t1-vrf

address-family ipv4 unicast

advertise l2vpn evpn

redistribute direct route-map fabric-rmap-redist-subnet

maximum-paths ibgp 2

export-gateway-ip

address-family ipv6 unicast

advertise l2vpn evpn

redistribute direct route-map fabric-rmap-redist-subnet

maximum-paths ibgp 2

export-gateway-ip

!

interface nve1

member vni 50000 associate-vrf

!

evpn

vni 30000 l2

rd auto

route-target import auto

route-target export auto

Locally define the L2VNI segments used as firewall Keepalive Link to connect the endpoints and as Service Network to extend those networks across the fabrics.

vlan 2300

vn-segment 30000

!

vlan 2301

vn-segment 30001

!

vlan 2302

vn-segment 30002

!

vlan 3000

vn-segment 30003

!

interface nve1

host-reachability protocol bgp

source-interface loopback1

multisite border-gateway interface loopback100

member vni 30000

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30001

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30002

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30003

multisite ingress-replication

mcast-group 239.1.1.1

!

evpn

vni 30000 l2

rd auto

route-target import auto

route-target export auto

vni 30001 l2

rd auto

route-target import auto

route-target export auto

vni 30002 l2

rd auto

route-target import auto

route-target export auto

vni 30003 l2

rd auto

route-target import auto

route-target export auto

Locally define the L2VNI segments used as firewall Keepalive Link to connect the endpoints and as Service Network to extend those networks across the fabrics.

vlan 2300

vn-segment 30000

!

vlan 2301

vn-segment 30001

!

vlan 2302

vn-segment 30002

!

vlan 3000

vn-segment 30003

!

interface nve1

host-reachability protocol bgp

source-interface loopback1

multisite border-gateway interface loopback100

member vni 30000

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30001

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30002

multisite ingress-replication

mcast-group 239.1.1.1

member vni 30003

multisite ingress-replication

mcast-group 239.1.1.1

!

evpn

vni 30000 l2

rd auto

route-target import auto

route-target export auto

vni 30001 l2

rd auto

route-target import auto

route-target export auto

vni 30002 l2

rd auto

route-target import auto

route-target export auto

vni 30003 l2

rd auto

route-target import auto

route-target export auto

Firewall Nodes

The configuration sample below is taken from a Cisco ASA model but can be easily adapted to apply to different types of firewall devices (physical or virtual form factors). We also assume that the required failover configuration has already been applied to build an Active/Standby firewall pair, as described in the previous “Active/Standby Firewall Cluster Stretched across Sites” section.

Configure the required inside and outside interfaces. A local port-channel interfaces (Port-channel2) is deployed on each firewall (assuming it is a physical appliance) to carry data interfaces. Sub-interfaces are created on this port-channel interface to forward traffic toward the endpoints’ subnets and toward the northbound Layer 3 device. A default static route pointing to the anycast gateway IP address defined on the service leaf nodes for the Service Network segment is used to forward all northbound traffic destined to any destination external to the specific tenant/VRF domain.

interface Port-channel2.2301

vlan 2301

nameif inside-VLAN2301

security-level 100

ip address 10.10.1.254 255.255.255.0 standby 10.10.1.253

!

interface Port-channel2.2302

vlan 2302

nameif inside-VLAN2302

security-level 100

ip address 10.10.2.254 255.255.255.0 standby 10.10.2.253

!

interface Port-channel2.3000

vlan 3000

nameif outside

security-level 0

ip address 172.16.1.1 255.255.255.0 standby 172.16.1.2

!

access-list permit-any extended permit ip any any

access-group permit-any in interface outside

!

route outside 0.0.0.0 0.0.0.0 172.16.1.254 1

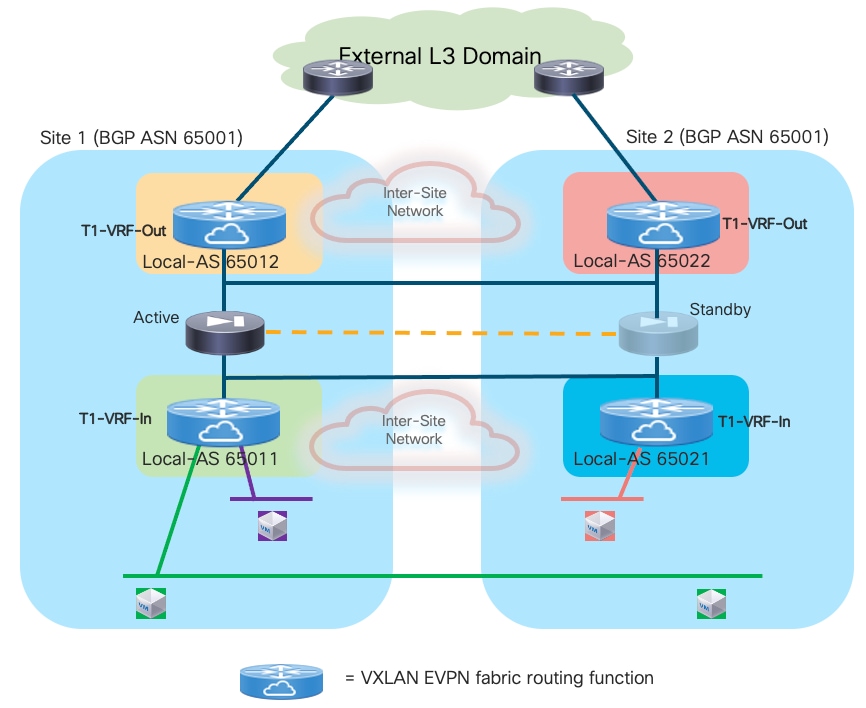

Dynamic Peering between the Active Firewall and the Service Leaf Nodes

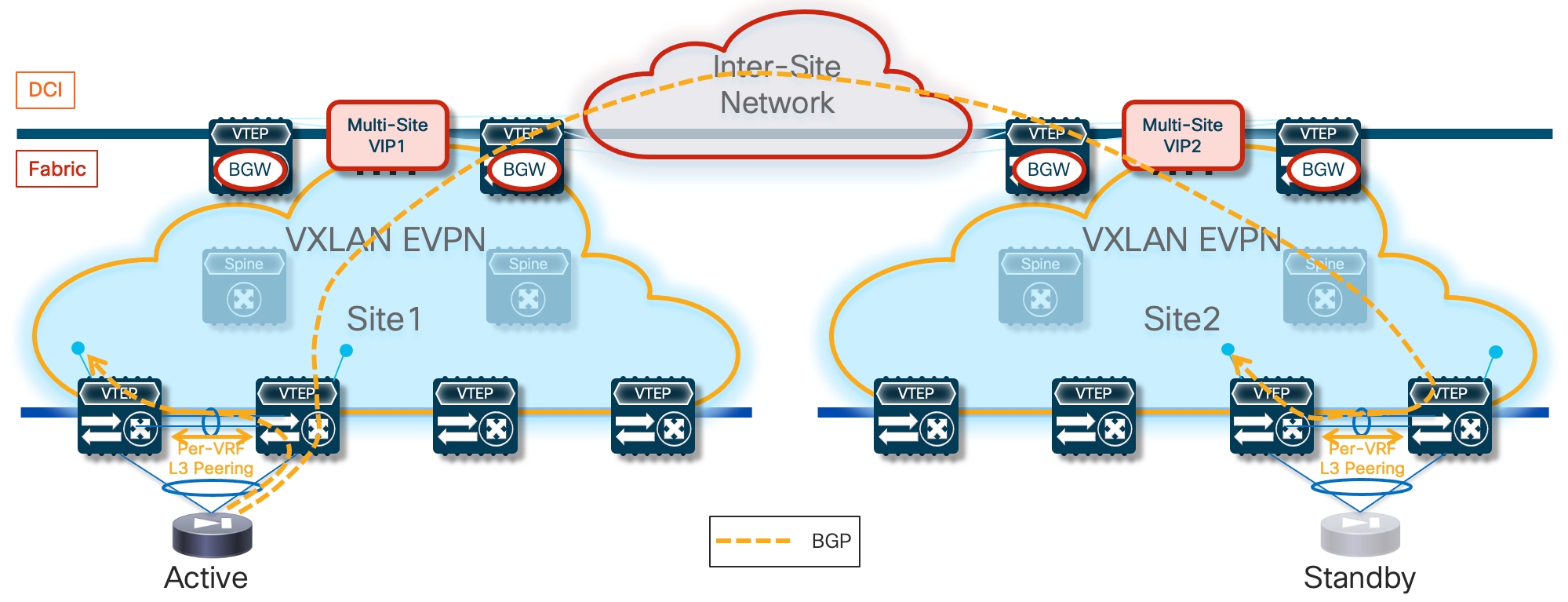

This deployment model represents a variation from the one just discussed in that the firewall node now establishes dynamic routing peering with the fabric leaf nodes. The most common and recommended option consists in using EBGP for establishing the peering, but the use of an IGP is also possible.

EBGP Peering between the Firewall and the Leaf Nodes

The logical diagram in Figure 24 shows the establishment of EBGP connectivity between the firewall and the fabric leaf nodes.

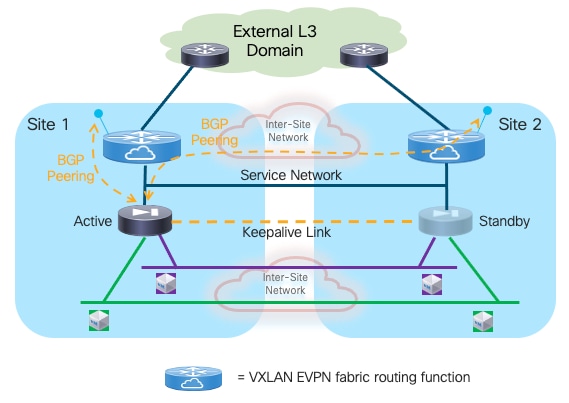

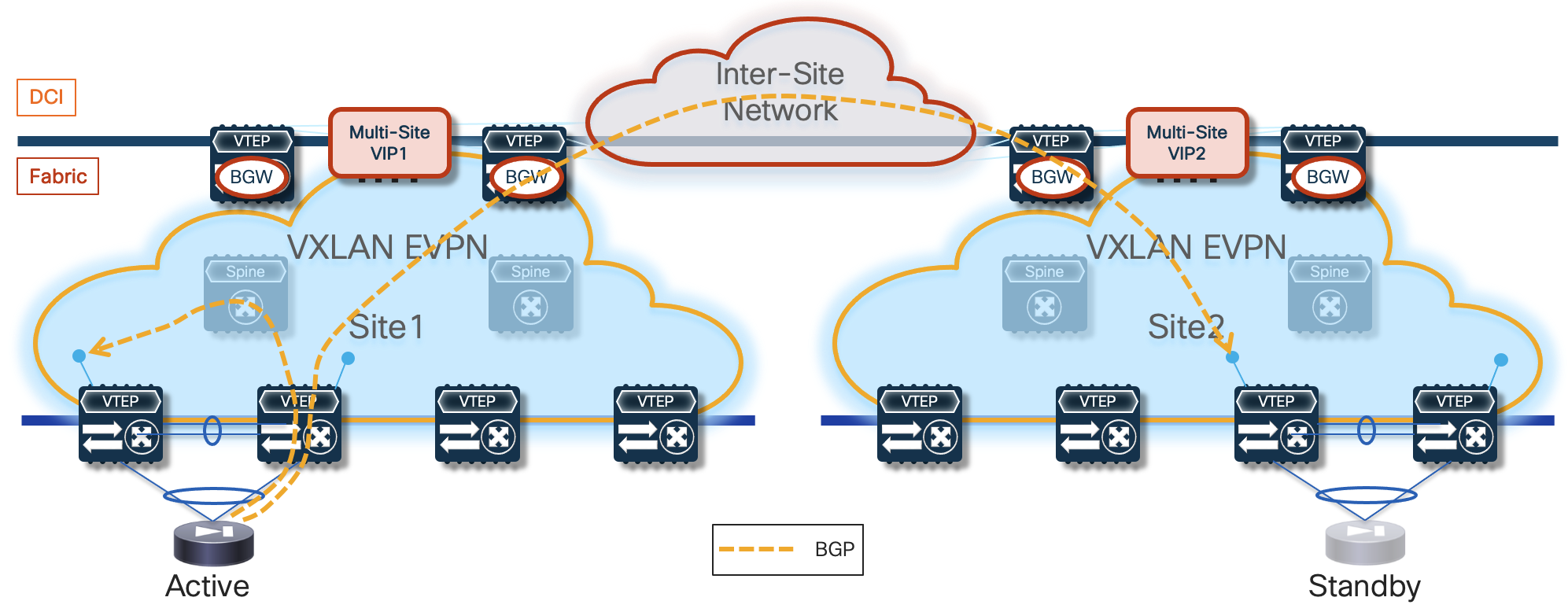

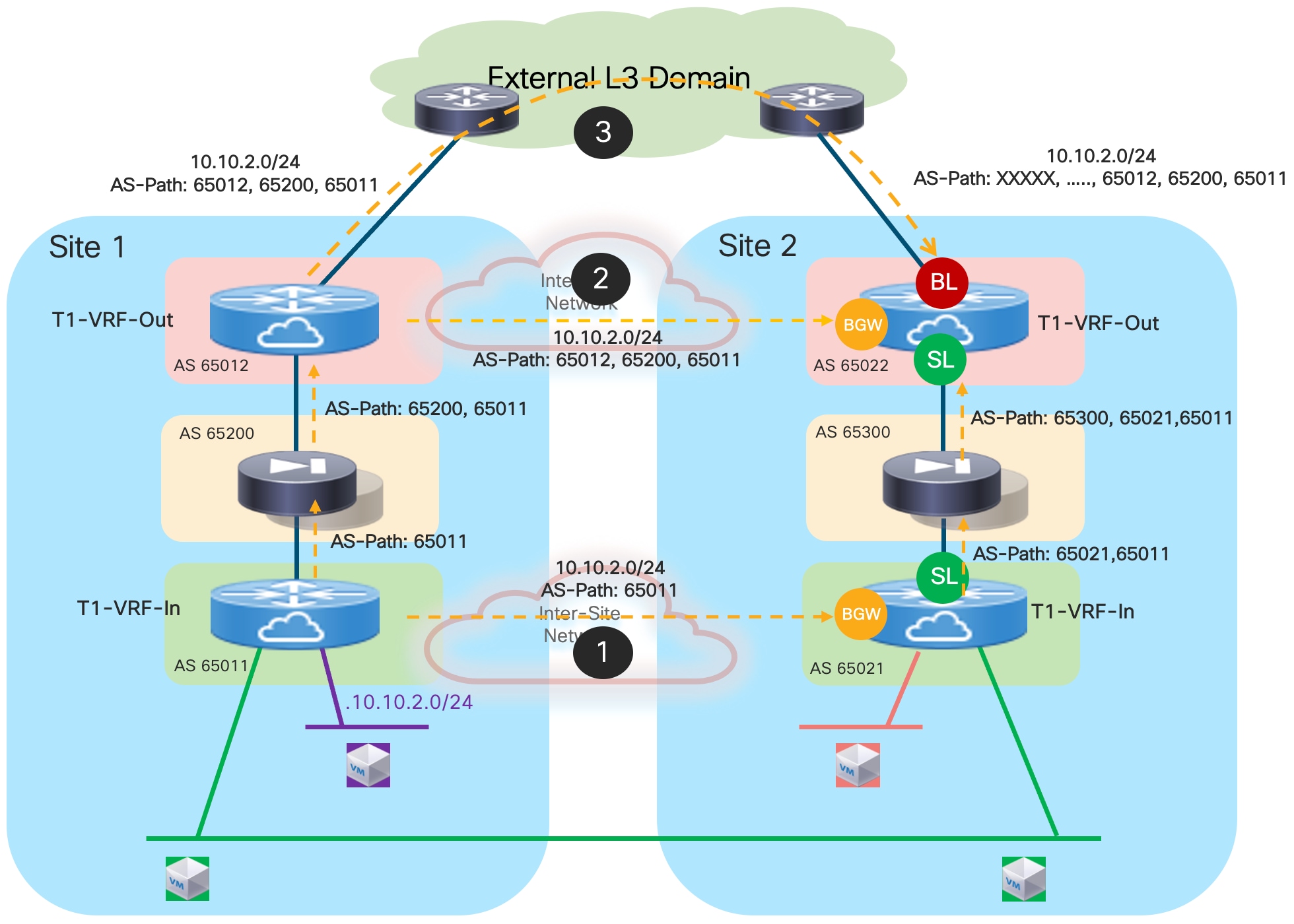

The active firewall node should establish multi-hop EBGP sessions with both the local and remote service leaf nodes (or better with unique loopback interfaces defined on those leaf nodes). This is important to minimize the traffic outage during a firewall switchover event. To understand why, let’s consider the sequence of events involved in a firewall failover event:

1. The initial conditions are the ones shown in Figure 24, where the active firewall in Site 1 has established EBGP adjacencies with the local and remote service leaf nodes.

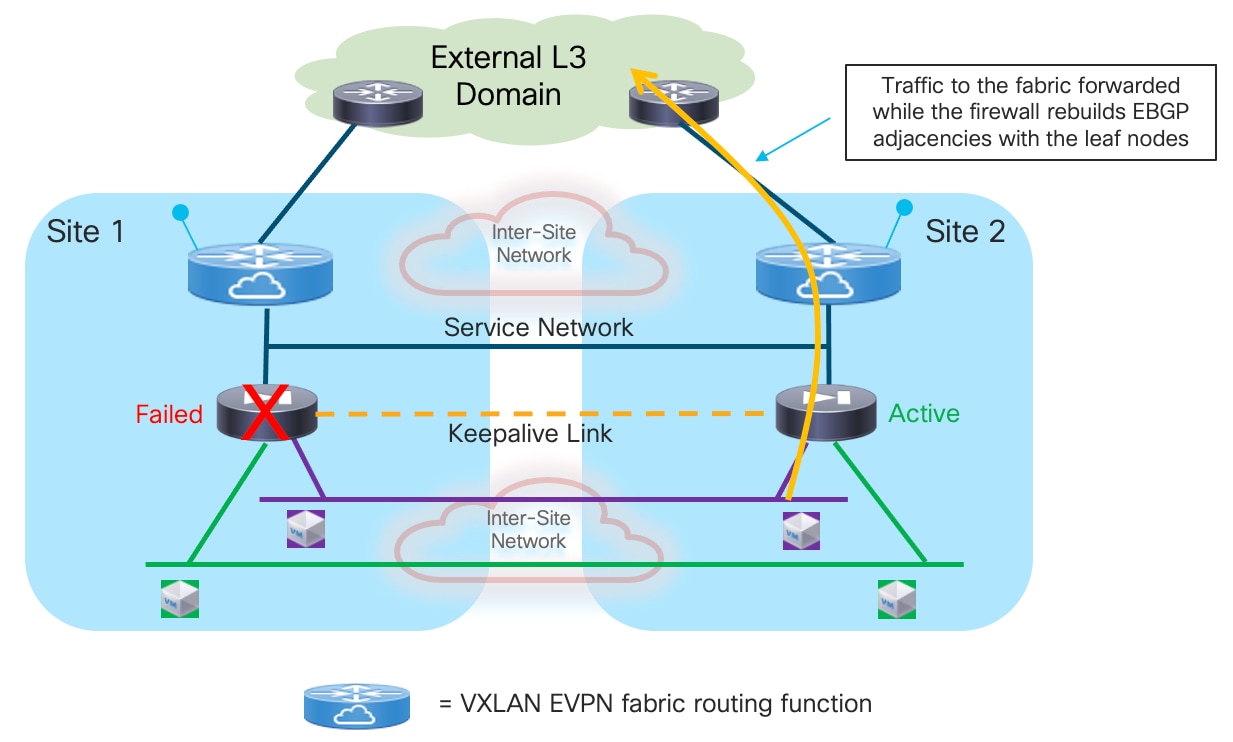

2. Now, a firewall failover event causes the standby firewall in Site 2 to become active. The standby firewall must be configured with BGP Graceful Restart, a feature that enables BGP sessions to be restarted without causing a disruption in the network. It works by allowing the devices to maintain their established routes even after a routing peering session reset or restart, and because the routing table of the newly activated firewall is fully synchronized with the previously active firewall node, it can continue to forward traffic toward the fabric based on previously programmed information (Figure 25).

3. At the same time, the service leaf nodes are aware that the firewall is going through a BGP graceful restart event, so they do not bring down their BGP sessions established with the firewall node and as a consequence can continue to forward traffic toward the firewall based on the routing information they have received from the previously active firewall node (Figure 26). This works as the newly activated firewall inherits the same MAC/IP address of the previously active firewall. Even if the MAC changed (depending on the specific Active/Standby cluster implementation), the new associated MAC address could be learned by the service leaf nodes based on the GARP frame received from the newly activated firewall.

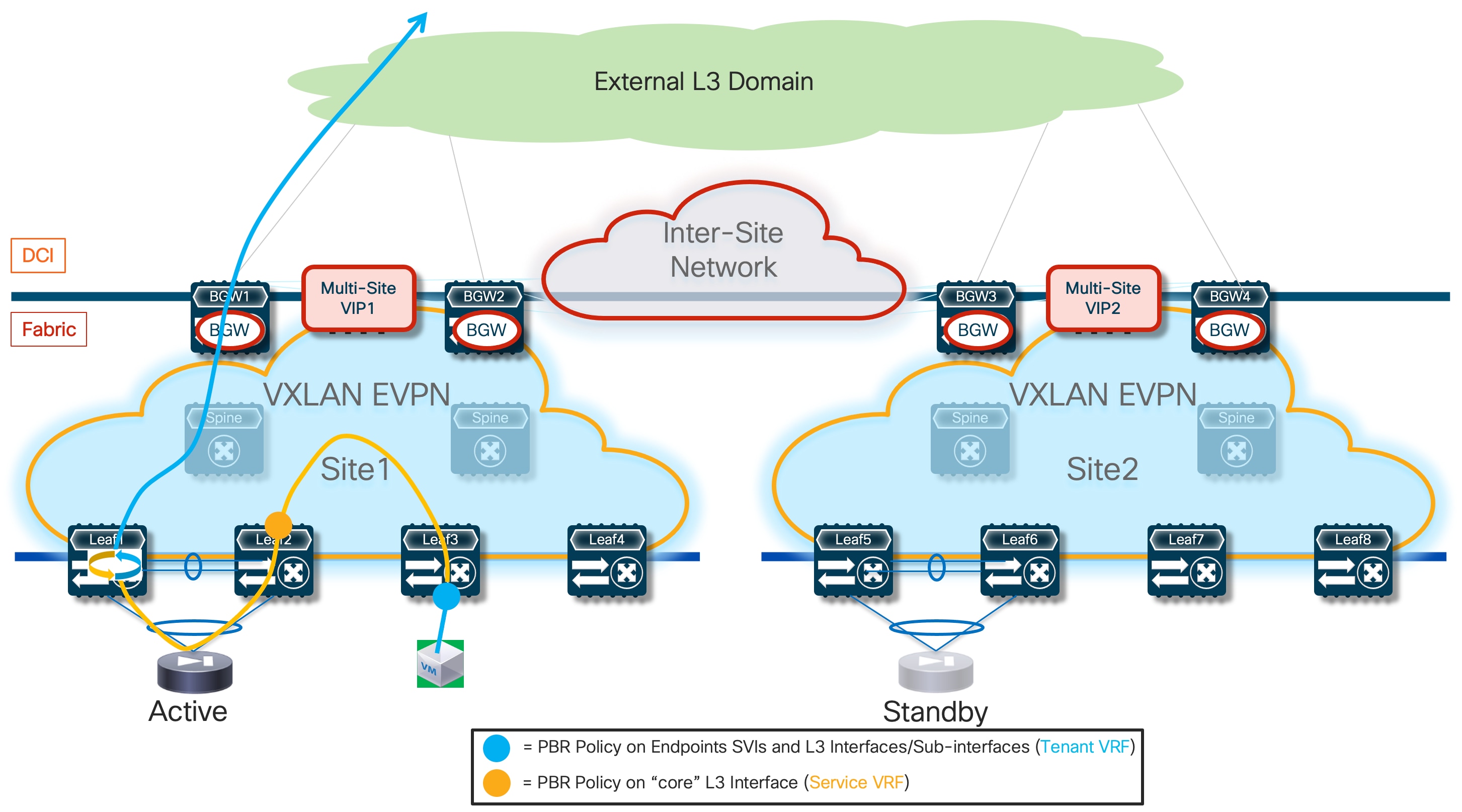

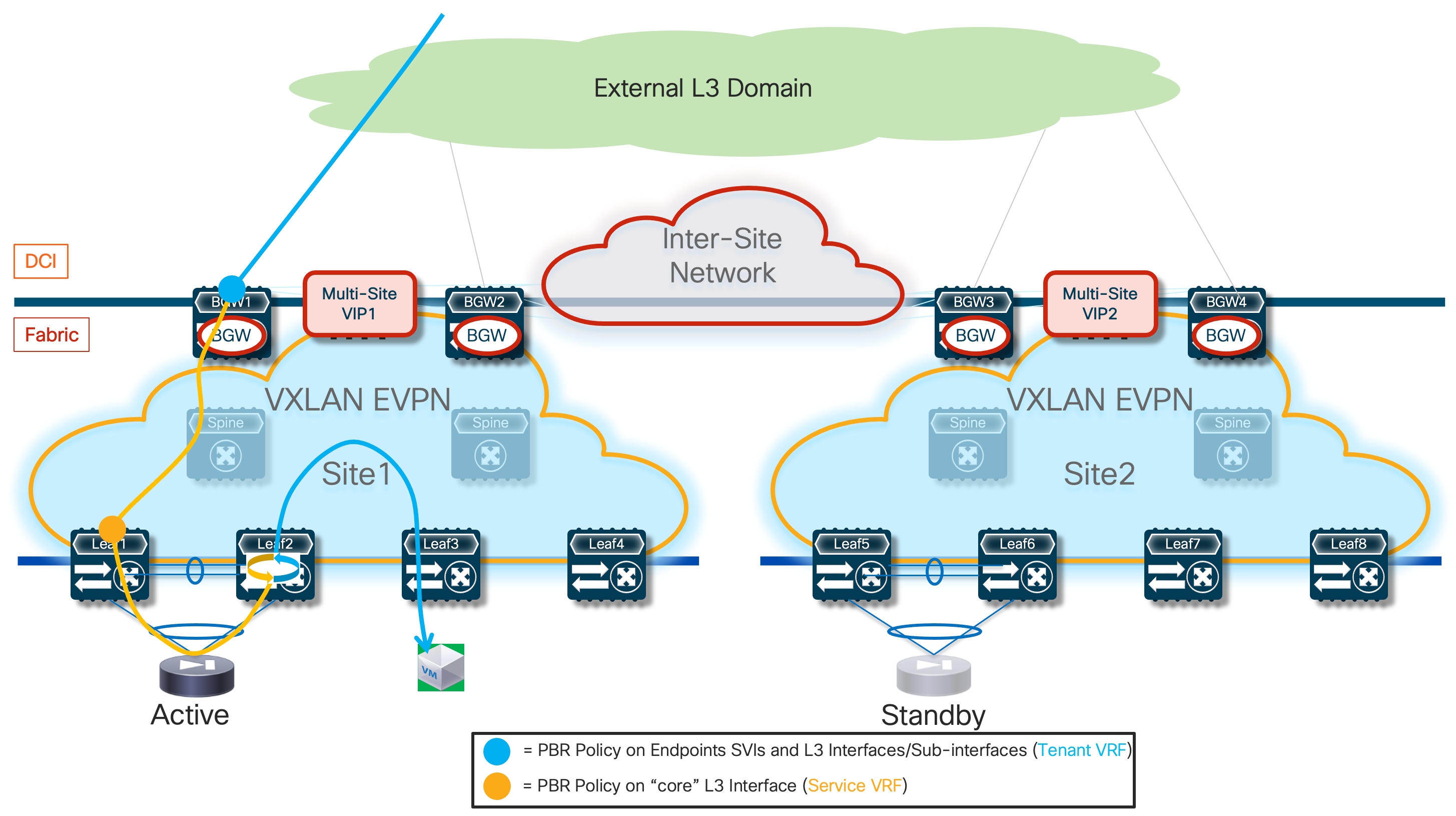

While the active firewall could establish EBGP sessions with the SVI interfaces of the local service leaf nodes connected to the Service Network segment, the same it is not possible with the remote service leaf nodes (this limitation applies to both single fabric and Multi-Site VXLAN EVPN deployments). That is why the recommended design shown in Figure 24 (logical view) and Figure 27 (physical view) calls for the use of loopback interfaces defined on the local and remote service leaf nodes to establish EBGP adjacencies with the active firewall.

Note: The same limitation mentioned above applies also to the use of Interior Gateway Protocols (IGPs – OSPF and IS-IS being the most common examples) between the firewall and the service nodes. However, with IGP protocols it is not even possible to establish adjacencies with loopback interfaces defined on the remote service leaf nodes, which is one of the reasons why we recommend using EBGP for this use case.

The Service Network is deployed on a VLAN mapped to a specific VXLAN segment (L2VNI). The L2VNI is associated to the specific VRF dedicated to the tenant and provides the anycast default gateway functionality. Static routes defined on the firewall (pointing to the L2VNI anycast gateway address) are required to reach the loopback interfaces of the local and remote service leaf nodes.

When the firewall is connected to the leaf nodes using the traditional vPC configuration (i.e. leveraging a physical vPC peer-link), it is mandatory to ensure that reachability to the loopbacks of all the service leaf nodes is always possible independently from what the physical path used to establish the EBGP sessions. There are two options to ensure this is always the case:

● Establishing a per-VRF Layer 3 peering on a dedicated VLAN carried on the vPC peer-link (Figure 27).

As shown above, this is needed on the local leaf nodes where the active firewall is directly connected because the BGP packets destined to the loopback IP address defined on leaf node 1 may be sent toward leaf node 2. Leaf node 2 by default does not learn the /32 prefix of the loopback interface of leaf node 1, as this prefix is advertised by leaf node 1 as a type-5 EVPN prefix with the vPC TEP as next-hop. Because that vPC TEP is locally defined also on leaf node 2, the received advertisement is discarded.

Note: the vPC TEP is configured as a common secondary IP address on the loopback interfaces used for the Primary IP address (PIP) on both leaf nodes part of the same vPC domain.

For the same reason, traffic destined to the loopback address of a remote leaf node is encapsulated by the remote BGWs toward the vPC TEP of that remote pair and may land on the wrong leaf node. The Layer 3 peering on the peer-link would then be needed to deliver the traffic to the loopback defined on the second service leaf node.

● Both issues described above could be avoided by enabling the “advertise-pip” knob on both the local and remote fabric either for a traditional vPC deployment (leveraging a physical peer-link connection) or for the vPC fabric peering configuration (this is the best practice recommendation). In both cases, the type-5 advertisements for the loopback /32 prefixes would use the unique PIP address defined on each leaf node as next-hop allowing always for the successful direct establishment of the EBGP peerings (Figure 28). When adopting such configuration, it is always important to keep into considerations the scalability impact in terms of maximum number of leaf nodes that could be supported in each fabric

Please refer to the latest Nexus 9000 VXLAN EVPN scalability guides for more information:

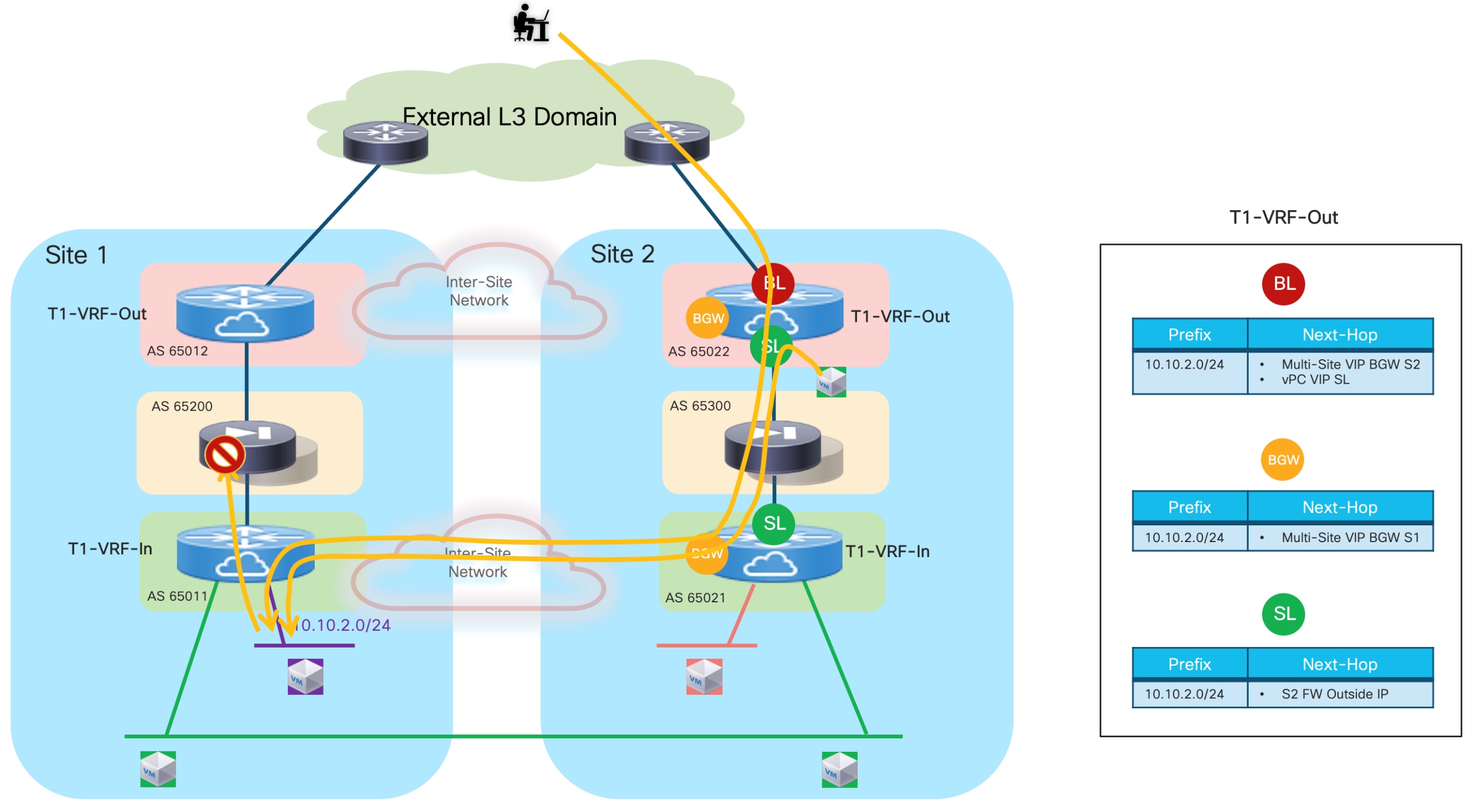

https://www.cisco.com/c/en/us/td/docs/dcn/nx-os/nexus9000/103x/configuration/scalability/cisco-nexus-9000-series-nx-os-verified-scalability-guide-1032.html