Why Orchestrate Multi-Site Connectivity Using NDFC and NDO

You have several options when orchestrating multi-site connectivity:

-

Configuring multi-site connectivity solely through NDFC, or

-

By using Nexus Dashboard Orchestrator (NDO) as the controller on top to orchestrate multi-site connectivity

If you were to configure multi-site connectivity solely through NDFC, there are two areas in NDFC that you have to take into consideration:

-

Latency concerns: Currently, the latency from NDFC to every device that it manages should be within 150 milliseconds. We would not recommend managing any device that is beyond those 150 milliseconds through NDFC. In these situations, with that sort of latency, there is the possibility of frequent timeouts with those devices managed by NDFC.

-

Number of devices that can be managed: Beginning with NDFC release 12.1.2, a single instance of NDFC can manage up to 500 devices. If you have a very large fabric where you go beyond that 500-device limit, you will not be able to manage all of those devices using a single NDFC, so you would have to use multiple NDFCs to manage that large number of devices in this case.

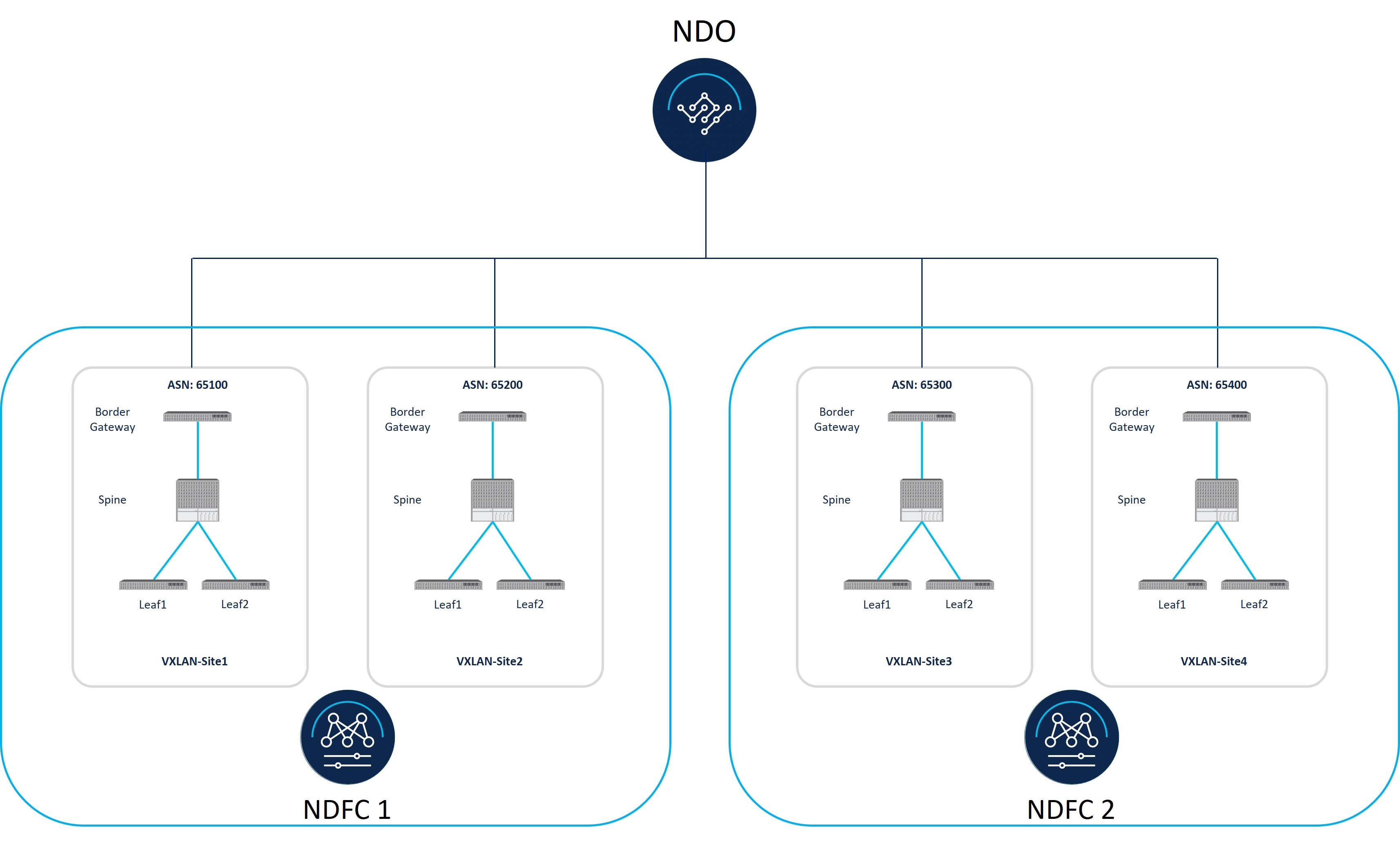

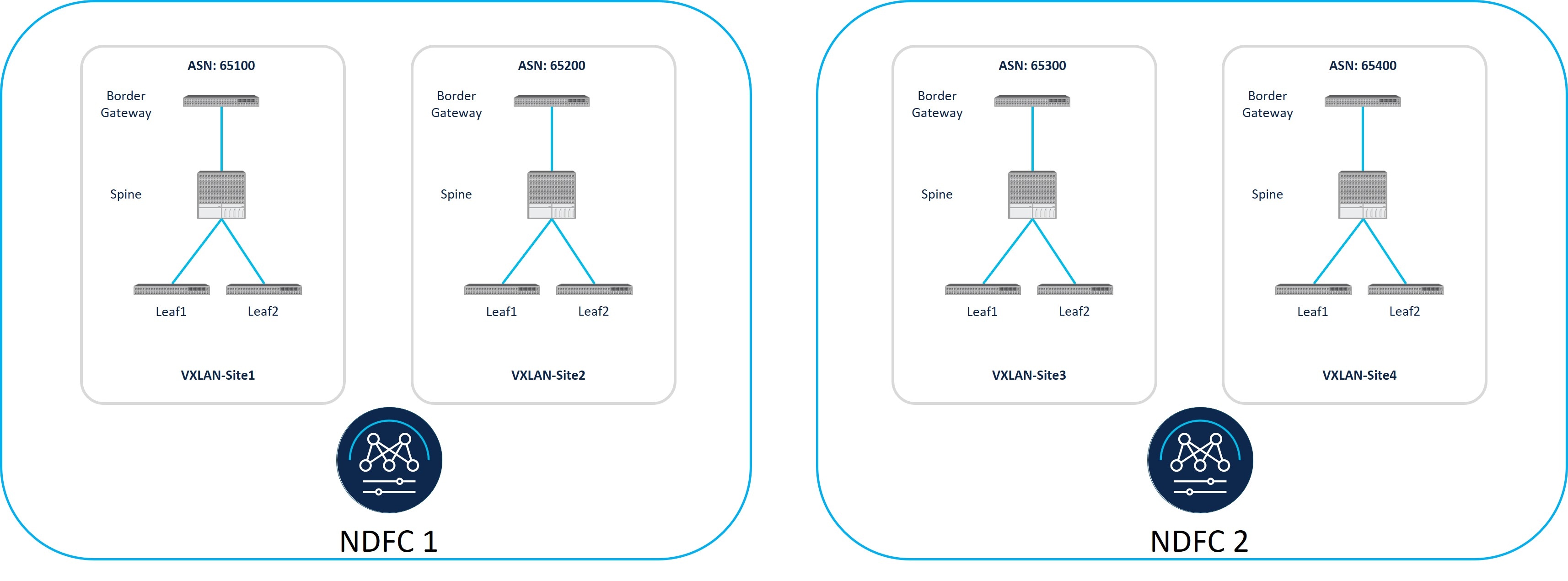

Assume that you have fabrics with 800 devices that you want to manage through NDFC. You could split those 800 devices up in the following manner in order to fall within the NDFC devices limit, which is 500 devices or fewer for a single NDFC instance:

-

In the first NDFC, you could create two fabrics,

site1andsite2, with each site containing 200 devices, for a total of 400 devices being managed through the first NDFC. -

A similar configuration in the second NDFC: Two fabrics,

site1andsite2, with each site containing 200 devices, for a total of 400 devices being managed through the second NDFC.

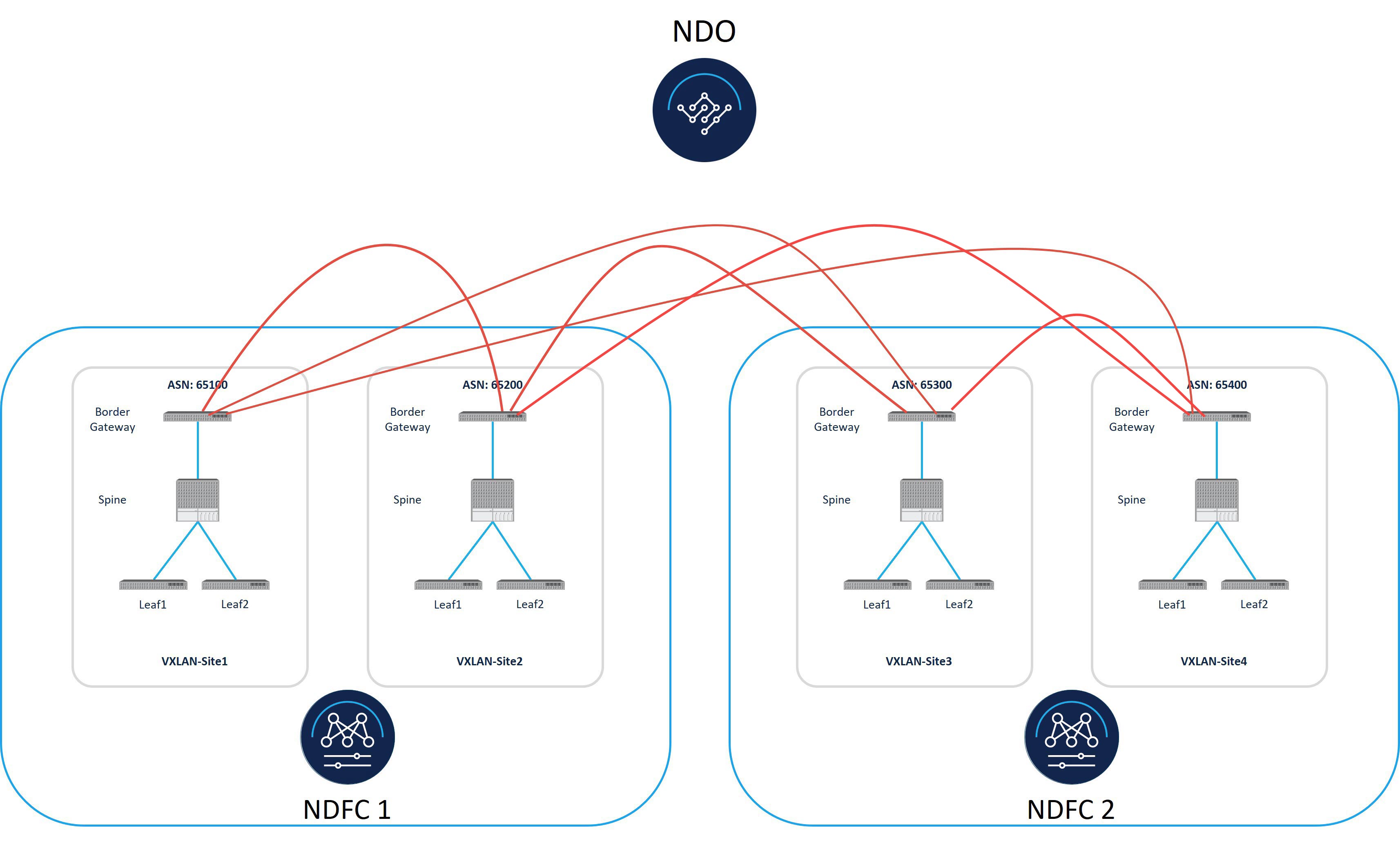

In this way, you are able to use two NDFCs to manage the large number of devices past the 500-device limit imposed on a single NDFC. However, in order to stretch Layer 2 domains and Layer 3 connectivity between these fabrics, you need to build a VXLAN multi-site between those individual fabrics that are managed by different NDFCs.

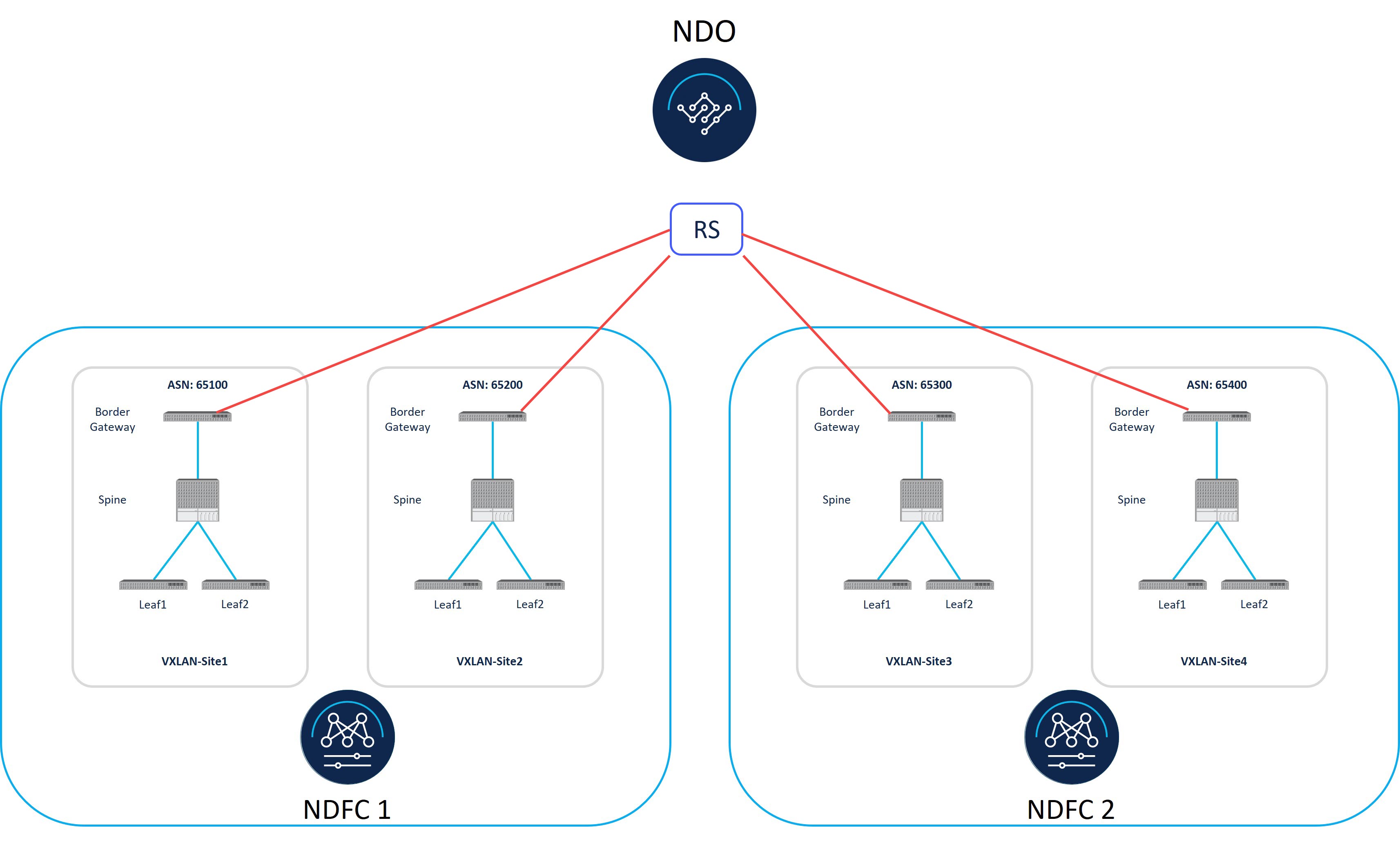

Normally, if you have fabrics that are managed by a single NDFC, you would have a VXLAN EVPN Multi-Site template within that single NDFC that you would use to form the VXLAN multi-site. However, in order to orchestrate VXLAN multi-site connectivity between the fabrics that are managed by different NDFCs, you can leverage NDO (which are managed by those two NDFCs) to deploy the VRFs and networks.

A similar concern arises when you are building a VXLAN multi-site across a wide geographic location, where some devices fall outside of the 150-millisecond latency requirement in an NDFC. Even if you have an NDFC that contains fewer than 500 devices, thereby falling within the acceptable number of devices allowed within an NDFC, you might have devices in that NDFC that exceed the 150-millisecond latency requirement that might create issues. In this situation, creating separate NDFCs might solve these latency requirement issues because the latency requirement from NDO to NDFC is 150 milliseconds. In this sort of configuration, NDO does not communicate directly with the devices that are managed by the NDFCs; NDO communicates directly with the NDFCs themselves instead, where the latency requirement is 150 milliseconds rather than 150 milliseconds.

By using NDO as the controller on top to orchestrate multi-site connectivity by stitching the tunnels between the fabrics managed by different NDFCs, you are able to circumvent the issues presented by the number of devices that can be managed by single NDFCs or by the 150-millisecond latency problems that might arise with certain devices within an NDFC.

Feedback

Feedback