Cisco Unified Wireless Network Solution Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

Cisco wireless network solution overview

Built from the ground up for intent-based networking and Cisco DNA Center, the Cisco Catalyst™ 9800 Series Wireless Controllers bring together Cisco IOS® XE Software and Cisco RF excellence to create a best-in-class wireless experience for your evolving and growing organization.

These controllers also support the newest Wi-Fi 6E Cisco Catalyst 9100 Access Points.

This document primarily focuses on the Cisco® wireless on-premises solution, managed by Cisco DNA Center.

The Cisco Catalyst wireless network solution offers secure, scalable, cost-effective wireless LANs for business-critical mobility. It is the industry’s only unified wired and wireless solution to cost-effectively address the deployment, management, security, and network optimization of a Wireless LAN (WLAN) in enterprises.

This powerful indoor and outdoor solution combines the best elements of wired and wireless networking to deliver high-performance, manageable, and secure WLANs with a low total cost of ownership.

1. Next-generation wireless: An introduction

Mobile users require the same accessibility, security, Quality of Service (QoS), and high availability enjoyed by wired users. Whether users are at work, at home, or on the road, locally or internationally, there is a need to connect. The technological challenges are apparent, but mobility plays a role for everyone. Companies are deriving business value from mobile and wireless solutions. What was once a vertical market technology is now mainstream and is an essential tool in getting access to voice, real-time information, and critical applications such as email and calendar, enterprise databases, supply chain management, sales force automation, and customer relationship management.

Benefits of the Catalyst wireless infrastructure

Benefits achieved by WLANs include:

● Mobility within buildings or campus: Facilitates implementation of applications that require an always-on network and that tend to involve movement within a campus environment.

● Convenience: Simplifies networking for large, open areas where people congregate.

● Flexibility: Allows work to be done at the most appropriate or convenient place, rather than where a cable drop terminates. Getting the work done is what is important, not where you are.

● Easier setup of temporary spaces: Promotes quick network setup of meeting rooms, war rooms, or brainstorming rooms tailored to variations in the number of participants.

● Lower cabling costs: Reduces the requirement for contingency cable plant installation because the WLAN can be employed to fill the gaps.

● Easier adds, moves, and changes and lower support and maintenance costs: Enables easier setup of temporary networks, easing migration issues and costly last-minute fixes.

● Improved efficiency: Studies show that WLAN users are connected to the network 15 percent longer per day than hard-wired users.

● Productivity gains: Promotes easier access to network connectivity, resulting in better use of business productivity tools. Productivity studies show a 22 percent increase for WLAN users.

● Easier collaboration: Facilitates access to collaboration tools from any location, such as meeting rooms; files can be shared on the spot and requests for information handled immediately.

● More efficient use of office space: Allows greater flexibility for accommodating groups, such as large team meetings.

● Fewer errors: Data can be entered directly into systems as it is being collected, rather than when network access is available.

● Improved efficiency, performance, and security for enterprise partners and guests: Enables implementation of guest access networks.

● Improved business resilience: Increased mobility of the workforce allows rapid redeployment to other locations with WLANs.

The core components of the Cisco wireless solutions include:

● Cisco Access Points (APs)

● Cisco Catalyst 9800 Series Wireless Controllers (WLC)

● Cisco DNA Center

● Cisco Prime® Infrastructure

● Cisco Identity Services Engine

● Cisco Spaces

For more information about the Cisco wireless network, see the Cisco Wireless and Mobility page at https://www.cisco.com/c/en/us/products/wireless/index.html.

2. Cisco wireless technology and architecture

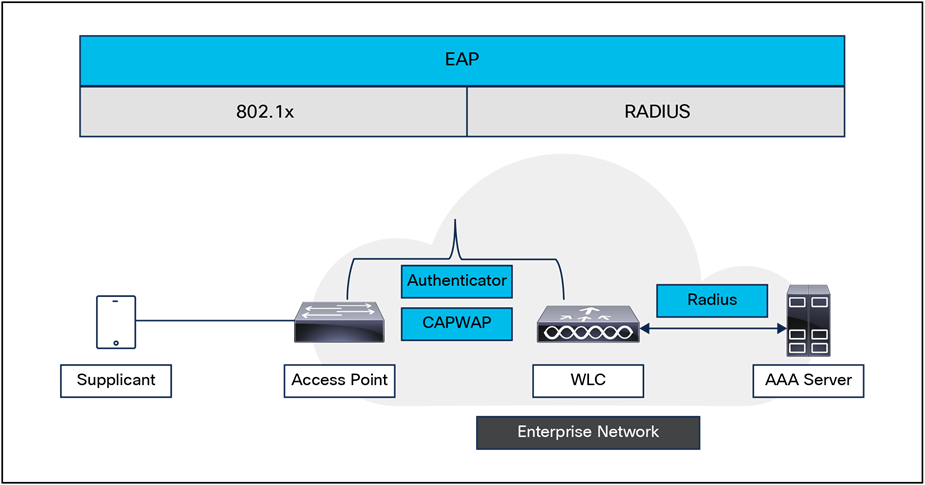

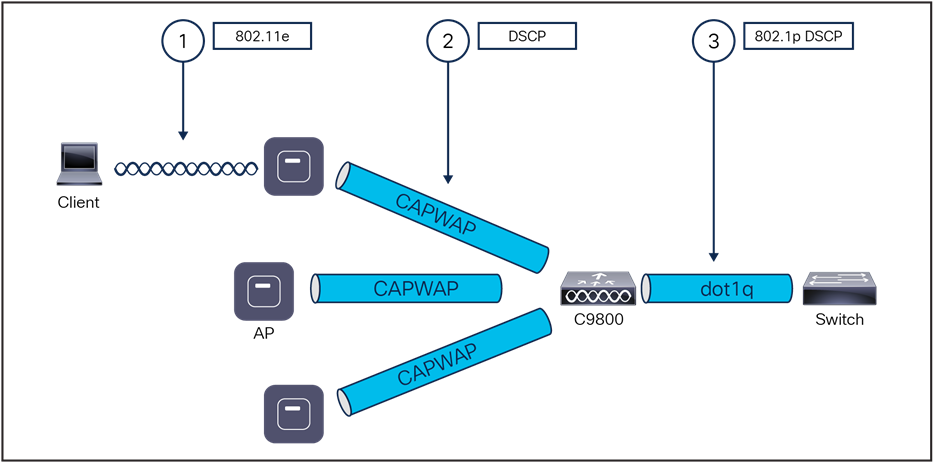

The Internet Engineering Task Force (IETF) Control and Provisioning of Wireless Access Points (CAPWAP) protocol is the underlying protocol used in the Cisco WLAN architecture. CAPWAP provides the configuration and management of APs and WLANs in addition to encapsulation and forwarding of WLAN client traffic between an AP and a WLAN Controller (WLC).

CAPWAP brings additional security with Datagram Transport Layer Security (DTLS) for both management and client traffic. CAPWAP uses the User Datagram Protocol (UDP) and can operate over either IPv4 or IPv6. Table 1 lists the protocol and port implemented for each CAPWAP version.

Table 1. Ports and protocols

| IP version |

Protocol number |

Destination |

Description |

| Version 4 |

17 (UDP) |

5246 |

CAPWAPv4 control channel |

| 17 (UDP) |

5247 |

CAPWAPv4 data channel |

|

| Version 6 |

136 (UDP Lite) |

5246 |

CAPWAPv6 control channel |

| 136 (UDP Lite) |

5247 |

CAPWAPv6 data channel |

Cisco recommends the following guidelines when implementing CAPWAP:

● IP addressing: APs must be assigned a static or dynamic IPv4 or IPv6 address to be able to successfully discover and communicate with a WLC.

● Firewall rules and Access Control Lists (ACLs): All firewall rules and ACLs defined on devices placed between the APs and WLCs must be configured to permit the CAPWAP protocol.

● IPv6 deployments: At least one WLC should be configured for both IPv4 and IPv6 to support APs with older firmware that does not support IPv6.

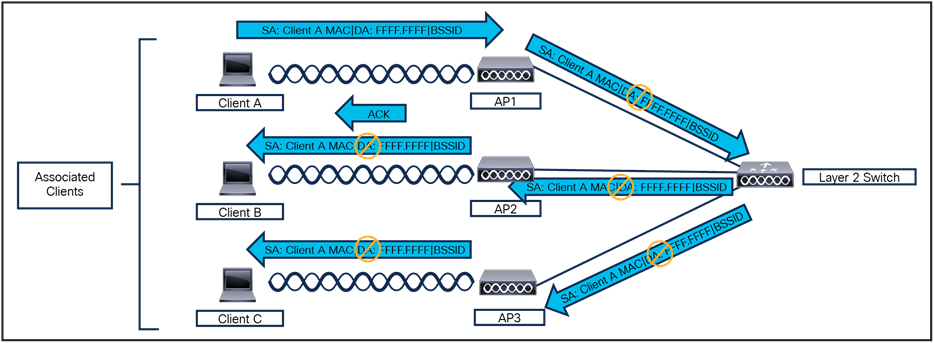

CAPWAP functions

The CAPWAP AP handles the following functions:

● Frame exchange handshake between a client and AP.

● Transmission of beacon frames.

● Buffering and transmission of frames for clients in power save mode.

● Response to probe request frames from clients; the probe requests are also sent to the WLC for processing.

● Forwarding notification of received probe requests to the WLC.

● Provision of real-time signal quality information to the switch with every received frame.

● Monitoring each of the radio channels for noise, interference, and other WLANs.

● Monitoring for the presence of other APs.

● Encryption and decryption of 802.11 frames.

● Data handling in centralized deployments.

● QoS handling.

WLC functions

The Cisco WLC handles the following functions:

● 802.11 authentication.

● 802.11 association and reassociation (mobility) in centralized deployment (local mode).

● 802.11 frame translation and bridging.

● 802.1X, Extensible Authentication Protocol (EAP), and RADIUS processing.

● Termination of 802.11 traffic on a wired interface, except in the case of Cisco FlexConnect® Aps.

● Mobility.

● Data handling in centralized deployments.

● QoS handling.

Security and encryption

Communication between the Cisco WLC and APs is secured and encrypted. CAPWAP control and data packets exchanged between an AP and a WLC use DTLS. DTLS is an IETF protocol based on Transport Layer Security (TLS). All Cisco access points and controllers are shipped with a Manufacturing Installed Certificate (MIC), which is used by an AP and WLC by default for mutual authentication and encryption key generation. Cisco also supports Locally Significant Certificates (LSC) to provide additional security for enterprises that wish to issue certificates from their own Certificate Authority (CA).

By default, DTLS uses the RSA 128-bit Advanced Encryption Standard/Secure Hash Algorithm 2 (AES/SHA-2) cipher suite, which is globally defined using the #ap dtls-cipher-suite command. Alternative ciphers include 256-bit AES with SHA-1 or SHA-256. DTLS is enabled by default to secure the CAPWAP control channel but is disabled by default for the data channel. No DTLS license is required to secure the control channel. All CAPWAP management and control traffic exchanged between an AP and WLC is encrypted and secured by default to provide control plane privacy and prevent Man-In-the-Middle (MIM) attacks.

CAPWAP data encryption is optional and is enabled per AP. Data encryption requires a DTLS license to be installed on the WLC prior to being enabled on an AP. When enabled, all WLAN client traffic is encrypted at the AP before being forwarded to the WLC and vice versa. DTLS data encryption is automatically enabled for teleworker APs but is disabled by default for all other APs. Most APs are deployed in a secure network where data encryption is not necessary. In contrast, traffic exchanged between a teleworker AP and WLC is forwarded over an unsecured public network, where data encryption is important.

Discovery process and provisioning

In a CAPWAP environment, an AP discovers a WLC by using a CAPWAP discovery mechanism and then sends the WLC a CAPWAP join request. When an AP joins a WLC, the WLC manages its configuration, firmware, control transactions, and data transactions. A CAPWAP AP must discover and join a WLC before it can become an active part of the Cisco wireless network.

Each Cisco AP supports the following discovery processes:

Step 1. Broadcast discovery: The AP sends a CAPWAP discovery message to the IPv4 broadcast address (255.255.255.255). Any WLC connected to the same VLAN will see the discovery message and will in turn reply with a unicast IPv4 discovery response.

Step 2. Multicast discovery: The AP sends a CAPWAP discovery message to the multicast group address for all controllers (FF01::18C). Any WLC connected to the same VLAN will see the discovery message and will in turn reply with an IPv6 discovery response.

Step 3. Locally stored controller IPv4 or IPv6 address discovery: If the AP was previously associated to a WLC, the IPv4 or IPv6 addresses of the primary, secondary, and tertiary controllers are stored in the AP’s nonvolatile memory (NVRAM). This process of storing controller IPv4 or IPv6 addresses on an AP for later deployment is called priming the access point.

Step 4. Dynamic Host Configuration Protocol (DHCP) discovery: DHCPv4 and/or DHCPv6 servers are configured to advertise WLC IP addresses to APs using vendor-specific options:

◦ DHCPv4 discovery using option 43: DHCPv4 servers use option 43 to provide one or more WLC management IPv4 addresses to the AP. Option 43 values are supplied to an AP in the DHCPv4 offer and acknowledgment packets.

◦ DHCPv6 discovery using option 52: DHCPv6 servers use option 52 to provide one or more WLC management IPv6 addresses to the AP. Option 52 values are supplied to an AP in the DHCPv6 advertise and reply packets.

Step 5. DNS discovery: The AP sends a DNS query to the DNSv4 and/or DNSv6 servers to attempt to resolve cisco-capwap-controller.localdomain (where localdomain is the AP domain name provided by DHCP).

Step 6. The Plug and Play (PnP) server provides staging parameters to an AP before it joins a controller. Using this staging configuration, the AP receives the runtime configuration when it joins the controller.

The AP PnP feature enables the PnP server to provide all tag-related information as part of the preconfigured information to the AP and, in turn, to the controller.

You can upload a configuration to the PnP server in either TXT or JSON format and add the AP details. The AP details are then mapped with the details in the TXT or JSON configuration file. While the AP is being provisioned from the PnP server, it acquires the details of this configuration. Based on the configuration details, the AP then joins the corresponding controller with the tag details.

Step 7. If, after steps 1 through 6, no CAPWAP discovery response is received, the AP resets and restarts the discovery process.

Cisco Catalyst 9800 Series Wireless Controllers support Cisco access points in Local (centralized and Software-Defined Access [SD-Access] deployment), FlexConnect, Bridge, Flex+Bridge, Sniffer, and Monitor modes.

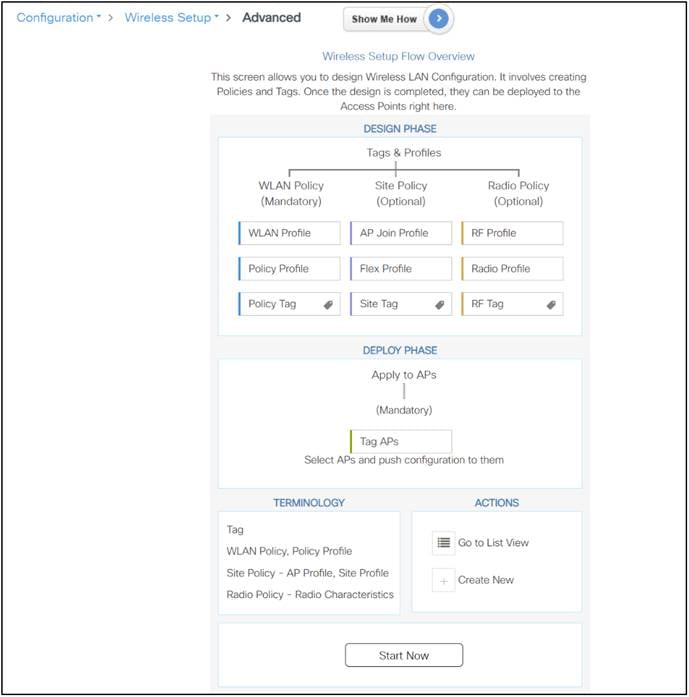

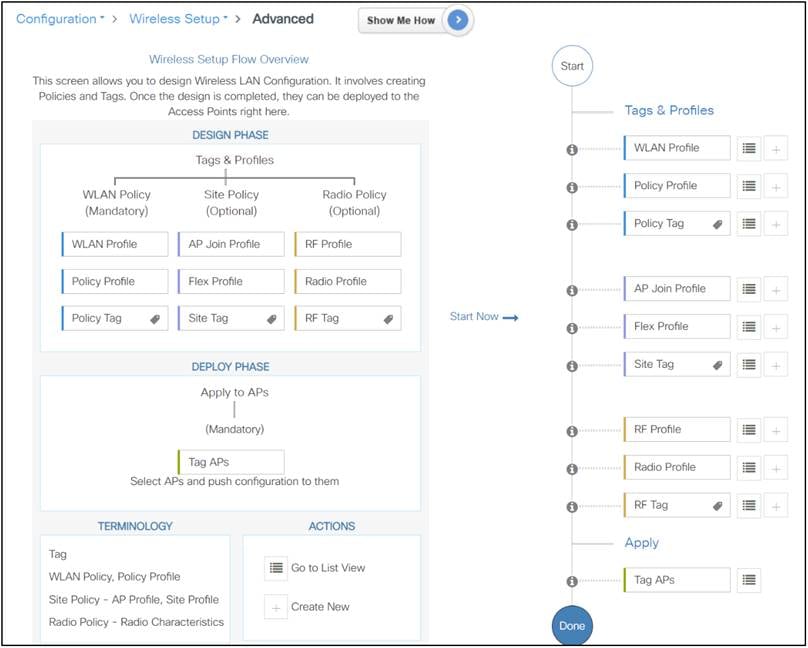

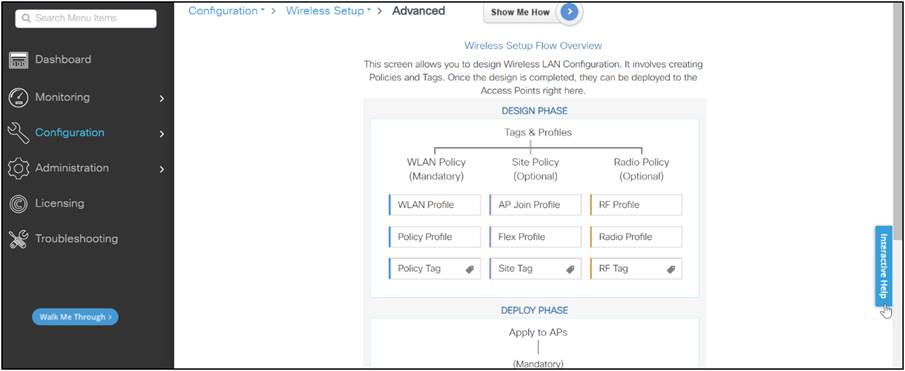

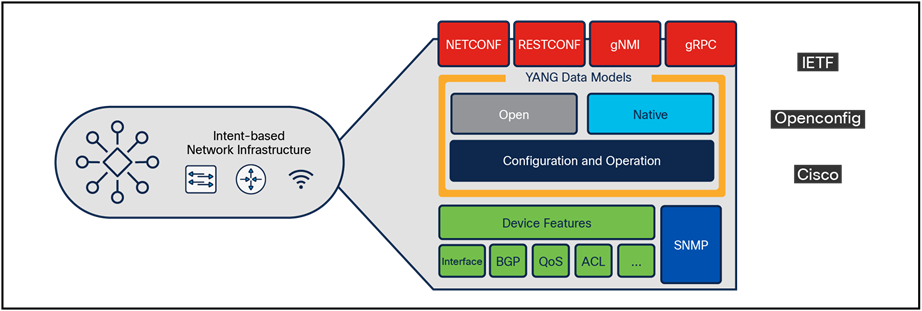

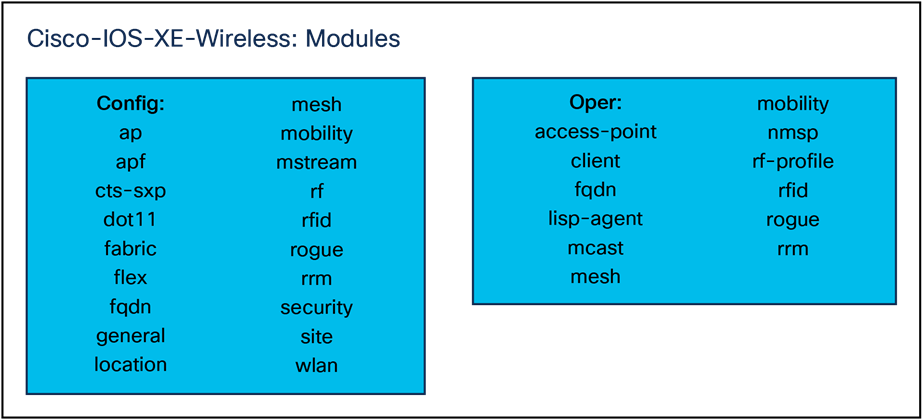

The Cisco Catalyst 9800 Series configuration data model is based on design principles of reusability, simplified provisioning, enhanced flexibility, and modularization to help in network management, as they scale and simplify the management of dynamically changing business and IT requirements.

This model enables the client/AP devices to derive their configurations from profiles that are contained within tags. APs can be mapped to the tags either statically or as part of the rule engine that runs on the controller and comes into effect during the AP join process. Configuration objects are modularized as objects, which helps in the reusability of configurations. In addition, a flat, tag-based configuration model eliminates the complexities associated with inheritance and container-based grouping, leading to a simpler and more flexible configuration that can ease change management.

If you are familiar with AireOS WLCs, you are aware of APs and FlexConnect groups. Those groups allow you to control what capabilities (for example, which WLANs or RF profiles) are available for each AP, based on its AP group association.

On Catalyst 9800 Series WLCs, tags are used to control the features that are available for each AP. Tags are assigned to every AP, and inside every tag you can find all the settings that were applied to the AP.

There are three types of tags:

● Policy tag

● Site tag

● RF tag

A policy tag is the link between a WLAN profile (Service Set Identifier [SSID]) and a policy profile.

● Policy profile: Inside a policy profile you can specify a virtual LAN (VLAN) ID, whether traffic is central or local switching, mobility anchors, QoS, and timers, among other settings.

● SSID: Inside an SSID you can specify the WLAN name, a security type for the WLAN, and advanced protocols such as 802.11k, among other settings.

A site tag defines whether the APs are in Local mode or FlexConnect mode. Other AP modes, such as Sniffer, Sensor, Monitor, and Bridge, can be configured directly on the AP. The site tag also contains the AP Join profile and Flex profile that are applied to the AP.

● AP Join profile: Inside an AP Join profile you can specify settings such as CAPWAP timers, remote access to APs (via Telnet or Secure Shell [SSH]), backup controller configuration, and others.

● Flex profile: On a Flex profile, you have settings such as Address Resolution Protocol (ARP) caching, VLAN/ACL mapping, and so on.

Inside an RF tag you can either select any RF profile or select to use the Global RF configuration.

● 2.4-GHz profile: Allows you to define specific data rates to be used, Transmit Power Control (TPC) settings, Dynamic Channel Assignment (DCA), and some other Radio Resource Management (RRM) settings for the 2.4-GHz band.

● 5-GHz profile: Allows you to define specific data rates to be used, TPC settings, DCA, and some other RRM settings for the 5-GHz band.

By default, the APs get assigned the default tags (default Policy, Site, and RF tags), and the default tags get assigned the default profiles (default Policy AP Join, and Flex profiles).

For detailed information on configuration model and guidelines, please visit the Cisco Catalyst 9800 Wireless Controller Series Web UI Deployment Guide at https://www.cisco.com/c/dam/en/us/td/docs/wireless/controller/9800/17-4/deployment-guide/c9800-webui-dg.pdf.

The Wireless Management Interface (WMI) is the mandatory Layer 3 interface on the Catalyst 9800 Series. It is used for all communications between the controller and access points. It is also used for all CAPWAP or inter-controller mobility messaging and tunneling traffic.

WMI is also the default interface for in-band management and connectivity to enterprise services, such as authentication, authorization, and accounting (AAA), syslog, Simple Network Management Protocol (SNMP), and so on. You can use the WMI IP address to remotely connect to the device using SSH or Telnet, or access the GUI using HTTP or HTTPs by entering the WMI IP address of the controller in the address field of your browser.

The WMI is a Layer 3 interface and can be configured with an IPv4 address or IPv6 address or by using a dual-stack configuration.

It is always recommended to use a wireless management VLAN and configure WMI as a Switched VLAN Interface (SVI). If the uplink port or port-channel to the next-hop switch is configured as a dot1q trunk, the wireless management VLAN would be one of the allowed tagged VLANs on the trunk.

For centrally switched traffic, it is mandatory to configure a Layer 2 VLAN mapped to the SSID, but the corresponding Layer 3 interface (SVI) is optional. This is different from AireOS, in which a dynamic interface (Layer 3 interface and related IP address) is required. The recommendation for the Catalyst 9800 Series is not to configure an SVI for a client VLAN unless:

● You need to run DHCP relay on the Catalyst 9800, either because this function cannot be configured on the next-hop Layer 3 switch (the default gateway for that VLAN) or because you want to add option 82 information in the DHCP relayed packet.

● You want to enable the mDNS gateway and you are running code before 17.9.1; in 17.9.1 and higher, the mDNS gateway feature no longer needs a client SVI interface.

This section describes the basic information necessary to understand RF considerations in planning for various WLAN environments. The topics covered include:

● Regulatory domains and RF considerations

● IEEE 802.11 standards.

● RF spectrum implementations of 802.11b/g/n/ax (2.4 GHz) and 802.11a/n/ac/ax (5 GHz) and 6 GHz.

● Planning for RF deployment.

● Radio Resource Management (RRM) algorithms.

● Antenna choices.

The Federal Communications Commission (FCC), European Telecommunications Standards Institute (ETSI), and other regulatory bodies regulate the use of wireless devices in three main bands (frequency ranges) allocated for unlicensed Industrial, Scientific, and Medical (ISM) usage.

The ISM bands are designated as the:

● 2.4-GHz band (IEEE 802.11b/g/n/ax): 2.4 to 2.4835 GHz. The 2.4-GHz band provides the most coverage but transmits data at slower speeds.

● 5-GHz band (IEEE 802.11a/n/ac/ax): The 5-GHz band provides less coverage but transmits data at faster speeds.

● 6-GHz band (IEEE 802.11ax): The 6-GHz band, introduced with the new Wi-Fi 6E standard, provides the least coverage but transmits data at the fastest speeds of the three frequencies.

Separation of physical groups of clients is performed using different frequency assignments, or channels. For an AP operating on a given channel, there is a finite amount of airtime available, and every client connecting to an AP shares the airtime that the AP channel has to offer. The more clients that are actively using an AP, the less airtime each individual client will get. Supporting a higher data rate for one or more clients (for more efficient use of airtime) will increase available airtime for all clients and result in higher potential bandwidth to the individual user.

All clients on a given channel share a common collision domain that extends to other APs operating on the same channel, regardless of whose network they ultimately belong to. This means that other clients and access points using the same channel, and able to hear one another, share the available airtime. Each additional AP added to a channel brings with it management overhead on the air. The effect of this additional management traffic further reduces the total amount of airtime available for each user and constrains performance. In short:

Bandwidth = Airtime x Data rate.

If you require more bandwidth than can be served from a single AP (for example, if you have many users in a small area), multiple APs will be required. When implemented on nonoverlapping channels, each AP provides an isolated chunk of airtime over its coverage area. APs that are on the same channel must be kept out of range of one another. This is what Cisco’s RRM manages for you—the power and the channel selection to coordinate multiple APs and neighbors for optimal performance.

Channel assignment and reuse for the network is a big factor in determining the airtime efficiency and ultimately the bandwidth that can be delivered to the clients. When two APs can hear one another on the same channel, the result can be co-channel interference unless the overlapping basic service set (BSS) is managed carefully. Whether co-channel interference is the result of your own APs or of your AP and a neighbor doesn’t matter– either way the APs must share the channel. To produce a good physical design, four things must be considered:

● AP placement

● AP operating band (2.4 GHz or 5 GHz or 6 GHz)

● AP channels

● AP power levels

The goal in a good design is to produce even wireless coverage (similar conditions end to end) with minimal co-channel interference, maximizing the available potential bandwidth for the client devices.

Cisco’s RRM calculates and assigns the best channels and power combinations using measured, over-the-air metrics. Over-the-air observations include Wi-Fi networks operating within the infrastructure as well as existing external users, both Wi-Fi and non-Wi-Fi, of the spectrum. RRM will mitigate co-channel assignments and balance power, but if there are no open channels available, or if the APs are simply too close together, the only choice remaining is to share the channel with an existing user. This happens in congested environments, and two different networks may have to share the same bandwidth. If one is not busy, the other may use all the bandwidth. If both become busy, they will share the bandwidth 50/50 due to 802.11’s contention mechanisms (“listen before talk”) that are designed to ensure fair access.

Devices that operate in unlicensed bands do not require a formal licensing process on the part of the end user. However, equipment designed and built for operating 802.11 in the ISM bands is obligated to follow the government regulations for the region it is to be used in. “Unlicensed” does not mean without rules. Cisco wireless equipment is designed and certified to operate and meet the regulatory requirements for specific regions. Regulatory designations are included in the part numbers for pre-provisioned regions.

The end user bears responsibility for correct implementation and for ensuring that the correct equipment is used for the specified region. Your Cisco sales team can guide you in making a selection.

The regulatory agencies in different regions of the world monitor the unlicensed bands according to their individual criteria. WLAN devices must comply with the specifications of the relevant governing regulatory body. Although the regulatory requirements do not affect the interoperability of IEEE 802.11a/b/g/n/ac/ax-compliant products, the regulatory agencies do set certain criteria in the product implementation. For example, the RF emission requirements for WLAN devices are designed to minimize the amount of interference any radio (not just Wi-Fi) can generate or receive from any other radio within a certain proximity. It is the responsibility of the WLAN vendor to obtain product certification from the relevant regulatory body. And it is the responsibility of the installer to ensure that the resulting installation does not exceed those requirements. We recommend and certify the use of antennas and radio combinations that meet regulatory requirements.

Besides following the requirements of the regulatory agencies, Cisco helps ensure interoperability with other vendors through various Wi-Fi Alliance (WFA) certification programs (www.wi-fi.org).

The 2.4-GHz band regulations of 802.11b/g/n/ax have been relatively constant, given the length of time they have been in operation. The FCC (U.S) allows for 11 channels, ETSI (and most other parts of the world) allows for up to 13 channels, and Japan allows up to 14 channels but requires a special license and operating modes to operate in channel 14.

Countries that adhere to the 5-GHz band regulations of 802.11a/n/ac/ax are more diverse in the channels they allow and their rules for operation. In addition, the advancement of 802.11ax regulatory domains around the world has opened spectrum around 6 GHz for unlicensed communications such as Wi-Fi. As one example, the FCC has proposed opening 1.2 GHz of spectrum between 5.925 GHz and 7.125 GHz, which is more than the total amount of spectrum used for Wi-Fi today.

This new spectrum will be extremely valuable, as the current 2.4-GHz and 5-GHz bands used for Wi-Fi are crowded and heavily used. Additionally, the 6-GHz band will allow only devices supporting the latest 802.11ax Wi-Fi standard. In other words, only HE (High Efficiency) devices will be supported, not HT (High Throughput), VHT (Very High Throughput), or older legacy devices. This will result in 6-GHz Wi-Fi networks being more performant, since the network won’t be slowed down by legacy Wi-Fi devices as the 2.4- and 5-GHz bands are today.

These frequency bands and their associated protocols can and do change as the technology evolves and regulatory rules change. Regulatory certifications and allowed frequencies and channels for all Cisco APs are documented in their individual data sheets.

Should I design for 2.4 GHz, 5 GHz, or 6 GHz?

Wi-Fi is a relatively mature technology today. While there are still places where Wi-Fi is not present, it is hard to find any place where there are people that doesn’t have some signal coverage. A good way to look at this is: the more independent neighbors you have, the more Wi-Fi interference you either already have or possibly will have. Interference is often at its worst in multi-dwelling facilities, where many disparate company offices share a single building and spectrum.

This issue is of critical importance, since Wi-Fi passes through walls and floors and must operate and accept all interference from other Wi-Fi and non-Wi-Fi devices alike. What this means is that to the degree that your network devices can hear other networks, they will share the available airtime with those other networks. If you and your neighbor are both heavy users, you will both get less bandwidth than the connection speeds would suggest in the areas that your networks overlap. For both networks, waiting on the other to access the channel will cost time (and less time on the air leads to less throughput).

Using 2.4 GHz in a congested metropolitan city, multi-dwelling facility, or shopping mall will produce variable success at best, and at worst can be unusable. Best practices recommend three nonoverlapping channels in most of the world.

Use 2.4 GHz for a larger coverage range and in deployments where the use of legacy and Internet of Things (IoT) devices is prevalent.

Table 2. Comparison of 2.4 GHz, 5 GHz, and 6 GHz

| 2.4 GHz overview |

5 GHz overview |

6 GHz overview |

| Pros: Larger coverage area, better at penetrating solid objects |

Pros: Higher data rate, less prone to interference, usually fewer devices using this frequency |

Pros: Higher number of channel bonding, wider range, advanced security |

| Cons: Lower data rate, more prone to interference, usually more devices using this frequency |

Cons: Smaller coverage area (except 802.11ac), worse at penetrating solid objects |

Cons: Smaller coverage area, reduced cell size, and challenges similar to 5 GHz with regard to penetration |

If an application is critical to business operations and requires higher speeds, plan on using 5 GHz. Once upon a time this was more difficult to do, as 5-GHz devices were less prevalent. This is not the case today, as most manufacturers are focusing on 802.11ax and Wi-Fi 6E as the standards for their products.

The 6-GHz band is newly certified and is exclusive to devices that support Wi-Fi 6E. This means that on 6 GHz, the Wi-Fi network doesn’t need to slow down to accommodate legacy devices. The 6-GHz band also supports almost twice as many channels as 5 GHz. Fewer devices, more spectrum, and more bandwidth mean less interference and network congestion.

What protocols should I enable?

There are multiple protocol standards available in the 802.11 standard. In fact, everything that has been ratified since 1999 is still required for WFA certification and is present in all hardware that supports the band it belongs to. That doesn’t mean that you need to use it, though. The choices you make in deciding which protocols to support (and which not to) can have a big impact on your network’s efficiency.

By efficiency we mean the use of airtime. The faster a station can get onto and off the air, the more airtime will be available for other stations. 802.11b was one the first protocols implemented in 2.4 GHz. Today it is truly a unique example among all other Wi-Fi protocols, as both the coding and modulation methods are completely different from every other protocol that has been ratified since.

802.11n and 802.11ac also provide for block ACK, or block acknowledgments, which allow for higher efficiency gains by allowing a large block of packets to be acknowledged all at one. The legacy protocols all send a packet and get a response, one by one. This adds a considerable number of frames to the transaction for reliability that is largely no longer needed with modern standards.

Now, with 802.11ax, we get the capacity, efficiency, coverage, and performance required by users today in the most demanding Wi-Fi environments. The 802.11ax standard emphasizes quality connectivity in locations with hundreds or thousands of connected devices, such as stadiums and other public venues, as well as corporate networks using time-sensitive, high-bandwidth applications. With 11ax, devices meet the highest standards for security and interoperability and enable lower battery consumption, making it a solid choice for any environment, including the IoT.

Cisco WLCs have several options available for implementing the most popular and necessary speeds. The various network types and decision points are detailed later in this document to ensure that you understand the need to implement a well-tuned network from the start.

What are DFS channels, and should I use them?

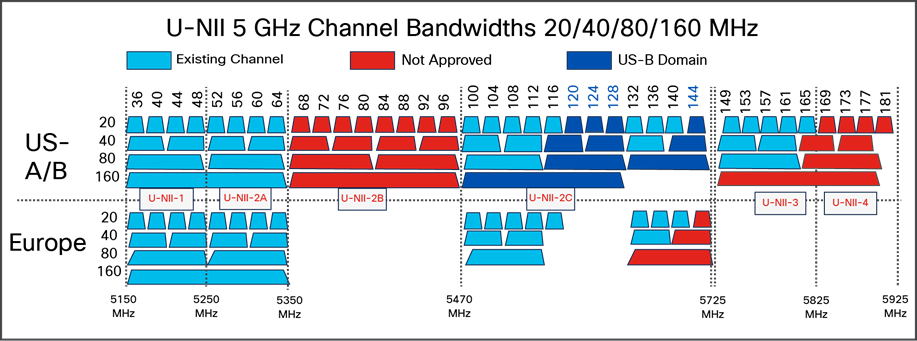

Many of the channels available in 5 GHz are known as Dynamic Frequency Selection, or DFS, channels. Along with Transmit Power Control (TPC), DFS defines coexistence mitigations (that is, detect and avoid) for radar while operating in the UNII-2 and UNII-2e bands (channels 52 to 144). These mechanisms are detailed in an amendment to the 802.11 standard.

The 802.11h standard was crafted to solve problems such as interference with satellites and radar, which also legally use the 5-GHz band as primary users. A primary user has priority over the frequency range of UNII-2 and UNII-2e. It is Wi-Fi’s job, as a condition of using these frequencies, to not interfere with any primary users. While this standard was introduced primarily to address European regulations, it is used by many other regions of the world today to achieve the same goals of enabling more operational 5-GHz spectrum for Wi-Fi.

In 2004, the U.S. added channels 100 to 140 in the UNII-2e (“e” stands for extended) band, with rules requiring 802.11h certification, which allow us to peacefully coexist with primary licensed users of the 5-GHz frequencies in this range. For Europe these channels represent most of their available 5-GHz spectrum today. Before the rules and mechanisms were worked out, Europe was limited to only 4 channels in 5 GHz. At the same time in the U.S., we had UNII-1, 2, and 3, for a total of 13 channels.

For equipment that does not interfere with licensed band users, the requirements are straightforward:

● The Wi-Fi equipment must be able to detect radar and satellite emissions.

● Before using a channel in this range, a “channel primary” (an infrastructure AP) must first listen for 60 seconds and determine that the channel is clear of radar.

● If a radar signal is detected, the Wi-Fi channel primary, and all the clients associated to it, have to abandon the channel immediately and not return to it for 30 minutes, at which time it can be cleared again for Wi-Fi use if no radar emissions are detected.

U-NII-2e channels got a bad name early in 2004 in the U.S. among network administrators. Clients were slow to adopt the new rules initially, so using these channels in the infrastructure meant that you could (and some did) inadvertently configure a channel that some clients wouldn’t be able to use, creating a coverage hole for that client type. There were also many undue concerns about DFS operations in a production network. The concern was that if DFS detected radar, a channel change followed by waiting a full minute before resuming transmissions was viewed as disruptive. However, the behavior is not disruptive, as RRM initially places the AP into a non-DFS channel. The channel is blocked for 30 minutes and then made available again to RRM by means of background scanning to clear the required listening time. Once the channel is available, we can choose to use it or remain on the current channel, depending on which is better for the clients.

It has been a decade since the addition of these channels and 802.11h logic. In Europe, DFS is and has been making 5-GHz Wi-Fi possible and even enabling it to flourish. Client vendors vary; the majority support the DFS channels just fine, as there is no additional logic required by the client.

If you are within 5 miles of an airport or shipping port and have concerns, evaluate by monitoring the channel range with Cisco APs. Cisco leads the industry in certified hardware models and function for DFS operation and flexibility. Monitoring the channels will alert you to any potential interference and will identify the affected channels.

A site survey is an important tool. It will tell you who is operating around you—and, more importantly, where and how much they interfere with your intended coverage zones. It also allows identification of mounting locations, existing cable plants, infrastructure requirements, and architectural oddities and yields a plan to get the coverage your application requires. Because RF interacts with the physical world around it, and all buildings and offices are different, so is each network to a degree. Unfortunately, there is no “one size fits all” for Wi-Fi. There are recommendations by deployment type, and it is possible to generalize what you are likely to encounter. If you have not done a site survey in a while, keep in mind what has changed since the last one before you decide against it:

● The protocols and radio technology.

● How the users will use the network (likely everyone, and for almost anything).

● How many clients the network supports (likely a lot more users; count as at least two devices per user these days, and many have more).

● The primary use of the network (very likely changed since the initial plan and implementation).

While early WLAN designs focused on coverage to get a few casual users signal everywhere, today’s WLAN designs are more focused on capacity, as the number of users has increased and what we are demanding of the network has gone up exponentially. A capacity design requires more APs in closer proximity to manage the number of users who are sharing the bandwidth of the cell. Increasing placement density should have a plan.

If you decide to conduct your own survey and plan, tools are important. There are multiple free tools online and available as downloads. However, if you want professional results, you need professional tools.

The free tools can provide simple solutions for smaller, less complex projects. But if you are looking to provide ubiquitous multimedia coverage in a multifloor or multibuilding campus, you need a good tool to balance the elements that will be required for success. Planning tools have evolved with the radio technologies and applications in use today. A familiarity with the design elements and applications is required to produce a good plan.

Cisco Prime Infrastructure has a planning tool built in, and you can import and export maps and plans between Cisco Prime and many top survey and planning applications, such as Ekahau ESS and AirMagnet Pro Planner and Survey.

Similarly, the Ekahau Pro tool allows you to create the complete network plan for your enterprise, including floor layout, AP locations, and obstacles. After creating the floor layout, you can export the simulated network plan and the real-world site survey data into a format that Cisco DNA Center can use. You can import the Ekahau project file into Cisco DNA Center for further planning.

Ekahau Pro version 10.2 allows you to automatically create the site hierarchy, save it as a project file, and import it into Cisco DNA Center.

For more on site surveys, visit Understand Site Survey Guidelines for WLAN Deployment at https://www.cisco.com/c/en/us/support/docs/wireless/5500-series-wireless-controllers/116057-site-survey-guidelines-wlan-00.html.

Having a site survey done for 802.11ax now will yield good information that can be used again and again as the network grows and continues to evolve. Whether this is something that you should contract out in part or handle yourself depends on the size of your project and your level of knowledge with regard to Wi-Fi.

Different deployment types for WLAN coverage

The amount of WLAN coverage you plan for in the design of your wireless network depends largely on the usage and density of clients you require. With limited exceptions, all designs should be deployed to minimize retransmission and data rate shifting while supporting good client roaming and throughput. Wireless networks can be deployed for data-only, voice, video, and location-aware services or, more frequently these days, a combination of all of these. The difference between these application types is minimal today, with the requirements of each largely describing good, solid, capacity-based coverage. Location-aware services add some AP placement criteria for good location triangulation and guidelines on hyperlocation technologies. Real-time multimedia (voice and video) applications have different latency requirements for two-way live implementations. But by and large, all describe a minimum coverage level needed to make the application viable for the number of users you expect in any given area.

For most campuses and enterprise installations, coverage and capacity are the primary concerns, and these are easily achievable. High-density client implementations or high-interference locations such as shopping malls or apartment buildings may require additional equipment such as external antennas to properly implement the network to scale. The sections that follow provide in-depth information on application-specific guidelines, recommendations, and configurations.

Most application-specific coverage guidelines describe the signal level or coverage required at the cell edge for good operation as a design recommendation. This is generally a negative Received Signal Strength Indication (RSSI) value such as -67 dBm. It’s important to understand that this number assumes a good signal-to-noise ratio (SNR) of 25 dB with a noise floor of 92 dBm. If the noise floor is higher than 92 dBm, 67 dBm may not be enough signal to support the minimum data rates required for the application to perform its function.

For location-aware services, deploying a network to a specification on 67 dBm is fine; what matters to location-aware applications is how the network hears the client, not how the client hears network. For location-aware services we need to hear the client at three APs or more at a level of at least 75 dBm for it to be part of the calculation. (72 dBm is the recommended design minimum.)

Clients are a big consideration when planning coverage. They come in all shapes and sizes these days, and as a result individual implementations can and do vary widely on their opinion of the strength of a given RF signal. For instance, the laptop you are using for surveying may show 67 dBm at the cell edge, the tablet might show 68 dBm, and the smartphone may show 70 dBm. These are all very different opinions and affect roaming and data rates that everyone will use. Overbuilding to accommodate these varying opinions will help assure a trouble-free installation. When taking measurements, using the device that will support the application is the best approach. Understanding that your smartphones are generally 5 dB off from your survey tool will let you develop good rules for design (such as adding or subtracting 5 dB to whatever the reading is from your survey tool). Then test and tune the resulting implementation.

High-density client coverage requirements

High client density can be defined as any environment with a high number of concentrated clients (1 client at least every 1.5 square meters), such as a conference room, classroom, lecture hall, auditorium, sports arena, or conference hall. The concepts stay the same regardless of the size of the challenge. The tools required and methods employed increase in complexity with the complexity (size) of the challenge.

What needs to and can be managed remains largely remains the same. Two things that remain true about a high-density client environment are:

● You cannot serve more bandwidth than you have available.

● Capitalizing on ALL the potential bandwidth is a matter of proper sizing and an efficient and tuned design.

Visit the Wireless High Client Density Design Guide for more information: https://www.cisco.com/c/en/us/td/docs/wireless/controller/technotes/8-7/b_wireless_high_client_density_design_guide.html.

Roaming and voice coverage requirements

Client roaming enables a client to move from one AP coverage zone into another AP coverage zone, minimizing interruption in service and coverage. This is the very essence of mobility. There are many factors that must be considered for roaming to be effective. For instance, how the client transitions its association and authentication from one AP to another must be considered as well as the time it takes to do so. An often-overlooked aspect is the network design itself. For a client to roam, there must be something to roam to. Cells must overlap with good coverage for a client to gracefully leave coverage of one cell and establish an association within coverage on another without delay. Too little overlap encourages “sticky” clients, meaning a client holding on to an AP well after it moves into the coverage area of another AP.

When designing for network coverage, consider the amount of overlap needed in the required signal range you are getting. Overlap should be 10% to 15% (15% to 20% for voice) of the total coverage area. Voice is particularly sensitive, as the conversation is in real time, and any coverage lapse will result in broken audio or potentially a lost call. An easy way to calculate overlap is to measure the distance from the AP to the point where you reach 67 dBm, then multiply that distance by 1.4 for 15% to 20% or by 1.3 for 10% to 15%, and that’s where your next AP goes.

Data rates also matter, as the usable cell size increases with lower data rates and decreases with higher data rates. Higher data rates require a higher SNR, and since the noise floor is theoretically constant, the closer the client is to the signal (the AP) the higher the SNR and the resulting data rate will be. We can enforce minimum data rates in configuration, and when a client can no longer support a given data rate, it will have to move.

A good physical design enables and supports roaming at the physical layer. Only the client decides when to roam, though, and the decisions it makes are based on the client’s observation of the network. There have been multiple amendments to the 802.11 specification specifically to help clients make better decisions based on network infrastructure observations. See the following guides for additional information on roaming and configuring Cisco hardware and software to enable good roaming transitions. Cisco supports 802.11r, 802.11k, and 802.11v, which assist capable clients in making good decisions and afford some control from the infrastructure to enforce design goals.

Ascertain Methods for 802.11 WLAN and Fast-Secure Roaming on Cisco Unified Wireless Network: https://www.cisco.com/c/en/us/support/docs/wireless-mobility/wireless-lan-wlan/116493-technote-technology-00.html.

802.11r BSS Fast Transition chapter of Catalyst 9800 Series Wireless Controller Software Configuration Guide: https://www.cisco.com/c/en/us/td/docs/wireless/controller/9800/config-guide/b_wl_16_10_cg/802-11r-bss-fast-transition.html.

Assisted Roaming (802.11k) chapter of Catalyst 9800 Series Wireless Controller Software Configuration Guide: https://www.cisco.com/c/en/us/td/docs/wireless/controller/9800/config-guide/b_wl_16_10_cg/assisted-roaming.html.

Location-aware coverage requirements

Location–aware deployments differ slightly from other types in that the goal of the installation is to provide good location resolution of clients, tags, and IoT sensors in the context of where they are on a given map. We derive this information in its most basic form from client RSSI readings obtained by multiple APs (a minimum of three APs is required to triangulate on the client’s position). The pattern that you choose for deploying your APs can have a big effect on the network’s ability to “locate” a client accurately.

For good location resolution, the APs are laid out in a staggered pattern, with APs defining the borders and corners. It is possible to get coverage using APs in a straight line down the middle of both sections; however, this would not provide enough APs to hear and triangulate on clients in all locations (remember, we need three). Coverage and capacity requirements for this floor require many APs to start with, so it is quite likely, given your coverage requirements, that you already have what is needed to perform good location calculations.

Deployment Best Practices: Location-Aware WLAN Design Considerations (https://www.cisco.com/en/US/docs/solutions/Enterprise/Mobility/emob30dg/Locatn.html#wp1040131) is a must-read chapter and still quite relevant, as the physical requirements for the design have not changed.

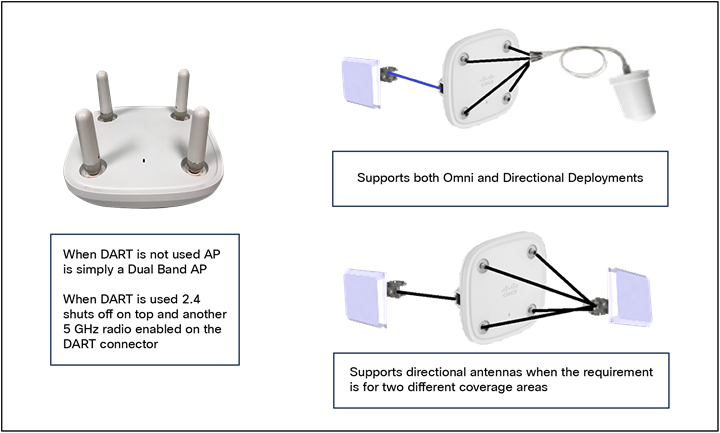

Flexible Radio Assignment (FRA) radios and coverage requirements

A recent Cisco innovation, the flexible radio AP models (Cisco Aironet® 2800, 3800, and 4800 Series and Cisco Catalyst 9120, 9130, 9124, 9136, and 9162, 9164, and 9166 Series) access points were designed specifically to solve some of the challenges presented by traditional dual-band radios. Each of the coverage scenarios described above has improved solutions when using these radios.

High density is impacted by the flexible radio AP’s dual 5-GHz ability, allowing for two independent 5-GHz channels from a single AP.

● The internal antenna model will be implemented as a macro/micro cell, or as a cell within a cell. The FRA RRM logic also provides logic for balancing clients between the two cells. This will double the bandwidth within the cell boundary.

● The “E” model or external antenna model can provide two 5-GHz macro cells, which allows the implementation to gain two 5-GHz cells using the same Ethernet cable and switch port. A second antenna, and a DART connector to attach it, are required, but both together are far cheaper than an additional AP and switch ports, and you would still need the antenna. This is particularly beneficial for updating an existing high-density coverage area, as very often you can reuse everything except the APs and dramatically increase the capacity in 5 GHz.

Voice coverage: These APs participate in the FRA RRM algorithm, which will calculate the correct 2.4 vs. 5 GHz balance and prevent overutilization of 2.4-GHz radios. In general, voice should be implemented in 5 GHz only, and FRA helps there significantly by enabling a higher density of 5 GHz while right-sizing the density of 2.4-GHz radios. Protections are built in against being overly dense in 5 GHz by allowing the flexible interface to be placed in a monitoring role (both bands), which increases the Resolution of RF Metrics (RRM observations, location information).

Table 3. AP models and types of hardware managed by FRA

| AP model |

FRA radios |

Functions |

| Cisco Aironet 2800 Series Access Points |

2.4/5 XOR |

2.4-GHz and 5-GHz or dual 5-GHz operations |

| Cisco Aironet 3800 Series Access Points |

2.4/5 XOR |

2.4-GHz and 5-GHz or dual 5-GHz operations |

| Cisco Aironet 4800 Series Access Points |

2.4/5 XOR |

2.4-GHz and 5-GHz or dual 5-GHz operations |

| Cisco Catalyst 9120 Series Access Points |

2.4/5 XOR |

2.4-GHz and 5-GHz or dual 5-GHz operations |

| Cisco Catalyst 9130 Series Access Points |

5-GHz Tri-Radio |

2.4-GHz 4x4 and single 5-GHz 8x8, or 2.4-GHz 4x4 and dual 5-GHz 4x4 |

| Cisco Catalyst Wireless 9166 Access Points |

5/6-GHz XOR |

2.4-GHz 4x4 and dual 5-GHz 4x4, or 5-GHz 4x4 and 6-GHz 4x4 |

Power level and antenna choice

Power level and antenna design choice go together to determine AP placement and coverage results. Together, these two variables determine where and how powerful the RF is in any given place in the environment. Along with choosing the correct antenna to produce the required coverage area, we recommend that you use RRM to control the power level and provide the optimal channel and power plan. For more information, see the RRM section later in this document.

An antenna gives the wireless system three fundamental properties:

● Gain: A measure of increase in power introduced by the antenna over a theoretical (isotropic) antenna that transmits the RF energy equally in all directions. Gain also affects received signals and can assist weaker client devices by increasing the signal presented to the receiver.

◦ Front-to-back ratio, or FTB: The opposite of gain is signal rejection. The opposite direction of the gain in an antenna is less sensitive than the focus of the antenna, and this property can be used to isolate your cell from unwanted signals behind the antenna, for instance.

● Direction: The shape of the antenna transmission pattern. Different antenna types have different radiation patterns that provide various amounts of gain in different directions. A highly directional antenna will produce a very tight beam pattern. Outside of the area of focus, signals erode quickly, which allows more cells to be placed in the same physical space without interference.

● Polarization: Indicates the direction of the electric field. An RF signal has both an electric field and a magnetic field. If the electric field is orientated vertically, the wave will have a vertical polarization.

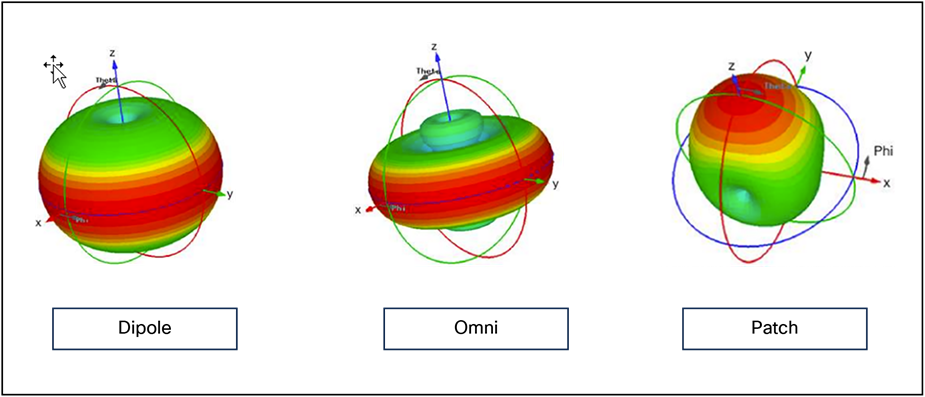

A good analogy for how an antenna works is the reflector in a flashlight. The reflector concentrates and intensifies the light beam in a particular direction, like what a parabolic dish antenna does to an RF source in a radio system. The antenna, however, is both the ears and the mouth of the AP, so the characteristics of a given antenna work for both transmit and receive. Many different antenna designs exist to serve different purposes. Some of the more familiar designs appear in Figure 1.

Antenna design types

Gain and direction mandate range, speed, and reliability, while polarization affects reliability and isolation of noise.

For more information on antenna selection, see the Cisco Aironet and Catalyst Antennas and Accessories Reference Guide at https://www.cisco.com/c/en/us/products/collateral/wireless/aironet-antennas-accessories/product_data_sheet09186a008008883b.html.

Omnidirectional antennas

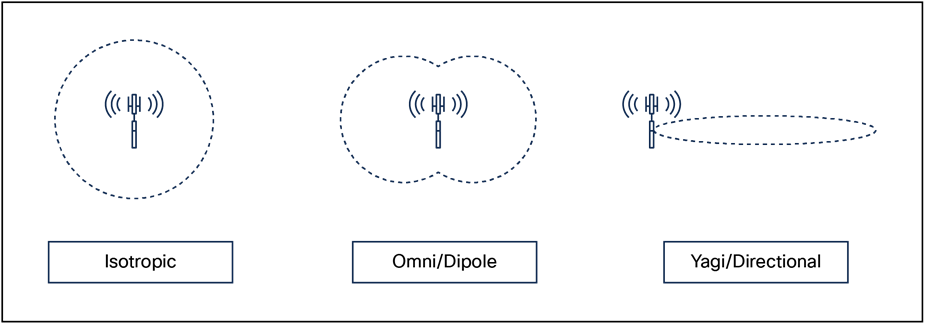

Omnidirectional antennas have a different radiation pattern compared to isotropic antennas; the isotropic antenna is theoretical, and therefore all physical antennas are different from the isotropic antenna. Any change in shape of the radiation pattern of an isotropic antenna is experienced as gain and increases directionality. The dipole omnidirectional antenna features a radiation pattern that is nearly symmetrical about a 360-degree axis in the horizontal plane and 75 degrees in the vertical plane (assuming the dipole antenna is standing vertically). The radiation pattern of an omnidirectional antenna generally resembles a doughnut in shape and hence is directional. The higher the rated gain in dBi of a given omnidirectional antenna, the more focused the energy is (generally in the vertical plane) and the more directional it becomes. See the comparison between an isotropic and omnidirectional dipole antenna in Figure 2 below. Note that the views are from the side.

Omnidirectional antennas work well and are easy to implement – to a point. If you are faced with increasing the density of APs to accommodate more capacity requirements, you will see increasing channel utilization from self-interference. This happens because the antenna pattern is designed for maximum coverage. 3000 to 6000 square feet (280 to 560 square meters) of coverage per AP can be managed with the internal antennas. If your coverage requirements are at the minimum or denser than this, you should consider directional antennas.

Isotropic vs. omnidirectional vs. directional antenna

Directional antennas

A directional antenna differs from an omnidirectional antenna in that the energy is focused in a particular way to achieve different coverage goals. Most people assume that a directional antenna is used specifically for gain – to increase power. While directional antennas can be used for that reason and can achieve greater distances, they are more often used in Wi-Fi to control the size (and shape) of the transmit and receive cell.

For current Cisco indoor APs (Catalyst 9100), the antenna selections are all dual band (each antenna covers 2.4 and 5 GHz) patch-type antennas designed for different coverage distances. The three most popular are shown below.

Directional antenna options

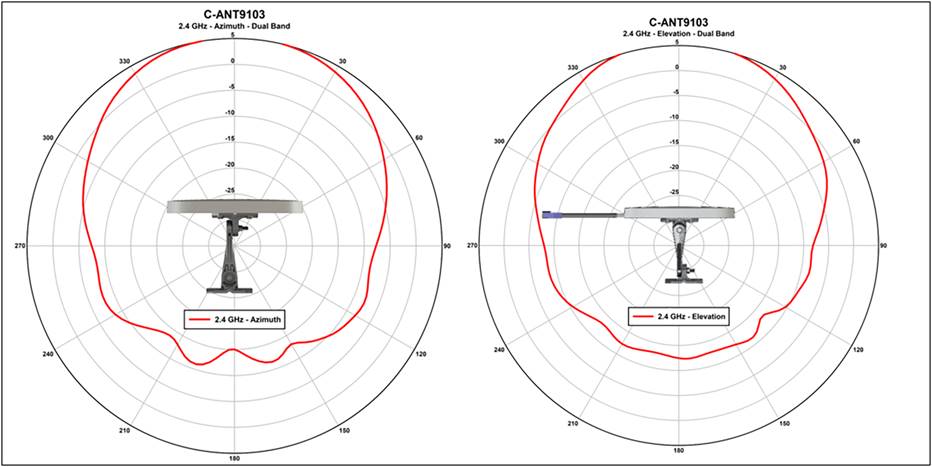

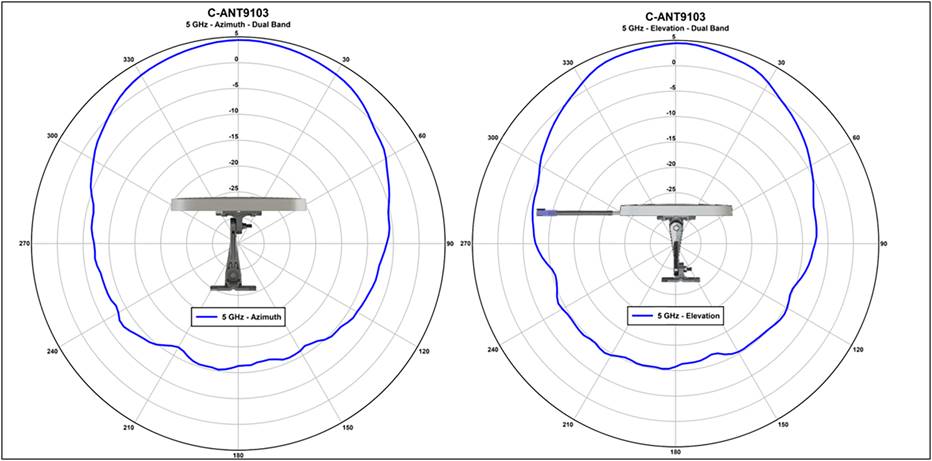

Each antenna is designed for a specific purpose. When selecting an antenna, one of the factors to consider is the beamwidth. Beamwidth describes the coverage area of an antenna; however, it does not describe how hard or soft the edge of that coverage is. For that you need to look at the antenna’s pattern in a plot.

The plot below is from one of the C-ANT9103 antennas. It is designed to provide good coverage over a general area. The beamwidth of this antenna at 2.4 GHz and 5 GHz is 75 degrees; this describes the point where the peak gain of the antenna falls by 3dB. What’s important in a directional antenna is what happens after that 3 dB. The gain falls sharply after the rated beamwidth. This is exactly what needs to happen to enable more APs to be put closer together for higher capacity.

Beamwidth plots for the C-ANT9103 antenna

If the antenna cannot hear, it may not interfere with your AP. We have only three channels in 2.4 GHz; channel reuse in a dense deployment is already a problem there. With a good antenna, you can make the cell size smaller and get more radios closer together to provide adequate capacity in your design for 2.4-GHz users. 5 GHz has more channels; however, with 20-, 40-, and 80-MHz channel widths, we are using channels up faster, and cell isolation is becoming more of a problem.

Other problems that can be solved using directional antennas include high-interference environments – a shopping mall, for instance. Most of the stores in a shopping mall will have installed Wi-Fi, and this creates interference for your Wi-Fi. Using directional antennas, you can isolate your store from the neighbor by focusing the ears of the AP inward and making the receive sensitivity less behind the antenna. The front-to-back ratio of an antenna is responsible for this. Think of it as being like cupping your hands over your ears to hear a distant sound. When you do this, you focus the sound energy into your ears, but you also shield your ears to the surrounding noise, and this produces a better SNR – you experience it as better, more intelligible sound. Putting a directional antenna on your AP will similarly focus its ears, and it will experience better sound with less noise as well.

Newer antenna designs

● The external antenna connectors on the Catalyst APs are identical to the antenna connectors on previous APs. There is no difference in operation when the access point is used in dual-band (2.4 and 5 GHz) operation (the default mode). RF coverage and cell sizes are like those of the previous Aironet 2800 and 3800 Series.

● Like the prior external antenna versions, the new Catalyst 9120 Series access points now support the capability of dual 5-GHz operation. The main serving radios default to the following configuration:

◦ Dedicated 5-GHz radio is tied to the dual-band client-serving antennas at 4 dBi.

◦ (Exclusive OR) known as XOR radio (defaulted to 2.4 GHz) is tied to the dual-band client-serving antennas at 3 dBi.

◦ Dual 5-GHz mode: XOR 2.4-GHz disabled secondary 5-GHz radio is tied to the dedicated 5-GHz antennas at 4 dBi.

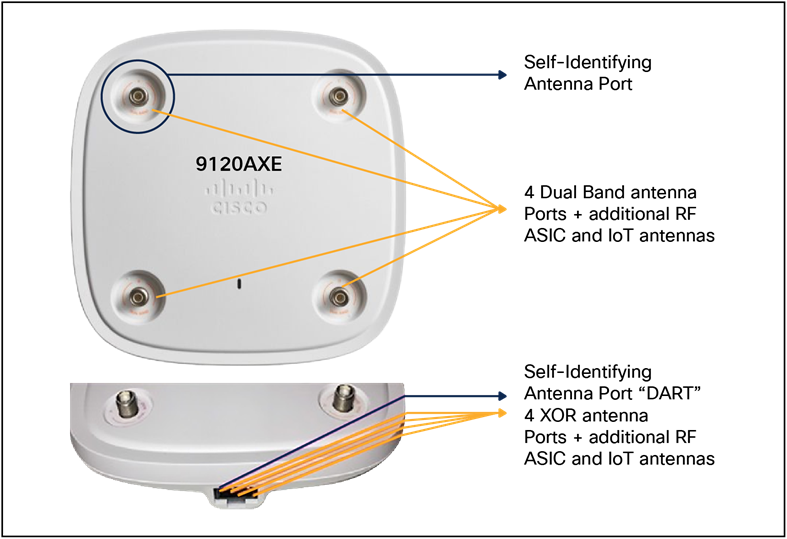

● Similarly, in the Catalyst 9120AXE access point, which has external antenna ports, for dual 5 GHz, a smart antenna connector must be used on the external antennas, as the additional 5-GHz radio cannot use the same top antennas on the access point that are being used by the primary 5-GHz radio.

9120e antenna system using the DART connector for dual 5 GHz

● When a smart antenna connector is installed, the XOR radio (the radio that is defined in software as Radio 0) has its RF switched to the smart antenna connector.

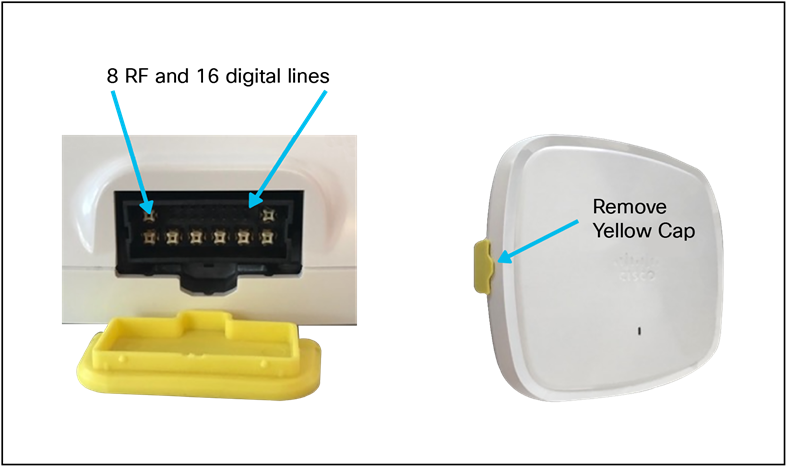

● The smart antenna connector can detect the type of antenna used and has 16 digital lines as well as 4 analog RF lines.

● The self-identifying antenna ports are indicated by a different color (PURPLE). Figure 6 shows the traditional antenna ports and the DART ports.

Catalyst 9120 antenna ports

When the smart antenna is not installed, the antenna on top of the unit is in Dual Radiating Element (DRE) mode. If the smart antenna connector is installed, the XOR (2.4 or 5 GHz, depending on the mode) goes out the smart connector. In this mode the XOR radio (unless in Monitor mode) can be configured for only one band, 2.4 GHz or the other band, 5 GHz. This is in Single Radiating Element (SRE) mode.

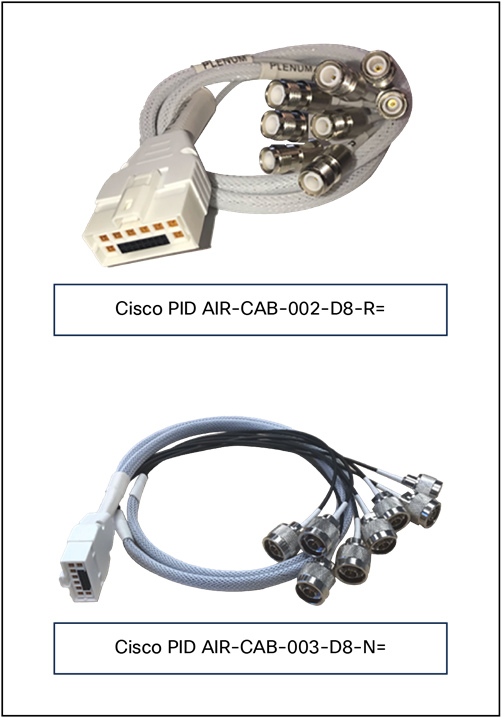

Cables to use with existing RP-TNC

Unlike the Catalyst 9120AXE, the Catalyst 9130AXE does not have antenna ports. The 9130AXE requires the use of an external antenna system. The yellow cover (on the left) must be removed and a suitable antenna system installed via the 8-port DART smart connector, which is exposed once the yellow cover is removed. Do not operate the unit without a suitable antenna.

C9130AXE antenna connector

Three new antennas have been designed to support the Cisco Catalyst C9130AXE:

● C-ANT9101: Ceiling mount omni, like AIR-ANT2524V4C-R=.

● C-ANT9102: Wall/pole mount omni, like AIR-ANT2544V4M-R=.

● C-ANT9103: Wall/pole mount patch, like AIR-ANT2566D4M-R=.

Antenna options

In addition to new antennas being designed with the smart connector, conventional antennas using RP-TNC connectors may attach to the Catalyst 9120or 9130 Series using the smart connector.

Smart connector

For more on this topic, see the Cisco Catalyst 9130 Series Access Point Deployment Guide at: https://www.cisco.com/c/en/us/products/collateral/wireless/catalyst-9100ax-access-points/deployment-guide-c07-743490.html.

Some design considerations can be addressed by general best practice guidelines. The following applies to most situations:

The number of users per AP that we recommend is as follows:

● 30 to 50 for data-only users.

● 10 to 20 for voice users.

This number should be used as a guideline and can vary depending on the AP model, handset, or application in use. Check your handset/application requirements.

● The AP data rates should be limited to those designed and for which the site survey was performed. Enabling lower data rates can cause increases in co-channel interference and greater throughput variations for clients. A common minimum data rate to start with is 12 Mbps.

● The number of APs depends on coverage and throughput requirements, which can vary. For example, the Cisco internal Information Systems group currently uses one AP per 3000 square feet of floor space.

Radio Resource Management (RRM)

The RRM software that is embedded in the device acts as a built-in RF engineer to consistently provide real-time RF management of your wireless network. RRM enables devices to continually monitor their associated lightweight access points for the following information:

Traffic load: The total bandwidth used for transmitting and receiving traffic. It enables wireless LAN managers to track and plan network growth ahead of client demand.

● Interference: The amount of traffic coming from other 802.11 sources.

● Noise: The amount of non-802.11 traffic that is interfering with the currently assigned channel.

● Coverage: The RSSI and SNR for all connected clients.

● Other: The number of nearby access points.

RRM performs these functions:

● Radio resource monitoring

● Power control transmission

● Dynamic Channel Assignment (DCA)

● Coverage hole detection and correction

● RF grouping

Note: RRM grouping does not occur when an AP operates in a static channel that is not in the DCA channel list. The Neighbor Discovery Protocol (NDP) is sent only on DCA channels; therefore, when a radio operates on a non-DCA channel, it does not receive NDP on the channel.

RRM automatically detects and configures new devices and lightweight access points as they are added to the network. It then automatically adjusts the associated and nearby lightweight access points to optimize coverage and capacity.

Lightweight access points can scan all the valid channels for the country of operation as well as for channels available in other locations. The access points in local mode go off channel for a period not greater than 0 ms to monitor these channels for noise and interference. Packets collected during this time are analyzed to detect rogue access points, rogue clients, ad hoc clients, and interfering access points.

Note: In the presence of voice traffic or other critical traffic (in the last 100 ms), access points can defer off-channel measurements. The access points also defer off-channel measurements based on the WLAN scan priority configurations.

Each access point spends only 0.2 percent of its time off channel. This activity is distributed across all the access points so that adjacent access points are not scanning at the same time, which could adversely affect WLAN performance.

An RF group is a logical collection of controllers that coordinate to conduct RRM in a globally optimized manner to perform network calculations on a per-radio basis. Separate RF groups exist for 2.4-GHz and 5-GHz networks. Clustering Catalyst 9800 Series controllers into a single RF group enables the RRM algorithms to scale beyond the capabilities of a single controller.

An RF group is created based on the following parameters:

● User-configured RF network name.

● Neighbor discovery performed at the radio level.

● Country list configured on the controller.

RF grouping runs between controllers.

Lightweight access points periodically send out neighbor messages over the air. Access points using the same RF group name validate messages from each other.

When access points on different controllers hear validated neighbor messages at a signal strength of 80 dBm or stronger, the controllers dynamically form an RF neighborhood in auto mode. In static mode, the leader is manually selected, and the members are added to the RF group.

Note: RF groups and mobility groups are similar in that they both define clusters of controllers, but they are different in terms of their use. An RF group facilitates scalable, systemwide, dynamic RF management, while a mobility group facilitates scalable, systemwide mobility and controller redundancy.

RF group leader

An RF group leader can be configured in one of two ways, as follows:

Note: The RF group leader is chosen based on the controller with the greatest AP capacity (platform limit.) If multiple controllers have the same capacity, the leader is the one with the highest management IP address.

● Auto mode: In this mode, the members of an RF group elect an RF group leader to maintain a primary power and channel scheme for the group. The RF grouping algorithm dynamically chooses the RF group leader and ensures that an RF group leader is always present. Group leader assignments can and do change (for instance, if the current RF group leader becomes inoperable or RF group members experience major changes).

● Static mode: In this mode, a user selects a controller as an RF group leader manually. The leader and the members are manually configured and fixed. If the members are unable to join the RF group, the reason is indicated. The leader tries to establish a connection with a member every minute if the member has not joined in the previous attempt.

The RF group leader analyzes real-time radio data collected by the system, calculates the power and channel assignments, and sends them to each of the controllers in the RF group. The RRM algorithms ensure systemwide stability and restrain channel and power scheme changes to the appropriate local RF neighborhoods.

Note: When a controller becomes both leader and member for a specific radio, you get to view the IPv4 and IPv6 address as part of the group leader.

When Controller A becomes a member and Controller B becomes a leader, Controller A displays either the IPv4 or IPv6 address of Controller B using the address it is connected with.

So if both leader and member are not the same, you get to view only one IPv4 or IPv6 address as a group leader in the member.

If DCA needs to use the worst-performing radio as the single criterion for adopting a new channel plan, it can result in pinning or cascading problems.

The main cause of both pinning and cascading is that any potential channel plan changes are controlled by the RF circumstances of the worst-performing radio. The DCA algorithm does not do this; instead, it does the following:

● Multiple local searches: The DCA search algorithm performs multiple local searches initiated by different radios in the same DCA run rather than performing a single global search that is driven by a single radio. This change addresses both pinning and cascading while maintaining the desired flexibility and adaptability of DCA and without jeopardizing stability.

● Multiple Channel Plan Change Initiators (CPCIs): Previously, the single worst radio was the sole initiator of a channel plan change. Now each radio in an RF group is evaluated and prioritized as a potential initiator. Intelligent randomization of the resulting list ensures that every radio is eventually evaluated, which eliminates the potential for pinning.

● Limiting the propagation of channel plan changes (localization): For each CPCI radio, the DCA algorithm performs a local search for a better channel plan, but only the CPCI radio itself and its one-hop neighboring access points are allowed to change their current transmit channels. The impact of an access point triggering a channel plan change is felt only to within two RF hops from that access point, and the actual channel plan changes are confined to within a one-hop RF neighborhood. Because this limitation applies across all CPCI radios, cascading cannot occur.

● Non-RSSI-based cumulative cost metric: A cumulative cost metric measures how well an entire region, neighborhood, or network performs with respect to a given channel plan. The individual cost metrics of all the access points in that area are considered to provide an overall understanding of the channel plan’s quality. These metrics ensure that the improvement or deterioration of each single radio is factored into any channel plan change. The objective is to prevent channel plan changes in which a single radio improves, but at the expense of multiple other radios experiencing a considerable performance decline.

The RRM algorithms run at a specified update interval, which is 600 seconds by default. Between update intervals, the RF group leader sends keep-alive messages to each of the RF group members and collects real-time RF data.

For more information on RRM, see the Catalyst 9800 Radio Resource Management Deployment Guide at https://www.cisco.com/c/en/us/td/docs/wireless/controller/technotes/8-8/b_C9800_rrm_dg.html.

RF group name

A controller is configured in an RF group name, which is sent to all the access points joined to the controller and used by the access points as the shared secret for generating the hashed MIC in the neighbor messages. To create an RF group, you configure all the controllers to be included in the group with the same RF group name.

If there is any possibility that an access point joined to a controller might hear RF transmissions from an access point on a different controller, you should configure the controller with the same RF group name. If RF transmissions between access points can be heard, systemwide RRM is recommended to avoid 802.11 interference and contention as much as possible.

Secure RF groups

Secure RF groups enable to encrypt and secure RF grouping and RRM message exchanges over a DTLS tunnel. During the DTLS handshake, controllers authenticate each other with a wireless management trust-point certificate.

The device dynamically controls access point transmit power based on the real-time wireless LAN conditions.

The TPC algorithm increases and decreases an access point’s power in response to changes in the RF environment. In most instances, TPC seeks to lower an access point's power to reduce interference, but in the case of a sudden change in the RF coverage – for example, if an access point fails or becomes disabled – TPC can also increase power on the surrounding access points. This feature is different from coverage hole detection, which is primarily concerned with clients. TPC provides enough RF power to achieve the required coverage levels while avoiding channel interference between access points. We recommend that you select TPCv1; the TPCv2 option is deprecated. With TPCv1, you can select the channel-aware mode; we recommend that you select this option for 5 GHz and leave it unchecked for 2.4 GHz.

Overriding the TPC algorithm with minimum and maximum transmit power settings

The TPC algorithm balances RF power in many diverse RF environments. However, it is possible that automatic power control will not be able to resolve some scenarios in which implementing an adequate RF design was not possible due to architectural restrictions or site restrictions – for example, when all the access points must be mounted in a central hallway, requiring them to be placed close together, but coverage is required to the edge of the building.

In these scenarios, you can configure maximum and minimum transmit power limits to override TPC recommendations. The maximum and minimum TPC power settings apply to all the access points through RF profiles in a RF network.

To set the maximum power level assignment and minimum power level assignment, enter the maximum and minimum transmit power used by RRM in the fields in the Tx Power Control window. The range for these parameters is 10 to 30 dBm. The minimum value cannot be greater than the maximum value; the maximum value cannot be less than the minimum value.

If you configure a maximum transmit power, RRM does not allow any access point attached to the controller to exceed this transmit power level (whether the power is set by RRM TPC or by coverage hole detection). For example, if you configure a maximum transmit power of 11 dBm, no access point will transmit above 11 dBm, unless the access point is configured manually.

Cisco APs support power level changes in 3-dB granularity. TPC Min and Max power settings allow for values in 1-dB increments. The resulting power level will be rounded to the nearest value supported in the allowed power entry for the AP model and the current serving channel.

Each AP model has its own set of power levels localized for its regulatory country and region. Moreover, the power levels for the same AP model will vary based on the band and channel it is set to. For more information on allowed power level vs. actual power (in dBm), use the show ap name <name> config slot <0|1|2|3> command to view the specific number of power levels, the range of power levels allowed, and the current power level setting on the AP.

Dynamic Channel Assignment (DCA)

Two adjacent access points on the same channel can cause either signal contention or signal collision. In a collision, data is not received by the access point. This functionality can become a problem when, for example, someone reading an email in a café affects the performance of the access point in a neighboring business. Even though these are separate networks, someone sending traffic to the café on channel 1 can disrupt communication in an enterprise using the same channel. Devices can dynamically allocate access point channel assignments to avoid conflict and increase capacity and performance. Channels are reused to avoid wasting scarce RF resources. In other words, channel 1 is allocated to a different access point far from the café, which is more effective than not using channel 1 altogether.

The device’s DCA capabilities are also useful in minimizing adjacent channel interference between access points. For example, two overlapping channels in the 802.11b/g band, such as 1 and 2, cannot simultaneously use 11 or 54 Mbps. By effectively reassigning channels, the device keeps adjacent channels that are separated.

The device examines a variety of real-time RF characteristics to efficiently handle channel assignments as follows:

● Access point received energy: The RSSI measured between each access point and its nearby neighboring access points. Channels are optimized for the highest network capacity.

● Noise: Noise can limit signal quality at the client and access point. An increase in noise reduces the effective cell size and degrades user experience. By optimizing channels to avoid noise sources, the device can optimize coverage while maintaining system capacity. If a channel is unusable due to excessive noise, that channel can be avoided.

● 802.11 interference: Interference is any 802.11 traffic that is not a part of your WLAN, including rogue access points and neighboring wireless networks. Lightweight access points constantly scan all the channels looking for sources of interference. If the amount of 802.11 interference exceeds a predefined configurable threshold (the default is 10 percent), the access point sends an alert to the device. Using the RRM algorithms, the device may then dynamically rearrange channel assignments to increase system performance in the presence of the interference. Such an adjustment could result in adjacent lightweight access points being on the same channel, but this setup is preferable to having the access points remain on a channel that is unusable due to an interfering foreign access point.

● In addition, if other wireless networks are present, the device shifts the usage of channels to complement the other networks. For example, if one network is on channel 6, an adjacent WLAN is assigned to channel 1 or 11. This arrangement increases the capacity of the network by limiting the sharing of frequencies. If a channel has virtually no capacity remaining, the device may choose to avoid this channel. In huge deployments in which all nonoverlapping channels are occupied, the device does its best, but you must consider RF density when setting expectations.

● Load and utilization: When utilization monitoring is enabled, capacity calculations can consider that some access points are deployed in ways that cause them to carry more traffic than other access points, for example, a lobby versus an engineering area. The device can then assign channels to improve the access point that has performed the worst. The load is considered when changing the channel structure to minimize the impact on the clients that are currently in the WLAN. This metric keeps track of every access point’s transmitted and received packet counts to determine how busy the access points are. New clients avoid an overloaded access point and associate to a new access point. This load and utilization parameter is disabled by default.

The device combines this RF characteristic information with RRM algorithms to make systemwide decisions. Conflicting demands are resolved using soft-decision metrics that guarantee the best choice for minimizing network interference. The result is optimal channel configuration in a three-dimensional space, where access points on the floors above and below play a major factor in an overall WLAN configuration.

Note: DCA supports only 20-MHz channels in 2.4-GHz band.

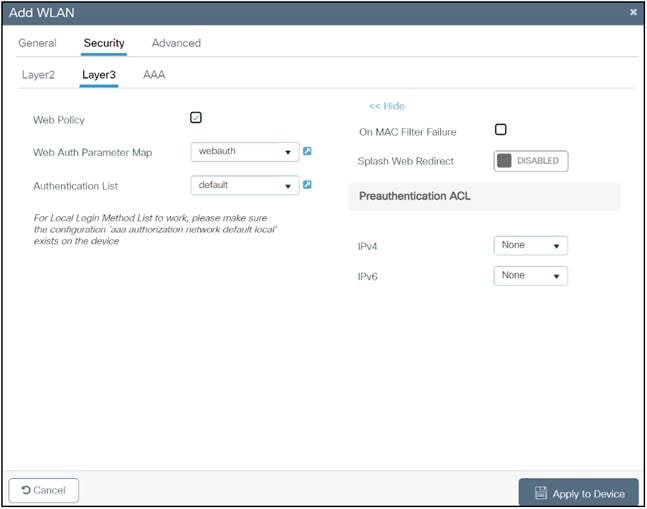

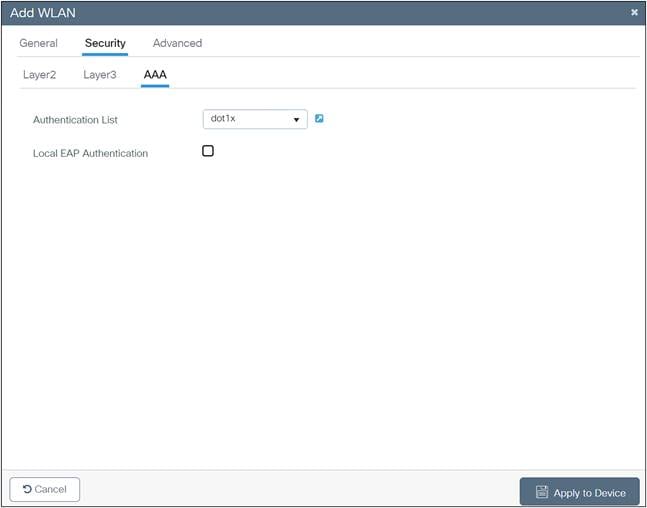

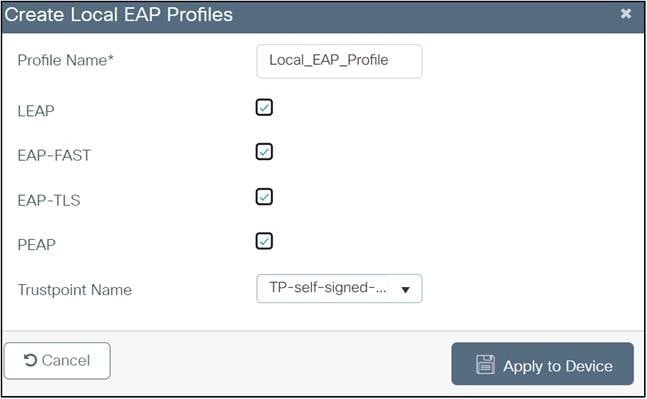

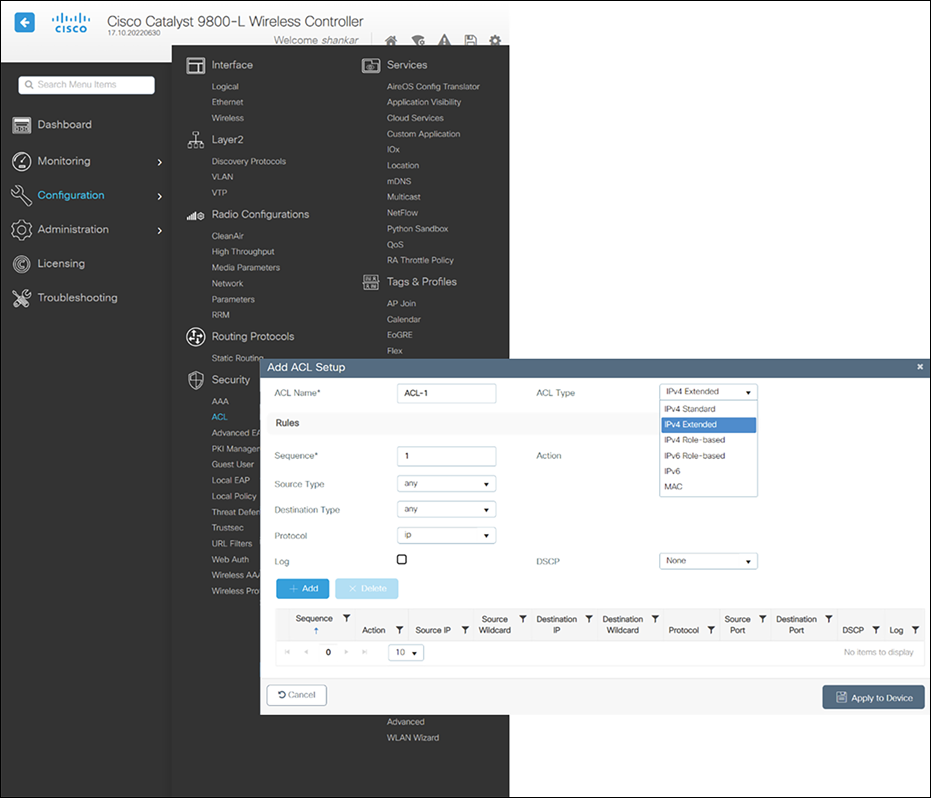

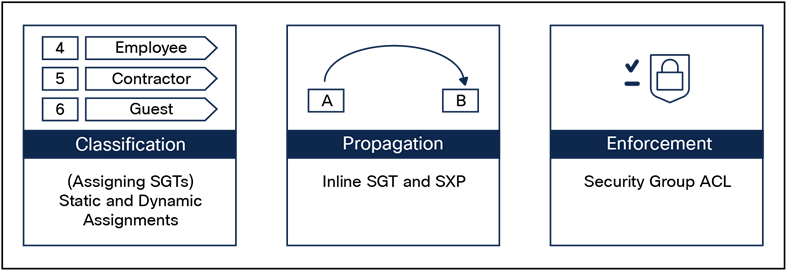

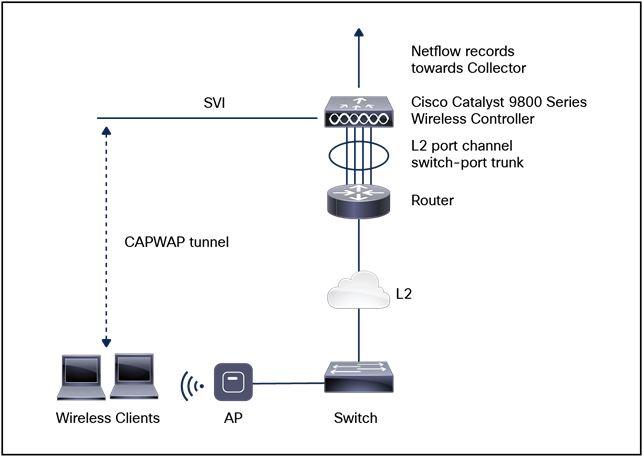

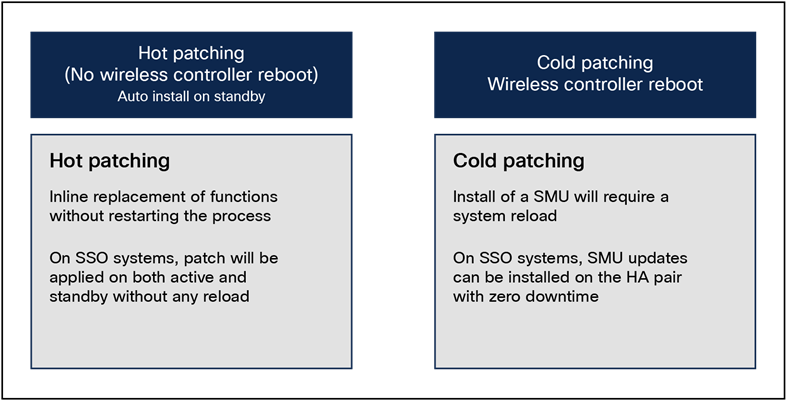

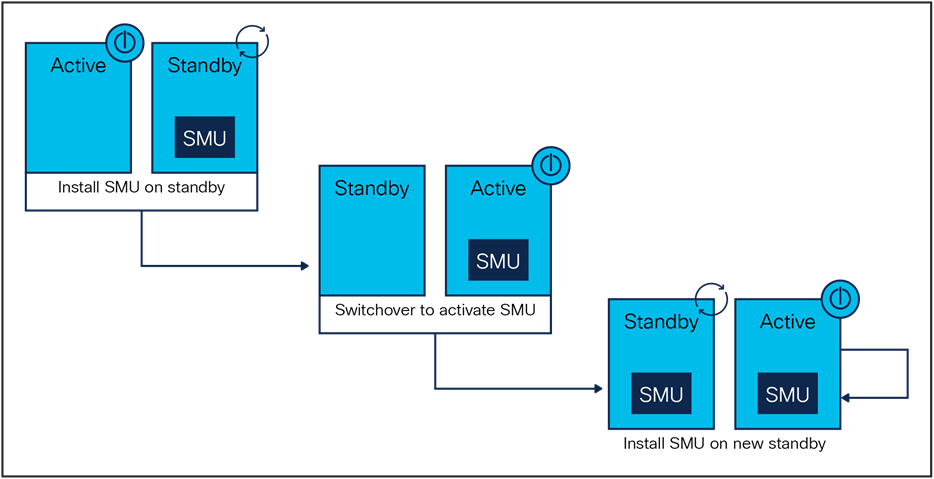

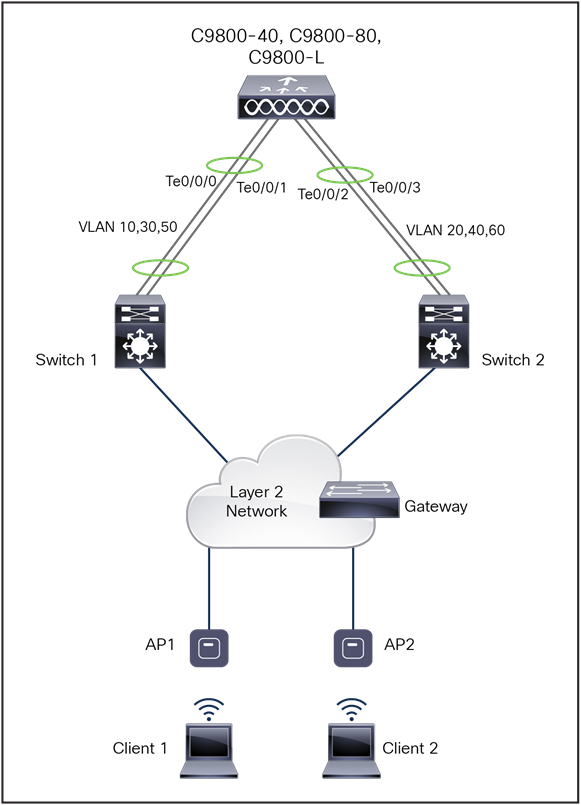

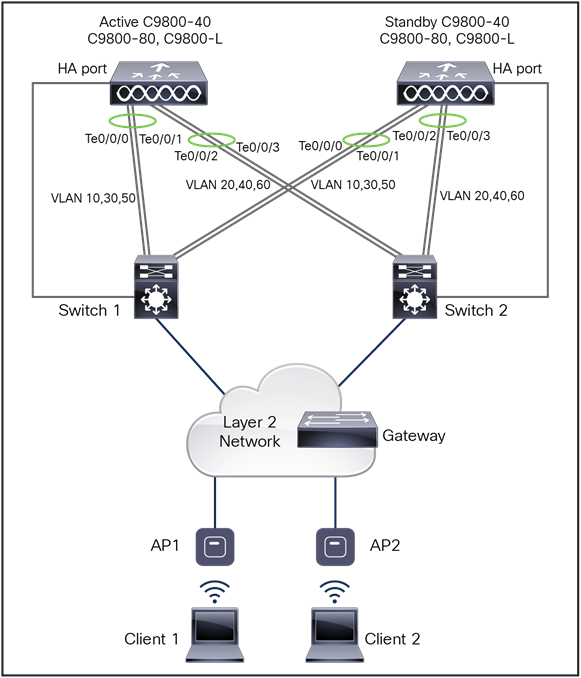

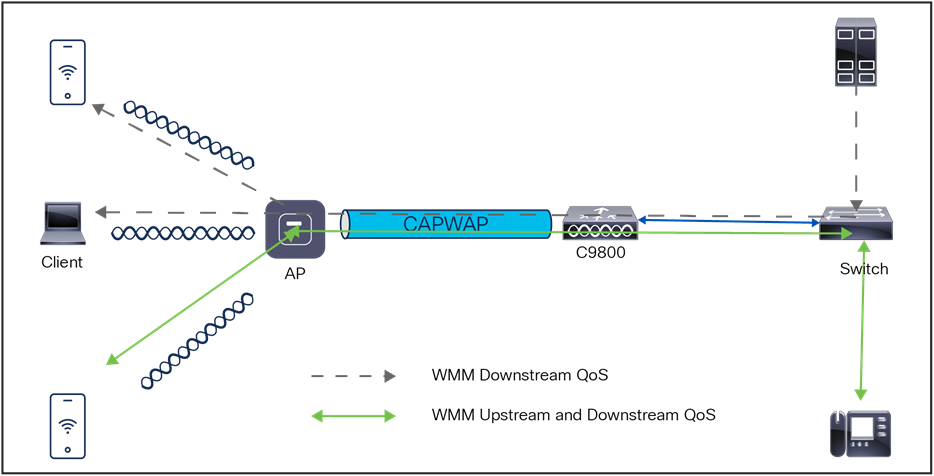

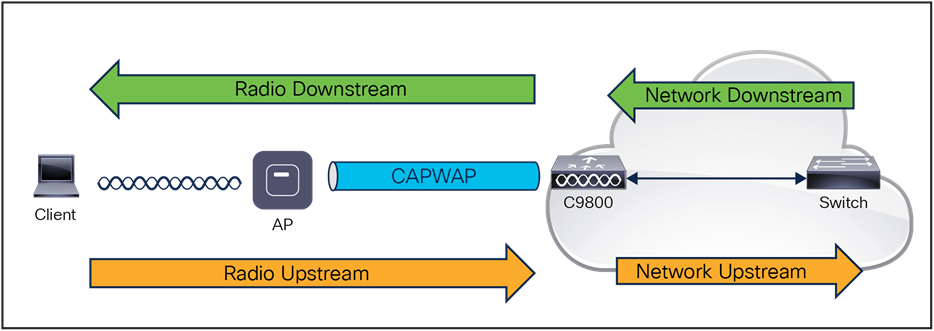

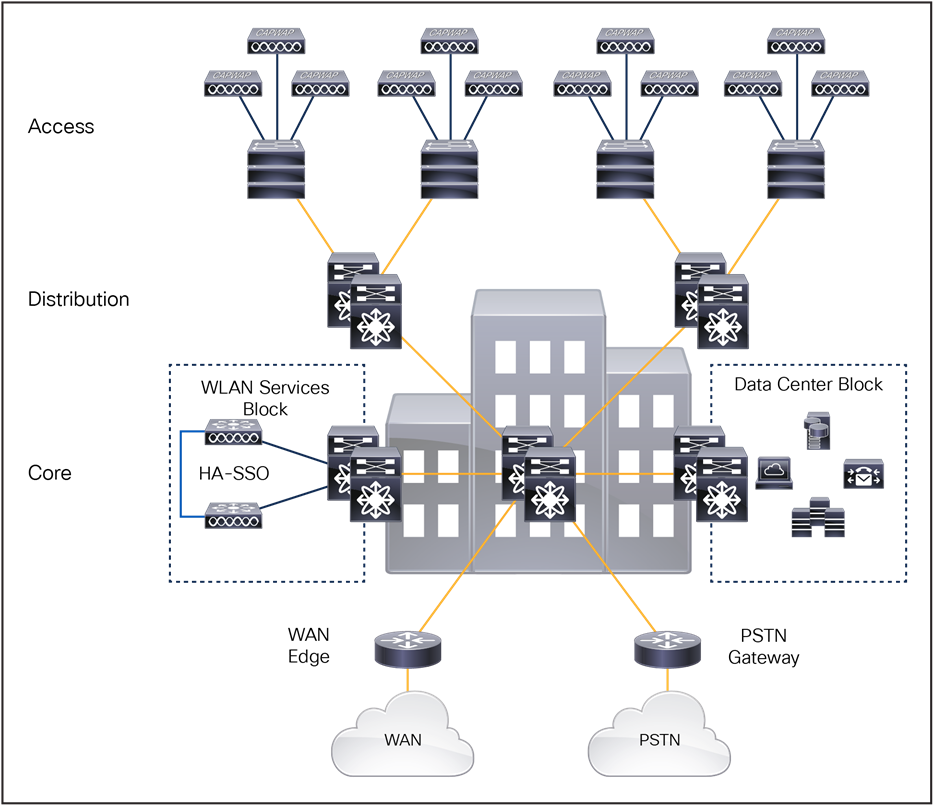

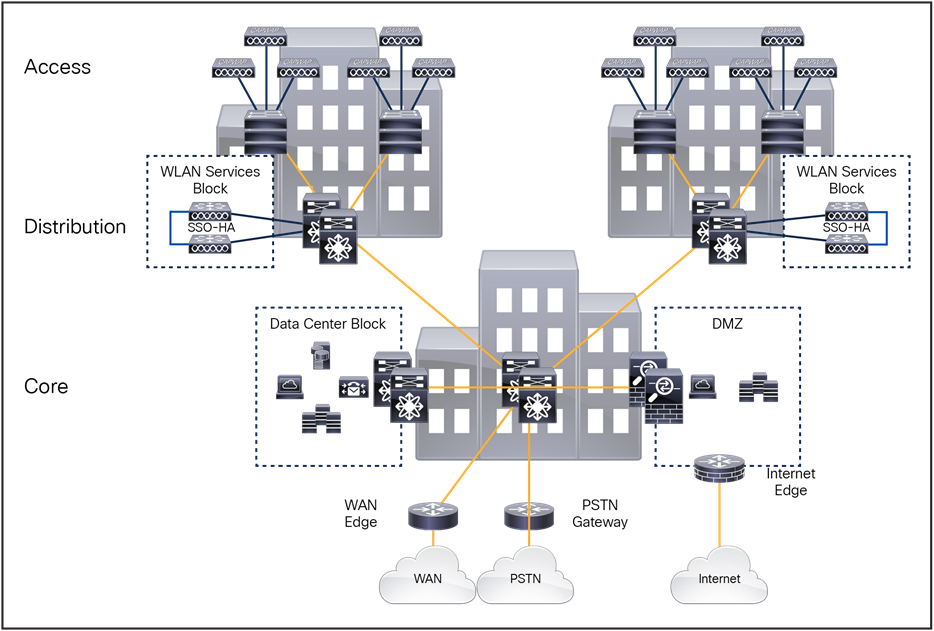

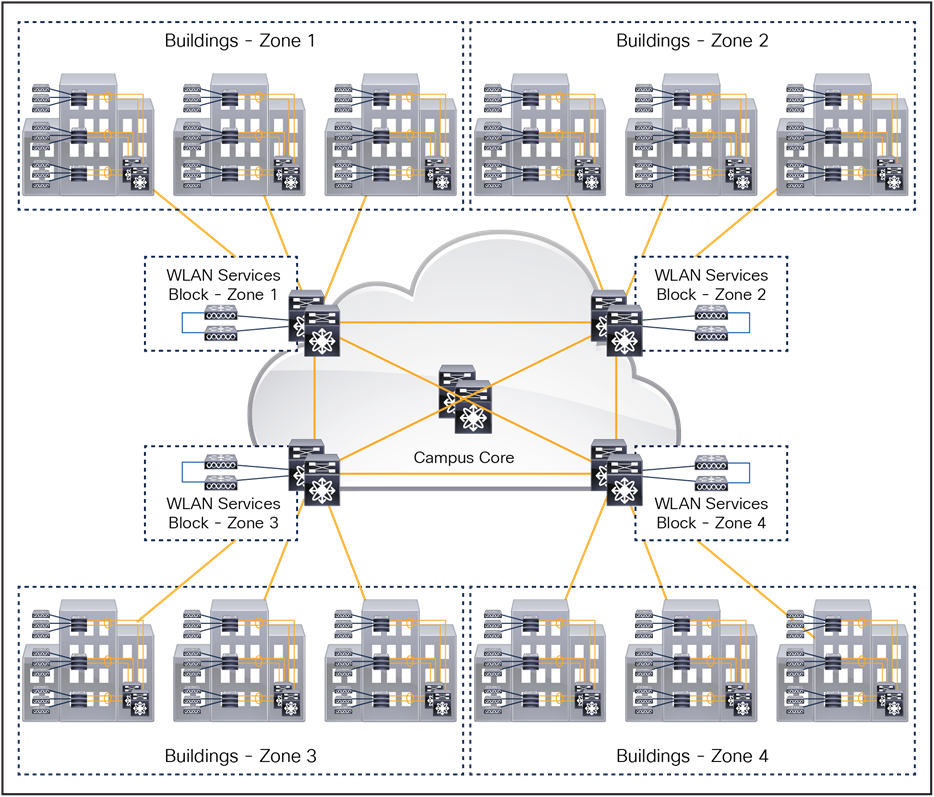

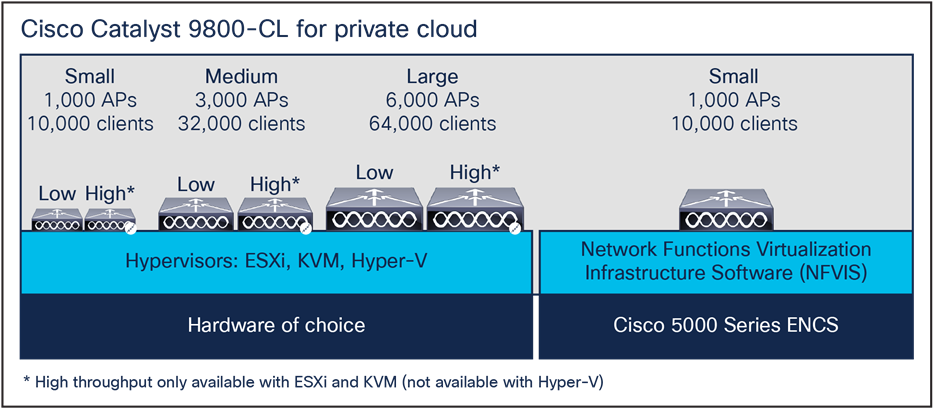

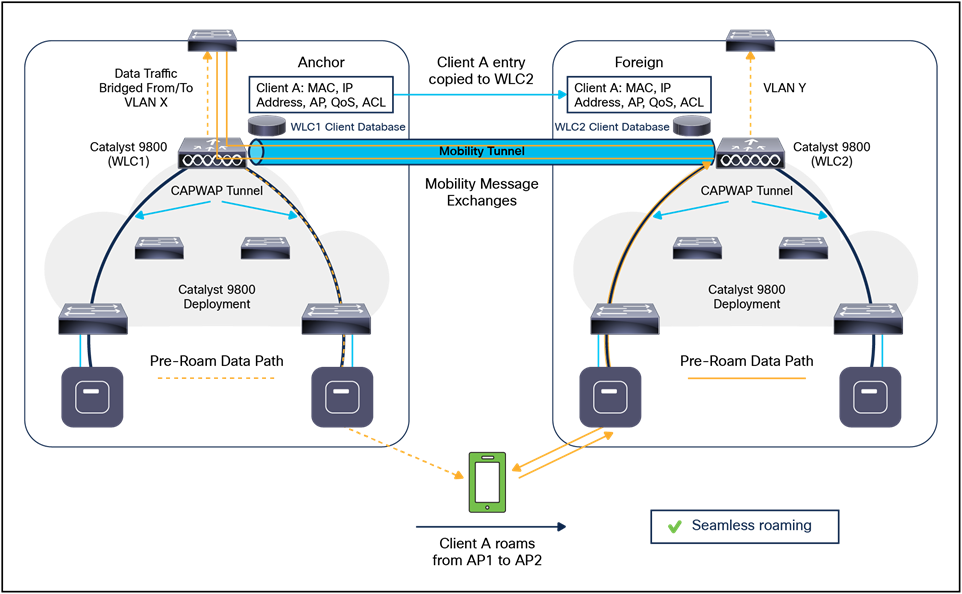

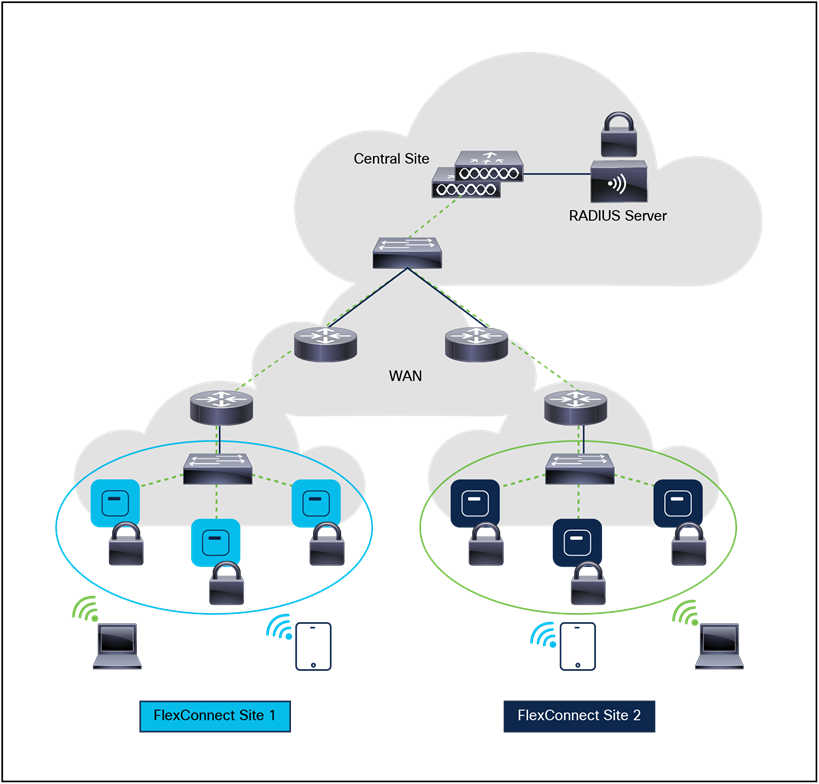

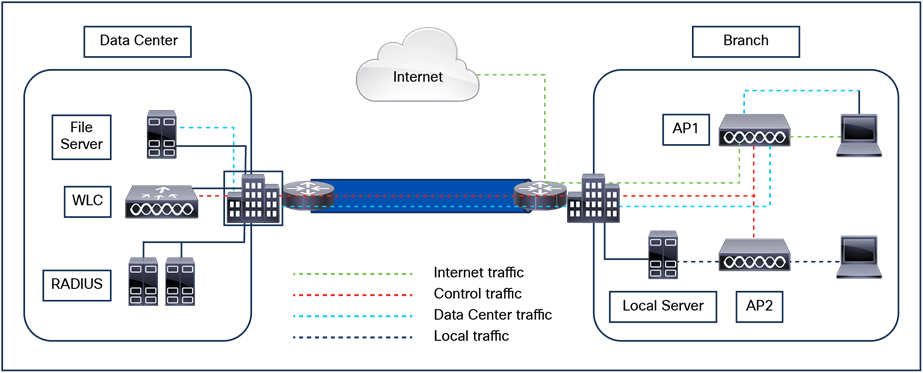

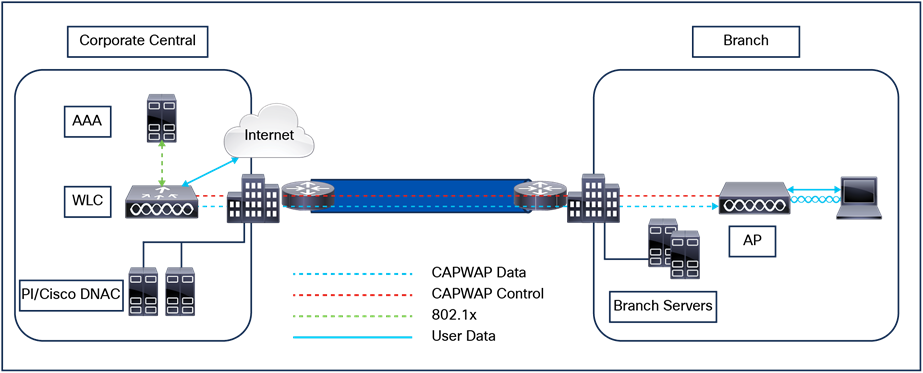

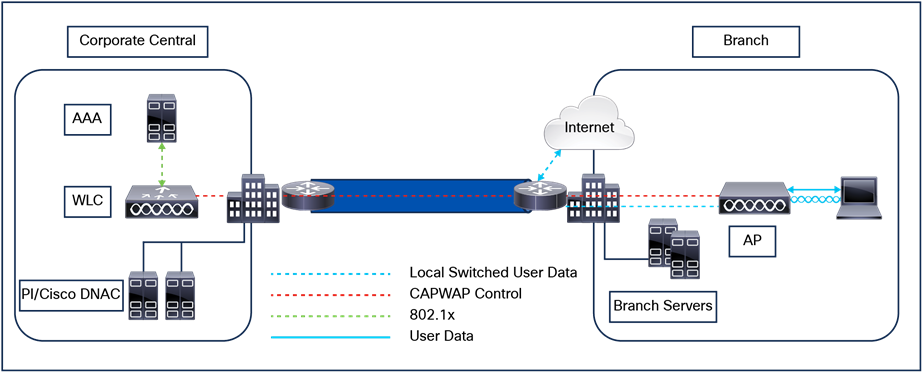

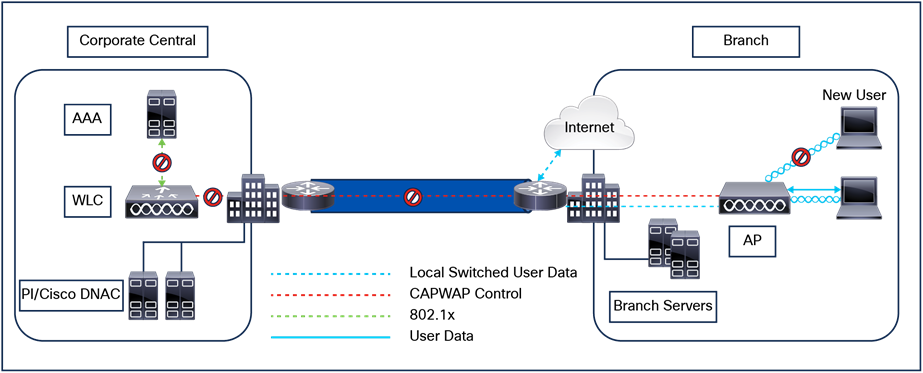

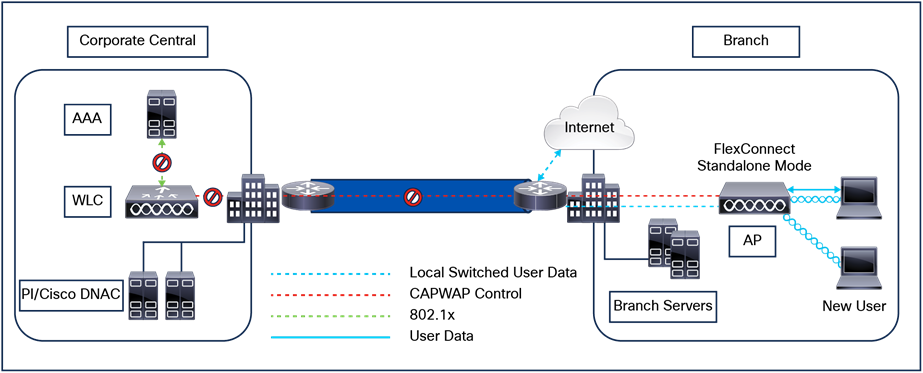

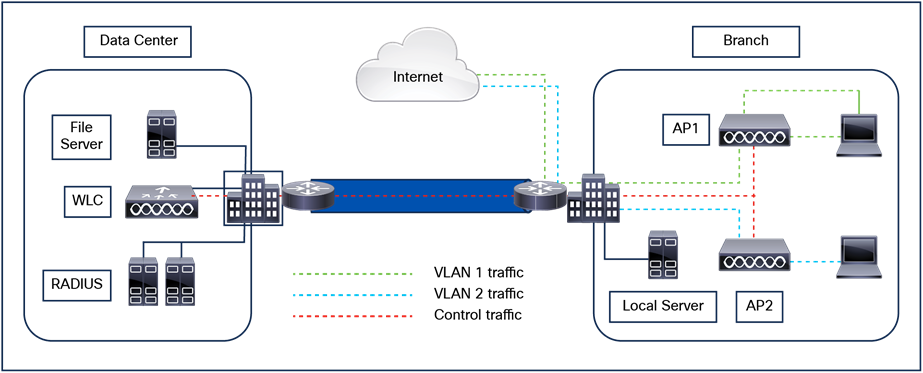

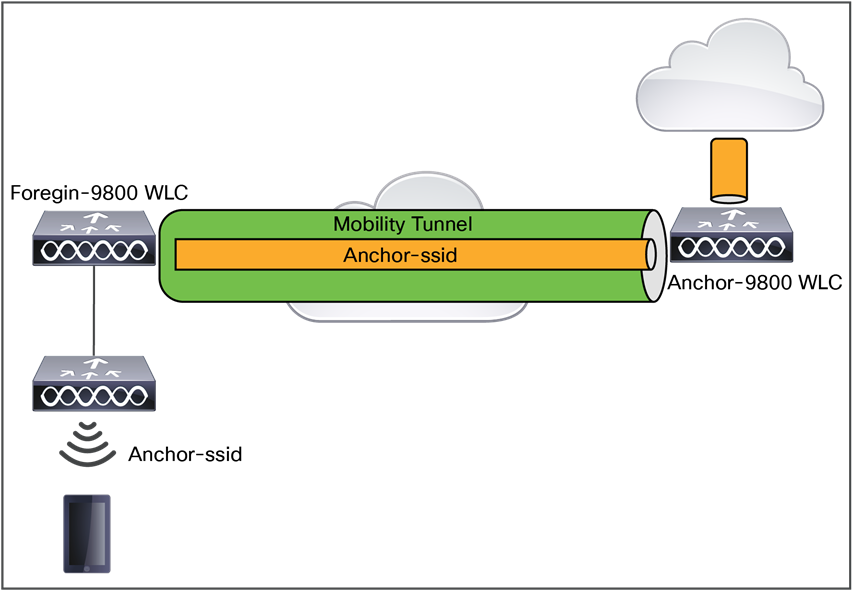

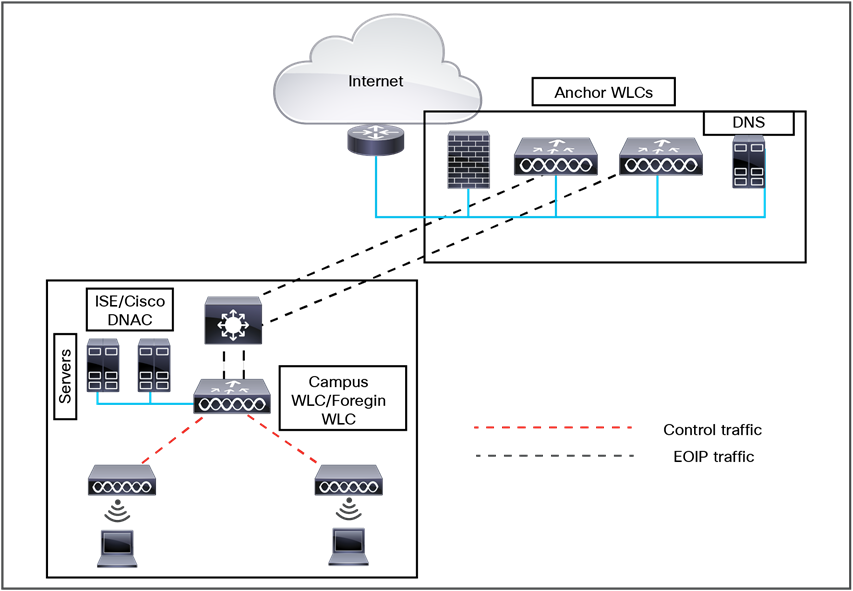

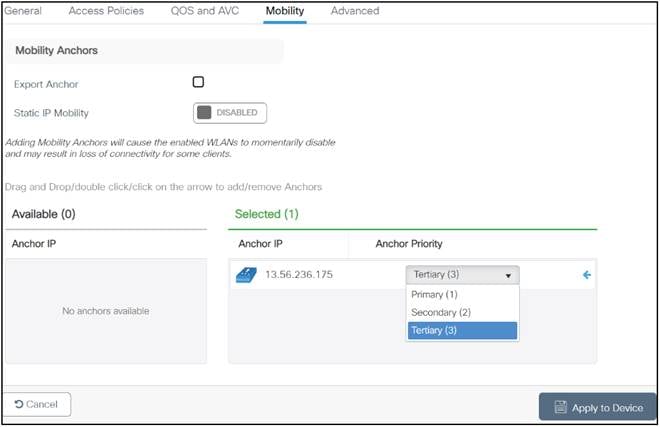

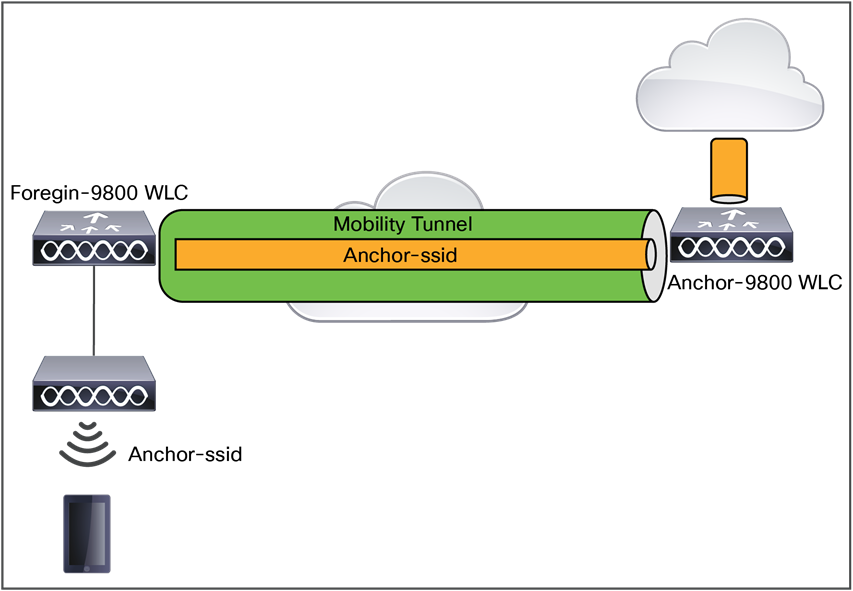

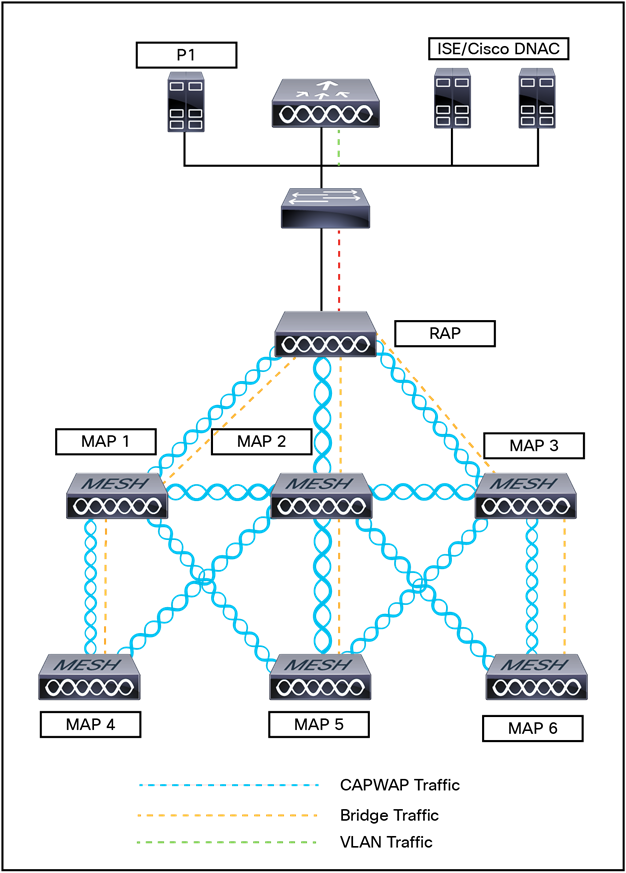

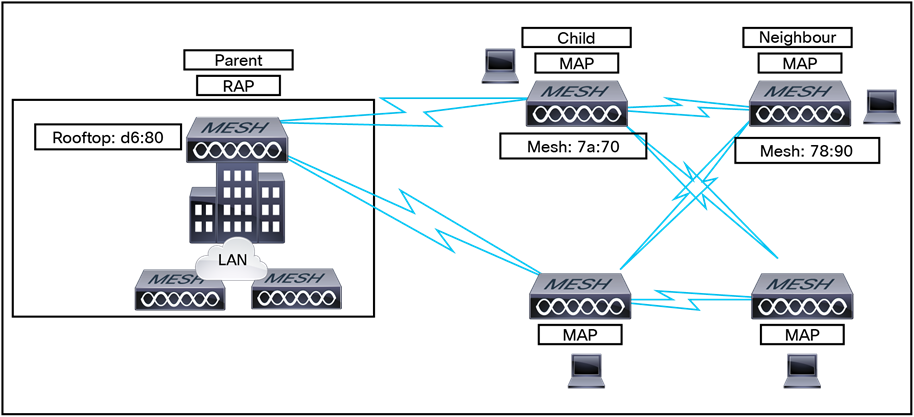

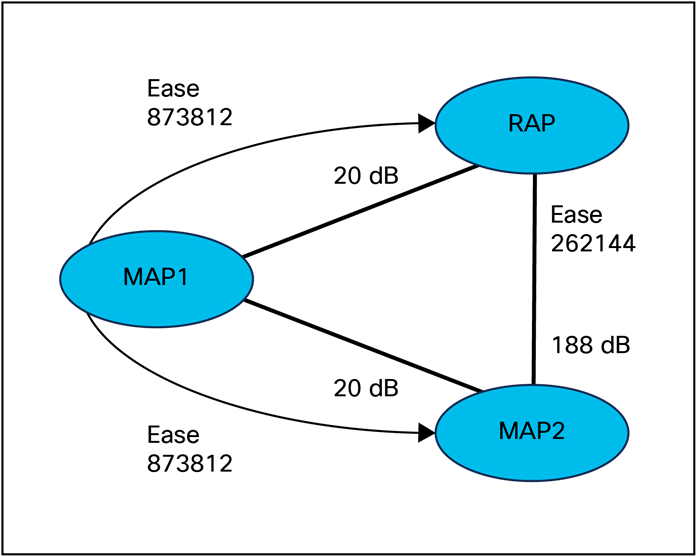

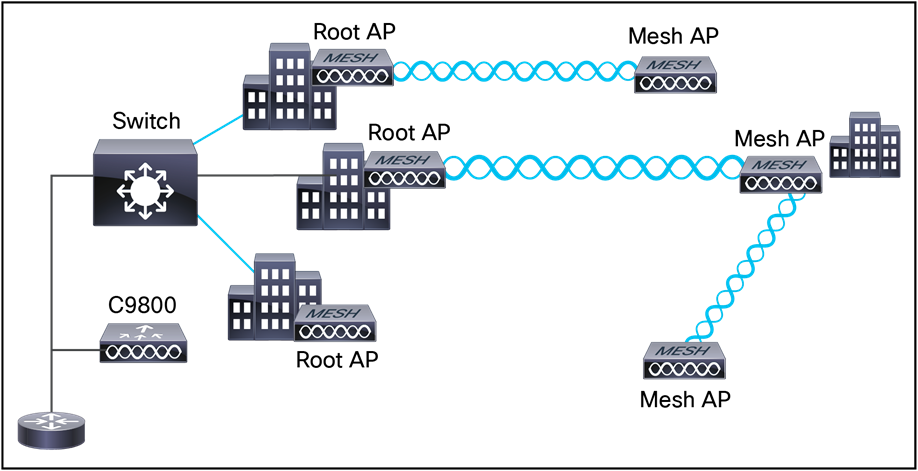

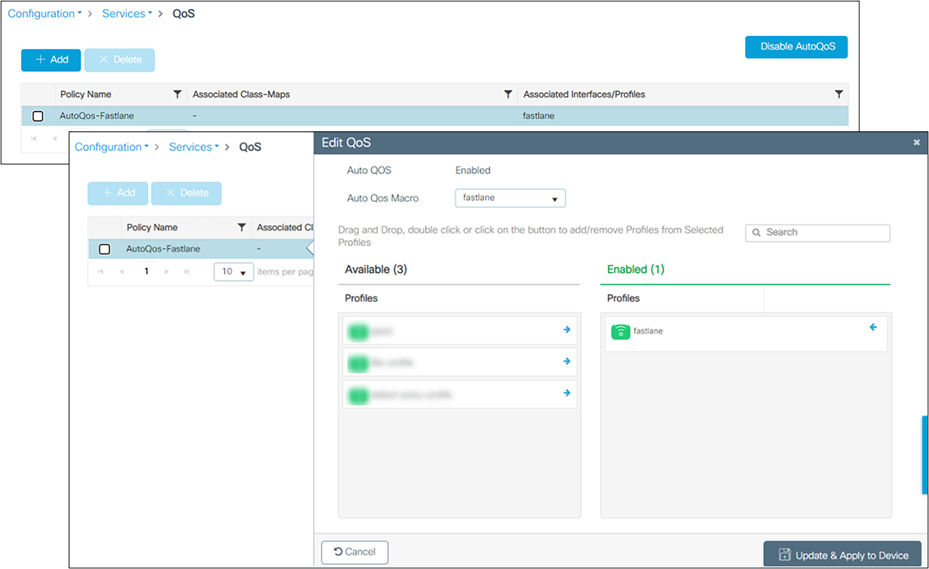

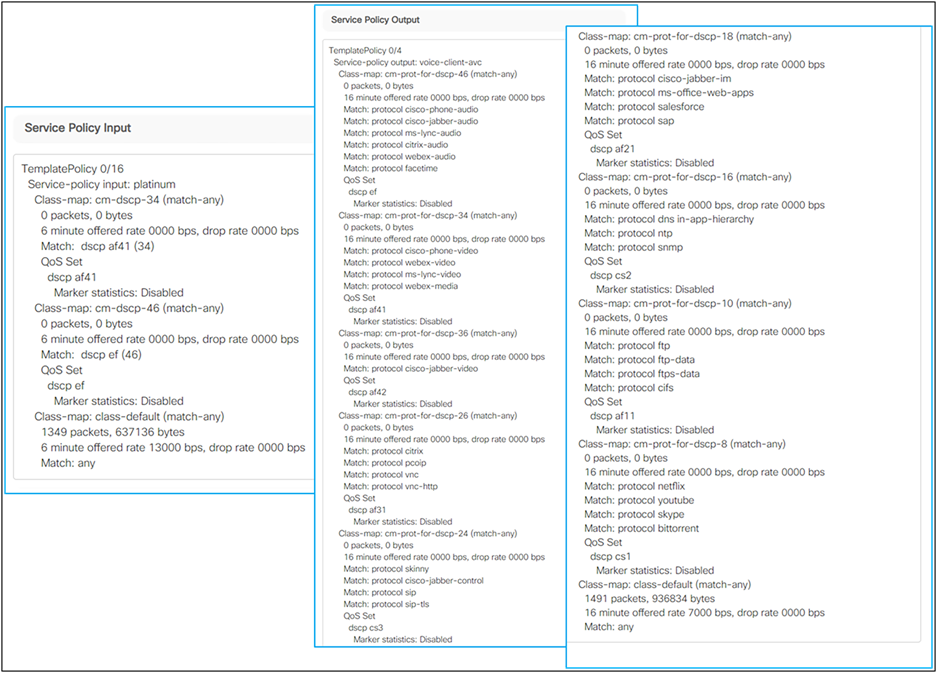

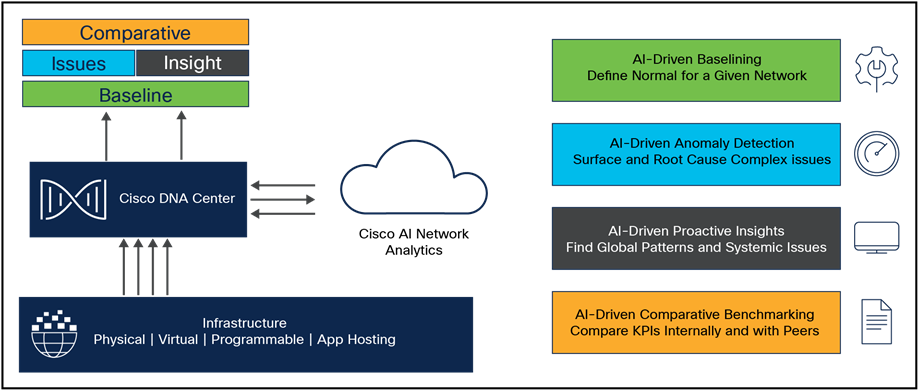

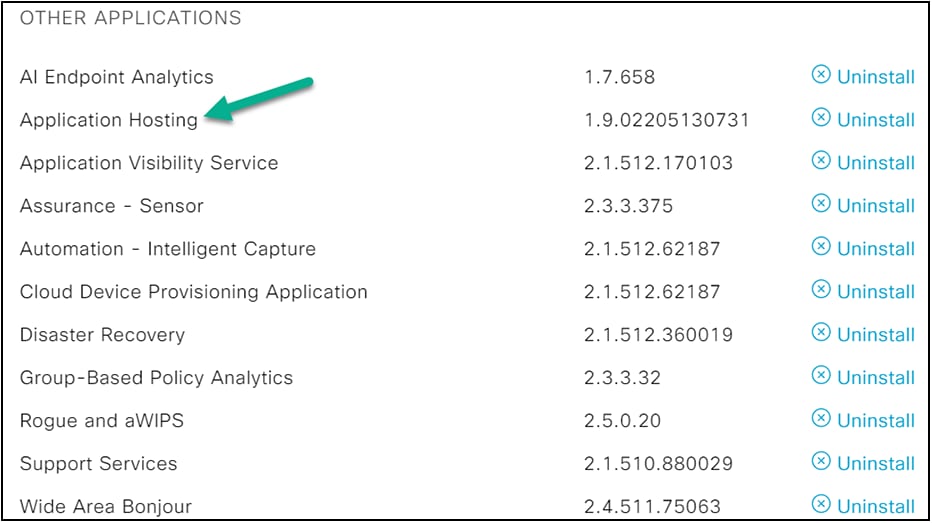

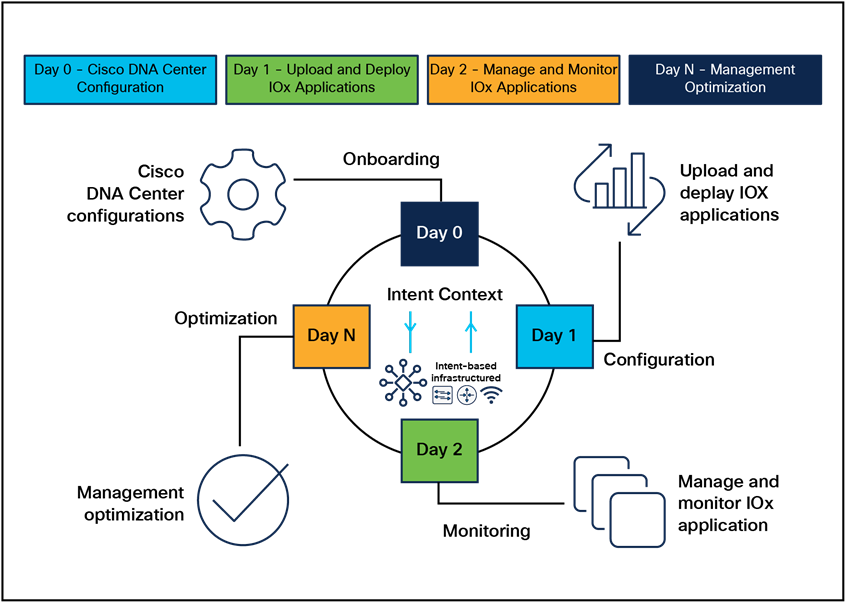

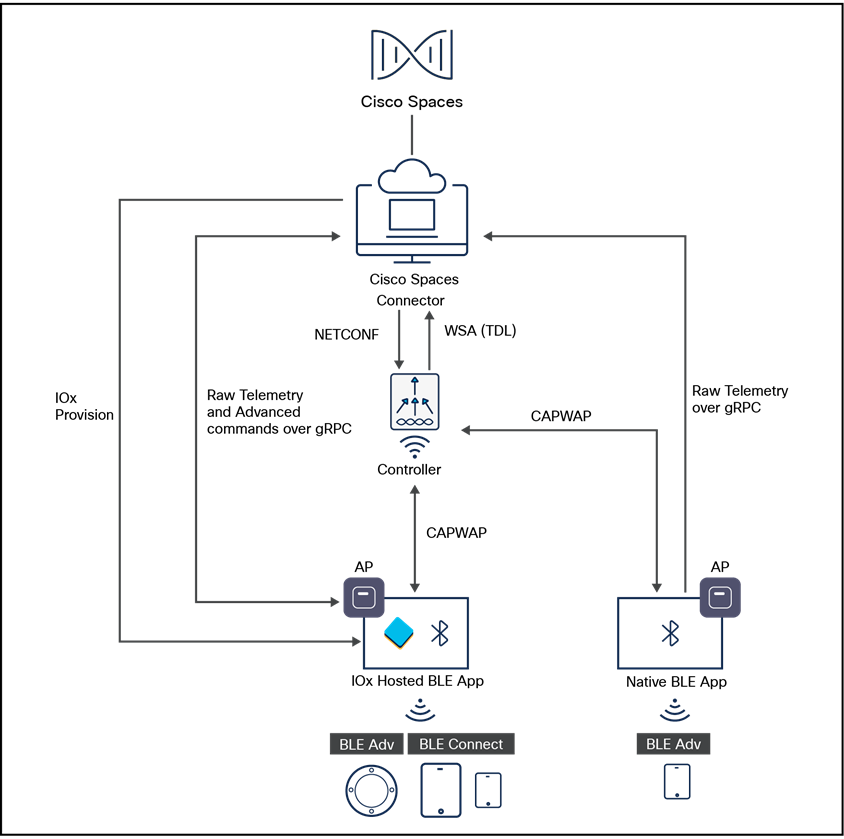

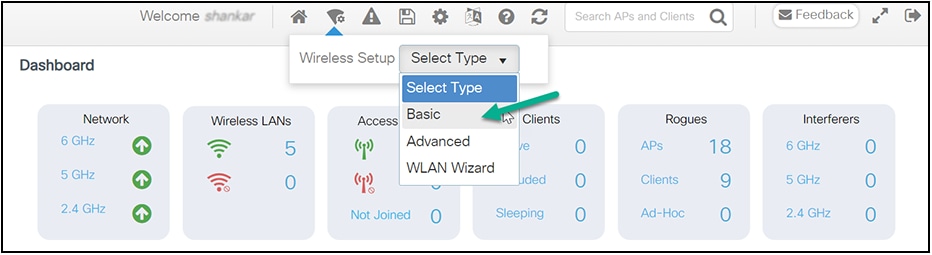

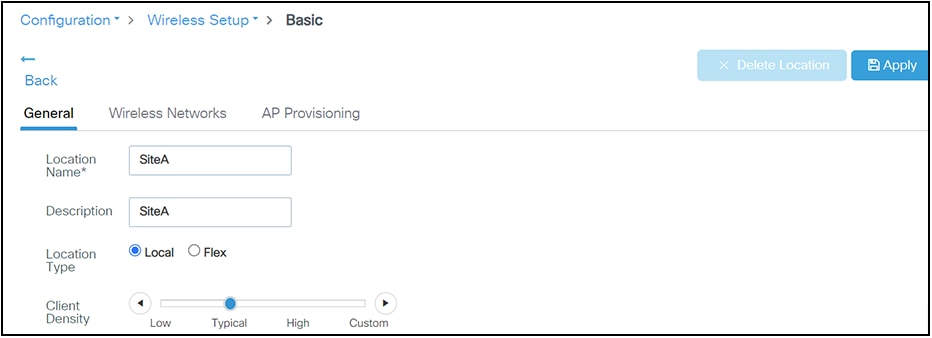

Note: In a Dynamic Frequency Selection (DFS)-enabled AP environment, ensure that you enable the UNII2 channels option under the DCA channel to allow 100-MHz separation for the dual 5-GHz radios.