Transforming Generative AI Workloads with Cisco and Lenovo Integrated AI Solutions: Solution Overview Lenovo and Cisco Strategic Partnership

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

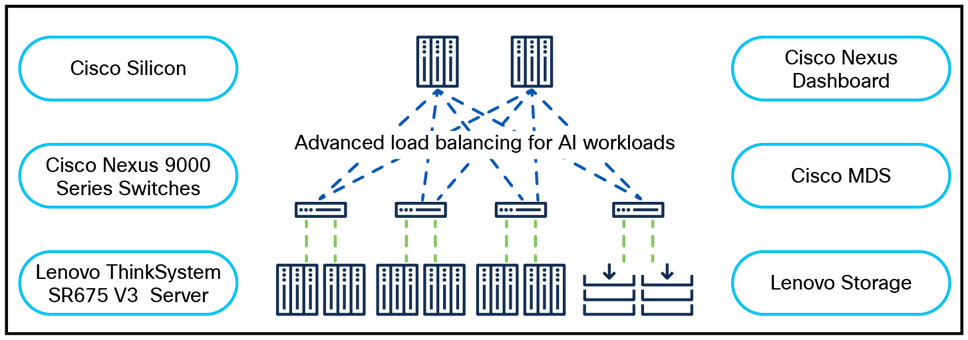

Lenovo and Cisco continue to expand their strategic partnership and welcome intelligent AI to the evolving world of AI solutions. Our collaboration allows us to grow the portfolio of integrated offerings for intelligent enterprise AI solutions with Lenovo ThinkSystem SR675 V3 servers, Lenovo ThinkSystem DG Storage Arrays, and the Cisco Nexus® 9000 Series Switches and Nexus Dashboard.

Together, we offer an integrated solution designed to be deployed for a variety of generative AI workloads, including model training, Retrieval-Augmented Generation (RAG), agent creation, and fine-tuning. Customers can tap into the combined AI expertise of both Lenovo and Cisco with trusted, integrated solutions designed to help customers get the most from their AI workloads for on-premises and hybrid AI deployments.

Cisco Nexus 9000 Series Switches for AI

Cisco has the advantage of building its own silicon, systems, software, and operating model. Cisco Nexus 9000 Series Switches, powered by Cisco Silicon One™ and CloudScale ASICs, are designed to deliver high-density, high-performance, low-latency, and sustainable switching in network infrastructures. Cisco Nexus 9000 Series switches have the hardware and software capabilities to provide innovative congestion-management mechanisms and advanced load-balancing capabilities with RDMA over Converged Ethernet (RoCEv2), Priority Flow Control (PFC), Explicit Congestion Notification (ECN), intelligent buffer management with Approximate Fair Drop (AFD), Dynamic Packet Prioritization (DPP), Dynamic Load Balancing (DLB), per-packet load balancing, flow pinning, and weighted multipath load balancing to meet the stringent performance requirements of AI/ML applications. Coupled with the Cisco Nexus Dashboard operating model for visibility and automation, the Cisco Nexus 9000 Series Switches are ideal platforms to build an Ultra Ethernet Consortium (UEC) ready, high-performance AI network fabric.

Cisco Nexus 9000 Series 800G and 400G switches

Table 1. Cisco Nexus 9000 Series Switches recommended with the Lenovo ThinkSystem SR675 V3 servers for AI/ML

|

|

|

|

| Cisco Nexus 9364E-SG2-Q/O |

Cisco Nexus 9364D-GX2A |

Cisco Nexus 9332D-GX2B |

| 64x 800G |

64x 400G |

32x 400G |

| QSFP-DD and OSFP form-factor options |

QSFP-DD form factor |

QSFP-DD form factor |

| 2RU with 51.2-Tbps unidirectional bandwidth |

2RU with 25.6-Tbps unidirectional bandwidth |

1RU with 12.8-Tbps unidirectional bandwidth |

The Cisco Silicon One G200-based Cisco Nexus 9364E-SG2 features the industry’s highest radix with 512x 100G Ethernet ports, allowing customers to build scalable networks. Additionally, the G200’s high radix flattens the network by reducing latency from five hops to three, helping lower the Job Completion Time (JCT). This is crucial in complex networks with many switches, as it maintains agility in large-scale, hyper-scale operations.

Designed for ultimate flexibility, the Lenovo ThinkSystem SR675 V3 is a versatile GPU-rich 3U rack server that supports multiple GPU configurations, including up to eight double-wide or single-wide GPUs or the NVIDIA HGX 4-GPU with NVLink. Customers need the ability to enhance foundational generative AI models for high-performance inferencing on-premises. Lenovo plays a pivotal role in addressing that challenge with the ThinkSystem SR675 V3 server with L40S, B200NVL, or RTX 6000 NVIDIA GPU options.

Customers gain even more efficiency and powerhouse performance with Lenovo’s Neptune hybrid liquid-to-air cooling for the accelerators positioned in the front. The design also allows both front and rear I/O connectivity for maximum graphic performance and I/O throughput.

Built on one or two fourth- or fifth-generation AMD EPYC processors, up to 24 TruDDR5 DIMMs, and a choice of high-speed NVMe storage and networking, the ThinkSystem SR675 V3 server is an industry leader on its own. With the OVX node configuration leveraging PCIe Gen5 and the latest AMD EPYC processor, the ThinkSystem SR675 V3 server supports two to eight PCI-based NVIDIA GPUs, driving the ultimate flexibility to seamlessly run demanding AI workloads. It’s ideal for high-performance graphics, complex simulations, high-resolution renderings, robotics, and autonomous procedures and tasks.

ThinkSystem DG Series storage arrays offer an exceptional all-flash QLC storage solution for enterprises that demand industry-leading performance and unified data management. They deliver significant storage capacity within a minimal physical footprint and storage expense. With seamless cloud integration with on-premises environments, the ThinkSystem DG Series storage arrays effortlessly connect cloud platforms and software for data protection, data access, and faster backup and recovery.

Benefits of the Cisco and Lenovo solution

Cisco Nexus 9000 Series Switches with Lenovo ThinkSystem SR675 V3 and ThinkSystem DG Storage arrays provide the networking and compute benefits for AI/ML — generative AI and agentic AI workloads, advanced AI processes, and AI inferencing. The combined solution provides an architecture that can scale to address network and compute requirements for performance, scalability, and availability.

Cisco and Lenovo integrated AI solution