Cisco HyperFlex 4.0 Stretched Cluster with Cisco ACI 4.2 Multi-Pod Fabric

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco HyperFlex 4.0 Stretched Cluster with Cisco ACI 4.2 Multi-Pod Fabric

Deployment Guide for Cisco HyperFlex 4.0 Stretched Cluster with Cisco ACI 4.2 Multi-Pod Fabric and VMware vSphere 6.7U3

Published: July 2020

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Table of Contents

Solution Deployment – ACI Fabric (Single Pod)

Initial Setup of APIC(s) in Pod-1

Deploy Spine and Leaf switches in Pod-1

Configure Pod Policies for Pod-1

Enable/Review ACI Fabric Settings

Pre-configure Access Layer Policies

Initial Setup of APIC(s) in Pod-1

Deploy Spine and Leaf Switches in Pod-1

Add Leaf Switches to the ACI Fabric

Upgrade Firmware on Leaf Switches in Pod-1 (Optional)

Add Spine Switches to the ACI Fabric

Verify Spine and Leaf Switches are Added to the ACI Fabric

Upgrade Firmware on Spine Switches in Pod-1 (Optional)

Configure Out-of-Band and In-Band Management for Switches in Pod-1

Configure Pod Policies for Pod-1

Update BGP Route Reflector Policy for Pod-1

Update Pod Profile to Apply Pod Policies

Enable/Review ACI Fabric Settings

COS Preservation (Fabric Wide Setting)

Enforce Subnet Check for Endpoint Learning (Fabric Wide Setting)

Limit IP Learning to Subnet (Bridge-domain, Optional)

IP Aging (Fabric Wide Setting)

Pre-configure Access Layer Policies

Solution Deployment – ACI Fabric (to Outside Networks from Pod-1)

Create VLAN Pool for Shared L3Out

Configure Domain Type for L3Out

Create Attachable Access Entity Profile for L3Out

Configure Tenant Networking for Shared L3Out

Configure OSPF Interface Policy for L3Out in Pod-1

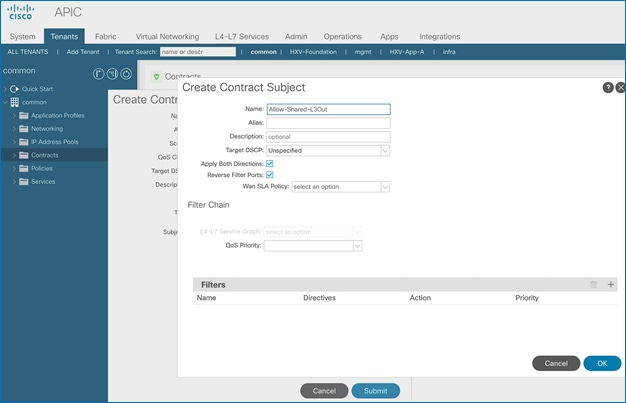

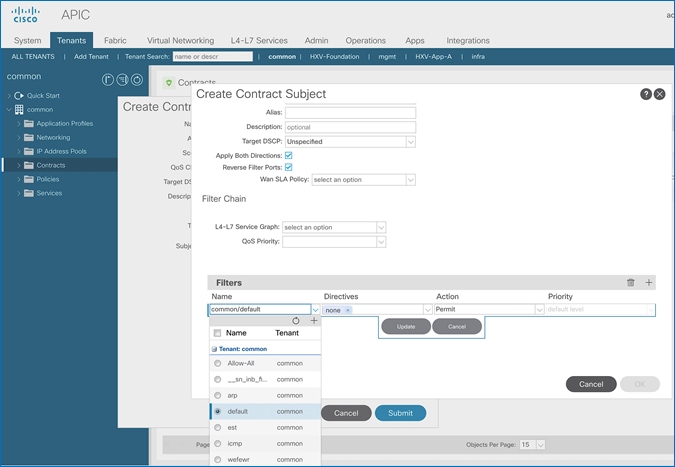

Create Contracts for Shared L3Out in Pod-1

Provide Contracts for Shared L3Out in Pod-1

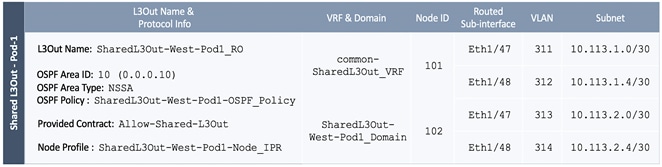

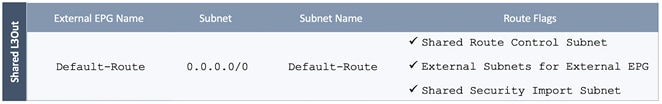

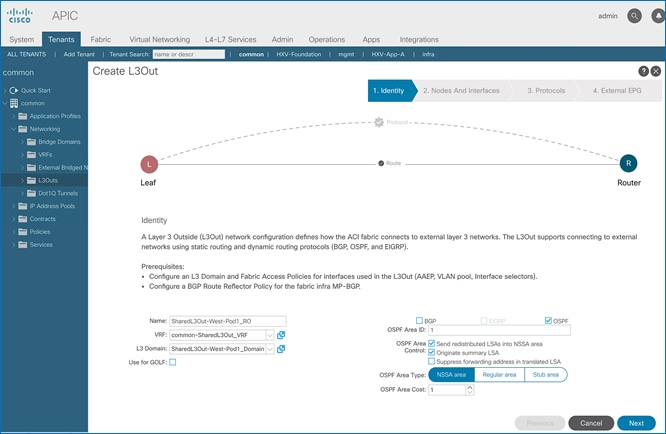

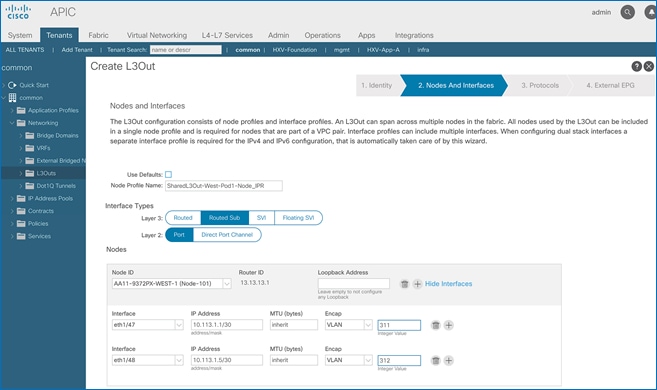

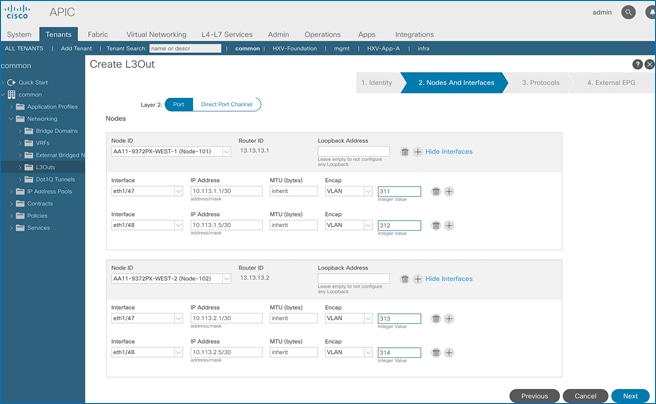

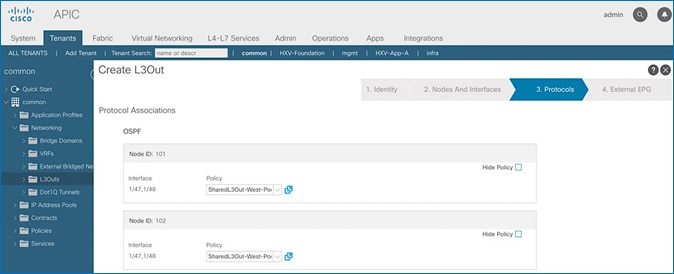

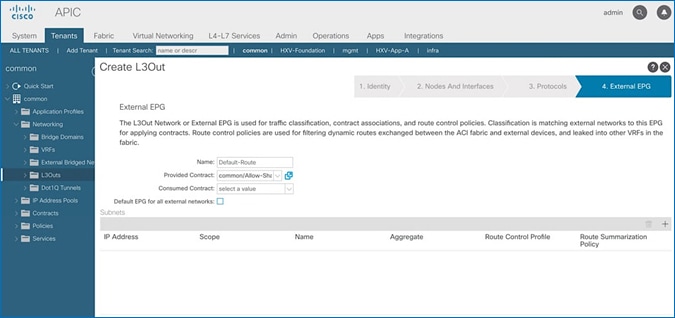

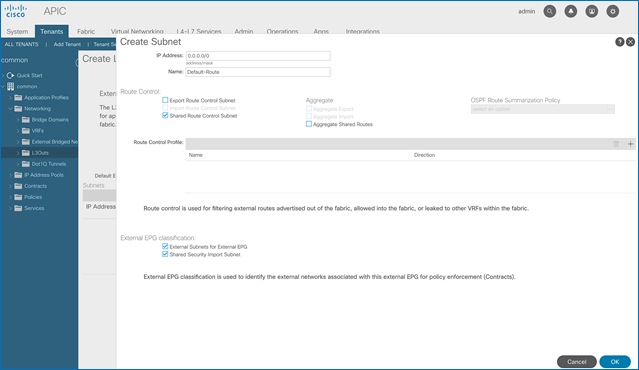

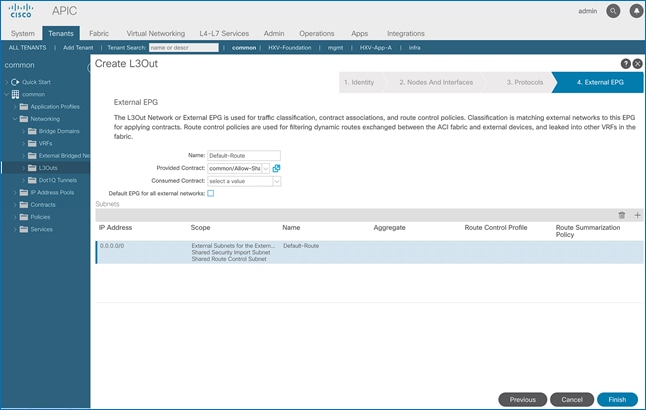

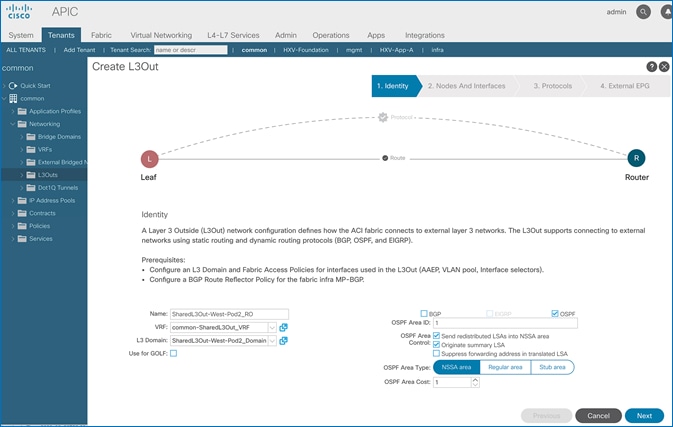

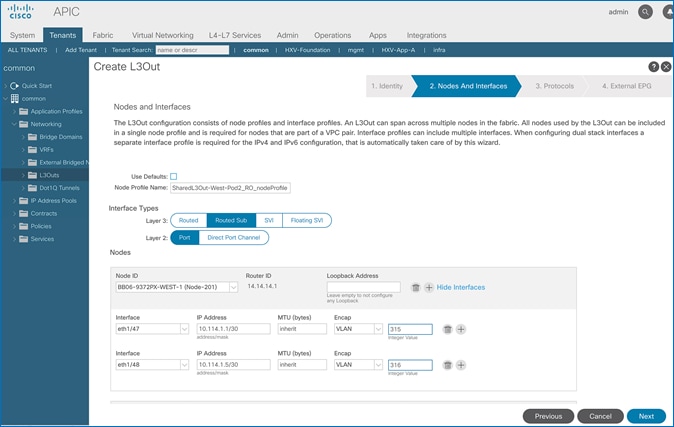

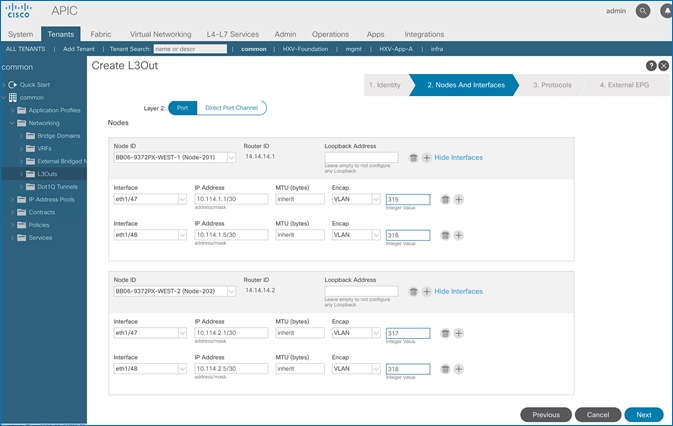

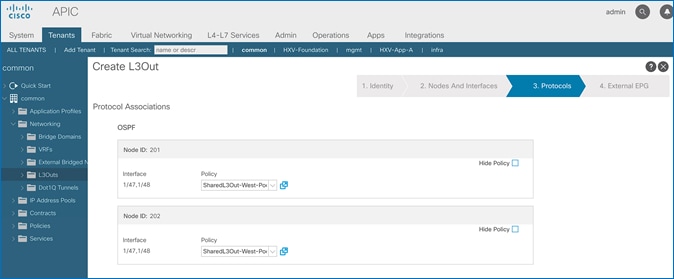

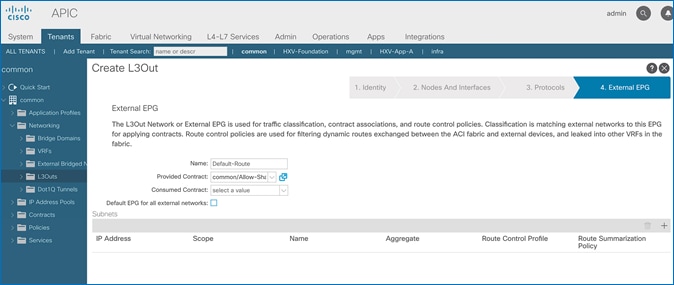

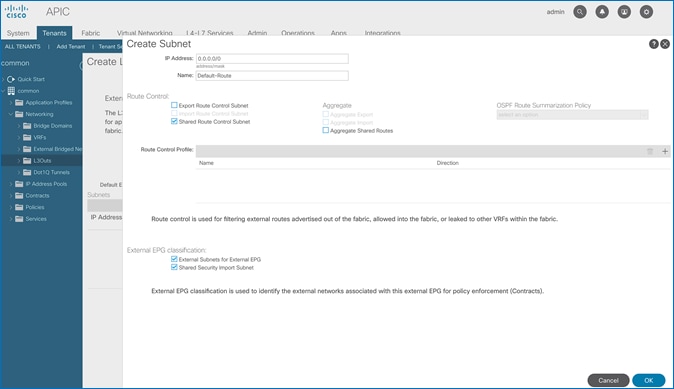

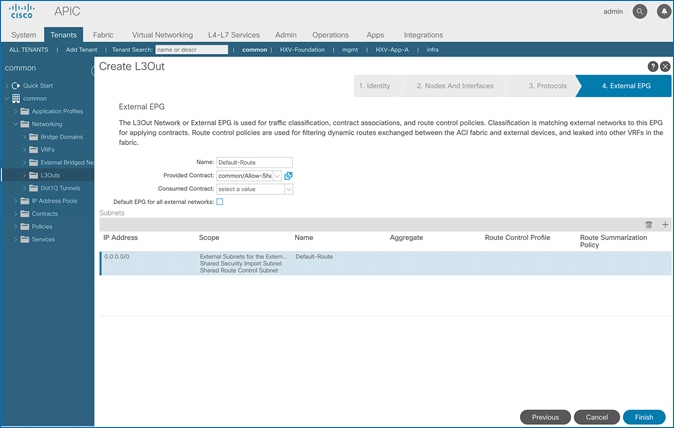

Configure L3Out Connectivity for Pod-1

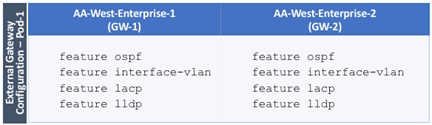

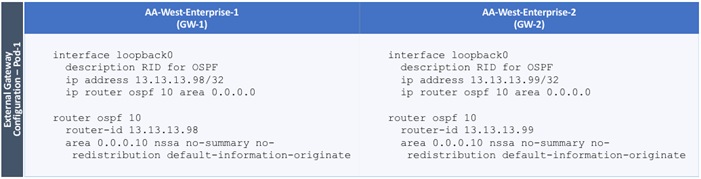

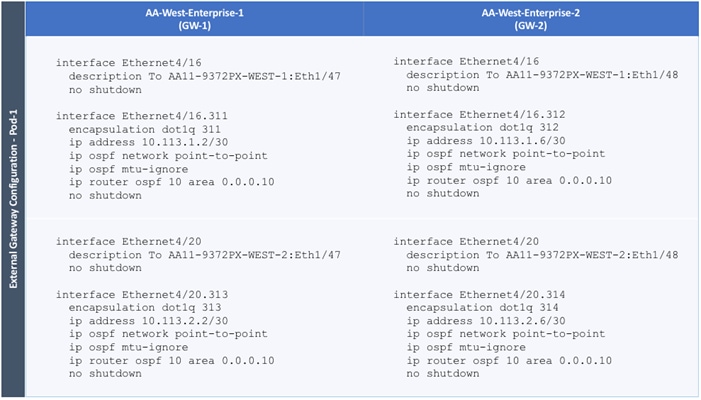

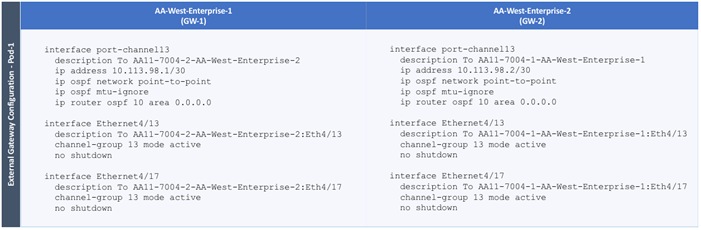

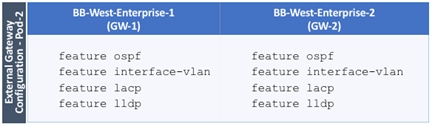

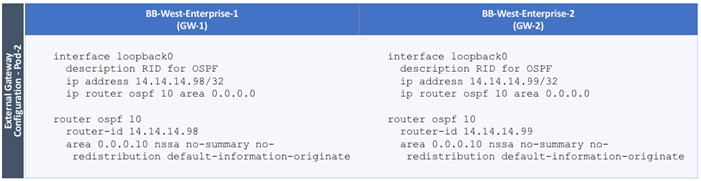

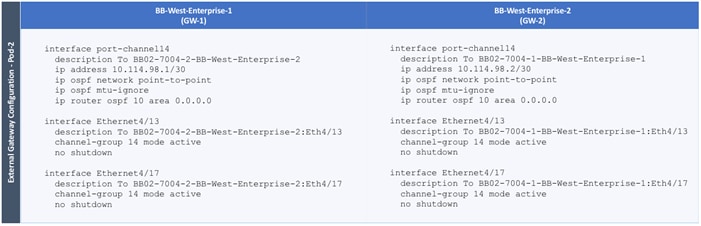

Configure External Gateways in the Outside Network

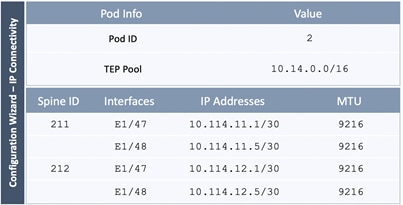

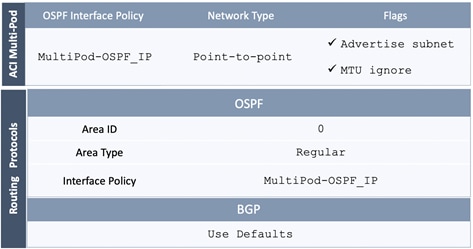

Solution Deployment – ACI Fabric (Multi-Pod)

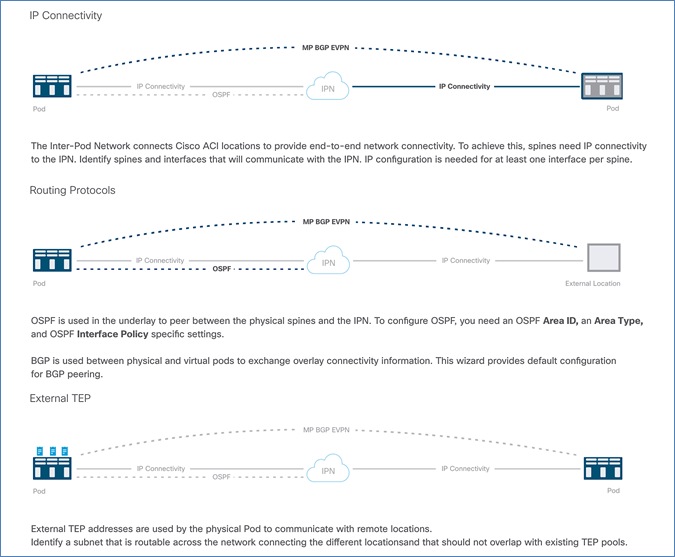

Deploy Inter-Pod Network (IPN)

Setup ACI Fabric for Multi-Pod

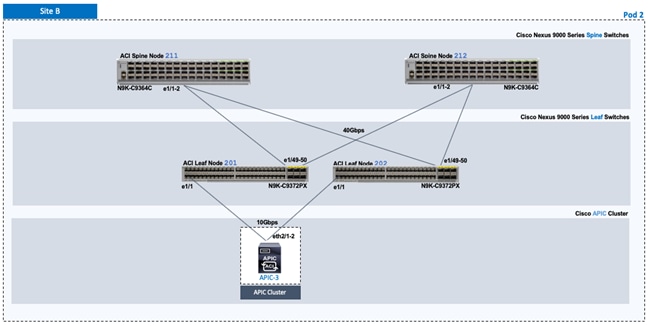

Setup Pod-2 Spine Switches, Leaf Switches, and APICs

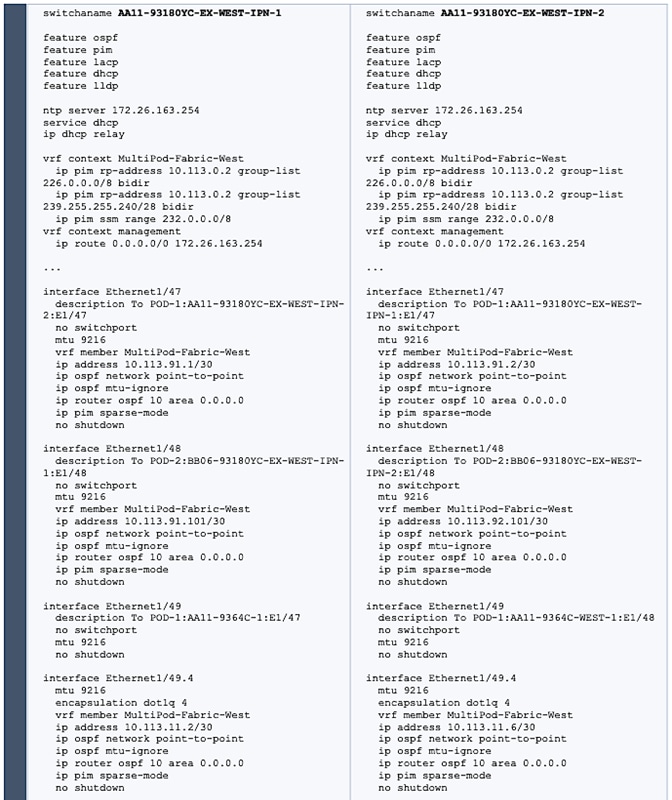

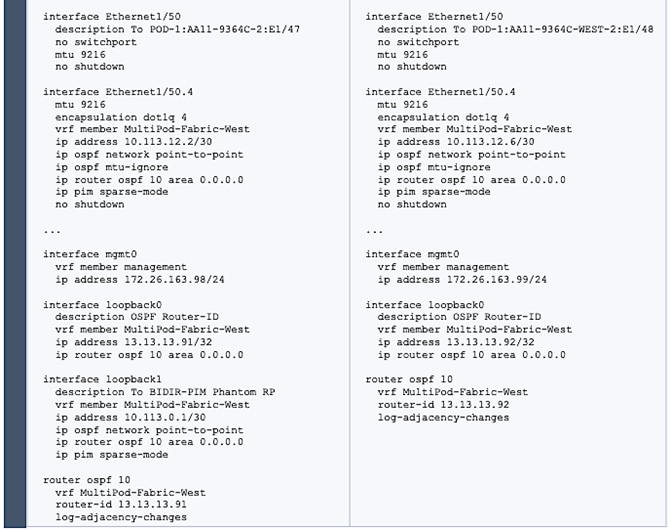

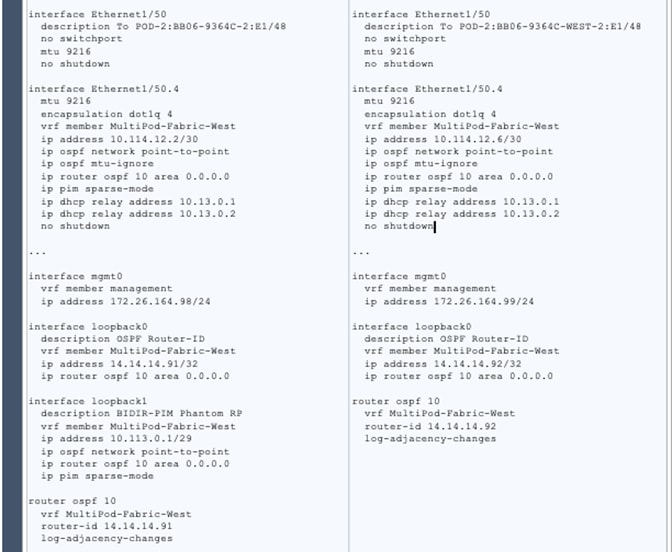

Configure IPN Devices in Pod-1

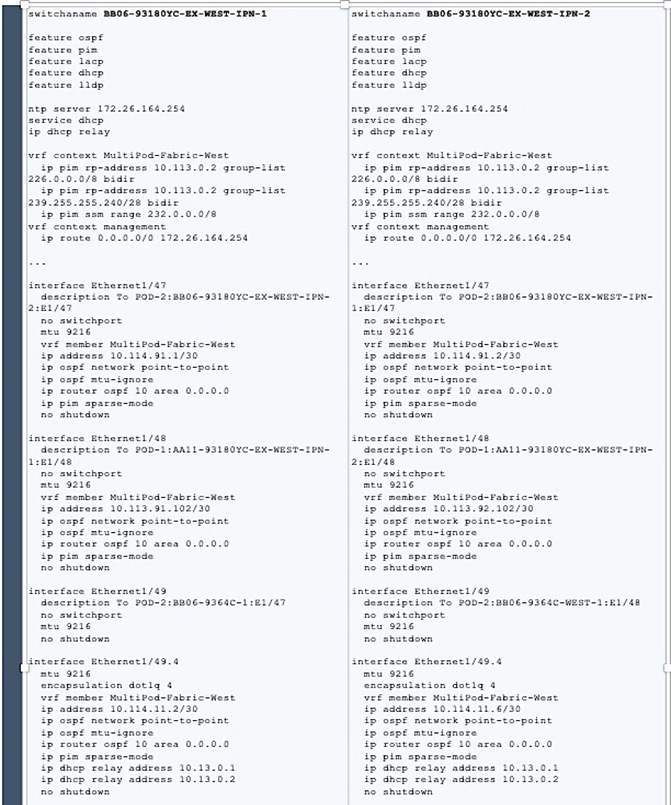

Configure IPN Devices in Pod-2

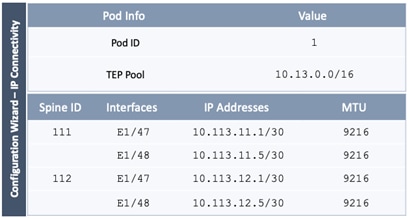

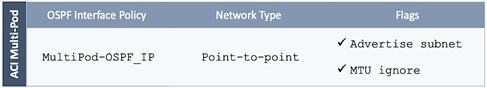

Enable Connectivity to IPN from Pod-1

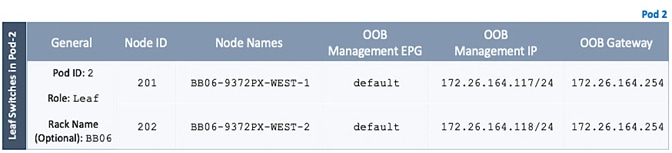

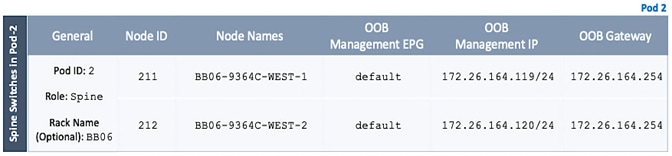

Deploy Spine and Leaf Switches in Pod-2

Update BGP Route Reflector Policy for Pod-2

Update Pod Profile to Apply Pod Policies

Enable Connectivity to IPN from Pod-2

Configure DHCP Relay on IPN Devices

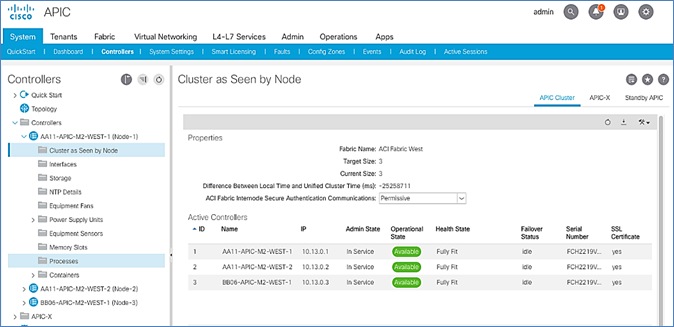

Verify Pod-2 APIC is Part of the APIC Cluster

Add Pod-2 APIC as DHCP Relay Destination

Verify ACI Multi-Pod Fabric Setup

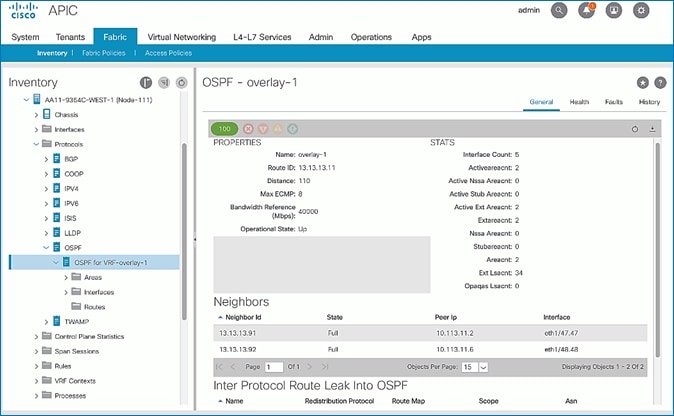

Verify OSPF Status on Spine Switches

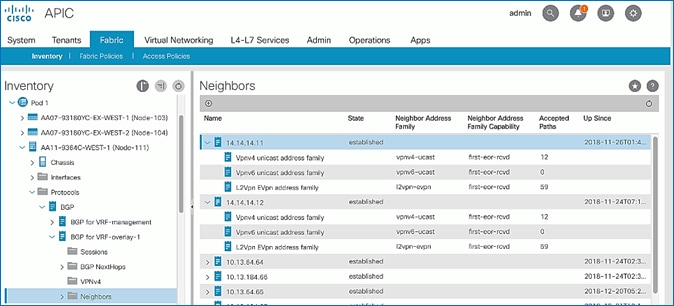

Verify MP-BGP EVPN Status on Spine Switches

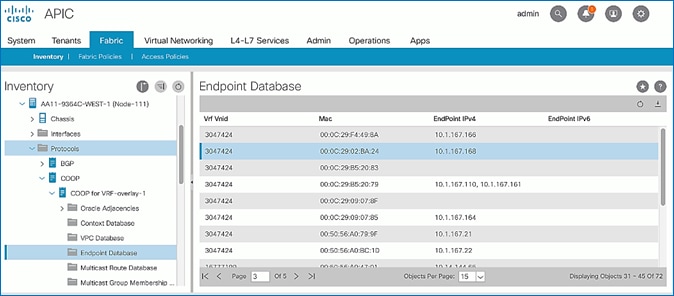

Verify COOP Status on Spine Switches

Solution Deployment – ACI Fabric (To Outside Networks from Pod-2)

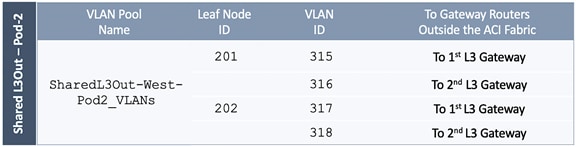

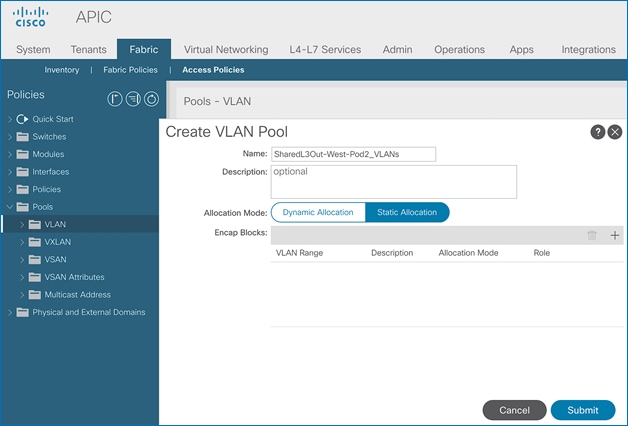

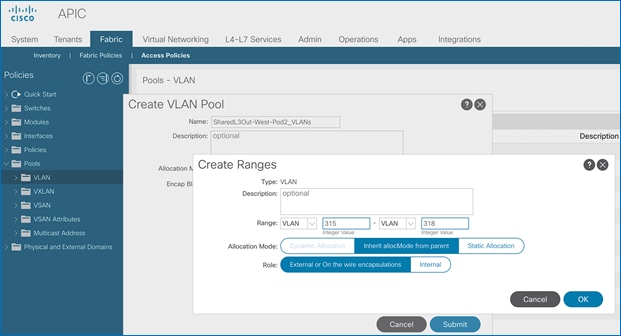

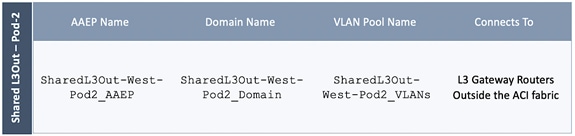

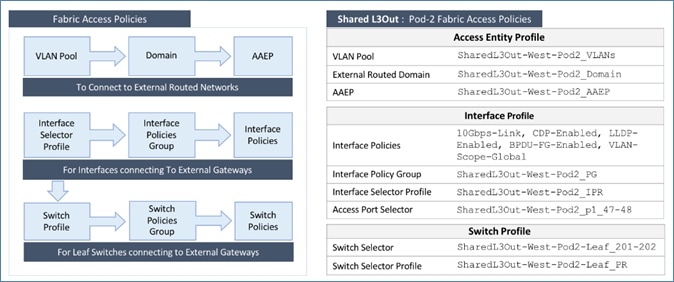

Create VLAN Pool for Shared L3Out

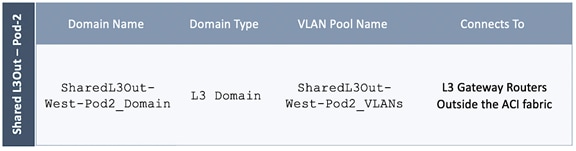

Configure Domain Type for L3Out

Create Attachable Access Entity Profile for L3Out

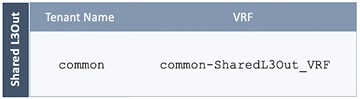

Configure Tenant Networking for Shared L3Out

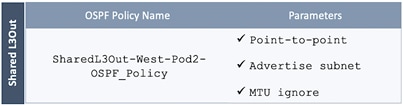

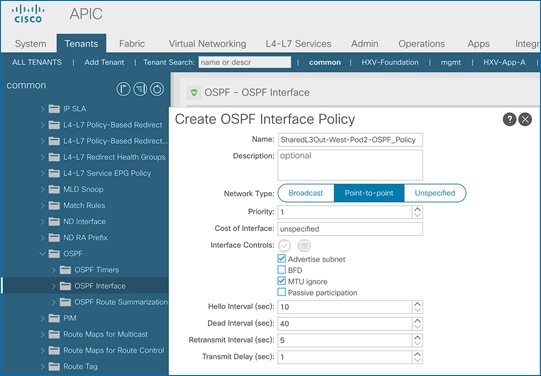

Configure OSPF Interface Policy for L3Out in Pod-2

Create Contracts for Shared L3Out in Pod-2

Provide Contracts for Shared L3Out in Pod-2

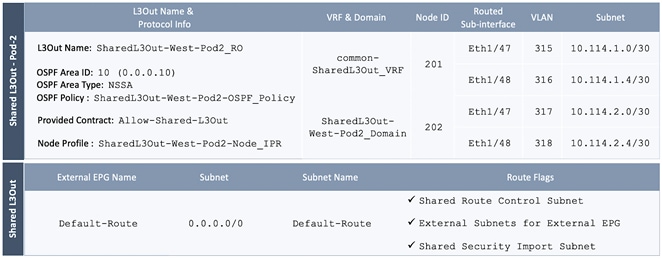

Configure L3Out Connectivity for Pod-2

Configure External Gateways in the Outside Network

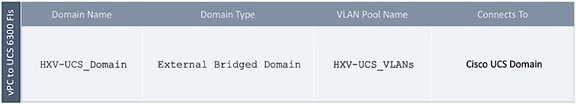

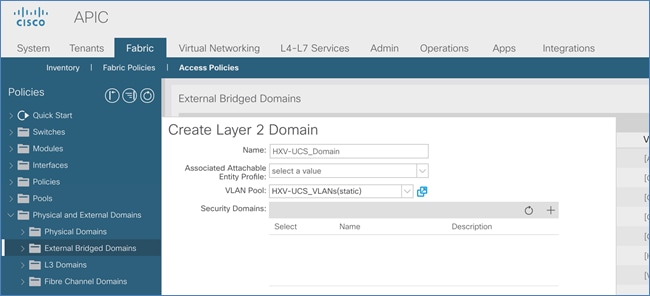

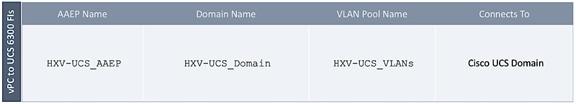

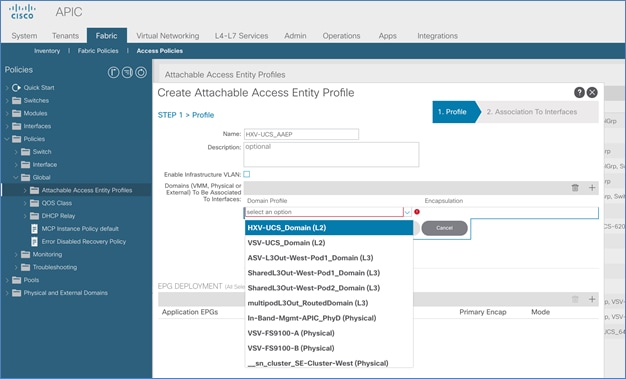

Solution Deployment – ACI Fabric (to Cisco UCS Domains)

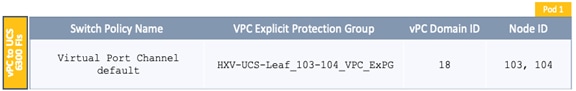

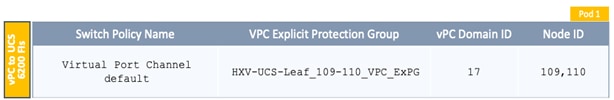

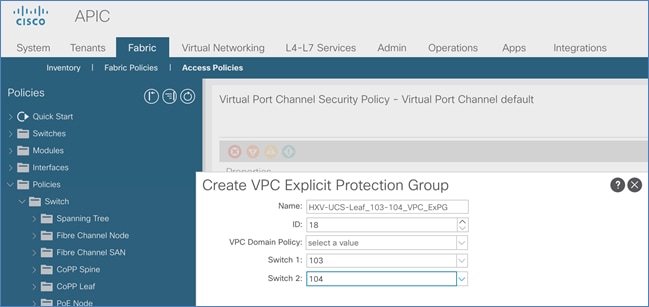

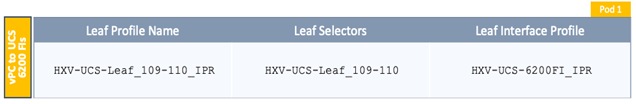

Deploy New Leaf Switches for Connectivity to Cisco UCS Domains

ACI Fabric Discovery of Leaf Switches

Add Nexus 9000 Series Leaf Switches to the ACI Fabric

Setup Out-of-Band and In-Band Management for New Leaf Switches

Enable Access Layer Connectivity to Cisco UCS Domains

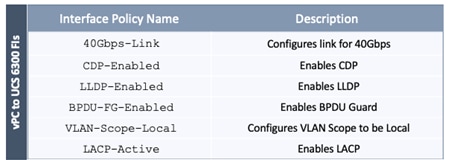

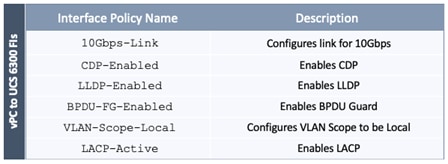

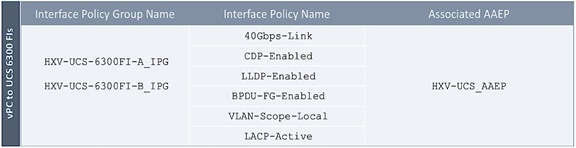

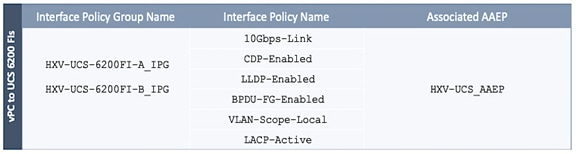

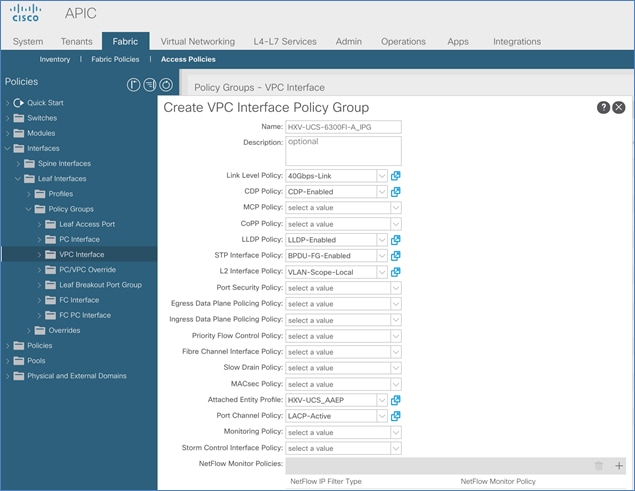

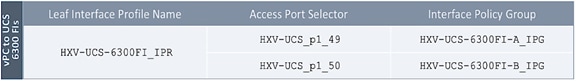

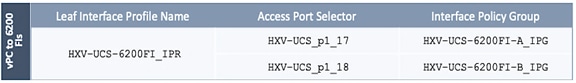

Enable 40Gbps on Links to Cisco UCS Domain

Enable Access Layer Configuration to Cisco UCS Domain

Solution Deployment – Setup Cisco UCS Domains

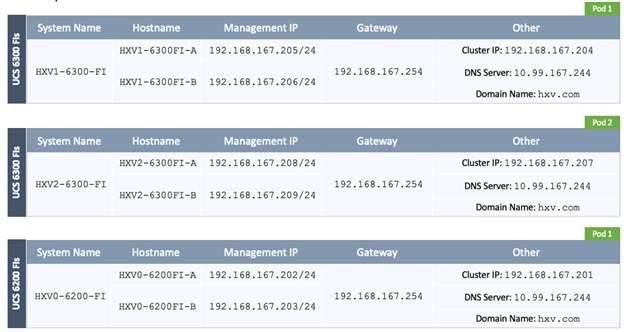

Bring Up Cisco UCS Domain with Fabric Interconnects

Initial Setup of Cisco UCS Domain

Upgrade Cisco UCS Manager Software to Version 4.0(1c)

Configure Cisco UCS Call Home and Anonymous Reporting (Optional)

Configure Uplink Ports on Each FI – To Nexus Leaf Switches in ACI Fabric

Bundle Uplink Ports on each FI – To Nexus Leaf Switches in ACI Fabric

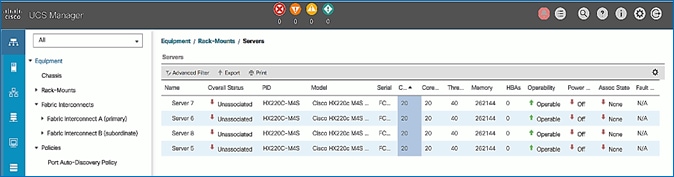

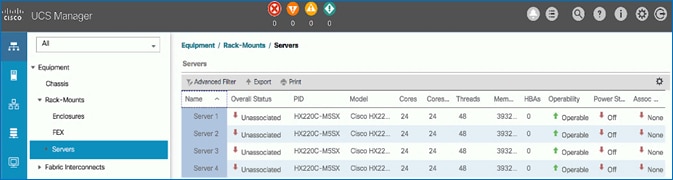

Configuration of Server Ports – To HyperFlex Servers

Auto-Discovery of Server Ports (Option 1)

Manual Configuration of Server Ports (Option 2)

Modify Chassis Discovery Policy – For Blade Servers Only (Optional)

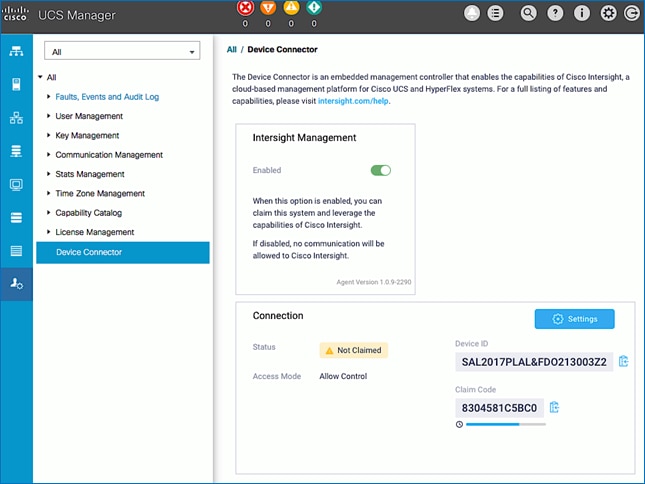

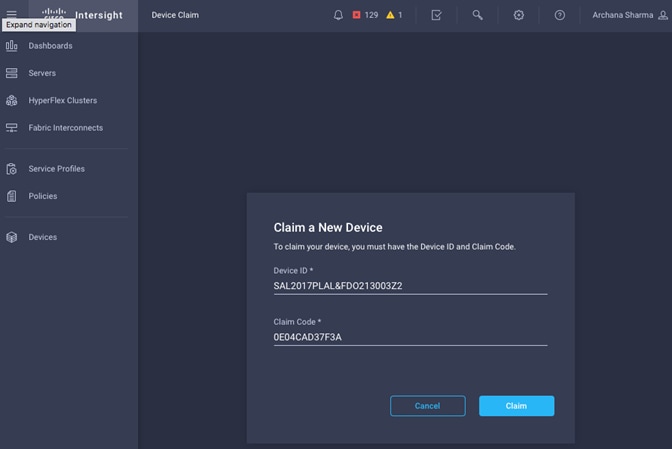

Enable Cisco Intersight Cloud-Based Management

Solution Deployment – Foundational Infrastructure for Cisco HyperFlex

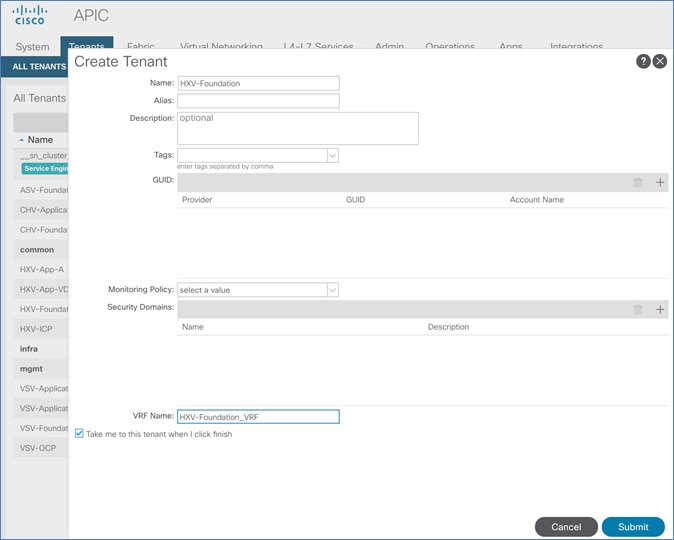

Create Foundation Tenant and VRF

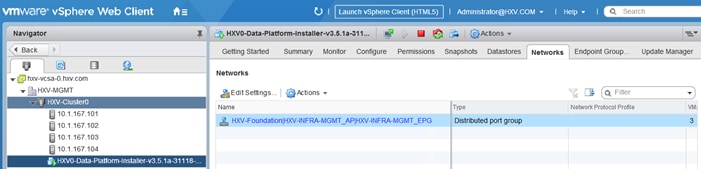

Configure ACI Fabric for HyperFlex In-Band Management

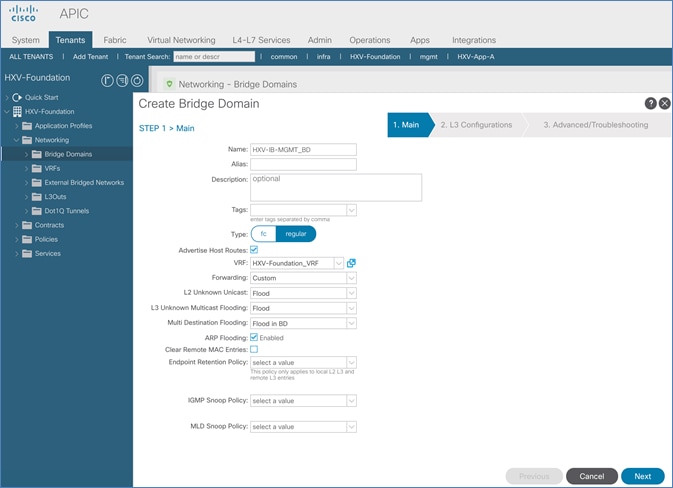

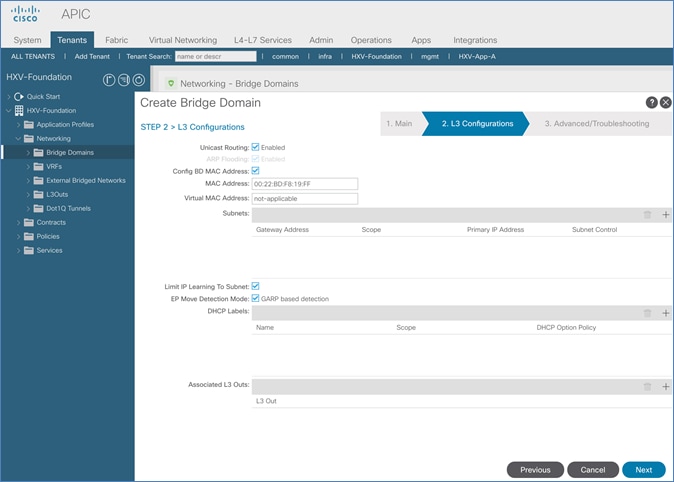

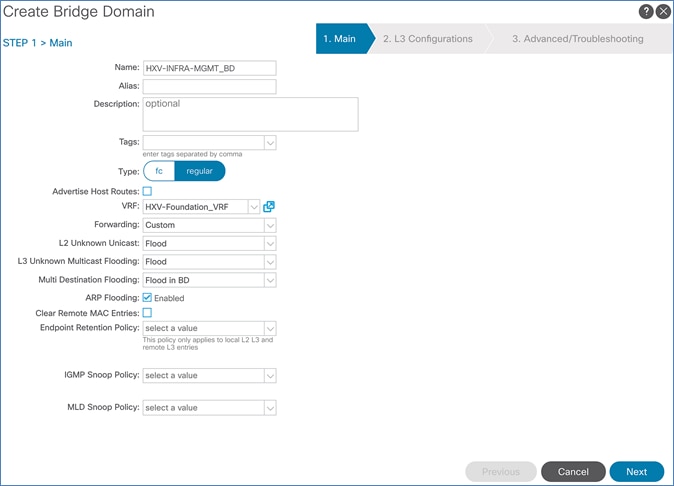

Create Bridge Domain for In-Band Management

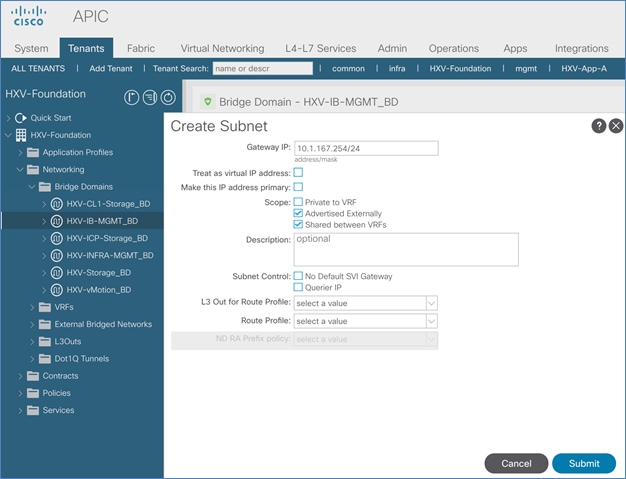

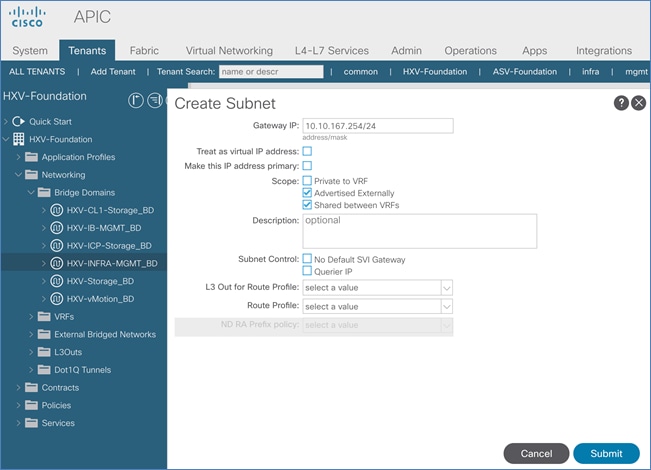

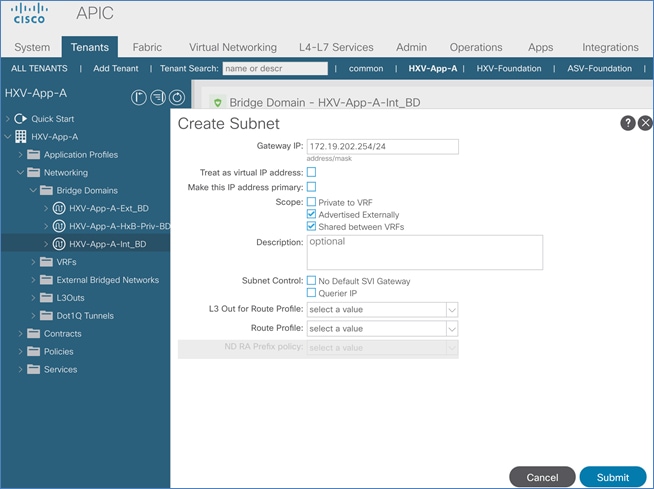

Configure Subnet Gateway for In-Band Management

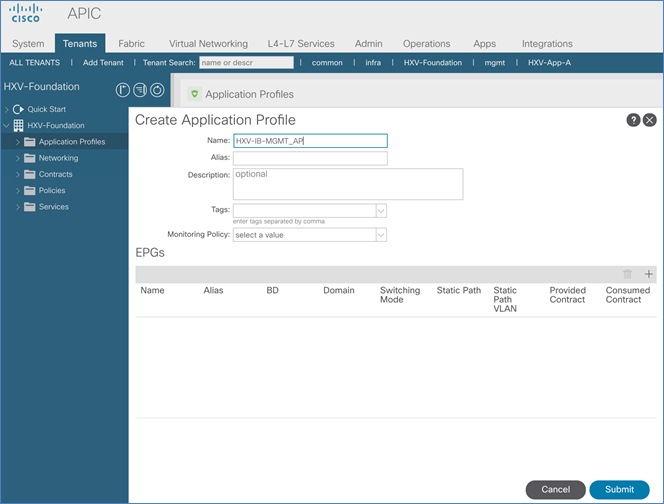

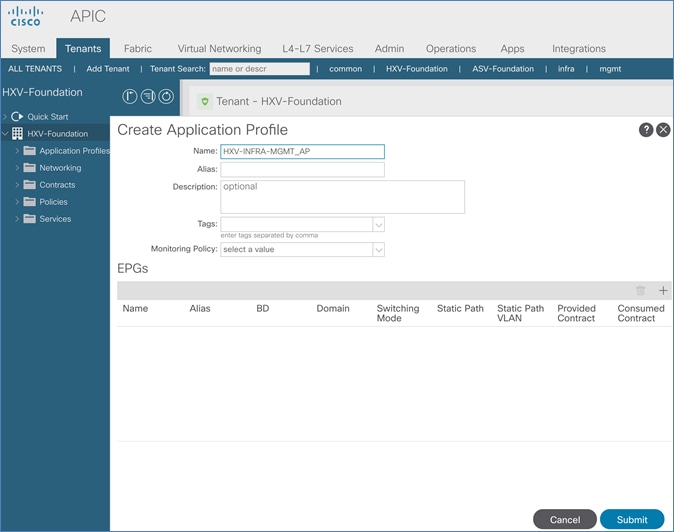

Create Application Profile for In-Band Management

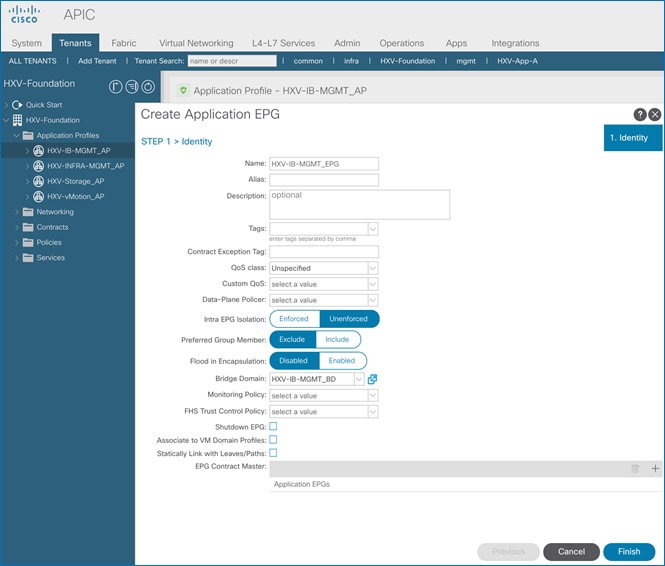

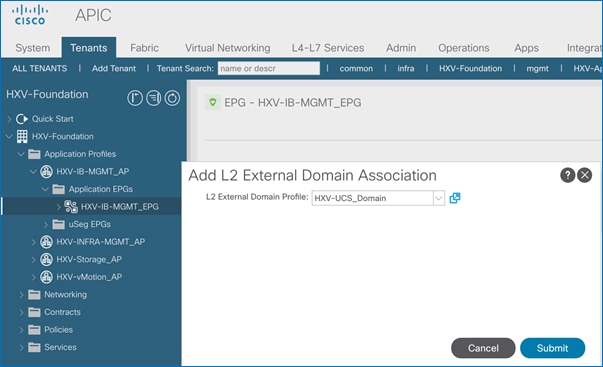

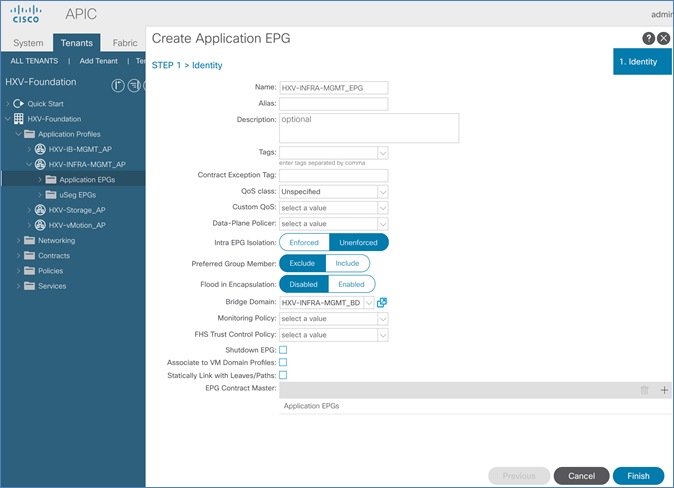

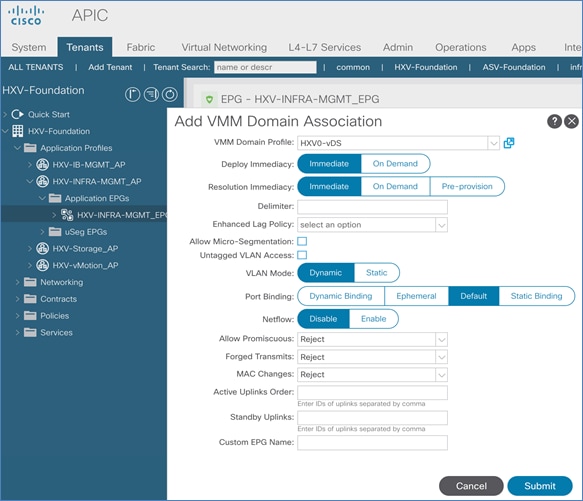

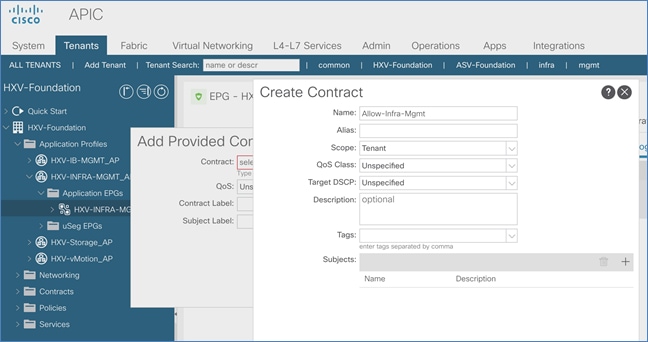

Create EPG for In-Band Management

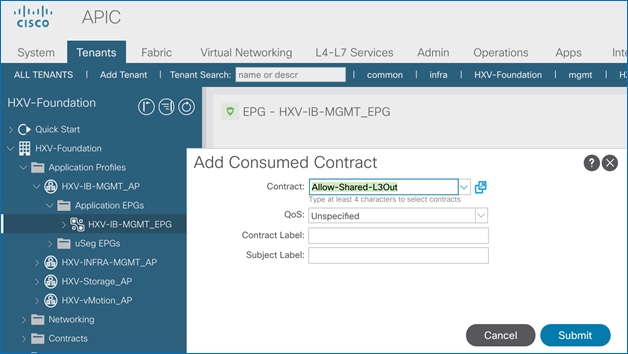

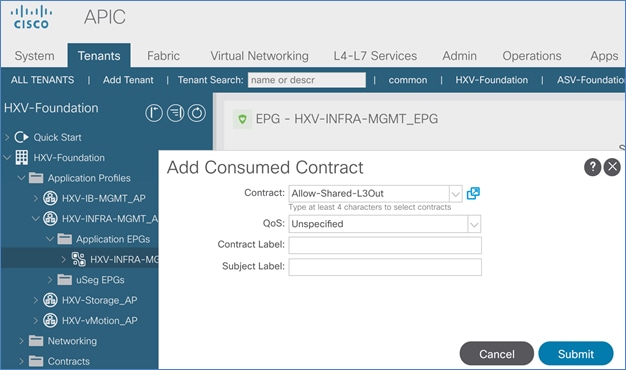

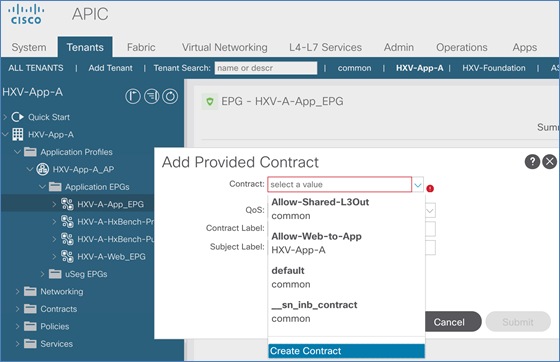

Add Contract to Access Outside Networks and Services

Configure ACI Fabric for HyperFlex vMotion Traffic

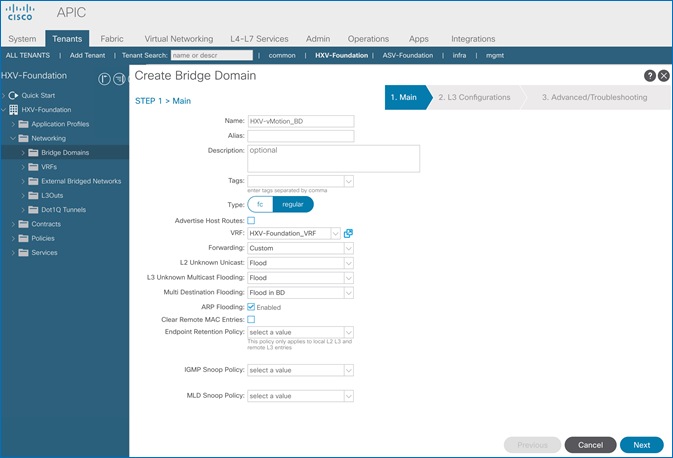

Create Bridge Domain for HyperFlex vMotion Traffic

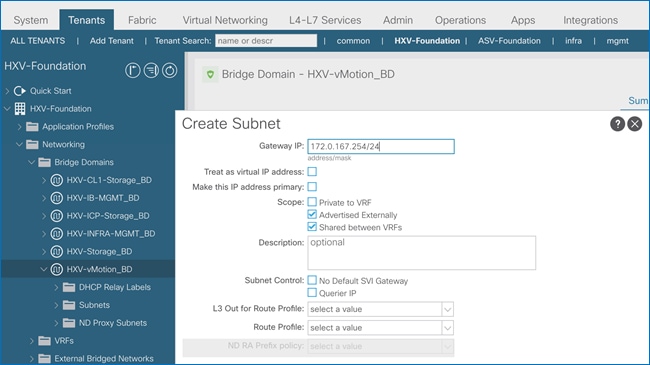

Configure Subnet Gateway for HyperFlex vMotion Traffic

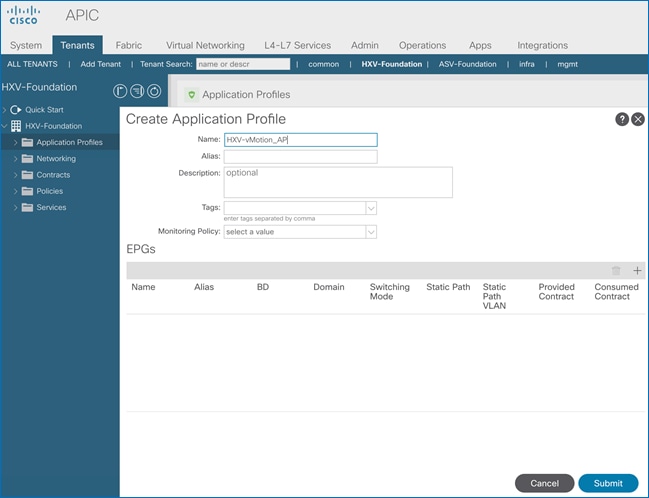

Create Application Profile for HyperFlex vMotion Traffic

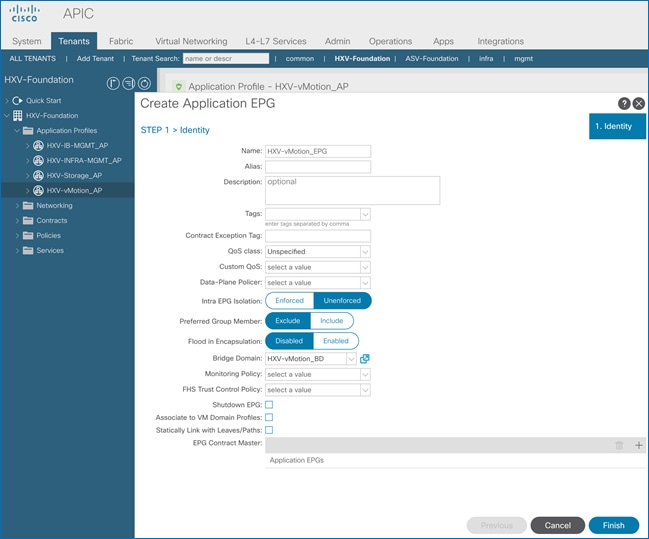

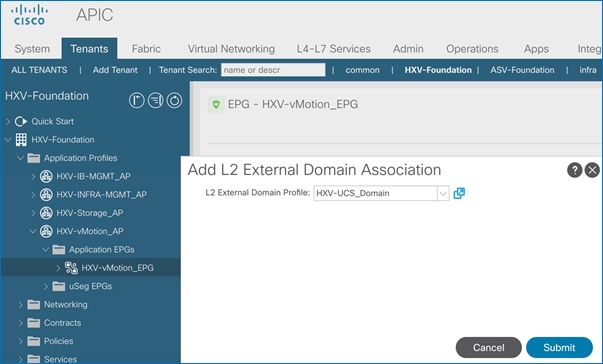

Create EPG for HyperFlex vMotion Traffic

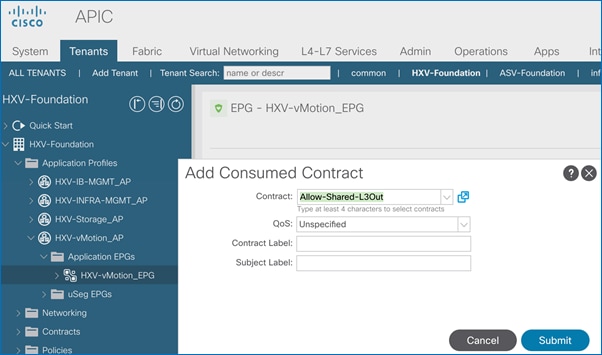

Add Contract to Access Outside Networks and Services (Optional)

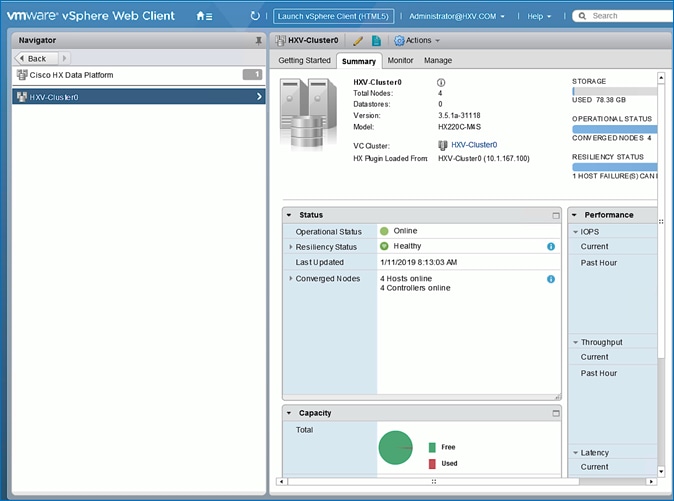

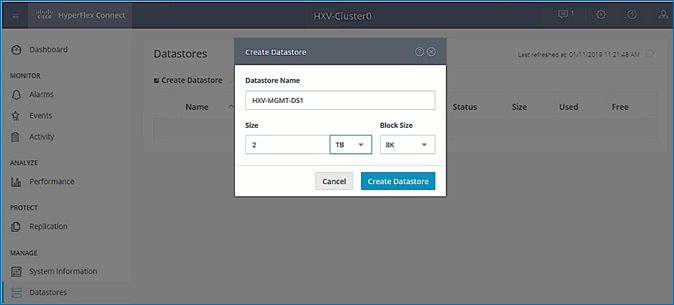

Solution Deployment – HyperFlex Management Cluster

Setup ACI Fabric for HyperFlex Standard Cluster

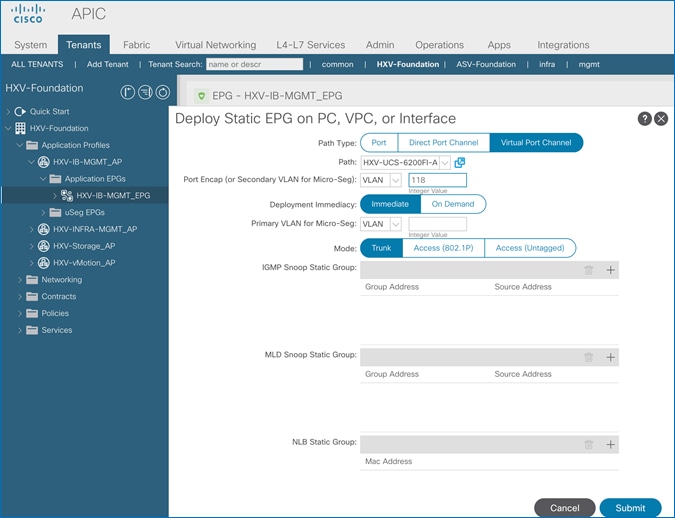

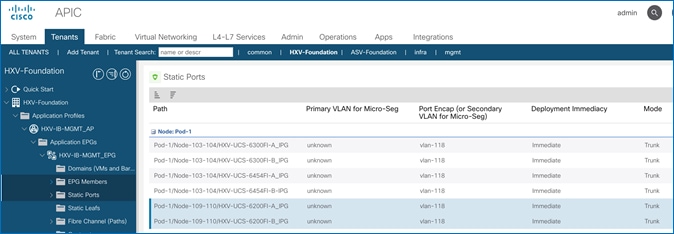

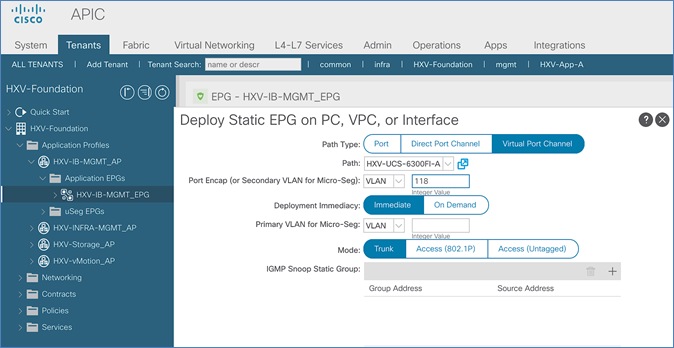

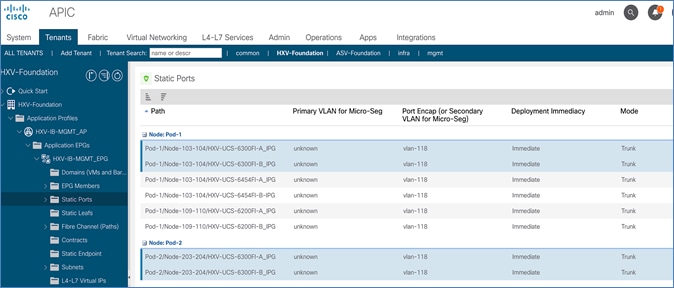

Create Static Binding for In-Band Management to HyperFlex Standard Cluster

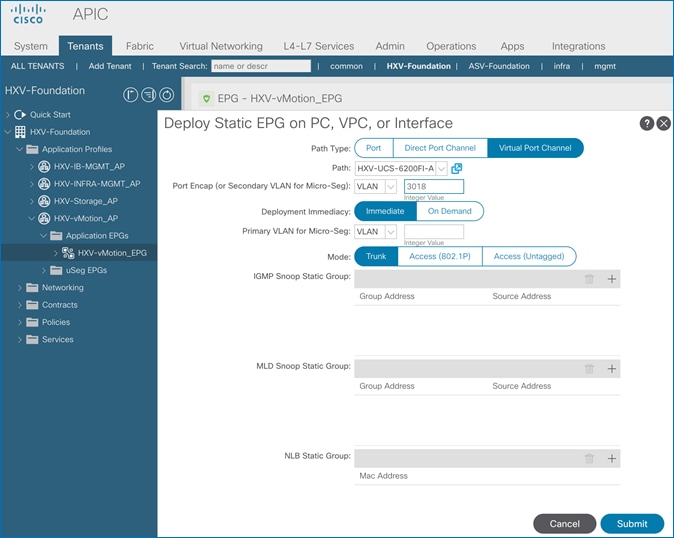

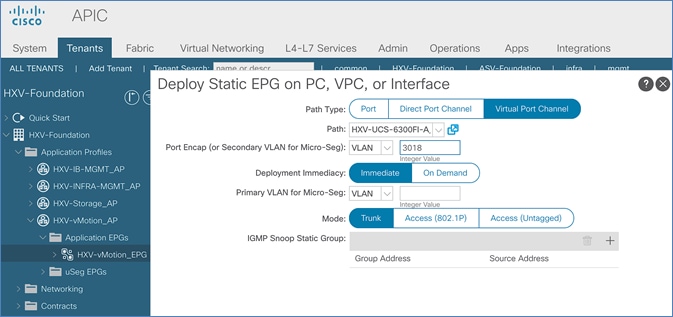

Create Static Binding for vMotion to HyperFlex Standard Cluster

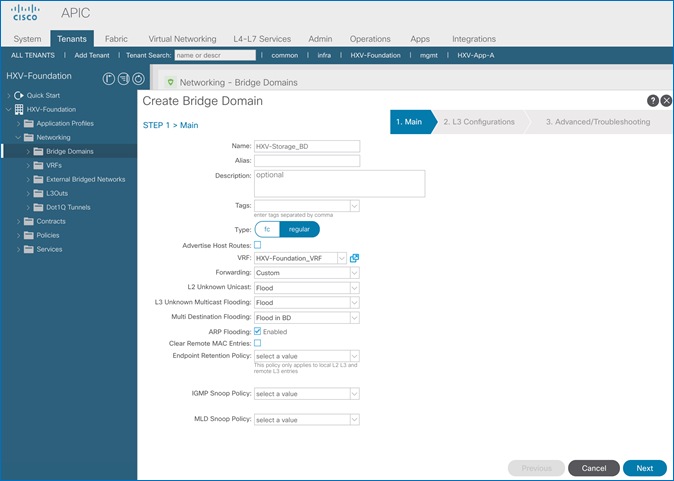

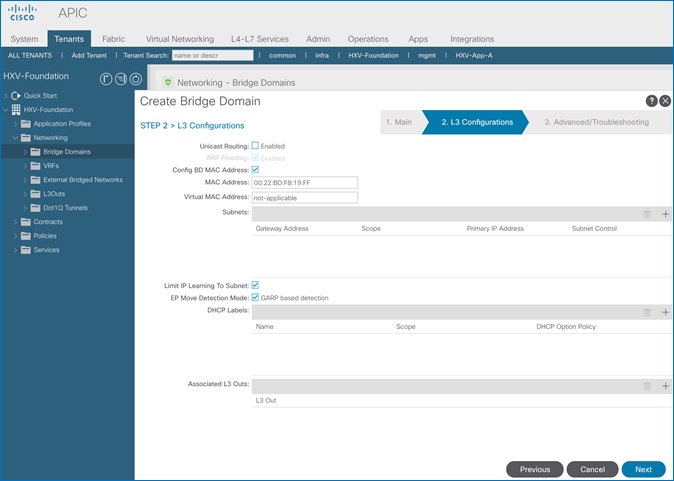

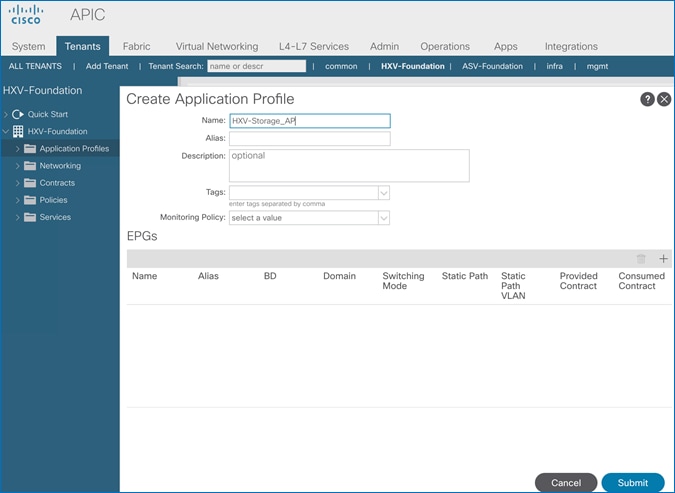

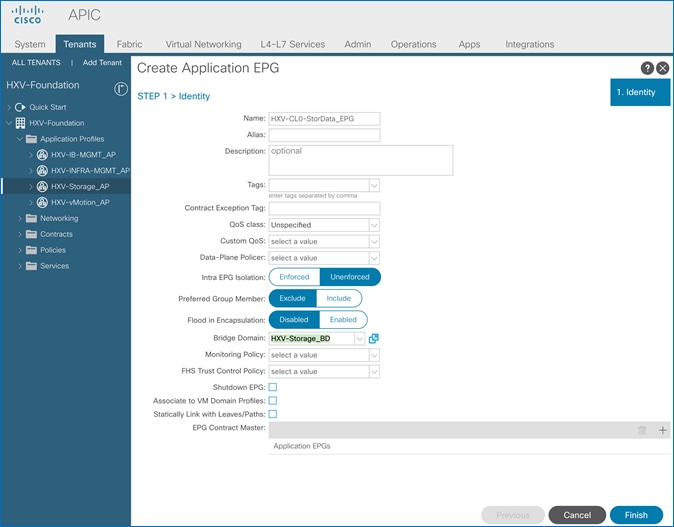

Configure ACI Fabric for Storage Data Traffic on HyperFlex Standard Cluster

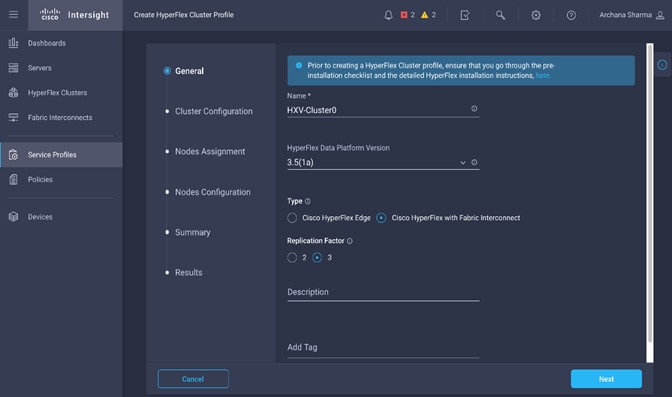

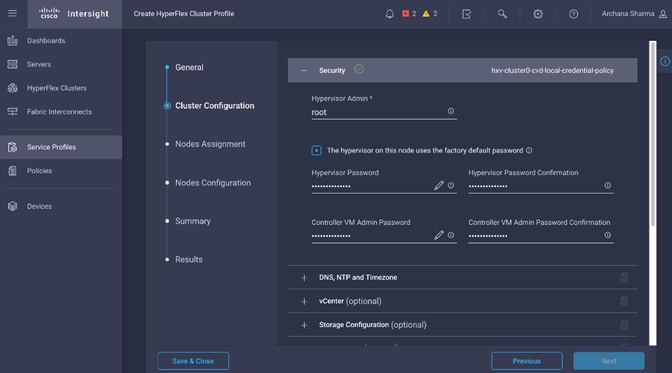

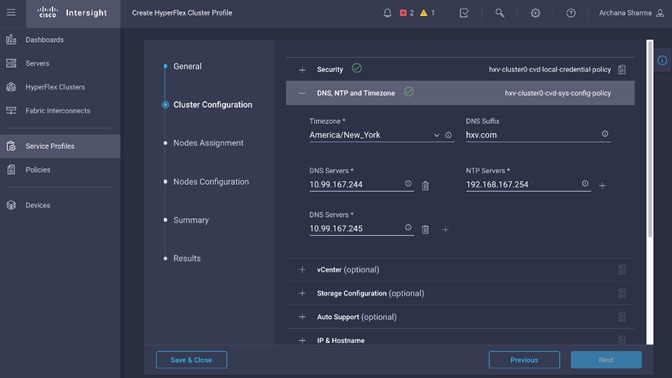

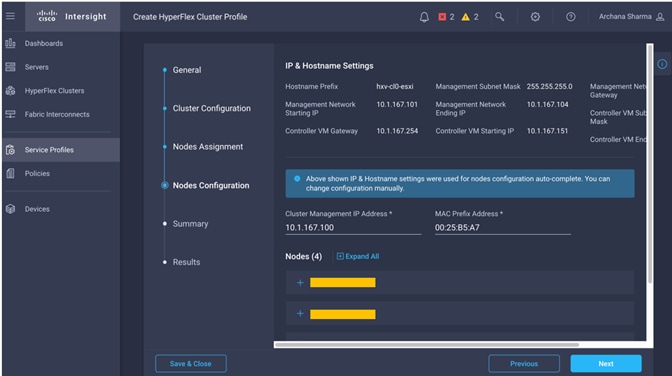

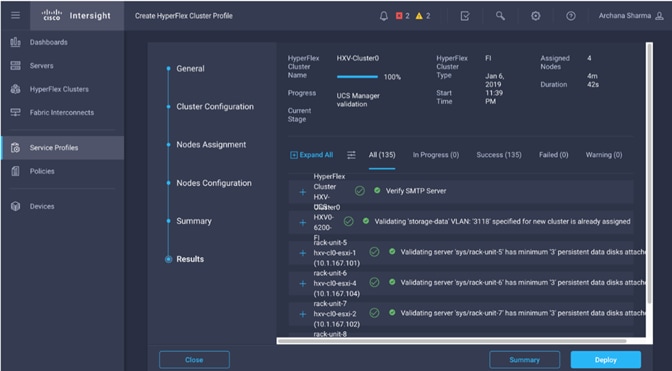

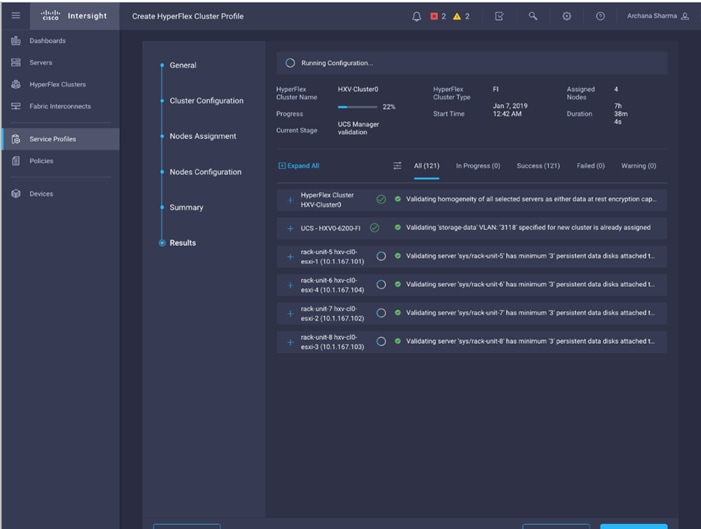

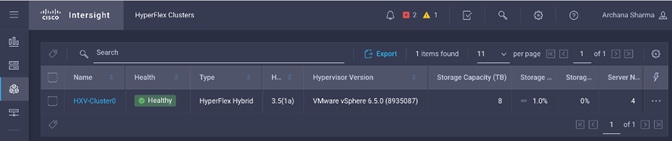

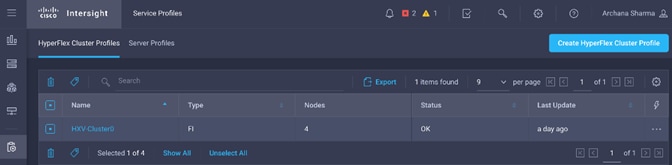

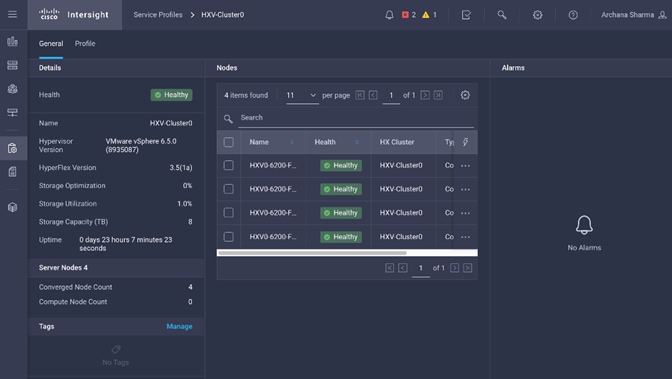

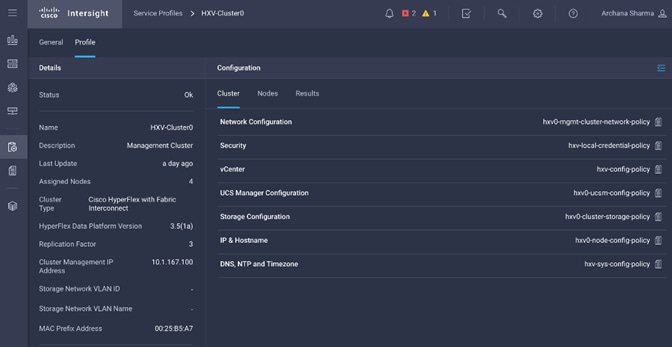

Install HyperFlex Cluster (Management) using Cisco Intersight

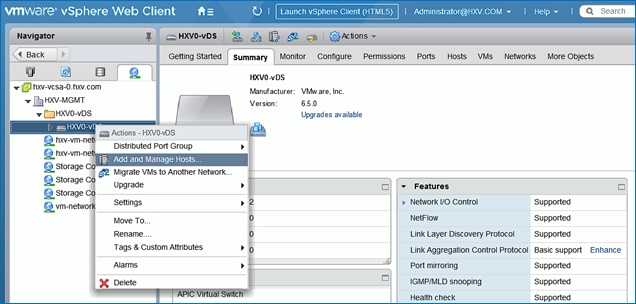

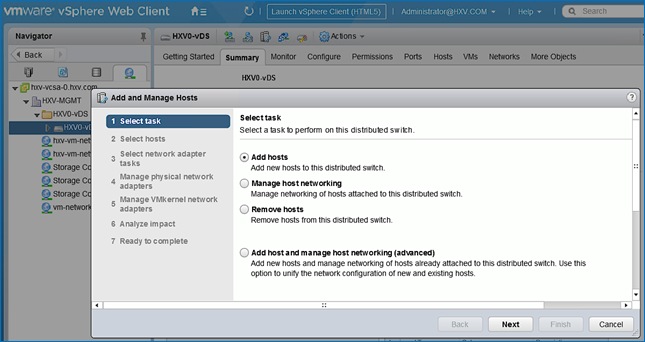

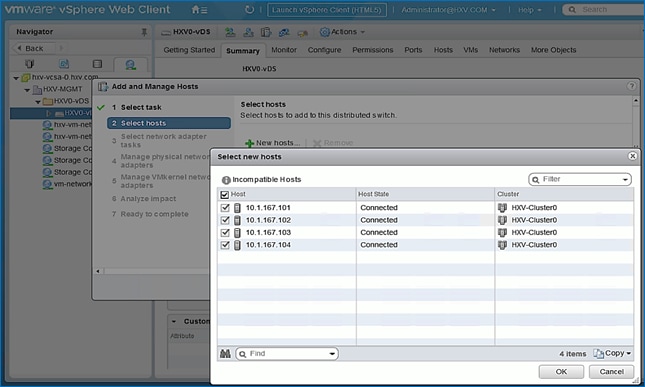

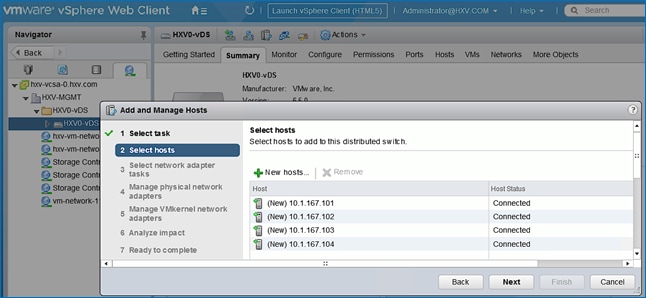

Migrate Virtual Networking to VMware vDS on HyperFlex Management Cluster

Deploy Virtual Machines – Infrastructure Management

Configure ACI Fabric for Infrastructure Management

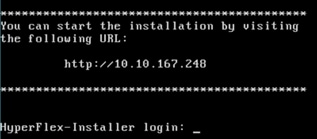

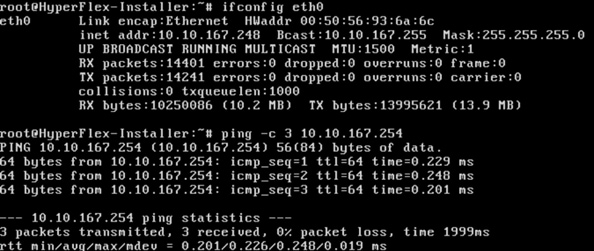

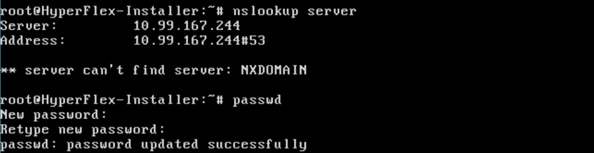

Deploy HX Installer Virtual Machine in the HyperFlex Management Cluster

Solution Deployment – HyperFlex Application Cluster

Setup Cisco UCS Domain for HyperFlex Stretched Cluster

Setup ACI Fabric for HyperFlex Stretched Cluster

Create Static Binding for In-Band Management to HyperFlex Stretched Cluster

Create Static Binding for vMotion to HyperFlex Stretched Cluster

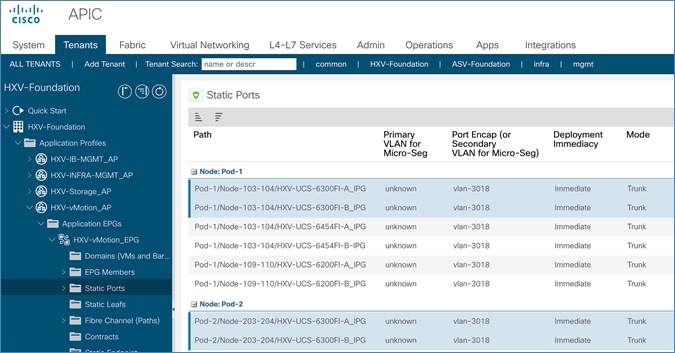

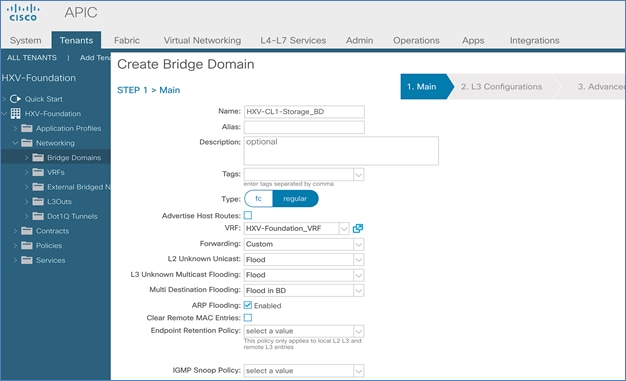

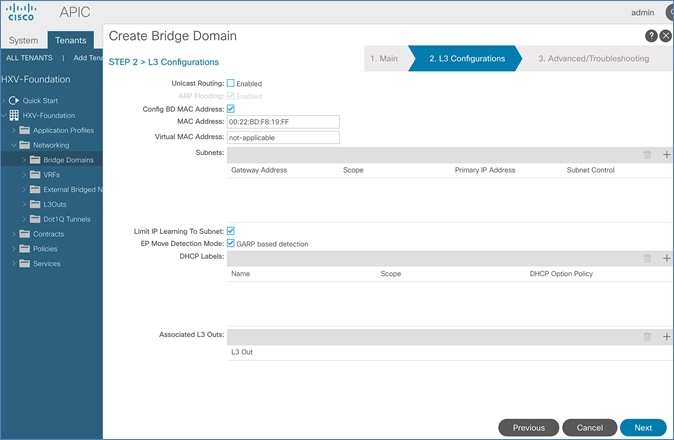

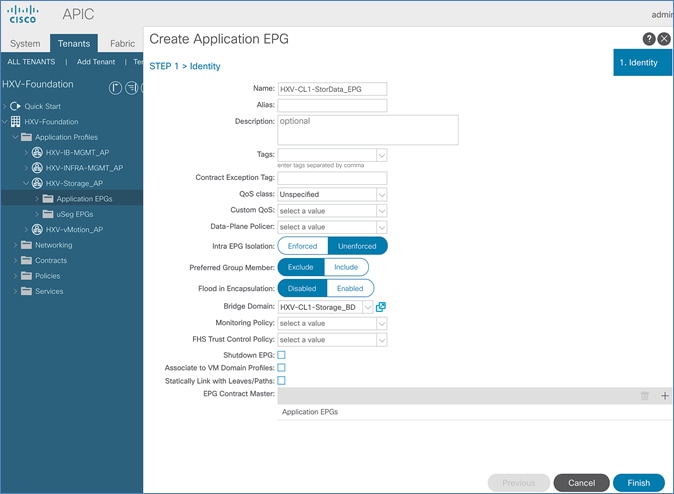

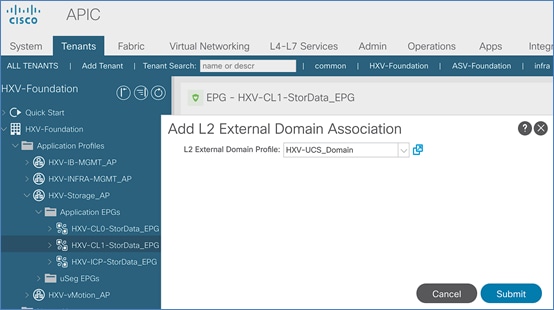

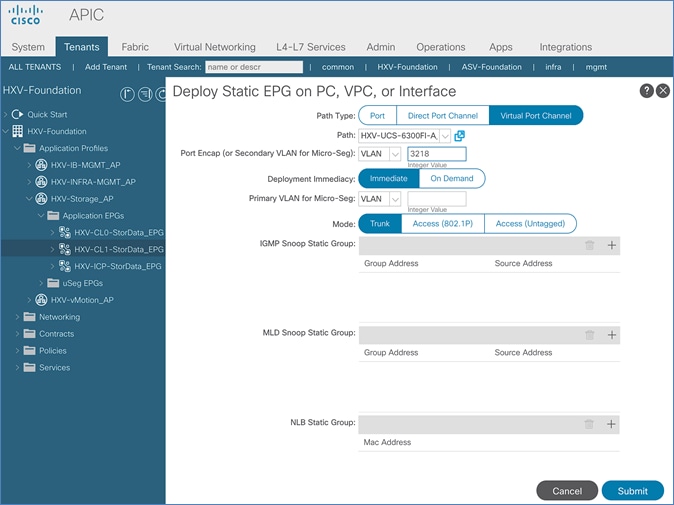

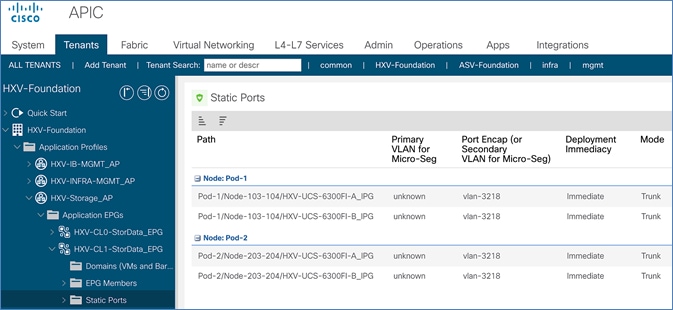

Configure ACI Fabric for Storage Data Traffic on HyperFlex Stretched Cluster

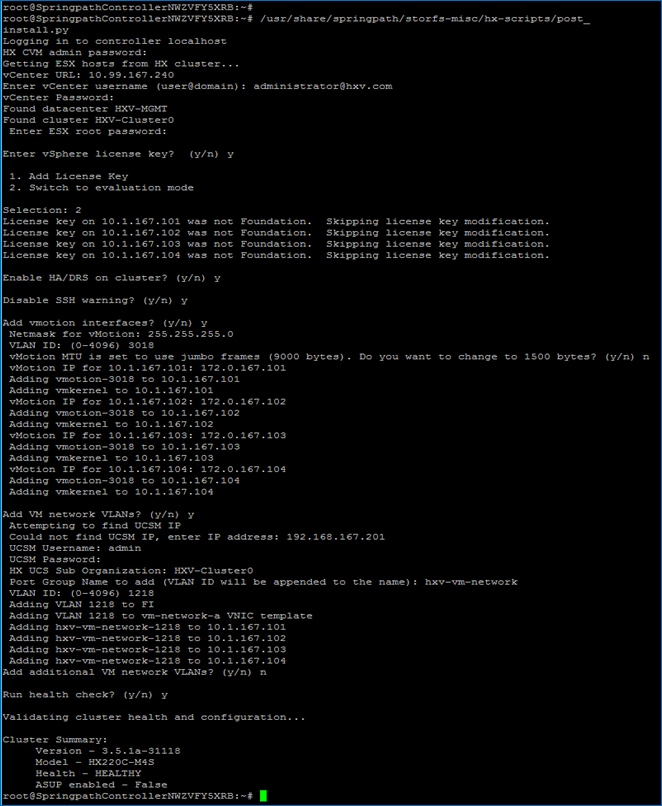

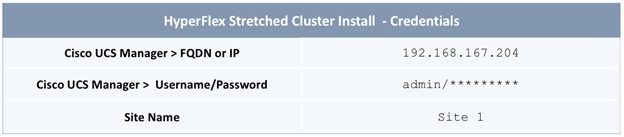

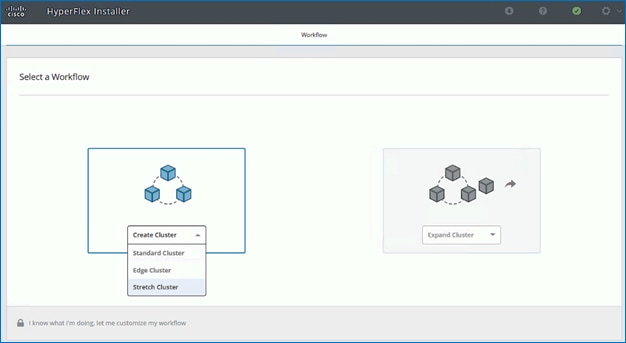

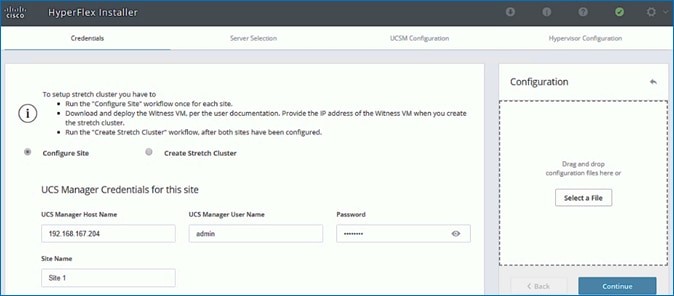

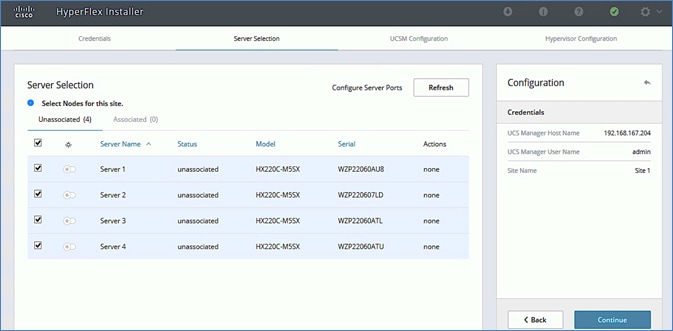

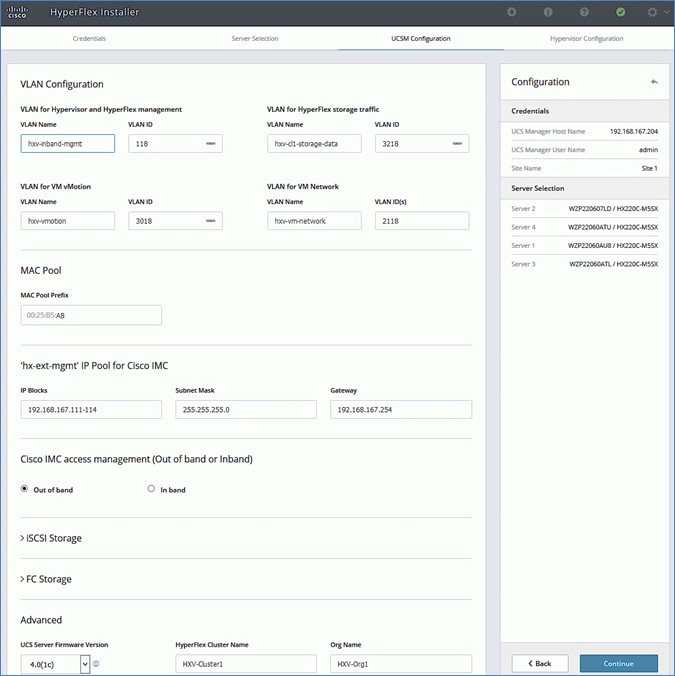

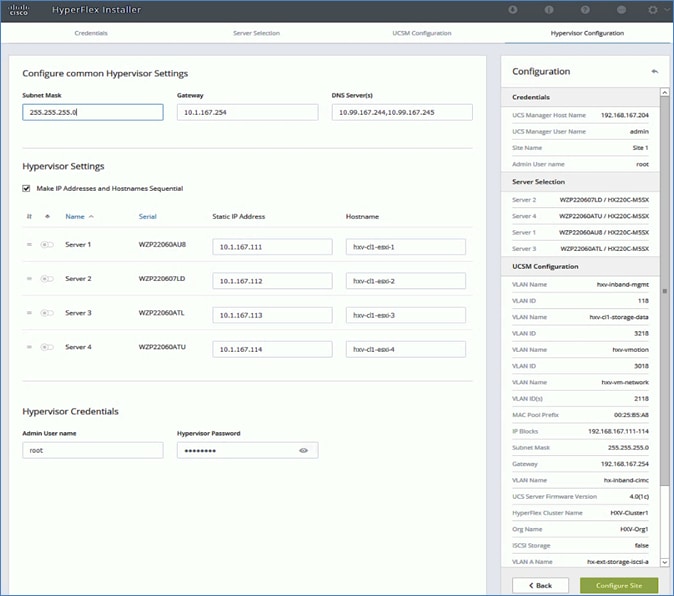

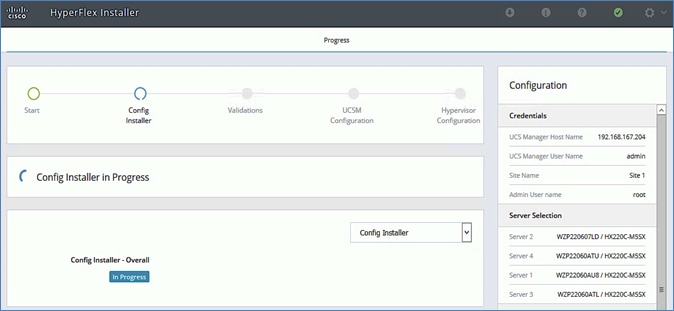

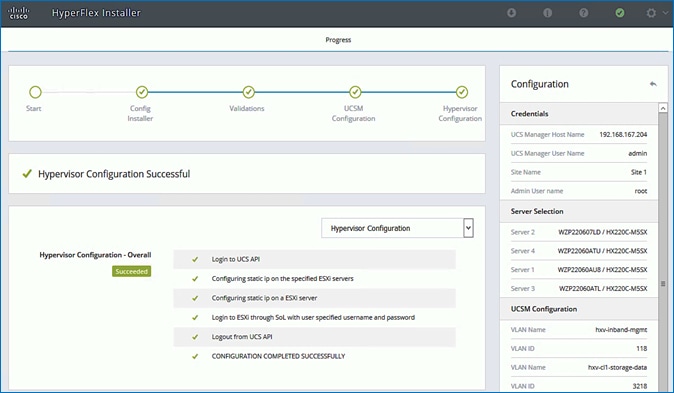

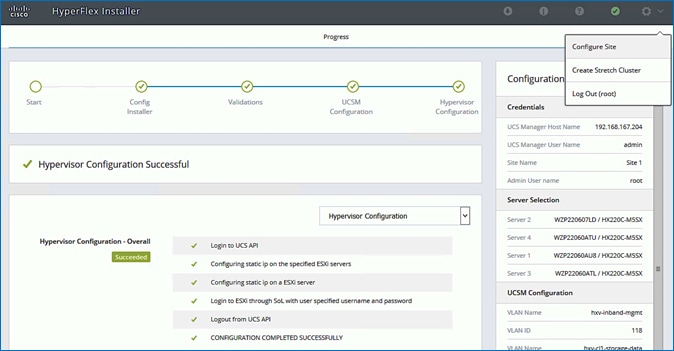

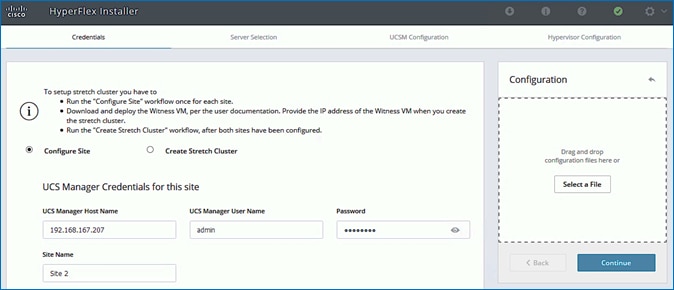

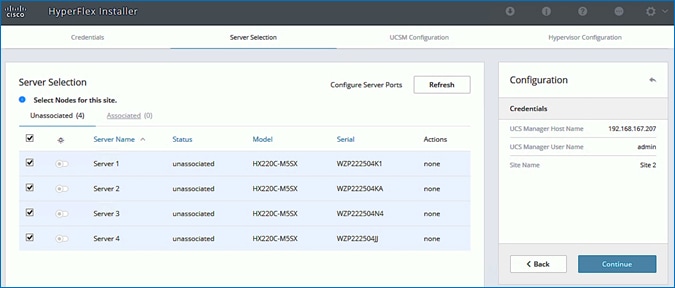

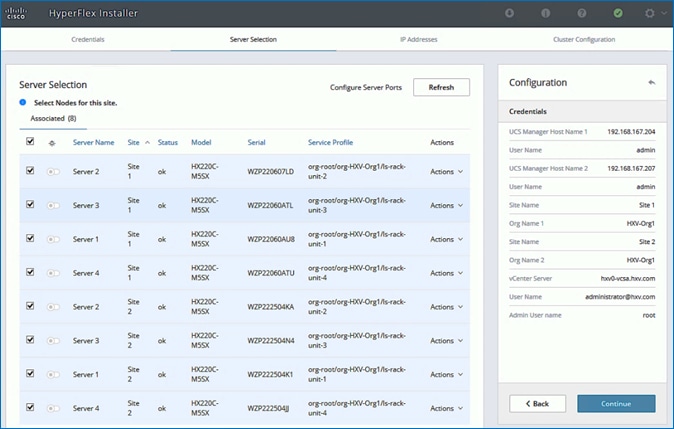

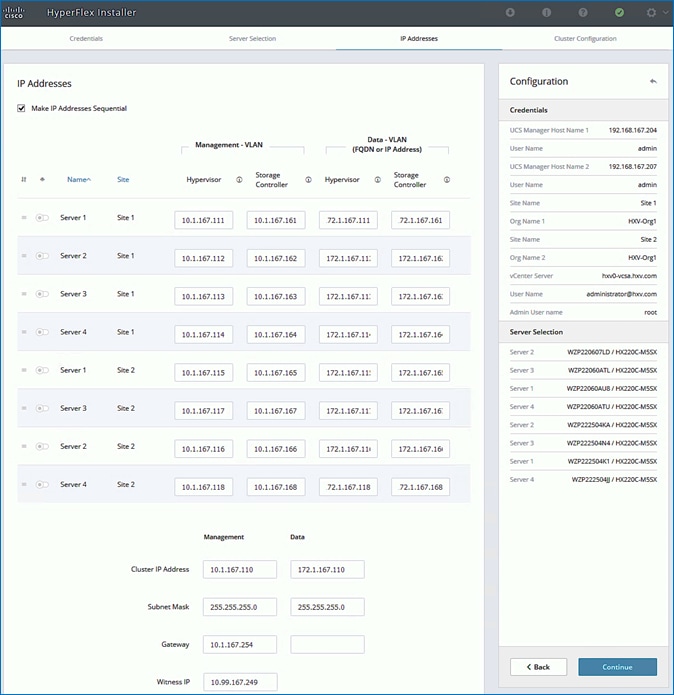

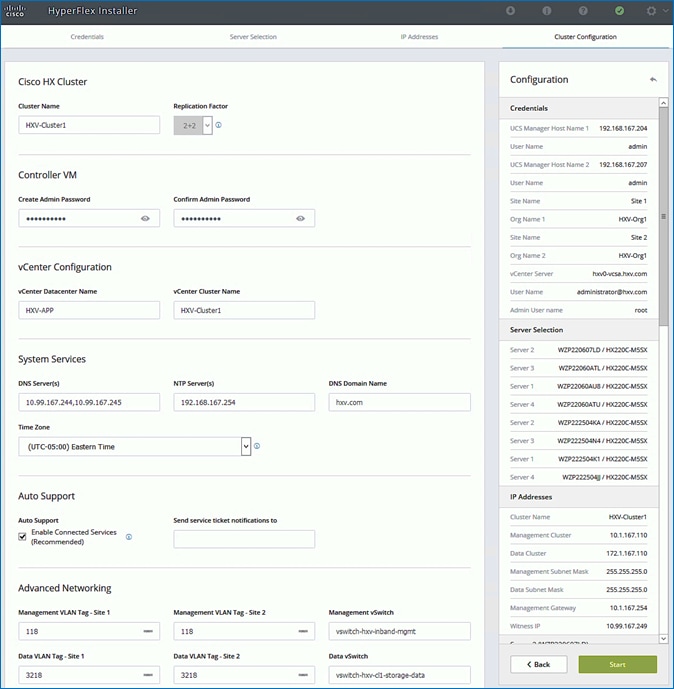

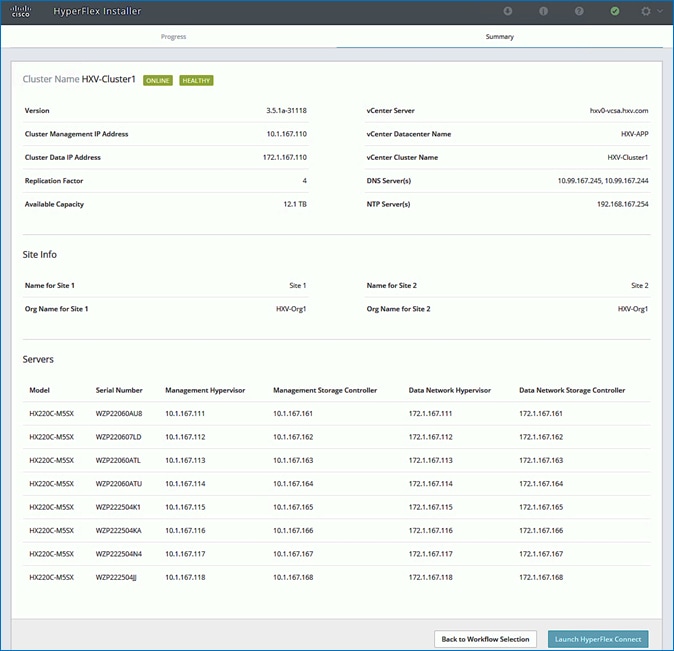

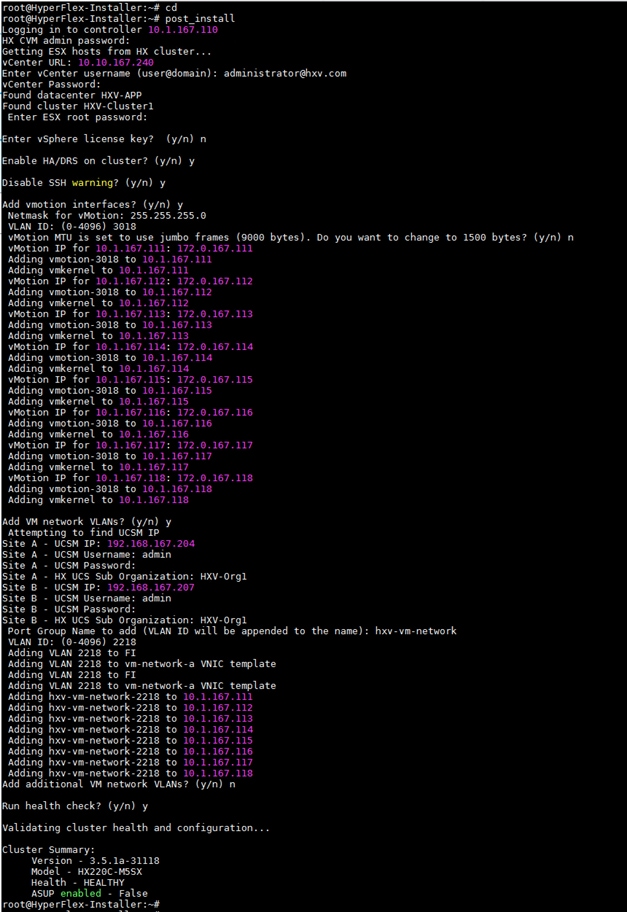

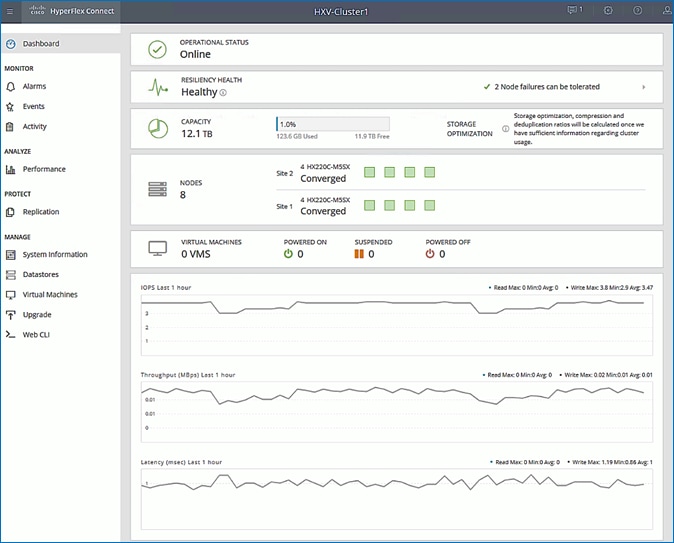

Install HyperFlex Stretched Cluster (Applications) using HyperFlex Installer VM

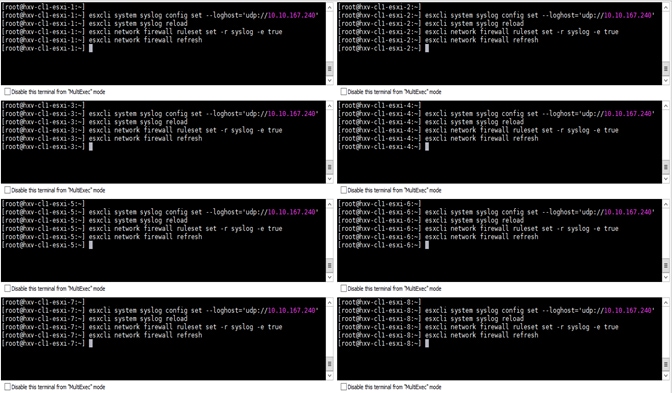

Migrate Virtual Networking to VMware vDS on HyperFlex Application Cluster

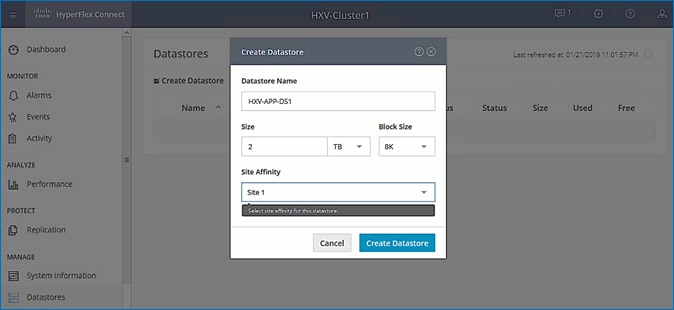

Solution Deployment – Onboarding Multi-Tier Applications

Configure ACI constructs for Application Traffic

Create Tenant and VRF for Application

Verify Virtual Networking for the Application EPGs

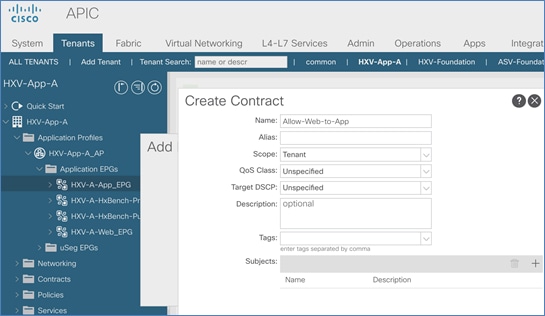

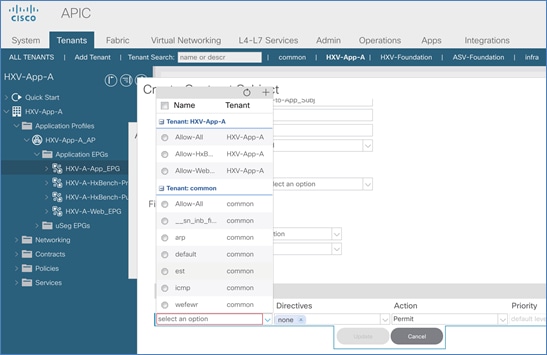

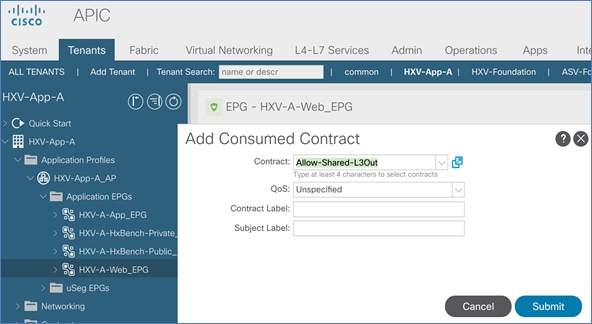

Web-Tier to Shared L3Out Contract

Validated Hardware and Software

Cisco ACI Application Centric Infrastructure (ACI)

Cisco Validated Designs (CVDs) are systems and solutions that are designed, tested, and documented to facilitate and accelerate customer deployments. CVDs incorporate a wide range of technologies, products, and best-practices into a portfolio of solutions that address the business needs of our customers. CVDs based on Cisco HyperFlex deliver infrastructure and application solutions using a hyperconverged, software-defined infrastructure. For a complete portfolio of HyperFlex solutions, see: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/data-center-hyperconverged-infrastructure.html

The Virtual Server Infrastructure (VSI) solutions based on Cisco HyperFlex combine software-defined computing using Cisco UCS servers, software defined storage using Cisco HyperFlex HX Data Platform, and software-defined networking using Cisco Unified Fabric to deliver a foundational, hyperconverged infrastructure platform for Enterprise data centers. When combined with a Cisco Application Centric Infrastructure (Cisco ACI) fabric, it extends the software-defined paradigm into the data center network to deliver a comprehensive, scalable, application-centric infrastructure for Enterprise data centers.

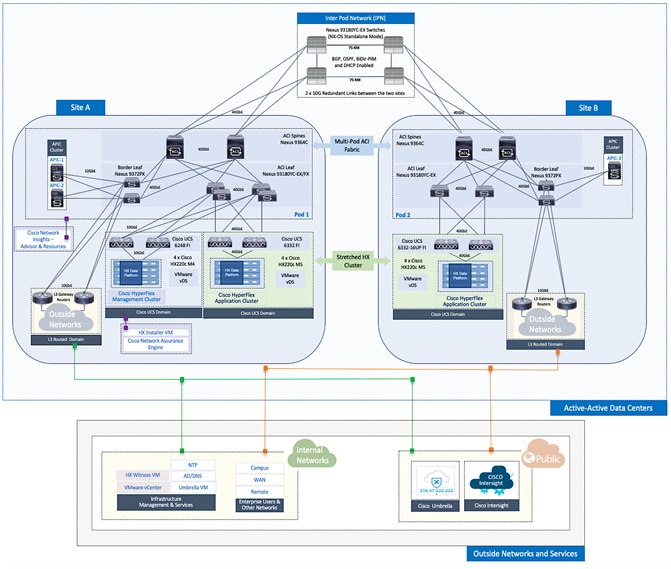

The Cisco HyperFlex Stretched Cluster with Cisco ACI Multi-Pod Fabric solution discussed in this document, is a validated reference architecture for building an active-active data center to provide business continuity and disaster avoidance. The solution extends compute, storage, and networking across two data center locations to enable the active-active data centers. Workloads can be placed in either data center with seamless mobility between data centers. In this design, a Cisco HyperFlex stretched cluster is extended across the active-active data centers to provide the hyperconverged virtual server infrastructure in each data center. The nodes in the cluster are distributed evenly across both data centers and connect to Cisco Unified Fabric or Cisco UCS Fabric Interconnects to a Cisco ACI fabric in each location. The solution uses a Cisco ACI Multi-Pod fabric as the end-to-end data center fabric for interconnecting the data centers and to provide connectivity within each data center location. The fabric also provides Layer 2 extension and Layer 3 forwarding between data centers to enable the seamless workload mobility and connectivity between data centers. The data centers can be in geographically separate sites such as a metropolitan area or they can be in the same campus or building. The HyperFlex stretched clusters serves as an Applications cluster in this design. The solution also includes an optional HyperFlex standard cluster as a Management cluster for hosting management and other shared services directly from within the ACI fabric.

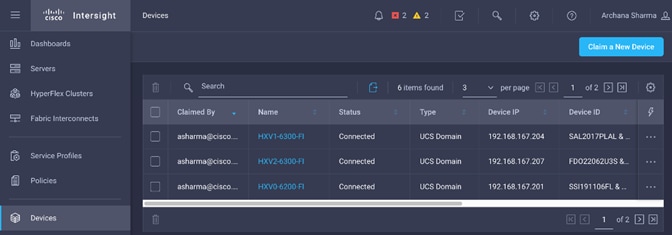

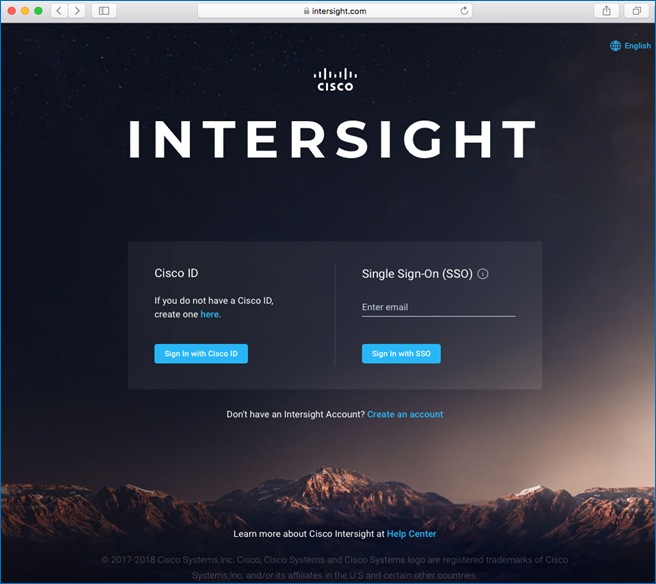

To simplify day-2 operations, the solution uses Cisco Intersight to centrally manage all virtual server infrastructure in the solution. This includes the Applications cluster, the Management cluster, and the Cisco Unified Fabrics in both locations. Cisco Intersight can also be used to manage other data center infrastructure that Enterprises have. Cisco Intersight is also used to deploy the Management cluster in the solution. Cisco Intersight is a centralized, cloud-based, software-as-a-service (SAAS) platform that simplifies operations by providing pro-active, actionable intelligence to manage and operate Enterprise data centers. Cisco Intersight provides capabilities such as Cisco Technical Assistance Center (TAC) integration for support and Cisco Hardware Compatibility List (HCL) integration for compliance that Enterprises can leverage for their Cisco HyperFlex and UCS systems in all locations. Enterprises can also quickly adopt the new features that are continuously being rolled out in Cisco Intersight. The solution also uses Cisco Network Assurance Engine (Cisco NAE), Cisco Network Insights – Advisor (Cisco NIA), and Cisco Network Insights- Resources (Cisco NIR) to further simplify operations through pro-active monitoring of the ACI Multi-Pod fabric. The three tools can comprehensively monitor the fabric, leveraging analytics and cisco expertise in the networking arena to provide assurance the network is working as intended, and to identify issues pro-actively with in-depth analysis and guidance for resolving the issues.

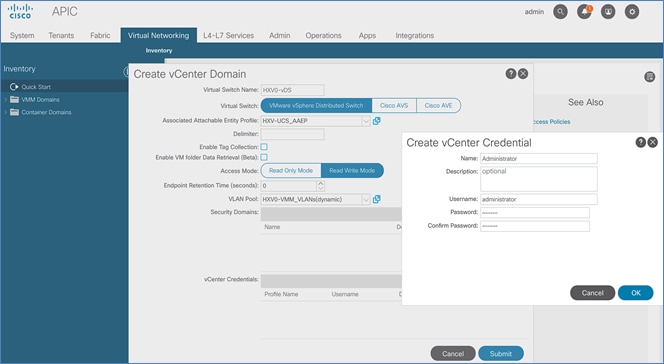

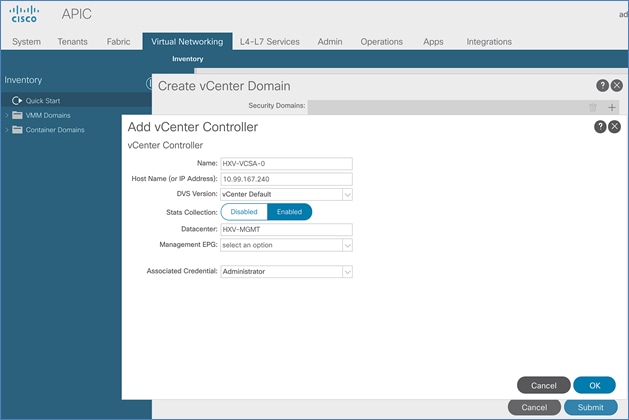

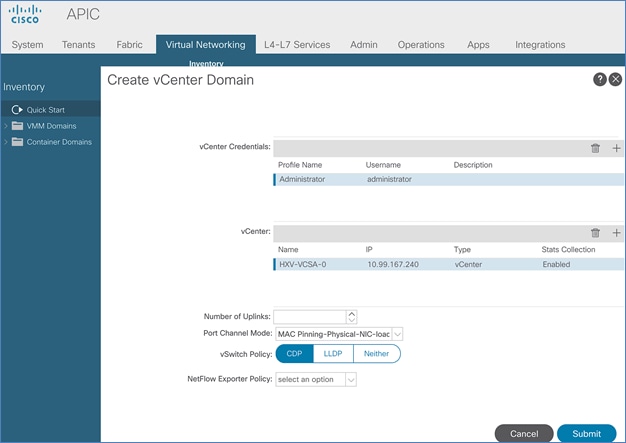

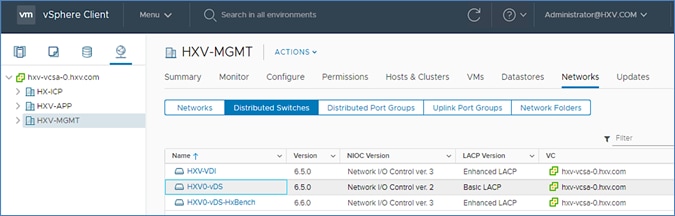

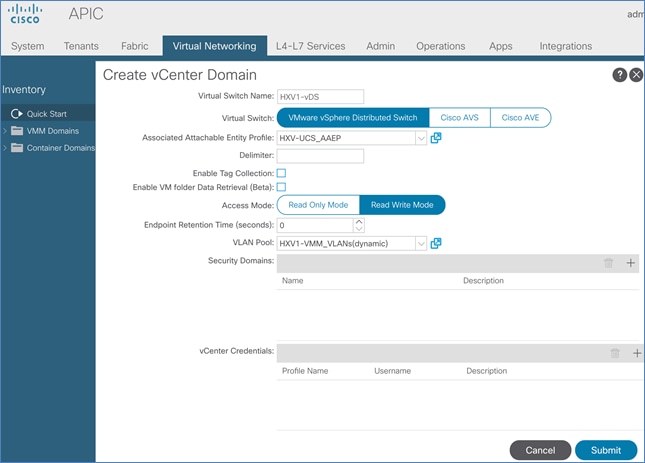

To ease the deployment of virtualized workloads, the solution leverages the VMM integration that ACI provides, the VMM being VMware vCenter in this case, to dynamically orchestrate and manage the virtual networking using either a VMware virtual Distributed Switch (vDS) or Cisco ACI Virtualization Edge (AVE) switch. Cisco AVE is a virtual Leaf that brings the advanced capabilities of an ACI fabric (for example, application policies, micro-segmentation, security) to the virtualization layer. In this release of the solution, VMware vDS is used in both HyperFlex standard and stretch clusters.

The Cisco HyperFlex Stretched Cluster with Cisco ACI Multi-Pod Fabric CVD consists of the following documents:

· Design Guide: Cisco HyperFlex 4.0 Stretched Cluster with Cisco ACI 4.2 Multi-Pod Fabric Design Guide

· Deployment Guide: Cisco HyperFlex 4.0 Stretched Cluster with Cisco ACI 4.2 Multi-Pod Fabric

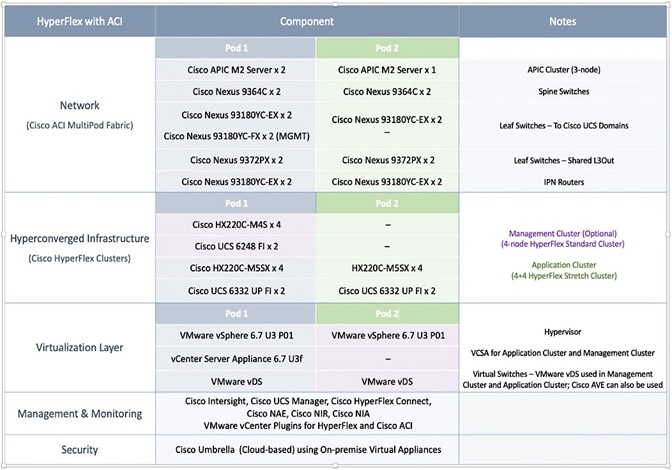

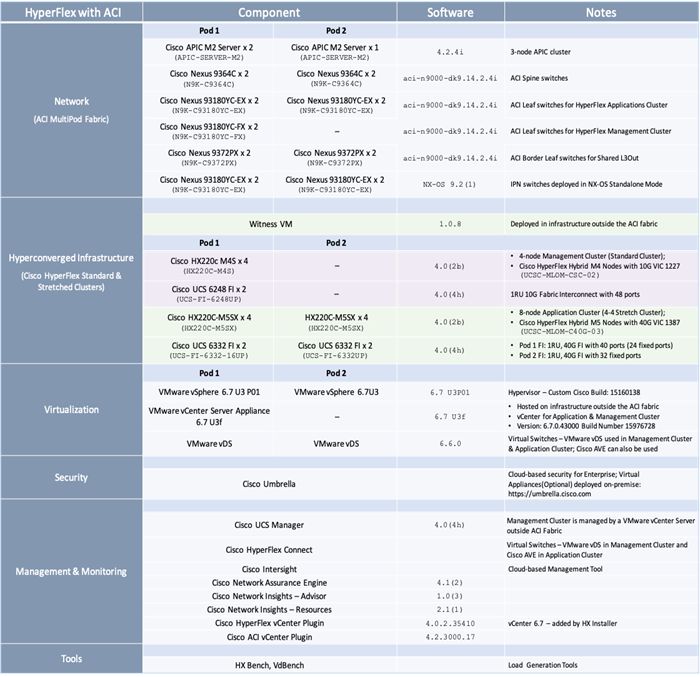

This document is the deployment guide for the solution. The solution was built and validated using Cisco HyperFlex 4.0, Cisco Unified Computing System 4.0, Cisco ACI 4.2 Multi-Pod fabric running on Cisco Nexus family of switches and VMware vSphere 6.7U3. The design guide for the solution is available here.

Introduction

Audience

Purpose of this Document

What’s New in this Release?

· Cisco HyperFlex 4.0(2b), Cisco UCS Manager 4.0(4h), Cisco Intersight

· Cisco ACI 4.2(4i), VMware vDS 6.6.0 and VMware vSphere 6.7U3

For Cisco Intersight, since it is a SaaS platform where new features are being continuously added, a number of new capabilities and integrations have been added since the last release of this solution that customers can leverage as needed. The latest features and capabilities added to the platform are available here.

To further simplify day-2 operations through pro-active intelligence and monitoring, this release also adds the following operational tools to the solution. The Cisco Network Insights are hosted on a 3-node Cisco Application Services Engine cluster connected to the in-band management network of the ACI fabric. To support these tools, Precision Time Protocol (PTP) was also enabled in the ACI Fabric.

· Cisco Network Insights – Advisor (NIA)

· Cisco Network Insights – Resources (NIR)

· Cisco Network Assurance Engine (NAE)

Solution Summary

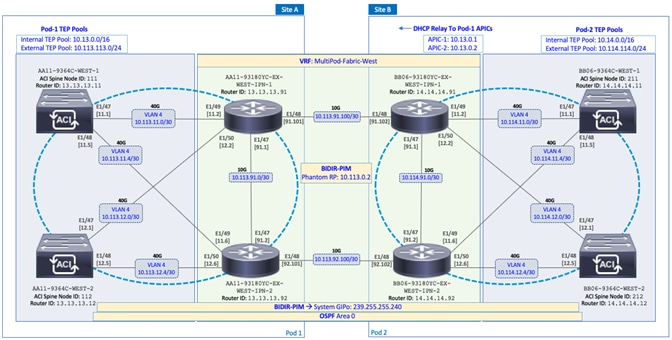

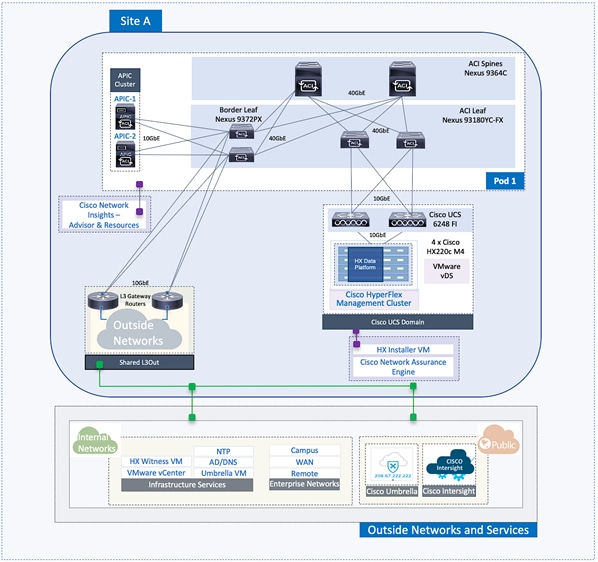

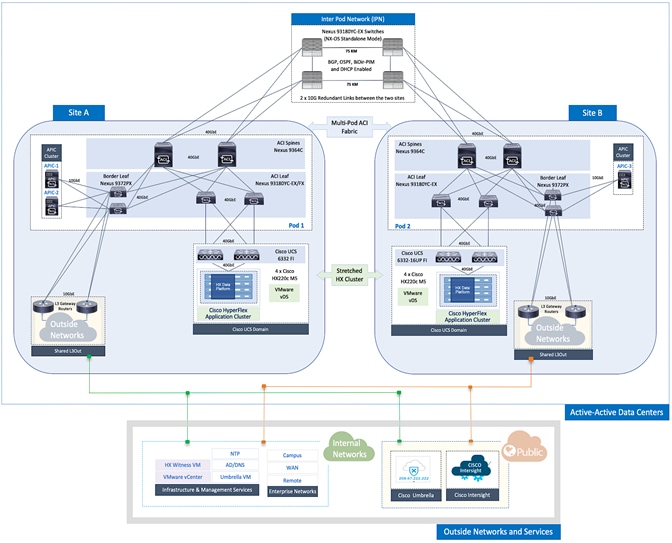

The end-to-end design for the active-active data centers in the Cisco HyperFlex Stretched Cluster with Cisco ACI Multi-Pod Fabric solution is shown in Figure 1.

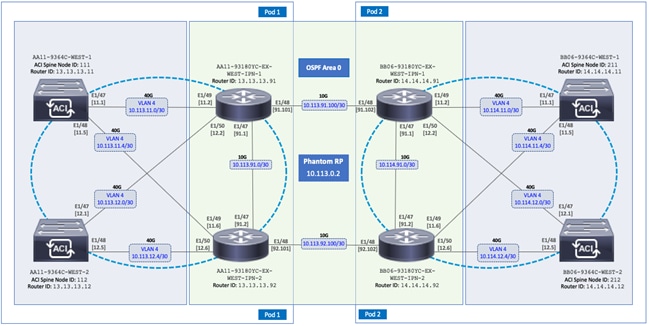

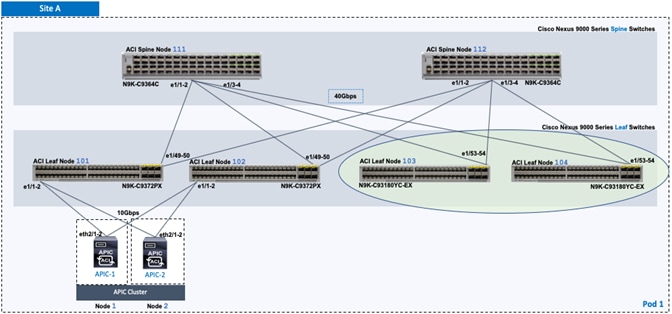

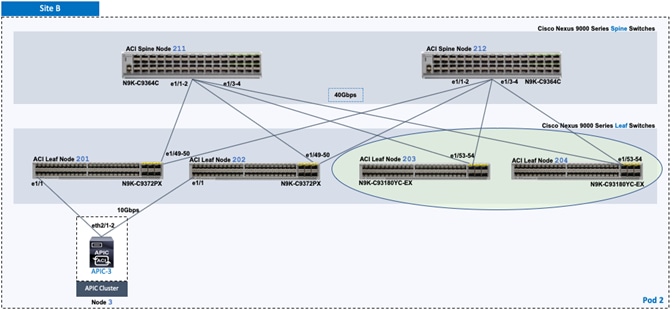

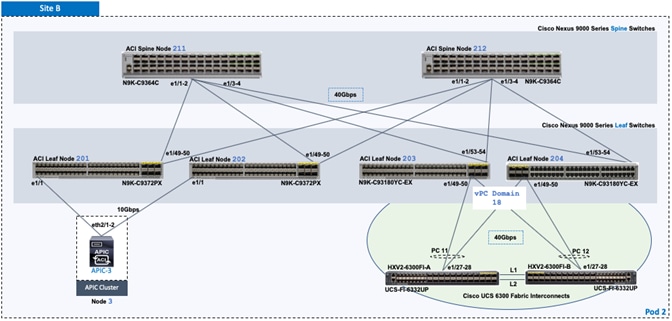

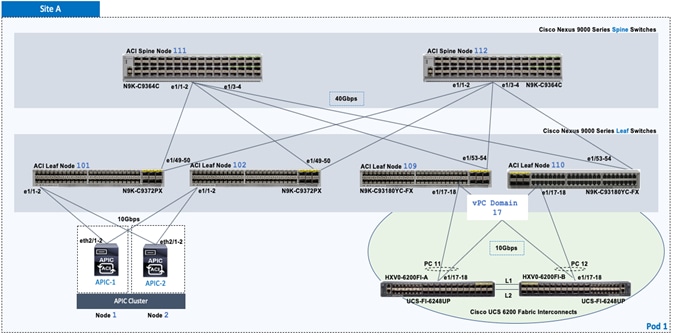

As stated earlier, the active-active data centers in the solution uses a HyperFlex stretched cluster to extend the hyperconverged infrastructure across two data centers. The two data centers can be in the same site such as different buildings in a campus location or in different geographical locations. In this design, the two data centers are assumed to be in different geographical sites, separated by a distance of 75km as shown in the above figure. Cisco ACI Multi-Pod fabric provides the network fabric in each site and the connectivity between them using an Inter-Pod Network (IPN). The ACI fabric provides both layer 2 extension and layer 3 connectivity between sites that enable seamless workload placement and mobility between data centers. The fabric in each site is referred to as a Pod in the ACI Multi-Pod architecture, where each Pod is deployed as a standard Spine-Leaf architecture. The fabric is managed using a 3-node APIC cluster with two APICs in the first site and a third APIC in the second site. The physical connectivity is based on 40GbE within the Pod, and 10GbE or 40GbE to connect to IPN, outside networks and access layer devices (APICs, UCS Fabric Interconnects). A highly-resilient design is used within each Pod to ensure availability to networks and services in the event of a failure.

Each Pod also has a dedicated Layer 3 connection to outside networks to enable direct access from each data center location. As a result, a failure in the remote Pod or an IPN failure will not impact the local Pod’s reachability to/from external networks (for example, Internet or cloud) or internal networks (for example, non-ACI infrastructure or a campus network) outside the ACI fabric. The Layer 3 outside connection can be used to access services hosted outside the fabric or it can be used to host services within the ACI fabric that users outside the fabric access. In this design, all endpoints connected to the ACI fabric will share the same Layer 3 connection(s). ACI refers to this type of connection as a Shared L3Out. Shared L3Out connections are typically defined in the ACI system-defined common Tenant but it can also be in a user-defined tenant. Alternatively, a dedicated L3Out can also be defined for each tenant. In this design, two Shared L3Out connections are defined in the common Tenant - one for each Pod. The leaf switches that connect to outside networks are referred to as Border Leaf switches in the ACI architecture. A routing protocol (or static routes) is enabled on the border leaf switches and on external gateways outside the fabric to exchange routing information between ACI and outside networks. In this design, OSPF is used as the routing protocol and the border leaf switches in ACI connect to Nexus 7000 series gateways in the outside network.

The solution uses ACI multi-tenancy to provide isolate and manage the connectivity requirements. In addition to the system-defined common Tenant, the design uses the following user-defined ACI tenants to provide connectivity to HyperFlex clusters and to the workloads hosted on the clusters. Enterprises can define as many tenants as needed to meet the needs of their environment. The two user-defined tenants in this design are:

· HXV-Foundation: This tenant provides infrastructure connectivity between nodes in a HyperFlex cluster. The connectivity provided by this tenant is critical to the health and operation of the HyperFlex clusters. In this design, the infrastructure connectivity for all HyperFlex clusters in the active-active data center is enabled using this tenant.

· HXV-App-A: This tenant provides connectivity to applications, services and any other workload hosted on the HyperFlex clusters.

The solution uses two types of HyperFlex clusters – a HyperFlex standard cluster for Management (optional) and a HyperFlex stretched cluster for Applications. Both clusters connect to the ACI fabric through Cisco UCS Fabric Interconnects which in turn connects to leaf switches in the ACI fabric. Though a pair of Cisco UCS Fabric Interconnects can support several HyperFlex clusters, the HyperFlex clusters in this design connect using dedicated pairs of Fabric Interconnects and ACI leaf switches, one for each cluster. The HyperFlex stretch cluster that spans two data center locations use two pairs of Cisco UCS Fabric Interconnects and ACI leaf switches, one in each site, to connect to the ACI fabric.

For higher bandwidth and resiliency, each Fabric Interconnect pair(s) use multiple 10GbE or 40GbE links in a Port-channel (PC) configuration to connect to the upstream ACI leaf switches. In this design, the optional HyperFlex Management cluster use 10GbE links and the HyperFlex Applications cluster use 40GbE links for connecting to the ACI fabric. The downstream connectivity from Fabric Interconnects to HyperFlex nodes in the Management cluster and Applications cluster also use 10GbE and 40GbE links respectively.

ACI manages the virtual networking on both HyperFlex clusters by integrating with VMware vCenter that manages the clusters. Cisco APIC deploys a distributed virtual switch and creates port-groups as necessary to manage the virtual networking. In this release of the solution, an APIC-controlled VMware vDS is used in both the Management and Applications clusters.

The HyperFlex and Cisco UCS infrastructure in the solution are also managed from the cloud using Cisco Intersight. Cisco Intersight offers centralized management of virtualized infrastructure in any location with capabilities such as integration with Cisco TAC, proactive monitoring and analytics, integration with Cisco Hardware Compatibility List (HCL) for compliance checks, and so on.

The solution was validated in Cisco Labs using the component models shown in Table 1 . Other models are supported, provided the software and hardware combinations are supported per Cisco and VMware’s hardware compatibility lists. See Solution Validation section of this document for additional details on the testing.

A high-level summary of the implementation steps for deploying the active-active data center solution is provided below. Upcoming sections will provide the detailed procedures for each implementation step.

· Deploy ACI fabric in Pod-1 where the first data center will be located. Though this is an ACI Multi-Pod fabric, the configuration at this stage is the same as that of a single-site ACI fabric.

· Enable connectivity from ACI fabric in Pod-1 to outside networks. These are networks outside the ACI fabric, either internal or external to the Enterprise. In this design, this connection provides reachability to critical functions such as VMware vCenter, HyperFlex Witness and Cisco Intersight.

· Deploy ACI Multi-Pod fabric. This involves enabling the Inter-Pod network and deploying the ACI fabric in the second data center location or Pod-2. It also includes configuration that enables Layer 2 extension and Layer 3 forwarding between Pods or data centers.

· Enable connectivity from ACI fabric in Pod-2 to outside networks. As in Pod-1, this connection provides Pod-2 with reachability to critical functions such as VMware vCenter, HyperFlex Witness and Cisco Intersight.

· Configure Foundation Tenant to enable infrastructure connectivity for Cisco HyperFlex clusters. The tenant will provide reachability between nodes in a cluster. These are networks that are required to standup the cluster such as the HyperFlex in-band management and storage-data networks. This tenant is not used for applications workloads hosted on the cluster, but it is used by management and other infrastructure VMs such as the HyperFlex Installer virtual machine used for deploying the HyperFlex stretch cluster.

· Enable access-layer connectivity from the ACI fabric in each Pod to Cisco UCS domains that connect to Cisco HyperFlex clusters. This includes connectivity to the UCS domains in Pod-1 for the optional Management cluster, and in Pod-1 and Pod-2 for the HyperFlex stretch cluster.

· Setup Cisco UCS domain for deploying Cisco HyperFlex clusters. Three UCS domains are used in this design – two for the HyperFlex stretch cluster and one for the optional Management cluster.

· Deploy and setup the HyperFlex Management Cluster (optional). This is a HyperFlex standard cluster in Pod-1 and it is deployed from the cloud using Cisco Intersight in this solution. It can also be deployed using an on-premise HyperFlex Installer VM.

· Deploy and setup the HyperFlex Applications Cluster. This is a HyperFlex stretch cluster extended across Pod-1 and Pod-2. It is deployed using the HyperFlex Installer VM hosted on the Management cluster The cluster is also enabled for Cisco Intersight management.

· On-board multi-tier applications. A separate application tenant is defined in the ACI fabric to meet the connectivity needs of the applications. Virtual networking for these workloads is automatically deployed by the APIC through integration with VMware vCenter.

![]() For this CVD, the solution setup from an earlier release of this CVD was updated to the versions and configurations needed for this release. For this reason, any initial deployment screenshots in this document are from the earlier CVD release - all other screenshots are from this release. The solution was then validated to verify the end-to-end functionality and tested for various failure scenarios to ensure the accuracy of the implementation. The deployment guide for the previously-built solution is available here.

For this CVD, the solution setup from an earlier release of this CVD was updated to the versions and configurations needed for this release. For this reason, any initial deployment screenshots in this document are from the earlier CVD release - all other screenshots are from this release. The solution was then validated to verify the end-to-end functionality and tested for various failure scenarios to ensure the accuracy of the implementation. The deployment guide for the previously-built solution is available here.

Solution Deployment – ACI Fabric (Single Pod)

This section provides detailed procedures for deploying a new Cisco ACI fabric. This fabric will serve as the first Pod or site (Pod 1 or Site A in Figure 1) in the ACI Multi-Pod fabric. The fabric will provide network connectivity for Cisco UCS domains and Cisco HyperFlex clusters that connect to it. In this solution, half the nodes in the stretched cluster and all nodes in the optional Management cluster will connect to Pod-1.

![]() The procedures in this section are the same as that for deploying a single-site ACI fabric.

The procedures in this section are the same as that for deploying a single-site ACI fabric.

Deployment Overview

A high-level overview of the steps involved in deploying a single-site ACI fabric is summarized below:

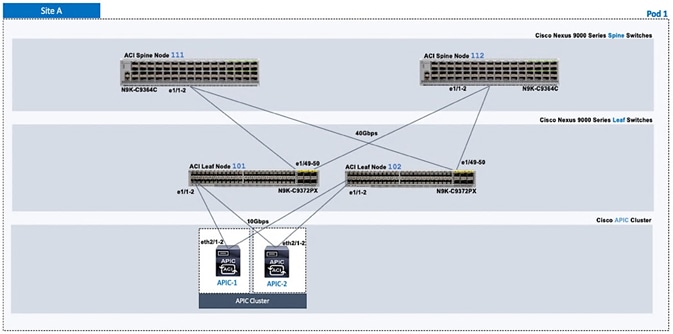

Physical Connectivity

· Complete the physical cabling required to bring up an ACI fabric in Pod-1. An ACI fabric should have a minimum of two Spine switches, two Leaf switches, and a 3-node APIC cluster. In this design, a pair of spine switches and three pairs of leaf switches are deployed in Pod-1. In this section, only the leaf switches that the APICs connect to are deployed in Pod-1. The other leaf switch pairs will be deployed at a later time. Each APIC is dual-homed to a leaf switch pair to provide both switch and link-level redundancy. For APIC high-availability, a 3-node APIC cluster is used with nodes distributed across different Pods in the ACI Multi-Pod fabric. In this design, two APICs are deployed in Pod-1 and one in Pod-2. Pod-2 APIC will be deployed and added to the cluster later in the deployment – in the Deploy APIC(s) in Pod-2 section.

· Complete all out-of-band and in-band management connectivity for Pod-1. The solution uses out-of-band management to access all switches. In this CVD release, in-band management access is also added, primarily to support Cisco Network Insights tools hosted on a dedicated Cisco Application Services Engine cluster.

· Initial setup of the APICs requires access to the keyboard, video, and mouse (KVM) console through the Cisco Integrated Management Controller (CIMC) port on the APIC. Enable CIMC connectivity to APICs in Pod-1.

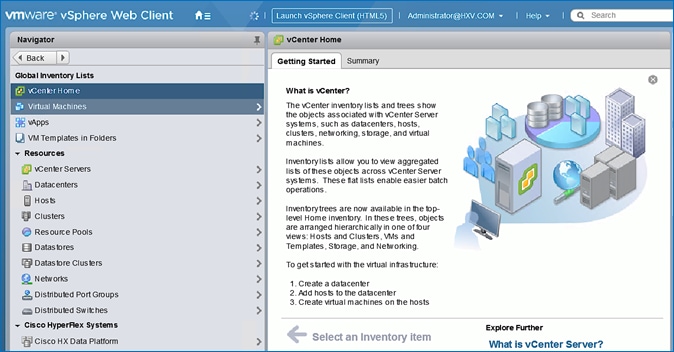

Initial Setup of APIC(s) in Pod-1

Complete the initial setup of the APICs in Pod-1. In Cisco ACI, all configuration is centralized and managed from the APIC - the spine and leaf switches in the fabric are not individually configured. APIC uses Link Layer Discovery Protocol (LLDP) to discover ACI capable Nexus 9000 series switches in the infrastructure (and other APICs) in the fabric. The newly discovered switches are then added, provisioned, and managed from the APIC web GUI. The initial setup establishes key parameters for the fabric such as Fabric ID, Pod ID, and address pools.

Deploy Spine and Leaf switches in Pod-1

Add spine and leaf switches in Pod-1 to the ACI fabric. APICs discover the switches in the fabric through LLDP. APICs can now add the switches to the fabric and manage them. In this step, only the APIC leaf switches are added to the fabric though the physical connectivity is in place for all

Configure Global Policies

Configure fabric-level policies such as Timezone and DNS policies.

Configure Pod Policies for Pod-1

Configure pod-level policies such as NTP, BGP Route Reflector function, Fabric Profiles and Access Policies for Pod-1.

Enable/Review ACI Fabric Settings

Review or enable settings that impact the flow of traffic between endpoints. These policies apply to all endpoints in the ACI Multi-Pod fabric.

Pre-configure Access Layer Policies

Configure common policies for access layer connection to endpoints, gateways or other devices that connect to the fabric. These policies can be re-used across all access layer connections in the ACI Multi-Pod fabric.

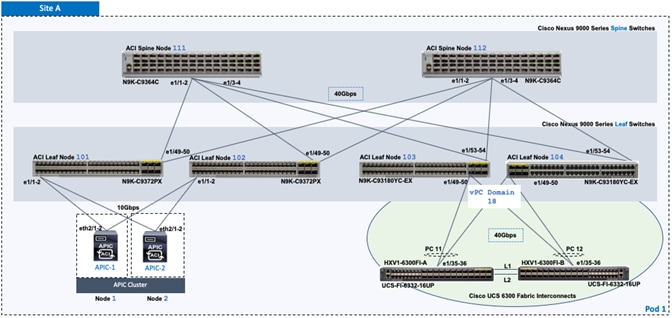

Physical Connectivity

Complete the physical cabling necessary to bring up an ACI Fabric in Pod-1 as shown in Figure 2. Out-of-Band (OOB) management and In-Band management connectivity for all devices and CIMC management for the APICs should also be completed – not shown in the figure.

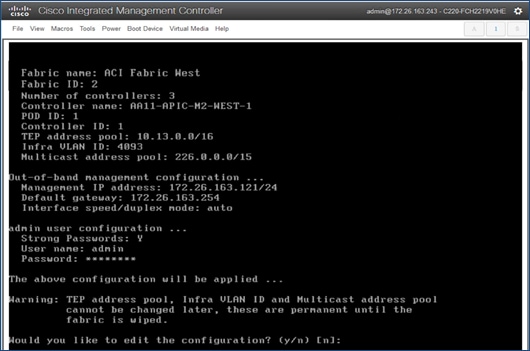

Initial Setup of APIC(s) in Pod-1

Follow the procedures outlined in this section to do an initial setup of the APIC(s) in Pod-1.

![]() The screenshots in this section are from a previous release of this CVD. For this CVD, the previous testbed environment was upgraded and re-configured. Therefore, any screenshots showing the initial setup of the APIC cluster are based on a previous release of this CVD.

The screenshots in this section are from a previous release of this CVD. For this CVD, the previous testbed environment was upgraded and re-configured. Therefore, any screenshots showing the initial setup of the APIC cluster are based on a previous release of this CVD.

Prerequisites

KVM Console access is necessary to do an initial setup and configuration of new APIC(s). KVM access is available through CIMC and therefore access to the CIMC Management interface on each APIC is required. The following CIMC information is also needed:

· CIMC Management IP Address for the APIC(s) being setup

· CIMC log in credentials for the APIC(s) being setup

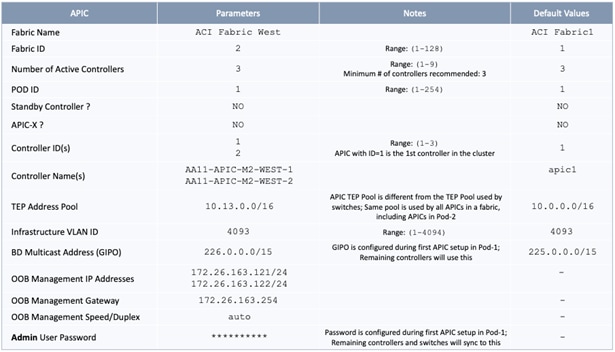

Setup Information

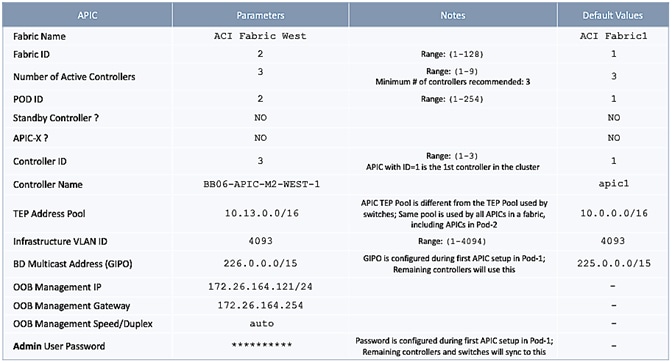

The parameters required for the initial setup of the APICs in Pod-1 are shown in Table 2 .

Table 2 Setup Parameters for APICs in Pod-1

![]() TEP Address Pool specified above are specifically for the APICs and include the APICs in Pod-2. This pool is also used by the ACI fabric switches in Pod-1. The Pod-2 fabric switches use a different TEP pool though the APIC in Pod-2 will still use the above pool.

TEP Address Pool specified above are specifically for the APICs and include the APICs in Pod-2. This pool is also used by the ACI fabric switches in Pod-1. The Pod-2 fabric switches use a different TEP pool though the APIC in Pod-2 will still use the above pool.

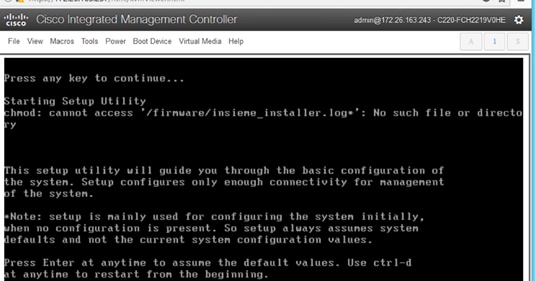

Deployment Steps

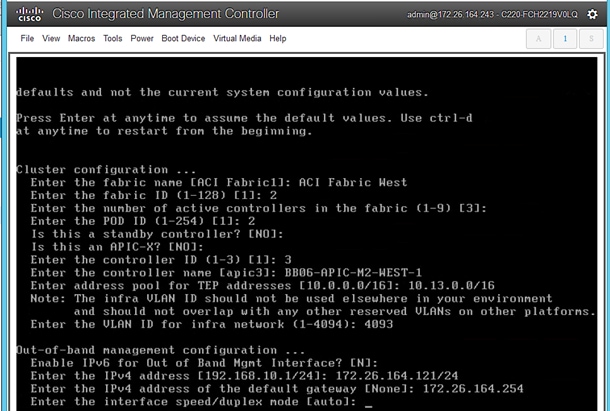

To do an initial setup of the new APICs in Pod-1, follow these steps:

1. Use a browser to navigate to the CIMC IP address of the new APIC. Log in using admin account.

2. From the top menu, click Launch KVM. Select HTML based KVM from the drop-down list.

3. When the KVM Application launches, the initial APIC setup screen should be visible. Press any key to start the Setup Utility.

![]() If the APIC was previously configured, reset to factory defaults, and wipe it clean before proceeding.

If the APIC was previously configured, reset to factory defaults, and wipe it clean before proceeding.

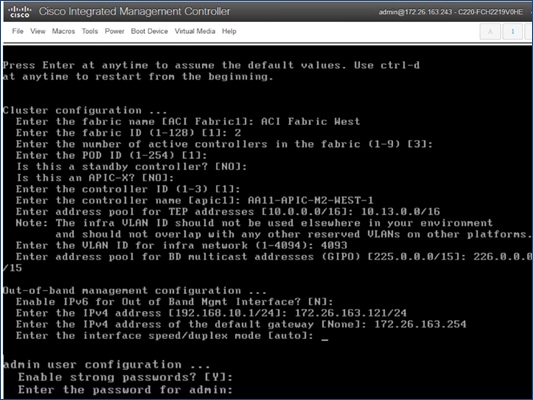

4. Use the Setup information provided above to step through the initial APIC configuration as shown below.

5. Press Enter after the last question (password for admin).

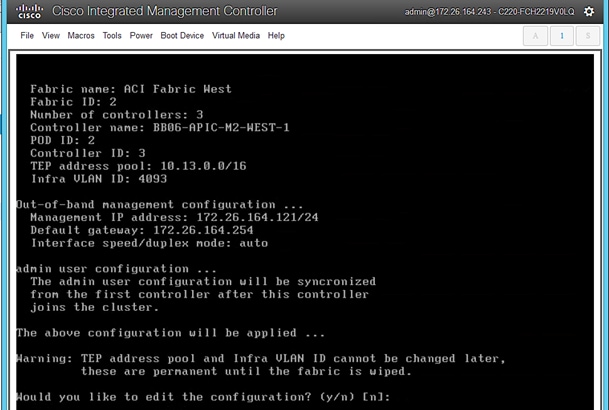

6. Review the configured information. Click y if necessary to go back and make changes, otherwise press Enter to accept the configuration.

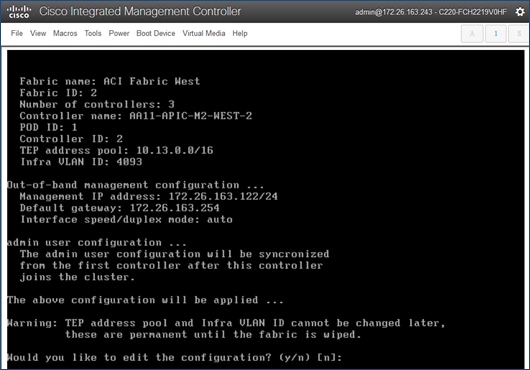

7. Repeat steps 1-6 for the next APIC in Pod-1.

8. Review the configured information. Click y if necessary to go back and make changes, otherwise press Enter to accept the configuration.

![]() The third APIC in Pod-2 will be setup at a later time, after Inter-Pod connectivity is established between Pods.

The third APIC in Pod-2 will be setup at a later time, after Inter-Pod connectivity is established between Pods.

The APICs can now be used to configure and manage the ACI fabric by navigating to the Management IP address of any APIC in the cluster. The configuration done from one APIC will be synced to other APICs in the cluster, ensuring a consistent view of the fabric.

Deploy Spine and Leaf Switches in Pod-1

Once an APIC is up and running in Pod-1, it will discover connected spine and leaf switches in Pod-1 through LLDP. Follow the procedures outlined in this section to setup and deploy spine and leaf switches in Pod-1. The leaf switches that connect to Cisco UCS domains are added later.

![]() All screenshots in this section are from a previous release of this CVD. The previous testbed environment was upgraded and re-configured for this CVD. Therefore, any screenshots showing the initial install and setup of the fabric is from the prior CVD release.

All screenshots in this section are from a previous release of this CVD. The previous testbed environment was upgraded and re-configured for this CVD. Therefore, any screenshots showing the initial install and setup of the fabric is from the prior CVD release.

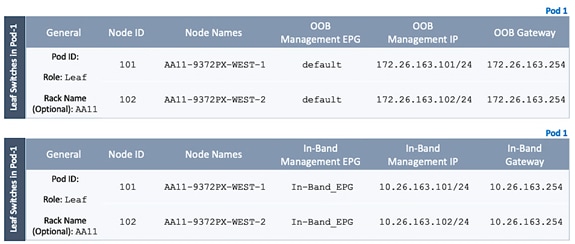

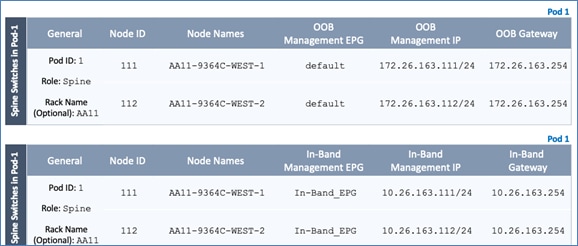

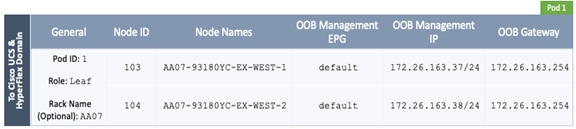

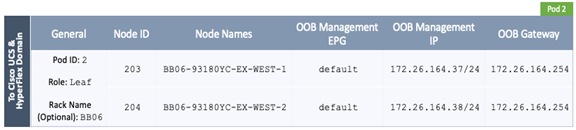

Setup Information

The setup information for deploying Spine and Leaf switches in Pod-1 are shown in the tables below.

Table 3 Setup Information - Leaf Switches

Table 4 Setup Information - Spine Switches

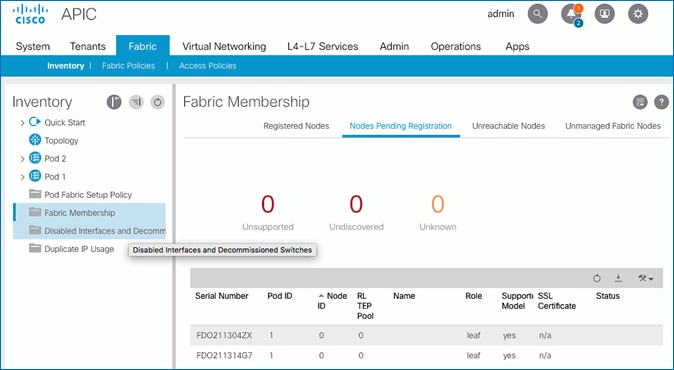

Add Leaf Switches to the ACI Fabric

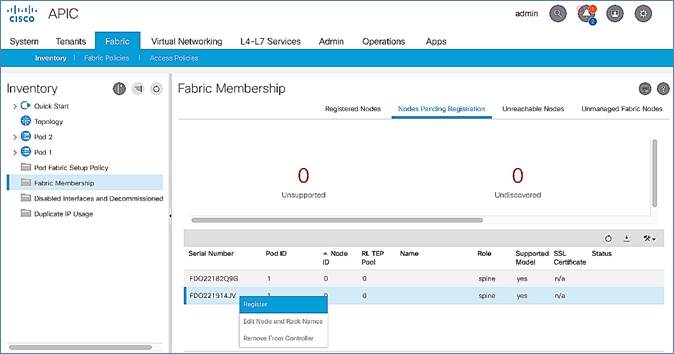

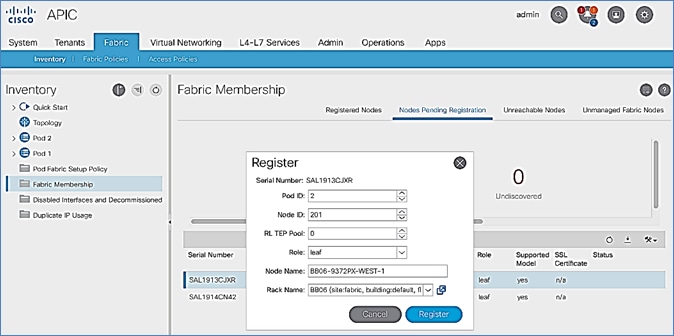

To add the discovered Leaf and Spine switches in Pod-1 to the ACI Fabric, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Fabric > Inventory.

3. From the left navigation pane, navigate to Fabric Membership.

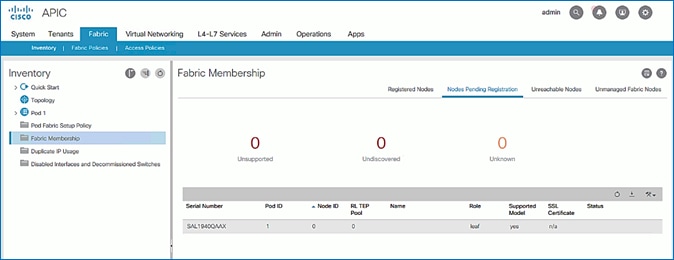

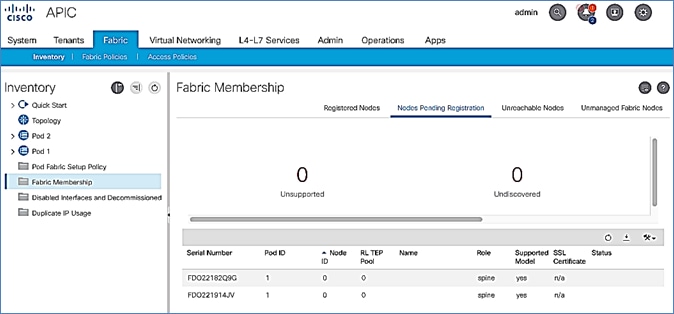

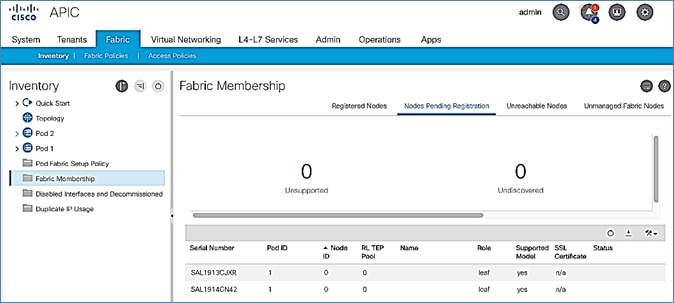

4. In the right navigation pane, go to the Nodes Pending Registration tab.

5. The newly discovered Leaf Switches will be listed with a Node ID of ‘0’. You should see at least one of the Leaf switches – the APIC is dual-homed to a pair of Leaf switches. Note that the switch’s Role is leaf.

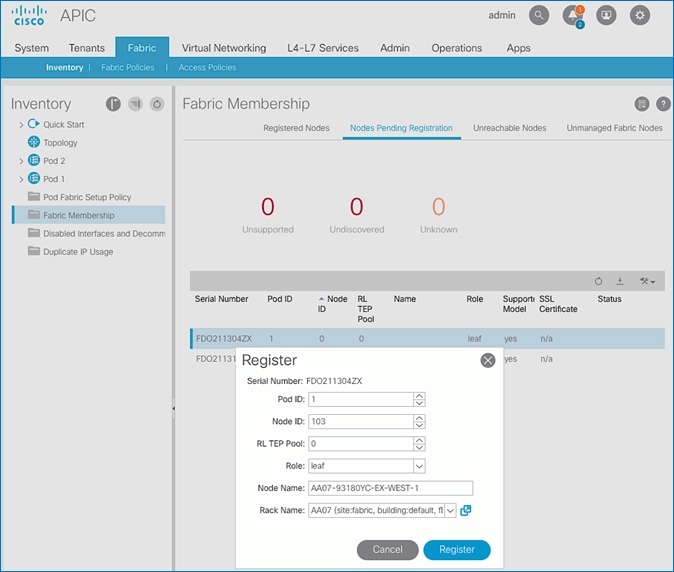

6. Use the serial numbers to identify the new Leaf switch. Collect the setup information for this switch.

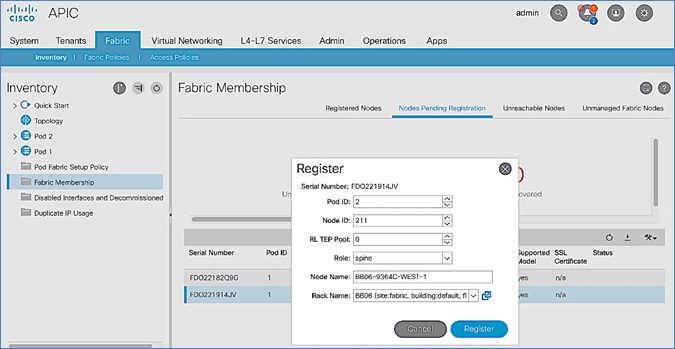

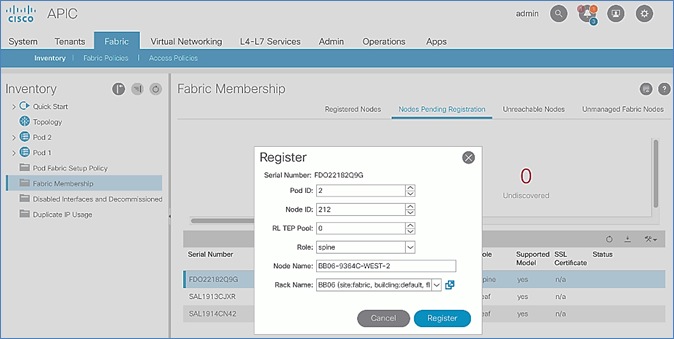

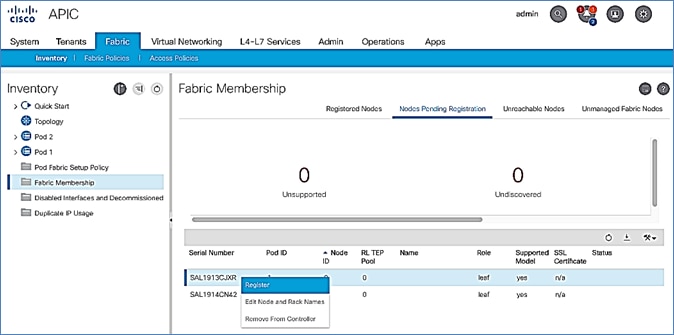

7. In the right windowpane, select the switch. Right-click and select Register.

8. In the Register pop-up window, specify the Pod ID (for example, 1), Node Id (for example, 101), Node Name for example, AA11-9372PX-WEST-1) and Rack Name (for example, AA11).

9. Click Register.

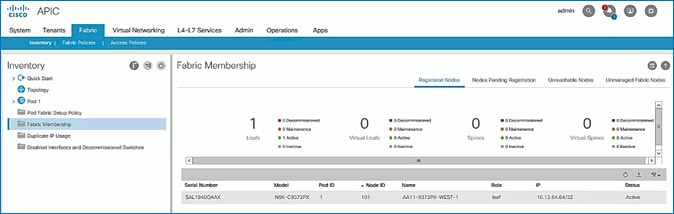

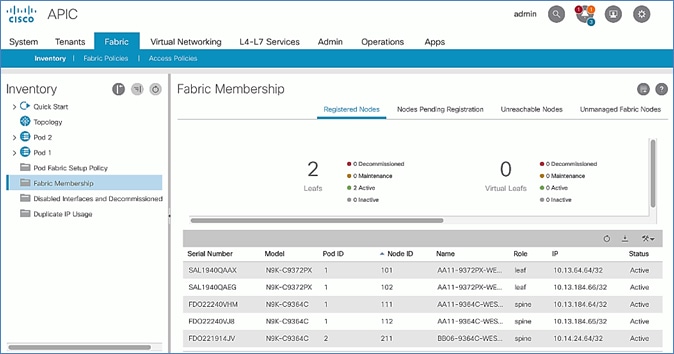

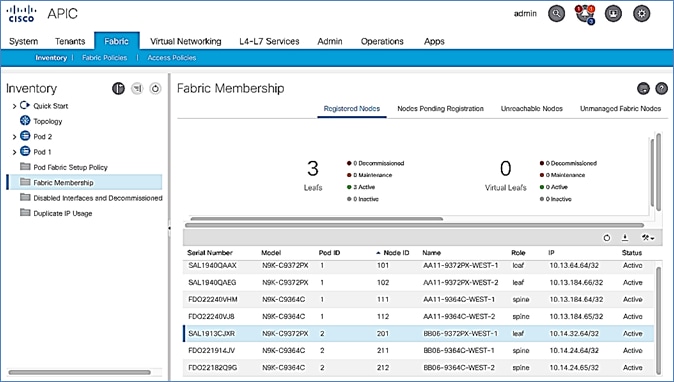

10. Switch to the Registered Nodes tab. The newly configured leaf switch should show up as Active after a few minutes.

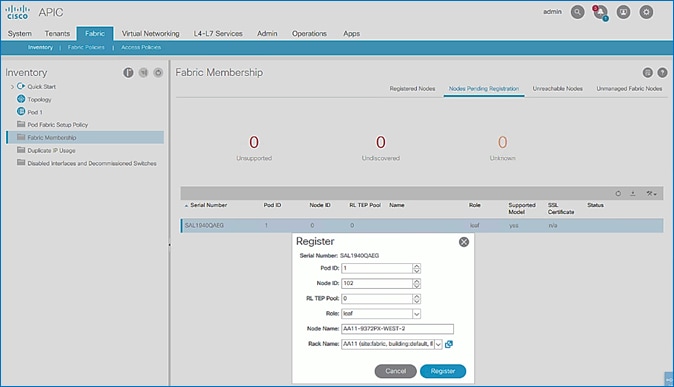

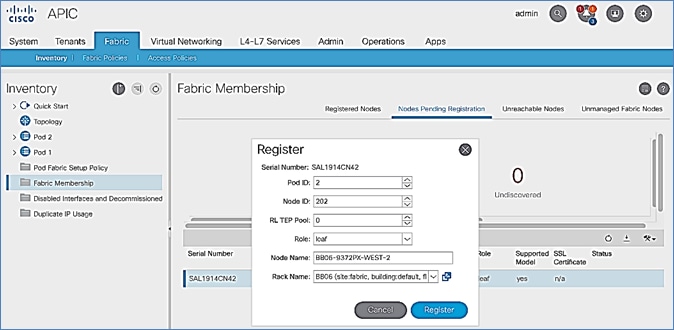

11. In the right navigation pane, go to the Nodes Pending Registration tab.

12. Select the second (-2) Leaf switch using the serial number. Right-click and select Register.

13. In the Register pop-up window, specify the Pod ID (for example, 1), Node Id (for example, 102), Node Name for example, AA11-9372PX-WEST-2) and Rack Name (for example, AA11).

14. Click Register.

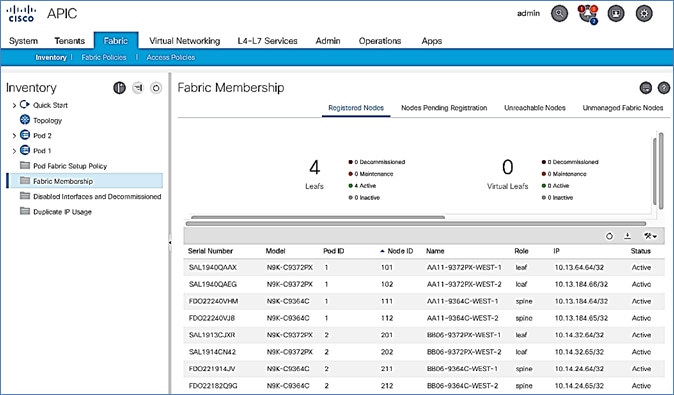

15. You should now see the Leaf switches under the Registered Nodes tab.

16. Repeat steps 1-14 to add additional leaf switch pairs to the fabric.

Upgrade Firmware on Leaf Switches in Pod-1 (Optional)

To upgrade the firmware on leaf switches in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, navigate to Admin > Firmware.

3. Select the tabs for Infrastructure > Nodes.

4. Check the Current Firmware version column for the newly deployed Leaf switches to verify they are at the desired version and that it is compatible with the APIC version running.

5. If an upgrade is not required, proceed to the next section but if an upgrade is required, use the product documentation to upgrade the switches.

Add Spine Switches to the ACI Fabric

![]() The screenshots in this section are from a previous release of the CVD available here.

The screenshots in this section are from a previous release of the CVD available here.

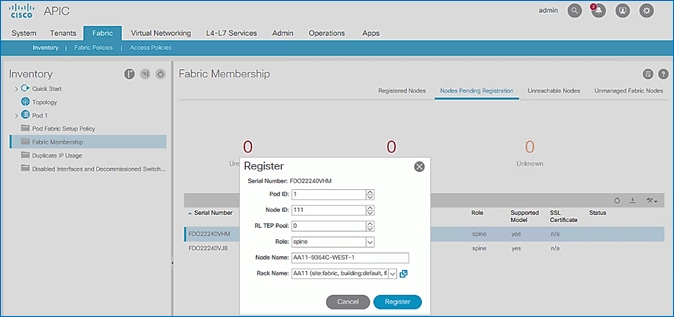

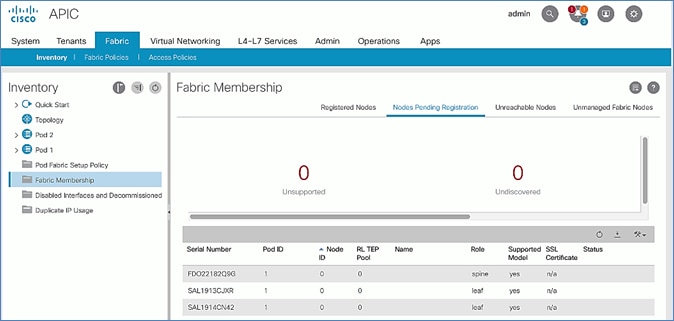

To add spine switches to the ACI fabric, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Fabric > Inventory.

3. From the left navigation pane, navigate to Fabric Membership.

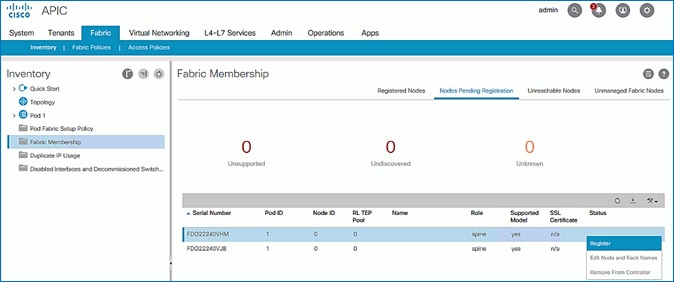

4. In the right navigation pane, go to the Nodes Pending Registration tab.

5. The newly discovered spine switches will be listed with a Node ID of ‘0’, with Role as spine.

6. Use the serial numbers to identify the spine switch pair. Collect the information for each switch.

7. Select the first (-1) spine switch using the serial number. Right-click and select Register.

8. In the Register pop-up window, specify the Pod ID (for example, 1), Node Id (for example, 111), Node Name (for example, AA11-9364C-WEST-1) and Rack Name (for example, AA11).

9. Click Register.

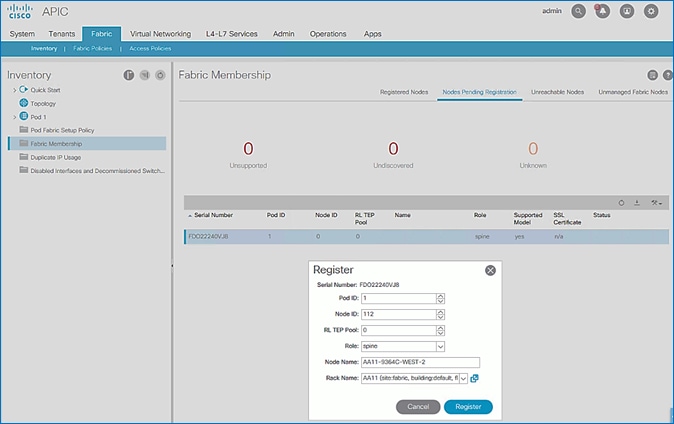

10. Select the second (-2) spine switch using the serial number. Right-click and select Register.

11. In the Register pop-up window, specify the Pod ID (for example, 1), Node Id (for example, 112), Node Name (for example, AA11-9364C-WEST-2) and Rack Name (for example, AA11).

12. Click Register.

13. Repeat steps 1-12 to add additional spine switch pairs to the fabric.

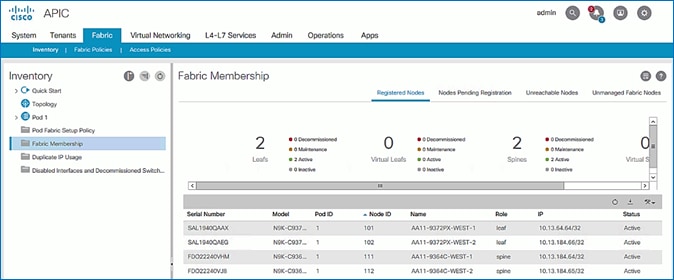

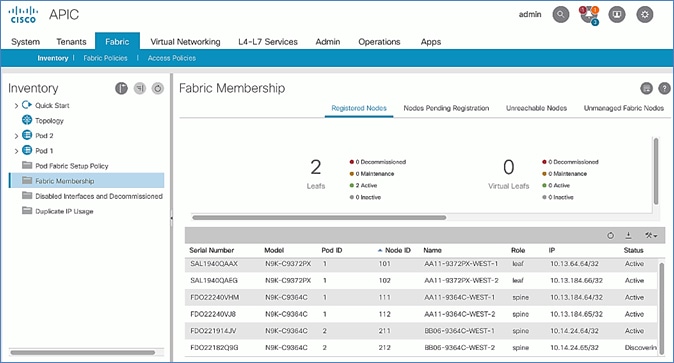

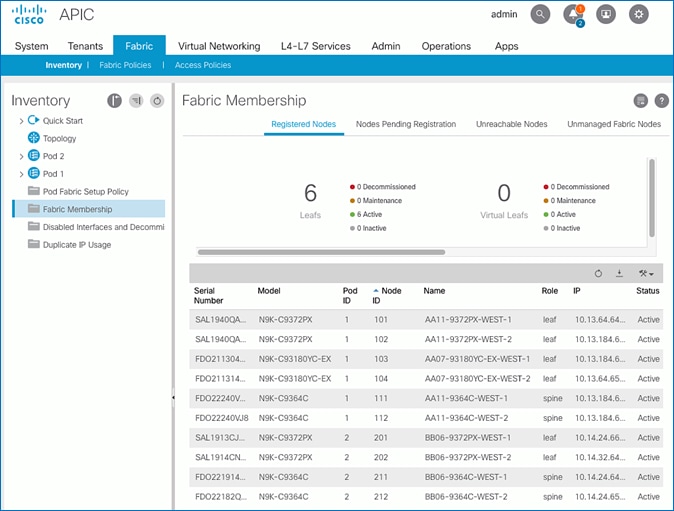

Verify Spine and Leaf Switches are Added to the ACI Fabric

To verify that the spine and leaf switches have been added to the ACI fabric, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Fabric > Inventory.

3. From the left navigation pane, navigate to Fabric Membership.

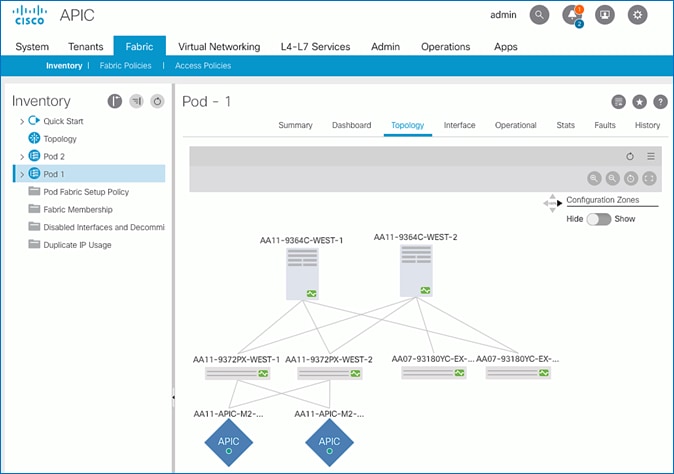

4. In the right navigation pane, go to the Registered Nodes tab.

5. All Spine and Leaf switches are configured and added to the fabric. Note that the APIC has allocated IP addresses from the TEP Pool for Pod-1.

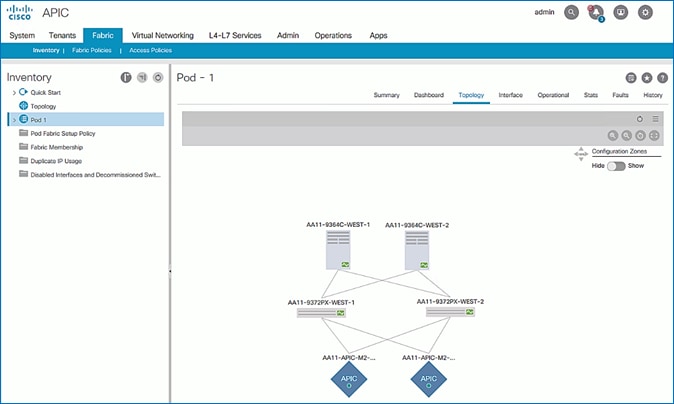

6. From the left navigation pane, select Topology to view the fabric topology after all devices have been added to the fabric.

Upgrade Firmware on Spine Switches in Pod-1 (Optional)

To upgrade the firmware on the spine switches in Pod-1, follow these steps:

1. From the top menu, navigate to Admin > Firmware.

2. Select the tabs for Infrastructure > Nodes.

3. Check the Current Firmware version column for the newly deployed Spine switches to verify they are compatible with the APIC version running.

4. If an upgrade is not required, proceed to the next section but if an upgrade is required, use the product documentation to upgrade the switches.

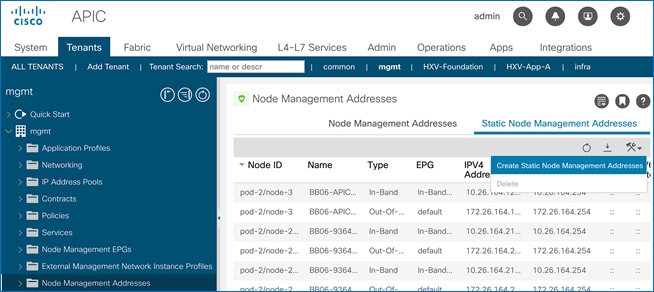

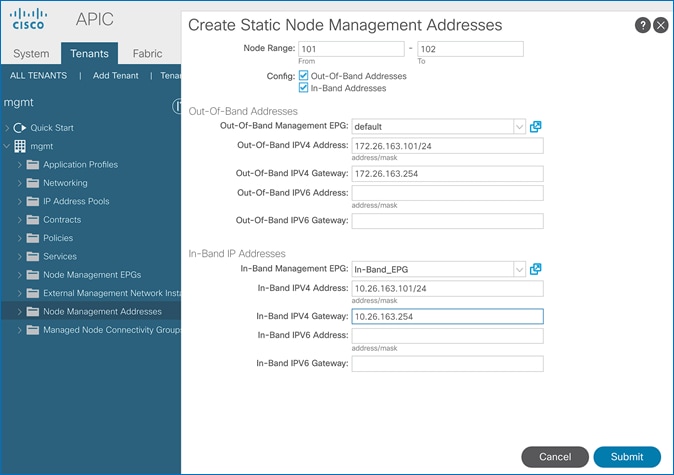

Configure Out-of-Band and In-Band Management for Switches in Pod-1

To configure Out-of-Band (OOB) and In-Band Management for Pod-1 Spine and Leaf switches, follow these steps using the setup information provided in Table 3 and Table 4 :

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Tenants > mgmt.

3. From the left navigation pane, expand and select mgmt > Node Management Addresses.

4. In the right windowpane, select the tab for Static Node Management Addresses.

5. Click the arrow next to the Tools icon and select Create Static Node Management Addresses.

6. In the Create Static Node Management Addresses pop-up window, specify a Node Range (for example, 101-102), for Config: select the check-boxes for Out-of-Band Addresses and In-Band Addresses.

7. In the Out-of-Band Addresses section of the window, for the Out-of-Band Management EPG, select default from the drop-down list.

8. Specify the Out-of-Band Management IPv4 Address for the first node in the specified node range.

9. Specify the Out-of-Band Management IPv4 Gateway.

10. In the In-Band IP Addresses section of the window, for the In-Band Management EPG, select an EPG, for e.g. In-Band_EPG or select Create In-Band Management EPG from the drop-down list to create a new EPG.

11. Specify the In-Band Management IPv4 Address for the first node in the specified node range.

12. Specify the In-Band Management IPv4 Gateway.

13. Click Submit to complete.

14. Click Yes in the Confirm pop-up window to assign the IP address to the range of nodes specified.

15. Repeat steps 1-14 for the remaining switches in Pod-1.

16. The switches can now be accessed directly using SSH.

![]() You can deploy contracts to limit access to the Out-of-Band Management network – see the APIC Configuration Guide for more details. Contracts were not deployed in this setup. You may also need to re-add the APIC Out-of-Band Management IP addresses under Node Management Addresses though it was configured during the initial setup of the APIC. Node IDs for APICs typically start from ‘1’.

You can deploy contracts to limit access to the Out-of-Band Management network – see the APIC Configuration Guide for more details. Contracts were not deployed in this setup. You may also need to re-add the APIC Out-of-Band Management IP addresses under Node Management Addresses though it was configured during the initial setup of the APIC. Node IDs for APICs typically start from ‘1’.

Configure Global Policies

Follow the procedures outlined in this section to configure fabric-wide policies.

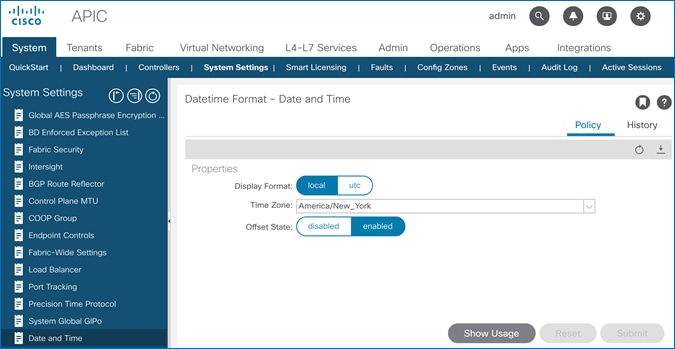

Configure Time Zone Policy

To configure Time Zone for the ACI fabric, follow these steps:

1. Use a browser to navigate to APIC’s Web GUI. Log in using the admin account.

2. From the top menu, select System > System Settings.

3. In the left navigation pane, expand System Settings and select Date and Time.

4. In the right windowpane, select Policy tab. For the Time Zone, select the time zone for the deployment from the drop-down list and verify that Offset State is enabled.

5. Click Submit.

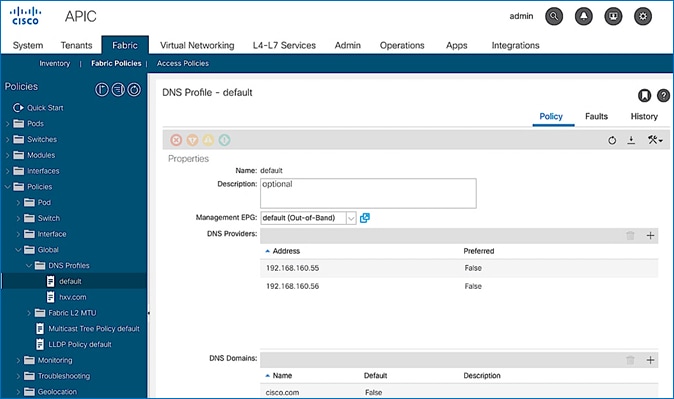

Configure DNS Policy

To configure Domain Name Server (DNS) for the ACI fabric, follow these steps:

1. Use a browser to navigate to APIC’s Web GUI. Log in using the admin account.

2. From the top menu, select Fabric > Fabric Policies.

3. In the left navigation pane, expand and select Policies > Global > DNS Profiles > default.

4. For the Management EPG, select the default (Out-of-Band) from the drop-down list if the DNS servers are reachable through the out of band management subnet.

5. Use the [+] signs to the right of DNS Providers and DNS Domains to add DNS servers and domains as needed.

Configure Pod Policies for Pod-1

To configure policies specific to a Pod in Pod-1, complete the procedures outlined in this section.

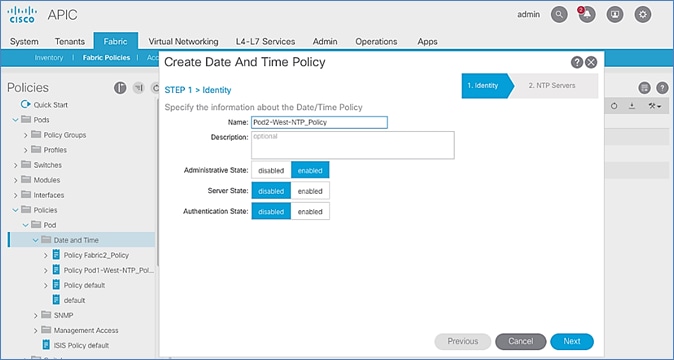

Configure NTP for Pod-1

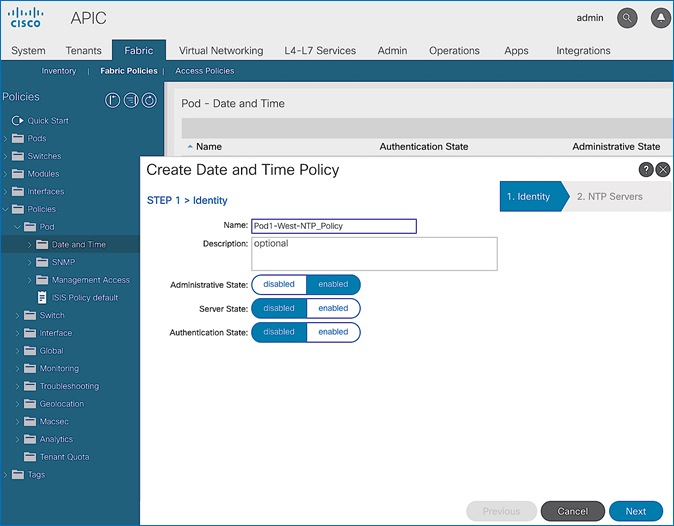

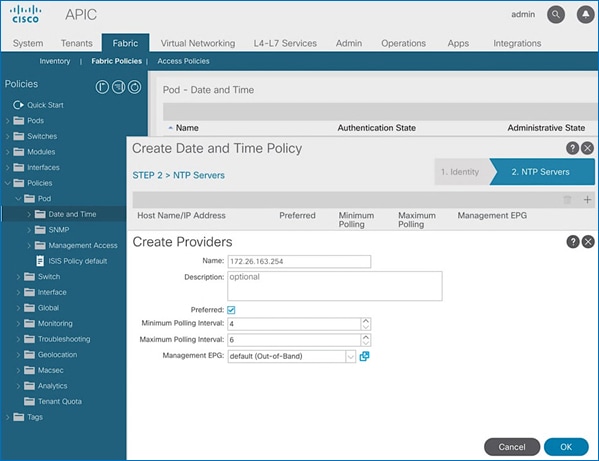

To configure NTP for Pod-1, follow these steps using the setup information provided below:

· NTP Policy Name: Pod1-West-NTP_Policy

· NTP Server: 172.26.163.254

· Management EPG: default(Out-of-Band)

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Fabric > Fabric Policies.

3. From the left navigation pane, navigate to Policies > Pod > Date and Time.

4. Right-click and select Create Date and Time Policy.

5. In the Create Date and Time Policy pop-up window, specify a Name for Pod-1’s NTP Policy. The Administrative State should be enabled.

6. Click Next.

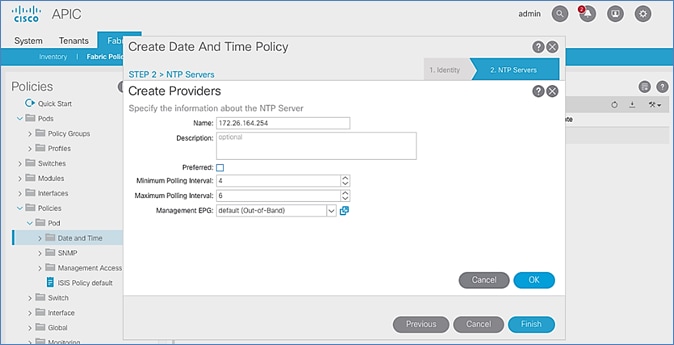

7. In Step 2 > NTP Servers, add NTP server(s) for Pod-1 using the [+] to the right of the list of servers.

8. In the Create Providers pop-up window, specify the Hostname/IP of the NTP server in the Name field. If multiple NTP Providers are being created for Pod-1, select the checkbox for Preferred when creating the preferred provider. For the Management EPG, select default (Out-of-Band) from the drop-down list.

9. Click OK.

10. Click Finish.

![]() NTP policy is not in effect until it is applied using a Pod Profile in an upcoming section.

NTP policy is not in effect until it is applied using a Pod Profile in an upcoming section.

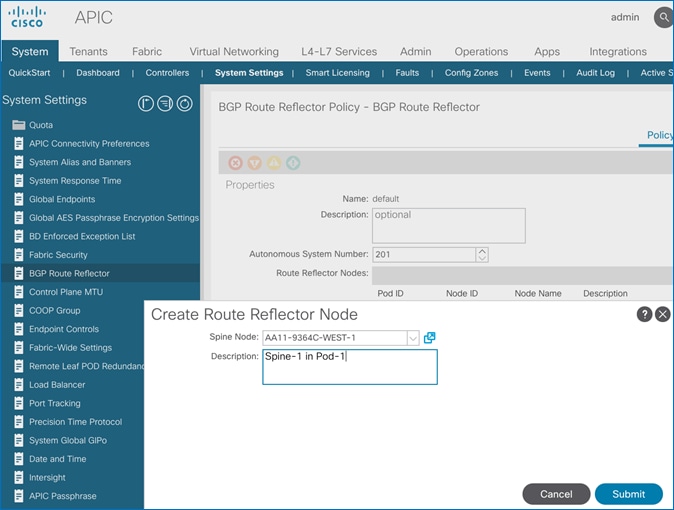

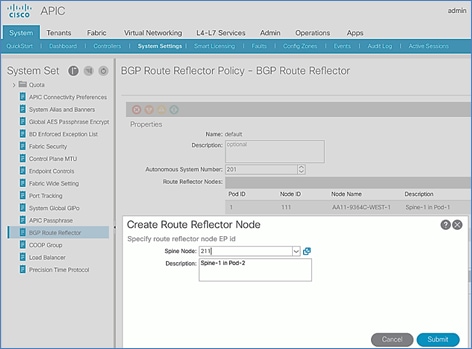

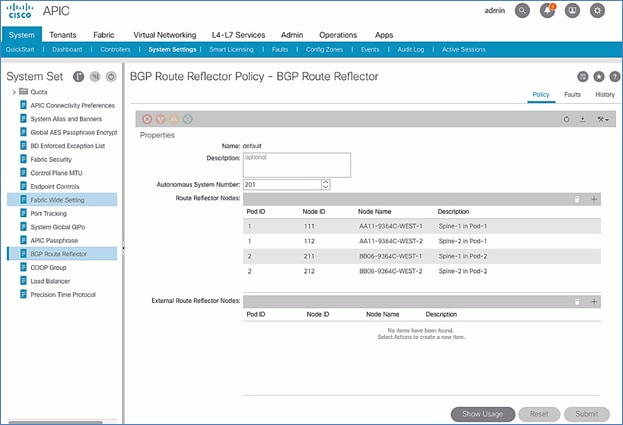

Update BGP Route Reflector Policy for Pod-1

In an ACI fabric with multiple Spine switches, a pair of spine switches are selected as BGP Route Reflectors (RR) to redistribute routes from external domains into the fabric. In a Multi-Pod ACI fabric, each Pod has a pair of RR nodes. The procedures in this section will enable RR functionality on Pod-1 spine switches.

Setup Information

· BGP Route-Reflector Policy Name: default

· Pod-1 Spine Nodes: AA11-9364C-WEST-1, AA11-9364C-WEST-2

Deployment Steps

To enable BGP Route Reflector functionality on spine switches in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select System > System Settings.

3. From the left navigation pane, navigate to BGP Route Reflector.

4. In the right windowpane, select the Policy tab and in the Route Reflector Nodes section, click the [+] on the right to create route reflector nodes.

5. In the Create Route Reflector Node pop-up window, for the Spine Node, select the node name for the first RR spine in Pod-1.

6. Click Submit.

7. Repeat steps 1-6 to add the second RR spine in Pod-1.

8. You should now see two spine switches as Route Reflectors Nodes in Pod-1.

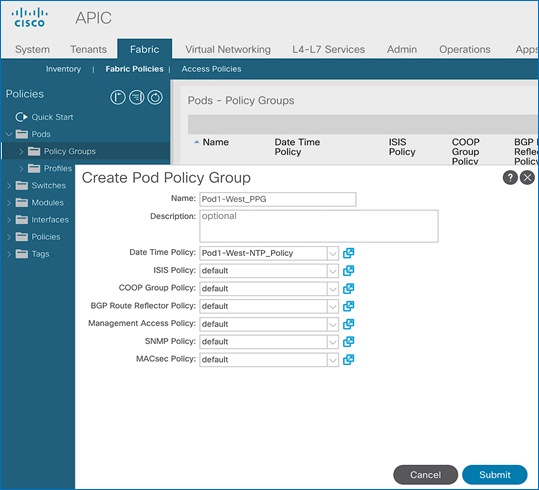

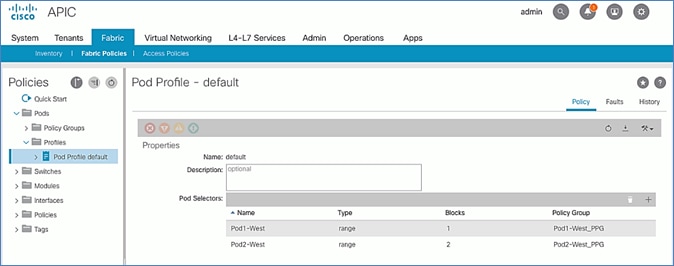

Update Pod Profile to Apply Pod Policies

In ACI, Pod policies (for example, NTP and BGP policies) are applied through a Pod Profile. A Pod Policy Group is used to first group the policies in each Pod before they are applied using a Pod Profile. Pod-1 and Pod-2 policies are applied using the same Pod Profile. The procedures in this section will apply Pod Policies for Pod-1.

Setup Information

· Pod Policy Group Name for Pod-1: Pod1-West_PPG

· Pod Selector Name for Pod-1: Pod1-West

· Pod Profile: default

· ID for Pod-1: 1

· Pod policy names to be applied: Pod1-West-NTP_Policy, default

Deployment Steps

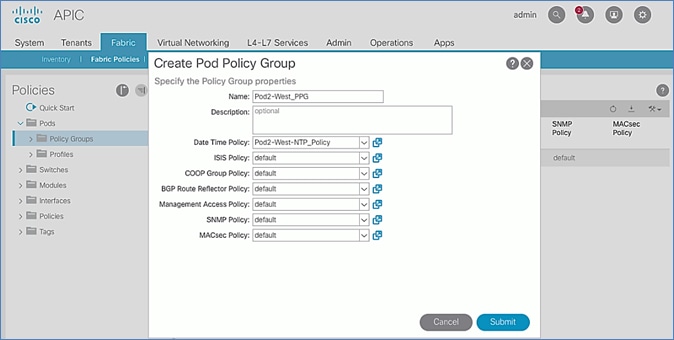

To apply Pod policies for Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using admin account.

2. From the top menu, select Fabric > Fabric Policies.

3. From the left navigation pane, navigate to Pods > Policy Groups. Right-click and select Create Pod Policy Group to create a policy group.

4. In the Create Pod Policy Group pop-up window, for Name, specify a Pod Policy Group Name. For the Date Time Policy, select the previously created NTP policy for Pod-1. Select default for the remaining policies.

5. Click Submit.

6. From the left navigation pane, navigate to Pods > Profiles > Pod Profile default .

7. In the right windowpane, select the Policy tab and in the Pod Selectors section, click the [+] icon to add a Pod Selector.

8. In the newly created row, specify a Name. For Type, select Range. For Blocks, specify the Pod Id for Pod-1. For Policy Group, select the previously created Policy Group Name for Pod1.

9. Click Update and then Submit to apply the Pod Policies for Pod-1.

Enable/Review ACI Fabric Settings

Customers should evaluate the ACI fabric settings discussed in this section and apply it only if it is appropriate for their environment. Some settings are recommended and required, while others are recommended but optional. The procedures discussed in this section will apply the following fabric settings.

· COS Preservation (Fabric Wide)

· Enforce Subnet Check (Fabric Wide, Optional)

· Limit IP Learning to Subnet (Bridge Domain Level, Optional)

· IP Aging (Fabric Wide, Optional)

· Endpoint Learning Features

- Endpoint Dataplane Learning (Bridge Domain Level, Enabled by default)

- Layer 2 Unknown Unicast (Bridge Domain Level)

- Clear Remote MAC Entries (Bridge Domain Level, Optional)

- Unicast Routing (Bridge Domain Level)

- ARP Flooding (Bridge Domain Level)

- GARP Based Detection for EP Move Detection Mode (Bridge Domain Level)

· Jumbo Frames and MTU

Not all features will be available on first generation ACI leaf switches, but they are available on second generation switches. Models of first and second-generation leaf switches are provided below - see the Cisco Product documentation for a complete list.

· First-generation Cisco ACI leaf switches models: Nexus 9332PQ, Nexus 9372 (PX, PX-E, TX, TX-E), Nexus 9396 (PX, TX), 93120TX, 93128TX switches

· Second-generation Cisco ACI leaf switches models: Nexus 9300-EX and 9300-FX Series, Nexus 9348GC-FXP, Nexus 9336C-FX2, Nexus 93240YC-FX2 switches.

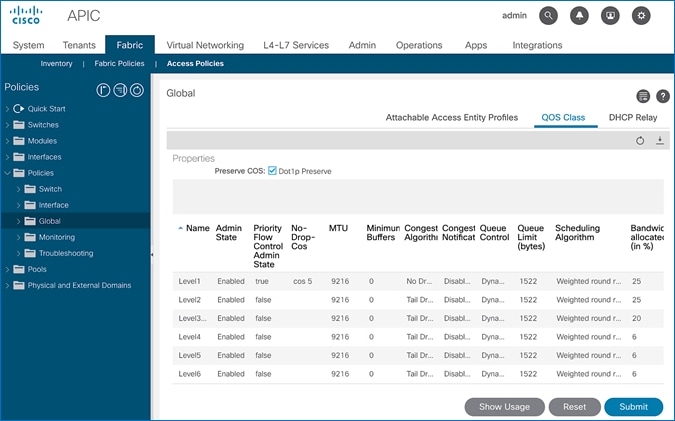

COS Preservation (Fabric Wide Setting)

Class Of Service (COS) Preservation feature in ACI preserves the COS setting in the traffic received from the endpoints. This feature should be enabled in all HyperFlex deployments to preserve the COS end-to-end across an ACI fabric, including an ACI Multi-Pod fabric. This policy has fabric-wide impact.

To enable COS Preservation, follow these steps:

1. Use a browser to navigate to APIC’s Web GUI. Log in using the admin account.

1. From the top menu, select Fabric > Access Policies.

2. In the left navigation pane, select and expand Policies > Policies > Global.

3. In the right window plane, select the QOS Class tab. For Preserve QOS, enable the checkbox for Dot1p Preserve is selected.

4. Click Submit and then Submit Changes in the pop-up window.

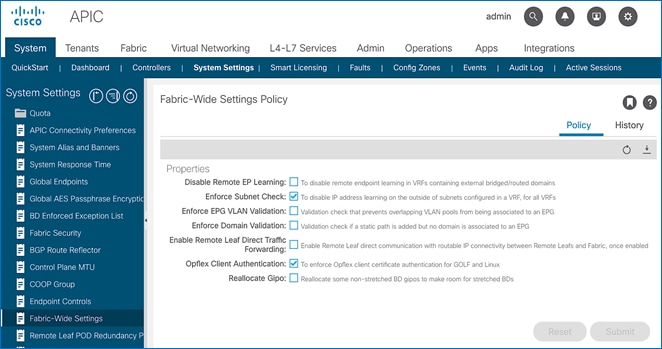

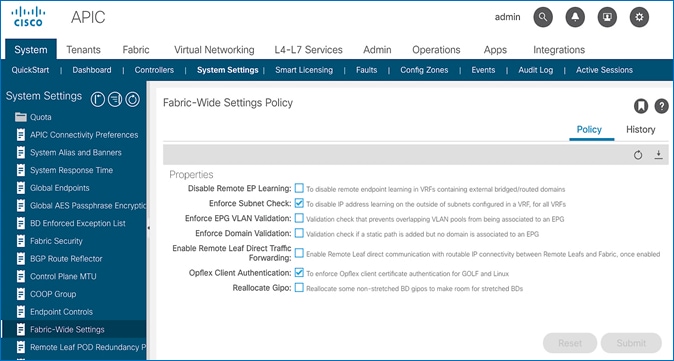

Enforce Subnet Check for Endpoint Learning (Fabric Wide Setting)

This feature limits both local and remote IP endpoint learning in a VRF to only those addresses that belong to one of the bridge domain subnets defined for that VRF. This a fabric wide policy that impacts data plane learning on all VRFs. Note that for local learning, the source IP address must also be in the same bridge domain subnet but for remote learning, the source IP just needs to match one of the bridge domain subnets for the VRF.

For subnets outside the VRF, enabling this feature will prevent all (mac, IP) address learning for local endpoints, and IP addresses for remote endpoints. This feature provides a better check than the Limit IP Learning to Subnet feature discussed in the next section, which only applies to IP addresses but not for MAC addresses. Also, it does the check only for local endpoint learning and not for remote endpoints. However the Limit IP Learning to Subnet feature is more granular in scope as it does the subnet-check on a per bridge domain basis while the Enforce Subnet Check does a check against all subnets at the VRF level and is enabled/disabled at the fabric level so it applies to all VRFs in the fabric. Limiting endpoint learning will reduce ACI fabric resource usage and therefore it is recommended but optional. This feature is disabled by default.

Some guidelines regarding this feature are provided below:

· This feature is available only on second-generation leaf switches. In a mixed environment with first and second-generation leaf switches, the first-generation switches will ignore this feature.

· Enabling this feature will enable it fabric-wide, across all VRFs though the subnet-check is for the subnets in the VRF.

· Available in APIC Releases 2.2(2q) and higher 2.2 releases and in 3.0(2h) and higher. It is not available in 2.3 or 3.0(1x) releases.

· The feature can be enabled/disabled under Fabric > Access Policies > Global Policies > Fabric Wide Setting Policy in earlier releases.

To enable the Enforce Subnet Check feature, follow these steps:

1. Use a browser to navigate to APIC’s Web GUI. Log in using the admin account.

2. From the top menu, select System > System Settings.

3. In the left navigation pane, select Fabric-Wide Settings.

4. In the right windowpane, enable check box for Enforce Subnet Check.

5. Click Submit.

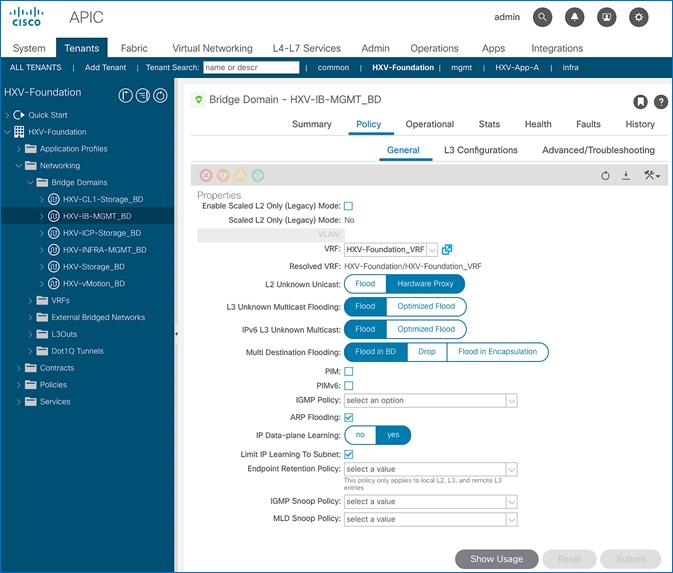

Limit IP Learning to Subnet (Bridge-domain, Optional)

This is a bridge-domain level setting. It is superseded by the Enforced Subnet Check feature in the previous section. This feature changes the default endpoint “IP” address learning behavior of the ACI fabric. Enabling this feature will disable IP address learning on subnets that are not part of the bridge domain subnets and only learn if the source IP address belongs to one of the configured subnets for that bridge domain. A bridge domain can have multiple IP subnets and enabling this feature will limit the IP address learning to the bridge-domain subnets but will not learn addresses for subnets outside the bridge-domain. This feature will also reduce ACI fabric resource usage and therefore it is recommended but optional.

This feature is available as of APIC release 1.1(1j) and enabled by default as of APIC releases 2.3(1e) and 3.0(1k). This feature can be enabled for HyperFlex deployments as shown in the figure below.

Some guidelines regarding this feature are provided below:

· Available on first and second-generations of ACI leaf switches

· If Enforce Subnet Checking is also enabled, it supersedes this feature.

· This feature should be used when subnet-check is for a specific bridge domain (as opposed to all VRF subnets) or when you have an environment with first-generation leaf switches.

· Prior to APIC release 3.0(1k), toggling this feature with Unicast Routing enabled could result in an impact of 120s. In prior releases, ACI flushed all endpoints addresses and suspended learning on the bridge domain for 120s. The behavior in 3.0(1k) and later releases is to only flush endpoint IP addresses that are not part of the bridge domain subnets and there is no suspension of address learning.

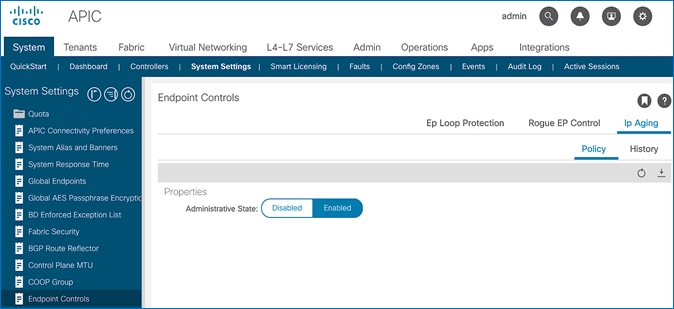

IP Aging (Fabric Wide Setting)

IP Aging tracks and ages endpoint IP addresses that the fabric has learned, to age out stale entries. This is a fabric wide setting. This feature will also reduce ACI fabric resource usage and therefore it is recommended but optional. This feature has fabric-wide impact.

To enable IP aging, follow these steps:

1. Use a browser to navigate to APIC’s Web GUI. Log in using the admin account.

2. From the top menu, select System > System Settings.

3. In the left navigation pane, select Endpoint Controls.

4. In the right windowpane, select IP Aging tab and then Policy tab. For Administrative State, click Enabled.

5. Click Submit.

Endpoint Learning

Endpoint learning in ACI is primarily done in hardware from data-plane traffic by examining the incoming traffic, specifically the source MAC and IP address fields in the received traffic. ACI can learn the address (MAC, IP) and location of any endpoint that sends traffic to the fabric. ACI provides several configuration settings (mostly at the bridge-domain level) that impact endpoint learning behavior.

IP vs. MAC Learning

By default, ACI learns the MAC address of all endpoints but for any “IP” learning to occur, Unicast Routing must be enabled at the bridge-domain level. Unicast Routing enables both Layer 3 forwarding and IP learning in an ACI fabric. The Endpoint Dataplane Learning feature is available at the bridge-domain level – see next section.

Silent Hosts

ACI typically learns from data-plane traffic but for silent endpoints that do not send any traffic to the fabric, ACI can also use control plane protocols such as ARP and GARP to do endpoint learning. The behaviour varies depending on whether the Bridge Domain is doing Layer 2 forwarding (Unicast Routing disabled) or Layer 3 forwarding (Unicast Routing enabled).

For bridge-domains doing Layer 2 forwarding (Unicast Routing disabled), ARP flooding can be used to learn the location of silent endpoints. ARP Flooding enables ACI to learn from the data-plane ARP traffic exchanged between the endpoints. In this scenario, the L2 Unknown Unicast option should also be set to “Flood” to prevent ACI from dropping unicast traffic destined to endpoints that it hasn’t learned of yet.

![]() APIC GUI automatically enables ARP Flooding if L2 Unknown Unicast is set to “Flood”. However, regardless of the GUI setting, APR Flooding is always enabled in hardware when Unicast Routing is disabled.

APIC GUI automatically enables ARP Flooding if L2 Unknown Unicast is set to “Flood”. However, regardless of the GUI setting, APR Flooding is always enabled in hardware when Unicast Routing is disabled.

For bridge-domains doing Layer 3 forwarding (Unicast Routing enabled), ACI can learn the location of silent or unknown hosts either by generating an ARP request or from data-plane ARP traffic. If IP subnet(s) are configured for the bridge-domain, ACI can generate an ARP request and learn the location of the unknown endpoint from its ARP response (also known as ARP gleaning). If Unicast Routing is enabled without configuring bridge-domain subnets (not recommended), ACI cannot initiate ARP requests. However, ACI can still learn their location from the data-plane ARP traffic. Though ARP Flooding is not necessary in first scenario, it should be enabled so that if the endpoint moves, ACI can learn the new location quickly rather than waiting for ACI to age out the entry for the endpoint. ACI can also detect endpoint moves using GARP by enabling the GARP-based endpoint move detection feature.

![]() ARP Flooding must be enabled for GARP-based endpoint move detection feature.

ARP Flooding must be enabled for GARP-based endpoint move detection feature.

Local vs. Remote Endpoints

Endpoint learning in ACI also depends on whether the endpoints are local or remote endpoints. For a given leaf switch, local endpoints are local to that leaf switch while remote endpoints connect to other leaf switches. Local and remote endpoints are also learned from data-plane traffic. However, unlike local endpoints, ACI typically learns either the MAC or IP address of remote endpoints but not both. The local endpoints information is sent to the Spine switches that maintain the endpoint database, but remote endpoints are maintained on the leaf switches. Remote entries are also aged out sooner than local endpoints by default.

As stated earlier, ACI provides several options that impact endpoint learning. These settings are covered in more detail in the upcoming sections.

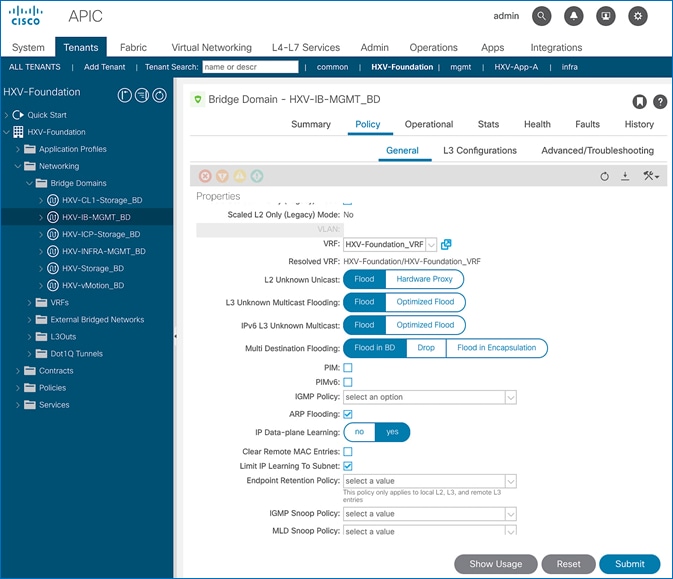

IP Dataplane Learning

IP Dataplane Learning is bridge-domain level setting that enables/disables “IP” learning in the data-plane. This feature was referred to as Endpoint Dataplane Learning in earlier releases. The feature is available as of APIC release 2.0(1m) and it is enabled by default as shown in the figure below:

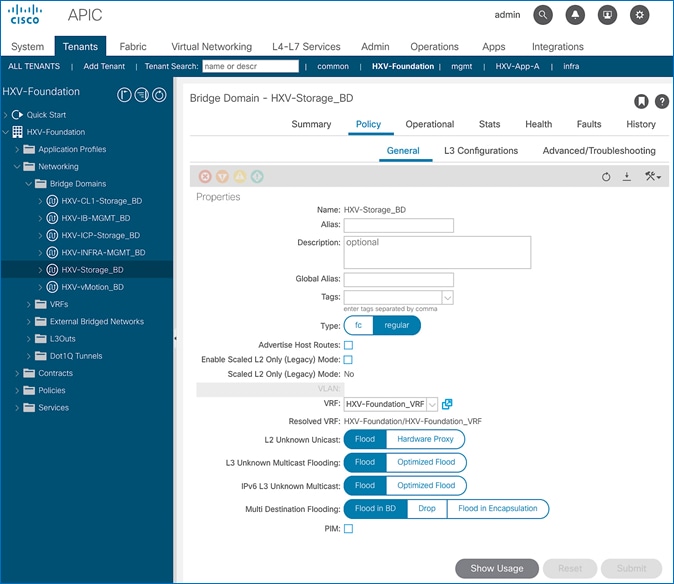

L2 Unknown Unicast

L2 Unknown Unicast is a bridge-domain level setting that specifies how unknown Layer 2 unicast frames should be forwarded within the fabric. This field can be set to “Flood” or “Hardware Proxy” (default) mode. In “Flood mode”, the unknown Layer 2 unicast frames are flooded across all ports in the bridge-domain using the bridge-domain specific multicast tree. In “Hardware Proxy” mode, the unknown unicast frames are sent to the spine switch to do a lookup in the endpoint mapping database. However, if the spine has not learned the address of that endpoint, the unicast traffic will be dropped by the fabric. For this reason, if a Layer 2 bridge-domain has silent endpoints, the L2 Unknown Unicast field should always be set to “Flood”.

The default setting for L2 Unknown Unicast is “Hardware-Proxy” but in this design, this field is set to “Flood” for deployments that may have silent hosts. This feature can be enabled as shown in the figure below:

This feature requires ARP Flooding to be enabled on the bridge-domain. Customers may also want to enable the Clear Remote MAC Entries setting. See upcoming sections for additional information on these two settings.

Clear Remote MAC Entries

This is a bridge-domain level setting that clears the remote Layer 2 MAC addresses on other switches when the corresponding MAC addresses (learnt on a vPC) are deleted from a local switch. The entries are cleared on all remote switches if it is deleted on a local switch. The setting is visible in the GUI when L2 Unknown Unicast is set to “Flood”. This feature is optional but recommended for deployments that may have silent hosts.

Unicast Routing

Unicast Routing setting on the bridge-domain enables both Layer 3 forwarding and “IP” learning in an ACI fabric. The IP endpoint learning is primarily done from the data plane traffic but ACI can also initiate ARP requests to do endpoint learning in the control plane. ACI can originate ARP requests for unknown endpoints if both Unicast Routing and bridge-domain subnet is configured. However, ACI cannot generate ARP requests if a subnet is not configured for the bridge-domain, but it can still learn their location from the data-plane ARP traffic if ARP Flooding is enabled. In this design, Unicast Routing is enabled on HyperFlex bridge-domains except for the storage-data bridge-domain.

ARP Flooding

ARP Flooding is used for both Layer 2 (Unicast Routing disabled) and Layer 3 bridge-domains (Unicast Routing enabled). By default, ACI fabric will treat ARP requests as unicast packets if Unicast Routing is enabled and forward them using the target IP address in the ARP packets. It will not flood the ARP traffic to all the leaf nodes in the bridge domain. However, the ARP Flooding setting provides the ability to change this default behavior and flood the ARP traffic fabric-wide to all the leaf nodes in a given bridge domain. See Endpoint Learning section above for other scenarios that require ARP Flooding. This feature can be enabled as shown in the figure below.

ARP Flooding is also required in environments that use Gratuitous ARP (GARP) to indicate an endpoint move. If an endpoint move occurs on the same EPG interface, GARP feature must be enabled in ACI to detect the endpoint move – see GARP based Detection section for more details. This feature is disabled by default but it is enabled in this design for deployments that may have silent hosts or require GARP.

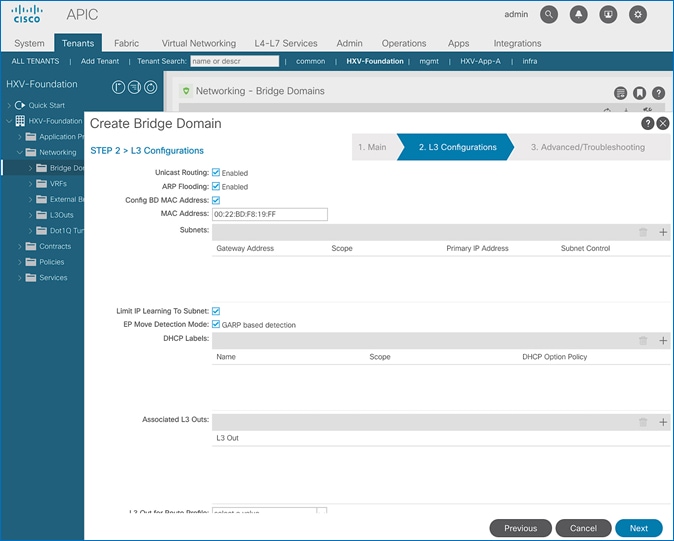

GARP-based Detection

Gratuitous ARP (GARP) based detection setting enables ACI to detect an endpoint IP move from one MAC address to another when the new MAC is on the same EPG interface as the old MAC. ACI can detect all other endpoint IP address moves such as moves between ports, switches, EPGs or bridge-domains but not when it occurs on the same EPG interface. With this feature, ACI can use GARP to learn of an endpoint IP move on the same EPG interface. This is a bridge-domain level setting that can be enabled as shown in the figure below.

Note that ARP Flooding must be enabled to use this feature. GARP-based detection setting will not be visible on the GUI until ARP Flooding is enabled on the bridge domain.

Jumbo Frames and MTU

Traditional switching fabrics typically us a 1500B MTU and must be configured to support Jumbo frames. However, the ACI fabric, by default uses an MTU of 9150B on core facing ports of leaf and spine switches and 9000B on access ports of leaf switches. Therefore, no configuration is necessary to support Jumbo frames on an ACI fabric.

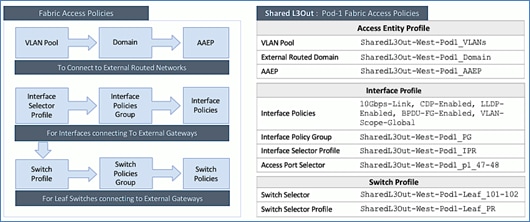

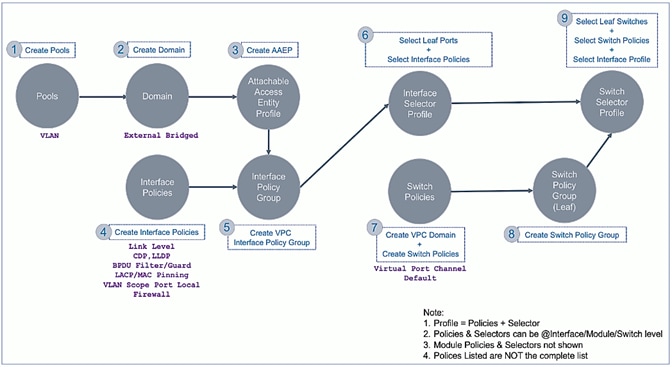

Pre-configure Access Layer Policies

Fabric Access Policies are policies that are applied to access layer connections, typically on leaf switches. The access layer connections can be to a physical domain or a virtual domain managed by a Virtual Machine Manager (VMM). The physical domains in this design include vPC connections to Cisco UCS/HyperFlex domain and Layer 3 connections to external networks. Cisco recommends configuring all policies explicitly even when the policies match the defaults to avoid issues in the future as defaults can change in newer releases. Policies can be re-used across the fabric to configure any number of access layer. The procedures in this section will pre-configure policies that will be used in later stages of the deployment.

Setup Information

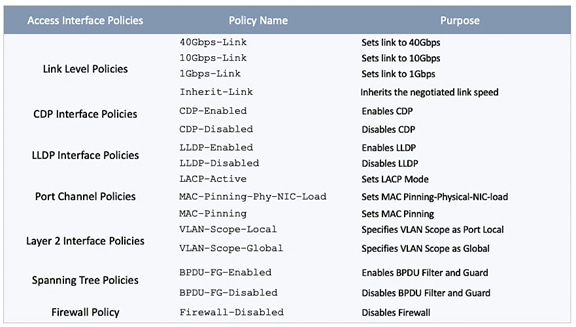

The pre-configured policies used in this design are summarized in Table 5 .

Table 5 Fabric Access Policies

Deployment Steps

To configure all policies from the following location in the GUI, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation pane, select and expand Policies > Policies > Interface.

4. Create all the policies in Table 5 by following the steps in the next sections.

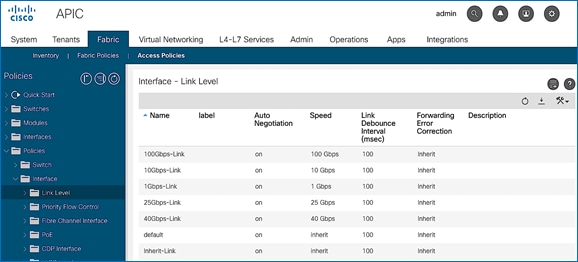

Create Link Level Policies

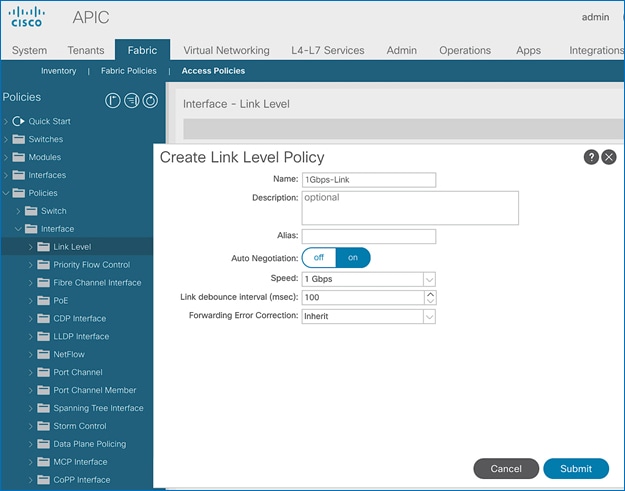

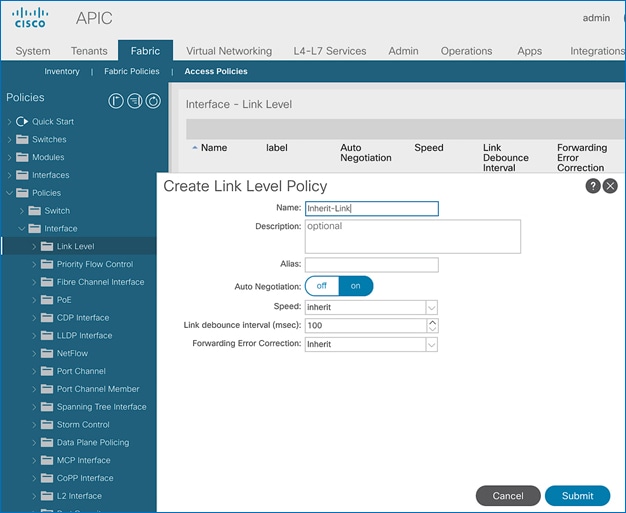

To create the link level policies to specify link speeds of 1/10/40-Gbps and other link policies, follow these steps:

1. From the left navigation pane, select Link Level. Right-click and select Create Link Level Policy.

2. In the Create Link Level Policy pop-up window, specify the policy Name. For the Speed, select 1Gbps from the drop-down list.

3. Click Submit to complete creating the policy.

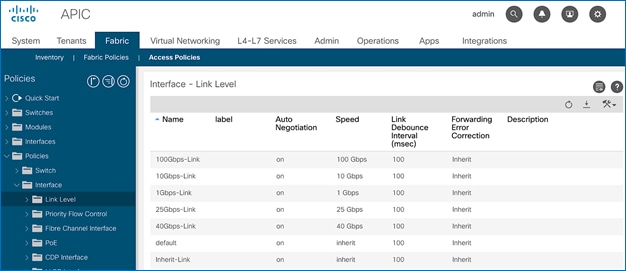

4. Repeat steps 1-3 to create a link policies for 10Gbps, 40Gbps and for any other speeds as needed. Also create an inherit link policy as shown below.

5. Click Submit to complete. You should now have the following Link policies in place:

Create CDP Interface Policies

To create CDP interface policies, follow these steps:

1. From the left navigation pane, select CDP Interface. Right-click and select Create CDP Interface Policy.

2. In the Create CDP Interface Policy pop-up window, specify the policy Name. For Admin State, click Enabled.

3. Click Submit to complete creating the policy.

4. Repeat steps 1-3 to create a policy to disable CDP. The Admin State for this policy should be Disabled.

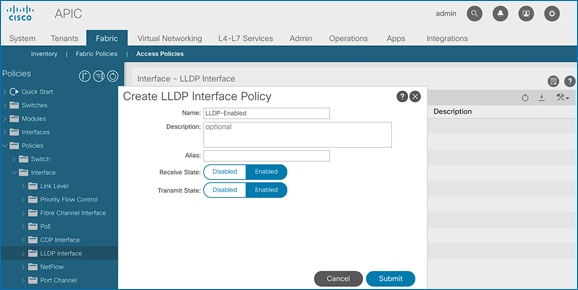

Create LLDP Interface Policies

To create LLDP interface policies, follow these steps:

1. From the left navigation pane, select LLDP Interface. Right-click and select Create LLDP Interface Policy.

2. In the pop-up window, specify a Name. For Receive and Transmit State, click Enabled.

3. Click Submit to complete creating the policy.

4. Repeat steps 1-3 to create a policy to disable LLDP. The Receive and Transmit states for this policy should be Disabled.

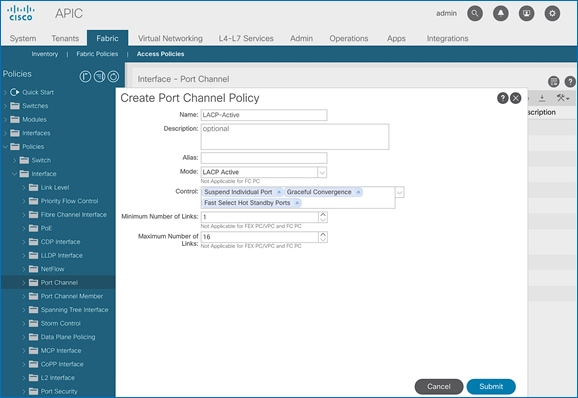

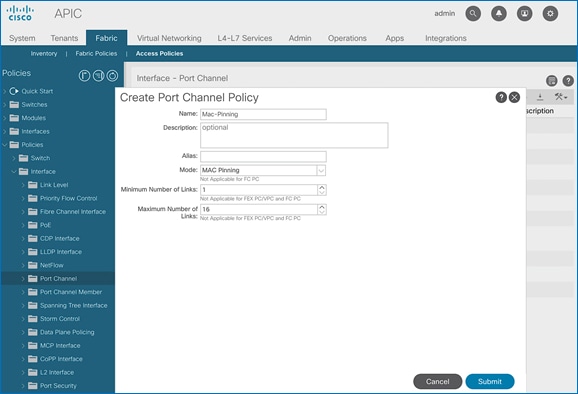

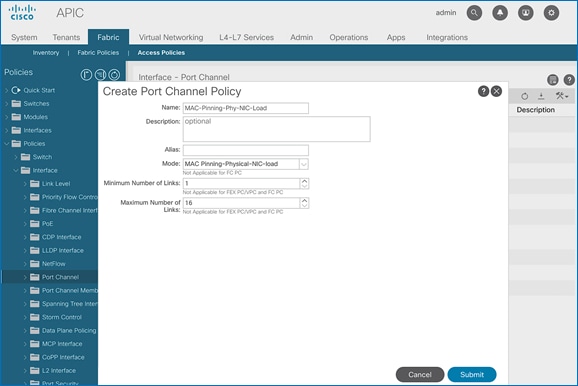

Create Port Channel Policies

To create port channel policies, follow these steps:

1. From the left navigation pane, select Port Channel. Right-click and select Create Port Channel Policy.

2. In the Create Port Channel Policy pop-up window, specify a Name for the policy. For the Mode, select LACP-Active from the drop-down list. Leave everything else as-is.

3. Click Submit to complete creating the policy.

4. Repeat steps 1-3 to create a port-channel policy for mac-pinning as shown below.

5. Click Submit to complete creating the policy.

6. Repeat steps 1-3 to create a policy for mac-pinning based on physical NIC load as shown below.

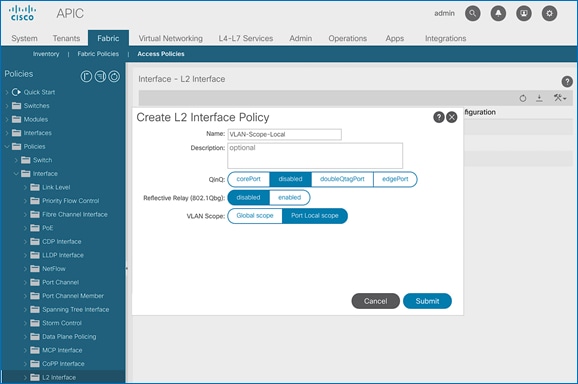

Create L2 Interface (VLAN Scope) Policies

To create L2 interface policies, follow these steps:

1. From the left navigation pane, select L2 Interface. Right-click and select Create L2 Interface Policy.

2. In the Create L2 Interface Policy pop-up window, specify a name for the policy. For VLAN Scope, select Port Local scope.

3. Click Submit to complete creating the policy.

4. Repeat steps 1-3 to create a L2 Interface policy for VLAN scope global. The VLAN Scope for this policy should be Global scope.

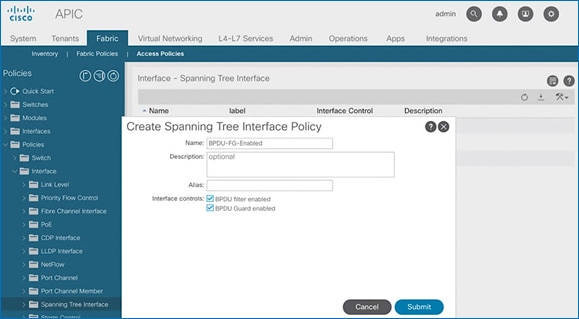

Create Spanning Tree Interface Policies

To create spanning tree interface policies, follow these steps:

1. From the left navigation pane, select Spanning Tree Interface. Right-click and select Create Spanning Tree Interface Policy.

2. In the Create Spanning Tree Interface Policy pop-up window, specify a policy Name. For Interface Controls, select the checkbox for BPDU Filter enabled and BPDU Guard enabled.

3. Click Submit to complete creating the policy.

4. Repeat steps 1-3 to create a policy to disable BPDU Filter and Guard. The Interface Controls for this policy should leave both BPDU filter enabled and BPDU Guard enabled unchecked.

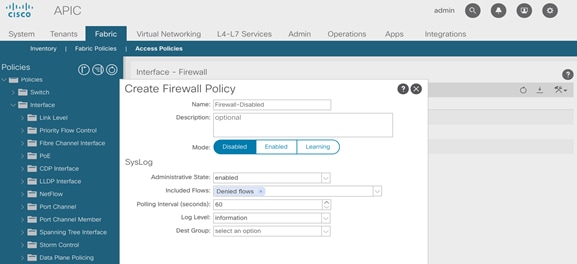

Create Firewall Policy

To create a firewall policy, follow these steps:

1. From the left navigation pane, select Firewall. Right-click and select Create Firewall Policy.

2. In the Create Firewall Policy pop-up window, specify a policy name. For Mode, select Disabled.

3. Click Submit to complete creating the policy.

The procedures outlined in this section will deploy a shared Layer 3 outside (Shared L3Out) connection in Pod-1 for reachability to networks outside the ACI fabric.

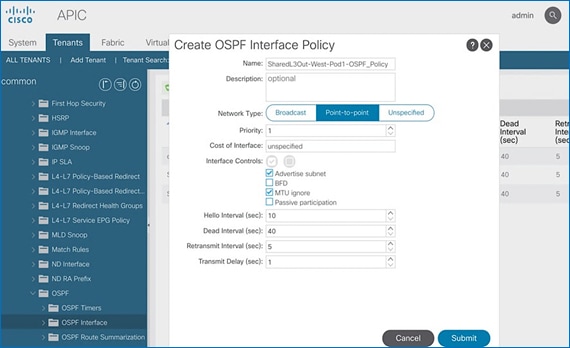

Deployment Overview

In this design, the Shared L3Out connection is established in the system-defined common Tenant so that it can be used by all tenants in the ACI fabric. Tenants must not use overlapping addresses when connecting to the outside networks using a shared L3Out connection. The connectivity is between border leaf switches in Pod-1 and pair of Nexus 7000 switches in the same location. The Nexus 7000 routers serve as external gateways to networks outside the fabric. OSPF is utilized as the routing protocol to exchange routes between the two networks. Some additional details of this connectivity are provided below:

· A pair of Nexus 7000 routers are connected to a pair of border leaf switches using four 10GbE interfaces – for a total of 4 links. The border leaf switches were deployed earlier. Each link is a separate routed link.

· VLANs are used for connectivity across the 4 links – for a total of 4 VLANs. VLANs are configured on separate sub-interfaces.

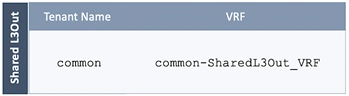

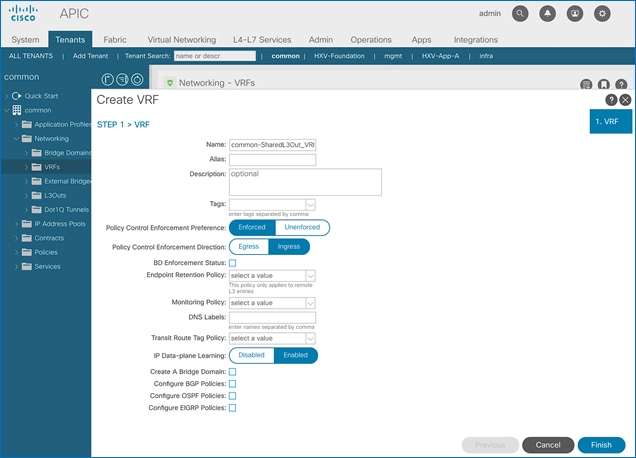

· A dedicated VRF common-SharedL3Out_VRF is configured in Tenant common for this connectivity.

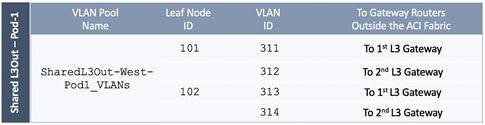

· Fabric Access Policies are configured on the ACI border leaf switches to connect to the external routed domain or Layer 3 Outside (L3Out) domain (via Nexus 7000s) using VLAN pool (vlans: 311-314).

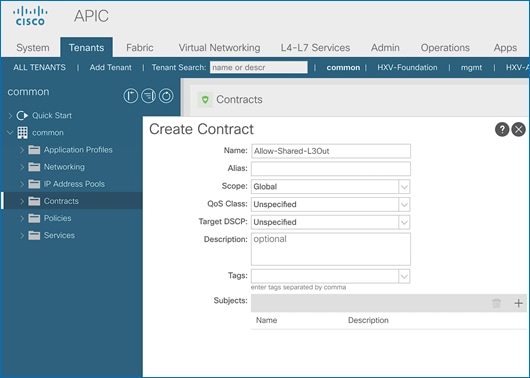

· The shared Layer 3 Out created in common Tenant “provides” an external connectivity contract that can be “consumed” by any tenant.

· The Nexus 7000s are configured to originate and send a default route to the Nexus 9000 leaf switches using OSPF.

· ACI leaf switches in Pod-1 advertise tenant subnets to Nexus 7000 switches in Pod-1.

· Host Routing - In ACI 4.0 release and later, an ACI fabric can also advertise host routes if it is enabled at the bridge-domain level. In this design, host routing is critical for advertising reachability to HyperFlex stretched cluster endpoints from outside the fabric since the nodes are located in different sites but in the same IP subnet. In this design, host-routing enables VMware vCenter and HyperFlex Witness in a third location (outside the ACI fabric) to reach the HyperFlex stretch cluster nodes are in the same subnet but in different subnets. This feature is critical to the operation of the HyperFlex stretch cluster in this design.

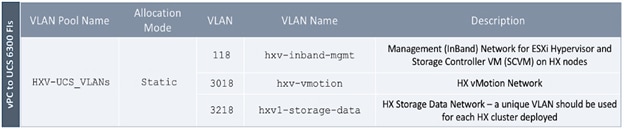

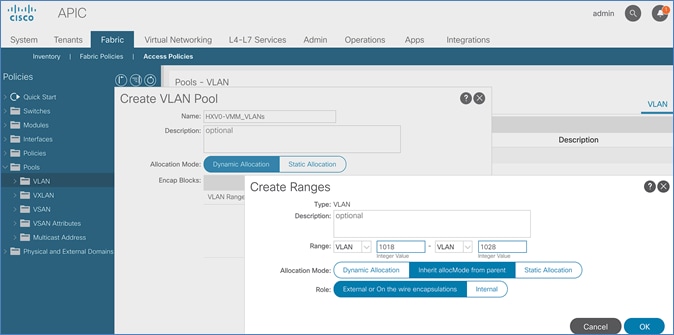

Create VLAN Pool for Shared L3Out

In this section, a VLAN pool is created to enable connectivity to networks outside the ACI fabric. The VLANs in the pool are for the individual routed links that connect the ACI border leaf switches to the gateway routers outside the fabric in Pod-1.

Setup Information

Table 6 VLAN Pool for Shared L3Out in Pod-1

Deployment Steps

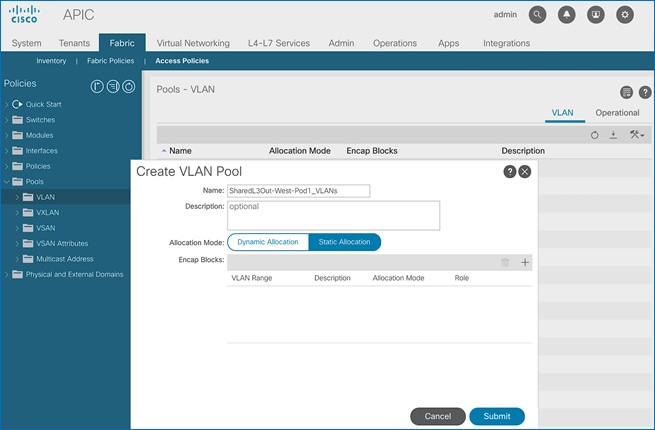

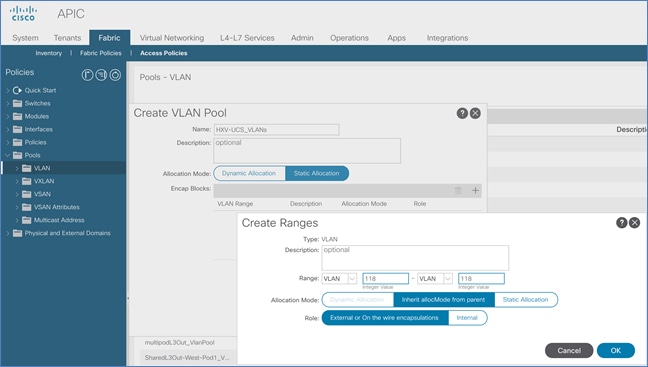

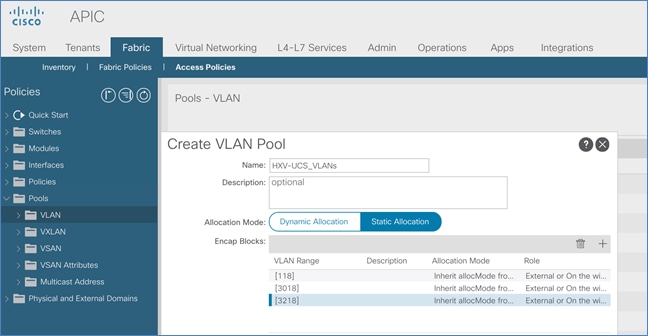

To configure a VLAN pool to connect to external gateways in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation pane, expand and select Pools > VLAN.

4. Right-click and select Create VLAN Pool.

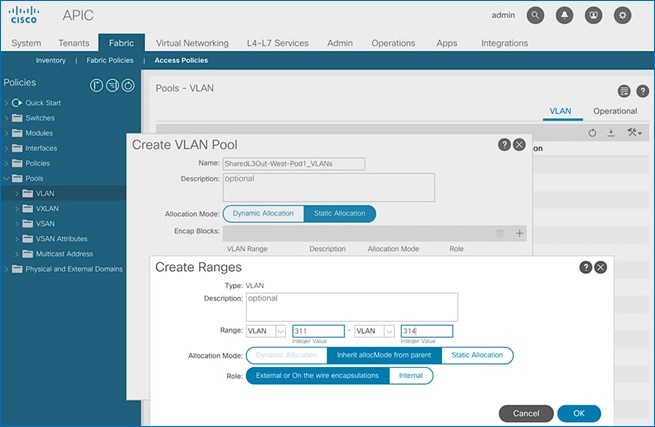

5. In the Create VLAN Pool pop-up window, specify a Name and for Allocation Mode, select Static Allocation. For Encap Blocks, click on the [+] icon on the right to add VLANs to the VLAN Pool.

6. In the Create Ranges pop-up window, configure the VLANs for the border leaf switches that connect to external gateways outside the ACI fabric. Leave the remaining parameters as is.

7. Click OK. Use the same VLAN ranges on the external gateway routers that connect to the ACI Fabric.

8. Click Submit to complete.

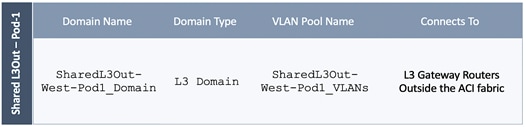

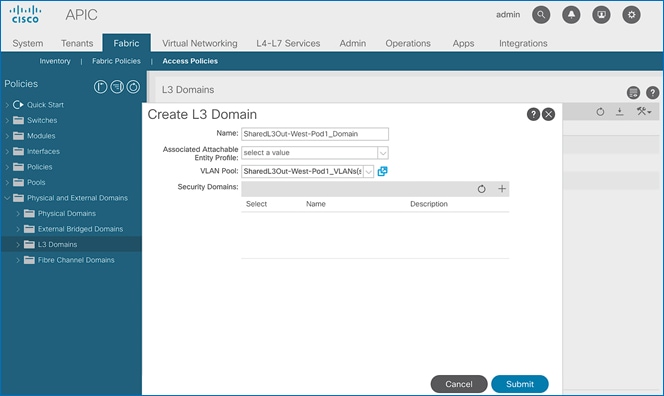

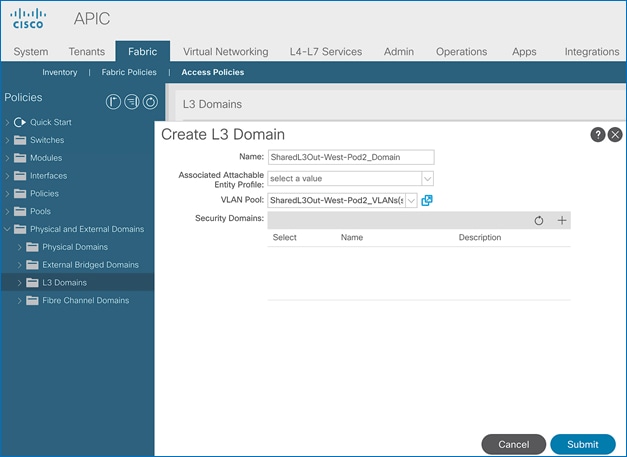

Configure Domain Type for L3Out

Follow the procedures outlined in this section to configure a domain type for the L3Out in Pod-1.

Setup Information

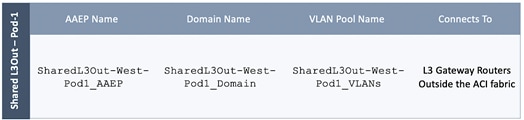

Table 7 Domain Type for Shared L3Out in Pod-1

Deployment Steps

To specify the domain type for the L3Out in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation pane, expand and select Physical and External Domains > L3 Domains.

4. Right-click on L3 Domains and select Create L3 Domain.

5. In the Create L3 Domain pop-up window, specify a Name. For the VLAN Pool, select the previously created VLAN pool from the drop-down list.

6. Click Submit to complete.

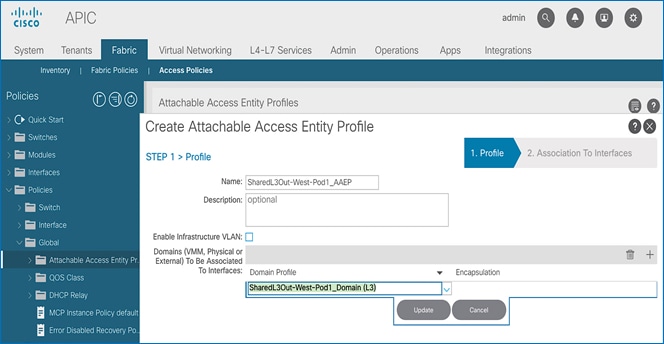

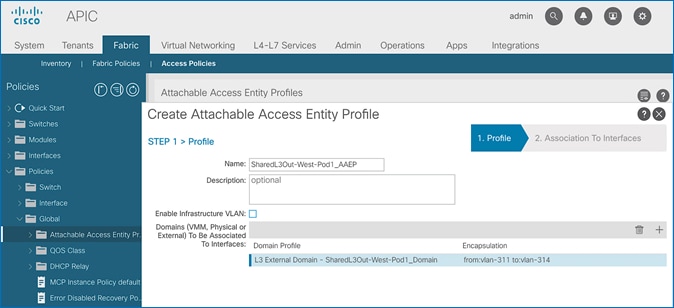

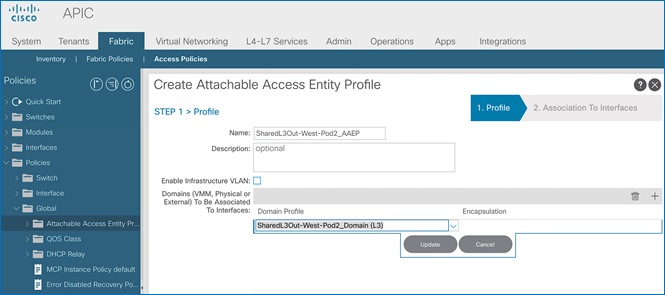

Create Attachable Access Entity Profile for L3Out

To configure an Attachable Access Entity Profile (AAEP) for the L3Out in Pod-1, follow the procedures outlined in this section.

Setup Information

Table 8 AAEP for Shared L3Out in Pod-1

Deployment Steps

To create an AAEP for the L3Out in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation pane, expand and select Policies > Global > Attachable Access Entity Profiles.

4. Right-click and select Create Attachable Access Entity Profile.

5. In the Create Attachable Access Entity Profile pop-up window, specify a Name. Under Domains, click on the [+] icon on the right-side of the window and select the previously created domain for the Domain Profile.

6. Click Update. You should now see the selected domain and the associated VLAN Pool.

7. Click Next. This profile is not associated with interfaces at this time.

8. Click Finish to complete.

Configure Interfaces to L3Out

Follow the procedures outlined in this section to configure interfaces to the external routed domain in Pod-1.

Setup Information

Border leaf switches (Node ID: 101,102) in Pod-1 connect to external gateways using 10Gbps links, on ports 1/47 and 1/48. The access layer setup information for this connection is provided below.

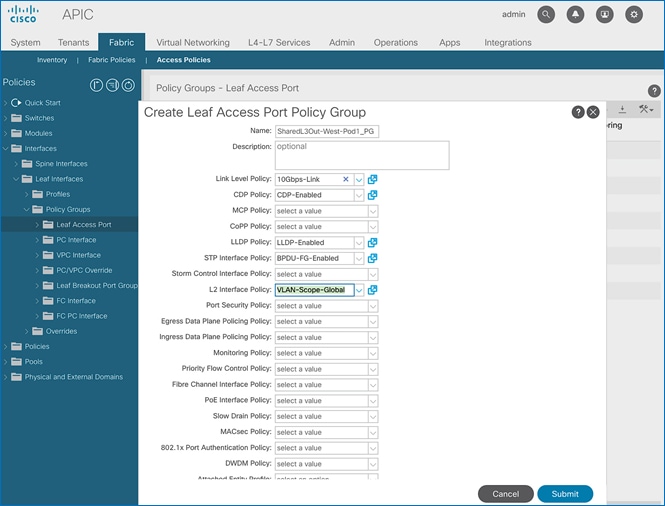

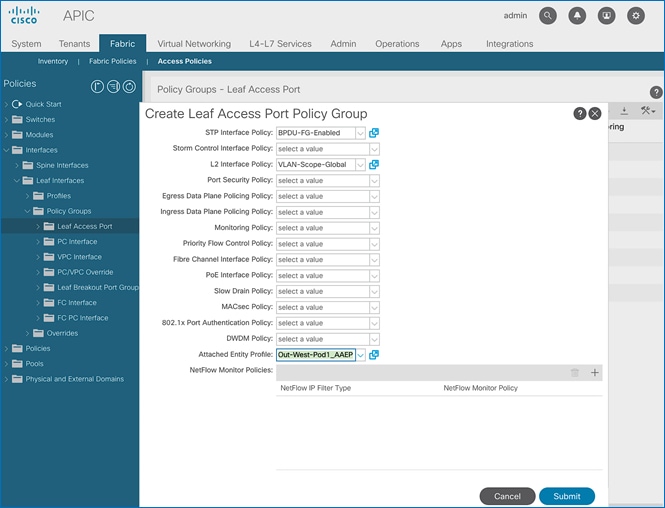

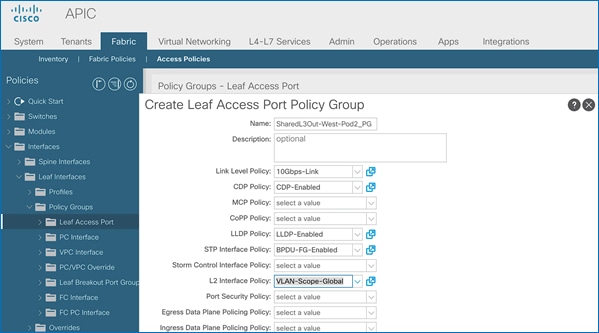

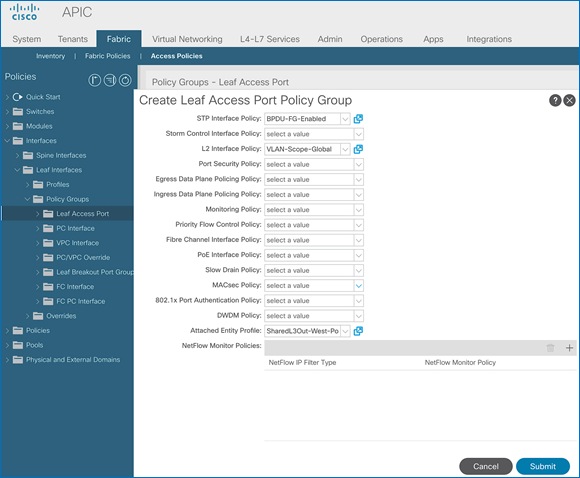

Create Interface Policy Group for L3Out Interfaces

To create an interface policy group for the L3Out in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation pane, expand and select Interfaces > Leaf Interfaces > Policy Groups > Leaf Access Port. Right-click and select Create Leaf Access Port Policy Group.

4. In the Create Leaf Access Port Policy Group pop-up window, specify a Name and select the applicable interface policies from the drop-down list for each field.

5. For the Attached Entity Profile, select the previously created AAEP to external routed domain.

6. Click Submit to complete.

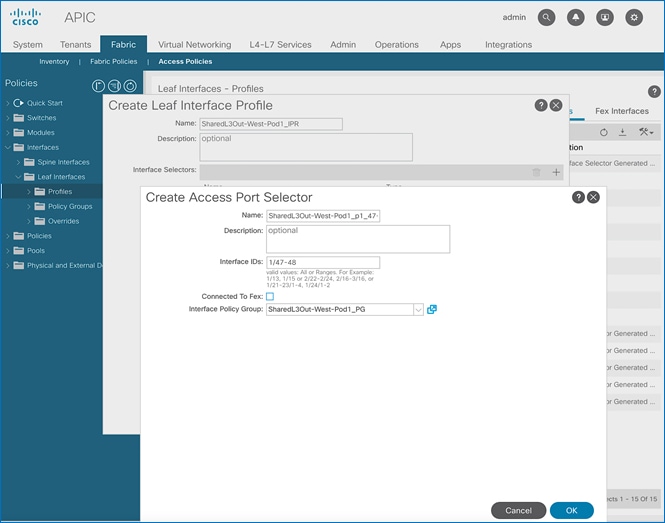

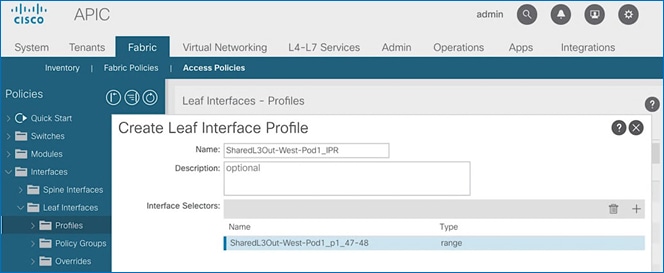

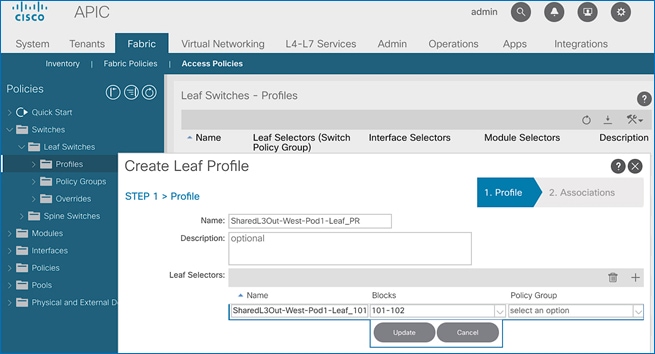

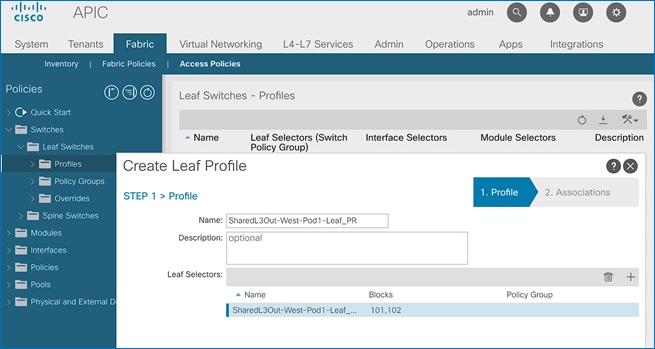

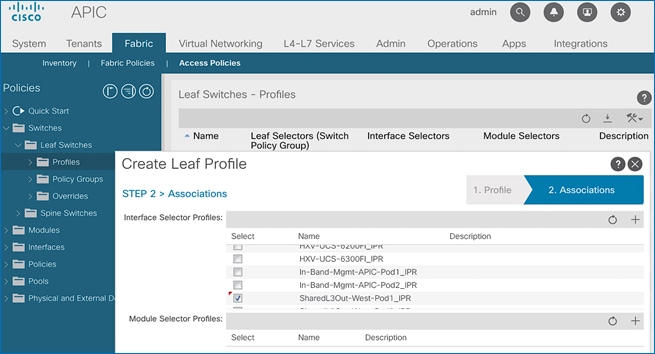

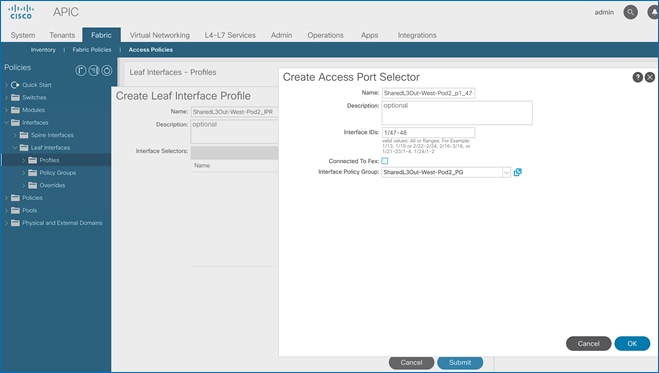

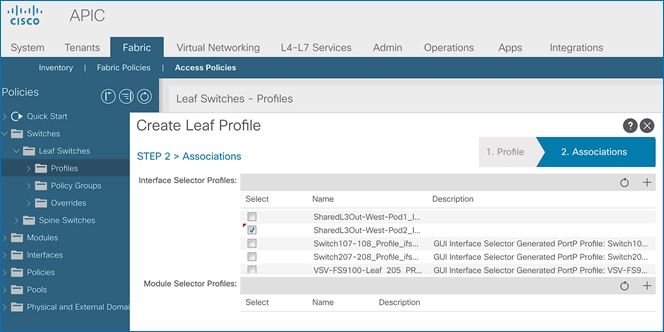

Create Interface Profile for L3Out Interfaces

To create an interface profile for the L3Out in Pod-1, follow these steps:

1. Use a browser to navigate to the APIC GUI. Log in using the admin account.

2. From the top navigation menu, select Fabric > Access Policies.

3. From the left navigation menu, expand and select Interfaces > Leaf Interfaces > Profiles. Right-click and select Create Leaf Interface Profile.

4. In the Create Leaf Interface Profile pop-up window, specify a Name. For Interface Selectors, click on the [+] icon to select access ports to apply interface policies to. In this case, the interfaces are access ports that connect Border leaf switches to gateways outside ACI.

5. In the Create Access Port Selector pop-up window, specify a selector Name. For the Interface IDs, specify the access ports connecting to the two external gateways. For the Interface Policy Group, select the previously created Policy Group from the drop-down list.

6. Click OK to close the Create Access Port Selector pop-up window.

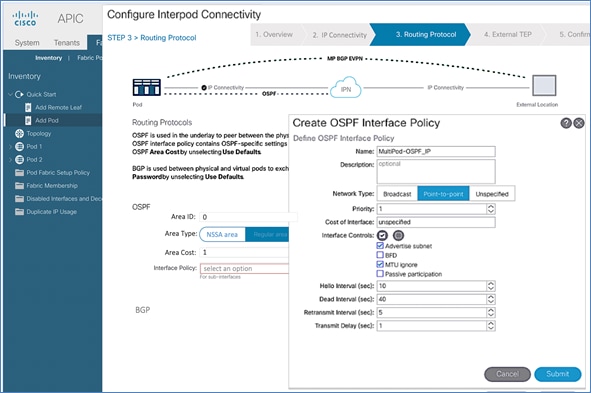

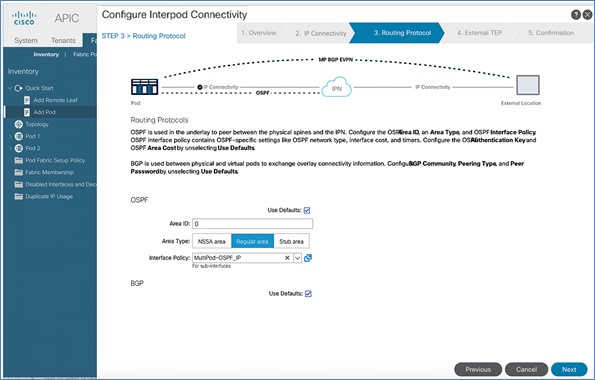

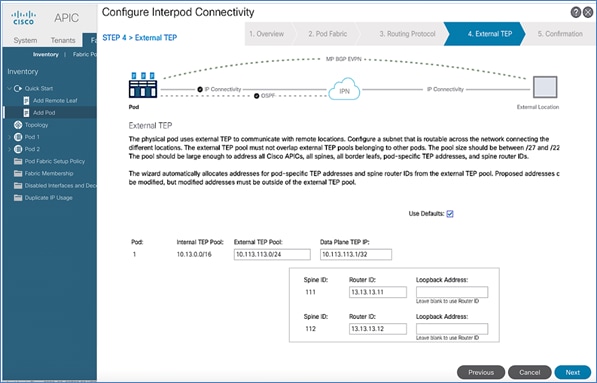

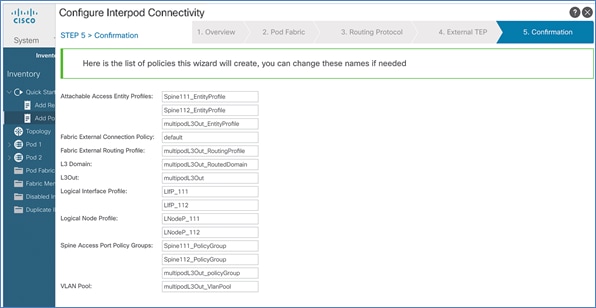

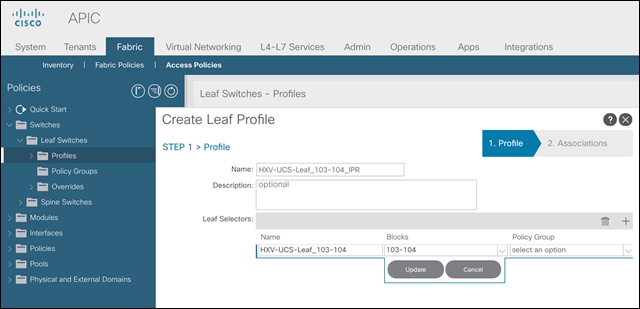

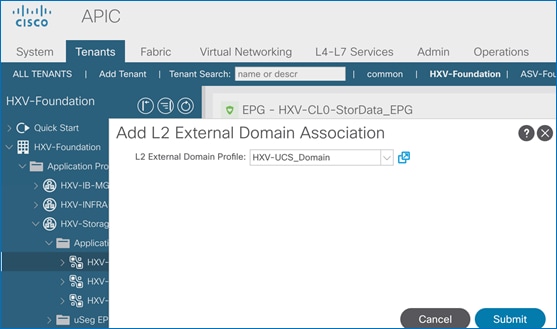

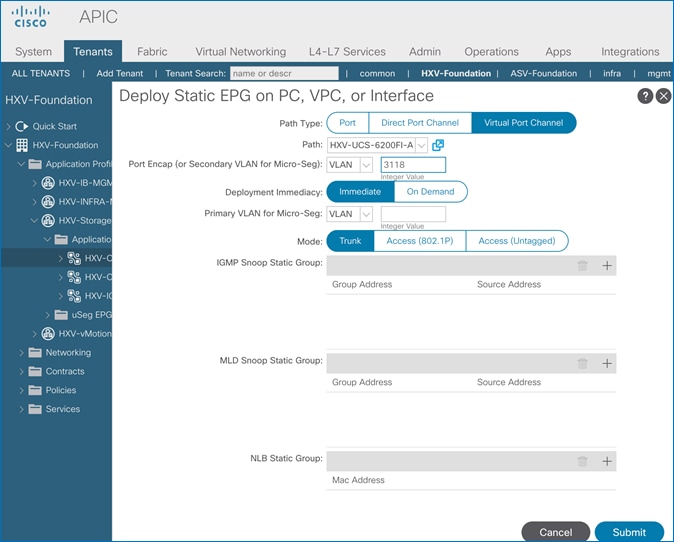

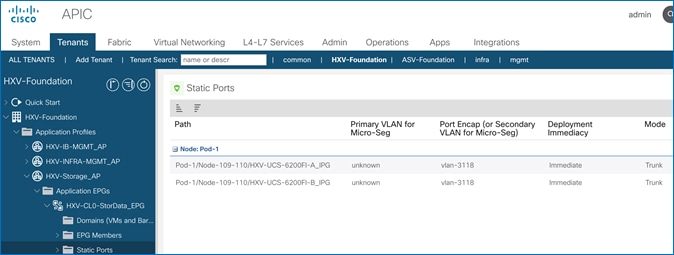

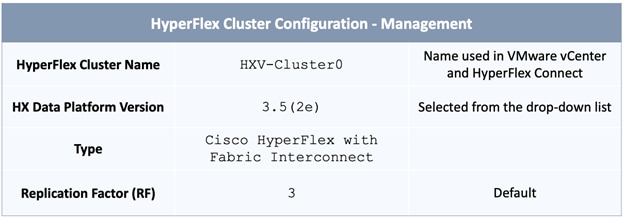

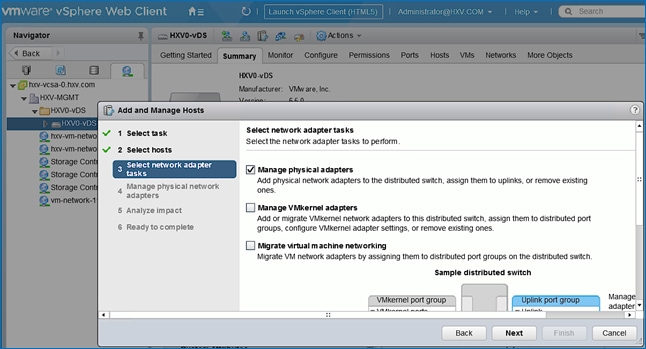

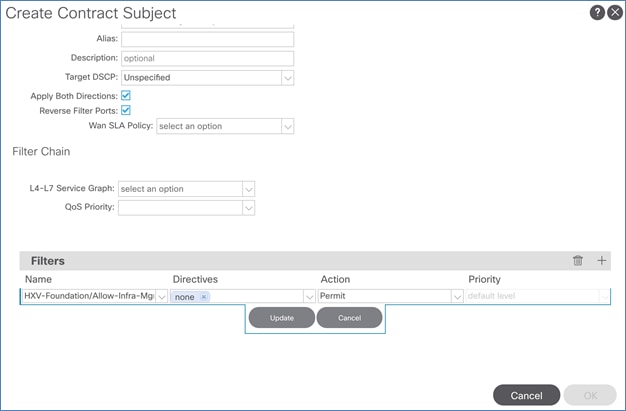

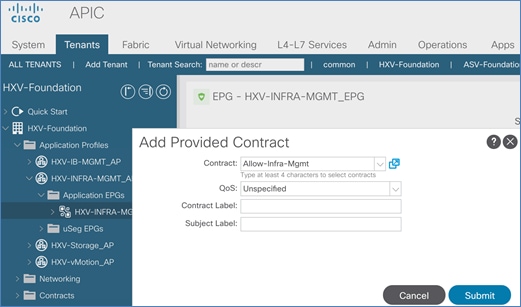

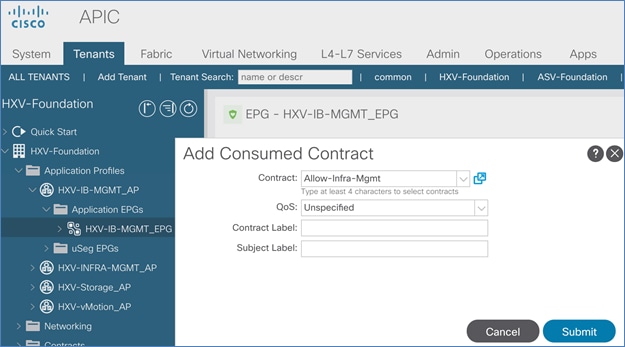

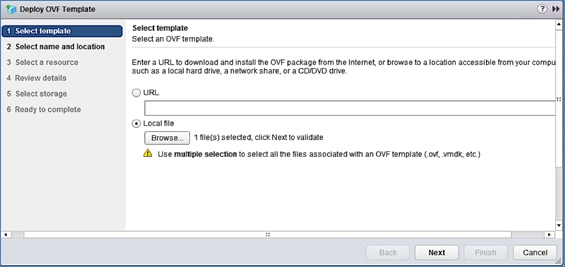

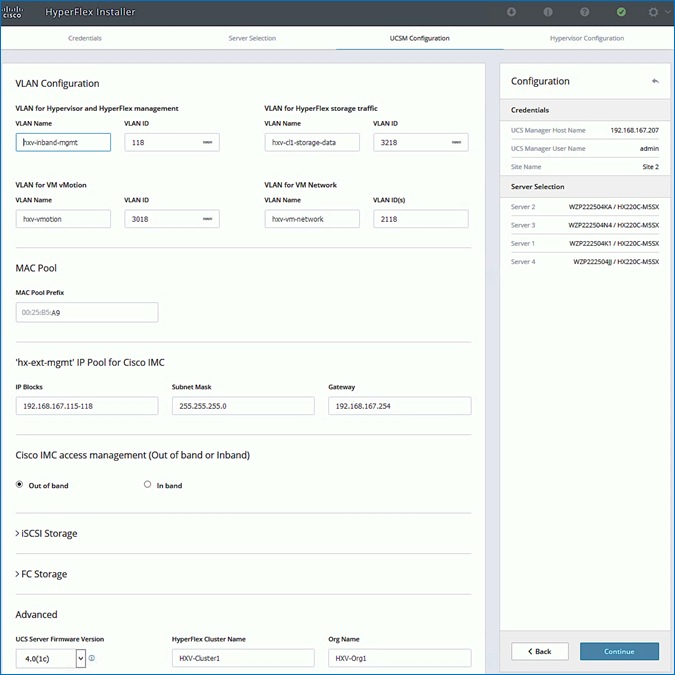

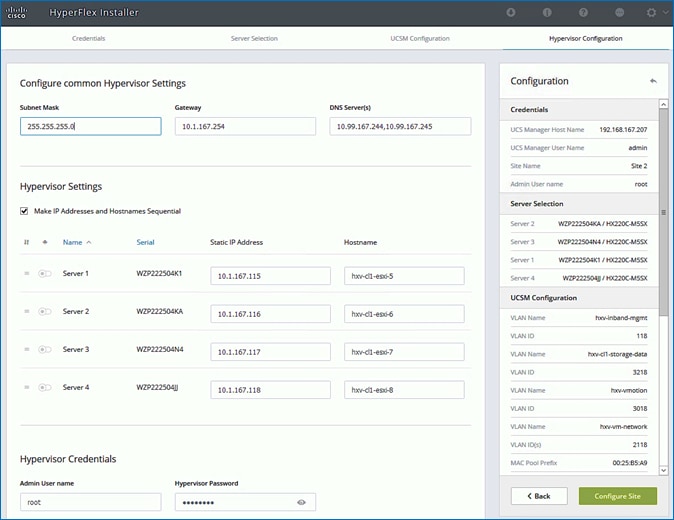

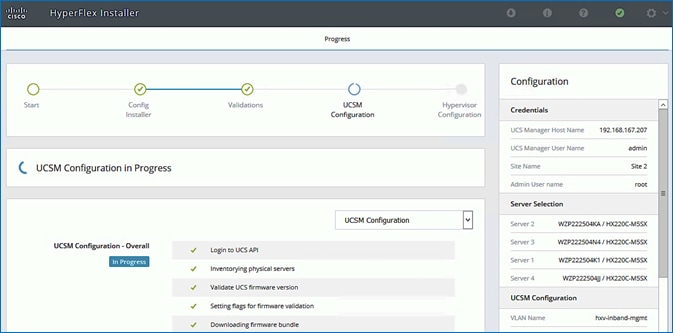

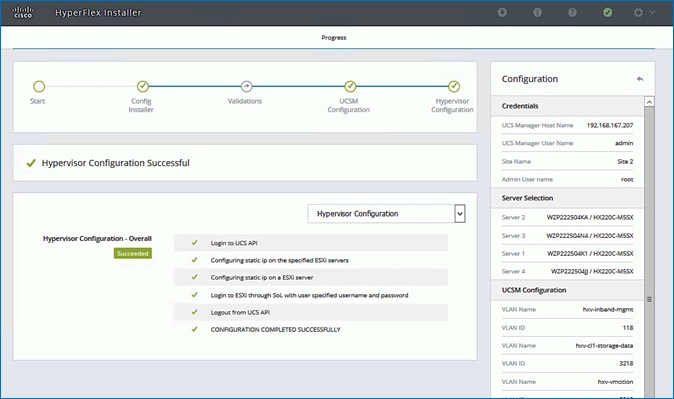

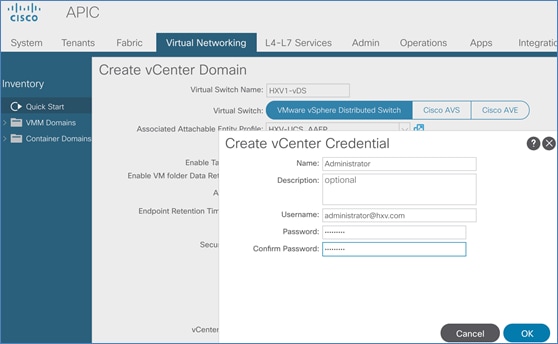

7. Click Submit to complete.