Feature Summary and Revision History

Summary Data

|

Applicable Products or Functional Area |

SMF |

|

Applicable Platforms |

SMI |

|

Feature Default Setting |

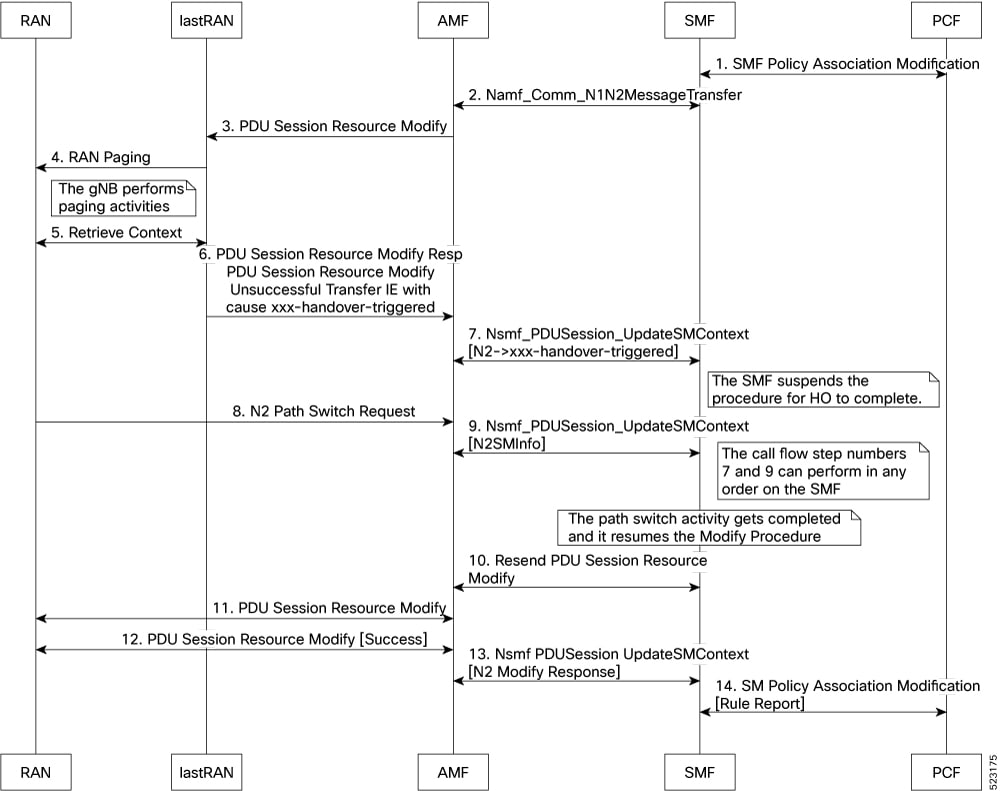

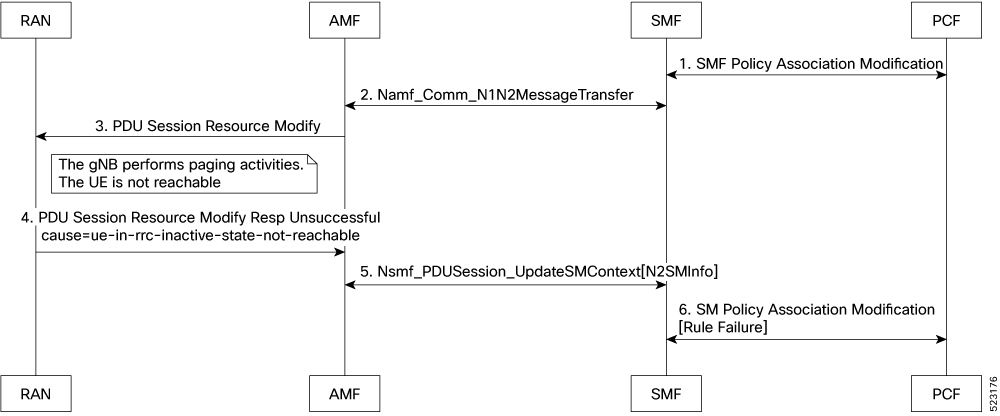

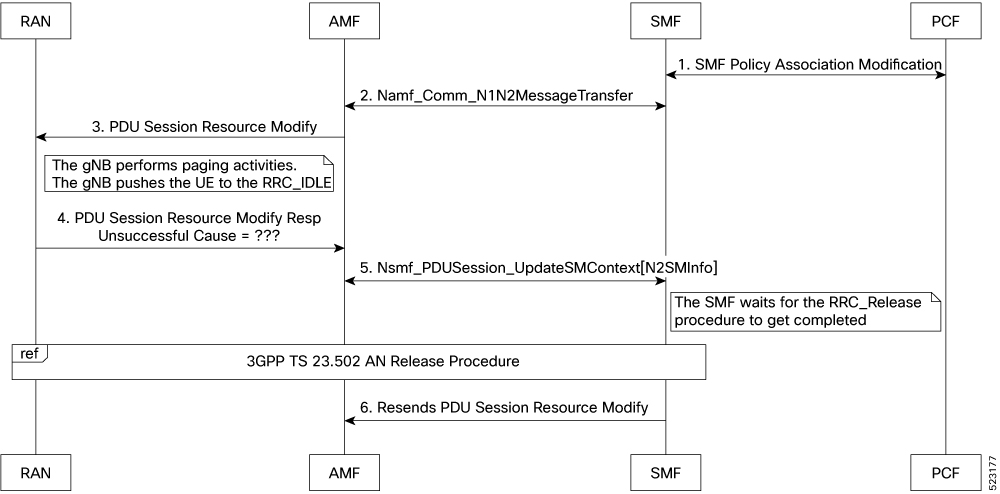

Batch ID Allocation, Release, Reconciliation Support: Disabled – Configuration required to enable Cache Pod Optimization CDL Flush Interval and Session Expiration Tuning Configuration: Enabled – Configuration required to disable Domain-based User Authorization Using Ops Center Edge Echo Implementation: Enabled – Always-on Encoder and Decoder Optimization for GTPC Endpoint Pod: Disabled – Configuration required to enable ETCD Peer Optimization Support: Enabled - Always-on ETCD Traffic Optimization: Enabled - Always-on Flag DB Database Updates: Enabled – Always-on GTPC IPC Cross-rack Support: Disabled – Configuration required to enable Handling PDU Session Modifications based on RRC Inactive Cause Codes: Disabled – Configuration required to enable Interservice Pod Communication: Disabled – Configuration required to enable Resiliency Handling: Disabled – Configuration required to enable Resiliency Handling: Disabled – Configuration required to enable |

|

Related Documentation |

Not Applicable |

Revision History

|

Revision Details |

Release |

|---|---|

|

Added the following support:

|

2023.01.0 |

|

First introduced. Added the following support:

|

2022.04.0 |

Feedback

Feedback