VersaStack Solutions with Red Hat OpenShift Container Platform 4.3 and IBM FlashSystem Family

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

VersaStack Solutions with Red Hat OpenShift Container Platform 4.3 and IBM FlashSystem Family

Design and Deployment Guide for VersaStack User Provisioned Infrastructure for Red Hat OpenShift Container Platform with Cisco UCS, Cisco ACI, IBM FlashSystem Family, and VMware vSphere 6.7U3

Published: June 2020

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Table of Contents

Application Modernization and Enterprise Applications

Cisco Unified Computing System

Cisco UCS Fabric Interconnects

Cisco UCS 5108 Blade Server Chassis

Cisco UCS 2408 Fabric Extender

Cisco UCS B-Series Blade Servers

Cisco UCS C-Series Rack Servers

Cisco UCS Virtual Interface Card 1400

2nd Generation Intel® Xeon® Scalable Processors

Cisco Workload Optimization Manager (optional)

Cisco Application Centric Infrastructure and Nexus Switching

IBM Spectrum Virtualize for IBM FlashSystems 9200, 7200 and 5100

IBM FlashSystem 9200, 7200 and 5100 high availability

Data Protection on FlashSystem 9200, 7200, and 5100

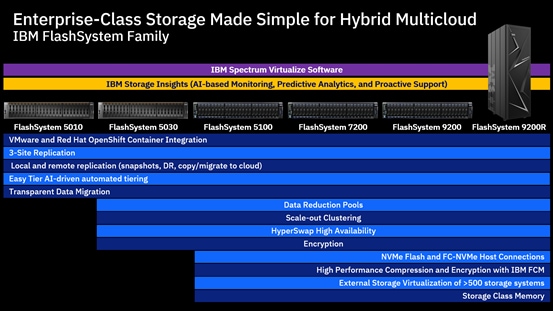

IBM FlashSystem Family Overview

FlashSystem Features for Manageability

VersaStack for Red Hat OpenShift Container Platform Add-on Components

Red Hat OpenShift Container Platform

IBM CSI Driver for Block Storage

VersaStack with OCP Logical Topology

VersaStack Network Connectivity and Design

Application Centric Infrastructure Design

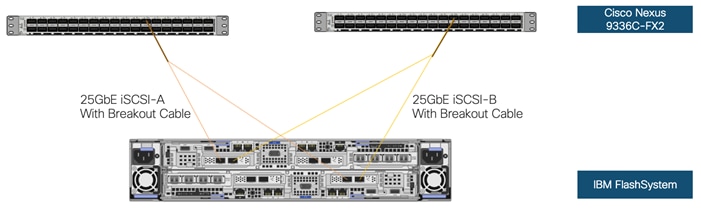

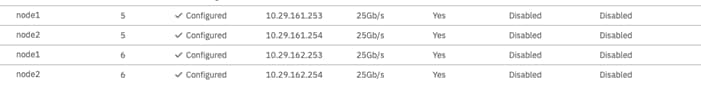

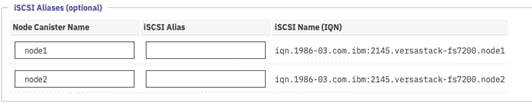

IBM FlashSystem– iSCSI Connectivity

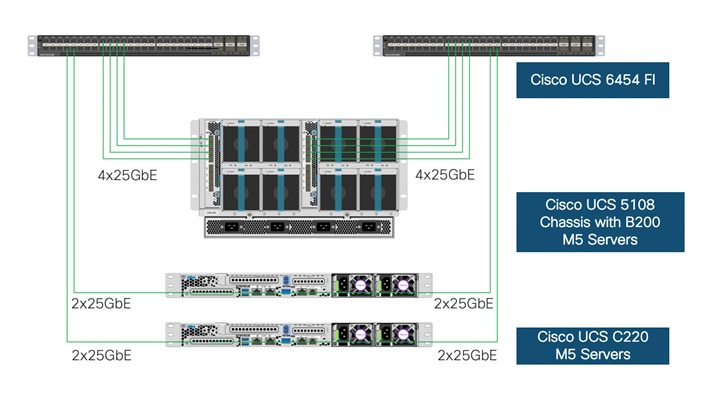

VersaStack Compute Connectivity

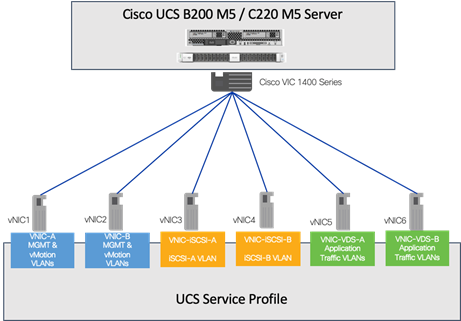

Cisco UCS Server Configuration for VMware vSphere

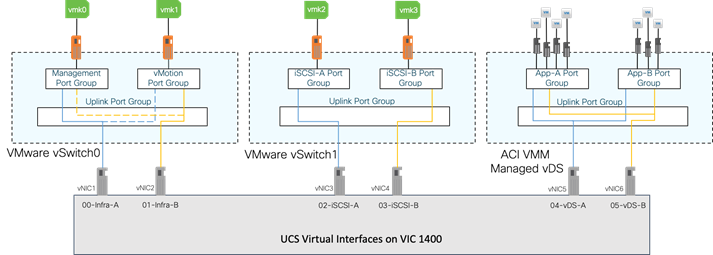

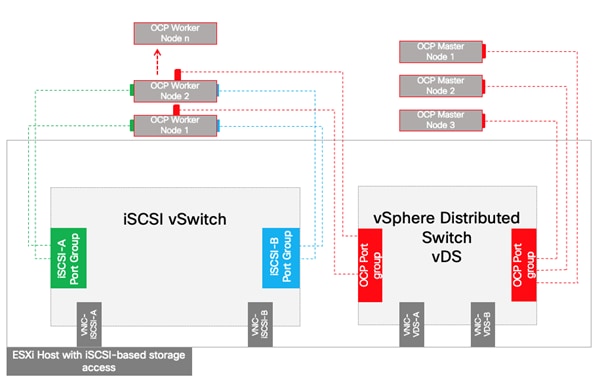

Virtual Switching Architecture

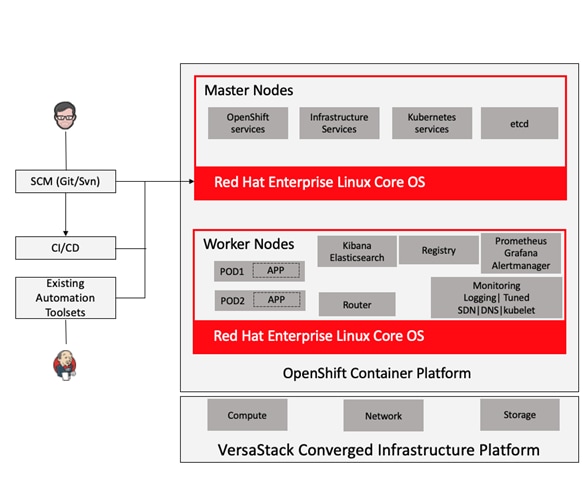

Red Hat OpenShift Container Platform Architecture

OpenShift Ingress and Egress Traffic flow

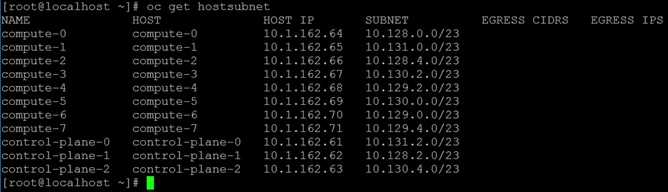

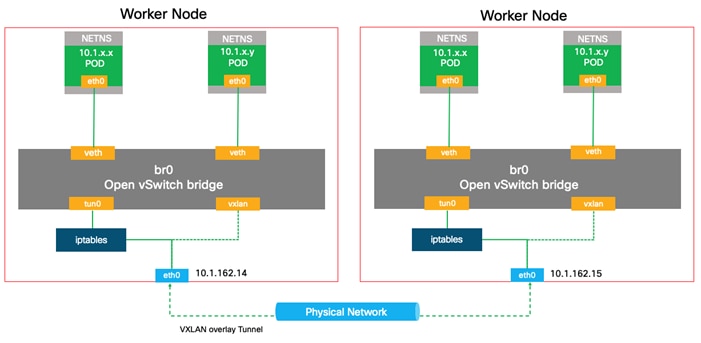

OpenShift internal cluster communication with OpenShift SDN

OpenShift External Cluster Communication

Red Hat OpenShift Container Platform Deployment on VersaStack

OpenShift Cluster Node connectivity on VersaStack

VersaStack Storage Design for OCP

Deployment Hardware and Software

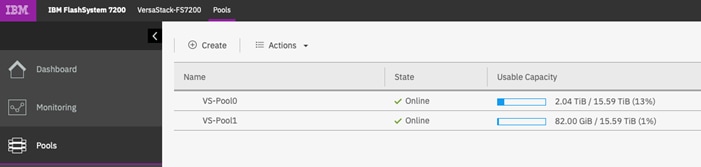

VersaStack Storage Configuration

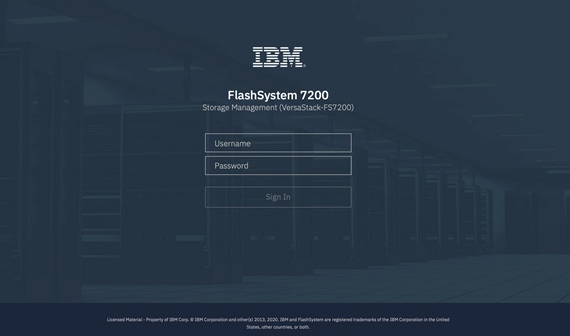

FlashSystem 7200 Storage System – Management Access

Storage Controllers and Storage Pool Configuration

Network Configuration of the IBM FlashSystem

VersaStack Network Configuration

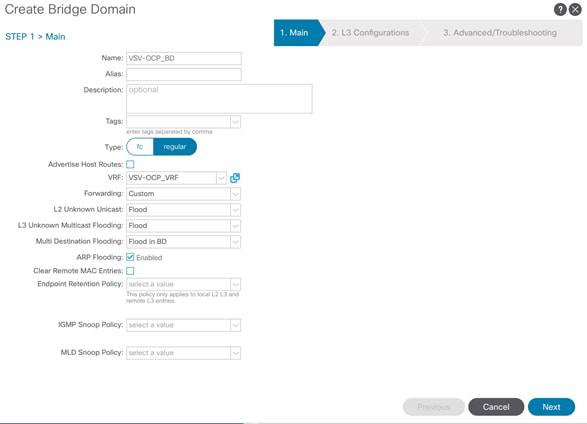

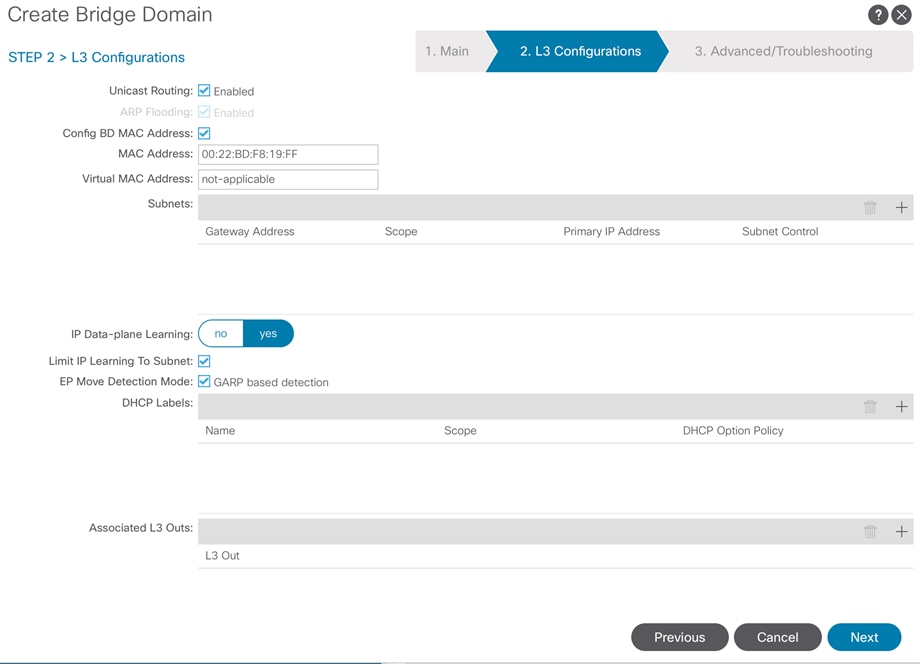

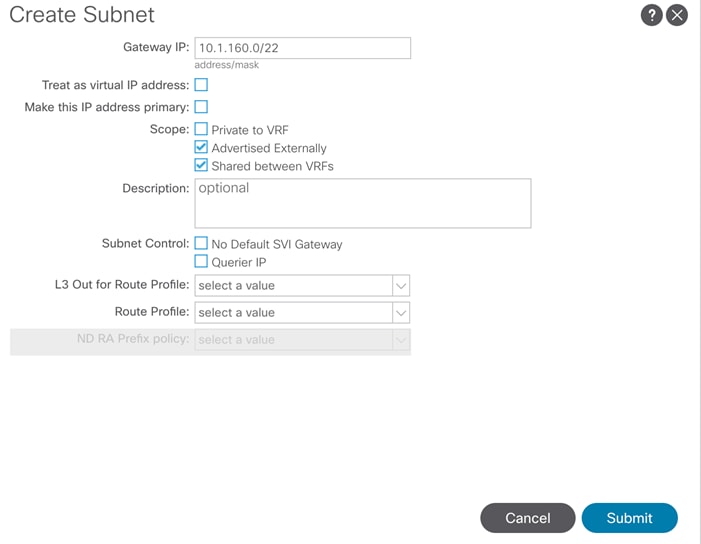

Create an OCP Application Tenant with the Cisco ACI APIC

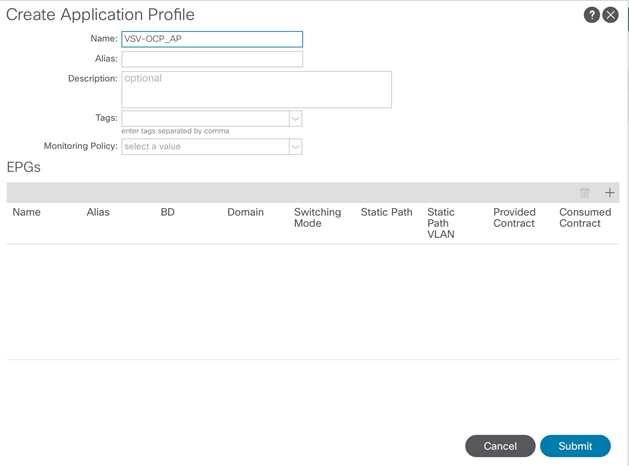

Create Application Profile for OpenShift Container Platform

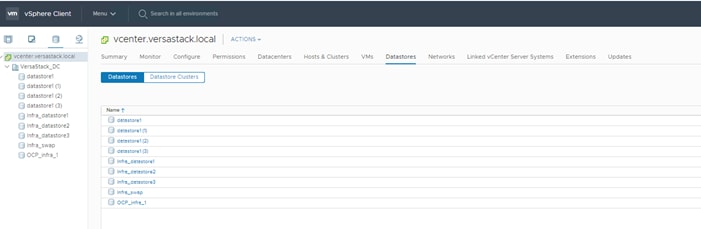

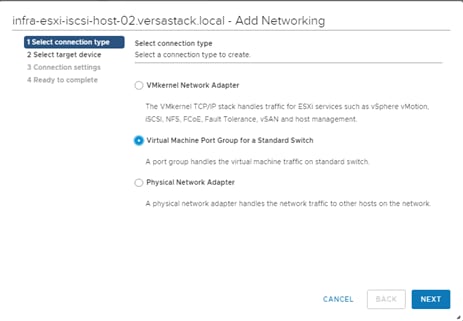

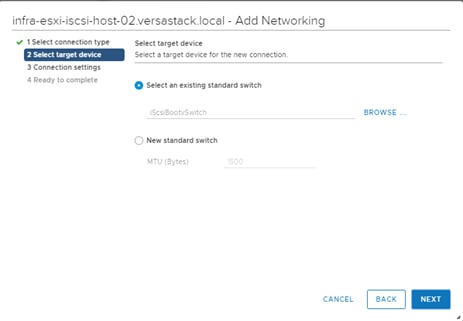

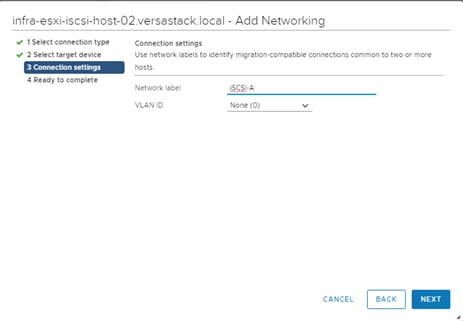

VersaStack VMware vSphere Configuration

Setting Up vSphere Distributed Resource Scheduler (DRS) Rules

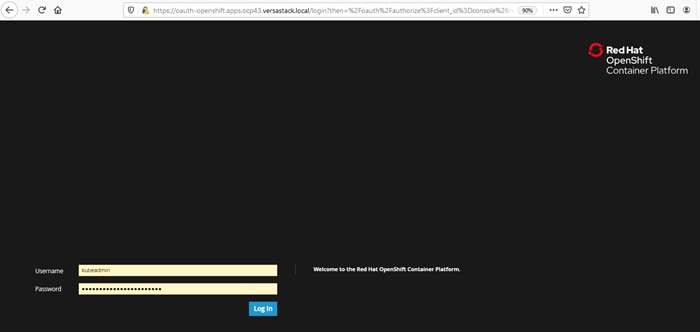

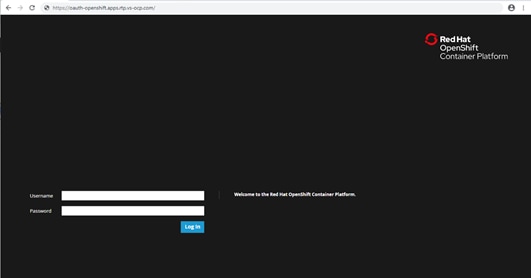

OpenShift Container Platform Installation and Configuration

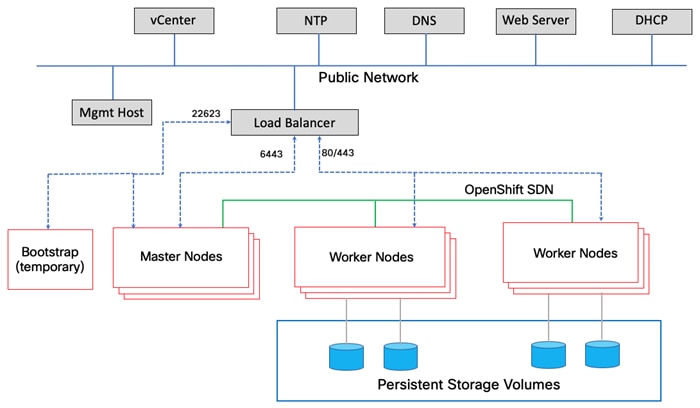

Prerequisites and Preparation Stage

OCP Network Connectivity Requirements

Red Hat OpenShift Container Platform 4.3 Installation Overview

Install Infrastructure Services

Installing and Configuring a DNS server (named)

Installing and Configuring a DHCP Server (dhcp)

Installing and Configuring a Web Server (Apache httpd)

Installing and Configuring the Load Balancer (haproxy)

Generating an SSH Private Key and Adding It to the Agent

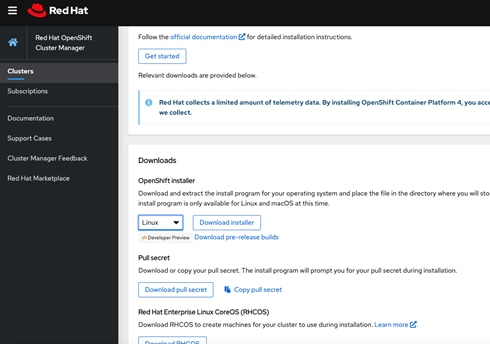

Obtaining the Installation Program

Create the Installation Configuration File

Installing and Creating the Ignition Configuration Files on Mgmt-host

Installing the CLI by Downloading the Binary

Prepare the Terraform Installer

Approving the CSRs for Your Machines (Optional)

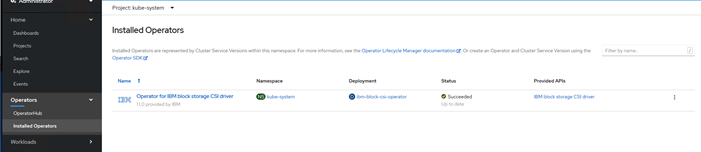

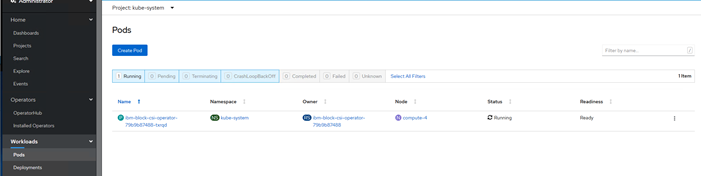

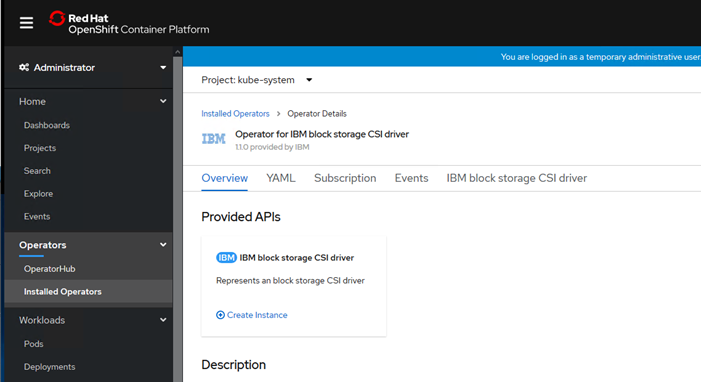

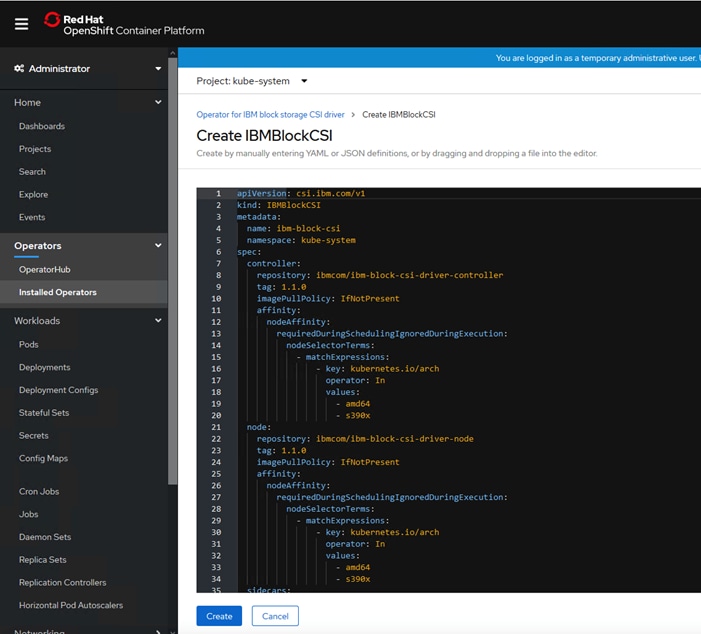

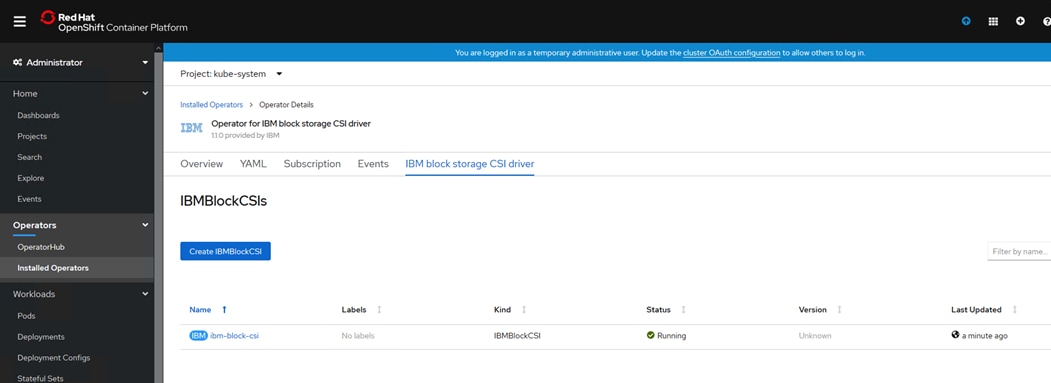

IBM CSI Driver Installation and Configuration

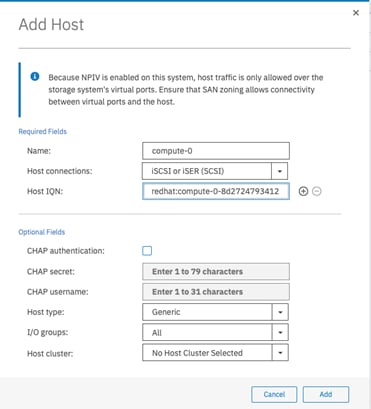

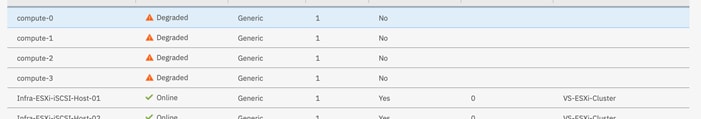

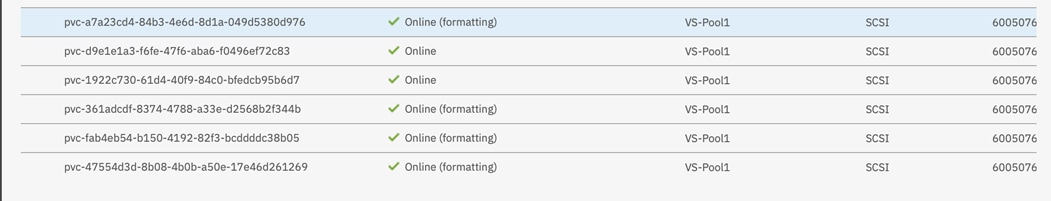

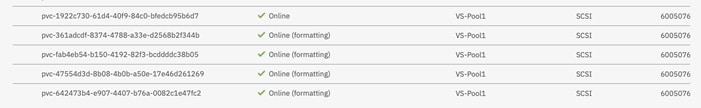

Preparing the IBM FlashSystem Backend Storage

Configuring Worker Nodes Running Red Hat Enterprise Linux CoreOS

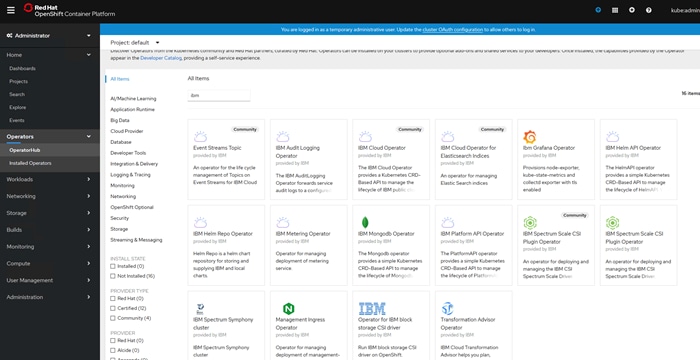

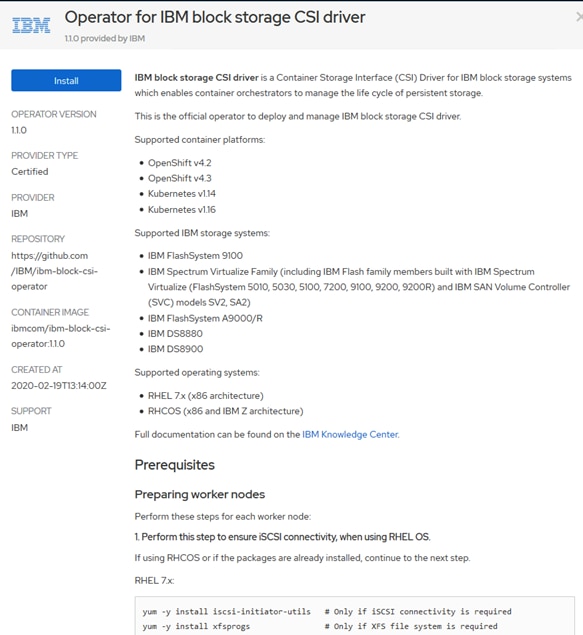

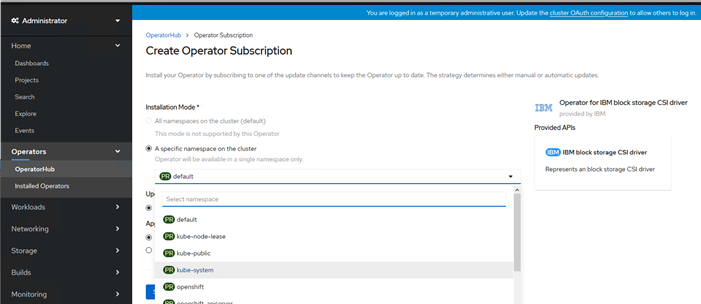

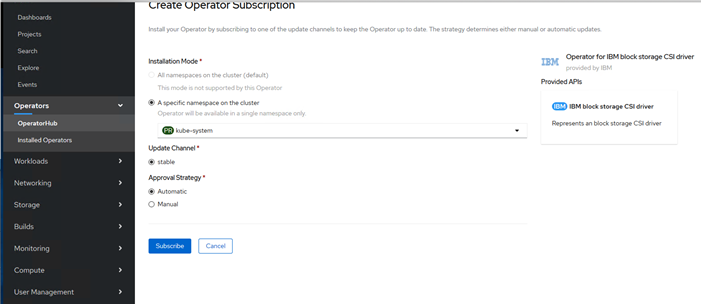

Install IBM Block Storage CSI Driver on Red Hat OpenShift Container Platform

Creating a Test Persistent Volume Claim (PVC)

Applications Deployment on VersaStack for OpenShift Container Platform

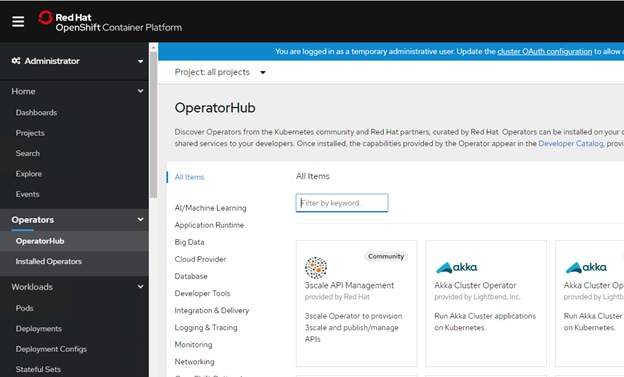

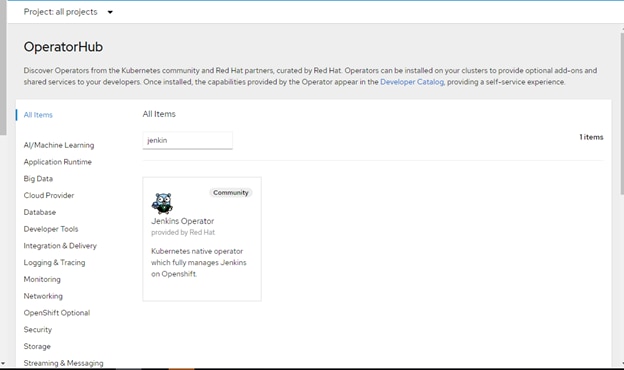

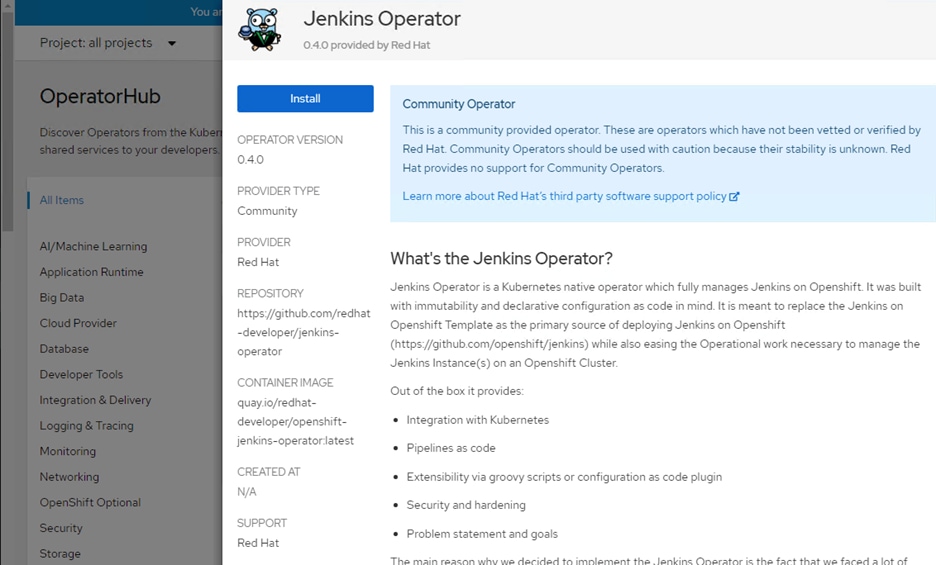

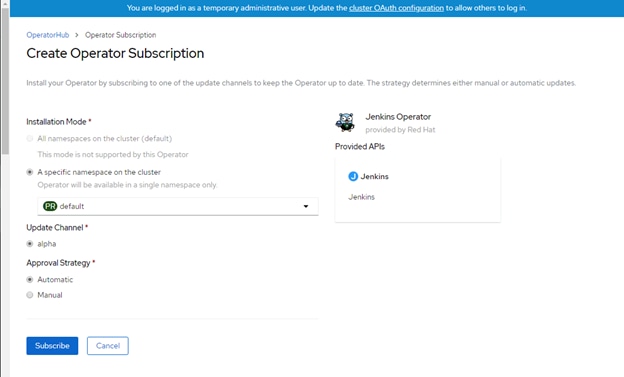

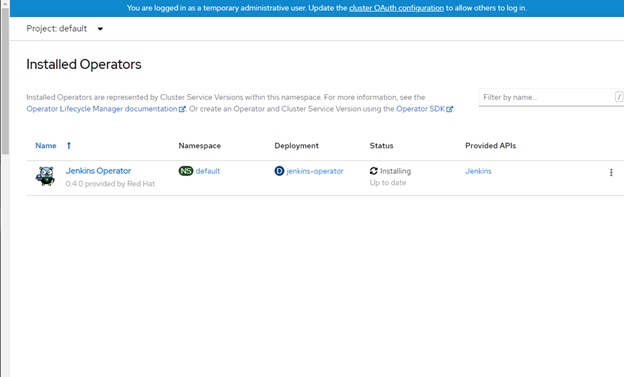

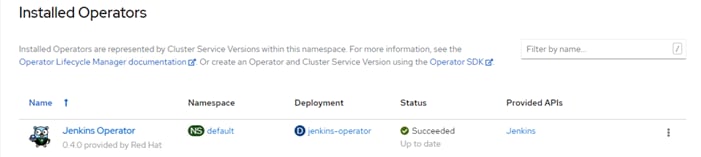

Deploy Applications using OpenShift Operator

Red Hat OpenShift Container Platform Day-2 Operations

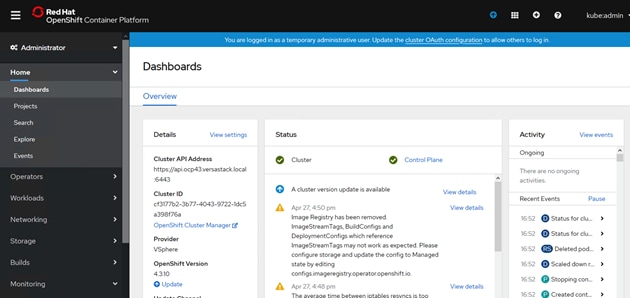

VersaStack Infrastructure Validation

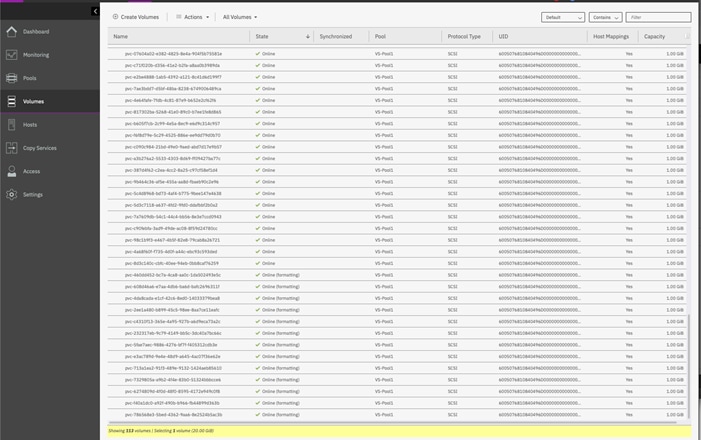

IBM CSI Block Storage Driver Validation

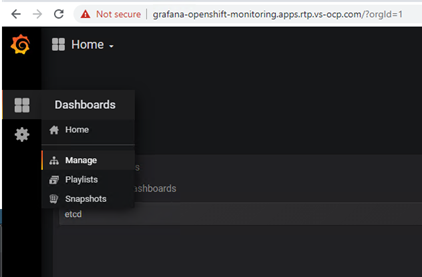

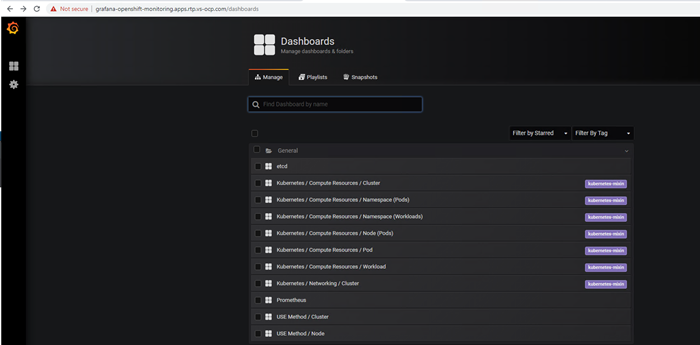

Red Hat OpenShift Container Platform Environment Validation

OpenShift Container Platform Benchmarking with Ripsaw

IBM Cloud Pak for Applications

IBM Cloud Pak for Multi-cloud Management

Accelerate Application Modernization

To help organizations with their digital transformation and to enhance their cloud-native and application modernization practices, Cisco and IBM have partnered to produce this Cisco Validated Design (CVD) for the VersaStack™ user provisioned infrastructure for Red Hat OpenShift Container Platform solution, featuring the IBM FlashSystem family.

VersaStack™ Solutions bring together a comprehensive set of converged infrastructure and software-defined technologies from IBM and Cisco designed for quick deployment and rapid time to value. The solution includes Cisco UCS integrated infrastructure together with IBM’s simplified, distributed storage portfolio to deliver extraordinary levels of agility and efficiency. Combining Red Hat OpenShift with VersaStack™ helps achieve the speed, flexibility, security and scale required for application modernization and digital transformation initiatives. It also improves efficiency, and delivers better data protection, lower risk, and the flexibility to scale this highly available enterprise-grade infrastructure stack to accommodate new business requirements and other changes over time. For many organizations, this means adopting micro-services and DevOps practices and moving away from monolithic application development practices as a first step in creating more business value from their applications.

This CVD supports all products in the IBM FlashSystem family, delivering both hybrid and all-flash options, and running on IBM Spectrum Virtualize software – a common data management software layer enabling hybrid and multi-cloud deployments. The FlashSystem family products are all NVMe-enabled, with performance and scalability that spans entry enterprise/midrange enterprise to high-end enterprise level clients.

IBM FlashSystem storage is enabled for Red Hat® OpenShift® Container Platform deployments through CSI Plug-Ins. Red Hat® OpenShift®, an enterprise-ready Kubernetes container platform with full-stack automated operations to manage hybrid cloud and multi-cloud deployments, is optimized to improve developer productivity and promote innovation. Red Hat OpenShift includes everything needed for hybrid cloud, enterprise container and Kubernetes development and deployments. It includes an enterprise-grade Linux® operating system, container runtime, networking, monitoring, container registry, authentication, and authorization solutions.

This document provides a reference architecture that includes design and deployment guidance, best practices, and other recommendations. With this guide, one can simplify the digital transformation and address many of the associated challenges, including cost and risk reduction, scalability, operational flexibility, and security.

Businesses are increasingly expecting technology to be a centerpiece of every new change. Traditional approaches to leverage technology are too slow in delivering innovation at the pace at which business ecosystems are changing.

Furthermore, there is a rapid industry transition underway. With most organizations now using a mix of cloud models and more than one public cloud provider, and billions of US dollars expected to be spent in 2020 on hybrid multi-cloud infrastructure, hybrid multi-cloud is the new normal - and it is impacting strategic storage and infrastructure choices.

In order to keep up with new technologies or stay one step ahead, enterprises will have to overcome key challenges to accelerate product development, add value and compete better at lower cost.

To succeed, organizations must adopt new technologies, such as the cloud, by updating existing IT infrastructure to accommodate new requirements, including those related to, hybrid cloud and multi-cloud environments, security and data privacy. They must implement new processes and methodologies such as DevOps and micro-services, and they need to address gaps in their current available skill sets.

One observation that is worth unpacking is that the speed of Hybrid Multi-cloud adoption is being driven by the idea that developers can build once and deploy anywhere, freeing them to innovate with speed and agility. Open innovations like Linux and Java were aimed at this goal and each spawned entire industries. VMware made it possible to move virtual machines from one x86 platform to another. Today, however, we've reached an apex with container technology such as Red Hat OpenShift Container Platform.

The need to move fast along the digital transformation path leaves organizations with very little room for errors when making decisions about their infrastructure and its related services. The speed of transformation further increases the exposure to risks associated with any changes.

Even though cloud-based services can accelerate the digital transformation that customers are seeking, using public cloud-based services exclusively can be difficult, and a complete transition might take a significant amount of time. In some cases, it is simply not possible because of regulations or other constraints related to data sensitivity, privacy, and security.

Solution

The VersaStack™ user provisioned infrastructure for Red Hat OpenShift platform solution, featuring IBM FlashSystem addresses many of these challenges because it allows organizations to move faster with less risk. This solution provides more flexibility and options for managing, operating, and scaling IT infrastructures along the digital transformation journey, while operating in a predictable and economical cost structure.

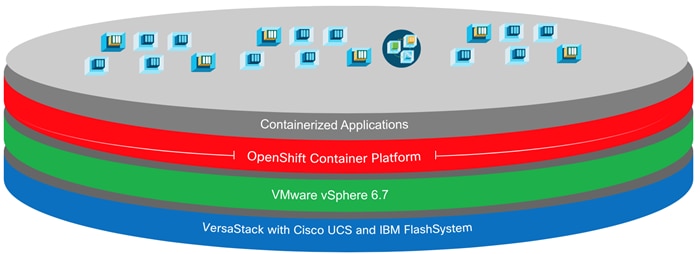

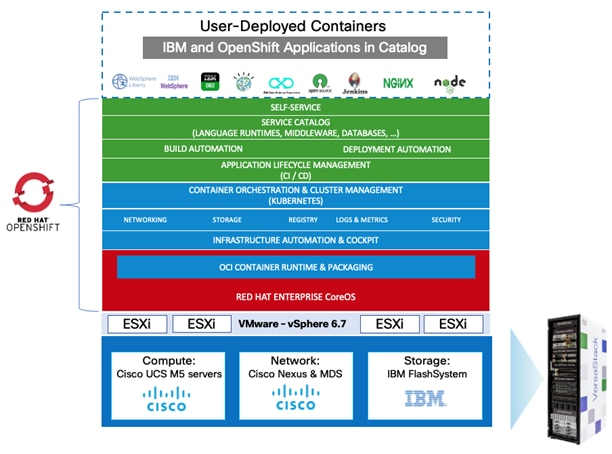

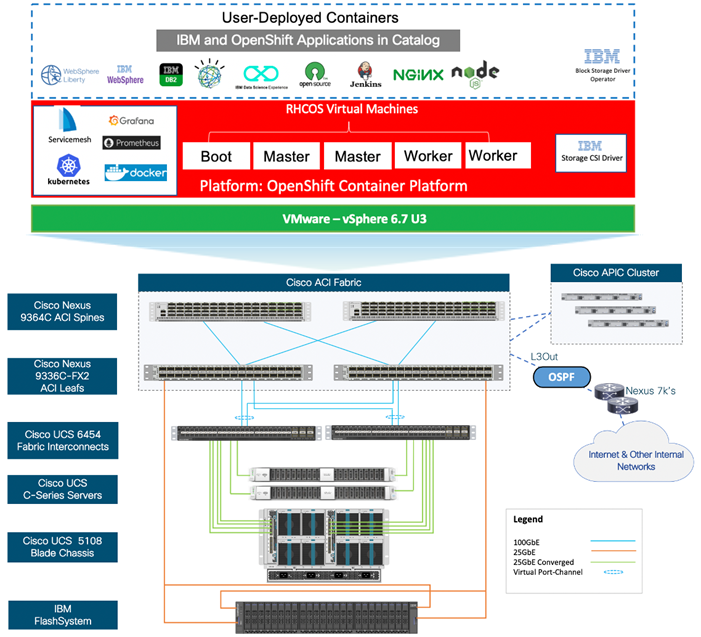

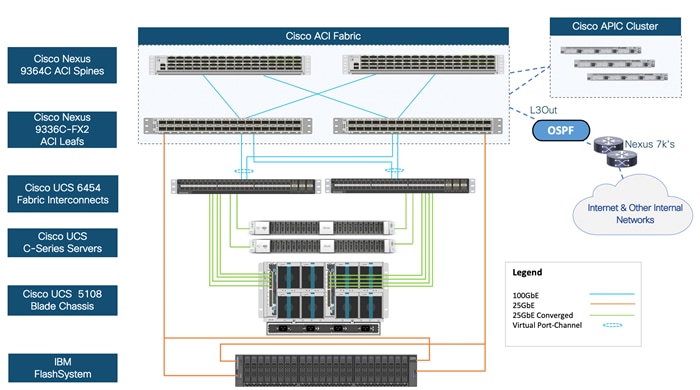

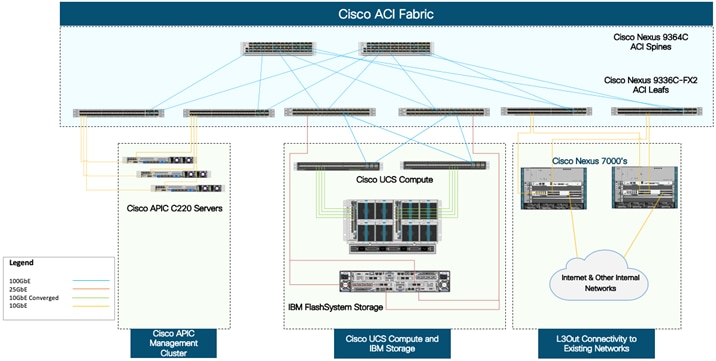

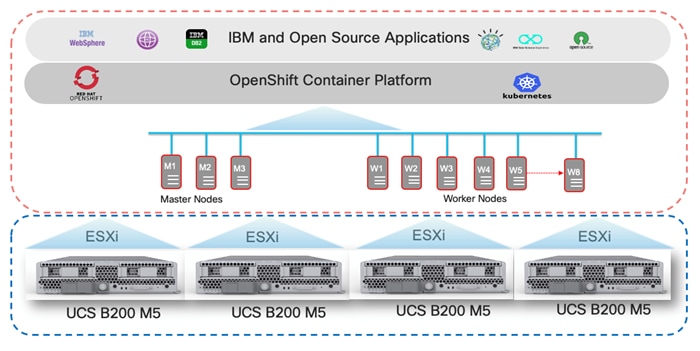

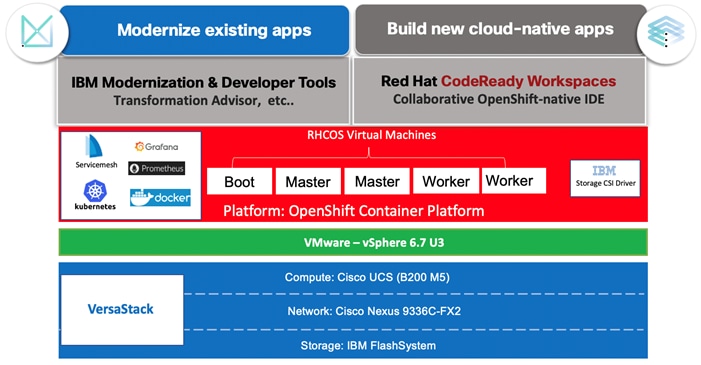

Figure 1 Solution

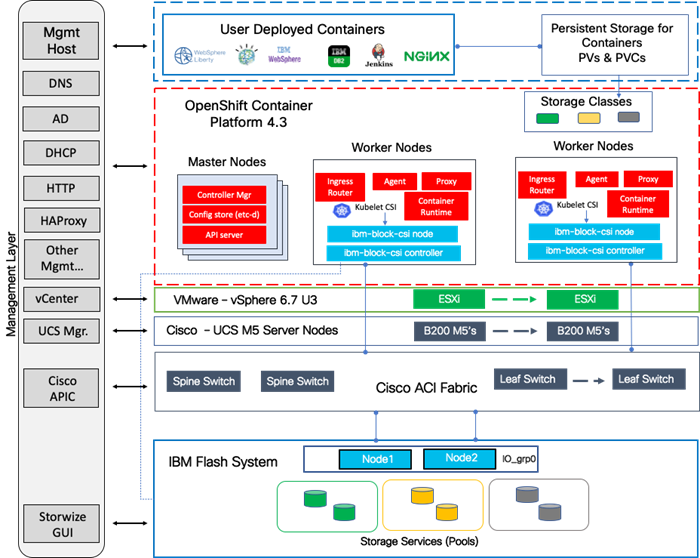

The VersaStack for Red Hat® Openshift® Container Platform solution covered in this Cisco Validated Design (CVD) is a converged infrastructure (CI) stack featuring IBM FlashSystem storage. Red Hat® Openshift® Container Platform is a platform for developing and running containerized applications. It is designed to allow applications and the data centers that support them to expand from just a few machines and applications to thousands of machines that serve millions of clients.

The converged infrastructure stack is comprised of Cisco compute and network switches, an IBM FlashSystem family storage and VMware vSphere as the underlying hypervisor used as User Provisioned Infrastructure (UPI) for OpenShift Container Platform (OCP). This stack is integrated so customers do not have to allocate time and resources researching the required components and how to optimally integrate them to support OCP. This CI solution was also tested and validated, which saves time and minimizes risks so that you can quickly deploy the solution, become productive in days, and focus on your business rather than worrying about setting up infrastructure.

All the products in the IBM FlashSystem family are supported as part of this CVD. The FlashSystem family simplifies storage for hybrid multi-cloud. With a unified set of software, tools and API’s, the flash storage platform addresses the entire range of storage needs, all from one data platform that extends enterprise functionality across the storage estate:

· IBM FlashSystem 5010, 5030, and 5100 deliver Entry Enterprise solutions

· FlashSystem 7200 provides a Midrange Enterprise solution

· FlashSystem 9200 and the rack-based FlashSystem 9200R deliver two high end enterprise solutions.

All of these systems are based on IBM Spectrum Virtualize software, the common storage software used in this solution. When the software is enhanced, it is enhanced across the entire product family.

The IBM FlashSystem family delivers excellent performance coupled with a wealth of enterprise features. The majority of these FlashSystem models support both all-flash and hybrid configurations, denoted by ”H” in the model name. This flexibility enables you to build a system that is economically targeted to meet your specific needs.

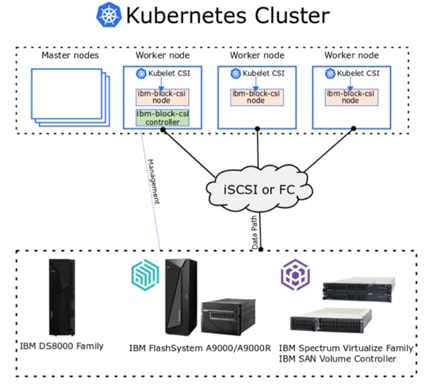

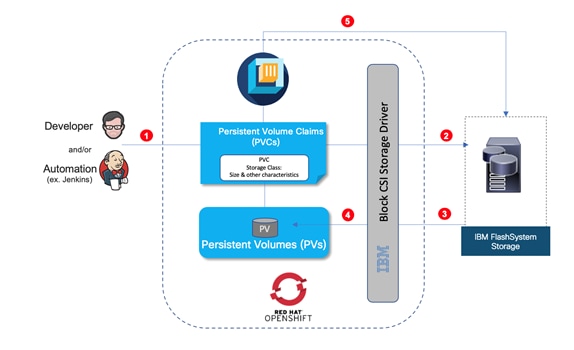

IBM FlashSystem storage integration in this CVD is based on Container Storage Interface (CSI) Driver for IBM block storage systems, which enables container orchestrators such as Kubernetes to manage the life cycle of persistent storage.

Another key aspect of the solution is minimizing risks by providing more flexibility. VersaStack is a highly flexible and scalable CI platform that is based on a unified architecture. With VersaStack, you can start small and grow as your business needs grow.

Introduction

The featured VersaStack user provisioned infrastructure for OpenShift Container platform solution is a pre-designed, integrated, and validated architecture for the data center that combines Cisco UCS servers, the Cisco Nexus family of switches, and IBM FlashSystem family storage with IBM Spectrum Virtualize software into a single, flexible architecture. VersaStack is designed for high availability (HA), with no single point of failure, while maintaining cost-effectiveness and flexibility in the design to support a wide variety of workloads. The VersaStack solution covered in this document is for Red Hat OpenShift Container Platform (OCP), built on Enterprise Kubernetes for an on-premises deployment.

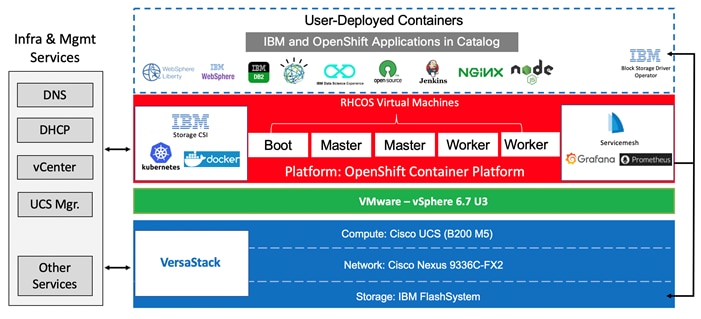

The VersaStack for OpenShift Container Platform solution, in addition to the hardware components, includes VMware vSphere as the hypervisor layer on top of which the OpenShift Container Platform components are deployed.

Integration between OpenShift Container Platform and the storage and data management services occur at several levels, all of which are captured in the design aspects of this document. The main storage integration is based on Container Storage Interface (CSI) Driver for IBM block storage systems, which enables container orchestrators such as Kubernetes to manage the life cycle of persistent storage.

After CSI driver is installed, it becomes part of the Kubernetes framework, which helps accelerates development and complements DevOps practices.

The OCP platform is installed on top of a VMware cluster with the OCP nodes running as Red Hat Enterprise Linux CoreOS (RHCOS) VMs on ESXi hosts which are in turn deployed on the Cisco UCS servers.

The following design and deployment aspects of the VersaStack for OCP solution are explained in this document:

· Red Hat OpenShift Container Platform 4.3

· VersaStack converged infrastructure

· VMware vSphere 6.7 U3

· IBM CSI driver – Dynamic storage provisioner for OpenShift

· IBM FlashSystem Family storage featuring IBM Spectrum Virtualize

The document also covers key configurations based on the validated environment and best practices.

Audience

The intended audience for this document includes, but is not limited to, DevOps managers, IT infrastructure managers, application development leaders, business digital transformation leaders, storage and data management managers, sales engineer and architects working with hybrid and private clouds, and other parties that are looking for a tested, market-proven CI solution that offers flexibility and simplicity in support of their cloud native and application modernization needs along with their digital transformation journey.

Purpose of This Document

The purpose of this design and deployment guide is to provide a reference architecture with specific examples indicating how the solution was designed, deployed, and tested. In addition, the document provides several best practices and recommendations that simplify your implementation of this solution.

What’s New?

Although the VersaStack for OCP solution was developed based on an existing CVD (VersaStack with Cisco ACI and IBM FlashSystem family), the following elements distinguish this version of VersaStack from previously published solutions:

· Support for Red Hat OpenShift Container Platform 4.3.

· IIBM CSI block storage driver integration with OpenShift Kubernetes for dynamic persistent storage provisioning for applications.

· Cisco 2408 Fabric Extender providing 25 Gbps connectivity from the Cisco UCS Chassis to Cisco UCS Fabric Interconnects

· Support for the Cisco UCS release 4.1(1a)

· Support for ACI 4.2 (4i)

· IBM FlashSystem storage, built with IBM Spectrum Virtualize software, version 8.3.1

For more information about previous VersaStack designs, see: https://www.cisco.com/c/en/us/solutions/data-center-virtualization/versastack-solution-cisco-ibm/index.html

Solution Summary

This solution includes a hardware stack from Cisco and IBM, a hypervisor from VMware, and OCP software platform from Red Hat, a set of tools for integration and management, and a collection of containerized applications available via Red Hat and IBM Catalogs. These components are integrated so that customers can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the ground up.

Figure 2 VersaStack UPI for OpenShift Container Platform

Solution Benefits

Successful digital transformation requires solution that provides software, processes, and systems together in a digital-ready infrastructure that is simple, intelligent, automated, and highly secure.

VersaStack combined with the OCP platform provides faster deployment, higher efficiency, a highly scalable and flexible stack, and less risk. These intrinsic capabilities improve developer productivity, reduce build times, and optimize storage with advanced data management capabilities. The solution helps VersaStack customers to modernize existing applications, develop new cloud-native solutions, and leverage cloud-based services.

OpenShift Container Platform helps customers accelerate their digital transformation because it contains all the required tools needed out-of-the-box for consuming on-premises, cloud-based services.

IBM FlashSystem storage, built with IBM Spectrum Virtualize software, is targeted for hybrid multi-cloud solutions and enterprise deployments. Storage Class Memory (SCM) and IBM FlashCore technology bring the performance needed for demanding workloads and AI-driven Easy Tier ensures data is effortlessly positioned on the right storage tier for the right workload.

IBM FlashCore Modules bring unprecedented levels of efficiency with as much as 4PB of data in only 2 rack units. 3-site replication, including replication to the cloud, and improved scalability and data reduction in the cloud transform hybrid multi-cloud deployments and lower critical costs.

Red Hat OpenShift pushes the boundaries of what containers and Kubernetes can do for developers, driving innovation for stateful applications, serverless or event-driven applications, and machine learning. The platform integrates tightly with Jenkins and other standard continuous integration continuous delivery (CI/CD) tools for security-focused application builds. Red Hat OpenShift helps you build with speed, agility, confidence, and choice so that developers can get back to doing work that matters.

In addition, IBM offers a family of Cloud Paks that give developers, data managers and administrators an open environment to quickly build new cloud-native applications, modernize/extend existing applications, and deploy middleware in a consistent manner across multiple clouds.

Beyond the well-integrated software stack at the OCP platform level, VersaStack offer the same value at the hardware stack level.

This joint solution offers the following benefits:

· Highly available, scalable platform and flexible architecture supports various deployment models

· Accelerated adoption of new technology to modernize heritage applications and cloud native development

· A trusted platform from industry leaders, Cisco and IBM Enterprise-grade, highly available infrastructure stack

· Converged infrastructure validated for OCP with a published CVD that includes best practices and recommendations, which minimizes risks and accelerates development cycles

· IBM FlashSystems with new roll-out of FlashCore Modules (FCMs). The innovation behind these custom-designed modules delivers improved performance, capacity, and reliability.

· The enterprise-class functionality of IBM Spectrum Virtualize software including: Encryption, Copy Services such as Synchronous and Asynchronous Mirroring, Deduplication, Compression, Virtualization of over 500 different storage devices, single pain of glass management, EasyTier, among others.

· Ecosystem of partners and the recognized and trusted VersaStack brand

· Cooperative support between Red Hat, Cisco, IBM, and VMware

· Easy to deploy, consume, and manage; saves time and resources on research, procurement, and integration

· Flexible and highly scalable architecture with outstanding application performance

· Integration with Kubernetes for dynamic provisioning of persistent storage services with advanced data management capabilities

· Enhanced CI/CD workflow and practices associated with DevOps and micro-services

Solution Components

The solution offers redundant architecture from a compute, network, and storage perspective. The solution consists of the following key components:

· Cisco UCS 6400 Series Fabric Interconnects (FI)

· Cisco UCS 5108 Blade Server chassis

· Cisco Unified Computing System (Cisco UCS) servers with 2nd generation Intel Xeon scalable processors

· Cisco Nexus 9336C-FX2 Switches running ACI mode

· IBM FlashSystem NVMe-accelerated Storage

· VMware vSphere 6.7 Update 3

· OpenShift Container Platform 4.3

· IBM block storage CSI driver 1.1.0

Use Cases

The Red Hat OpenShift Container Platform gives developers a self-service platform on which to build and run containerized applications. With Red Hat OpenShift you can quickly start creating new cloud-native applications or cloud-enabling existing applications and spawning an environment for a new microservice in minutes.

Cloud-Native, Application Modernization and a model of hosting additional services such as enterprise applications are covered as use cases in this document, the solution supports many other use cases which are not documented in this guide. These use cases reflect the flexibility of the VersaStack platform to accommodate various requirements and enable the different workloads and topologies you might focus on.

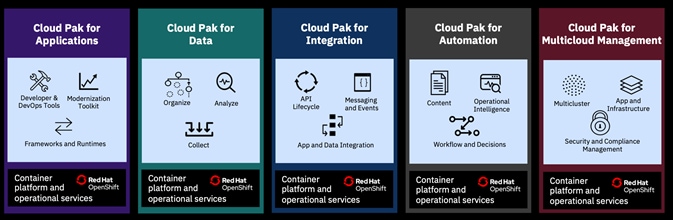

Customers can optionally consider IBM Cloud Paks for their application needs, IBM Cloud Paks are pre-integrated containers, pre-packaged solutions, ready for deployment in production environments. These IBM Cloud Paks can be easily deployed to Kubernetes-based container orchestration platforms. They are software solutions that give clients an open, faster, and more secure way than individual solutions to move core business applications to any cloud. Designed to reduce development time, IBM Cloud Paks include IBM middleware and common software services for development and management, on top of a standard integration layer. They are portable and can run on-premises, on public clouds, or in an integrated system.

IBM currently offers five independent Cloud Paks that provides a Kubernetes environment to help to build cloud-native applications or modernize the existing applications. Also, IBM Cloud Paks enable you to quickly bring workloads to an integrated container platform, supporting production-level qualities of service and end-to-end lifecycle management.

![]() For more information on IBM Cloud Paks, refer to the Appendix of this document. However, customers interested in IBM Cloud Paks should refer to the IBM documentation for the installation and usage details or contact IBM or their partners for proper recommendations and guidance.

For more information on IBM Cloud Paks, refer to the Appendix of this document. However, customers interested in IBM Cloud Paks should refer to the IBM documentation for the installation and usage details or contact IBM or their partners for proper recommendations and guidance.

Cloud-Native

In a cloud-native environment, applications are viewed as collections of microservices with many deployable components that deploy at various speeds and independently of each other. Manually deploying anything becomes an unsustainable effort prone to error, inconsistency, and delays. Automating continuous delivery pipelines is a way to help operations from deploying an application they have little knowledge of and rescue developers from complex configurations and deployments. Efficient delivery means more time for innovation, enabling the business to disrupt the market and competitors.

OCP is cloud-native ready out-of-the-box. No additional software packages or tools are required, but you can add to the platform-containerized applications with Red Hat tools. Through automation, self-service, and containerization, Red Hat development platforms let you deliver apps on any architecture faster. With Red Hat open tools, you can migrate your infrastructure and become cloud-native at your own pace, without rebuilding from the ground up.

Figure 3 illustrates a high-level overview of the VersaStack for OCP platform with the cloud-native use case.

Figure 3 VersaStack UPI for OCP – Cloud Native Topology

Application Modernization and Enterprise Applications

Application modernization is key for enterprises in moving towards cloud-based infrastructure and services, application modernization is a strategy for helping companies to move legacy application footprint to the cloud-based infrastructure such as OpenShift Container Platform.

Applications need to be adapted and ported to the new infrastructure using Cloud-native technologies, such as containers, Kubernetes, and microservices.

VersaStack for OCP supports enterprise applications in several ways. VersaStack offers hybrid virtualized architecture by combining virtualization for both traditional Virtual machines and containers. It is also easy to integrate the VersaStack platform with the existing on-premises infrastructure for consistent management of the entire end-to-end infrastructure. With many customers already having enterprise and middleware applications deployed, prior to introducing containers to their environment and some needing a platform that can support legacy and modernized components of an application, having the VersaStack infrastructure is like a perfect recipe for application modernization.

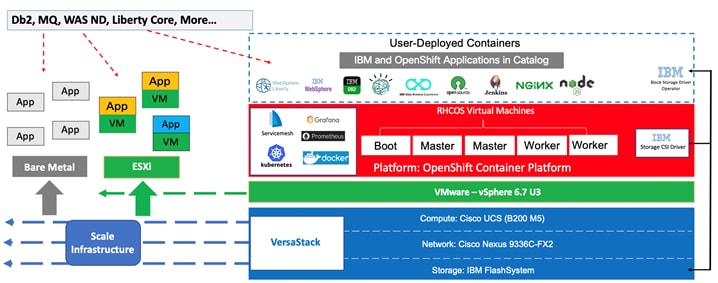

Customers commonly add new applications and tools to the VersaStack OCP platform and also want to integrate with products that are already in place. For example, the organization might use a database deployed in traditional form and might prefer to keep this database in place and allow the containerized applications running within the OCP platform to interact with the database. They might also use other middleware products, for example; IBM offers containerized versions of many of its enterprise applications, including MQ, WebSphere, and several products in the Db2 family. However, some organizations might not deploy these products in their container version and might start with a more traditional approach of hosting the application on a guest OS running as a VM, and in some cases the application may end up being deployed directly on the server (bare metal); VersaStack can be extended to host these applications as well (Figure 4).

Figure 4 Hosting Enterprise Applications on VersaStack for OCP

Additional workloads and applications beyond OCP can be hosted on the VersaStack CI platform. This deployment model can also accommodate IBM enterprise applications that can run on a VM as part of the vSphere environment or in a bare metal fashion directly on the Cisco UCS servers if they are not containerized. The flexibility of the platform to scale improves ROI and other aspects of productivity and, in addition, offers customers more confidence that the platform supports their changing needs over time.

Regarding application modernization, each customer develops their own plan for transforming monolithic applications to a cloud-native topology and the process can be very complex. Therefore, a reliable, flexible, and scalable platform at the infrastructure level that complements the value proposition of the OCP software platform can have tremendous value.

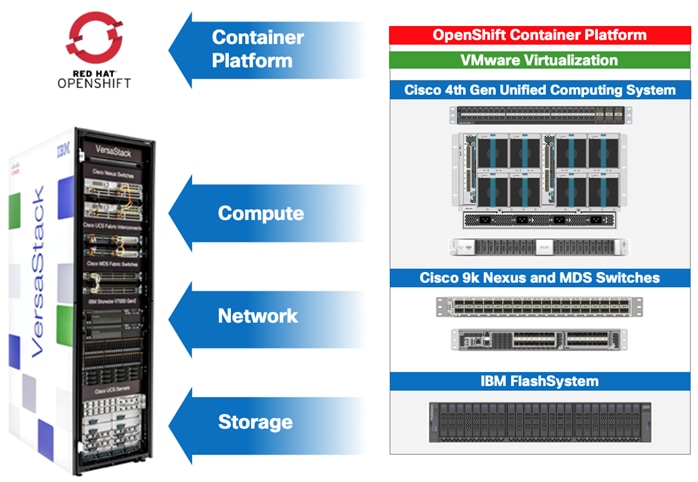

The VersaStack architecture is comprised of the following infrastructure components for compute, network, and storage:

· Cisco Unified Computing System (Cisco UCS)

· Cisco Nexus and Cisco MDS Switches

· IBM SAN Volume Controller and IBM FlashSystem storage

These components are connected and configured according to best practices of both Cisco and IBM and provide an ideal platform for running a variety of workloads with confidence.

The VersaStack reference architecture explained in this document leverages:

· Cisco UCS 6400 Series Fabric Interconnects (FI)

· Cisco UCS 5108 Blade Server chassis

· Cisco Unified Computing System servers with 2nd generation Intel Xeon scalable processors

· Cisco Nexus 9336C-FX2 Switches running ACI mode

· IBM FlashSystem NVMe-accelerated Storage

· VMware vSphere 6.7 Update 3

· Red Hat OpenShift Container Platform (version 4.3)

· IBM Container Storage Interface (CSI) for block storage (version 1.1.0)

Figure 5 VersaStack for OpenShift Container Platform – Components

The following sections provide a technical overview of the compute, network, storage and management components of the VersaStack solution.

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership (TCO) and to increase business agility. The system integrates a low-latency; lossless unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform where all resources are managed through a unified management domain.

The Cisco Unified Computing System consists of the following subsystems:

· Compute - The compute piece of the system incorporates servers based on latest Intel’s x86 processors. Servers are available in blade and rack form factor, managed by Cisco UCS Manager.

· Network - The integrated network fabric in the system provides a low-latency, lossless, 10/25/40/100 Gbps Ether-net fabric. Networks for LAN, SAN and management access are consolidated within the fabric. The unified fabric uses the innovative Single Connect technology to lowers costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

· Storage access - Cisco UCS system provides consolidated access to both SAN storage and Network Attached Storage over the unified fabric. This provides customers with storage choices and investment protection. The use of Policies, Pools, and Profiles allows for simplified storage connectivity management.

· Management - The system uniquely integrates compute, network and storage access subsystems, enabling it to be managed as a single entity through Cisco UCS Manager software. Cisco UCS Manager increases IT staff productivity by enabling storage, network, and server administrators to collaborate on Service Profiles that define the desired server configurations.

Cisco UCS Management

Cisco UCS® Manager (UCSM) provides unified, integrated management for all software and hardware components in Cisco UCS. UCSM manages, controls, and administers multiple blades and chassis enabling administrators to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive GUI, a CLI, as well as a robust API. Cisco UCS Manager is embedded into the Cisco UCS Fabric Interconnects and offers comprehensive set of XML API for third party application integration.

Cisco Intersight (optional)

The Cisco Intersight™ platform provides intelligent cloud-powered infrastructure management for Cisco Unified Computing System™ (Cisco UCS®) and Cisco HyperFlex™ platforms. Cisco Intersight is a subscription-based, cloud service for infrastructure management that simplifies operations by providing pro-active, actionable intelligence for operations. Cisco Intersight provides capabilities such as Cisco Technical Assistance Center (TAC) integration for support and Cisco Hardware Compatibility List (HCL) integration for compliance that Enterprises can leverage for all their Cisco HyperFlex and Cisco UCS systems in all locations. Cloud-based delivery enables Enterprises to quickly adopt the new features that are continuously being rolled out in Cisco Intersight.

Each Cisco UCS server or Cisco HyperFlex system automatically includes a Cisco Intersight Base edition at no additional cost when the customer accesses the Cisco Intersight portal and claims the device. In addition, customers can purchase the Cisco Intersight Essentials edition using the Cisco ordering tool.

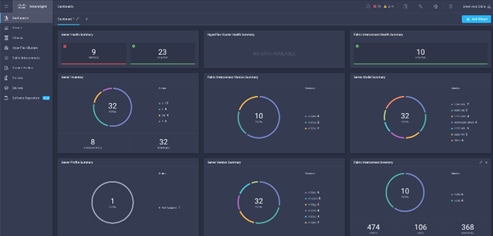

A view of the unified dashboard provided by Intersight can be seen in Figure 6.

Figure 6 Cisco Intersight Dashboard View

For more information on Cisco Intersight, see: https://www.intersight.com/help/getting_started#cisco_intersight_overview

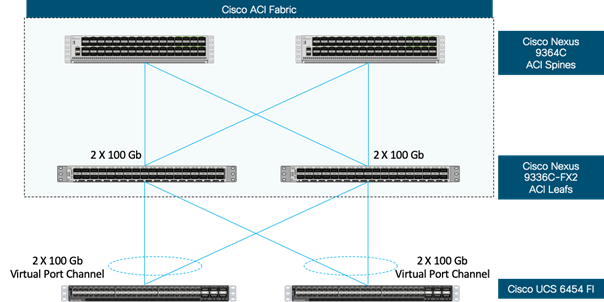

Cisco UCS Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco Unified Computing System. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager. Cisco UCS FIs provide a single unified fabric for the system that supports LAN, SAN and management traffic using a single set of cables.

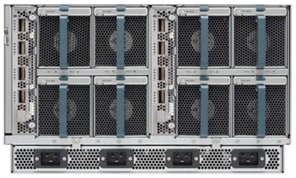

The 4th generation (6454) Fabric Interconnect (Figure 7) leveraged in this VersaStack design provides both network connectivity and management capabilities for the Cisco UCS system. The Cisco UCS 6454 offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and 32 Gigabit Fibre Channel functions.

Figure 7 Cisco UCS 6454 Fabric Interconnect

![]()

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5108 Blade Server Chassis (Figure 8) delivers a scalable and flexible blade server architecture. The Cisco UCS blade server chassis uses an innovative unified fabric with fabric-extender technology to lower total cost of ownership by reducing the number of network interface cards (NICs), host bus adapters (HBAs), switches, and cables that need to be managed. Cisco UCS 5108 is a 6-RU chassis that can house up to 8 half-width or 4 full-width Cisco UCS B-Series Blade Servers. A passive mid-plane provides up to 80Gbps of I/O bandwidth per server slot and up to 160Gbps for two slots (full-width). The rear of the chassis contains two I/O bays to house Cisco UCS Fabric Extenders for enabling uplink connectivity to the pair of FIs for both redundancy and bandwidth aggregation.

Figure 8 Cisco UCS 5108 Blade Server Chassis

|

|

|

Cisco UCS 2408 Fabric Extender

The Cisco UCS 2408 Fabric Extender has eight 25-Gigabit Ethernet, FCoE-capable, Small Form-Factor Pluggable (SFP28) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2408 provide 10-Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis, giving it a total 32 10G interfaces to Cisco UCS blades. Typically configured in pairs for redundancy, two fabric extenders provide up to 400 Gbps of I/O from FI 6454's to 5108 chassis.

Figure 9 Cisco UCS 2408 Fabric Extender

Cisco UCS B-Series Blade Servers

Cisco UCS B-Series Blade Servers are based on Intel Xeon processors; they work with virtualized and non-virtualized applications to increase performance, energy efficiency, flexibility, and administrator productivity. The latest Cisco UCS M5 B-Series blade server models come in two form factors; the half-width Cisco UCS B200 Blade Server and the full-width Cisco UCS B480 Blade Server. Cisco UCS M5 server uses the latest Intel Xeon Scalable processors with up to 28 cores per processor. The Cisco UCS B200 M5 blade server supports 2 sockets, 3TB of RAM (using 24 x128GB DIMMs), 2 drives (SSD, HDD or NVMe), 2 GPUs and 80Gbps of total I/O to each server. The Cisco UCS B480 blade is a 4-socket system offering 6TB of memory, 4 drives, 4 GPUs and 160 Gb aggregate I/O bandwidth.

The Cisco UCS B200 M5 Blade Server (Figure 10) has been used in this VersaStack architecture.

Figure 10 Cisco UCS B200 M5 Blade Server

Each supports the Cisco UCS VIC 1400 series adapters to provide connectivity to the unified fabric.

For more information about Cisco UCS B-series servers, see: https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/datasheet-c78-739296.html

Cisco UCS C-Series Rack Servers

Cisco UCS C-Series Rack Servers deliver unified computing in an industry-standard form factor to reduce TCO and increase agility. Each server addresses varying workload challenges through a balance of processing, memory, I/O, and internal storage resources. The most recent M5 based Cisco UCS C-Series rack mount models come in three main models; the Cisco UCS C220 1RU, the Cisco UCS C240 2RU, and the Cisco UCS C480 4RU chassis, with options within these models to allow for differing local drive types and GPUs.

The enterprise-class Cisco UCS C220 M5 Rack Server (Figure 11) has been leveraged in this VersaStack design.

Figure 11 Cisco UCS C220 M5 LFF Server

![]()

For more information about Cisco UCS C-series servers, see:

Cisco UCS Virtual Interface Card 1400

The Cisco UCS Virtual Interface Card (VIC) 1400 Series provides complete programmability of the Cisco UCS I/O infrastructure by presenting virtual NICs (vNICs) as well as virtual HBAs (vHBAs) from the same adapter according to the provisioning specifications within UCSM.

The Cisco UCS VIC 1440 is a dual-port 40-Gbps or dual 4x 10-Gbps Ethernet/FCoE capable modular LAN On Motherboard (mLOM) adapter designed exclusively for the M5 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1440 capabilities are enabled for two ports of 40-Gbps Ethernet. In this CVD, Cisco UCS B200 M5 blade servers were equipped with Cisco VIC 1440.

The Cisco UCS VIC 1457 is a quad-port Small Form-Factor Pluggable (SFP28) mLOM card designed for the M5 generation of Cisco UCS C-Series Rack Servers. The card supports 10/25-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs. In this CVD, Cisco VIC 1457 was installed in Cisco UCS C240 M5 server.

2nd Generation Intel® Xeon® Scalable Processors

This VersaStack architecture includes the 2nd generation Intel Xeon Scalable processors in all the Cisco UCS M5 server models used in this design. These processors provide a foundation for powerful data center platforms with an evolutionary leap in agility and scalability. Disruptive by design, this innovative processor family supports new levels of platform convergence and capabilities across computing, storage, memory, network, and security resources.

Cascade Lake (CLX-SP) is the code name for the next-generation Intel Xeon Scalable processor family that is supported on the Purley platform serving as the successor to Skylake SP. These chips support up to eight-way multiprocessing, use up to 28 cores, incorporate a new AVX512 x86 extension for neural-network and deep-learning workloads, and introduce persistent memory support. Cascade Lake SP–based chips are manufactured in an enhanced 14-nanometer (14-nm++) process and use the Lewisburg chip set.

Cisco Umbrella (optional)

Cisco Umbrella is the delivery of secure DNS through Cisco’s acquisition of OpenDNS. Cisco Umbrella stops malware before it can get a foothold by using predictive intelligence to identify threats that next-generation firewalls might miss. Implementation is easy as pointing to Umbrella DNS servers, and unobtrusive to the user base outside of identified threat locations they may have been steered to. In addition to threat prevention, Umbrella provides detailed traffic utilization as shown in Figure 12.

Figure 12 Traffic Breakdown of Activity Seen through Cisco Umbrella

For more information about Cisco Umbrella, see: https://www.cisco.com/c/dam/en/us/products/collateral/security/router-security/opendns-product-overview.pdf

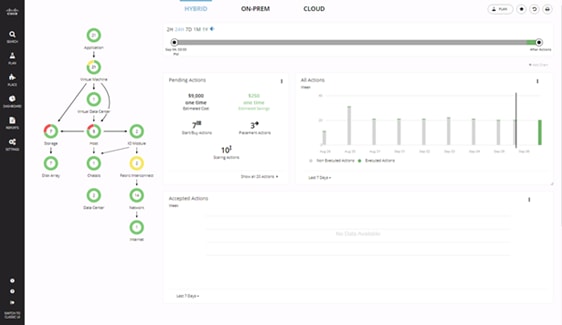

Cisco Workload Optimization Manager (optional)

Instantly scale resources up or down in response to changing demand assuring workload performance. Drive up utilization and workload density. Reduce costs with accurate sizing and forecasting of future capacity.

To perform intelligent workload management, Cisco Workload Optimization Manager (CWOM) models your environment as a market of buyers and sellers linked together in a supply chain. This supply chain represents the flow of resources from the datacenter, through the physical tiers of your environment, into the virtual tier and out to the cloud. By managing relationships between these buyers and sellers, CWOM provides closed-loop management of resources, from the datacenter, through to the application.

When you launch CWOM, the Home Page provides the following options:

· Planning

· Placement

· Reports

· Overall Dashboard

The CWOM dashboard provides views specific to On-Prem, the Cloud, or a Hybrid view of infrastructure, applications, and costs across both.

Figure 13 CWOM Dashboard

For more information about the full capabilities of workload optimization, planning, and reporting, see: https://www.cisco.com/c/en/us/products/servers-unified-computing/workload-optimization-manager/index.html

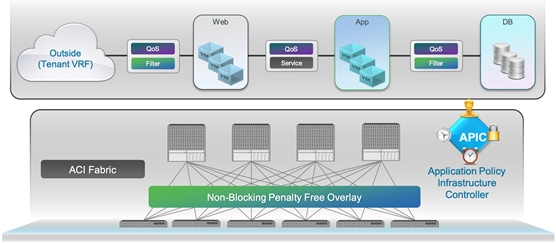

Cisco Application Centric Infrastructure and Nexus Switching

Cisco ACI is an evolutionary leap from SDN’s initial vision of operational efficiency through network agility and programmability. Cisco ACI has industry leading innovations in management automation, programmatic policies, and dynamic workload provisioning. The ACI fabric accomplishes this with a combination of hardware, policy-based control systems, and closely coupled software to provide advantages not possible in other architectures.

Cisco ACI takes a policy-based, systems approach to operationalizing the data center network. The policy is centered around the needs (reachability, access to services, security policies) of the applications. Cisco ACI delivers a resilient fabric to satisfy today's dynamic applications.

Cisco ACI Architecture

The Cisco ACI fabric is a leaf-and-spine architecture where every leaf connects to every spine using high-speed 40/100-Gbps Ethernet links, with no direct connections between spine nodes or leaf nodes. The ACI fabric is a routed fabric with a VXLAN overlay network, where every leaf is VXLAN Tunnel Endpoint (VTEP). Cisco ACI provides both Layer 2 (L2) and Layer 3 (L3) forwarding across this routed fabric infrastructure.

Figure 14 Cisco ACI High-Level Architecture

Cisco Nexus 9000 Series Switches

The Cisco ACI fabric is built on a network of Cisco Nexus 9000 series switches that provide low-latency, high-bandwidth connectivity with industry proven protocols and innovative technologies to create a flexible, scalable, and highly available architecture. ACI is supported on several models of Nexus 9000 series switches and line cards. The selection of a Nexus 9000 series switch as an ACI spine or leaf switch will depend on a number of factors such as physical layer connectivity (1/10/25/40/50/100-Gbps), FEX aggregation support, analytics support in hardware (Cloud ASICs), FCoE support, link-level encryption, support for the Multi-Pod, Multi-Site design implementations and so on.

Architectural Building Blocks

The key architectural buildings blocks of the Cisco ACI fabric are:

· Application Policy Infrastructure Controller (APIC) - Cisco APIC is the unifying point of control in Cisco ACI for automating and managing the end-to-end data center fabric. The Cisco ACI fabric is built on a network of individual components that are provisioned and managed as a single entity. The APIC is a physical appliance that serves as a software controller for the overall fabric. It is based on Cisco UCS C-series rack mount servers with 2x10Gbps links for dual-homed connectivity to a pair of leaf switches and 1Gbps interfaces for out-of-band management.

· Spine Nodes – The spines provide high-speed (40/100-Gbps) connectivity between leaf nodes. The ACI fabric forwards traffic by doing a host lookup in a mapping database that contains information about the leaf node where an endpoint (IP, Mac) resides. All known endpoints are maintained in a hardware database on the spine switches. The number of endpoints or the size of the database is a key factor in the choice of a Nexus 9000 model as a spine switch. Leaf switches also maintain a database but only for those hosts that send/receive traffic through it.

The Cisco Nexus featured in this design for the ACI spine is the Nexus 9364C implemented in ACI mode (Figure 15).

![]()

For more information about Cisco Nexus 9364C switch, see: https://www.cisco.com/c/en/us/products/switches/nexus-9364c-switch/index.html

· Leaf Nodes – Leaf switches are essentially Top-of-Rack (ToR) switches that end devices connect into. They provide Ethernet connectivity to devices such as servers, firewalls, storage and other network elements. Leaf switches provide access layer functions such as traffic classification, policy enforcement, L2/L3 forwarding of edge traffic etc. The criteria for selecting a specific Nexus 9000 model as a leaf switch will be different from that of a spine switch.

The Cisco Nexus featured in this design for the ACI leaf is the Nexus 9336C-FX2 implemented in ACI mode (Figure 16).

![]()

For more information about Cisco Nexus 9336C-FX2 switch, see: https://www.cisco.com/c/en/us/products/switches/nexus-9336c-fx2-switch/index.html

For more information about Cisco ACI, see: https://www.cisco.com/c/en/us/products/cloud-systemsmanagement/application-policy-infrastructure-controller-apic/index.html

IBM Spectrum Virtualize

IBM Spectrum Virtualize is a software-enabled storage virtualization engine that provides a single point of control for storage resources within the data centers. IBM Spectrum Virtualize is a core software engine of well-established and industry-proven IBM storage virtualization solutions. These solutions include IBM SAN Volume Controller and all versions of IBM Storwize and FlashSystem family of products.

The IBM Spectrum Virtualize™ software stack was first introduced as a part of the IBM SAN Volume Controller (SVC) product released in 2003, offering unparalleled storage virtualization capabilities before being integrated into the IBM Storwize platform and more recently, a subset of the IBM FlashSystem storage appliances.

Since the first release of IBM SAN Volume Controller, IBM Spectrum Virtualize has evolved into the feature-rich storage hypervisor evolving over 34 major software releases, installed and deployed on over 250,000+ Storwize and 70,000 SVC engines. Managing 5000,000 enclosures, virtualizing, managing and securing 9.6 Exabytes of data. Exceeding 99.999% availability.

This solution supports IBM Spectrum Virtualize firmware version 8.3.1 and any* IBM FlashSystem storage array such as the following:

· IBM FlashSystem 9200

· IBM FlashSystem 7200

· IBM FlashSystem 5100

*The interoperability and configuration steps are consistent between the members of the FlashSystem family.

For reference, the IBM Storwize platforms have been replaced by the following FlashSystem product:

| Original platform |

Successor |

Drive Type |

Performance / Target Market |

| Storwize V5010 |

FlashSystem 5010 |

SAS |

Entry Enterprise |

| Storwize V5030 |

FlashSystem 5030 |

SAS |

Entry Enterprise |

| Storwize V5100 |

FlashSystem 5100 |

NVMe |

Entry Enterprise |

| Storwize V7000 Gen3 |

FlashSystem 7200 |

NVMe |

Midrange Enterprise |

| FlashSystem 9110 |

<Discontinued> |

NVMe |

N/A |

| FlashSystem 9150 |

FlashSystem 9200 |

NVMe |

High-End Enterprise |

The following are some of the new features brought in with IBM Spectrum Virtualize firmware version 8.3.1:

· Ownership groups - An ownership group defines a subset of users and objects within the system. You can create ownership groups to further restrict access to specific resources that are defined in the ownership group. Only users with Security Administrator roles can configure and manage ownership groups.

· Priority flow control - Priority flow control (PFC) is an Ethernet protocol that supports the ability to select the priority of different types of traffic within the network. With PFC, administrators can reduce network congestion by slowing or pausing certain classes of traffic on ports, thus providing better bandwidth for more important traffic. The system supports PFC on various supported Ethernet-based protocols on three types of traffic classes: system, host attachment, and storage traffic.

· Support for IBM® Easy Tier® overallocation limit for pools with IBM FlashCore Module devices as the top tier of storage.

· Support for 32GB Fibre Channel (FC) PCIe adapters.

· Support for expanding distributed arrays - Ability to dynamically expand distributed arrays to increase the available capacity of the array or create additional rebuild space. As part of the expansion, the system automatically migrates data for optimal performance for the new expanded configuration.

· Support for pool level Volume protection - prevents active volumes or host mappings from being deleted inadvertently if the system detects recent I/O activity.

· Support for SNMP protocol version 3 enhanced security features.

· Support for enhanced auditing features for syslog servers.

· Enhanced password security.

· Improvements to the terms and definitions that relate to capacity were updated.

· Support for the new Storage Class Memory (SCM) technology (Optane, 3DXP, and so on).

· 3 site replication - Limited availability at GA and subject to RPQ initially.

· Secure Drive Erase - the ability to completely erase any customer data from a NVMe or SAS SSD drive, before it’s removed from either the control or expansion enclosure.

For more information, go to the IBM Spectrum Virtualize website: http://www03.ibm.com/systems/storage/spectrum/virtualize/index.html

IBM Spectrum Virtualize for IBM FlashSystems 9200, 7200 and 5100

IBM FlashSystem 9200, 7200 and 5100 all use IBM Spectrum Virtualize™ software that combines a variety of software-defined functionality for Flash Storage to manage data such as following:

· Deduplication

· Compression

· Thin provisioning

· Easy Tier (automatic and dynamic tiering)

· Encryption for internal and virtualized external storage

· SCSI Unmap

· HyperSwap (high availability active-active)

· FlashCopy (snapshot)

· Remote data replication

IBM FlashSystem 9200, 7200 and 5100 high availability

IBM FlashSystem 9100, 9200 and 7200 are designed to offer high system and data availability with the following features:

· HyperSwap support

· Dual-active, intelligent node canisters with mirrored cache

· Dual-port flash drives with automatic drive failure detection and RAID rebuild

· Redundant hardware, including power supplies and fans

· Hot-swappable and customer replaceable components

· Automated path failover support for the data path between the server and the drives

Data Protection on FlashSystem 9200, 7200, and 5100

Data protection from NAND chip and controller failures are managed using two IBM technologies: Variable Stripe RAID (VSR) and DRAID. VSR protects failures at the IBM FlashCore modules chip level, and DRAID protects data from failure of IBM FlashCore modules and industry-standard NVMe drives.

Variable Stripe RAID

Variable Stripe RAID is a patented IBM technology that provides data protection at the page, block, or chip level. It eliminates the necessity to replace a whole flash module when a single chip or plane fails. This increases the life and endurance of flash modules and reduces considerably maintenance events throughout the life of the system.

DRAID

Distributed RAID functionality is managed by IBM Spectrum Virtualize, which enables a storage array to distribute RAID5 or RAID6 to the largest set of drives. For example, on traditional RAID5, if 8 drives were used the data was striped across 7 and the parity was on 8.

DRAID enhanced this method by specifying the stripe width and the number of drives separately. As a result, the setup still has 7 data stripes protected by a parity stripe, but the 8 drives are selected from the larger set. In addition, with distributed sparing, each drive in the array gives up some of its capacity to make a spare instead of an unused spare drive.

DRAID6

DRAID6 is advised for FS9100, 9200, 7200 and 5100 systems, and is the only allowed option from the GUI. DRAID5 is configurable using the CLI. DRAID6 creates spare space across all NVMe SSDs or FCMs on the array and, during failure, the array rebuilds data using the spare space faster than traditional RAID rebuilds.

IBM FlashSystem Family Overview

While each member of the FlashSystem family shares a common enclosure and internal architecture principles, the performance of each platform covers a wide range of application requirements.

FlashSystem 5100

Formerly known as the IBM Storwize V5100, the FlashSystem 5100 is a virtualized, software-defined storage system comprised of hardware components and a required licensed software product, IBM Spectrum Virtualize Software. All functional capabilities for FlashSystem 5100 system are provided through IBM Spectrum Virtualize software. The two models within the 5100 series, 424 and AF4 are designed to meet modern high-performance storage requirements, including ultra-low latency, cost-effectiveness, operational efficiency, and mission-critical reliability.

It is built on a flash-optimized design, with an end-to-end NVMe strategy to bring extremely low latencies to organizations of all sizes. FlashSystem 5100 model AF4 is an all-flash storage system that supports NVMe FlashCore Modules and industry-standard NVMe flash drives in the control enclosure. Model AF4 attaches to expansion enclosures Models AFF and A9F which support SAS Flash drives. FlashSystem 5100 model 424 is a hybrid storage system that supports NVMe FlashCore Modules and industry-standard flash NVMe drives in the control enclosure. Model 424 attaches to expansion enclosures models 12F, 24F, and 92F which support SAS Flash drives and SAS HDD Drives.

FlashSystem 7200

FlashSystem 7200 is an evolution of the previous generation Storwize V7000, with the same basic enclosure hardware (metalwork only) as the other members of the FlashSystem family supporting up to 24 NVMe Flash drives or FCM, with up to four SCM drives per enclosure.

One of the main differences between the previous V7000 hardware is that all six PCIe slots can be populated with Host Interface Cards (HIC) – where previously two were always reserved for a SAS expansion attachment card. You therefore now have the option of still using a SAS expansion card if you wish to expand the capacity beyond what is supported by the base 24 slots.

However, with FCM, having a maximum usable capacity (before data reduction) of 38.4 TB, the base enclosure can hold some 750TB of usable capacity, after RAID, and before data reduction. Add in the maximum FCM compression capability of 88TB per FCM, and that is over 1.5PB of effective usable capacity from hardware FCM compression alone.

Other notable updates are a base cache memory increase to 256GiB, upgradable in two stages to 1.5TiB. The CPU’s are updated to use Intel’s Cascade Lake family with 32 cores per enclosure. All PCIe slots are 16 lane Gen3 to allow full bandwidth capability to each FibreChannel 32Gbit 4 port card. Up to 24x 32Gbit ports per enclosure.

FlashSystem 9200

The FlashSystem 9200 is an evolution of the 9150 and follows the same basic principles and specifications of the 7200 with significantly more horsepower.

FlashSystem 9200 can also support up to 1.5TiB of cache but provides 64 cores per enclosure allowing for even more system throughput, particularly when making use of the advanced functions provided by the embedded Spectrum Virtualize software.

IBM FlashSystem 9200

This section describes the FlashSystem 9200 architectural components.

Integral to the IBM FlashSystem 9200 solution is the IBM FlashCore technology. The recent evolution of this technology saw the introduction of inline hardware compression and decompression with the IBM FlashSystem model AE3 enclosure.

The IBM FlashSystem 9200 system with IBM FlashCore Modules NVMe type drives features built-in hardware data compression as standard, and this data reduction is “always on”. This compression is implemented in hardware by using field-programmable gate arrays (FPGAs) within each module and a modified dynamic GZIP algorithm. With this approach, the solution can deliver the level of performance that you expect without compression, with the added benefit of better utilization of the physical storage. Compression and decompression are transparent above the IBM FlashCore Modules except for management of space. Performance is not affected and scales linearly with the number of instances.

IBM FlashSystem 9200 Control Enclosure Data Reduction Pool compression can increase the effective capacity of your flash memory up to 5x, decreasing the cost for effective capacity up to 80 percent. Data Reduction Pool supports active data, unlike other data reduction solutions.

IBM FlashSystem 9200 Control Enclosure offers several features for Data Reduction Pool compression workloads. These features include 16 Intel core processors with up to 1.536 GB of memory per control enclosure, and a built-in compression accelerator for hardware-assisted compression. In addition, the IBM FlashSystem 9200 system with IBM FlashCore Modules NVMe-type drives applies compression to any data that is not already compressed. The IBM FlashSystem 9200 system also supports the new SCM type drives. SCM is a new storage media technology that offers high endurance, high IOPS, and ultra-low latencies.

IBM FlashSystem 9200 Hardware Overview

Each FlashSystem 9200 consists of a control enclosure and IBM FlashCore module drives. The control enclosure is the storage server that runs the IBM Spectrum Virtualize software that controls and provides features to store and manage data on the IBM FlashCore module or industry-standard NVMe drives. The IBM FlashSystem 9200 system has two different types of enclosures: Control Enclosures and Expansion Enclosures.

· A Control Enclosure manages your storage systems, communicates with the host, and manages interfaces. In addition, it can also house up to 24 NVMe-capable flash drives. These drives can be either industry-standard NVMe types or the exclusive IBM FlashCore Module NVMe type and up to 4 optional SCM class drives.

· An Expansion Enclosure increases the available capacity of an IBM FlashSystem 9200 cluster. It communicates with the Control Enclosure through a dual pair of 12 Gbps serial-attached SCSI (SAS) connections. These Expansion Enclosures can house many of flash (solid-state drive (SSD)) SAS type drives, depending on which model of enclosure is ordered.

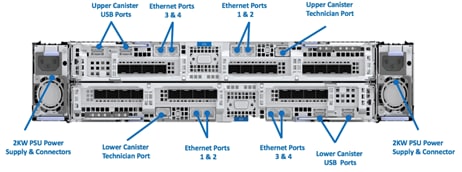

Figure 17 and Figure 18 shows the IBM FlashSystem 9200 Control Enclosure front and rear views.

Figure 17 FlashSystem 9200 Front View

Figure 18 FlashSystem 9200 Rear View

Control Enclosures

Each Control Enclosure can have multiple attached Expansion Enclosures, which expand the available capacity of the entire system. The IBM FlashSystem 9200 solution supports up to four Control Enclosures and up to two chains of SAS Expansion Enclosures per Control Enclosure.

The IBM FlashSystem 9200 Control Enclosure supports up to 24 NVMe-capable flash drives in a 2U high form factor and consists of the following machine types:

· The 9846 has one model: AG8

· The 9848 has two models: AG8 and UG8

Expansion Enclosures

The IBM FlashSystem 9000 Expansion Enclosure consists of the following machine types:

· The 9846 has two models: AFF and A9F

· The 9848 has two models: AFF and A9F

New SAS-based small form factor (SFF) and large form factor (LFF) Expansion Enclosures support flash-only MDisks in a storage pool, which can be used for IBM Easy Tier. Consider the following points:

· IBM FlashSystem 9000 SFF Expansion Enclosure Model AFF offers drive options with SSD flash drives. Up to 480 drives of SAS expansions are supported per IBM FlashSystem 9200 Control Enclosure. The Expansion Enclosure is 2U high.

· IBM FlashSystem 9000 LFF Expansion Enclosure Model A9F offers drive options with SSD flash drives. Up to 760 drives of SAS expansions are supported per IBM FlashSystem 9200 Control Enclosure. The Expansion Enclosure is 5U high.

FlashSystem Features for Manageability

The IBM FlashSystem systems offer the following manageability and serviceability features:

· An intuitive GUI

· IBM Call-home

· IBM Storage Insights

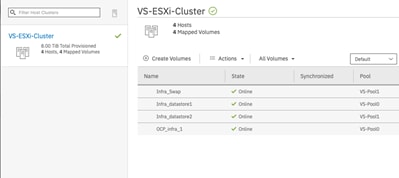

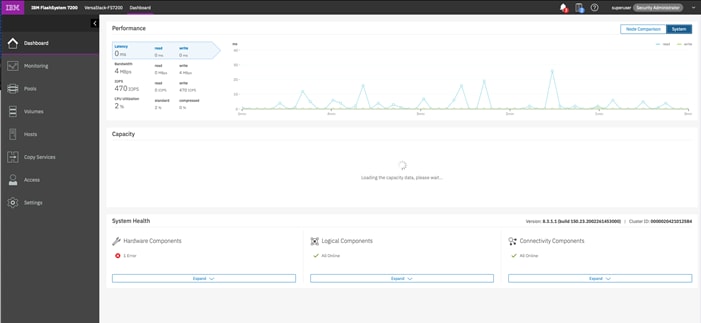

FlashSystem Family System GUI

The IBM FlashSystem family systems include an easy-to-use management Spectrum Virtualize GUI that runs on one of the node canisters in the control enclosure to help you monitor, manage, and configure your system. You can access the GUI by opening any supported web browser and entering the management IP addresses. The IBM FlashSystem systems use a GUI with the same look and feel as other IBM Storwize family solutions for a consistent management experience across all platforms. The GUI has an improved overview dashboard that provides all information in an easy-to-understand format and enables visualization of effective capacity. With the GUI, you can quickly deploy storage and manage it efficiently.

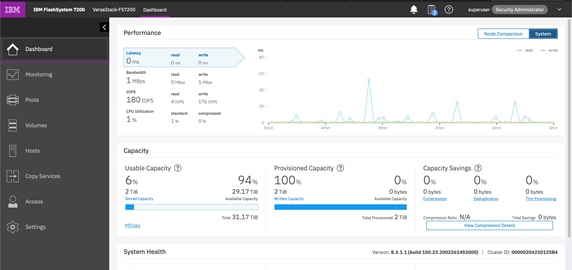

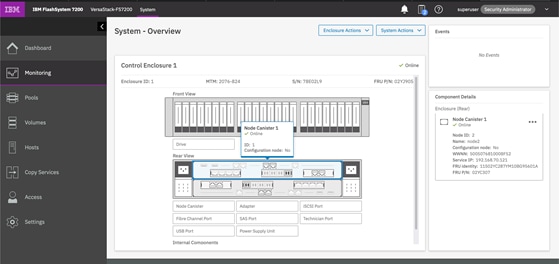

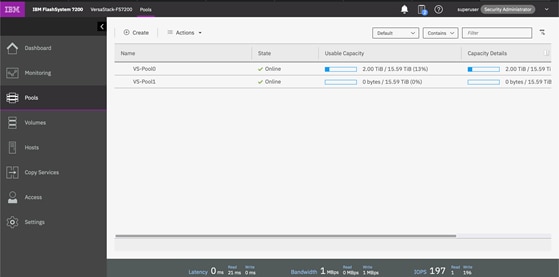

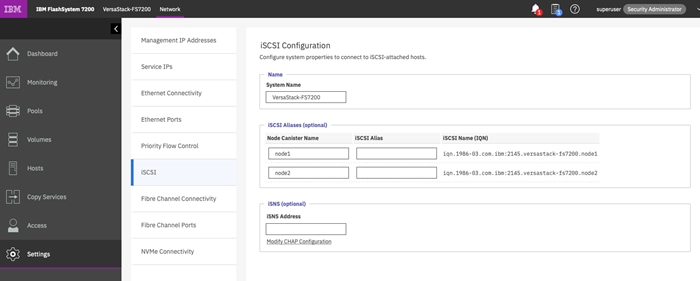

Figure 19 shows the IBM FlashSystem dashboard view.

Figure 19 IBM FlashSystem GUI Dashboard

This is the default view that is displayed after the user logs on to the system. The IBM FlashSystem family systems also provides a CLI, which is useful for advanced configuration and scripting. The FlashSystem family systems support Simple Network Management Protocol (SNMP), email notifications that uses Simple Mail Transfer Protocol (SMTP), and syslog redirection for complete enterprise management access.

IBM Call Home

Call home connects the system to IBM Service Personnel who can monitor and respond to system events to ensure that your system remains up and running. The call home function opens a service alert if a serious error occurs in the system, automatically sending details of the error and contact information to IBM Service Personnel. If the system is entitled for support, a Problem Management Record (PMR) is automatically created and assigned to the appropriate IBM support team. The information provided to IBM in this case would be an excerpt from the Event Log containing the details of the error, and client contact information from the system. This enables IBM Service Personnel to contact the client and arrange service on the system, which can greatly improve the speed of resolution by removing the need for the client to detect the error and raise a Support call themselves.

IBM Storage Insights

IBM Storage Insights is an IBM Cloud software as a service offering that can help you monitor and optimize the storage resources in the system and across your data center. IBM Storage Insights monitors your storage environment and provides status about multiple systems in a single dashboard. You can view data from the perspectives of the servers, applications, and file systems. Two versions of IBM Storage Insights are available: IBM Storage Insights and IBM Storage Insights Pro.

When you order the IBM FlashSystem storage, IBM Storage Insights is available for free. With this version, you can monitor the basic health, status, and performance of various storage resources.

IBM Storage Insights Pro is a subscription-based product that provides a more comprehensive view of the performance, capacity, and health of your storage resources. In addition to the features offered by IBM Storage Insights, IBM Storage Insights Pro provides tools for intelligent capacity planning, storage reclamation, storage tiering, and performance troubleshooting services. Together, these features can help you reduce storage costs and optimize your data center.

IBM Storage Insights is a part of the monitoring and helps to ensure continued availability of the IBM FlashSystem storage.

Cloud-based IBM Storage Insights provides a single dashboard that gives you a clear view of all of your IBM block storage. You can make better decisions by seeing trends in performance and capacity. With storage health information, you can focus on areas that need attention. When IBM support is needed, IBM Storage Insights simplifies uploading logs, speeds resolution with online configuration data, and provides an overview of open tickets, all in one place.

The following features are some of those available with IBM Storage Insights:

· A unified view of IBM systems.

· IBM Storage Insights collects telemetry data and call home data and provides real-time system reporting of capacity and performance.

· Overall storage monitoring looking at the overall health of the system, monitoring of the configuration for preferred practices and system resource management.

· IBM Storage Insights provides advanced customer service with an event filter to view support tickets and automatic log collection.

Figure 20 IBM Storage Insights Dashboard

In order for IBM Storage Insights to operate, a lightweight data collector is installed in your data center to stream performance, capacity, asset, and configuration metadata to your IBM Cloud instance. The metadata flows in one direction: from your data center to IBM Cloud over HTTPS. Only metadata is collected. The actual application data that is stored on the storage systems can’t be accessed by the data collector. In the IBM Cloud, your metadata is AES256-encrypted and protected by physical, organizational, access, and security controls.

VMware vSphere 6.7 Update 3

VMware vSphere is a virtualization platform for holistically managing large collections of infrastructures (resources-CPUs, storage and networking) as a seamless, versatile, and dynamic operating environment. Unlike traditional operating systems that manage an individual machine, VMware vSphere aggregates the infrastructure of an entire data center to create a single powerhouse with resources that can be allocated quickly and dynamically to any application in need.

vSphere 6.7 Update 3 (U3) provides several improvements including, but not limited to:

· ixgben driver enhancements

· VMXNET3 enhancements

· bnxtnet driver enhancements

· QuickBoot support enhancements

· Configurable shutdown time for the sfcbd service

· NVIDIA virtual GPU (vGPU) enhancements

· New SandyBridge microcode

VersaStack for Red Hat OpenShift Container Platform Add-on Components

The following sections describe the add-on components that are part of the solution along with the core VersaStack converged infrastructure and the vSphere hypervisor. The add-on components are:

· Red Hat OpenShift Container Platform (version 4.3)

· IBM CSI driver for Block Storage, a dynamic storage provisioner for Kubernetes

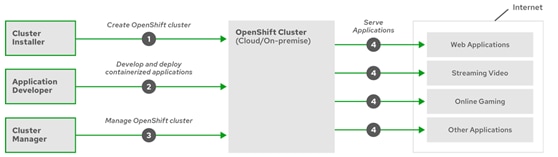

Red Hat OpenShift Container Platform

Red Hat OpenShift is one of the most reliable enterprise-grade containers, designed and optimized to easily deploy web applications and services. Categorized as a cloud development Platform as a Service (PasS), OpenShift allow to developers focusing in code, taking care of all of the complex IT operations and processes.

One of its main features that OpenShift offers to the industry, is the ability to handle applications written in different languages, such as Python, Node.js, Java, and Perl.

Red Hat OpenShift is a Kubernetes distribution focused on developer experience and application security that's platform agnostic. OpenShift helps you develop and deploy applications to one or more hosts. These can be public facing web applications, or backend applications, including micro services or databases. Applications can be implemented in any programming language you choose. The only requirement is that the application can run within a container.

Additionally, OpenShift Container Platform integrate:

· Source code management, builds, and deployments for developers

· Managing and promoting images at scale as they flow through your system

· Application management at scale

· Team and user tracking for organizing an organization with tons of developers

· Networking infrastructure that supports the cluster

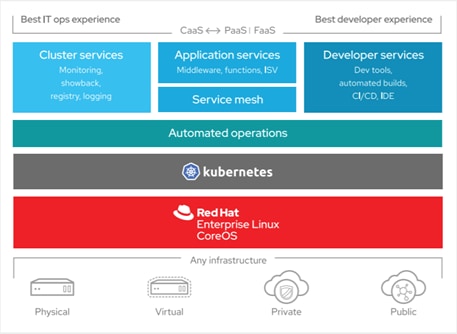

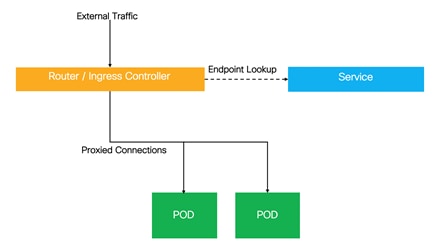

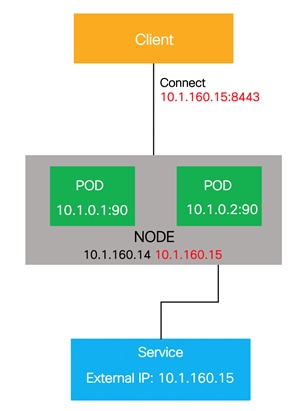

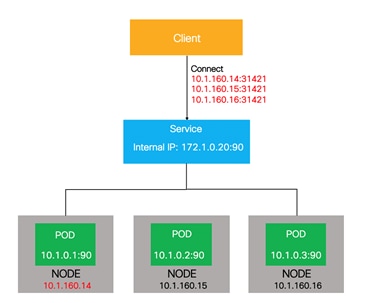

Figure 21 OpenShift Container Platform Overview

OpenShift Container Platform provides enterprise-ready enhancements to Kubernetes, including the following enhancements:

· Hybrid cloud deployments. You can deploy OpenShift Container Platform clusters to variety of public cloud platforms or in your data center.

· Integrated Red Hat technology. Major components in OpenShift Container Platform come from Red Hat Enterprise Linux and related Red Hat technologies. OpenShift Container Platform benefits from the intense testing and certification initiatives for Red Hat’s enterprise quality software.

· Open source development model. Development is completed in the open, and the source code is available from public software repositories. This open collaboration fosters rapid innovation and development.

Although Kubernetes excels at managing your applications, it does not specify or manage platform-level requirements or deployment processes. Powerful and flexible platform management tools and processes are important benefits that OpenShift Container Platform 4.3 offers. The following sections describe some unique features and benefits of OpenShift Container Platform.

Custom Operating System

OpenShift Container Platform uses Red Hat Enterprise Linux CoreOS (RHCOS), a container-oriented operating system that combines some of the best features and functions of the CoreOS and Red Hat Atomic Host operating systems. RHCOS is specifically designed for running containerized applications from OpenShift Container Platform and works with new tools to provide fast installation, Operator-based management, and simplified upgrades.

RHCOS includes:

· Ignition, which OpenShift Container Platform uses as a firstboot system configuration for initially bringing up and configuring machines.

· CRI-O, a Kubernetes native container runtime implementation that integrates closely with the operating system to deliver an efficient and optimized Kubernetes experience. CRI-O provides facilities for running, stopping, and restarting containers. It fully replaces the Docker Container Engine, which was used in OpenShift Container Platform 3.

· Kubelet, the primary node agent for Kubernetes that is responsible for launching and monitoring containers.

In OpenShift Container Platform 4.3, you must use RHCOS for all control plane machines, but you can use Red Hat Enterprise Linux (RHEL) as the operating system for compute machines, which are also known as worker machines. If you choose to use RHEL workers, you must perform more system maintenance than if you use RHCOS for all of the cluster machines.

Simplified Installation and Update Process

With OpenShift Container Platform 4.3, if you have an account with the right permissions, you can deploy a production cluster in supported clouds by running a single command and providing a few values. You can also customize your cloud installation or install your cluster in your data center if you use a supported platform.

For clusters that use RHCOS for all machines, updating, or upgrading, OpenShift Container Platform is a simple, highly automated process. Because OpenShift Container Platform completely controls the systems and services that run on each machine, including the operating system itself, from a central control plane, upgrades are designed to become automatic events. If your cluster contains RHEL worker machines, the control plane benefits from the streamlined update process, but you must perform more tasks to upgrade the RHEL machines. Our recommendation is to go with RHCOS for all the cluster nodes for automated installation of the solution and easy maintenance.

Other Key Features

Operators are both the fundamental unit of the OpenShift Container Platform 4.3 code base and a convenient way to deploy applications and software components for your applications to use. In OpenShift Container Platform, Operators serve as the platform foundation and remove the need for manual upgrades of operating systems and control plane applications. OpenShift Container Platform Operators such as the Cluster Version Operator and Machine Config Operator allow simplified, cluster-wide management of those critical components.

Operator Lifecycle Manager (OLM) and the OperatorHub provide facilities for storing and distributing Operators to people developing and deploying applications.

The Red Hat Quay Container Registry is a Quay.io container registry that serves most of the container images and Operators to OpenShift Container Platform clusters. Quay.io is a public registry version of Red Hat Quay that stores millions of images and tags.

Other enhancements to Kubernetes in OpenShift Container Platform include improvements in software defined networking (SDN), authentication, log aggregation, monitoring, and routing. OpenShift Container Platform also offers a comprehensive web console and the custom OpenShift CLI (oc) interface.

Openshift Container Platform Lifecycle

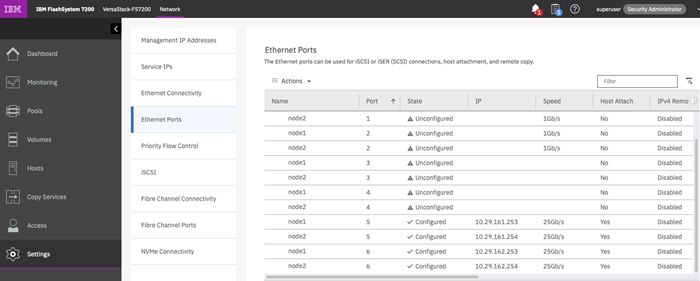

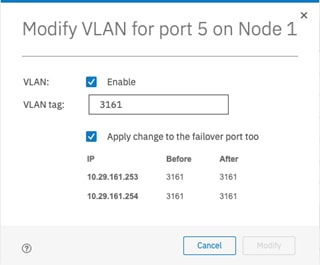

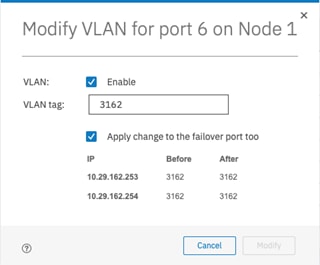

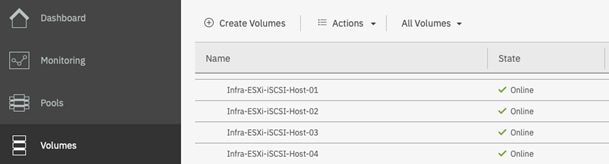

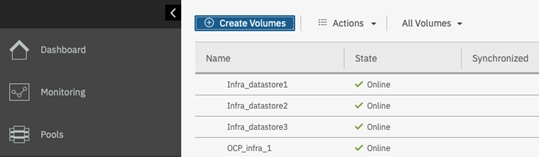

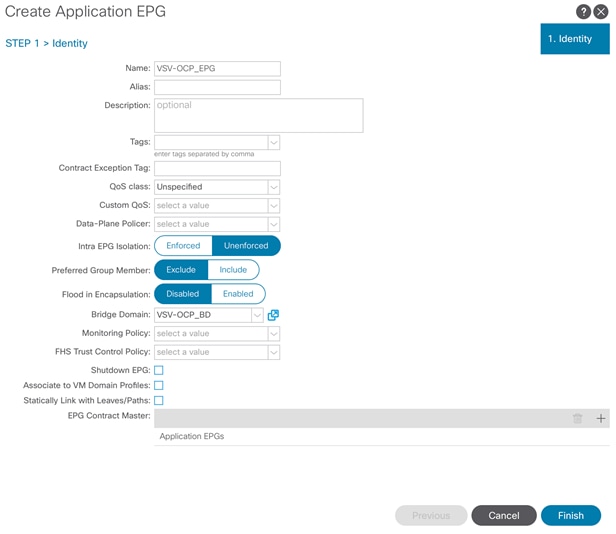

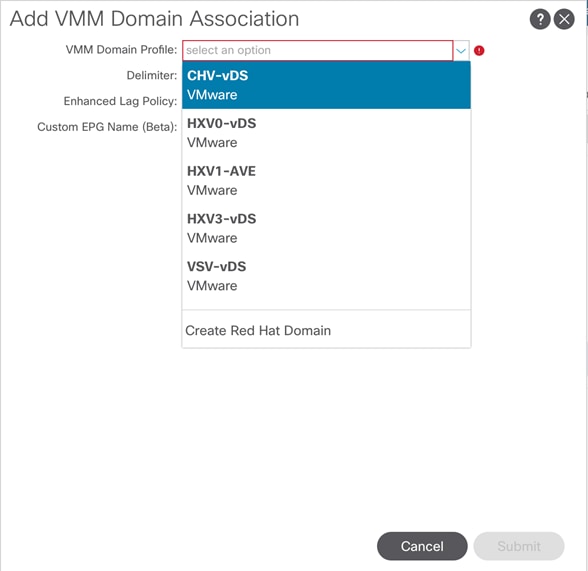

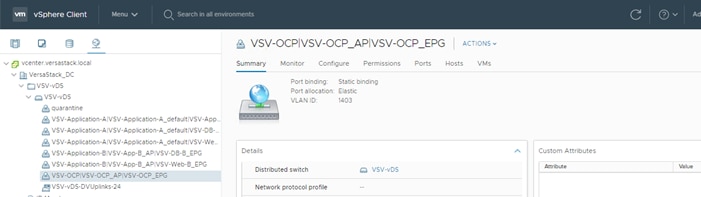

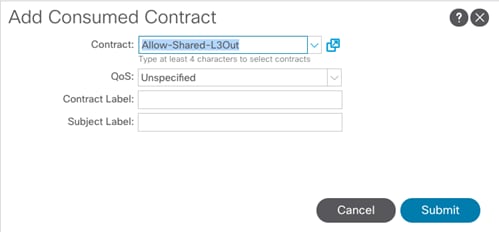

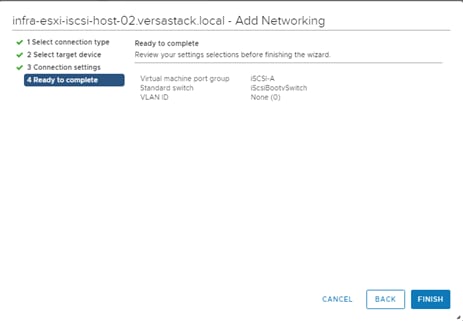

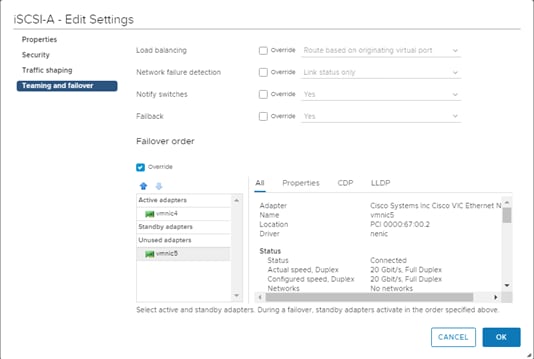

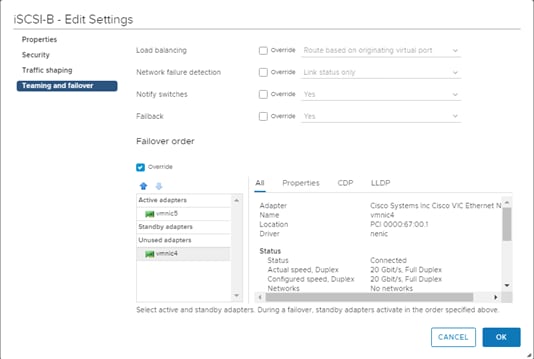

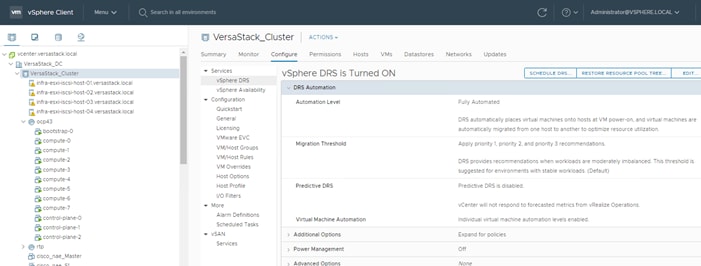

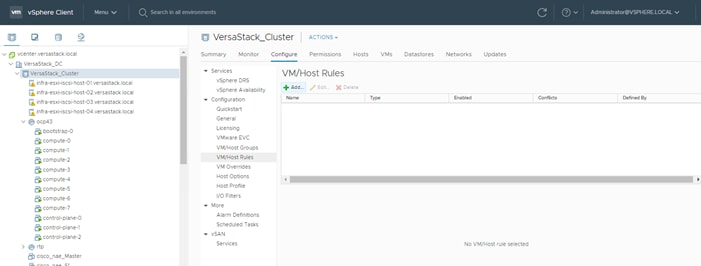

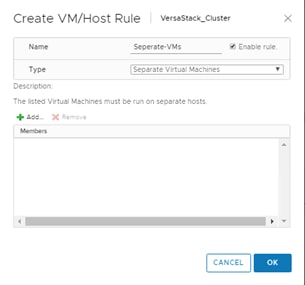

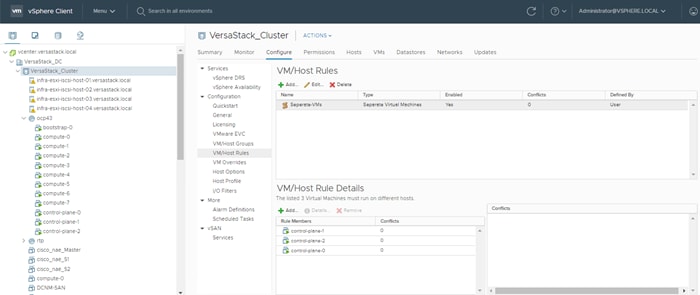

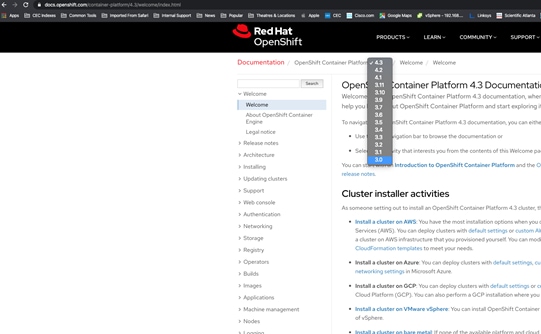

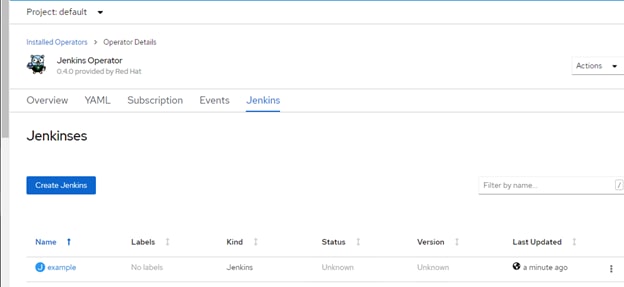

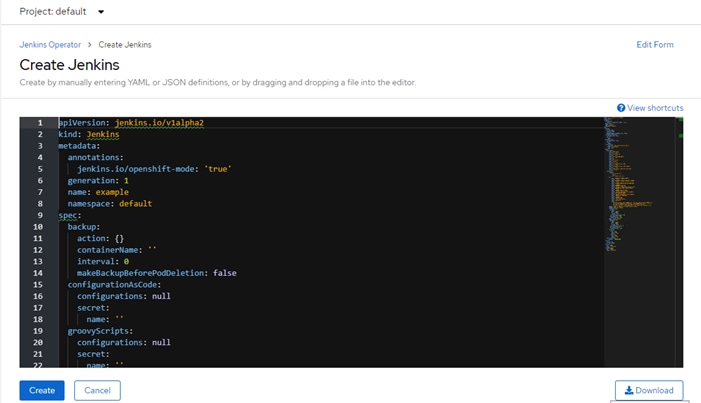

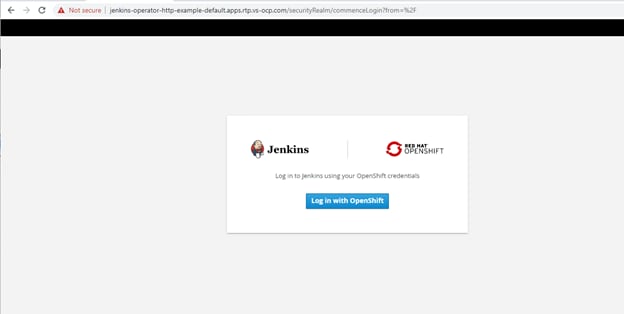

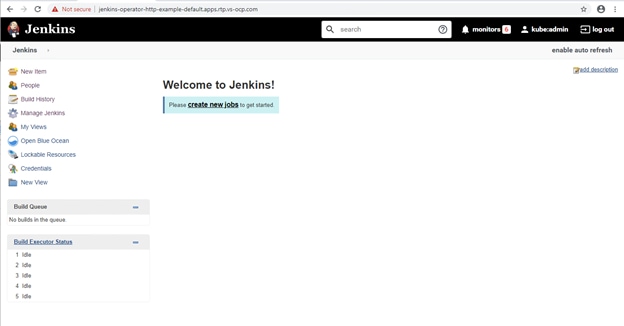

Figure 22 illustrates the basic OpenShift Container Platform lifecycle: