Cisco HyperFlex 5.0 for Virtual Server Infrastructure with VMware ESXi

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Cisco HyperFlex systems have established themselves as a premier hyperconverged hardware platform for computing virtualization in companies of all sizes. Cisco HyperFlex systems are based on Cisco UCS hardware, combining Cisco HX-Series x86 rack-mount servers, along with industry leading virtualization hypervisor software from VMware, and next-generation software defined storage technology. The combination creates a complete virtualization platform, which provides the network connectivity for the guest virtual machine (VM) connections, and the distributed storage to house the VMs, spread across all of the Cisco UCS x86 servers, versus using specialized storage or networking components. The unique storage features of the HyperFlex log-based filesystem enable rapid cloning of VMs, snapshots without the traditional performance penalties, inline data deduplication and compression, plus VM protection via replication.

Cisco HyperFlex 5.0 adds significant new features and capabilities to the platform, including support for our latest M6 generation of HX-series rack mount servers, including models with Intel or AMD processors. Hardware support is also enabled for Intel Optane DC Persistent Memory (DCPMM) in memory mode, for systems that can take advantage of the larger memory space it offers, such as virtual desktops. Cisco HyperFlex supports the latest version of the VMware ESXi 7.0 hypervisor and has updated the snapshot processes for simplified operation. Software encryption enables data-at-rest to be encrypted without the need for an add-in card or self-encrypting drives. Lastly, Cisco HyperFlex offers a native Kubernetes CSI plugin, enabling container platforms deployed on Cisco HyperFlex to use the HyperFlex Data Platform (HXDP) for storage of persistent container data.

The configuration, deployment, management, and monitoring of the Cisco HyperFlex DC-No-FI solution can be done with standard tools for Cisco UCS and VMware vSphere, such as the cloud-based management platform Cisco Intersight, the integrated HTML management tool HyperFlex Connect, and traditional tools such as VMware vCenter. This powerful linking of advanced technology stacks into a single, simple, rapidly deployed solution makes Cisco HyperFlex a true second generation hyperconverged platform.

This chapter contains the following:

● Audience

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers deploying the Cisco HyperFlex System. External references are provided wherever applicable, but readers are expected to be familiar with VMware specific technologies, Cisco ACI, infrastructure concepts, network switching and connectivity, and security policies of the customer installation.

This document describes the best practices and recommendations when deploying Cisco HyperFlex systems using the VMware ESXi hypervisor via the Cisco Intersight cloud-based management portal. The document is based on all known best practices using the software, hardware and firmware revisions specified in the document at the time of publication. As such, recommendations and best practices can be amended with later versions. This document describes the current product requirements and limitations, and relevant considerations when deploying a Cisco HyperFlex cluster using Cisco UCS Fabric Interconnects. Additional information and instructions are offered for enabling and configuring several product features beyond the basic installation of the platform. While readers of this document are expected to have sufficient knowledge to install and configure the products used, configuration details that are important to the deployment of this solution are provided in this paper.

The Cisco HyperFlex system has several new capabilities and enhancements in version 5.0(2b):

● Cisco HyperFlex is supported on Intel or AMD processor based HX220, HX225, HX240 and HX245 model M6 generation servers

● Intel Optane DC Persistent Memory (DCPMM) is supported for use in memory mode

● Support for VMware ESXi 7.0 update 3 as the hypervisor

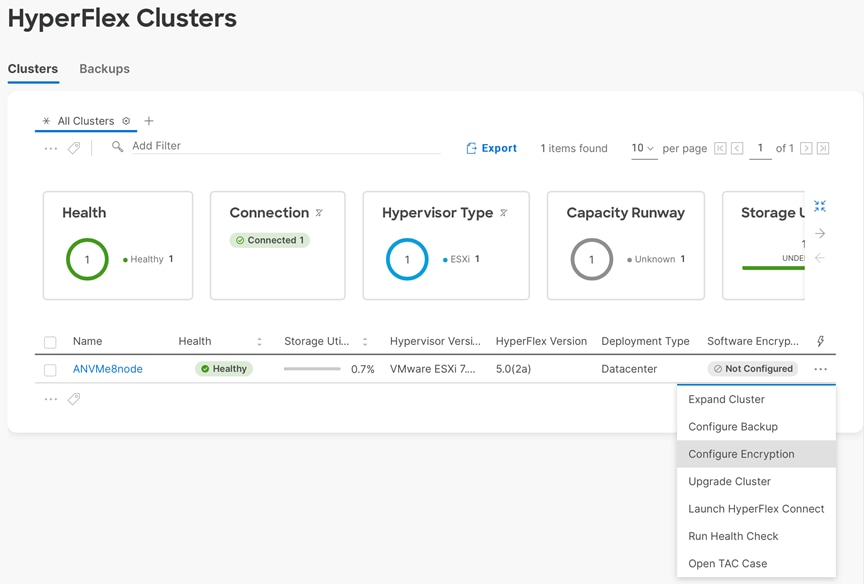

● Software based encryption of data-at-rest when using the required licensing

● Native snapshots of iSCSI-based LUNs stored in the HXDP filesystem, including consistency groups

● Updated snapshot offload mechanism without the use of Sentinel snapshots

● Cisco HXCSI release 1.2(3a) supporting persistent container storage

The Cisco HyperFlex System provides an all-purpose virtualized server platform, with hypervisor hosts, networking connectivity, and virtual server storage across a set of Cisco UCS HX-Series x86 rack-mount servers. Data center architectures have evolved away from the traditional legacy platforms, which typically contained a disparate set of technologies, such as individual servers for applications or hosting virtual machines (VMs), network switches connecting endpoints and transferring Ethernet network traffic, and Fibre Channel (FC) storage arrays providing block-based storage via a dedicated storage area network (SAN). The rapid increase in processing power and storage resources available in modern servers has led to the rise of Software-Defined Storage (SDS), where distributed software replaces the functions of traditional storage controllers. Using a distributed SDS platform, a group of rack-mount servers can effectively be turned into a clustered storage system. If the servers that provided the SDS environment were in fact the same model of server that typically hosts guest VMs, could they simply do both things at once and collapse the two functions into one? This ultimate combination of resources becomes what the industry has given the moniker of a hyperconverged infrastructure. Hyperconverged infrastructures coalesce the computing, memory, hypervisor, and storage devices of servers into a single platform for virtual servers. There is no longer a separate storage system, as the servers running the hypervisors to host virtual machines, also provide the software defined storage resources to store the virtual servers, ultimately storing the virtual machines on themselves.

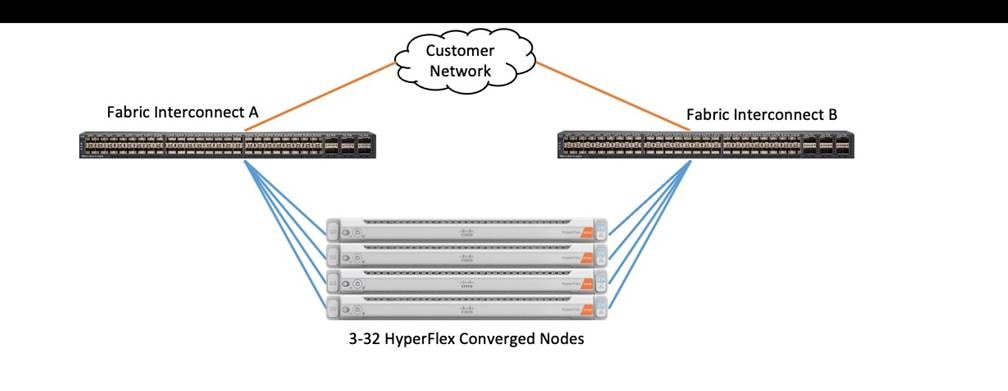

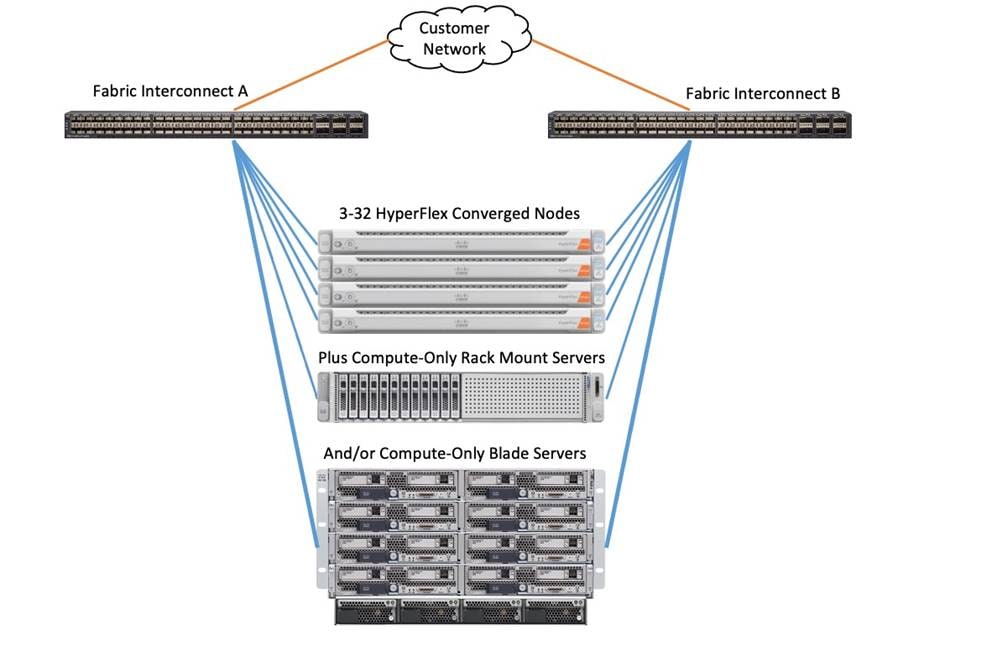

The Cisco HyperFlex system is a next-generation hyperconverged platform, which uniquely combines the convergence of computing and networking provided by Cisco UCS, along with advanced custom hyperconverged storage software, to provide the compute resources, network connectivity, distributed storage, and hypervisor platform to run an entire virtualized environment, all contained in a single uniform system. Some key advantages of hyperconverged infrastructures are the simplification of deployment and day to day management operations, as well as increased agility, thereby reducing the amount of ongoing operational costs. Since hyperconverged storage can be easily managed by an IT generalist, this can also reduce technical debt that is accrued from implementing complex systems, which often need dedicated management teams and skillsets. Cisco HyperFlex is available in four core configurations; a single site cluster managed by Cisco UCS Fabric Interconnects, which is the subject of this document, a split two-site cluster managed by two pairs of Cisco UCS Fabric Interconnects, a smaller scale single site deployment without the use of Fabric Interconnects, called HyperFlex Edge, and HyperFlex DC-No-FI, which is a larger scale solution also without the use of Fabric Interconnects.

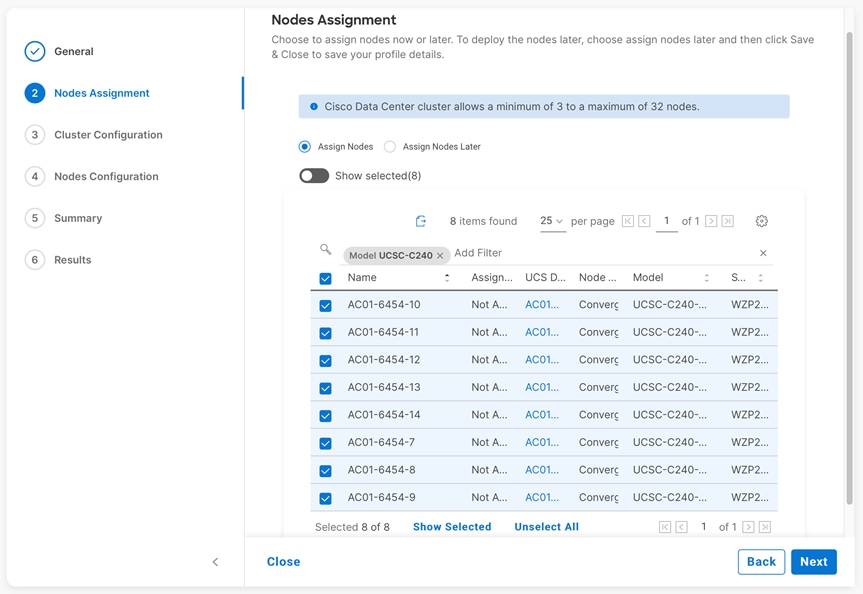

Cisco HyperFlex systems can scale as high as 32 nodes, plus additional but optional compute-only nodes. Network options are available for 10Gb, 25Gb, 40Gb and 100Gb Ethernet connection speeds depending on the hardware chosen, connecting to a pair of Cisco UCS Fabric Interconnects. All current models of Intel or AMD processor based HX-series servers can be used in all configurations, including hybrid, all-flash drives, and all-NVMe drives. Cisco HyperFlex systems are deployed and managed via Cisco Intersight, the cloud-based management platform for Cisco UCS.

The following are the components of a Cisco HyperFlex system using the VMware ESXi Hypervisor:

● A pair of Cisco UCS model 6454 or 64108 Fabric Interconnects

● Three to thirty-two Cisco HyperFlex HX-Series Rack-Mount Servers, choose from models:

◦ Cisco HyperFlex HX220C-M6S Rack-Mount Servers

◦ Cisco HyperFlex HX225C-M6S Rack-Mount Servers

◦ Cisco HyperFlex HX240C-M6SX Rack-Mount Servers

◦ Cisco HyperFlex HX245C-M6SX Rack-Mount Servers

◦ Cisco HyperFlex HXAF220C-M6S All-Flash Rack-Mount Servers

◦ Cisco HyperFlex HXAF225C-M6S All-Flash Rack-Mount Servers

◦ Cisco HyperFlex HXAF240C-M6SX All-Flash Rack-Mount Servers

◦ Cisco HyperFlex HXAF220C-M6SN All-NVMe Rack-Mount Servers

◦ Cisco HyperFlex HXAF245C-M6SX All-Flash Rack-Mount Servers

◦ Cisco HyperFlex HXAF240C-M6SN All-NVMe Rack-Mount Servers

Or

● Three to sixteen Cisco HyperFlex HX-Series Rack-Mount Servers, choose from models:

◦ Cisco HyperFlex HX240C-M6L Rack-Mount Servers

● Cisco HyperFlex Data Platform Software

● VMware vSphere ESXi Hypervisor

● VMware vCenter Server (end-user supplied)

Optional components for additional compute-only resources are:

● Cisco UCS 5108 Chassis

● Cisco UCS 2204XP, 2208XP, 2304 or 2408 model Fabric Extenders

● Cisco UCS B200-M4, B200-M5, B200-M6, B260-M4, B420-M4, B460-M4 or B480-M5 blade servers

● Cisco UCS C220-M4, C220-M5, C220-M6, C240-M4, C240-M5, C240-M6, C460-M4, or C480-M5 rack-mount servers

| Tech tip |

| Cisco HX-series M4 and M5 generation rack mount servers are also supported in Cisco HyperFlex 5.0, however this document does not cover their hardware or software configurations. Please refer to the online compatibility documentation available here: https://www.cisco.com/c/en/us/td/docs/hyperconverged_systems/HyperFlex_HX_DataPlatformSoftware/release-guidelines-and-support-timeline/b-recommended-hx-data-platform-sw-releases/m-software-requirements-5-0.html |

This chapter contains the following:

● Cisco Unified Computing System

● Cisco HyperFlex HX Data Platform Software

● Cisco HyperFlex HX Data Platform Controller

● Data Operations and Distribution

● All-NVMe and All-Flash versus Hybrid Nodes

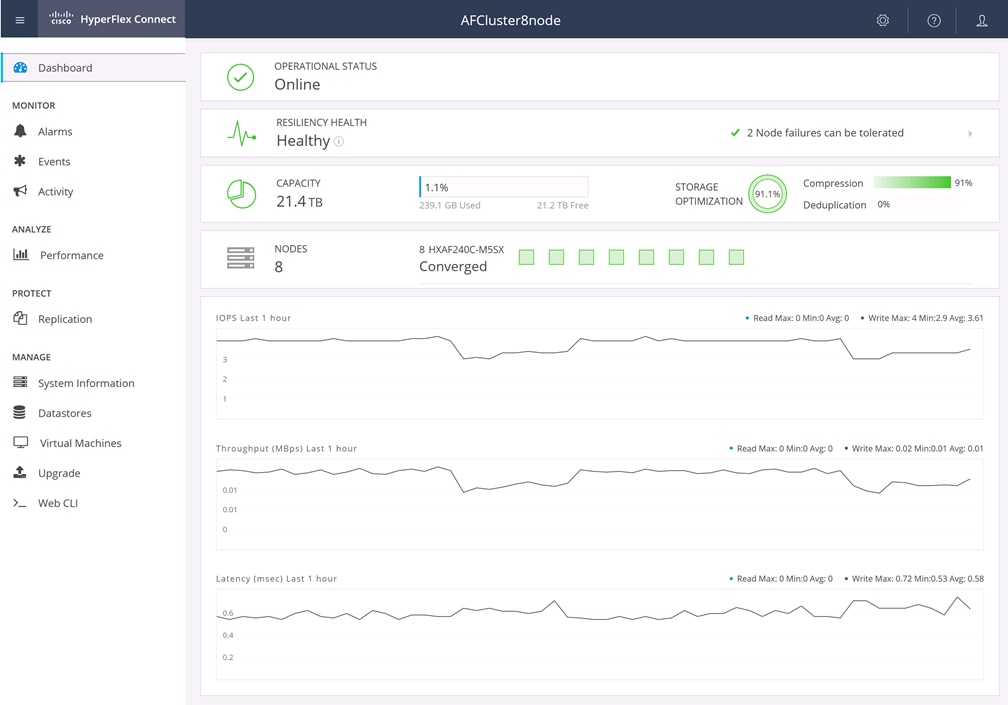

● Cisco HyperFlex Connect HTML 5 Management Webpage

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low-latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System consists of the following subsystems:

● Compute—The compute piece of the system incorporates servers based on the Intel Xeon Scalable processors. Servers are available in blade and rack form factor, managed by Cisco UCS Manager.

● Network—The integrated network fabric in the system provides a low-latency, lossless, 10/25/40/100 Gbps Ether-net fabric. Networks for LAN, SAN and management access are consolidated within the fabric. The unified fabric uses the innovative Single Connect technology to lowers costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

● Virtualization—The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtual environments to support evolving business needs.

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded Management—In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Inter-connects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified Fabric—In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

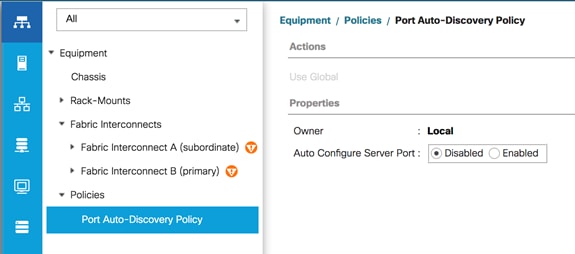

● Auto Discovery—By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

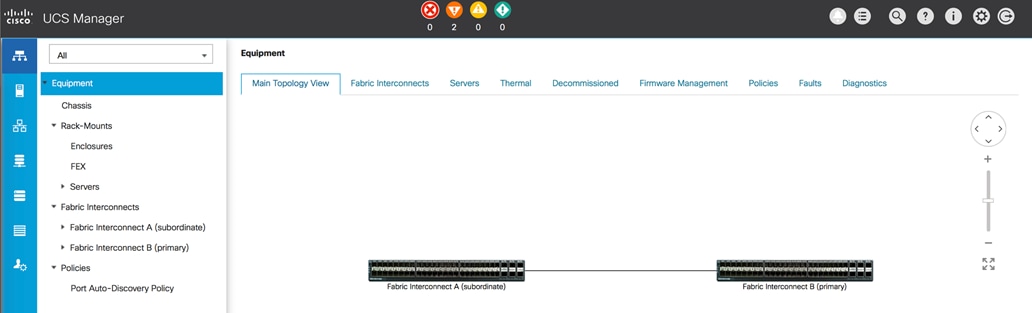

Cisco UCS Manager (UCSM) provides unified, integrated management for all software and hardware components in Cisco UCS. Using Cisco Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive graphical user interface (GUI), a command-line interface (CLI), or a through a robust application programming interface (API).

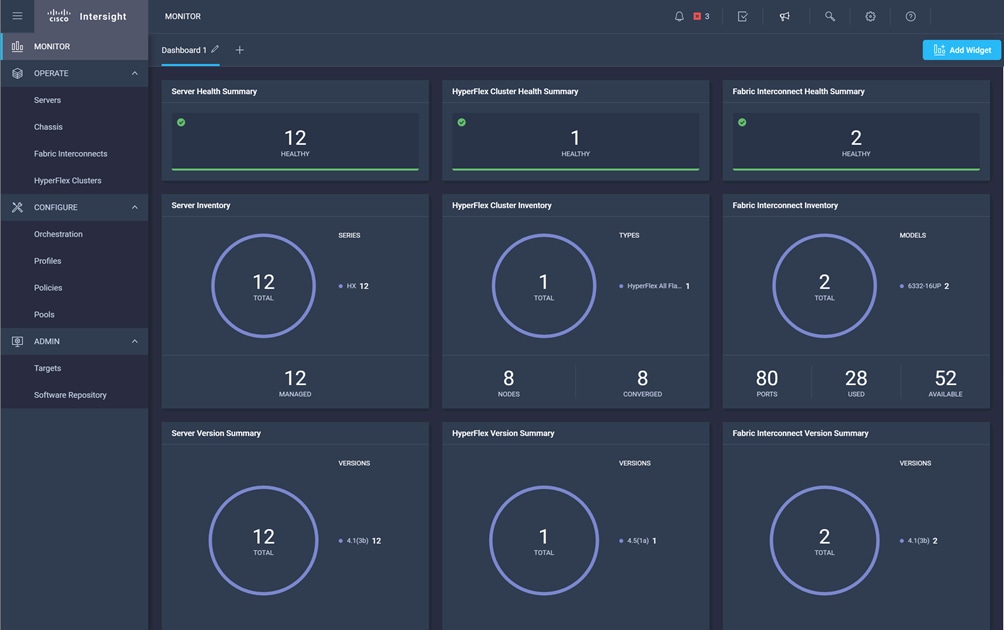

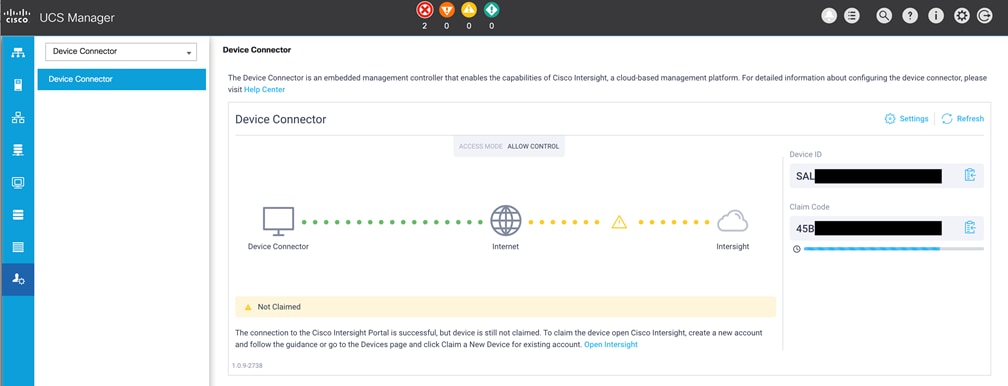

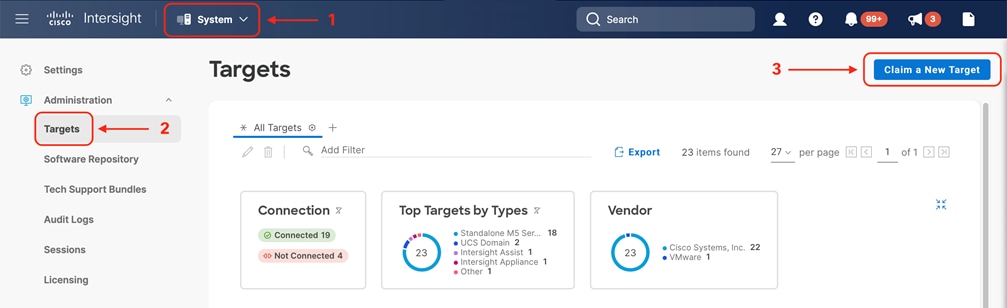

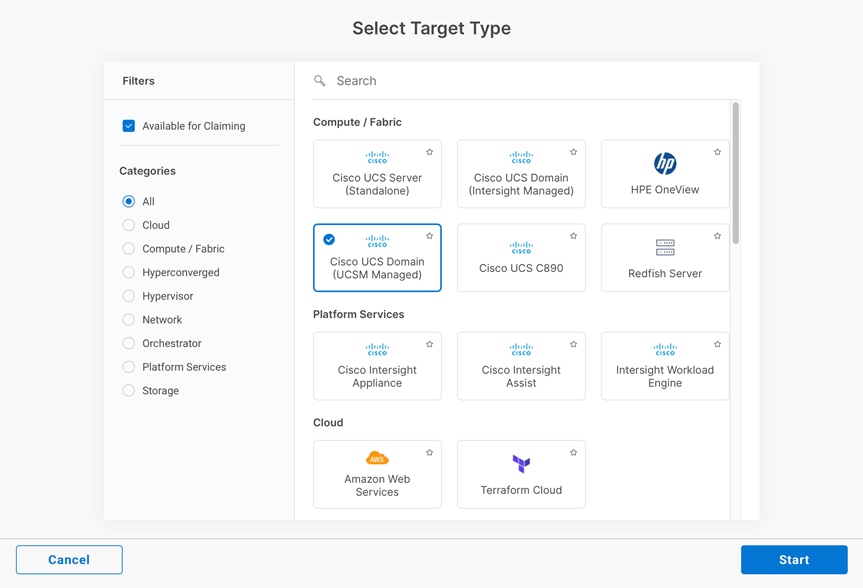

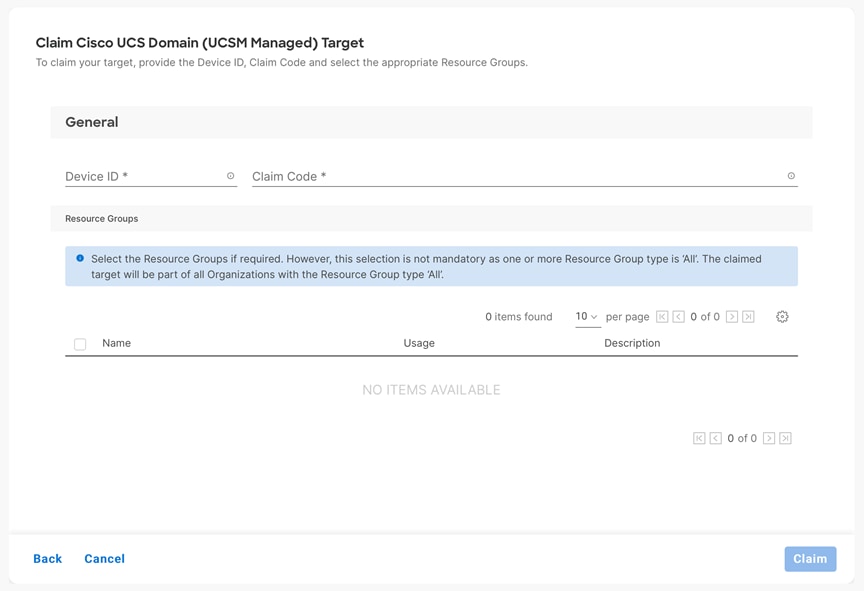

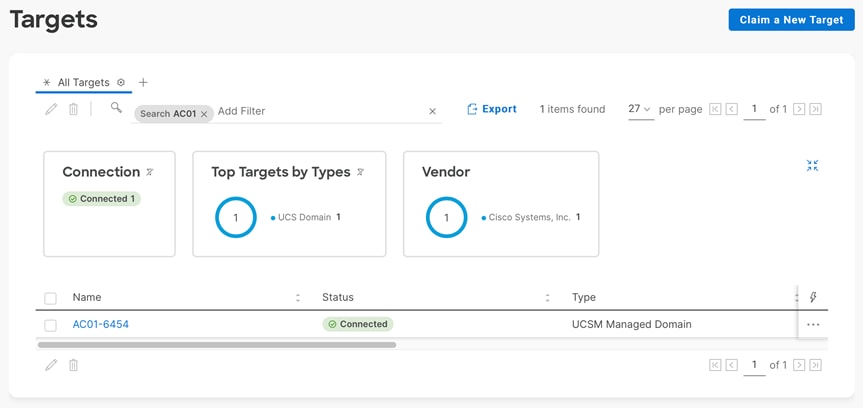

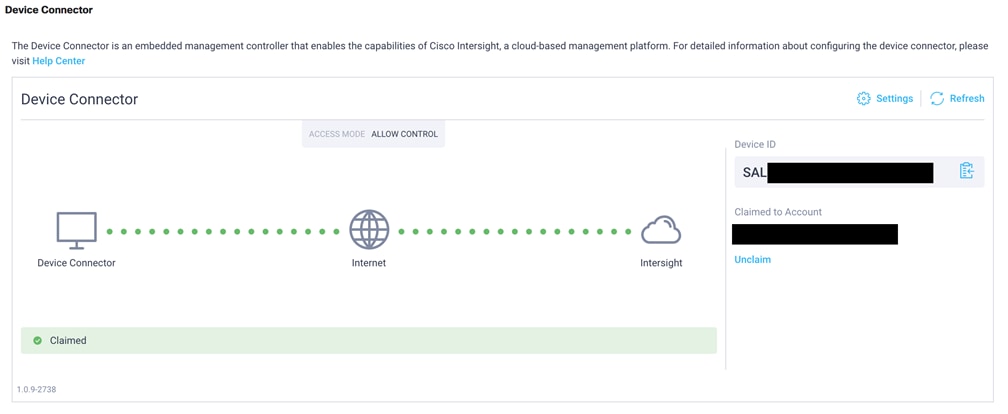

The Cisco Intersight platform (https://intersight.com) is Cisco’s latest visionary cloud-based management tool (Figure 2). It is designed to provide centralized off-site management, monitoring, and reporting capabilities for all your Cisco UCS solutions, and it can be used to deploy and manage Cisco HyperFlex clusters. The Cisco Intersight platform offers direct links to Cisco UCS Manager and Cisco HyperFlex Connect for the systems it is managing and monitoring. The Cisco Intersight website and framework is being constantly upgraded and extended with new and enhanced features independently of the products that are managed, meaning that many new features and capabilities can be added with no downtime or upgrades required by the end users. This unique combination of embedded and online technologies results in a complete cloud-based management solution that can care for your Cisco HyperFlex systems throughout the entire lifecycle, from deployment through retirement.

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10 Gigabit, 25 Gigabit, 40 Gigabit, or 100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, S-Series and HX-Series Rack-Mount Servers, Cisco UCS B-Series Blade Servers, and Cisco UCS 5100 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, the Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain.

Cisco HyperFlex HX Data Platform Software

The Cisco HyperFlex HX Data Platform is a purpose-built, high-performance, distributed file system with a wide array of enterprise-class data management services. The data platform’s innovations redefine distributed storage technology, exceeding the boundaries of first-generation hyperconverged infrastructures. The data platform has all the features expected in an enterprise shared storage system, eliminating the need to configure and maintain complex Fibre Channel storage networks and devices. The platform simplifies operations and helps ensure data availability. Enterprise-class storage features include the following:

● Data protection creates multiple copies of the data across the cluster so that data availability is not affected if single or multiple components fail (depending on the replication factor configured).

● Deduplication is always on, helping reduce storage requirements in virtualization clusters in which multiple operating system instances in guest virtual machines result in large amounts of replicated data.

● Compression further reduces storage requirements, reducing costs, and the log-structured file system is designed to store variable-sized blocks, reducing internal fragmentation.

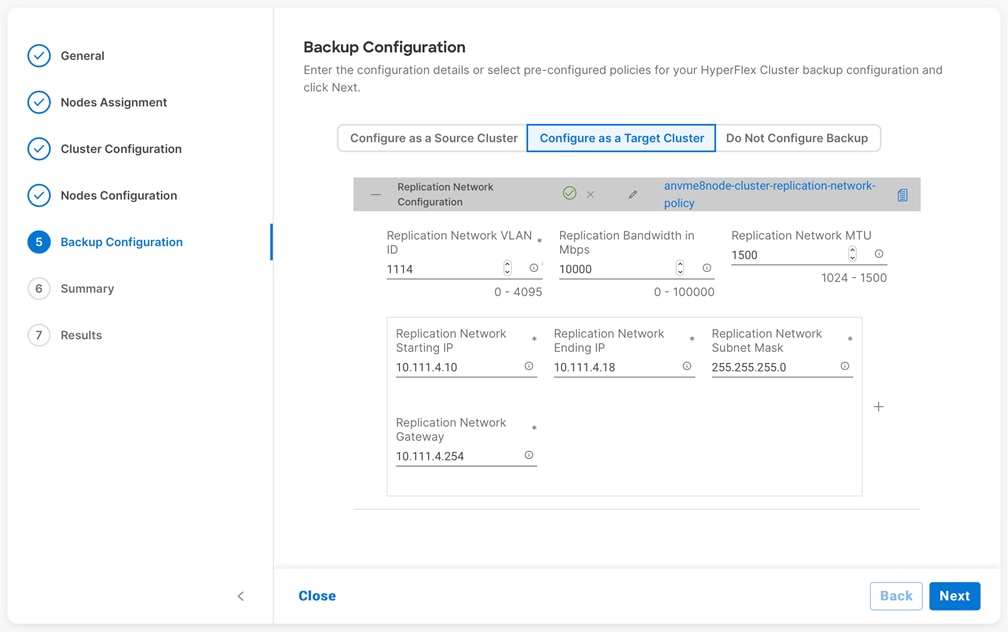

● Replication copies virtual machine–level snapshots from one Cisco HyperFlex cluster to another to facilitate recovery from a cluster or site failure through failover to the secondary site of all the virtual machines.

● Thin provisioning allows large volumes to be created without requiring storage to support them until the need arises, simplifying data volume growth and making storage a "pay as you grow" proposition.

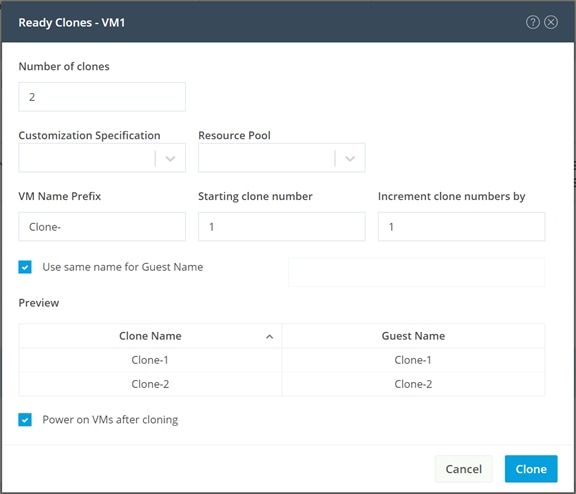

● Fast, space-efficient clones rapidly duplicate virtual storage volumes so that virtual machines can be cloned simply through metadata operations, with actual data copied only for write operations.

● Snapshots help facilitate backup and remote-replication operations, which are needed in enterprises that require always-on data availability.

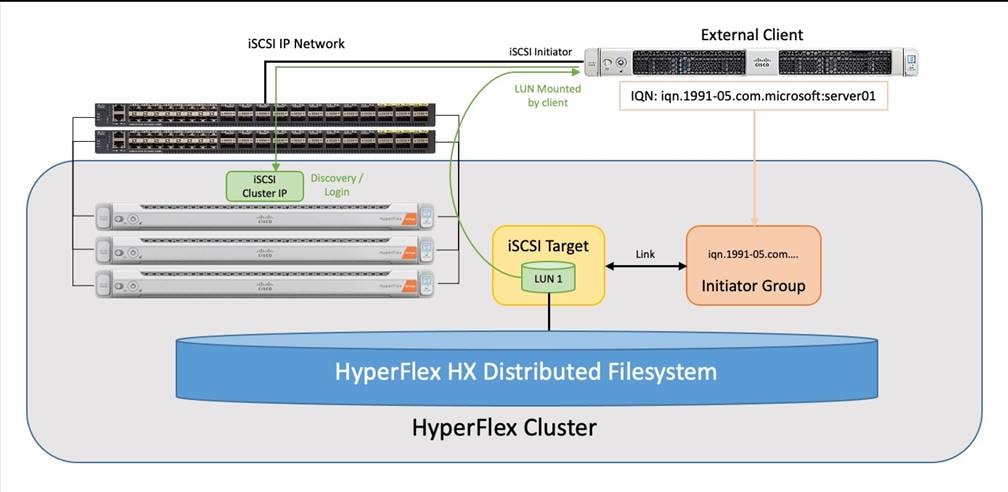

● Small Computer System Interface over IP (iSCSI) connectivity allows external systems to consume HX Data Platform storage by presenting volumes to be mounted by the external systems using the iSCSI protocol.

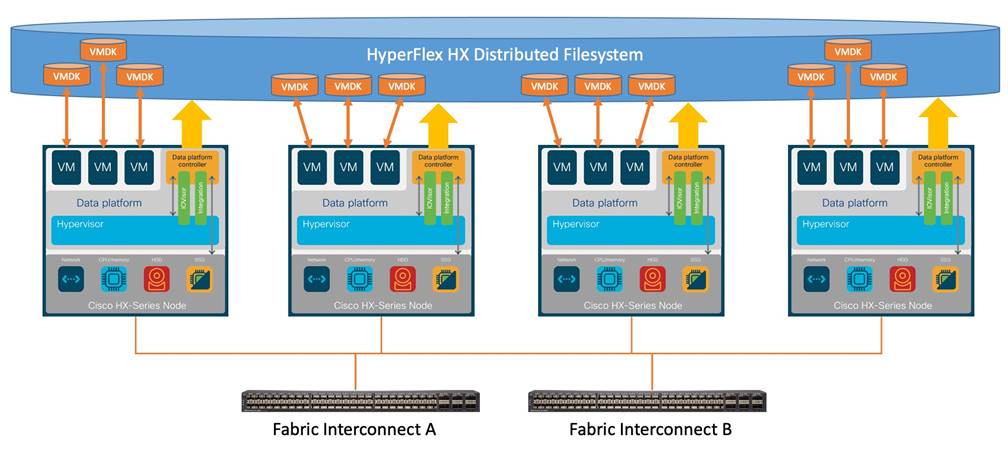

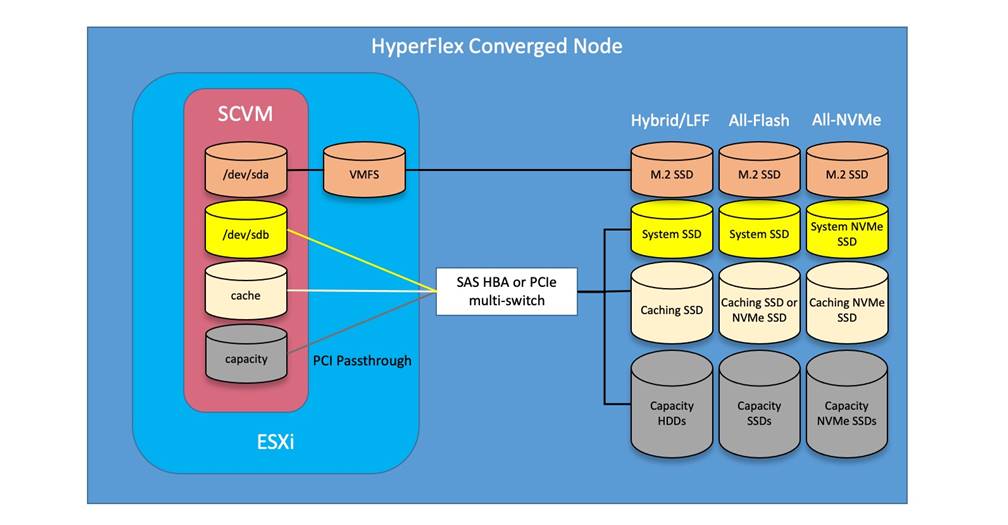

Cisco HyperFlex HX Data Platform Controller

A Cisco HyperFlex HX Data Platform controller resides on each node and implements the distributed file system. The controller runs as software in user space within a virtual machine and intercepts and handles all I/O from the guest virtual machines. The storage controller virtual machine (SCVM) uses the VMDirectPath I/O feature to provide direct PCI passthrough control of the physical server’s SAS disk controller or direct control of the PCI-attached NVMe-based solid-state disks (SSDs). This method gives the controller virtual machine full control of the physical disk resources, using the SSD drives as a read-write caching layer and using the hard-disk drives (HDDs) or SSDs as a capacity layer for distributed storage.

The controller integrates the data platform into the VMware vSphere cluster through the use of three preinstalled VMware ESXi vSphere Installation Bundles (VIBs) on each node:

● scvmclient: This VIB, also called the Cisco HyperFlex IO Visor, provides a network file system (NFS) mount point so that the ESXi hypervisor can access the virtual disks that are attached to individual virtual machines. From the hypervisor’s perspective, it is simply attached to a network file system. The IO Visor intercepts guest virtual machine I/O traffic and intelligently redirects it to the Cisco HyperFlex SCVMs.

● STFSNasPlugin: The VMware API for Array Integration (VAAI) storage offload API allows vSphere to request advanced file system operations such as snapshots and cloning. The controller implements these operations through manipulation of the file system metadata rather than actual data copying, providing rapid response, and thus rapid deployment of new environments.

● stHypervisorSvc: This VIB adds enhancements and features needed for Cisco HyperFlex data protection and virtual machine replication.

Data Operations and Distribution

The Cisco HyperFlex HX Data Platform controllers handle all read and write operation requests from the guest virtual machines to their virtual disks (VMDKs) stored in the distributed data stores in the cluster. The data platform distributes the data across multiple nodes of the cluster, and also across multiple capacity disks for each node, according to the replication-level policy selected during the cluster setup. This method avoids storage hotspots on specific nodes, and on specific disks of the nodes, and thereby also avoids networking hotspots, or congestion caused by accessing more data on some nodes than others.

The policy for the number of duplicate copies of each storage block is chosen during cluster setup and is referred to as the replication factor (RF).

● Replication factor 3: For every I/O write committed to the storage layer, two additional copies of the blocks written will be created and stored in separate locations, for a total of three copies of the blocks. Blocks are distributed in such a way as to ensure that multiple copies of the blocks are not stored on the same disks, nor on the same nodes of the cluster. This setting can tolerate simultaneous failures of two entire nodes in a cluster of five nodes or more without losing data or requiring restore-from-backup or other recovery processes. RF=3 is recommended for all production systems and is the default for all clusters of three nodes or more.

● Replication factor 2: For every I/O write committed to the storage layer, one additional copy of the blocks written will be created and stored in separate locations, for a total of two copies of the blocks. Blocks are distributed in such a way as to ensure that multiple copies of the blocks are not stored on the same disks, nor on the same nodes of the cluster. This setting can tolerate a failure of one entire node without losing data or requiring restore-from-backup or other recovery processes. RF=2 is suitable for nonproduction systems and for environments for which the extra data protection is not needed.

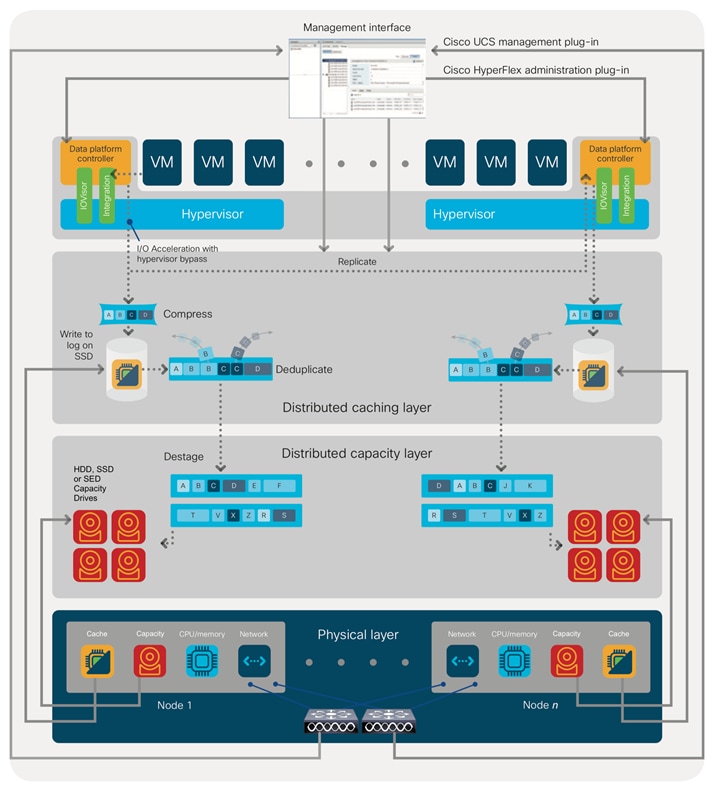

Data write, encryption and compression operations

Internally, the contents of each guest virtual machine's virtual disks are subdivided and spread across multiple servers by the HX Data Platform software. For each write operation, the data is intercepted by the IO Visor module on the node on which the virtual machine is running, a primary node is determined for that particular operation through a hashing algorithm, and then the data is sent to the primary node through the network. The primary node compresses the data in real time and writes the compressed data to the write log on its caching SSD. If the cluster is enabled for data-at-rest encryption, then the data is also encrypted by the primary node when writing it to the caching disk. Next, replica copies of that encrypted and/or compressed data are sent through the network and written to the write log on the caching SSD of the remote nodes in the cluster, according to the replication factor setting.

For example, at RF=3, a write operation will be written to the write log of the primary node for that virtual disk address, and two additional write operations will be committed in parallel on two other nodes. Because the virtual disk contents have been divided and spread out by the hashing algorithm for each unique operation, this method results in all write operations being spread across all nodes, avoiding problems with data locality and with "noisy" virtual machines consuming all the I/O capacity of a single node. The write operation will not be acknowledged until all three copies are written to the caching layer SSDs. Written data is also cached in a write log area resident in memory in the controller virtual machine, along with the write log on the caching SSDs. This process speeds up read requests when read operations are requested for data that has recently been written.

Data destaging and deduplication

The Cisco HyperFlex HX Data Platform constructs multiple write log caching segments on the caching SSDs of each node in the distributed cluster. As write cache segments become full and based on policies accounting for I/O load and access patterns, those write cache segments are locked, and new write operations roll over to a new write cache segment. The data in the now-locked cache segment is then deduplicated and destaged to the capacity layer of the nodes for long-term storage. On hybrid systems, the now-deduplicated and compressed data is also written to the dedicated read cache area of the caching SSD, which speeds up read requests for data that has recently been written. When the data is destaged to the capacity disks, it is written in a single sequential operation, avoiding disk-head seek thrashing on the spinning disks and accomplishing the task in the least amount of time. Because the data is already deduplicated and compressed before it is written, the platform avoids the additional I/O overhead often experienced on competing systems, which must then later perform a read, deduplication, compress, and write cycle.

Figure 3 shows this data movement.

For data read operations, data may be read from multiple locations. For data that was very recently written, the data is likely to still exist in the write log of the local platform controller memory or in the write log of the local caching layer disk. If local write logs do not contain the data, the distributed file system metadata will be queried to see if the data is cached elsewhere, either in write logs of remote nodes or in the dedicated read cache area of the local and remote caching SSDs of hybrid nodes. Finally, if the data has not been accessed in a significant amount of time, the file system will retrieve the requested data from the distributed capacity layer. As requests for read operations are made to the distributed file system and the data is retrieved from the capacity layer, the caching SSDs of hybrid nodes populate their dedicated read cache area to speed up subsequent requests for the same data. This multitiered distributed system with several layers of caching techniques helps ensure that data is served at the highest possible speed, using the caching SSDs of the nodes fully and equally. All-flash configurations do not employ a dedicated read cache; such caching would not provide any performance benefit because the persistent data copy already resides on high-performance SSDs.

In summary, the Cisco HyperFlex HX Data Platform implements a distributed, log-structured file system that performs data operations through two configurations:

● In a hybrid configuration, the data platform provides a caching layer using SSDs to accelerate read requests and write responses, and it implements a storage capacity layer using HDDs.

● In an all-flash or all-NVMe configuration, the data platform provides a dedicated caching layer using high-endurance SSDs to accelerate write responses, and it implements a storage capacity layer also using SSDs. Read requests are fulfilled directly from the capacity SSDs, because a dedicated read cache is not needed to accelerate read operations.

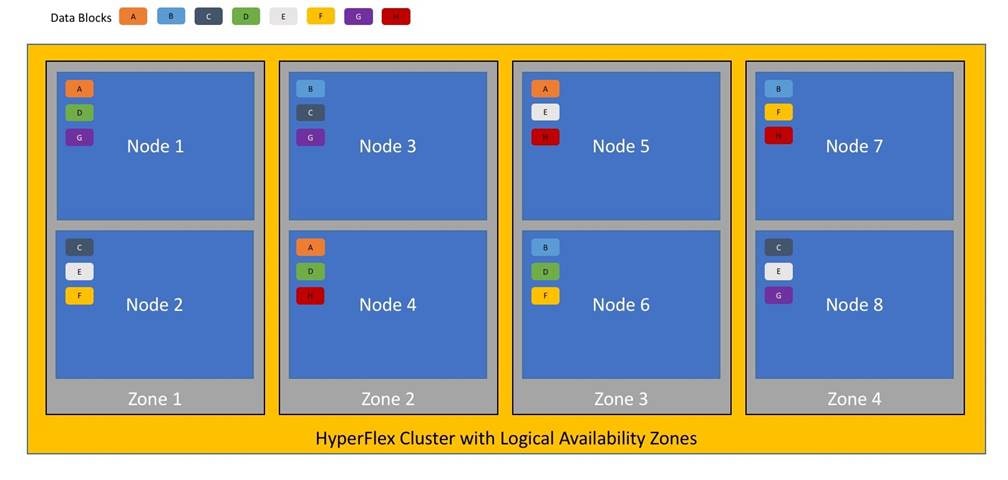

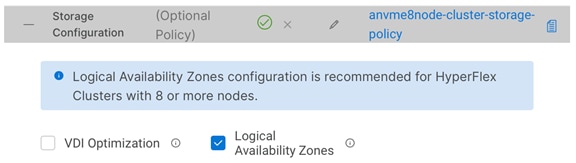

Larger scale HyperFlex clusters are subject to higher failure risks, simply due to the number of nodes in the cluster. While any individual node’s risk of failure is the same no matter how many nodes there are, with clusters up to 32 converged nodes in size, there is a logically higher probability that a single node could fail, when compared to a cluster with fewer nodes. To mitigate these risks in larger scale clusters, a HyperFlex cluster of eight nodes or more can be configured with a feature called Logical Availability Zones (LAZ). The Logical Availability Zones feature groups 2 or more HyperFlex nodes together into a logically defined zone, a minimum of 3 zones are created, and the data in the cluster is distributed in such a way that no blocks are written to the nodes within a single zone more than once. Due to this enhanced distribution pattern of data across zones, wherein each zone has multiple servers, clusters with LAZ enabled can typically withstand more failures than clusters which operate without it. The number of failures that can tolerated varies depending on the number of zones in the cluster, and the number of servers in each of the zones. Generally speaking, multiple node failures across one or two zones will be tolerated better, and with less risk than multiple nodes failing across three or more zones. Note that the failure tolerance shown in the HyperFlex Connect dashboard will always present a “worst case scenario” view, meaning that even though the dashboard may state that two failures can be tolerated, in fact two servers could fail and the cluster can remain online, and the failure tolerance may still remain at two.

Logical availability zones should not be confused with the concept of fault domains. An example of a fault domain would be a subset of the nodes in a single HyperFlex cluster being powered by one uninterruptable power supply (UPS) or connected to one power distribution unit (PDU), meanwhile the remaining nodes would be connected to another UPS or PDU. If one of the UPS’ or PDUs were to fail, then there would be a simultaneous failure of multiple nodes. While LAZ may actually prevent the cluster from failing in this scenario, to guarantee it would require that the zone membership be manually controlled, so that a failure of all of the servers protected by a single UPS or PDU, would be distributed in such a way that it would not cause an outage. The LAZ feature is not designed to be manually configured in this way, instead the zone membership is determined automatically by the system. If a HyperFlex cluster needs to be physically split in half due to a physical limitation, such as the UPS example above, or a distance requirement for fault tolerance, then the cluster should be built as a stretched cluster instead of using LAZ.

Figure 4 illustrates an example of the data distribution method for clusters with Logical Availability Zones enabled, set to replication factor 3, where each zone only contains one of the three copies of the data in the cluster. This cluster consists of eight nodes, which the system configures into four zones.

Logical availability zones are subject to the following requirements and limitations:

● Only HyperFlex clusters with 8 nodes or more can be configured with logical availability zones during the installation process.

● Logical Availability Zones can be enabled during the HyperFlex cluster installation, or it can be enabled via the command line at a later time. It is recommended to enable this feature during installation, in order to avoid a large migration and reorganization of data across the cluster, which would be necessary to comply with the data distribution rules if LAZ is turned on in a cluster already containing data.

● The number of zones can be manually specified as 3, 4, 5, or you can allow the installer to automatically choose, which is the recommended setting.

● The HyperFlex cluster determines which nodes participate in each zone, and this configuration cannot be modified.

● To maintain the most balanced consumption of space and data distribution, it is recommended that the number of nodes in a cluster are whole multiples of 3, 4, 5, or 7. For example, 8 nodes would evenly divide into 4 zones of 2 servers each, and 9 nodes would divide evenly into 3 zones of 3 servers each. Eleven nodes would create an unbalanced number of nodes across the zones, leading to unbalanced space consumption on the nodes.

● In addition to the previous point, expansion of a cluster should be done in multiples of the number of zones, when the cluster is operating with LAZ enabled. Expanding in such a way preserves a matched number of nodes in each zone and prevents any unbalance of space consumption. For example, a cluster with 3 zones should be expanded by adding 3 more nodes, because adding only 1 or 2 nodes would lead to an imbalance, as would adding 4 nodes.

All-NVMe and All-Flash versus Hybrid Nodes

Cisco HyperFlex systems can be divided logically into two families: a collection of hybrid nodes, and a collection of all-flash or all-NVMe nodes.

Hybrid nodes use a combination of SSDs for the short-term storage caching layer and HDDs for the long-term storage capacity layer. The hybrid Cisco HyperFlex system is an excellent choice for entry-level or midrange storage solutions, and hybrid solutions have been successfully deployed in many nonperformance-sensitive virtual environments.

However, the number highly performance-sensitive and mission-critical applications being deployed is increasing rapidly. The primary challenge to hybrid Cisco HyperFlex systems for these performance-sensitive applications is their increased sensitivity to storage latency. Due to the characteristics of the spinning hard disks, which results in higher latency, HDDs almost inevitably become a bottleneck in a hybrid system. Ideally, if all the storage operations occurred on the caching SSD layer, the hybrid system’s performance would be excellent. But in some scenarios, the amount of data being written and read exceeds the caching layer capacity, placing larger loads on the HDD capacity layer, and the subsequent increase in latency results in reduced performance.

Cisco HyperFlex all-flash and all-NVMe systems are an excellent option for customers with high-performance, latency-sensitive workloads. Because the capacity layer disks are also SSDs, the all-flash and all-NVMe systems avoid the increased latency seen in hybrid nodes when large amounts of data are written and read. With a purpose-built, flash-optimized, high-performance log-based file system, the Cisco HyperFlex all-flash and all-NVMe systems provide these features:

● Predictable high performance across all the virtual machines the cluster

● Highly consistent and low latency, which benefits data-intensive applications

● Architecture that can continue to meet your needs in the future; it is well suited for flash-memory configuration, reducing write amplification and flash cell wear

● Cloud-scale solution with easy scale-out and distributed infrastructure and the flexibility to scale out independent resources separately

Cisco HyperFlex support for hybrid, all-flash, and all-NVMe models allows customers to choose the right platform configuration based on their capacity, applications, performance, and budget requirements. All-flash configurations offer repeatable and sustainable high performance, especially for scenarios with a larger working set of data—that is, a large amount of data in motion. All-NVMe configurations elevate performance to an even higher level, with lower latencies for the most demanding applications. Hybrid configurations are a good option for customers who want the simplicity of the Cisco HyperFlex solution, but whose needs are focused on capacity-sensitive solutions, lower budgets, and few performance-sensitive applications.

Cisco HyperFlex Connect HTML 5 Management Webpage

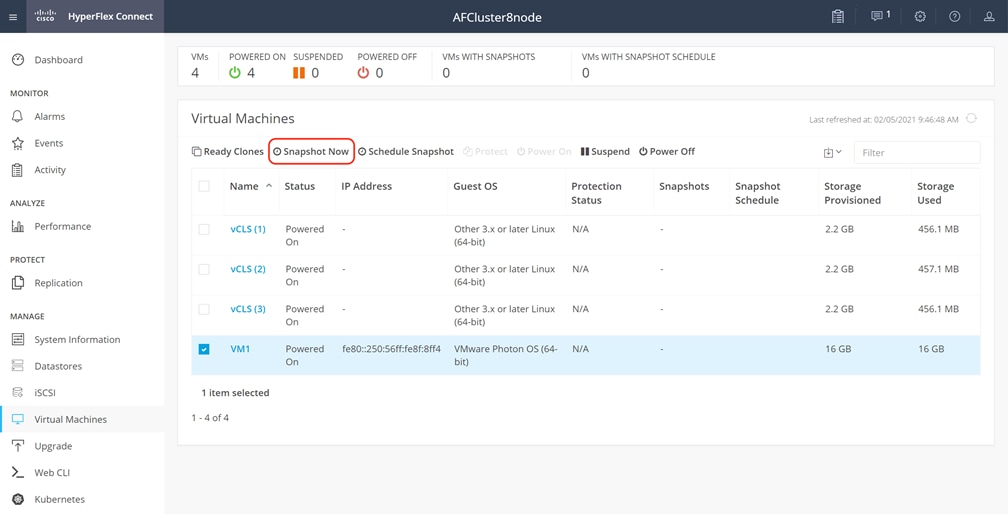

An HTML 5–based web user interface named Cisco HyperFlex Connect is available for use as the primary management tool for Cisco HyperFlex systems (Figure 4). Through this centralized point of control for the cluster, administrators can create data stores, monitor the data platform health and performance, manage resource use, and perform upgrades. Administrators can also use this management portal to predict when the cluster will need to be scaled, create virtual machine snapshot schedules, and configure native virtual machine replication. To use the Cisco HyperFlex Connect user interface, connect using a web browser to the Cisco HyperFlex cluster IP address: http://<hx controller cluster ip>.

This chapter contains the following:

This section is organized into the following subjects:

Cisco UCS 6454 Fabric Interconnect

The Cisco UCS 6454 Fabric Interconnect is a One-Rack-Unit (1RU) 10/25/40/100 Gigabit Ethernet, FCoE and Fibre Channel switch offering up to 3.82 Tbps throughput and up to 54 ports. The switch has 28 10/25-Gbps Ethernet ports, 4 1/10/25-Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports and 16 unified ports that can support 10/25-Gbps Ethernet ports or 8/16/32-Gbps Fibre Channel ports. All Ethernet ports are capable of supporting FCoE. Cisco HyperFlex nodes can connect at 10-Gbps or 25-Gbps speeds depending on the model of Cisco VIC card in the nodes and the SFP optics or cables chosen.

Cisco UCS 64108 Fabric Interconnect

The Cisco UCS 64108 Fabric Interconnect is a Two-Rack-Unit (2RU) 10/25/40/100 Gigabit Ethernet, FCoE and Fibre Channel switch offering up to 7.42 Tbps throughput and up to 108 ports. The switch has 72 10/25-Gbps Ethernet ports, 8 1/10/25-Gbps Ethernet ports, 12 40/100-Gbps Ethernet uplink ports and 16 unified ports that can support 10/25-Gbps Ethernet ports or 8/16/32-Gbps Fibre Channel ports. All Ethernet ports are capable of supporting FCoE. Cisco HyperFlex nodes can connect at 10-Gbps or 25-Gbps speeds depending on the model of Cisco VIC card in the nodes and the SFP optics or cables chosen.

Cisco HyperFlex HX220c-M6 converged nodes

The Cisco HyperFlex HX220c-M6 converged node is a small footprint (1RU) Cisco HyperFlex model using third-generation Intel Xeon scalable processors and up to 4TB of RAM or DCPMM. It contains one or two 240 GB M.2 form factor solid-state disks (SSD) that acts as the boot drive. When two boot drives are ordered the optional HX-M2-HWRAID controller must be included to enable RAID 1 boot drive redundancy. Optionally, the Cisco HyperFlex Acceleration Engine card can be added to improve write performance and compression. Either Cisco VIC model 1467 quad-port 10/25 Gb, or model 1477 quad-port 40/100 Gb card may be selected.

Cisco HyperFlex HX220c-M6S model servers are hybrid nodes, using an SSD for both the system drive and the caching drive, and up to 8 traditional spinning hard disk drives (HDD) for the capacity disks. Cisco HyperFlex HXAF220c-M6S model servers are all-flash nodes and replace the capacity HDDs with SSDs for higher performance. Cisco HyperFlex HXAF220c-M6SN model servers are all-NVMe nodes, which replace all the system, caching, and capacity SSDs with NVMe based flash drives for the highest possible performance. A wide variety of drive models are available for use as the system, caching, and capacity drives, depending on the model of server. Please refer to the current online spec sheet for all of the available drive options: https://www.cisco.com/c/dam/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/hyperflex-hx220c-m6-nvme-spec-sheet.pdf

Cisco HyperFlex HX225c-M6 converged nodes

The HX225c-M6 converged node is a small footprint (1RU) Cisco HyperFlex model using AMD Rome, Milan, or Milan-X series processors and up to 4TB of RAM. It contains one or two 240 GB M.2 form factor solid-state disks (SSD) that acts as the boot drive. When two boot drives are ordered the optional HX-M2-HWRAID controller must be included to enable RAID 1 boot drive redundancy. Either Cisco VIC model 1467 quad-port 10/25 Gb, or model 1477 quad-port 40/100 Gb card may be selected.

Cisco HyperFlex HX225c-M6S model servers are hybrid nodes, using an SSD for both the system drive and the caching drive, and up to 8 traditional spinning hard disk drives (HDD) for the capacity disks. Cisco HyperFlex HXAF225c-M6S model servers are all-flash nodes and replace the capacity HDDs with SSDs for higher performance. A wide variety of drive models are available for use as the system, caching, and capacity drives, depending on the model of server. Please refer to the current online spec sheet for all of the available drive options: https://www.cisco.com/c/dam/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/hx225c-m6sx-specsheet.pdf

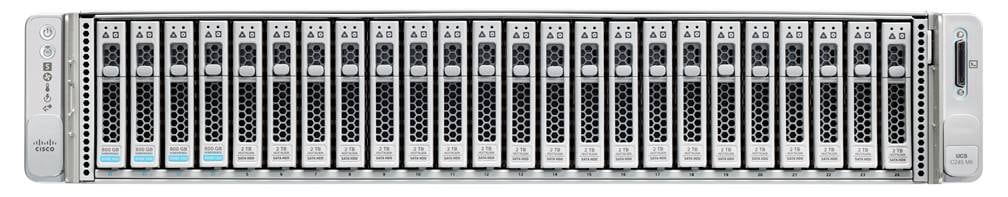

Cisco HyperFlex HX240c-M6 converged nodes

The HX240c-M6 converged node is a capacity optimized (2RU) Cisco HyperFlex model using third-generation Intel Xeon scalable processors and up to 8TB of RAM or DCPMM. It contains one or two 240 GB M.2 form factor solid-state disks (SSD) that acts as the boot drive. When two boot drives are ordered the optional HX-M2-HWRAID controller must be included to enable RAID 1 boot drive redundancy. Optionally, the Cisco HyperFlex Acceleration Engine card can be added to improve write performance and compression. Either Cisco VIC model 1467 quad-port 10/25 Gb, or model 1477 quad-port 40/100 Gb card may be selected.

Cisco HyperFlex HX240c-M6S model servers are hybrid nodes, using an SSD for both the system drive and the caching drive, and up to 24 traditional spinning hard disk drives (HDD) for the capacity disks. Cisco HyperFlex HX240c-M6L servers are hybrid models which utilize up to twelve 3.5” large form factor (LFF) HDDs for deployments with higher storage capacity requirements. Cisco HyperFlex HXAF240c-M6S model servers are all-flash nodes and replace the capacity HDDs with SSDs for higher performance. Cisco HyperFlex HXAF240c-M6SN model servers are all-NVMe nodes, which replace all the system, caching, and capacity SSDs with NVMe based flash drives for the highest possible performance. A wide variety of drive models are available for use as the system, caching, and capacity drives, depending on the model of server. Please refer to the current online spec sheet for all of the available drive options: https://www.cisco.com/c/dam/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/hyperflex-hx240c-m6-nvme-spec-sheet.pdf and https://www.cisco.com/c/dam/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/hyperflex-hx240c-m6-lff-spec-sheet.pdf

Cisco HyperFlex HX245c-M6 converged nodes

The HX245c-M6 converged node is a capacity optimized (2RU) Cisco HyperFlex model using AMD Rome, Milan, or Milan-X series processors and up to 8TB of RAM. It contains one or two 240 GB M.2 form factor solid-state disks (SSD) that acts as the boot drive. When two boot drives are ordered the optional HX-M2-HWRAID controller must be included to enable RAID 1 boot drive redundancy. Either Cisco VIC model 1467 quad-port 10/25 Gb, or model 1477 quad-port 40/100 Gb card may be selected.

Cisco HyperFlex HX245c-M6S model servers are hybrid nodes, using an SSD for both the system drive and the caching drive, and up to 24 traditional spinning hard disk drives (HDD) for the capacity disks. Cisco HyperFlex HXAF245c-M6S model servers are all-flash nodes and replace the capacity HDDs with SSDs for higher performance. A wide variety of drive models are available for use as the system, caching, and capacity drives, depending on the model of server. Please refer to the current online spec sheet for all of the available drive options: https://www.cisco.com/c/dam/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/hx245c-m6sx-specsheet.pdf

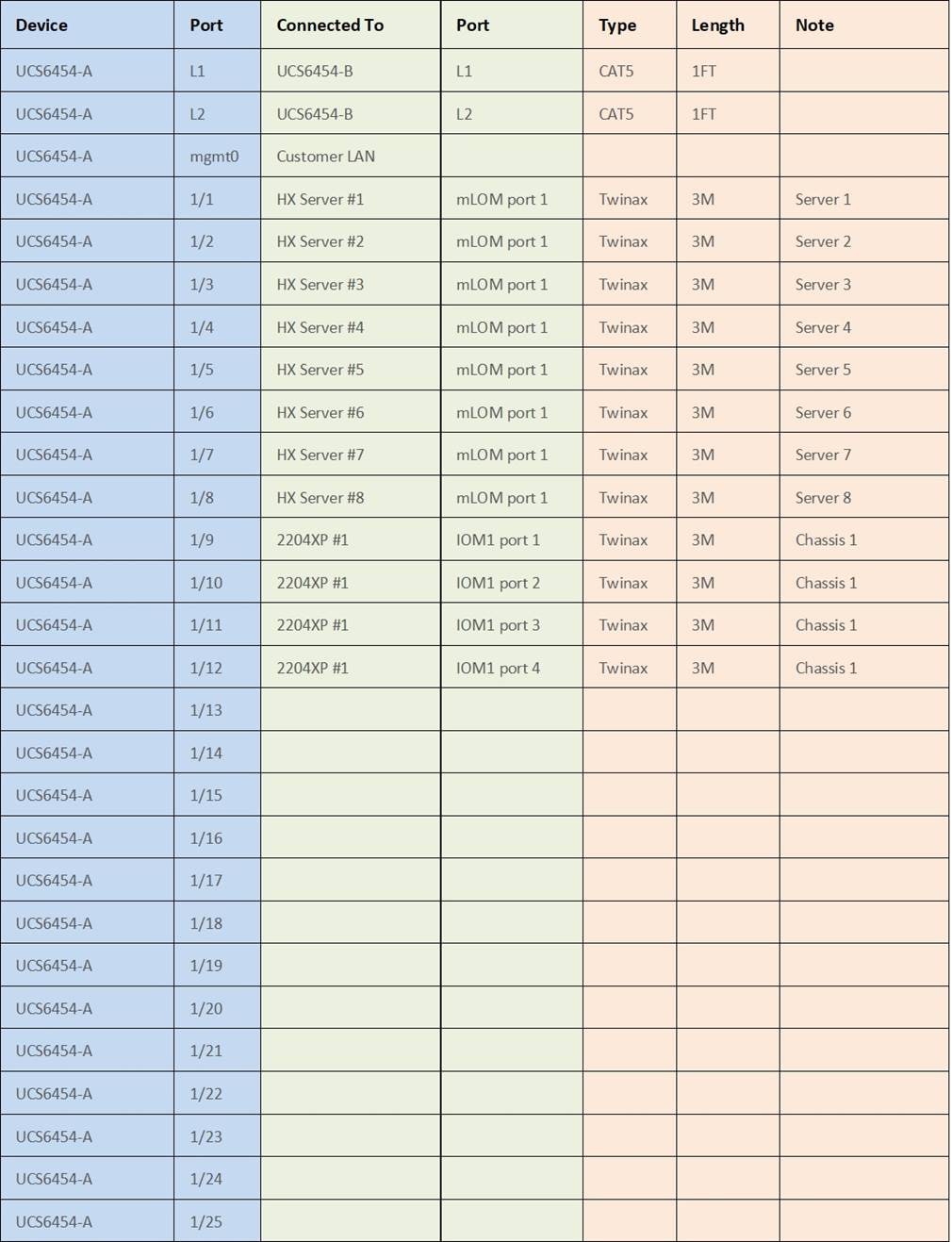

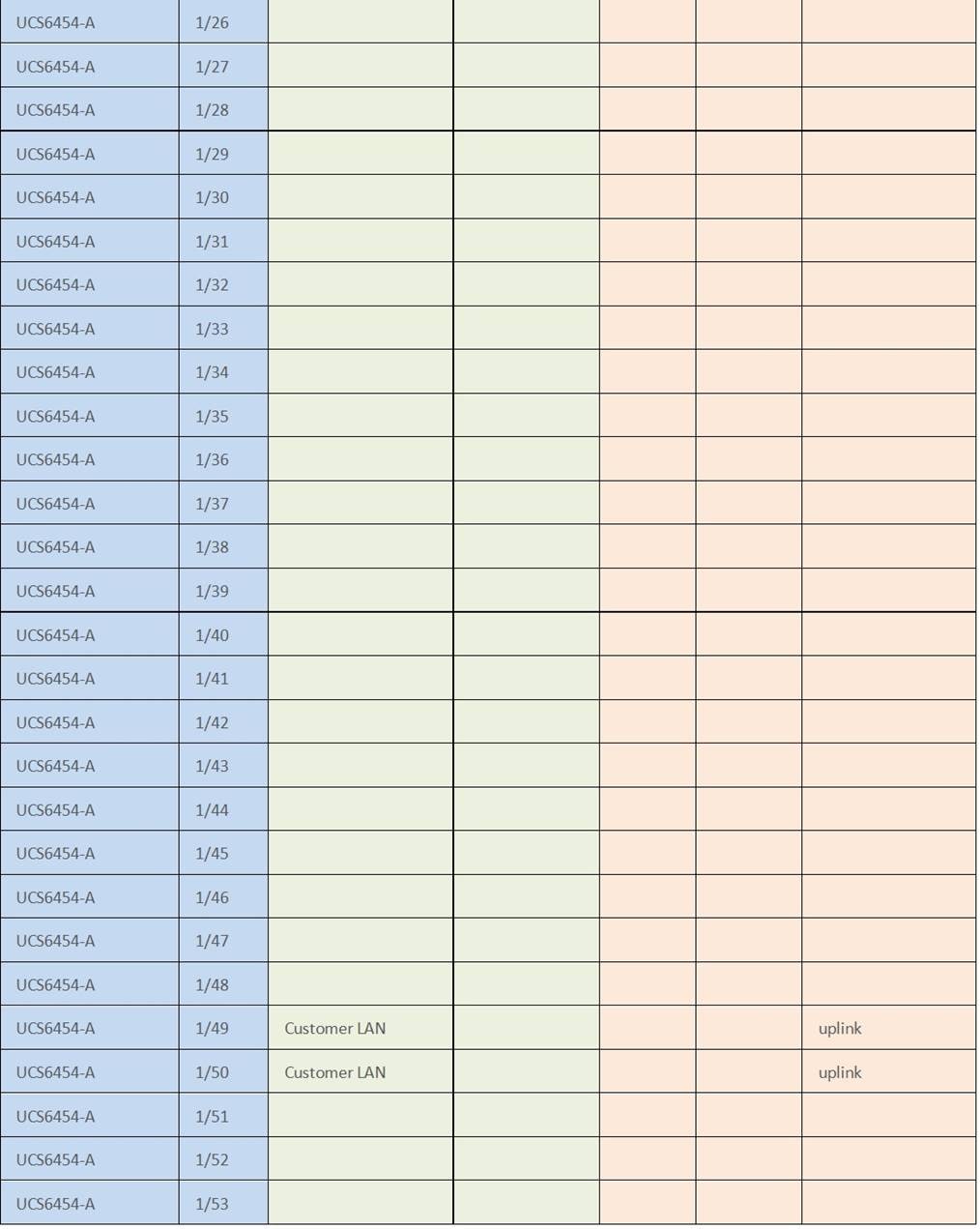

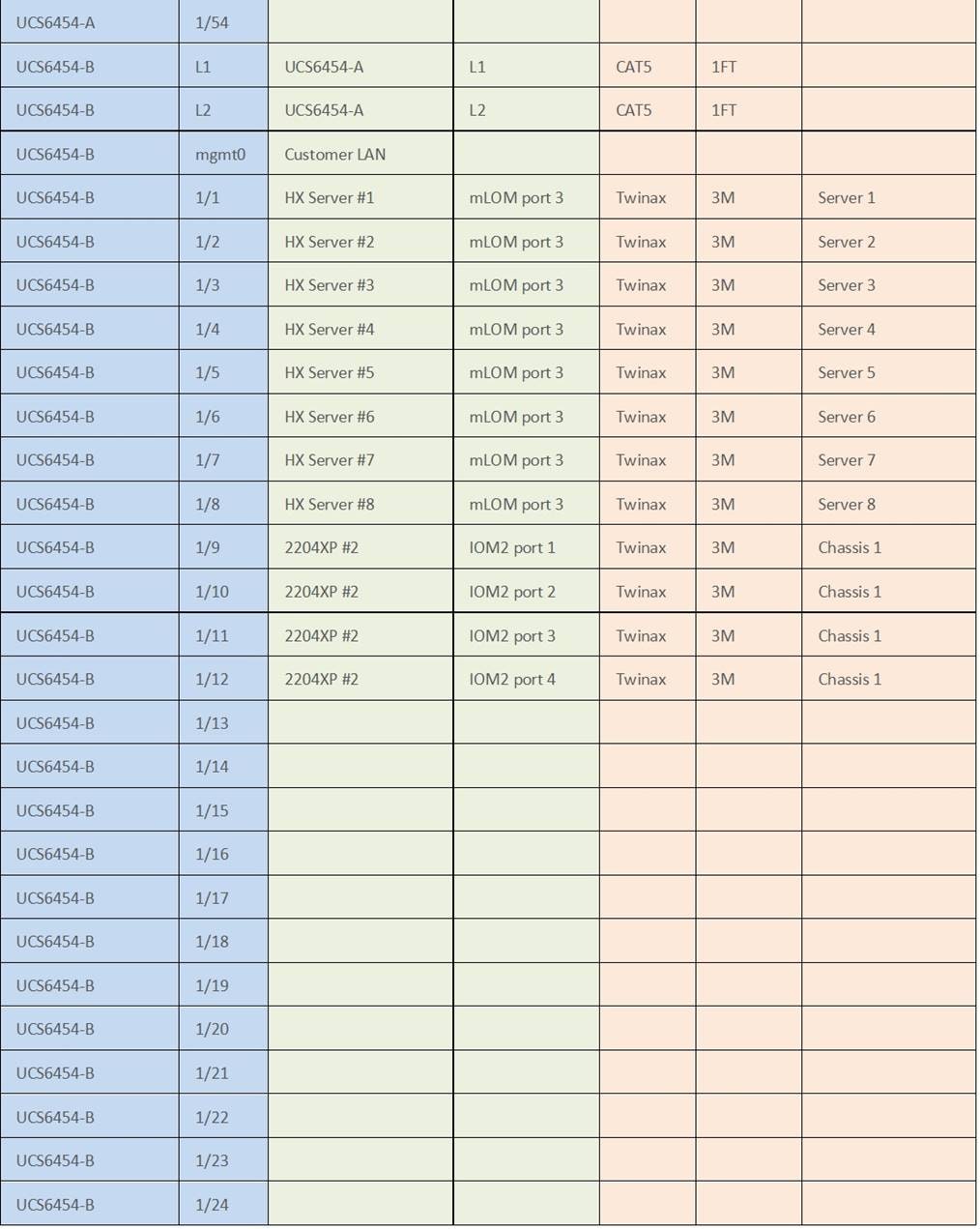

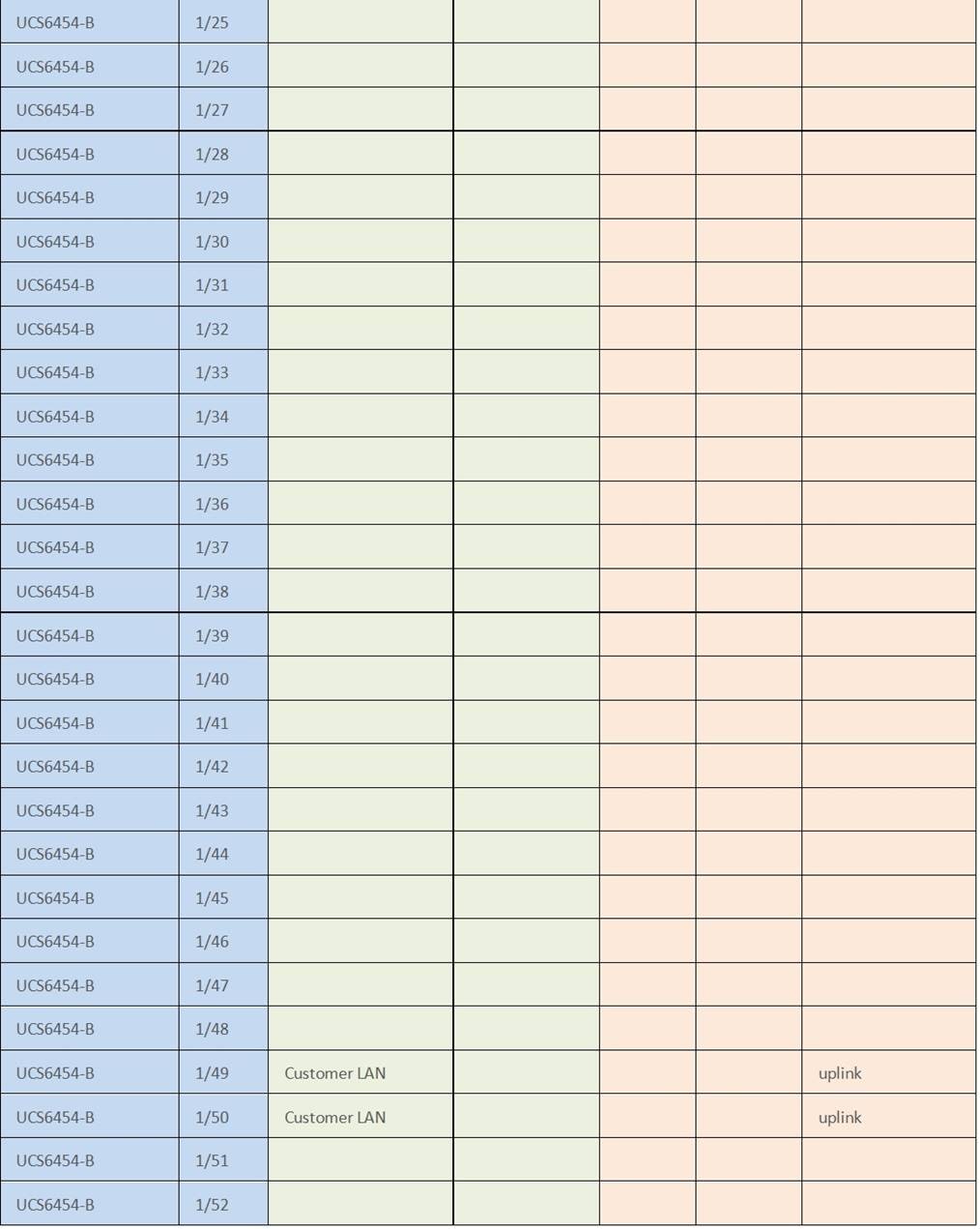

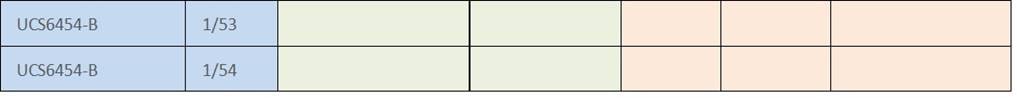

Table 1 lists the required physical components and hardware.

Table 1 lists the required physical components and hardware.

Table 1. Cisco HyperFlex System Components

| Component |

Hardware |

| Fabric Interconnects |

Two (2) Cisco UCS 6454 or 64108 Fabric Interconnects |

| Servers |

Minimum of (3) three and up to (32) thirty-two Cisco UCS HX-series servers. Choose from models:

● Cisco HyperFlex HX220c M6S

● Cisco HyperFlex HX225c M6S

● Cisco HyperFlex HX240c M6SX

● Cisco HyperFlex HX245c M6SX

● Cisco HyperFlex HXAF220c M6S

● Cisco HyperFlex HXAF225c M6S

● Cisco HyperFlex HXAF240c M6SX

● Cisco HyperFlex HXAF245c M6SX

● Cisco HyperFlex HXAF220c M6SN

● Cisco HyperFlex HXAF240c M6SN

Or Minimum of (3) three and up to (16) sixteen Cisco UCS HX-series servers. Choose from models:

● Cisco HyperFlex HX240c M6L

|

The software components of the Cisco HyperFlex system must meet minimum requirements for the Cisco UCS firmware, hypervisor version, and the Cisco HyperFlex Data Platform software in order to interoperate properly. For the full details of software and hardware version requirements and compatibility, visit this page: https://www.cisco.com/c/en/us/td/docs/hyperconverged_systems/HyperFlex_HX_DataPlatformSoftware/release-guidelines-and-support-timeline/b-recommended-hx-data-platform-sw-releases/m-software-requirements-5-0.html

Table 2 lists the software components and the versions required for a single cluster of the Cisco HyperFlex, as tested, and validated in this document.

Table 2. Software Components and Hardware

| Component |

Hardware |

| Hypervisor |

VMware ESXi 6.7 U3, 7.0 U2 or 7.0 U3 CISCO Custom Image for ESXi 7.0 Update 3 for HyperFlex: HX-ESXi-7.0U3-20842708-Cisco-Custom-7.3.0.11-install-only.iso |

| Management Server |

VMware vCenter 6.7 U3, 7.0 U2 or 7.0 U3 |

| Cisco UCS Firmware |

Cisco UCS Infrastructure software, B-Series and C-Series bundles, revision 4.2(2d) or later |

| Cisco HyperFlex |

Cisco HyperFlex 5.0(2b) |

Cisco HyperFlex systems must be properly licensed using Cisco Smart Licensing, which is a cloud-based software licensing management solution used to automate many manual, time-consuming, and error-prone licensing tasks. The Cisco HyperFlex system communicates with the Cisco Smart Software Manager (SSM) online service through a Cisco Smart Account to check out or assign available licenses from the account to the Cisco HyperFlex cluster resources. Communications can be direct through the internet. You can also configure communication using a proxy server, or through an internal Cisco SSM satellite server, which caches and periodically synchronizes licensing data.

In a small number of highly secure environments, systems can be provisioned with a permanent license reservation (PLR), which does not need to communicate with Cisco SSM. Contact your Cisco sales representative or partner to discuss whether your security requirements will necessitate use of these permanent licenses.

New Cisco HyperFlex cluster installations will operate for 90 days without licensing to provide an evaluation period. Thereafter, the system will generate alarms and operate in a noncompliant mode. Systems without compliant licensing will not be entitled to technical support.

For more information about the Cisco Smart Software Manager satellite server, visit this website: https://www.cisco.com/c/en/us/buy/smart-accounts/software-manager-satellite.html.

Licensing of the Cisco HyperFlex DC-No-FI system requires one license per node from one of two license editions: Cisco HyperFlex Datacenter Advantage or Datacenter Premier. The type of cluster being installed and the features you want to activate and use in the system determine the licenses you need to purchase and the appropriate licensing tier.

Additional features in the future will be added to the different licensing editions as they are released. The features listed in Table 3 are current as of the publication of this document.

Table 3. Cisco HyperFlex system license editions

| Cisco HyperFlex license edition |

Cisco HyperFlex Datacenter Advantage |

Cisco HyperFlex Datacenter Premier |

| Features |

● Cisco HyperFlex installation on all supported models other than all-NVMe nodes

● 1:1 ratio of compute-only nodes to converged nodes

● In-line compression and deduplication

● Self-encrypting drives

● Logical Availability Zones

● iSCSI storage

● Snapshots and replication

|

Everything in the Advantage license plus:

● Cisco HyperFlex installation on all-NVMe nodes

● 2:1 ratio of compute-only nodes to converged nodes

● Software encryption

● Cisco HyperFlex Acceleration Engine card

● Stretch clusters

|

| Tech tip |

| To enable software encryption, each server in the cluster will also require, at minimum, Intersight Essentials license Before enabling Software Encryption on a cluster, a customer also needs to purchase a special software PID (HXDP-SW-PKG-SE-K9=) |

For a comprehensive guide to licensing and all the features in each edition, consult the Cisco HyperFlex Licensing Guide here: https://www.cisco.com/c/en/us/td/docs/hyperconverged_systems/HyperFlex_HX_DataPlatformSoftware/HX-Ordering-and-Licensing-Guide/b_Cisco_HyperFlex_Systems_Ordering_and_Licensing_Guide.html.

The Cisco HyperFlex system is composed of a pair of Cisco UCS Fabric Interconnects along with up to thirty-two HX-Series rack-mount servers per cluster. Up to thirty-two compute-only servers can also be added per HyperFlex cluster. Adding Cisco UCS rack-mount servers and/or Cisco UCS 5108 blade chassis, which house Cisco UCS blade servers, allows for additional compute resources in an extended cluster design. The two fabric interconnects both connect to every HX-Series rack-mount server, and both connect to every Cisco UCS 5108 blade chassis, and Cisco UCS rack-mount server. Upstream network connections, also referred to as “northbound” network connections are made from the Fabric Interconnects to the customer datacenter network at the time of installation.

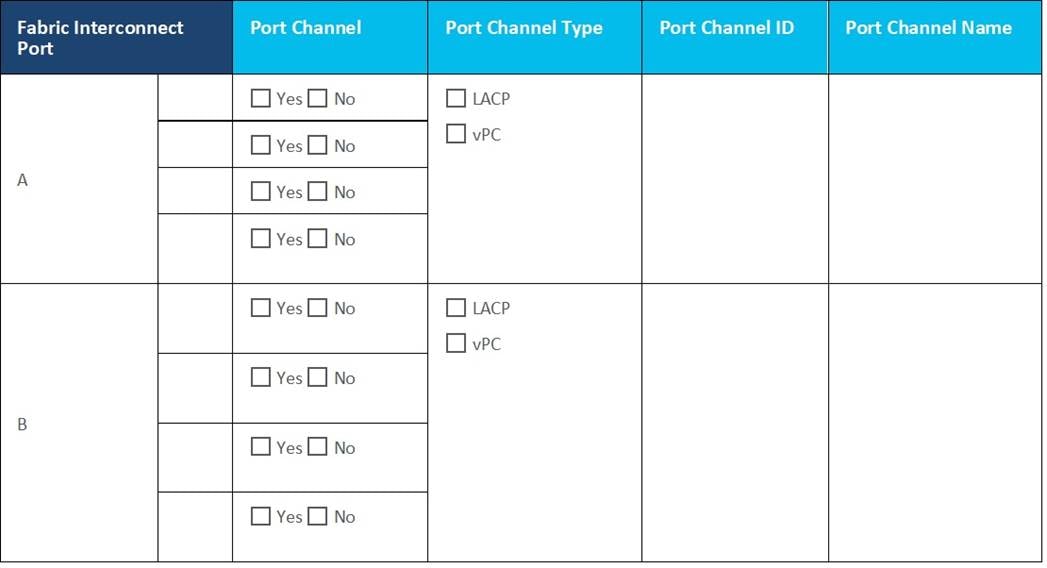

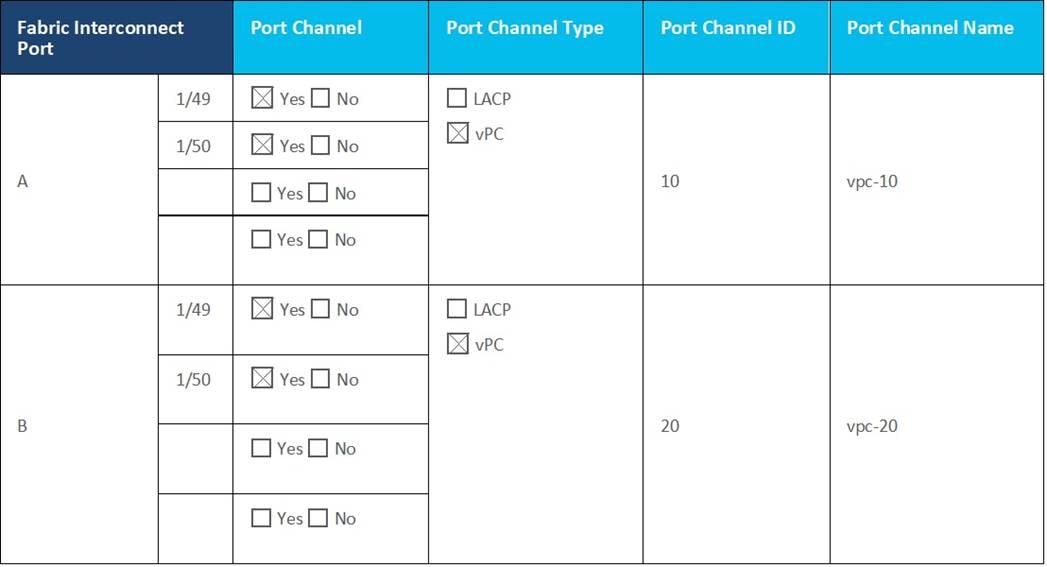

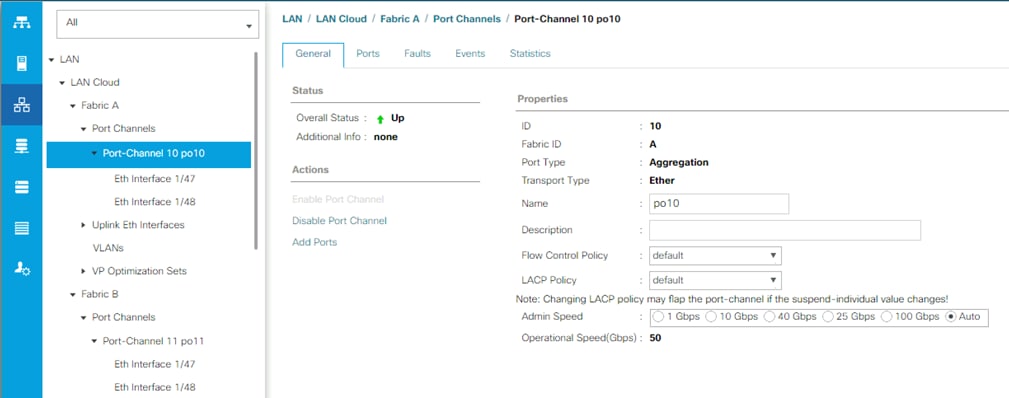

Cisco UCS network uplinks connect “northbound” from the pair of Cisco UCS Fabric Interconnects to the LAN in the customer datacenter. All Cisco UCS uplinks operate as trunks, carrying multiple 802.1Q VLAN IDs across the uplinks. The default Cisco UCS behavior is to assume that all VLAN IDs defined in the Cisco UCS configuration are eligible to be trunked across all available uplinks.

Cisco UCS Fabric Interconnects appear on the network as a collection of endpoints versus another network switch. Internally, the Fabric Interconnects do not participate in spanning-tree protocol (STP) domains, and the Fabric Interconnects cannot form a network loop, as they are not connected to each other with a layer 2 Ethernet link. All link up/down decisions via STP will be made by the upstream root bridges.

Uplinks need to be connected and active from both Fabric Interconnects. For redundancy, multiple uplinks can be used on each FI, either as 802.3ad Link Aggregation Control Protocol (LACP) port-channels or using individual links. For the best level of performance and redundancy, uplinks can be made as LACP port-channels to multiple upstream Cisco switches using the virtual port channel (vPC) feature. Using vPC uplinks allows all uplinks to be active passing data, plus protects against any individual link failure, and the failure of an upstream switch. Other uplink configurations can be redundant, however spanning-tree protocol loop avoidance may disable links if vPC is not available.

All uplink connectivity methods must allow for traffic to pass from one fabric interconnect to the other, or from fabric A to fabric B. There are scenarios where cable, port or link failures would require traffic that normally does not leave the Cisco UCS domain, to instead be directed over the Cisco UCS uplinks because that traffic must travel from fabric A to fabric B, or vice-versa. Additionally, this traffic flow pattern can be seen briefly during maintenance procedures, such as updating firmware on the fabric interconnects, which requires them to be rebooted. Cisco recommends that the uplink bandwidth configured is greater than or equal to double the bandwidth available to each HyperFlex converged node. For example, if the nodes are connected at 10 Gigabit speeds, then each fabric interconnect should have at least 20 Gigabit of uplink bandwidth available. The following sections and figures detail several uplink connectivity options.

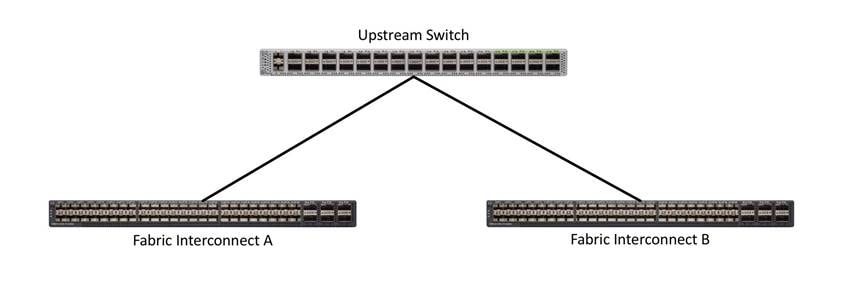

Single Uplinks to Single Switch

This connection design is susceptible to failures at several points; single uplink failures on either fabric interconnect can lead to connectivity losses or functional failures, and the failure of the single uplink switch will cause a complete connectivity outage.

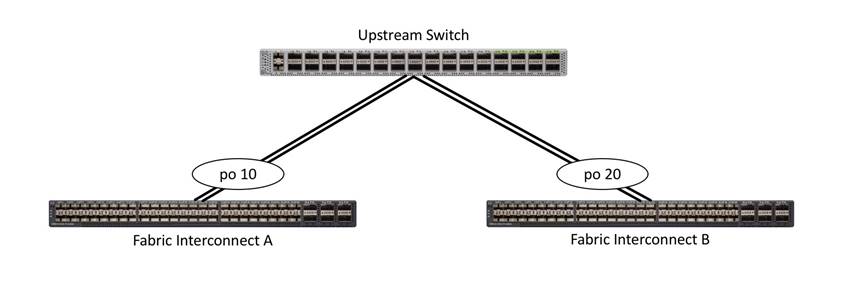

Port Channels to Single Switch

This connection design is now redundant against the loss of a single link but remains susceptible to the failure of the single switch.

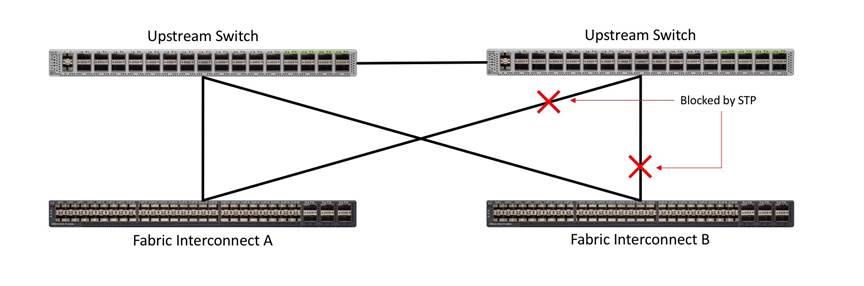

Single Uplinks or Port Channels to Multiple Switches

This connection design is redundant against the failure of an upstream switch, and redundant against a single link failure. In normal operation, STP is likely to block half of the links to avoid a loop across the two upstream switches. The side effect of this is to reduce bandwidth between the Cisco UCS domain and the LAN. If any of the active links were to fail, STP would bring the previously blocked link online to provide access to that fabric interconnect via the other switch. It is not recommended to connect both links from a single FI to a single switch, as that configuration is susceptible to a single switch failure breaking connectivity from fabric A to fabric B. For enhanced redundancy, the single links in the figure below could also be port-channels.

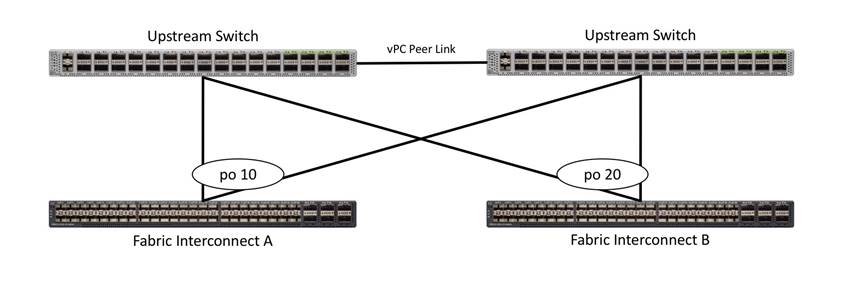

vPC to Multiple Switches

This recommended connection design relies on using Cisco switches that have the virtual port channel feature, such as Catalyst 6000 series switches running VSS, Cisco Nexus 5000 series, and Cisco Nexus 9000 series switches. Logically the two vPC enabled switches appear as one, and therefore spanning-tree protocol will not block any links. This configuration allows for all links to be active, achieving maximum bandwidth potential, and multiple redundancy at each level.

Fabric Interconnects (FI) are deployed in pairs, wherein the two units operate as a management cluster, while forming two separate network fabrics, referred to as the A side and B side fabrics. Therefore, many design elements will refer to FI A or FI B, alternatively called fabric A or fabric B. Both Fabric Interconnects are active at all times, passing data on both network fabrics for a redundant and highly available configuration. Management services, including Cisco UCS Manager, are also provided by the two FIs but in a clustered manner, where one FI is the primary, and one is secondary, with a roaming clustered IP address. This primary/secondary relationship is only for the management cluster and has no effect on data transmission.

Fabric interconnects have the following ports, which must be connected for proper management of the Cisco UCS domain:

● Mgmt: A 10/100/1000 Mbps port for managing the Fabric Interconnect and the Cisco UCS domain via GUI and CLI tools. This port is also used by remote KVM, IPMI and SoL sessions to the managed servers within the domain. This is typically connected to the customer management network.

● L1: A cross connect port for forming the Cisco UCS management cluster. This port is connected directly to the L1 port of the paired Fabric Interconnect using a standard CAT5 or CAT6 Ethernet cable with RJ45 plugs. It is not necessary to connect this to a switch or hub.

● L2: A cross connect port for forming the Cisco UCS management cluster. This port is connected directly to the L2 port of the paired Fabric Interconnect using a standard CAT5 or CAT6 Ethernet cable with RJ45 plugs. It is not necessary to connect this to a switch or hub.

● Console: An RJ45 serial port for direct console access to the fabric interconnect. This port is typically used during the initial FI setup process with the included serial to RJ45 adapter cable. This can also be plugged into a terminal aggregator or remote console server device.

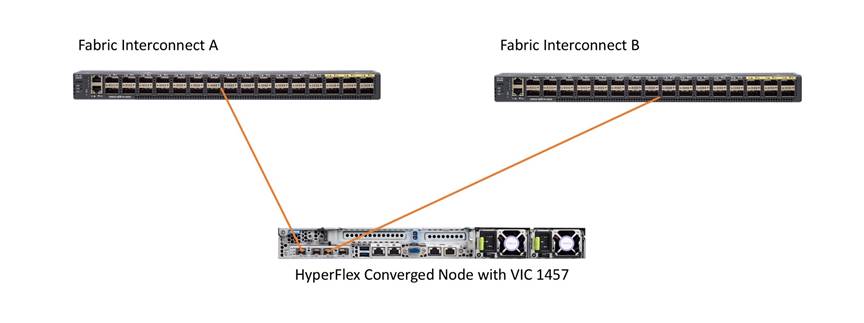

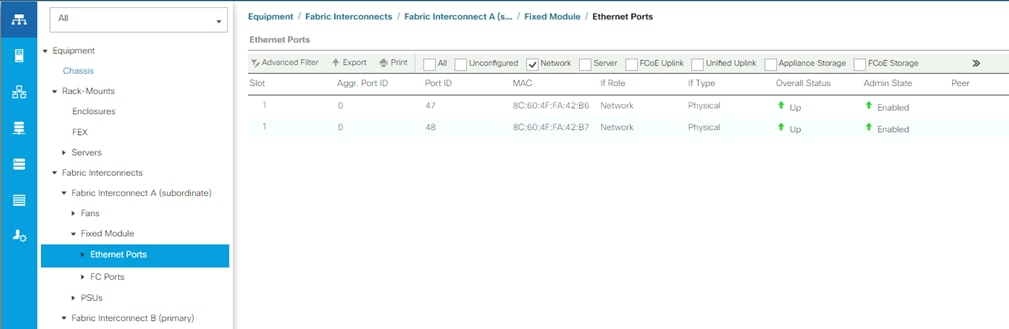

HX-Series Server Connectivity

The HX-Series converged servers are connected directly to the Cisco UCS Fabric Interconnects in Direct Connect mode. This option enables Cisco Intersight and Cisco UCS Manager to manage the HX-Series Rack-Mount Servers using a single cable for both management traffic and data traffic. Cisco HyperFlex M6 generation servers can be configured with the Cisco VIC 1467 or VIC 1477 cards. For the Cisco VIC 1467 and VIC 1477 cards, the standard and redundant practice is to connect port 1 of the VIC card (the left-hand most port) to a port on FI A and connect port 3 (the right-center port) to a port on FI B (Figure 18). An optional configuration method for servers containing the Cisco VIC 1467 or VIC 1477 card is to cable the servers with 2 links to each FI, using ports 1 and 2 to FI A, and ports 3 and 4 to FI B. The HyperFlex installer checks for these configurations, and that all servers’ cabling matches. Failure to follow this cabling best practice can lead to errors, discovery failures, and loss of redundant connectivity.

All nodes within a Cisco HyperFlex cluster must be connected at the same communication speed, for example, mixing 10 Gb with 25 Gb interfaces is not allowed, and all of the nodes within a cluster must contain the same model of Cisco VIC cards. For servers with the Cisco UCS VIC 1467 installed, both 10 Gb and 25 Gb speeds are available when connected to a model 6454 or 64108 Fabric Interconnect. The speed of the links are dependent on the model of optics and cables used to connect the servers to the Fabric Interconnects. For servers with the Cisco UCS VIC 1477 installed, both 40 Gb and 100 Gb speeds are available.

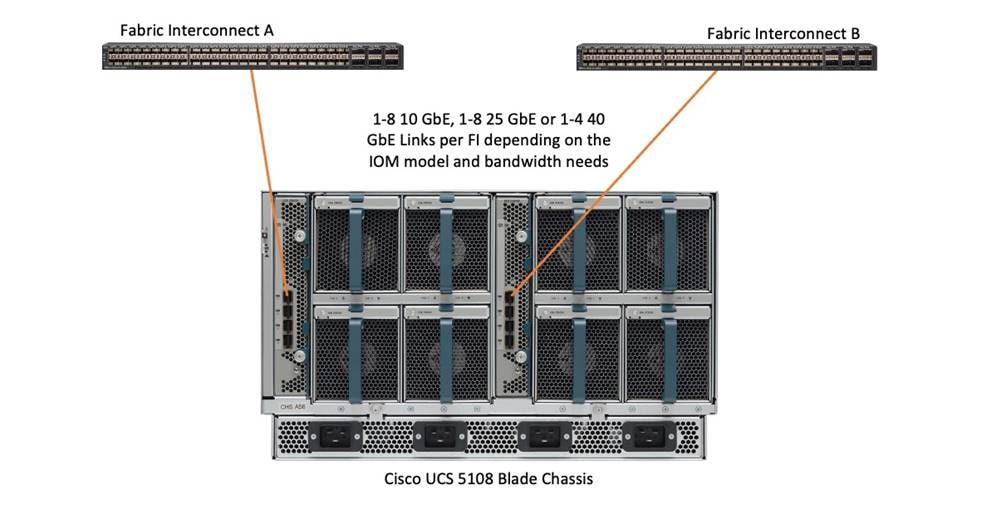

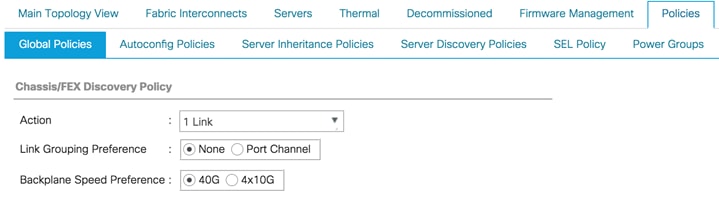

Cisco UCS B-Series Blade Servers

HyperFlex extended clusters can also incorporate 1-32 Cisco UCS blade servers for additional compute capacity. The blade chassis comes populated with 1-4 power supplies, and 8 modular cooling fans. In the rear of the chassis are two bays for installation of Cisco Fabric Extenders. The Fabric Extenders (also commonly called IO Modules, or IOMs) connect the chassis to the Fabric Interconnects. Internally, the Fabric Extenders connect to the Cisco VIC card installed in each blade server across the chassis backplane. The standard connection practice is to connect 1-8 10 GbE links, 1-8 25 GbE links, or 1-4 40 GbE links (depending on the IOMs and FIs purchased) from the left-side IOM, or IOM 1, to FI A, and to connect the same number of 10 GbE, 25 GbE or 40 GbE links from the right-side IOM, or IOM 2, to FI B (Figure 19). All other cabling configurations are invalid, and can lead to errors, discovery failures, and loss of redundant connectivity.

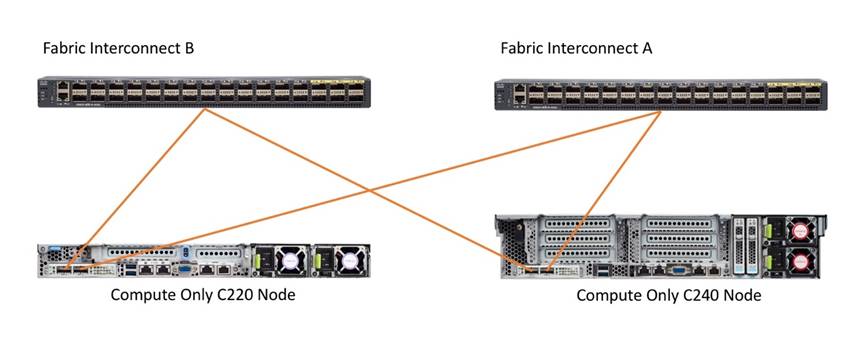

Cisco UCS C-Series Rack-Mount Servers

HyperFlex extended clusters can also incorporate 1-32 Cisco UCS Rack-Mount Servers for additional compute capacity. The Cisco UCS C-Series Rack-Mount Servers are connected directly to the Cisco UCS Fabric Interconnects in Direct Connect mode. The model of Cisco VIC card in the servers, the model of optics or physical transceivers, and the model of fabric interconnects must be compatible to establish links between the equipment. The standard and redundant connection practice for connecting standard Cisco UCS C-Series servers to the fabric interconnects is identical to the method described earlier for the HX-Series servers. Failure to follow this cabling practice can lead to errors, discovery failures, and loss of redundant connectivity.

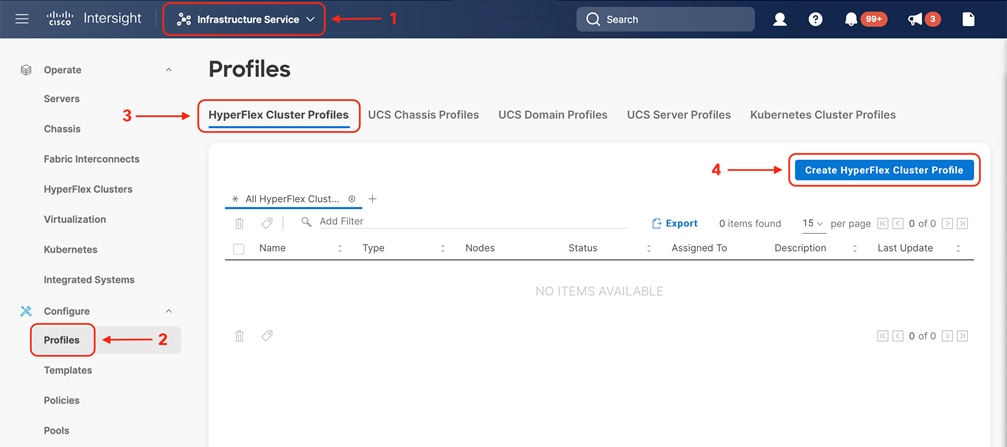

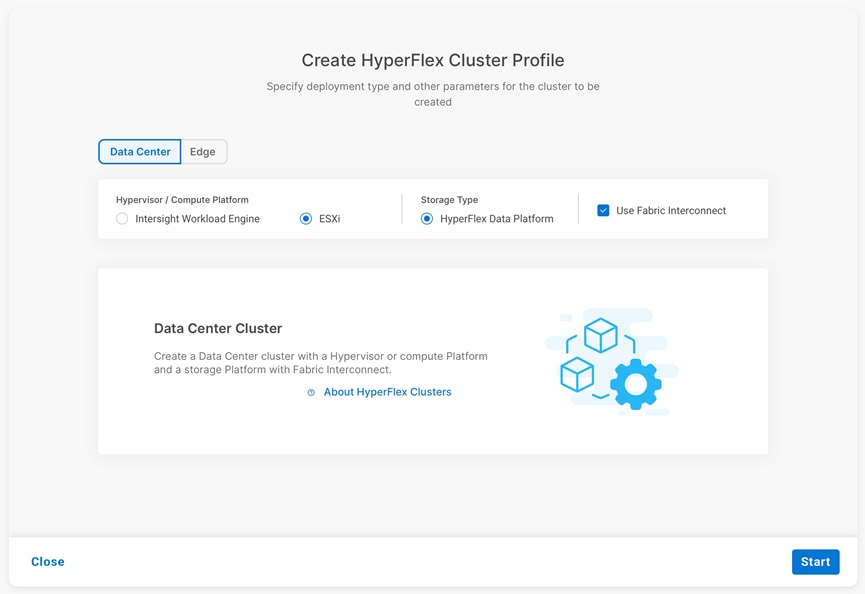

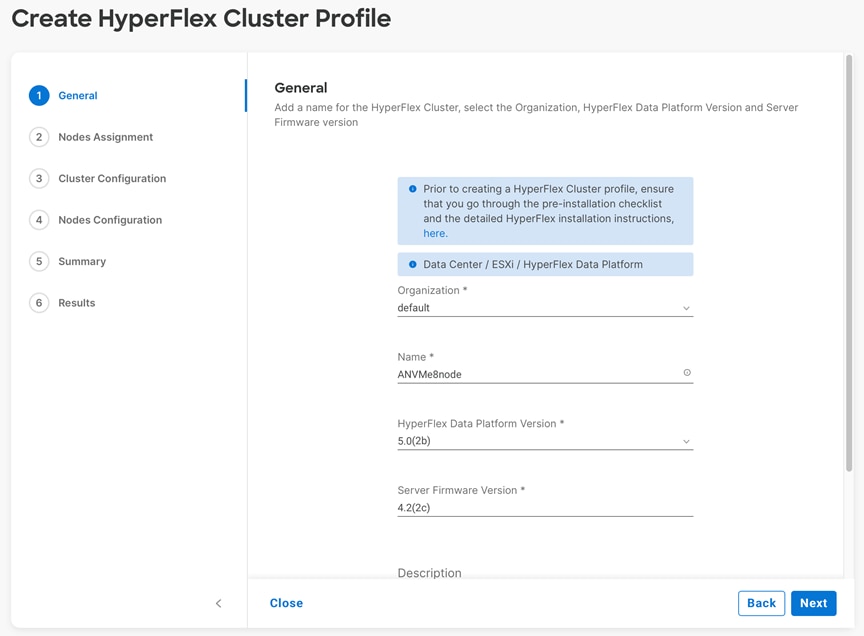

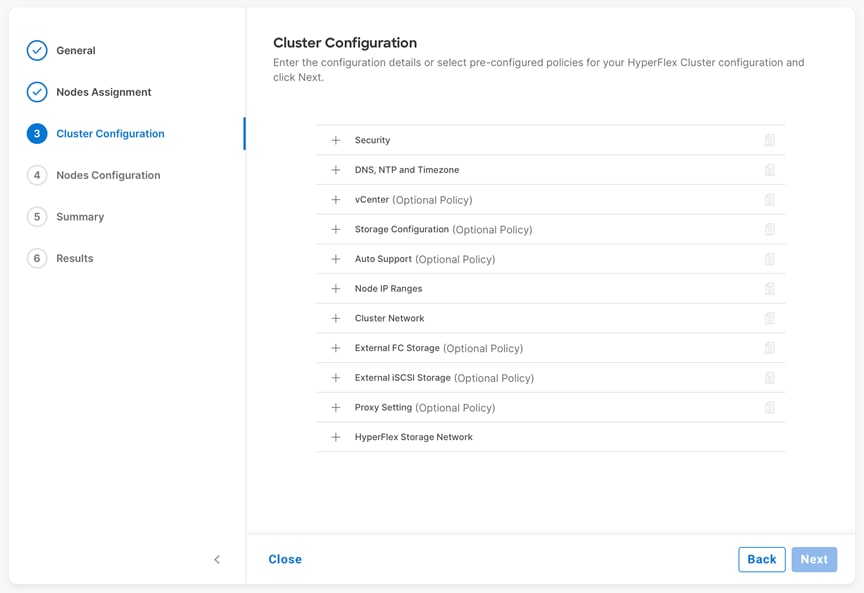

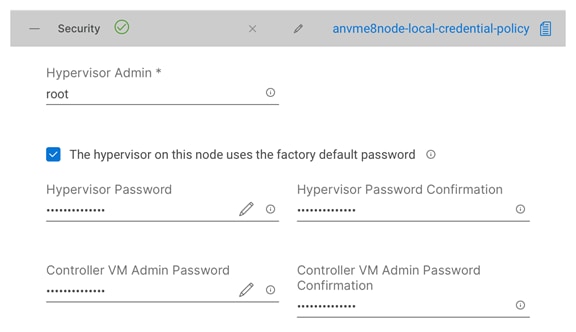

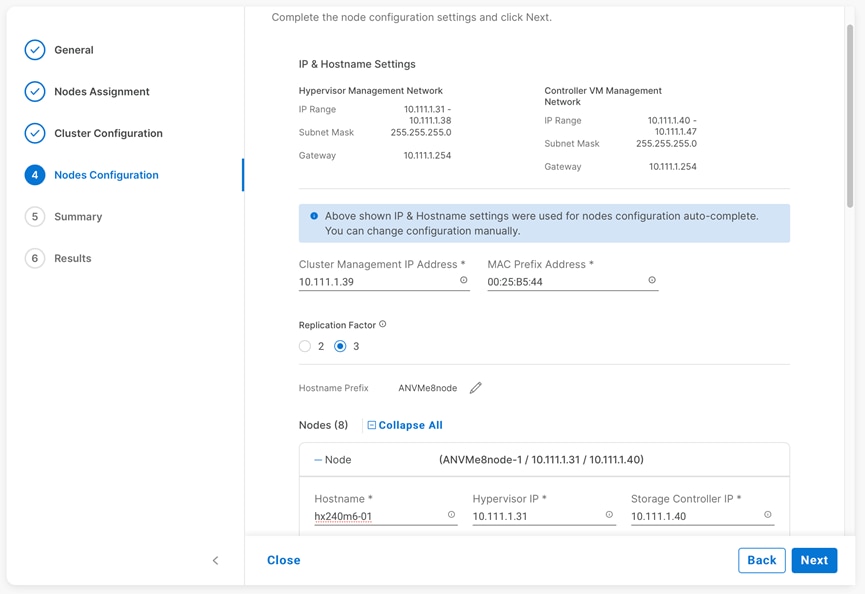

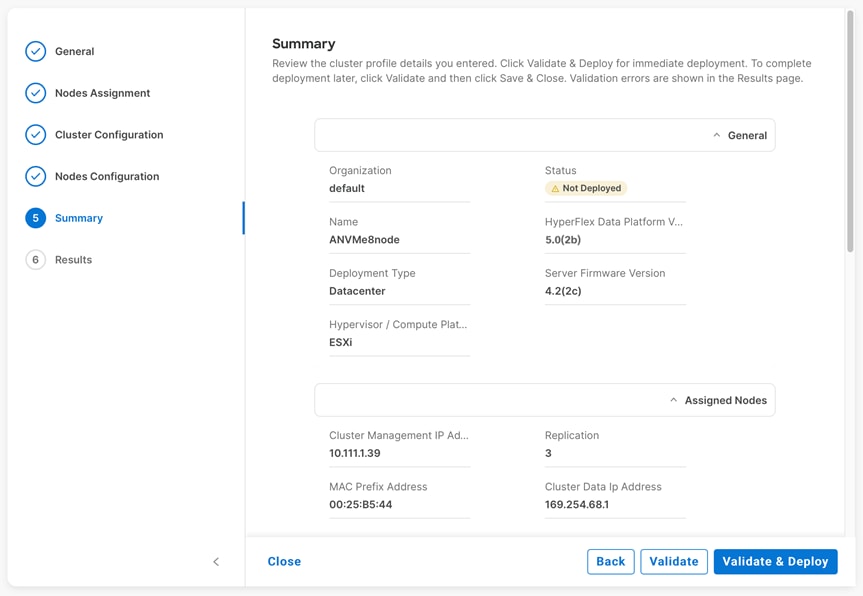

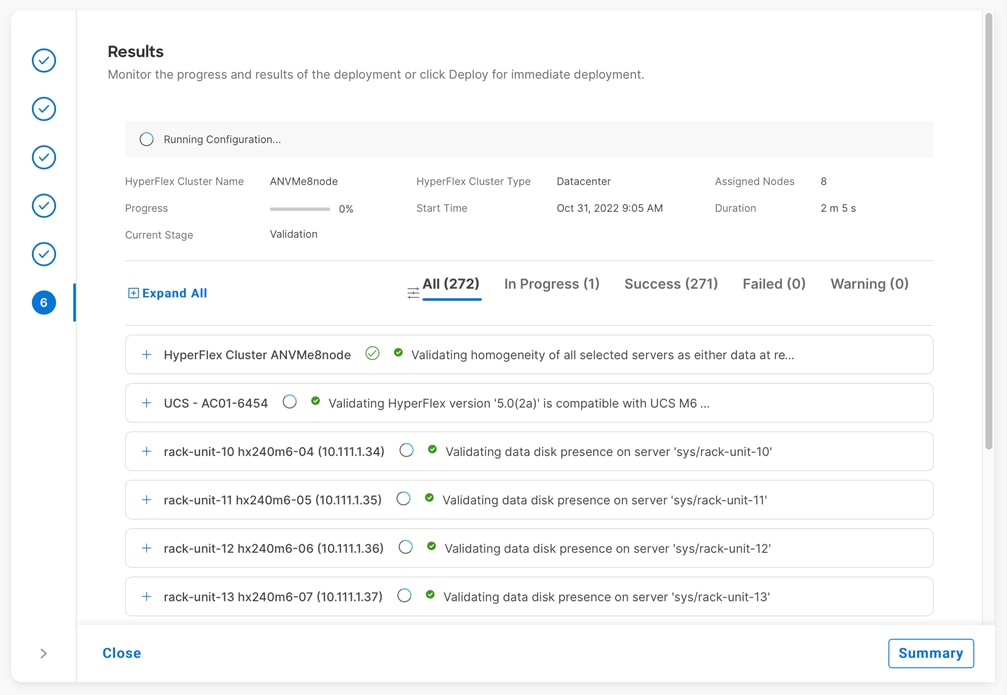

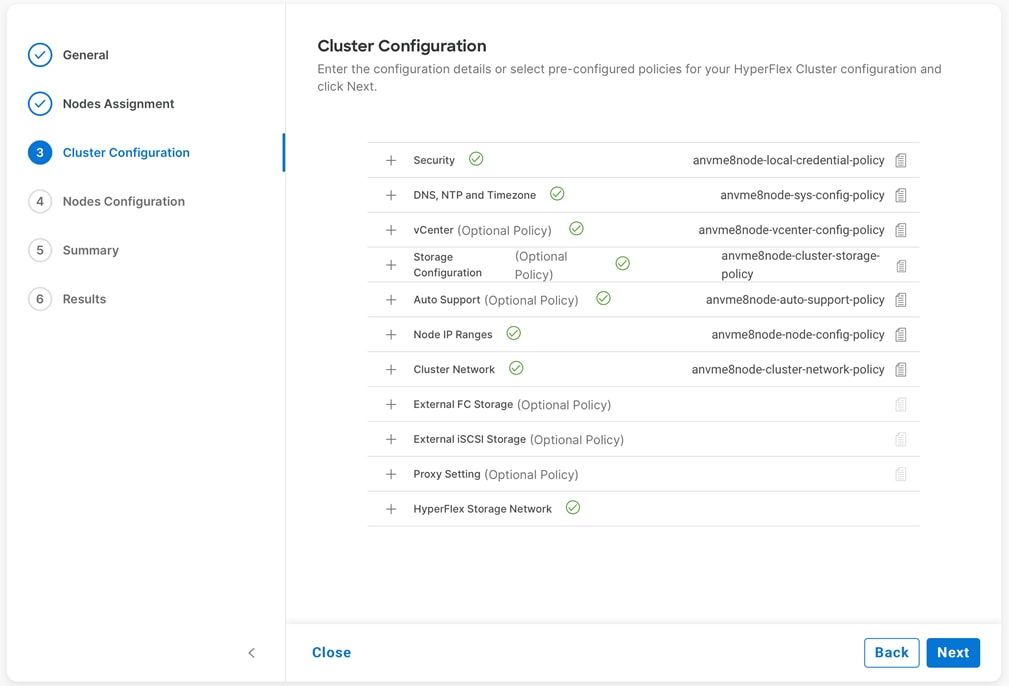

Installing the HyperFlex system is done via the Cisco Intersight online management portal, or through a deployable HyperFlex installer virtual machine, available for download at cisco.com as an OVA file. The installer performs the configuration of Cisco UCS Manager, the physical servers, and also performs significant portions of the ESXi configuration. Finally, the installer will install the HyperFlex HX Data Platform software and create the HyperFlex cluster. The details of the logical network and ESXi host designs as installed by the HyperFlex installer follows below.

Cisco HyperFlex Logical Network Design

Cisco HyperFlex clusters can be installed with the choice between 10Gb, 25Gb and 40Gb Ethernet bandwidth, with two connections per server to the dual redundant upstream Fabric Interconnects.

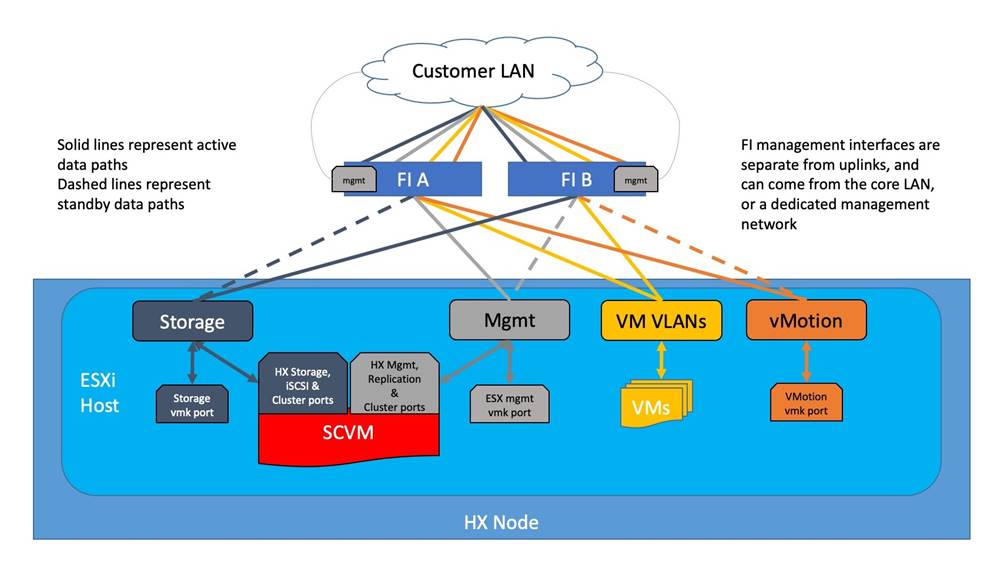

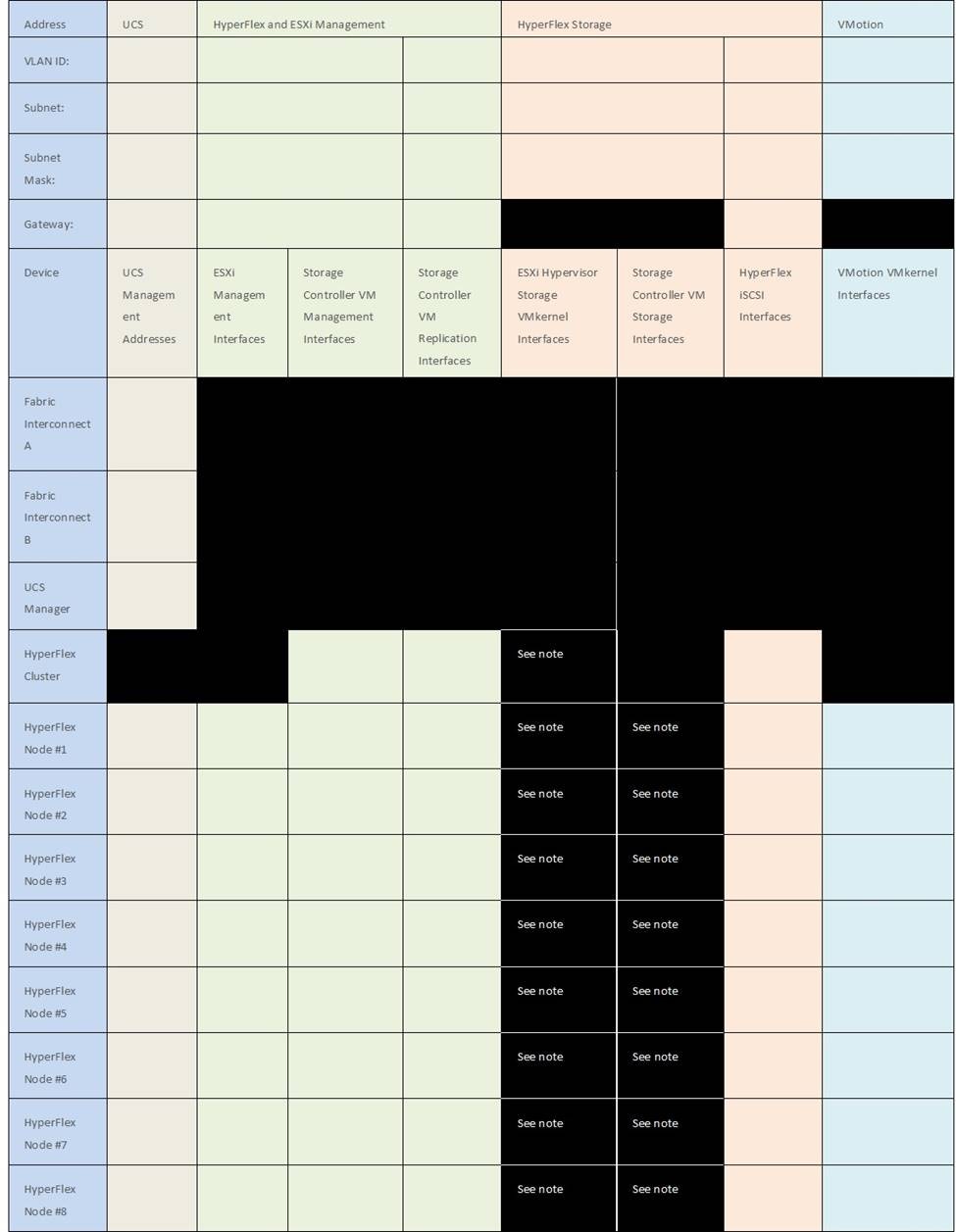

The Cisco HyperFlex system has communication pathways that fall into four defined zones (Figure 20):

● Management Zone: This zone comprises the connections needed to manage the physical hardware, the hypervisor hosts, and the storage platform controller virtual machines (SCVM).

● VM Zone: This zone comprises the connections needed to service network IO to the guest VMs that will run inside the HyperFlex hyperconverged system. This zone typically contains multiple routable VLANs, which are trunked to the Cisco UCS Fabric Interconnects via the network uplinks and tagged with 802.1Q VLAN IDs. For the Red Hat OpenShift Container Platform installation, the RHOCP master and worker VMs' networking endpoints would reside in this zone.

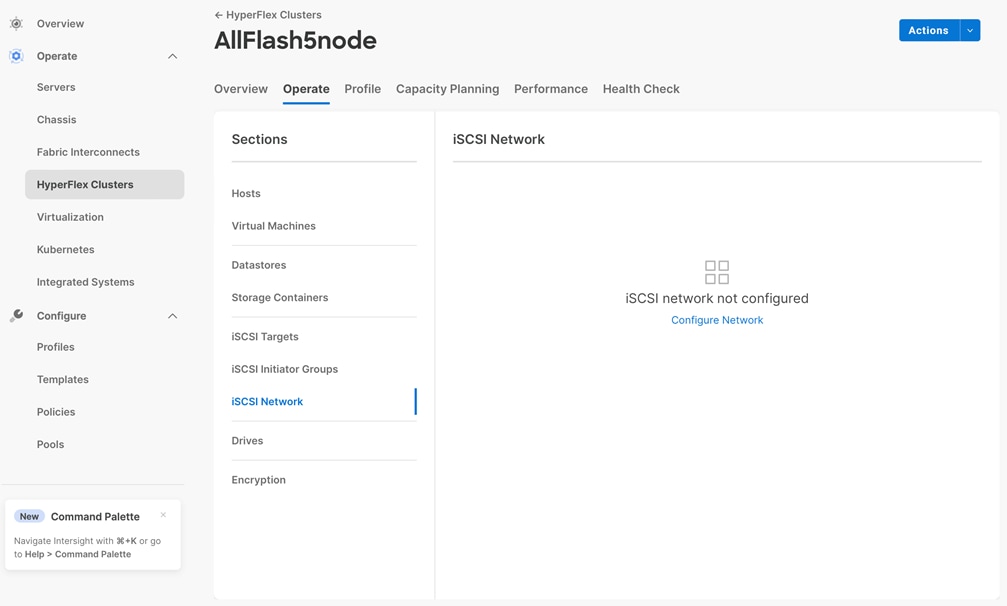

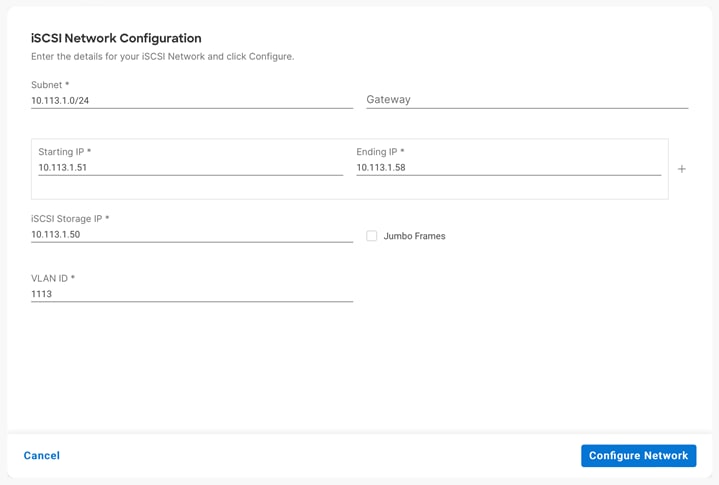

● Storage Zone: This zone comprises the connections used by the Cisco HX Data Platform software, ESXi hosts, and the storage controller VMs to service the HX Distributed Data Filesystem. The HX storage VLAN does not need to be routable to the rest of the LAN, however it must be able to traverse the switches upstream from the Fabric Interconnects. In addition, a VLAN for iSCSI-based traffic is created in this zone, which may or may not be routable according to the network design. Within this VLAN, the iSCSI storage IP addresses, one per node and one for the entire cluster, are created for presenting HXDP storage to external clients via the iSCSI protocol. These addresses are used in this design by the stateful containers which mount iSCSI-based volumes using the HX CSI plugin.

● VMotion Zone: This zone comprises the connections used by the ESXi hosts to enable vMotion of the guest VMs from host to host.

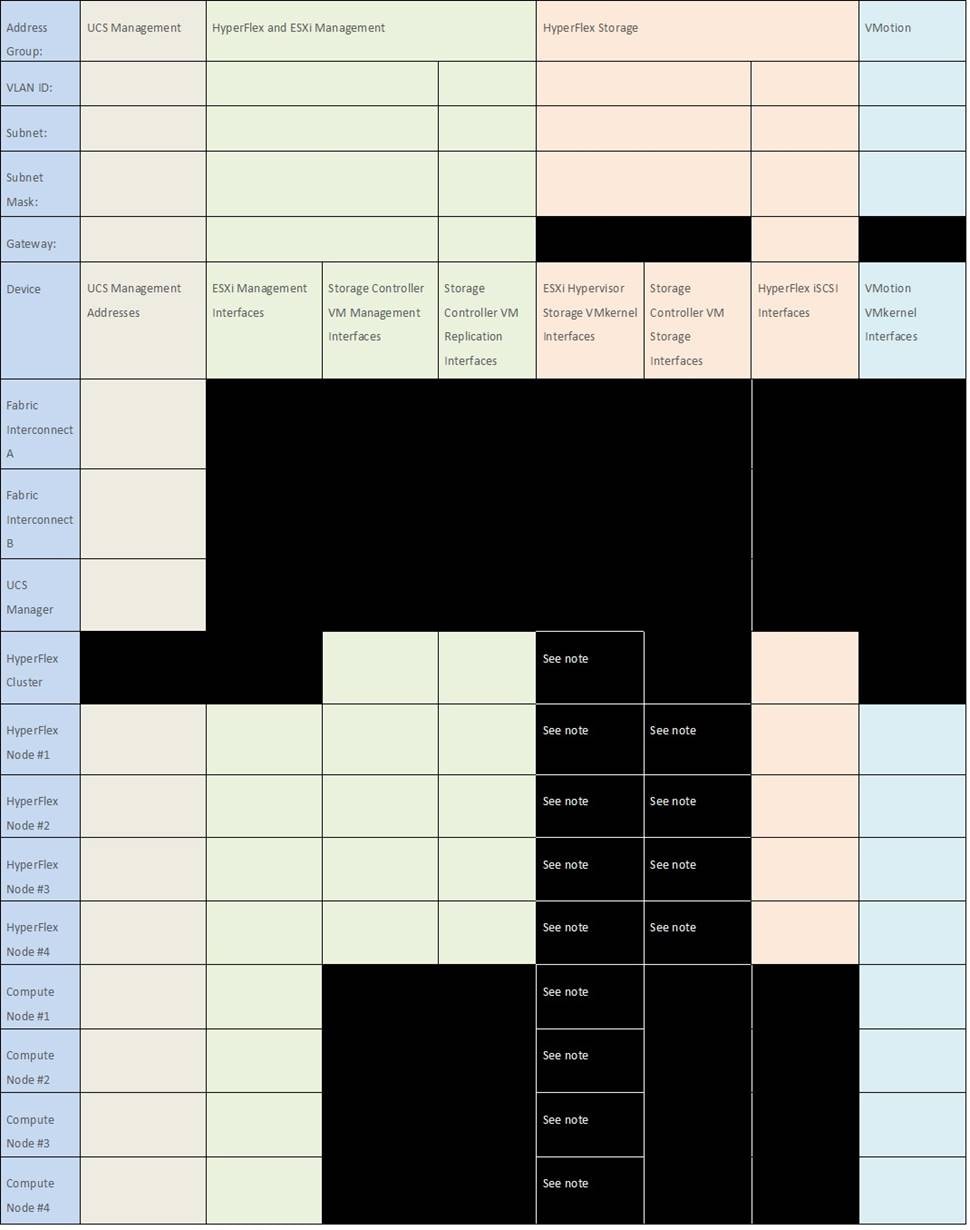

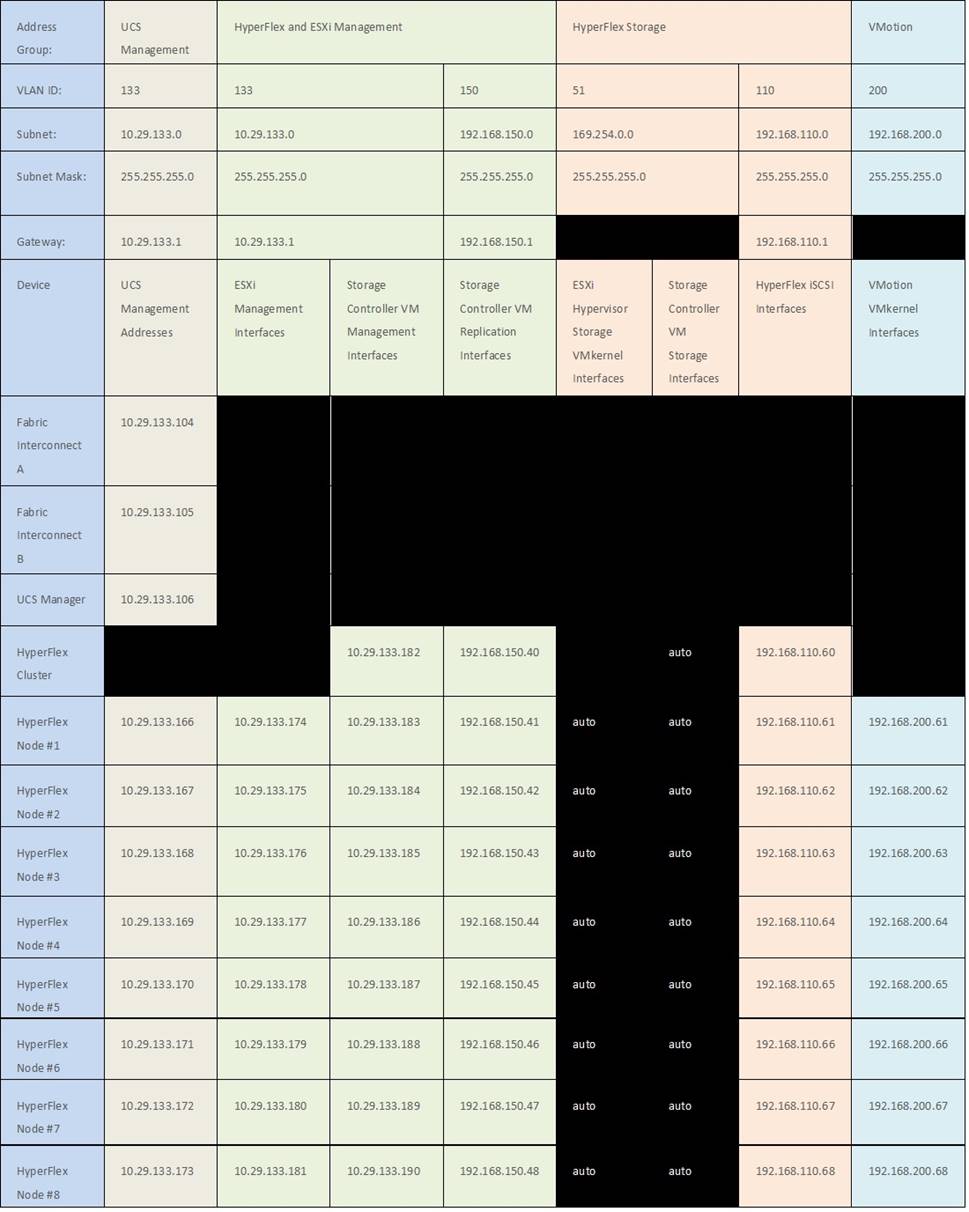

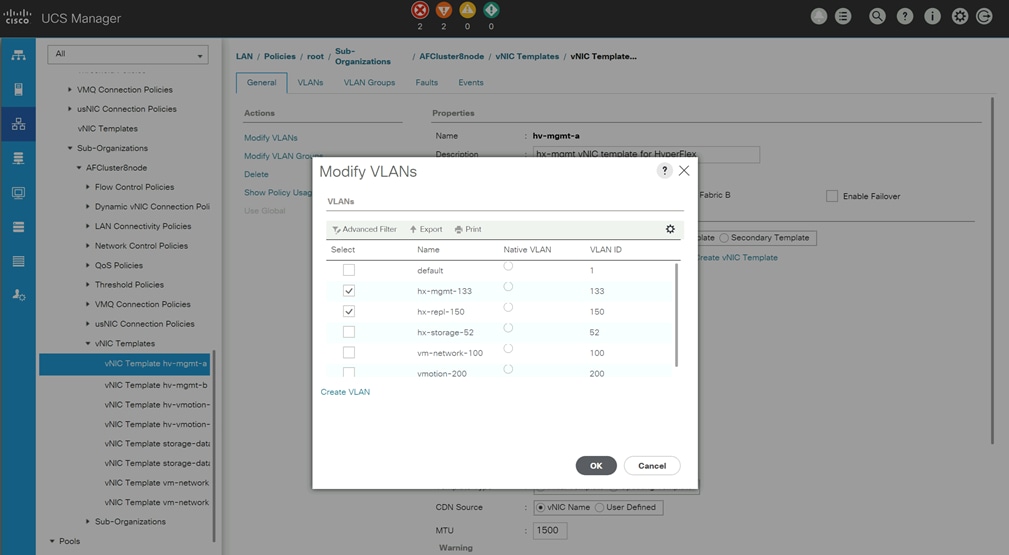

For the base HyperFlex system configuration, multiple VLANs need to be carried to the Cisco UCS domain from the upstream LAN, and these VLANs are also defined in the Cisco UCS configuration. The hx-storage-data VLAN must be a separate VLAN ID from the remaining VLANs. Table 4 lists the VLANs created by the HyperFlex installer in Cisco UCS, and their functions.

| VLAN Name |

VLAN ID |

Purpose |

| hx-inband-mgmt |

Customer supplied |

ESXi host management interfaces HX Storage Controller VM management interfaces HX Storage Cluster roaming management interface |

| hx-inband-repl |

Customer supplied |

HX Storage Controller VM Replication interfaces HX Storage Cluster roaming replication interface |

| hx-storage-data |

Customer supplied |

ESXi host storage VMkernel interfaces HX Storage Controller storage network interfaces HX Storage Cluster roaming storage interface |

| hx-inband-iscsi |

Customer supplied |

iSCSI external storage access |

| vm-network |

Customer supplied |

Guest VM network interfaces |

| hx-vmotion |

Customer supplied |

ESXi host vMotion VMkernel interfaces |

Note: A dedicated network or subnet for physical device management is often used in datacenters. In this scenario, the mgmt0 interfaces of the two Fabric Interconnects would be connected to that dedicated network or subnet. This is a valid configuration for HyperFlex installations with the following caveat; wherever the HyperFlex installer is deployed it must have IP connectivity to the subnet of the mgmt0 interfaces of the Fabric Interconnects, and also have IP connectivity to the subnets used by the hx-inband-mgmt VLANs listed above.

Jumbo Frames

All HyperFlex storage traffic traversing the hx-storage-data VLAN, and subnet is configured by default to use jumbo frames, or to be precise, all communication is configured to send IP packets with a Maximum Transmission Unit (MTU) size of 9000 bytes. The Cisco HyperFlex installer will configure jumbo frame support on the appropriate interfaces automatically. Jumbo frames could also significantly improve the performance of iSCSI traffic, which would also improve the performance of the Cisco HyperFlex CSI plugin for pods that require persistent storage. However, doing so can drastically increase the complexity of the configuration. Jumbo frame support requires that all interfaces, switches, initiators, and endpoints be configured properly to support the larger than standard MTU size. By default, the Red Hat OpenShift worker nodes would not be configured with a dedicated iSCSI interface with jumbo frame support enabled, and the Cisco HyperFlex CSI plugin would also not be configured to use jumbo frames. As such, although support for jumbo frames on the Cisco HyperFlex iSCSI network is possible, it is not recommended to attempt to use jumbo frames at this time.

ESXi Host Design

Building upon the Cisco UCS service profiles and policy designs, the following sections detail the design of the elements within the VMware ESXi hypervisors, system requirements, virtual networking, and the configuration of ESXi for the Cisco HyperFlex HX Distributed Data Platform.

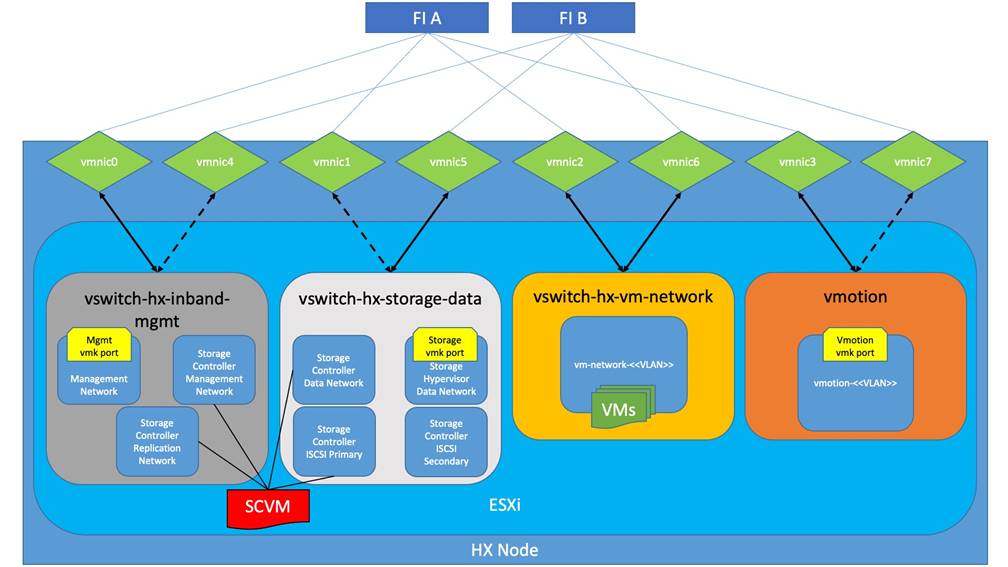

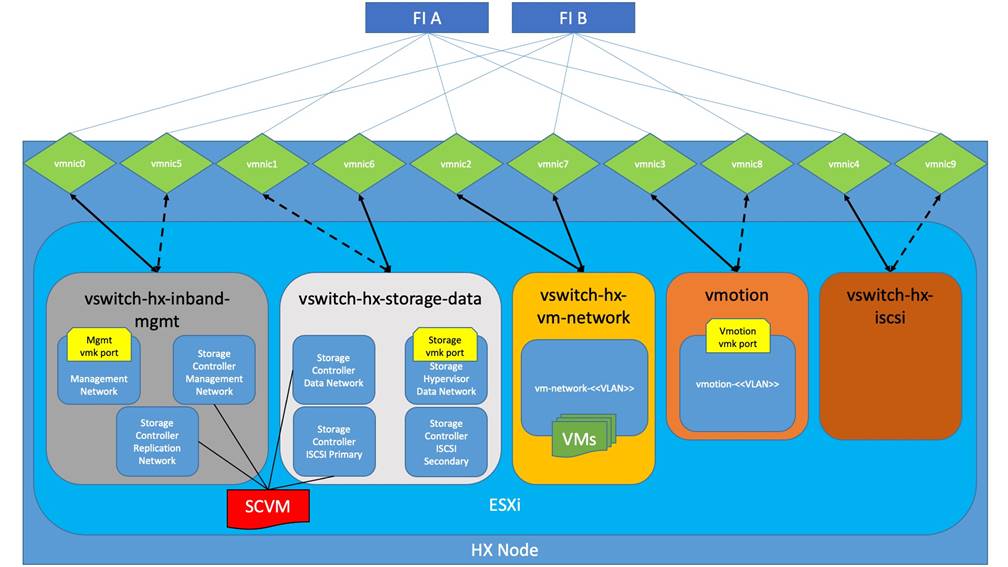

The Cisco HyperFlex system has a pre-defined virtual network design at the ESXi hypervisor level. The ESXi host networking design is derived from the configuration of the nodes as set within Cisco UCS Manager, which is automatically configured via Cisco Intersight during the HyperFlex installation. Four different virtual switches are created by the HyperFlex installer, each using two uplinks, which are each serviced by a vNIC defined in the Cisco UCS service profile. The vSwitches created are:

● vswitch-hx-inband-mgmt: This is the default vSwitch0 which is renamed by the ESXi kickstart file as part of the automated installation. The switch has two uplinks, active on fabric A and standby on fabric B, without jumbo frames. The default VMkernel port, vmk0, is configured in the standard Management Network port group. A second port group is created for the Storage Platform Controller VMs to connect to with their individual management interfaces. A third port group is created for cluster-to-cluster VM snapshot replication traffic. The VLANs are not Native VLANs as assigned to the vNIC templates, and therefore they are defined in ESXi/vSphere.

● vswitch-hx-storage-data: This vSwitch is created as part of the automated installation. The switch has two uplinks, active on fabric B and standby on fabric A, with jumbo frames highly recommended. A VMkernel port, vmk1, is configured in the Storage Hypervisor Data Network port group, which is the interface used for connectivity to the HX Datastores via NFS. A second port group is created for the Storage Platform Controller VMs to connect to with their individual storage interfaces. A third and fourth port groups are created for external iSCSI traffic primary and secondary paths, although only the primary port group is used at this time and is assigned with the hx-inband-iscsi VLAN ID. The VLANs are not Native VLANs as assigned to the vNIC templates, and therefore they are defined in ESXi/vSphere.

● vswitch-hx-vm-network: This vSwitch is created as part of the automated installation. The switch has two uplinks, active on both fabrics A and B, and without jumbo frames. The VLANs are not Native VLANs as assigned to the vNIC templates, and therefore they are defined in ESXi/vSphere.

● vmotion: This vSwitch is created as part of the automated installation. The switch has two uplinks, active on fabric A and standby on fabric B, with jumbo frames highly recommended. The IP addresses of the VMkernel ports (vmk2) are configured during the post_install script execution. The VLAN is not a Native VLAN as assigned to the vNIC templates, and therefore they are defined in ESXi/vSphere.

● vswitch-hx-iscsi: This additional standard vSwitch is only created when the optional additional pair of vNICs are created during installation for connectivity to external iSCSI storage systems. Port groups and VLAN ID assignment must be done manually as a post-installation activity.

Table 5 and Figure 22 provide more details about the ESXi virtual networking design as built by the HyperFlex installer by default.

Table 5. Default Virtual Switches

| Virtual Switch |

Port Groups |

Active vmnic(s) |

Passive vmnic(s) |

VLAN IDs |

Jumbo |

| vswitch-hx-inband-mgmt |

Management Network Storage Controller Management Network |

vmnic0 |

vmnic4 |

<<hx-inband-mgmt>> |

no |

| Storage Controller Replication Network |

vmnic0 |

vmnic4 |

<<hx-inband-repl>> |

no |

|

| vswitch-hx-storage-data |

Storage Controller Data Network Storage Hypervisor Data Network |

vmnic5 |

vmnic1 |

<<hx-storage-data>> |

yes |

| Storage Controller ISCSI Primary Storage Controller ISCSI Secondary |

vmnic5 |

vmnic1 |

<<hx-inband-iscsi>> |

yes |

|

| vswitch-hx-vm-network |

vm-network-<<VLAN ID>> |

vmnic2 vmnic6 |

<<vm-network>> |

no |

|

| vmotion |

vmotion-<<VLAN ID>> |

vmnic3 |

vmnic7 |

<<hx-vmotion>> |

yes |

Table 6 and Figure 23 provide more details about the ESXi virtual networking design as built by the HyperFlex installer when the additional vNICs are configured during the installation.

Table 6. Optional Virtual Switches

| Virtual Switch |

Port Groups |

Active vmnic(s) |

Passive vmnic(s) |

VLAN IDs |

Jumbo |

| vswitch-hx-inband-mgmt

|

Management Network Storage Controller Management Network |

vmnic0 |

vmnic5 |

<<hx-inband-mgmt>> |

no |

| Storage Controller Replication Network |

vmnic0 |

vmnic5 |

<<hx-inband-repl>> |

no |

|

| vswitch-hx-storage-data

|

Storage Controller Data Network Storage Hypervisor Data Network |

vmnic6 |

vmnic1 |

<<hx-storage-data>> |

yes |

| Storage Controller ISCSI Primary Storage Controller ISCSI Secondary |

vmnic6 |

vmnic1 |

<<hx-inband-iscsi>> |

yes |

|

| vswitch-hx-vm-network |

vm-network-<<VLAN ID>> |

vmnic2 vmnic7 |

|

<<vm-network>> |

no |

| vmotion |

vmotion-<<VLAN ID>> |

vmnic3 |

vmnic8 |

<<hx-vmotion>> |

yes |

| vswitch-hx-iscsi |

User configured |

vmnic4 |

vmnic9 |

<<hx-ext-iscsi-a>> <<hx-ext-iscsi-b>> |

no |

Controller Virtual Machine Locations

The storage controller VM is operationally no different from any other typical virtual machine in an ESXi environment. The storage controller VM runs custom software and services which work cooperatively to form, manage, and maintain the Cisco HX Distributed Filesystem and service all the guest VM IO requests. The services and processes that run within the controller VMs are not exposed directly to the ESXi hosts, although the controller VMs are configured to automatically start and stop with the ESXi hosts and protected from accidental deletion. The deployment of the controller VMs and vCenter plugins are all done by the Cisco HyperFlex installer and requires no manual steps.

The VM must have a virtual disk with the bootable root filesystem available in a location separate from the SAS HBA that the VM is controlling via VMDirectPath I/O. The server boots the ESXi hypervisor from the internal M.2 form factor SSD(s). The boot disk is partitioned by the ESXi installer, and all remaining space is used as a VMFS datastore. The controller VM’s root filesystem is stored on a 2.5 GB virtual disk, /dev/sda, which is placed on this VMFS datastore. The controller VM has full control of all the front and rear facing SAS or NVMe based hot-swappable disks via PCI passthrough. The controller VM operating system mounts the system disk, also commonly called the “housekeeping” disk as /dev/sdb, and places HyperFlex binaries and logs on this disk. The remaining disks seen by the controller VM OS are used by the HX Distributed filesystem for caching and capacity layers.

The following figures detail the Storage Platform Controller VM placement on the ESXi hypervisor hosts.

Prior to the installation of the cluster, proper consideration must be given to the number of nodes required for the overall cluster scale, and the usable capacity that will result.

When using HX-series servers with Intel processors, Cisco HyperFlex standard clusters scale from a minimum of 3 to a maximum of 32 Cisco HX-series converged nodes with small form factor (SFF) disks per cluster. A converged node is a member of the cluster which provides storage resources to the HX Distributed Filesystem. For the compute intensive “extended” cluster design, a configuration with 3 to 32 Cisco HX-series converged nodes can be combined with up to 32 compute nodes. It is required that the number of compute-only nodes should always be less than or equal to number of converged nodes when using the HyperFlex Datacenter Advantage licenses. If using HyperFlex Datacenter Premier licenses, the number of compute-only nodes can grow to as much as twice the number of converged nodes. Regardless of the licensing used, the combined maximum size of any HyperFlex cluster cannot exceed 96 nodes. Once the maximum size of a single cluster has been reached, the environment can be “scaled out” by adding additional HX-series servers to the Cisco UCS domain, installing an additional HyperFlex cluster on those new servers, and managing them via the same vCenter server. There is no limit to the number of clusters that can be created in a single UCS domain, the practical limits will instead be reached due to the number of ports available on the Fabric Interconnects. Up to 100 HyperFlex clusters can be managed by a single vCenter server. Within Cisco Intersight, there are no practical limits to the number of Cisco HyperFlex clusters being managed.

Cisco HyperFlex HX240c-M6L model servers with large form factor (LFF) disks are limited to a maximum of sixteen nodes per cluster and cannot be mixed within the same cluster as models with small form factor (SFF) disks.

Table 7 lists the minimum and maximum scale for various installations of the Cisco HyperFlex system.

Table 7. HyperFlex Cluster Scale

| Cluster Type |

Minimum Converged Nodes Required |

Maximum Converged Nodes |

Maximum Compute-only Nodes Allowed |

Maximum Total Cluster Size |

| Intel and AMD based servers with SFF disks |

3 |

32 |

64 |

96 |

| Intel based servers with LFF disks |

3 |

16 |

32 |

48 |

Overall usable cluster capacity is based on a number of factors. The number of nodes in the cluster, the number and size of the capacity layer disks, and the replication factor of the HyperFlex HX Data Platform, all affect the cluster capacity. Caching disk sizes are not calculated as part of the cluster capacity.

Disk drive manufacturers have adopted a size reporting methodology using calculation by powers of 10, also known as decimal prefix. As an example, a 120 GB disk is listed with a minimum of 120 x 10^9 bytes of usable addressable capacity, or 120 billion bytes. However, many operating systems and filesystems report their space based on standard computer binary exponentiation, or calculation by powers of 2, also called binary prefix. Using this method, 2^10 or 1024 bytes make up a kilobyte, 2^10 kilobytes make up a megabyte, 2^10 megabytes make up a gigabyte, and 2^10 gigabytes make up a terabyte. As the sizes increase, the disparity between the two systems of measurement and notation gets worse, for example at the terabyte level, the deviation between a decimal prefix value and a binary prefix value is nearly 10 percent.

The International System of Units (SI) defines values and decimal prefix by powers of 10 as listed in Table 8.

Table 8. SI Unit Values (Decimal Prefix)

| Value |

Symbol |

Name |

| 1000 bytes |

kB |

Kilobyte |

| 1000 kB |

MB |

Megabyte |

| 1000 MB |

GB |

Gigabyte |

| 1000 GB |

TB |

Terabyte |

The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) defines values and binary prefix by powers of 2 in ISO/IEC 80000-13:2008 Clause 4 as listed in Table 9.

Table 9. IEC Unit Values (binary prefix)

| Value |

Symbol |

Name |

| 1024 bytes |

KiB |

Kibibyte |

| 1024 KiB |

MiB |

Mebibyte |

| 1024 MiB |

GiB |

Gibibyte |

| 1024 GiB |

TiB |

Tebibyte |

For the purpose of this document, the decimal prefix numbers are used only for raw disk capacity as listed by the respective manufacturers. For all calculations where raw or usable capacities are shown from the perspective of Cisco Intersight, Cisco HyperFlex software, filesystems or operating systems, the binary prefix numbers are used. This is done primarily to show a consistent set of values as seen by the end user from within Cisco Intersight or the HyperFlex Connect GUI when viewing cluster capacity, allocation, and consumption, and also within most operating systems.

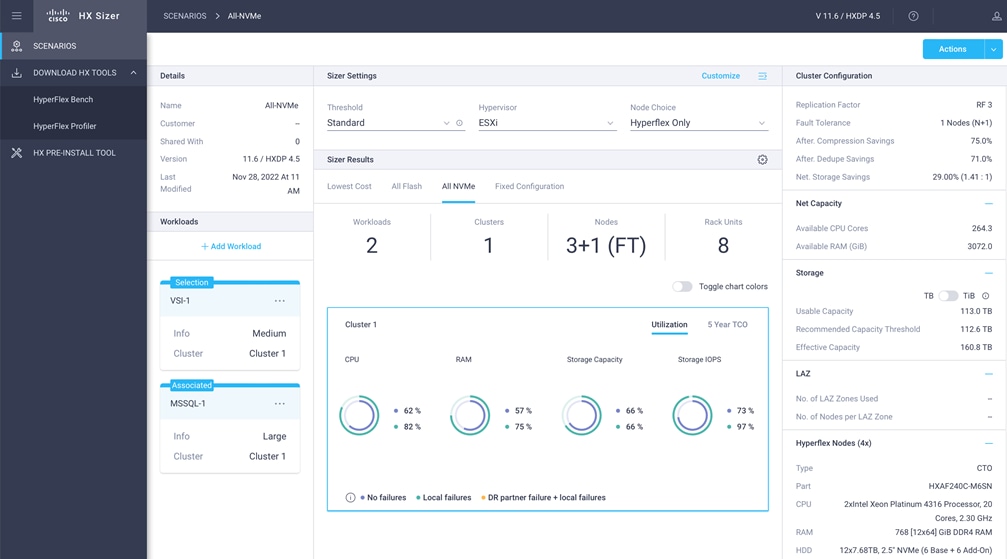

Table 10 lists a set of HyperFlex HX Data Platform cluster usable capacity values, using binary prefix, for an array of cluster configurations. These values provide an example of the capacity calculations, for determining the appropriate size of HX cluster to initially purchase, and how much capacity can be gained by adding capacity disks. The calculations for these values are listed in Appendix A: Cluster Capacity Calculations. The HyperFlex tool to help with sizing is listed in Appendix B: HyperFlex Sizer.

Table 10. Example Cluster Usable Capacities

| HX-Series Server Model |

Node Quantity |

Capacity Disk Size (each) |

Capacity Disk Quantity (per node) |

Cluster Usable Capacity at RF=2 |

Cluster Usable Capacity at RF=3 |

| HXAF220c-M6S |

8 |

960 GB |

8 |

25.7 TiB |

17.1 TiB |

| 1.9 TB |

8 |

50.9 TiB |

33.9 TiB |

||

| HXAF240c-M6SX |

12 |

1.9 TB |

10 |

95.4 TiB |

63.6 TiB |

| 15 |

143.1 TiB |

95.4 TiB |

|||

| 23 |

219.4 TiB |

146.3 TiB |

|||

| 7.6 GB |

10 |

381.6 TiB |

254.4 TiB |

||

| 15 |

572.3 TiB |

381.6 TiB |

|||

| 23 |

877.6 TiB |

585.0 TiB |

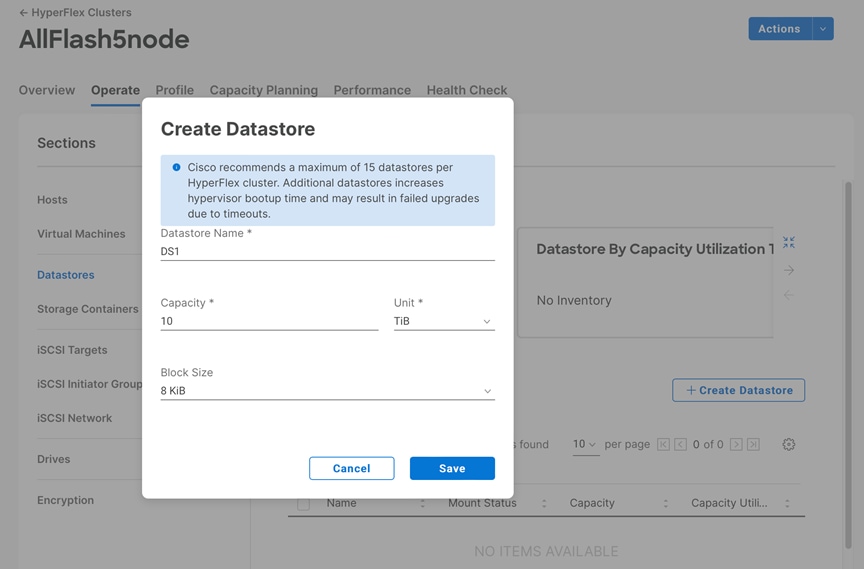

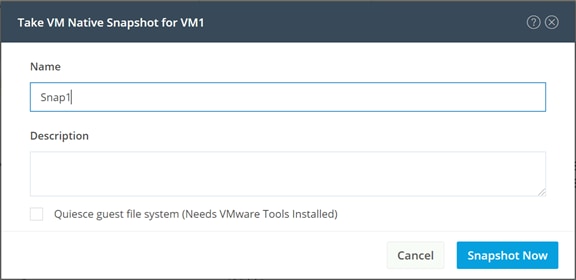

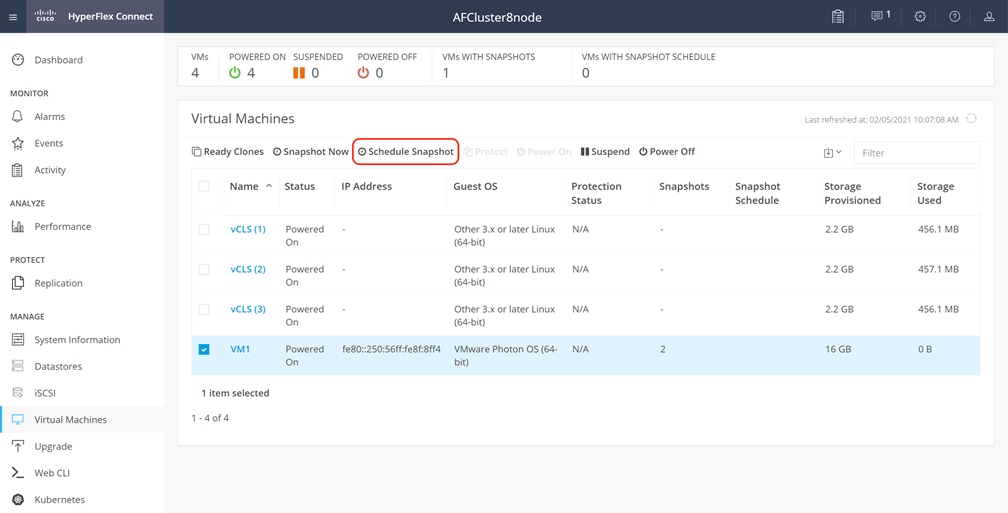

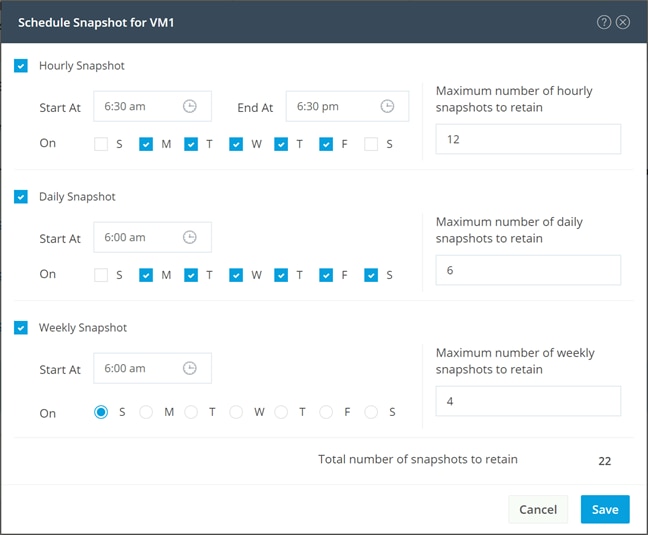

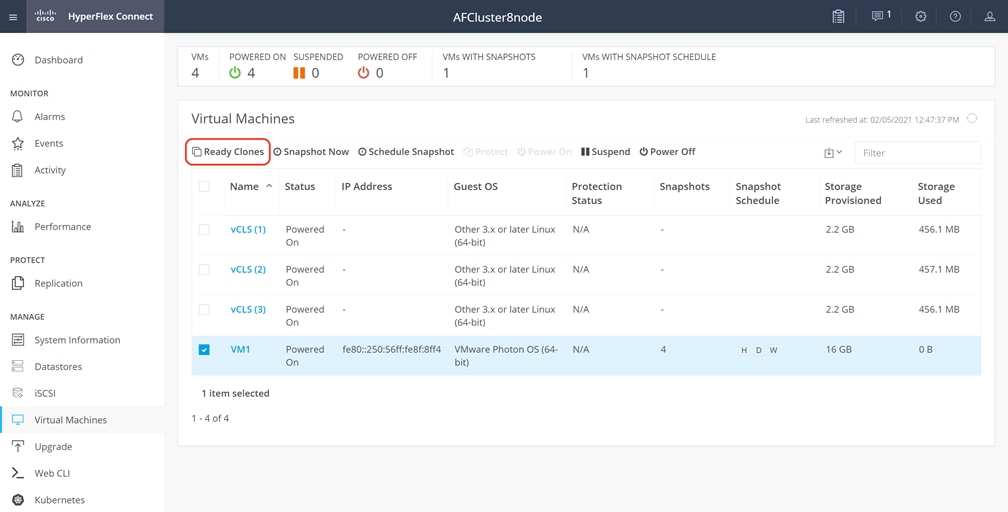

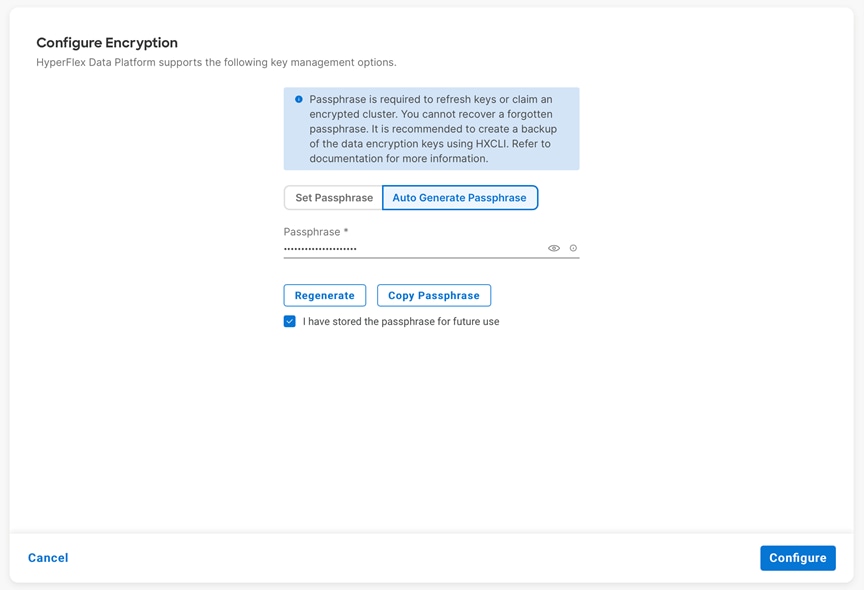

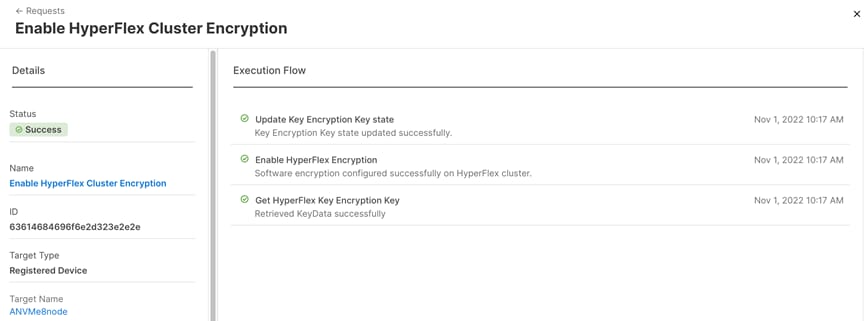

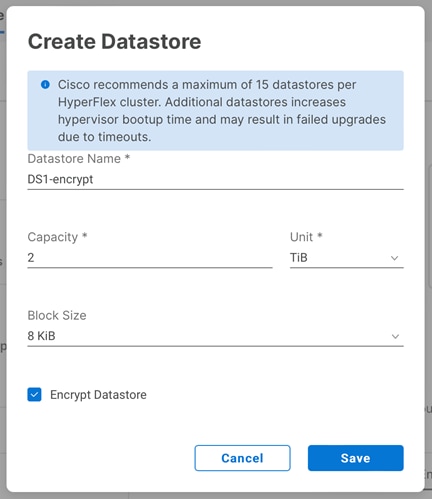

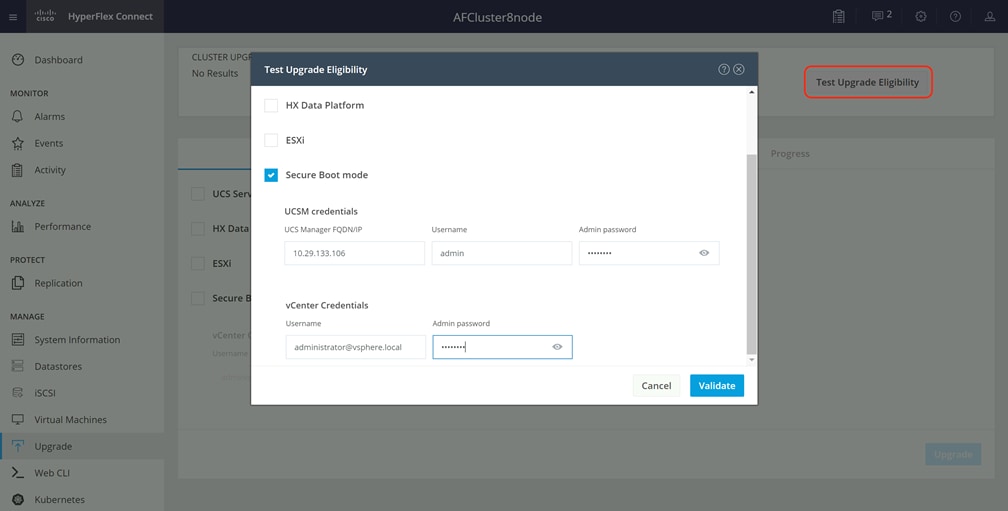

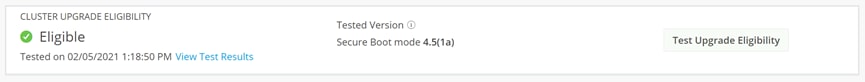

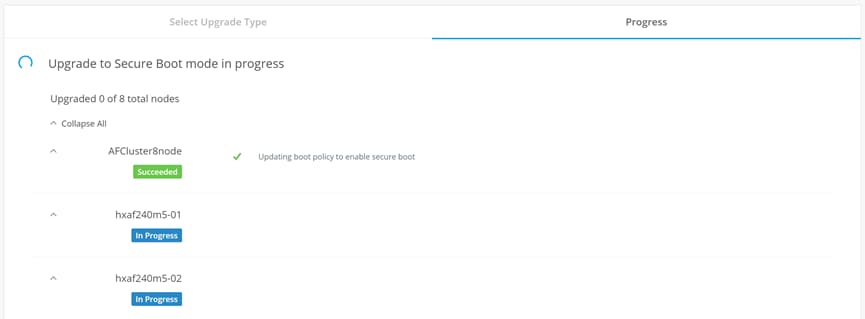

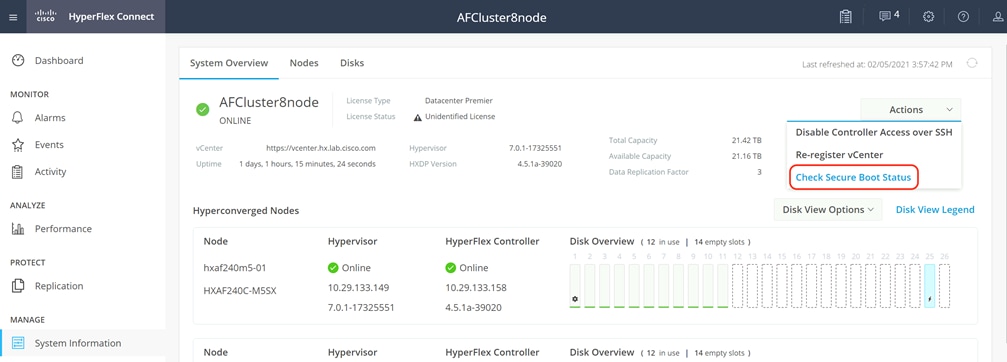

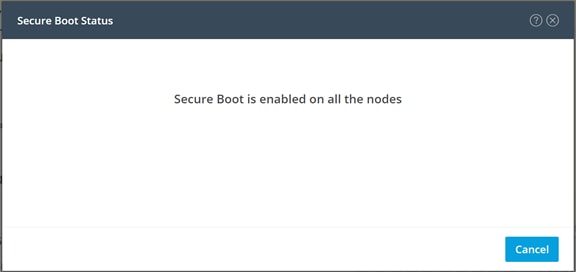

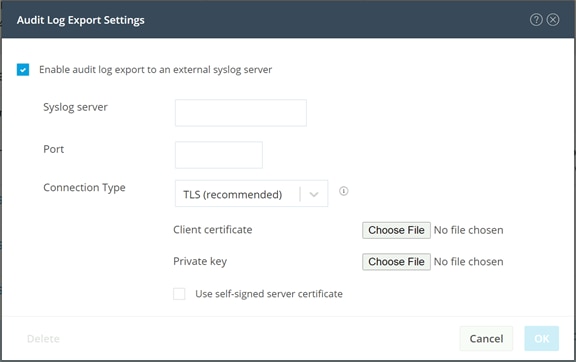

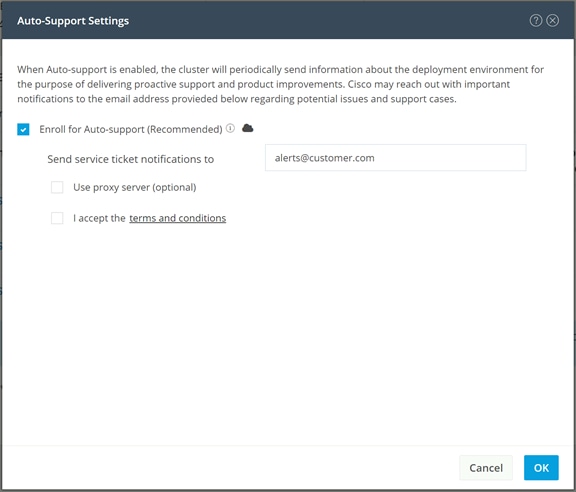

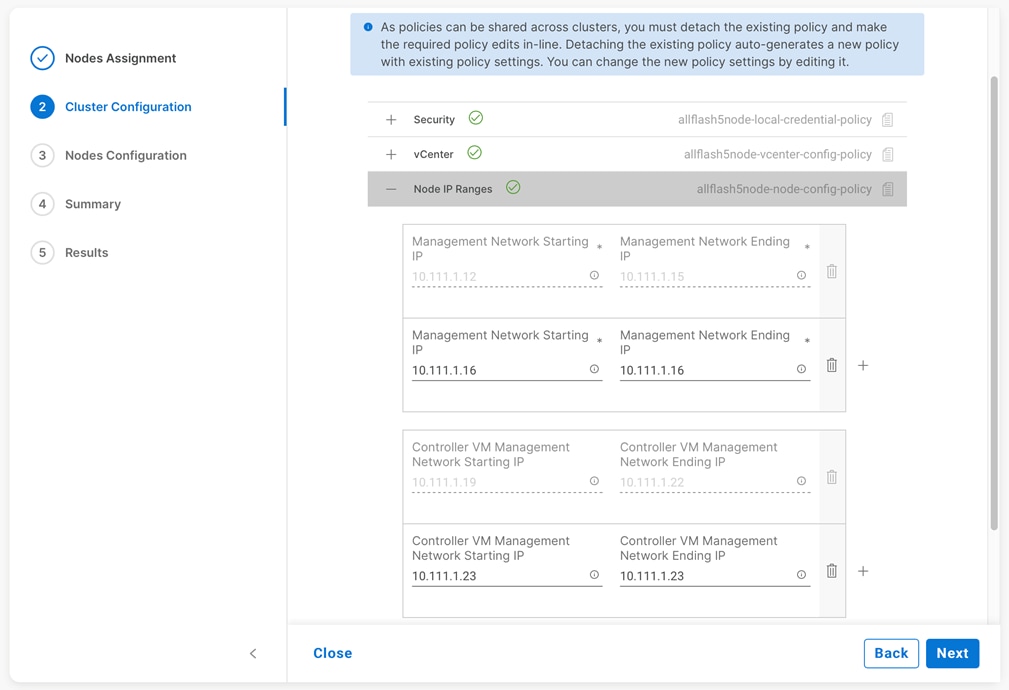

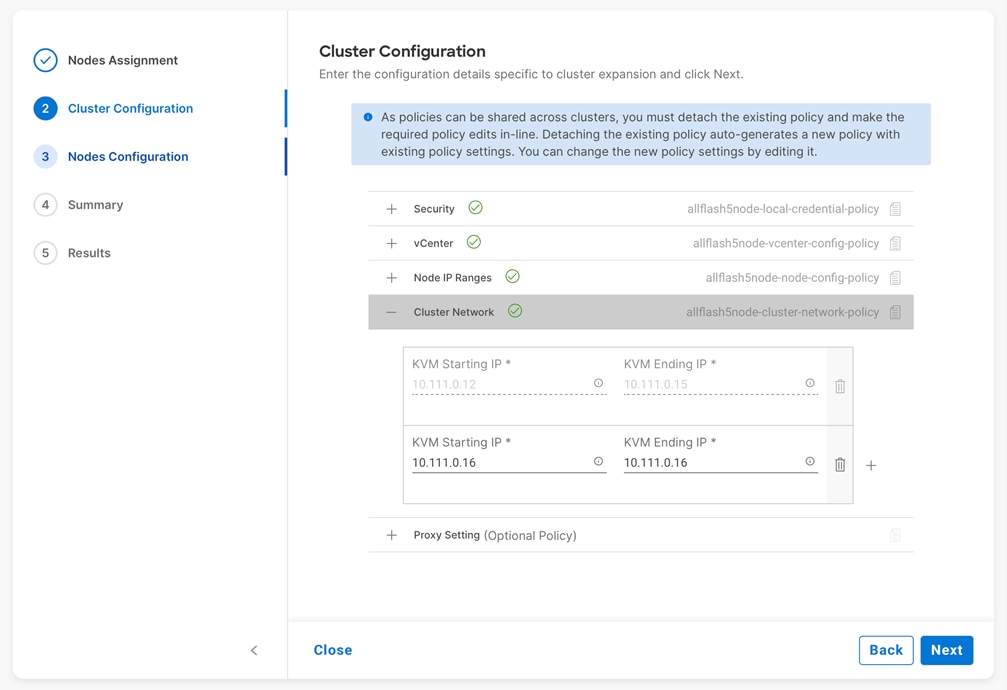

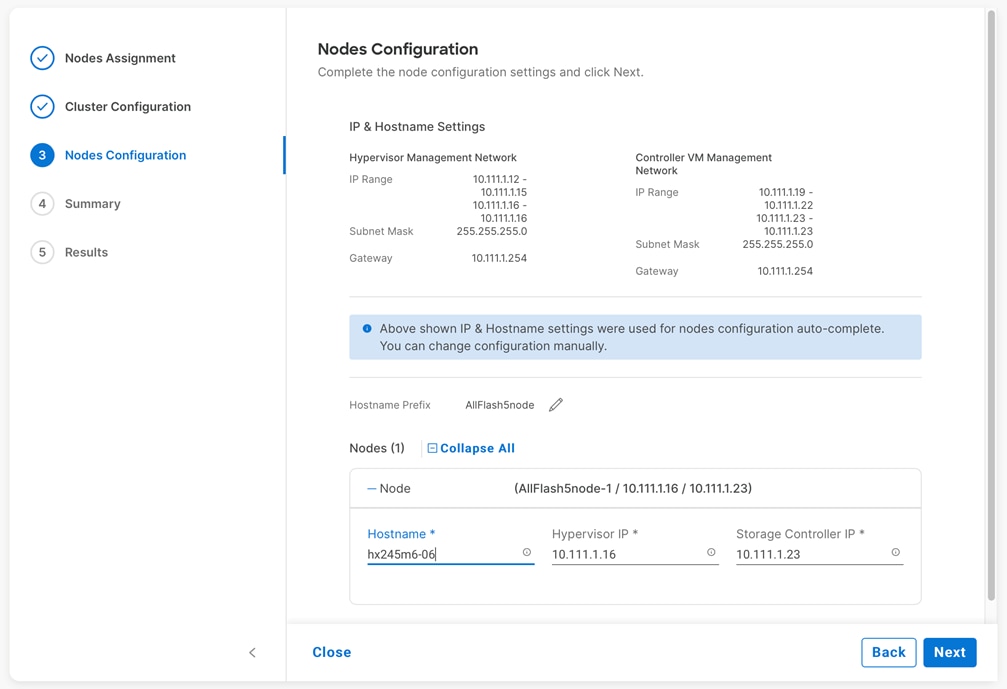

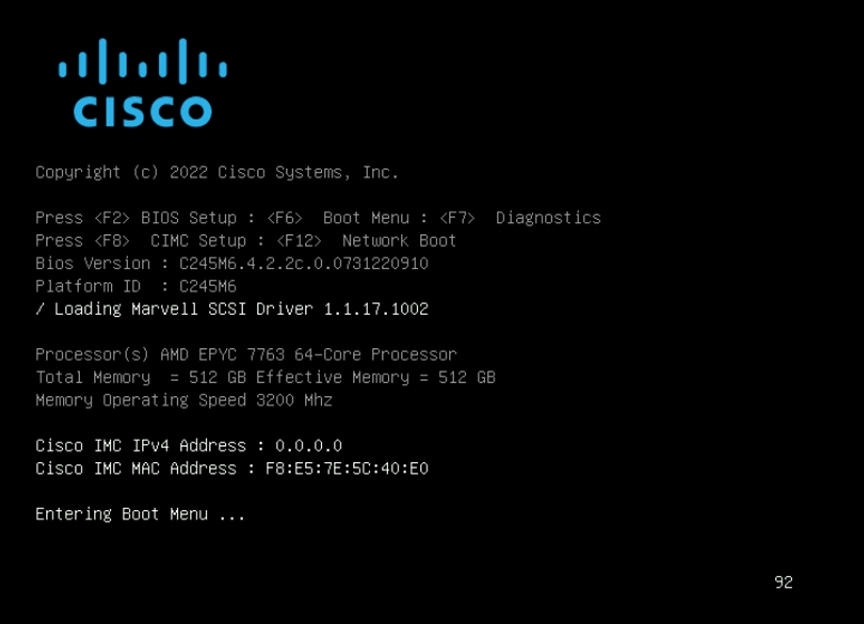

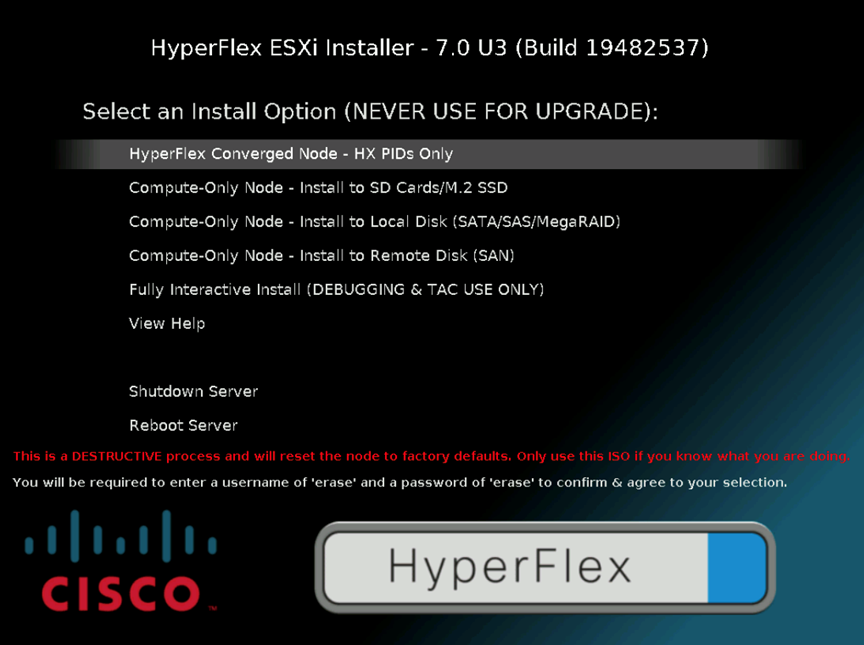

|||