FlexPod Datacenter with Cisco UCS X-Series for SAP HANA TDI Design Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

The FlexPod Datacenter solution is a validated approach for deploying Cisco and NetApp technologies and products to build shared private and public cloud infrastructure. Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic data-center platforms. The success of the FlexPod solution is driven through its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking.

This document explains the design details of incorporating the Cisco X-Series modular platform into the FlexPod Datacenter for SAP HANA Tailored Datacenter Integration (TDI) implementations and its ability to manage and orchestrate FlexPod components from the cloud using Cisco Intersight. Some of the key advantages of integrating Cisco UCS X-Series into the FlexPod infrastructure are:

The key benefits of this solution are:

● Simpler and programmable infrastructure: infrastructure as a code delivered through a single partner integrable open API

● Power and cooling innovations: higher power headroom and lower energy loss due to a 54V DC power delivery to the chassis

● Better airflow: midplane-free design with fewer barriers, therefore lower impedance

● Fabric innovations: PCIe/Compute Express Link (CXL) topology for heterogeneous compute and memory composability

● Innovative cloud operations: continuous feature delivery and no need for maintaining on-premise virtual machines supporting management functions

● Built for investment protections: design ready for future technologies such as liquid cooling and high-Wattage CPUs; CXL-ready

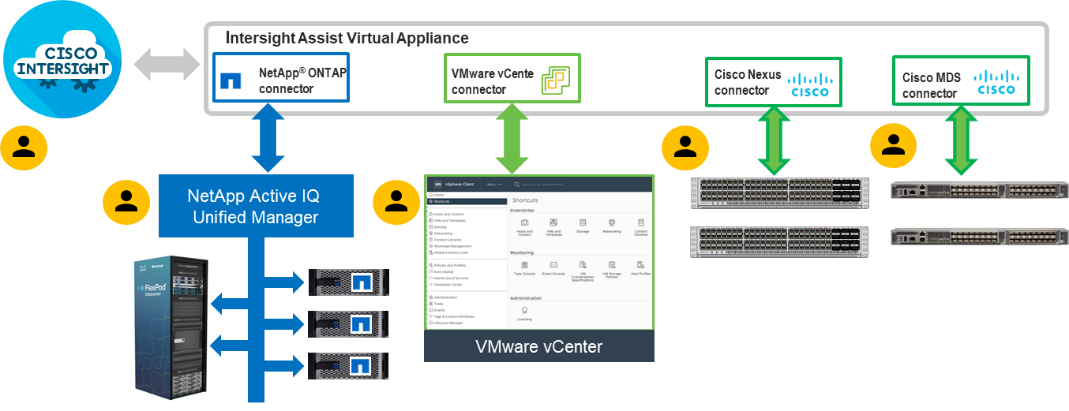

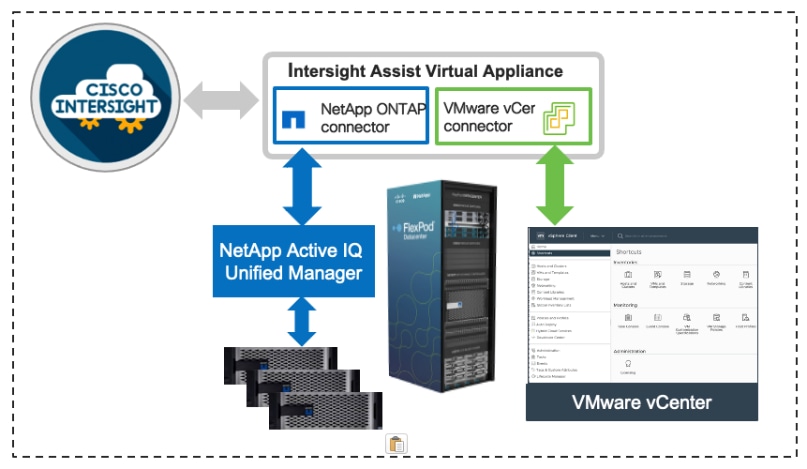

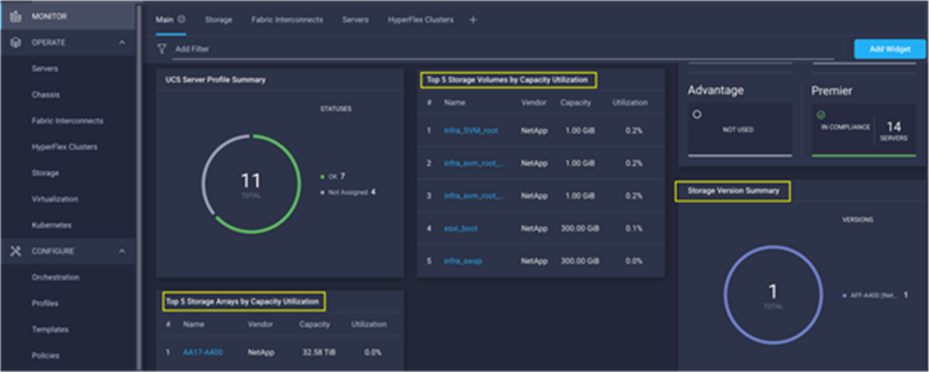

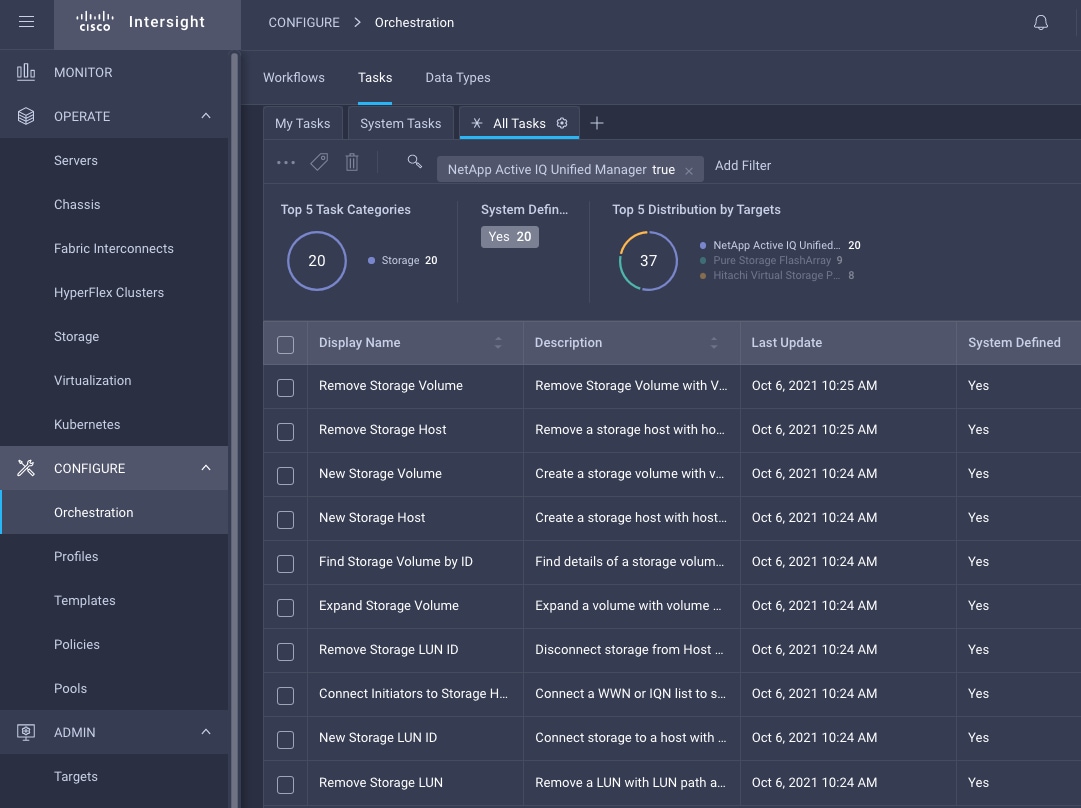

In addition to the compute-specific hardware and software innovations, the integration of the Cisco Intersight cloud platform with VMware vCenter and NetApp Active IQ Unified Manager delivers monitoring, orchestration, and workload optimization capabilities for different layers (virtualization and storage) of the FlexPod infrastructure.

If you’re interested in understanding the FlexPod design and deployment details, including the configuration of various elements of design and associated best practices, refer to Cisco Validated Designs for FlexPod, here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html.

This chapter contains the following:

● Audience

The Cisco Unified Computing System (Cisco UCS) X-Series is a brand-new modular compute system, configured and managed from the cloud. It is designed to meet the needs of modern applications and to improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design. The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support.

SAP HANA in-memory database handles transactional and analytical workloads with any data type – on a single data copy. It breaks down the transactional and analytical silos in organizations, for quick decision-making, on premise and in the cloud. SAP HANA offers a multi-engine, query-processing environment that supports relational data (with both row- and column-oriented physical representations in a hybrid engine) as well as graph and text processing for semi-structured and unstructured data management within the same system. The SAP HANA TDI offers a more open and flexible way for the integration of SAP HANA into the data center with benefits like the virtualization of the SAP HANA platform or a flexible combination of multiple SAP HANA systems on the fully certified, converged infrastructure.

Powered by the Cisco Intersight cloud-operations platform, the Cisco UCS X-Series enables the next-generation cloud-operated FlexPod infrastructure that not only simplifies data-center management but also allows the infrastructure to adapt to the unpredictable needs of modern applications as well as traditional workloads. With the Cisco Intersight platform, you get all the benefits of SaaS delivery and the full lifecycle management of Intersight-connected distributed servers and integrated NetApp storage systems across data centers, remote sites, branch offices, and edge environments.

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who are interested in learning about and deploying the FlexPod Datacenter for SAP and SAP HANA use cases, such as Scale-up system, SAP HANA scale out system with NFS or mixed protocol configurations with Linux bare metal as well as VMware ESXi installations.

This document compliments the FlexPod Datacenter with Cisco UCS X-Series, VMware 7.0 U2, and NetApp ONTAP 9.9 Design Guide: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/UCS_CVDs/flexpod_xseries_esxi7u2_design.html and explains the design considerations and requirements for SAP HANA TDI deployments, both in virtualized as well as bare-metal scenarios.

The following design elements distinguish this version of FlexPod from previous models:

● Integration of Cisco UCS X-Series into FlexPod Datacenter for SAP HANA TDI

● Deploying and managing Cisco UCS X-Series from the cloud using Cisco Intersight

● Integration of Cisco Intersight with NetApp Active IQ Unified Manager for storage monitoring and orchestration

● Integration of Cisco Intersight with VMware vCenter for interacting with, monitoring, and orchestrating the virtualized SAP HANA environment

The FlexPod Datacenter solution with Cisco UCS X-Series and NetApp ONTAP 9.9.1 offers the following key benefits:

● Simplified cloud-based management of the solution components

● Hybrid-cloud-ready, policy-driven modular design

● Highly available and scalable platform with flexible architecture that supports various deployment models

● Easy to deploy, consume, and manage architecture, which saves time and resources required to research, procure, and integrate off-the-shelf components

● Support for component monitoring, solution automation and orchestration, and workload optimization

● Stateless architecture, providing the capability to expand and adapt to new business requirements

● Robust components capable of supporting high-performance and high bandwidth for virtualized and non-virtualized applications

● Risk reduction at each level of the design with resiliency built into each touch point

Like other FlexPod solution designs, FlexPod Datacenter with Cisco UCS X-Series, and Intersight for SAP HANA TDI is configurable according to demand and usage. You can purchase the exact infrastructure you need for your current application requirements and can then scale-up by adding more resources to the FlexPod system or scale-out by adding more FlexPod instances. By moving the management from the fabric interconnects into the cloud, the solution can respond to the speed and scale of your deployments with a constant stream of new capabilities delivered from Intersight software-as-a-service model at cloud-scale.

This chapter contains the following:

● Cisco Unified Computing System X-Series

● Cisco Nexus Switching Fabric

● Cisco MDS 9132T 32G Multilayer Fabric Switch

● Cisco Nexus Dashboard Fabric Controller

● Red Hat Ansible Automation Platform

● Red Hat Enterprise Linux for SAP Solutions

● SUSE Linux Enterprise Server for SAP Applications

● SAP Application Monitoring with AppDynamics

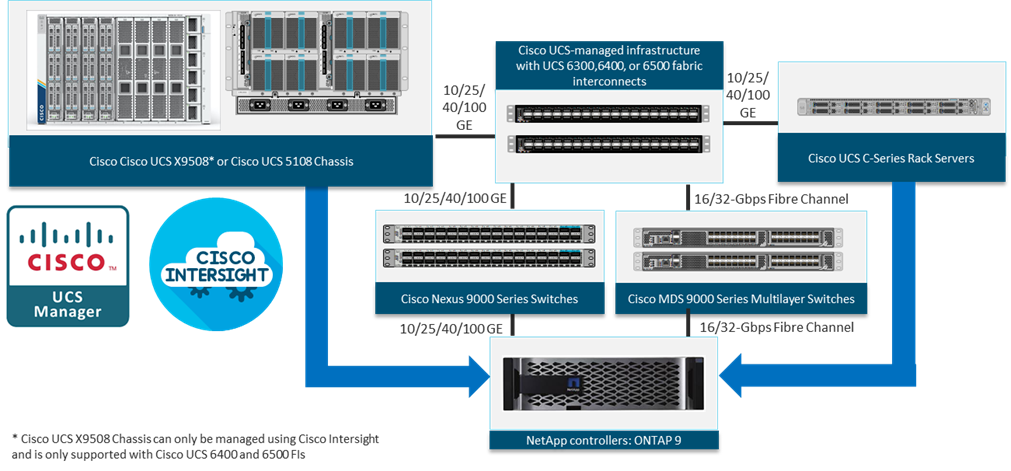

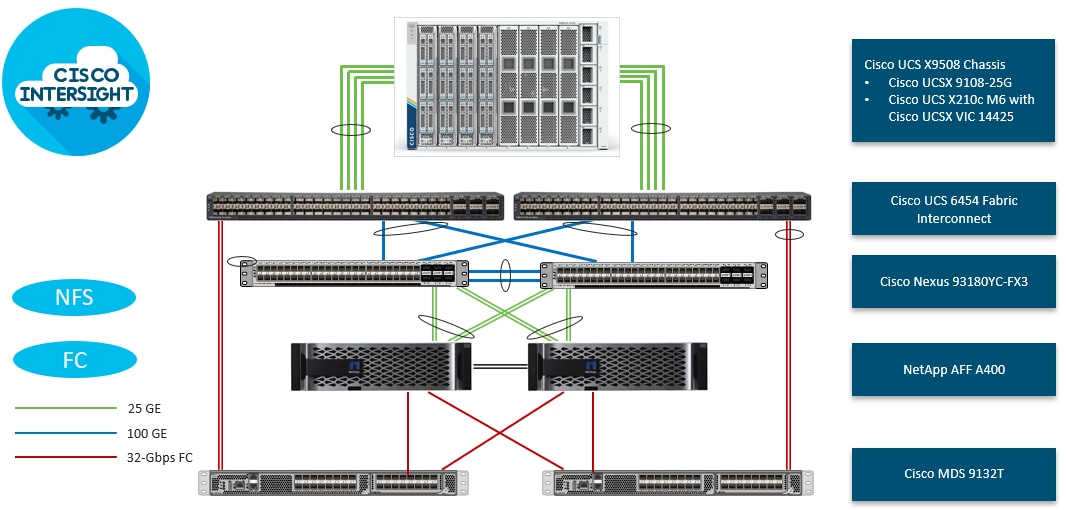

FlexPod Datacenter architecture is built using the following infrastructure components for compute, network, and storage:

● Cisco Unified Computing System (Cisco UCS)

● Cisco Nexus and Cisco MDS switches

● NetApp All Flash FAS (AFF), FAS and ALL SAN Array (ASA) storage systems

All the FlexPod components have been integrated so that you can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlexPod is its ability to maintain consistency at scale. Each of the component families shown in Figure 1 (Cisco UCS, Cisco Nexus, Cisco MDS, and NetApp controllers) offers platform and resource options to scale up or scale out the infrastructure while supporting the same features.

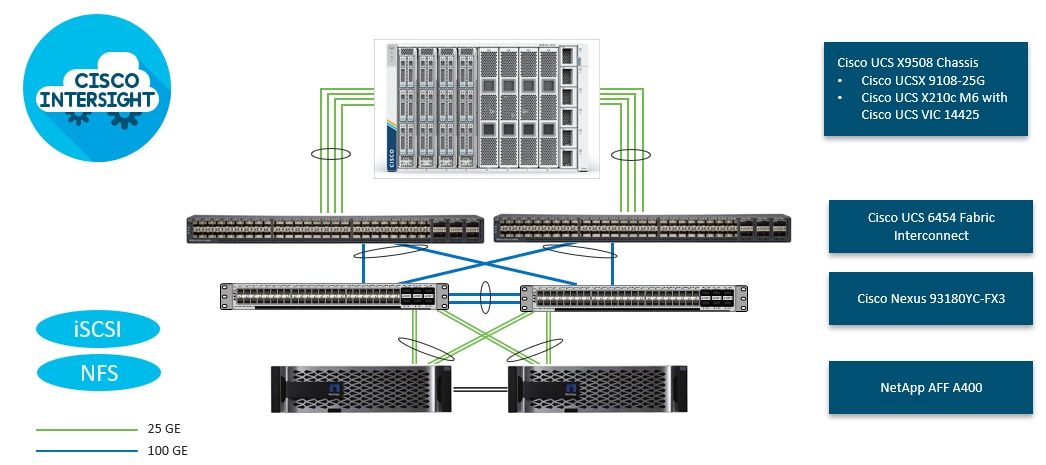

The FlexPod Datacenter solution with Cisco UCS X-Series in this validated design is built using following hardware components:

● Cisco UCS X9508 Chassis with up to eight Cisco UCS X210c M6 Compute Nodes

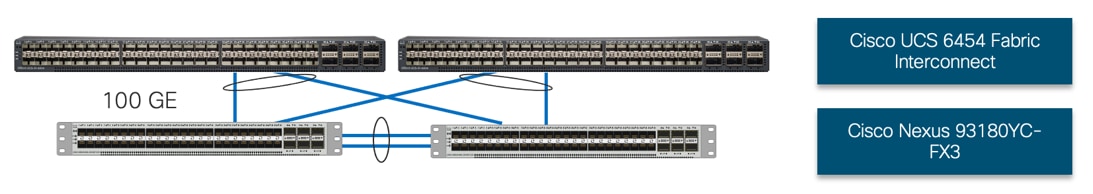

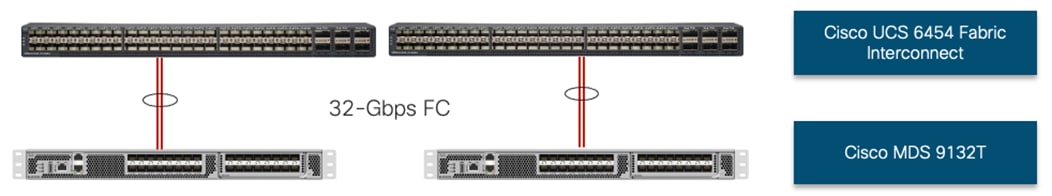

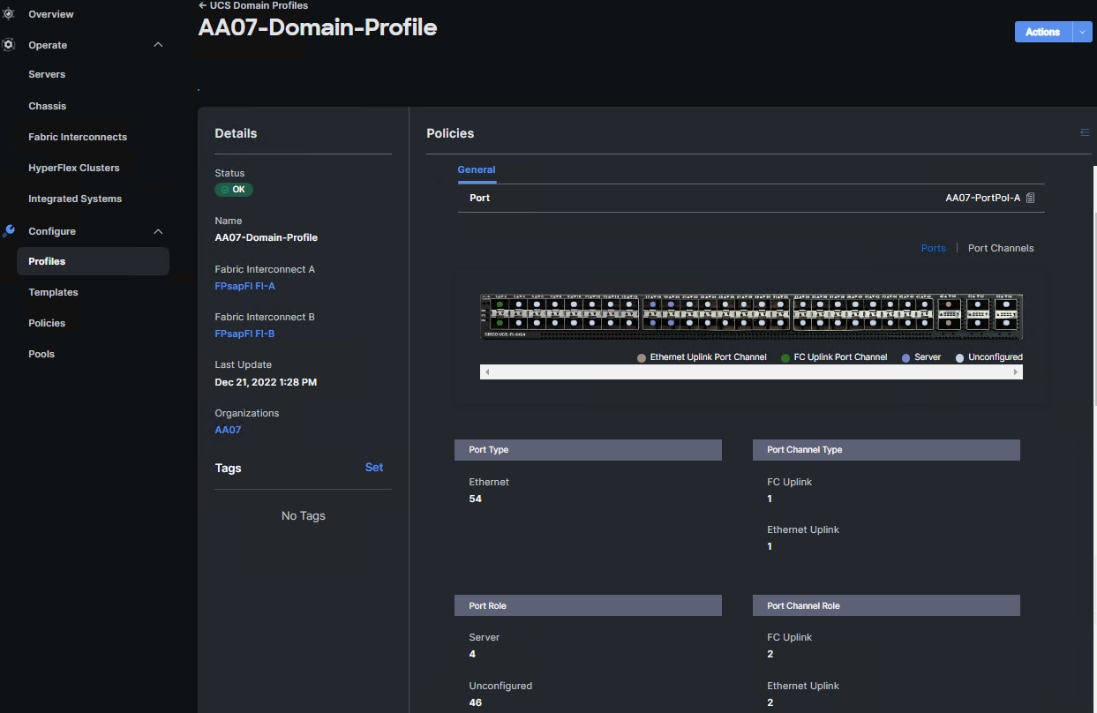

● Fourth-generation Cisco UCS 6454 Fabric Interconnects to support 10GbE, 25GbE, and 100GbE connectivity from various components

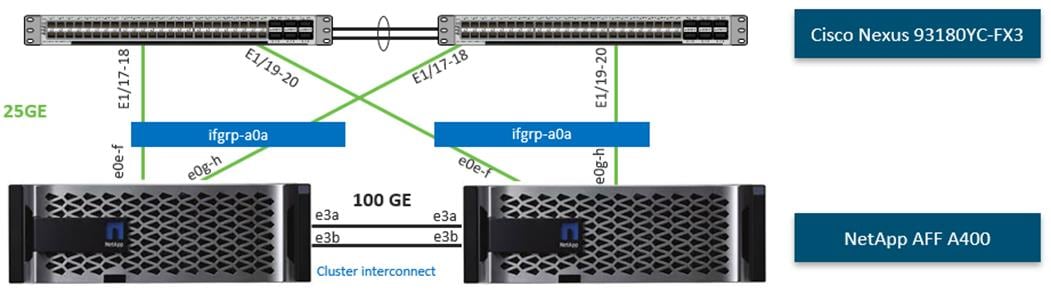

● High-speed Cisco NX-OS-based Nexus 93180YC-FX3 switching design to support up to 100GE connectivity

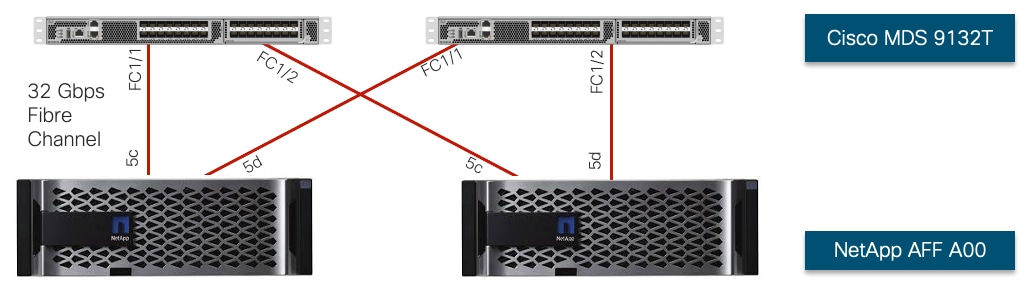

● Cisco MDS 9132T SAN switch to support consistent 32Gbps Fibre Channel port performance

● NetApp AFF A400 end-to-end NVMe storage with high-speed Ethernet and Fibre Channel connectivity

The software components of the solution consist of:

● Cisco Intersight platform to deploy, maintain and support the FlexPod components

● Cisco Intersight Assist Virtual Appliance to help connect NetApp ONTAP and VMware vCenter with Cisco Intersight

● NetApp ONTAP 9.12 and NetApp Active IQ Unified Manager to monitor and manage the storage for NetApp ONTAP integration with Cisco Intersight

● VMware vCenter to set up and manage the virtual infrastructure as well as Cisco Intersight integration

● VMware vSphere 7.0 U3c and later

● VMware vCenter 7.0 and later to set up and manage the virtual infrastructure and integration into Cisco Intersight

● Red Hat Enterprise Linux (RHEL) for SAP Solutions 8.2 and later

● SUSE Linux Enterprise System (SLES) for SAP Applications 15 SP2 and later

● SAP HANA 1.0 SPS 12 Revision 122.19; SAP HANA 2.0 recommended

| Tech tip |

| The solution consists of VMware certified systems as listed on the VMware hardware compatibility list (HCL) and SAP HANA supported server and storage systems, as listed on the certified and supported SAP HANA hardware directory. |

These key product highlights and features are described in the following sections.

Cisco Unified Computing System X-Series

The Cisco UCS X-Series Modular System is designed to take the current generation of the Cisco UCS platform to the next level with its future-ready design and cloud-based management. Decoupling and moving the platform management to the cloud allows Cisco UCS to respond to your feature and scalability requirements in a much faster and efficient manner. Cisco UCS X-Series state of the art hardware simplifies the data-center design by providing flexible server options. A single server type, supporting a broader range of workloads, results in fewer different data-center products to manage and maintain. The Cisco Intersight cloud-management platform manages Cisco UCS X-Series as well as integrating with third-party devices, including VMware vCenter and NetApp storage, to provide visibility, optimization, and orchestration from a single platform, thereby driving agility and deployment consistency.

The various components of the Cisco UCS X-Series are described in the following sections.

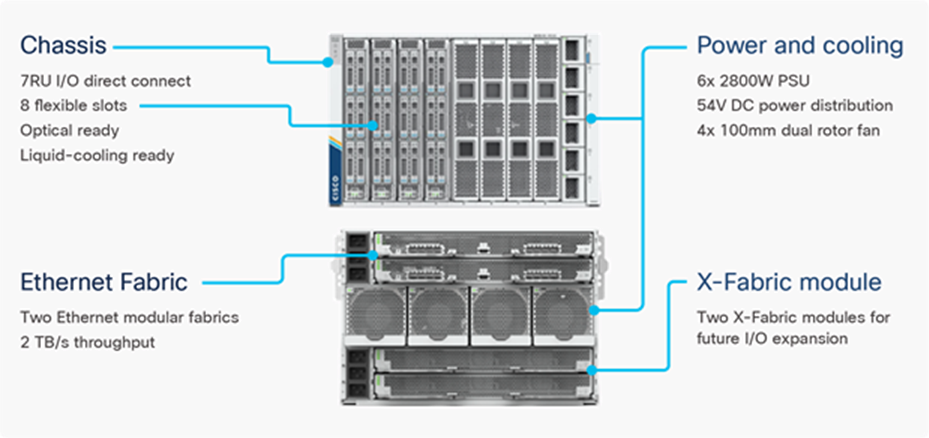

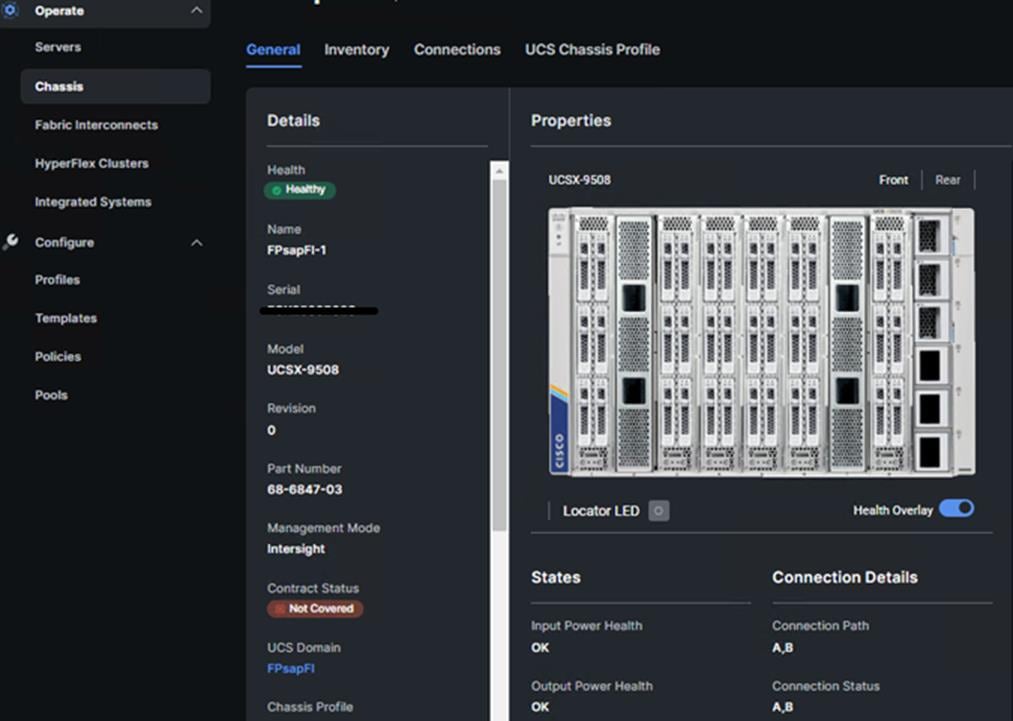

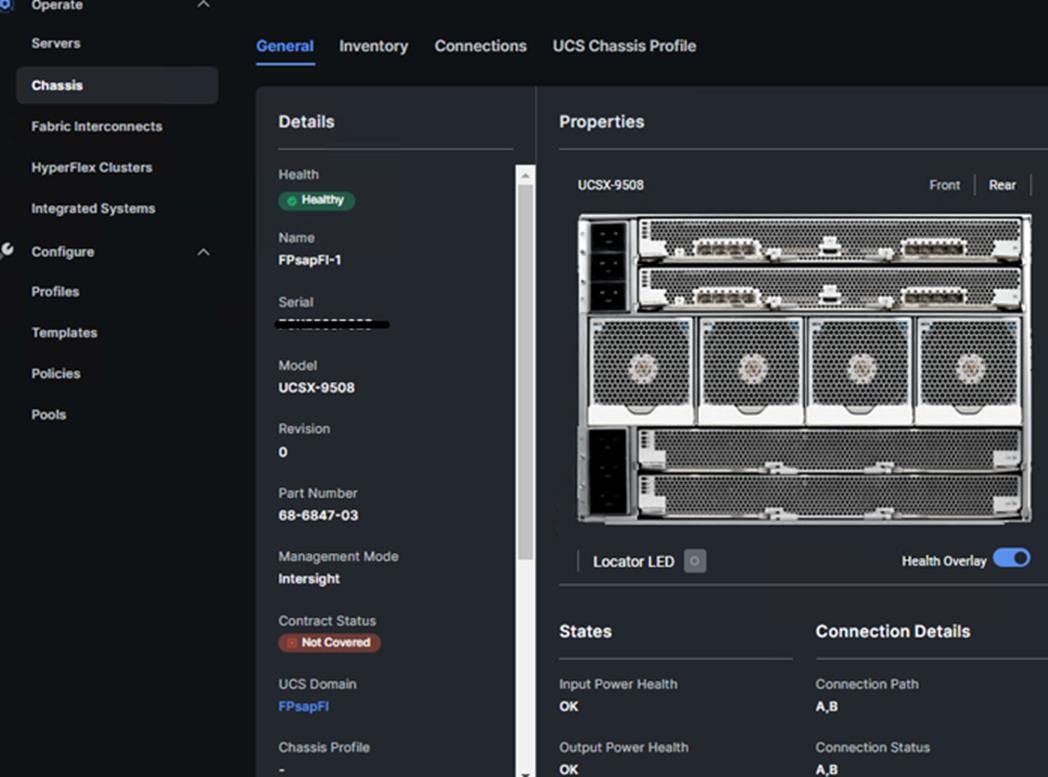

Cisco UCS X9508 Chassis

The Cisco UCS X-Series chassis is engineered to be adaptable and flexible. As seen in Figure 3, Cisco UCS X9508 chassis has only a power-distribution midplane. This midplane-free design provides fewer obstructions for better airflow. For I/O connectivity, vertically oriented compute nodes intersect with horizontally oriented fabric modules, allowing the chassis to support future fabric innovations. Cisco UCS X9508 Chassis’ superior packaging enables larger compute nodes, thereby providing more space for actual compute components, such as memory, GPU, drives, and accelerators. Improved airflow through the chassis enables support for higher power components, and more space allows for future thermal solutions (such as liquid cooling) without limitations.

The Cisco UCS X9508 7-Rack-Unit (7RU) chassis has eight flexible slots. These slots can house a combination of compute nodes and a pool of future I/O resources that may include GPU accelerators, disk storage, and nonvolatile memory. At the top rear of the chassis are two Intelligent Fabric Modules (IFMs) that connect the chassis to upstream Cisco UCS 6400 Series Fabric Interconnects. At the bottom rear of the chassis are slots ready to house future X-Fabric modules that can flexibly connect the compute nodes with I/O devices. Six 2800W Power Supply Units (PSUs) provide 54V power to the chassis with N, N+1, and N+N redundancy. A higher voltage allows efficient power delivery with less copper and reduced power loss. Efficient, 100mm, dual counter-rotating fans deliver industry-leading airflow and power efficiency, and optimized thermal algorithms enable different cooling modes to best support your environment.

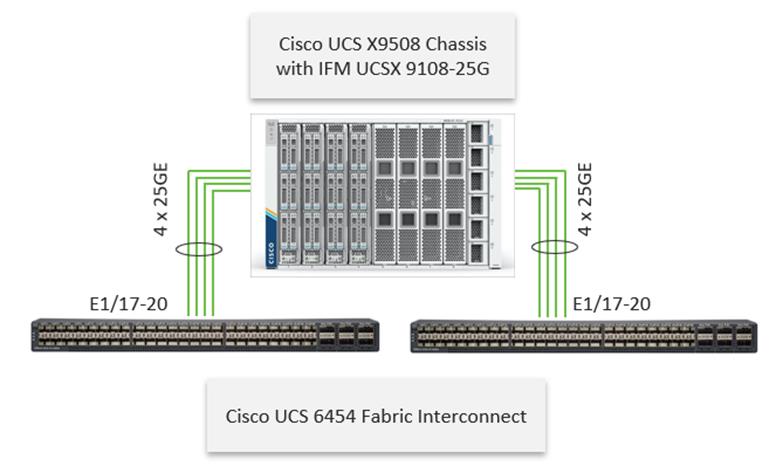

Cisco UCS 9108 25G Intelligent Fabric Modules

For the Cisco UCS X9508 Chassis, the network connectivity is provided by a pair of Cisco UCS 9108 25G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6400 Series Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508’s midplane-free design enables easy upgrades to new networking technologies as they emerge making it straightforward to accommodate new network speeds or technologies in the future.

Each IFM supports eight 25Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 32 25Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the UCS FIs, providing up to 400Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to the native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches), and data Ethernet traffic is forwarded upstream to the data center network (via Cisco Nexus switches).

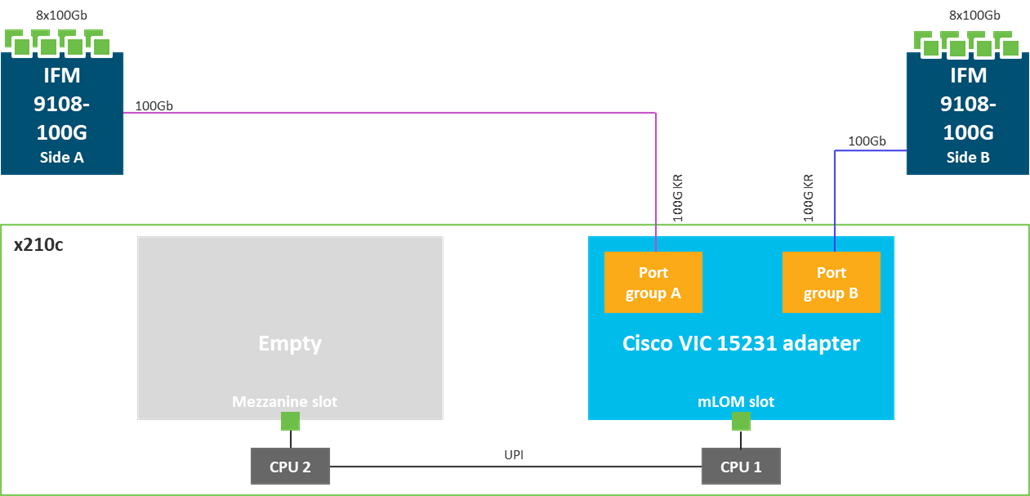

Cisco UCS 9108 100G Intelligent Fabric Modules

In the end-to-end 100Gbps Ethernet design, for the Cisco UCS X9508 Chassis, the network connectivity is provided by a pair of Cisco UCS 9108 100G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6536 Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508’s midplane-free design enables easy upgrades to new networking technologies as they emerge making it straightforward to accommodate new network speeds or technologies in the future.

Each IFM supports eight 100Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 8 100Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the UCS FIs, providing up to 1600Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to either native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches) or to FCoE uplinks (to Cisco Nexus switches supporting SAN switching), and data Ethernet traffic is forwarded upstream to the data center network (using Cisco Nexus switches).

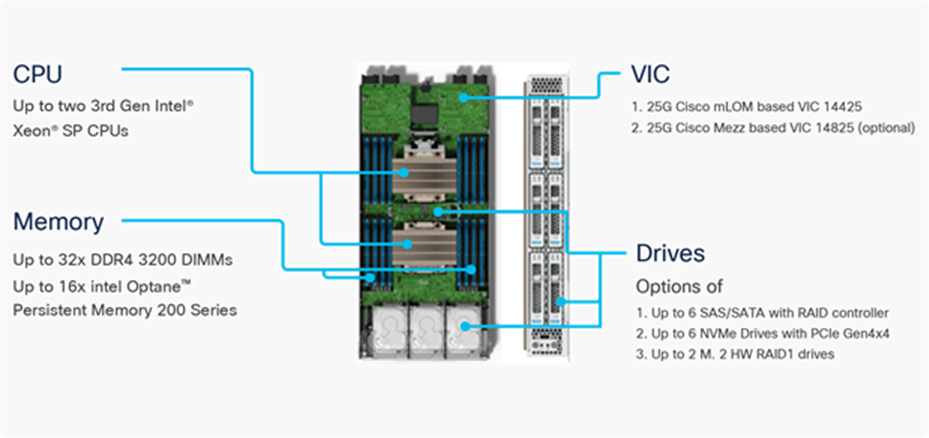

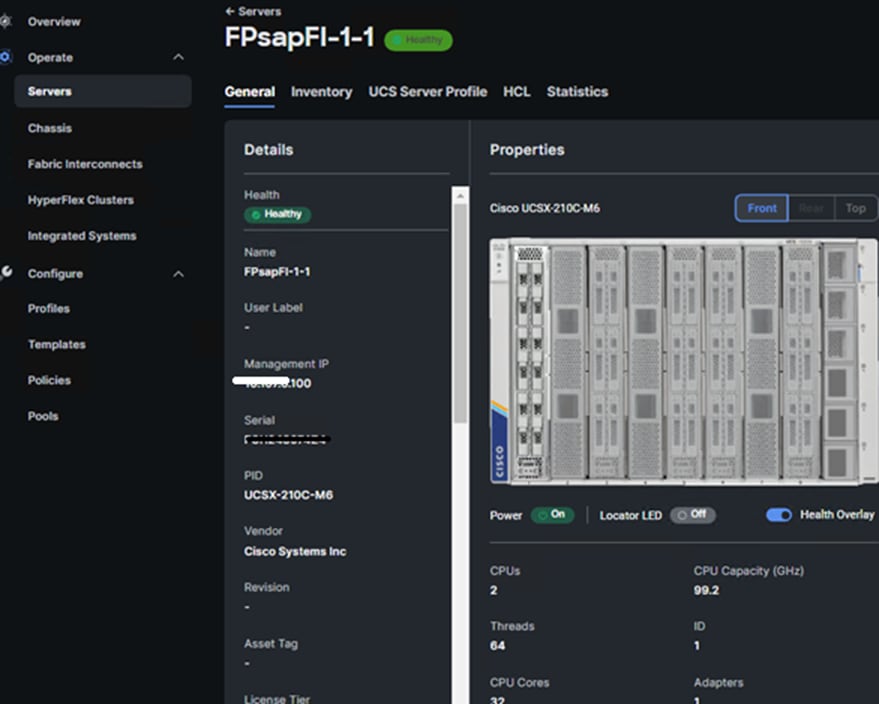

Cisco UCS X210c M6 Compute Node

The Cisco UCS X9508 Chassis is designed to host up to 8 Cisco UCS X210c M6 Compute Nodes. The hardware details of the Cisco UCS X210c M6 Compute Nodes are shown in Figure 6:

The Cisco UCS X210c M6 features:

● CPU: Up to 2x 3rd Gen Intel Xeon Scalable Processors with up to 40 cores per processor and 1.5 MB Level 3 cache per core.

● Memory: Up to 32 x 256 GB DDR4-3200 DIMMs for a maximum of 8 TB of main memory. The Compute Node can also be configured for up to 16 x 512-GB Intel Optane persistent memory DIMMs for a maximum of 12 TB of memory.

● Disk storage: Up to 6 SAS or SATA drives can be configured with an internal RAID controller, or you can configure up to 6 NVMe drives. 2 M.2 memory cards can be added to the Compute Node with RAID 1 mirroring.

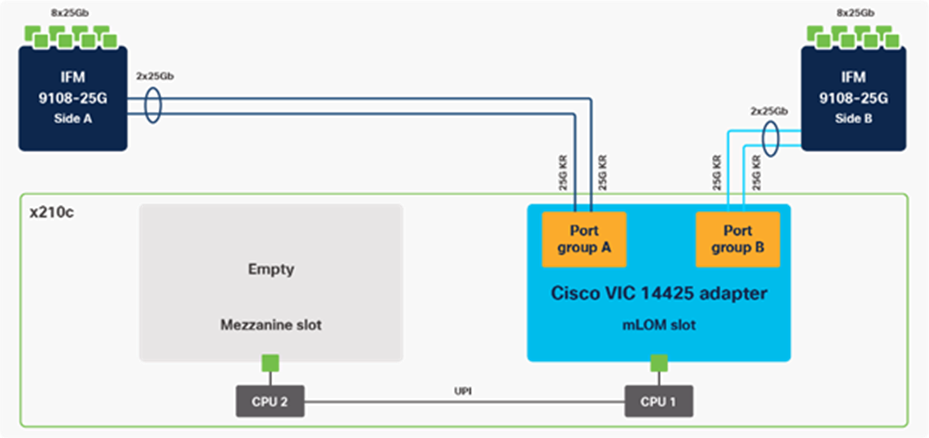

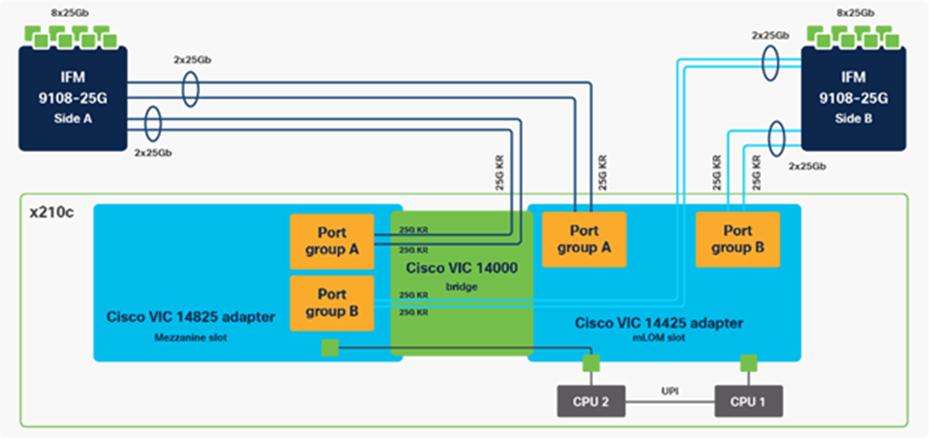

● Virtual Interface Card (VIC): Up to 2 VICs including an mLOM Cisco VIC 14425 and a mezzanine Cisco VIC card 14825 can be installed in a Compute Node.

● Security: The server supports an optional Trusted Platform Module (TPM). Additional security features include a secure boot FPGA and ACT2 anticounterfeit provisions.

● For SAP HANA production system, the maximum allowed memory configuration is 2 TB of main memory for SAP BW/4HANA or BW on HANA and 4 TB of main memory for SAP S/4HANA or Suite on HANA. As of SAP HANA 2.0 SPS 04 the memory limit can be extended in combination with Intel Optane persistent memory DIMMs operated in AppDirect mode.

Cisco UCS Virtual Interface Cards (VICs)

Cisco UCS X210c M6 Compute Nodes support the following Cisco fourth-generation VIC cards:

● Cisco UCS VIC 14425

Cisco UCS VIC 14425 fits the mLOM slot in the Cisco UCS X210c Compute Node and enables up to 50 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 100 Gbps of connectivity per server. Cisco UCS VIC 14425 connectivity to the IFM and up to the fabric interconnects is delivered through 4x 25-Gbps connections, which are configured automatically as 2x 50-Gbps port channels. Cisco UCS VIC 14425 supports 256 virtual interfaces (both Fibre Channel and Ethernet).

● Cisco UCS VIC 14825

The optional Cisco UCS VIC 14825 fits the mezzanine slot on the server. A bridge card (UCSX-V4-BRIDGE) extends this VIC’s 2x 50 Gbps of network connections up to the mLOM slot and out through the mLOM’s IFM connectors, bringing the total bandwidth to 100 Gbps per fabric for a total bandwidth of 200 Gbps per server.

● Cisco UCS VIC 15231

Cisco UCS VIC 15231 fits the mLOM slot in the Cisco UCS X210c Compute Node and enables up to 100 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 200 Gbps of connectivity per server. Cisco USC VIC 15231 connectivity to the IFM and up to the fabric interconnects is delivered through 100Gbps. Cisco UCS VIC 15231 supports 512 virtual interfaces (both FCoE and Ethernet).

Cisco UCS Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco UCS system. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager or Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

![]()

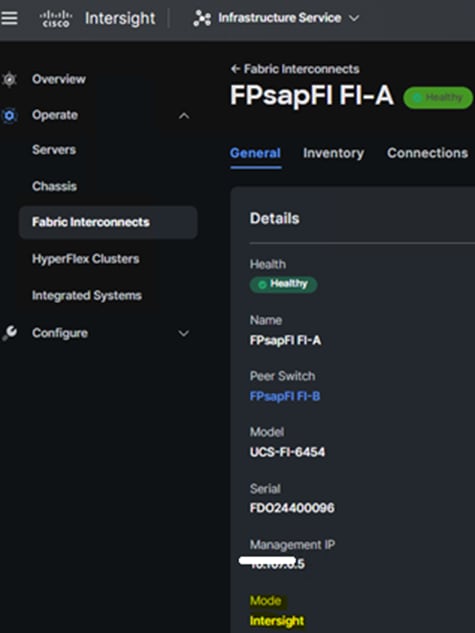

Cisco UCS 6454 FIs utilized in the current design is a 54-port Fabric Interconnect. This single RU device includes 28 10/25 Gbps Ethernet ports, 4 1/10/25-Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports, and 16 unified ports that can support 10/25 Gigabit Ethernet or 8/16/32-Gbps Fibre Channel, depending on the SFP.

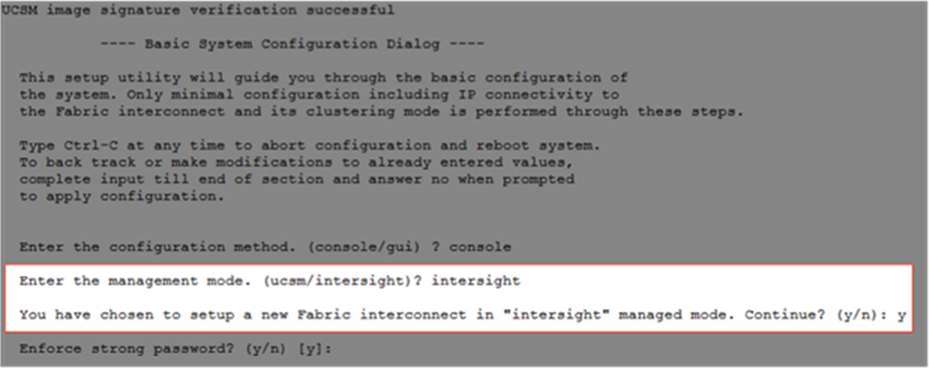

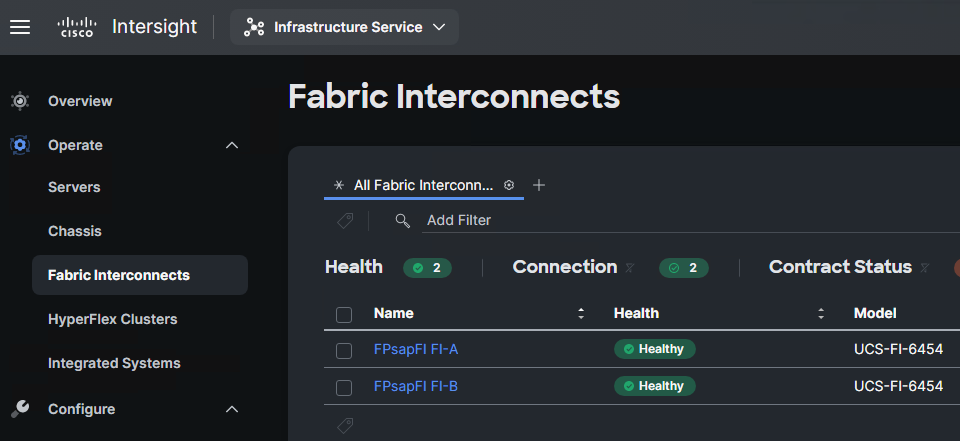

Note: For supporting the Cisco UCS X-Series, the fabric interconnects must be configured in Intersight Managed Mode (IMM). This option replaces the local management with Cisco Intersight cloud- or appliance-based management.

Cisco UCS 6536 Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point for connectivity and management for the entire Cisco Unified Computing System. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

Note: Currently, the Cisco UCS 6536 does not support Cisco UCS Manager.

![]()

This single RU device includes up to 36 10/25/40/100 Gbps Ethernet ports, 16 8/16/32-Gbps Fibre Channel ports via 4 128 Gbps to 4x32 Gbps breakouts on ports 33-36. All 36 ports support breakout cables or QSA interfaces.

Note: The Cisco UCS 6536 FI currently only supports Intersight Managed Mode (IMM). This option replaces the local UCS management with Cisco Intersight cloud- or appliance-based management supporting the Cisco UCS X-Series.

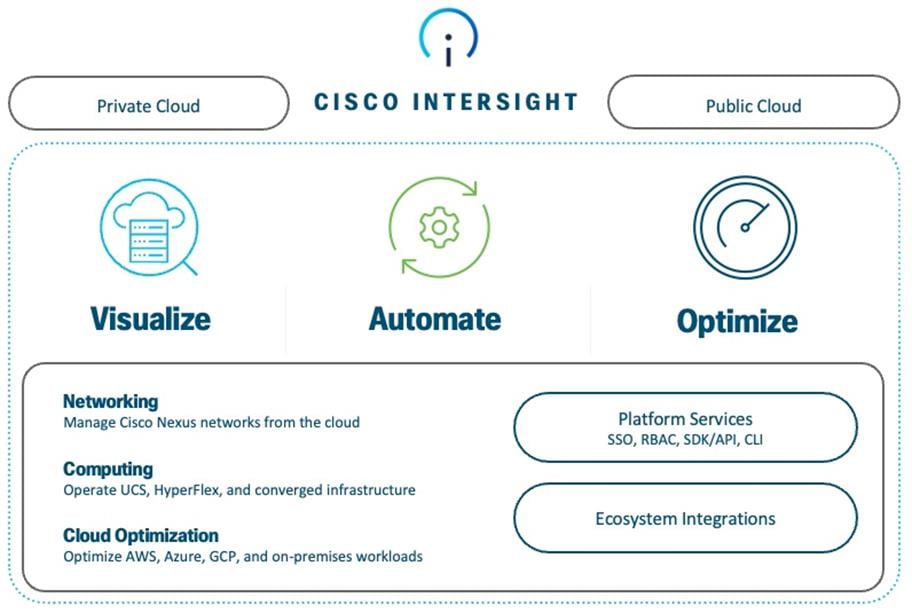

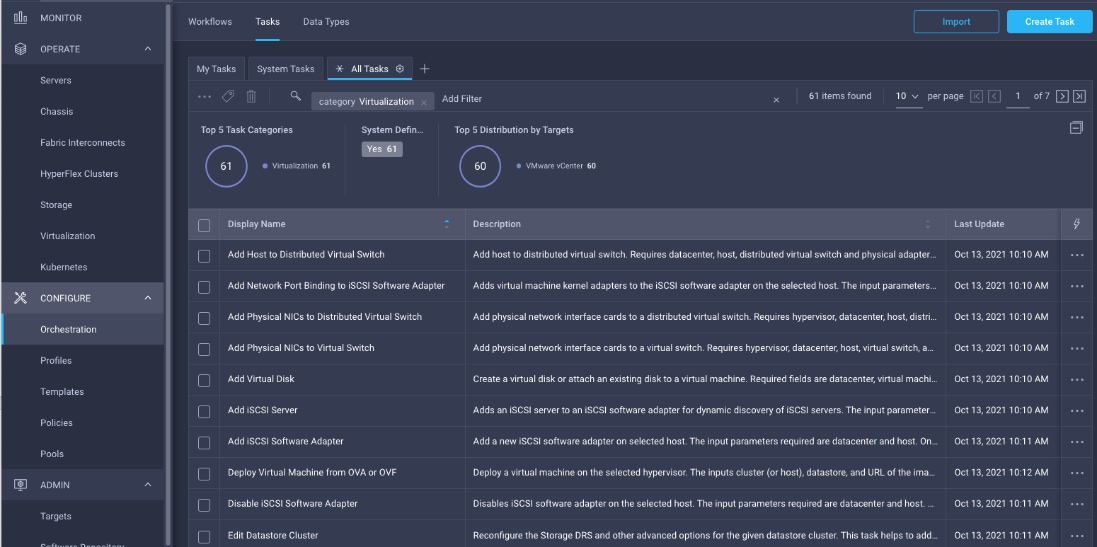

The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support. The Cisco Intersight platform is designed to be modular, so you can adopt services based on your individual requirements. The platform significantly simplifies IT operations by bridging applications with infrastructure, providing visibility and management from bare-metal servers and hypervisors to serverless applications, thereby reducing costs and mitigating risk. This unified SaaS platform uses a unified Open API design that natively integrates with third-party platforms and tools.

The main benefits of Cisco Intersight infrastructure services are as follows:

● Simplify daily operations by automating many daily manual tasks

● Combine the convenience of a SaaS platform with the capability to connect from anywhere and manage infrastructure through a browser or mobile app

● Stay ahead of problems and accelerate trouble resolution through advanced support capabilities

● Gain global visibility of infrastructure health and status along with advanced management and support capabilities

Cisco Intersight Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, on-premises options can be purchased separately. The Cisco Intersight Virtual Appliance and Cisco Intersight Private Virtual Appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight Virtual Appliance delivers the management features of the Cisco Intersight platform in an easy-to-deploy VMware Open Virtualization Appliance (OVA) or Microsoft Hyper-V Server virtual machine that allows you to control the system details that leave your premises. The Cisco Intersight Private Virtual Appliance is provided in a form factor specifically designed for those who operate in disconnected (air gap) environments. The Private Virtual Appliance requires no connection to public networks or back to Cisco to operate.

Cisco Intersight Assist

Cisco Intersight Assist helps you add endpoint devices to Cisco Intersight. A data center could have multiple devices that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight, but does not connect directly with it, will need a connection mechanism. Cisco Intersight Assist provides that connection mechanism. In FlexPod, VMware vCenter and NetApp Active IQ Unified Manager connect to Intersight with the help of Intersight Assist VM.

Cisco Intersight Assist is available within the Cisco Intersight Virtual Appliance, which is distributed as a deployable virtual machine contained within an Open Virtual Appliance (OVA) file format. More details about the Cisco Intersight Assist VM deployment configuration is covered in later sections.

Licensing Requirements

The Cisco Intersight platform uses a subscription-based license with multiple tiers. You can purchase a subscription duration of one, three, or five years and choose the required Cisco UCS server volume tier for the selected subscription duration. Each Cisco endpoint automatically includes a Cisco Intersight Base license at no additional cost when you access the Cisco Intersight portal and claim a device. You can purchase any of the following higher-tier Cisco Intersight licenses using the Cisco ordering tool:

● Intersight Infrastructure Services - Essentials: Essentials includes Intersight functionality available in UCS and HyperFlex servers along with additional features such as policy-based configuration with Server Profiles, firmware management, and evaluation of compatibility with the Hardware Compatibility List (HCL).

● Intersight Infrastructure Services – Advantage: Advantage includes all features of the Essentials tier.

● Intersight Infrastructure Services - Premier: In addition to the functionality provided in the Advantage tier, the Premier tier includes full subscription entitlement for Cisco UCS Director at no additional cost.

Servers in the Cisco Intersight managed mode require at least the Essentials license. For more information about the features provided in the various licensing tiers, see https://intersight.com/help/saas/getting_started/licensing_requirements

The Cisco Nexus 9000 Series Switches offer both modular and fixed 1/10/25/40/100 Gigabit Ethernet switch configurations with scalability up to 60 Tbps of nonblocking performance with less than five-microsecond latency, wire speed VXLAN gateway, bridging, and routing support.

The Cisco Nexus 9000 Series Switch featured in this design is the Cisco Nexus 93180YC-FX3 configured in NX-OS standalone mode. NX-OS is a purpose-built data-center operating system designed for performance, resiliency, scalability, manageability, and programmability at its foundation. It provides a robust and comprehensive feature set that meets the demanding requirements of virtualization and automation.

The Cisco Nexus 93180YC-FX3 Switch is a 1RU switch that supports 3.6 Tbps of bandwidth and 1.2 bpps. The 48 downlink ports on the 93180YC-FX3 can support 1-, 10-, or 25-Gbps Ethernet, offering deployment flexibility and investment protection. The six uplink ports can be configured as 40- or 100-Gbps Ethernet, offering flexible migration options.

Cisco MDS 9132T 32G Multilayer Fabric Switch

The Cisco MDS 9132T 32G Multilayer Fabric Switch is the next generation of the highly reliable, flexible, and low-cost Cisco MDS 9100 Series switches. It combines high performance with exceptional flexibility and cost effectiveness. This powerful, compact one Rack-Unit (1RU) switch scales from 8 to 32 line-rate 32 Gbps Fibre Channel ports.

The Cisco MDS 9132T delivers advanced storage networking features and functions with ease of management and compatibility with the entire Cisco MDS 9000 family portfolio for reliable end-to-end connectivity. This switch also offers state-of-the-art SAN analytics and telemetry capabilities that have been built into this next-generation hardware platform. This new state-of-the-art technology couples the next-generation port ASIC with a fully dedicated network processing unit designed to complete analytics calculations in real time. The telemetry data extracted from the inspection of the frame headers are calculated on board (within the switch) and, using an industry-leading open format, can be streamed to any analytics-visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver, including Cisco Data Center Network Manager.

Cisco Nexus Dashboard Fabric Controller

The Cisco Nexus Dashboard Fabric Controller (NDFC), which is the evolution of Data Center Network Manager (DCNM), makes fabric management simple and reliable. It is the comprehensive management solution for all Cisco NX-OS network deployments spanning SAN fabrics, LAN fabrics, and IP fabric for media (IPFM) networking in the data center. Cisco NDFC provides management, control, automation, monitoring, visualization, and troubleshooting across Cisco Multilayer Switching (MDS) and Cisco Nexus solutions, reducing the complexities and costs of operating Cisco Nexus and storage network deployments while connecting and managing your cloud environments. Cisco NDFC is available through Cisco Data Center Networking (DCN) or NX-OS Essentials, Advantage, or Premier license and runs exclusively as a service on Cisco Nexus Dashboard.

Cisco Nexus Dashboard is managed through a web browser with various deployment options either as physical appliance or as virtual appliance like with VMware in a cluster setup with three VMware ESX virtual machines.

With the additional capability to discover and report about disk arrays in the fabric and the use of virtual machine managers, Cisco NDFC can effectively provide an end-to-end view of all communication within the data center. If you’re adopting a combination of bare-metal and virtualized servers, the capability of Cisco NDFC to provide visibility into the network, servers, and storage resources makes this tool in high demand, helping provide full control of application Service-Level Agreements (SLAs) and metrics beyond simple host and virtual machine monitoring.

Cisco Intersight Nexus Dashboard Base

The Cisco Nexus Dashboard (ND) Base provides Cisco Technical Assistance Center (TAC) Assist functions that are useful when working with the dashboard. It provides a way for you to collect technical support information across multiple devices and upload those tech supports to Cisco Cloud. The Cisco ND Base offers basic data center network assets, inventory, and status information in Cisco Intersight.

The Cisco ND Base application is connected to Cisco Intersight through a device connector that is embedded in the management controller of the Cisco NDFC platform. The device connector provides a secure way for connected Cisco NDFC to send and receive information from Cisco Intersight by using a secure Internet connection.

The NetApp AFF A400 offers full end-to-end NVMe support. The frontend NVMe/FC connectivity makes it possible to achieve optimal performance from an all-flash array for workloads that include artificial intelligence, machine learning, and real-time analytics as well as business-critical databases. On the back end, the A400 supports both serial-attached SCSI (SAS) and NVMe-attached SSDs, offering the versatility for you to move up from your legacy A-Series systems and satisfying the increasing interest in NVMe-based storage.

The NetApp AFF A400 offers greater port availability, network connectivity, and expandability. The NetApp AFF A400 has 10 PCIe Gen3 slots per high availability pair. The NetApp AFF A400 offers 25GbE or 100GbE, as well as 32Gb/FC and NVMe/FC network connectivity. This model was created to keep up with changing business needs and performance and workload requirements by merging the latest technology for data acceleration and ultra-low latency in an end-to-end NVMe storage system.

Note: Cisco UCS X-Series is supported with all NetApp AFF systems running NetApp ONTAP 9 release.

Build your hybrid cloud with ease

Your data fabric built by NetApp helps you simplify and integrate data management across cloud and on-premises environments to meet business demands and gain a competitive edge. With NetApp AFF A-Series, you can connect to more clouds for more data services, data tiering, caching, and disaster recovery. You can also:

● Maximize performance and reduce overall storage costs by automatically tiering cold data to the cloud with FabricPool.

● Instantly deliver data to support efficient collaboration across your hybrid cloud

● Protect your data by taking advantage of Amazon Simple Storage Service (Amazon S3) cloud resources—on premises and in the public cloud.

● Accelerate read performance for data that is shared widely throughout your organization and across hybrid cloud deployments.

● Keep data available, protected, and secure

As organizations become more data driven, the business impact of data loss can be increasingly dramatic—and costly. IT must protect data from both internal and external threats, ensure data availability, eliminate maintenance disruptions, and quickly recover from failures.

With NetApp, you can get disaster recovery and backup in the cloud while protecting data against site failure and freeing up valuable data center resources. In addition, Amazon FSx for NetApp ONTAP is now a production-certified, fully managed file service for SAP HANA. With FlexPod Hybrid cloud integration, you can automate tasks, simplify deployments, and run SAP HANA in-memory database with the flexibility of AWS-certified cloud infrastructure. For more information, refer to https://www.netapp.com/blog/new-fsx-for-ontap-features/

NetApp BlueXP

BlueXP is a unified control plane that enables you to manage your entire data landscape through one single, SaaS-delivered, point of control. Gone are the days of deploying, managing, and optimizing every environment in its own management framework and toolset, requiring specific competencies. Helps you deploy, discover, manage, and optimize data and infrastructure with the simplicity of SaaS. It combines visibility and manageability of storage instances, with data services like data protection, governance and compliance, mobility, and resource monitoring and optimization and enables multicloud operations via a unified control plane to eliminate fragmented and redundant toolsets, frameworks, lexicons, and competencies.

NetApp ONTAP 9.12.1

NetApp storage systems harness the power of ONTAP to simplify the data infrastructure from edge, core, and cloud with a common set of data services and 99.9999 percent availability. NetApp ONTAP 9 data management software from NetApp enables you to modernize your infrastructure and transition to a cloud-ready data center. ONTAP 9 has a host of features to simplify deployment and data management, accelerate and protect critical data, and make infrastructure future-ready across hybrid-cloud architectures.

NetApp ONTAP 9 is the data management software that is used with the NetApp AFF A400 all-flash storage system in this solution design. ONTAP software offers secure unified storage for applications that read and write data over block- or file-access protocol storage configurations. These storage configurations range from high-speed flash to lower-priced spinning media or cloud-based object storage. ONTAP implementations can run on NetApp engineered FAS or AFF series arrays and in private, public, or hybrid clouds (NetApp Private Storage and NetApp Cloud Volumes ONTAP). Specialized implementations offer best-in-class converged infrastructure, featured here as part of the FlexPod Datacenter solution or with access to third-party storage arrays (NetApp FlexArray virtualization). Together these implementations form the basic framework of the NetApp Data Fabric, with a common software-defined approach to data management, and fast efficient replication across systems. FlexPod and ONTAP architectures can serve as the foundation for both hybrid cloud and private cloud designs.

The following sections provide an overview of how ONTAP 9 is an industry-leading data management software architected on the principles of software defined storage.

Read more about all the capabilities of ONTAP data management software here: https://www.netapp.com/us/products/data-management-software/ontap.aspx.

For more information on new features and functionality in latest ONTAP software, refer to the ONTAP release notes: ONTAP 9 Release Notes (netapp.com)

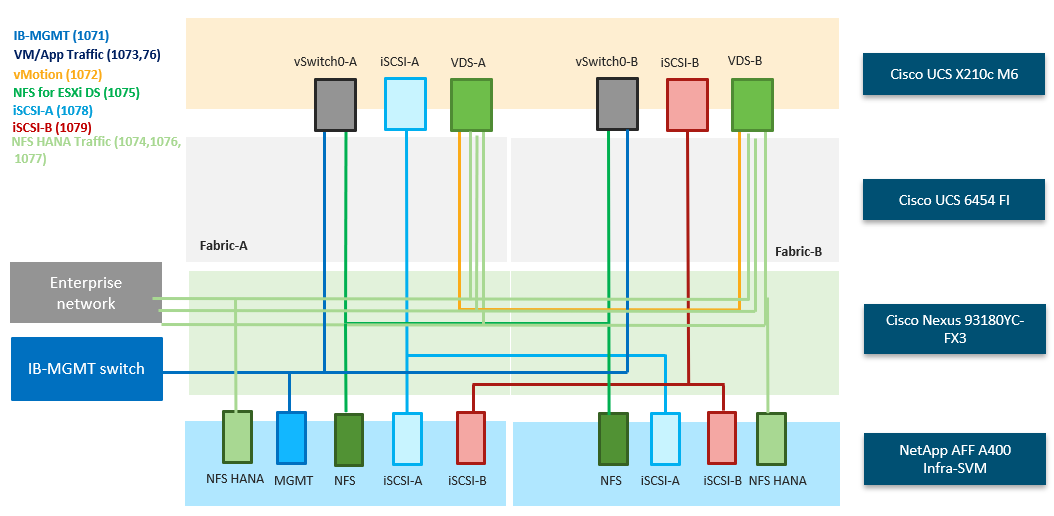

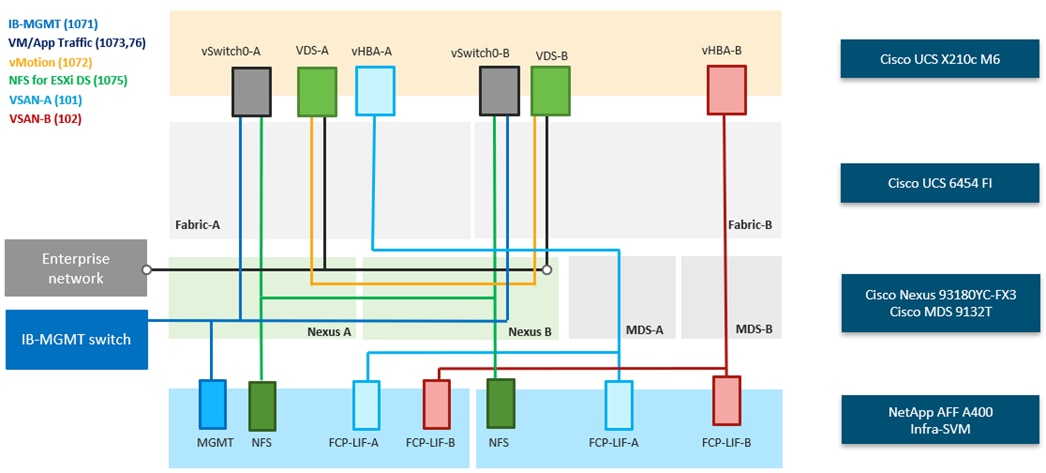

NetApp Storage Virtual Machine

A NetApp ONTAP cluster serves data through at least one, and possibly multiple, storage virtual machines (SVMs). An SVM is a logical abstraction that represents the set of physical resources of the cluster. Data volumes and network LIFs are created and assigned to an SVM and can reside on any node in the cluster to which that SVM has access. An SVM can own resources on multiple nodes concurrently, and those resources can be moved non-disruptively from one node in the storage cluster to another. For example, a NetApp FlexVol flexible volume can be non-disruptively moved to a new node and aggregate, or a data LIF can be transparently reassigned to a different physical network port. The SVM abstracts the cluster hardware, and therefore it is not tied to any specific physical hardware.

An SVM can support multiple data protocols concurrently. Volumes within the SVM can be joined to form a single NAS namespace. The namespace makes all of the SVM's data available through a single share or mount point to NFS and CIFS clients. SVMs also support block-based protocols, and LUNs can be created and exported by using iSCSI, FC, and FCoE. Any or all of these data protocols can be used within a given SVM. Storage administrators and management roles can be associated with an SVM, offering higher security and access control. This security is important in environments that have more than one SVM and when the storage is configured to provide services to different groups or sets of workloads. In addition, you can configure external key management for a named SVM in the cluster. This is a best practice for multitenant environments in which each tenant uses a different SVM (or set of SVMs) to serve data.

Storage Efficiencies

Storage efficiency is a primary architectural design point of ONTAP data management software. A wide array of features enables you to store more data that uses less space. In addition to deduplication and compression, you can store your data more efficiently by using features such as unified storage, multitenancy, thin provisioning, and by using NetApp Snapshot technology.

Starting with ONTAP 9, NetApp guarantees that the use of NetApp storage efficiency technologies on AFF systems reduces the total logical capacity used to store your data up to a data reduction ratio of 7:1, based on the workload. This space reduction is enabled by a combination of several different technologies, including deduplication, compression, and compaction.

Compaction, which was introduced in ONTAP 9, is the latest patented storage efficiency technology released by NetApp. In the NetApp WAFL file system, all I/O takes up 4KB of space, even if it does not actually require 4KB of data. Compaction combines multiple blocks that are not using their full 4KB of space together into one block. This single block can be more efficiently stored on the disk to save space. These storage efficiencies improve the ability of ONTAP to store more data in less space, reducing storage costs and maximizing the effective capacity of your storage system.

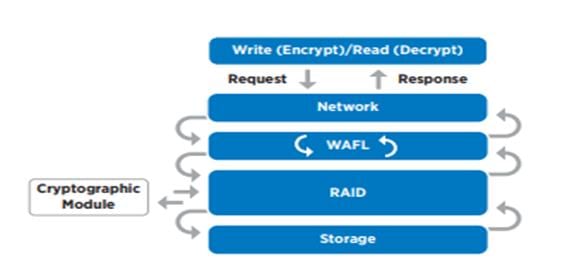

NetApp Volume Encryption(NVE) and NetApp Aggregate Encryption (NAE)

NetApp Volume Encryption is a software-based, data-at-rest encryption solution that is FIPS 140-2 compliant. NVE allows ONTAP to encrypt data for each volume for granularity. NAE, is an outgrowth of NVE; it allows ONTAP to encrypt data for each volume, and the volumes can share keys across the aggregate. NVE and NAE enable you to use storage efficiency features that would be lost with encryption at the application layer. For greater storage efficiency, you can use aggregate deduplication with NAE.

Here’s how the process works: The data leaves the disk encrypted, is sent to RAID, is decrypted by the CryptoMod, and is then sent up the rest of the stack. This process is illustrated in Figure 17.

To view the latest security features for ONTAP 9, go to: Security Features in ONTAP 9 | NetApp.

ONTAP Rest API

ONTAP Rest API enables you to automate the deployment and administration of your ONTAP storage systems using one of several available options. The ONTAP REST API provides the foundation for all the various ONTAP automation technologies.

Beginning with ONTAP 9.6, ONTAP includes an expansive workflow-driven REST API that you can use to automate deployment and management of your storage. In addition, NetApp provides a Python client library, which makes it easier to write robust code, as well as support for ONTAP automation based on Ansible.

FlexClone

NetApp FlexClone technology enables instantaneous point-in-time copies of a FlexVol volume without consuming any additional storage until the cloned data changes from the original. FlexClone volumes add extra agility and efficiency to storage operations. They take only a few seconds to create and do not interrupt access to the parent FlexVol volume. FlexClone volumes use space efficiently, applying the ONTAP architecture to store only data that changes between the parent and clone. FlexClone volumes are suitable for testing or development environments, or any environment where progress is made by locking-in incremental improvements. FlexClone volumes also benefit any business process where you must distribute data in a changeable form without endangering the integrity of the original.

SnapMirror (Data Replication)

NetApp SnapMirror is an asynchronous replication technology for data replication across different sites, within the same data center, on-premises datacenter to cloud, or cloud to on-premises datacenter. SnapMirror Synchronous (SM-S) offers volume granular, zero data loss protection. It extends traditional SnapMirror volume replication to synchronous mode meeting zero recovery point objective (RPO) disaster recovery and compliance objectives. ONTAP 9.7 extends support for SnapMirror Synchronous to application policy-based replication providing a simple and familiar configuration interface that is managed with the same tools as traditional SnapMirror. This includes ONTAP CLI, NetApp ONTAP System Manager, NetApp Active IQ Unified Manager, and NetApp Manageability SDK.

NetApp’s Solutions to Ransomware

It is important for ransomware detection to occur as early as possible so that you can prevent its spread and avoid costly downtime. NetApp offers a layered defense approach with ONTAP software and its native detection and recovery tools. This section summarizes various features and tools that NetApp offers to detect, alert, and recover from ransomware attacks.

● NetApp Active IQ (AIQ) checks NetApp ONTAP systems for adherence to NetApp configuration best practices such as enabling FPolicy.

● NetApp Active IQ Unified Manager (AIQUM) generates alerts for abnormal growth of NetApp Snapshot copies or storage efficiency loss, which can indicate potential ransomware attacks.

● ONTAP System Manager enables to look at Snapshot percent change or storage efficiency savings in real time.

● Autonomous Ransome ware Protection. NetApp ONTAP 9.10.1 and later comes with anti-ransomware feature that leverages built-in on-box machine learning (ML) that looks at volume workload activity and data entropy to automatically detect ransomware. In ONTAP 9.11.1, this feature has been enhanced with an enhanced analytics engine that catches newer variations of ransomware that manipulates data entropy and file extensions. This feature can be integrated with Cloud Secure to track the status of on-box protection in Cloud Insight dashboard. This feature is supported on FSx and CVO as well. In ONTAP 9.12.1, ARP screening profile is transferred as part of the NetApp SnapMirror replication, resulting in ransomware protection on secondary storage.

● NetApp Native FPolicy is a file-access notification framework that is used to monitor and to manage file access over the NFS or SMB/CIFS protocol. This Zero trust engine is built around the concept of "not to trust and always verify". FPolicy helps you block unwanted files from being stored on the NetApp storage device. This feature can be leveraged to block known ransomware file extensions. With ONTAP 9.12.1, FPolicy can now be activated with a simple one-click in System Manager or NetApp BlueXP. This feature protects against thousands of known, common ransomware extensions that are used for typical ransomware attacks.

● FPolicy external mode in ONTAP uses UBA (sometimes referred to as User and Entity Behavior Analytics, or UEBA) as the key to stopping a zero-day ransomware attack. UBA tracks user's and group's data access patterns and report any deviation in pattern. UBA can also deny access to files when users do something outside their usual pattern. UBA requires an external mode FPolicy server.

Note: Cloud Insights with Cloud Secure is NetApp's own external mode FPolicy server.

● NetApp SnapShot copies. Snapshot is a read-only image of a volume that captures the state of a file system at a point in time. These copies help protect data with no effect on system performance and, at the same time, do not occupy a lot of storage space. Scheduled Snapshots taken would come in handy when you need to restore the data after an attack.

● NetApp SnapLock is a key component for enterprise data protection and data resiliency against ransomware. It provides a special immutable volume in which the data can be stored and committed to a non-erasable, non-rewritable state for a specific retention period. User’s production data residing in FlexGroups can also be created as SnapLock volumes, enabling higher performance and massive scale for indelible worm-protected data.

NetApp Cloud Secure

NetApp Cloud Secure is a feature of NetApp Cloud Insights, an offering from NetApp Blue-XP. It provides centralized visibility and control of all corporate data access across on-premises and cloud environments to ensure security and compliance goals are met. It reports access activity from insiders, outsiders, ransomware attacks, and rogue users. It profiles users and groups for normal data access patterns and if a risky behavior is detected, it alerts you and automatically takes a Snapshot copy which can be used to recover quickly.

Unlike perimeter security tools, which assume that insiders are trusted, NetApp Cloud Secure assumes zero trust for everyone. All activities on the supervised shares are monitored in real time and the data is used to automatically identify the working communities of all users.

Along with the ability to audit all document access, NetApp Cloud Secure helps you to ensure compliance with regulatory requirements.

NetApp Cloud Secure performs four major functions:

● Monitor user activity

● Detect anomalies and identify potential attacks

● Automated response policies

● Forensics and user audit reporting

It provides a graphical interface to slice and dice activity data to perform data breach investigations and generate user data access audit reports. It allows multiple views of file data activities by user, time, activity type, and file attributes.

For more information about NetApp ransomware protection refer to https://bluexp.netapp.com/ransomware-protection.

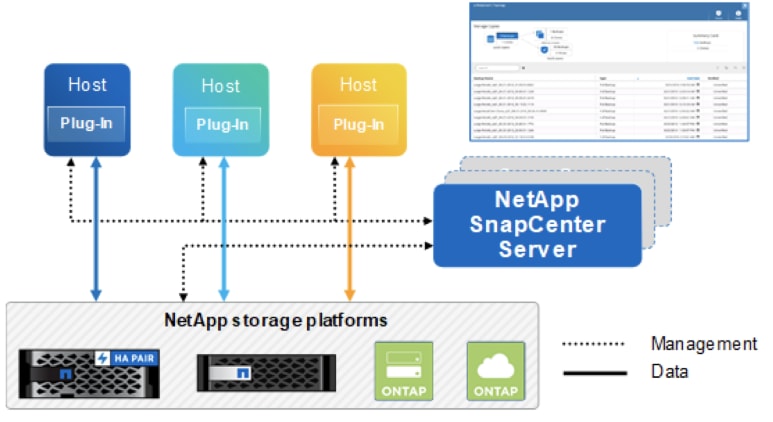

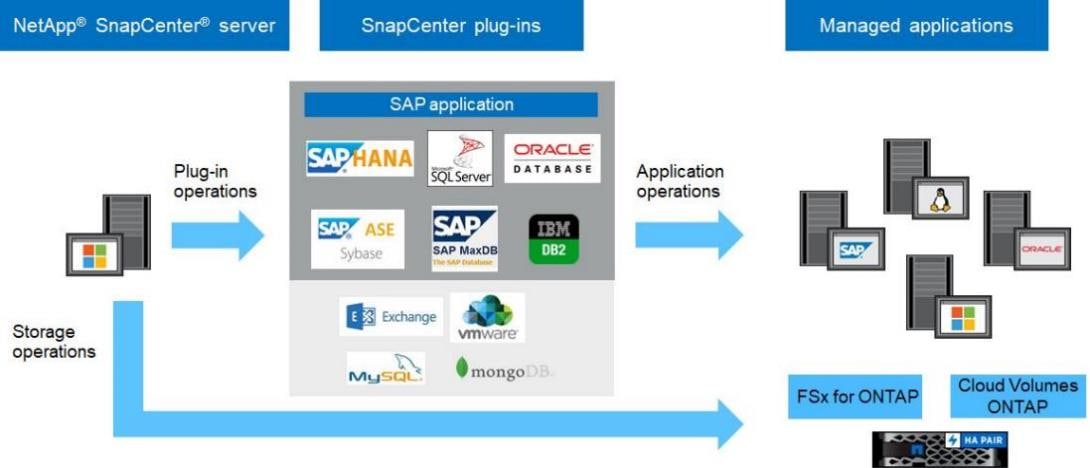

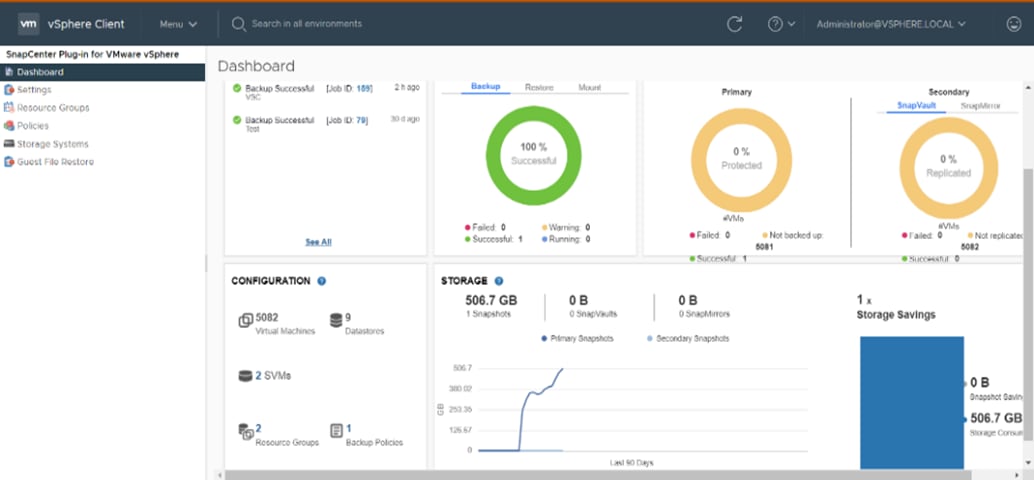

NetApp SnapCenter is a NetApp next-generation data protection software for tier 1 enterprise applications. SnapCenter Software is a simple, centralized, scalable platform that provides application-consistent data protection for applications, databases, host file systems, and virtual machines (VMs) running on ONTAP systems anywhere in the Hybrid Cloud.

SnapCenter leverages NetApp Snapshot, SnapRestore, FlexClone, SnapMirror, and SnapVault technologies to provide the following:

● Fast, space-efficient, application-consistent, disk-based backups

● Rapid, granular restore and application-consistent recovery

● Quick, space-efficient cloning

SnapCenter enables seamless integration with Oracle, Microsoft, SAP, MongoDB and VMware across FC, iSCSI, and NAS protocols. This integration enables IT organizations to scale their storage infrastructure, meet increasingly stringent SLA commitments, and improve the productivity of administrators across the enterprise.

Starting with SnapCenter 4.3, SnapCenter Server has been decoupled from the SnapCenter plugin for VMware vSphere and is no longer required to backup the VM’s and datastores. VM and datastore backup functions have been moved exclusively to the SnapCenter plugin for VMware vSphere which was deployed as part of this design. SnapCenter server is still required for application-level VM backups such as for Microsoft SQL Server, Oracle, and SAP HANA.

The SnapCenter platform is based on a multitiered architecture that includes a centralized management server (SnapCenter Server) and a SnapCenter plug-in host.

Figure 18 illustrates the high-level architecture of the NetApp SnapCenter Server.

The SnapCenter Server includes a web server, a centralized HTML5-based user interface, PowerShell cmdlets, REST APIs, and the SnapCenter repository.

SnapCenter enables load balancing, high availability, and horizontal scaling across multiple SnapCenter Servers within a single user interface. You can accomplish high availability by using Network Load Balancing (NLB) and Application Request Routing (ARR) with SnapCenter. For larger environments with thousands of hosts, adding multiple SnapCenter Servers can help balance the load.

The SnapCenter Server can push out plug-ins to remote hosts. These plug-ins are used to interact with an application, a database, or a file system. The SnapCenter Server and plug-ins communicate with the host agent using HTTPS. Usually, the plug-ins must be present on the remote host so that application-level or database-level commands can be issued from the same host where the application or database is running.

To manage the plug-ins and the interaction between the SnapCenter Server and the plug-in host, SnapCenter uses SM Service. SM service is a NetApp SnapManager web service running on top of Windows Server internet information services (IIS) on the SnapCenter Server. SM Service takes all client requests such as backup, restore, and clone.

The SnapCenter Server communicates those requests to SMCore, which is a service that runs co-located within the SnapCenter Server and remote servers. SMCore plays a significant role in coordinating with the SnapCenter plug-ins package for Windows.

SnapCenter Features

SnapCenter enables you to create application-consistent Snapshot copies and to complete data protection operations, including Snapshot copy-based backup, clone, restore, and backup-verification operations. SnapCenter provides a centralized management environment, and it uses role-based access control (RBAC) to delegate data protection and management functions to individual application users across the SnapCenter Server and Windows hosts.

SnapCenter includes the following key features:

● A unified and scalable platform across applications and database environments with virtual and nonvirtual storage powered by the SnapCenter Server

● Consistency of features and procedures across plug-ins and environments supported by the SnapCenter GUI

● RBAC for security and centralized role delegation

● Application-consistent Snapshot copy management, restore, clone, and backup verification support from both primary and secondary destinations (NetApp SnapMirror and NetApp SnapVault technology)

● Remote package installation from the SnapCenter GUI

● Nondisruptive, remote upgrades

● A dedicated SnapCenter repository for faster data retrieval

● Load balancing that is implemented by using Microsoft Windows network load balancing (NLB) and application request routing (ARR) with support for horizontal scaling

● Centralized scheduling and policy management to support backup and clone operations

● Centralized reporting, monitoring, and dashboard views

● SnapCenter 4.7 support for data protection for VMware VMs, SQL Server databases, Oracle databases, MySQL, SAP HANA, MongoDB, and Microsoft Exchange

SAP HANA Data Protection with SnapCenter

The FlexPod solution can be extended with additional software and hardware components to cover data protection, backup and recovery, and disaster recovery operations. The following chapter provides a high-level overview of how to enhance SAP HANA backup and disaster recovery using the NetApp SnapCenter plug-In for SAP HANA.

More details on the setup and configuration of SnapCenter for backup and recovery or disaster recovery operations can be found in the following technical reports:

● SAP HANA Backup and Recovery with SnapCenter

● SAP HANA Disaster Recovery with Asynchronous Storage Replication

Storage-based Snapshot backups are a fully supported and integrated backup method available for SAP HANA.

Storage-based Snapshot backups are implemented with the NetApp SnapCenter plug-in for SAP HANA, which creates consistent Snapshot backups by using the interfaces provided by the SAP HANA database. SnapCenter registers the Snapshot backups in the SAP HANA backup catalog so that they are visible within the SAP HANA studio or cockpit and can be selected for restore and recovery operations.

Snapshot copies created within primary storage can be replicated to the secondary backup storage by using NetApp SnapMirror technology controlled by SnapCenter. Different backup retention policies can be defined for backups held on the primary storage and to those backups held on the secondary storage. The SnapCenter Plug-In for SAP HANA manages the retention of Snapshot copy-based data backups and log backups, including housekeeping of the backup catalog. The SnapCenter plug-in for SAP HANA also allows the execution of a block integrity check of the SAP HANA database by executing a file-based backup.

Storage-based Snapshot backups provide significant advantages when compared to file-based backups. Advantages include the following:

● Rapid backup (less than a minute)

● Faster restore on the storage layer (less than a minute)

● No performance effect on the SAP HANA database host, network, or storage during backup

● Space-efficient and bandwidth-efficient replication to secondary storage based on block changes

SAP HANA Disaster Recovery with Asynchronous Storage Replication

SAP HANA disaster recovery can be performed either on the database layer by using SAP system replication or on the storage layer by using storage replication technologies. This section provides an overview of disaster recovery solutions based on asynchronous storage replication.

The same SnapCenter plug-in that is described in section SAP HANA Backup, and is also used for the asynchronous mirroring solution. A consistent Snapshot image of the database at the primary site is asynchronously replicated to the disaster recovery site with SnapMirror.

High-level Architecture Description

Figure 19 shows a high-level overview of the data protection architecture.

For an offsite backup and disaster recovery solution, the following additional hardware and software components are required:

● A Windows host to run SnapCenter server software

● Offsite backup storage to replicate backups from primary storage to a secondary storage system

● Disaster recovery storage to replicate backups from primary storage to a disaster recovery site

● AWS FSx for NetApp ONTAP and Cloud Volumes ONTAP at different Cloud provides can be used as backup targets and as disaster recovery site as well

The SnapCenter Server must be able to communicate with the SVMs that are used at the primary (within the FlexPod instance), the offsite backup location, and the disaster recovery storage.

The primary storage must have a network connection to the offsite storage and the disaster recovery storage. A storage cluster peering must be established between the primary storage, the offsite storage, and the disaster recovery storage.

The SnapCenter Server must have a network connection to the SAP HANA database hosts to deploy the HANA plug-in and to communicate with the plug-in after deployment. As an alternative, the HANA plug-in can also be deployed at the FlexPod management server. See SAP HANA Backup and Recovery with SnapCenter for more details on the deployment options for the HANA plug-in.

SAP HANA System Replication - Backup and Recovery with SnapCenter

SAP HANA System Replication is often used as a high availability or disaster recovery solution for SAP HANA databases. SAP HANA System Replication offers different modes of operation that you can use depending on your use case or availability requirements. The single node SAP HANA system hosted on a Cisco X-series node can have the replication configured with a dedicated secondary SAP HANA host for high availability purpose within the same site or for disaster recovery over long distances. In either case, the backups must be able to be taken regardless of which SAP HANA host is primary or secondary. For more details on SnapCenter configuration options for system replication, go to: https://docs.netapp.com/de-de/netapp-solutions-sap/backup/saphana-sr-scs-sap-hana-system-replication-overview.html.

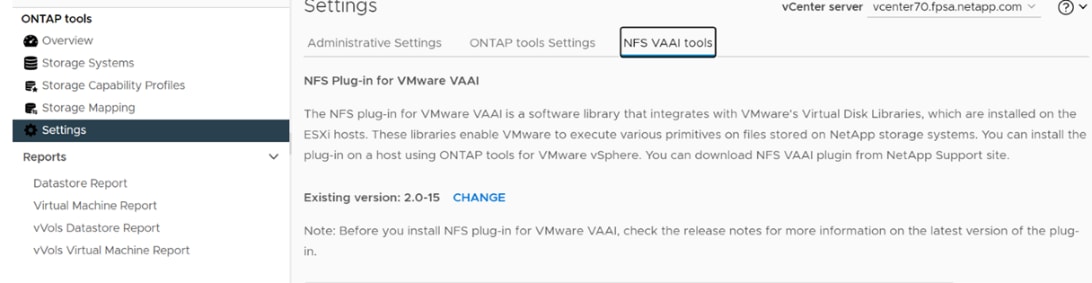

NetApp ONTAP Tools for VMware vSphere

NetApp ONTAP tools for VMware vSphere is a unified appliance that includes vSphere Storage Console (VSC),VASA Provider and SRA Provider. This vCenter web client plug-in that provides Context sensitive menu to provision traditional datastores and Virtual Volume (vVol) datastore.

NetApp ONTAP tools provides visibility into the NetApp storage environment from within the VMware vSphere web client. VMware administrators can easily perform tasks that improve both server and storage efficiency while still using role-based access control to define the operations that administrators can perform. It includes enhanced REST APIs that provide vVols metrics for SAN storage systems using NetApp ONTAP 9.7 and later. NetApp OnCommand API Services is no longer required to get metrics for NetApp ONTAP systems 9.7 and later.

To download ontap tools for VMware vSphere, go to: https://mysupport.netapp.com/site/products/all/details/otv/downloads-tab.

NetApp NFS Plug-in for VMware VAAI

The NetApp NFS Plug-in for VMware vStorage APIs - Array Integration (VAAI) is a software library that integrates the VMware Virtual Disk Libraries that are installed on the ESXi host. The VMware VAAI package enables the offloading of certain tasks from the physical hosts to the storage array. Performing those tasks at the array level can reduce the workload on the ESXi hosts.

The copy offload feature and space reservation feature improve the performance of VSC operations. The NetApp NFS Plug-in for VAAI is not shipped with VSC, but you can install it by using VSC. You can download the plug-in installation package and obtain the instructions for installing the plug-in from the NetApp Support site.

For more information about the NetApp VSC for VMware vSphere, see the NetApp Virtual Infrastructure Management Product Page.

Note: While vVol datastores with FCP are supported with virtualized SAP HANA, the preferred option to connect storage to virtual machines is with NFS directly out of the guest operating system. For more information, go to: https://docs.netapp.com/us-en/netapp-solutions-sap/bp/saphana_aff_fc_sap_hana_using_vmware_vsphere.html.

NetApp SnapCenter Plug-In for VMware vSphere

NetApp SnapCenter Plug-in for VMware vSphere enables VM-consistent and crash-consistent backup and restore operations for VMs and datastores from the vCenter server. The NetApp SnapCenter plug-in is deployed as a virtual appliance, and it integrates with the vCenter server web client GUI.

Here are some of the functionalities provided by the SnapCenter plug-in to help protect your VMs and datastores:

● Backup VMs, virtual machine disks (VMDKs), and datastores

◦ You can back up VMs, underlying VMDKs, and datastores. When you back up a datastore, you back up all the VMs in that datastore.

◦ You can create mirror copies of backups on another volume that has a SnapMirror relationship to the primary backup or perform a disk-to-disk backup replication on another volume that has a NetApp SnapVault relationship to the primary backup volume.

◦ Backup operations are performed on all the resources defined in a resource group. If a resource group has a policy attached and a schedule configured, then backups occur automatically according to the schedule.

● Restore VMs and VMDKs from backups

◦ You can restore VMs from either a primary or secondary backup to the same ESXi server. When you restore a VM, you overwrite the existing content with the backup copy that you select.

◦ You can restore one or more VMDKs on a VM to the same datastore. You can restore existing

● VMDKs, or deleted or detached VMDKs from either a primary or a secondary backup

● You can attach one or more VMDKs from a primary or secondary backup to the parent VM (the same VM that the VMDK was originally associated with) or an alternate VM. You can detach the VMDK after you have restored the files you need.

● You can restore a deleted VM from a datastore primary or secondary backup to an ESXi host that you select.

Note: For application-consistent backup and restore operations, the NetApp SnapCenter Server software is required.

Note: For additional information, requirements, licensing, and limitations of the NetApp SnapCenter Plug-In for VMware vSphere, see the NetApp Product Documentation.

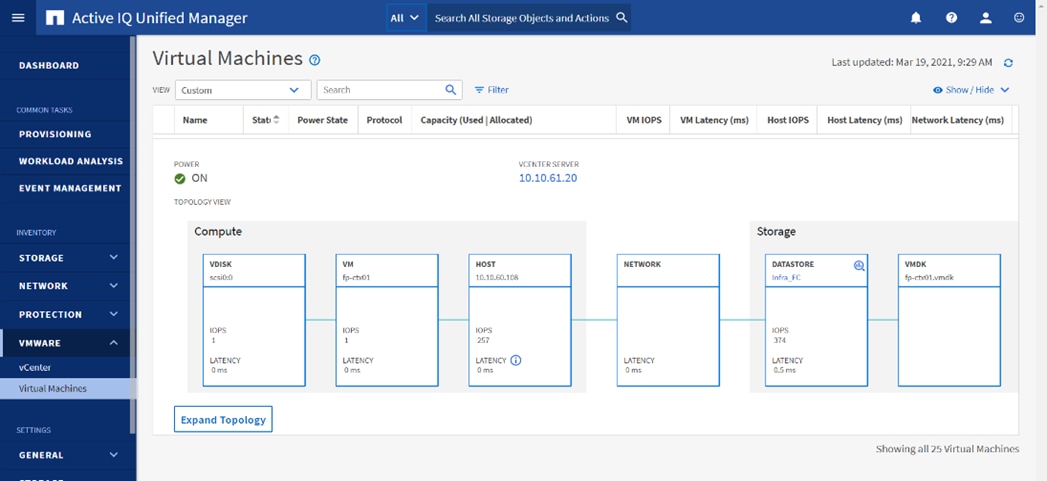

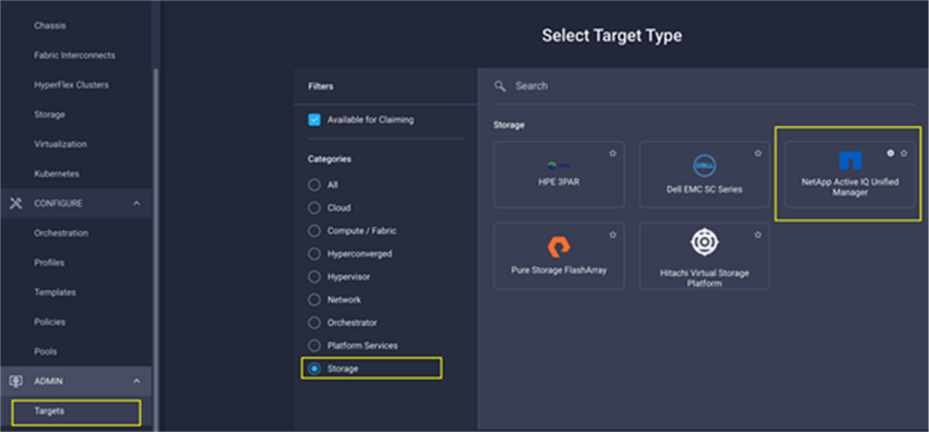

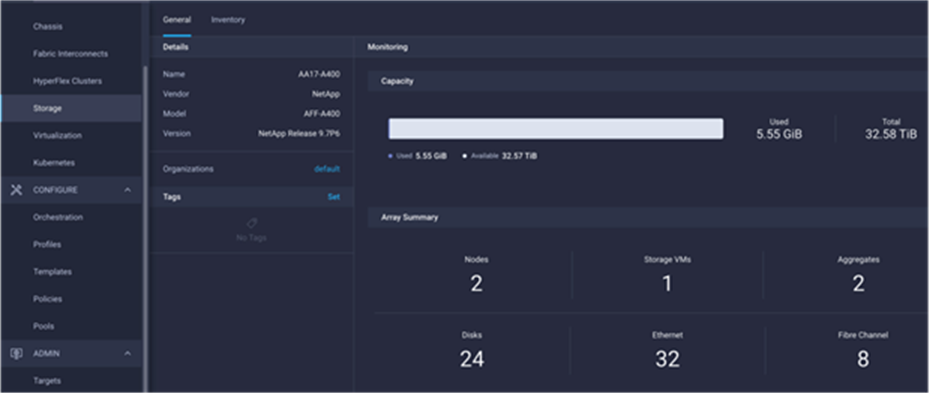

NetApp Active IQ Unified Manager

NetApp Active IQ Unified Manager (Unified Manager) is a comprehensive monitoring and proactive management tool for NetApp ONTAP systems to help manage the availability, capacity, protection, and performance risks of your storage systems and virtual infrastructure. You can deploy NetApp Active IQ Unified Manager on a Linux server, on a Windows server, or as a virtual appliance on a VMware host.

Active IQ Unified Manager enables monitoring your NetApp ONTAP storage clusters, VMware vCenter server and VMs from a single redesigned, intuitive interface that delivers intelligence from community wisdom and AI analytics. It provides comprehensive operational, performance, and proactive insights into the storage environment and the VMs running on it. When an issue occurs on the storage or virtual infrastructure, NetApp Active IQ Unified Manager can notify you about the details of the issue to help with identifying the root cause.

NetApp Active IQ Unified Manager enables to manage storage objects in your environment by associating them with annotations. You can create custom annotations and dynamically associate clusters, SVMs, and volumes with the annotations through rules.

The VM dashboard gives you a view into the performance statistics for the VM so that you can investigate the entire I/O path from the vSphere host down through the network and finally to the storage. Some events also provide remedial actions that can be taken to rectify the issue. You can also configure custom alerts for events so that when issues occur, you are notified through email and SNMP traps.

VMware vSphere is a virtualization platform for holistically managing large collections of infrastructures (resources including CPUs, storage, and networking) as a seamless, versatile, and dynamic operating environment. Unlike traditional operating systems that manage an individual machine, VMware vSphere aggregates the infrastructure of an entire data center to create a single powerhouse with resources that can be allocated quickly and dynamically to any application in need.

Running SAP HANA TDI virtualized on vSphere delivers a software-defined deployment architecture to SAP HANA customers, which will allow easy transition between private, hybrid or public cloud environments.

Using the SAP HANA platform with VMware vSphere virtualization infrastructure provides an optimal environment for achieving a unique, secure, and cost-effective solution and provides benefits physical deployments of SAP HANA cannot provide, such as:

● Higher service-level agreements (SLAs) by leveraging vSphere vMotion to live migrate running SAP HANA instances to other vSphere host systems before hardware maintenance or host resource constraints.

● Standardized high availability solution based on vSphere High Availability.

● Built-in multitenancy support via SAP HANA system encapsulation in a virtual machine (VM).

● Easier hardware upgrades or migrations due to abstraction of the hardware layer.

● Higher hardware utilization rates.

● Automation, standardization and streamlining of IT operation, processes, and tasks.

● Cloud readiness due to software-defined data center (SDDC) SAP HANA deployments.

These features available exclusively with virtualization, lower the total cost of ownership and ensure the best operational performance and availability. FlexPod for SAP HANA TDI fully supports SAP HANA and related software in production environments, as well as SAP HANA features such as SAP HANA multitenant database containers (MDC) or SAP HANA system replication (HSR).

For more information about SAP HANA on VMware vSphere, see: https://launchpad.support.sap.com/#/notes/2937606

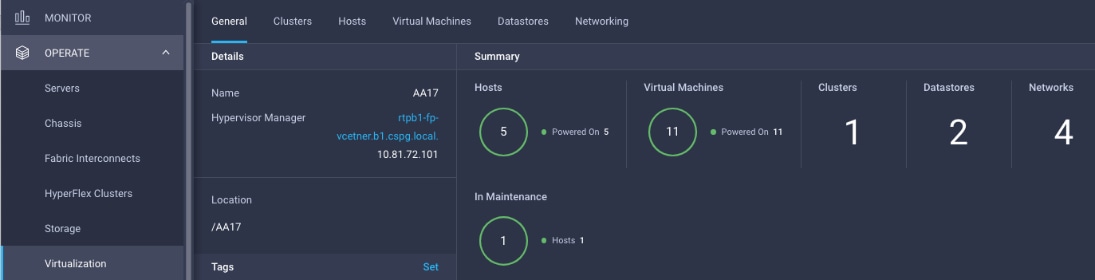

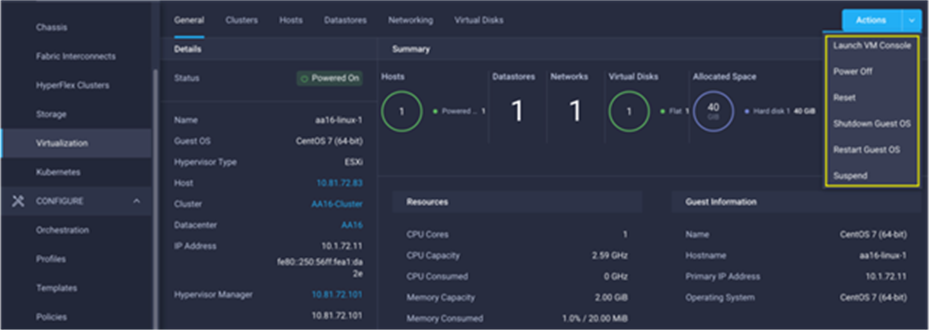

VMware vSphere vCenter

VMware vCenter Server provides unified management of all hosts and VMs from a single console and aggregates performance monitoring of clusters, hosts, and VMs. VMware vCenter Server gives administrators a deep insight into the status and configuration of compute clusters, hosts, VMs, storage, the guest OS, and other critical components of a virtual infrastructure. VMware vCenter manages the rich set of features available in a VMware vSphere environment.

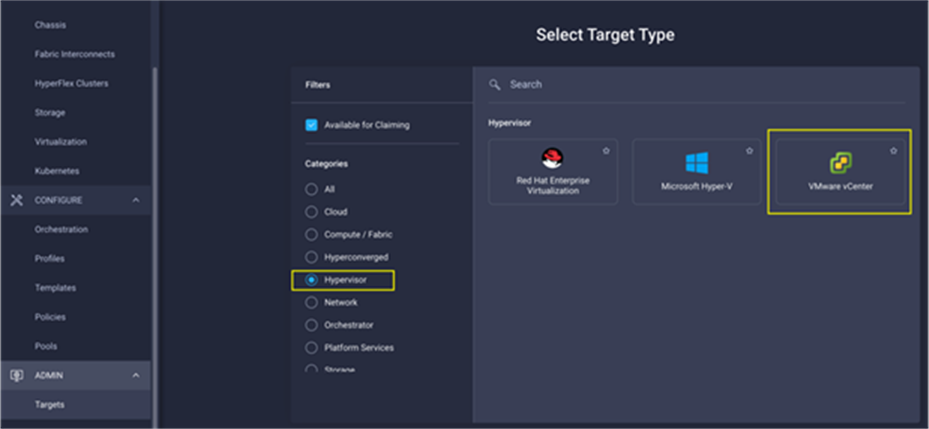

Cisco Intersight uses the device connector running within the Cisco Intersight Assist virtual appliance to communicate with the VMware vCenter, NetApp storage, and Cisco Nexus switches.

The device connector provides a secure way for connected targets to send information and receive control instructions from the Cisco Intersight portal using a secure internet connection. The integration brings the full value and simplicity of Cisco Intersight infrastructure management service to VMware hypervisor and NetApp ONTAP data storage environments.

Enterprise SAN and NAS workloads can benefit equally from the integrated management solution. The integration architecture enables you to use new management capabilities with no compromise in your existing VMware, NetApp ONTAP, or switch operations. IT users will be able to manage heterogeneous infrastructure from a centralized Cisco Intersight portal. At the same time, the IT staff can continue to use VMware vCenter, NetApp Active IQ Unified Manager, and Cisco Switch Interfaces for comprehensive analysis, diagnostics, and reporting of virtual, storage, and switching environments. The functionality provided through this integration is explained in the upcoming solution design section.

Red Hat Ansible Automation Platform

Red Hat Ansible Automation Platform provides an enterprise framework for building and operating IT automation across an organization. It is simple and powerful, allowing users to easily manage various physical devices within FlexPod including the provisioning of Cisco UCS bare metal servers, Cisco Nexus and MDS switches, NetApp A400 AFF storage array and VMware vSphere. Using Ansible’s Playbook-based automation provides a more secure and stable foundation for deploying end-to-end infrastructure automation.

This solution offers Ansible Playbooks that are made available from a GitHub repository that you can access to automate the FlexPod deployment.

Red Hat Enterprise Linux for SAP Solutions

Red Hat Enterprise Linux for SAP Solutions is an SAP specific offering, tailored to the needs of SAP workloads such as S/4HANA and SAP HANA platform. Furthermore, standardizing your SAP environment on Red Hat Enterprise Linux for SAP Solutions helps streamline operations and reduce costs by providing integrated smart management and high availability solutions as part of the offering.

Built on Red Hat Enterprise Linux (RHEL), the RHEL for SAP Solutions subscription offers the following additional components:

● SAP-specific technical components to support SAP S/4HANA, SAP HANA, and SAP Business Applications.

● High Availability solutions for SAP S/4HANA, SAP HANA, and SAP Business Applications.

● RHEL System Roles for SAP, which can be used to automate the configuration of a RHEL system to run SAP workloads.

● Smart Management and Red Hat Insights for lifecycle management and proactive optimization.

● Update Services and Extended Update Support.

SUSE Linux Enterprise Server for SAP Applications

SUSE Linux Enterprise Server (SLES) for SAP Applications is the leading Linux platform for SAP HANA, SAP NetWeaver, and SAP S/4HANA solutions providing optimized performance and reduced downtime as well as faster SAP landscape deployments.

The key features of SLES for SAP Applications are:

● Deploy SAP services faster with automation. Automate the installation of the complete SAP stack including the operating system (OS), SAP workloads, high availability (HA), and monitoring. Avoid delays with the saptune configuration of the OS and HA configuration, optimized for specific SAP applications.

● Reduce downtime and increase security. Reduce outages with HA configurations designed for SAP HANA, and SAP applications. Eliminate downtime for Linux security updates with live kernel patching.

● Avoid errors with advanced monitoring. Monitor key metrics with Prometheus exporters for server and cloud instances, SAP HANA, SAP applications, and high availability cluster operations for visualization with SUSE Manager or other graphical display tools.

● Safeguard SAP Systems to prevent errors. Automatically discover and enable full observability of servers, SAP HANA databases, SAP S/4HANA and NetWeaver applications, and clusters with Trento in SAP domain language. Continuously check HA configurations, visualize potential problems, and apply recommended fixes.

SAP Application Monitoring with AppDynamics

AppDynamics is an Application Performance Monitoring (APM) Platform that helps you to understand and optimize the performance of your business, from its software to infrastructure to business journeys.

The AppDynamics APM Platform enables you to monitor and manage your entire application-delivery ecosystem, from the mobile app or browser client request through your network, backend databases and application servers and more. AppDynamics APM gives you a single view across your application landscape, letting you quickly navigate from the global perspective of your distributed application right down to the call graphs or exception reports generated on individual hosts.

AppDynamics has an agent-based architecture. Once the agents are installed you receive a dynamic flow map or topography of your application. It uses the concept of traffic lights to indicate the health of your application (green is good, yellow is slow, and red indicates potential issues) with dynamics baselining. AppDynamics measures application performance based on business transactions which essentially are the key functionality of the application. When the application deviates from the baseline AppDynamics captures and provides deeper diagnostic information to help be more proactive in troubleshooting and reduce the MTTR (Mean Time To Repair).

For more information about SAP monitoring using AppDynamics, see: https://docs.appdynamics.com/display/SAP/SAP+Monitoring+Using+AppDynamics.

This chapter contains the following:

● SAP HANA System Implementation Options

● Interoperability and Feature Compatibility

● Network and SAN Design Considerations

● Cisco Nexus Ethernet Connectivity

● Cisco MDS SAN Connectivity – Fibre Channel Only Design

● NetApp AFF A400 – Storage Virtual Machine (SVM) Design

● VMware vSphere – ESXi Design

● Cisco Intersight Integration with VMware vCenter and NetApp Storage

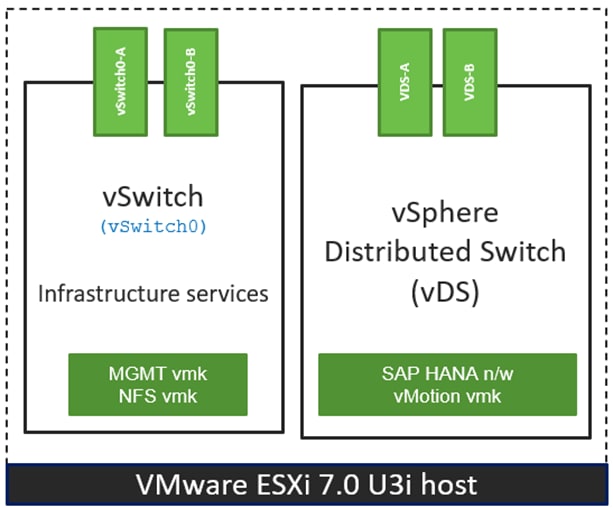

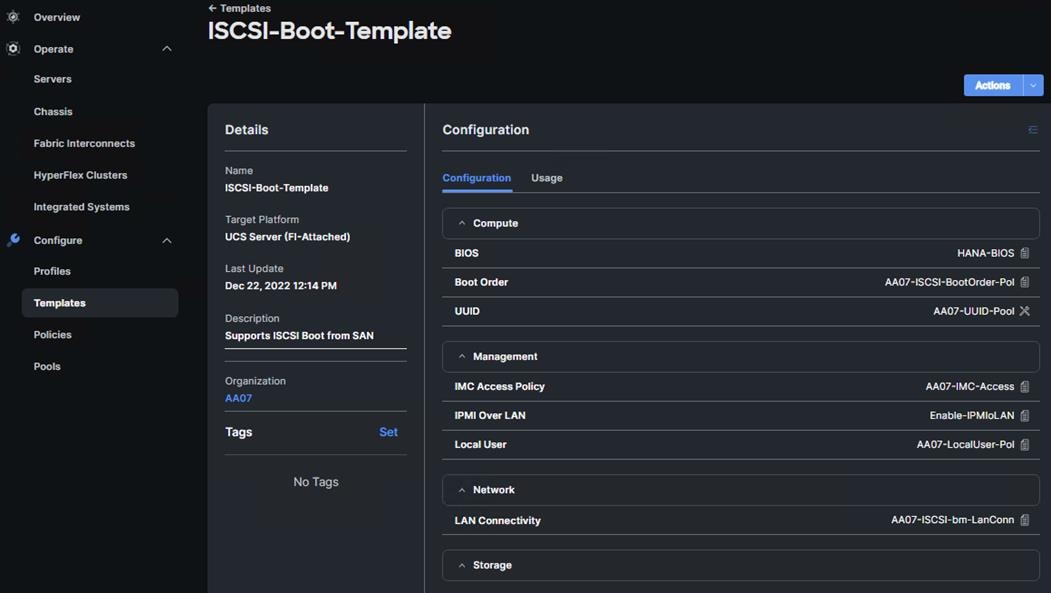

The FlexPod Datacenter with Cisco UCS X-Series and Intersight solution delivers a cloud-managed infrastructure solution on the latest Cisco UCS hardware for both bare-metal as well as virtualized implementations of SAP HANA. For the virtualized deployments, VMware vSphere 7.0 U3i hypervisor is installed on the Cisco UCS X210c M6 Compute Nodes configured for stateless compute design using boot from SAN. NetApp AFF A400 provides the required storage infrastructure. The Cisco Intersight cloud-management platform is utilized to configure and manage the infrastructure. The solution requirements and design details are covered in this section.

The section explains the SAP HANA system requirements defined by SAP followed by the reference architecture of FlexPod Datacenter Solution providing the platform for SAP and SAP HANA.

The FlexPod Datacenter with Cisco UCS X-Series for SAP HANA TDI design meets the following general design requirements:

● Resilient design across all layers of the infrastructure with no single point of failure.

● Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed.

● Modular design that can be replicated to expand and grow as the needs of the business grow.

● Simplified design with ability to integrate and automate with external automation tools.

● Cloud-enabled design which can be configured, managed, and orchestrated from the cloud using GUI or APIs.

● The FlexPod solution is SAP HANA TDI certified to provide organizations with the flexibility to choose the best, cost-effective, and appropriate solution that meets their needs.

SAP HANA System Implementation Options

This section defines the basic requirements for available implementation options with Cisco X210C M6 based FlexPod DC.

Single SAP HANA System on a Single Node: Scale-Up (Bare Metal or Virtualized)

A scale-up TDI solution is the simplest of the installation types. All data and processes are located on the same server in this single-node solution. SAP HANA scale-up TDI solutions are based on X-series compute node and use the intended external storage.

The network requirements for this option depend on the client and application server connectivity, backup/storage connectivity, and optional system replication services access needs. At a minimum application server access network and bandwidth factored for the data, log and shared filesystem access storage networks are required to run SAP HANA in a scale-up configuration.

Co-existing SAP HANA and SAP Application Workloads

Scenarios where SAP HANA database bare metal installation along with virtualized SAP application workloads are common in the datacenter. With SAP HANA TDI it is possible to run SAP HANA on shared infrastructure that also hosts non-HANA workloads like standard SAP applications. It is important to ensure appropriate storage IO and network bandwidth segregation so that HANA systems get their due to comfortably satisfy the storage and network KPIs for production support.

Interoperability and Feature Compatibility

The different hardware and software compatibility tools are available at the following links:

● Cisco UCS Hardware and Software Interoperability Matrix

● Cisco MDS and Nexus Interoperability Matrix

● NetApp Interoperability Matrix Tool

In addition to the hardware components the software product features need to fully integrate with SAP solutions which is confirmed with SAP certifications and SAP notes accordingly:

● Certified and supported SAP HANA hardware

● SAP note 2235581 – SAP HANA: Supported Operating Systems

● SAP note 2937606 – SAP HANA on VMware vSphere 7.0 in production

To achieve the performance and reliability requirements for SAP HANA it is vital to select the correct components and configuration for the SAP landscape.

Bare-metal Installation

The existing core-to-memory ratios for SAP HANA bare-metal environments are dependent on the Intel CPU architecture and the type of SAP data processing: online analytical processing (OLAP), online transaction processing (OLTP), or a mixed data processing system like with SAP Suite on/for HANA (SoH/S4H).

With these dependencies the 2-socket, Intel Ice Lake CPU architecture-based Cisco UCS X210c M6 compute node can scale up to 2 TB DDR main memory for SAP Business Warehouse (BW) systems or 4 TB DDR main memory for SAP Suite systems.

With SAP expert sizing mixed memory configurations of DDR memory and Intel Persistent Memory (PMem) using the AppDirect mode of the Intel PMem modules can increase the amount of available memory for the SAP HANA in-memory database further.

Virtualized installation

Since SAP HANA TDI Phase 5, it is possible to perform a workload-based sizing (SAP note 2779240) which can deviate from the existing core-to-memory ratio if the following conditions are met:

● Certified SAP HANA hardware

● Validated hypervisor

● Deviations are within the upper and lower limits of the hypervisor

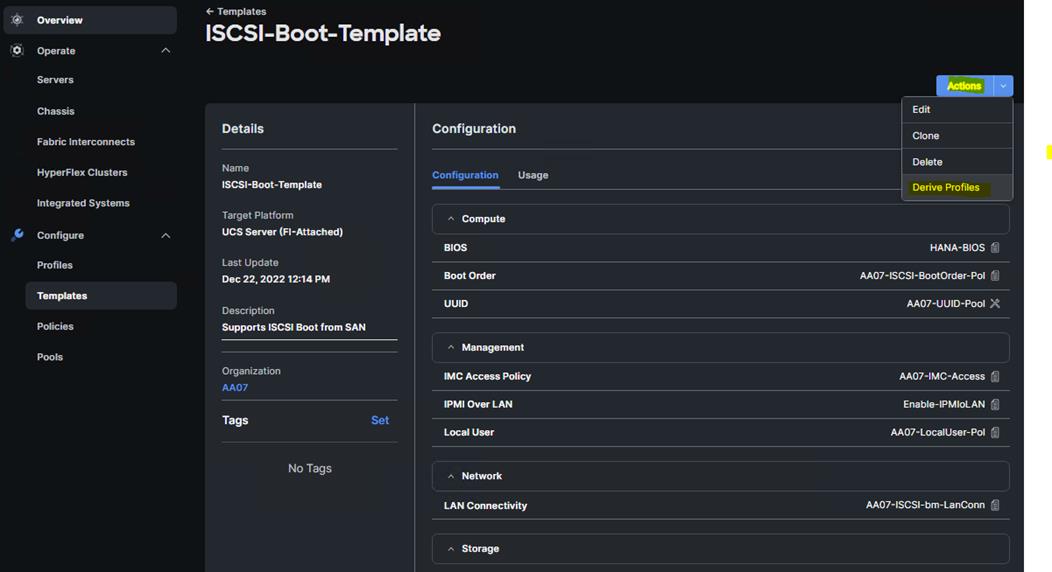

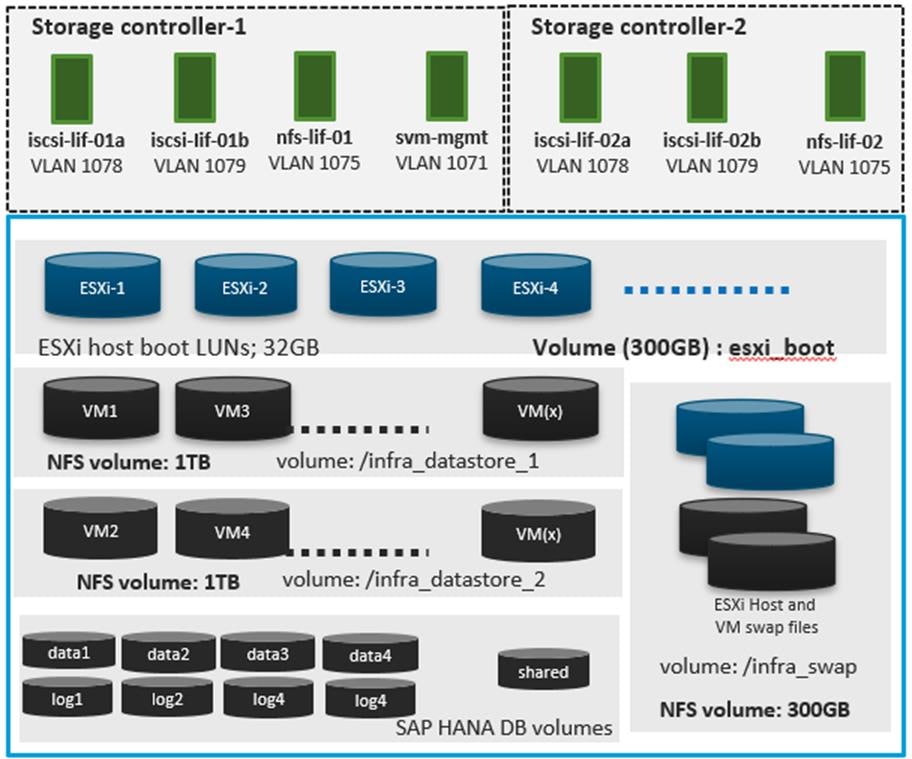

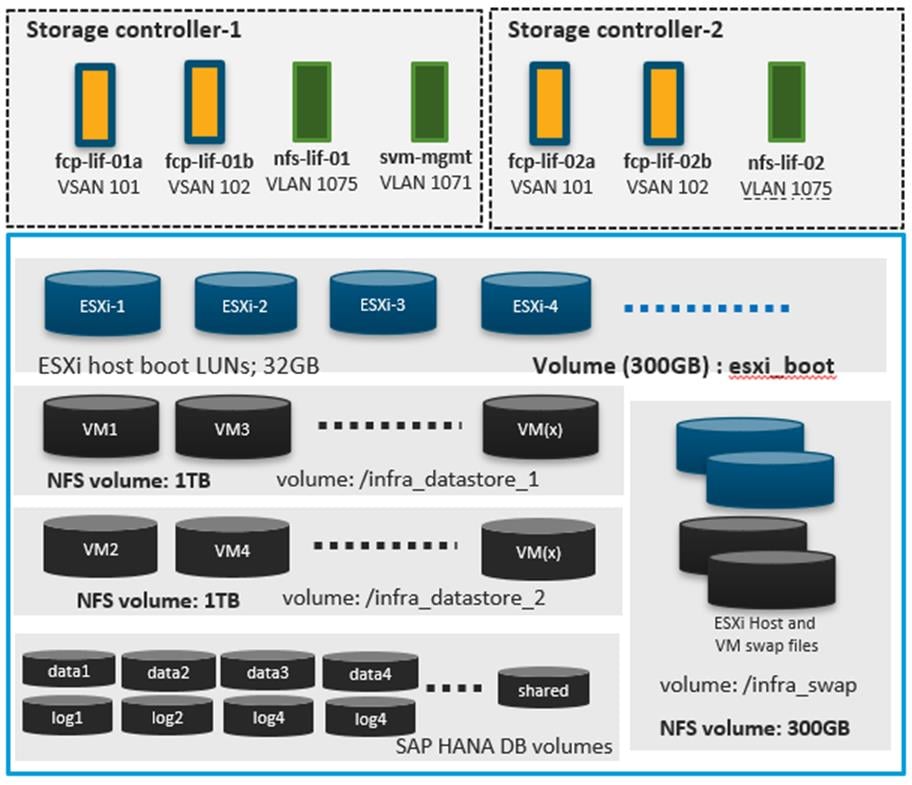

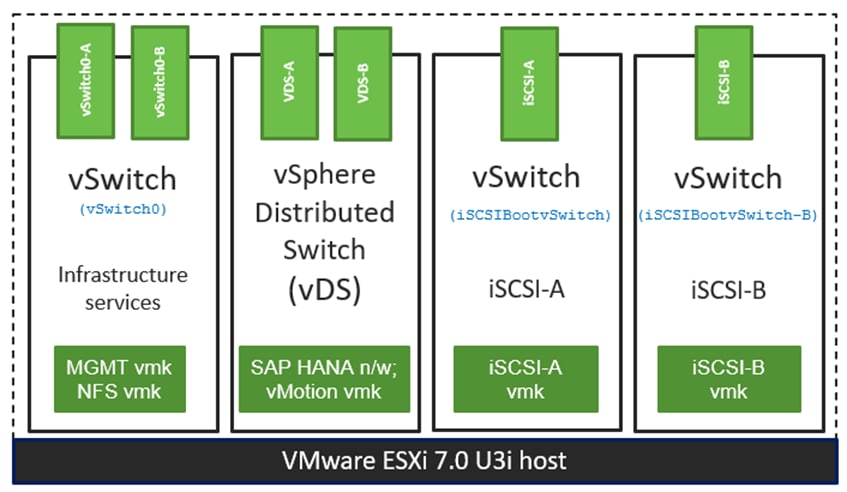

VMware vSphere and Intel Ice Lake CPUs are validated for SAP HANA starting with VMware vSphere 7.0 U3c. Sizing of the virtualized SAP HANA machines (vHANA) depends on the CPU model, number of cores and number of CPU sockets.