FlexPod Datacenter with Red Hat OpenShift AI for MLOps Design and Deployment Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

◦

Published: May 2025

![]()

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Artificial Intelligence (AI) and Machine Learning (ML) are driving unprecedented investment and innovation in enterprise data centers as businesses seek to leverage these technologies across a wide range of applications and quickly deliver them in production. This is particularly challenging considering that each application has its own unique set of requirements based on the specific ML use case it implements and the SLAs it must meet. The ML use case will impact several downstream decisions, such as the model type (generative or predictive or both), selection of model(s), and decisions around whether to build the models in-house or use pre-trained ones and may others. These applications will also utilize Enterprise data, which must be prepped and curated before they can be integrated, further complicating the process.

The delivery of these ML applications in production also requires extensive collaboration as well as integration of tools, processes and workflows between existing teams and newer ML and data teams. Once deployed, these applications, along with the models and data pipeline will need to be improved and maintained over the long term. Application teams will need to integrate the model delivery and data pipelines into their existing application delivery framework which may include continuous integration and continuous delivery (CI/CD), automation and other DevOps best practices. As a result, the application delivery pipeline — with integrated model and data pipelines — is significantly more challenging and complex. Scaling this environment both in terms of the number of models and the applications leveraging them, further adds to the challenges that an Enterprise faces. Gartner estimates that, on average, only 54 percent of AI projects make it from pilot to production, and so, despite the heavy investments in AI, delivering production applications with integrated models and data will continue to be a challenge for the foreseeable future.

To address these challenges and ensure successful outcomes, Enterprises need a strategic, holistic approach to accelerate and quickly operationalize AI/ML models for use by Enterprise applications. A crucial first step in addressing these challenges is implementing Machine Learning Operations (MLOps) to streamline model delivery. Unlike siloed, ad-hoc efforts that are inefficient, MLOps allows organizations to innovate, scale, and bring sustainable value to the business. MLOps uses DevOps principles to accelerate model delivery with consistency and efficiency. Like DevOps practices that integrate software development and IT operations with CI/CD to make the application delivery process more agile, MLOps bring DevOps principles used in application development to model delivery. Adopting MLOps is therefore essential for any organization seeking to scale AI/ML initiatives sustainably.

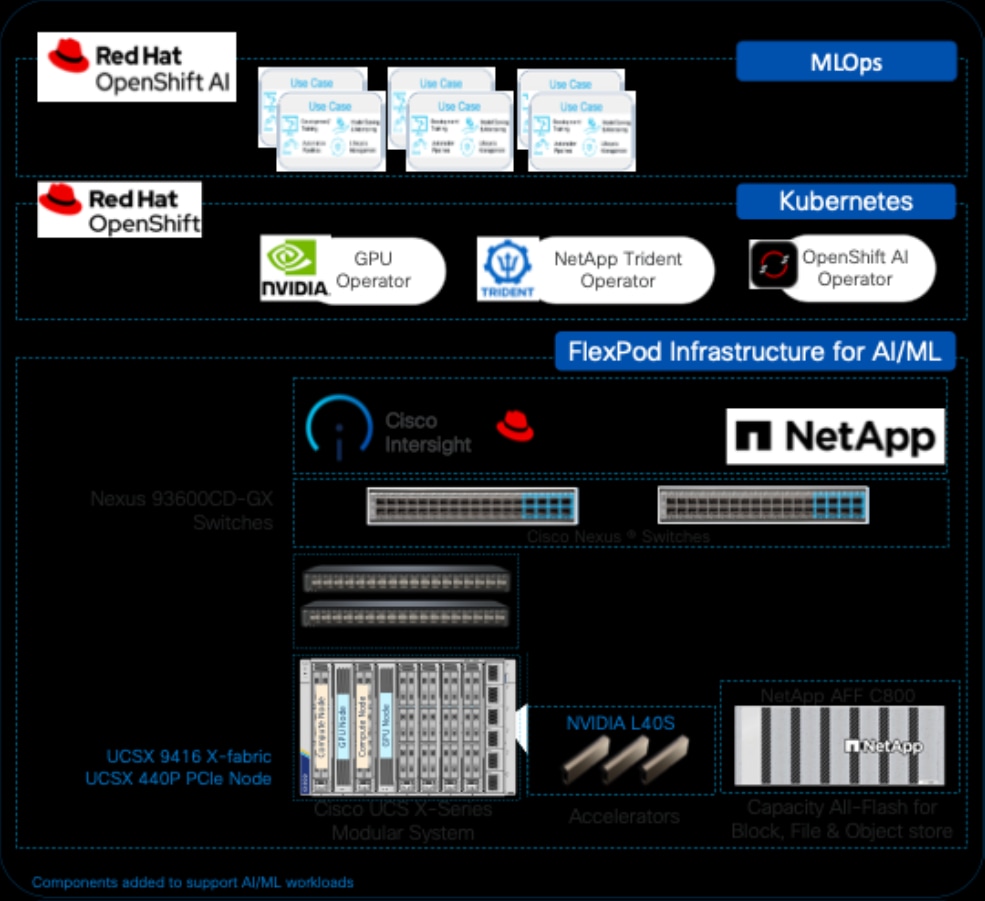

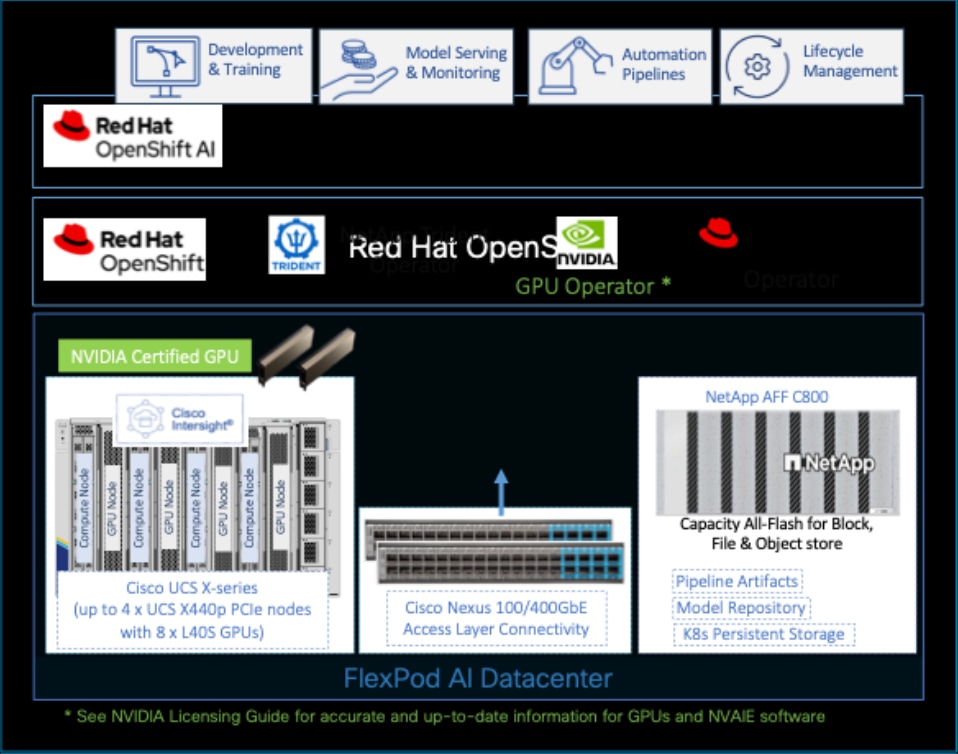

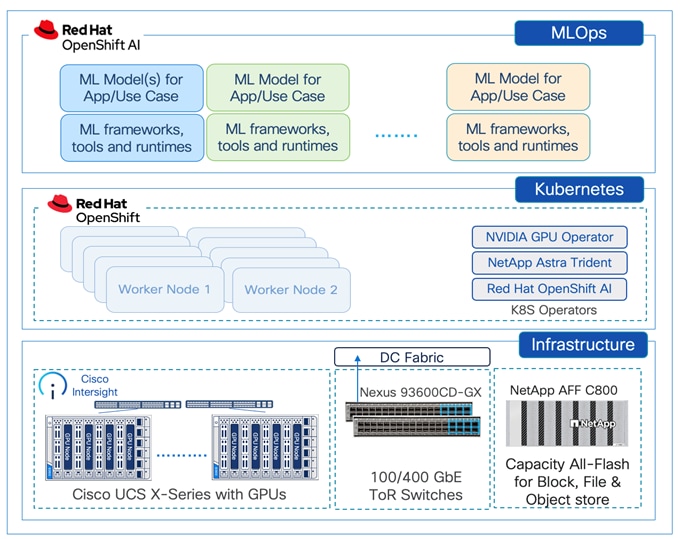

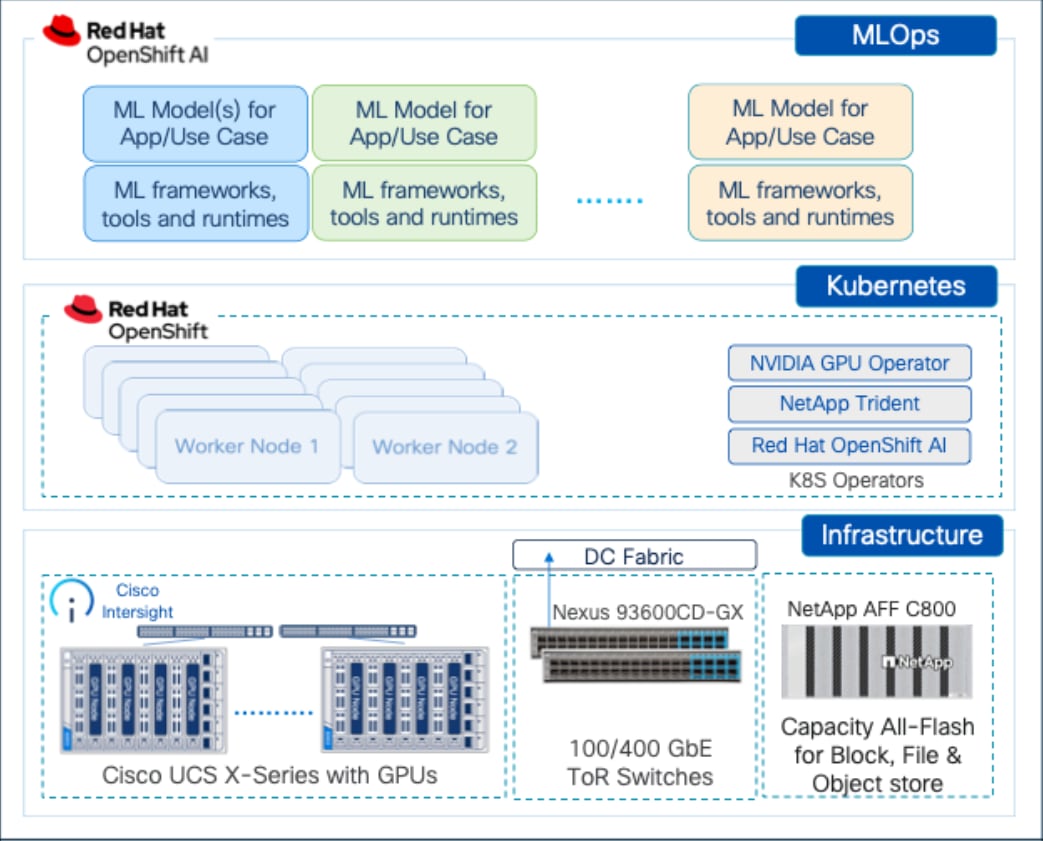

The solution in this design guide delivers a complete infrastructure stack with MLOps that Enterprises can deploy to efficiently manage, accelerate, and scale multiple AI/ML initiatives from incubation to production. The solution uses Red Hat OpenShift AI as the MLOps platform running on FlexPod baremetal infrastructure, (Cisco UCS X-Series, NetApp, and Cisco Nexus) with Red Hat Openshift to support Enterprise AI/ML initiatives at scale.

Audience

This document is intended for, but not limited to, sales engineers, technical consultants, solution architect and enterprise IT, and machine learning teams interested in learning how to design, deploy, and manage a production-ready AI/ML infrastructure for hosting machine learning models and AI-enabled applications.

Purpose of this document

This document serves as a reference architecture for MLOps using Red Hat OpenShift AI to accelerate AI/ML efforts and deliver models that application teams can use to build and deliver integrated ML applications. This document also provides design guidance for building a production-ready AI/ML infrastructure based on Red Hat OpenShift running on FlexPod datacenter infrastructure, leveraging optional NVIDIA AI Enterprise software and GPUs.

This chapter contains the following:

● Compute Unified Device Architecture

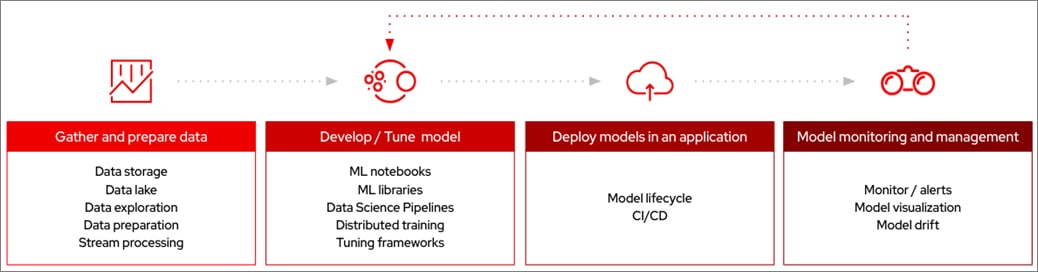

The end goal of an Enterprise’s AI/ML initiatives are to deploy ML-integrated applications that bring value to the business. Figure 1 illustrates the model delivery pipeline of an ML model before it can integrate into the application and the continuous integration and continuous delivery lifecycle that is required to maintain that model. The workflow also highlights integration of the data pipeline with the model pipeline before it is integrated into the application workflow.

Typically, for a given model, the life cycle will have the following stages which are then maintained for the lifetime of that ML model to ensure accuracy and minimize drift.

Data Pipeline – This is represented by the first box in the workflow. In this stage, data is collected and consolidated from various data sources. This is the Enterprise data that is necessary to for the ML use case in question – either for differentiation or to address the limitations that Large Language Models (LLMs) have in an Enterprise use case. Enterprises must continuously manage a steady-stream of continuously changing data from different sources and curate them for use in a model delivery pipeline. Data engineers may have to perform activities such as ingestion, exploration, labeling and preparation to deliver the curated data to the second stage in the above workflow, the model delivery or ML pipeline. This stage is part of the data delivery pipeline with a life cycle of its own and serves as input to the model workflow.

Model Delivery – This is represented by the second box in the workflow (see Figure 1). It includes the development and deployment stages of delivering a model in production, also know as model serving. The published models are made available using a standard interface (RPC, HTTPs) that applications teams can integrate into their CI/CD or development workflow to build and deliver the ML-enabled application. The model delivery pipeline is the focus of MLOps and typically involves the following stages. The next section details the ML pipelines and MLOps.

● Access ML-ready data – This is the output from the Gather and Prepare Data box in the above pipeline. It takes as input consolidated structured or unstructured data that has been cleaned, labeled, and formatted for use by ML engineers to evaluate, train and test the models. The data pipeline for AI delivers a curated dataset that serves as input to ML pipeline.

● Model Training – In this stage, data from the previous stage are used to develop, train, and test a new model from scratch or evaluate and re-train/customize a foundational model using Enterprise data. This stage involves any experimentation and evaluation work involved identify and select a model that best suits the needs of the use case in question. Other model customizations such as fine-tuning, and prompt engineering may also be done in this stage.

● Model Validation – In this stage, the model that has been selected and trained using Enterprise data is tested to ensure that it is ready for production deployment.

● Model Serving – In this stage, the model is deployed into production and available as an inferencing endpoint that applications can then use in their applications to implement the specific ML use case. Models are hosted on inferencing engines (for example, Virtual LLM) and delivered as an API endpoint accessible via a standard interface (RPC or HTTPS) by the application. The inference server or engine must meet the performance criteria of the application – however, the overall application design will determine how the inferencing engine hosting the model is deployed at scale, with resiliency.

● Automation – This stage represents the automation required to maintain the ML model with CI/CD to adapt to new data and other changes that maybe necessary based on feedback from its use in production.

● AI-Enabled Application Deployment – This stage represents the output of ML delivery pipeline which a published model for use in product. Application teams takes the delivered model and integrates it into their software development processes with CI/CD, and other DevOps and GitOps practices to deliver ML-enabled applications that implements a given ML use case. Models are continuously monitored in production with a feedback loop to continuously improve the model’s performance and accuracy.

Machine Learning Operations (MLOps) are a set of best practices to streamline and accelerate the delivery of machine learning (ML) models. The delivery of these ML models for production use or model serving is key to operationalizing AI so that Enterprises can build and deliver ML-enabled applications. Once delivered, the maintenance of the models are critical for ensuring the accuracy and reliability of model predictions and other outputs. MLOps leverages DevOps and GitOps principles to enable continuous retraining, integration, and delivery. Delivering ML-enabled applications involves new roles such as data scientists and ML engineers that weren’t part of traditional software/application development. These new roles will also require new tools and environments to do their work. As such, MLOps platforms will typically include a wide ecosystem of tools, technologies, libraries, and other components, including automation capabilities.

Automation is integral to MLOps to accelerate efforts that minimizes technical debt, enabling Enterprises to deliver and maintain models at scale. MLOps pipelines also need to continuously retrain models to keep up with everchanging data to ensure model performance. MLOps brings consistency and efficiency to the model delivery process.

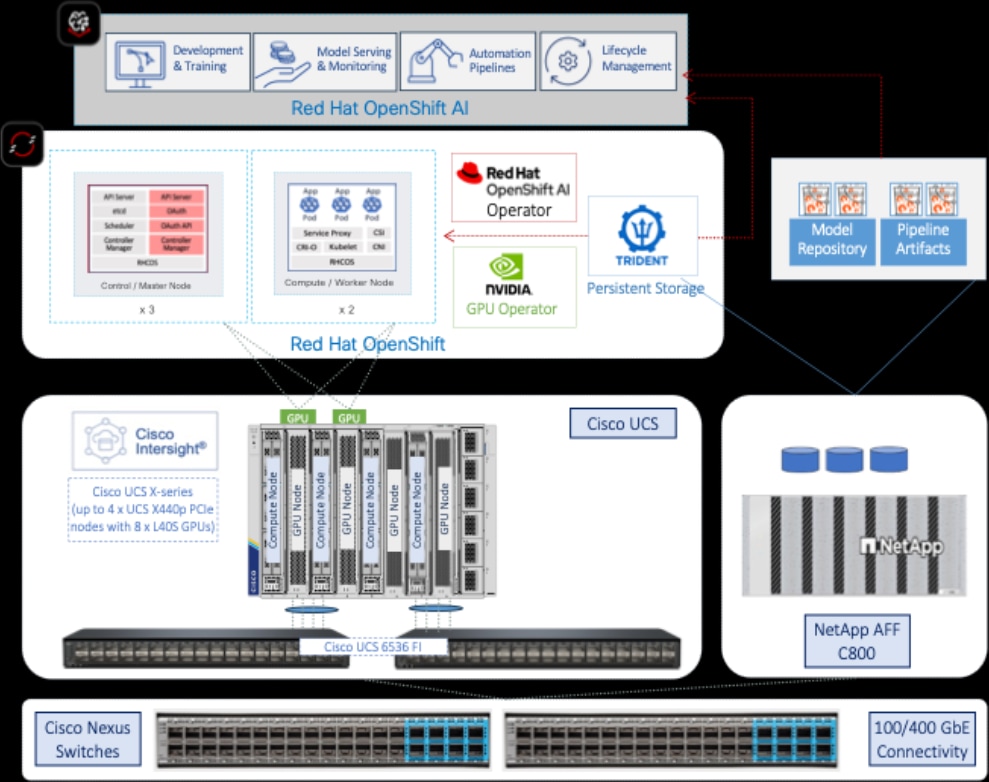

In this solution, MLOps is provided by Red Hat OpenShift AI serves as the MLOps platform to streamline, scale and accelerate model delivery, with Red Hat OpenShift providing cloud-native (Kubernetes) cluster management and orchestration.

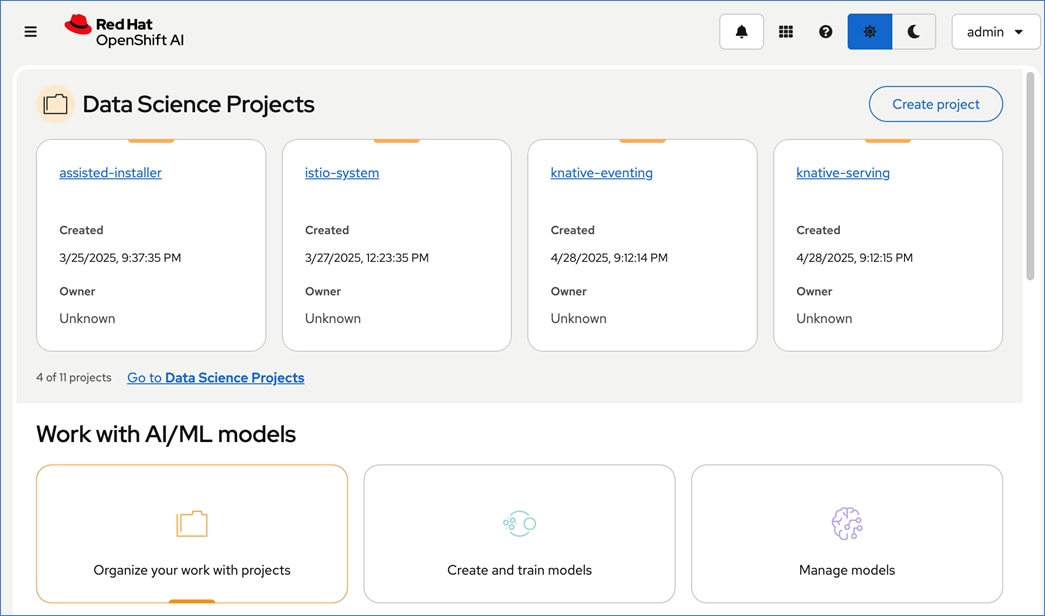

Red Hat OpenShift AI (previously known as Red Hat OpenShift Data Science or RHODS) is a flexible and scalable platform for AI/ML and MLOps that enables enterprises to create and deliver AI-enabled applications at scale. Built using open-source technologies and Red Hat OpenShift as the foundation, OpenShift AI provides a trusted, operationally consistent environment for Enterprise teams to experiment, serve models, and deliver ML-enabled applications. Red Hat OpenShift AI running on OpenShift provides a single enterprise-grade application platform for ML models and applications that use them. Data scientists, engineers, and app developers can collaborate in a single destination that promotes consistency, security, and scalability. OpenShift administrators that manage existing application environment can continue to do the same for OpenShift AI and ML workloads. This also allows application, ML, and data science teams to focus on their areas of work and spend less time managing the infrastructure.

Red Hat OpenShift AI includes key capabilities to accelerate the delivery of AI/ML models and applications in a seamless, consistent manner, at scale. The platform provides the development environment, tools, and frameworks that data scientists and machine learning teams need to build, deploy, and maintain AI/ML models in production. OpenShift AI streamlines the ML model delivery process from development to production deployment (model serving) with efficient life cycle management and pipeline automation. From the OpenShift AI console, AI teams can select from a pre-integrated, Red Hat supported set of tools and technologies or custom components that are enterprise managed, providing the flexibility that teams need to innovate and operate with efficiency. OpenShift AI also makes it easier for multiple teams to collaborate on one or more efforts in parallel.

OpenShift AI is compatible with leading AI tools and frameworks such as TensorFlow, PyTorch, and can work seamlessly with NVIDIA GPUs, to accelerate AI workloads. It provides pre-configured Jupyter notebook images with popular data science libraries. Red Hat tracks, integrates, tests, and supports common AI/ML tooling and model serving on RedHat OpenShift. The latest release of Red Hat OpenShift AI delivers enhanced support for predictive and generative AI model serving and improves efficiency of data processing and model training.

Other key features of OpenShift AI include:

● Collaborative Workspaces: OpenShift offers a collaborative workspace where teams can work together and collaborate on one or more models in parallel.

● Development Environments: ML teams can use Jupyter notebooks as a service using pre-built images, common Python libraries and open-source technologies such as TensorFlow and PyTorch to work on their models. In addition, administrators can add customized environments for specific dependencies or for additional IDEs such as RStudio and VSCode.

● Model Serving at scale: Multiple Models can be served for integration into intelligent AI-enabled applications using inferencing servers (for example, Intel OpenVINO, NVIDIA Triton) using GPU or CPU resources provided by the underlying OpenShift cluster without writing a custom API server. These models can be rebuilt, redeployed, and monitored by making changes to the source notebook.

● Support for enhanced model serving with the ability to use multiple model servers to support both predictive and GenAI, including support for KServe, a Kubernetes custom resource definition that orchestrates serving for all types of models, vLLM and text generation inference server (TGIS), serving engines for LLMs and Caikit-nlp-tgis runtime, which handles natural language processing (NLP) models and tasks. Enhanced model serving allows users to run predictive and GenAI on a single platform for multiple use cases, reducing costs and simplifying operations. This enables out-of-the-box model serving for LLMs and simplifies the surrounding user workflow.

● Innovate with open-source capabilities: Like Red Hat OpenShift, OpenShift AI integrates with open-source tools and leverages a partner ecosystem to enhance the capabilities of the platform, minimizing vendor lock-ins.

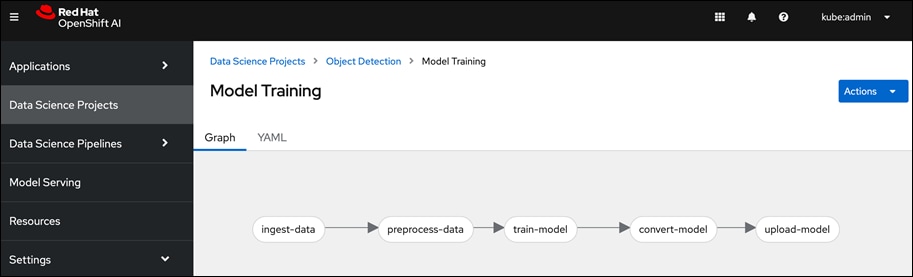

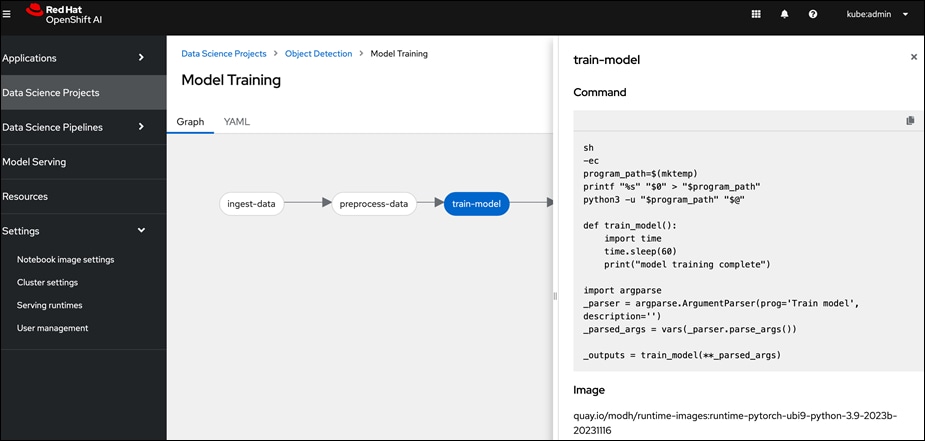

● Data Science Pipelines for GUI-based automation using OpenShift pipelines: OpenShift AI leverages OpenShift pipelines to automate ML workflow using an easy to drag-and-drop web UI as well as code driven development of pipelines using a Python SDK.

● Model monitoring visualizations for performance and operational metrics, improving observability into how AI models are performing.

● New accelerator profiles enable administrators to configure different types of hardware accelerators available for model development and model-serving workflows. This provides simple, self-service user access to the appropriate accelerator type for a specific workload.

By using Red Hat OpenShift AI, enterprises can manage and maintain AI/ML models and the applications using the same models on a single, unified platform and simplify overall management of the environment.

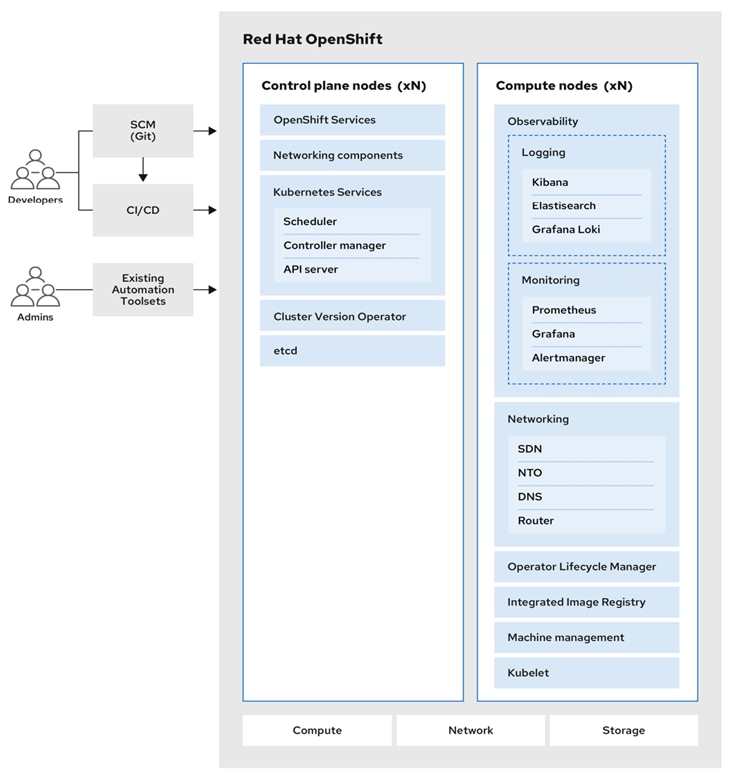

Red Hat OpenShift is a leading enterprise application platform that brings together a comprehensive set of tools and services that streamline the entire application lifecycle, from development to delivery and maintenance of application workloads. It allows organizations to modernize their applications and includes multiple advanced open-source capabilities that are tested and integrated with the underlying certified Kubernetes environment, such as Red Hat OpenShift Serverless, Red Hat OpenShift Pipelines and Red Hat OpenShift GitOps. Red Hat OpenShift offers a complete set of services that helps developers code applications with speed, flexibility, and efficiency. OpenShift is designed to support anywhere from a few machines and applications to thousands of machines and applications and allows enterprises to extend their application environment from on-prem to public cloud and multi-cloud environments.

Figure 2 shows the high-level architecture of Red Hat Openshift.

Red Hat OpenShift uses Red Hat Enterprise Linux CoreOS (RHCOS), a container-oriented operating system that is specifically designed for running containerized applications and provides several tools for fast installation, Operator-based management, and simplified upgrades. RHCOS includes:

● Ignition, which is used as a first-boot system configuration for initially bringing up and configuring machines.

● CRI-O, a Kubernetes native container runtime implementation that integrates closely with the operating system to deliver an efficient and optimized Kubernetes experience. CRI-O provides facilities for running, stopping, and restarting containers.

● Kubelet, the primary node agent for Kubernetes that is responsible for launching and monitoring containers.

Note: The control plane nodes in Red Hat OpenShift must run RHCOS, but Red Hat Enterprise Linux (RHEL) can be used in worker or compute nodes.

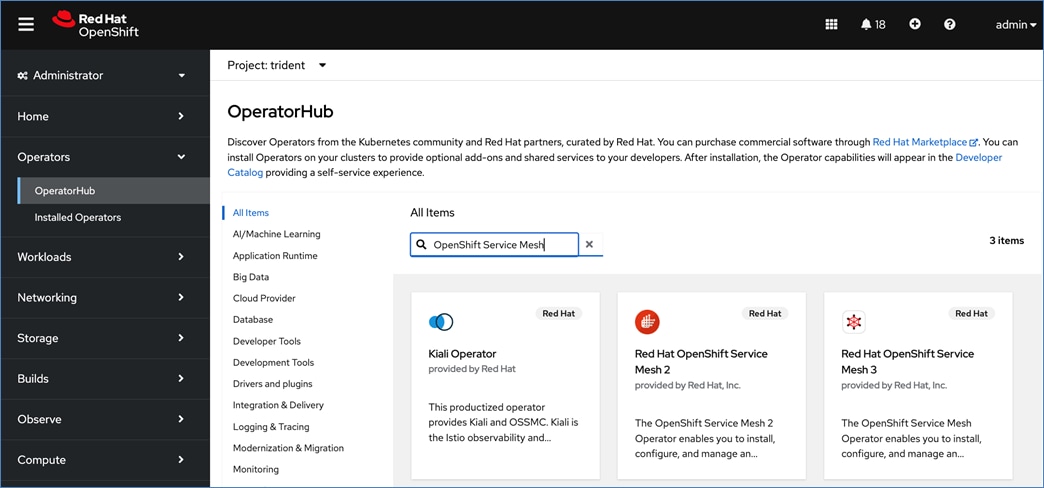

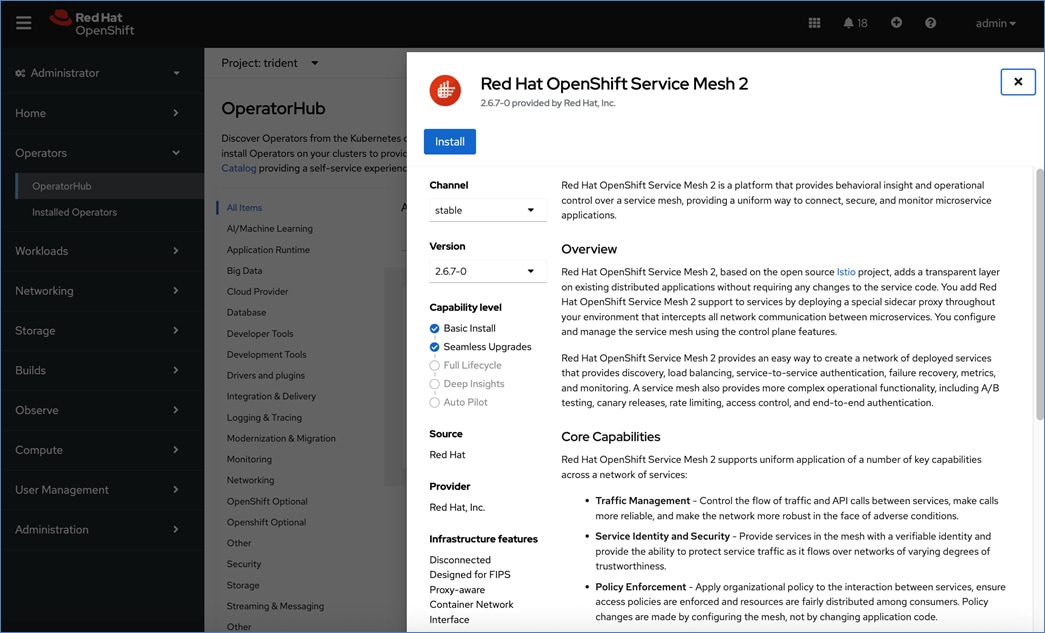

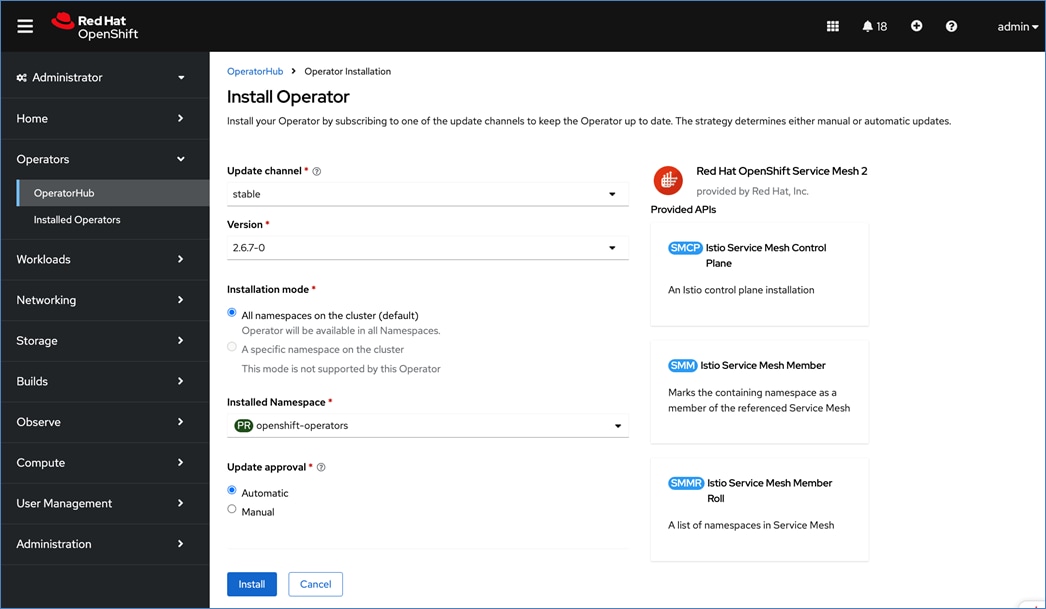

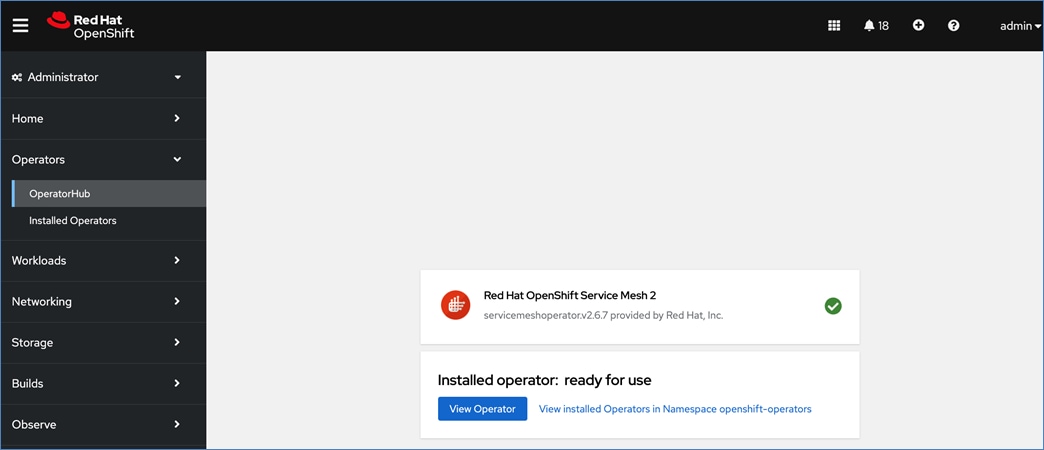

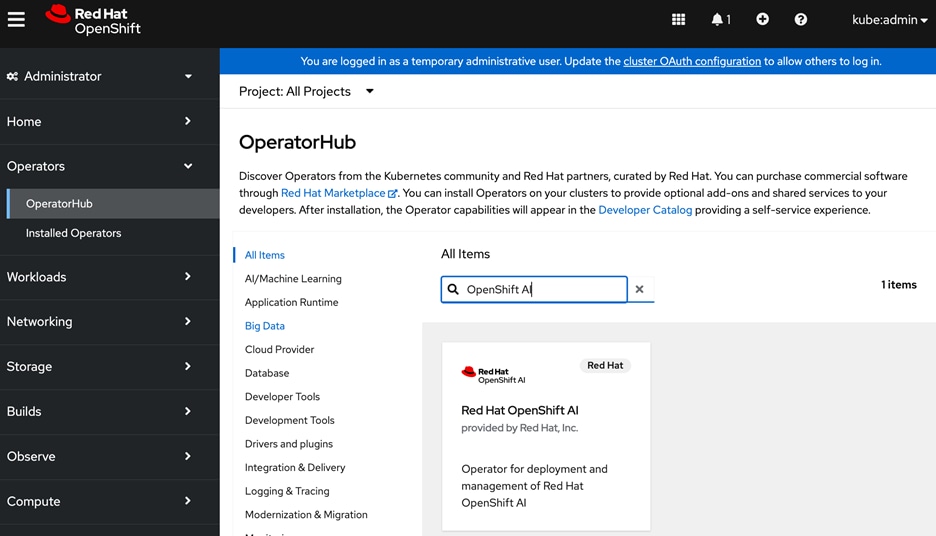

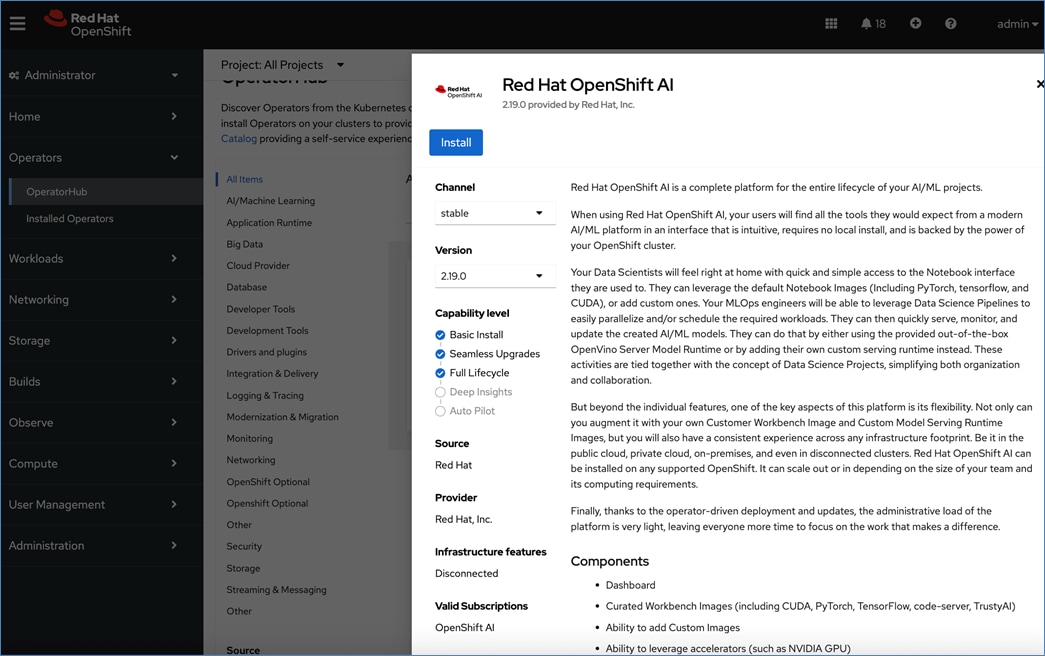

AI/ML workloads, like many modern applications, uses containers and Kubernetes (K8s) orchestration as the de facto development environment for model development and AI-enabled applications. Kubernetes offer several benefits, but one key attribute is its extensibility. Kubernetes provides an Operator framework that vendors and open-source communities can use to develop and deploy self-contained operators that extend the capabilities of the K8s cluster. These operators generally require minimum provisioning and are usually self-managed with automatic updates (unless disabled) and handle life-cycle management. Kubernetes operators are probably the closest thing to an easy-button in infrastructure provisioning (short of IaC). In the Red Hat OpenShift environment that this solution uses, it is even easier to deploy and use operators. Red Hat OpenShift provides an embedded OperatorHub, directly accessible from the cluster console. The Red Hat OperatorHub has hundreds of Red Hat and community certified operators that can be deployed with a few clicks.

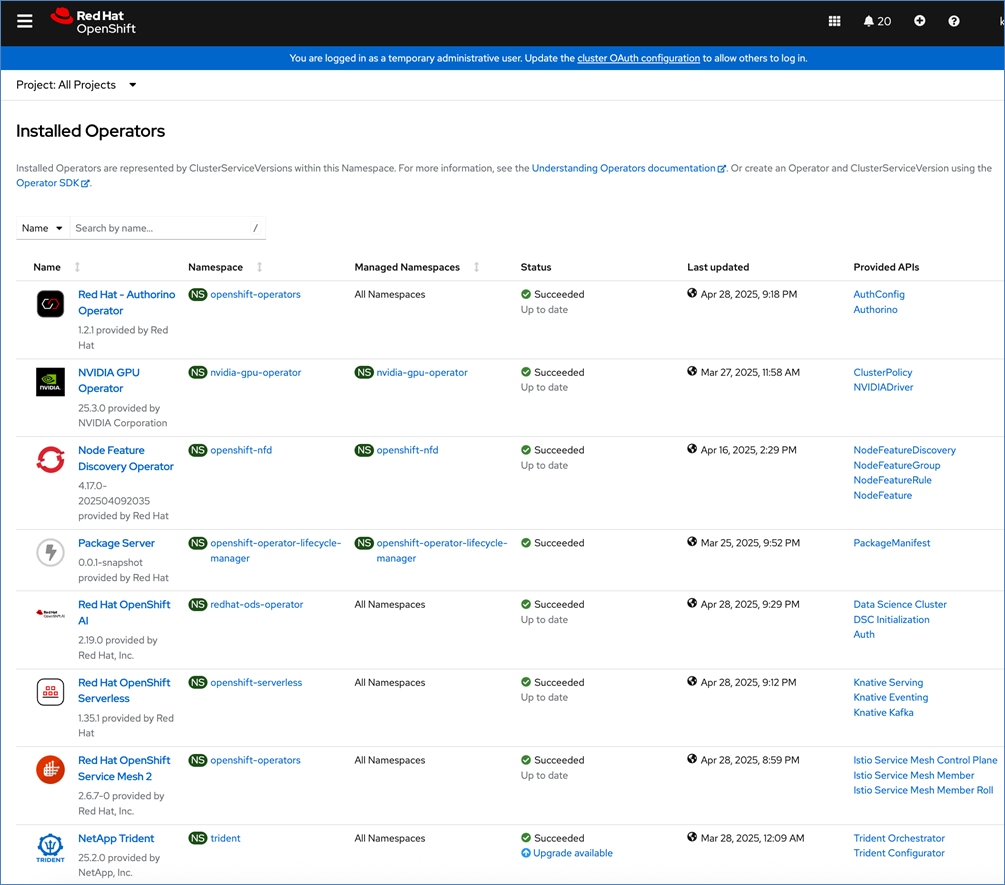

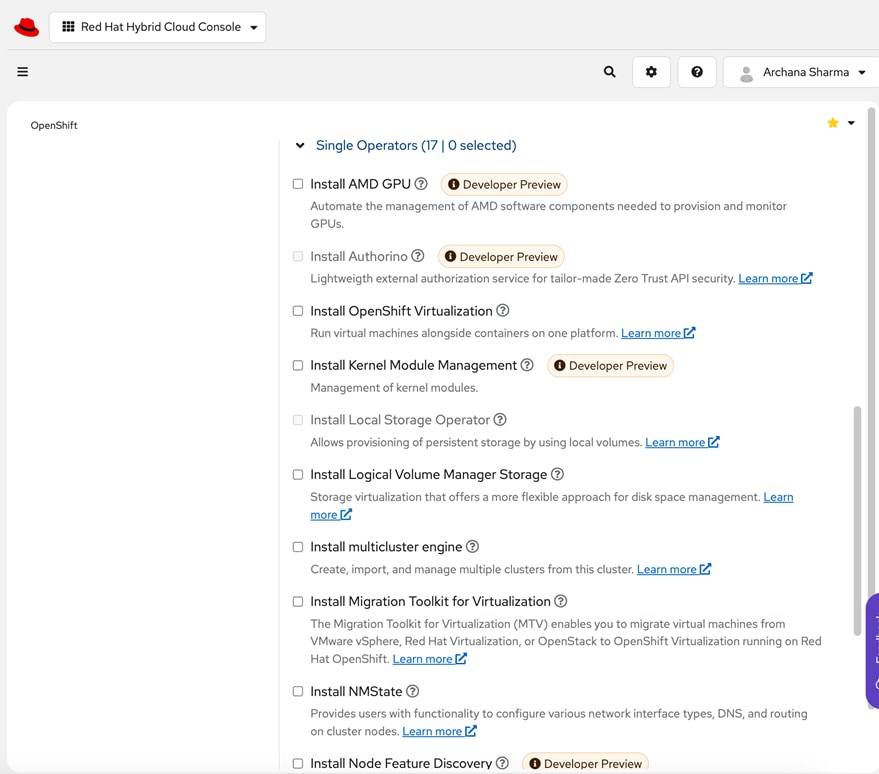

To support AI/ML workloads and OpenShift AI, the following Red Hat OpenShift operators are deployed in this solution to enable GPU, storage, and other resources:

● Red Hat Node Feature Discovery Operator to identify and label hardware resources (for example, NVIDIA GPUs)

● NVIDIA GPU Operator deploys and manages the GPU resource on a Red Hat OpenShift cluster (for example, Guest OS vGPU drivers)

● NetApp Trident Operator for managing container-native persistent storage required for model delivery, backed by NetApp ONTAP storage – file, block, and object store.

● Red Hat OpenShift AI Operator deploys OpenShift AI on any OpenShift cluster

For more information on Red Hat OpenShift Operators, see: https://www.redhat.com/en/technologies/cloud-computing/openshift/what-are-openshift-operators.

NVIDIA AI Enterprise (NVAIE) is a comprehensive suite of enterprise-grade, cloud-native software, hardware, and support services offered by NVIDIA for artificial intelligence (AI) and machine learning (ML) applications. NVIDIA describes NVAIE as the “Operating System” for enterprise AI. NVIDIA AI Enterprise includes key enabling technologies for rapid deployment, management, and scaling of AI workloads. It includes NVIDIA GPUs, Kubernetes Operators for GPUs, virtual GPU (vGPU) technology, and an extensive software library of tools and frameworks optimized for AI that make it easier for enterprises to adopt and scale AI solutions on NVIDIA infrastructure.

NVAIE can be broadly categorized into Infrastructure Management, AI Development, and Application Frameworks optimized for AI. For more details on NVAIE, see: https://www.nvidia.com/en-us/data-center/products/ai-enterprise/.

This solution optionally leverages the NVIDIA AI Enterprise Software suite (along with other complementary partner components) to extend and operationalize a robust, production-ready FlexPod AI infrastructure to support a range of use cases.

NVIDIA’s GPU operator for Red Hat OpenShift provides seamless deployment and management of GPU resources and CUDA libraries for optimal use of GPU to support various AI/ML use cases. NVIDIA AI Enterprise can be used to extend those capabilities even further.

NVAIE is a licensed software from NVIDIA that must be certified to run on the infrastructure servers. For more information on the licensing and certification, click the links below:

● Certification: https://www.nvidia.com/en-us/data-center/products/certified-systems/

For additional information on NVIDIA AI Enterprise, go to: https://www.nvidia.com/.

The NVIDIA L40S GPU Accelerator is a full height, full-length (FHFL), PCI Express Gen4 graphics solution based on the NVIDIA Ada Lovelace architecture. The NVIDIA L40S GPU delivers acceleration for the next generation of AI-enabled applications—from gen AI, LLM inference, small-model training and fine-tuning to 3D graphics, rendering, and video applications.

Note: NVIDIA L40S GPUs does not support Multi-Instance GPUs or MIG that allows a physical GPU to be partitioned into multiple, smaller instances.

Table 1. NVIDIA L40S – Technical Specification

| NVIDIA L40S PCIe 40GB |

|

| GPU Architecture |

NVIDIA Ada Lovelace architecture |

| GPU Memory |

48GB GDDR6 with ECC |

| Memory Bandwidth |

864GB/s |

| Interconnect Interface |

PCIe Gen4 x16: 64GB/s bidirectional |

| NVIDIA Ada Lovelace Architecture-Based CUDA® Cores |

18,176 |

| NVIDIA Third-Generation RT Cores |

142 |

| NVIDIA Fourth-Generation Tensor Cores |

568 |

| RT Core Performance TFLOPS |

209 |

| FP32 TFLOPS |

91.6 |

| TF32 Tensor Core TFLOPS |

183 I 366* |

| BFLOAT16 Tensor Core TFLOPS |

362.05 I 733* |

| FP16 Tensor Core |

362.05 I 733* |

| FP8 Tensor Core |

733 I 1,466* |

| Peak INT8 Tensor TOPS |

733 I 1,466* |

For more information, see: https://resources.nvidia.com/en-us-l40s/l40s-datasheet-28413

Compute Unified Device Architecture

Compute Unified Device Architecture (CUDA) is a parallel computing platform and application programming interface (API) model from NVIDIA that enables general purpose computing on GPUs that were originally designed for graphics. CUDA excels in complex mathematical computations and data processing tasks that can run on thousands of GPU cores in parallel, making it well suited for compute-intensive AI/ML use cases. It also provides memory management to enable efficient data transfers between the CPU and GPU. NVIDIA’s CUDA Toolkit provides developers with the software tools and libraries for developing GPU-accelerated applications that harness the parallel processing capabilities of the GPUs. It includes a compiler, debugger, runtime libraries, and other tools that simplify the process of GPU programming.

The FlexPod Datacenter is a reference architecture for hosting a wide range of enterprise workloads on both virtualized baremetal infrastructure in enterprise data centers. Cisco Validated Designs (CVDs) for FlexPod Datacenter solutions provide design and implementation guidance as well as Infrastructure as Code (IaC) automation using Red Hat Ansible to accelerate enterprise data center infrastructure deployments. The designs incorporate product, technology, and industry best practices to deliver a highly-available, scalable, and flexible architecture.

The key infrastructure components for compute, network, and storage in a FlexPod Data center solution are:

● Cisco Unified Computing System (Cisco UCS) Infrastructure

● Cisco Nexus 9000 switches

● Cisco MDS 9000 SAN switches (when using Fibre Channel SAN)

● NetApp AFF/FAS/ASA storage

FlexPod designs are flexible and can be scaled-up or scaled-out without sacrificing feature/functionality. FlexPod solutions are built and validated in Cisco labs to ensure interoperability and minimize risk in customer deployments. CVD solutions enables enterprise IT teams to save valuable time that would otherwise be spent on designing and integrating the solution in-house.

All FlexPod CVDs are available in the Cisco Design Zone here: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/flexpod-design-guides.html

The FlexPod AI solution in this document serves as a foundational infrastructure architecture to deliver a robust, scalable design for compute, networking, storage and GPU options for Enterprise AI initiatives. Using this solution, enterprises can quickly start on their AI journey and scale incrementally as the enterprise needs grow.

The FlexPod AI design adds the following components to the foundational architecture documented in the foundational FlexPod Datacenter Baremetal infrastructure solution.

● Cisco UCS X440p PCIe nodes, capable of hosting up to four GPUs (only some models). Each PCIe node is paired with a Cisco UCS compute node, specifically the Cisco UCS X210c M7 server but a Cisco UCS X410c can also be used with UCS. Connectivity between the compute node and PCIe node requires a PCIe mezzanine card on the compute node and a pair of X-Fabric modules on the Cisco UCS X9508 server chassis.

● NVIDIA GPUs (L40S-48GB) for accelerating AI/ML workloads and model delivery pipeline.

● Red Hat OpenShift for Kubernetes based container orchestration and management.

● NetApp Trident for persistent storage (backed by NetApp ONTAP Storage).

● Red Hat OpenShift AI for MLOps.

● Object store provided by NetApp AFF storage

Note: Cisco UCS-X supports a range of CPU and GPU options from Intel, AMD and NVIDIA for accelerating AI/ML workloads.

Figure 3 shows the components validated in this solution for hosting AI/ML workloads.

The following sections provide a brief overview of the new components added in this solution to support AI/ML workloads.

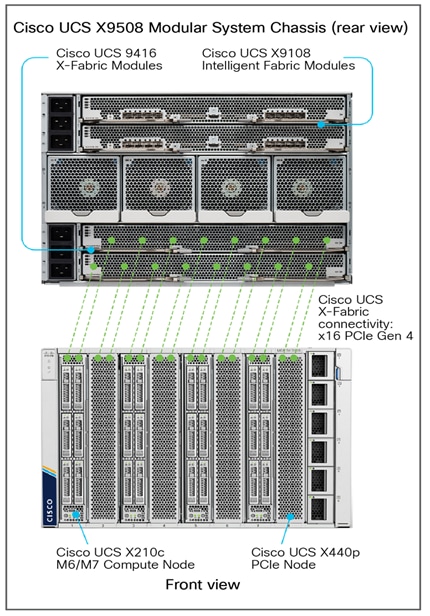

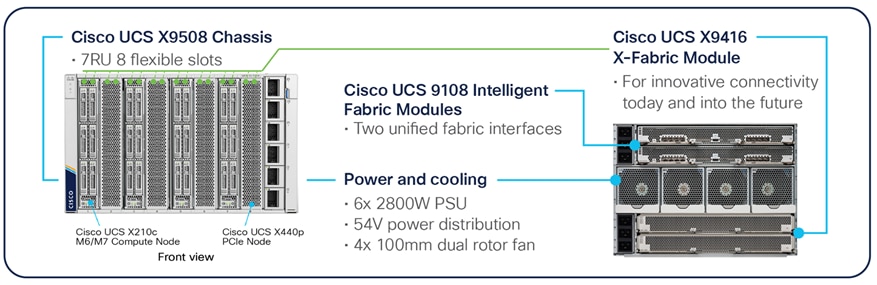

Cisco UCS-X 9508 Server Chassis

Cisco UCS X9508 is a 7RU chassis with eight slots that can support up 4 PCIe nodes, each paired with a compute node for a total of 4 compute nodes as shown in Figure 4.

PCIe or GPU nodes must be deployed in a slot adjacent to the compute node which will enable the compute node to automatically recognize and use the adjacent PCIe node as an extension of itself. To enable this connectivity between GPUs in PCIe nodes and the compute nodes, the following additional components are required to enable PCIe Gen4 connectivity between compute and GPU nodes.

● PCIe mezzanine card on the compute node (UCSX-V4-PCIME)

● Pair of Cisco UCS X9416 X-Fabric Modules deployed to the back of Cisco UCS X-series server chassis (UCSX-F-9416) - see Figure 5

The X-Fabric modules provide a redundant PCIe 4 fabric to enable PCIe connectivity between compute and PCIe/GPU nodes.

The Cisco UCS X9508 Chassis is midplane-less design, provides fewer obstructions for better airflow. The vertically oriented Cisco UCS X210c or X410c compute nodes and the PCIe nodes connect directly to horizontally oriented X-Fabric modules, located at the back of the chassis (see Figure 5). The innovate design enables Cisco UCS X-Series to easily upgrade to newer technologies and hardware without requiring forklift upgrades.

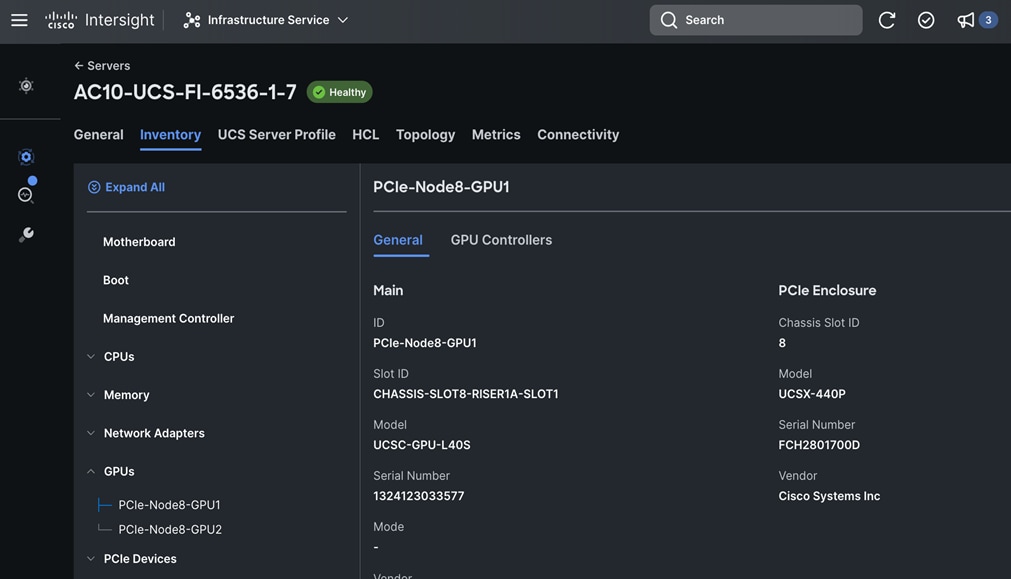

Cisco UCS X440p PCIe Node and GPUs

The Cisco UCS X440p PCIe node (UCSX-440P-U) is the first PCIe node supported on a Cisco UCS X-Series fabric. It is part of the Cisco UCS-X Series modular system, managed using Cisco Intersight and integrated to provide GPU acceleration for workloads running on Cisco UCS compute (X210c, X410c) nodes. GPUs can be installed on the PCIe node and then paired with a compute node in an adjacent slot to support AI/ML, VDI, and other workloads that require GPU resources. GPUs. The PCIe node requires riser cards to support different GPU form factors, either full height, full length (FHFL) or half height, half length (HHHL) GPUs as outlined below:

● Riser Type A: Supports 1 x 16 PCIe connectivity for FHFL GPUs (UCSX-RIS-A-440P)

● Riser Type B: Supports 1 x 8 PCIe connectivity for HHHL GPUs (UCSX-RIS-B-440P)

Each PCIe node supports a maximum of two riser cards, with each riser card capable of supporting up to:

● 1 x 16 FHFL dual slot PCIe cards, one per riser card for a total of two FHFL cards

● 1 x 8 HHHL single slot PCIe card, two per riser card for a total of four HHHL cards

Note: Each PCIe node must have the same type of risers and GPUs. You cannot mix and match riser types and GPU types in the same PCIe node.

The NVIDIA L40S-48GB GPU (UCSX-GPU-L40S) deployed in this solution is a FHFL GPU and uses the Type A riser card.

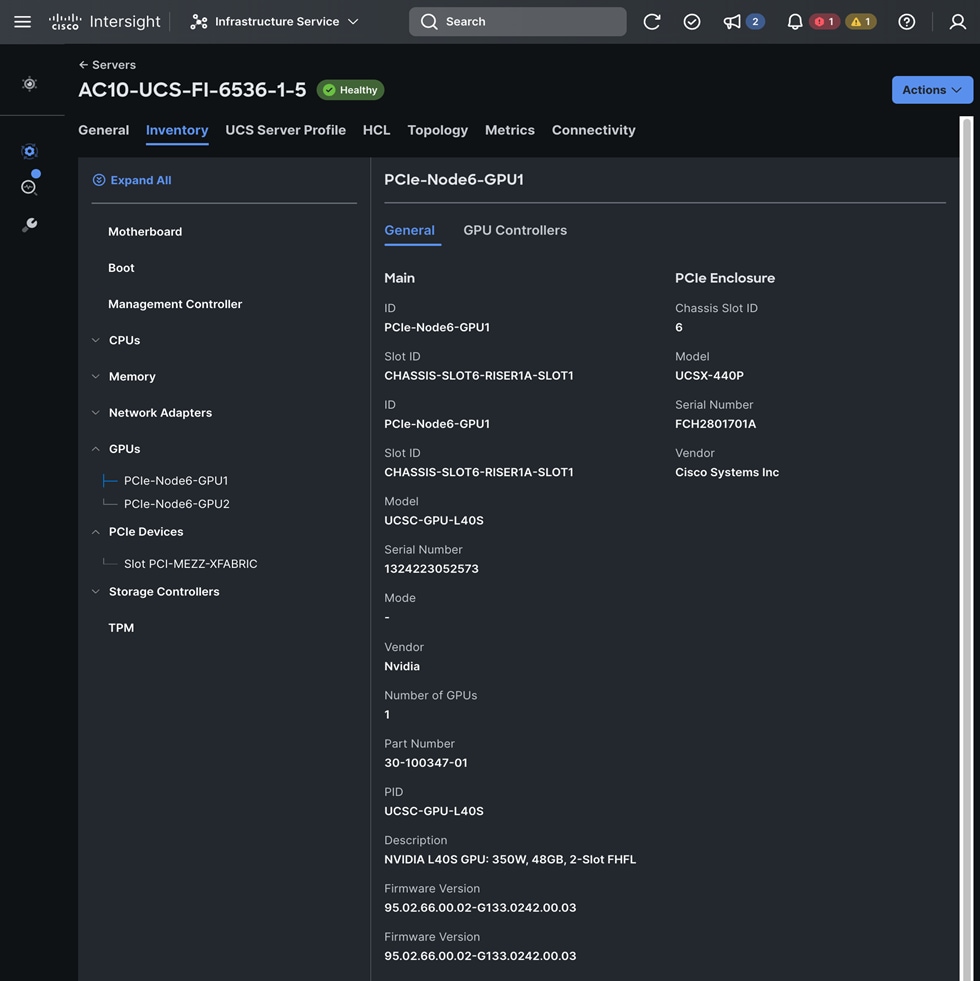

PCIe or GPU nodes must be deployed in a slot adjacent to the compute node and the compute node will automatically recognize and use the adjacent PCIe node as an extension of itself in Cisco Intersight as shown below. The compute node does require a mezzanine card for connecting to the PCIe fabric and node hosting GPUs as shown in the figure below. The figure also shows PCIe node is in slot 6 with two L40S GPUs, as indicated by the node name: PCIe-Node6-GPU-1, -2 with compute node in slot 5.

As stated earlier, each PCIe node allows you to add up to four HHHL GPUs to accelerate workloads running on either a Cisco UCS X210c or Cisco UCS X410c compute node. This provides up to 16 GPUs per chassis. As of the publishing of this document, the following GPU models are supported on a Cisco UCS X440p PCIe node.

Table 2. GPU Options on Cisco UCS X-Series Server System

| GPU Model |

GPUs Supported per PCIe node |

GPUs Supported on |

| NVIDIA H100 NVL Tensor Core GPU |

Max of 2 |

Max of 8 |

| NVIDIA H100 Tensor Core GPU |

Max of 2 |

Max of 8 |

| NVIDIA L40S GPU |

Max of 2 |

Max of 8 |

| NVIDIA L4 Tensor Core GPU |

Max of 4 |

Max of 16 |

| NVIDIA A100 Tensor Core GPU |

Max of 2 |

Max of 8 |

| NVIDIA A16 GPU |

Max of 2 |

Max of 8 |

| NVIDIA A40 GPU |

Max of 2 |

Max of 8 |

| NVIDIA T4 Tensor Core GPUs |

Max of 4 |

Max of 24* |

| Intel® Data Center GPU Flex 140 |

Max of 4 |

Max of 24* |

| Intel Data Center GPU Flex 170 |

Max of 2 |

Max of 8 |

| AMD MI210 GPU |

Max of 2 |

Max of 8 |

*Using the optional front mezzanine GPU adapter (UCSX-X10C-GPUFM-D) on Cisco UCS X210c compute node.

If additional GPUs are needed, up to two GPUs can be added using an optional GPU front mezzanine card on the Cisco UCS X210c or Cisco UCS X410c compute nodes. Only two GPU models are currently supported in this configuration but enables up to have up to 24 GPUs per chassis. Product IDs for enabling GPU acceleration components on Cisco UCS-X are summarized in Table 3.

Table 3. Product IDs for GPU acceleration using PCIe Node

| Component |

PID |

| UCS X-Series Gen 4 PCIe node |

UCSX-440P-U |

| Riser A for 1x dual slot GPU per riser, 440P PCIe node

● Riser 1A (controlled with CPU1 on UCS X210c)

● Riser 2A (controlled with CPU2 on UCS X210c)

|

UCSX-RIS-A-440P |

| Riser B for 2x single slot GPUs per riser, 440P PCIe node

● Riser 1B (controlled with CPU1 on UCS X210c)

● Riser 2B (controlled with CPU2 on UCS X210c)

|

UCSX-RIS-B-440P |

| UCS PCI Mezz card for X‐Fabric connectivity |

UCSX-V4-PCIME |

| UCS X-Fabric module for UCS-X9508 chassis |

UCSX-F-9416 |

| NVIDIA A16 GPUs, 250W, 4x16GB |

UCSX-GPU-A16 |

| NVIDIA A40 GPUs RTX, PASSIVE, 300W, 48GB |

UCSX-GPU-A40 |

| NVIDIA T4 Tensor Core GPUs 75W, 16GB |

UCSX-GPU-T4-16 |

| NVIDIA H100 Tensor Core GPU, 350W, 80GB (2-slot FHFL GPU) |

UCSX-GPU-H100-80 |

| NVIDIA L40 GPU, 300W, 48GB |

UCSX-GPU-L40S |

| NVIDIA L4 Tensor Core GPU, 70W, 24GB |

UCSX-GPU-L4 |

For NVIDIA GPUs, see NVIDIA AI Enterprise Software Licensing guide for up-to-date licensing and support information: https://resources.nvidia.com/en-us-nvaie-resource-center/en-us-nvaie/nvidia-ai-enterprise-licensing-pg?lb-mode=preview

NetApp Trident for Kubernetes Persistent Storage

NetApp Trident is an open-source, fully supported storage orchestrator for containers created by NetApp. It has been designed from the ground up to help you meet your containerized applications persistence demands using industry-standard interfaces, such as the Container Storage Interface (CSI). With Trident, microservices and containerized applications can take advantage of enterprise-class storage services provided by the full NetApp portfolio of storage systems. In a FlexPod environment, Trident is utilized to allow end users to dynamically provision and manage persistent volumes for containers backed by FlexVols and LUNs hosted on ONTAP-based products such as NetApp AFF and FAS systems.

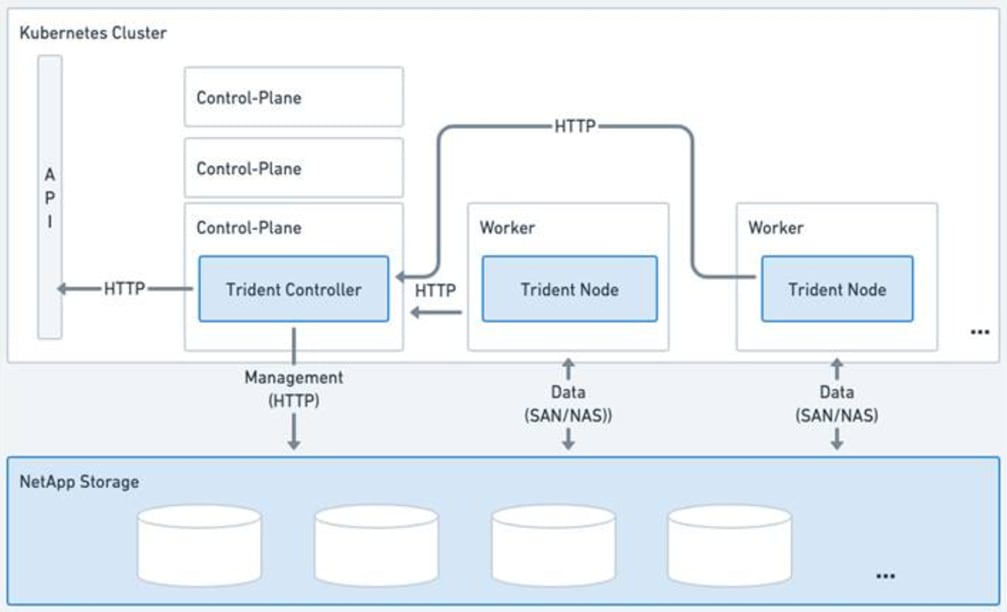

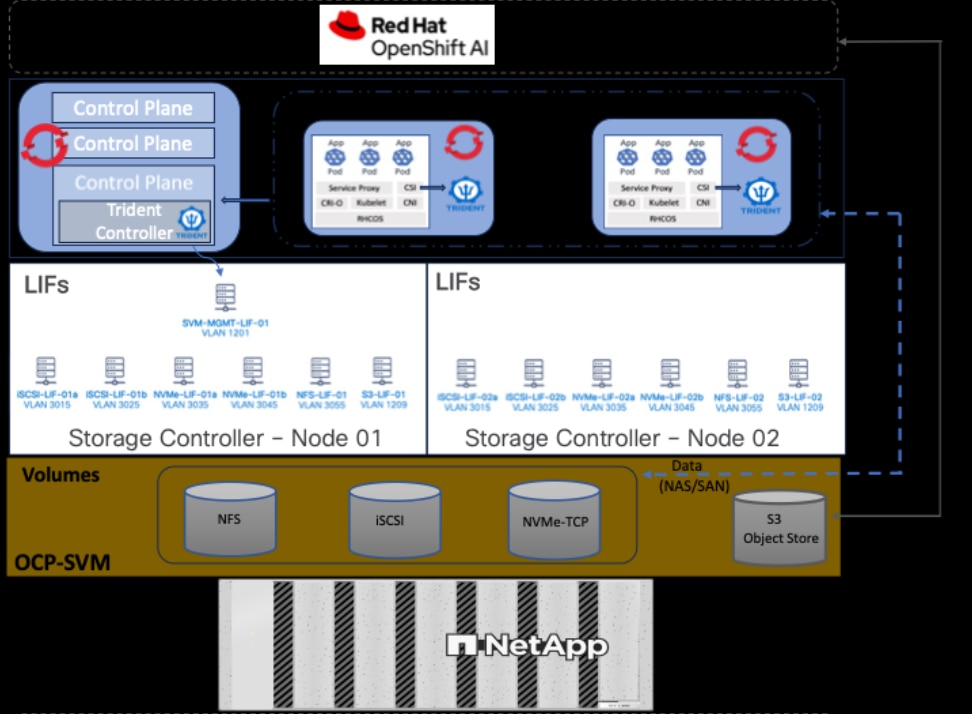

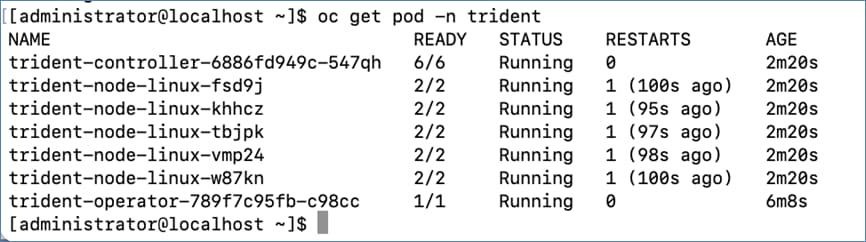

Trident deploys (see Figure 6) as a single Trident Controller Pod and one or more Trident Node Pods on the Kubernetes cluster and uses standard Kubernetes CSI Sidecar Containers to simplify the deployment of CSI plugins. Kubernetes CSI Sidecar Containers are maintained by the Kubernetes Storage community.

Kubernetes node selectors and tolerations and taints are used to constrain a pod to run on a specific or preferred node. You can configure node selectors and tolerations for controller and node pods during Trident installation.

● The controller plugin handles volume provisioning and management, such as snapshots and resizing.

● The node plugin handles attaching the storage to the node.

In this solution, NetApp Trident dynamically provides K8s native persistent storage using both NFS file and iSCSI/NVMe-TCP block storage hosted on a NetApp AFF storage.

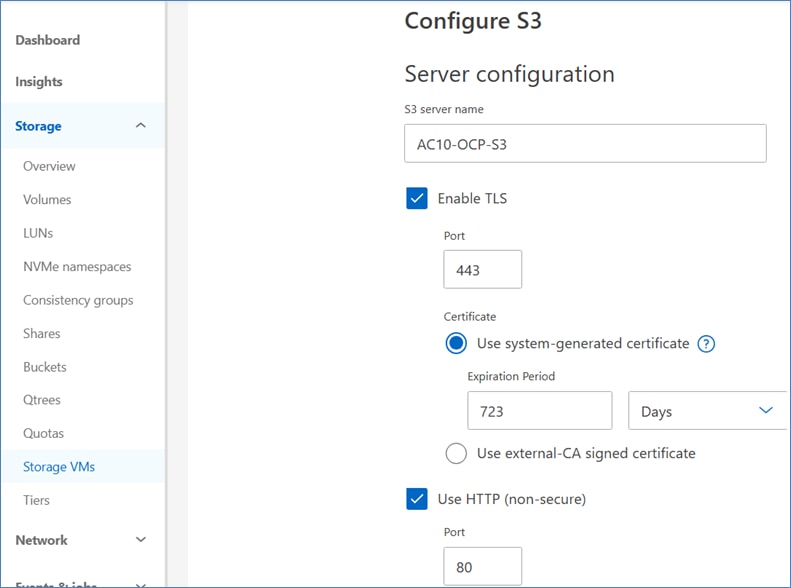

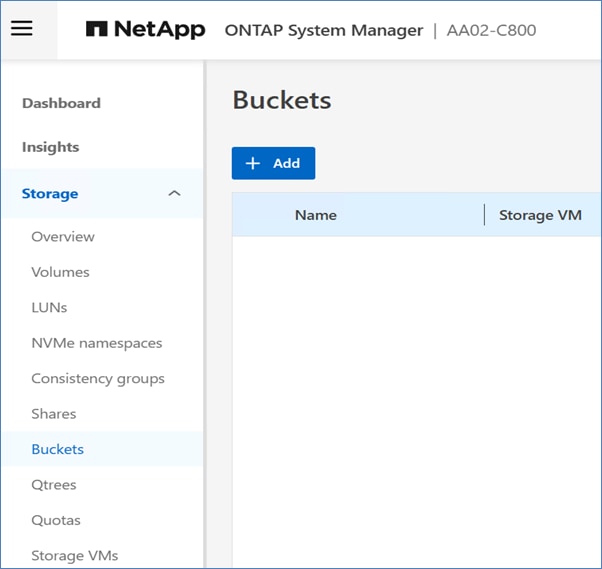

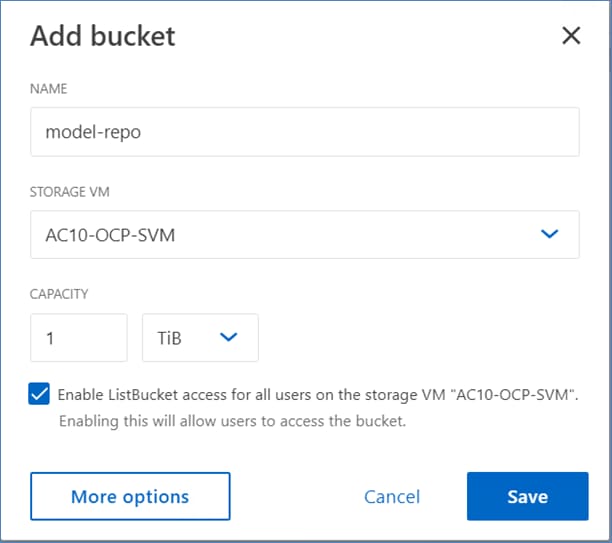

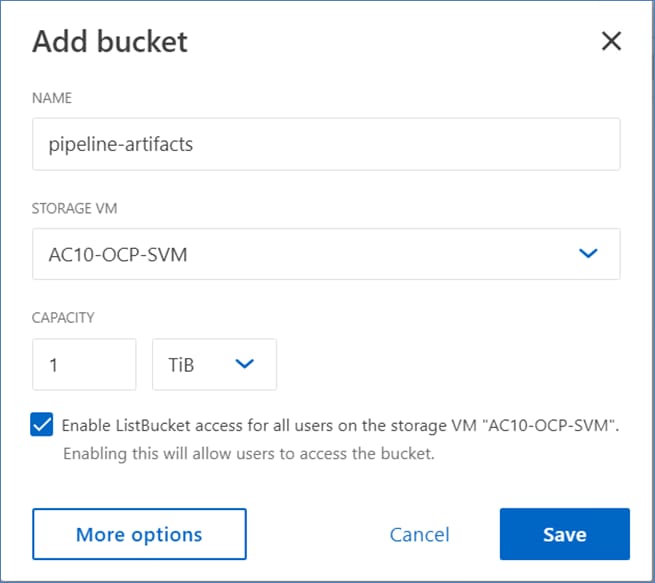

NetApp ONTAP S3

With the growing demand for S3-compatible storage, ONTAP extended its support to include an additional scale-out storage option for S3. Capitalizing on ONTAP's robust data management framework, ONTAP S3 provides S3-compatible object storage capabilities, allowing data to be represented as objects within ONTAP-powered systems, including AFF and FAS. Beginning with ONTAP 9.8, you can enable an ONTAP Simple Storage Service (S3) object storage server in an ONTAP cluster, using familiar manageability tools such as ONTAP System Manager to rapidly provision high-performance object storage for development and operations in ONTAP and taking advantage of ONTAP's storage efficiencies and security.

Solution Design

This chapter contains the following:

The FlexPod Datacenter for AI with Red Hat OpenShift AI solution aims to address the following design goals:

● Best-practices based design for AI/ML workloads, incorporating product, technology, and industry best practices.

● Simplify and streamline operations for AI/ML. Ease integration into existing deployments and processes.

● Flexible design with options for tools, technologies and individual components and sub-systems used in the design can be modified to adapt to changing requirements (for example, storage access, network design)

● Modular design where sub-system components (for example, links, interfaces, model, platform) can be expanded or upgraded as needed.

● Scalable design: As deployments grow, FlexPod Datacenter can be scaled up or out to meet enterprise needs. Each FlexPod Datacenter deployment unit can also be replicated as needed to meet scale requirements.

● Resilient design across all layers of the infrastructure with no single point of failure.

The following sections explain the solution architecture and design that meets these design requirements.

A high-level design of the FlexPod Datacenter AI solution using Red Hat OpenShift AI is shown in Figure 9.

The solution provides a foundational infrastructure design for AI using NVIDIA GPUs, NetApp Storage, Red Hat OpenShift, and MLOps provided by Red Hat OpenShift AI.

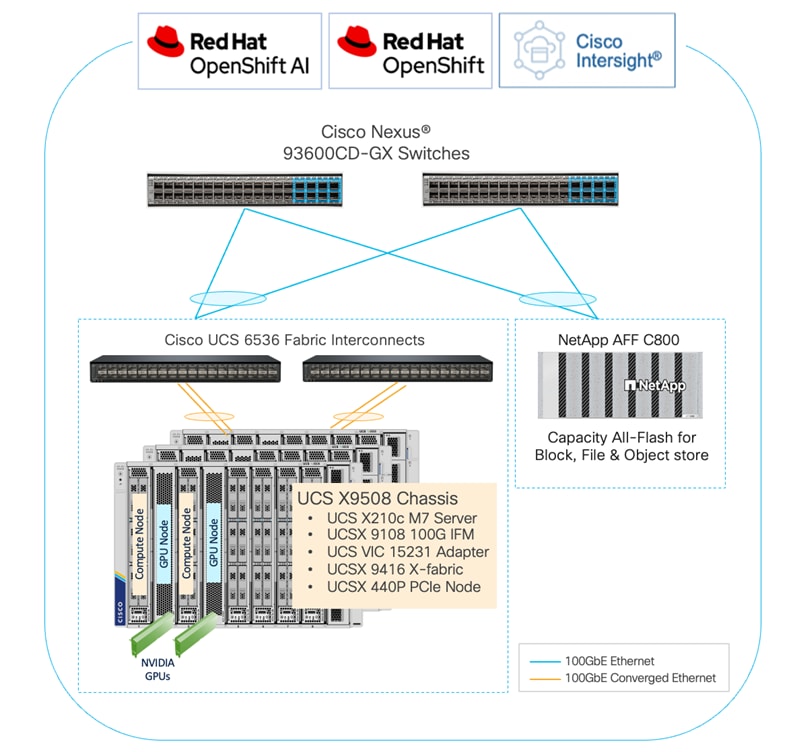

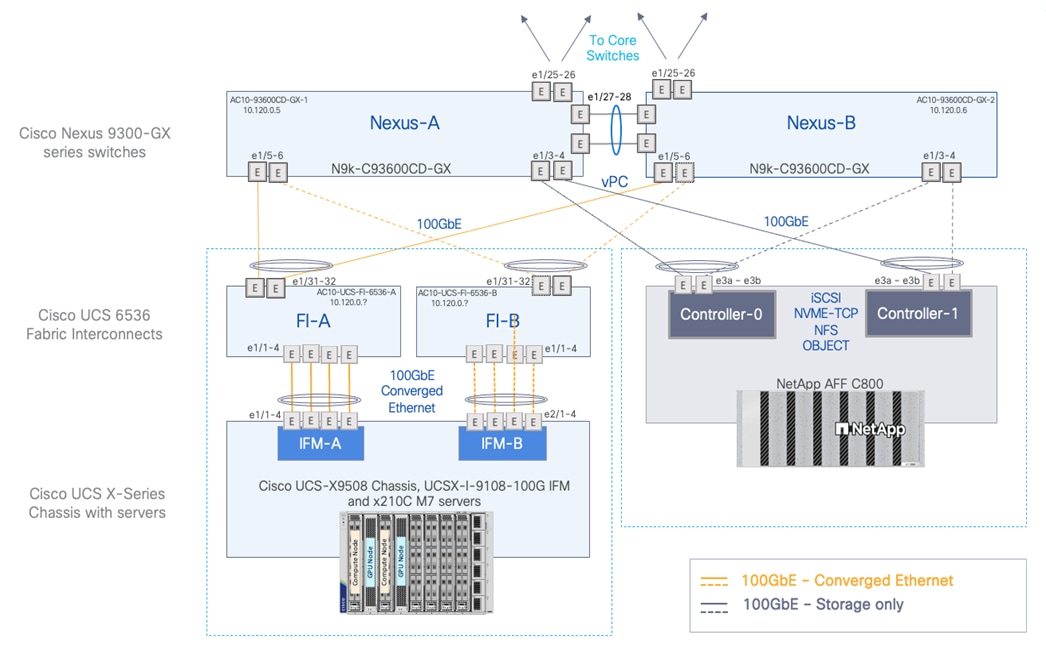

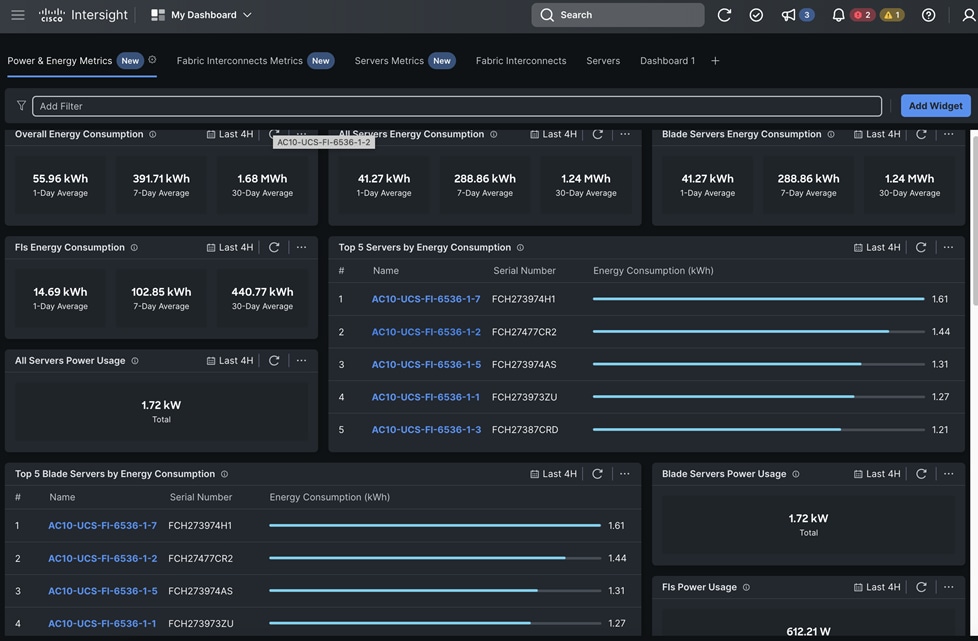

The high-level infrastructure design and topology built in Cisco labs for validating this FlexPod AI Datacenter infrastructure with Red Hat OpenShift AI is shown in Figure 10.

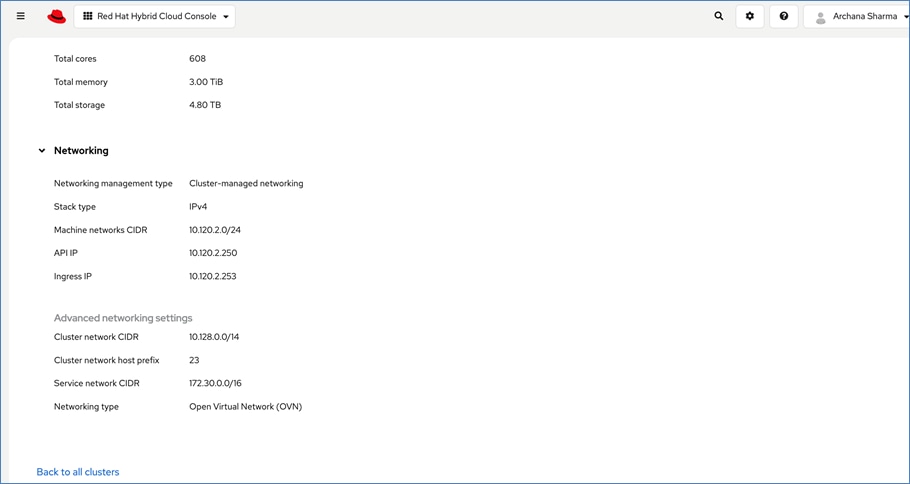

The FlexPod Datacenter infrastructure in this solution is an end-to-end 100Gb Ethernet design using NFS file and block (iSCSI, NVMe-TCP) storage hosted on NetApp AFF C-series storage. The solution provides an OpenShift Kubernetes (K8s) infrastructure for hosting cloud-native application workloads and ML workloads. The Openshift is running on Cisco UCS baremetal servers, specifically Cisco UCS X210C M7 servers with the latest Intel processors. Cisco UCS infrastructure and NetApp storage use multiple virtual port channels (VPCs) to connect to a pair of top-of-rack Cisco Nexus 9000 series switches. The access layer switches can use either multiple 100GbE or 400GbE uplinks to connect into the larger data center fabric. The solution incorporates design, technology, and product best practices to deliver a highly scalable and flexible architecture with no single point of failure.

To support Enterprise AI/ML initiatives and model delivery efforts, FlexPod AI extends the FlexPod Datacenter design as outlined in the upcoming sections to deliver a robust infrastructure design with GPU acceleration.

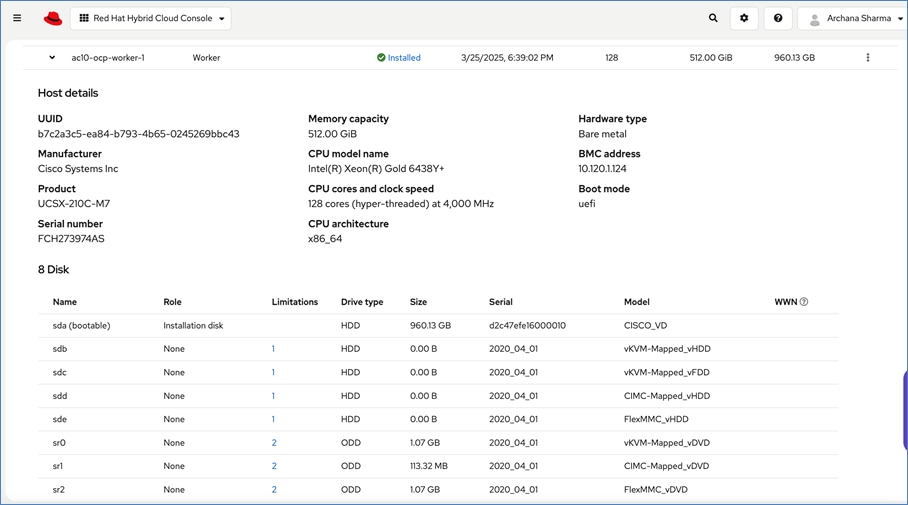

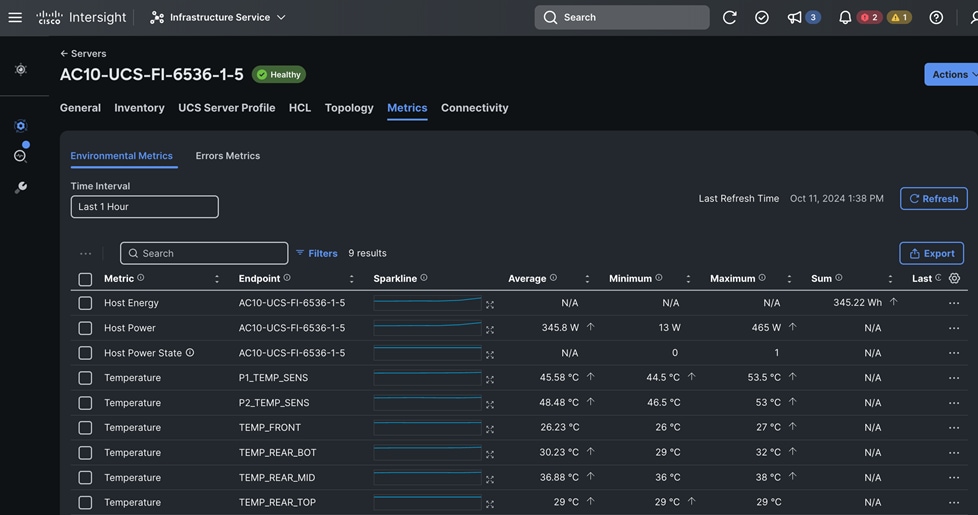

Cisco UCS servers with GPU nodes and NVIDIA GPUs

The design uses the Cisco UCS X-Series server chassis with NVIDIA GPUs to provide the compute and GPU resources in the solution. The Cisco UCS X9508 server chassis is a 7RU chassis with eight slots where Cisco UCS servers and PCIe nodes can be deployed with GPUs to provide a total of 8 FHFL GPUs or 16 HHHL GPUs per chassis. The Cisco UCS X9508 supports up to four PCIe nodes, with each PCIe node paired with a compute node, either a Cisco UCS X210c or X410c server. This design was validated using NVIDIA L40S-48GB GPUs, with two NVIDIA L40S GPUs installed on each GPU worker node. Alternatively, Cisco UCS C-Series Rackmount Servers can also be used. A Cisco UCS C-Series M7 server with Intel processors can support up to 3 x L40S GPUs.

Cisco UCS X-Fabric Technology

To support GPU acceleration on Cisco UCS X-Series systems, Cisco’s first generation UCS X-fabric technology, a pair of Cisco UCS X9416 Fabric modules are deployed to enable PCIe connectivity between UCS servers and UCS X440p PCIe nodes housing NVIDIA GPUs. The first-generation X-fabric supports 32 lanes of PCIe Gen 4 connectivity to each compute node, enabling each server to access a PCIe node, housing either 4 HHHL GPUs (for example, L4) or 2 FHFL GPUs (for example, L40S). Figure 11 shows the X-fabric modules and the connectivity between each compute node and the x440p PCIe node in the adjacent slot.

Note: Each UCS compute server can only access adjacent x440p GPUs through the X-Fabric.

The hardware components and connectivity between them are shown in Figure 12.

● A Cisco UCS 9508 chassis with at least 5 x Cisco UCS X210 M7 servers are connected using 100GbE IFM modules to Cisco UCS Fabric Interconnects, deployed in Intersight Managed Mode (IMM). 4 x 100GbE links from each IFM connect are bundled in a port-channel and connected to each Fabric Interconnect to provide an aggregate bandwidth of 800Gbps to the chassis with up to 8 UCS compute servers.

● Two Cisco Nexus 93600CD-GX 100/400GbE Switches in Cisco NX-OS mode provide top-of-rack switching. The fabric interconnects use multiple 100GbE links to connect to the Nexus switches in a VPC configuration.

● At least two 100 Gigabit Ethernet ports from each FI, in a port-channel configuration are connected to each Nexus 93600CD-GX switch.

● One NetApp AFF C800 HA pair connects to the Cisco Nexus 93600CD-GX Switches using two 100 GE ports from each controller configured as a Port-Channel.

● The high-performance servers are deployed as OpenShift compute nodes and booted using the Assisted Installer deployed RHCOS image on local M.2 boot drives in a RAID1 configuration. The persistent storage volumes are provisioned on the NetApp AFF C800 and accessed using NFS NAS storage, iSCSI and NVMe-TCP storage. ONTAP S3 object storage is also provisioned to store OpenShift AI models, artifacts, and other data.

● Two of the Cisco UCS X210C M7 servers in Cisco UCS X9508 Chassis are paired with Cisco UCS X440p PCIe nodes, with each PCIe node housing 2 x NVIDIA L40S GPUs.

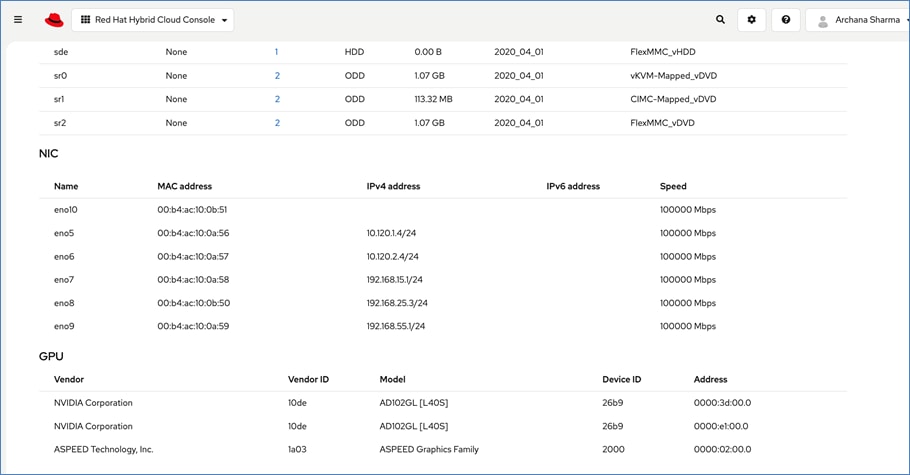

● Each UCS M7 server is equipped with a Cisco VIC 15231 that provides 2 x 100GbE ports for 200Gbps of bandwidth from each server to the chassis.

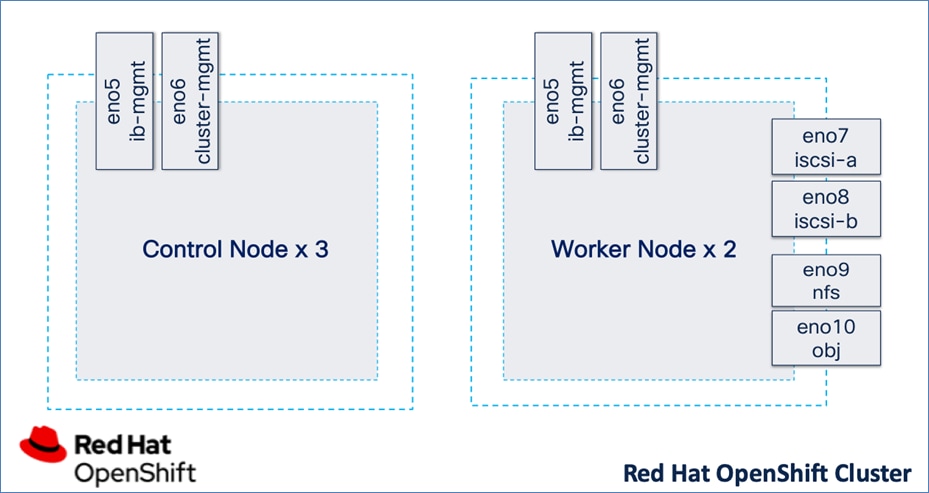

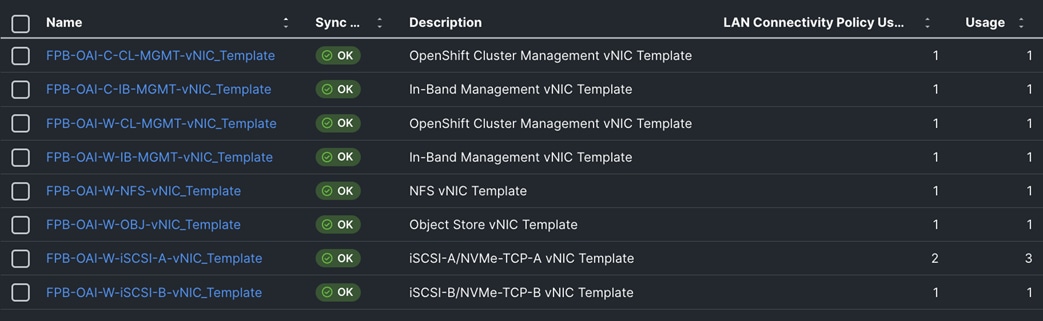

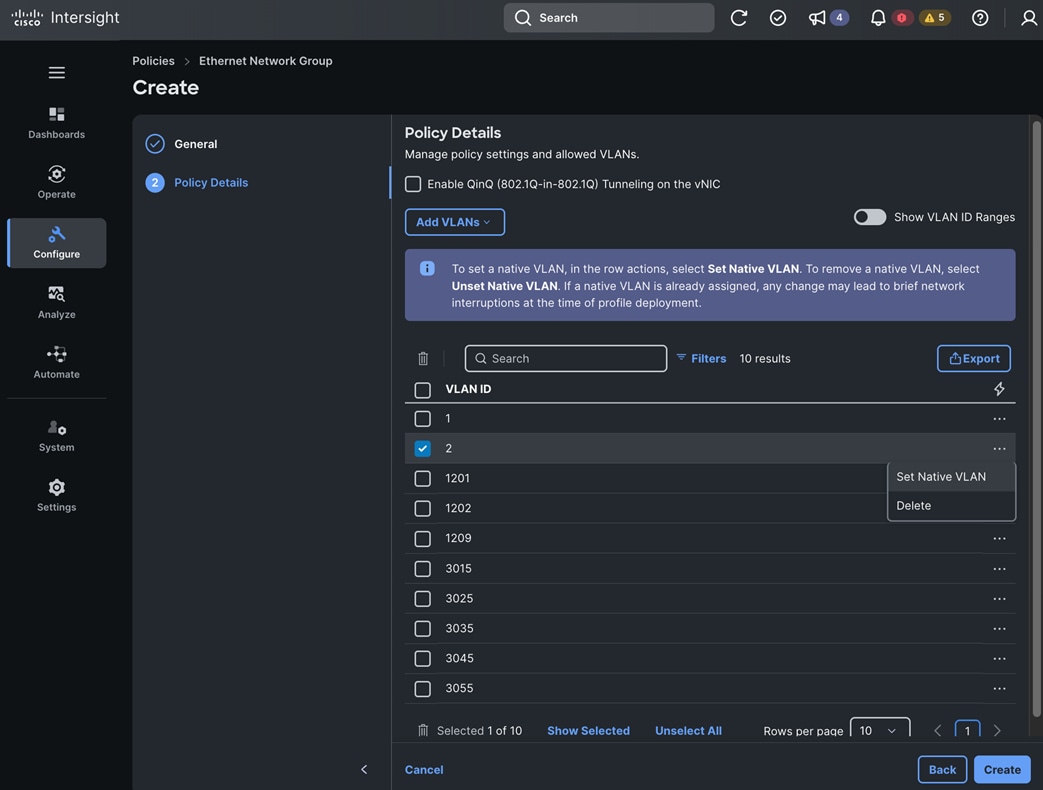

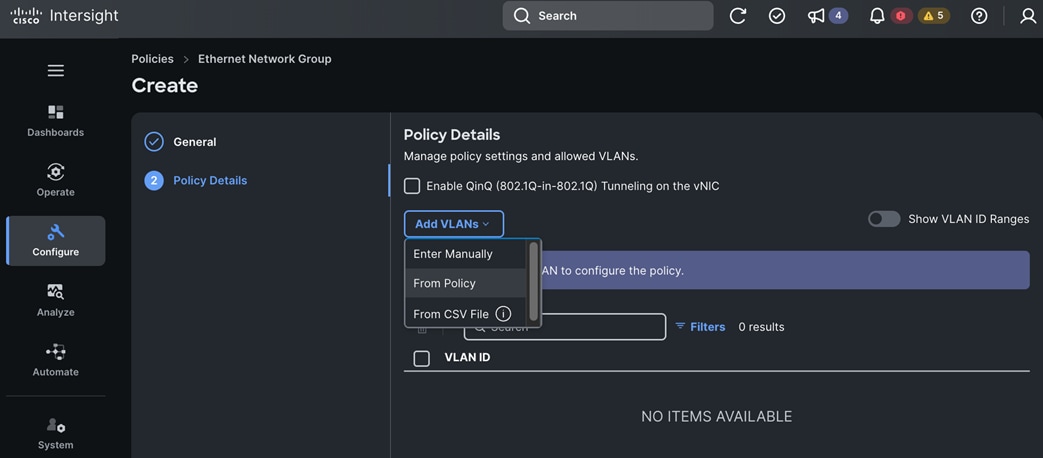

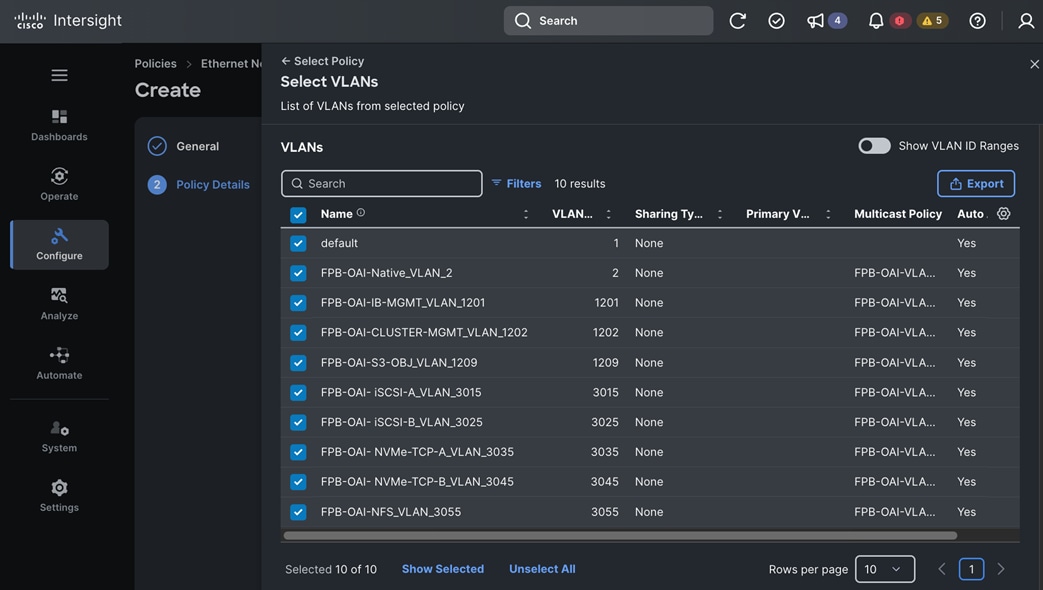

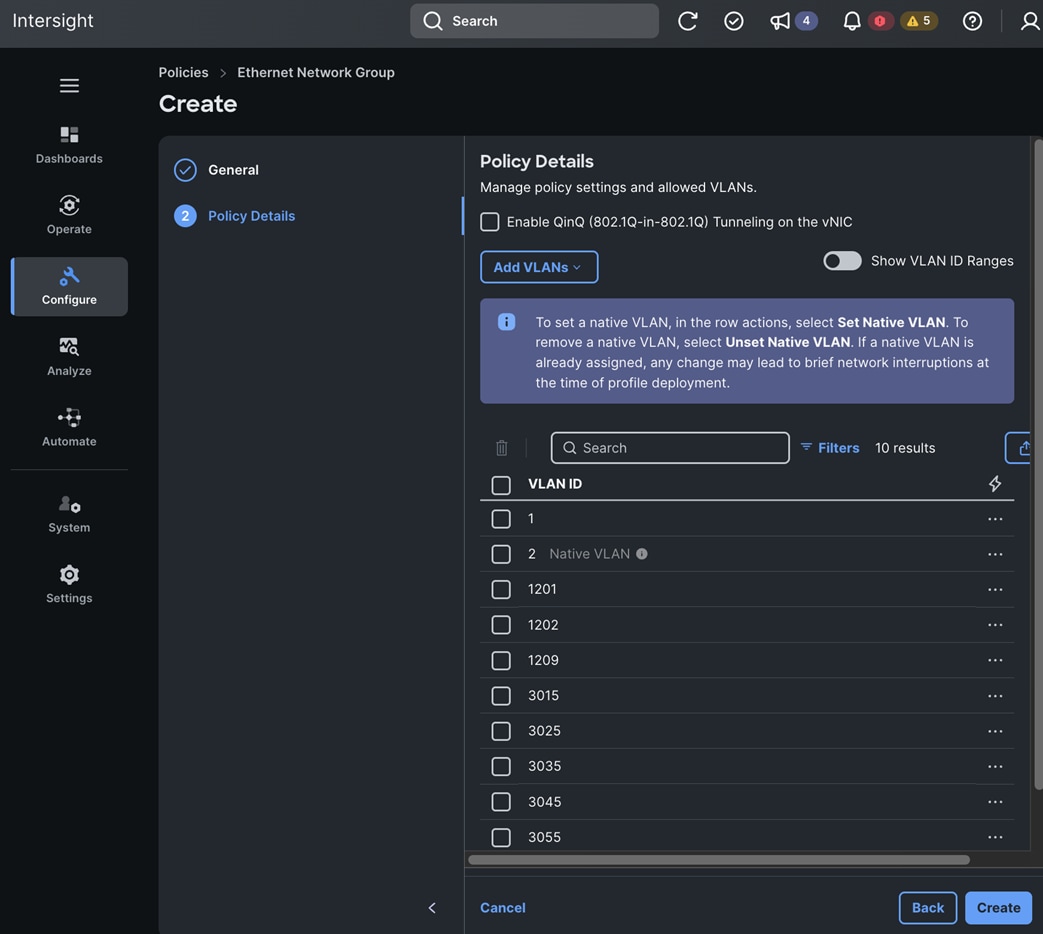

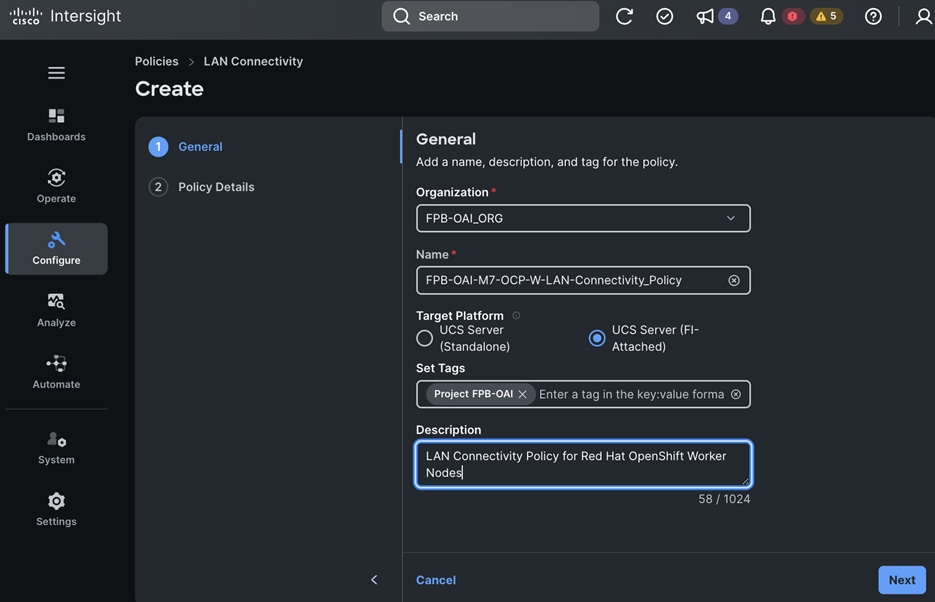

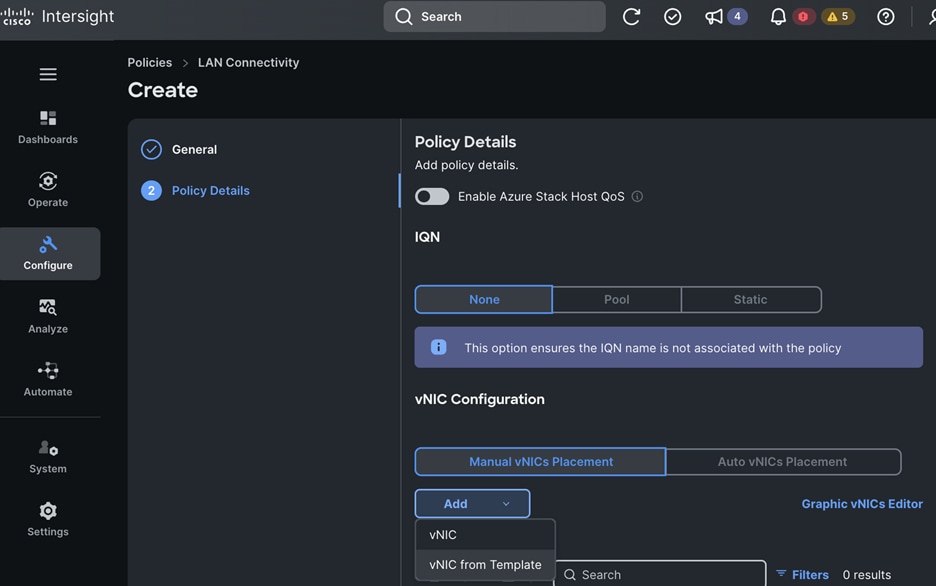

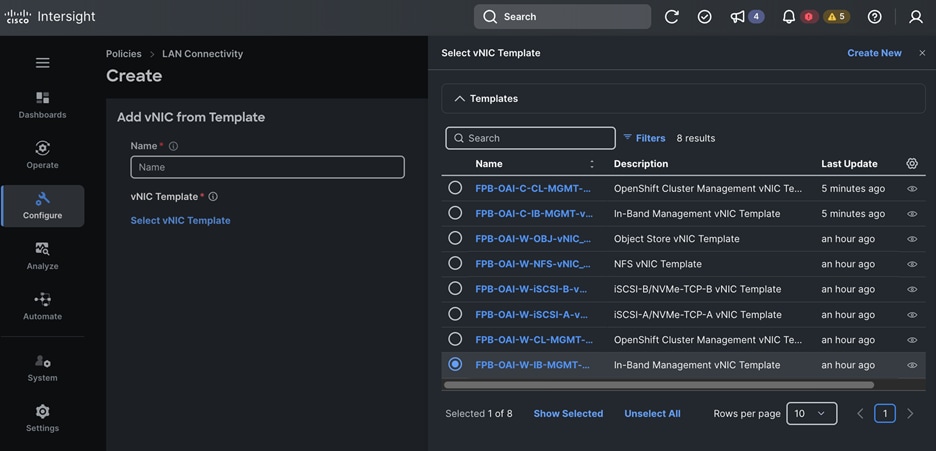

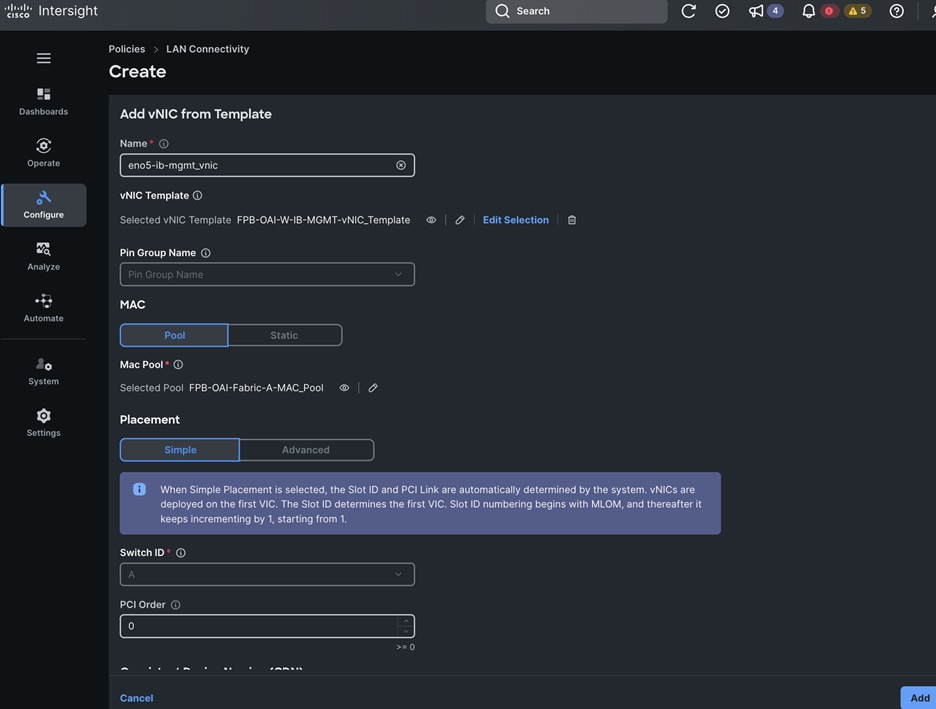

Cisco UCS Server Networking Design

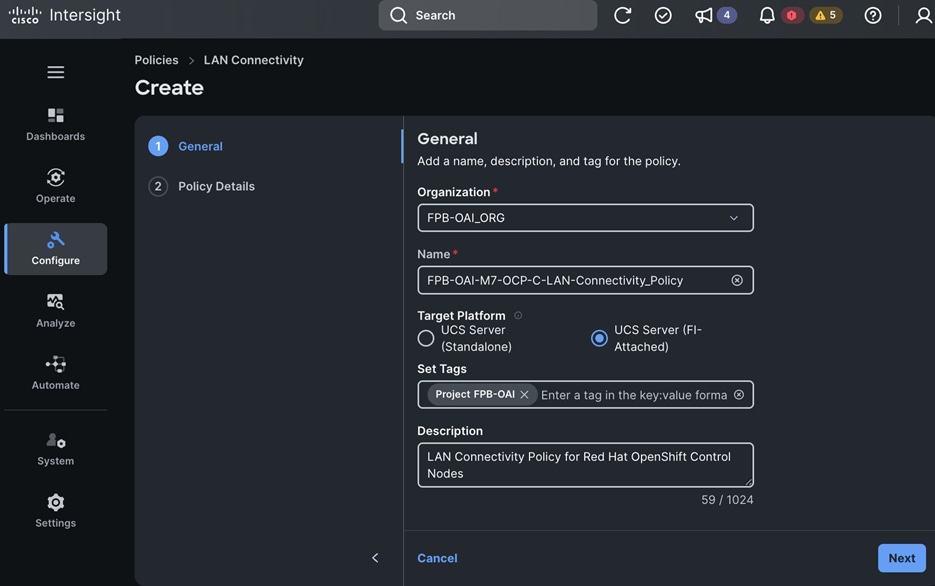

Each server is deployed with multiple virtual NICs (vNICs) and VLANs using Cisco Intersight server profiles as shown in Figure 13. Different vNIC configurations are used on OpenShift control and worker nodes.

The vNICs configuration on worked nodes are for power management, OpenShift cluster networking and storage access as outlined below:

● IB-MGMT (Optional): One vNIC and VLAN with fabric failover enabled on UCS Fabric Interconnects (FI) for in-band power management (for example, IPMI). Alternatively, you can CLUSTER-MGMT vNIC for this.

● CLUSTER-MGMT: One vNIC and VLAN with fabric failover enabled on UCS Fabric Interconnects for all OpenShift cluster networking. This includes both pod and machine networks. The default cluster networking in OpenShift, i.e. Open Virtual Networking (OVN) is used in this solution.

● iSCSI-A, iSCSI-B: Two vNICs and VLANs, one path through each UCS FI for iSCSI storage access.

● NVMe-TCP-A, NVMe-TCP-B: Two VLANs, one path through each UCS FI for NVMe over TCP storage access. These are tagged VLANs using iSCSI-A and iSCSI-B vNICs.

● NFS: One vNIC and VLAN for accessing NFS filesystems, with fabric failover enabled on UCS FIs.

● OBJ: One vNIC and VLAN for accessing S3-compatible object store hosted on NetApp storage, with fabric failover enabled on UCS FIs.

Note: Cisco UCS FI Fabric failover is recommended on non-ISCSI and NVMe-TCP interfaces versus NIC bonding at the operating system level due to the complexity involved. With fabric failover, the management and failover is handled by the Cisco FIs, by enabling it in the vNIC template and LAN connectivity policy used in the server profile template used to provision the servers. This enables it to be deployed with ease without compromising on resiliency.

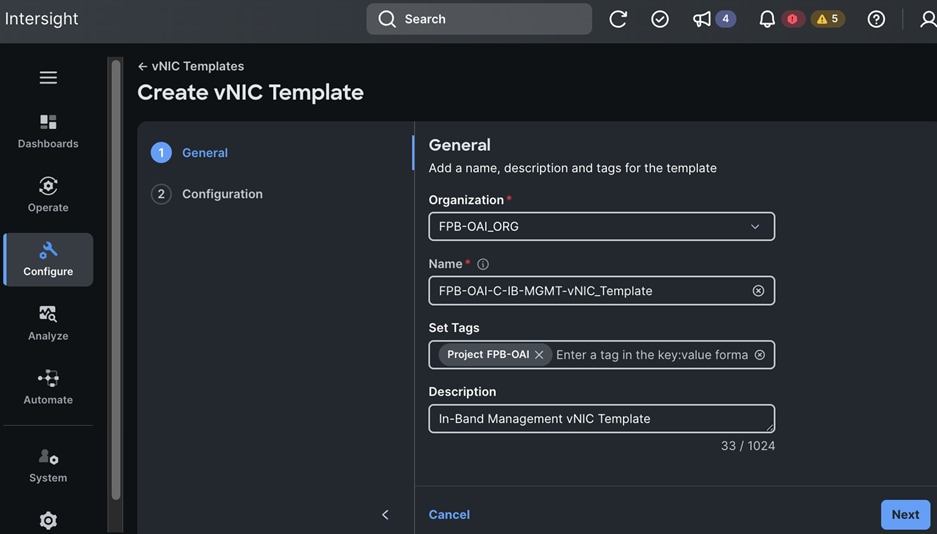

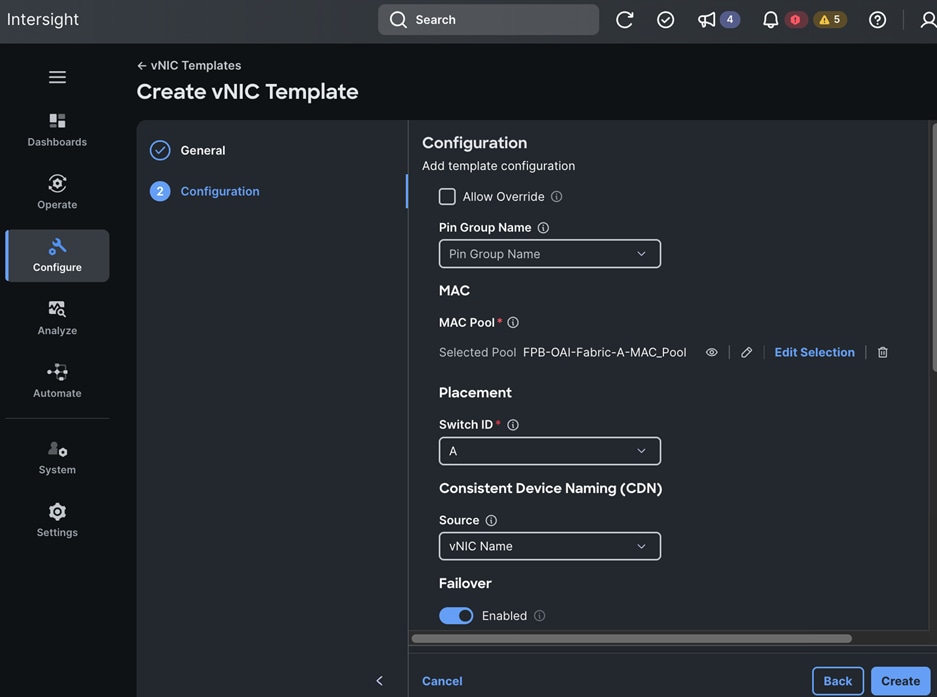

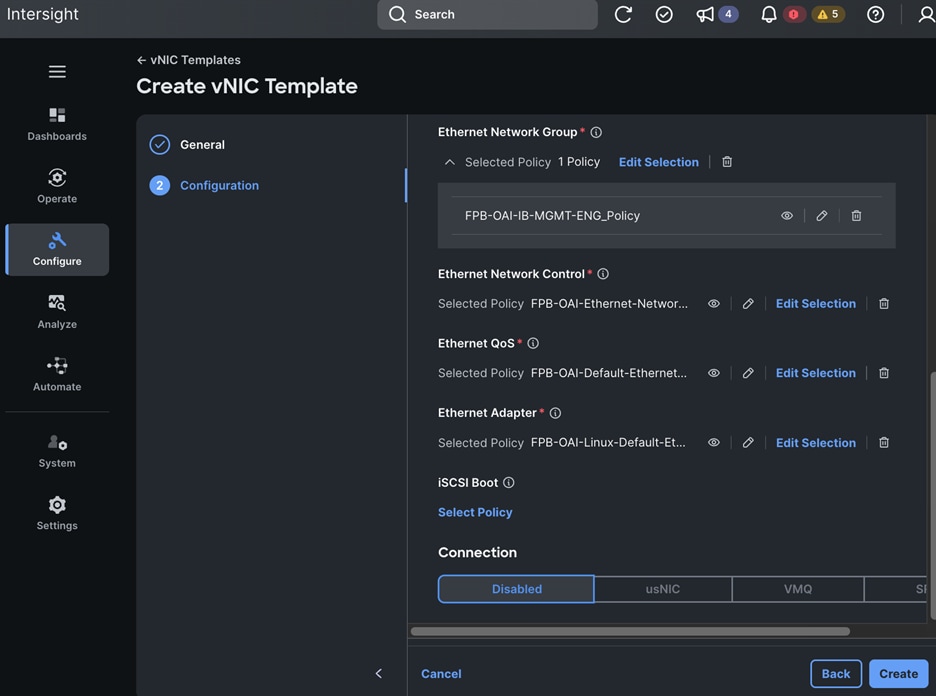

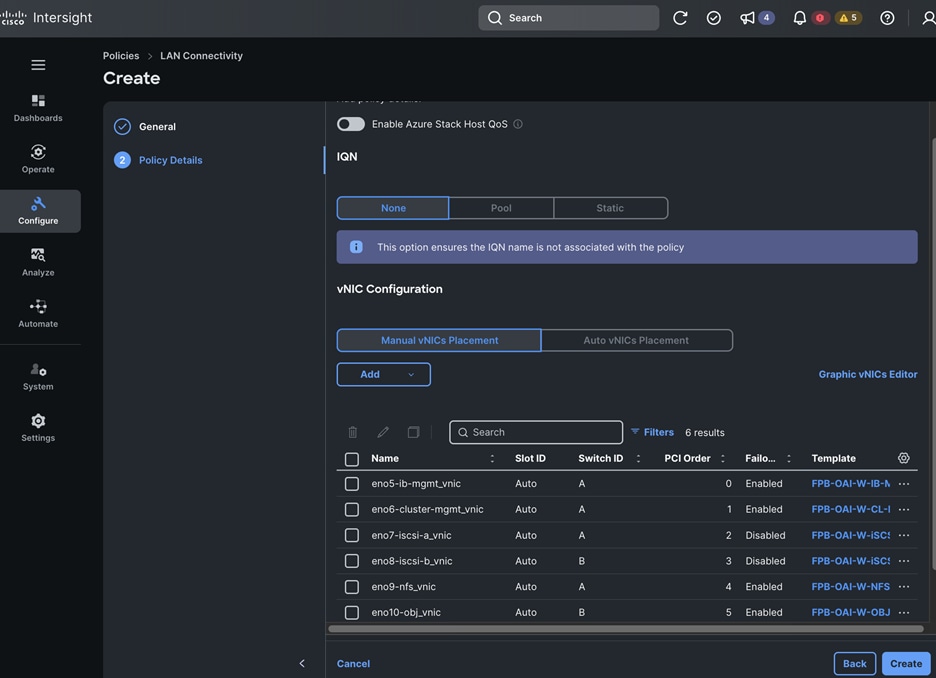

The OpenShift Control node servers in the design are only provisioned for the first two vNICs in the above list. All vNICs are configured using vNIC templates. The vNIC Template used for control (-C) and worker (-W) nodes are shown in Figure 14.

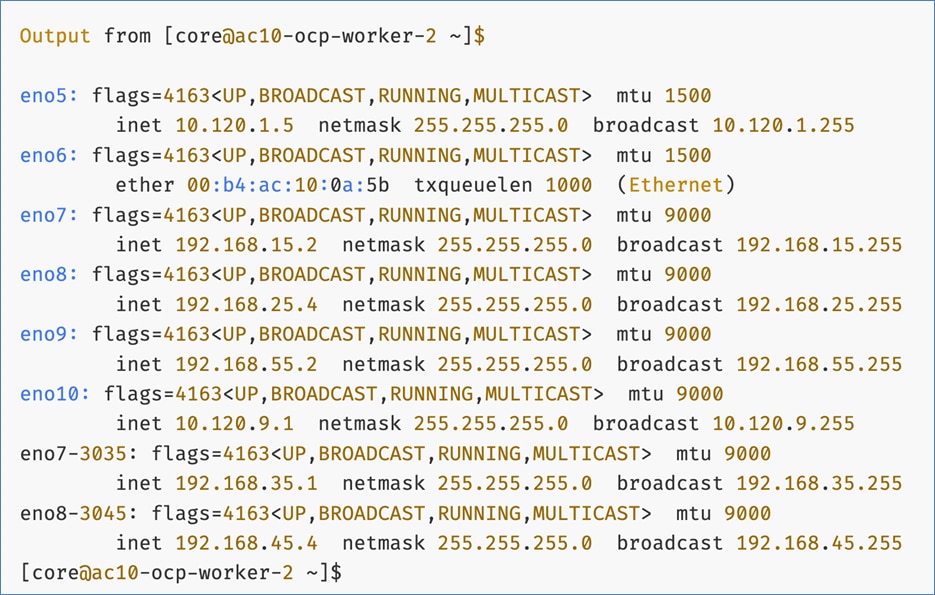

The vNIC configuration on a given UCS server worker node, derived from the above worker node (-W) templates are shown in the figure below. The vNICs name includes the OpenShift interface numbering which starts with ‘eno5’.

Storage Design

The storage is set up with NFS, iSCSI, NVMe-TCP, and S3 protocols, each having VLAN and LIF (see Figure 15). NetApp Trident will handle dynamic storage orchestration for Red Hat OpenShift workloads. Trident can be configured with various storage backends based on requirements. For this solution, NAS, and SAN backends were used. Trident will provision PVCs based on the configured storage class and map them to the containers.

OpenShift AI requires S3-compatible object stores to store artifacts, logs, immediate results used by data science pipelines, and single- or multi-model serving platforms for deploying stored models. ONTAP S3 is configured to provide object stores and is directly presented to OpenShift AI for use in this solution. Following NetApp’s best practices, S3 LIFs are configured on both nodes.

OpenShift Design

Most machine learning (ML) models, frameworks, and test applications from popular sources like Hugging Face and NVIDIA GPU Cloud (NGC) are typically available as pre-packaged containers. The AI/ML ecosystem has also embraced Kubernetes, making containers the primary environment for the development and deployment of AI/ML workloads.

Given these factors and the need for an enterprise-class platform, this solution leverages OpenShift to offer enterprises a secure, robust Kubernetes orchestration and container management platform for developing, deploying, and managing cloud-native applications and ML workloads. By using OpenShift as the foundation for ML model delivery, including applications that integrate production ML models, enterprises can benefit from a unified platform for their AI/ML initiatives.

By combining OpenShift with OpenShift AI (see next section), OpenShift uniquely simplifies and accelerates projects with clear separation of functions. OpenShift administrators can continue to manage Kubernetes infrastructure administration, including provisioning GPUs, role-based access control, and resource utilization, while ML engineers can focus on ML delivery without worrying about the underlying infrastructure.

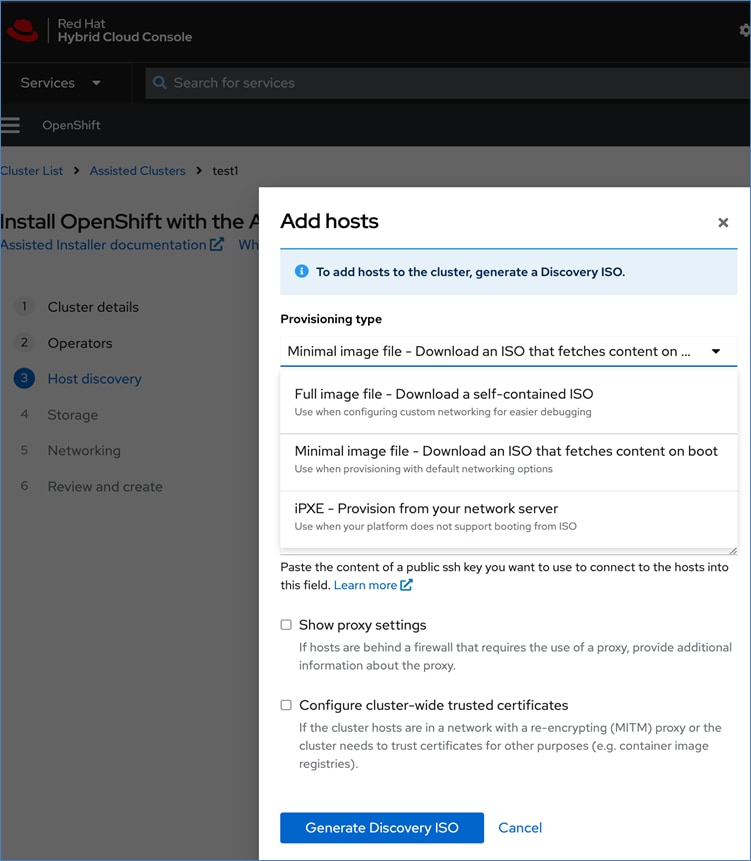

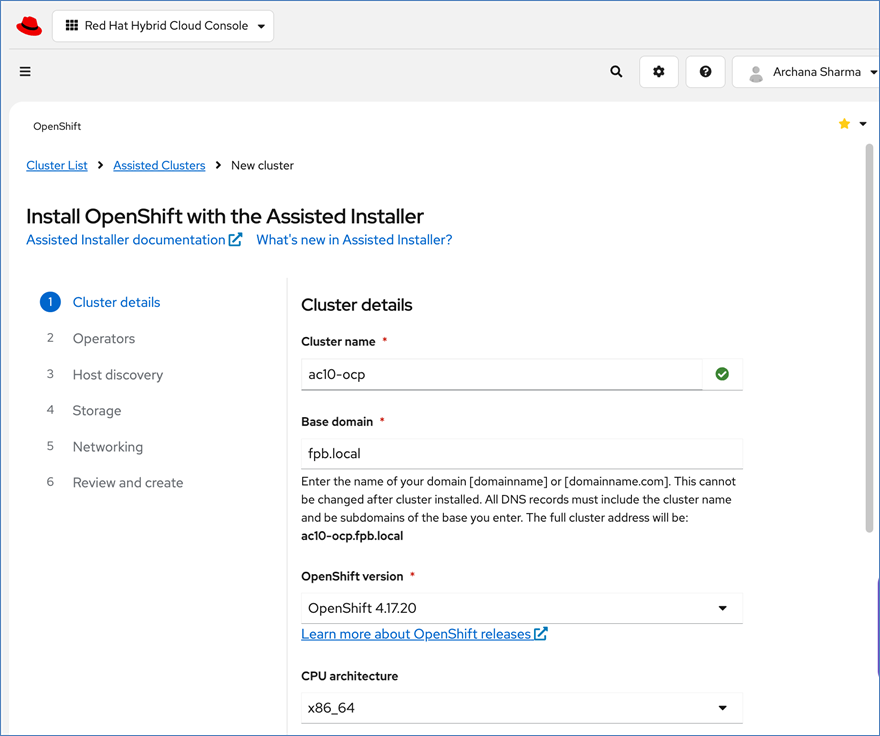

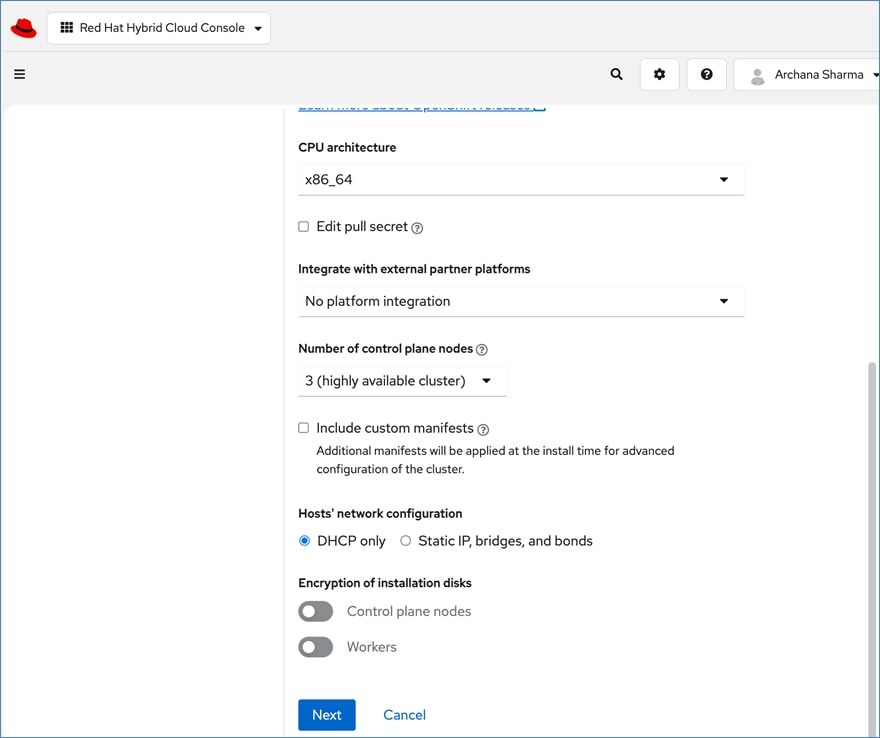

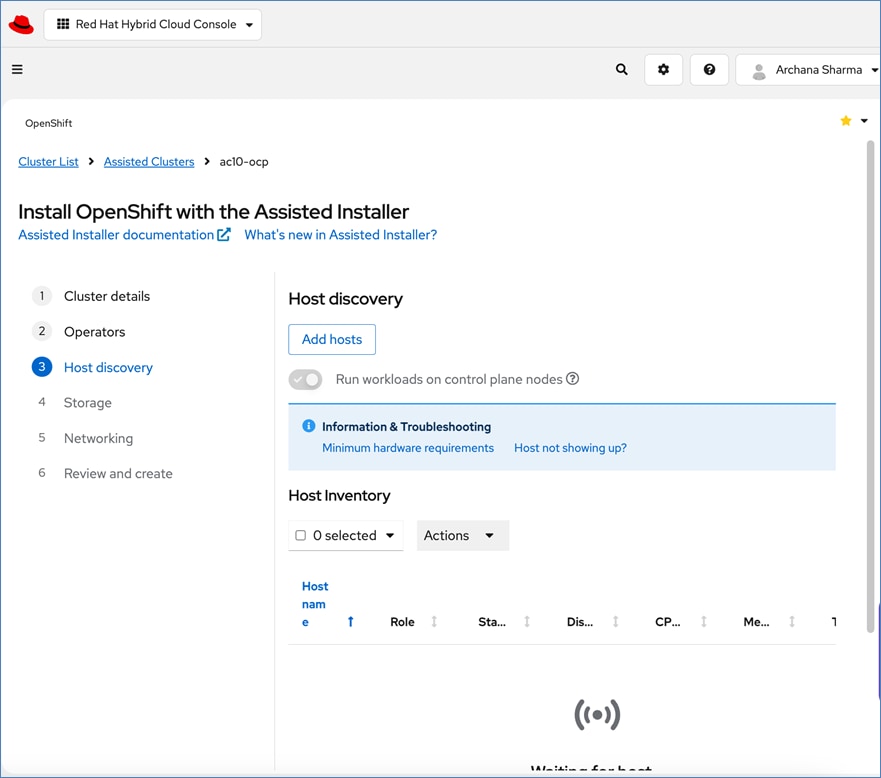

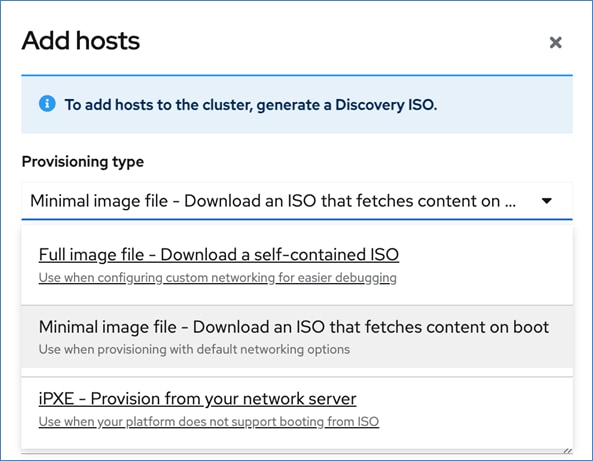

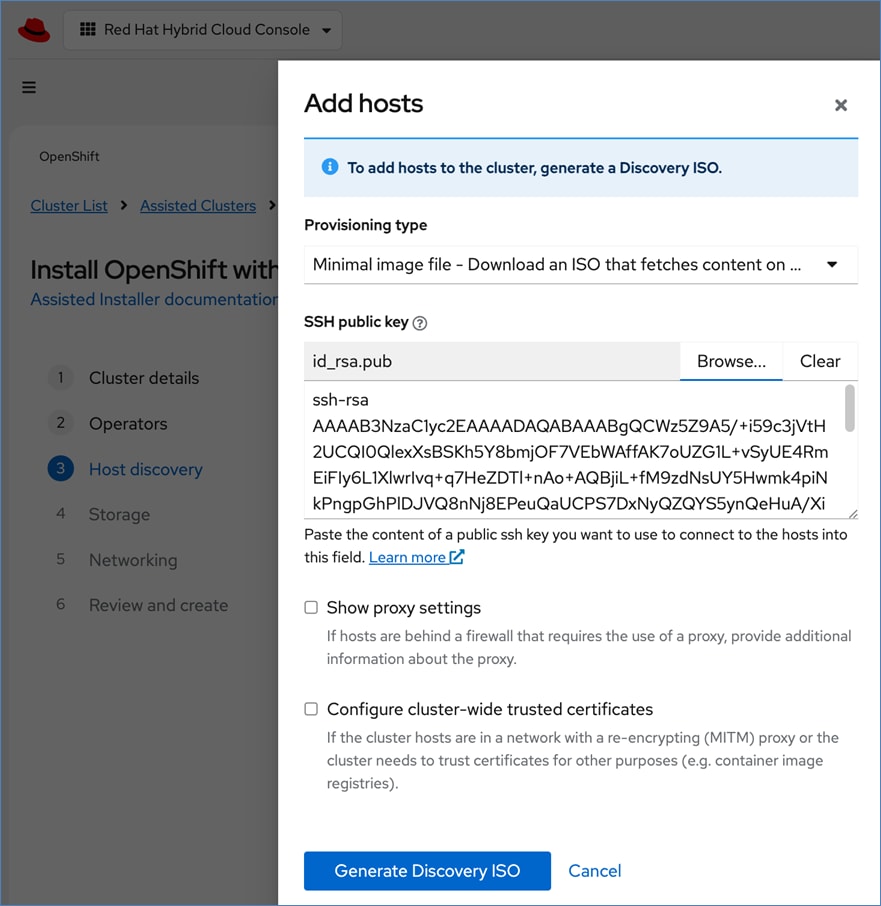

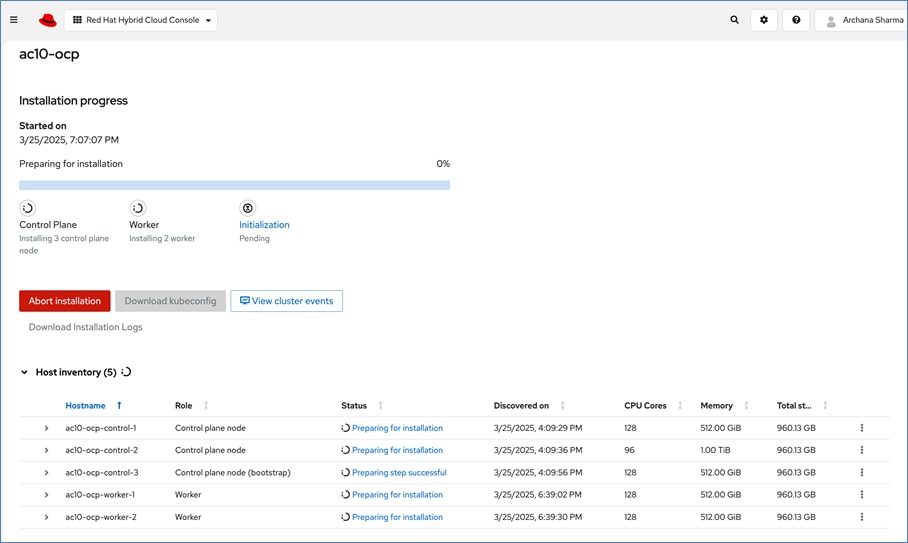

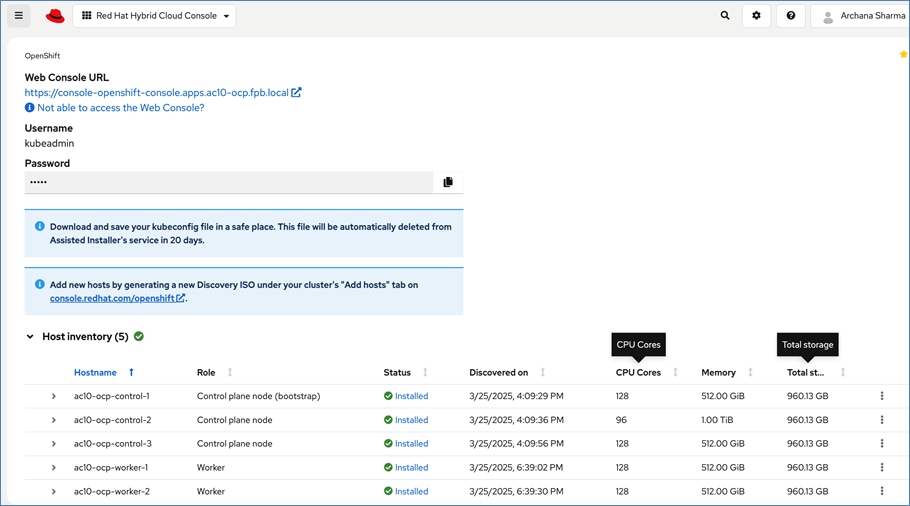

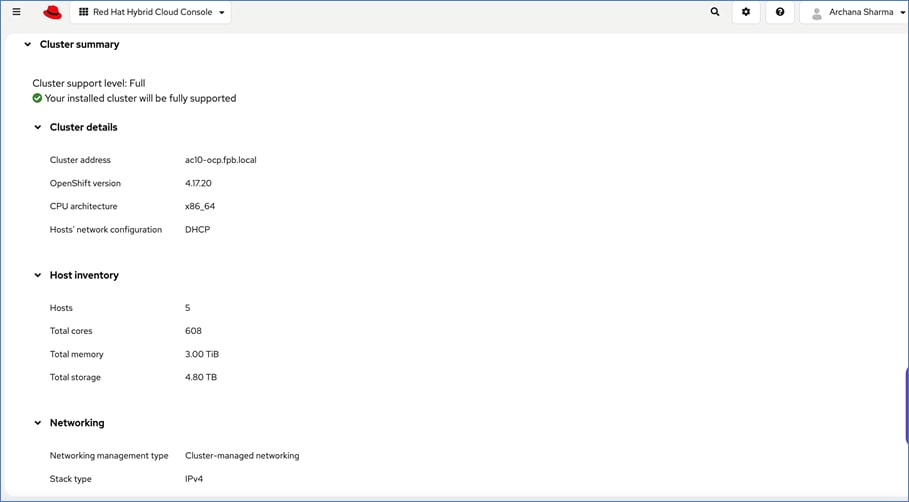

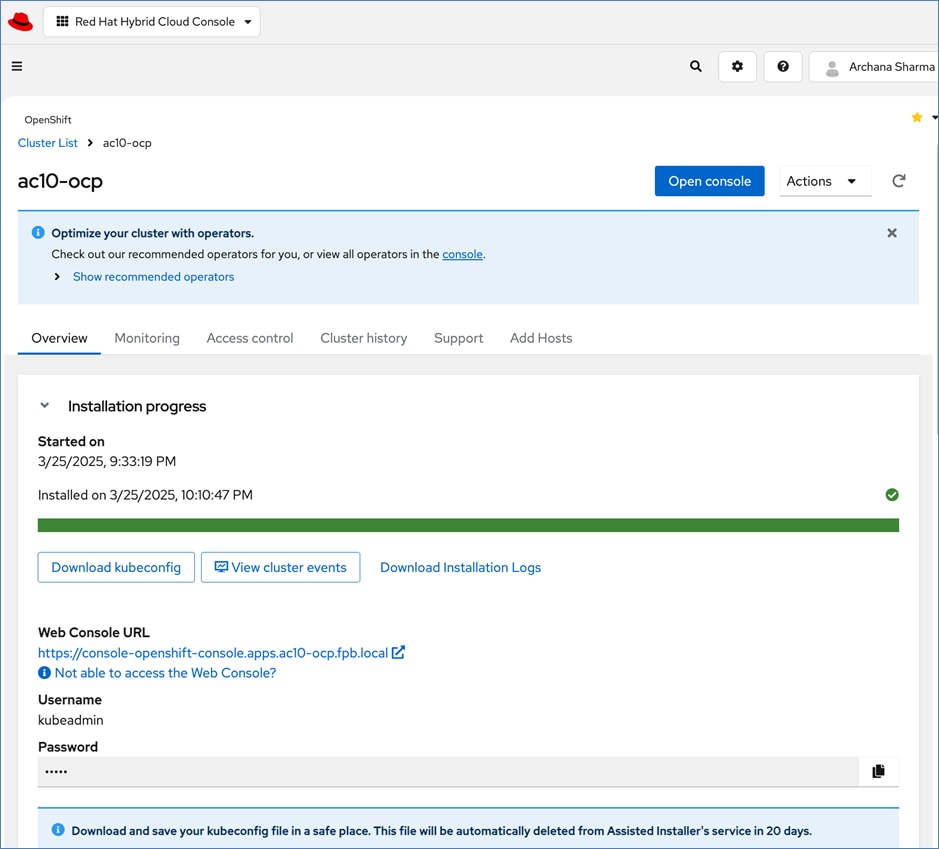

Red Hat offers multiple options for deploying OpenShift clusters, including both on-premises and SaaS solutions for connected and disconnected environments. These deployments can be managed using Advanced Cluster Management (ACM) or the cloud-based Red Hat Hybrid Cloud Console (HCC). In this solution, Red Hat OpenShift is deployed as a self-managed service using the Red Hat-recommended Assisted Installer from the Hybrid Cloud Console.

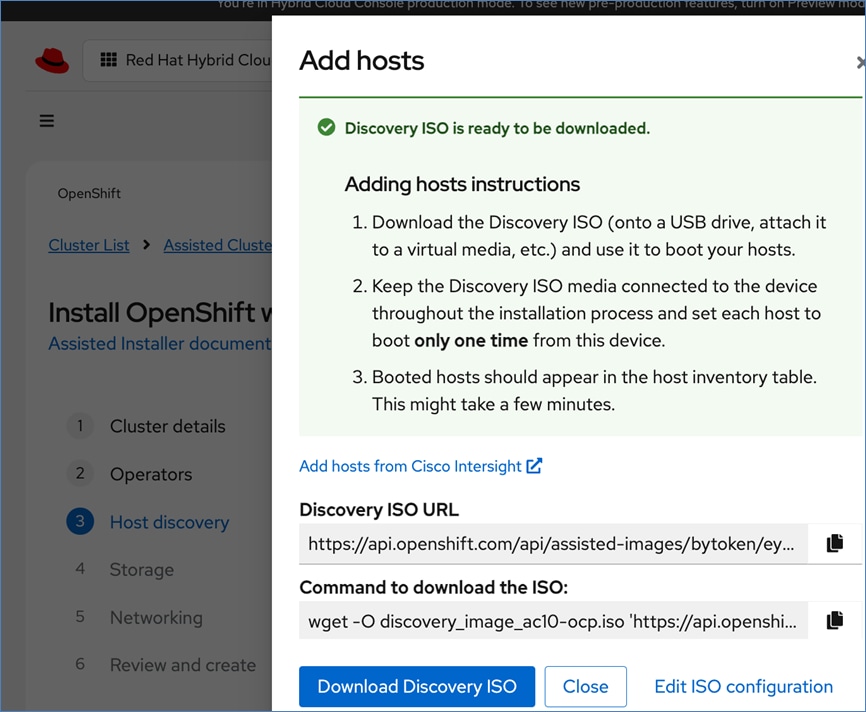

Red Hat recommends using the Assisted Installer for several reasons – one advantage being that it eliminates the need for a separate bootstrap machine during installation. Instead, the Assisted Installer uses one of the cluster nodes to manage the bootstrapping of other nodes in the cluster. Additionally, the Assisted Installer offers REST APIs that can be used to automate the installation process.

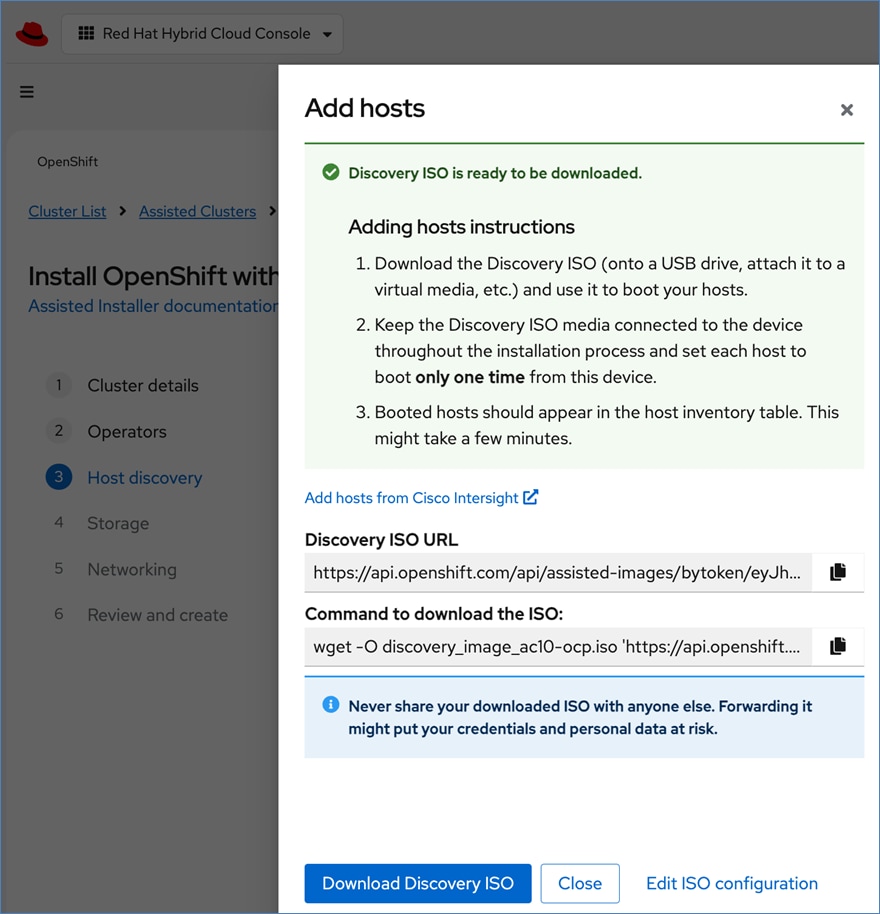

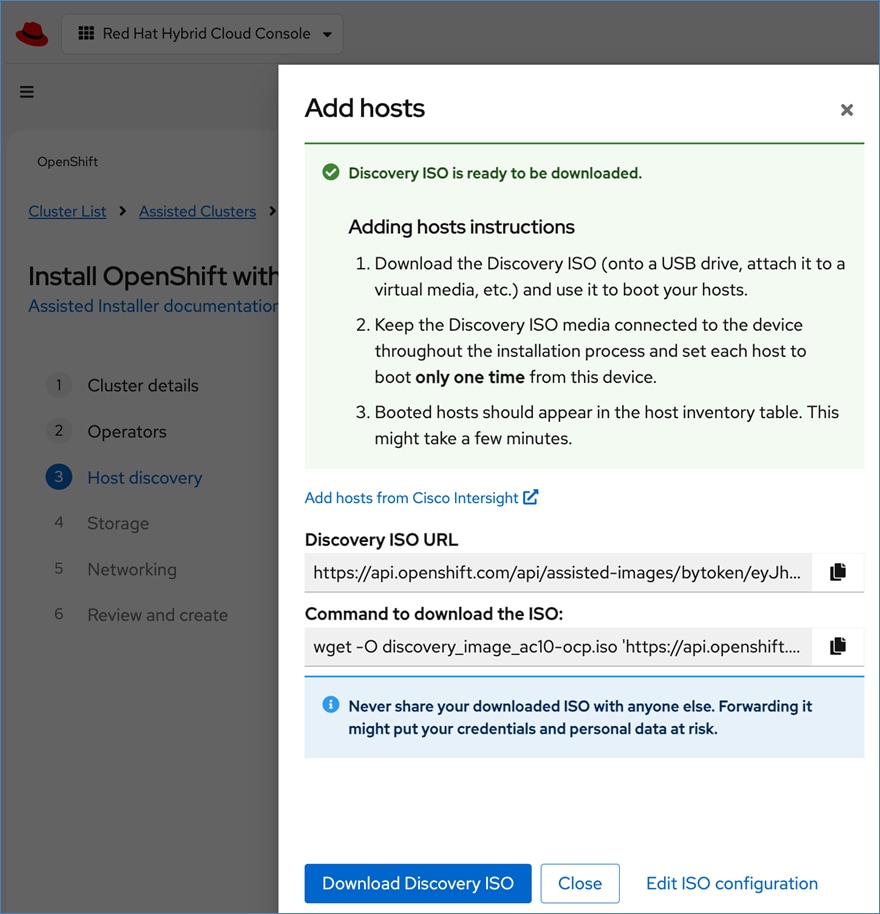

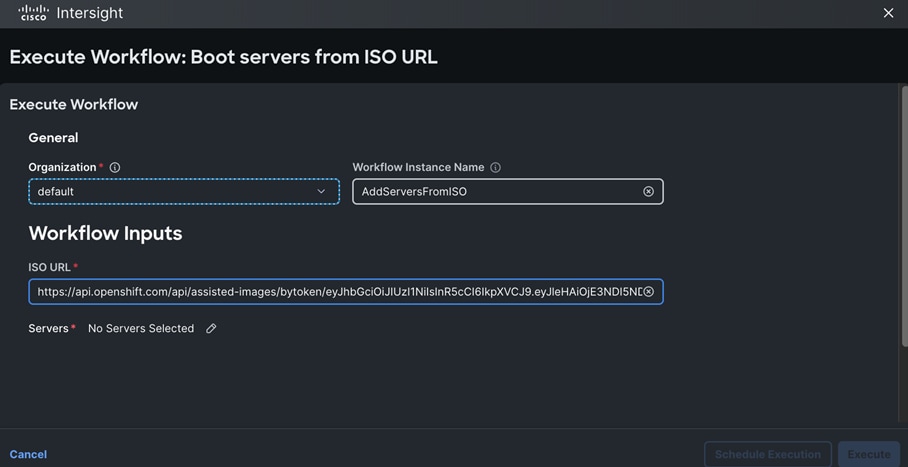

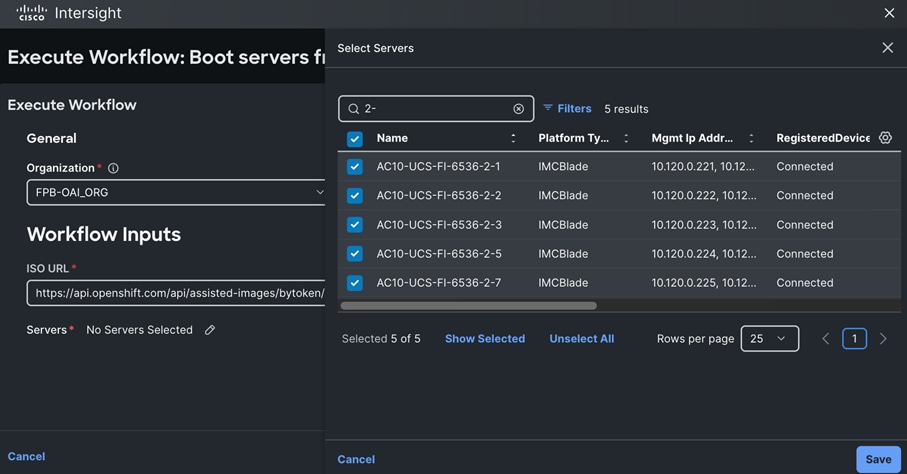

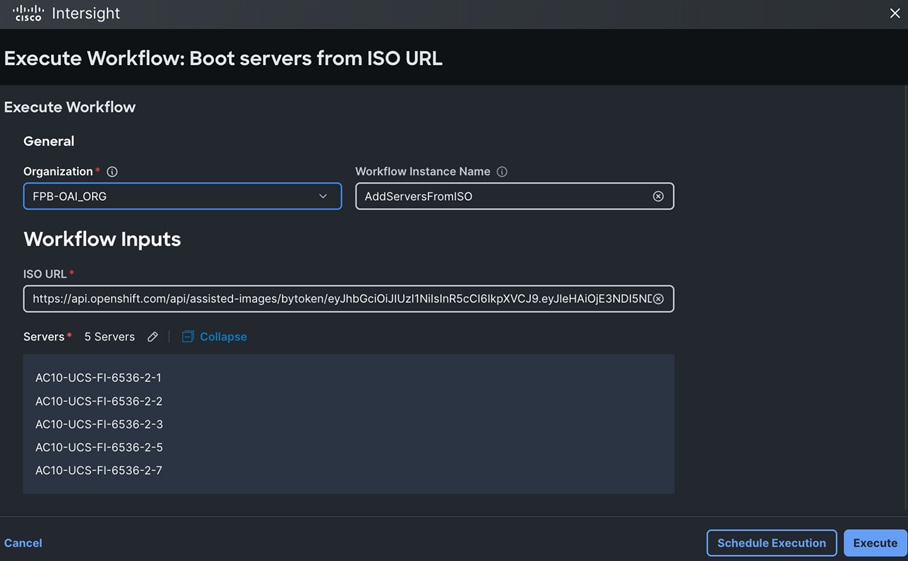

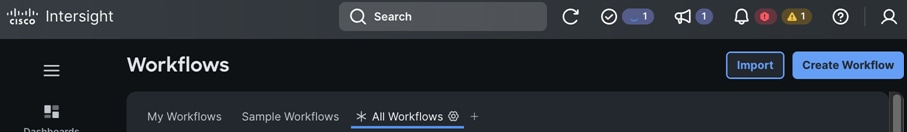

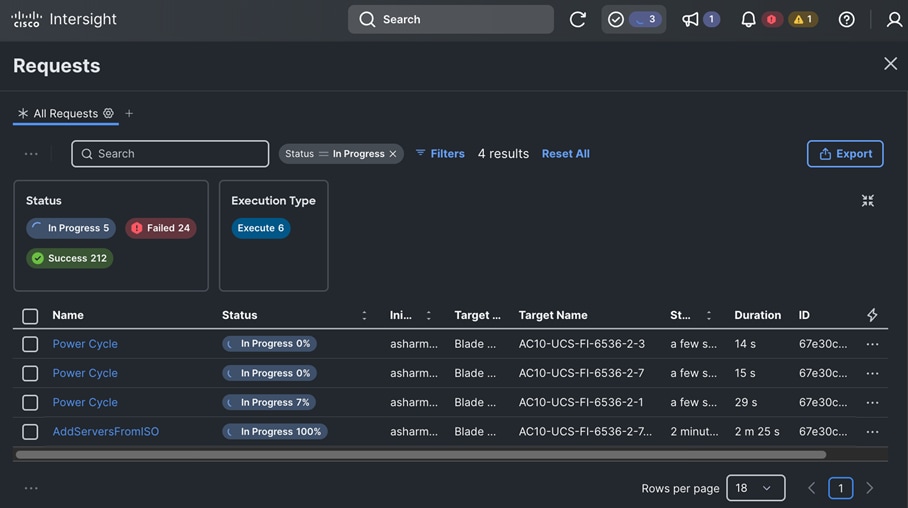

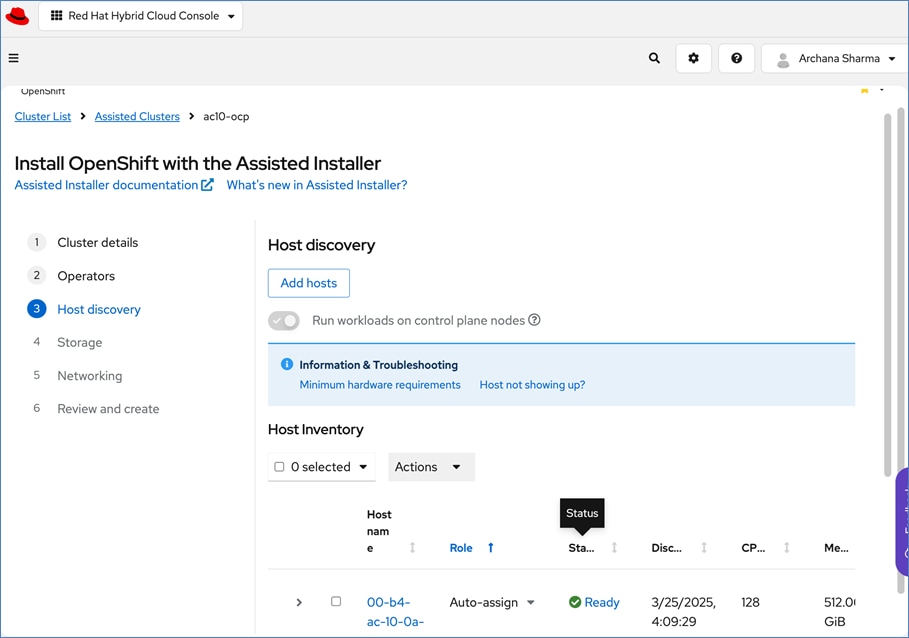

OpenShift is deployed on Cisco UCS-X baremetal servers, which are also managed from the cloud using Cisco Intersight. Red Hat Assisted Installer provides a discovery image (minimal image or full ISO) that must be downloaded and installed on the baremetal servers.

Cisco and Red Hat provides an integration that allows the discovery ISO to be deployed directly on Cisco UCS servers provisioned in Cisco Intersight by clicking on the direct link to Intersight as shown below:

Note: This integration makes bare-metal deployment of an OpenShift cluster on Cisco UCS servers significantly easier.

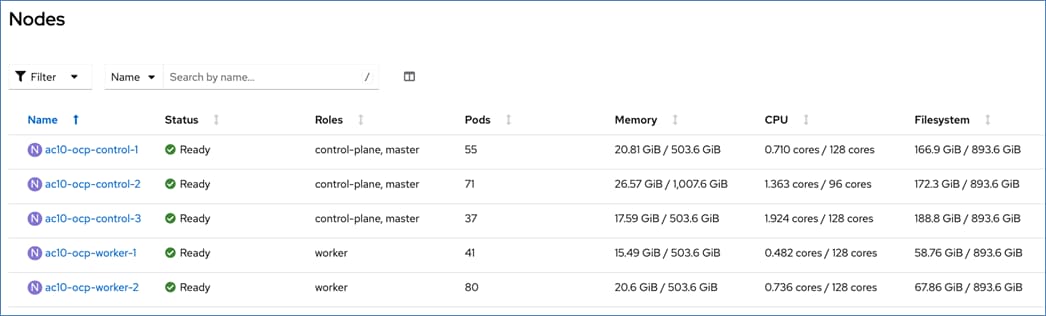

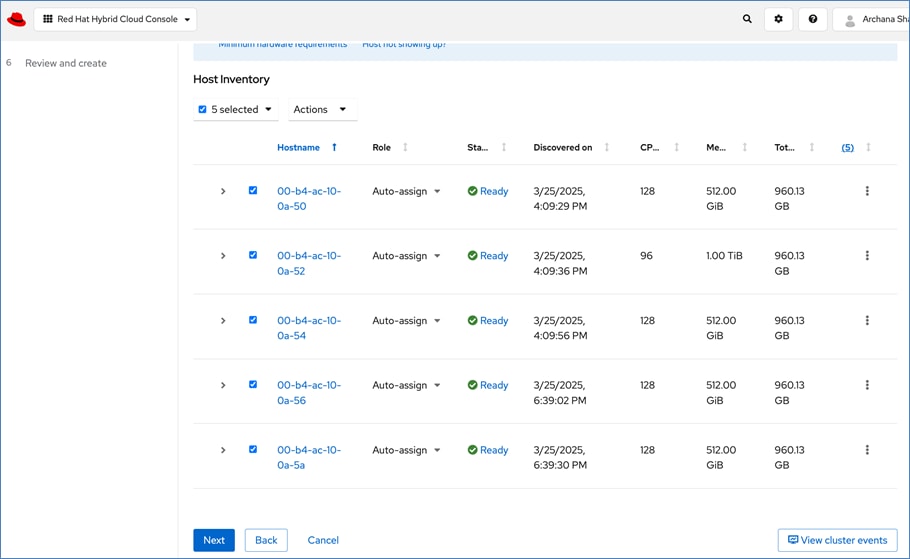

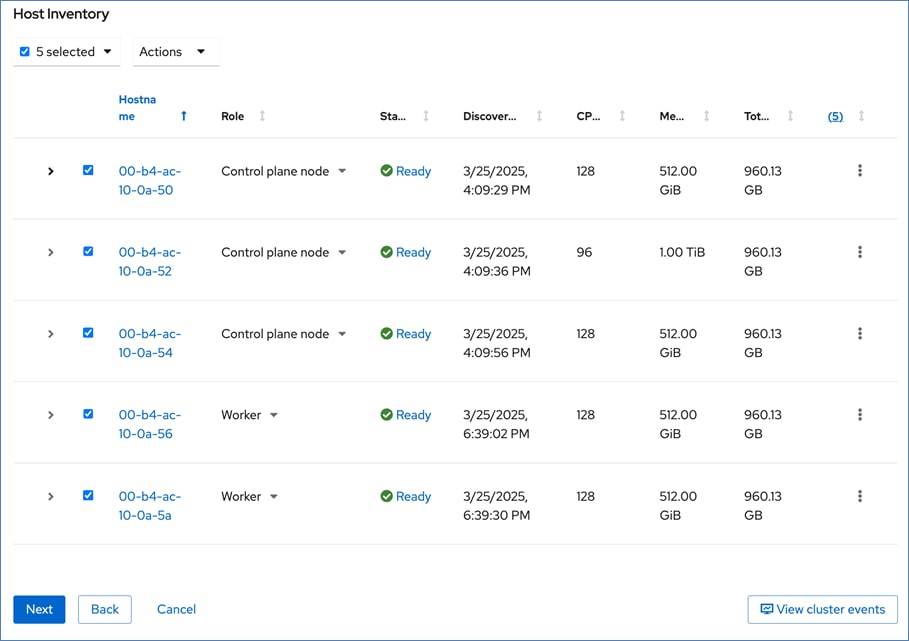

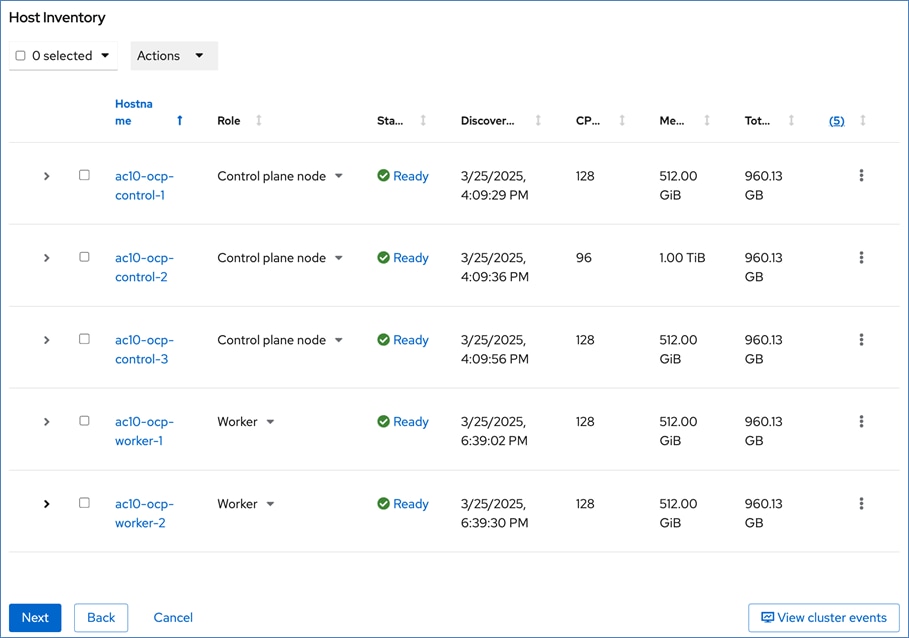

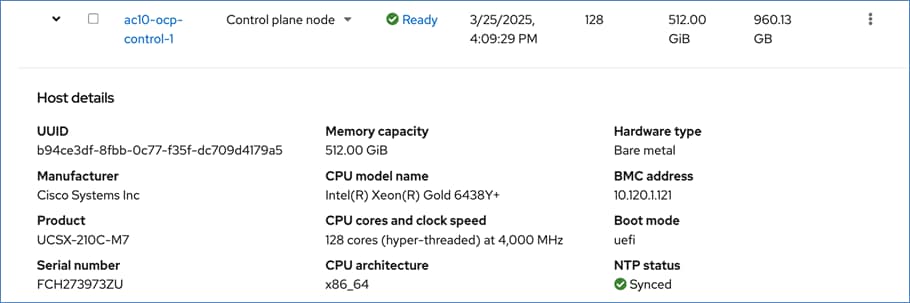

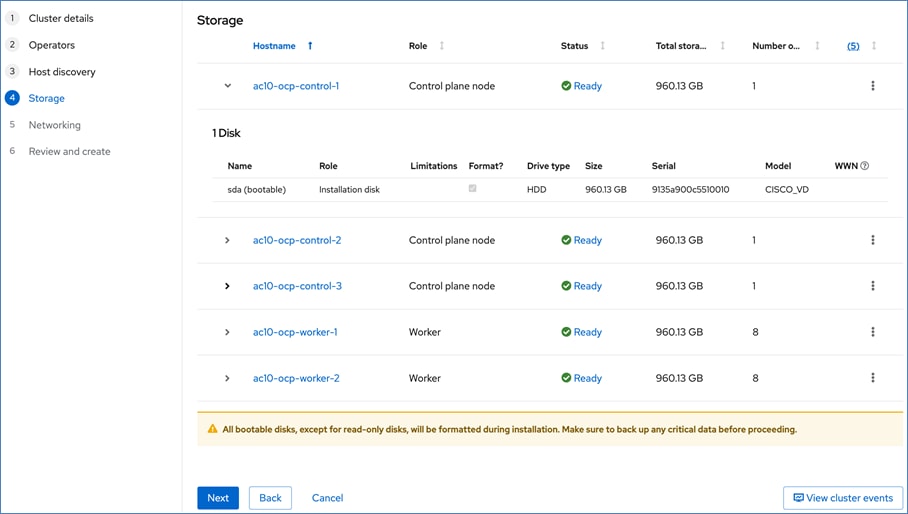

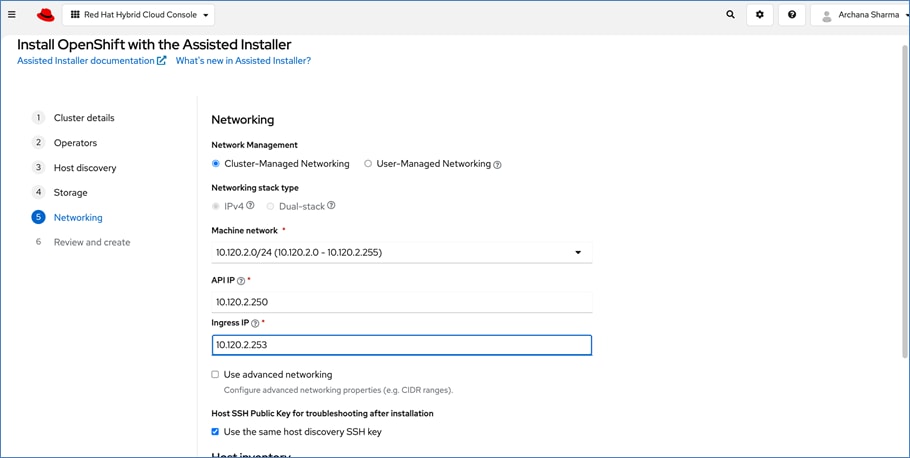

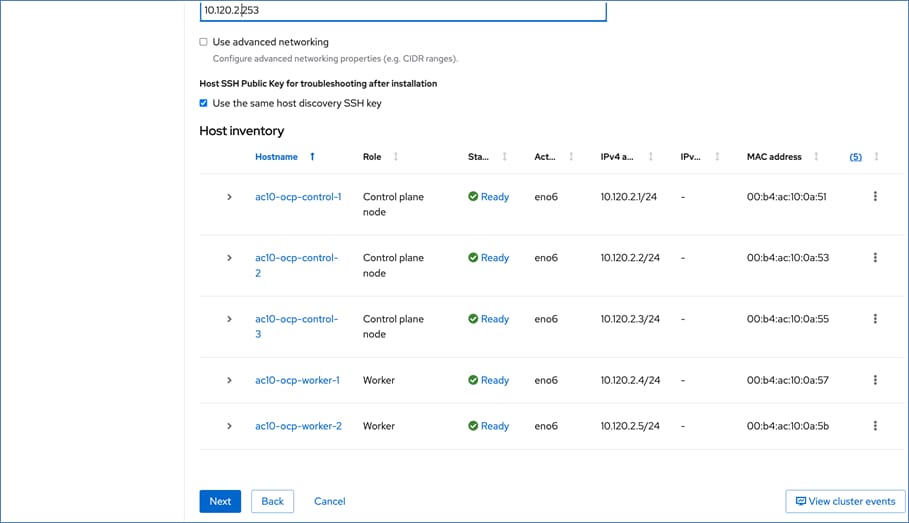

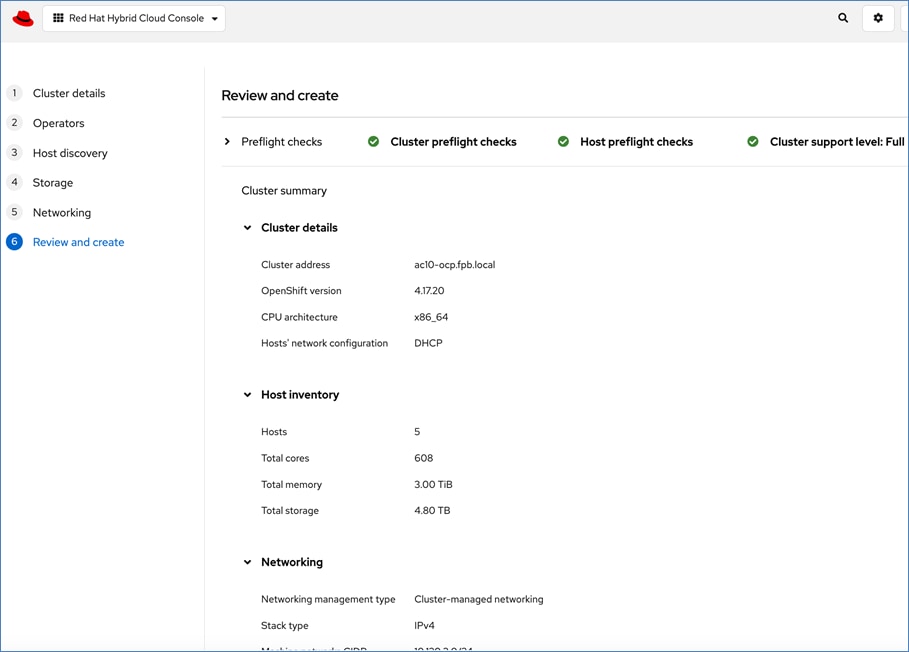

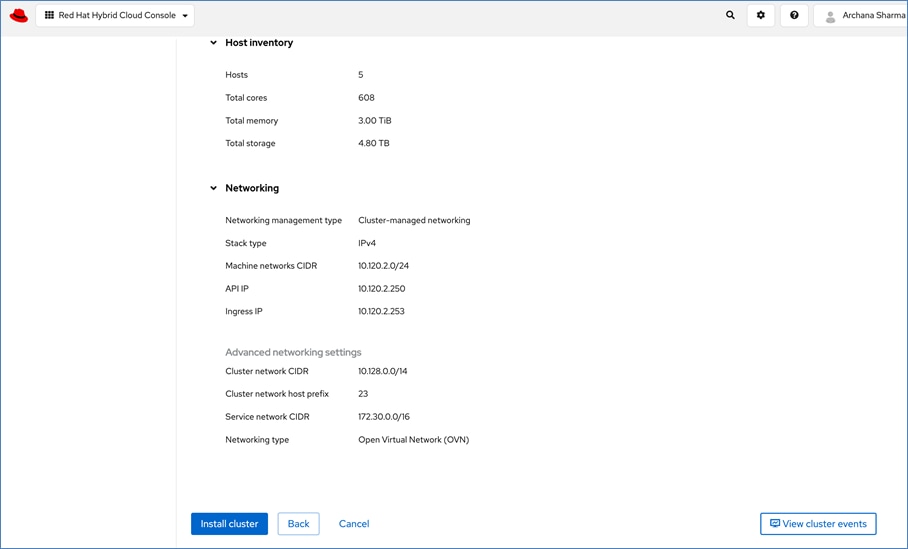

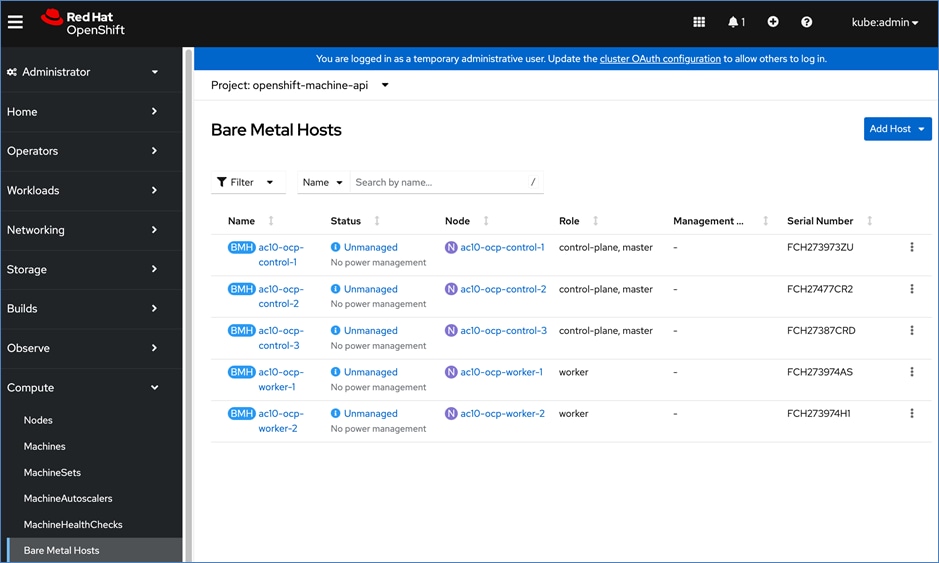

For a high-availability cluster, OpenShift typically requires 3 control nodes and two or more compute (or worker) nodes. The initial cluster used for validation is shown in Figure 17. Additional nodes can be added as needed to scale the cluster.

All network interfaces are provisioned using DHCP – DHCP requests are either routed through the gateway using DHCP relay or directly on the same segment (for storage vNICs) in this design. DNS, DHCP and NTP must all be setup prior to starting the installation.

Post-install, BMC access (for example, IPMI), NTP, etcd backup, storage and other post-install activities will need to be provisioned as needed.

To support AI/ML efforts, the two compute/worker nodes are paired with Cisco UCS X440p nodes in adjacent slots, each equipped with NVIDIA L40S GPUs. These GPUs can be used by workloads running on OpenShift AI or directly on the OpenShift cluster.

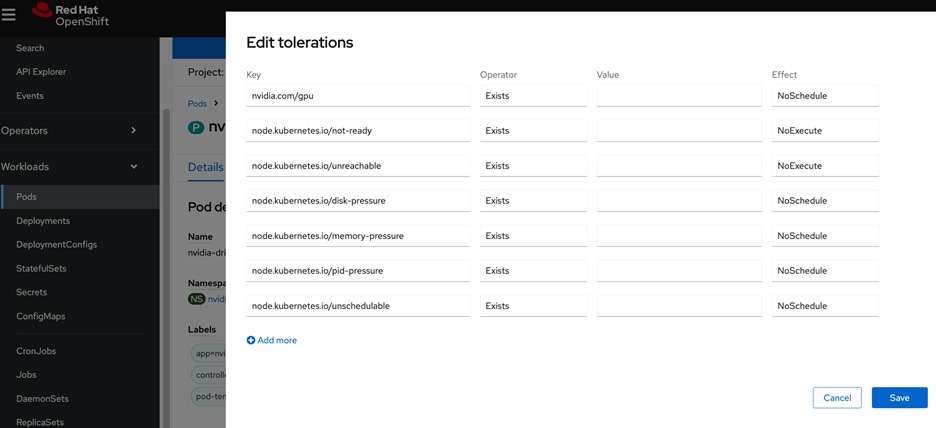

When using GPUs, if not all worker nodes in the cluster are GPU-enabled, it is important to provision taints and tolerations on the nodes and workloads. This ensures that only workloads requiring GPUs are scheduled on the GPU-equipped nodes.

Workloads:

Nodes:

OpenShift Networking Design

OpenShift networking enables communication between various components both internal and external to the cluster.

Control Plane nodes and worker nodes, connect to two networks; OVN-Kubernetes that OpenShift manages and then the physical datacenter network.

For communication outside the cluster, the virtual NICs provisioned on UCS server nodes provide connectivity to the physical data center network.

Table 4. Cisco UCS Server and OpenShift Network Mapping

| UCS vNIC |

Worker Node Interface |

VLAN |

Header D |

Failover |

| IB-MGMT_vNIC |

eno5 |

1201 |

For BMC access and other mgmt. functions |

Fabric Failover on UCS FI |

| CLUSTER-MGMT_vNIC |

eno6 |

1202 |

OpenShift cluster/machine network |

Fabric Failover on UCS FI |

| iSCSI-A_vNIC |

eno7 |

3015 |

For direct iSCSI storage access via Path-A |

Redundant NICs/paths |

| iSCSI-B_vNIC |

eno8 |

3025 |

For direct iSCSI storage access via Path-B |

Redundant NICs/paths |

| NVMe-TCP-A_vNIC |

eno7 |

3035 |

For direct NVMe-TCP storage access via Path-A |

Redundant NICs/paths |

| NVMe-TCP-B_vNIC |

eno8 |

3045 |

For direct NVMe-TCP storage access via Path-B |

Redundant NICs/paths |

| NFS_vNIC |

eno9 |

3055 |

For direct NFS Storage access |

Fabric Failover on UCS FI |

| OBJ_NIC |

eno10 |

1209 |

For S3-compatible object store access |

Fabric Failover on UCS FI |

Note: Cisco UCS FIs do not support LACP based NIC teaming/port-channeling, so it is not an option in this design.

For connectivity within the cluster, OpenShift uses Software-Defined Networking (SDN) to create overlay networks to interconnect pods and services across the cluster. The default networking in OpenShift is Open Virtual Networking – Kubernetes (or OVN-Kubernetes). OVN-Kubernetes is an open-source project that provides networking for Kubernetes clusters with OVN (Open Virtual Networking) and Open vSwitch (Open Virtual Switch) in its core architecture. It is plug-in, specifically designed for Kubernetes, and conforms to Kubernetes Container Network Interface (CNI) specifications.

Figure 18 illustrates the OpenShift networking on control and compute nodes in this solution.

CLUSTER-MGMT network is the cluster or machine network that all control and worker nodes are connected to. Overlay networks are created on this network for pods and services connectivity.

IB-MGMT network is included to provide independent management connectivity to the nodes. This network is used in this solution for management function, for example IPMI access and for loading Red Hat CoreOS to the servers during the install process.

Additional storage network interfaces and VLANs provide connectivity to NetApp storage – either directly or through NetApp Trident (file, block) and S3 compatible object store, also on NetApp storage.

For cluster networking (CLUSTER-MGMT), OVN architecture provides two key components, OVN controller and OVS virtual switch, which are deployed on each node to manage the networking, packet forwarding and policies. OVN configures the OVS on each node to implement the declared network configuration. OVN uses the Geneve (Generic Network Virtualization Encapsulation) protocol to create overlay network between nodes.

The post-install and once DHCP has successfully provisioned the interfaces, each worker node should have a configuration as shown in Figure 19.

By default, Kubernetes (and OpenShift) allocates each pod an internal cluster-wide IP address that it can use for Pod-to-Pod communication using the Pod network. Within a Pod, all containers behave as if they’re on the same logical host and communicate with each other using localhost, using the ports assigned to the containers. For services, OpenShift, as in Kubernetes, exposes services using an internal stable IP address from within the cluster. This internal IP address, known as the ClusterIP, is type of service that allows other pods within the same cluster to communicate with the service without exposing it to the external network.

For communication outside the cluster, OpenShift provides services (node ports, load balancers) and API resources (Ingress, Route) to expose an application or a service outside cluster so that users can securely access the application or service running on the OCP cluster.

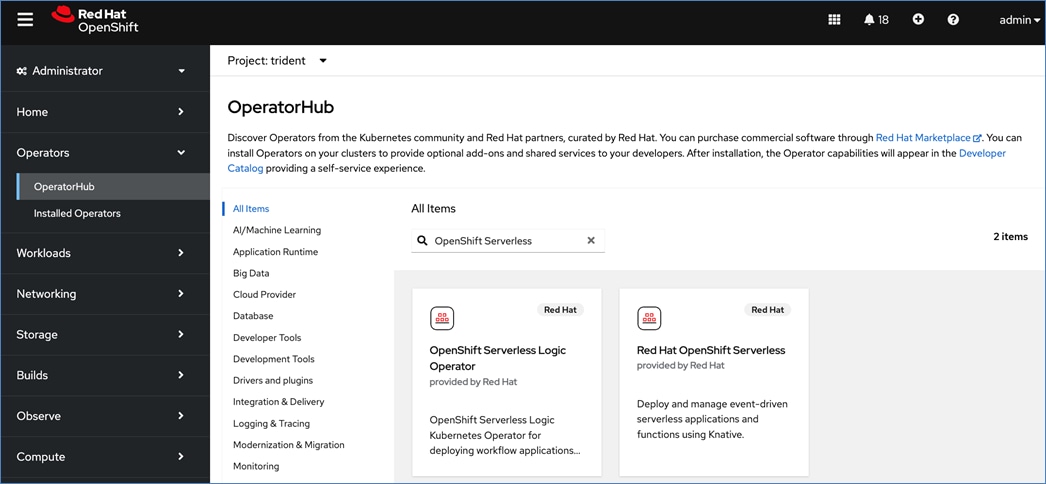

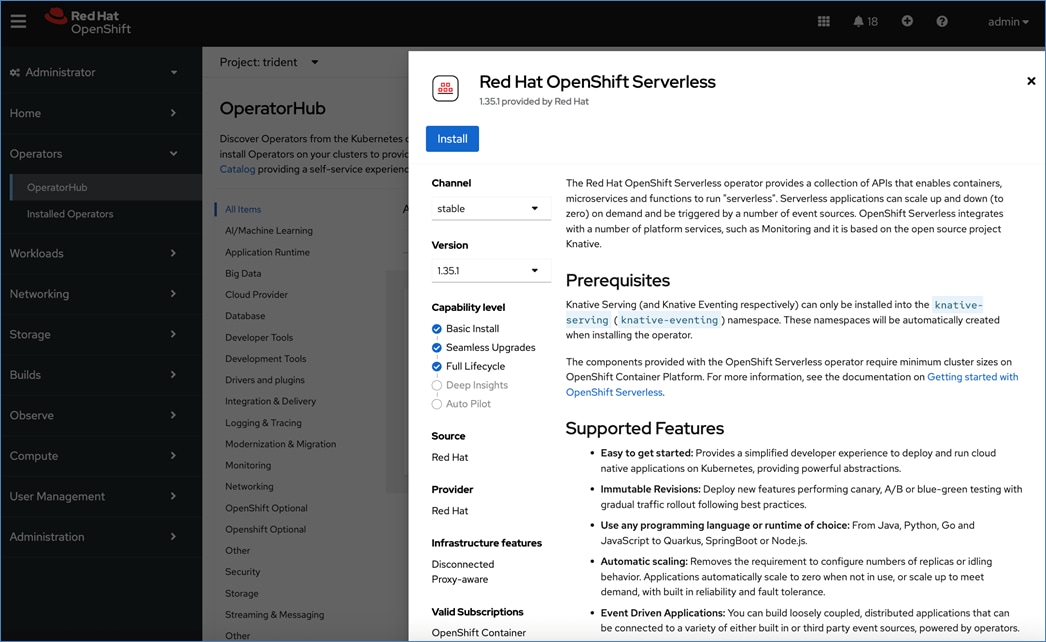

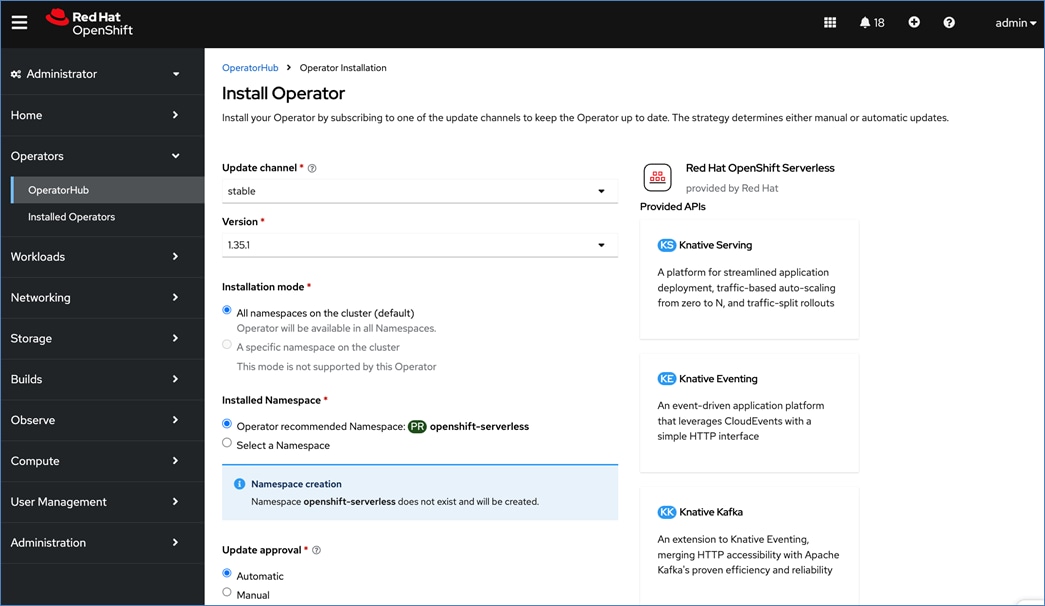

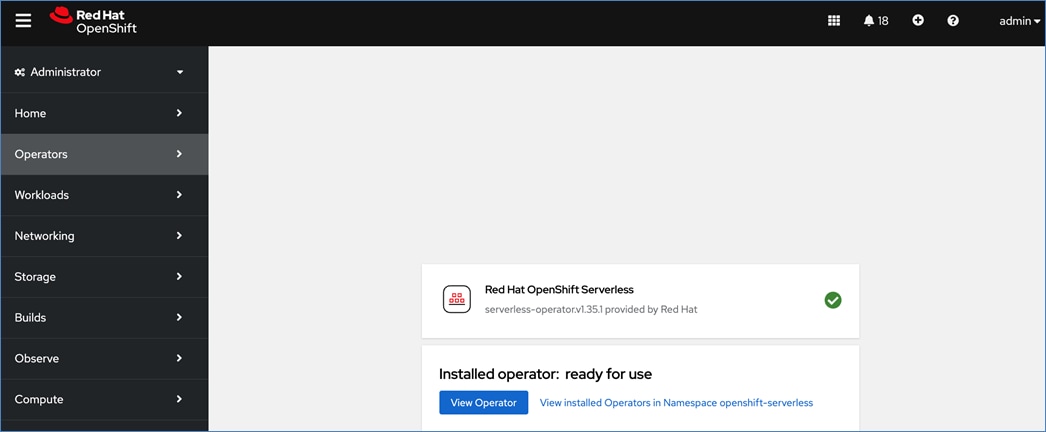

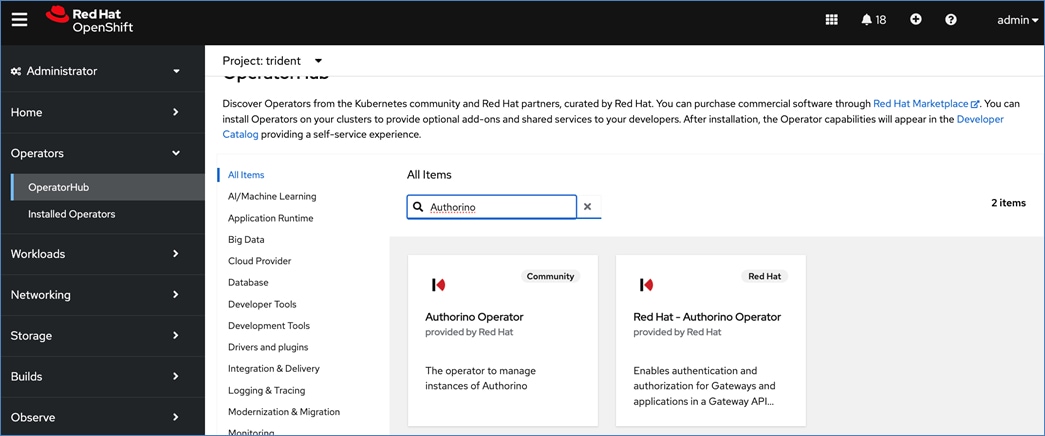

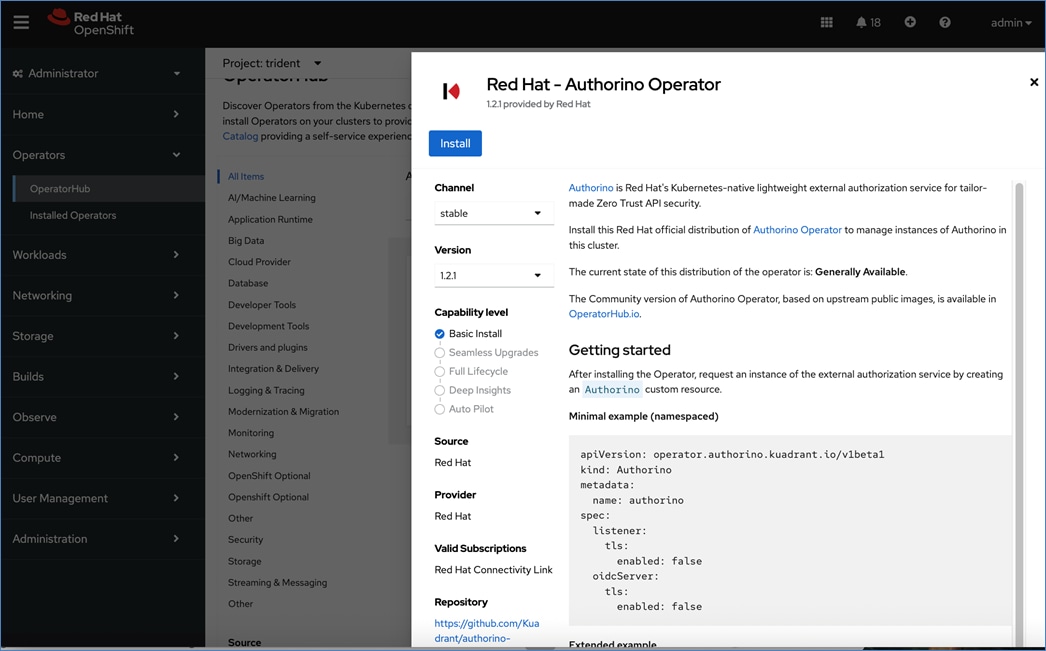

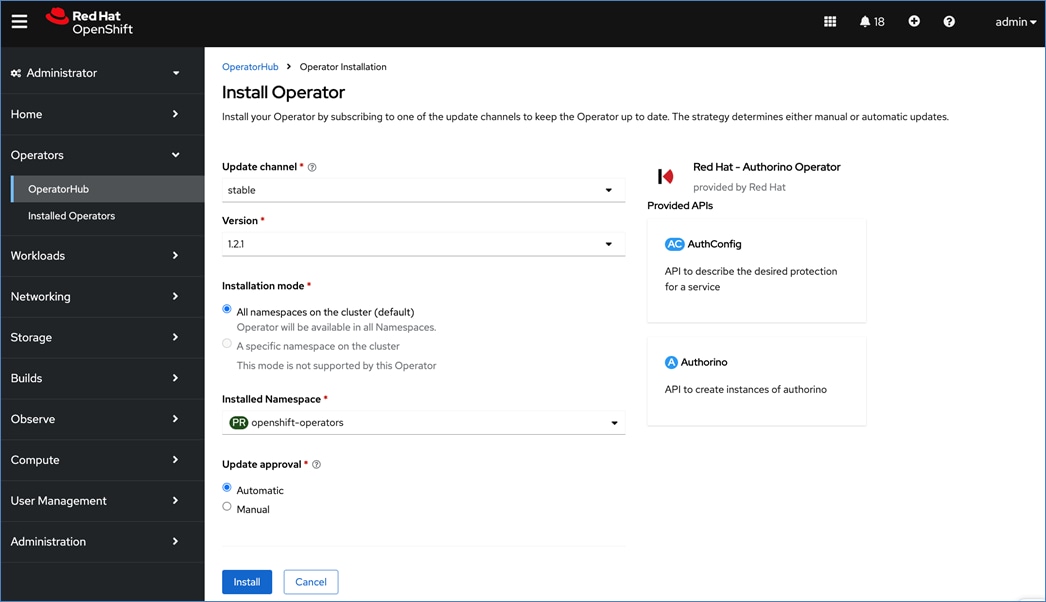

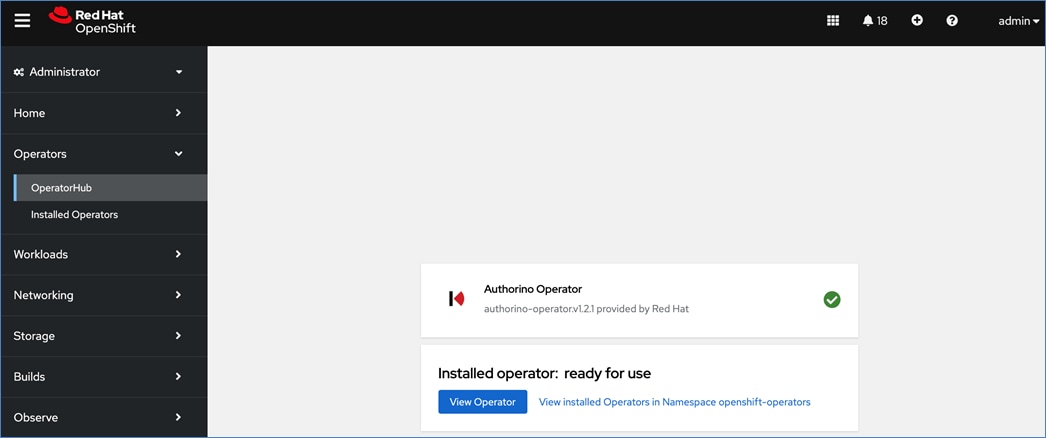

OpenShift Operators

Operators are a powerful tool in Kubernetes. It was designed to extend the capabilities of a Kubernetes cluster without changing the core Kubernetes code. Once a cluster is deployed, Red Hat OpenShift operators can be deployed to enable persistent storage, GPU acceleration and other services. A library of certified and community operators are available on Red Hat’s OperatorHub that is directly accessible from the cluster console. The operators deployed for this solution are shown in Figure 20.

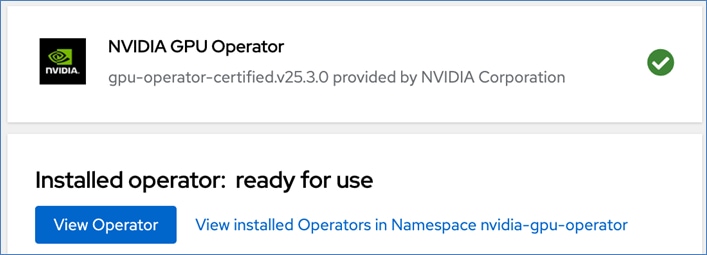

NVIDIA GPU Operator

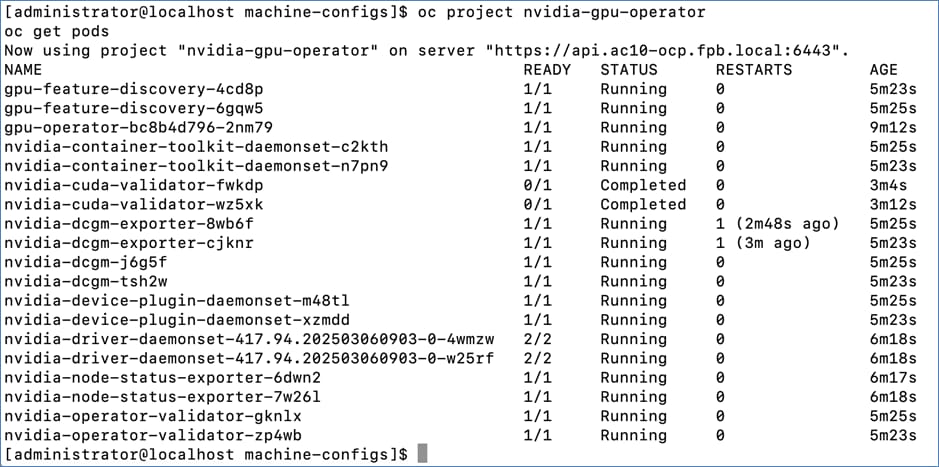

The NVIDIA GPU Operator uses the operator framework within Kubernetes to automate the management of all NVIDIA software components needed to provision and monitor GPUs. These components include:

● NVIDIA drivers (to enable CUDA)

● Kubernetes device plugin for GPUs

● NVIDIA Container Runtime

● Automatic node labeling

● NVIDIA DCGM exporter

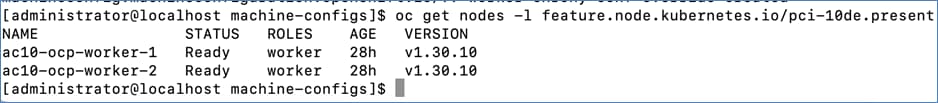

The GPU operator is responsible for enabling GPU acceleration on UCS-X worker nodes with NVIDIA GPUs on X440p GPU nodes. The NVIDIA GPU operator also requires Red Hat’s Node Feature Discovery Operator to detect the GPUs assigned to the worker node.

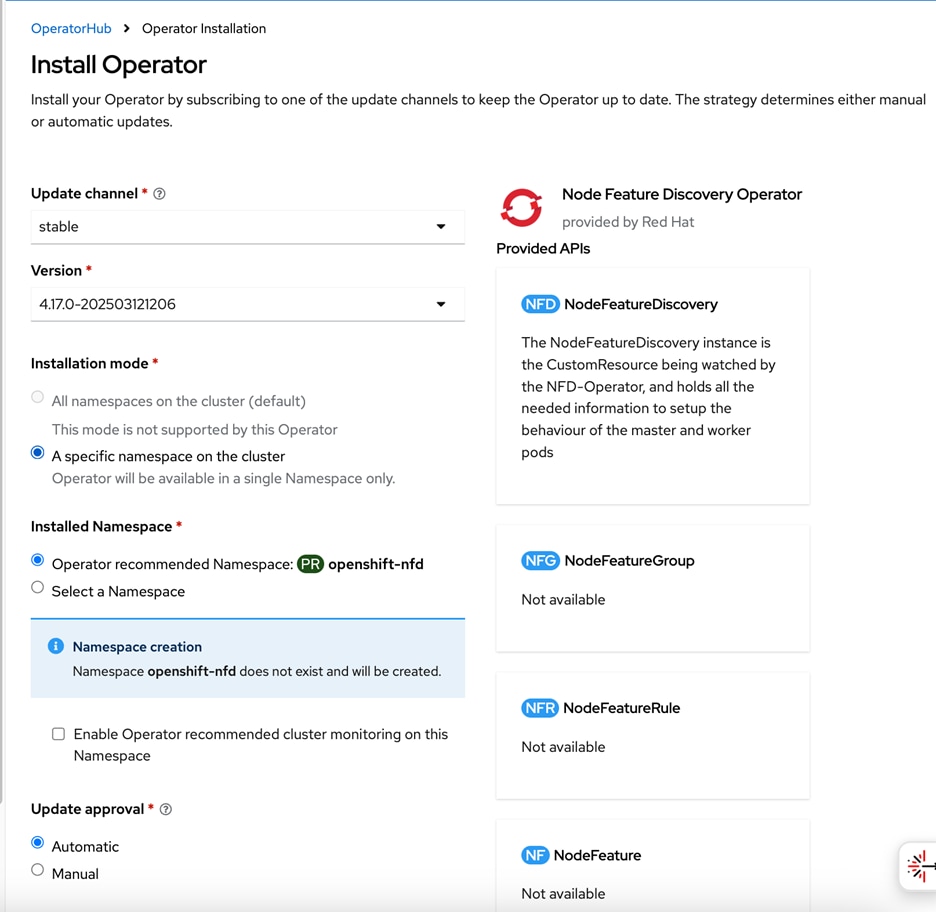

Red Hat Node Feature Discovery Operator

The Node Feature Discovery Operator (NFD) is responsible for detecting hardware capabilities and labeling the nodes with the hardware-specific information so that OpenShift cluster can use them. For NVIDIA GPUs on Cisco UCS worker nodes, the NFD Operator detects and labels it using the following label:

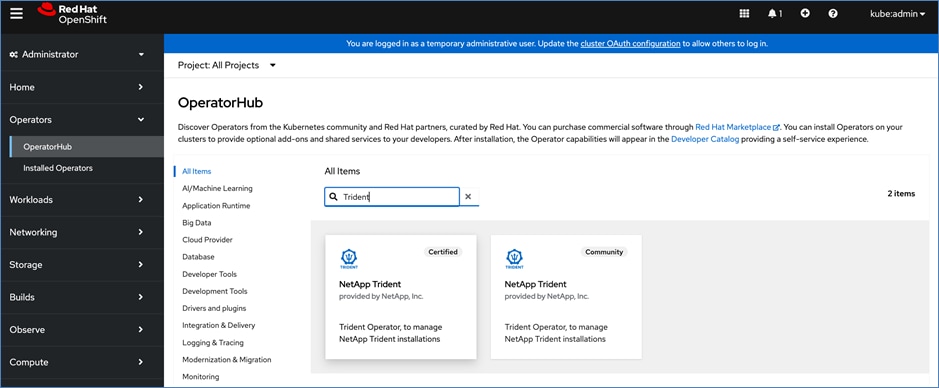

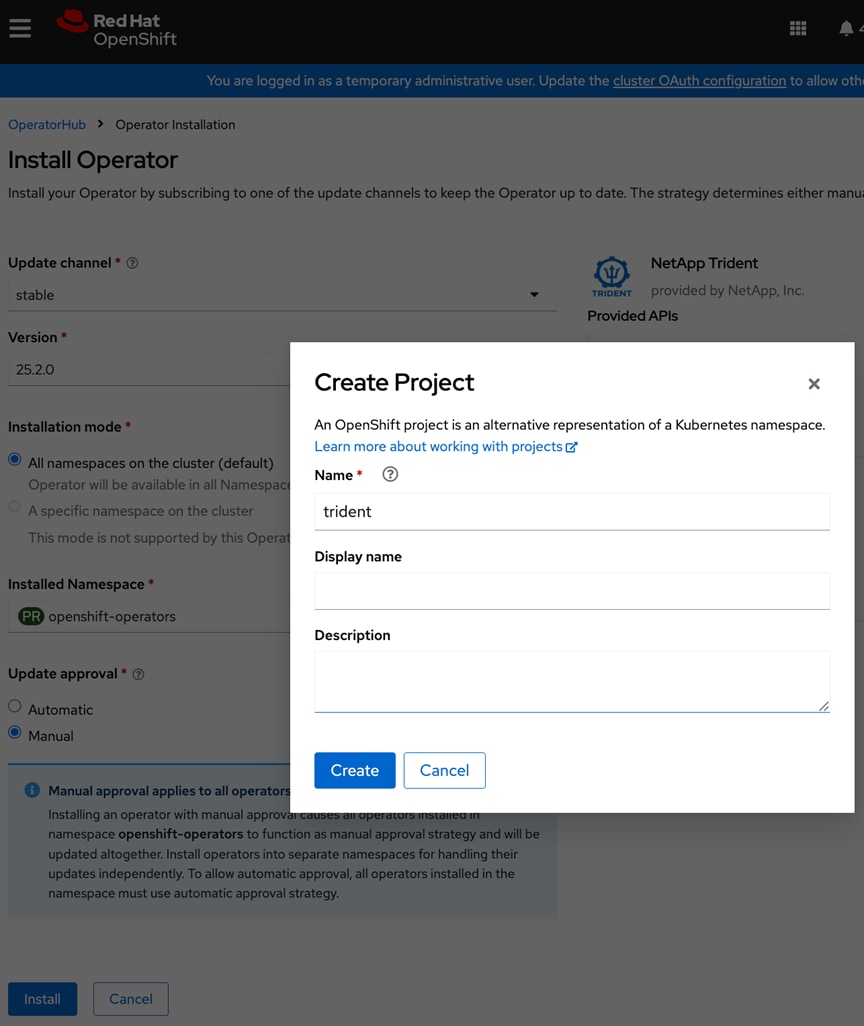

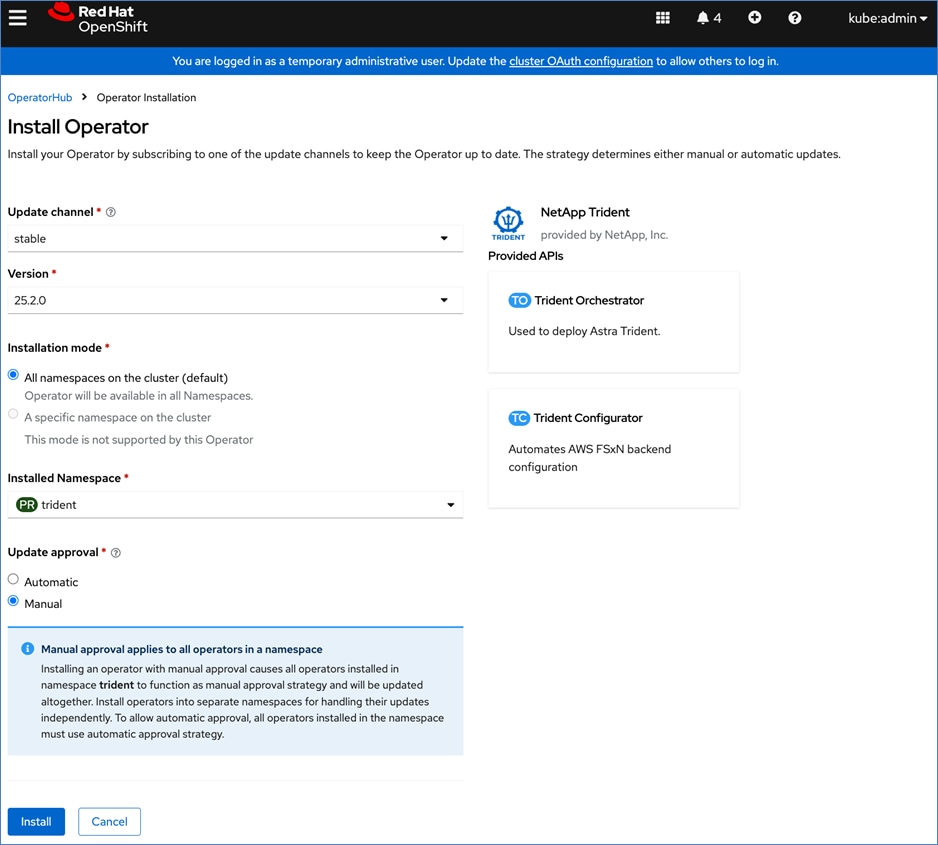

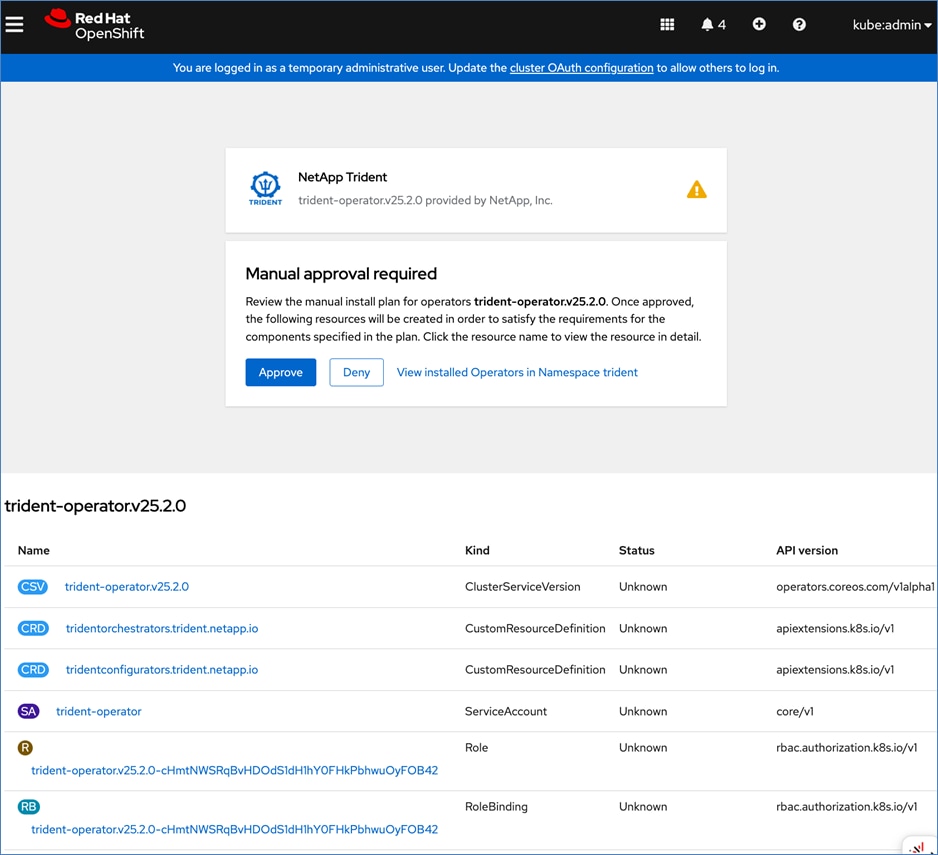

NetApp Trident Operator

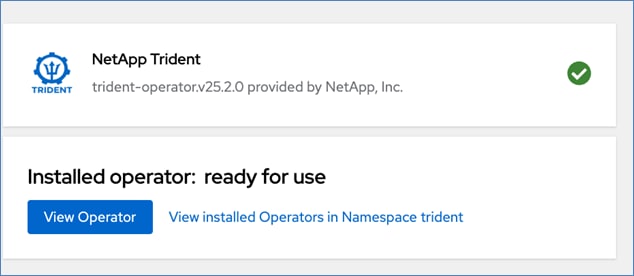

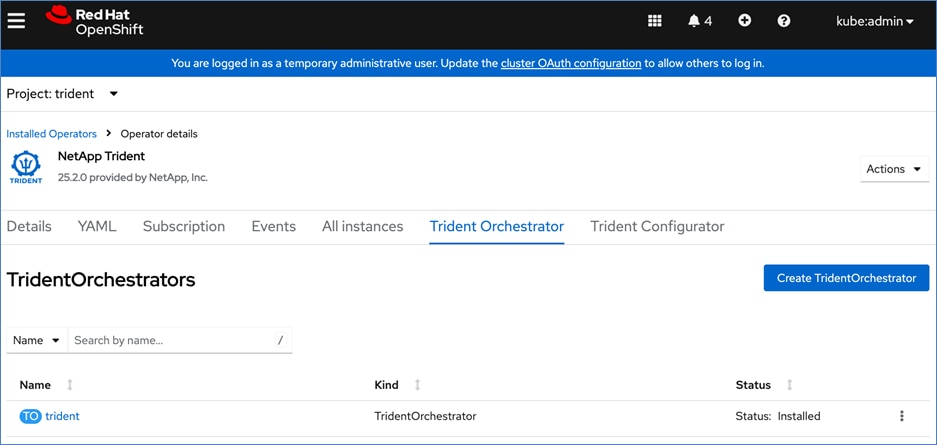

NetApp Trident is an open-source storage provisioner and orchestrator maintained by NetApp. It enables you to create storage volumes for containerized applications managed by Docker and Kubernetes. It has been designed to help you meet your containerized application's persistence demands using industry-standard interfaces, such as the Container Storage Interface (CSI). For the release information, including patch release changes, see https://docs.netapp.com/us-en/trident/trident-rn.html.

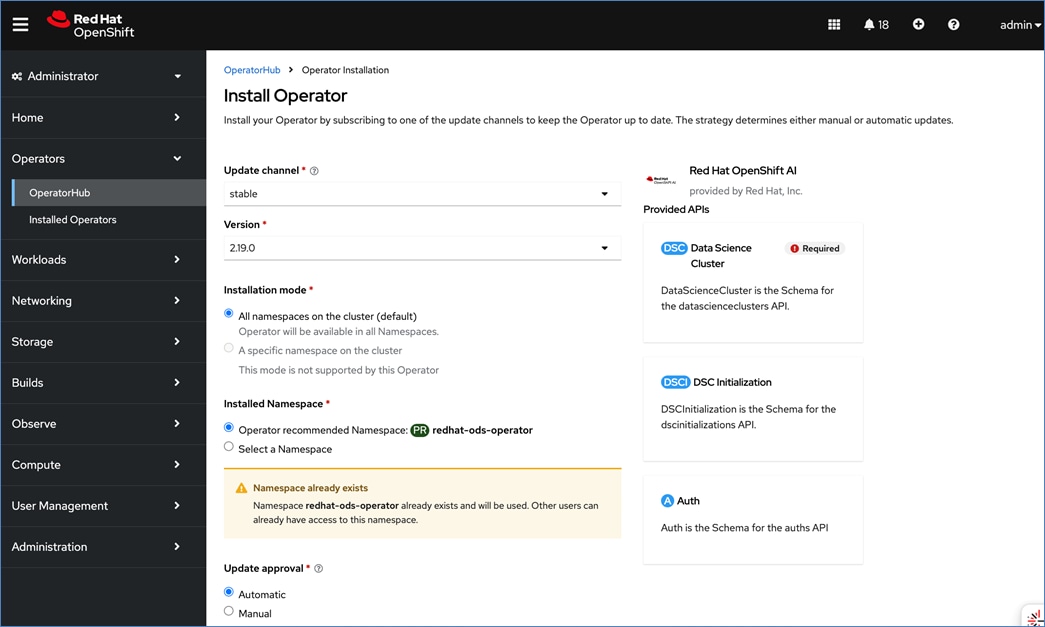

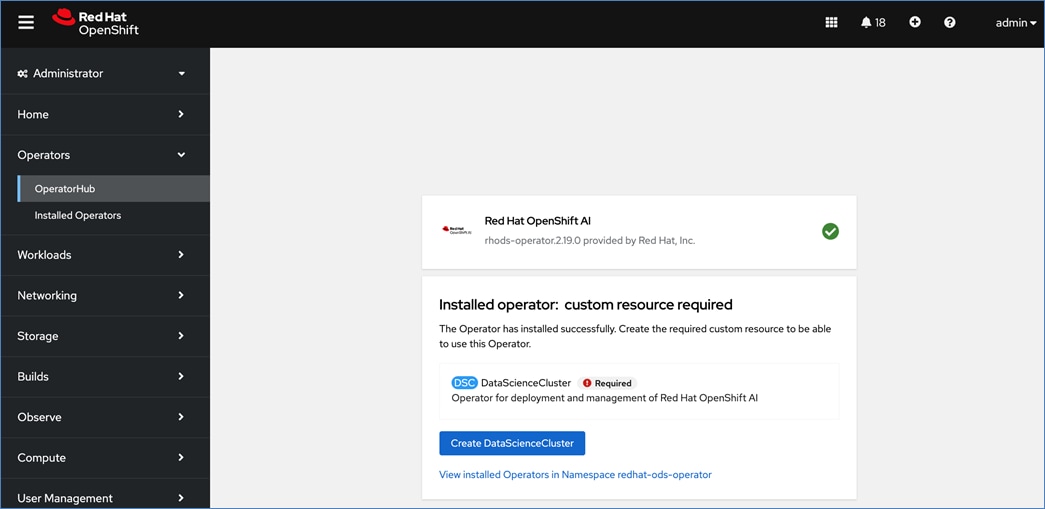

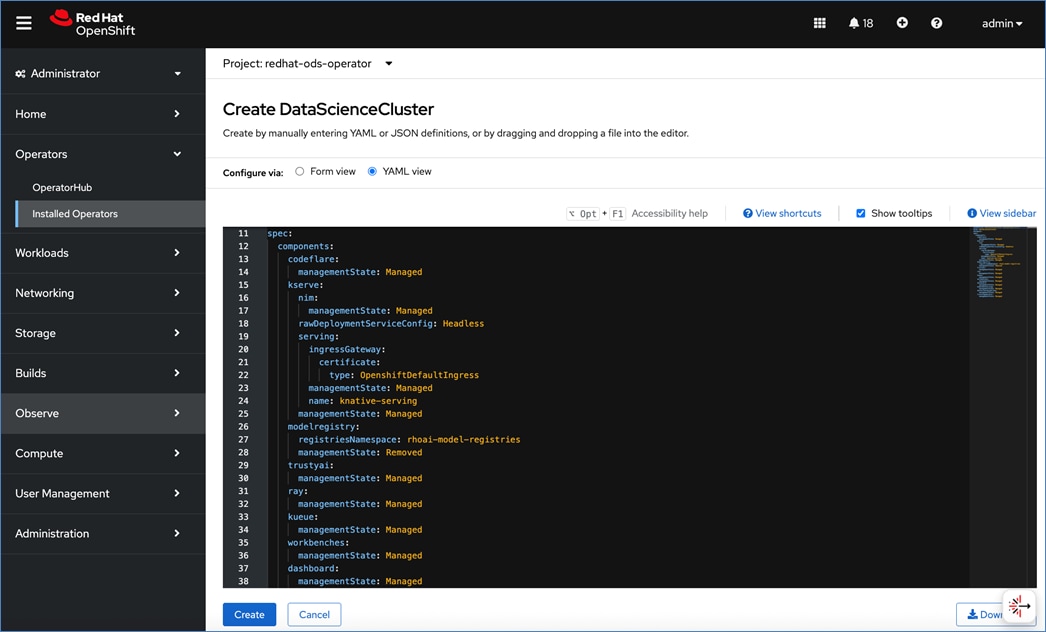

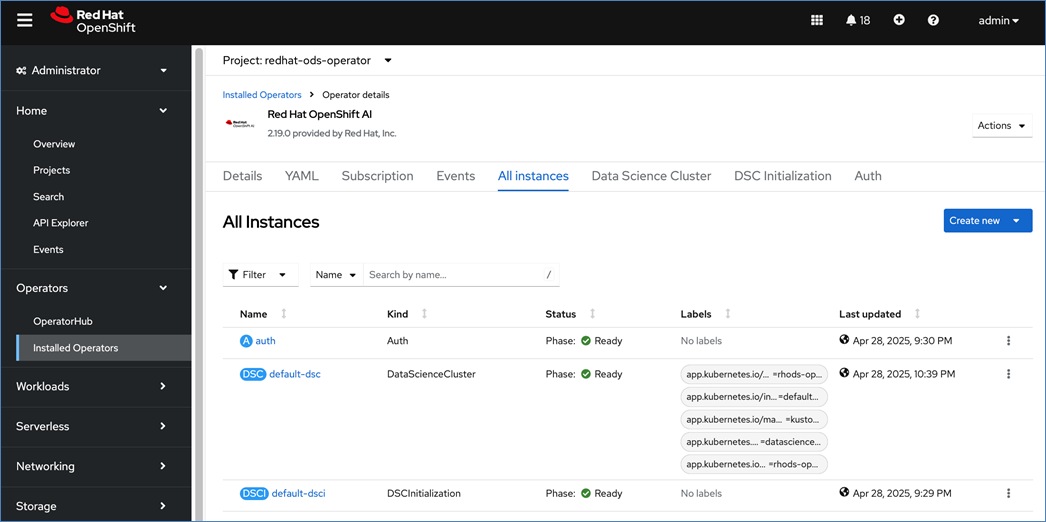

Red Hat OpenShift AI Operator

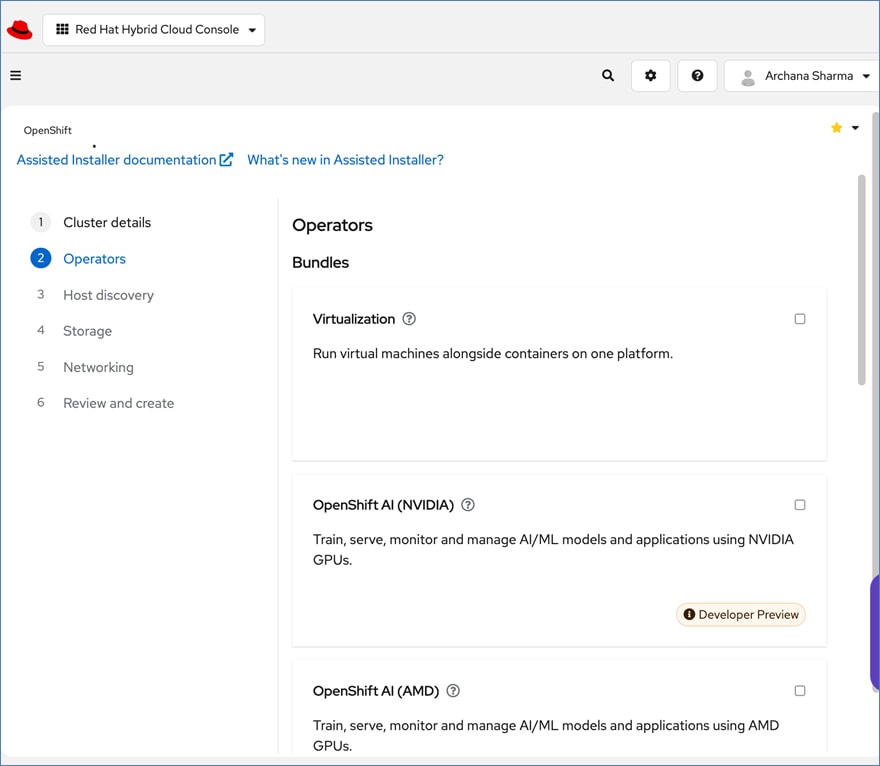

Red Hat OpenShift AI operator deploys OpenShift AI on the OpenShift cluster that enables a fully supported environment for MLOps. The OpenShift AI environment deployed by the operator provides a core environment with built-in tools, libraries, and frameworks that ML engineers and data scientists need to train and deploy models. The GPU resources deployed on the OpenShift cluster are automatically available from the OpenShift AI UI and can be used as needed during various stages of model delivery ( for example, GPUs can be assigned to a Jupyter notebook for use in model experimentation). OpenShift AI includes project workspaces to enable multiple AI/ML efforts in parallel, Jupyter Notebooks with different built-in images to pick from (for example, PyTorch, TensorFlow, CUDA), Data Science Pipelines using OpenShift pipelines, model serving using ModelMesh (and Kserve) with Intel OpenVINO inferencing server. Customers can extend this environment by adding custom images, and other partner and open-source technologies. By using the operator framework, it is also simple to use the lifecycle OpenShift AI.

MLOps using Red Hat OpenShift AI

Red Hat OpenShift includes key capabilities to streamline and scale machine learning operations (MLOps) in a consistent way. By applying DevOps and GitOps principles, organizations automate and simplify the iterative process of integrating ML models into software development processes, production rollout, monitoring, retraining, and redeployment to ensure continued prediction accuracy.

Red Hat OpenShift AI leverages OpenShift’s capabilities in application development and container infrastructure management to enable a robust, scalable, and secure environment for model delivery and MLOps. OpenShift Administrators manage all aspects of the underlying infrastructure, from GPU resources to storage to user access. This eases the operational burden on ML engineers and data scientists, enabling them to focus on model delivery and less time on managing the infrastructure. OpenShift also provides integration with DevOps capabilities (for example, OpenShift Pipelines, OpenShift GitOps, and Red Hat Quay). Also, projects and workbenches deployed in OpenShift AI are projects (or namespaces) in OpenShift, enabling Openshift administrator to monitor and manage the resources in their environment. These operational benefits make it significantly easier for Enterprise teams, enabling them to accelerate their ML efforts .

At a high level, a ML lifecycle can be summarized as follow. Red Hat OpenShift AI provides a unified platform for each of the above stages, along with the required AI/ML tools and applications.

● Gather and prepare (or curate) data to make sure the input data is complete, and of high quality

● Develop model, including training, testing, and selection of the model with the highest prediction accuracy

● Integrate models in application development process, and inferencing

● Model monitoring and management, to measure business performance and address potential production data drift

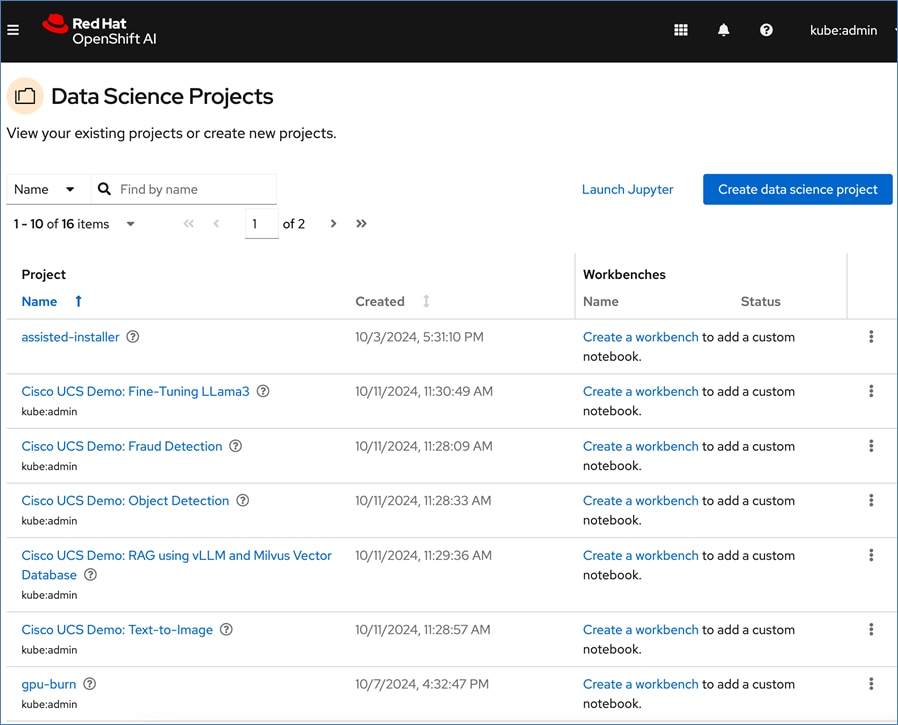

Multiple efforts can run in parallel within OpenShift AI, from incubation projects to production serving of multiple models at scale. Red Hat OpenShift AI platform provides key capabilities to support this and accelerate model delivery as outlined below.

● Seamlessly leverage resources and capabilities from the underlying OpenShift cluster (for example, use OpenShift Identity provider to manage users).

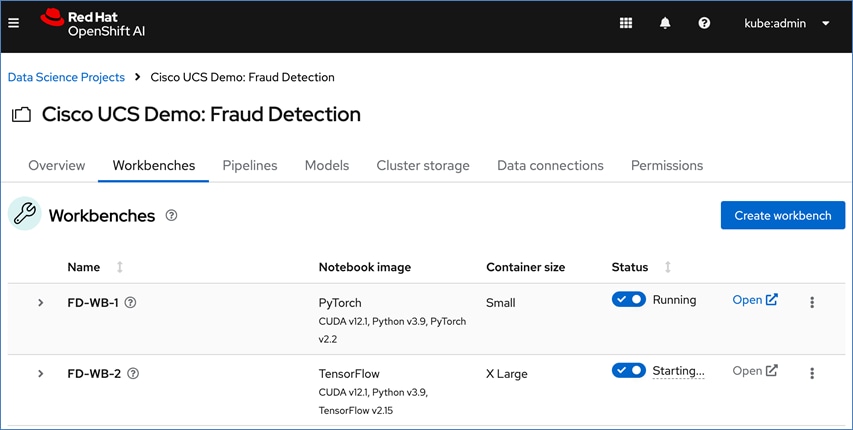

● Support for multiple Data Science Projects to enable parallel AI/ML efforts, including multiple workflows (known as workbenches) within a project.

● Support for multiple work efforts or Workbenches within a given data science project to support parallel work efforts within the same projects. A workbench is an isolated area where you can work with models in your preferred IDE, such as a Jupyter notebook. You can add accelerators and data connections, create pipelines, and add cluster storage in your workbench. The workbenches can be launched with pre-built or custom images with necessary libraries and frameworks.

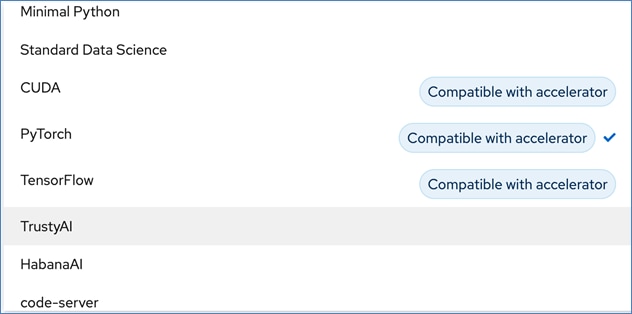

● The pre-built image options available in the release of OpenShift AI used in this design include commonly used images such as: Minimal Python, Standard Data Science, CUDA, PyTorch, TensorFlow, TrustyAI, Habana AI, and Code-server.

● Other notebook options you can select from include:

◦ Container size (Small, Medium, Large, and X Large) based on memory and CPU requirements for project

◦ Number of GPU accelerators (optional)

◦ Persistent Storage – new or existing (provided by NetApp Trident in this solution)

◦ Data Connection to access S3-compatible storage on NetApp ONTAP storage on-prem

● If GPU acceleration is selected, OpenShift AI will detect and make the GPU available for use. The pre-built images that support GPU acceleration will also be updated to indicate that it is available s shown below. Otherwise, CPU resources will be used. Within a given data science project, the parallel efforts on different workbenches can individually select whether to use GPU or CPU resources.

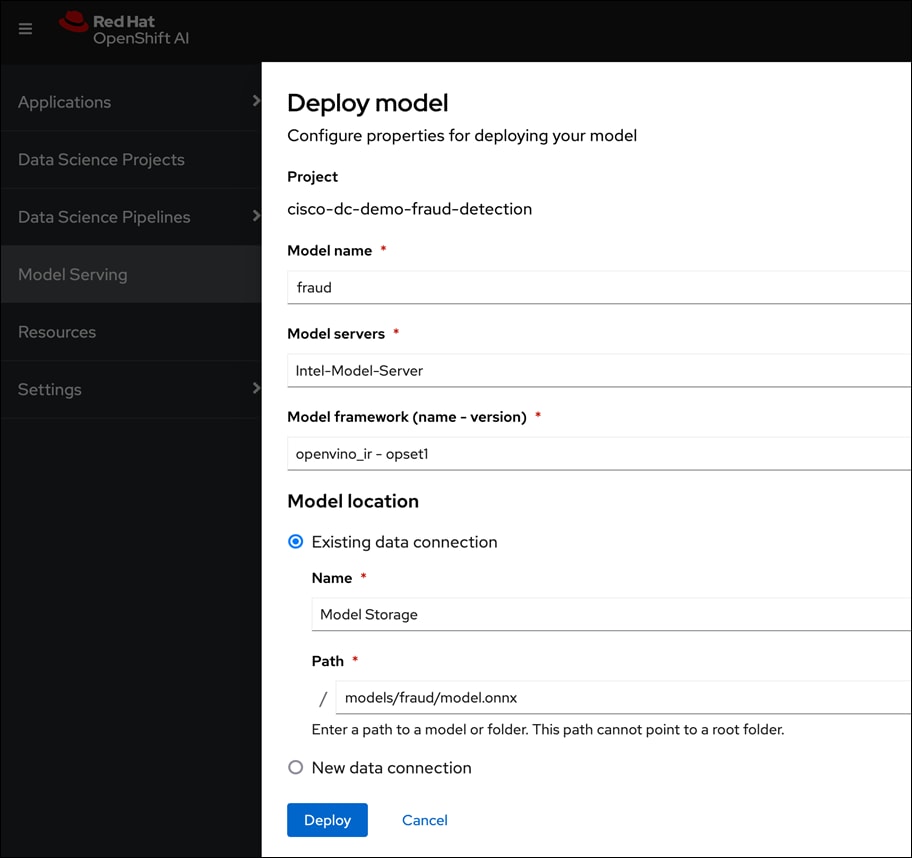

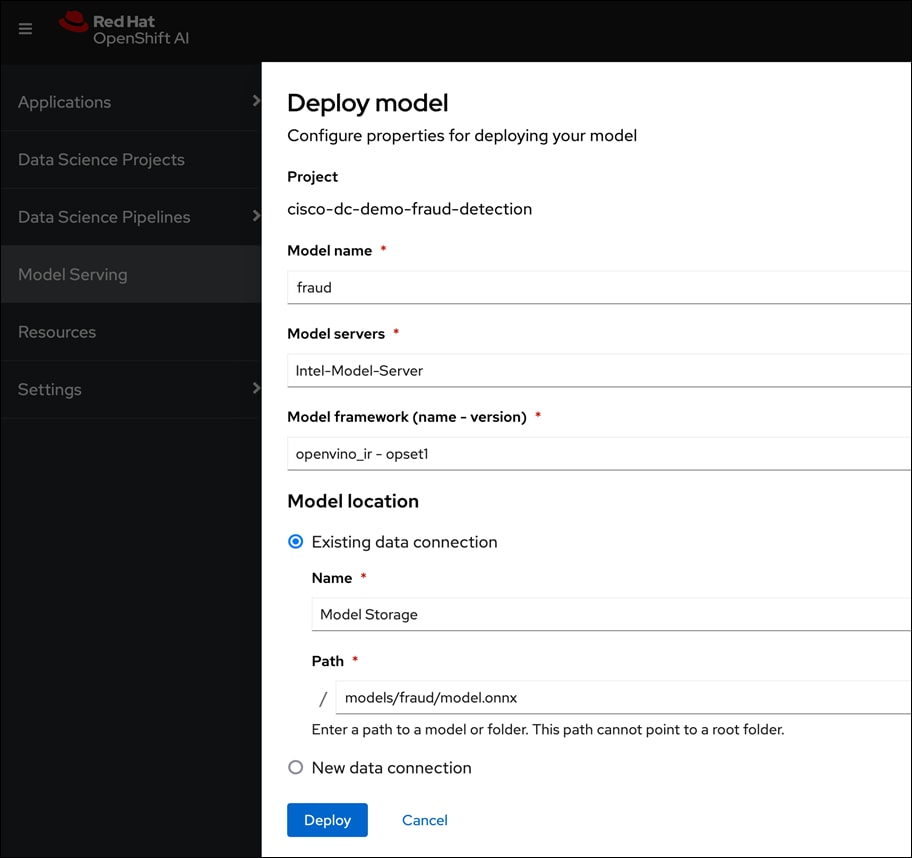

● For model serving using pre-integrated Intel OpenVINO inferencing server or use a custom server such as NVIDIA Triton. For model serving, you can specify the model repository where the model is stored, the format or framework the published model uses (for example, onnx, tensorflow, openvino_ir) as well as the number of GPU accelerators to use.

● Simple drag and drop GUI based Pipeline Automation with options to schedule execution runs.

Red Hat OpenShift AI provides flexibility and scalable platform for an Enterprise AI/ML initiatives by providing pre-integrated both pre-integrate and customizable environments without compromising on flexibility, in an easy-to-use interface with automation, Jupyter notebooks, GitHub access, and multiple storage and database options The scalability of the solution will primarily depend on underlying OpenShift infrastructure and environment.

End-to-End Design

The FlexPod AI solution with MLOps is now capable of supporting a wide range of ML model delivery efforts, including both Predictive AI and Generative AI, single-modal and multi-modal, as well as CPU and GPU-based use cases with flexible inferencing engines and runtimes. The solution offers a foundational infrastructure platform to simplify, streamline and expedite an enterprise's AI/ML initiatives. The comprehensive design for the solution is shown in Figure 21.

Once the models are ready, they can be served and monitored through OpenShift AI. Enterprise applications can leverage this environment for everything from experimentation to production, seamlessly integrating their applications with the served models to support a variety of use cases as illustrated in Figure 22.

Solution Deployment

This chapter contains the following:

● Deploy Networking – Cisco Nexus

● Deploy Storage - NetApp ONTAP

● Deploy Kubernetes – OpenShift on Baremetal UCS Servers

● Deploy NetApp Trident Operator

● Deploy GPU Operator - NVIDIA

● Deploy Red Hat OpenShift AI for MLOps

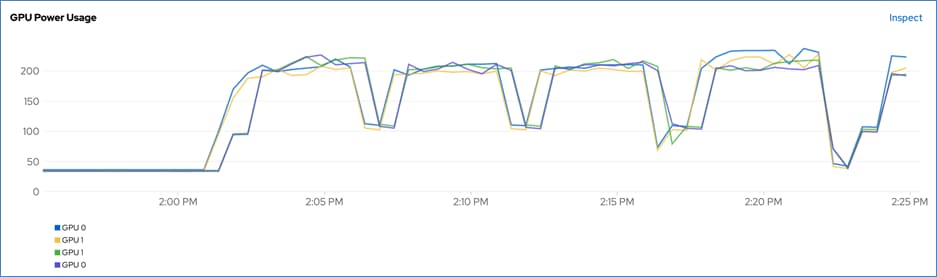

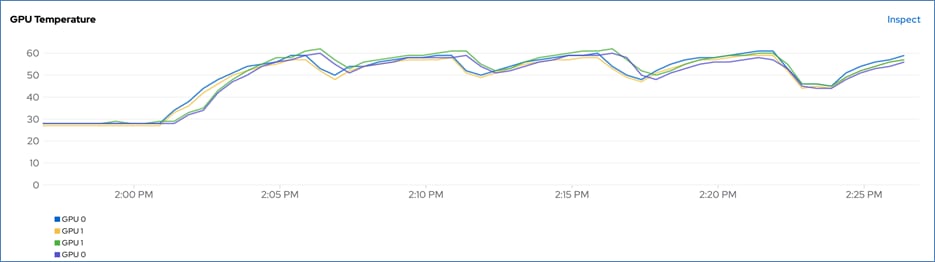

● Visibility and Monitoring – GPU

This chapter provides an high-level overview of the implementation steps for deploying the solution, followed by step-by-step guidance for implementing each step in the overall solution.

The AI/ML infrastructure leverages the latest FlexPod Datacenter CVD for Cisco UCS M7 baremetal servers running Red Hat OpenShift as the foundational design for containerized AI/ML workloads running on Red Hat OpenShift and MLOps for model deployment and maintenance using Red Hat OpenShift AI.

Check Cisco UCS Hardware Compatibility List for NVIDIA GPU support on Cisco UCS running Red Hat OpenShift and upgrade UCS server firmware as needed.

With the FlexPod Datacenter infrastructure in place, an overview of the remaining deployment steps to bring up the AI/ML infrastructure for model serving in production with MLOps are summarized in Table 5.

The detailed procedures for the steps listed in this table will be available on GitHub in the future: https://github.com/ucs-compute-solutions/FlexPod-OpenShift-AI

| Steps |

Deployment Action |

| 01_CVD |

Cisco Nexus Switch Configuration: Configure both Nexus switches for network connectivity. |

| 02_CVD |

NetApp AFF Storage Configuration: Configure NetApp storage for use in OCP Bare Metal and OpenShift AI.

● Setup NFS, iSCSI, NVMe-TCP and S3 related configuration

● Setup ONTAP S3 object store to be used by OpenShift AI for storing pipeline artifacts and model repo

|

| 03_CVD |

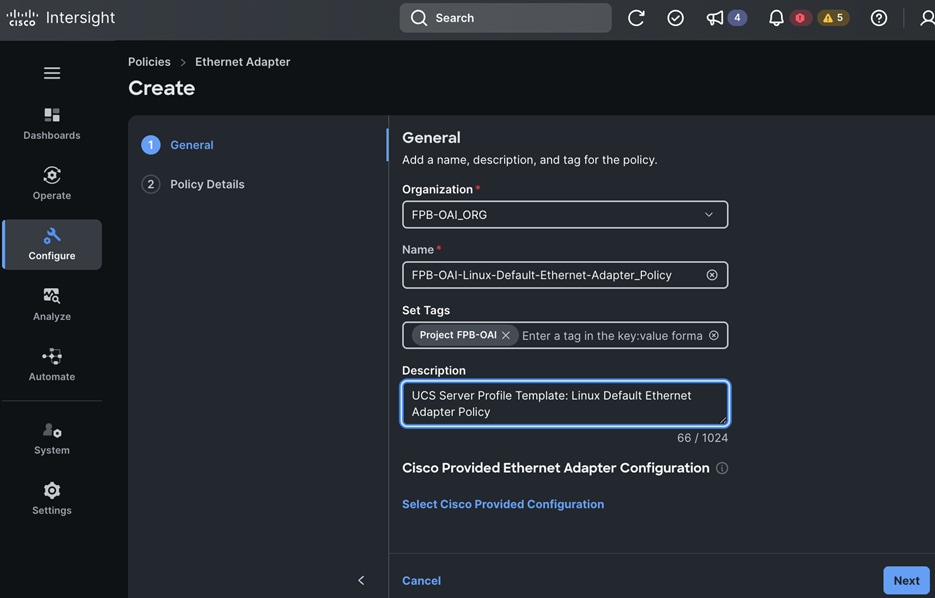

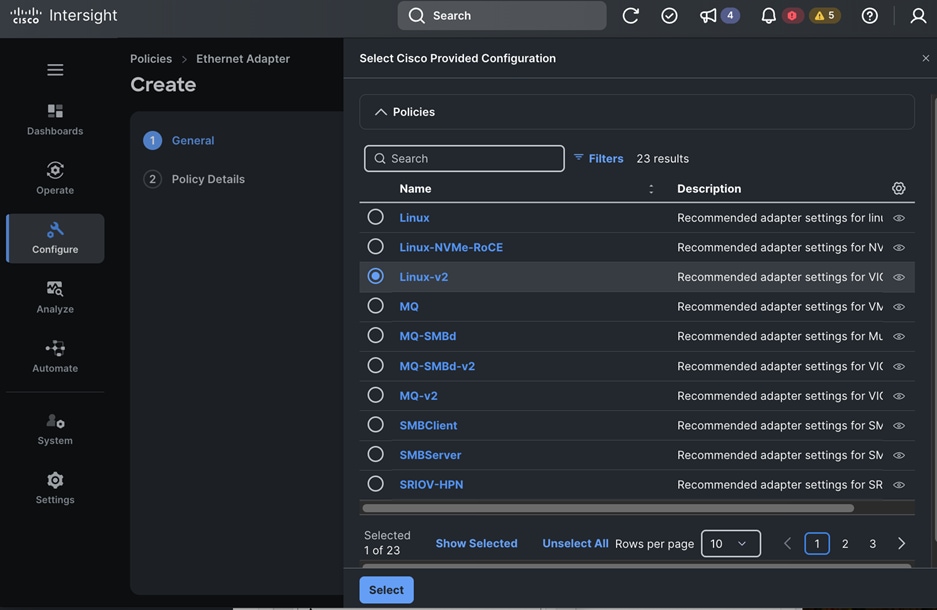

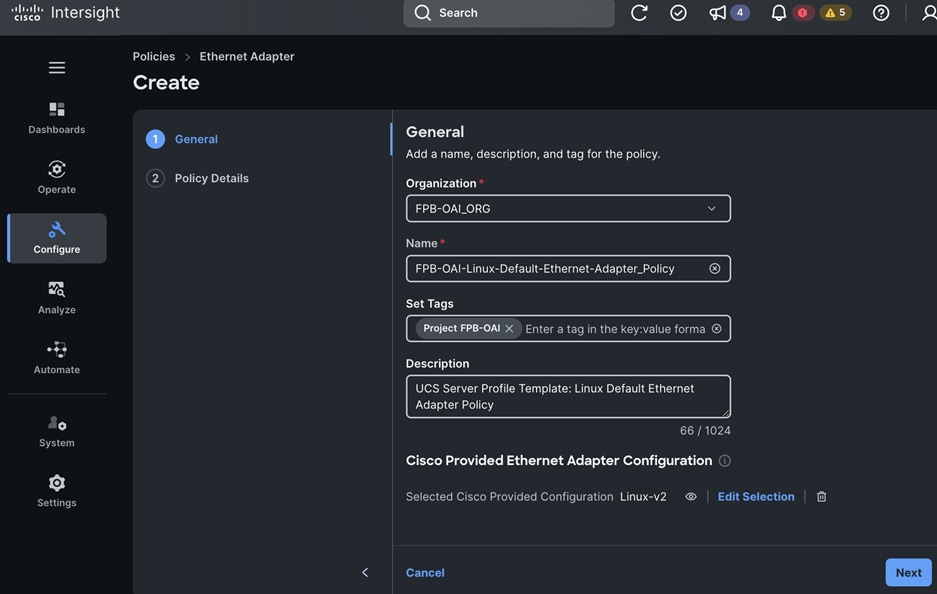

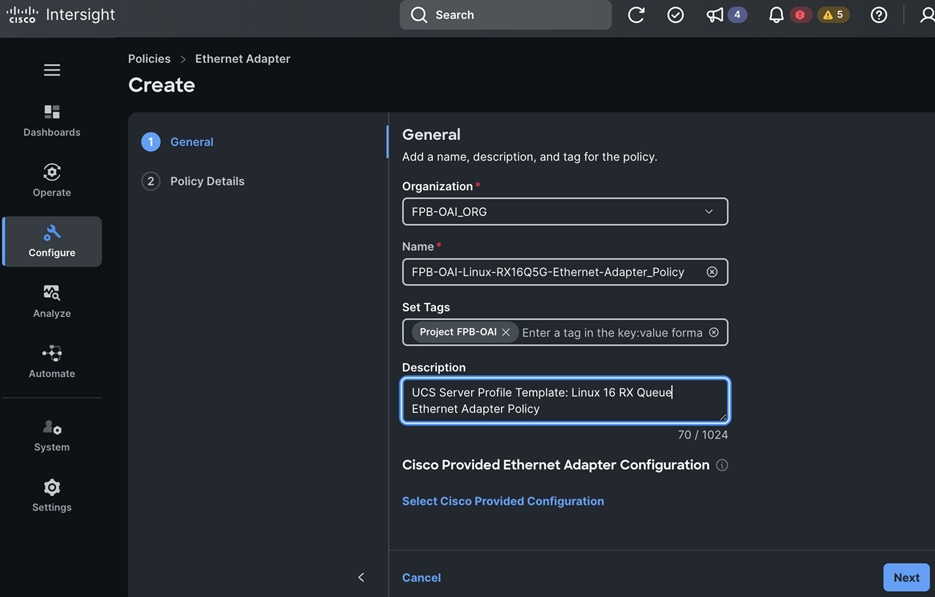

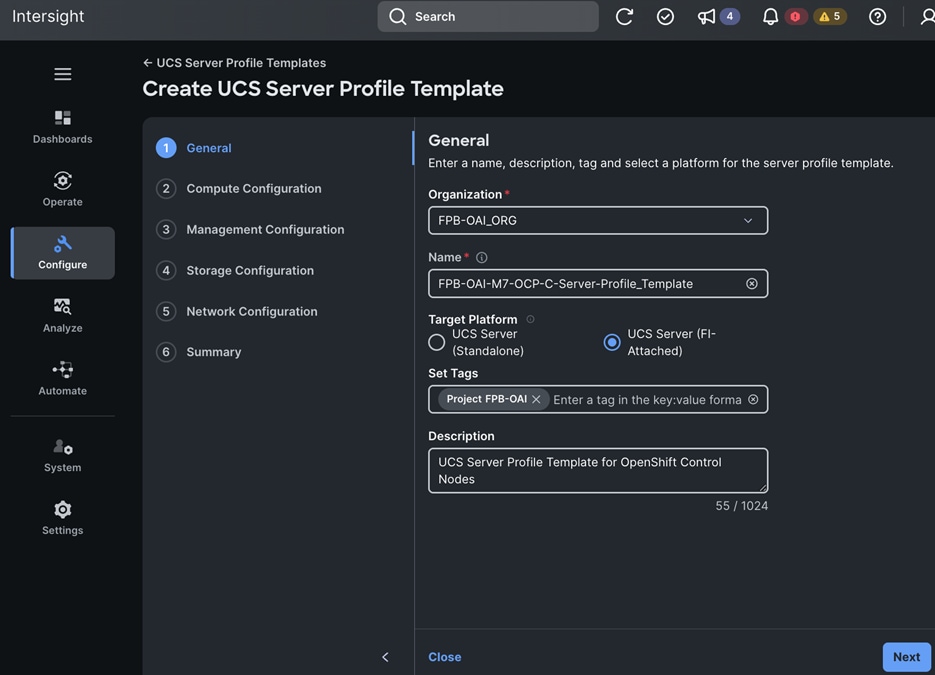

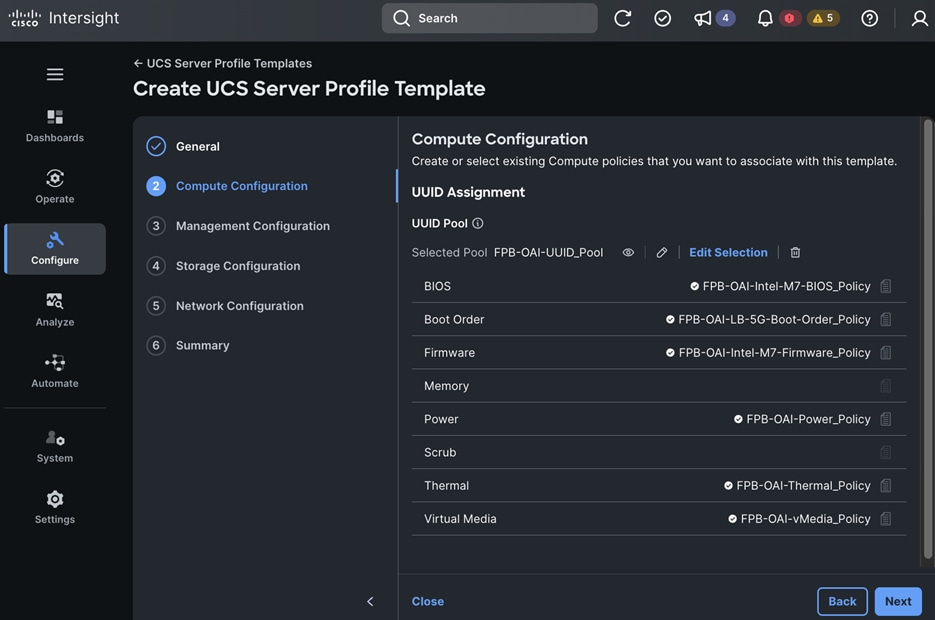

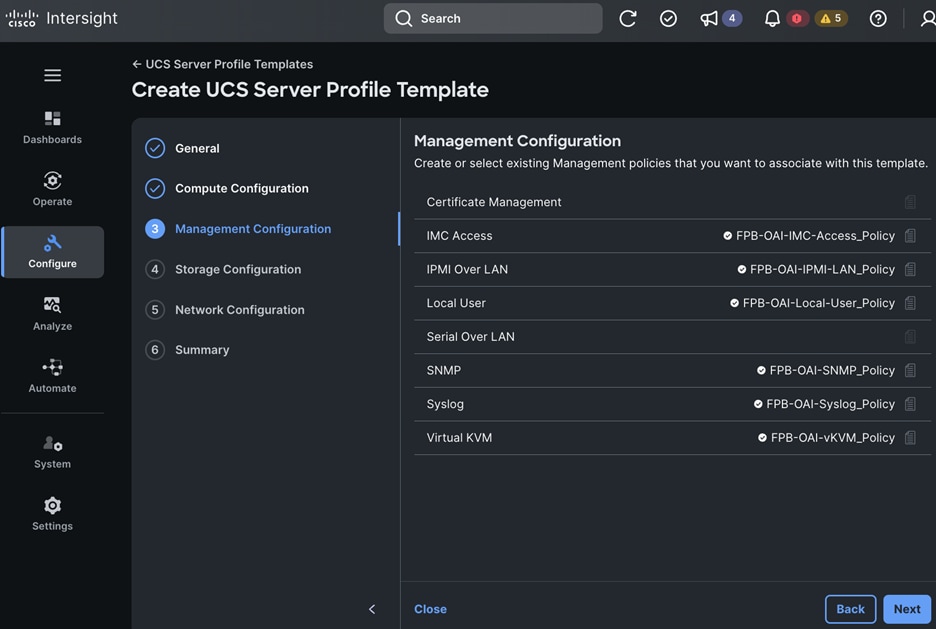

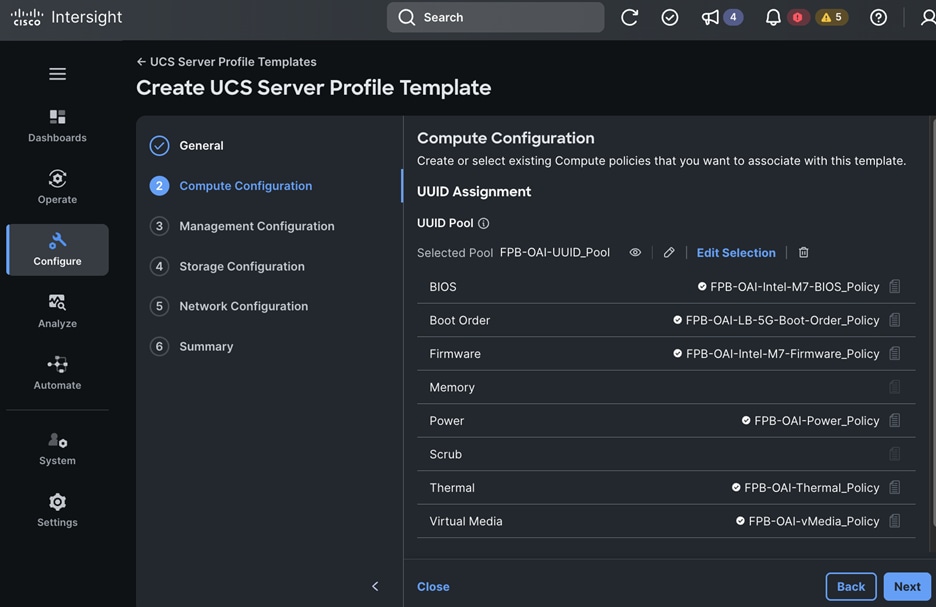

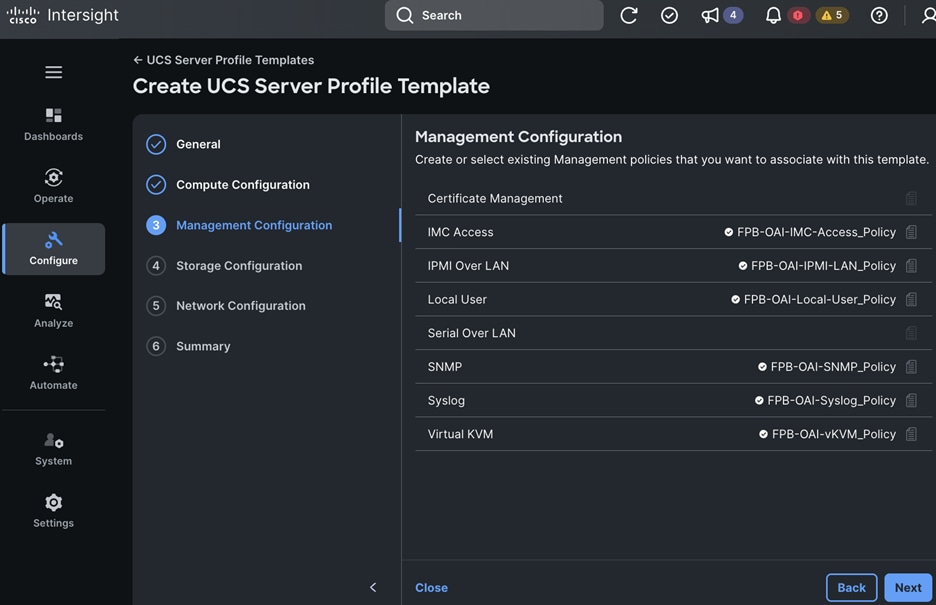

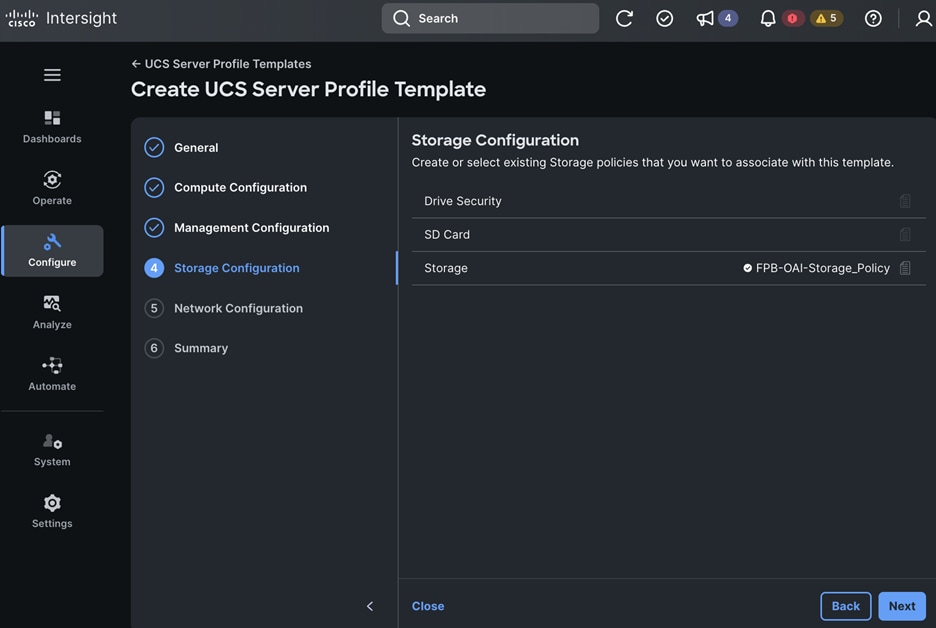

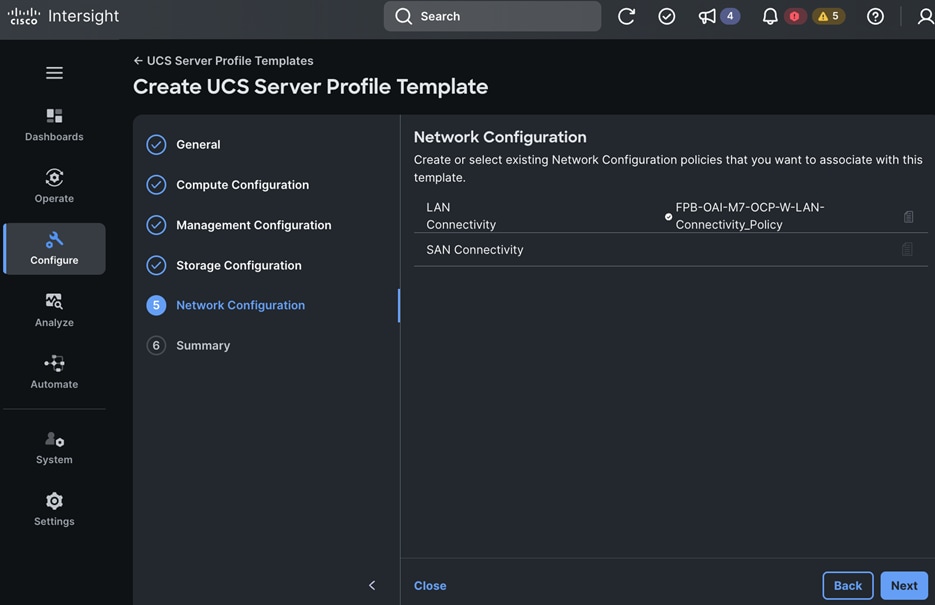

Cisco UCS Server Configuration: Provision Cisco UCS-X servers from Cisco Intersight to support AI/ML workloads. This requires deploying servers with PCIe nodes and GPUs. |

| 04_CVD |

Red Hat OpenShift Prerequisites: Setup and/or verify that the following prerequisites for Red Hat OpenShift are in place.

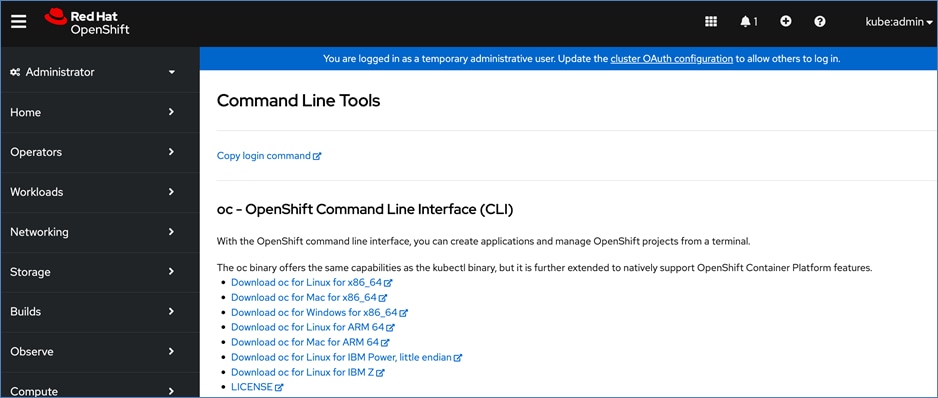

● Deploy an installer machine to remotely manage the OpenShift cluster and to serve as an HTTP server to load openshift images on Cisco UCS servers. Generate public SSH keys on the installer to enable SSH access to OpenShift cluster post-install.

● Valid Red Hat account to access Red Hat Hybrid Cloud Console (HCC) for deploying OpenShift.

● Identify a VLAN, IP subnet and DNS domain for use by Red Hat OpenShift cluster.

● Setup DNS: Add DNS records for API VIP and Ingress Virtual IP (VIP)

● Setup DHCP: Add DHCP pool for OpenShift cluster nodes to use. Configure DHCP options for NTP, Gateway (for routed subnets) and DNS.

● Assisted Installer will check for the following before starting the installation:

◦ Network connectivity ◦ Network bandwidth ◦ Connectivity to the registry ◦ Upstream DNS resolution of the domain name ◦ Time synchronization between cluster nodes ◦ Cluster node hardware ◦ Installation configuration parameters |

| 05_CVD |

Deploy Red Hat OpenShift: Install OpenShift from console.redhat.com using Use Red Hat recommended Assisted Installer to deploy an OpenShift Baremetal cluster for hosting AI/ML workloads. |

| 06_CVD |

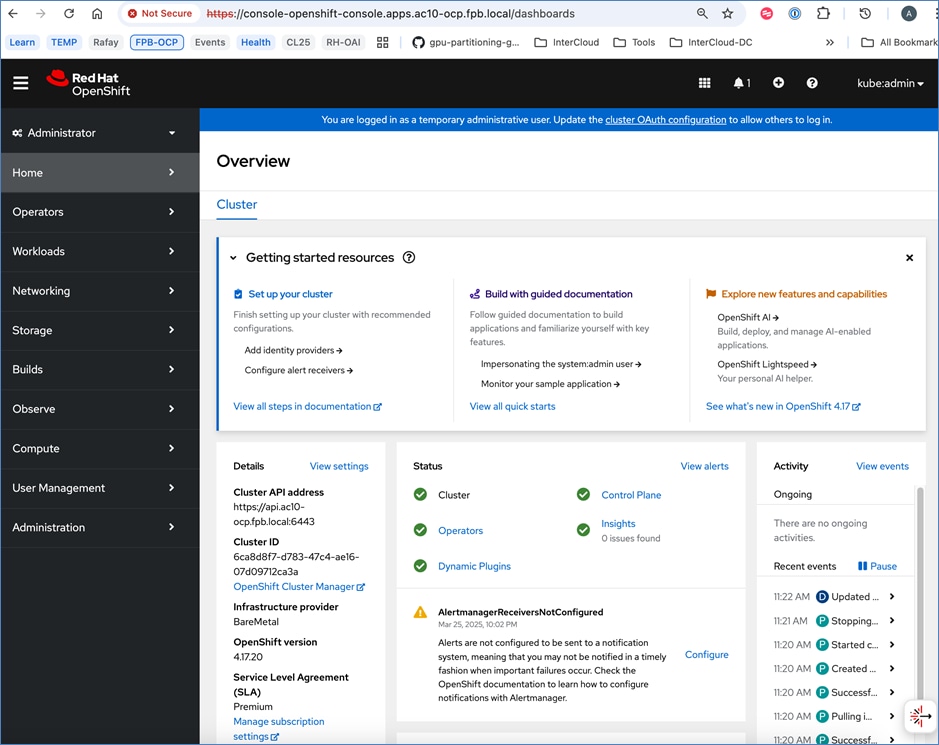

Red Hat OpenShift - Post-Deployment Verification:

● Verify access to OpenShift cluster by navigation to cluster console URL

● Setup/Verify NTP setup on all OpenShift cluster virtual machines (control and worker nodes)

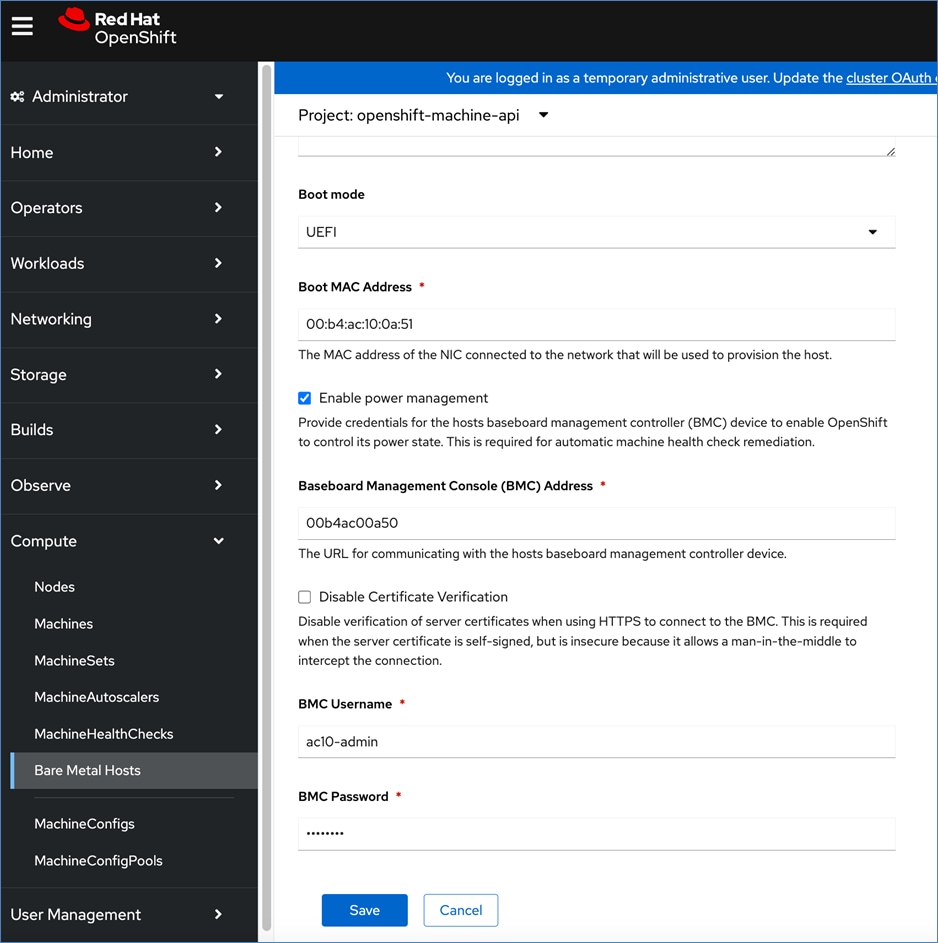

● Enable IPMI for each baremetal host from the OpenShift cluster console

● Verify cluster is registered with

console.redhat.com

● From Red Hat OpenShift cluster console, provision machineset to modify CPU, memory as needed.

● Provision taints on the Openshift worker nodes with GPUs to only allow workloads that require a GPU to be scheduled on them.

|

| 07_CVD |

Deploy NVIDIA GPU Operator on Red Hat OpenShift:

● From the Red Hat OpenShift Console, search and deploy Red Hat’s Node Feature Discovery Operator (NFD).

● Verify that the worker nodes with GPUs have identified and labelled the GPU. Label should be:

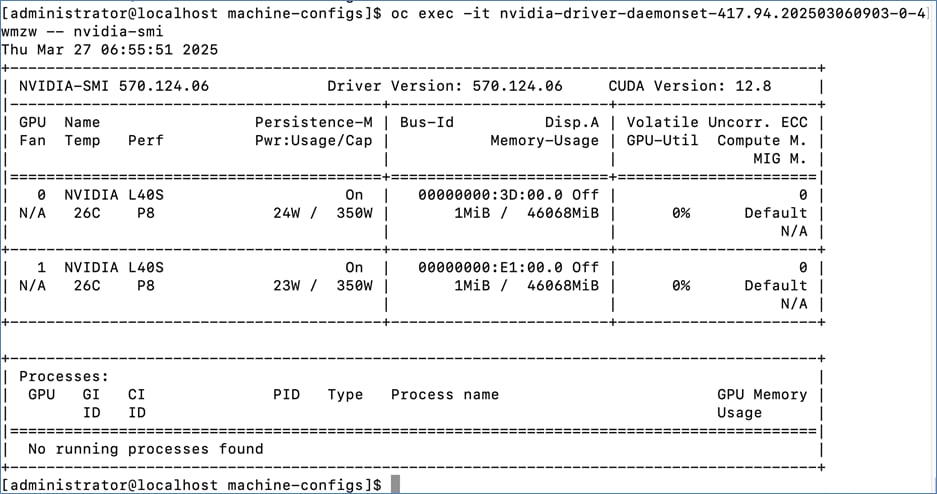

● From the Red Hat OpenShift cluster console, search and deploy the NVIDIA GPU Operator:

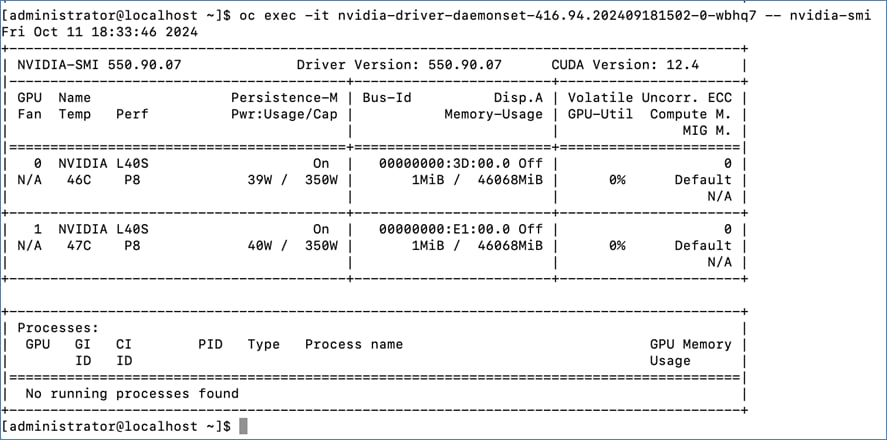

◦ Deploy Cluster Policy instance and ensure that it shows a Status of State: Ready ◦ Use the following command to verify GPU details: oc exec -it <nvidia-driver-daemonset pod name> -- nvidia-smi (option: -q)

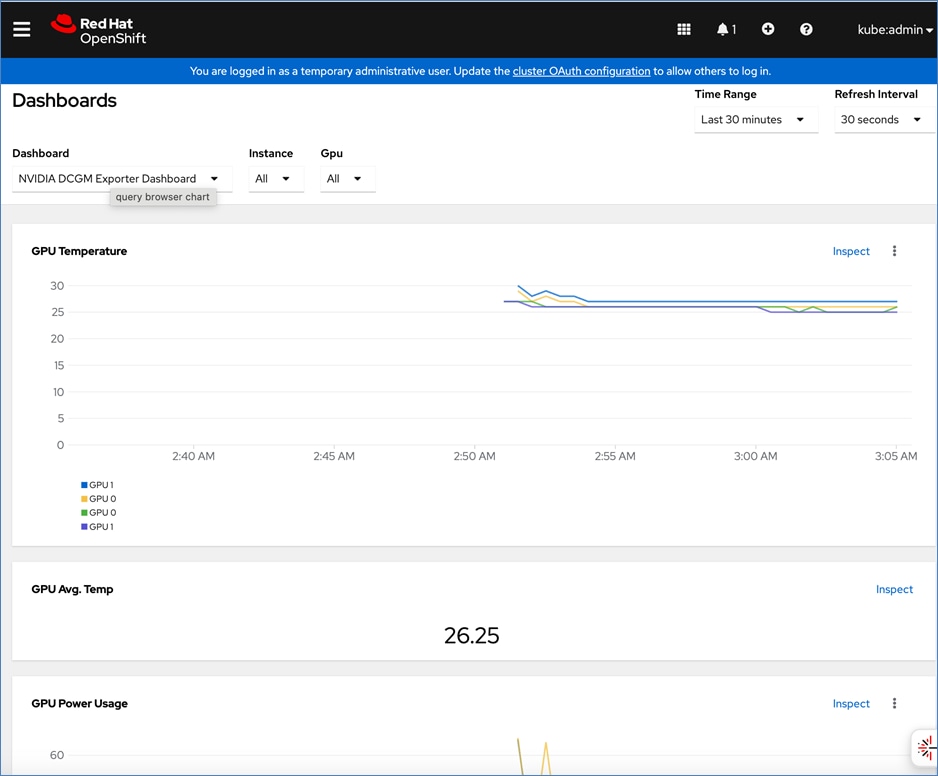

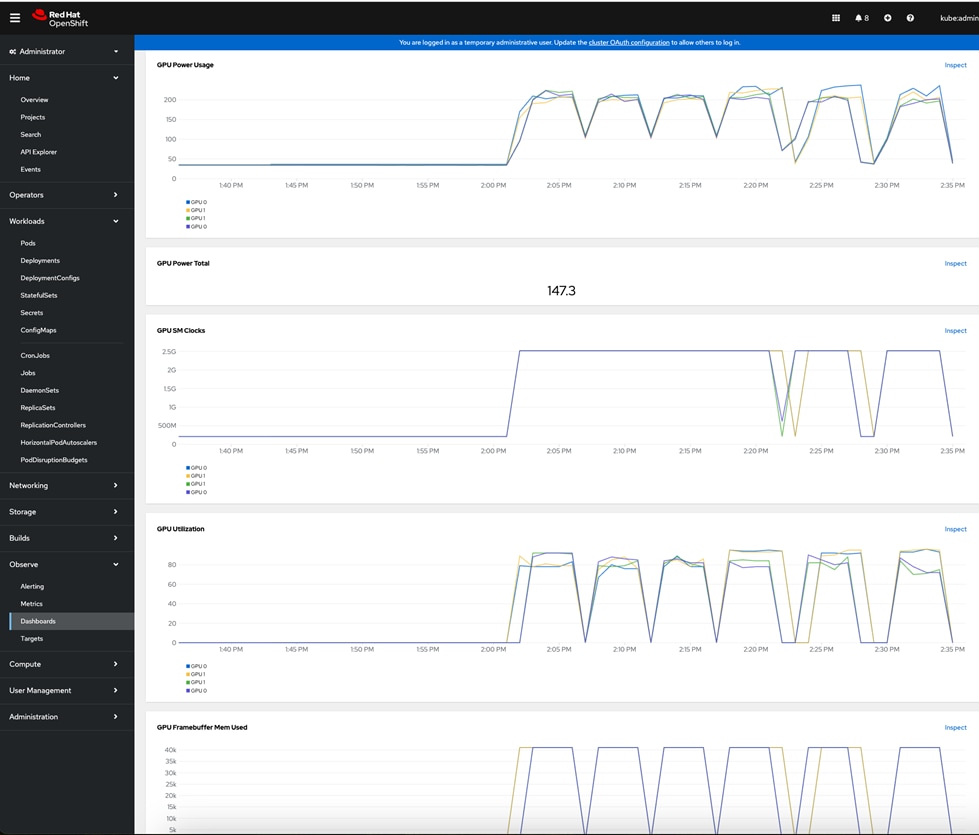

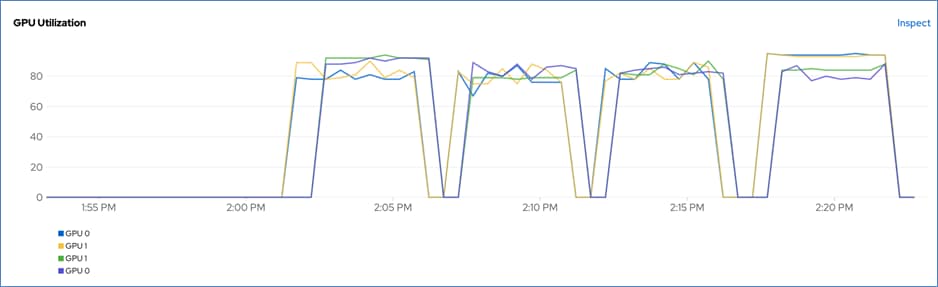

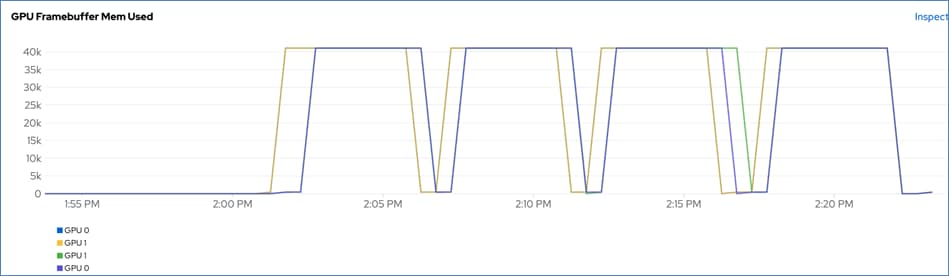

● Enable DCGM GPU Monitoring Dashboard in Red Hat OpenShift:

https://docs.nvidia.com/datacenter/cloud-native/openshift/latest/enable-gpu-monitoring-dashboard.html

|

| 08_CVD |

Deploy Persistent Storage on Red Hat OpenShift using NetApp Trident The persistent storage will be used Red Hat OpenShift containers and AI workloads.

● Deploy NetApp Trident as an operator or using Helm charts, backed by iSCSI, NVMe-TCP or NFS datastores on NetApp ONTAP storage

● Create one or more Storage Classes for above

● Create a test Persistent Volume Claim

● Provision of the newly created storage classes as the default storage class

|

| 09_CVD |

Deploy Red Hat OpenShift AI for MLOps This involves the following prerequisites and high-level tasks:

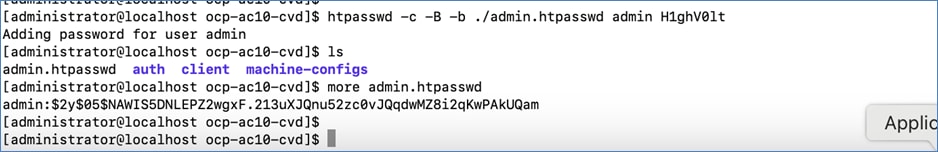

● Provision or use existing identity provider from Red Hat OpenShift.

● Add users and administrator groups in OpenShift to enable for access to OpenShift AI web UI.

● Deploy GPU resources for AI/ML efforts (for efforts that require GPUs)

● Deploy Persistent storage for AI/ML efforts. In this solution, the ML engineer’s work (image, environment) is saved on Trident persistent volumes, backed by NetApp ONTAP storage.

● Deploy S3-compatible object store. In this CVD, it used as model repository and to store pipeline artifacts on NetApp AFF storage

● When using GPUs, if all nodes in the OpenShift cluster are not using GPUs, then taints and tolerations should be provisioned on the nodes to ensure that only workloads requiring GPUs are scheduled on the nodes with GPUs.

● Deploy Red Hat OpenShift AI Operator. The environment is now ready for accelerating and operationalizing enterprise AI/ML efforts at scale.

|

| 10_CVD |

Sanity Tests

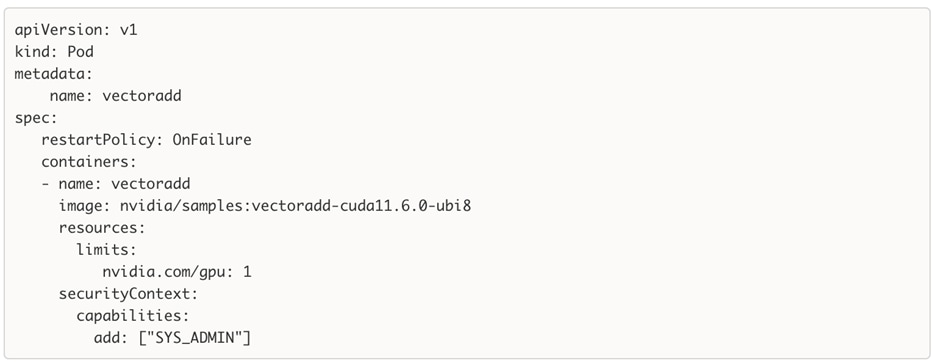

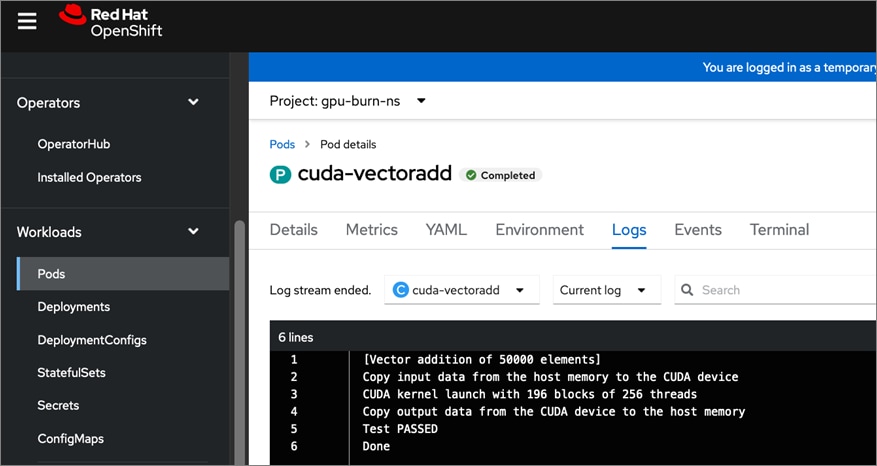

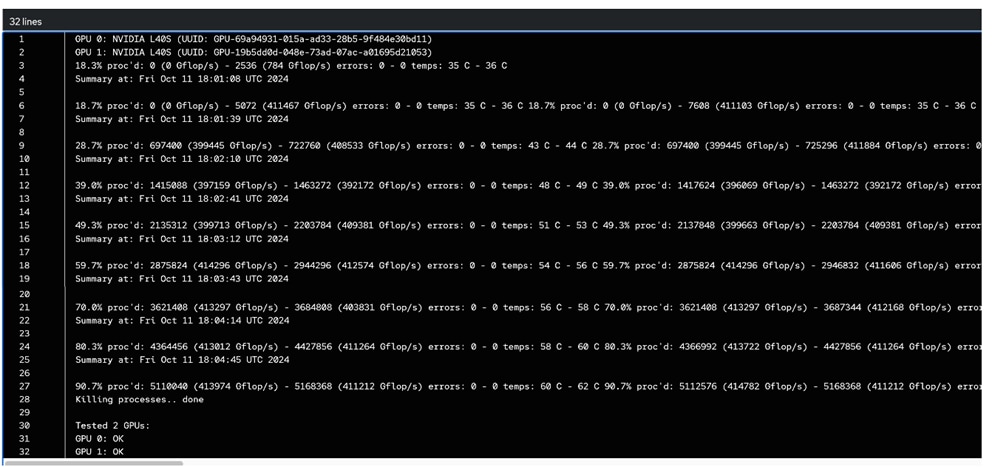

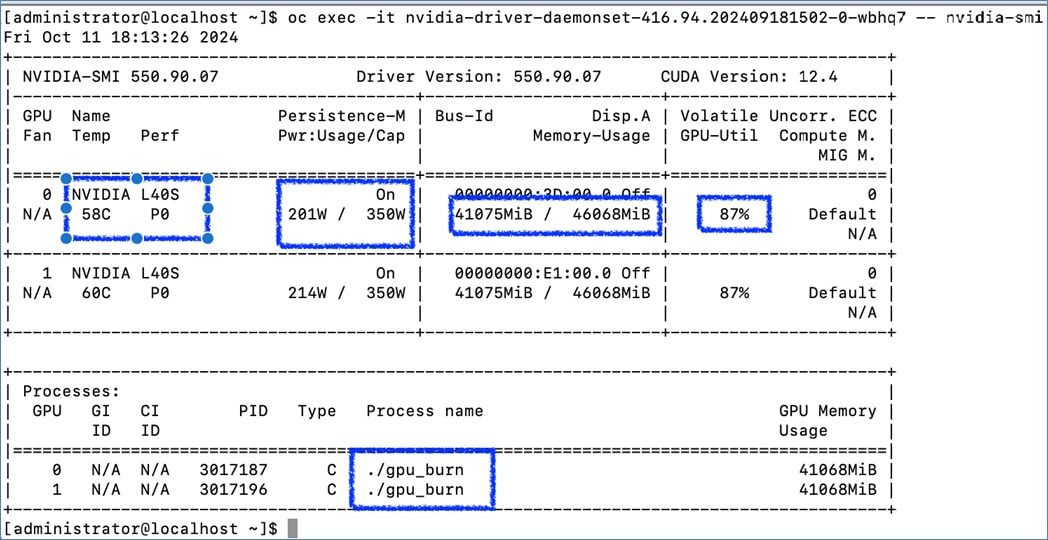

● GPU Functional Validation – Sample CUDA Application

● GPU Burn Test:

https://github.com/wilicc/gpu-burn

● Sample PyTorch script executed from Red Hat OpenShift AI

|

Deploy Networking – Cisco Nexus

The procedures detailed in this section will configure the necessary networking on Cisco Nexus 9000 series switches to enable connectivity between the different components in the solution. This section explains the following:

● Initial setup of Cisco Nexus 9000 series Top-of-Rack (ToR) switches.

● Provision Cisco Nexus switches to enable the connectivity that infrastructure and workloads running on the infrastructure need. This includes connectivity between UCS domains and NetApp storage directly connected to the switches, as well as upstream connectivity to other networks, both within the enterprise and externally.

Note: The Nexus switches used in the solution will be referred to as Nexus-A and Nexus-B. The screenshots will show their actual hostnames.

Initial Setup of Cisco Nexus 9000 Series ToR Switches

This section describes the initial setup of a pair of Cisco Nexus 9000 series Top-of-Rack (ToR) switches used in the solution.

Assumptions and Prerequisites

● Assumes a greenfield deployment with new Nexus switches that have not been configured.

● Console access to both Cisco Nexus switches.

● Collect the setup information for your environment – see Table 6.

Setup Information

Table 6 lists the setup parameters and other information necessary for the procedures in this section. The information also includes access information for devices used in the configuration.

Table 6. Cisco Nexus: Initial Setup Parameters and Information

| Variable/Info |

Value |

Additional Values and Information |

| Console Access: Nexus-A |

<collect> |

|

| Console Access: Nexus-B |

<collect> |

|

| Nexus-A/-B: Admin Username |

admin |

Assuming the same values are used for Nexus-A and Nexus-B |

| Nexus-A/-B: Admin Password |

<specify> |

|

| Nexus-A: Hostname |

AC10-N93600CD-GX-A |

|

| Out-of-Band Management IPv4 Address |

10.120.0.5 |

Options: IPv4 or IPv6 |

| Out-of-Band Management Netmask |

255.255.255.0 |

|

| Out-of-Band Management Gateway |

10.120.0.254 |

|

| Nexus-B: Hostname |

AC10-N93600CD-GX-A |

|

| Out-of-Band Management IPv4 Address |

10.120.0.6 |

Options: IPv4 or IPv6 |

| Out-of-Band Management Netmask |

255.255.255.0 |

|

| Out-of-Band Management Gateway |

10.120.0.254 |

|

Deployment Steps

Use the setup information in Table 6 for the procedures detailed in this section.

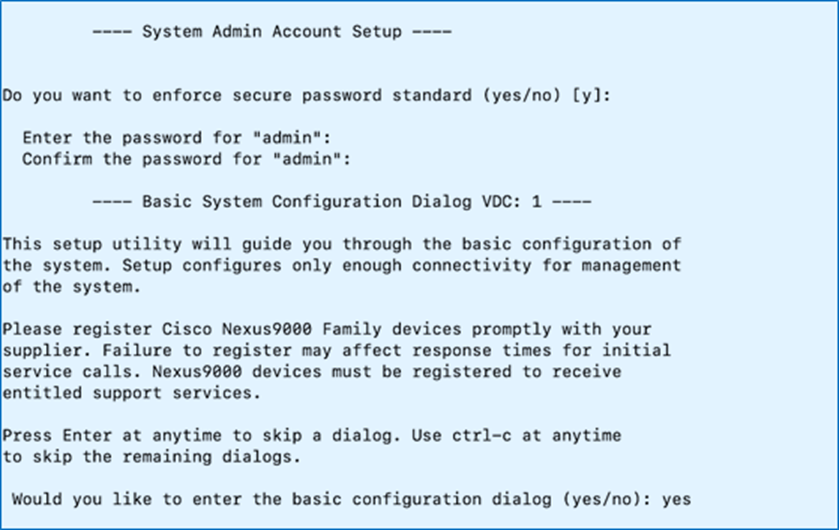

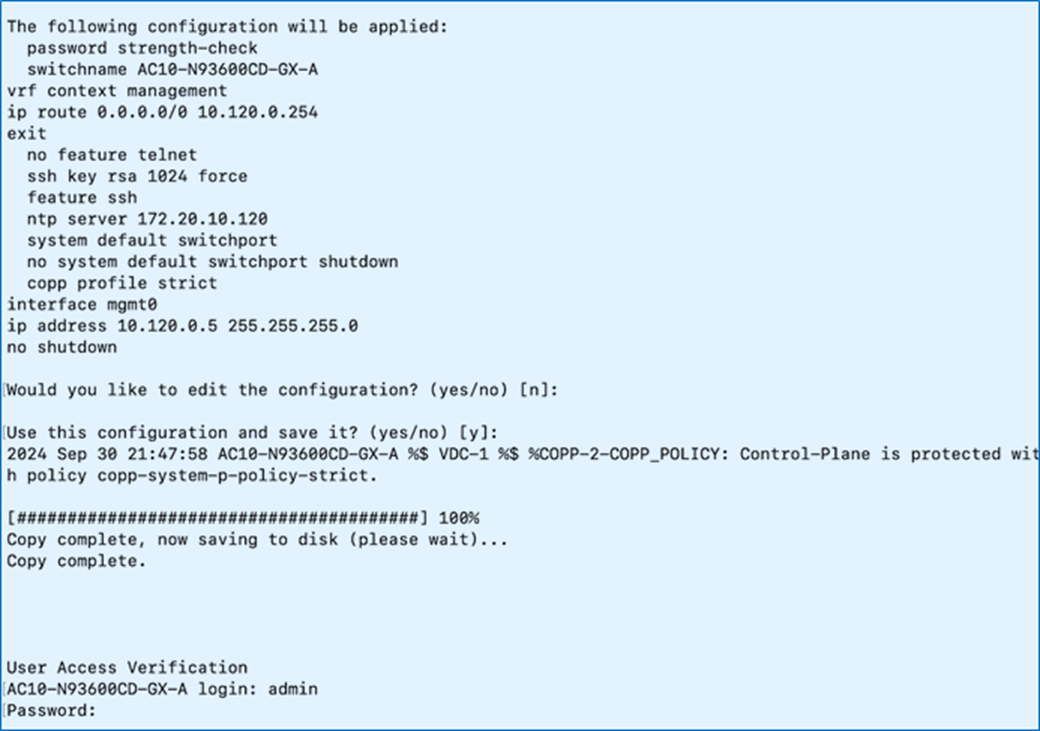

Procedure 1. Initial setup of the Nexus-A switch

Step 1. Connect to the console port of the first Nexus switch (Nexus-A) .

Step 2. Power on the switch.

Note: On bootup, Nexus should automatically start and attempt to enter Power on Auto Provisioning (PoAP).

Step 3. Click Yes to abort PoAP and continue with normal setup.

Step 4. Specify an admin password to access the switch.

Step 5. Click Yes to enter the basic configuration dialog.

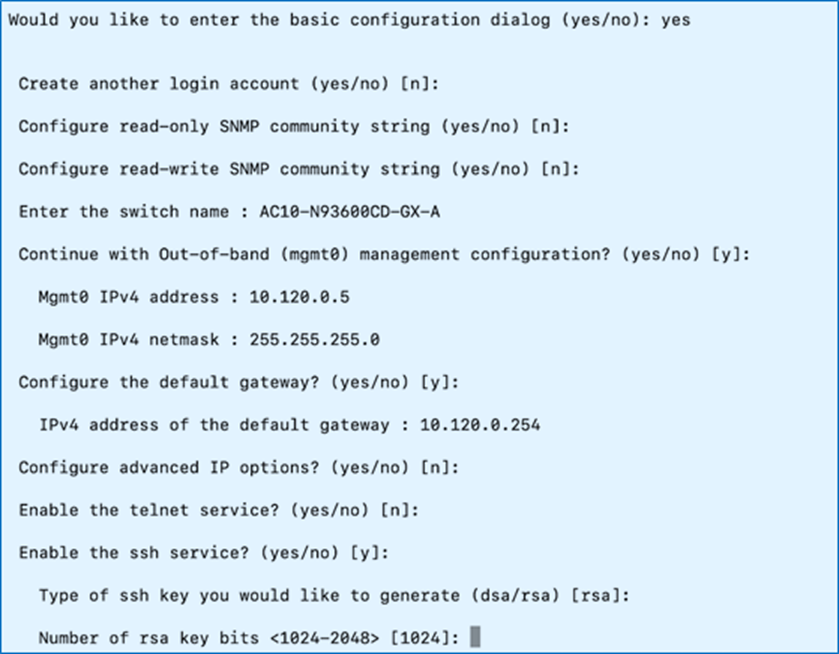

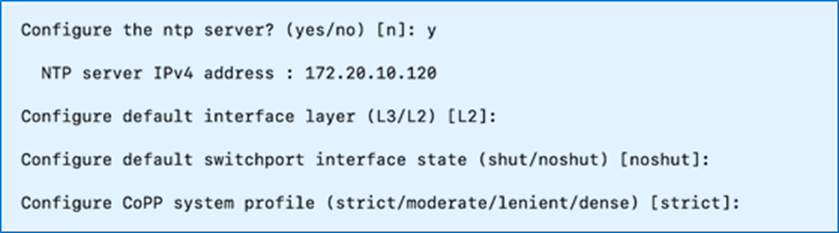

Step 6. Specify a switch name, Mgmt0 IPv4 address, netmask, and default gateway. You can choose the default options for everything else or modify it as you see fit for your environment.

Step 7. Review and Save to use the specified configuration.

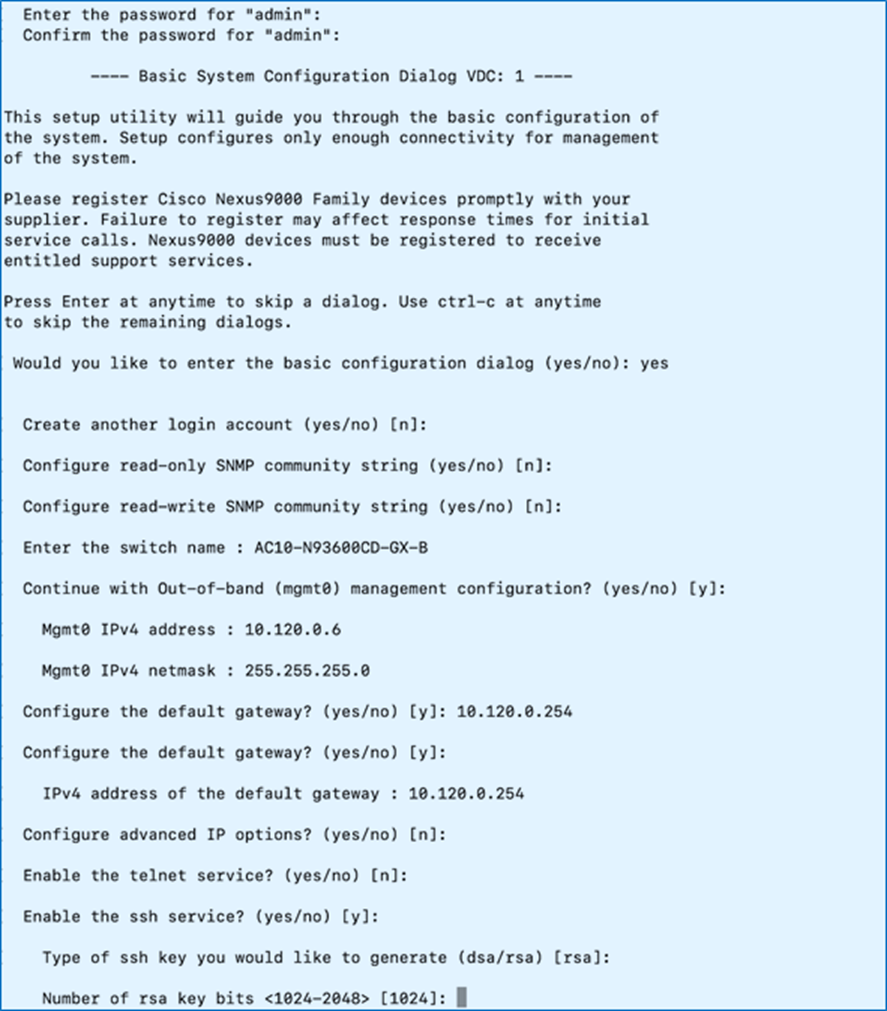

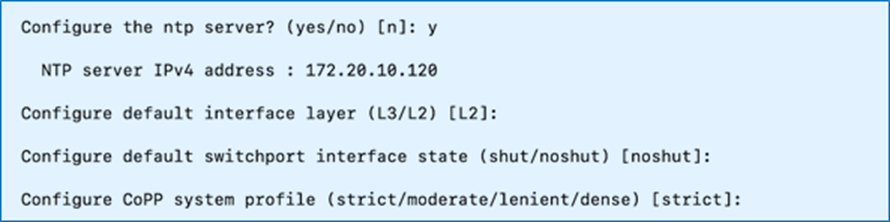

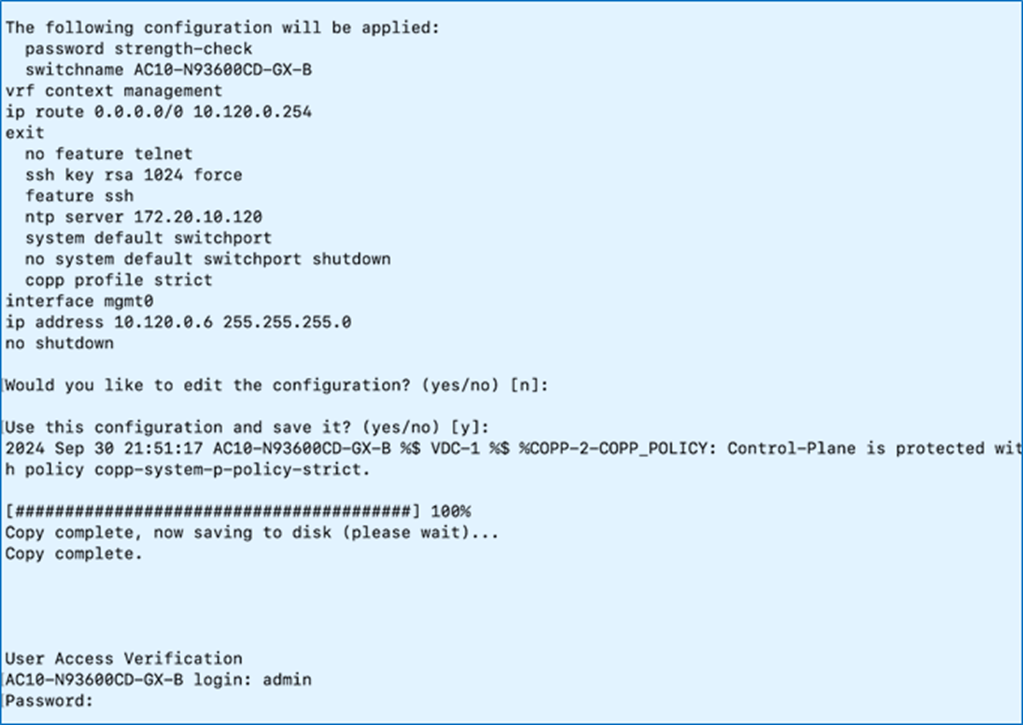

Procedure 2. Initial setup of the Nexus-B switch

Step 1. Repeat the configuration steps in Procedure 1 to set up the Nexus-B switch.

Step 2. Review the configuration. Confirm to use the configuration and Save it.

Provision Cisco Nexus Switches to enable workload and infrastructure connectivity

The procedures detailed in this section configure the Nexus 9000 series switches in the solution to provide the necessary infrastructure and workload connectivity.

Assumptions and Prerequisites

● The initial setup of the Nexus switches is complete.

● Collect the setup information for your environment – see Table 7.

Setup Information

The setup parameters and other information necessary to configure the components in this section, including access information are listed in Table 7.

Table 7. Cisco Nexus: Setup Parameters and Information

| Variable/Info |

Variable Name |

Value |

Additional Values and Information |

| Nexus Global Configuration – General |

|||

| Features |

|

nxapi udld interface-vlan lacp vpc lldp scp-server sftp server |

|

| DNS Server IP |

|

172.20.4.53 172.20.4.54 |

Could be multiple |

| DNS Domain Name |

|

fpb.local |

|

| NTP Server IP |

|

172.20.10.120 |

|

| Clock Time Zone |

|

EST -5 |

|

| Clock Summertime |

|

EDT |

|

| In-Band Management |

|||

| In-Band Management VLAN |

FPB-IB-MGMT_VLAN |

1201 |

|

| VLANs – to Cisco UCS Compute Infrastructure |

|||

| Native VLAN |

FPB-NATIVE_VLAN |

2 |

|

| OpenShift Cluster Management VLAN |

FPB-CLUSTER-MGMT_VLAN |

1202 |

|

| Storage Access – Object Store |

FPB-S3-OBJ_VLAN |

1209 |

|

| Storage Access – iSCSI-A |

FPB-iSCSI-A_VLAN |

3015 |

|

| Storage Access – iSCSI-B |

FPB-iSCSI-B_VLAN |

3025 |

|

| Storage Access – NVMe-TCP-A |

FPB-NVMe-TCP-A_VLAN |

3035 |

|

| Storage Access – NVMe-TCP-B |

FPB-NVMe-TCP-B_VLAN |

3045 |

|

| Storage Access – NFS VLAN |

FPB-NFS_VLAN |

3055 |

|

| Gateway IP |

|||

| Out-of-Band Management Gateway IP |

FPB-OOB-MGMT-GW_IP |

10.120.0.254/24 |

External to Nexus-A, -B |

| In-Band Management Gateway IP |

FPB-IB-MGMT-GW_IP |

10.120.1.254/24 |

External to Nexus-A, -B |

| OpenShift Cluster Management Gateway IP |

FPB-CLUSTER-MGMT-GW _IP |

10.120.2.254/24 |

External to Nexus-A, -B |

| Storage Access – Object Store Gateway IP |

FPB-S3-OBJ-GW_IP |

10.120.9.254/24 |

External to Nexus-A, -B |

| Subnets |

|||

| Storage Access – iSCSI-A Subnet |

|

192.168.10.0/24 |

|

| Storage Access – iSCSI-B Subnet |

|

192.168.20.0/24 |

|

| Storage Access – NVMe-TCP-A Subnet |

|

192.168.30.0/24 |

|

| Storage Access – NVMe-TCP-B Subnet |

|

192.168.40.0/24 |

|

| Storage Access – NFS Subnet |

|

192.168.50.0/24 |

|

| Nexus-A vPC Global Configuration |

To UCS Fabric Interconnects |

||

| vPC Domain ID |

FPB-VPC-DOMAIN_ID |

20 |

|

| vPC Peer Keepalive - Destination |

FPB-OOB-MGMT-B_IP |

OOB Mgmt. IP of Nexus-B |

(for example, 10.120.0.6) |

| vPC Peer Keepalive - Source |

FPB-OOB-MGMT-A_IP |

OOB Mgmt. IP of Nexus-A |

(for example, 10.120.0.5) |

| Nexus-B vPC Global Configuration |

To UCS Fabric Interconnects |

||

| vPC Domain ID |

FPB-VPC-DOMAIN_ID |

20 |

|

| vPC Peer Keepalive - Destination |

FPB-OOB-MGMT-A_IP |

OOB Mgmt. IP of Nexus-A |

(for example, 10.120.0.5) |

| vPC Peer Keepalive - Source |

FPB-OOB-MGMT-B_IP |

OOB Mgmt. IP of Nexus-B |

(for example, 10.120.0.6) |

| Nexus-A Peer-Link Configuration |

|||

| vPC Peer Link – Port Channel ID |

|

100 |

To Nexus-B |

| vPC Peer Link – Interfaces |

|

e1/27-28 |

|

| Nexus-A vPC Configuration to Upstream Datacenter Network |

|||

| vPC ID |

|

120 |

To upstream switch-1,-2 |

| Port Channel ID |

|

120 |

|

| Local Interface |

|

e1/25 |

To upstream switch-1 |

| Remote Interface |

|

e1/49 |

|

| Local Interface |

|

e1/26 |

To upstream switch-2 |

| Remote Interface |

|

e1/49 |

|

| Nexus-A vPC Configuration to UCS Fabric Interconnects |

|||

| vPC ID |

|

11 |

To Fabric Interconnect A |

| Port Channel ID |

|

11 |

|

| Local Interface |

|

e1/5 |

|

| Remote Interface |

|

e1/32 |

|

| vPC ID |

|

12 |

To Fabric Interconnect B |

| Port Channel ID |

|

12 |

|

| Local Interface |

|

e1/6 |

|

| Remote Interface |

|

e1/32 |

|

| Nexus-A Interface Configuration to NetApp Controllers: e3a_ifgrp, e3b_ifgrp |

For iSCSI, NVMe-TCP, NFS |

||

| vPC ID |

|

13 |

To NetApp:e3a_ifgrp |

| Port Channel ID |

|

13 |

|

| Local Interface to e3a_ifgrp |

|

e1/3 |

|

| Remote Interface |

|

e3a |

|

| vPC ID |

|

14 |

To NetApp:e3b_ifgrp |

| Port Channel ID |

|

14 |

|

| Local Interface to e3b_ifgrp |

|

e1/4 |

|

| Remote Interface |

|

e3b |

|

| Nexus-B Peer-Link Configuration |

|||

| vPC Peer Link – Port Channel ID |

|

100 |

To Nexus-A |

| vPC Peer Link – Interfaces |

|

e1/27-28 |

|

| Nexus-B vPC Configuration to Upstream Datacenter Network |

|||

| vPC ID |

|

120 |

To upstream switch-1,-2 |

| Port Channel ID |

|

120 |

|

| Local Interface |

|

e1/25 |

To upstream switch-1 |

| Remote Interface |

|

e1/50 |

|

| Local Interface |

|

e1/26 |

To upstream switch-2 |

| Remote Interface |

|

e1/50 |

|

| Nexus-B vPC Configuration to UCS Fabric Interconnects |

|||

| vPC ID |

|

11 |

To Fabric Interconnect A |

| Port Channel ID |

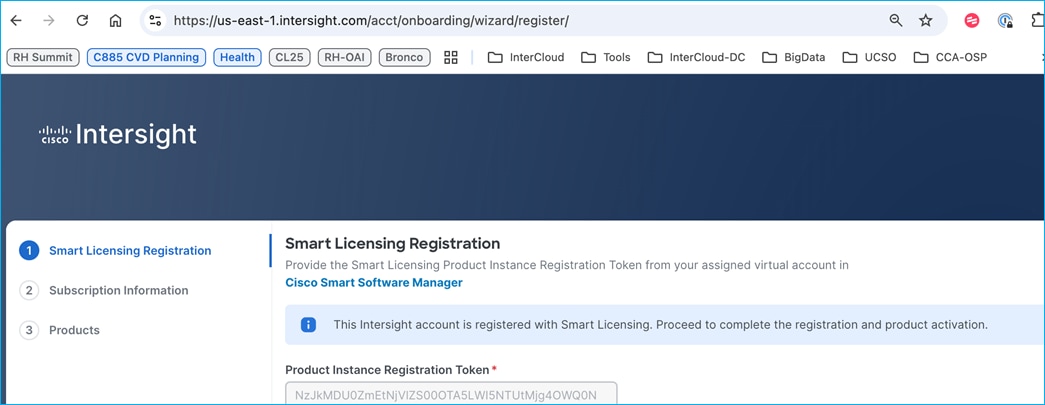

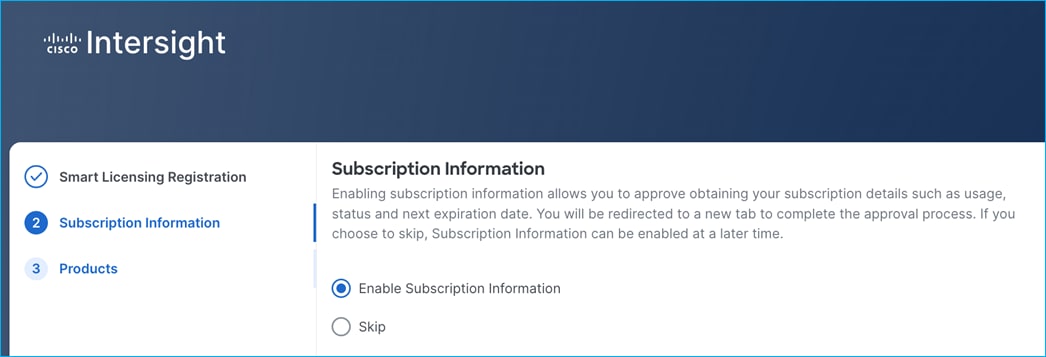

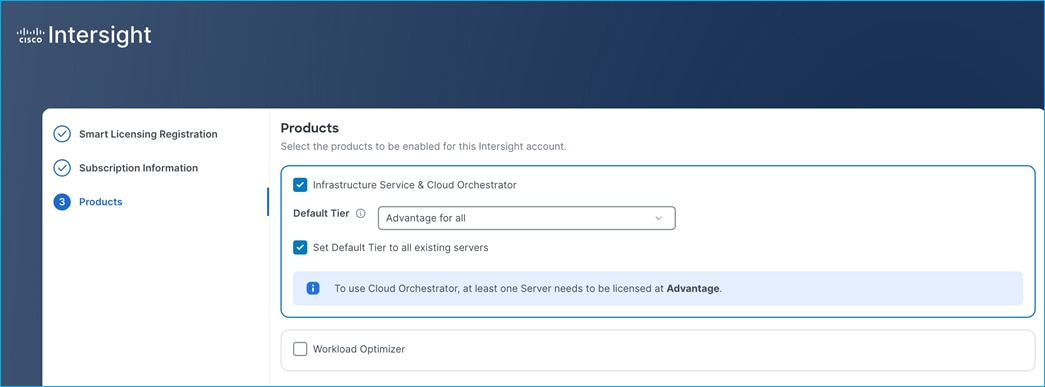

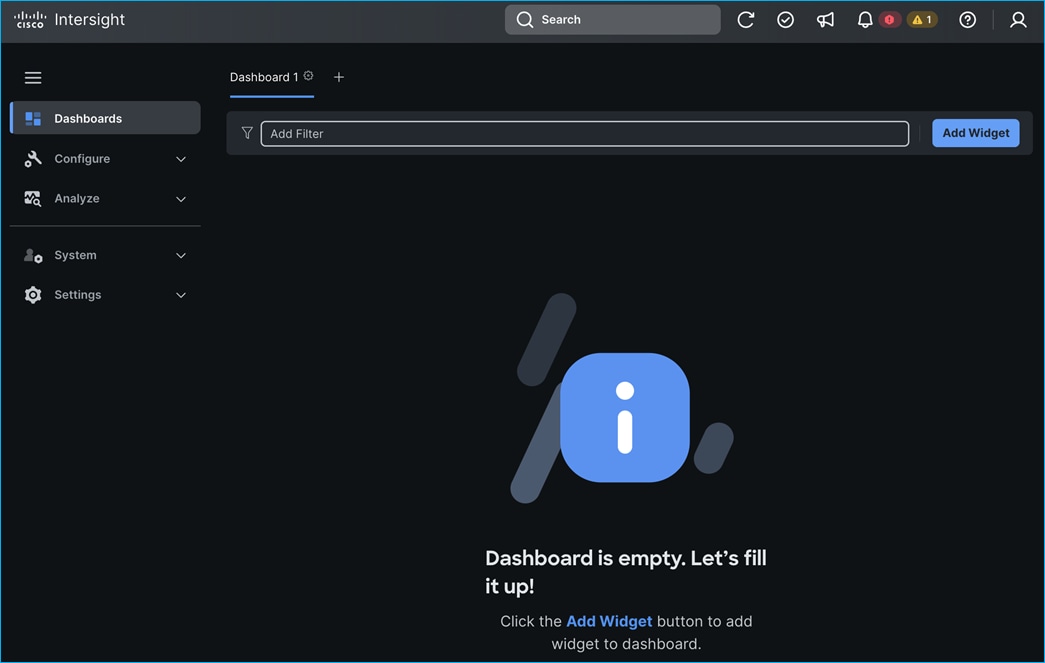

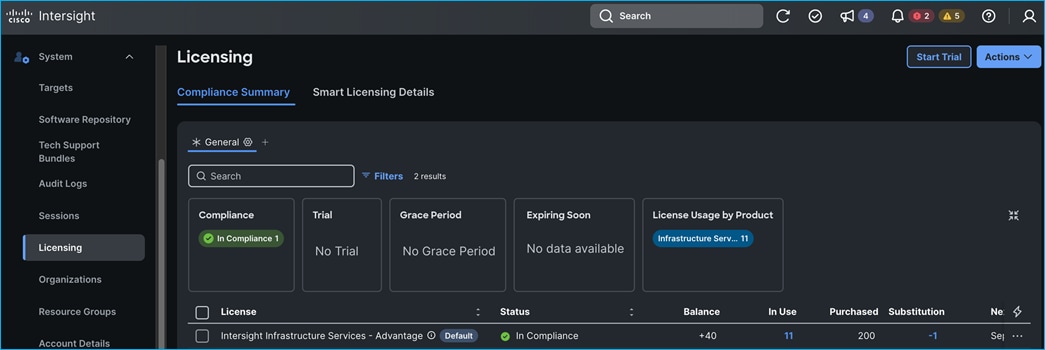

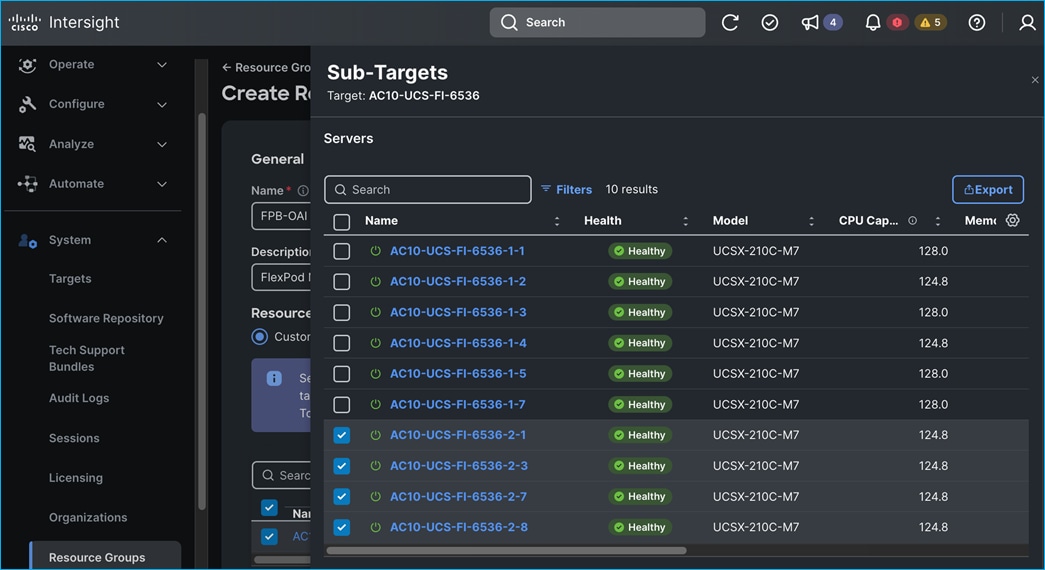

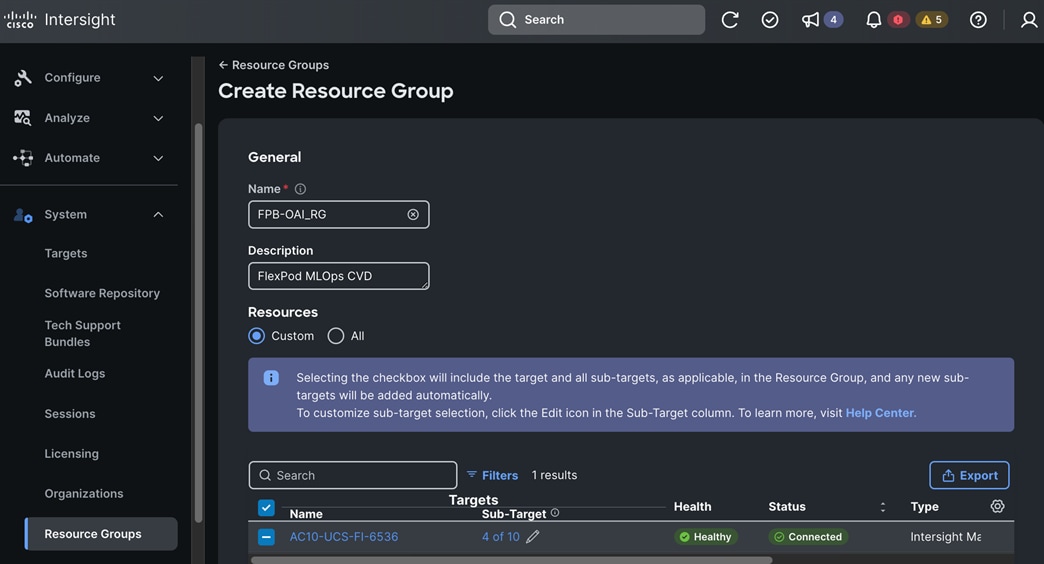

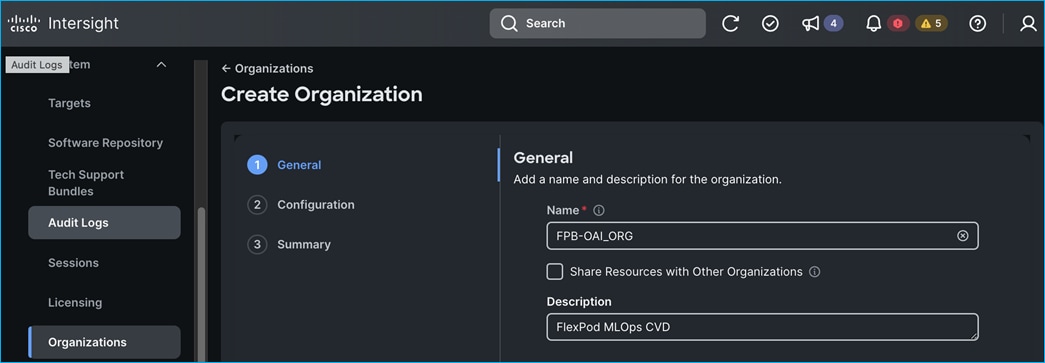

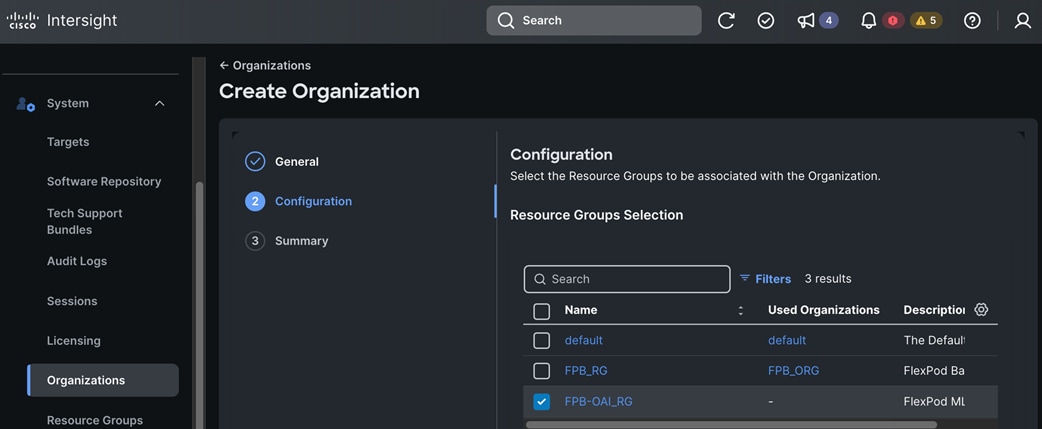

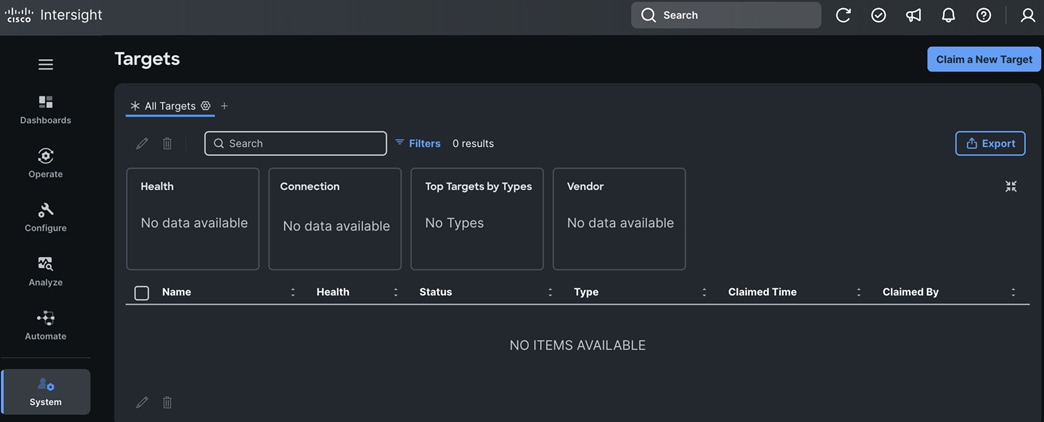

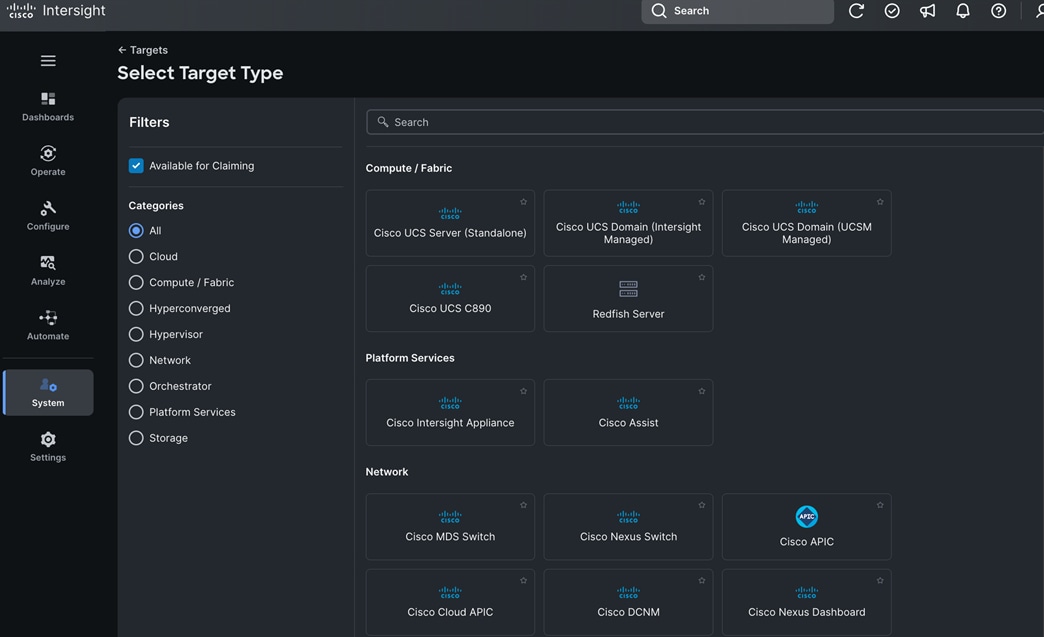

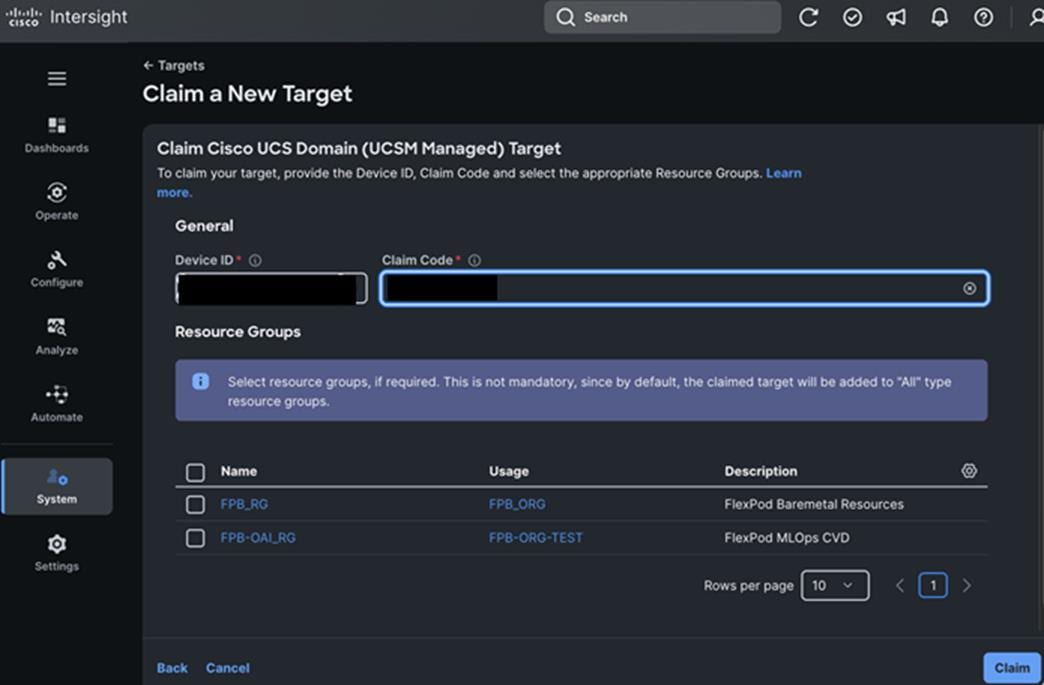

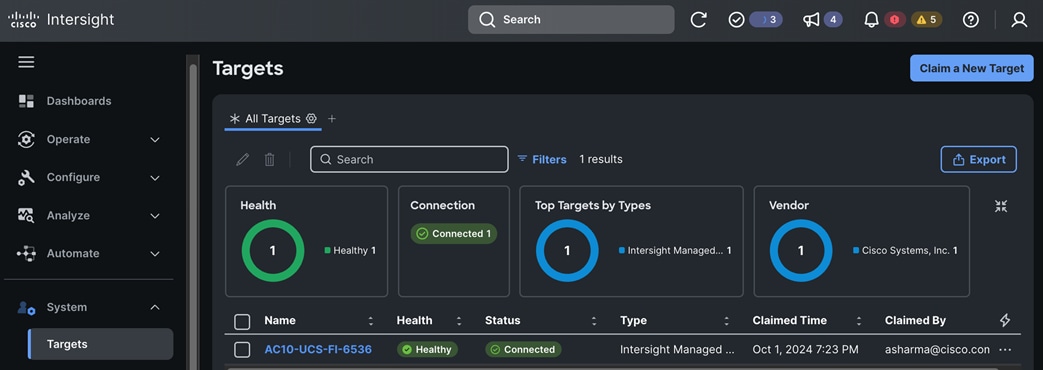

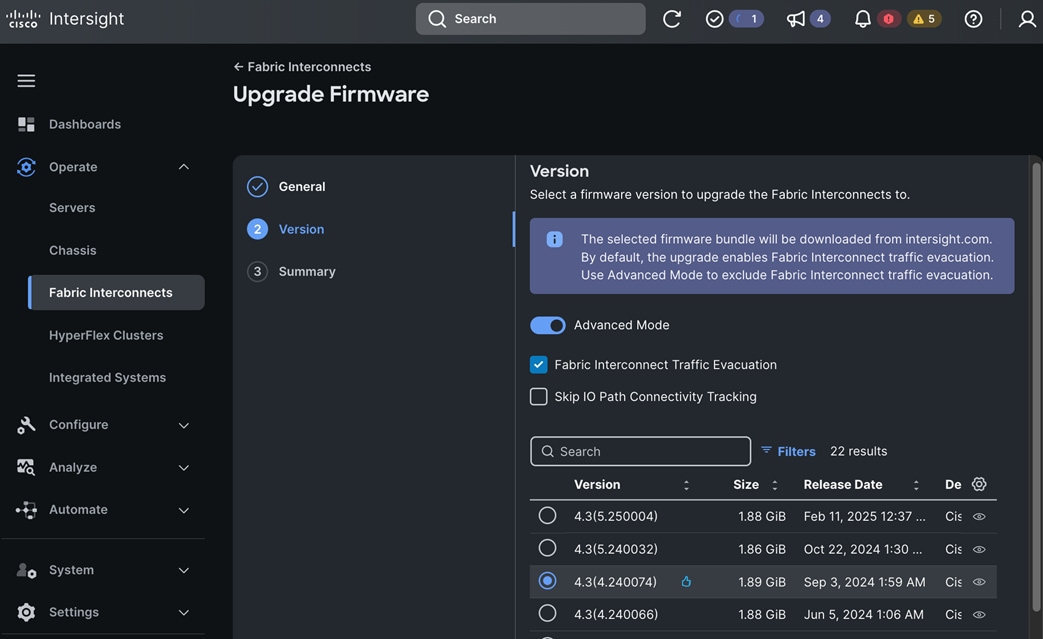

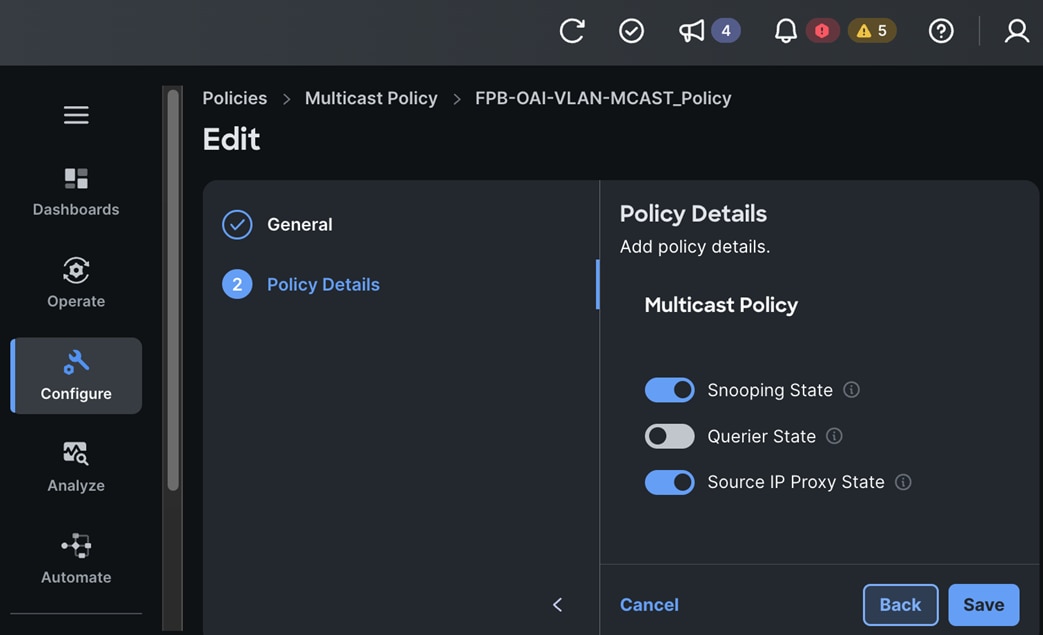

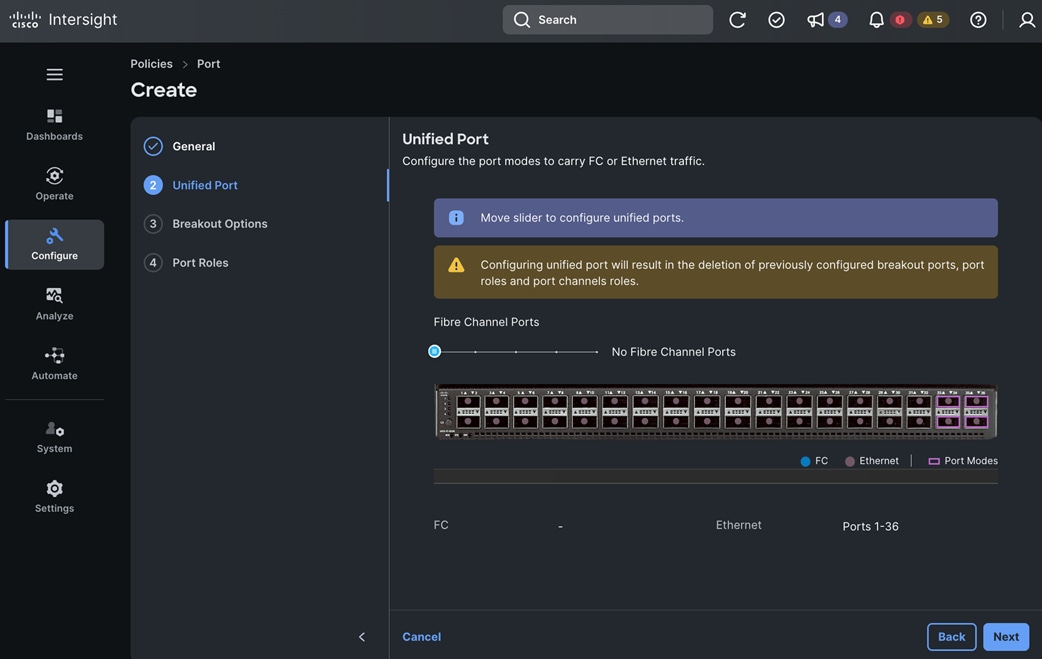

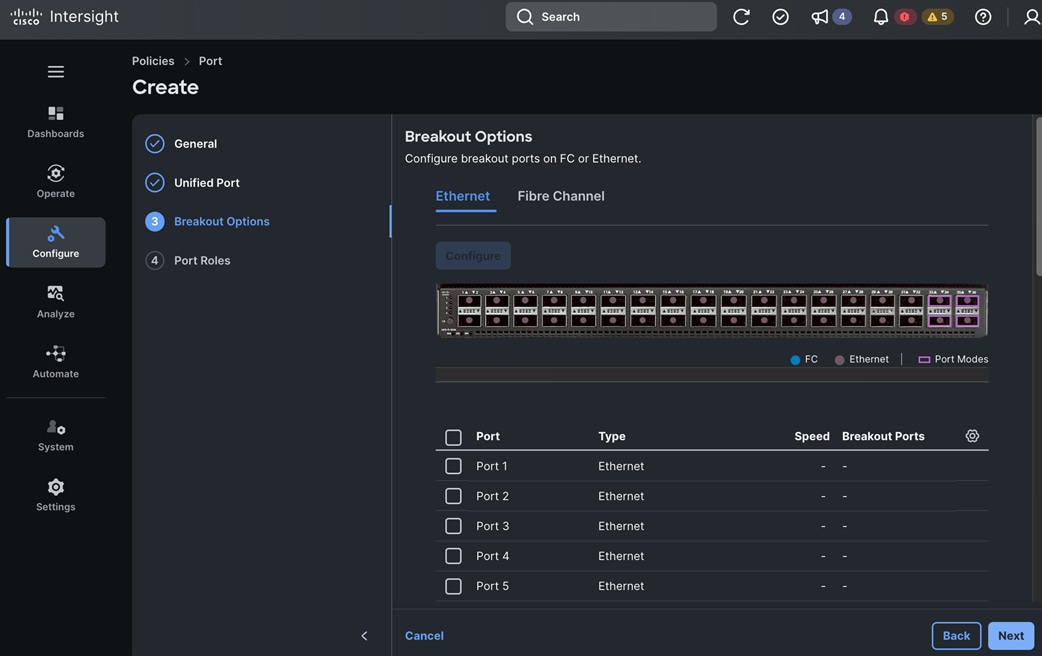

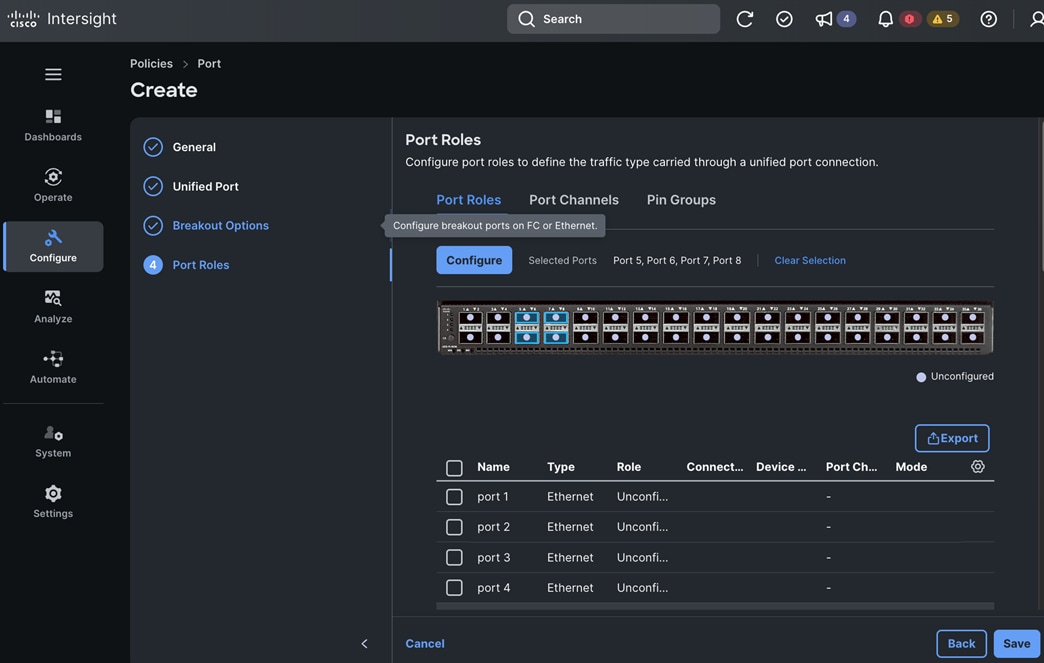

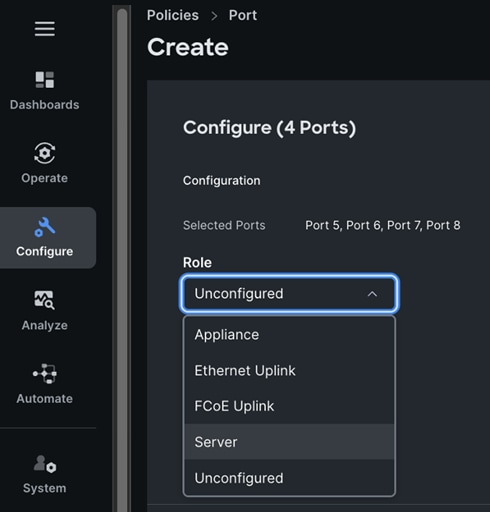

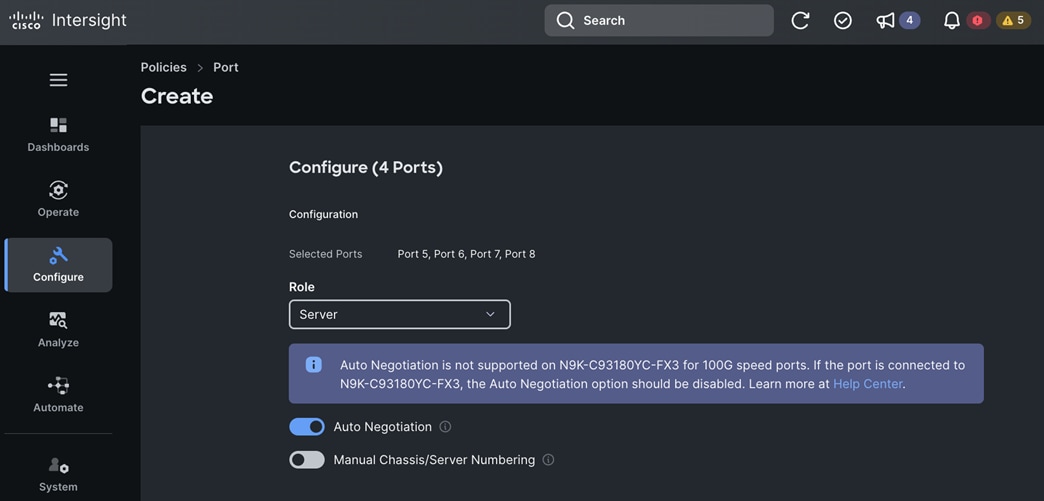

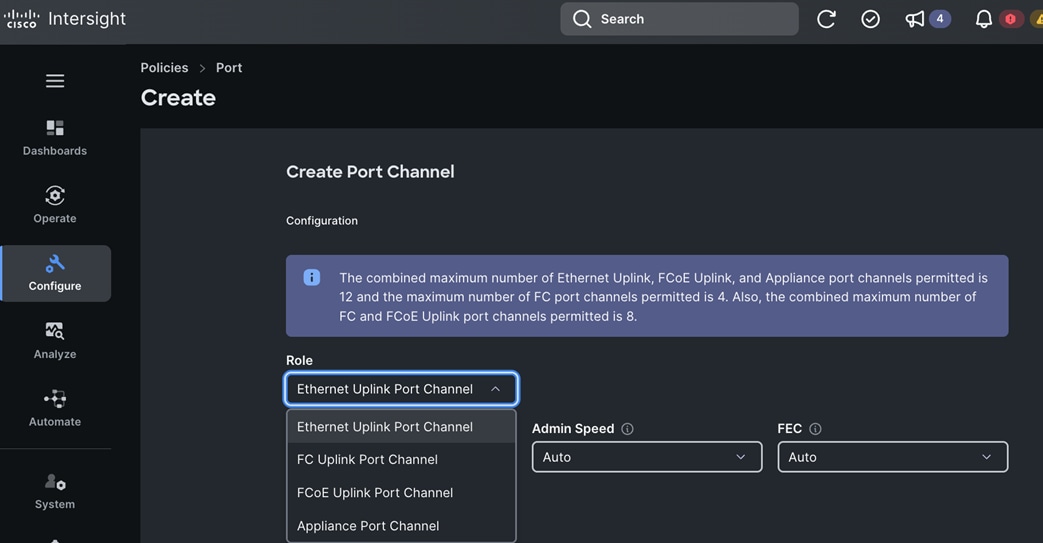

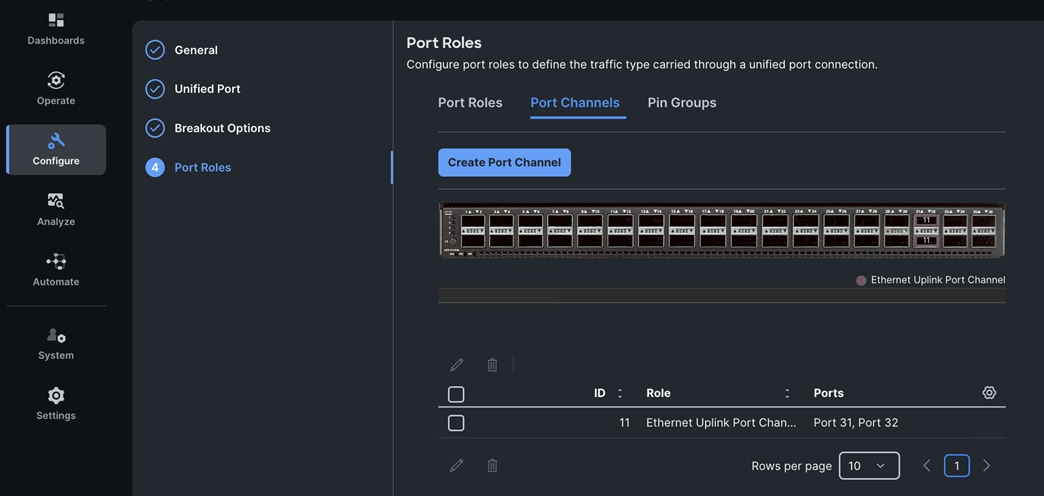

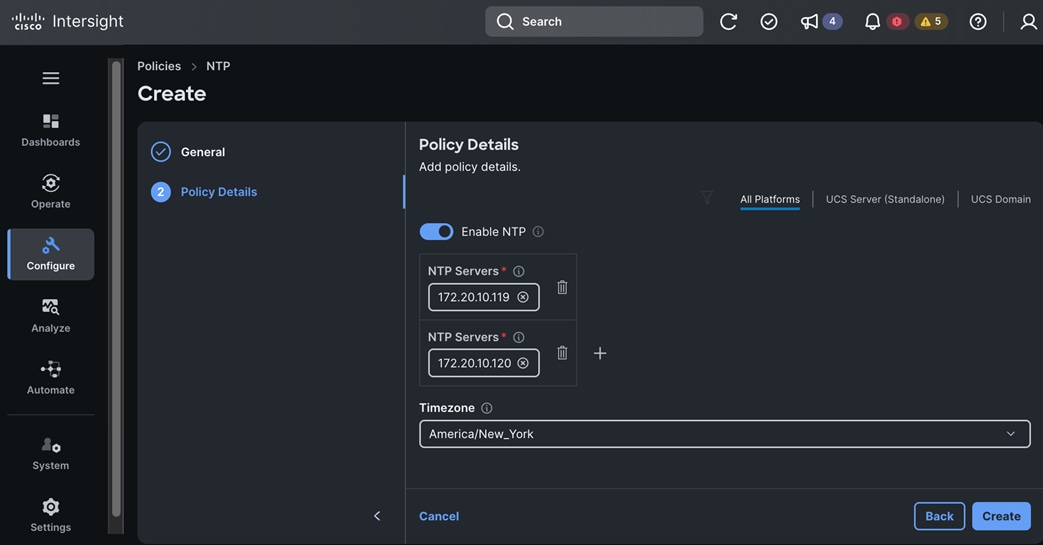

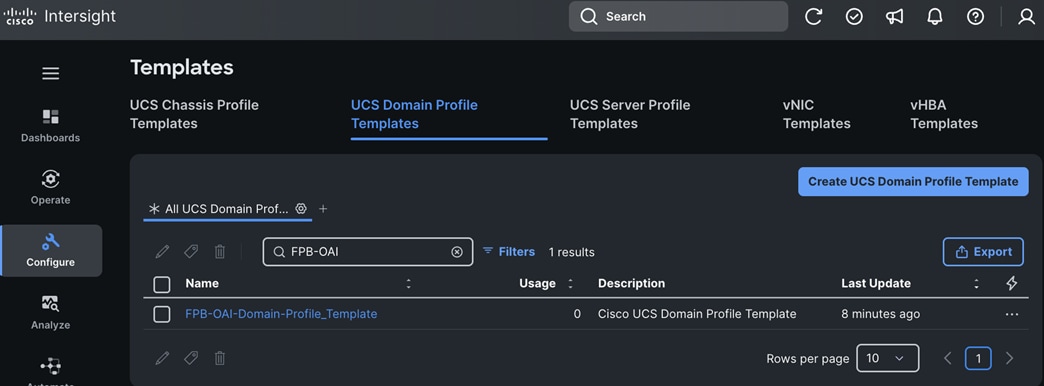

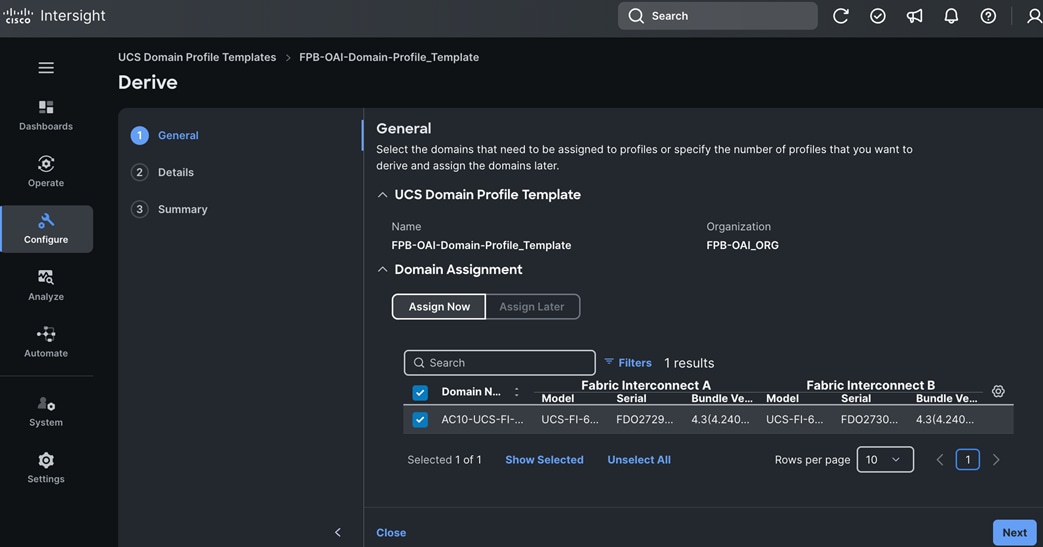

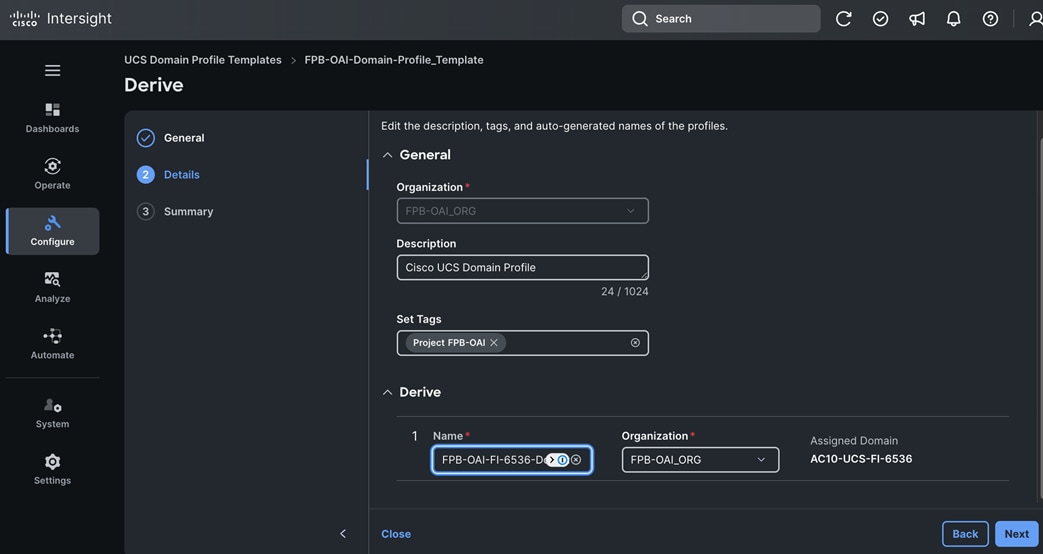

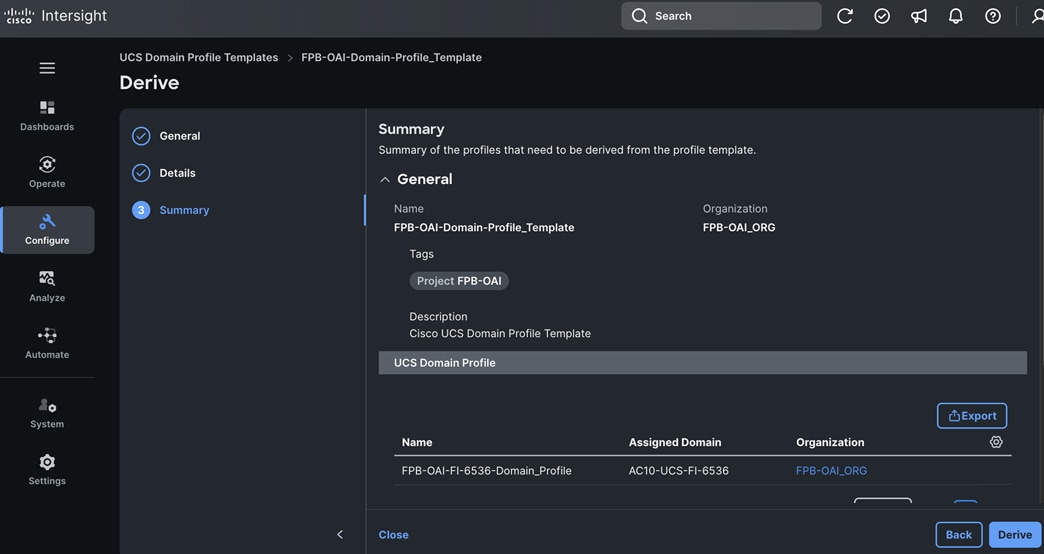

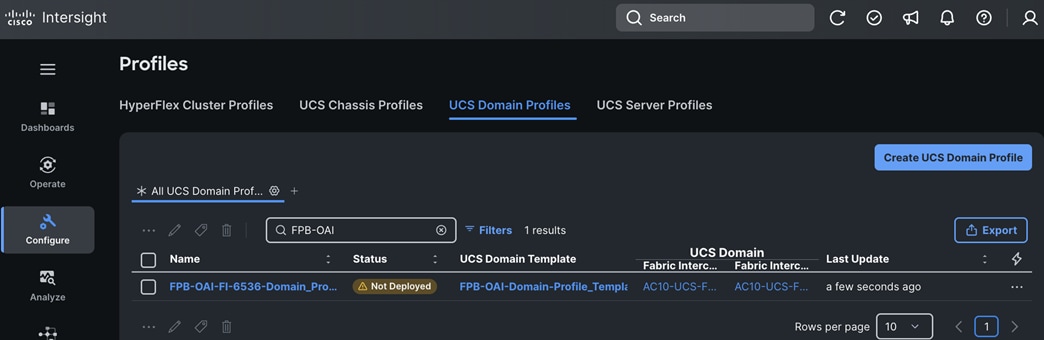

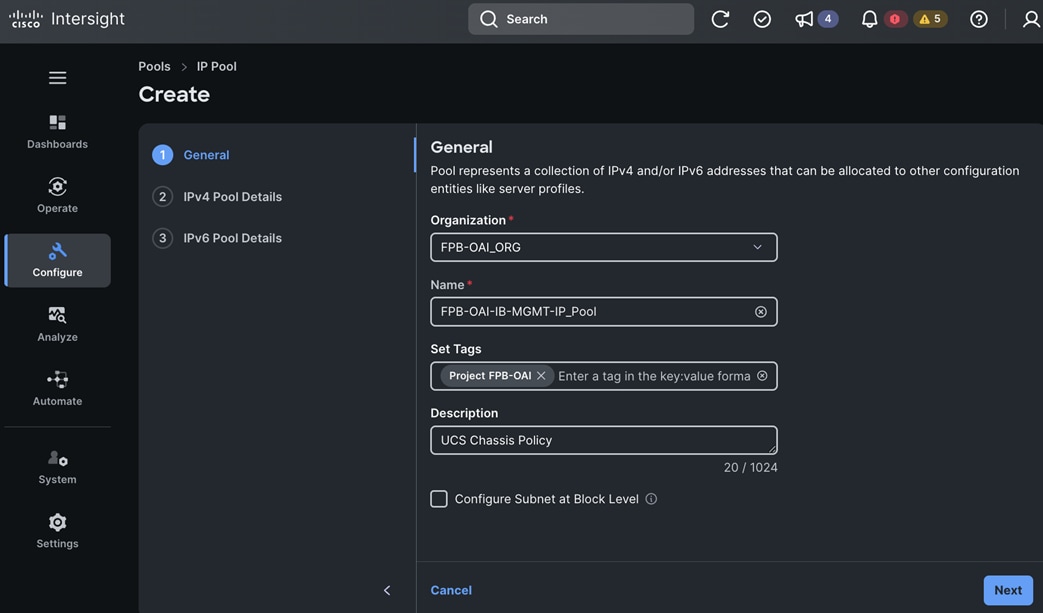

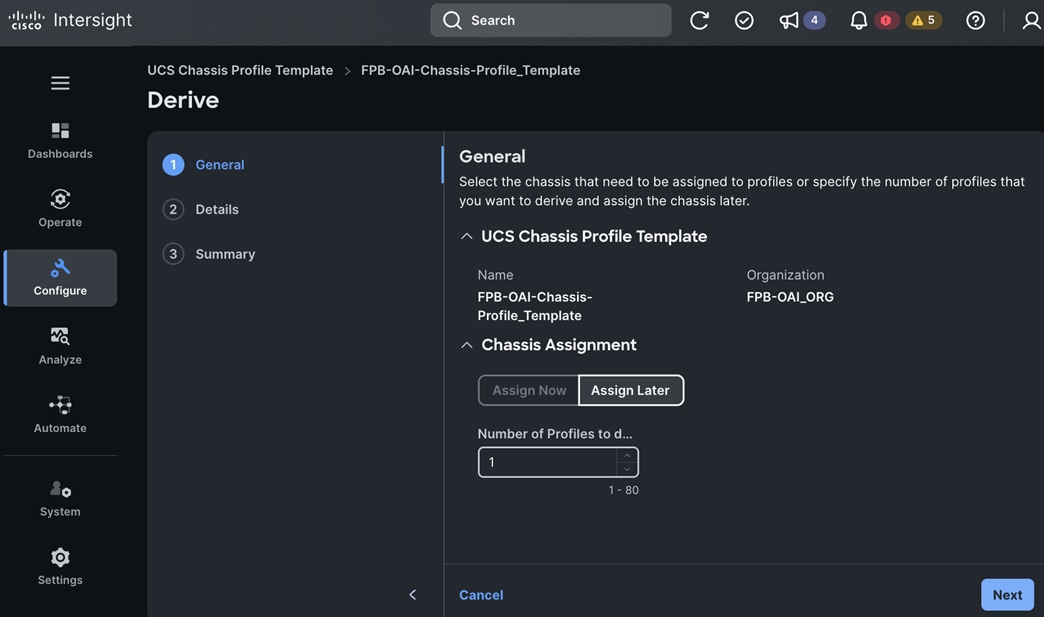

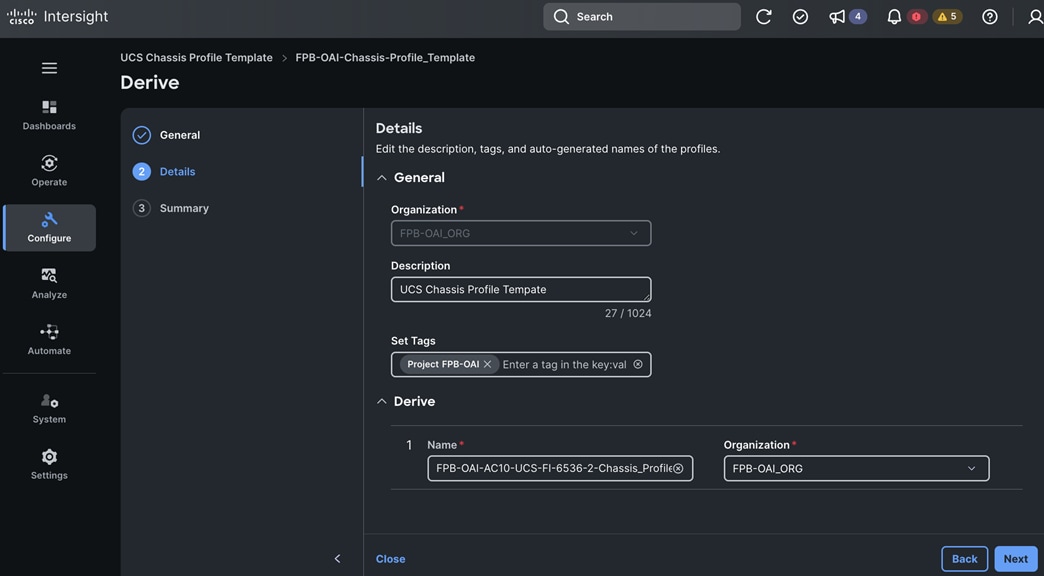

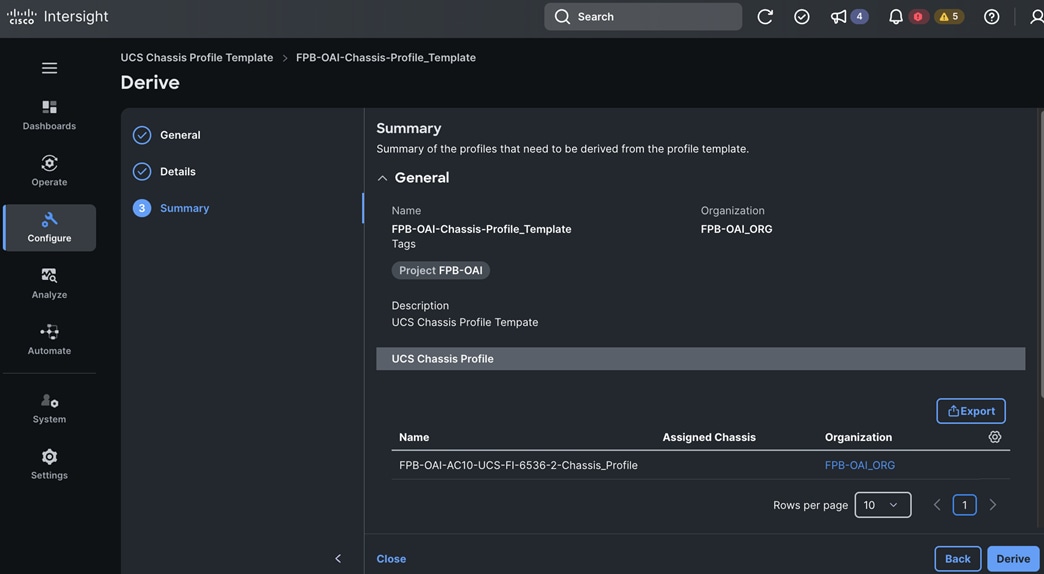

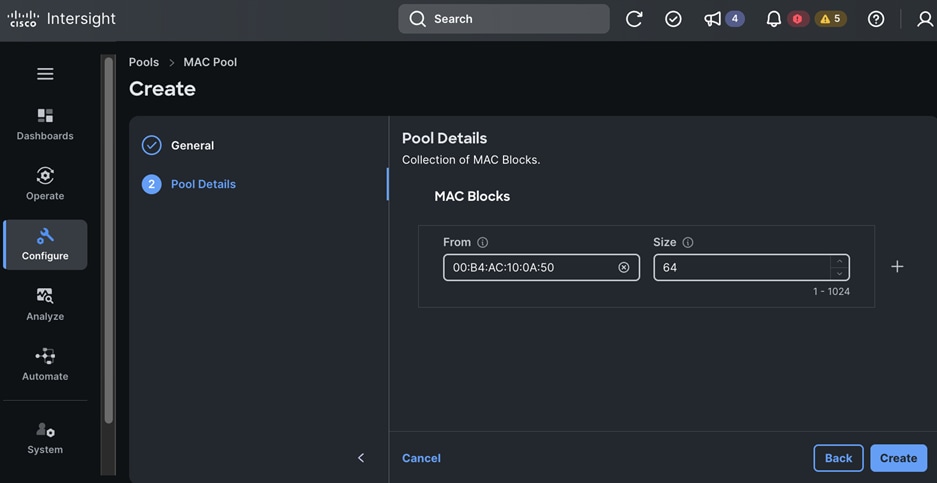

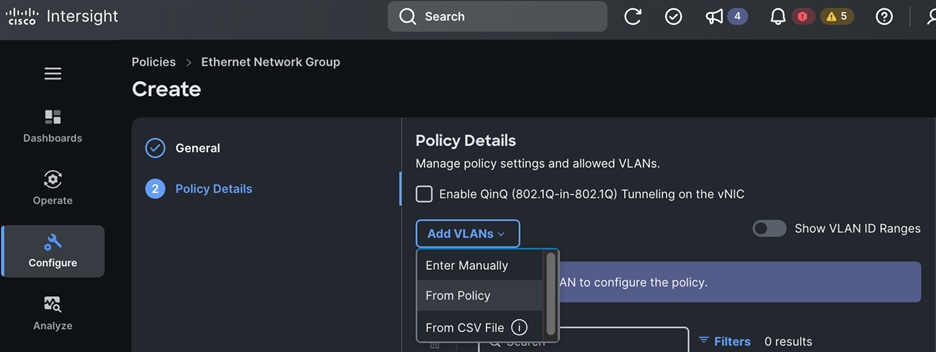

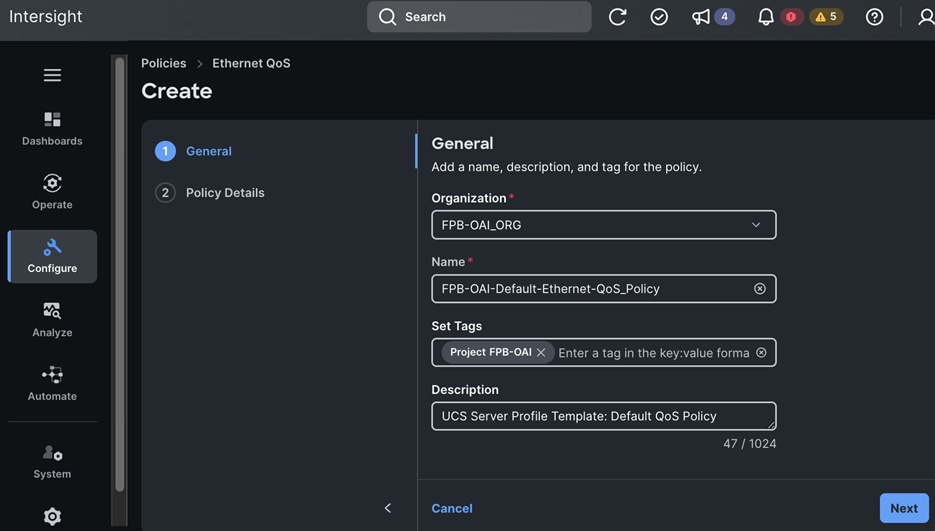

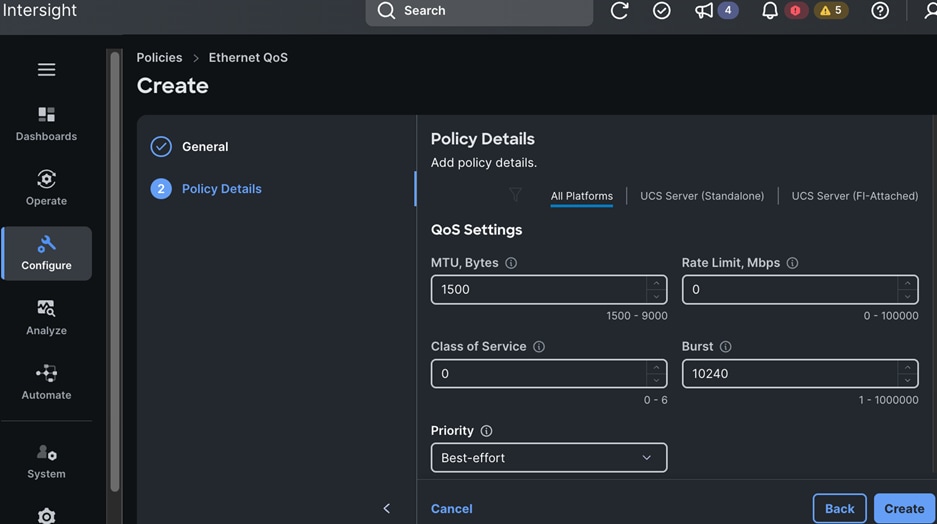

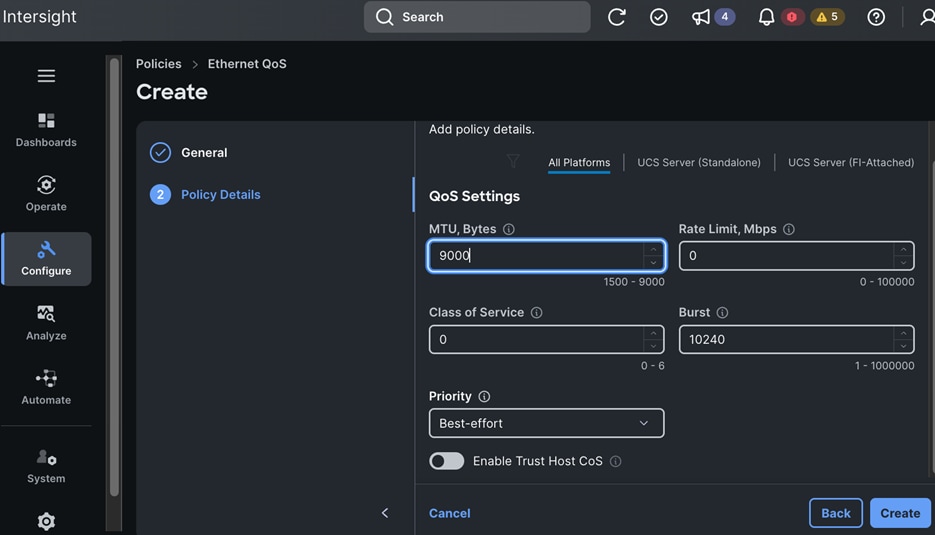

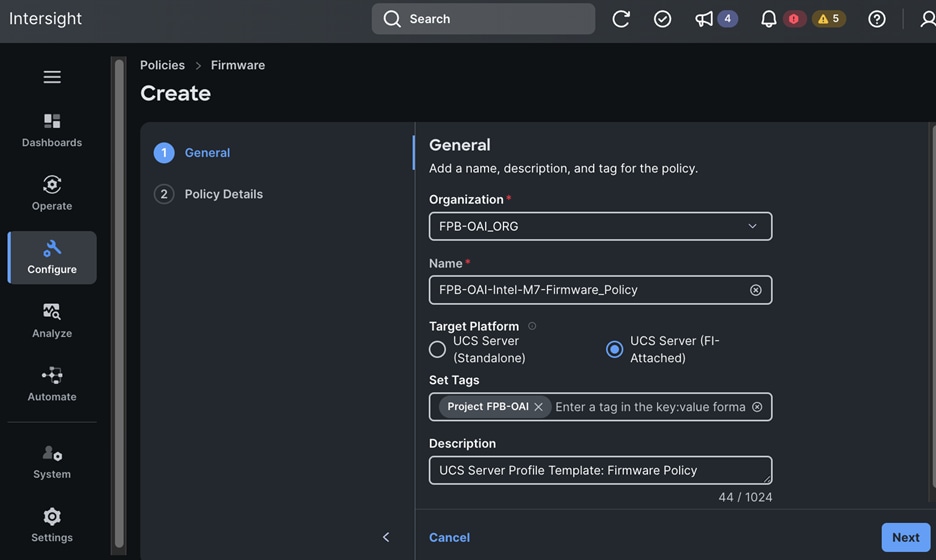

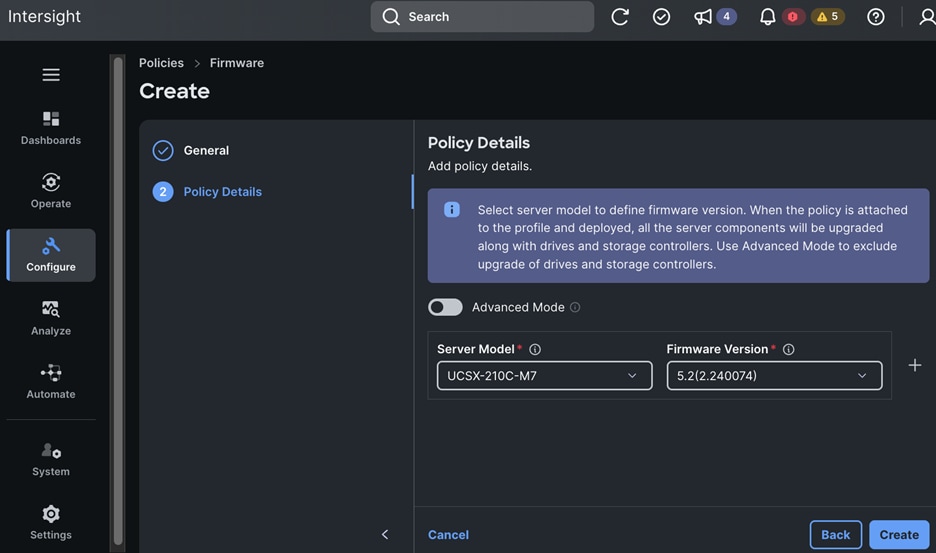

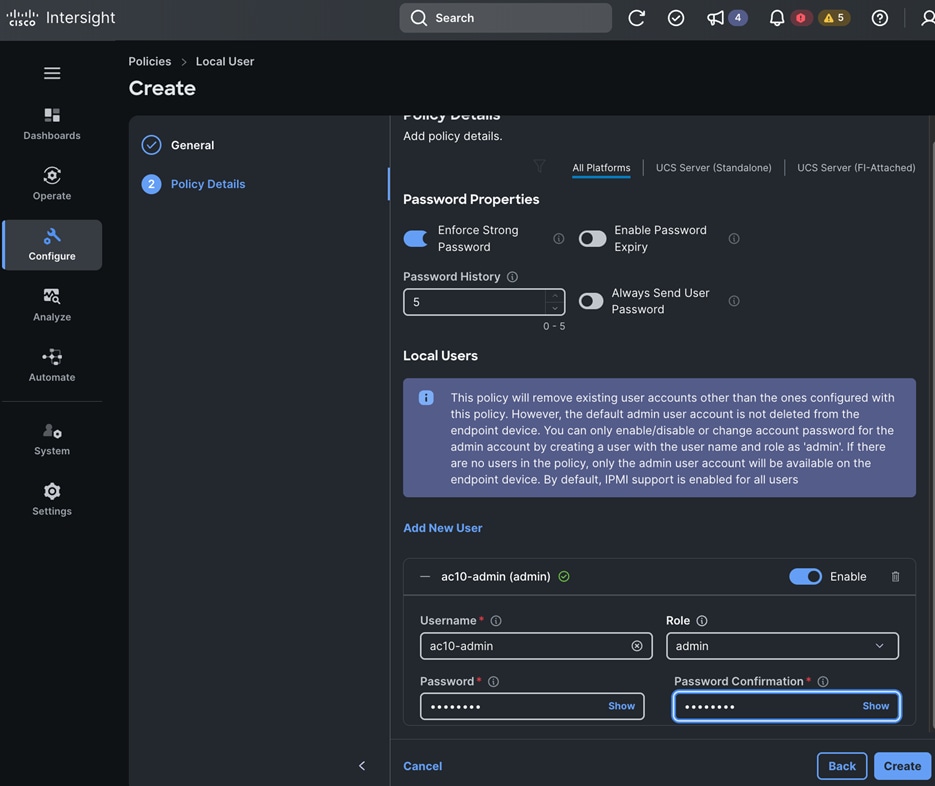

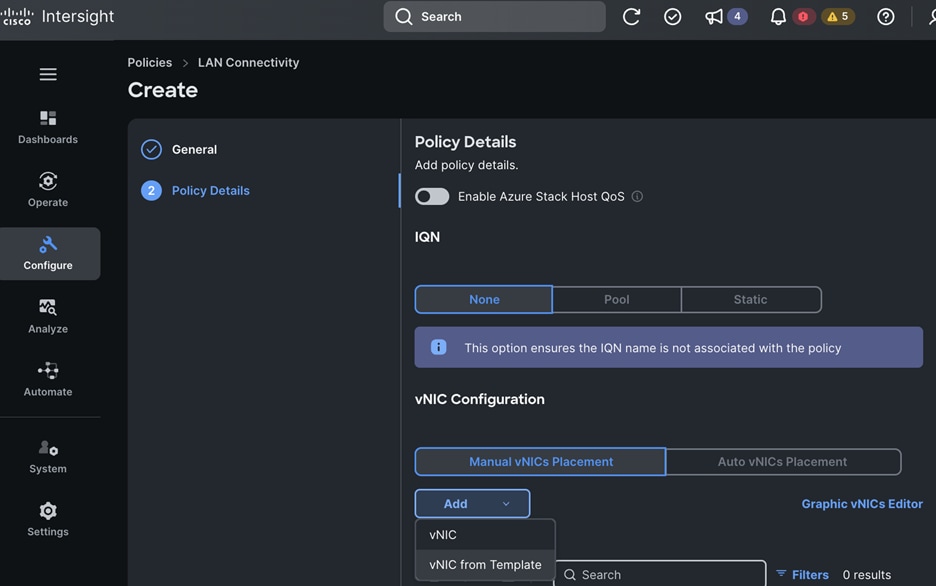

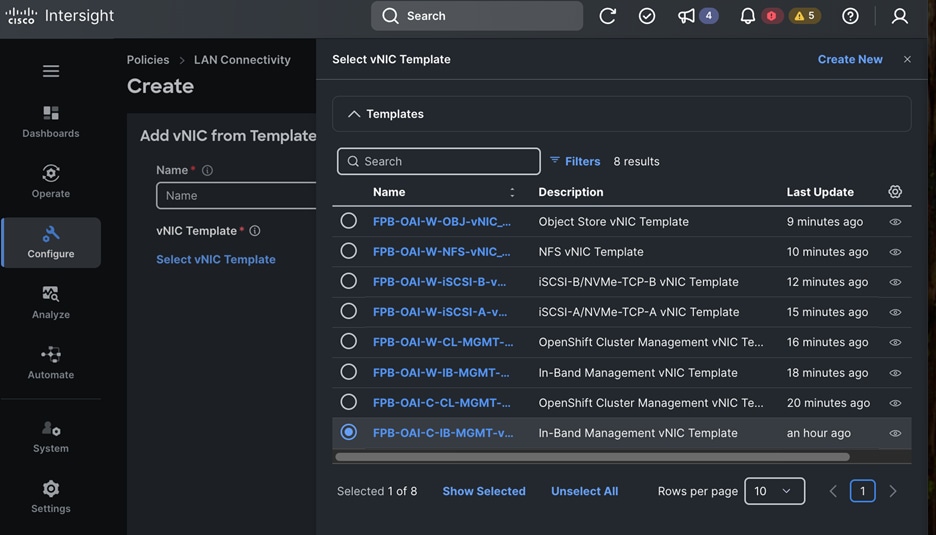

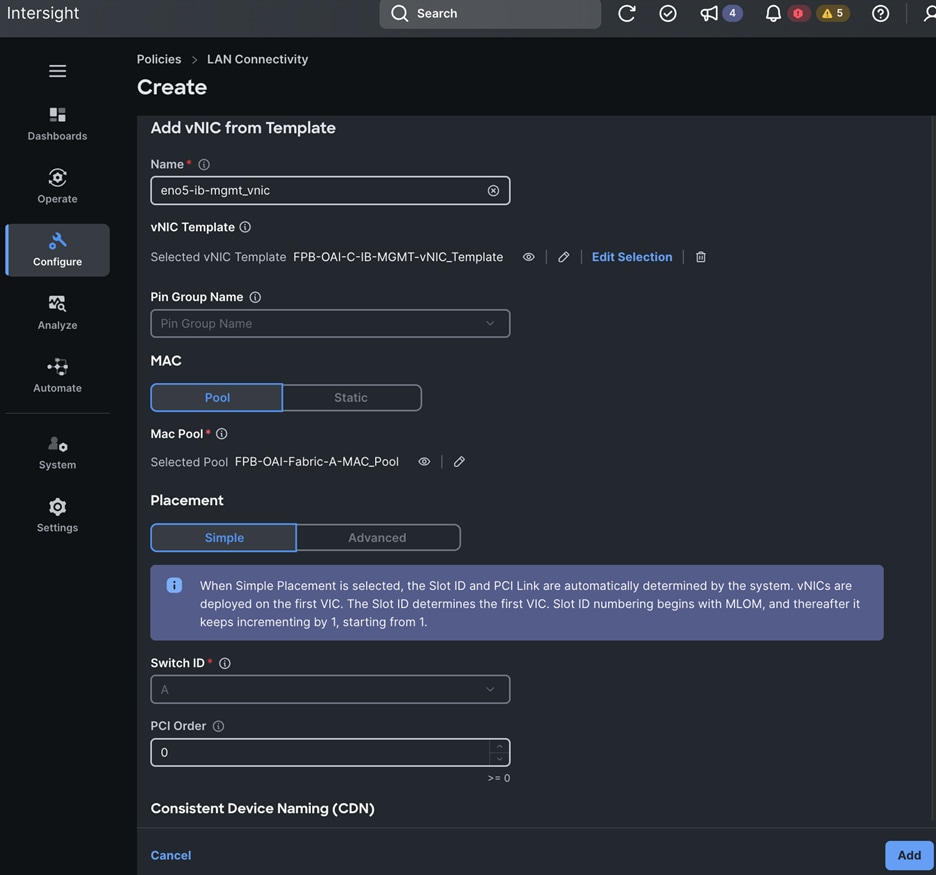

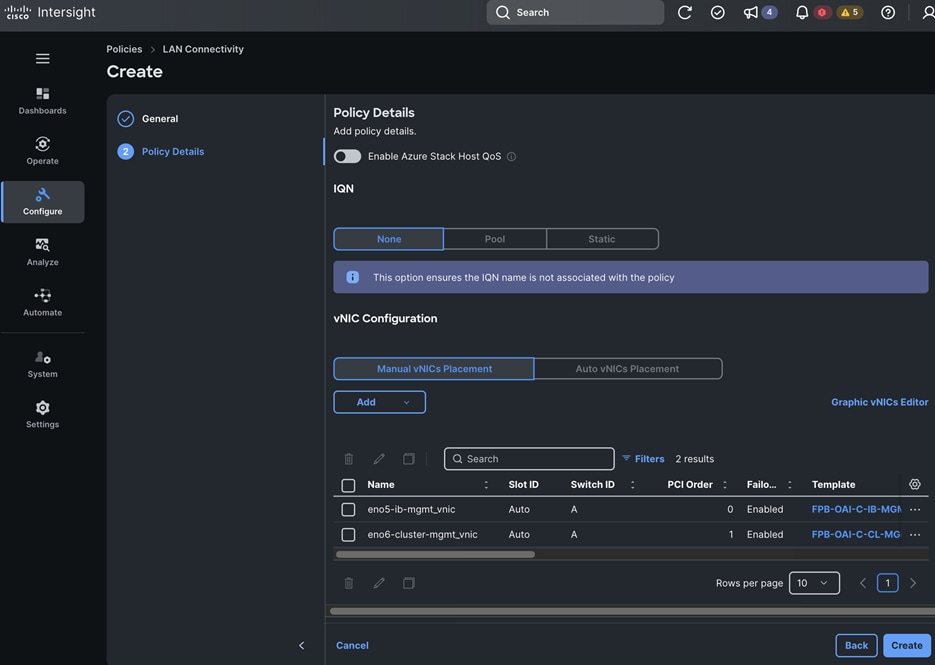

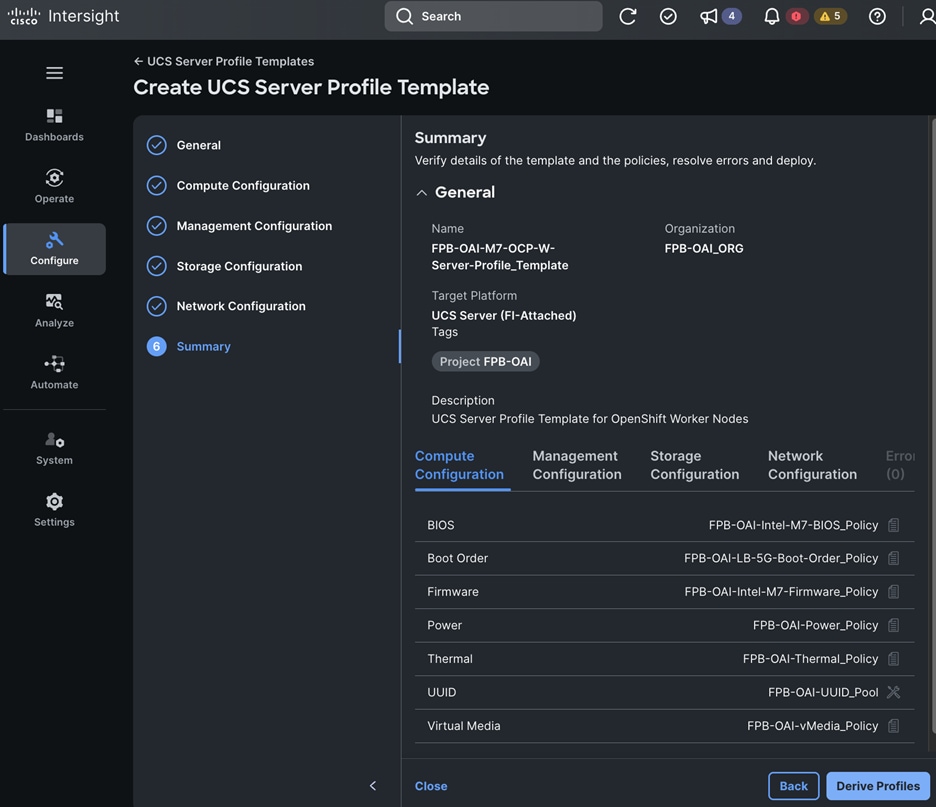

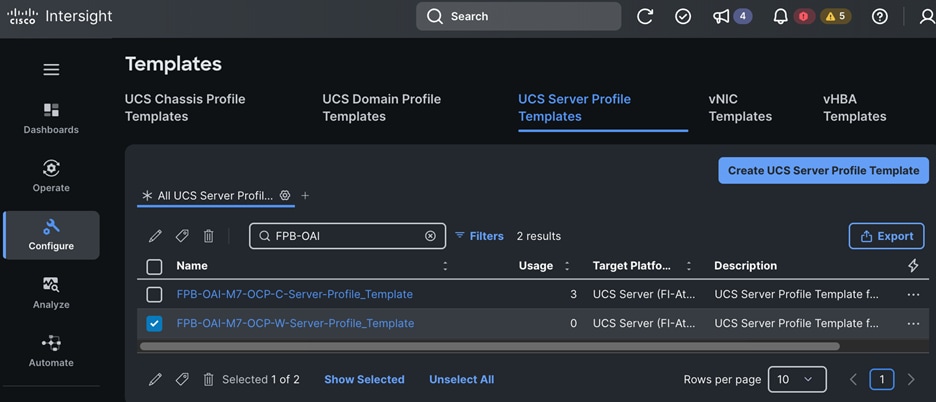

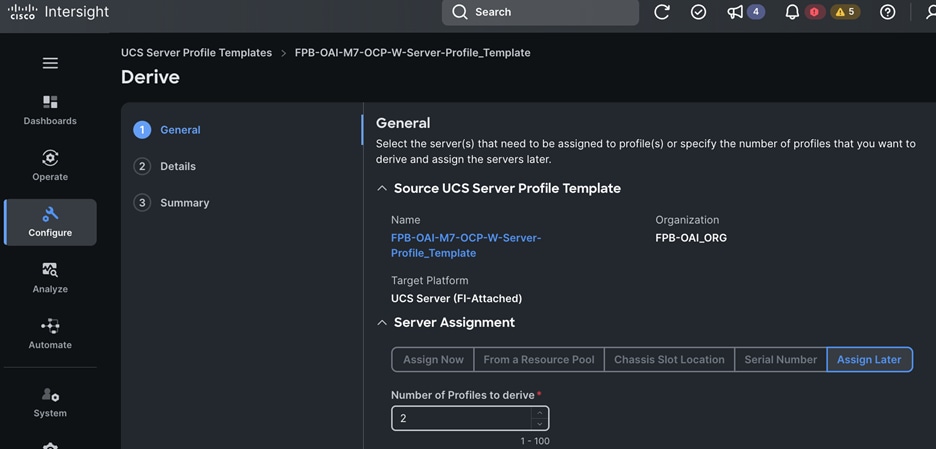

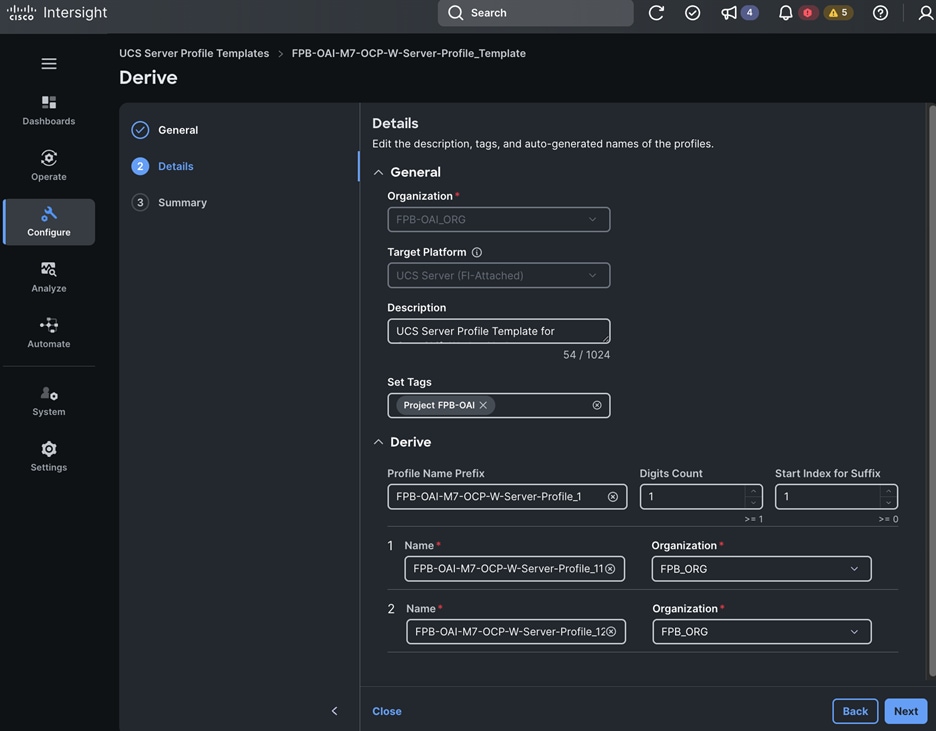

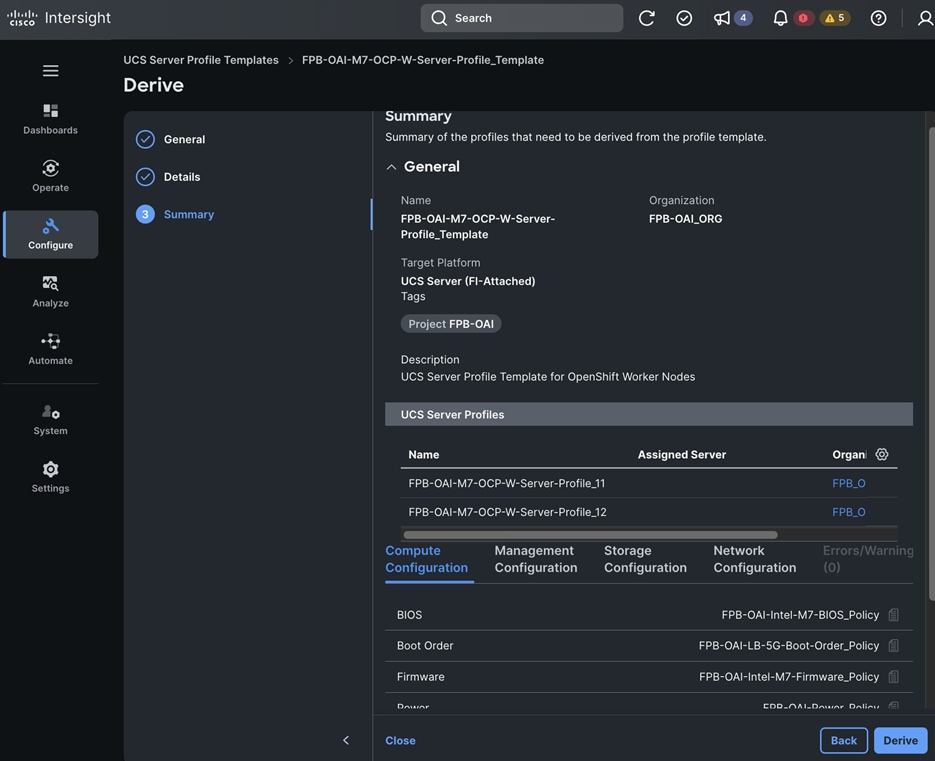

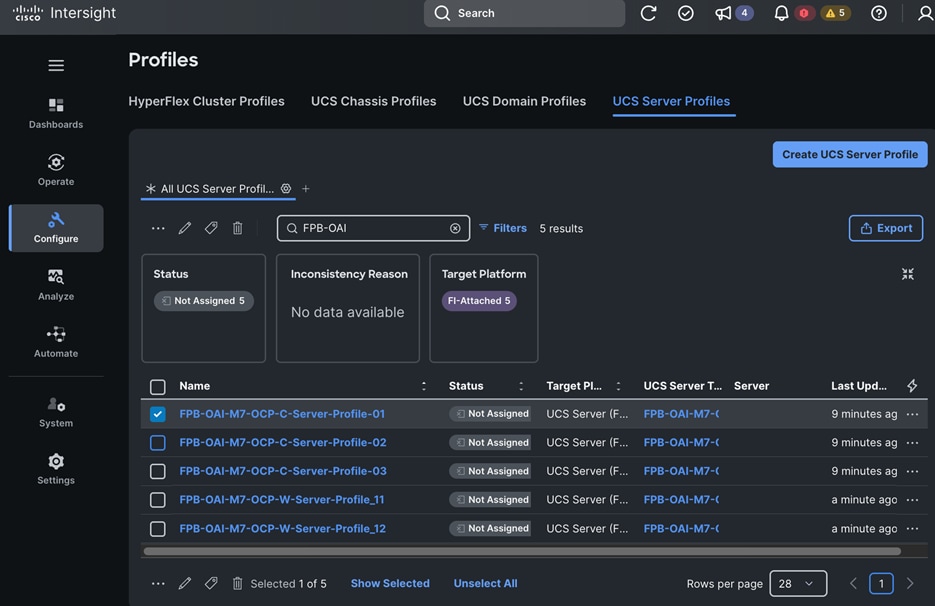

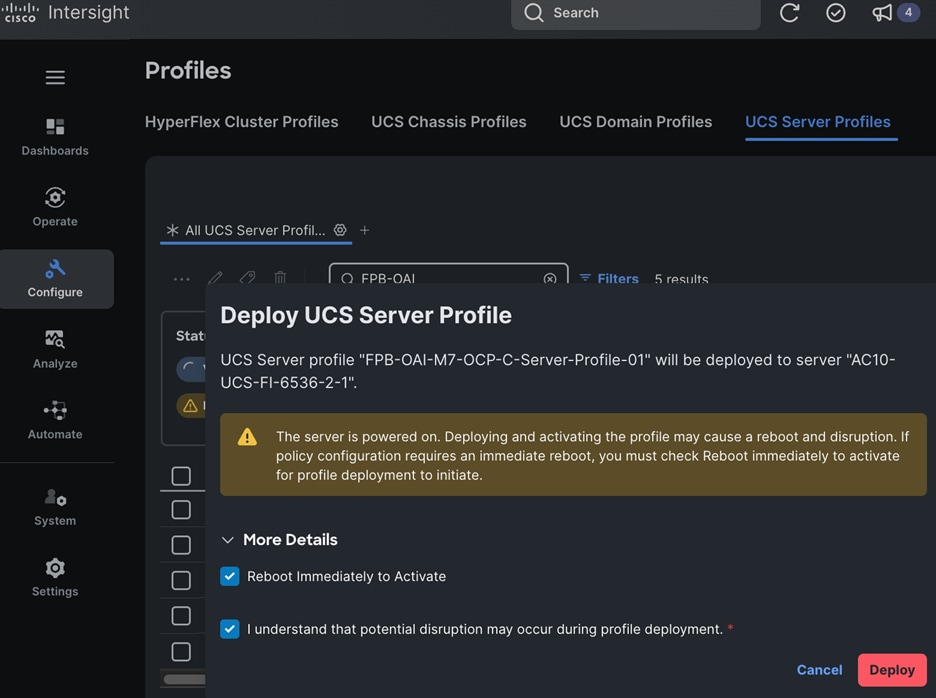

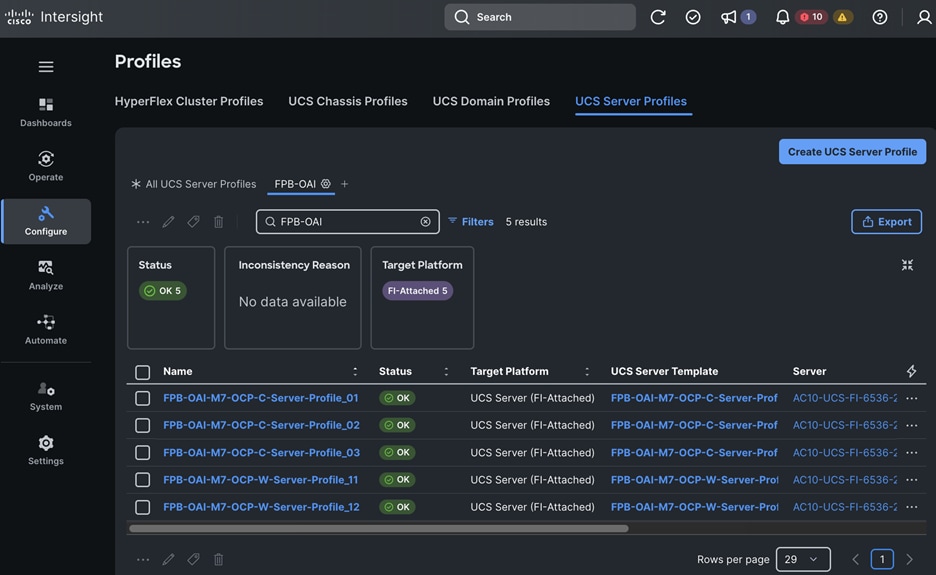

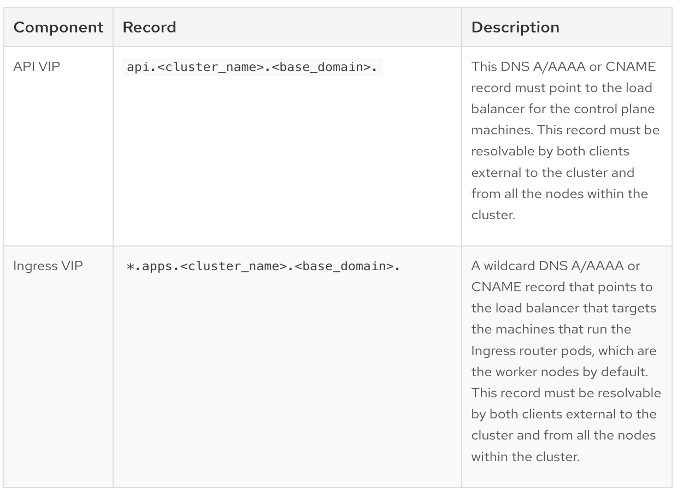

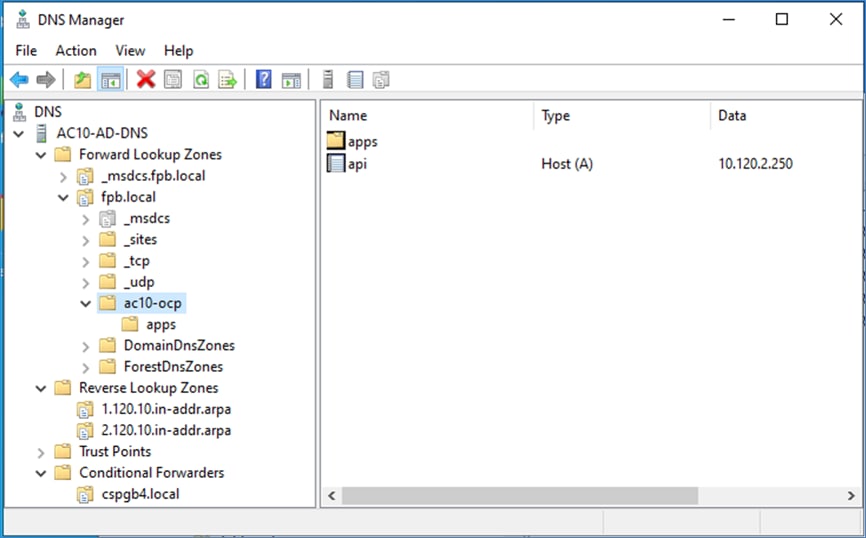

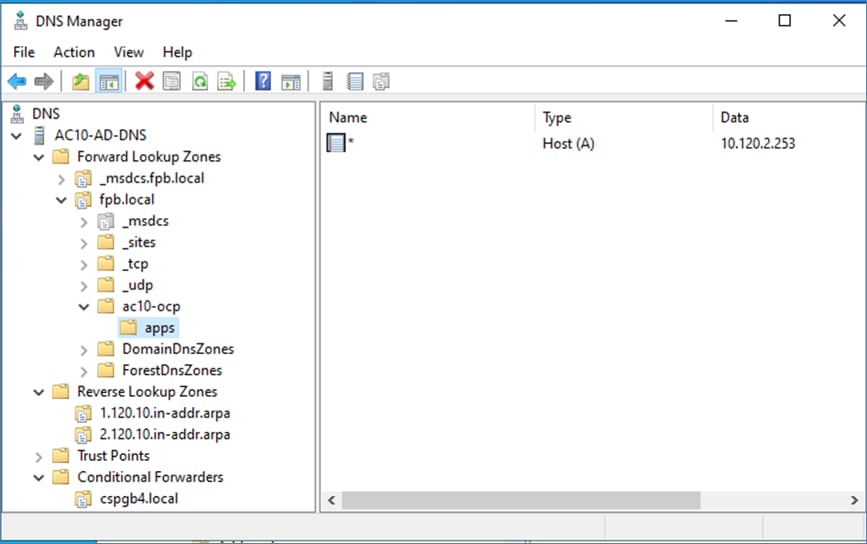

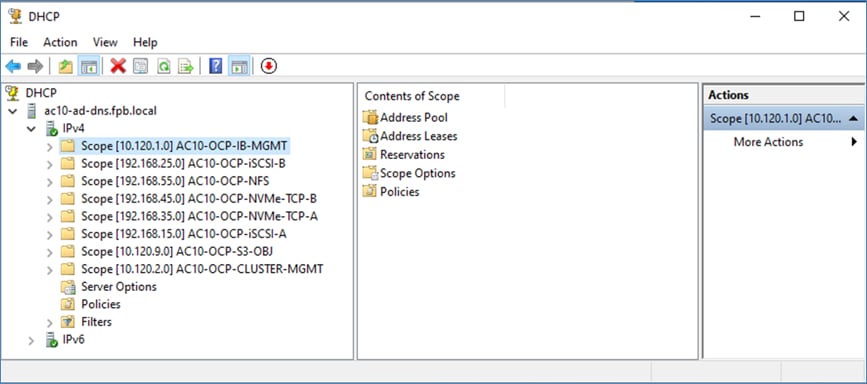

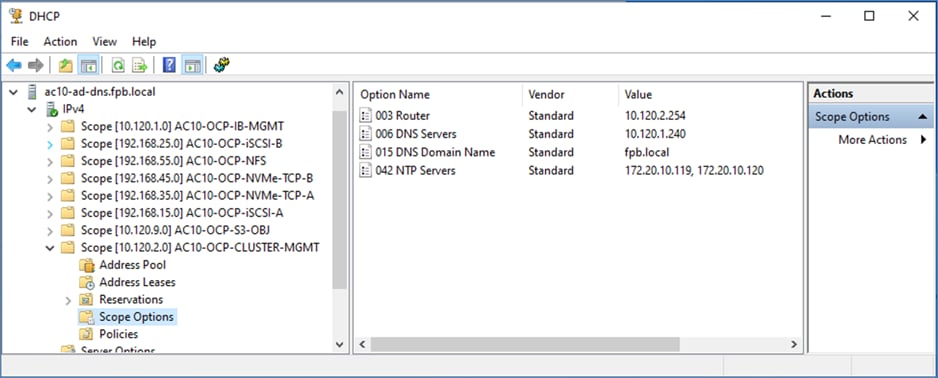

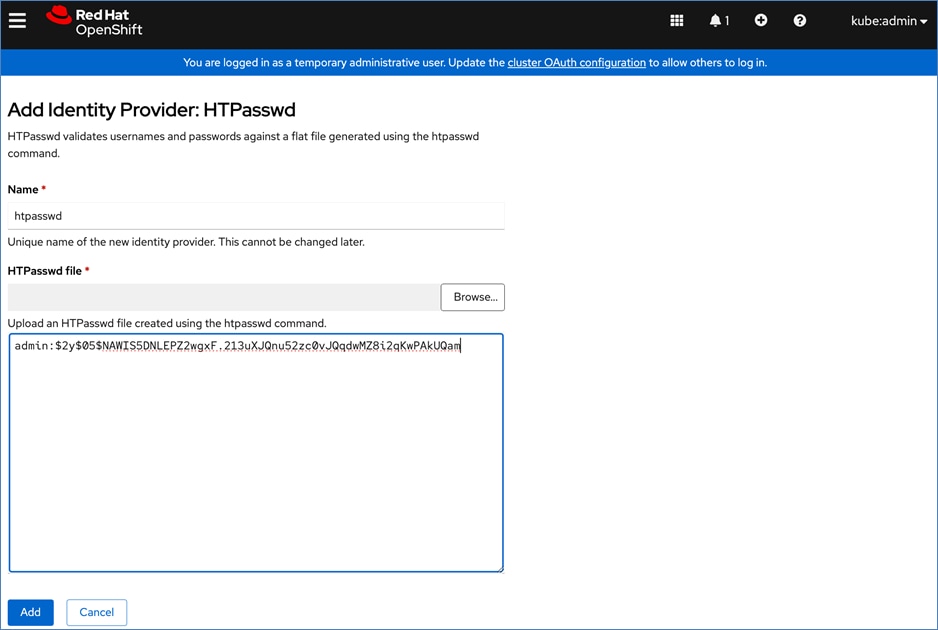

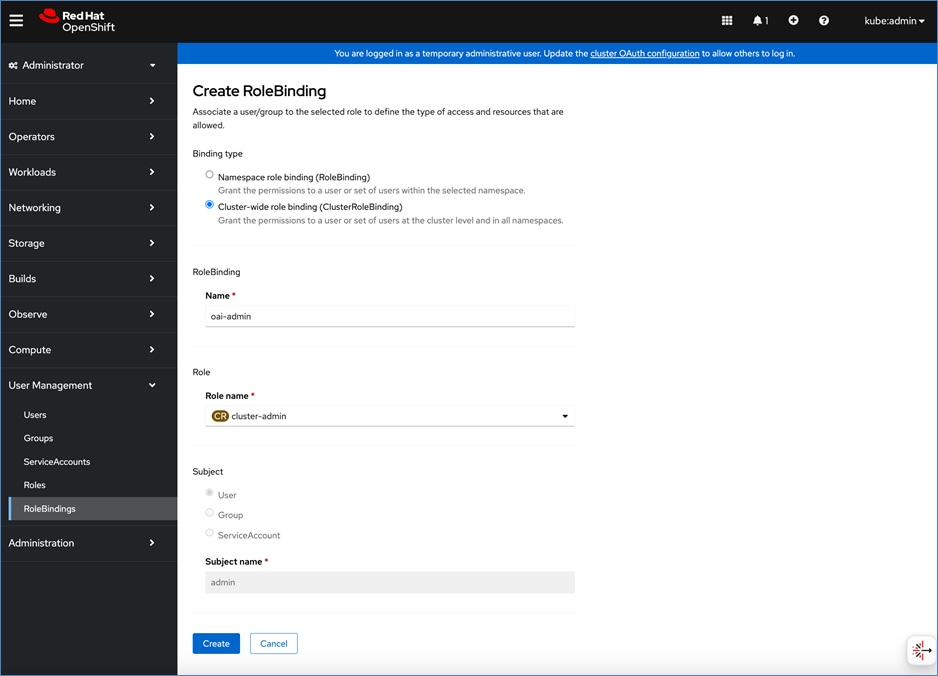

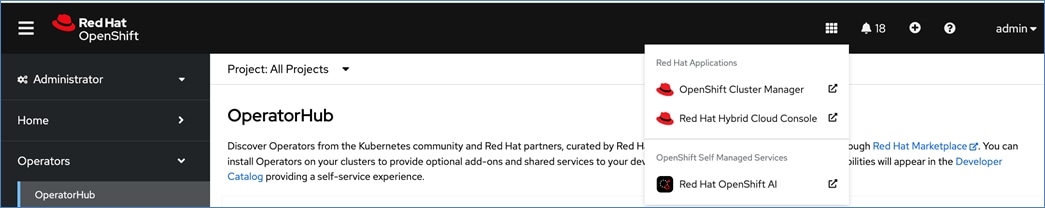

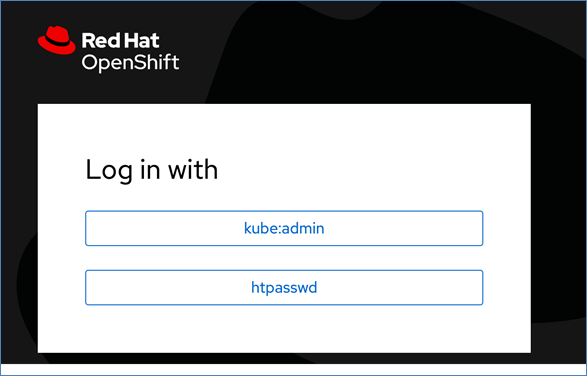

|