Cisco and SAS Edge-to-Enterprise IoT Analytics Platform

Available Languages

Cisco and SAS Edge-to-Enterprise IoT Analytics Platform

Meeting the Challenge of IoT for Industrial Applications

Last Updated: February 24, 2017

About the Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2017 Cisco Systems, Inc. All rights reserved.

Table of Contents

Cisco and SAS Edge-to-Enterprise IoT Analytics Platform

Cisco and SAS Support the Entire IoT Analytics Lifecycle

Cisco Unified Computing System

Cisco IR829G Industrial Integrated Services (IR829)

Cisco 3000 Series Industrial Security Appliance (ISA)

Cisco Unified Computing System

Cisco UCS Integrated Infrastructure for Big Data and Analytics

Cisco UCS 6300 Series Fabric Interconnects

Cisco UCS C-Series Rack Mount Servers

Cisco UCS Virtual Interface Card 1387

Cisco 829 Industrial Integrated Services Router

Cisco Fog Computing Architecture

Cisco Application Centric Infrastructure (ACI) Overview

Architectural Benefits of Using Fabric Interconnect with Cisco ACI

Centralized Management for the Entire Network

Multi-Tenant and Mixed Workload Support

Deep Telemetry of Tenant and Application Network

Cisco Nexus 9000 Series Switches

ACI Spine Line Card for Cisco Nexus 9508

Application Policy Infrastructure Controller (APIC)

SAS Event Stream Processing (ESP)

SAS Event Stream Processing (ESP) Server

Software Distributions and Versions

Red Hat Enterprise Linux (RHEL)

Switch Discovery with the APIC

Switch Registration with the APIC Cluster

Validating the Fabric Topology

Network Configuration and ACI Setup

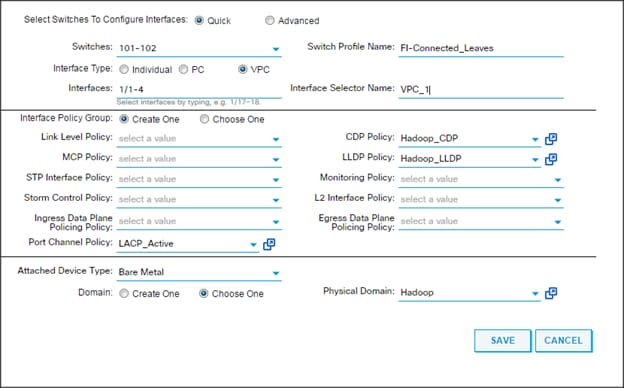

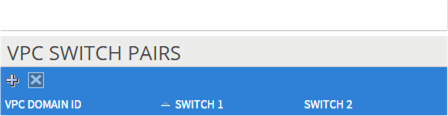

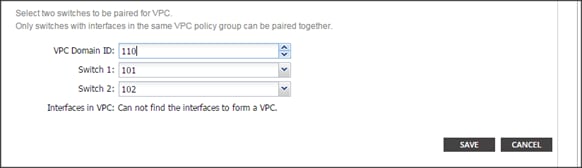

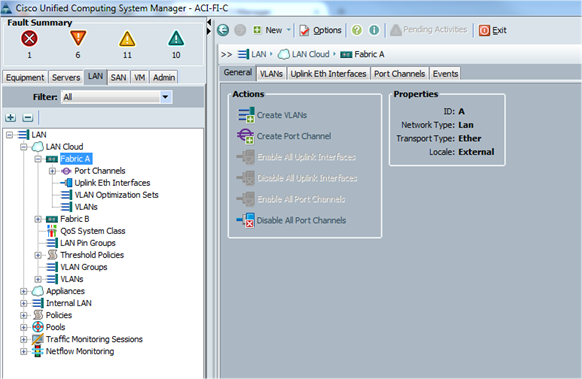

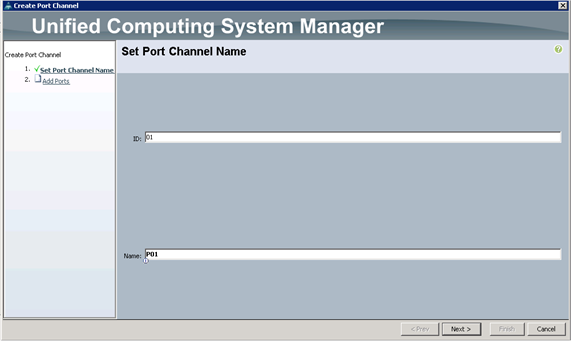

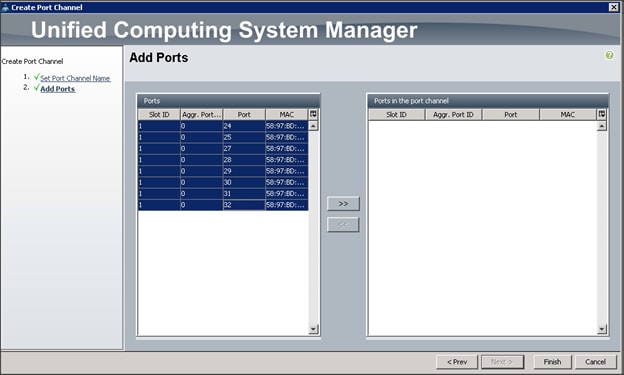

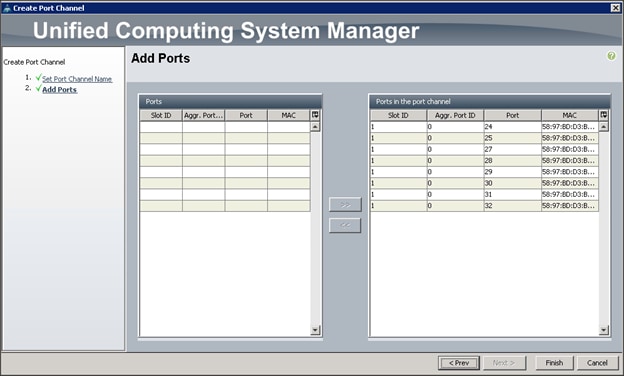

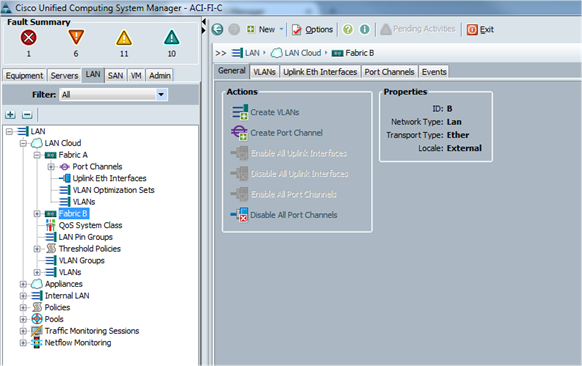

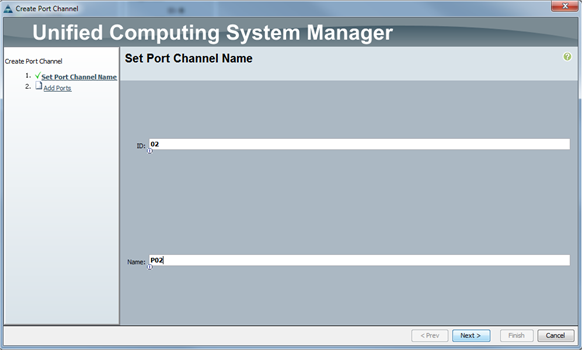

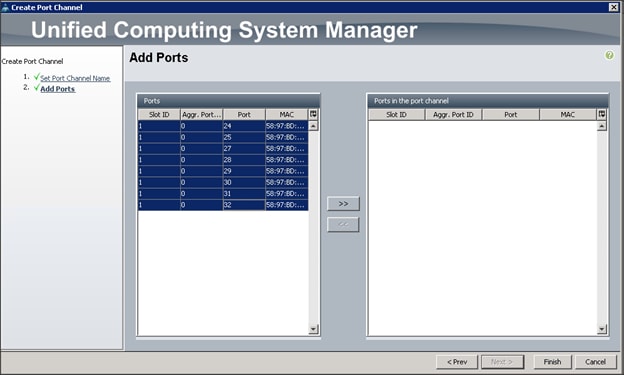

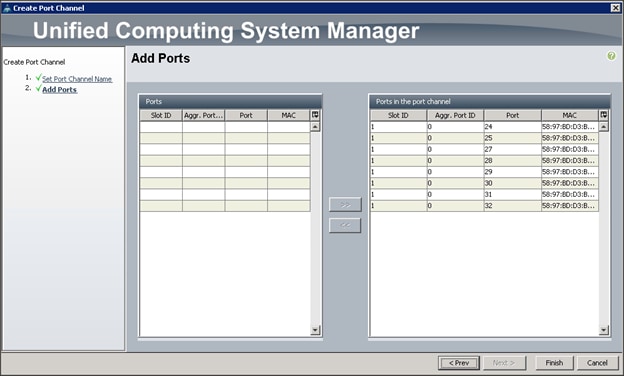

Configuring VPC Ports for Fabric Interconnect

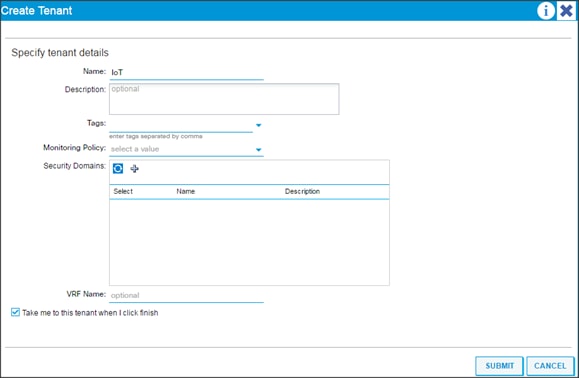

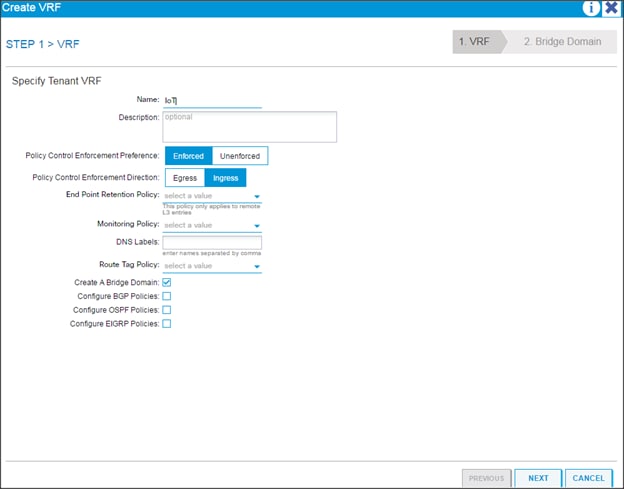

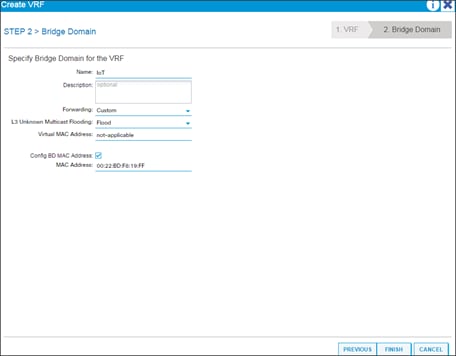

Creating Tenants, Private Networks, and Bridge Domains

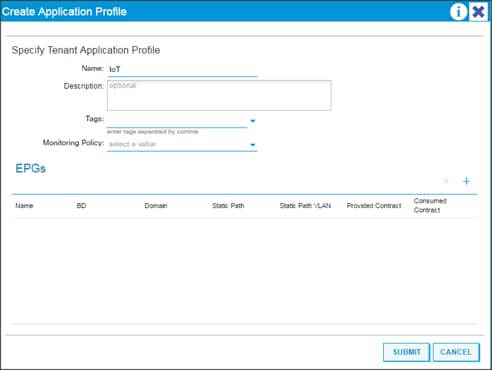

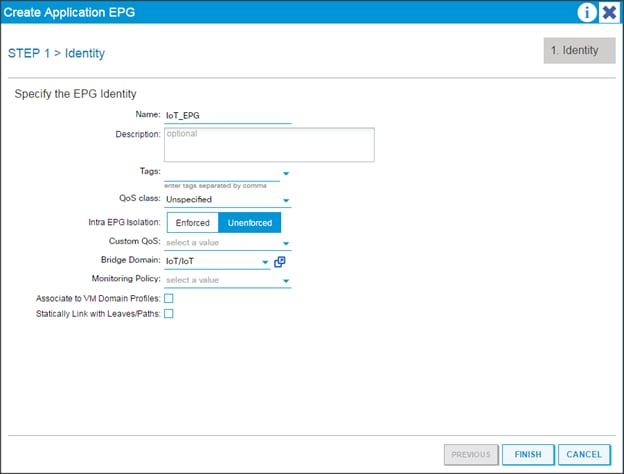

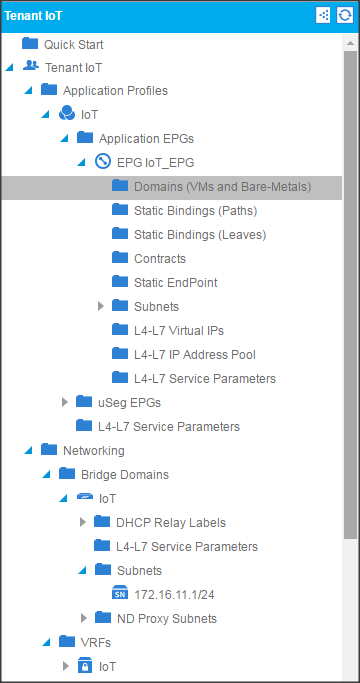

Creating an Application Profile Using the GUI

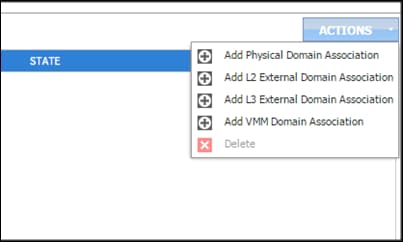

Creating the Static Bindings for the Leaves and Paths

Configure the Ports Connected to the Cisco IR829G Routers

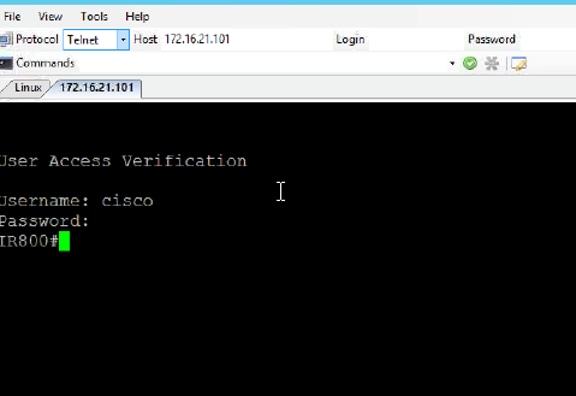

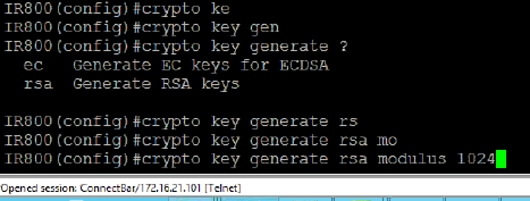

Router Setup and Configuration

Performing Initial Setup of Cisco UCS 6332 Fabric Interconnects

Logging into Cisco UCS Manager

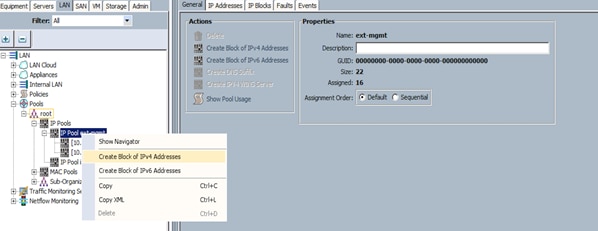

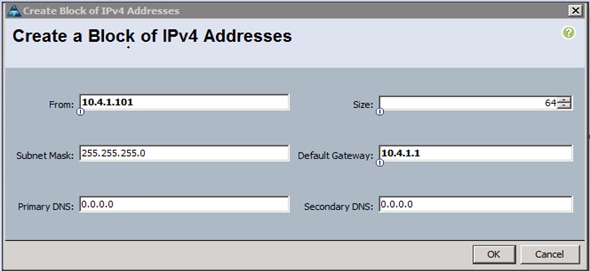

Adding a Block of IP Addresses for KVM Access

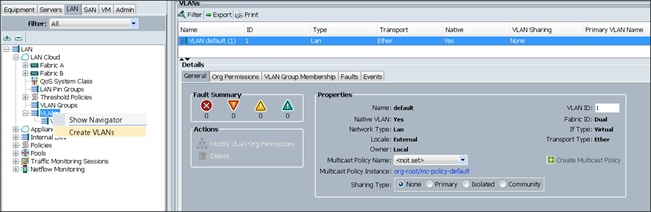

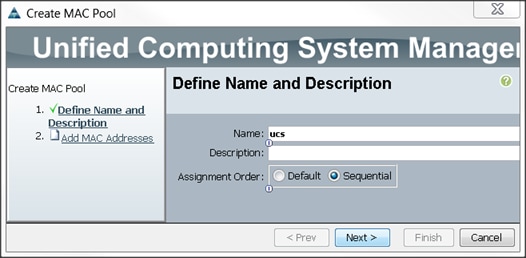

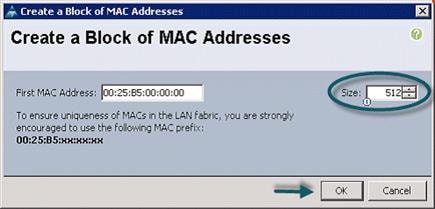

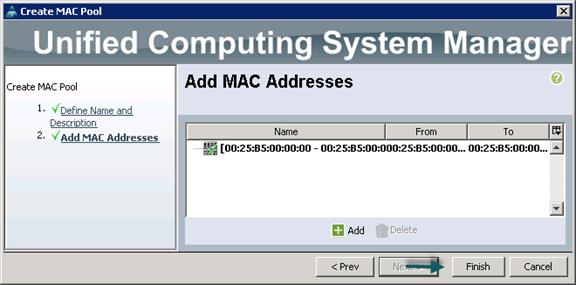

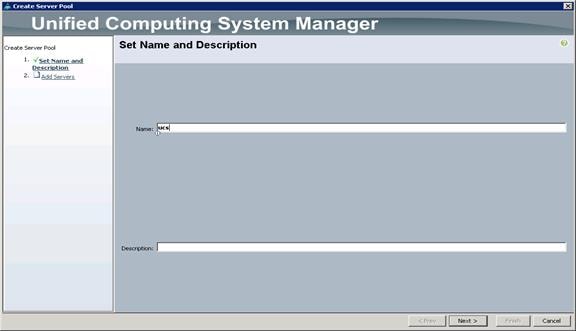

Creating Pools for Service Profile Templates

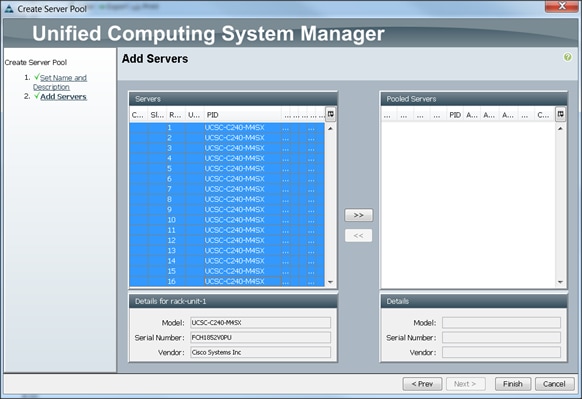

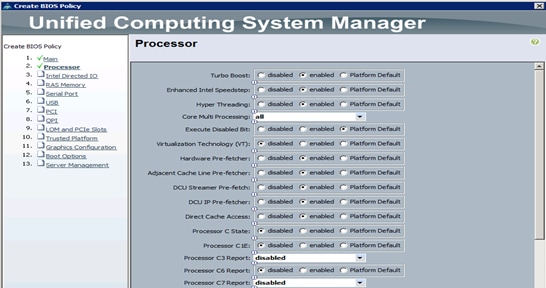

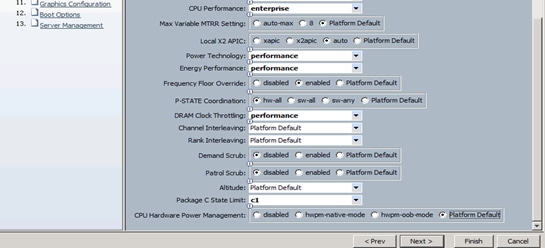

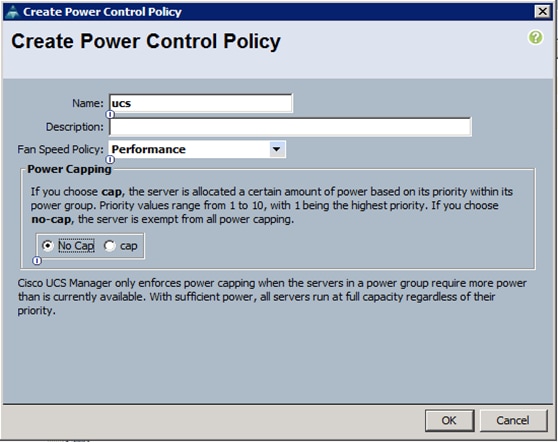

Creating Policies for Service Profile Templates

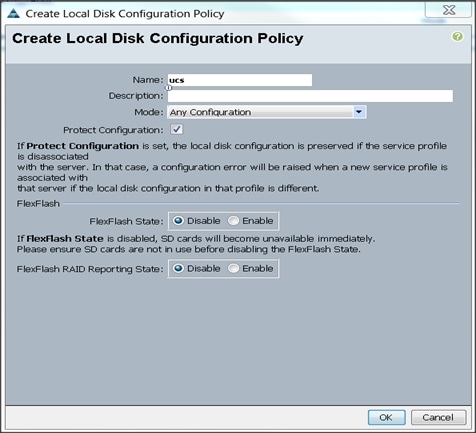

Creating the Local Disk Configuration Policy

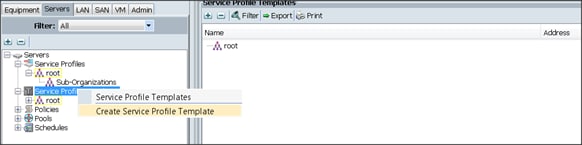

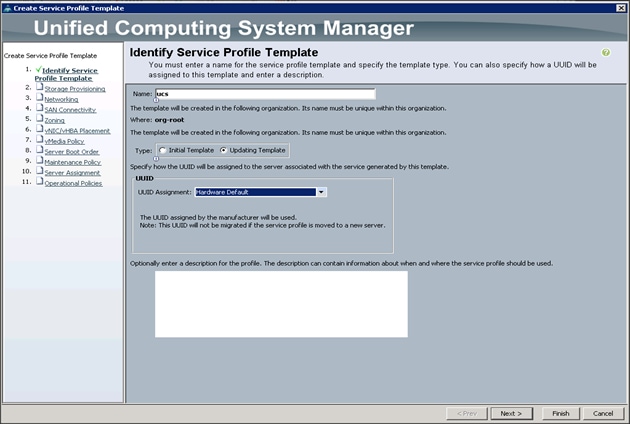

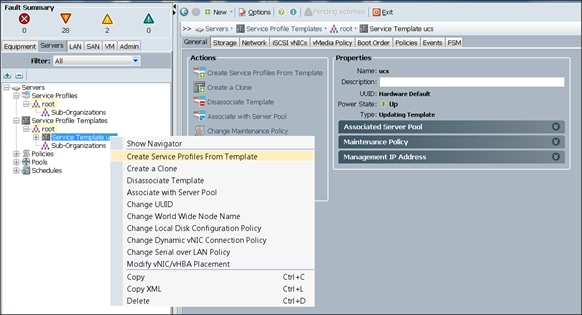

Creating a Service Profile Template

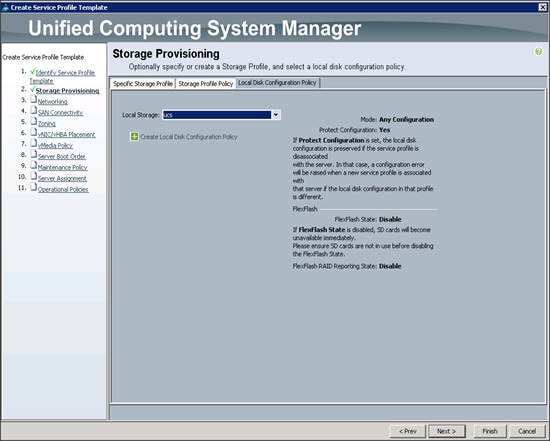

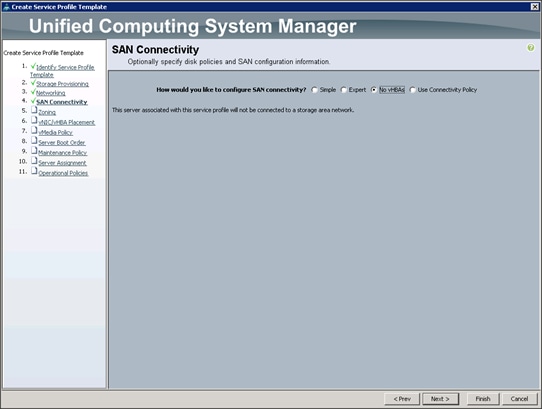

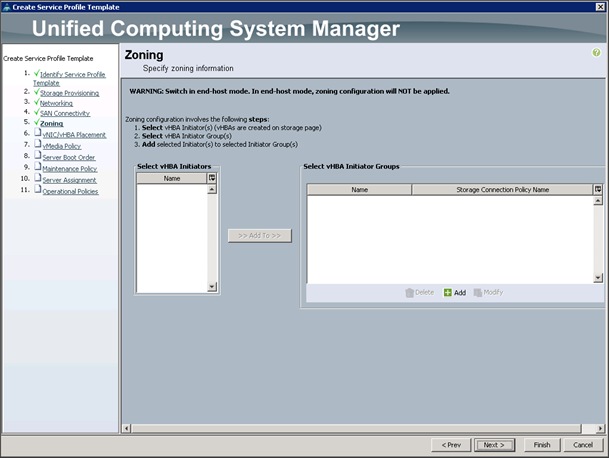

Configuring the Storage Provisioning for the Template

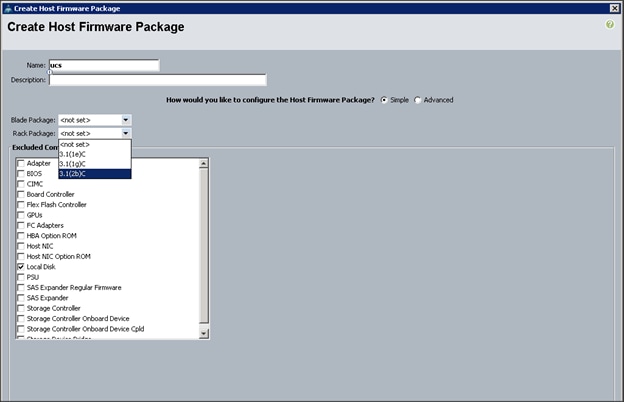

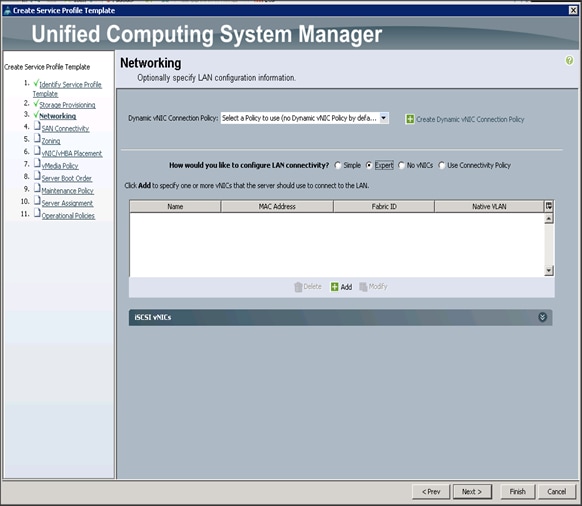

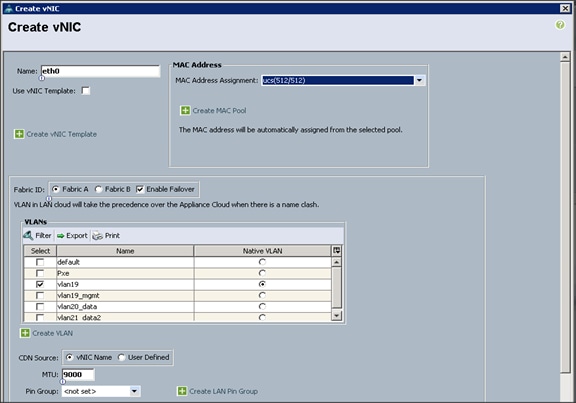

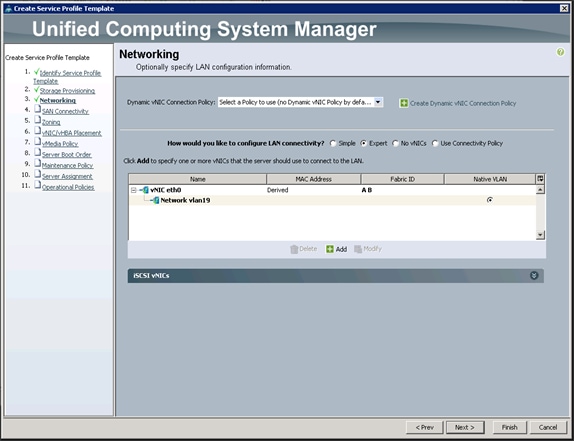

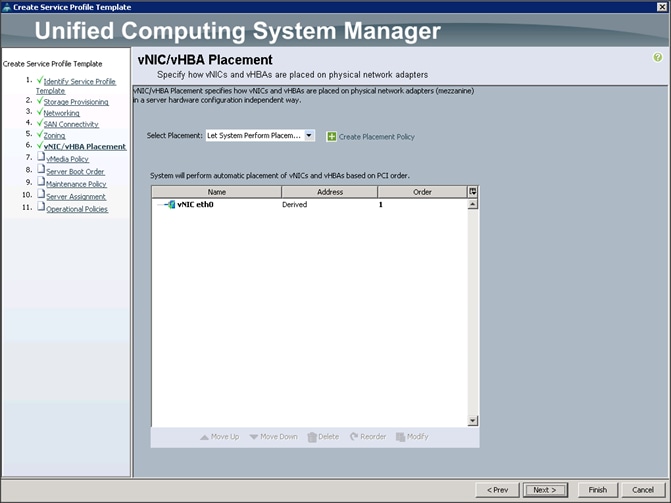

Configuring Network Settings for the Template

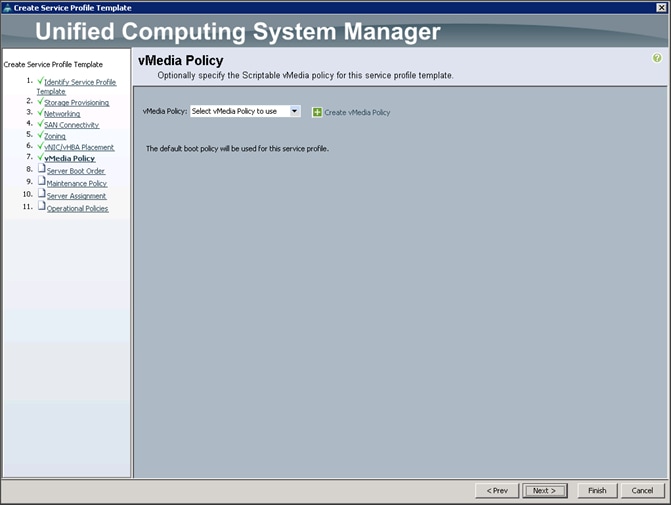

Configuring the vMedia Policy for the Template

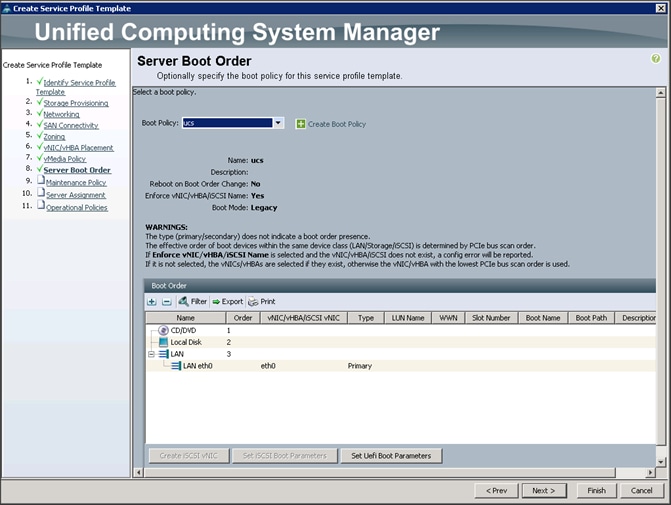

Configuring Server Boot Order for the Template

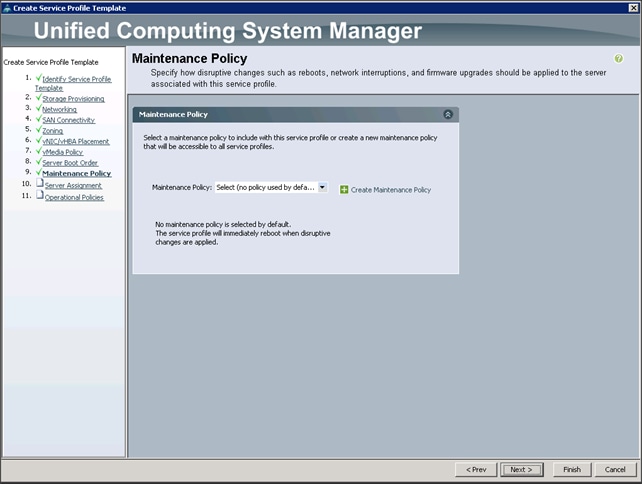

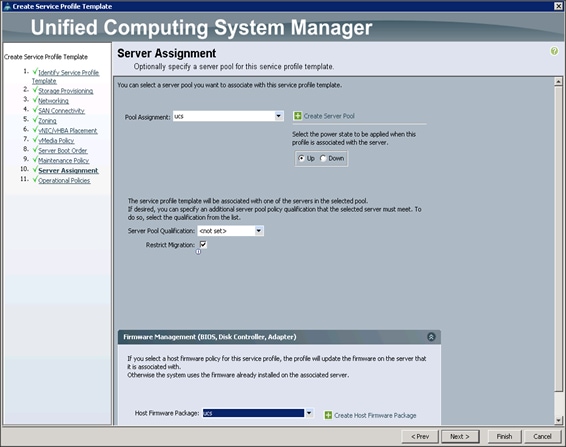

Configuring Server Assignment for the Template

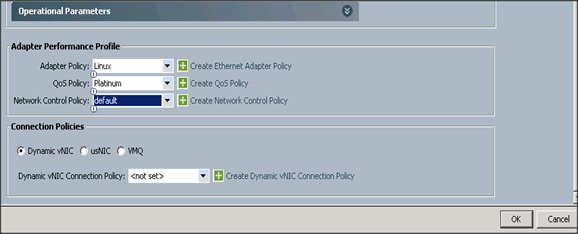

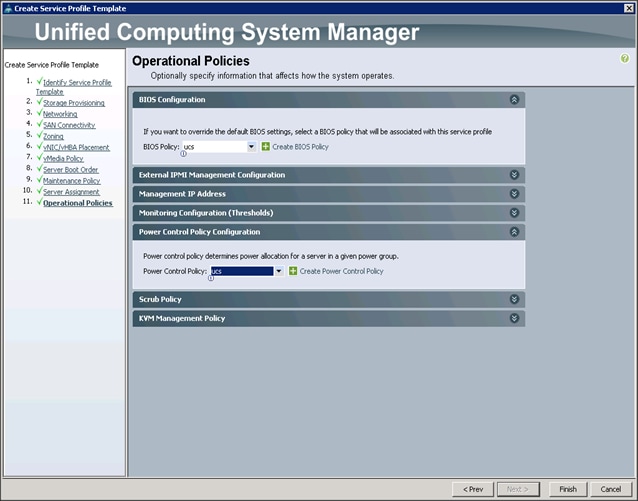

Configuring Operational Policies for the Template

Installing Red Hat Enterprise Linux 7.2

Installing SAS LASR Analytic Server and Visual Analytics

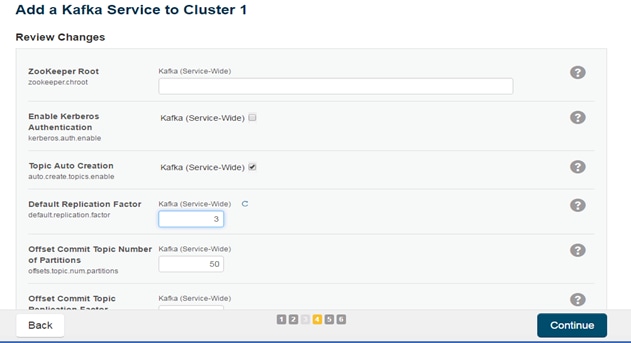

Apache Kafka Installation and Configuration

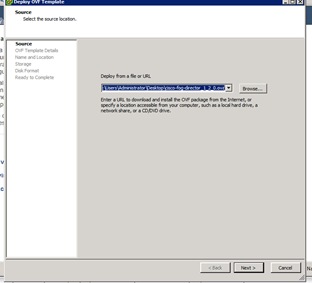

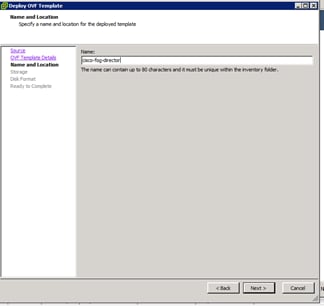

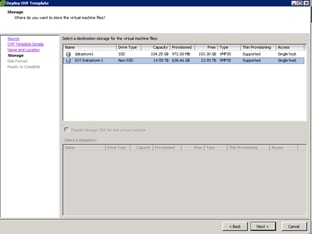

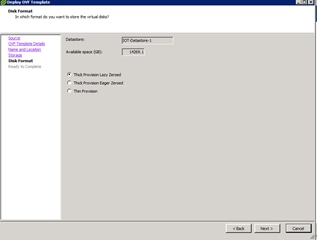

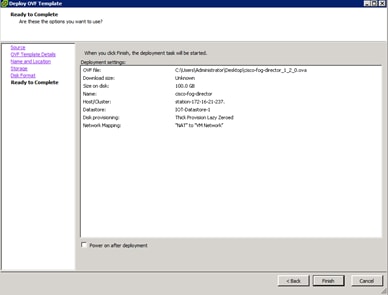

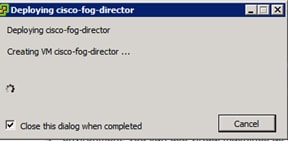

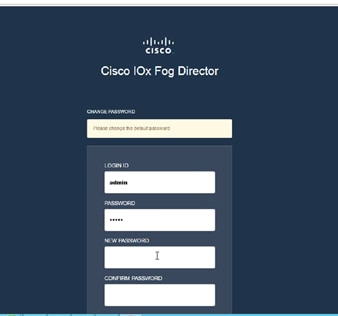

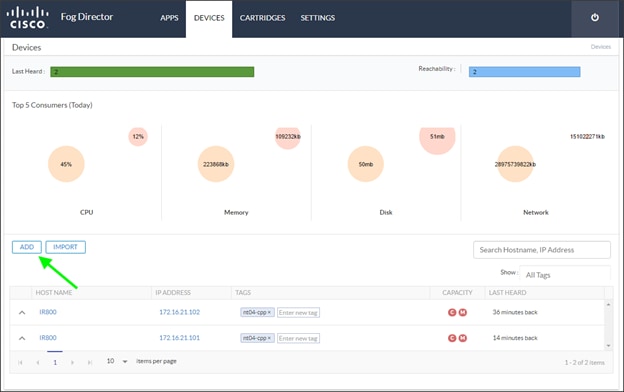

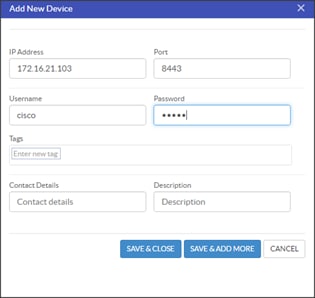

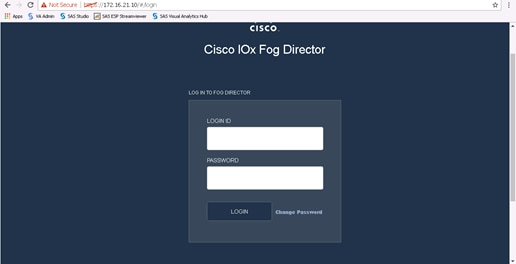

Cisco Fog Director Installation

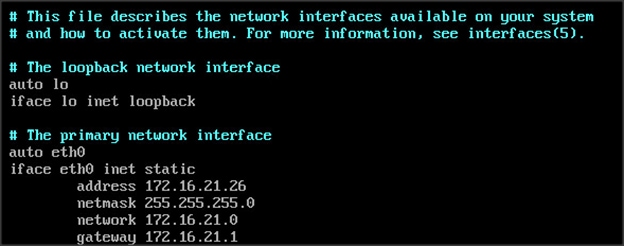

Configuring the Network for Cisco Fog Director

Configuring Cisco Fog Director to Connect to the Routers

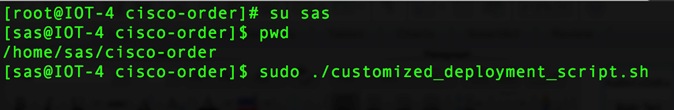

Pre-configuration Requisites for Installing SAS ESP 4.2

Installing SAS Event Stream Processing

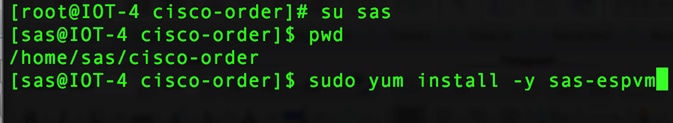

Start SAS Event Stream Processing Studio

Setting Up the Configuration Database

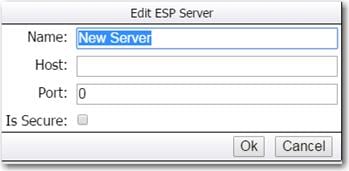

Testing the Server-Database Connection

Running Streamviewer on a Web Server

Connecting to the Configuration Server

Connecting to Event Stream Processing Servers in Streamviewer

Post-Installation Configuration

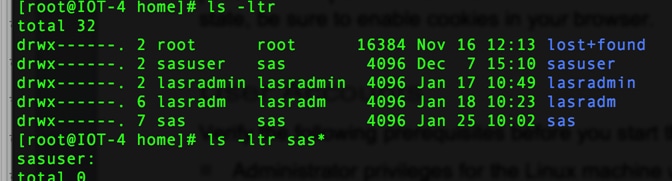

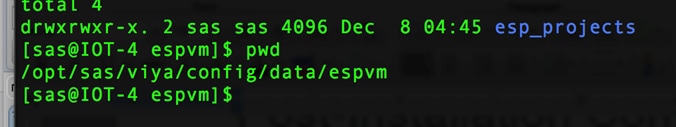

Directory Structure and Permissions

Change the Default Port (Optional)

ESP Server Configuration to Connect to LASR

ESP Server Configuration to Connect to Kafka

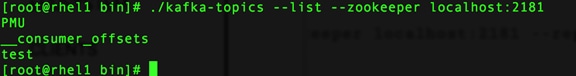

Configure ESP Server to Connect to Kafka Broker

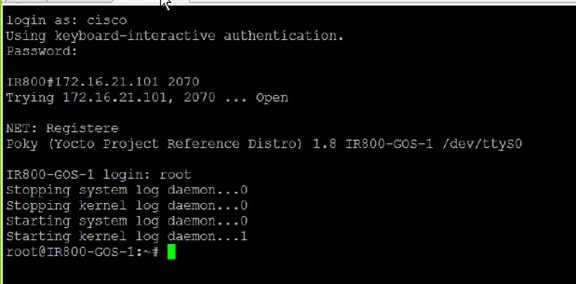

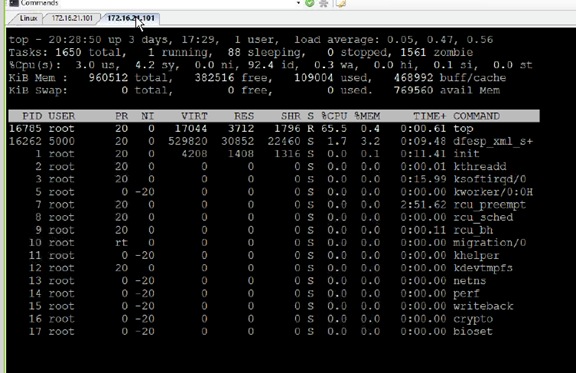

SAS ESP Client and Model Installation on the Edge Router

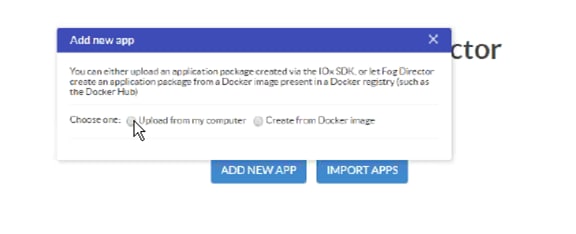

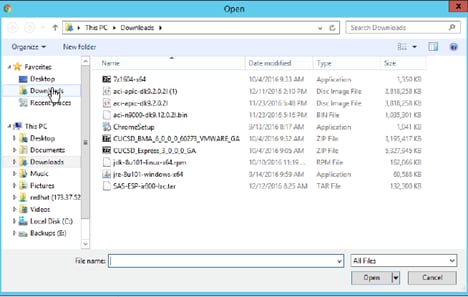

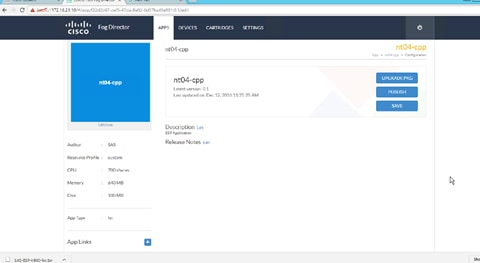

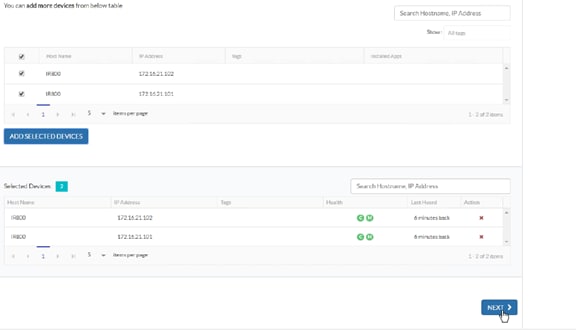

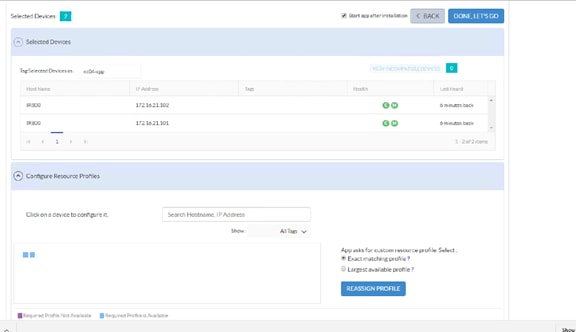

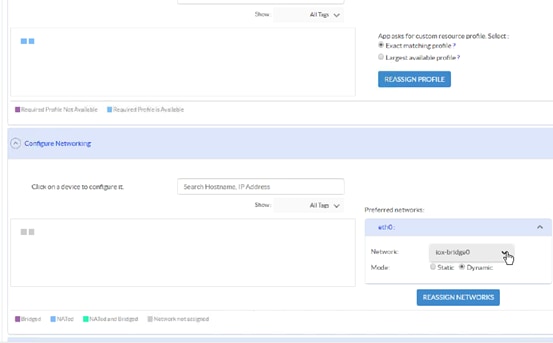

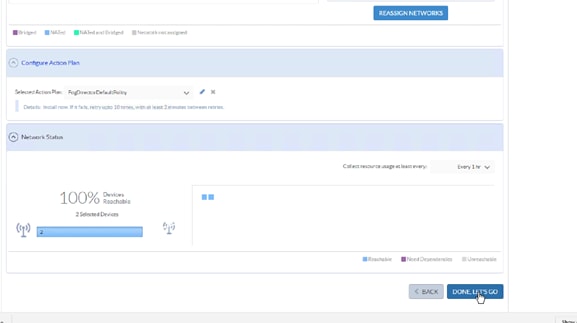

Uploading the Edge Application

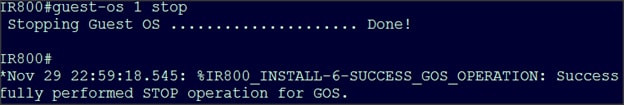

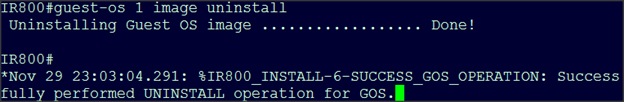

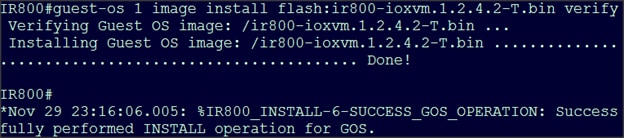

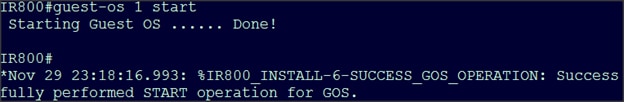

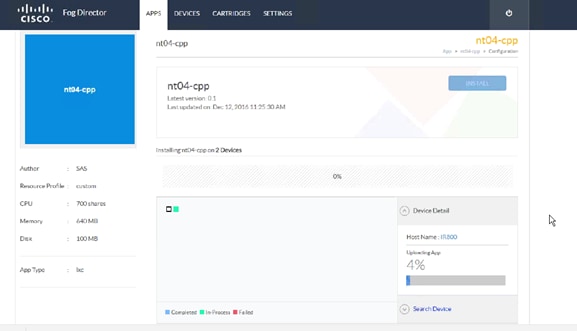

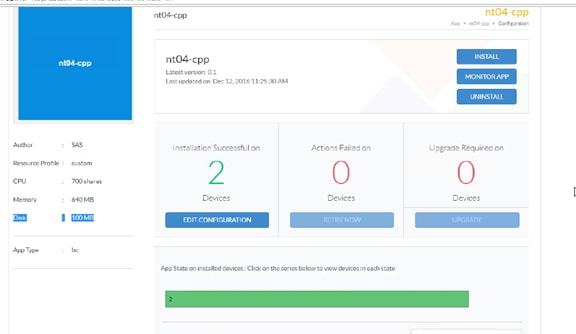

Installing the Edge Application

Verifying Application Deployment

The Internet of Things (IoT) is considered one of the most profound global market transitions in history. There is an enormous surge in the number of things being connected to the internet including devices, people and entire business processes. With the advent of the Internet of Things (IoT) petabyte scale data is being generated in real-time. Companies need the ability to capture the data and quickly turn it into business insight. Cisco and SAS have partnered together to create edge-to-enterprise analytics systems that allow businesses to quickly collect, process and analyze massive amounts of data, both at the edge and in the core data center.

According to Cisco, 50 billion devices will be connected to the Internet by 2020 and 500 billion devices are expected to be connected to the Internet by 2030. Each device includes sensors that collect data, interact with the environment and communicate over the network. The Internet of Things (IoT) is the network of these connected devices. As these connections multiply, the result is exponential change, and a digital disruption that creates new revenue streams with better customer and social experiences.

This represents a significant architectural challenge as these devices generate enormous amounts of data, currently about two exabytes per day. In addition, these new IoT-enabled devices produce many different types of data using a variety of protocols. Finally, the IoT devices generate data, often very noisy data, continuously, and frequently need rapid analysis and response.

Traditional computing models send the data to the core data center for analysis. This is impractical in many scenarios given the volume of data being produced and the requirement for real-time analysis and response times measured in milliseconds.

Simply collecting data from connected sensors, systems or products is not enough. To benefit from the promise of IoT data, businesses need to be able to shift analytics from traditional data centers toward devices on the edge – the “things.” The challenges arise from the complexity – and risks – inherent in capturing and analyzing extreme volumes and varieties of the data torrents flowing from ever-increasing numbers of things.

As a result, a new model for analyzing IoT data at the edge of the network has emerged. This model moves the analysis and response close to the devices generating the data, minimizing latency while reducing the load on the network and the core data center.

This paper describes an architecture for edge-to-enterprise analysis of IoT data including real-time analysis and response at the edge of the network, as well as historical analysis, operational control and model development in the core data center.

Cisco UCS Integrated Infrastructure for Big Data and Analytics is a highly scalable architecture for big data and analytics systems that includes computing, storage, and networking resources fully managed through Cisco UCS Manager and linearly scalable to thousands of nodes using Cisco Nexus® 9000 Series Switches and the Cisco Application Centric Infrastructure (Cisco ACI™) platform.

SAS is the market leader in advanced analytics with software that is infused with cutting-edge, innovative algorithms helping to solve even the most intractable problems in a timely manner.

Cloudera, Inc.’s Cloudera Enterprise product is a hardened distribution of Apache Hadoop and related projects designed for the demanding requirements of enterprise customers. This validated design uses Cloudera Enterprise to manage the transfer and storage of the vast amounts of data being generated at the edge.

Together, Cisco, SAS and Cloudera combine to create a dependable deployment model for edge-to-enterprise analytics offering a predictable path for businesses to turn data into information and information into insight.

Audience

The intended audience for this document includes sales engineers, field consultants, professional services, IT managers, partner engineering and customers who want to create an edge-to-enterprise analytics system by deploying SAS advanced analytics software and Cloudera Enterprise 5.7 on the Cisco Unified Computing System.

Solution Summary

In the IoT, objects or sensors with embedded computing devices connect to the Internet to send and receive data. This behavior represents a significant architectural challenge because these devices generate enormous amounts of data, currently about exabytes per day. In addition, these new IoT-enabled devices produce many different types of data; this data is often very noisy, produced continuously, and uses a variety of protocols; and it all needs real-time analysis and response.

Traditional computing models send the data to the core data center for analysis. However, this approach is impractical in many scenarios because of the volume of data being produced and the need for real-time analysis and response times measured in milliseconds.

Simply collecting data from connected sensors, systems, or products is not enough. To benefit from the promise of IoT data, businesses need to be able to expand the way that analytics processes are run, from traditional data centers to devices on the edge: the “things.” The challenges arise from the complexity—and risks—inherent in capturing and analyzing the huge volumes and varieties of data flowing from the ever-increasing numbers of things.

As a result, a new model for analyzing IoT data at the edge of the network has emerged. This model moves the analysis and response close to the devices that generate the data, reducing latency and also reducing the load on the network and the core data center.

This document describes an architecture for analysis of IoT data, including real-time analysis and response at the edge of the network as well as historical analysis, operation control, and model development in the core data center. This architecture takes into account the often-harsh environment that exists outside the computing center.

Cisco and SAS Edge-to-Enterprise IoT Analytics Platform

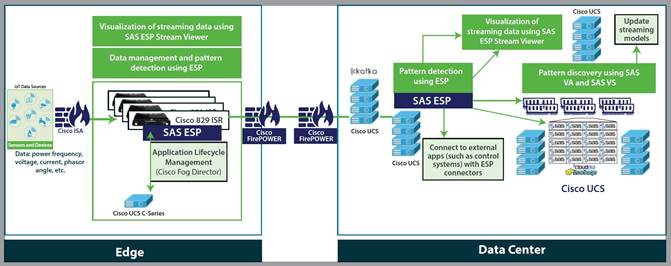

The use of big data analytics in the data center and the application of certain targeted analytics at the network edge together enhance the IoT analytics lifecycle. The IoT analytics lifecycle provides an opportunity to think differently about where and when analytics processes are run. It allows organizations to challenge the barriers of latency, data volume, and connectivity and the costs associated with them.

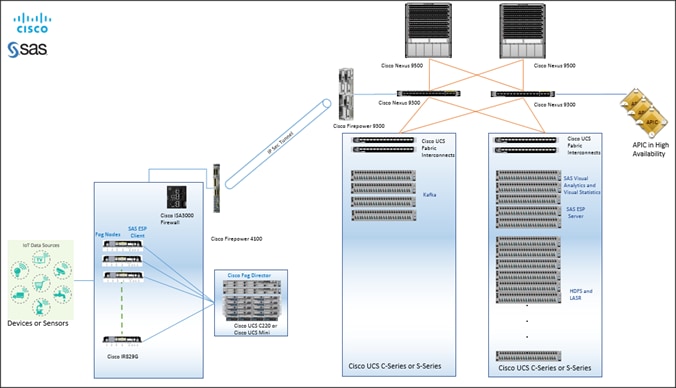

Edge to enterprise analytics architecture that supports the full IoT analytics lifecycle. It consists of three layers: the edge, the network, and the data center.

Cisco and SAS Support the Entire IoT Analytics Lifecycle

The edge includes physical devices or sensors that interact with the world and collect data. They can be very small in terms of their computing power, perhaps only able to collect their data and transmit it over an industrial protocol. They can also be very powerful, with built-in computing and the capability to route data directly over the network. They are the data producers.

The edge network provided by the Cisco 829 Industrial ISR and Cisco Fog Director acts as a bridge between the IoT devices and the data center. It acts as the collection and routing point for the data produced by large numbers of IoT devices. At a minimum, it routes data to the data center, but in an edge-to-enterprise IoT analytics architecture with SAS Event Stream Processing (ESP), it plays a larger role as a robust analytics processing engine.

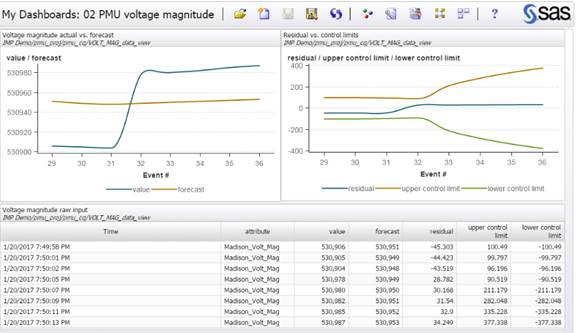

SAS ESP supports real-time analytics using streaming data. These functions of analysis, filtering, and routing by SAS ESP are the main elements that make the whole system work. By performing analysis at the edge, the system can respond very quickly, within milliseconds. By filtering out unnecessary data, the system dramatically reduces the volume of data traversing the network to the data center, reducing the network load and the data ingest and analysis in the core data center. Figure 1 shows one simple example of analytics processing that ESP can perform at the edge. Using analytics, it can detect when a particular variable exceeds its control limit boundaries.

Figure 1 ESP Processing at the edge

Note that the conditions at the edge of the network are harsh relative to the controlled environment of the data center. Equipment at the edge needs to be specifically designed to survive these conditions. This has an impact on the choices made regarding what services to put where. For example, the cost of using hardened industrial routers capable of running independent applications may be far less than the cost of maintaining a remote facility for less rugged equipment.

The transfer layer includes real-time data pipelines, such as Apache Kafka, that provide a reliable, fault-tolerant, linearly scalable message bus for data in transit to accommodate the flood of messages from the sensors. The data is retained until it is processed and stored in the data center.

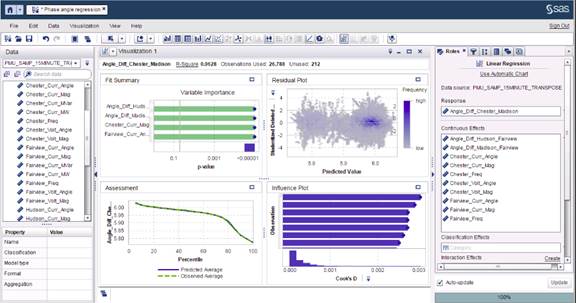

The data center fulfills its traditional role of housing the data and providing the tools to analyze and use the data. The big data analysis needs at the data center are supported by SAS Visual Analytics (VA) and SAS Visual Statistics (VS). Figure 2 is a screen shot from SAS VS illustrating a linear regression model that estimates the phase angle at a point on the power grid. You can use data from substations that have sensors to estimate values in other locations.

Figure 2 SAS Visual Statistics Linear Regression Modeling

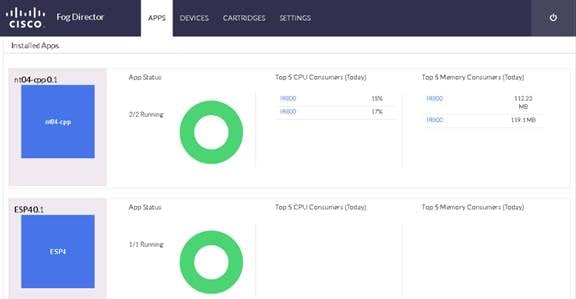

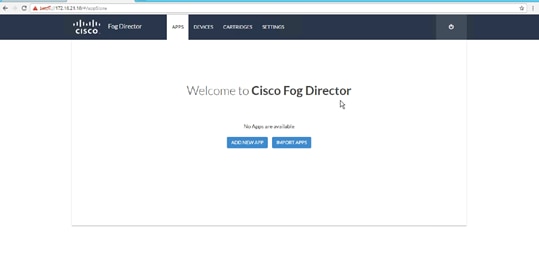

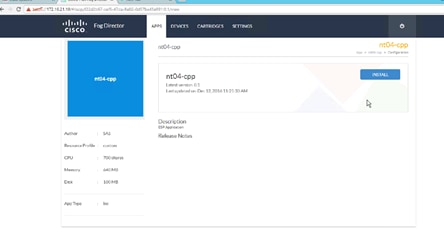

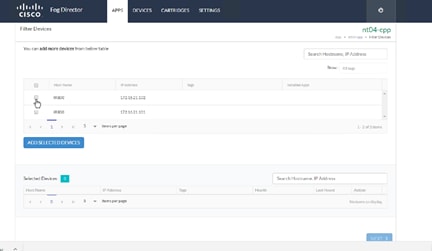

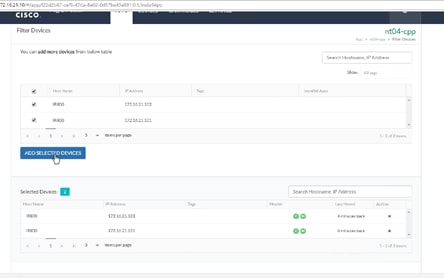

An important function in the data center is the development of models that can be used by the edge systems to analyze the data at the edge. The capability to learn from the deep analysis in the data center and then roll out improved analytical models at the edge provides a continuous feedback loop. Support for a transparent workflow between model development and model deployment is an important differentiator of edge-to-enterprise analytics. For example, Figure 3 shows a screen shot of the Cisco Fog Director application in which the application manages the SAS ESP instances at the edge.

Figure 3 Cisco Fog Director Managing SAS ESP on Edge Routers

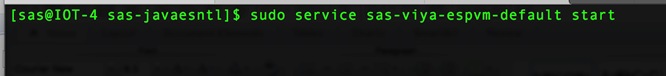

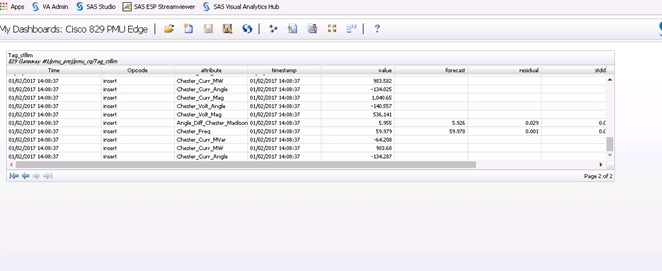

With SAS ESP also present in the data center, organizations can develop pattern-detection capabilities across multiple streams of data. Organizations also can develop real-time control center–type dashboards based on the data streaming from various edge locations. For example, Figure 4 shows the number of signals emerging from the various substations in a utilities scenario.

Figure 4 SAS ESP Aggregate Views in the Data Center

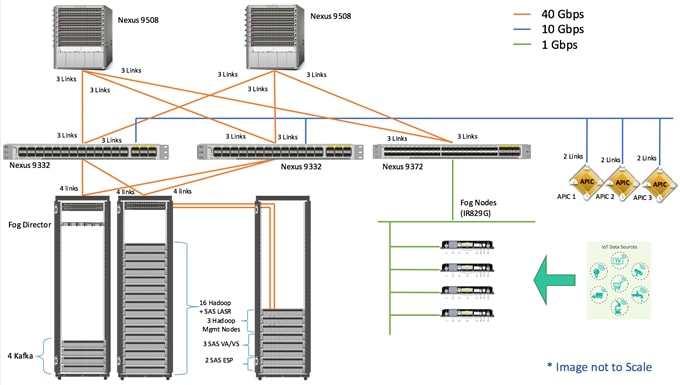

As shown in Figure 5, the Cisco and SAS Edge-to-Enterprise IoT Analytics architecture uses Cisco infrastructure and software technologies to connect to IoT devices at the edge, route data traffic, and run SAS software at both the edge and the core data center. The SAS software at the edge filters data, applies models, and issues alerts.

SAS integrates streaming data with analytics and visualization processes to get more value from the IoT. Whether the data is at the edge, in motion, or at rest, SAS technology helps organizations use it to make decisions quickly, while reducing data movement and storage costs.

Filtered (or relevant) IoT data is routed to the data center by Kafka: a secure, highly available, distributed, message broker.

In the data center, data is stored using Hadoop-based big data technologies on Cisco servers. The data is analyzed both in real time using SAS ESP and in Hadoop for historical analysis. The real-time analysis and model development uses SAS software, including SAS ESP, SAS VA, SAS VS, and SAS LASR Analytics Server.

Figure 5 Cisco and SAS Edge-to-Enterprise IoT Analytics Platform Reference Architecture

The following sections discuss the details of the edge and data center components.

Edge Components

The reference architecture includes the edge components discussed in this section.

Cisco Unified Computing System

At the edge, Cisco UCS Rack mount servers or Cisco UCS Mini is recommended.

Cisco UCS C-Series Rack Servers deliver unified computing in an industry-standard form factor to reduce TCO and increase agility. Each server addresses varying workload challenges through a balance of processing, memory, I/O, and internal storage resources.

Cisco UCS Mini is optimized for branch and remote offices, point-of-sale locations, and smaller IT environments. It is the ideal solution for customers who need fewer servers but still want the comprehensive management capabilities provided by Cisco UCS Manager. Cisco UCS Mini delivers servers, storage, and 10-Gigabit networking in an easy-to-deploy, compact form factor.

The reference architecture presented in this document uses a Cisco UCS C220 M4 Rack server, a 1-rack-unit (1RU) server, to deploy Cisco Fog Director and also serves as an edge network server to address additional reporting and analysis requirements.

Cisco IR829G Industrial Integrated Services (IR829)

The Cisco IR829G Industrial Integrated Services Routers is a ruggedized integrated services router designed for deployment in harsh industrial environments. The Cisco 829 Industrial ISR has a compact form factor, an integrated 9 to 32V DC power input, and multimode third-generation (3G) and fourth-generation (4G) Long-Term Evolution (LTE) wireless WAN and WLAN connections. It can rapidly deploy a wide variety of IoT solutions, including fleet management, mass transit, and remote asset monitoring applications.

Cisco IOx software, an open, extensible environment for hosting applications at the network edge, and SAS ESP software are installed on the Cisco 829 Industrial ISR.

Cisco Fog Director

Cisco Fog Director manages the Cisco 829 Industrial ISR. It provides the capability to manage large-scale production deployments of edge applications that support Cisco IOx, and it controls the IOx application lifecycle, from initial deployment through ongoing change management and application retirement.

Cisco Fog Director employs a visual web interface or can be integrated with existing management systems through an API. Cisco Fog Director supports both application-centric and network-centric infrastructure views to optimize productivity.

Cisco Fog Director is used to deploy and manage the application lifecycle of SAS ESP on the edge Cisco 829 Industrial ISR as a containerized application.

SAS Event Stream Processing

SAS ESP analyzes and acts on events in real time as they occur. SAS ESP is highly embeddable, making it well suited for embedding inside SFF edge devices such as IoT gateways. It is deployed both at the edge and in the core data center. It provides a real-time, low-latency, high-throughput event processing solution. It supports a variety of functions, including machine-learning algorithms (such as neutral networks, gradient boosting, and decision trees), text analysis to categorize text and extract entities, sentiment analysis, advanced pattern matching, in-stream joins, data quality, and much more.

Cisco 3000 Series Industrial Security Appliance (ISA)

Simplify compliance and protect Internet of Things (IoT) Networks from Attacks, Industrial networks have advanced threat protection needs, requiring a ruggedized solution that helps to ensure safe, reliable service delivery. Cisco offers a broad portfolio of solutions for industrial control networks. These include the Industrial Security Appliance 3000 for the most demanding Industrial Control System (ICS) environments. Cisco ruggedized 3000 appliance currently provides the industry's widest range of OT specific access control, threat detection, and application visibility for the harshest and most demanding of environments (with the ability to work in harsh environments, with a temperature range of -40° to 60°C) and is hardened for vibration, shock, surge, and electrical noise immunity. It offers four high-performance Ethernet data links in a DIN rail or rack-mount form factor. The Industrial Security Appliance 3000 extends the network as a sensor and enforcer to IoT environments.

Cisco FirePOWER

The Cisco FirePOWER next-generation firewall (NGFW) is the industry’s first fully integrated, threat-focused next-gen firewall with unified management. It uniquely provides advanced threat protection before, during and after attacks while providing better security, faster speeds and a smaller footprint. The 4100 Series’ 1-rack-unit size is ideal at the Internet edge and in high-performance environments.

Data Center Components

The reference architecture encompasses the components described in this section running on Cisco Unified Computing System™ (Cisco UCS) servers.

Cisco Unified Computing System

As stated earlier, Cisco UCS C-Series Rack Servers and S-Series Storage Servers are industry-leading 2-socket servers designed for both performance and expandability over a wide range of computing and storage-intensive infrastructure workloads. The Cisco UCS C240 Rack Server is an SFF server. It comes with 24 drives and supports a wide range of computing, I/O, and storage demands. The server uses dual Intel Xeon processor E5-2600 v4 series CPUs and supports up to 1.5 TB of main memory and a range of HDD and SSD drive options. This server can be used with the Cisco UCS VIC 1227 or 1387 to provide 10- or 40-Gbps network connectivity.

Kafka

Kafka is an open-source, distributed messaging system that provides fast, highly scalable, and durable messaging through a publish-subscribe model. SAS ESP uses Kafka to handle data pipelines for high-speed filtering and pattern matching. Kafka is co-located in the data center core and deployed on Cisco UCS C240 M4 servers.

SAS Event Stream Processing

SAS ESP provides real-time event stream processing in the data center, capturing data arriving continuously from devices and applications, analyzing it, and acting on new information as it arrives. SAS ESP analyzes millions of events per second, detecting patterns of interest as they occur. It issues alerts and notifications and streams live information to operation dashboards.

SAS ESP in the data center includes a visual model-development environment and a visualization component for building dashboards using streaming data.

SAS Visual Analytics

SAS VA enables organizations to gain insight from all the data, no matter the amount of data, with no need to sample or create subsets of the data. It is implemented as an integrated suite of web applications that offer intuitive, drag-and-drop interactions, rapid, highly visual responses, and role-based access to functions.

SAS Visual Statistics

SAS VS enables organizations to derive predicted values from the predictive models. These new variables contain the prediction information for the models and can be used in other visualizations. Deployed on Cisco UCS C240 servers, SAS VS is fully integrated into SAS VA.

SAS Analytics for IoT Bundle

The SAS ESP, VA, and VS components are encapsulated in a bundle, SAS Analytics for IoT, to provide an industry-independent foundational platform for IoT analytics.

SAS LASR Analytics Server

SAS® LASR Analytic Server is an analytic platform applying analytics to big data. The server provides speedy, secure, multi-user access to in-memory data in a distributed computing environment.

Hadoop

Apache Hadoop is an open-source initiative focused on the big data challenge and a leading solution for enterprise big data implementations. Hadoop provides the software library needed to store, process, and analyze incredibly large amounts of both structured and unstructured data. Hadoop is designed for high availability and linear scaling. In this solution we use Cloudera Enterprise, explained in detail later.

Reference Architecture

The reference architecture includes the components shown in Figure 6 and listed in Table 1.

Figure 6 Reference Architecture

Table 1 Reference Architecture Configuration Details

|

|

Component |

Configuration |

| Edge

|

Cisco IR829G Industrial Integrated Services Routers |

2 cores (1.25 GHz dual core CPU, 2 GB DDR3, 8GB eMMC storage, 4x10/100/1000 Mbps) · 2 cores (1.25-GHz dual-core CPU, 2-GB DDR3, 8-GB embedded multimedia card [eMMC] storage, and 4 x 10/100/1000 Mbps) · IOx : 1.2.0 · Guest OS: Ubuntu 14.04.01/03 · Cisco IOS® Software Release 15M&T · SAS ESP Client: 4.2.0 |

| SAS ESP Client |

Container Application running on IR829G Guest O/S |

|

| Firewall |

In-bound (to IR829): Cisco ISA 3000 Out-bound (from IR829 and Cisco Fog Director): Cisco FirePOWER 4100 series |

|

| Cisco Fog Director |

Cisco UCS C220 M4 servers with: · 2 Intel Xeon processor E5-2680 v4 CPUs (14 cores on each CPU) · 256 GB of memory · Cisco 12-Gbps SAS Modular RAID Controller with 2-GB flash-based write cache (FBWC) · 8 x 1.2TB 10k-rpm HDD · Cisco UCS VIC 1227 (with 2 x 10 Gigabit Ethernet) · Version: 1.2.0 |

|

| Data Pipeline (Transfer) |

Kafka nodes

|

Four Cisco UCS C240 M4 servers each with: · 2 Intel Xeon processor E5-2680 v4 CPUs (14 cores on each CPU) · 256 GB of memory · Cisco 12-Gbps SAS Modular RAID Controller with 2-GB flash-based write cache (FBWC) · 24 x 1.8-TB 10k-rpm HDD · 2 x 240-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS VIC 1387 (with 2 x 40 Gigabit Ethernet QSFP ports) Alternate Reference Architecture with Cisco S-Series Server: · Cisco UCS S3260 M4 servers each with two nodes, each node with: · 2 Intel Xeon processor E5-2680 v4 CPUs (14 cores on each CPU) · 256 GB of memory · Cisco 12-Gbps SAS Modular RAID Controller with 2-GB flash-based write cache (FBWC) · 28 x 4TB 10k HDD · 2 x 480-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS SIOC (with 2 x 40 Gigabit Ethernet QSFP ports) |

|

|

Firewall |

Inbound to Kafka: Cisco FirePOWER 9300 series |

| Data Center Core |

Connectivity |

Cisco ACI spine-leaf architecture consisting of: · Two Cisco Nexus 9508 Spine switches · Two Cisco Nexus 9332 Leaf switches · One Cisco Nexus 9372 Leaf switch · Three Cisco APIC M2 appliances · Four Cisco UCS 6332 Fabric Interconnects |

| Hadoop |

Management Nodes: 3 nodes Data Nodes: 16 nodes All nodes are Cisco UCS C240 M4 servers each with: · 2 Intel Xeon processor E5-2690 v4 CPUs (14 cores on each CPU) · 512 GB of memory · Cisco 12-Gbps Modular SAS HBA · 8 x 1.6-TB SSD · 2 x 240-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS VIC 1387 (with 2 x 40 Gigabit Ethernet QSFP ports) Alternate Reference Architecture with Cisco S-Series Server: · Cisco UCS S3260 M4 storage servers each with two nodes, each node with: · 2 Intel Xeon processor E5-2690 v4 CPUs (14 cores on each CPU) · 512 GB of memory · Cisco 12-Gbps SAS Modular RAID Controller with 2-GB flash-based write cache (FBWC) · 8 x 1.6-TB SSD · 12 x 4TB 10k HDD · 2 x 480-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS SIOC (with 2 x 40 Gigabit Ethernet QSFP ports) |

|

| SAS LASR |

Collocated with all Data Nodes |

|

| SAS VA/VS (Visual Analytics and Visual Statistics) |

3 Cisco UCS C240 M4 servers each with: · 2 Intel Xeon processor E5-2690 v4 CPUs (14 cores on each CPU) · 512 GB of memory · Cisco 12-Gbps Modular SAS HBA · 8 x 1.6-TB SSD · 2 x 240-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS VIC 1387 (with 2 x 40 Gigabit Ethernet QSFP ports) |

|

|

|

SAS ESP Server

|

2 Cisco UCS C240 M4 servers each with: · 2 Intel Xeon processor E5-2690 v4 CPUs (14 cores on each CPU) · 512 GB of memory · Cisco 12-Gbps Modular SAS HBA · 8 x 1.6-TB SSD · 2 x 240-GB 6-Gbps 2.5-inch enterprise value SATA SSD drives for boot · Cisco UCS VIC 1387 (with 2 x 40 Gigabit Ethernet QSFP ports) |

Sizing Guidelines

Kafka

Table 2 shows the scaling and sizing guidelines for Kafka storage, for various drives, and replication factors.

Time taken for filling one server = ~((Total Storage)/Network Bandwidth)/3600)

Table 2 Scaling and Sizing Guidelines

| Network Bandwidth |

Server Type |

Total Usable Storage |

Time to Fill One server |

Total Servers |

Total Servers |

|

|

|

|

|

(1 way replicated data) (Full network utilization) |

(3 way replicated data) (Full network utilization) |

| 10 Gbps (1.25 GBps) |

C240 M4 (SFF) with 1.8 TB drives |

~40800 GB |

~9 hours |

~3 servers for storing 1 day of data |

~9 servers for storing 1 day of data |

| 40 Gbps (5 GBps) |

C240 M4 (SFF) with 1.8 TB drives |

~40800 GB |

~2.3 hours |

~10 servers for storing 1 day of data |

~30 servers for storing 1 day of data ( |

| 10 Gbps (1.25 GBps) |

C240 M4 (LFF) with 6 TB drives |

~72000 GB |

~16 hours |

~2 servers for storing 1 day of data |

~6 servers for storing 1 day of data |

| 40 Gbps |

C240 M4 (LFF) with 6 TB drives |

~72000 GB |

~4 hours |

~ 6 servers for storing 1 day of data |

~18 servers for storing 1 day of data |

Cisco UCS Integrated Infrastructure for Big Data and Analytics

The Cisco UCS Integrated Infrastructure for Big Data and Analytics solution is based on Cisco UCS Integrated Infrastructure for Big Data and Analytics, a highly scalable architecture designed to meet a variety of scale-out application demands with seamless data integration and management integration capabilities built using the following components:

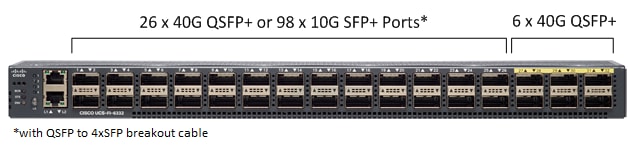

Cisco UCS 6300 Series Fabric Interconnects

Cisco UCS 6300 Series Fabric Interconnects provide high-bandwidth, low-latency, lossless 10 and 40 Gigabit Ethernet, Fiber Channel over Ethernet (FCoE), and Fiber Channel functions with management capabilities for the system. All servers attached to Fabric interconnects become part of a single, highly-available management domain.

Deployed in redundant pairs, Cisco UCS Fabric Interconnects offer the full active-active redundancy, performance and exceptional scalability needed to support the large number of nodes that are typical in clusters serving edge-to-enterprise analytic applications. See Figure 7.

Figure 7 Cisco UCS 6332 32-Port Fabric Interconnect

Cisco UCS C-Series Rack Mount Servers

Cisco UCS C-Series Rack Servers deliver unified computing in an industry-standard form factor to reduce total cost of ownership and increase agility. Each product addresses varying workload challenges through a balance of processing, memory, I/O, and internal storage resources.

Cisco UCS unifies computing, networking, management, virtualization, and storage access into a single integrated architecture that can enable edge-to-enterprise server visibility, management, and control in both bare-metal and virtualized environments. With Cisco UCS-managed deployment, UCS C-Series servers take advantage of standards-based unified computing innovations to significantly reduce customers' TCO and increase business agility.

The Cisco UCS C220 M4 and C240 M4 Rack Servers provide:

· Dual Intel Xeon E5-2600 v4 processors for improved performance

· Next-generation double-data-rate 4 (DDR4) memory, 12-Gbps SAS throughput, and NVMe PCIe SSD support

· Innovative Cisco UCS Virtual Interface Card (VIC) support in PCIe or modular LAN-on-motherboard (mLOM) form factor

Cisco UCS C220 M4 Rack Server

The Cisco UCS C220 M4 Rack Server is a versatile, high-density, general-purpose enterprise infrastructure and application server (Figure 8). It delivers world-record performance for a wide range of enterprise workloads.

Table 3 Cisco UCS C220 M4 Rack Server Specifications At-a-Glance

| Item |

Specification |

| Chassis |

One rack-unit (1-RU) server |

| Processor |

Either one or two Intel® Xeon® processor E5-2600 v4 product family CPUs |

| Memory |

24 double-data-rate 4 (DDR4) dual in-line memory (DIMMs) of up to 2400 MHz speeds. Up to 1.5 TB of main memory |

| PCIe slots |

Six PCI Express (PCIe) Generation 3 slots (four full-height and full-length; four NCSI-capable and VIC-ready; two GPU-ready) |

| Hard drives |

8 small-form factor (SFF) drives or 4 large form-factor (LFF) drives, plus two optional internal SATA boot drives, and NVMe drive support |

Figure 8 Cisco UCS C220 M4 Rack Server

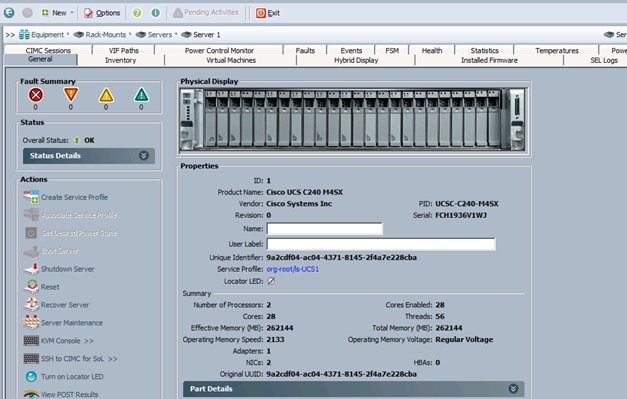

Cisco UCS C240 M4 Rack Server

Figure 9 shows the Cisco UCS C240 Rack Server

Figure 9 Cisco UCS C240 M4 Rack Server

Table 4 Cisco UCS C240 M4 Rack Server Specifications At-a-Glance

| Item |

Specification |

| Chassis |

Two rack-unit (2-RU) server |

| Processor |

Either one or two Intel® Xeon® processor E5-2600 v4 product family CPUs |

| Memory |

24 double-data-rate 4 (DDR4) dual in-line memory (DIMMs) of up to 2400 MHz speeds. Up to 1.5 TB of main memory. |

| PCIe slots |

Six PCI Express (PCIe) Generation 3 slots (four full-height and full-length; four NCSI-capable and VIC-ready; two GPU-ready) |

| Hard drives |

24 small-form factor (SFF) drives or 12 large form-factor (LFF) drives, plus two optional internal SATA boot drives, and NVMe drive support |

Cisco UCS Virtual Interface Card 1387

Cisco UCS Virtual Interface Cards (VICs) are unique to Cisco. Cisco UCS Virtual Interface Cards incorporate next-generation converged network adapter (CNA) technology from Cisco. Optimized for virtualized networking, these cards deliver high performance and bandwidth utilization, and support up to 256 virtual devices.

The Cisco UCS Virtual Interface Card 1387 offers dual-port, Enhanced Quad, Small Form-Factor Pluggable (QSFP) 40 Gigabit Ethernet and Fiber Channel over Ethernet (FCoE), in a modular-LAN-on-motherboard (mLOM) form factor. The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot providing greater I/O expandability. See Figure 10.

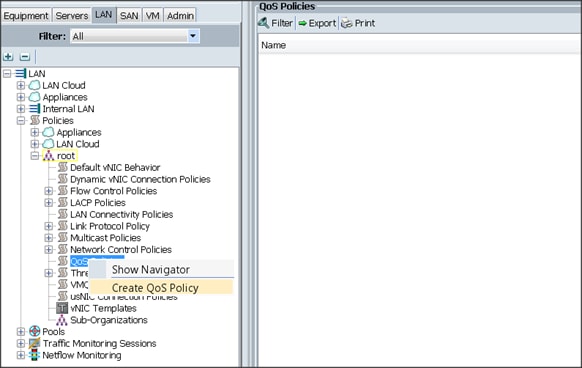

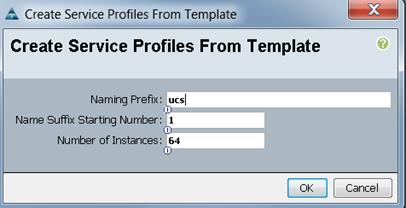

Cisco UCS Manager

Cisco UCS Manager resides within the Cisco UCS 6300-series Fabric Interconnects. It makes the system self-aware and self-integrating, managing all of the system components as a single logical entity. Cisco UCS Manager can be accessed through an intuitive graphical user interface (GUI), a command-line interface (CLI) or an XML application-programming interface (API). Cisco UCS Manager uses service profiles to define the personality, configuration and connectivity of all resources within Cisco UCS, radically simplifying provisioning of resources so that the process takes minutes instead of days.

Cisco UCS Manager enables rapid and consistent server configuration using service profiles, automating ongoing system maintenance activities such as firmware updates across the entire cluster as a single operation. Cisco UCS Manager also offers advanced monitoring with options to raise alarms and send notifications about the health of the entire cluster. The advanced features of the Cisco UCS Manager allow IT departments to shift their focus from constant maintenance to strategic business initiatives. See Figure 11.

Cisco 829 Industrial Integrated Services Router

Cisco® 829 Industrial Integrated Services Routers are ruggedized integrated services routers designed for deployment in harsh industrial environments. The Cisco IR829G Industrial Integrated Services Routers have a compact form factor, integrated 9-32 VDC power input, and multimode 3G and 4G LTE wireless WAN and IEEE 802.11a/b/g/n WLAN connections.

With the Cisco IR829G, you can rapidly deploy a wide variety of Internet of Things (IoT) solutions, including fleet management, mass transit, and remote asset monitoring. The Cisco IR829G routers are designed to withstand hostile environments including shock, vibration, dust, humidity, and water sprayed from all directions, as well as a wide temperature range (-40°C to +60°C and type-tested at +85°C for 16 hours).

The Cisco IR829G brings together enterprise-grade wireline-like services such as quality of service (QoS), Cisco advanced VPN technologies (DMVPN, Flex VPN and GETVPN) and multi-VRF for WAN, highly secure data, voice, and video communications and Cisco IOx, an open, extensible environment for hosting applications at the network edge.

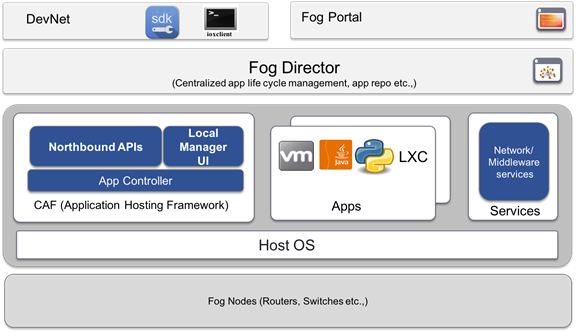

Cisco Fog Director

Cisco Fog Director delivers the capability to manage large-scale production deployments of IOx-enabled fog applications including controlling the IOx application lifecycle from initial deployment through ongoing change management and application retirement. It can be operated from a visual web environment or integrated with existing management systems through APIs. Cisco Fog Director supports both application centric and network infrastructure centric views to optimize productivity.

Cisco Fog Director improves operational effectiveness with a single point of control for applications and associated network infrastructure at production scale. It increases line of business agility with systematic change management of IOx-enabled fog applications, and enhances returns on application development investment through acceleration of deployment and scalable application lifecycle management.

Cisco Fog Computing Architecture

IOx is Cisco’s implementation of Fog Computing. IOx enables hosting of applications and services developed by Cisco, its partners and third party developers in network edge devices in a seamless fashion across diverse and disparate hardware platforms.

IOx provides a seamless application enablement framework and compute platform across various devices operating at the network edge with the ability to host applications and services, connecting them securely and reliably to applications in the cloud. The term Application enablement covers all life cycle aspects of applications including development, distribution, deployment, hosting, monitoring and management.

For the following discussion please refer to Figure 12.

Figure 12 IOx High Level Architecture

Fog Nodes

These are the devices that provides compute and runtime resources for applications.

CAF

Cisco application hosting framework (CAF) is responsible for orchestrating and managing applications on Fog nodes. At a high level, it provides:

· Application lifecycle management (Install, Start, Stop, Monitor, Uninstall, Upgrade)

· Resource provisioning and management (CPU, memory, network, storage etc.)

· Application monitoring and metrics collection

· Provides mechanisms for troubleshooting and debugging (Access to application and platform logs, access to application console etc.)

IOx Middleware Services

IOx middleware services provide high-level abstractions and APIs to accelerate the development of IOx applications.

Apps

Apps embody the logic that has to run on a Fog node. IOx supports different application types that cater to wide variety of use cases.

Local Manager

Local manager is the embedded web UI supported by CAF geared towards application lifecycle management for a single node.

Fog Director

Fog Director provides centralized management services to manage all life cycle aspects of applications and services on thousands of fog nodes enabling operations at scale.

Fog director also provides uniform north bound RESTful APIs which can be used by client programs to integrate application management into their workflows. The Fog Director RESTful API documentation are available here.

Fog director usage and reference guide is available here.

IOx SDK

The IOx SDK is a set of tools and software packages used by 3rd party developers to build applications that can be hosted by CAF on Cisco's IOx enabled platforms.

ioxclient

ioxclient is a cross platform command line utility primarily meant for assisting application development for Cisco's IOx platforms. It aims to increase developer productivity by providing easy to use CLIs for all application lifecycle tasks.

Fog Portal

Fog portal is the primary interface for developers to interact with Cisco IOx ecosystem. A single stop portal that provides all the developer resources for the developer to develop, test their application and make them available for deployment via Fog director.

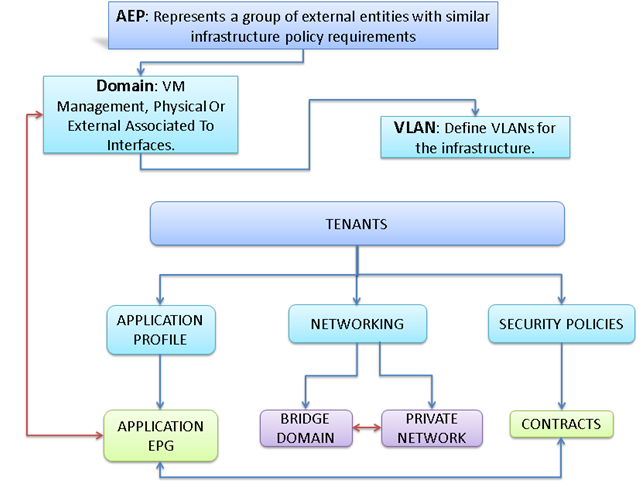

Cisco Application Centric Infrastructure (ACI) Overview

Cisco ACI provides the network the ability to deploy and respond to the needs of applications, both in the data center and in the cloud. The network must be able to deliver the right levels of connectivity, security, compliance, firewalls, and load balancing, and it must be able to do this dynamically and on-demand.

This is accomplished through centrally defined policies and application profiles.

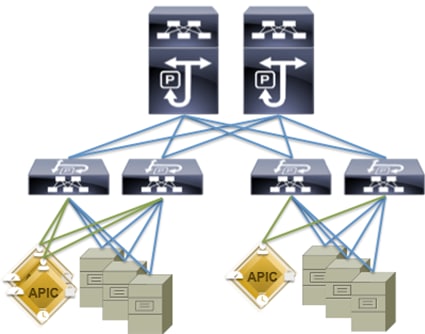

The profiles are managed by the Application Policy Infrastructure Controller [APIC] and distributed to switches, like the Cisco Nexus 9000 Series. Cisco Nexus 9000 Series Switches and the Cisco Application Policy Infrastructure Controller (APIC) are the building blocks for Cisco ACI.

Cisco ACI is software-defined networking (SDN) plus a whole lot more. Most SDN models stop at the network. Cisco ACI extends the promise of SDN-namely agility and automation-to the applications themselves. Through a policy-driven model, the network can cater to the needs of each application, with security, network segmentation, and automation at scale. And it can do so across physical and virtual environments, with a single pane of management.

The Cisco ACI fabric supports more than 64,000 dedicated tenant networks. A single fabric can support more than one million IPv4/IPv6 endpoints, more than 64,000 tenants, and more than 200,000 10G ports. The Cisco ACI fabric enables any service (physical or virtual) anywhere, with no need for additional software or hardware gateways, to connect between the physical and virtual services, and normalizes encapsulations for Virtual Extensible Local Area Network (VXLAN) / VLAN / Network Virtualization using Generic Routing Encapsulation (NVGRE).

The Cisco ACI fabric decouples the endpoint identity and associated policy from the underlying forwarding graph. It provides a distributed Layer 3 gateway that ensures optimal Layer 3 and Layer 2 forwarding. The fabric supports standard bridging and routing semantics without standard location constraints (any IP address anywhere), and removes flooding requirements for the IP control plane Address Resolution Protocol (ARP) / Generic Attribute Registration Protocol (GARP). All traffic within the fabric is encapsulated within VXLAN.

Architectural Benefits of Using Fabric Interconnect with Cisco ACI

The Cisco ACI fabric consists of discrete components that operate as routers and switches, but is provisioned and monitored as a single entity. The operation is like a single switch and router that provides advanced traffic optimization, security, and telemetry functions, stitching together virtual and physical workloads.

Cisco Application Centric Infrastructure (ACI) and Cisco Unified Computing System (Cisco UCS), working together, can cost-effectively scale capacity, and deliver exceptional performance for the growing demands of big data processing, analytics, and storage workflows. For larger clusters and mixed workloads, Cisco ACI uses intelligent, policy-based flowlet switching and packet prioritization to deliver:

· Centralized Management for the entire Network

· Dynamic load balancing

· Dynamic Packet Prioritization

· Multi-Tenant and Mixed Workload Support

· Deep Telemetry

Centralized Management for the Entire Network

Cisco ACI treats the network as a single entity rather than a collection of switches. It uses a central controller to implicitly automate common practices such as Cisco ACI fabric startup, upgrades, and individual element configuration. The Cisco Application Policy Infrastructure Controller (Cisco APIC) is the unifying point of automation and management for the Cisco Application Centric Infrastructure (ACI) fabric. This architectural approach dramatically increases the operational efficiency of networks, by reducing the time and effort needed to make modifications to the network and, also, for root cause analysis and issue resolution

Dynamic Load Balancing

Cisco's Application Centric Infrastructure is not only aware of the congestion points but is able to make dynamic decisions on how the traffic is switched/routed. This could be new flows that are about to start or existing long flows which could benefit from moving to a less congested route. Dynamic load balancing takes care of these decisions at run time automatically and helps utilize the links optimally - both the healthy and the congested links. This is useful in both congested link scenarios and scenarios where there are link failures. Even when there is no congestion this will maintain close to optimal distribution of traffic across the spines.

Dynamic Packet Prioritization (DPP) prioritizes short flows higher than long flows; a short flow is less than approximately 15 packets. Short flows are more sensitive to latency than long ones. Small and urgent data workloads, such as database queries, may suffer processing latency delays because larger data sets are being sent across the fabric ahead of them. This approach presents a challenge for instances in which database queries require near-real-time results.

Dynamic Packet Prioritization can improve overall application performance. Together these technologies enable performance enhancements to applications, including Big Data workloads.

Multi-Tenant and Mixed Workload Support

Cisco ACI is built to incorporate secure multi-tenancy capabilities. The fabric enables customers to host multiple concurrent Big Data clusters on a shared infrastructure. Cisco ACI provides the capability to enforce proper isolation and SLA's for workloads of different tenants. These benefits extend beyond multiple Big Data workloads - Cisco ACI allows the same cluster to run a variety of different application workloads, not just Big Data, with the right level of security and SLA for each workload.

Deep Telemetry of Tenant and Application Network

One of the core design principles behind Cisco ACI is to provide complete visibility into the infrastructure - physical and virtual. Cisco APIC is designed to provide application and tenant health at a system level by using real-time metrics, latency details, atomic counters, and detailed resource consumption statistics

If your application is experiencing performance issues, you can drill down easily into the lowest possible granularity - be it at a switch level, line card level, or port level.

The holistic approach to correlate virtual and physical and tie that intelligence to an application or tenant level ensures that troubleshooting becomes extremely simple across your infrastructure, through a single pane of glass.

Cisco ACI Building Blocks

Cisco ACI consists of:

· Cisco Nexus 9000 Series Switches

· Centralized policy management and Cisco Application Policy Infrastructure Controller (APIC)

Cisco Nexus 9000 Series Switches

The Cisco Nexus 9000 Series Switches offer both modular (9500 switches) and fixed (9300 switches), 1/10/40/100 Gigabit Ethernet switch configurations designed to operate in one of two modes:

Cisco NX-OS mode for traditional architectures and consistency across the Cisco Nexus portfolio.

Cisco ACI mode to take full advantage of the policy-driven services and infrastructure automation features of ACI.

The ACI-Ready Cisco Nexus 9000 Series provides:

· Accelerated migration to 40G: zero cabling upgrade cost with Cisco QSFP+ BiDi Transceiver Module innovation.

· Switching platform integration: Cisco Nexus 9000 Series enables a highly scalable architecture and is software upgradable to ACI.

· Streamlined application management: drastically reduce application deployment time and get edge-to-enterprise application visibility.

This architecture consists of Cisco Nexus 9500 series switches acting as the spine, and Nexus 9300 series switches as leaves.

Cisco Nexus 9508 Spine Switch

The Cisco Nexus 9508 Switch offers a comprehensive feature set, high resiliency, and a broad range of 1/10/40 Gigabit Ethernet line cards to meet the most demanding requirements of enterprise, service provider, and cloud data centers. The Cisco Nexus 9508 Switch is an ACI modular spine device enabled by a non-blocking 40 Gigabit Ethernet line card, supervisors, system controllers, and power supplies. See Figure 13.

The Cisco Nexus 9500 platform internally uses a Clos fabric design that interconnects the line cards with rear-mounted fabric modules. The Cisco Nexus 9500 platform supports up to six fabric modules, each of which provides up to 10.24-Tbps line-rate packet forwarding capacity. All fabric cards are directly connected to all line cards. With load balancing across fabric cards, the architecture achieves optimal bandwidth distribution within the chassis.

Figure 13 Cisco Nexus 9508 Switch

ACI Spine Line Card for Cisco Nexus 9508

There are multiple spine line cards supported on Cisco Nexus 9508. This architecture uses the N9K-X9736PQ: 40 Gigabit Ethernet ACI Spine Line Card. See Figure 14.

· 36-port 40 Gigabit Ethernet QSFP+ line card

· Non-blocking

· Designed for use in an ACI spine switch role

· Works only in ACI mode

· Cannot mix with non-spine line cards

· Supported in 8-slot chassis

Figure 14 N9K-X973PQ Line Card

Cisco Nexus 9332 Leaf Switch

The Cisco Nexus 9332PQ switch delivers comprehensive line-rate, layer 2 and layer 3 features in a one-rack-unit (1-RU) form factor. It supports a line rate of 1/40 GE with 2.56 Tbps of bandwidth over 720 million packets / sec (mpps) across 32 fixed 40-Gbps QSFP+ ports. It is ideal for top-of-rack and middle-of-row deployments in both traditional and Cisco Application Centric Infrastructure (ACI)–enabled enterprise, service provider, and cloud environments. See Figure 15.

Figure 15 Cisco Nexus 933PQ Switch

Application Policy Infrastructure Controller (APIC)

The APIC is the unified point of automation, management, monitoring, and programmability for the Cisco Application Centric Infrastructure. The APIC supports the deployment, management, and monitoring of any application anywhere, with a unified operations model for physical and virtual components of the infrastructure. The APIC programmatically automates network provisioning and control that is based on the application requirements and policies. It is the central control engine for the broader cloud network; it simplifies management and allows flexibility in how application networks are defined and automated. It also provides northbound REST APIs. The APIC is a distributed system that is implemented as a cluster of many controller instances. See Figure 16.

Cisco ACI Topology

Cisco ACI topology is spine-leaf architecture. Each leaf is connected to each spine. It uses internal routing protocol; Intermediate System to Intermediate System (IS-IS) to establish IP connectivity throughout the fabric among all the nodes including spine and leaf. To transport tenant traffic across the IP fabric, integrated VxLAN overlay is used. The broadcast ARP traffic coming from the end point or hosts to the leaf are translated to unicast ARP in the fabric.

The forwarding is done as a host based forwarding. In the leaf layer the user information such as username, IP address, locations, policy groups etc., are decoupled from the actual forwarding path and encode them into the fabric VxLAN header and is forwarded to the desired destination.

Each spine has the complete forwarding information about the end hosts that are connected to the fabric and on every leaf have the cached forwarding information. The leaf only needs to know the hosts it needs to talk to. For example, if Server Rack-1 has to send some information to Server Rack-2, when a packet comes in the ingress leaf (LEAF_1) it will encapsulate the information into the VxLAN header and forward that information to LEAF_2. If the LEAF_1 does not have information about the LEAF_2, it uses Spine as a proxy and since Spine has all the complete information about the entire end host connected to the fabric, it will resolve the egress leaf and forward the packet to the destination.

To the outside world, routing protocols can be used to learn outside prefixes or static routing can be used instead. The outside learned routes will be populated into the fabric or to the other leafs with Multiprotocol BGP (M-BGP). In M-BGP topology the spine nodes acts as route reflectors.

The Network topology of ACI is as depicted in Figure 17.

Figure 17 Network Topology Based on Cisco ACI

The Cisco ACI infrastructure incorporates the following components:

· Two Cisco Nexus 9508 Spine Switch

· Cisco ACI Spine Line Card for Nexus 9508

· Cisco Nexus 9332 Leaf Switch for Data Traffic

· Cisco APIC-M2-Cluster with three APIC-M2 appliances

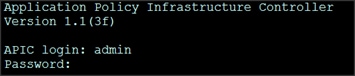

Once the configuration is completed, the APIC will Boot its APIC IOS Image and will ask for the login information. The default username is "admin" and the password is the one that was set during the initial configuration. See Figure 18.

SAS Advanced Analytics

SAS is the market leader in advanced analytics with decades of experience and a broad portfolio of innovative products that help businesses turn data into actionable insight. This design uses advanced tools from SAS Institute for data filtering, analysis and response at the edge of the network and historical analysis, real-time analysis and model development in the data center, including: SAS Event Stream Processing (ESP), SAS Visual Analytics (VA), SAS Visual Statistics (VS), SAS Event Stream Processing Server and LASR Analytics Server.

SAS Event Stream Processing (ESP)

SAS Event Stream Processing analyzes and acts on events as they happen in real-time. Its complex event processing (CEP) platform delivers real-time stream processing and analytics.

SAS Visual Analytics

SAS Visual Analytics enables you to gain insight from all of your data, no matter the size of your data, with no need to subset or sample the data. It is implemented as an integrated suite of web applications that offer intuitive, drag-and-drop interactions, rapid, highly visual responses, and role-based access to functionality.

Deployed on Cisco UCS C240 servers, data is prepared from data sources and loaded in to memory. Analysts interactively explore, analyze, and interpret the data. Report designers create reports and dashboards. Report consumers view reports via a web interface or on their mobile devices.

SAS Visual Statistics

SAS Visual Statistics enables you to derive predicted values from the predictive models. These new variables contain the prediction information for your models and can be used in other visualizations. Deployed on Cisco UCS C240 servers, SAS Visual Statistics is fully integrated into SAS Visual Analytics.

SAS Event Stream Processing (ESP) Server

SAS Event Stream Processing (ESP) Server provides real-time event stream processing in the data center capturing data arriving continuously from devices and applications, analyzing and acting on new information as it arrives. It issues alerts and notifications, and streams live information to operational dashboards.

SAS LASR Analytics Server

SAS® LASR Analytic Server is an analytic platform applying analytics to big data. The server provides speedy, secure, multi-user access to in-memory data in a distributed computing environment. It also handles smaller data sets and supports an alternate, single-machine configuration.

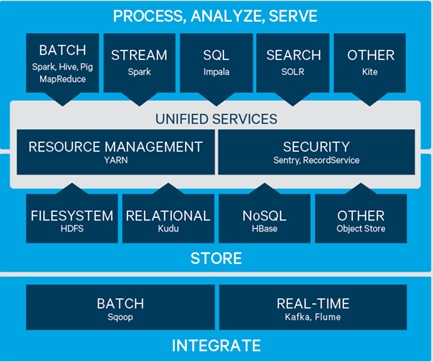

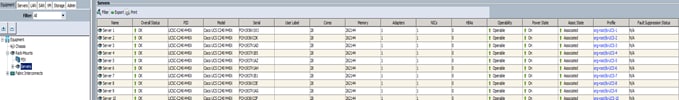

Cloudera Enterprise

Hadoop is a new type of data platform: one place to store unlimited data and access that data with multiple frameworks, all within the same platform. However, all too often, enterprises struggle to turn this new technology into real business value.

Powered by the world's most popular Hadoop distribution, Cloudera Enterprise (Figure 19) makes Hadoop fast, easy, and secure so you can focus on results, not the technology.

Fast for Business - Cloudera Enterprise enables more insights for more users, all within a single platform. With powerful open source tools and active data optimization designed for Hadoop, you can move from big data to results faster. Key features include:

· Fast Analytic SQL: The lowest latency and best concurrency for BI with Apache Impala

· Native Search: Complete user accessibility built-into the platform with Apache Solr

· Active Data Optimization: Cloudera Navigator Optimizer helps tune data and workloads for peak performance with Hadoop

Easy to Manage - Hadoop is a complex, evolving ecosystem of open source projects. Cloudera Enterprise makes it simple so you can run at scale, across a variety of environments, all while meeting SLAs. Key features include:

· Powerful Cluster Operations: Cloudera Manager is the Hadoop administration tool trusted by the professionals

· Expert Support: Dedicated help and predictive care, just a click away

· Open Source Leadership: Constant open source development and curation, with the most rigorous testing, for trusted innovation

Secure without Compromise - The potential of big data is huge, but not at the expense of security. Cloudera Enterprise achieves compliance with its comprehensive security and governance. Key features include:

· Enterprise Encryption and Key Management: Protect everything with Navigator Encrypt and Navigator Key Trustee

· Uniform Access Policy Enforcement: Uniformly manage and enforce role-based access controls across the entire platform with Apache Sentry and RecordService

· Automated Data Management: Full-stack audit, lineage, discovery, and lifecycle management for Hadoop with Cloudera Navigator

· Secure Operations: Separation of duties to protect production environments and built-in log and query redaction to protect sensitive information

Apache Kafka

Apache Kafka is a distributed publish-subscribe messaging system that is designed to be fast, scalable and durable. Kafka maintains feeds of messages in topics. Producers write data to topics and consumers read from topics. Since Kafka is a distributed system, topics are partitioned and replicated across multiple nodes. Kafka is designed to allow a single cluster to serve as the central data backbone for a large organization. It can be elastically and transparently expanded without downtime. Data streams are partitioned and spread over a cluster of machines to allow data streams larger than the capability of any single machine and to allow clusters of coordinated consumers.

Messages are simply byte arrays and developers can use them to store any object in any format, with String, JSON, and Avro the most common. It is possible to attach a key to each message, in which case the producer guarantees that all messages with the same key will arrive to the same partition.

Messages are persisted on disk and replicated within the cluster to prevent data loss. Each broker can handle terabytes of messages without performance impact. When consuming from a topic, it is possible to configure a consumer group with multiple consumers. Each consumer in a consumer group will read messages from a unique subset of partitions in each topic they subscribe to, so each message is delivered to one consumer in the group, and all messages with the same key arrive at the same consumer.

What makes Kafka unique is that Kafka treats each topic partition as a log (an ordered set of messages). Each message in a partition is assigned a unique offset. Kafka does not attempt to track, which messages were read by each consumer and only retain unread messages; rather, Kafka retains all messages for a set amount of time, and consumers are responsible to track their location in each log. Consequently, Kafka can support a large number of consumers and retain large amounts of data with very little overhead.

Kafka allows clients to choose synchronous or asynchronous replications. In the former case message is acknowledged only after it reaches multiple replicas, in the latter case a message to be published is acknowledged as soon as it reaches one replica. The purpose of adding replication in Kafka is for stronger durability and higher availability. Details on how to configure the replicas are captured later in this document.

Please refer to http://www.cloudera.com/products.html for more details.

Requirements

This CVD describes the architecture and deployment procedures to create an edge-to-enterprise analytics system using Cisco servers, switches and routers, SAS analytics software and the Cloudera distribution for big data systems.

The cluster configuration consists of the following:

· 28 Cisco UCS C240 M4 Rack Servers fulfilling a variety of roles,

- 16 x HDFS data nodes collocated with SAS LASR

- 3 x HDFS management nodes

- 3 x SAS VA/VS nodes

- 2 x SAS ESP server nodes

- 4 x Kafka nodes

· One Cisco UCS C220 M4 Rack Server for Cisco Fog Director, used to manage the fog nodes,

· Four Cisco UCS 6332 Fabric Interconnects

· Two Cisco Nexus 9508 spine switches

· Two Cisco Nexus 9332 leaf switches

· One Cisco Nexus 9372PX leaf switch

· Three Cisco APIC M2 appliances

· Four Cisco IR829G Industrial Integrated Services Routers

· Cisco R42610 standard racks

· Cisco Vertical Power distribution units (PDUs) (Country Specific)

Software Distributions and Versions

The required software distribution versions are listed below.

Cloudera Enterprise 5.7

Cloudera Enterprise version used is 5.7. For more information visit https://www.cloudera.com/documentation/enterprise/release-notes/topics/cdh_vd_cdh5_maven_repo_58x.html - concept_s1z_m5f_x5

SAS

· SAS LASR: 3.4

· SAS Event Stream Processing Client : 4.2

· SAS Event Stream Processing Server: 4.2

· SAS Visual Analytics (VA), SAS Visual Analytics (VS): 7.3

![]() Note: On 9.4 platform (Linux for x64) for Distributed processing.

Note: On 9.4 platform (Linux for x64) for Distributed processing.

For more information visit: www.sas.com

Red Hat Enterprise Linux (RHEL)

The operating system implemented is Red Hat Enterprise Linux 7.2. For more information visit http://www.redhat.com.

Software Versions

The software versions tested and validated in this document are shown in Table 5.

|

|

Component |

Version or Release |

| Compute |

Cisco UCS C240-M4 |

C240M4.2.0.13d |

| Cisco UCS C220-M4 |

C220M4.2.0.13d |

|

| Network |

Cisco UCS 6332 |

UCS 3.1(2b) |

| Cisco UCS VIC1387 Firmware |

4.1(2d) |

|

| Cisco UCS VIC1387 Driver |

2.3.0.31 |

|

| Storage |

LSI SAS 3108 |

24.12.1-0049 |

| LSI MegaRAID SAS Driver |

06.810.10.00 |

|

| Software |

Red Hat Enterprise Linux Server |

7.2 (x86_64) |

| Cisco UCS Manager |

3.1(2b) |

|

| CDH |

5.8.0 |

|

| SAS LASR |

3.4 |

|

| SAS Event Stream Processing (ESP) |

4.2 |

|

| SAS Event Stream Processing Server |

4.2 |

|

| SAS Visual Analytics / Visual Statistics (VA/VS) |

7.3 |

|

| Cisco Fog Director |

1.2.0 |

|

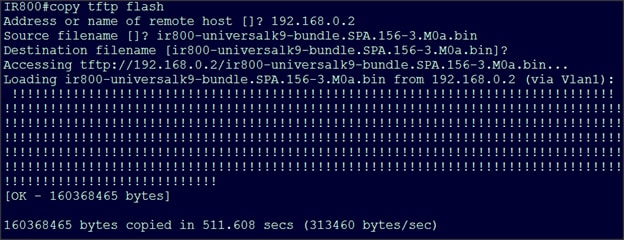

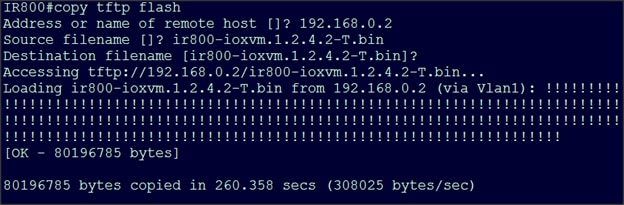

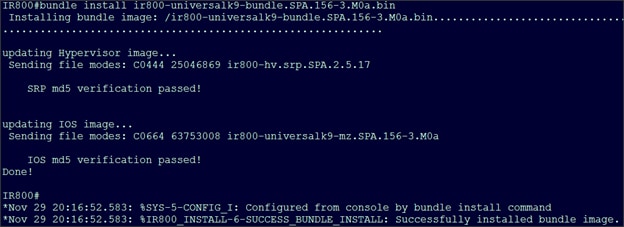

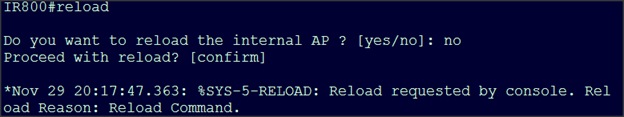

| Cisco IR829G router |

IOS |

15.6.3M |

| Fog node |

1.2.4.2 |

|

| IOx local manager |

1.2.0.0 |

![]() Note: The latest drivers can be downloaded from this link: https://software.cisco.com/download/release.html?mdfid=283862063&release=2.0(13)&relind=AVAILABLE&flowid=25886&softwareid=283853158&rellifecycle=&reltype=latest

Note: The latest drivers can be downloaded from this link: https://software.cisco.com/download/release.html?mdfid=283862063&release=2.0(13)&relind=AVAILABLE&flowid=25886&softwareid=283853158&rellifecycle=&reltype=latest

![]() Note: The latest supported RAID controller driver is already included with the RHEL 7.2 operating system.

Note: The latest supported RAID controller driver is already included with the RHEL 7.2 operating system.

System Architecture

The system architecture includes Cisco UCS C240 M4 servers, based on Cisco UCS Integrated Infrastructure for Big Data and Analytics with two domains. Each domain can support up to 30 servers under a pair of Fabric Interconnects depending on the number of uplink ports, interconnected through ACI Fabric. For this design, we are using eight uplink ports leaving 24 ports for the servers.

The ACI fabric consists of three major components: the Application Policy Infrastructure Controller (APIC), spine switches and leaf switches. These three components handle both the application of network policy and the delivery of packets.

The system architecture consists of two domains (two pairs of FIs) connecting to ACI having:

· Two Cisco Nexus 9508 switches acting as a spine

· Two Cisco Nexus 9332 as the leaf switches

· Three APIC-M2 as APIC appliances

· One Cisco Nexus 9372 leaf switch used to connect the Cisco IR829G routers to the network

Figure 20 shows the overall system architecture of the solution.

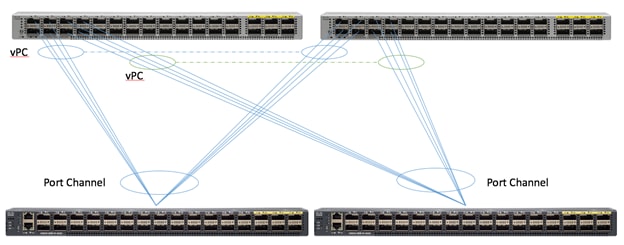

Figure 21 shows the connectivity between the leaf switches and fabric interconnects.

Figure 21 Leaf/Fabric Interconnect

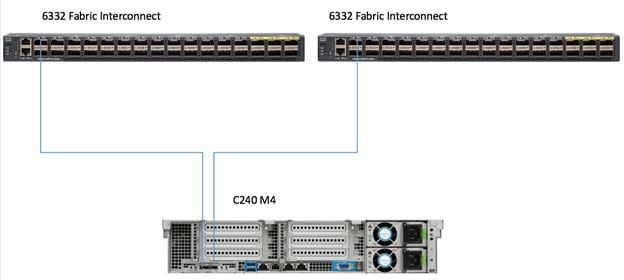

Figure 22 shows the connectivity between the Cisco UCS C240 M4 servers and the Fabric Interconnect pairs.

Figure 22 Cisco UCS C240 M4 Server Connectivity

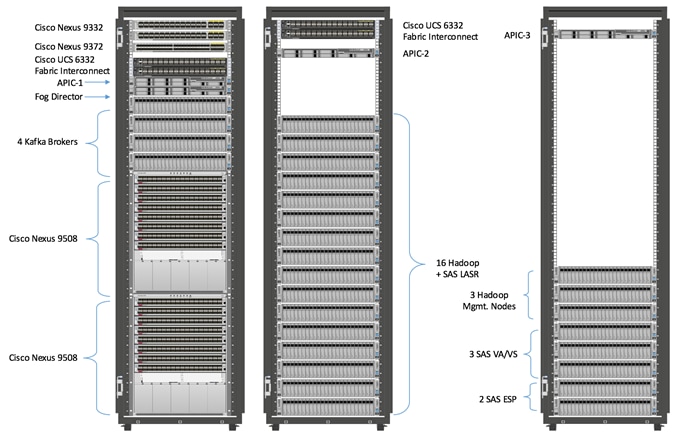

The physical layout for the solution is shown in Figure 23. Each rack consists of two vertical PDUs. The solution consists of three Cisco R42610 racks.

All switches for the ACI fabric are in rack 1, as well as one APIC appliance. Four Cisco UCS C240 M4 servers acting as Kafka nodes are also in rack one, as is one Cisco UCS C220 M4 server for fog node management. Finally, two fabric interconnects are mounted in rack one.

The second rack houses two fabric interconnects; one APIC appliance and 16 Cisco UCS C240 M4 servers used for the HDFS data and SAS LASR nodes.

The third rack houses the final APIC appliance and eight C240 M4 servers for: Hadoop management (3 nodes), SAS Visual Analytics / Visual Statistics (3 nodes), and SAS Event Stream Processing Server (2 servers).

The Cisco IR829G routers are not shown, as they are not rack mounted. Each Cisco IR829G router is connected to the 9372 leaf switch via 1 x 1GE link using 1/10 GE adapter.

In this design, the Cisco UCS C220 running the Cisco Fog Director software is connected directly to the Fabric Interconnects. In actual practice this server may be anywhere that is appropriate to managing the edge devices.

Figure 23 Reference Architecture-Physical Layout

Configuration of APIC

This section describes loading and configuring the APIC.

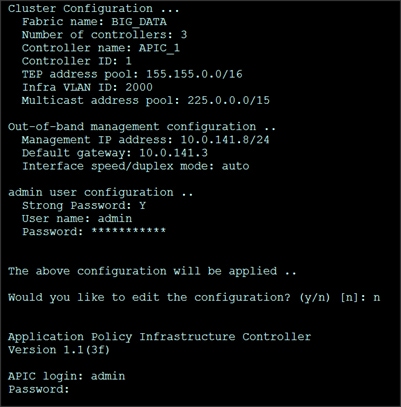

When the APIC appliance is booted for the first time, the APIC console presents a series of initial setup options. For many options, you can press Enter to choose the default setting that is displayed in brackets. At any point in the setup dialog, you can restart the dialog from the beginning by pressing Ctrl-C.

To configure APIC, complete the following steps:

1. Enter the fabric name [ACI Fabric1]:

2. Enter the number of controllers in the fabric (1-9) [3]:3

3. Enter the controller ID (1-3) [1]:1

4. Enter the controller name [apic1]:APIC_1

5. Enter address pool for TEP addresses [10.0.0.0/16]: 155.155.0.0/16

6. Enter the VLAN ID for infra network (1-4094) [4]: 2000

7. Out-of-band management configuration.

8. Enter the IP address for out-of-band management: 10.0.141.8/24

9. Enter the IP address of the default gateway [None]: 10.0.141.1

10. Administrator user configuration.

11. Enable strong passwords? [Y]

12. Enter the password for admin.

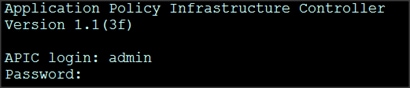

A screenshot of the configuration is shown below.

13. Repeat steps 1 through 12 for the additional 2 APICs with unique IP addresses for each of them.

When the configuration is completed, the APIC will Boot its APIC IOS Image and will ask for the login information. The default username is "admin" and the password is the one that was set during the initial configuration.

Switch Discovery with the APIC

The APIC is a central point of automated provisioning and management for all the switches that are part of the ACI fabric. A single data center might include multiple ACI fabrics, each with their own APIC cluster and Cisco Nexus 9000 Series switches that are part of the fabric. To ensure that a switch is managed only by a single APIC cluster, each switch must be registered with that specific APIC cluster that manages the fabric. The APIC discovers new switches that are directly connected to any switch it currently manages. Each APIC instance in the cluster first discovers only the leaf switch to which it is directly connected. After the leaf switch is registered with the APIC, the APIC discovers all spine switches that are directly connected to the leaf switch. As each spine switch is registered, that APIC discovers all the leaf switches that are connected to that spine switch. This cascaded discovery allows the APIC to discover the entire fabric topology in a few simple steps.

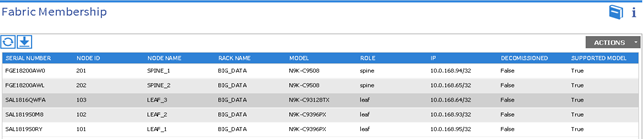

Switch Registration with the APIC Cluster

When the switch is discovered by the APIC cluster it needs to be registered in the APIC to make it as a part of the fabric.

Prerequisite: All switches must be physically connected and booted with the correct ACI Image.

To register the switch with the APIC cluster, complete the following steps:

1. Using a web browser, connect to the out-of-band management IP address [10.0.141.8] configured in the initial configuration.

2. On the menu bar, choose FABRIC > INVENTORY. In the Navigation pane, choose the appropriate pod.

3. In the Navigation pane, expand the pod, and click Fabric Membership.

In the Work pane, in the Fabric Membership table, a single leaf switch is displayed with an ID of 0. It is the leaf switch that is connected to APIC.

To configure the ID, double-click the leaf switch row, and complete the following steps:

1. In the ID field, add the appropriate ID (leaf1 is ID 101, leaf2 is ID 102 and leaf3 is ID103).

![]() For the purpose of this CVD, only two leaf nodes have been used. Leaf3 added here is only for demonstration.

For the purpose of this CVD, only two leaf nodes have been used. Leaf3 added here is only for demonstration.

The ID must be a number that is greater than 100 because the first 100 IDs are for APIC appliance nodes.

2. In the Switch Name field, add the name of the switch, and click Update.

After an ID is assigned, it cannot be updated. The switch name can be updated by double-clicking the name and updating the Switch Name field.

The Success dialog box is displayed. An IP address is assigned to the switch, and in the Navigation pane, the switch is displayed under the pod.

Monitor the Work pane until one or more spine switches appear.

To configure the ID, double-click the spine switch row and complete the following steps:

1. In the ID field, add the appropriate ID (spine1 is ID 201 and spine 2 is ID 202). The ID must be a number that is greater than 100.

2. In the Switch Name field, add the name of the switch, and click Update. The Success dialog box is displayed. An IP address is assigned to the switch, and in the Navigation pane, the switch is displayed under the pod. Wait until all remaining switches appear in the Node Configurations table.

For each switch listed in the Fabric Membership table, complete the following steps:

1. Double-click the switch, enter an ID and a Name, and click Update.

2. Repeat these steps for the next switch in the list.

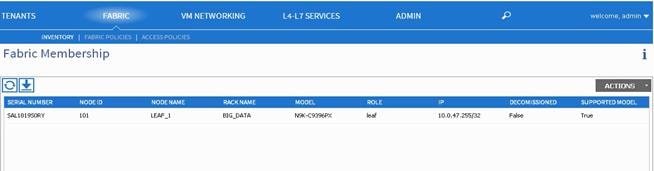

Validating the Switches

To validate the switches, complete the following steps:

1. On the menu bar, choose Fabric > Inventory, and in the Navigation pane, under Pod 1, expand Fabric Membership.

2. The switches in the fabric are displayed with their node IDs. In the Work pane, all the registered switches are displayed with the IP addresses that are assigned to them.

Validating the Fabric Topology

To validate the fabric topology, complete the following steps:

1. On the menu bar, choose Fabric > Inventory.

2. In the Navigation pane, choose the pod that you want to view.

3. In the Work pane, click the Topology tab.

4. The displayed diagram shows all attached switches, APIC instances, and links.

5. (Optional) To view the port-level connectivity of a leaf switch or spine switch, double-click its icon in the topology diagram.

6. To return to the topology diagram, in the upper left corner of the Work pane click the Previous View icon.

7. (Optional) To refresh the topology diagram, in the upper left corner of the Work pane, click the Refresh icon.

Adding Management Access

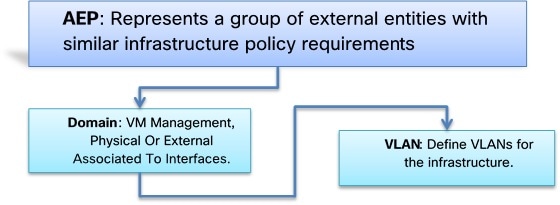

Attach Entity Profiles (AEP)

The ACI fabric provides multiple attachment points that connect through leaf ports to various external entities such as bare metal servers, hypervisors, Layer 2 switches (for example, the Cisco UCS Fabric Interconnect), and Layer 3 routers (for example Cisco Nexus 7000 Series switches). These attachment points can be physical ports, port channels, or a virtual port channel (vPC) on the leaf switches.

An attachable entity profile (AEP) represents a group of external entities with similar infrastructure policy requirements. The infrastructure policies consist of physical interface policies, for example, Cisco Discovery Protocol (CDP), Link Layer Discovery Protocol (LLDP), maximum transmission unit (MTU), and Link Aggregation Control Protocol (LACP).

An AEP is required to deploy any VLAN pools on the leaf switches. It is possible to reuse the encapsulation pools (for example, VLAN) across different leaf switches. An AEP implicitly provides the scope of the VLAN pool (associated to the VMM domain) to the physical infrastructure.

![]() An AEP provisions the VLAN pool (and associated VLANs) on the leaf. The VLANs are not actually enabled on the port. No traffic flows unless an EPG is deployed on the port. Without VLAN pool deployment using an AEP, a VLAN is not enabled on the leaf port even if an EPG is provisioned.

An AEP provisions the VLAN pool (and associated VLANs) on the leaf. The VLANs are not actually enabled on the port. No traffic flows unless an EPG is deployed on the port. Without VLAN pool deployment using an AEP, a VLAN is not enabled on the leaf port even if an EPG is provisioned.

![]() A particular VLAN is provisioned or enabled on the leaf port based on EPG events either statically binding on a leaf port or based on VM events from external controllers such as VMware vCenter.

A particular VLAN is provisioned or enabled on the leaf port based on EPG events either statically binding on a leaf port or based on VM events from external controllers such as VMware vCenter.

![]() A leaf switch does not support overlapping VLAN pools. Different overlapping VLAN pools must not be associated with the same AEP.

A leaf switch does not support overlapping VLAN pools. Different overlapping VLAN pools must not be associated with the same AEP.

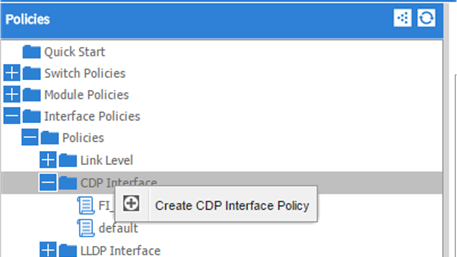

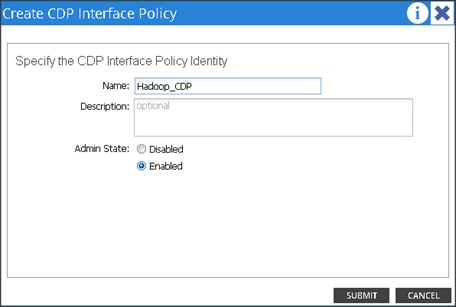

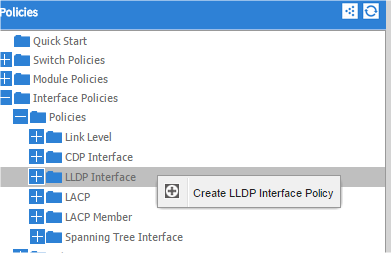

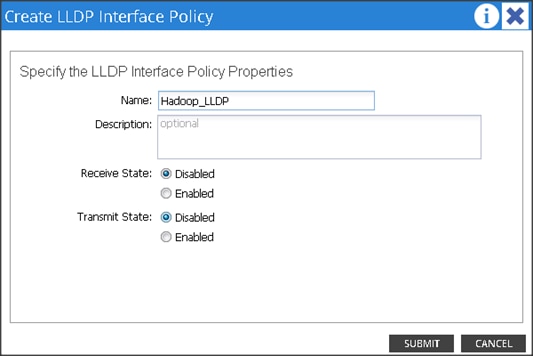

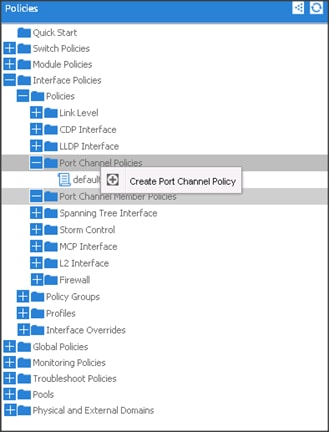

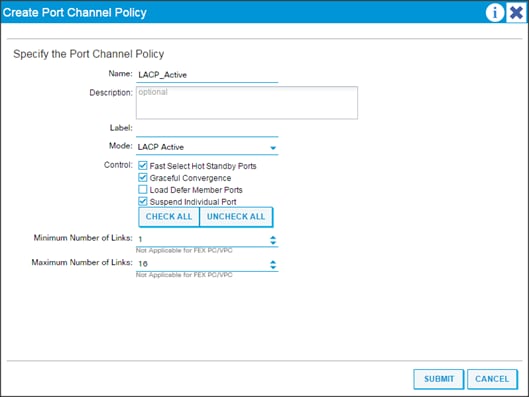

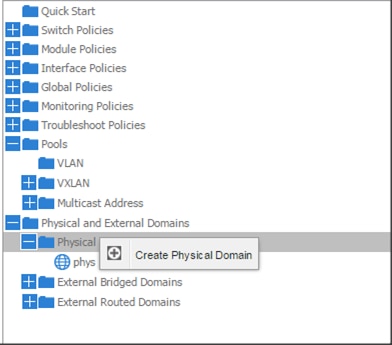

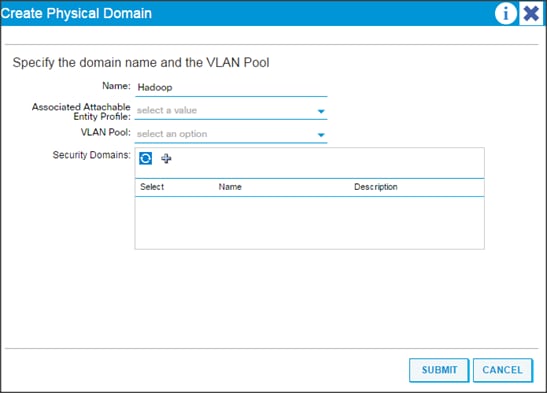

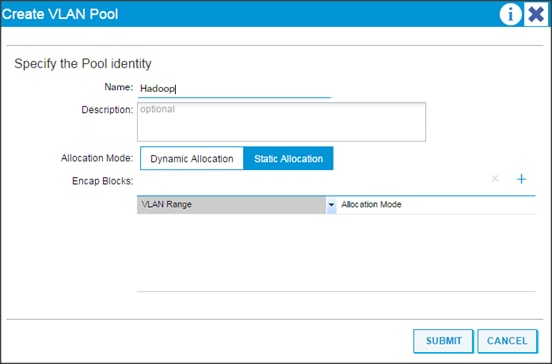

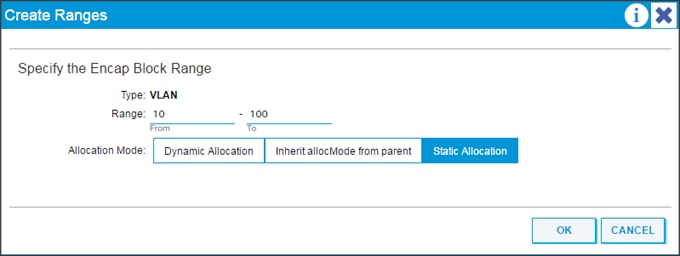

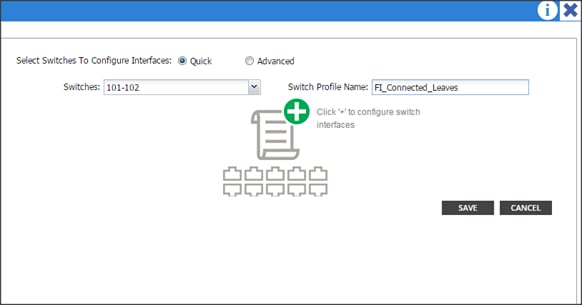

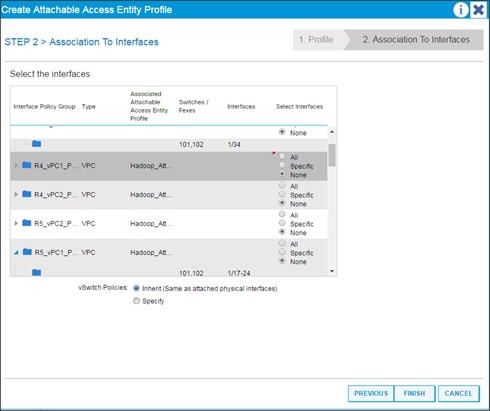

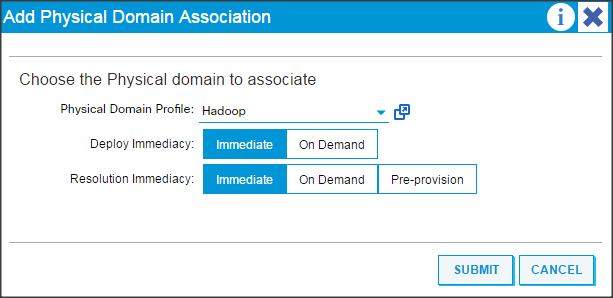

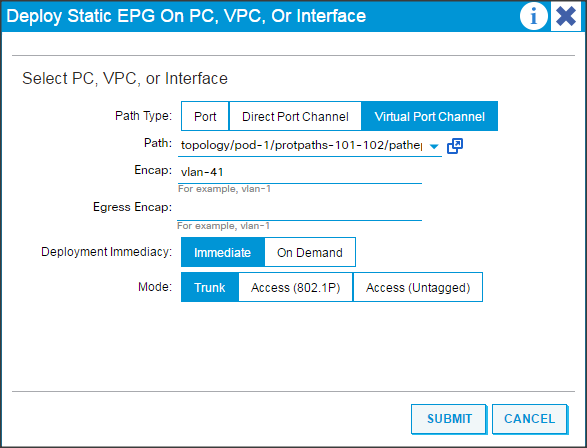

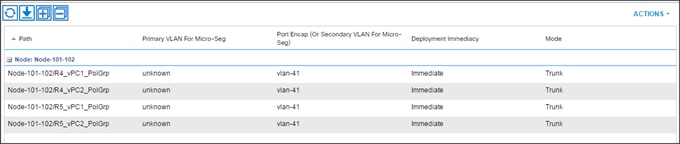

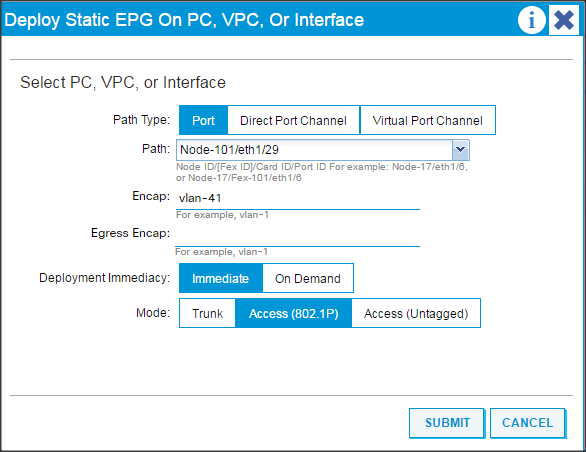

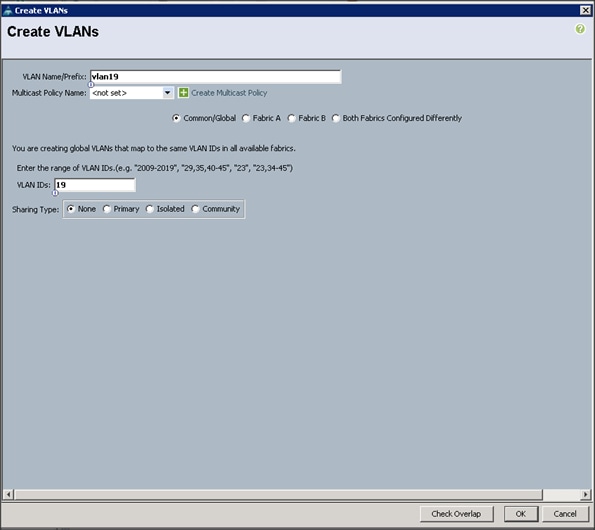

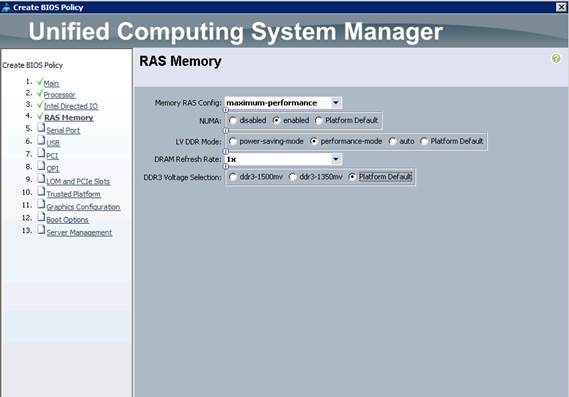

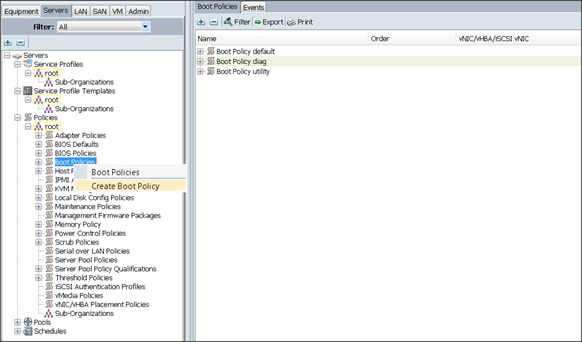

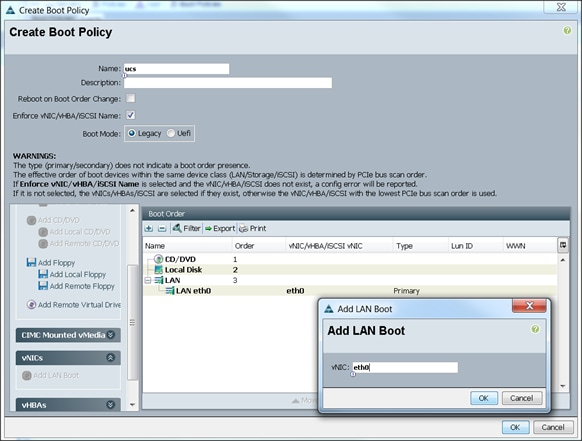

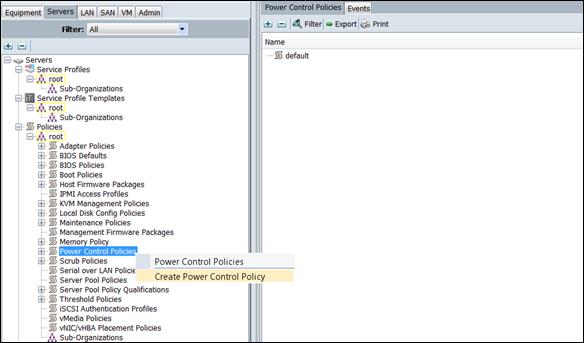

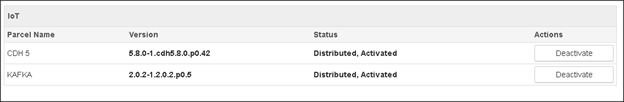

Network Configuration and ACI Setup