- About Cisco Nexus 9000v

- Cisco Nexus 9000v Guidelines and Limitations

- Benefits of Virtualization Using the Cisco Nexus 9000v

- Cisco Nexus 9000v Software Functionality

- Cisco Nexus 9000v System Management Configuration

- Cisco Nexus 9000v Resource Requirements

- VMware ESXi Support Information

Cisco Nexus 9000v

This chapter contains the following sections:

- About Cisco Nexus 9000v

- Cisco Nexus 9000v Guidelines and Limitations

- Benefits of Virtualization Using the Cisco Nexus 9000v

- Cisco Nexus 9000v Software Functionality

- Cisco Nexus 9000v System Management Configuration

- Cisco Nexus 9000v Resource Requirements

- VMware ESXi Support Information

- KVM-QEMU Support Information

- VirtualBox Support Information

- VMware Fusion Support Information

- Cisco Nexus 9000v Installation and Deployment

- Cisco Nexus 9000v Software Upgrade and Downgrade

- Cisco Nexus 9000v Configuration

- Cisco Nexus 9000v Deployment

- Network Topology Examples

About Cisco Nexus 9000v

The Cisco Nexus 9000v is a virtual platform that is designed to simulate the control plane aspects of a network element running Cisco Nexus 9000 software. The Cisco Nexus 9000v shares the same software image running on Cisco Nexus 9000 hardware platform although no specific hardware emulation is implemented. When the software runs as a virtual machine, line card (LC) ASIC provisioning or any interaction from the control plane to hardware ASIC is handled by the Cisco Nexus 9000v software data plane.

The Cisco Nexus 9000v for the Cisco Nexus 9000 Series provides a useful tool to enable the devops model and rapidly test changes to the infrastructure or to infrastructure automation tools. This enables customers to validate configuration changes on a simulated network prior to applying them on a production network. Some users have also expressed interest in using the simulation system for feature test ,verification, and automation tooling development and test simulation prior to deployment. Cisco Nexus 9000v can be used as a programmability vehicle to validate software defined networks (SDNs) and Network Function Virtualization (NFV) based solutions.

Cisco Nexus 9000v Guidelines and Limitations

Cisco Nexus 9000v has the following guidelines and limitations:

-

Cisco Nexus 9000v does not support the VGA console. You must provision a serial console on a VM to access the Nexus 9000v switch prompt on initial boot. See Deploying the Cisco Nexus 9000v on VirtualBox for more information.

-

When N9000v VMs are created by KVM hypervisor, the following issues may occur due to the default setting on the Linux Bridge:

-

LLDP communication between the VMs: The LLDP communication is not established between N9000v. For the solution, the following Linux Bridge settings should be configured. (In the example, assume vb7af2d7ab777d0 is the Linux Bridge that is used for connecting two VMs.

-

Stop STP running on the Linux Bridge using the brctl setageing vb7af2d7ab777d0 0 command.

-

Allow LLDP to be forwarded on the Linux Bridge using the echo 0x4000 > /sys/class/net/vb7af2d7ab777d0/bridge/group_fwd_mask command.

-

Stop LLDP service running on Linux base host (on which the topology is running) using the /etc/init.d/lldpd stop command.

-

[Optional] Disable multicast snooping using the echo 0 > /sys/devices/virtual/net/vb7af2d7ab777d0/bridge/multicast_snooping command.

-

-

LACP connection between the VMs: The LACP connection is not formed between eNXOSv. For the solution, complete the following steps:

-

The Linux kernel should be patched.

-

Group forward mask should be set up using the echo 0x4 > /sys/class/net/vb7af2d7ab777d0/bridge/group_fwd_mask command.

-

-

The multicast packet may not flow through the Linux Bridge. For the solution, use the echo 0 > /sys/devices/virtual/net/vb7af2d7ab777d0/bridge/multicast_snooping command.

-

Some ports may get into STP blocked port by the Linux Bridge. For the solution, disable the STP running on the Linux Bridge using the brctl setageing vb7af2d7ab777d0 0 command.

-

-

After initial setup of the Cisco Nexus 9000v, you must configure the booting image in your system. Otherwise, the Cisco Nexus 9000v drops to the loader> prompt after reload/shut down.

switch# configure terminal switch(config)# boot nxos n9000-dk9.7.0.3.I2.0.454.bin switch(config)# copy running-config startup-config

-

Cisco Nexus 9000v does not support VGA console. You must provision the serial console on any VM to access the Cisco Nexus 9000v switch prompt on initial boot.

-

Beginning with Cisco Nexus Release 7.0(3)I7(1), you can use the Cisco Nexus Network Manager to manage Nexus 9000v chassis node in network management system

Note

The Cisco Nexus 9000v does not support statistics querying.

-

The Cisco Nexus 9000v uses vNICs that are entered from the KVM/QEMU command line or from the GUI on ESXi for networking either externally or internally within a hypervisor server. The first NIC is always used as the Cisco Nexus 9000v management interface. The subsequence NICs are used as data ports as e1/1, e1/2, ... e1/9. Ten NICs are allowed with nine NICs for data ports.

Note

Beginning with Cisco NX-OS Release 7.0(3)I5(2), a maximum of 64 data ports (e1/1, e1/2, ... e1/64) are supported.

Connect only the first NIC for the Cisco Nexus 9000v VM as the management interface to your LAN physical switch or vSwitch (VM Network) connecting directly to a physical switch. Do not connect any data port vNIC to any physical switch that conflicts with your server management connectivity.

-

Cisco Nexus 9000v only supports the ESXi standard vSwitch when VMs are interconnected within a hypervisor or an external physical switch.

-

The vSwitch mapping to data port interface is required to have Promiscuous Mode as the Accept mode in order to pass traffic between VMs.

-

Multicast snooping should be disabled on net-devices configured as bridges on Linux-host machines. These net-devices are used to connect VMs in the Cisco Nexus 9000v setup.

-

The Cisco Nexus 9000v operates as a bridge that generates BPDU packets on its Ethernet interfaces as it participates in Spanning Tree Protocol (STP). It also forwards broadcast, unknown unicast, and multicast traffic as expected by classic bridging logic. Do not connect the Cisco Nexus 9000v data plane interfaces to the upstream network in a manner that would create bridging loops or interfere with upstream STP operation.

-

Beginning with Cisco NX-OS Release 7.0(3)I6(1), Cisco Nexus 9000v is supported in the Virtual Internet Routing Lab (VIRL) and the Cisco Modeling Lab (CML) environment running as a VM.

-

Beginning with Cisco NX-OS Release 7.0(3)I6(1), VXLAN BGP EVPN is supported on Cisco Nexus 9000v. For details on VXLAN configuration, see the Cisco Nexus 9000 Series NX-OS VXLAN Configuration Guide, Release 7.x.

Benefits of Virtualization Using the Cisco Nexus 9000v

This virtual platform provides these virtualization benefits in a cloud environment and you are not limited to the type of hardware as well as other resources.

|

Benefits |

Description |

||

|---|---|---|---|

|

Hardware Independence |

This virtual platform provides these virtualization benefits in a cloud environment and users is not limited to hardware as well as other resources.

|

||

|

Resource Sharing |

The resources used by Cisco Nexus 9000v are managed by the hypervisor, and can be shared among VMs. The amount of hardware resources that VM sever allocates to a specific VM, can be reallocated to another VM on the server. |

||

|

Flexibility in Deployment |

You can easily move a VM from one server to another, Thus, you can move the Cisco Nexus 9000v from a server in one physical location to a server in another physical location without moving any hardware resources. |

||

|

Dynamic Networking |

Users can change network connectivity and configuration in a matter of mins without any physical cabling. |

Cisco Nexus 9000v Software Functionality

NX-OS 7.0(3)I6(1) Release and Earlier Releases

Beginning Cisco NX-OS 7.0(3)I5(1), Cisco Nexus 9000v supports emulation and implementation on a subset of hardware dependent features. Enablement of such features in the forwarding plane allows users to simulate a more realistic deployment scenario such as an NX-OS hardware platform.

The following are restrictions and host environment differences for Cisco Nexus 9000v:

-

Interface throughputs are currently rate-limited to prevent any production usage. The interface rate-limit is set as 4M per device.

-

For the Oracle VM VirtualBox hypervisor, the SATA block device must be used due to the image size increase from the 7.0(3)I5(1) branch which impacts the legacy bios IDE controller size limit. SATA block devices also significantly improve disk performance, especially for initial boot up time. The IDE controller can be used in the VMware ESXi and the KVM/QEMU hypervisor, but it is significantly slower than the SATA controller.

-

A SATA block device can also be used in KVM/QEMU hyerpvisor, but it requires QEMU 2.2.0 or later version. Similar to the Oracle VM VirtualBox hypervisor, a performance improvement can be obtained in the KVM/QEMU environment.

Supported Features

The following table displays specific Layer 2 and Layer 3 software feature support based on branch/lineup.

|

Technology Branch/Lineup |

Nexus Feature Name |

Support Statement Cisco NX-OS 7.0(3)I5(1), 7.0(3)I5(2), and 7.0(3)I6(1) Releases |

||

|---|---|---|---|---|

|

OS Infra |

Bash Shell |

Supported |

||

|

Guest Shell |

Supported |

|||

|

SSH |

Supported |

|||

|

RPM Installation |

Supported |

|||

|

POAP |

Supported for the management interface in Cisco NX-OS Release 7.0(3)I5(1) and for all interfaces in Cisco NX-OS Release 7.0(3)I5(2) and Cisco NX-OS Release 7.0(3)I6(1) |

|||

|

Programmability |

NXAPI |

Supported |

||

|

Puppet Integration (Native) |

Supported |

|||

|

Puppet Integration (Guest Shell) |

Supported |

|||

|

Chef Integration (Native) |

Supported |

|||

|

Chef Integration (Guest Shell) |

Supported |

|||

|

L3 Features |

CDP |

Supported |

||

|

LLDP |

Supported |

|||

|

BGP v4 |

Supported (No BFD, EVPN)

|

|||

|

BGP v6 |

Supported (No BFD, EVPN) |

|||

|

OSPFv2 |

Supported (No BFD, EVPN) |

|||

|

OSPFv3 |

Supported (No BFD, EVPN) |

|||

|

EIGRP |

Supported |

|||

|

RIP |

Supported |

|||

|

L2 Features |

L2 Switching Unicast |

Supported |

||

|

L2 Switching Broadcast |

Supported |

|||

|

L2 Switching Multicast |

Supported as Broadcast (not explicit Mcast) , No PIM or Mcast Group support |

|||

|

ARP Suppression |

Supported in Cisco NX-OS Release 7.0(3)I7(1) and later. |

|||

|

MAC learning |

Supported |

|||

|

Static/Router MAC |

Supported |

|||

|

Switchport |

Supported |

|||

|

802.1q VLAN Trunk/Access |

Supported |

|||

|

STP |

Supported |

|||

|

L3 SVI |

Supported |

|||

|

Subinterfaces |

Supported |

|||

|

VXLAN and VXLAN EVPN |

Supported (Flood and Learn); BGP EVPN without ARP suppression in Cisco NX-OS Release 7.0(3)I6(1) and later. BGP EVPN with ARP suppression in Cisco NX-OS Release 7.0(3)I7(1) and later. |

|||

|

vPC |

Not supported in Cisco NX-OS Release 7.0(3)I5(1), but supported in Cisco NX-OS Release 7.0(3)I5(2) and Cisco NX-OS Release 7.0(3)I6(1) |

|||

|

Port channel |

Supported |

Note | The Cisco Nexus 9000v features in this table have been verified to operate only with the Cisco devices mentioned in this document. |

If a networking or system feature is not identified as a supported feature in this document, it should be considered as unsupported despite that it may seem to work correctly. Unsupported features did not have any level of regression testing on Cisco Nexus 9000v.

|

NX-OS Features |

Limitations |

|---|---|

|

QoS |

Not supported on Cisco Nexus 9000v. |

|

BFD |

Not supported on Cisco Nexus 9000v. |

|

ACL |

Not supported on Cisco Nexus 9000v. |

|

Policy maps |

Not supported on Cisco Nexus 9000v. |

|

SPAN |

Not supported on Cisco Nexus 9000v. |

|

IGMP Snooping |

Not supported on Cisco Nexus 9000v. |

|

AMT |

Not supported on Cisco Nexus 9000v. |

|

LISP |

Not supported on Cisco Nexus 9000v. |

|

OTV |

Not supported on Cisco Nexus 9000v. |

|

EVPN Multi-Site |

Not supported on Cisco Nexus 9000v. |

The following list (not comprehensive) contains known system limitations.

|

System Capabilities |

Limitations |

|---|---|

|

MAC Address |

Cisco Nexus 9000v does not integrate the L2FM module and L2FDWR data plane. It maintains its own MAC Table. Therefore the behavior of the MAC address related CLIs will be different from the physical platform. |

|

Statistics |

Cisco Nexus 9000v re-uses a software data plane that was created for L2FDWR. With this data plane, Cisco Nexus 9000v will not be able to account for and display traffic related statistics (such as interface statistics). |

|

Consistency Checker |

The consistency checker has a hardware dependency and hence is not supported on Cisco Nexus 9000v. All ‘show’ and ‘exec’ commands will result with appropriate error/warnings. |

|

Network Throughput |

Low data plane performance. Additional rate limiter is in place to limit the total amount of traffic received by Cisco Nexus 9000v to 4M. |

|

TOR-ISSU |

TOR-ISSU is not supported. |

|

Link Status |

Cisco Nexus 9000v virtual interfaces serve as the ‘Ethernet Ports’. The link status of these links within the NX-OS is dependent on the Hypervisor’s capability. |

|

Link-down |

Connectivity between the two ends of the interface link is simulated, hence it is important that you shut the interface in both the ends, followed by no shut at both the ends of the interface link. |

Cisco Nexus 9000v Feature UI/CLI Difference From Hardware Platform

Feature enablement in the Cisco Nexus 9000v virtual platform is the same as Cisco Nexus 9000 hardware platform.

For example, the following features can be enabled:

-

feature telnet

-

feature bash-shell

-

feature ospf

-

feature bgp

-

feature interface-vlan

-

feature nv overlay

However, not all commands are available for Cisco Nexus 9000v, such as hardware data plane specific commands. Some of these commands exist in the command parse chain, but these commands might not display correct output information. It is not possible for the virtual platform to verify all commands on Cisco Nexus 9000v that exist for the Cisco Nexus 9000 hardware platform.

A few commands are critical for Cisco Nexus 9000v to display Layer 2/Layer 3 information, but are not provided for the Cisco Nexus 9000v platform. The following displays substitute commands:

|

NX-OS Hardware Platform Commands |

Substitute for Cisco Nexus 9000v |

|---|---|

|

show mac address-table |

show system internal l2fwder mac |

|

clear mac address-table |

clear mac address-table datapath static|dynamic |

Cisco Nexus 9000v System Management Configuration

Beginning Cisco NX-OS 7.0(3)I7(1), Cisco Nexus 9000v runs the same software as Nexus 9000 Series TOR hardware platform in aspect of control plane. All applicable CLIs should be the same as hardware platform. The Simple Network Management Protocol (SNMP) for Nexus 9000v chassis management is added in this release. Nexus 9000v SNMP software inherent basic SNMP infrastructure from Nexus 9000 Series hardware platform. System management configuration should follow Cisco Nexus 9000 series documentation. However, management entity is subject to Nexus 9000v platform specific limitation. For example, interfaces statistics will not be available for any management request because Nexus 9000v platform does not have such data available. For details about supported features, see Cisco Nexus 9000v Software Functionality.

Cisco Nexus 9000v SNMP chassis management supports the following entity MIBs. However, only applicable and meaningful attributes can be retrieved from this platform.

Cisco Nexus 9000v Resource Requirements

The Cisco Nexus 9000v uses the Cisco Nexus 9000 Series hardware software image. It requires the minimum resources as shown in the following list. These resources are generally not oversubscribed on any server.

-

8G memory

-

Minimum 4G. We recommend a 8G VM configuration for complex topology and enabling features.

-

1-4 vCPUs

-

4G hard disk

-

1 serial port

-

1 network interface card (NIC)

Server Software Requirements

The Cisco Nexus 9000v can run on Cisco Unified Computing System (UCS) servers or servers from leading vendors that support VMware ESXi 5.1 (Post Build 1065491/ ESXi 5.5) or the combination of Ubuntu Linux 14.04LTS or later version and KVM-QEMU 2.0.

if you only need a standalone Cisco Nexus 9000v node, the Cisco Nexus 9000v can also be deployed on a laptop or and Apple Mac Pro with a virtual box hypervisor as long as your laptop meets basic resource requirements.

VMware ESXi Support Information

The virtual machine (VM) runs on the VMware vSphere Hypervisor. You can use the same VMware vSphere hypervisor to run serial VMs. Use the VMware vSphere Client GUI to create and manager VMs.

The VMware vSphere Client is an application for creating, configuring, and managing VMs on the VMware vCenter Server. The Cisco Nexus 9000v can boot from a virtual disk located on the data store. You can perform basic administration tasks such as starting and stopping the Cisco Nexus 9000v, using the VMware vSphere Client.

VMWare vCenter Server manages the vSphere environment and provides unified management of all the hosts and VMs in the data center from a single console.

For more information about how Cisco and VMware work together, see https://www.vmware.com/partners/global-alliances/cisco.html.

For more information about VMware features and operations, see the https://www.vmware.com/support/pubs/.

Nexus 9000v on ESXi 6.5 Deployment Notes

If you are deploying Cisco Nexus 9000v on VMware ESXi 6.5, please ensure that you have checked the following:

-

Check that the deployment environment has the correct ESXi 6.5 server and host licenses. Invalid licenses may cause instability in your deployment environment. The instability issues are VM related, such as, no access to a VM serial console,inability to access the Cisco nexus 9000v switch prompt, or incorrect error messages.

-

We recommend using the Opera browser, if you are deploying in a Mac environment: http://www.opera.com.

-

EFI default firmware option: Nexus 9000v requires EFI firmware boot. Download our distributed ova file from http://software.cisco.com. Select EFI from the Edit Virtual Machine setting menu before powering the VM on.

Note

You do not need to perform this if you are deploying the Cisco Nexus 9000v using the previous vSphere client in Windows.

-

The distributed vmdk file downloaded from http://software.cisco.com is not compatible with the ESXi 6.5 release format. To use the old vmdk file, see Using an Old vmdk File with ESXi 6.5.

Using an Old vmdk File with ESXi 6.5

KVM-QEMU Support Information

The kernel-based Virtual Machine (KVM) is an open-source, full-virtualization solution for Linux on x86 hardware, containing virtualization extensions. It consists of a loadable kernel module, kvm.ko, that provides the core virtualization infrastructure and a processor-specific module, ivm-intel.ko or kvm-amd.ko

Quick Emulator (QEMU) is a free and open-source software product that performs hardware virtualization. You can run QEMU on the Cisco UCS server with KVM installed. The recommended version of QEMU for the Cisco Nexus 9000v reference platform is version 2.2.0 or later.

Starting with Cisco NX-OS Release 7.0(3)I7(3), 128 interfaces are suppoted for Cisco Nexus 9000v switches only on KVM hypervisor. This support is applicable for Ubuntu 14.04.4 LTS and 16.04.3 LTS environments and Qemu distort qemu-2.10.0-rc3.tar.xz.

Cisco Nexus 9000v now supports interfaces up to 128+1 (128 data ports, for example, e1/1, e1/2.., e1/128, and management interface). If you do not need 128 interfaces, there is no any negative impact. Only number of vNICs users entered are displayed in the proper interface state. All other interfaces that do not have any associated vNICs display link not connected state.

For the interfaces to work smoothly, make sure that the following criteria is met:

-

Total 129 vNICs (128 data + 1 for management) from KVM hypervisor command line are required in order to have 128 interfaces available.

-

VM resources should be sufficient in terms of memory and vCPUs based on the enabled features and interfaces.

-

Extra 3+ minutes are required to boot up the system due to significant PCI scan time on kernel boot-up. The Qemu 2.9.93 (the tested version) is recommended to reduce the VM boot up time. Typical VM boot up time is proximately 5-6 minutes for all 128 data port interfaces to be able to pass the traffic in a large topology system. Any previous released Qemu version could take longer time to boot up the VM.

-

8G+ memory footprint is required in order to have 128 connected interfaces.

VirtualBox Support Information

VirtualBox is a powerful x86 and AMD64/Intel 64 virtualization product for enterprise as well as for the home user. It is free software available as Open Source Software under the terms of the GNU General Public License (GPL) version 2 and you can obtain more information and download from https://www.virtualbox.org/ web site.

VMware Fusion Support Information

VMware Fusion is also a powerful virtualization product for enterprise as well as PC user.

Cisco Nexus 9000v Installation and Deployment

Cisco Nexus 9000v currently does not support virtio block disk. To optimize performance, specific virtual artifact formats are recommended to be used in particular hypervisor.

|

Hypervisor |

Virtual Artifact Format |

|---|---|

|

EXSi |

Virtual Machine Disk Image (vmdk), Open Virtualization Appliance (ova) |

|

KVM/Qemu |

QEMU Copy On Write (qcow2),Open Virtualization Appliance (ova) |

|

Virtual Box |

Virtual Machine Disk Image (vmdk), or packaged box |

|

VMware Fusion |

Open Virtualization Appliance (ova) |

Cisco Nexus 9000v Software Upgrade and Downgrade

The software upgrade and downgrade of Cisco Nexus 9000v does not follow normal hardware platform procedures. A common upgrade method for Cisco Nexus 9000v is to tftp or scp a new image into the bootflash, then boot the new image from the loader> prompt or set the boot image in "config t; boot nxos bootflash:new_image.bin". A similar approach is used for downgrade.

Note | This approach requires sufficient bootflash disk space to hold another image. As such, the nxos.7.0.3.I2.2a image is not upgradable to a new release. In this case, you can create a new VM based on the nxosv-final.7.0.3.I2.2d release; and then upgrade to a new release. |

Cisco Nexus 9000v Configuration

Beginning with Cisco NX-OS Release 7.0(3)I5(2), Cisco Cisco Nexus 9000v supports the Cisco Virtual Appliance Configuration (CVAC). This out-of-band configuration mechanism is similar to the PowerOn Auto Provisioning (POAP) autoconfiguration, but instead of downloading the configuration across the network as POAP does, CVAC receives the configuration injected into the Cisco Cisco Nexus 9000v environment on a CD-ROM. The configuration is detected and applied at startup time.

CVAC can be used for a bootstrap configuration (supplying just enough configuration to bring the switch into a reachable state suitable for subsequent configuration using Telnet, RESTful APIs, or other standard mechanisms) or a full configuration (taking the entire configuration of another router and replicating it into a newly launched platform VM). The configuration should be in a plain-text file called nxos_config.txt. You can package the configuration file onto the CD-ROM using the following command:

mkisofs -output nxosconfig.iso -l --relaxed-filenames --iso-level 2 <file(s) to add>

If the system does not detect a CVAC configuration, the POAP process begins, and the POAP interface prompts you for the initial installation. See the NX-OS Fundamentals Configuration Guide for information about POAP for a newly installed switch.

The Cisco Cisco Nexus 9000v supports the same control plane features and configuration that are supported on the Cisco Nexus 9000 Series hardware platforms. The configuration commands for the control plane features follow the same syntax as the Cisco Nexus 9000 Series switches.

Cisco Nexus 9000v Deployment

- Provisioning the Cisco Nexus 9000v in the ESXi Hypervisor

- Deploying a Cisco Nexus 9000v on a KVM or QEMU in a Hypervisor

- Deploying the Cisco Nexus 9000v on VirtualBox

- Deleting the VM

Provisioning the Cisco Nexus 9000v in the ESXi Hypervisor

You must have already installed your ESXi hypervisor.

Deploying a Cisco Nexus 9000v on a KVM or QEMU in a Hypervisor

The Cisco Nexus 9000v can be brought up in the KVM or QEMU hypervisor. The following table lists the parameters that are supported for the Cisco Nexus 9000v deployment on KVM or QEMU.

|

Parameter |

Example |

Description |

|---|---|---|

|

/path_to/qemu |

/usr/bin/qemu-system-x86_64 |

Path to QEMU executable. (The QEMU software can be downloaded from http://wiki.qemu.org/download for different versions.) |

|

-nographic |

-nographic |

Recommended, as the Cisco Nexus 9000v does not support VGA. |

|

-bios file |

-bios bios.bin |

Required. The Cisco Nexus 9000v uses EFI boot and requires a compatible BIOS image to operate. We recommend using the latest OVMF BIOS file with the SATA controller for better performance in terms of disk operation. QEMU 2.6 is recommended with the SATA controller. For more information, see http://www.linux-kvm.org/page/OVMF. |

|

-smp |

-smp 4 |

The Cisco Nexus 9000v supports one to four vCPUs, but two to four are recommended. |

|

-m memory |

-m 8096 |

Minimum memory is required. |

|

-serial telnet:host:port,server,nowait |

-serial telnet:localhost:8888,server,nowait or -serial telnet:server_ip:8888,server,nowait |

Requires at least one. |

|

-net ... -net ... or -netdev ... -device ... |

-net socket,vlan=x,name=nl_s0,listen=localhost:12000 -net nic, vlan=x,model=e1000,macaddr=aaaa.bbbb.cccc -netdev socket,listen=localhost:12000,id=eth_s_f -device e1000,addr=s.f,netdev=eth_s_f, mac=aaaa.bbbb.cccc,multifunction=on,romfile= or -netdev tap,ifname=tap_s_f,script=no, downscript=no,id=eth_s_f -device e1000,addr=s.f,netdev=eth_s_f, mac=aaaa.bbbb.ccc,multifunction=on,romfile= |

The net/net or netdev/device pairs are for networking a virtual network interface card (vNIC). The _s_f represents the PCI slot number and function number. QEMU 2.0 or above has the capability to plug in at least 20 PCI slots and four functions, which accommodates about 80 vNICs in total. The slot range is from 3 to 19, and the function number range is from 0 to 3. The mac= option passes the MAC address of each vNIC MAC address to the VM interfaces. The first -netdev is automatically mapped to the mgmt0 interface on the VM. The second -netdev is mapped to the e1/1 interface and so on up to the sixty-fifth on e1/64. Make sure these MAC addresses are unique for each network device. |

|

-enable-kvm |

-enable-kvm |

This flag is required for the Cisco Nexus 9000v. |

|

-drive ... -device ... (for the SATA controller) |

-device ahci,id=ahci0,bus=pci.0 -drive file=img.qcow2,if=none,id=drive-sata-disk0,format=qcow2 -device ide-drive,bus=ahci0.0,drive=drive-sata-disk0,id=drive-sata-disk0 |

Format to use the SATA controller. We recommend using the SATA controller with QEMU 2.6.0 because this controller offers better performance than the IDE controller. However, you can use the IDE controller if you have an early QEMU version that does not support the SATA controller. |

|

-drive ... -device .... (for the IDE controller) |

-drive file=img.qcow2,if=none,id=drive-ide0-0-0,format=qcow2 -device ide-hd,bus=ide.0,unit=1,drive=drive-ide0-0-0,id=ide0-0-0 |

Format to use the IDE controller. |

|

-drive ... media=cdrom |

-drive file=cfg.iso,media=cdrom |

CD-ROM disk containing a switch configuration file that will be applied after the Cisco Nexus 9000v comes up. 1. Name a text file (nxos_config.txt). 2. Use Linux commands to make cfg.iso, mkisofs -o cfg.iso -l --iso-level 2 nxos_config.txt. |

KVM or QEMU Environment Networking

Deploying the Cisco Nexus 9000v on VirtualBox

Cisco Nexus 9000v deployment on VirtualBox uses one of two forms of distributed virtual artifacts: VMDK or Pre-packaged Box. The simplest method is to use Pre-packaged Box along with Vagrant software. However, the box is created for simple standalone VM deployment with very minimal configuration. This procedure is covered in Deploying Cisco Nexus 9000v on VirtualBox with Vagrant Using a Pre-Packaged Box.

Some basic steps and concepts are shown here to create a virtual machine similar to other kinds of VM guests. These instructions are generally for Mac users, but slight differences are highlighted for Window users.

- Creating a VM in a VirtualBox Environment Using VMDK

- Deploying Cisco Nexus 9000v on VirtualBox with Vagrant Using a Pre-Packaged Box

Creating a VM in a VirtualBox Environment Using VMDK

-

Mac OS X

-

Windows

-

Install VirtualBox

-

Install Vagrant

-

Install PuTTY for serial console. (For more information, see www.putty.org.)

-

Install Git to access tools, such as SSH. (For more information, see www.git-for-windows.github.io.)

-

| Command or Action | Purpose | |||

|---|---|---|---|---|

| Step 1 | Copy VMDK to your local hard drive. Launch VirtualBox and click the New icon.

| |||

| Step 2 | Enter the Name as n9kv, select the Type as Linux, and select the Version as Other Linux (64-bit).

| |||

| Step 3 | Enter the Memory size (RAM) as 8192 MB.

| |||

| Step 4 | Select Use an existing virtual hard drive file. Click the folder icon (bottom right side) and select the VMDK created in step 1.

| |||

| Step 5 | Select your VM and click Settings.

| |||

| Step 6 | In the Attributes of Settings, highlight the IDE controller. Select Add SATA controller and add the VMDK file. Click OK to save the settings.

|

| ||

| Step 7 | Click the System icon.

| |||

| Step 8 | Click the Audio icon. Uncheck the Enable Audio checkbox.

| |||

| Step 9 | Click the Network icon.

| |||

| Step 10 | Click the Ports icon.

|

| ||

| Step 11 | Click the Ports icon.

| |||

| Step 12 | Click the Start button.

|

Starts the VM.

|

Setup Serial Console on VirtualBox Environment

Mac OS X

-

Use the 'socat' utility to connect to the serial port (console access) of the VM.

-

Go through the NXOS boot process.

-

Configure the router.

switch# conf t Enter configuration commands, one per line. End with CNTL/Z. switch(config)# interface mgmt0 switch(config-if)# ip address 10.0.2.15/24 <--- NOTE: can use "ip address dhcp" here instead switch(config-if)# no shut switch(config-if)# end switch# conf t Enter configuration commands, one per line. End with CNTL/Z. switch(config)# username vagrant password vagrant role network-admin switch(config)# username vagrant shell bash switch(config)# boot nxos bootflash:nxos.7.0.3.I2.2d.bin <--- Note: use correct image name from "dir" command output switch(config)# copy r s [########################################] 100% Copy complete. switch(config)#

Windows

-

Open PuTTY.

-

Open "Basic options for your PuTTY session".

-

Go through the NXOS boot process.

-

Configure the router.

switch# conf t Enter configuration commands, one per line. End with CNTL/Z. switch(config)# interface mgmt0 switch(config-if)# ip address 10.0.2.15/24 <--- NOTE: can use "ip address dhcp" here instead switch(config-if)# no shut switch(config-if)# end switch# conf t Enter configuration commands, one per line. End with CNTL/Z. switch(config)# username vagrant password vagrant role network-admin switch(config)# username vagrant shell bash switch(config)# boot nxos bootflash:nxos.7.0.3.I2.2d.bin <--- Note: use correct image name from "dir" command output switch(config)# copy r s [########################################] 100% Copy complete. switch(config)#

Set Up SSH Passwordless Connection to VM

Navigate to login screen and login as vagrant (password: vagrant). Place the vagrant insecure key into the 'authorized_keys' file.

switch(config)# exit switch# exit User Access Verification switch login: vagrant Password: -bash-4.2$ -bash-4.2$ pwd /var/home/vagrant -bash-4.2$ cd .ssh -bash-4.2$ pwd /var/home/vagrant/.ssh -bash-4.2$ echo "ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4Tj GYe7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0 aYet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC 6kivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+ R4LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ == vagrant insecure public key" > authorized_keys -bash-4.2$ cat authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAABIwAAAQEA6NF8iallvQVp22WDkTkyrtvp9eWW6A8YVr+kz4TjG Ye7gHzIw+niNltGEFHzD8+v1I2YJ6oXevct1YeS0o9HZyN1Q9qgCgzUFtdOKLv6IedplqoPkcmF0a Yet2PkEDo3MlTBckFXPITAMzF8dJSIFo9D8HfdOV0IAdx4O7PtixWKn5y2hMNG0zQPyUecp4pzC6k ivAIhyfHilFR61RGL+GPXQ2MWZWFYbAGjyiYJnAmCP3NOTd0jMZEnDkbUvxhMmBYSdETk1rRgm+R4 LOzFUGaHqHDLKLX+FIPKcF96hrucXzcWyLbIbEgE98OHlnVYCzRdK8jlqm8tehUc9c9WhQ == vagrant insecure public key -bash-4.2$

Deploying Cisco Nexus 9000v on VirtualBox with Vagrant Using a Pre-Packaged Box

Starting with Cisco NX-OS Release 7.0(3)I7(3), the Cisco Nexus 9000v Vagrant startup process is streamlined.

See the following customization guidelines and caveats for using Vagrant/vbox:

-

The users' customization in Vagrant file is no longer needed.

-

There is no need to change the named pipe for Windows users. The serial console can be accessed using port 2023. Now all users can use the telnet localhost 2023 command to access the serial console using port 2023.

-

Now the standard box process is used as any other VM distribution. You can simply bring-up a VM using the base box name.

-

The box name can be changed into a different name other than base using the config.vm.box field.

-

The bootstrap configuration is still possible if you want to apply a different configuration on the switch other than pre-baked configuration in .box from the release image file. In this case, vb.customize pre-boot should be used, for example:

vb.customize "pre-boot", [ "storage attach", :id, "--storagectl", "SATA", "--port", "1", "--device", "0", "--type", "dvddrive", "--medium", "./nxosv_config.iso", ] -

The VM interface MAC address can be customized using the config.vm.base_mac field, but this modification must be done prior to entering the vagrant up CLI command and after entering the vagrant init CLI command. If you want to modify the MAC address after entering the vagrant up CLI command or after the VM is created, the box commands should be used to modify the VM.

For example, enter the vboxmanage list vms CLI command to find out the VM that is created by the vagrant up CLI command:

vboxmanage list vms

Use the VM listed from the earlier command output, for example, test_default_1513628849309_59058 is found from the vboxmanage list vms command as displayed in the following example:

vboxmanage modifyvm test_default_1513628849309_59058 --macaddress1 080B206CEEAC

Complete the following steps to deploy Cisco Nexus 9000v on VirtualBox with Vagrant using a pre-packaged box:

| Step 1 | Open a terminal in your Mac or PC (GitBash) and make a directory. |

| Step 2 | Download a released image to this directory (for example, nexus9000v-final.7.0.3.I7.3d.box). |

| Step 3 | Execute vagrant init. |

| Step 4 | Execute vagrant box add base nxosv-final.7.0.3.IGP7.0.365.box. |

| Step 5 | Bring up the VM using the vagrant up command in the current directory. |

| Step 6 | Wait for a few minutes to let the bootup finish. Then proceed to the next step. |

| Step 7 | Execute vagrant ssh to access the Nexus 9000v bash shell and enter vagrant for the password. |

| Step 8 | You can monitor the boot up process from the serial console using telnet localhost 2023. |

Deleting the VM

| Step 1 | Shut down the VM.

nexus9000v-user@fe-ucs-dt13:~/n9kv/box-test$ vagrant halt --force box-test ==> box-test: Forcing shutdown of VM... nexus9000v-user@fe-ucs-dt13:~/n9kv/box-test$ |

| Step 2 | Delete the VM from the system.

nexus9000v-user@fe-ucs-dt13:~/n9kv/box-test$ vagrant destroy box-test

box-test: Are you sure you want to destroy the 'box-test' VM? [y/N] y

==> box-test: Destroying VM and associated drives...

nexus9000v-user@fe-ucs-dt13:~/n9kv/box-test$

|

Network Topology Examples

A key advantage of Cisco Nexus 9000v is that you can set up a quick networking topology without hardware or complicated cabling tasks to obtain a look and feel about a Cisco Nexus 9000 switch platform.

For example, you can quickly set up a two node system with a server connecting to a Cisco Nexus 9000 virtual machine on laptop. A more complex system can also be setup with a large resource server to do a multiple node simulation. With the topology, you can do tooling and automation in a simulated network that could be applied in a real customer network environment. The following examples show how to interconnect VMs on a laptop or UCS servers.

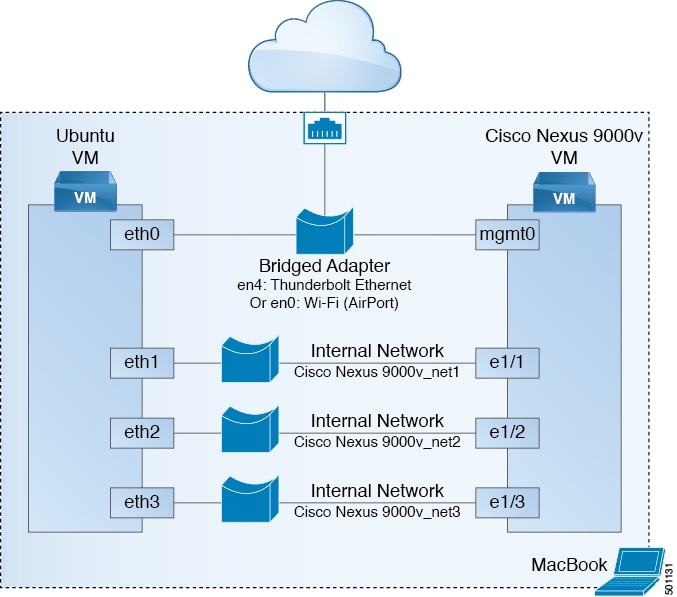

VirtualBox Topology on a Laptop

An example diagram above is a typical configuration with Cisco Nexus 9000v and Ubuntu VM two node system. In this case, Both Ubuntu VM and Cisco Nexus 9000v would obtain IPs statically or dynamically visa DHCP protocol reachable from cloud. Similarly, both Ubuntu and Cisco Nexus 9000v can be managed through management network. Ubuntu VM can send/receive packets to Cisco Nexus 9000v through Cisco Nexus 9000v data ports, eth1/1, eth1/2, and eth1/3, or ... e1/9.

Key to Setup:

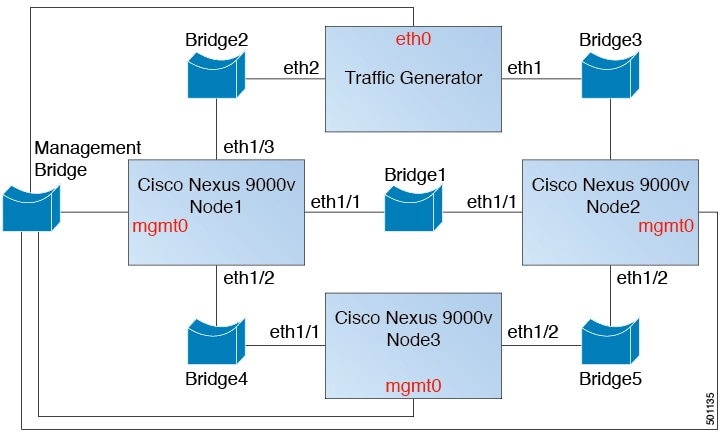

Three Node Topology with Traffic Generator

The nodes in the above diagram are instantiated using the hypervisor specific machine definitions. For networking, each data port interface pair needs to be connected to unique bridge/vSwitch. All the management ports of the Cisco Nexus 9000v (mgmt0) need to be connected to the management bridge and provided a unique IP address, which will enable access to these devices from an external network.

Each data port interface pair that needs to be interconnected should be mapped to the same Bridge/vSwitch. Similar to VirtualBox topology, vSwitch/Bridge must have "Promiscuous Mode" set to "Accept" and "Vlan ID" to "All" for networking to work between Cisco Nexus 9000v nodes. Please read "Troubleshooting" section for hypervisor specific handling for data port communication.

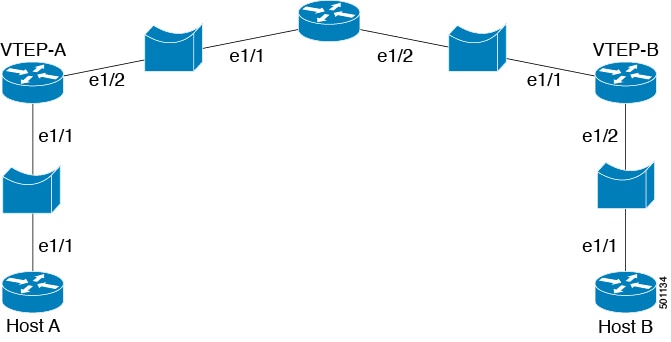

Five Nodes VXLAN Topology

This topology can simulate basic vxlan functionality on Cisco Nexus 9000v platform. Similar bridge/vSwitch setup should be done as shown in other topology examples.

Feedback

Feedback