-

FEX QoS Queuing Support

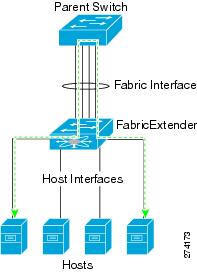

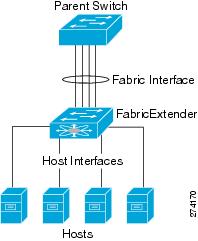

Fabric Extenders

(FEXs) follow the network quality of service (QoS) queuing model for supporting

queuing on FEX host interfaces, regardless of whether the FEX is connected to

M-series or F-series fabric uplinks.

-

Depending on

the network-QoS template that is attached to the system QoS, the following

parameters are inherited for queuing support on a FEX:

-

Number of

queues

-

Class of

service (CoS2q) mapping

-

Differentiated services code point (DSCP2q) mapping

-

Maximum

transmission unit (MTU)

-

For both

ingress and egress queuing on the FEX host interfaces, all of the preceding

parameters are derived from the ingress queuing parameters that are defined in

the active network-QoS policy. The egress queuing parameters of the active

network-QoS policy do not affect the FEX host-port queuing.

-

Such

parameters as the bandwidth, queue limit, priority, and set CoS in the

network-QoS type queuing-policy maps are not supported for a FEX.

-

Hardware Queue-limit

Support

The following

example shows how to configure the queue limit for a FEX by using the

hardware

fex-type

queue-limit command in the FEX configuration mode:

switch(config)# fex 101

switch(config-fex)# hardware ?

B22HP Fabric Extender 16x10G SFP+ 8x10G SFP+ Module

N2224TP Fabric Extender 24x1G 2x10G SFP+ Module

N2232P Fabric Extender 32x10G SFP+ 8x10G SFP+ Module

N2232TM Fabric Extender 32x10GBase-T 8x10G SFP+ Module

N2232TM-E Fabric Extender 32x10GBase-T 8x10G SFP+ Module

N2248T Fabric Extender 48x1G 4x10G SFP+ Module

N2248TP-E Fabric Extender 48x1G 4x10G SFP+ Module

switch(config-fex)# hardware N2248T ?

queue-limit Set queue-limit

switch(config-fex)# hardware N2248T queue-limit ?

<5120-652800> Queue limit in bytes ======> Allowed range of values varies dependent on the FEX type for which it is configured

switch(config-fex)# hardware N2248T queue-limit ======> Default configuration that sets queue-limit to default value of 66560 bytes

switch(config-fex)# hardware N2248T queue-limit 5120 ======> Set user defined queue-limit for FEX type N2248T associated on fex id 101

switch(config-fex)# no hardware N2248T queue-limit ======> Disable queue-limit for FEX type N2248T associated on fex id 101

switch(config-fex)# hardware N2248TP-E queue-limit ?

<32768-33538048> Queue limit in Bytes

rx Ingress direction

tx Egress direction

switch(config-fex)# hardware N2248TP-E queue-limit 40000 rx

switch(config-fex)# hardware N2248TP-E queue-limit 80000 tx ======> For some FEX types, different queue-limit can be configured on ingress & egress directions

The value of the

queue limit that is displayed for a FEX interface is 0 bytes until after the

first time the FEX interface is brought up. After the interface comes up, the

output includes the default queue limit or the user-defined queue limit based

on the hardware queue-limit configuration. If the hardware queue limit is

unconfigured, “Queue limit: Disabled” is displayed in the command output. The

following partial output of the

show queuing interface

interface command shows the queue limit that is

enforced on a FEX:

switch# show queuing interface ethernet 101/1/48

<snippet>

Queue limit: 66560 bytes

<snippet>

-

Global Enable/Disable Control

of DSCP2Q

In the following

example, the

all or the

f-series

keyword enables DSCP2q mapping for the FEX host interfaces, regardless of the

module type to which the FEX is connected:

switch(config)# hardware QoS dscp-to-queue ingress module-type ?

all Enable dscp based queuing for all cards

f-series Enable dscp based queuing for f-series cards

m-series Enable dscp based queuing for m-series cards

-

Show Command Support for FEX

Host Interfaces

The

show queuing interface

interface command is supported for FEX host

interfaces. The following sample output of this command for FEX host interfaces

includes the number of queues used, the mapping for each queue, the

corresponding queue MTU, the enforced hardware queue limit, and the ingress and

egress queue statistics.

Note |

There is no

support to clear the queuing statistics shown in this output.

|

switch# show queuing interface ethernet 199/1/2

slot 1

=======

Interface is not in this module.

slot 2

=======

Interface is not in this module.

slot 4

=======

Interface is not in this module.

slot 6

=======

Interface is not in this module.

slot 9

=======

Ethernet199/1/2 queuing information:

Input buffer allocation:

Qos-group: ctrl

frh: 0

drop-type: drop

cos: 7

xon xoff buffer-size

---------+---------+-----------

2560 7680 10240

Qos-group: 0 2 (shared)

frh: 2

drop-type: drop

cos: 0 1 2 3 4 5 6

xon xoff buffer-size

---------+---------+-----------

34560 39680 48640

Queueing:

queue qos-group cos priority bandwidth mtu

--------+------------+--------------+---------+---------+----

ctrl-hi n/a 7 PRI 0 2400

ctrl-lo n/a 7 PRI 0 2400

2 0 0 1 2 3 4 WRR 80 1600

4 2 5 6 WRR 20 1600

Queue limit: 66560 bytes

Queue Statistics:

queue rx tx flags

------+---------------+---------------+-----

0 0 0 ctrl

1 0 0 ctrl

2 0 0 data

4 0 0 data

Port Statistics:

rx drop rx mcast drop rx error tx drop mux ovflow

---------------+---------------+---------------+---------------+--------------

0 0 0 0 InActive

Priority-flow-control enabled: no

Flow-control status: rx 0x0, tx 0x0, rx_mask 0x0

cos qos-group rx pause tx pause masked rx pause

-------+-----------+---------+---------+---------------

0 0 xon xon xon

1 0 xon xon xon

2 0 xon xon xon

3 0 xon xon xon

4 0 xon xon xon

5 2 xon xon xon

6 2 xon xon xon

7 n/a xon xon xon

DSCP to Queue mapping on FEX

----+--+-----+-------+--+---

FEX TCAM programmed successfully

queue DSCPs

----- -----

02 0-39,

04 40-63,

03 **EMPTY**

05 **EMPTY**

slot 10

=======

slot 11

=======

Interface is not in this module.

slot 15

=======

Interface is not in this module.

slot 16

=======

Interface is not in this module.

slot 17

=======

Interface is not in this module.

slot 18

=======

Interface is not in this module.

-

ISSU Behavior

In Cisco NX-OS

Release 6.2(2) and later releases, FEX queuing is disabled by default on all

existing FEXs after an in-service software upgrade (ISSU). FEX queuing is

enabled upon flapping the FEX. You can reload the FEX to enable queuing on any

FEX after an ISSU. A message is displayed in the output of the

show queuing interface

interface command for the FEX host interface after

an ISSU.

switch# show queuing interface ethernet 133/1/32 module 9

Ethernet133/1/32 queuing information:

Input buffer allocation:

Qos-group: ctrl

frh: 0

drop-type: drop

cos: 7

xon xoff buffer-size

---------+---------+-----------

2560 7680 10240

Qos-group: 0

frh: 8

drop-type: drop

cos: 0 1 2 3 4 5 6

xon xoff buffer-size

---------+---------+-----------

0 126720 151040

Queueing:

queue qos-group cos priority bandwidth mtu

--------+------------+--------------+---------+---------+----

ctrl-hi n/a 7 PRI 0 2400

ctrl-lo n/a 7 PRI 0 2400

2 0 0 1 2 3 4 5 6 WRR 100 9440

Queue limit: 66560 bytes

Queue Statistics:

queue rx tx flags

------+---------------+---------------+-----

0 0 0 ctrl

1 0 0 ctrl

2 0 0 data

Port Statistics:

rx drop rx mcast drop rx error tx drop mux ovflow

---------------+---------------+---------------+---------------+--------------

0 0 0 0 InActive

Priority-flow-control enabled: no

Flow-control status: rx 0x0, tx 0x0, rx_mask 0x0

cos qos-group rx pause tx pause masked rx pause

-------+-----------+---------+---------+---------------

0 0 xon xon xon

1 0 xon xon xon

2 0 xon xon xon

3 0 xon xon xon

4 0 xon xon xon

5 0 xon xon xon

6 0 xon xon xon

7 n/a xon xon xon

***FEX queuing disabled on fex 133. Reload the fex to enable queuing.<======

For any new FEXs

brought online after an ISSU, queuing is enabled by default.

The queue limit

is enabled by default for all FEXs, regardless of whether queuing is enabled or

disabled for the FEX. In Cisco NX-OS Release 6.2(2), all FEXs come up with the

default hardware queue-limit value. Any user-defined queue limit that is

configured after an ISSU by using the

hardware queue-limit

command takes effect even if queuing is not enabled

for the FEX.

-

No Support on the Cisco Nexus

2248PQ 10-Gigabit Ethernet Fabric Extender

The following

sample output shows that FEX queuing is not supported for the Cisco Nexus

2248PQ 10-Gigabit Ethernet Fabric Extender (FEX2248PQ):

switch# show queuing interface ethernet 143/1/1 module 5

Ethernet143/1/1 queuing information:

Network-QOS is disabled for N2248PQ <=======

Displaying the default configurations

Input buffer allocation:

Qos-group: ctrl

frh: 0

drop-type: drop

cos: 7

xon xoff buffer-size

---------+---------+-----------

2560 7680 10240

Qos-group: 0

frh: 8

drop-type: drop

cos: 0 1 2 3 4 5 6

xon xoff buffer-size

---------+---------+-----------

0 126720 151040

Queueing:

queue qos-group cos priority bandwidth mtu

--------+------------+--------------+---------+---------+----

ctrl-hi n/a 7 PRI 0 2400

ctrl-lo n/a 7 PRI 0 2400

2 0 0 1 2 3 4 5 6 WRR 100 9440

Queue limit: 0 bytes

Queue Statistics:

queue rx tx flags

------+---------------+---------------+-----

0 0 0 ctrl

1 0 0 ctrl

2 0 0 data

Port Statistics:

rx drop rx mcast drop rx error tx drop mux ovflow

---------------+---------------+---------------+---------------+--------------

0 0 0 0 InActive

Priority-flow-control enabled: no

Flow-control status: rx 0x0, tx 0x0, rx_mask 0x0

cos qos-group rx pause tx pause masked rx pause

-------+-----------+---------+---------+---------------

0 0 xon xon xon

1 0 xon xon xon

2 0 xon xon xon

3 0 xon xon xon

4 0 xon xon xon

5 0 xon xon xon

6 0 xon xon xon

7 n/a xon xon xon

-

Fabric Port

Queuing Restrictions

-

MTU

-

FEX queue MTU

configurations are derived from type network-QoS policy-map templates. MTU

changes are applied on cloned network-QoS policy maps. The MTU that is

configured on a FEX port must match the MTU in the network-QoS policy map so

that the FEX MTU can be applied to the FEX host interfaces.

For more information, see the

Cisco

Nexus 7000 Series NX-OS Quality of Service Configuration Guide.

Note |

Starting with Cisco NX-OS Release 6.2(2), the configured MTU

for the FEX ports is controlled by the network QoS policy. To change the MTU

that is configured on the FEX ports, modify the network QoS policy to change

when the fabric port MTU is also changed.

If you change the FEX fabric port MTU on a version prior to

Cisco NX-OS Release 6.2(x), and then upgrade via ISSU to Cisco NX-OS Release

6.2(x) or a later version, you will not get any issues until either a FEX or

switch is reloaded. It is recommended that post-upgrade, the FEX HIF MTU be

changed via the network QoS policy as described above.

|

Qos policy changes affects only F series cards and M series

cards.

Feedback

Feedback