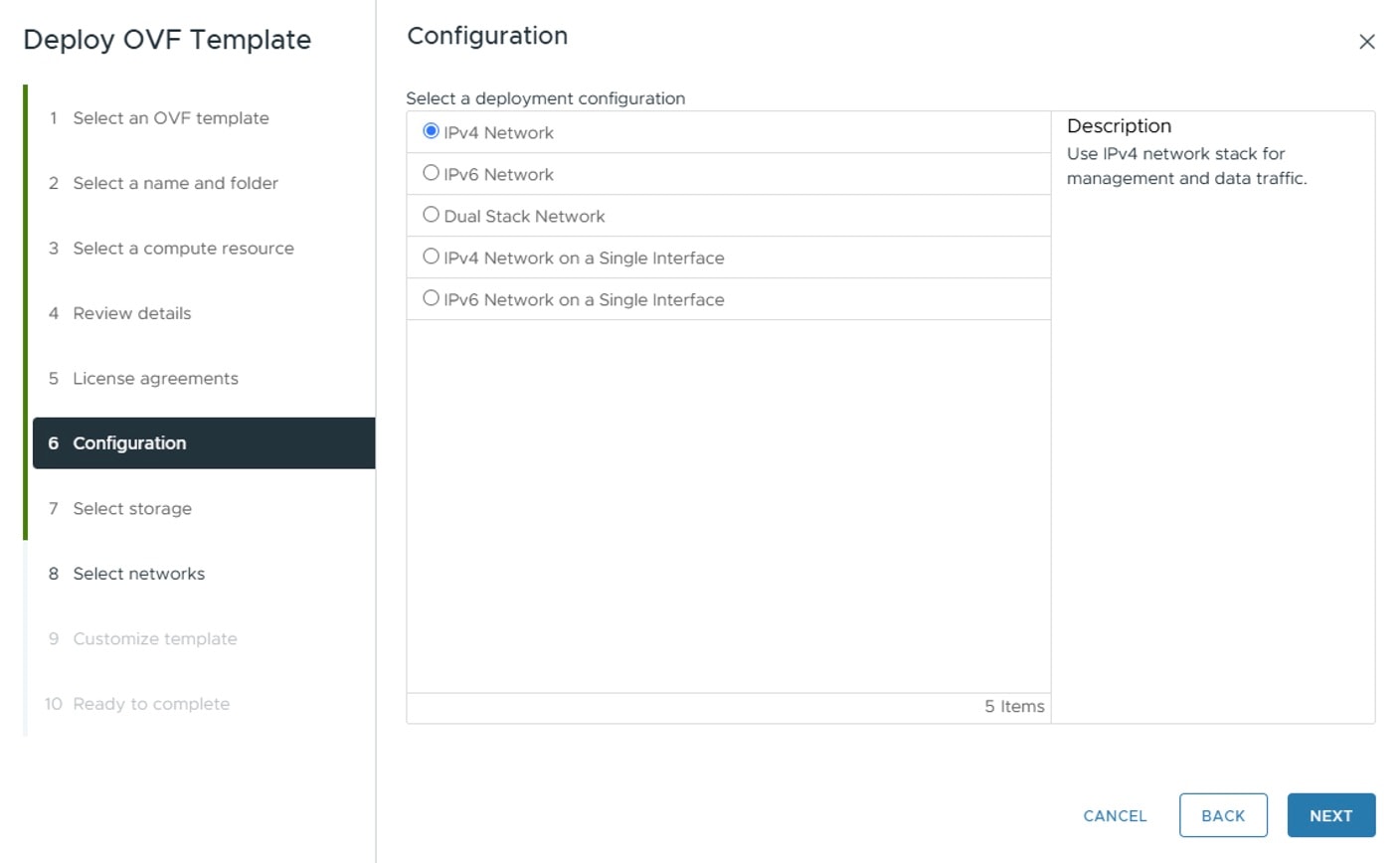

ClusterIPStack |

The IP stack protocol: IPv4, IPv6 or dualstack.

|

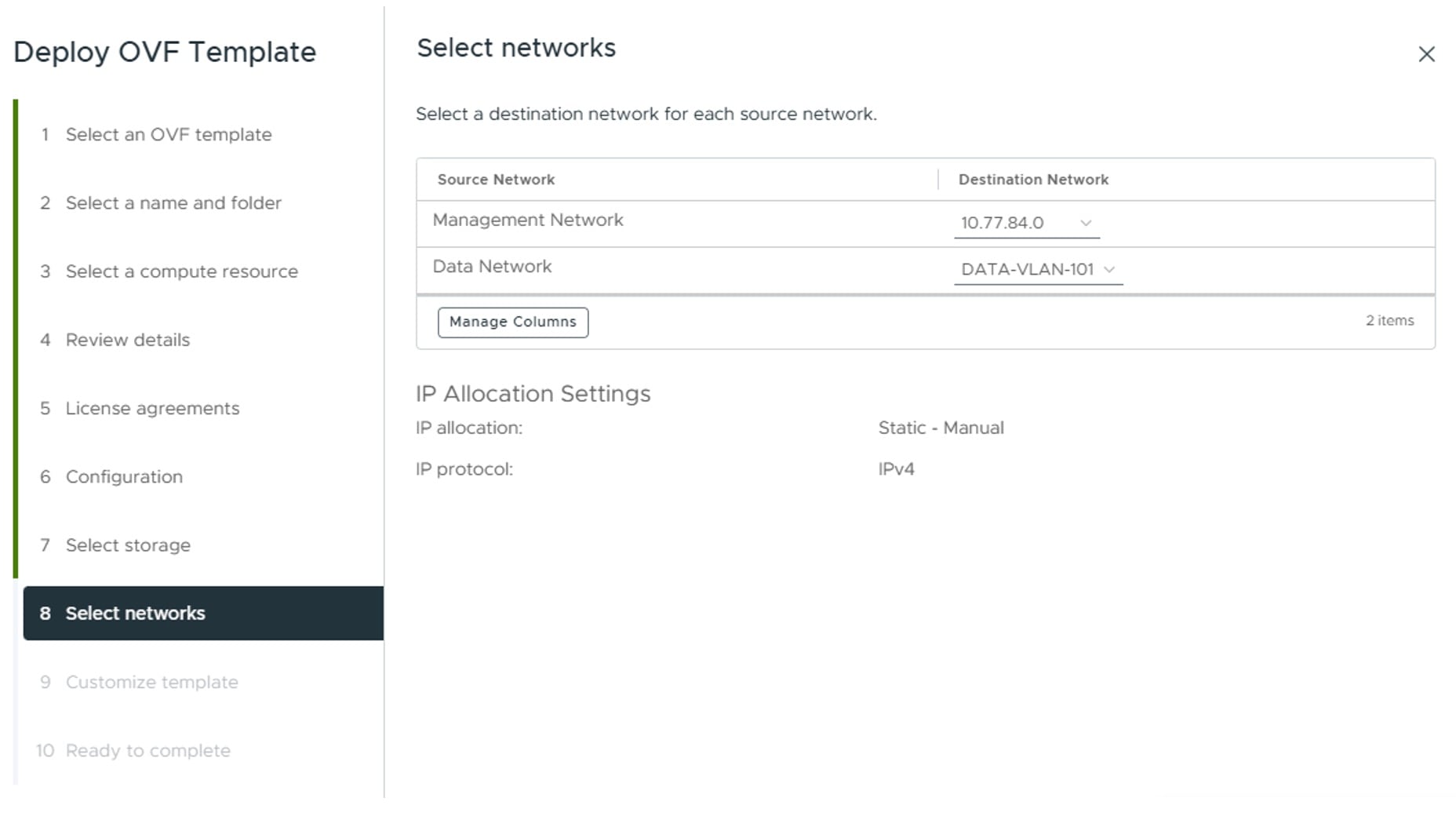

ManagementIPAddress |

The Management IP address of the VM (IPv4 and/or IPv6).

|

ManagementIPNetmask |

The Management IP subnet in dotted decimal format (IPv4 and/or IPv6).

|

ManagementIPGateway |

The Gateway IP on the Management Network (IPv4 and/or IPv6). The address must be reachable, otherwise the installation will

fail.

|

ManagementVIP |

The Management Virtual IP for the Crosswork VM.

|

DataIPAddress |

The Data IP address of the VM (IPv4 and/or IPv6).

|

DataIPNetmask |

The Data IP subnet in dotted decimal format (IPv4 and/or IPv6).

|

DataIPGateway |

The Gateway IP on the Data Network (IPv4 and/or IPv6). The address must be reachable, otherwise the installation will fail.

|

DataVIP |

The Data Virtual IP for the Crosswork VM.

|

DNS |

The IP address of the DNS server (IPv4 and/or IPv6). The address must be reachable, otherwise the installation will fail.

|

NTP |

NTP server address or name. The address must be reachable, otherwise the installation will fail.

|

DomainName |

The domain name used for the VM.

|

CWPassword |

Password to log into Cisco Crosswork. When setting up a VM, ensure the password is strong and meets the following criteria:

-

It must be at least 8 characters long and include uppercase and lowercase letters, numbers, and at least one special character.

-

The following special characters are not allowed: backslash (\), single quote ('), or double quote (").

-

Avoid using passwords that resemble dictionary words (such as "Pa55w0rd!"). While such passwords may meet the specified criteria,

they are considered weak and will be rejected, resulting in a failure to set up the VM.

|

VMSize |

Size of the VM. For Crosswork Workflow Manager Solutions deployments, specify the "XLarge" profile.

|

VMName |

Name of the VM.

|

NodeType |

Indicates the type of VM. Choose Hybrid.

|

IsSeed |

Set to "True".

|

InitNodeCount |

Set value to 1.

|

InitMasterCount |

Set value to 1.

|

BackupMinPercent |

Minimum percentage of the data disk space to be used for the size of the backup partition. The default value is 35 (valid

range is from 1 to 80).

Please use the default value unless recommended otherwise.

|

Note

|

The final backup partition size will be calculated dynamically. This parameter defines the minimum.

|

|

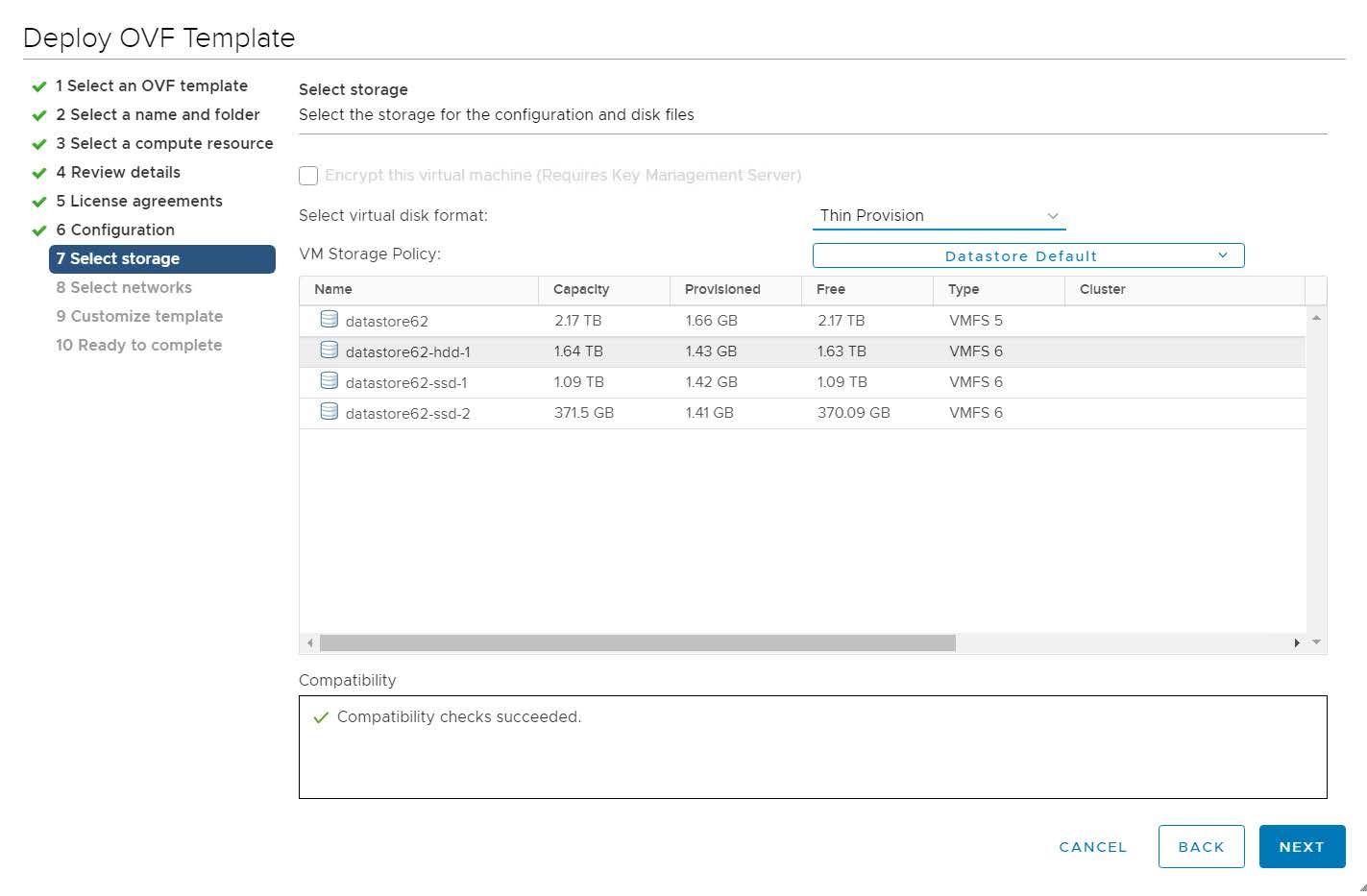

ThinProvisioned |

Set to false for production deployments.

|

SchemaVersion |

The configuration Manifest schema version. This indicates the version of the installer to use with this template.

Schema version should map to the version packaged with the sample template in the installer tool on cisco.com. You should

always build a new template from the default template provided with the release you are deploying, as template requirements

may change from one release to the next.

|

LogFsSize |

Log partition size (in gigabytes). Minimum value is 20 GB and Maximum value is 1000 GB.

If left blank, the default value (20 GB) is selected.

|

EnableSkipAutoInstallFeature |

Pods marked as "skip auto install" will not be brought up unless explicitly requested by a dependent application or pod. By

default, the value is set as "False".

For Crosswork Workflow Manager Solutions deployment, you must set the value as "True".

|

Note

|

-

If left blank, the default value ("False") is automatically selected.

-

This parameter accepts a string value, so be sure to enclose the value in double quotes.

|

|

EnforcePodReservations |

Enforces minimum resource reservations for the pod. If left blank, the default value ("True") is selected.

This parameter accepts a string value, so be sure to enclose the value in double quotes.

|

K8sServiceNetwork |

The network address for the kubernetes service network. By default, the CIDR range is fixed to '/16'.

|

K8sPodNetwork |

The network address for the kubernetes pod network. By default, the CIDR range is fixed to '/16'.

|

IgnoreDiagnosticsCheckFailure |

Used to set the system response in case of a diagnostic-check failure. If set to "False", the installation will terminate if the diagnostic check reports an error. If set to "True", the diagnostic check will be ignored, and the installation will continue.

The default value is "False". Cisco recommends that you leave the value set to "False" whenever you are installing in a production environment. If the installation is failing with this setting, contact Cisco

Customer Experience.

This parameter accepts a string value, so be sure to enclose the value in double quotes.

|

Note

|

-

The log files (diagnostic_stdout.log and diagnostic_stderr.log) can be found at /var/log. The result from each diagnostic execution is kept in a file at /home/cw-admin/diagnosis_report.txt.

-

Use diagnostic all command to invoke the diagnostic manually on day N.

-

Use diagnostic history command to view previous test report.

|

|

ManagementVIPName |

Name of the Management Virtual IP for the Crosswork VM. This is an optional parameter used to reach the Crosswork Management

VIP via a DNS name. If this parameter is used, the corresponding DNS record must exist in the DNS server.

|

DataVIPName |

Name of the Data Virtual IP for the Crosswork VM. This is an optional parameter used to reach the Crosswork Data VIP via a

DNS name. If this parameter is used, the corresponding DNS record must exist in the DNS server.

|

EnableHardReservations |

Determines the enforcement of VM CPU and Memory profile reservations. This is an optional parameter and the default value

is "True", if not explicitly specified. This parameter accepts a string value, so be sure to enclose the value in double quotes.

If set as "True", the VM's resources are provided exclusively. In this state, the installation will fail if there are insufficient CPU cores,

memory or CPU cycles.

If set as "False" (only set for lab installations), the VM's resources are provided on best efforts. In this state, insufficient CPU cores

can impact performance or cause installation failure.

|

ManagerDataFsSize |

This parameter is applicable only when installing with the Docker installer tool.

Refers to the data disk size for the Crosswork node (in gigabytes). This is an optional parameter and the default value is

485 (valid range is from 485 to 8000), if not explicitly specified.

Please use the default value unless recommended otherwise.

|

RamDiskSize |

Size of the RAM disk.

This parameter is only used for lab installations (value must be at least 2). When a non-zero value is provided for RamDiskSize, the HSDatastore value is not used.

|

Timezone |

Enter the timezone name. The name must be a standard IANA "TZ" timezone name in English (for example, "America/Chicago").

The name is a string value, so be sure to enclose it in double quotes.

You can find the authoritative list of IANA TZ timezone names at https://data.iana.org/time-zones/tzdb-2021a/zone1970.tab. You can also see the list by entering the following at any Ubuntu command line:

timedatectl list-timezones

Setting the TZ timezone in this manner is optional. If you leave this field blank, the VM will set the system clock when it

boots and connects to your local NTP server. The system clock will then use the NTP server’s UTC protocol. UTC ensures proper

server time synchronization across the network but does not provide local timezone or DST adjustments, which can complicate

global network management unless your organization has a defined policy for implementing the NTP protocol. For help with this,

see Use Best Practices for Network Time Protocol.

If you later decide you want to use an IANA "TZ" timezone name, you can set one using the CNC server VM's command line, as

follows:

- Access the command line of the CNC server VM:

ssh cw-admin@VMIPaddress

-

Switch to the administrative user (you may be prompted for the administrative password):

sudo su

-

Set the timezone, using the IANA TZ name you have selected:

timedatectl set-timezone TZName

-

Confirm that the setting was accepted:

timedatectl status

|

Feedback

Feedback