Cisco Data Intelligence Platform on Cisco UCS C4200 with Cloudera Data Platform

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco Data Intelligence Platform on Cisco UCS C4200 with Cloudera Data Platform

Design and Deployment Guide for Modernizing Data Lake with All Flash Cisco UCS C4200 Platform with Cisco UCS C125 M5 on Cloudera Data Platform Private Cloud Base 7.1.3 Managed by Cisco Intersight

Published: October 2020

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. Lisa.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Deployment Hardware and Software

Data scientists are constantly searching for newer techniques and methodologies that can unlock the value of big data and distill this data further to identify additional insights which could transform productivity and provide business differentiation.

One such area is Artificial Intelligence/Machine Learning (AI/ML), which has seen tremendous development with bringing in new frameworks and new forms of compute (CPU, GPU, and FPGA) to work on data to provide key insights. While data lakes have historically been data intensive workloads, these advancements in technologies have led to a new growing demand of compute intensive workloads to operate on the same data.

While data scientists want to be able to use the latest and greatest advancements in AI/ML software and hardware technologies on their datasets, the IT team is also constantly looking at enabling these data scientists to be able to provide such a platform to a data lake. This has led to architecturally siloed implementations. When data, which is ingested, worked, and processed in a data lake, needs to be further operated by AI/ML frameworks, it often leaves the platform and must be on-boarded to a different platform to be processed. This would be fine if this demand is seen only on a small percentage of workloads. However, AI/ML workloads working closely on the data in a data lake are seeing an increase in adoption. For instance, data lakes in customer environment are seeing deluge of data from new use cases such as IoT, autonomous driving, smart cities, genomics, and financials, who are all seeing more and more demand of AI/ML processing of this data.

IT is demanding newer solutions to enable data scientists to operate on both a data lake and an AI/ML platform (or a compute farm) without worrying about the underlying infrastructure. IT also needs this to seamlessly grow to cloud scale while reducing the TCO of this infrastructure and without affecting utilization. Thus, driving a need to plan a data lake along with an AI/ML platform in a systemic fashion.

Seeing this increasing demand by IT, and also envisioning this as a natural extension of a data lake, we announced the Cisco Data Intelligence Platform. Cisco Data Intelligence Platform is discussed in detail here.

Hadoop enables data engineering, providing very fast ingestion of data and Extract, Transform, and Load (ETL) processing. In a data-intensive workload, computing moves to the data to enable faster, distributed processing of the data. Building a data pipeline that receives data flows from different data sources at higher velocities, performs ETL on this data so that it lands in HDFS, and then makes it available for the serving layer either for real-time streaming or batch processing, is an extremely I/O intensive operation.

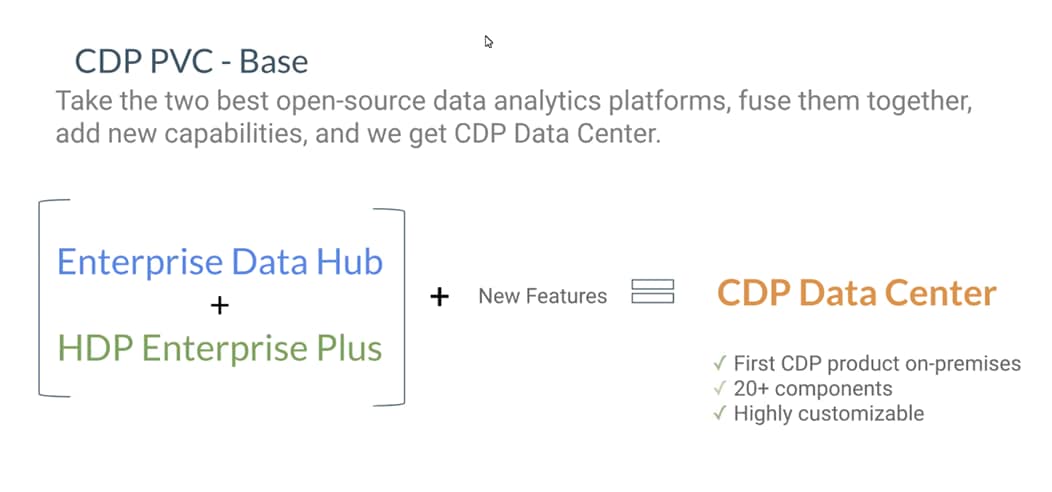

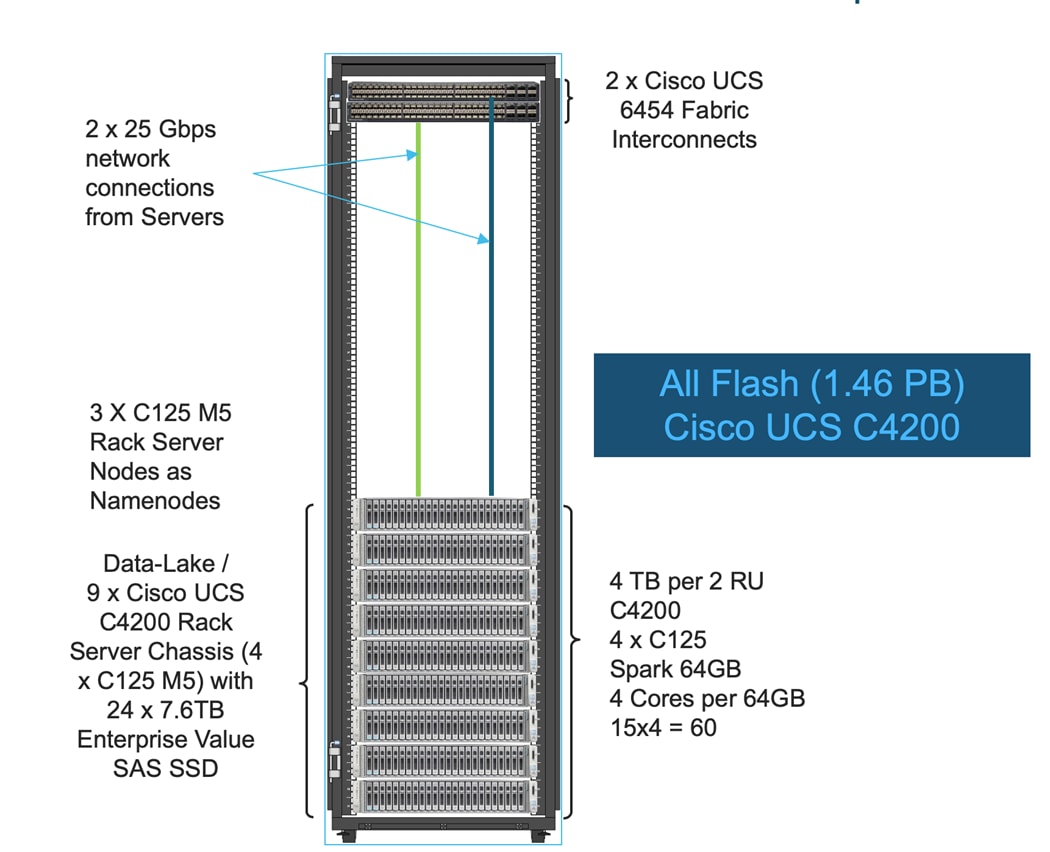

Cisco UCS C4200 platform is primarily positioned for All SSDs and the AMD Rome EPYC 2 series CPUs with highest core density for x86 architecture provenly ideal platform for the Hadoop data and compute intensive applications. Given the higher server density within 2RU form factor and the additional network capabilities running as single socket for Hadoop, Cisco UCS C125 M5 Rack Server Node is predominantly an excellent server architecture for Hadoop data lake on Cloudera Data Platform deployment. This CVD implements the data lake tier of the Cisco Data Intelligence Platform with Cloudera Data Platform Data Center (CDP-Private Cloud Base). CDP combines the best of both worlds, such as Cloudera Enterprise Data Hub and Hortonworks Data Platform Enterprise along with new features and enhancements across the stack. This unified distribution is a scalable and customizable platform where end user can securely run many types of workloads.

Furthermore, this CVD with CDP-Private Cloud Base sets the foundation for CDP private cloud which offers cloud-like user experience with self-service portal where users can efficiently search, curate, and share data, enabling access to trusted data and analytics in a secured manner.

This solution offers cohesive platform for both IT and data scientists by providing a scalable infrastructure for IT while also providing application platform for data scientists.

Both Big Data and machine learning technology have progressed to the point where they are being implemented in production systems running 24x7. There exists a very clear need for a proven, dependable, high-performance platform for the ingestion, processing, storage, and analysis of the data, as well as the seamless dissemination of the output, results, and insights of the analysis.

This solution implements Cloudera Data Platform Private Cloud Base on Cisco UCS Integrated Infrastructure for Big Data and Analytics based on Cisco Data Intelligence Platform (CDIP) architecture, a world-class platform specifically designed for demanding workloads that is both easy to scale and easy to manage, even as the requirements grow to thousands of servers and petabytes of storage.

Many companies, recognizing the immense potential of big data and machine learning technology, are gearing up to leverage these new capabilities, building out departments and increasing hiring. However, these efforts face a new set of challenges:

● Making the data available to the diverse set of people who need it

● Enabling access to high-performance computing resources, GPUs, that also scale with the data growth

● Allowing people to work with the data using the environments in which they are familiar

● Publishing their results so the organization can make use of it

● Enabling the automated production of those results

● Managing the data for compliance and governance

● Scaling the system as the data grows

● Managing and administering the system in an efficient, cost-effective way

This solution is based on the Cisco UCS Integrated Infrastructure for Big Data and Analytics and includes computing, storage, connectivity, and unified management capabilities to help companies manage the immense amount of data being collected. It is built on Cisco Unified Computing System (Cisco UCS) infrastructure, using Cisco UCS 6332 or 6454 Series Fabric Interconnects, and Cisco UCS C-Series Rack Servers. This architecture is specifically designed for performance and linear scalability for big data and machine learning workload.

The intended audience of this document includes sales engineers, field consultants, professional services, IT managers, partner engineering and customers who want to deploy the Cloudera Data Platform Data Center on the Cisco UCS Integrated Infrastructure for Big Data and Analytics (Cisco UCS M5 Rack Mount servers).

This document describes the architecture, design choices, and deployment procedures for Cisco Data Intelligence Platform using Cloudera Data Platform DC on Cisco UCS C125 M Rack Server Node.

This document also serves as a step-by-step guide on how to deploy CDP Private Cloud Base on 48 node cluster of Cisco UCS C125 M5 Rack Server Nodes.

This solution extends the portfolio of Cisco Data Intelligence Platform (CDIP) architecture with Cloudera Data Platform Data Center, a state-of-the-art platform, providing a data cloud for demanding workloads that is easy to deploy, scale and manage. Furthermore, as the enterprise’s requirements and needs changes overtime, the platform can grow to thousands of servers, hence providing peta bytes of storage.

The following design consideration will be implemented in this validated design:

● Modernizing the Data Lake with All flash C4200 platform with single socket AMD based server nodes using Cloudera Data Platform Datacenter for Big Data and Analytics

● Cisco Intersight deployed UCSM managed cluster with some feature limitations (outlined in the Intersight deployment sections)

This CVD showcases the Hadoop cluster deployment using Cisco Intersight (partially covered features) and UCS manager. This solution can also be fully deployed using Cisco Intersight in the future. Additional Cisco UCS features will be added to the Appendix. Some of the industry driven platforms and services include the following:

● Cloudera Data Platform Private Cloud

● Apache Ozone – Object Store for the dis-aggregated compute and storage

● A fully integrated CDP on CDIP with

◦ Data lake enabled through fully supported production grade CDP Private Cloud Base

◦ AI/ML enabled through CDP Private Cloud using REDHAT OpenShift

◦ Exabyte storage enabled through Apache Ozone

This CVD details the process of installing Cloudera Data Platform Data Center and the configuration details of the cluster. The current version of Cisco UCS Integrated Infrastructure for Big Data and Analytics offers the following configurations depending on the compute and storage requirements.

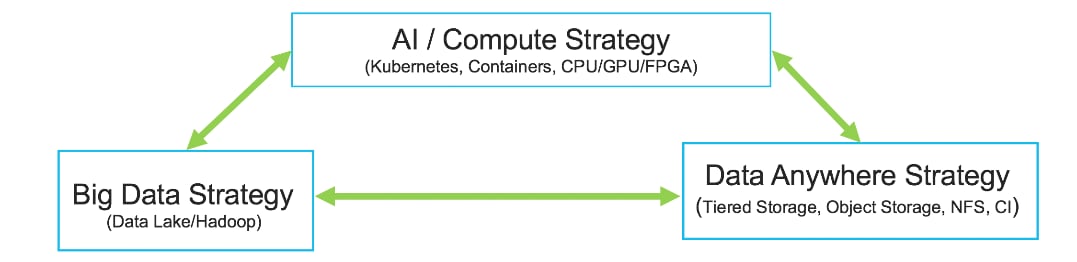

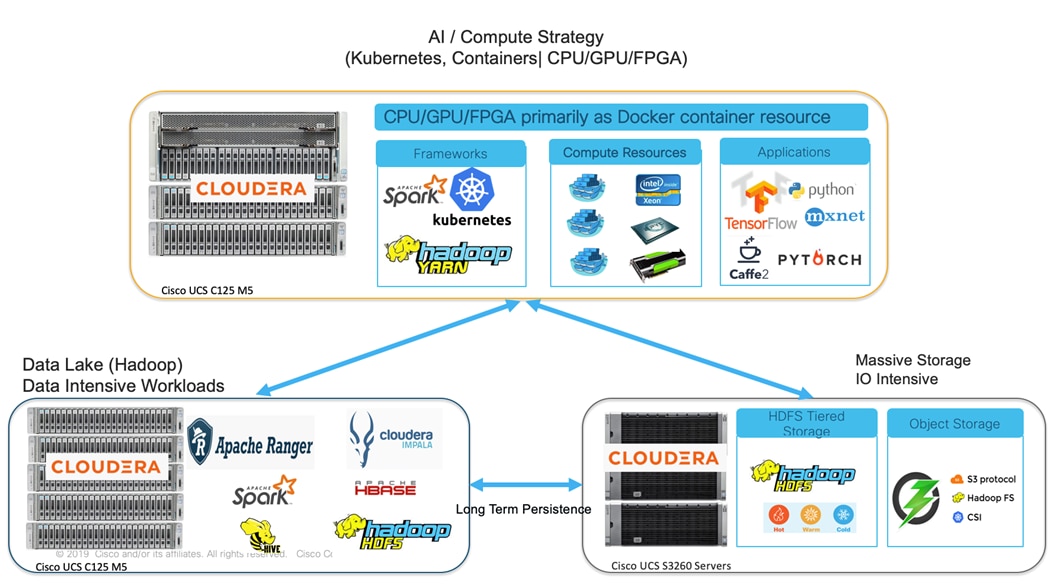

Cisco Data Intelligence Platform

Cisco Data Intelligence Platform (CDIP) is a cloud scale architecture which brings together big data, AI/compute farm, and storage tiers to work together as a single entity while also being able to scale independently to address the IT issues in the modern data center. This architecture allows for:

● Extremely fast data ingest, and data engineering done at the data lake

● AI compute farm allowing for different types of AI frameworks and compute types (GPU, CPU, FPGA) to work on this data for further analytics

● A storage tier, allowing to gradually retire data which has been worked on to a storage dense system with a lower $/TB providing a better TCO

● Seamlessly scale the architecture to thousands of nodes with a single pane of glass management using Cisco Application Centric Infrastructure (ACI)

Cisco Data Intelligence Platform caters to the evolving architecture bringing together a fully scalable infrastructure with centralized management and fully supported software stack (in partnership with industry leaders in the space) to each of these three independently scalable components of the architecture including data lake, AI/ML and Object stores.

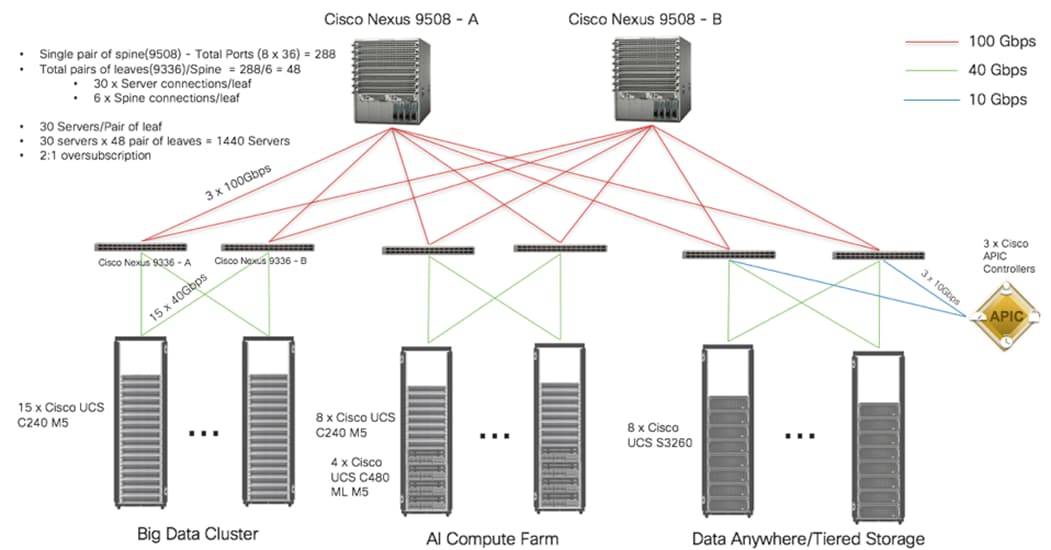

Figure 1. Cisco Data Intelligent Platform

Cisco has developed numerous industry leading Cisco Validated Designs (reference architectures) in the area of Big Data (CVDs with Cloudera, Hortonworks and MapR), compute farm with Kubernetes (CVD with RedHat OpenShift) and Object store (Apache Ozone, MinIO, SwiftStack, and others).

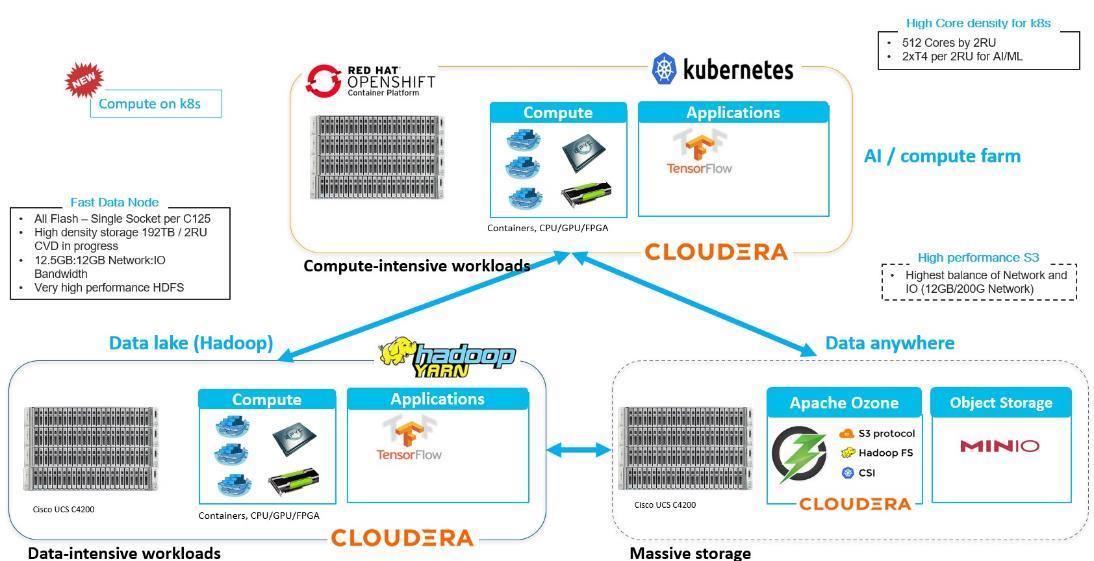

This Cisco Data Intelligence Platform can be deployed in these variants:

● CDIP with Cloudera with Data Science Workbench (powered by Kubernetes) and Tiered Storage with Hadoop

● CDIP with Hortonworks with Apache Hadoop 3.1 and Data Science Workbench (powered by Kubernetes) and Tiered Storage with Hadoop

● CDIP with CDP 7.1.3 with CDSW or CML (powered by Kubernetes/OpenShift) and Tiered Storage with Ozone Object Store

Figure 2. Cisco Data Intelligence Platform with Hadoop, Kubernetes, and Object Store

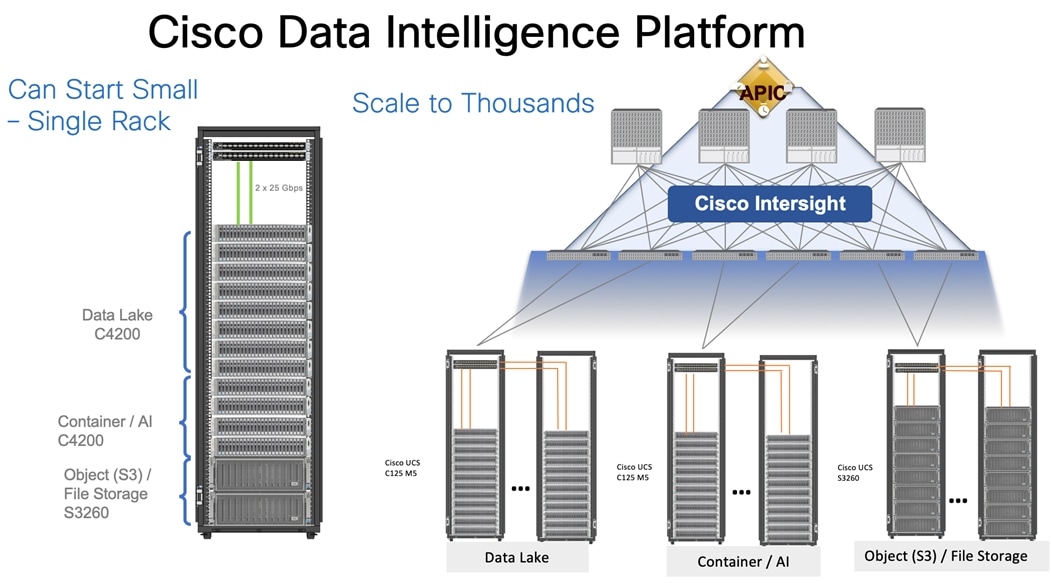

This architecture can start from a single rack and scale to thousands of nodes with a single pane of glass management with Cisco Application Centric Infrastructure (ACI).

Figure 3. Cisco Data Intelligent Platform at Scale

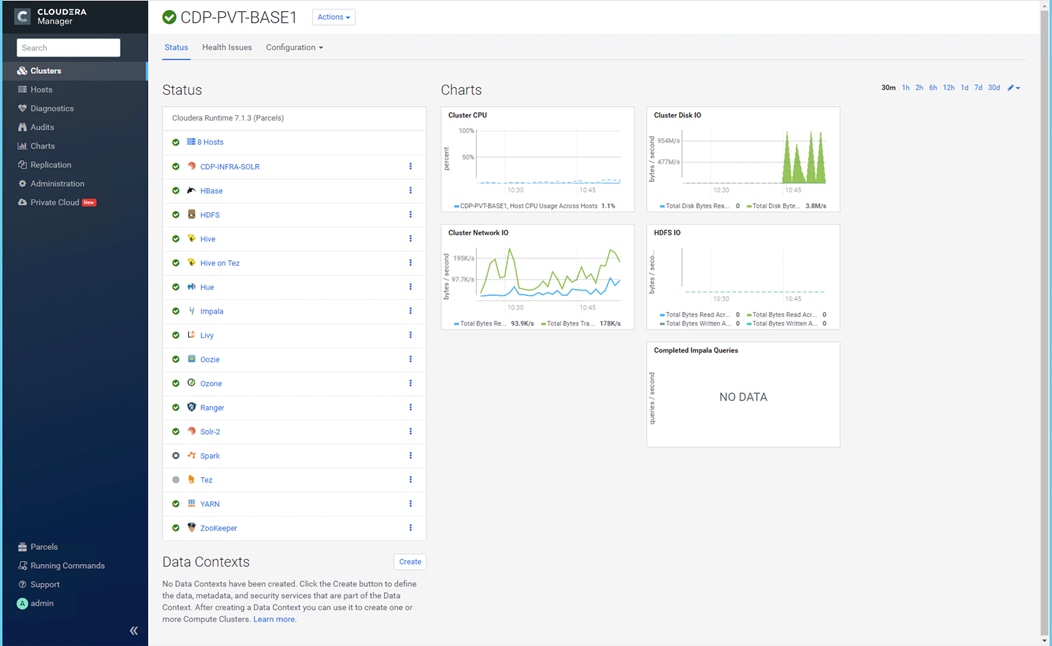

Figure 4. Cloudera Data Platform on Cisco Data Intelligent Platform

A CDIP architecture can fully be enabled by Cloudera Data Platform with the following components:

● Data lake enabled through CDP Private Cloud Base

● AI/ML enabled through CDP Private Cloud and

● Exabyte storage enabled through Apache Ozone

The reference architecture for Cisco UCS C4200 Series Chassis with Cisco UCS C125 M5 Rack Server Nodes powered by AMD EPYC processors and Hortonworks’ big data distribution is optimally designed and tested to help ensure a balance between performance and capacity. It can scale out to meet big data and analytics requirements. It can expand to thousands of servers with Cisco Nexus® 9000 Series Switches using the Cisco® Application Policy Infrastructure Controller (APIC) with a leaf-and-spine design using the Cisco Application Centric Infrastructure (Cisco ACI™) platform. This next generation infrastructure can be deployed to meet a wide variety of computing, storage, and connectivity options.

Data Lake Reference Architecture

Table 1 lists the data lake reference architecture configuration details for Cisco UCS Integrated Infrastructure for Big Data and Analytics.

Table 1. Cisco UCS Integrated Infrastructure for Big Data and Analytics Configuration Options

|

|

Superior Performance |

Performance |

Superior Performance |

Performance |

| Servers |

9 x Cisco UCS C4200 Series Chassis Each with4 x Cisco UCS C125 M5 Rack Server Nodes |

16 x Cisco UCS C240 M5 Rack Servers with small-form-factor (SFF) drives |

16 x Cisco UCS C240 M5 Rack Servers with large-form-factor (LFF) drives |

8 x Cisco UCS S3260 Storage Servers |

| CPU |

1 x AMD EPYC 2 7532 Processor (1 x 32 cores, 2.4 GHz) |

2 x 2nd Gen Intel® Xeon® Scalable 6230 processors (2 x 20 cores, at 2.1 GHz) |

2 x 2nd Gen Intel Xeon Scalable 6230 processors (2 x 20 cores, at 2.1 GHz) |

2 x 2nd Gen Intel Xeon Processor Scalable Family 5220 (2 x 18 cores, 2.2 GHz) |

| Memory |

8 x 64 GB RDIMMs (512 GB) |

12 x 32GB DDR4 (384 GB) |

12 x 32GB DDR4 (384 GB) |

12 x 32GB DDR4 (384 GB) |

| Boot |

M.2 with 2 x 240 GB SATA SSDs |

M.2 with 2 x 240-GB SSDs |

M.2 with 2 x 240-GB SSDs |

2 x 240-GB SATA SSDs |

| Storage |

6 x 7.6 TB Enterprise Value SATA SSD |

26 x 2.4TB 10K rpm SFF SAS HDDs or 12 x 1.6-TB Enterprise Value SATA SSDs |

12 x 8-TB 7.2K rpm LFF SAS HDDs |

28 x 6 TB 7.2K rpm LFF SAS HDDs per server node |

| Virtual interface card (VIC) |

25 Gigabit Ethernet (Cisco UCS VIC 1455) |

40 Gigabit Ethernet (Cisco UCS VIC 1387) or |

25 Gigabit Ethernet (Cisco UCS VIC 1455) |

40 Gigabit Ethernet (Cisco UCS VIC 1387) or |

| Storage controller |

Cisco 12-Gbps SAS 9460-8i RAID Controller 2GB cache (FBWC) |

Cisco 12-Gbps SAS modular RAID controller with 4-GB flash-based write cache (FBWC) or Cisco 12-Gbps modular SAS host bus adapter (HBA) |

Cisco 12-Gbps SAS modular RAID controller with 2-GB FBWC or Cisco 12-Gbps modular SAS host bus adapter (HBA) |

Cisco 12-Gbps SAS Modular RAID Controller with 4-GB flash-based write cache (FBWC) |

| Network connectivity |

2 x Cisco UCS 6454 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect or Cisco UCS 6454 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect or Cisco UCS 6454 Fabric Interconnect |

Cisco UCS 6332 Fabric Interconnect |

| GPU (optional) |

NA |

Up to 2 x NVIDIA Tesla V100 with 32 GB memory each Or Up to 6 x NVIDIA Tesla T4 with 16 GB memory each |

2 x NVIDIA Tesla V100 with 32 GB memory each Or Up to 6 x NVIDIA Tesla T4 with 16 GB memory each |

NA |

![]() The above mentioned non-Superior Performance configuration models can also be deployed with the 4th Generation Cisco UCS 6454 Fabric Interconnect with 25G VIC.

The above mentioned non-Superior Performance configuration models can also be deployed with the 4th Generation Cisco UCS 6454 Fabric Interconnect with 25G VIC.

![]() In this architecture, we deployed Cisco UCS C125 M5 Rack Server Nodes with Intersight capabilities.

In this architecture, we deployed Cisco UCS C125 M5 Rack Server Nodes with Intersight capabilities.

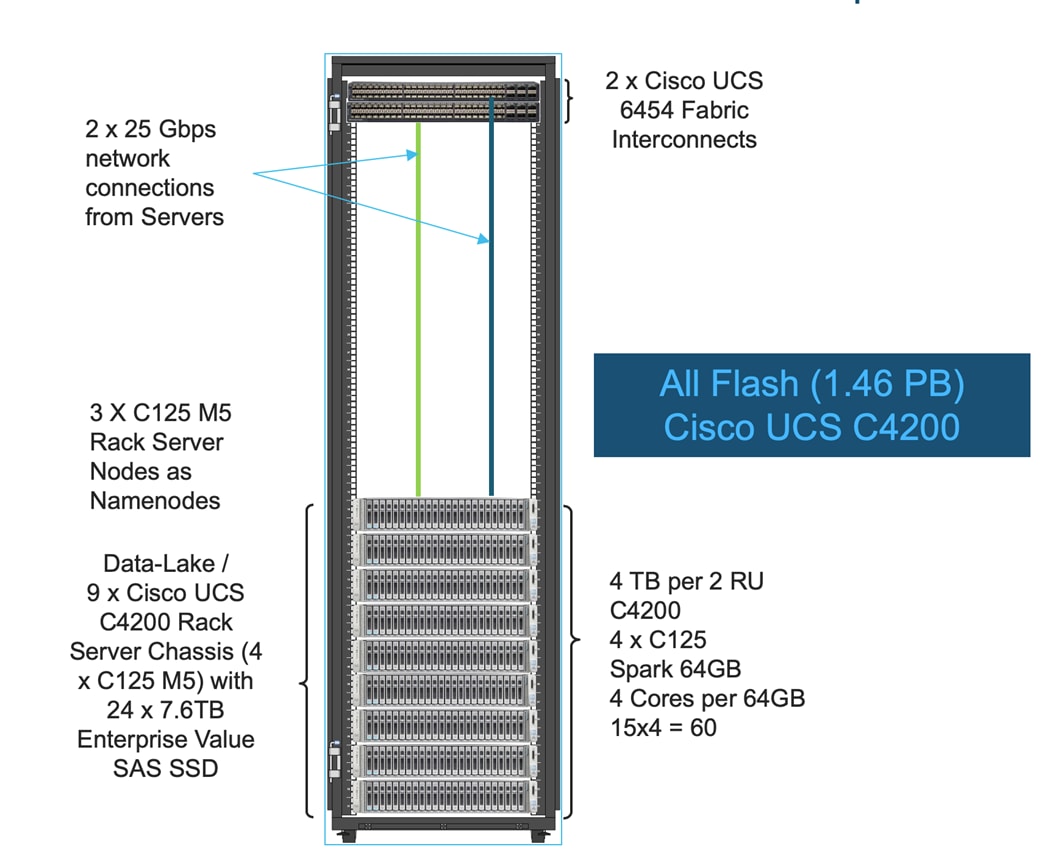

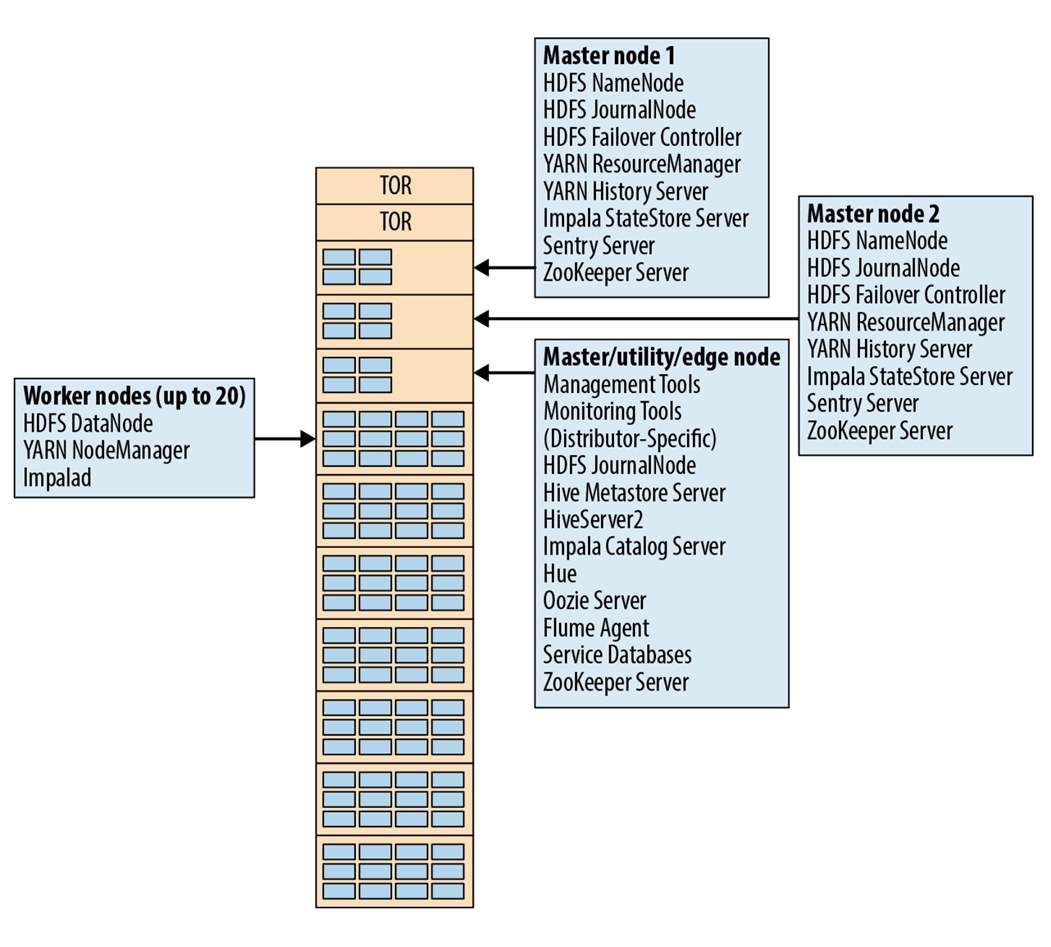

As illustrated in Figure 5, a 9 node cluster with Rack#1 hosting 32 x Cisco UCS M5 Rack Server Node as data nodes and 3 x Cisco UCS C125 M5 Rack Server Node as name nodes. Each link in the figure represents a 25 Gigabit Ethernet link from each of the 48 servers directly connected to a Fabric Interconnect.

Figure 5. Cisco Data Intelligence Platform with Cloudera Data Platform Data Center – Data Lake

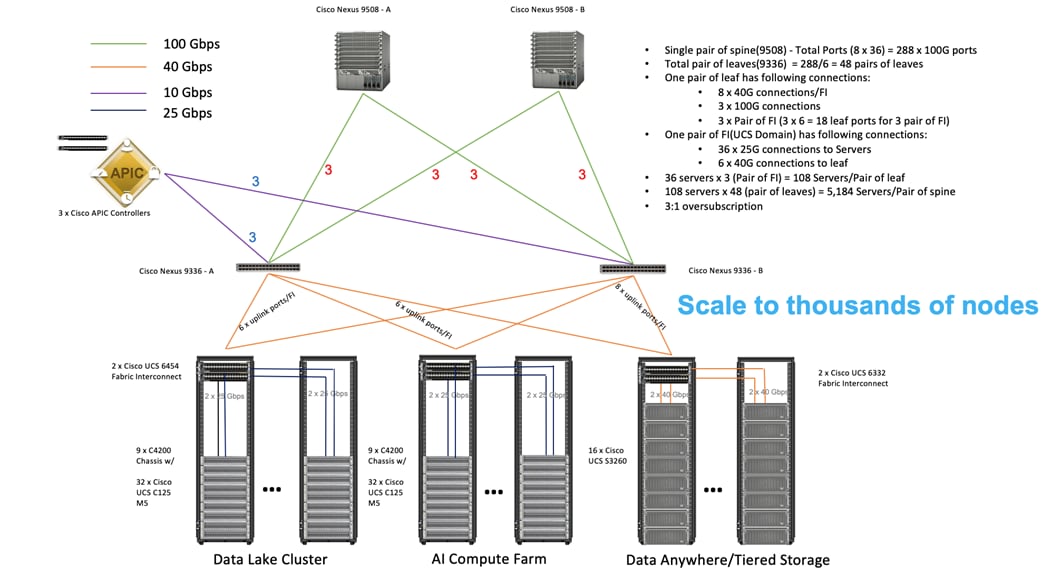

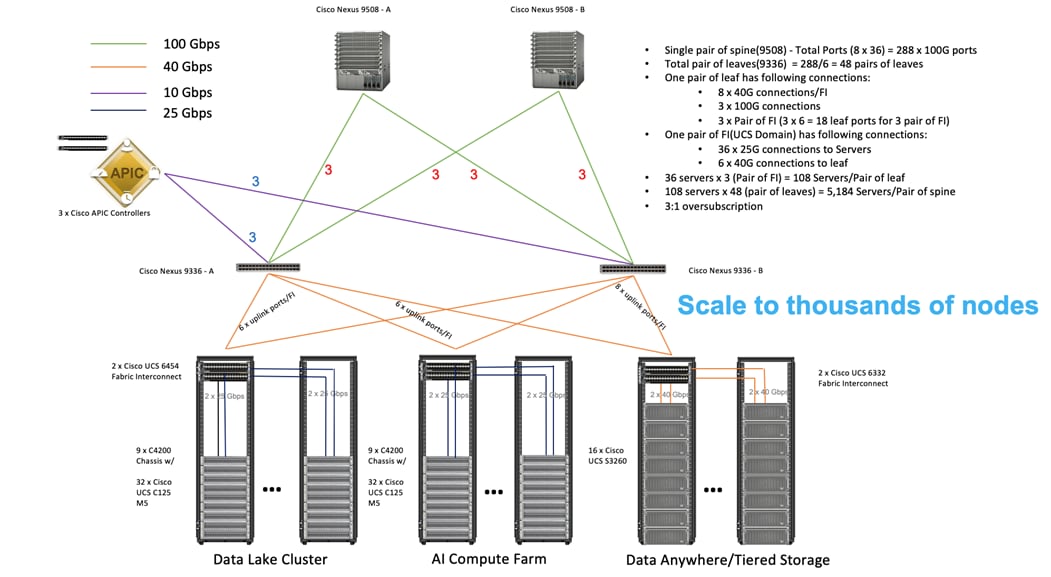

Figure 6 illustrates how to scale the solution. Each pair of Cisco UCS 6454 Fabric Interconnects has 36 Cisco UCS C125 M5 servers connected to it. This allows for six uplinks from each Fabric Interconnect to the Cisco Nexus 9336 switch. Three pairs of Cisco UCS 6454 FI’s can connect to a single switch with four uplink ports each. With 36 servers per FI, a total of 108 servers can be supported. Additionally, this solution can scale to thousands of nodes with the Nexus 9500 series family of switches.

Figure 6. Scaling the Solution

In the reference architectures discussed, each of the components is scaled separately, and for the purposes of this example, scaling is uniform. Two scale scenarios are as follows:

● Scaled architecture with 3:1 oversubscription with Cisco fabric interconnects and Cisco ACI

● Scaled architecture with 2:1 oversubscription with Cisco ACI

In the following scenarios, the goal is to populate up to a maximum of 200 leaf nodes in a Cisco ACI domain. Not all cases reach that number because they use the Cisco Nexus® 9508 Switch for this sizing and not the Cisco Nexus 9516 Switch.

Scaled Architecture with 3:1 Oversubscription with Cisco Fabric Interconnects and Cisco ACI

The architecture discussed here and shown in Figure 7 supports 3:1 network oversubscription from every node to every other node across a multidomain cluster (nodes in a single domain within a pair of Cisco fabric interconnects are locally switched and not oversubscribed).

From the viewpoint of the data lake, 36 x Cisco UCS C125 M5 Servers are connected to a pair of Cisco UCS 6454 Fabric Interconnects (with 48 x 25-Gbps throughput). From each fabric interconnect, 8 x 40-Gbps links connect to a pair of Cisco Nexus 9336 Switches. Three pairs of fabric interconnects can connect to a single pair of Cisco Nexus 9336 Switches (8 x 40-Gbps links per Fabric Interconnect to a pair of Nexus switch). Each of these Cisco Nexus 9336 Switches connects to a pair of Cisco Nexus 9508 Cisco ACI switches with 6 x 100-Gbps uplinks (connecting to a Cisco N9K-X9736C-FX line card). the Cisco Nexus 9508 Switch with the Cisco N9K-X9736C-FX line card can support up to 36 x 100-Gbps ports, each and 8 such line cards.

Figure 7. Scaled Architecture with 3:1 Oversubscription with Cisco Fabric Interconnects and Cisco ACI

Scaled Architecture with 2:1 Oversubscription with Cisco ACI

In the scenario discussed here and shown in Figure 8, the Cisco Nexus 9508 Switch with the Cisco N9K-X9736C-FX line card can support up to 36 x 100-Gbps ports, each and 8 such line cards.

Here, for the 2:1 oversubscription, 30 Cisco UCS C125 M5 Rack Servers are connected to a pair of Cisco Nexus 9336 Switches, and each Cisco Nexus 9336 connects to a pair of Cisco Nexus 9508 Switches with three uplinks each. A pair of Cisco Nexus 9336 Switches can support 30 servers and connect to a spine with 6 x 100-Gbps links on each spine. This single pod (pair of Cisco Nexus 9336 Switches connecting to 30 Cisco UCS C125 M5 servers and 6 uplinks to each spine) can be repeated 48 times (288/6) for a given Cisco Nexus 9508 Switch and can support up to1440 servers.

To reduce the oversubscription ratio (to get 1:1 network subscription from any node to any node), you can use just 15 servers under a pair of Cisco Nexus 9336 Switches and then move to Cisco Nexus 9516 Switches (the number of leaf nodes would double).

To scale beyond this number, multiple spines can be aggregated.

Figure 8. Scaled Architecture with 2:1 Oversubscription with Cisco ACI

Cisco UCS Integrated Infrastructure for Big Data and Analytics

The Cisco UCS Integrated Infrastructure for Big Data and Analytics solution for Cloudera is based on Cisco UCS Integrated Infrastructure for Big Data and Analytics, a highly scalable architecture designed to meet a variety of scale-out application demands with seamless data integration and management integration capabilities built using the components described in this section.

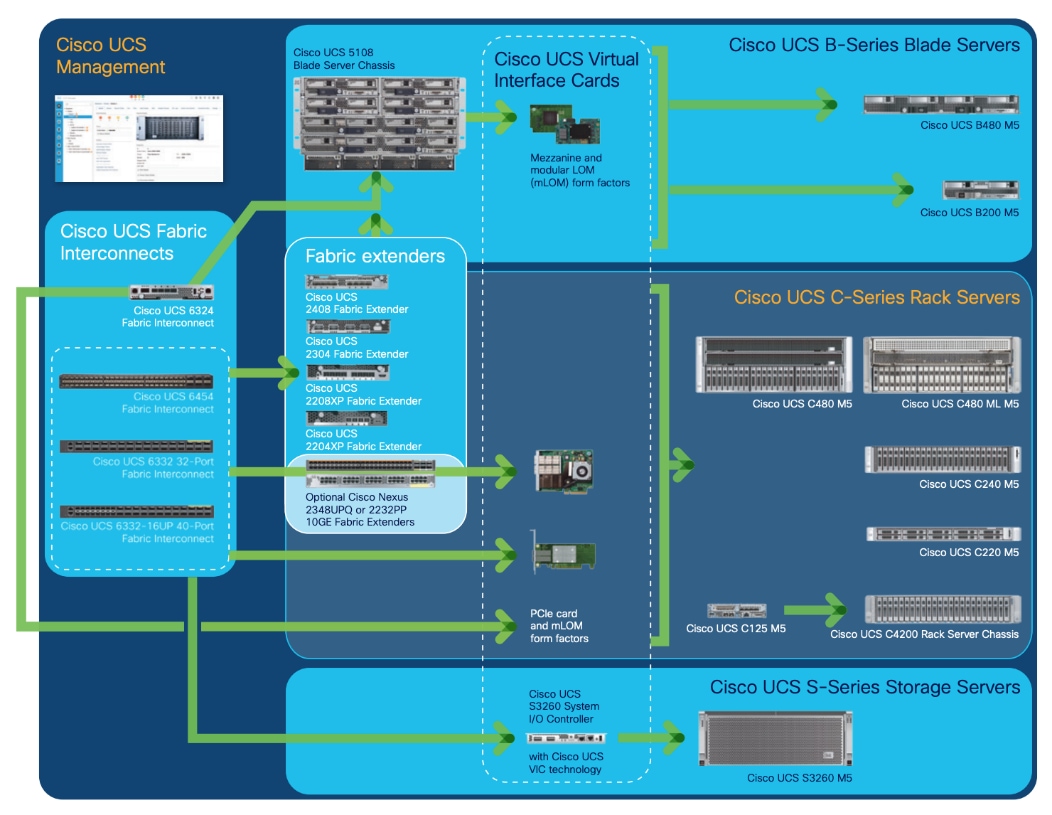

Cisco Unified Computing System™ (Cisco UCS®) is a next-generation data center platform that unites computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce Total Cost of Ownership (TCO) and increase business agility. The system integrates a low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain (Figure 9).

Figure 9. Cisco UCS Component Hierarchy

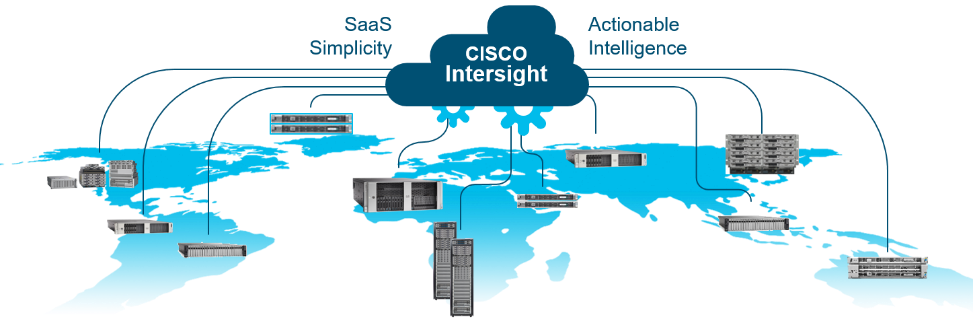

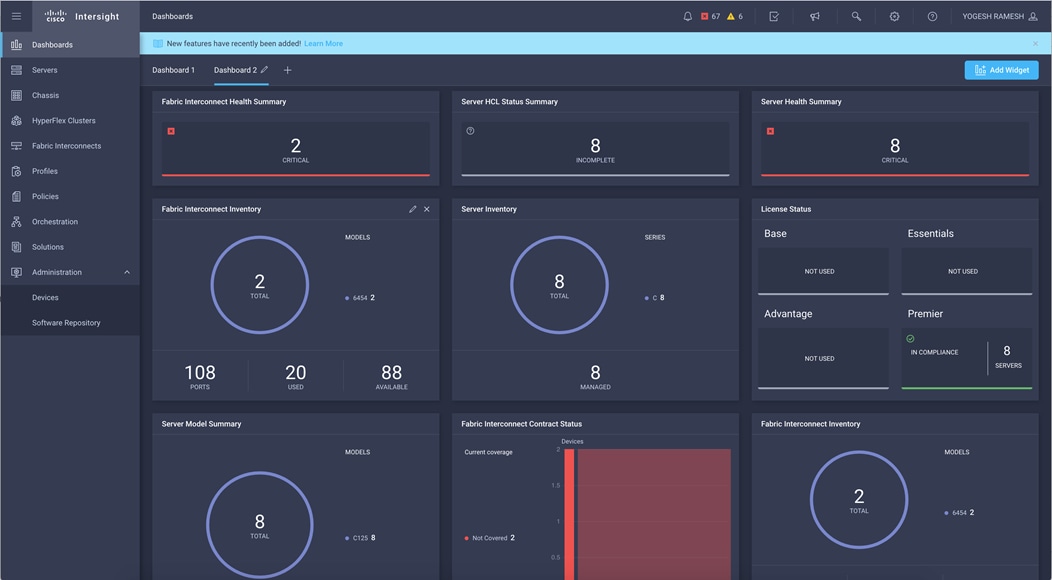

Cisco Intersight is Cisco’s systems management platform that delivers intuitive computing through cloud-powered intelligence. This platform offers a more intelligent level of management that enables IT organizations to analyze, simplify, and automate their environments in ways that were not possible with prior generations of tools. This capability empowers organizations to achieve significant savings in Total Cost of Ownership (TCO) and to deliver applications faster, so they can support new business initiatives.

Cisco Intersight is a Software as a Service (SaaS) infrastructure management which provides a single pane of glass management of CDIP infrastructure in the data center. Cisco Intersight scales easily, and frequent updates are implemented without impact to operations. Cisco Intersight Essentials enables customers to centralize configuration management through a unified policy engine, determine compliance with the Cisco UCS Hardware Compatibility List (HCL), and initiate firmware updates. Enhanced capabilities and tight integration with Cisco TAC enables more efficient support. Cisco Intersight automates uploading files to speed troubleshooting. The Intersight recommendation engine provides actionable intelligence for IT operations management. The insights are driven by expert systems and best practices from Cisco.

Cisco Intersight offers flexible deployment either as Software as a Service (SaaS) on Intersight.com or running on your premises with the Cisco Intersight virtual appliance. The virtual appliance provides users with the benefits of Cisco Intersight while allowing more flexibility for those with additional data locality and security requirements.

Figure 10. Cisco Intersight

Cisco Intersight has the following:

● Connected TAC

● Security Advisories

● Hardware Compatibility List (HCL) and much more

To learn more about all the features of Intersight go to: https://www.cisco.com/c/en/us/products/servers-unified-computing/intersight/index.html

Cisco UCS Manager (UCSM) resides within the Cisco UCS Fabric Interconnect. It makes the system self-aware and self-integrating, managing all the system components as a single logical entity. Cisco UCS Manager can be accessed through an intuitive graphical user interface (GUI), a command-line interface (CLI), or an XML application-programming interface (API). Cisco UCS Manager uses service profiles to define the personality, configuration, and connectivity of all resources within Cisco UCS, radically simplifying provisioning of resources so that the process takes minutes instead of days. This simplification allows IT departments to shift their focus from constant maintenance to strategic business initiatives.

Key Features

● Supports Cisco UCS B-Series Blade and Cisco UCS C-Series Rack Servers, the Cisco UCS C3260 storage server, Cisco UCS Mini, and the Cisco HyperFlex hyperconverged infrastructure.

● Programmatically controls server, network, and storage resources, with a unified, policy-driven management, so they can be efficiently managed at scale through software.

● Works with HTML 5, Java, or CLI graphical user interfaces.

● Can automatically detect, inventory, manage, and provision system components that are added or changed.

● Facilitates integration with third-party systems management tools.

● Builds on existing skills and supports collaboration across disciplines through role-based administration

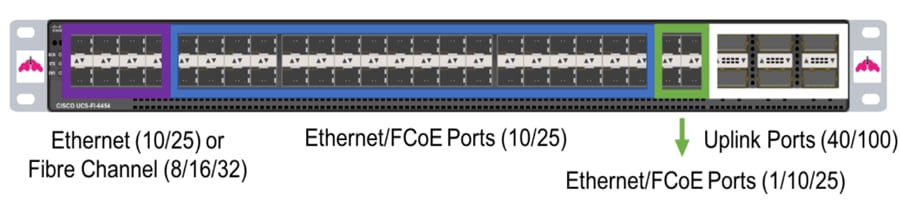

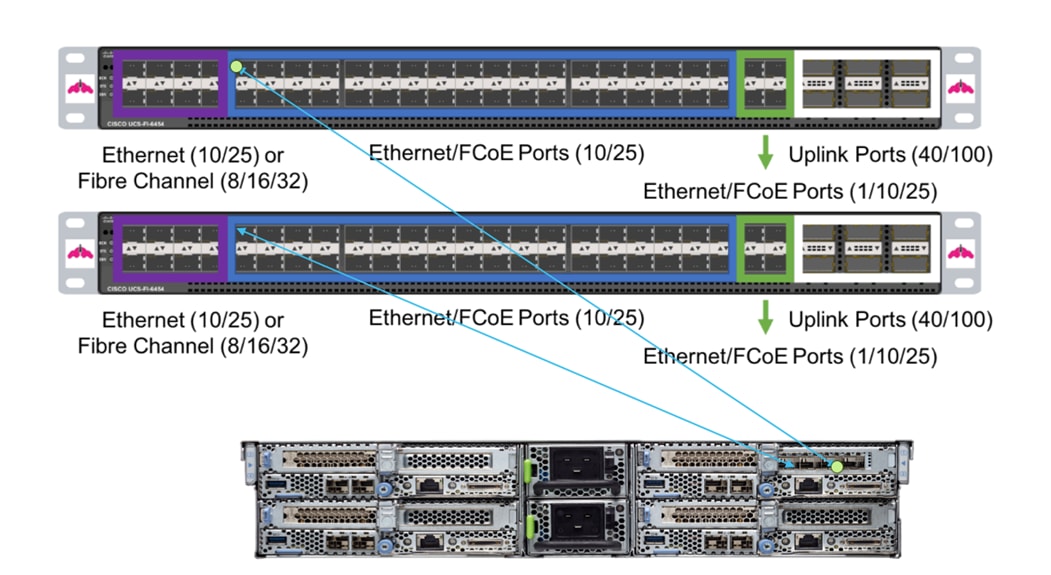

Cisco UCS 6400 Series Fabric Interconnect

The Cisco UCS 6454 provides the management and communication backbone for the Cisco UCS B-Series Blade Servers, Cisco UCS 5108 B-Series Server Chassis, Cisco UCS Managed C-Series Rack Servers, and Cisco UCS S-Series Storage Servers, providing both network connectivity and management capabilities for the system (Figure 12).

From a networking perspective, the Cisco UCS 6454 uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10/25/40/100 Gigabit Ethernet ports, switching capacity of 3.82 Tbps, and 160 Gbps bandwidth between FI 6454 and IOM 2208 per 5108 blade chassis, independent of packet size and enabled services. The product family supports Cisco® low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the Fabric Interconnect. Significant TCO savings come from an FCoE optimized server design in which Network Interface Cards (NICs), Host Bus Adapters (HBAs), cables, and switches can be consolidated.

Figure 11. Cisco UCS 6454 Fabric Interconnect

Cisco UCS C4200 Series Chassis

The Cisco UCS C4200 Series Chassis is a modular, density optimized rack-server chassis that supports:

● Up to four Cisco UCS C125 M5 Rack Server Nodes and up to 256 cores per chassis with AMD EPYC processors: It is excellent for environments requiring dense computing form factors and high core densities, such as scale-out, computing-intensive, general service provider, and BareMetal applications.

● 24 small-form-factor (SFF) drives: The drive bays are allocated so that each rack server node has access to six SAS and SATA HDD or solid-state disks (SSDs) or up to four SSDs and two Non-Volatile Memory Express (NVMe) drives.

Figure 12. Cisco UCS C4200 Series Chassis

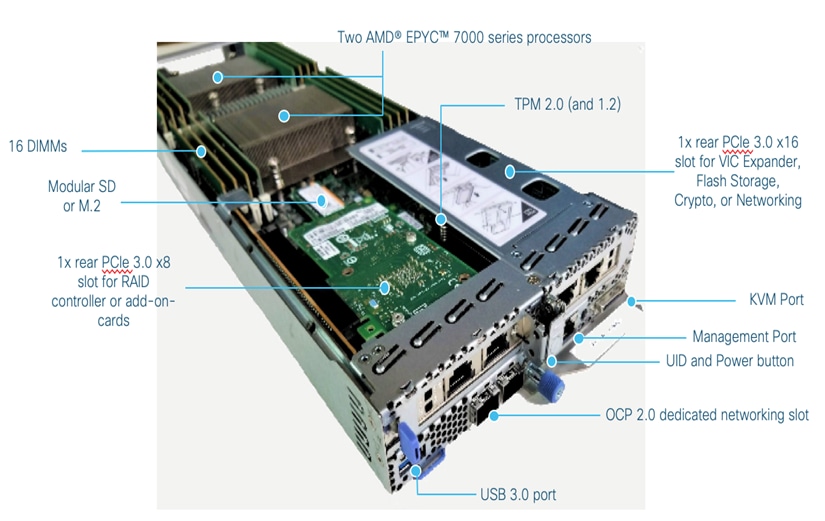

Cisco UCS C125 M5 Rack Server Node

Cisco UCS C125 M5 Rack Server Node has the greatest number of cores commercially available in a multi-node system. It offers 2 sockets per node and from 8 to 32 cores per processor, with the support of the AMD EPYC 7000 series processors, 16 DIMM slots for 2666-MHz DDR4 DIMMs, and capacity points of up to 128 GB per slot for a total of 2 TB per socket. It offers up to 2 half-height and half-length PCI Express (PCIe) 3.0 slots and an optional M.2 SSD module. The C125 supports either SAS RAID through a PCIe 12-Gbps SAS storage controller card or SATA directly from the AMD EPYC processor. The node also includes a dedicated internal LAN mezzanine slot based on the Open Compute Project (OCP) 2.0 standard, supporting networking speeds of up to 100 Gbps. Additionally, a fourth-generation Cisco PCIe virtual interface card (VIC) can be added in the x16 PCIe 3.0 slot.

Figure 13. Cisco U125 Rack Server Node

AMD EPYC 7000 Series Processor

Designed from the foundation for a new generation of solutions, AMD EPYC server processors implement a philosophy of choice without restriction. Choose the number of cores that meet your needs without sacrificing key features such as memory and I/O. Each EPYC processor can have from 8 to 32 cores with access to an exceptional amount of I/O and memory regardless of the number of cores in use. The processors include 128 PCIe Generation 3 lanes and support for up to 2 TB of high-speed memory per socket. The innovative AMD EPYC architecture provides outstanding performance. I/O intensive workloads can use the plentiful I/O bandwidth with the right number of cores, helping organizations avoid overpaying for unneeded power. And computing-intensive workloads can make use of fully loaded core counts, dual sockets, and plenty of memory.

Samsung enterprise SSDs offer a simple yet versatile and comprehensive selection of enterprise data storage and caching options suitable for nearly any application. They are engineered to provide high throughput and a consistent rate of I/O operations per second (IOPS). They offer large capacities of up to 3.84 TB, making them well suited for enterprise storage solutions for businesses seeking to enhance performance and cost effectiveness by upgrading their current servers or workstations from hard-disk drives (HDDs). These newer SSDs dramatically reduce latency and improve the IOPS rate.

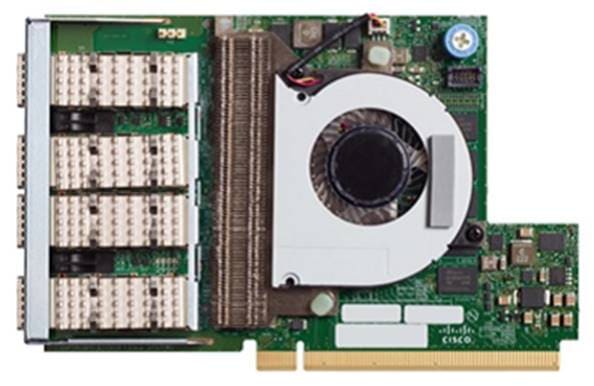

Cisco UCS Virtual Interface Cards (VICs)

This section describes the available Cisco VICs.

Cisco UCS VIC 1457

The Cisco UCS VIC 1457 (Figure 14) is a quad-port Small Form-Factor Pluggable (SFP28) mLOM card designed for the M5 generation of Cisco UCS C-Series Rack Servers. The card supports 10/25-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

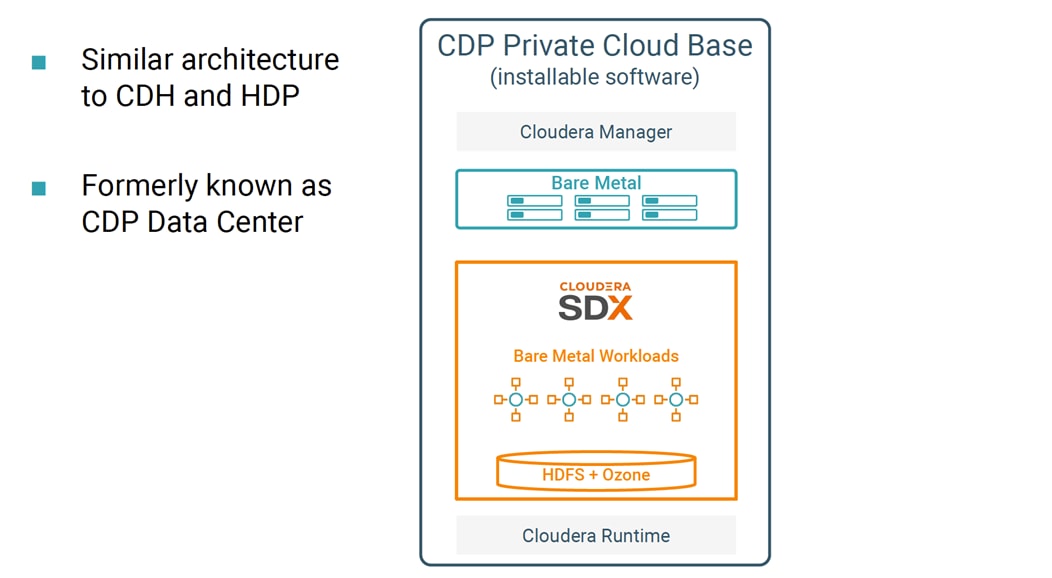

Cloudera Data Platform (CDP) Private Cloud Base

CDP Private Cloud Base is an on-premise version of Cloudera Data Platform.

This new product combines the best of Cloudera Enterprise Data Hub and Hortonworks Data Platform Enterprise along with new features and enhancements across the stack. This unified distribution is a scalable and customizable platform where you can securely run many types of workloads.

CDP PVC Base supports a variety of hybrid solutions where compute tasks are separated from data storage and where data can be accessed from remote clusters, including workloads created using CDP Private Cloud Experiences. This hybrid approach provides a foundation for containerized applications by managing storage, table schema, authentication, authorization, and governance.

CDP PVC Base is comprised of a variety of components such as Apache HDFS, Apache Hive 3, Apache HBase, and Apache Impala, along with many other components for specialized workloads. You can select any combination of these services to create clusters that address your business requirements and workloads. Several pre-configured packages of services are also available for common workloads.

Figure 15. Cloudera Data Platform Private Cloud Base Overview

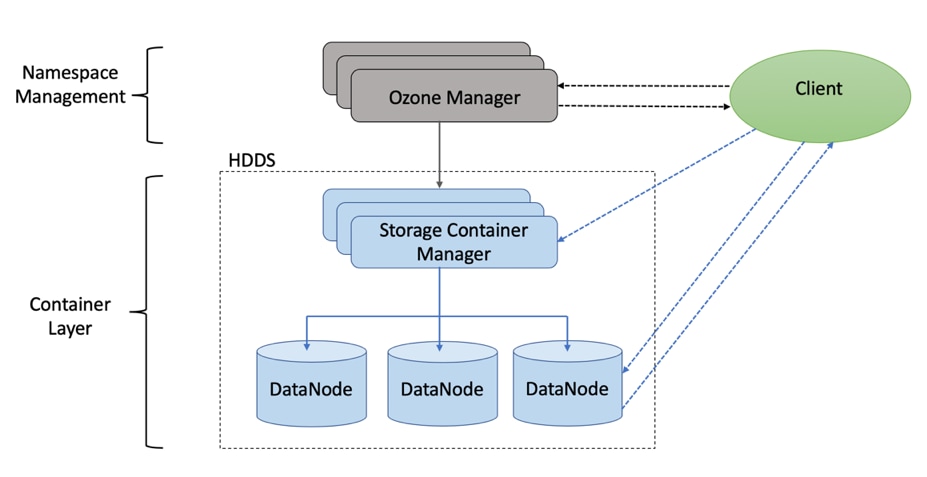

Ozone is a scalable, redundant, and distributed object store optimized for big data workloads. Apart from scaling to billions of objects of varying sizes, Ozone can function effectively in containerized environments such as Kubernetes and YARN.

Ozone consists of three important storage elements: volumes, buckets, and keys. Each key is part of a bucket, which, in turn, belongs to a volume. Only an administrator can create volumes. Depending on their requirements, users can create buckets in volumes. Ozone stores data as keys inside these buckets.

When a key is written to Ozone, the associated data is stored on the DataNodes in chunks called blocks. Therefore, each key is associated with one or more blocks. Within the DataNodes, a series of unrelated blocks is stored in a container, allowing many blocks to be managed as a single entity.

Ozone separates management of namespaces and storage, helping it to scale effectively. Ozone Manager manages the namespaces while Storage Container Manager handles the containers.

Figure 16. Basic Architecture for Ozone

![]() Ozone is available for technical preview and considered to be under development. Do not use this component in your production systems.

Ozone is available for technical preview and considered to be under development. Do not use this component in your production systems.

This solution uses Red Hat Ansible Automation for all pre and post deployment steps for automating repeatable tasks to maintain consistency.

Red Hat Ansible Automation is a powerful IT automation tool. It is capable of provisioning numerous types of resources and deploying applications. It can configure and manage devices and operating system components. Due to its simplicity, extensibility, and portability, this solution extensively utilizes Ansible for performing repetitive deployment steps across the nodes.

![]() For more information about Ansible, go to: https://www.redhat.com/en/technologies/management/ansible

For more information about Ansible, go to: https://www.redhat.com/en/technologies/management/ansible

The cluster configuration consists of the following:

● 2 Cisco UCS 6454 Fabric Interconnects

● 9 Cisco UCS C4200 Series Chassis

● 1 Cisco R42610 standard racks

● 2 Vertical Power distribution units (PDUs) (Country Specific)

Each rack consists of two vertical PDUs. The first rack consists of two Cisco UCS 6454 Fabric Interconnects, 48 Cisco UCS C125 M5 Rack Server Node connected to each of the vertical PDUs for redundancy; thereby, ensuring availability during power source failure. Figure17 represents a 25 Gigabit Ethernet link from each server is connected to both Fabric Interconnects.

![]() Please contact your Cisco representative for country-specific information.

Please contact your Cisco representative for country-specific information.

Figure 17. Cisco Data Intelligence Platform - 9 Node Configuration with CDP Private Cloud Base

Port Configuration on Fabric Interconnect

Table 2 lists the port configuration on Cisco UCS FI 6454 Fabric Interconnect.

Table 2. Port Configuration on Fabric Interconnect

| Port Type |

Port Number |

| Server |

1-48 |

| Network |

49-54 |

Server Configuration and Cabling for Cisco UCS C125 M5 Rack Server Node

The Cisco UCS C4200 chassis is a modular architecture consisting of the following modules:

● Base Chassis: 24 SFF drive bays segmented into four groups of six direct attach drives (one group per node slot), four rear slots supporting C125 M5 server node, four redundant hot-pluggable fans, two 2400W AC high-line redundant power supplies, and a rail mounting kit.

● Server Node: Each C125 M5 has two sockets supporting the AMD Epyc 7000 Processors up to 180W TDP, 16 DIMM slots for 2666 MHz DDR4 DIMMs and capacity points up to 64GB, up to 2 half-height/half-length PCI Express (PCIe) 3.0 slots, and optional M.2/SD module. The C125 supports either SAS RAID via a PCIe 12G SAS storage controller card or SATA direct from the AMD Epyc CPU. The node also includes a dedicated internal LAN mezzanine slot based on the OCP 2.0 standard supporting networking speeds up to 100Gbps. Additionally, installation of a 4th generation Cisco PCIe Virtual Interface Card (VIC) can be added in the x16 PCIe 3.0 slot.

Figure 18 illustrates the port connectivity between the Cisco UCS FI 6454 and Cisco UCS C125 M5 Rack Server Nodes. 13 Cisco UCS C125 M5 Rack Server Nodes are installed in this configuration.

For information on physical connectivity and single-wire management, go to:

Figure 18. Fabric Topology for Cisco UCS C125 M5 Rack Server Node

Software Distributions and Firmware Versions

The software distributions required versions are listed in Table 3.

Table 3. Software Distribution and Version

| Layer |

Component |

Version or Release |

| Compute |

Cisco UCS C125 M5 |

C125 M5.4.1(2a)C |

| Network |

Cisco UCS 6454 |

UCS 4.1(2a) A |

| Cisco UCS VIC1455 Firmware |

5.1(2d) |

|

| Storage |

Cisco 12G Modular Raid Controller |

51.10.0-3151 |

| Software |

Red Hat Enterprise Linux Server |

7.8 |

| Cisco UCS Manager |

4.1(2a)A |

|

| Cloudera CDP Private Cloud Base |

7.1.3 |

|

| Hadoop |

3.1 |

|

| Spark |

2.4 |

The latest drivers can be downloaded from the link below: https://software.cisco.com/download/home/283862063/type/283853158/release/4.1(2a)

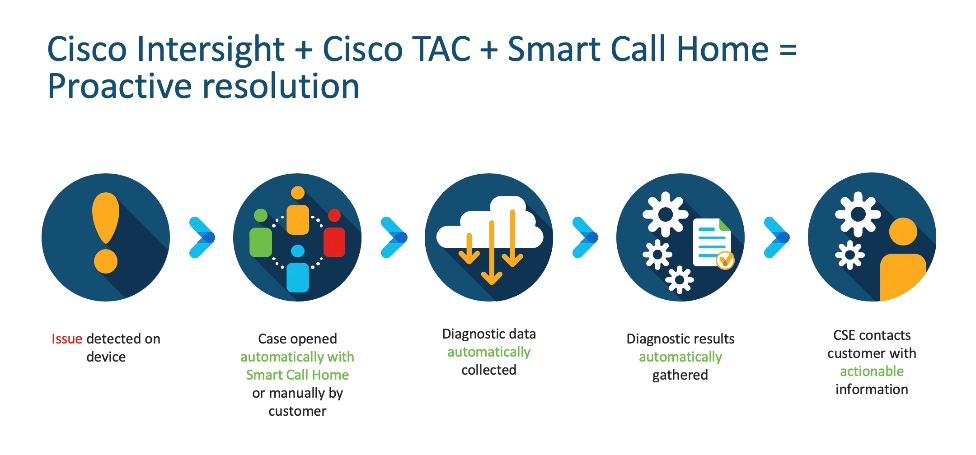

Cisco Intersight provides the following features to assist the IT staff with an ease of operations and administration.

Connected TAC is an automated transmission of technical support files to the Cisco® Technical Assistance Center (TAC) for accelerated troubleshooting.

Cisco Intersight enables Cisco TAC to automatically generate and upload Tech Support Diagnostic files when a Service Request is opened. If you have devices that are connected to Intersight but not claimed, Cisco TAC can only check the connection status and will not be permitted to generate Tech Support files. When enabled, this feature works in conjunction with the Smart Call Home service and with an appropriate service contract. Devices that are configured with Smart Call Home and claimed in Intersight can use Smart Call Home to open a Service Request and have Intersight collect Tech Support diagnostic files.

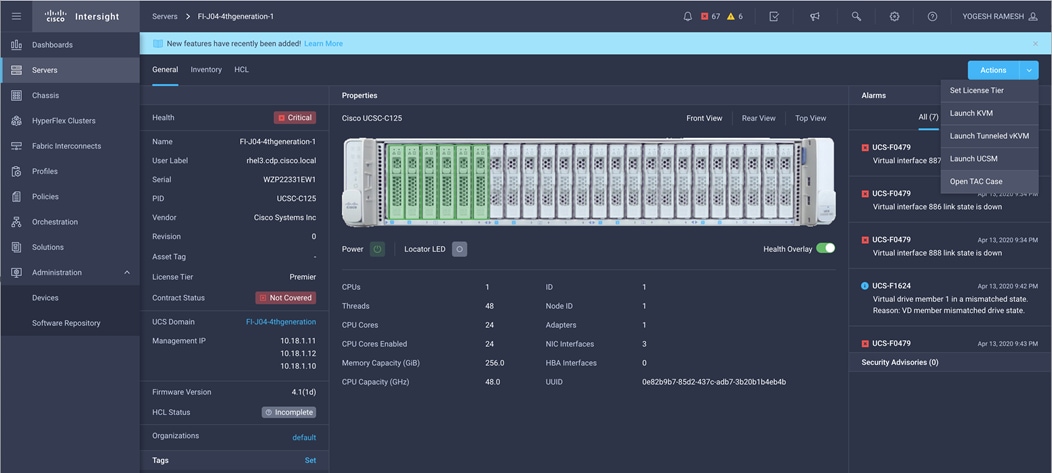

Figure 19. Cisco Intersight: Connected TAC

To enable Connected TAC, follow these steps:

1. Log into Intersight.com.

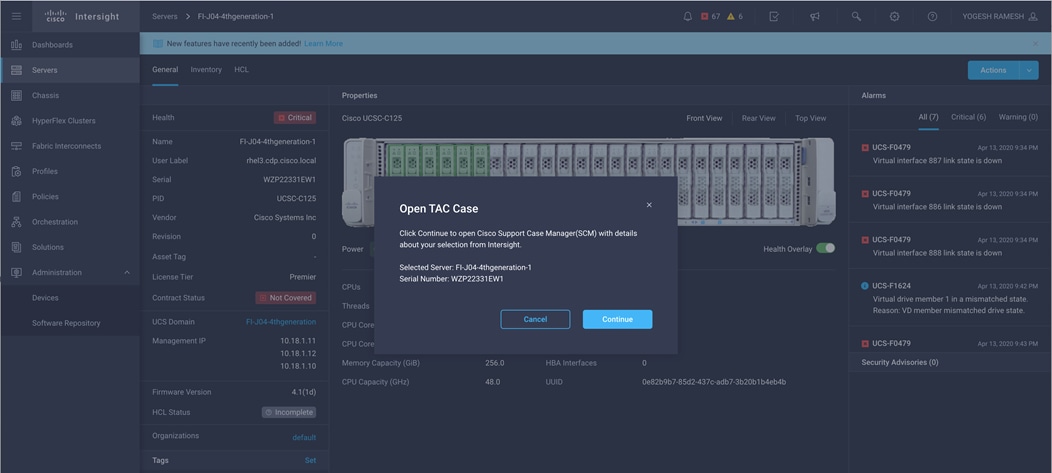

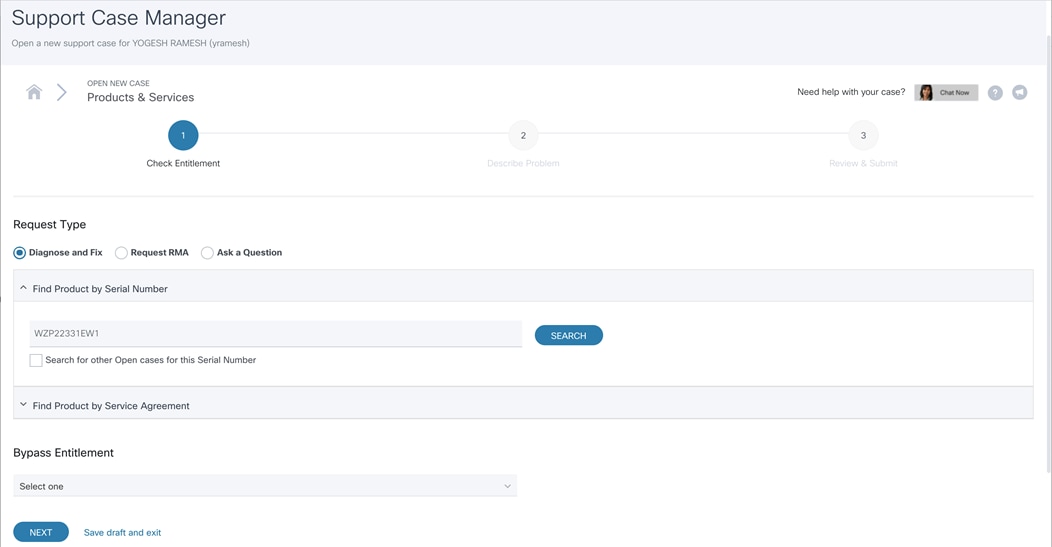

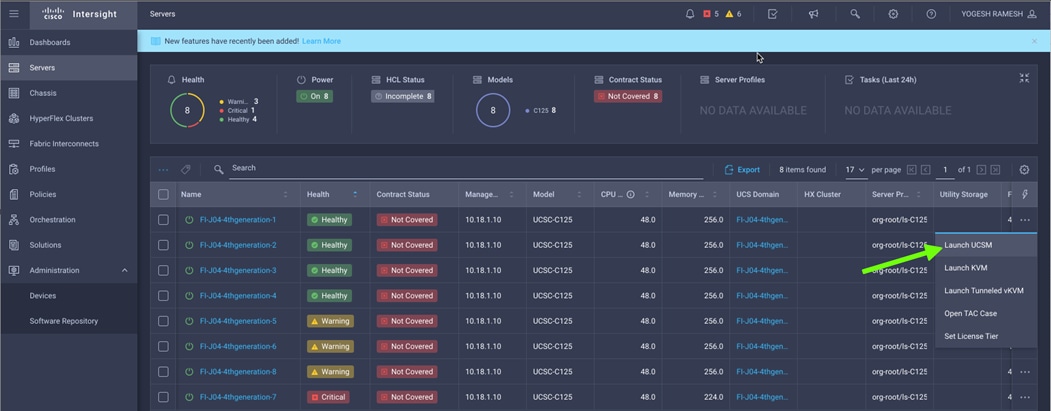

2. Click the Servers tab. Select Server > Actions tab. From the drop-down list, select Open TAC Case.

3. Clicking “Open TAC Case” launches the Cisco URL for Support case manager where associated service contracts for Server or Fabric Interconnect is displayed.

4. Click Continue.

5. Follow the process to the Open TAC Case.

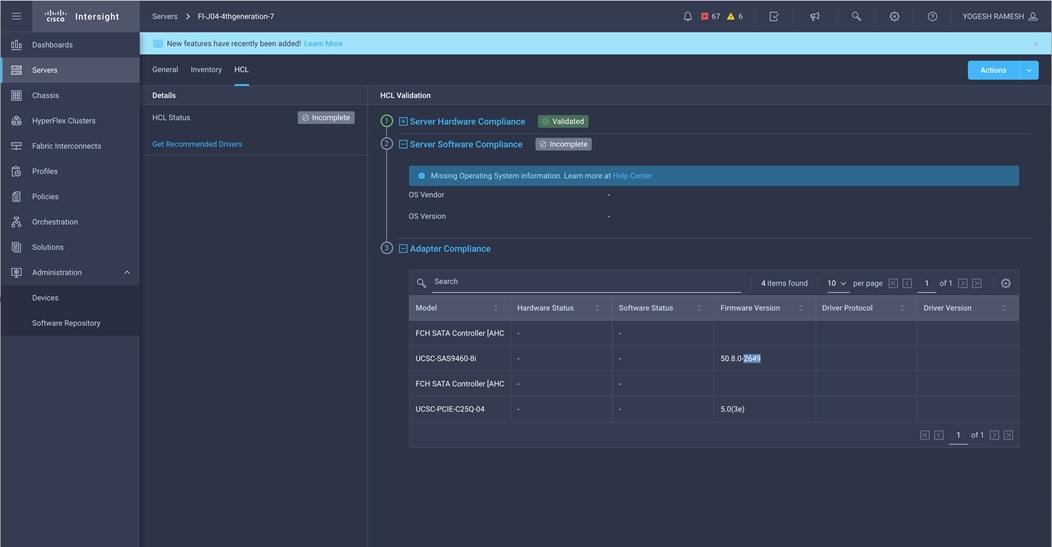

Cisco Intersight Integration for HCL

Cisco Intersight evaluates the compatibility of your Cisco UCS and Cisco HyperFlex systems to check if the hardware and software have been tested and validated by Cisco or Cisco partners. Cisco Intersight reports validation issues after checking the compatibility of the server model, processor, firmware, adapters, operating system, and drivers, and displays the compliance status with the Hardware Compatibility List (HCL).

You can use Cisco UCS Tools, a host utility vSphere Installation Bundle (VIB), or OS Discovery Tool, an open source script to collect OS and driver information to evaluate HCL compliance.

In Intersight, you can view the HCL compliance status in the dashboard (as a widget), the Servers table view, and the Server details page. Below is the server details page.

![]() For more information, go to: https://www.intersight.com/help/features#compliance_with_hardware_compatibility_list_(hcl)

For more information, go to: https://www.intersight.com/help/features#compliance_with_hardware_compatibility_list_(hcl)

Figure 20. Example of HCL Status and Driver Recommendation for RHEL 7.8

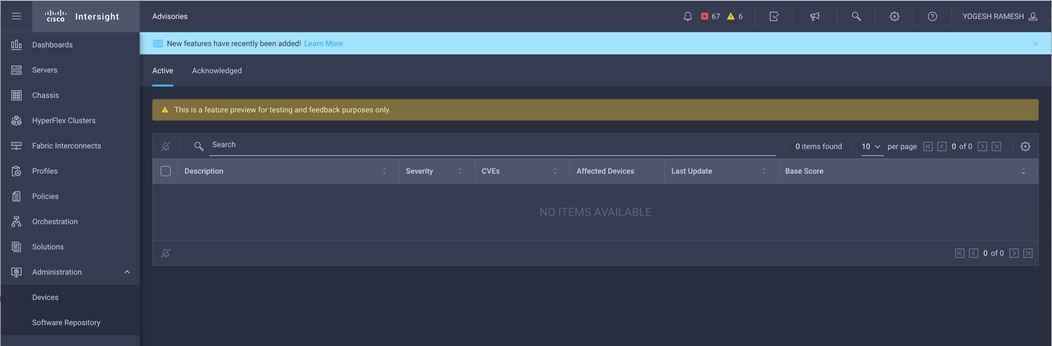

Cisco Intersight sources critical security advisories from the Cisco Security Advisory service to alert users about the endpoint devices that are impacted by the advisories and deferrals. These alerts are displayed as Advisories in Intersight. The Cisco Security Advisory service identifies and monitors and updates the status of the advisories to provide the latest information on the impacted devices, the severity of the advisory, the impacted products, and any available workarounds. If there are no known workarounds, you can open a support case with Cisco TAC for further assistance. A select list of the security advisories is shown in Intersight under Advisories.

Figure 21. Intersight Dashboard

![]()

Figure 22. Example: List of PSIRTs Associated with Sample Intersight Account

Deployment Hardware and Software

Cisco Unified Computing System Configuration

This section details the Cisco Unified Computing System (Cisco UCS) configuration that was done as part of the infrastructure build out. The racking, power, and installation of the Cisco UCS Rack Server is described in the physical topology section earlier in this document. Please refer to the Cisco UCS Manager Getting Started Guide. For more information about each step, see the Cisco UCS Manager - Configuration Guides.

Configure Cisco UCS Fabric Interconnect

This document assumes you are using Cisco UCS Manager Software version 4.1(1b). To upgrade the Cisco UCS Manager software and the Cisco UCS 6454 Fabric Interconnect software to a higher version of the firmware, see the Cisco UCS Manager Install and Upgrade Guides.

Alternatively, if you intend to clear the existing Cisco UCS Manager configuration, follow these steps:

1. Connect a console cable to the console port on what will become the primary fabric interconnect.

2. If the fabric interconnects were previously deployed and you want to erase it to redeploy, follow these steps:

a. Login with the existing username and password.

#connect local-mgmt

#erase config

#yes (to confirm)

3. After the fabric interconnect restarts, the out-of-box first time installation prompt appears, type “console” and press Enter.

4. Follow the Initial Configuration steps as outlined in Cisco UCS Manager Getting Started Guide. When configured, log into UCSM IP Address via the web interface to perform the base Cisco UCS configuration.

Configure Fabric Interconnects for a Cluster Setup

To configure the Cisco UCS Fabric Interconnects, follow these steps:

1. Verify the following physical connections on the fabric interconnect:

a. The management Ethernet port (mgmt0) is connected to an external hub, switch, or router.

b. The L1 ports on both fabric interconnects are directly connected to each other.

c. The L2 ports on both fabric interconnects are directly connected to each other

Configure Fabric Interconnect A

To configure Fabric Interconnect A, follow these steps:

1. Connect to the console port on the first Cisco UCS 6454 Fabric Interconnect.

At the prompt to enter the configuration method, enter console to continue.

If asked to either perform a new setup or restore from backup, enter setup to continue.

Enter y to continue to set up a new Fabric Interconnect.

Enter y to enforce strong passwords.

2. Enter the password for the admin user.

3. Enter the same password again to confirm the password for the admin user.

When asked if this fabric interconnect is part of a cluster, answer y to continue.

Enter A for the switch fabric.

4. Enter the cluster name for the system name.

5. Enter the Mgmt0 IPv4 address.

6. Enter the Mgmt0 IPv4 netmask.

7. Enter the IPv4 address of the default gateway.

8. Enter the cluster IPv4 address.

To configure DNS, answer y.

9. Enter the DNS IPv4 address.

Answer y to set up the default domain name.

10. Enter the default domain name.

Review the settings that were printed to the console, and if they are correct, answer yes to save the configuration.

11. Wait for the login prompt to make sure the configuration has been saved.

Configure Fabric Interconnect B

To configure Fabric Interconnect B, follow these steps:

1. Connect to the console port on the second Cisco UCS 6454 Fabric Interconnect.

When prompted to enter the configuration method, enter console to continue.

The installer detects the presence of the partner Fabric Interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

2. Enter the admin password that was configured for the first Fabric Interconnect.

3. Enter the Mgmt0 IPv4 address.

4. Answer yes to save the configuration.

5. Wait for the login prompt to confirm that the configuration has been saved.

For more information about configuring Cisco UCS 6454 Series Fabric Interconnect, go to: https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/ucs-manager/GUI-User-Guides/Getting-Started/4-0/b_UCSM_Getting_Started_Guide_4_0.html

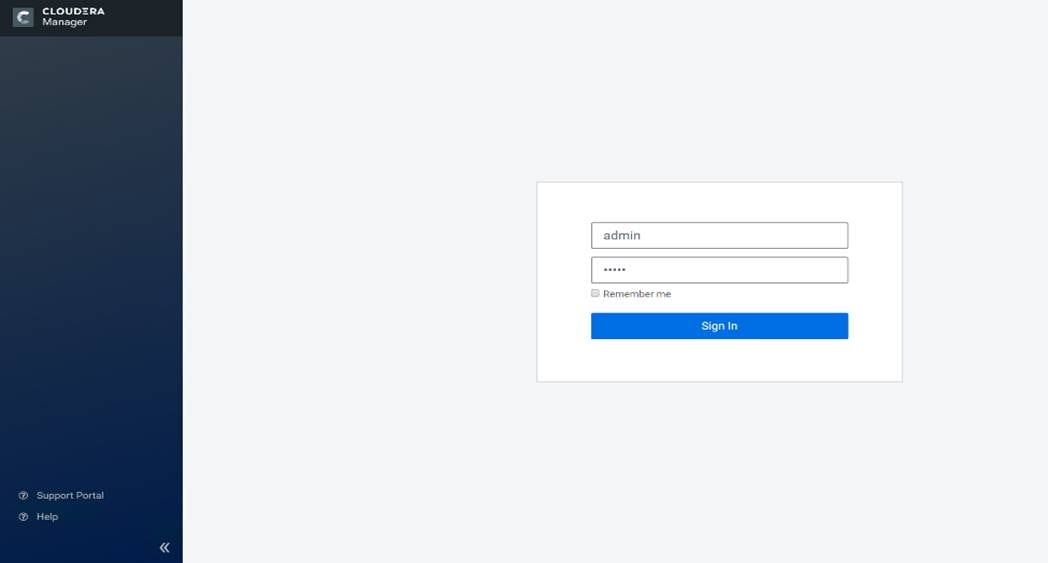

To log into Cisco UCS Manager, follow these steps:

1. Open a Web browser and navigate to the Cisco UCS 6454 Fabric Interconnect cluster address.

2. Click the Launch link to download the Cisco UCS Manager software.

3. If prompted to accept security certificates, accept as necessary.

4. When prompted, enter admin for the username and enter the administrative password.

5. Click Login to log into the Cisco UCS Manager.

Upgrade Cisco UCS Manager Software to Version 4.1(2a)A

This document assumes you’re using Cisco UCS 4.1(2a). Refer to the Cisco UCS 4.1 Release (upgrade Cisco UCS Manager software and Cisco UCS 6454 Fabric Interconnect software to version 4.1(2a) ). Also, make sure the Cisco UCS C-Series version 4.1(2c) software bundles are installed on the Fabric Interconnects.

![]() Upgrading Cisco UCS firmware is beyond the scope of this document. However for complete Cisco UCS Install and Upgrade Guides, go to: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

Upgrading Cisco UCS firmware is beyond the scope of this document. However for complete Cisco UCS Install and Upgrade Guides, go to: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

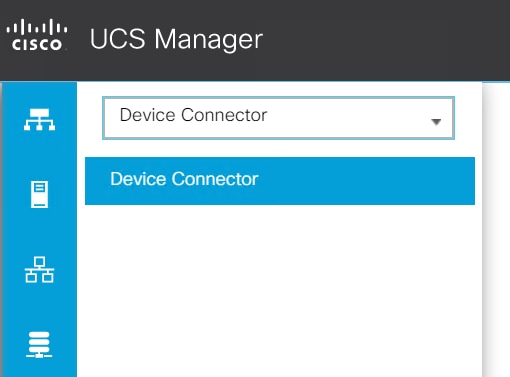

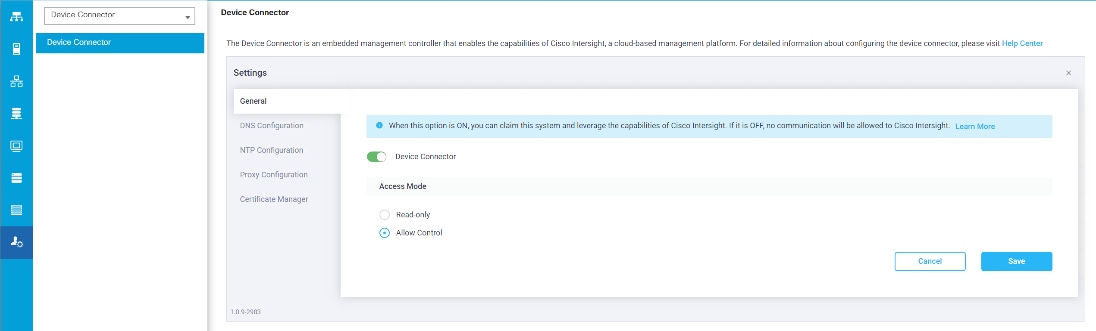

Register Cisco USC Manager with Intersight

To register UCSM with Intersight, follow these steps:

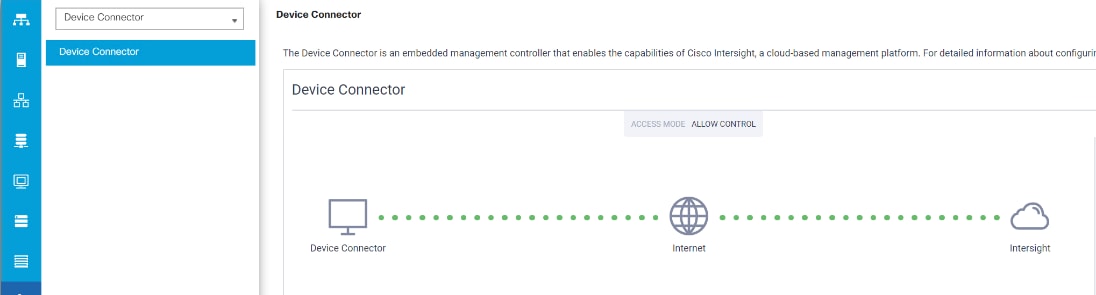

1. Log into the WebUI for Cisco UCS Manager and click the Admin tab. Select Device Connector from the drop-down list. Click Settings.

2. Enable Device Connector. Select Allow Control in Access Mode.

3. Complete the steps for the DNS configuration, NTP Configuration and Proxy Configuration as applicable. Click Save.

4. Make sure UCSM can communicate to Intersight.

5. Copy Device Claim ID from right side of Device Connector screen.

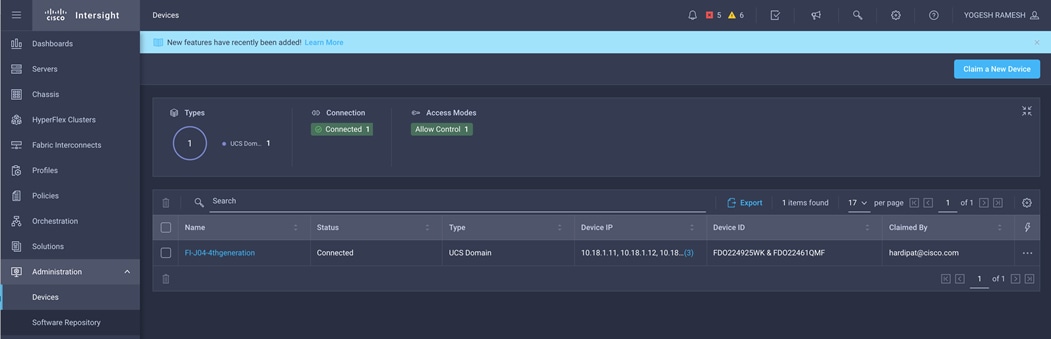

6. Log into Intersight.com

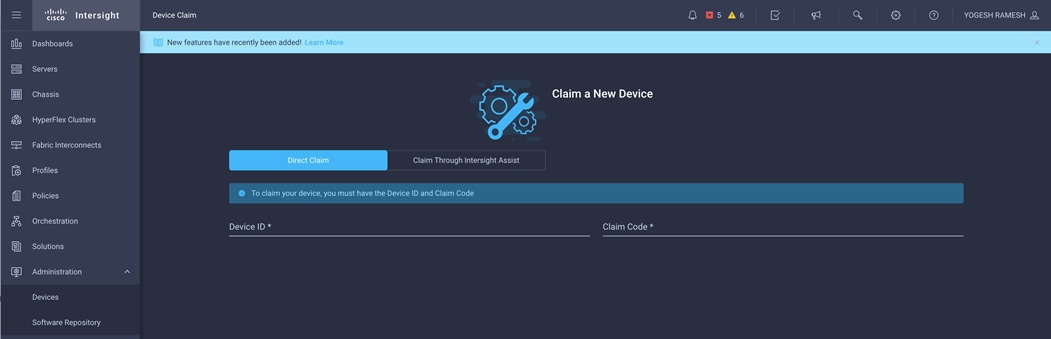

7. Select Devices tab and click Claim a New Device.

8. Enter Device ID and Device Claim Code copied from Cisco UCS Manager. Click Claim.

9. Once Claimed, UCSM can be launched directly from Intersight.

For more information, go to: Claiming a Device

Configure Cisco UCS Manager through Intersight

To configure Cisco UCS Manager, follow these high-level steps:

1. Configure Fabric Interconnects for a Cluster Setup.

2. Set Fabric Interconnects to Fibre Channel End Host Mode.

3. Synchronize Cisco UCS to NTP.

4. Configure Fabric Interconnects for Rack or Chassis and Blade Server Discovery.

5. Configure Global Policies.

6. Configure Server Ports.

7. Configure LAN on Cisco UCS Manager.

8. Configure Ethernet LAN Uplink Ports.

9. Set QoS system class and Jumbo Frames in both the Cisco Fabric Interconnect.

10. Create Uplink Port Channels to Cisco Nexus Switches.

11. Configure FC SAN Uplink Ports

12. Configure VLAN

13. Configure IP, UUID, Server, MAC Pool and policy:

a. IP Pool Creation

b. UUID Suffix Pool Creation

c. Server Pool Creation

d. Configure Server BIOS Policy

e. Create Adapter Policy

f. Configure Default Maintenance Policy

g. Configure vNIC Template

h. Create Server Boot Policy

Details for each step are discussed in the following sections.

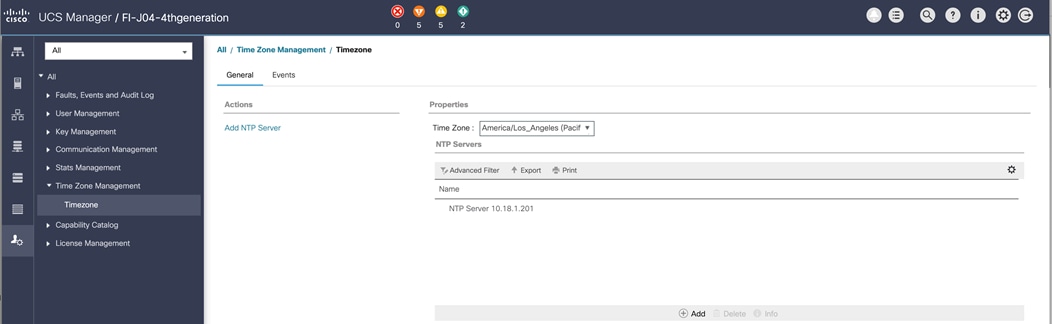

Synchronize Cisco UCSM to NTP

To synchronize the Cisco UCS environment to the NTP server, follow these steps:

1. In Cisco UCS Manager, in the navigation pane, click the Admin tab.

2. Select All > Time zone Management.

3. In the Properties pane, select the appropriate time zone in the Time zone menu.

4. Click Save Changes and then click OK.

5. Click Add NTP Server.

6. Enter the NTP server IP address and click OK.

7. Click OK to finish.

8. Click Save Changes.

Figure 23. Synchronize Cisco UCS Manager to NTP

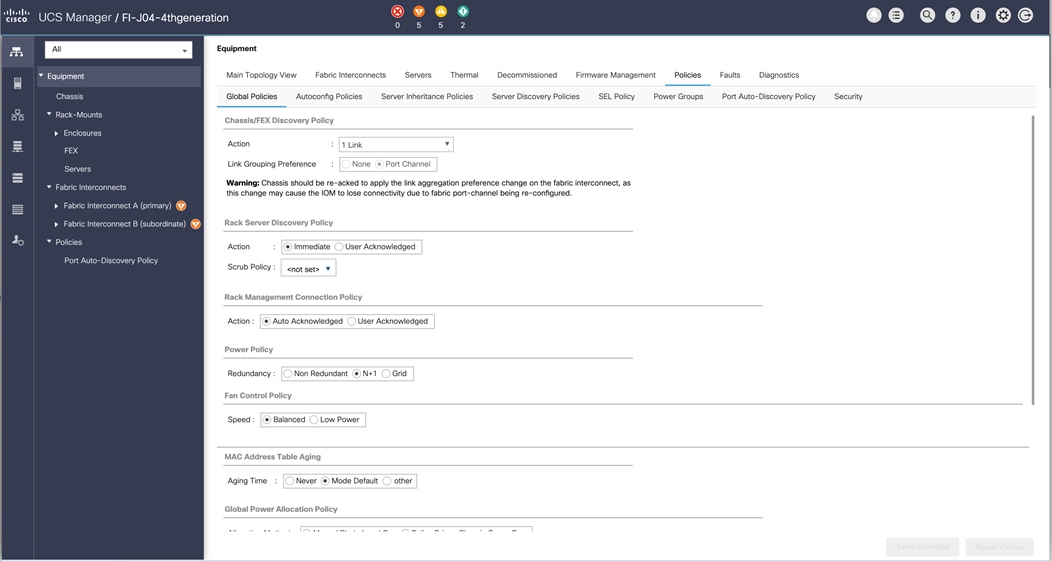

Configure Global Policies

The rack server and chassis discovery policy determine how the system reacts when you add a new rack server or chassis. We recommend using the platform max value as shown. Using platform max helps ensure that Cisco UCS Manager uses the maximum number of IOM uplinks available.

To configure the global policies, follow this step:

1. In Cisco UCS Manager; Configure Global Policy. Go to Equipment > Policies > Global Policies.

Figure 24. Global Policies in UCSM

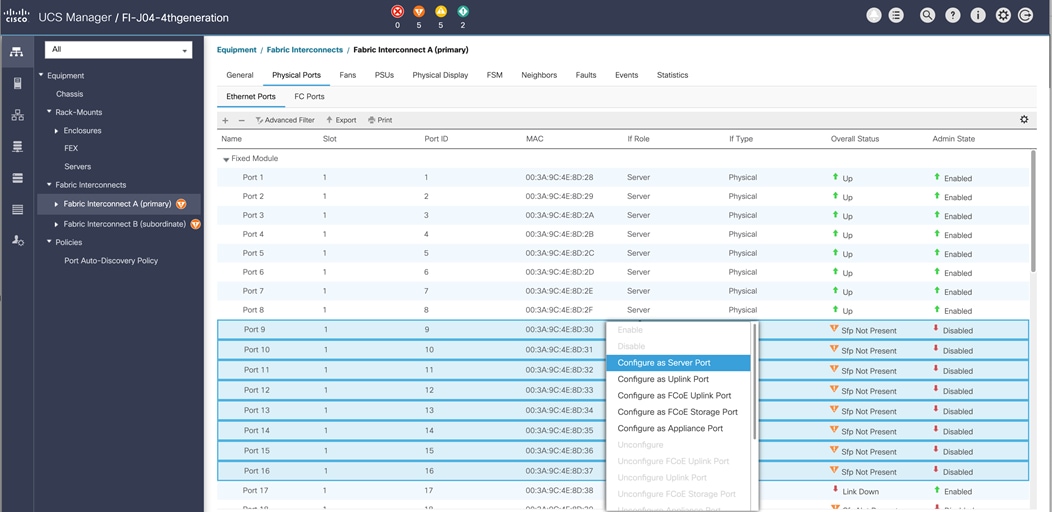

Configure Server Ports

Configure Server Ports to initiate Chassis and Blade discovery. To configure server ports, follow these steps:

1. Go to Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

2. Select the ports (for this solution ports are 1-48) which are connected to the Cisco UCS VIC 1455 on Cisco UCS C125 M5 rack server node.

3. Right-click and select Configure as Server Port.

Figure 25. Configure Server Port on Cisco UCS Manager Fabric Interconnect for Server/Chassis Discovery

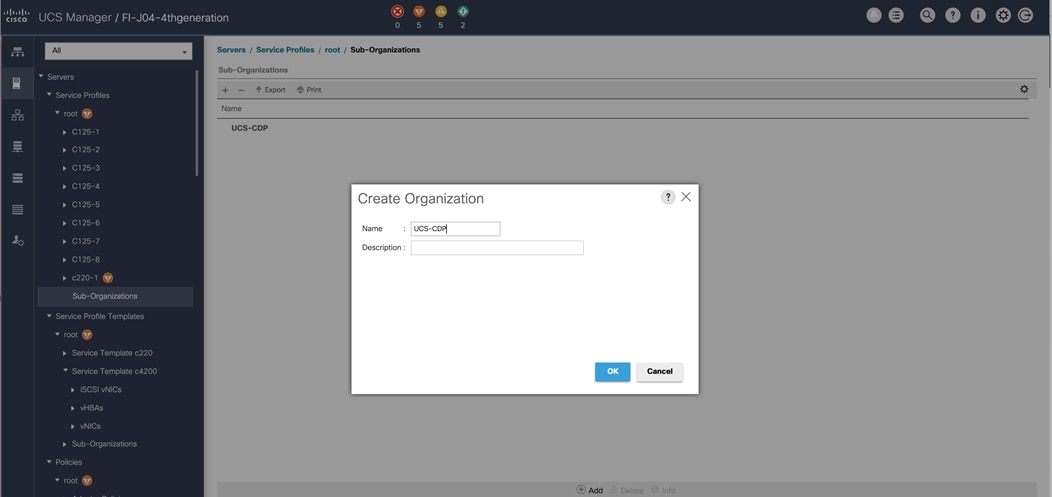

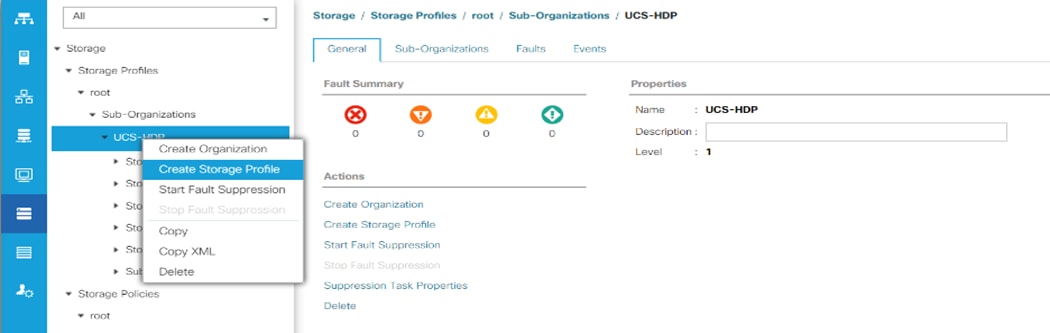

Create New Organization

To configure the necessary Organization for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select root > Sub-Organization.

3. Right-click and select Create Sub-Organization.

4. Enter the name of the Organization.

5. Click OK.

Figure 26. Create New Organization under Sub-Organizations

![]() The Cisco UCS Manager pools and policies required for this solution were created under the newly created “UCS-CDP” Organization.

The Cisco UCS Manager pools and policies required for this solution were created under the newly created “UCS-CDP” Organization.

Configure IP, UUID, Server and MAC Pools

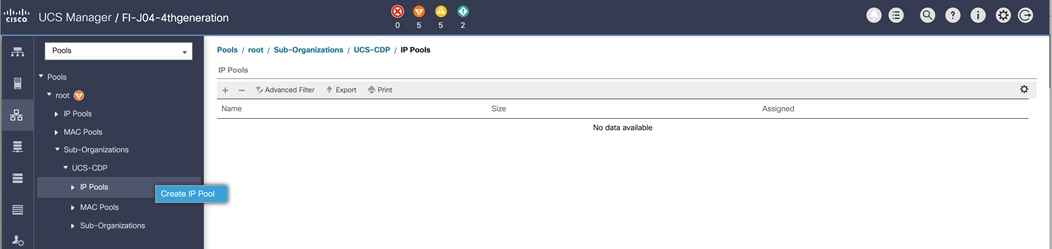

IP Pool Creation

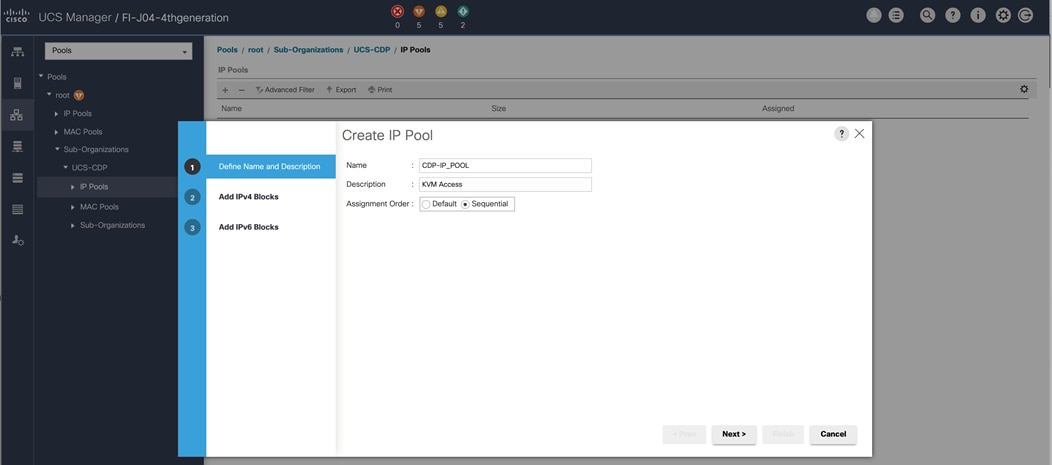

An IP address pool on the out-of-band management network must be created to facilitate KVM access to each compute node in the Cisco UCS domain. To create a block of IP addresses for server KVM access in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, in the navigation pane, click the LAN tab.

2. Select Pools > root > Sub-Organizations > UCS-CDP> IP Pools > click Create IP Pool.

3. Enter name for the IP Pool, select option Sequential to assign IP in sequential order then click Next.

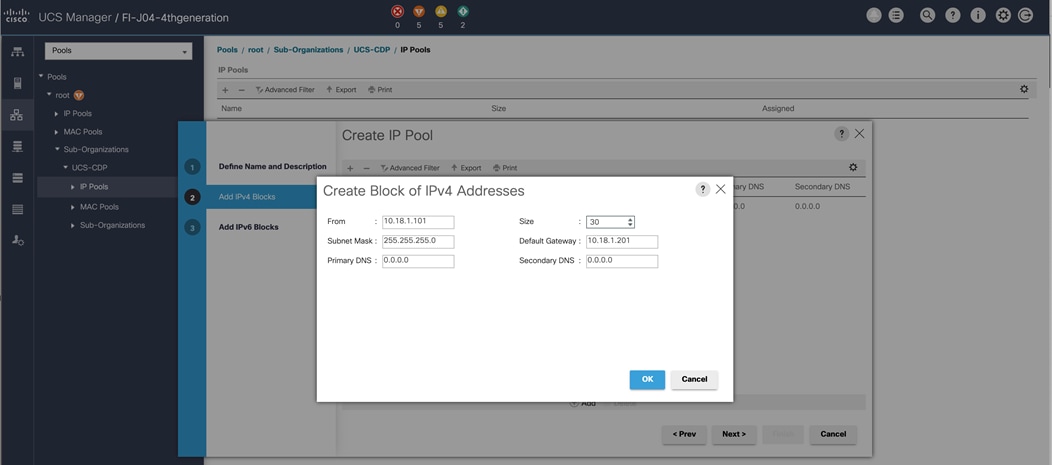

4. Click Add IPv4 Block.

5. Enter the starting IP address of the block and the number of IP addresses required, and the subnet and gateway information as shown below.

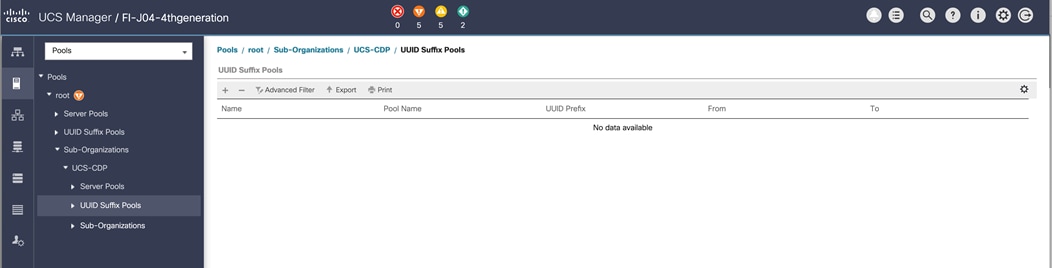

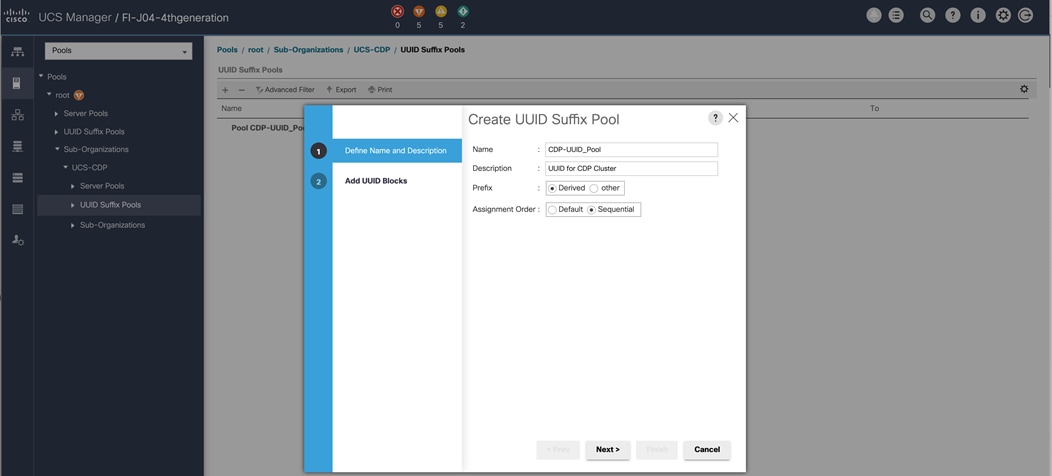

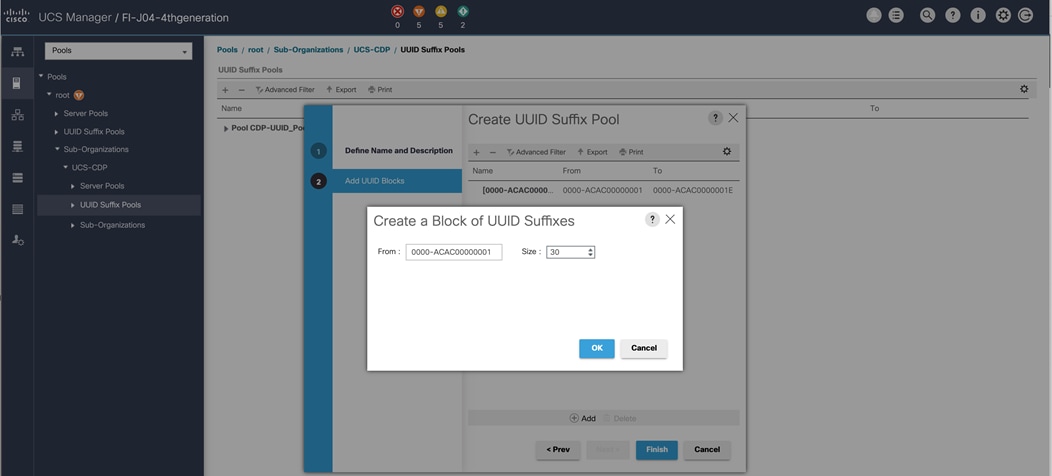

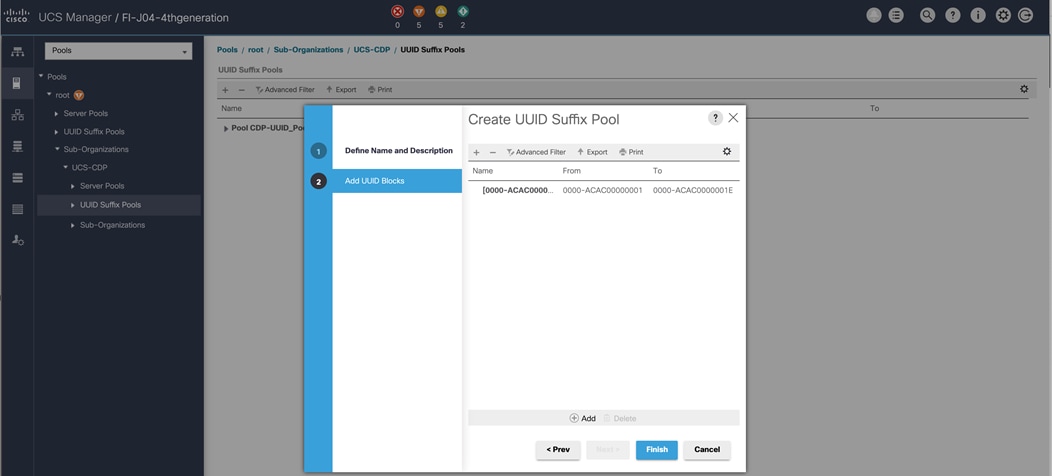

UUID Suffix Pool Creation

To configure the necessary universally unique identifier (UUID) suffix pool for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Pools > root > Sub-Organization > UCS-CDP.

3. Right-click UUID Suffix Pools and then select Create UUID Suffix Pool.

4. Enter the name of the UUID name.

5. Optional: Enter a description for the UUID pool.

6. Keep the prefix at the derived option and select Sequential in as Assignment Order then click Next.

Figure 27. UUID Suffix Pool Creation

Figure 28. Create a Block of UUID Suffixes

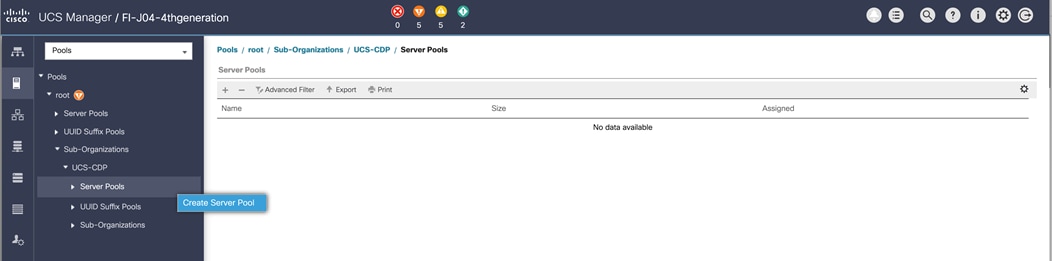

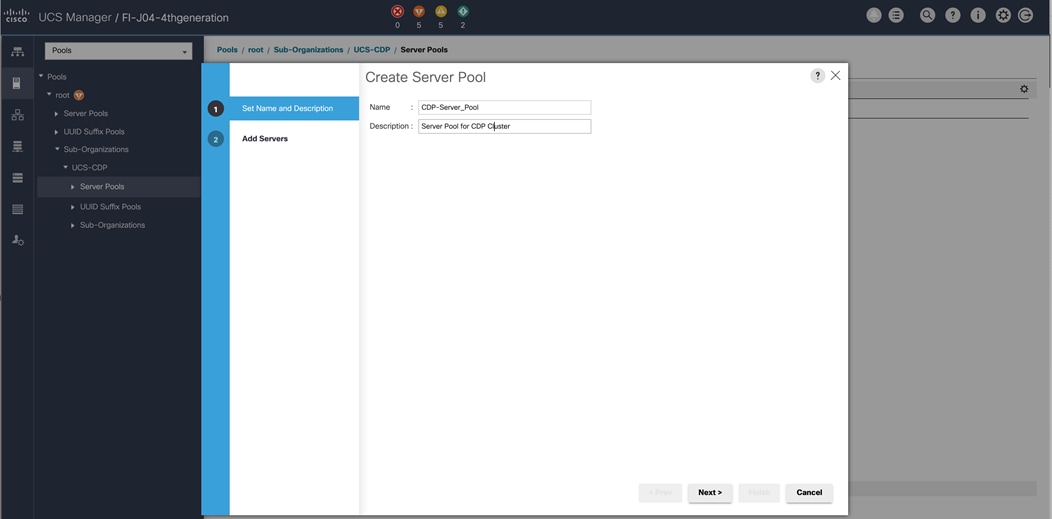

Server Pool Creation

To configure the necessary server pool for the Cisco UCS environment, follow these steps:

![]() Consider creating unique server pools to achieve the granularity that is required in your environment.

Consider creating unique server pools to achieve the granularity that is required in your environment.

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Pools > root > Sub-Organization > UCS-CDP > right-click Server Pools > Select Create Server Pool.

3. Enter name of the server pool.

4. Optional: Enter a description for the server pool then click Next.

Figure 29. Create Server Pool

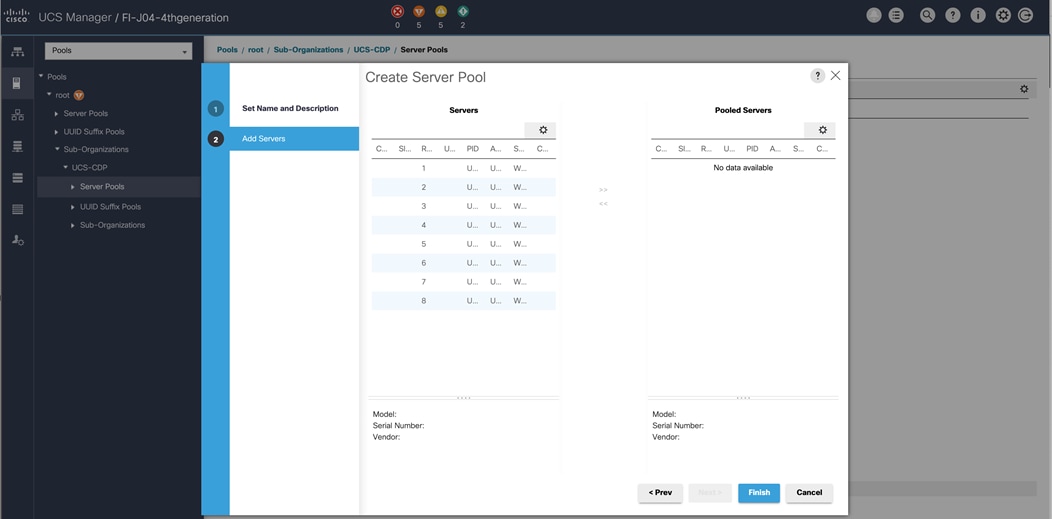

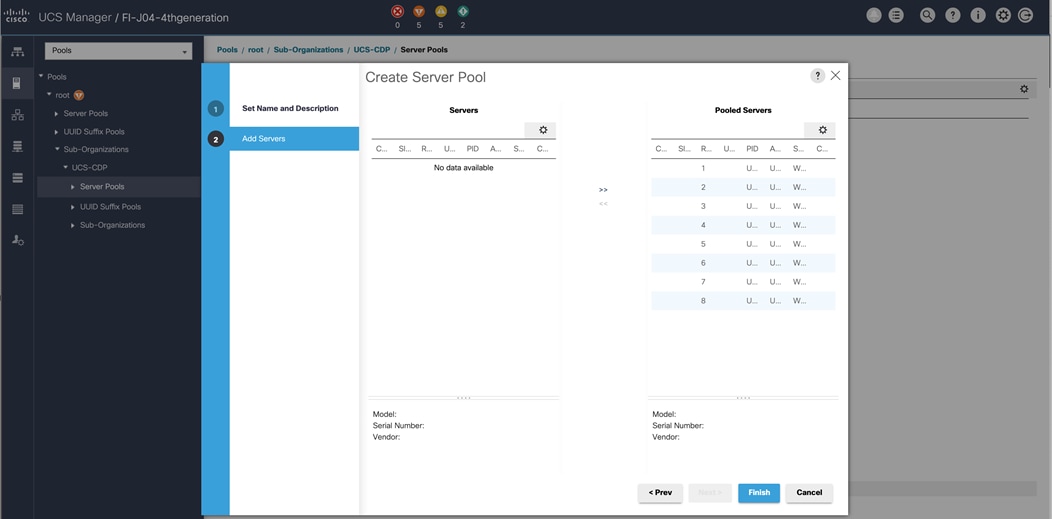

5. Select servers to be used for the deployment and click > to add them to the server pool. In our case we added thirty servers in this server pool.

6. Click Finish and then click OK.

Figure 30. Add Server in the Server Pool

7. Once the added Servers are in the Pooled servers, click Finish.

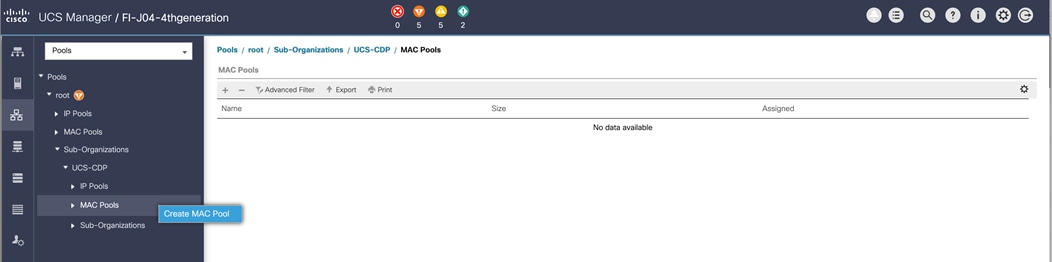

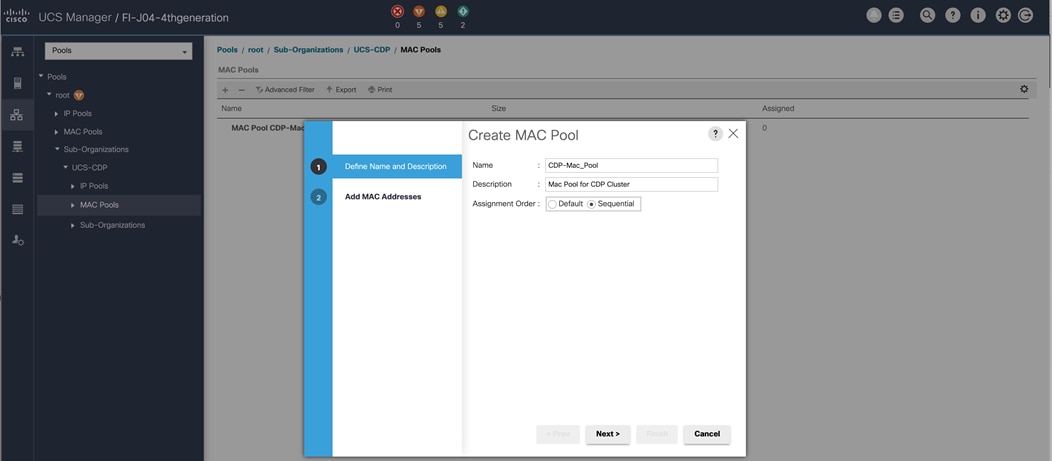

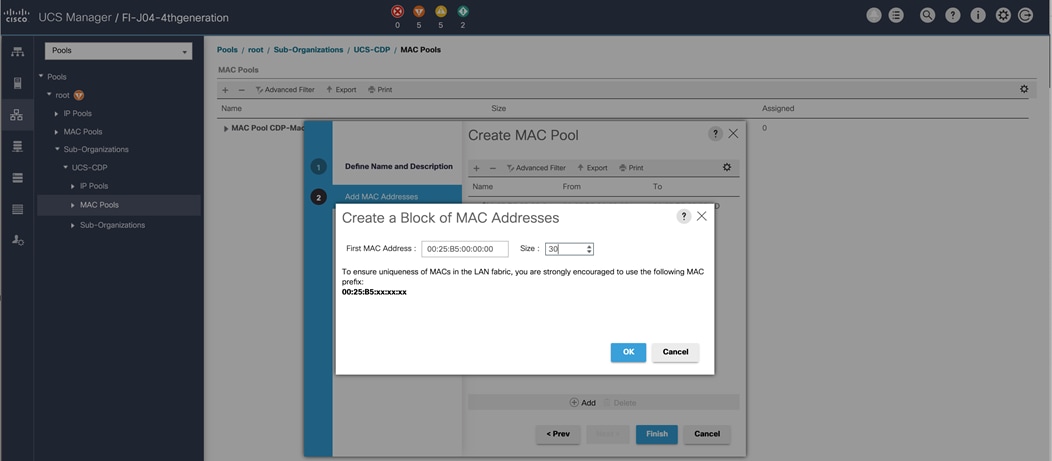

MAC Pool Creation

To configure the necessary MAC address pools for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Pools > root > Sub-Organization > UCS-CDP> right-click MAC Pools.

3. Select Create MAC Pool to create the MAC address pool.

4. Enter name for MAC pool. Select Assignment Order as “Sequential”.

5. Enter the seed MAC address and provide the number of MAC addresses to be provisioned.

6. Click OK and then click Finish.

7. In the confirmation message, click OK.

Figure 31. Creating a Block of MAC Addresses

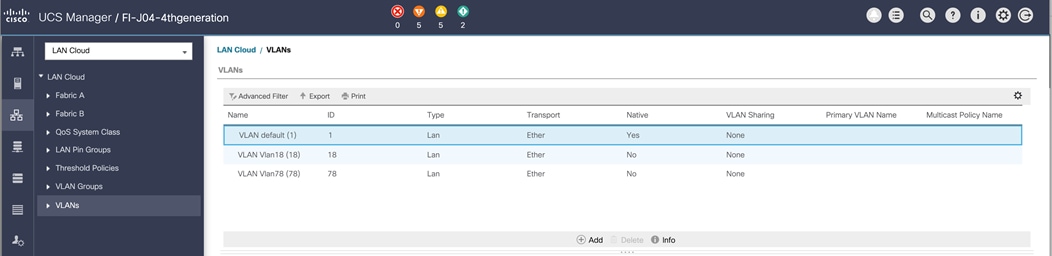

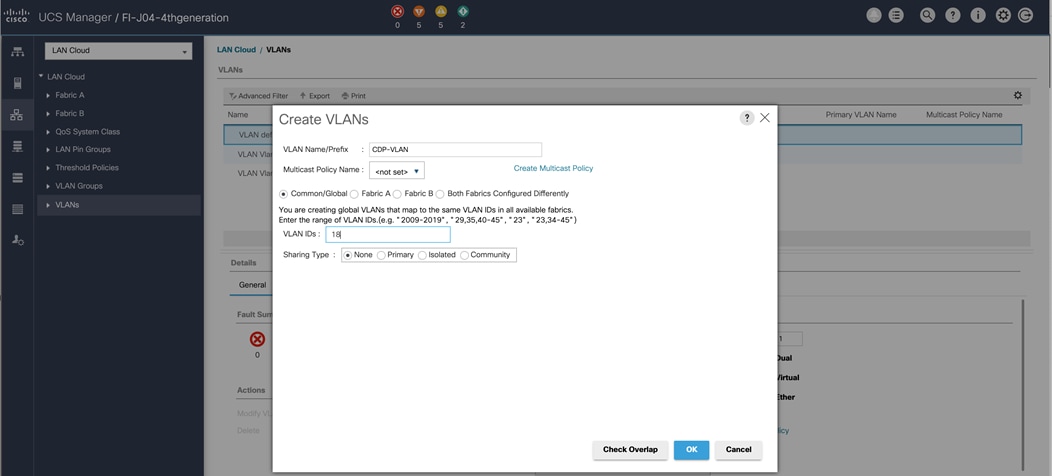

Configure VLAN

To configure the necessary virtual local area networks (VLANs) for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud.

3. Right-click VLANs.

4. Select Create VLANs.

5. Enter the name of the VLAN to be used.

6. Keep the Common/Global option selected for the scope of the VLAN.

7. Enter <VLAN Number> as the ID of the VLAN ID.

8. Keep the Sharing Type as None.

Figure 32. Create VLAN

The NIC will carry the data traffic from VLAN18. A single vNIC is used in this configuration and the Fabric Failover feature in Fabric Interconnects will take care of any physical port down issues. It will be a seamless transition from an application perspective.

Figure 33. Create VLANs

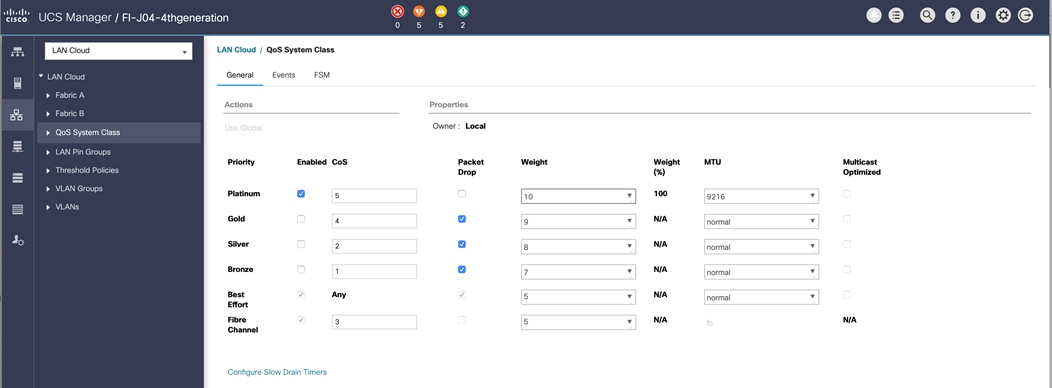

Set System Class QoS and Jumbo Frame in Both Cisco Fabric Interconnects

To configure jumbo frames and enable quality of service in the Cisco UCS fabric, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select LAN > LAN Cloud > QoS System Class.

3. In the right pane, click the General tab.

4. On the Platinum row, enter 9216 in the box under the MTU column.

5. Click Save Changes.

6. Click OK.

![]() Changing the QoS system class MTU requires a reboot of Cisco UCS Fabric Interconnect for changes to be effective.

Changing the QoS system class MTU requires a reboot of Cisco UCS Fabric Interconnect for changes to be effective.

Figure 34. Configure System Class QoS on Cisco UCS Fabric Interconnects

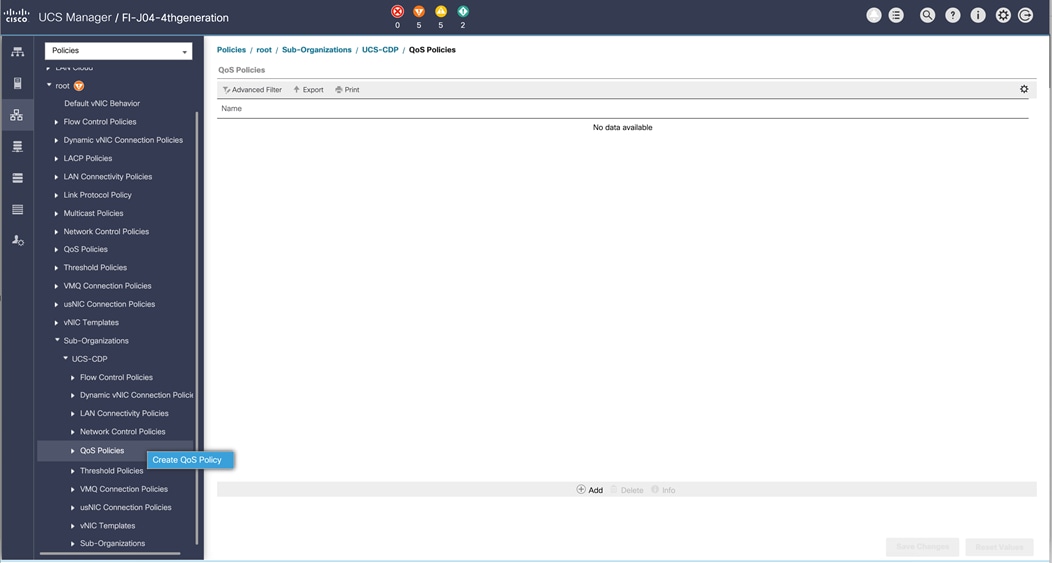

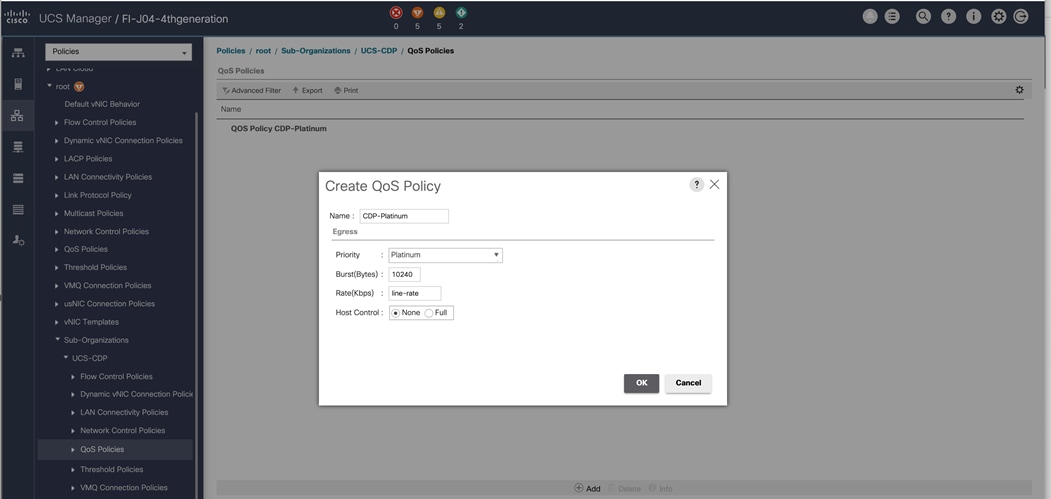

To create the QoS policy to assign priority based on the class using the Cisco UCS Manager GUI, follow these steps:

1. Select LAN tab in the Cisco UCS Manager GUI.

2. Select LAN > Policies > root > UCS-CDP> QoS Policies.

3. Right-click QoS Policies.

4. Select Create QoS Policy.

Figure 35. Create QoS Policy

![]() We created a Platinum class QoS policy for this solution.

We created a Platinum class QoS policy for this solution.

Figure 36. Platinum QoS Policy

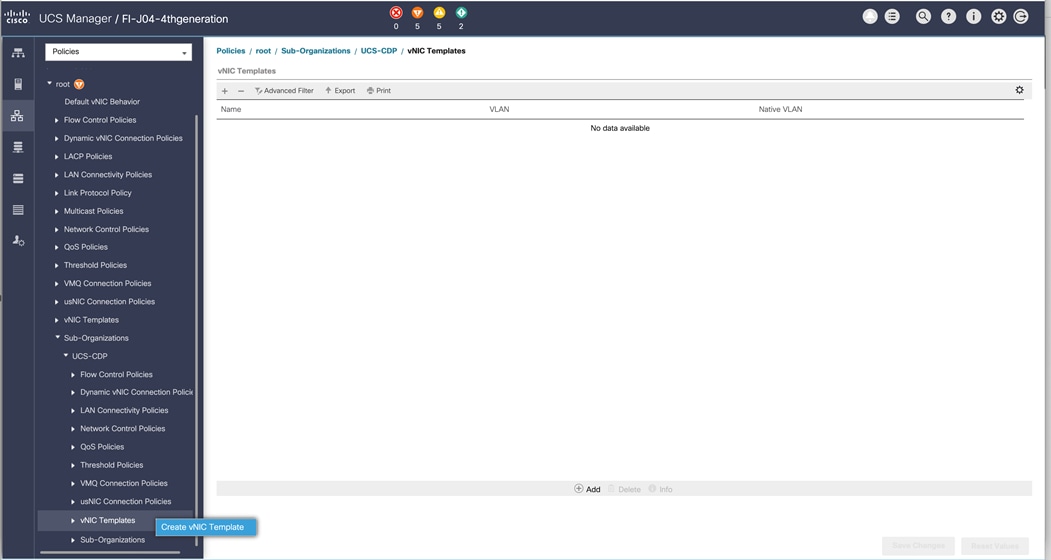

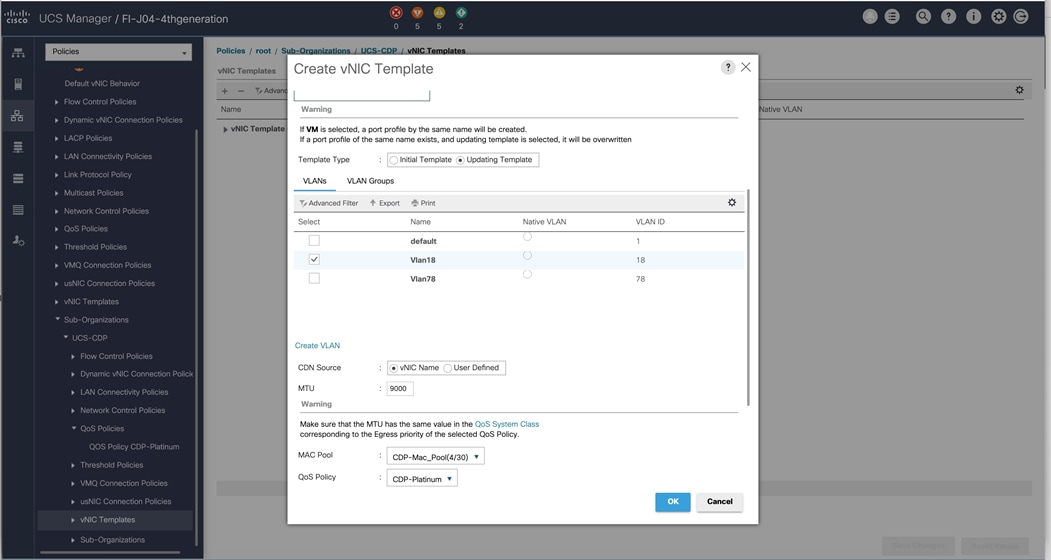

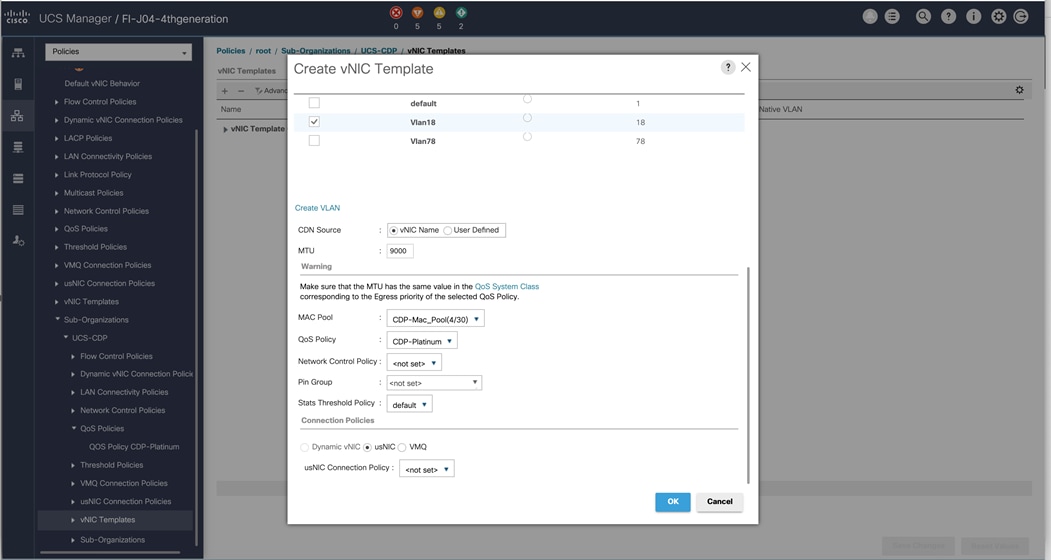

To create multiple virtual network interface card (vNIC) templates for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-CDP> vNIC Template.

3. Right-click vNIC Templates.

4. Select Create vNIC Template.

5. Enter name for vNIC template.

6. Keep Fabric A selected. Select the Enable Failover checkbox.

7. Select Updating Template as the Template Type.

8. Under VLANs, select the checkboxes for desired VLANs to add as part of the vNIC Template.

9. Set Native-VLAN as the native VLAN.

10. For MTU, enter 9000.

11. In the MAC Pool list, select MAC Pool configured.

12. Select QOS policy created earlier.

13. Select default Network Control Policy.

14. Click OK to create the vNIC template.

Figure 37. Create the vNIC Template

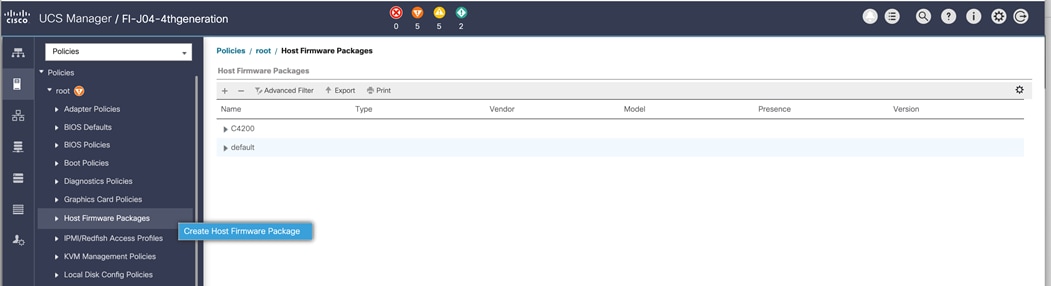

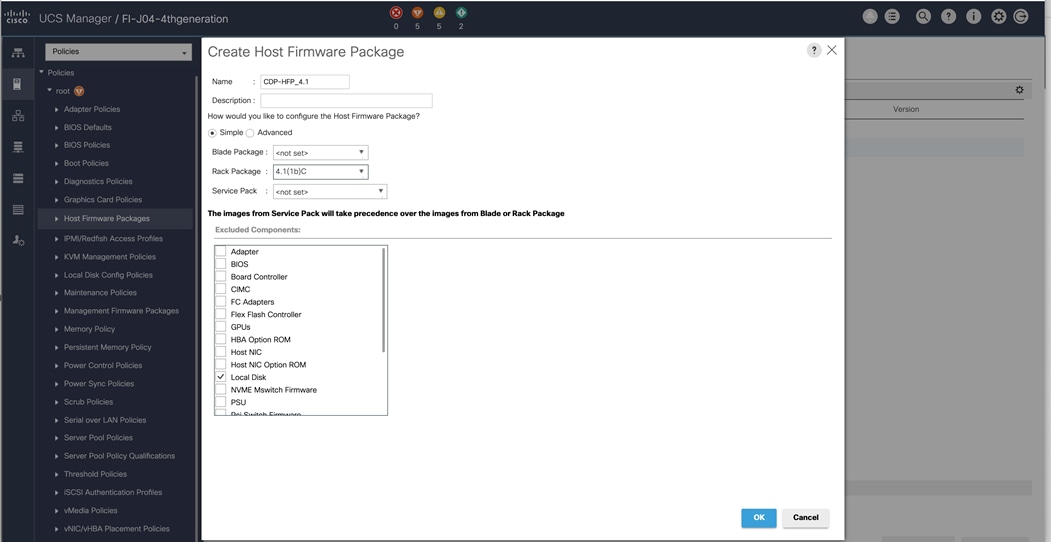

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties.

To create a firmware management policy for a given server configuration in the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-CDP> Host Firmware Packages.

3. Right-click Host Firmware Packages.

4. Select Create Host Firmware Package.

5. Enter name of the host firmware package.

6. Leave Simple selected.

7. Select the version.

8. Click OK to create the host firmware package.

Figure 38. Host Firmware Package

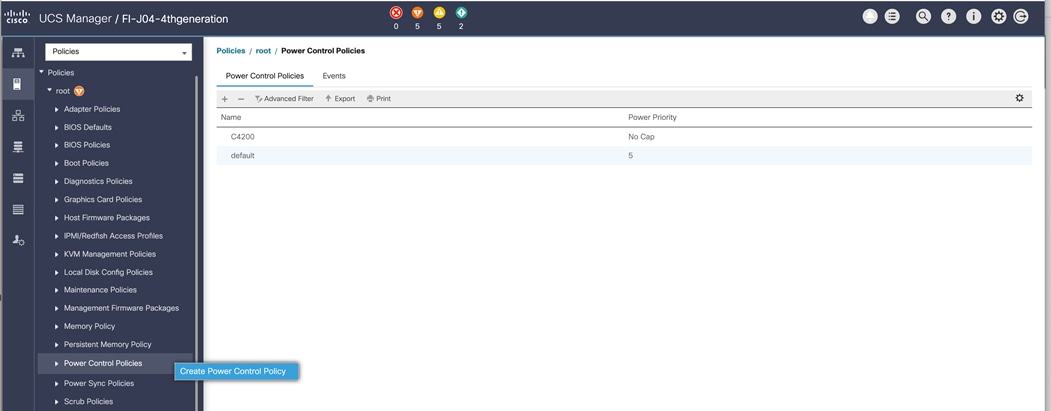

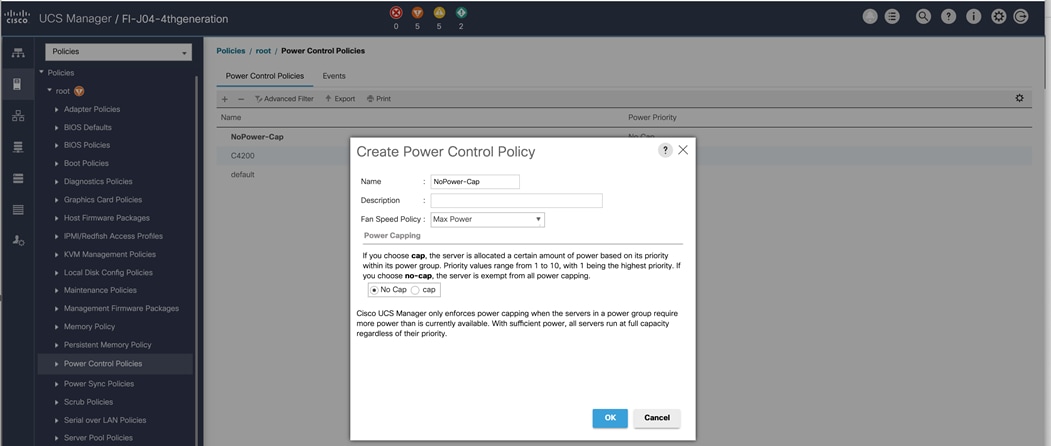

To create a power control policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-CDP> Power Control Policies.

3. Right-click Power Control Policies.

4. Select Create Power Control Policy.

5. Select Fan Speed Policy as “Max Power”.

6. Enter NoPowerCap as the power control policy name.

7. Change the power capping setting to No Cap.

8. Click OK to create the power control policy.

Figure 39. Create Power Control Policy

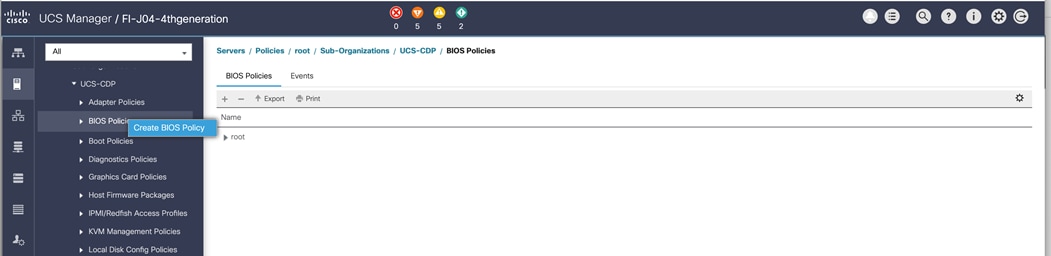

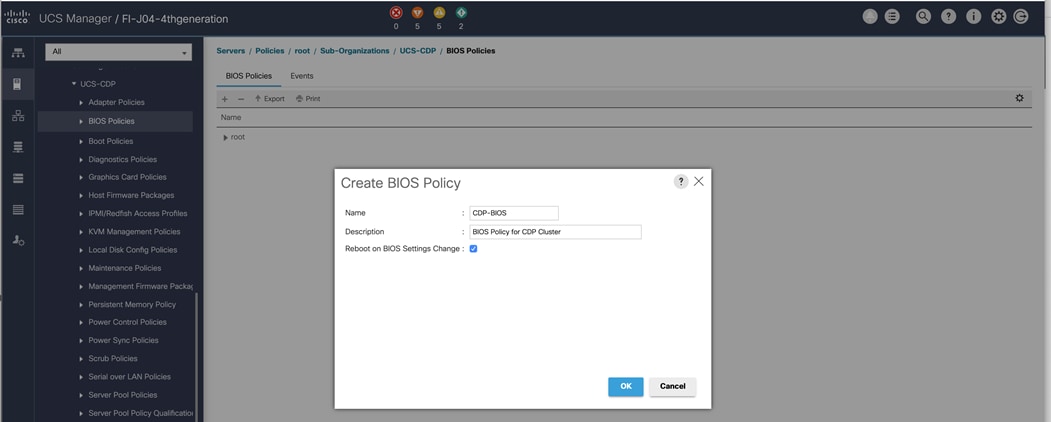

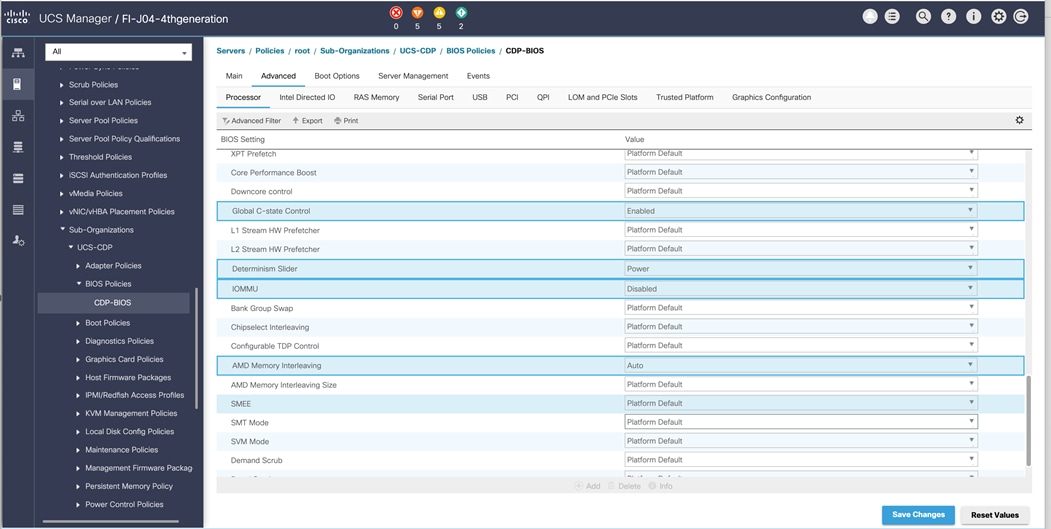

To create a server BIOS policy for the Cisco UCS environment, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-CDP> BIOS Policies.

3. Right-click BIOS Policies.

4. Select Create BIOS Policy.

5. Enter the BIOS policy name.

Figure 40. BIOS Configuration

![]() For more information, go to: “Hadoop Tuning Guide for AMD EPYC Processor Based Servers”

For more information, go to: “Hadoop Tuning Guide for AMD EPYC Processor Based Servers”

![]() BIOS settings can have a significant performance impact, depending on the workload and the applications. The BIOS settings listed in this section is for configurations optimized for best performance which can be adjusted based on the application, performance, and energy efficiency requirements.

BIOS settings can have a significant performance impact, depending on the workload and the applications. The BIOS settings listed in this section is for configurations optimized for best performance which can be adjusted based on the application, performance, and energy efficiency requirements.

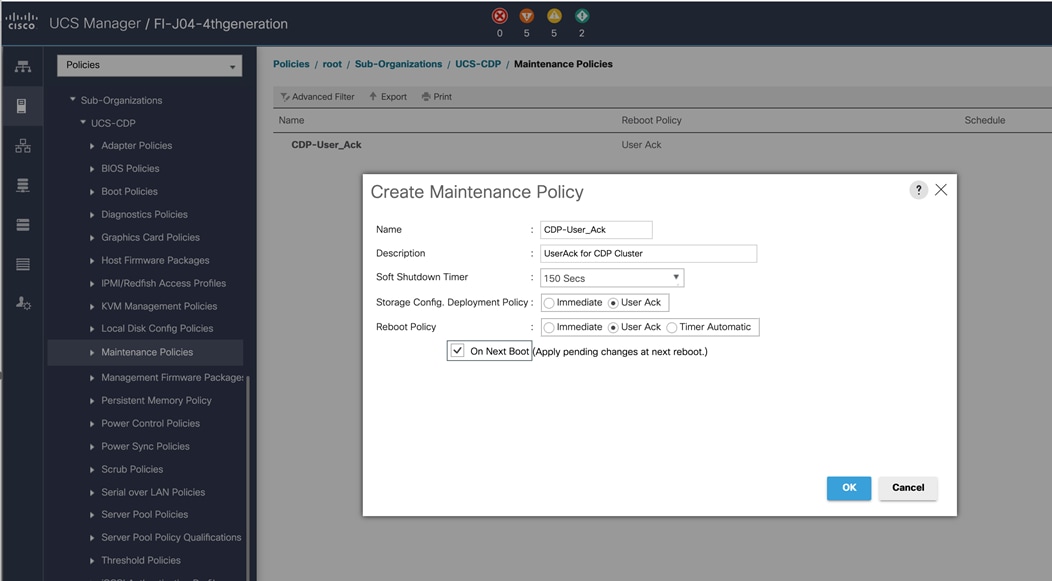

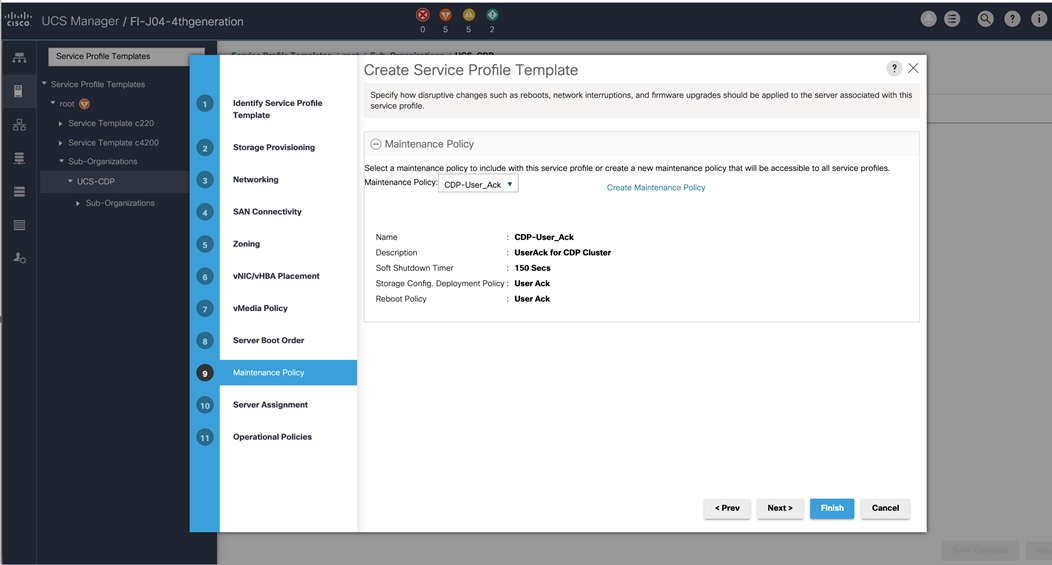

To update the default Maintenance Policy, follow these steps:

1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

2. Select Policies > root > Sub-Organization > UCS-CDP> Maintenance Policies.

3. Right-click Maintenance Policies to create a new policy.

4. Enter name for Maintenance Policy.

5. Change the Reboot Policy to User Ack.

6. Click Save Changes.

7. Click OK to accept the change.

Figure 41. Create Server Maintenance Policy

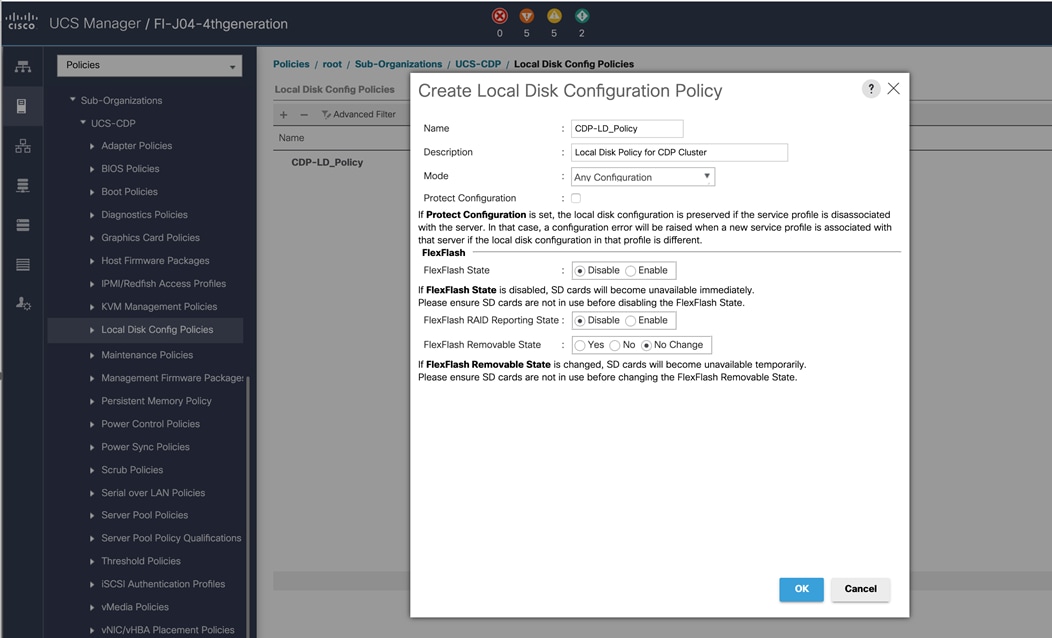

Create the Local Disk Configuration Policy

To create local disk configuration in the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the Cisco UCS Manager GUI.

2. Select Policies > root > Sub-Organization > UCS-CDP> Local Disk Config Policies.

3. Right-click Local Disk Config Policies and Select Create Local Disk Config Policies.

4. Enter UCS-Boot as the local disk configuration policy name.

5. Change the Mode to Any Configuration. Check the Protect Configuration box.

6. Keep the FlexFlash State field as default (Disable).

7. Keep the FlexFlash RAID Reporting State field as default (Disable).

8. Click OK to complete the creation of the Local Disk Configuration Policy.

9. Click OK.

Figure 42. Create the Local Disk Configuration Policy

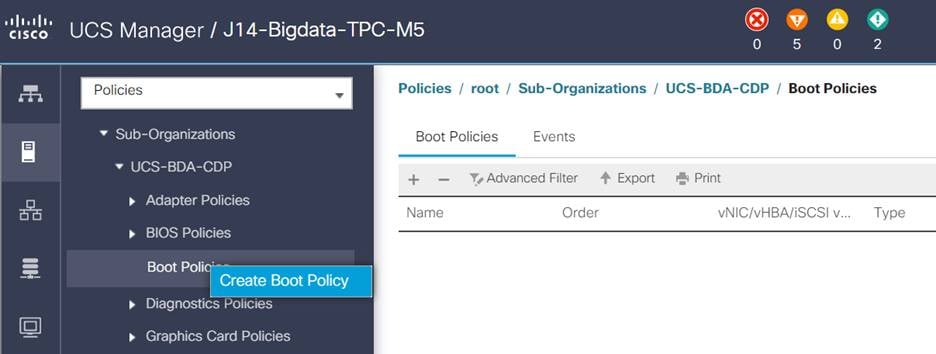

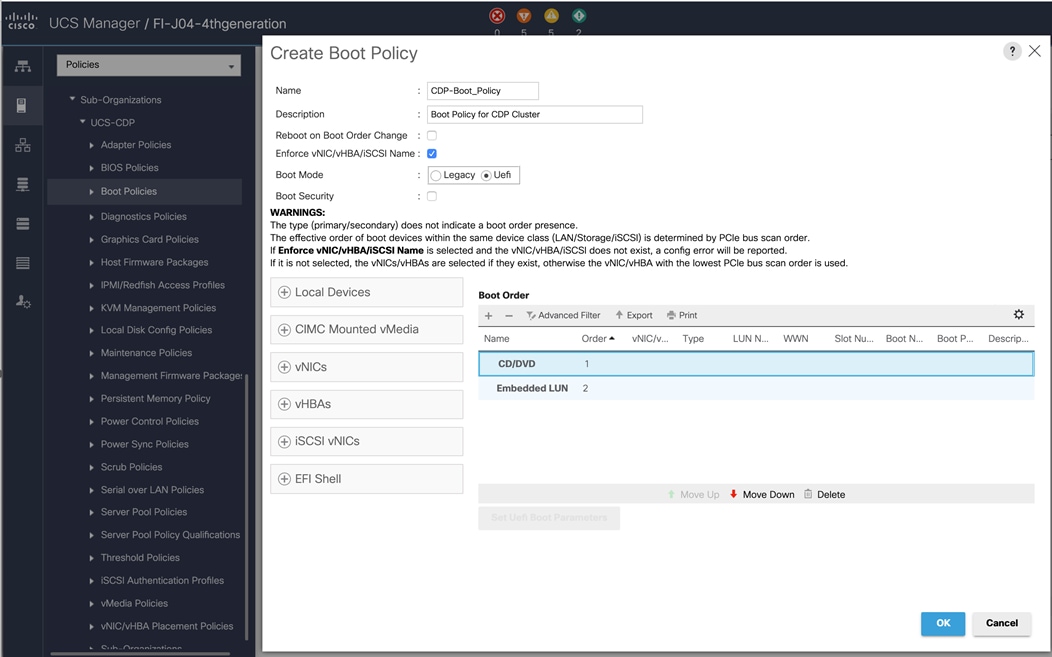

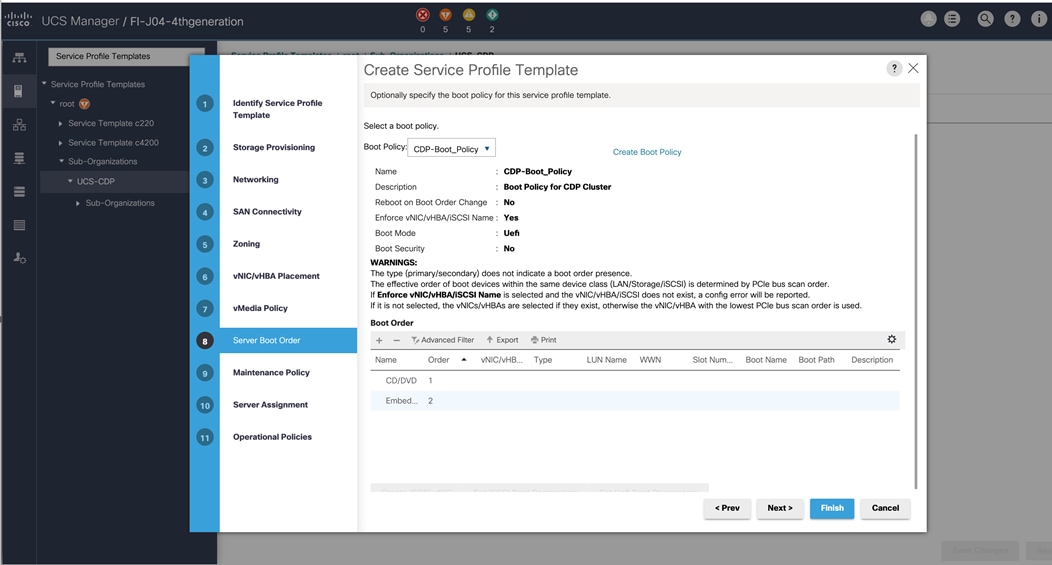

To create boot policies within the Cisco UCS Manager GUI, follow these steps:

1. Select the Servers tab in the Cisco UCS Manager GUI.

2. Select Policies > root.

3. Right-click the Boot Policies.

4. Select Create Boot Policy.

5. Enter “CDP-Boot_Policy” for the boot policy name.

6. (Optional) enter a description for the boot policy.

7. Keep the Reboot on Boot Order Change check box unchecked.

8. Keep Enforce vNIC/vHBA/iSCSI Name check box checked.

9. Keep Boot Mode Default (Legacy).

10. Expand Local Devices > Add CD/DVD and select Add Local CD/DVD.

11. Expand Local Devices and select Add Local Disk.

12. Expand vNICs and select Add LAN Boot and enter eth0.

13. Click OK to add the Boot Policy.

14. Click OK.

Figure 43. Create Boot Policy for Cisco UCS Server(s)

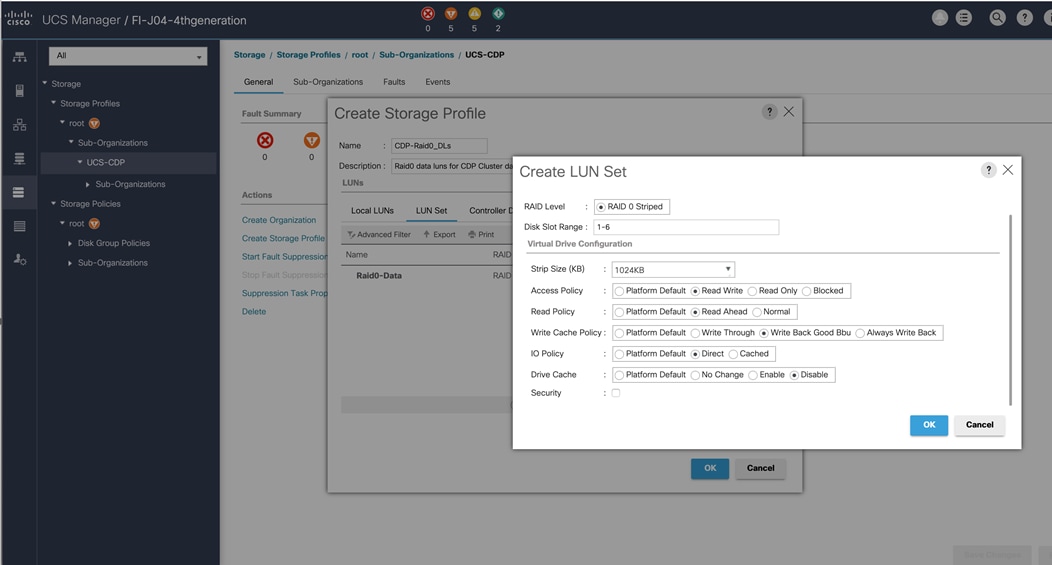

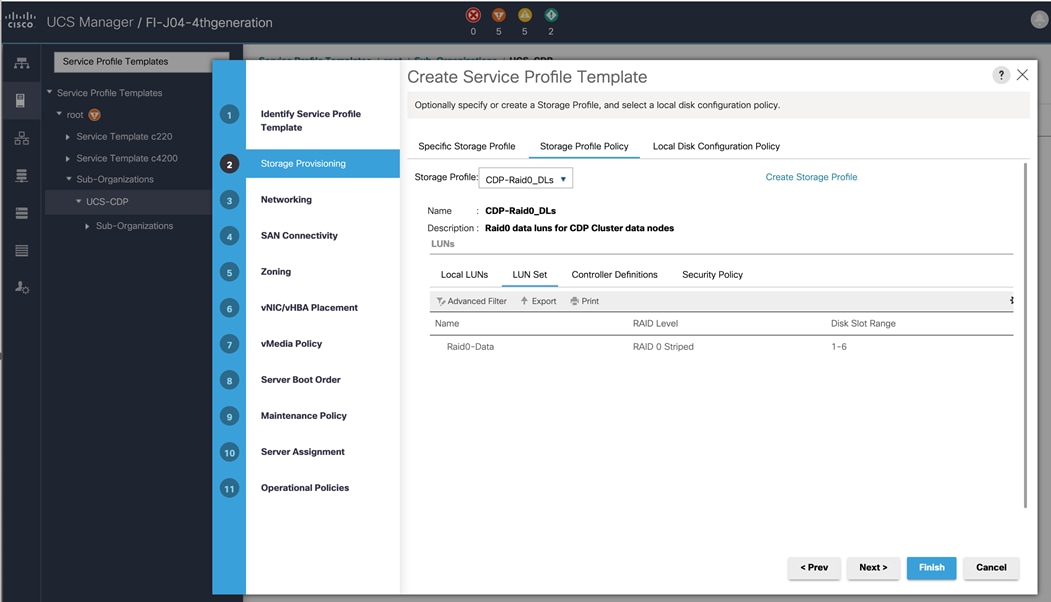

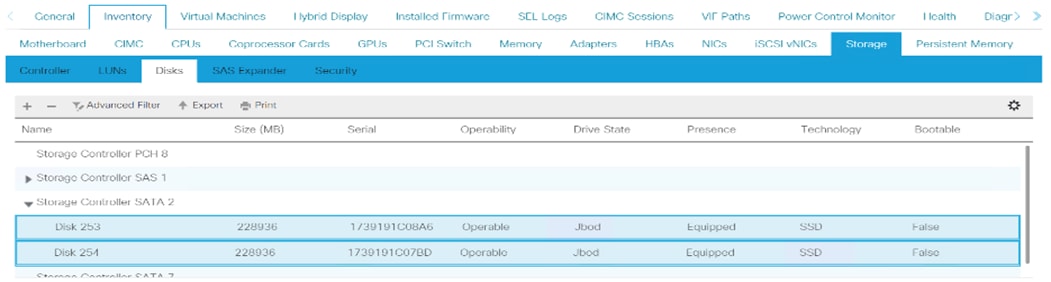

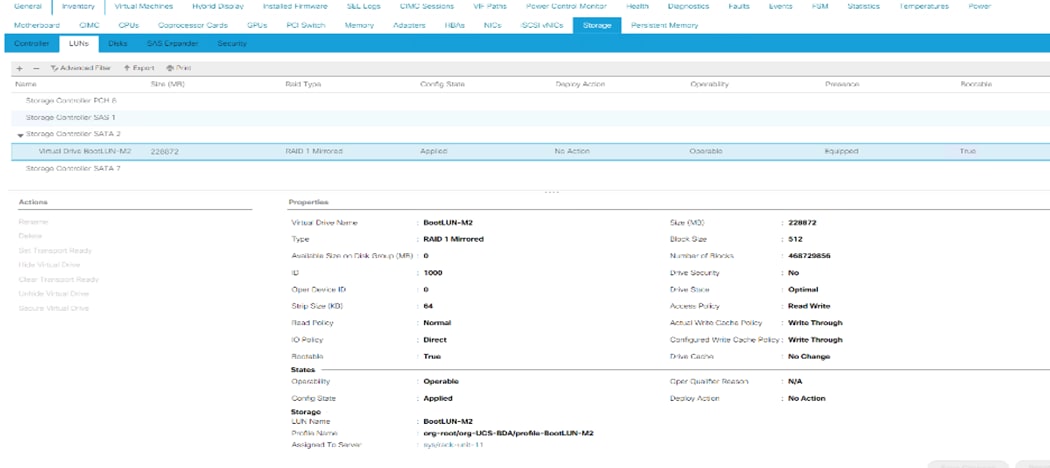

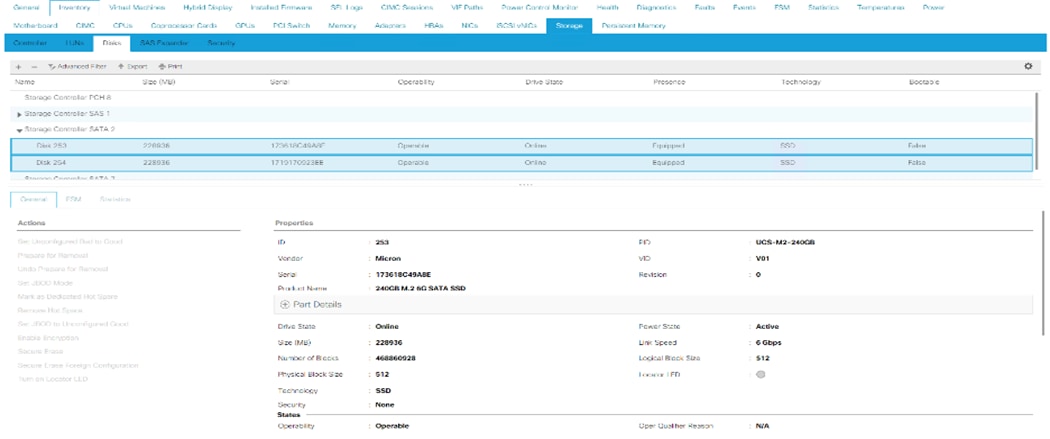

Create Storage Profile for Individual RAID0 for Data Nodes

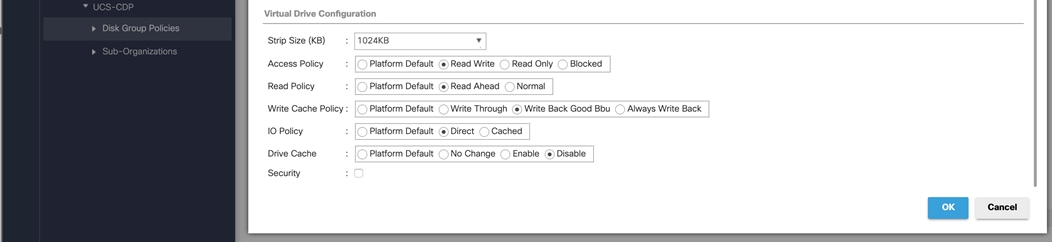

To create the storage profile for the individual RAID0, follow these steps:

1. On the UCSM navigation page, select the Storage tab.

2. From the Storage Profiles drop-down list, right-click and select Create Storage Profile.

3. Enter a name for the Storage Profile and click the LUN Set tab.

4. Click Add.

5. Select the properties for the LUN set:

a. Enter a name for LUN set. Disk Slot Range – 1 – 6 (Depends on number of drives installed in a server).

b. Enter Virtual Drive configuration:

i. Strip Size(kb) – 1024KB

ii. Access Policy – Read Write

iii. Read Policy – Read Ahead

iv. Write Cache Policy – Write Back Good Bbu

v. IO Policy – Direct

vi. Drive Cache – Disable

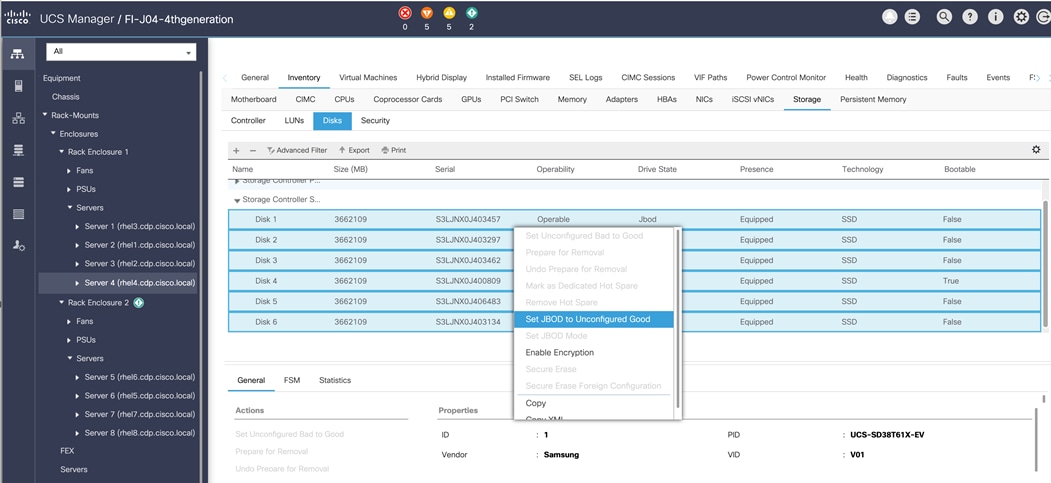

![]() For a LUN set based configuration, set the JBOD disks to unconfigured by selecting all JBOD disk in Server > Inventory > Disks, right-click and select “Set JBOD to Unconfigured Good.”

For a LUN set based configuration, set the JBOD disks to unconfigured by selecting all JBOD disk in Server > Inventory > Disks, right-click and select “Set JBOD to Unconfigured Good.”

Figure 44. Set JBOD Disks to Unconfigured Good

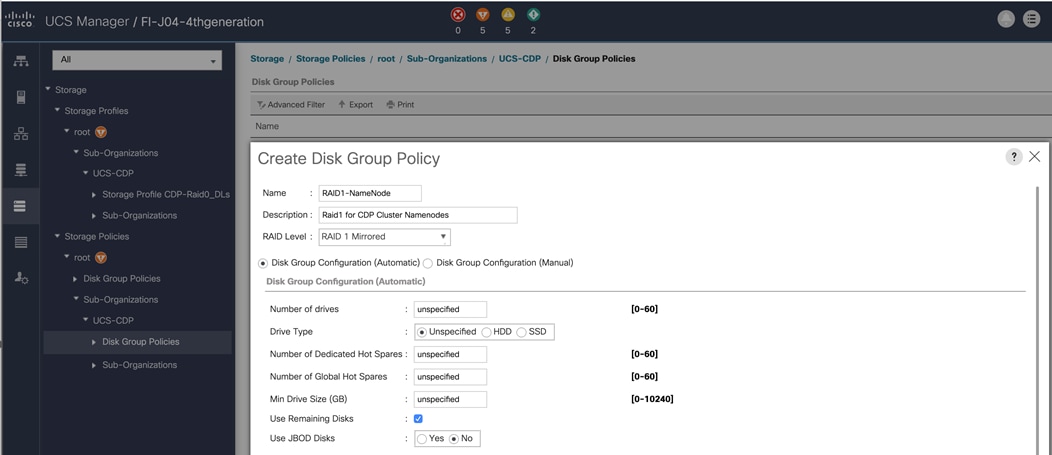

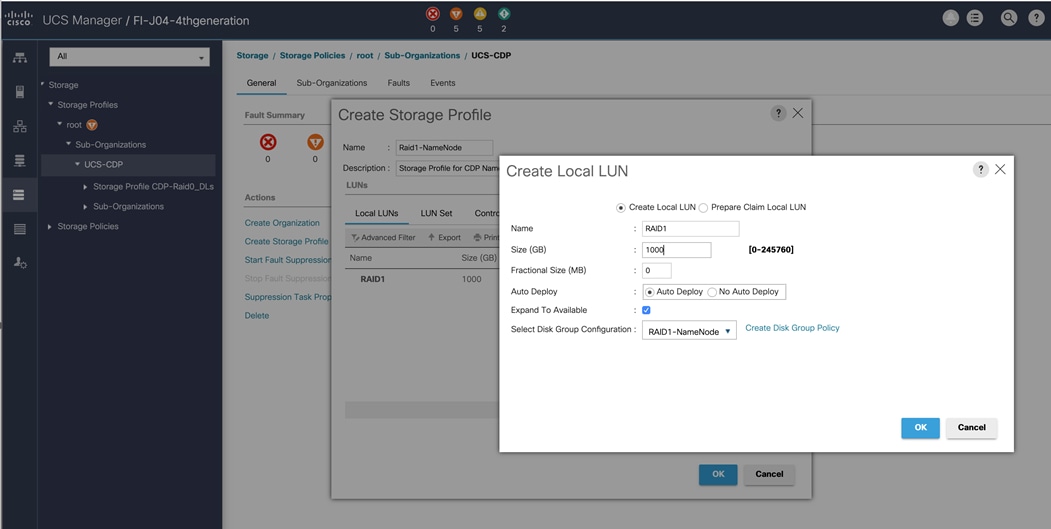

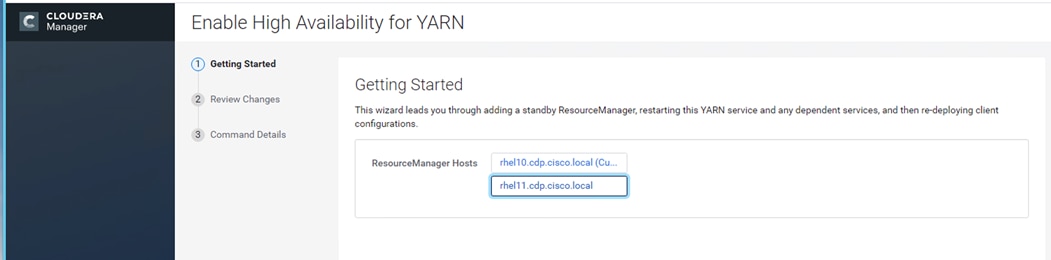

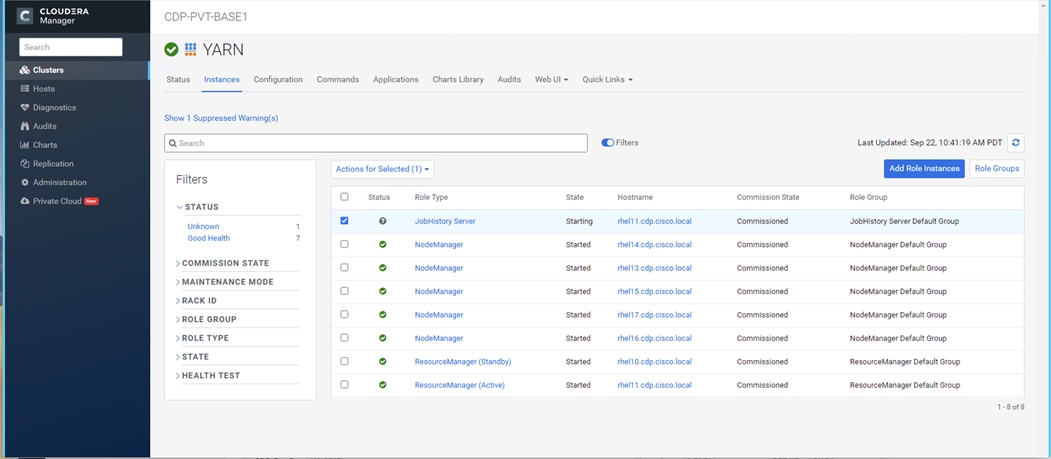

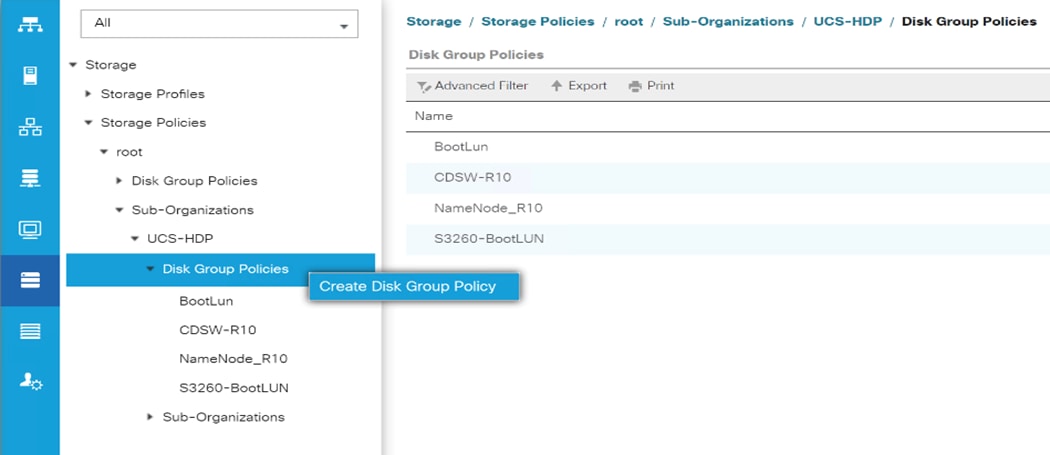

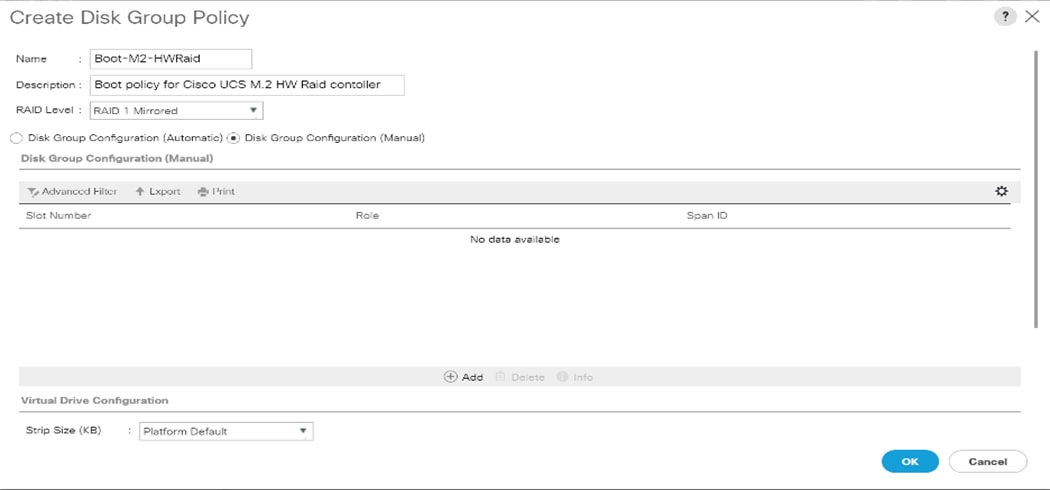

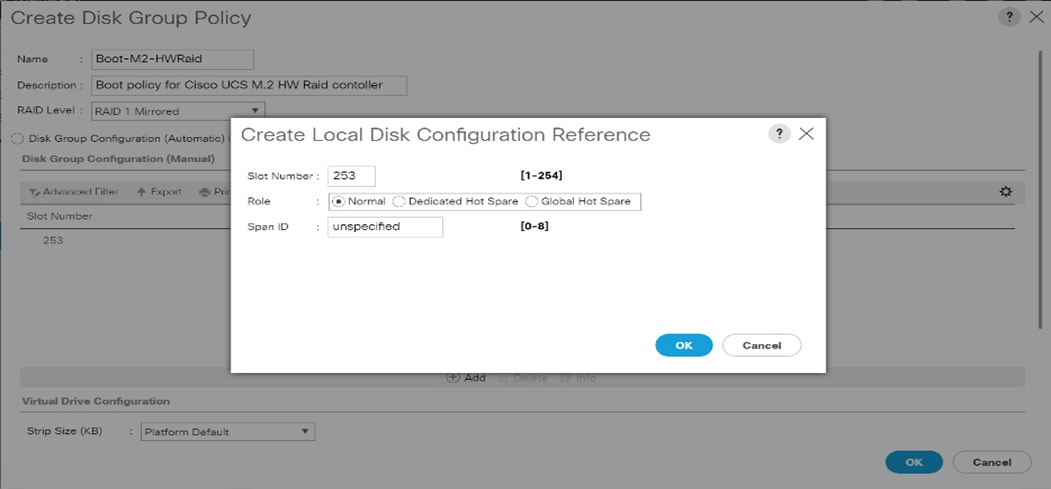

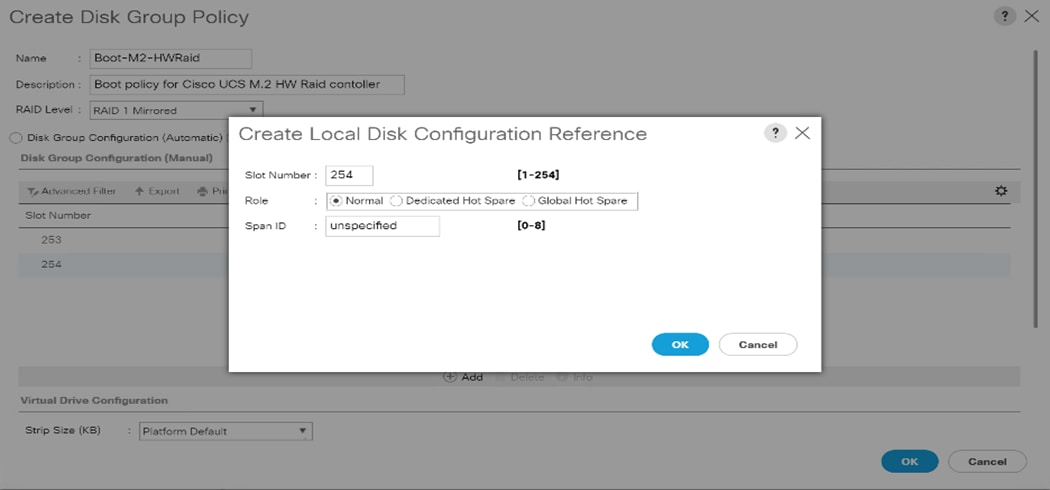

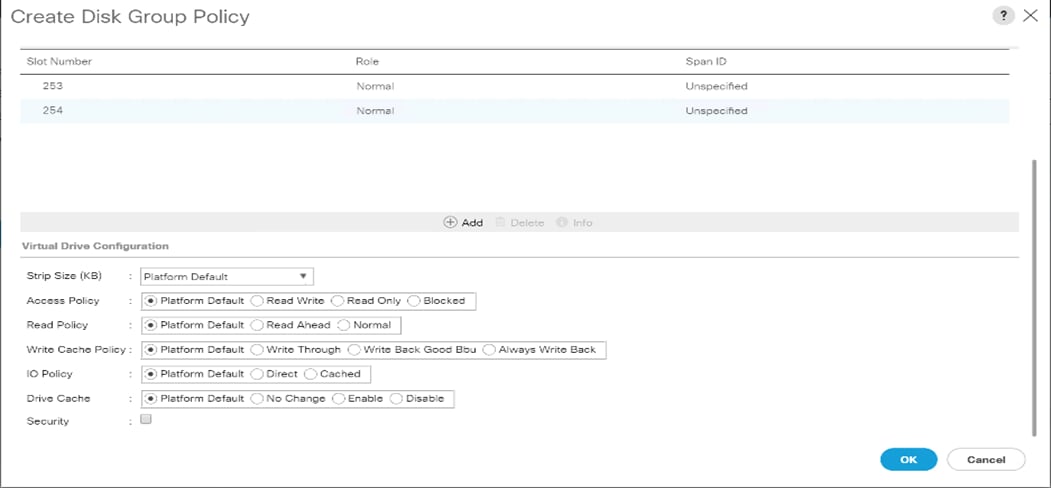

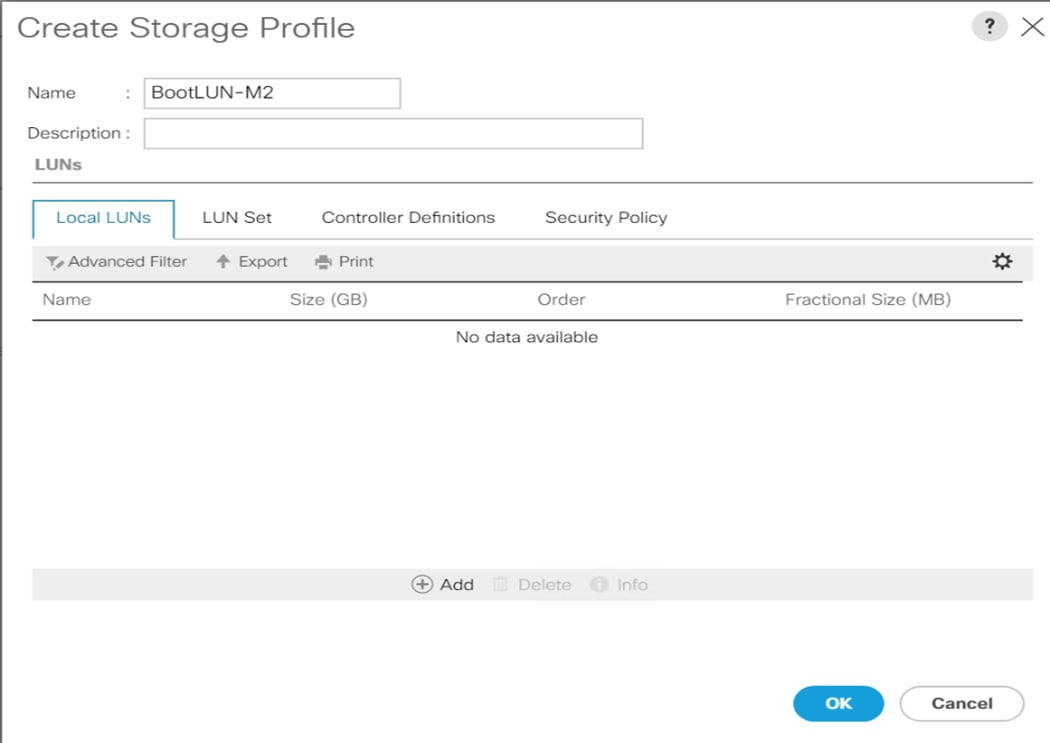

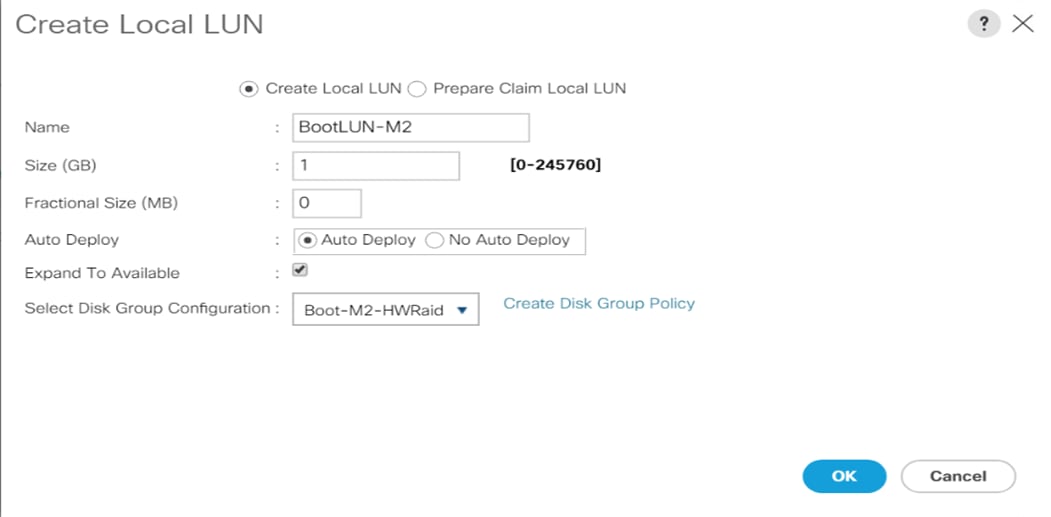

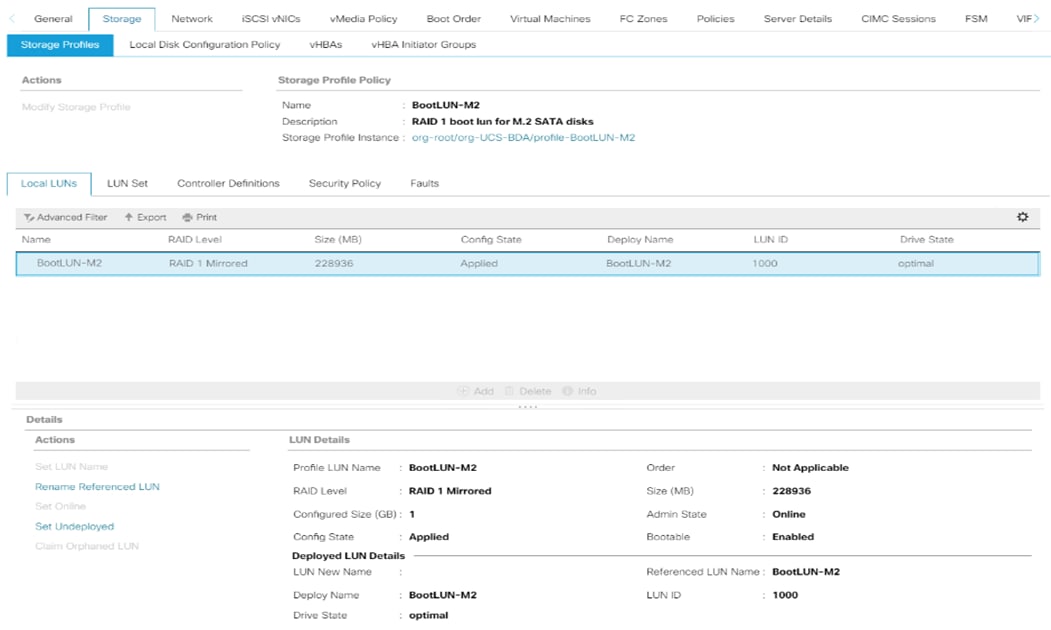

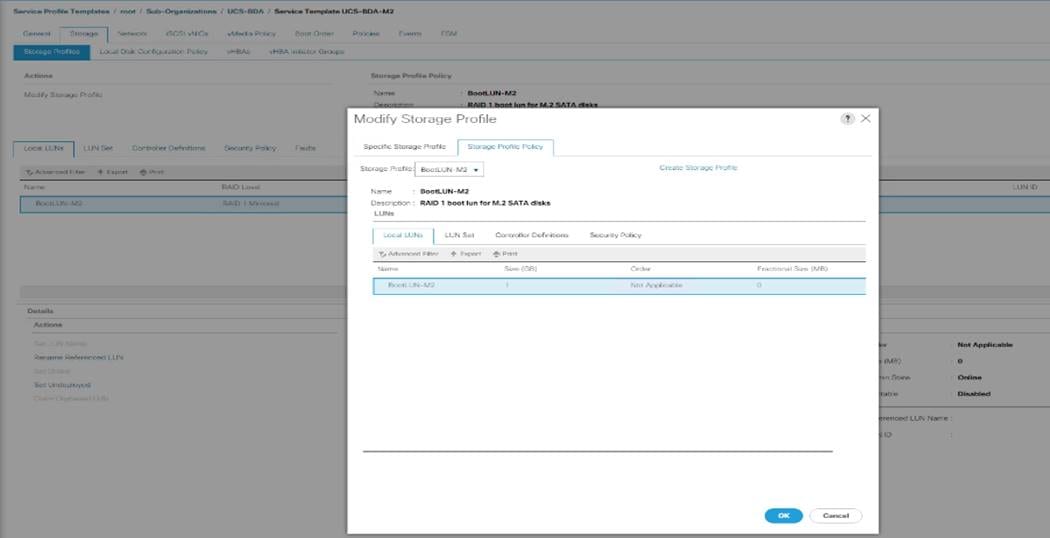

Create Storage Policy and Storage Profile RAID1 for Name Nodes

To create a Storage Profile with multiple RAID LUNs, create Storage Policies and attach them to a Storage Profile.

To create a Storage Policy and attach them to a Storage Profile, follow these steps:

1. Go to the Storage tab and select “Storage Policies”.

2. From the Storage Policies drop-down list, select and right-click “Disk Group Policies”. Select “Create Disk Group Policy”.

3. Enter a name for Disk Group Policy and select RAID level.

4. Select “Disk Group Configuration” (Automatic/Manual).

Figure 45. Disk Group Configuration.

5. Virtual Drive Configuration.

6. Select Storage Profiles, right-click and select Create Storage Profile

7. Enter a name for the Storage profile and click Add.

8. Enter a Local LUN name and select Auto Deploy.

9. Check the box for Expand to Available and from the drop-down list select the storage policy you want to attach with the Storage Profile. Click OK.

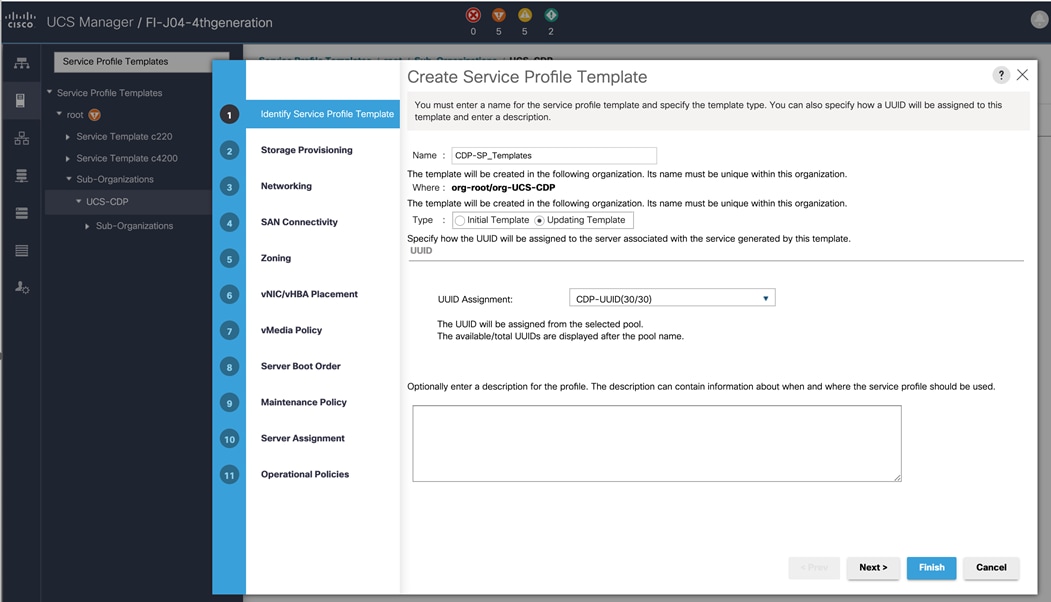

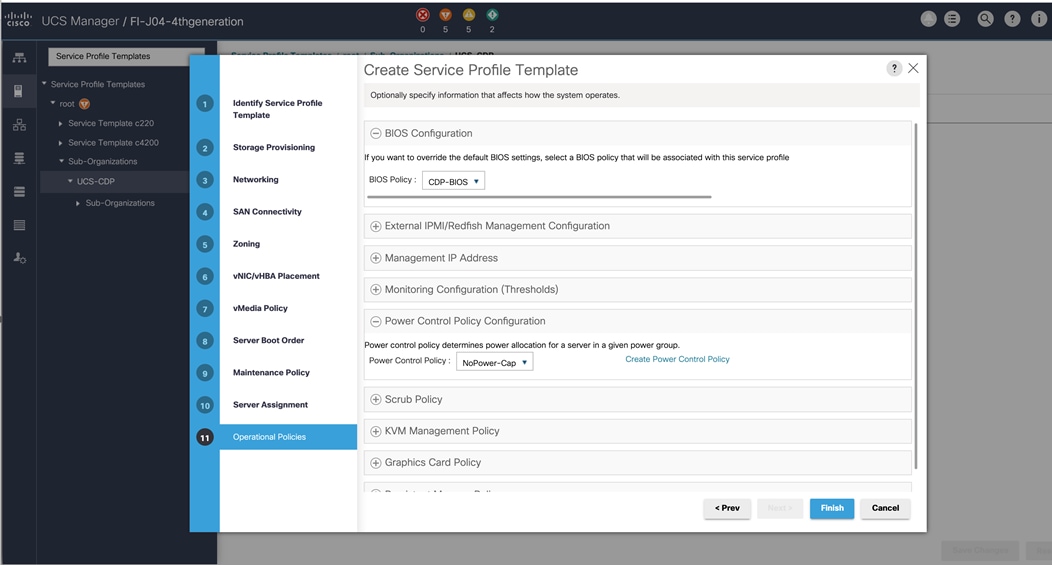

Create Service Profile Template

To create a service profile template, follow these steps:

1. In the Cisco UCS Manager, go to Servers > Service Profile Templates > root Sub Organization > UCS-CDP> and right-click “Create Service Profile Template” as shown below.

2. Enter the Service Profile Template name, Updating Template as type of template and select the UUID Pool that was created earlier. Click Next.

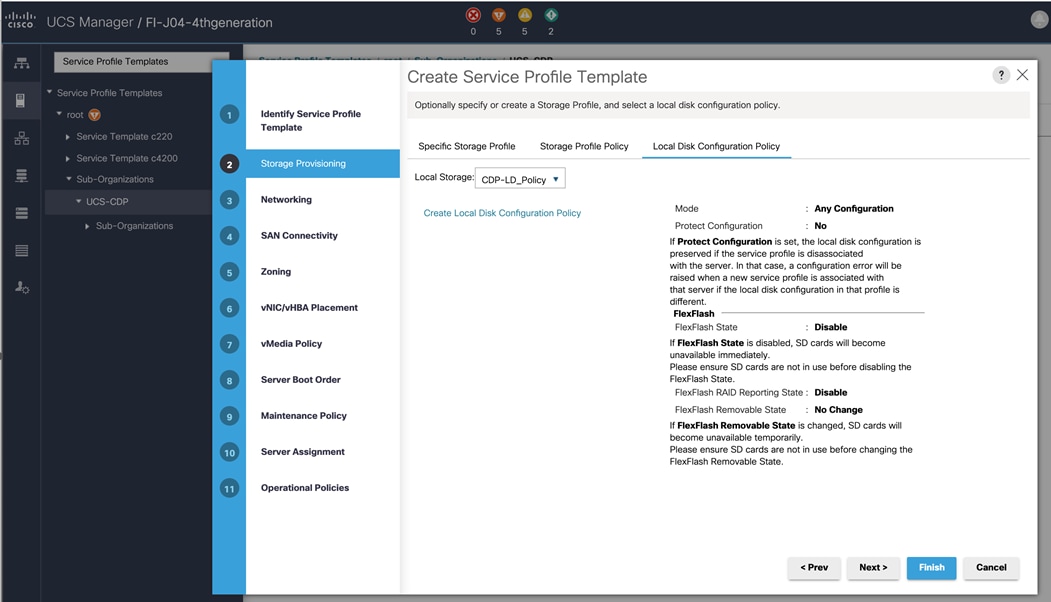

3. Select Local Disk Configuration Policy tab and select Local Storage policy from the drop-down list.

4. On Storage Profile Policy, select the Storage Profile to attach with the server.

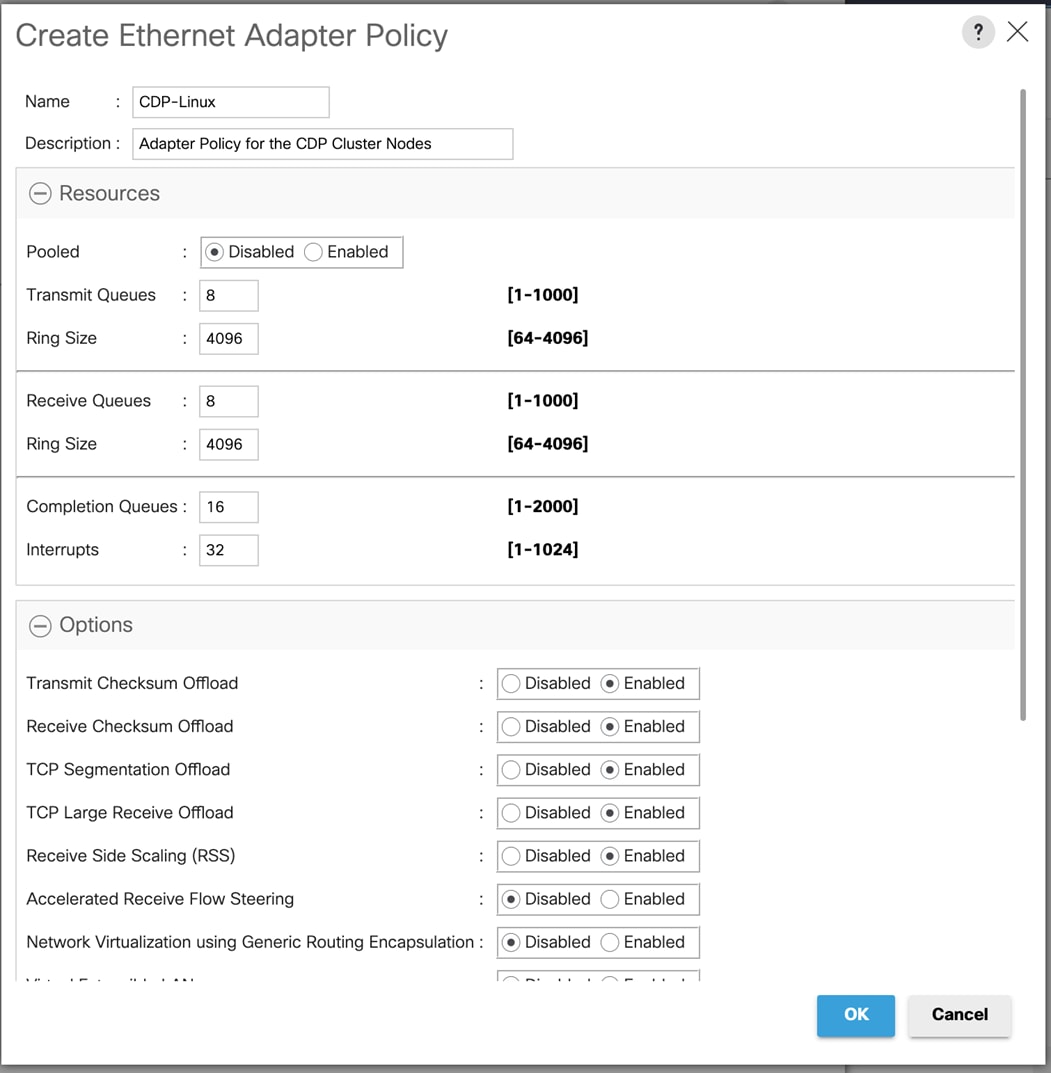

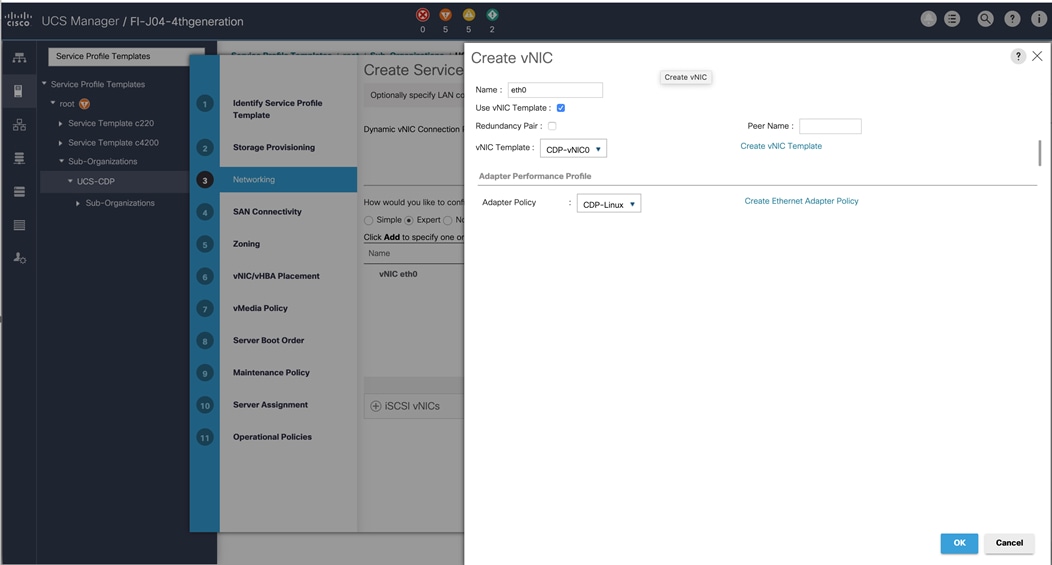

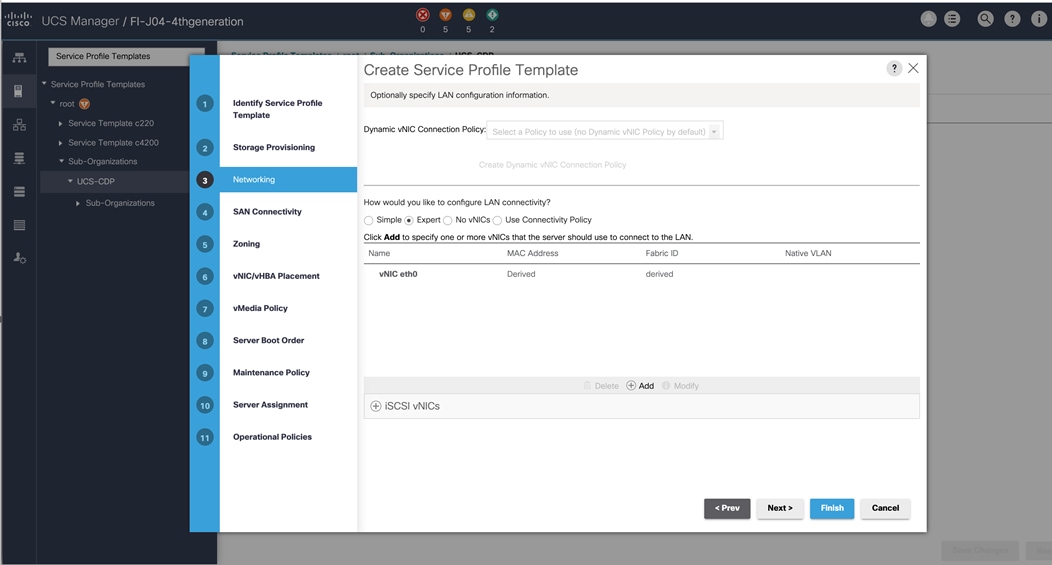

5. In the networking window, select “Expert” and click “Add” to create vNICs. Add one or more vNICs that the server should use to connect to the LAN.

6. In the create vNIC menu as vNIC name.

7. Select the vNIC Template CDP-vNIC0 and the Adapter Policy CDP-Linux:

a. Tx Q / Rx Q - 8

b. Enable RSS on the server adapter setting

c. Completion Q 16

d. Interrupts 32

e. Tx / Rx ring sizes = 4096/4096

![]() Optionally, Network Bonding can be setup on the vNICs for each host for redundancy as well as for increased throughput.

Optionally, Network Bonding can be setup on the vNICs for each host for redundancy as well as for increased throughput.

8. In the SAN Connectivity menu, select no vHBAs.

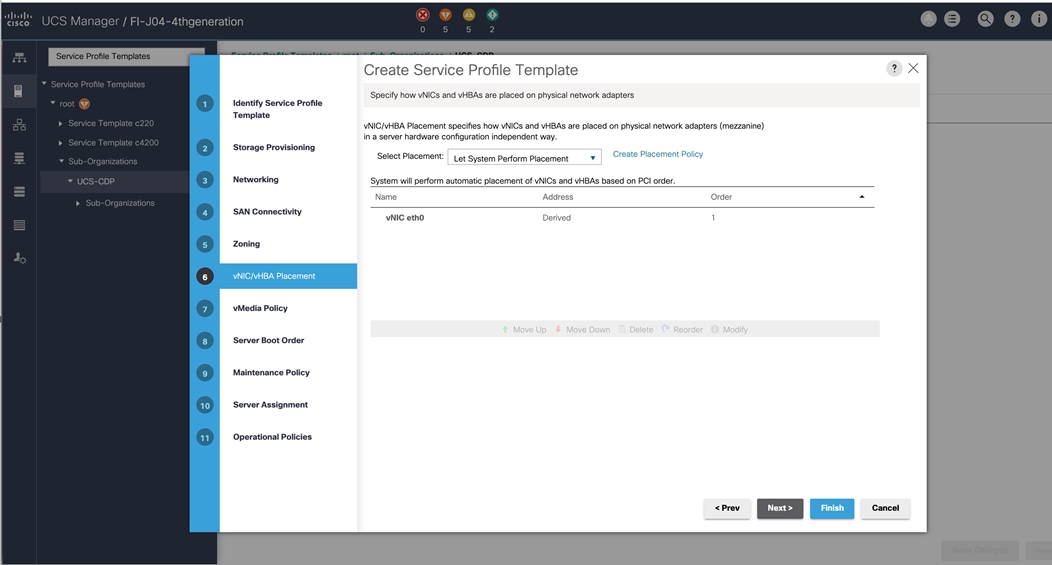

9. Click Next in the Zoning tab (no change) and Click Next.

10. Select Let System Perform Placement for vNIC/vHBA Placement. Click Next.

11. Click Next in the vMedia Policy tab (no change) and click Next.

12. Select Boot Policy in the Server Boot Order tab.

13. Select UserAck maintenance policy, which requires user acknowledgement prior rebooting server when making changes to policy or pool configuration tied to a service profile.

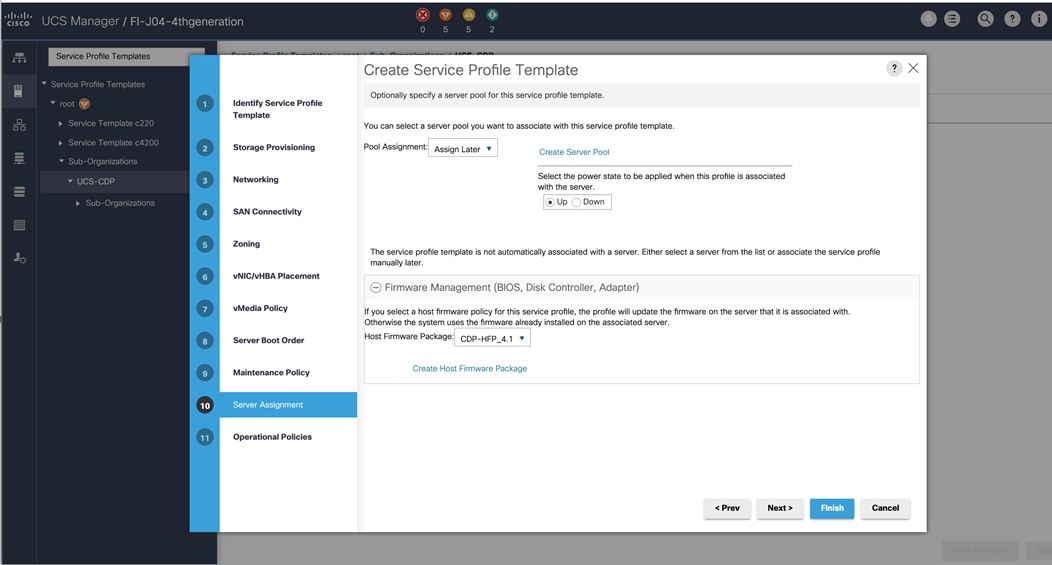

14. Select the Server Pool policy to automatically assign a service profile to a server that meets the requirements for server qualification based on the pool configuration. Select Power state when the Service Profile is associated to server

15. On the same page you can configure “Host firmware Package Policy” which helps to keep the firmware in sync when associated to server.

![]() On the Operational Policy page, the BIOS policy for a Cisco UCS C125 M5 Rack server node with the Power Control Policy set to “NoPowerCap” for maximum performance.

On the Operational Policy page, the BIOS policy for a Cisco UCS C125 M5 Rack server node with the Power Control Policy set to “NoPowerCap” for maximum performance.

16. Click Finish to create the Service Profile template.

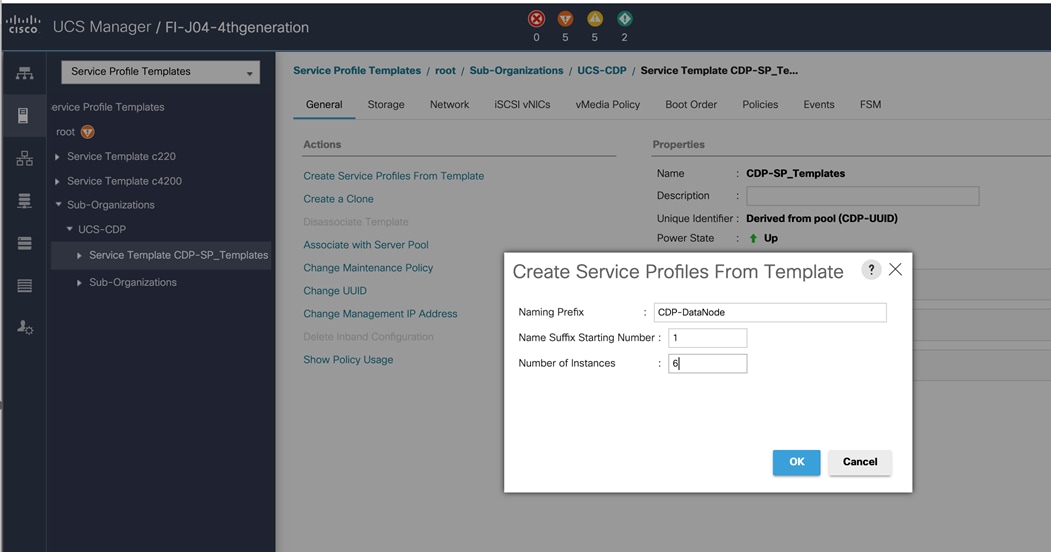

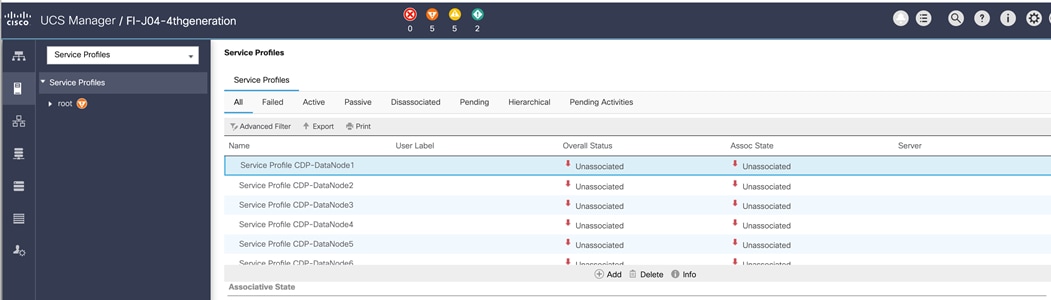

Create Service Profiles from Template

To create a Service Profile from a template, follow these steps:

1. Right-click the Service Profile Template and select Create Service profile from Template.

Figure 46. Create Service Profile from Template

![]() The Service profile will automatically assign to servers discovered and meets the requirement of Server Pool.

The Service profile will automatically assign to servers discovered and meets the requirement of Server Pool.

2. Repeat these steps to create service profile template(s) and service profile(s) according to different deployment scenario.

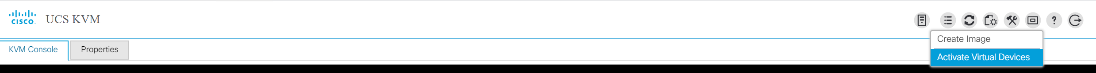

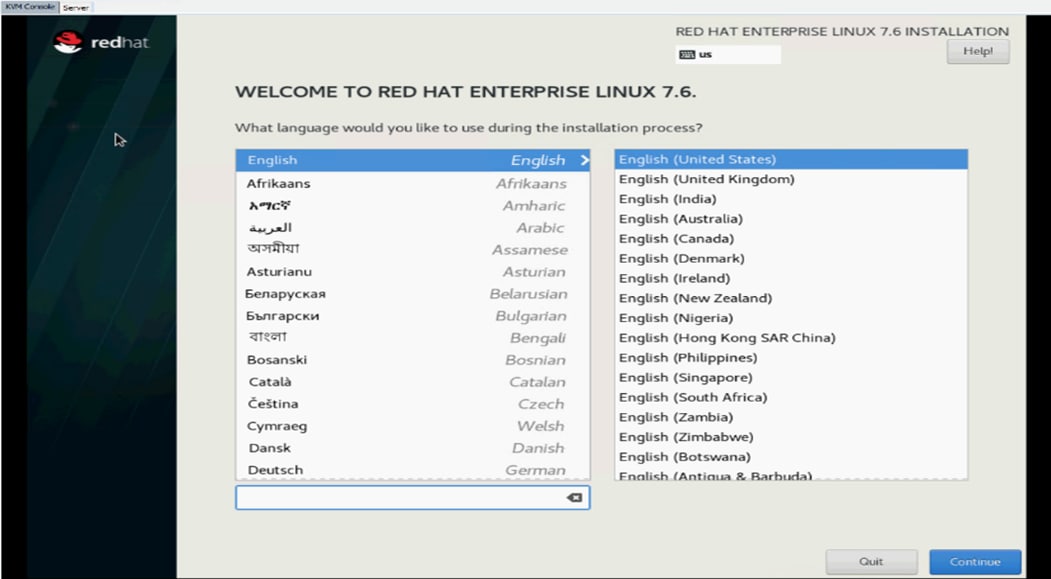

Install Red Hat Enterprise Linux 7.8

This section provides detailed procedures for installing Red Hat Enterprise Linux Server using Software RAID (OS based Mirroring) on Cisco UCS C125 M5 servers. There are multiple ways to install the RHEL operating system. The installation procedure described in this deployment guide uses KVM console and virtual media from Cisco UCS Manager.

To install the Red Hat Enterprise Linux 7.8 operating system, follow these steps:

1. Log into the Cisco UCS Manager.

2. Select the Equipment tab.

3. In the navigation pane expand Rack-Mounts and then Servers.

4. Right-click the server and select KVM console.

5. In the right pane, click the KVM Console >>.

6. Click the link to launch the KVM console.

7. Point the cursor over the top right corner and select the Virtual Media tab.

8. Click the Activate Virtual Devices found in Virtual Media tab.

9. Click the Virtual Media tab to select CD/DVD.

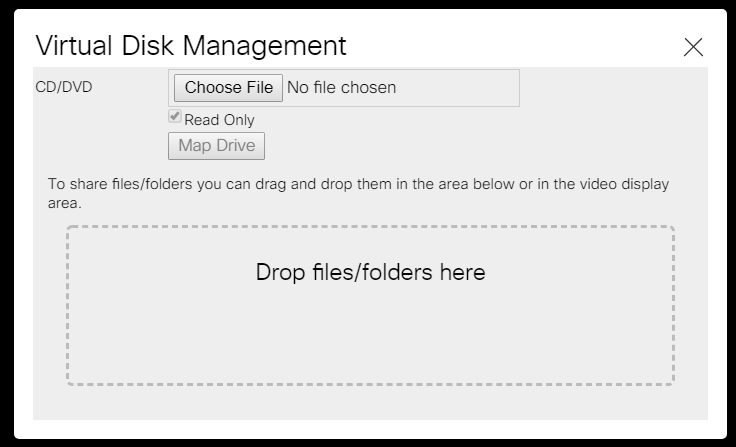

10. Select Map Drive in the Virtual Disk Management windows.

11. Browse to the Red Hat Enterprise Linux 7.8 installer ISO image file.

![]() The Red Hat Enterprise Linux 7.8 Server DVD is assumed to be on the client machine.

The Red Hat Enterprise Linux 7.8 Server DVD is assumed to be on the client machine.

12. Click Open to add the image to the list of virtual media.

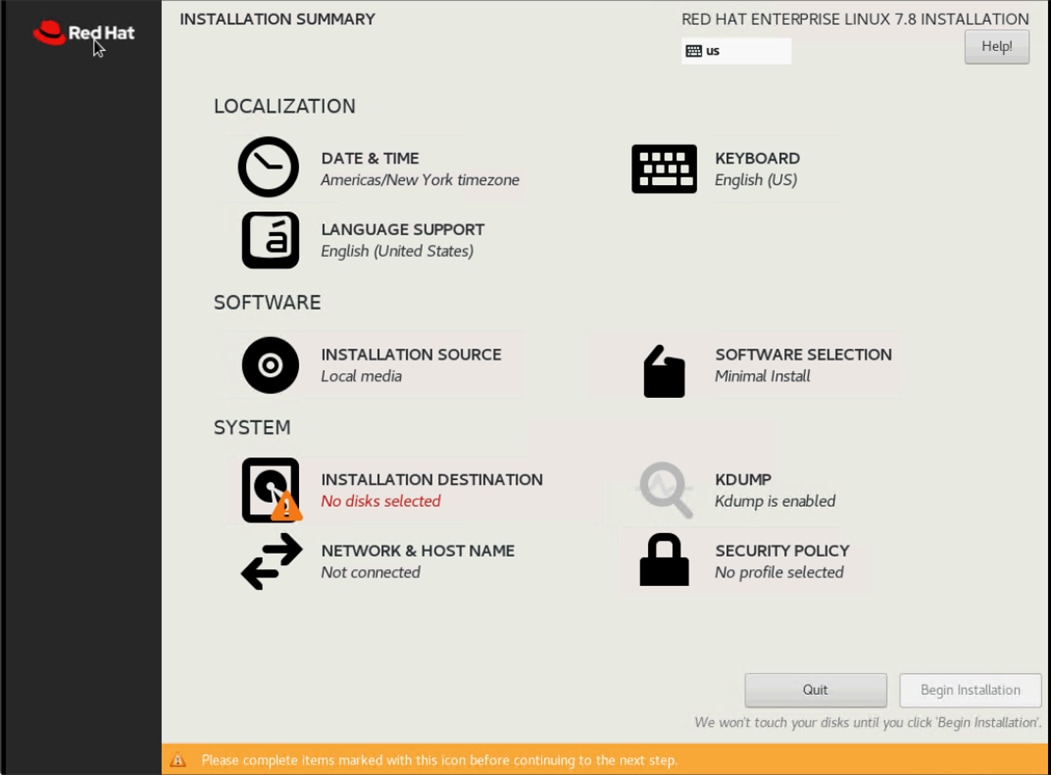

13. Select the Installation option from Red Hat Enterprise Linux 7.8.

14. Select the language for the installation and click Continue.

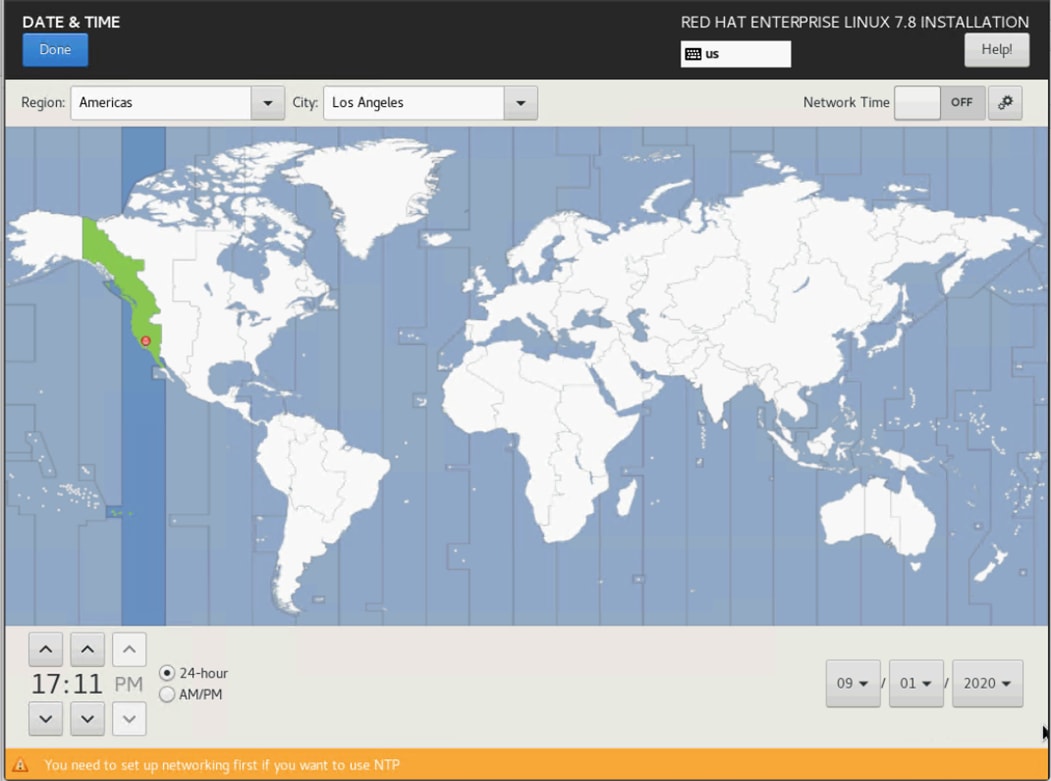

15. Select date and time, which pops up another window as shown below.

16. Select the location on the map, set the time, and click Done.

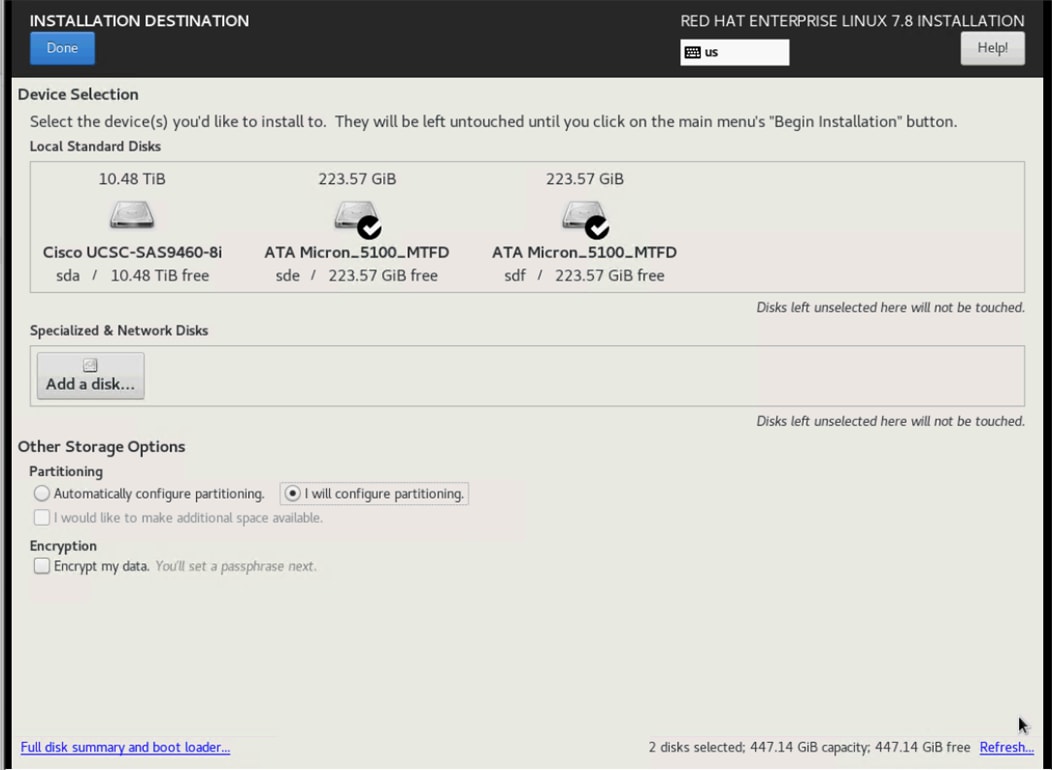

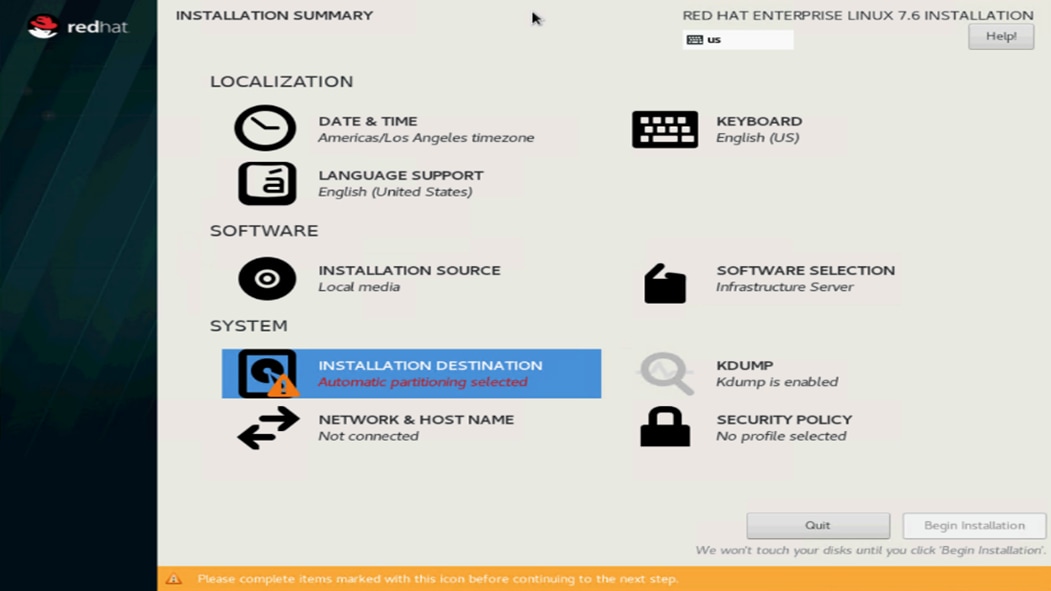

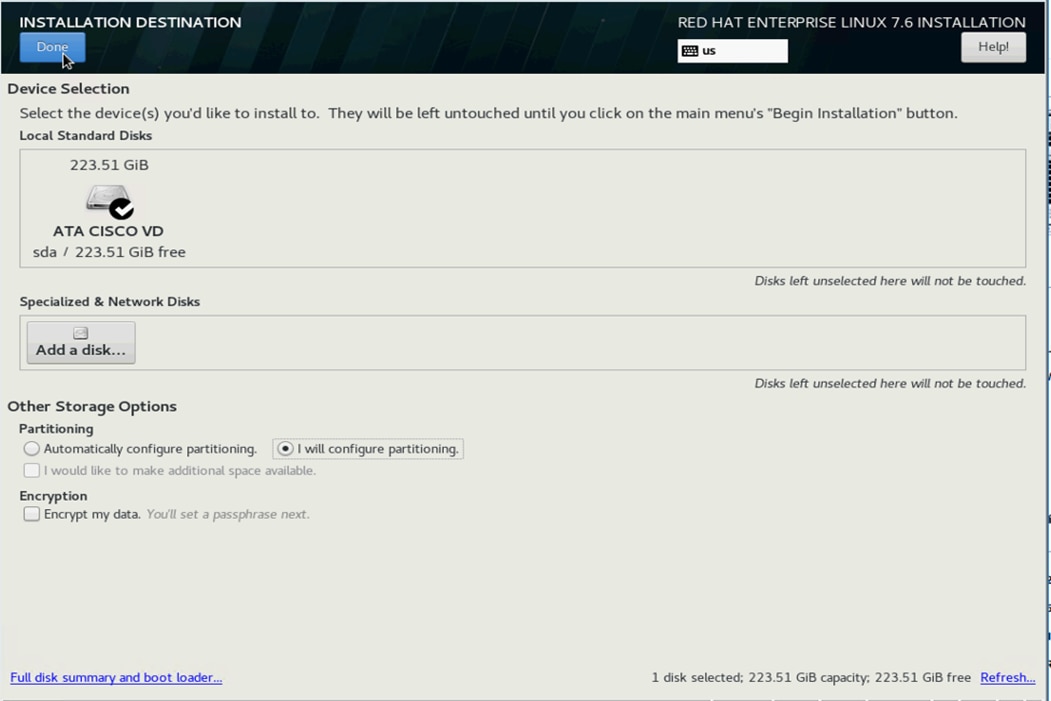

17. Click Installation Destination.

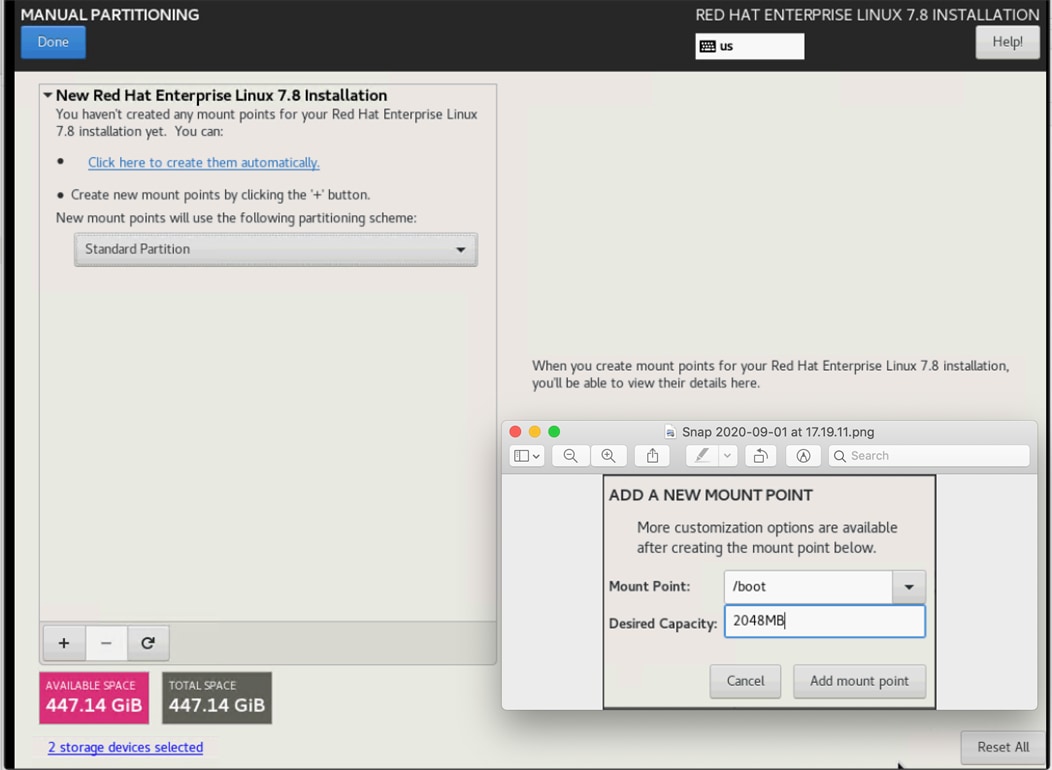

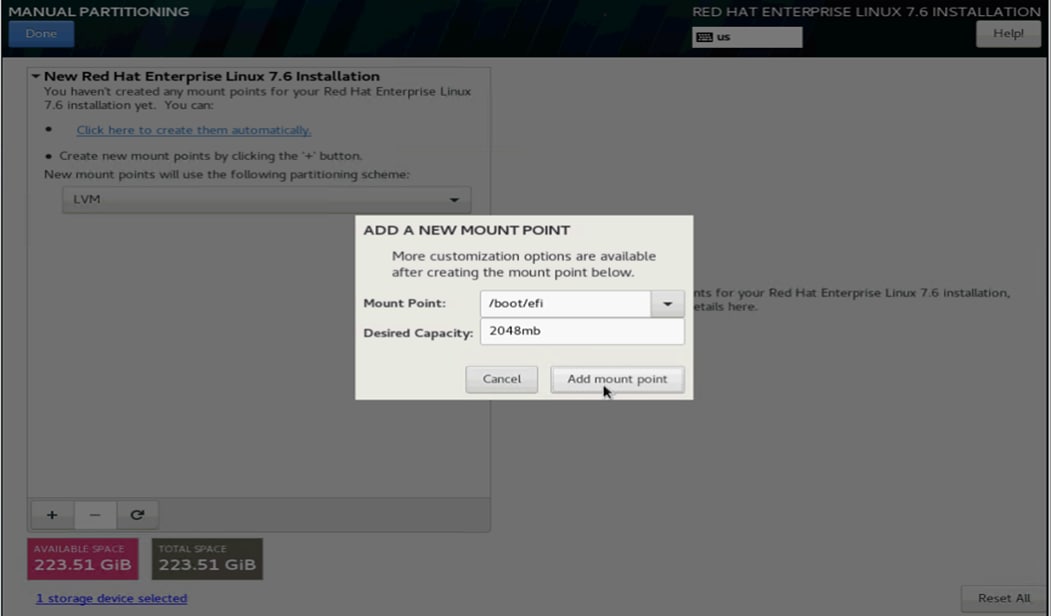

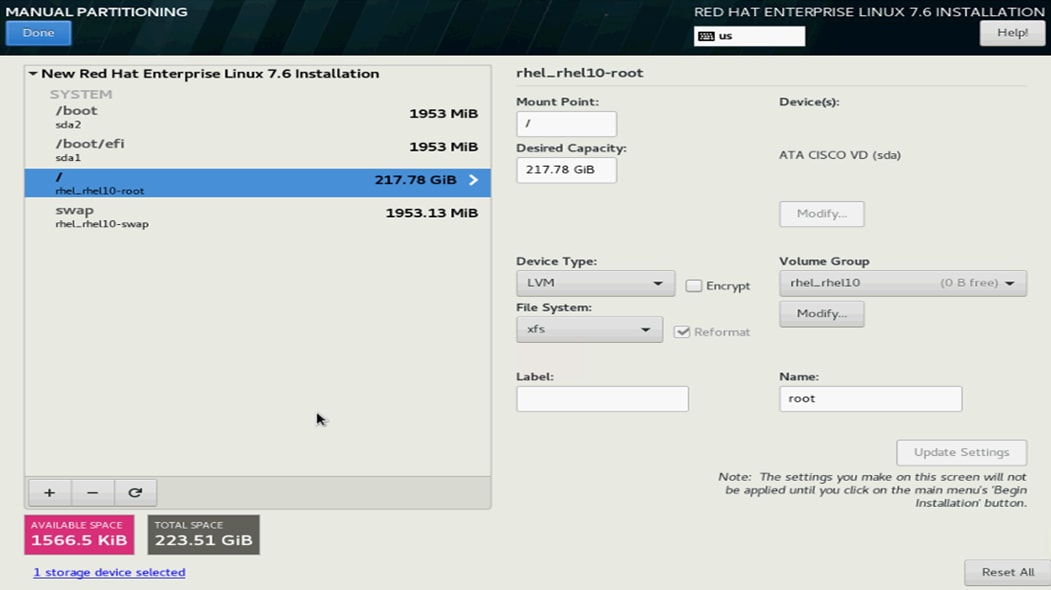

18. This opens a new window with the boot disks. Select a device and choose “I will configure partitioning”. Click Done. We selected two M.2 SATA SSDs.

19. This opens a window to create the partitions. Click the + sign to add a new partition as shown below with a boot partition size 2048 MB.

20. Click Add Mount Point to add the partition.

21. Change the device type to RAID and make sure the RAID level is RAID1 (redundancy) and click Update Settings to save the changes.

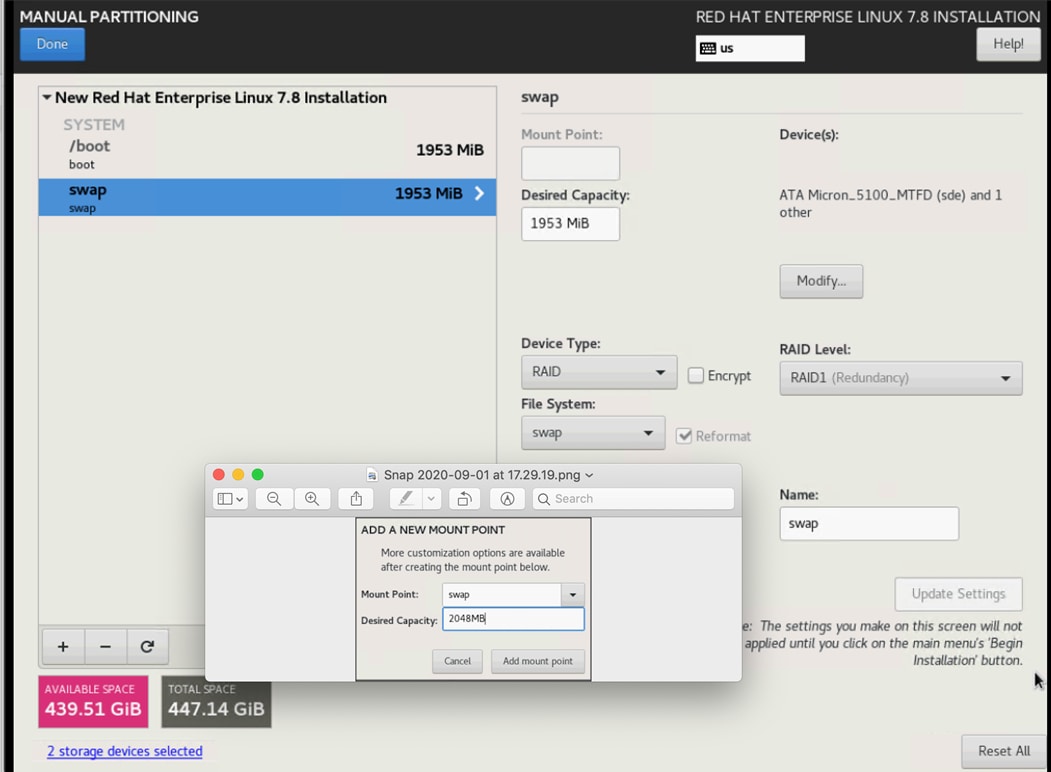

22. Click the + sign to create the swap partition of size 2048 MB. Click Add Mount Point.

23. Change the Device type to RAID and RAID level to RAID1 (Redundancy) and click Update Settings.

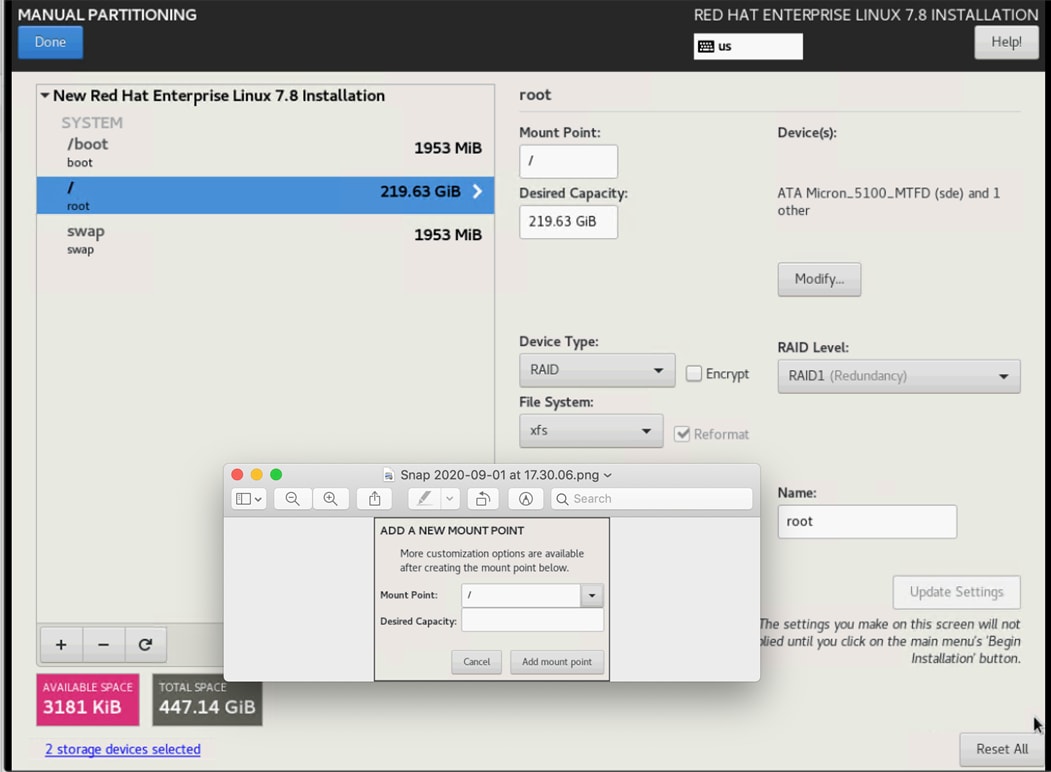

24. Click + to add the / partition. The size can be left empty so it will use the remaining capacity. Click Add Mountpoint.

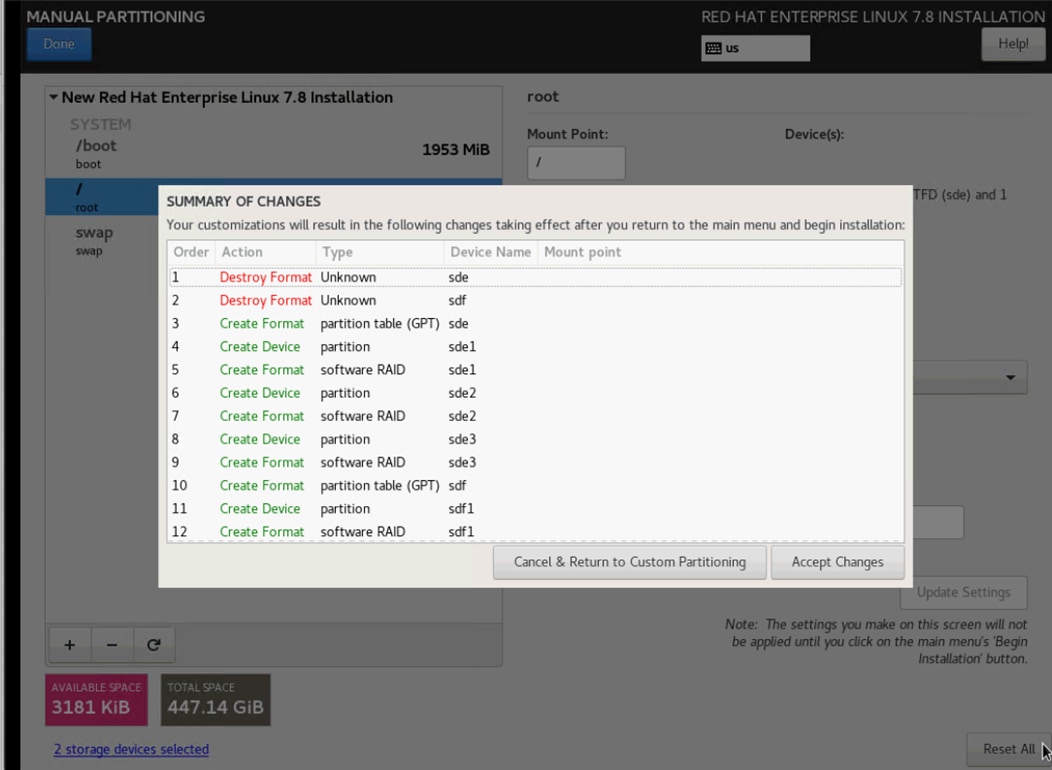

25. Change the Device type to RAID and RAID level to RAID1 (Redundancy). Click Update Settings

26. Click Accept Changes.

27. Click Done to return to the main screen and continue the Installation.

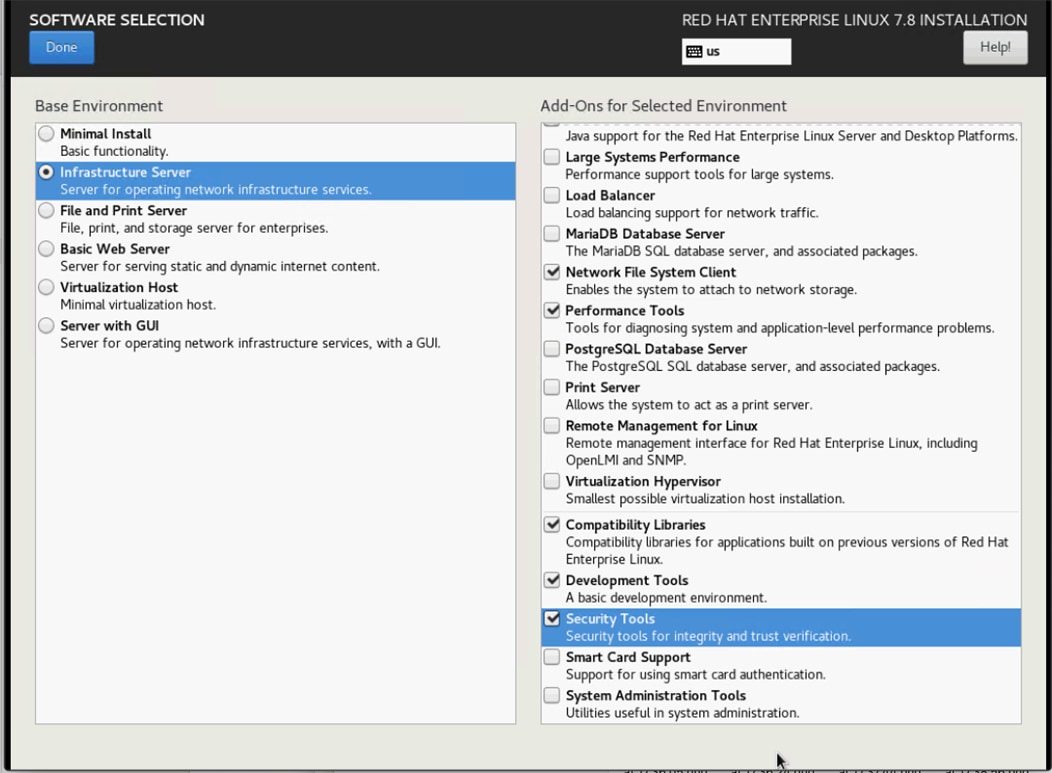

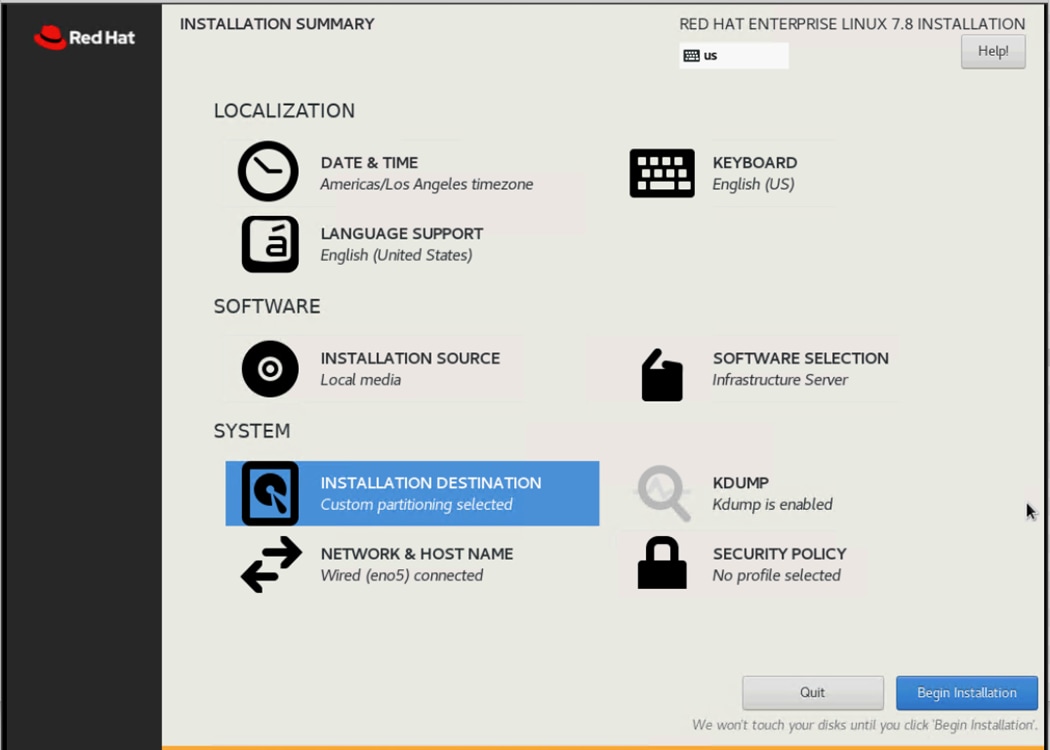

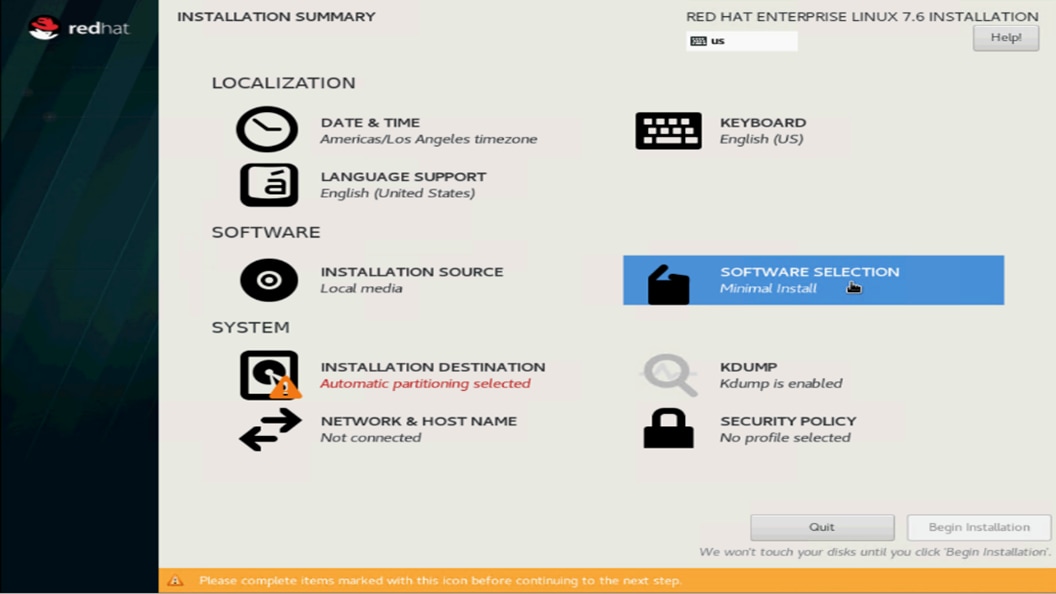

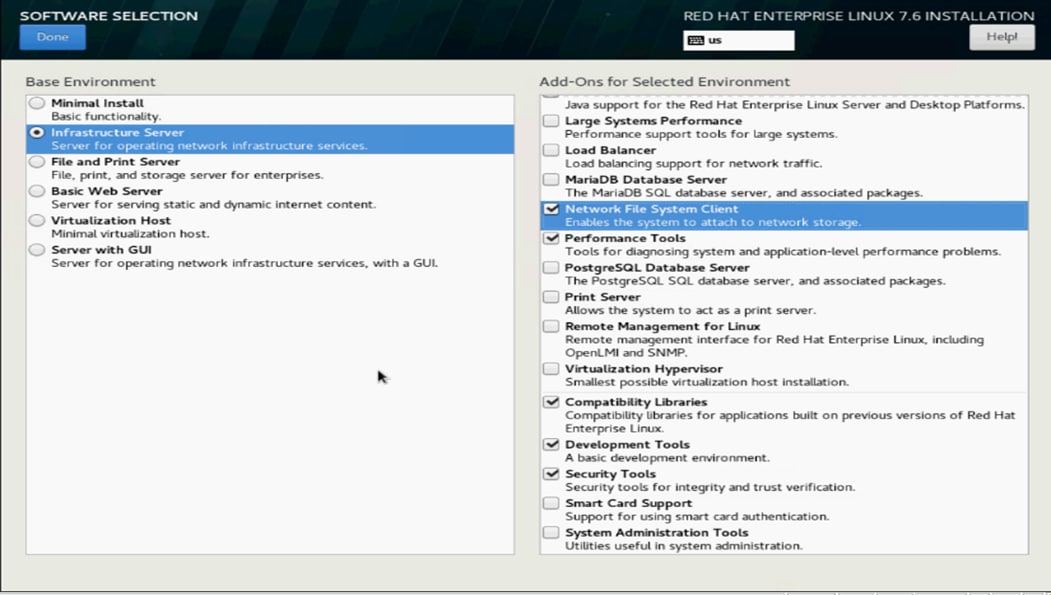

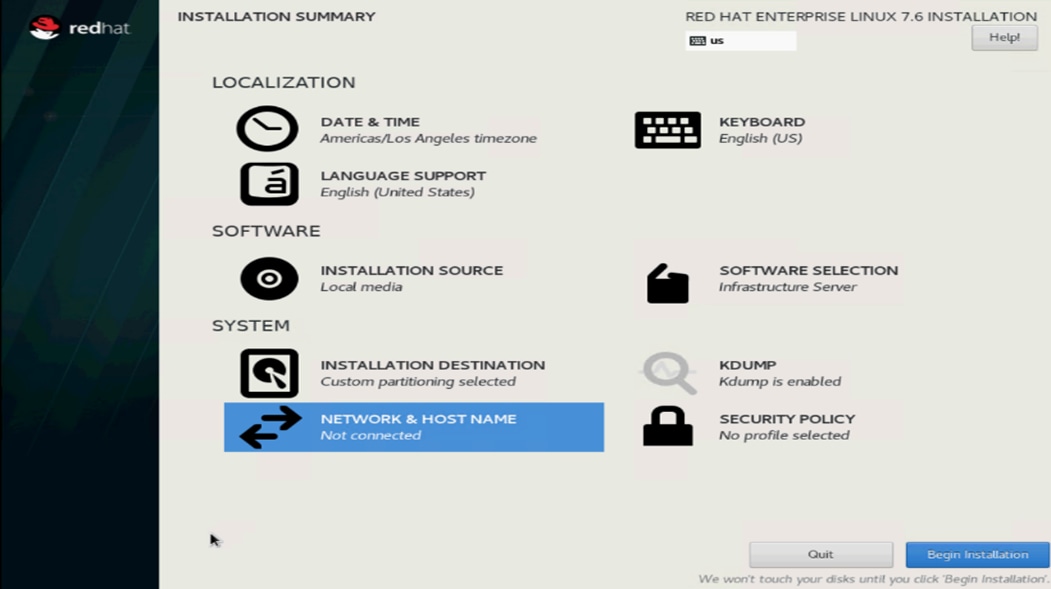

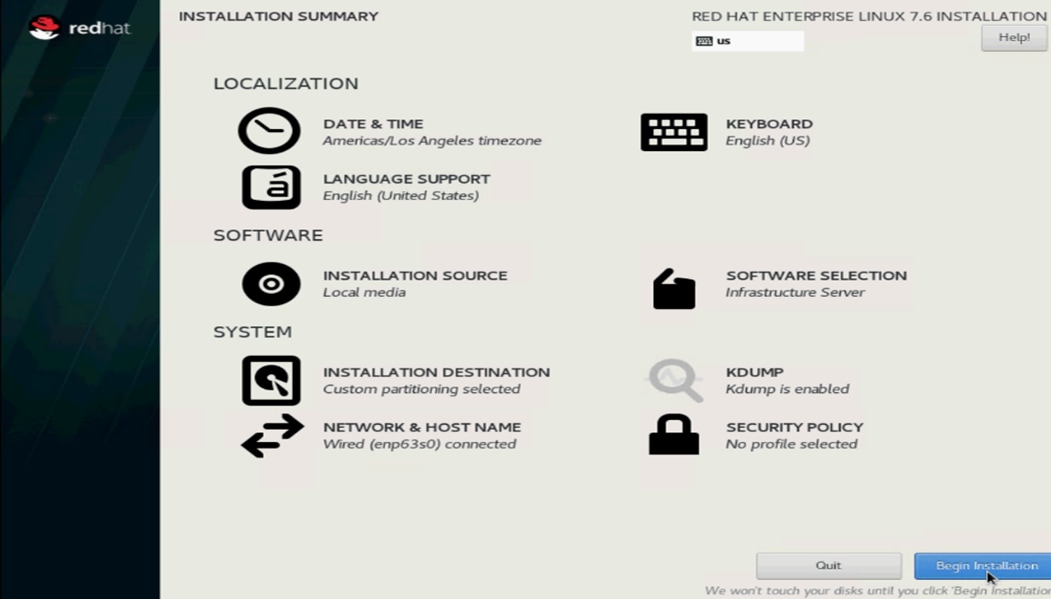

28. Click Software Selection.

29. Select Infrastructure Server and select the Add-Ons as noted below, then click Done:

a. Network File System Client

b. Performance Tools

c. Compatibility Libraries

d. Development Tools

e. Security Tools

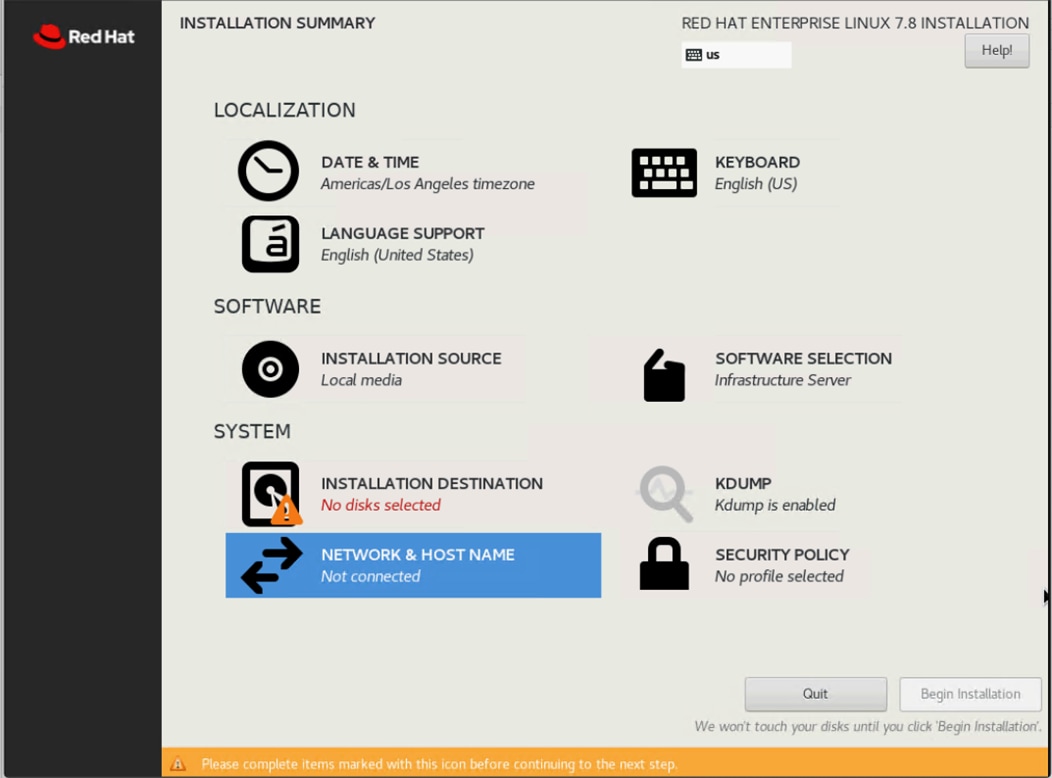

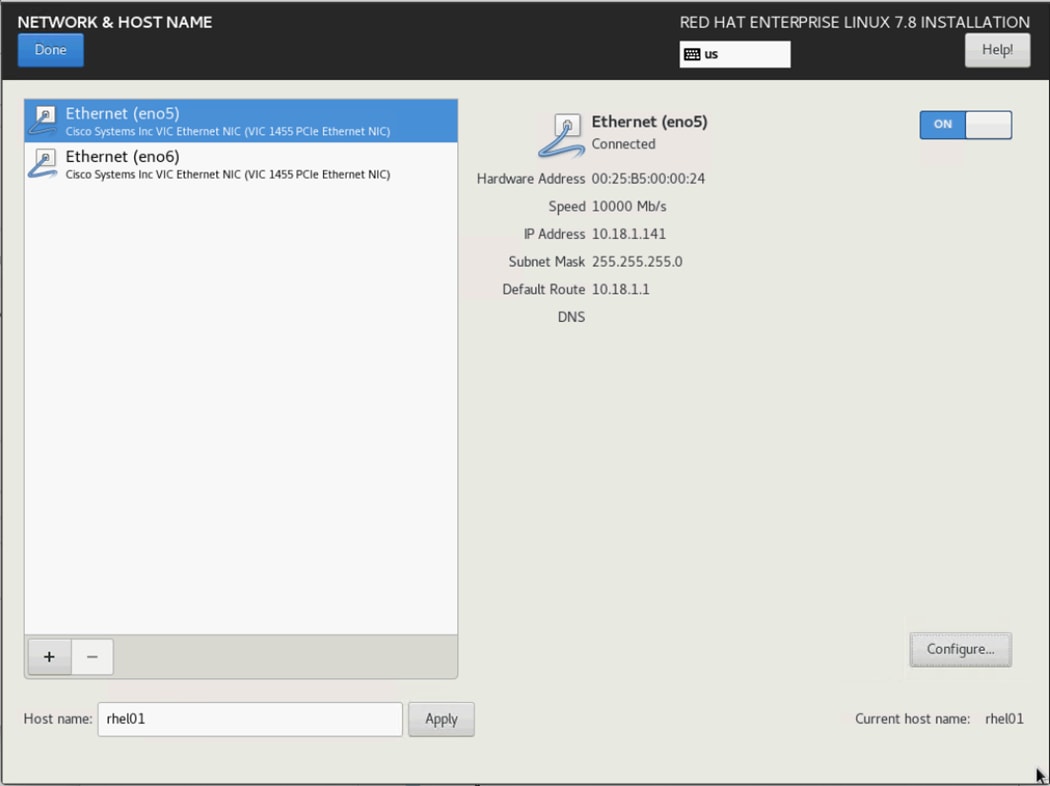

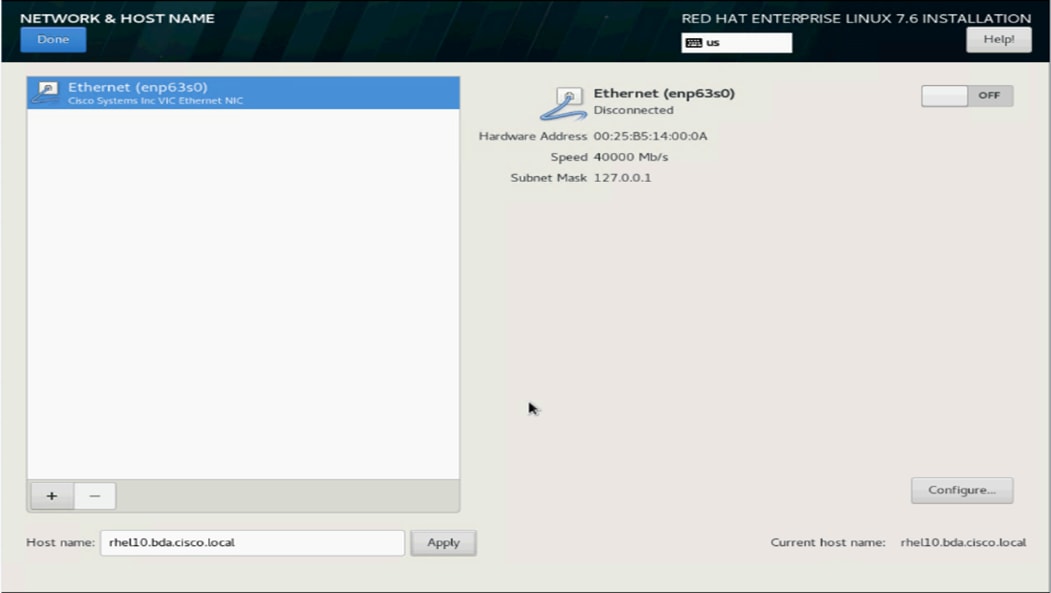

30. Click Network and Hostname and configure Hostname and Networking for the Host.

31. Type in the hostname as shown below.

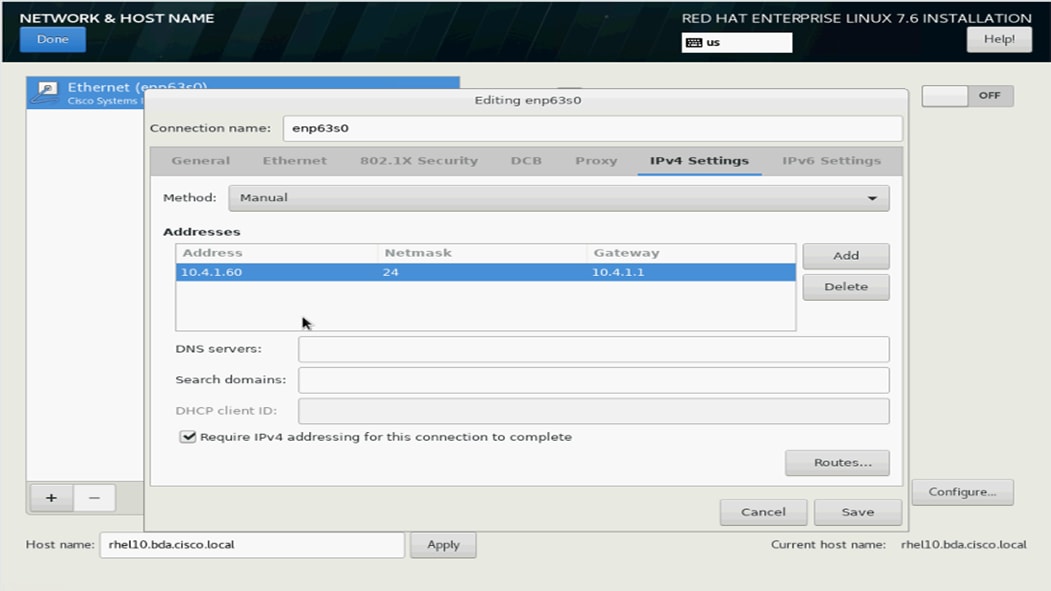

32. Click Configure to open the Network Connectivity window. Click IPv4 Settings.

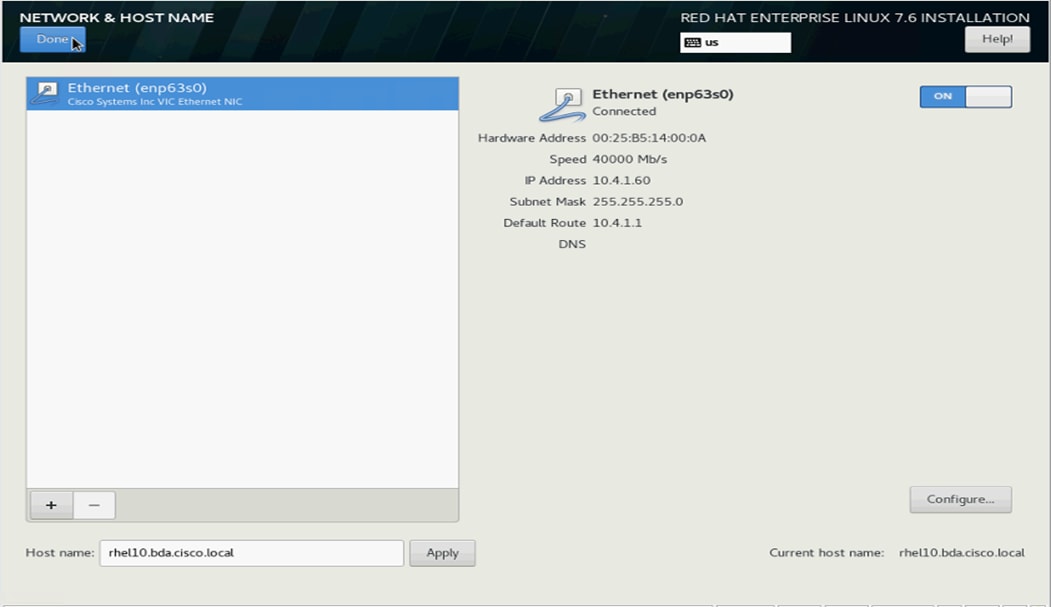

33. Change the Method to Manual and click Add to enter the IP Address, Netmask and Gateway details.

34. Click Save, update the hostname, and turn Ethernet ON. Click Done to return to the main menu.

35. Click Begin Installation in the main menu.

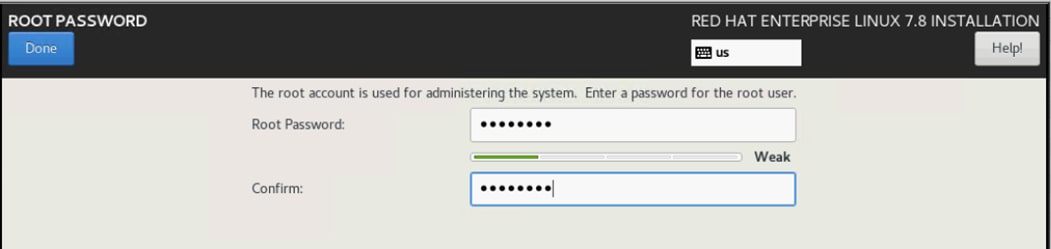

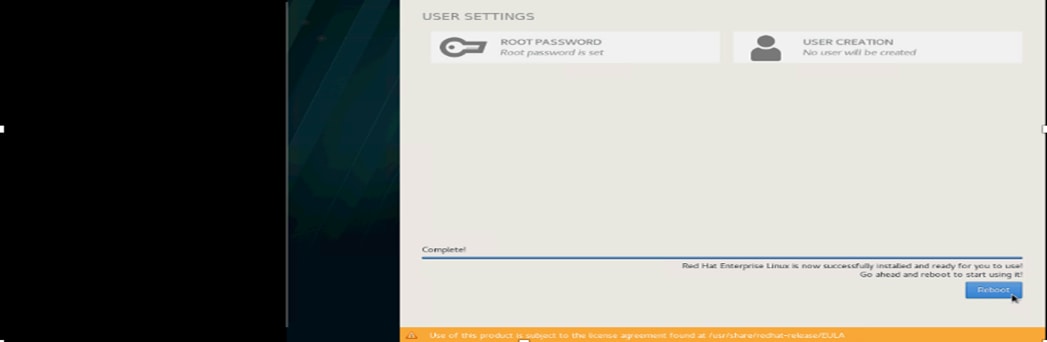

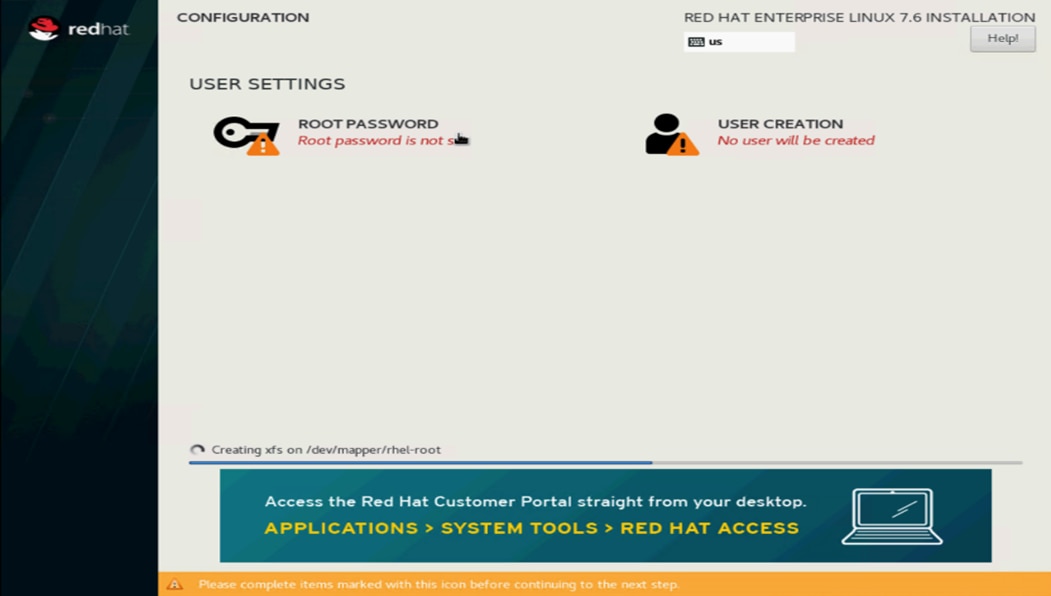

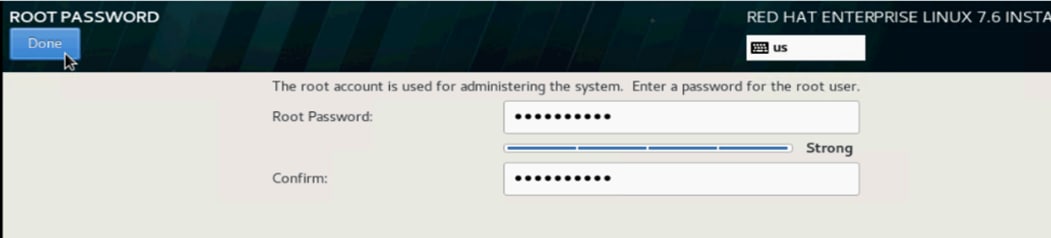

36. Select Root Password in the User Settings.

37. Enter the Root Password and click Done.

38. Once the installation is complete, reboot the system.

39. Repeat steps 1 to 38 to install Red Hat Enterprise Linux 7.8 on rest of the Server Nodes (2-48).

![]() The OS installation and configuration of the nodes that is mentioned above can be automated through PXE boot or third-party tools.

The OS installation and configuration of the nodes that is mentioned above can be automated through PXE boot or third-party tools.

![]() Go to the Appendix, section Configure Cisco Boot Optimized M.2 RAID Controller for Installation steps for Cisco Boot Optimized M.2 RAID Controller.

Go to the Appendix, section Configure Cisco Boot Optimized M.2 RAID Controller for Installation steps for Cisco Boot Optimized M.2 RAID Controller.

The hostnames and their corresponding IP addresses are shown in Table 4.

Table 4. Hostname and IP address

| Hostname |

Eth0 |

| rhel1 |

10.18.1.131 |

| rhel2 |

10.18.1.132 |

| rhel3 |

10.18.1.133 |

| rhel4 |

10.18.1.134 |

| rhel5 |

10.18.1.135 |

| ….. |

….. |

| rhel47 |

10.18.1.177 |

| rhel48 |

10.18.1.178 |

![]() Multi-homing configuration is not recommended in this design, so please assign only one network interface on each host.

Multi-homing configuration is not recommended in this design, so please assign only one network interface on each host.

![]() For simplicity, outbound NATing is configured for internet access when desired, such as accessing public repos and/or accessing Red Hat Content Delivery Network. However, configuring outbound NAT is beyond the scope of this document.

For simplicity, outbound NATing is configured for internet access when desired, such as accessing public repos and/or accessing Red Hat Content Delivery Network. However, configuring outbound NAT is beyond the scope of this document.

Choose one of the nodes of the cluster or a separate node as the Admin Node for management, such as CDP PVC Base installation, Ansible, creating a local Red Hat repo, and others. In this document, we used rhel1 for this purpose.

Setup /etc/hosts on the Admin node; this is a pre-configuration to setup DNS as shown in the next section.

![]() For the purpose of simplicity, /etc/hosts file is configured with hostnames in all the nodes. However, in large scale production grade deployment, DNS server setup is highly recommended. Furthermore, /etc/hosts file is not copied into containers running on the platform.

For the purpose of simplicity, /etc/hosts file is configured with hostnames in all the nodes. However, in large scale production grade deployment, DNS server setup is highly recommended. Furthermore, /etc/hosts file is not copied into containers running on the platform.

Below are the sample A records for DNS configuration within Linux environment:

ORIGIN cdp.cisco.local

rhel1 A 10.18.1.131

rhel2 A 10.18.1.132

rhel3 A 10.18.1.133

…

…

rhel47 A 10.18.1.177

rhel48 A 10.18.1.178

To create the host file on the admin node, follow these steps:

1. Log into the Admin Node (rhel1).

#ssh 10.18.1.131

2. Populate the host file with IP addresses and corresponding hostnames on the Admin node (rhel1) and other nodes as follows:

3. On Admin Node (rhel1):

[root@rhel1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.18.1.131 rhel1.cdp.cisco.local

10.18.1.132 rhel2.cdp.cisco.local

10.18.1.133 rhel3.cdp.cisco.local

10.18.1.134 rhel4.cdp.cisco.local

10.18.1.135 rhel5.cdp.cisco.local

10.18.1.136 rhel6.cdp.cisco.local

10.18.1.137 rhel7.cdp.cisco.local

10.18.1.138 rhel8.cdp.cisco.local

10.18.1.139 rhel9.cdp.cisco.local

10.18.1.140 rhel10.cdp.cisco.local

10.18.1.141 rhel11.cdp.cisco.local

10.18.1.142 rhel12.cdp.cisco.local

10.18.1.143 rhel13.cdp.cisco.local

10.18.1.144 rhel14.cdp.cisco.local

10.18.1.145 rhel15.cdp.cisco.local

10.18.1.146 rhel16.cdp.cisco.local

10.18.1.147 rhel17.cdp.cisco.local

10.18.1.148 rhel18.cdp.cisco.local

10.18.1.149 rhel19.cdp.cisco.local

.

.

.

10.18.1.177 rhel47.cdp.cisco.local

10.18.1.178 rhel48.cdp.cisco.local

To manage all the nodes in a cluster from the admin node password-less login needs to be setup. It assists in automating common tasks with Ansible, and shell-scripts without having to use passwords.

To enable password-less login across all the nodes when Red Hat Linux is installed across all the nodes in the cluster, follow these steps:

1. Log into the Admin Node (rhel1).

#ssh 10.18.1.131

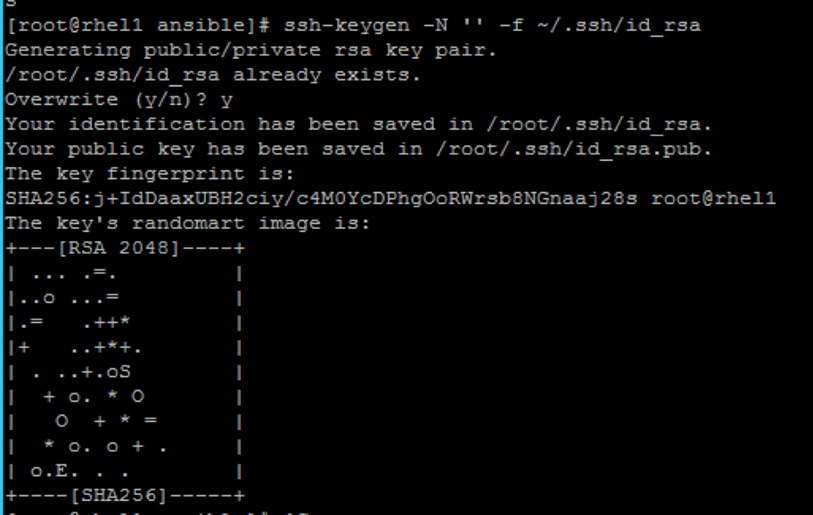

2. Run the ssh-keygen command to create both public and private keys on the admin node.

# ssh-keygen -N '' -f ~/.ssh/id_rsa

Figure 47. ssh-keygen

3. Run the following command from the admin node to copy the public key id_rsa.pub to all the nodes of the cluster. ssh-copy-id appends the keys to the remote-hosts .ssh/authorized_keys.

# for i in {01..48}; do echo "copying rhel$i.cdp.cisco.local"; ssh-copy-id -i ~/.ssh/id_rsa.pub root@rhel$i.cdp.cisco.local; done;

4. Enter yes for Are you sure you want to continue connecting (yes/no)?

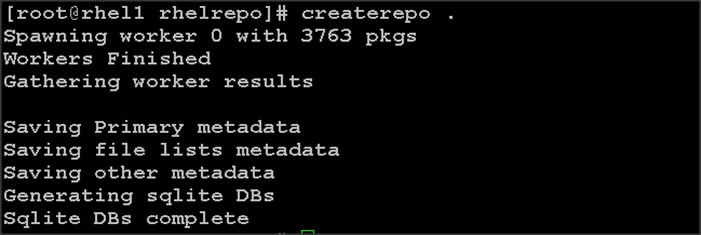

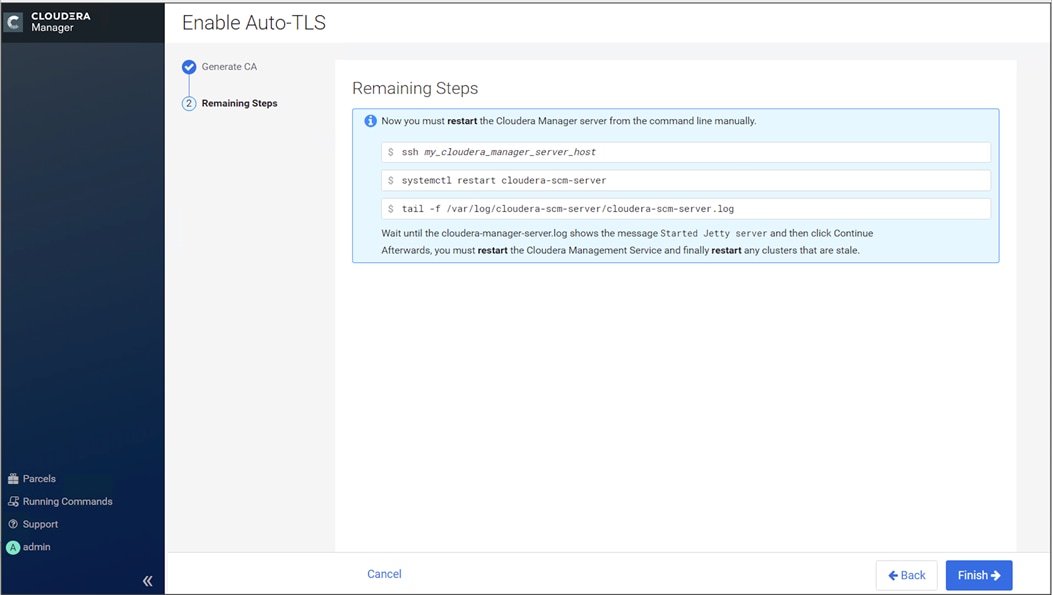

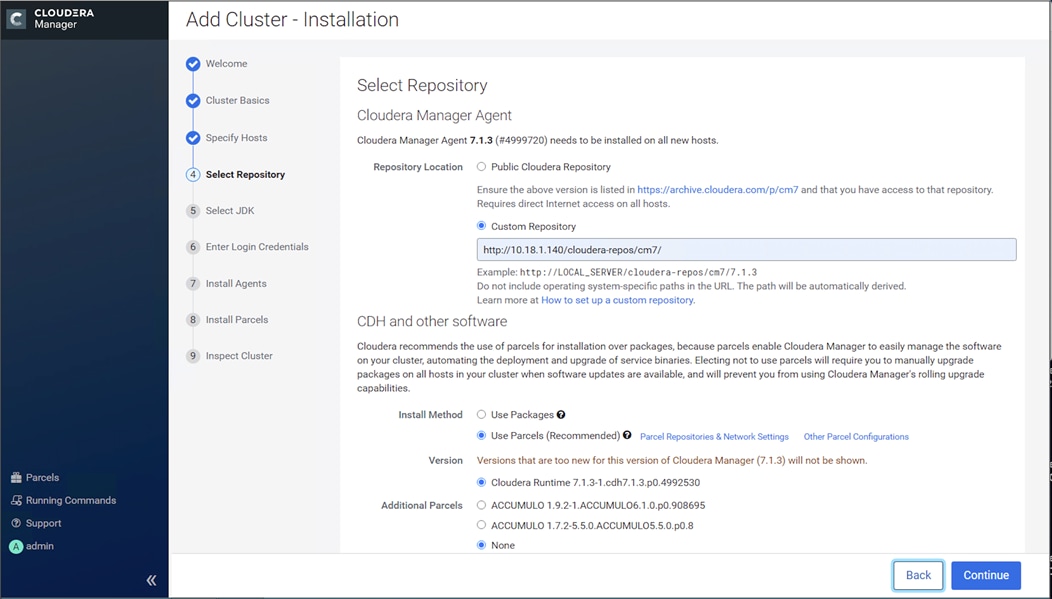

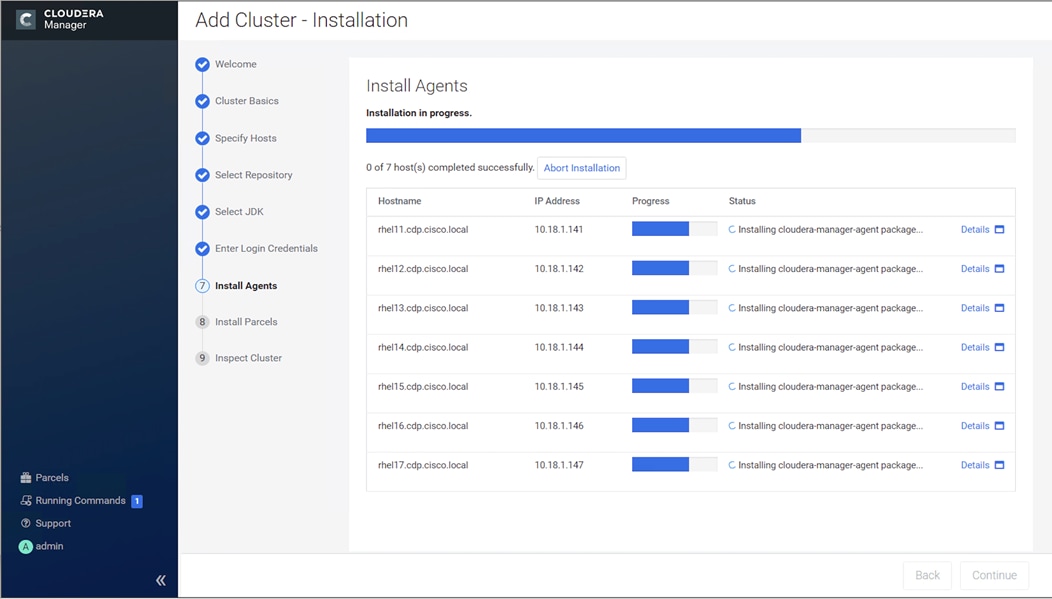

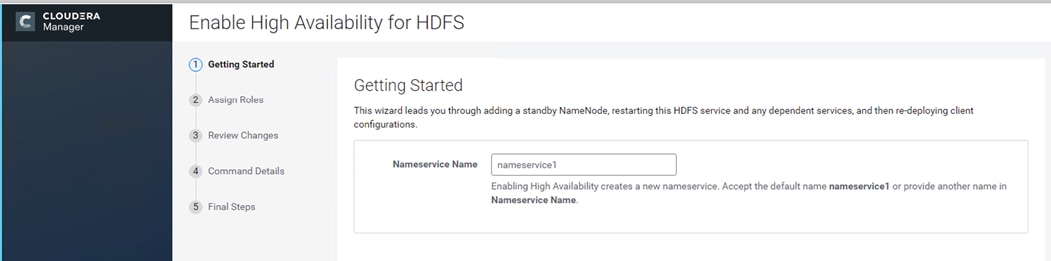

5. Enter the password of the remote host.