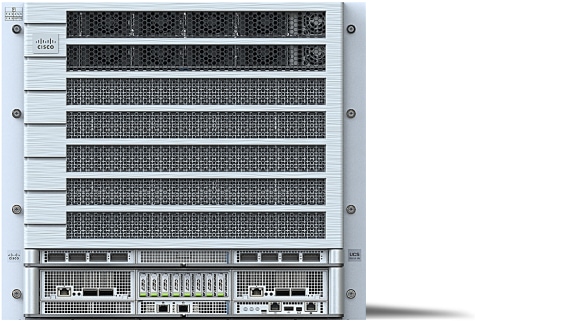

Purpose-built for the pinnacle of AI performance

Engineered to be the heart of large-scale AI infrastructure, Cisco AI GPU servers enable training, fine-tuning, and deployment of massive models fast, efficiently, and at unprecedented scale.

Largest-model optimization

Get exceptional GPU parallelism and bandwidth for cutting-edge use cases, from LLMs, deep learning, and generative AI to scientific simulations.

Architectural choice

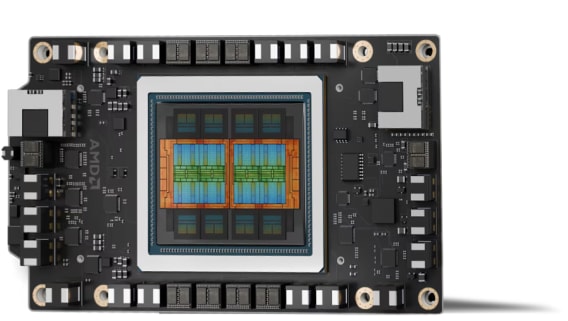

Select NVIDIA HGX for NVLink-connected GPU meshes or choose AMD OAM MI300/MI350X accelerators for modular GPU density—both fully integrated into enterprise-ready Cisco platforms.

Rack-scale efficiency

Maximize performance per square foot of data center space with server designs that carefully balance power, cooling, and form factor.

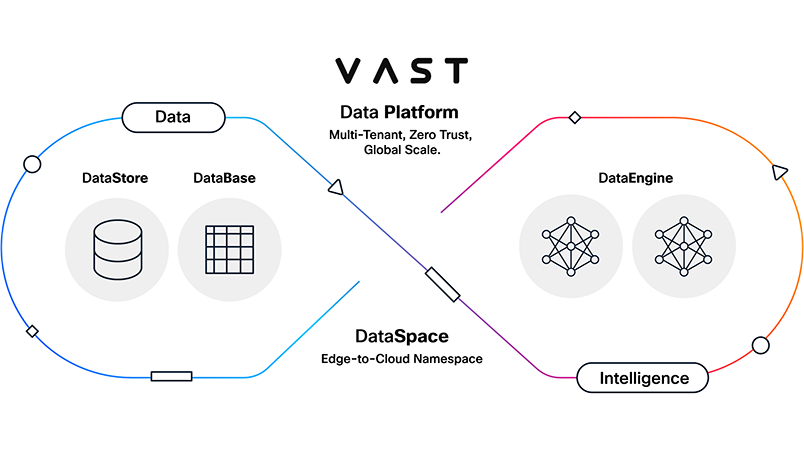

Unified operations

Cisco dense AI GPU servers are integrated with Cisco Intersight for cloud-based lifecycle management, monitoring, and optimization across your entire AI cluster.

Choose your dense AI GPU platform

Cisco dense AI GPU servers support two leading accelerator frameworks: NVIDIA HGX for ultra-high bandwidth GPU meshes and AMD OAM MI300/MI350X for flexible AMD GPU clusters. Both are designed for tight integration, performance, and enterprise-grade management.

- NVIDIA HGX – GPU mesh excellence: NVLink-enabled HGX modules deliver unmatched inter-GPU throughput—ideal for training large-scale models with full-bandwidth interconnect.

- AMD OAM MI300/MI350X – Modular GPU compute: Open Accelerator Modules from AMD give you modular, interchangeable GPU units with dedicated NVLink-equivalent bandwidth—optimized for dense AI workloads on AMD.

- Cisco integration – Ready for the rack: Engineered with power, cooling, and Intersight management to ensure seamless deployment from bare metal to full AI rack pod.

Discover the Total Economic Impact™ of Cisco Intersight

Hear how Cisco Intersight improved IT productivity and provided a 192% ROI. Explore the full Forrester TEI findings.