Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone

Executive Summary

Cisco Validated Designs (CVDs) consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

Generative Artificial Intelligence (Generative AI) stands as a transformative force across every industry, driving innovation in multiple use cases. Despite opportunities, integrating generative AI into enterprise settings poses unique challenges. Leveraging internal data effectively is critical for on-premises AI solutions. Building the right infrastructure with appropriate computational resources is critical. Visibility and monitoring of the entire stack is important from the operations point of view.

The Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 solution takes the complexity out of adopting generative AI by providing prescriptive steps for deploying the underlying infrastructure for Nutanix GPT-in-a- Box 2.0. This solution combines Cisco® servers and SaaS operations with Nutanix software, utilizing the most popular Large Language Models (LLMs) to produce a fully validated AI-ready platform that can simplify and jumpstart your AI initiatives from the data center to the edge.

This document explains the Cisco Validated Design and Deployment for GPT-in-a-Box 2.0 on Cisco Compute Hyperconverged with Nutanix to deploy on-premises Generative AI applications. This solution outlines the design that supports the deployment of an innovative, flexible, and secure generative pretrained transformer (GPT) solution for Generative AI to privately run and manage organization’s choice of AI large language models (LLMs) and applications leveraging it.

Solution Overview

This chapter contains the following:

● Audience

Generative AI is reshaping industries, from dynamic marketing content to interactive virtual assistants and chatbots. However, quick provisioning of models, management, and monitoring of secure LLM end points across several teams within an organization remains a challenge. It is critical to effectively import and deploy certified LLM models and use the internal data of the organization in these applications. A robust infrastructure, observability across the stack, optimized model deployment and serving, high availability, and scaling are some of the other challenges which IT Administrators are facing today.

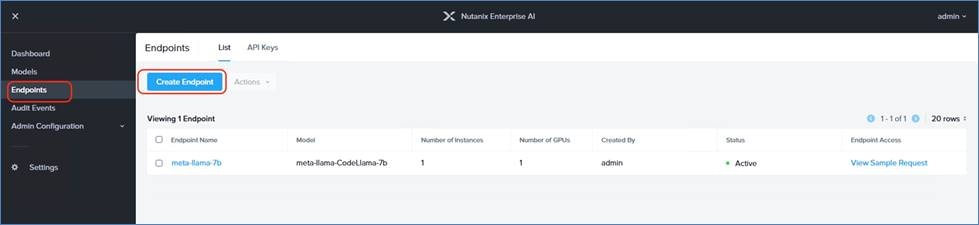

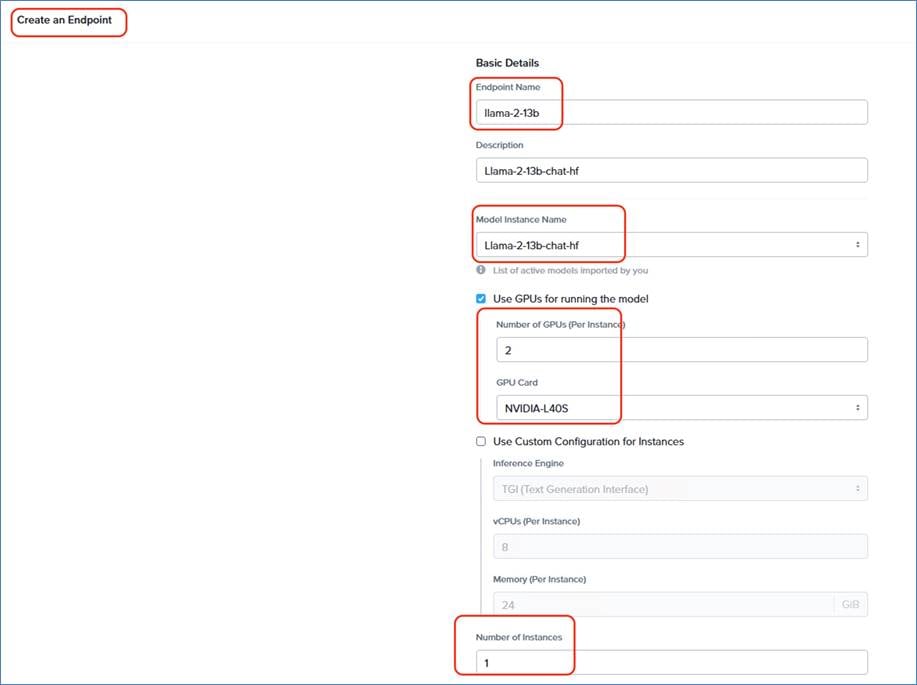

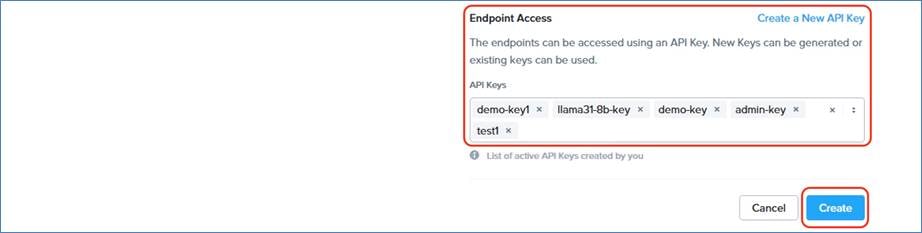

The solution highlights how enterprises can design and deploy Generative Pretrained Transformer (GPT) solution for Generative AI to privately run and manage organization’s choice of AI large language models (LLMs) and applications leveraging it. The solution effectively focusses to import, generate secure LLM end points and monitor the inference end points provisioned across the organization.

The hardware and software components are integrated so that customers can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the ground up.

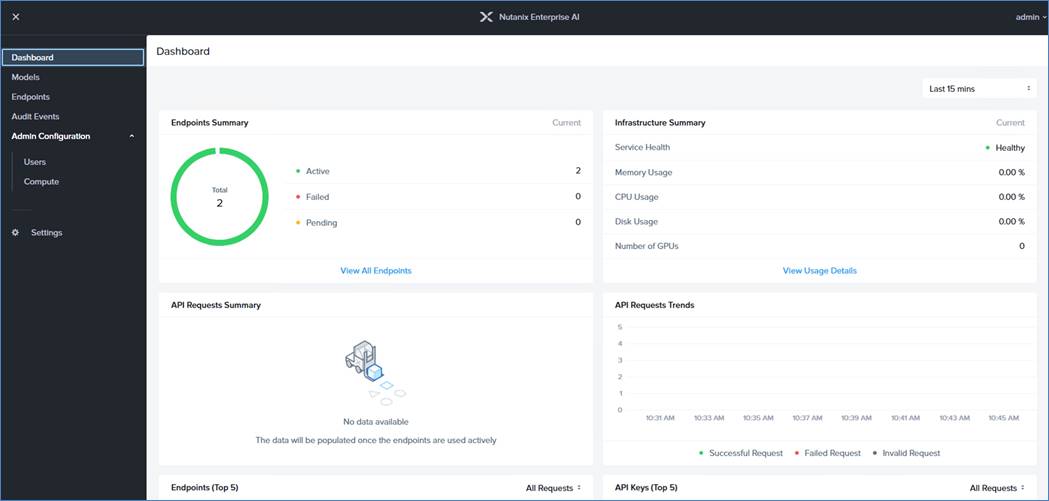

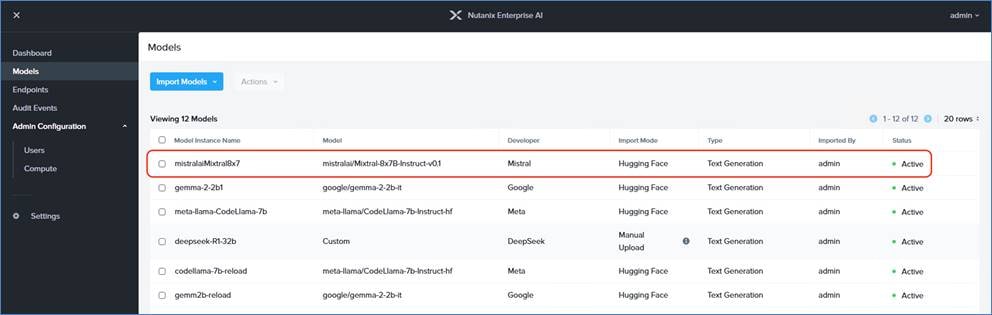

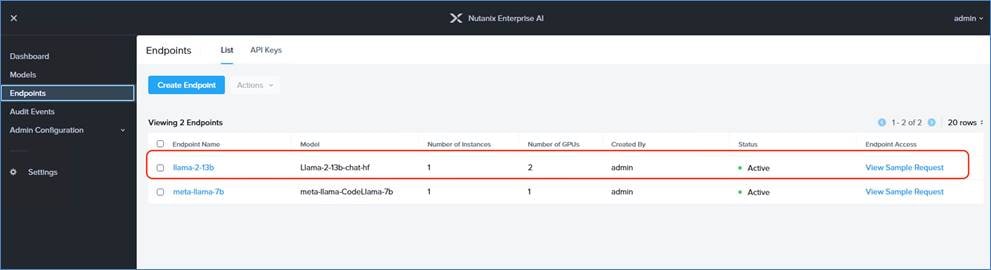

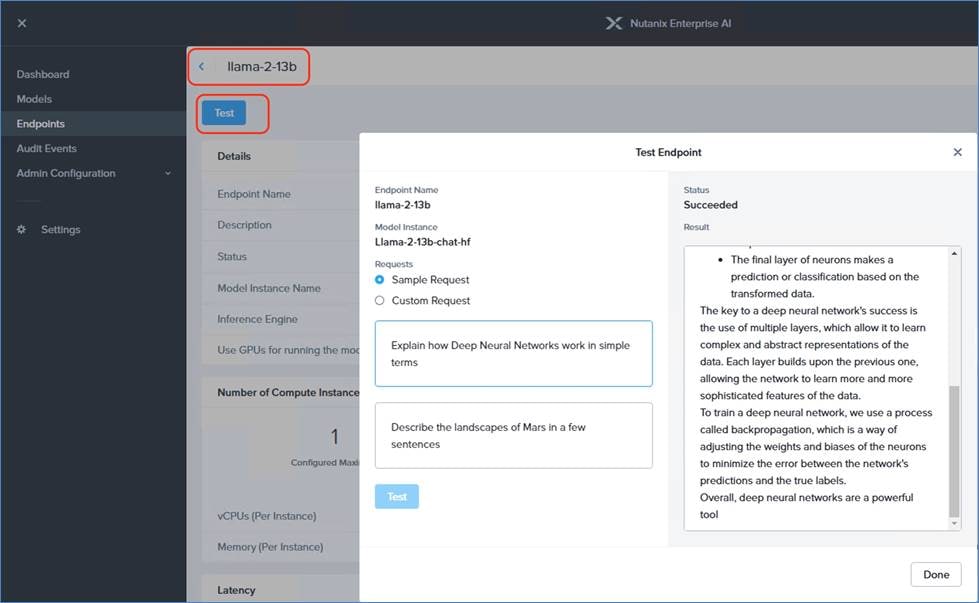

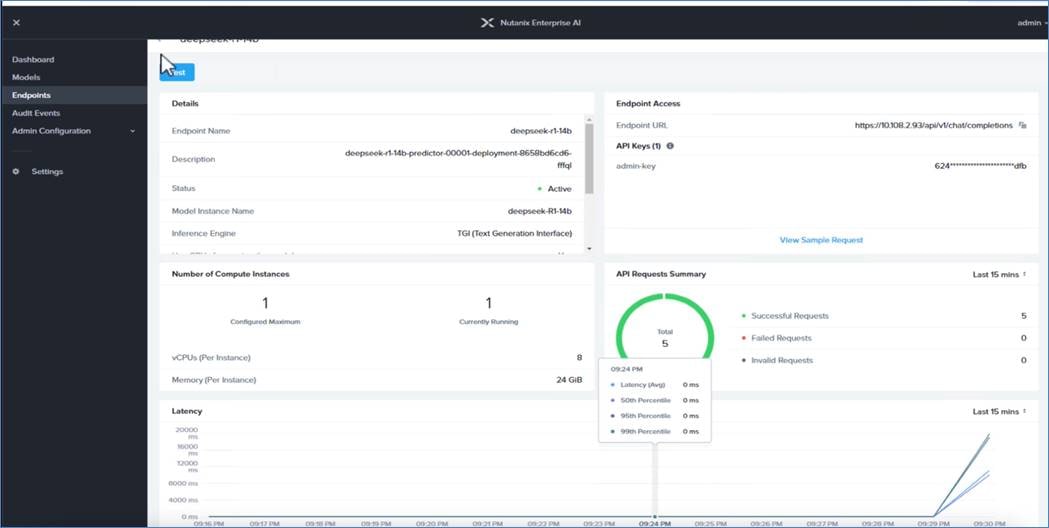

This document explains the Cisco Validated Design and Deployment details for GPT-in-a-Box 2.0 solution on Cisco Compute Hyperconverged with Nutanix. The solution presented in this document will address design, reference architecture, and deployment and validates Nutanix Enterprise AI stack providing ease of importing models and generating secure inference end points through NAI dashboard.

This Cisco Validated Design is just one example of a supported GPT configuration. You can design and build a GPT solution in many ways, and you can deviate from this specific configuration while still following CVD best practices.

GPT-in-a-Box 2.0 provides several new features through the latest offerings from Nutanix Cloud Platform and validated on the industry’s first hyperconverged solution using a modular server architecture:

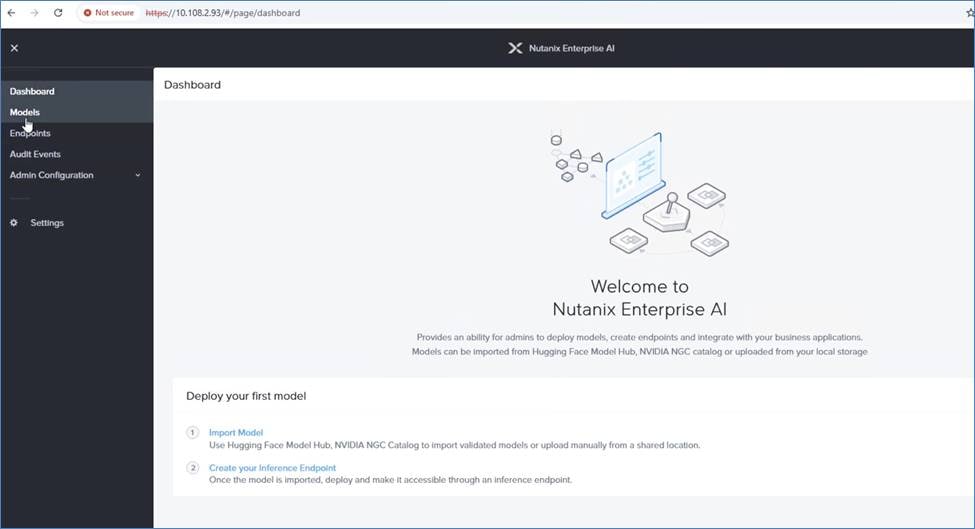

● An enterprise Grade inference endpoint provided by Nutanix Enterprise AI which provides an easy way to deploy your choice of LLMs (large language models) from leading LLM providers and create and manage secure APIs to connect your GenAI applications.

● An enterprise-grade Kubernetes solution Nutanix Kubernetes Platform Integrating seamlessly with Nutanix's hyperconverged infrastructure, NKP is ideal for businesses aiming to accelerate application modernization while maintaining operational consistency and scalability.

● Validated on Cisco Compute Hyperconverged X-Series System combines the operational simplicity of the Nutanix Cloud Platform with the flexibility and efficiency of the award-winning Cisco UCS X-Series Modular System, enabling organizations to easily deploy, scale, and upgrade hyperconverged clusters with a more sustainable, future-ready solution.

● The solution supports Cisco UCS X-Series Direct to address all your edge, retail, and small and remote-office use cases.

The intended audience of this document includes IT decision makers like CTOs and CIOs, IT architects and customers who are working on or interested in design, deployment, and life cycle management of generative AI systems and applications.

GPT-in-a-Box 2.0 on is a new turnkey solution that includes everything needed to build AI-ready infrastructure. AI applications can be easily deployed on top of GPT-in-a-Box 2.0.

Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 solution is a reference architecture that combines:

● Nutanix GPT-in-a-Box 2.0 software-defined solution

● Cisco Compute Hyperconverged X-Series modular system

● Cisco UCS X210c M7 All-NVMe server with Cisco UCS X440p PCIE node configured with 2x NVIDIA L40S GPU configured and managed through Cisco Intersight

● Nutanix Prism Central for deploying and managing solution software components

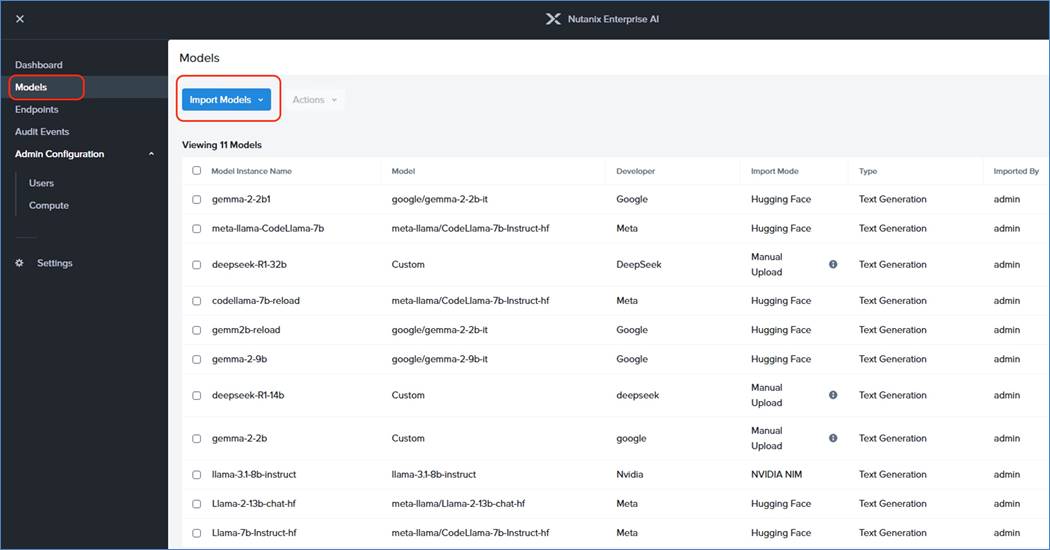

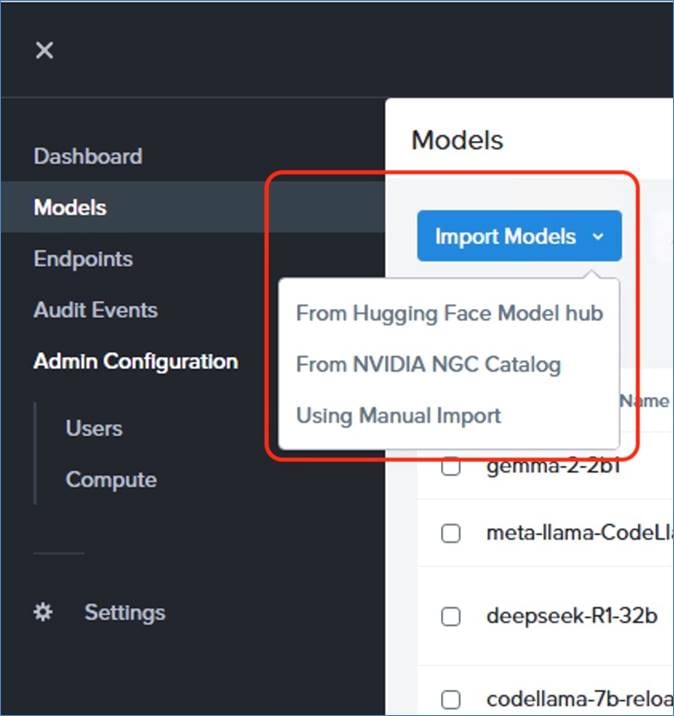

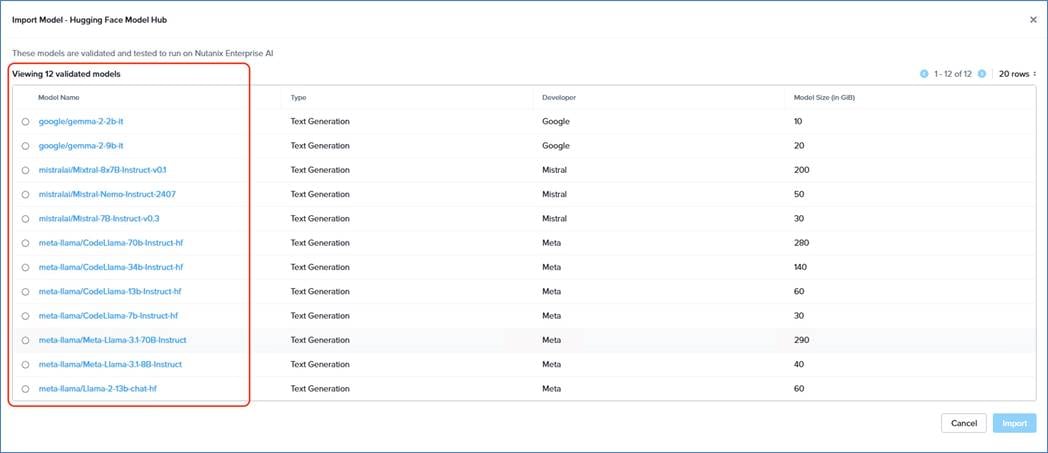

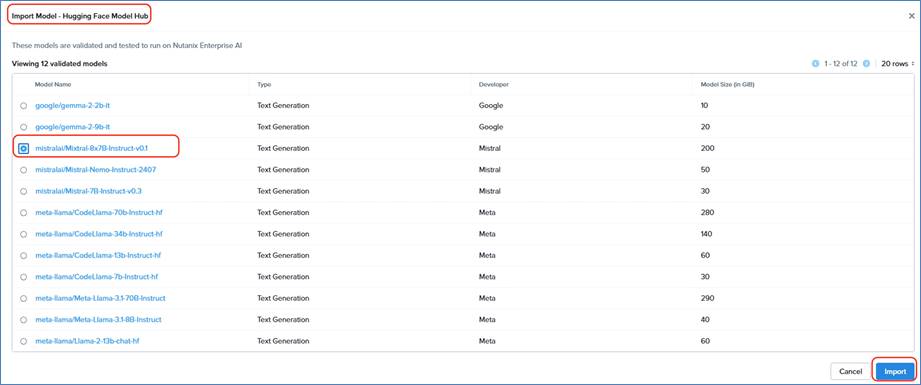

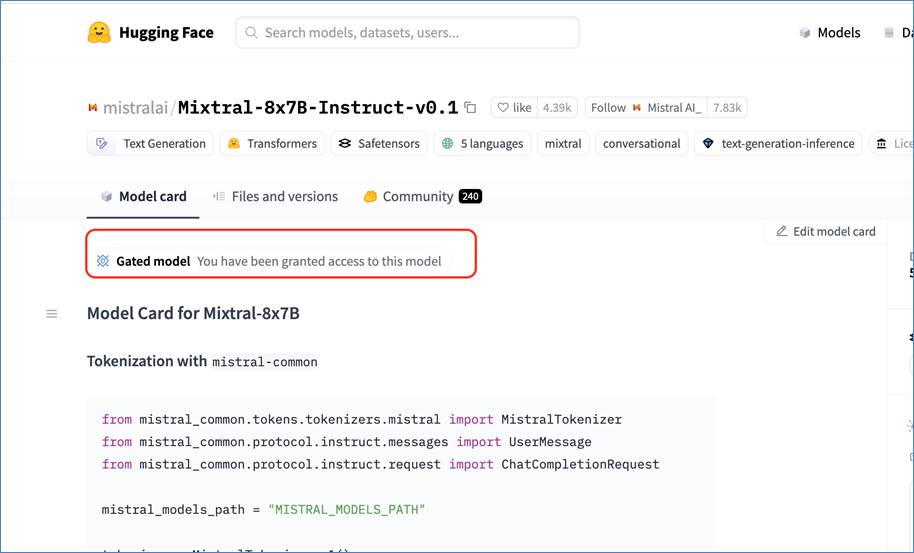

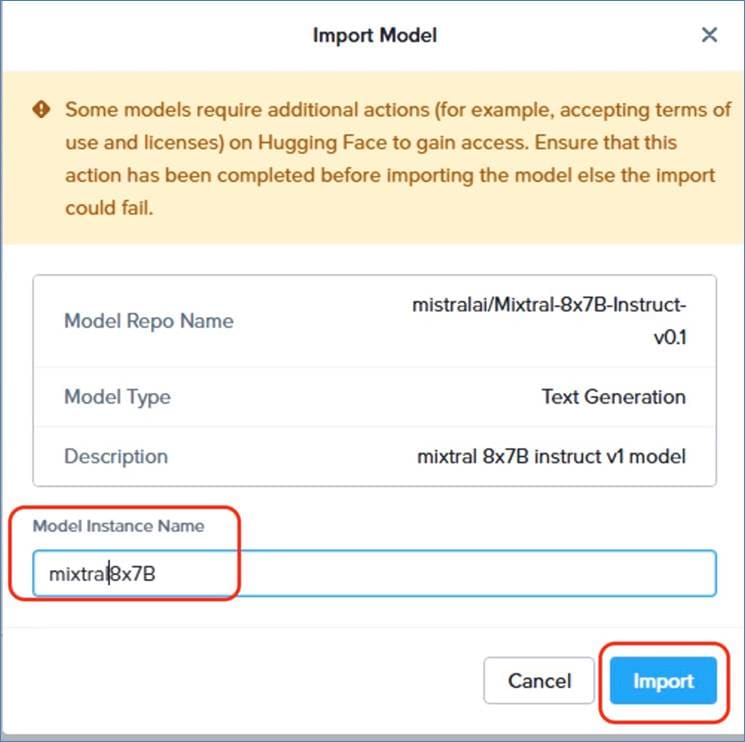

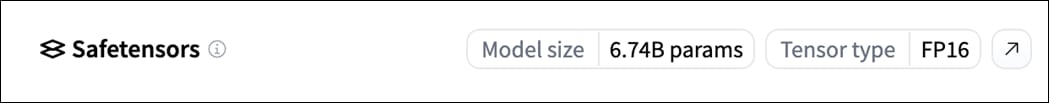

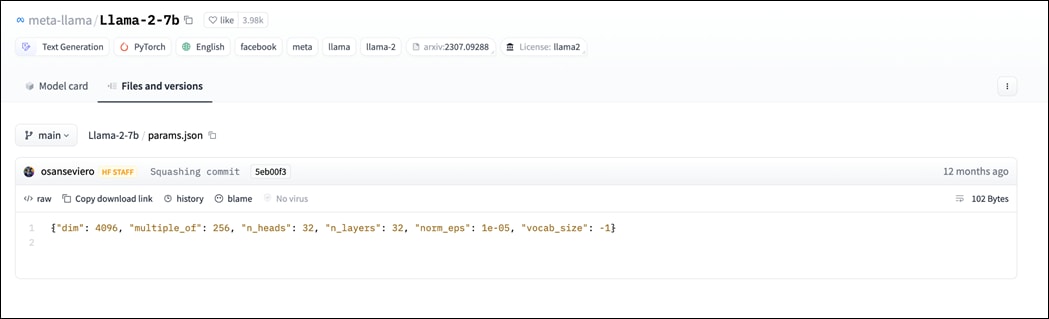

● A range of the Nutanix certified large language models which can be easily imported through Hugging Face or Nvidia NGC Catalog

GPT-in-a-Box 2.0 solution software component includes:

● Uses NKP as Kubernetes Platform and Nutanix Unified Storage (NUS)

● Leverages Nutanix Enterprise AI

● Integrates with Nutanix Unified Storage

Some highlights of the solution include the following:

● AI-ready platform to enable customers to quickly design, size, and deploy an on-premises AI solution

● Quickly deploy off-the-shelf AI applications or empower developers to build their own

● A single-cloud operating model using Nutanix Cloud Platform hyperconverged infrastructure with graphic processing units (GPUs)

● Nutanix Unified Storage (NUS) for total data management, security, privacy, and resilience

● Generate secure inference end points with imported LLM models deployed on GPU pods

● Support for popular AI/ML frameworks

● LLM freedom of choice through custom imports enabled though Nutanix Enterprise AI

Generative AI: Concepts and Components

This chapter contains the following:

● What is Generative AI Inferencing?

This chapter explains various concepts of Generative AI, including model development workflow, inferencing challenges and use cases.

Generative AI is a powerful branch of artificial intelligence that holds immense potential for addressing various challenges faced by enterprises. With generative AI, users and applications can quickly generate new content based on a variety of inputs; inputs and outputs to these models can include text, images, sounds, animation, 3D models, or other types of data. Due to the versatility of generative AI models, applications leveraging them can perform multiple tasks based on available data and inputs, increasing functionality beyond just text and image generation or chat-based Q&A.

How Does Generative AI Compare to Traditional AI?

Generative AI can create new content, chat responses, designs, synthetic data, and more. Traditional AI, on the other hand, is focused on detecting patterns, making decisions, honing analytics, classifying data, and detecting fraud.

As more organizations recognize the value of using AI to create new content, they’re now exploring large language models (LLMs) and other generator models. Since pretrained LLMs are available, known as foundation models, adopting generative AI requires less upfront training compared with traditional AI models. This results in significant cost and time savings when developing, running, and maintaining AI applications in production.

While 2023 has been the year of Generative AI with the introduction of ChatGPT and models like Stable Diffusion, the technology has been in development for some time. NVIDIA and other companies have been researching and innovating in this space for years, which has helped lead us to where we are today. Examples include StyleGAN (2018), which creates realistic images of people, and GauGAN (2019), which allows you to create fingerpaint-style images that instantly become realistic landscapes. NVIDIA has released an app based on this research called Canvas, and these technologies have been used broadly by ecosystem partners.

What is Generative AI Inferencing?

Generative AI inferencing refers to the process of using a trained generative AI model (large language models and non-large language models) to generate new data or content based on input or contextual cues. During inferencing, the model applies its learned knowledge to produce outputs that are not direct repetitions of the training data but are rather novel creations generated by the model.

The inferencing process is crucial for leveraging the generative capabilities of the models in practical applications. It allows users to obtain novel outputs by providing inputs or guiding the model's behavior based on specific requirements or constraints. The generated content can be used for various creative purposes, prototyping, or as a tool for exploration in different domains.

The term "inferencing" in the context of generative AI is associated with generating content like:

● Text Generation

◦ Storytelling: Generative models can create fictional stories, narratives, or even entire chapters of books.

◦ Poetry and Prose: AI models can generate poetic verses, prose, or creative writing.

◦ Dialogues: Conversational agents powered by generative models can produce human-like dialogues.

● Image Generation

◦ Artistic Creations: Generative Adversarial Networks (GANs) can generate visually appealing and artistic images.

◦ Style Transfer: Models can transform images into different artistic styles.

◦ Face Synthesis: GANs can create realistic faces of non-existent individuals.

● Music Composition

◦ Melody Generation: AI models can compose original melodies and music.

◦ Genre-specific Music: Generative models can create music in specific genres, mimicking different styles.

● Code Generation

◦ Source Code: AI models can generate code snippets or even entire programs based on a given task or description.

● Language Translation

◦ Multilingual Text: Models like OpenAI's GPT can generate text in multiple languages.

◦ Translation: AI models can translate text from one language to another while preserving context.

● Content Summarization

◦ Text Summaries: Generative models can summarize large blocks of text into concise and coherent summaries.

● Content Completion

◦ Sentence Completion: AI models can complete sentences or paragraphs in a way that fits the context.

◦ Text Expansion: Generative models can expand on given ideas or concepts.

● Product Descriptions

◦ E-commerce Descriptions: AI models can generate product descriptions for e-commerce websites.

● Scientific Writing

◦ Research Abstracts: Models can generate abstracts or summaries of scientific research papers.

● Conversational Agents

◦ Chatbot Responses: AI-powered chatbots can generate responses in natural language during conversations.

Generative AI is a broad category that includes models designed to generate new and original content. This content can be in various forms, such as images, text, audio, or even video. Large language models are a specific subset of generative AI designed to understand and generate human language. They are primarily focused on natural language processing tasks.

Large language models (LLMs) are a class of natural language processing models which uses deep learning methodologies to comprehend and generate human language. These models are trained in vast amounts of textual data to learn the patterns, structures, and nuances of language.

One of the notable examples of LLMs is the GPT (Generative Pre-trained Transformer) series developed by OpenAI.

Key features of large language models include:

● Scale: LLMs are characterized by their large number of parameters, often ranging from tens of millions to billions. The scale of these models allows them to capture complex linguistic patterns and generate diverse and contextually relevant text.

● Pre-training: LLMs are typically pre-trained on a massive corpus of text data before being fine-tuned for specific tasks. During pre-training, the model learns to predict the next word in a sentence or fill in missing words, which helps it acquire a broad understanding of language.

● Transformer Architecture: LLMs, including GPT, are built on the Transformer architecture, which enables efficient processing of sequential data. Transformers use self-attention mechanisms to capture relationships between words in a sentence, facilitating better context understanding.

● Transfer Learning: LLMs leverage transfer learning, where the knowledge gained during pre-training on a general language understanding task is transferred to specific tasks with minimal additional training. This approach allows these models to excel in a variety of natural language processing (NLP) applications.

● Fine-tuning: After pre-training, LLMs can be fine-tuned for specific tasks, such as text classification, language translation, summarization, and more. This fine-tuning process adapts the model to the nuances of the target application.

● Diverse Applications: Large Language Models find applications in a wide range of tasks, including but not limited to natural language understanding, text generation, sentiment analysis, machine translation, question answering, and chatbot development.

The development of Large Language Models has significantly advanced the field of natural language processing, enabling the creation of sophisticated AI systems capable of understanding, and generating human-like text across various domains. However, ethical considerations, biases in training data, and potential misuse are important considerations associated with the deployment of these models.

Model Parameters

Model parameters are the internal variables or weights that the model learns during the training process. Weights are the coefficients that scale the input features in a neural network. In the context of LLMs, these weights determine the strength of connections between neurons in different layers. For example, in a transformer model, weights are associated with the attention mechanisms and transformations applied to input sequences.

LLMs often consist of multiple layers, each with its set of weights and biases. In transformer architectures, these layers may include self-attention mechanisms and feedforward neural networks. The parameters of each layer capture different aspects of the input data.

The total number of parameters in an LLM is a critical factor in its capacity to capture complex language patterns and nuances.

Typical Generative AI workflow starts with aligning to business objectives while maintaining a concise and accurate technical focus in every stage.

Business Strategy and Use Case Definition: Define generative AI objectives aligning with business goals.

● Key Tasks

◦ Identify use cases.

◦ Clearly define the generative task, whether it's image generation, text generation, style transfer, etc.

◦ Establish goals and success metrics.

Data Preparation and Curation: Ensure high-quality, well-managed dataset availability.

● Key Tasks

◦ Gather a diverse and representative dataset for training the generative model.

◦ Data cleansing and labeling.

◦ Data aggregation and preprocessing.

◦ Increase the diversity of the training data through techniques like rotation, scaling, or flipping.

◦ Anonymization or synthetic data generation if required.

◦ Leveraging MLOps platforms for efficient data management.

Model Training: Utilize accelerated infrastructure for efficient training.

● Key Tasks

◦ Training from scratch or selecting pretrained models.

◦ Allocating heavy computational resources.

◦ Optimizing performance with validated, high-performance infrastructure.

Model Customization: Fine-tuning, prompt learning (including prompt tuning and P-tuning), transfer learning, reinforcement learning.

● Key Tasks

◦ Adapt pretrained models to specific business needs.

◦ Implement customization methods based on requirements.

Inferencing: Deploy and operate trained models for ongoing generation.

● Key Tasks

◦ Scale computing resources (scaling up or out) based on demand.

◦ Iterate on inferencing based on new data and customization opportunities.

◦ Continuous monitoring of inferencing performance.

◦ Identification and optimization of opportunities for further customization and fine-tuning.

This workflow emphasizes technical aspects, highlighting the importance of infrastructure efficiency, model customization techniques, and ongoing optimization in the inferencing phase.

Technology Overview

This chapter contains the following:

● Cisco Compute Hyperconverged X9508 Chassis

● Cisco Compute Hyperconverged with Nutanix

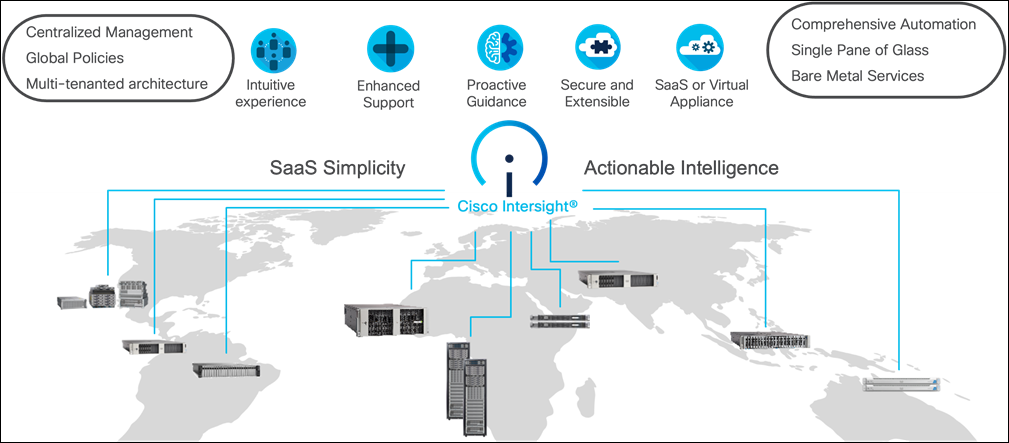

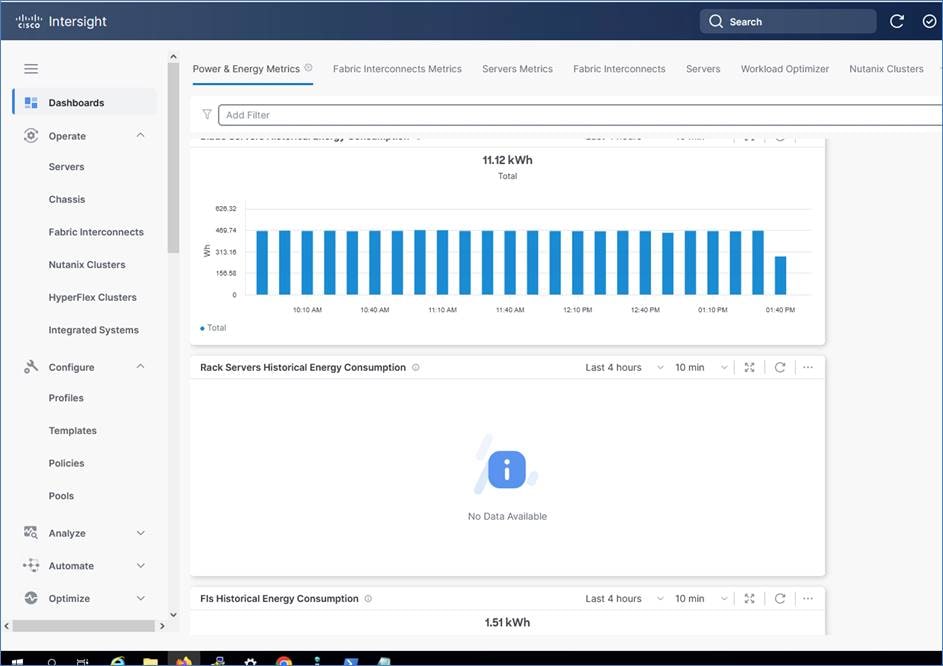

As applications and data become more distributed from core data center and edge locations to public clouds, a centralized management platform is essential. IT agility will be a struggle without a consolidated view of the infrastructure resources and centralized operations. Cisco Intersight provides a cloud-hosted, management and analytics platform for all Cisco Compute for Hyperconverged, Cisco UCS, and other supported third-party infrastructure deployed across the globe. It provides an efficient way of deploying, managing, and upgrading infrastructure in the data center, ROBO, edge, and co-location environments.

Cisco Intersight provides:

● No Impact Transition: Embedded connector allows you to start consuming benefits without forklift upgrade.

● SaaS/Subscription Model: SaaS model provides centralized, cloud-scale management and operations across hundreds of sites around the globe without the administrative overhead of managing the platform.

● Enhanced Support Experience: A hosted platform allows Cisco to address issues platform-wide with the experience extending into TAC supported platforms.

● Unified Management: Single pane of glass, consistent operations model, and experience for managing all systems and solutions.

● Programmability: End to end programmability with native API, SDK’s and popular DevOps toolsets will enable you to deploy and manage the infrastructure quickly and easily.

● Single point of automation: Automation using Ansible, Terraform, and other tools can be done through Intersight for all systems it manages.

● Recommendation Engine: Our approach of visibility, insight and action powered by machine intelligence and analytics provide real-time recommendations with agility and scale. Embedded recommendation platform with insights sourced from across Cisco install base and tailored to each customer.

For more information, go to the Cisco Intersight product page on cisco.com.

Cisco Intersight Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, you can deploy on-premises options separately. The Cisco Intersight virtual appliance and Cisco Intersight private virtual appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight virtual appliance delivers the management features of the Cisco Intersight platform in an easy-to-deploy VMware Open Virtualization Appliance (OVA), Microsoft Hyper-V Server or a Nutanix AHV virtual machine, which allows you to control the system details that leave your premises. The Cisco Intersight private virtual appliance is provided in a form factor designed specifically for users who operate in disconnected (air gap) environments. The private virtual appliance requires no connection to public networks or to Cisco network.

Licensing Requirements

The Cisco Intersight platform uses a subscription-based license with two different tiers. You can purchase a subscription duration of 1, 3, or 5 years and choose the required Cisco UCS server volume tier for the selected subscription duration. You can purchase any of the following higher-tier Cisco Intersight licenses using the Cisco ordering tool:

● Cisco Intersight Essentials: Essentials includes all the functions of the Base license plus additional features, including Cisco UCS Central software and Cisco Integrated Management Controller (IMC) supervisor entitlement, policy-based configuration with server profiles, firmware management, and evaluation of compatibility with the Cisco Hardware Compatibility List (HCL).

● Cisco Intersight Advantage: Advantage offers all the features and functions of the Base and Essentials tiers. It also includes storage widgets and cross-domain inventory correlation across compute, storage, and virtual environments (VMware ESXi). OS installation for supported Cisco UCS platforms is also included.

Servers in the Cisco Intersight Managed Mode require at least the Essentials license. For more information about the features provided in the various licensing tiers, go to: https://www.intersight.com/help/saas/getting_started/licensing_requirements/lic_intro

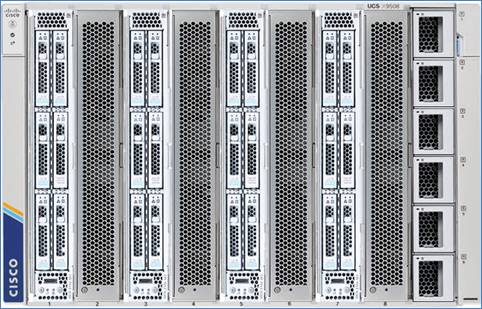

Cisco Compute Hyperconverged X9508 Chassis

Cisco and Nutanix have partnered to introduce the industry’s first hyperconverged solution using a modular server architecture. The Cisco Compute Hyperconverged X-Series System combines the operational simplicity of the Nutanix Cloud Platform with the flexibility and efficiency of the award-winning Cisco UCS X-Series Modular System, enabling organizations to easily deploy, scale, and upgrade hyperconverged clusters with a more sustainable, future-ready solution. There is also support for Cisco UCS X-Series Direct to address all your edge, retail, and small and remote-office use cases.

The Cisco Compute Hyperconverged X-Series System with Nutanix combines the operational simplicity of Nutanix Cloud Platform (NCP) with the efficiency, flexibility, and sustainability of the Cisco UCS X-Series Modular System. The X-Series system comprises modular components that can be assembled into systems through the Cisco Intersight cloud-operations platform.

The Cisco Compute Hyperconverged X-Series System is engineered to be adaptable and future-ready. With a midplane-free design, the system achieves I/O connectivity, using frontloading, vertically oriented compute and accelerator nodes that intersect with horizontally oriented I/O connectivity modules in the rear of the chassis. In the front of the chassis, Cisco Compute Hyperconverged X210c M7 All NVMe Nodes with Intel® Xeon® Scalable Processors offer compute density and storage capacity in a single form factor and the Cisco UCS X440p PCIe Node supports adding GPUs with PCIe Gen 4.0 with the Cisco UCS X9416 X-Fabric Module.

In the rear of the chassis, a unified Ethernet fabric is supplied with Cisco UCS 9108 100G Intelligent Fabric Modules and the Cisco UCS X9416 X-Fabric Module, part of the Cisco UCS X-Fabric Technology, supply PCIe Gen 4 industry-standard protocols for GPU accelerators. Interconnections can be easily updated with new modules supporting faster Ethernet, PCIe Gen 5, and CXL.

Cisco Compute Hyperconverged X-Series Direct is a self-contained system with a pair of integrated fabric interconnects and can be used if you do not want top-of-rack fabric interconnects and need to support edge, retail, and small or remote-office use cases.

The X-Series system was designed with sustainability in mind and is equipped with power-delivery and cooling innovations designed to reduce material waste and energy consumption.

Features and Benefits

● 7-Rack-Unit (7RU) chassis has 8x front-facing flexible slots. These can house a combination of hyperconverged nodes, compute nodes, and a pool of future I/O resources that may include GPU accelerators, disk storage, and nonvolatile memory.

◦ 2x 9108 25G Intelligent Fabric Modules (IFMs) at the top of the chassis that connect the chassis to upstream 6400 Series Fabric Interconnects or 6500 Series fabric interconnects. Each IFM features:

◦ Up to 100 Gbps of unified fabric connectivity per compute node.

◦ 8x 25-Gbps SFP28 uplink ports / 8x 100-Gbps SFP28 uplink ports. The unified fabric carries management traffic to the Cisco Intersight cloud-operations platform, Fibre Channel over Ethernet (FCoE) traffic, and production Ethernet traffic to the fabric interconnects.

● At the bottom are slots ready to house future I/O modules that can flexibly connect the compute modules with I/O devices. The connectivity is named Cisco UCS X-Fabric technology because the “X” is a variable that can evolve with new technology developments.

● Six 2800W Power Supply Units (PSUs) provide 54 V power to the chassis with N, N+1, and N+N redundancy. A higher voltage allows efficient power delivery with less copper and reduced power loss.

● Efficient, 4x100mm, dual counter-rotating fans deliver industry-leading airflow and power efficiency. Optimized thermal algorithms enable different cooling modes to best support the network environment. Cooling is modular so that future enhancements can potentially handle open-or closed-loop liquid cooling to support even higher-power processors.

|

|

|

Benefits

Since we first delivered the Cisco Unified Computing System™ (Cisco UCS) in 2009, our goal has been to simplify the data center. We pulled management out of servers and into the network. We simplified multiple networks into a single unified fabric. And we eliminated network layers in favor of a flat topology wrapped into a single unified system. With the Cisco Compute Hyperconverged X-Series System, we take that simplicity to the next level:

● Simplified operations with a solution that combines the operational simplicity of hyperconverged software with the efficiency and flexibility of a modular system.

● Increased agility and response to the dynamic needs of your business with a solution that is inherently easy to scale and includes support for future generations of processors, storage, accelerators, networking technologies, and SaaS innovations.

● Improved sustainability with a solution that is engineered to be more energy efficient and can be easily upgraded and reused, lowering the consumption of power and raw materials when compared to traditional rack servers.

Cisco UCS X210c M7 All-NVMe/All-Flash Servers

The Cisco Compute Hyperconverged X210c M7 All NVMe Node delivers performance, flexibility, and optimization for deployments in data centers, in the cloud, and at remote sites. This enterprise-class server offers market-leading performance, versatility, and density without compromise for workloads. Up to eight hyperconverged nodes can reside in the 7-Rack-Unit (7RU) Cisco Compute Hyperconverged X9508 Chassis, offering one of the highest densities of compute, I/O, and storage per rack unit in the industry.

For more information, see the Cisco Compute Hyperconverged X210c M7 All NVMe Node Data Sheet.

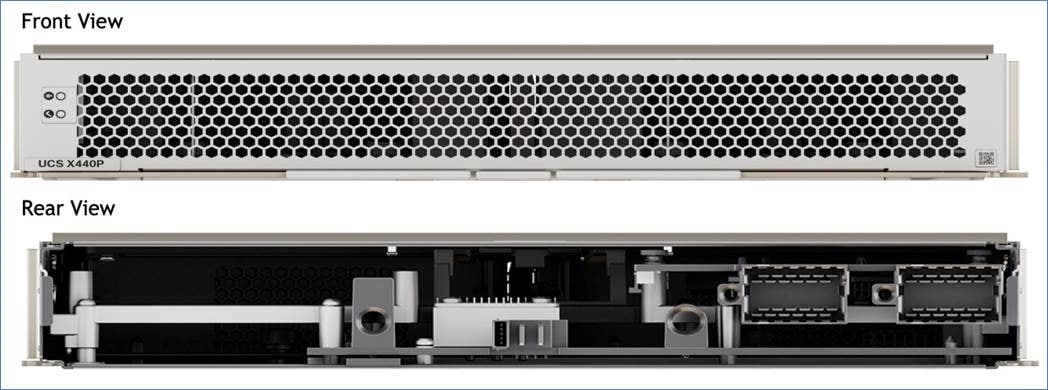

Cisco UCS X440p PCIe Node

The Cisco UCS X440p PCIe Node is the first PCIe resource node to integrate into the Cisco UCS X-Series Modular System. The Cisco UCS X9508 Chassis has eight node slots, up to four of which can be X440p PCIe nodes when paired with a Cisco UCS X210c M6 Compute Node. The Cisco UCS X440p PCIe Node supports two x16 full-height, full-length dual slot PCIe cards, or four x8 full-height, full-length single slot PCIe cards and requires both Cisco UCS 9416 X-Fabric modules for PCIe connectivity. This provides up to 16 GPUs per chassis to accelerate your applications with the Cisco UCS X440p Nodes. If your application needs even more GPU acceleration, up to two additional GPUs can be added on each Cisco UCS X210c compute node.

Cisco UCS X440p supports the following GPU options:

● NVIDIA H100 NVL, 400W, 94GB, 2-slot FHFL GPU

● NVIDIA H100: 350W, 80GB, 2-slot FHFL GPU

● NVIDIA L40S: 350W, 48GB, 2-slot FHFL GPU

● NVIDIA L4 Tensor Core, 70W, 24GB

● NVIDIA A16 PCIE 250W 4X16GB

For more information, see the Cisco Compute Hyperconverged 440p PCIe Node.

Cisco Compute Hyperconverged with Nutanix

Cisco and Nutanix have come together to offer the industry’s most simple, comprehensive HCI solution. The Cisco Compute Hyperconverged with Nutanix solution combines the Cisco Unified Computing System (UCS) innovative server, networking, and SaaS management with Nutanix’s leading HCI foundation, offering a fully integrated and validated system with flexible deployment options and a unified, enhanced support model backed by two world-class organizations.

The solution offers the following key benefits:

● Complete simplicity: The solution offers both SaaS and on-premises management options and includes day-0 through day-N operations, including server profiles for compute, storage, and networking customized for Nutanix to help simplify and accelerate cluster deployment and deliver better performance and resiliency. This also includes preinstalled software configured with a choice of hypervisor for a faster, easier start. Cisco and Nutanix combined and complementary cloud operating models provide control, visibility, and consistency across highly distributed IT environments, including fully integrated cluster installation, expansion, and end-to-end software and firmware upgrades. To simplify the buying experience, the complete solution can be ordered and delivered from Cisco.

● Complete flexibility: The Cisco Compute Hyperconverged with Nutanix solution addresses modern applications and use cases, offering multiple choices in UCS server deployment options, the latest accelerator and drive technologies, and SaaS innovations from two industry powerhouses, including integrations with the leading public cloud providers. Additionally, the solution incorporates Cisco's best-in-class networking technology, including Cisco ACI integrations, to enhance performance and resiliency for data-intensive workloads in hybrid cloud environments.

● Complete resiliency: The joint solution utilizes only enterprise-grade components and offers augmented system protection with a collaborative support model and proactive, automated resilience and security capabilities. This includes integrated support systems and case notes for faster triage. Any time log files are uploaded, or case notes are generated, that information is shared between Cisco TAC and Nutanix support, enabling enhanced collaboration among support teams to resolve issues faster and provide an improved customer experience.

● Our policy-based approach minimizes human error and configuration drift, resulting in consistent, reliable cluster deployments.

● It also enforces overall security posture through centralized authorizations, preventing tampering of configurations.

This solution provides a reference architecture for GPT-In-Box in the enterprises using NVIDIA L40S GPUs.

NVIDIA L40S GPU

The NVIDIA L40S GPU is the most powerful universal GPU for the data center, delivering end-to-end acceleration for the next generation of AI-enabled applications—from gen AI, LLM inference, small-model training and fine-tuning to 3D graphics, rendering, and video applications.

The L40S GPU is optimized for 24/7 enterprise data center operations and designed, built, tested, and supported by NVIDIA to ensure maximum performance, durability, and uptime. The L40S GPU meets the latest data center standards, is Network Equipment-Building System (NEBS) Level 3 ready, and features secure boot with root of trust technology, providing an additional layer of security for data centers.

Table 1. NVIDIA L40S Tensor Core GPU Specifications

|

|

L40S PCIe GPU |

| GPU Architecture |

NVIDIA Ada Lovelace Architecture |

| GPU Memory |

48GB GDDR6 with ECC |

| GPU Memory Bandwidth |

864GB/s |

| Interconnect Interface |

PCIe Gen4 x16: 64GB/s bidirectional |

| NVIDIA Ada Lovelace Architecture-Based CUDA® Cores |

18,176 |

| NVIDIA Third-Generation RT Cores |

142 |

| NVIDIA Fourth-Generation Tensor Cores |

568 |

| RT Core Performance TFLOPS |

209 |

| FP32 TFLOPS |

91.6 |

| TF32 Tensor Core TFLOPS |

183 | 366 |

| BFLOAT16 Tensor Core TFLOPS |

362.05 | 733 |

| FP16 Tensor Core |

362.05 | 733 |

| FP8 Tensor Core |

733 | 1,466 |

| Peak INT8 Tensor TOPS |

733 | 1,466 |

| Peak INT4 Tensor TOPS |

733 | 1,466 |

| Form Factor |

4.4" (H) x 10.5" (L), dual slot |

| Display Ports |

4x DisplayPort 1.4a |

| Max Power Consumption |

350W |

| Power Connector |

16-pin |

| Thermal |

Passive |

| Virtual GPU (vGPU) Software Support Yes |

Yes |

| NVENC | NVDEC |

3x | 3x (includes AV1 encode and decode) |

| Secure Boot With Root of Trust |

Yes |

| NEBS Ready |

Level 3 |

| MIG Support |

No |

| NVIDIA® NVLink® Support |

No |

This chapter contains the following:

● Nutanix Files and Objects Design

● Backup and Disaster Recovery

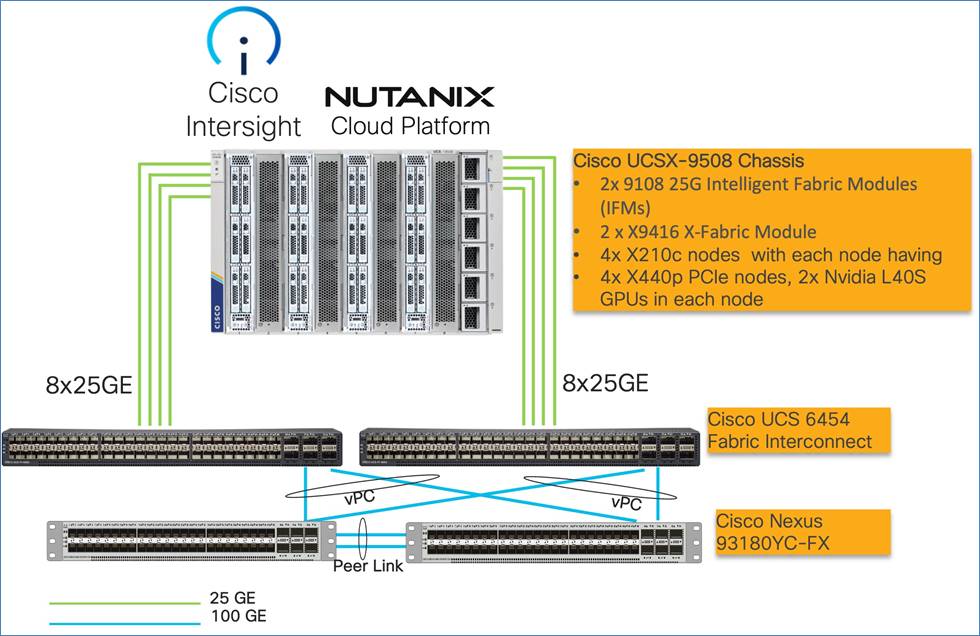

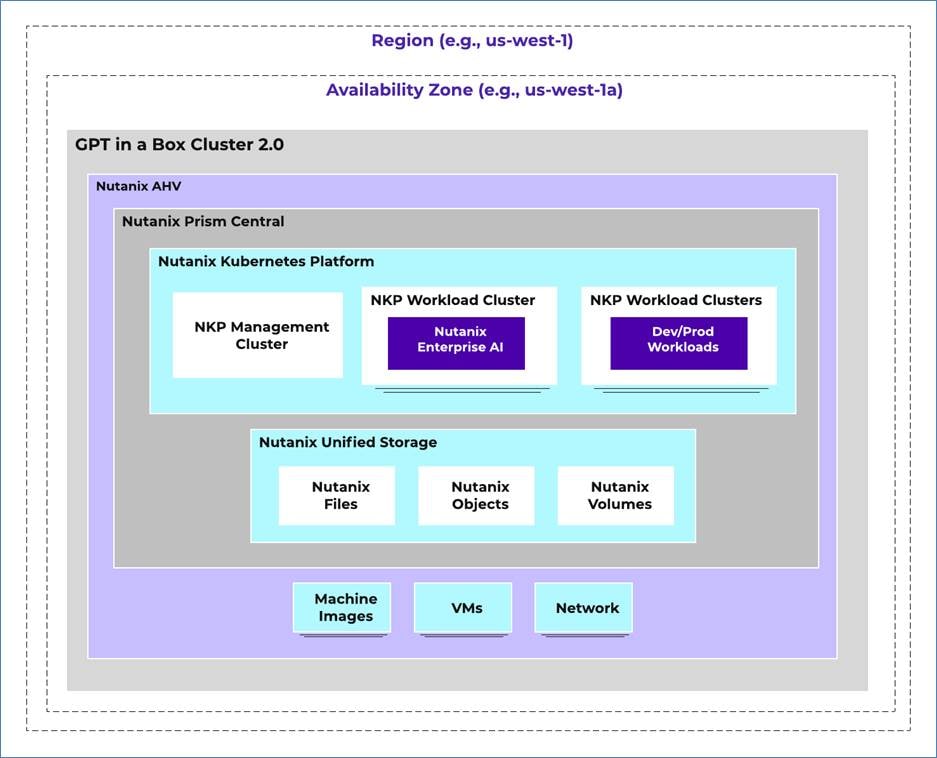

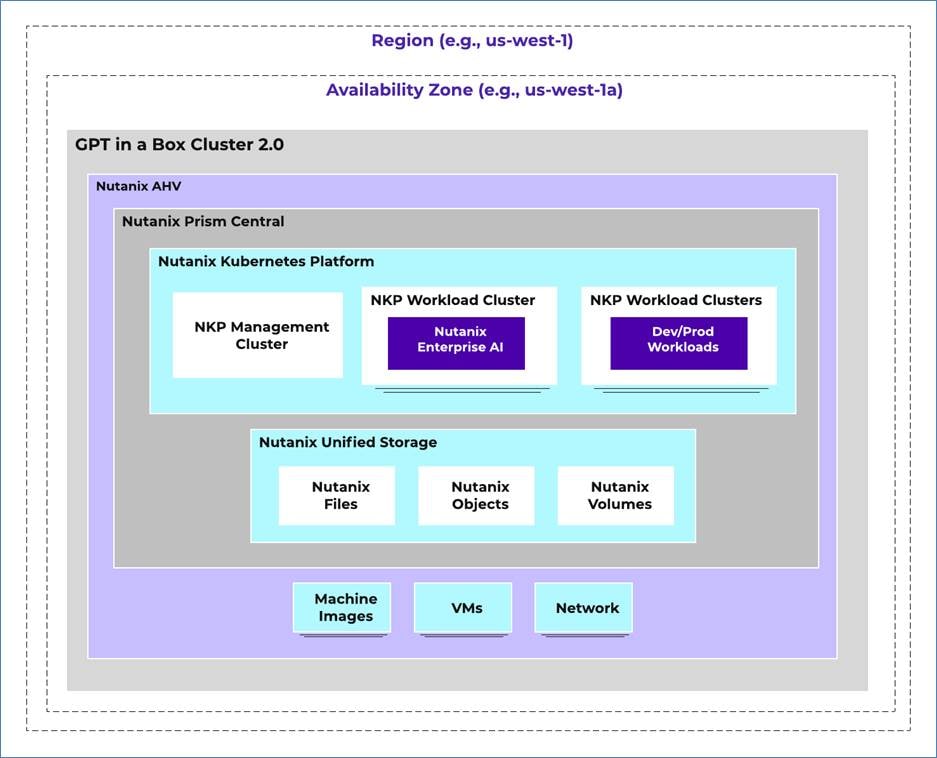

This solution provides a foundational reference architecture for AI-ready platform to enable you to quickly design, size, and deploy an on-premises AI solution. The key design components configured in this solution are elaborated in Figure 5.

This design includes the following hardware and software components:

● Single availability zones (AZs) in a single region in an on-premises Cisco Compute Hyperconverged (CCHC) with Nutanix cluster.

◦ The CCHC with Nutanix (AHV) cluster deployed on Cisco X-Series chassis with 4x X210C All NVMe compute node each paired with UCS X440p PCIe node each equipped with 2x NVIDIA L40S: 350W, 48GB GPUs.

● The CCHC with Nutanix cluster hosts the following key Nutanix services:

◦ Nutanix Unified Storage (NUS).

◦ Nutanix Kubernetes Platform designed to simplify deployment, scaling, and management of containerized applications across hybrid and multicloud environments, integrating seamlessly with Nutanix's hyperconverged infrastructure.

◦ An enterprise Grade inference endpoint provided by Nutanix Enterprise AI which provides an easy way to deploy your choice of LLMs (large language models) from leading LLM providers and create and manage secure APIs to connect your GenAI applications.

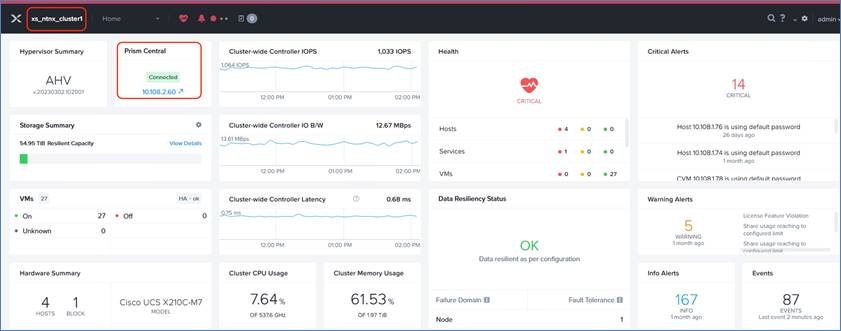

Note: Nutanix Prism Central was hosted on same Nutanix AHV Cluster. Prism Central can be deployed either on the existing cluster or on a separate AHV based Nutanix cluster.

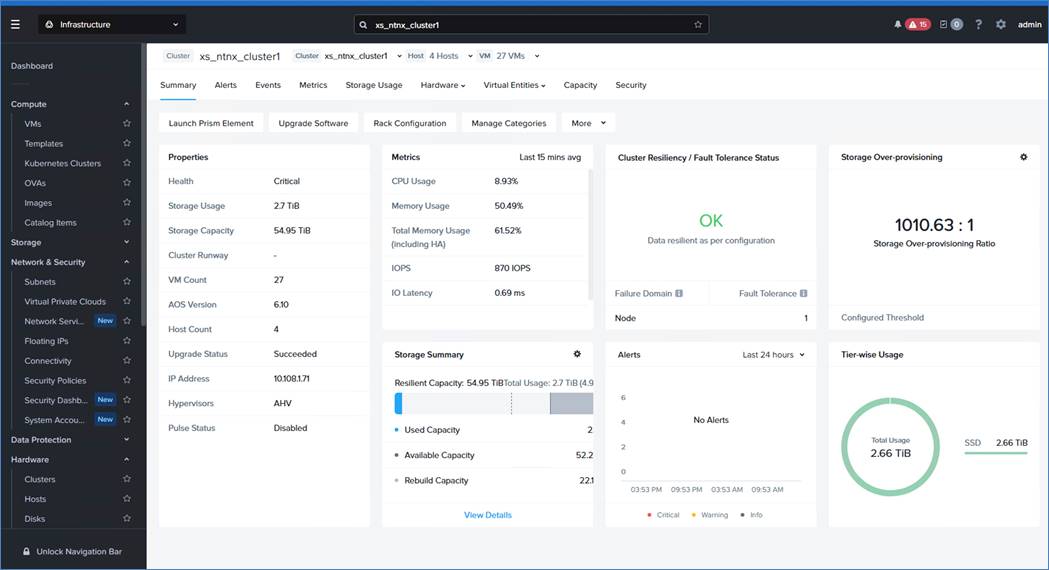

The deployment architecture for Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 is detailed in the figure below. The entire Day 0 deployment is managed through workflow defined in Nutanix Foundation Central.

CCHC with Nutanix cluster for GPT-in-a-box 2.0 is managed through Prism Central deployed on the same Nutanix AHV cluster. Prism Central can also be deployed on a separate AHV based Nutanix Cluster.

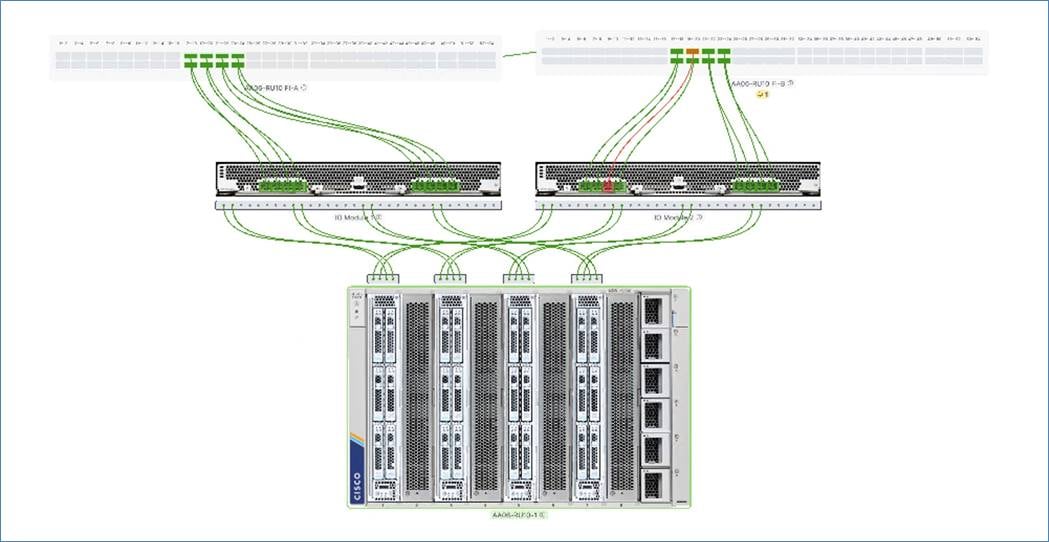

The Cisco UCS X-Series system is connected to a pair of Cisco UCS 6536 Fabric Interconnect managed through Cisco Intersight.

Each X210c M7 All-NVMe server is configured with:

● 2x Intel I6442Y processor (2.6GHz/225W 24C/60MB).

● 1 TB DDR5 memory (32x 32GB DDR5-4800 RDIMM).

● 2x 240GB M.2 card managed through M.2 RAID controller.

● 6x 3.8 TB NVMe.

● 1x Cisco VIC 15420 enabling up to 50 Gbps of unified fabric connectivity to each of the chassis Intelligent Fabric Modules (IFMs) for 100 Gbps connectivity per server.

● 2x NVIDIA L40S: 350W, 48GB GPUs.

Go to the Bill of Materials (BoM) section for the complete specifications of the infrastructure deployed to validate this solution.

Figure 6 illustrates the hardware deployment architecture for Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box.

The solution features a single Nutanix cluster that hosts the following services, among others:

● Nutanix management components, including Prism Central

● NUS to provide NFS storage and S3-compatible storage capabilities

● NKP cluster

● Nutanix Enterprise AI

Software Revisions

Table 2 lists the software revisions for various components of the solution.

| Device |

Image Bundle |

Comments |

| Cisco UCS 6536 Fabric Interconnect |

4.3(4.240066) |

Cisco UCS GA release for infrastructure including FIs and Server Firmware |

| Cisco Compute Hyperconverged X210c M7 All NVMe Node |

5.2(2.240074) |

4x Cisco Compute Hyperconverged X210c M7 All NVMe Node |

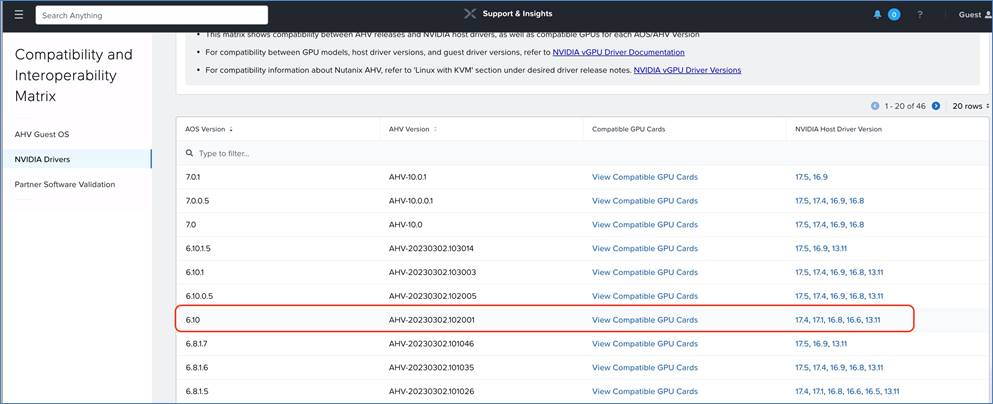

| NVIDIA L40S: 350W, 48GB GPUs |

lcm_nvidia_aie_20230302.102001_550.127.06 |

Download from the Nutanix Portal |

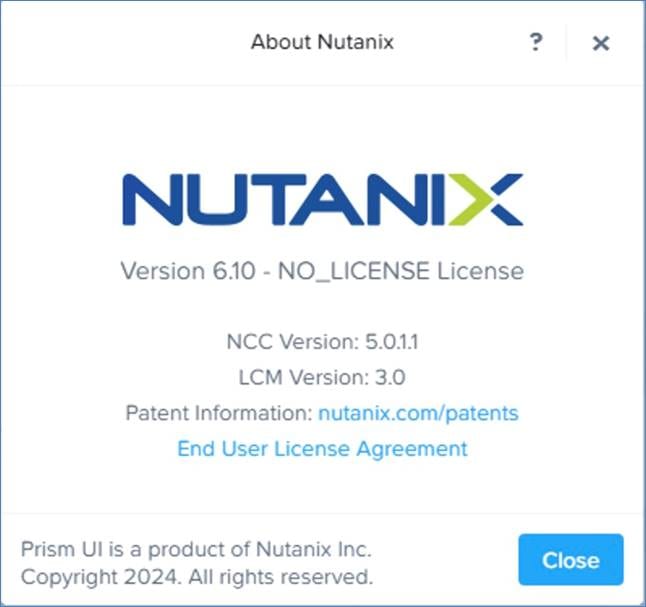

| Nutanix AOS/AHV Cluster on UCS Managed X-Series modular system |

6.10 |

|

| Prism Central hosted on separate Nutanix AHV cluster |

2024.3 |

|

| Nutanix Kubernetes Platform (NKP) |

2.13 |

|

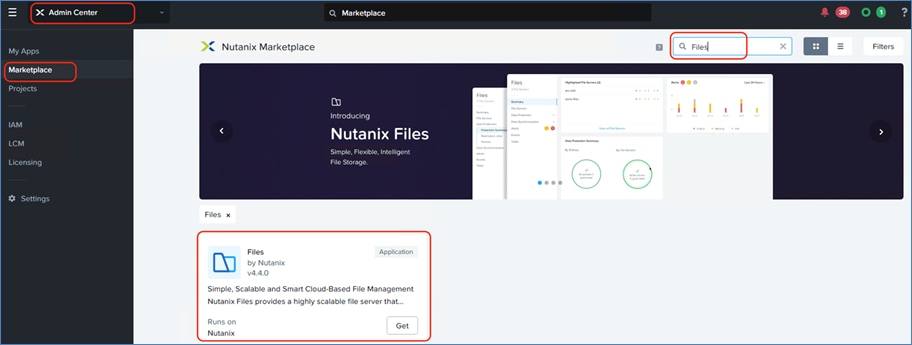

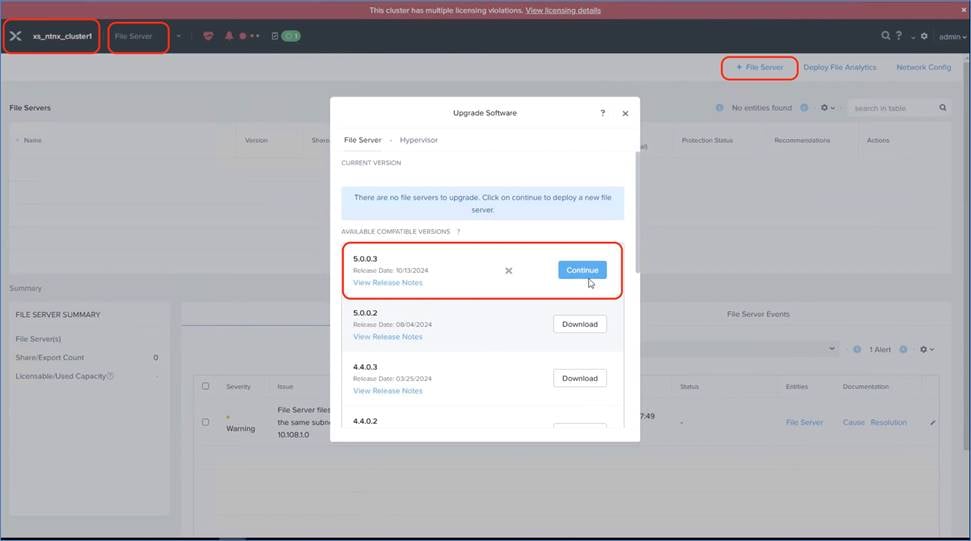

| Files enabled through Nutanix marketplace and hosted on GPT-in-a-Box 2.0 cluster |

5.0.03 |

|

| Nutanix Enterprise AI |

|

|

Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 utilizes 100GbE end-to-end network.

Figure 8 illustrates the network design used in this solution.

The key aspects of network design are as follows:

● Each of the 2x Cisco UCS Intelligent Fabric Module (IFM) are connected to 8x 25G on Cisco UCS Fabric Interconnects.

● Each node is equipped with 1x Cisco UCS VIC 15420 2x 50G mLOM.

● The network ports in the hypervisor are configured in Active-Passive Mode. These are configured automatically during automated cluster installation.

● The deployment in Intersight Managed Mode and does not support bond mode 4 (LACP).

● Fabric Interconnect ports are separate from 100G Uplink ports and can be connected via the core LAN or through dedicated management network switch.

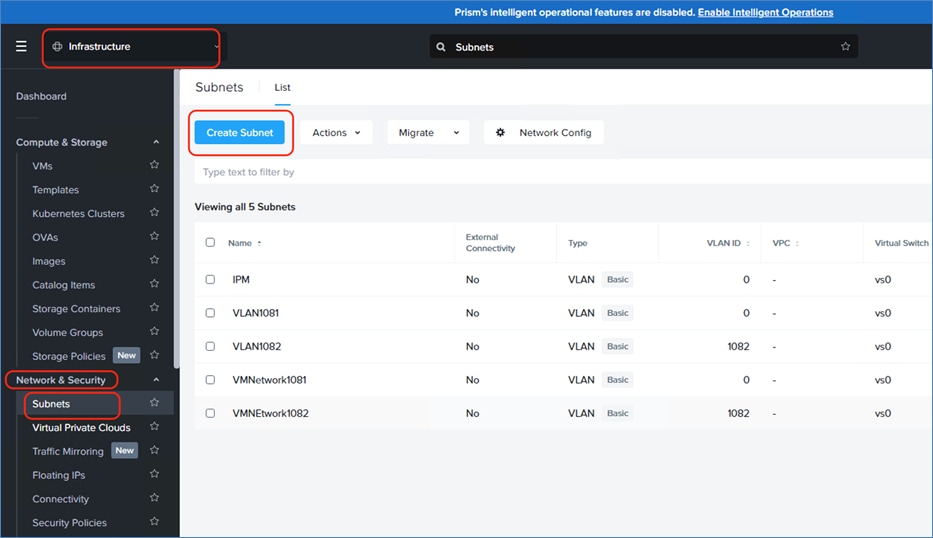

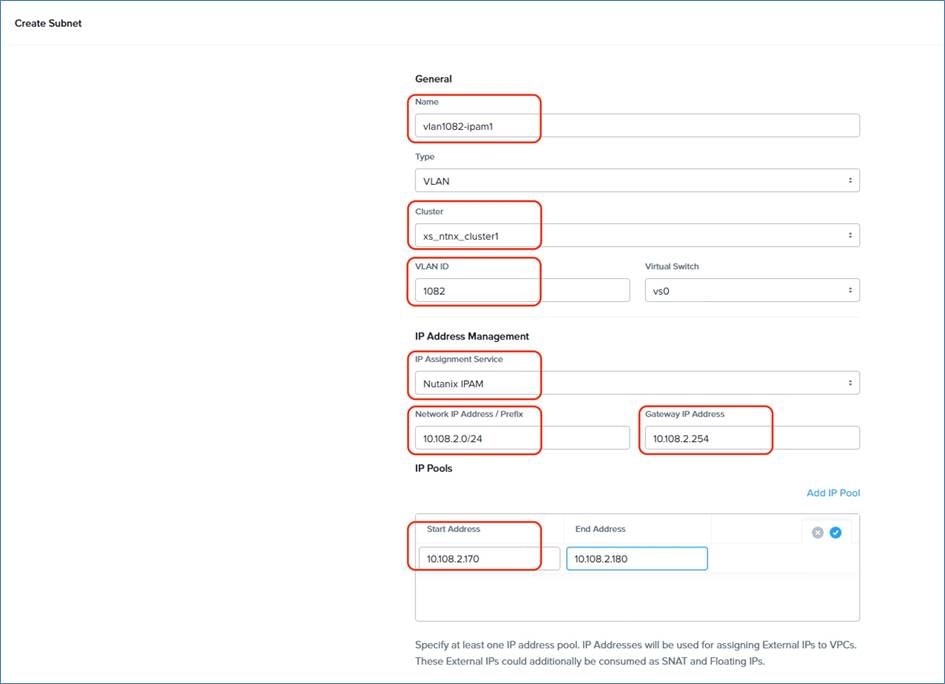

VLAN Configuration

Table 3 lists the VLANs configured for this solution.

| VLAN ID |

Name |

Usage |

IP Subnet used in this deployment |

| 2 |

Native-VLAN |

Use VLAN 2 as native VLAN instead of default VLAN (1). |

|

| 1080 |

OOB-MGMT-VLAN |

Out-of-band management VLAN to connect management ports for various devices. |

10.108.0.0/24; GW: 10.108.0.254 |

| 1081 |

NTNX-VLAN |

VLAN utilized for Nutanix Cluster Management, Files & Objects Services, and Nutanix Kubernetes deployment. |

10.108.1.0/24; GW: 10.108.1.254 |

| 1082 |

NTNX-AI-VLAN |

VLAN utilized, Files & Objects Services, and Nutanix Kubernetes deployment. |

10.108.2.0/24; GW: 10.108.2.254 |

|

|

|

|

|

Table 4 lists the IP Address Assignment for this solution.

| Type |

VLAN |

IP Address Range |

DNS Entries |

Comments |

| UCS Management |

1080 |

10.108.0.110-120 |

|

UCS Management and Out of Band Server Management |

| Nutanix Cluster |

1081 |

10.108.1.71-80 |

|

Nutanix Nodes AHV, CVM, iSCSI Data Service and Cluster VIP |

| Prism Central |

1082 |

10.108.2.51 VIP 10.208.2.60 |

pc2024.nai.rtp4.local |

Prism Central hosted on a separate AHV Nutanix Cluster |

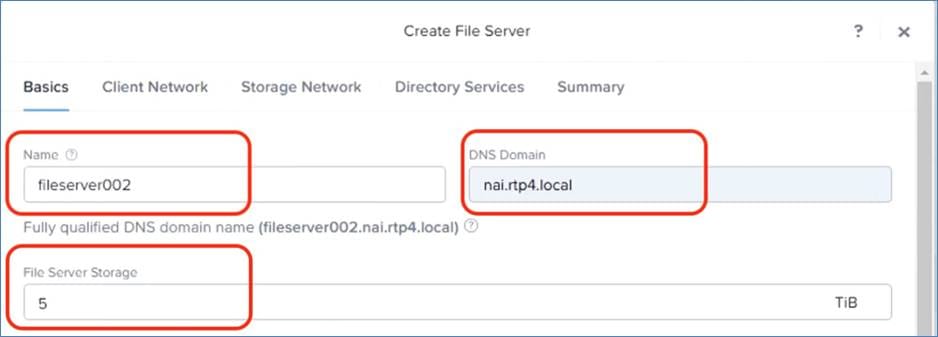

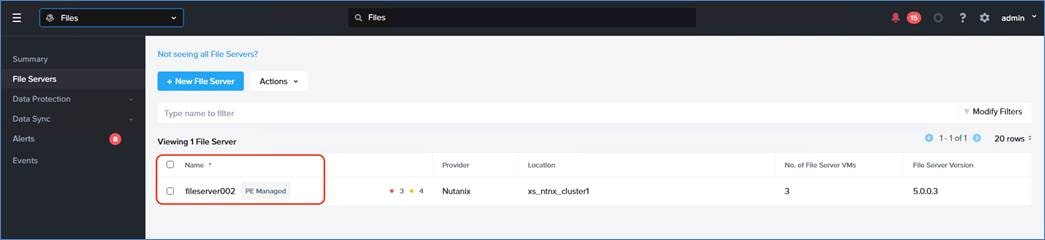

| File Server (PE managed) |

1082 |

10.108.2.94 |

fileserver002.nai.rtp4.local |

Hosted on GPT-in-a-Box 2.0 cluster |

| IPAM for File services |

1082 |

10.108.1.95-101 |

|

Configured on Prism Central |

| IPAM for Nutanix Kubernetes Services |

1082 |

10.102.2.61-75 |

IPAM for Nutanix Kubernetes Services |

1082 |

The design incorporates a single GPU-enabled Nutanix cluster dedicated to GPT-in-a-Box 2.0 workloads that offers access to large language models (LLMs). Supporting management applications like Prism Central and file and object storage are hosted on this cluster.

Size the Nutanix GPT-in-a-Box 2.0 cluster Controller VM (CVM) with 16 vCPU and 64 GB of memory.

This design uses one region with a single AZ that hosts the GPT-in-a-Box 2.0 cluster. This solution doesn't use any replication targets to protect the workloads because the focus is to host the GPT workloads in a single cluster.

Table 5. Cluster Design Decisions

| Design Option |

Validated Selection |

| Cluster size |

Ensure the full redundancy of all components in the datacenter. |

| CPU |

Use at least 24 cores and a high clock rate. |

| Minimum cluster size |

Use at least 4 nodes. |

| Cluster expansion |

Expand in increments of 1. |

| Maximum cluster size |

Use at most 16 nodes. |

| Networking |

Use 100 GbE networking. |

| Cluster replication factor |

Use storage replication factor 2. |

| Cluster high availability configuration |

Guarantee high availability. |

| VM high availability |

Enable high availability reservation on the clusters. |

Cluster Resilience

VM high availability ensures that VMs restart on another AHV host in the AHV cluster when a host becomes unavailable, either because the original AHV host has a complete failure or becomes network partitioned or because of an AHV host management process failure. When the AHV host where a VM is running becomes unavailable, the VM turns off; therefore, from the perspective of the VM operating system, VM high availability involves a full VM start cycle.

Table 6. High Availability Configuration

| Feature |

Description |

Setting |

| High availability reservation |

Guarantee compute failover capacity within cluster for application |

Enabled |

| Rebuild capacity reservation |

Guarantee storage rebuild capacity within cluster |

Enabled |

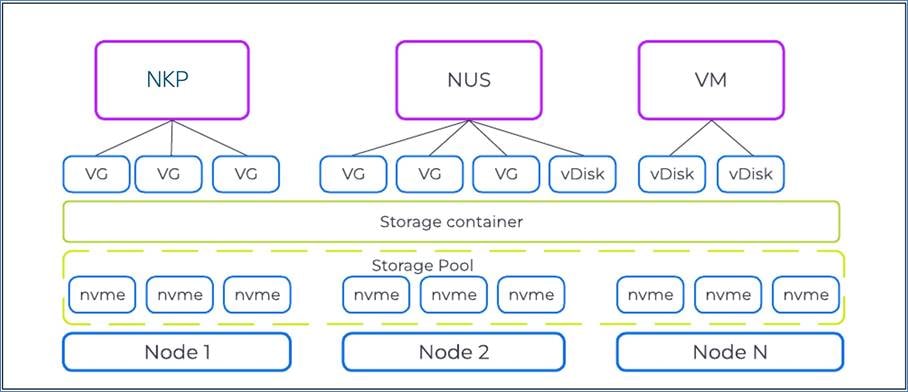

Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 utilizes a distributed, shared-nothing architecture for storage.

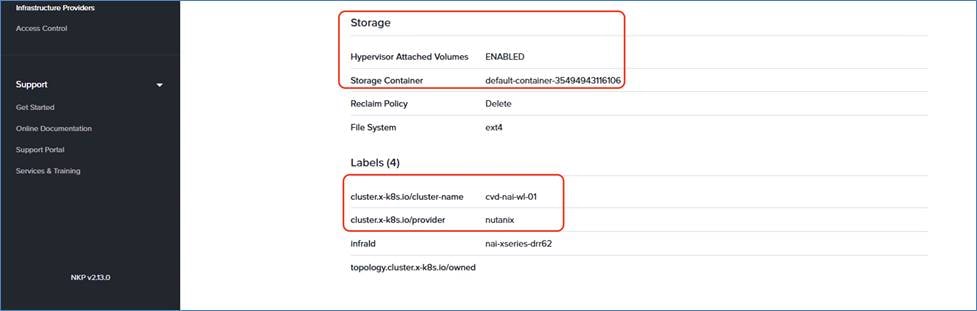

Figure 10 illustrates the storage design used in this solution.

When creating the Nutanix AHV cluster, the following storage containers are automatically created:

● NutanixManagementShare: Used for Nutanix features like Files and Objects and other internal storage needs; doesn't store workload vDisks

● SelfServiceContainer: Used by the NCM Self-Service Portal to provision VMs

● Default-Container-XXXX: Used by VMs to store vDisks for user VMs and applications

In addition to the automatically created storage containers, the following additional storage containers are created during the Nutanix Files and Objects deployment:

● NTNX_<fileserver_name>_ctr: Provides storage for the file server instances, which provide NFS storage for the application tier

● objectsd<uniqueidentifier>: Data container for Nutanix Objects

● objectsm<uniqueidentifier>: Metadata container for Nutanix Objects

The Default-Container stores VMs and their vDisks. The additional containers for Nutanix Files and Objects are created throughout the deployment process of the respective components.

Note: To increase the effective capacity of the cluster, the design enables inline compression on all storage containers. It doesn't use additional functionalities such as deduplication or erasure coding. Replication factor 2 protects against the loss of a single component in case of failure or maintenance.

Data Reduction and Resilience Options

To increase the effective capacity of the cluster, the design enables inline compression on all storage containers. It doesn't use additional functionalities such as deduplication or erasure coding. Replication factor 2 protects against the loss of a single component in case of failure or maintenance.

Table 7. Data Reduction Settings

| Container |

Compression |

Deduplication |

Erasure Coding |

Replication Factor |

| Default-Container-XXXX |

On |

Off |

Off |

2 |

| NutanixManagementShare |

On |

Off |

Off |

2 |

| SelfServiceContainer |

On |

Off |

Off |

2 |

| NTNX_files_ctr |

On |

Off |

Off |

2 |

| objects containers |

On |

Off |

Off |

2 |

Table 8 lists the information about the storage decisions made for this design.

Table 8. Storage Design Decisions

| Design Option |

Validated Selection |

| Sizing a cluster |

Use an all-flash cluster to provide low-latency, high-throughput storage to support the application's active data set. |

| Node type vendors |

Don't mix node types from different vendors in the same cluster. |

| Node and disk types |

Use similar node types that have similar disks. Don't mix nodes that contain NVMe SSDs in the same cluster with hybrid SSD or HDD nodes. |

| Sizing for node redundancy for storage and compute |

Size all clusters for n + 1 failover capacity. |

| Fault tolerance and replication factor settings |

Configure the cluster for fault tolerance 1 and configure the container for replication factor 2. |

| Inline compression |

Enable inline compression. |

| Deduplication |

Don't enable deduplication. |

| Erasure coding |

Don't enable erasure coding. |

| Availability domain for cluster |

Use node awareness. |

| Storage containers in cluster |

The cluster has the following storage containers: NutanixManagementShare, SelfServiceContainer, Default-Container, NTNX_files_ctr, and the two objects storage containers. |

| Reserve rebuild capacity |

Enable reserve rebuild capacity. |

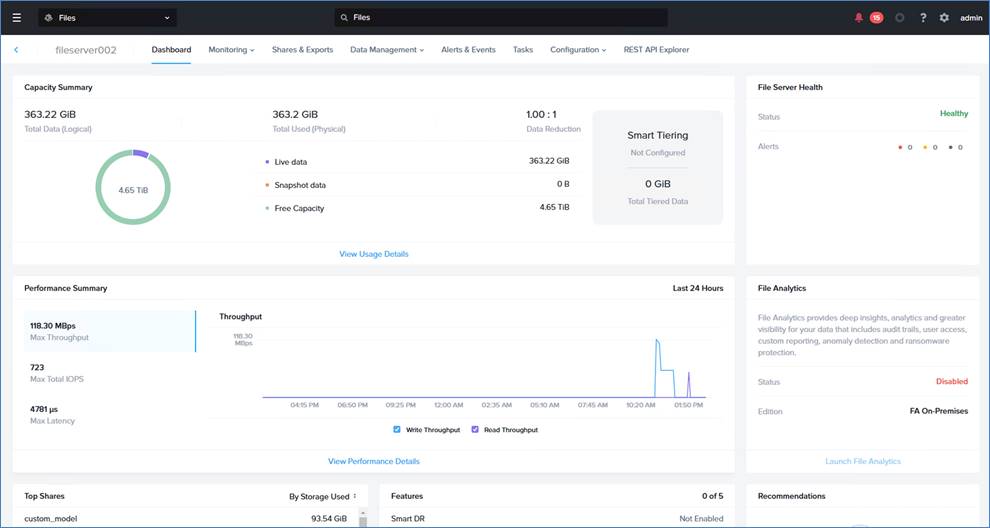

Nutanix Files and Objects Design

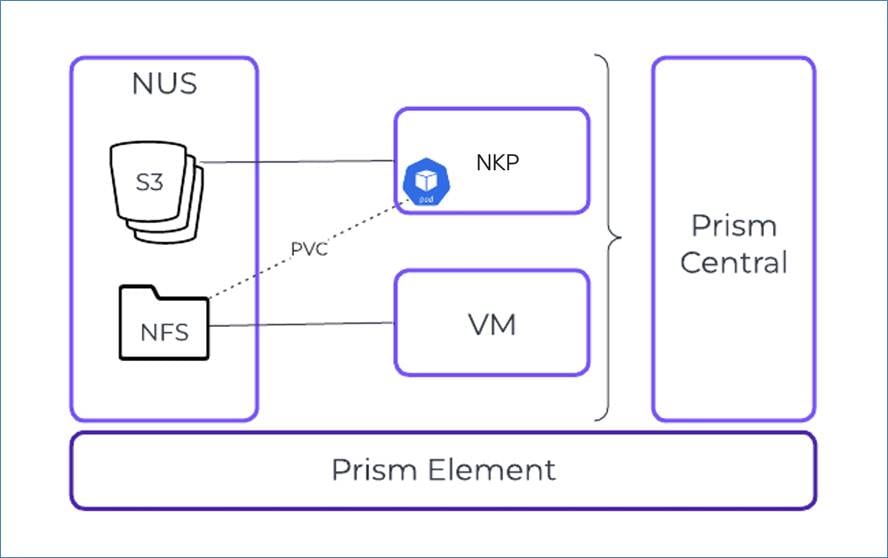

Cisco Compute Hyperconverged with Nutanix GPT-in-a-Box 2.0 utilizes Nutanix Files providing high-performance, shared storage to applications using the NFS protocol. Nutanix Files temporarily stores the LLMs and makes them available across VMs and Kubernetes services.

Nutanix Objects provides S3-compatible storage capabilities to the application and enables users to upload new data models.

Nutanix Files and Objects run on the same Nutanix cluster as the GPT-in-a-Box 2.0 workloads.

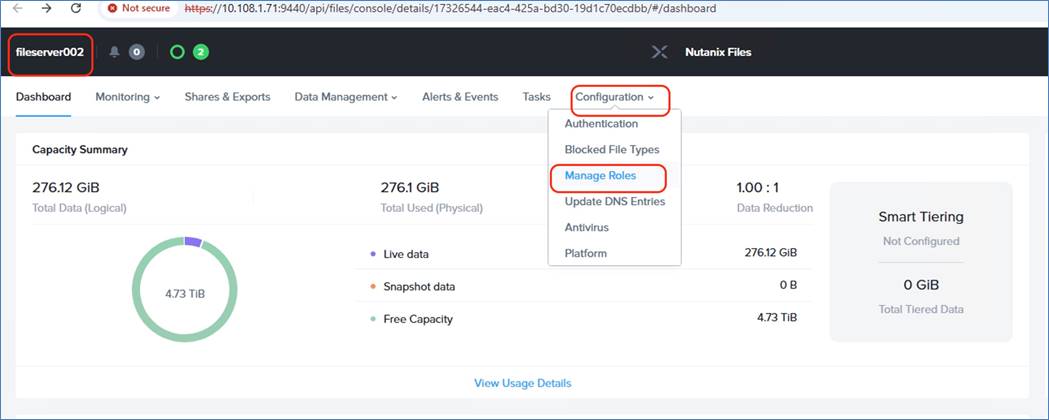

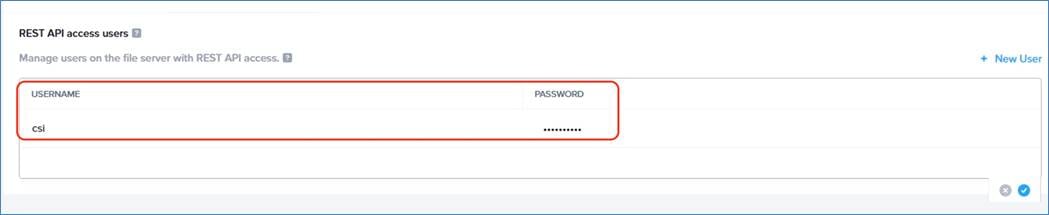

Note: In the existing GPT-in-a-Box 2.0 solution, Prism Element should be utilized to deploy Nutanix Files instance.

Note: If you plan to expand the environment, you can also run Nutanix Files or Nutanix Objects in a dedicated cluster.

Figure 11 illustrates the Nutanix Files and Objects design. The VM displayed in Figure 11 is an end user or jump host used to mount the NFS share and store the LLMs.

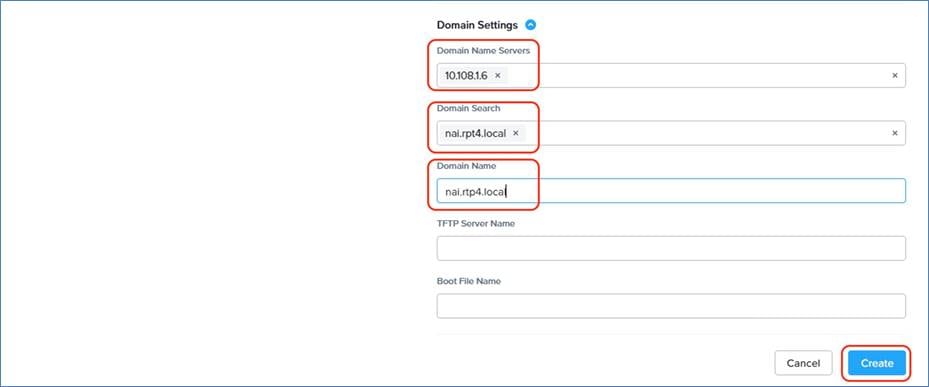

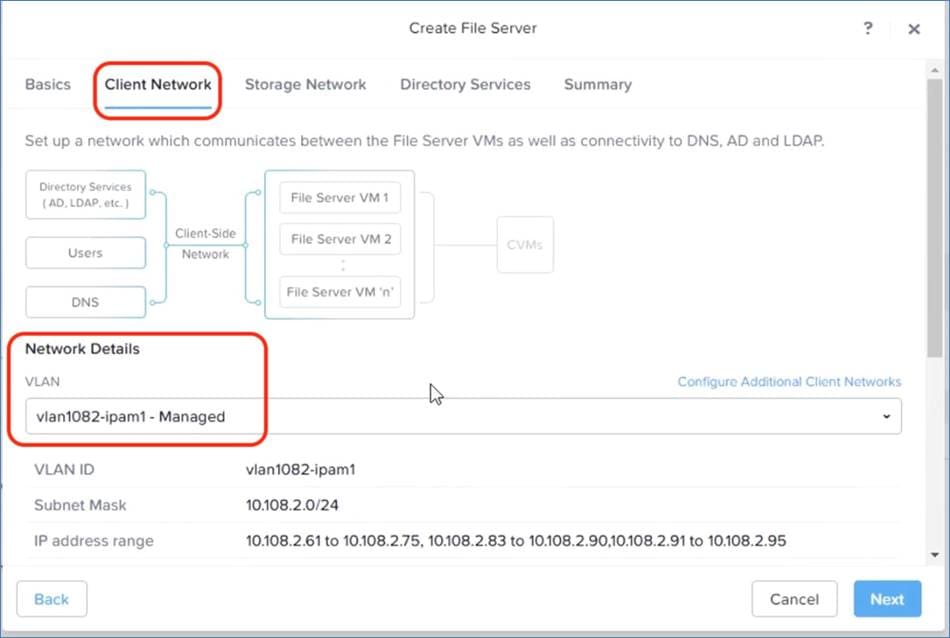

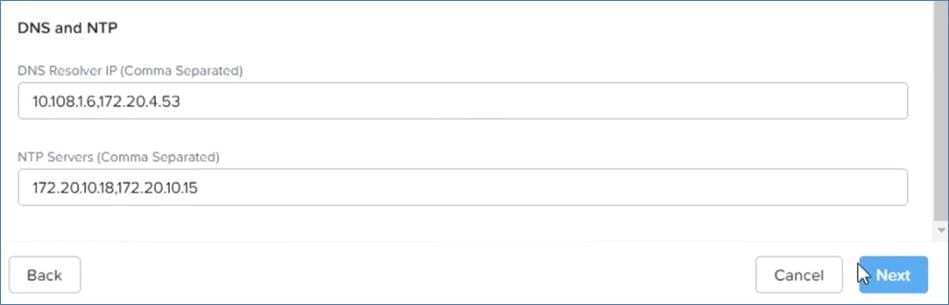

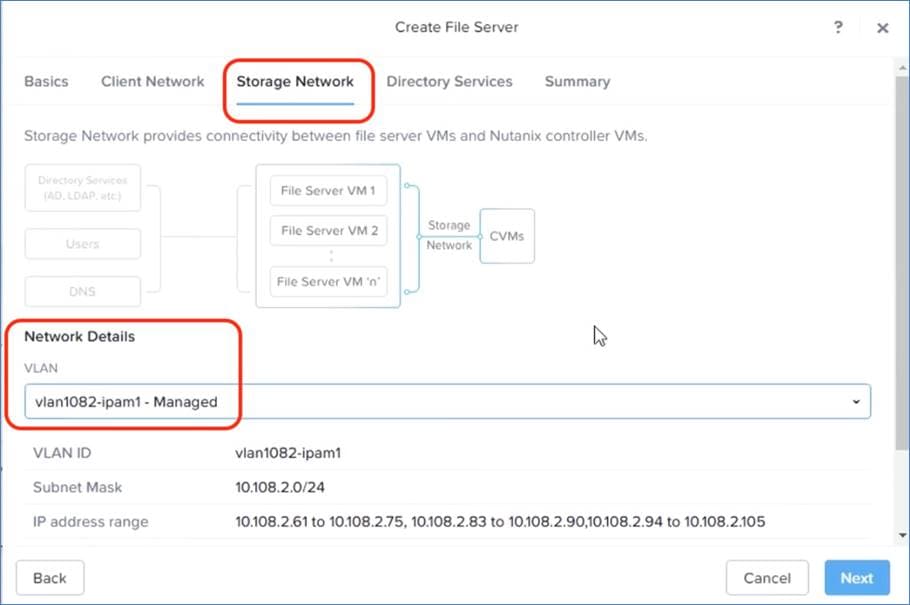

Nutanix Files and Objects have the following network requirements:

● For maximum performance, the storage network for Nutanix Files uses the same subnet as the CVMs.

● To maximize security, the client network connects to a separate subnet.

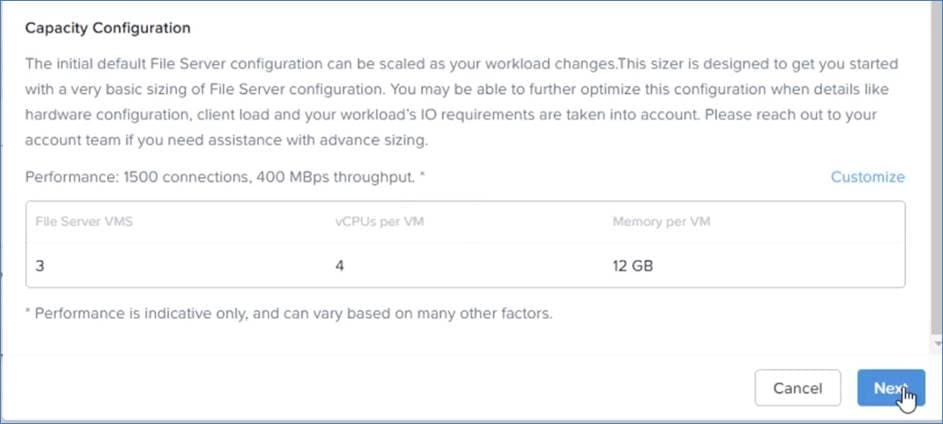

● The GPT-in-a-Box 2.0 cluster that provides a single file server instance requires three storage network IP addresses and four client network IP addresses for its three file server VMs (FSVMs).

Nutanix Objects runs as a containerized service on a Kubernetes microservices platform, which provides benefits such as increased velocity of new features. Several of the required storage IP addresses are for functions related to the underlying microservices platform. You must also manage these networks. Nutanix Objects, with three worker nodes, requires seven storage network IP addresses and two client network IP addresses.

Each cluster provisioned by NKP has minimum IP address requirements. A Kubernetes cluster in a production-level layout with three worker nodes requires one static IP address and eight or more IPAM addresses.

The client network provides all IP addresses. You might need additional IPAM addresses for additional worker nodes.

For more information, go to: Nutanix Files, Nutanix Objects, and Nutanix Kubernetes Engine Network Design.

Table 9 lists information about the Nutanix Files and Objects decisions made for this design.

Table 9. GPT-in-a-Box 2.0 Files Design Decisions

| Design Option |

Validated Selection |

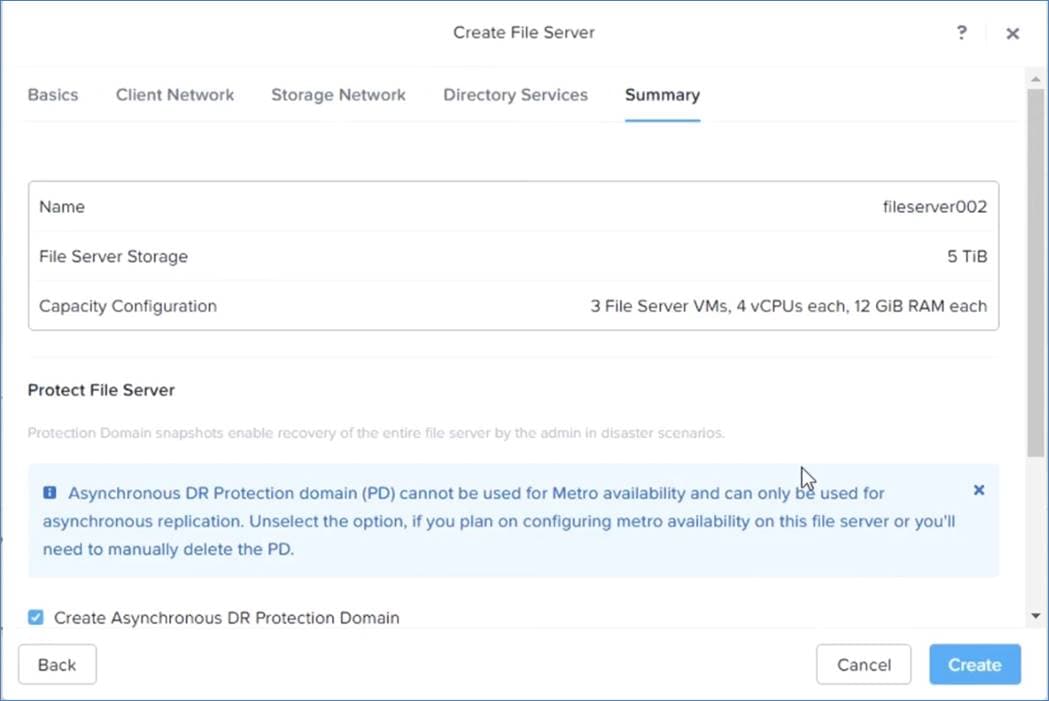

| FSVM cluster size |

Use 3 FSVMs. |

| vCPU and memory for FSVM |

Use 12 vCPU and 64 GB of memory for each FSVM. |

| Storage networking |

Keep the storage network in the same subnet as the CVMs and AHV. |

| Client networking |

Use a separate subnet to provide client access to storage. |

| Fault tolerance and replication factor settings |

Configure the cluster for fault tolerance 1 and configure the container for replication factor 2. |

| Storage containers |

Use a separate storage container to host NUS files. |

| Erasure coding |

Don't enable erasure coding. |

| Compression |

Enable compression. |

| Deduplication |

Don't enable deduplication. |

| Shares to create |

Create 1 share |

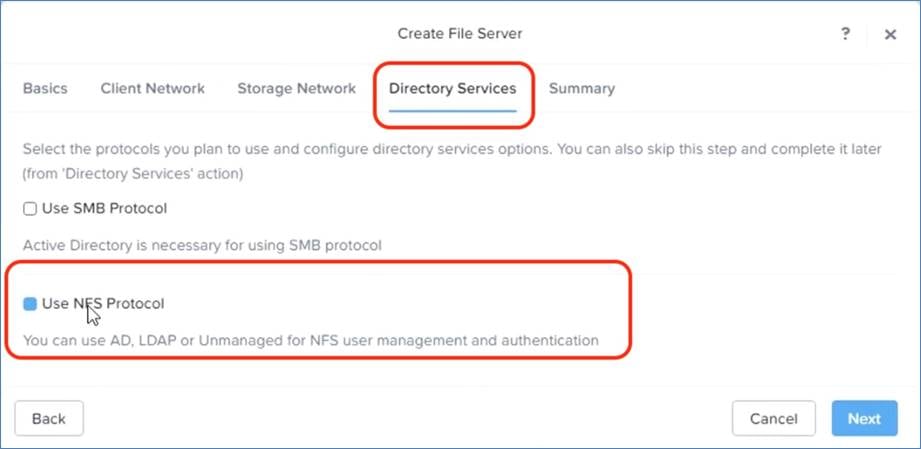

| Protocols for shares |

Use NFS for shares. |

For more information, go to: Nutanix Files and Nutanix Objects Design Decisions.

Table 10. GPT-in-a-Box 2.0 Objects Design Decisions

| Design Option |

Validated Selection |

| Nutanix Objects cluster size |

Use 3 worker nodes and 2 load balancer nodes. |

| Worker node size |

Use 10 vCPU and 32 GB of memory for each worker node. |

| Load balancer node size |

Use 2 vCPU and 4 GB of memory for each load balancer node. |

| Storage networking |

Keep the storage network in the same subnet as the CVMs and AHV. |

| Client networking |

Use a separate subnet to provide client access to storage. |

| Fault tolerance and replication factor settings |

Configure the cluster for fault tolerance 1 and configure the container for replication factor 2. |

| Storage containers |

Use a separate storage container to host NUS Objects. |

| Erasure coding |

Don't enable erasure coding. |

| Compression |

Enable compression. |

| Deduplication |

Don't enable deduplication. |

| Buckets to create |

Create 3 buckets: milvus (vector database), documents (for end-user access), and backup (backup target). |

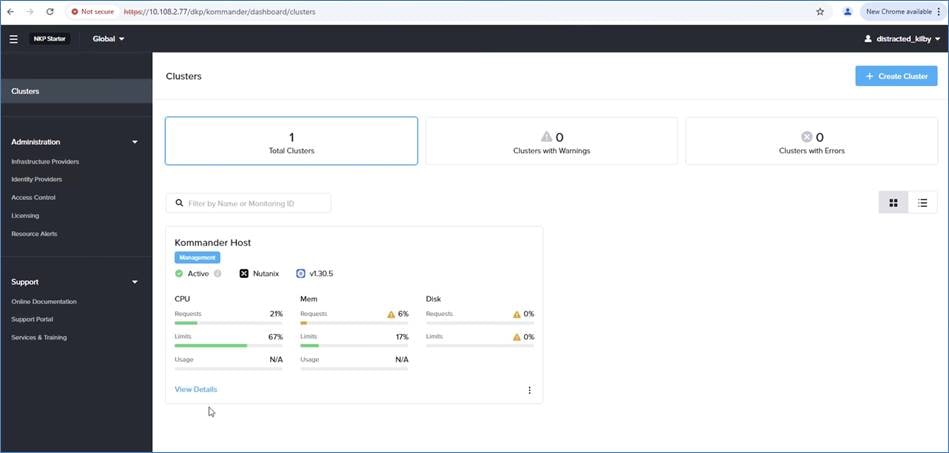

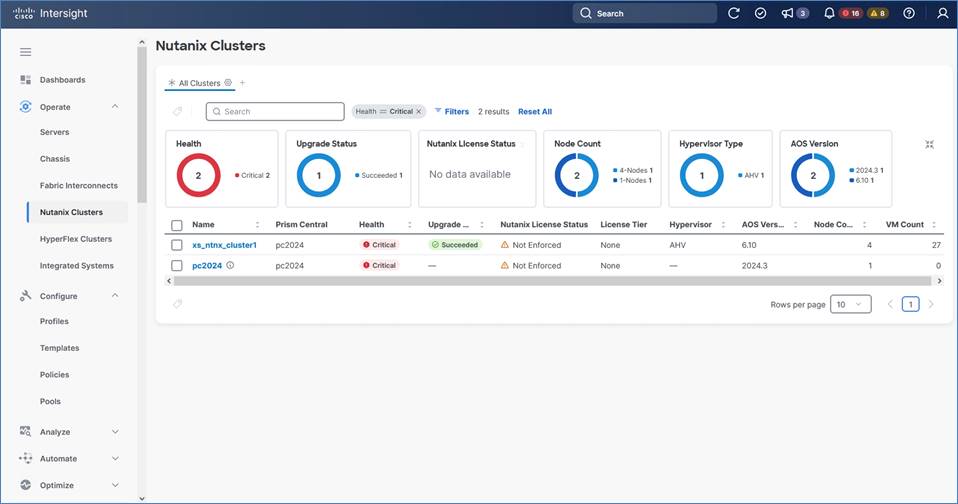

Management components such as Cisco Intersight (cloud managed) Prism Central, Active Directory, DNS, and NTP are critical services that must be highly available. Prism Central is the global control plane for Nutanix, responsible for VM management, application orchestration, micro segmentation, and other monitoring and analytics functions.

This solution utilizes two key management plane:

● Cisco Intersight (cloud managed) supports the entire Cisco UCS X-Series modular system. It enables server, fabric, and storage provisioning as well as device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection.

● Prism Central was deployed as single VM on a separate Nutanix AHV cluster. Prism Central manages the following:

◦ Nutanix Files and Objects

◦ VMs

◦ RBAC

◦ Monitoring, observability, and auditing for the core Nutanix Services

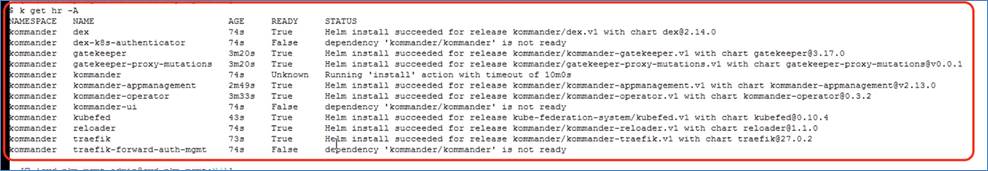

● Nutanix Kubernetes Platform is managed through commander dashboard

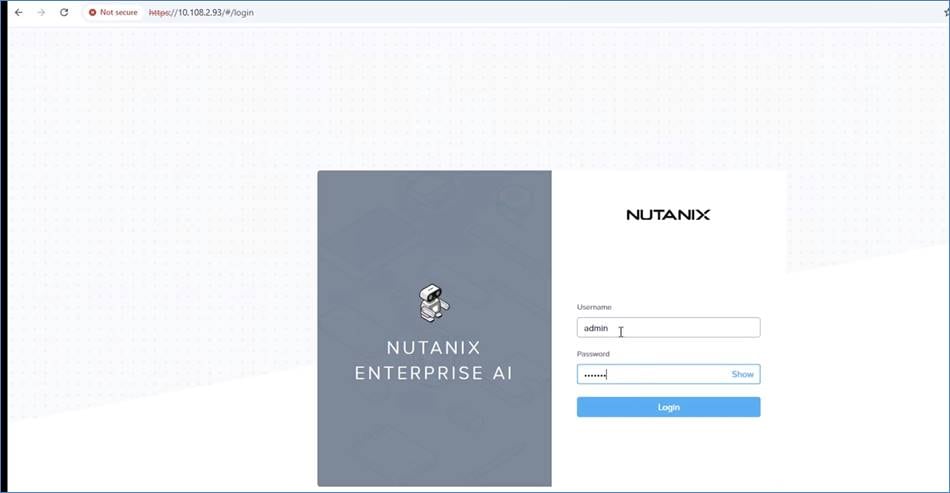

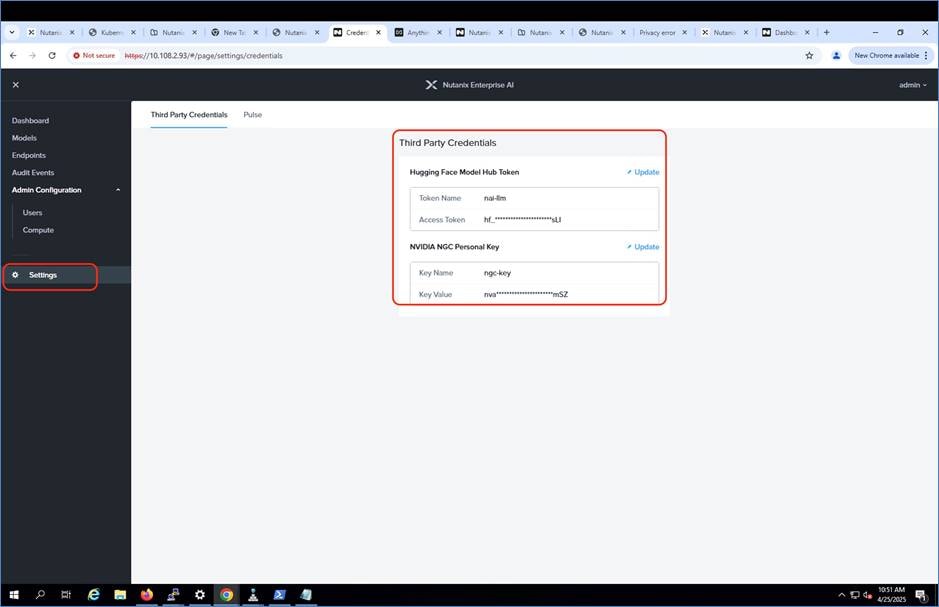

● Nutanix Enterprise AI is managed through NAI dashboard

DNS Management

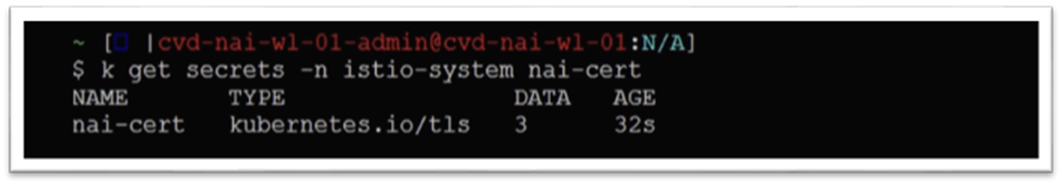

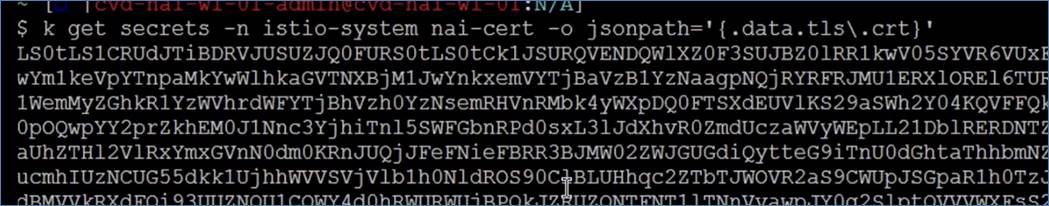

Name resolution and SSL certificate management are critical to deploying and managing Kubernetes clusters. In this solution, DNS root domain is hosted locally on a windows DNS server. Subdomains map the DNS and route the traffic to the Kubernetes clusters.

Note: In the existing deployment, local DNS with self-signed certificates were utilized.

Monitoring

Monitoring in the solution falls into two categories: event monitoring and performance monitoring. Each category addresses different needs and issues.

In a highly available environment, you must monitor events to maintain high service levels. When faults occur, the system must raise alerts on time so that administrators can take remediation actions as soon as possible. This solution configures the Nutanix platform's built-in ability to generate alerts in case of failure.

In addition to keeping the platform healthy, maintaining a healthy level of resource usage is also essential to the delivery of a high-performing environment. Performance monitoring continuously captures and stores metrics that are essential when you need to troubleshoot application performance. A comprehensive monitoring approach tracks the following areas:

● Application and database metrics

● Operating system metrics

● Hyperconverged platform metrics

● Network environment metrics

● Physical environment metrics

By tracking a variety of metrics in these areas, the Nutanix platform can also provide capacity monitoring across the stack. Most enterprise environments inevitably grow, so you need to understand resource usage and the rate of expansion to anticipate changing capacity demands and avoid any business impact caused by a lack of resources.

Monitor the Conceptual Design

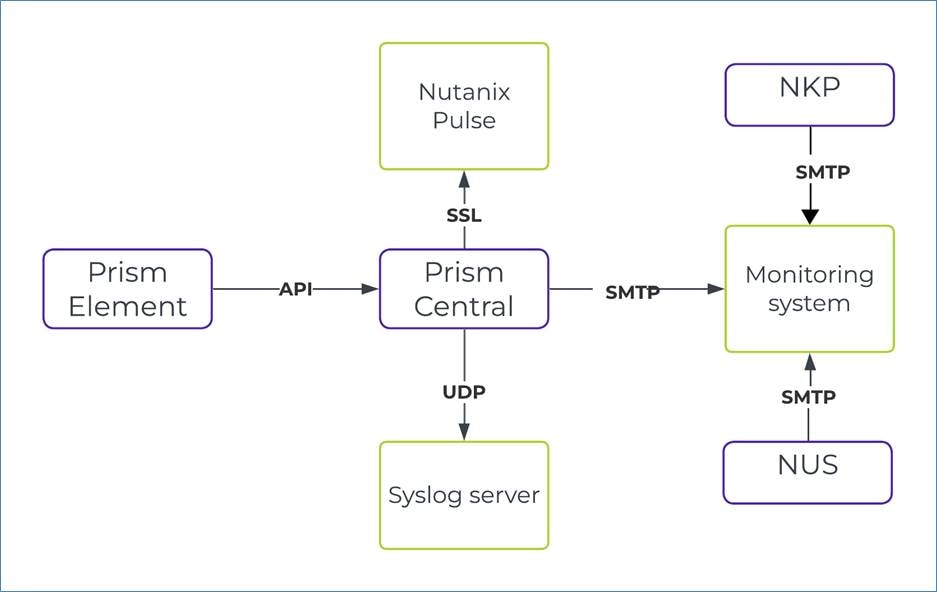

In this design, Prism Central performs event monitoring for the Nutanix core infrastructure. This NVD uses syslog for log collection; for more information, see the Security and Compliance section. SMTP-based email alerts serve as the channel for notifications in this design. To cover situations where Prism Central might be unavailable, each Nutanix cluster in this NVD sends out notifications using SMTP as well. The individual Nutanix clusters send alerts to a different receiving mailbox that's only monitored when Prism Central isn't available.

Prism Element transmits the data to Prism Central using an API. Prism Central then transmits the log to Nutanix Pulse (using the Secure Sockets Layer) and the syslog server using the User Datagram Protocol (UDP). All components (PC, NUS and NKP) send the alerts to the monitoring system using SMTP.

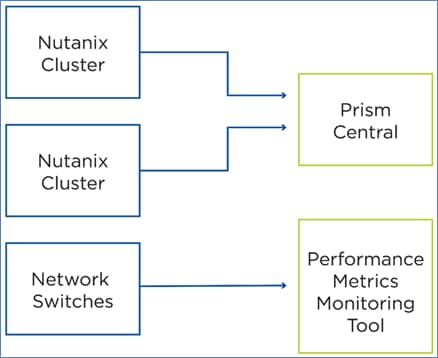

Prism Central monitors cluster performance in key areas such as CPU, memory, network, and storage usage, and captures these metrics by default. When a Prism Central instance manages a cluster, Prism Central transmits all Nutanix Pulse data, so it doesn't originate from individual clusters. When you enable Nutanix Pulse, it detects known issues affecting cluster stability and automatically opens support cases.

The network switches that connect the cluster also play an important role in cluster performance. A separate monitoring tool that's compatible with the deployed switches can capture switch performance metrics. For example, an SNMP-based tool can regularly poll counters from the switches.

Table 11 lists the descriptions of the monitoring design decisions.

Table 11. Monitoring Design Decisions

| Design Option |

Validated Selection |

| Platform performance monitoring |

Prism Central monitors Nutanix platform performance. |

| Network switch performance monitoring |

A separate tool that performs SNMP polling to the switches monitors network switch performance. |

| SMTP alerting |

Use SMTP alerting; use an enterprise SMTP service as the primary SMTP gateway for Prism Element and Prism Central. |

| SMTP alerting source email address |

Configure the source email address to be clustername@<yourdomain>.com to uniquely identify the source of email messages. For Prism Central, use the Prism Central host name in place of cluster name. |

| SMTP alerting Prism Central recipient email address |

Configure the Prism Central recipient email address to be primaryalerts@<yourdomain>.com. |

| SMTP alerting Prism Element recipient email address |

Configure the Prism Element recipient email address to be secondaryalerts@<yourdomain>.com. |

| NCC reports |

Configure daily NCC reports to run at 6:00 AM local time and send them by email to the primary alerting mailbox. |

| Nutanix Pulse |

Configure Nutanix Pulse to monitor the Nutanix cluster and send telemetry data to Nutanix. |

Nutanix recommends a defense-in-depth strategy for layering security throughout any enterprise datacenter solution. This design section focuses on validating the layers that Nutanix can directly oversee at the control and data-plane levels.

Security Domains

Isolate Nutanix cluster management and out-of-band interfaces from the rest of the network using firewalls and only allow direct access to them from the management security domain. In addition, Nutanix recommends separating out-of-band management and cluster management interfaces onto a dedicated VLAN away from the application traffic.

Syslog

For each control plane endpoint (Prism Central), system-level internal logging goes to a centralized third-party syslog server that runs in the existing customer landscape. The system is configured to send logs for all available modules when they reach the syslog Error severity level.

This design assumes that the centralized syslog servers are highly available and redundant, so you can inspect the log files in case the primary log system is unavailable.

Certificates

SSL endpoints serve all Nutanix control plane web pages. In the deployment and validation, self-signed certificates are utilized. The existing solution can replace the default self-signed certificates with certificates signed by an internal certificate authority from a Microsoft public key infrastructure (PKI). Any client endpoints that interact with the control plane should have the trusted certificate authority chain preloaded to prevent browser security errors.

Note: Certificate management is an ongoing activity, and certificates need to be rotated periodically. This solution signs all certificates for one year of validity.

Note: In the existing deployment, local DNS with self-signed certificates were utilized

Table 12. Security Design Decisions

| Design Option |

Validated Selection |

| Data-at-rest encryption (DaRE) |

Don't use DaRE. |

| SSL endpoints |

Sign control plane SSL endpoints with an internal trusted certificate authority (Microsoft PKI). |

| Certificates |

Provision certificates with a yearly expiration date and rotate accordingly. |

| Authentication |

Use Active Directory LDAPS authentication. |

| Control plane endpoint administration |

Use a common administrative Active Directory group for all control plane endpoints. |

| Cluster lockdown mode |

Don't enable cluster lockdown mode (allow password-driven SSH). |

| Non-default hardening options |

Enable AIDE and hourly SCMA. |

| System-level internal logging |

Enable error-level logging to an external syslog server for all available modules. |

| Syslog delivery |

Use UDP transport for syslog delivery. |

Table 13. Security Configuration References

| Design Option |

Validated Selection |

| Active Directory |

AD-admin-group:ntnx-ctrl-admins |

| Syslog Server |

infra-az[1..2]-syslog:6514 (udp) |

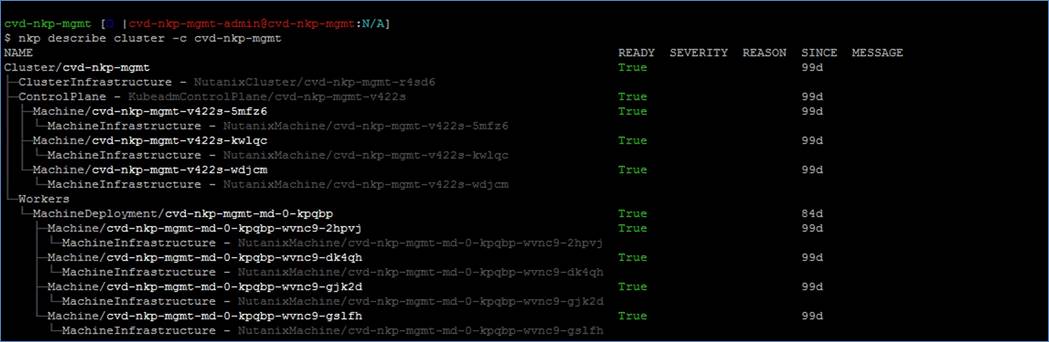

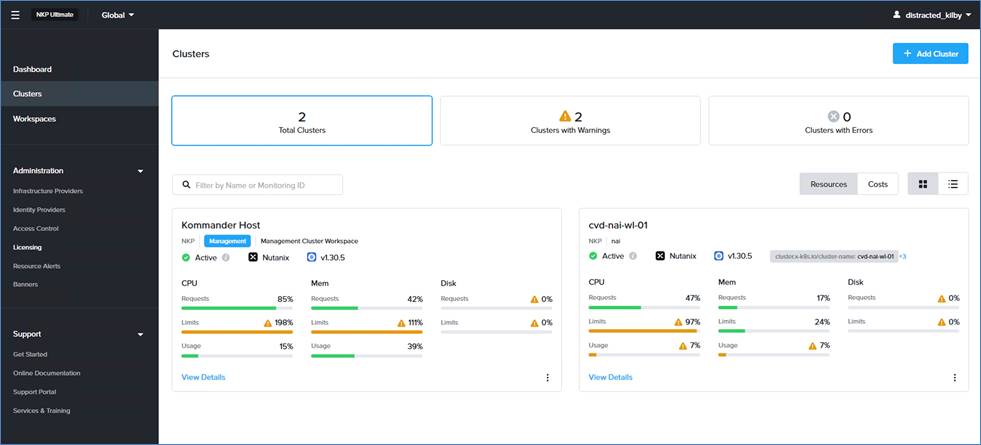

This conceptual design outlines a robust, highly available Kubernetes environment on the Nutanix platform, using Nutanix Kubernetes Platform (NKP) and integrating with essential Kubernetes tools (many of which come pre-canned with NKP) and practices for efficient and secure operations.

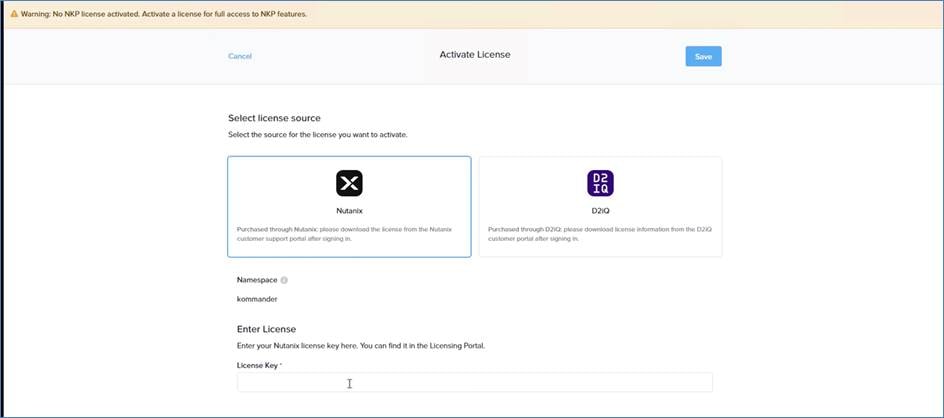

Table 14. GPT-in-a-Box 2.0 Cluster with NKP License Design Decisions

| License Type |

Reason |

| NKP Ultimate |

Application Catalogs & Gitops with built in FluxCD to push out workloads to multiple clusters. Workspaces & Projects provide isolation (in terms of access); cluster profile definition (in terms of Applications that will be deployed to it); and segregation (in terms of cluster-wide and namespace specific workloads). |

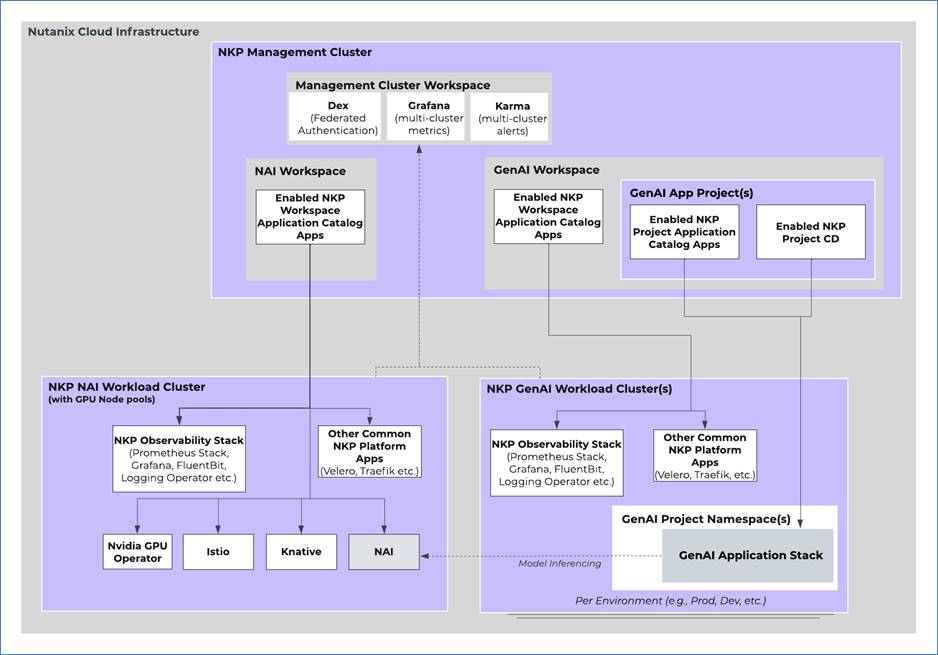

In this design, a single NKP Ultimate Management Cluster is used to manage and push out workloads and configuration to multiple Workload Clusters via NKP Application Catalogs Apps and NKP Continuous Delivery (CD) mechanism. The NKP Management cluster is used for management only. NAI and its supporting components run on one NKP workload cluster with GPU node pools. GenAI Apps and supporting components run on another workload cluster(s). The data resides locally within these clusters or in a S3 bucket (Nutanix Objects) or NFS share (Nutanix Files). All production Kubernetes clusters are set up as production-level clusters using multiple control plane and worker nodes distributed across different physical hosts to ensure high availability.

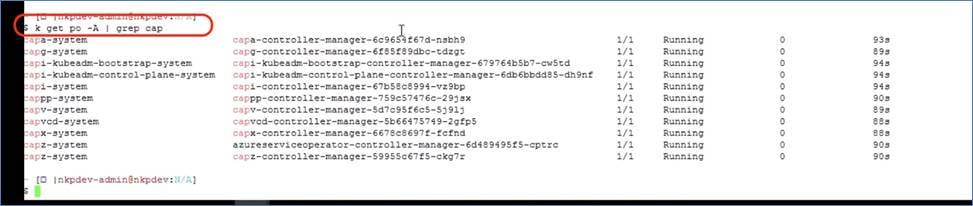

When a NKP cluster is built, the following preconfigured Cloud Native tools are deployed automatically for NKP's internal use:

Note: Although these are for internal use, they can also be used by workloads running on the cluster.

● Certificate Management - Cert-Manager

● Ingress Controller - Traffic

● Authentication - Dex

● CD - FluxCD

● Kubernetes Dashboard

● Policy Admission Webhook - Gatekeeper

● Cost Management - Kubecost

Table 15. GPT-in-a-Box 2.0 Cluster with NKP Workspace & Projects Design Decisions

| Workspaces & Projects |

Reason |

| Workspaces |

Two workspaces are created as there are two category of clusters (such as NAI Cluster and GenAI Cluster(s)), which require different kind of resources (NAI cluster requires GPUs and GenAI does not). Also, these run different kind of workloads and would most likely be managed by two different teams. |

| Projects |

A Project is created for each GenAI Application in the GenAI Workspace (which creates a namespace in the associated cluster(s)), as this allows multiple Application teams to share clusters. The Project Continuous Delivery mechanism makes deploying apps easy across all clusters associated with the project. |

The following NKP workspaces are created and the NKP workload cluster(s) are deployed to:

● NAI Workspace: One NAI Workload Cluster deployed to this workspace

● GenAI Workspace: One or more GenAI Workload Clusters deployed to this workspace

The Common NKP Workspace Applications explicitly enabled from the NKP Application catalog in both Workspaces (NAI and GenAI) are:

● Logging - Logging Operator, Grafana Logging, Grafana Loki, Fluent Bit

● Monitoring - Prometheus Monitoring, Prometheus Adapter

● Backup & Recovery - Velero with Nutanix Objects as the S3 Compatible Object Store used for storage

● Object Store - Rook Ceph, Rook Ceph Cluster

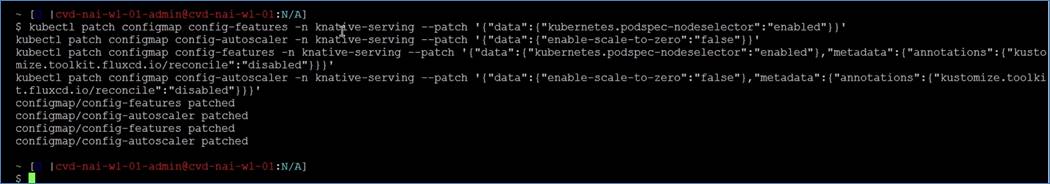

The NKP Workspace Applications explicitly enabled only on the NAI Workspace are:

● Istio Service Mesh

● NVIDIA GPU Operator

● Knative

● Nutanix Enterprise AI

The GenAI App Project is created in the GenAI Workspace and used to deploy the GenAI app leveraging its Continuous Delivery (CD) mechanism. The cluster(s) in the GenAI Workspace is/are explicitly attached to this project.

Note: Additional projects can be added to this workspace to allow more than one App team to share cluster(s) in the GenAI Workspace.

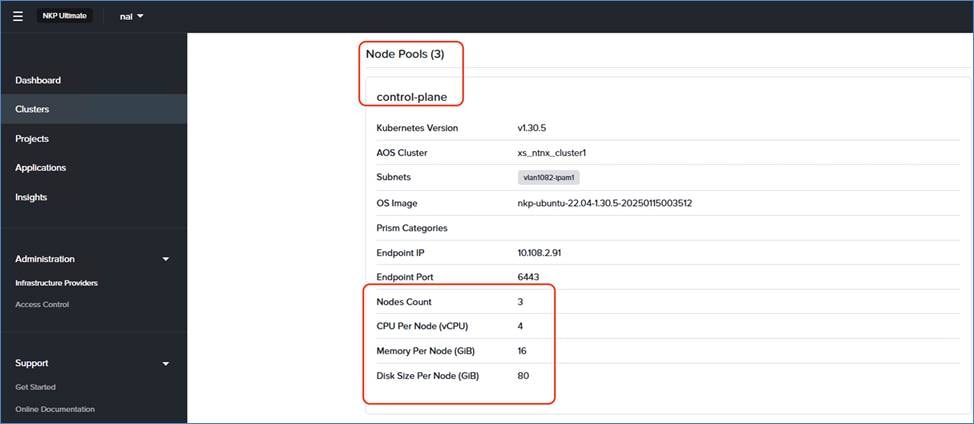

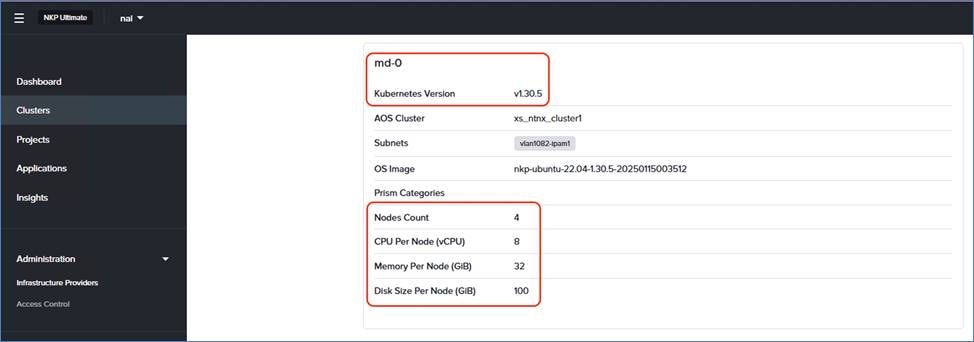

Table 16. GPT-in-a-Box 2.0 Cluster with NKP Scalability Design Decisions

| Design Option |

Validated Selection |

| NKP cluster type |

Use a NKP Ultimate License. |

| Control plane size |

Size the control plane with 4 CPU and 16 GB of memory, and 80 GB of storage. |

| Initial Workload size |

Start with 4 worker nodes with 8 CPU, 32 GB of memory, and 100 GB of storage. |

| GPU pool size (NAI cluster only) |

Use 2 worker nodes with 12 CPU, 40 GB of memory, and 100 GB of storage. |

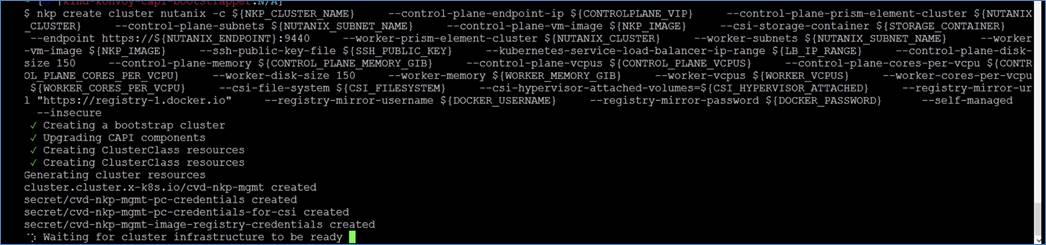

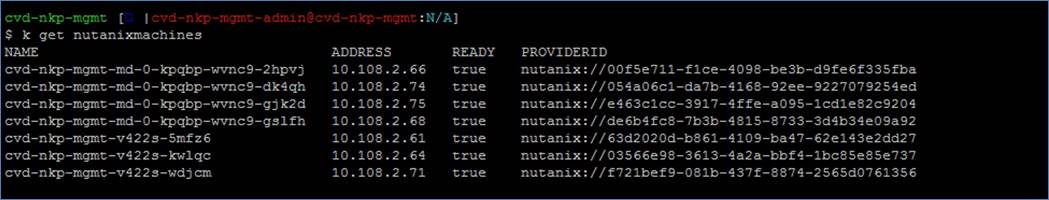

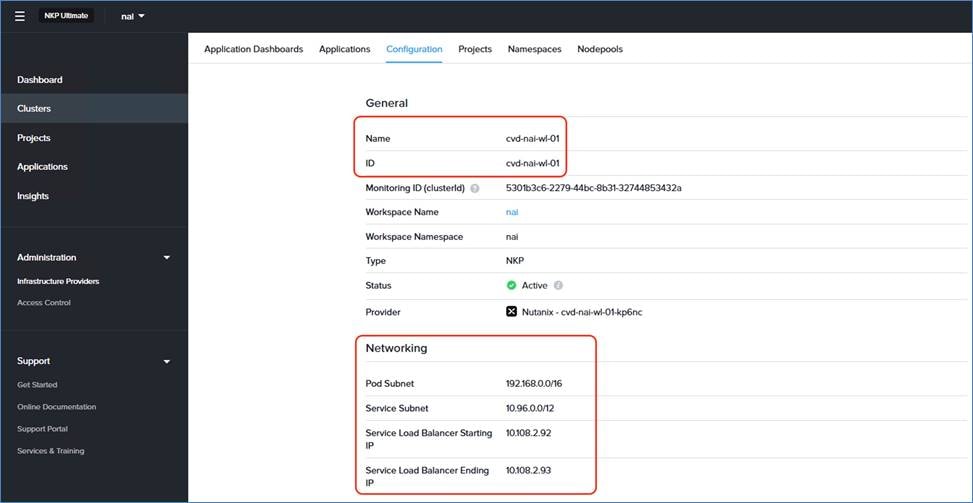

The workloads are divided into NKP Management, GPT-in-a-Box 2.0 NAI, and Production/Development environments. Since there is only a single NKP management cluster, it is named <custom-prefix>-nkp-mgmt. Nutanix Enterprise AI is running in a dedicated cluster in the NAI workspace <custom-prefix>-nai. The following naming convention is used for workload clusters: <custom-prefix>-<cluster_type>-<environment_id>-wl-<app_id>-<optional_app_index_number>.

Note: NKP cluster names use a maximum of 63 characters and have the same restriction as any kubernetes resource (such as the Cluster name must be valid DNS-1035 label). Which means it can only contain lowercase alphanumeric characters and hyphens and must start and end with an alphanumeric character.

In this design, the NKP Management or Workload cluster's worker nodes can be scaled to accommodate different workload sizes. Nutanix recommends scaling out. The total number of workers in the GPU node pools is constrained by the number of physically installed GPUs.

All GPUs are licensed to run NAI, but this design will keep some GPU in spare for dev/experimental workloads:

● NKP Management Cluster:

◦ cvd-nkp-mgmt

● GPT-in-a-Box 2.0 NAI Environment

◦ cvd-nai (2 GPU nodes with 2 L40s)

● Prod-Environment:

◦ cvd-nai-wl-01

Kubernetes Resilience

NKP clusters are production-level clusters that provide a resilient control plane by running multiple nodes for the control plane and etcd. To ensure high availability, Kubernetes deployments use multiple replica pods and implement pod anti-affinity rules. This approach helps maintain service availability, even in the event of a worker node update or failure.

Kubernetes services and ingress controllers perform the essential service of load balancing by evenly distributing network traffic across all available pods, enhancing service reliability and system performance.

Kubernetes Networking

Each deployed cluster uses a base configuration for networking:

● kube-vip: Load-balancing service

● nginx-ingress: Ingress service for L4 and L7 network traffic

● cert-manager: Service that creates valid SSL certificates for TLS application services

● Local DNS with self-signed certificate

Kubernetes Monitoring

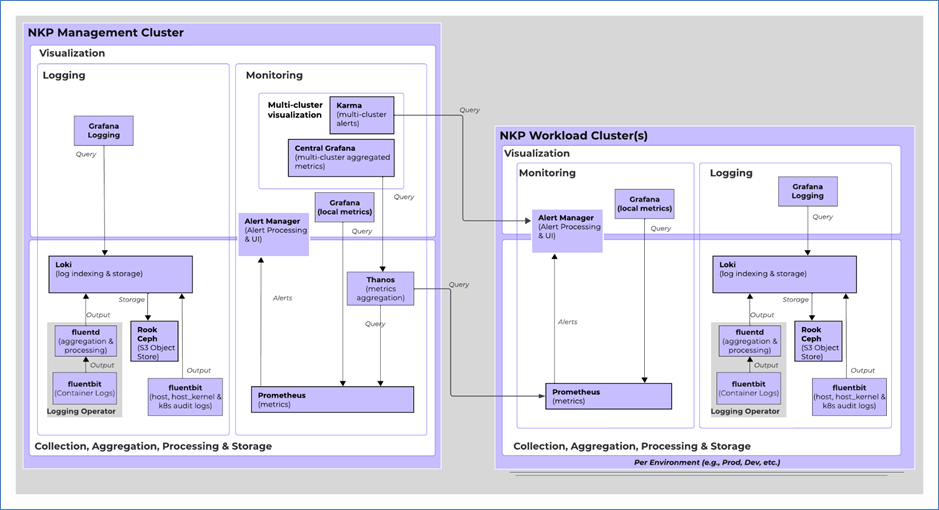

NKP comes with a fully integrated Observability stack out-of-the-box. The logging stack is disabled by default and needs to be enabled explicitly.

The Monitoring stack uses:

● Prometheus for metric collection and alert generation. It uses Prometheus Operator and allows modifying the configuration using CRDs such as PrometheusRules, ServiceMonitors, and so on.

● Prometheus Adapter for serving Kubernetes metric API

● AlertManager for alerting

● Grafana for visualization of metrics with a rich set of pre-created custom dashboards

Additionally, for centralized monitoring, the NKP Management cluster runs Thanos for metric aggregation from all attached clusters. A centralized Grafana instance hosts dashboards to convert these metrics to meaningful information. Similarly, Karma provides a multi-cluster dashboard for Alerts.

The Logging stack uses:

● Fluentbit for host, host_kernel, audit log collection

● Logging-Operator for container log collection and forwarding

● Loki for log indexing and storage (these are stored by default in a locally deployed rook-ceph Object Store)

● Grafana Logging for logs and audit dashboard visualization.

All clusters run their local logging and monitoring stack. A Thanos instance running on the management cluster aggregates metrics from all Prometheus instances across NKP clusters. The management cluster runs an instance of Karma, which pulls alerts from workload clusters and shows them in its dashboard.

Kubernetes and application-level monitoring is based on the core infrastructure monitoring concept. The observability stack uses OpenTelemetry to collect and move the following data between the Kubernetes clusters:

● Kubelet metrics

● Host metrics

● Kubernetes cluster metrics

● Kubernetes events

● Pod logs

● Prometheus metrics from service monitors

● Application-specific data from instrumentalization

Kubernetes Backup

The default storage class installed by NKP during deployment provides persistent storage for the management and workload clusters. The persistent volumes and application data in the clusters can be protected by a Velero backup schedule and stored in a S3 bucket provided by Nutanix Objects. A bucket in the local Nutanix Object store can be configured as the Backup Storage Location and replicated to an external location.

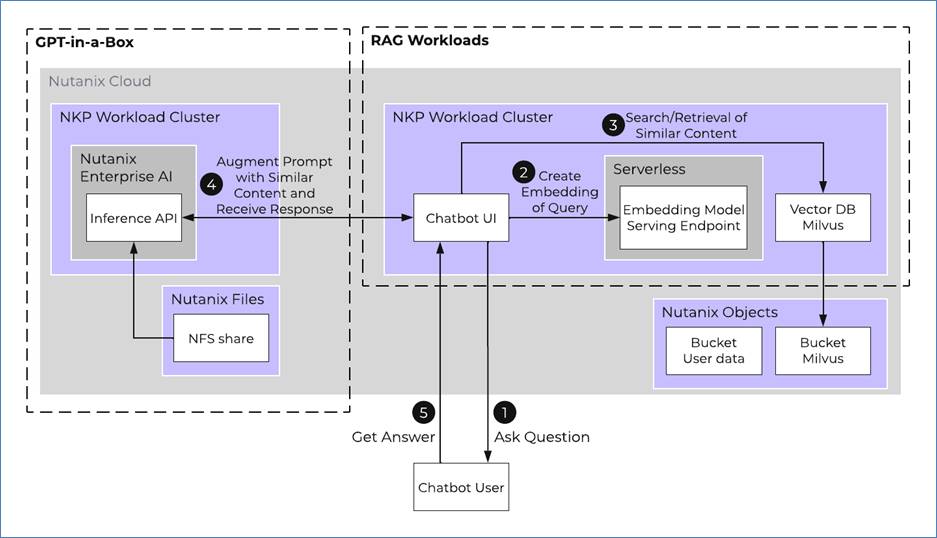

This reference design presents a robust architecture for running LLM applications around the Kubernetes-based Nutanix AI inference endpoint, using NKP as the orchestration platform. It provides a comprehensive, scalable, and efficient framework tailored for building RAG pipeline applications. This framework capitalizes on the latest LLM technologies and supporting tools, ensuring ease of development, deployment, and monitoring.

Large Language Model Logical Design

At the core of this architecture are the LLM inference endpoints, which are provided through the Nutanix Enterprise AI platform. This essential component plays a critical role in deploying and managing machine learning models, particularly for addressing real-time inference requirements. The integration with the Nutanix GPT-in-a-Box 2.0 Design guarantees scalability, reliable accessibility, and consistent performance.

The modular architecture enables independent scaling and updating of each component, providing flexibility for system optimization. Its compatibility with the RAG framework allows the LLM to query and retrieve data from the Milvus vector database, significantly improving the accuracy and relevance of generated outputs.

The data ingestion process is designed for versatility, supporting both batch processing and event-driven workflows using Kafka. This dual approach allows the system to handle large-scale periodic batch uploads as well as continuous real-time data streams seamlessly. Event processing is implemented using serverless functions built on Knative, which are triggered by Nutanix Objects event notifications relayed through Kafka. This architecture ensures efficient, scalable, and highly responsive handling of incoming data streams. Intel AMX features are discovered automatically by embedding function to allow optimized processing of the data.

Once the domain-specific documents are ingested, the data is then vectorized using LangChain, where the embedding model encodes its data into vector representations. These vectors are then stored into Milvus, a scalable and high-performance vector database. Nutanix Objects provides the robust back-end storage necessary to meet the large-scale data demands of Milvus.

During the inference process, its integration with the RAG pipeline architecture allows the LLMs running on Nutanix Enterprise AI to dynamically query and retrieve relevant information from the Milvus vector database, significantly improving the accuracy and relevance of generated outputs. This approach enhances the system's ability to provide accurate, domain-specific answers by reducing reliance on the LLM's internal knowledge, which might be insufficient. Instead, the RAG workflow ensures that responses are informed by up-to-date and highly relevant data, improving both accuracy and contextual relevance for user queries.

In this architecture, NAI leverages NKP’s observability stack to enable seamless monitoring and logging for LLM workloads and GPU performance. Upon deploying the NVIDIA GPU Operator from the NKP catalog, NVIDIA Data Center GPU Manager (DCGM) metrics are automatically configured for Prometheus, along with default Grafana dashboards that detail GPU utilization, memory bandwidth, and thermal performance. Metrics are aggregated with Thanos and visualized in Grafana, while Fluentbit and Loki handle log collection and indexing, resulting in an advanced monitoring solution that goes beyond conventional GPU observability.

Large Language Model Research Workflow

The LLM uses the following research workflow:

1. Ask a question: The interaction begins when the end user poses a question through the UI or chatbot interface.

2. Create a query embedding: The embedding model transforms the user's query into a vector representation. This process is known as vectorization.

3. Search and retrieve similar context: The vector database, which is specifically designed for similarity searches, stores the document embeddings generated by the model. It can efficiently search for and retrieve items based on these embeddings, which encapsulate the semantic meaning of the texts.

4. Send the prompt: The workflow augments the user's query with relevant contextual information retrieved from the database, then sends this enriched query as a prompt to the LLM endpoint. The LLM processes the enriched query and generates a response.

5. Get an answer: The UI or chatbot interface presents the LLM's response as the answer to the user's query.

The scope of this solution is a single standalone GPT-in-a-Box 2.0 cluster, and you must back up the application data on the S3 buckets in the Nutanix Objects store to an external environment.

The persistent volumes and application data in the clusters can be protected by a Velero backup schedule and stored in a S3 bucket provided by Nutanix Objects.

You can use the streaming replication mechanism built into Nutanix Objects to replicate the data at the bucket level to a different S3 object store outside the GPT-in-a-Box 2.0 cluster. You can also use the existing backup solution to back up the persistent application data and store it outside the GPT-in-a-Box 2.0 cluster.

Solution Deployment

This chapter contains the following:

This section details the prerequisites for installing the GPT-in-a-Box 2.0 solution and divided into the following key sections and procedures:

1. Install AHV-based CCHC with Nutanix Cluster.

2. Install the NVIDIA Grid Driver.

3. Enable and Configure Nutanix Files.

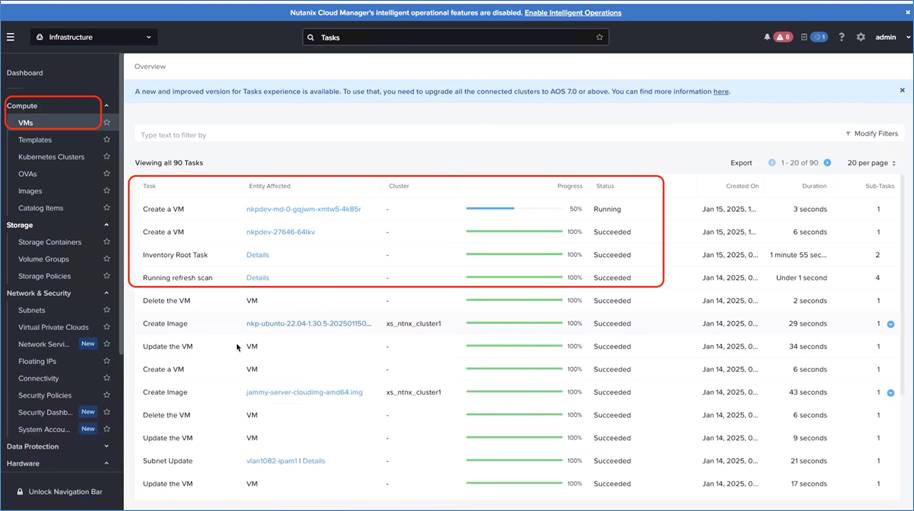

Procedure 1. Install AHV-based CCHC with Nutanix Cluster

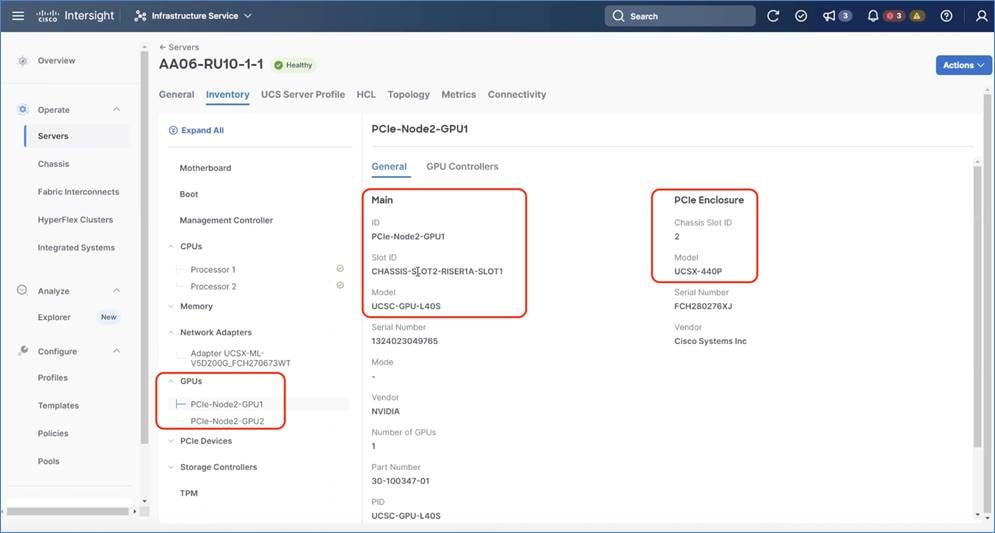

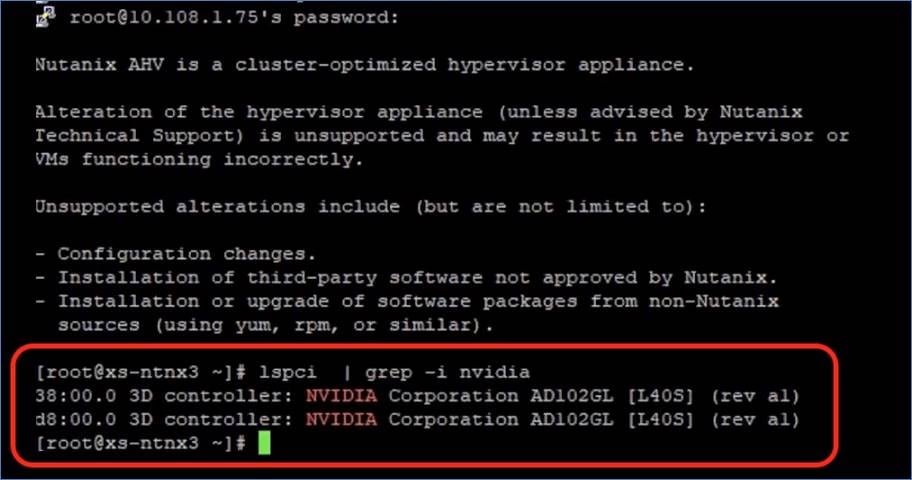

This solution requires a minimum of four (4) Cisco Compute Hyperconverged X210c M7 All NVMe Nodes. Each of the cluster node is enabled with Intel® Xeon® Scalable Processors offering compute density and storage capacity in a single form factor and the Cisco UCS X440p PCIe Nodes supporting 2x NVIDIA L40S GPUs. For detailed specifications on the specification of server nodes, go to the Solution Design chapter.

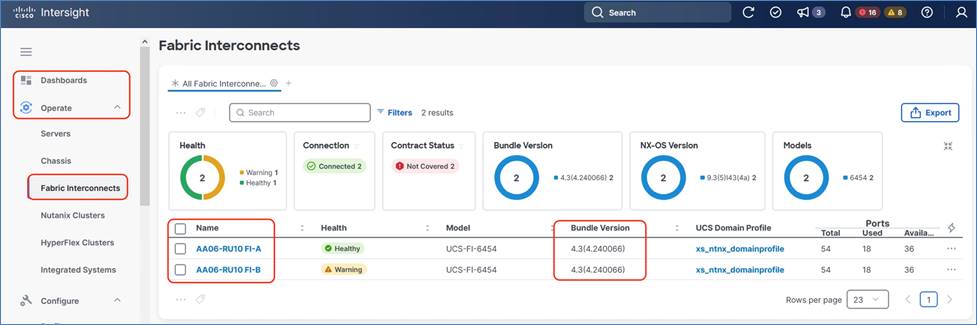

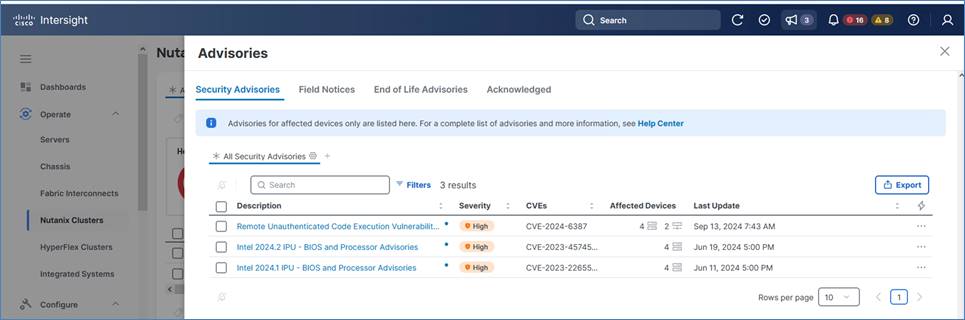

Note: A complete install process of AHV based CCHC with Nutanix cluster is outside the scope of this document. Please refer to the Cisco Compute Hyperconverged with Nutanix IMM Field Guide for detailed installation steps. The key validation prerequisites for the Nutanix Cluster installation are detailed in this section. These validations can be verified after UCS Domain Profile deployment. The steps verify that four (4) X210c M7 All NVMe Nodes with X440P PCIe Nodes enabling 2x L40S GPUS for each compute node are discovered in Cisco Intersight.

Step 1. Ensure the Fabric Interconnect Firmware is 4.3(.240066) or above.

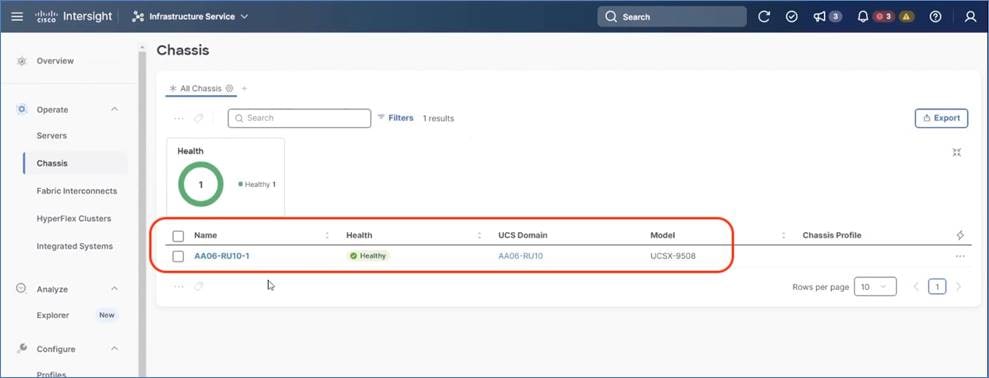

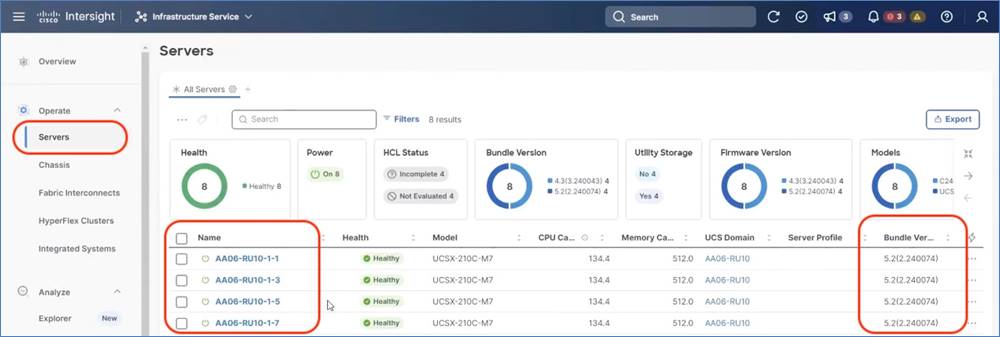

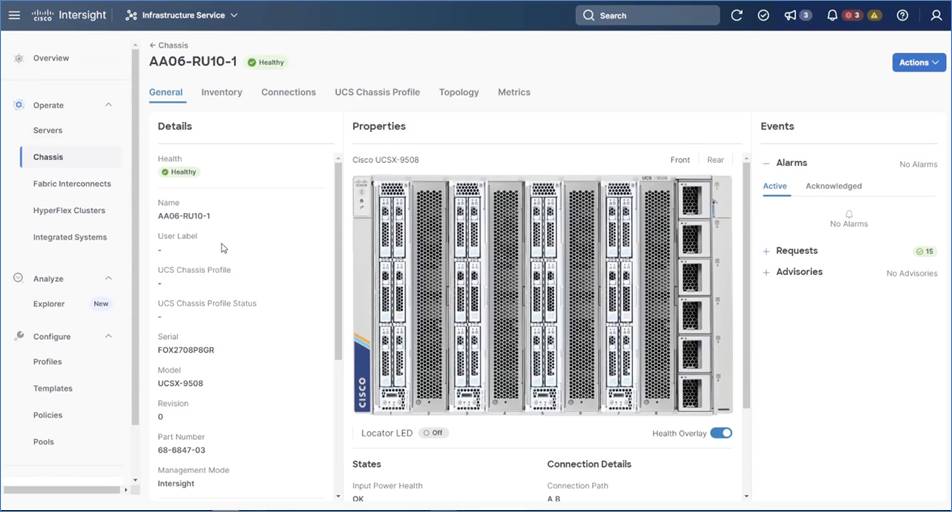

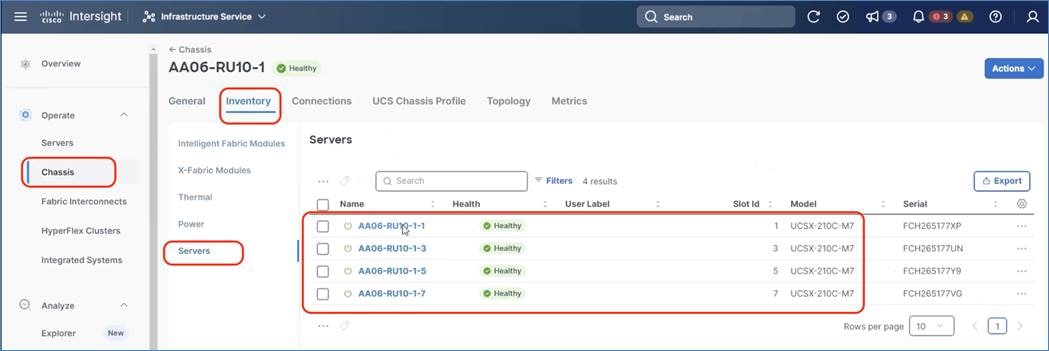

Step 2. From Intersight Dashboard, go to Operate > Chassis and ensure Domain Profile is deployed successfully.

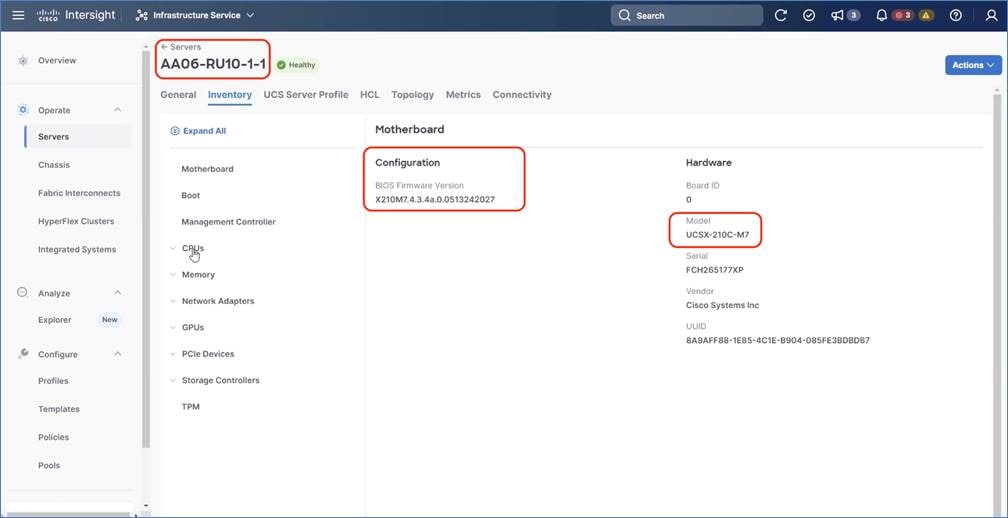

Step 3. Go to Operate > Servers and ensure the X210C compute nodes firmware is 5.2 2.40074 or above.

Step 4. Go to Operate > Chassis > <Chassis Name> and ensure the X210C compute nodes and X440P PCIe nodes are displayed correctly. The compute nodes are placed in Slot 1, 3, 5, 7 and PCIe nodes on Slot 2, 4, 6, 8.

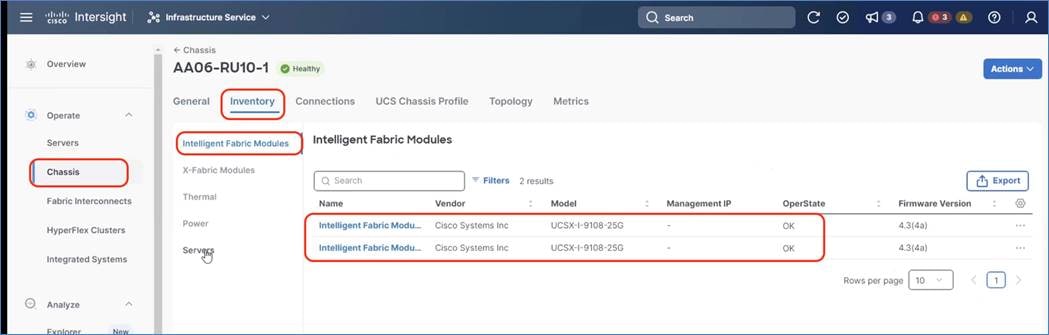

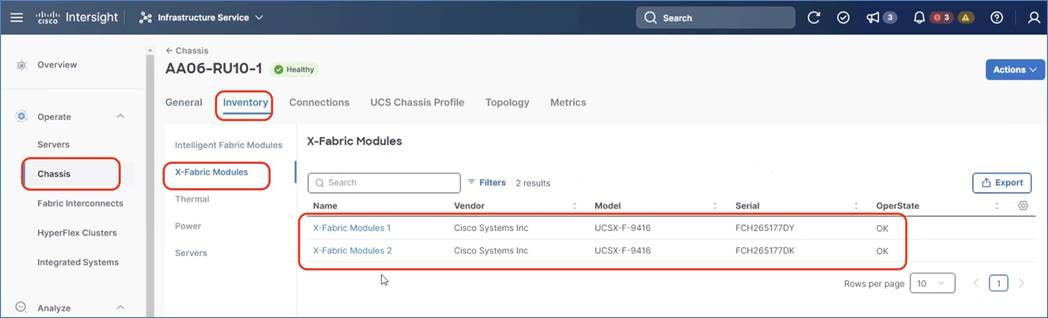

Step 5. Go to Chassis > Inventory tab and verify the IFM (Intelligent Fabric Module), X-Fabric Module, and the X210C compute nodes are displayed on the dashboard.

Step 6. Go to Servers and select one of the servers and view the inventory of the server. Ensure the nodes are attached to UCS X440P PCIe node which is equipped with 2x L40S or H100 GPUs.

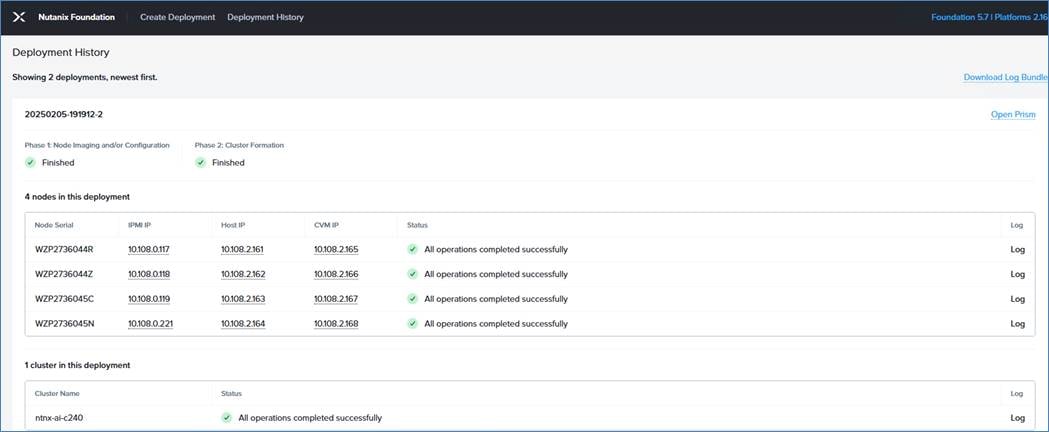

Step 7. Install the Nutanix cluster with AOS 6.7.1. and go to the Field Guide for information to successfully install the cluster.

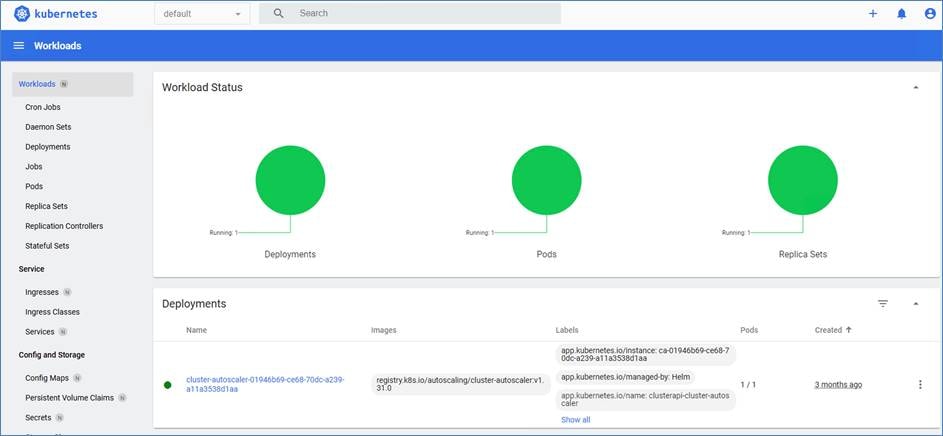

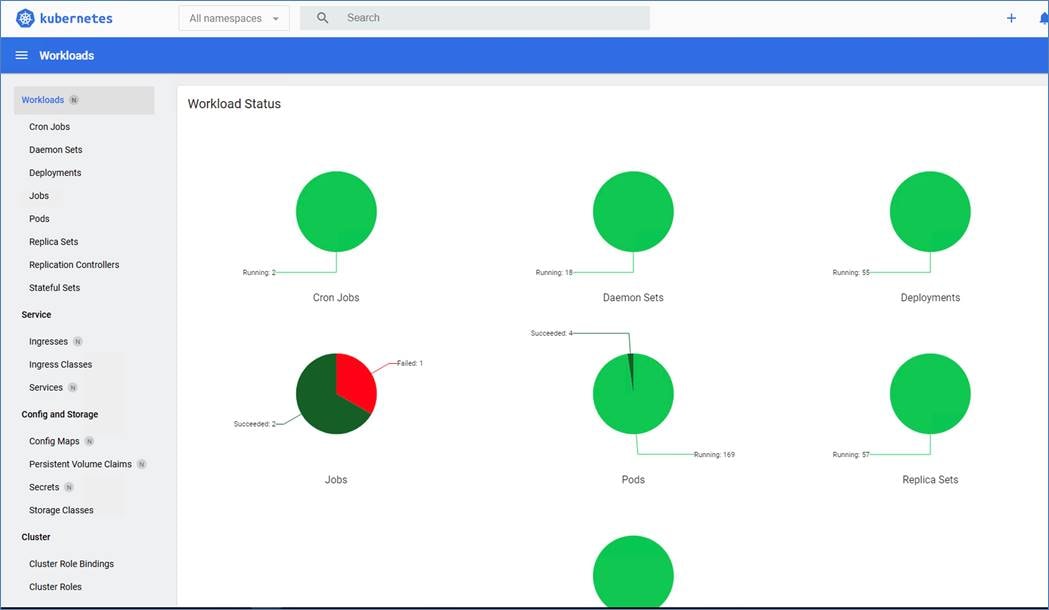

The screenshot below shows four (4) nodes:

Step 8. Provision a Prism Central Instance deployed on the Nutanix cluster and register the existing cluster to Prism Central. You have a choice to either deploy Prism Central on the GPT-in-a-Box cluster or utilize an existing Prism Central Instance.

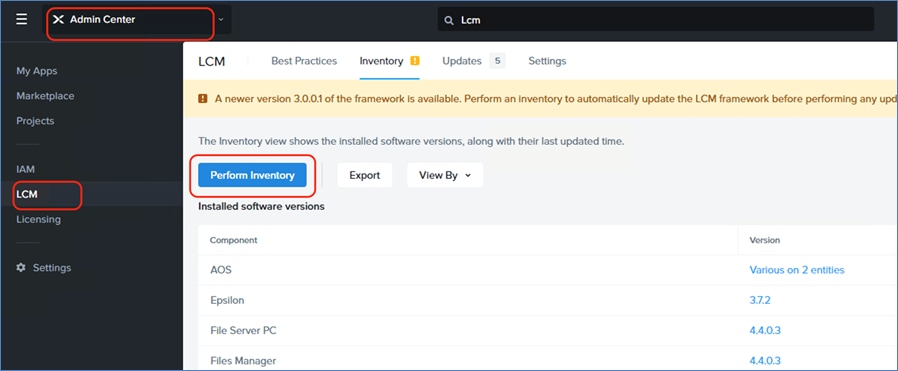

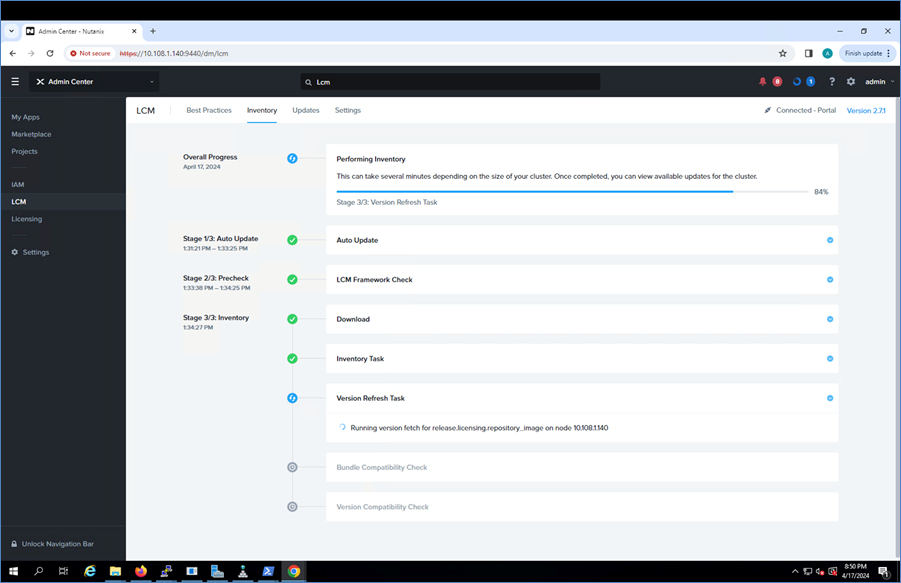

Procedure 2. Install NVIDIA Grid Driver

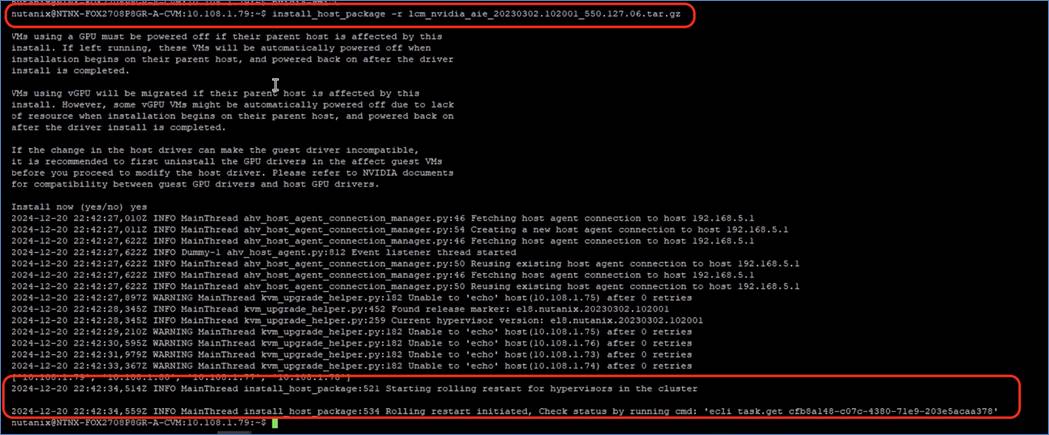

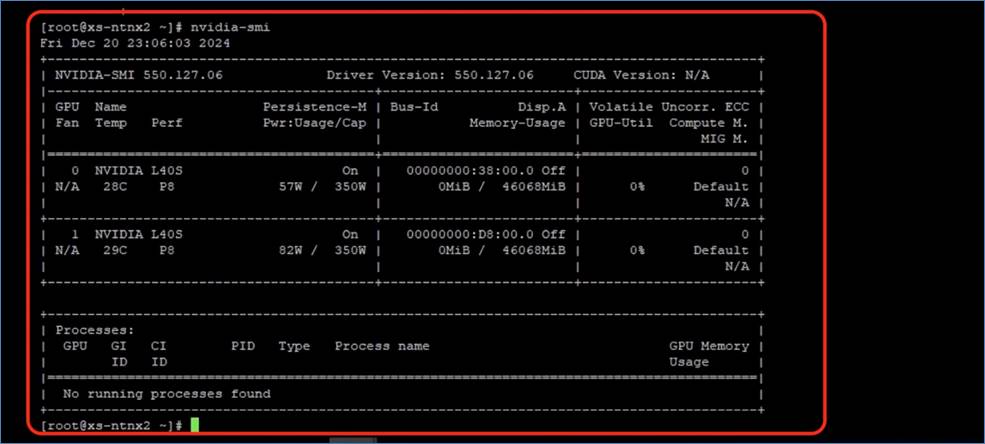

The following steps detail the process to deploy the NVIDIA driver on cluster nodes configured with L40S GPUs.