Accessing CPS vDRA Management CLI

There are two options for accessing the CPS vDRA Management CLI.

Access Via Web Browser

Perform the following steps to access the CPS vDRA Management CLI:

Procedure

|

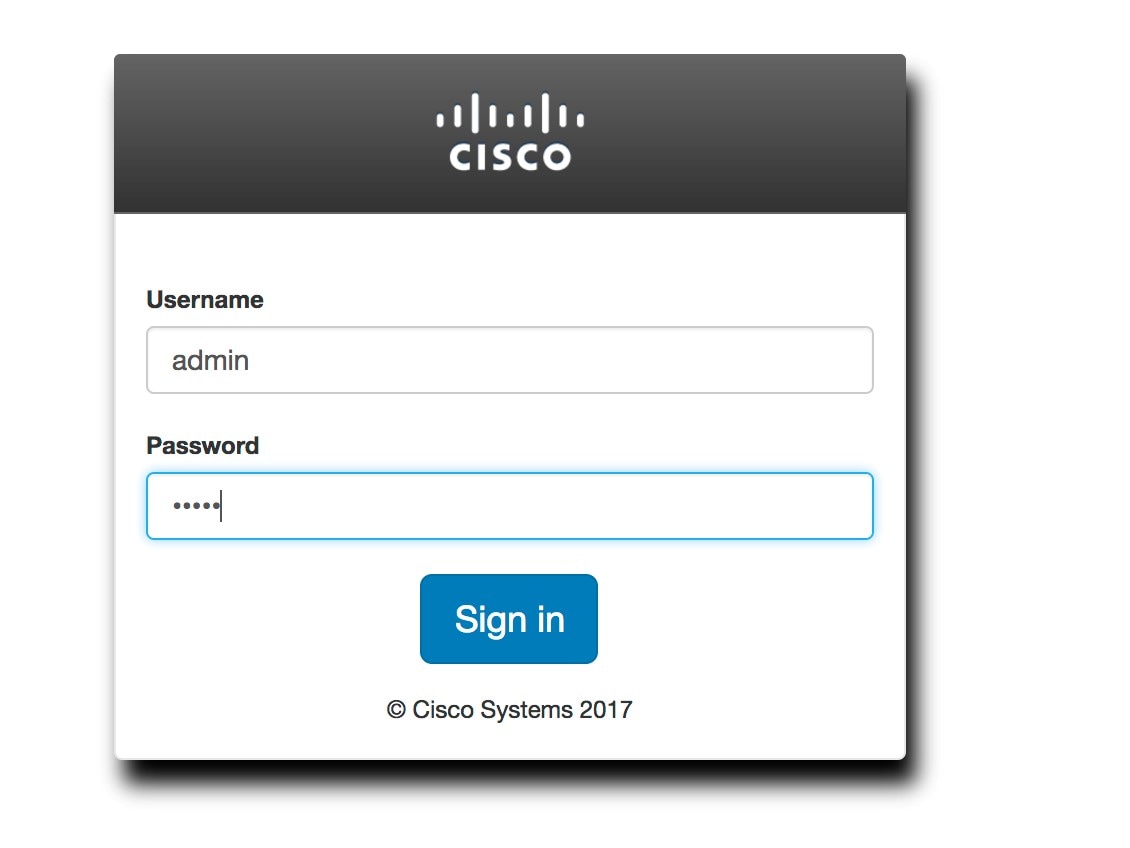

Step 1 |

Enter the following URL in Firefox or Chrome: https://<masterip>/ |

|

Step 2 |

Login to the application using your user ID and password. |

|

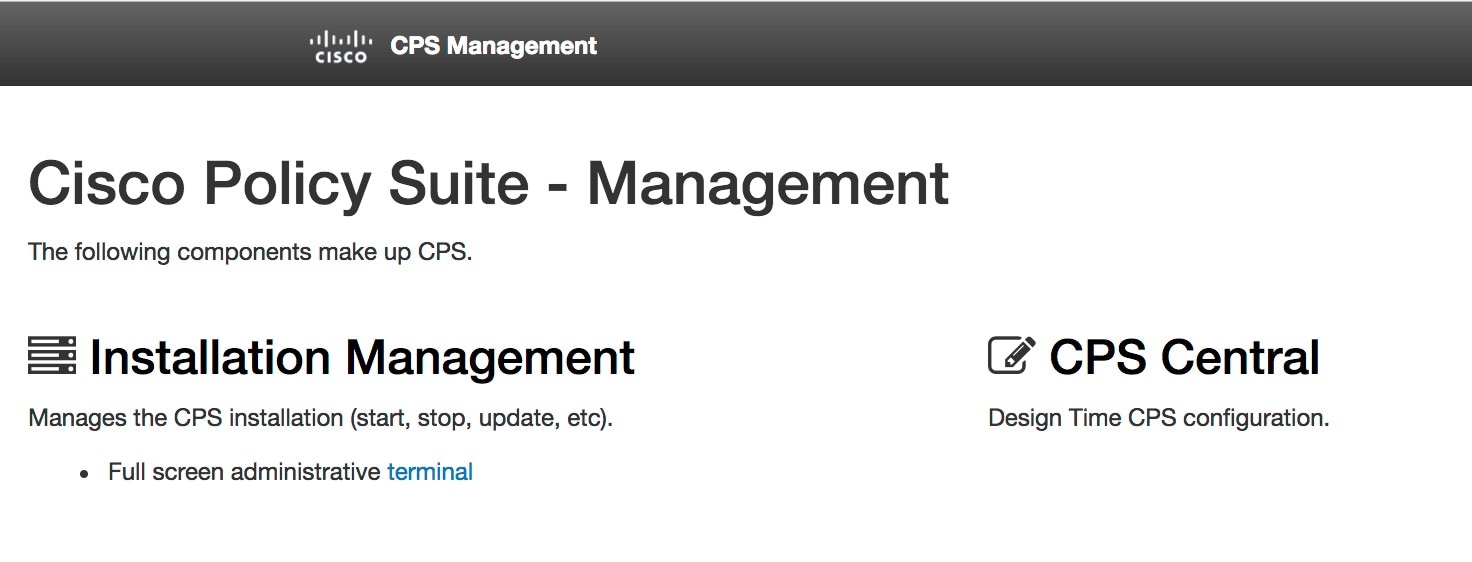

Step 3 |

Follow the Installation Management hyperlink in the following screen:

|

|

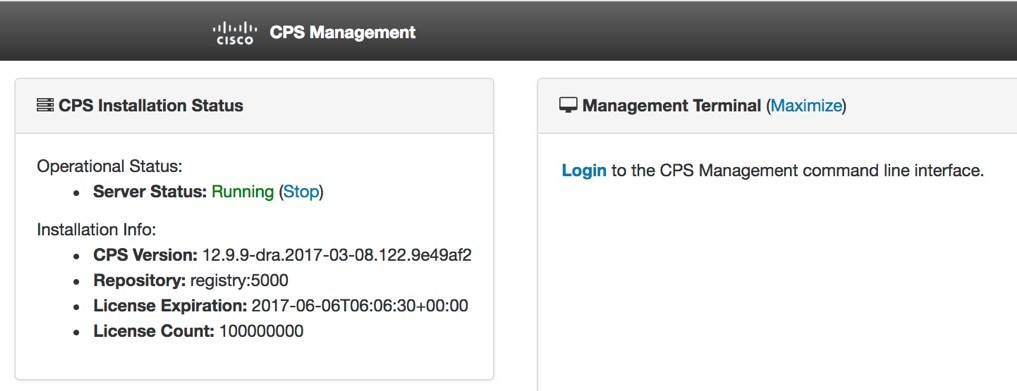

Step 4 |

In the Management screen, click the Login link to display the in-browser terminal window.

|

|

Step 5 |

Login with a valid user name and password.

|

Access Via SSH

Access is available to the CPS vDRA via SSH listening on port 2024 of the master virtual machine. This port must be open in the OpenStack security rules in order to access the Management CLI via SSH.

Feedback

Feedback