In-Service Software Upgrade to 19.3.0

For more information on updating graphite data source, see Configuring Graphite User Credentials in Grafana in CPS Operations Guide.

This section describes the steps to perform an in-service software upgrade (ISSU) of an existing CPS 18.2.0 deployment to CPS 19.3.0. This upgrade allows traffic to continue running while the upgrade is being performed.

In-service software upgrade to 19.3.0 is supported only for Mobile installation. Other CPS installation types cannot be upgraded using ISSU.

Note |

During ISSU from CPS 18.2.0 to CPS 19.3.0, if the following issue is observed then you need to reboot Cluster Manager and start ISSU again: The issue is observed only when the kernel is updated for the first time. In subsequent ISSU, the kernel issue is not observed. |

Note |

Before upgrade, you need to configure at least one Graphite/Grafana user. Grafana supports Graphite data source credential configuration capability. Graphite data source requires common data source credential to be configured using Grafana for Grafana user. Data source credential must be configured before upgrade. If you fail to add the user, then Grafana will not have an access to Graphite database and you will get continuous prompts for Graphite/Grafana credentials. All Grafana users configured will be available after upgrade. However, you need to configure the graphite data source in Grafana UI. Synchronize the Grafana information between the OAM (pcrfclient) VMs by running For more information on updating graphite data source, see Configuring Graphite User Credentials in Grafana in CPS Operations Guide. |

Note |

In CPS 19.3.0, additional application and platform statistics are enabled. Hence, there can be an increase in the disk space usage at pcrfclient VMs. Once CPS 19.3.0 is deployed, monitor the disk space usage and if required, increase the disk space. |

Prerequisites

Important |

During the upgrade process, do not make policy configuration changes, CRD table updates, or other system configuration changes. These type of changes should only be performed after the upgrade has been successfully completed and properly validated. |

Note |

During upgrade, the value of Session Limit Overload Protection under System configuration in Policy Builder can be set to 0 (default) which indefinitely accepts all the messages so that the traffic is not impacted but SNMP traps are raised. Once upgrade is complete, you must change the value as per the session capacity of the setup and publish it without restarting the Policy Server (QNS) process. For more information, contact your Cisco Account representative. |

Before beginning the upgrade:

-

Create a backup (snapshot/clone) of the Cluster Manager VM. If errors occur during the upgrade process, this backup is required to successfully roll back the upgrade.

-

Back up any nonstandard customizations or modifications to system files. Only customizations which are made to the configuration files on the Cluster Manager are backed up. Refer to the CPS Installation Guide for VMware for an example of this customization procedure. Any customizations which are made directly to the CPS VMs directly will not be backed up and must be reapplied manually after the upgrade is complete.

-

Remove any previously installed patches. For more information on patch removal steps, refer to Remove a Patch.

-

If necessary, upgrade the underlying hypervisor before performing the CPS in-service software upgrade. The steps to upgrade the hypervisor or troubleshoot any issues that may arise during the hypervisor upgrade is beyond the scope of this document. Refer to the CPS Installation Guide for VMware or CPS Installation Guide for OpenStack for a list of supported hypervisors for this CPS release.

-

Verify that the Cluster Manager VM has at least 10 GB of free space. The Cluster Manager VM requires this space when it creates the backup archive at the beginning of the upgrade process.

-

Synchronize the Grafana information between the OAM (pcrfclient) VMs by running the following command from pcrfclient01:

/var/qps/bin/support/grafana_sync.shAlso verify that the

/var/broadhop/.htpasswdfiles are the same on pcrfclient01 and pcrfclient02 and copy the file from pcrfclient01 to pcrfclient02 if necessary.Refer to Copy Dashboards and Users to pcrfclient02 in the CPS Operations Guide for more information.

-

Check the health of the CPS cluster as described in Check the System Health

-

The following files are overwritten with latest files after ISSU. Any modification done to these files, needs to merge manually after the upgrade.

/etc/broadhop/logback-debug.xml /etc/broadhop/logback-netcut.xml /etc/broadhop/logback-pb.xml /etc/broadhop/logback.xml /etc/broadhop/controlcenter/logback.xmlRefer to logback.xml Update for more details.

Refer also to Rollback Considerations for more information about the process to restore a CPS cluster to the previous version if an upgrade is not successful.

Overview

The in-service software upgrade is performed in increments:

-

Download and mount the CPS software on the Cluster Manager VM.

-

Divide CPS VMs in the system into two sets.

-

Start the upgrade (

install.sh). The upgrade automatically creates a backup archive of the CPS configuration. -

Manually copy the backup archive (/var/tmp/issu_backup-<timestamp>.tgz) to an external location.

-

Perform the upgrade on the first set while the second set remains operational and processes all running traffic. The VMs included in the first set are rebooted during the upgrade. After upgrade is complete, the first set becomes operational.

-

Evaluate the upgraded VMs before proceeding with the upgrade of the second set. If any errors or issues occurred, the upgrade of set 1 can be rolled back. Once you proceed with the upgrade of the second set, there is no automated method to roll back the upgrade.

-

Perform the upgrade on the second set while the first assumes responsibility for all running traffic. The VMs in the second set are rebooted during the upgrade.

Check the System Health

Procedure

| Step 1 |

Log in to the Cluster Manager VM as the root user. |

| Step 2 |

Check the health of the system by running the following command:

Clear or resolve any errors or warnings before proceeding to Download and Mount the CPS ISO Image. |

Download and Mount the CPS ISO Image

Procedure

| Step 1 |

Download the Full Cisco Policy Suite Installation software package (ISO image) from software.cisco.com. Refer to the Release Notes for the download link. |

| Step 2 |

Load the ISO image on the Cluster Manager. For example:

where,

|

| Step 3 |

Execute the following commands to mount the ISO image:

|

| Step 4 |

Continue with Verify VM Database Connectivity. |

Verify VM Database Connectivity

Verify that the Cluster Manager VM has access to all VM ports. If the firewall in your CPS deployment is enabled, the Cluster Manager can not access the CPS database ports.

To temporarily disable the firewall, run the following command on each of the OAM (pcrfclient) VMs to disable IPTables:

IPv4:

service

iptables stop

IPv6:

service

ip6tables stop

The iptables service restarts the next time the OAM VMs are rebooted.

Create a Backup of CPS 18.2.0 Cluster Manager

Before upgrading Cluster Manager to CPS 19.3.0, create a backup of the current Cluster Manager in case an issue occurs during upgrade.

Procedure

| Step 1 |

On Cluster Manager, remove the following files if they exist: |

| Step 2 |

After removing the files, reboot the Cluster Manager. |

| Step 3 |

Create a backup (snapshot/clone) of Cluster Manager. For more information, refer to the CPS Backup and Restore Guide. |

Create Upgrade Sets

The following steps divide all the VMs in the CPS cluster into two groups (upgrade set 1 and upgrade set 2). These two groups of VMs are upgraded independently in order allow traffic to continue running while the upgrade is being performed.

Procedure

| Step 1 |

Determine which VMs in your existing deployment should be in upgrade set 1 and upgrade set 2 by running the following command on the Cluster Manager:

|

| Step 2 |

This script outputs two files defining the 2 sets: /var/tmp/cluster-upgrade-set-1.txt /var/tmp/cluster-upgrade-set-2.txt |

| Step 3 |

Create the file backup-db at the location /var/tmp. This file contains backup-session-db (hot-standby) set name which is defined in /etc/broadhop/mongoConfig.cfg file (for example, SESSION-SETXX). For example:

|

| Step 4 |

Review these files to verify that all VMs in the CPS cluster are included. Make any changes to the files as needed. |

| Step 5 |

Continue with Move the Policy Director Virtual IP to Upgrade Set 2. |

Move the Policy Director Virtual IP to Upgrade Set 2

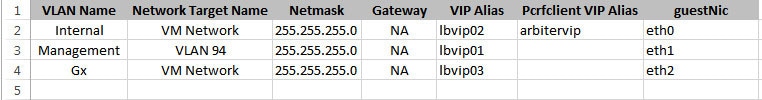

Before beginning the upgrade of the VMs in upgrade set 1, you must transition the Virtual IP (VIP) to the Policy Director (LB) VM in Set 2.

Check which Policy Director VM has the virtual IP (VIP) by connecting to (ssh) to lbvip01 from the Cluster Manager VM. This connects you to the Policy Director VM which has the VIP either lb01 or lb02.

You can also run

ifconfig on the Policy Director VMs to confirm the VIP

assignment.

-

If the VIP is already assigned to the Policy Director VM that is to be upgraded later (Set 2), continue with Upgrade Set 1.

-

If the VIP is assigned to the Policy Director VM that is to be upgraded now (Set 1), issue the following commands from the Cluster Manager VM to force a switchover of the VIP to the other Policy Director:

ssh lbvip01service corosync stopContinue with Upgrade Set 1.

Upgrade Set 1

Important |

Perform these steps while connected to the Cluster Manager console via the orchestrator. This prevents a possible loss of a terminal connection with the Cluster Manager during the upgrade process. |

The steps performed during the upgrade, including all console inputs and messages, are logged to /var/log/install_console_<date/time>.log.

Procedure

| Step 1 |

Run the following command to initiate the installation script:

|

||

| Step 2 |

When prompted for the install type, enter mobile. Please

enter install type [mobile|mog|pats|arbiter|andsf|escef]:

|

||

| Step 3 |

When prompted to initialize the environment, enter y.

|

||

| Step 4 |

(Optional) You can skip Step 2 and Step 3 by configuring the following parameters in /var/install.cfg file: Example: |

||

| Step 5 |

When prompted for the type of installation, enter 3. |

||

| Step 6 |

When prompted, open a second terminal session to the Cluster Manager VM and copy the backup archive to an external location. This archive is needed if the upgrade needs to be rolled back. After you have copied the backup archive, enter c to continue. |

||

| Step 7 |

When prompted to enter the SVN repository to back up the policy files, enter the Policy Builder data repository name.

The default

repository name is

|

||

| Step 8 |

(Optional) If

prompted for a user, enter

|

||

| Step 9 |

(Optional) If

prompted for the password for

|

||

| Step 10 |

The upgrade proceeds on Set 1 until the following message is displayed:

For example: |

||

| Step 11 |

Enter y to proceed with the kernel upgrade.

|

||

| Step 12 |

(Optional) The upgrade proceeds until the following message is displayed: |

||

| Step 13 |

(Optional) Open a second terminal to the Cluster Manager VM and run the following command to check that all DB members are UP and in the correct state:

|

||

| Step 14 |

(Optional) After confirming the database member state, enter y to continue the upgrade. |

||

| Step 15 |

The upgrade proceeds until the following message is displayed:

|

||

| Step 16 |

If you have entered y, continue with Evaluate Upgrade Set 1. |

Evaluate Upgrade Set 1

At this point in the in-service software upgrade, the VMs in Upgrade Set 1 have been upgraded and all calls are now directed to the VMs in Set 1.

Before continuing with the upgrade of the remaining VMs in the cluster, check the health of the Upgrade Set 1 VMs. If any of the following conditions exist, the upgrade should be rolled back.

-

Errors were reported during the upgrade of Set 1 VMs.

-

Calls are not processing correctly on the upgraded VMs.

-

about.shdoes not show the correct software versions for the upgraded VMs (under CPS Core Versions section).

Note |

|

If clock skew is seen with respect to VM or VMs after executing diagnostics.sh, you need to synchronize the time of the redeployed VMs.

For example,

Checking clock skew for qns01...[FAIL]

Clock was off from lb01 by 57 seconds. Please ensure clocks are synced. See: /var/qps/bin/support/sync_times.sh

Synchronize the times of the redeployed VMs by running the following command:

/var/qps/bin/support/sync_times.sh

For more information on sync_times.sh, refer to CPS Operations

Guide.

If you observe directory not empty error during puppet execution, refer to Directory Not Empty for the solution.

Important |

Once you proceed with the upgrade of Set 2 VMs, there is no automated method for rolling back the upgrade. |

If any issues are found which require the upgraded Set 1 VMs to be rolled back to the original version, refer to Upgrade Rollback.

To continue upgrading the remainder of the CPS cluster (Set 2 VMs), refer to Move the Policy Director Virtual IP to Upgrade Set 1.

Move the Policy Director Virtual IP to Upgrade Set 1

Issue the following commands from the Cluster Manager VM to switch the VIP from the Policy Director (LB) on Set 1 to the Policy Director on Set 2:

ssh lbvip01

service corosync

stop

If the command prompt does not display again after running this command, press Enter.

Continue with Upgrade Set 2.

Upgrade Set 2

Procedure

| Step 1 |

In the first terminal, when prompted with the following message, enter y after ensuring that all the VMs in Set 1 are upgraded and restarted successfully. |

||

| Step 2 |

The upgrade proceeds on Set 2 until the following message is displayed:

For example: |

||

| Step 3 |

Enter y to proceed with the kernel upgrade.

|

||

| Step 4 |

(Optional) The upgrade proceeds until the following message is displayed: |

||

| Step 5 |

(Optional) In the second terminal to the Cluster Manager VM, run the following command to check the database members are UP and in the correct state:

|

||

| Step 6 |

(Optional) After confirming the database member state, enter y on first terminal to continue the upgrade. |

||

| Step 7 |

(Optional) The upgrade proceeds until the following message is displayed: |

||

| Step 8 |

(Optional) In the second terminal to the Cluster Manager VM, run the following command to check the database members are UP and in the correct state:

|

||

| Step 9 |

(Optional) After confirming the database member state, enter y on first terminal to continue the upgrade. |

||

| Step 10 |

The upgrade proceeds until the following message is displayed. |

||

| Step 11 |

Once you verify that all VMs in Set 2 are upgraded and restarted successfully, enter y to continue the upgrade. Once the Cluster Manager VM reboots, the CPS upgrade is complete. |

||

| Step 12 |

Continue with Verify System Status, and Remove ISO Image. |

||

| Step 13 |

Any Grafana dashboards used prior to the upgrade must be manually migrated. Refer to Migrate Existing Grafana Dashboards in the CPS Operations Guide for instructions. |

Feedback

Feedback