General Troubleshooting

-

Find out if your problem is related to CPS or another part of your network.

-

Gather information that facilitate the support call.

-

Are their specific SNMP traps being reported that can help you isolate the issue?

Gathering Information

Determine the Impact of the Issue

-

Is the issue affecting subscriber experience?

-

Is the issue affecting billing?

-

Is the issue affecting all subscribers?

-

Is the issue affecting all subscribers on a specific service?

-

Is there anything else common to the issue?

-

Have there been any changes performed on the CPS system or any other systems?

-

Has there been an increase in subscribers?

-

Initially, categorize the issue to determine the level of support needed.

Collecting MongoDB Information for Troubleshooting

This sections describes steps on how to collect information regarding mongo if a customer has issues with MongoDB:

Procedure

| Step 1 |

Collect the

information from

|

||

| Step 2 |

Collect

|

||

| Step 3 |

Collect the information from /var/log/broadhop/mongodb-<dbportnum>.log file from the sessionmgr VMs where database is hosted (primary/secondary/arbiter for all hosts in the configured replica set. If multiple replica sets experience issues collect from 1 replica set). |

||

| Step 4 |

Connect to the primary sessionmgr VM hosting the database and collect the data (for example, for 10 minutes) by executing the following commands:

where, <dbportnum> is the mongoDB port for the given database (session/SPR/balance/admin), such as 27717 for balance database.

|

||

| Step 5 |

Connect to the primary sessionmgr VM hosting the balance and collect all the database dumps by executing the following command:

|

||

| Step 6 |

Use the following command to check mongoDB statistics on queries/inserts/updates/deletes for all CPS databases (and on all primary and secondary databases) and verify if there are any abnormalities (for example, high number of insert/update/delete considering TPS, large number of queries going to other site). Here considering the session database as an example:

For example,

|

High CPU Usage Issue

-

Thread details and jstack output. It could be captured as:

-

From top output see if java process is taking high CPU.

-

Capture output of the following command:

ps -C java -L -o pcpu,cpu,nice,state,cputime,pid,tid | sort > tid.log -

Capture output of the following command where <process pid> is the pid of process causing high CPU (as per top output):

If java process is running as a root user:

jstack <process pid> > jstack.logIf java process is running as policy server (qns) user :

sudo -u qns "jstack <process pid>" > jstack.logIf running above commands report error for process hung/not responding then use

-Foption afterjstack.Capture another jstack output as above but with an additional

-loption

-

JVM Crash

JVM generates a fatal

error log file that contains the state of process at the time of the fatal

error. By default, the name of file has format

hs_err_pid<pid>.log and it is generated in the

working directory from where the corresponding java processes were started

(that is the working directory of the user when user started the policy server

(qns) process). If the working directory is not known then one could search

system for file with name

hs_err_pid*.log and look into file which has timestamp

same as time of error.

High Memory Usage/Out of Memory Error

- JVM could generate heap dump

in case of out of memory error. By default, CPS is not configured to generate

heap dump. For generating heap dump the following parameters need to be added

to

/etc/broadhop/jvm.conf file for different CPS

instances present.

-XX+HeapDumpOnOutOfMemoryError-XXHeapDumpPath=/tmpNote that the heap dump generation may fail if limit for core is not set correctly. Limit could be set in file

/etc/security/limits.conffor root and policy server (qns) user. -

If no dump is generated but memory usage is high and is growing for sometime followed by reduction in usage (may be due to garbage collection) then the heap dump can be explicitly generated by running the following command:

-

If java process is running as user root:

jmap -dumpformat=bfile=<filename> <process_id> -

If java process is running as policy server (qns) user:

jmap -J-d64 -dump:format=b,file=<filename> <process id>Example:

jmap -J-d64 -dump:format=b,file=/var/tmp/jmapheapdump_18643.map 13382

Note

-

Capture this during off-peak hour. In addition to that, nice utility could be used to reduce priority of the process so that it does not impact other running processes.

-

Create archive of dump for transfer and make sure to delete dump/archive after transfer.

-

-

-

Use the following procedure to log Garbage Collection:

-

Login to VM instance where GC (Garbage Collection) logging needs to be enabled.

-

Run the following commands:

cd /opt/broadhop/qns-1/bin/ chmod +x jmxterm.sh ./jmxterm.sh > open <host>:<port> > bean com.sun.management:type=HotSpotDiagnostic > run setVMOption PrintGC true > run setVMOption PrintGCDateStamps true > run setVMOption PrintGCDetails true > run setVMOption PrintGCDetails true > exit -

Revert the changes once the required GC logs are collected.

-

Issues with Output displayed on Grafana

In case of Grafana issue, whisper database output is required.

whisper-fetch --pretty /var/lib/carbon/whisper/cisco/quantum/qps/hosts/*

For example,

whisper-fetch --pretty /var/lib/carbon/whisper/cisco/quantum/qps/dc1-pcrfclient02/load/midterm.wsp

Basic Troubleshooting

Capture the following details in most error cases:

Procedure

| Step 1 |

Output of the following commands:

|

| Step 2 |

Collect all the logs:

|

| Step 3 |

CPS configuration details present at /etc/broadhop. |

| Step 4 |

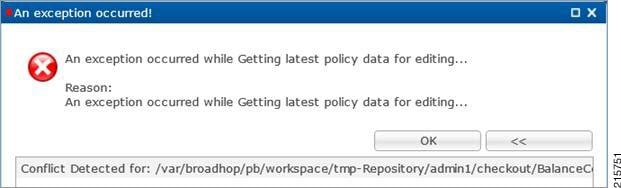

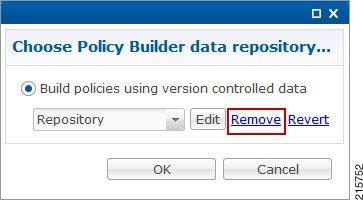

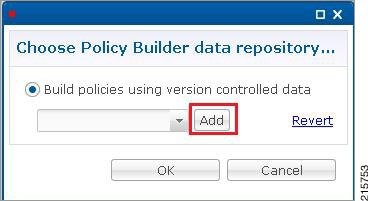

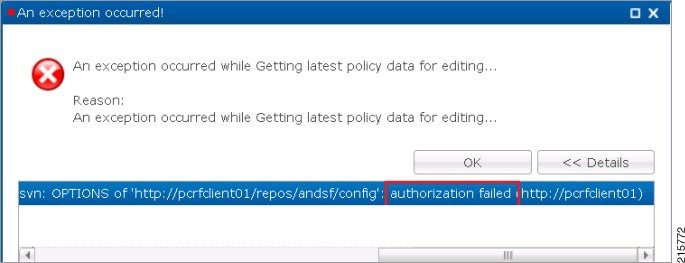

SVN repository To export SVN repository, go to /etc/broadhop/qns.conf and copy the URL specified against com.broadhop.config.url. For example,

Run the following command to export SVN repository:

|

| Step 5 |

Top command on all available VMs to display the top CPU processes on the system:

|

| Step 6 |

Output of the following command from pcrfclient01 VM top_qps.sh with output period of 10-15 min and interval of 5 sec:

|

| Step 7 |

Output of the following command on load balancer (lb) VMs having issue.

|

| Step 8 |

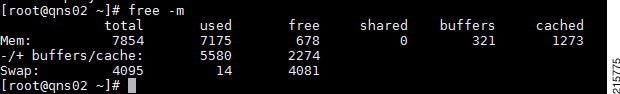

Output of the following command on all VMs.

|

| Step 9 |

Details mentioned in Periodic Monitoring. |

Trace Support Commands

This section covers the following two commands:

-

trace.sh

-

trace_id.sh

For more information on trace commands, refer to Policy Tracing and Execution Analyzer section in CPS Operations Guide.

trace.sh

trace.sh usage:

/var/qps/bin/control/trace.sh -i <specific id> -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace.sh -x <specific id> -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace.sh -a -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace.sh -e -d sessionmgr01:27719/policy_traceThis script starts a selective trace and outputs it to standard out.

-

Specific Audit Id Tracing

/var/qps/bin/control/trace.sh -i <specific id> -

Dump All Traces for Specific Audit Id

/var/qps/bin/control/trace.sh -x <specific id> -

Trace All.

/var/qps/bin/control/trace.sh -a -

Trace All Errors.

/var/qps/bin/control/trace.sh -e

trace_id.sh

trace_id.sh usage:

/var/qps/bin/control/trace_ids.sh -i <specific id> -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace_ids.sh -r <specific id> -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace_ids.sh -x -d sessionmgr01:27719/policy_trace

/var/qps/bin/control/trace_ids.sh -l -d sessionmgr01:27719/policy_traceThis script starts a selective trace and outputs it to standard out.

-

Add Specific Audit Id Tracing

/var/qps/bin/control/trace_ids.sh -i <specific id> -

Remove Trace for Specific Audit Id

/var/qps/bin/control/trace_ids.sh -r <specific id> -

Remove Trace for All Ids

/var/qps/bin/control/trace_ids.sh -x -

List All Ids under Trace

/var/qps/bin/control/trace_ids.sh -l

Periodic Monitoring

-

Run the following command on pcrfclient01 and verify that all the processes are reported as Running.

For CPS 7.0.0 and higher releases:

/var/qps/bin/control/statusall.shProgram 'cpu_load_trap' status Waiting monitoring status Waiting Process 'collectd' status Running monitoring status Monitored uptime 42d 17h 23m Process 'auditrpms.sh' status Running monitoring status Monitored uptime 28d 20h 26m System 'qns01' status Running monitoring status Monitored The Monit daemon 5.5 uptime: 21d 10h 26m Process 'snmpd' status Running monitoring status Monitored uptime 21d 10h 26m Process 'qns-1' status Running monitoring status Monitored uptime 6d 17h 9m -

Run

/var/qps/bin/diag/diagnostics.shcommand on pcrfclient01 and verify that no errors/failures are reported in output./var/qps/bin/diag/diagnostics.sh CPS Diagnostics HA Multi-Node Environment --------------------------- Ping check for all VMs... Hosts that are not 'pingable' are added to the IGNORED_HOSTS variable...[PASS ] Checking basic ports for all VMs...[PASS] Checking qns passwordless logins for all VMs...[PASS] Checking disk space for all VMs...[PASS] Checking swap space for all VMs...[PASS] Checking for clock skew for all VMs...[PASS] Checking CPS diagnostics... Retrieving diagnostics from qns01:9045...[PASS] Retrieving diagnostics from qns02:9045...[PASS] Retrieving diagnostics from qns03:9045...[PASS] Retrieving diagnostics from qns04:9045...[PASS] Retrieving diagnostics from pcrfclient01:9045...[PASS] Retrieving diagnostics from pcrfclient02:9045...[PASS] Checking svn sync status between pcrfclient01 & 02... svn is not sync between pcrfclient01 & pcrfclient02...[FAIL] Corrective Action(s): Run ssh pcrfclient01 /var/qps/bin/support/recover_svn_sync.sh Checking HAProxy statistics and ports... -

Perform the following actions to verify VMs status is reported as UP and healthy and no alarms are generated for any VMs.

-

Login to the VMware console

-

Verify the VM statistics, graphs and alarms through the console.

-

-

Verify if any trap is generated by CPS.

cd /var/log/snmptailf trap -

Verify if any error is reported in CPS logs.

cd /var/log/broadhop

grep -i error consolidated-qns.log

grep -i error consolidated-engine.log

-

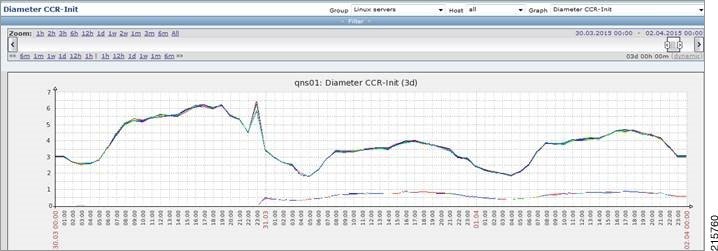

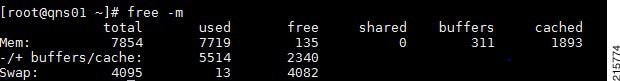

Monitor the following KPIs on Grafana for any abnormal behavior:

-

CPU usage of all instances on all the VMs

-

Memory usage of all instances on all VMs

-

Free disk space on all instances on all VMs

-

Diameter messages load: CCR-I, CCR-U, CCR-T, AAR, RAR, STR, ASR, SDR

-

Diameter messages response time: CCR-I, CCR-U, CCR-T, AAR, RAR, STR, ASR, SDA

-

-

Errors for diameter messages.

Run the following command on pcrfclient01:

tailcons | grep diameter | grep -i error -

Response time for sessionmgr insert/update/delete/query.

-

Average read, write, and total time per sec:

mongotop --host sessionmgr* --port port_number -

For requests taking more than 100ms:

SSH to sessionmgr VMs:

tailf /var/log/mongodb-<portnumber>.log

Note

Above commands will by default display requests taking more than 100 ms, until and unless the following parameter has been configured on mongod process --slows XYZms. XYZ represents the value in milliseconds desired by user.

-

-

Garbage collection.

Check the service-qns-*.log from all policy server (QNS), load balancer (lb) and PCRF VMs. In the logs look for “GC” or “FULL GC”.

-

Session count.

Run the following command on pcrfclient01:

session_cache_ops.sh --count -

Run the following command on pcrfclient01 and verify that the response time is under expected value and there are no errors reported.

/opt/broadhop/qns-1/control/top_qps.sh -

Use the following command to check mongoDB statistics on queries/inserts/updates/deletes for all CPS databases (and on all primary and secondary databases) and verify if there are any abnormalities (for example, high number of insert/update/delete considering TPS, large number of queries going to other site).

mongostat --host <sessionmgr VM name> --port <dBportnumber>For example,

mongostat --host sessionmgr01 --port 27717 -

Use the following command for all CPS databases and verify if there is any high usage reported in output. Here considering session database as an example:

mongotop --host <sessionmgr VM name> --port <dBportnumber>For example,

mongotop --host sessionmgr01 --port 27717 -

Verify EDRs are getting generated by checking count of entries in CDR database.

-

Verify EDRs are getting replicated by checking count of entries in the databases.

-

Determine most recently inserted CDR record in MySQL database and compare the insert time with the time the CDR was generated. Time difference should be within 2 min or otherwise signifies lag in replication.

-

Count of CCR-I/CCR-U/CCR-T/RAR messages from/to GW.

-

Count of failed CCR-I/CCR-U/CCR-T/RAR messages from/to GW. If GW has capability, capture details at error code level.

Run the following command on pcrfclient01:

cd /var/broadhop/statsgrep "Gx_CCR-" bulk-*.csv -

Response time of CCR-I/CCR-U/CCR-T messages at GW.

-

Count of session in PCRF and count of session in GW. There could be some mismatch between the count due to time gap between determining session count from CPS and GW. If the count difference is high then it could indicate stale sessions on PCRF or GW.

-

Count of AAR/RAR/STR/ASR messages from/to Application Function.

-

Count of failed AAR/RAR/STR/ASR messages from/to Application Function. If Application Function has capability, capture details at error code level.

Run the following command on pcrfclient01:

cd /var/broadhop/statsgrep "Gx_CCR-" bulk-*.csv -

Response time of AAR/RAR/STR/ASR messages at Application Function.

-

Count of session in PCRF and count of session in Application Function. There could be some mismatch between the count due to time gap between determining session count from CPS and Application Function. If the count difference is high then it could indicate stale sessions on PCRF or Application Function.

Count of session in PCRF:

session_cache_ops.sh -count

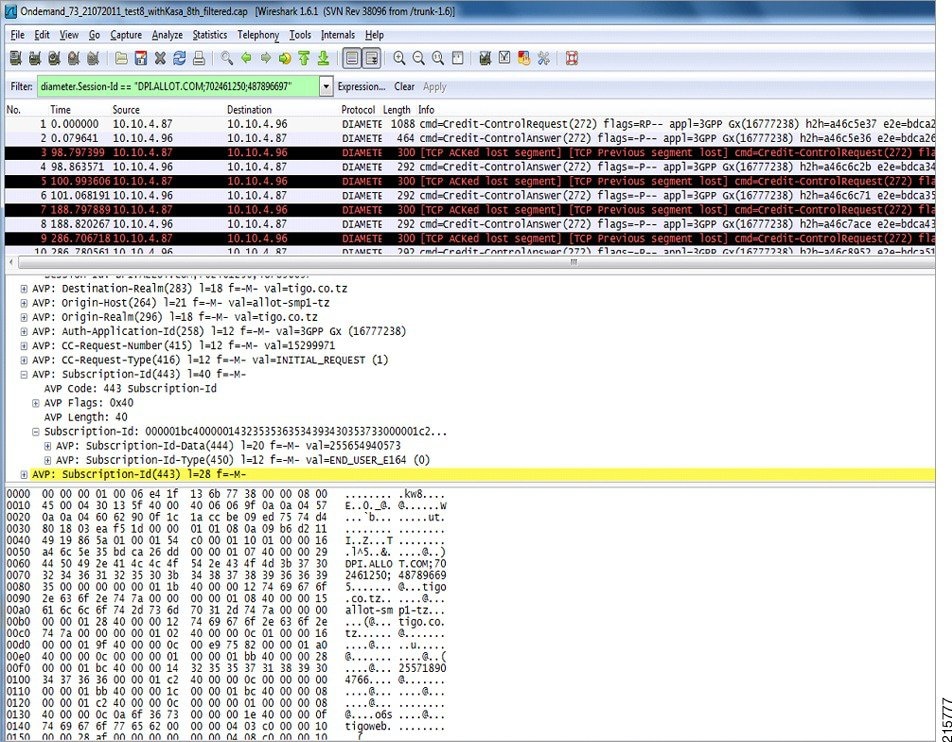

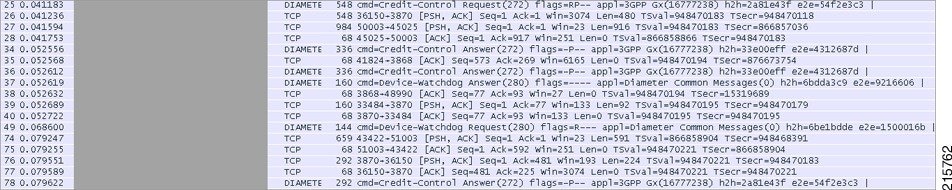

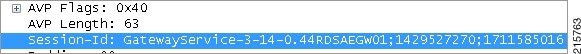

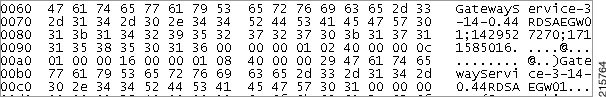

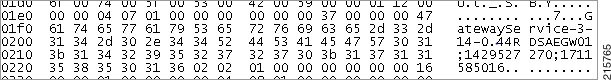

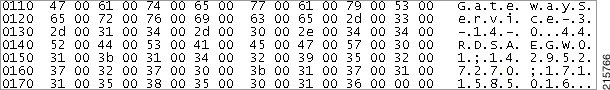

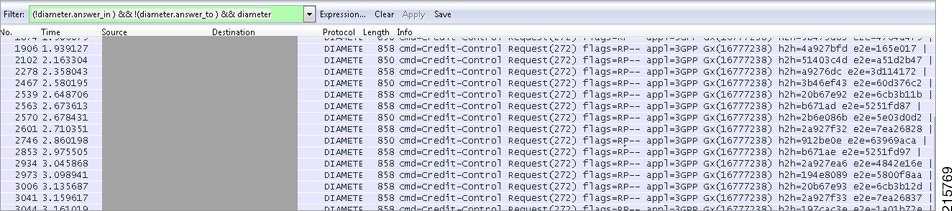

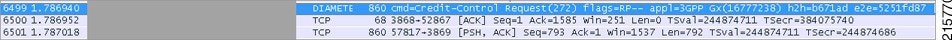

E2E Call Flow Troubleshooting

-

On an All-in-One deployment, run the following commands:

tcpdump -i <any port 80 or 8080 or 1812 or 1700 or 1813 or 3868> -s 0 -vv-

Append a

–w /tmp/callflow.pcapto capture output to Wireshark file

-

-

Open the file in WireShark and filter on HTTP to assist debugging the call flow.

-

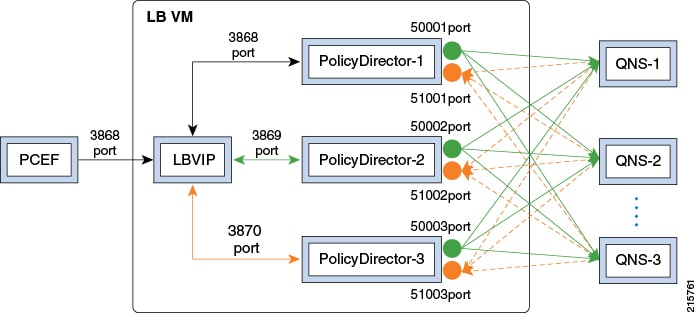

In a distributed model, you need to tcpdump on individual VMs:

-

Load balancers on port 1812, 1813, 1700, 8080 and 3868

-

Correct call flows are shown Call Flows.

Feedback

Feedback