FlashStack Data Protection with Veeam

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

FlashStack Data Protection with Veeam

Deployment Guide for FlashStack Data Protection with Veeam on Cisco UCS S3260 Storage Server, Cisco UCS C240 All Flash Rack Server, and Pure Storage Flash Array//C with Cisco UCS C220 Rack Server

Published: April 2021

![]()

In partnership with:

![]()

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2021 Cisco Systems, Inc. All rights reserved.

Cisco Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. Cisco, Pure and Veeam have partnered to deliver this document, which serves as a specific step-by-step guide for implementing FlashStack© Data Protection with Veeam. This Cisco Validated Design (CVD) provides an efficient architectural design that is based on customer requirements. The solution that follows is a validated approach for deploying Cisco, Pure Storage, and Veeam technologies as a shared, high performance, resilient, data protection solution.

This document includes a reference architecture and design guide for a complete set of data protection options for FlashStack. These options, combined with Veeam Backup & Replication v11, using Pure FlashArray//C, Cisco UCS C240 AFF Rack Server or Cisco UCS S3260 Storage Server as on-premises backup storage targets. FlashStack with Veeam Data Protection provides an end-to-end solution that includes backup, restores and archive to on-premises and public cloud

FlashStack provides pre-integrated, pre-validated converged infrastructure that combines compute, network, and storage—into a platform designed for business-critical applications and a wide variety of workloads. This platform delivers maximum performance, increased flexibility, and rapid scalability. It enables rapid, confident deployment as well as reducing the management overhead consumed by things like, patch upgrades and system updates.

Modern infrastructure also needs modern data protection, and Veeam’s data protection platform integrates backup and replication with advanced monitoring, analytics and intelligent automation and data re-use. Veeam® Backup & Replication™ helps businesses achieve comprehensive data protection for ALL workloads including virtual, physical, file and cloud. With a single console, Veeam achieves fast, flexible, and reliable backup, recovery and virtual machine replication of all applications and data, on-premises or in the cloud.

This solution works with FlashStack to deliver performance and features to help ensure that your data and applications are available while also unleashing the power of backup data through data re-use use cases.

A CVD and pre-validated reference architectures facilitate faster, more reliable, and more predictable customer deployments:

● Each CVD has been extensively tested, validated, and documented by Cisco and partner experts.

● CVDs minimize both integration, deployment, and performance risks to ensure always-on availability for enterprise applications.

From design to configuration, instructions to bill of materials (BOMs), CVDs provide everything businesses need to deploy the solutions in the most efficient manner.

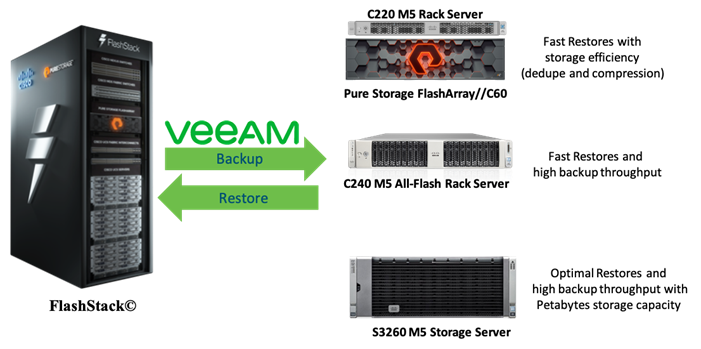

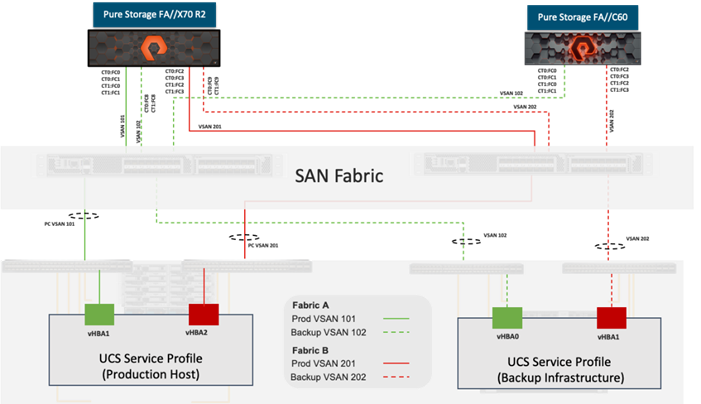

Figure 1 illustrates on the deployment model for FlashStack data protection with Veeam using three backup target options elaborated in this solution.

Figure 1. High-level Deployment Model – FlashStack Data Protection with Veeam

The audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT and data protection managers, partner engineers, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

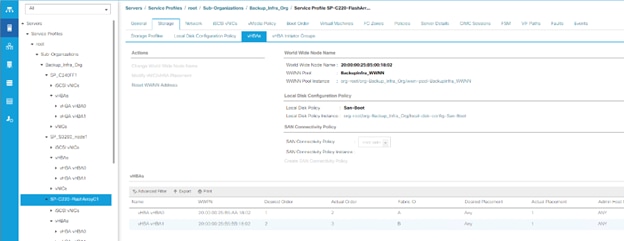

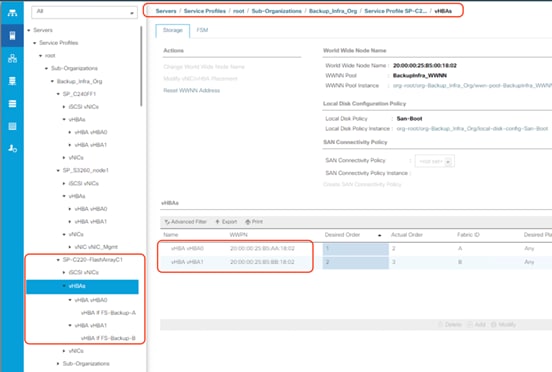

This document provides a step-by-step design, configuration, and implementation guide for the Cisco Validated Design for FlashStack Data Protection with Veeam for virtualized workloads. This document offers three backup target architectures: Cisco UCS S3260 Storage Server, Cisco UCS C240 All Flash rack server, and Pure Flash Array//C with a Cisco UCS C220 M5 rack server. The choice for any of these data protection Infrastructure platforms, depends on backup and restore requirements, backup throughput, storage efficiency and capacity.

This is the first release of Cisco Validated Design for FlashStack data protection with Veeam.

It incorporates the following features:

● Cisco UCS S3260 Storage Server

● Cisco UCS C240 All Flash Rack Server

● Cisco UCS C220 Rack Server,

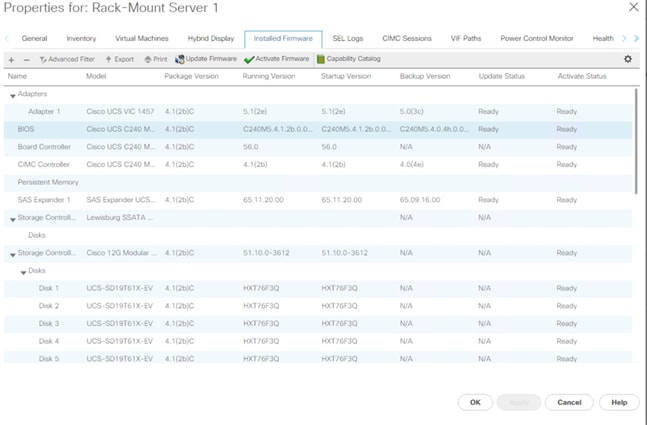

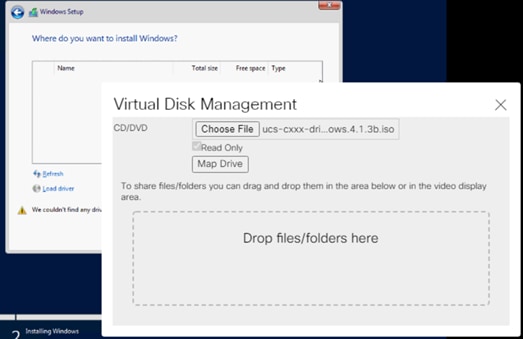

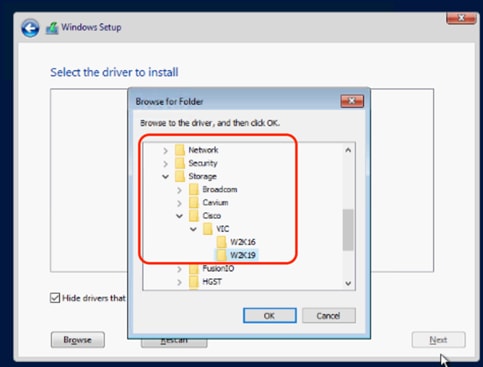

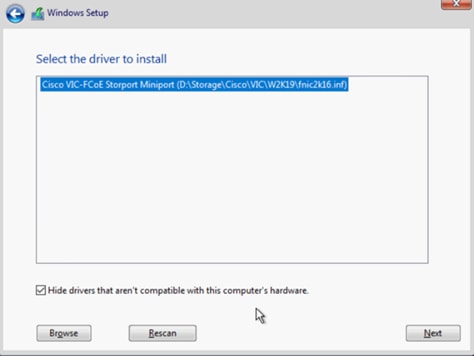

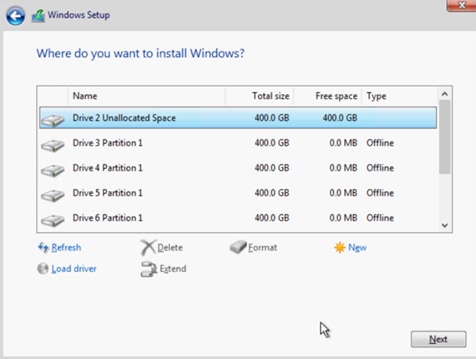

● Support for the Cisco UCS 4.1(3b) release

● Veeam Backup & Replication v11

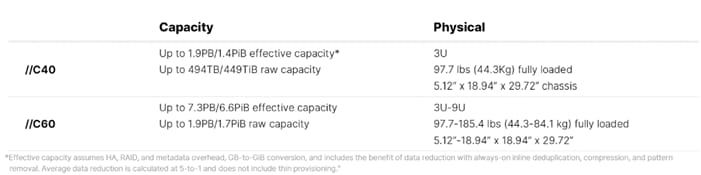

● Support for the latest release of Pure Storage FlashArray//C60 345TB hardware and Purity//FA v6.1.3

● Backup of FlashStack environment through Fibre Channel with Veeam/Pure Storage snapshot integration

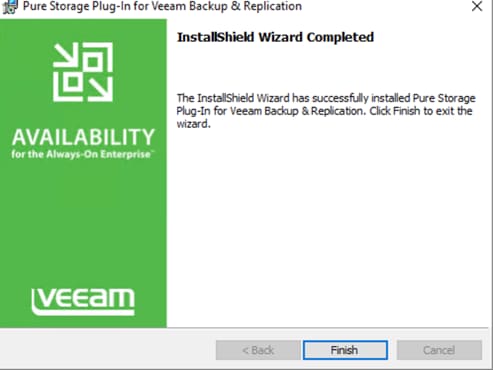

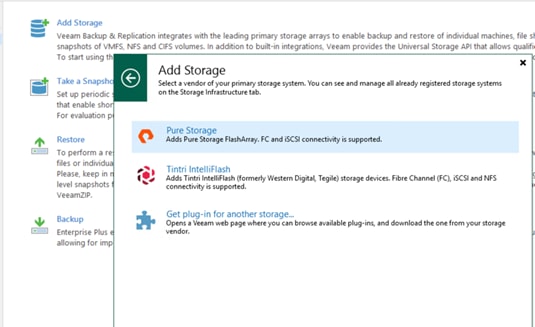

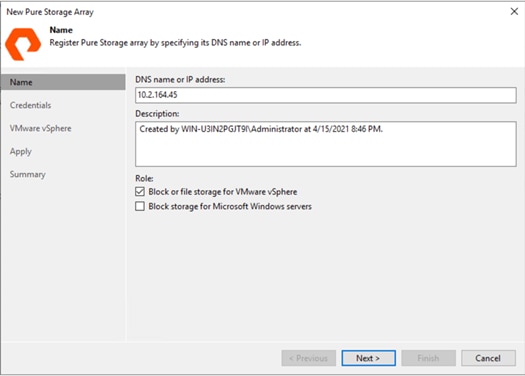

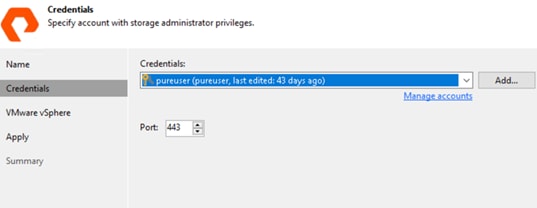

● Pure Storage Universal Storage API Plug-In for Veeam Backup & Replication 1.2.45

● Restore through Veeam SAN Mode

● VMware vSphere 7.0 GA Hypervisor

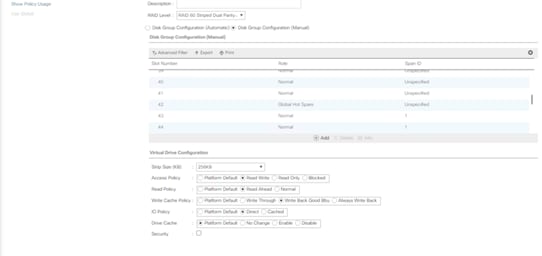

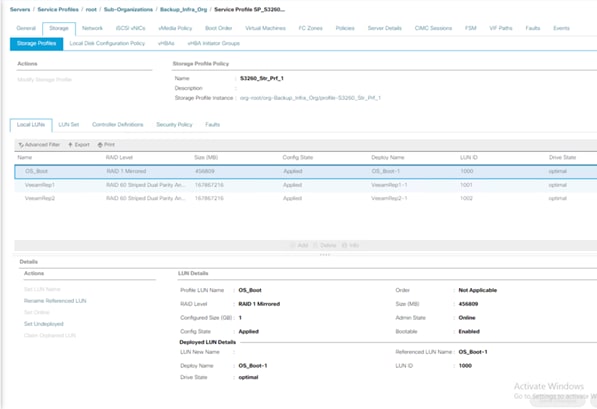

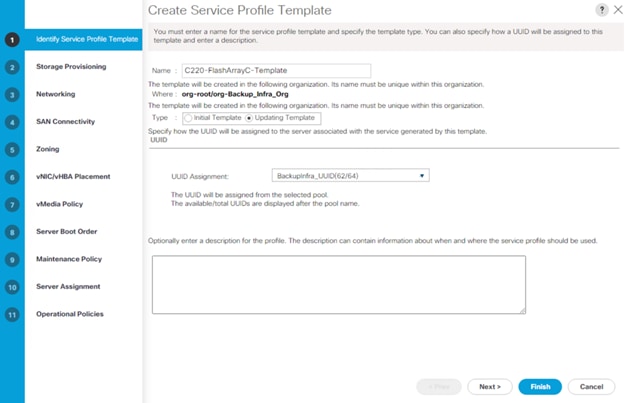

This solution for FlashStack data protection with Veeam Backup & Replication v11, delivers reliable and fast backup and restore of virtual infrastructure provisioned on FlashStack environment. This solution protects workloads on FlashStack and provides a choice of backup targets to run Veeam backups and replicas. The target storage and the Veeam services can consolidate on any of the following backup infrastructure platforms:

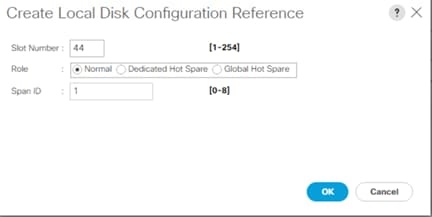

● Cisco UCS S3260 Storage Server running Veeam services on the compute node with 56 top load NL-SAS drives as the Veeam Backup Repository

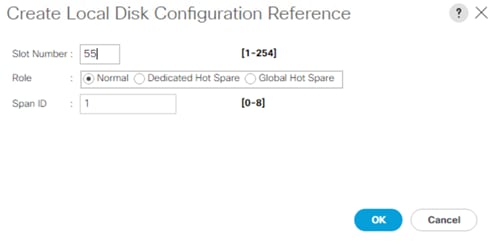

● Cisco UCS C240 All Flash Rack Server running Veeam services, equipped with 24 front load SSDs as the Veeam Backup Repository

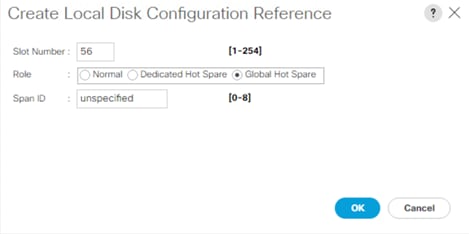

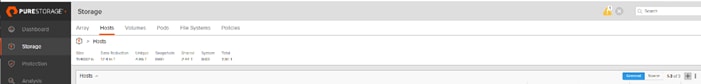

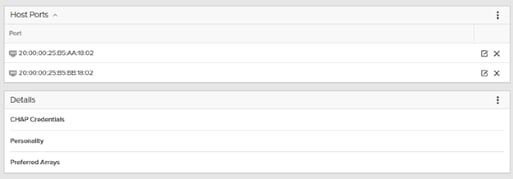

● Pure Storage FlashArray//C as the Veeam Backup Repository with Cisco UCS C220 Rack Server providing computing power for running Veeam Services

Customers can choose any of the above backup infrastructure platforms with key determining factors such as Recovery Point Objective (RPO), Recovery Time Objective (RTO) and data efficiency of stored backups. All of the platforms unleash the key features provided through a three-way solution between Cisco, Veeam and Pure Storage. Some of the key features universal to all the three platforms are as follows

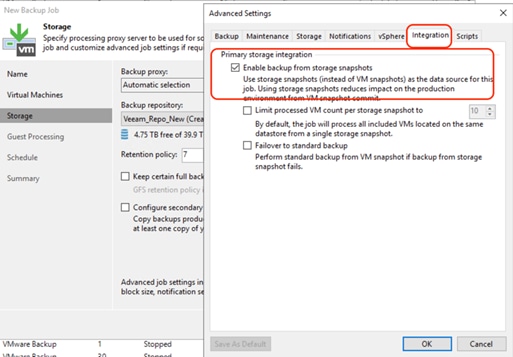

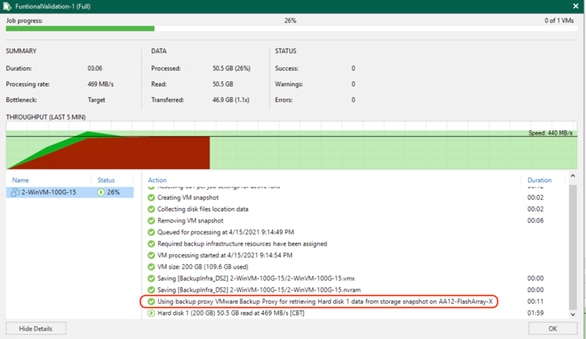

● Veeam’s integration with Pure Storage snapshot technology, enabling backup from storage snapshot of any volume, without worrying about pausing workloads and with zero overhead

● Veeam Direct SAN Access mode, leveraging VMware API for Data Protection (VADP) to transport VM data directly from and to FC, FCoE and iSCSI storage over the SAN. The Direct SAN access transport method provides the fastest data transfer speed and produces no load on the production workloads or networks.

● Ease of management with scalability of compute and storage elements through with Cisco UCS Manager 4.0 (UCSM) and Cisco Intersight

● Backup of virtual infrastructure on Flash Stack through Fibre Channel

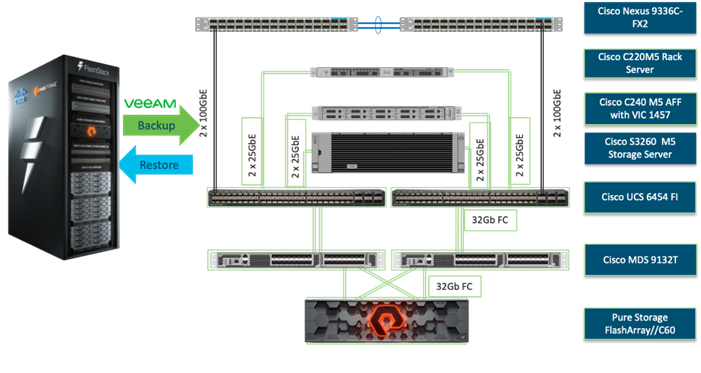

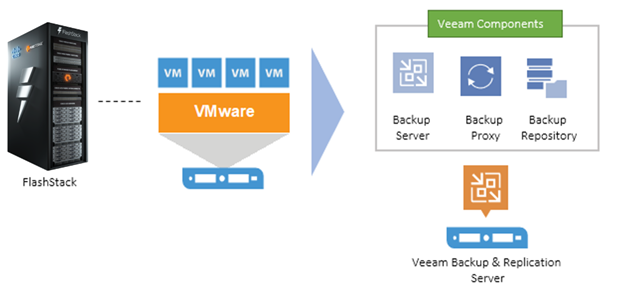

Figure 2 illustrate the high-level Solution Architecture providing protection of FlashStack environment with Veeam v11.

Figure 2. High-level Solution Architecture – FlashStack Data Protection with Veeam

The key features and benefits of the above three backup infrastructure platforms with Veeam are detailed in the following sections.

FlashArray//C: Fast Restores with Storage Efficiency (Dedupe and Compression)

Veeam, with FlashArray//C from Pure Storage, and Cisco UCS C220 M5 servers, delivers maximum flash-based performance that can handle multiple workloads, while paired with Pure Storage data efficiency features. This solution offers storage capacity without compromise, along with flash-based performance at close to disk economics. It targets multiple workloads and large-scale deployments featuring:

● All-QLC flash storage for cost-effective, capacity-oriented workloads

● Advanced data services and technologies for guaranteed data efficiency

● Scale-up, scale-out architecture to meet the capacity expansion requirements of data-intensive workloads

● Non-disruptive, Evergreen architecture that eliminates risky, complex, and costly upgrades

Cisco UCS C240 All Flash Rack Server: Fast Restores and High Backup Throughput

Veeam, with Cisco UCS C240 M5 all-flash storage servers, delivers the performance and flexibility needed to run and support virtually any workload, while meeting the requirements of a sophisticated data protection environment. It features:

● Architectural and compute flexibility

● Multiple workload capability

● Best-in-class backup and restore performance

● Scale-out capability

Cisco UCS S3260 Storage Server: Dense Platform with Optimal Restores and High Backup Throughput

Veeam, with Cisco UCS S3260 M5 storage servers, delivers superior performance with massive scale-up and scale-out capability and disk economics. This solution includes Cisco Intersight or UCS Manager to reduce cost of ownership, simplify management, and deliver consistent policy-based deployments and scalability.

This dense storage platform, combined with FlashStack and Veeam, offers massive storage capacity and high backup throughput for multiple workloads. You can run Veeam components such as Backup Proxy, Veeam Console and Backup Repository on a single compute and storage platform with the ability to scale both compute and storage through Veeam Scale-Out Backup Repositories (SOBR).

You can deploy a scale-out backup storage platform on a cluster of Cisco UCS S3260 storage servers, providing an S3 archive target for the Veeam Capacity tier. The Capacity Tier features Scale-Out Backup Repositories (SOBR) architecture, which makes it possible to immediately copy new backups, and to move older backups to more cost-effective cloud or on-premises object storage. Archiving backup in the Capacity Tier can result in up to 10X savings on long-term data retention costs and help you align with compliance requirements by storing data as long as needed.

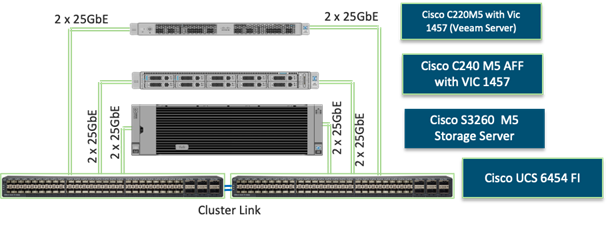

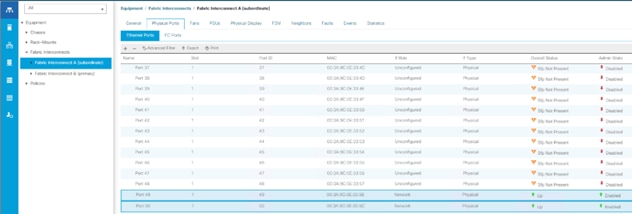

Each rack server in the design is redundantly connected to the managing Fabric Interconnects (FI) with two ports to each FI. Ethernet traffic from the upstream network and Fibre Channel frames coming from the FlashArray are converged within the fabric interconnect to be both Ethernet and Fibre Channel over Ethernet and transmitted to the UCS server.

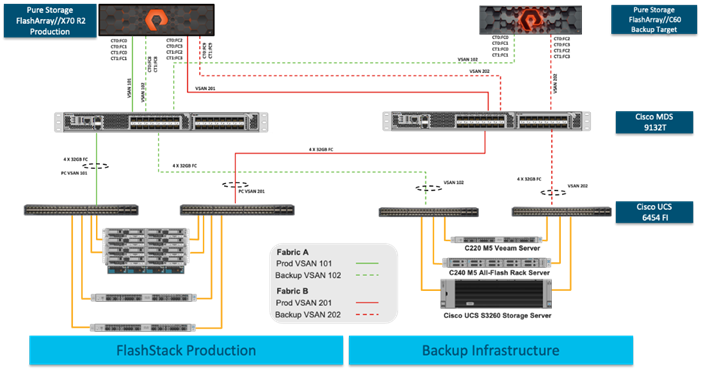

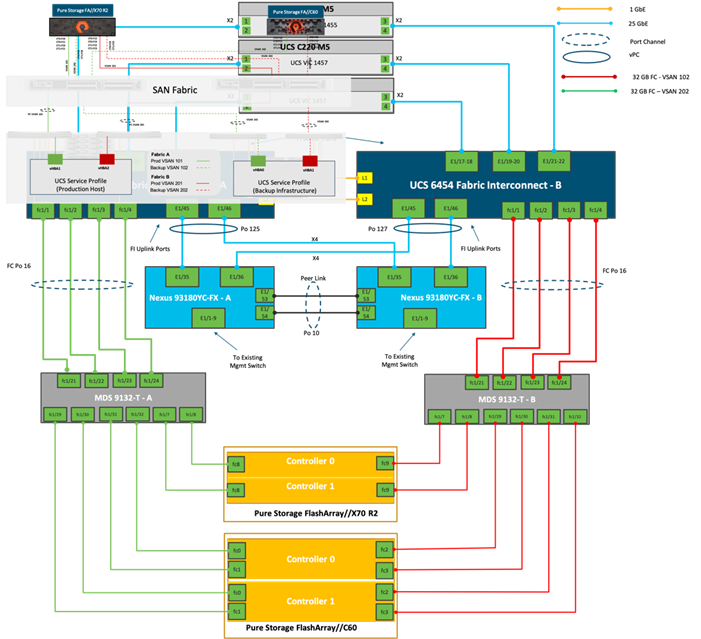

These connections from the 4th Gen UCS 6454 Fabric Interconnect to the Cisco UCS C220, Cisco UCS C240 Rack Server, and Cisco UCS S3260 storage server are detailed in Figure 3.

Figure 3. Compute Connectivity

Each rack and storage server in the design is redundantly connected to the managing fabric interconnects with two ports to each Fabric Interconnect (FI). Ethernet traffic from the upstream network and Fibre Channel frames coming from the FlashArray are converged within the fabric interconnect to be both Ethernet and Fibre Channel over Ethernet and transmitted to the UCS server.

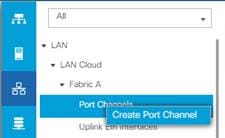

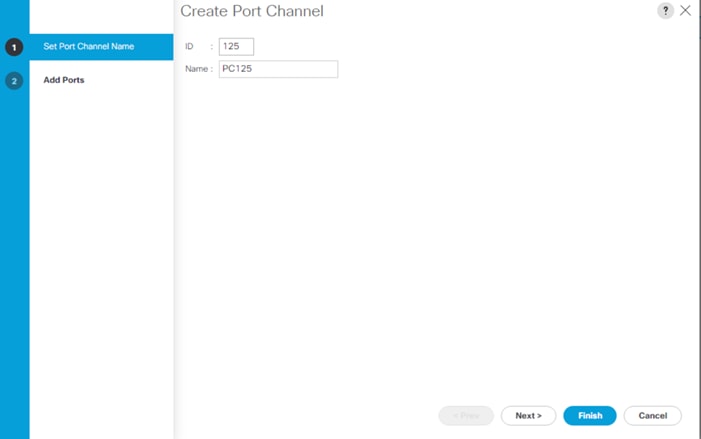

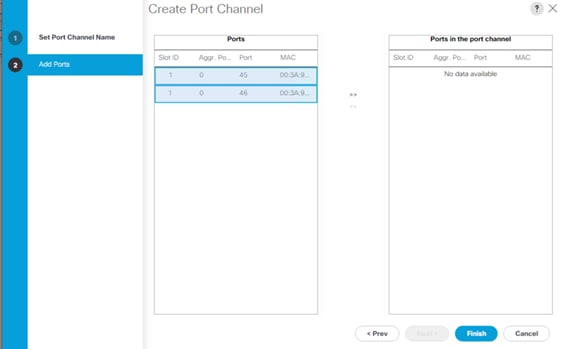

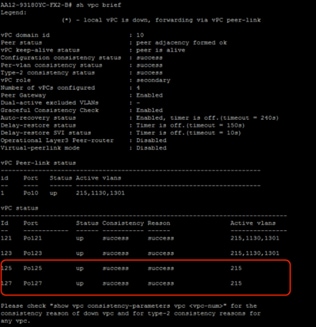

The layer 2 network connection to each Fabric Interconnect is implemented as Virtual Port Channels (vPC) from the upstream Cisco Nexus Switches. In the switching environment, the vPC provides the following benefits:

● Allows a single device to use a Port Channel across two upstream devices

● Eliminates Spanning Tree Protocol blocked ports and use all available uplink bandwidth

● Provides a loop-free topology

● Provides fast convergence if either one of the physical links or a device fails

● Helps ensure high availability of the network

The upstream network switches can connect to the Cisco UCS 6454 Fabric Interconnects using 10G, 25G, 40G, or 100G port speeds. In this design, the 40G ports from the 40/100G ports on the 6454 (1/49-54) were used for the virtual port channels.

Figure 4. Network Connectivity

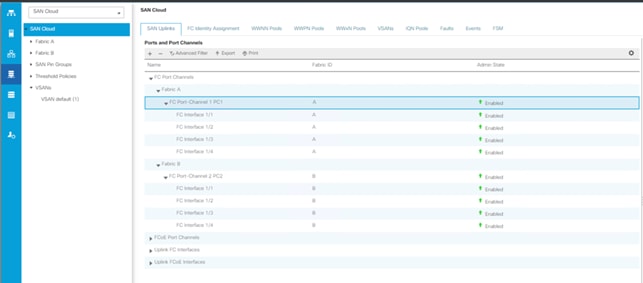

Fibre Channel Storage Connectivity

The Pure Storage FlashArray//X platform and FlashArray//C platform are connected through both MDS 9132Ts to their respective Fabric Interconnects in a traditional air-gapped A/B fabric design. The Fabric Interconnects are configured in N-Port Virtualization (NPV) mode, known as FC end host mode in UCSM. The MDS has N-Port ID Virtualization (NPIV) enabled. This allows F-port channels to be used between the Fabric Interconnect and the MDS, providing the following benefits:

● Increased aggregate bandwidth between the fabric interconnect and the MDS

● Load balancing across the FC uplinks

● High availability in the event of a failure of one or more uplinks

The FlashArray//X platform hosts the source virtual infrastructure and FlashArray//C platform is provisioned with Veeam Backup Repository. Both the platforms share 2xMDS 9132T switches.

Figure 5. Fibre Channel Logical Design

End-to-End Physical Connectivity

The FC end-to-end path in the design is a traditional air-gapped fabric with identical data path through each fabric as detailed below:

● Each Cisco UCS Server is equipped with a Cisco UCS VIC 1400 Series adapter

● Cisco UCS C-Series Rack Servers are equipped with Cisco UCS VIC 1457 and Cisco UCS S-Series Storage server is equipped with a Cisco UCS VIC 1455 providing 2x25Gbe to Fabric Interconnect A and 2x25Gbe to Fabric Interconnect B

● Each Cisco UCS 6454 FI connects to the MDS 9132T for the respective SAN fabric using an F-Port channel

● The Pure Storage FlashArray//X70 R2 and FlashArray//C are connected to both MDS 9132T switches to provide redundant paths through both fabrics

Figure 6. FC End-to-End Data Path

The components of this integrated architecture shown in Figure 6 are:

● Cisco Nexus 93180YC-FX – 10/25/40/100Gbe capable, LAN connectivity to the Cisco UCS compute resources

● Cisco UCS 6454 Fabric Interconnect – Unified management of Cisco UCS compute, and the compute’s access to storage and networks

● Cisco UCS C-Series – High powered rack server, with fast storage

● Cisco UCS S-Series – High powered dense storage platform with two compute nodes

● Cisco MDS 9132T – 32Gb Fibre Channel connectivity within the architecture, as well as interfacing to resources present in an existing data center

● Pure Storage FlashArray//X70 R2 – part of FlashStack environment providing storage for virtual infrastructure hosted on Cisco UCS B Series Server with Cisco UCS 5108 chassis

● Pure Storage FlashArray//C60 – Veeam Backup Repository

● Cisco UCS S3260 Storage server – Veeam Backup Repository

● Cisco UCS C240 All Flash rack server– Veeam Backup Repository

● Cisco UCS C220 rack server – Veeam Backup Server with Veeam Backup Repository on FlashArray//C60

Solution Reference Architecture

Figure 7 illustrates the data protection of FlashStack with Veeam architecture used in this validated design to support fast, reliable, and dense backup targets for virtual infrastructure deployed on FlashStack environment. It follows Cisco configuration requirements to deliver highly available and scalable architecture.

Figure 7. FlashStack Data Protection with Veeam Solution Reference Architecture

The reference hardware configuration includes:

● Two Cisco Nexus 93180YC-FX switches

● Two Cisco MDS 9132T 32-Gb Fibre Channel switches

● Two Cisco UCS 6454 Fabric Interconnects

● One Cisco UCS 5108 Blade Chassis

● Four Cisco UCS B200 M5 Blade Servers (virtual infrastructure)

● One Cisco UCS C240 M5 All Flash Rack Server providing compute and storage resources for Veeam services

● One Cisco UCS S3260 Storage Server one single compute node providing compute and storage resources for Veeam services

● One Pure Storage FlashArray//X70 R2 (FlashStack environment hosting virtual infrastructure)

● One Pure Storage FlashArray//C providing storage resource for Veeam Backup Repository with Veeam Services running on a Cisco UCS C220 rack server

This document guides you through the detailed steps for deploying the base architecture. This procedure explains everything from physical cabling to network, compute, and storage device configurations.

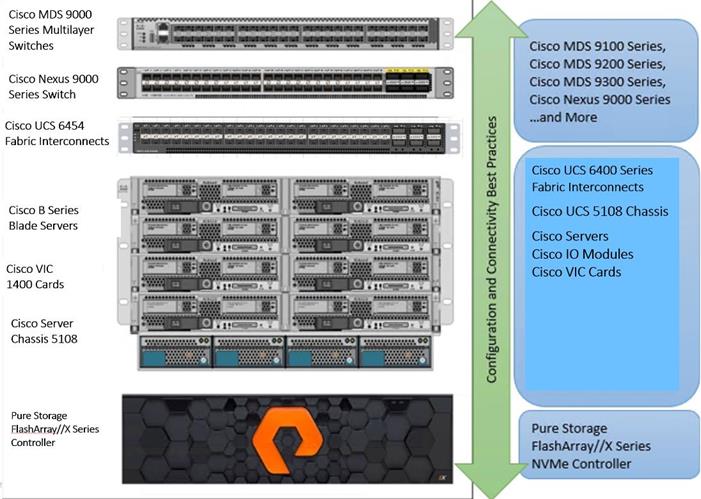

What is FlashStack?

The FlashStack platform, developed by Cisco and Pure Storage, is a flexible, integrated infrastructure solution that delivers pre-validated storage, networking, and server technologies. Cisco and Pure Storage have carefully validated and verified the FlashStack solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model.

FlashStack is a best practice data center architecture that includes the following components:

● Cisco Unified Computing System

● Cisco Nexus Switches

● Cisco MDS Switches

● Pure Storage FlashArray & FlashBlade

Figure 8. FlashStack Systems Components

As shown in Figure 8, these components are connected and configured according to best practices of both Cisco and Pure Storage and provide the ideal platform for running a variety of enterprise workloads (for example, databases) with confidence. FlashStack can scale up for greater performance and capacity (adding compute, network, or storage resources individually as needed), or it can scale out for environments that require multiple consistent deployments.

The reference architecture covered in this document leverages the Pure Storage FlashArray//X70 R2 Controller with NVMe based DirectFlash modules for storage, Cisco UCS B200 M5 Blade Server for compute, Cisco Nexus 9000, and Cisco MDS 9100 Series for the switching element and Cisco Fabric Interconnects 6300 Series for system management. As shown in Figure 8, FlashStack architecture can maintain consistency at scale. Each of the component families shown in the FlashStack (Cisco UCS, Cisco Nexus, Cisco MDS, Cisco FI and Pure Storage) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlashStack.

FlashStack provides a jointly supported solution by Cisco and Pure Storage. Providing a carefully validated architecture built on superior compute, world-class networking, and the leading innovations in all flash storage. The portfolio of validated offerings from FlashStack includes but is not limited to the following:

● Consistent Performance and Scalability

◦ Consistent sub-millisecond latency with 100 percent NVMe enterprise flash storage

◦ Consolidate hundreds of enterprise-class applications in a single rack

◦ Scalability through a design for hundreds of discrete servers and thousands of virtual machines, and the capability to scale I/O bandwidth to match demand without disruption

◦ Repeatable growth through multiple FlashStack CI deployments

● Operational Simplicity

◦ Fully tested, validated, and documented for rapid deployment

◦ Reduced management complexity

◦ No storage tuning or tiers necessary

◦ 3x better data reduction without any performance impact

● Lowest TCO

◦ Dramatic savings in power, cooling, and space with Cisco UCS and 100% flash

◦ Industry leading data reduction

◦ Free FlashArray controller upgrades every three years with Pure’s Evergreen Gold Subscription

● Mission Critical and Enterprise Grade Resiliency

◦ Highly available architecture with no single point of failure

◦ Non-disruptive operations with no downtime

◦ Upgrade and expand without downtime or performance loss

◦ Native data protection capabilities: snapshots and replication

Cisco and Pure Storage have also built a robust and experienced support team focused on FlashStack solutions, from customer account and technical sales representatives to professional services and technical support engineers. The support alliance between Pure Storage and Cisco gives customers and channel services partners direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues.

This section describes the components used in the solution outlined in this solution.

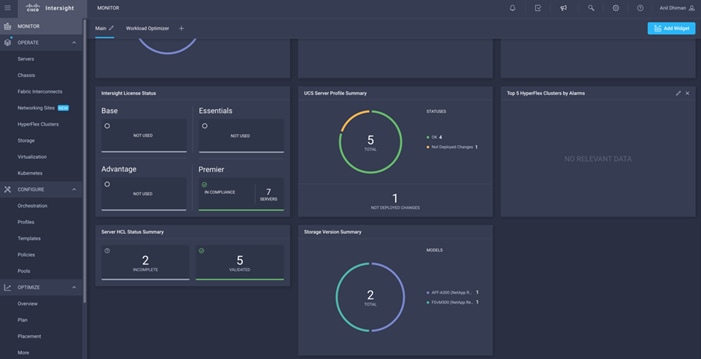

Cisco Intersight Cloud Based Management

Cisco Intersight is Cisco’s new systems management platform that delivers intuitive computing through cloud-powered intelligence. This platform offers a more intelligent level of management that enables IT organizations to analyze, simplify, and automate their environments in ways that were not possible with prior generations of tools. This capability empowers organizations to achieve significant savings in Total Cost of Ownership (TCO) and to deliver applications faster, so they can support new business initiates. The advantages of the model-based management of the Cisco UCS platform plus Cisco Intersight are extended to Cisco UCS servers.

The Cisco UCS platform uses model-based management to provision servers and the associated storage and fabric automatically, regardless of form factor. Cisco Intersight works in conjunction with Cisco UCS Manager and the Cisco® Integrated Management Controller (IMC). By simply associating a model-based configuration with a resource through service profiles, your IT staff can consistently align policy, server personality, and workloads. These policies can be created once and used repeatedly by IT staff with minimal effort to deploy servers. The result is improved productivity and compliance and lower risk of failures due to inconsistent configuration.

Cisco Intersight is integrated with data center, hybrid cloud platforms, and services to securely deploy and manage infrastructure resources across data center and edge environments. In addition, Cisco will provide future integrations to third-party operations tools to allow customers to use their existing solutions more effectively.

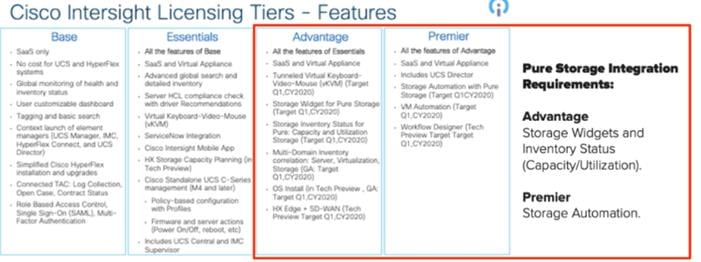

Pure Storage FlashArray with Intersight

The Cisco Intersight Premier edition offers private-cloud Infrastructure-as-a-Service (IaaS) orchestration across Cisco UCS, HyperFlex, and third-party endpoints including VMWare vCenter and Pure Storage. This feature, called Cisco Intersight Orchestrator, enables you to create and execute workflows in Cisco Intersight. For example, provisioning a Pure Storage FlashArray or deploying a new virtual machine from a template could involve multiple tasks, but with Cisco Intersight Orchestrator, the administrator has a workflow designer to visualize a workflow definition and monitor the execution of that workflow on any infrastructure element.

Figure 9. Example of User-Customizable Cisco Intersight Dashboard for Cisco UCS Domain

Figure 10. Cisco UCS Manager Device Connector Example

Figure 11. Cisco Intersight License

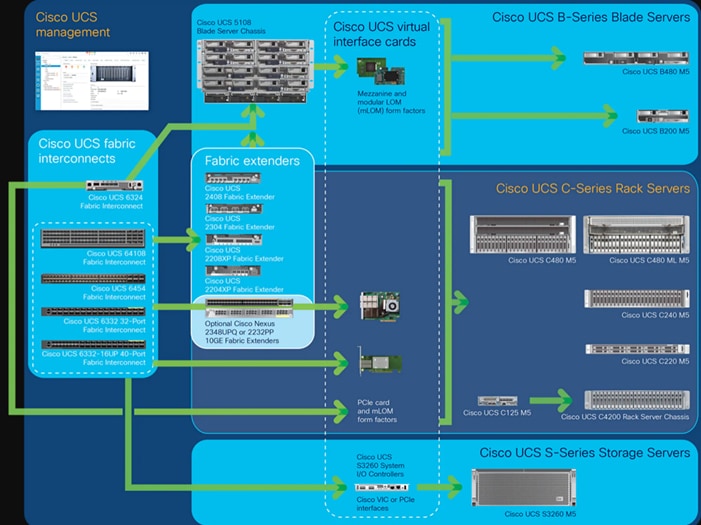

Cisco Unified Computing System

Cisco UCS Manager (UCSM) provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) through an intuitive GUI, a CLI, and an XML API. The manager provides a unified management domain with centralized management capabilities and can control multiple chassis and thousands of virtual machines.

Cisco UCS is a next-generation data center platform that unites computing, networking, and storage access. The platform, optimized for virtual environments, is designed using open industry-standard technologies and aims to reduce total cost of ownership (TCO) and increase business agility. The system integrates a low-latency; lossless 40 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. It is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain.

Cisco Unified Computing System Components

The main components of Cisco UCS are:

● Compute: The system is based on an entirely new class of computing system that incorporates blade servers based on Intel® Xeon® Scalable Family processors.

● Network: The system is integrated on a low-latency, lossless, 25-Gbe unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing (HPC) networks, which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables needed, and by decreasing the power and cooling requirements.

● Virtualization: The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

● Storage access: The system provides consolidated access to local storage, SAN storage, and network-attached storage (NAS) over the unified fabric. With storage access unified, Cisco UCS can access storage over Ethernet, Fibre Channel, Fibre Channel over Ethernet (FCoE), and Small Computer System Interface over IP (iSCSI) protocols. This capability provides customers with choices for storage access and investment protection. In addition, server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity and management and helping increase productivity.

● Management: Cisco UCS uniquely integrates all system components, enabling the entire solution to be managed as a single entity by Cisco UCS Manager. Cisco UCS Manager has an intuitive GUI, a CLI, and a robust API for managing all system configuration processes and operations.

Figure 12. Cisco Data Center Overview

Cisco UCS is designed to deliver the following benefits:

● Reduced TCO and increased business agility

● Increased IT staff productivity through just-in-time provisioning and mobility support

● A cohesive, integrated system that unifies the technology in the data center; the system is managed, serviced, and tested as a whole

● Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand

● Industry standards supported by a partner ecosystem of industry leaders

Cisco UCS Manager provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System across multiple chassis, rack servers, and thousands of virtual machines. Cisco UCS Manager manages Cisco UCS as a single entity through an intuitive GUI, a CLI, or an XML API for comprehensive access to all Cisco UCS Manager Functions.

The Cisco UCS 6400 Series Fabric Interconnects are a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6400 Series offer line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions.

The Cisco UCS 6400 Series provides the management and communication backbone for the Cisco UCS B-Series Blade Servers, Cisco UCS 5108 B-Series Server Chassis, Cisco UCS Managed C-Series Rack Servers, and Cisco UCS S-Series Storage Servers. All servers attached to a Cisco UCS 6400 Series Fabric Interconnect become part of a single, highly available management domain. In addition, by supporting a unified fabric, Cisco UCS 6400 Series Fabric Interconnect provides both the LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6400 Series use a cut-through architecture, supporting deterministic, low-latency, line-rate 10/25/40/100 Gigabit Ethernet ports, switching capacity of 3.82 Tbps for the 6454, 7.42 Tbps for the 64108, and 200 Gbe bandwidth between the Fabric Interconnect 6400 series and the IOM 2408 per 5108 blade chassis, independent of packet size and enabled services. The product family supports Cisco low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The fabric interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings come from an FCoE-optimized server design in which Network Interface Cards (NICs), Host Bus Adapters (HBAs), cables, and switches can be consolidated.

Figure 13. Cisco UCS 6400 Series Fabric Interconnect – 6454 Front View

Figure 14. Cisco UCS 6400 Series Fabric Interconnect – 6454 Rear View

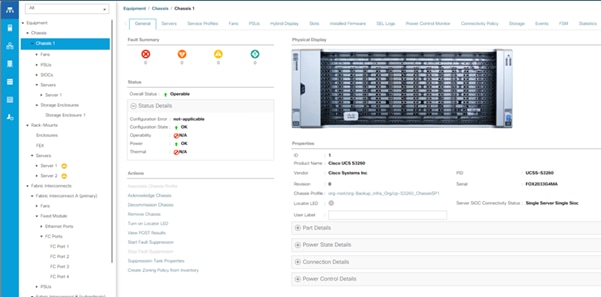

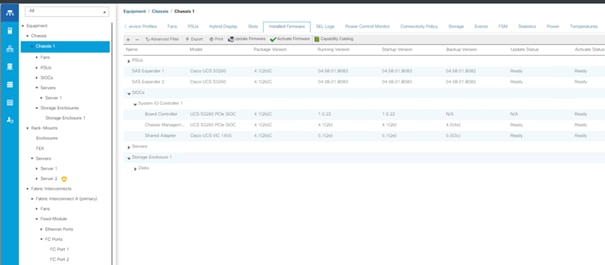

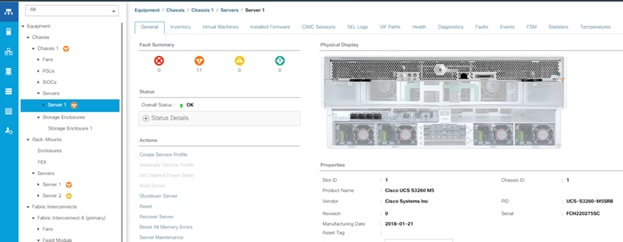

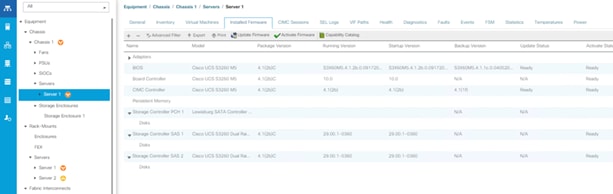

Cisco UCS S3260 Storage Server

The Cisco UCS S3260 Storage Server is a modular, high-density, high-availability dual-node rack server well suited for service providers, enterprises, and industry-specific environments. It addresses the need for dense, cost-effective storage for the ever-growing amounts of data. Designed for a new class of cloud-scale applications and data-intensive workloads, it is simple to deploy and excellent for big data, software-defined storage, and data-protection environments.

Figure 15. Cisco UCS S3260 Storage Server

The Cisco UCS S3260 server helps you achieve the highest levels of data availability and performance. With dual-node capability that is based on the 2nd Gen Intel® Xeon® Scalable and Intel Xeon Scalable processor, it features up to 840 TB of local storage in a compact 4-Rack-Unit (4RU) form factor. The drives can be configured with enterprise-class Redundant Array of Independent Disks (RAID) redundancy or with a pass-through Host Bus Adapter (HBA) controller. Network connectivity is provided with dual-port 40-Gbps nodes in each server, with expanded unified I/O capabilities for data migration between Network-Attached Storage (NAS) and SAN environments. This storage-optimized server comfortably fits in a standard 32-inch-depth rack, such as the Cisco® R 42610 Rack.

You can deploy Cisco UCS S-Series Storage Servers as standalone servers or as part of a Cisco UCS managed environment to take advantage of Cisco® standards-based unified computing innovations that can help reduce your TCO and increase your business agility.

The Cisco UCS S3260 uses a modular server architecture that, using Cisco’s blade technology expertise, allows you to upgrade the computing or network nodes in the system without the need to migrate data from one system to another. It delivers the following:

● Dual 2-socket server nodes based on 2nd Gen Intel Xeon Scalable and Intel Xeon Scalable processors with up to 48 cores per server node

● Up to 1.5 TB of DDR4 memory per M5 server node and up to 1 TB of Intel Optane™ DC Persistent Memory

● Support for high-performance Nonvolatile Memory Express (NVMe) and flash memory

● Massive 840-TB data storage capacity that easily scales to petabytes with Cisco UCS Manager software

● Policy-based storage management framework for zero-touch capacity on demand

● Dual-port 40-Gbps system I/O controllers with a Cisco UCS Virtual Interface Card 1300 platform embedded chip or PCIe-based system I/O controller for Quad Port 10/25G Cisco VIC 1455 or Dual Port 100G Cisco VIC 1495

● Unified I/O for Ethernet or Fibre Channel to existing NAS or SAN storage environments

Cisco UCS C240 All Flash Rack Server

The Cisco UCS C240 M5 Rack Server is a 2-socket, 2-Rack-Unit (2RU) rack server offering industry-leading performance and expandability. It supports a wide range of storage and I/O-intensive infrastructure workloads, from big data and analytics to collaboration. Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of a Cisco Unified Computing System™ (Cisco UCS) managed environment to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility.

Figure 16. Cisco UCS C240 SFF Rack Server (All Flash)

In response to ever-increasing computing and data-intensive real-time workloads, the enterprise-class Cisco UCS C240 M5 server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, and five times more.

Non-Volatile Memory Express (NVMe) PCI Express (PCIe) Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C240 M5 delivers outstanding levels of storage expandability with exceptional performance, with:

The latest second-generation Intel Xeon Scalable CPUs, with up to 28 cores per socket, provide the following:

● Supports the first-generation Intel Xeon Scalable CPU, with up to 28 cores per socket

● Support for the Intel Optane DC Persistent Memory (128G, 256G, 512G)[1]

● Up to 24 DDR4 DIMMs for improved performance including higher density DDR4 DIMMs

● Up to 26 x 2.5-inch SAS and SATA HDDs and SSDs and up to 4 NVMe PCIe drives

● Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

● Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot, supporting dual 10- or 40-Gbps network connectivity

● Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

● Modular M.2 or Secure Digital (SD) cards that can be used for boot

Cisco UCS C220 SFF Rack Server

The Cisco UCS C220 M5 Rack Server is among the most versatile general-purpose enterprise infrastructure and application servers in the industry. It is a high-density 2-socket rack server that delivers industry-leading performance and efficiency for a wide range of workloads, including virtualization, collaboration, and bare-metal applications. The Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of the Cisco Unified Computing System™ (Cisco UCS) to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ Total Cost of Ownership (TCO) and increase their business agility.

Figure 17. Cisco UCS C220 SFF Rack Server

The Cisco UCS C220 M5 server extends the capabilities of the Cisco UCS portfolio in a 1-Rack-Unit (1RU) form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, 20 percent greater storage density, and five times more PCIe NVMe Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C220 M5 delivers outstanding levels of expandability and performance in a compact package, with:

● Latest (second generation) Intel Xeon Scalable CPUs with up to 28 cores per socket

● Supports first-generation Intel Xeon Scalable CPUs with up to 28 cores per socket

● Up to 24 DDR4 DIMMs for improved performance

● Support for the Intel Optane DC Persistent Memory (128G, 256G, 512G)

● Up to 10 Small-Form-Factor (SFF) 2.5-inch drives or 4 Large-Form-Factor (LFF) 3.5-inch drives (77 TB storage capacity with all NVMe PCIe SSDs)

● Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

● Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot

● Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

Cisco Nexus 93180YC-FX Switches

The 93180YC-EX Switch provides a flexible line-rate Layer 2 and Layer 3 feature set in a compact form factor. Designed with Cisco Cloud Scale technology, it supports highly scalable cloud architectures. With the option to operate in Cisco NX-OS or Application Centric Infrastructure (ACI) mode, it can be deployed across enterprise, service provider, and Web 2.0 data centers, and provides the following:

● Architectural Flexibility

◦ Includes top-of-rack or middle-of-row fiber-based server access connectivity for traditional and leaf-spine architectures

◦ Leaf node support for Cisco ACI architecture is provided in the roadmap

◦ Increase scale and simplify management through Cisco Nexus 2000 Fabric Extender support

● Feature Rich

◦ Enhanced Cisco NX-OS Software is designed for performance, resiliency, scalability, manageability, and programmability

◦ ACI-ready infrastructure helps users take advantage of automated policy-based systems management

◦ Virtual Extensible LAN (VXLAN) routing provides network services

◦ Rich traffic flow telemetry with line-rate data collection

◦ Real-time buffer utilization per port and per queue, for monitoring traffic micro-bursts and application traffic patterns

● Highly Available and Efficient Design

◦ High-density, non-blocking architecture

◦ Easily deployed into either a hot-aisle and cold-aisle configuration

◦ Redundant, hot-swappable power supplies and fan trays

● Simplified Operations

◦ Power-On Auto Provisioning (POAP) support allows for simplified software upgrades and configuration file installation

◦ An intelligent API offers switch management through remote procedure calls (RPCs, JSON, or XML) over a HTTP/HTTPS infrastructure

◦ Python Scripting for programmatic access to the switch command-line interface (CLI)

◦ Hot and cold patching, and online diagnostics

● Investment Protection

A Cisco 40 Gbe bidirectional transceiver allows reuse of an existing 10 Gigabit Ethernet multimode cabling plant for 40 Gigabit Ethernet Support for 1 Gbe and 10 Gbe access connectivity for data centers migrating access switching infrastructure to faster speed. The following are supported:

● 1.8 Tbps of bandwidth in a 1 RU form factor

● 48 fixed 1/10/25-Gbe SFP+ ports

● 6 fixed 40/100-Gbe QSFP+ for uplink connectivity

● Latency of less than 2 microseconds

● Front-to-back or back-to-front airflow configurations

● 1+1 redundant hot-swappable 80 Plus Platinum-certified power supplies

● Hot swappable 3+1 redundant fan trays

Figure 18. Cisco Nexus 93180YC-EX Switch

Cisco MDS 9132T 32-Gb Fiber Channel Switch

The next-generation Cisco® MDS 9132T 32-Gb 32-Port Fibre Channel Switch (Figure 19) provides high-speed Fibre Channel connectivity from the server rack to the SAN core. It empowers small, midsize, and large enterprises that are rapidly deploying cloud-scale applications using extremely dense virtualized servers, providing the dual benefits of greater bandwidth and consolidation.

Small-scale SAN architectures can be built from the foundation using this low-cost, low-power, non-blocking, line-rate, and low-latency, bi-directional airflow capable, fixed standalone SAN switch connecting both storage and host ports.

Medium-size to large-scale SAN architectures built with SAN core directors can expand 32-Gb connectivity to the server rack using these switches either in switch mode or Network Port Virtualization (NPV) mode.

Additionally, investing in this switch for the lower-speed (4- or 8- or 16-Gb) server rack gives you the option to upgrade to 32-Gb server connectivity in the future using the 32-Gb Host Bus Adapter (HBA) that are available today. The Cisco® MDS 9132T 32-Gb 32-Port Fibre Channel switch also provides unmatched flexibility through a unique port expansion module (Figure 19) that provides a robust cost-effective, field swappable, port upgrade option.

This switch also offers state-of-the-art SAN analytics and telemetry capabilities that have been built into this next-generation hardware platform. This new state-of-the-art technology couples the next-generation port ASIC with a fully dedicated Network Processing Unit designed to complete analytics calculations in real time. The telemetry data extracted from the inspection of the frame headers are calculated on board (within the switch) and, using an industry-leading open format, can be streamed to any analytics-visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver including Cisco Data Center Network Manager.

Figure 19. Cisco 9132T 32-Gb MDS Fibre Channel Switch

Figure 20. Cisco MDS 9132T 32-Gb 16-Port Fibre Channel Port Expansion Module

● Features

◦ High performance: MDS 9132T architecture, with chip-integrated nonblocking arbitration, provides consistent 32-Gb low-latency performance across all traffic conditions for every Fibre Channel port on the switch.

◦ Capital Expenditure (CapEx) savings: The 32-Gb ports allow users to deploy them on existing 16- or 8-Gb transceivers, reducing initial CapEx with an option to upgrade to 32-Gb transceivers and adapters in the future.

◦ High availability: MDS 9132T switches continue to provide the same outstanding availability and reliability as the previous-generation Cisco MDS 9000 Family switches by providing optional redundancy on all major components such as the power supply and fan. Dual power supplies also facilitate redundant power grids.

◦ Pay-as-you-grow: The MDS 9132T Fibre Channel switch provides an option to deploy as few as eight 32-Gb Fibre Channel ports in the entry-level variant, which can grow by 8 ports to 16 ports, and thereafter with a port expansion module with sixteen 32-Gb ports, to up to 32 ports. This approach results in lower initial investment and power consumption for entry-level configurations of up to 16 ports compared to a fully loaded switch. Upgrading through an expansion module also reduces the overhead of managing multiple instances of port activation licenses on the switch. This unique combination of port upgrade options allow four possible configurations of 8 ports, 16 ports, 24 ports and 32 ports.

◦ Next-generation Application-Specific Integrated Circuit (ASIC): The MDS 9132T Fibre Channel switch is powered by the same high-performance 32-Gb Cisco ASIC with an integrated network processor that powers the Cisco MDS 9700 48-Port 32-Gb Fibre Channel Switching Module. Among all the advanced features that this ASIC enables, one of the most notable is inspection of Fibre Channel and Small Computer System Interface (SCSI) headers at wire speed on every flow in the smallest form-factor Fibre Channel switch without the need for any external taps or appliances. The recorded flows can be analyzed on the switch and also exported using a dedicated 10/100/1000BASE-T port for telemetry and analytics purposes.

◦ Intelligent network services: Slow-drain detection and isolation, VSAN technology, Access Control Lists (ACLs) for hardware-based intelligent frame processing, smartzoning and fabric wide Quality of Service (QoS) enable migration from SAN islands to enterprise-wide storage networks. Traffic encryption is optionally available to meet stringent security requirements.

◦ Sophisticated diagnostics: The MDS 9132T provides intelligent diagnostics tools such as Inter-Switch Link (ISL) diagnostics, read diagnostic parameters, protocol decoding, network analysis tools, and integrated Cisco Call Home capability for greater reliability, faster problem resolution, and reduced service costs.

◦ Virtual machine awareness: The MDS 9132T provides visibility into all virtual machines logged into the fabric. This feature is available through HBAs capable of priority tagging the Virtual Machine Identifier (VMID) on every FC frame. Virtual machine awareness can be extended to intelligent fabric services such as analytics[1] to visualize performance of every flow originating from each virtual machine in the fabric.

◦ Programmable fabric: The MDS 9132T provides powerful Representational State Transfer (REST) and Cisco NX-API capabilities to enable flexible and rapid programming of utilities for the SAN as well as polling point-in-time telemetry data from any external tool.

◦ Single-pane management: The MDS 9132T can be provisioned, managed, monitored, and troubleshot using Cisco Data Center Network Manager (DCNM), which currently manages the entire suite of Cisco data center products.

◦ Self-contained advanced anticounterfeiting technology: The MDS 9132T uses on-board hardware that protects the entire system from malicious attacks by securing access to critical components such as the bootloader, system image loader and Joint Test Action Group (JTAG) interface.

Pure Storage acquired Compuverde in 2019, and they’ve been integrating this technology into the Purity//FA operating system. They emphasize “integrating”, because they incorporated key parts of it into Purity to give you the advantages of native files alongside blocks.

The SMB and NFS protocols bring consolidated storage to the Purity//FA operating system, complementing its block capabilities, while the file system offers features like directory snapshots and directory-level performance and space monitoring.

Moreover, Purity includes enterprise-grade data security, modern data protection options, and complete business continuity and global disaster recovery through ActiveCluster multi-site stretch cluster and ActiveDR* for continuous replication with near zero RPO. All these features are included with every array.

Figure 21. FlashArray//C Specifications

Table 1. FlashArray Connectivity (Applicable for both FA//X and FA//C)

| ONBOARD PARTS (PER CONTROLLER) |

HOST I/O CARDS (3 SLOTS/CONTROLLER) |

|

| 2 x 1/10/25Gb Ethernet 2 x 1/10/25Gb Ethernet Replication 2 x 1Gb Management Ports |

2-port 10GBase-T Ethernet 2-port 1/10/25Gb Ethernet 2-port 40Gb Ethernet 2-port 25/100Gb NVMe/RoCE |

2-port 16/32Gb SCSI FC and NVMe-FC 4-port 16/32Gb SCSI FC and NVMe-FC |

Customers can deploy storage once and enjoy a subscription to continuous innovation through Pure’s Evergreen Storage ownership model: expand and improve performance, capacity, density, and/or features for 10 years or more – all without downtime, performance impact, or data migrations. Pure has disrupted the industry’s 3-5-year rip-and-replace cycle by engineering compatibility for future technologies right into its products, notably nondisruptive capability to upgrade from //M to //X with NVMe, DirectMemory, and NVMe-oF capability.

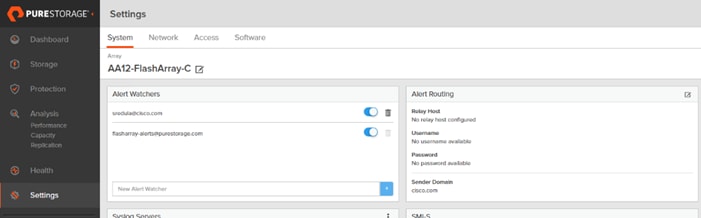

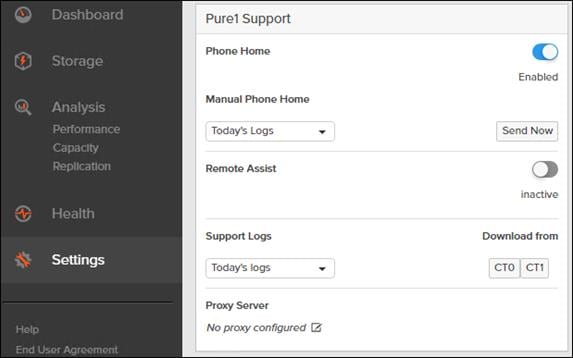

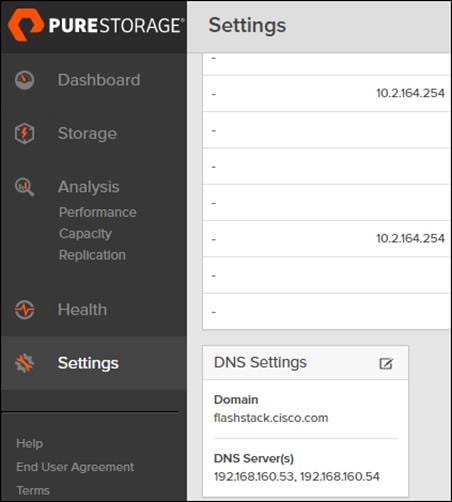

Pure1, a cloud-based management, analytics, and support platform, expands the self-managing, plug-n-play design of Pure all-flash arrays with the machine learning predictive analytics and continuous scanning of Pure1 Meta™ to enable an effortless, worry-free data platform.

In the Cloud IT operating model, installing, and deploying management software is an oxymoron: you simply login. Pure1 Manage is SaaS-based, allowing you to manage your array from any browser or from the Pure1 Mobile App – with nothing extra to purchase, deploy, or maintain. From a single dashboard you can manage all your arrays, with full visibility on the health and performance of your storage.

Pure1 Analyze delivers true performance forecasting – giving customers complete visibility into the performance and capacity needs of their arrays – now and in the future. Performance forecasting enables intelligent consolidation and unprecedented workload optimization.

Pure combines an ultra-proactive support team with the predictive intelligence of Pure1 Meta to deliver unrivaled support that’s a key component in our proven FlashArray 99.9999% availability. Customers are often surprised and delighted when we fix issues they did not even know existed.

The foundation of Pure1 services, Pure1 Meta is global intelligence built from a massive collection of storage array health and performance data. By continuously scanning call-home telemetry from Pure’s installed base, Pure1 Meta uses machine learning predictive analytics to help resolve potential issues and optimize workloads. The result is both a white glove customer support experience and breakthrough capabilities like accurate performance forecasting.

Meta is always expanding and refining what it knows about array performance and health, moving the Data Platform toward a future of self-driving storage.

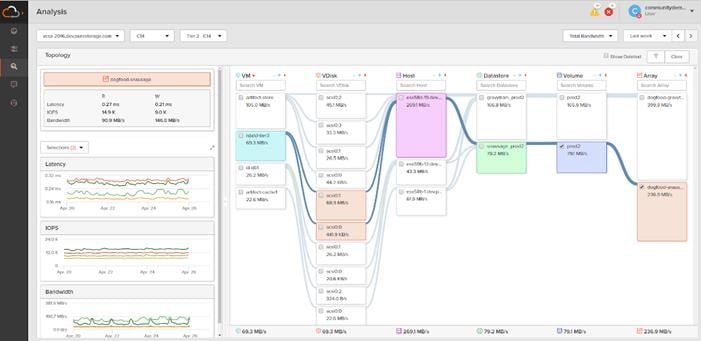

Pure1 helps you narrow down the troubleshooting steps in your virtualized environment. VM Analytics provides you with a visual representation of the IO path from the VM all the way through to the FlashArray. Other tools and features guide you through identifying where an issue might be occurring in order to help eliminate potential candidates for a problem.

VM Analytics doesn’t only help when there’s a problem. The visualization allows you to identify which volumes and arrays particular applications are running on. This brings the whole environment into a more manageable domain.

Veeam Backup & Replication is a 4-in-1 solution combining backup, replication, storage snapshots, and Continuous Data Protection (CDP) under a single platform, delivering faster and more flexible data protection, recovery, and retention options. Veeam Backup & Replication can protect all enterprise workloads, including virtual, physical, cloud, and file for organizations operating out of their own data centers, public cloud, managed cloud, or any combination.

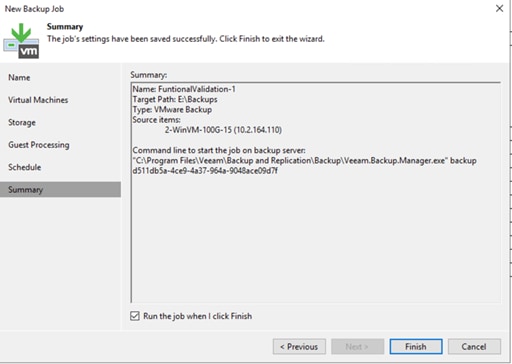

For this CVD, Veeam Backup & Replication was configured to protect VMware virtual machines (VMs) running on FlashStack. When protecting VMs, Veeam Backup & Replication operates at the virtualization layer and uses an image-based approach for VM backup. When backup jobs are run, Veeam retrieves the VM data leveraging two things. First, the VMware API for Data Protection (VADP). Second, Veeam storage snapshot integration with the Pure Storage FlashArray//X that provides the storage for the FlashStack environment. Veeam Backup & Replication leverages a vSphere snapshot for point-in-time backup and application-aware processing. Once Veeam creates the application-aware VMware snapshot, it then orchestrates a transactionally consistent storage snapshot on the Pure Storage FlashArray//X. Veeam then removes the VMware snapshot to minimize the impact of backup on the production virtual workloads and backs up the data from the FlashArray//X storage snapshot over the storage network (for example, Fibre Channel or iSCSI).

Veeam Backup & Replication creates image-based backups that can be used for all types of recovery, including:

● Instant VM Recovery enables you to instantly start a VM directly from a backup file

● Application-Item Recovery leverages Veeam Explorers to enable granular restore of application-specific items

● Full VM recovery enables you to recover a VM from a backup file to its original, or another, location

● VM file recovery enables you to recover separate VM files (for example, virtual disks, configuration files)

● Instant VM Disk Recovery enables you to recover a specific hard drive of a VM from the backup file and attach it to the original VM, or a new VM

● Windows file-level recovery enables you to recover individual Windows guest OS files (from FAT, NTFS, and ReFS file systems)

● Multi-OS file-level recovery enables you to recover files from 15 different guest OS file systems

Veeam Backup & Replication uses the same image-level backup for all data recovery operations. You can restore VMs, VM files and drives, application objects, and individual guest OS files to the most recent state or any available restore point.

In addition to being able to provide these capabilities from backup files, Veeam Backup & Replication can also provide many of these recoveries from Veeam orchestrated storage snapshots on the FlashStack.

Veeam Explorers are powerful recovery tools included in Veeam Backup & Replication. Customers can restore granular application items, directly from Veeam backups or orchestrated storage snapshots. Veeam has application-specific Explorers for the following enterprise applications:

● Microsoft Active Directory: Search and restore all Active Directory object types (e.g., users, groups, computer accounts, contacts, expiring links), Group Policy Objects (GPOs), Active Directory-integrated Microsoft DNS records, and Configuration Partition objects.

● Microsoft Exchange: Get instant visibility into Exchange backups, advanced search capabilities, and quick recovery of individual Exchange items (for example, emails, contacts, notes), online archive mailboxes, purges folder support, and hard-deleted (such as permanently deleted) items; eDiscovery features include detailed export reports and export size estimation based on query search criteria.

● Microsoft SharePoint: Get instant visibility into SharePoint backups, search for and quickly restore full SharePoint sites, item permissions, and specific files. Export recovered items directly to their original SharePoint server or send them as an email attachment.

● Microsoft SQL Server: Get fast transaction and table-level recovery of SQL databases, including agentless transaction log backup and replay, so you can restore your SQL databases to a precise point in time and achieve low Recovery Time and Point Objectives (RTPO).

● Oracle: Get transaction-level recovery of Oracle databases including agentless transaction log backup, so you can restore your Oracle databases to a precise point in time, self-service restore, and restore via PowerShell.

![]() Each Explorer has a corresponding user guide.

Each Explorer has a corresponding user guide.

With instant VM recovery, you can immediately restore a VM into your production environment by running it directly from the backup file. Instant VM recovery helps improve recovery time objectives (RTO), minimize disruption and downtime of production VMs. It is like having a "temporary spare" for a VM; users remain productive while you can troubleshoot an issue with the failed VM.

When instant VM recovery is performed, Veeam Backup & Replication uses the Veeam vPower technology to mount a VM image to an ESX(i) host directly from a compressed and deduplicated backup file. Since there is no need to extract the VM from the backup file and copy it to production storage, you can restart a VM from any restore point (incremental or full) in a matter of minutes.

After the VM is back online you can use VMware storage vMotion to migrate the VM back to production storage.

Veeam Backup & Replication can help you to restore specific VM files (for example, vmdk, vmx) if any of these files are deleted or the datastore is corrupted. This option provides a great alternative to full VM restore, for example, when your VM configuration file is missing, and you need to restore it. Instead of restoring the whole VM image to the production storage, you can restore the specific VM file only. Another data recovery option provided by Veeam Backup & Replication is restore of a specific hard drive of a VM. If a VM hard drive becomes corrupted for some reason (for example, with a virus), you can restore it from the image-based backup to any good-to-know point in time.

Continuous Data Protection (CDP)

Eliminate downtime and minimize data loss for Tier-1 VMware vSphere workloads and perform immediate recoveries to the latest state or desired point in time with the built-in CDP functionality, achieving the most stringent RTOs and RPOs.

Veeam CDP implementations include:

● No VM snapshots — Veeam CDP captures all write I/O directly in the data path with the VMware-certified I/O filter driver, eliminating the need to create VM snapshots as with classic replication jobs. And with I/O-level tracking, only the data changed is sent over to a DR site, as opposed to larger virtual disk blocks returned by the changed block tracking.

● No workload or hardware dependency — Protect any OS and applications that can run within a vSphere VM. And unlike storage-based replication, Veeam CDP works across all types of storage arrays, hyperconverged storage solutions, and even local vSphere ESXi storage.

● Asynchronous replication — Unlike synchronous array-based replication, Veeam CDP can be used across any distance while requiring significantly lower bandwidth, thanks to I/O consolidation, when the same block is overwritten multiple times, and network traffic compression.

● Policy-based protection — Unlike with regular replication jobs, you don’t have to worry about scheduling at all. Just define the required RPO (maximum data loss allowed in case of a disaster) and the CDP policy will take care of performing the sync cycles as needed. Also, to reduce monitoring events spam, you can define acceptable RPO violation thresholds so that sporadic connectivity issues do not result in alarms.

● Flexible retention — Separately define short-term retention, allowing crash-consistent restores to a point in time with RPO period granularity, and long-term retention policy with optional periodic application-consistent restore points providing an additional layer of protection.

● Flexible deployment models — Depending on the amount of data under protection, you can opt for virtual CDP proxies or use dedicated physical CDP proxies to completely offload all data processing overhead from your vSphere hosts, removing impact to your VM consolidation ratio. In either case, only one proxy per vSphere cluster is required with additional proxies providing redundancy and increased scalability.

● Deployment assistant — A built-in deployment calculator removes the guesswork by looking at the historical I/O of all VMs selected for protection in the CDP policy to estimate required bandwidth to achieve the specified RPO and evaluates whether your currently selected CDP proxy resources are sufficient for the historical I/O change rate.

● No additional licensing — Veeam CDP is included in the Veeam Universal License along with existing data protection methods for vSphere VMs: host-based backup or replication, agent-based backup, application-level backup, and storage snapshots. And just as before, using multiple protection methods on the same VM does not consume additional licenses.

![]() Veeam CDP functionality requires deploying the I/O filter to both the source and target vSphere cluster. This can be done by right-clicking the cluster in the newly added clusters tree view on the Backup Infrastructure tab.

Veeam CDP functionality requires deploying the I/O filter to both the source and target vSphere cluster. This can be done by right-clicking the cluster in the newly added clusters tree view on the Backup Infrastructure tab.

Veeam Replication can be used for workloads that require RPOs better than recovery from backup, but not the near-zero RPOs of Veeam CDP. Veeam Replication complements image-based backup and CDP with image-based replication. Replication is the process of copying a VM from its primary location (source host) to a destination location (target host). Veeam Backup & Replication creates an exact copy of the VM (replica), registers it on the target host, and maintains it in sync with the original VM.

Replication provides tier-2 recovery time objectives (RTOs) and recovery point objectives (RPOs). Veeam Backup & Replication provides the means to perform both onsite replication for high availability (HA) scenarios and remote (offsite) replication for disaster recovery (DR) scenarios. To facilitate replication over WAN or slower connections, Veeam Backup & Replication optimizes traffic transmission, by filtering out unnecessary data blocks (such as duplicate data blocks, zero data blocks, or blocks of swap files) and compresses replica traffic. Veeam Backup & Replication also allows you to apply network throttling rules to prevent replication jobs from consuming the entire bandwidth available in your environment.

WAN accelerators are optional components in the Veeam infrastructure. You can use WAN accelerators if you replicate VMs or send Backup Copies over a slow connection or over the WAN.

In the replication and Backup Copy process, WAN accelerators are responsible for global data caching and deduplication. To use WAN acceleration, you must deploy two WAN accelerators in the following manner:

● The source WAN accelerator must be deployed on the source side, close to the Veeam Backup Proxy running the source-side Data Mover Service.

● The target WAN accelerator must be deployed in the target side, close to the Veeam Backup Proxy running the target-side Data Mover Service.

In case of software or hardware malfunction, you can quickly recover a corrupted VM by failing over to its replica. When you perform failover, a replicated VM takes over the role of the original VM. You can fail over to the latest state of a replica or to any of its good known restore points.

In Veeam Backup & Replication, failover is a temporary intermediate step that should be further finalized. Veeam Backup & Replication offers the following options for different disaster recovery scenarios:

· You can perform permanent failover to leave the workload on the target host and let the replica VM act as the original VM. Permanent failover is suitable if the source and target hosts are nearly equal in terms of resources and are located on the same HA site.

· You can perform failback to recover the original VM on the source host or in a new location. Failback is used in case you failed over to a DR site that is not intended for continuous operations and would like to move the operations back to the production site when the consequences of a disaster are eliminated.

Veeam Backup & Replication supports failover and failback operations for one VM and for several VMs. In case one or several hosts fail, you can use failover plans to restore operations with minimum downtime.

Failover-Plans

If you have several VMs running interdependent applications, you need to failover them one by one, as a group. To do this automatically, you can prepare a failover plan.

In a failover plan, you set the order in which VMs must be processed and time delays for VMs. The time delay is an interval of time for which Veeam Backup & Replication must wait before starting the failover operation for the next VM in the list. It helps to ensure that some VMs, such as a DNS server, is already running at the time the dependent VMs start. The failover plan must be created in advance. In case the primary VM group goes offline, you can start the corresponding failover plan manually. When you start the procedure, you can choose to fail over to the latest state of a VM replica or to any of its good known restore points.

Planned Failover

If you know that your primary VMs are about to go offline, you can proactively switch the workload to their replicas. A planned failover is smooth manual switching from a primary VM to its replica with minimum interruption in operation. You can use the planned failover, for example, if you plan to perform datacenter migration, maintenance, or software upgrade of the primary VMs. You can also perform planned failover if you have advance notice of a disaster approaching (for example, Hurricane) that will require taking the primary servers offline.

Failback

If you want to resume operation of a production VM, you can fail back to it from a VM replica. When you perform failback, you get back from the VM replica to the original VM, shift your I/O and processes from the target host to the production host and return to the normal operation mode.

If you managed to restore operation of the source host, you can switch from the VM replica to the original VM on the source host. If the source host is not available, you can restore the original VM to a new location and switch back to it.

Veeam Availability Suite

Veeam Availability Suite combines the backup, replication, storage snapshots, and CDP capabilities of Veeam Backup & Replication with the advanced monitoring, reporting, and capacity planning functionality of Veeam ONE. Veeam Availability Suite delivers everything you need to reliably protect and manage your Cisco FlashStack virtual environment. Veeam Backup & Replication is a modular solution that lets you build a scalable backup infrastructure for environments of different sizes and configurations. The installation package of Veeam Backup & Replication includes a set of components that you can use to configure the backup infrastructure. Some components are mandatory and provide core functionality; some components are optional and can be installed to provide additional functionality for your business and deployment needs. You can co-install all Veeam Backup & Replication components on the same machine, physical or virtual, or you can set them up separately for a more scalable approach

Figure 22 shows an overview on the main Veeam components.

Figure 22. Veeam Backup & Replication Components

Backup Server

The Veeam Backup Server can run on a Windows-based physical or virtual machine on which Veeam Backup & Replication is installed. It is the core component in the backup infrastructure that fills the role of the “configuration and control center.” The backup server performs all types of administrative activities:

● Coordinate’s backup, storage snapshots, CDP, replication, recovery verification, and restore tasks

● Controls job scheduling and resource allocation

● Manages all Backup Proxy and Backup Repository servers and other components of the backup infrastructure

The Veeam Backup Server is used to set up and manage backup infrastructure components as well as specify global settings for the backup infrastructure.

Figure 23. Veeam Backup Server Management

In addition to its primary functions, a newly deployed backup server also performs the roles of the default Backup Proxy and the Backup Repository.

The backup server uses the following services and components:

● Veeam Backup Service is a Windows service that coordinates all operations performed by Veeam Backup & Replication such as backup, storage snapshots, CDP, replication, recovery verification and restore tasks. The Veeam Backup Service runs under the Local System account or account that has the Local Administrator permissions on the backup server.

● Veeam Backup Shell provides the application user interface and allows user access to the application's functionality.

● Veeam Guest Catalog Service is a Windows service that manages guest OS file system indexing for VMs and replicates system index data files to enable search through guest OS files. Index data is stored in the Veeam Backup Catalog — a folder on the backup server. The Veeam Guest Catalog Service running on the backup server works in conjunction with search components installed on Veeam Backup Enterprise Manager and (optionally) a dedicated Microsoft Search Server.

● Veeam Backup SQL Database is used by Veeam Backup Service, Veeam Backup Shell, and Veeam Guest Catalog Service to store data about the backup infrastructure, jobs, sessions, and so on. The database instance can be located on a SQL Server installed either locally (on the same machine where the backup server is running) or remotely.

● Backup Proxy Services. In addition to dedicated services, the backup server runs a set of data mover services.

Backup Proxy

The Backup Proxy is an architecture component that sits between the data source and backup target and is used to process jobs and deliver backup traffic. In particular, the Backup Proxy tasks include retrieving VM data from the production storage, optionally compressing, deduplicating, and encrypting the data, then sending it to the Backup Repository (for example, if you run a backup job) or to another Backup Proxy (for example, if you run a replication job). As the data handling task is assigned to the Backup Proxy, the backup server becomes the “point of control” for dispatching jobs to Backup Proxy servers.

The role of a Backup Proxy can be assigned to a Windows or Linux server (physical or virtual) in your environment. You can deploy Backup Proxies both in the primary site and in remote sites. To optimize performance of several concurrent jobs, you can scale-out to use multiple Backup Proxies. In this case, Veeam Backup & Replication will distribute the backup workload between available Backup Proxies.

Figure 24. Veeam Distributed Proxy Server Deployment

Using Veeam Backup Proxies lets you easily scale your backup infrastructure up and down based on your demands. Backup Proxies run light-weight services that take a few seconds to deploy from the Veeam console. The primary role of the Backup Proxy is to provide an optimal route for backup traffic and enable efficient data transfer.

The Veeam Backup Proxy uses the Veeam Data Mover Service is responsible for deploying and coordinating executable modules that act as "data movers" and perform main job activities on behalf of Veeam Backup & Replication, such as communicating with VMware Tools, copying VM files, performing data deduplication and compression and so on.

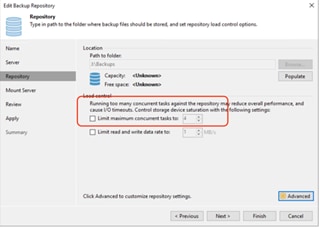

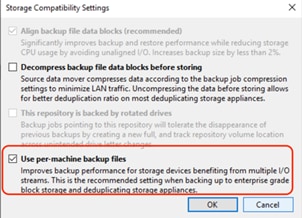

Backup Repository

A Backup Repository is a location used by Veeam Backup & Replication jobs to store backup files and metadata for replicated VMs.

You can configure one of the following types of Backup Repositories:

● Microsoft Windows server with local or directly attached storage. The storage can be a local disk, directly attached disk-based storage (such as a USB hard drive), or iSCSI/FC SAN LUN in case the server is connected to the SAN fabric.

● Linux server with local, directly attached storage, SAN storage or mounted NFS. The storage can be a local disk, directly attached disk-based storage (such as a USB hard drive), NFS share, or iSCSI/FC SAN LUN in case the server is connected to the SAN fabric.

● Hardened backup repository is a Linux-based backup repository with an option for switching on native Linux immutability. Immutability protects your data from loss as a result of malware activity by temporarily prohibiting the deletion of data.

● CIFS (SMB) share. SMB share cannot host Veeam Data Mover Services. For this reason, data to the SMB share is written from the gateway server. By default, this role is performed by a Backup Proxy that is used by the job for data transport.

● NFS share. NFS share cannot host Veeam Data Mover Services. For this reason, data to the NFS share is written from the gateway server. By default, this role is performed by a Backup Proxy that is used by the job for data transport.

● Deduplicating storage appliance. Veeam Backup & Replication supports different deduplicating storage appliances.

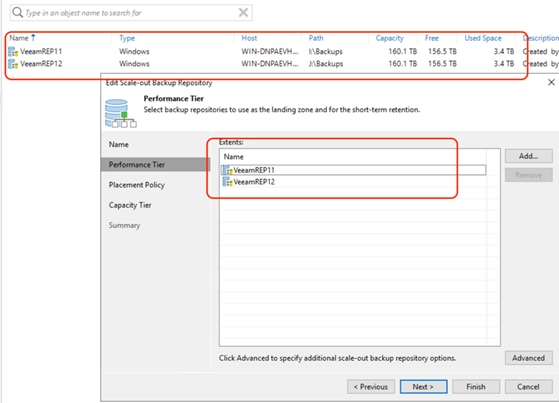

Scale-Out Backup Repository

A scale-Out Backup Repository is a repository system with horizontal scaling support for multi-tier storage of data. The Scale-Out Backup Repository consists of one or more Backup Repositories called the performance tier and can be expanded with object storage repositories for long-term and archive storage: capacity tier and archive tier. All the storage devices and systems inside the Scale-Out Backup Repository are joined into a system.

The main capabilities of Scale-Out Backup Repositories are:

● It provides a convenient way of managing the backup storage.

● The Scale-Out Backup Repository can be expanded at any moment: if the performance extents of your Scale-Out Backup Repository run out of space, you can add a new performance extent to the existing Scale-Out Backup Repository. The free space on this storage system will be added to the capacity of the Scale-Out Backup Repository. As a result, you will not have to move backups to a backup repository of a larger size.

● It supports any backup target supported by Veeam: Windows or Linux servers with local or DAS storage, network shares, deduplicating storage appliances. All the features of any storage device or system are preserved.

● It allows you to set up granular performance policies.

● It provides practically unlimited cloud-based storage capacity. Veeam Backup & Replication can offload data from Backup Repository extents to the cloud object storage for long-term retention.

● A Scale-Out Backup Repository can comprise different tiers or logical levels of storage.

● Performance tier is the level used for fast access to the data. It consists of one or more Backup Repositories called performance extents that live in your datacenter.

● Capacity tier is an additional level for storing data that needs to be accessed less frequently. However, you still can restore your data directly from it. The capacity tier consists of a cloud-based or on-premises object storage repository called a capacity extent.

● Archive tier is an additional level for archive storage of infrequently accessed data. Applicable data from the capacity tier can be transported to the archive tier. For restore from the archive tier, data must undergo a preparation process.

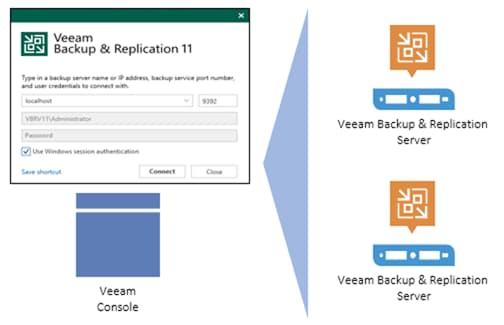

Backup & Replication Console

The Veeam Backup & Replication console is a client-side component that provides access to the backup server. The console is installed locally on the backup server by default. You can also use it in a standalone mode by installing the console on a workstation and access Veeam Backup & Replication remotely over the network. The console lets you log into Veeam Backup & Replication and perform all data protection and disaster recovery operations as if you are working on the backup server.

Figure 25. Veeam Backup & Replication Console

You can install as many remote consoles as you need so that multiple users can access Veeam Backup & Replication simultaneously. Veeam Backup & Replication prevents concurrent modifications on the backup server.

The Veeam Backup Proxy is an architecture component that sits between the backup server and other components of the backup infrastructure. While the backup server administers tasks, the proxy processes jobs and delivers backup traffic.

Basic Backup Proxy tasks include the following:

● Retrieving VM data from the production storage

● Compressing

● Deduplicating

● Encrypting

● Sending it to the backup repository (for example, if you run a backup job) or another Backup Proxy (for example, if you run a replication job)

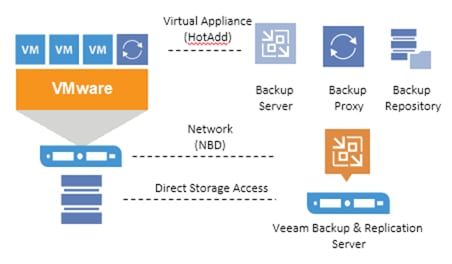

Transport Modes

Job efficiency and time required for job completion greatly depend on the transport mode. The transport mode is a method that is used by the Veeam Data Mover Service to retrieve VM data from the source and write VM data to the target. Depending on your backup architecture, a Backup Proxy can use one of the following data transport modes:

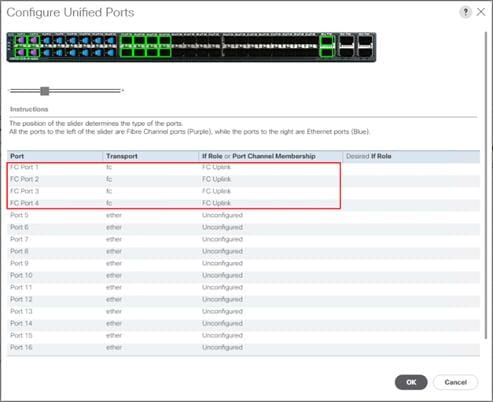

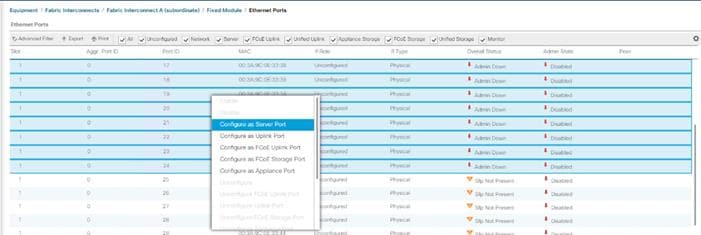

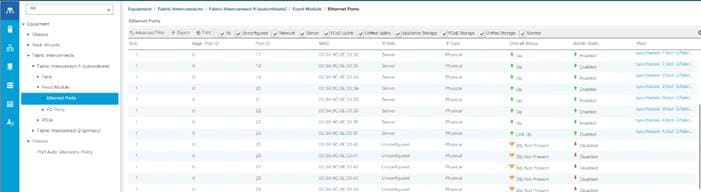

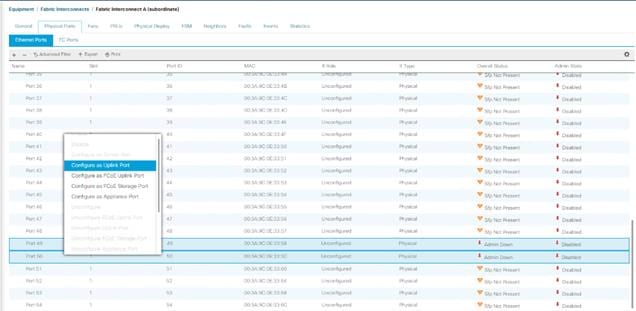

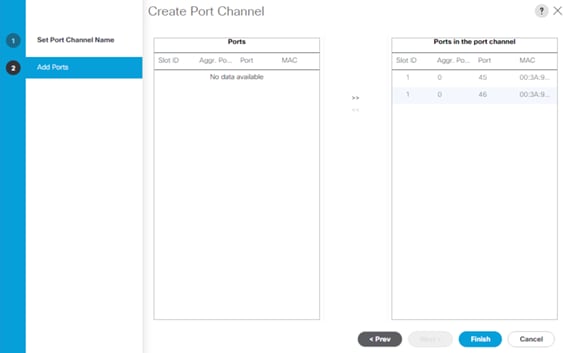

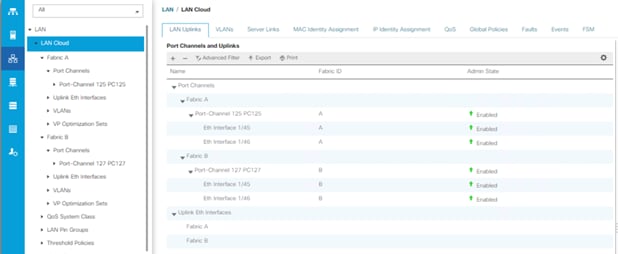

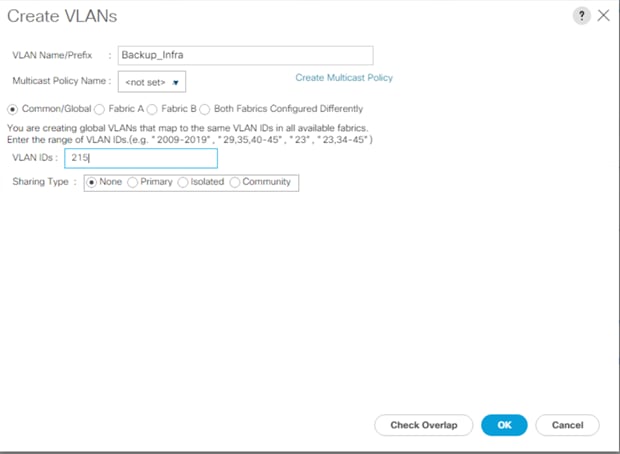

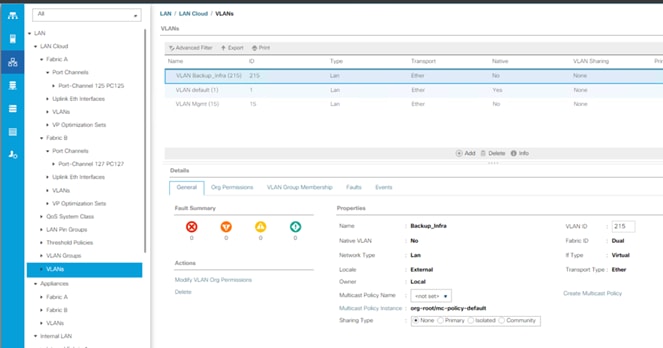

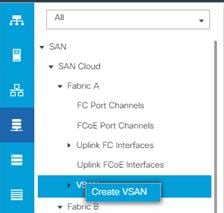

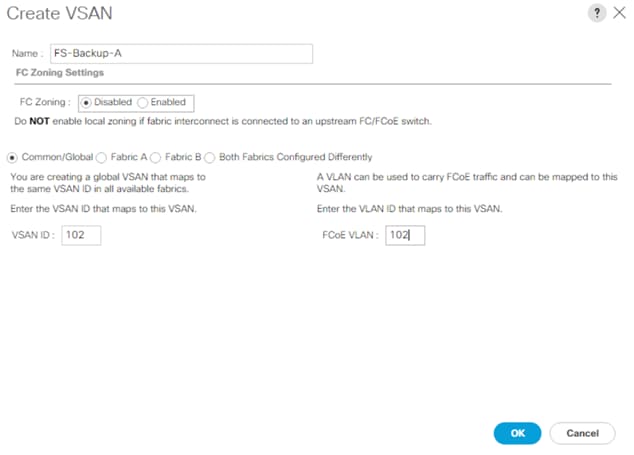

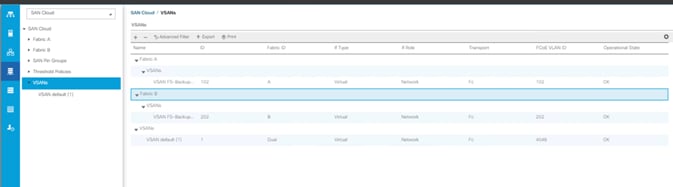

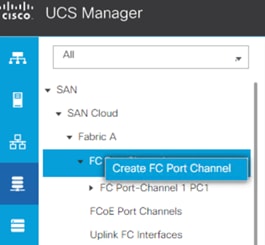

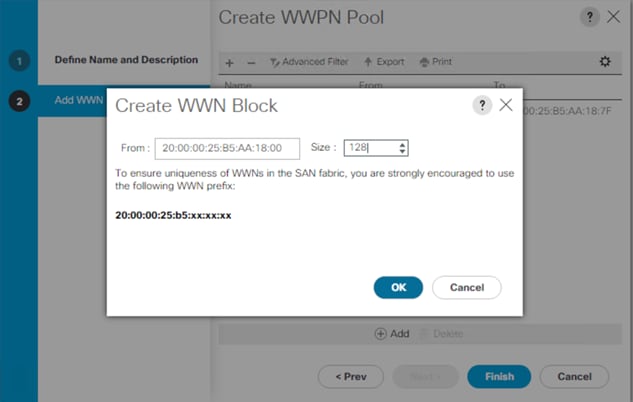

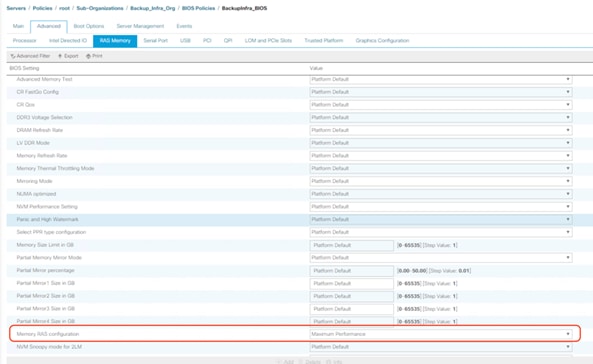

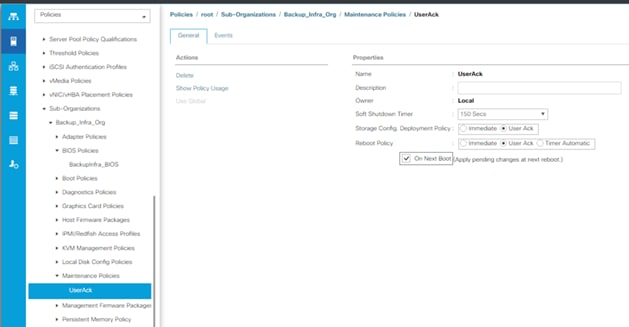

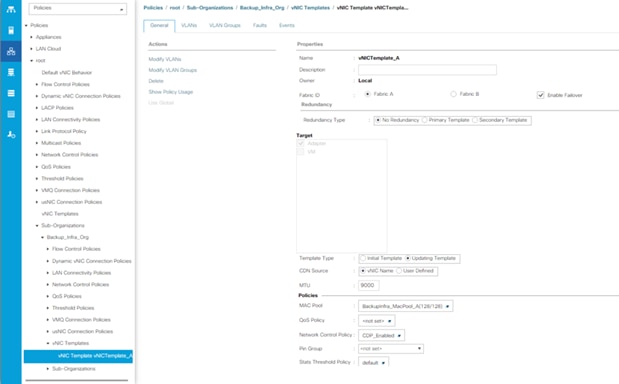

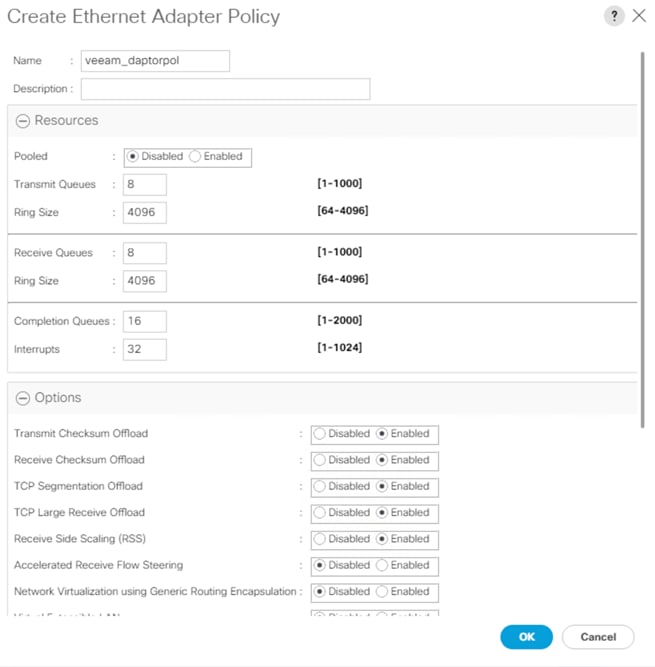

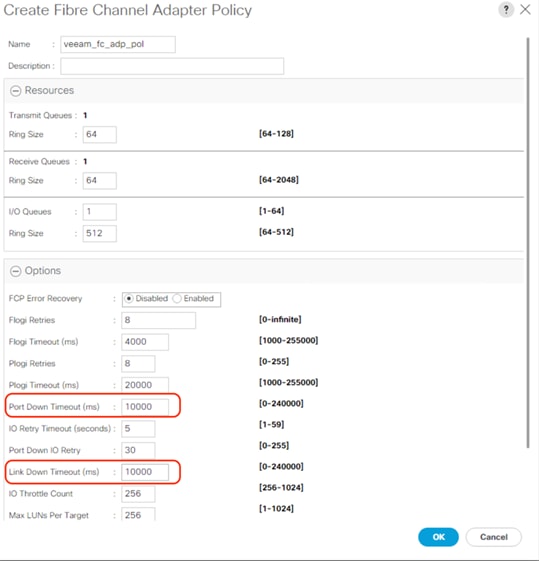

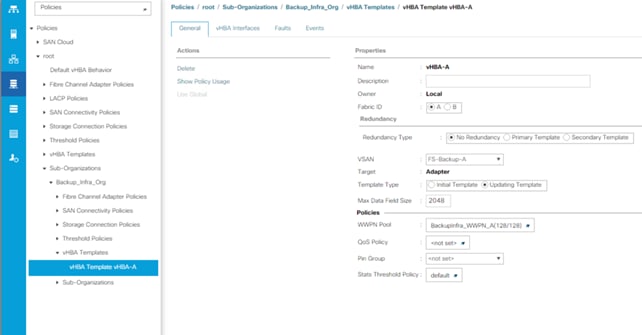

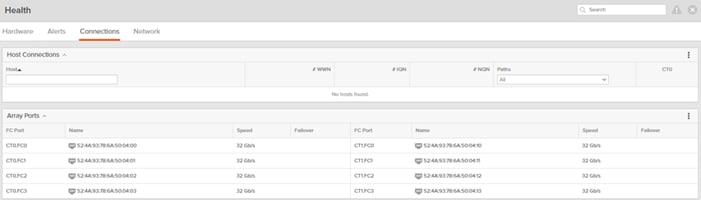

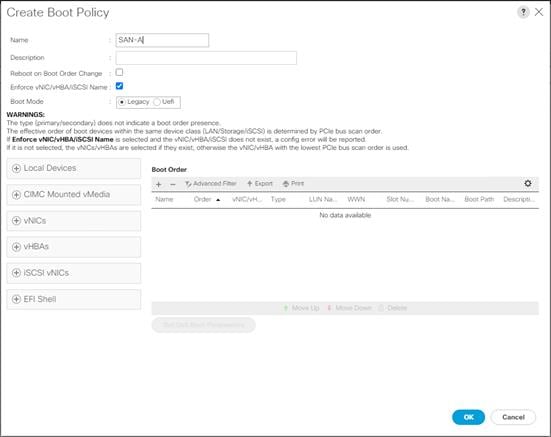

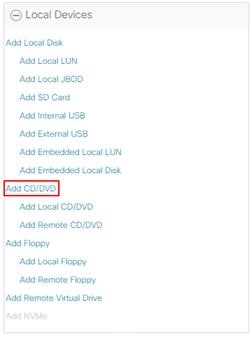

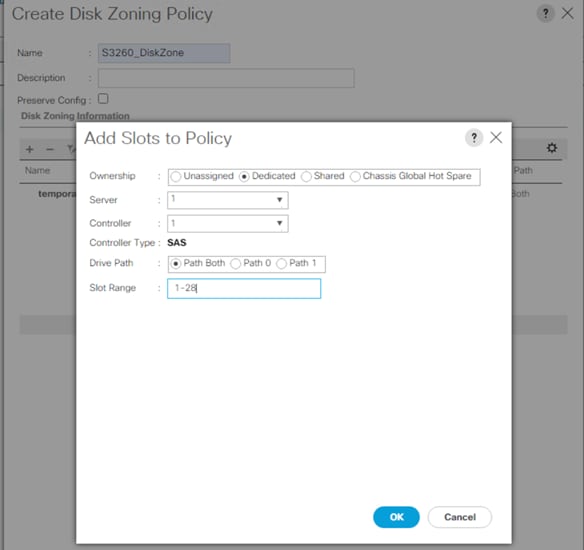

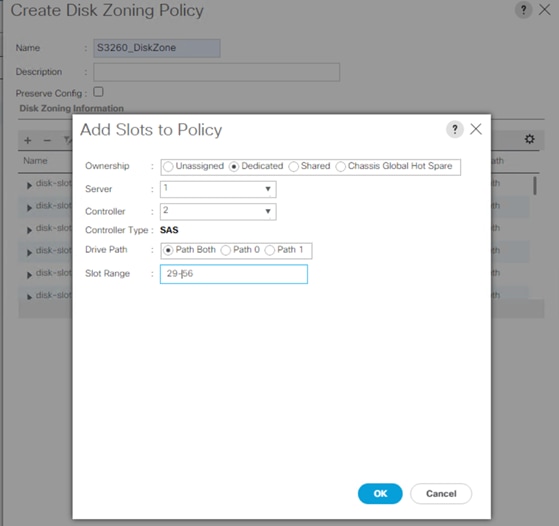

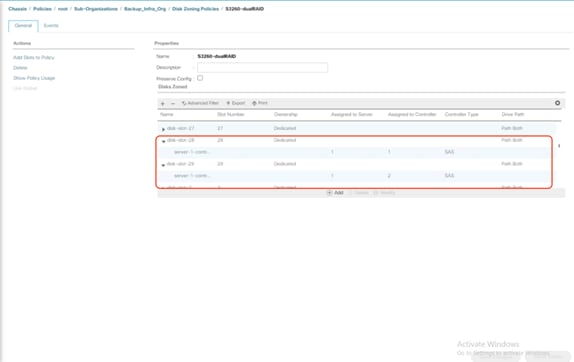

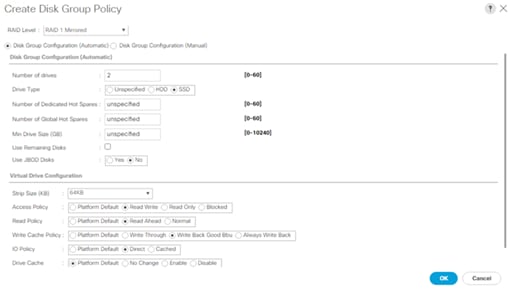

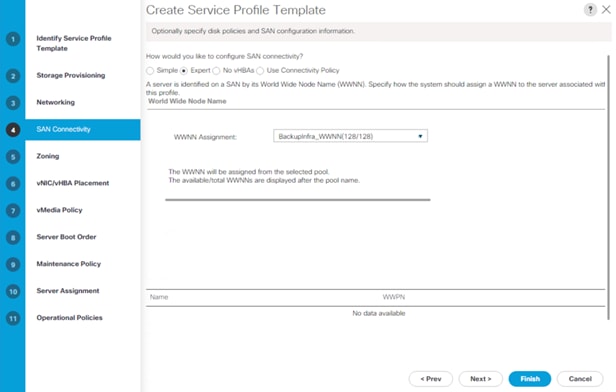

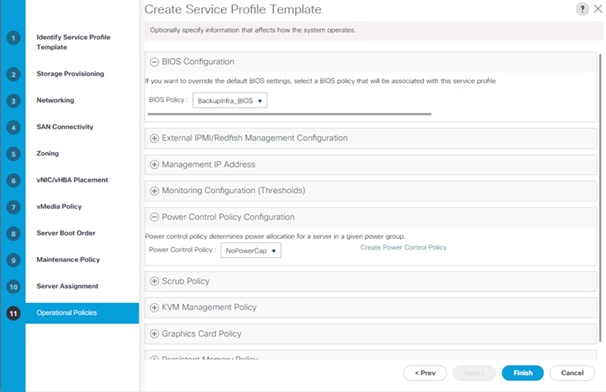

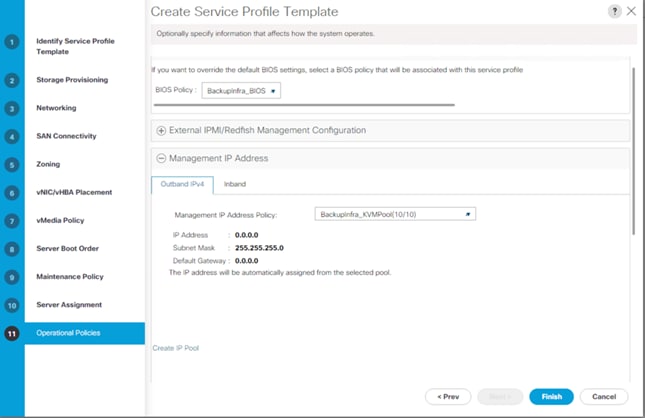

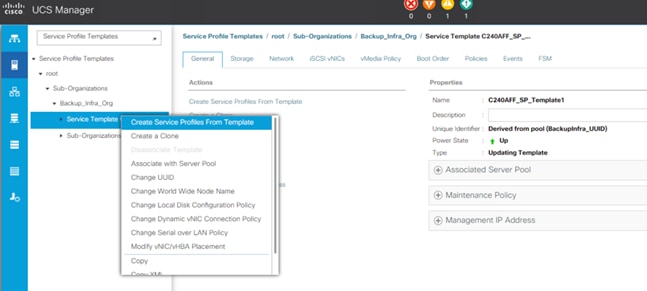

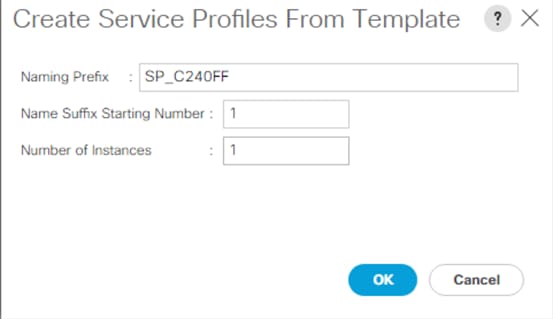

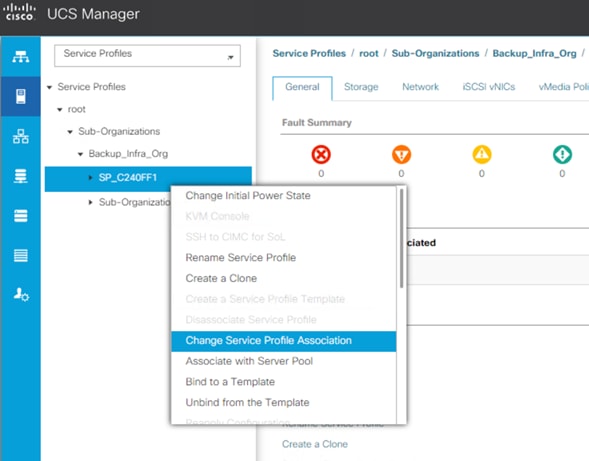

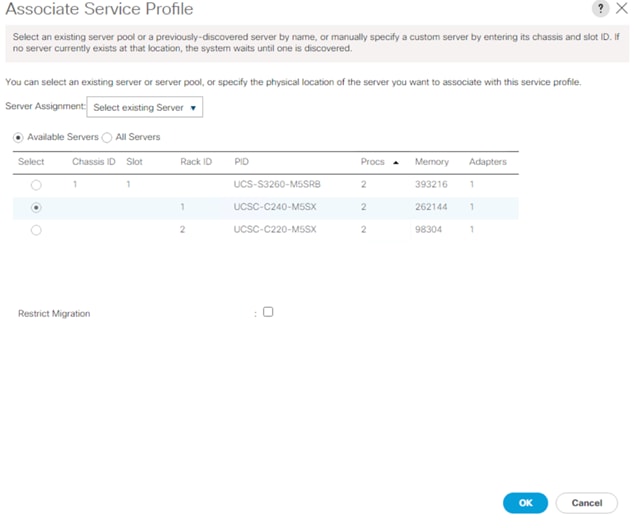

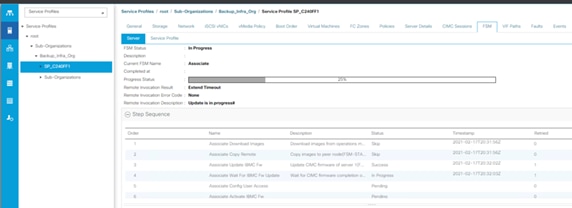

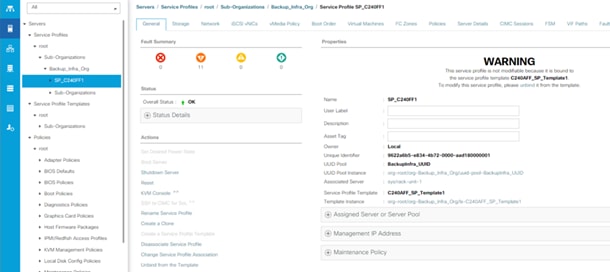

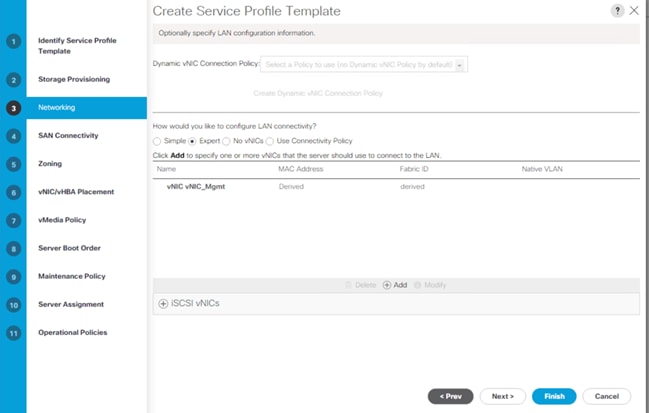

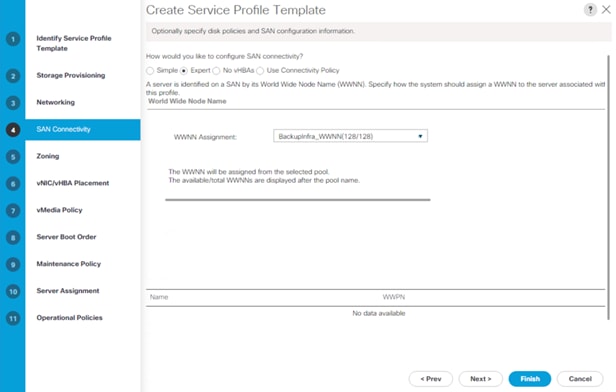

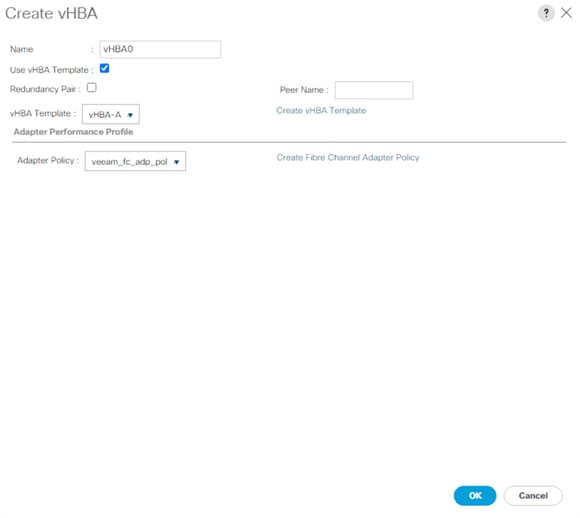

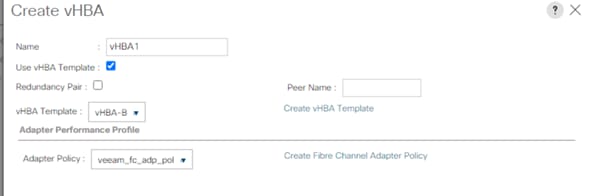

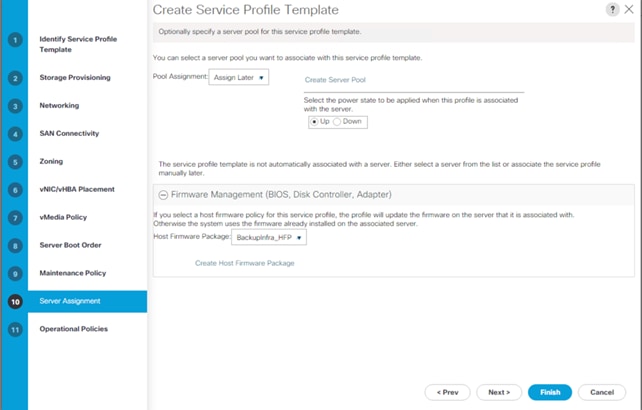

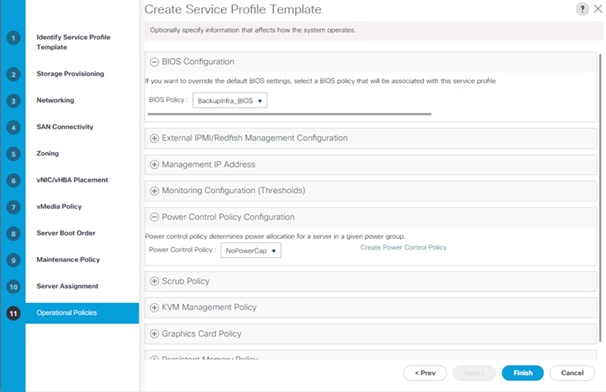

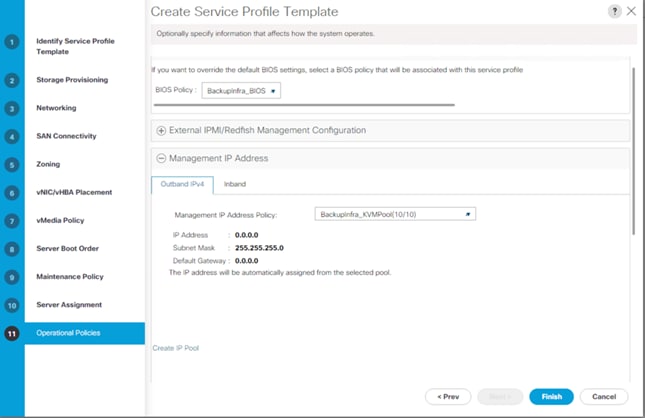

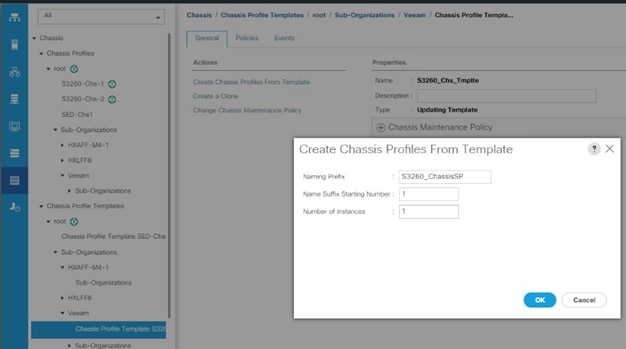

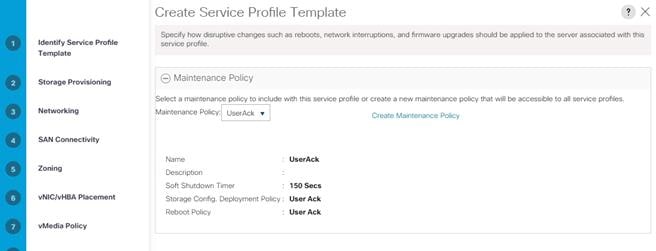

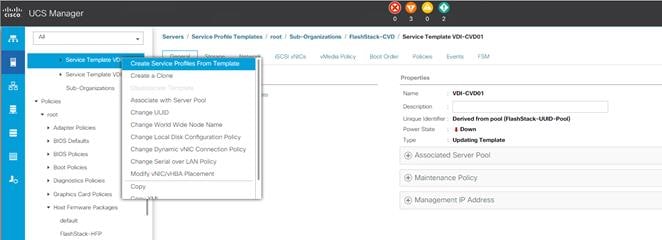

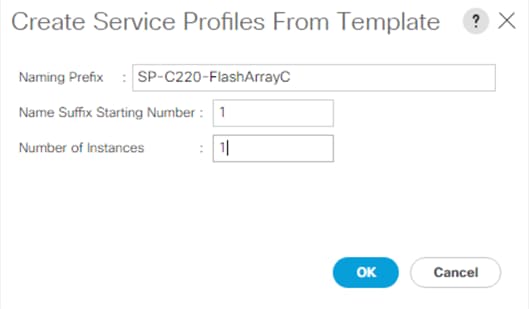

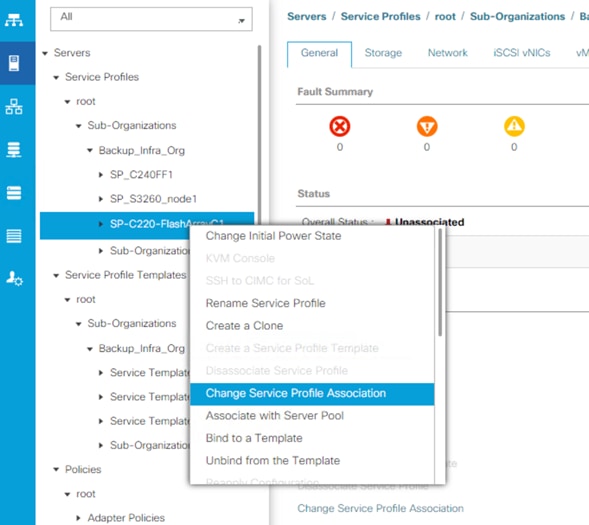

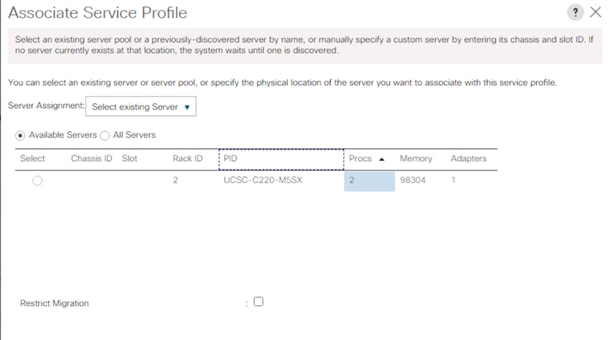

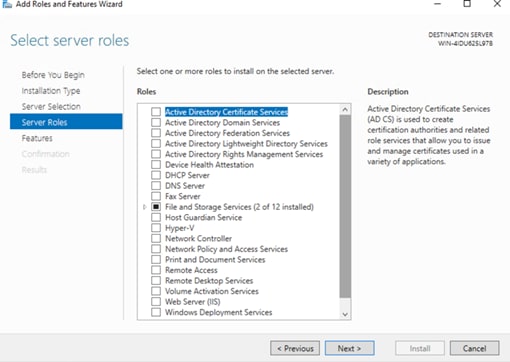

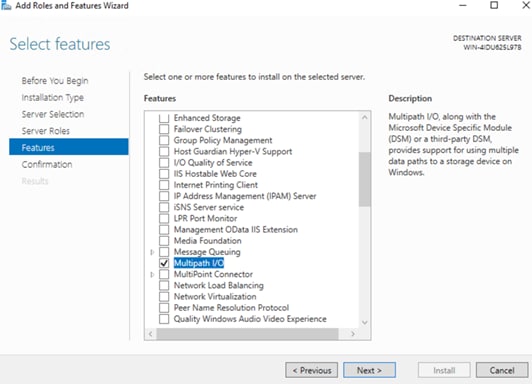

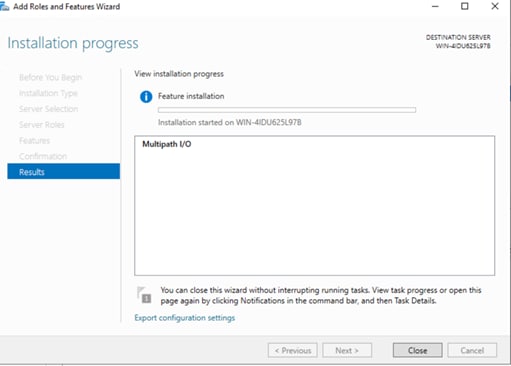

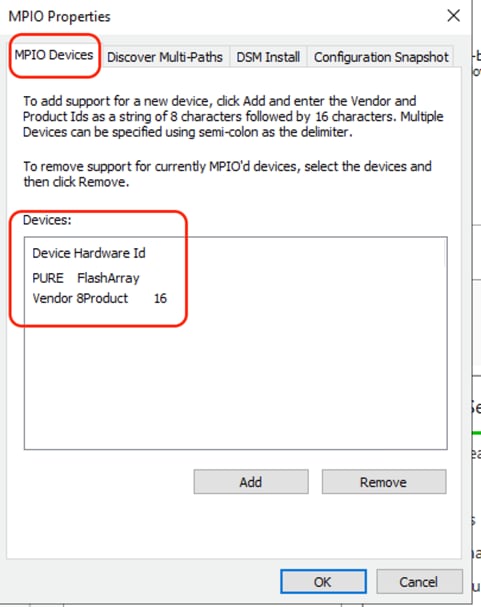

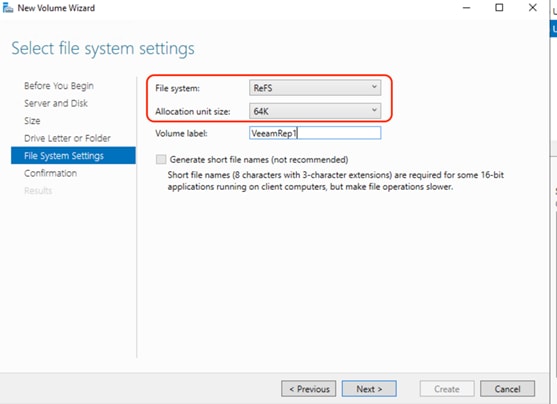

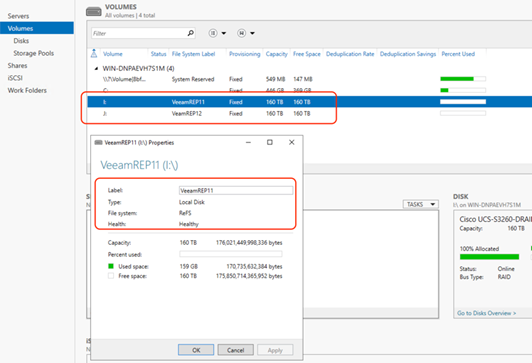

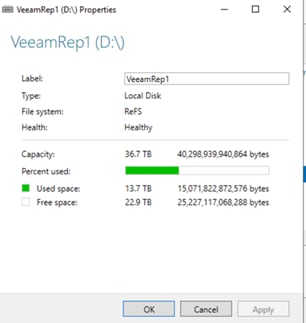

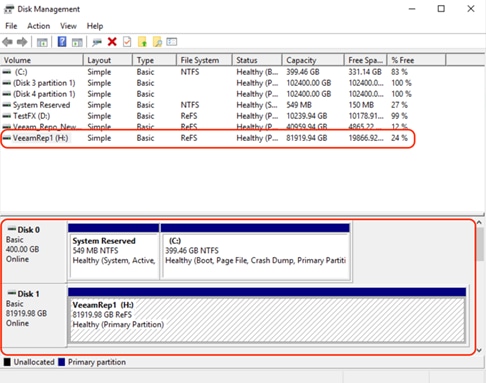

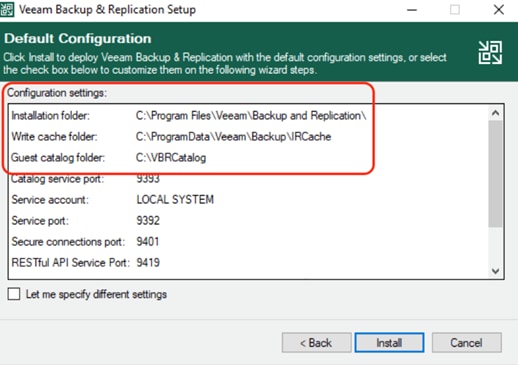

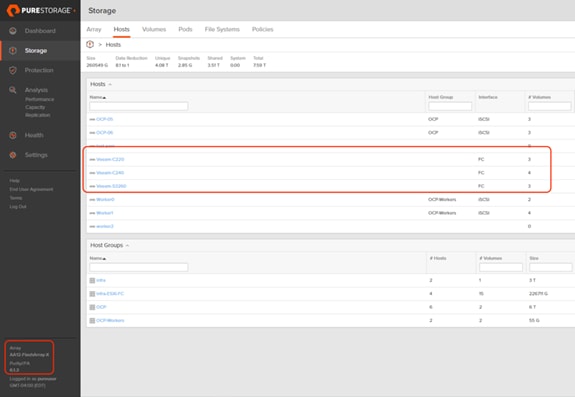

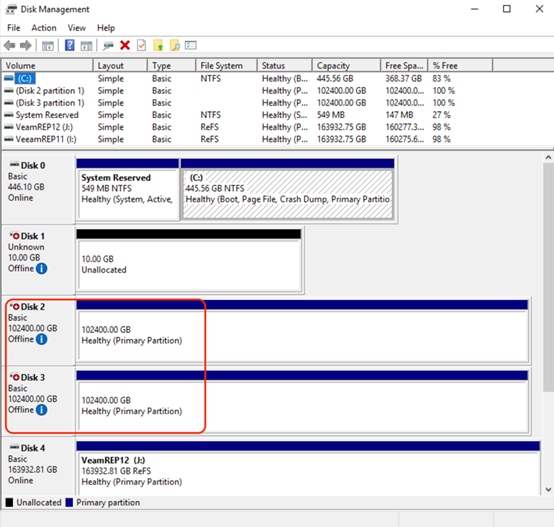

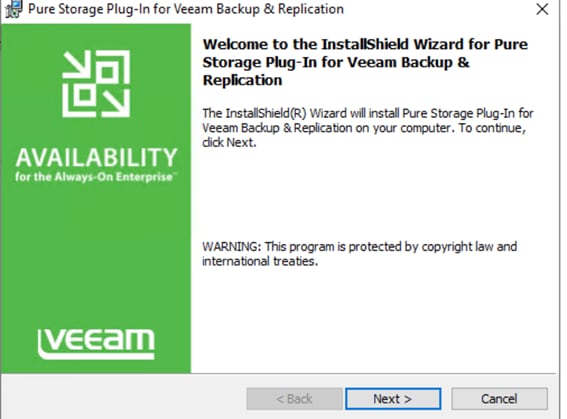

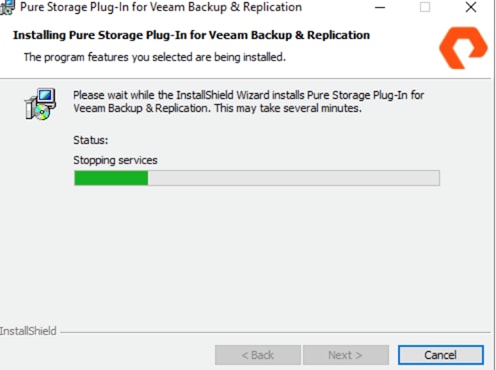

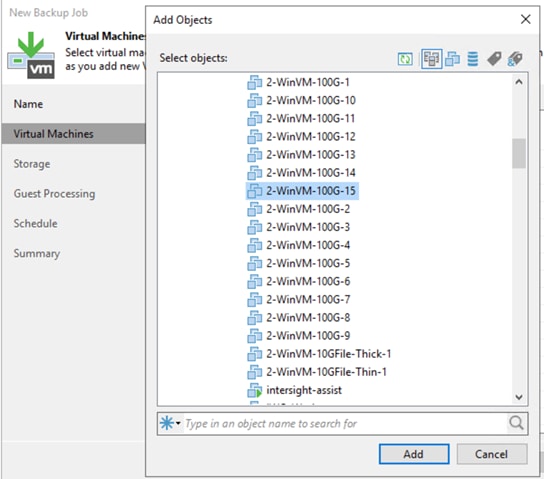

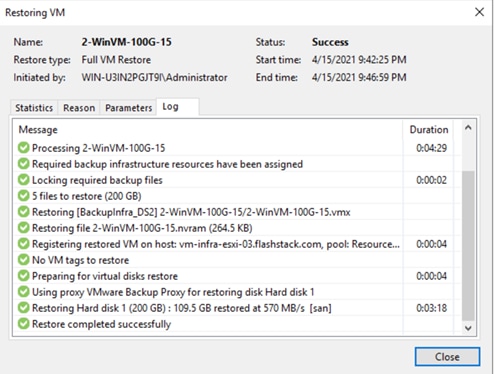

● Direct storage access