Cisco Catalyst SD-WAN Small Branch Design Case Study

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco Catalyst SD-WAN Overview

Cisco Catalyst SD-WAN Design Methodology

SD-WAN Overlay Routing Across the Transports

Overlay IP Unicast Routing Design

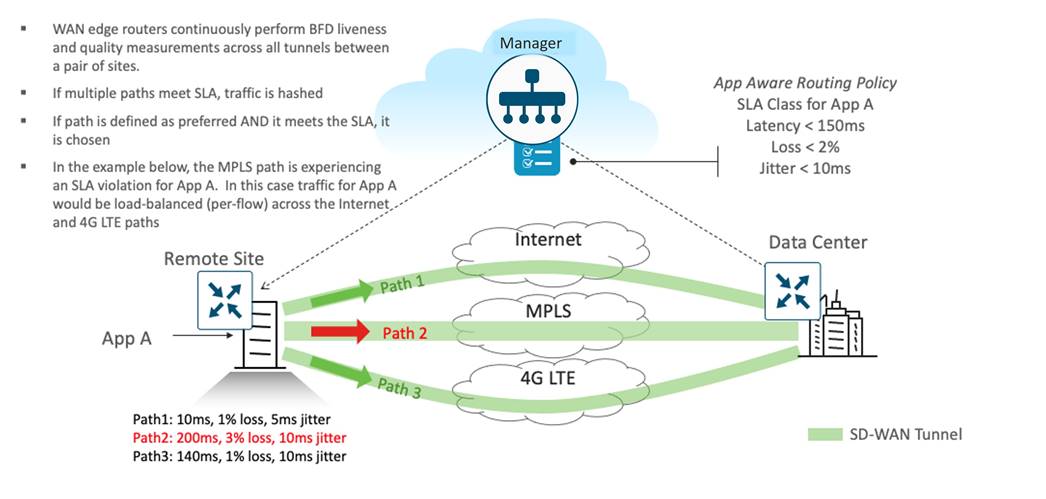

Application-Aware Routing (AAR)

Appendix A: SD-WAN Centralized SD-WAN Controller Control Policy

Appendix B: WAN Edge Multicast Configurations

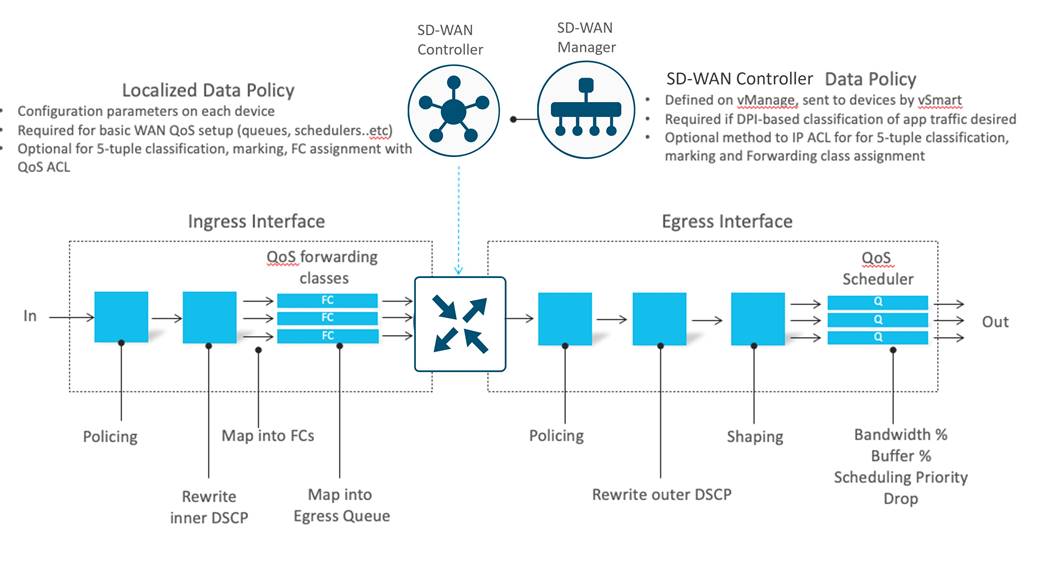

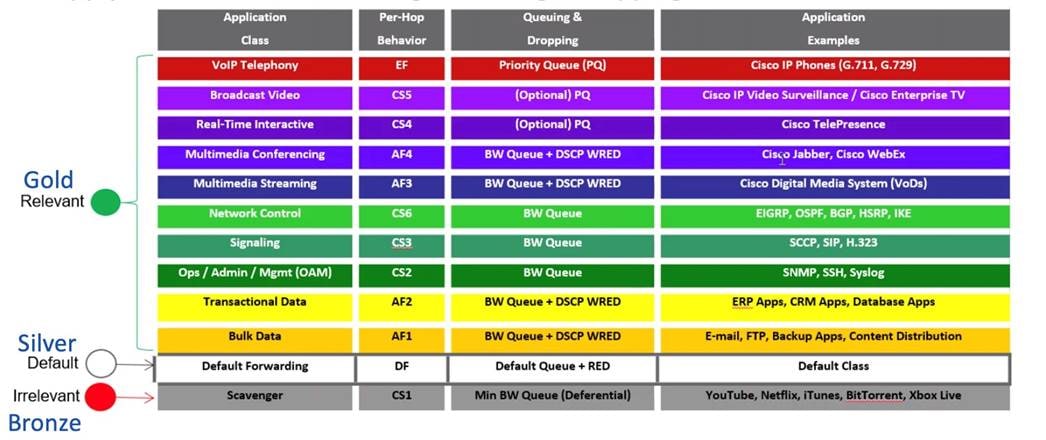

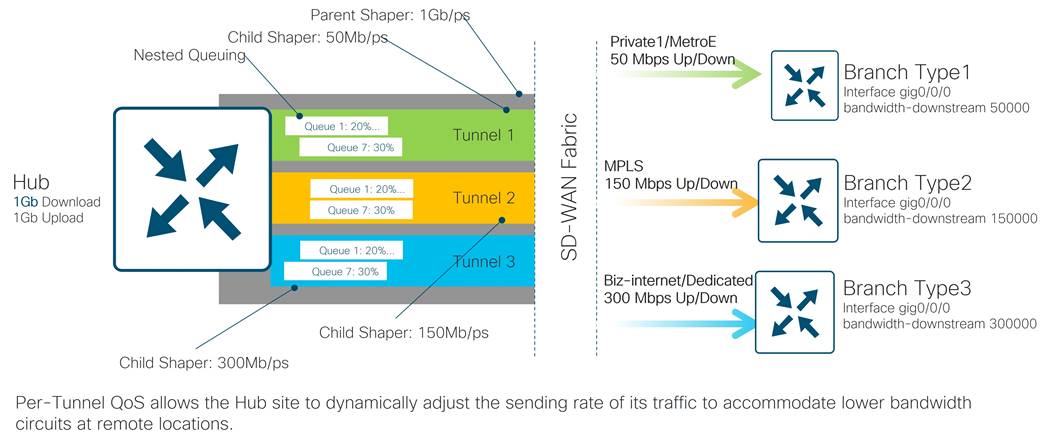

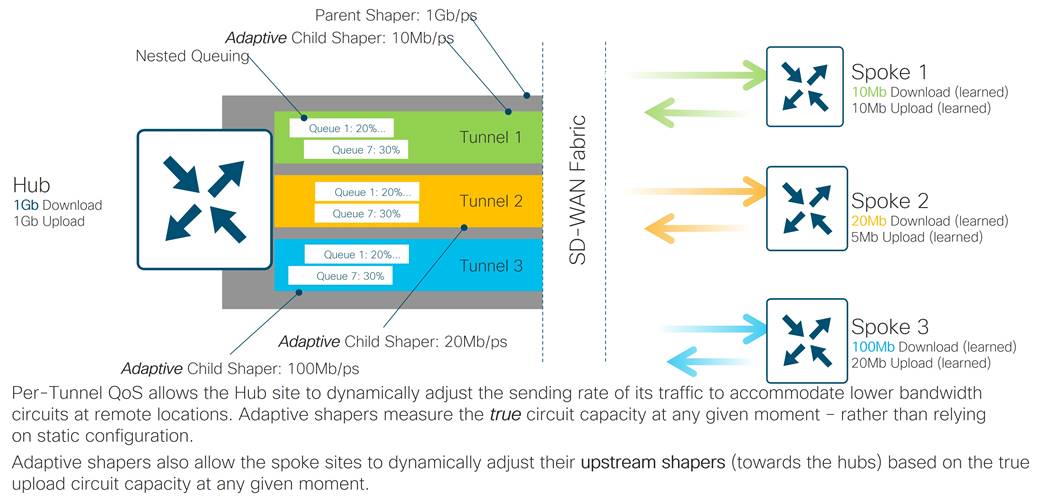

Appendix C: WAN Edge QoS Configuration

Appendix D: SD-WAN Controller AAR Data Policy Configuration

SD-WAN design case studies are deep-dives into the methodologies and technical solutions of how Cisco customers have leveraged SD-WAN use cases to achieve business outcomes. Although the companies covered in these case studies are fictitious, the designs, features, and configurations represent best practices and lessons learned from actual customer deployments across multiple industries.

Design case studies showcase the depth of Cisco’s coverage for the different categories of SD-WAN use cases as defined by the technological research firm Gartner, Inc, in the 2021 SD-WAN Edge Magic Quadrant (MQ) report. Design prototypes for each category have been built in Cisco Catalyst SD-WAN labs to validate the best practices and feature combinations covered in each case study. The categories include:

● Small Branch

● Global WAN

● Security Sensitive

● Cloud First

● Remote Worker

This design case study focuses on an SD-WAN deployment for an enterprise small branch. Gartner characterizes the SD-WAN small branch as a remote site supporting up to 10 people, where simplicity, cost consciousness, and flexibility of transport choices are key. Examples of the small branch category include gas stations, convenience stores, small banks, and fast-food restaurants.

This guide follows a fictitious company, American GasCo, through several planning and design phases and considerations they addressed during their journey to SD-WAN. Note that American GasCo is not a real customer and the network discussed within this document is not a real network, however, the use cases presented within this guide are based on actual customer deployments. The major topics of this guide include the following:

● SD-WAN high-level and low-level design methodology

● Enterprise considerations for Cisco cloud-hosted control component deployments

● SD-WAN underlay design for multiple types of WAN transports

● Small branch WAN Edge platform and topology considerations

● Cellular 4G/LTE branch deployment best practices

● Dual data center hub-and-spoke overlay routing

● Application-Aware Routing (AAR)

● Quality of Service (QoS)

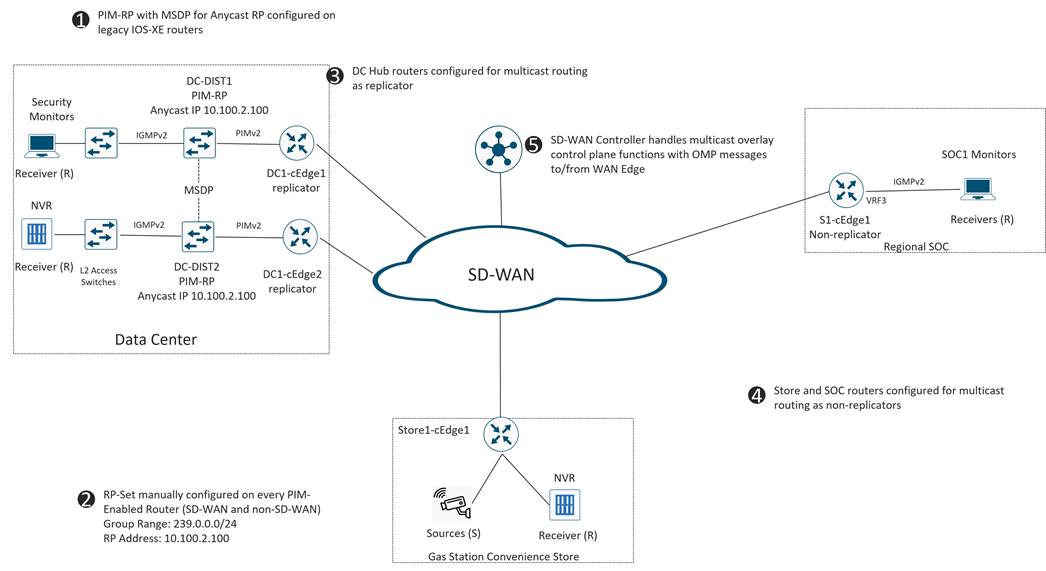

● IP Multicast

This guide is not intended to be a step-by-step “how to” guide for deploying Cisco Catalyst SD-WAN, although enough details are provided for the reader to understand what features and configurations are required on the WAN Edge routers and control components. All use cases and feature combinations were prototyped in a Cisco lab environment using 20.6 SD-WAN Manager/17.6 IOS XE SD-WAN code versions. Supporting documentation can be found in the Cisco Catalyst SD-WAN Design Guide, which also references other existing SD-WAN documentation. Configurations for the devices in this test topology can be referenced at http://cs.co/SDWAN_CaseStudy_1.

Audience

The intended audience is for anyone who wants a better understanding of the Cisco Catalyst SD-WAN solution, especially network architects that need to understand the SD-WAN design best practices to make good design choices for their organization.

Cisco Catalyst SD-WAN Overview

| Tech Tip |

| Cisco SD-WAN has been rebranded to Cisco Catalyst SD-WAN. As part of this rebranding, the vManage name has been changed to SD-WAN Manager, the vSmart name has been changed to SD-WAN Controller, and the vBond name has been changed to SD-WAN Validator. Together, the vManage, vSmart, and vBond will be referred to as the SD-WAN control components or the SD-WAN control complex in this document. |

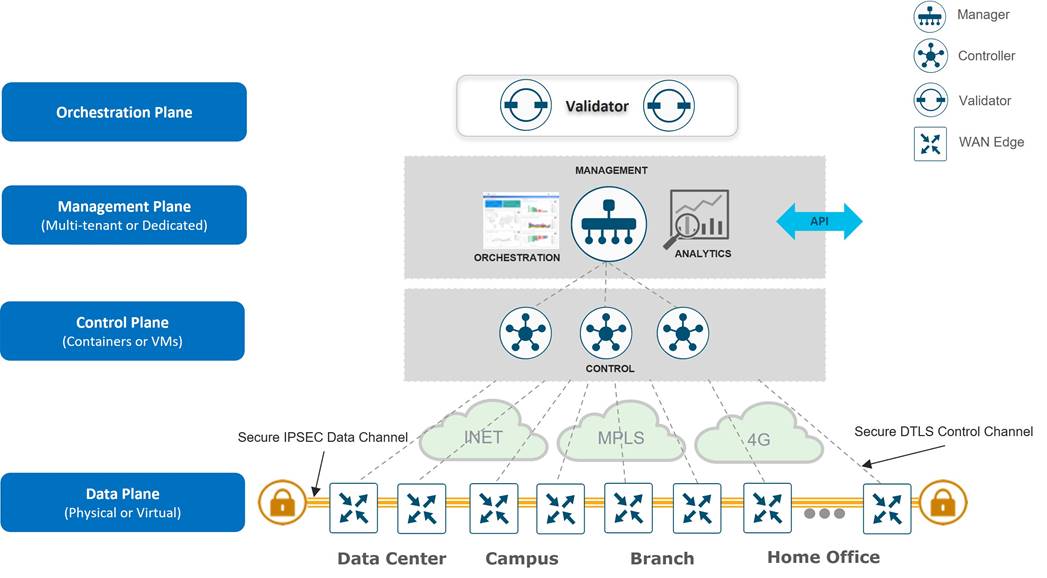

The Cisco Catalyst SD-WAN solution is comprised of separate orchestration, management, control, and data planes. The different major components that make up the solution are as follows:

● SD-WAN Validator (orchestration plane): The software component that performs initial authentication into the SD-WAN network and enables the communication between the SD-WAN control components and devices.

● SD-WAN Manager (management plane): The centralized network management system that provides a GUI interface to monitor, configure, and maintain the SD-WAN devices.

● SD-WAN Controller (control plane): The software component which is responsible for distributing routing, policy, and crypto key information to the WAN Edge routers.

● WAN Edge router (data plane): The hardware or software-based router that sits at a physical site or in the cloud and provides secure data plane connectivity between sites over one or more WAN transports.

When attempting to join a Cisco Catalyst SD-WAN overlay network, the WAN Edge router first authenticates with the SD-WAN Validator by initiating temporary Datagram Transport Layer Security (DTLS) control connections over each WAN transport. Once authenticated, the WAN Edge router then forms a permanent DTLS or Transport Layer Security (TLS) control connection with the SD-WAN Manager NMS over a single WAN transport, and initiates DTLS or TLS connections to two SD-WAN Controllers by default over all transports. After being onboarded to the SD-WAN overlay by the control components, the WAN Edge router joins the fabric by establishing IPsec (default) or GRE tunnels to other WAN Edge routers. WAN Edge routers initiate continuous Bidirectional Forwarding Detection (BFD) probes over each of these tunnels to ensure peer liveness, while also measuring the loss, latency and jitter associated with each WAN transport. Refer to the Cisco Catalyst SD-WAN Design Guide for more detailed information regarding the interworking of the Cisco Catalyst SD-WAN architecture.

Cisco Catalyst SD-WAN Design Methodology

Cisco recommends a top-down approach be taken with SD-WAN designs to ensure the platforms, use cases and features chosen will support the project goals and business objectives of the organization. American GasCo followed a design methodology that included specific steps that are summarized below:

● Network goals and objectives: The network goals and objectives and the purpose for implementing SD-WAN is captured.

● Current requirements and problem areas: In this phase, current applications in the network and their latency and loss tolerances are identified, along with the traffic patterns, current hardware, software, and transports and their current utilization. In addition, the network performance should be reviewed to identify any problem areas.

● New WAN and site standards: Once an audit has been completed and the SD-WAN use cases that will be implemented are identified, physical and logical topology standards can be developed for how the WAN Edge routers connect to the LAN and WAN infrastructure. Different sets of standards are often necessary when an organization has remote sites with unique business requirements, or when sites are classified by the numbers of users at a site.

Site standards should also take into consideration any future SD-WAN use cases and features that are expected to be deployed, such as direct internet access, cloud networking, on-premise security, multicast routing, or IPv6. These features may have an impact on traffic load, flow patterns, and other factors that will drive decisions on bandwidth, redundancy, and WAN Edge platform selection.

● High-Level Design (HLD): A high-level design can then be developed. In the high-level design, decisions are made around the key elements of the SD-WAN deployment, such as budget allocation, project scope, and orders for circuits and hardware. It includes the necessary information needed to determine the platform choices, such as the features and circuits required, and bandwidth and number of IPsec tunnels per device for each site required for the design. The following topics were addressed in the high-level design for American GasCo:

◦ Planned use cases and features: The SD-WAN use cases and features to be implemented are determined, such as application visibility, VPN segmentation, Application-Aware Routing (AAR), Quality of Service (QoS), Forward Error Correction (FEC), cloud-based access, on-premise security, multicast, etc.

◦ SD-WAN Manager and WAN Edge router code version selection: There is a balance between choosing a code version for support of all desired features with choosing a version which has had sufficient field time for stability reasons. The code version used in the deployment can influence the design based on what features are supported as well as the control plane scale supported. Newer platforms for the SD-WAN deployment may offer less code choice flexibility, as these devices may only be supported by newer code versions.

◦ WAN transport circuits: The WAN transports that will be used during the deployment are chosen.

◦ Control component deployment: The control component deployment model should be chosen. For on-premise deployments, enough information needs to be gathered to determine the number of control components, the server requirements for them, and how they will be distributed in the network.

◦ VPN segmentation: Identify any VPN segmentation in the network. This might be segmentation for separating traffic between lines of business, or traffic types requiring different topologies, such as full-mesh or spoke-to-spoke connectivity.

◦ Transport color scheme: Transport colors are the means in which the SD-WAN infrastructure associates overlay tunnels with underlying WAN transport circuits. Transport colors are configured as labels in the tunnel configurations of the branch and data center WAN Edge routers and can be leveraged by control and data policy logic. Since the number of transport circuits at a Data Center is often more than any branch, decisions need to be made whether every unique transport should warrant a unique tunnel color, or whether certain transports can be aggregated by the underlay routing infrastructure and treated as the same color. This information will help calculate the IPsec tunnels required in the network.

◦ SD-WAN overlay tunnels: Based on traffic flows during the audit and future traffic requirements, determine what topology is required per VPN (full mesh, hub-and-spoke, partial mesh, etc.), and based on the transport color scheme, calculate the number of tunnels required per device.

◦ WAN Edge router platform selections: Determine the hardware devices (if needed) that can accommodate the new site requirements.

● Low-Level Design (LLD): The low-level design can be worked on in parallel with the high-level design. In the low-level design, specifics about the configurations and policies and other parts of the implementation are developed and validated when necessary. The following topics were addressed in the low-level design for American GasCo:

◦ Control component deployment: For the Cisco-managed, cloud-hosted control components, the LLD should address initial onboarding details of the control components (virtual machine sizing, certificate installation) and reachability to remote site WAN Edge routers and customer NMS servers, when required.

◦ Branch design: Detailed site profiles with new platform and connectivity standards should be developed. Specific transports used to provide redundancy and SLAs necessary for the applications should be determined. If LTE is utilized, considerations should be taken to make the most efficient use of the bandwidth.

◦ Data center design: This topic addresses the connectivity and routing between the WAN Edge routers and the data center WAN transports and LAN backbone.

◦ SD-WAN underlay design: This topic addresses the connectivity and IP routing design for the end-to-end path between WAN Edge router tunnel endpoints.

◦ Firewall considerations: The NAT types for the various sites and what ports need to be opened on the firewalls for SD-WAN required connectivity should be determined.

◦ SD-WAN overlay design: Various aspects of the overlay design should be determined, including the site-ID scheme, control policy, cellular tunnel optimizations, VPN segmentation details, IP unicast and multicast routing, Quality of Service (QoS), and Application-Aware Routing (AAR).

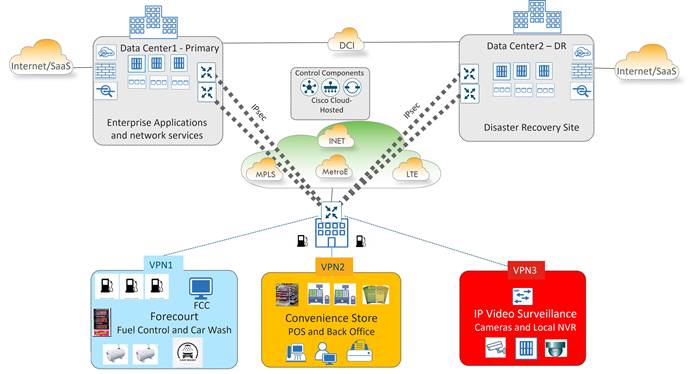

Company Background

American GasCo corporation owns and operates approximately 500 gas station convenience stores in the southeastern region of the US. In addition to selling fuel and car wash services, the company retails food, beverages, tobacco products, periodicals, lottery tickets, and other convenience items. The headquarters location in Atlanta, GA includes the company's primary data center that hosts enterprise services, including outbound access to the Internet for the stores. A backup data center in a colocation facility in Raleigh, NC serves as a disaster recovery site, providing business continuity in the event of a catastrophic outage. Application data stores are replicated nightly from the primary DC to the backup DC over a high bandwidth data center interconnect (DCI) circuit.

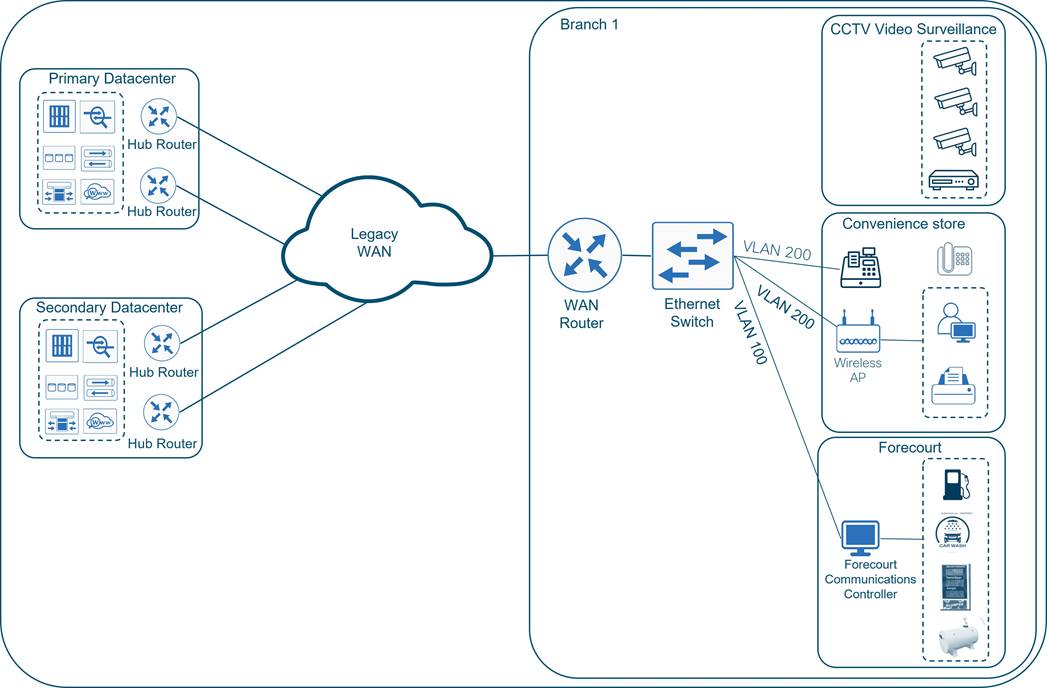

The legacy Wide Area Network (WAN) was built with Cisco routed technologies to securely connect gas station devices and convenience store users to enterprise applications in the data centers and SaaS applications on the Internet. This included a classic MPLS/VPN network and DMVPN fabric of IPsec tunnels overlaid on top of the Internet service provider transports that extended between a remote site router and pairs of hub routers in each of the data centers. A single Cisco ethernet switch partitioned into VLANs was installed at the branch to connect remote site operators and devices. There are three fundamental services for the stations, which include:

● Forecourt Controller (FCC) system that controls the fuel dispensers, storage tank controllers, outdoor payment terminals, price poles, and automated car wash devices. American GasCo uses an FCC system with an embedded processor that is reachable over a web server interface for remote monitoring and maintenance.

● Convenience store point-of-sales terminals, back-office computers, and printers.

● Legacy CCTV video surveillance system with in-store monitors and DVR for security monitoring of the convenience store and forecourt areas. It is a self-contained analog system that does not use the IP WAN infrastructure.

Network Modernization Initiative

American GasCo migrated their network to Cisco Catalyst SD-WAN as part of a gas station modernization program to support multiple IT projects to include:

● Office automation and refresh of back-office computers, fax machines, and printers

● New Point-of-Service (POS) systems for the gas pumps and convenience stores

● Upgraded audio, video, and digital signage systems in the stores and forecourt areas

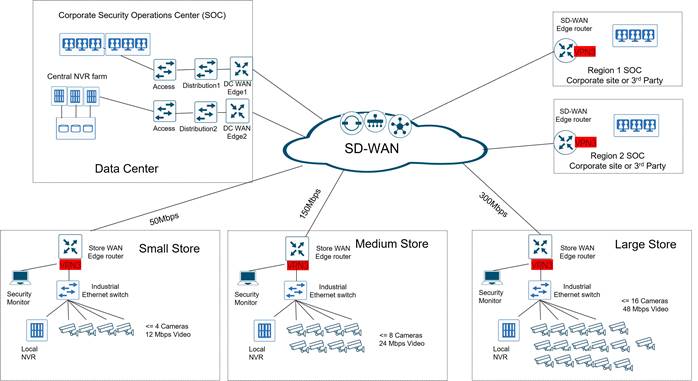

● Replacement of in-store, CCTV security systems with a next-generation IP video surveillance system that includes remote-monitoring and remote storage of video activity

● Replacement of landline telephones with Cisco IP telephony

● Network equipment refresh to replace end-of-life routers, switches, and wireless access points.

All the new initiatives were designed to either increase revenue or decrease store operating costs by improving efficiency. American GasCo understood that WAN availability would be more critical than ever, and that the new IP video surveillance system (in particular) would dramatically increase WAN traffic.

To meet the WAN transport redundancy and capacity demands while avoiding the high cost of provisioning additional MPLS circuits, American GasCo sought to utilize any IP-capable transport available at a store. This included an increased dependence on broadband Internet service delivered through cable or DSL and the introduction of business class Internet and cellular 4G/LTE as additional public WAN transport methods. Private, point-to-point circuits, and Metro Ethernet services were also planned to be introduced at store locations where access was available.

American GasCo selected Cisco Catalyst SD-WAN as the replacement for the legacy architecture largely due the flexibility of the WAN Edge routers in terms of transport connectivity, as well as the application visibility and control that would optimize transport selection for the different applications.

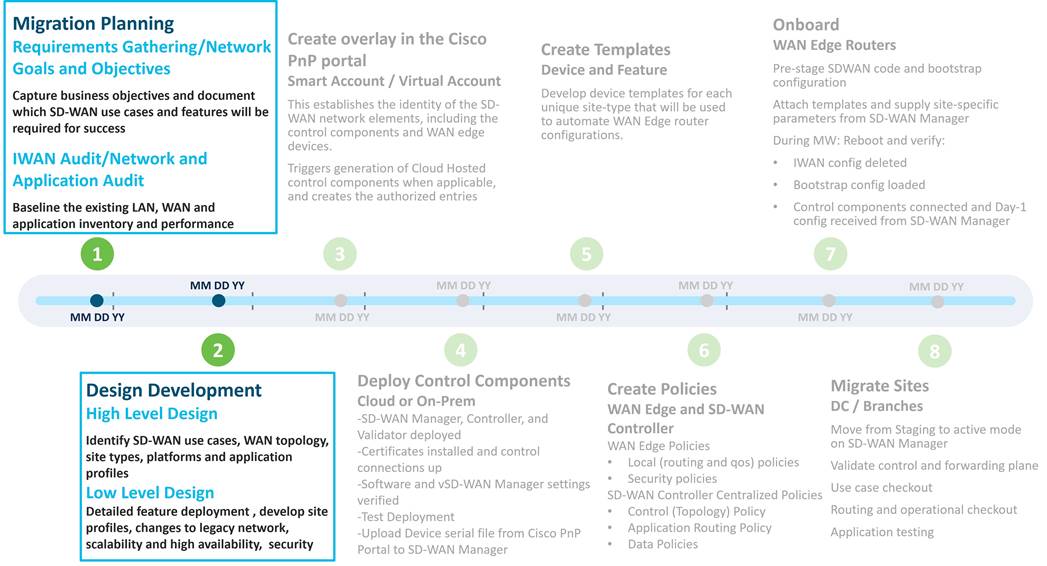

SD-WAN Migration Methodology

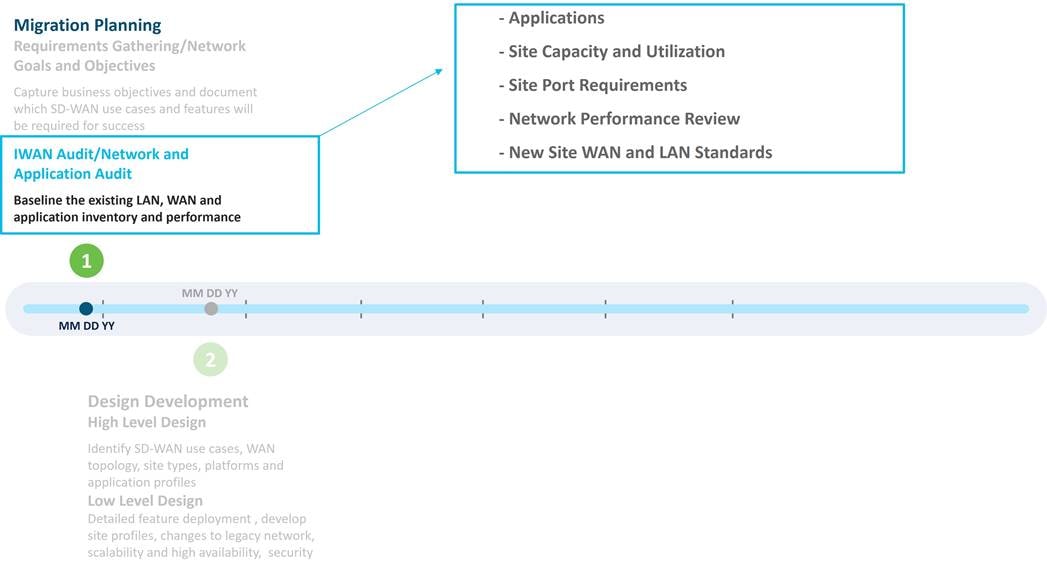

American GasCo followed Cisco’s recommended methodology for SD-WAN design and migration, following the IWAN to Cisco SD-WAN Migration Guide. A summary of the workstreams from this guide is shown below:

American GasCo spent considerable time during the planning and design phases to ensure that the proper platforms, use cases, and features could be thoroughly evaluated. Business requirements were documented, and a baseline of the existing network was taken to understand current LAN/WAN capacity and pain points. This was used as input into the high and low-level design documents that were developed and highlighted in this guide.

The first step in an SD-WAN design is to capture network goals and objectives and then identify the use cases that will be used to satisfy them. These will drive decisions about platform hardware and code selection, transport types, features, configurations, and policies that will be addressed by the design. The following table captures American GasCo's network objectives and SD-WAN use cases planned for deployment.

Table 1. Network Objectives and SD-WAN Use Cases for American GasCo

| SD-WAN Use Cases |

|

| Increase network availability |

Ensure every site has two or more WAN transports capable of handling the full traffic load Leverage cellular at critical sites as a last resort path in case of complete fiber cut of ethernet transports |

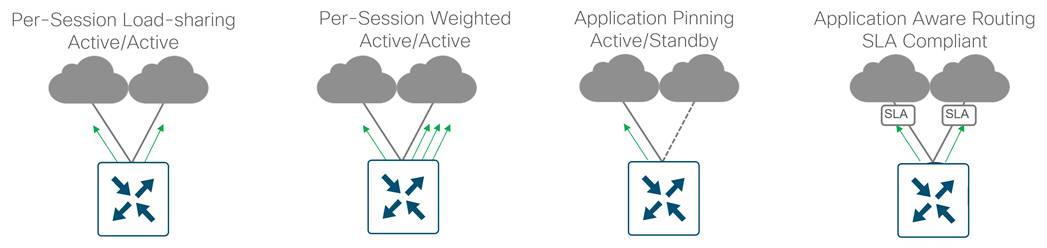

| Improve application performance |

Increase WAN bandwidth at remote sites to reduce congestion Leverage advanced QoS features such as per-tunnel and adaptive QoS to prioritize critical applications Utilize performance monitoring and application-aware routing. Critical applications are routed to the best-performing links |

| Reduce WAN carrier costs |

Leverage Internet and private circuits over MPLS when more bandwidth is required at a site Use application-aware routing and data policies to steer low priority traffic over less expensive links |

| Improve WAN security |

Segment devices, users, and cameras into different VPNs Leverage zero-trust onboarding with certificate-based identity |

| Simplify network operations |

Leverage centralized provisioning, monitoring, and troubleshooting with the SD-WAN Manager Utilize GUI-driven templates and policies for standardized configurations |

Direct Internet Access, SASE/Secure Internet Gateway, and Cloud Networking were all use cases interesting to American GasCo, but it was decided that phase 1 would be limited to deploying a basic SD-WAN secure automated WAN infrastructure. This would allow them to become familiar with operating an SD-WAN overlay and set the foundation for the advanced use cases that would be implemented in future phases.

In this phase, a baseline audit of the applications, site capacity, utilization, and site port requirements was performed, followed by a review of Sev1 tickets and availability reports. New site LAN and WAN standards were then derived to help drive decisions around which platforms would be ordered and how much bandwidth would be necessary for the new SD-WAN deployment.

A telecommunications outsourcing company was contracted by American GasCo to lead the audit and analyze the existing WAN transport performance, capacity, and pricing. Based on the results, the consultant assisted with planning WAN capacity for each site and helped establish the new network requirements. Once completed, the consultant helped evaluate, select, and negotiate contracts with the service providers that would provide new circuits for sites that required additional bandwidth or redundancy, which included alternative bandwidth solutions, such as private Ethernet (P2P, Metro Ethernet), business Internet, and cellular 4G/LTE.

Applications

As a first step, the consultant worked with American GasCo engineers to analyze the current applications and traffic flows. The American GasCo legacy routers were exporting IPFIX and NBAR application data to NetFlow collectors which allowed them to identify traffic patterns of the various applications. The table below represents the top applications in use at the American GasCo store locations.

Table 2. American GasCo Application Audit

| Application |

Traffic Patterns |

Business Relevance |

| Forecourt controller |

Store to forecourt servers in DC |

Relevant |

| Convenience Store Point of Sales (POS) |

Store to POS servers in DC |

Relevant |

| |

Store to email server in DC |

Relevant |

| SNMP, Syslog, SSH |

Store to servers in DC |

Relevant |

| Facebook, Instagram, Twitter |

Store to Internet exit at DC |

Irrelevant |

| HTTP/HTTPS |

Store to Internet exit at DC |

Unknown |

| YouTube |

Store to Internet exit at DC |

Unknown |

| Wireless CAPWAP tunnels |

Store to Wireless LAN controller at DC |

Relevant |

Site Capacity and Utilization

WAN circuit capacity and utilization was measured at each store over a period of several weeks in order to understand the traffic load of the existing applications. Stores were classified into site types based on size and bandwidth requirements as shown in the table below.

Table 3. American GasCo Site Type Inventory and WAN Capacity

| Site Type |

Description |

Number of Sites |

Number of Routers per Site |

WAN Transport Circuits |

WAN Circuit Capacity |

Circuits Exceeding 70% BW Utilization |

| Type 1 |

Small-size filling stations with outdoor payment terminals, some with a single employee selling convenience items from within a secured enclosure |

147 |

1 |

1x broadband Internet circuit |

5 Mbps |

50 |

| Type 2 |

Medium-size filling stations with outdoor payment terminals and a small indoor convenience store |

221 |

1 |

1x broadband Internet circuit |

10 Mbps |

70 |

| Type 3 |

Large-size filling stations with outdoor payment terminals and indoor convenience store with limited food-to-go |

120 |

1 |

1x MPLS and 1x broadband Internet circuits |

20 Mbps |

60 |

| Type 4 |

Rest stop with outdoor payment terminals and full-service store with an indoor restaurant and wi-fi for guests |

12 |

1 |

2x MPLS circuits |

50 Mbps |

10 |

| Primary data center |

Data center server farm to host various applications and Internet access via DC Internet exit. Large on-premise office in the primary DC (HQ – Atlanta) |

1 |

2 |

2x MPLS and 2x broadband Internet circuits (1 each per router) |

2x400 Mbps |

1 |

Site Port Requirements

The consultant then worked with the store owners to obtain a count of active connections on the Ethernet switches at each site to help determine whether American GasCo could consolidate the switching functions into a WAN Edge router with a Layer 2 switch module. The switch port audit included a count of ports providing power over ethernet (PoE) capabilities to devices such as Wifi access points or IP phones that may have been installed at certain stores. The data center sites were not included in this audit since American GasCo had a traditional DC design with distinct core, distribution, and access layer devices.

During the audit it was discovered a mix of Fast Ethernet (100 Mbps) and Gigabit Ethernet (1000 Mbps) connections on the switches, but no Power over Ethernet (PoE) capabilities.

Table 4. American GasCo Store Ethernet Port Counts

| Site Type |

Ethernet LAN ports in Use |

| Type 1 |

1-2 |

| Type 2 |

1-4 |

| Type 3 |

3-5 |

| Type 4 |

4-8 |

Network Performance Review

Finally, a review of the help-desk tickets and service provider outage reports were conducted to gain an understanding of the overall network stability and performance at the different sites. The following conclusions were drawn:

● Most major outages were due to circuit instabilities, primarily at sites with single WAN connections.

● Router crashes or failures were infrequent and not considered to be a factor.

● Network performance slowdowns were common at the type 1 and type 2 sites having single broadband Internet circuits, and further examination of the interface queues indicated a large number of packet drops, suggesting network congestion to be the cause.

● It was noted that type 3 sites with dual WAN connections were sending and receiving traffic only on the MPLS link, leaving the Internet link largely unused. This was attributed to an active/backup routing design that preferred the BGP routes learned from the MPLS providers over the EIGRP routes learned over the DMVPN tunnels on the Internet transports.

New Site Type WAN and LAN Standards

Once an existing network audit was performed, future requirements were considered based on the modernization projects along with future gas station growth. First, the traffic patterns and business relevance of the future applications were captured:

Table 5. Future Applications

| Application |

Traffic Patterns |

Business Relevance |

| IP Video Surveillance |

Store to storage and monitoring systems in DC |

Relevant |

| IP Telephony |

Store to CCM/SIP servers in DC (VoIP signaling) Store to Data center NOC IP telephony (VoIP bearer) Store to off-net destinations via VoIP gateway in DC (VoIP bearer) |

Relevant |

| Office 365 |

Store to Internet SaaS sites via DC Internet exit |

Relevant |

An estimate of the additional bandwidth required to support the new IP video surveillance, Telephony, Office 365, and other applications was added to the site baseline to create new WAN standards for each branch. Additional Ethernet and PoE port requirements were determined based on the new applications to create new LAN standards. Bandwidth for the data center was calculated based on the existing audit of applications and the additional load expected from the new applications. N+1 bandwidth redundancy was planned for the data center, so that each WAN Edge router could support the full site bandwidth in the event that either Edge router lost power or became unavailable for some other reason. The new site type WAN and LAN standards would be the basis for hardware platform selections and could also be used by the telecommunications service providers for pricing estimates.

Table 6. American GasCo New Site Type WAN and LAN Standards

| Site Type |

New Site Bandwidth Specification |

New WAN Transport Standard |

New Ethernet LAN port specification |

PoE / PoE+ ports required? |

| Type 1 |

Up to 50 Mbps |

Single Ethernet WAN transport (Metro Ethernet/MPLS/Broadband Internet) + active “always-on” LTE |

Up to 4 |

Up to 2 PoE |

| Type 2 |

Up to 150 Mbps |

Dual Ethernet WAN transports (Metro Ethernet/MPLS + Broadband Internet) |

Up to 8 |

Up to 2 PoE |

| Type 3 |

Up to 300 Mbps |

Dual Ethernet WAN transports (Metro Ethernet/MPLS/Business Internet + Broadband Internet) with LTE for backup |

Up to 8 |

Up to 4 PoE |

| Type 4 |

Up to 500 Mbps |

Dual Ethernet WAN transports (Metro Ethernet/MPLS + Business Internet) with LTE for backup |

Up to 16 |

Up to 4 PoE |

| Data center |

Up to 2x10 Gbps |

Multiple incoming WAN transports (Metro Ethernet + MPLS + Business Internet) |

N/A |

N/A |

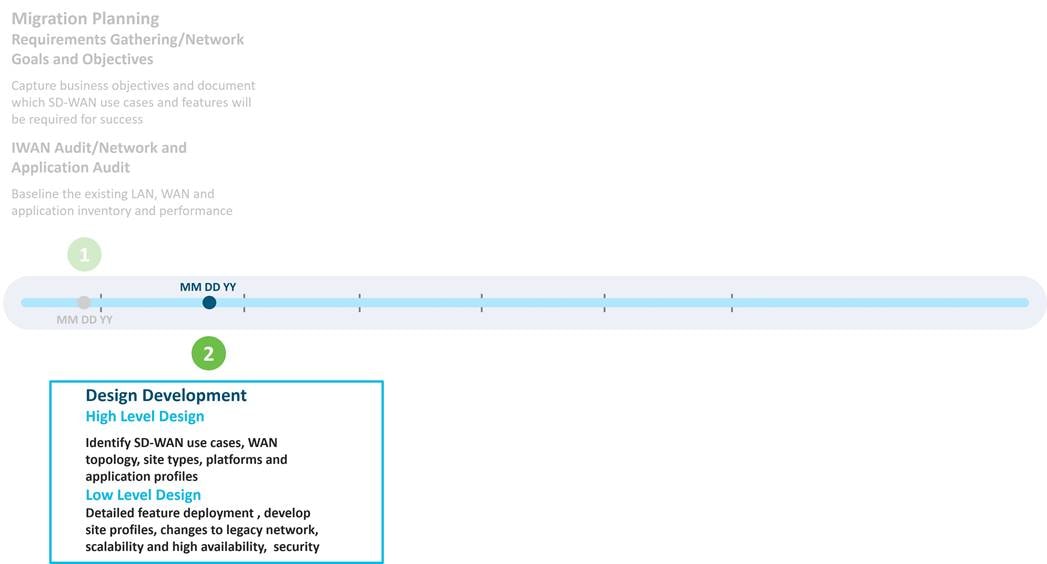

SD-WAN design development often includes creation of High-Level Design (HLD) and Low-Level Design (LLD) deliverables during different phases of the project. The HLD is crucial at the start of a project, where budget is allocated and orders for hardware, software, licenses, control components, WAN transport circuits, and tools must be placed. Some of these items may take months of lead time, particularly when site construction is required to bring in new transports or manufacturing backlogs delay hardware. The SD-WAN high-level design should be lean, easy to read, and clearly document the project scope. The HLD should include the number of sites, number of WAN Edge routers per site, number of transports, planned use cases and features, platform selection, and control component deployment model. The low-level design includes all the specifics of how the device configurations and policies will be implemented with an emphasis on connectivity, fabric and site routing, and advanced features that enhance the application experience. The low-level design is where migration considerations, site onboarding, integration with management tools and operational processes is addressed.

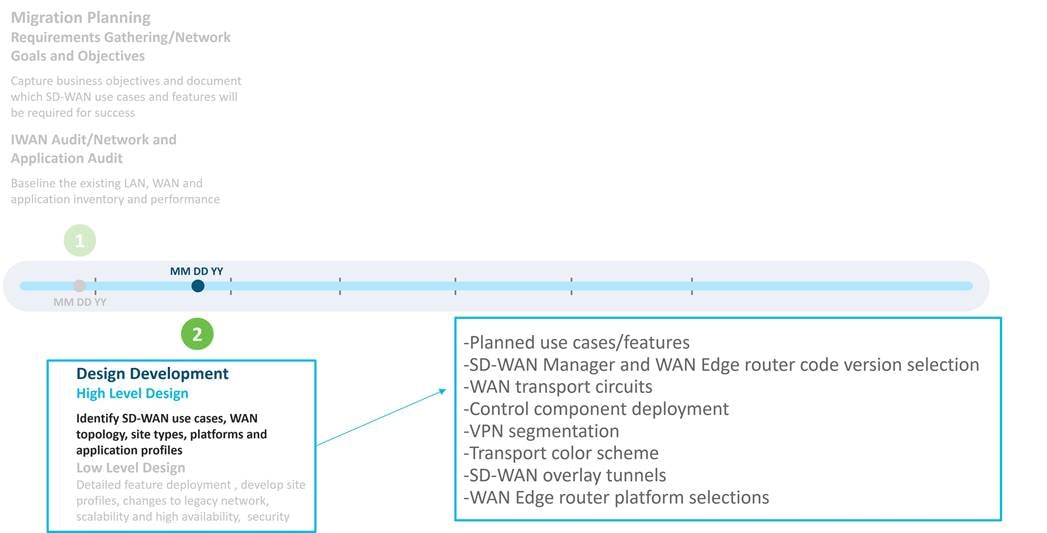

A high-level design was developed by American GasCo to document key decisions around the main elements of the SD-WAN deployment, which includes the following topics:

● Planned use cases/features: the use cases and features that will be implemented in the first phase of the SD-WAN deployment

● SD-WAN Manager and WAN Edge router code version selection: the code version targeted for the SD-WAN devices during the initial SD-WAN deployment

● WAN transport circuits: which WAN transport circuits will be used in the deployment

● Control component deployment: which control component deployment model will be used

● VPN segmentation: what VPNs will be used in the new design

● Transport color scheme: what colors are assigned to the transports in the SD-WAN overlay

● SD-WAN overlay tunnels: calculating the SD-WAN tunnel requirements

● WAN Edge router platform selections: what SD-WAN router platforms are chosen for the different site types in the deployment

Planned Use Cases/Features

For American GasCo, the SD-WAN phase 1 deployment would include the following use cases and features:

● Secure automated WAN

◦ Introduction of Business Internet and Metro Ethernet WAN transport circuits

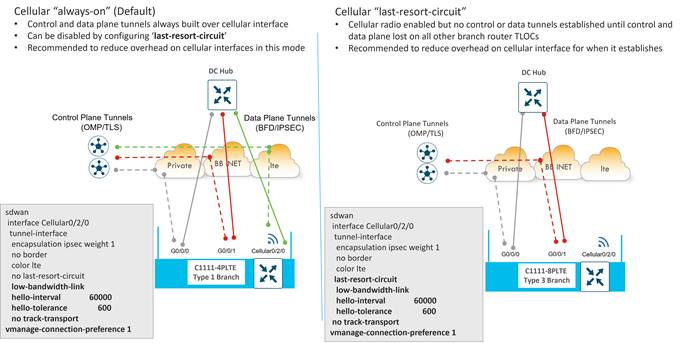

◦ LTE as an “always-on” circuit at some site types and a circuit of last resort at others.

◦ Hub-and-spoke SD-WAN tunnel topology

◦ VPN segmentation in the stores and data centers

◦ IP Multicast

● Application performance optimization

◦ Application visibility

◦ Application-Aware Routing

◦ Quality of Service (QoS)

SD-WAN Manager and WAN Edge Router Code Version Selection

Based on the planned use cases, American GasCo needed to choose a SD-WAN Manager and WAN Edge router code version to deploy. This is defined in the high-level design because the code selection may affect what is supported from a control plane scale perspective and may change the design depending on the features and use cases that are supported.

There are several documentation references to use for choosing the most appropriate software:

● The software release notes are useful for checking on supported features and caveats.

● The SD-WAN device compatibility sheet shows what code version supports what hardware platforms.

● The control components compatibility matrix demonstrates what control components code versions are compatible with which WAN Edge router versions.

● The recommended SD-WAN software versions for control components and WAN Edge routers guide provides guidance to customers on the Cisco Catalyst SD-WAN software lifecycle to assist in code choices. The general idea is to run a release marked as a recommended release under the software downloads page, but if features are needed in higher versions of code, try to stick to extended maintenance releases which have a longer support lifetime. Examples include 20.6/17.6, 20.9/17.9, and 20.12/17.12 (control components image version/IOS XE SD-WAN image version).

American GasCo chose 20.6.3 for the control components and 17.6.3a for the IOS XE SD-WAN routers, since it is an extended maintenance release image, and it is currently on its 3rd maintenance release. By the time American GasCo would be ready to go into production, the code would have had over a year’s worth of field exposure. American GasCo was comfortable with this choice.

20.6.3/17.6.3a also supports the platforms American GasCo would be interested in along with the basic features they are interested in deploying. American GasCo is also interested in features they could deploy in the future which are introduced in the 20.6/17.6 release:

● Quick Connect Workflow for onboarding Cisco IOS XE SD-WAN devices

● Cisco Catalyst SD-WAN EtherChannel support on the service-side VPNs (for the data center hub routers)

● Per-VPN QoS, where you can configure a QoS policy to limit the bandwidth that can be used by traffic belonging to a VPN or group of VPNs

WAN Transport Circuits

WAN performance and overall user experience is highly dependent on the last mile circuit that connects it to end users and devices in the business location. Cisco Catalyst SD-WAN features such as Application-Aware Routing (AAR), Forward Error Correction (FEC) and TCP Optimization are able to address transient impairments on the end-to-end WAN path but are no substitute for stable last mile circuits provisioned with adequate bandwidth to handle the traffic loads that occur during peak periods of usage. In other words, having a robust and stable underlay with plenty of bandwidth is of the utmost importance.

As a result of the network audit, it was determined that a lack of circuit redundancy and bandwidth congestion on the existing transport circuits were the major sources of issues on the WAN. American GasCo contracted with several new WAN service providers to provide new transports to augment the site MPLS and broadband Internet circuits that provided transport for the legacy WAN. In addition to MPLS and broadband Internet, they decided to augment with the following transport types:

● Dedicated Internet circuits: Business-class Internet circuits with SLA guarantees for upload and download symmetrical bandwidth. Provisioned at data centers and larger remote sites as a substitute for public, broadband Internet circuits with no bandwidth SLA.

● Metro Ethernet: Ethernet Private Line and Virtual Private Line services. Deployed at data centers and remote sites located in metropolitan areas where service is available as higher bandwidth/lower cost alternative to MPLS.

● Cellular 4G/LTE: Used at small locations having no access to leased circuits or as a secondary, always-on connection. Also used at larger sites as a backup circuit to provide business continuity during failure of leased circuits.

Control Component Deployment

The SD-WAN Manager, SD-WAN Controller, and SD-WAN Validator are deployed as virtual machines on server hardware located on-premise or in a cloud service provider. The high-level design should address the number of control component instances required for scale and redundancy, the amount of compute and storage needed for each VM, where the control components should be hosted, and who should be responsible for deployment and operations. There are three common control component deployment models:

● Cisco cloud-hosted: In this model, control components are deployed in AWS or Azure. Cisco takes care of provisioning the control components and meeting requirements for scale and redundancy. Cisco is responsible for backups/snapshots and disaster recovery and will scale out the control component deployment as the network grows. The customer is given access to the SD-WAN Manager for creating configuration templates and control and data polices for their devices.

● On-premise: In this model, control components are deployed on the customer premises in a data center or regional hub, or in a private/public cloud or co-location facility. The customer is responsible for the server hardware, provisioning the control components, and all maintenance and monitoring activities.

● Managed Service Provider (MSP): In this model, the control components are hosted by the service provider in a private or public cloud and the provider is responsible for backups and disaster recovery.

American GasCo evaluated the pros and cons of each of the three deployment models for control components.

Table 7. Pros and Cons for the Different Control Component Deployment Models

| Pros |

Cons |

|

| Cisco cloud-hosted control components |

Rapid deployment of control components in AWS or Azure by Cisco Cloud operations Cisco responsible for deployment, monitoring, backup and restore functions of the control component infrastructure Cisco proactively monitors capacity and can expand resources quickly as the network grows |

Cisco Cloud Ops is not responsible for monitoring the Cisco Catalyst SD-WAN overlay services |

| On-premise in a private cloud or data center owned by the organization |

Customer has complete control of the physical servers and storage environments Sometimes required by customers with strict security policies that prohibit cloud deployments of network infrastructure |

Time to deploy typically longer than cloud-hosted Cisco does not have visibility to control components for monitoring and troubleshooting Must plan carefully for growth upfront, as it is more difficult to upgrade physical resources later as the network grows |

| Managed Service Provider (MSP) or partner-hosted cloud |

Widely varies depending on the specific MSP’s control components hosting methods and operations |

Widely varies depending on the specific MSP’s control components hosting methods and operations Additional costs may be involved compared to Cisco cloud-hosted option |

American GasCo chose the Cisco CloudOps-managed, cloud-hosted option to accelerate their deployment and avoid the operational burden of ongoing monitoring and maintenance of the control components hardware and infrastructure software.

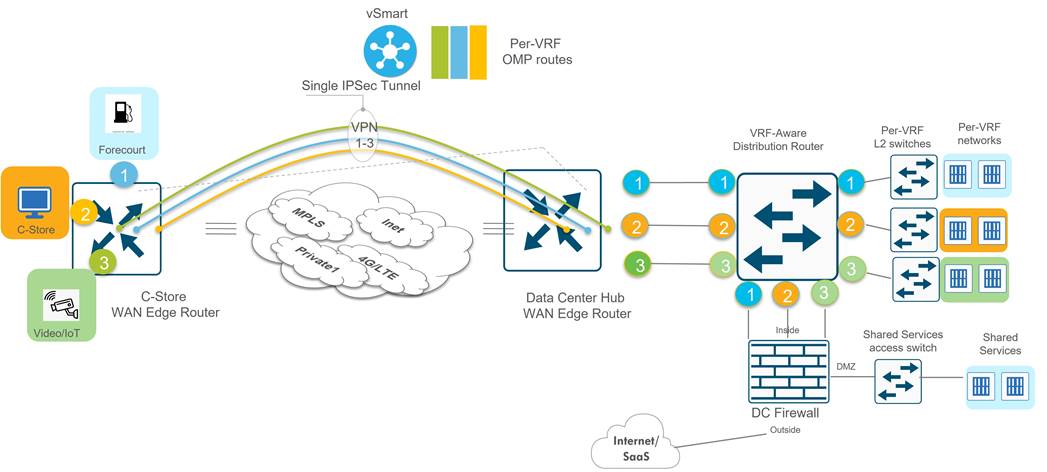

VPN Segmentation

American GasCo security policy mandates video surveillance traffic be kept separate from convenience store and forecourt point-of-sales applications. End-to-end VPN segmentation within the store and across the SD-WAN to the data center was planned to completely isolate each of the following VPNs:

Table 8. American GasCo VPN Definitions

| VPN |

Purpose |

| VPN 1 |

Forecourt applications |

| VPN 2 |

Convenience store applications |

| VPN 3 |

Video surveillance |

Transport Color Scheme

SD-WAN color attributes are the means in which the SD-WAN infrastructure maps the overlay tunnels to the underlying transport circuits that are terminated on WAN Edge router TLOC interfaces. Color attributes are a key component of centralized control policies that dictate the degree of tunnel meshing and should be worked out in the high-level design.

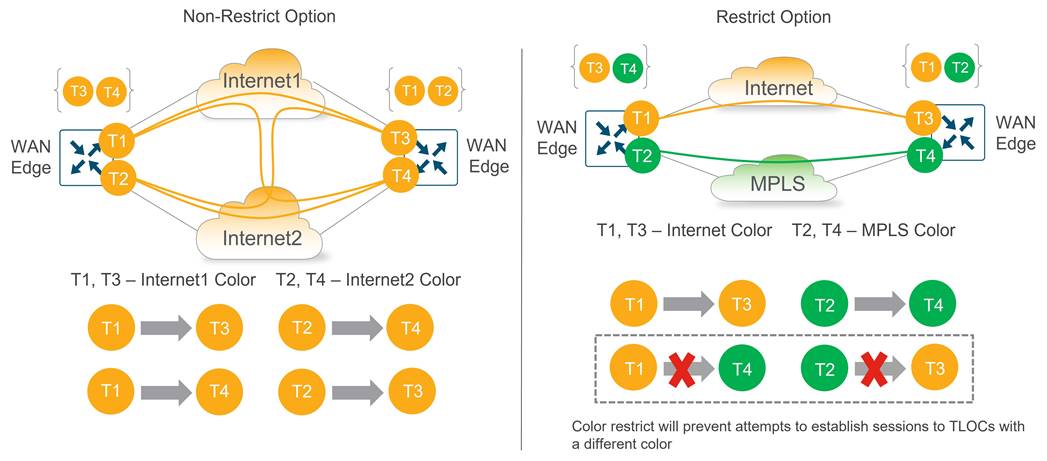

Color Restrict

By default, a WAN Edge router will attempt to create tunnels to every remote TLOC it discovers from OMP during initial onboarding. Tunnel setup to every remote TLOC is attempted over each local WAN transport, including TLOCs that belong to other transports marked with different colors. This is useful when using a public network underlay comprised of different ISPs, but often undesirable when there is a mix of public and private transports with no interconnection points.

To prevent this behavior, a restrict keyword can be specified when defining the tunnel characteristics. This prevents attempts to establish BFD sessions to TLOCs with different colors and is commonly used to limit the number of tunnels and associated overhead on the WAN transport circuits.

| Without any tunnel restriction in the network, BFD sessions and secure IPsec tunnels are formed between all TLOCs. This can substantially increase the number of tunnels in the network, which can be a factor in the ability to scale the network depending on the WAN Edge platforms used. It can give you more paths between TLOCs which could translate into more available paths that meet SLA thresholds when out-of-threshold events occur, but at the expense of more tunnels to manage and troubleshoot if problems do occur. |

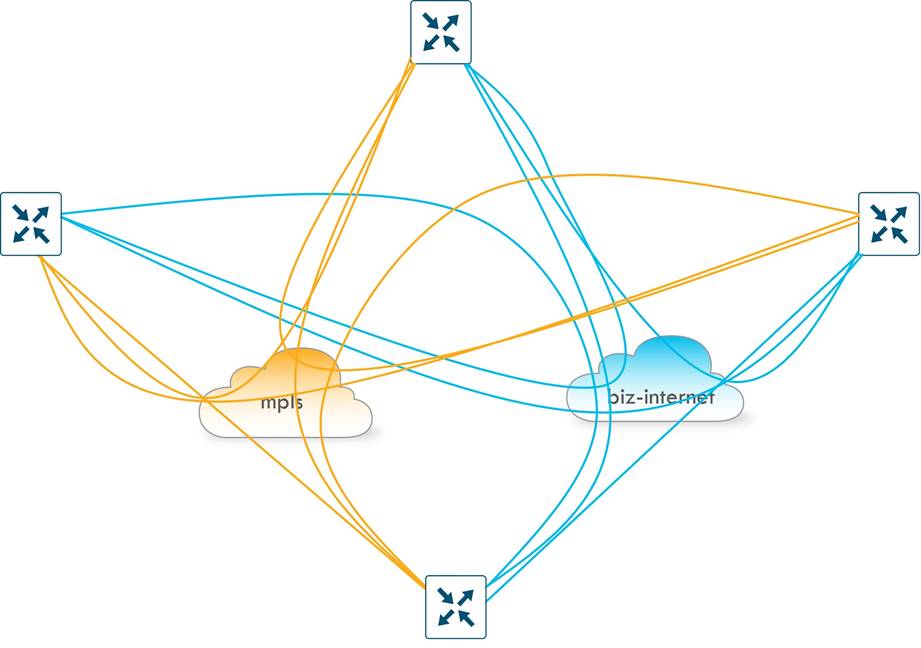

The following diagram shows the non-restrict (default) option as compared to the restrict option. The non-restrict option results in twice as many tunnels between the two WAN Edge routers with two transports involved as shown in the diagram below.

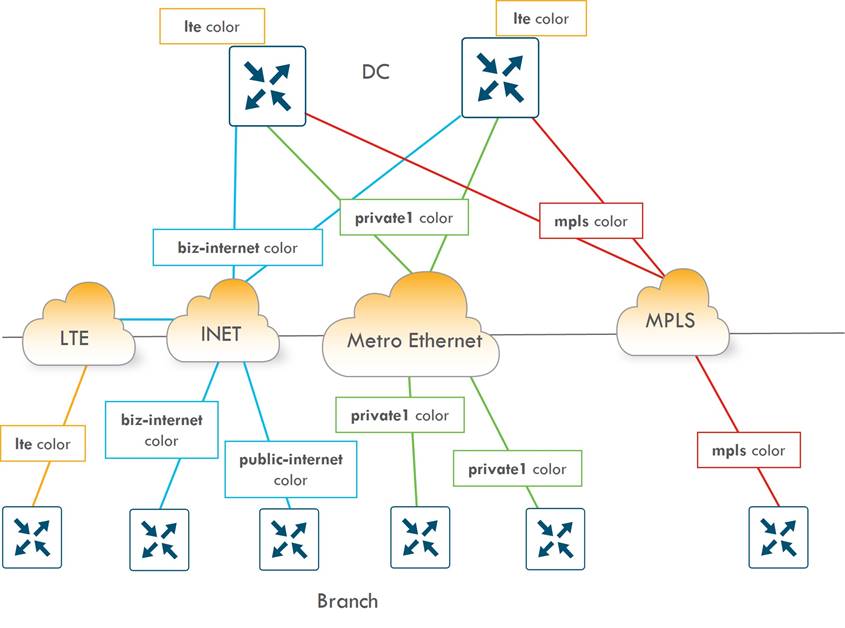

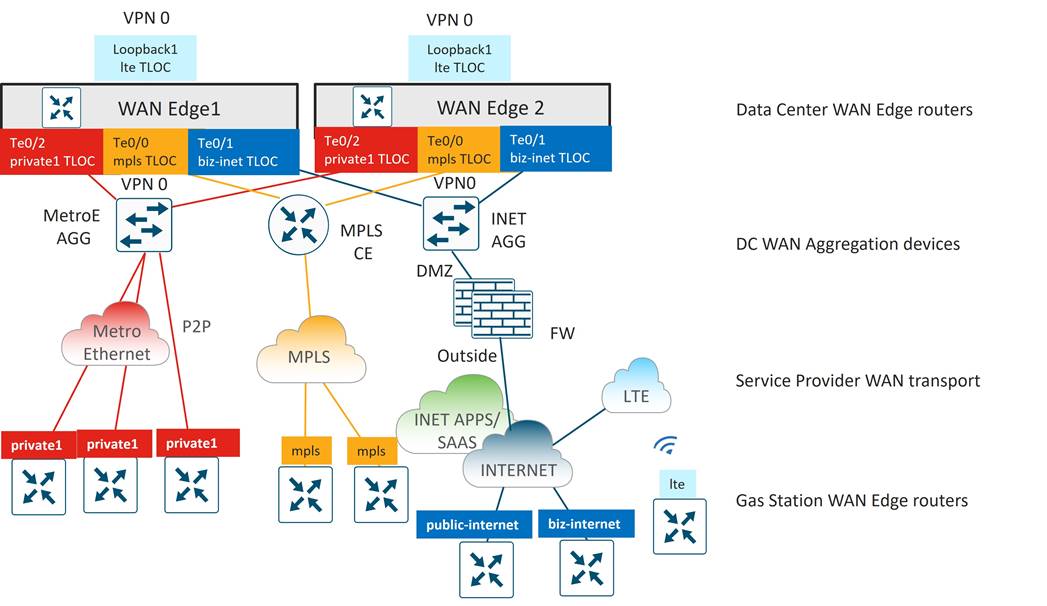

American GasCo Transport Color Scheme

A transport color scheme was developed for American GasCo. On the branch side, three distinct transport colors (public-internet, biz-internet, and lte) were specified for broadband Internet, business class Internet, and cellular 4G/LTE transports, respectively. The private1 color was specified for point-to-point Ethernet or Metro Ethernet transports, and the mpls color was associated with MPLS/VPN transport.

In the datacenter, all Metro Ethernet connections are aggregated into a layer 3 switch, and the connections to this switch correspond to the private1 color. The MPLS circuit uses the mpls color, and the business Internet connection uses the biz-internet color. Any broadband or business Internet or LTE circuit in the branches connects to the Internet transport in the datacenter.

Since LTE is a metered link, it is desirable to implement traffic optimizations, or SD-WAN overhead reductions, such as reduced BFD and OMP intervals. These optimizations are covered later in this case study. Since LTE optimizations are deployed on the branches, an LTE color should be present in the datacenter so the LTE configuration can be tweaked on both ends of the tunnel. If not, the LTE optimizations cannot be fully realized. This can be accomplished by configuring a tunnel with the lte color on a loopback interface on the datacenter hub routers. The tunnel can still use the Internet link as underlay. Only the private1, mpls, and lte colors are configured with the restrict option.

Table 9. American GasCo Transport Color Scheme

| Transport Type |

Branch Transport Color |

Data Center Transport Color |

Restrict? |

| Metro Ethernet |

private1 |

private1 |

Y |

| MPLS |

mpls |

mpls |

Y |

| Cellular 4G/LTE |

lte |

lte (TLOC is a loopback interface on the DC WAN Edge router) |

Y |

| Broadband Internet |

public-internet |

biz-internet |

N |

| Business Internet |

biz-internet |

biz-internet |

N |

The following diagram shows the connection between the physical links and logical colors between the branches and data center:

SD-WAN Overlay Tunnels

An important consideration to factor into WAN Edge platform selection is how many IPsec tunnels are generated to the other WAN Edge routers on the fabric. Cisco Catalyst SD-WAN uses BFD to check path availability and measure performance across every IPsec tunnel, a process that can be CPU-intensive and can affect stability of the platform as the IPsec tunnel limits are reached.

By default, during bring-up, a Cisco WAN Edge router attempts to form encrypted BFD sessions to every other WAN Edge TLOC in the network unless a control policy is in place to filter TLOCs and/or routes. Assuming the restrict option is used, a full mesh of tunnels across each transport is the result, and the number of tunnels on a single WAN Edge router can be calculated with the formula (X-1) * Y, where X represents the total number of WAN Edge routers on the network and Y represents the number of transports.

The following shows 4 WAN Edge routers and 2 transports. With the restrict option, the total number of tunnels per WAN Edge router is (4-1) * 2 = 6. The number of tunnels double if the tunnels are not using the restrict option, and with additional transports, the number of tunnels would continue to multiply.

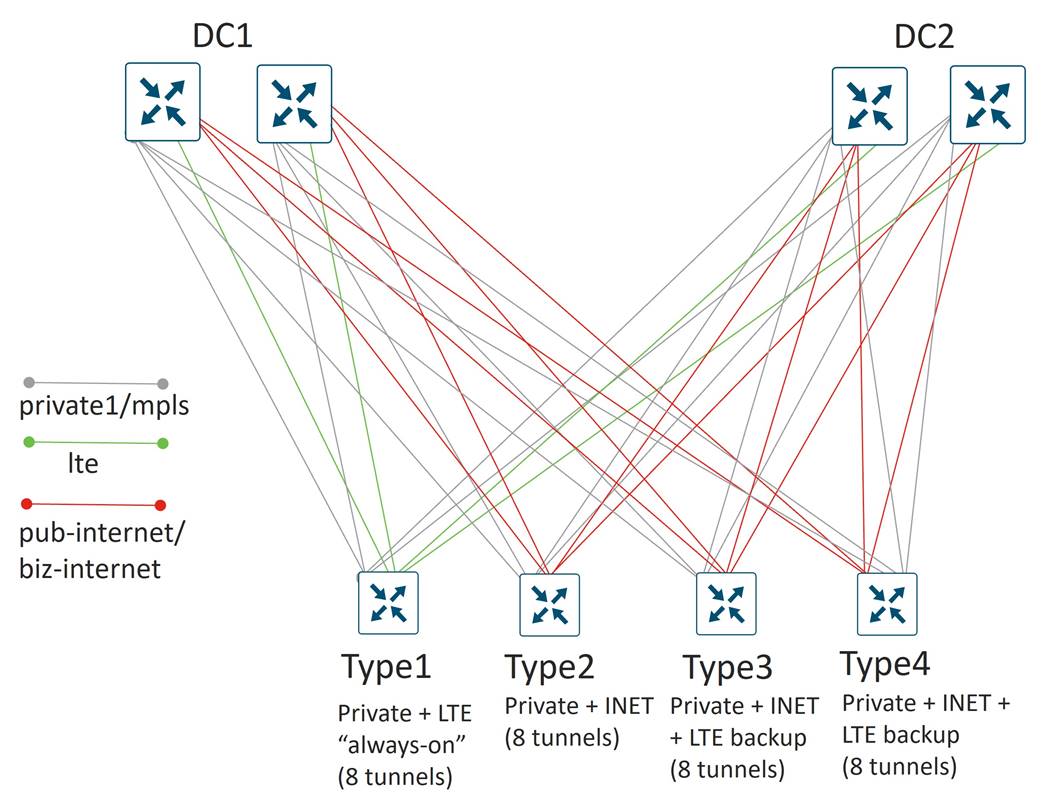

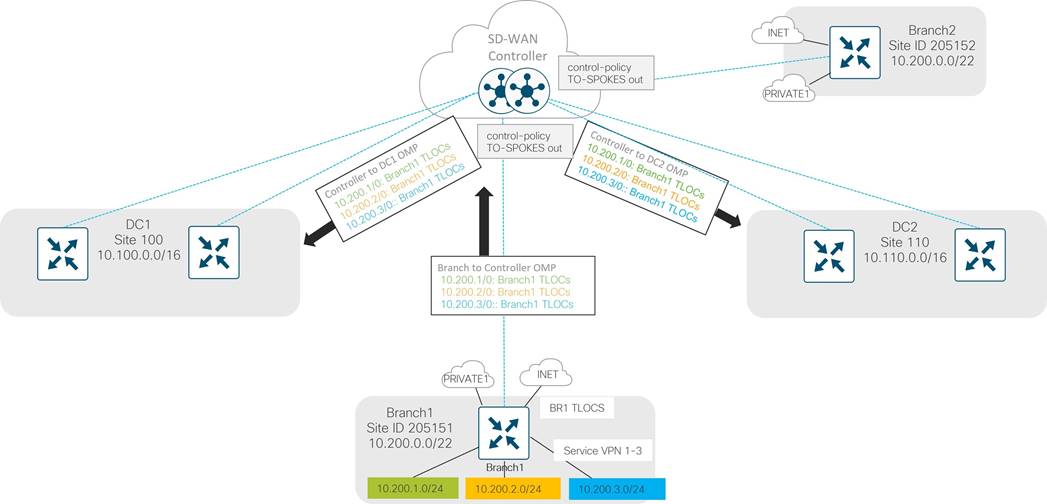

American GasCo Tunnel Topology

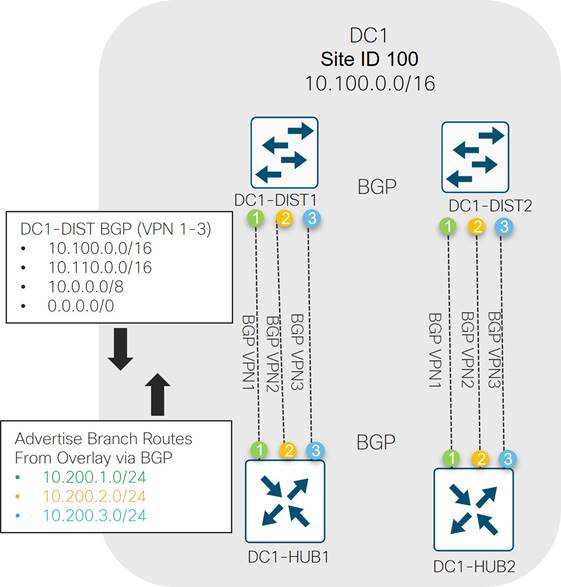

A 500-router network like American GasCo with an average of 2 transports connected on each router with the restrict option enabled would result in (500-1) * 2, or 1998 IPsec tunnels created on each router. The number would double to 3096 if the 2 WAN transports were interconnected (as in dual Internet with the non-restrict option) as the local WAN Edge will attempt to build tunnels across every local TLOC to every remote TLOC.

The American GasCo traffic analysis indicated that all traffic flows were “North-South” (branch to data center) and that no “East-West” (branch to branch) traffic existed. As with most small branch deployments, a hub-and-spoke topology could be utilized, which greatly reduced the amount of tunnel state that needed to be kept by the WAN Edge routers and the SD-WAN Manager for statistics aggregation. This allowed American GasCo to leverage lower-end WAN Edge router platforms for the branch deployments.

| Dynamic, on-demand tunnels can be leveraged as an alternative to a full tunnel mesh for deployments that need direct branch-to-branch communication for optimized delivery of East-West traffic (like VoIP). Dynamic, on-demand tunnels were introduced starting in SD-WAN Manager version 20.3/IOS XE version 17.3.1a and require only static tunnels to a hub and backup hub. Tunnels between spokes are dynamically allocated when traffic is required between those spokes, so there is not a need to purchase platforms based on full-mesh capabilities if direct spoke-to-spoke traffic is required. |

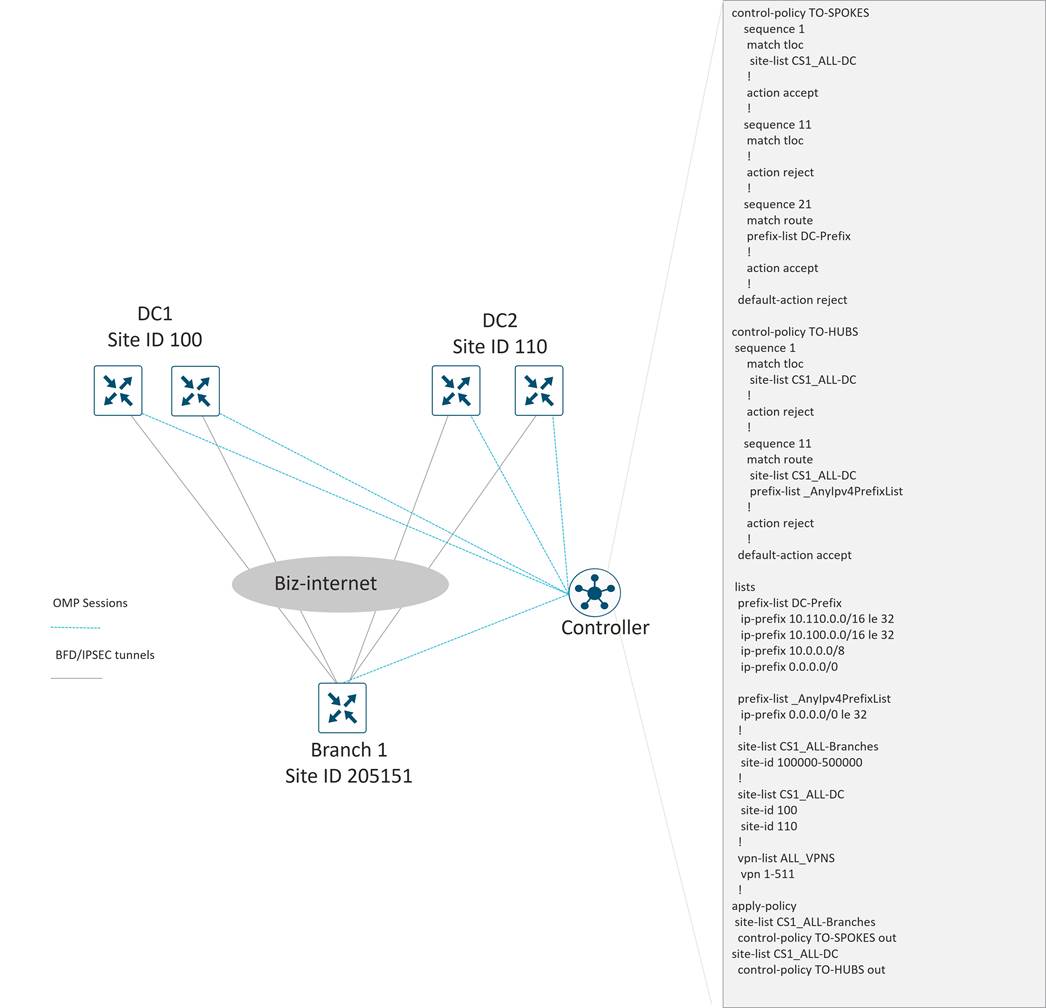

American GasCo Hub-and-Spoke Topology

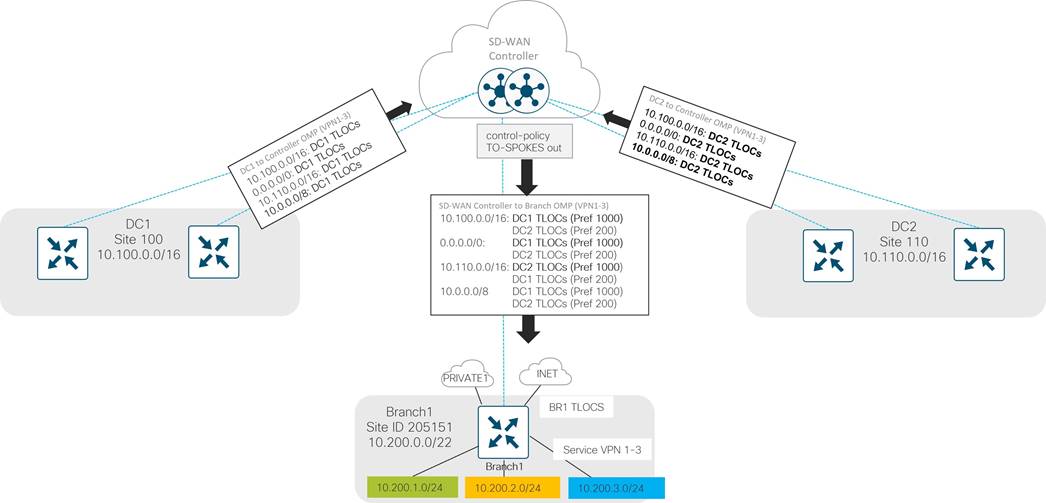

American GasCo decided to implement a hub-and-spoke topology, which is configured using a SD-WAN Controller centralized control policy:

This reduces the number of tunnels down to 4 tunnels per WAN transport on the branch routers (1 tunnel to each hub WAN Edge router in each DC). For type 1 and 2 branches with 2 transports, each then only needs to support 8 tunnels total. On types 3 and 4 branches, the LTE configured as a backup does not activate until the other 2 TLOCs at the site lose their BFD sessions, so these branches only need to support 8 tunnels at one time as well.

Each hub router terminates a tunnel over each transport from each branch. Since there are 2 active transports from each branch, each hub router needs to terminate 2 * 500 spokes, or 1000 tunnels, which is well within the limits of any recommended Cisco Catalyst SD-WAN hub router platform.

WAN Edge Platform Selection

Several factors must be considered, and several questions should be asked when choosing the appropriate WAN Edge platform for a site. Some of these questions include:

● Is a hardware-based or software WAN Edge router on a virtualization platform desired?

● Are redundant WAN Edge routers or components (power supplies, fans) necessary to meet availability targets?

● How many IPsec tunnels will be terminated?

● How much IPsec throughput is required? Throughput performance on branch platforms will vary based on application packet sizes and whether features such as QoS, NAT, Network Based Application Recognition (NBAR), Flexible NetFlow, zone-based firewall, IPS/IDS, and AMP are enabled.

● How many ports are needed to connect to the WAN providers and what are the interface types required?

● Will the router need LAN switch network interface modules to connect users or devices directly, or will LAN functions be handed by an external, standalone switch?

● Is PoE or PoE+ support required for endpoints connecting to LAN switch network modules?

● Will the WAN Edge need to provide advanced branch features such as voice or on-prem security or need to support host compute modules for local virtual machines?

● Are service containers needed for uses cases such as SD-WAN embedded security appliances or ThousandEyes monitoring?

American GasCo used the Cisco Enterprise router selector tool to narrow their choices for SD-WAN platforms by providing information about their deployment collected during the network audit. This helped develop a short list of SD-WAN platforms that could be reviewed with their partner and Cisco account team with the following considerations:

● American GasCo decided to limit the Phase1 use cases to basic SD-WAN site-to-site connectivity, with no immediate plans for Direct Internet Access, SASE integration, or multi-cloud optimizations. Platform selection would consider these features as potential use cases for future phases.

● Branch sites would be deployed with a single WAN Edge router. Dual routers were deemed unnecessary for phase1 based on the reliability history of the ISR routers in the DMVPN deployment.

● WAN Edge routers for site types 2-4 were ordered with at least 8GB of memory to support advanced security features should they be required in future phases.

● WAN Edge routers with integrated Gigabit Ethernet LAN switch network interface modules (NIM) were ordered so that external, standalone ethernet switches could be removed.

● Existing ISR 4451-X routers that were recently purchased for the type 4 sites as DMVPN routers would be re-used and migrated to SD-WAN Edge routers.

● Cellular 4G/LTE modules are required for site types requiring LTE as an always-on or backup transport.

● There were no requirements for compute blades such as UCS-E modules.

● No integrated Wi-Fi modules were required since external APs will provide this function.

● There were no immediate plans for cellular/5G since most locations were in areas with no coverage.

● No T1 or SONET interfaces were required.

The following table details the American GasCo WAN Edge platform selections:

Table 10. American GasCo WAN Edge Platform Selections

| American GasCo Site Type |

Site Type Specs |

WAN Edge Router |

Memory |

WAN ports |

Cellular Ports |

Switch ports |

| Type 1 |

Up to 50 Mbps 1 GE + Cellular WAN 4 Switchports No advanced security required |

C1111-4PLTEEA Fixed Enterprise branch router, 1RU with PoE power modules

|

4G |

1 x GE |

Integrated |

4 |

| Type 2 |

Up to 150 Mbps 2 x GE WAN 8 Switchports Advanced security features possible in future phase |

C1111X-8P Fixed Enterprise branch router, 1RU with PoE power modules

|

8G |

2 x 1GE |

N/A |

8 |

| Type 3 |

Up to 300 Mbps 2 x GE + Cellular WAN 8 Switchports Advanced security features possible in future phase |

C1111X-8PLTEEA Fixed Enterprise branch router, 1RU with PoE power modules

|

8G |

2 x 1 GE |

Integrated |

8 |

| Type 4 |

Up to 500 Mbps 2 x GE + Cellular WAN 16 Switchports Advanced security features possible in future phase |

Existing ISR4451-X routers at type 4 sites to be converted to SD-WAN Modular, Enterprise branch, 2RU, 3 NIM slots, 2 SM slots with PoE power modules

|

8-32G |

4 x 1 GE onboard Additional 6 with service module SM-X-6X1G |

NIM-LTEA-EA |

16 SM-X-16G4M2X |

| Data center |

Up to 2x10G (1x10G per head-end router) 3 x GE N/A switchports |

C8500-12X Fixed high-performance routing, 1RU |

16G/32G/64G |

12x 1/10 GE ports |

N/A |

N/A |

The following is the High-Level Design (HLD) topology diagram:

In the low-level design (LLD) developed by American GasCo, design details were explored and documented in different areas, including:

● Control component deployment: covers details on the cloud-hosted deployment, how control components reachability is achieved from the private transports, factors that go into the number of control components and virtual machine (VM) sizing, how control components can access the data center network management servers, and what control components certificates are chosen

● Branch design: covers various design aspects of the branches, mainly the detailed site profiles with the platform and connectivity standards

● Data center design: covers various design aspects of the data center, including the transport and service-side design of the WAN Edge routers and a portion of the IP unicast routing design

● SD-WAN underlay design: covers the underlay routing and other underlay design considerations

● Firewall considerations: covers NAT design considerations for the network as well as firewall port considerations for successful SD-WAN device communication

● SD-WAN overlay design: covers various aspects of the overlay design, including site-ID design, control policy, cellular tunnel optimizations, VPN segmentation details, IP unicast routing, IP multicast routing, Quality of Service (QoS), and Application-Aware Routing (AAR)

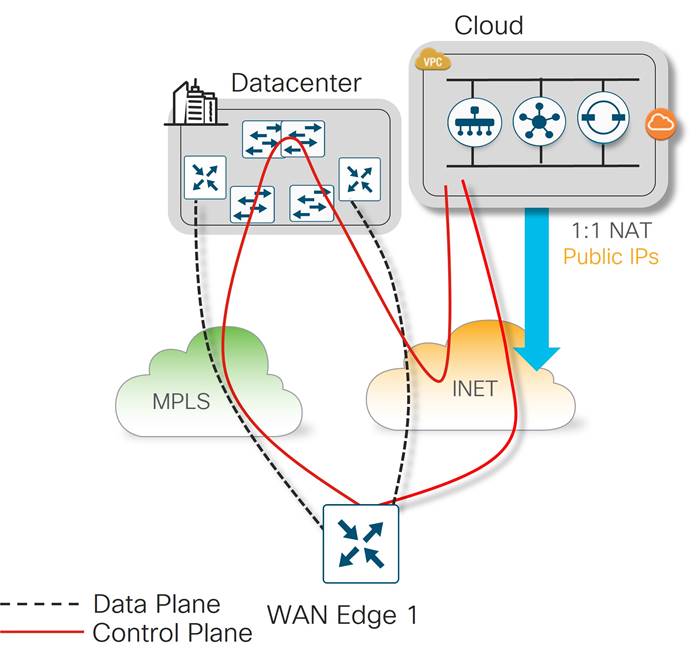

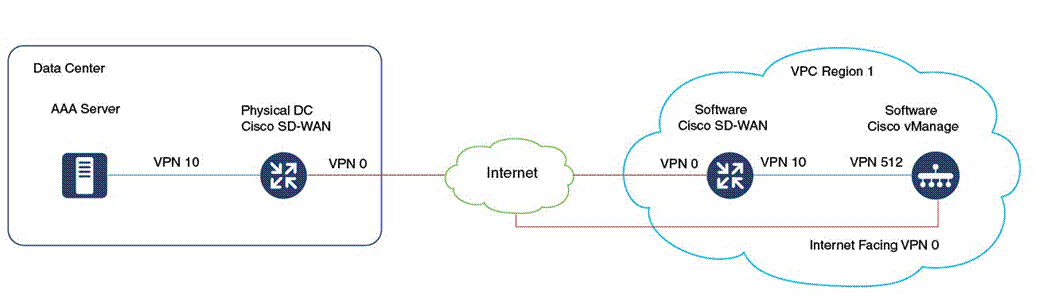

Deployment Model

American GasCo opted for a Cisco cloud-hosted control component deployment in AWS. In this type of deployment, Cisco CloudOps is responsible for the initial overlay provisioning and ongoing monitoring and troubleshooting of the control components VM infrastructure. Cisco CloudOps also provides backups (snapshots) for the SD-WAN Manager configuration and statistics database and performs restoration in cases where disaster recovery is needed. American GasCo operators are provided network access into their AWS VPC and granted administrator rights to the SD-WAN Manager in order to provision and monitor their site WAN Edge devices.

The customer is responsible for managing their SD-WAN network overlay. The customer is also responsible for upgrading software and for opening TAC cases to arrange and authorize service windows when receiving certain notifications from CloudOps. The customer may also need to open TAC cases when notified about potential issues that need further investigation. This notification is done through email. Every cloud-hosted overlay has a single customer contract email address registered as the owner to receive alert notifications. This can be changed by opening a TAC case or through the Self-Service Portal at https://ssp.sdwan.cisco.com. Since one email address is supported, it is recommended that a group mailing list email address is used. For further information on the Cisco CloudOps service, along with a matrix of Cisco and Customer responsibilities, refer to the Cisco Catalyst SD-WAN CloudOps end-user guide.

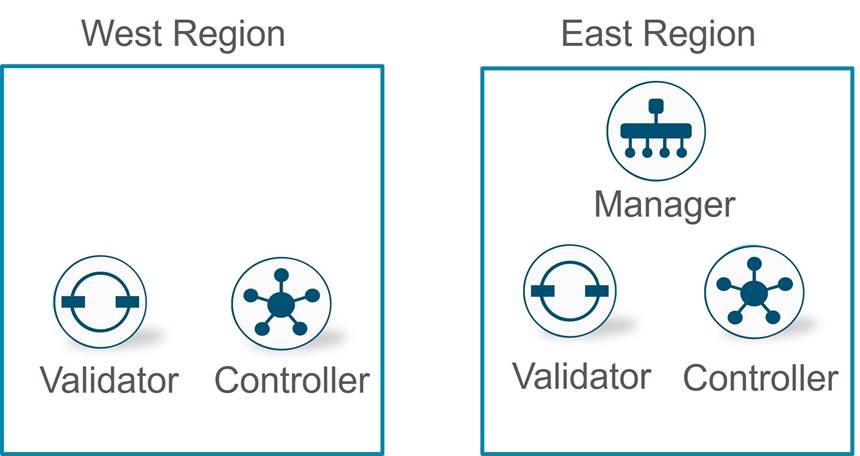

Control Components Redundancy/High Availability

The control components infrastructure consists of multiple SD-WAN Validators, multiple SD-WAN Controllers, and a single node SD-WAN Manager or SD-WAN Manager cluster comprised of multiple SD-WAN Manager nodes. CloudOps distributes the SD-WAN Controllers and Validators in multiple cloud regions, so if one region becomes unreachable, the second cloud region can support the WAN Edge routers in the network. A SD-WAN Manager node or cluster is deployed in a single region, and CloudOps takes care of the disaster recovery in a second region should the primary SD-WAN Manager node or cluster fail.

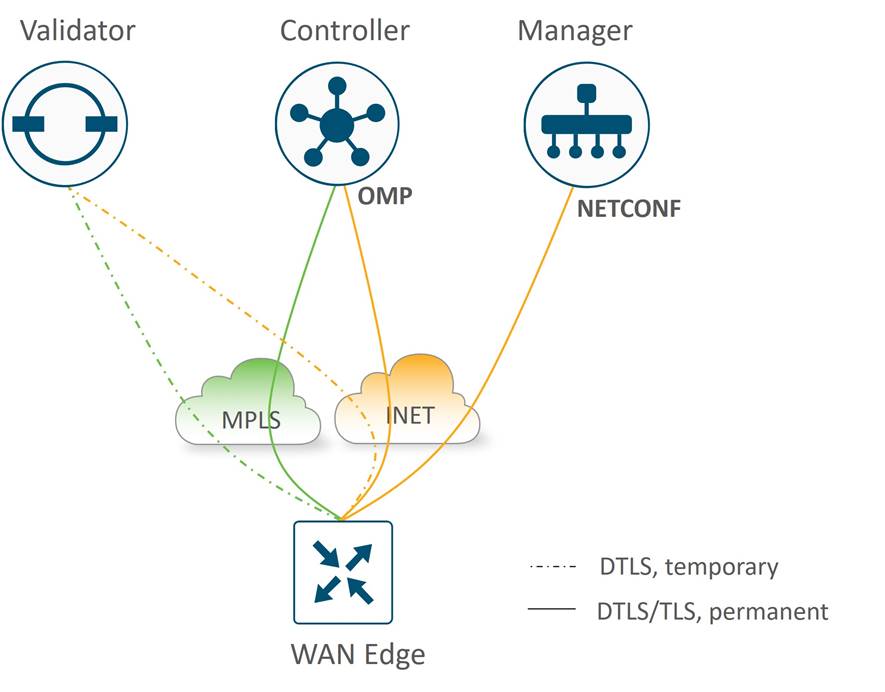

Control Connections

In the Cisco Catalyst SD-WAN network, all SD-WAN devices need to authenticate and form control connections to the various control components (starting with the SD-WAN Validator) before being able to join the SD-WAN overlay. The number of control connections to a particular control component influences that control component’s ability to scale. One also needs to understand how control connections form so that the network design can accommodate these connections through the routing and firewall configurations.

WAN Edge Control Connections

For WAN Edge routers, the following control connections are formed:

● Temporary DTLS connection between each WAN Edge and one SD-WAN Validator – one connection on each transport. A SD-WAN Validator to connect to is chosen by the WAN Edge router from a list of IP addresses that is returned from the DNS server during SD-WAN Validator hostname resolution. Once the proper number of control connections have been made to the SD-WAN Manager and Controller, the SD-WAN Validator connections are torn down. This is different when the SD-WAN Controller and Manager control components make connections to the SD-WAN Validators, as their connections are persistent.

● Permanent DTLS or TLS (configurable) connection between each WAN Edge and one SD-WAN Manager instance – only one connection over one transport is chosen.

● Permanent DTLS or TLS (configurable) connections between each WAN Edge and two SD-WAN Controllers by default – connections to each over each transport.

Control Component Reachability from Private Transports

With control components being hosted in a public cloud, they are directly reachable by WAN Edge router Internet TLOCs. Since the private TLOCs cannot directly reach Internet-hosted control components, a strategy must be developed for how to handle these control connections.

American GasCo chose to use their data center Internet breakout for private transport reachability to the control components. This allowed control connections to be formed over the private TLOCs so each WAN Edge router has a redundant path to the control components in case of TLOC failures.

Control Components and Virtual Machine (VM) Sizing

In the cloud hosted deployment model, Cisco Cloud Operations is responsible for instantiating the control component virtual machines in the cloud service provider. In order to size the VMs resources (vCPU, memory, storage) accurately for the anticipated scale. Cloud Ops worked with American GasCo to obtain specific information about the end state design. This included the following information:

● Total of 500 branch sites, each with a single WAN Edge (spoke) router

● Two data centers, each with dual WAN Edge (hub) routers

● Four WAN transport colors at the data center and an average of 2 active transport colors at each remote site at a time

● Strict hub-and-spoke topology with dual hub routers in each data center.

● Application visibility enabled using Cisco Catalyst SD-WAN Application Intelligence Engine (SAIE), which sends flow statistics and application identifying information to the SD-WAN Manager for monitoring

| Tech Tip |

| One important data point that must be considered when sizing SD-WAN Manager resources is the volume of statistics expected to be received, processed, and stored by the SD-WAN Manager from the WAN Edge routers. This includes the statistics associated with up/down events on the site WAN Edge routers and the statistics associated with flow and application reporting for each site. While daily up/down events are relatively few in most deployments, flow and application statistics are continuously collected and exported to the SD-WAN Manager during regular intervals. This accounts for most of the traffic received by the SD-WAN Manager. |

Cisco Catalyst SD-WAN Application Intelligence Engine (SAIE)

Cisco Catalyst SD-WAN Application Intelligence Engine (SAIE) is the architecture for application classification. It can determine the contents of the packet for application visibility and can record the information for statistics collection. When application visibility is enabled through localized policy, flow records are enabled on the router and NBAR2 is used as the application classification engine on the WAN Edge router. Traffic flow statistics and its classification information are sent to the SD-WAN Manager, then collected and processed, where it can be displayed on the SD-WAN Manager GUI.

WAN Edge routers store statistics or aggregated statistics (starting in 20.6/17.6 code) and the SD-WAN Manager pulls this data from each WAN Edge router at pre-defined intervals and is processed/analyzed and stored on the SD-WAN Manager. Note that these statistics not only include SAIE statistics data but also other statistics, such as interface stats, QoS, App-route stats, firewall stats, etc. SAIE statistics typically make up a larger proportion of the statistics data.

A number of factors can increase the number of statistics being generated and processed by the SD-WAN Manager:

● Short-lived flows increase the SAIE statistics volume

● Running application visibility at the DC and remote sites in a hub-and-spoke topology doubles the amount of SAIE traffic sent to the SD-WAN Manager

● Modifying App-route timers to be more aggressive generates more App-route statistics

● Unstable links can increase the number of statistics being generated to the SD-WAN Manager

American GasCo ran an SD-WAN pilot deployment at 5 different remote sites running real traffic/applications and only enabled application visibility at the remote sites and not the DC sites. One reason for doing this is that the DC sites are dual-router sites and for application visibility to work with most applications, traffic symmetry would have to be assured. Also, with less statistics to deal with, the SD-WAN Manager requires less virtual machine resources.

After running the pilot for a week, American GasCo polled their SD-WAN Manager to estimate the volume of statistics being received from the five pilot sites. Using an API call (http://<SD-WAN Manager IP>/dataservice/management/elasticsearch/index/size/estimate), it was found that the 5 sites were generating a cumulative amount of 450MB of statistics per day. Taking this as a baseline, it was approximated that the SD-WAN Manager could expect to receive up to 45GB per day once the 500 sites were migrated to SD-WAN and exporting statistics.

Control Component Number Calculations

For number of control components and virtual machine sizing information, refer to the Cisco Catalyst SD-WAN Control Components Compatibility Matrix and Recommended Computing Resources. From this page, there is a link to the Cisco Catalyst SD-WAN Control Components Release 20.6.x (Cisco Hosted Cloud Deployment) which gives release-specific information. How many of each control component is needed depends on the number of WAN Edge devices in the network and the number of Cisco Catalyst SD-WAN Application Intelligence Engine (SAIE) statistics being generated. Based on the documentation, for 500 devices generating 45G of SAIE traffic per day, the following control component numbers are deployed:

Table 11. American GasCo Control Component Calculations

| Number |

|

| SD-WAN Manager |

One Node (Large Instance) |

| SD-WAN Validator |

2 |

| SD-WAN Controller |

2 |

CloudOps determines the instance type, vCPUs, memory, and storage for each control component as they can be subject to change.

Control Component Access to DC NMS Servers

American GasCo security policy requires authentication to a TACACS/AAA server in the data center for users accessing the SD-WAN control components via HTTPS or SSH. The control components also need to send logging and SNMP trap information to NMS servers in the data center, which presents a challenge for cloud-hosted control component deployments on regional VPCs having no routed path to the customer internal network. To accommodate this requirement, it is necessary to connect the control component VPCs to the SD-WAN overlay so that they can join the fabric just like any other remote site.

The process to add the regional VPCs to a customer overlay is described in the Cisco SDWAN CloudOps Provisioning guide. It is important to do this step upfront since it affects the way the IP addressing is allocated on the control components. A summary of the steps is described below.

● Request CloudOps to instantiate a new software WAN Edge in each regional VPC, with the service VPN interface connected to the management VLAN that connects to VPN 512 of each control component. Additional licenses may need to be purchased so that the new software WAN Edge routers can be added to the device list of the SD-WAN Manager.

● Request CloudOps re-allocate the VPN 512 addresses from a private IP subnet not in use on the customer network. One /24 private IP address block is needed for each region. By default, the cloud-hosted control components are deployed with 10.0.0.0/16 subnets which might conflict with an existing subnet in the customer’s network. These IP prefixes are used to create the control components, and the subnets are then configured to be available within the Cisco Catalyst SD-WAN fabric.

● Onboard the new software WAN Edge into the customer SD-WAN overlay as a remote spoke site in each region, provisioning IPsec tunnels to each of the DC hub routers. Verify connectivity to the AAA server (and any other customer NMS servers) from the VPN 512 interface of the control components.

The following diagram illustrates this concept:

Certificates

Control components require signed device certificates that are used to authenticate other control components, along with the WAN Edge devices in the overlay network. In addition, proper root certificate chains must also be installed in each SD-WAN device so authentication between the devices can succeed since root certificates are used to trust device certificates. Once authenticated, control connections can be secured between the devices. For new Cisco cloud-hosted deployments, the customer can choose a Cisco-signed certificate, or alternatively, an Enterprise CA certificate.

American GasCo chose to implement Cisco PKI due to its simplicity and the fact that it would not require operations folks to maintain and operate the certificate system. There was also no security policy in place at American GasCo that required the use of Enterprise CA certificates.

Detailed Site Profiles – Platform and Connectivity Standards

American GasCo developed a set of standard site profiles for each store site type, specifying the WAN Edge platforms, VPN segmentation, and the LAN and WAN interface connectivity. These standards were used as site connectivity plans and served as the blueprints for the SD-WAN Manager templates and policies that were created during the implementation phase of the project.

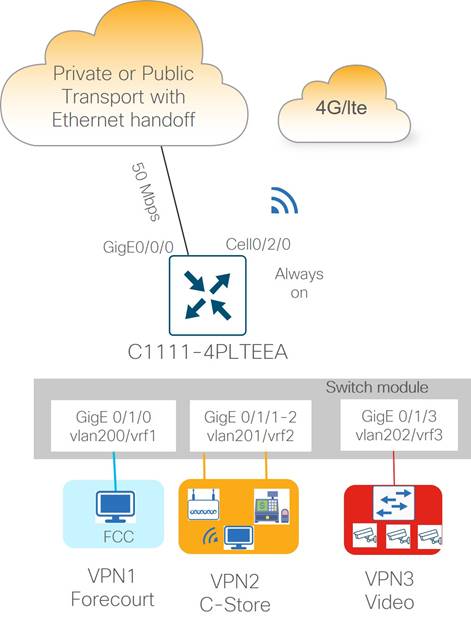

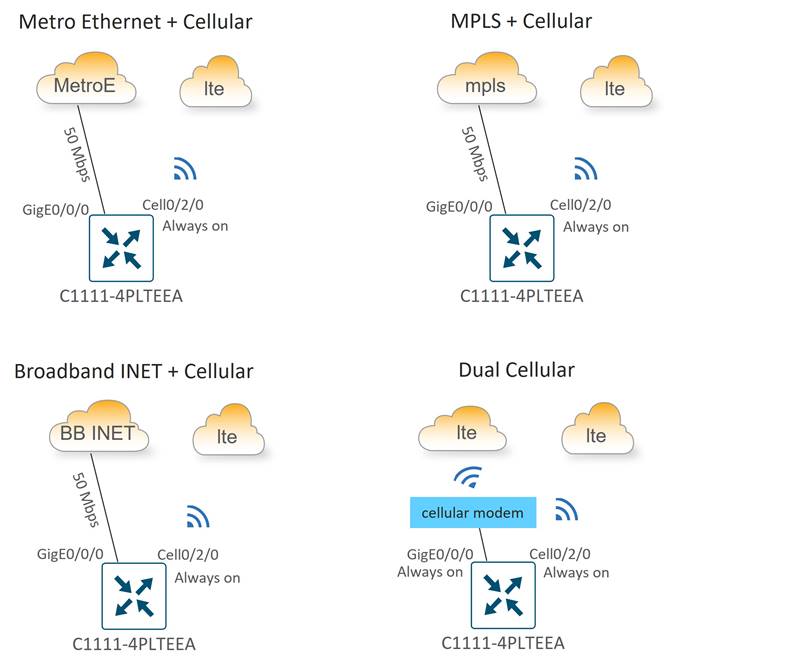

Type 1: Small Store (C1111-4PLTEEA)

Type 1 store site profiles support up to 50 Mbps of site throughput with N+1 transport redundancy using a combination of wired and wireless WAN connectivity. Wired ethernet connectivity to the transport carrier CPE device is provided by the routed Gigabit Ethernet interface that supports RJ45/copper and SFP/fiber cabling options. The integrated cellular module provides wireless connectivity to a 4G/LTE Internet service provider for the redundant WAN transport.

| ISR1100 routers with integrated LTE modules include a single cellular modem. The LTE modules support dual SIM cards for carrier redundancy but will only connect to a single LTE network at a time. At the time of this writing, only the industrial version of the ISR1100 (IR1101) supports dual LTE modems. Modular platforms that support pluggable LTE modules do not have this limitation. |

Ethernet LAN connectivity for up to 4 devices (computers, POS terminals, WLAN, Ethernet switch) is provided by the integrated 4-port Gigabit Ethernet LAN switch network interface module (NIM). The Ethernet switch ports are provisioned as access ports in VLANs 200, 201, or 202, with switched virtual interfaces (SVIs) mapped into VRFs 1, 2, or 3, respectively, for site segmentation.

| Tech Tip |

| PoE/PoE+ can be enabled on Gigabit Ethernet interfaces (4/8 PoE or 2/4 PoE+ ports on the 1100-8P and 2 PoE or 1 PoE+ ports on the 1100-4P), to provide power to external devices such as video endpoints and 802.11ac access points The C1111-8PWB and ISR-1100-POE4 are the Power over Ethernet (PoE) part numbers that must be ordered with the ISR1100 router if PoE or PoE+ is required to be enabled on the Gigabit Ethernet interfaces of the switch module. Together they provide 10/100/1000 Gigabit Ethernet connectivity with LAN services, 802.11b/ac WiFi, and 802.3at PoE/PoE+ support. |

Small store subtypes were designed with different combinations of WAN transport connectivity to provide maximum flexibility to use whatever transport is available and most cost-effective at a particular location.

Type 1 WAN connectivity options include the following variants:

● Metro Ethernet + “Always-on” Cellular

● MPLS + “Always-on” Cellular

● Broadband Internet + “Always-on” Cellular

● Dual “Always-on” Cellular

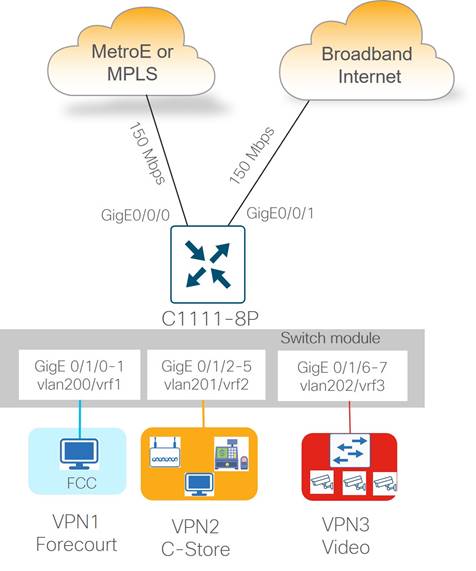

Type 2: Medium Store (C1111X-8P)

Type 2 store site profiles support up to 150 Mbps of site throughput with N+1 transport redundancy provided by private transport (MPLS or Metro Ethernet) and public Internet carrier circuits. Wired Ethernet connectivity to carrier CPEs is provided by the WAN Edge router integrated Gigabit Ethernet WAN modules, which supports RJ45/copper and SFP/fiber cabling.

Ethernet LAN connectivity for up to 8 devices (computers, POS terminals, WLAN AP, and video surveillance aggregation switch) is provided by the integrated 8-port Ethernet switch. The LAN switch Gigabit Ethernet ports are provisioned as access ports in VLANs 200, 201, or 202, with switched virtual interfaces (SVIs) mapped into VRFs 1, 2, or 3, respectively for site segmentation.

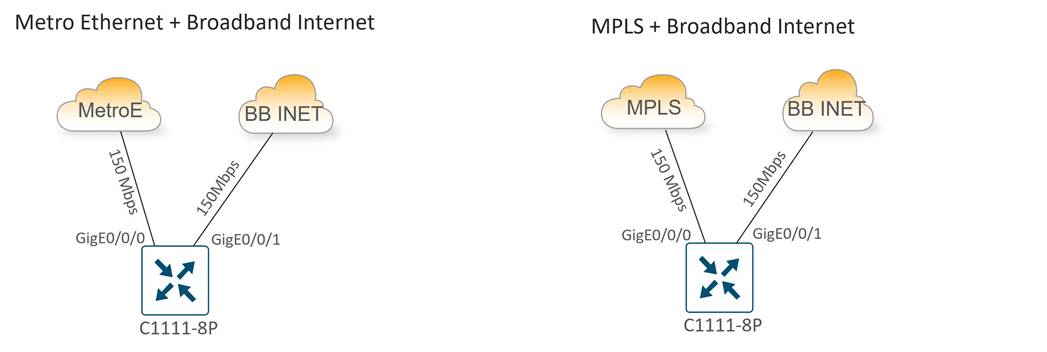

The two subtypes for the medium store include the following:

● Metro Ethernet + Broadband Internet

● MPLS + Broadband Internet

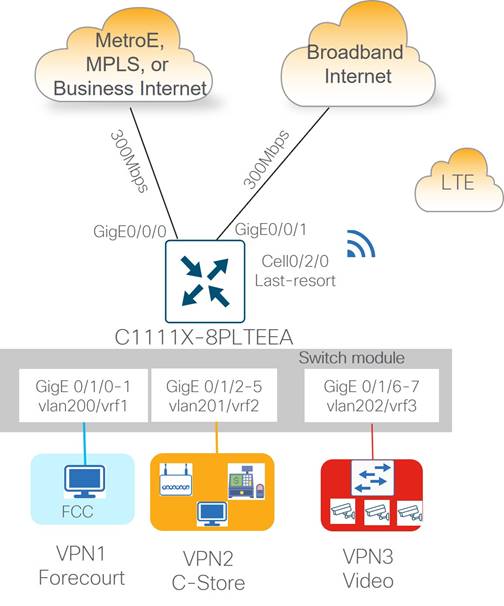

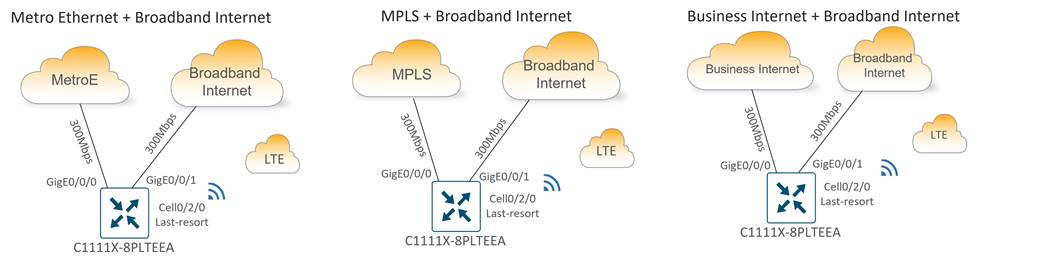

Type 3: Large Store (C1111X-8PLTEEA)

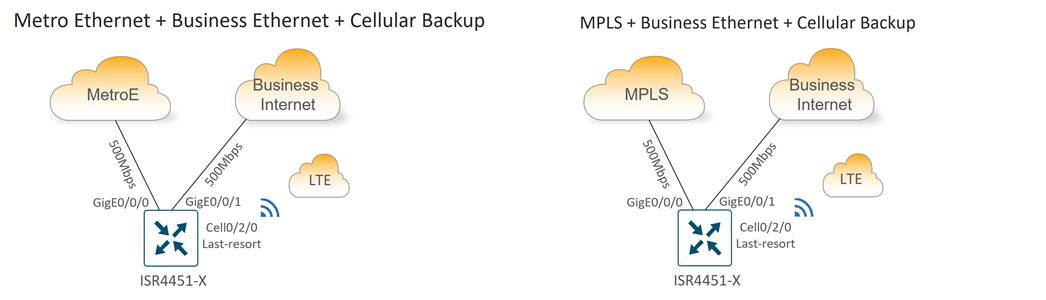

Type 3 store site profiles support up to 300 Mbps of site throughput with N+2 transport redundancy provided by private and public transport circuits with a cellular connection to LTE as a last resort circuit. Dedicated/Business Internet circuits can also be used in place of private circuits as an option. Ethernet connectivity to carrier CPEs is provided by the 2-port GE WAN module, which supports RJ45/copper and SFP/fiber cabling.

Ethernet LAN connectivity for up to 8 devices (computers, POS terminals, WLAN, and Ethernet switch) is provided by the integrated 8-port Ethernet switch. The Ethernet switch ports are provisioned as access ports in VLANs 200, 201, or 202, with switched virtual interfaces (SVIs) mapped into VRFs 1, 2, or 3, respectively for site segmentation.

There are three main subtypes for the large station:

● Metro Ethernet + Broadband Internet + Cellular Backup

● MPLS + Broadband Internet + Cellular Backup

● Business Internet + Broadband Internet + Cellular Backup

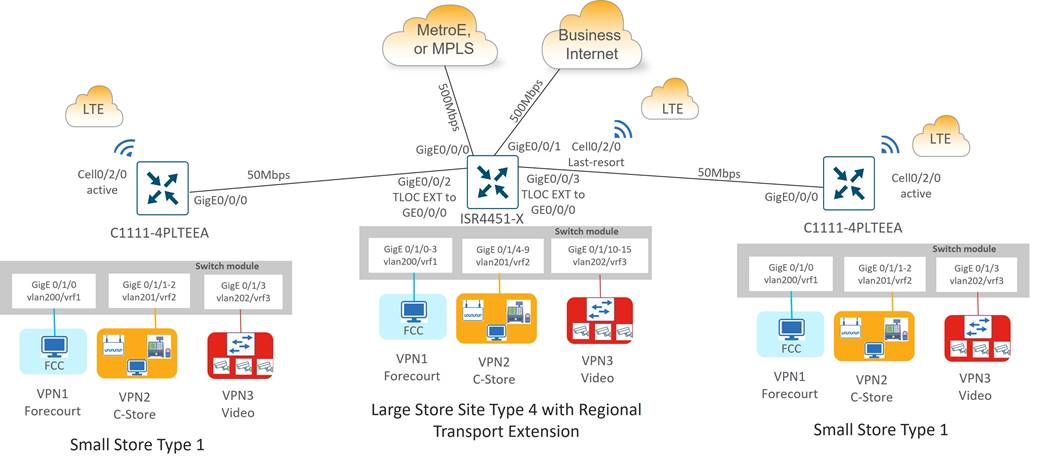

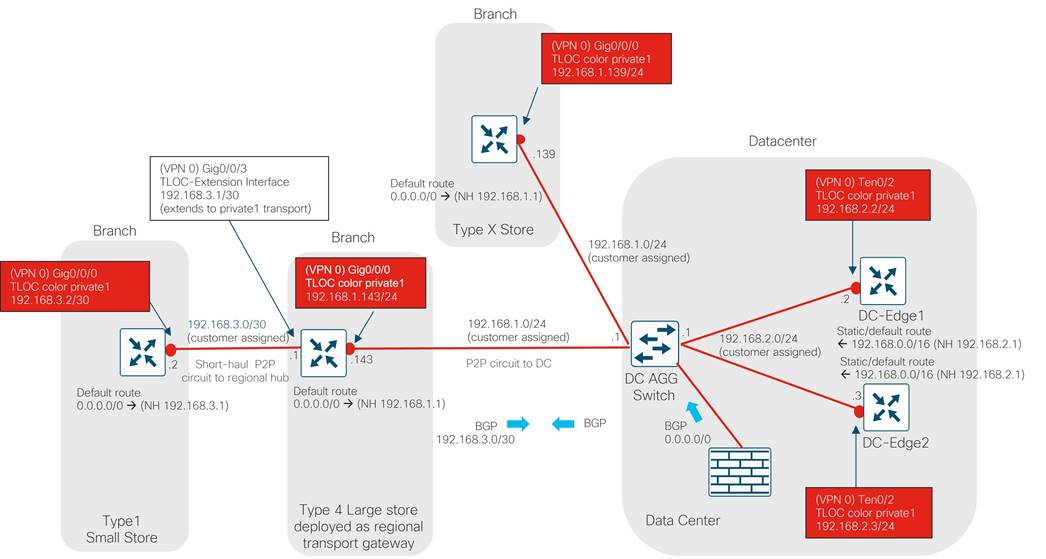

Type 4: Large Store/Regional Transport Gateway (ISR4451-X)

Type 4 sites support up to 500 Mbps of site throughput with N+2 transport redundancy provided by private and public transport circuits with a cellular connection to LTE as a last resort circuit. Dedicated Internet is preferred for type 4 site types over broadband Internet at the store location. Ethernet connectivity to carrier CPEs is provided by the 2-port GE WAN module, which supports RJ45/copper and SFP/fiber cabling.

The large store type 4 site types are unique because they can accommodate type 1 stores at remote locations without affordable access to American GasCo Metro Ethernet or MPLS transport carriers. These type 4 stores can act as a transport gateway, in addition to servicing their own site traffic. Type 1 stores can use short-haul private ethernet circuits and connect to a type 4 site that can be switched onto the private circuit with a TLOC extension configuration.

| Tech Tip |

| Type 1 stores connect their WAN interface/layer 3 ports to a layer 3 interface port of a type 4 store in the VPN 0 transport. This type 4 store interface is configured as a TLOC extension interface to connect the type 1 station to the private1 or mpls transport. Note that layer 2/SVI interfaces are not supported as TLOC extension interfaces. |

Site LAN connectivity for up to 16 Ethernet devices (computers, wireless APs, and POS terminals) is provided through a 16-port Ethernet switch NIM. Devices are partitioned across three VPNs for security and granular control.

There are two main subtypes for the type 4 large store with regional transport extension

● Metro Ethernet + Business Internet + Cellular Backup

● MPLS + Business Internet + Cellular Backup

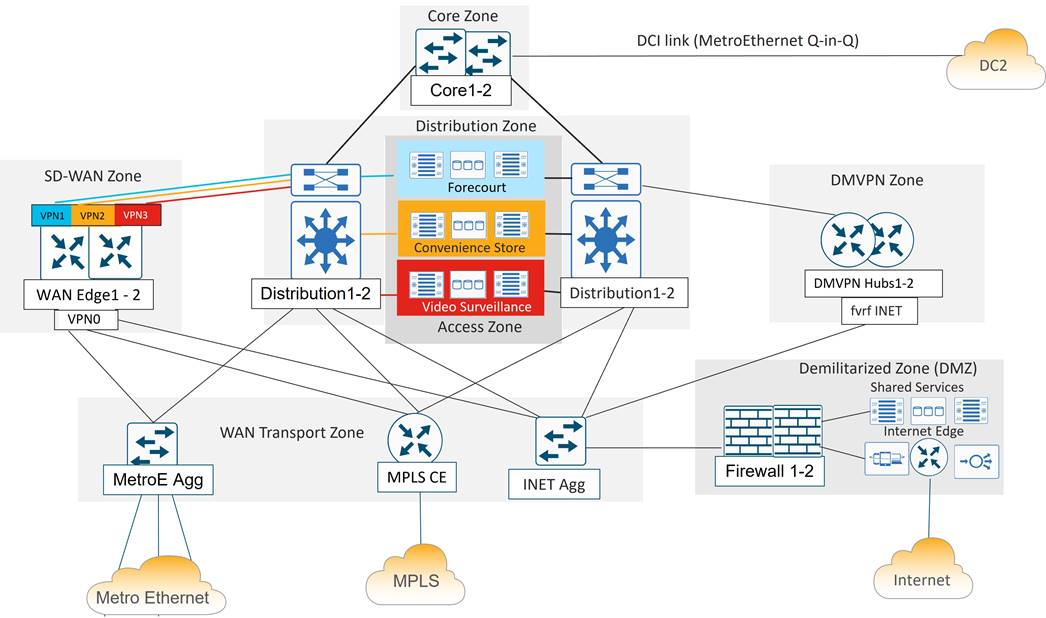

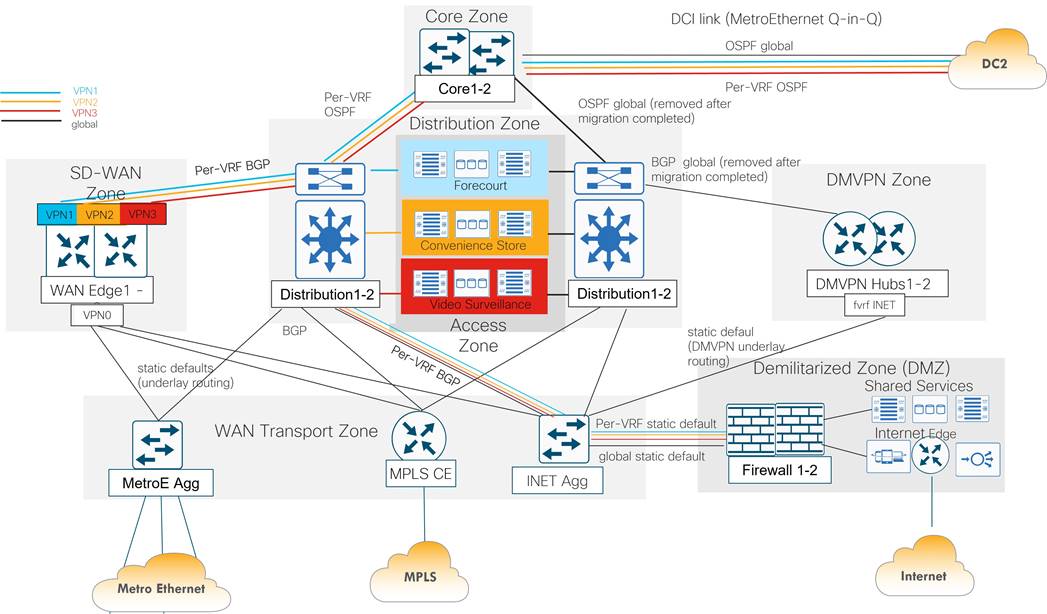

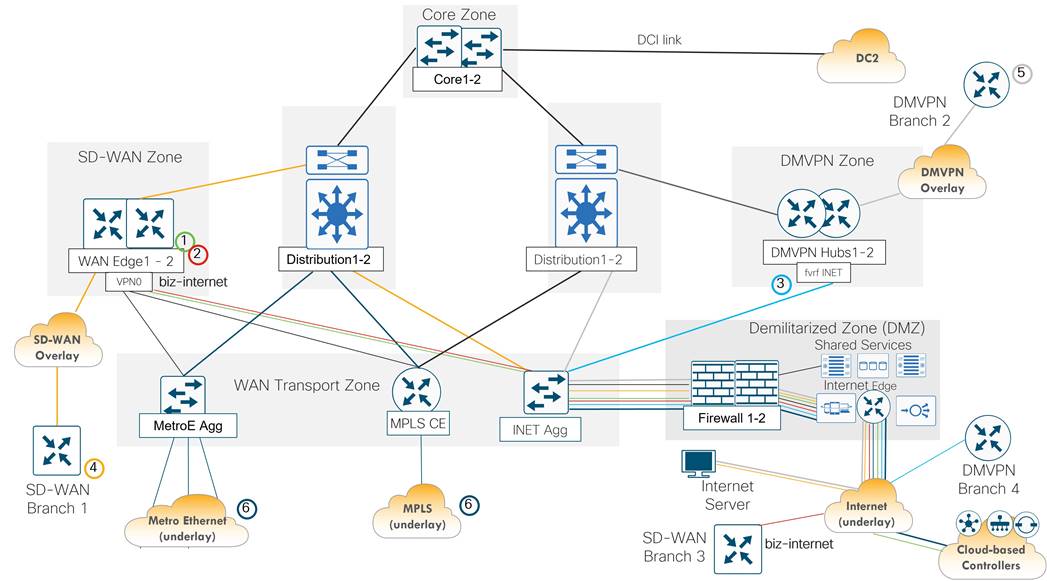

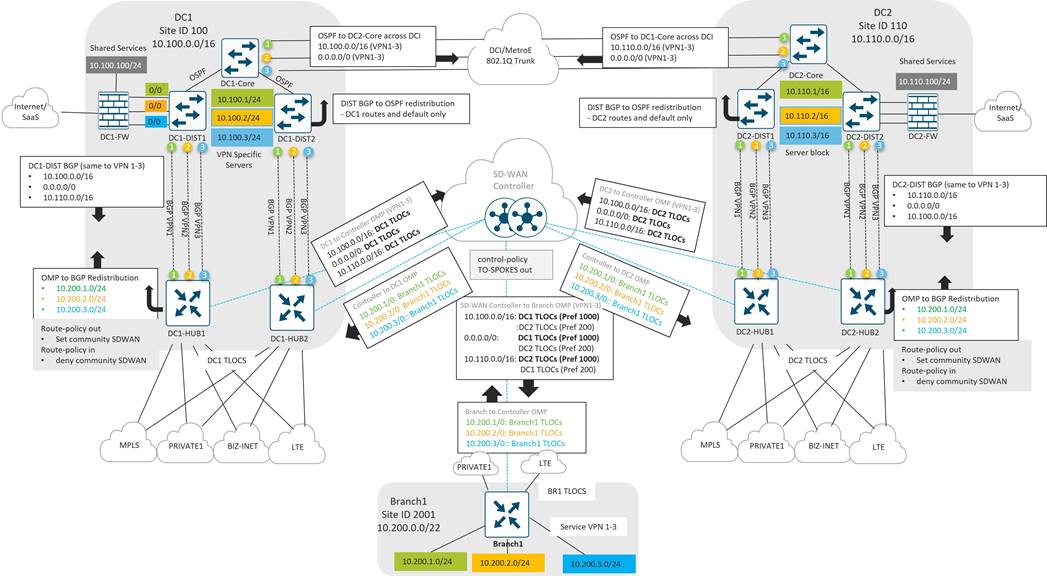

The Data Center devices are grouped into functional zones as shown in the following diagram. Each device in a zone connects to separate power supplies and is cabled to redundant devices in adjacent zones where applicable.

Overview

A brief description of each functional DC zone is as follows:

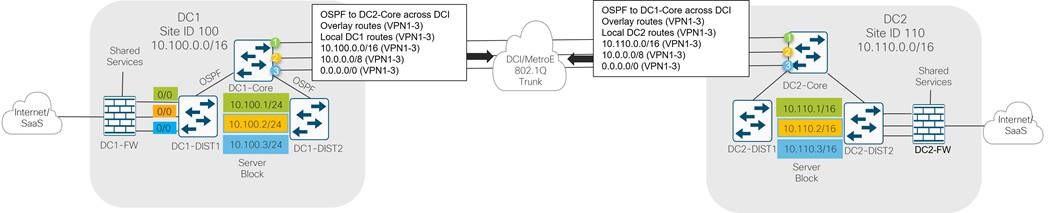

● Core zone: The core zone connects DC1 to DC2 over a private data center interconnect (DCI) circuit. Each DC has a pair of core routers that interconnect over different VLANs of a Metro Ethernet service providing Q-in-Q trunking.

● Distribution zone: The distribution zone connects to all zones in the data center. Includes a pair of layer 3 switches that controls the boundary between the WAN, access, and core layers. Distribution routers connect to each zone with redundant 10 Gigabit Ethernet connections and run multiple routing protocols.

● Access zone: The access zone contains layer 2 switches for the server and storage devices hosting the forecourt, convenience store and IP video surveillance applications.

● SD-WAN zone: The SD-WAN zone contains the Catalyst 8500 WAN Edge routers that function as the SD-WAN tunnel and routing “hubs” for the remote “spoke” WAN Edge routers.

● WAN transport zone: The WAN transport contains the aggregation devices that allow shared access to the WAN transports circuits. It includes the MPLS CE router, a layer 3 switch connecting to the Internet DMZ firewall pair, and a layer 3 switch that aggregates point-to-point and Metro Ethernet circuits connecting to remote sites. The WAN transport connectivity provided several benefits to American GasCo during the SD-WAN project rollout, which included:

◦ Allowed the SD-WAN Edge routers in the DC to share the MPLS and Internet bandwidth with the legacy WAN devices during the migration to SD-WAN

◦ Provided the remote SD-WAN Edge routers with MPLS and private circuits and underlay path to the shared network services, such as DNS, NTP, syslog, and SNMP

◦ Provided the remote SD-WAN Edge routers with MPLS and private circuits an underlay path to the Internet for access to the SD-WAN cloud-hosted control components

◦ Avoided the complexity associated with enabling an underlay routing path through the DC WAN Edge routers

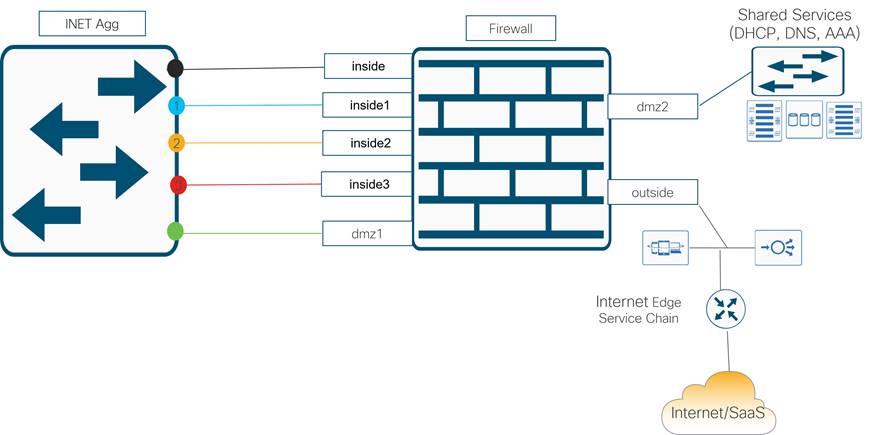

● Demilitarized zone (DMZ): The DMZ provides secure access to shared network services and Internet for users and devices on all VPNs. In the segmented DC design, the DMZ firewall is the only point where traffic from different VPNs is allowed to converge, which is necessary to avoid duplicating shared services and Internet access for each VPN.

● DMVPN zone: The DMVPN zone contains the DMVPN hub routers that aggregate tunnels and routes from the legacy remote sites, utilizing the Internet as an underlay transport. The DMVPN zone was decommissioned after all remote sites were migrated to SD-WAN.

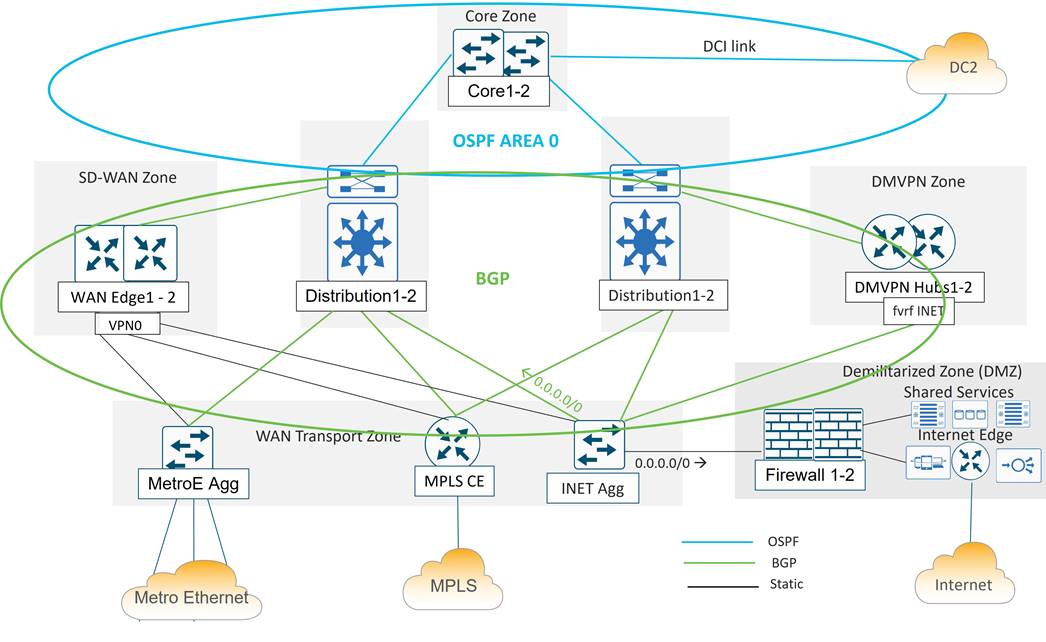

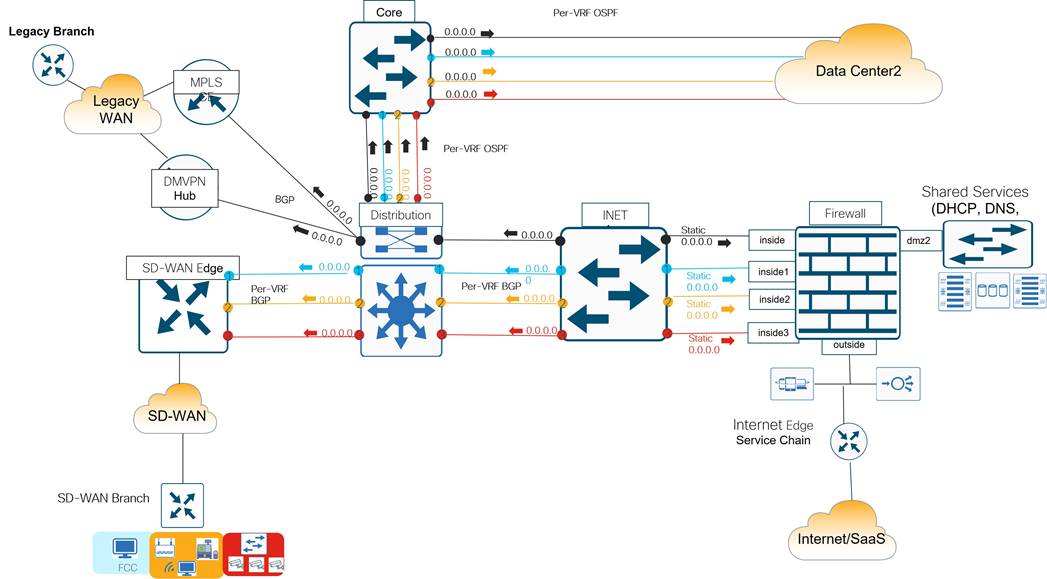

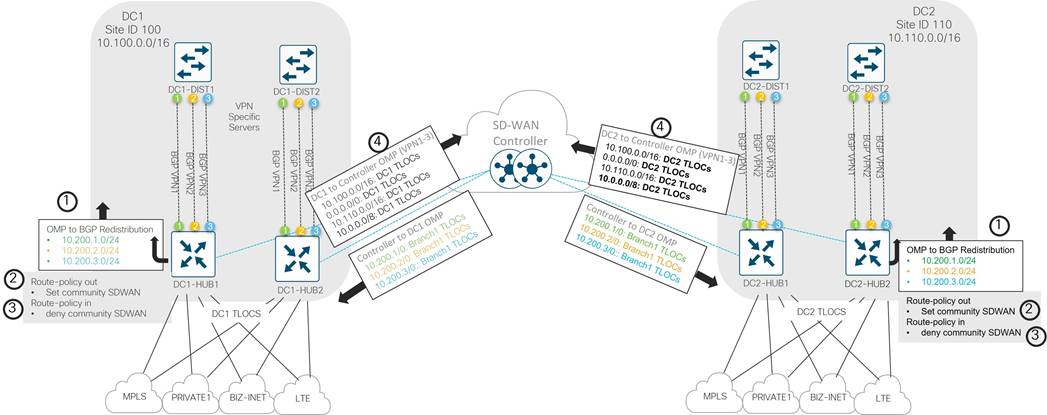

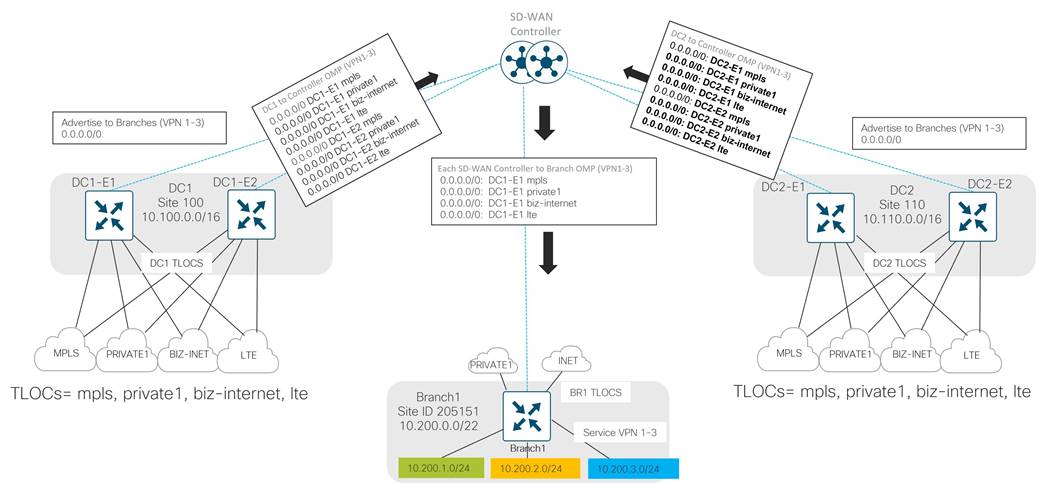

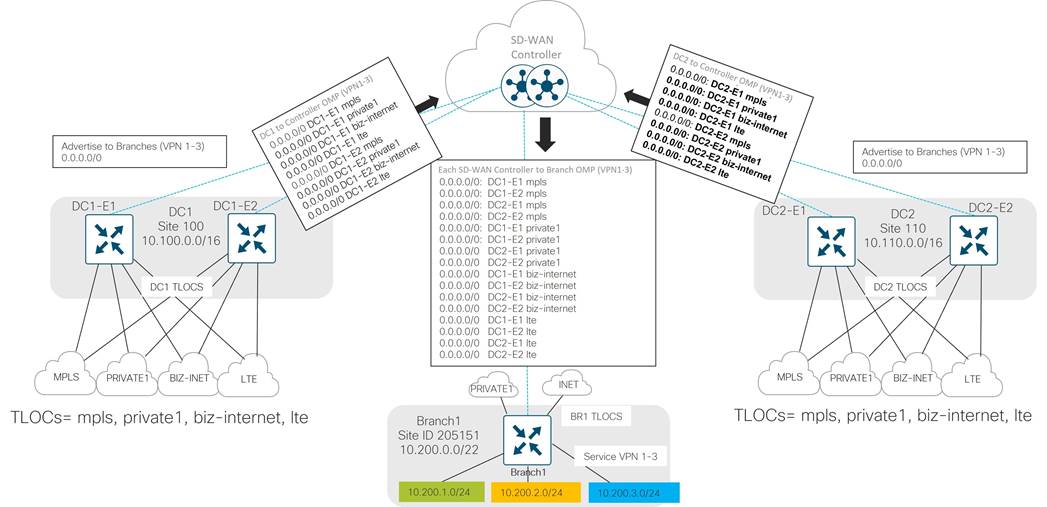

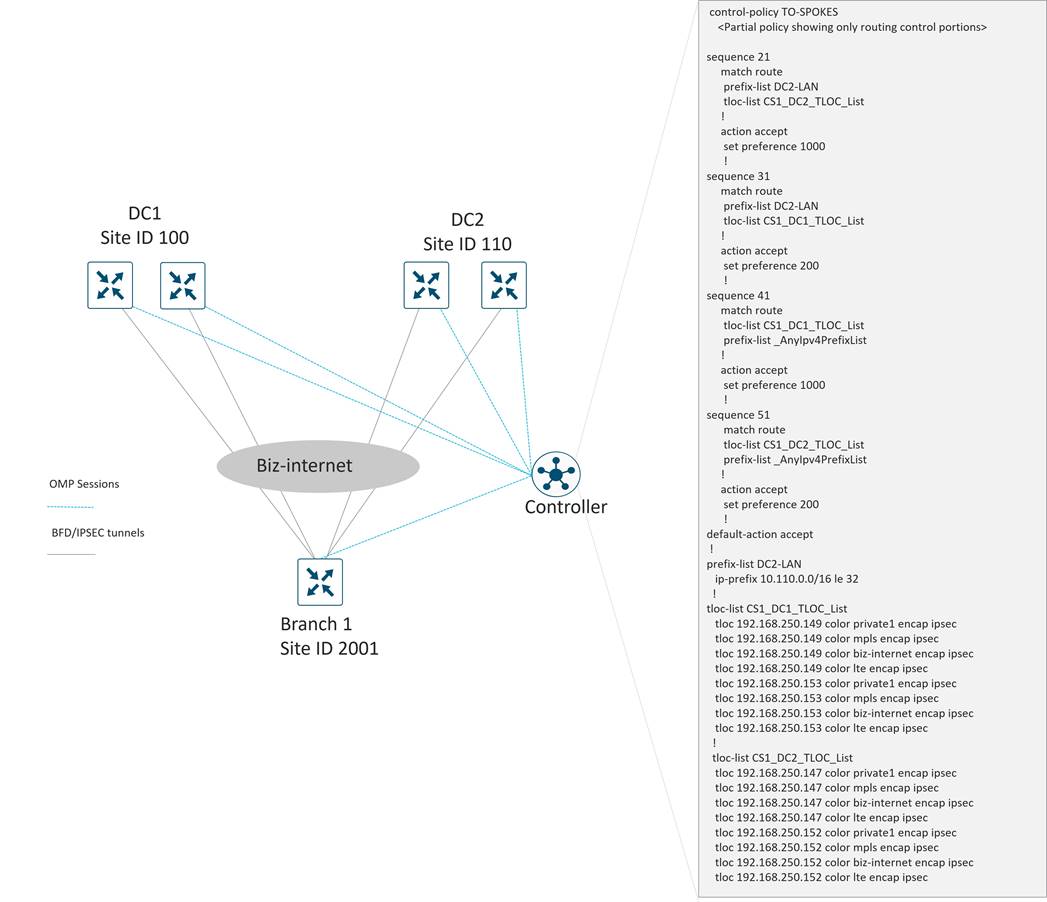

Data Center Routing Overview

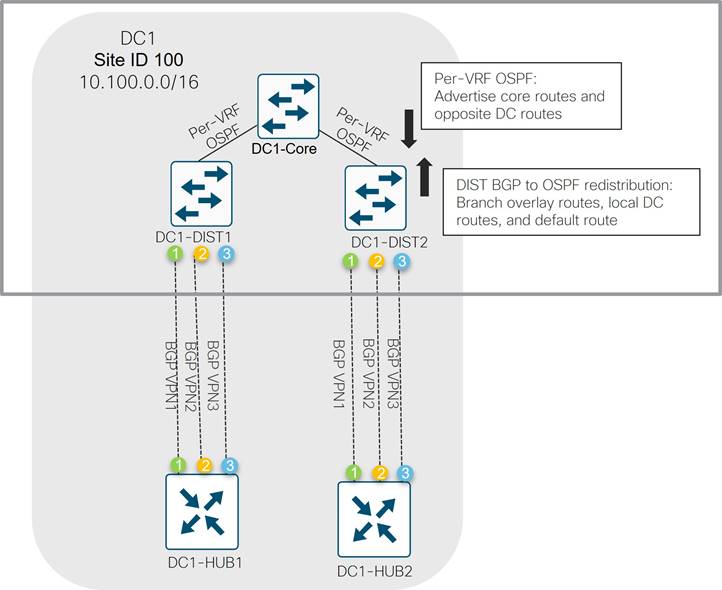

In the data center, both BGP and OSPF is implemented. BGP is implemented throughout the distribution layer, the DMVPN zone, the LAN/service-side of the SD-WAN Zone, and throughout the WAN Transport Zone. OSPF is implemented from the distribution to the core routers and across the DCI link.

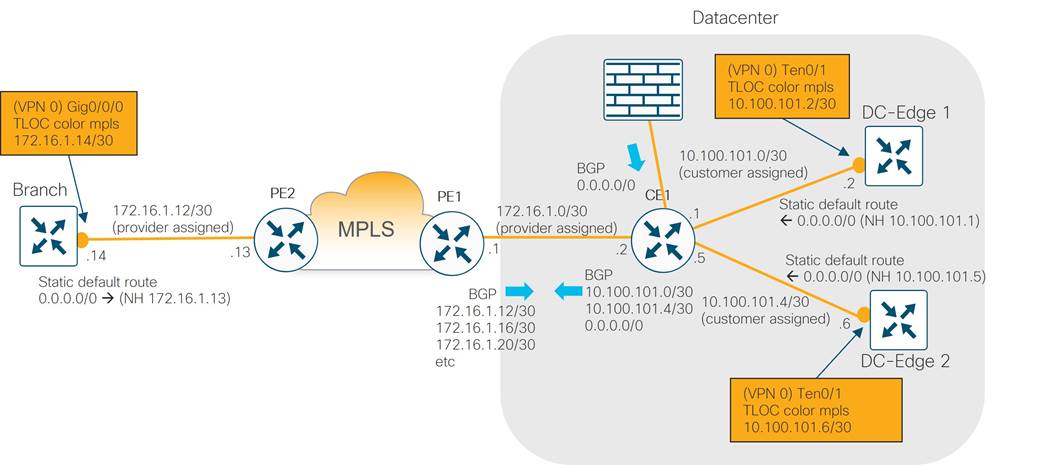

In the diagram below, the Metro Ethernet aggregation switch, the MPLS CE, the Internet aggregation switch, and the WAN Edge routers on the service-side interfaces use the BGP routing protocol to exchange routes with the distribution layer 3 switches. A static default route is distributed from the Internet aggregation switch into the BGP routing protocol for the purposes of outbound Internet routing.

The WAN Edge transport-side interfaces do not participate in BGP but instead use a static default route for underlay routing to establish control plane and data plane tunnels.

SD-WAN Edge Router Deployment and Related Details

A pair of Catalyst 8500 SD-WAN Edge routers were installed and connected to the distribution and transport zone devices with 10 Gigabit Ethernet interfaces. The following sections describe the details of the connectivity, SD-WAN TLOC colors, and service VPN segmentation.

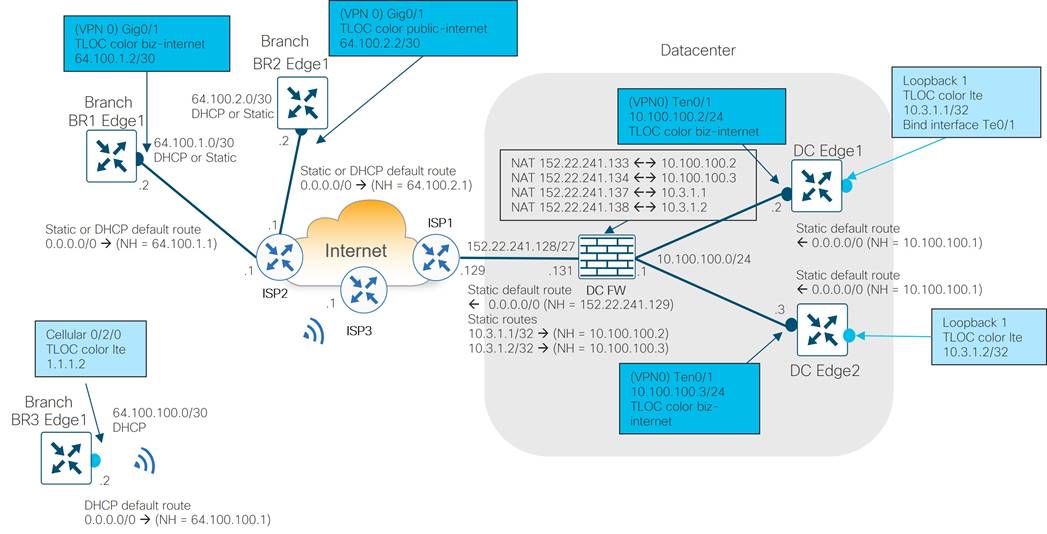

Transport Side connections and TLOC colors

Each WAN Edge router is given 10 GE port connectivity to the MPLS CE, Internet, and Metro Ethernet aggregation devices in the transport zone as shown in the diagram below. The IP addresses on the Catalyst 8500 ports map into VPN 0 and represent the tunnel sources for TLOC colors mpls, biz-internet, and private1 for each WAN Edge router. A loopback interface was additionally configured as a tunnel interface on each WAN Edge router and mapped as TLOC color lte. This tunnel was bound to the Te0/1 interface using the bind command so that it shared the same underlay transport path as the biz-internet tunnel.

It would have been possible to avoid creating a separate tunnel color lte on the WAN Edge routers by allowing (or not using the restrict option on) the lte to biz-internet tunnels, but this was done so that protocol timers could be tuned specifically on the lte tunnels to minimize SD-WAN control and forwarding plane overhead. Details of these optimizations can be found in the overlay design section.

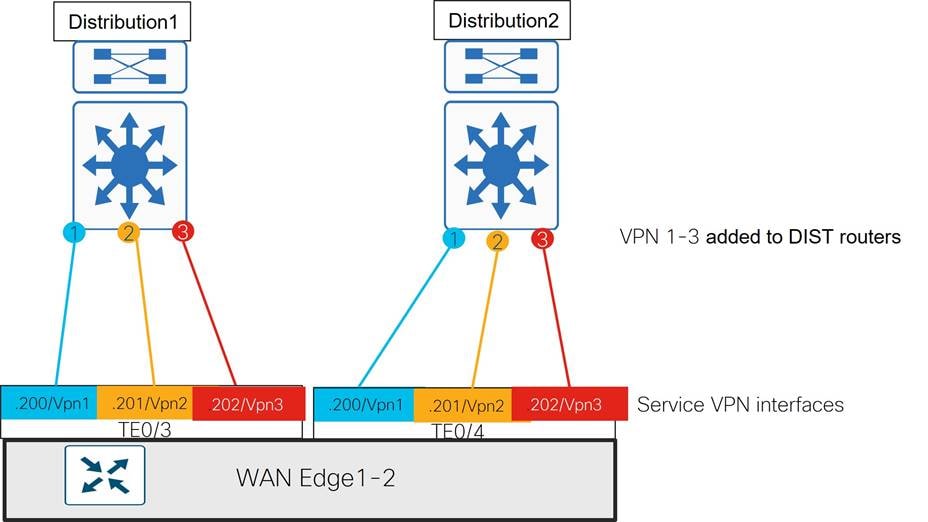

Service Side Connections and VPNs