Overview

Cisco Nexus Dashboard provides a common platform for deploying Cisco Data Center applications. These applications provide real time analytics, visibility and assurance for policy and infrastructure.

The Cisco Nexus Dashboard server is required for installing and hosting the Cisco Nexus Dashboard application.

The appliance is orderable in the following versions:

-

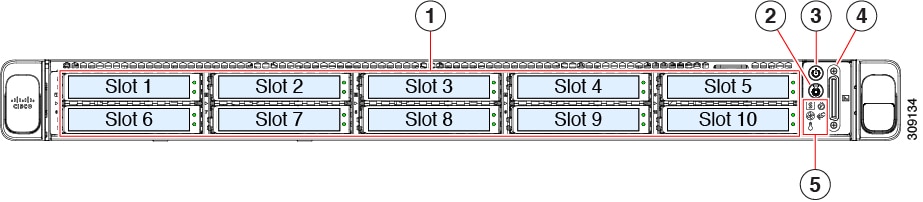

ND-NODE-G5S: Single-node appliance

-

ND-CLUSTERG5S: Three-node version that leverages the same configuration as ND-NODE-G5S but with three appliances included

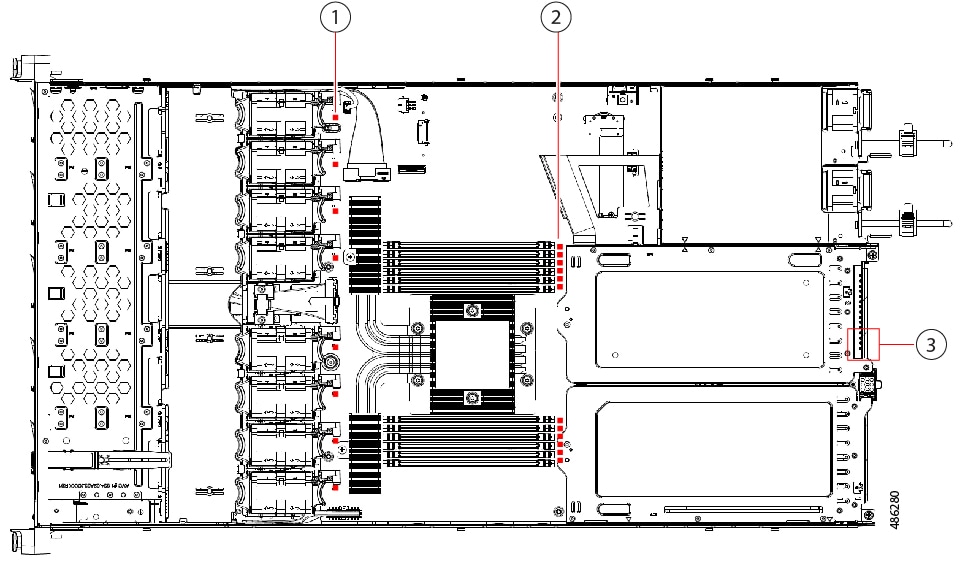

Components

The ND-NODE-G5S appliance is configured with the following components:

-

CIMC-LATEST-D: IMC SW (Recommended) latest release for C-Series Servers

-

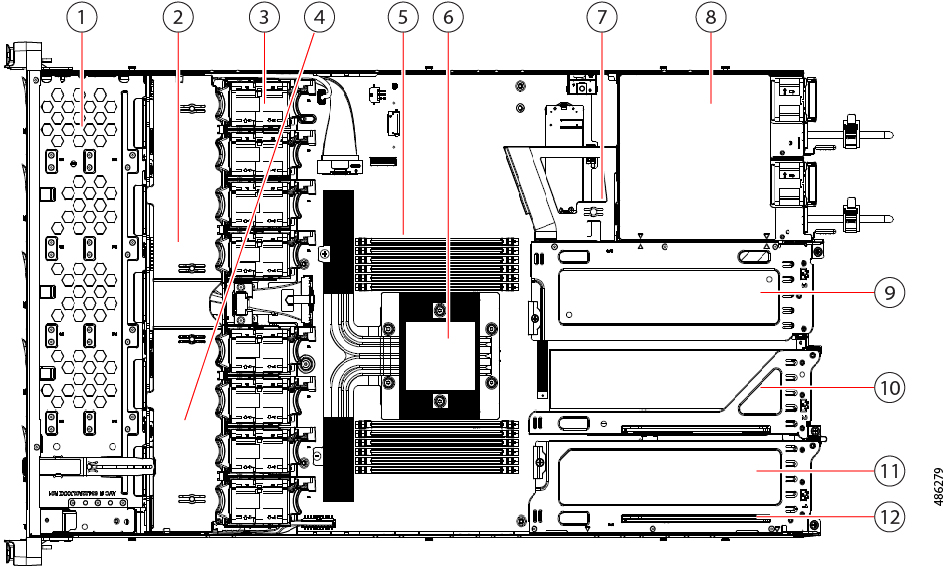

ND-CPU-A9454P: ND AMD 9454P 2.75GHz 290W 48C/256MB Cache DDR5 4800MT/s

-

ND-M2-240G-D: ND 240GB M.2 SATA Micron G2 SSD

-

ND-M2-HWRAID-D: ND Cisco Boot optimized M.2 Raid controller

-

ND-TPM2-002D-D: ND TPM 2.0 FIPS 140-2 MSW2022 compliant AMD M8 servers

-

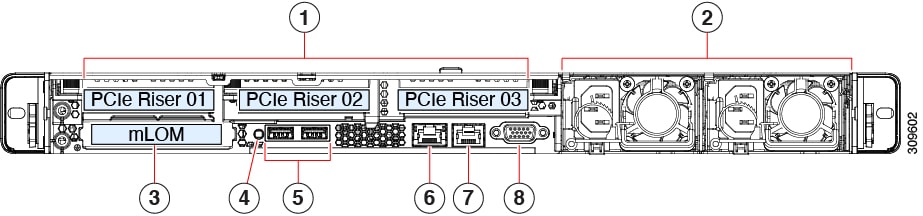

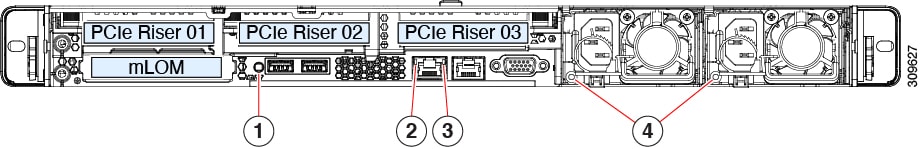

ND-RIS1A-225M8: ND C225 M8 1U Riser 1A PCIe Gen4 x16 HH

-

ND-HD24TB10KJ4-D: ND 2.4TB 12G SAS 10K RPM SFF HDD (4Kn)

-

ND-SD960GBM3XEPD: ND 960GB 2.5in Enter Perf 6G SATA Micron G2 SSD (3X)

-

Power supplies:

-

1200W AC Titanium Power Supply for C-series Rack Servers

-

1050W -48V DC Power Supply for UCS Rack Server

-

1050W -48V DC Power Supply for APIC servers (India)

-

-

ND-MRX32G1RE3: ND 32GB DDR5-5600 RDIMM 1Rx4 (16Gb)

-

ND-RAID-M1L16: ND 24G Tri-Mode M1 RAID Controller w/4GB FBWC 16Drv

-

ND-O-ID10GC-D: Intel X710T2LOCPV3G1L 2x10GbE RJ45 OCP3.0 NIC

-

ND-OCP3-KIT-D: C2XX OCP 3.0 Interposer W/Mech Assy

-

ND-P-V5Q50G-D: Cisco VIC 15425 4x 10/25/50G PCIe C-Series w/Secure Boot

Feedback

Feedback