Table Of Contents

Frame Relay Service Modules (FRSM)

ATM UNI Service Modules (AUSM)

Circuit Emulation Service Modules (CESM)

Summary of FRSM Features By Module Version

Frame Relay Service Module Special Features

CESM-8T1/E1 Peak Cell Rate Calculation

Asychronous Clocking (Adaptive)

CESM-T3/E3 Peak Cell Rate Calculation

Electrical and Safety Standards

Multiple Groups of 1:N Redundancy

SM Redundancy with Line Modules

Bulk Mode Distribution/Redundancy

Frame Aggregation: Port Forwarding

ATM Deluxe Integrated Port Adapter/Interface

Service Modules

The MGX 8230 switch supports Frame Relay, CE, ATM, Cisco IOS (including IP services), and voice services through an array of service modules.

This chapter includes a summary of the available modules, followed by detailed information on the modules and the services they provide:

•

ATM Deluxe Integrated Port Adapter/Interface

Summary of Modules

The MGX 8230 supports the following service modules.

Service Resource Module (SRM)

Service Resource Module (MGX-SRM-3T3/C)

The optional SRM provides three major functions for service modules; bit error rate tester (BERT) of T1 and E1 lines and ports, loops back of individual N x 64 channels toward the customer premises equipment (CPE), and 1:N redundancy for the service modules.Frame Relay Service Modules (FRSM)

A variety of Frame Service Modules are supported as described in the following list.

•

Frame Service Module for eight T1 ports (AX-FRSM-8T1)

The AX-FRSM-8T1 provides interfaces for up to eight fractional T1 lines, each of which can support one 56 kbps or one Nx64 kbps FR-UNI, FR-NNI port, ATM-FUNI, or a Frame forwarding port. The AX-FRSM-8T1 supports fractional and unchannelized T1 port selection on a per-T1 basis.•

Frame Service Module for eight E1 ports (AX-FRSM-8E1)

The AX-FRSM-8E1 provides interfaces for up to eight fractional E1 lines, each of which can support one 56 kbps or one Nx64 kbps FR-UNI, FR-NNI, ATM-FUNI, or Frame forwarding port. The AX-FRSM-8E1 supports fractional and unchannelized E1 port selection on a per-E1 basis.•

Frame Service Module for eight channelized T1 ports (AX-FRSM-8T1-C)

The AX-FRSM-8T1-C allows full DS0 and n x DS0 channelization of the T1s. Each interface is configurable as up to 24 ports running at full line rate, at 56 or n x 64 kbps for a maximum of 192 ports per FRSM-8T1-C.•

Frame Service Module for eight channelized E1 ports (AX-FRSM-8E1-C)

The AX-FRSM-8E1-C allows full DS0 and n x DS0 channelization of the E1s. Each interface is configurable as up to 31 ports running at full line rate, at 56 or n x 64 kbps for a maximum of 248 ports per FRSM-8E1-C.•

Frame Service Module for T3 and E3 (MGX-FRSM-2E3T3)

The MGX-FRSM-2E3/T3 provides interfaces for two T3 or E3 Frame Relay lines, each of which can support either two T3 lines (each at 44.736 Mbps) or two E3 lines (each at 34.368Mbps) FR-UNI, ATM-FUNI, or Frame Forwarding port.•

Frame Service Module for channelized T3 (MGX-FRSM-2CT3)

The MGX-FRSM-2CT3 supports interfaces for two T3 channelized Frame Relay lines. Each interface supports 56 Kbps, 64 Kbps, Nx56 Kbps, Nx64 Kbps, T1 ports that can be freely distributed across the two T3 lines.•

Frame Service Module for high speed serial (MGX-FRSM-HS1/B)

The FRSM-HS1/B supports the 12-in-1 back card. This back card supports up to four V.35 or X.25 serial interfaces. This card also supports the two port HSSI back cards with SCSI-2 connectors.•

Frame Service Module for unchannelized HSSI (MGX-FRSM-HS2/B)

The MGX-FRSM-HS2/B supports interfaces for two unchannelized HSSI lines. Each interface supports approximately 51 Mbps; with both lines operating, maximum throughput is 70 Mbps.ATM UNI Service Modules (AUSM)

Two ATM UNI Service Modules are supported.

•

ATM UNI Service Module for T1 (MGX-AUSM/B-8T1)

The MGX-AUSM/B-8T1 provides interfaces for up to eight T1 lines. You can group N x T1 lines to form a single, logical interface (IMA).•

ATM UNI Service Module for E1 (MGX-AUSM/B-8E1)

The MGX-AUSM/B-8E1 provides interfaces for up to eight E1 lines. You can group N x T1 lines to form a single, logical interface (IMA).Circuit Emulation Service Modules (CESM)

Three Circuit Emulation Service Modules are supported.

•

Circuit Emulation Service Module for T1 (AX-CESM-8T1)

The AX-CESM-8T1 provides interfaces for up to eight T1 lines, each of which is a 1.544 Mbps structured or unstructured synchronous data stream.•

Circuit Emulation Service Module for E1 (AX-CESM-8E1)

The AX-CESM-8E1 provides interfaces for up to eight E1 lines, each of which is a 2.048-Mbps structured or unstructured synchronous data stream.•

Circuit Emulation Service Module for T3 and E3 (MGX-CESM-T3/E3)

The MGX-CESM-T3E3 provides direct connectivity to one T3 or E3 line for full-duplex communications at the DS3 rate of 44.736 MHz or at the E3 rate of 34.368 MHz. Each T3 or E3 line consists of a pair of 75-ohm BNC coaxial connectors, one for transmit data and one for receive data, along with three LED indicators for line status.Voice Service Modules (VISM)

MGX-VISM-8T1 and MGX-VISM-8E1

These cards support eight T1 or E1ports for transporting digitized voice signals across a packet network. The VISM provides toll-quality voice, fax and modem transmission and efficient utilization of wide-area bandwidth through industry standard implementations of echo cancellation, voice-compression and silence- suppression techniques.

Note

For configuration information on the Voice Interworking Service Module (VISM), refer to the Voice Interworking Service Module Installation and Configuration.

Route Processor Module (RPM)

Route Processor Module (RPM)

The RPM is a Cisco 7200 series router redesigned as a double-height card. Each RPM uses two single-height back cards. The back card types are single-port Fast Ethernet, four-port Ethernet, and single-port (FDDI).

Note

For information on availability and support of the MGX-RPM-128/B and MGX-RPM-PR, see the Release Notes for Cisco WAN MGX 8850, 8230, and 8250 Software.

Note

For configuration information on the Route Processor Module (RPM), see the Cisco MGX Route Processor Module Installation and Configuration.

Frame Relay Services

The primary function of the Frame Relay Service Modules (FRSM) is to convert between the Frame Relay formatted data and ATM/AAL5 cell-formatted data. For an individual connection, you can configure network interworking (NIW), service interworking (SIW), ATM-to-Frame Relay UNI (FUNI), or frame forwarding.

Summary of FRSM Features By Module Version

Table 4-1 summarizes the basic features of the Frame Relay service modules for the MGX 8230.

Features Common to All FRSMs

The features supported by all FRSM cards are

•

Frame Relay UNI and NNI—Offers standards-compliant Frame Relay UNI and NNI on a variety of physical interfaces that support congestion notification (FECN/BECN), ForeSight (optional), bundled connections, frame forwarding, extended traffic management to the router, ELMI, and enhanced loopback tests.

•

Frame-Relay-to-ATM Network Interworking as Defined in FRF.5—The MGX 8230 switch supports a standards-based interworking that segments and maps variable-length Frame Relay frames into ATM cells in both transparent and translational modes. This interworking enables transparent connectivity between large ATM and small Frame Relay locations independently of the WAN protocol.

•

Frame-Relay-to-ATM Service Interworking—The modules provide Frame-Relay-to-ATM service interworking (FRF.8), both transparent and translation modes, configured on a per-PVC basis.

•

Frame Forwarding—The MGX 8230 Frame Relay modules enable efficient transport of frame-based protocols, such as SDLC, X.25, or any other HDLC-based protocol, over Frame Relay interfaces. Application examples include routers interconnected via PPP, mainframes or hosts connected by X.25/HDLC, SNA/SDLC links, and video CODECs that use a frame-based protocol.

•

ATM FUNI—ATM Forum FUNI mode 1A is supported. Interpreted CCITT-16 CRC at the end of the frame enables frame discard if in error. The service modules also support AAL5 mapping of user payloads to ATM, 16 VPI values, virtual path connections for all nonzero VPI values (up to 15 VPCs), 64 VCI values, and OAM frame/cell flows.

•

Dual Leaky Bucket Policing—(Standards-based CIR policing and DE tagging/discarding) Once basic parameters such as committed burst, excess burst, and CIR have been agreed to, incoming frames are placed in two buckets: those to be checked for compliance with the committed burst rate and those to be checked for compliance with the excess burst rate. Frames that overflow the first bucket are placed in the second bucket. The buckets "leak" by a certain amount to allow for policing without disruption or delay of service.

•

ForeSight—The Foresight congestion management and bandwidth optimization mechanism continuously monitors the utilization of ATM trunks and adjusts the bandwidth to all connections, proactively avoiding queuing delays and cell discards.

Frame Relay Service Module Special Features

The following special features are supported by all FRSM cards:

•

Support for Initial Burst Size (IBS)—Supported on a per-VC basis to favor connections that have silent for a long time.

•

Extended ForeSight—Extended ForeSight uses standards-based consolidated link-layer management (CCLM) messages to pass ForeSight congestion indications across Frame Relay UNI and NNI service interfaces. Extended ForeSight provides a powerful means for extending the range of ForeSight flow control beyond the network boundaries of a single carrier.

•

Consolidated Link Layer Management (CLLM)—An out-of-band mechanism to transport congestion-related information to the far end. Consolidated Link Layer Management (CLLM; T1.618) is an industry-standard protocol. Its PVC congestion notification portion of the CLLM protocol is implemented by Cisco. CLLM is not part of the UNI or NNI signaling protocol and uses a different reserved DLCI. CLLM effectively extends congestion notification to external upstream equipment. CLLM does not support the type of granularity that ForeSight provides (rate down, rate fast down, and so on); it can only send congestion notification messages of congestion or no congestion.

•

Enhanced LMI (ELMI)—Cisco LMI, ANSI T1.617 Annex D and ITU-T Q.933 Annex A are supported on the FRSMs. In these specifications, there is no way for the network (switches) to inform a user (access device, FRAD, router) about various Quality of Service parameters for Permanent Virtual Circuits (PVCs) like Committed Information Rate (CIR), Committed Burst Size (Bc), Excess Burst Size (Be), Maximum Frame Size, and so on. Thus, once a connection is provisioned within the switching network, this information must again be input when the connection is provisioned in the router. This makes provisioning susceptible to manual errors, needlessly making troubleshooting more complex.

•

Having the correct information about these parameters is valuable for routers that are capable of making congestion management/prioritization decisions. Cisco enhanced its Frame Relay capability by using CIR, Be, and Bc values for traffic shaping. Currently, all of these values need to be manually configured by the user and these can be inadvertently be set differently from what the network (service provider) has established. To ease router configuration and ensure consistency with the network a mechanism to provide this information is required. The Enhanced LMI (E-LMI) feature in the Frame Relay interfaces on Cisco routers and wide area switches enables this.

•

Advanced Buffer Management—When a frame is received, the depth of the per-VC queue for that LCN is compared against the peak queue depth scaled down by a specified factor. The scale-down factor depends on the amount of congestion in the free buffer pool. As the free buffer pool begins to empty, the scale-down factor is increased, preventing an excessive number of buffers from being held up by any single LCN.

•

OAM (Operation, Administration and Management) Features—OAM F5 AIS, RAI and end-to-end/segment loopback supported. Also includes support for the following commands: tstcon, tstdelay, tstconi, tstdelayi.

•

Standards-Based Management Tools—FRSMs support SNMP, TFTP (for configuration/statistics collection), and a command-line interface. WAN Manager technology provides full graphical user interface support for connection management. CiscoView provides equipment management, MGX 8230 network management functions. These include image download, configuration upload, statistics, telnet, UI, SNMP, trap, and MGX 8230 switch MIB maintenance.

Circuit Emulation Services

The CE service module (CESM) use a standards-based adaptation of circuit interfaces onto ATM.

CE Service Modules

Table 4-2 summarizes the key attributes of the CE service modules.

CESM-8T1/E1 Features

The CESM-8T1/E1 features are listed below.

•

Provides up to 8 T1/E1 interfaces.

•

Allows structured (SDT) or unstructured (UDT) data transfer per physical interface.

•

Provides N x 64 Kbps fractional DS1/E1 service (SDT only). In Structured Data Transfer Mode, the CESM supports fractional DS1/E1 service (time slots must be contiguous). Any N x 64 channels can be mapped to any VC. Therefore, multiple ports can be defined that are composed of unique contiguous timeslots and a connection is used to emulate the data for that logical port.

•

Supports synchronous timing in both Structured Data Transfer (SDT) mode and Unstructured Data Transfer (UDT) mode. Synchronous timing is derived from the switch.

•

Supports asynch timing mode with Synchronous Residual Time Stamp (SRTS) and adaptive clock recovery methods (UDT only) can be employed for asynchronous timing.

•

Provides ON/OFF hook detection and idle suppression using channel-associated signaling (CAS). Only available in SDT mode.

•

Allows a choice of partially filled AAL1 cell payload to improve cell delay.

–

Type Fill Range (bytes)

–

T1 Structured: 25-47

–

T1 Unstructured: 33-47

–

E1 Structured: 20-47

–

E1 Unstructured: 33-47

•

Provides a maximum number of connections: 192 T1 and 248 E1.

CESM-8T1/E1 Peak Cell Rate Calculation

The following features are shared by all CE service modules:

•

Partial Fill Value (K bytes)

•

SDT Timeslots (N channels)

•

Unstructured T1 cell rate

–

(1.544 x 106 bps)/(K octets/cell x 8 bits/octet)

–

4107 cps (for K = 47 bytes)

•

Unstructured E1 cell rate

–

(2.048 x 106 bps)/(K octets/cell x 8 bits/octet)

–

5447 cps (for K=47 bytes)

•

Structured N x 64 cell rate, basic mode

–

(8000 x N) / (K octets/cell)

–

170 cps (for K = 47, and N = 1) feature specific to CESM 8T1/8E1—Structured Data Transfer

T1/E1 Clocking Mechanism

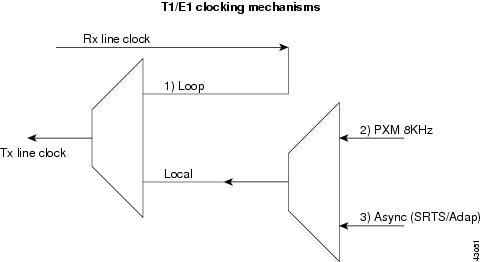

The CESM card provides the choice of physical interface Tx clock from one of the following sources, (see Figure 4-1):

1.

Loop clocking derived from Rx line clock.

2.

MGX local switch clock derived on the PXM1 (Synchronous).

3.

SRTS and Adaptive based clock (for T1/E1 unstructured asynchronous mode only).

Figure 4-1 T1/E1 Clocking Mechanisms

Asynchronous Clocking (SRTS)

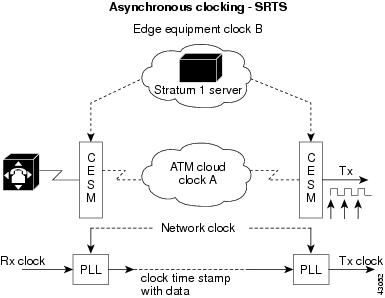

Synchronous Residual Time Stamp (SRTS) clocking requires a Primary Reference Source (PRS) and network clock synchronization services. This mode allows user equipment at the edges of an ATM network to use a clocking signal that is different (and completely independent) from the clocking signal being used in the ATM network. However, SRTS clocking can only be used for unstructured (clear channel) CES services.

For example, in Figure 4-2, user equipment at the edges of the network can be driven by clock B, while the devices within the ATM network are being driven by clock A. The user-end device introduces traffic into the ATM network according to clock B. The CESM segments the CBR bit stream into ATM cells; it measures the difference between user clock B, which drives it, and network clock A. This delta value is incorporated into every eighth cell. As the destination CESM receives the cells, the card not only reassembles the ATM cells into the original CBR bit stream, but also reconciles the user clock B timing signal from the delta value. Thus, during SRTS clocking, CBR traffic is synchronized between the ingress side of the CES circuit and the egress side of the circuit according to user clock signal B, while the ATM network continues to function according to clock A.

Figure 4-2 Asynchronous Clocking

Asychronous Clocking (Adaptive)

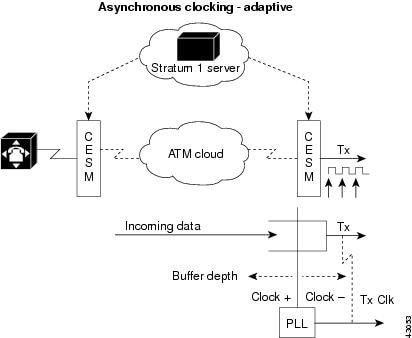

Adaptive clocking requires neither the network clock synchronization services nor a global PRS for effective handling of CBR traffic. Rather than using a clocking signal to convey CBR traffic through an ATM network, adaptive clocking infers appropriate timing for data transport by calculating an average data rate for the CBR traffic. However, as in the case with SRTS clocking, adaptive clocking can be used only for unstructured (clear channel) CES services. (See Figure 4-3).

For example, if CBR data is arriving at a CES module at a rate of X bits per second, then that rate is used, in effect, to govern the flow of the CBR data through the network. What happens behind the scenes, however, is that the CES module automatically calculates the average data rate. This calculation occurs dynamically as user data traverses the network.

When the CES module senses that its segmentation and reassembly (SAR) buffer is filling up, it increases the rate of the (TX) clock for its output port, thereby draining the buffer at a rate that is consistent with the rate of data arrival.

Similarly, the CES module slows down the transmit clock of its output port if it senses that the buffer is being drained faster than the CBR data is being received. Adaptive clocking attempts to minimize wide excursions in SAR buffer loading, while at the same time providing an effective means of propagating CBR traffic through the network.

Relative to other clocking modes, implementing adaptive clocking is simple and straightforward. It does not require network clock synchronization services, a PRS, or the advance planning typically associated with developing a logical network timing map. However, adaptive clocking does not support structured CES services, and it exhibits relatively high wander characteristics.

Figure 4-3 Asychronous Clocking (Adaptive)

CESM Idle Suppression

The CESM T1/E1 card in structured mode can interprets CAS robbed bit signaling for T1 (ABCD for ESF and AB for SF frames) and CAS for E1 (timeslot 16). The ABCD code is user configurable per VC (xcnfchan onhkcd = 0-15; ABCD = 0000 = 0 ... ABCD = 1111 = 15). By detecting on-hook/off-hook states, AAL1 cell transmission is suppressed for the idle channel; thereby reducing backbone bandwidth consumed. ON/OFF hook detection/suppression can be enabled/disabled per VC and ON/OFF hook states can be forced via SNMP through the NMS.

On the ingress end, the CESM card monitors the signaling bits of the AAL1 cell. Whenever a particular connection goes on-hook and off-hook, the CESM card senses this condition by comparing ABCD bits in the cell with pre programmed idle ABCD code for that channel.

When an on-hook state is detected, keep-alive cells are sent once every second to the far-end CESM. This prevents the far end from reporting an under-run trap during idle suppression, since no cells are transmitted. When the timeslot switches to off-hook mode, the CESM stops sending the keep-alive cells.

CESM-T3/E3 Specific Features

The specific features for the CESM-T3/E3 are

•

Unstructured Support—Supports T3/E3 unstructured data transfer.

•

Synchronous clocking—Synchronous timing mode only supported. Must derive clock from shelf.

•

Onboard BERT—BERT support using on board BERT controller. Bert commands executed on T3/E3 card.

•

Maximum number of connections—Maximum number of connections is one. In the unstructured mode, one logical port is used to represent the T3/E3 line and one connection is added to the port to emulate the circuit.

CESM-T3/E3 Peak Cell Rate Calculation

The CESM-T3/E3 peak cell rate calculations are as follows:

•

T3/E3 only supports unstructured mode and no partial fill

–

Unstructured T3 cell rate

–

(44.736 x 106 bps)/(47 octets/cell x 8 bits/octet)

–

118980 cps

•

Unstructured E3 cell rate

–

(34.368 x 106 bps)/(47 octets/cell x 8 bits/octet)

–

91405 cps

T3/E3 Clocking Mechanisms

The T3/E3 clock configuration is shown in Figure 4-4.

Figure 4-4 T3/E3 Clocking Mechanisms

The CESM card provides the choice of physical interface Tx clock from one of the following sources:

1.

Loop clocking derived from Rx Line Clock.

2.

MGX local switch clock derived on the PXM1 (Synchronous).

ATM Service

The ATM UNI Service Modules (AUSM/Bs) provide native ATM UNI (compliant with ATM Forum Version3.0 and Version3.1) interfaces at T1 and E1 speeds, with eight ports per card, providing up to 16 Mbps of bandwidth for ATM service interfaces.

Consistent with Cisco's Intelligent QoS Management features, AUSM/B cards support per-VC queuing on ingress and multiple Class-of-Service queues on egress. AUSM/B cards fully support continuous bit rate (CBR), variable bit rate (VBR), unspecified bit rate (UBR), and available bit rate (ABR) service classes.

The AUSM/B-8 cards also support ATM Forum-compliant inverse multiplexing for ATM (IMA). This capability enables multiple T1 or E1 lines to be grouped into a single high-speed ATM port. This N x T1 and N x E1 capability fills the gap between T1/E1 and T3/E3, providing bandwidth up to 12 Mbps (N x T1) or 16 Mbps (N x E1) without requiring a T3/E3 circuit.

A single AUSM/B card can provide hot-standby redundancy for all active AUSM/B cards of the same type in the shelf (1:N redundancy).

AUSM/B modules are supported by standards-based management tools, including SNMP, TFTP (for configuration and statistics collection), and a command line interface. The Cisco WAN Manager application suite also provides full graphical user interface support for connection management, and CiscoView software provides equipment management.

Table 4-3 summarizes the key attributes of the AUSM/B cards.

AUSM/B Key Features

The key features of the AUSM/B card are as follows.

•

E1 ATM UNI or NNI—The ATM ports on the AUSM/B card can be configured to accept either the User-Network Interface (UNI) or Network-Node (NNI) cell header formats.

•

ATM Forum-compliant IMA—The AUSM/B-8 cards also support ATM Forum-compliant inverse multiplexing for ATM (IMA1.0). This capability enables multiple T1 or E1 lines to be grouped into a single high-speed ATM port. Multiple IMA groups up to a maximum of eight supported per module.

•

Policing—The AUSM/B checks for conformance of per-connection traffic contract from CPE. For variable bit rate (VBR) and available bit rate (ABR) connections, peak cell rate (PCR) and sustained cell rate (SCR) are policed. For unspecified bit rate (UBR) and constant bit rate (CBR) connections, only PCR is policed.

•

Ingress per-VC Queuing—Each VC is buffered in separate queues with configurable threshold values for cell loss priority (CLP), explicit forward congestion indicator (EFCI), and early packet discard (EPD).

•

Cell and Frame-Based Traffic Control—The CLP discard capability allows to selectively discard CLP = 1 cells in case of congestion.

–

Early Packet Discard—EPD, the discard of a data frame if queue length exceeds set thresholds.

–

Partial Packet Discard—PPD, the cell-discard mechanism when part of a frame is discarded due to buffer unavailability, are both supported.

–

EFCI bit is set of the queue length exceeds set threshold.

•

Egress per COS Queuing—Cell traffic buffered in multiple Class of Service Queues (CBR, VBR, ABR, UBR) per port on Egress with configurable CLP and EFCI threshold values.

•

ABR ForeSight—Prestandard ABR closed-loop congestion mechanism used to predict and adjust network traffic to avoid congestion.

•

Connection admission control (CAC)—A means of determining whether a connection request will be accepted or rejected based on the bandwidth available.

•

ILMI, OAM Cells—The Integrated Local Management Interface (ILMI) signaling protocol can be configured on the AUSM/B on a per-port basis. OAM cell support for monitoring end-to-end AIS and RDI. Used to generate and monitor segment loopback flows for VPCs and VCCs.

•

System clock extraction—The Model B AUSM/B in the MGX 8230 has the added capability of extracting clocking from the T1/E1 line to feed into the system clock. The MGX 8220 using a Model B AUSM/B will not support this feature.

•

1:N Redundancy—Within a group of N+1 AUSM/B cards of the same type on a shelf (with optional SRM).

AUSM/B Ports

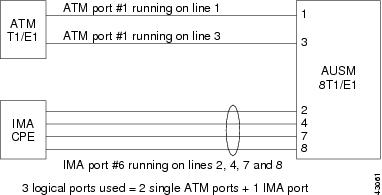

On the AUSM/B card, the term "port" is used to collectively refer to two types of logical interfaces: ATM T1/E1 ports and IMA groups. ATM ports are defined on a T1 or E1 line and one port is mapped to one line. IMA groups are composed of a logical grouping of lines defined by the user.

In total, the AUSM/B-8T1/E1 can support a maximum of eight logical ports (see Figure 4-5), of which some can be ATM T1/E1 ports and some can be IMA ports. An ATM T1/E1 port numbered i precludes the possibility of an IMA port numbered i. These logical port numbers are assigned by the user as part of configuration. The term IMA group and IMA port will be used synonymously.

The bandwidth of the logical port or IMA group is equal to:

(number of links) * (T1/E1 speed - overhead of IMA protocol)

Figure 4-5 AUSM/B Ports

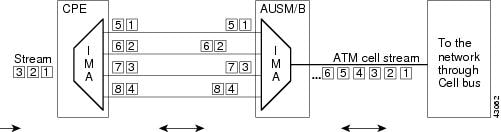

AUSM/B-IMA

IMA offers the user a smooth migration from T1/E1 bandwidth to n * T1/E1 bandwidth without having to use a T3/E3. Multiple T1/E1 lines form a logical pipe called the IMA group.

The IMA group is based on cell-based inverse multiplexing, whereby the stream of incoming cells are distributed over multiple T1/E1 lines (sometimes referred to as links) on a per-cell basis in a cyclic round-robin manner (see Figure 4-6). On the far end, the cells are recombined and the original stream is recreated. From the perspective of the application and the rest of the network, the inverse multiplexing function is transparent and the IMA group is viewed as any other logical port.

T1/E1 lines routed through different path (different carrier) are supported within the same IMA group. The ingress end compensates for the differential delay among individual links in an IMA group.

The maximum link differential delay is 275msec for T1 and 200 for E1.

Within an IMA group, each line is monitored and lines with persistent errors are taken out of data round-robin. The link is activated again when it is clear of errors at both ends.

The connectivity test procedure allows the detection of mis-connectivity of links. A test pattern is sent on one link of the IMA group. The Far End (FE) IMA group loops back the test pattern on all links in the group.

Figure 4-6 AUSM/B-IMA Ports

IMA Protocol

IMA protocol is based on IMA framing. An IMA frame is defined as M consecutive number of cells transmitted on each link in an IMA group. The ATM Forum requires the IMA implementation to support M=128 cells and optionally support M=32, 64, and 256. The current AUSM/B implementation only supports frame lengths of 128 cells. The transmitting IMA aligns the transmission of IMA frames on all links within the group.

The IMA protocol uses two types of control cells: IMA Control Protocol (ICP) cells and Filler Cells.

•

ICP cells' primary functions are to maintain IMA Frame synchronization and protocol control. These control cells send a variety of information such as near-end and far-end transmit-and-receive states, defect indicators for all links, and group states. An ICP cell is sent on each link once per IMA frames; in this case they are sent every 127 cells.

•

Filler cells are sent over links that are not part of data round-robin. When there is no data to be sent out on a link, the sending device transmits filler cells to keep the round-robin process in sync.

ICP and Filler cells have VPI=0, VCI=0, PTI=5, and CLP=1.

AUSM/B IMA Features

IMA allows for diverse routing of T1/E1 lines in the IMA group. The ingress end of the IMA port compensates for differential delay among the lines within a set limit. The maximum configurable delay for a T1 is 275 ms and the maximum configurable delay for an E1 is 200 ms.

The IMA group also provides for a level of resiliency. The user can configure a minimum number of links that must be active in order for the IMA group to be active. This allows the IMA group to still carry data traffic even during line failures (errors, signal loss) as long as the number of lines active does not fall below the user-configured value of the minimum number of links.

Manual line deletion and addition to an IMA group can be performed without any data loss. If the user is planning on eventually creating an IMA group, configure the line as an IMA group since future additions and deletions to an existing group are non-service disrupting.

Lines that experience bit errors are detected and are automatically removed if the errors are persistent. The threshold for line removal is not user configurable and is set at two consecutively errored IMA frames on a line. The line will automatically be added back in when frame synchronization is recovered.

The AUSM/B supports only Common Transmit Clock (CTC) mode of operation, whereby the same clock is used for all links in IMA group.

The IMA implemented on the AUSM/B is compliant with the ATM Forum IMA 1.0 Specification. ATM Forum-compliant IMA 1.0 interoperability testing has been conducted with the Cisco 2600/3600, ADC Kentrox AAC-3, Larscom NetEdge and Orion 4000, and Nortel Passport.

The differences in Forum-Compliant IMA and previous Proprietary IMA are as follows:

•

Active IMA Group Requirements

–

Prestandard IMA—Maximum number of failed links is configured. The number of errored links must be fewer than the configured limit in order for the group to be active.

–

Forum-compliant IMA—Minimum number of links out-of-error is configured. The number of active links must be greater than or equal to the configured requirement.

•

FC-IMA does not support the inband addition of links (not a part of the IMA 1.0 Specification)

–

Prestandard IMA allowed for automatic configuration at the Far End when a link was added at the Near End.

–

In Forum-Compliant IMA, the automatic configuration at the far end is not supported and thus requires the user to perform the line addition at both ends of the IMA group.

Voice Service—VISM

The Voice Interworking Service Module (VISM) is a high-performance voice module for the Cisco MGX 8230, MGX 8250 and MGX 8850 series wide-area IP+ATM switches. This module is suitable for all service provider voice applications and offers highly reliable standards-based support for voice over ATM and voice over IP.

The VISM provides toll-quality voice, fax and modem transmission and efficient utilization of wide-area bandwidth through industry standard implementations of echo cancellation, voice-compression and silence-suppression techniques.

Service Provider Applications

The MGX 8230 with VISM is the industry's most flexible and highest density packet voice solution giving the customers the capability to provide VoIP, VoMPLS, VoAAL1 and VoAAL2 thus enabling service providers to deliver new revenue generating voice services using their existing network infrastructure.

Point-to-Point Trunking

Service providers worldwide are rushing to grow and transition their voice traffic onto packet based infrastructure and stop further expenditure on TDM equipment. With its standards based AAL2 implementation, the MGX/VISM can be used to provide a cost effective solution for an integrated voice and data network. By moving all point-to-point TDM voice traffic onto the packet network, cost savings of up to five times can be achieved through efficient use compression, voice activity detection and AAL2 sub-cell multiplexing while guaranteeing transparency of all existing voice services. In addition to the immediate bandwidth savings, the trunking application realizes all the benefits of a single voice+data network. Migration to switched voice services can easily be done through the introduction of a softswitch without any changes on the MGX/VISM platform.

Integrated Voice/Data Access

With the MGX/VISM and access products such as the Cisco MC3810, service providers can now offer integrated voice and data services on a single line (T1/E1) to their enterprise customers. By eliminating the high cost of disparate voice and data networks, service providers can build a single network that will enable them to deliver current and future voice and data services.

At the customer premises, the MC3810 acts as a voice and data aggregator. All the customers voice (from the PBX) and data (from the routers) traffic is fed into the MC3810. AAL2 PVCs are established between the CPE device and the VISM. By enabling VAD and using compression, tremendous bandwidth savings are realized. Connection Admission Control (CAC ) can be used to control bandwidth utilization for voice traffic. All the voice signaling traffic is passed transparently to the PSTN from the VISM. CAS is transported over AAL 2 type 3 cells and CCS is transported over AAL5.

Switched Voice Applications

The VISM supports industry standard media gateway control protocol (MGCP) for interworking with a variety of Softswitches (refer to eco-system partners) to provide TDM voice offload onto packet networks. The VISM together with a SoftSwitch (Call Agent) can be used to provide switched voice capability for local tandem, long distance tandem and local services. In conjunction with a Softswitch, the VISM can act as high density PSTN gateway for H.323 and SIP based networks.

Core Functions

The VISM card provides the following voice processing services to support voice over ATM networks:

1.

Voice Compression

The VISM supports the following standards-based voice coding schemes:

–

ADPCM (G.726)

–

CS-ACELP (G.729a/b)

Support for a range of compression allows customers to select the compression quality and bandwidth savings appropriate for their applications; 32-kbps ADPCM, and 8-kbps CS-ACELP compression provide very high-quality and low-bit-rate voice, while reducing total bandwidth requirements.

2.

Voice Activity Detection

Voice activity detection (VAD) uses the latest digital-signal processing techniques to distinguish between silence and speech on a voice connection. VAD reduces the bandwidth requirements of a voice connection by not generating traffic during periods of silence in an active voice connection. Comfort noise generation is supported. VAD reduces bandwidth consumption without degrading voice quality. When combined with compression, VAD achieves significant bandwidth savings.

3.

Onboard Echo Cancellation

The VISM uses digital signal processor (DSP)-based echo cancellation to provide near-end echo cancellation on a per-connection basis. Up to 128 ms of user-configurable near-end delay can be canceled. Onboard echo cancellation reduces equipment cost and potential points of failure, and facilitates high-quality voice connections. The echo cancellor complies with ITU standards G.164, G.165, and G.168.

4.

Fax and Modem Detection

The VISM continually monitors and detects fax and modem carrier tones. When a carrier tone is detected from a modem or a fax, the channel is upgraded to PCM to ensure transparent connectivity. Fax and modem tone detection ensures compatibility with all voice-grade data connections.

5.

QOS

The VISM takes full advantage of all the various Quality of Service (QoS) mechanisms available for IP+ATM networks. IP TOS and precedence values are configurable on the VISM. For VoIP, either the RPM (integrated routing module on the MGX) or an external router can be used for advanced QoS mechanisms like traffic classification, congestion avoidance and congestion management. Also, in conjunction with RPM, VISM can take advantage of the QOS characteristics of MPLS networks (VoMPLS). The MGX's advanced traffic management capabilities combined with it's intelligent QoS management suite gives VISM the ability to support voice services which need predictable delays and reliable transport.

6.

Integrated Network Management

Cisco WAN Manager (CWM) is a standards based network and element management system that enables operations, administration, and maintenance (OA&M) of the VISM and its shelf. CWM provides an open API for seamless integration with OSS and 3rd party management systems.

Key Features

The VISM uses high performance digital signal processors and dual control processors with advanced software to provide a fully non-blocking architecture that supports the following functions:

•

VoIP using RTP (RFC 1889)—VISMR1.5 supports standards based VoIP using RTP (RFC1889) and RTCP protocols. This allows VISM to interwork with other VoIP Gateways.

•

VoAAL2 (With sub-cell multiplexing) PVC—The VISM supports standards compliant AAL2 adaptation for the transport of voice over an ATM infrastructure. AAL2 trunking mode is supported

•

Codec Support—G.711 PCM (A-law, Mu-law), G.726, G.729a/b.

•

8 T1/E1 Interfaces—The VISM supports 8 T1 or 8 E1 interfaces when G.711 PCM coding is used. For higher complexity coders such as G.726-32K and G.729a-8K, the density drops to 6 T1 or 5 E1 interfaces (max 145 channels).

•

1:N redundancy using SRM.

•

T3 interfaces (via SRM bulk distribution)—T3 interfaces are supported using the SRM bulk distribution capability. In this case, the T3 interfaces are physically terminated at the SRM module. The SRM module breaks out the individual T1s and distributes the T1s via the TDM backplane bus to the individual VISM cards for processing.

•

Echo Cancellation—VISM provides on-board echo cancellation on a per connection basis.

•

128 msec user-configurable near-end delay can be canceled. The echo cancellation is compliant with ITU G.165 and G.168 specifications.

•

Voice Activity Detection (VAD)—VISM uses VAD to distinguish between silence and voice on an active connection. VAD reduces the bandwidth requirements of a voice connection by not generating traffic during periods of silence in an active voice connection. At the far end, comfort noise is generated.

•

Fax/modem detection for ECAN and VAD control—VISM continually monitors and detects fax and modem carrier tones. When carrier tone from a fax or modem is detected, the connection is upgraded to full PCM to ensure transparent connectivity. Fax and modem tone detection ensures compatibility with all voice-grade data connections.

•

CAS tunneling via AAL2(For AAL2 trunking mode)—VISM in AAL2 mode facilitates transport of CAS signaling information. CAS signaling information is carried transparently across the AAL2 connection using type 3 packets. In this mode, VISM does not interpret any of the signaling information.

•

PRI tunneling via AAL5(For AAL2 trunking mode)—VISM supports transport of D-oh signaling information over an AAL5 VC. The signaling channel is transparently carried over the AAL5 VC and delivered to the far end. In this mode, VISM does not interpret any of the signaling messages.

•

Voice CAC—VISM can be configured to administer Connection Admission Control (CAC) so that the bandwidth distribution between voice and data can be controlled in AAL2 mode.

•

Type 3 packet for DTMF—VISM in AAL2 mode facilitates transport of DTMF signaling information. DTMF information is carried transparently across the AAL2 connection using type 3 packets.

•

Dual (Redundant) PVCs for bearer/contro—VISM provides the capability to configure two PVCs for bearer/signaling traffic terminating on two external routers (dual-homing). VISM continually monitors the status of the active PVC by using OAM loopback cells. Upon detection of failure, the traffic is automatically switched over to the backup PVC.

•

64 K clear channel transport—VISM supports 64 Kbps clear channel support. In this mode, all codecs are disabled and the data is transparently transported through the VISM.

•

DTMF relay for G.729—In VoIP mode, DTMF signaling information is transported across the connection using RTP NSE (Named Signaling Event) packets.

•

MGCP 0.1 for VoIP with Softswitch control—VISM supports Media Gateway Control Protocol (MGCP) Version 0.1. This open protocol allows any Softswitch to interwork with the VISM module.

•

Resource coordination via Simple Resource Control Protocol (SRCP)—provides a heartbeat mechanism between the VISM and the softswitch. In addition, SRCP also provides the softswitch with gateway auditing capabilities.

•

Full COT functions—VISM provides the capability to initiate continuity test as well as provide loopbacks to facilitate continuity test when originated from the far end.

•

Courtesy Down—Provides a mechanism for graceful upgrades. By enabling this feature, no new calls are allowed on the VISM while not disrupting the existing calls. Eventually, when there are no more active calls, the card is ready for a upgrade and/or service interruption.

•

PRI backhaul to the Softswitch using RUDP—Provides PRI termination on the VISM with the Softswitch providing call control. ISDN layer 2 is terminated on the VISM and the layer 3 messages are transported to the softswitch using RUDP.

•

Latency Reduction (<60 ms round trip)—Significant improvements have been made to bring the round-trip delay to less than 60 ms.

•

Codecs Preference—VISM provides the capability to have the codecs negotiated between the two end-points of the call. The VISM can be configured, for a given end-point, to have a prioritized list of codecs. Codec negotiation could be directly between the end- points or could be controlled by a softswitch.

•

31 DS0 for E1 with 240 channels only—while all 31 DS0s on a E1 port can be used, there is a limitation of 240 channels per card.

•

New and enhanced CLI commands.

•

A-law and µ-law encoding on any channel

•

Fax and modem upspeed to G.711 for reliable transfer

•

Redundant PVCs for bearer and signaling (MGCP, and so on) traffic

•

1:N Redundancy with standby switchover

•

AAL5 connections for OAM, management and signaling transport are supported.

•

Standard utilities support for configuration, status, statistics collection, card/port/connection management.

•

BERT and loopback support using SRM-3T3 or SRM-T1E1.

•

Loop timing and payload and line loopbacks supported.

•

Supports a wide variety of compression schemes and silence suppression for efficient bandwidth utilization.

•

Statistics collection.

•

Standards-based alarm and fault management.

•

Simple Network Management Protocol (SNMP) configuration and access.

VISM Physical Interfaces

Two front cards, VISM-8T1 and VISM-8E1, are available for the MGX 8230 platform. Each has eight T1 or E1 line interfaces.

The following 8-port back cards are used:

•

RJ48-8T1-LM

•

RJ48-8E1-LM

•

SMB-8E1-LM

•

R-RJ48-8T1-LM

•

R-RJ48-8E1 -LM

•

R-SMB-8E1 -LM

Redundancy

The VISM redundancy strategy is the same as for any of the 8-port cards in the MGX 8230 switch. For VISM-8T1, 1:N redundancy is supported via the Line Modules (LMs) using the SRM-3T3 or the SRM-T1E1 and it is supported with the distribution bus using the SRM-3T3.

Physical Layer Interface T1

The physical layer interface T1 provides the following features:

Physical Layer Interface E1

The physical layer interface E1 provides the following features:

VISM Card General

The VISM card provides the following general features:

Maintenance/Serviceability:

–

Internal Loopbacks

–

Hot-pluggability

VISM front card

AX-VISM-8T1/8E1

7.25" X 16.25"

VISM line modules

AX-RJ48-8T1-LM

7.0" X 4.5"

AX-R-RJ48-8T1-LM

7.0" X 4.5"

AX-RJ48-8E1-LM

7.0" X 4.5"

Electrical and Safety Standards

The MGX 8230 conforms to the following electrical and safety standanrd.

•

FCC Part 15

•

Bellcore GR1089-CORE

•

IEC 801-2, 801-3, 801-4, 801-5, 825-1 (Class 1)

•

EN 55022, 60950

•

UL 1950

Service Resource Module

Currently, the SRM-3T3/C is the only Service Resource Module (SRM) supported on the MGX 8230 platform. It is an optional card.

The SRM-3T3/C provides the following major functions for service modules:

•

Built-in Bit Error Rate Tester (BERT) capabilities for individual nxDS0, T1, and E1 lines and ports

•

Loopback capability for individual Nx64 channels toward the customer premises equipment (CPE)

•

Enables 1:N redundancy capability for T1/E1 Service Modules

•

Bulk Distribution (built-in M13 functionality)

•

Service Resource Module (SRM-3T3/C)

A Service Module (such as FRSM, CESM) operates as a front card and back card combination unless it uses the distribution bus. With the bulk distribution capability of the SRM, certain service modules can communicate through the bus on the backplane and forego the use of back cards.

There are a total of two SRMs per node. The PXM1 in slot 1 controls the SRM in slot 7 and the PXM1 in slot 2 controls the SRM in slot 14. A PXM1 switchover will cause an SRM switchover. A switch with redundant PXMs must have redundant SRMs. The SRM-3T3/C in the MGX 8230 supports bulk distribution in all service module slots.

In bulk mode, each of the SRM's T3 lines can support 28 T1s, which it distributes to T1-based service modules in the switch. Up to 64 T1s (eight 8-port T1 cards) per chassis can be supported.

SRM Architecture

The SRM-3T3/C uses the following buses on the MGX 8230 back plane:

•

Local bus—This bus is used to communicate between the Processor Switch Module (PXM1) and the Service Resource Module (SRM).

•

T1/E1 Redundancy bus—The redundancy bus is used to route the T1/E1 signals from a selected service module's line module to the standby module. Access to this bus is controlled by the SRM.

•

BERT bus—The BERT bus is used to generate and test using a variety of Bit Error Rate Tests (BERT) on any specific individual nx56k/64K, T1, or E1 line or ports. The BERT function is built into the SRM module. The BERT bus provides all the necessary signals including time slot indications required for this purpose.

•

Distribution bus—This bus is used by the SRM-3T3/C to distribute DS1 signals to the service modules. It is a point-to-point connection between the SRM-3T3 and all the service module slots.

One of the main applications of the SRM-3T3/C is to eliminate the need for individual T1 lines to directly interface with the service modules. Instead, the DS1s are multiplexed inside the T3 lines. The SRM-3T3/C can accept up to three T3 inputs. When the T3 inputs are selected, the SRM-3T3/C assumes asynchronous mapping of DS1s into the T3 signal. It will demultiplex individual DS1 tributaries directly from the incoming T3 and distribute them into the service modules.

SRM-3T3/C Features

SRM-3T3/C features include:

•

Three DS3 interfaces

•

Supports bulk and nonbulk mode of operation

•

Supports multiple groups of 1:N (T1/E1) service module redundancy

•

Provides BERT capabilities

•

Generates OCU/CSU/DSU latching and nonlatching loopback codes

•

Monitors any individual timeslot for any specified DDS trouble code

Interfaces

The SRM-3T3/C has three DS3 (44.736 Mbps +/-40 ppm) interfaces with dual female 75-ohm BNC coaxial connectors per port (separate RX and TX).

Bulk Mode and Nonbulk Mode

Each of the T3 ports can be used to support up to 28 multiplexed T1 lines, which are distributed to T1 service module ports in the switch. Called bulk distribution, this feature is performed when the SRM is in "bulk mode." The purpose of this feature is to allow large numbers of T1 lines to be supported over three T3 lines rather than over individual T1 lines. 64 channels per service bay can be active at any time. Any T1 in a T3 line can be distributed to any eight ports on a service module in any slots of the service bay without restriction.

The SRM-3T3/C can also be operated in "nonbulk mode." For a port configured in nonbulk mode, bulk distribution is disabled and the SRM acts as an SRM-T1/E1, providing BERT and 1:N redundancy functions only. A service module port cannot be used simultaneously with an individual T1 line and with a distributed T1 channel.

Multiple Groups of 1:N Redundancy

The SRM enables 1:N redundancy for multiple groups of (T1/E1) service modules, where a group consists of N active and one standby service module. For example, if both AUSM/B and FRSM cards are installed in the chassis, you can protect both groups of cards separately via redundant cards for each of these groups. The redundant service module in a group must be a superset (with respect to functionality) of the cards. Upon the detection of a failure in any of the service modules, the packets destined for the failed service module are carried over the distribution or redundancy bus (depending on whether in bulk or nonbulk mode) to the SRM in its chassis. The SRM receives the packets and switches them to the backup service module. Thus each active SRM provides redundancy for a maximum of 8 service modules. The failed service module must be replaced and service switched back to the original service module before protection against new service module failures is available.

BERT Capabilities

After a service module line or port is put into loopback mode, the SRM can generate a test pattern over the looped line or port, read the received data and report on the error rate. This operation can be performed on a fractional T1/E1, T1/E1or an Nx64K channel/bundle. The SRM can support BERT for only one line or port at a time. If a switchover occurs while BERT testing is in progress, the BERT testing must be re-initiated. BERT capabilities are supported on the FRSM-8T1/E1, AUSM/B-8T1/E1, CESM-8T1/E1, and the FRSM-2CT3.

Other Capabilities

The SRM can also generate OCU/CSU/DSU latching and nonlatching loopback codes and monitor any single timeslot for any specified DDS trouble code.

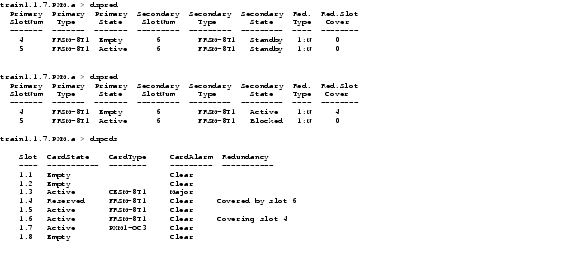

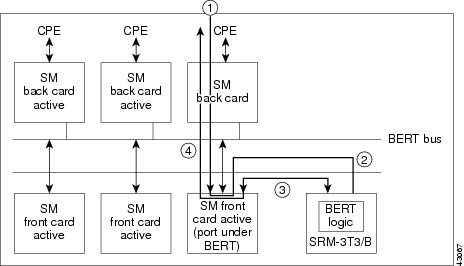

Redundancy

One of the major functions of the SRM-3T3/C is to provide 1:N redundancy. Figure 4-7 illustrates 1:N redundancy. The upper part of the illustration show the FRSM-8T1 in slot 6 has been configured to provide 1:N redundancy for the FRSM-8T1s in slots 4 and 5. In the bottom part, the FRSM-8T1 in slot 5 has failed and the one in slot 6 has taken over for the failed service module.

Figure 4-7 1:N Redundancy

1:N Redundancy

Currently, 1:N redundancy is only supported for the 8-port T1/E1 cards (for example, FRSM-8T1, 8E1, 8T1-C and 8E1-C). A 1:N redundancy support for the T3/E3 cards would require a new SRM.

If the system has an SRM-3T3/C, 1:N redundancy can be specified for 8T1/E1 service modules. With 1:N redundancy, a group of service modules has one standby module. When an active card in a group fails, the SRM-3T3/C invokes 1:N redundancy for the group. The back card of the failed service module subsequently directs data to and from the standby service module using the redundancy bus. The SRM-3T3/C can support multiple group failures if the service modules are configured in bulk mode. In this case, the SRM reroutes the T1 data from the failed card to the standby card using the distribution bus.

The standby service module uses a special, redundant version of the back card. The module number of the redundant back card begins with an "R," as in "AX-R-RJ48-8T1".

When the failed card is replaced, switch back to normal operation. The switch does not automatically do so.

1:1 Redundancy

A 1:1 redundancy is NOT a feature of the SRM. This type of redundancy requires a pair of card sets with a Y-cable for each active line and its redundant standby. Specify one set as active and one set as standby. The configuration is card-level rather than port-level.

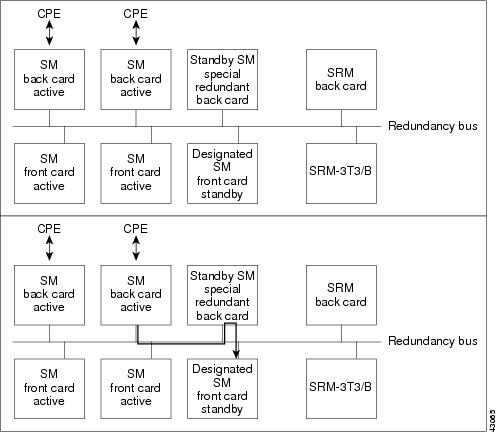

SM Redundancy with Line Modules

Nonbulk mode distribution is a mode of operation where individual T1 lines are directly connected to the line module of each front card. During normal nonbulk mode operation, the T1/E1 data flow is from the service module's line module to its front card and vice-versa. The line modules also contain isolation relays that switch the physical interface signals to a common redundancy bus under SRM-3T3 control in case of service module failure.

When a service module is detected to have failed, the PXM1 will initiate a switchover to the standby service module. The relays on the service module's line module (all T1/E1s) are switched to drive the signals onto the T1 redundancy bus. The designated standby card's line module (controlled by the SRM-3T3) receives these signals on the T1/E1 redundancy bus. The data path then is from the failed service modules' line module to the T1/E1 redundancy bus to the line module of the standby service module and finally to the standby service module itself. The service module redundancy data path is shown in Figure 4-8.

Figure 4-8 SM Redundancy with Line Modules

Line module redundancy is not offered since there are no service affecting active devices.

In nonbulk mode, the SRM-3T3 will control the data path relays on the service module's line modules.

The 1:N redundancy is limited to the service bay that the SRM and the service modules are located in. Therefore, each active SRM provides redundancy for a maximum of 8 service modules.

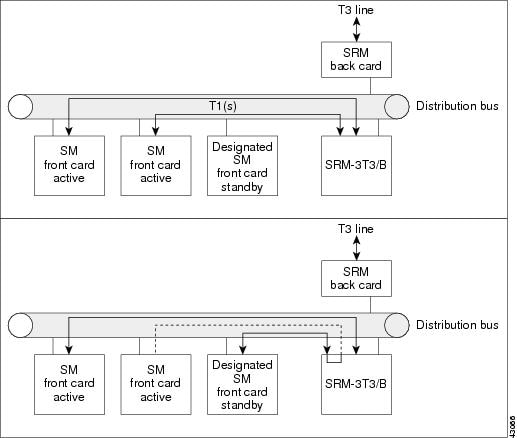

Bulk Mode Distribution/Redundancy

Bulk distribution is a mode of operation in which individual lines are not brought to service modules, but instead the these lines are multiplexed into a few high-speed lines attached to the SRM. The SRM then takes this "bulk" interface, extracts the lines, and distributes them to the service modules. Any cards served by this bulk interface can participate in 1:N redundancy without using the separate redundancy bus. Any T1 in a T3 line can be distributed to any eight ports on a service module in any slots of the service bay without restriction.

The 1:N redundancy is limited to the service bay that the SRM and the service modules are located in.

During bulk mode operation, the SRM-3T3/B unbundles T1 data from the incoming T3s and sends it to each service module (see Figure 4-9). Any slot can be used to process T1 data or to house a standby service module. When a service module fails, the PXM1 initiates a switchover to a previously configured standby module. The SRM-3T3/C will redirect the recovered T1 traffic to the designated standby module. The switching takes place inside the SRM-3T3/C and requires no special back cards or cabling. The data path to the standby module is still via the Distribution Bus. The Redundancy Bus is NOT used in bulk mode.

The current SRM can support 64 T1/E1s.

Figure 4-9 Bulk Mode Distribution/Redundancy

Loopbacks

The MGX 8230 supports many different types of loops for performance testing. The loop types supported on a card are dependent on the card type and line type. There are three types of loops supported:

•

Local loop—A loop that faces toward the ATM backbone network. Local loops are used to test through the network from a remote MGX 8230.

•

Remote loop—A loop that faces toward the attached end-user equipment. Remote loops are used to test a line or port from a remote test device.

•

Far-end loop—A loop that is implemented on the attached end-user equipment facing back toward the MGX 8230. Far-end loops are used in conjunction with the SRM BERT or to test from a remote MGX 8230 through the network and out to the remote end-user equipment.

Local line loops can also be initiated on the T1/E1 service modules via the addlnloop command. On the T3 service modules, the addds3loop command would be entered. All three line loops are supported by the SRM.

BERT Data Path

The SRM-3T3/C card performs BERT pattern generation and checking for the DS1/DS0 stream. This function is completely separate from the 3T3 distribution features of the SRM-3T3.

The SRM can support BERT on only one line or port at a time. BERT is capable of generating a variety of test patterns, including all ones, all zeros, alternate one zero, double alternate one zero, 223-1, 220-1, 215-1, 211-1, 29-1, 1 in 8, 1 in 24, DDS1, DDS2, DDS3, DDS4, and DDS5.

The BERT bus is used to provide the BERT operation to the individual service modules. This bus is also used to drive special codes such as fractional T1 loopback codes, and so on, onto the T1 line. The BERT function is initiated ONLY on one logical T1/E1 N x 64K port per MGX 8230 at any given time and this is controlled by the PXM1. The SRM-3T3 ensures that the BERT patterns are generated and monitored (if applicable) at the appropriate time slots.

The datapath then for that particular port (N x 64K) is from the service module to the SRM-3T3/C (via the BERT bus) and back to the service module (via the BERT bus). On the service module, the data that is transmitted is switched between the regular data and the BERT data at the appropriate timeslots as needed. Similarly in the receive direction, the received data is diverted to the BERT logic for comparison during appropriate time slots. The illustration in Figure 4-10 shows the datapath for BERT and loopback operations via the SRM-3T3 module.

Figure 4-10 BERT Data Path

The BERT logic is self-synchronizing to the expected data. It also reports the number of errors for bit error rate calculation purposes.

BERT testing requires the presence of an SRM-3T3/C card in the service bay in which the card under test is located. The BERT tests can only be initiated by CLI.

CautionBERT is a disruptive test. Activation of this test will stop the data flow on all the channels configured on the port under test.

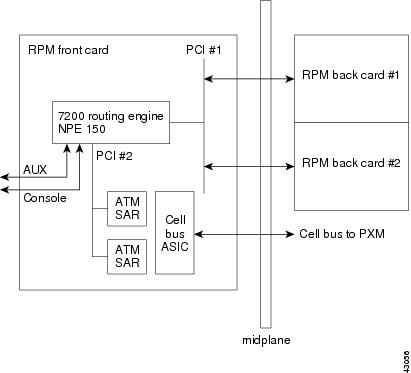

Route Processor Module

The Cisco MGX 8230 Route Processor module (RPM) provides a new dimension in its industry-leading service breadth, providing integrated IP in an ATM platform, enabling services such as integrated Point-to-Point (PPP) protocol and Frame Relay termination and IP virtual private networks (VPNs). The full IOS enables the IP services for RPM.

The Route Processor Module on an MGX 8230 is a Cisco 7200 series router redesigned to fit onto a single double-height card that fits into an MGX 8230 chassis.

The module fits into the MGX 8230 midplane architecture, with the RPM front card providing a Cisco IOS network processing engine (NPE-150), capable of processing up to 140K packets per second (pps). The front card also provides ATM connectivity to the MGX 8230 internal cell bus at full-duplex OC-3c/STM-1 from the module. Each module supports two single-height back cards. Initially, three single-height back-card types will be supported: four-port Ethernet, one-port (FDDI), and one-port Fast Ethernet.

The RPM enables high-quality, scalable IP+ATM integration on the MGX 8230 platform using MPLS Tag Switching technology. Tag Switching combines the performance and virtual-circuit capabilities of Layer 2 switching with the proven scalability of Layer 3 networking and is the first technology to fully integrate routing and switching for a scalable IP environment.

Each RPM module supports two single-height back cards. Initially, three basic types of back-cards will be supported: four-port Ethernet, one-port (FDDI), and one-port Fast Ethernet.

The RPM can be ordered with 64M DRAM or 128M DRAM. The RPM currently has 4M of Flash Memory and does not support PCMCIA slots for Flash memory cards. The Cisco IOS image and configuration files are stored on the PXM1 hard drive or a network server.

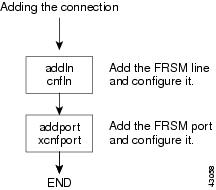

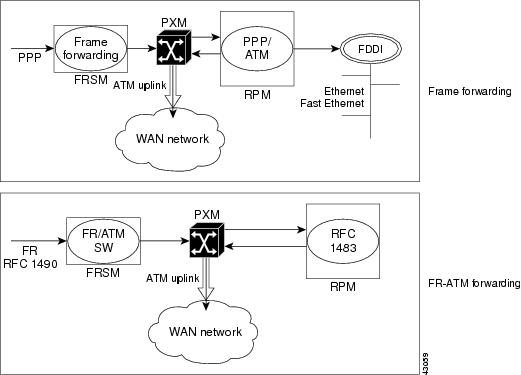

FRSM to RPM Connection

From the FRSM, the frames are forwarded to the RPM via frame forwarding and FR-ATM Interworking, as illustrated in Figure 4-11.

Figure 4-11 FRSM to RPM Connection

Frame Aggregation: Port Forwarding

All frames received on a port are forwarded to the router for Layer 3 processing. For example, an FRSM T1 could be configured for PPP IP access by

1.

Setting up a frame forwarding (FF) connection from an FRSM T1 port to the RPM cell bus address on VPI/VCI.

2.

Configuring the router to terminate PPP frames forwarded over an ATM connection on the internal ATM interface via aal5ciscoppp encapsulation (a Cisco proprietary method whereby all HDLC frames received on a port are converted to ATM AAL5 frames with a null encapsulation and are sent over a single VC). Cisco has already implemented code to terminate frame-forwarded PPP over ATM.

The data flow for a PPP connection destined for the RPM is shown in Figure 4-12. The packet enters the FRSM module as PPP and is frame forwarded to the RPM. The RPM receives the packet in PPP over ATM because the MGX 8230 internal connectivity is ATM. The RPM is running software that understands PPP over ATM encapsulation, allowing the router to reach the IP layer and route the packet to its destination (for example, the Internet). Packets destined to the Internet via a WAN network are then sent back to the PXM1, and out the ATM uplink.

Figure 4-12 FRSM to RPM Connection

FR-ATM Interworking

In this example, all frames received on a given connection are forwarded to the router using the appropriate ATM encapsulation. For example, Frame Relay connections on an FRSM port could be forwarded to the RPM by:

•

Translating a Frame Relay connection to an ATM connection via network interworking (FRF.5) or service interworking (FRF.8).

•

Configuring the router to terminate Frame Relay over ATM (RFC 1483) on the ATM PA port on VCI 0/x.

The data flow for a native Frame Relay connection destined to the RPM is shown in Figure 4-13. This data flow is identical to that of PPP packets, but the encapsulation techniques are different. Standard Frame Relay is encapsulated using RFC1490. When a packet is received at the FRSM encapsulated using RFC1490, standard FR-ATM service interworking translation mode (FRF.8) is performed so that when the packet is forwarded to the router blade it is encapsulated using RFC1483. The router also understands RFC1483, allowing it to reach the IP layer, and route the packet.

Tip

An aal5snap encapsulation is needed to perform Interworking functions.

AUSM/B to RPM Connection

ATM UNI/NNI connection between the RPM and the AUSM/B is illustrated in Figure 4-13.

Figure 4-13 AUSM/B to RPM Connection

ATM Deluxe Integrated Port Adapter/Interface

The ATM Deluxe port adapter/interface is a permanent, internal ATM interface. The ATM Deluxe port adapter provides a single ATM interface to the MGX 8230 cell bus interface (CBI). Since it is an internal interface and resides on the RPM front card, it has no cabling to install and no interface types supported. It connects directly to the MGX 8230 midplane. (See Figure 4-14.)

The following features from the ATM Deluxe port adapter/interface are supported on the MGX 8230 switch:

•

Support for all 24 bits of the UNI VP/VC field, any arbitrary address

•

Support for 4080 connections

•

ATM Adaptation Layer 5 (AAL5) for data traffic

•

Traffic management

•

Four transmit scheduling priority levels

•

Respond to/generate OAM flows (F4/F5)

Figure 4-14 ATM Deluxe Integrated Port Adapter

Figure 4-15 shows a block diagram of RPM front and back cards in an MGX 8230.

Figure 4-15 RPM Block Diagram

Feedback

Feedback