Cisco UCS C240 M5 with Scality RING 8

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco UCS C240 M5 with Scality RING 8

Design and Deployment Guide for Scality RING 8 + Scality NAS Archiver on Cisco UCS C240 M5 with Cisco Intersight and Terraform

Published: October 2020

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. Lisa.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Contents

Deployment Hardware and Software

Installation of Cisco Intersight

Create a Terraform Configuration Environment for Cisco Intersight

Configure Scality RING Infrastructure with Terraform

Prepare Virtual Environment for Scality RING Solution

Manage and Monitor Scality RING

Scality RING Performance Testing

Scality RING High Availability Testing

Cisco Validated Designs consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

The purpose of this document is to describe the design and deployment of Scality RING and Scality NAS Archiver on the latest generation of Cisco UCS C240 Rack Servers. This validated design provides the framework of designing and deploying Scality SDS software on Cisco UCS C240 Rack Servers together with Cisco Intersight. The Cisco Unified Computing System provides the storage, network, and storage access components for Scality RING, deployed as a single cohesive system. The Scality NAS Archiver provides the connectivity between Tier 1 NAS storage solutions and Scality RING. All together built with an orchestration tool Terraform and the Terraform provider for Cisco Intersight.

The Cisco Validated Design describes how the Cisco Unified Computing System can be used in conjunction with the latest release of Scality RING and Scality NAS Archiver. With the continuous evolution of Software Defined Storage (SDS), there has been increased demand to have small Scality RING solutions validated on Cisco UCS servers. The Cisco UCS C240 Rack Server, originally designed for the data center, together with Scality RING is optimized for such object storage solutions, making it an excellent fit for unstructured data workloads such as active archive, backup, and cloud data. The Cisco UCS C240 Rack Server delivers a complete infrastructure with exceptional scalability for computing and storage resources together with 25 Gigabit Ethernet networking.

Cisco and Scality are collaborating to offer customers a scalable object storage solution for unstructured data that is integrated with Scality RING. With the power of the Cisco Intersight management framework, the solution is cost effective to deploy and manage and will enable the next-generation cloud deployments that drive business agility, lower operational costs, and avoid vendor lock-in.

When combining various storage solutions like Tiered Storage or Cloud Storage, companies struggle to find the right solution. Especially when it comes to connect private storage cloud solutions with private or public cloud storage solutions, so called hybrid cloud storage solutions. Hybrid cloud storage utilizes services to connect on-premises or private clouds to data hosted on private clouds or on the public cloud. Many solutions come equipped with a storage gateway to connect local appliances to various cloud storage services. Companies use these tools to increase scalability and integrate multiple data sources. These tools also centralize and streamline many data access and retrieval operations. Hybrid cloud storage solutions will typically connect users to a number of file storage and sharing software and object storage software.

Typically, hybrid cloud storage solutions consolidate storage infrastructure across storage environments and provide a storage gateway or local access point for data retrieval. One main use case for hybrid cloud storage is data management – a way to effectively handle data in both worlds. Most often companies rely on an inflexible infrastructure with the use of traditional storage systems like NAS storage to hold the data. When it comes to moving, migrating, or archiving data, there is an enormous effort needed – often combined with additional costs.

Cisco and Scality offer a solution, which solves the problem of connecting storage cloud solutions and managing data effectively. Cisco with Cisco UCS provides an enterprise-grade compute, network, and storage infrastructure, building the foundation for Scality RING storage platform and Scality NAS Archiver data management solution. To offer a more intelligent level of management that enables IT organizations to analyze, simplify, and automate their environments, Cisco Intersight as a Cisco’s systems management platform plays a major role in building and managing the infrastructure. With Terraform for building, changing, and versioning infrastructure safely and efficiently, Terraform is an ideal tool for building and managing these hybrid cloud storage infrastructures.

Scality RING is a storage platform that is ideal for holding large amounts of colder production data, such as backups and archives, and very large individual files, such as video files, image files, and genomic data and can also include support of warm or even hot data, by increasing CPU performance and/or memory capacity. Scality RING is a perfect archive target for inactive NAS data, to achieve 50% and higher TCO savings versus traditional NAS data protection, along with freeing up a commensurate amount (50%+) of NAS Tier 1 storage capacity.

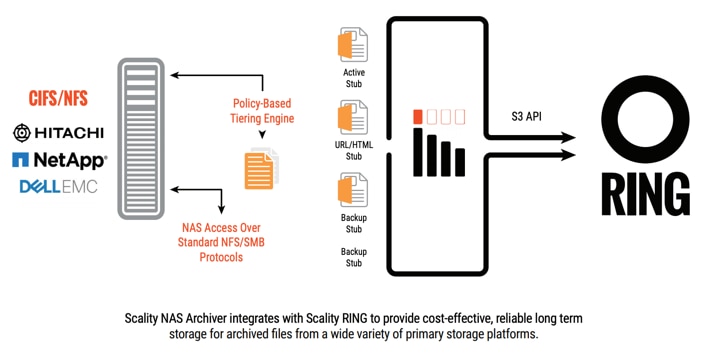

To transparently detect and migrate inactive data to the RING, Scality provides the Scality NAS Archiver as an optimal solution for NAS offload. The Scality NAS Archiver provides a wide range of migration policies based on file size, file types, file age and more.

This document describes the architecture, design, and deployment procedures of Scality RING and Scality NAS Archiver on Cisco UCS C240 M5 servers together Cisco Intersight and Terraform.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy Scality RING with Scality NAS Archiver on Cisco UCS C240 M5 Servers with Cisco Intersight and Terraform.

This document describes how to deploy Scality RING and Scality NAS Archiver with Cisco Intersight and Terraform on Cisco UCS C240 M5 Servers.

It presents a tested and validated solution and provides insight into operational best practices.

This is an update of the former Scality RING solution with Scality RING 7.4 and Cisco UCS C240 M5. The update contains the following:

● Cisco Intersight Virtual Appliance

● Terraform Provider for Cisco Intersight

● Scality RING 8

● Scality NAS Archiver

This revision of the CVD focuses on the latest release of Scality’s SDS platform, RING 8, along with Scality NAS Archiver, a brand new offering aimed at offloading inactive, dormant data from premium NAS storage into Scality RING, to reclaim space on the NAS and cost-optimize data storage location to its value.

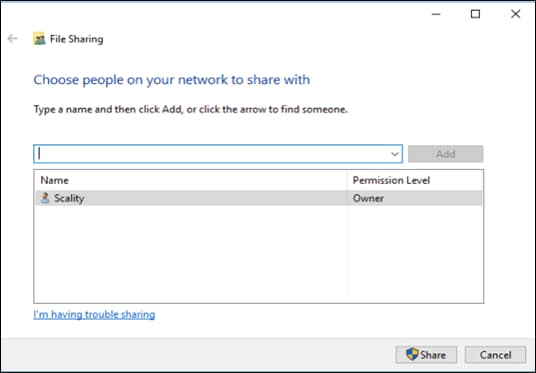

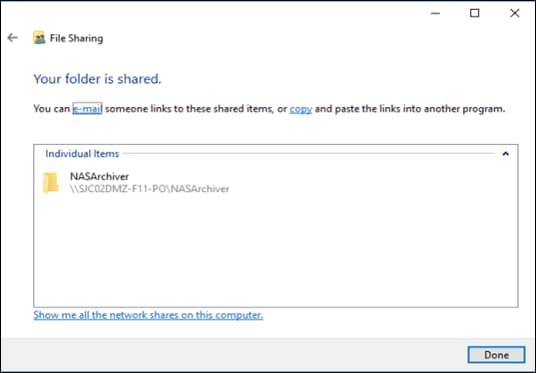

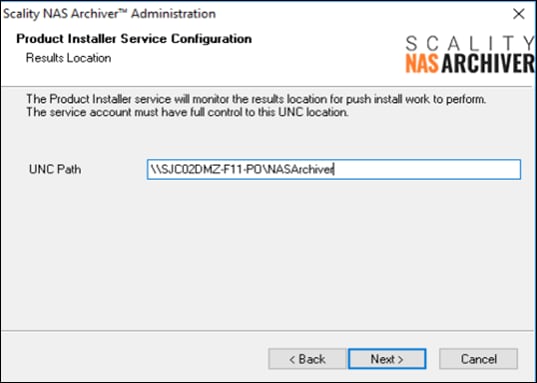

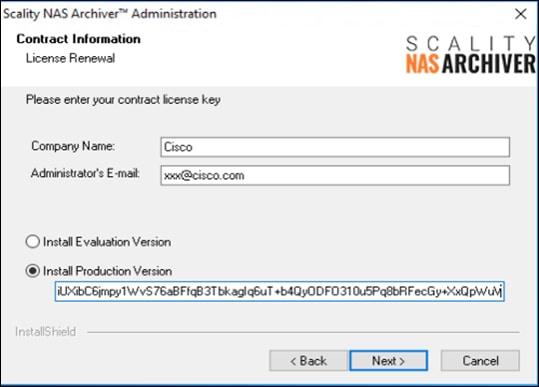

In this architecture we have deployed Scality RING on Cisco UCS C240 M5 with Cisco Intersight. The deployment of Cisco UCS C240 M5 on Cisco Intersight was done through the Terraform provider for Cisco Intersight. In addition, we have deployed the Scality NAS Archiver, a policy-based Tiering Engine. We have setup a Windows File Share to show the functionality of Scality NAS archiver to archive files directly to the Scality RING.

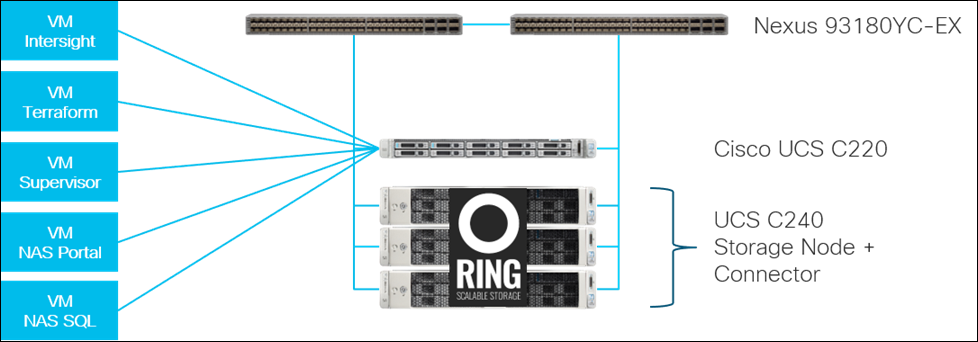

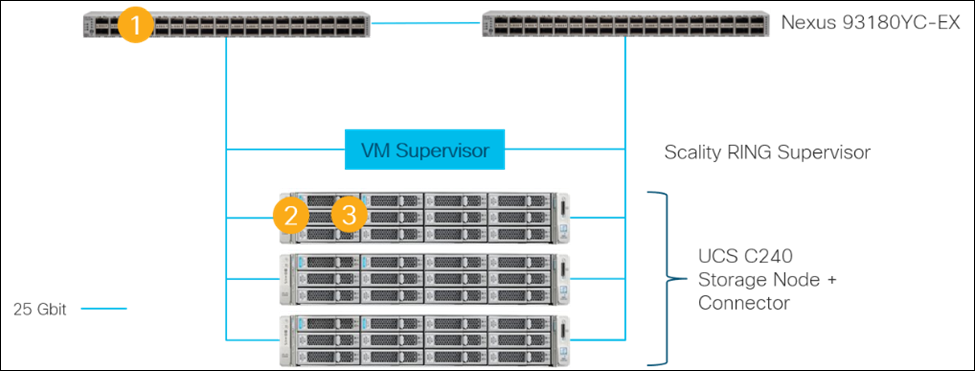

Figure 1. High Level Overview

The configuration uses the following architecture for the deployment:

● 3 x Cisco UCS C240 M5L

● 1 x Cisco UCS C220 M5S

● 2 x Cisco Nexus 93180YC-EX

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a state-of-the-art data center platform that unites computing, network, storage access, and virtualization into a single cohesive system.

The main components of Cisco Unified Computing System are:

● Computing - The system is based on an entirely new class of computing system that incorporates rackmount and blade servers based on Intel Xeon Scalable processors. Cisco UCS servers offer the patented Cisco Extended Memory Technology to support applications with large datasets and allow more virtual machines (VM) per server.

● Network - The system is integrated onto a low-latency, lossless, 10/25/40/100-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

● Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements.

● Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access, the Cisco Unified Computing System can access storage over Ethernet (NFS or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with choice for storage access and investment protection. In addition, the server administrators can pre-assign storage-access policies for system connectivity to storage resources, simplifying storage connectivity, and management for increased productivity.

The Cisco Unified Computing System is designed to deliver:

● A reduced Total Cost of Ownership (TCO) and increased business agility

● Increased IT staff productivity through just-in-time provisioning and mobility support

● A cohesive, integrated system, which unifies the technology in the data center

● Industry standards supported by a partner ecosystem of industry leaders

The Cisco UCS C240 Rack Server is a 2-socket, 2-Rack-Unit (2RU) rack server offering industry-leading performance and expandability. It supports a wide range of storage and I/O-intensive infrastructure workloads, from big data and analytics to collaboration. Cisco UCS C-Series Rack Servers can be deployed as standalone servers or as part of a Cisco UCS managed environment to take advantage of Cisco’s standards-based unified computing innovations that help reduce customers’ TCO and increase their business agility.

Figure 2. Cisco UCS C240 Rack Server

In response to ever-increasing computing and data-intensive real-time workloads, the enterprise-class Cisco UCS C240 server extends the capabilities of the Cisco UCS portfolio in a 2RU form factor. It incorporates the Intel® Xeon® Scalable processors, supporting up to 20 percent more cores per socket, twice the memory capacity, and five times more Non-Volatile Memory Express (NVMe) PCI Express (PCIe) Solid-State Disks (SSDs) compared to the previous generation of servers. These improvements deliver significant performance and efficiency gains that will improve your application performance. The Cisco UCS C240 M5 delivers outstanding levels of storage expandability with exceptional performance, comprised of the following:

● Latest Intel Xeon Scalable CPUs with up to 28 cores per socket

● Up to 24 DDR4 DIMMs for improved performance

● Up to 26 hot-swappable Small-Form-Factor (SFF) 2.5-inch drives, including 2 rear hot-swappable SFF drives (up to 10 support NVMe PCIe SSDs on the NVMe-optimized chassis version), or 12 Large-Form-Factor (LFF) 3.5-inch drives plus 2 rear hot-swappable SFF drives

● Support for 12-Gbps SAS modular RAID controller in a dedicated slot, leaving the remaining PCIe Generation 3.0 slots available for other expansion cards

● Modular LAN-On-Motherboard (mLOM) slot that can be used to install a Cisco UCS Virtual Interface Card (VIC) without consuming a PCIe slot, supporting dual 10-, 25- or 40-Gbps network connectivity

● Dual embedded Intel x550 10GBASE-T LAN-On-Motherboard (LOM) ports

● Modular M.2 or Secure Digital (SD) cards that can be used for boot

The Cisco UCS C240 rack server is well suited for a wide range of enterprise workloads, including:

● Object Storage

● Big Data and analytics

● Collaboration

● Small and medium-sized business databases

● Virtualization and consolidation

● Storage servers

● High-performance appliances

Cisco UCS C240 rack servers can be deployed as standalone servers or in a Cisco UCS managed environment. When used in combination with Cisco UCS Manager, the Cisco UCS C240 brings the power and automation of unified computing to enterprise applications, including Cisco® SingleConnect technology, drastically reducing switching and cabling requirements.

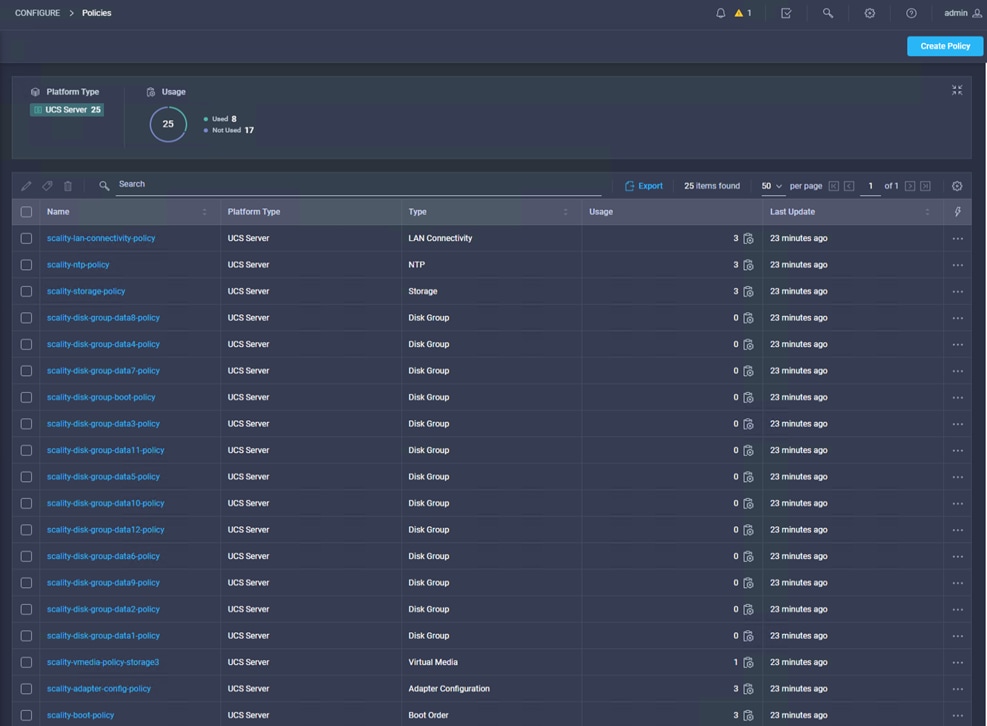

Cisco UCS Manager uses service profiles, templates, and policy-based management to enable rapid deployment and help ensure deployment consistency. If also enables end-to-end server visibility, management, and control in both virtualized and bare-metal environments.

The Cisco Integrated Management Controller (IMC) delivers comprehensive out-of-band server management with support for many industry standards, including:

● Redfish Version 1.01 (v1.01)

● Intelligent Platform Management Interface (IPMI) v2.0

● Simple Network Management Protocol (SNMP) v2 and v3

● Syslog

● Simple Mail Transfer Protocol (SMTP)

● Key Management Interoperability Protocol (KMIP)

● HTML5 GUI

● HTML5 virtual Keyboard, Video, and Mouse (vKVM)

● Command-Line Interface (CLI)

● XML API

Management Software Development Kits (SDKs) and DevOps integrations exist for Python, Microsoft PowerShell, Ansible, Puppet, Chef, and more. For more information about integrations, see Cisco DevNet (https://developer.cisco.com/site/ucs-dev-center/).

The Cisco UCS C240 is Cisco Intersight™ ready. Cisco Intersight is a new cloud-based management platform that uses analytics to deliver proactive automation and support. By combining intelligence with automated actions, you can reduce costs dramatically and resolve issues more quickly.

Cisco UCS Virtual Interface Card 1455

The Cisco UCS VIC 1455 is a quad-port Small Form-Factor Pluggable (SFP28) half-height PCIe card designed for the M5 generation of Cisco UCS C-Series Rack Servers. The card supports 10/25-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

Figure 3. Cisco UCS Virtual Interface Card 1455

The Cisco UCS VIC 1400 series provides the following features and benefits:

● Stateless and agile platform: The personality of the card is determined dynamically at boot time using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and Worldwide Name [WWN]), failover policy, bandwidth, and Quality-of-Service (QoS) policies of the PCIe interfaces are all determined using the service profile. The capability to define, create, and use interfaces on demand provides a stateless and agile server infrastructure.

● Network interface virtualization: Each PCIe interface created on the VIC is associated with an interface on the Cisco UCS fabric interconnect, providing complete network separation for each virtual cable between a PCIe device on the VIC and the interface on the Fabric Interconnect.

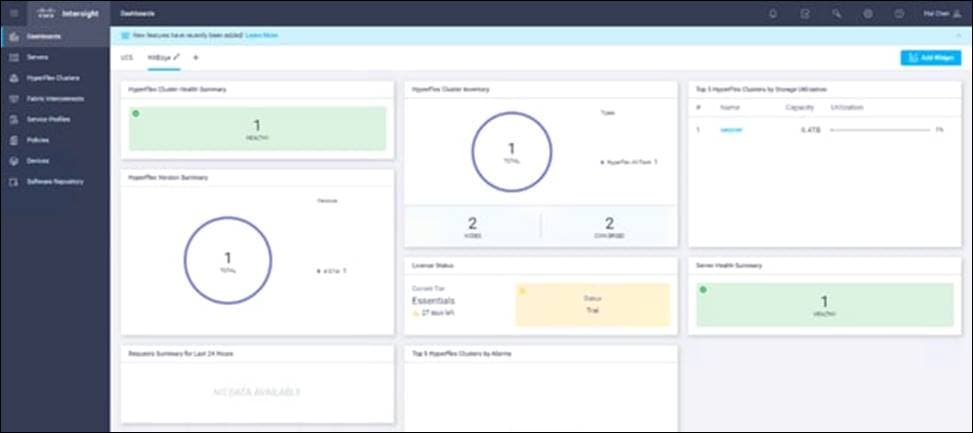

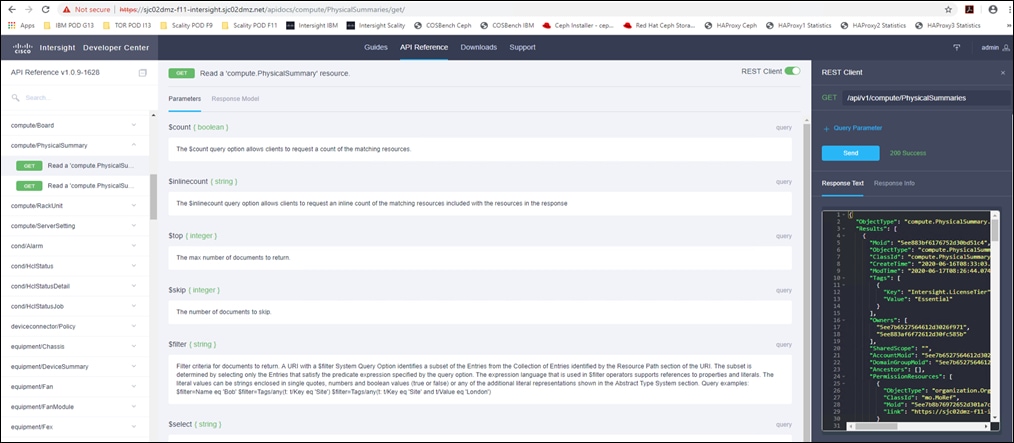

Cisco Intersight (https://intersight.com) is an API driven, cloud-based system management platform. It is designed to help organizations to achieve their IT management and operations with a higher level of automation, simplicity, and operational efficiency. It is a new generation of global management tool for the Cisco Unified Computing System (Cisco UCS) and Cisco HyperFlex systems and provides a holistic and unified approach to managing the customers’ distributed and virtualized environments. Cisco Intersight simplifies the installation, monitoring, troubleshooting, upgrade, and support for your infrastructure with the following benefits:

● Cloud Based Management: The ability to manage Cisco UCS and HyperFlex from the cloud provides the customers the speed, simplicity, and easy scaling in the management of their infrastructure whether in the datacenters or remote and branch office locations.

● Automation: Unified API in Cisco UCS and Cisco HyperFlex systems enables policy driven configuration and management of the infrastructure and it makes Intersight itself and the devices connected to it fully programmable and DevOps friendly.

● Analytics and Telemetry: Intersight monitors the health and relationships of all the physical and virtual infrastructure components. It also collects telemetry and configuration information for developing the intelligence of the platform in the way in accordance with Cisco information security requirements.

● Connected TAC: Solid integration with Cisco TAC enables more efficient and proactive technical support. Intersight provides enhanced operations automation by expediting sending files to speed troubleshooting.

● Recommendation Engine: Driven by analytics and machine learning, Intersight recommendation engine provides actionable intelligence for IT operations management from daily increasing knowledge base and practical insights learned in the entire system.

● Management as A Service: Cisco Intersight provides management as a service and is designed to be infinitely scale and easy to implement. It relieves users of the burden of maintaining systems management software and hardware.

Figure 4. Cisco Intersight

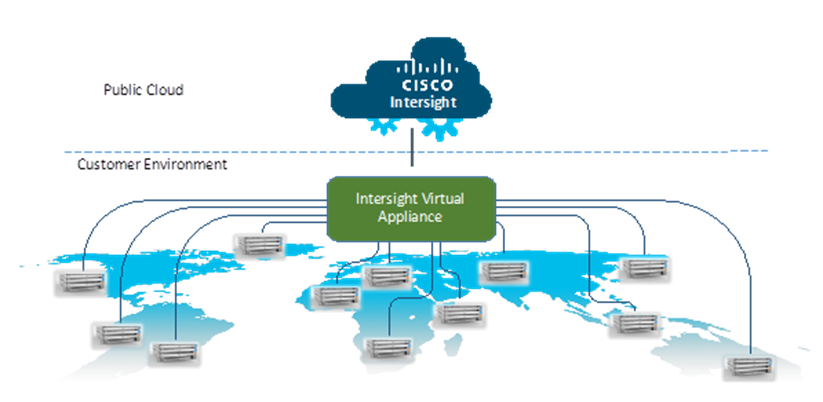

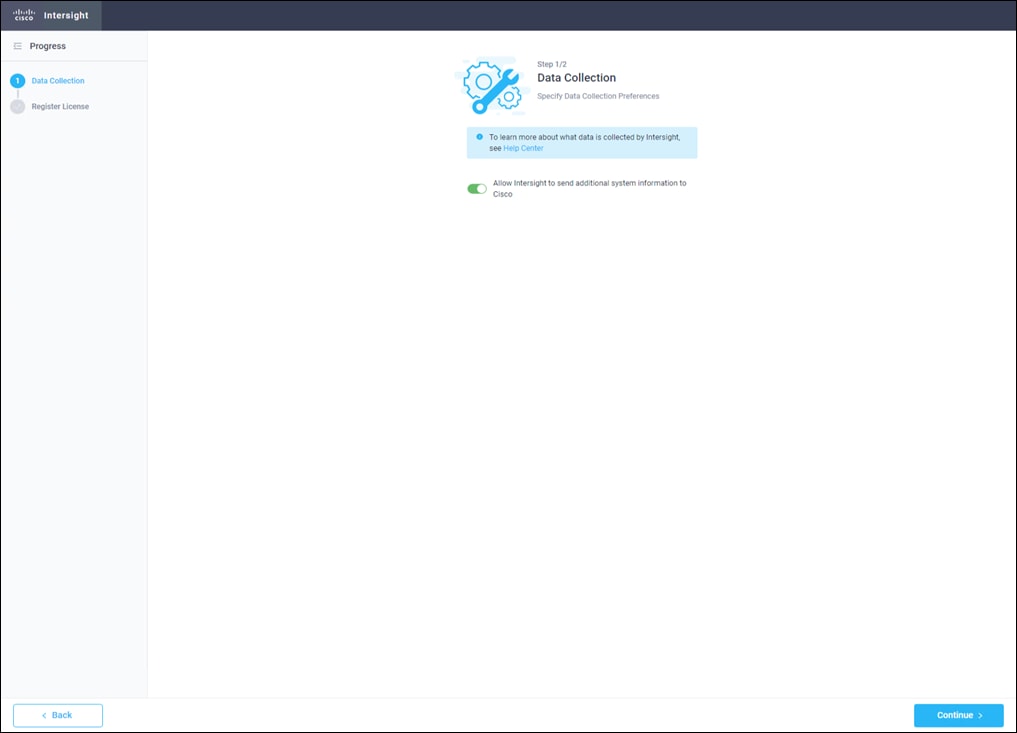

Intersight Virtual Appliance

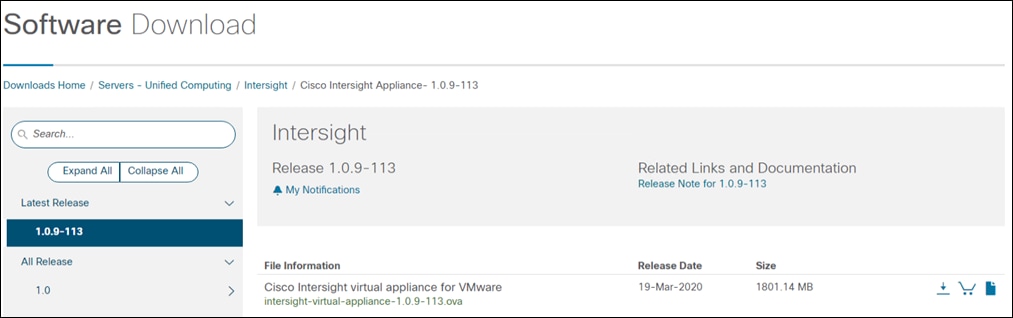

The Cisco Intersight Virtual Appliance delivers the management features of Intersight for Cisco UCS and HyperFlex into the on-premise environment. It is deployed from a VMware OVA that enables the additional control to specify what data is sent back to Cisco with a single point of egress within the enterprises network. The virtual appliance form factor enables additional data locality, security, or compliance needs that are not completely met by connecting directly to intersight.com in the cloud. However, The Cisco Intersight Virtual Appliance is not intended for an environment with no external connectivity, the Cisco Intersight virtual appliance requires an internet connection back to Cisco and the cloud-based Intersight services for updates and to deliver some of the product features. Communication back to Cisco can be redirected via a proxy server if direct connectivity is not available or allowed by policy. Updates to the virtual appliance are automated and applied during a user specified recurring maintenance window. This connection also facilitates the streamlining of Cisco TAC services for Cisco UCS and HyperFlex systems, with features like automated support log collection.

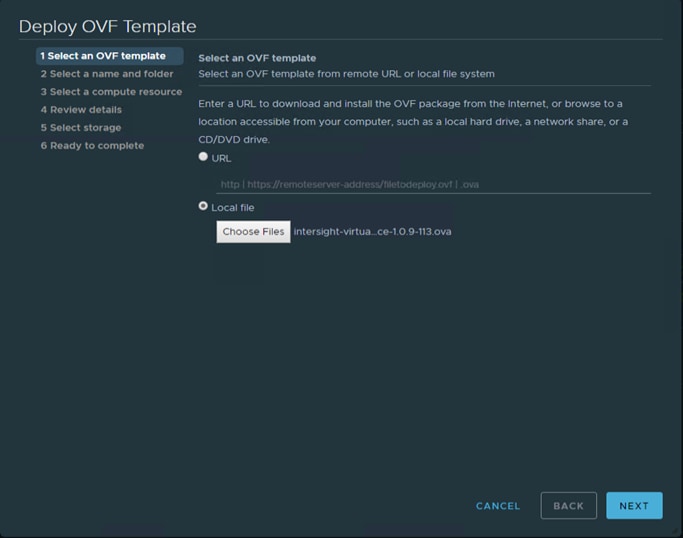

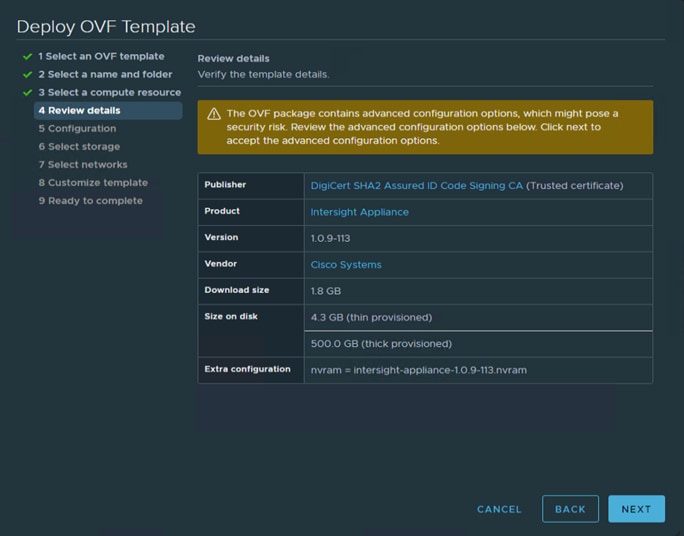

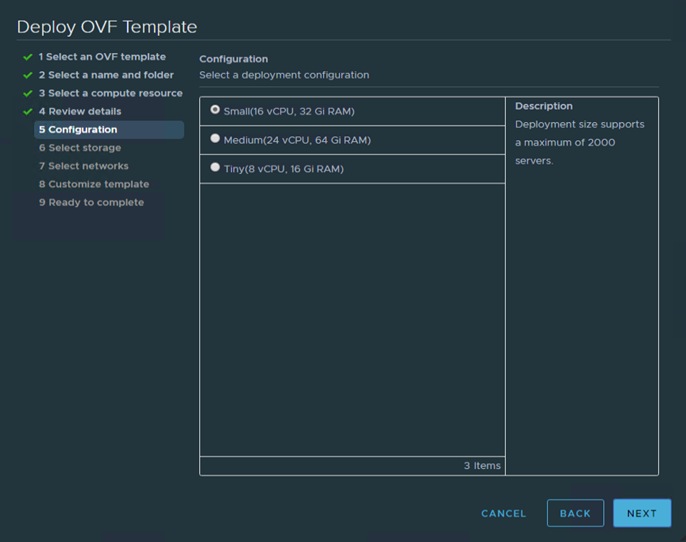

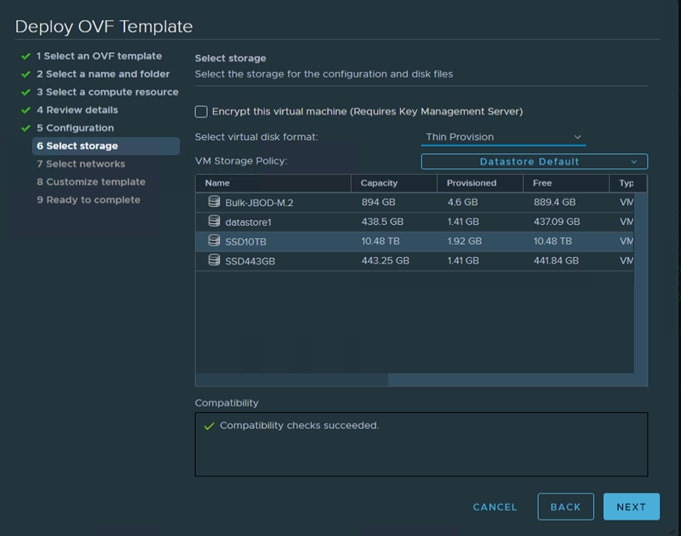

Cisco Intersight Virtual Appliance OVA can be downloaded from Cisco website and can be deployed as a virtual machine in your existing environment. Cisco Intersight Virtual Appliance uses a subscription-based license delivered via Cisco Smart Licensing. After the installation of the appliance OVA is completed, you must connect the appliance to Cisco Intersight, and register the license as part of the initial setup process.

Figure 5. Cisco Intersight Virtual Appliance

The Cisco Nexus® 9300-EX Series switches belongs to the fixed Cisco Nexus 9000 platform based on Cisco Cloud Scale technology. The platform supports cost-effective cloud-scale deployments, an increased number of endpoints, and cloud services. The platform is built on modern system architecture designed to provide high performance and meet the evolving needs of highly scalable data centers and growing enterprises.

Cisco Nexus 9300-EX series switches offer a variety of interface options to transparently migrate existing data centers from 100-Mbps, 1-Gbps, and 10-Gbps speeds to 25-Gbps at the server, and from 10- and 40-Gbps speeds to 50- and 100-Gbps at the aggregation layer. The platforms provide investment protection for customers, delivering large buffers, highly flexible Layer 2 and Layer 3 scalability, and performance to meet the changing needs of virtualized data centers and automated cloud environments.

Cisco provides two modes of operation for Cisco Nexus 9000 Series Switches. Organizations can use Cisco NX-OS Software to deploy the switches in standard Cisco Nexus switch environments (NX-OS mode). Organizations can also deploy the infrastructure that is ready to support the Cisco Application Centric Infrastructure (Cisco ACI™) platform to take full advantage of an automated, policy-based, systems-management approach (ACI mode).

The Cisco Nexus 93180YC-EX Switch is a 1-Rack-Unit (1RU) switch with latency of less than 1 microsecond that supports 3.6 Terabits per second (Tbps) of bandwidth and over 2.6 billion packets per second (bpps). The 48 downlink ports on the 93180YC-EX can be configured to work as 1-, 10-, or 25-Gbps ports, offering deployment flexibility and investment protection. The uplink can support up to six 40- and 100-Gbps ports, or a combination of 1-, 10-, 25-, 40-, 50, and 100-Gbps connectivity, offering flexible migration options. The switch has FC-FEC enabled for 25Gbps and supports up to 3m in DAC connectivity. Please check Cisco Optics Matrix for the most updated support.

Figure 6. Cisco Nexus 93180 YC-EX

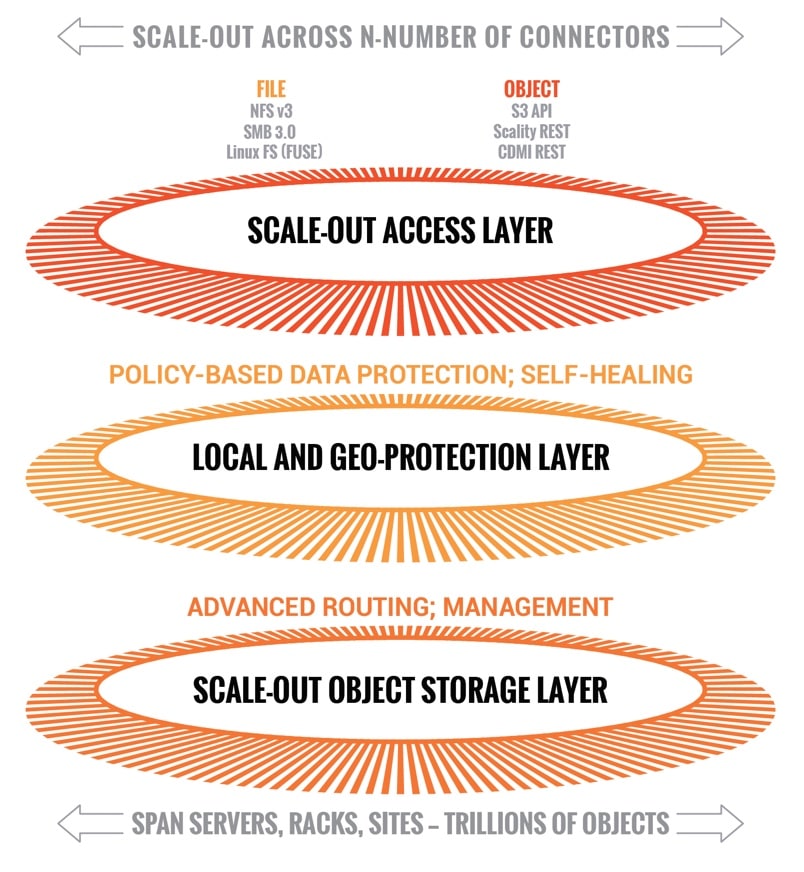

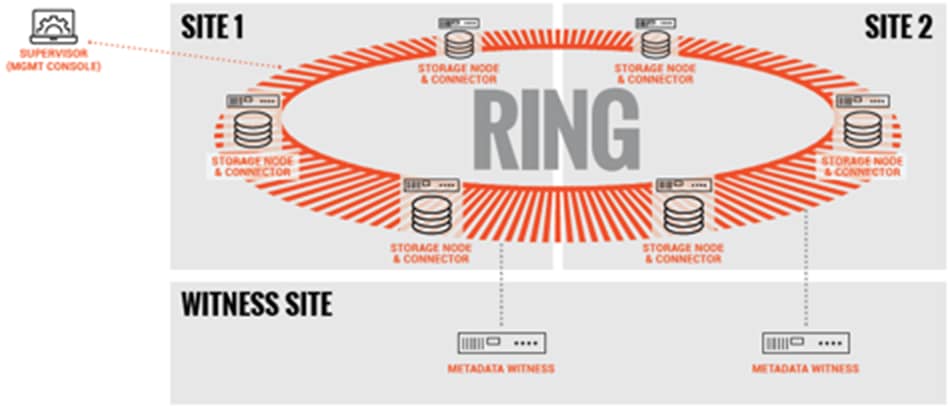

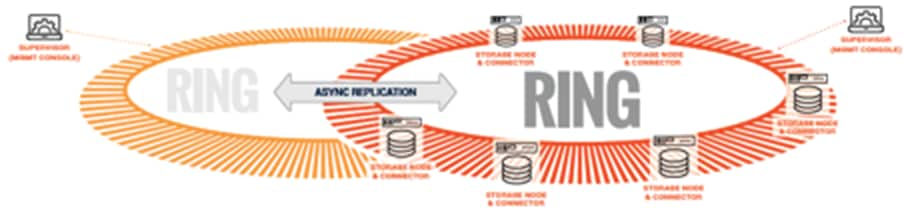

RING is a cloud-scale, distributed software solution for petabyte-scale unstructured data storage. It is designed to create unbounded scale-out storage systems for the many petabyte-scale applications and use cases, both object and file, that are deployed in today’s enterprise data centers. RING is a fully distributed system deployed on industry standard hardware, starting with a minimum of three (3) storage servers and/or 200TB of usable capacity. It is designed to support an unbounded number of storage servers and can grow to 100’s of petabytes of storage capacity. RING has no single points of failure, and requires no downtime during any upgrades, scaling, planned maintenance or unplanned system events. With self-healing capabilities, it continues operating normally throughout these events. To match performance to increasing capacity, RING can also independently scale-out its access layer of protocol Connectors, to enable an even match of aggregate performance to the application load. RING provides data protection and resiliency through local or geo-distributed erasure-coding and replication, with services for continuous self-healing to resolve expected failures in platform components such as servers and disk drives. RING is fundamentally built on a scale-out object-storage layer that employs a second-generation peer-to-peer architecture. This approach uniquely distributes both the user data and the associated metadata across the underlying nodes to eliminate the typical central metadata database bottleneck. To enable file and object data in the same system, the RING integrates a virtual file system layer through an internal NoSQL scale-out database system, which provides POSIX-based access semantics using standard NFS, SMB and FUSE protocols with shared access to the files as objects using the REST protocol.

Figure 7. Scality RING Diagram

Scality has designed RING along the design criteria spearheaded by the leading cloud-scale service providers, such as Google, Facebook, and Amazon. RING leverages loosely-coupled, distributed systems designs that leverage commodity, mainstream hardware along the following key tenets:

● 100 percent parallel design for metadata and data - to enable scaling of capacity and performance to unbounded numbers of objects, with no single points of failures, service disruptions, or forklift upgrades as the system grows.

● Multi-protocol data access – to enable the widest variety of object, file, and host-based applications to leverage RING storage.

● Flexible data protection mechanisms - to protect a wide range of data types and sizes efficiently and durably.

● Self-healing from component failures – to provide high-levels of data durability, the system expects and tolerates failures and automatically resolves them.

● Hardware freedom – to provide optimal platform flexibility, eliminate lock-in and reduce TCO.

RING incorporates these design principles at multiple levels, to deliver the highest levels of data durability, at the highest levels of scale, for most optimal economics.

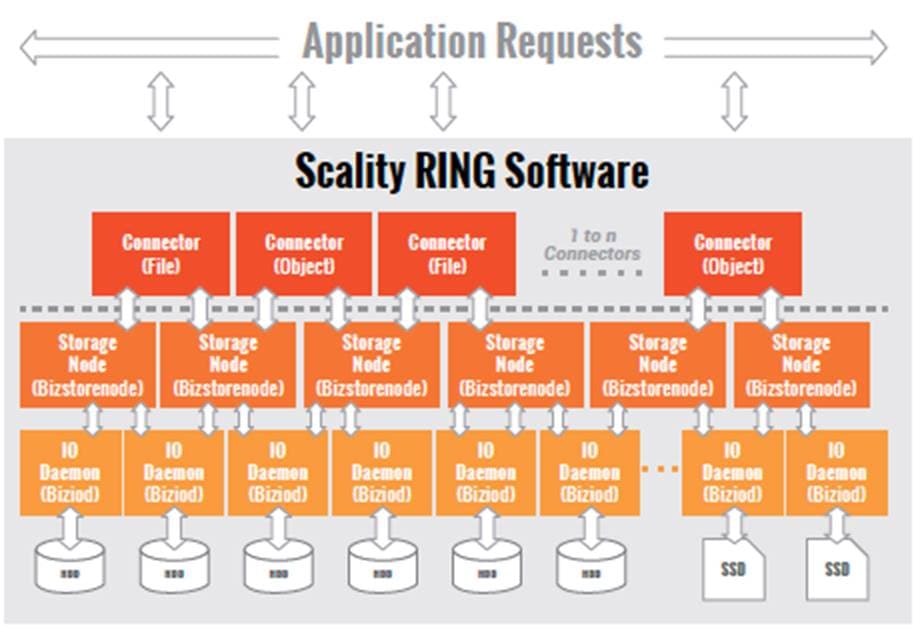

To scale both storage capacity and performance to massive levels, the Scality RING software is designed as a distributed, parallel, scale-out architecture with a set of intelligent services for data access and presentation, data protection and systems management. To implement these capabilities, RING provides a set of fully abstracted software services including a top-layer of scalable access services (Connectors) that provide storage protocols for applications. The middle layers are comprised of a distributed virtual file system layer, a set of data protection mechanisms to ensure data durability and integrity, self-healing processes and a set of systems management and monitoring services. At the bottom of the stack, the system is built on a distributed storage layer comprised of virtual storage nodes and underlying IO daemons that abstract the physical storage servers and disk drive interfaces.

At the heart of the storage layer is a scalable, distributed object key/value store based on a second-generation peer-to-peer routing protocol. This routing protocol ensures that store and lookup operations scale efficiently to very high numbers of nodes.

RING software is comprised of the following main components: RING Connectors, a distributed internal NoSQL database called MESA, RING Storage Nodes and IO daemons, and the Supervisor web-based management portal. The MESA database is used to provide the Scale-Out-File-System (SOFS) file system abstraction layer, and the underlying core routing protocol and Keyspace mechanisms are described later in this paper.

Figure 8. Scality Scale-out Architecture

The Connectors provide the data access endpoints and protocol services for applications that use RING for data storage. As a scale-out system, RING supports any number of Connectors and endpoints to support large and growing application workloads. The RING 7 release provides a family of object and file interfaces:

● AWS S3 API - a comprehensive implementation of the AWS S3 REST API, with support for the Bucket and Object data model, AWS style Signature v4/v2 authentication, and the AWS model of Identity and Access Management (IAM)

● http/REST (sproxyd) - the RING’s native key/value REST API, provides a flat object storage namespace with direct access to RING objects

● NFS v3 - SOFS volumes presented as a standard NFSv3 mount points

● SMB 3.0 - SOFS volumes presented as SMB Shares to Microsoft Windows clients. Scality implements a subset of the SMB 3.0 protocol.

● FUSE - SOFS volumes presented as a local Linux file system

● CDMI/REST - support for the SNIA CDMI REST interface, with full compatibility to SOFS file data

● S3 on SOFS - SOFS volumes may be accessed in read-only mode over the S3 protocol, for namespace and data sharing between objects and files

● NFS v4/v3 on S3 - S3 buckets may be exported as NFS v4/v3 mount points

Connectors provide storage services for read, write, delete and lookup for objects or files stored into the RING based on either object or POSIX (file) semantics. Applications can make use of multiple connectors in parallel to scale out the number of operations per second, or the aggregate throughput of the RING. A RING deployment may be designed to provide a mix of file access and object access (over NFS and S3 for example), simultaneously—to support multiple application use cases.

The heart of the ring is the Storage Nodes, that are virtual processes that own and store a range of objects associated with its portion of the RING’s keyspace. Each physical storage server (host) is typically configured with six (6) storage node processes (termed bizstorenode). Under the storage nodes are the storage daemons (termed biziod), which are responsible for persistence of the data on disk, in an underlying local standard disk file system. Each biziod instance is a low-level software process that manages the IO operations to a particular physical disk drive and maintains the mapping of object keys to the actual object locations on disk. Biziod processes are local to a given server, managing only local, direct-attached storage, and communicating only with Storage Nodes on the same server. The typical configuration is one biziod per physical disk drive, with support for up to hundreds of daemons per server, so the system can support very large, high-density storage servers.

Each biziod stores object payloads and metadata in a set of fixed size container files on the disk it is managing. With such containerization the system can maintain high-performance access even to small files, without any storage overhead. The bizoid deamons typically leverage low-latency flash (SSD or NVMe) devices to store the index files for faster lookup performance. The system provides data integrity assurance and validation through the use of stored checksums on the index and data container files, which are validated upon read access to the data. The use of a standard file system underneath biziod ensures that administrators can use normal operating system utilities and tools to copy, migrate, repair, and maintain the disk files if required.

The recommended deployment for systems that have both HDD and SSD media on the storage servers is to deploy a data RING on HDD, and the associated metadata in a separate RING on SSD. Typically, the requirements for metadata are approximately 2 percent of the storage capacity of the actual data, so the sizing of SSD should follow that percentage for best effect. Scality can provide specific sizing recommendations based on the expected average file sizes, and number of files for a given application.

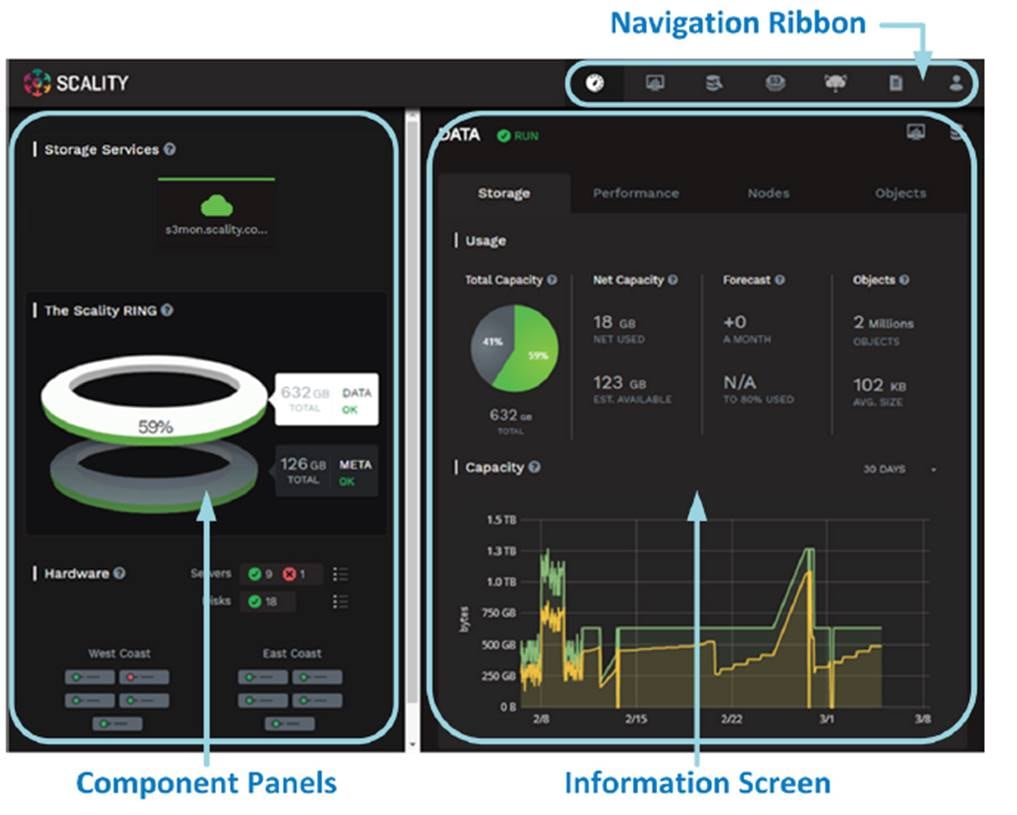

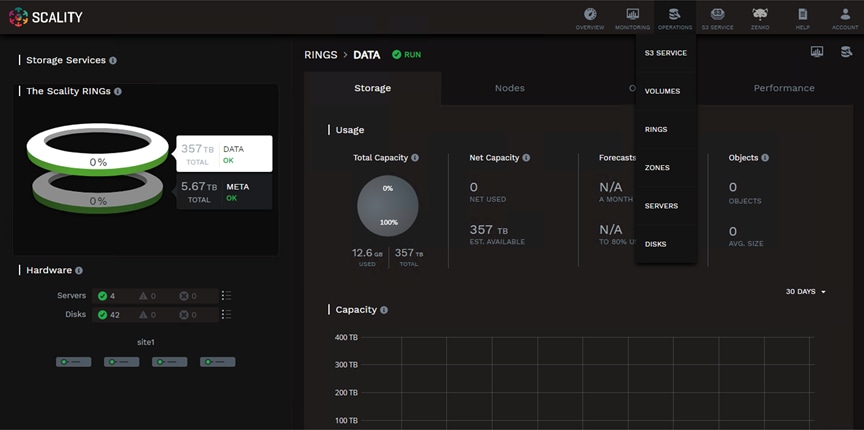

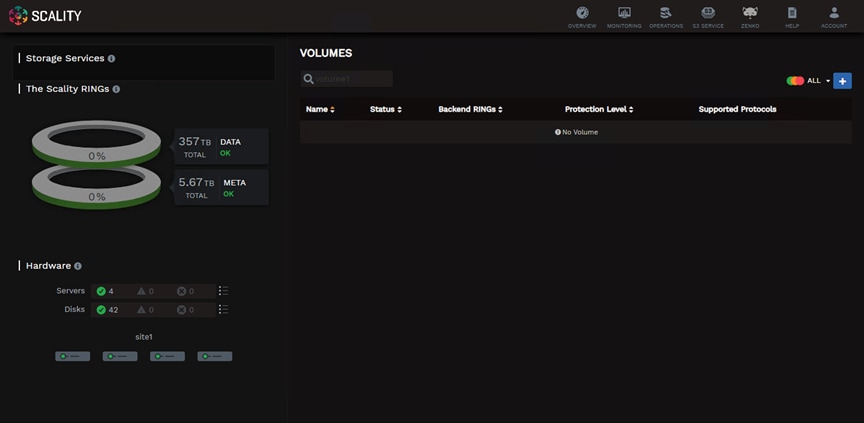

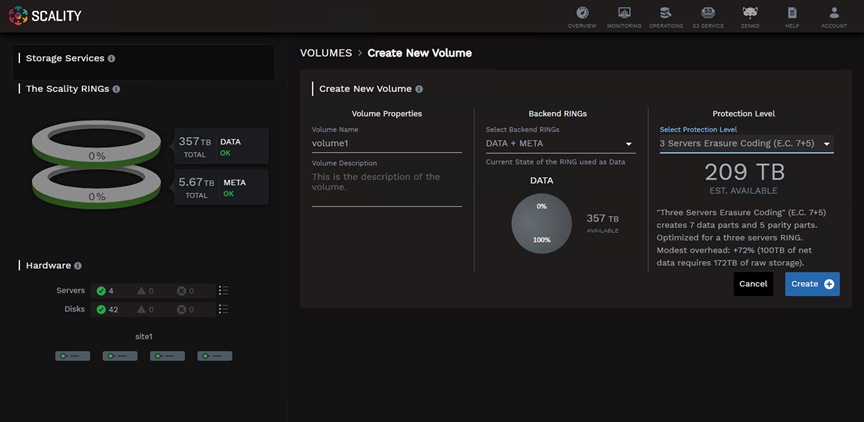

Managing and monitoring the RING is enabled through a cohesive suite of user interfaces, built on top of a family of RESTful interfaces termed the Supervisor API (SupAPI). The SupAPI provides an API based method that may be accessed from scripts, tools, and frameworks for gathering statistics, metrics, health check probes and alerts, and for provisioning new services on the RING. The SupAPI is also enabled with Role Based Access Control (RBAC), by supporting an administrator identity to provide access control privileges for Super-Admin and Monitor admin user Roles.

RING provides a family of tools that use the SupAPI for accessing the same information and services. RING 7 includes the new Scality Supervisor, a browser-based portal for both systems monitoring and management of Scality components. In RING 7, the Supervisor now provides capabilities across object (S3) and file (NFS, SMB, FUSE) Connectors including integrated dashboards including Key Performance Indicators (KPIs) with trending information such as Global Health, Performance, Availability and Forecast. The Supervisor also includes provisioning capabilities to add new servers in the system and a new zone management module to handle customer failure domains for multi-site deployments.

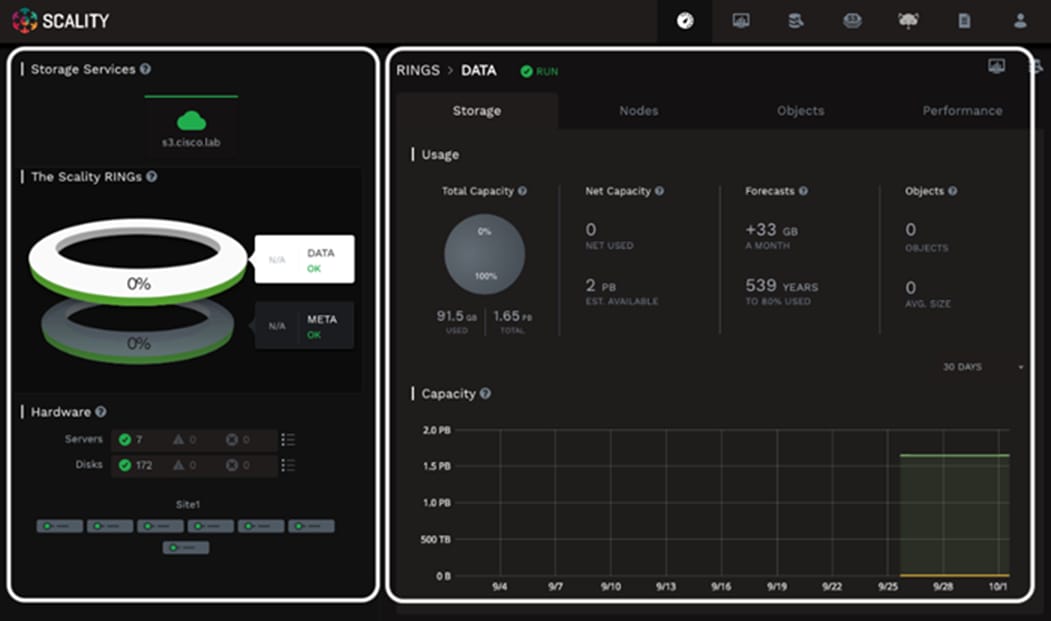

Figure 9. Supervisor Web GUI

RING Supervisor also includes an Advanced Monitoring dashboard where all collected metrics can be graphed and analyzed component per-component and per-server. This is based on a very powerful graphing engine that has access to thousands of metrics.

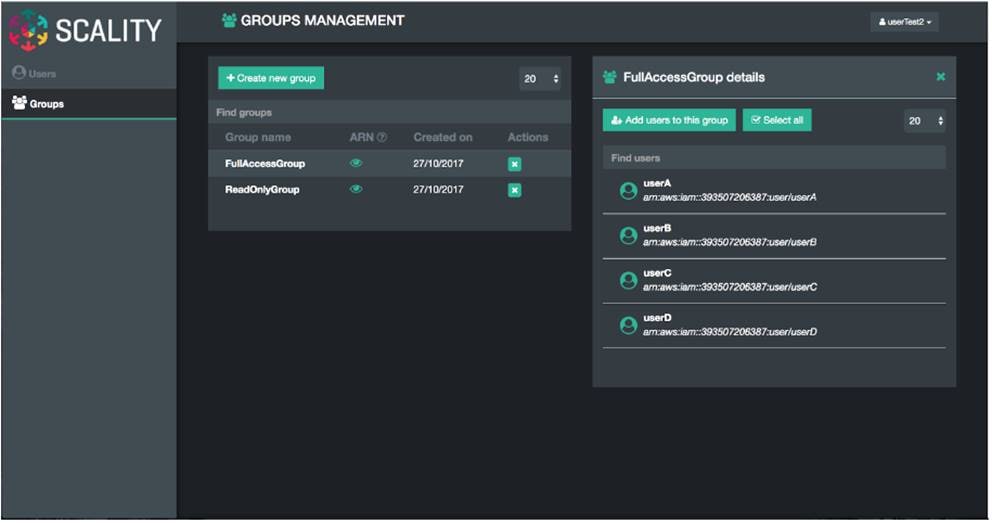

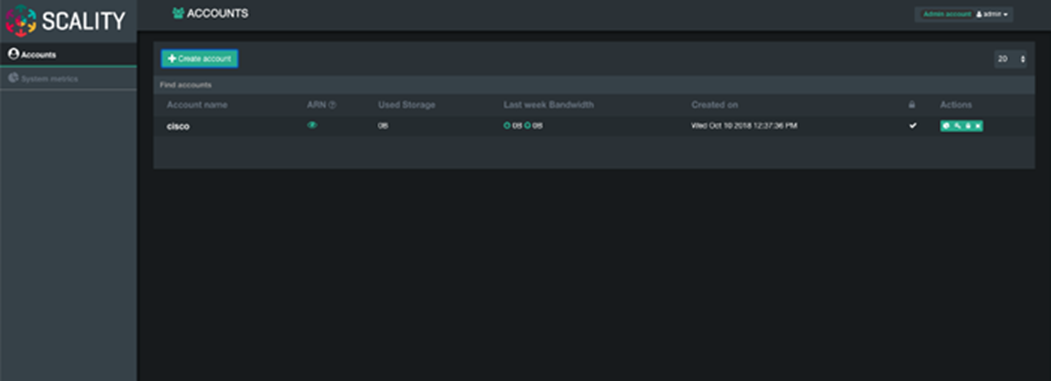

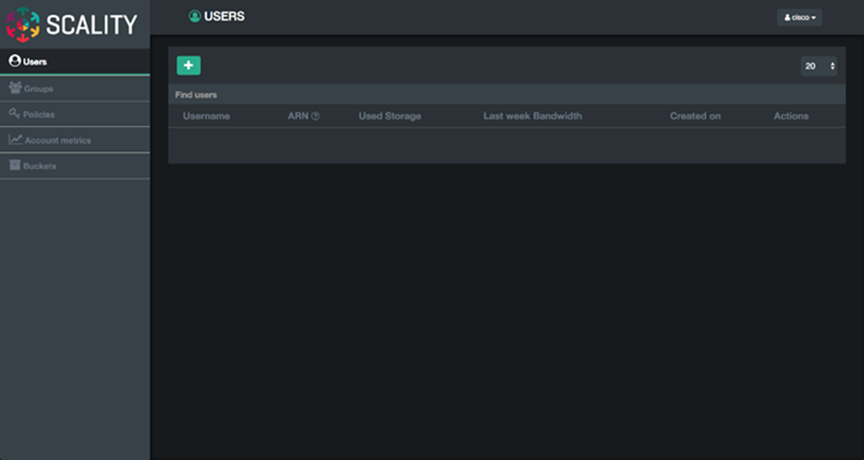

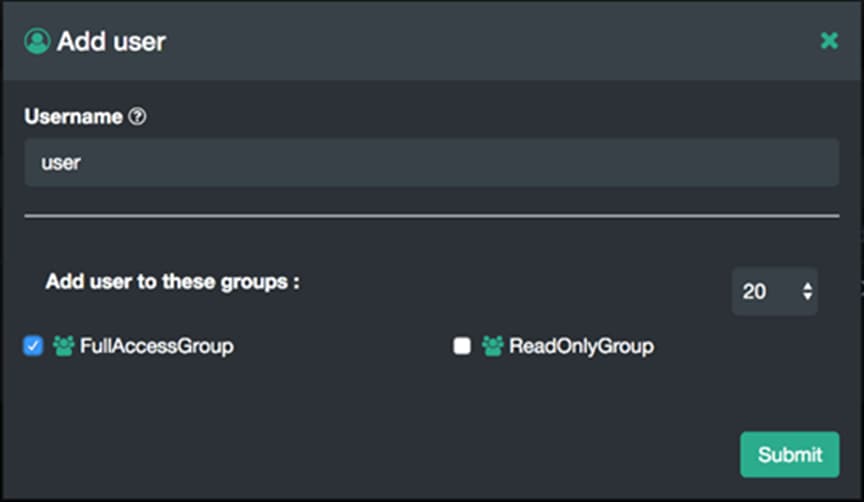

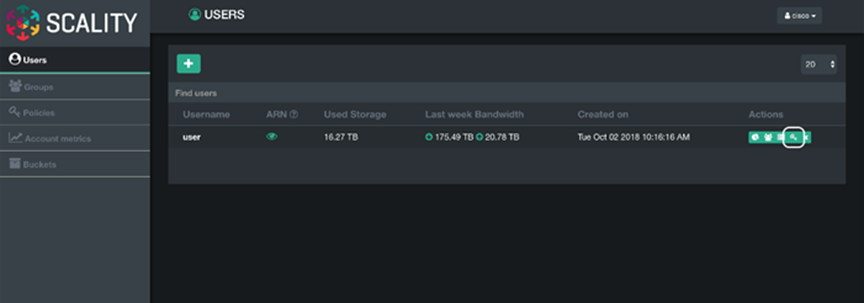

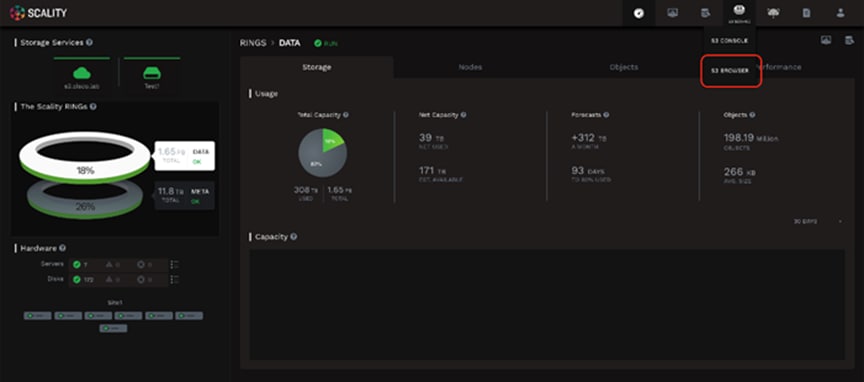

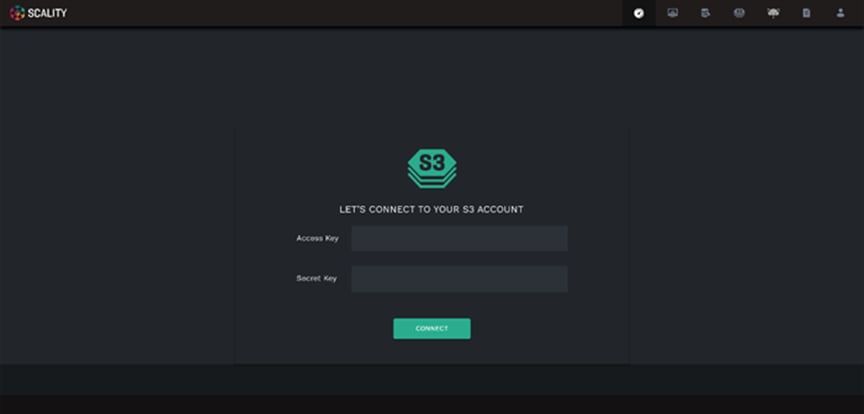

A new S3 Service Management console portal is provided to manage the integrated AWS Identity and Access Management (IAM) model of S3 multi-tenancy in the RING. This provides two-level management of Accounts, Users/Groups and IAM access control policies. The S3 Console may also be easily customized for white-labeling purposes.

Figure 10. S3 Service Management Console

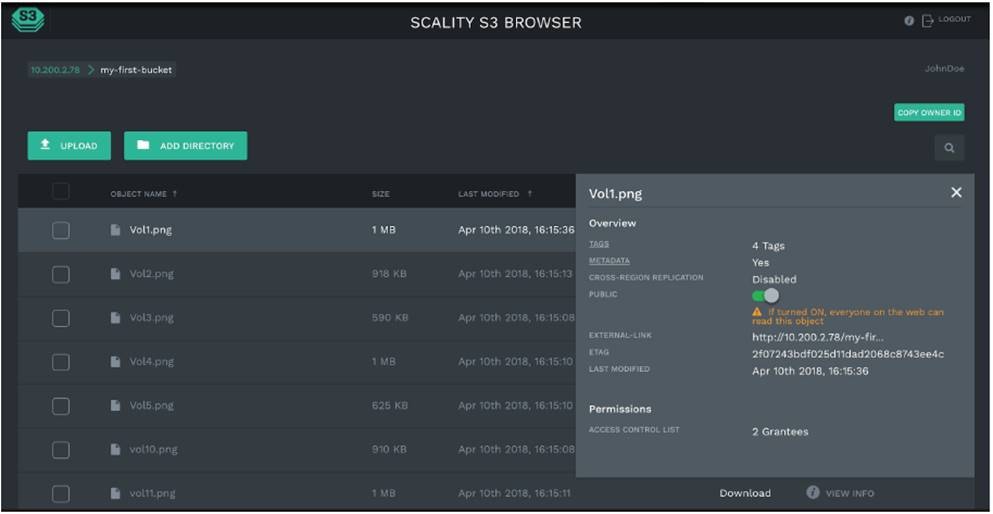

A new Scality S3 Browser is also provided to browse S3 buckets, upload and download object data, and for managing key S3 features such as bucket versioning, CORS, editing of metadata attributes and tagging. The S3 Browser is an S3 API client that runs on the S3 user browser and is accessible to both the Storage administrator and also to the S3 end-user.

Figure 11. Scality S3 Browser

A scriptable Command Line Interface (CLI) called RingSH is also provided, as well as an SNMP compatible MIB and traps interface for monitoring from standard SNMP consoles. RING is designed to be self-managing and autonomous to free administrators to work on other value-added tasks, and not worry about the component level management tasks common with traditional array-based storage solutions.

S3 Connector: AWS S3 Storage with Identity and Access Management (IAM)

The Scality S3 Connector provides a modern S3 compatible application interface to the Scality RING. The AWS S3 API has now become the industry’s default cloud storage API and has furthermore emerged as the standard RESTful dialect for object storage as NFS was for the NAS generation. The S3 Connector is built on a distributed scale-out architecture to support very high levels of application workloads and concurrent user access. This is based on a highly-available, high-performance metadata engine that can also be scaled-out for increased performance. Deployments can be geo-replicated deployments to enable highly-available disaster recovery solutions, for both Metro-Area Network environments (stretched deployments), as well as Cross Region Replication (CRR) asynchronous replication of individual S3 buckets or a full site.

The Scality S3 Connector also provides a full implementation of the AWS multi-tenancy and identity management (AWS IAM) model with federated authentication to LDAP and Active Directory to integrate into enterprise deployment environments. In addition to the RING Supervisor management UI, the S3 Service Provider UI is a web-based user interface to manage multi-tenancy accounts, users, group, and policies. To support enterprise security, development and operational methodologies, the S3 Connector on RING supports:

● Integration with Enterprise directory/security servers: most commonly Microsoft Active Directory or LDAP servers. Federated authentication integration is supported through a SAML 2.0-compatible Identity Provider such as Microsoft ADFS, and many other SAML compatible products, to enable a complete Single Sign-On (SSO) solution.

● Secure Multi-tenancy support: through IAM Accounts, secure access keys, Users, Groups, access control policies and v4 authentication per-tenant, bucket encryption (integrated with corporate KMS solutions) and auditing

● Utilization reporting to enable chargeback: the S3 Connector Utilization API provides an extended API for reporting on comprehensive usage metrics including capacity, #objects, bandwidth and S3 operations (per unit time). This provides all of the metrics required for consumption into corporate tools for chargeback calculations.

● High-performance, scale-out access: to support many corporate applications and workloads simultaneously reading and writing to the S3 service

● Highly-available disaster-recovery solutions: enabled deployments through multi-data center deployments to provide availability in the event of site failure

● Bucket Versioning via the S3 API, and for Cross Region Replication (CRR) of Buckets through the S3 API, this provides bucket-level asynchronous replication to another S3/RING deployment.

● S3 Object Lock API: designed to render data immutable, by preventing data from being deleted or overwritten for a period of time or indefinitely. Combined with backup solutions like Veeam Backup and Replication v10, Scality RING8 provides for an air-gapped, tamper-proof backup data that stays immune to ransomware, thereby mitigating its impact and offering a swift recovery path in case of an attack, and therefore thwarting malicious ransomware attacks.

RING supports native file system access to RING storage through the integrated Scale-Out-File-System (SOFS) with NFS, SMB and FUSE Connectors for access over these well-known file protocols. SOFS is a POSIX compatible, parallel file system that provides file storage services on the RING without the need for external gateways.

SOFS is more precisely a virtual file system, which is based on an internal distributed database termed MESA (table in Spanish) on top of the RING’s storage services. MESA is a distributed, semi-structured database that is used to store the file system directories and file inode structures. This provides the virtual file system hierarchical view, with the consistency required for file system data, by ensuring that file system updates are always atomic. This means that updates are either committed or rolled back entirely—which guarantees the file system is never left in an intermediate or inconsistent state. A key advantage for scaling is that MESA is itself is distributed as a set of objects across all of the RING’s storage node in a shared nothing manner to eliminate any bottlenecks or limitations.

File system lookups are performed using the RING’s standard peer-to-peer routing protocol. For fast access performance, SOFS metadata is recommended to be stored on flash storage, typically on its own dedicated SSD drives in the storage servers, with the SOFS file payloads stored in the data RING on hard disk drives (HDDs). SOFS works directly with the data protection and durability mechanisms present in the RING, including replication and configurable Erasure Coding schemas.

SOFS can be provisioned into one or more volumes and can be scaled in capacity as needed to support application requirements. Each volume can be accessed by any number of Connectors to support the incoming load workload, even with mixed protocols (NFS, SMB or FUSE). RING can support an enormous number of volumes (up to 2^32) and can grow to billions of files per volume. There is no need to pre-configure volumes for capacity (the RING effectively supports thin-provisioning of volumes). Volumes will utilize the RING’s storage pool to expand as needed when files are created and updated. For efficient storage of very large files, the RING supports the concept of sparse files, effectively files combined from multiple individual data-stripes.

While multiple Connectors may be used to simultaneously access a volume, the RING currently supports scale-out access for multiple concurrent readers, and a new File Access Coordination mode that allows multiple readers on a file while it is being written from another Connector. This is useful in use-cases such as video streaming where very large video files are written over the course of minutes or hours, but the file must be accessed for content distribution before the write is complete. Multiple Connectors attempt to write to the same directory or one per file within a directory, SOFS maintains view consistency across multiple connectors. By supporting scale-out across any number of Connectors, SOFS throughput can be scaled out to support increasing workload demands. When performance saturation is reached, it is always possible to add more connectors or storage nodes (and disk spindles) to the RING to further increase throughput into the system. The system can achieve 10’s of Gigabytes per second of aggregate throughput for parallel workloads through this architecture.

SOFS provides volume-level utilization metering and quota support, in addition to User and Group (uid/gid) quotas. This enables administrators to effectively use the concept of volumes to meter, report, and limit space (capacity) usage at the volume level. This is useful in a multi-tenant environment where multiple applications or use cases are sharing the same RING, but accessing data stored in their own volume.

SOFS also provides integrated failover and load balancer services for the NFS and SMB Connectors. The load balancer uses an integrated DNS service to expose one or more service names (e.g., sofs1.companyname. com) on Virtual IP addresses (VIPs), which can be mounted as NFS mount points or SMB shares. The load balancer can be configured with multiple underlying NFS or SMB connector real IP addresses, and provides load balancing of file traffic across these SOFS connectors. In combination with the RING 6.0 Folder Scale-out feature, this also provides transparent multi-connector access to a single folder, as well as enabling failover. In the event one of the underlying NFS or SMB Connectors becomes non-responsive, the load balancer can select another Connector IP address as the access point for the request.

Intelligent Data Durability and Self-Healing

RING is designed to expect and manage a wide range of component failures including disks, server networks and even across multiple data centers, while ensuring that data remains durable and available during these conditions. RING provides data durability through a set of flexible data protection mechanisms optimized for distributed systems, including replication, erasure coding and geo-replication capabilities that allow applications to select the best data protection strategies for their data. These flexible data protection mechanisms implement Scality’s design principle to address a wide spectrum (80 percent) of storage workloads and data sizes. A full description of multi-site data protection is provided in the next section, Multi-Site Geo-Distribution.

Replication Class of Service (COS)

To optimize data durability in a distributed system, the RING employs local replication, or the storage of multiple copies of an object within the RING. RING will attempt to spread these replicas across multiple storage nodes, and across multiple disk drives, in order to separate them from common failures (assuming sufficient numbers of servers and disks are available). RING supports six Class-of-Service levels for replication (0-5), indicating that the system can maintain between 0 to 5 replicas (or 1-6 copies) of an object. This allows the system to tolerate up to 5 simultaneous disk failures, while still preserving access and storage of the original object. Note that any failure will cause the system to self-heal the lost replica, to automatically bring the object back up to its original Class-of-Service, as fast as possible.

While replication is optimal for many use cases where the objects are small, and access performance is critical, it does impose a high storage overhead penalty compared to the original data. For example, a 100KB object being stored with a Class-of-Service=2 (2 extra copies so 3 total), will therefore consume 3 x 100KB = 300KB of actual physical capacity on the RING, in order to maintain its 3 replicas. This overhead is acceptable in many cases for small objects but can become a costly burden for megabyte or gigabyte level video and image objects. In this case, paying a penalty of 200% to store a 1GB object since it will require 3GB of underlying raw storage capacity for its 3 replicas. When measured across petabytes of objects, this becomes a significant cost burden for many businesses, requiring a more efficient data protection mechanism.

Scality’s erasure coding (EC) provides an alternative data protection mechanism to replication that is optimized for large objects and files. RING implements Reed-Solomon erasure coding6 techniques, to store large objects with an extended set of parity chunks, instead of multiple copies of the original object. The basic idea with erasure coding is to break an object into multiple chunks (m) and apply a mathematical encoding to produce an additional set of parity chunks (k). A description of the mathematical encoding is beyond the scope of this paper, but they can be simply understood as an extension of the XOR parity calculations used in traditional RAID. The resulting set of chunks, (m+k) are then distributed across the RING nodes, providing the ability to access the original object as long as any subset of m data or parity chunks are available. Stated another way, this provides a way to store an object with protection against k failures, with only k/m overhead in storage space.

Many commercial storage solutions impose a performance penalty on reading objects stored through erasure coding, due to the fact that all of the chunks, including the original data, are encoded before they are stored. This requires mandatory decoding on all access to the objects, even when there are no failure conditions on the main data chunks. With Scality’s EC, the data chunks are stored in the clear, without any encoding, so that this performance penalty is not present during normal read accesses. This means that erasure coded data can be accessed as fast as other data, unless a data chunk is missing which would require a parity chunk to be accessed and decoded. In summary, for single-site data protection, Scality’s replication and erasure coded data protection mechanisms can provide very high-levels of data durability, with the ability to trade-off performance and space characteristics for different data types.

Note that replication and erasure coding may be combined, even on a single Connector, by configuring a policy for the connector to store objects below a certain size threshold with a replication CoS, but files above the file size limit with a specific erasure coding schema. This allows the application to simply store objects without worrying about the optimal storage strategy per object, with the system managing that automatically.

Note that RING does not employ traditional RAID based data protection techniques. While RAID has served the industry well in legacy NAS and SAN systems, industry experts have written at large about the inadequacies of classical RAID technologies when employed on high-density disk drives, in capacity-optimized and distributed storage systems. These deficiencies include higher probabilities of data loss due to long RAID rebuild times, and the ability to protect against only a limited set of failure conditions (for example, only two simultaneous disk failures per RAID6 group). Further information and reading on the limitations of RAID as a data protection mechanism on high-capacity disk drives is widely available

RING provides self-healing processes that monitor and automatically resolve component failures. This includes the ability to rebuild missing data chunks due to disk drive or server failures, rebalance data when nodes leave and join the RING, and to proxy requests around component failures. In the event a disk drive or even a full server fails, background rebuild operations are spawned to restore the missing object data from its surviving replicas or erasure coded chunks. The rebuild process completes when it has restored the original Class of Service - either the full number of replicas or the original number of erasure coded data and parity chunks. A local disk failure can also be repaired quickly on a node (distinct from a full distributed rebuild), through the use of an in-memory key map maintained on each node. Nodes are also responsible for automatically detecting mismatches in their own Keyspace, rebalancing keys and for establishing and removing proxies during node addition and departure operations. Self-healing provides the RING with the resiliency required to maintain data availability and durability in the face of the expected wide set of failure conditions, including multiple simultaneous component failures at the hardware and software process levels.

To optimize rebuilds as well as mainline IO performance during rebuilds, RING utilizes the distributed power of the entire storage pool. The parallelism of the underlying architecture pays dividends by eliminating any central bottlenecks that might otherwise limit performance or cause contention between servicing application requests, and normal background operations such as rebuilds, especially when the system is under load. To further optimize rebuild operations, the system will only repair the affected object data, not the entire set of disk blocks, as is commonly the case in RAID arrays. Rebuilds are distributed across multiple servers and disks in the system, to utilize the aggregate processing power and available IO of multiple resources in parallel, rather than serializing the rebuilds onto a single disk drive.

By leveraging the entire pool, the impact of rebuilding data stored either with replication or erasure coding is minimized since there will be relatively small degrees of overlap between disks involved in servicing data requests, and those involved in the rebuilds.

Scality RING Multi-Site Deployments

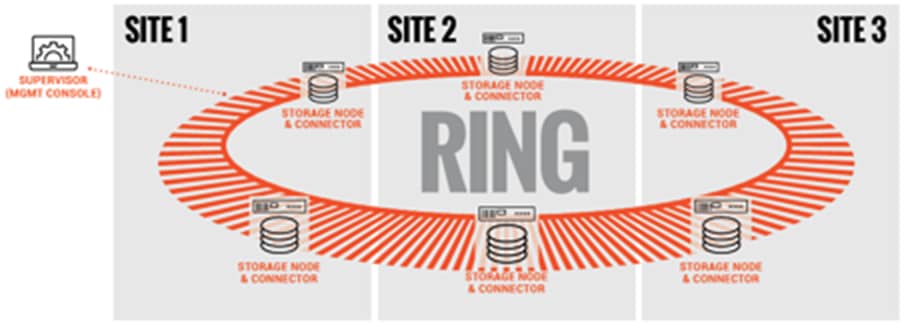

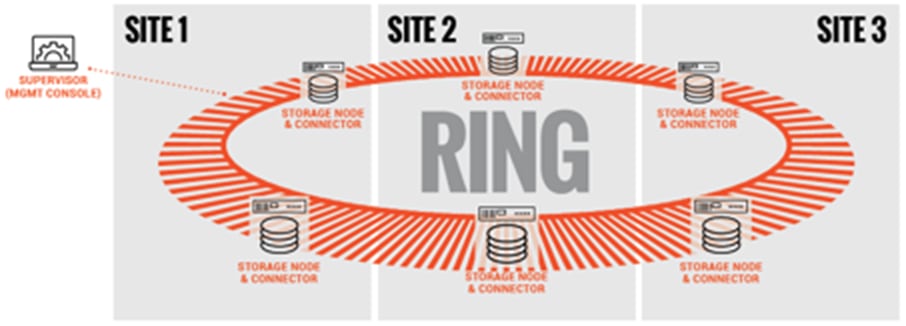

To support multi data center deployments with site protection and complete data consistency between all sites, the RING natively supports a stretched (synchronous) deployment mode across sites. In this mode, a single logical RING is deployed across multiple data centers, with all nodes participating in the standard RING protocols as if they were local to one site.

When a stretched RING is deployed with EC, it provides multiple benefits including full site-level failure protection, active/active access from both data centers, and dramatically reduced storage overhead compared to mirrored RINGs. An erasure coding schema for a three-site stretched RING of 7+5 would provide protection against one complete site failure, or up to four disk/server failures per site, plus one additional disk/server failure in another site, with approximately 70 percent space overhead. This compares favorably to a replication policy that might require 300-400 percent space overhead, for similar levels of protection across these sites.

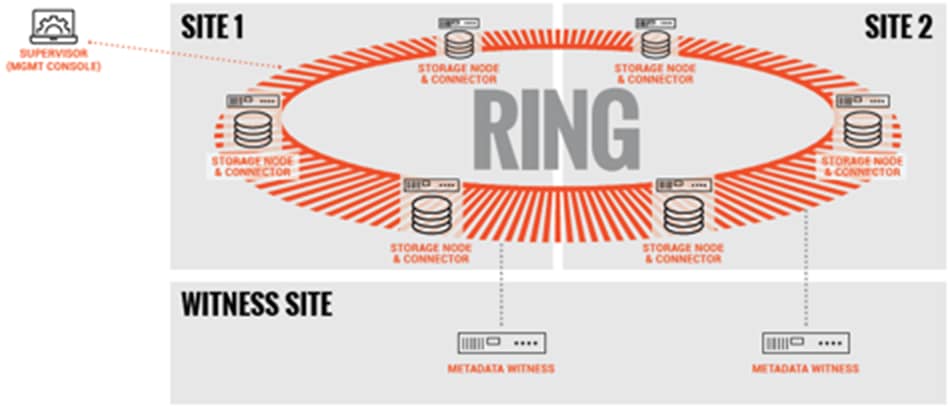

File System (SOFS) Multi-Site Geo-Distribution

The Scality RING can be stretched across 2 to 3 sites within a Metro-Area Network (MAN) to provide full site failover, should the latency between the several sites go above 10ms. The stretched architecture provides zero RTO and RPO since the failover is automatized. This is the same for the failback procedure since when the lost site is recovered, the system will automatically recover the data. For the two-site stretched architecture only and to manage the mitigation between the 2 sites, 2 witness servers will be needed.

The 2 stretched sites + witness is an Active / Active replication system based on a synchronous replication.

Figure 12. SOFS – Two-site Stretched

The 3 stretched sites are an Active / Active replication system based on a synchronous replication.

Figure 13. SOFS – Three-Site Stretched

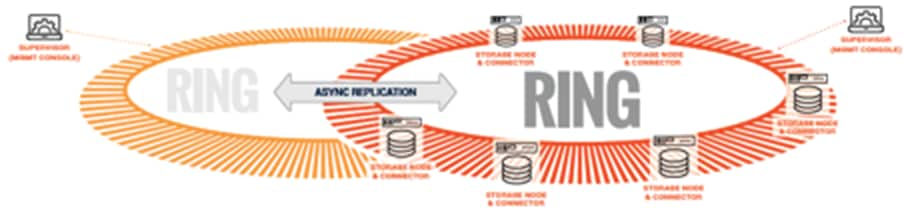

For high latency between sites, Scality supports SOFS 2 Sites Full Asynchronous replication mechanism at Scale to enable the replication of massive amount of file data across the 2 sites. Scality also supports a Full Diff mechanism that can compare at scale the content of the 2 sites to ensure the data are effectively replicated. Should one site be fully lost, Scality provides a mechanism to fully reconstruct the lost site.

To manage replication burst, Scality integrates a back-pressure system to be sure your production network link won’t be overloaded by the replication itself and at the same time will respect the RPO defined during the setup of the installation. This feature enables the Disaster Recovery (DR) feature by providing Failover and Failback system to recover in case of partial or full loss.

The 2 sites with high latency between them are an Active / Passive replication system based on an asynchronous replication.

Figure 14. SOFS – Two-Site Asynchronous Replication

S3 Object Multi-Site Geo-Distribution

The same multi-site architectures are supported for S3 as with SOFS, both synchronous & asynchronous. The first one with a stretched solution on two and three sites with no RPO and no RTO. As for SOFS, a stretched architecture is available within a MAN to provide full site failover. Should the latency between the several sites goes above 10ms. For the two-site stretched architecture only and to manage the mitigation between the 2 sites, 1 witness server will be needed.

The 2 stretched sites + witness is an Active / Active replication system based on a synchronous replication.

Figure 15. S3 – Two-site Stretched

The 3 stretched sites are an Active / Active replication system based on a synchronous replication.

Figure 16. S3 – Three-Site Stretched

For high latency between sites (such as on a Wide Area Network - WAN), Scality supports the S3 2 Sites Full Asynchronous replication mechanism at Scale to enable the replication of massive amount of data across the 2 sites. This system is based on the S3 CRR design to replicate a bucket between 2 sites. For site replication, Scality developed its own system to support site replication instead of just bucket. This feature enables the Disaster Recovery (DR) feature by providing Failover and Failback system to recover in case of partial or fully (flooding, fire, and so on) lost.

The 2 sites with high latency between them are an Active / Passive replication system based on an asynchronous replication.

Figure 17. S3 – Two-Site Asynchronous Replication

Scality NAS Archiver makes it easy to identify and migrate inactive data automatically and transparently to Scality RING, freeing-up premium primary storage capacity while cutting down overall backup and operating costs.

Scality NAS Archiver is a software-based fi le archiving solution that is designed to meet the needs of today’s modern data center, capitalizing on the benefits of the RING platform. This intelligent file archiving system maximizes the value of existing primary storage by removing the burden of stale data wasting space and compromising performance. Scality NAS Archiver offers an optimal solution for NAS offload and archiving, ensuring that data migration from the NAS to the RING stays perfectly seamless and totally transparent, with no impact to applications it serves.

The Scality NAS Archiving solution provides such a compelling TCO savings for users of enterprise NAS systems that pays for itself and more. It provides not only a transparent two-tier storage solution, preserving the normal performance and access characteristics of the NAS filers for users and applications, but also introduces a scalable, long-term, durable, and cost-effective solution for archiving on the RING object storage solution. Perhaps more important than the direct TCO savings, the NAS filer now has 60-80% free capacity, which can be used for additional Tier 1 file data – this opens up new applications and use-cases that the enterprise can use for its key business goals. Moreover, while all data archived to Scality RING is fully and seamlessly accessible through the NAS without any changes, this also provides a super optimal zero RPO/RTO solution in the event the NAS filer fails or becomes inaccessible.

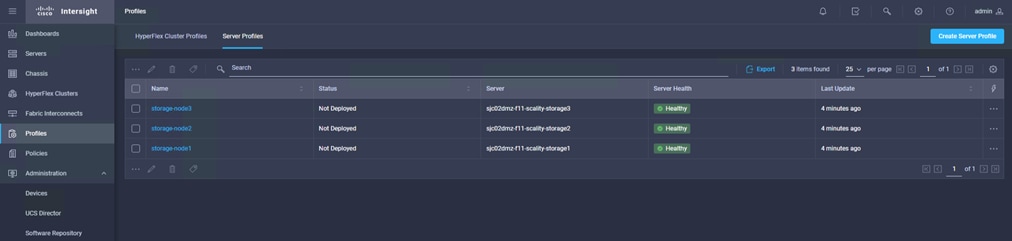

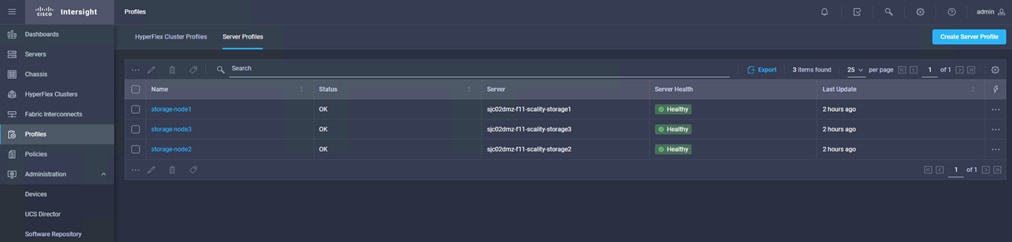

In this architecture, we have Scality RING and Scality NAS Archiver deployed on Cisco UCS with Cisco Intersight and Terraform provider for Cisco Intersight. We automatically have setup three Cisco UCS C240 M5L server with Terraform provider for Cisco Intersight, simplifying the process of orchestrating a scale-out storage environment. All three servers were installed with the latest Red Hat Enterprise Linux 7 operating system.

We deployed manually Cisco Intersight virtual Appliance and Terraform as virtual machines. In addition, we automatically installed three virtual machines with Terraform for VMware vSphere, covering the Scality Supervisor, Scality NAS Archiver SQL server, and Scality NAS Archiver Portal. All virtual machines were deployed on a Cisco UCS C220 M5 server, connected to a pair of Cisco Nexus switches.

We’ve setup Scality NAS Archiver with a virtual machine for the SQL database and a virtual machine for the Scality NAS Archiver Portal server to show in an example how to archive data from a Windows File Server to Scality RING.

Figure 18. Solution Overview

This Cisco Validated Design provides a comprehensive, end-to-end guide for deploying Scality RING and Scality NAS Archiver on Cisco UCS C240 M5 with Cisco Intersight and Terraform provider for Cisco Intersight.

The 3-node Scality RING solution has various options to scale capacity. The tested configuration uses ARC (Advanced Resiliency Configuration) 7+5 and COS 3 replication for small objects. A base capacity summary for the tested solution is listed in Table 1. Because of the smallest Scality RING license of 200 TB usable, there is no option to use smaller drives than 10 TB.

| HDD Type |

Number of Disks |

Usable Capacity |

| 10 TB 7200-rpm LFF NL-SAS |

36 |

196 TB |

| 12 TB 7200-rpm LFF NL-SAS |

236 TB |

|

| 14 TB 7200-rpm LFF NL-SAS |

275 TB |

|

| 16 TB 7200-rpm LFF NL-SAS |

314 TB |

The solution setup consists of multiple parts. It covers basic setup of the network components, policies and profiles setup, installations of various parts as well as functional tests and high availability testing. The high-level flow of the solution setup is as follows:

1. Install and configure Cisco Nexus 93180YC-EX.

2. Deploy Cisco Intersight virtual Appliance.

3. Deploy Terraform virtual machine.

4. Install and configure Cisco UCS C240 M5 with Cisco Intersight and Terraform provider for Cisco Intersight.

5. Deploy Scality RING Supervisor, Scality NAS Archiver SQL virtual machine, and Scality NAS Archiver Portal server virtual machine through Terraform for VMware vSphere.

6. Configure and install Scality RING.

7. Configure and install Scality NAS Archiver.

8. Functional tests of the whole solution.

9. Performance tests for S3 and NFS.

10. High Availability testing of the solution.

The following sections detail the physical hardware, software revisions, and firmware versions required to install a single Scality RING cluster on Cisco UCS as well Scality NAS Archiver. This is specific to the solution built in this CVD.

Table 2. Hardware Components used in this CVD

| Component |

Model |

Quantity |

Comments |

| Switches |

Cisco Nexus 93180YC-EX |

2 |

|

| Cisco UCS |

Cisco UCS C240 M5L |

3 |

Each Node: 2 x Intel Xeon Silver 4214 (2.2 GHz, 12 Cores) 256 GB Memory Cisco 12G Modular Raid Controller with 2GB cache 2 x 960 GB M.2 6 Gbps SATA SSD for Metadata 2 x 960 GB 6 Gbps SATA SSD for System 12 x 10 TB 12 Gbps NL-SAS HDD for Data 1 x VIC 1455 |

| Cisco UCS |

Cisco UCS C220 M5S |

1 |

2 x Intel Xeon Platinum 8180 (2.5 GHz, 28 Cores) 192 GB Memory Cisco 12G Modular Raid Controller with 2GB cache 2 x 480 GB 6 Gbps SATA SSD for System 3 x 3.8 TB 6 Gbps SATA SSD for Data 1 x VIC 1455 |

| Cisco Intersight Virtual Appliance |

Virtual Machine |

1 |

16 vCPU 32 GB Memory 500 GB Disk 1 x Network |

| Terraform |

Virtual Machine |

1 |

2 vCPU 16 GB Memory 100 GB Disk 1 x Network |

| Scality Supervisor |

Virtual Machine |

1 |

4 vCPU 16 GB Memory 800 GB Disk 2 x Network |

| Scality NAS Archiver SQL |

Virtual Machine |

1 |

8 vCPU 16 GB Memory 250 GB Disk 1 x Network |

| Scality NAS Archiver Portal |

Virtual Machine |

1 |

4 vCPU 8 GB Memory 80 GB Disk 1 x Network |

The required software distribution versions are listed in Table 3.

| Layer |

Component |

Version or Release |

| Cisco UCS C240 M5L |

Firmware Version |

4.1(1f) |

| Cisco UCS C220 M5SX |

Firmware Version |

4.1(1f) |

| Network Nexus 93180YC-EX |

BIOS |

07.67 |

| NXOS |

9.3(4) |

|

| Cisco Intersight Virtual Appliance |

Version |

1.0.9-164 |

| Software |

Terraform |

0.13.2 |

| Software |

Terraform Provider for Intersight |

0.1.3 |

| Software |

Scality RING |

8.2 |

| Software |

Scality NAS Archiver |

8.2 |

| Software |

Red Hat Enterprise Linux |

7.8 |

| Hypervisor |

VMware ESXi |

6.7 Update 3 |

| Management Server |

VMware vCenter |

6.7 Update 3 |

The solution contains one topology configuration. There are three Cisco UCS C240 M5 and one Cisco UCS C220 M5 connected to a pair of Cisco Nexus 93180YC-EX switches. Each Cisco UCS C240 M5 and C220 M5 server relates to one 25-Gbps cable to each Cisco Nexus 93180YC-EX. All three Cisco UCS C240 M5 server use 802.3ad bonding to achieve high availability and high performance. The Cisco UCS C220 M5 server uses two active network interfaces configured under ESXi to achieve in the same way high availability and high performance.

The following diagram illustrates the details of the configuration.

Figure 19. Datacenter Topology

VLANs and Subnets

For the base configuration multiple VLANs need to be carried to the Cisco UCS domain from the upstream LAN, and these VLANs are also defined in the Cisco UCS configuration. Table 4 lists the VLANs created by the Cisco Intersight used in this CVD and their functions:

| VLAN Name |

VLAN ID |

Subnet |

Purpose |

| Management/Client |

205 |

172.16.21.0/24 GW 172.16.21.1 |

Cisco UCS CIMC management interfaces Cisco Intersight Terraform Client network for Scality RING, Scality NAS Archiver Windows File Server for Scality NAS Archiver |

| Storage |

206 |

172.16.22.0/24 GW 172.16.22.1 |

Storage network for Scality RING |

Jumbo Frames

All traffic traversing the Client and Storage VLAN and subnet is configured by default to use jumbo frames, or to be precise, all communication is configured to send IP packets with a Maximum Transmission Unit (MTU) size of 9000 bytes. Using a larger MTU value means that each IP packet sent carries a larger payload, therefore transmitting more data per packet, and consequently sending and receiving data faster.

Naming Scheme and DNS

DNS servers are highly recommended to be configured for querying Fully Qualified Domain Names (FQDN). DNS records need to be created prior to beginning the installation. At a minimum, it is highly recommended to create A records and reverse PTR records.

Use Table 5 to gather the required DNS information for the installation and list the information required for this CVD:

Table 5. DNS Server Information

| Item |

Name |

| DNS Server |

192.168.10.51 |

| DNS Domain |

sjc02dmz.net |

| vCenter Server Name |

sjc02dmz-vcsa |

| Cisco Nexus 93180YC-EX #1 |

sjc02dmz-f9-n93180ycex-a |

| Cisco Nexus 93180YC-EX #2 |

sjc02dmz-f11-n93180ycex-b |

| Cisco Intersight virtual Appliance |

sjc02dmz-f11-intersight |

| Cisco UCS C240 M5 #1 |

sjc02dmz-f11-storage1 |

| Cisco UCS C240 M5 #2 |

sjc02dmz-f11-storage2 |

| Cisco UCS C240 M5 #3 |

sjc02dmz-f11-storage3 |

| Cisco UCS C220 M5 |

sjc02dmz-f11-esxi |

| Cisco Intersight |

sjc02dmz-f11-intersight |

| Terraform |

sjc02dmz-f11-terraform |

| Scality Supervisor |

sjc02dmz-f11-supervisor |

| Scality NAS Archiver SQL Server |

sjc02dmz-f11-sql |

| Scality NAS Archiver Portal Server |

sjc02dmz-f11-portal |

| Windows File Server for Scality NAS Archiver |

sjc02dmz-f11-winnas |

Cabling

The physical layout of the solution was previously described in section Topology Overview. The Cisco Nexus switches, and the Cisco UCS server need to be cabled properly before beginning the installation activities. Table 6 provides the cabling map for installation of a Scality RING solution on Cisco UCS.

Table 6. Cabling Map Cisco Nexus 93180YC-EX

| Device |

Port |

Connected To |

Port |

Note |

| sjc02dmz-f9-n93180ycex-a |

11 |

sjc02dmz-f11-esxi |

Port 0 |

|

| sjc02dmz-f9-n93180ycex-a |

12 |

sjc02dmz-f11-storage1 |

Port 0 |

|

| sjc02dmz-f9-n93180ycex-a |

13 |

sjc02dmz-f11-storage2 |

Port 0 |

|

| sjc02dmz-f9-n93180ycex-a |

14 |

sjc02dmz-f11-storage3 |

Port 0 |

|

| sjc02dmz-f9-n93180ycex-a |

49 |

sjc02dmz-f11-n93180ycex-b |

Eth1/49 |

vPC Peer Link |

| sjc02dmz-f9-n93180ycex-a |

50 |

sjc02dmz-f11-n93180ycex-b |

Eth1/50 |

vPC Peer Link |

| sjc02dmz-f11-n93180ycex-b |

11 |

sjc02dmz-f11-esxi |

Port 2 |

|

| sjc02dmz-f11-n93180ycex-b |

12 |

sjc02dmz-f11-storage1 |

Port 2 |

|

| sjc02dmz-f11-n93180ycex-b |

13 |

sjc02dmz-f11-storage2 |

Port 2 |

|

| sjc02dmz-f11-n93180ycex-b |

14 |

sjc02dmz-f11-storage3 |

Port 2 |

|

| sjc02dmz-f11-n93180ycex-b |

49 |

sjc02dmz-f9-n93180ycex-a |

Eth1/49 |

vPC Peer Link |

| sjc02dmz-f11-n93180ycex-b |

50 |

sjc02dmz-f9-n93180ycex-a |

Eth1/50 |

vPC Peer Link |

Deployment Hardware and Software

This section provides the details to configure a fully redundant, highly available Cisco UCS configuration.

● Initial setup of Cisco Nexus 93180YC-EX Switch A and B

Configure Cisco Nexus 93180YC-EX Switch A and B

Both Cisco UCS Fabric Interconnect A and B are connected to two Cisco Nexus 93180YC-EX switches for connectivity to applications and clients. The following sections describe the setup of both Cisco Nexus 93180YC-EX switches.

Initial Setup of Cisco Nexus 93180YC-EX Switch A and B

To configure Switch A, connect a Console to the Console port of each switch, power on the switch and follow these steps:

1. Type yes.

2. Type n.

3. Type n.

4. Type n.

5. Enter the switch name.

6. Type y.

7. Type your IPv4 management address for Switch A.

8. Type your IPv4 management netmask for Switch A.

9. Type y.

10. Type your IPv4 management default gateway address for Switch A.

11. Type n.

12. Type n.

13. Type y for ssh service.

14. Press <Return> and then <Return>.

15. Type y for ntp server.

16. Type the IPv4 address of the NTP server.

17. Type in L2 for interface layer.

18. Press <Return> and again <Return>.

19. Check the configuration and if correct then press <Return> and again <Return>.

The complete setup looks like the following:

---- System Admin Account Setup ----

Do you want to enforce secure password standard (yes/no) [y]:

Enter the password for "admin":

Confirm the password for "admin":

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of

the system. Setup configures only enough connectivity for management

of the system.

Please register Cisco Nexus9000 Family devices promptly with your

supplier. Failure to register may affect response times for initial

service calls. Nexus9000 devices must be registered to receive

entitled support services.

Press Enter at any time to skip a dialog. Use ctrl-c at anytime

to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]:

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : sjc02dmz-f9-n93180ycex-a

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : 172.16.16.4

Mgmt0 IPv4 netmask : 255.255.255.0

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : 192.168.12.3

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [1024]:

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : 173.38.201.115

Configure default interface layer (L3/L2) [L3]: L2

Configure default switchport interface state (shut/noshut) [shut]:

Configure CoPP system profile (strict/moderate/lenient/dense) [strict]:

The following configuration will be applied:

password strength-check

switchname sjc02dmz-f9-n93180ycex-a

vrf context management

ip route 0.0.0.0/0 192.168.12.3

exit

no feature telnet

ssh key rsa 1024 force

feature ssh

ntp server 173.38.201.115

no system default switchport

system default switchport shutdown

copp profile strict

interface mgmt0

ip address 172.16.16.4 255.255.255.0

no shutdown

Would you like to edit the configuration? (yes/no) [n]:

Use this configuration and save it? (yes/no) [y]:

[########################################] 100%

Copy complete.

User Access Verification

Sjc02dmz-f9-n93180ycex-a login:

![]() Repeat steps 1-19 for the Cisco Nexus 93180YC-EX Switch B with the exception of configuring a different IPv4 management address in step 7.

Repeat steps 1-19 for the Cisco Nexus 93180YC-EX Switch B with the exception of configuring a different IPv4 management address in step 7.

Enable Features on Cisco Nexus 93180YC-EX Switch A and B

To enable the features UDLD, VLAN, LACP, HSRP, VPC, and Jumbo Frames, connect to the management interface via ssh on both switches and follow these steps on both Switch A and B:

Switch A

sjc02dmz-f9-n93180ycex-a # configure terminal

Enter configuration commands, one per line. End with CNTL/Z.

sjc02dmz-f9-n93180ycex-a (config)# feature udld

sjc02dmz-f9-n93180ycex-a (config)# feature interface-vlan

sjc02dmz-f9-n93180ycex-a(config)# feature lacp

sjc02dmz-f9-n93180ycex-a(config)# feature vpc

sjc02dmz-f9-n93180ycex-a(config)# feature hsrp

sjc02dmz-f9-n93180ycex-a(config)# system jumbomtu 9216

sjc02dmz-f9-n93180ycex-a(config)# spanning-tree port type edge bpduguard default

sjc02dmz-f9-n93180ycex-a(config)# spanning-tree port type edge bpdufilter default

sjc02dmz-f9-n93180ycex-a(config)# port-channel load-balance src-dst ip-l4port-vlan

sjc02dmz-f9-n93180ycex-a(config)# exit

sjc02dmz-f9-n93180ycex-a#

Switch B

sjc02dmz-f11-n93180ycex-b# configure terminal

Enter configuration commands, one per line. End with CNTL/Z.

sjc02dmz-f11-n93180ycex-b(config)# feature udld

sjc02dmz-f11-n93180ycex-b(config)# feature interface-vlan

sjc02dmz-f11-n93180ycex-b(config)# feature lacp

sjc02dmz-f11-n93180ycex-b(config)# feature vpc

sjc02dmz-f11-n93180ycex-b(config)# feature hsrp

sjc02dmz-f11-n93180ycex-b(config)# system jumbomtu 9216

sjc02dmz-f11-n93180ycex-b(config)# spanning-tree port type edge bpduguard default

sjc02dmz-f11-n93180ycex-b(config)# spanning-tree port type edge bpdufilter default

sjc02dmz-f11-n93180ycex-b(config)# port-channel load-balance src-dst ip-l4port-vlan

sjc02dmz-f11-n93180ycex-b(config)# exit

sjc02dmz-f11-n93180ycex-b#

Configure VLANs on Nexus 93180YC-EX Switch A and B

To configure VLAN Client and Storage, follow these steps on Switch A and Switch B:

Switch A

sjc02dmz-f9-n93180ycex-a# config terminal

Enter configuration commands, one per line. End with CNTL/Z.

sjc02dmz-f9-n93180ycex-a(config)# vlan 205

sjc02dmz-f9-n93180ycex-a(config-vlan)# name Client

sjc02dmz-f9-n93180ycex-a(config-vlan)# exit

sjc02dmz-f9-n93180ycex-a(config)# vlan 206

sjc02dmz-f9-n93180ycex-a(config-vlan)# name Storage

sjc02dmz-f9-n93180ycex-a(config-vlan)# exit

sjc02dmz-f9-n93180ycex-a(config)#interface vlan 205

sjc02dmz-f9-n93180ycex-a(config-if)# no shut

sjc02dmz-f9-n93180ycex-a(config-if)# mtu 9216

sjc02dmz-f9-n93180ycex-a(config-if)# no ip redirects

sjc02dmz-f9-n93180ycex-a(config-if)# ip address 172.16.21.2/24

sjc02dmz-f9-n93180ycex-a(config-if)# no ipv6 redirects

sjc02dmz-f9-n93180ycex-a(config-if)# hsrp version 2

sjc02dmz-f9-n93180ycex-a(config-if)# hsrp 205

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# preempt delay minimum 300

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# priority 110

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# ip 172.16.21.1

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# exit

sjc02dmz-f9-n93180ycex-a(config-if)# exit

sjc02dmz-f9-n93180ycex-a(config)#interface vlan 206

sjc02dmz-f9-n93180ycex-a(config-if)# no shut

sjc02dmz-f9-n93180ycex-a(config-if)# mtu 9216

sjc02dmz-f9-n93180ycex-a(config-if)# no ip redirects

sjc02dmz-f9-n93180ycex-a(config-if)# ip address 172.16.22.2/24

sjc02dmz-f9-n93180ycex-a(config-if)# no ipv6 redirects

sjc02dmz-f9-n93180ycex-a(config-if)# hsrp version 2

sjc02dmz-f9-n93180ycex-a(config-if)# hsrp 206

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# preempt delay minimum 300

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# priority 110

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# ip 172.16.22.1

sjc02dmz-f9-n93180ycex-a(config-if-hsrp)# exit

sjc02dmz-f9-n93180ycex-a(config-if)# exit

sjc02dmz-f9-n93180ycex-a(config)# copy run start

Switch B

sjc02dmz-f11-n93180ycex-b# config terminal

Enter configuration commands, one per line. End with CNTL/Z.

sjc02dmz-f11-n93180ycex-b(config)# vlan 205

sjc02dmz-f11-n93180ycex-b(config-vlan)# name Client

sjc02dmz-f11-n93180ycex-b(config-vlan)# exit

sjc02dmz-f11-n93180ycex-b(config)# vlan 206

sjc02dmz-f11-n93180ycex-b(config-vlan)# name Storage

sjc02dmz-f11-n93180ycex-b(config-vlan)# exit

sjc02dmz-f11-n93180ycex-b(config)#interface vlan 205

sjc02dmz-f11-n93180ycex-b(config-if)# no shut

sjc02dmz-f11-n93180ycex-b(config-if)# mtu 9216

sjc02dmz-f11-n93180ycex-b(config-if)# no ip redirects

sjc02dmz-f11-n93180ycex-b(config-if)# ip address 172.16.21.3/24

sjc02dmz-f11-n93180ycex-b(config-if)# no ipv6 redirects

sjc02dmz-f11-n93180ycex-b(config-if)# hsrp version 2

sjc02dmz-f11-n93180ycex-b(config-if)# hsrp 205

sjc02dmz-f11-n93180ycex-b(config-if-hsrp)# preempt delay minimum 300

sjc02dmz-f11-n93180ycex-b(config-if-hsrp)# priority 120

sjc02dmz-f11-n93180ycex-b(config-if-hsrp)# ip 172.16.21.1

sjc02dmz-f11-n93180ycex-b(config-if-hsrp)# exit

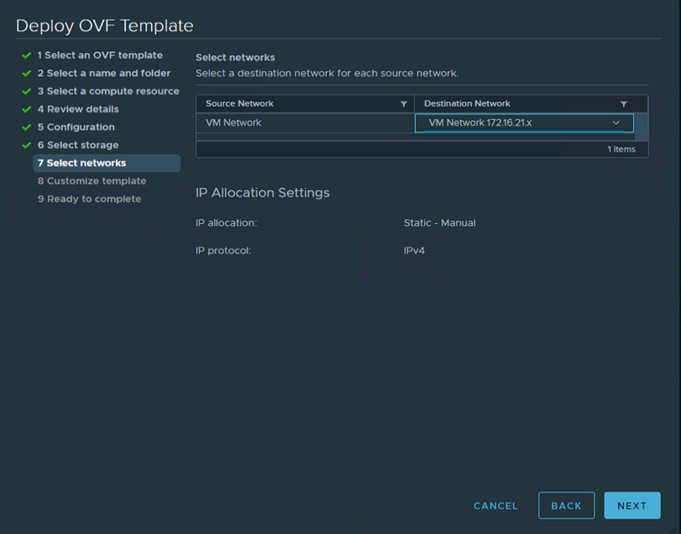

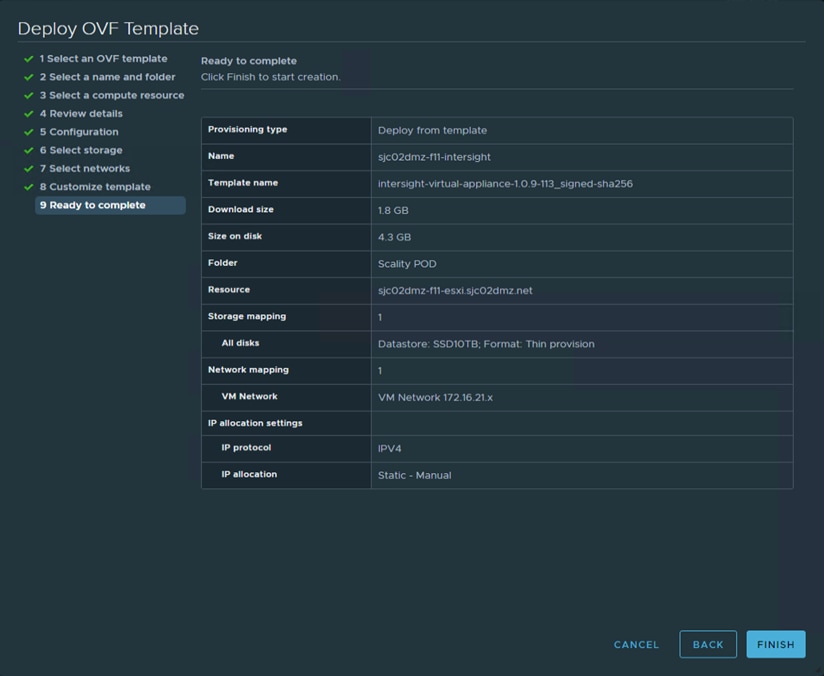

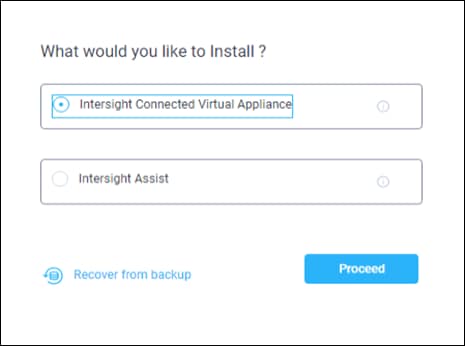

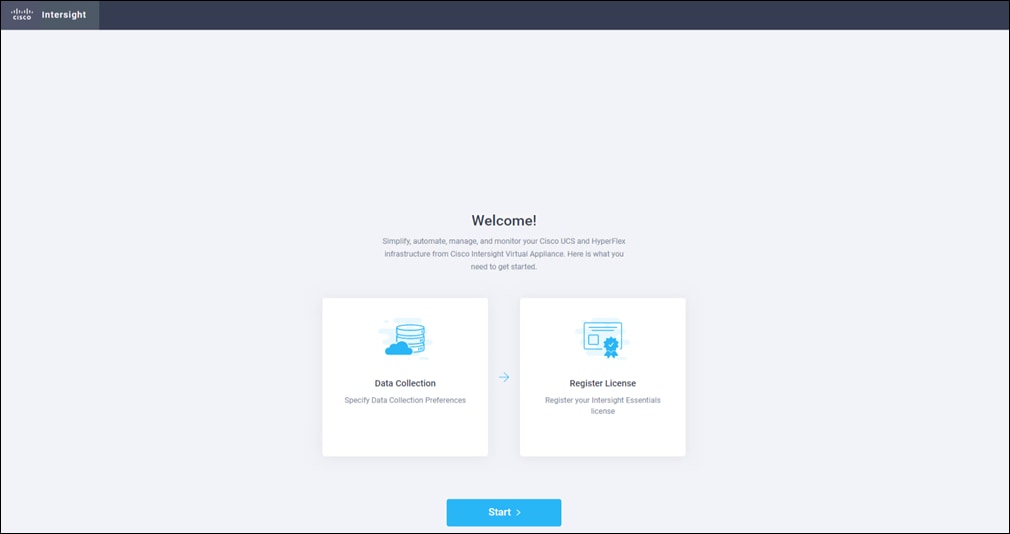

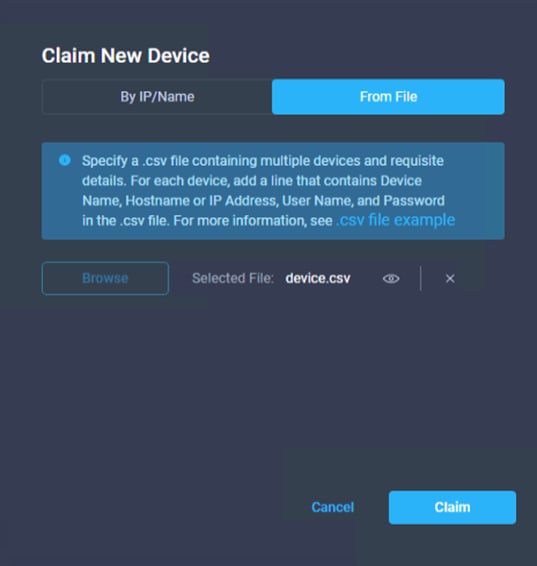

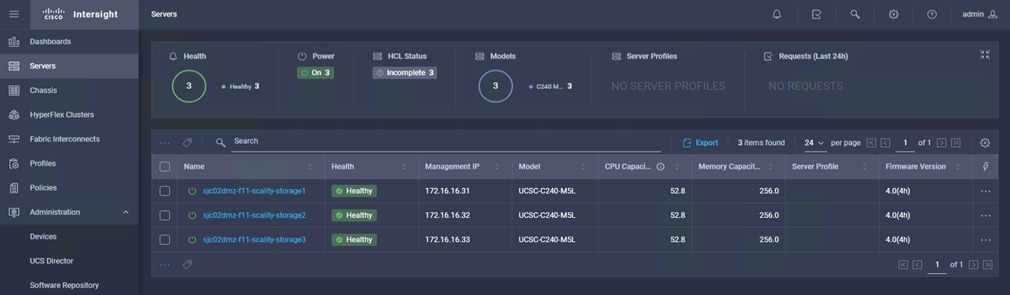

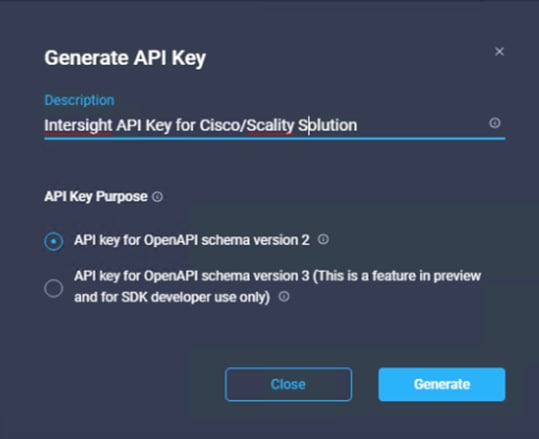

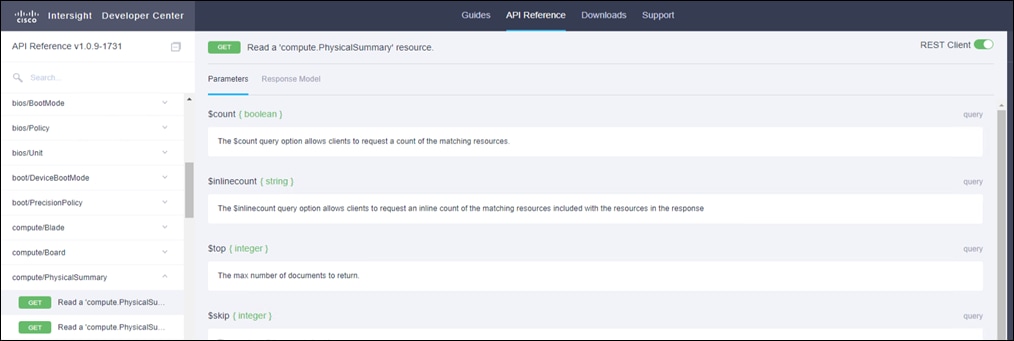

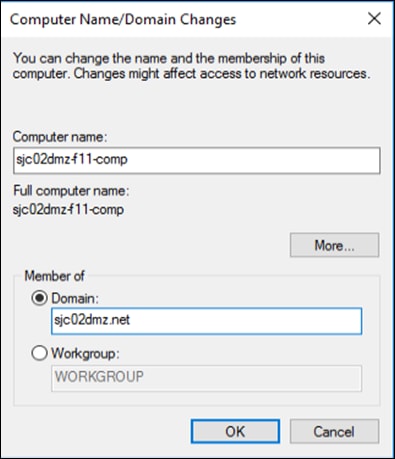

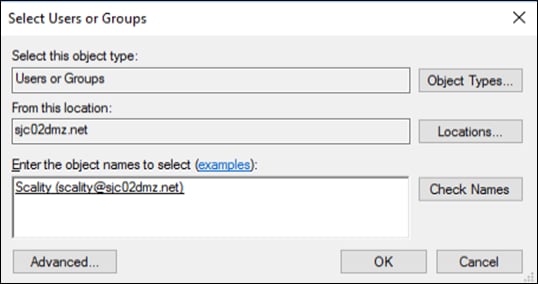

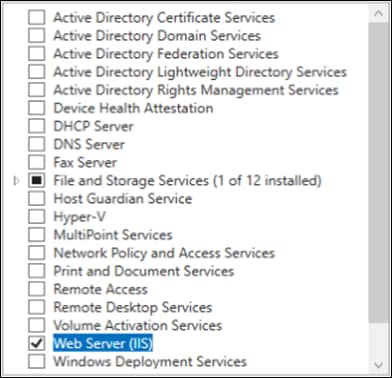

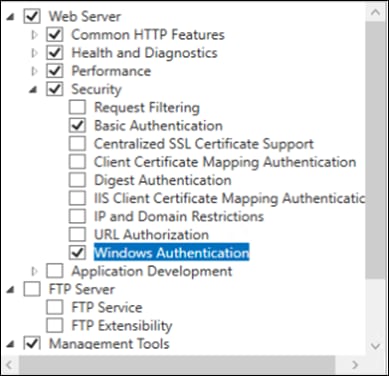

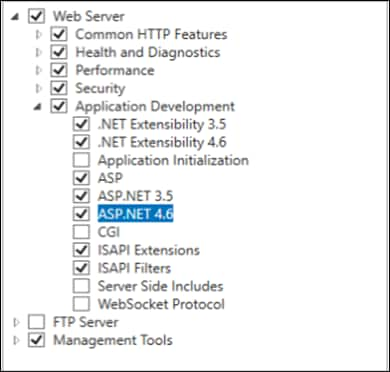

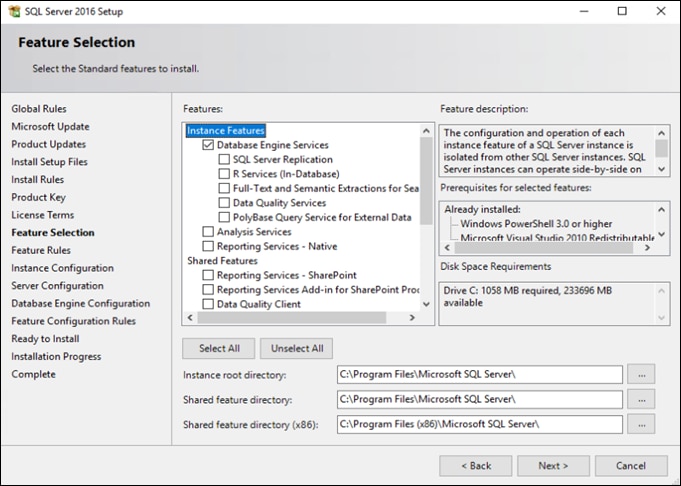

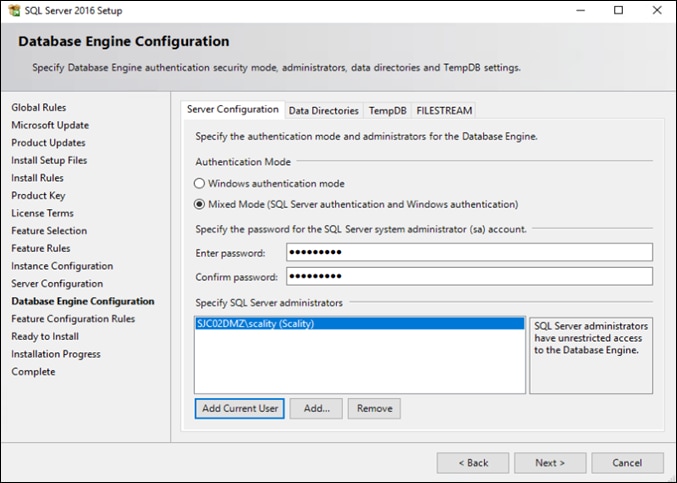

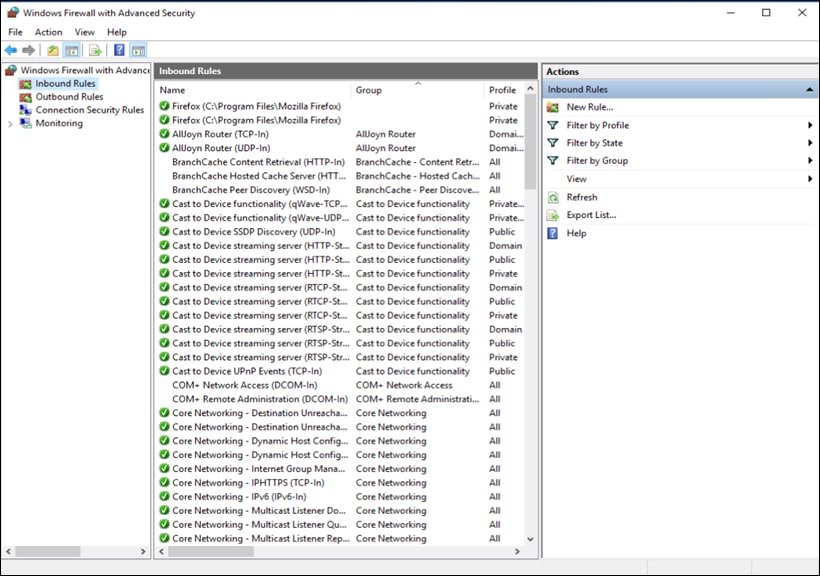

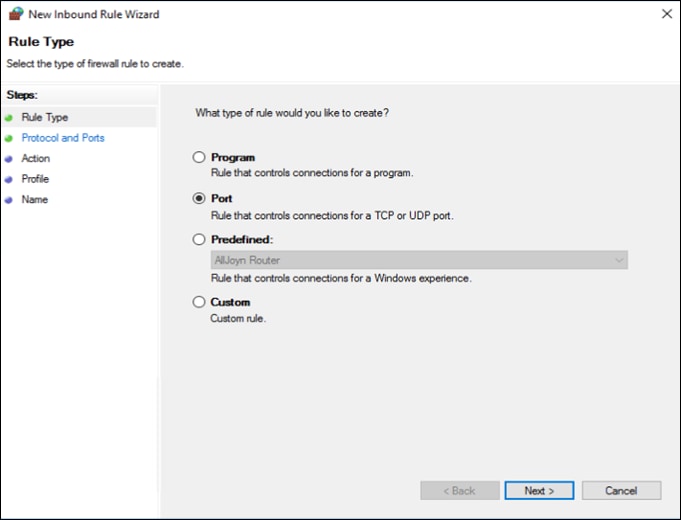

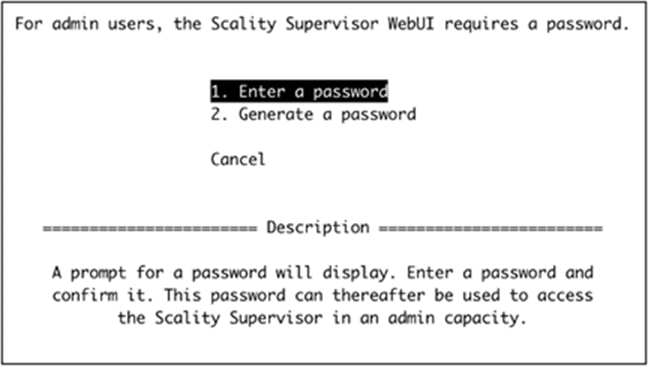

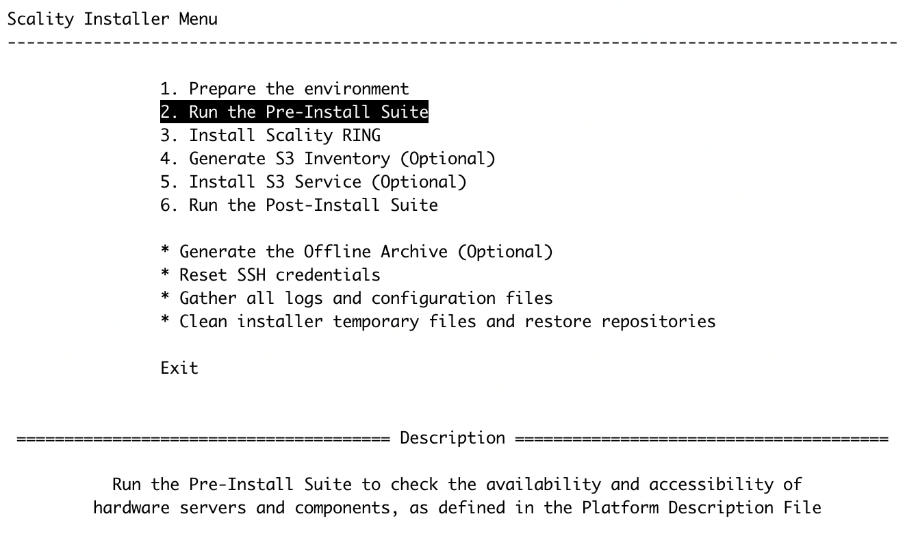

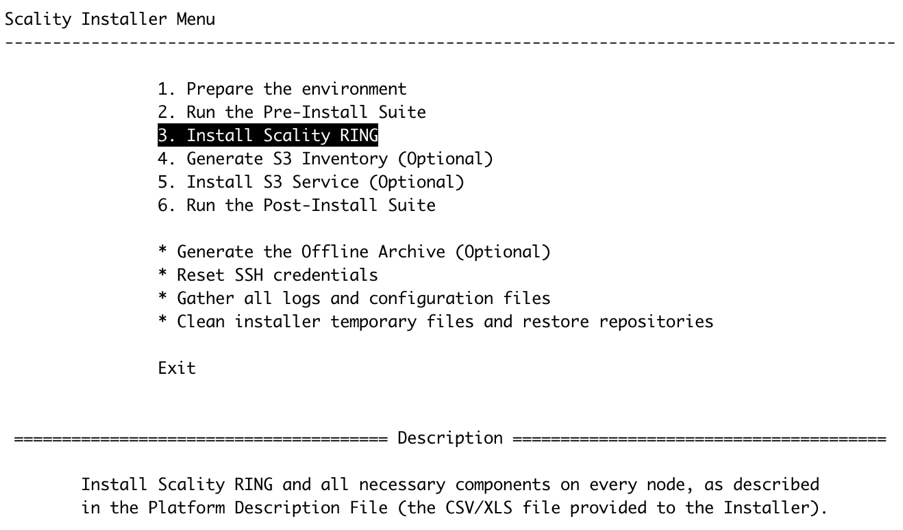

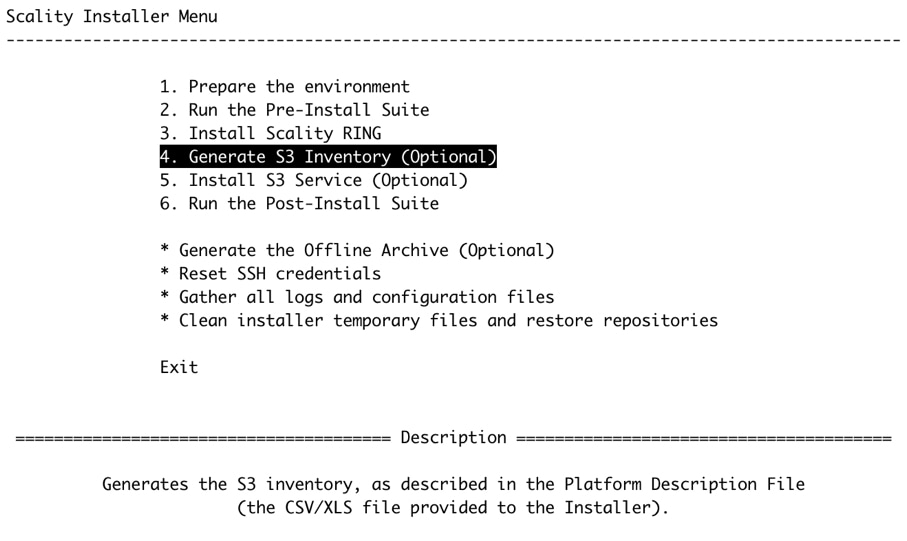

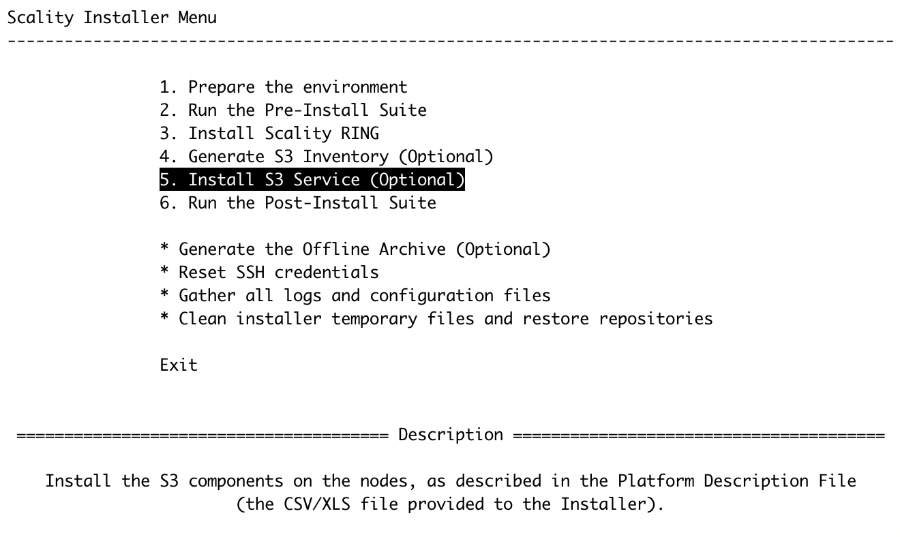

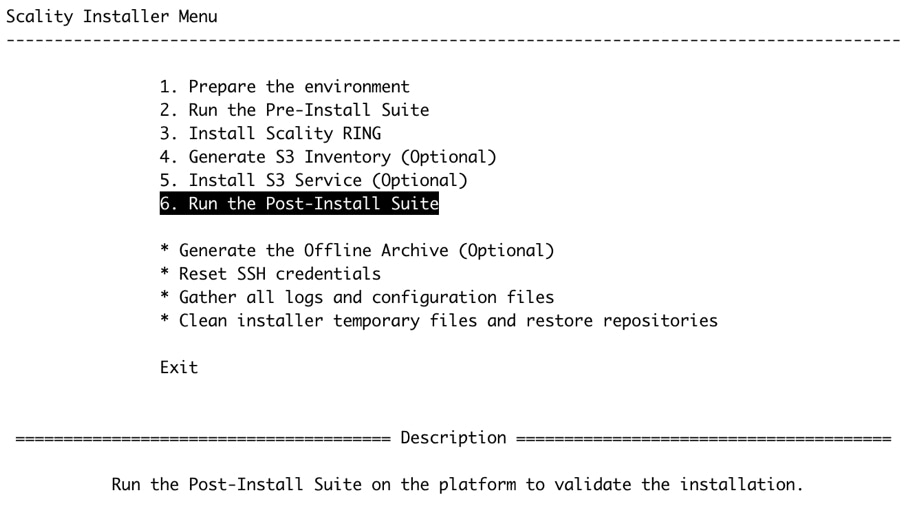

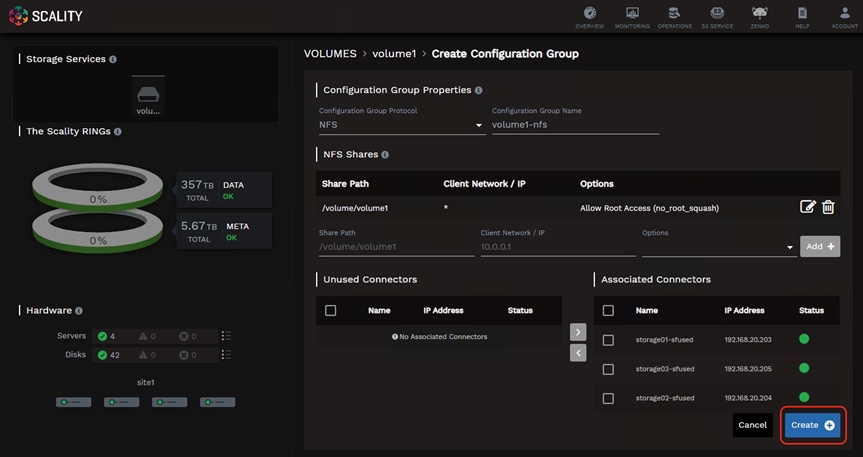

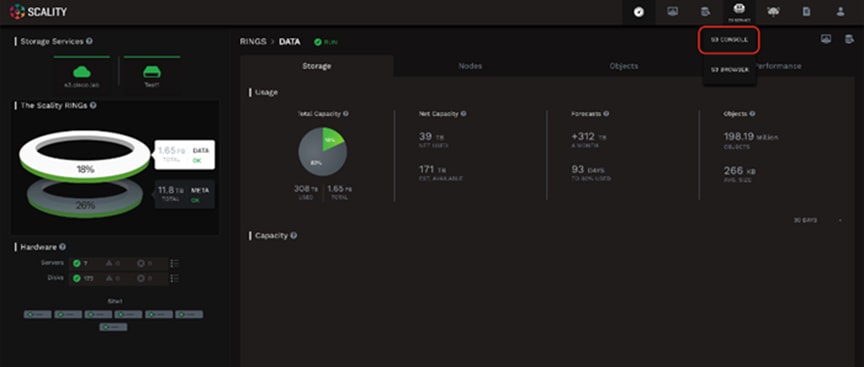

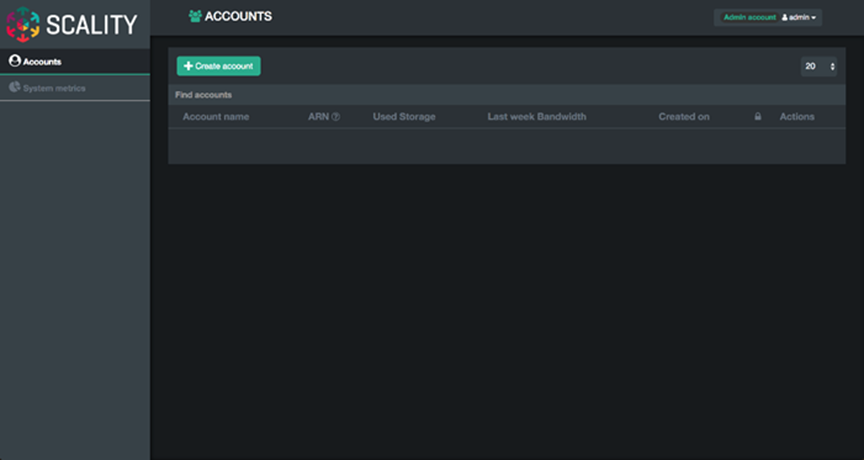

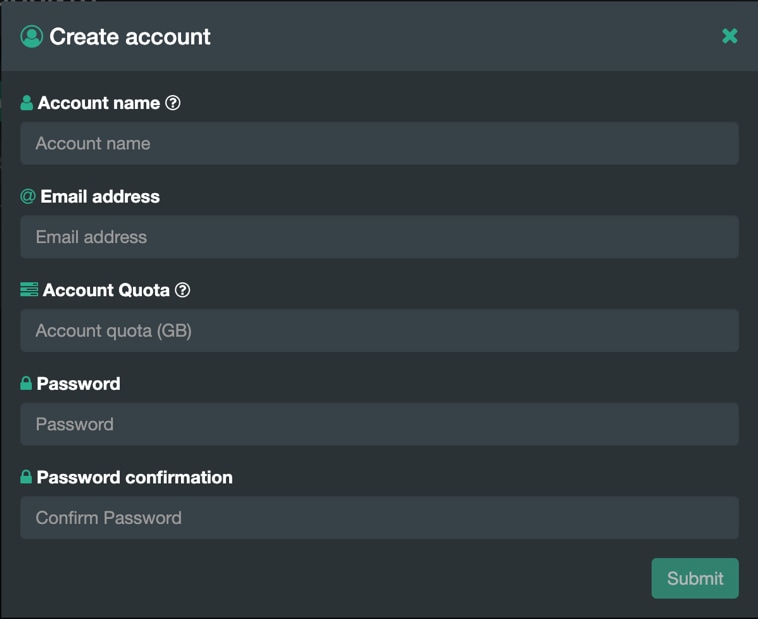

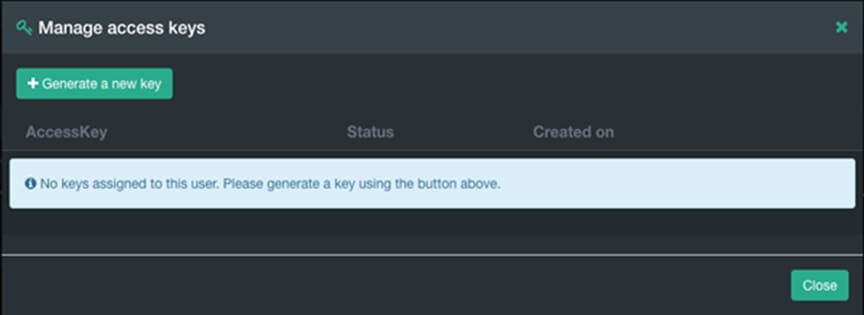

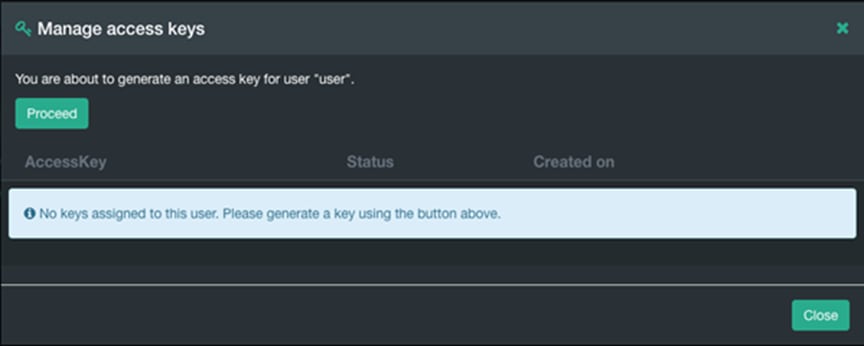

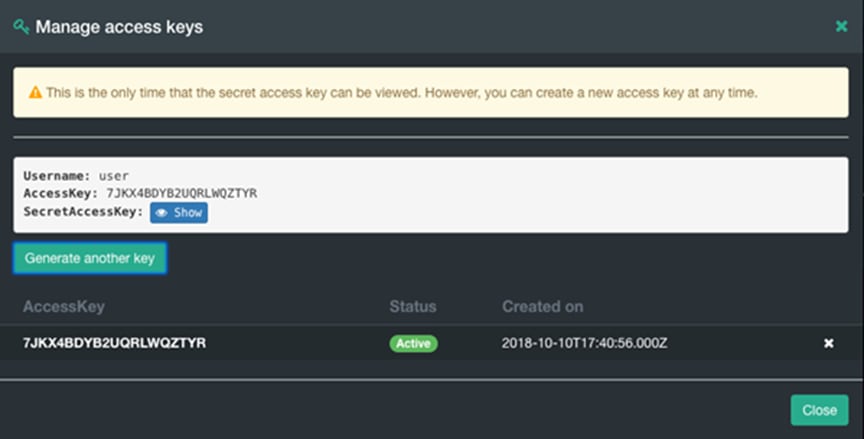

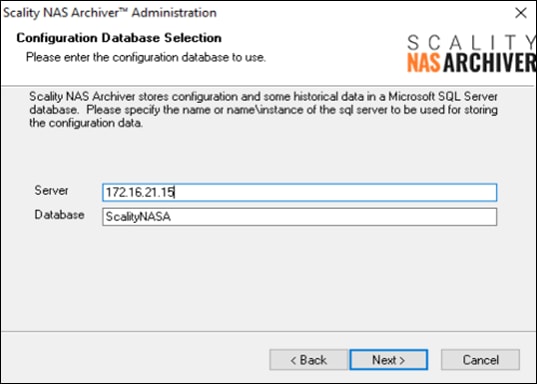

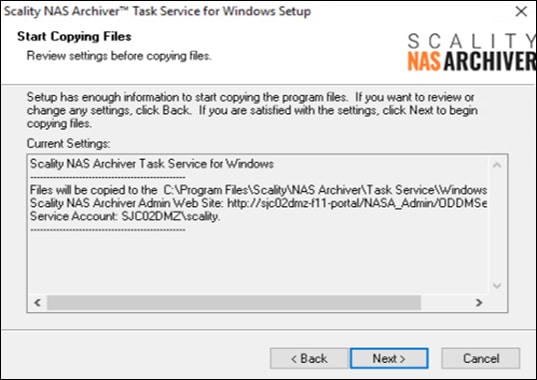

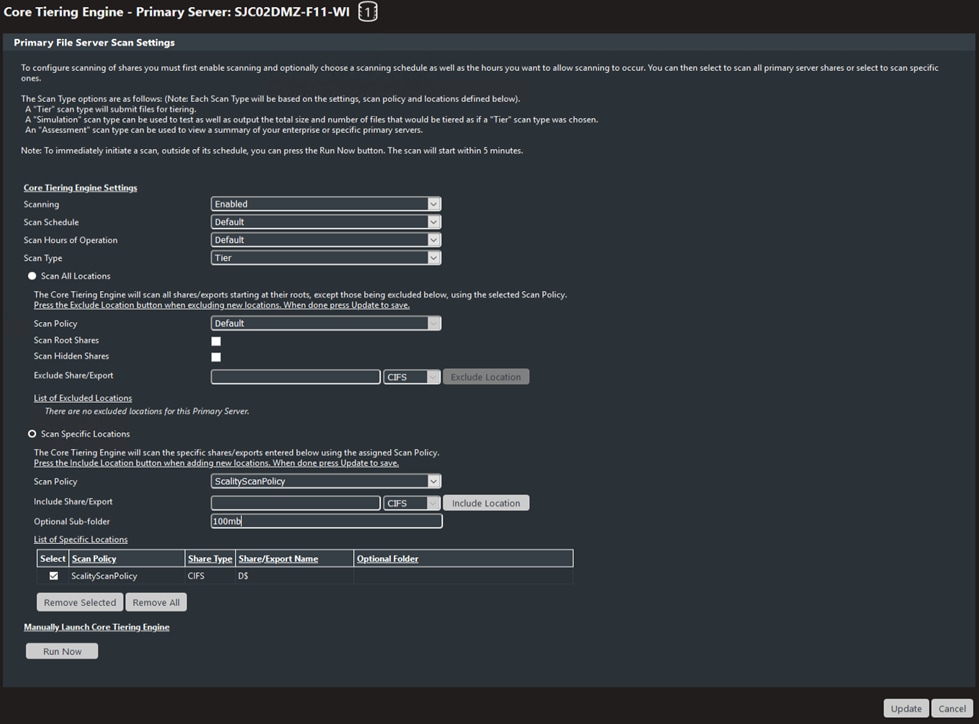

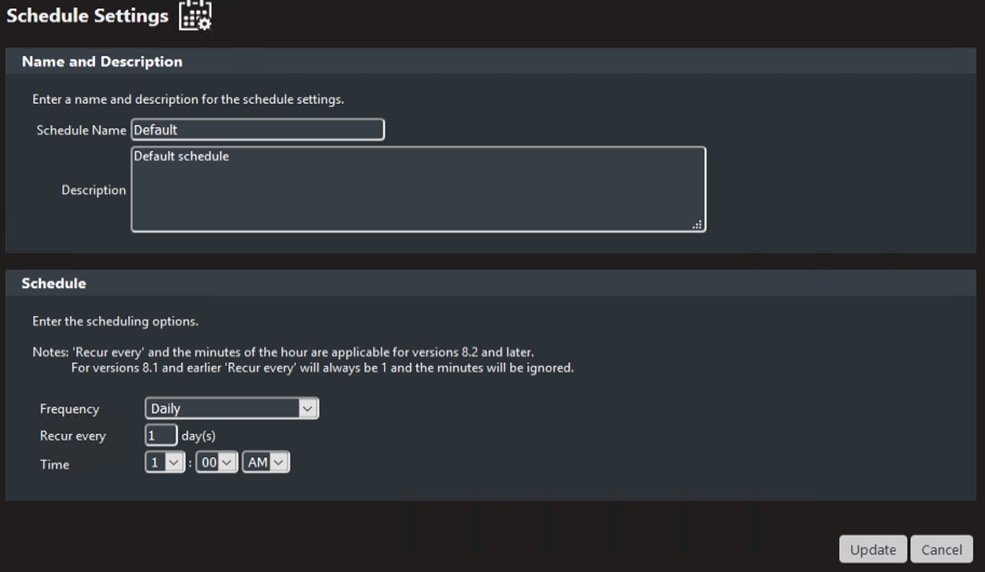

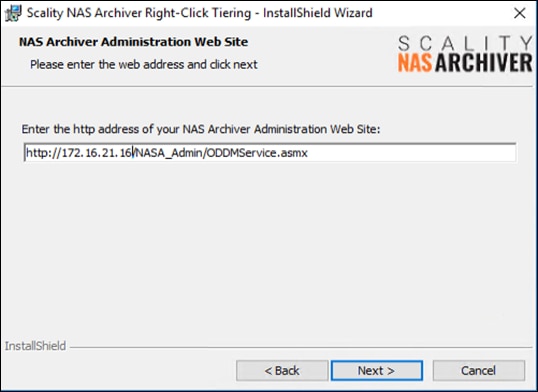

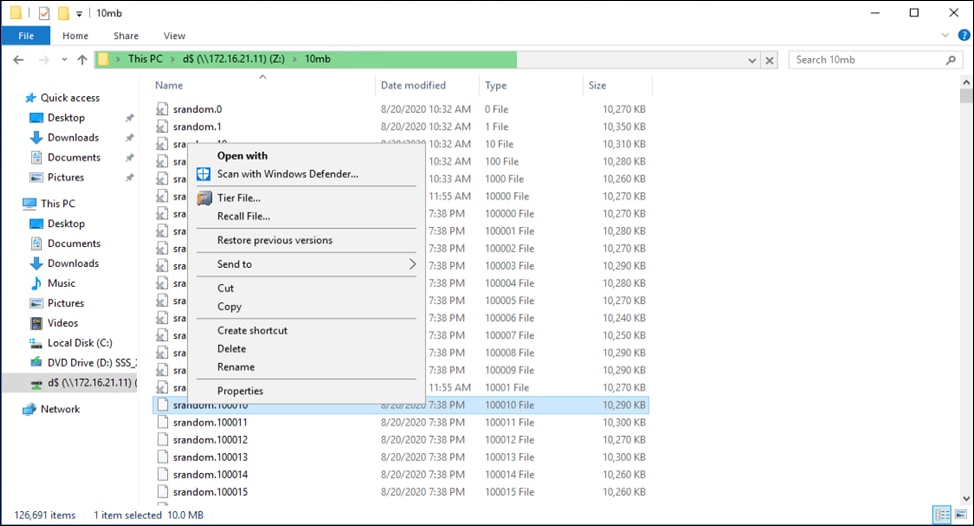

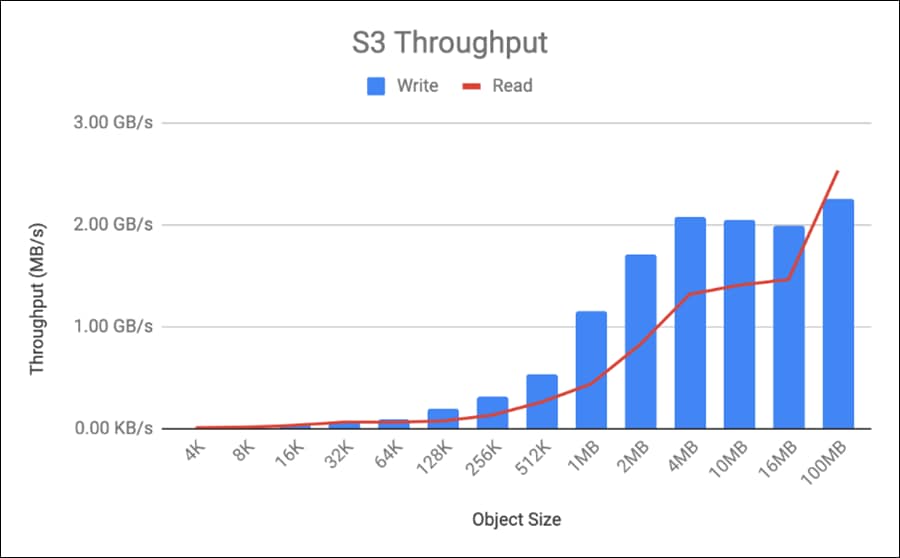

sjc02dmz-f11-n93180ycex-b(config-if)# exit