Cisco HyperFlex 4.0 for Business Continuity using VMware vSphere 6.7 and VXLAN Multi-Site Fabric

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco HyperFlex 4.0 for Business Continuity using VMware vSphere 6.7 and VXLAN Multi-Site Fabric

Design and Deployment Guide using Cisco HyperFlex Stretched Cluster 4.0(2f), Cisco DCNM 11.5(1), Cisco VXLAN EVPN Multi-Site Fabric, and VMware vSphere 6.7P5

Published: October 2021

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_P)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2021 Cisco Systems, Inc. All rights reserved.

Contents

Cisco Validated Designs (CVDs) include systems and solutions that are designed, tested, and documented to accelerate customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers. CVDs not only address IT pain points but also minimize risk. The design and deployment guidance found in CVDs also serve as a reference for enterprises to guide their roll-outs.

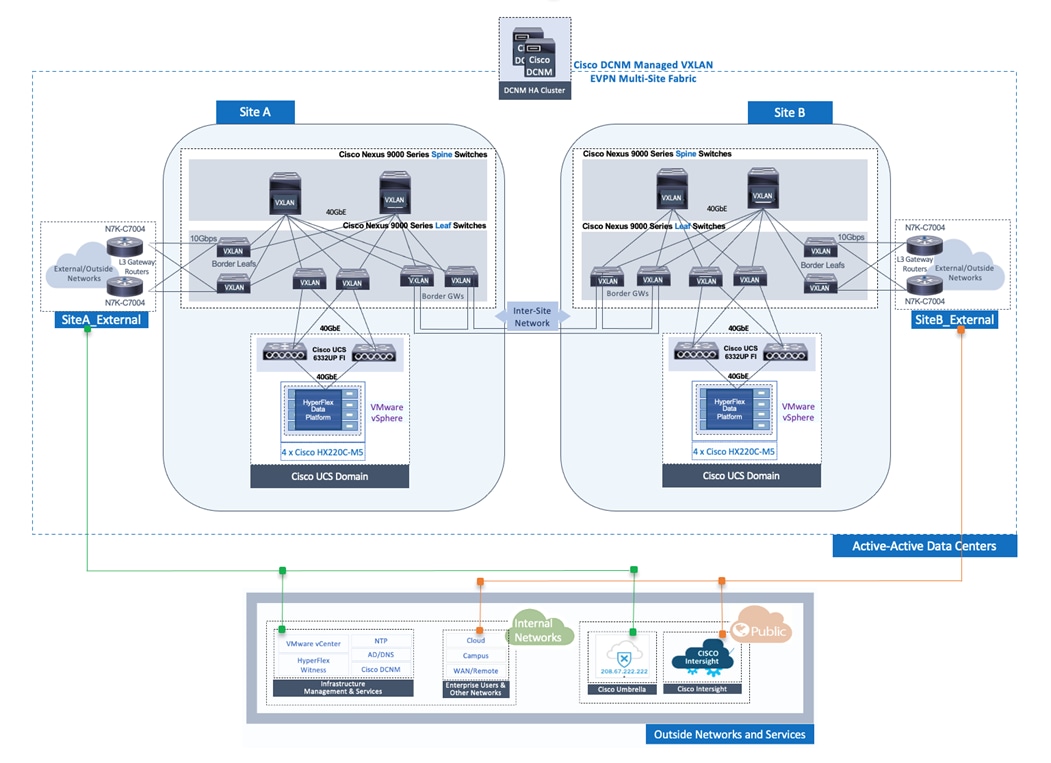

The Cisco HyperFlex Stretched Cluster with Cisco VXLAN Multi-Site Fabric solution is a disaster recovery (DR) and business continuity (BC) solution for Enterprise data centers. The solution uses an active-active design to ensure the availability of at least one data center at all times. The Virtual Server Infrastructure (VSI) is a VMware vSphere cluster running on a Cisco HyperFlex stretch cluster that spans both data centers. A HyperFlex stretch cluster is a single cluster with geographically distributed nodes, typically in separate data centers either within a campus or a metropolitan area. HyperFlex stretch clusters use synchronous replication between sites to ensure that the data is available in both data centers. The maximum supported Round Trip Time (RTT) between sites is 5ms (~100km), with zero data loss, zero recovery point objective (RPO), and near-zero recovery time objective (RTO).

The solution uses a Cisco VXLAN Ethernet VPN (EVPN) Multi-Site fabric for the end-to-end data center network to provide connectivity within and across sites. The VXLAN Multi-Site fabric provides Layer 2 extension and Layer 3 forwarding, enabling applications to be deployed in either data center with seamless connectivity and mobility. The end-to-end VXLAN fabric is built using Cisco Nexus 9000 series cloud-scale switches and managed by Cisco Data Center Manager (Cisco DCNM) that serves as a centralized controller for the VXLAN fabric.

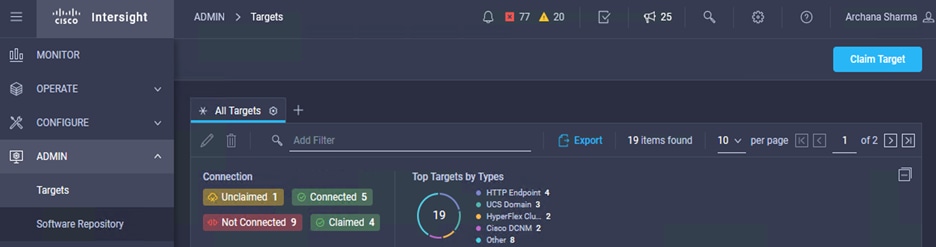

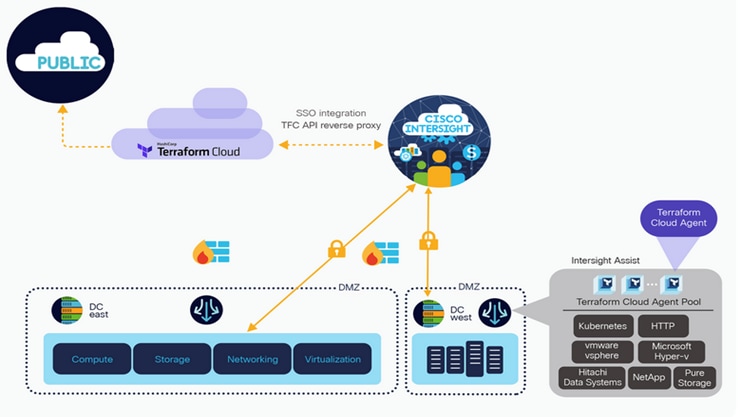

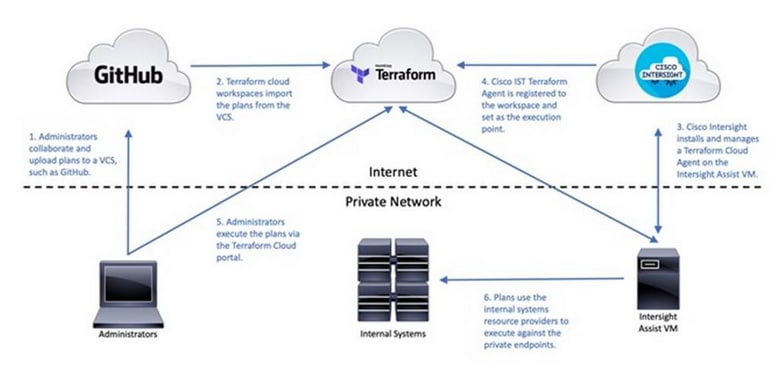

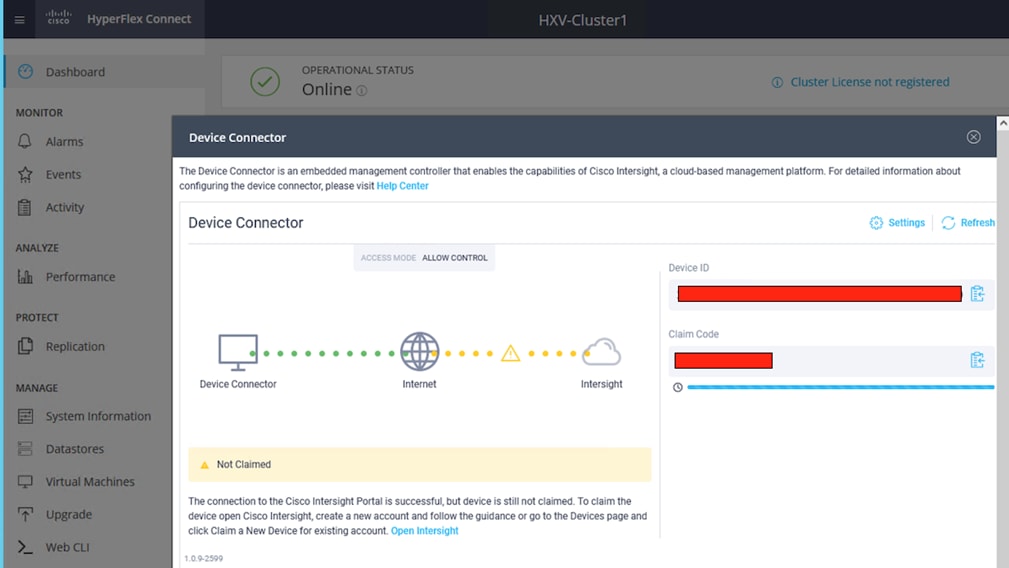

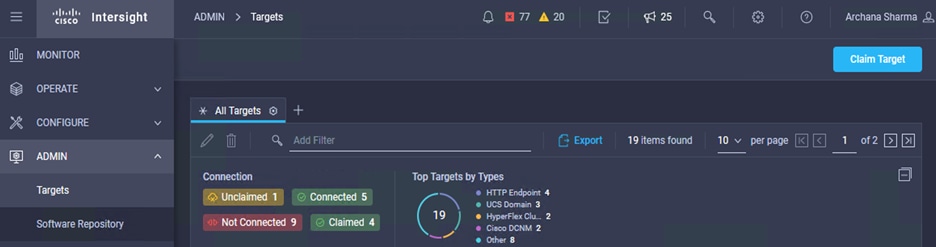

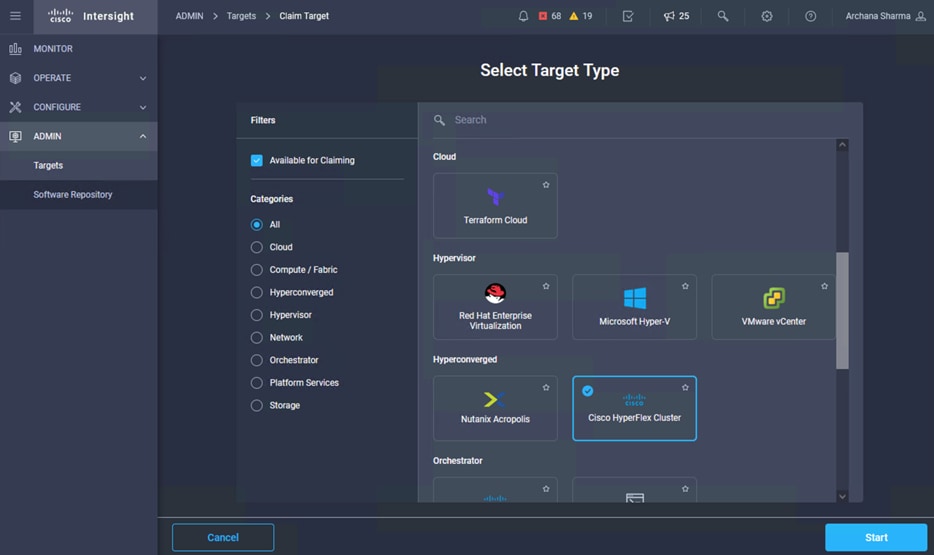

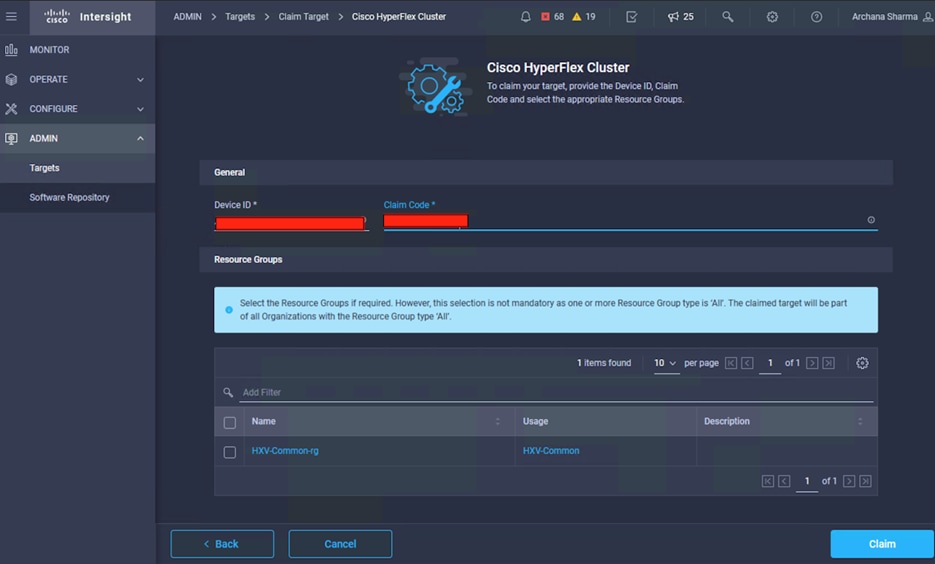

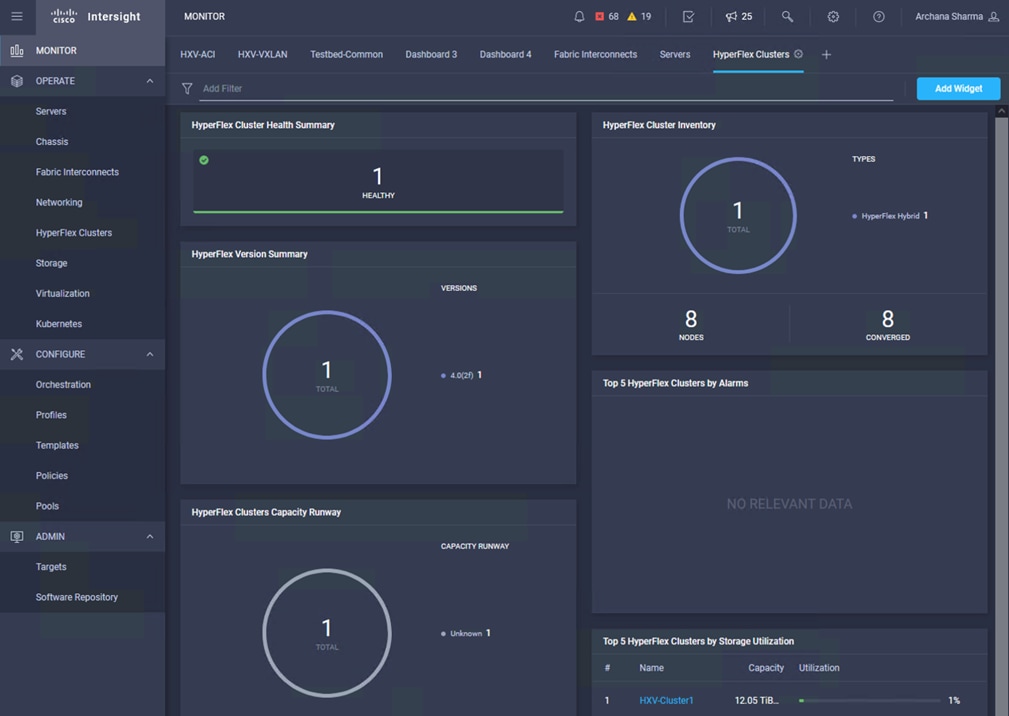

The hyperconverged infrastructure in each site consists of a pair of Cisco Unified Computing System (Cisco UCS) Fabric Interconnects (FIs) and the HyperFlex nodes that connect to it. The infrastructure design is symmetrical in the two active-active data centers, with the stretch cluster nodes evenly distributed between sites. The virtualized infrastructure is a single VMware vSphere cluster that spans both data centers, managed by VMware vCenter, located in a third site. The two data centers are centrally managed from the cloud using Cisco Intersight and on-prem using HyperFlex Connect. Cisco Intersight is a cloud-hosted operations and orchestration platform that uses the continuous integration/continuous development (CI/CD) model to continuously deliver new capabilities that Enterprises can leverage for their private and hybrid cloud deployments. Cisco Intersight Cloud Orchestrator (ICO) and Cisco Intersight Service for Terraform (IST) are two orchestration capabilities that Enterprises can use with this solution to accelerate and simplify operations.

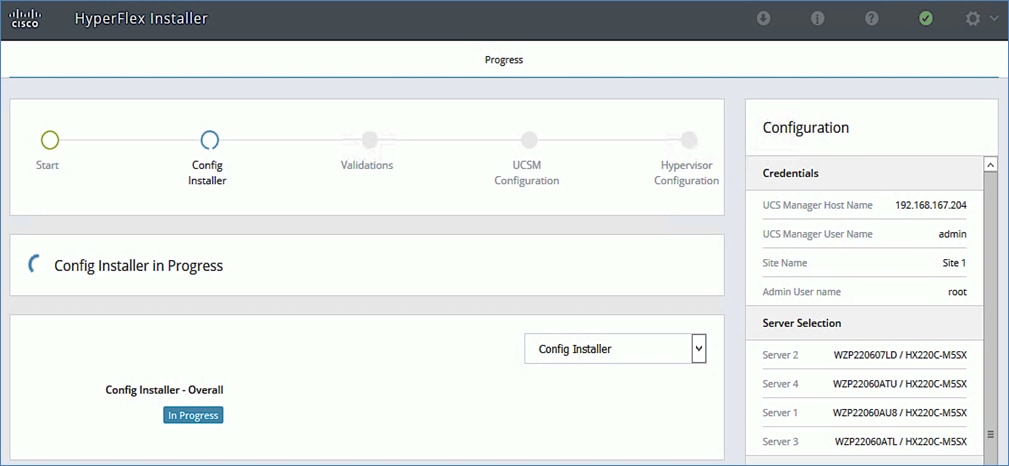

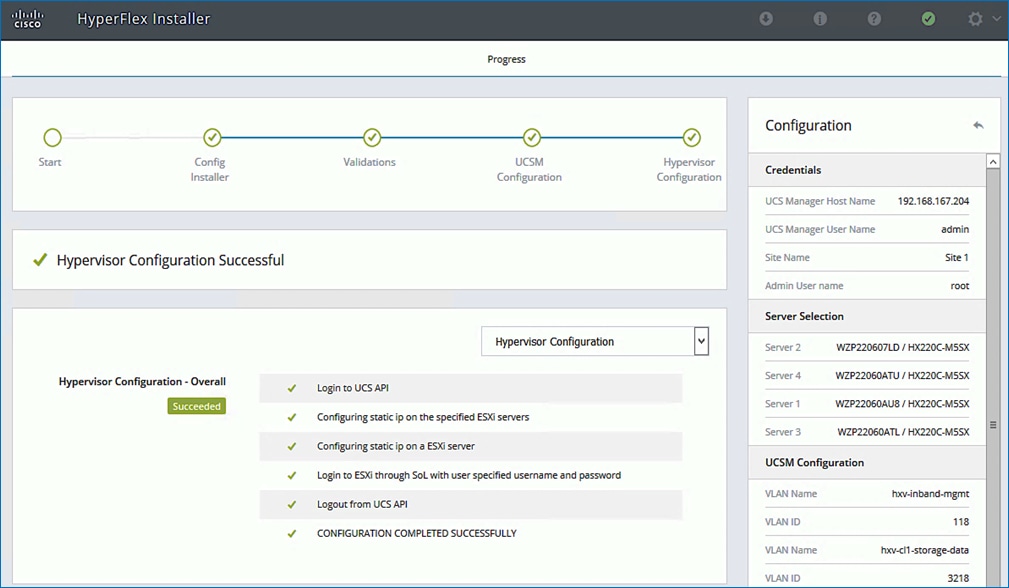

This solution uses GUI-driven automation for Day 0 provisioning and HashiCorp Terraform for Day 1-2 network provisioning. The Cisco DCNM Fabric Builder and HyperFlex Installer can automate the Day 0 deployment of the two active-active data center sites by provisioning the VXLAN fabric and HyperFlex VSI, respectively. The Day 1-2 network setup is automated using the HashiCorp Terraform provider for Cisco DCNM. In hybrid cloud deployments, Enterprises can leverage Cisco IST to execute the same Terraform plans from the cloud.

This solution was validated using Cisco HyperFlex 4.0(2f), Cisco UCS Manager 4.0(4k), VMware vSphere 6.7P05, NX-OS 9.3(7a) and Cisco DCNM 11.5(1) versions of software. This solution is part of a larger portfolio of Cisco HyperFlex VSI solutions. For the complete list, see: https://www.cisco.com/c/en/us/solutions/design-zone/data-center-design-guides/data-center-hyperconverged-infrastructure.html

The Cisco HyperFlex Stretch Cluster with Cisco VXLAN EVPN Multi-Site Fabric is a business continuity and disaster recovery solution for the Enterprise data center. The HyperFlex stretch cluster provides synchronous storage replication between two data centers sites to ensure the availability of the data in both sites. The solution enables Enterprises to build a VMware vSphere private cloud on distributed infrastructure interconnected by a VXLAN Multi-Site fabric. This infrastructure solution enables multiple sites to behave in much the same way as a single site while protecting application workloads and data from a variety of failure scenarios, including a complete site failure. Cisco Intersight simplifies operations by providing a unified, centralized point of management for the active-active sites. Cisco Intersight orchestration capabilities such as IST and ICO further accelerate an Enterprise’s journey towards Infrastructure as code (IaC) in the data center.

Audience

The audience for this document includes, but not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, and customers interested in an infrastructure built to deliver IT efficiency and enable IT innovation.

This document provides the end-to-end design for a business continuity and disaster recovery solution using a Cisco HyperFlex Stretch cluster and a Cisco VXLAN EVPN Multi-Site fabric. The document also provides guidance for deploying the solution.

The solution delivers the following features and capabilities:

● Validated reference architecture for business continuity and disaster avoidance in Enterprise data centers using an active-active design.

● Solution level integration and validation of Cisco HyperFlex VSI with a Cisco VXLAN EVPN fabric.

● Solution validation using the latest recommended software releases for Cisco HyperFlex Stretch Cluster, Cisco UCS FI, VMware vSphere, and Cisco VXLAN Fabric.

● Use of Cisco Intersight to ease the operational burden of managing a multi-site, multi-data center solution by providing centralized operations and orchestration from the cloud.

● Use of Cisco DCNM to manage the Cisco VXLAN EVPN Multi-Site fabric simplifies deployment, operations, and automation.

● Operational Agility by using GUI-driven automation for Day 0 provisioning of the HyperFlex VSI and VXLAN fabric in the solution, and HashiCorp Terraform for Day 2 network automation using Cisco DCNM’s Terraform Provider

The Cisco HyperFlex Stretched Cluster with Cisco VXLAN EVPN Multi-Site Fabric solution is a data center infrastructure solution for mission-critical workloads that require high uptime, with near-zero RTO and zero RPO. The solution uses an active-active data center design to provide business continuity and disaster recovery to handle disaster scenarios and data center-wide failures. The two data centers run active workloads under normal conditions and provide failover and backup during major failure events. The solution incorporates technology, design, and product best practices to deliver a highly resilient design across all layers of the infrastructure stack, both within and across data centers. The data centers can be in one location (for example, different buildings in a campus) or geographically separate locations (for example, different sites in a metropolitan area). The HyperFlex stretch cluster used in this design requires a minimum bandwidth of 10Gbps and an RTT latency of <5ms between data center locations.

In this design, the active-active data centers consists of the following infrastructure components in each site.

● Cisco HyperFlex (Cisco HX) Stretched Cluster, HyperFlex Witness (3rd site)

● Cisco Unified Computing System (Cisco UCS), Cisco Intersight (cloud)

● Cisco DCNM managed VXLAN EVPN Multi-Site fabric (Cisco DCNM in 3rd site)

● Cisco Nexus 9000 series switches (for VXLAN fabric and Inter-Site Network)

● VMware vSphere, VMware vCenter (3rd site)

A Cisco HyperFlex (4+4) stretched cluster provides the hyperconverged virtual server infrastructure in the two active-active data centers in the solution. The stretched cluster is a single cluster with evenly distributed nodes in two data centers. When there is a failure in one data center, the Hyperflex stretch cluster provides quick recovery by making the data available from the second data center. HyperFlex stretch clusters synchronously replicate data between sites, enabling both sites to be primary for the application virtual machines as needed. The latency between sites interconnecting stretch cluster nodes should be <5ms and require a minimum bandwidth of 10Gbps to meet storage latency requirements. The end-to-end network in this solution consists of a VXLAN fabric in each data center and an inter-site network that interconnects them, managed using Cisco DCNM. Cisco DCNM serves as a centralized controller to provision and manage the multi-site fabric.

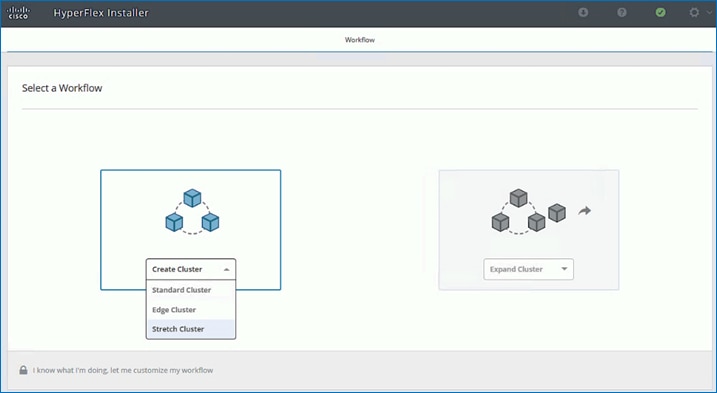

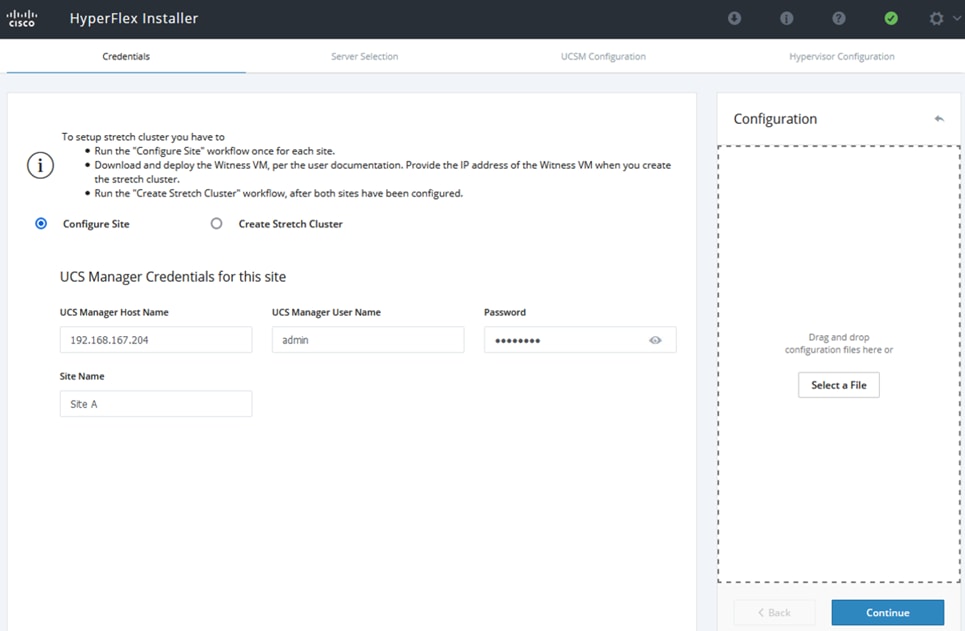

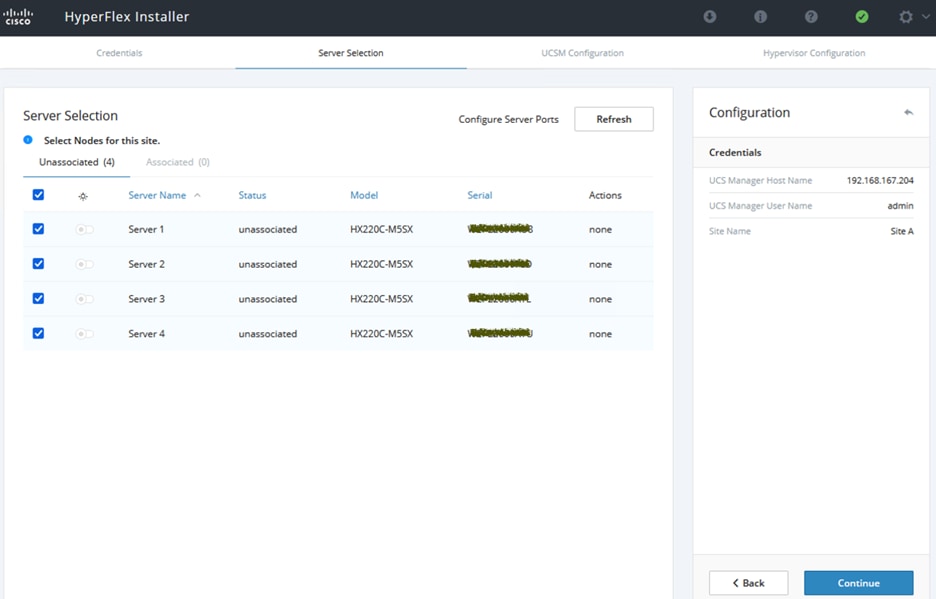

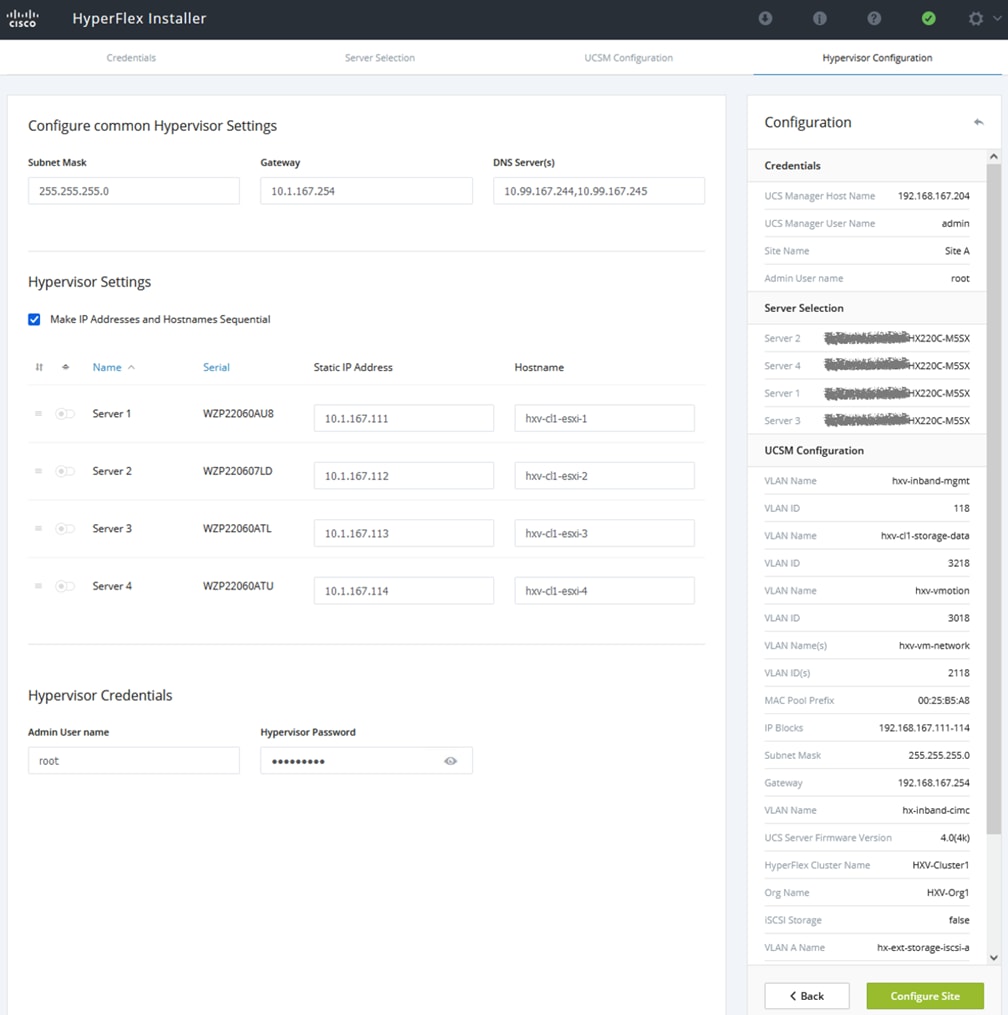

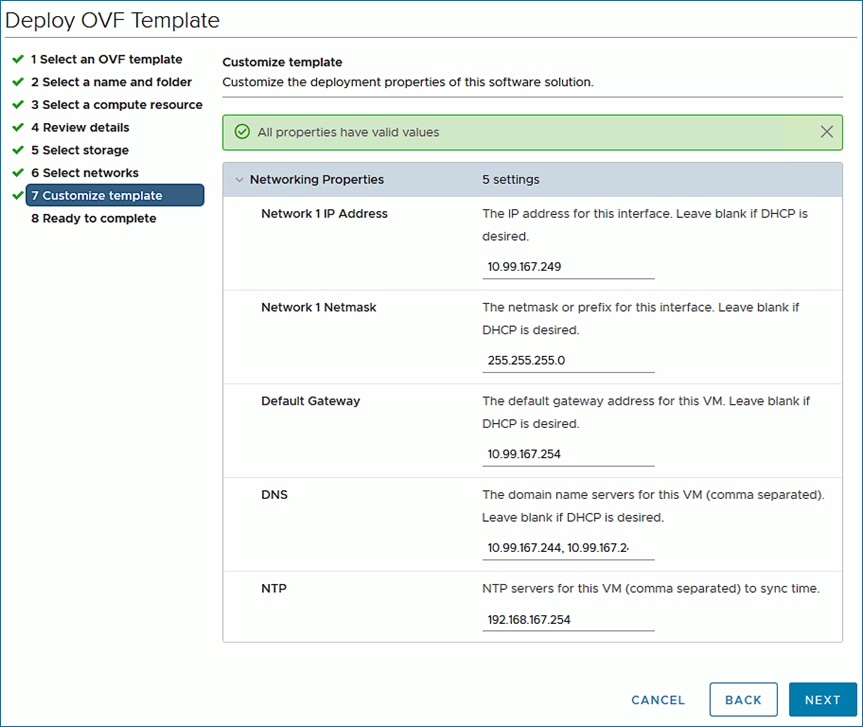

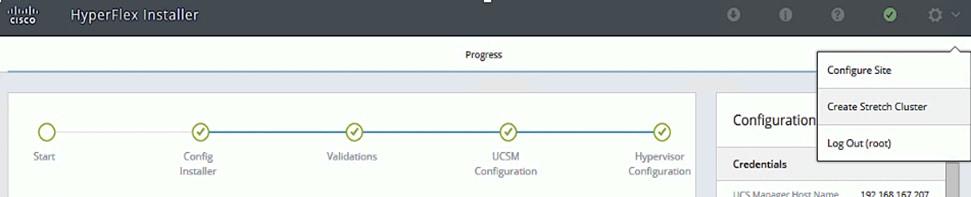

A HyperFlex Installer located at a third site automates the deployment of the HyperFlex stretch cluster and the VMware vSphere cluster that runs on it. The vSphere cluster is a single cluster that spans the two active-active sites, managed using VMware vCenter located in the third site. The stretch clusters also require a HyperFlex Witness node in a third site to resolve split-brain failures to achieve the quorum necessary to maintain cluster operations. The connectivity between the data centers and the Witness site should have a minimum bandwidth of 100Mbps and a worst-case RTT latency of 200ms for 16kB packet sizes. The latency should be significantly lower in large clusters with significant data and load to minimize failure recovery times. The HyperFlex Witness VM, Installer, and VMware vCenter are all on the same site in this design.

Cisco Intersight, centrally manages the virtualized server infrastructure in the two active-active sites from the cloud. Cisco Intersight is a subscription-based, cloud-hosted service with embedded intelligence for managing Cisco and third-party infrastructure. To simplify day-2 operations, Cisco Intersight provides features such as pro-active support with Cisco TAC integration, integration with Cisco Hardware Compatibility List (HCL) for compliance verification, proactive monitoring, and so on. The SAAS delivery model enables Cisco Intersight to continuously roll out new features and functionalities that Enterprises can quickly adopt. For more details on the operational capabilities available on Cisco Intersight, go here.

Solution Components

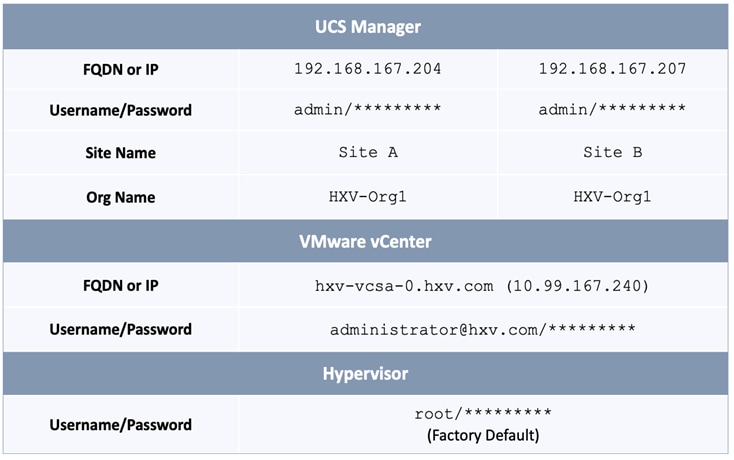

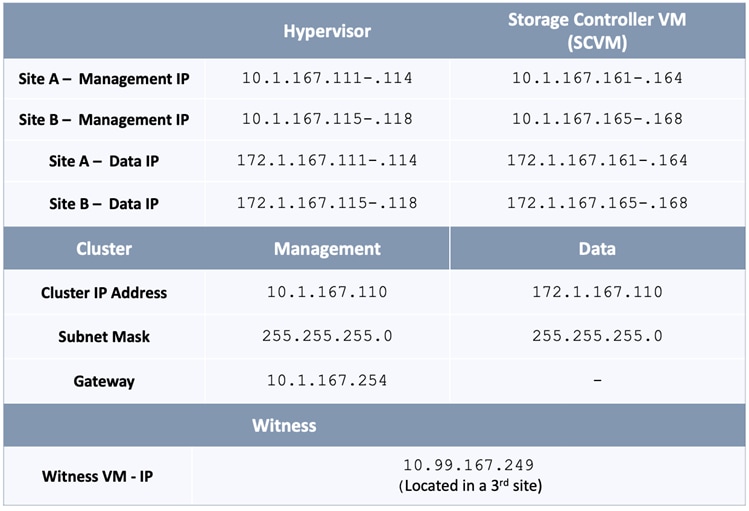

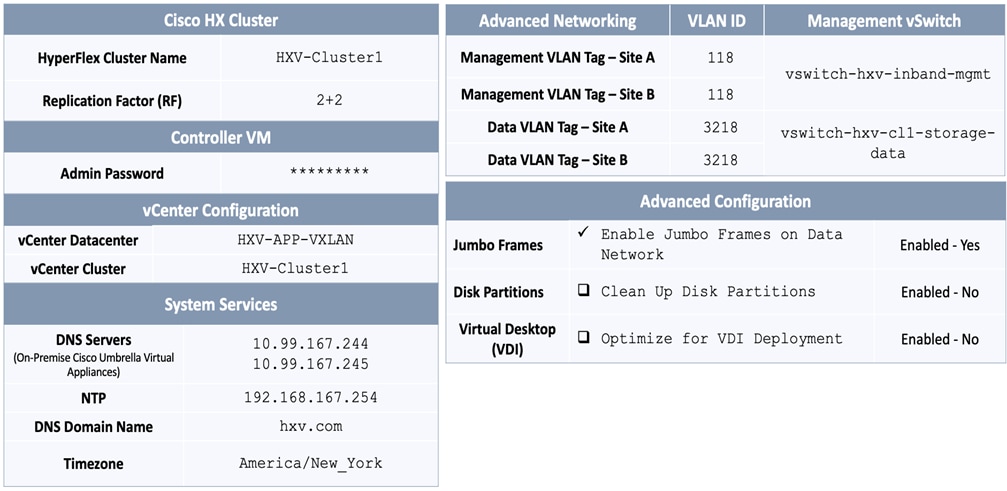

Table 1 lists the component models and versions used for solution validation in Cisco Labs. Other software and hardware combinations can also be used if it is supported in Cisco and VMware’s Hardware Compatibility Lists (HCL).

Table 1. Solution Components per Site

Solution Topology

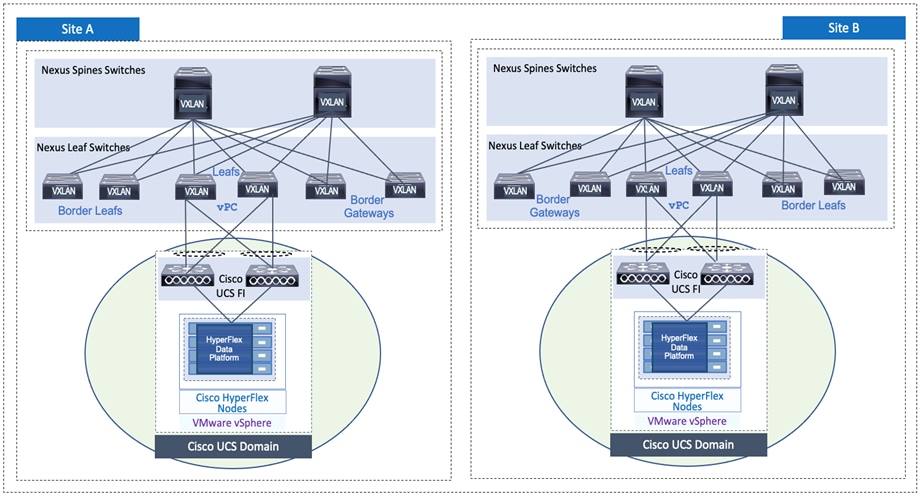

Figure 1 shows the high-level design for the solution using two active-active data center sites.

Figure 1. Solution Topology (High-level)

The Cisco HyperFlex Stretch Cluster with a VXLAN Multi-Site fabric solution is designed to address the following key requirements:

● Business continuity and disaster recovery in the event of a complete data center (site) failure

● Flexible, distributed workload placement with workload mobility across data centers

● Each site should be able to operate independently – no dependency on the other site

● Access to external networks and services directly from each site

● Site Affinity – a Virtual Machine (VM)’s data should be locally accessible under normal conditions

● Quick recovery with zero data loss if a failure occurs

● Simplified administration and operation of the solution

The solution also meets the following high-level design goals:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the ability to independently scale compute, storage, and networking as

needed

● Modular design with the ability to upgrade or replace components and sub-systems as needed

● Flexible design across all layers of the solution that includes sub-system design, individual

components used, and storage configuration and connectivity options

● Operational agility and simplicity through the use of automation and orchestration tools

● Incorporates technology and product best practices for the different components in the solution

This section provides a detailed overview of the network, compute, storage, and virtualization layer design used in the solution.

The data center network must first be in place before an Enterprise can deploy the HyperFlex VSI in the two active-active data centers. The design discussion will therefore begin with the network design used in the solution.

The network connectivity required to deploy and maintain a HyperFlex VSI in the two data centers are as follows:

● Reachability from Cisco HyperFlex Installer VM to the out-of-band management IP addresses on Cisco UCS Fabric Interconnects and HyperFlex servers in each data center.

● Reachability from Cisco HyperFlex Installer VM to the in-band management (ESXi) IP addresses on Cisco HyperFlex servers in each data center.

● Reachability from the HyperFlex Installer VM to infrastructure services needed to bring up the cluster. In this design, the HyperFlex Installer VM, Cisco HyperFlex Witness, and VMware vCenter are all located in a third site, separate from the active-active data center sites.

● Layer 2 or Layer 3 reachability from HyperFlex cluster nodes in each data center to the Cisco HyperFlex Witness and VMware vCenter used in the solution.

● Layer 2 in-band management and storage-data connectivity between Cisco HyperFlex cluster nodes distributed across the two active-active sites.

Network - Cisco VXLAN Fabric Design

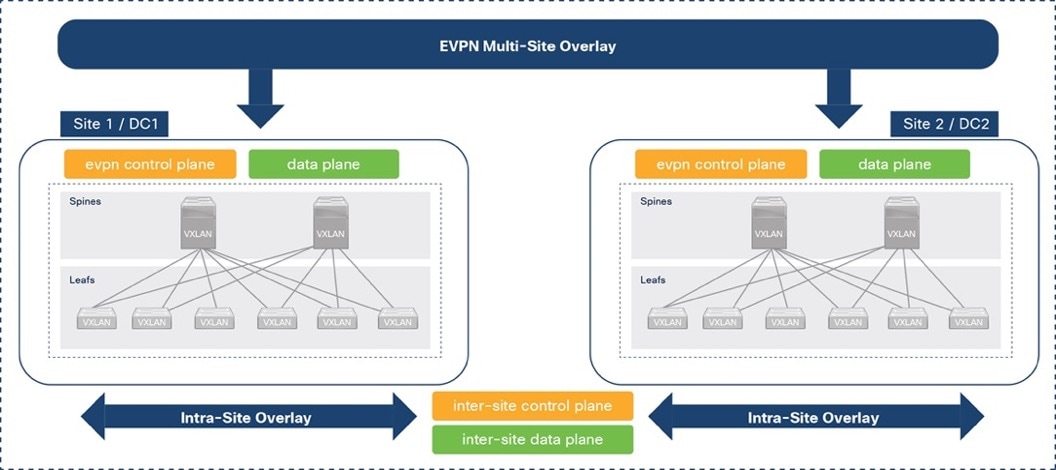

The end-to-end data center network used in this solution is a Cisco VXLAN EVPN Multi-Site fabric. The VXLAN fabric provides a highly flexible, scalable, and resilient multi-site network architecture for enabling business continuity and disaster recovery in Enterprise data centers. The end-to-end VXLAN fabric consists of two VXLAN fabrics, one in each data center site, interconnected by an inter-site network. The VXLAN fabric in each data center is a 2-tier Clos-based spine and leaf architecture, built using Cisco Nexus® 9000 Series spine and leaf switches. Cisco Data Center Network Manager (Cisco DCNM) centrally manages the end-to-end, multi-site fabric. The fabric design is highly resilient, with no single point of failure, and incorporates technology and product best practices in the design.

VXLAN fabrics establish VXLAN overlays (tunnels) across an IP underlay to extend Layer 2 edge networks across a Layer 3 boundary (in this case, a routed data center network). The Layer 2 extension enables East-West communication between applications and services hosted in the data center that need Layer 2 adjacency. In this solution, the HyperFlex stretch cluster needs Layer 2 adjacency between all nodes in the cluster for intra-cluster communication to bring the cluster online and for the overall health and operation of the cluster.

Layer 2 extension also provides seamless mobility where application endpoints (MAC, IP) can move anywhere in the data center without requiring configuration changes. In this solution, the VXLAN Multi-Site fabric provides Layer 2 extension (and Layer 3 forwarding) with seamless mobility within the data center and between data centers. Seamless mobility is critical for disaster recovery as it enables application VMs to quickly failover and become operational in a second data center. For a HyperFlex stretch cluster, endpoint mobility enables a node in a second data center to take over as the cluster master using the same IP and continue providing data services from the second data center.

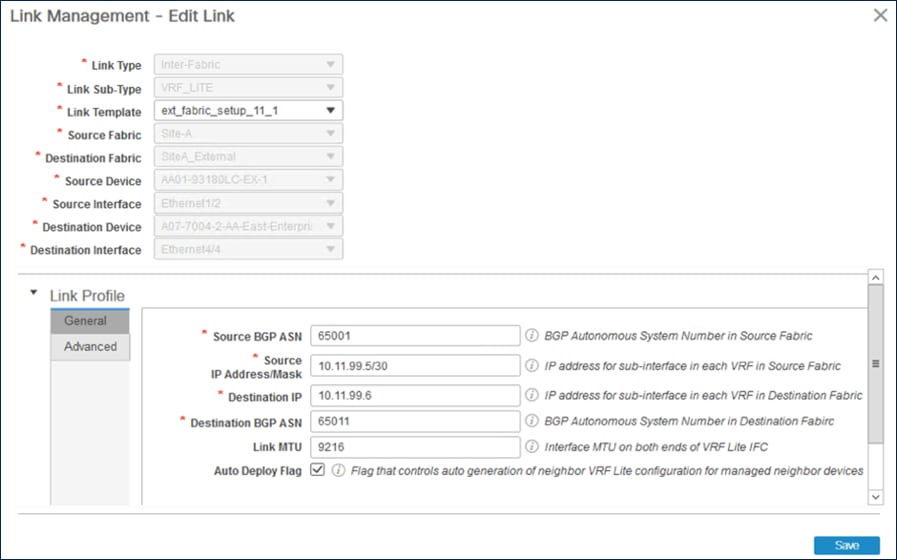

VXLAN overlays are commonly used in data centers due to the flexibility and functionality it provides, but it can also create a flat overlay network that spans data centers, with no fault isolation. VXLAN overlays also use a data plane flood-and-learn mechanism, similar to Ethernet, for address learning. When VXLAN overlays interconnect data centers, this can create a multi-data center, bridged overlay network, causing large amounts of traffic to be flooded across data centers. To address the problem, Internet Engineering Task Force (IETF) standardized a control plane mechanism for address learning using an Internet-scale routing protocol, Multi-Protocol Border Gateway Protocol (MP-BGP), and a new address family called Ethernet VPNs (EVPNs). VXLAN fabrics can use the MP-BGP EVPN address family to distribute endpoint reachability information (MAC, IP) and additional information such as the network and tenant (VRF) associated with the endpoint. This method not only reduces flooding but also enables optimal forwarding of traffic within a VXLAN fabric. MP-BGP also provides segmentation and fault isolation in the overlay without sacrificing Layer 2 extension or seamless mobility between data centers. Cisco’s VXLAN Multi-Site architecture uses MP-BGP to provide a more scalable architecture for interconnecting the active-active data centers in the solution. Figure 2 illustrates this architecture. For more details, see: https://www.cisco.com/c/en/us/products/collateral/switches/nexus-9000-series-switches/white-paper-c11-739942.html

Figure 2. VXLAN EVPN Multi-Site Architecture

Cisco DCNM Design

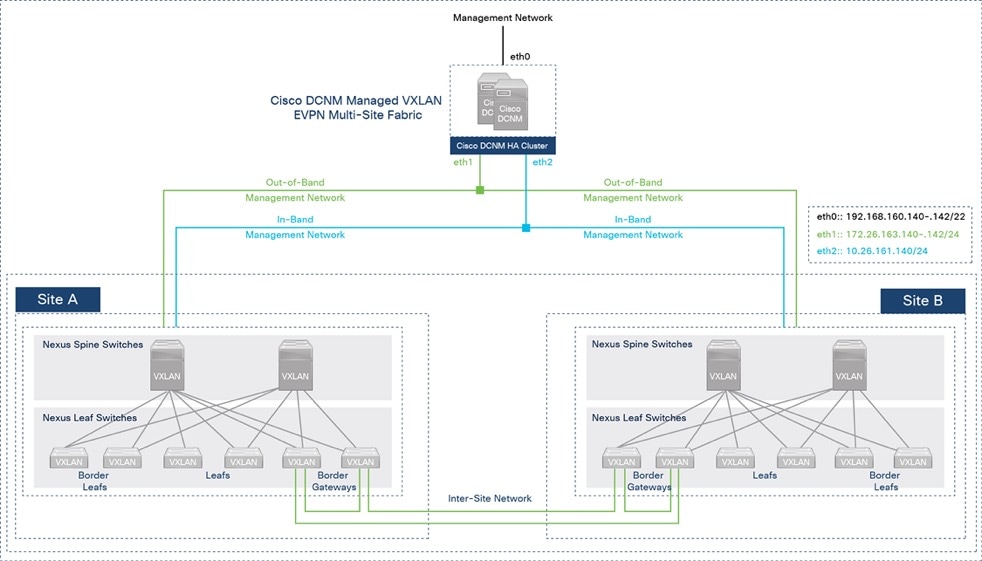

As stated earlier, Cisco DCNM serves as a centralized controller to provision and manage the VXLAN Multi-site Fabric in the solution. Cisco DCNM, though not required, is highly recommended for any VXLAN deployment with more than a few switches. Cisco DCNM is available in three modes: LAN Fabric, SAN, or IP Fabric for Media. Cisco DCNM in LAN Fabric mode is used in this solution. LAN Fabric simplifies the management of a VXLAN fabric and reduces the deployment time of a data center fabric from days to minutes. Cisco DCNM minimizes configuration errors by using policy templates that generates the configuration that gets deployed on the. Templates provide an error-free and scalable mechanism for deploying and maintaining configuration changes. To ensure configuration compliance, Cisco DCNM continuously monitors the switches and provides alerting with 1-click remediation to maintain consistency and prevent configuration drifts.

In the solution, Cisco DCNM is deployed as a cluster of multiple nodes for high availability (HA). Two Cisco DCNM virtual machines are deployed in native HA mode and operate as active/standby nodes. Additional compute nodes (or worker VMs) can be added for scalability; three worker VMs are used in this solution to support operational tools such as Cisco Network Insights. The VMs are hosted on three physical servers. The Cisco DCNM VMs and compute/worker VMs are clustered and must be in the same Layer 2 network on each ethernet interface (eth0, eth1, eth2).

Figure 3 shows the connectivity from Cisco DCNM to the VXLAN Multi-Site fabric in this solution.

Figure 3. Cisco DCNM Connectivity to VXLAN Fabric Switches

Cisco DCNM GUI is accessible from the management network on the eth0 interface of the VMs in the cluster. Cisco DCNM connects to the VXLAN fabric in each site through the out-of-band (OOB) management network on eth1 and has connectivity to all switches that it manages, including the Nexus 7000 series gateway switches used in the solution for external connectivity. Cisco DCNM uses an in-band (IB) management network on eth2 for bandwidth-intensive operations such as the Endpoint Locator and telemetry features. Cisco DCNM is not necessary for traffic forwarding; only for managing and provisioning the fabric.

Cisco DCNM also provides complete lifecycle management and automation, with capabilities such as automated fabric deployment, automatic consistency-checking, automatic remediation, and device lifecycle management. Cisco DCNM provides real-time health summary of the fabrics, devices, and topologies, with correlated visibility and triggered alarms. Cisco DCNM also offers numerous workflows for agility in operations (return materials authorization [RMA], install, upgrade) and deployment such as customizable Python++ templates for enabling access-layer, multi-site and external connectivity. All deployment history (underlay, overlay, interface) is also available on a per-switch basis. Cisco DCNM also has other features to simplify and speed up operations such as interface grouping, vPC peering using virtual links, auto peer matching of vPC peers for provisioning, and VMM workload automation. For a more complete list of features, see Cisco DCNM’s datasheet available here.

Fabric Automation and Agility

Cisco DCNM serves as a single point of automation for the end-to-end VXLAN Multi-Site fabric. Cisco DCNM offers multiple programmability options to automate and achieve the agility that Infrastructure as Code (IaC) can provide. Cisco DCNM provides RedHat Ansible modules, HashiCorp Terraform providers, and Representational State Transfer (REST) APIs to provision and manage a VXLAN Multi-Site fabric. Cisco DCNM is also a single point of integration, northbound to DevOps and other IT toolsets.

In this solution, Cisco DCNM Fabric Builder provides Day-0 automation for deploying the end-to-end VXLAN Multi-Site fabric and Cisco DCNM Terraform provider for Day-2 automation. Terraform plans automate Day-2 deployment activities such as adding a new leaf switch pair, provisioning access layer connectivity to Cisco UCS FI and HyperFlex VSI, adding tenants, and adding networks. The Fabric Builder deploys a greenfield VXLAN Fabric in each site and provides templates for additional connectivity such as connectivity to outside/external networks, including the configuration of external gateways outside the fabric. Fabric Builder also provisions the multi-site fabric to enable connectivity between the two data center site fabrics. Cisco DCNM uses policy-based Python++ templates for provisioning that incorporates technology and product best practices where possible.

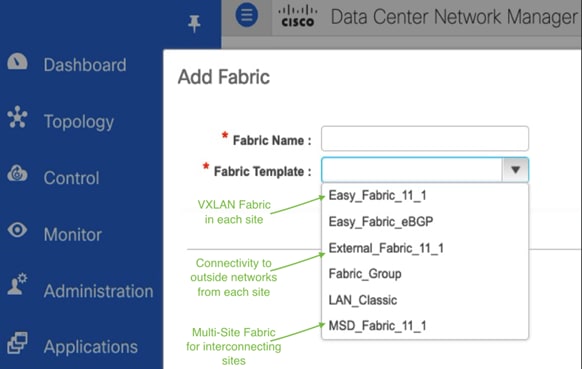

Figure 4 shows the Cisco DCNM Fabric Builder templates used in this solution to automate the deployment of the end-to-end VXLAN Multi-site fabric.

Figure 4. Cisco DCNM LAN Fabric – Fabric Builder Templates

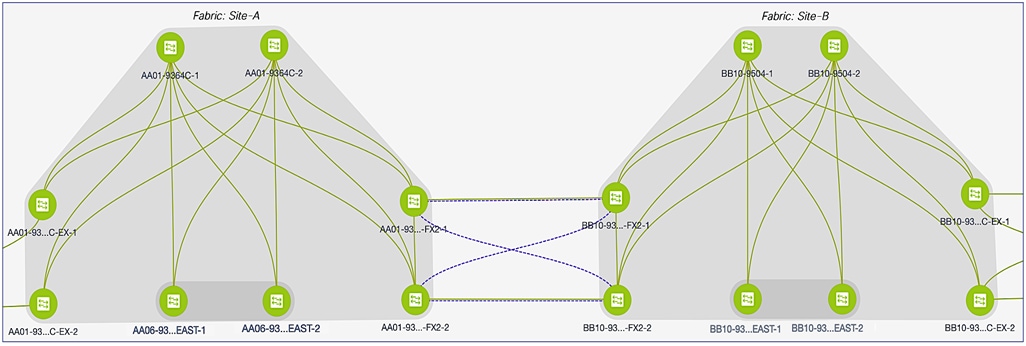

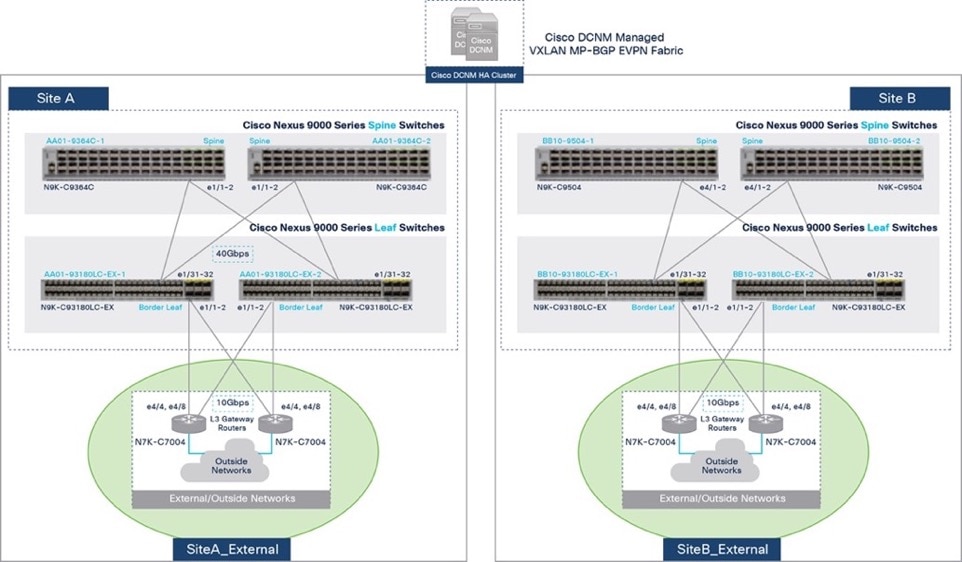

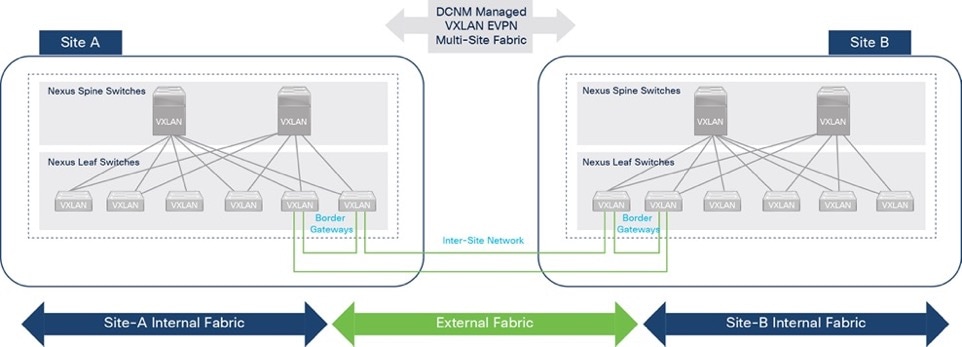

Figure 5 shows the end-to-end VXLAN EVPN Multi-Site fabric deployed by the Fabric Builder templates above. This fabric was used to validate the active-active data center solution in Cisco Labs.

Figure 5. VXLAN EVPN Multi-site Fabric

VXLAN Fabric – Intra-Site Design

In the active-active data center solution, each data center site has an independent VXLAN fabric, built using Cisco Nexus 9000 Series switches in a 2-tier, spine-leaf Clos topology. The intra-site design is highly resilient, with no single point of failure. Cisco DCNM manages the site fabrics as well as the end-to-end multi-site fabric.

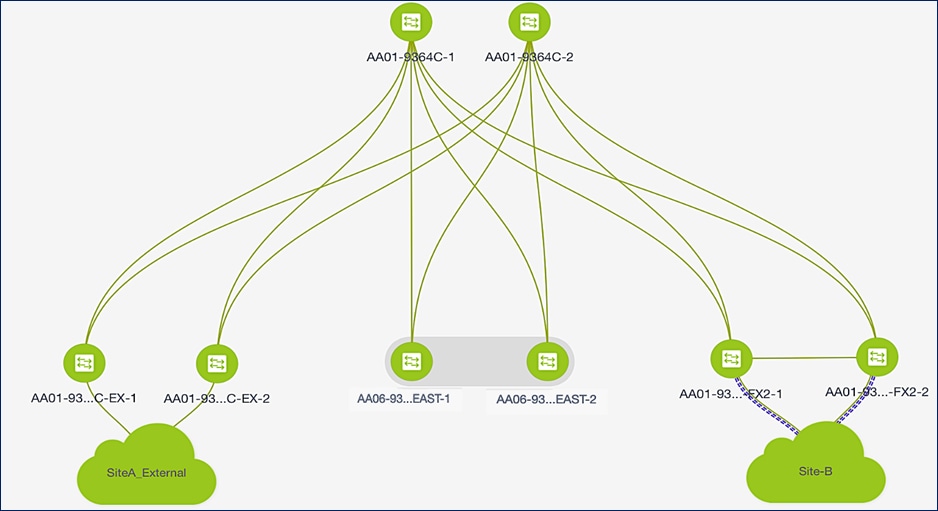

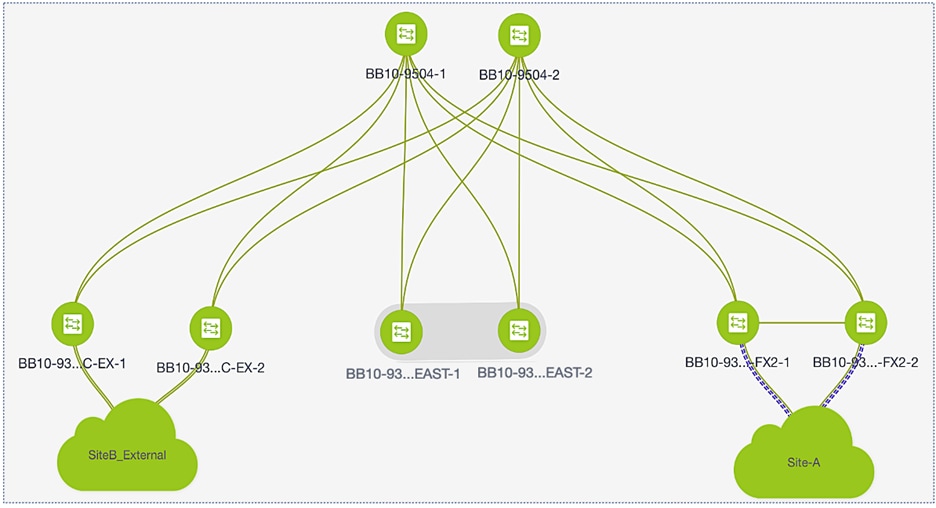

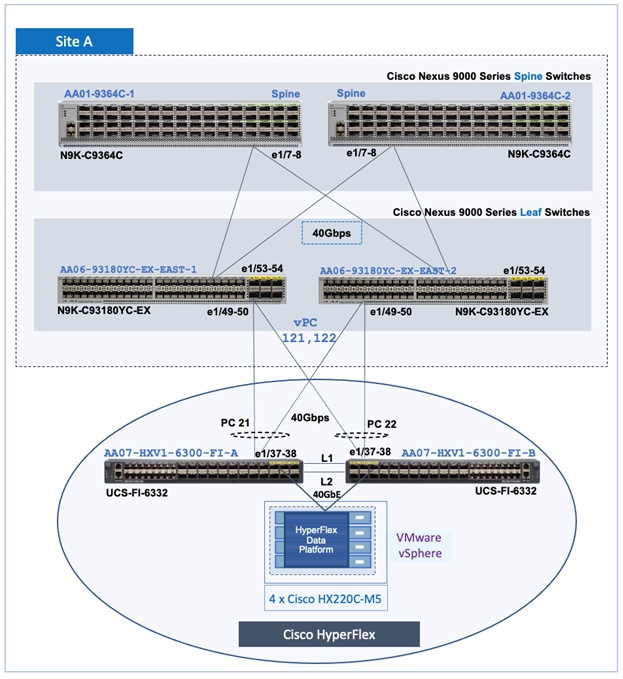

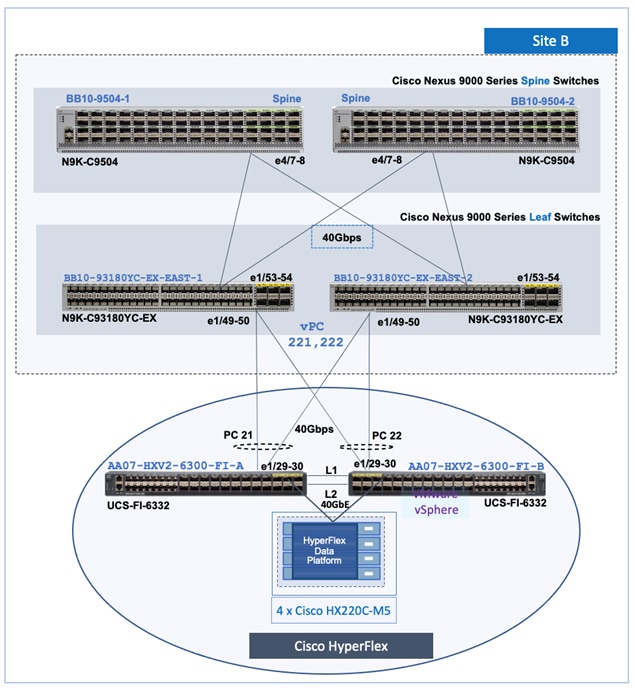

Figures 6 and 7 illustrate the intra-site design for the two data centers (Site-A, Site-B) used in the solution.

Figure 6. Intra-Site Design – Data Center Fabric in Site-A

Figure 7. Intra-Site Design – Data Center Fabric in Site-B

The site fabric design in the two active-active data center sites is very similar. Each site uses a two-tier Clos topology consisting of a pair of spine switches and three pairs of leaf switches. The spine switches provide high-speed core connectivity and serve as redundant Internal Border Gateway Protocol (iBGP) route-reflectors (RR) and as IP Multicast Rendezvous-Points (RP) for each site fabric. The leaf switch pairs provide different functionality depending on its role. The three Leaf switch pairs deployed in each site are:

● Access/ToR Leaf switches for connecting to Cisco UCS and HyperFlex infrastructure in each site

● Border Leaf switches for connecting to outside/external networks from each site

● Border Gateway Leaf switches for inter-site connectivity between data centers

For scalability, this design uses separate leaf switch pairs for each role. However, smaller deployments can combine the switch roles and use fewer leaf switch pairs if necessary. The leaf switches are dual-homed to the two spine switches and do not connect directly to other leaf switches. However, border gateway switches connect directly for inter-site connectivity to establish full-mesh E-BGP connectivity between sites. Cross-links minimize the need for the more costly inter-site links. For more details on this design, see VXLAN EVPN Multi-Site Design and Deployment White Paper.

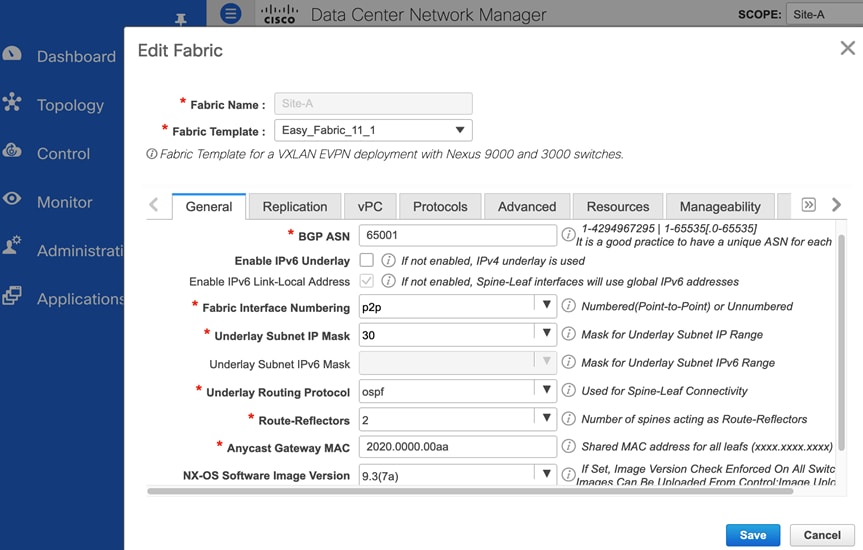

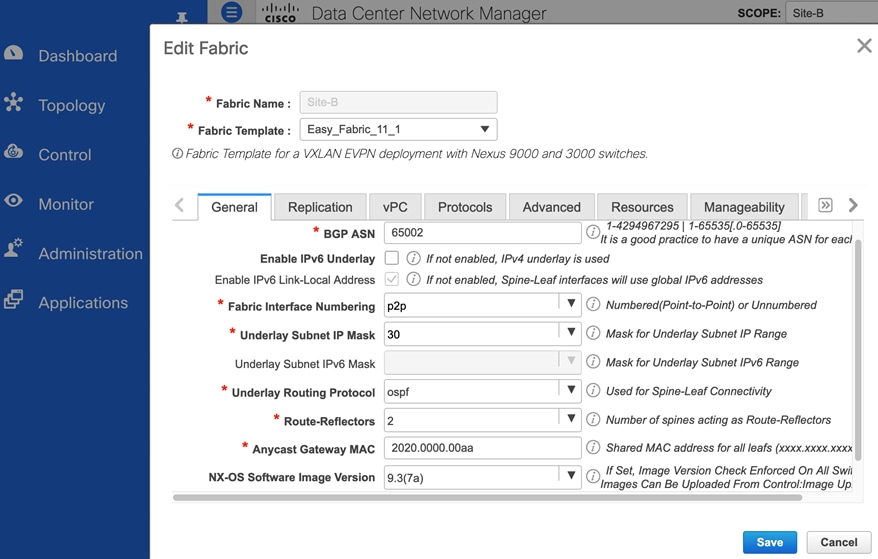

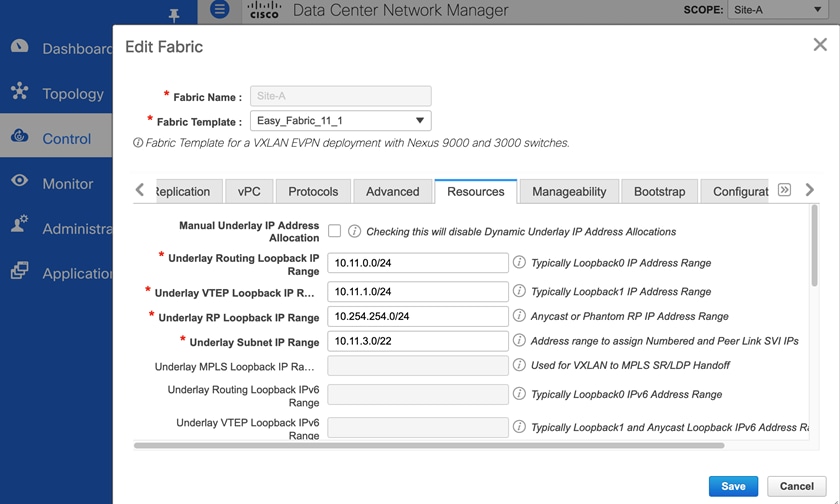

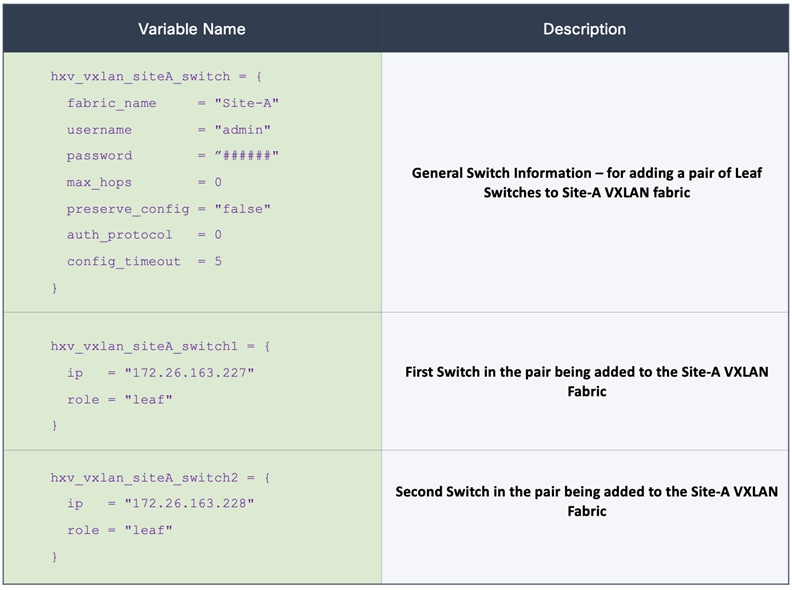

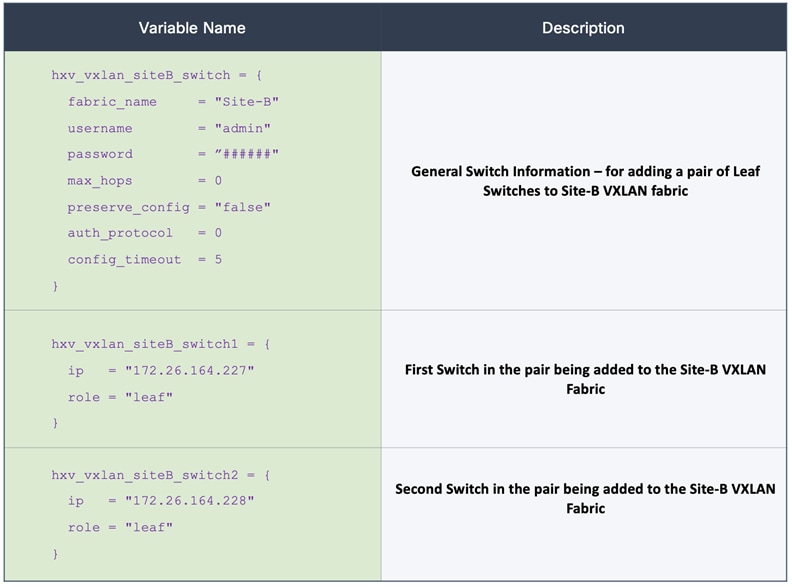

In this solution, the VXLAN fabric is deployed in each site using the Easy_Fabric_11_1 template available in Cisco DCNM Fabric Builder. The template will automate the Day 0 provisioning of the VXLAN fabric in each data center site using the inputs specified by the fabric administrator in Cisco DCNM. The specified inputs, both mandatory and optional, can be broadly grouped as outlined below.

● Underlay Networking – for example, BGP, Anycast GW MAC, Interface Numbering, etc.

● Layer 2 Multi-Destination Traffic handling, replication mode and related configuration

● Underlay Routing Protocols – for example, Intermediate System-to-Intermediate System (S-IS), Open Shortest Path First (OSPF), or Exterior Border Gateway Protocol (eBGP)

● Advanced Configuration (QoS, Encryption, Fabric MTU, Overlay VRF/Network Templates)

Underlay Network

This section is used to provide general information regarding the underlay such as the BGP Autonomous System Number (ASN) for each site, the interface type (point-to-point, unnumbered) and subnet mask (/30, /31) on the interfaces, the underlay routing protocol (OSPF, ISIS) etc.

In the active-active data center design, both site fabrics use the same configuration except for the BGP ASN for each site. The underlay links are point-point IPv4 links with a /30 subnet mask and use OSPF as the underlay routing protocol. All links in the fabric also use jumbo MTU.

Figure 8 and Figure 9 shows the underlay settings in Site-A and Site-B respectively, configured using the Easy_Fabric_11_1 template.

Figure 8. Fabric Builder: Underlay Configuration (Site-A)

Figure 9. Fabric Builder: Underlay Configuration (Site-B)

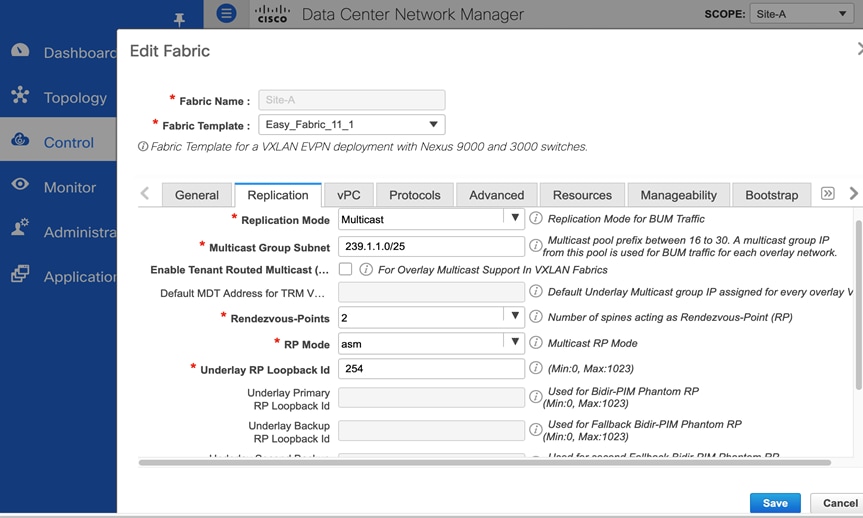

Replication

Ethernet networks use flooding to forward broadcast, unknown (yet-to-be learned destinations) unicasts and multicast (BUM) traffic to endpoints in the same Layer 2 broadcast domain. When the Ethernet networks span a VXLAN fabric, the fabric can use either IP Multicast or Ingress Replication to forward the BUM traffic. A local VXLAN Tunnel Endpoint (VTEP) or Leaf switch will forward the BUM traffic it receives to all remote VTEPs handling traffic for that Ethernet segment. If the fabric uses Ingress (headend) Replication, the local VTEP will replicate and send an individual copy to each remote VTEP. If the fabric uses IP Multicast, the local VTEP will forward it to the IP Multicast group associated with that network. In a VXLAN fabric, each Ethernet network is assigned an IP multicast group for sending and receiving BUM traffic. When the administrator deploys a Layer 2 or Layer 3 network on a VTEP, the VTEP will use Internet Group Management Protocol (IGMP)/Protocol Independent Multicast (PIM) to join the multicast group associated with that network. Cisco recommends using IP multicast for forwarding BUM traffic efficiently across an IP underlay network. This design uses IP multicast.

When using IP multicast, a multicast routing protocol, either PIM-ASM or PIM-BiDir, is needed. Both protocols also use a Rendezvous-Point (RP), and the spine switches in each fabric are ideal for providing this functionality as it is centrally located with connectivity to all leaf switches in the fabric. In this solution, both data center sites use IP multicast with PIM-ASM for BUM forwarding, with the spine switches serving as redundant RPs for each fabric. Cisco DCNM Fabric Builder automatically provisions the configuration necessary to enable IP multicast for BUM forwarding. Figure 10 shows the replication settings used in Site-A, configured using the Easy_Fabric_11_1 template. An identical configuration is used in Site-B. The two sites can also use different replication modes if needed.

Figure 10. Fabric Builder: Replication Configuration (Site-A)

As each network is provisioned, an IP multicast group address must also be provisioned for forwarding BUM traffic. As the number of Layer 2 segments increase, the number of multicast groups and forwarding states that must be maintained also increases. By default, Cisco DCNM uses the same IP Multicast group address for all networks unless explicitly specified otherwise. Using the same multicast group reduces the control plane resources used, but it also means that a VTEP could receive BUM traffic for a network that it does not handle. The VTEP will forward the BUM traffic to a local segment only if the VXLAN Network ID (VNID) on the packets matches that of the local segment. Nevertheless, in this solution, each HyperFlex infrastructure network is assigned a separate Multicast IP group to make it easier to monitor and troubleshoot.

For BUM forwarding between data centers, see “Inter-site Design – Interconnecting Data Centers” section of this document.

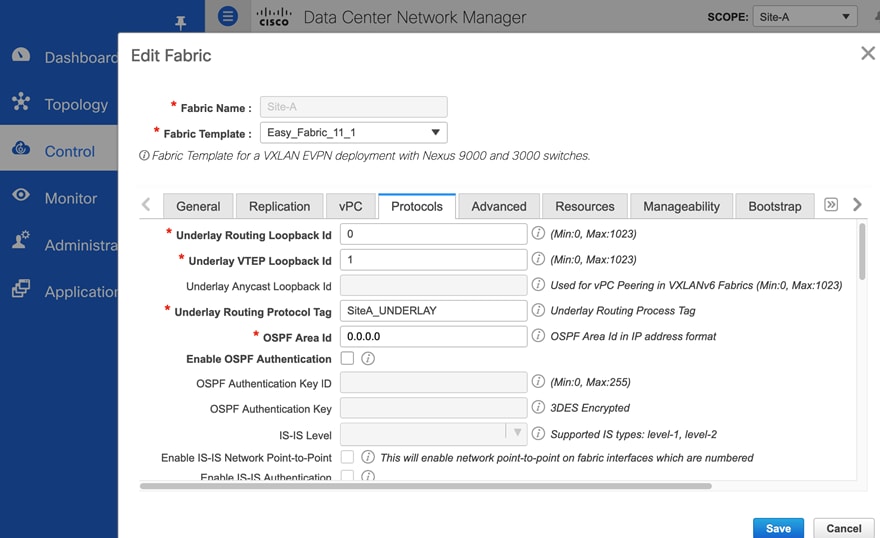

Protocols

A VXLAN fabric uses routing protocols to advertise VTEP and endpoint reachability (or address learning. In this solution, OSPF and BGP are used. Cisco DCNM Fabric Builder deploys multiple loopbacks for use as router ID by the routing protocols, as tunnel endpoint IP, for vPC peering and so on.

Figure 11 and 12 show the settings used in Site-A.

Figure 11. Fabric Builder: Underlay Protocols Configuration (Site-A)

Figure 12. Fabric Builder: Underlay Resources Configuration (Site-A)

Additional Considerations

This section discusses additional factors to consider when deploying a VXLAN EVPN fabric:

● VXLAN fabrics can use a data-plane flood-and-learn mechanism, similar to Ethernet, for address learning and endpoint reachability. The flooding is done using either IP Multicast or Ingress Replication. Though IP Multicast is efficient, large amounts of multicast traffic can still limit the scalability of a data center fabric. Alternatively, a more scalable, control-plane method using MP-BGP EVPN can also be used for address learning. By default, Cisco DCNM Fabric Builder deploys VXLAN fabrics using MP-BGP EVPN.

● Cisco VXLAN fabrics use MP-BGP to advertise endpoint reachability, specifically internal BGP (iBGP) within a site and external BGP (eBGP) between sites. For iBGP, the switches must have full-mesh connectivity or peer with Route-Reflectors (RRs) that can relay the routes. By default, Cisco DCNM deploys route-reflectors deployed on spine switches since all leaf switches connect to it.

● When an endpoint originates an ARP request, the receiving VTEP or Leaf switch will flood the ARP broadcast to all VTEPs in the fabric using the multicast-group address associated with that network. However, if ARP Suppression is enabled, the receiving VTEP will first inspect the ARP request and if it has learned the endpoint info via MP-BGP EVPN, it will respond to the ARP request locally. ARP suppression is only supported for Layer 3 networks.

● In VXLAN fabrics, the Integrated Routing and Bridging (IRB) provided by leaf switches can be symmetric or asymmetric. Symmetric IRB is more scalable and less complex from a configuration perspective. By default, Cisco DCNM deploys symmetric IRB.

● Distributed anycast gateways facilitate flexible workload placement and endpoint mobility across a data center fabric. In a VXLAN fabric, each Leaf switch is a distributed anycast gateway for the Layer 3 networks connected to it. All leaf switches configured for a given Layer 3 network will use the same gateway IP and virtual MAC address (2020.0000.00aa), ensuring that the endpoint always has a valid ARP entry for its gateway, regardless of where it moves to within the data center. For each Layer 3 network provisioned, Cisco DCNM will automatically deploy the corresponding anycast gateway function on all relevant switches in the end-to-end VXLAN Multi-Site fabric.

● VXLAN fabrics with MP-BGP EVPNs use multi-tenancy concepts similar to that of MPLS Layer 3 VPNs. When advertising routes to other BGP peers, Route Distinguishers (RD) ensure the global uniqueness of routes from different VPNs (VRFs). Route targets (RT) enable flexible route export/import on a per-tenant/VRF basis. In the data plane, VXLAN uses VNIDs to segment the overlay network by mapping each edge network to a VXLAN segment (VNID) and by enforcing VNID/VRF boundaries. In this solution, multi-tenancy separates the infrastructure connectivity from that of the applications hosted on the infrastructure. The design uses an infrastructure tenant (HXV-Foundation) for all connectivity required to build and maintain the HyperFlex VSI and an application tenant for the applications hosted on the HyperFlex VSI. For each VRF provisioned, Cisco DCNM will automatically deploy the corresponding tenancy configuration on all relevant switches in the VXLAN Multi-Site fabric. Enterprises can choose a tenancy model that meets the needs of their business.

● VXLAN uses a MAC-in-IP/User Datagram Protocol encapsulation, resulting in a 50B overhead on VXLAN-tagged frames. A VTEP (leaf) also cannot fragment the packets per the IETF standard. For this reason, the fabric MTU should at least be 50B higher than the largest packet it can receive from an endpoint. By default, Cisco DCNM uses an MTU of 9216B.

● VNID allocation and naming conventions: The VXLAN fabric deployed in this design uses VNIDs in the 20000s range for Layer 2 networks and 30000s range for Layer 3 networks.

Similarly, the design uses a naming convention such as “<NameOfObject>_<Type>” where Type indicates the type of object (for example, Mgmt_VLAN). Enterprises can use a similar approach as it can be helpful from a troubleshooting perspective.

Intra-Site Design – Core Connectivity

Core connectivity refers to the connectivity between spine and leaf switches within a given data center site. As stated earlier, the VXLAN fabric in each site is a collapsed, 2-tier Clos-based spine and leaf topology, where each leaf switch connects to all spine switches in the top-tier. Clos topologies are designed for modern applications that are increasingly distributed, resulting in large amounts of East-West traffic in today’s data centers. Clos topology provides a simple and scalable design, with predictable latency and performance to meet the needs of modern data centers. Clos topologies provide multiple equal-cost paths that the VXLAN fabric can leverage for load-balancing East-West traffic. Clos fabrics also offer predictability and consistency where connectivity between any two endpoints is always three hops (leaf-spine-leaf). The fabric can also be easily scaled by adding more leaf and spine switches to the topology.

In this solution, the VXLAN fabric in each site consists of a pair of spine switches and three pairs of Leaf switches, built using Cisco Nexus 9000 series switches. The Solution Validation section of this document provides the specific Cisco Nexus switch models used in the solution. The design uses 40GbE links for core connectivity and 10GbE links for external and inter-site connectivity. The design ensures that each site can operate independently of the other in the event of a failure, and provides access to outside networks and services directly from each site.

Intra-Site Design – Edge Connectivity

Leaf switches use Link Aggregation Control Protocol (LACP) to bundle links that connect to physical and virtual endpoints in the edge network. Link aggregation provides redundancy and higher aggregate bandwidth. A port-channel or a virtual Port-Channel (vPC) can be used but vPCs are preferred when possible as it also provides node-level resiliency.

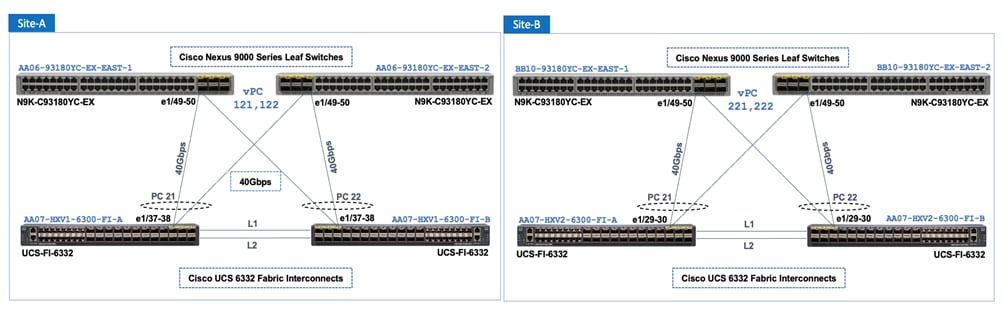

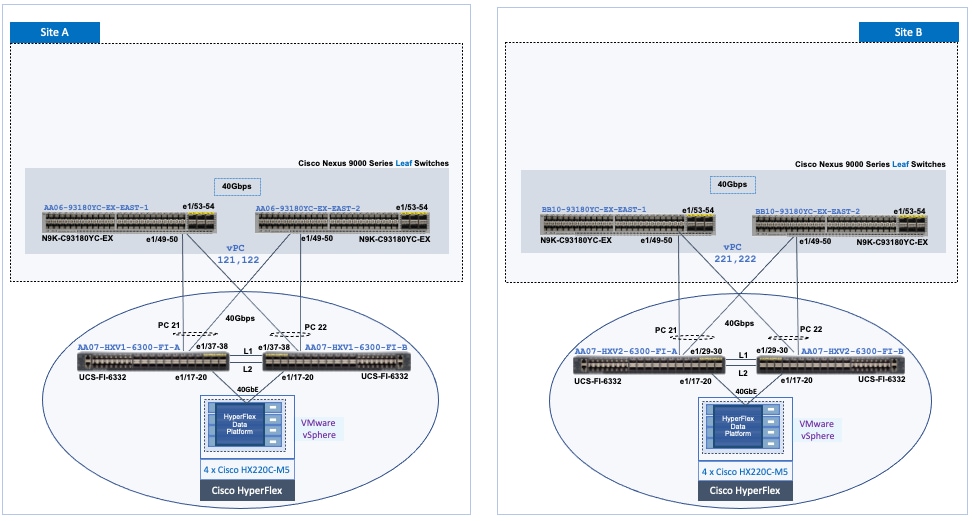

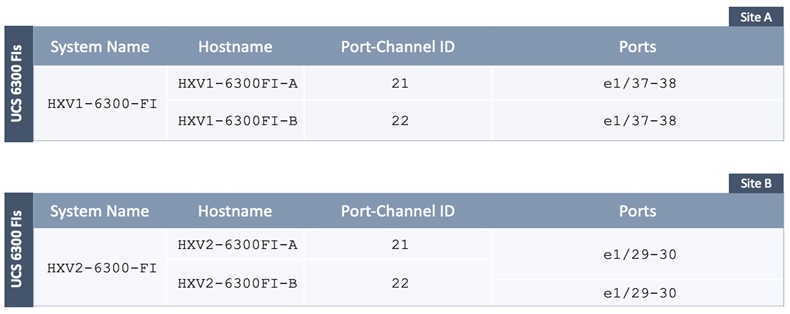

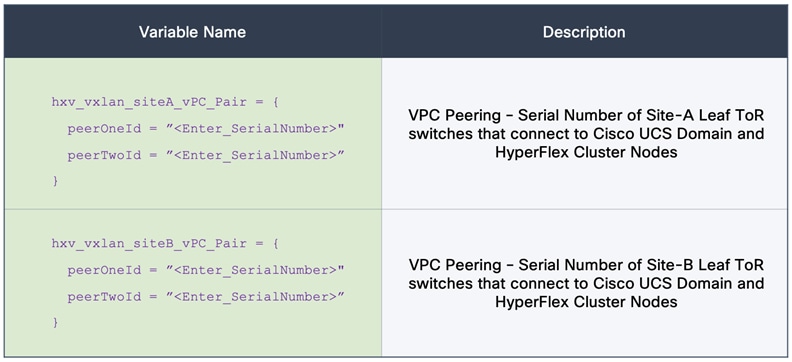

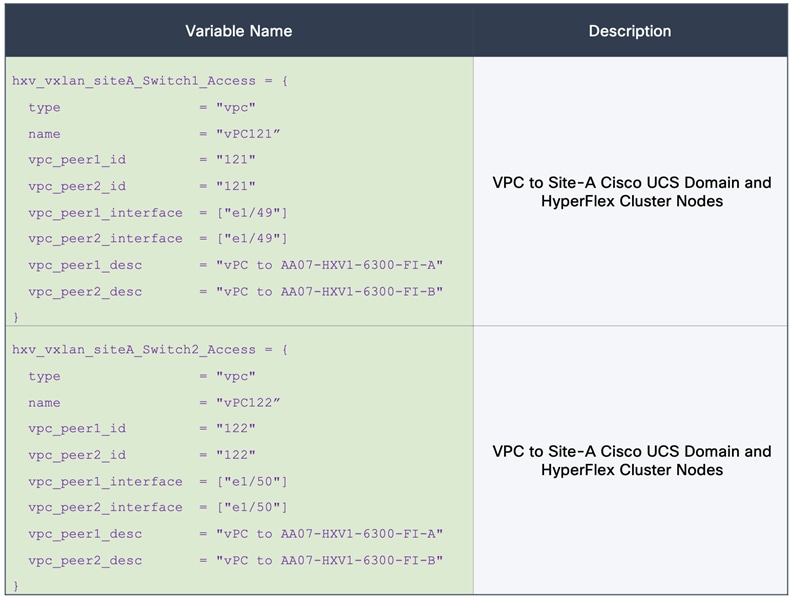

In this solution, leaf switches use vPCs to connect to the Cisco UCS domain (and HyperFlex infrastructure) in each site as shown in Figure 13. Leaf switches are deployed as Virtual Port-Channel (vPC) peers and use 40GbE links for connecting to the Cisco UCS FIs. The vPC design is identical in both active-active data center locations.

Figure 13. Intra-Site Design: Edge Connectivity in Site-A and Site-B

Intra-Site Design – Outside/External Connectivity

Endpoints and applications that connect to the VXLAN fabric require access to networks and services outside the fabric. In this solution, external connectivity is necessary for deploying and managing the HyperFlex VSI. The fabric must provide connectivity to the HyperFlex Installer, HyperFlex Witness, and VMware vCenter located outside the fabric. Applications hosted on the HyperFlex VSI also require access to outside networks and services. In this design, both sites have dedicated connections for external connectivity, enabling each site to operate independently in the event of failure in the other. Figure 13 shows a high-level view of the external connectivity in each site (Site-A, Site-B) to the external gateways outside the fabric (SiteA_External, SiteB_External).

Figure 14. External Connectivity in Site-A and Site-B (High Level View)

Figure 15 shows the detail external connectivity in each site.

Figure 15. External Connectivity in Site-A and Site-B (Detailed View)

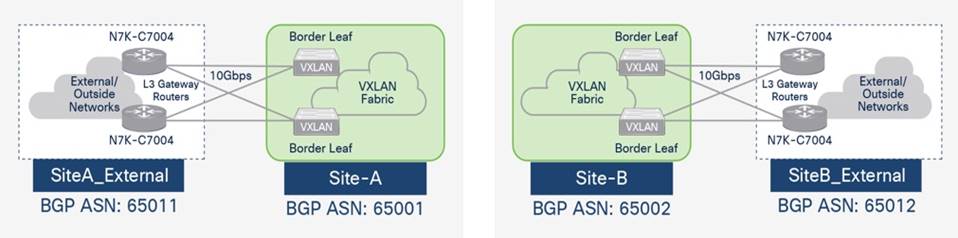

The design uses a pair of Cisco Nexus 7000 Series switches as external gateways in the outside network that connect to the border leaf switches in Site-A and Site-B fabrics using redundant 10 GbE links.

Cisco VXLAN fabrics can connect to outside/external networks using a VRF-to-VRF handoff, either to an MPLS-VPN or IP network, or use VRF-to-IP network handoff. This solution uses VRF-to-VRF handoff to an IP network which extends the multi-tenancy to the external IP network. The design uses MP-BGP to enable this connectivity and extend multi-tenancy (VRFs) to the external network. The VXLAN fabrics in each site and the external networks are all in different BGP Autonomous Systems as shown in Figure 16.

Figure 16. MP-BGP External Connectivity in Site-A and Site-B

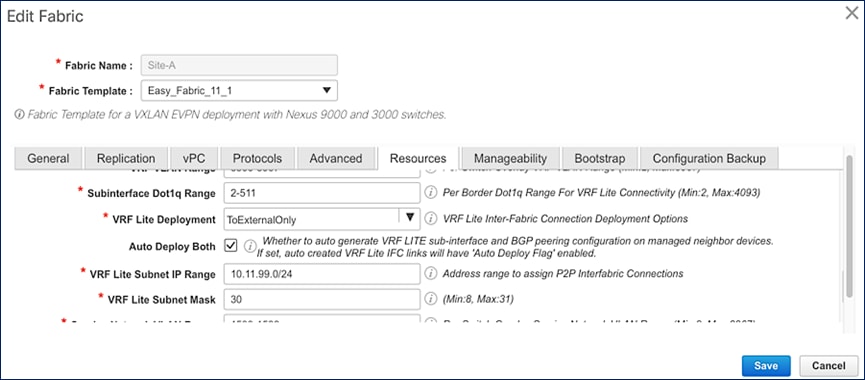

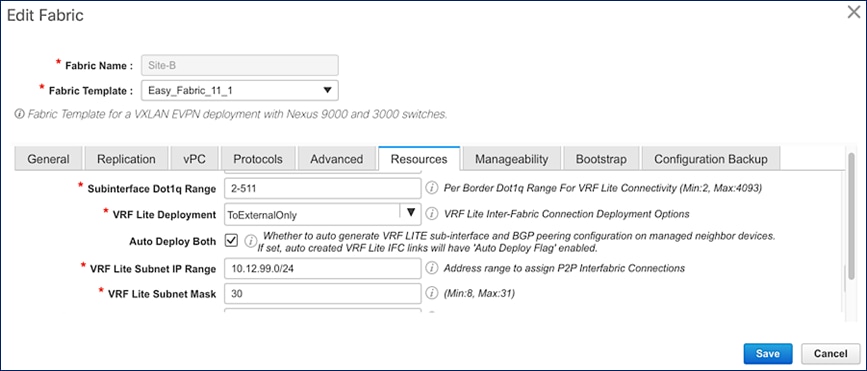

Figures 17 and 18 show the Cisco DCNM Fabric Builder configuration for external connectivity from Site-A and Site-B respectively. This configuration is part of the Easy_Fabric_11_1 template.

Figure 17. Cisco DCNM Fabric Builder – External Connectivity (Site-A)

Figure 18. Cisco DCNM Fabric Builder – External Connectivity (Site-B)

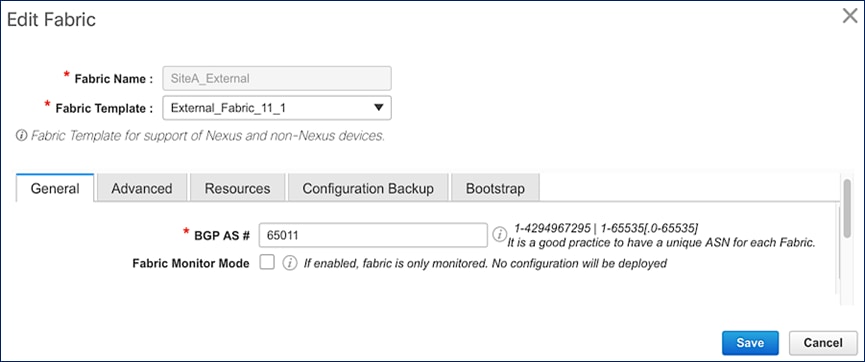

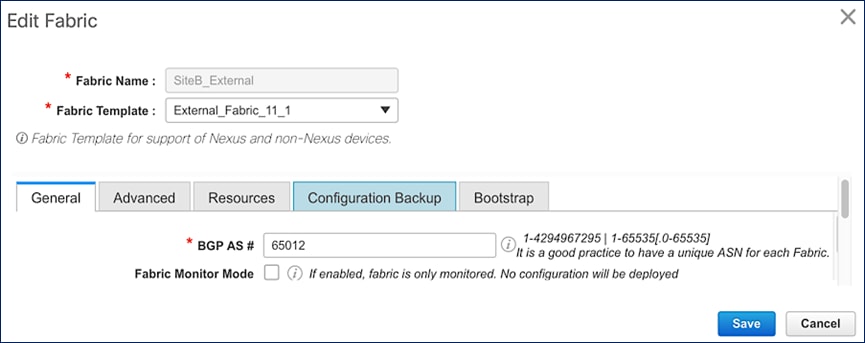

Cisco DCNM can also manage the external network, either in managed or monitored mode. In this solution, the external network is in managed mode which enables Cisco DCNM to provision the VRF-Lite setup on the external gateways. Cisco DCNM Fabric Builder uses the External_Fabric_11_1 template to deploy the external network and establish connectivity from the external gateways to the border leaf switches in each site.

Figures 19 and 20 show the corresponding Cisco DCNM Fabric Builder configuration for external networks (SiteA_External, SiteB_External) that connect to Site-A and Site-B respectively.

Figure 19. Cisco DCNM Fabric Builder – External Network Setup (Site-A)

Figure 20. Cisco DCNM Fabric Builder – External Network Setup (Site-B)

The access-layer connectivity from each site to the external gateways is enabled through Inter-Fabric links configured for IEEE 802.1Q trunking. For high availability, each border leaf switch connects to both external gateways in a full-mesh topology. Each connection is from a routed, VLAN tagged, VRF interface on the border leaf switch to a routed, VLAN tagged VRF-Lite interface on the external gateway. The Layer 3 connectivity is on a per VRF basis, enabled only for tenants that require connectivity to external/outside networks. In this design, the HXV-Foundation_VRF requires external connectivity (Layer 3) from both sites.

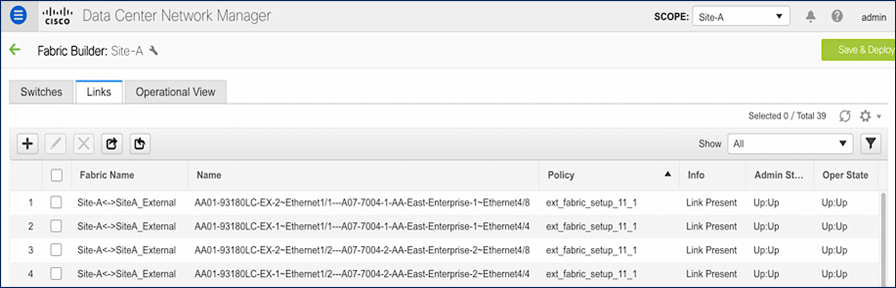

Figure 21 shows the Inter-Fabric connectivity between switches in Site-A and SiteA_External. Cisco DCNM Fabric Builder automatically provisions the links using the ext_fabric_setup_11_1 policy. The setup in Site-B is similar to that of Site-A – IP addressing and BGP ASN are different.

Figure 21. Cisco DCNM Fabric Builder – Site-A External Connections

Figure 22 shows the detailed link-level configuration for one link between the VXLAN fabric and external fabric in Site-A. The remaining links in Site-A and Site-B links are set up similarly.

Figure 22. VXLAN Fabric and External Fabric Site A – Detailed Configuration

When the external-facing links and the initial setup is complete as previously described, the tenants and VRF interfaces for the HyperFlex infrastructure connectivity or for applications hosted on the HyperFlex infrastructure can be deployed as needed on the border leaf switches to enable external connectivity for those tenants.

Inter-Site Design – Interconnecting Data Centers

The VXLAN EVPN Multi-Site architecture provides seamless Layer 2 and Layer 3 extension between individual VXLAN EVPN fabrics. Inter-site (or data center) connectivity is possible using different Data Center Interconnect (DCI) technologies; however the VXLAN EVPN Multi-Site approach is a more integrated and scalable architecture. For more details about the Multi-Site approach used in this design, refer to the IETF drafts listed in the References section of this document.

The Inter-Site network provides the Layer 3 connectivity between VXLAN fabric sites in a VXLAN EVPN Multi-Site architecture. In this solution, Border Gateways (BGWs) in the active-active sites directly connect to each other to enable east-west traffic flow between data centers. In Cisco VXLAN fabrics, you can deploy BGWs as standalone leaf switches or combine the function with spine switches already in each site. BGW function can also be combined with the Border leaf switches that provide connectivity to outside networks and services. This design uses standalone BGW leaf switches for a more scalable design to support large Enterprise data centers. The BGWs can be deployed as vPC Gateways or Anycast BGWs. vPC Gateway mode is used when BGWs connect to endpoints, typically network services such as firewalls and load balancers. Anycast BGW mode is used when there are no endpoints directly connecting to them. In this design, BGWs are deployed in Anycast BGW mode. At the time of writing this document, Enterprises can deploy up to four BGWs in each site for higher data-plane scalability. The solution uses two BGWs per site, but you can add additional BGWs as needed.

The BGWs provide separation between the internal VXLAN fabric and the external or inter-site VXLAN network by implementing internal and external VTEP functions for connecting to the internal and external networks respectively. To the internal fabric, the BGWs in a site are anycast BGWs (A-BGWs); they provide a common anycast virtual IP (VIP) address that is used for all data-plane communication between sites. A dedicated loopback IP address is allocated for this VIP. The distributed BGWs with anycast VIP enable you to use Equal Cost Multi-Pathing (ECMP) to provide active data forwarding across all BGWs for load distribution and redundancy.

To enable BUM traffic forwarding between sites, BGWs use ingress replication. However, within a site, Enterprises can use either IP multicast or ingress replication, and it can be different in each site. This design uses IP Multicast with PIM ASM within each site. In the VXLAN EVPN Multi-Site architecture, a BGW is elected as the designated-forwarder for each Layer 2 VNI. The election process distributes the designated-forwarder functionality for the different networks across the different A-BGWs. The A-BGWs will forward BUM traffic for one or more networks typically. Failure detection and the failover of VIP and designated-forwarder function to other BGWs is an important advantage of the VXLAN EVPN Multi-Site architecture. Internal and external interfaces on the BGWs are specially configured to understand their role in the network and tracked to detect failure quickly. Seamless Layer 2 and Layer 3 extension between sites will be available as long as one BGW with one internal- and external-facing interface is available in each site.

The control plane for inter-site connectivity uses Multiprotocol External BGP (MP-eBGP), unlike intra-site connectivity, which can use either eBGP or iBGP. For control-plane scalability, you can deploy route servers in the inter-site network and provide the functions similar to route reflectors in iBGP. Route servers are recommended when three or more sites are being connected. Route servers are not used in this solution because it is an active-active, two-data-center solution. However, not using a centralized entity for route peering means that the BGWs in one site will need full-mesh eBGP connectivity to BGWs in the remote site.

The EVPN Multi-Site architecture uses VXLAN tunnels to provide Layer 2 extension and Layer 3 forwarding. VXLANs add 50 - 54 bytes of overhead, so a minimal MTU of 1550 or 1554 is necessary in the inter-site network. In this design, a jumbo MTU of 9216 is used in the end-to-end VXLAN fabric, including the inter-site network.

Figure 23 shows the high-level inter-site design with back-to-back gateways used in this solution.

Figure 23. Inter-Site Design with Back-to-Back Border Gateways

![]() The anycast BGWs in each site are also directly connected to each other using a cross-link, resulting in a square topology in the inter-site network. The IP connectivity provided by the square topology is necessary for proper BUM traffic handling.

The anycast BGWs in each site are also directly connected to each other using a cross-link, resulting in a square topology in the inter-site network. The IP connectivity provided by the square topology is necessary for proper BUM traffic handling.

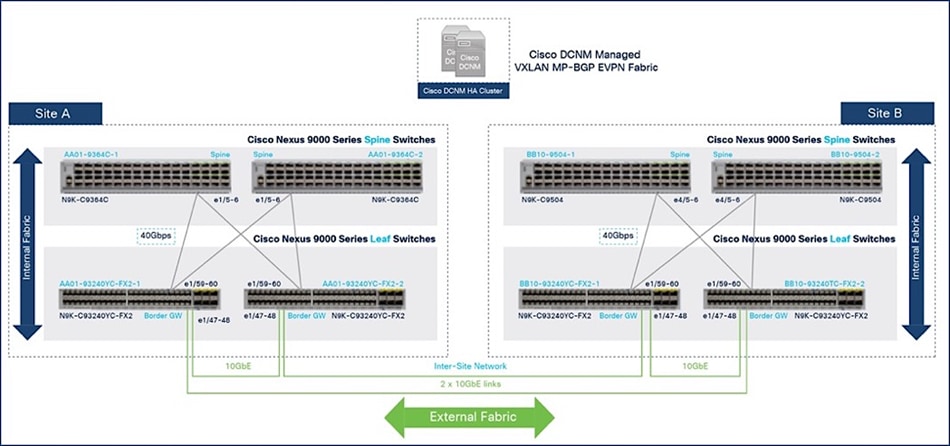

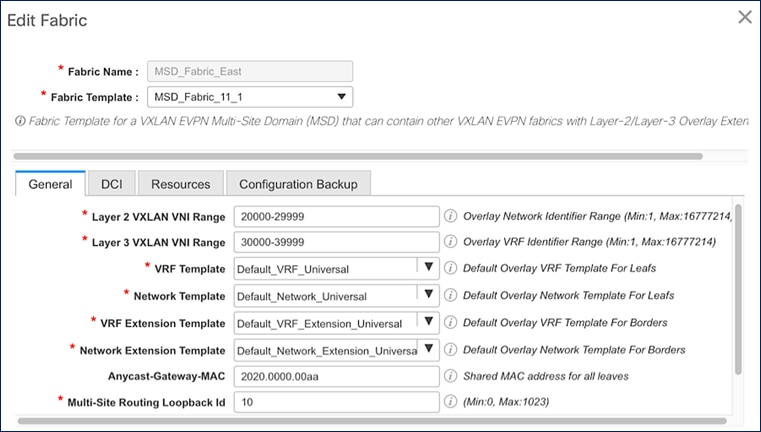

In this solution, Cisco DCNM deploys and manages the inter-site connectivity. Cisco DCNM Fabric Builder deploys a multi-site domain (MSD) fabric using the MSD_Fabric_11_1 template, and the existing fabrics (Site-A, Site-B, SiteA_External, SiteB_External) are then added and integrated into this new MSD fabric with Cisco DCNM managing the end-to-end network.

Figure 24 shows the physical connectivity between sites in the end-to-end MSD fabric.

Figure 24. Inter-Site Design – Physical Connectivity

The border gateways used in this solution are a pair of Cisco Nexus 93240YC-FX2 Switches. The connectivity between the BGWs within a given site and across sites are 10-GbE, enabling BGWs to establish full-mesh eBGP sessions across all BGWs in the inter-site network. Within a site, BGWs connect to spine switches using 40-GbE links, the same as other leaf switches in each fabric.

The BGW is a point of transition from the intra-site to inter-site fabric, making it a good location for enforcing policies (QoS, security) between data centers. It may also be a transition point between higher and lower speed links where congestion can occur, so it is important to ensure that critical traffic is prioritized. The traffic across the inter-site links should be monitored to understand the traffic patterns and collect data baseline information such as the bandwidth consumed, and latency (peak, average) experienced by the flows traversing the inter-site links. The baseline collected can be a point of a reference for comparison purposes so that you can take corrective action before any performance issues occur. This monitoring is particularly important for the high-bandwidth, latency-sensitive storage flows that traverse these links. The actions you could take include adding more links to increase the available bandwidth and thereby avoiding congestion altogether or using QoS to prioritize the more critical storage traffic.

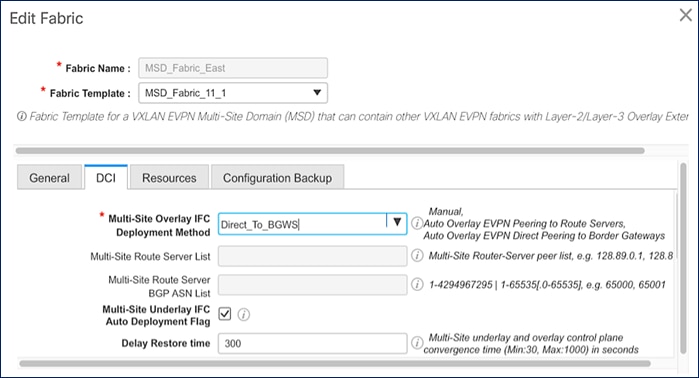

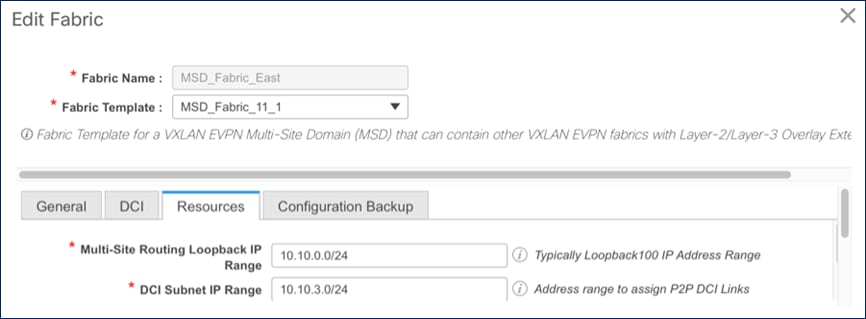

The following figures show the MSD fabric configuration deployed by Cisco DCNM for inter-site connectivity.

Figure 25. MSD Fabric - VNI range and Templates

For Inter-fabric connectivity, Direct_To_BGWS is selected to reflect the back-to-back BGW design used in this solution.

Figure 26. MSD Fabric - DCI Configuration

Figure 27. MSD Fabric - Resources Configuration

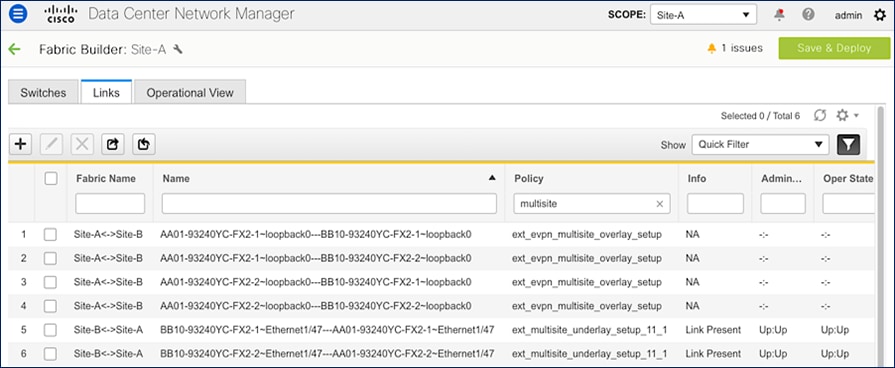

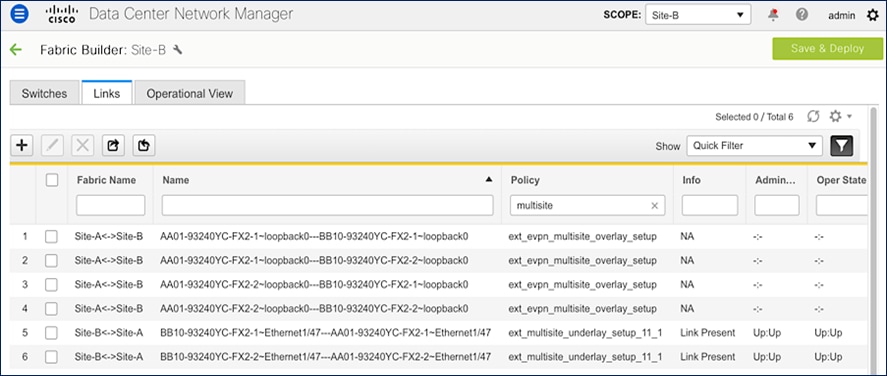

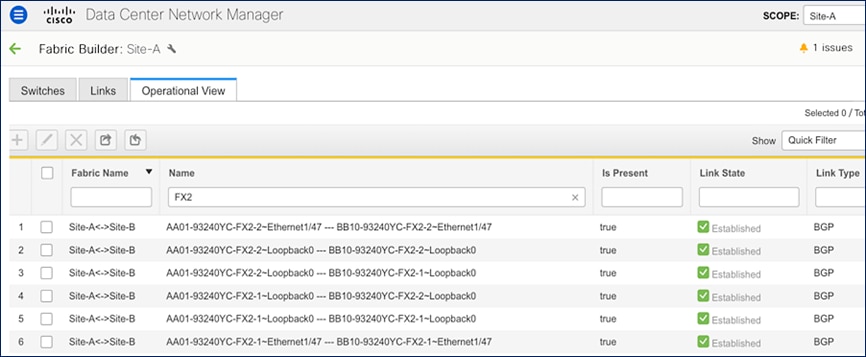

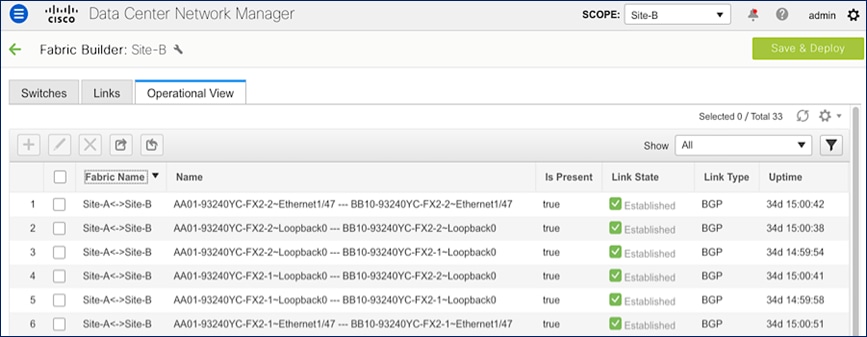

The inter-site connectivity between sites is setup by deploying the ext_multisite_underlay_setup_11_1 and ext_evpn_multisite_overlay_setup policies on the physical and loopback interfaces used for inter-site connectivity. Two 10-GE links (from e1/47 on each BGW) provide connectivity between sites and form the underlay IP transport for the inter-site network. The loopbacks are used to establish VXLAN overlay connectivity between sites. The following screenshots show the connectivity and policies used between sites in this solution.

Figure 28. Inter-Site Network: Overlay and Underlay Connectivity Setup (Site-A)

Figure 29. Inter-Site Network: Overlay and Underlay Connectivity Setup (Site-B)

The result of the configuration shown above is the following eBGP sessions getting established between BGWs:

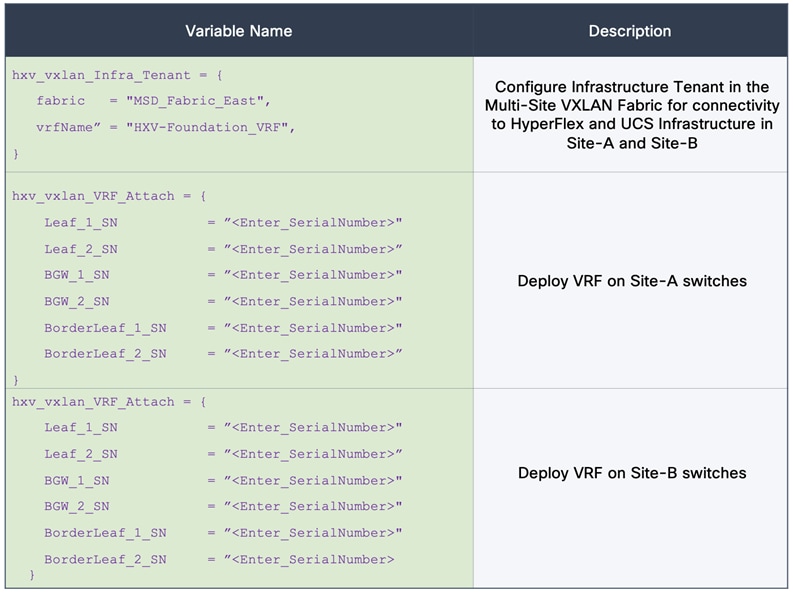

Tenancy Design

The VXLAN MP-BGP EVPN is designed for multitenancy. The tenancy design can be along organizational or functional lines or based on other factors. The tenancy design in this solution is based on connectivity requirements. Two tenants are used in this design: HXV-Foundation_VRF and HXV-Application_VRF. The HXV-Foundation_VRF tenant is used for all HyperFlex infrastructure connectivity. It includes the connectivity required to stand up the virtual server infrastructure within and across data center sites. It also includes connectivity required by management and operational tools that manage the infrastructure. The application tenant, on the other hand, is for any application workloads hosted on the HyperFlex virtual server infrastructure. Enterprises can deploy additional tenants as needed to meet the needs of their deployment.

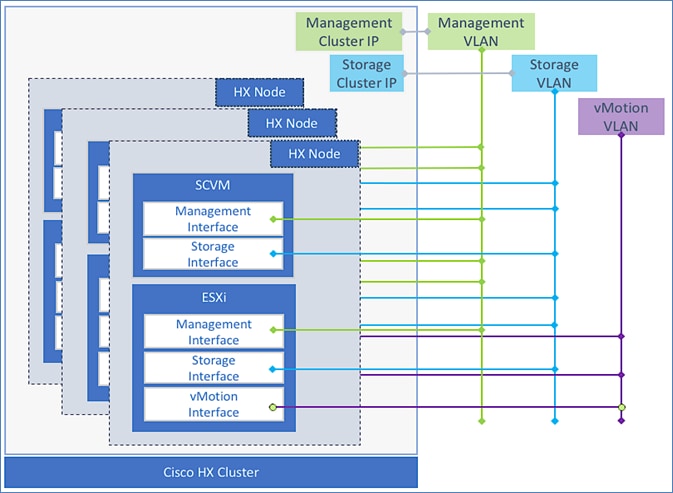

Connectivity to HyperFlex Infrastructure

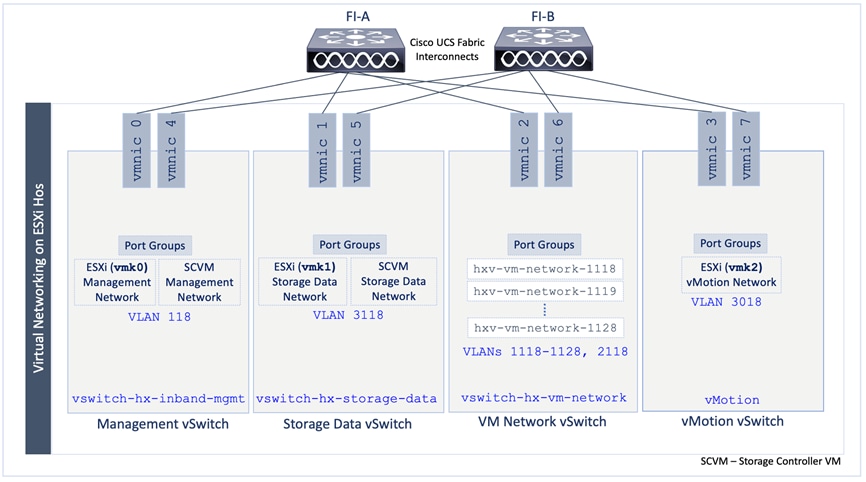

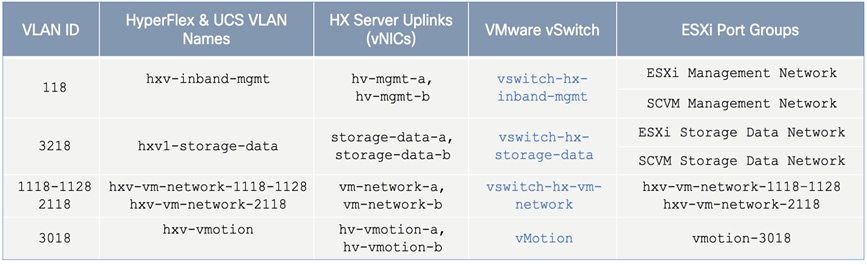

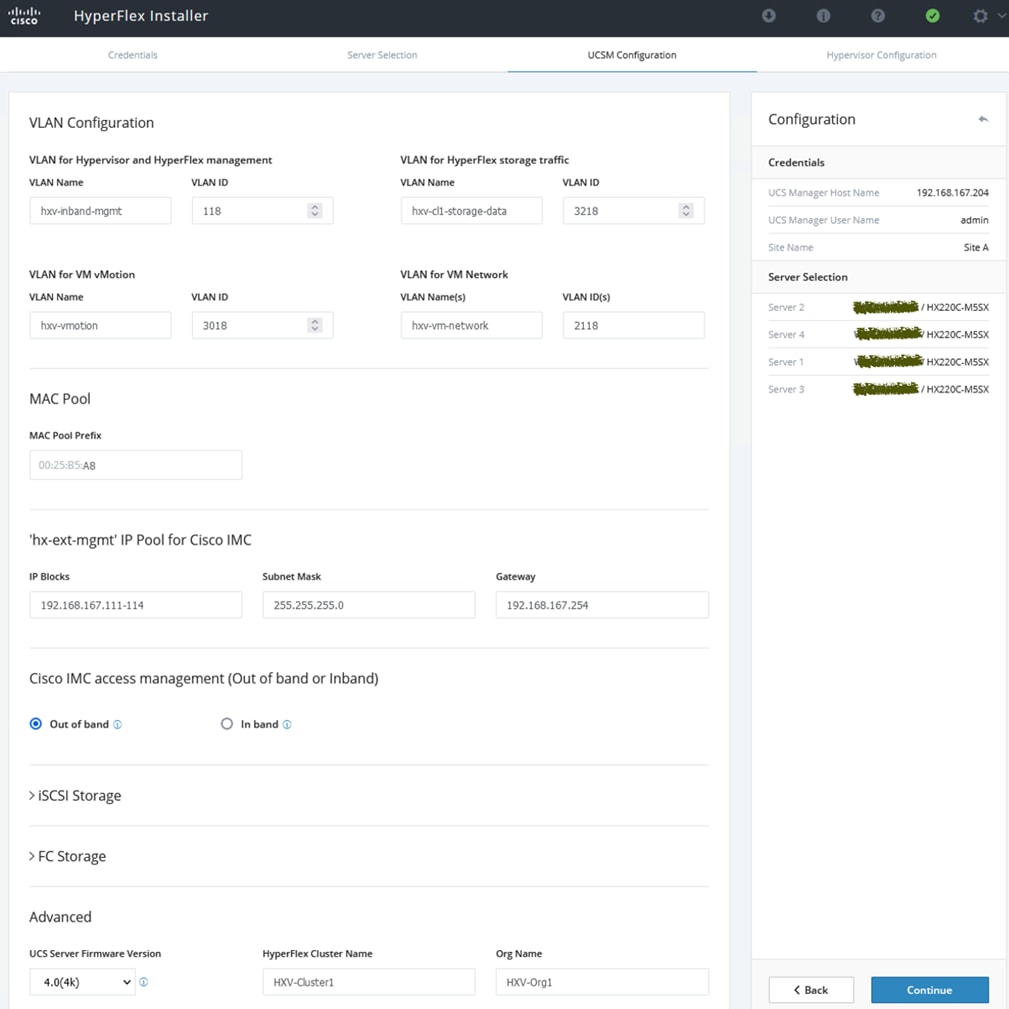

The HyperFlex infrastructure networks are critical for the operation of the HyperFlex stretch cluster and the VMware vSphere cluster. To provide reachability for these networks through the VXLAN fabric, the VXLAN fabric must be first provisioned. The VXLAN fabric in each site also needs connectivity to the Cisco UCS domain where the HyperFlex nodes and ESXi hosts in the cluster reside. As described in the “Intra-Site Design — Edge Connectivity” section of this document, vPCs are used for connecting the HyperFlex infrastructure in the edge or access-layer network to the leaf switches in the VXLAN fabric. To enable connectivity beyond the leaf switches, the VXLAN fabric will need to extend the infrastructure networks across the inter-site network. In this design, all infrastructure connectivity is handled within a dedicated tenant ( HXV-Foundation_VRF), to keep the application and infrastructure connectivity (and traffic) separated.

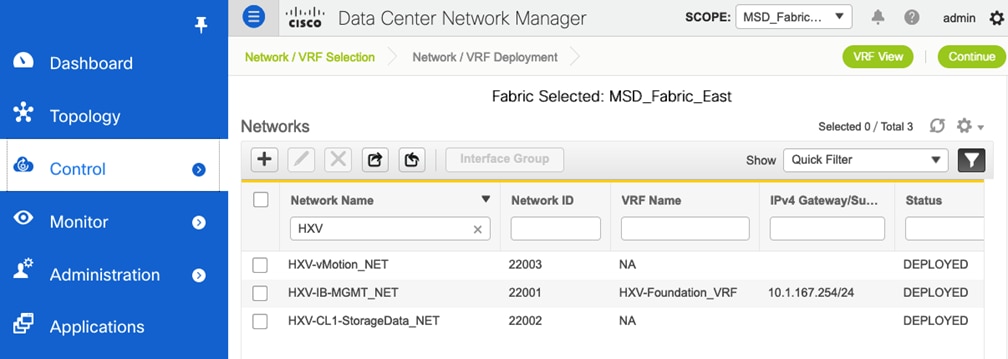

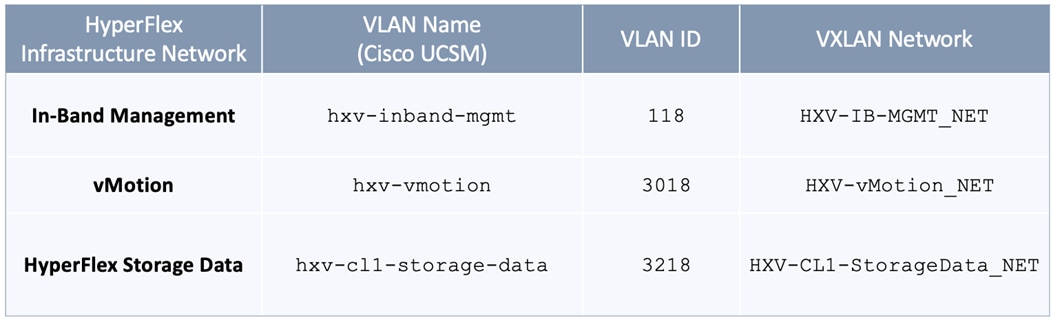

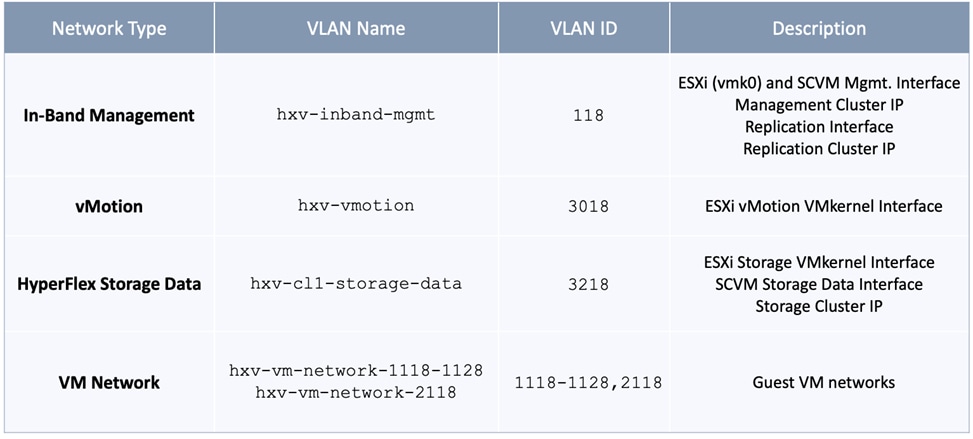

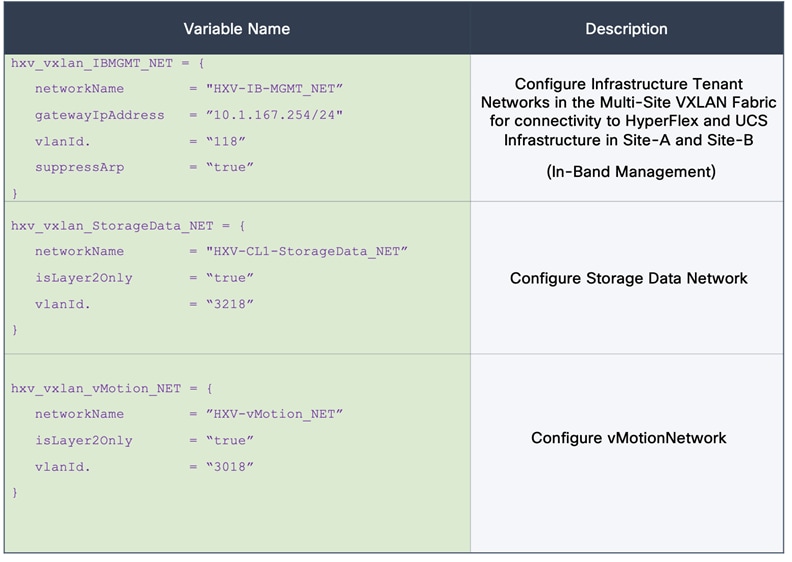

The HXV-Foundation_VRF in the VXLAN fabric enables connectivity for the following HyperFlex infrastructure networks:

● In-band Management: This network is primarily used by HyperFlex nodes and ESXi hosts in the cluster for intra-cluster communications. The HyperFlex inband management network is mapped to the HXV-IB-MGMT_NET network within the VXLAN fabric to enable traffic forwarding and connectivity between endpoints on that network. This network is deployed as a Layer 3 network with the default gateway in the VXLAN fabric.

● Storage Data Network: This network is primarily used for HyperFlex storage cluster communication, for providing storage services and for accessing datastores hosted on the HyperFlex stretch cluster. The HyperFlex storage data network is mapped to the HXV-CLI1-StorageData_NET network within the VXLAN fabric to enable traffic forwarding and connectivity between endpoints on that network. This network is deployed as a Layer 2 network in the VXLAN fabric.

● VMware vMotion network: To support VMware vMotion for the virtual machines hosted on the HyperFlex VSI, ESXi hosts need connectivity to a VMware vMotion network. The HyperFlex vMotion network is mapped to the HXV-vMotion_NET network within the VXLAN fabric to enable traffic forwarding and connectivity between ESXi hosts in the cluster. It is deployed as a Layer 2 network in the VXLAN fabric.

![]() All provisioning is done using a combination of Cisco DCNM and HashiCorp Terraform automation. Cisco DCNM is used for Day 0 deployment and Terraform for Day 1 and Day 2 provisioning using the Terraform provider for Cisco DCNM

All provisioning is done using a combination of Cisco DCNM and HashiCorp Terraform automation. Cisco DCNM is used for Day 0 deployment and Terraform for Day 1 and Day 2 provisioning using the Terraform provider for Cisco DCNM

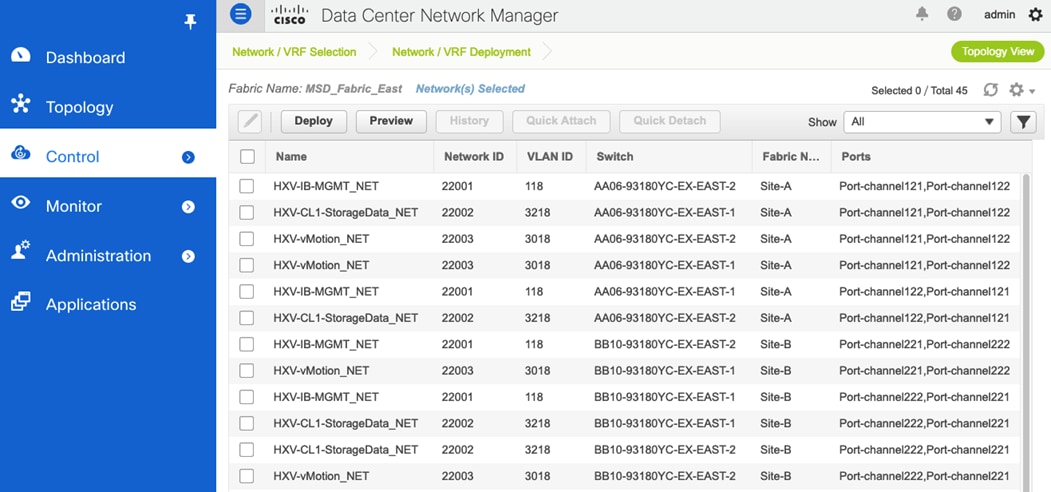

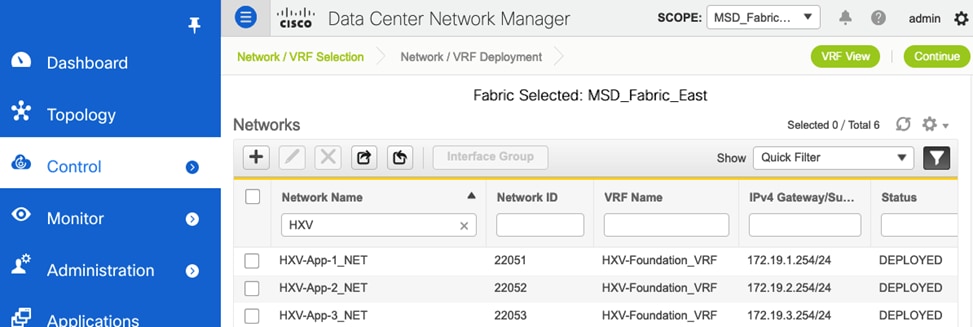

Figure 30 shows the HyperFlex Infrastructure networks provisioned in the VXLAN fabric for this solution.

Figure 30. HyperFlex Infrastructure Networks

The access layer connectivity to the UCS domain where the HyperFlex nodes reside is shown in Figure 31.

Figure 31. Access Layer Connectivity Design - To Cisco UCS Domain

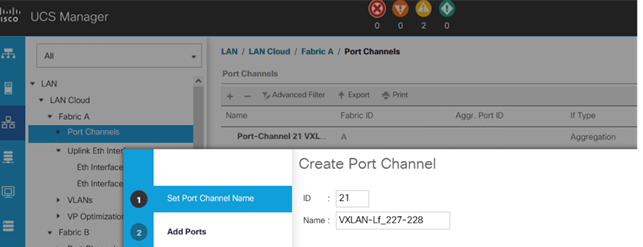

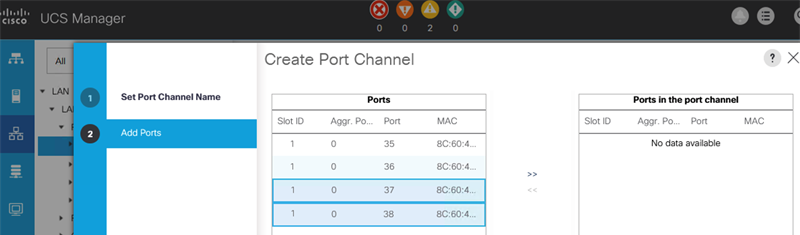

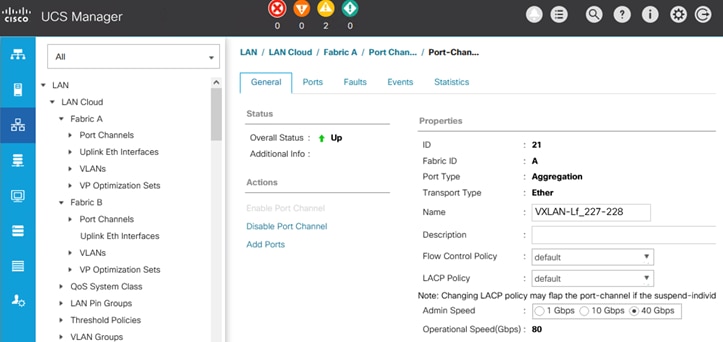

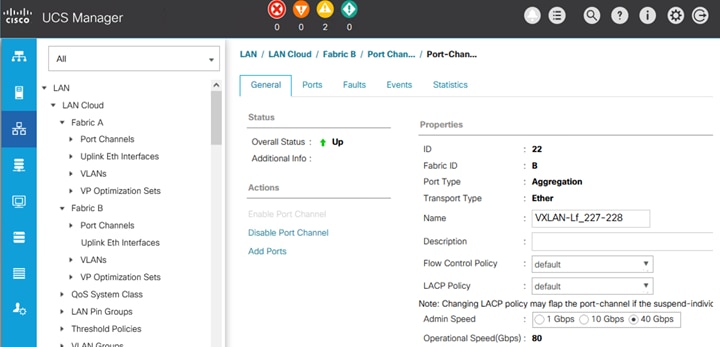

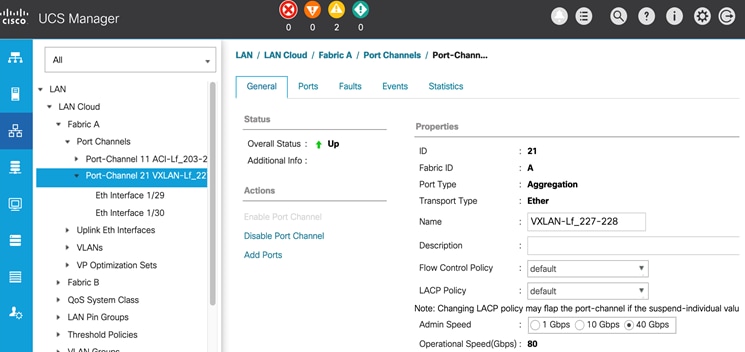

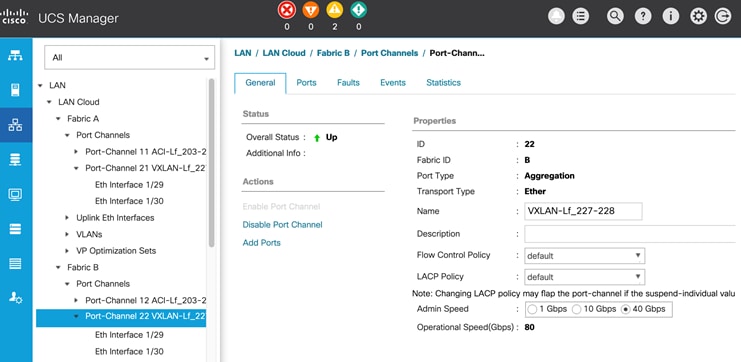

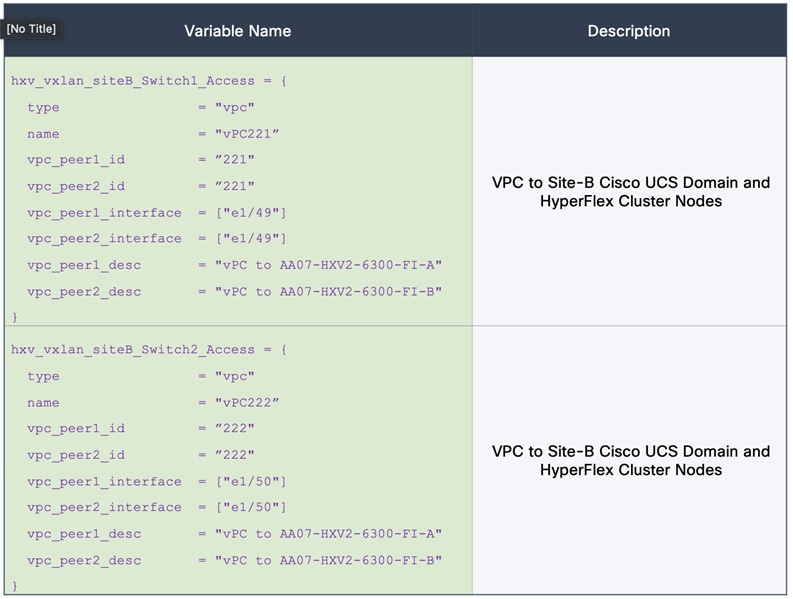

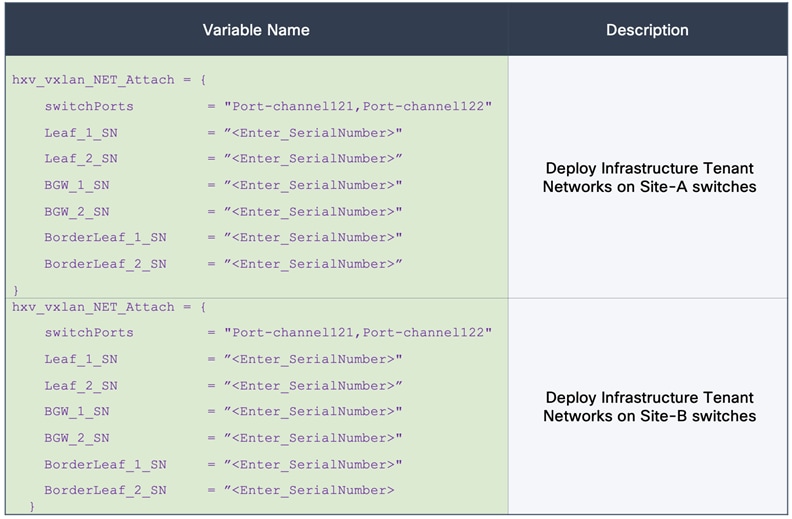

Figure 32 shows the corresponding VXLAN fabric side configuration to enable access-layer connectivity to the HyperFlex and Cisco UCS infrastructure in the access/edge network for Sites A and B, respectively. Note that vPCs 121-122 connect to FI-A and FI-B respectively in Site-A. Similarly, vPCs 221-222 connect to FI-A and FI-B respectively in Site-B.

Figure 32. Access-layer Connectivity to HyperFlex and Cisco UCS Infrastructure

Each of the HyperFlex Infrastructure network (see Table 2) is trunked on the port-channel between the Cisco UCS domain and VXLAN Leaf switches in each site. The VLANs are mapped to the corresponding network in the VXLAN fabric – see Table 2. Note that the HyperFlex Infrastructure networks are part of the HXV-Foundation_VRF Tenant.

Table 2. HyperFlex Infrastructure Network

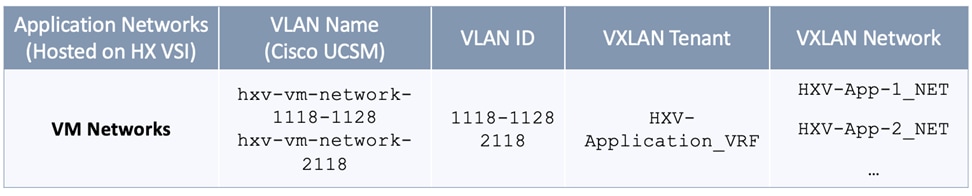

Connectivity for Applications and Services Hosted on HyperFlex VSI

Once the HyperFlex cluster is up and running, applications can be deployed in either of the two active-active data centers. In this design, the connectivity for the application networks through the VXLAN fabric, is handled in one Application Tenant (HXV-Application_VRF). Customers can choose to use one Application Tenant for all their applications or choose a different tenancy model that best suits their needs. In this design, for validation, the following application tenants and networks were deployed.

The Application Network VLANs are trunked on the same vPCs as the HyperFlex Infrastructure networks to connect to the application virtual machines that use these networks. Application VMs are hosted on the HyperFlex VSI. The configuration is identical in both sites to ensure that the applications can failover to the second site in the event of a failure in the first site.

High Availability

High availability is a critical consideration for any data center infrastructure design, and more so for a disaster-recovery solution such as this one. The active-active VSI in each data center delivers continuous access to mission-critical workloads, with each site providing backup and seamless failover for instances of failure. You can deploy applications and services in either data center location using local resources (HyperFlex VSI) or remote resources, depending on the type of failure. To achieve availability at the data center level, the sub-systems that make up data center infrastructure (compute, storage, network, virtualization) must provide complementary capabilities in each active-active data center, with the ability to fail over to the second data center if a failure occurs in the first one. High availability is also important within a data center to handle smaller failures with minimal impact.

For the network sub-system, the VXLAN EVPN Multi-Site architecture used in this solution provides the network fabrics in each data center location and the connectivity between them, as well as the ability to fail over by extending connectivity and services across data centers. The solution also provides high availability for the network fabric in each data center and in the inter-site network between them, with no single points of failure. The end-to-end network is resilient at the physical link and node level as well as across higher layers of the infrastructure stack. The high availability features the network provides include:

● VXLAN Multi-Site architecture: The architecture fundamentally provides high availability by enabling interconnection of independent fabrics. This feature allows deployment and interconnection of a second fabric and data center to the first data center, thereby enabling the active-active design used in this solution. The architecture also provides fault containment and isolation between sites because each site is a separate failure domain, helping ensure that a failure in one active site does not affect the other.

● Intra-site connectivity: The connectivity within a site is the same for both active-active data centers. Endpoints connect to top-of-rack leaf switches, and each leaf switch connects to all the spine switches in that data center site. This setup provides redundancy while also enabling multiple IP Equal-Cost Multipath (ECMP) routes between leaf switches for VTEP-to-VTEP connectivity. The VXLAN fabric is deployed using two spine switches that serve as redundant BGP route reflectors for the fabric. The routing protocols deployed in the fabric uses the physical-layer connectivity to provide multiple ECMP paths between VTEPs for redundancy and load distribution.

● Inter-Site connectivity: Two border gateways in each data center site connect to BGWs in the remote data center, providing two redundant paths between sites. The BGWs establish eBGP sessions for inter-site connectivity.

● Access-layer connectivity: Two leaf switches are used in this design to connect to the HyperFlex infrastructure. vPCs are used to connect to Cisco UCS Fabric Interconnects to NetApp storage in each site, providing node and link-level redundancy in the access layer.

● Connectivity to outside networks and services: To enable each site to operate as an independent data center, the design uses separate connections from each site for reachability to outside networks, helping ensure access to critical services directly from each data center.

● Cisco DCNM clustering: To provide resiliency and scalability, a Cisco DCNM cluster consisting of multiple nodes is used to manage the end-to-end VXLAN EVPN Multi-Site fabric. The cluster is located outside the fabric, with reachability to both sites. However, both sites have independent connectivity such that a failure in one site will not affect the ability of Cisco DCNM to communicate and manage the other.

Hyperconverged Infrastructure Design – Cisco HyperFlex and Cisco UCS

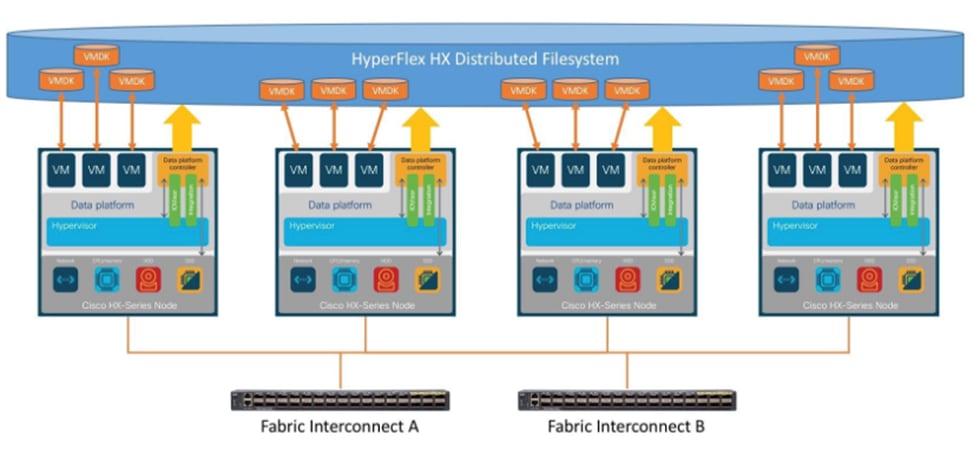

The Cisco HyperFlex system is a fully-contained modular virtual server platform with flexible pools of compute and memory resources, integrated networking, and a distributed log-based filesystem for virtual machine storage. HyperFlex uses a high-performance and highly-available HyperFlex Data Platform (HXDP) software to deliver a hyperconverged platform with distributed storage and Enterprise-grade data management services. The data platform runs on all servers to implement a scale-out, distributed storage file system using internal flash-based SSDs, NVMe storage, or a combination of flash-based SSDs and high-capacity HDDs to store data. HXDP runs on multiple Cisco HyperFlex HX-Series nodes to create a highly available cluster. The data management features provided by HyperFlex include replication, always-on inline deduplication, always-on inline compression, thin provisioning, instantaneous space-efficient clones, and snapshots. Each node also runs a hypervisor software for virtualizing the servers and connects to Fabric Interconnects in a UCS domain. HyperFlex leverages Cisco UCS technology and architecture to deliver an integrated platform with computing, storage, and server networking. HyperFlex also uses software-defined computing, software-defined networking, and software-defined storage to deliver a fully automated, pre-installed, and pre-provisioned virtualized infrastructure ready for hosting application workloads. A HyperFlex system can be deployed as a standard or stretch cluster in an Enterprise data center or as an Edge cluster in smaller or remote site deployments. Figure 33 illustrates the high-level architecture for a HyperFlex system.

Figure 33. HyperFlex System Architecture

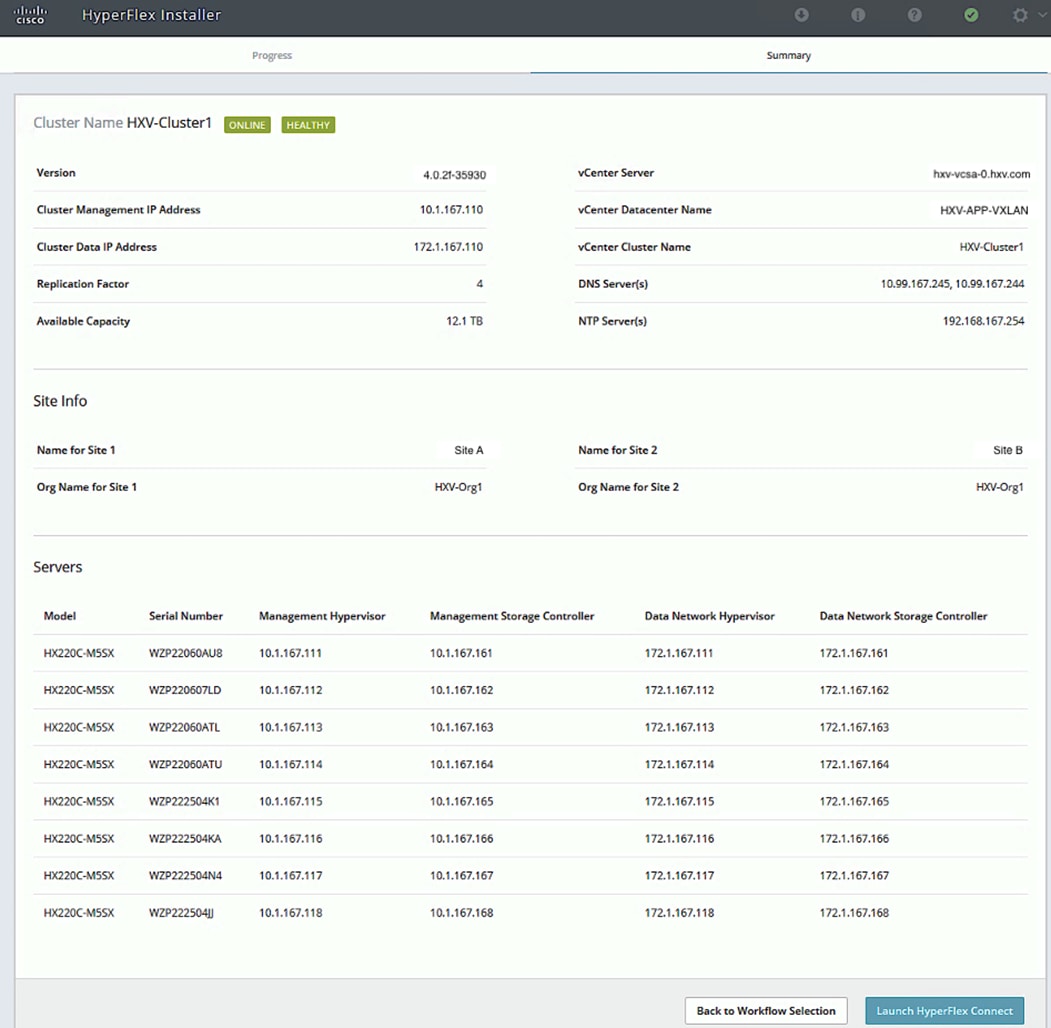

In this solution, a single HyperFlex stretched cluster provides the hyperconverged infrastructure. The cluster serves as an Application cluster that spans both data centers, enabling application virtual machines to be deployed in either data center with seamless connectivity and mobility as needed. The HyperFlex servers and UCS Fabric Interconnects in the two data centers are centrally managed from the cloud using Cisco Intersight. Figure 34 illustrates the HyperFlex and UCS design in the two active-active sites.

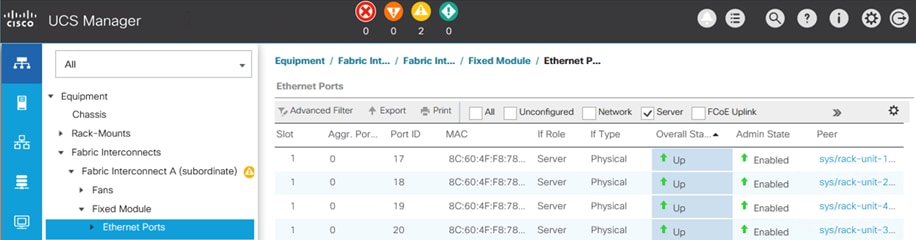

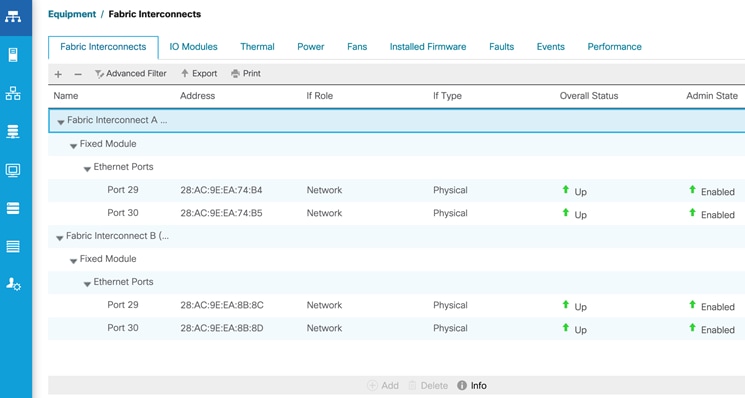

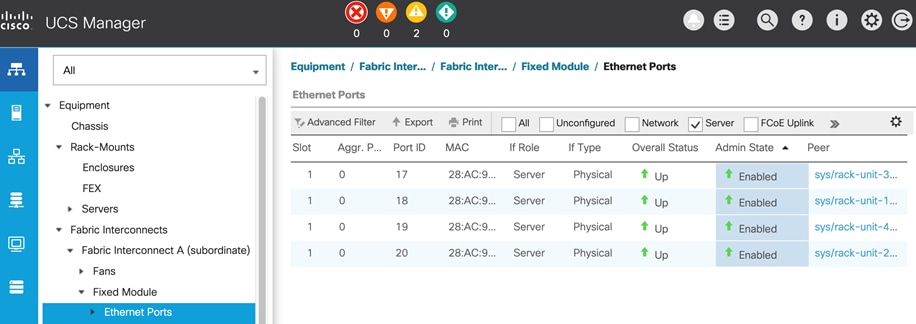

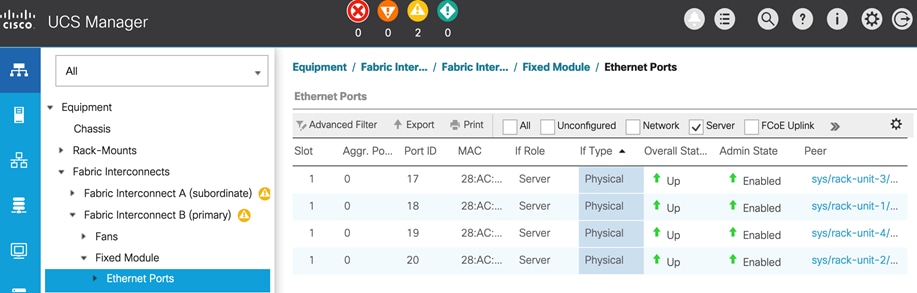

Figure 34. HyperFlex and Cisco UCS Design

The HyperFlex stretch cluster is a 4+4 cluster, with nodes in the cluster evenly distributed between the sites (Site-A, Site-B) to provide the hyperconverged infrastructure in the active-active data centers. The solution is a 40GbE design within a data center site and 10GbE outside the data center, including inter-site connectivity between data centers provided by the VXLAN Multi-Site fabric. In each site, 4 x HX-series server nodes connect to a pair of Cisco UCS Fabric Interconnects using 2 x 40GbE links. The Cisco UCS Fabric Interconnects then connects to a VXLAN fabric in the site for connectivity between sites and to outside networks and services. The connectivity between stretch cluster nodes within a site, under normal conditions, is through the local Cisco UCS Fabric Interconnects but in certain failure scenarios, the traffic may require the local VXLAN fabric to provide connectivity. The inter-site stretch cluster communication between nodes will always use the VXLAN Multi-Site fabric for Layer 2 and Layer 3 connectivity.

A HyperFlex installer in a third site, outside the fabric, automates the deployment of the stretched cluster across data centers. The VXLAN EVPN Multi-Site fabric provides the necessary reachability to enable the automated deployment. The fabric in each site provides an external connection for accessing networks and services outside the fabric. The external connectivity is through a pair of Border Leaf switches that connect to pair of external Nexus 7000 series gateway switches in the external/outside network. In this design, the HyperFlex Installer uses the external connection in each site to communicate with the HyperFlex and UCS infrastructure in the two active-active sites.

Cisco HyperFlex Stretched Cluster

A HyperFlex “stretched” cluster is designed to provide business continuity in the event of a significant disaster such as site-wide failure that takes down the data center at that location. It is also used when a highly resilient architecture is needed to protect mission critical applications and workloads. Stretch cluster provides geographical redundancy, even if it is between two buildings in a campus environment. Stretch clusters are designed to ensure the availability of the hyperconverged infrastructure, and the VMware vSphere cluster that runs on it. HyperFlex stretch cluster and VMware vSphere cluster that runs on it, are not multiple clusters, but a single cluster that spans data center locations. The HyperFlex servers and ESXi hosts that make up the cluster are geographically distributed across different data center locations. A single VMware vCenter manages the vSphere cluster. Storage Data is mirrored both locally and across data centers.

Stretch Clusters also require a Witness node, one per cluster. The HyperFlex Witness is a VM located in a third location that decides which site becomes the primary when a split-brain failure occurs. Split-brain failure is when the sites cannot communicate with each other, but they can still communicate with the Witness. Stretch clusters require a 100Mbps minimum connection to the Witness, with less than 200ms of RTT latency. Latency to the witness impacts site failure times, so larger clusters with significant load and data, should use RTT times in the order of 10ms or lower. The reachability to the Witness in a third site can be Layer 2 or Layer 3. Layer 3 is used in this solution.

To meet write latency requirements of applications such as databases, the sites in a stretch cluster require 10Gbps of bandwidth per cluster and a less than 5ms round-trip time (RTT) network latency.

A “stretched” HyperFlex cluster requires a symmetric configuration between sites (including Fabric Interconnects), with a minimum of two HX-series “converged” nodes (i.e. nodes with shared disk storage) in each site. Each site can support up to a maximum of 16 SFF or 8SFF converged nodes per site (at the time of writing this document), but both sites must have the same number of nodes to maintain the symmetric configuration. In a stretch cluster, the converged nodes must be an M5 model or higher. Stretch cluster can also be expanded to include compute-only nodes for additional processing capacity, but it cannot exceed the total supported node count. At the time of the writing of this document, a HyperFlex stretch cluster can support a maximum cluster size of 64 (16 per site x 2 or 32 converged nodes, plus 32 compute-only) with SFF converged nodes and 48 (8 per site x 2 or 16 converged nodes, plus 32 compute-only) with LFF converged nodes.

Data is replicated across multiple nodes, depending on the replication factor, for continuous

operation in the event of a single-node failure. The default replication factor in a HyperFlex stretch cluster is (2+2), which means that two copies are maintained in each site, for a total of 4 copies. This is to address the different permutations of failure scenarios in a stretch cluster environment.

HyperFlex stretch clusters also require VMware vSphere Enterprise Plus license since it relies on advanced DRS capabilities available only in the premium edition.

![]() Stretch clusters currently do not support self-encrypting drives (SED) and require M5 or higher server models. VMware ESXi is the only hypervisor supported HyperFlex stretch clusters.

Stretch clusters currently do not support self-encrypting drives (SED) and require M5 or higher server models. VMware ESXi is the only hypervisor supported HyperFlex stretch clusters.

Cisco HyperFlex Data Platform (HXDP)

The foundation for Cisco HyperFlex systems is the Cisco HyperFlex Data Platform software that runs on each node in a HyperFlex cluster. HyperFlex Data Platform is a purpose-built, high-performance, log-structured, scale-out file system that is designed for hyperconverged environments. The data platform runs on Cisco HX-series nodes to create a highly available cluster. Each node includes an HX Data Platform controller that implements the scale-out and distributed file system using internal flash-based SSDs, NVMe storage, or a combination of flash-based SSDs and high-capacity HDDs to store data. The controllers communicate with each other over 10 or 40 GbE to present a single pool of storage that spans the nodes in the cluster. As nodes are added, the cluster scales linearly to deliver computing, storage capacity, and I/O performance.

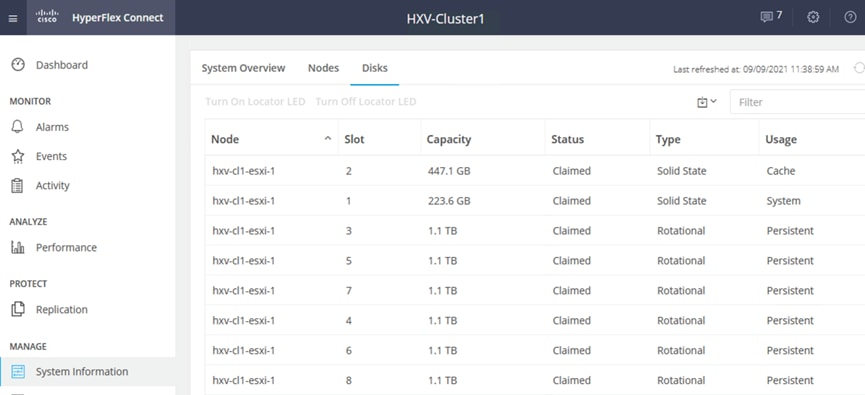

In this solution, the HyperFlex servers communicate with each other using 40GbE links (bundled in some cases) within a site and 10GbE links between sites. Each server in the cluster has 6 x 1.2TB HDDs that is used to build the distributed file system. The server configuration can be changed to meet the needs of the Enterprise. HyperFlex supports multiple server models, with different drive configurations.

For more details on HyperFlex Data Platform software architecture and design, see: https://www.cisco.com/c/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/white-paper-c11-736814.html

Cisco HyperFlex Server

The Cisco HyperFlex portfolio includes a wide range of Hybrid, All-flash and All-NVMe server models that can also be used in this solution. The HyperFlex servers come in different form factors, and offer great flexibility with respect to capacity, processing and memory options that it offers.

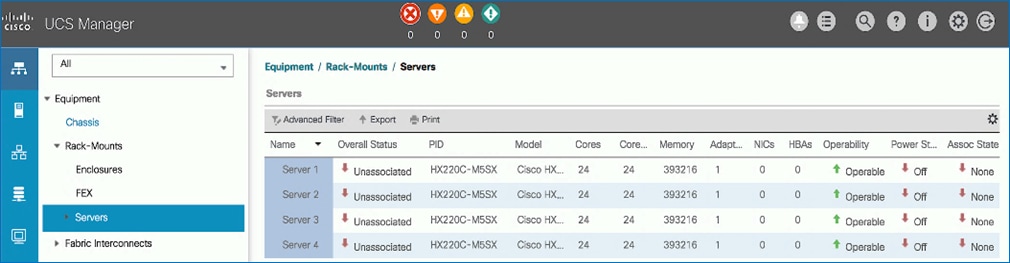

The 4+4 stretch cluster in the solution, is built using HX220C-M5SX model of HyperFlex hybrid servers. The server is a 1RU, small footprint model with a minimum of six, and up to eight 2.4TB, 1.8TB or 1.2TB SAS hard disk drives (HDD) for capacity storage, a 240 GB SSD housekeeping drive, a 480 GB or 800 GB SSD caching drive, and a 240 GB M.2 form factor SSD that acts as the boot drive. For configurations requiring self-encrypting drives, the caching SSD is replaced with an 800 GB SAS SED SSD, and the capacity disks are replaced with 1.2TB SAS SED HDDs.

Figure 35. Cisco HX220C-M5SX Server

Either a 480 GB SATA or 800 GB SAS caching SSD may be chosen. This option is provided to allow flexibility in ordering based on product availability, pricing and lead times. While the SAS option may provide a slightly higher level of performance, the partitioning of the two disk options is the same, therefore the amount of cache available on the system is the same regardless of the model chosen.

In a HyperFlex cluster, each node with disk storage is equipped with at least one high-performance SSD drive for data caching and rapid acknowledgment of write requests. Each node also is equipped with additional disks, up to the platform’s physical limit, for long term storage and capacity. Caching drives are not factored into the overall cluster capacity, only the capacity disks contribute to total cluster capacity. Many models of servers, drives and form-factors are supported in the solution. However, for validation, the HyperFlex nodes in the cluster used the following drive configuration, with 2 SSDs, one (240GB) for housekeeping, one (480GB) for cache and 6 x 1.2TB capacity drives.

Figure 36. Drive Configuration on HyperFlex Servers used in Validation

For a complete list of HyperFlex server models and specifications supported in this solution, see: https://www.cisco.com/c/en/us/products/hyperconverged-infrastructure/hyperflex-hx-series/index.html#~models

![]() A HyperFlex stretch cluster requires a symmetric configuration across all nodes in the cluster, with M5 or higher server model. For other stretch cluster specific requirements, see HyperFlex Stretch Cluster Guide.

A HyperFlex stretch cluster requires a symmetric configuration across all nodes in the cluster, with M5 or higher server model. For other stretch cluster specific requirements, see HyperFlex Stretch Cluster Guide.

Cisco UCS VIC Interface Card

Each HyperFlex server in the solution is equipped with a Cisco UCS VIC 1387 MLOM adapter to enable dual 40GbE connectivity to the 2 x Fabric Interconnects in the site. The VIC 1387 is used in conjunction with the Cisco UCS 6332 or 6332-16UP model Fabric Interconnects to support 40GbE connectivity. The Cisco UCS VIC 1387 MLOM is a dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40-GbE and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter that can be installed on any model of Cisco UCS HX-Series Rack Servers.

The mLOM is used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

Figure 37. Cisco VIC 1387 mLOM Card

![]() Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

Hardware revision V03 or later of the Cisco VIC 1387 card is required for the Cisco HyperFlex HX-series servers.

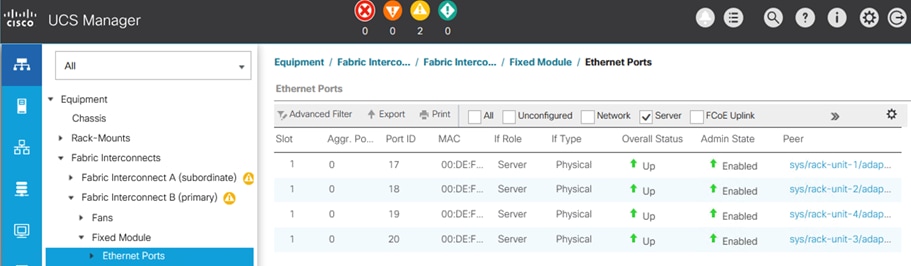

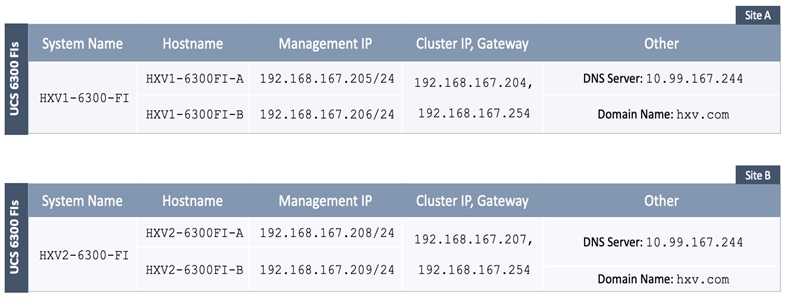

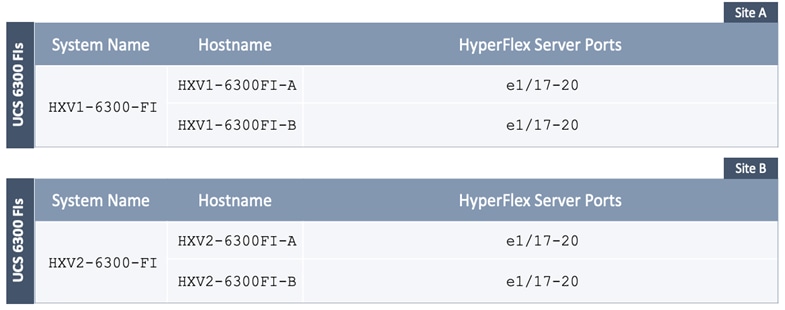

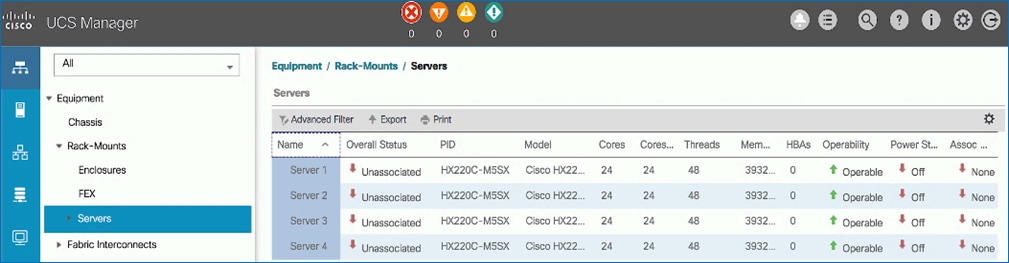

The HyperFlex stretch cluster nodes connect to separate UCS domains, one in each site. A UCS domain consists of a pair of Cisco UCS 6x00 series Fabric Interconnects and the servers that connect to it. A single Cisco UCS domain can support multiple HyperFlex clusters, the exact number depends on the size of the cluster and the port-density on the Fabric Interconnect model chosen. Cisco UCS Manager that manages the Cisco HyperFlex and UCS servers in the UCS domain, runs on the Fabric Interconnects. In this design, the UCS domains and the associated HyperFlex clusters are also managed centrally from the cloud using Cisco Intersight. Cisco Intersight is a cloud operations, orchestration and management platform for Enterprise data centers and hybrid cloud deployments.

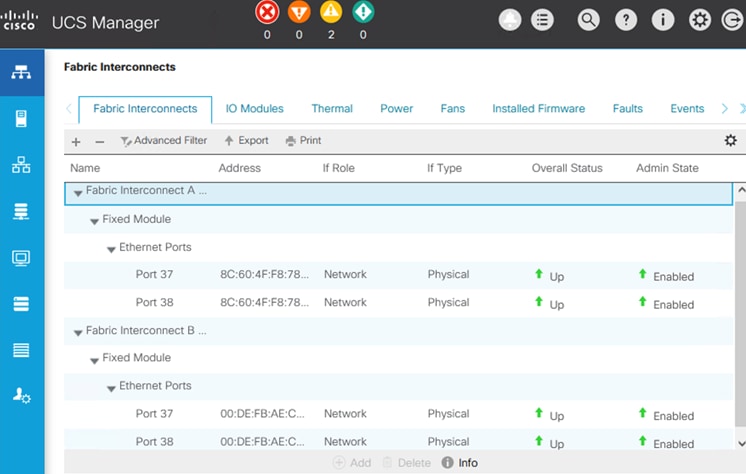

Unified Fabric – Cisco UCS Fabric Interconnects

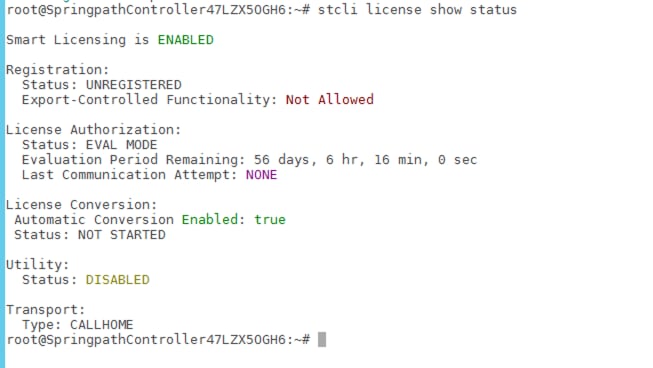

Cisco UCS Fabric Interconnects (FI) are an integral part of the HyperFlex system. The fabric interconnects providing a unified fabric for integrated LAN, SAN and management connectivity for all HyperFlex servers that connect to the Fabric Interconnects. Fabric Interconnects provide a lossless and deterministic switching fabric, capable of handling I/O traffic from hundreds of servers.