FlexPod Datacenter with Red Hat Enterprise Linux OpenStack Platform Design Guide

Available Languages

FlexPod Datacenter with Red Hat Enterprise Linux OpenStack Platform Design Guide

Last Updated: October 21, 2015

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2015 Cisco Systems, Inc. All rights reserved.

FlexPod: Cisco and NetApp Verified Architecture

Out of the Box Infrastructure High Availability

Cisco Unified Computing System (UCS)

Cisco UCS 6248UP Fabric Interconnects

Cisco UCS 5108 Blade Server Chassis

Cisco Nexus 9000 Series Switch

Cisco Nexus 1000v for KVM - OpenStack

Cisco Nexus 1000V for OpenStack Solution Offers

ML2 Mechanism Driver for Cisco Nexus 1000v

NetApp Clustered Data ONTAP 8.3 Fundamentals

NetApp Advanced Data Management Capabilities

NetApp E-Series Storage Controllers

NetApp SANtricity Operating System Fundamentals

Domain and Management Software

NetApp OnCommand System Manager

NetApp SANtricity Storage Manager

Red Hat Enterprise Linux OpenStack Platform Installer

Red Hat Enterprise Linux OpenStack Platform

Other OpenStack Supporting Technologies

Hardware and Software Specifications

FlexPod with Red Hat Enterprise Linux OpenStack Platform Physical Topology

FlexPod with Red Hat Enterprise Linux OpenStack Platform Physical Design

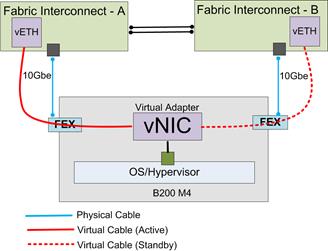

MLOM Virtual Interface Card (VIC)

Validated I/O Components and Servers

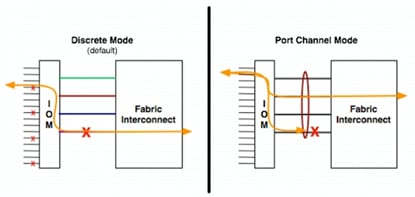

Fabric Failover for Ethernet: Highly Available vNIC

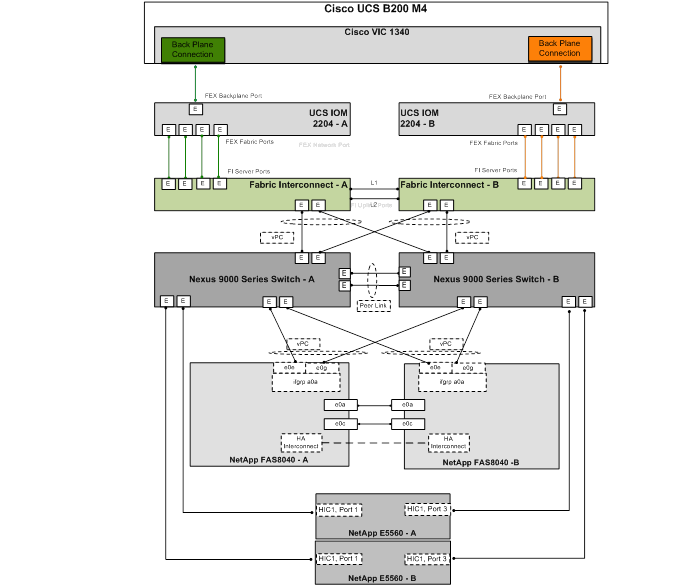

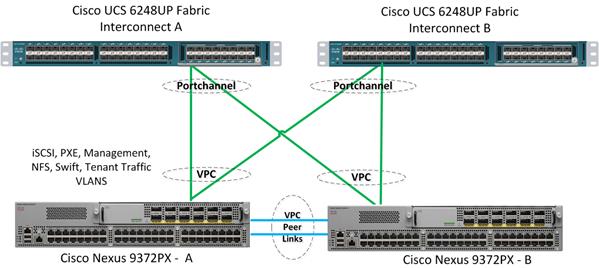

Cisco UCS Physical Connectivity to Nexus 9000

Cisco UCS QoS and Jumbo Frames

Cisco Nexus 9000 Series Modes of Operation

Cisco Nexus 9000 Standalone Mode Design

IP Based Storage and Boot from iSCSI

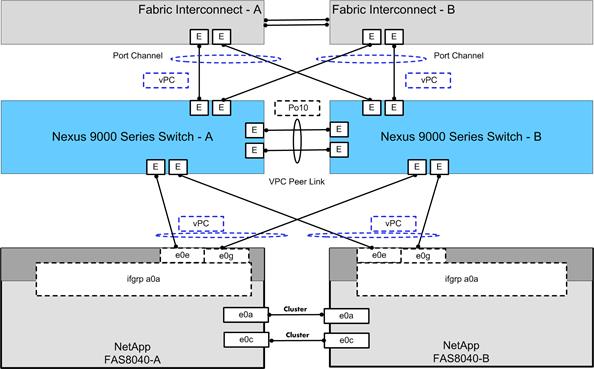

Link Aggregation and Virtual Port Channel

Cisco Nexus 1000v for KVM Solution Design

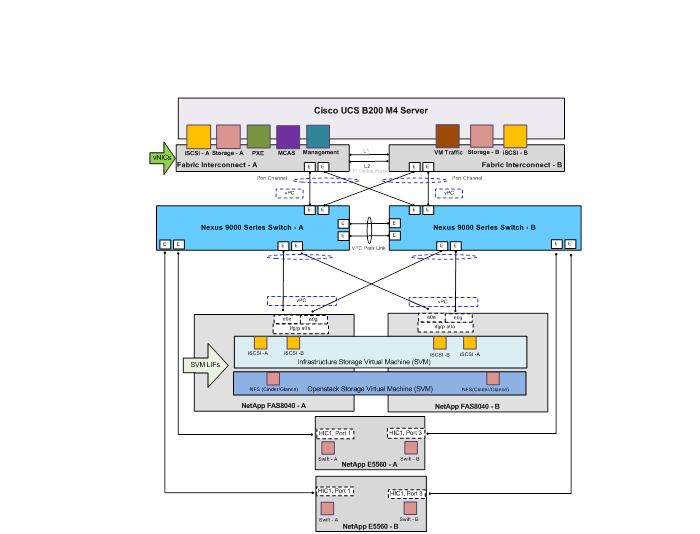

Network and Storage Physical Connectivity

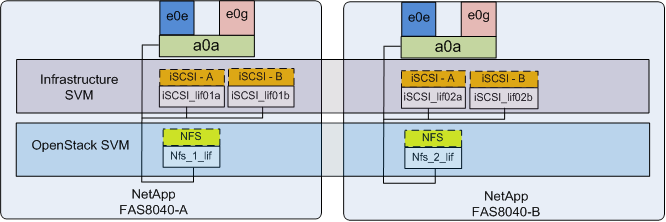

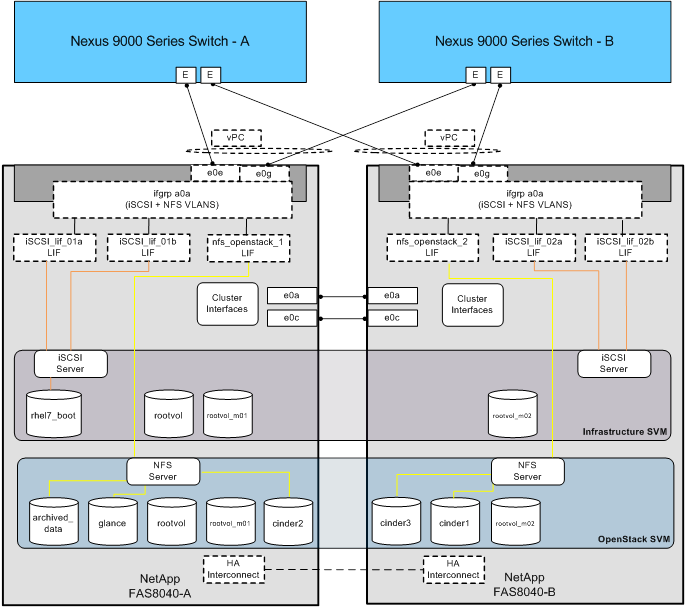

Clustered Data ONTAP Logical Topology Diagram

Clustered Data ONTAP Configuration for OpenStack

Storage Virtual Machine Layout

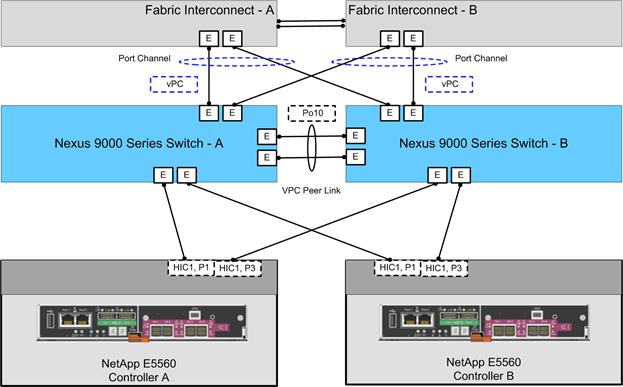

NetApp E-Series Storage Design

Network and Storage Physical Connectivity

SANtricity OS Configuration for OpenStack

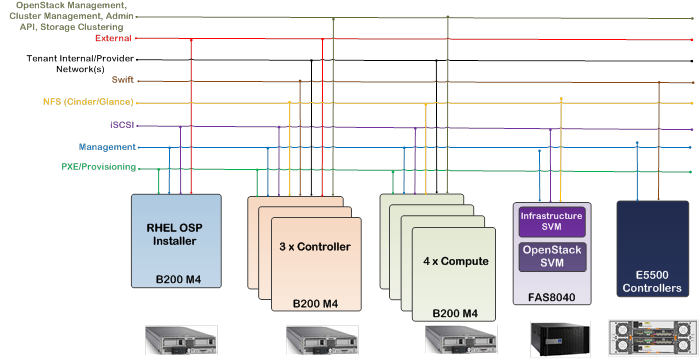

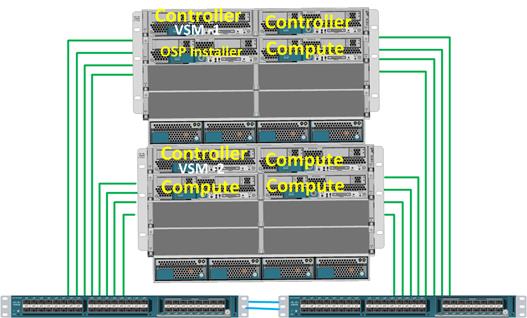

Cisco UCS and NetApp Storage OpenStack Deployment Topology

Hosts Roles and OpenStack Service Placement in Cisco UCS

Red Hat Enterprise Linux OpenStack Platform Installer Network Traffic Types

Cisco Validated Designs consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

The purpose of this document is to describe the Cisco and NetApp® FlexPod® solution, which is a validated approach for deploying Cisco and NetApp technologies as shared cloud infrastructure. This validated design provides a framework of deploying Red Hat Enterprise Linux OpenStack platform on FlexPod.

FlexPod is a leading converged infrastructure supporting broad range of enterprise workloads and use cases. With the growing interest, continuous evolution, and popular acceptance of OpenStack there has been an increased customer demand to have OpenStack platform validated on FlexPod, and be made available for Enterprise private cloud, as well as other OpenStack based Infrastructure as a Service (IaaS) cloud deployments. To accelerate this process and simplify the evolution to a shared cloud infrastructure, Cisco, NetApp, and Red Hat have developed a validated solution, FlexPod with Red Hat Enterprise Linux OpenStack Platform 6.0. This solution enables customers to quickly and reliably deploy OpenStack based private and hybrid cloud on converged infrastructure while offering FlexPod Cooperative Support Model that provides both OpenStack and FlexPod support.

The recommended solution architecture is built on Cisco UCS B200 M4 Blade Servers, Cisco Nexus 9000 Series switches, and NetApp FAS8000 Series and E5000 Series storage arrays. In addition to that, it includes Red Hat Enterprise Linux 7.1, Red Hat Enterprise Linux OpenStack Platform 6.0, and the Red Hat Enterprise Linux OpenStack platform installer.

Introduction

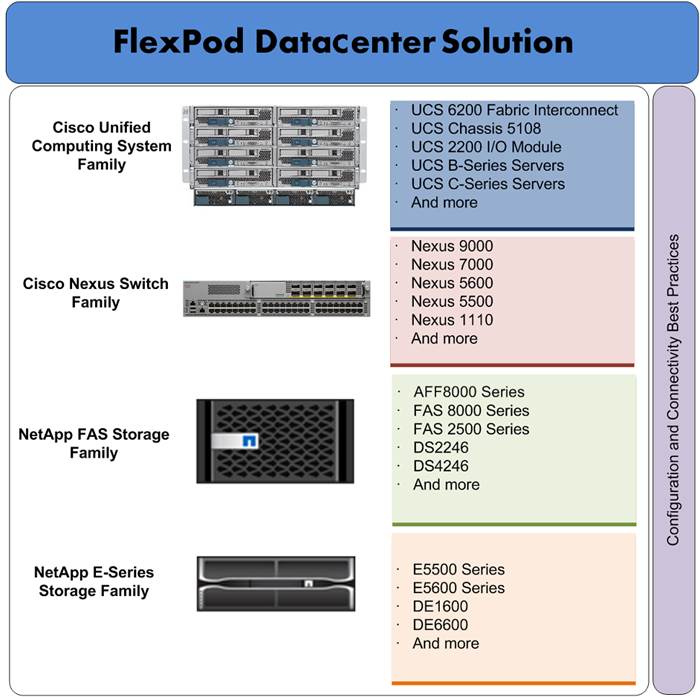

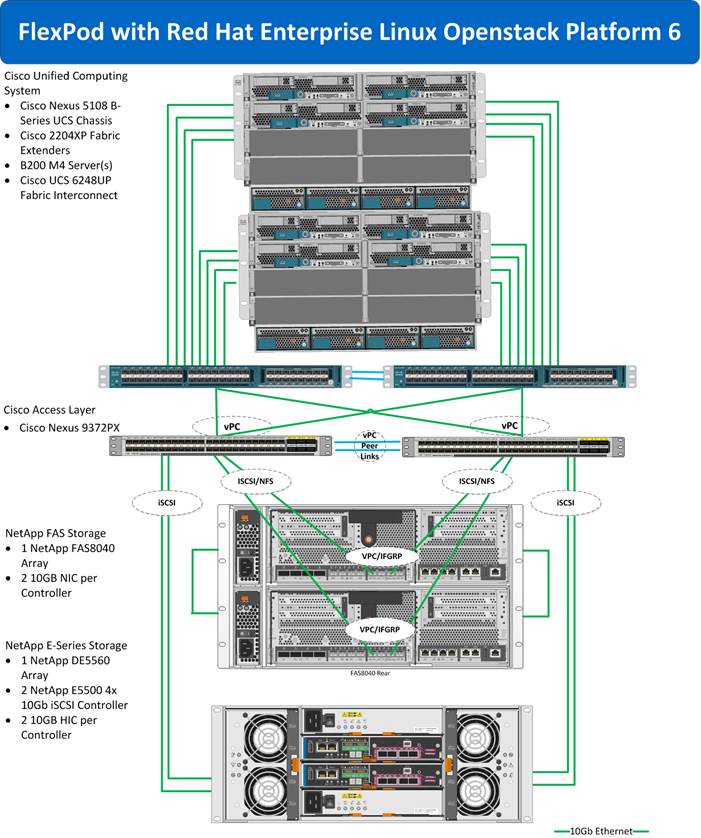

FlexPod is a pre-validated datacenter architecture followed by best practices that is built on the Cisco Unified Computing System (UCS), the Cisco Nexus® family of switches, and NetApp unified storage systems as shown in Figure 1 FlexPod has been a trusted platform for running a variety of virtualization hypervisors as well as bare metal operating systems. The FlexPod architecture is highly modular, delivers a baseline configuration, and also has the flexibility to be sized and optimized to accommodate many different use cases and requirements. The FlexPod architecture can both scale up (adding additional resources within a FlexPod unit) and scale out (adding additional FlexPod units). FlexPod with Red Hat Enterprise Linux OpenStack Platform 6.0 validated design is an extension to FlexPod validated design portfolio to provide OpenStack solution.

OpenStack is a massively scalable open source architecture that controls compute, network, and storage resources through a web user interface. The OpenStack development community operates on a six-month release cycle with frequent updates. Their code base is composed of many loosely coupled OpenStack projects. However, Red Hat’s OpenStack technology addresses these challenges and uses upstream OpenStack open source architecture and enhances it for Enterprise and service provider customers with better quality, stability, installation procedure, and support structure.

Red Hat Enterprise Linux OpenStack Platform 6.0, engineered with Red Hat hardened OpenStack Juno code delivers a stable release for production scale environment. Red Hat Enterprise Linux OpenStack Platform 6.0 adopters have an advantage of immediate access to bug fixes and critical security patches, tight integration with Red Hat’s enterprise security features including SELinux, and a steady release cadence between OpenStack versions.

Cisco Unified Computing System (UCS) is a next-generation datacenter platform that unifies computing, networking, storage access, and virtualization into a single cohesive system, which makes Cisco UCS an ideal platform for OpenStack architecture. Combination of Cisco UCS platform and Red Hat Enterprise Linux OpenStack Platform architecture accelerates your IT transformation by enabling faster deployments, greater flexibility of choice, efficiency, and lower risk. Furthermore, Cisco Nexus family of switches provides the network foundation for next-generation datacenter.

In FlexPod with Red Hat Enterprise Linux OpenStack Platform 6.0, OpenStack block storage (Cinder) is provided by a highly available enterprise-class NetApp FAS array. From a storage standpoint, NetApp Clustered Data ONTAP® provides the means of scale up or scale out, iSCSI boot for all the infrastructure hosts, and non-disruptive operations and upgrade capabilities. For more improved performance, operational efficiency, and reduced data-center footprint, NetApp E-Series provides OpenStack object storage (Swift).

Figure 1 FlexPod Component Families

Audience

The audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, and customers who want to take advantage of an infrastructure that is built to deliver IT efficiency and enable IT innovation.

Purpose of this Document

This document describes Red Hat Enterprise Linux OpenStack Platform 6.0, which is based on “Juno” OpenStack release, built on the FlexPod from Cisco and NetApp. This document discusses design choices and best practices of deploying the shared infrastructure.

Solution Summary

The FlexPod® solution portfolio combines NetApp® storage systems, Cisco® Unified Computing System servers, and Cisco Nexus fabric into a single, flexible architecture. FlexPod datacenter can scale up for greater performance and capacity or scale out for environments that require consistent, multiple deployments. FlexPod provides:

· Converged infrastructure of compute, network, and storage components from Cisco and NetApp

· Is a validated enterprise-class IT platform

· Rapid deployment for business critical applications

· Reduces cost, minimizes risks, and increases flexibility and business agility

· Scales up or out for future growth

This solution is based on OpenStack “Juno” release hardened and streamlined by Red Hat Enterprise Linux OpenStack Platform 6.0. The advantages of Cisco Unified Computing System, NetApp, and Red Hat Enterprise Linux OpenStack Platform combine to deliver OpenStack Infrastructure as a Service (IaaS) deployment that is quick and easy to deploy.

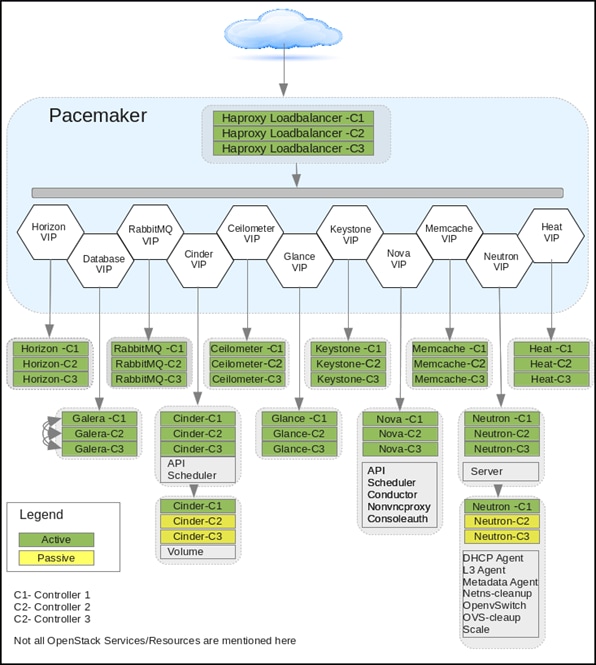

FlexPod with Red Hat Enterprise Linux OpenStack Platform helps IT organizations accelerate cloud deployments while retaining control and choice over their environments with open and inter-operable cloud solutions. FlexPod with Red Hat Enterprise Linux OpenStack Platform 6.0 offers fully redundant architecture from compute, network, and storage perspective. Furthermore, it includes OpenStack HA through redundant controller nodes. In this solution, OpenStack storage is provided by highly available NetApp storage sub-systems. The solution comprises of the following key components:

· Cisco Unified Computing System (UCS)

— Cisco UCS 6200 Series Fabric Interconnect

— Cisco VIC 1340

— Cisco UCS 2204XP IO Module or Fabric Extender

— Cisco UCS B200 M4 Blade Server

· Cisco Nexus 9300 Series switch

· Cisco Nexus 1000v switch for KVM

· NetApp Storage

— NetApp FAS8040

— NetApp E-5560

· Red Hat Enterprise Linux 7.1

· Red Hat Enterprise Linux Platform Installer

· Red Hat Enterprise Linux OpenStack Platform 6.0

FlexPod System Overview

FlexPod is a best practice datacenter architecture that includes these components:

· Cisco Unified Computing System (Cisco UCS)

· Cisco Nexus switches

· NetApp fabric-attached storage (FAS) and/or NetApp E-Series storage systems

These components are connected and configured according to best practices of both Cisco and NetApp, and provide the ideal platform for running a variety of enterprise workloads with confidence. As previously mentioned, the reference architecture covered in this document leverages the Cisco Nexus 9000 Series switch. One of the key benefits of FlexPod is the ability to maintain consistency at scaling, including scale up and scale out. Each of the component families shown in Figure 1 (Cisco Unified Computing System, Cisco Nexus, and NetApp storage systems) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlexPod.

FlexPod Benefits

As customers transition toward shared infrastructure or cloud computing they face a number of challenges such as initial transition hiccups, return on investment (ROI) analysis, infrastructure management and future growth plan. The FlexPod architecture is designed to help with proven guidance and measurable value. By introducing standardization, FlexPod helps customers mitigate the risk and uncertainty involved in planning, designing, and implementing a new datacenter infrastructure. The result is a more predictive and adaptable architecture capable of meeting and exceeding customers' IT demands.

FlexPod: Cisco and NetApp Verified Architecture

Cisco and NetApp have thoroughly validated and verified the FlexPod solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their datacenters to this shared infrastructure model. This portfolio includes, but is not limited to the following items:

· Best practice architectural design

· Workload sizing and scaling guidance

· Implementation and deployment instructions

· Technical specifications (rules for FlexPod configuration dos and don’ts)

· Frequently asked questions (FAQs)

· Cisco Validated Designs (CVDs) and NetApp Verified Architectures (NVAs) focused on a variety of use cases

Cisco and NetApp have also built a robust and experienced support team focused on FlexPod solutions, from customer account and technical sales representatives to professional services and technical support engineers. The Co-operative Support Program extended by NetApp, Cisco and Redhat provides customers and channel service partners with direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues. FlexPod supports tight integration with virtualized and cloud infrastructures, making it a logical choice for long-term investment. The following IT initiatives are addressed by the FlexPod solution.

Integrated System

FlexPod is a pre-validated infrastructure that brings together compute, storage, and network to simplify, accelerate, and minimize the risk associated with datacenter builds and application rollouts. These integrated systems provide a standardized approach in the datacenter that facilitates staff expertise, application onboarding, and automation as well as operational efficiencies relating to compliance and certification.

Out of the Box Infrastructure High Availability

FlexPod is a highly available and scalable infrastructure that IT can evolve over time to support multiple physical and virtual application workloads. FlexPod has no single point of failure at any level, from the server through the network, to the storage. The fabric is fully redundant and scalable, and provides seamless traffic failover, should any individual component fail at the physical or virtual layer.

FlexPod Design Principles

FlexPod addresses four primary design principles:

· Application availability: Makes sure that services are accessible and ready to use.

· Scalability: Addresses increasing demands with appropriate resources.

· Flexibility: Provides new services or recovers resources without requiring infrastructure modifications.

· Manageability: Facilitates efficient infrastructure operations through open standards and APIs.

![]() Performance and comprehensive security are key design criteria that are not directly addressed in this solution but have been addressed in other collateral, benchmarking, and solution testing efforts. This design guide validates the functionality and basic security elements.

Performance and comprehensive security are key design criteria that are not directly addressed in this solution but have been addressed in other collateral, benchmarking, and solution testing efforts. This design guide validates the functionality and basic security elements.

Cisco Unified Computing System (UCS)

The Cisco Unified Computing System is a next-generation solution for blade and rack server computing. The system integrates a low-latency; lossless 10 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. The Cisco Unified Computing System accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems. The Cisco Unified Computing System consists of the following components:

· Compute - The system is based on an entirely new class of computing system that incorporates rack mount and blade servers based on Intel Xeon 2600 v2 Series Processors.

· Network - The system is integrated onto a low-latency, lossless, 10-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements. Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtualized environments to better support changing business and IT requirements. Storage access - The system provides consolidated access to both SAN storage and Network Attached Storage (NAS) over the unified fabric. By unifying the storage access the Cisco Unified Computing System can access storage over Ethernet (SMB 3.0 or iSCSI), Fibre Channel, and Fibre Channel over Ethernet (FCoE). This provides customers with storage choices and investment protection. In addition, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity.

· Management - the system uniquely integrates all system components to enable the entire solution to be managed as a single entity by the Cisco UCS Manager. The Cisco UCS Manager has an intuitive graphical user interface (GUI), a command-line interface (CLI), and a powerful scripting library module for Microsoft PowerShell built on a robust application programming interface (API) to manage all system configuration and operations.

Cisco Unified Computing System (Cisco UCS) fuses access layer networking and servers. This high-performance, next-generation server system provides a datacenter with a high degree of workload agility and scalability.

Cisco UCS 6248UP Fabric Interconnects

· The Cisco UCS Fabric interconnects provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system’s fabric interconnects integrate all components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine’s topological location in the system.

· Cisco UCS 6200 Series Fabric Interconnects support the system’s 80 Gbps unified fabric with low-latency, lossless, cut-through switching that supports IP, storage, and management traffic using a single set of cables. The fabric interconnects feature virtual interfaces that terminate both physical and virtual connections equivalently, establishing a virtualization-aware environment in which blade, rack servers, and virtual machines are interconnected using the same mechanisms. The Cisco UCS 6248UP is a 1-RU Fabric Interconnect that features up to 48 universal ports that can support 80 Gigabit Ethernet, Fiber Channel over Ethernet, or native Fiber Channel connectivity.

For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-6200-series-fabric-interconnects/index.html

Figure 2 Cisco Fabric Interconnect – Front and Rear

![]()

Cisco UCS 5108 Blade Server Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis. The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors. Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2204XP or 2208XP Fabric Extenders. A passive mid-plane provides up to 40 Gbps of I/O bandwidth per server slot and up to 80 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 80 Gigabit Ethernet standards. For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-5100-series-blade-server-chassis/index.html

Figure 3 Cisco UCS 5108 Blade Chassis

|

Front view |

Back View |

Cisco UCS Fabric Extenders

The Cisco UCS 2204XP Fabric Extender (Figure 4) has four 10 Gigabit Ethernet, FCoE-capable, SFP+ ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2204XP has sixteen 10 Gigabit Ethernet ports connected through the mid-plane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 80 Gbps of I/O to the chassis.

The Cisco UCS 2208XP Fabric Extender (Figure 4) has eight 10 Gigabit Ethernet, FCoE-capable, Enhanced Small Form-Factor Pluggable (SFP+) ports that connect the blade chassis to the fabric interconnect. Each Cisco UCS 2208XP has thirty-two 10 Gigabit Ethernet ports connected through the midplane to each half-width slot in the chassis. Typically configured in pairs for redundancy, two fabric extenders provide up to 160 Gbps of I/O to the chassis.

Figure 4 Cisco UCS 2204XP/2208XP Fabric Extender

|

Cisco UCS 2204XP FEX |

Cisco UCS 2208XP FEX |

Cisco UCS B200 M4 Servers

The enterprise-class Cisco UCS B200 M4 Blade Server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M4 uses the power of the latest Intel® Xeon® E5-2600 v3 Series processor family CPUs with up to 768 GB of RAM (using 32 GB DIMMs), two solid-state drives (SSDs) or hard disk drives (HDDs), and up to 80 Gbps throughput connectivity. The UCS B200 M4 Blade Server mounts in a Cisco UCS 5100 Series blade server chassis or UCS Mini blade server chassis. It has 24 total slots for registered ECC DIMMs (RDIMMs) or load-reduced DIMMs (LR DIMMs) for up to 768 GB total memory capacity (B200 M4 configured with two CPUs using 32 GB DIMMs). It supports one connector for Cisco’s VIC 1340 or 1240 adapter, which provides Ethernet and FCoE. For more information, see: http://www.cisco.com/c/en/us/products/servers-unified-computing/ucs-b200-m4-blade-server/index.html

Figure 5 Cisco UCS B200 M4 Blade Server

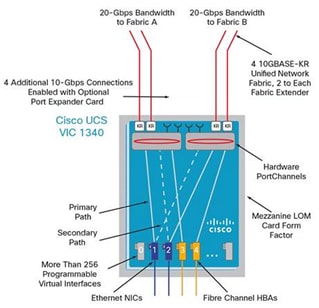

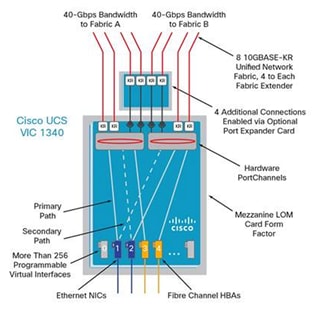

Cisco VIC 1340

The Cisco UCS Virtual Interface Card (VIC) 1340 is a 2-port 40-Gbps Ethernet or dual 4 x 10-Gbps Ethernet, FCoE-capable modular LAN on motherboard (mLOM) designed exclusively for the M4 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1340 capabilities is enabled for two ports of 40-Gbps Ethernet. The Cisco UCS VIC 1340 enables a policy-based, stateless, agile server infrastructure that can present over 256 PCIe standards-compliant interfaces to the host that can be dynamically configured as either network interface cards (NICs) or host bus adapters (HBAs). In addition, the Cisco UCS VIC 1340 supports Cisco® Virtual Machine Fabric Extender (VM-FEX) technology, which extends the Cisco UCS Fabric interconnect ports to virtual machines, simplifying server virtualization deployment and management. For more information, see: http://www.cisco.com/c/en/us/products/interfaces-modules/ucs-virtual-interface-card-1340/index.html

Figure 6 Cisco VIC 1340

Cisco UCS Differentiators

Cisco’s Unified Compute System is revolutionizing the way servers are managed in data-center. Following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager.

1. Embedded Management —In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating need for any external physical or virtual devices to manage the servers.

2. Unified Fabric —In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of overall solution.

3. Auto Discovery —By simply inserting the blade server in the chassis or connecting rack server to the fabric interconnect, discovery and inventory of compute resource occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of UCS, where compute capability of UCS can be extended easily while keeping the existing external connectivity to LAN, SAN and management networks.

4. Policy Based Resource Classification —Once a compute resource is discovered by UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD showcases the policy based resource classification of UCS Manager.

5. Combined Rack and Blade Server Management —Cisco UCS Manager can manage Cisco UCS B-series blade servers and C-series rack server under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic.

6. Model based Management Architecture —Cisco UCS Manager architecture and management database is model based and data driven. An open XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management systems.

7. Policies, Pools, Templates —The management approach in Cisco UCS Manager is based on defining policies, pools and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network and storage resources.

8. Loose Referential Integrity —In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each-other. This provides great flexibility where different experts from different domains, such as network, storage, security, server and virtualization work together to accomplish a complex task.

9. Policy Resolution —In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organization hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibility to owners of different organizations.

10. Service Profiles and Stateless Computing —A service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

11. Built-in Multi-Tenancy Support —The combination of policies, pools and templates, loose referential integrity, policy resolution in organization hierarchy and a service profiles based approach to compute resources makes UCS Manager inherently friendly to multi-tenant environment typically observed in private and public clouds.

12. Extended Memory — The enterprise-class Cisco UCS B200 M4 blade server extends the capabilities of Cisco’s Unified Computing System portfolio in a half-width blade form factor. The Cisco UCS B200 M4 harnesses the power of the latest Intel® Xeon® E5-2600 v3 Series processor family CPUs with up to 1536 GB of RAM (using 64 GB DIMMs) – allowing huge VM to physical server ratio required in many deployments, or allowing large memory operations required by certain architectures like big data.

13. Virtualization Aware Network —Cisco VM-FEX technology makes the access network layer aware about host virtualization. This prevents domain pollution of compute and network domains with virtualization when virtual network is managed by port-profiles defined by the network administrators’ team. VM-FEX also off-loads hypervisor CPU by performing switching in the hardware, thus allowing hypervisor CPU to do more virtualization related tasks. VM-FEX technology is well integrated with VMware vCenter, Linux KVM and Hyper-V SR-IOV to simplify cloud management.

14. Simplified QoS —Even though Fiber Channel and Ethernet are converged in Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

Cisco UCS for OpenStack

Cloud-enabled applications can run on organization premises, in public clouds, or on a combination of the two (hybrid cloud) for greater flexibility and business agility. Finding a platform that supports all these scenarios is essential. With Cisco UCS, IT departments can take advantage of technological advancements and lower the cost of their OpenStack deployments.

1. Open Architecture—A market-leading, open alternative to expensive, proprietary environments, the simplified architecture of Cisco UCS running OpenStack software delivers greater scalability, manageability, and performance at a significant cost savings compared to traditional systems, both in the datacenter and the cloud. Using industry-standard x86-architecture servers and open source software, IT departments can deploy cloud infrastructure today without concern for hardware or software vendor lock-in.

2. Accelerated Cloud Provisioning—Cloud infrastructure must be able to flex on demand, providing infrastructure to applications and services on a moment’s notice. Cisco UCS simplifies and accelerates cloud infrastructure deployment through automated configuration. The abstraction of Cisco Unified Compute System Integrated Infrastructure for Red Hat Enterprise Linux server identity, personality, and I/O connectivity from the hardware allows these characteristics to be applied on demand. Every aspect of a server’s configuration, from firmware revisions and BIOS settings to network profiles, can be assigned through Cisco UCS Service Profiles. Cisco service profile templates establish policy-based configuration for server, network, and storage resources and can be used to logically preconfigure these resources even before they are deployed in the cloud infrastructure.

3. Simplicity at Scale—With IT departments challenged to deliver more applications and services in shorter time frames, the architectural silos that result from an ad hoc approach to capacity scaling with traditional systems poses a barrier to successful cloud infrastructure deployment. Start with the computing and storage infrastructure needed today and then scale easily by adding components. Because servers and storage systems integrate into the unified system, they do not require additional supporting infrastructure or expert knowledge. The system simply, quickly, and cost-effectively presents more computing power and storage capacity to cloud infrastructure and applications.

4. Virtual Infrastructure Density—Cisco UCS enables cloud infrastructure to meet ever-increasing guest OS memory demands on fewer physical servers. The system’s high-density design increases consolidation ratios for servers, saving the capital, operating, physical space, and licensing costs that would be needed to run virtualization software on larger servers. With Cisco UCS B200 M4 latest Intel Xeon E5-2600 v3 Series processor up to 1536 GB of RAM (using 64 GB DIMMs), OpenStack deployments can host more applications using less-expensive servers without sacrificing performance.

5. Simplified Networking—In OpenStack environments, underlying infrastructure can become sprawling complex of networked systems. Unlike traditional server architecture, Cisco UCS provides greater network density with less cabling and complexity. Cisco’s unified fabric integrates Cisco UCS servers with a single high-bandwidth, low-latency network that supports all system I/O. This approach simplifies the architecture and reduces the number of I/O interfaces, cables, and access-layer switch ports compared to the requirements for traditional cloud infrastructure deployments. This unification can reduce network complexity by up to a factor of three, and the system’s wire-once network infrastructure increases agility and accelerates deployment with zero-touch configuration.

6. Installation Confidence—Organizations that choose OpenStack for their cloud can take advantage of the Red Hat Enterprise Linux OpenStack Platform Installer. This software performs the work needed to install a validated OpenStack deployment. Unlike other solutions, this approach provides a highly available, highly scalable architecture for OpenStack services.

7. Easy Management—Cloud infrastructure can be extensive, so it must be easy and cost effective to manage. Cisco UCS Manager provides embedded management of all software and hardware components in Cisco UCS. Cisco UCS Manager resides as embedded software on the Cisco UCS fabric interconnects, fabric extenders, servers, and adapters. No external management server is required, simplifying administration and reducing capital expenses for the management environment.

Cisco Nexus 9000 Series Switch

The Cisco Nexus 9000 Series delivers proven high performance and density, low latency, and exceptional power efficiency in a broad range of compact form factors. Operating in Cisco NX-OS Software mode or in Application Centric Infrastructure (ACI) mode, these switches are ideal for traditional or fully automated datacenter deployments.

Figure 7 Cisco Nexus 9000 Series

The Cisco Nexus 9000 Series Switches offer both modular and fixed 10/40/100 Gigabit Ethernet switch configurations with scalability up to 30 Gbps of non-blocking performance with less than five-microsecond latency, 1152 10 Gbps or 288 40 Gbps non-blocking Layer 2 and Layer 3 Ethernet ports and wire speed VXLAN gateway, bridging, and routing support. For more information, see: http://www.cisco.com/c/en/us/products/switches/nexus-9000-series-switches/index.html

Cisco Nexus 1000v for KVM - OpenStack

Cisco Nexus 1000V OpenStack solution is an enterprise-grade virtual networking solution, which offers Security, Policy control and Visibility all with Layer2/Layer 3 switching at the hypervisor, layer. Cisco Nexus 1000V provides state-full firewall functionality within your infrastructure to isolate tenants and enables isolation of virtual machines with policy-based VM attributes. Cisco Nexus 1000V’s robust policy framework enables centralized enterprise-compliant policy management, pre-provisioning of policies on a network-wide basis and simplifies policy additions and modifications across the virtual infrastructure. When it comes to application visibility, Cisco Nexus 1000V provides insight into live and historical VM migrations and advanced automated troubleshooting capabilities to identify problems in seconds. It also enables you to use your existing monitoring tools to provide rich analytics and auditing capabilities across your physical and virtual infrastructure.

Layer2/Layer3 Switching – Cisco Nexus 1000V offers the capability to route East-West traffic within the tenant without having to go to an external router. This capability reduces sub-optimal traffic patterns within the network and increases the network performance.

East-West Security – Cisco Nexus 1000V comes with Cisco Virtual Security Gateway (VSG) which provides layer 2 zone based firewall capability. Using VSG, Nexus 1000V can secure east west machine to machine traffic by providing stateful firewall functionality. VSG also enables the users to define security attributes based on VM attributes along with network attributes.

Policy Frame Work – Cisco Nexus 1000V provides an extremely power policy frame work to define polices per tenant and make these policies available to the end user via Horizon Dashboard or via Neutron API. This policy framework consists of the popular port profiles and the network policy (For example, VLAN, VxLAN). All these polices can be centrally managed which makes it easier to roll out new business polices or modify existing business polices instantaneously

Application Visibility – Nexus 1000V brings in a slew of industry proven monitoring features that exist in the physical Nexus infrastructure to virtual networking. To name few of them, Nexus 1000V provides remote-monitoring capabilities by using SPAN/ERSPAN, provides visibility into VM motion by using vTacker and provides consolidated interface status, traffic statistics using Virtual Supervisor Module (VSM)

All the monitoring, management and functionality features offered on the Nexus 1000V are consentient with the physical Nexus infrastructure. This enables customer to reuse the existing tool chains to manage the new virtual networking infrastructure as well. Also, customers can experience a seamless functionality in the virtual network homogenous to the physical network.

Cisco Nexus 1000V for OpenStack Solution Offers

Table 1 Use Cases

| Use Case |

Description |

| Micro-Segmentation |

· Stateful firewall functionality for East – West traffic (Layer 2 Zone based firewall) · VM isolation in a common layer 2 segment (tenant) with no additional security group’s |

| VM Visibility |

· Monitoring of live application traffic and collect user statistics · Insight into live and past VM migrations |

| Policy Control |

· Centralized location for policy management · Flexible and powerful frame work that enables organizations to pre provision network wide policies · Policies available via Horizon and Neutron API |

Cisco Nexus 1000V Components

Cisco Nexus 1000v brings the same robust architecture associated with traditional Cisco physical modular switches and with other virtualization environments (for example, VMware vSphere and Microsoft Hyper-V) to OpenStack deployments.

The Cisco Nexus1000v has the following main components:

· The Cisco Nexus® 1000V Virtual Ethernet Module (VEM) is a software component that is deployed on each Kernel-based Virtual Machine (KVM) host. Each virtual machine on the host is connected to the VEM through virtual Ethernet (vEth) ports.

· The Cisco Nexus 1000V Virtual Supervisor Module (VSM) is the management component that controls multiple VEMs and helps in the definition of virtual machine-focused network policies. It is deployed either as a virtual appliance on any KVM host or on the CiscoCloud Services Platform appliance.

· The Cisco Virtual Extensible LAN (VXLAN) Gateway is a gateway appliance to facilitate communication between a virtual machine located on a VXLAN with other entities (bare-metal servers, physical firewalls etc.) that are connected to traditional VLANs. It can be deployed as a virtual appliance on any KVM host.

· The OpenStack Neutron plug-in is used for communication between the VSM and OpenStack Neutron service and is deployed as part of the OpenStack Neutron service.

· The OpenStack Horizon integration for policy profile.

Each of these components are tightly integrated with the OpenStack environment:

· The VEM is a hypervisor-resident component and is tightly integrated with the KVM architecture.

· The VSM is integrated with OpenStack using the OpenStack Neutron plug-in.

· The OpenStack Neutron API has been extended to include two additional user-defined resources:

— Network profiles are logical groupings of network segments.

— Policy profiles group port policy information, including security, monitoring, and quality-of-service (QoS) policies.

![]() In Cisco Nexus 1000v for KVM Release 5.2(1) SK3 (2.2a), network profiles are created automatically for each network type. Network profile creation by administrators is not supported.

In Cisco Nexus 1000v for KVM Release 5.2(1) SK3 (2.2a), network profiles are created automatically for each network type. Network profile creation by administrators is not supported.

ML2 Mechanism Driver for Cisco Nexus 1000v

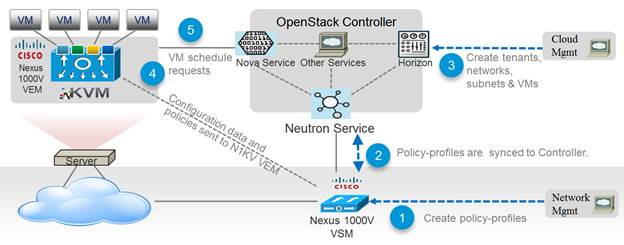

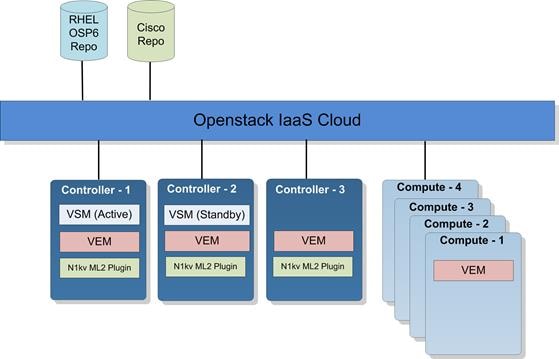

In Red Hat Enterprise Linux OpenStack Platform 6.0, The Cisco Nexus 1000v plugin added support for accepting REST API responses in JSON format from Virtual Supervisor Module (VSM) as well as control for enabling Policy Profile visibility across tenants. Figure 8 shows the operational workflow in OpenStack environment.

Figure 8 Cisco Nexus 1000v ML2 Driver Workflow

NetApp FAS8000

This FlexPod datacenter solution includes the NetApp fabric-attached storage (FAS) 8040 series unified scale-out storage system for both the OpenStack Block Storage Service (Cinder) and the OpenStack Image Service (Glance). Powered by NetApp Clustered Data ONTAP, the FAS8000 series unifies the SAN and NAS storage infrastructure. Systems architects can choose from a range of models representing a spectrum of cost-versus-performance points. Every model, however, provides the following core benefits:

· HA and fault tolerance. Storage access and security are achieved through clustering, high availability (HA) pairing of controllers, hot-swappable components, NetApp RAID DP® disk protection (allowing two independent disk failures without data loss), network interface redundancy, support for data mirroring with NetApp SnapMirror® software, application backup integration with the NetApp SnapManager® storage management software, and customizable data protection with the NetApp Snap Creator® framework and SnapProtect® products.

· Storage efficiency. Users can store more data with less physical media. This is achieved with thin provisioning (unused space is shared among volumes), NetApp Snapshot® copies (zero-storage, read-only clones of data over time), NetApp FlexClone® volumes and LUNs (read/write copies of data in which only changes are stored), deduplication (dynamic detection and removal of redundant data), and data compression.

· Unified storage architecture. Every model runs the same software (clustered Data ONTAP); supports all popular storage protocols (CIFS, NFS, iSCSI, FCP, and FCoE); and uses SATA, SAS, or SSD storage (or a mix) on the back end. This allows freedom of choice in upgrades and expansions, without the need for re-architecting the solution or retraining operations personnel.

· Advanced clustering. Storage controllers are grouped into clusters for both availability and performance pooling. Workloads can be moved between controllers, permitting dynamic load balancing and zero-downtime maintenance and upgrades. Physical media and storage controllers can be added as needed to support growing demand without downtime.

NetApp Storage Controllers

A storage system running Data ONTAP (also known as a storage controller) is the hardware device that receives and sends data from the host. Controller nodes are deployed in HA pairs, with these HA pairs participating in a single storage domain or cluster. This unit detects and gathers information about its own hardware configuration, the storage system components, the operational status, hardware failures, and other error conditions. A storage controller is redundantly connected to storage through disk shelves, which are the containers or device carriers that hold disks and associated hardware such as power supplies, connectivity interfaces, and cabling.

The NetApp FAS8000 features a multicore Intel chipset and leverages high-performance memory modules, NVRAM to accelerate and optimize writes, and an I/O-tuned PCIe gen3 architecture that maximizes application throughput. The FAS8000 series come with integrated unified target adapter (UTA2) ports that support 16 GB Fibre Channel, 10GbE, or FCoE. Figure 9 shows a front and rear view of the FAS8040/8060 controllers.

Figure 9 NetApp FAS8040/8060 (6U) - Front and Rear View

If storage requirements change over time, NetApp storage offers the flexibility to change quickly as needed without expensive and disruptive forklift upgrades. This applies to different types of changes:

· Physical changes, such as expanding a controller to accept more disk shelves and subsequently more hard disk drives (HDDs) without an outage

· Logical or configuration changes, such as expanding a RAID group to incorporate these new drives without requiring any outage

· Access protocol changes, such as modification of a virtual representation of a hard drive to a host by changing a logical unit number (LUN) from FC access to iSCSI access, with no data movement required, but only a simple dismount of the FC LUN and a mount of the same LUN, using iSCSI

In addition, a single copy of data can be shared between Linux and Windows systems while allowing each environment to access the data through native protocols and applications. In a system that was originally purchased with all SATA disks for backup applications, high-performance solid-state disks can be added to the same storage system to support Tier-1 applications, such as Oracle®, Microsoft Exchange, or Microsoft SQL Server.

For more NetApp FAS8000 information, see: http://www.netapp.com/us/products/storage-systems/fas8000/

NetApp Clustered Data ONTAP 8.3 Fundamentals

NetApp provides enterprise-ready, unified scale out storage with clustered Data ONTAP 8.3, the operating system physically running on the storage controllers in the NetApp FAS storage appliance. Developed from a solid foundation of proven Data ONTAP technology and innovation, clustered Data ONTAP is the basis for large virtualized shared-storage infrastructures that are architected for non-disruptive operations over the system lifetime.

![]() Clustered Data ONTAP 8.3 is the first Data ONTAP release to support clustered operation only. The previous version of Data ONTAP, 7-Mode, is not available as a mode of operation in version 8.3.

Clustered Data ONTAP 8.3 is the first Data ONTAP release to support clustered operation only. The previous version of Data ONTAP, 7-Mode, is not available as a mode of operation in version 8.3.

Data ONTAP scale-out is one way to respond to growth in a storage environment. All storage controllers have physical limits to their expandability; the number of CPUs, memory slots, and space for disk shelves dictate maximum capacity and controller performance. If more storage or performance capacity is needed, it might be possible to add CPUs and memory or install additional disk shelves, but ultimately the controller becomes completely populated, with no further expansion possible. At this stage, the only option is to acquire another controller. One way to do this is to scale up; that is, to add additional controllers in such a way that each is an independent management entity that does not provide any shared storage resources. If the original controller is completely replaced by a newer, larger controller, data migration is required to transfer the data from the old controller to the new one. This is time consuming and potentially disruptive and most likely requires configuration changes on all of the attached host systems.

If the newer controller can coexist with the original controller, then the two storage controllers must be individually managed, and there are no native tools to balance or reassign workloads across them. The situation becomes worse as the number of controllers increases. If the scale-up approach is used, the operational burden increases consistently as the environment grows, and the end result is a very unbalanced and difficult-to-manage environment. Technology refresh cycles require substantial planning in advance, lengthy outages, and configuration changes, which introduce risk into the system.

In contrast, when using a scale-out approach, additional controllers are added seamlessly to the resource pool residing on a shared storage infrastructure as the storage environment grows. Host and client connections as well as volumes can move seamlessly and non-disruptively anywhere in the resource pool, so that existing workloads can be easily balanced over the available resources, and new workloads can be easily deployed. Technology refreshes (replacing disk shelves, adding or completely replacing storage controllers) are accomplished while the environment remains online and continues serving data.

Although scale-out products have been available for some time, these were typically subject to one or more of the following shortcomings:

· Limited protocol support. NAS only.

· Limited hardware support. Supported only a particular type of storage controller or a very limited set.

· Little or no storage efficiency. Thin provisioning, de-duplication, compression.

· Little or no data replication capability.

Therefore, while these products are well positioned for certain specialized workloads, they are less flexible, less capable, and not robust enough for broad deployment throughout the enterprise.

Data ONTAP is the first product to offer a complete scale-out solution, and it offers an adaptable, always-available storage infrastructure for today’s highly virtualized environment.

Scale Out

Datacenters require agility. In a datacenter, each storage controller has CPU, memory, and disk shelf limits. Scale-out means that as the storage environment grows, additional controllers can be added seamlessly to the resource pool residing on a shared storage infrastructure. Host and client connections as well as volumes can be moved seamlessly and non-disruptively anywhere within the resource pool. The benefits of scale-out include:

· Non-disruptive operations

· The ability to add tenants, instances, volumes, networks, and so on without downtime in OpenStack

· Operational simplicity and flexibility

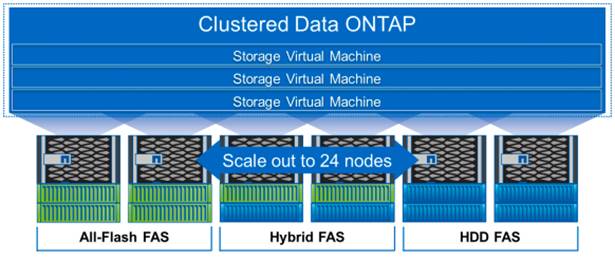

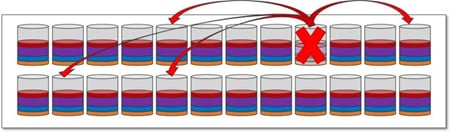

As Figure 10 shows, NetApp Clustered Data ONTAP offers a way to solve the scalability requirements in a storage environment. A NetApp Clustered Data ONTAP system can scale up to 24 nodes, depending on platform and protocol, and can contain different disk types and controller models in the same storage cluster with up to 101PB of capacity.

Figure 10 NetApp Clustered Data ONTAP

Non-disruptive Operations

The move to shared infrastructure has made it nearly impossible to schedule downtime for routine maintenance. NetApp clustered Data ONTAP is designed to eliminate the need for planned downtime for maintenance operations and lifecycle operations as well as the unplanned downtime caused by hardware and software failures. NetApp storage solutions provide redundancy and fault tolerance through clustered storage controllers and redundant, hot-swappable components, such as cooling fans, power supplies, disk drives, and shelves. This highly available and flexible architecture enables customers to manage all data under one common infrastructure while meeting mission-critical uptime requirements.

Three standard tools that eliminate the possible downtime:

· NetApp DataMotion™ data migration software for volumes (vol move). Allows you to move data volumes from one aggregate to another on the same or a different cluster node.

· Logical interface (LIF) migration. Allows you to virtualize the physical Ethernet interfaces in clustered Data ONTAP. LIF migration allows the administrator to move these virtualized LIFs from one network port to another on the same or a different cluster node.

· Aggregate relocate (ARL). Allows you to transfer complete aggregates from one controller in an HA pair to the other without data movement.

Used individually and in combination, these tools allow you to non-disruptively perform a full range of operations, from moving a volume from a faster to a slower disk all the way up to a complete controller and storage technology refresh.

As storage nodes are added to the system, all physical resources (CPUs, cache memory, network I/O bandwidth, and disk I/O bandwidth) can be easily kept in balance. NetApp Data ONTAP enables users to:

· Move data between storage controllers and tiers of storage without disrupting users and applications

· Dynamically assign, promote, and retire storage, while providing continuous access to data as administrators upgrade or replace storage

· Increase capacity while balancing workloads and reduce or eliminate storage I/O hot spots without the need to remount shares, modify client settings, or stop running applications.

These features allow a truly non-disruptive architecture in which any component of the storage system can be upgraded, resized, or re-architected without disruption to the private cloud infrastructure.

Availability

Shared storage infrastructure provides services to many different tenants in an OpenStack deployment. In such environments, downtime produces disastrous effects. The NetApp FAS eliminates sources of downtime and protects critical data against disaster through two key features:

· High Availability (HA). A NetApp HA pair provides seamless failover to its partner in the event of any hardware failure. Each of the two identical storage controllers in the HA pair configuration serves data independently during normal operation. During an individual storage controller failure, the data service process is transferred from the failed storage controller to the surviving partner.

· RAID-DP®. During any OpenStack deployment, data protection is critical because any RAID failure might disconnect and/or shutoff hundreds or potentially thousands of end users from their virtual machines, resulting in lost productivity. RAID-DP provides performance comparable to that of RAID 10 and yet it requires fewer disks to achieve equivalent protection. RAID-DP provides protection against double-disk failure, in contrast to RAID 5, which can protect against only one disk failure per RAID group, in effect providing RAID 10 performance and protection at a RAID 5 price point.

For more information, see: Clustered Data ONTAP 8.3 High-Availability Configuration Guide

NetApp Advanced Data Management Capabilities

This section describes the storage efficiencies, advanced storage features, and multiprotocol support capabilities of the NetApp FAS8000 storage controller.

Storage Efficiencies

NetApp FAS includes built-in thin provisioning, data deduplication, compression, and zero-cost cloning with FlexClone that offers multilevel storage efficiency across OpenStack instances, installed applications, and user data. This comprehensive storage efficiency enables a significant reduction in storage footprint, with a capacity reduction of up to 10:1, or 90% (based on existing customer deployments and NetApp solutions lab validation). Four features make this storage efficiency possible:

· Thin provisioning. Allows multiple applications to share a single pool of on-demand storage, eliminating the need to provision more storage for one application if another application still has plenty of allocated but unused storage.

· De-duplication. Saves space on primary storage by removing redundant copies of blocks in a volume that hosts hundreds of instances. This process is transparent to the application and the user, and it can be enabled and disabled on the fly or scheduled to run at off-peak hours.

· Compression. Compresses data blocks. Compression can be run whether or not deduplication is enabled and can provide additional space savings whether it is run alone or together with deduplication.

· FlexClone. Offers hardware-assisted rapid creation of space-efficient, writable, point-in-time images of individual VM files, LUNs, or flexible volumes. The use of FlexClone technology in OpenStack deployments provides high levels of scalability and significant cost, space, and time savings. The NetApp Cinder driver provides the flexibility to rapidly provision and redeploys thousands of instances.

Advanced Storage Features

NetApp Data ONTAP provides a number of additional features, including:

· NetApp Snapshot™ copy. A manual or automatically scheduled point-in-time copy that writes only changed blocks, with no performance penalty. A Snapshot copy consumes minimal storage space because only changes to the active file system are written. Individual files and directories can easily be recovered from any Snapshot copy, and the entire volume can be restored back to any Snapshot state in seconds. A NetApp Snapshot incurs no performance overhead. Users can comfortably store up to 255 NetApp Snapshot copies per NetApp FlexVol® volume, all of which are accessible as read-only and online versions of the data.

![]() NetApp Snapshots are taken at the FlexVol level, so they cannot be directly leveraged within an OpenStack user context. This is because a Cinder user requests that a Snapshot be taken of a particular Cinder volume, not the containing FlexVol volume. Because a Cinder volume is represented as either a file in the NFS or as a LUN (in the case of iSCSI or Fibre Channel), Cinder snapshots can be created by using FlexClone, which allows you to create many thousands of Cinder snapshots of a single Cinder volume.

NetApp Snapshots are taken at the FlexVol level, so they cannot be directly leveraged within an OpenStack user context. This is because a Cinder user requests that a Snapshot be taken of a particular Cinder volume, not the containing FlexVol volume. Because a Cinder volume is represented as either a file in the NFS or as a LUN (in the case of iSCSI or Fibre Channel), Cinder snapshots can be created by using FlexClone, which allows you to create many thousands of Cinder snapshots of a single Cinder volume.

NetApp Snapshots are however available to OpenStack administrators to do administrative backups, create and/or modify data protection policies, etc.

· LIF. A logical interface that is associated with a physical port, interface group, or VLAN interface. More than one LIF may be associated with a physical port at the same time. There are three types of LIFs: NFS LIFs, iSCSI LIFs, and Fibre Channel LIFs. LIFs are logical network entities that have the same characteristics as physical network devices but are not tied to physical objects. LIFs used for Ethernet traffic are assigned specific Ethernet-based details such as IP addresses and iSCSI qualfied names and then are associated with a specific physical port capable of supporting Ethernet. LIFs used for FC-based traffic are assigned specific FC-based details such as worldwide port names (WWPNs) and then are associated with a specific physical port capable of supporting FC or FCoE. NAS LIFs can be non-disruptively migrated to any other physical network port throughout the entire cluster at any time, either manually or automatically (by using policies), whereas SAN LIFs rely on MPIO and ALUA to notify clients of any change in the network topology.

· Storage Virtual Machines (SVMs). An SVM is a secure virtual storage server that contains data volumes and one or more LIFs, through which it serves data to the clients. An SVM securely isolates the shared, virtualized data storage and network and appears as a single dedicated server to its clients. Each SVM has a separate administrator authentication domain and can be managed independently by an SVM administrator.

Unified Storage Architecture and Multiprotocol Support

NetApp also offers the NetApp Unified Storage Architecture as well. The term “unified” refers to a family of storage systems that simultaneously support SAN (through FCoE, Fibre Channel (FC), and iSCSI) and network-attached storage (NAS) (through CIFS and NFS) across many operating environments, including OpenStack, VMware®, Windows, Linux, and UNIX. This single architecture provides access to data by using industry-standard protocols, including NFS, CIFS, iSCSI, FCP, SCSI, and NDMP.

Connectivity options include standard Ethernet (10/100/1000Mb or 10GbE) and Fibre Channel (4, 8, or 16 Gb/sec). In addition, all systems can be configured with high-performance solid-state drives (SSDs) or serial-attached SCSI (SAS) disks for primary storage applications, low-cost SATA disks for secondary applications (backup, archive, and so on), or a mix of the different disk types. By supporting all common NAS and SAN protocols on a single platform, NetApp FAS enables:

· Direct access to storage for each client

· Network file sharing across different platforms without the need for protocol-emulation products such as SAMBA, NFS Maestro, or PC-NFS

· Simple and fast data storage and data access for all client systems

· Fewer storage systems

· Greater efficiency from each system deployed

NetApp Clustered Data ONTAP can support several protocols concurrently in the same storage system and data replication and storage efficiency features are supported across all protocols. The following are supported:

· NFS v3, v4, and v4.1, including pNFS

· iSCSI

· Fibre Channel

· FCoE

· SMB 1, 2, 2.1, and 3

Storage Virtual Machines

The secure logical storage partition through which data is accessed in clustered Data ONTAP is known as an SVM. A cluster serves data through at least one and possibly multiple SVMs. An SVM is a logical abstraction that represents a set of physical resources of the cluster. Data volumes and logical network LIFs are created and assigned to an SVM and can reside on any node in the cluster to which the SVM has been given access. An SVM can own resources on multiple nodes concurrently, and those resources can be moved non-disruptively from one node to another. For example, a flexible volume can be non-disruptively moved to a new node, and an aggregate, or a data LIF, can be transparently reassigned to a different physical network port. The SVM abstracts the cluster hardware and is not tied to specific physical hardware.

An SVM is capable of supporting multiple data protocols concurrently. Volumes within the SVM can be combined together to form a single NAS namespace, which makes all of an SVM’s data available to NFS and CIFS clients through a single share or mount point. For example, a 24-node cluster licensed for UNIX and Windows File Services that has a single SVM configured with thousands of volumes can be accessed from a single network interface on one of the nodes. SVMs also support block-based protocols, and LUNs can be created and exported by using iSCSI, FC, or FCoE. Any or all of these data protocols can be configured for use within a given SVM.

An SVM is a secure entity. Therefore, it is aware of only the resources that have been assigned to it and has no knowledge of other SVMs and their respective resources. Each SVM operates as a separate and distinct entity with its own security domain. Tenants can manage the resources allocated to them through a delegated SVM administration account. An SVM is effectively isolated from other SVMs that share the same physical hardware, and as such, is uniquely positioned to align with OpenStack tenants for a truly comprehensive multi-tenant environment. Each SVM can connect to unique authentication zones, such as AD, LDAP, or NIS.

From a performance perspective, maximum IOPS and throughput levels can be set per SVM by using QoS policy groups, which allow the cluster administrator to quantify the performance capabilities allocated to each SVM.

Clustered Data ONTAP is highly scalable, and additional storage controllers and disks can easily be added to existing clusters to scale capacity and performance to meet rising demands. Because these are virtual storage servers within the cluster, SVMs are also highly scalable. As new nodes or aggregates are added to the cluster, the SVM can be non-disruptively configured to use them. New disk, cache, and network resources can be made available to the SVM to create new data volumes or to migrate existing workloads to these new resources to balance performance.

This scalability also enables the SVM to be highly resilient. SVMs are no longer tied to the lifecycle of a given storage controller. As new replacement hardware is introduced, SVM resources can be moved non-disruptively from the old controllers to the new controllers, and the old controllers can be retired from service while the SVM is still online and available to serve data.

SVMs have three main components:

· Logical interfaces. All SVM networking is done through LIFs created within the SVM. As logical constructs, LIFs are abstracted from the physical networking ports on which they reside.

· Flexible volumes. A flexible volume is the basic unit of storage for an SVM. An SVM has a root volume and can have one or more data volumes. Data volumes can be created in any aggregate that has been delegated by the cluster administrator for use by the SVM. Depending on the data protocols used by the SVM, volumes can contain either LUNs for use with block protocols, files for use with NAS protocols, or both concurrently. For access using NAS protocols, the volume must be added to the SVM namespace through the creation of a client-visible directory called a junction.

· Namespaces. Each SVM has a distinct namespace through which all of the NAS data shared from that SVM can be accessed. This namespace can be thought of as a map to all of the junctioned volumes for the SVM, regardless of the node or the aggregate on which they physically reside. Volumes can be junctioned at the root of the namespace or beneath other volumes that are part of the namespace hierarchy. For more information about namespaces, see: NetApp TR-4129: Namespaces in Clustered Data ONTAP.

For more information on Data ONTAP, see: NetApp Data ONTAP 8.3 Operating System.

NetApp E5000 Series

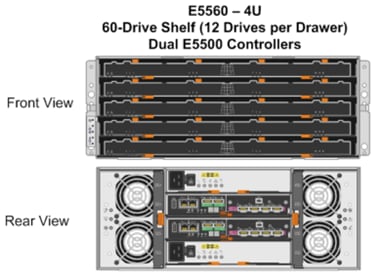

This FlexPod Datacenter solution also makes use of the NetApp E-Series E5560 storage system, primarily for the OpenStack Object Storage service (Swift). An E5560 is comprised of dual E5500 controllers mated with the 4U 60 drive DE6600 chassis. The NetAppÒ E5500 storage system family is designed to meet the most demanding and data-intensive applications and provide continuous access to data. It is from the E-Series line, which offers zero-scheduled downtime systems, redundant hot-swappable components, automated path failover, and online administration capabilities.

The E5560 is shown in Figure 11.

Figure 11 NetApp E-Series E5560

NetApp E-Series Storage Controllers

The E5000 Series hardware delivers an enterprise level of availability with:

· Dual-active controllers, fully redundant I/O paths, and automated failover

· Battery-backed cache memory that is destaged to flash upon power loss

· Extensive monitoring of diagnostic data that provides comprehensive fault isolation, simplifying analysis of unanticipated events for timely problem resolution

· Proactive repair that helps get the system back to optimal performance in minimum time

This storage system additionally provides the following high-level benefits:

· Flexible Interface Options. The E-Series supports a complete set of host or network interfaces designed for either direct server attachment or network environments. With multiple ports per interface, the rich connectivity provides ample options and bandwidth for high throughput. The interfaces include quad-lane SAS, iSCSI, FC, and InfiniBand to connect with and protect investments in storage networking.

· High Availability and Reliability. The E-Series simplifies management and maintains organizational productivity by keeping data accessible through redundant components, automated path failover, and online administration, including online SANtricity® OS and drive firmware updates. Advanced protection features and extensive diagnostic capabilities deliver high levels of data integrity, including Data Assurance (T10-PI) to protect against silent drive errors.

· Maximum Storage Density and Modular Flexibility. The E-Series offers multiple form factors and drive technology options to best meet your storage requirements. The ultra-dense 60-drive system shelf supports up to 360TB in just 4U of space. It is perfect for environments with large amounts of data and limited floor space. Its high-efficiency power supplies and intelligent design can lower power use up to 40% and cooling requirements by up to 39%.

· Intuitive Management. NetApp SANtricity Storage Manager software offers extensive configuration flexibility, optimal performance tuning, and complete control over data placement. With its dynamic capabilities, SANtricity software supports on-the-fly expansion, reconfigurations, and maintenance without interrupting storage system I/O.

For more information on the NetApp E5560, see: NetApp E5500 Storage System.

NetApp SANtricity Operating System Fundamentals

With over 20 years of storage development behind it, and approaching nearly one million systems shipped, the E-Series platform is based on a field-proven architecture that uses the SANtricity storage management software on the controllers. This OS is designed to provide high reliability and greater than 99.999% availability, data integrity, and security. The SANtricity OS:

· Delivers best-in-class reliability with automated features, online configuration options, state-of-the-art RAID, proactive monitoring, and NetApp AutoSupport™ capabilities.

· Extends data protection through FC- and IP-based remote mirroring, NetApp SANtricity Dynamic Disk Pools (DDPs), enhanced Snapshot copies, data-at-rest encryption, data assurance to ensure data integrity, and advanced diagnostics.

· Includes plug-ins for application-aware deployments of Oracle®, VMware®, Microsoft®, and Splunk® applications.

For more information, see the NetApp SANtricity Operating System product page.

Dynamic Disk Pools

DDPs increase the level of data protection, provide more consistent transactional performance, and improve the versatility of E-Series systems. DDP dynamically distributes data, spare capacity, and parity information across a pool of drives. An intelligent algorithm (seven patents pending) determines which drives are used for data placement, and data is dynamically recreated and redistributed as needed to maintain protection and uniform distribution.

Consistent Performance during Rebuilds

DDP minimizes the performance drop that can occur during a disk rebuild, allowing rebuilds to complete up to eight times faster than with traditional RAID. Therefore, your storage spends more time in an optimal performance mode that maximizes application productivity. Shorter rebuild times also reduce the possibility of a second disk failure occurring during a disk rebuild and protects against unrecoverable media errors. Stripes with several drive failures receive priority for reconstruction.

Overall, DDP provides a significant improvement in data protection; the larger the pool, the greater the protection. A minimum of 11 disks is required to create a disk pool.

How DDP Works

When a disk fails with traditional RAID, data is recreated from parity on a single hot spare drive, creating a bottleneck. All volumes using the RAID group suffer. DDP distributes data, parity information, and spare capacity across a pool of drives. Its intelligent algorithm based on CRUSH defines which drives are used for segment placement, ensuring full data protection. DDP dynamic rebuild technology uses every drive in the pool to rebuild a failed drive, enabling exceptional performance under failure. Flexible disk-pool sizing optimizes utilization of any configuration for maximum performance, protection, and efficiency.

When a disk fails in a Dynamic Disk Pool, reconstruction activity is spread across the pool and the rebuild is completed eight times faster.

Figure 12 Dynamic Disk Pool

NetApp Storage for OpenStack

Most options for OpenStack integrated storage solutions aspire to offer scalability, but often lack the features and performance needed for efficient and cost-effective cloud deployment at scale.

NetApp has developed OpenStack interfaces to provide FAS and E-Series value to enterprise customers and thus provides them with a choice in cloud infrastructure deployment, including open-source options that provide lower cost, faster innovation, unmatched scalability, and the promotion of standards. As OpenStack abstracts the underlying hardware from customer applications and workloads, NetApp enterprise storage features and functionality can be exposed through unique integration capabilities built for OpenStack. Features are passed through the interfaces such that standard OpenStack management tools (CLI, Horizon, etc.) can be used to access NetApp value proposition for simplicity and automation.

Once exposed through the abstraction of the OpenStack API set, NetApp technology features are now accessible, such as data deduplication, thin provisioning, cloning, Snapshots, DDPs, mirroring, and so on. Customers can be confident that the storage infrastructure underlying their OpenStack Infrastructure as a Service (IaaS) environment is highly available, flexible, and performant.

Because NetApp technology is integrated with

· OpenStack Block Storage Service (Cinder)

· OpenStack Object Storage Service (Swift)

· OpenStack Image Service (Glance)

· OpenStack Compute Service (Nova)

· OpenStack File Share Service (Manila)

Users can build on this proven and highly scalable storage platform not only with greenfield deployments as illustrated in this CVD, and also with brownfield deployments for customers who wish to optimize their existing NetApp storage infrastructure.

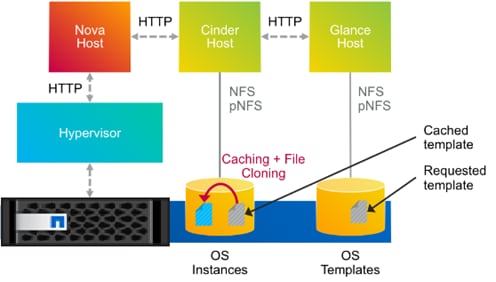

Cinder

The OpenStack Block Storage service provides management of persistent block storage resources. In addition to acting as secondarily attached persistent storage, you can write images into a Cinder volume for Nova to utilize as a bootable, persistent root volume for an instance.

In this Cisco Validated Design, Cinder volumes are stored on the NetApp FAS8040 storage array and accessed using the pNFS (NFS version 4.1) protocol. The pNFS protocol has a number of advantages at scale in a large, heterogeneous hybrid cloud, including dynamic failover of network paths, high performance through parallelization, and an improved NFS client.

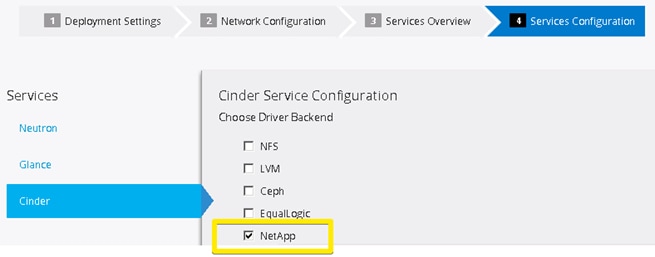

Red Hat Enterprise Linux OpenStack Platform Installer Integration

The Cinder service configurations (and pertinent configuration files on the resulting Controller hosts) are handled automatically as a part an OpenStack Deployment from within the Red Hat Enterprise Linux OpenStack Platform Installer. Customers can select NetApp within the Services Configuration plane of a deployment. (Figure 13)

Figure 13 Red Hat Enterprise Linux OpenStack Platform Installer Cinder NetApp Integration

Selectable options include support for NetApp Clustered Data ONTAP, Data ONTAP 7-mode, and E-Series platforms. In this reference architecture, NetApp Clustered Data ONTAP is selected, and pertinent details are filled in that are representative for a NetApp FAS8040 storage subsystem.

NetApp Unified Driver for Clustered Data ONTAP with NFS

A Cinder driver is a particular implementation of a Cinder backend that maps the abstract APIs and primitives of Cinder to appropriate constructs within the particular storage solution underpinning the Cinder backend.