Distribution Automation—Secondary Substation Design Guide

The Cisco Distribution Automation - Secondary Substation Cisco Validated Design (CVD), which is documented in this Cisco Distribution Automation - Secondary Substation Design Guide, provides a comprehensive explanation of the Cisco Smart Grid FAN solution design for Secondary Substation monitoring use cases, including FLISR and Volt/VAR control.

The document, which includes information about the system's architecture, possible deployment models, and guidelines for deployment, also recommends best practices and potential issues when deploying the reference architecture.

Executive Summary

Several key business drivers underlie the optimization of the distribution grid enabled by this solution. A pervasive, highly available, and well-designed communications network will help enable increased reliability and availability while also reducing OpEx.

Cisco Systems is addressing the networking needs of the utility industry. Specifically, in this Distribution Automation - Secondary Substation Design Guide, the communications solutions that address the utility distribution grid with use cases, such as SCADA transport, Fault Location, Isolation, and Service Restoration (FLISR), and line voltage-monitoring enabling applications such as Volt/VAR Control, are being highlighted. Field devices like transformers can offer predictive maintenance opportunities that will help eliminate customer outages and expensive unscheduled repairs and truck rolls.

The Cisco Distribution Automation validated solution, which is part of the Cisco portfolio of industry-leading, validated, and secure networking solutions for substation automation, Utility WAN, and Field Area Network Advanced Meter Infrastructure (FAN AMI), provides the following unique capabilities for distributed control and protection operations:

■![]() Cisco Resilient Mesh and cellular networking with FlexVPN technologies that are cost-effectively built to scale for the large number of Distribution Automation devices being enabled in the distribution grid

Cisco Resilient Mesh and cellular networking with FlexVPN technologies that are cost-effectively built to scale for the large number of Distribution Automation devices being enabled in the distribution grid

■![]() An IT-preferred security architecture, including hardware and software certification management, firewall, and malware protection with robust encryption to help ensure secure network communications and edge applications

An IT-preferred security architecture, including hardware and software certification management, firewall, and malware protection with robust encryption to help ensure secure network communications and edge applications

■![]() Enhanced management and serviceability by Cisco Field Network Director (FND) with Zero Touch Deployment (ZTD) and plug-and-play (PnP) functionality to help enable deployment and enhance operations

Enhanced management and serviceability by Cisco Field Network Director (FND) with Zero Touch Deployment (ZTD) and plug-and-play (PnP) functionality to help enable deployment and enhance operations

■![]() High availability that is designed in the headend and WAN, with redundant control center support

High availability that is designed in the headend and WAN, with redundant control center support

■![]() Edge application capabilities within FND lifecycle-managed Cisco equipment that include deployment, monitoring, upgrading, and troubleshooting

Edge application capabilities within FND lifecycle-managed Cisco equipment that include deployment, monitoring, upgrading, and troubleshooting

■![]() End-to-end testing and validation, which are completed and documented with various Distribution Automation device vendors and use cases

End-to-end testing and validation, which are completed and documented with various Distribution Automation device vendors and use cases

The recent enhancements to Cisco Resilient Mesh have increased by nearly tenfold the available bandwidth on the 900mhz field area network over the first generation, thus also reducing the latency between hops, helping enable peer-to-peer communication, and equipping the network with enhanced security features. Cisco has transformed a previously low performance wireless mesh network that was designed for smart metering into a network that is suitable for Distribution Automation use cases.

Cellular can be applied to areas or use cases where extremely high performance is needed. Since they are managed under a single highly usable Field Network Director (FND) system, the customer will receive a consistently intuitive management experience.

As a foundational element to any Cisco network, this DA architecture leverages enhanced security from the control center to the edge of the distribution network. The result is a reliable, scalable, and highly available DA network via wired and wireless, and a cellular WAN that supports large-scale DA deployments and secures communications to redundant control centers.

Deployment, ongoing operation, and management is simplified via standards-based protocols and ZTD tools for proven large scale DA network provisioning. This is all addressed in detail as part of this design guide.

This document covers this DA communications solution, which is based on industry-leading innovations in Cisco Resilient Mesh and cellular networking technologies that are built into the Cisco CGR 1240 and CGR 1120 Connected Grid Routers; the Cisco IR510 and IR530 Wi-Sun Mesh Industrial Routers product family; the IR807, IR809, and IR1101 Industrial Router cellular gateways; and the Cisco FND management system.

Navigator

The following table describes the chapters in this document..

Audience

The audience of this guide comprises, but is not limited to, system architects, network/compute/ systems engineers, field consultants, Cisco Advanced Services specialists, and customers.

Readers should be familiar with networking protocols, Network Address Translation (NAT), and SCADA protocols, and should be exposed to the FAN Solution Architecture.

Distribution Automation Architecture for Utilities

Cisco Systems has taken a holistic approach to Distribution Automation, and, in this release, the focus will be the Utility Distribution system. The goal of Distribution Automation in the Utility grid is real-time adjustment to changing loads, distributed generation, and failure conditions within the Distribution grid, usually without operator intervention. This requires control of field devices, which implies that information technology (IT) has developed adequately enough that automated decision making exists in the field and critical information can be relayed to the Utility Control Center. The IT infrastructure includes real-time data acquisition and communication with utility databases and other automated systems. Accurate modeling of distribution operations supports optimal decision making at the control center and in the field. This heavily depends on a highly reliable and high performing communications infrastructure. This document address these communications requirements as an architecture and addresses the key use cases below.

Distribution Automation technologies are commercially available for wide-scale utility deployments. The key for the utility is to identify and unlock the value that these solutions provide. Applications that may have the greatest potential are those that directly effect operations and efficiency such as management of peak load via demand response, predictive technologies for advanced maintenance or equipment replacement and secure communications for equipment, and system restoration technologies.

Automated control of devices in distribution systems is the closed-loop control of switching devices, voltage controllers, and capacitors based on recommendations of the distribution optimization algorithms. These closed loop systems often have rigorous communications systems requirements that vary from manufacturer to manufacturer and by application. The communications system must meet the most rigorous standards and do so at scale. Volt/VAR control is one of the key applications to optimize the distribution grid for the utility.

A utility fault may occur when a short circuit between two phase lines occurs or for other reasons. The fault in any one of the lines can affect a large number of customers. Before the fault on the line can be corrected, it has to be identified and isolated from the larger utility network. This identification and isolation is done by placing reclosers in the network. The reclosers are, in turn, connected to the recloser controller. The recloser controller is a connected gateway, which establishes a connection to the control center.

When a fault is identified, the reclosers perform the trip operation and the fault is isolated from the larger network. This trip operation can be automated or can be sent from the control center. Once the fault is corrected, the close operation on the circuit, which is done from the control center, can be executed. This is commonly referred to as Fault, Location, Isolation, and Service Restoration (FLISR), and is also one of the key use cases for a utility in a grid optimization effort.

This Distribution Automation architecture address the utility requirements for Volt/VAR and FLISR via a robust communications infrastructure that addresses the two predominant distribution automation schemes:

■![]() In Europe, portions of South America, and Asia, the distribution scheme is based on a more centralized transformer design and is commonly referred to as the Secondary Substation.

In Europe, portions of South America, and Asia, the distribution scheme is based on a more centralized transformer design and is commonly referred to as the Secondary Substation.

■![]() In North America, portions of South America, and along the Pacific Rim, the distribution scheme is based on a decentralized transformer model and this scheme will be referred to throughout this document as a Feeder Network.

In North America, portions of South America, and along the Pacific Rim, the distribution scheme is based on a decentralized transformer model and this scheme will be referred to throughout this document as a Feeder Network.

The following architecture leverages the latest technologies and recent enhancements to best address use cases and these topologies with a variety of cell-based gateways for the Secondary Substation as well as a combination of 900 Mhz mesh and cell gateways at the edge. The architecture addresses the requirements for these edge services and communications, including the edge as Neighborhood Area Network (NAN), the backhaul as Wide Area Network (WAN), and the Operations and Control Centers commonly referred to as the Headend.

Figure 1 Reference Architecture for Distribution Automation

The Headend provides aggregation and security for and between the distribution automation applications typically at the Utility Control Center. This architecture leverages a secure WAN aggregation for scalability since feeder sections may scale to hundreds or more devices with the DA network scaling to thousands of feeder segments and Secondary Substation networks with over 100,000 nodes.

As part of this architecture, the WAN segment is referred to in two modes: On-Net and Off-Net:

■![]() On-Net is a high speed communications network owned and operated by the utility; examples include SDH/SONET, Carrier Ethernet, or MPLS as the most common.

On-Net is a high speed communications network owned and operated by the utility; examples include SDH/SONET, Carrier Ethernet, or MPLS as the most common.

■![]() On the other hand, the Off-Net network is a service provider-leveraged network that can be based on the same technologies but as a shared service that often includes pre-negotiated service level agreements.

On the other hand, the Off-Net network is a service provider-leveraged network that can be based on the same technologies but as a shared service that often includes pre-negotiated service level agreements.

The WAN segment for DA networks is often a cellular backhaul connection because building out a private network in numerous and remote locations, especially in the Secondary Substation model, is frequently cost prohibitive. The NAN Mesh offers opportunities to leverage the On-Net network as backhaul when the radio network gateway can be co-located at a utility-owned facility such as a substation or depot.

The edge or NAN is built on a small form factor gateway or NAN router connected to the edge device such as a Capacitor Bank Controller (CBC) or voltage line monitor based on application or service. The connection to the edge device is often serial, but is rapidly moving to Ethernet. The NAN router can be configured to deliver edge services such as adaptation for serial connections via raw socket encapsulation or translation from serial protocols like IEC-101 to the packet-based IEC-104 protocol. The NAN router also provides security services such as 802.1x port-based authentication, encryption, and routing with possible alternate backhaul options, thus providing a secure connection for the edge device to the control center. The backhaul in the case of Secondary Substations is most often cellular with some satellite or DSL options.

Cisco Resilient Mesh is the latest version of the 900 Mhz Connected Grid Mesh radio with significant performance improvements now applicable for many Distribution Automation applications and use cases. However, it is recognized that Resilient Mesh may not be applicable for all use cases. The Distribution Feeder network will likely be a combination of mesh where the 900 Mhz radio network is feasible and where hop count and latency meet application requirements with cellular to augment based on hop count, application performance, or latency requirements.

Distribution Automation Use Cases

This chapter includes the following major topics:

■![]() Secondary Substation Monitoring and Control

Secondary Substation Monitoring and Control

■![]() Fault, Location, Isolation, Service Restoration

Fault, Location, Isolation, Service Restoration

Distribution Automation refers to the monitoring and control of devices located on the distribution feeders, such as line reclosers, load break switches, sectionalizers, capacitor banks and line regulators, and devices located in the distribution substation. DA is an overlay network deployed in parallel to the distribution feeder. It enables two-way communication between controllers used in the distribution feeder and the intelligence application that resides in the Utility Control Center or Secondary Substation for improving grid reliability, availability, and control. Figure 2 depicts a radial distribution feeder.

Figure 2 Radial Distribution Feeder

In Figure 2, the distribution feeder can be observed coming out of the Secondary Substation; various distribution automation controllers (IEDs) in the feeder, such as the recloser controller, voltage regular controller, and capacitor bank controller, are positioned along the distribution feeder. Key functions and operations of Distribution Automation include protecting the distribution system, managing the fault, measuring the energy usage, managing the assets, and controlling and managing system performance. European feeders are largely three-phase and most European countries have a standard secondary voltage of 220, 230, or 240 V.

This design guide discusses the following EMEA region Distribution Automation use cases:

■![]() Secondary Substation Monitoring and Control

Secondary Substation Monitoring and Control

■![]() Fault, Location, Isolation, Service Restoration

Fault, Location, Isolation, Service Restoration

The radial feeder distribution system design is considered for Volt/VAR regulation use cases and the parallel feeder distribution system is considered for FLISR use cases. Cisco DA Gateways are very well suited for other feeder deployments such as mesh and loop distributed feeder designs.

Secondary Substation Monitoring and Control

Secondary Substation Role

Secondary Substations, which are part of the Distribution Automation system, are used to step down the power voltage from medium to low voltage for end consumer needs. It has a bus topology that can split the distribution power off in multiple directions. Secondary Substations host transformers as well as a number of devices called intelligent electronic devices (IEDs), such as circuit breakers, voltage sensors, reclosers, surge protectors, and gateways (Secondary Substation Routers or SSRs)

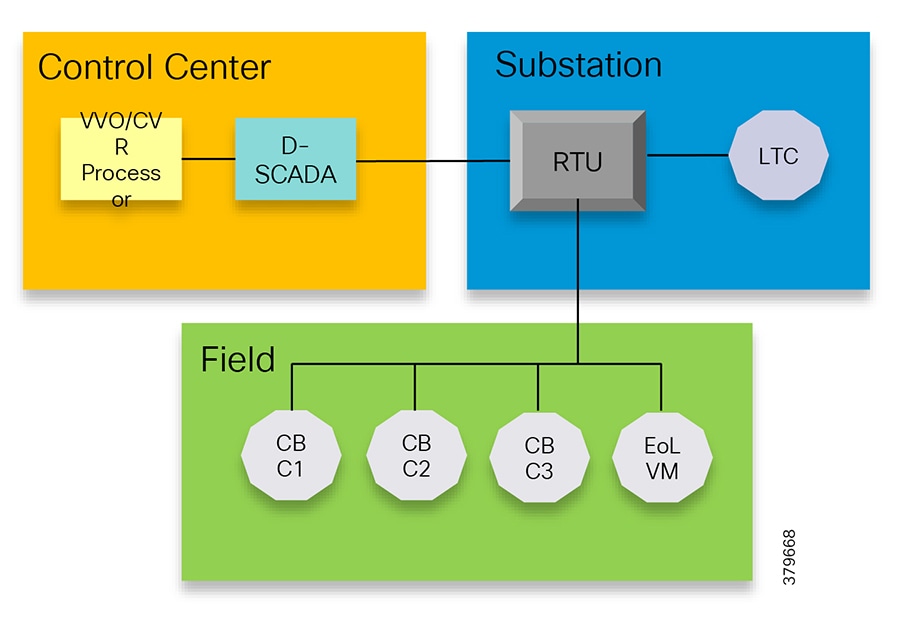

The fundamental function of the SSR is to provide reliable, two-way, real-time communication between the IED and Remote Terminal Unit (RTU) devices that reside in the Secondary Substation and backend SCADA systems running in the centralized control center of the Distribution System Operator (DSO). See Figure 3.

Figure 3 Role of Secondary Substation

Various operational functions, which will be performed on Secondary Substation IEDs/RTUs by central SCADA applications, are listed below:

1.![]() Monitoring—A SCADA application is used to monitor the values of the MV and LV transformers' voltage and current levels using periodic poll operation. This monitoring data will be important for control, protection, and preventive maintenance functions. IEDs will be configured to send unsolicited reporting to SCADA systems if they exceed certain threshold values or failure conditions.

Monitoring—A SCADA application is used to monitor the values of the MV and LV transformers' voltage and current levels using periodic poll operation. This monitoring data will be important for control, protection, and preventive maintenance functions. IEDs will be configured to send unsolicited reporting to SCADA systems if they exceed certain threshold values or failure conditions.

2.![]() Control—Includes remote control operations such as operation of circuit breakers and switches.

Control—Includes remote control operations such as operation of circuit breakers and switches.

3.![]() Protection—Performs various protection functions for isolating the Secondary Substation from the transmission grid when there is a failure.

Protection—Performs various protection functions for isolating the Secondary Substation from the transmission grid when there is a failure.

Figure 4 depicts various Secondary Substation components such as RTUs, IEDs, SSR, and meter data concentrator:

Figure 4 Secondary Substation Components

Secondary Substation Router Functions

The Secondary Substation Router aggregates traffic from various IEDs and RTUs and routes traffic to both primary and secondary regional Control Centers hosted by the DSO via public connectivity options such as cellular (LTE) or leased line (Ethernet/Fiber) on an IPV4 or IPV6 backhaul. The SSR encrypts the application traffic using the IPSec tunnel for maintaining confidentiality of application data over the public network. The headend router (HER) in the DSO Control Center aggregates various secured tunnels from multiple SSRs and decrypts the encrypted traffic; the traffic is routed after decryption to various SCADA application.

The uplink WAN connectivity, routing, backhaul redundancy, QoS, encryption, and NAT features that will be performed on the SSR are discussed in detail in Design Considerations.

The RTU, having legacy RS232/RS485 interfaces, can be directly connected to the SSR serial interfaces. Raw sockets or protocol translation techniques will be used to transport legacy application traffic such as T101 to the control center. Raw sockets and protocol translation techniques are discussed in detail in Design Considerations.

IPV4 IEDs can be directly connected to the Ethernet port of the SSR, which can act as a Dynamic Host Configuration Protocol (DHCP) relay agent to provide IP addresses to the IEDs (IEDs need support from DHCP client functionality) and dot1x relay agent functionality (IEDs need to support the dot1x suppliant feature) for performing device-level authentication. If a modern IED supports IPV6 addressing, the SSR can route the IPV6 traffic to IPV6 SCADA applications residing in the control center.

The SSR can aggregate and route meter concentrator data to a metering application residing in the DSO Control Center. It can also be used to transport IP camera traffic and asset monitoring traffic to DSO Control Center applications.

For advanced use cases like Distributed Energy Resources (DER), a fiber will be extended from the Secondary Substation to connect various IEDs residing in the consumer premises. This interface is called the extended LAN interface. A future version of this guide will address this use case.

Design Considerations discusses in detail how the SSR can be deployed in secured Zero Touch fashion over a public internet connection and how different configuration profiles as per vertical use cases can be pushed to SSR.

Volt/VAR Control

Volt/VAR Control Use Case and Benefits

This use case address automating dynamic and efficient delivery of power. Utilities look at achieving large saving by enhancing the efficiency of their power distribution infrastructure—in other words, improving the effectiveness of the flow of electricity. In order to evaluate the process, it is important to review the differences between what is called real power and reactive power:

■![]() Real power is used to run all lights, devices and production lines. It is the power that “does the work.”

Real power is used to run all lights, devices and production lines. It is the power that “does the work.”

■![]() Reactive power does not contribute anything to doing work, but it does cause conductors to heat up and it takes up a certain amount of “space” in the wires.

Reactive power does not contribute anything to doing work, but it does cause conductors to heat up and it takes up a certain amount of “space” in the wires.

The more reactive power flowing on a line, the less “room” there is for real power and the less efficient is the distribution system.

Today, in order to eliminate or at least minimize reactive power flows, utilities have deployed on their local distribution systems devices, such as capacitor banks or special transformers that are typically located at substations or on the feeder. These devices work to keep reactive power flows down, making the full capacity of the conductor available for the real power. This process is known as Volt/VAR regulation or control:

■![]() Power Factor Regulation/VAR Compensation—Improves efficiency of energy supply by ensuring voltage and current are in phase when supplied to the customer.

Power Factor Regulation/VAR Compensation—Improves efficiency of energy supply by ensuring voltage and current are in phase when supplied to the customer.

■![]() Conservation Voltage Regulation—At times of peak load, ensure the minimum required voltage level is supplied to the customer.

Conservation Voltage Regulation—At times of peak load, ensure the minimum required voltage level is supplied to the customer.

■![]() Volt/VAR Control—Power factor regulation + Conservation voltage regulation.

Volt/VAR Control—Power factor regulation + Conservation voltage regulation.

Volt/VAR Actors

Figure 5 depicts various actors used in the Volt/VAR use case. The actors used in the Volt/VAR use case are Load Tap Changers, Voltage Regulators, and Capacitor Bank Controllers (CBC).

Voltage Regulator and Load Tap Controllers

Voltage regulation functions are performed using the Voltage Regulator/Load Tap Controller actors. Voltage can be raised or lowered based on load conditions. Voltage Regulators are types of transformers that make small adjustments to voltage levels in response to changes in load. They are installed in substations (where they are called load tap changers) and along distribution feeders to regulate downstream voltage. Voltage Regulators have multiple “raise” and “lower” positions and can automatically adjust according to feeder configurations, loads, and device settings.

Capacitor Bank Controllers

Capacitor Bank Controllers (CBCs) are used to supply reactive power. Capacitor bank utilities use capacitors to compensate for reactive power requirements caused by inductive loads from customer equipment, transformers, or overhead lines. Compensating for reactive power reduces the total amount of power that needs to be provided by power plants, resulting in a flatter voltage profile along the feeder and less energy wasted from electrical losses in the feeder. A distribution capacitor bank consists of a group of capacitors connected together. Capacitor banks are mounted on substation structures, distribution poles, or are “pad-mounted” in enclosures.

Volt/VAR Application Flow

In Figure 6, Volt/VAR and SCADA applications are hosted in the DSO Control Center and RTU and load tap controllers are located in the Secondary Substation. The RTU acts as a outstation device that proxies the poll and/or control command to various field devices like the CBC and end-of-line voltage monitor. This guide covers the use case scenario where the Volt/VAR application flow between IED and SCADA happens via RTU and the distribution feeder type considered is radial. A direct application flow from field devices to the control center for the Volt/VAR use case will be covered in future guides.

Figure 6 Volt/VAR Use Case Block Diagram

The detailed application flow between different actors for power factor regulation is depicted in Figure 7:

Figure 7 Power Factor Regulation

1.![]() Event class data poll to the following devices from RTU:

Event class data poll to the following devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-line voltage monitor, poll measured Value (Short Floating Point) register (0)

2.![]() The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

3.![]() The control command is sent to RTU via SCADA to CBCs to close the Capacitor Bank Controller N by writing in a Control Relay Output Block (CROB) command register in T104 (IP packet-based IEC-104 protocol).

The control command is sent to RTU via SCADA to CBCs to close the Capacitor Bank Controller N by writing in a Control Relay Output Block (CROB) command register in T104 (IP packet-based IEC-104 protocol).

4.![]() Event class data poll to the following devices from the RTU:

Event class data poll to the following devices from the RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-line voltage monitor, poll measured Value (Short Floating Point) register(0)

End-of-line voltage monitor, poll measured Value (Short Floating Point) register(0)

5.![]() All of the above steps are repeated on all the CBCs on the feeder line to maintain a Power Factor value close to 1.

All of the above steps are repeated on all the CBCs on the feeder line to maintain a Power Factor value close to 1.

Figure 8 depicts the detail call flow involved in conservation voltage regulation:

Figure 8 Conservation Voltage Regulation

1.![]() Event class data poll to the below devices from RTU:

Event class data poll to the below devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command (0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command (0)

–![]() End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

2.![]() The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

The Volt/VAR Optimization processor processes the data received from the devices and makes a control command decision based on the power factor calculation.

3.![]() Control command is sent to RTU via SCADA to the load tap controller to lower/raise LTC by writing in a Control Relay Output Block (CROB) command register in T104.

Control command is sent to RTU via SCADA to the load tap controller to lower/raise LTC by writing in a Control Relay Output Block (CROB) command register in T104.

4.![]() Event class data polls to the following devices from RTU:

Event class data polls to the following devices from RTU:

–![]() Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

Substation meter, poll measured Value (Short Floating Point) registers (0 to 4)

–![]() All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

All CBC(s), poll measured Value (Short Floating Point) (0) and double point command(0)

–![]() End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

End-of-Line voltage monitor, poll measured Value (Short Floating Point) register (0)

5.![]() The above steps are repeated to maintain a Power Factor value close to 1 along the feeder line.

The above steps are repeated to maintain a Power Factor value close to 1 along the feeder line.

Fault, Location, Isolation, Service Restoration

FLISR Use Case and Benefits

Fault, Location, Isolation, Service Restoration (FLISR) is the process for dealing with fault conditions on the electrical grid. The following occurs as part of this process:

1.![]() Detects (and locates) faults

Detects (and locates) faults

2.![]() Isolates the faults to the smallest segment of the grid possible

Isolates the faults to the smallest segment of the grid possible

3.![]() Restores as much service as possible while the fault is isolated

Restores as much service as possible while the fault is isolated

FLISR includes automatic sectionalizing and restoration and automatic circuit reconfiguration. These applications accomplish DA operations by coordinating operation of field devices, software, and dedicated communication networks in order to automatically determine the location of a fault and rapidly reconfigure the flow of electricity so that some or all of the customers can avoid experiencing outages. Because FLISR operations rely on rerouting power, they typically require feeder configurations that contain multiple paths to single or multiple other substations. This creates redundancies in the power supply for customers located downstream or upstream of a downed power line, fault, or other grid disturbance.

The benefits of FLISR include:

■![]() Consumers experience minimal outage.

Consumers experience minimal outage.

■![]() Utilities improve the System Average Interruption Duration Index (SAIDI) and the System Average Interruption Frequency Index (SAIFI) numbers and avoid financial penalties being levied by the regulator.

Utilities improve the System Average Interruption Duration Index (SAIDI) and the System Average Interruption Frequency Index (SAIFI) numbers and avoid financial penalties being levied by the regulator.

FLISR application control can be implemented in the following modes:

■![]() Supervised Mode—In supervised mode of operation, no automatic control, system delivers information to operator. Operator initiates manual control actions. Restoration time will be longer in this approach. Please refer to the Secondary Substation 1.0 Implementation Guide, which addresses this use case, at the following URL:

Supervised Mode—In supervised mode of operation, no automatic control, system delivers information to operator. Operator initiates manual control actions. Restoration time will be longer in this approach. Please refer to the Secondary Substation 1.0 Implementation Guide, which addresses this use case, at the following URL:

–![]() https://salesconnect.cisco.com/#/search/Secondary%2520Substation%2520Implementation%2520Guide/content

https://salesconnect.cisco.com/#/search/Secondary%2520Substation%2520Implementation%2520Guide/content

■![]() Semi Automatic Mode—A mix of automatic and supervised control is followed. The DA system automatically isolates the fault and performs the restoration part of upstream restoration. The upstream section is between the substation and the faulted section. Manual restoration operation is performed on the downstream section, which is between the fault section and the end of feeder. This guide will address this mode of operation. In this mode, communication happens between IEDs in field to the Distribution Management System (DMS) application residing in control center.

Semi Automatic Mode—A mix of automatic and supervised control is followed. The DA system automatically isolates the fault and performs the restoration part of upstream restoration. The upstream section is between the substation and the faulted section. Manual restoration operation is performed on the downstream section, which is between the fault section and the end of feeder. This guide will address this mode of operation. In this mode, communication happens between IEDs in field to the Distribution Management System (DMS) application residing in control center.

■![]() Fully Automatic Mode—Isolation and restoration happens automatically without any dispatcher intervention. Communication happens directly between a group of associated IEDs. Restoration is very fast (<1 second), but this mode is a complex approach to deploy.

Fully Automatic Mode—Isolation and restoration happens automatically without any dispatcher intervention. Communication happens directly between a group of associated IEDs. Restoration is very fast (<1 second), but this mode is a complex approach to deploy.

How FLISR Works

Figure 9 is divided into four parts (A,B,C, and D) to show how FLISR operations typically work.

■![]() In Part A of Figure 9, the FLISR system locates the fault, typically using line sensors that monitor the flow of electricity, measures the magnitudes of fault currents, and communicates conditions to other devices and grid operators.

In Part A of Figure 9, the FLISR system locates the fault, typically using line sensors that monitor the flow of electricity, measures the magnitudes of fault currents, and communicates conditions to other devices and grid operators.

■![]() Once located, FLISR opens switches on both sides of the fault: one immediately upstream and closer to the source of power supply (Example B of Figure 9), and one downstream and further away (Example C of Figure 9).

Once located, FLISR opens switches on both sides of the fault: one immediately upstream and closer to the source of power supply (Example B of Figure 9), and one downstream and further away (Example C of Figure 9).

■![]() The fault is now successfully isolated from the rest of the feeder. With the faulted portion of the feeder isolated, FLISR next closes the normally open tie switches to neighboring feeders. This re-energizes the unfaulted portion(s) of the feeder and restores services to all customers served by these unfaulted feeder sections from another substation/feeder (Example D of Figure 9).

The fault is now successfully isolated from the rest of the feeder. With the faulted portion of the feeder isolated, FLISR next closes the normally open tie switches to neighboring feeders. This re-energizes the unfaulted portion(s) of the feeder and restores services to all customers served by these unfaulted feeder sections from another substation/feeder (Example D of Figure 9).

FLISR Actors

■![]() Recloser—The circuit recloser is a self-contained device with a necessary monitoring circuit to detect and interrupt over-current conditions and automatically reclose the line.

Recloser—The circuit recloser is a self-contained device with a necessary monitoring circuit to detect and interrupt over-current conditions and automatically reclose the line.

■![]() Sectionalizing Switch or Remote Control Switch—Remote Controller Switches can be load break or fault interrupting devices.

Sectionalizing Switch or Remote Control Switch—Remote Controller Switches can be load break or fault interrupting devices.

■![]() Remote Fault Indicator—Used to detect faults.

Remote Fault Indicator—Used to detect faults.

■![]() Distribution Management System (DMS)—The DMS application residing in the DSO Control Center is an intelligent application, which is the brain of FLISR systems and which performs application circuit reconfiguration logic.

Distribution Management System (DMS)—The DMS application residing in the DSO Control Center is an intelligent application, which is the brain of FLISR systems and which performs application circuit reconfiguration logic.

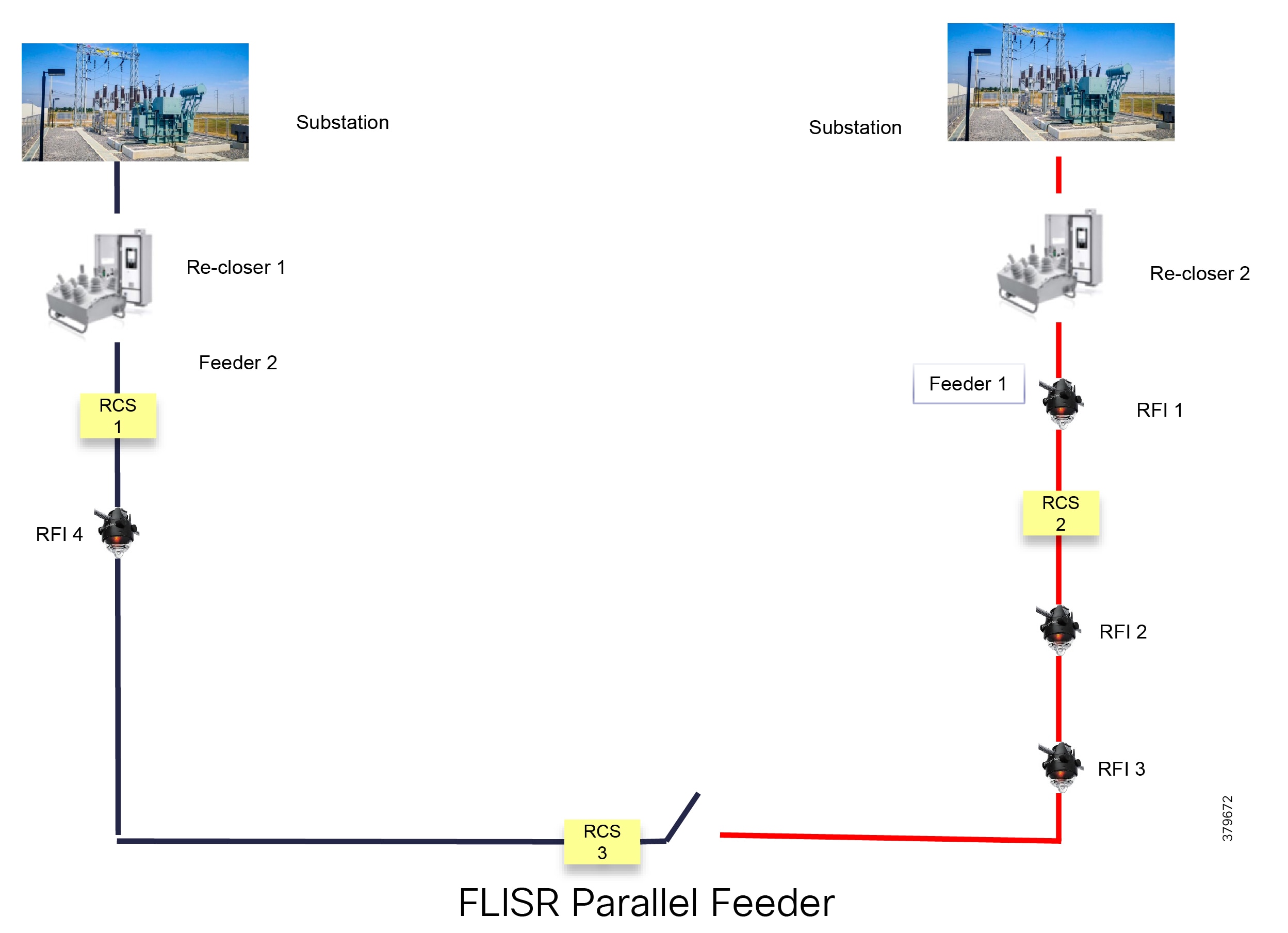

Figure 10 depicts a parallel feeder distribution system. Two distribution feeders are common out of two different Secondary Substations and each feeder has a recloser associated with it. Remote fault Indicators and remote control switch are distributed across both feeders. RCS3 3 is, by default, an open switch.

Figure 10 FLISR Parallel Feeder

Figure 11 FLISR Application Flow

In Figure 11, the application flow can be observed happening directly from feeder devices to the DMS application in the DSO Control Center. The flow is summarized below:

1.![]() Remote Fault Indicator (RFI) 1 reports to the Distribution Management System (DMS) whenever it encounters a fault.

Remote Fault Indicator (RFI) 1 reports to the Distribution Management System (DMS) whenever it encounters a fault.

2.![]() Recloser2 opens and send a report to DMS when it encounters a temporary fault.

Recloser2 opens and send a report to DMS when it encounters a temporary fault.

3.![]() Recloser2 opens and send a report to DMS when it encounters a permanent fault.

Recloser2 opens and send a report to DMS when it encounters a permanent fault.

4.![]() Remote Control Switch (RCS) 2 reports no voltage status to DMS.

Remote Control Switch (RCS) 2 reports no voltage status to DMS.

5.![]() RCS 2 opens when it encounters faults for second time and send a report to DMS.

RCS 2 opens when it encounters faults for second time and send a report to DMS.

6.![]() DMS issues a close command to the RCS 3.

DMS issues a close command to the RCS 3.

7.![]() DMS initiates a periodic poll (every minute) for the all feeder devices.

DMS initiates a periodic poll (every minute) for the all feeder devices.

8.![]() DMS initiates a solicit periodic poll (every 5 minutes once) for all feeder devices.

DMS initiates a solicit periodic poll (every 5 minutes once) for all feeder devices.

Solution Architecture

This chapter includes the following major topics:

■![]() Distribution Automation Solution Architecture

Distribution Automation Solution Architecture

Places in the Network

The DA Solution is a subset of the Field Area Network (FAN) solution architecture. It follows similar two-tier places in network. The WAN tier connects the control center block with the Secondary Substation block or field area block. In turn, the field area or Secondary Substation blocks connect to utility device blocks via different last mile connectivity methods such as Ethernet, Serial, or Wi-Fi.

Figure 12 Distribution Automation Places in the Network

Control Center Block

The control center block acts as a data center for DSOs, which are used to organize these blocks dedicated for each region (called Regional Network Operating Centers or NOCs). Regional NOCs host various SCADA applications that are needed to perform centralized management for various vertical use cases and which are discussed in Distribution Automation Use Cases. The control center blocks house the HER in clustering mode. As per the Cisco DA solution architecture, the ASR 1000 series of routers will deployed as the HER. The role of the HER is to terminate and aggregate IPSec tunnels from various DA Gateways and SSRs. Additional responsibilities include enforcement of QoS and security policies.

The control center block hosts the IoT Field Network Director (FND), which is a network management system for managing the various gateways. Certificate authorities, which support RSA and elliptic-curve cryptography (ECC) encryption and the AAA server, provide authentication, authorization, and accounting.

For details on the design of the control center, please refer to Cisco FAN-Headend Deep Dive Implementation and FAN Use Cases at the following URL :

■![]() https://salesconnect.cisco.com/open.html?c=da249429-ec79-49fc-9471-0ec859e83872

https://salesconnect.cisco.com/open.html?c=da249429-ec79-49fc-9471-0ec859e83872

The control center security design, with respect to IPS/IDS functionality and application load balancing, will be addressed in detail in upcoming releases. For the current version of the security design, refer to the Cisco Connected Utilities - Field Area Network 2.0 Design and Implementation Guide at the following URL:

■![]() https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/FAN/2-0/CU-FAN-2-DIG.html

https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/FAN/2-0/CU-FAN-2-DIG.html

Secondary Substation Block

A key component of the Secondary Substation block is the Secondary Substation Router (SSR). Cisco IR1101, IR807, and CGR1120 series routers will be deployed as SSRs, which connect and aggregate traffic from various IEDs and RTUs present in Secondary Substations to single or dual Control Centers.

Field Area Block

The field area block hosts various distribution automation gateways. Cisco IR1101, IR807, and IR809 will be deployed as DA Gateways. Most of the DA Gateways will be installed 1:1 to DA Controllers. DA Gateways will connect primarily to cellular or Ethernet backhaul. DA Gateways are managed from the IoT FND Network Management System (NMS) application residing in the control center block. DA Gateways are deployed in zero touch fashion. Zero Touch Deployment (ZTD) of DA Gateways is discussed in detail in Network Management System. DA Gateways will be provisioned based on application use cases and last mile connectivity to the utility control devices.

Utility Devices Block

The utility devices block hosts various DA controllers such as voltage regulator controllers, recloser controllers, RCSs, and CBCs. These controllers are connected to DA Gateways via Ethernet or serial (RS232, RS485) interfaces.

Distribution Automation Solution Architecture

The Distribution Automation Solution Architecture, which is depicted in Figure 13, is a centralized architecture where the control center, which plays a key role, hosts communication, security, utility, and network management applications. The DSO organizes this control center as a regional DSO NOC that aggregates ICT traffic from different SSRs and Distribution Automation gateways in that region.

The firewall segregates the control center into three zones: the external, DMZ, and internal zones.

–![]() An HER interface, which will be installed in clusters for redundancy and scaling purposes

An HER interface, which will be installed in clusters for redundancy and scaling purposes

–![]() The Registration Authority (RA), which offloads authentication and authorizing functionalities of the CA and leverages the AAA server for authorization

The Registration Authority (RA), which offloads authentication and authorizing functionalities of the CA and leverages the AAA server for authorization

–![]() The Tunnel Provisioning Server (TPS), which is the proxy for the NMS FND

The Tunnel Provisioning Server (TPS), which is the proxy for the NMS FND

■![]() The Internal Zone hosts the Network Time Protocol (NTP), Domain Naming Server (DNS), DHCP, NMS, and Edge Application management using FND, Field Network Director Database (FND-DB), PKI elements like CA, Active Directory, and AAA, and DA applications like SCADA and DMS.

The Internal Zone hosts the Network Time Protocol (NTP), Domain Naming Server (DNS), DHCP, NMS, and Edge Application management using FND, Field Network Director Database (FND-DB), PKI elements like CA, Active Directory, and AAA, and DA applications like SCADA and DMS.

Figure 13 Distribution Automation Solution Architecture

The DA application bidirectional flow can be classified as follows:

1.![]() SCADA <----> RTU <----> IEDs

SCADA <----> RTU <----> IEDs

This section addresses the solution architecture for the above three flows.

SCADA <----> RTU <----> IEDs

The application bidirectional traffic flow from field IEDs to SCADA in the control center is via RTU, which is located in the Secondary Substation. Application traffic is depicted by the yellow arrow in Figure 14:

Figure 14 SCADA RTU IED Flow DA Design

The ICT solution design for this application flow is as follows:

■![]() DA Gateways, which are installed 1:1 with the controllers and last mile connectivity, will have Ethernet or serial connectivity.

DA Gateways, which are installed 1:1 with the controllers and last mile connectivity, will have Ethernet or serial connectivity.

■![]() DA Gateways will have public WAN connectivity; in most deployment cases, it would be cellular backhaul.

DA Gateways will have public WAN connectivity; in most deployment cases, it would be cellular backhaul.

■![]() Application traffic would be encrypted using FlexVPN. In this architecture. tunnels from the DA Gateways are terminated on the SSR.

Application traffic would be encrypted using FlexVPN. In this architecture. tunnels from the DA Gateways are terminated on the SSR.

■![]() The WAN IP address of the Secondary Substation should be a static (fixed IP) address.

The WAN IP address of the Secondary Substation should be a static (fixed IP) address.

■![]() SSRs route traffic from the IEDs to the RTU, which is co-located.

SSRs route traffic from the IEDs to the RTU, which is co-located.

■![]() RTU processes this application traffic as unsolicited reporting or response to poll/control command.

RTU processes this application traffic as unsolicited reporting or response to poll/control command.

■![]() RTU sends the DA application traffic to the SCADA application in the control center.

RTU sends the DA application traffic to the SCADA application in the control center.

■![]() To transport this application, the SSR will have secure encrypted FlexVPN tunnel to the HER which resides in the control center.

To transport this application, the SSR will have secure encrypted FlexVPN tunnel to the HER which resides in the control center.

For redundancy purposes, two tunnels will be destined to two different regional Control Centers. These tunnels will be designed and deployed active/active tunnels. Separate control traffic will exist from the NMS, i.e., FND to DA Gateways and SSRs. This is depicted by the green arrow in Figure 14. As per this design, DA Gateways will have one FlexVPN tunnel for NMS application traffic and a separate FlexVPN tunnel for application traffic. Similarly, the SSR will have two FlexVPN tunnels to two different regional Control Centers. Application and control traffic can flow through the same FlexVPN tunnel. If required, a third FlexVPN tunnel from SSR to HER can be provisioned for control traffic.

SCADA <----> IEDs

Figure 15 depicts a solution architecture where IEDs can directly communicate with the centralized SCADA. In this design, DA Gateways and SSRs directly connect to HER in the regional control center via public WAN connectivity. For redundancy design, DA Gateways can have two active/active tunnels to two different regional Control Centers. DA application traffic and NMS control traffic can flow in the same FlexVPN tunnel.

Figure 15 SCADA-IED Flow DA Design

Figure 16 depicts the IEC61850 MMS communication flow between SCADA and IEDs:

Figure 16 IEC 61850 MMS Communication Flow with IEDs in Local LAN and Extended LAN

Figure 16 highlights two types of communication flows. One communication flow happens from the control center to the MMS IED located in the local LAN. The second communication flow happens from the control center to the MMS IED located in the Extended LAN (for example, fiber extension to DER sites).

IEDs <----> IEDs

The two types of IED to IED communication are:

Locally-Switched IED Communication

In the case of locally switched IED communication (refer to Figure 17), the IEC61850 Layer 2 GOOSE message flows between the devices located in the substation local LAN and the devices located in the extended LAN. This communication is locally switched at the IR1101 cellular gateway itself rather than being switched at the hub.

Although the communication is locally switched, should there be any requirement to forward the Layer 2 GOOSE message to other substation GOOSE devices, IR1101 could be configured to forward these Layer 2 messages to the Hub (HER) for switching at the hub to other substation devices.

Figure 17 IED-IED Flow Locally-Switched DA Design

In Figure 17, GOOSE messages originate from the Local LAN and are locally switched at the IR1101 Cellular Gateway to the devices located in the Extended LAN.

The LAN extension is facilitated with the introduction of Cisco Industrial Ethernet 2000U series switch, which is connected to SFP port of the IR1101 expansion module. The SFP port of the expansion module is a Layer 2 port, which could be configured as a trunk port allowing both GOOSE VLAN as well as MMS VLAN.

The GOOSE device could be connected to the IE2000U switch. If the connected GOOSE device is capable of VLAN tagging, the interface on IE2000U switch could be configured as trunk port allowing the desired VLAN; additionally, SVI needs to be created for the corresponding VLAN ID. Otherwise, the interface could be configured as an access port to tag the incoming GOOSE traffic with GOOSE VLAN.

As a side note, the interface on the IE2000U switch connecting to the MMS device could be configured as an access port, VLAN tagging the incoming MMS packets with MMS VLAN:

■![]() It is recommended to connect the IE2000U to the GigabitEthernet0/0/5 (SFP port) of the expansion module. This interface could be configured as a trunk port carrying multiple VLANs for Layer 2 GOOSE and Layer3/MMS protocols.

It is recommended to connect the IE2000U to the GigabitEthernet0/0/5 (SFP port) of the expansion module. This interface could be configured as a trunk port carrying multiple VLANs for Layer 2 GOOSE and Layer3/MMS protocols.

■![]() Multiple Layer 2 (bridge) domains could be created by configuring multiple GOOSE VLANs.

Multiple Layer 2 (bridge) domains could be created by configuring multiple GOOSE VLANs.

Should there be any requirement to forward the Layer 2 GOOSE traffic to the GOOSE devices located in other substations, the northbound Layer 2 connectivity to the Hub could be enabled by configuring the L2TPv3 pseudowire on the GOOSE SVI. At the same time, Layer 3 connectivity could be enabled by configuring the IP address on the MMS SVI.

Hub-Switched IED Communication

Layer 2 frames need to be forwarded between multiple GOOSE IEDs connected to multiple IR1101. However, the IR1101s are connected using the Layer 3 cellular network. In order to transport Layer 2 communication over Layer 3 cellular network, an overlay Layer 2 communication infrastructure would be added over the existing secure FlexVPN tunnel.

Figure 18 depicts the solution architecture to enable Layer 2 communication between IEDs over the cellular backhaul. This is achieved with the help of virtual bridge (emulated using hub-and-spoke topology). In Figure 18, the bridging operation is performed in the control center using hub. The DA cellular gateways act as spoke (sending/receiving Layer 2 GOOSE messages). The IEC61850 Layer 2 GOOSE messages sent by an IED connected to one DA gateway are forwarded to the Control Center, where the Layer 2 communication is bridged (to all other GOOSE IEDs) connected to other DA gateways.

Figure 18 IED-IED Flow Hub-Switched DA Design

In Figure 18, GOOSE communication from the leftmost GOOSE device enters the leftmost IR1101, is Layer 2 tunneled inside the L2TPv3 pseudowire to the HER cluster. HER cluster emulates the Layer 2 bridge. Therefore, the Layer 2 GOOSE message is Layer 2 bridged to all other pseudowires down to all the other IR1101s.

L2TPv3 pseudowires are configured over the GOOSE SVI on the IR1101 cellular gateways. The L2TPv3 pseudowire from the IR1101 is terminated on the data center-facing interface of the HER. The Layer 2 frames should be bridged at the HER cluster using an external physical loop (or) using an external switch.

Bridging is performed in the following steps:

1.![]() The GOOSE VLAN tagged frame should be stripped at the hub and is put into a bridge-domain.

The GOOSE VLAN tagged frame should be stripped at the hub and is put into a bridge-domain.

2.![]() The bridge-domain bridges between L2TPv3 pseudowires from multiple cellular gateways.

The bridge-domain bridges between L2TPv3 pseudowires from multiple cellular gateways.

Every IR1101 is configured with an active L2TPv3 pseudowire terminating on one HER. This pseudowire is protected with a backup pseudowire terminating on another HER. In Figure 18, the active pseudowire from the last IR1101 cellular gateway terminates on HER2, and the backup pseudowire terminates on HER4. As the primary pseudowire is down on the last IR1101, the backup pseudowire is UP and active.

Either the primary or the backup pseudowire is active and used at any point in time.

The following sequence captures the Layer 2 IEC 61850 GOOSE message flow from the GOOSE device connected to leftmost IR1101 to GOOSE devices connected to the rest of IR1101s (refer to Figure 18).

Sequence of IEC 61850 GOOSE Bridging Flow

1.![]() 6 x IR1101s in the bottom of Figure 18 are represented from left to right as IR1101-1, IR1101-2, through to IR1101-6. The distribution of pseudowires among the HERs in the cluster is simply for demonstration purposes.

6 x IR1101s in the bottom of Figure 18 are represented from left to right as IR1101-1, IR1101-2, through to IR1101-6. The distribution of pseudowires among the HERs in the cluster is simply for demonstration purposes.

2.![]() The Layer 2 GOOSE message from the first IR1101 goes up to the control center and reaches HER1.

The Layer 2 GOOSE message from the first IR1101 goes up to the control center and reaches HER1.

3.![]() At HER1, the physical loopback link enables Layer 2 bridging. Additionally, the bridge-domain is extended to other HERs by connecting to an external switch. This could even be a virtual switch in cases where CSR1000v is used as the virtual HER.

At HER1, the physical loopback link enables Layer 2 bridging. Additionally, the bridge-domain is extended to other HERs by connecting to an external switch. This could even be a virtual switch in cases where CSR1000v is used as the virtual HER.

4.![]() With the help of the extended Layer 2 bridge-domain, the Layer 2 frame reaches HER2, HER3, and HER4.

With the help of the extended Layer 2 bridge-domain, the Layer 2 frame reaches HER2, HER3, and HER4.

5.![]() HER2 forwards the Layer 2 frame to IR1101-2 over the active pseudowire.

HER2 forwards the Layer 2 frame to IR1101-2 over the active pseudowire.

6.![]() HER3 forwards the Layer 2 frame to IR1101-3 and IR1101-5 over the active pseudowire.

HER3 forwards the Layer 2 frame to IR1101-3 and IR1101-5 over the active pseudowire.

7.![]() HER4 forwards the Layer 2 frame to IR1101-4 over the active pseudowire.

HER4 forwards the Layer 2 frame to IR1101-4 over the active pseudowire.

8.![]() HER4 forwards the Layer 2 frame to IR1101-6 over the backup pseudowire (which is up, because the primary pseudowire is down).

HER4 forwards the Layer 2 frame to IR1101-6 over the backup pseudowire (which is up, because the primary pseudowire is down).

HER as L2TPv3 Hub for Creating Layer 2 Bridge Domain

Figure 19 HER as L2TPv3 Hub for Creating Layer 2 Bridge Domain

The FlexVPN tunnel from IR1101s could terminate on any HER of the cluster. From IR1101, the active pseudowire terminates on one HER, and the backup pseudowire terminates on a different HER. A physical loopback link also occurs on each HER. In addition, every HER is connected to each other using a trunk port, allowing the bridge-domain VLAN (for example, VLAN 1000). The pseudowire is terminated on the data center-facing interface. The connected physical loop interface removes the VLAN tags, and bridges them onto bridge-domain 1000. The trunk port connecting the HERs in the cluster would bridge the VLAN1000 between the HERs in the cluster.

Figure 20, which captures the emulated Layer 2 infrastructure (bridging with hub and spoke), shows the logical view of the hub-and-spoke view of the topology, with HERs acting as hubs and IR1101s acting as spokes. IR1101 (left and middle) has primary L2TPv3 pseudowire in active state. IR1101 (right) has primary L2TPv3 pseudowire in down state, with the secondary pseudowire in UP state taking care of Layer 2 communication.

Figure 20 L2TPv3 Hub and Spoke—Logical View

Figure 21 Encapsulation View (FlexVPN vs L2TPv3 vs Layer 2 vs Layer 3)

In Figure 21, the FlexVPN tunnel is established between the IR1101 cellular gateway and the HER cluster. The L2TPv3 pseudowire is a payload for FlexVPN tunnel. The L2TPv3 pseudowire carries the Layer 2 GOOSE messages between the IR1101 and HER cluster. The GOOSE message is a payload for L2TPv3 tunnel, which, in turn, is a payload for the FlexVPN tunnel. The Layer 2 trunk connecting HER cluster helps in emulating Layer 2 bridging in the control center for enabling IED-to-IED GOOSE communication. On the other hand, IEC61850 MMS messages, T104, MODBUS/IP, and DNP3/IP are all IP-aware protocols, and they could be transmitted as a payload under FlexVPN tunnel directly.

Solution Components

Table 1 lists various solution components and their role and Table 2 lists third party components of the solution.

Cisco IoT Field Network Director with Oracle Database

The Cisco IoT Field Network Director (formerly called Connected Grid Network Management System or CG-NMS) is a software platform that manages the infrastructure for smart grid applications. It provides enhanced Fault, Configuration, Accounting Performance, and Security (FCAPS) capabilities for highly scalable and distributed systems such as smart metering and Distribution Automation. Additional capabilities of Cisco IoT FND include:

■![]() Network topology visualization and integration with the existing Geological Information System (GIS)

Network topology visualization and integration with the existing Geological Information System (GIS)

■![]() Simple, consistent, and scalable network layer security policy management and auditing

Simple, consistent, and scalable network layer security policy management and auditing

■![]() Extensive network communication troubleshooting tools

Extensive network communication troubleshooting tools

■![]() Northbound APIs are provided for utility applications such as Distribution Management System (DMS), Outage Management System (OMS), and Meter Data Management (MDM)

Northbound APIs are provided for utility applications such as Distribution Management System (DMS), Outage Management System (OMS), and Meter Data Management (MDM)

Tunnel Provisioning Server

The TPS acts as a proxy to allow DA Gateways/SSRs to communicate with Cisco IoT FND when they are first deployed in the field. After TPS provisions the tunnels between DA Gateways/SSRs and the HER, the DA Gateways/SSRs can then communicate with Cisco IoT FND directly.

Headend Routers

The primary function of a HER is to aggregate the WAN connections coming from field area routers. HERs terminate the VPN tunnels from the Cisco Connected Grid Routers (CGRs) and may also enforce QoS, profiling (Flexible NetFlow), and security policies. HERs will be deployed in clusters. HER clustering and scale design are discussed in detail in Security, High Availability & Scale.

Registration Authority

The Registration Authority (RA) acts as a proxy to the CA server in the backend for automated certificate enrollment for the SSR and DA Gateways, which must go through the RA and TPS to establish a secure tunnel with the HER. Before this tunnel is established, the device cannot reach the data center network.

A Cisco IOS router can be configured as a Certificate Server-Simple Certificate Enrollment Protocol (SCEP) in RA mode. The Cisco ISR 4400 series of routers are recommended for high scale deployments although ESR5921 (Virtual Router) or any Cisco ISR 4000 series of routers could be chosen to serve the functionality of RA in low scale deployments.

RSA Certificate Authority

The RSA Certificate Authority (CA) provides certificates to network components such as routers and Cisco IoT FND. This solution makes use of the RSA CA within the control center block. Alternatively, an external utility-owned RSA-based CA can be used.

Active Directory

The Active Directory, which is a part of the utility data center and provides directory services, stores identity information for the SSRs and DA Gateways. It provides authentication of the various gateways in the DA solution.

AAA

The Microsoft Network Policy Server (NPS) provides RADIUS-based AAA services for network admission control of SSRs such as CGR 1120 and IR1101 and DA Gateways such as IR807 and IR809. It supports the certificate-base identity authentication used in this solution.

Network Time Protocol Server

Certain services running on the Distribution Automation require accurate time synchronization between the network elements. Many of these applications process a time-ordered sequence of events, so the events must be time stamped to a level of precision that allows individual events to be distinguished from one another and correctly ordered. A Network Time Protocol (NTP) Version 4 server running over the IPv4 and IPv6 network layer can act as a Stratum 1 timing source for the network.

The NTP might deliver accuracies of 10 to 100 milliseconds over the DA solution, depending on the characteristics of the synchronization source and network paths in the WAN.

SSR and DA Gateways

IR1101 Industrial Integrated Services Router

The IR1101 is modular and ruggedized (IP30 specification) platform designed for the utility and machine-to-machine market segment. As part of the Secondary Substation solution, IR1101 can play the role of both SSR and DA Gateway.

For more details, refer to the IR1101 Industrial Integrated Services Router Hardware Installation Guide at the following URL:

■![]() https://www.cisco.com/c/en/us/td/docs/routers/access/1101/hardware/installation/guide/1101hwinst/pview.html

https://www.cisco.com/c/en/us/td/docs/routers/access/1101/hardware/installation/guide/1101hwinst/pview.html

As shown in Figure 22, IR1101 is designed as a modular platform for supporting expansion modules and edge compute modules. IR1101 supports a variety of communication interfaces such as four FE ports, one combo WAN port RS232 DTE port, and LTE modules. The cellular modem is pluggable and a dual SIM card and IPV6 LTE data connection are supported. Raw sockets and protocol translation feature are available.

Expansion Module for IR1101 Industrial Integrated Services Router

The IR1101 provides investment protection. The base module of IR1101 provides a modular pluggable slot for inserting the pluggable LTE module (or) storage module. The expansion module, on the other hand, also comes with a modular pluggable slot for inserting the pluggable LTE module. Overall, two pluggable LTE modules could be inserted on IR1101 (with an expansion module), thus enabling cellular backhaul redundancy with Dual LTE deployments.

Using the expansion module, an additional fiber (SFP) port and an additional LTE port could be added to the capability of IR1101.

The SFP port on the expansion module is the Layer 2 port. Layer 3 is configured through the SVI interface.

Expansion module for dual active LTE, local storage for applications, SFP, and input/output ports |

For more details on the IR1101 expansion module, please refer to the following URL:

■![]() https://www.cisco.com/c/en/us/products/collateral/routers/1101-industrial-integrated-services-router/datasheet-c78-741709.html

https://www.cisco.com/c/en/us/products/collateral/routers/1101-industrial-integrated-services-router/datasheet-c78-741709.html

Connected Grid Router 1120

The CGR 1120 is designed as an indoor model that is well suited for Secondary Substation deployment. CGR 1120 has a rich feature set for utilities and energy verticals. CGR 1120, which acts a SSR in the DA solution, integrates applications like Distributed Energy Resources (DERs) and Secondary Substation monitoring and control. For more details, please refer to the Cisco 1120 Connected Grid Router specification guide at following URL:

■![]() https://www.cisco.com/c/en/us/support/routers/1120-connected-grid-router/model.html

https://www.cisco.com/c/en/us/support/routers/1120-connected-grid-router/model.html

IR807 Industrial Integrated Services Router

The IR807 (see Figure 23) is a compact, ruggedized, lower power, and smart-grid compliant router suited for DA and Secondary Substation deployment. IR807 supports a variety of communication interfaces like Ethernet, serial, and in-built cellular modems to support LTE and 3G networks. Dual SIM support is available for high reliability.

For more details on IR807, please refer to the Cisco 807 Industrial Integrated Services Routers Data Sheet at the following URL:

■![]() https://www.cisco.com/c/en/us/products/collateral/routers/800-series-industrial-routers/datasheet-c78-739643.html

https://www.cisco.com/c/en/us/products/collateral/routers/800-series-industrial-routers/datasheet-c78-739643.html

IR809 Industrial Integrated Services Router

The Cisco IR809 (see Figure 24) is a compact, ruggedized router designed for harsh environments. It will well suited for the Distribution Automation and asset management solution. It plays the role of a DA Gateway and has the edge compute capability, which can be used to host the applications to cater to mission-critical or time-sensitive requirements for fast decision making at the edge of IoT network.

For more details on IR809, please refer to the Cisco 809 Industrial Integrated Services Routers Data Sheet at the following URL:

■![]() https://www.cisco.com/c/en/us/products/collateral/routers/809-industrial-router/datasheet-c78-734980.html

https://www.cisco.com/c/en/us/products/collateral/routers/809-industrial-router/datasheet-c78-734980.html

Firewall

A high performance, application-aware firewall with IPS/IDS capability should be installed between the WAN and the head-end infrastructure at the DSO Control Center. The firewall performs inspection of IPv4 and IPv6 traffic from/to the FAN. Its throughput capacity must match the volume of traffic flowing between the application servers and the FANs.

The Cisco Adaptive Security Appliances (ASA) 4150 is recommended. The Cisco ASA 4150 is a high-performance data center security solution. For smaller deployments, low and mid-range firewalls such as the ASA 4110 and the ASA 4120 may be used.

The ASA FirePOWER module may be added for next generation firewall services such as Intrusion Prevention System (IPS), Application Visibility Control (AVC), URL filtering, and Advanced Malware Protection (AMP).

Firewalls can be configured for multiple (virtual) security contexts. For instance, IoT FND servers can be on a different context from infrastructure servers for segmentation. Firewalls are best deployed in pairs to permit failover in case of malfunction.

IEDs

This section describes the following IEDs that were considered during the validation of the Distribution Automation solution:

Capacitor Bank Controller

■![]() Eaton CBC-8000—This Capacitor Bank Controller (CBC) is designed to control capacitor banks installed in distribution feeders. This IED plays a key role in power factor regulation. For details, please refer to the following URL:

Eaton CBC-8000—This Capacitor Bank Controller (CBC) is designed to control capacitor banks installed in distribution feeders. This IED plays a key role in power factor regulation. For details, please refer to the following URL:

–![]() http://www.eaton.com/us/en-us/catalog/utility-and-grid-solutions/cbc-8000-capacitor-bank-control.html

http://www.eaton.com/us/en-us/catalog/utility-and-grid-solutions/cbc-8000-capacitor-bank-control.html

■![]() Beckwith M-6280A Digital Capacitor Bank Control—Digital CBC for Remote Capacitor Automation, Monitoring, and Protection. It supports the Classic Automatic mode of operation that is Voltage, optional VAR Control or optional Current Control. For details, please refer to the following URL:

Beckwith M-6280A Digital Capacitor Bank Control—Digital CBC for Remote Capacitor Automation, Monitoring, and Protection. It supports the Classic Automatic mode of operation that is Voltage, optional VAR Control or optional Current Control. For details, please refer to the following URL:

Recloser Controller

■![]() Beckwith M-7679 R-PAC—Protection, Automation and Control (PAC) System for Recloser, Switch, Sectionalizer, and Advanced Distribution Automation Applications. This is a key component for FLISR use case. For details, please refer to the following URL:

Beckwith M-7679 R-PAC—Protection, Automation and Control (PAC) System for Recloser, Switch, Sectionalizer, and Advanced Distribution Automation Applications. This is a key component for FLISR use case. For details, please refer to the following URL:

Load Tap Controller

■![]() Beckwith M-2001D Tap Changer Control—Digital Tap Changer Control for Transformers and Regulators. This is a key component for conservation voltage regulation use case. For details, please refer to the following URL:

Beckwith M-2001D Tap Changer Control—Digital Tap Changer Control for Transformers and Regulators. This is a key component for conservation voltage regulation use case. For details, please refer to the following URL:

SCADA and Other IED Simulation

■![]() The Triangular Microwave Dynamic Synchronous Transfer Mode (DTM) tool used for DMS (SCADA) and other IEDs like remote control switch, Line Fault Indicator. Used for automated testing of RTUs, IEDs, gateways, and SCADA systems. For details, please refer to an overview of DTM at the following URL:

The Triangular Microwave Dynamic Synchronous Transfer Mode (DTM) tool used for DMS (SCADA) and other IEDs like remote control switch, Line Fault Indicator. Used for automated testing of RTUs, IEDs, gateways, and SCADA systems. For details, please refer to an overview of DTM at the following URL:

–![]() http://www.trianglemicroworks.com/products/testing-and-configuration-tools/dtm-pages/overview

http://www.trianglemicroworks.com/products/testing-and-configuration-tools/dtm-pages/overview

RTU

■![]() Eximprod ES 200 is Virtual RTU. This RTU application can be hosted as a docker application on Cisco edge compute platforms like IR809 and IR1101. For more details on ES200, refer to the following URL:

Eximprod ES 200 is Virtual RTU. This RTU application can be hosted as a docker application on Cisco edge compute platforms like IR809 and IR1101. For more details on ES200, refer to the following URL:

–![]() http://www.epg.ro/wp-content/uploads/2017/09/ES200-Datasheet-public.pdf

http://www.epg.ro/wp-content/uploads/2017/09/ES200-Datasheet-public.pdf

■![]() For Virtual RTU implementation, please refer to the Connected Utilities Virtual RTU Implementation Guide at the following URL:

For Virtual RTU implementation, please refer to the Connected Utilities Virtual RTU Implementation Guide at the following URL:

–![]() https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/FAN/Virtual-RTU/IG/CU-VRTU-IG/CU-VRTU-IG.html

https://www.cisco.com/c/en/us/td/docs/solutions/Verticals/Utilities/FAN/Virtual-RTU/IG/CU-VRTU-IG/CU-VRTU-IG.html

Design Considerations

This chapter includes the following major topics:

IP Address Schema

The IP Address Schema is discussed in a combination of the following multiple options:

■![]() Addressing at various layers of the solution

Addressing at various layers of the solution

■![]() Types of network involved (Underlay Network vs Overlay Network):

Types of network involved (Underlay Network vs Overlay Network):

–![]() Underlay network—Service provider-assigned addresses used in establishment of tunnel

Underlay network—Service provider-assigned addresses used in establishment of tunnel

–![]() Overlay network—Reachable only after a secure overlay network path is established

Overlay network—Reachable only after a secure overlay network path is established

■![]() Choice of network layer protocol (IPv4 vs IPv6 vs Dual Stack)

Choice of network layer protocol (IPv4 vs IPv6 vs Dual Stack)

■![]() Mode of address assignment (Static vs Dynamic)

Mode of address assignment (Static vs Dynamic)

Note: SSRs and DA Gateways shall be referred to as IoT Gateways in the abstract context in this document.

Figure 25 IP Address Schema—Addressing at Various Layers of the Solution

Figure 25 serves as the reference base for the discussion of IP address schema used in this solution.

Overlay versus Underlay Network

Since the WAN is a highly unsecure network, the communication path between the DA Gateways/SSRs and the HERs have to be secured with FlexVPN tunnels.

■![]() The Underlay Network is the name of the underlying public network over which the tunnels are established.

The Underlay Network is the name of the underlying public network over which the tunnels are established.

■![]() Once the tunnels are established, any network connectivity established as an overlay on top of the already established tunnel is called an Overlay Network.

Once the tunnels are established, any network connectivity established as an overlay on top of the already established tunnel is called an Overlay Network.

As an example, with reference to Figure 26, the FlexVPN tunnel can be established between the public IP of the DA Gateway (located in the Distribution Network) and the DMZ Virtual IP of the HER Cluster (located in the Utility Public Network). Once the tunnel is established, reachability information of FND and SCADA (located in the Utility Private Network) could be advertised as overlay routes through the tunnel to the DA Gateway.

Figure 26 FlexVPN Tunnels, Underlay vs Overlay Networks

■![]() Both IPv4 and IPv6 are supported Overlay/Underlay IP addresses in this solution.

Both IPv4 and IPv6 are supported Overlay/Underlay IP addresses in this solution.

■![]() The term IP is used to represent both IPv4 and IPv6 addresses throughout the document unless explicitly called out.

The term IP is used to represent both IPv4 and IPv6 addresses throughout the document unless explicitly called out.

In Figure 26, the public IP of the HER cluster and the DA Gateways are used in establishing the FlexVPN Tunnel. They are Underlay IP addresses.

The following list includes Overlay IP addresses:

–![]() IP address of the Loopback interface on HER

IP address of the Loopback interface on HER

–![]() IP address of the Utility Private Network components (such as SCADA, FND, and application servers)

IP address of the Utility Private Network components (such as SCADA, FND, and application servers)

–![]() These routes are advertised through the tunnel towards the IoT Gateway

These routes are advertised through the tunnel towards the IoT Gateway

■![]() Advertised by the IoT Gateway:

Advertised by the IoT Gateway:

–![]() IP address of the Loopback interface on the gateway

IP address of the Loopback interface on the gateway

–![]() Optionally, locally connected devices on IoT Gateway can be advertised

Optionally, locally connected devices on IoT Gateway can be advertised

–![]() These routes are advertised through the tunnel towards the HER Cluster

These routes are advertised through the tunnel towards the HER Cluster

Table 8 captures the distinction between the usage of underlay address at different points in the solution..

■![]() Underlay IP addresses on the HER cluster have to be static IP addresses (IPv4 and/or IPv6).

Underlay IP addresses on the HER cluster have to be static IP addresses (IPv4 and/or IPv6).

■![]() As long as the tunnel is up, overlay routes to Utility Private Network components like FND and SCADA, would be reachable on the SSR or DA Gateway.