AI supply chains are susceptible to risks

Traditional tools fail to address AI model vulnerabilities, compliance, and geopolitical risks, hindering safe AI adoption and innovation.

Manage AI model risks proactively

Identify and block malicious code from model components, validate license compliance, and help ensure model provenance before models enter your environment.

Detect threats in AI models and components

Cisco continuously scans public repositories like Hugging Face for malicious code and vulnerabilities within AI model files. By scanning repositories, potential threats can be identified before they can be introduced into your enterprise environment.

Check for license compliance

Detect and block AI models with risky or restrictive open-source software licenses—such as copyleft licenses like GPL—that pose intellectual property (IP) and compliance risks. This helps to ensure legal adherence and avoids inadvertent IP violations.

Maintain proper provenance of AI models

Flag and enforce policies on AI models that originate from geopolitically sensitive regions. Maintain compliance and mitigate potential risks based on potential geopolitical liabilities.

Accelerate enterprise AI adoption from the beginning

Confirm that third-party and open-source models and components used to build your AI applications are secure and compliant from the start of the development process.

Network-based enforcement

Prevent risky AI models from entering your enterprise infrastructure through multiple enforcement points, powered by Cisco Security Cloud.

Cisco Secure Endpoint

Detect and quarantine risky AI assets directly from the endpoint.

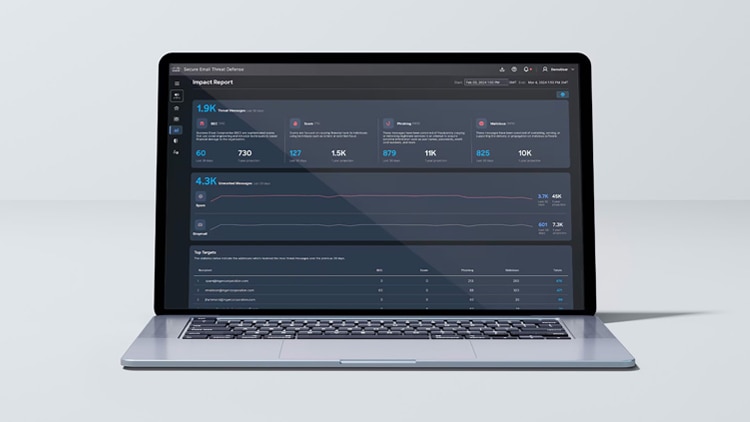

Cisco Email Threat Defense

Prevent delivery of models and components that violate security policies.

Cisco Secure Access

Monitor and block downloads of high-risk AI assets from online repositories.