These policies govern the host-side behavior of the adapter, including how the adapter handles traffic. For example, you

can use these policies to change default settings for the following:

Note |

For Fibre Channel adapter policies, the values displayed by Cisco

UCS Manager may not match those displayed by applications such as QLogic SANsurfer. For example, the following values may result in an

apparent mismatch between SANsurfer and Cisco

UCS Manager:

-

Max LUNs Per Target—SANsurfer has a maximum of 256 LUNs and does not display more than that number. Cisco

UCS Manager supports a higher maximum number of LUNs. This parameter is applicable only for FC-Initiator.

-

Link Down Timeout—In SANsurfer, you configure the timeout threshold for link down in seconds. In Cisco

UCS Manager, you configure this value in milliseconds. Therefore, a value of 5500 ms in Cisco

UCS Manager displays as 5s in SANsurfer.

-

Max Data Field Size—SANsurfer has allowed values of 512, 1024, and 2048. Cisco

UCS Manager allows you to set values of any size. Therefore, a value of 900 in Cisco

UCS Manager displays as 512 in SANsurfer.

-

LUN Queue Depth—The LUN queue depth setting is available for Windows system FC adapter policies. Queue depth is the number

of commands that the HBA can send and receive in a single transmission per LUN. Windows Storport driver sets this to a default

value of 20 for physical miniports and to 250 for virtual miniports. This setting adjusts the initial queue depth for all

LUNs on the adapter. Valid range for this value is 1 - 254. The default LUN queue depth is 20. This feature only works with

Cisco UCS Manager version 3.1(2) and higher. This parameter is applicable only for FC-Initiator.

-

IO TimeOut Retry—When the target device does not respond to an IO request within the specified timeout, the FC adapter cancels

the pending command then resends the same IO after the timer expires. The FC adapter valid range for this value is 1 - 59

seconds. The default IO retry timeout is 5 seconds. This feature only works with Cisco UCS Manager version 3.1(2) and higher.

|

Operating System

Specific Adapter Policies

By default, Cisco UCS provides a set of Ethernet adapter policies and Fibre Channel adapter policies. These policies include the recommended settings

for each supported server operating system. Operating systems are sensitive to the settings in these policies. Storage vendors

typically require non-default adapter settings. You can find the details of these required settings on the support list provided

by those vendors.

Important |

We recommend that

you use the values in these policies for the applicable operating system. Do

not modify any of the values in the default policies unless directed to do so

by Cisco Technical Support.

However, if you are creating an Ethernet adapter policy for an OS (instead of using the default adapter policy), you must

use the following formulas to calculate values that work for that OS.

Depending on the UCS firmware, your driver interrupt calculations may be different. Newer UCS firmware uses a calculation

that differs from previous versions. Later driver release versions on Linux operating systems now use a different formula

to calculate the Interrupt Count. In this formula, the Interrupt Count is the maximum of either the Transmit Queue or the

Receive Queue plus 2.

|

Interrupt Count in Linux Adapter Policies

Drivers on Linux operating systems use differing formulas to calculate the Interrupt Count, depending on the eNIC driver version.

The UCS 3.2 release increased the number of Tx and Rx queues for the eNIC driver from 8 to 256 each.

Use one of the following strategies, according to your driver version.

For Linux drivers before the UCS 3.2 firmware release, use the following formula to calculate the Interrupt Count.

- Completion Queues = Transmit Queues + Receive Queues

- Interrupt Count = (Completion Queues + 2) rounded up to nearest power of 2

For example, if Transmit Queues = 1 and Receive Queues = 8 then:

- Completion Queues = 1 + 8 = 9

- Interrupt Count = (9 + 2) rounded up to the nearest power of 2 = 16

On drivers for UCS firmware release 3.2 and higher, the Linux eNIC drivers use the following formula to calculate the Interrupt

Count.

Interrupt Count = (#Tx or Rx Queues) + 2

For example:

- Interrupt Count wq = 32, rq = 32, cq = 64 - then Interrupt Count = Max(32, 32) + 2 = 34

- Interrupt Count wq = 64, rq = 8, cq = 72 – then Interrupt Count = Max(64, 8) + 2 = 66

- Interrupt Count wq = 1, rq = 16, cq = 17 - then Interrupt count = Max(1, 16) + 2 = 18

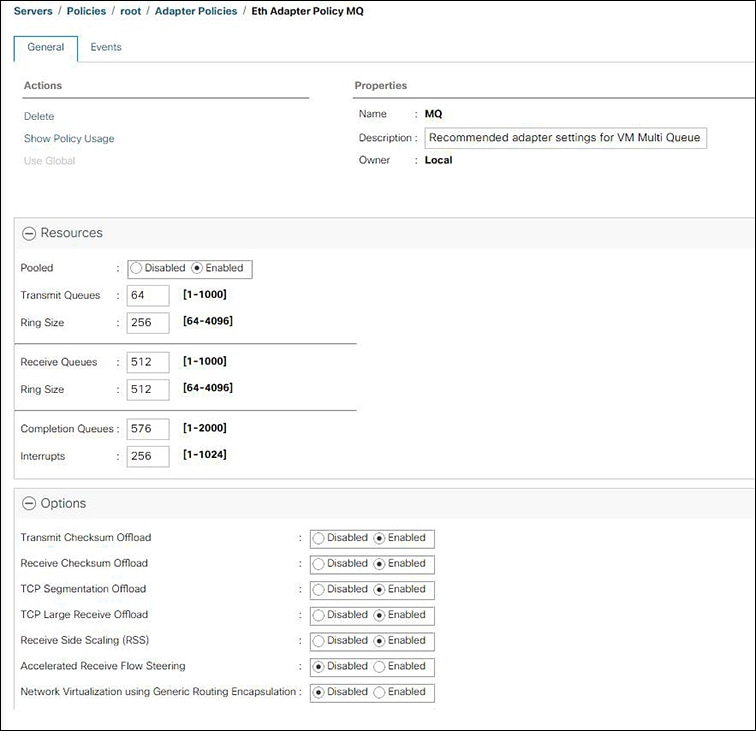

Interrupt Count in Windows Adapter Policies

For Windows OS, the recommended adapter policy in UCS Manager for VIC1400 series adapters is Win-HPN and if RDMA is used, the recommended policy is Win-HPN-SMB. For VIC 1400 series adapters, the recommended interrupt value setting is 512 and the Windows VIC driver takes care of allocating

the required number of Interrupts.

For VIC 1300 and VIC 1200 series adapters, the recommended UCS Manager adapter policy is Windows and the Interrupt would be TX + RX + 2, rounded to closest power of 2. The maximum supported Windows queues is 8 for Rx Queues

and 1 for Tx Queues.

Example for VIC 1200 and VIC 1300 series adapters:

Tx = 1, Rx = 4, CQ = 5, Interrupt = 8 ( 1 + 4 rounded to nearest power of 2), Enable RSS

Example for VIC 1400 series adapters:

Tx = 1, Rx = 4, CQ = 5, Interrupt = 512 , Enable RSS

NVMe over Fabrics using Fibre Channel

The NVM Express (NVMe) interface allows host software to communicate with a non-volatile memory subsystem. This interface

is optimized for Enterprise non-volatile storage, which is typically attached as a register level interface to the PCI Express

(PCIe) interface.

NVMe over Fabrics using Fibre Channel (FC-NVMe) defines a mapping protocol for applying the NVMe interface to Fibre Channel.

This protocol defines how Fibre Channel services and specified Information Units (IUs) are used to perform the services defined

by NVMe over a Fibre Channel fabric. NVMe initiators can access and transfer information to NVMe targets over Fibre Channel.

FC-NVMe combines the advantages of Fibre Channel and NVMe. You get the improved performance of NVMe along with the flexibility

and the scalability of the shared storage architecture. Cisco UCS Manager Release 4.0(2) supports NVMe over Fabrics using

Fibre Channel on UCS VIC 14xx adapters.

Cisco UCS Manager provides the recommended FC-NVMe adapter policies in the list of pre-configured adapter policies. To create

a new FC-NVMe adapter policy, follow the steps in the Creating a Fibre Channel Adapter Policy section.

Feedback

Feedback