Cisco UCS Integrated Infrastructure with Red Hat Enterprise Linux OpenStack Platform and Red Hat Ceph Storage

Available Languages

Cisco UCS Integrated Infrastructure with Red Hat Enterprise Linux OpenStack Platform and Red Hat Ceph Storage

Deployment Guide

Last Updated: May 4 2016

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2016 Cisco Systems, Inc. All rights reserved.

Table of Contents

System Hardware and Software Specifications

Cisco Unified Computing System

Cisco UCS Blades Distribution in Chassis

Red Hat Linux OpenStack Platform 7 Director

Reference Architecture Workflow

Cluster Manager and Proxy Server

Cluster Management and Proxy Server

Configure Cisco UCS Fabric Interconnects

Configure the Cisco UCS Global Policies

Configure Server Ports for Blade Discovery and Rack Discovery

Create Server Pools for Controller, Compute and Ceph Storage Nodes

Create a Network Control Policy

Create Storage Profiles for the Controller and Compute Blades

Create Storage Profiles for Cisco UCS C240 M4 Server Blades

Create Service Profile Templates for Controller Nodes

Create Service Profile Templates for Compute Nodes

Create Service Profile Templates for Ceph Storage Nodes

Create Service Profile for Undercloud ( OSP7 Director ) Node

Create Service Profiles for Controller Nodes

Create Service Profiles for Compute Nodes

Create Service Profiles for Ceph Storage Nodes

Create LUNs for the Ceph OSD and Journal Disks

Create Port Channels for Cisco UCS Fabrics

Configure the Cisco Nexus 9372 PX Switch A

Configure the Cisco Nexus 9372 PX Switch B

Configure the Interface VLAN (SVI) on the Cisco Nexus 9K Switch A

Configure the Interface VLAN (SVI) on the Cisco Nexus 9K Switch B

Configure the VPC and Port Channels on Switch A

Configure the VPC and Port Channels on the Cisco Nexus 9K Switch B

Verify the Port Channel Status on the Cisco Nexus Switches

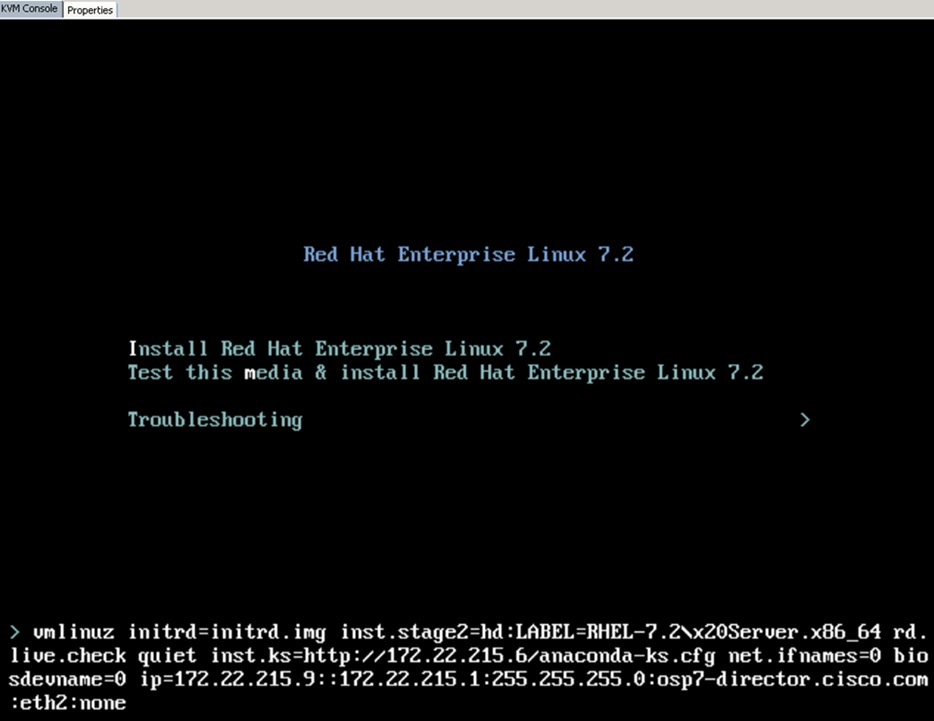

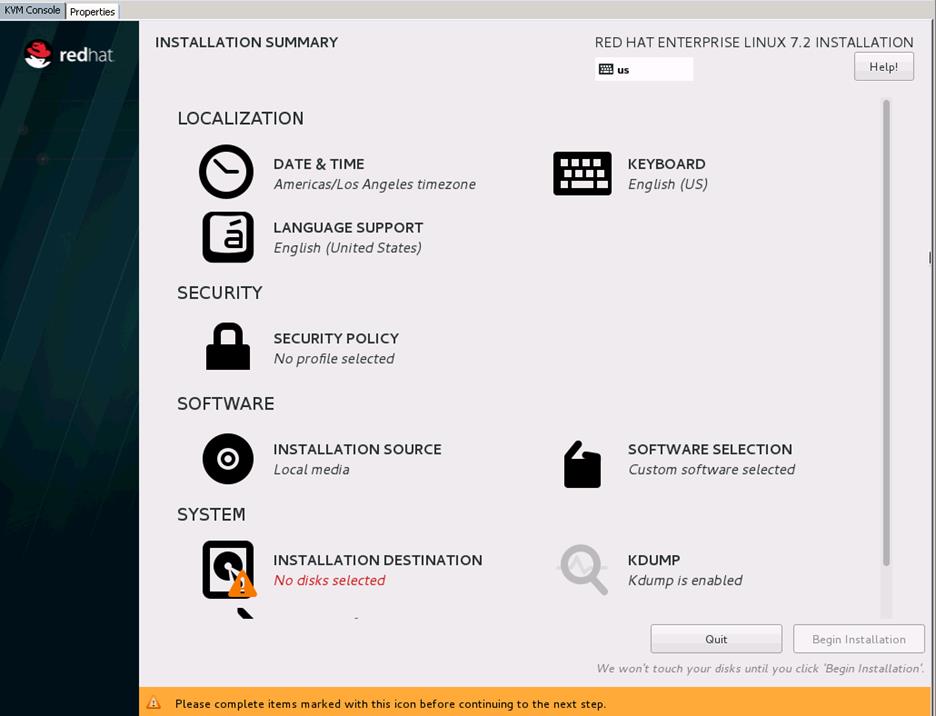

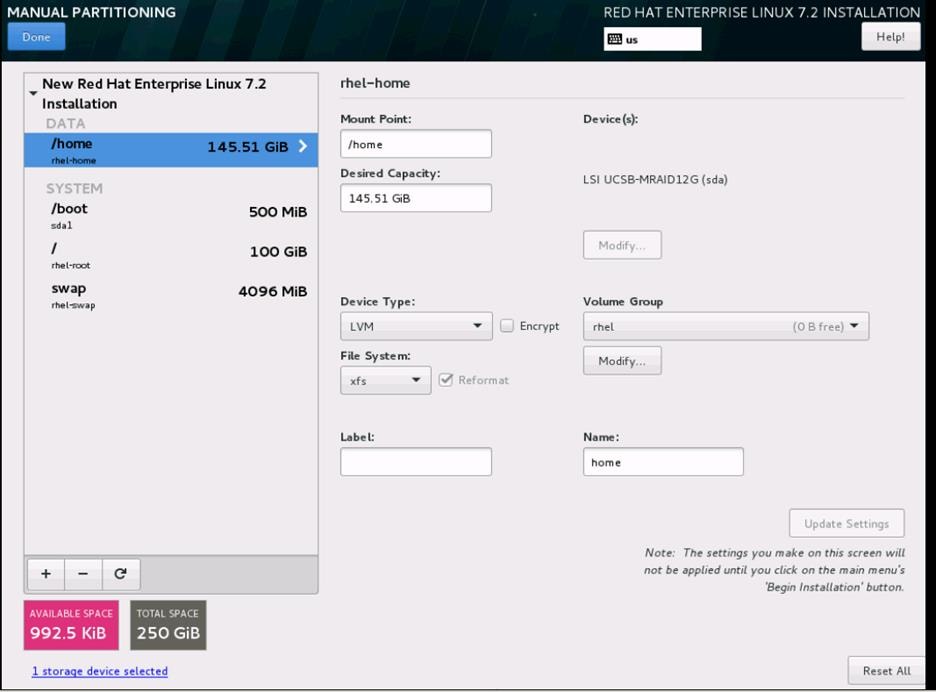

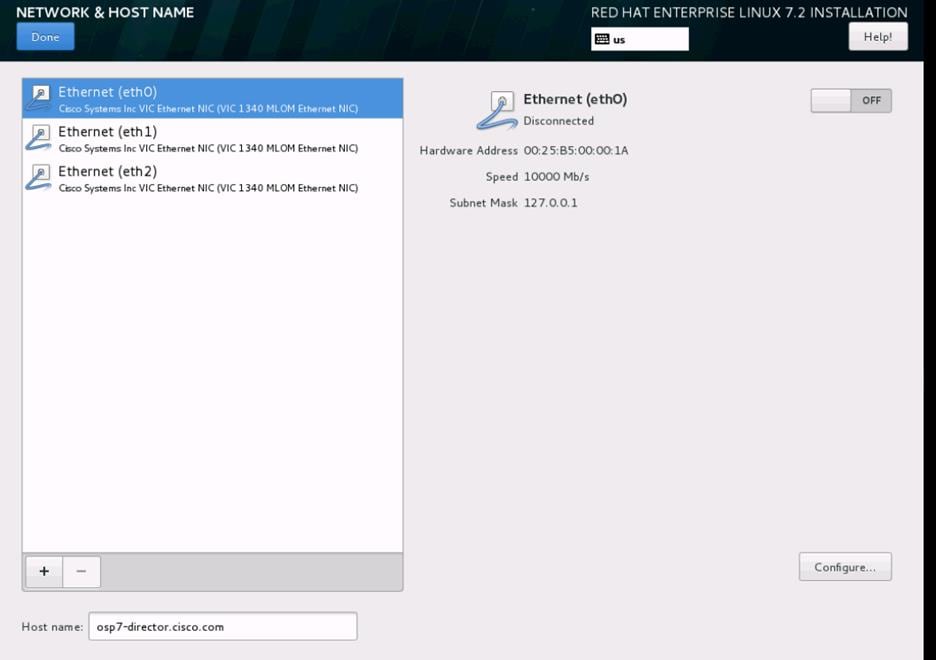

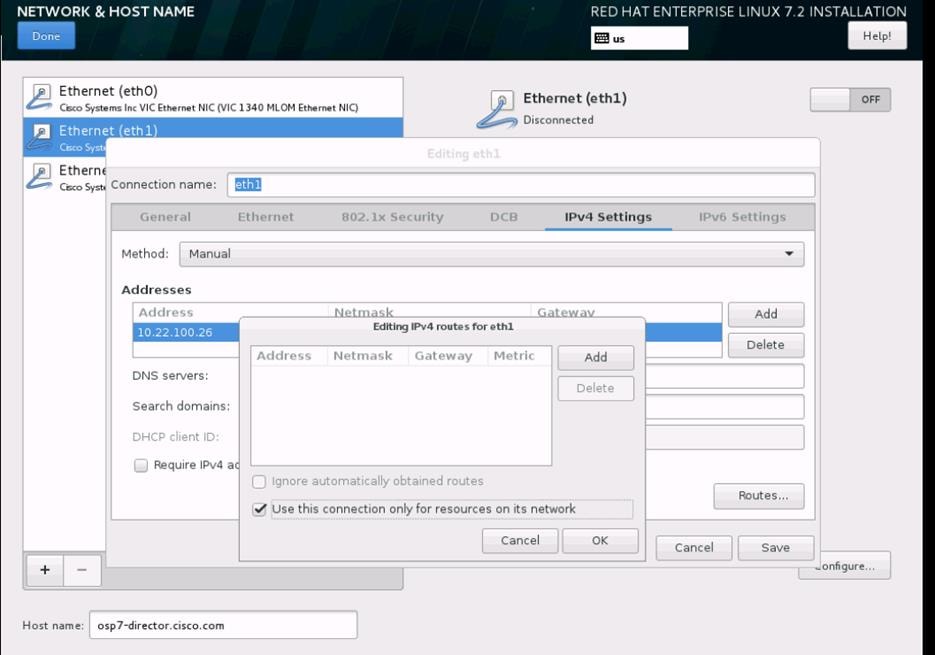

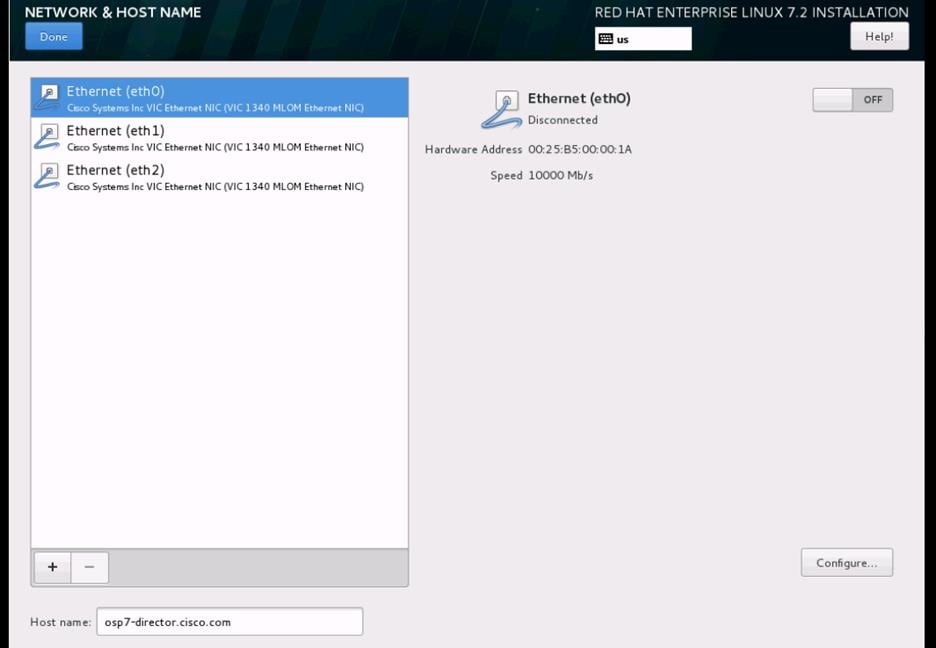

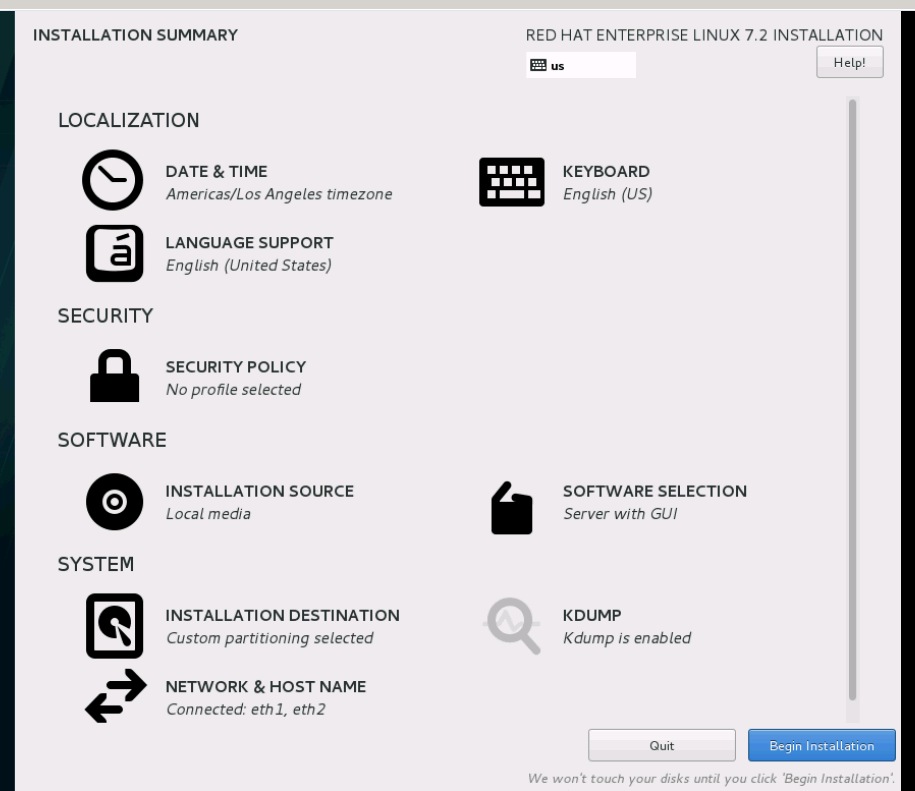

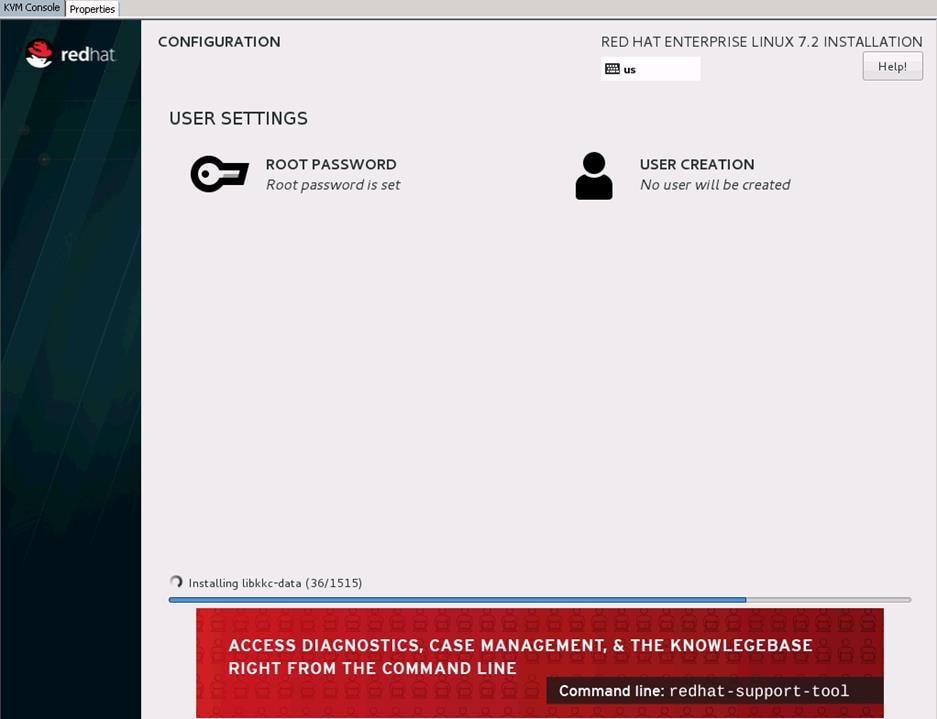

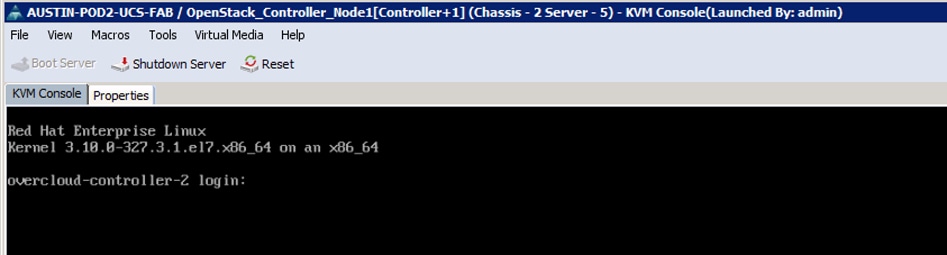

Install the Operating System on the Undercloud Node

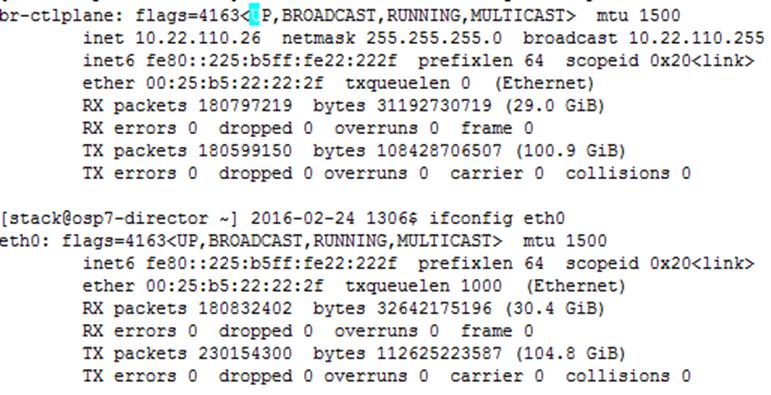

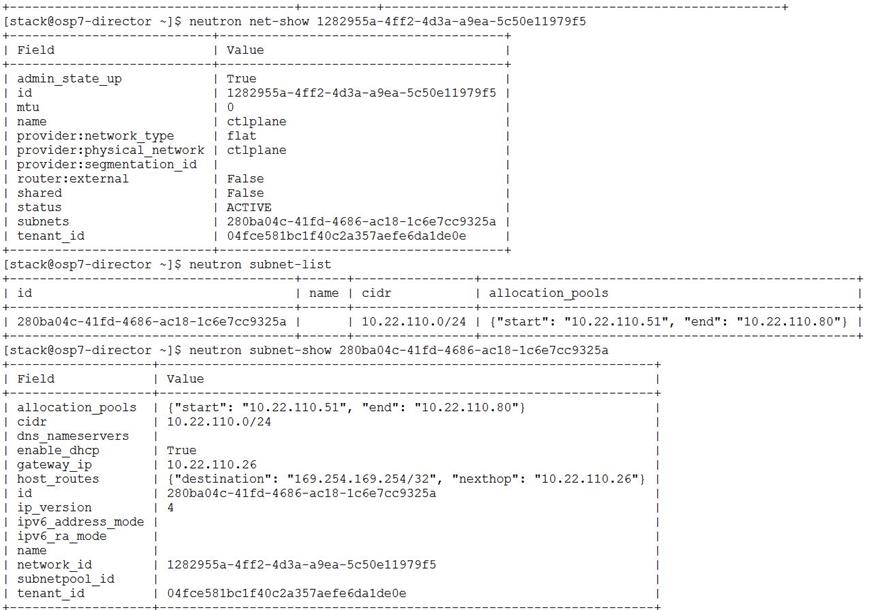

Post Undercloud Installation Checks

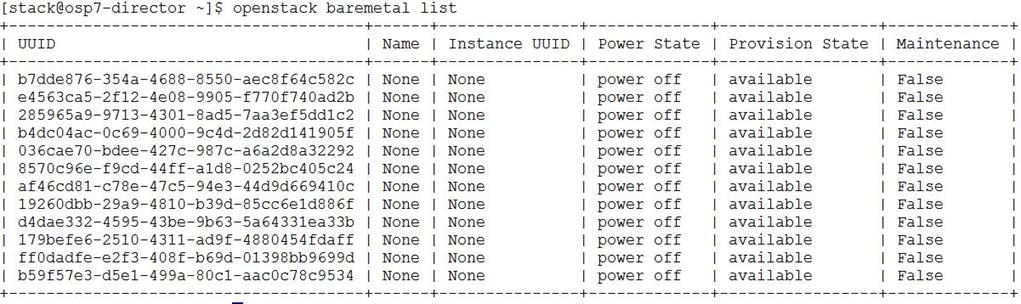

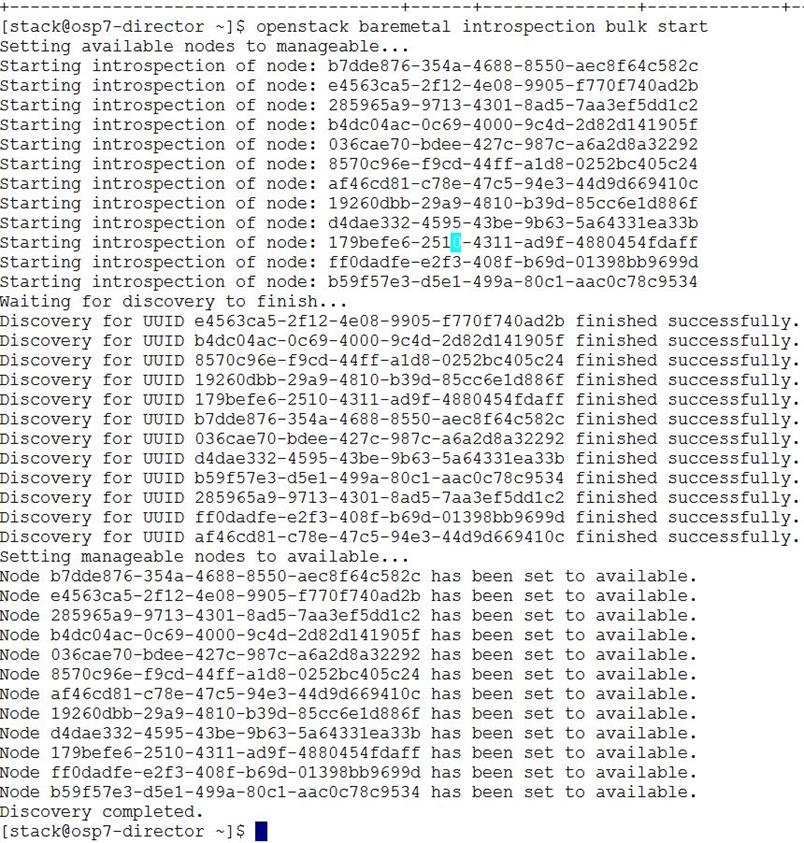

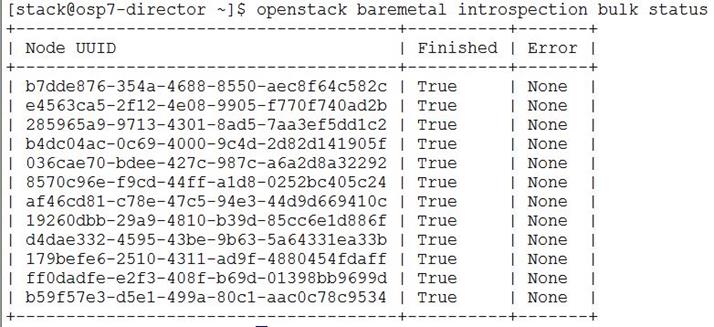

Pre-Installation Checks for Introspection

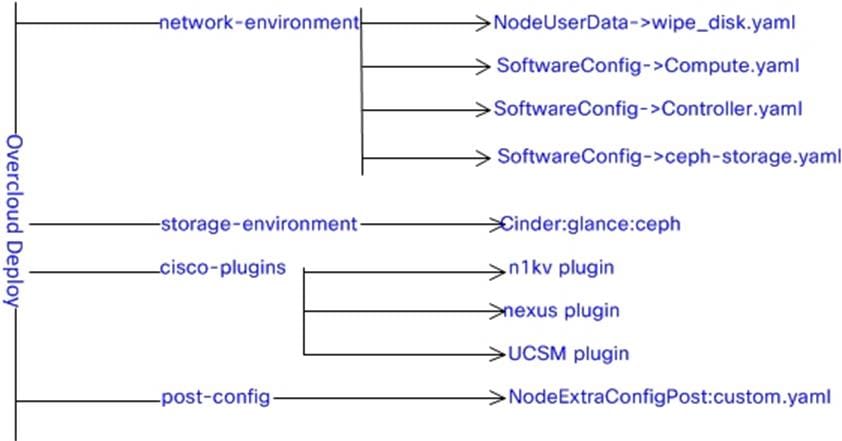

Yaml Configuration Files Overview

Pre-Installation Checks Prior to Deploying Overcloud

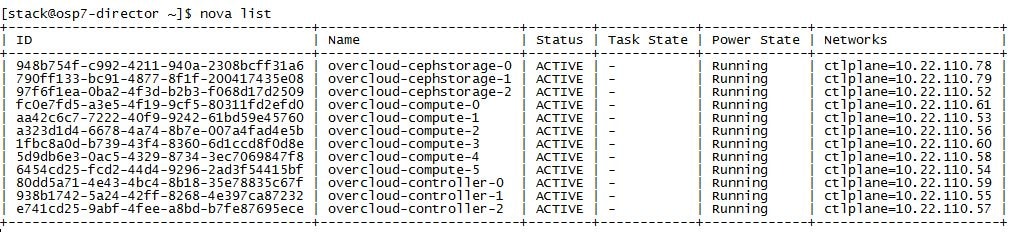

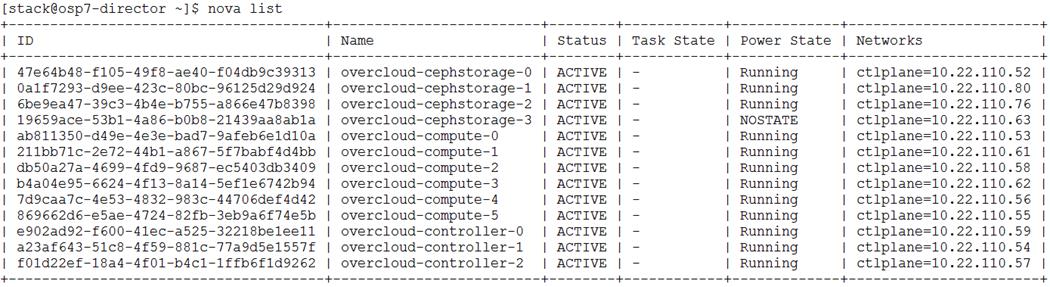

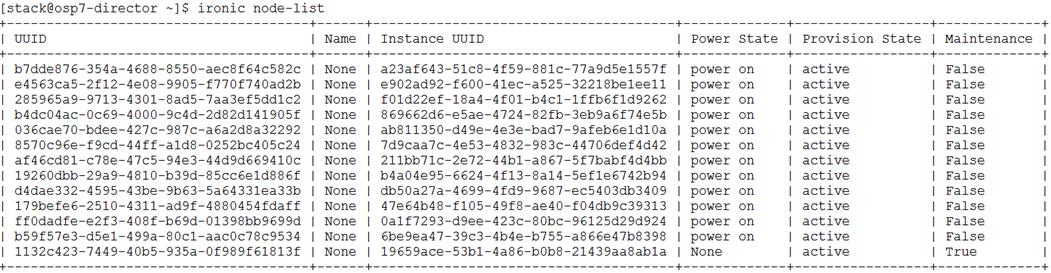

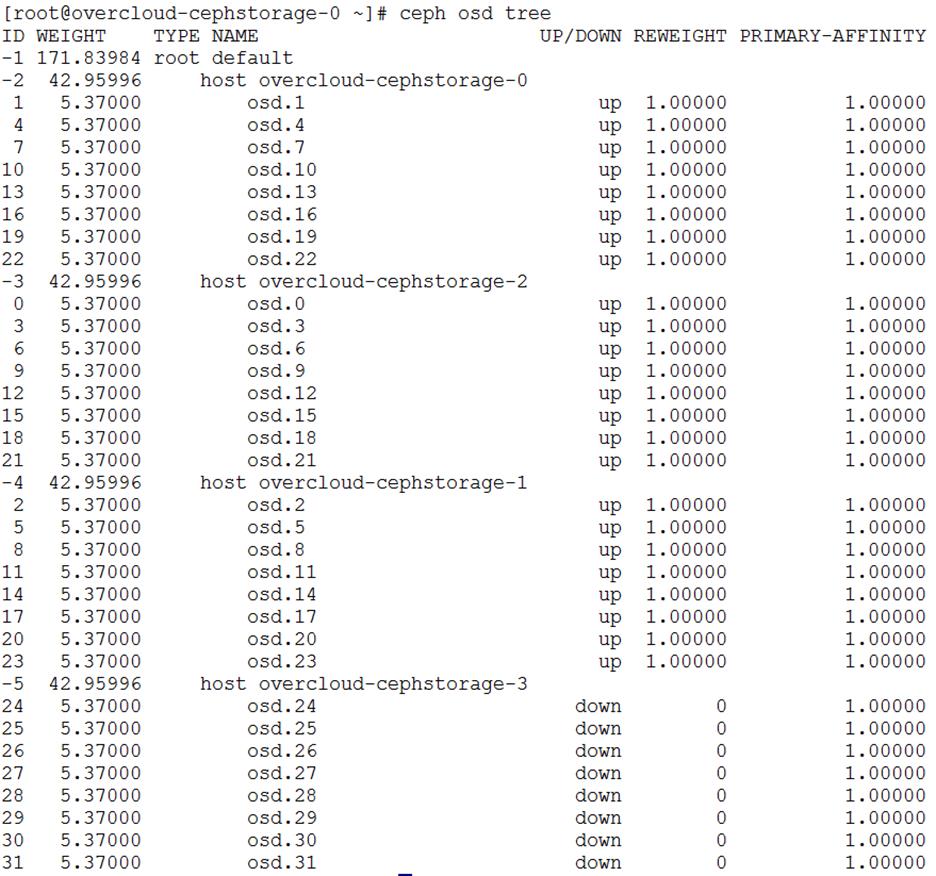

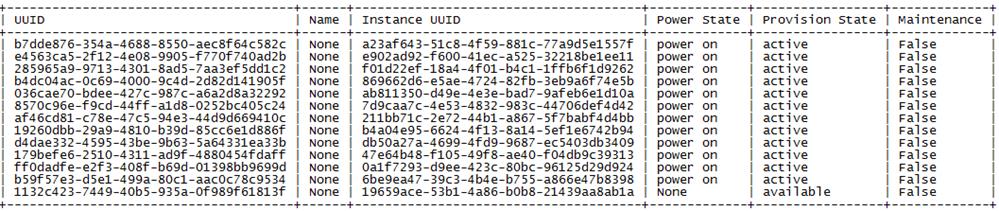

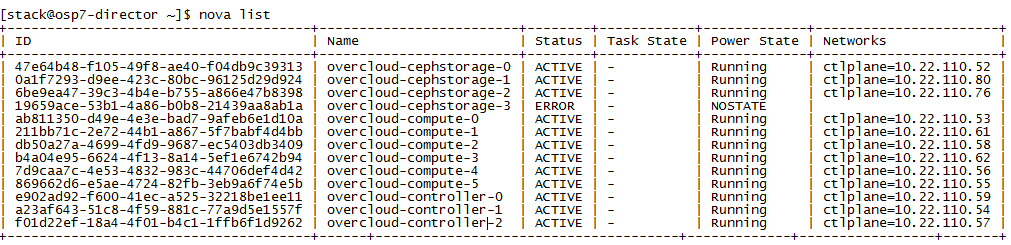

Overcloud Post Deployment Process

Overcloud Post-Deployment Configuration

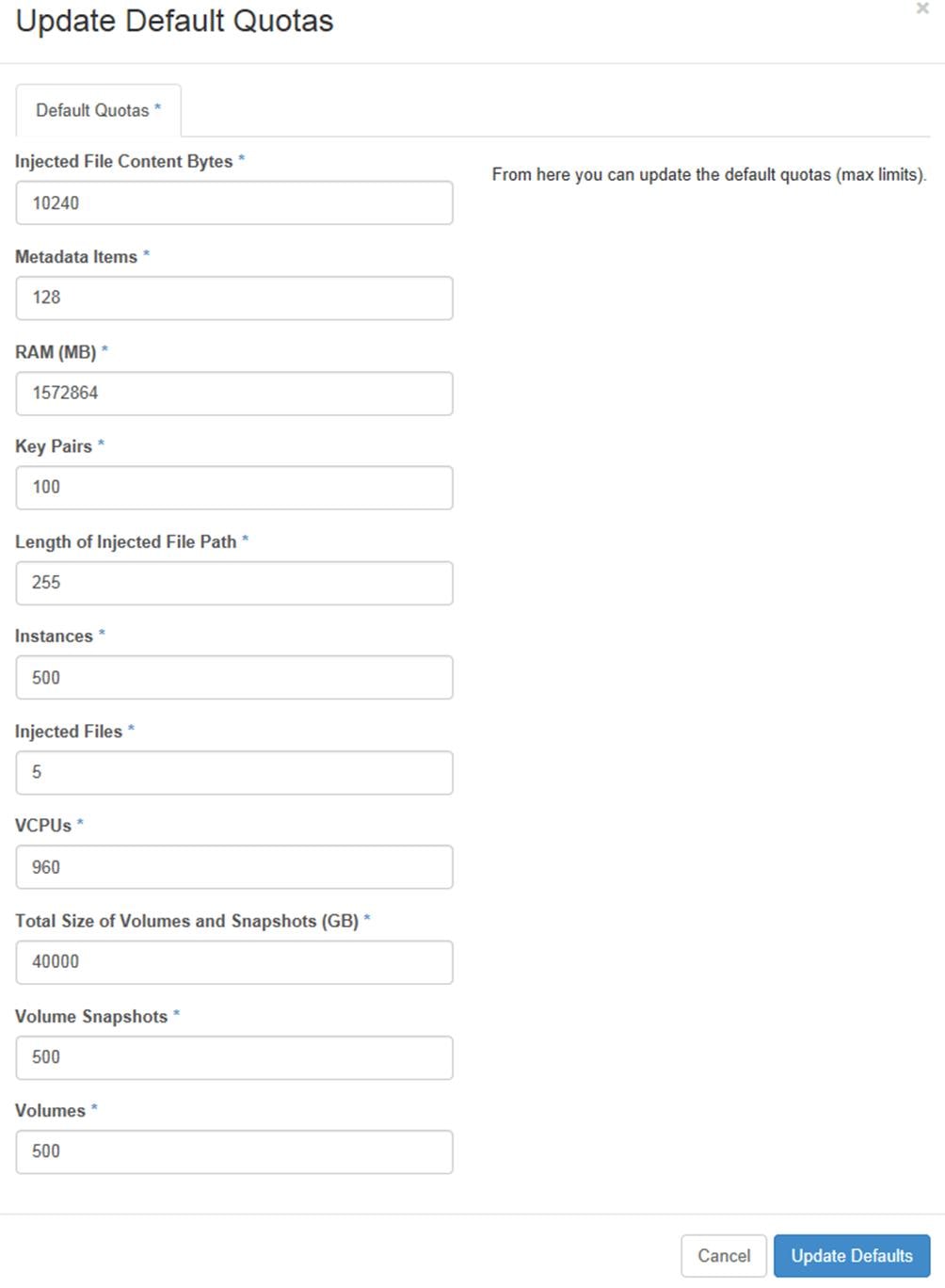

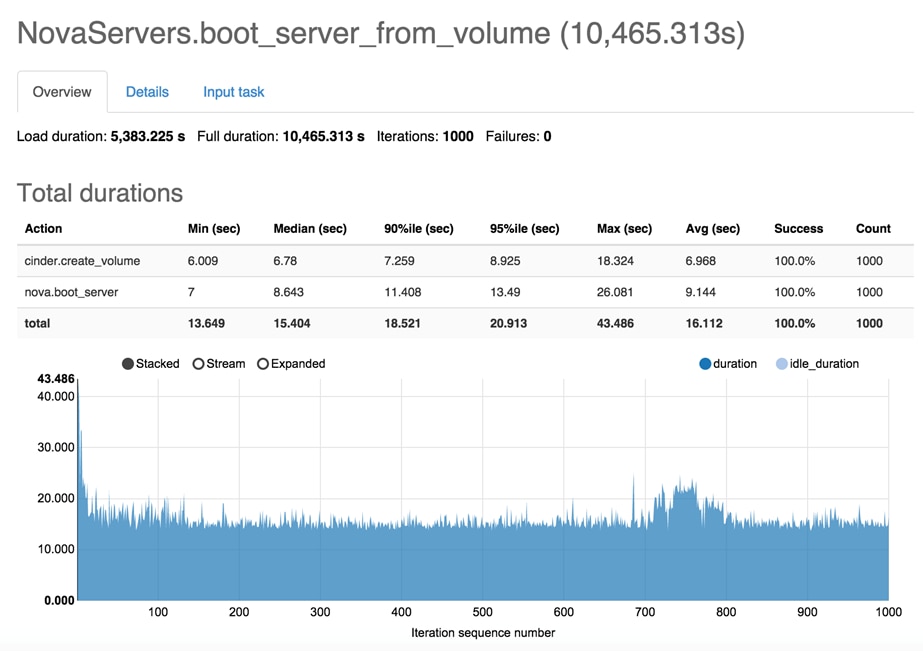

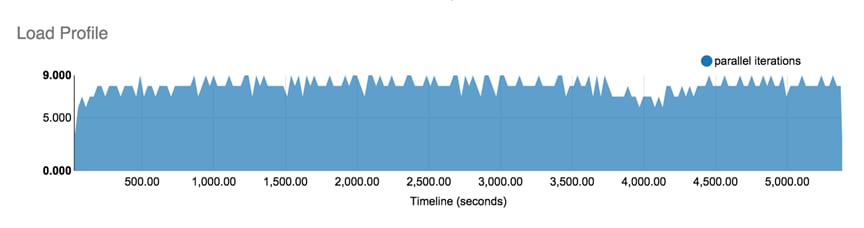

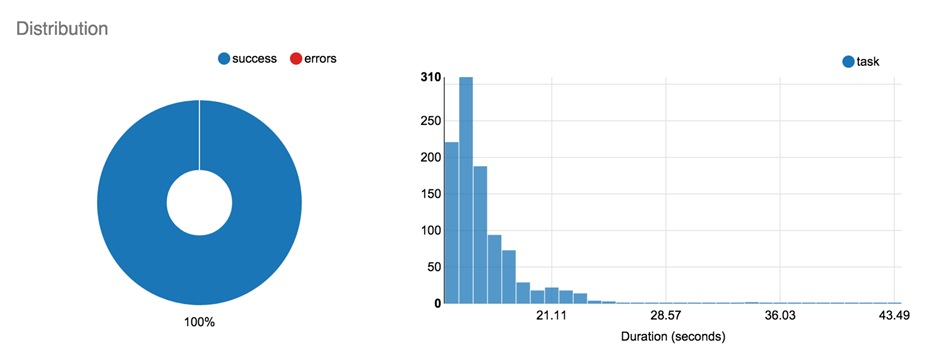

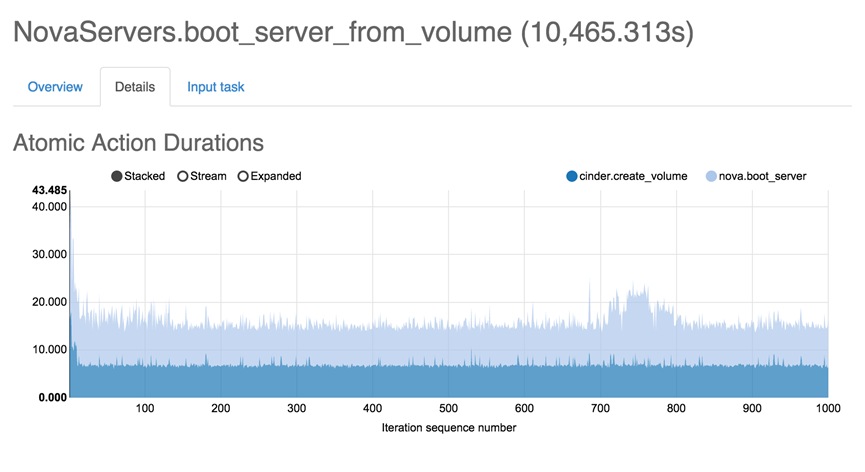

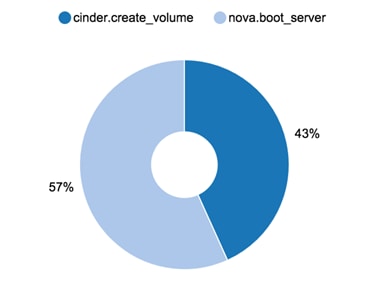

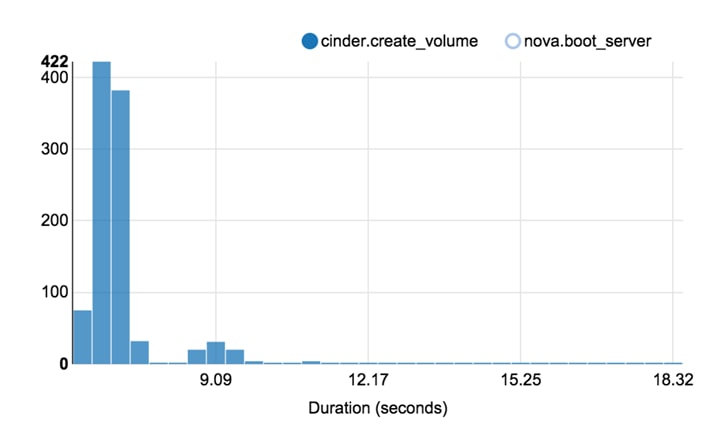

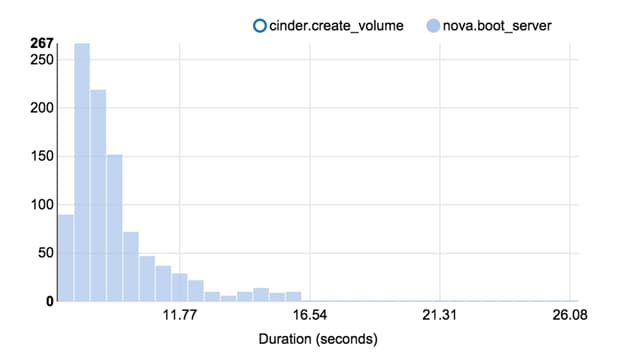

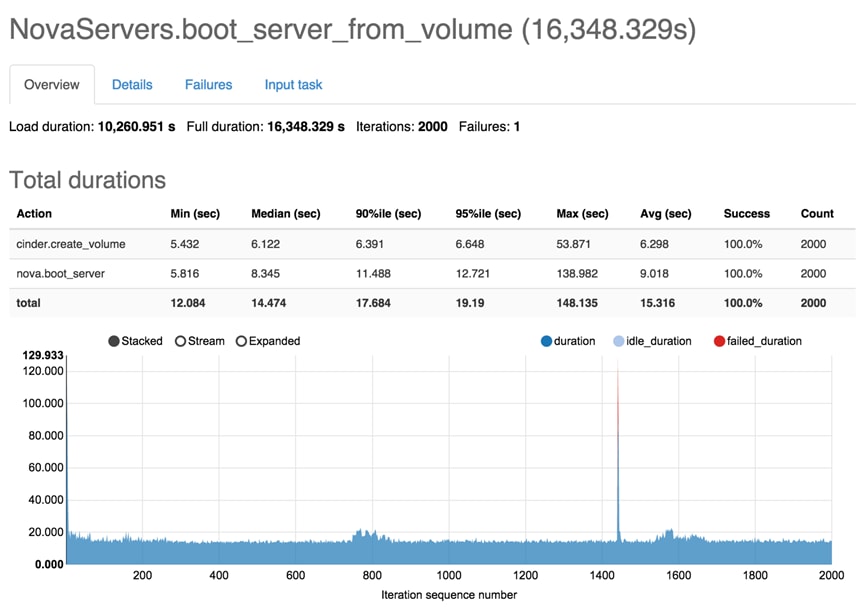

Rally Testing and Measuring Compute Scaling

Rally Scenario Task Configuration

Rally Tests with 1000 Virtual Machines

Rally Tests with 2000 Virtual Machines

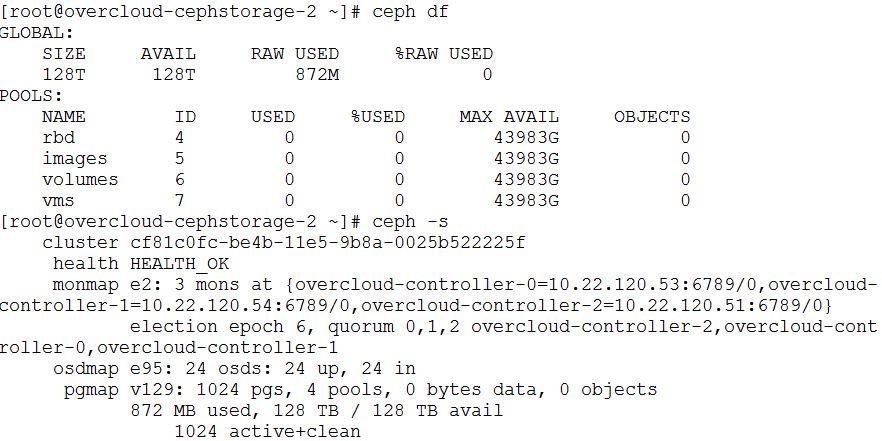

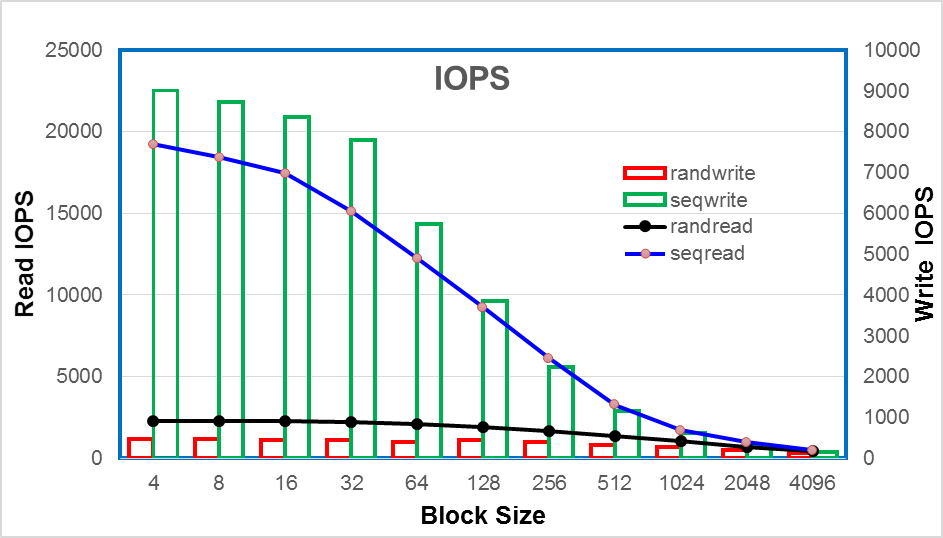

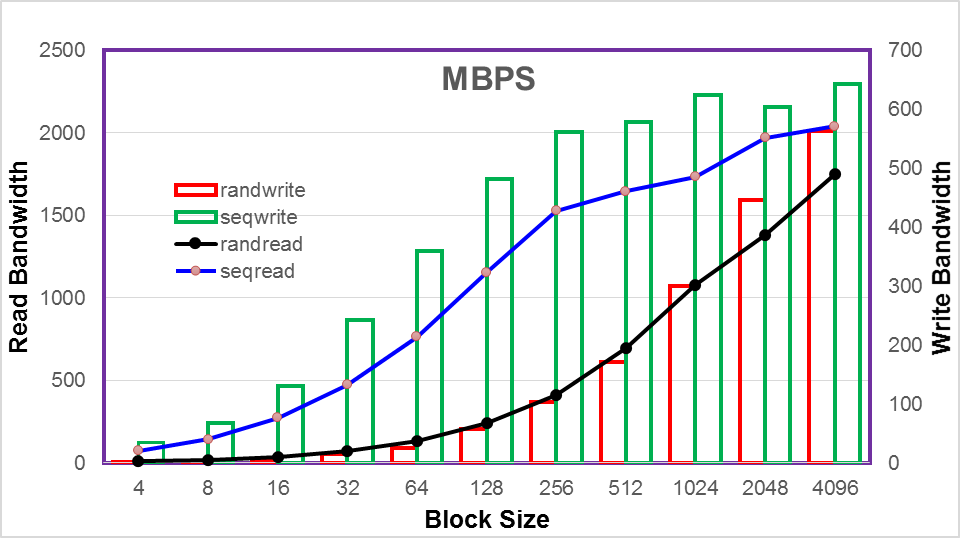

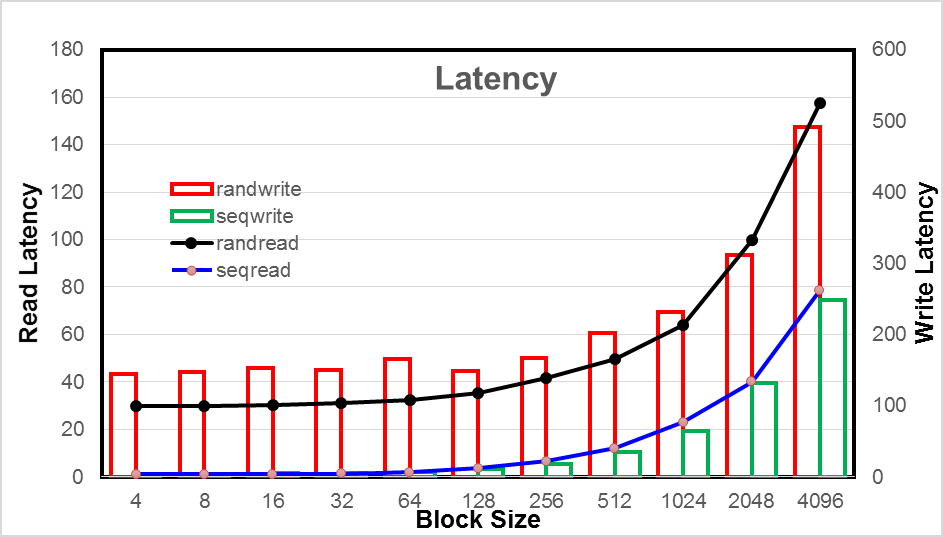

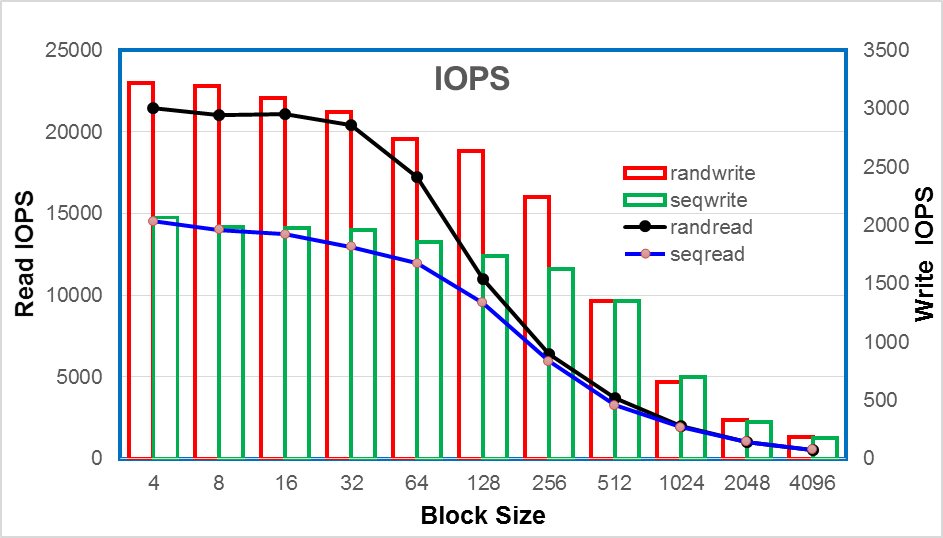

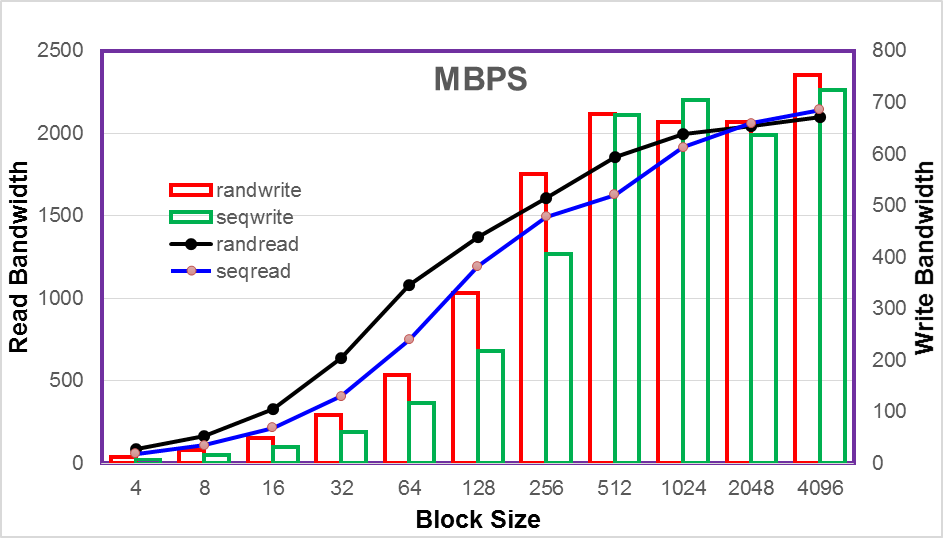

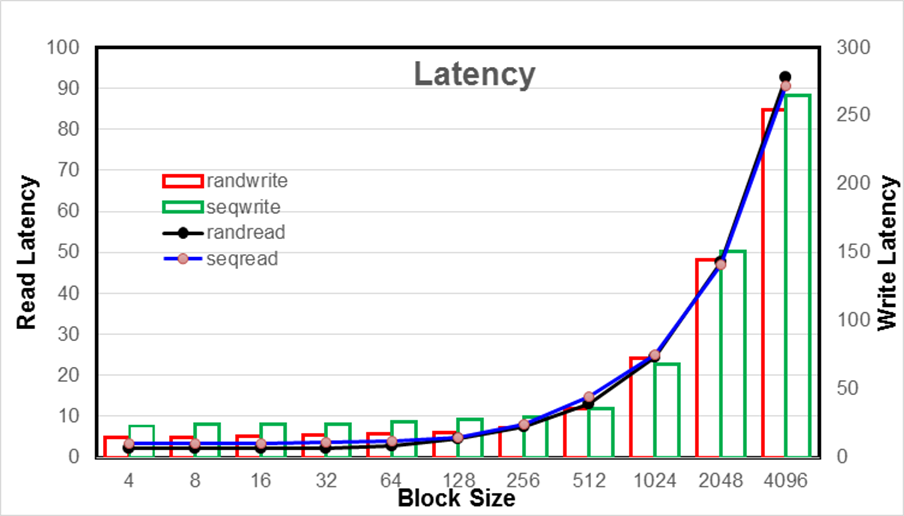

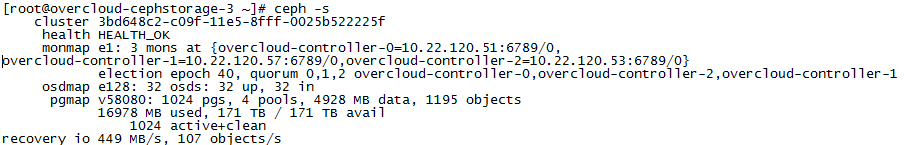

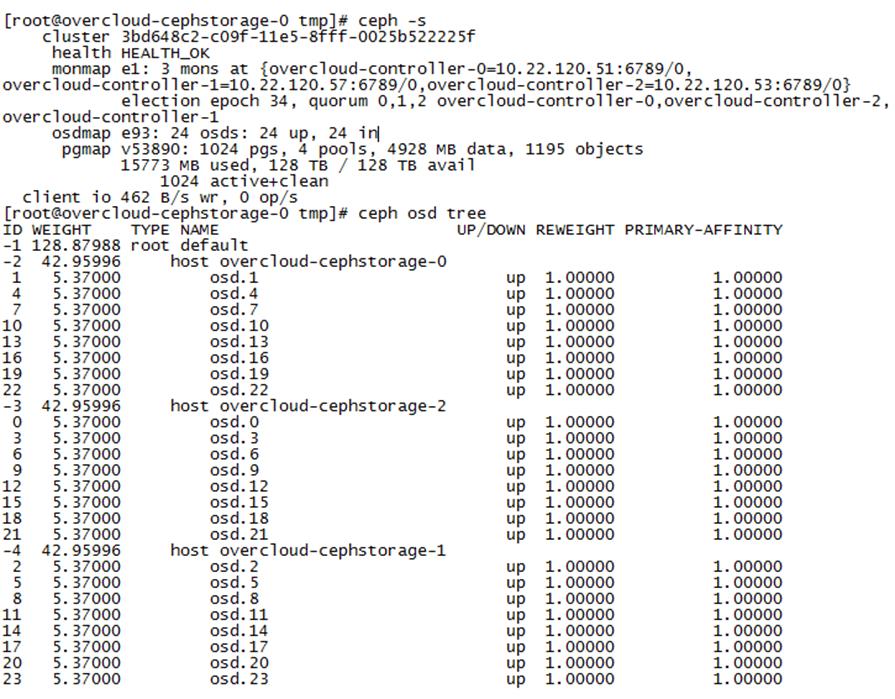

Ceph Benchmark Tool for Ceph Scalability

Create Virtual Machines for Ceph Testing

Live Migration Introduction and Scope

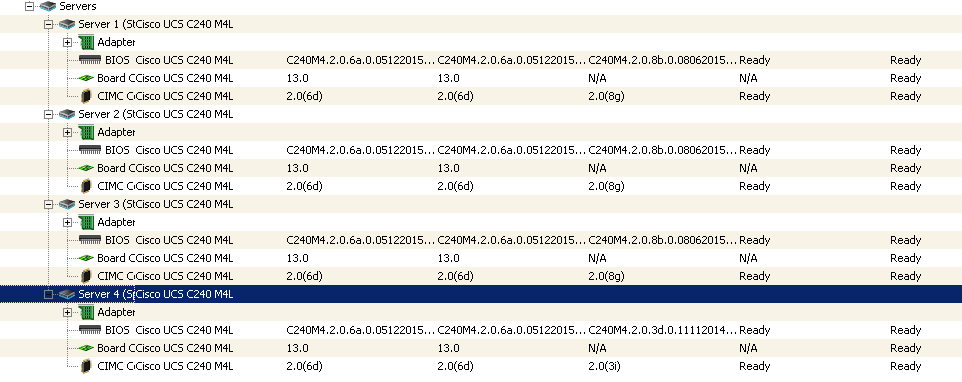

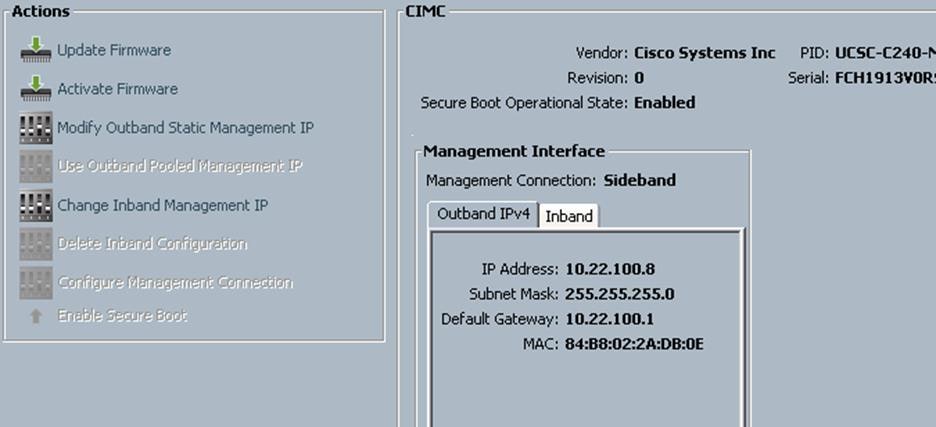

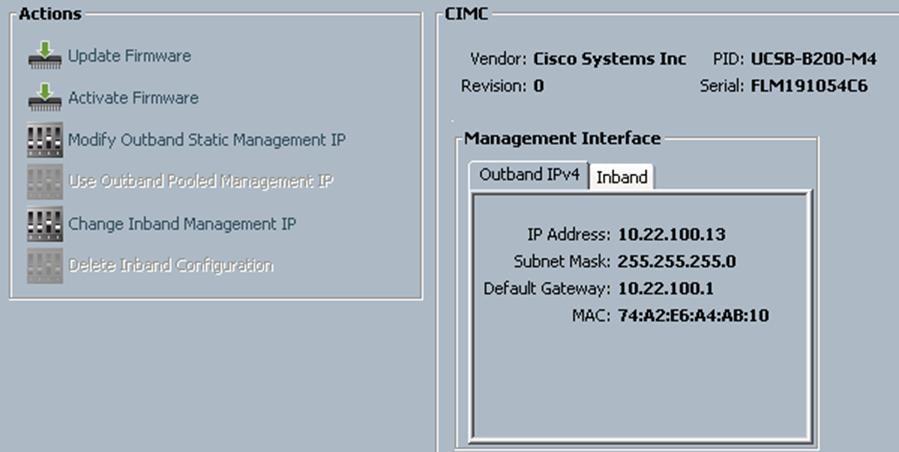

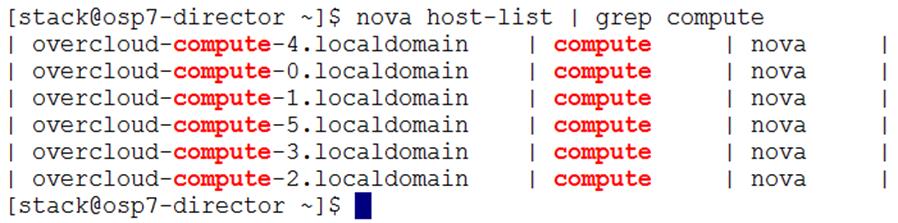

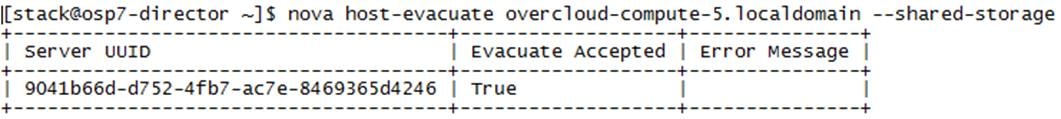

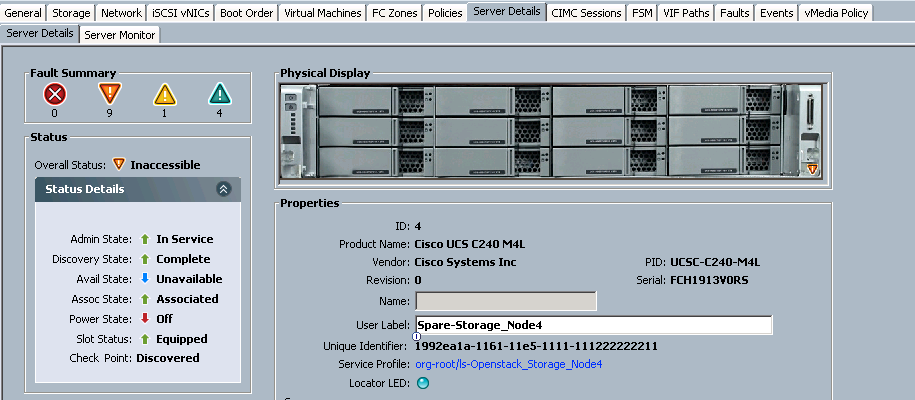

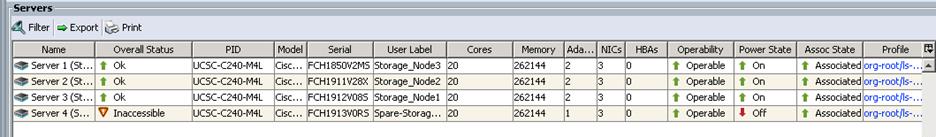

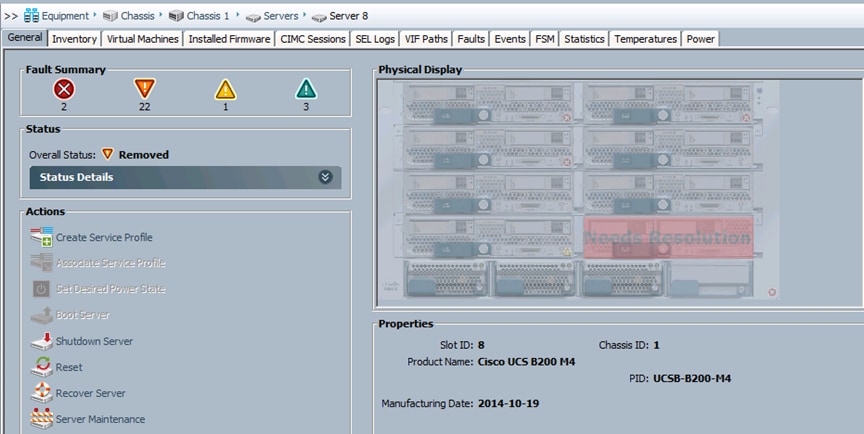

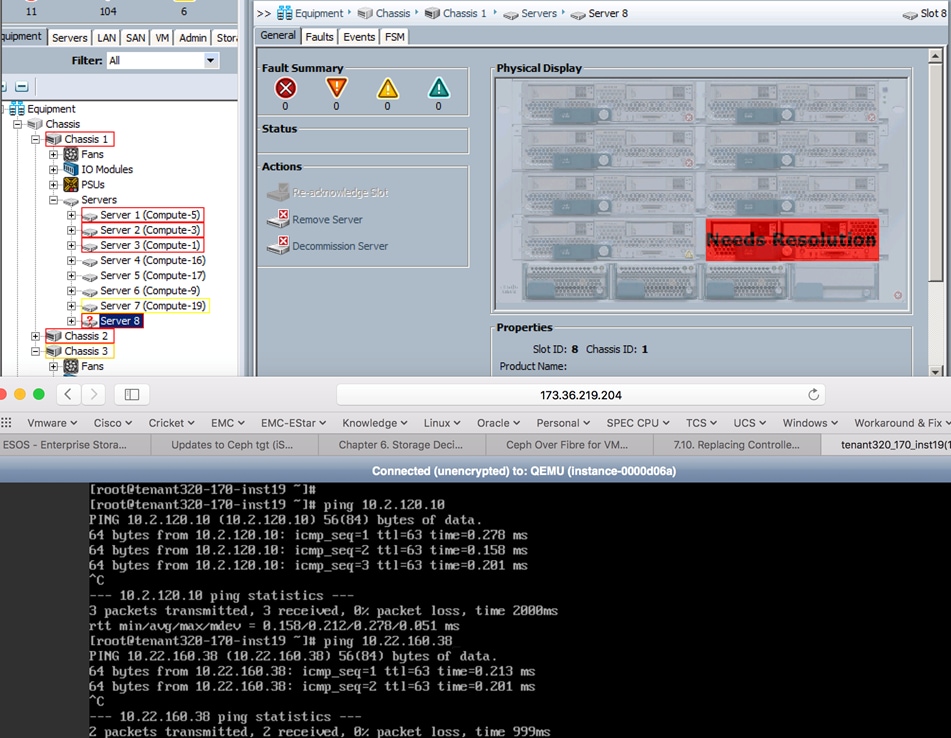

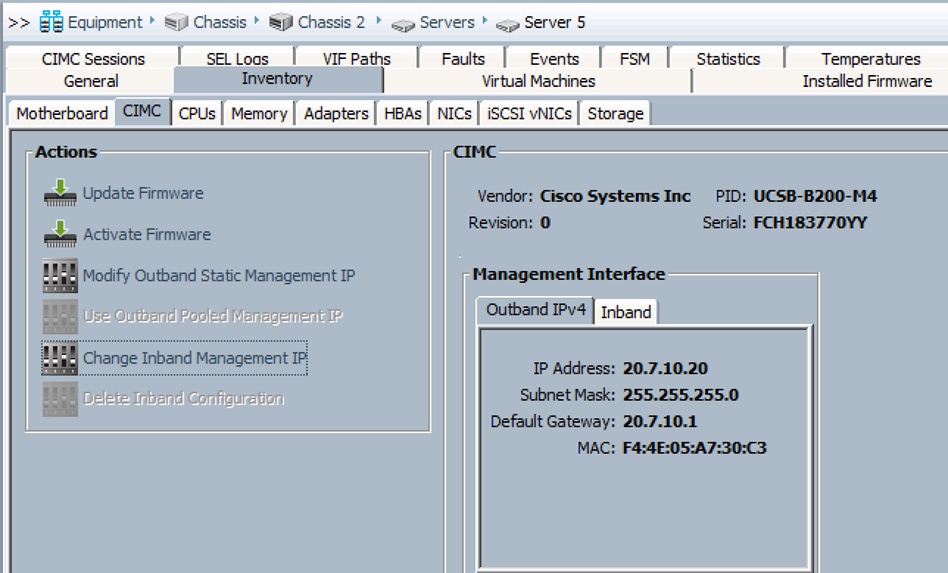

Provision the New Server in Cisco UCS

Provision the New Blade in UCS

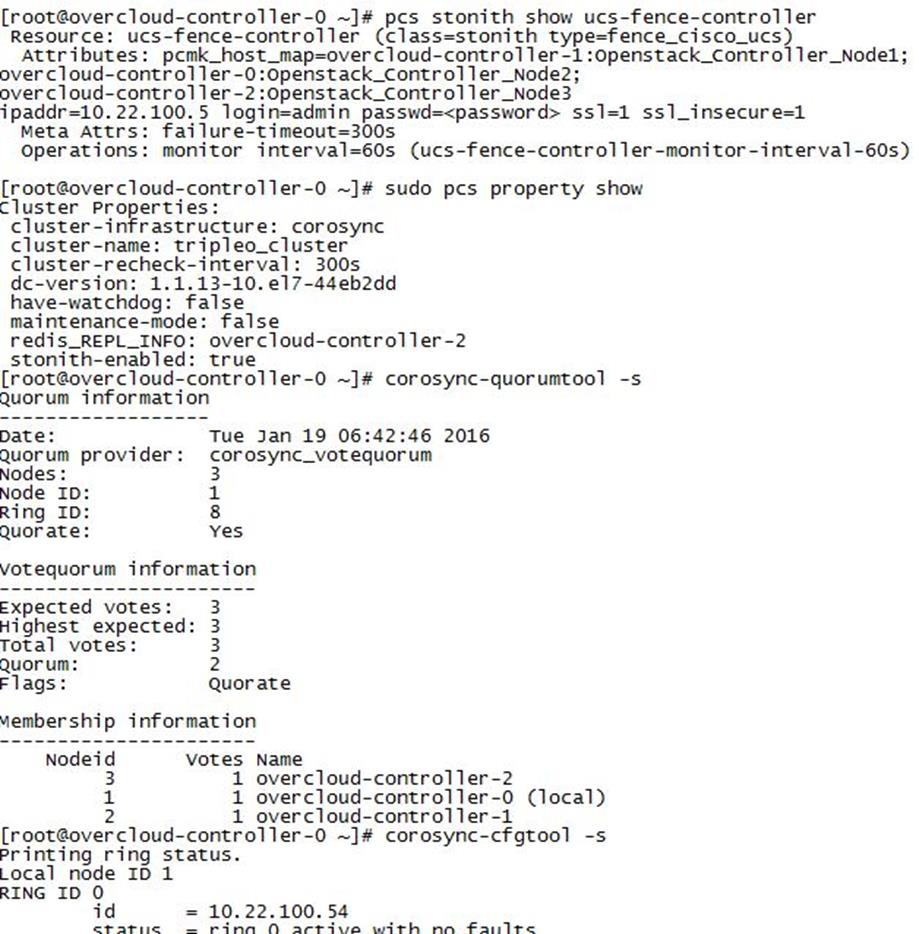

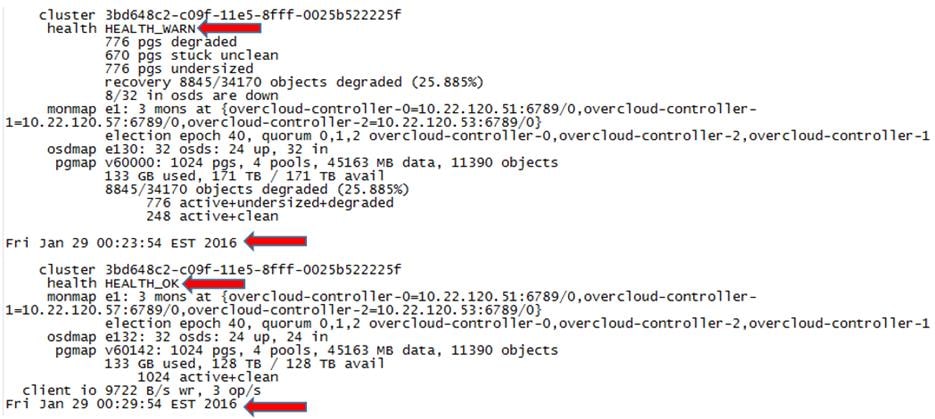

Post Deployment and Health Checks

High Availability of Software Stack

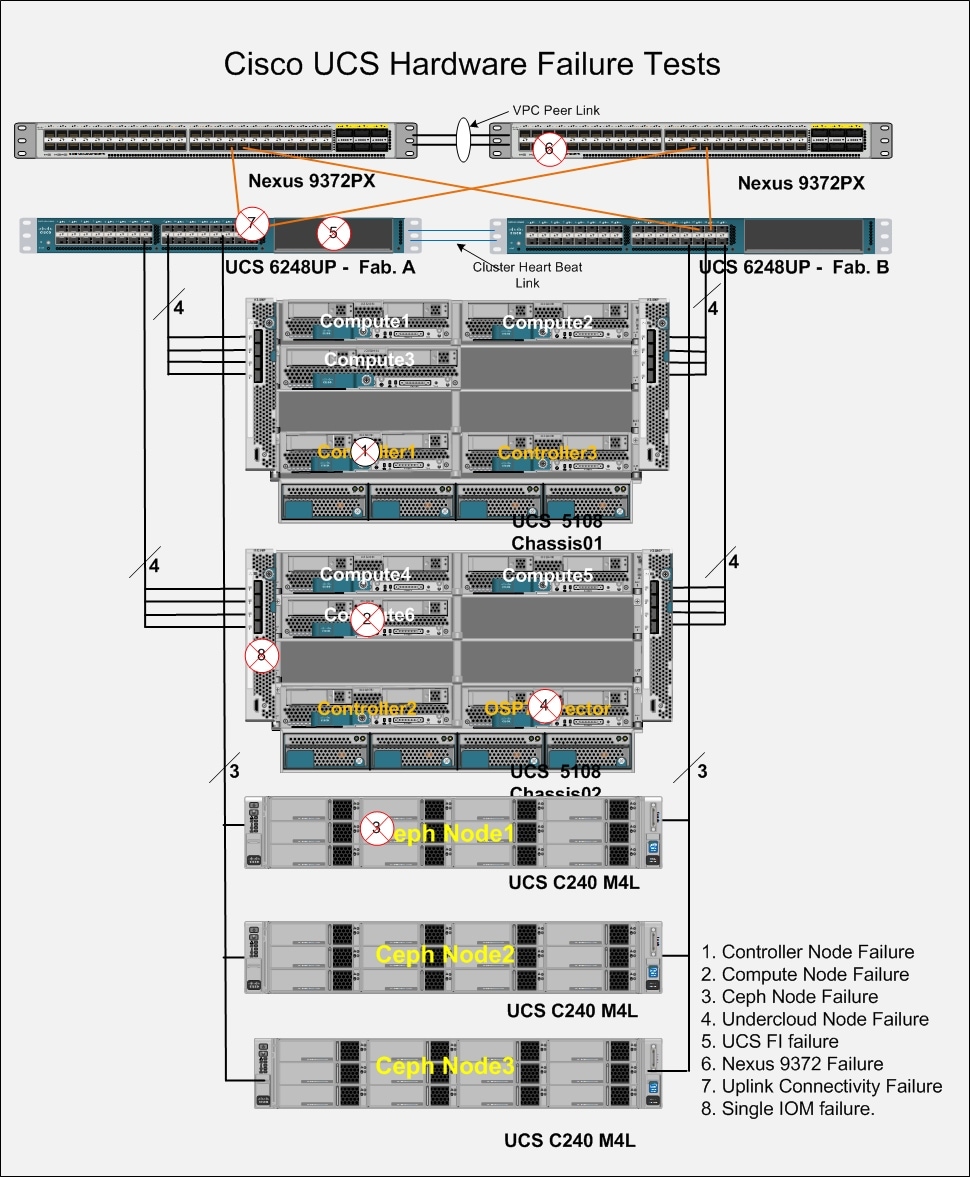

High Availability of Hardware Stack

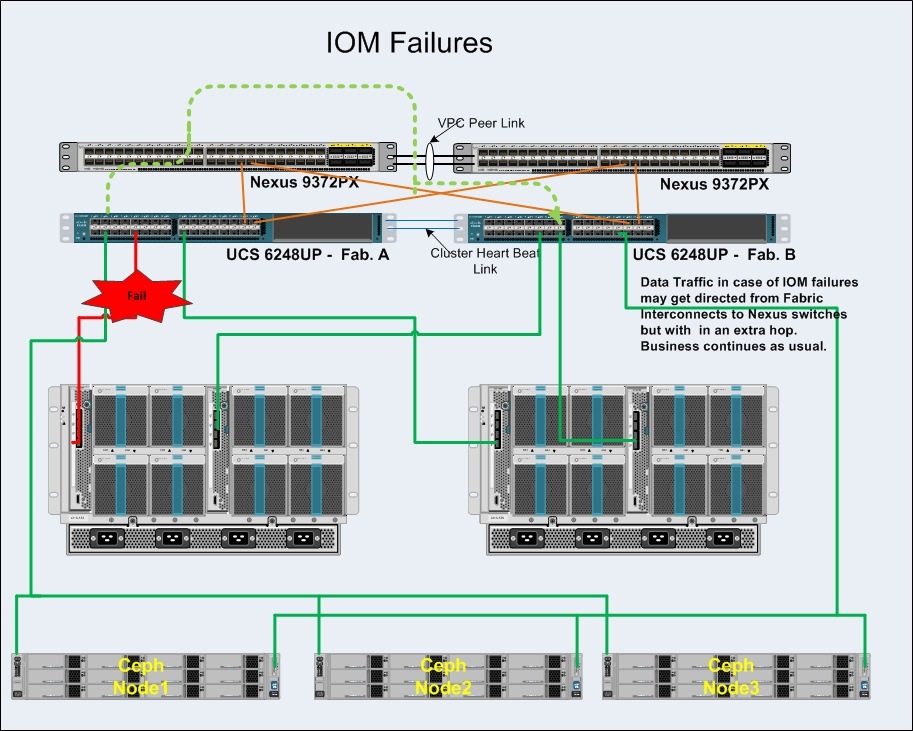

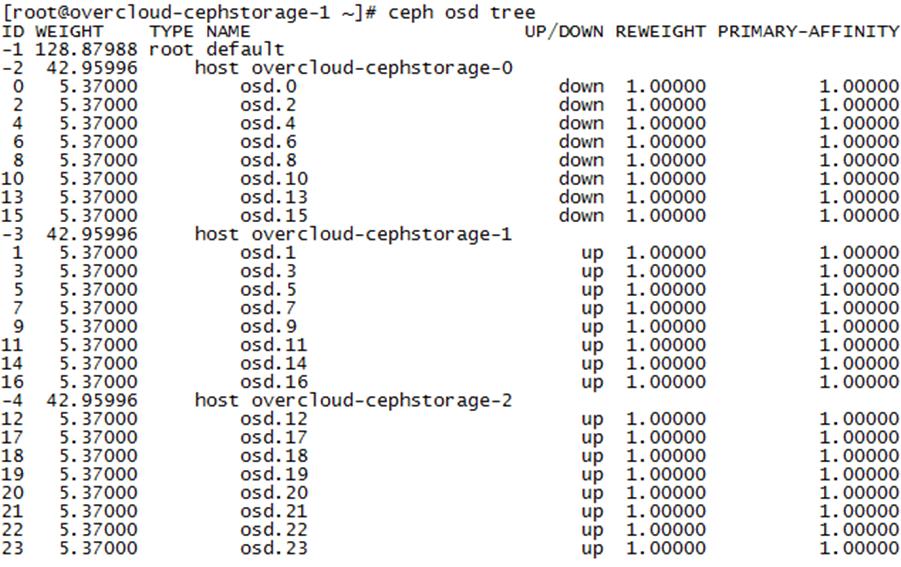

Hardware Failures of IO Modules

OpenStack Dependency on Hardware

Insert the New Blade into the Chassis

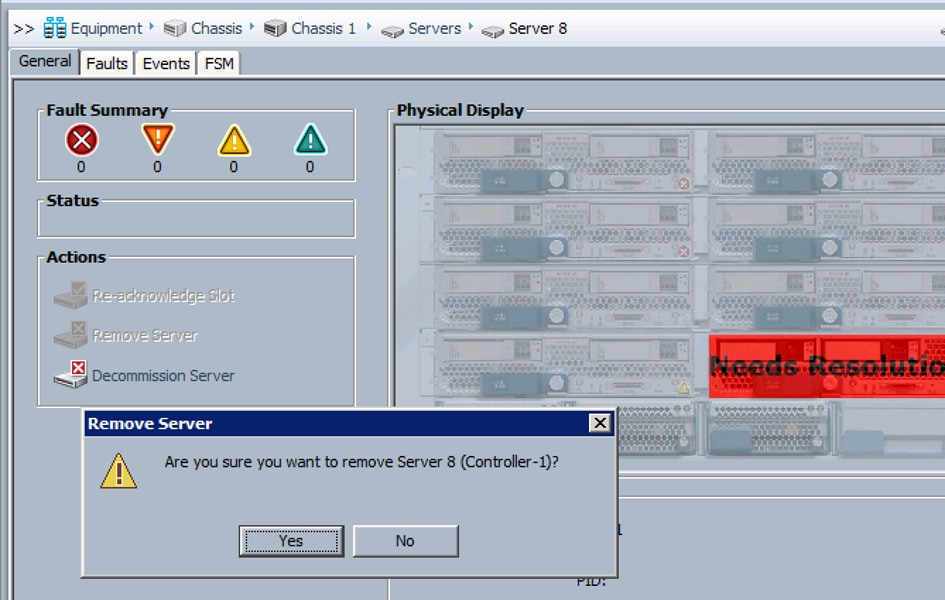

Remove Failed Blade from Inventory

Health Checks Post Replacement

Cisco Unified Computing System

Cisco Unified Computing System

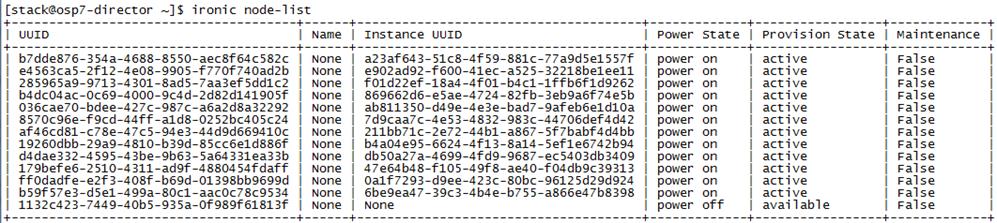

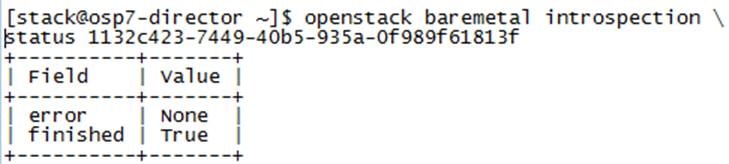

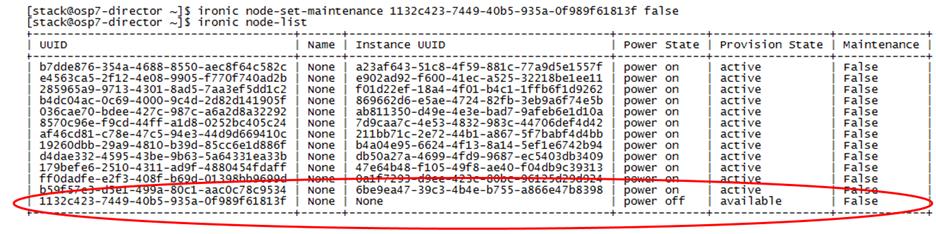

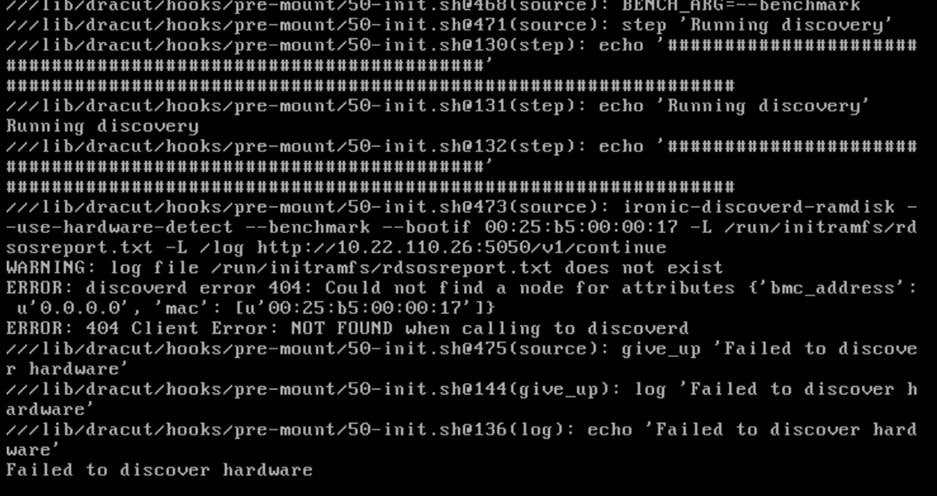

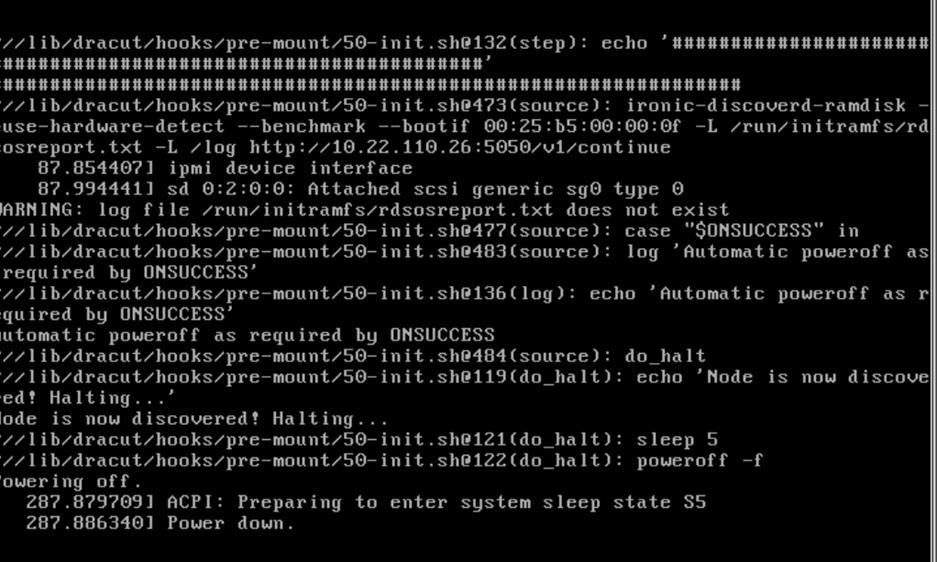

Cleaning Up Failed Introspection

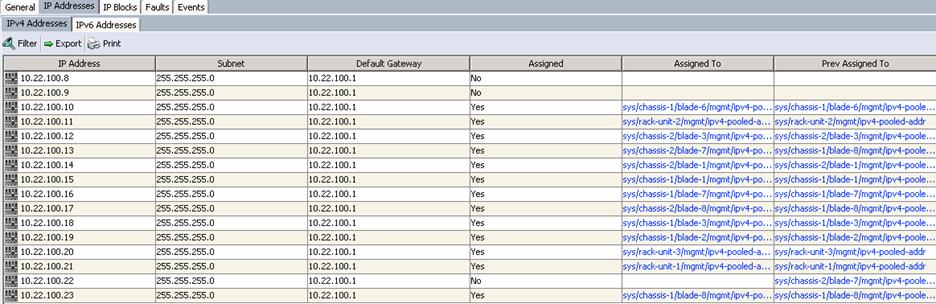

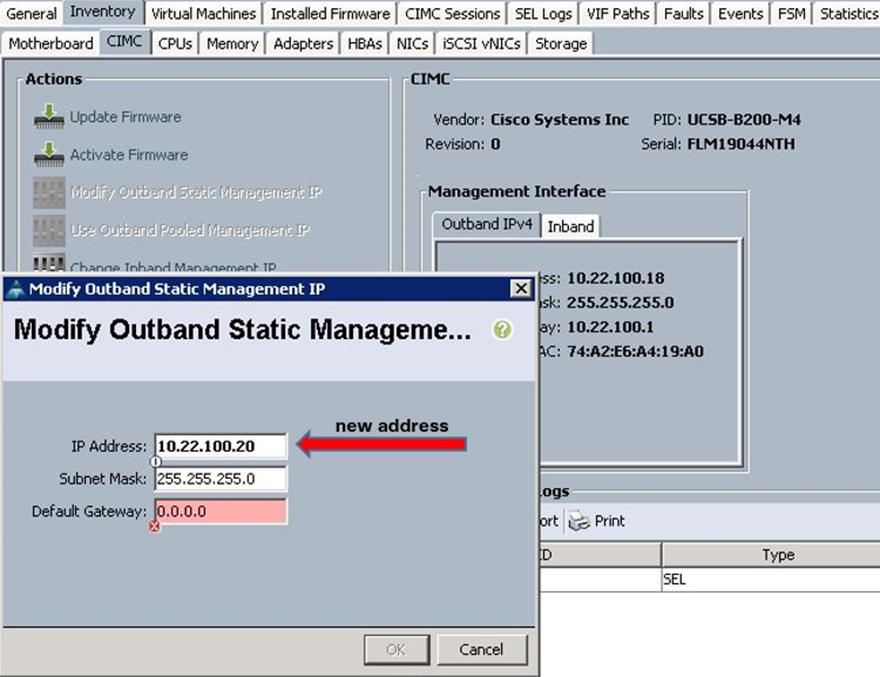

Updating Incorrect MAC or IPMI Addresses

Running Introspection on Failed Nodes

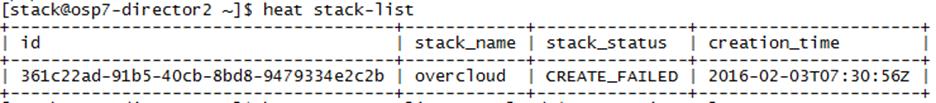

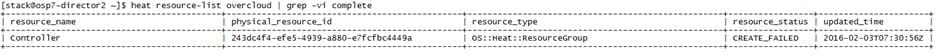

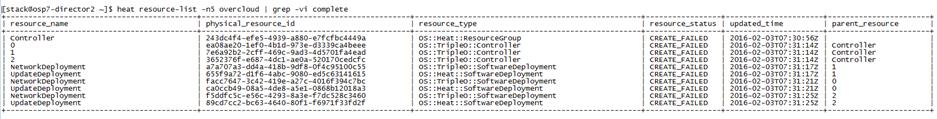

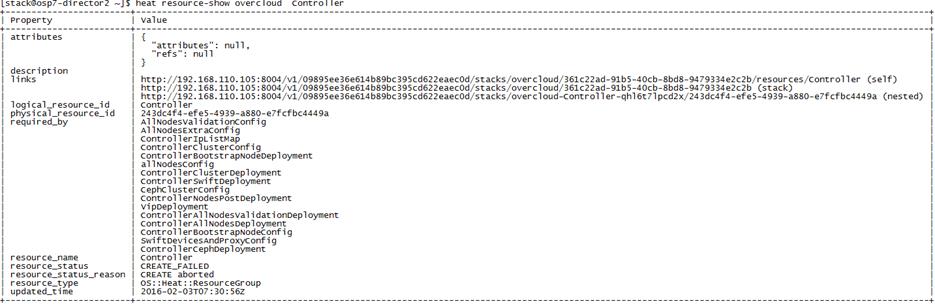

Overcloud Post-Deployment Issues

Cisco UCS Manager Plugin Checks

Cisco Validated Design program consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

The reference architecture described in this document is a realistic use case for deploying Red Hat Enterprise Linux OpenStack Platform 7 on Cisco UCS blade and rack servers. The document covers step by step instructions for setting UCS hardware, installing Red Hat Linux OpenStack Director, issues and workarounds evolved during installation, integration of Cisco Plugins with OpenStack, what needs to be done to leverage High Availability from both hardware and software, use case of Live Migration, performance and scalability tests done on the configuration, lessons learnt, best practices evolved while validating the solution and a few troubleshooting steps, etc.

Cisco UCS Integrated Infrastructure for Red Hat Enterprise Linux OpenStack Platform is all in one solution for deploying OpenStack based private Cloud using Cisco Infrastructure and Red Hat Enterprise Linux OpenStack platform. The solution is validated and supported by Cisco and Red Hat, to increase the speed of infrastructure deployment and reduce the risk of scaling from proof-of-concept to full enterprise production.

Introduction

Automation, virtualization, cost, and ease of deployment are the key criteria to meet the growing IT challenges. Virtualization is a key and critical strategic deployment model for reducing the Total Cost of Ownership (TCO) and achieving better utilization of the platform components like hardware, software, network and storage. The platform should be flexible, reliable and cost effective for enterprise applications.

Cisco UCS solution implementing Red Hat Enterprise Linux OpenStack Platform provides a very simplistic yet fully integrated and validated infrastructure to deploy VMs in various sizes to suit your application needs. Cisco Unified Computing System (UCS) is a next-generation data center platform that unifies computing, network, storage access, and virtualization into a single interconnected system, which makes Cisco UCS an ideal platform for OpenStack architecture. The combined architecture of Cisco UCS platform, Red Hat Enterprise Linux OpenStack Platform and Red Hat Ceph Storage can accelerate your IT transformation by enabling faster deployments, greater flexibility of choice, efficiency, and lower risk. Furthermore, Cisco Nexus series of switches provide the network foundation for the next-generation data center.

This deployment guide provides the audience a step by step instruction of Installing Red Hat Linux OpenStack Director and Red Hat Ceph Storage on Cisco UCS blades and rack servers. The traditional complexities of installing OpenStack are simplified by Red Hat Linux OpenStack Director while Cisco UCS Manager Capabilities bring an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. The solution included in this deployment guide is a partnership from Cisco Systems, Inc., Red Hat, Inc., and Intel Corporation.

Audience

The audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineers, IT architects, and customers who want to take advantage of an infrastructure that is built to deliver IT efficiency and enable IT innovation. The reader of this document is expected to have the necessary training and background to install and configure Red Hat Enterprise Linux, Cisco Unified Computing System (UCS) and Cisco Nexus Switches as well as a high level understanding of OpenStack components. External references are provided where applicable and it is recommended that the reader be familiar with these documents.

Readers are also expected to be familiar with the infrastructure, network and security policies of the customer installation.

Purpose of the Document

This document describes the step by step installation of Red Hat Enterprise Linux OpenStack Platform 7 and Red Hat Ceph Storage 1.3 architecture on Cisco UCS platform. It also discusses about the day to day operational challenges of running OpenStack and steps to mitigate them, High Availability use cases, Live Migration, common troubleshooting aspects of OpenStack along with Operational best practices.

Solution Summary

This solution is focused on Red Hat Enterprise Linux OpenStack Platform 7 (based on the upstream OpenStack Kilo release) and Red Hat Ceph Storage 1.3 on Cisco Unified Computing System. The advantages of Cisco UCS and Red Hat Enterprise Linux OpenStack Platform combine to deliver an OpenStack Infrastructure as a Service (IaaS) deployment that is quick and easy to setup. The solution can scale up for greater performance and capacity or scale out for environments that require consistent, multiple deployments. It provides:

Converged infrastructure of Compute, Networking, and Storage components from Cisco UCS is a validated enterprise-class IT platform, rapid deployment for business critical applications, reduces costs, minimizes risks, and increase flexibility and business agility Scales up for future growth.

Red Hat Enterprise Linux OpenStack Platform 7 on Cisco UCS helps IT organizations accelerate cloud deployments while retaining control and choice over their environments with open and inter-operable cloud solutions. It also offers redundant architecture on compute, network, and storage perspective. The solution comprises of the following key components:

· Cisco Unified Computing System (UCS)

— Cisco UCS 6200 Series Fabric Interconnects

— Cisco VIC 1340

— Cisco VIC 1227

— Cisco 2204XP IO Module or Cisco UCS Fabric Extenders

— Cisco B200 M4 Servers

— Cisco C240 M4 Servers

· Cisco Nexus 9300 Series Switches

· Cisco Nexus 1000v for KVM

· Cisco Nexus Plugin for Nexus Switches

· Cisco UCS Manager Plugin for Cisco UCS

· Red Hat Enterprise Linux 7.x

· Red Hat Enterprise Linux OpenStack Platform Director

· Red Hat Enterprise Linux OpenStack Platform 7

· Red Hat Ceph Storage 1.3

The scope is limited to the infrastructure pieces of the solution. It does not address the vast area of the OpenStack components and multiple configuration choices available in OpenStack.

This architecture is based on Red Hat Enterprise Linux OpenStack platform build on Cisco UCS hardware is an integrated foundation to create, deploy, and scale OpenStack cloud based on Kilo OpenStack community release. Kilo version introduces Red Hat Linux OpenStack Director (RHEL-OSP), a new deployment tool chain that combines the functionality from the upstream TripleO and Ironic projects with components from previous installers.

The reference architecture use case provides a comprehensive, end-to-end example of deploying RHEL-OSP7 cloud on bare metal using OpenStack Director and services through heat templates.

The first section in this Cisco Validated Design covers setting up of Cisco hardware the blade and rack servers, chassis and Fabric Interconnects and the peripherals like Nexus 9000 switches. The second section explains the step by step install instructions for installing cloud through RHEL OSP Director. The final section includes the functional and High Availability tests on the configuration, Performance, Live migration tests, and the best practices evolved while validating the solution.

Physical Topology

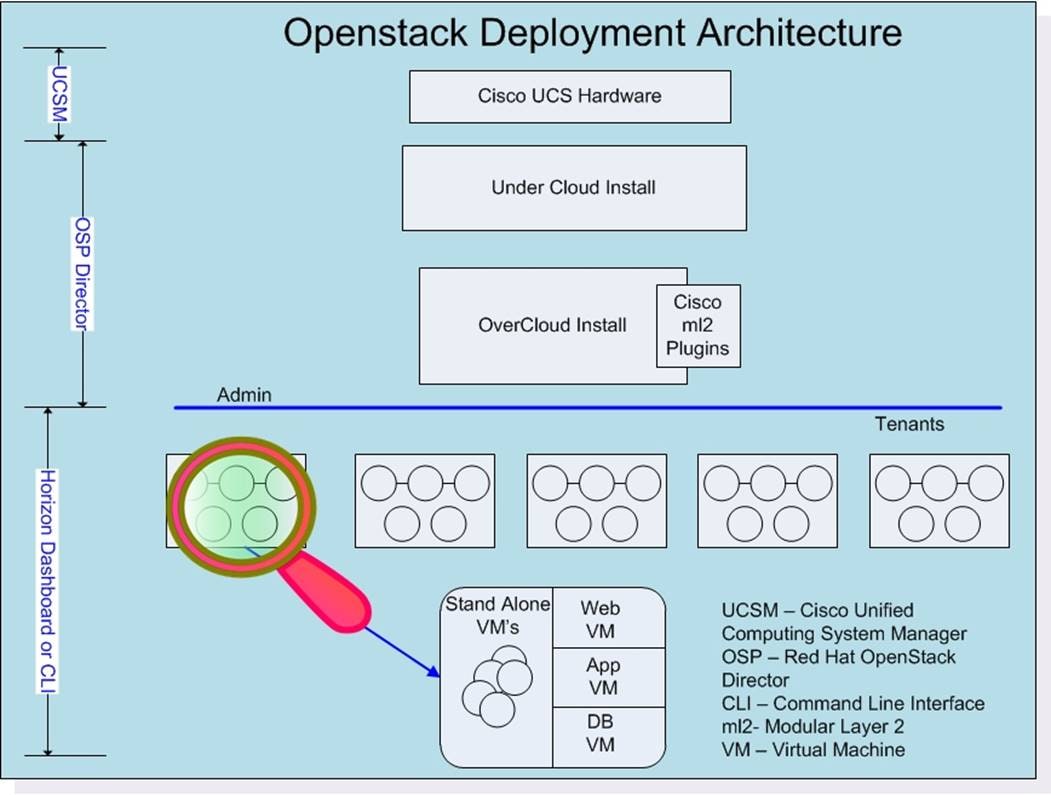

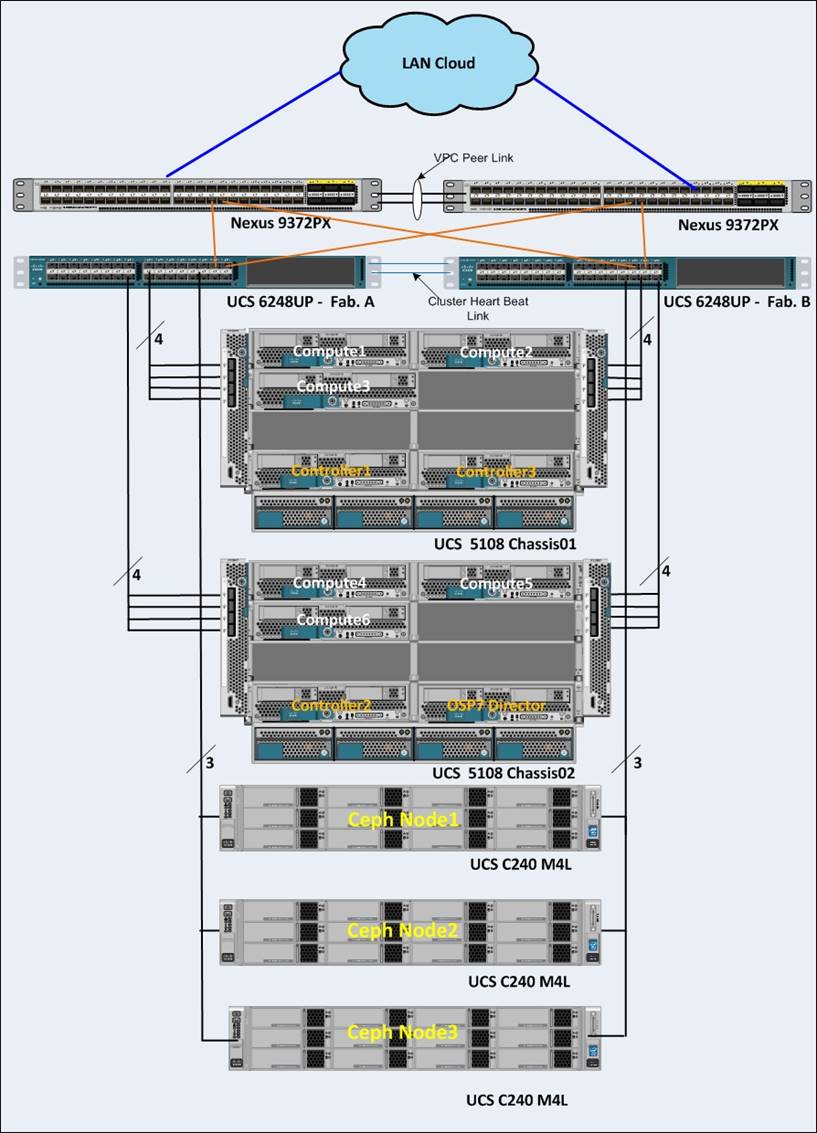

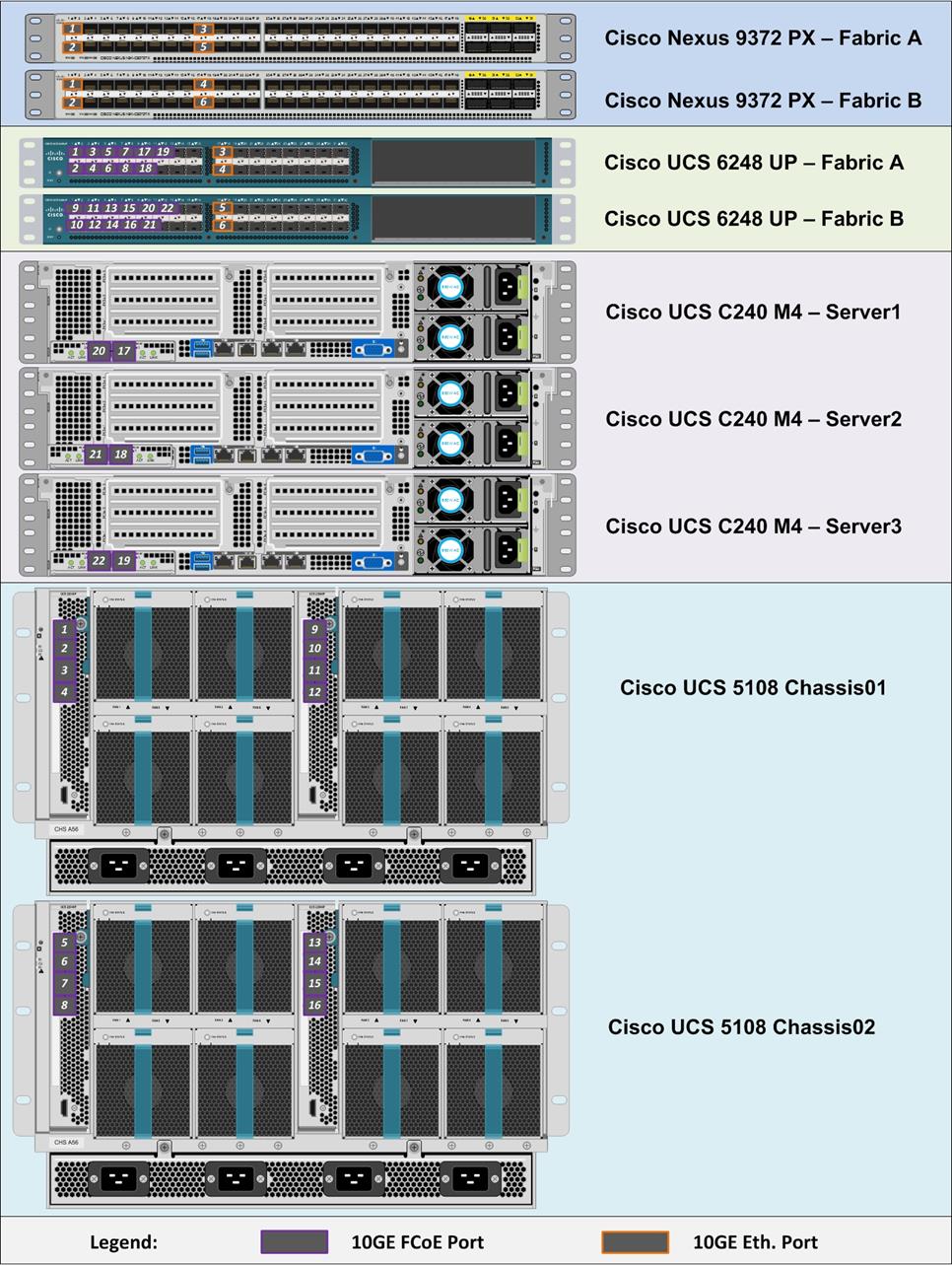

Figure 2 illustrates the physical topology of this solution.

The configuration comprised of 3 controller nodes, 6 compute nodes, 3 storage nodes, a pair of UCS Fabrics and Nexus switches, where most of the tests were conducted. In another configuration the system had 20 Compute nodes, 12 Ceph nodes and 3 controllers distributed across 3 UCS chassis where few install and scalability tests were performed. Needless to say that architecture is scalable horizontally and vertically within the chassis.

· More Compute Nodes and Chassis can be added as desired.

· More Ceph Nodes for storage can be added. The Ceph nodes can be UCS C240M4L or C240M4S.

· If more bandwidth is needed, Cisco IO Modules can be 2208XP as opposed to 2204XP used in the configuration.

· Both Cisco Fabric Interconnects and Cisco Nexus Switches can be on 96 port switches instead of 48 ports as shown above.

Solution Overview

This solution components and diagrams are implemented per the Design Guide and basic overview is provided below.

The Cisco Unified Computing System is an integrated, scalable, multi-chassis platform in which all resources participate in a unified management domain. The Cisco Unified Computing System accelerates the delivery of new services simply, reliably, and securely through end-to-end provisioning and migration support for both virtualized and non-virtualized systems. Cisco UCS manager using single connect technology manages servers and chassis and performs auto-discovery to detect inventory, manage, and provision system components that are added or changed.

The Red Hat Enterprise Linux OpenStack Platform IaaS cloud on Cisco UCS servers is implemented as a collection of interacting services that control compute, storage, and networking resources.

OpenStack Networking handles creation and management of a virtual networking infrastructure in the OpenStack cloud. Infrastructure elements include networks, subnets, and routers. Because OpenStack Networking is software-defined, it can react in real-time to changing network needs, such as creation and assignment of new IP addresses.

Compute serves as the core of the OpenStack cloud by providing virtual machines on demand. Computes supports the libvirt driver that uses KVM as the hypervisor. The hypervisor creates virtual machines and enables live migration from node to node.

OpenStack also provides storage services to meet the storage requirements for the above mentioned virtual machines.

The Keystone provides user authentication to all OpenStack systems.

The solution also includes OpenStack Networking ML2 Core components.

Cisco Nexus 1000V OpenStack solution is an enterprise-grade virtual networking solution, which brings Security, Policy control, and Visibility together with Layer2/Layer 3 switching at the hypervisor layer. When it comes to application visibility, Cisco Nexus 1000V provides insight into live and historical VM migrations and advanced automated troubleshooting capabilities to identify problems in seconds.

The Cisco Nexus driver for OpenStack Neutron allows customers to easily build their infrastructure-as-a-service (IaaS) networks using the industry’s leading networking platform, delivering performance, scalability, and stability with the familiar manageability and control you expect from Cisco® technology.

Cisco UCS Manager Plugin configures compute blades with necessary VLAN’s. The Cisco UCS Manager Plugin talks to the Cisco UCS Manager application running on Fabric Interconnect.

Table 1 lists the Hardware and Software releases used for solution verification.

Table 1 Required Hardware Components

|

|

Hardware |

Quantity |

Firmware Details |

| Director |

Cisco UCS B200M4 blade |

1 |

2.2(5) |

| Controller |

Cisco UCS B200M4 blade |

3 |

2.2(5) |

| Compute |

Cisco UCS B200M4 blade |

6 |

2.2(5) |

| Storage |

Cisco UCS C240M4L rack server |

3 |

2.2(5) |

| Fabrics Interconnects |

Cisco UCS 6248UP FIs |

2 |

2.2(5) |

| Nexus Switches |

Cisco Nexus 9372 NX-OS |

2 |

7.0(3)I1(3) |

Table 2 Software Specifications

|

|

Software |

Version |

| Operating System |

Red Hat Enterprise Linux |

7.2 |

| OpenStack Platform |

Red Hat Enterprise Linux OpenStack Platform |

RHEL-OSP 7.2 |

| Red Hat Enterprise Linux OpenStack Director |

RHEL-OSP 7.2 |

|

|

|

Red Hat Ceph Storage |

1.3 |

| Cisco N1000V |

VSM and VEM modules |

5.2(1)SK3(2.2x) |

| Plugins |

Cisco Nexus Plugin |

RHEL-OSP 7.2 |

|

|

Cisco UCSM Plugin |

RHEL-OSP 7.2 |

|

|

Cisco N1KV Plugin |

RHEL-OSP 7.2 |

This section contains the Bill of Materials used in the configuration.

| Component |

Model |

Quantity |

Comments |

| OpenStack Platform Director Node |

Cisco UCS B200M4 blade |

1 |

CPU – 2 x E5-2630 V3 Memory – 8 x 16GB 2133 MHz DIMM – total of 128G Local Disks – 2 x 300 GB SAS disks for Boot Network Card – 1x1340 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Controller Nodes |

Cisco UCS B200M4 blades |

3 |

CPU – 2 x E5-2630 V3 Memory – 8 x 16GB 2133 MHz DIMM – total of 128G Local Disks – 2 x 300 GB SAS disks for Boot Network Card – 1x1340 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Compute Nodes |

Cisco UCS B200M4 blades |

6 |

CPU – 2 x E5-2660 – V3 Memory – 16 x 16GB 2133 MHz DIMM – total of 256G Local Disks – 2 x 300 GB SAS disks for Boot Network Card – 1x1340 VIC Raid Controller – Cisco MRAID 12 G SAS Controller |

| Storage Nodes |

Cisco UCS C240M4L rack servers |

3 |

CPU – 2 x E5-2630 – V3 Memory – 8 x 16GB 2133 MHz DIMM – total of 128G Internal HDD – None Ceph OSD’s – 8 x 6TB SAS Disks Ceph Journals – 2 x 400GB SSD’s OS Boot – 2 x 1TB SAS Disks Network Cards – 1 x VIC 1227 Raid Controller – Cisco MRAID 12 G SAS Controller |

| Chassis |

Cisco UCS 5108 Chassis |

2 |

|

| IO Modules |

IOM 2204 XP |

4 |

|

| Fabric Interconnects |

Cisco UCS 6248UP Fabric Interconnects |

2 |

|

| Switches |

Cisco Nexus 9372PX Switches |

2 |

|

![]() Deployment and a few performance tests have been evaluated on another configuration with similar hardware and software specifications as listed above but with 20 Compute nodes and 12 Ceph storage nodes.

Deployment and a few performance tests have been evaluated on another configuration with similar hardware and software specifications as listed above but with 20 Compute nodes and 12 Ceph storage nodes.

Cisco Unified Computing System

Server Pools

Server pools will be utilized to divide the OpenStack server roles for ease of deployment and scalability. These pools will also decide the placement of server roles within the infrastructure. The following pools were created.

· OpenStack Controller Server pool

· OpenStack Compute Server pool

· OpenStack Ceph Server pool

![]() The Undercloud node will be a single server and is not associated with any pool. It is a standalone template and is used to create a service profile clone.

The Undercloud node will be a single server and is not associated with any pool. It is a standalone template and is used to create a service profile clone.

The compute server pool allows quick provisioning of additional hosts by adding the new servers to the compute server pool. The newly provisioned compute hosts can be added into an existing OpenStack environment through introspection and Overcloud deploy, covered later in this document.

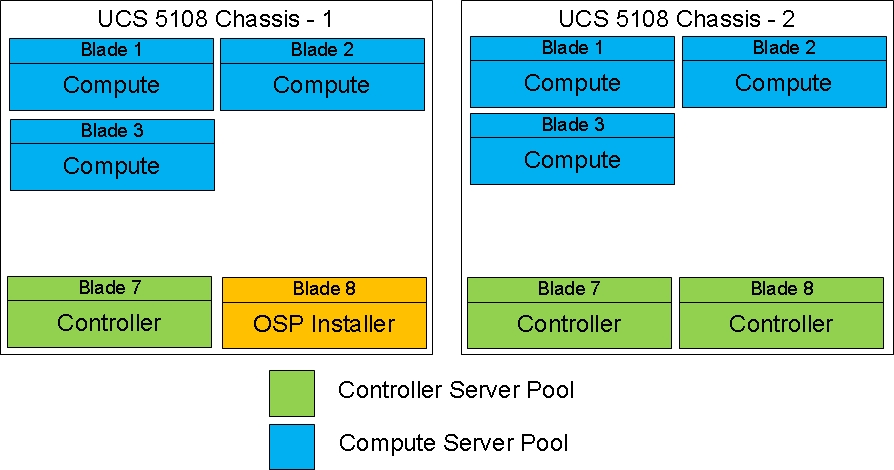

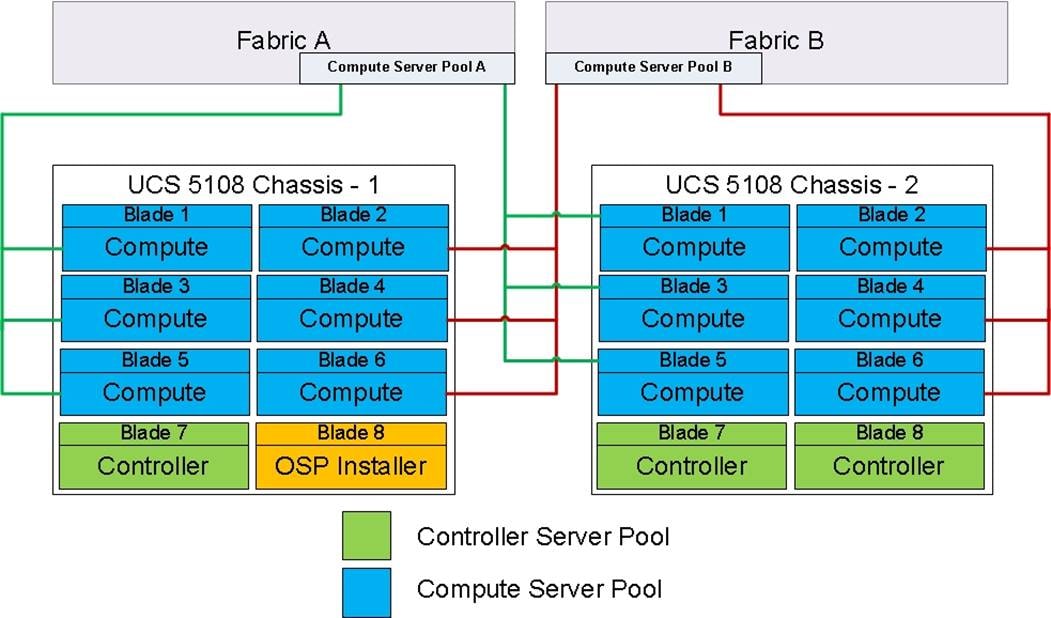

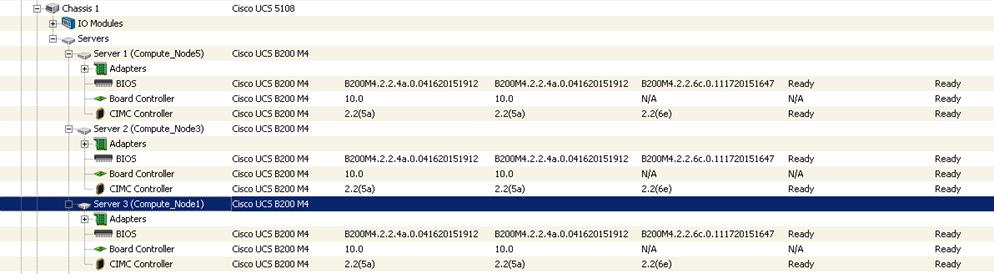

Cisco UCS Blades Distribution in Chassis

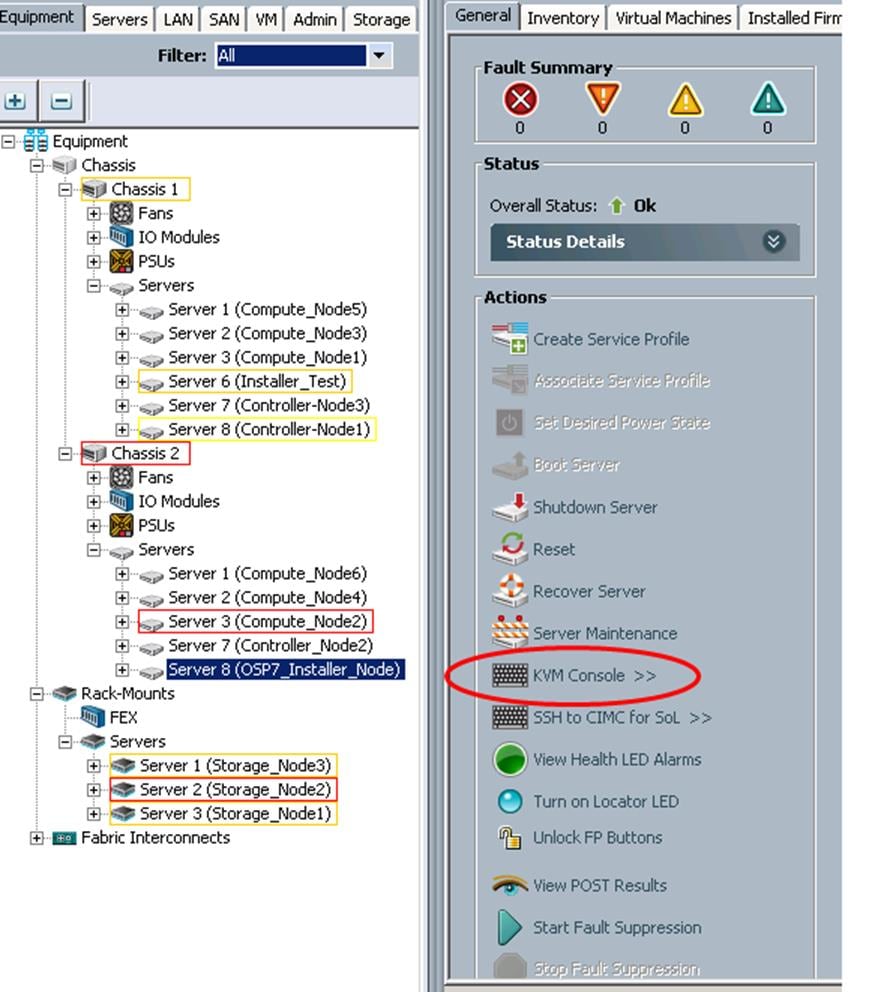

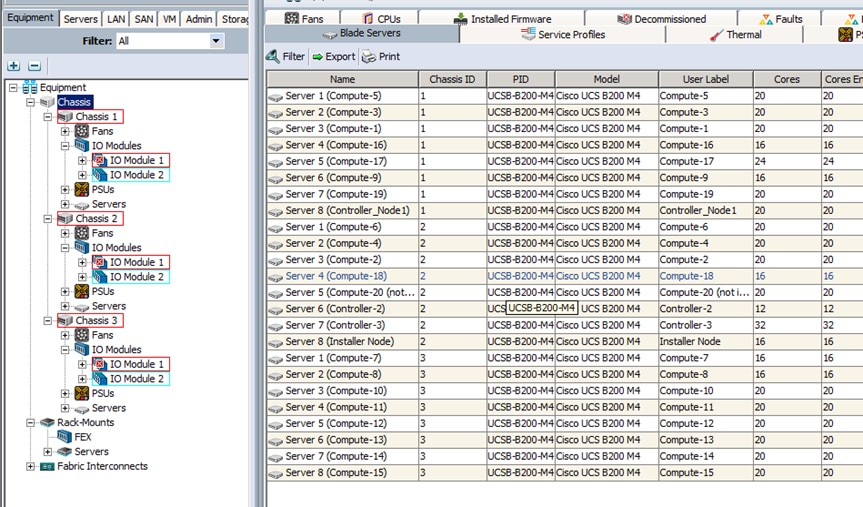

Figure 3 lists the server distribution in the Cisco UCS Chassis.

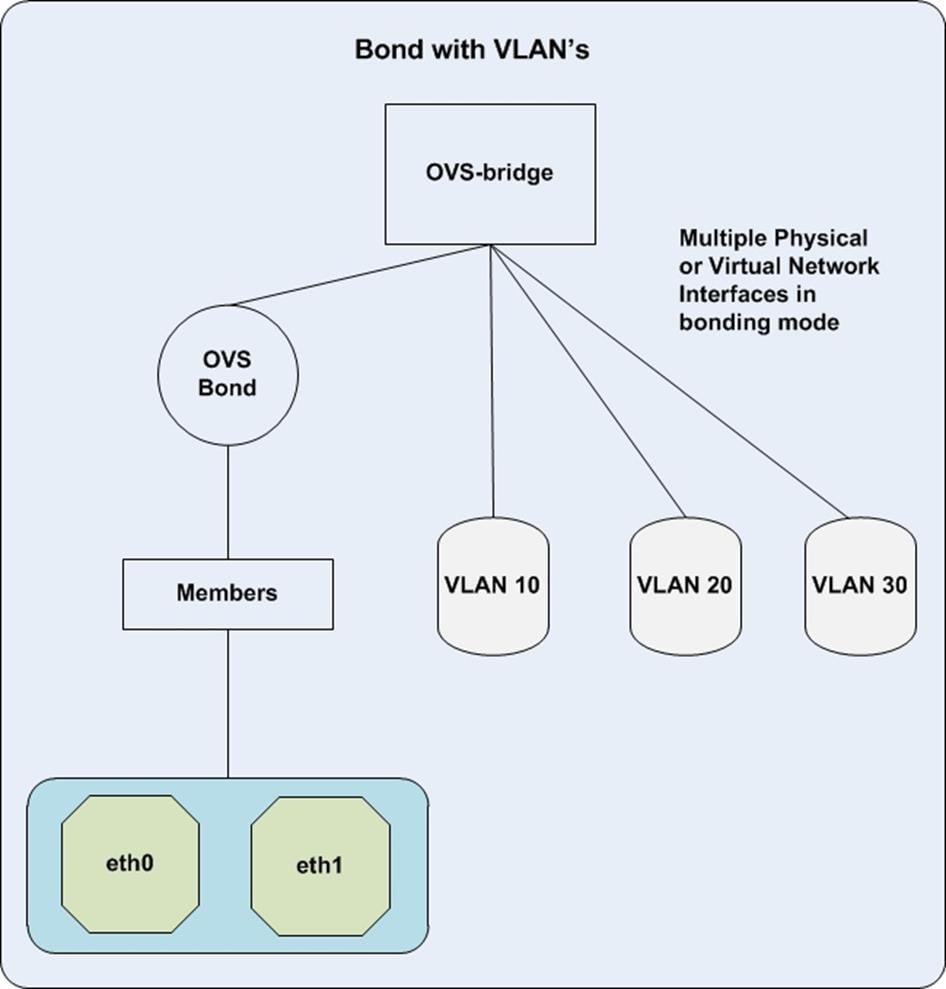

The controllers and computes are distributed across the chassis. This gives High Availability to the stack though a failure of Chassis per se does not happen. There is only one Installer node in the system and can be added in any one of the Chassis as above. In case of larger deployments having 3 or more chassis, it is recommended to distribute one controller in each chassis.

In larger deployments where the chassis are fully loaded with blades a better approach while creating server pools could be distribute manually the tenant and storage traffic across the Fabrics.

Compute pools are created as listed below:

· OpenStack Compute Server pool A

· OpenStack Compute Server pool B

· The Compute Server pool A can be used for the blades on the left side of the chassis pinned to Fabric A, while the Compute Server pool B can be used for the blades on the right side of the chassis. This is achieved with pool A using vNICs pinned to Fabric A while pool B tenant vNICs pinned to Fabric B.

The above method ensures that the tenant traffic is distributed evenly across both the fabrics.

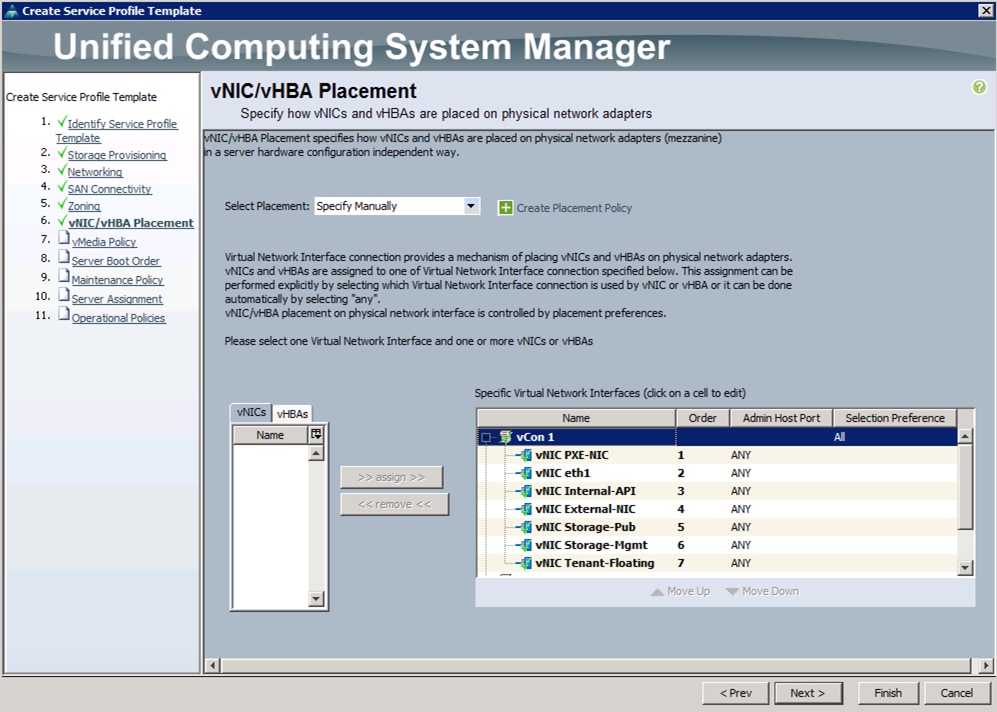

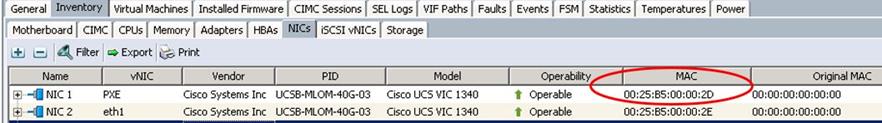

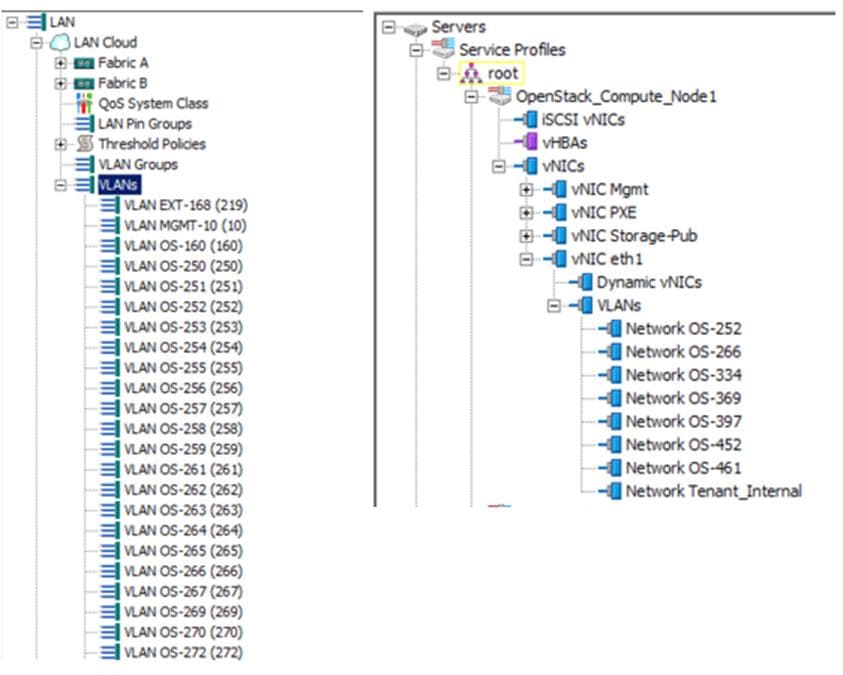

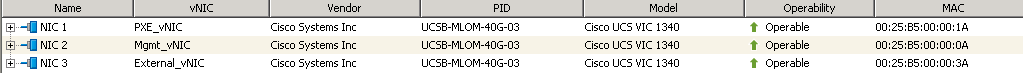

Service Profiles

Service profiles will be created from the service templates. However once successfully created, they will be unbound from the templates. The vNIC to be used for tenant traffic needs to be identified as eth1. This is to take care of the current limitation in Cisco UCSM kilo plugin for OpenStack. This is being addressed while this document is being written and will be taken care in the future releases.

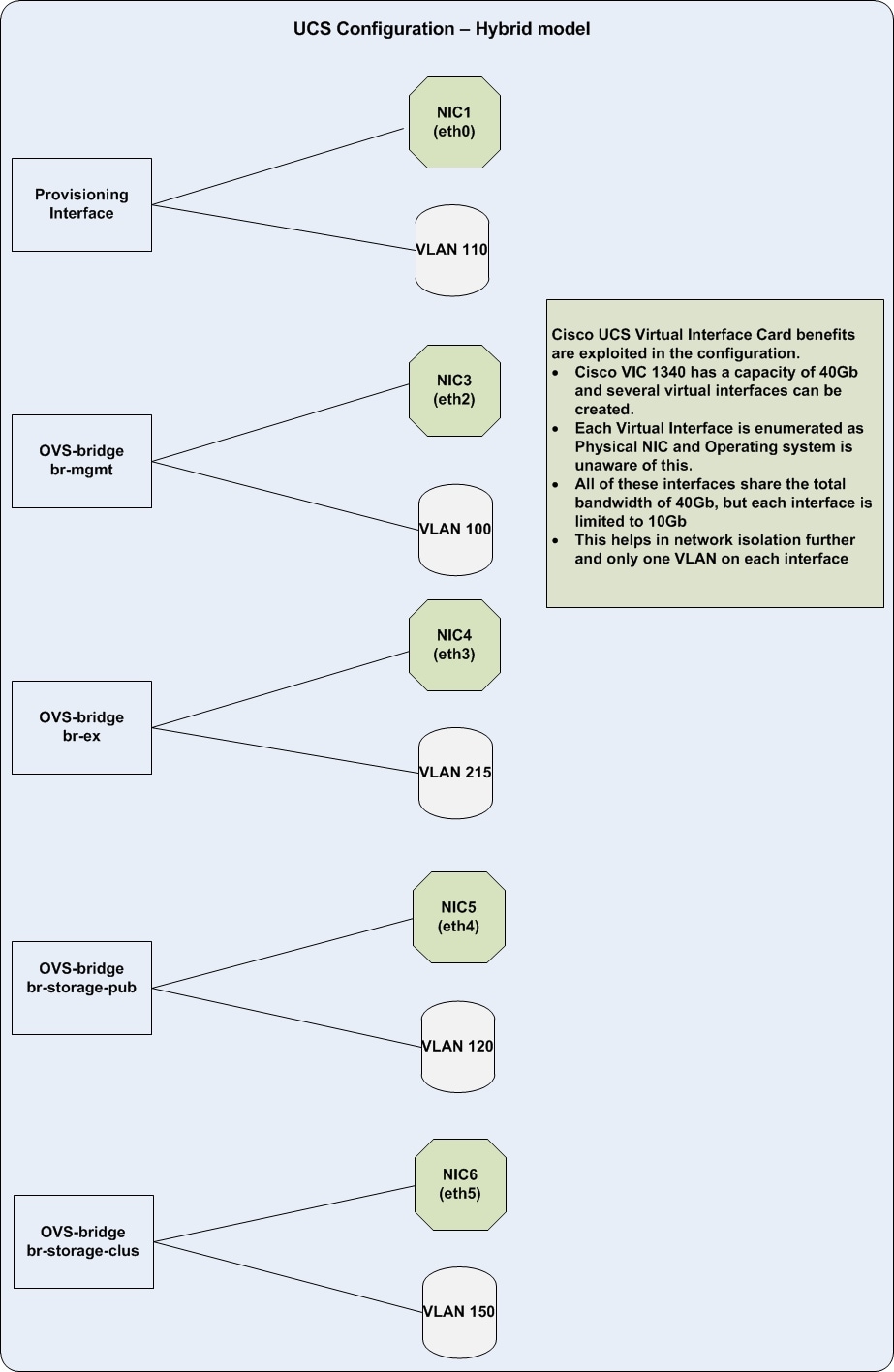

Cisco UCS vNIC Configuration

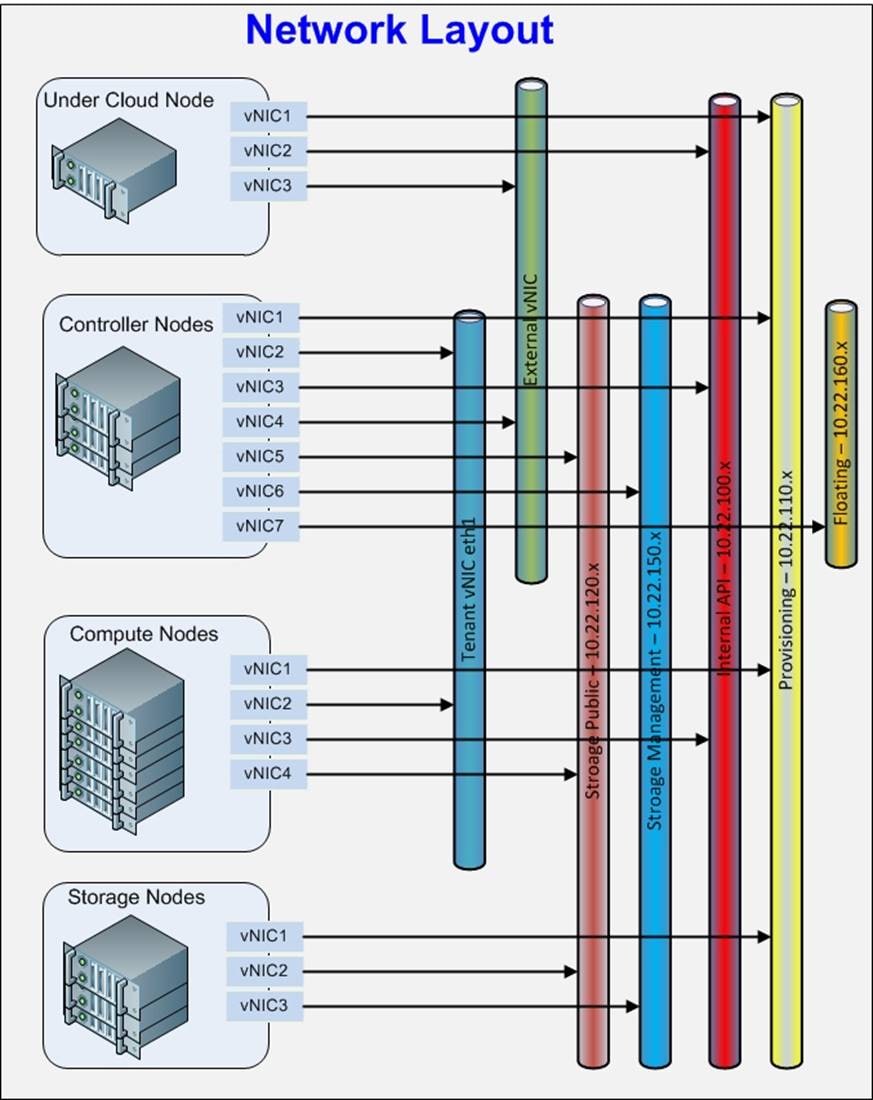

Figure 5 illustrates the network layout.

![]() A Floating or Provider network is not necessary. It has been included in the configuration because of the limitation in the IP’s. Virtual machines can be configured to have direct access through the external network too.

A Floating or Provider network is not necessary. It has been included in the configuration because of the limitation in the IP’s. Virtual machines can be configured to have direct access through the external network too.

A separate network layout was also verified in another POD without any floating IP’s. This is for customers who do not have the limitations of external IP’s as encountered in the configuration. However most of the tests were performed with floating IP’s only. The Network Topology in this design is almost similar to what shown above. The virtual machines can be accessed directly from the external work. The below diagram depicts how the network was configured in this POD without floating network. With this you need not have floating vNIC interface for Controller Service profile, nor you will need a section of block in controller.yaml for floating ip and passing the floating parameter in your overcloud deploy command. Refer Appendix B for details.

The family of vNICs are placed in the same Fabric Interconnect to avoid an extra hop to the upstream Nexus switches.

The following categories of vNICs are used in the setup:

· Provisioning Interfaces pxe vNICs are pinned to Fabric A

· Tenant vNICs are pinned to Fabric A

· Internal API vNICs are pinned to Fabric B

· External Interfaces vNICs are pinned to Fabric A

· Storage Public Interfaces are pinned to Fabric A

· Storage Management Interfaces are pinned to Fabric B

Only one Compute server pool is created in the setup. However, we may create multiple pools if desired as mentioned above.

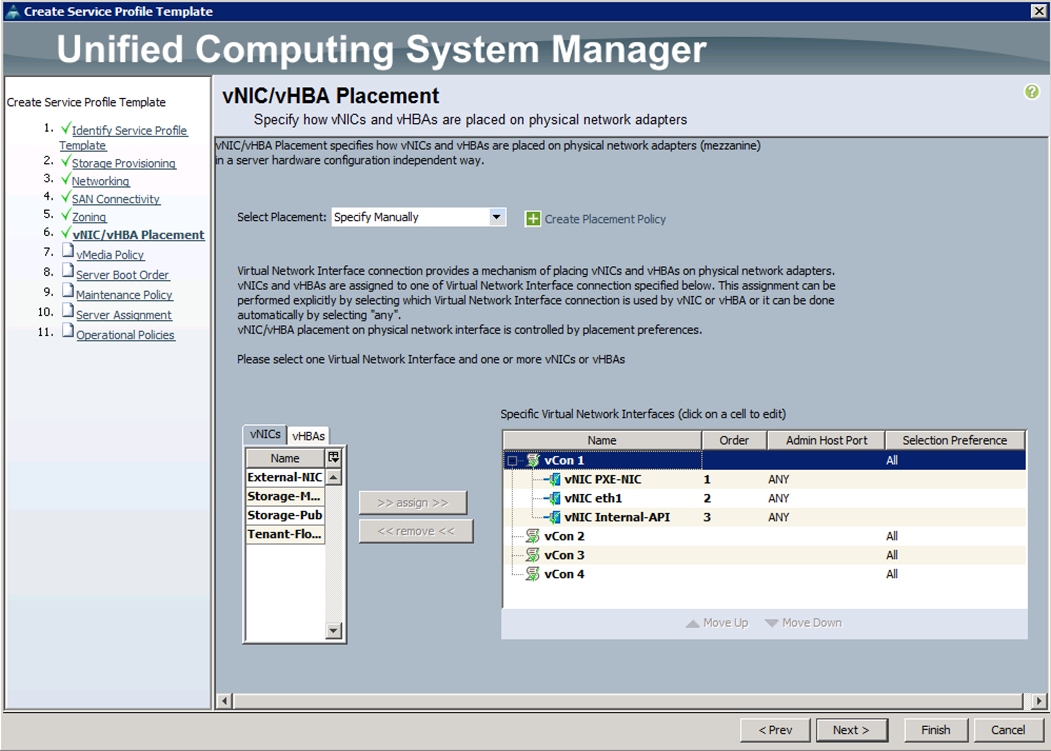

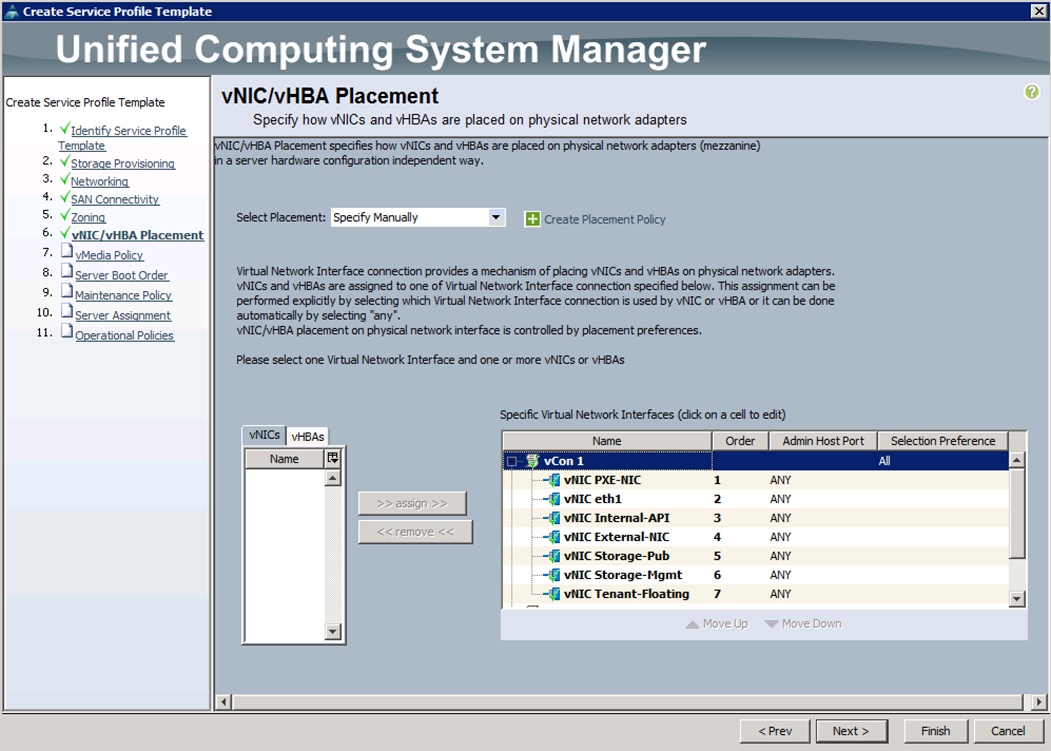

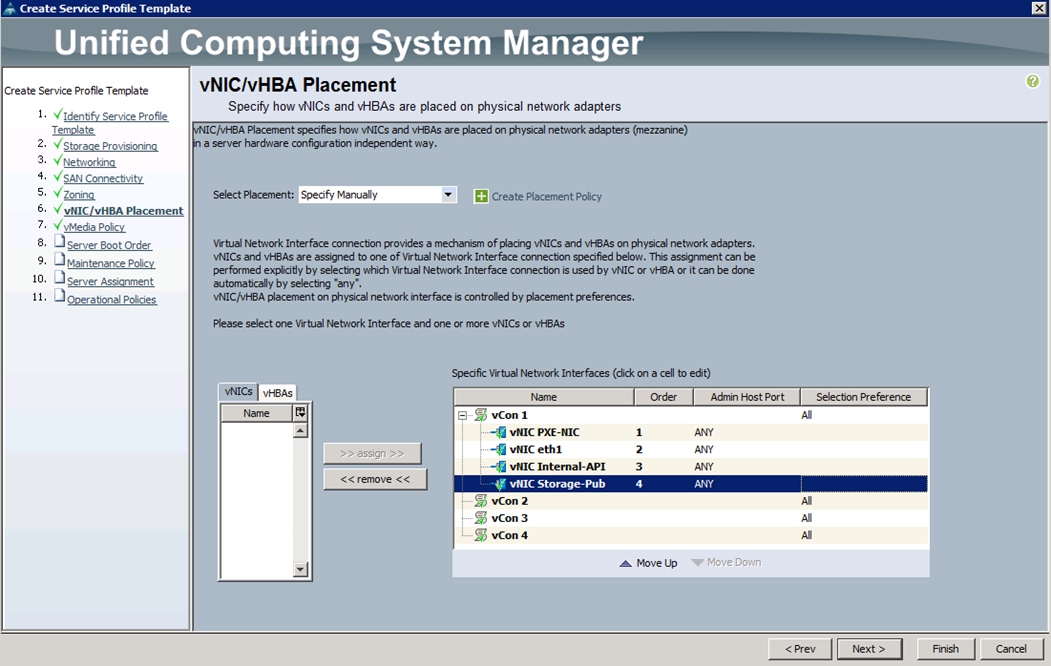

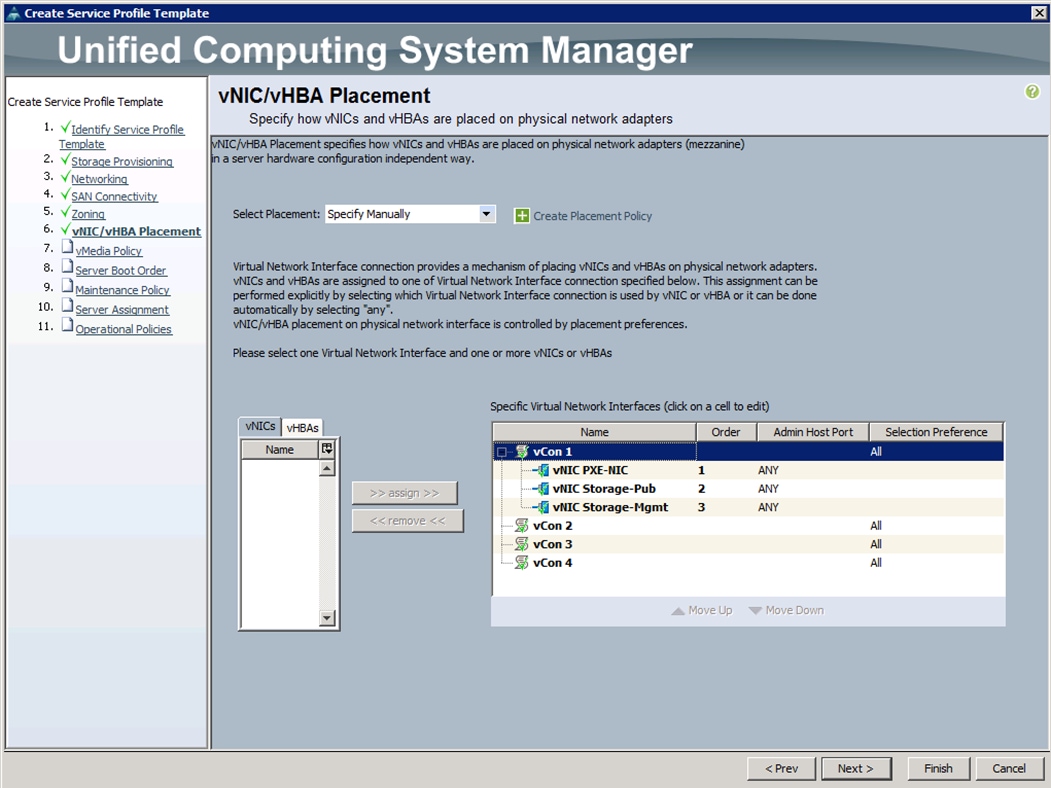

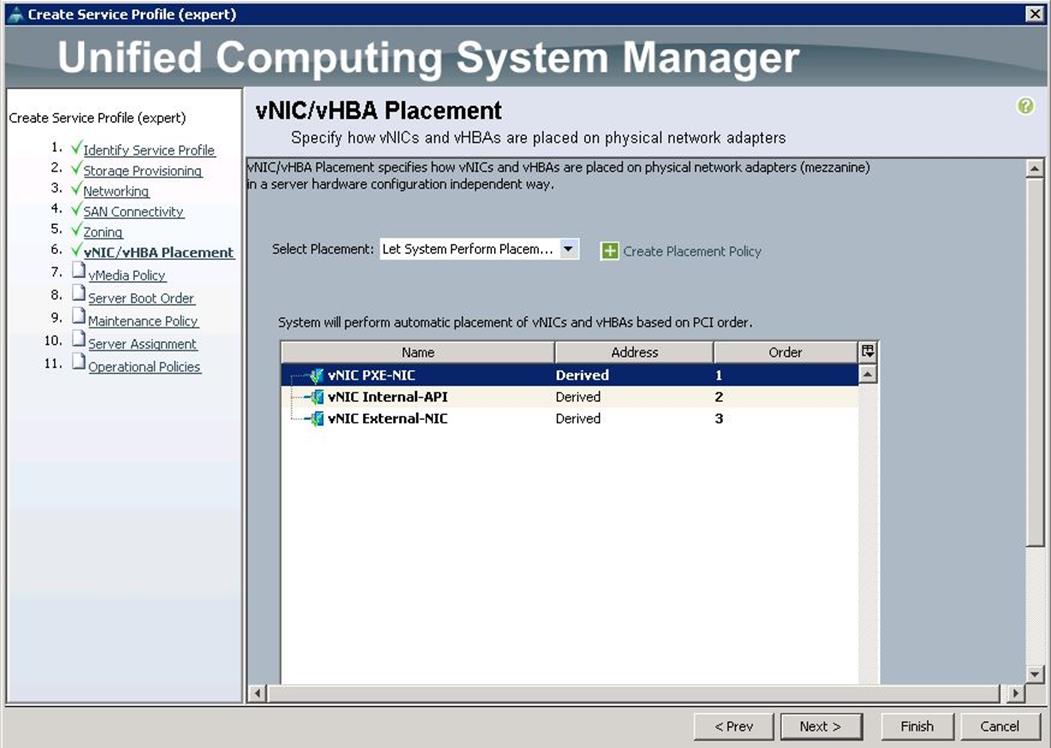

![]() While configuring vNICs in templates and with failover option enabled in Fabrics, the vNICs order has to be specified manually as shown below.

While configuring vNICs in templates and with failover option enabled in Fabrics, the vNICs order has to be specified manually as shown below.

The order of vNIC’s has to be pinned as above for consistent PCI device naming options. The above is an example of controller blade. The same has to be done for all the other servers, the Compute and Storage nodes. This order should match the Overcloud heat templates NIC1, NIC2, NIC3, and NIC4.

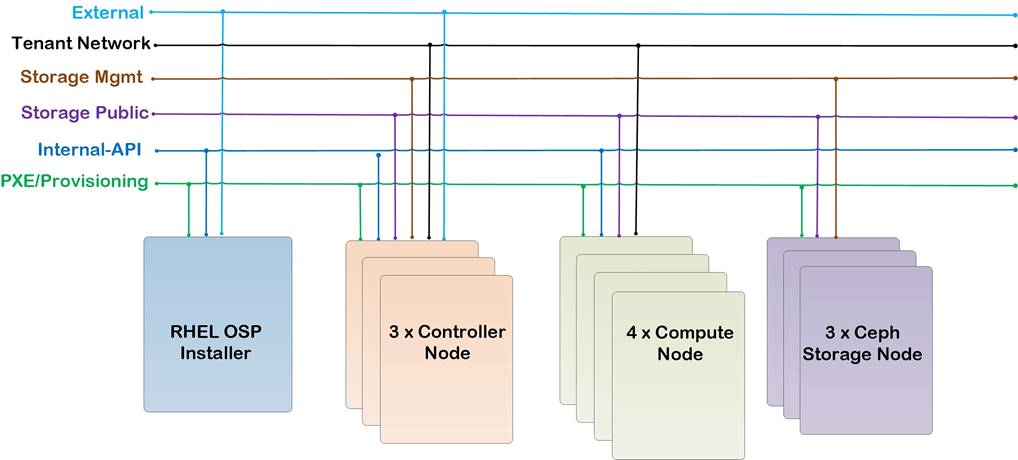

Red Hat Linux OpenStack Platform 7 Director

Red Hat Linux OpenStack Platform (RHEL-OSP7) delivers an integrated foundation to create, deploy, and scale a more secure and reliable public or private OpenStack cloud. RHEL-OSP7 starts with the proven foundation of Red Hat Enterprise Linux and integrates Red Hat’s OpenStack Platform technology to provide production ready cloud platform. RHEL-OSP7 Director is based on community based Kilo OpenStack release. Red Hat RHEL-OSP7 introduces a cloud installation and lifecycle management tool chain. It provides

· Simplified deployment through ready-state provisioning of bare metal resources

· Flexible network definitions

· High Availability with Red Hat Enterprise Linux Server High Availability

· Integrated setup and Installation of Red Hat Ceph Storage 1.3

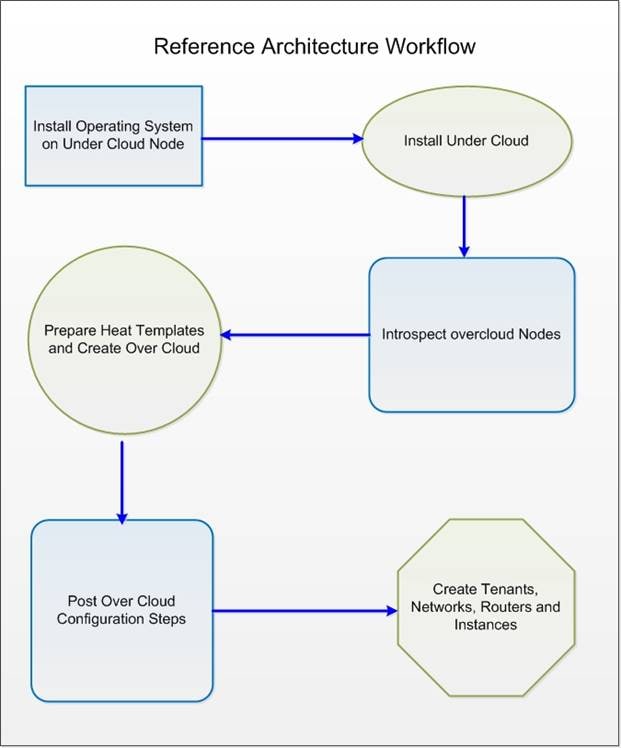

Reference Architecture Workflow

Figure 7 illustrates the reference architecture workflow.

Red Hat Linux OpenStack Platform Director is a new set of tool chain introduced with Kilo that automates the creation of Undercloud and Overcloud nodes as above. It performs the following:

· Install Operating System on Undercloud Node

· Install Undercloud Node

· Perform Hardware Introspection

· Prepare Heat templates and Install Overcloud

· Implement post Overcloud configuration steps

· Create Tenants, Networks and Instances for Cloud

Undercloud Node is the deployment environment while Overcloud nodes are referred to nodes actually rendering the cloud services to the tenants.

The Undercloud is the TripleO (OOO – OpenStack over OpenStack) control plane. It uses native OpenStack APIs and services to deploy, configure, and manage the production OpenStack deployment. The Undercloud defines the Overcloud with Heat templates and then deploys it through the Ironic bare metal provisioning service. OpenStack Director includes predefined Heat templates for the basic server roles that comprise the Overcloud. Customizable templates allow Director to deploy, redeploy, and scale complex Overclouds in a repeatable fashion.

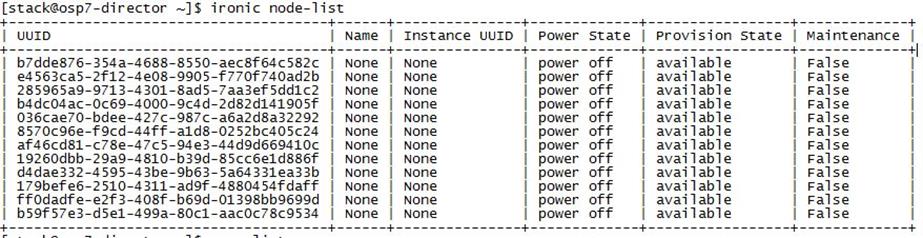

Ironic gathers information about bare metal servers through a discovery mechanism known as introspection. Ironic pairs servers with bootable images and installs them through PXE and remote power management.

Red Hat Linux OpenStack Director deploys all servers with the same generic image by injecting Puppet modules into the image to tailor it for specific server roles. It then applies host-specific customizations through Puppet including network and storage configurations. While the Undercloud is primarily used to deploy OpenStack, the Overcloud is a functional cloud available to run virtual machines and workloads.

The following subsections detail the roles that comprise the Overcloud.

Control

This role provides endpoints for REST-based API queries to the majority of the OpenStack services. These include Compute, Image, Identity, Block, Network, and Data processing. The controller nodes also provide the supporting facilities for the API’s, database, load balancing, messaging, and distributed memory objects. They also provide external access to virtual machines. The controller can run as a standalone server or as a High Availability (HA) cluster. The current configuration was configured with HA.

Compute

This role provides the processing, memory, storage, and networking resources to run virtual machine instances. It runs the KVM hypervisor by default. New instances are spawned across compute nodes in a round-robin fashion based on resource availability.

Ceph-Storage

Ceph is a distributed block, object store and file system. This role deploys Object Storage Daemon (OSD) nodes for Ceph clusters. It also installs the Ceph Monitor service on the controller. The instance distribution is influenced by the currently set filters. The default filters can be altered if needed; for more information, please refer to the OpenStack documentation.

Network Isolation

OpenStack requires multiple network functions. While it is possible to collapse all network functions onto a single network interface, isolating communication streams in their own physical or virtual networks provides better performance and scalability. Each OpenStack service is bound to an IP on a particular network. In a cluster a service virtual IP is shared among all of the HA controllers.

Provisioning

The Control plane installs Overcloud through this network. All nodes must have a physical interface attached to the provisioning network. This network carries DHCP/PXE and TFTP traffic. It must be provided on a dedicated interface or native VLAN to the boot interface. The provisioning interface can also act as a default gateway for to Overcloud; the compute and storage nodes use this provisioning gateway interface on the Undercloud node.

External

The External network is used for hosting the Horizon dashboard and the Public APIs, as well as hosting the floating IPs that are assigned to VMs. The Neutron L3 routers which perform NAT are attached to this interface. The range of IPs that are assigned to floating IPs should not include the IPs used for hosts and VIPs on this network.

Internal API

This network is used for connections to the API servers, as well as RPC messages using RabbitMQ and connections to the database. The Glance Registry API uses this network, as does the Cinder API. This network is typically only reachable from inside the OpenStack Overcloud environment, so API calls from outside the cloud will use the Public APIs.

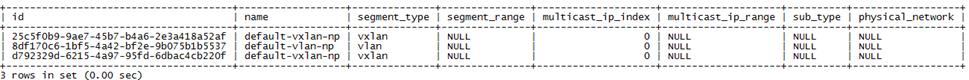

Tenant

Virtual machines communicate over the tenant network. It supports three modes of operation: VXLAN, GRE, and VLAN. VXLAN and GRE tenant traffic is delivered through software tunnels on a single VLAN. Individual VLANs correspond to tenant networks in the case where VLAN tenant networks are used.

Storage

This network carries storage communication including Ceph, Cinder, and Swift traffic. The virtual machine instances communicate with the storage servers through this network. Data-intensive OpenStack deployments should isolate storage traffic on a dedicated high bandwidth interface, i.e. 10 GB interface. The Glance API, Swift proxy, and Ceph Public interface services are all delivered through this network.

Storage Management

Storage management communication can generate large amounts of network traffic. This network is shared between the front and back end storage nodes. Storage controllers use this network to access data storage nodes. This network is also used for storage clustering and replication traffic.

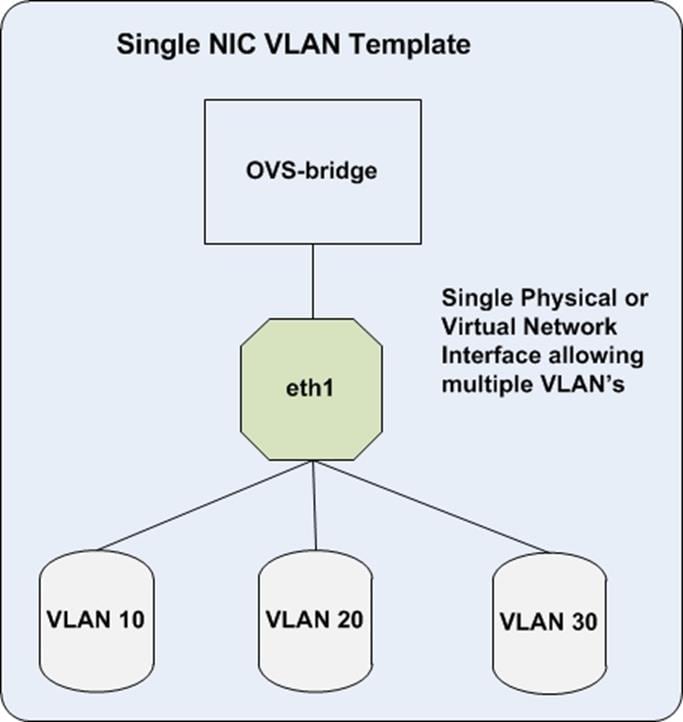

Network traffic types are assigned to network interfaces through Heat template customizations prior to deploying the Overcloud. Red Hat Enterprise Linux OpenStack Platform Director supports several network interface types including physical interfaces, bonded interfaces (not with Cisco UCS Fabric Interconnects), and either tagged or native 802.1Q VLANs.

Network Types by Server Role

The previous section discussed server roles. Each server role requires access to specific types of network traffic. The network isolation feature allows Red Hat Enterprise Linux OpenStack Platform Director to segment network traffic by particular network types. When using network isolation, each server role must have access to its required network traffic types.

By default, Red Hat Enterprise Linux OpenStack Platform Director collapses all network traffic to the provisioning interface. This configuration is suitable for evaluation, proof of concept, and development environments. It is not recommended for production environments where scaling and performance are primary concerns.

Tenant Network Types

Red Hat Enterprise Linux OpenStack Platform 7 supports tenant network communication through the OpenStack Networking (Neutron) service. OpenStack Networking supports overlapping IP address ranges across tenants through the Linux kernel’s network namespace capability. It also supports three default networking types:

VLAN segmentation mode

Each tenant is assigned a network subnet mapped to an 802.1q VLAN on the physical network. This tenant networking type requires VLAN-assignment to the appropriate switch ports on the physical network.

VXLAN segmentation mode

The VXLAN mechanism driver encapsulates each layer 2 Ethernet frame sent by the VMs in a layer 3 UDP packet. The UDP packet includes an 8-byte field, within which a 24-bit value is used for the VXLAN Segment ID. The VXLAN Segment ID is used to designate the individual VXLAN over network on which the communicating VMs are situated. This provides segmentation for each Tenant network

GRE segmentation mode

The GRE mechanism driver encapsulates each layer 2 Ethernet frame sent by the VMs in a special IP packet using the GRE protocol (IP type 47). The GRE header contains a 32-bit key which is used to identify a flow or virtual network in a tunnel. This provides segmentation for each Tenant network.

![]() Cisco Nexus Plugin is bundled in OpenStack Platform 7 kilo release. While it can support both VLAN and VXLAN configurations, only VLAN mode is validated as part of this design. VXLAN will be considered in future releases when the current VIC 1340 Cisco interface card will be certified on VXLAN and Red Hat operating system.

Cisco Nexus Plugin is bundled in OpenStack Platform 7 kilo release. While it can support both VLAN and VXLAN configurations, only VLAN mode is validated as part of this design. VXLAN will be considered in future releases when the current VIC 1340 Cisco interface card will be certified on VXLAN and Red Hat operating system.

Cluster Manager and Proxy Server

Two components drive HA for all core and non-core OpenStack services: the cluster manager and the proxy server.

The cluster manager is responsible for the startup and recovery of an inter-related services across a set of physical machines. It tracks the cluster’s internal state across multiple machines. State changes trigger appropriate responses from the cluster manager to ensure service availability and data integrity.

This section describes the steps to configure networking for Overcloud. The network setup used in the configuration as shown in Figure 5 earlier.

The configuration is done using Heat Templates on the Undercloud prior to deploying the Overcloud. These steps need to be followed after the Undercloud install. In order to use network isolation, we have to define the Overcloud networks. Each will have an IP subnet, a range of IP addresses to use on the subnet and a VLAN ID. These parameters will be defined in the network environment file. In addition to the global settings there is a template for each of the nodes like controller, compute and Ceph that determines the NIC configuration for each role. These have to be customized to match the actual hardware configuration.

Heat communicates with Neutron API running on the Undercloud node to create isolated networks and to assign neutron ports on these networks. Neutron will assign a static port to each port and Heat will use these static IP’s to configure networking on the Overcloud nodes. A utility called os-net-config runs on each node at provisioning time to configure host level networking.

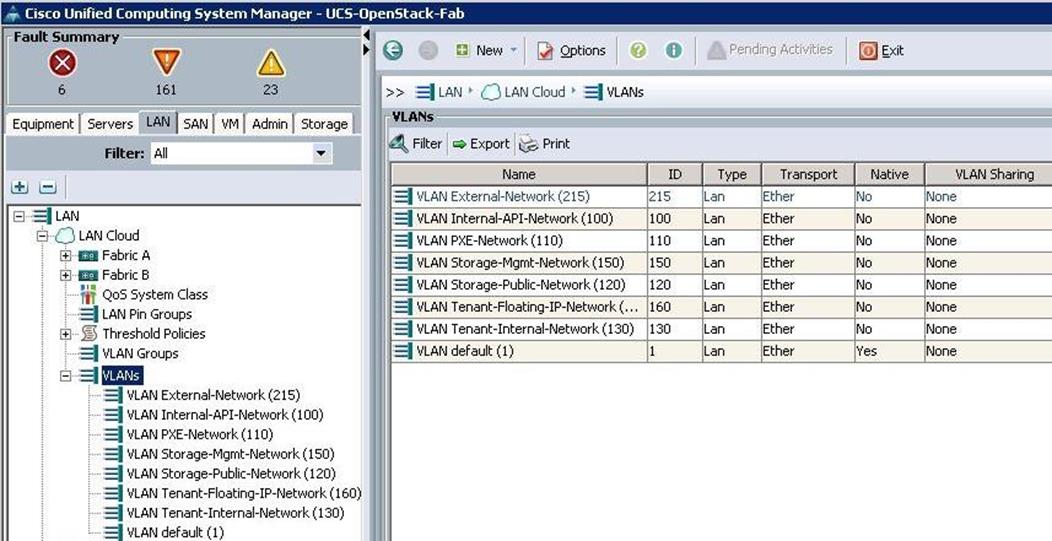

Table 3 lists the VLANs that are created on the configuration.

| VLAN Name |

VLAN Purpose |

VLAN ID or VLAN Range Used in This Design for Reference |

| PXE |

Provisioning Network VLAN |

110 |

| Internal-API |

Internal API Network |

100 |

| External |

External Network |

215 |

| Storage Public |

Storage Public Network |

120 |

| Storage Management |

Storage Cluster or Management Network |

150 |

| Floating |

Floating Network |

160 |

High Availability

Red Hat Linux OpenStack Director’s approach is to leverage Red Hat’s distributed cluster system.

Cluster Management and Proxy Server

The cluster manager is responsible for the startup and recovery of an inter-related services across a set of physical machines. It tracks the cluster’s internal state across multiple machines. State changes trigger appropriate responses from the cluster manager to ensure service availability and data integrity.

In the HA model Clients do not directly connect to service endpoints. Connection requests are routed to service endpoints by a proxy server.

Cluster manager provides state awareness of other machines to coordinate service startup and recovery, shared quorum to determine majority set of surviving cluster nodes after failure, data integrity through fencing and automated recovery of failed instances.

Proxy servers help in load balancing connections across service end points. The nodes can be added or removed without interrupting service.

Red Hat Linux OpenStack Director uses HAproxy and Pacemaker to manage HA services and load balance connection requests. With the exception of RabbitMQ and Galera, HAproxy distributes connection requests to active nodes in a round-robin fashion. Galera and RabbitMQ use persistent options to ensure requests go only to active and/or synchronized nodes. Pacemaker checks service health at one second intervals. Timeout settings vary by service.

The combination of Pacemaker and HAProxy:

· Detects and recovers machine and application failures

· Starts and stops OpenStack services in the correct order

· Responds to cluster failures with appropriate actions including resource failover and machine restart and fencing

RabbitMQ, memcached, and mongodb do not use HAProxy server. These services have their own failover and HA mechanisms.

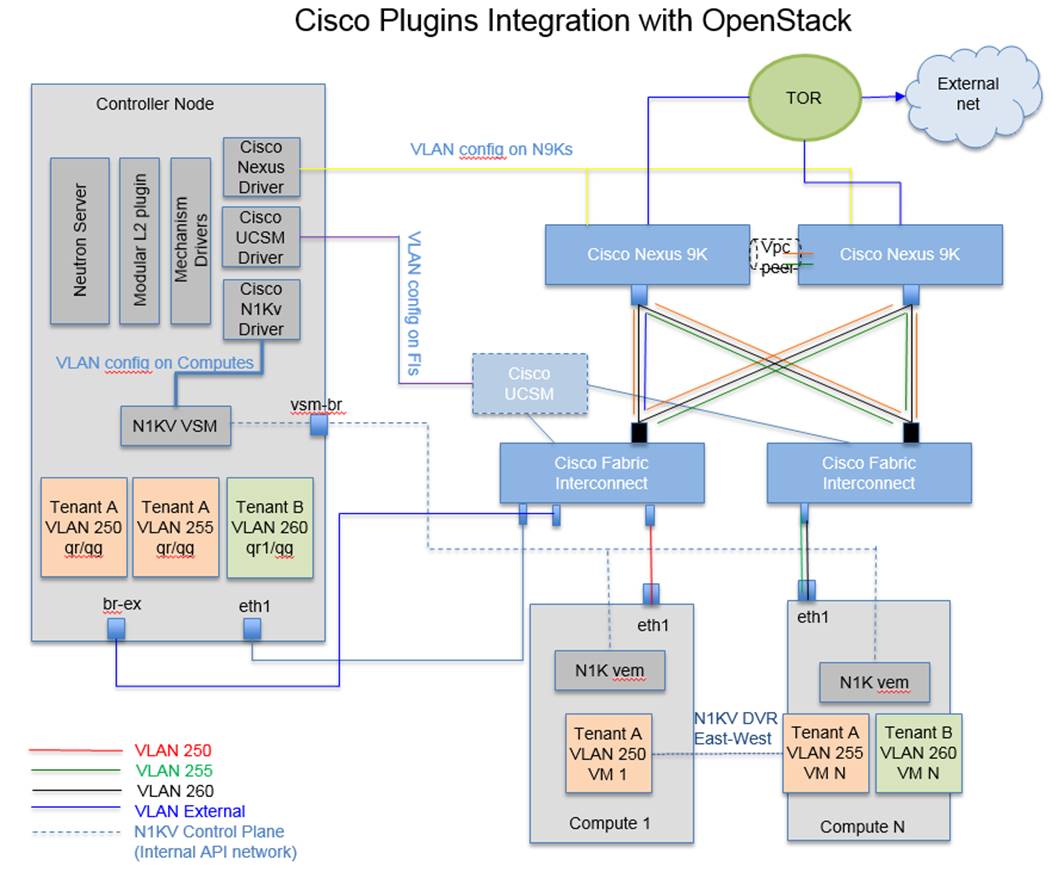

Cisco ML2 Plugins

OpenStack Modular Layer 2 (ML2) allows separation of network segment types and the device specific implementation of segment types. ML2 architecture consists of multiple ‘type drivers’ and ‘mechanism drivers’. Type drivers manage the common aspects of a specific type of network while the mechanism driver manages specific device to implement network types.

Type drivers

· VLAN

· GRE

· VXLAN

Mechanism drivers

· Cisco UCSM

· Cisco Nexus

· Cisco Nexus 1000v

· Openvswitch, Linuxbridge

The Cisco Nexus driver for OpenStack Neutron allows customers to easily build their Infrastructure-as-a-Service (IaaS) networks using the industry’s leading networking platform, delivering performance, scalability, and stability with the familiar manageability and control you expect from Cisco® technology. ML2 Nexus drivers dynamically provision OpenStack managed VLAN’s on Nexus switches. They configure the trunk ports with the dynamically created VLAN’s solving the logical port count issue on Nexus switches. They provide better manageability of the network infrastructure.

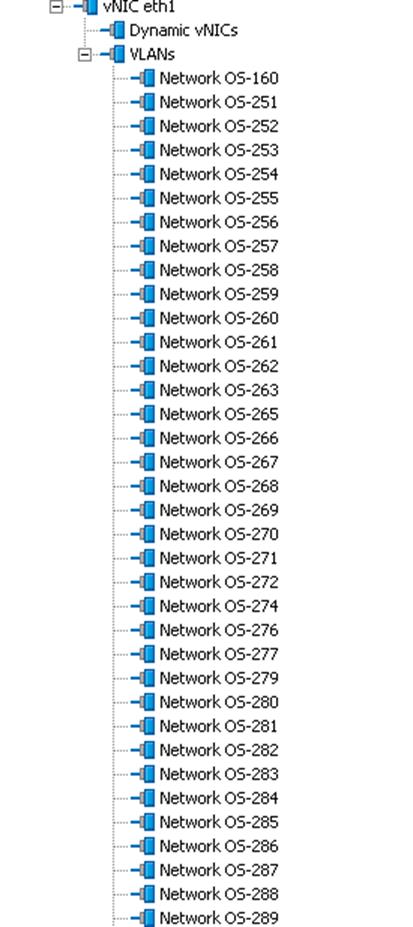

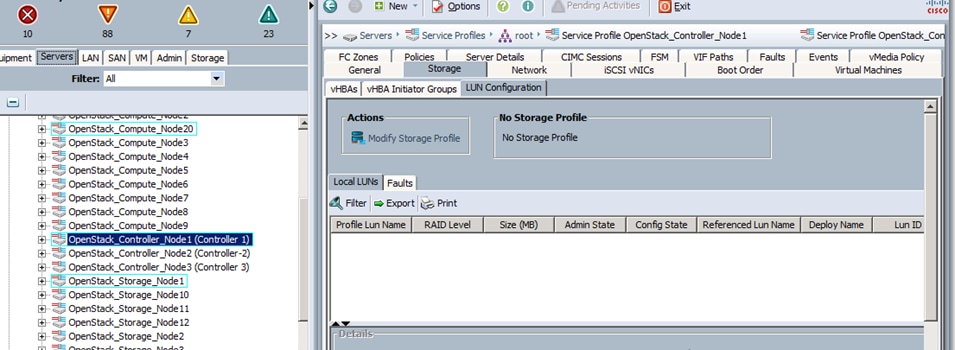

ML2 UCSM drivers dynamically provision OpenStack managed VLAN’s on Fabric Interconnects. They configure VLAN’s on Controller and Compute node VNIC’s. The Cisco UCS Manager Plugin talks to the Cisco UCS Manager application running on Fabric Interconnect and is part of an ecosystem for Cisco UCS Servers that consists of Fabric Interconnects and IO modules. The ML2 Cisco UCS Manager driver does not support configuration of Cisco UCS Servers, whose service profiles are attached to Service Templates. This is to prevent that same VLAN configuration to be pushed to all the service profiles based on that template. The plugin can be used after the Service Profile has been unbound from the template.

Cisco Nexus 1000V OpenStack offers rich features, which are not limited to the following:

· Layer2/Layer3 Switching

· East-West Security

· Policy Framework

· Application Visibility

All the monitoring, management and functionality features offered on the Nexus 1000V are consentient with the physical Nexus infrastructure. This enables customer to reuse the existing tool chains to manage the new virtual networking infrastructure as well. Along with this, customer can also have the peace of mind that the feature functionality they enjoyed in the physical network will now be the same in the virtual network.

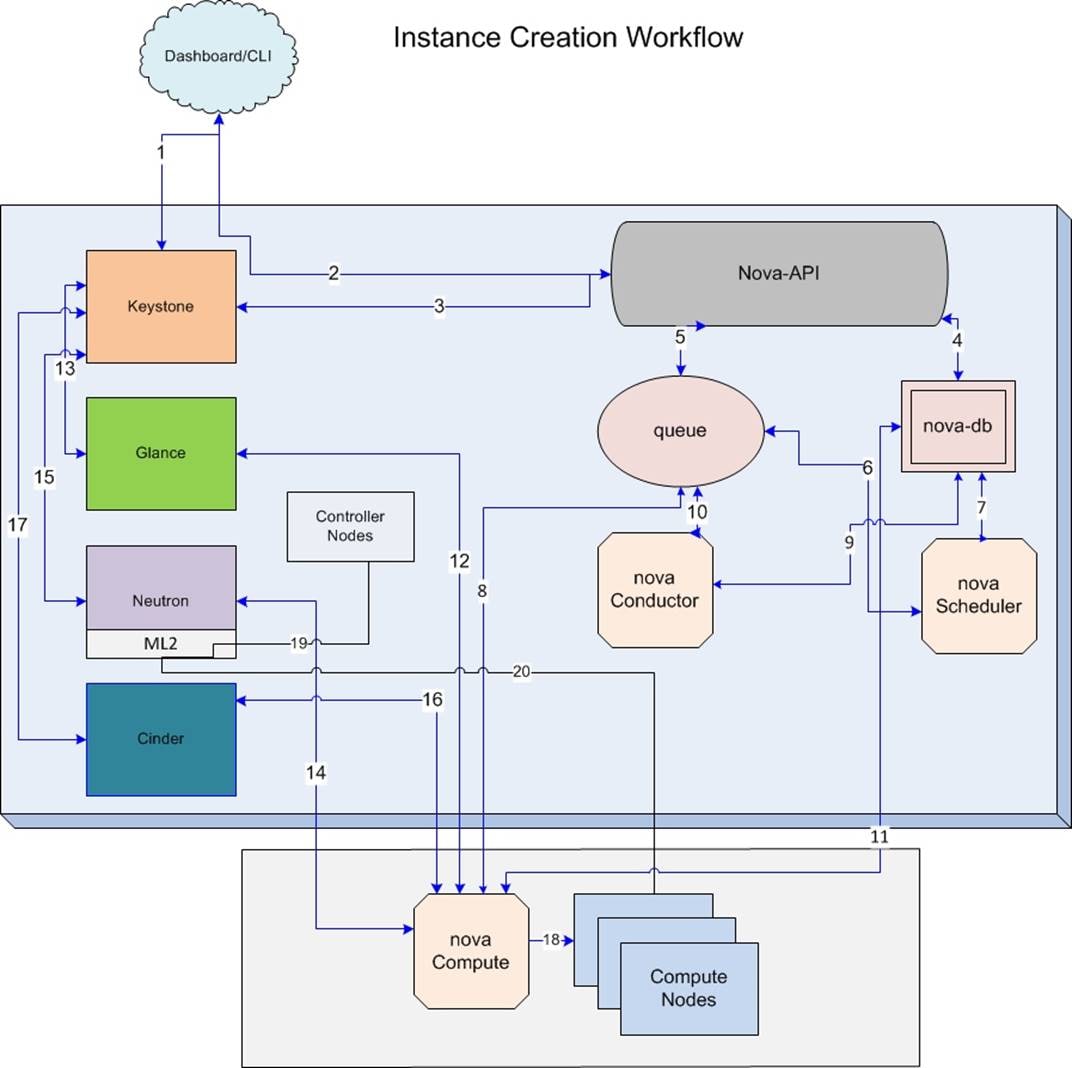

Instance creation work flow

To create a virtual machine, complete the following steps:

1. Dashboard/CLI authenticates with Keystone.

2. Dashboard/CLI sends nova-boot to nova-api.

3. nova-api validates the token with keystone.

4. nova-api checks for conflicts, if not creates a new entry in database.

5. nova-api sends rpc.call to nova-scheduler and gets updated host-entry with host-id.

6. nova-scheduler picks up the request from the queue.

7. nova-scheduler sends the rpc.cast request to nova-compute for launching an instance on the appropriate host after applying filters.

8. nova-compute picks up the request from the queue.

9. nova-compute sends the rpc.call request to nova-conductor to fetch the instance information such as host ID and flavor (RAM, CPU, and Disk).

10. nova-conductor picks up the request from the queue.

11. nova-conductor interacts with nova-database and picks up instance information from queue.

12. nova-compute performs the REST with auth-token to glance-api. Then, nova-compute retrieves the Image URI from the Image Service, and loads the image from the image storage.

13. glance-api validates the auth-token with keystone and nova-compute gets the image data.

14. nova-compute performs the REST call to network API to allocated and configure the network

15. neutron server validates the token and creates network info.

16. Nova-compute performs REST to volume API to attach volume to the instance.

17. Cinder-api validates the token and provides block storage info to nova-compute.

18. Nova compute generates data for the hypervisor driver.

19. DHCP and/or Router port bindings by neutron on controller nodes triggers Cisco ML2 plugins:

— UCSM driver creates VLAN and trunks the eth1 vNICs for the controller node’s service-profile

— Nexus driver creates VLAN and trunks the switches port/s mapped to the controller node

— N1KV VSM receives the logical port information from Neutron. DHCP or Router agents create the port on the N1KV VEM bridge

20. Virtual Machine’s Instance’s Port bindings to a Compute Node triggers again ML2:

— UCSM driver creates VLAN and trunks the eth1 vNICs for the compute node's service-profile

— Nexus driver creates VLAN and trunks the switches’ port(s) mapped to the compute node

— VSM receives logical port information from Neutron. Nova agent creates the port on the N1KV VEM bridge on the compute node

This section details the deployment hardware used in this solution.

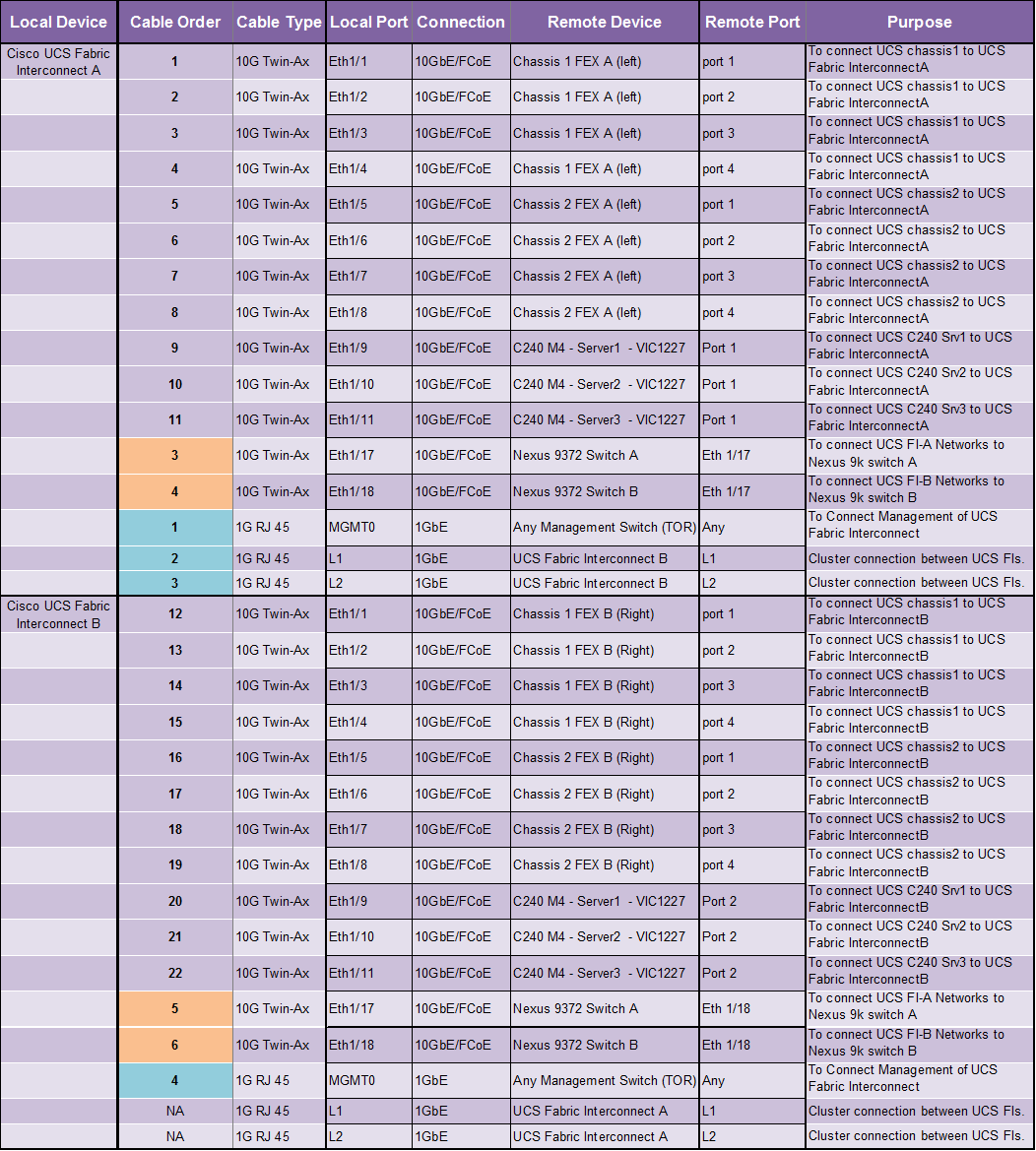

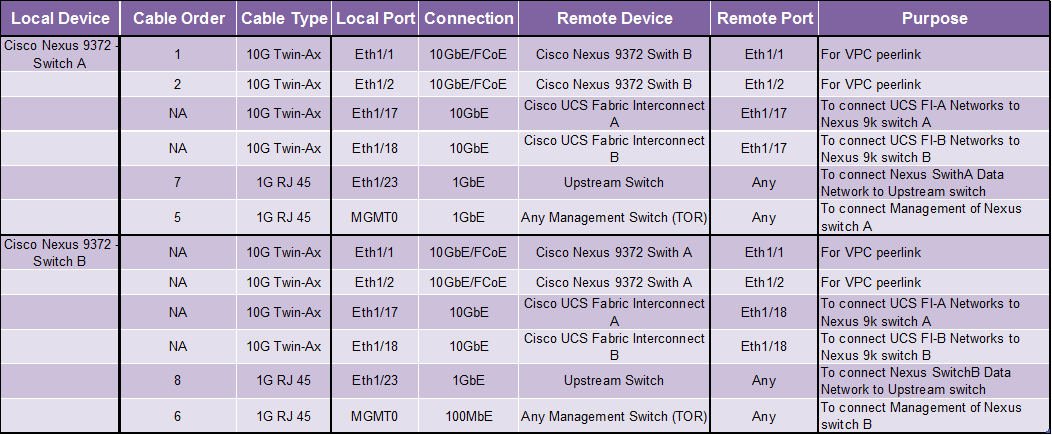

Cabling Details

Table 4 lists the cabling information.

Physical Cabling

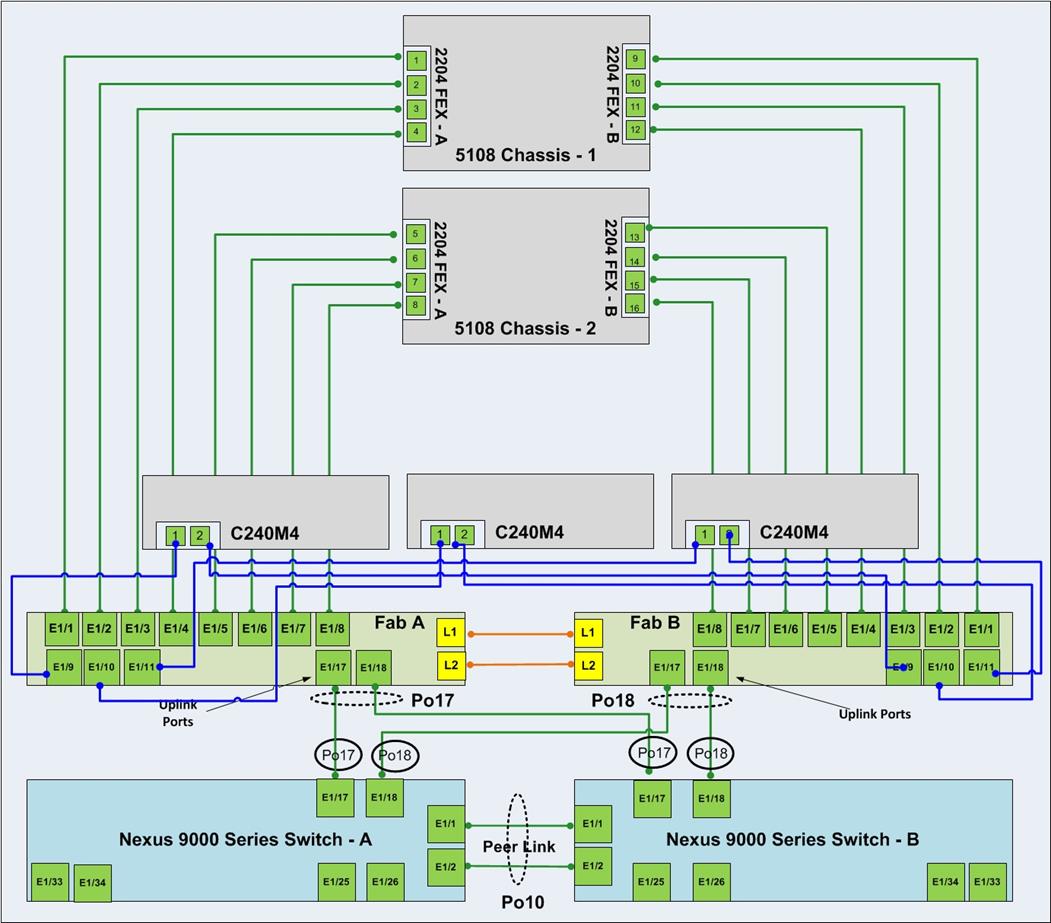

Figure 10 illustrates the physical cabling used in this solution.

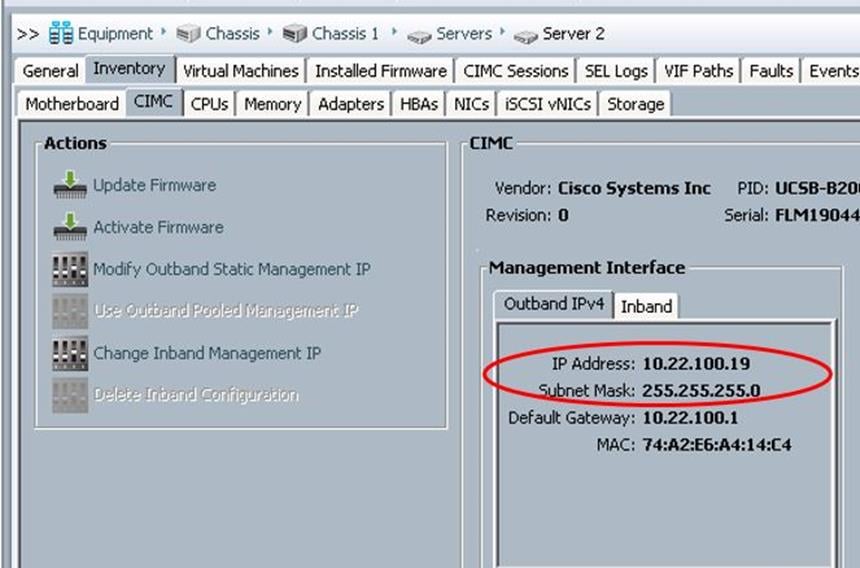

Please note the port numbers on VIC1227 card. Port 1 is on the right and Port 2 is on the left.

Cabling Logic

Figure 11 illustrates the cabling logic used in this solution.

Cisco UCS Configuration

Configure Cisco UCS Fabric Interconnects

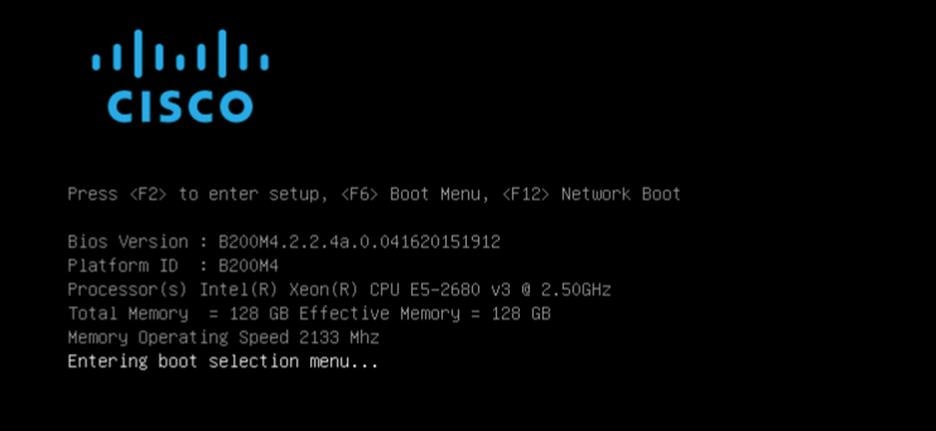

Configure the Fabric Interconnects after the cabling is complete. To hook up the console port on the Fabrics, complete the following steps:

![]() Please replace the appropriate addresses for your setup.

Please replace the appropriate addresses for your setup.

Cisco UCS 6248UP Switch A

Connect the console port to the UCS 6248 Fabric Interconnect switch designated for Fabric A:

Enter the configuration method: console

Enter the setup mode; setup newly or restore from backup.(setup/restore)? setup

You have chosen to setup a new fabric interconnect? Continue? (y/n): y

Enforce strong passwords? (y/n) [y]: y

Enter the password for "admin": <password>

Enter the same password for "admin": <password>

Is this fabric interconnect part of a cluster (select 'no' for standalone)?

(yes/no) [n]:y

Which switch fabric (A|B): A

Enter the system name: UCS-6248-FAB

Physical switch Mgmt0 IPv4 address: 10.22.100.6

Physical switch Mgmt0 IPv4 netmask: 255.255.255.0

IPv4 address of the default gateway: 10.22.100.1

Cluster IPv4 address: 10.22.100.5

Configure DNS Server IPv4 address? (yes/no) [no]: y

DNS IPv4 address: <<var_nameserver_ip>>

Configure the default domain name? y

Default domain name: <<var_dns_domain_name>>

Join centralized management environment (UCS Central)? (yes/no) [n]: Press Enter

You will be prompted to review the settings.

If they are correct, answer yes to apply and save the configuration. Wait for the login prompt to make sure that the configuration has been saved.

Cisco UCS 6248UP Switch B

Connect the console port to Peer UCS 6248 Fabric Interconnect switch designated for Fabric B:

Enter the configuration method: console

Installer has detected the presence of a peer Fabric interconnect. This Fabric

interconnect will be added to the cluster. Do you want to continue {y|n}? y

Enter the admin password for the peer fabric interconnect: <password>

Physical switch Mgmt0 IPv4 address: 10.22.100.7

Apply and save the configuration (select “no” if you want to re-enter)? (yes/no): yes

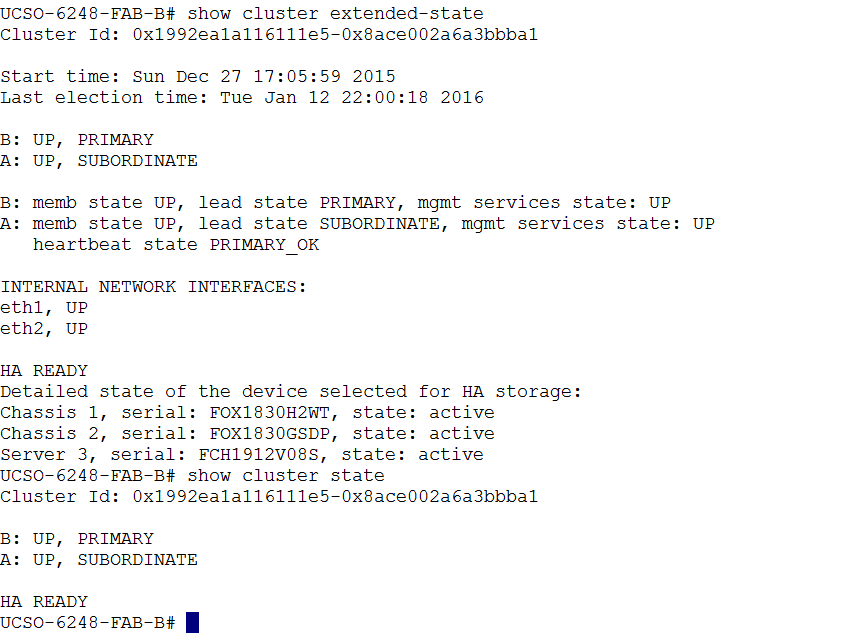

Verify the connectivity:

After completing the FI configuration, verify the connectivity as below by logging to one of the Fabrics or the VIP address and checking the cluster state or extended state as shown below:

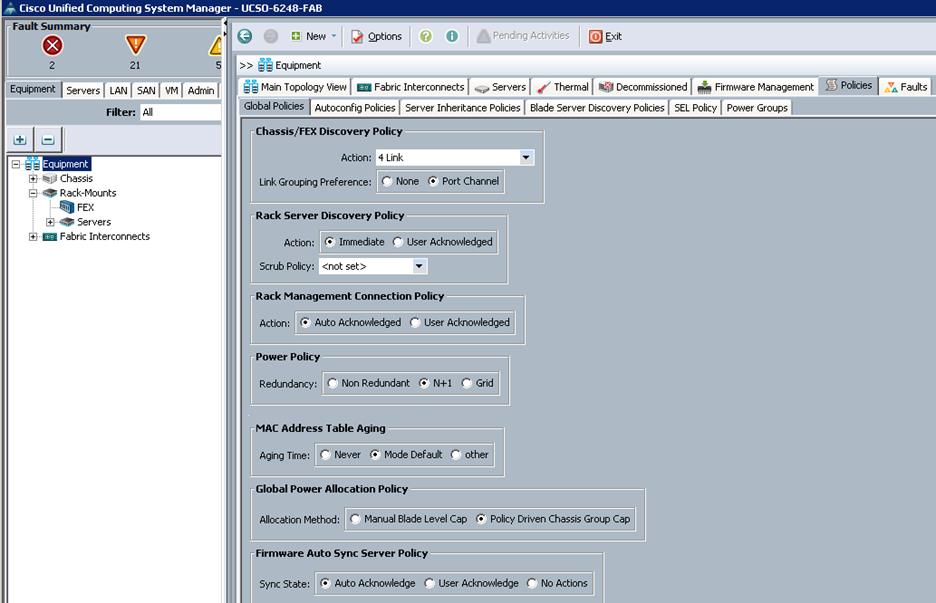

Configure the Cisco UCS Global Policies

To configure the Global policies, log into UCS Manager GUI, and complete the following steps:

1. Under Equipment à Global Policies;

a. Set the Chassis/FEX Discovery Policy to match the number of uplink ports that are cabled between the chassis or fabric extenders and to the fabric interconnects.

b. Set the Power policy based on the input power supply to the UCS chassis. In general, UCS chassis with 5 or more blades recommends minimum of 3 power supplies with N+1 configuration. With 4 power supplies, 2 on each PDUs the recommended power policy is Grid.

c. Set the Global Power allocation Policy as Policy driven Chassis Group cap.

d. Click Save changes to save the configuration.

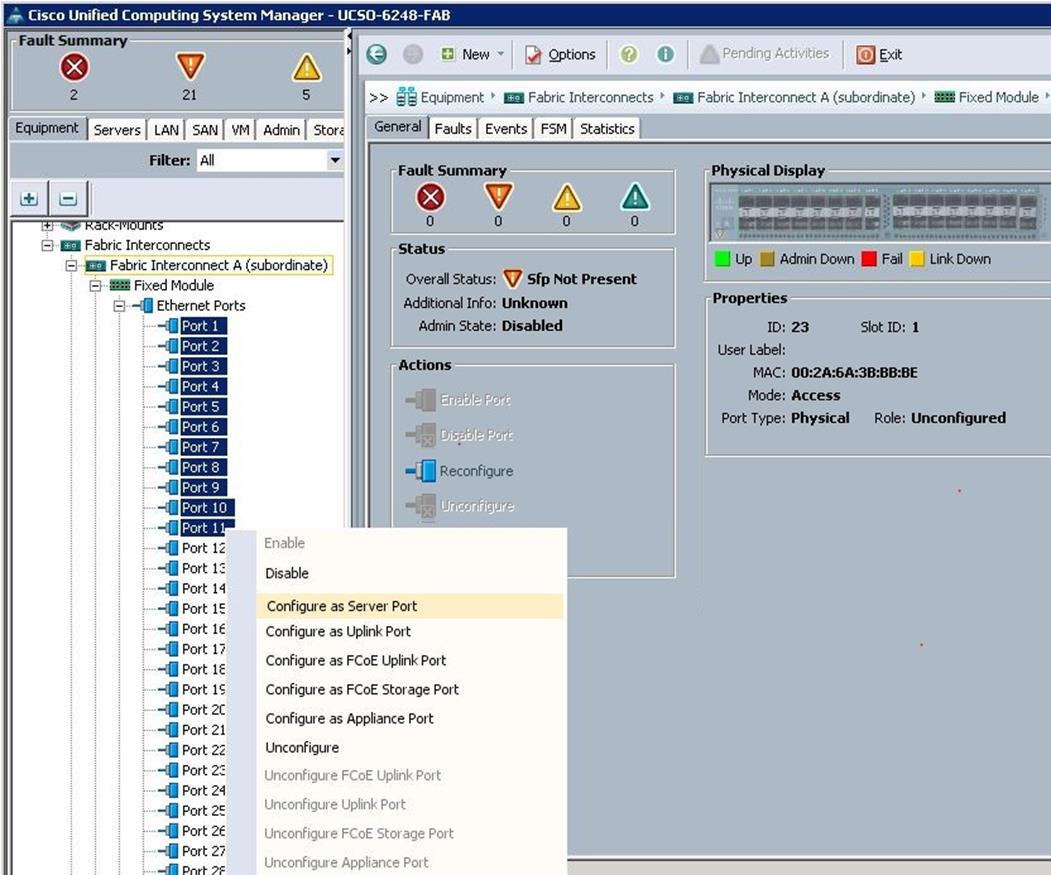

Configure Server Ports for Blade Discovery and Rack Discovery

Navigate to each Fabric Interconnect and configure the server ports on Fabric Interconnects. Complete the following steps:

1. Under Equipment à Fabric Interconnects à Fabric Interconnect A à Fixed Module à Ethernet Ports;

a. Select the ports (Port 1 to 8) that are connected to the left side of each UCS chassis FEX 2204, right-click them and select Configure as Server Port.

b. Select the ports (Port 9 to 11) that are connected to the 10G MLOM (VIC1227) port1 of each UCS C240 M4, right-click them, and select Configure as Server Port.

c. Click Save Changes to save the configuration.

d. Repeat steps 1 and 2 on Fabric Interconnect B and save the configuration.

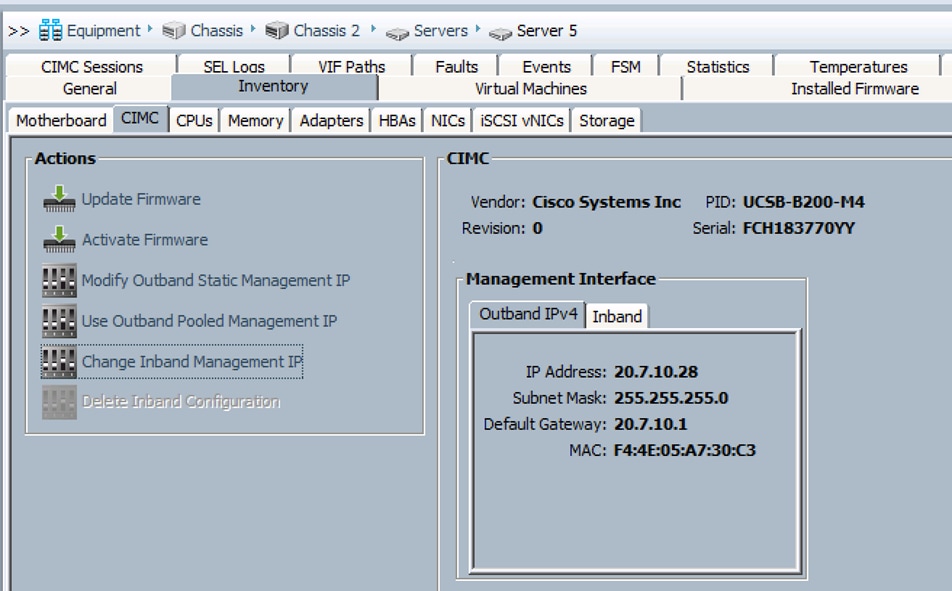

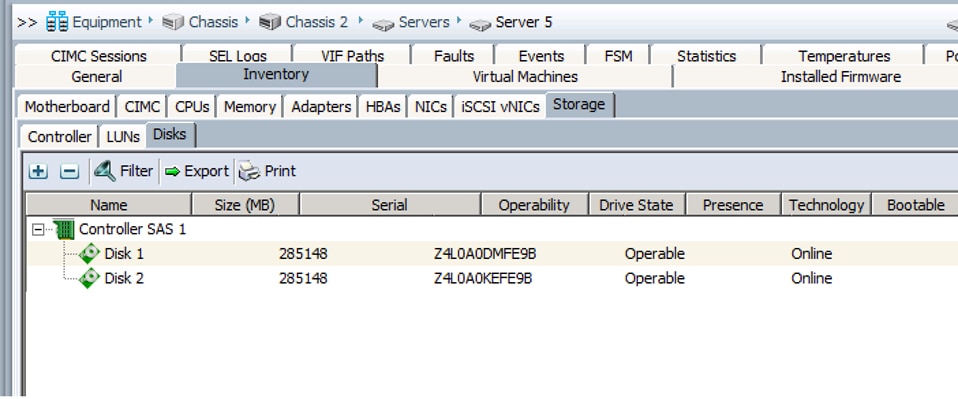

After this the blades and rack servers will be discovered as shown below:

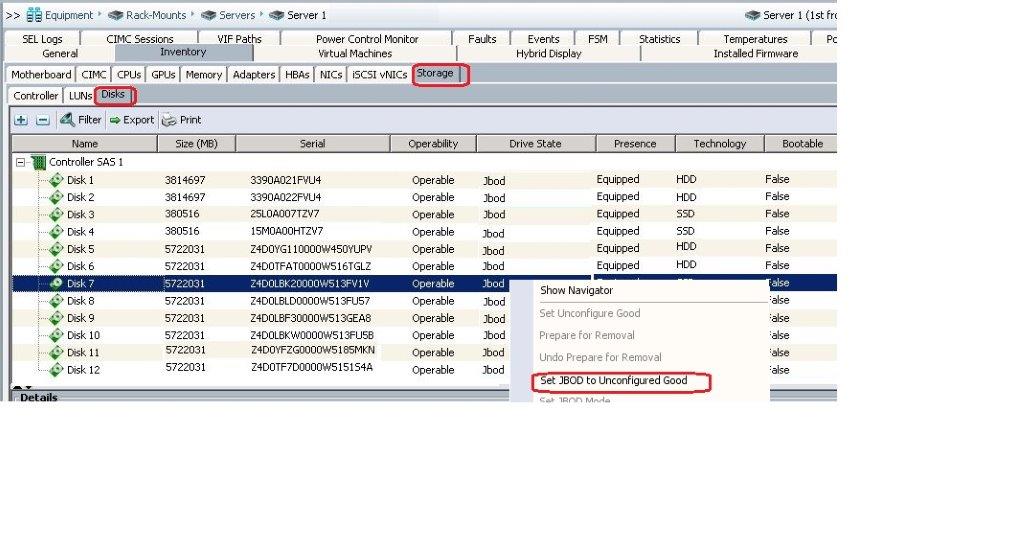

Navigate to each blade and rack servers to make sure that the disks are in Unconfigured Good state, else convert jbod to Uncofigured as below. The below diagram show how to convert a disk to Unconfigured Good state.

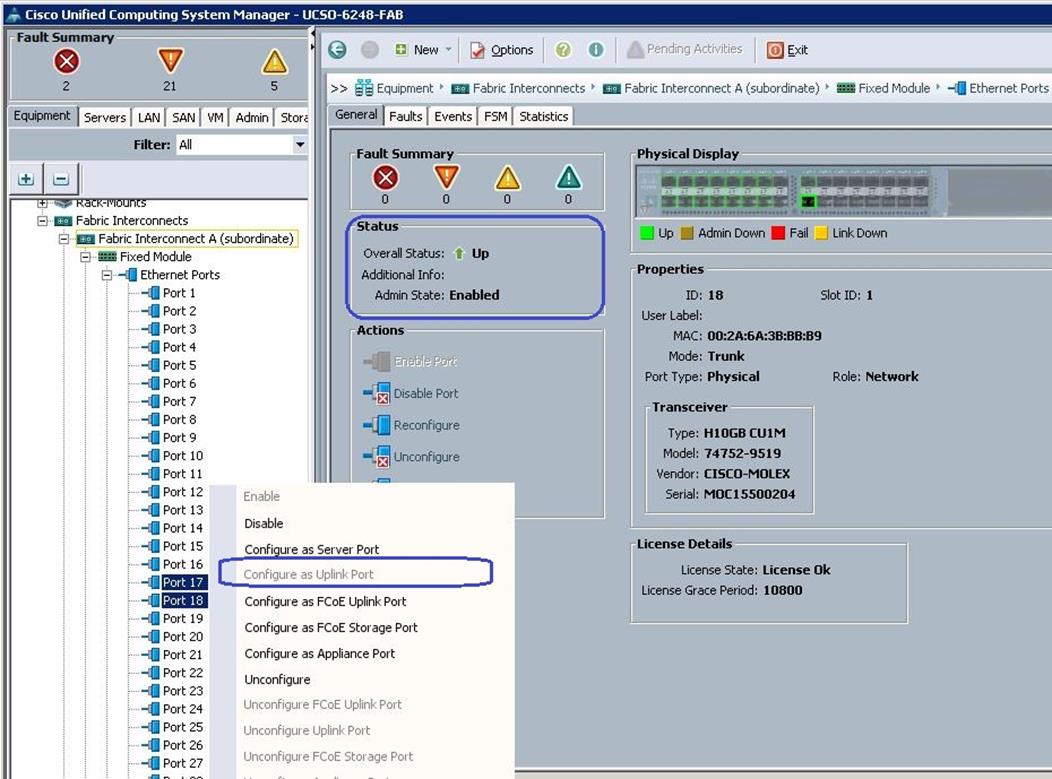

Configure Network Uplinks

Navigate to each Fabric Interconnects and configure the Network Uplink ports on Fabric Interconnects. Complete the following steps:

1. Under Equipment à Fabric Interconnects à Fabric Interconnect A à Fixed Module à Ethernet Ports

a. Select the port 17 and Port18 that are connected to Nexus 9k switches, right-click them and select Configure as Uplink Port.

b. Click Save Changes to save the configuration.

c. Repeat the steps 1 and 2 on Fabric Interconnect B.

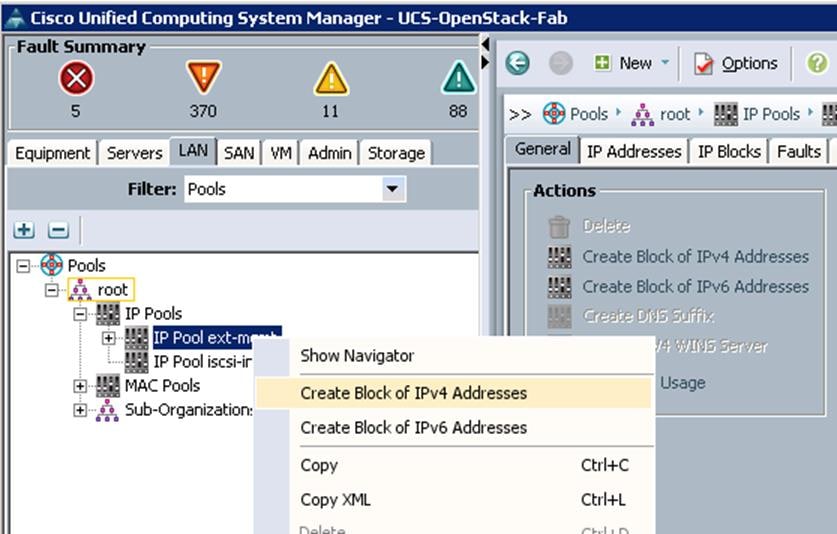

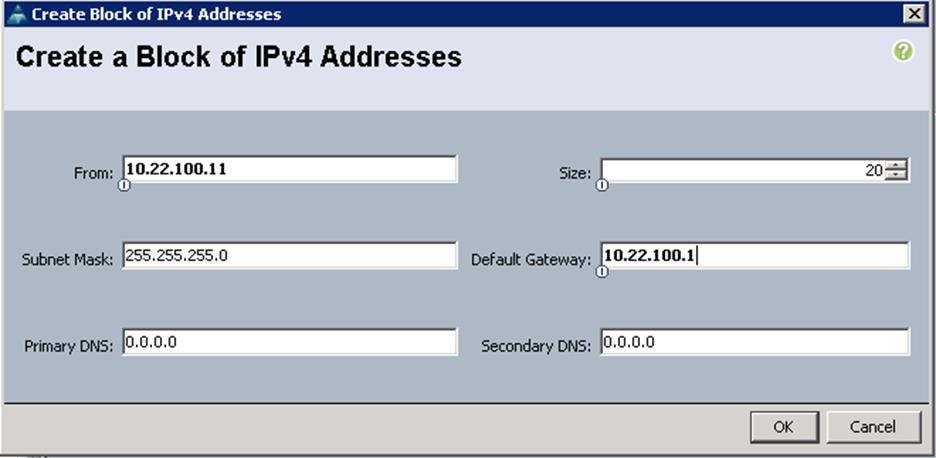

Create KVM IP Pools

To access the KVM console of each UCS Server, create the KVM IP pools from the UCS Manager GUI, and complete the following steps:

1. Under LAN à Pools à root à IP Pools à IP Pool ext-mgmt à right-click and select Create Block of IPV4 addresses.

2. Specify the Starting IP address, subnet mask and gateway and size.

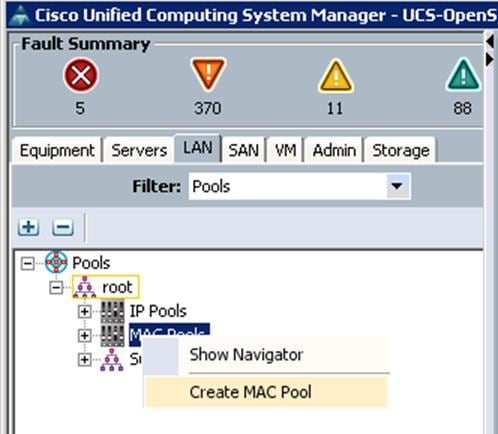

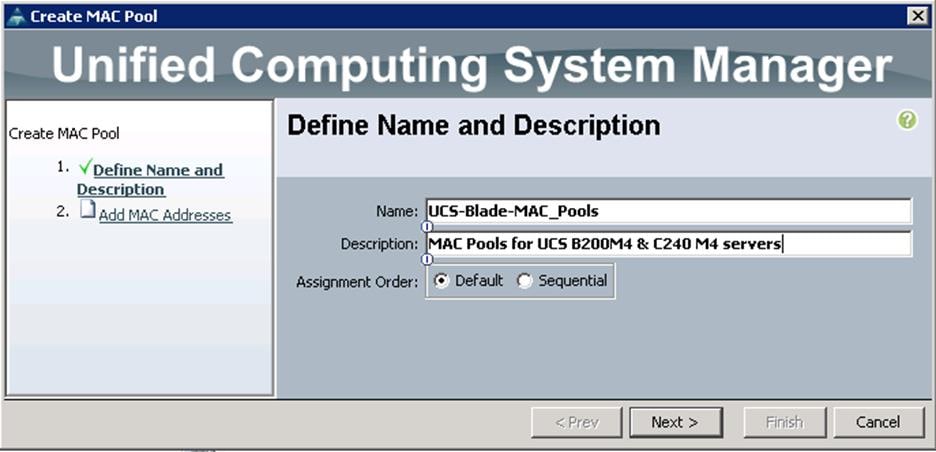

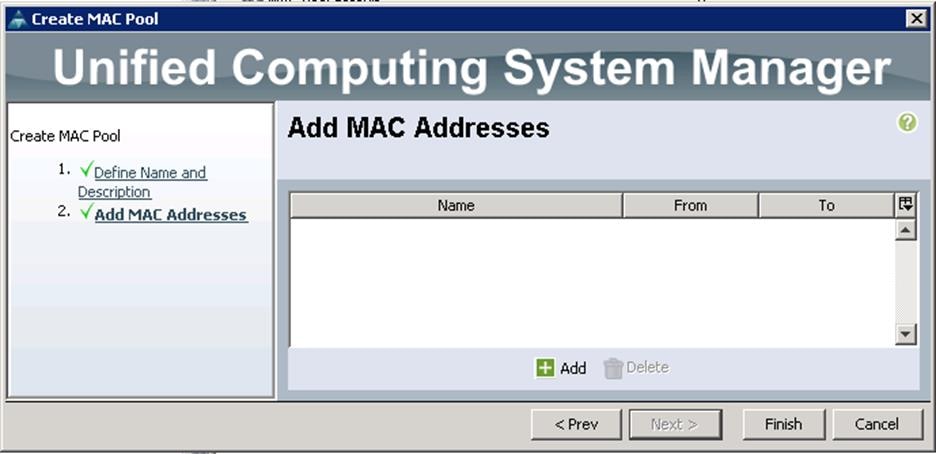

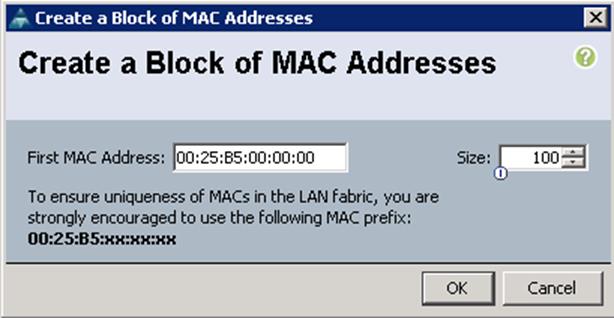

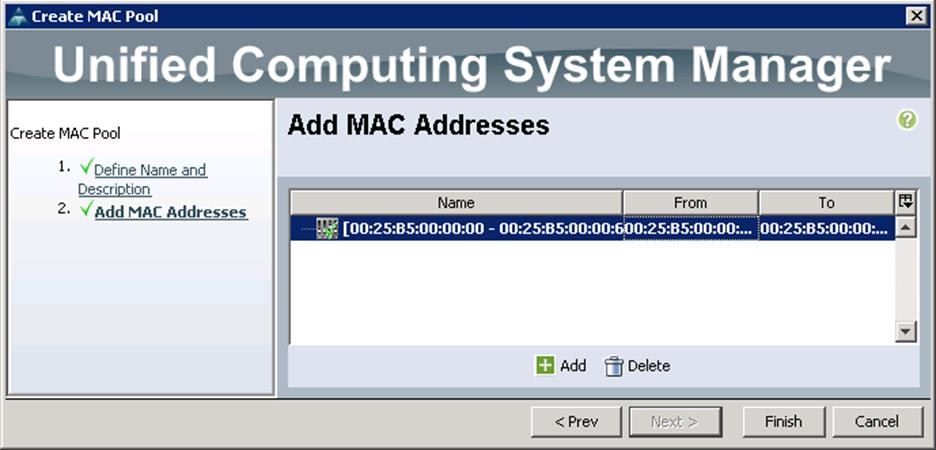

Create MAC Pools

To configure a MAC address for each Cisco UCS Server VNIC interface, create the MAC pools from the Cisco UCS Manager GUI, and complete the following steps:

1. Under LAN à Pools à root à MAC Pools à right-click and select Create MAC Pool.

2. Specify the name and description for the MAC pool.

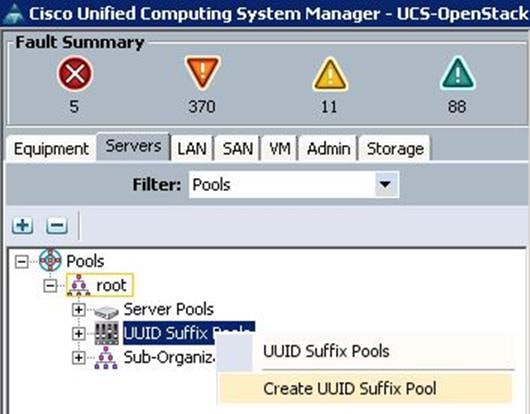

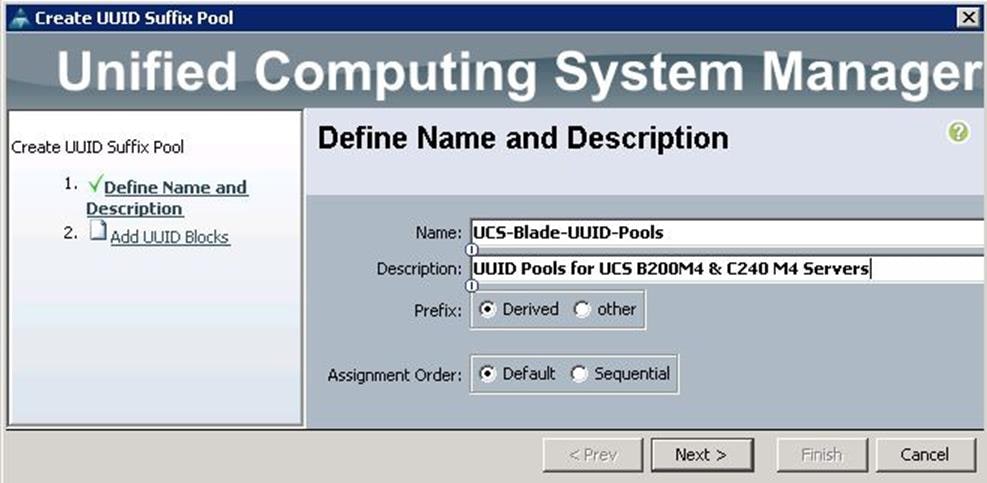

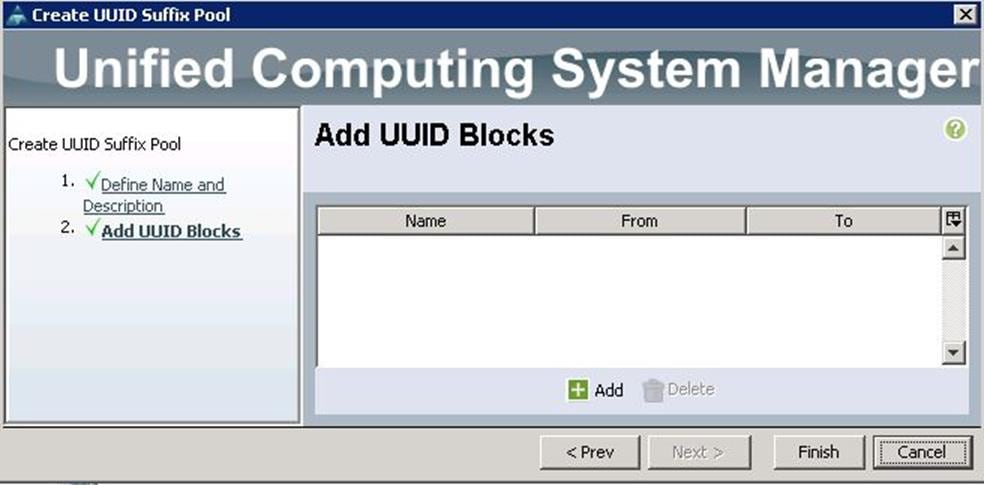

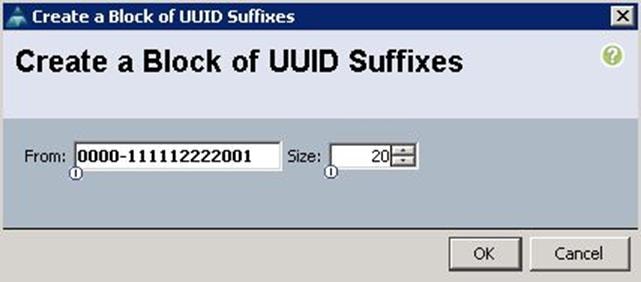

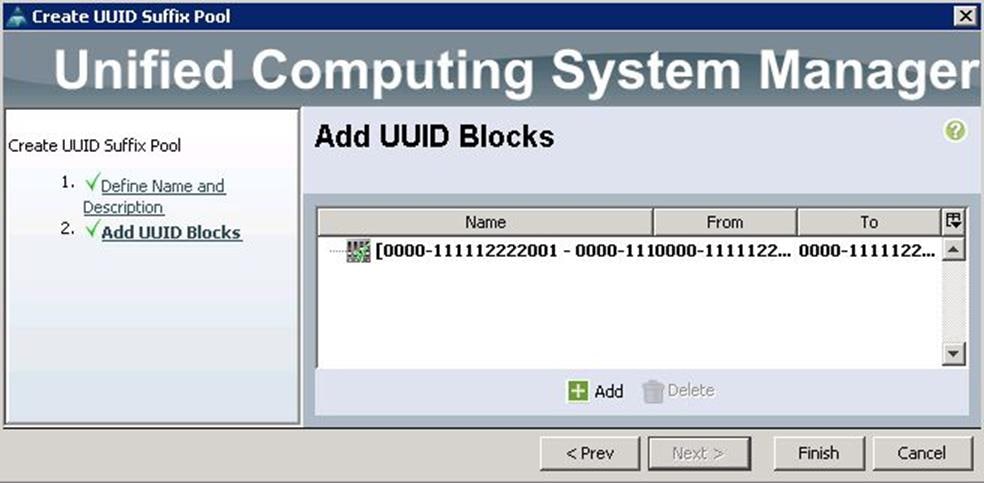

Create UUID Pools

To configure the UUID pools for each UCS Server, create the UUID pools from the Cisco UCS Manager GUI, complete the following steps:

1. Under Servers à Pools à root à UUID Suffix Pools à right-click and select Create UUID Suffix Pool.

2. Specify the name and description for the UUID pool.

3. Click Add.

4. Specify the UUID Suffixes and size for the UUID pool.

5. Click Finish to complete the UUID pool creation.

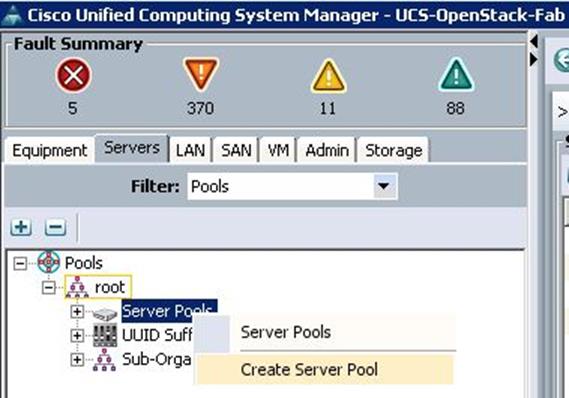

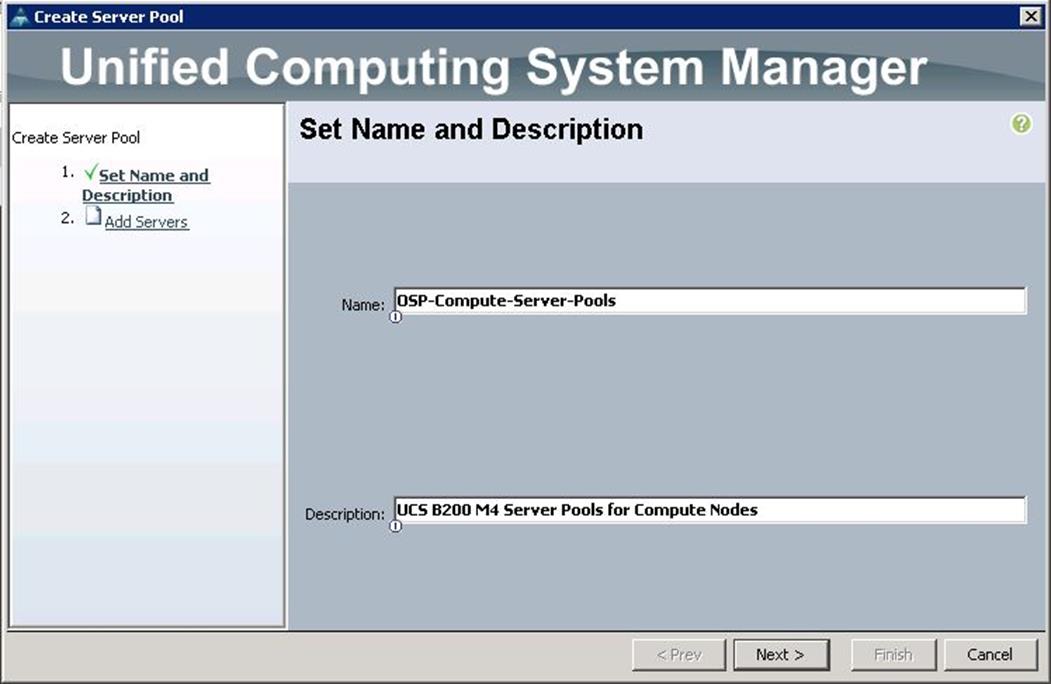

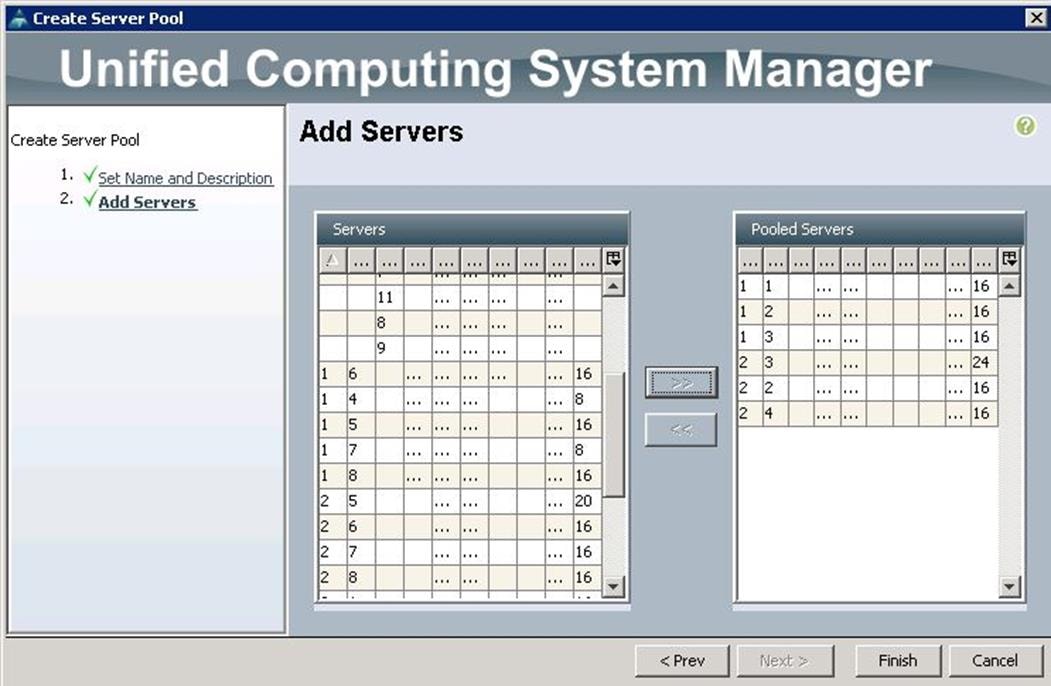

Create Server Pools for Controller, Compute and Ceph Storage Nodes

To configure Server pools for Controller, Compute and Ceph Storage Servers, create Server pools from the UCS Manager GUI, and complete the following steps:

1. Under Servers à Pools à root à Server Pools à right-click and select Create Server Pool.

2. Specify the name and description for the Server pool for Compute Nodes.

3. Similarly, create Server pools for Controller and Ceph Storage Nodes.

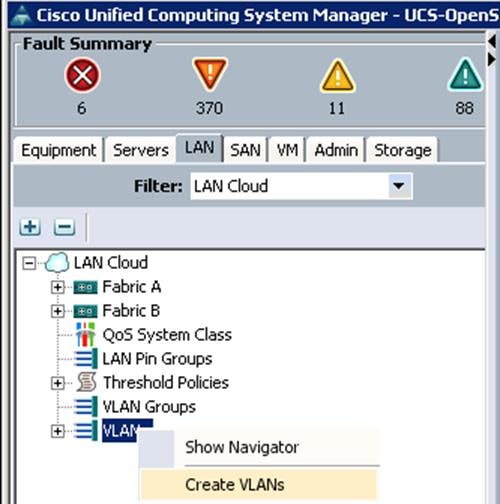

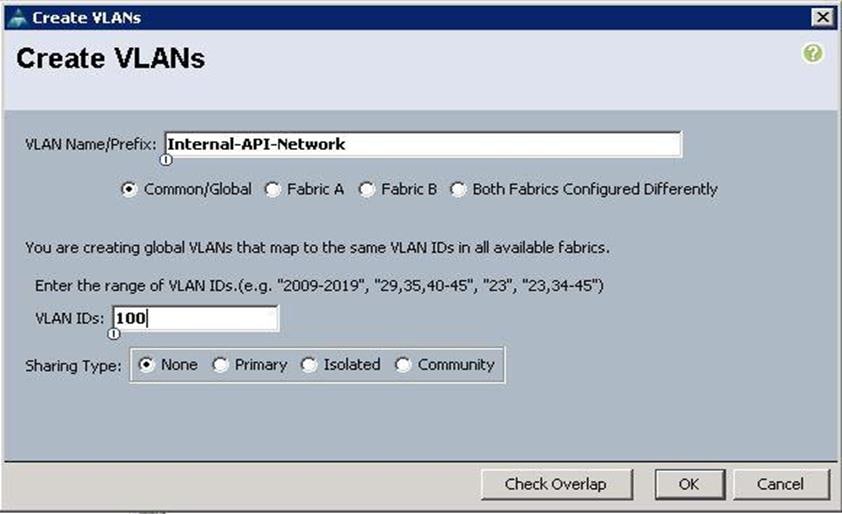

Create VLANs

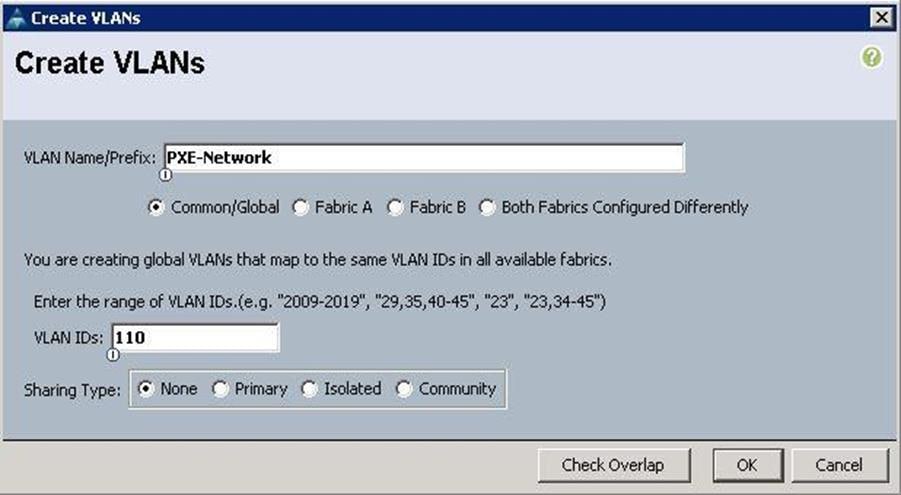

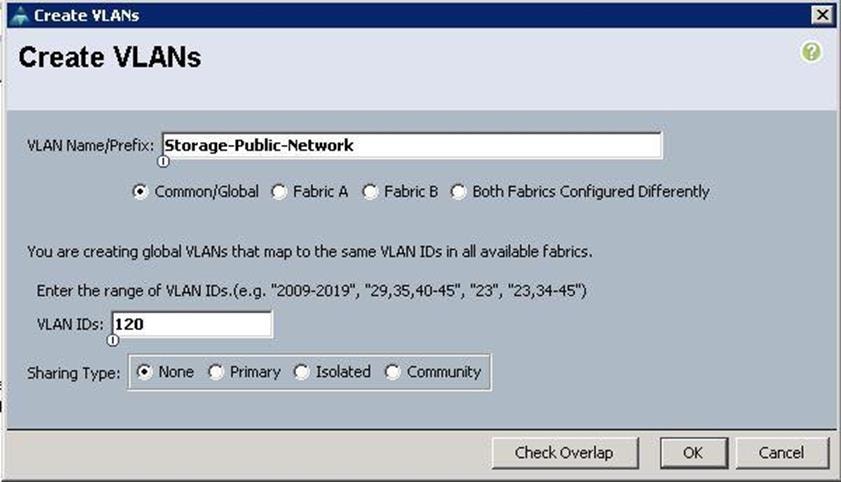

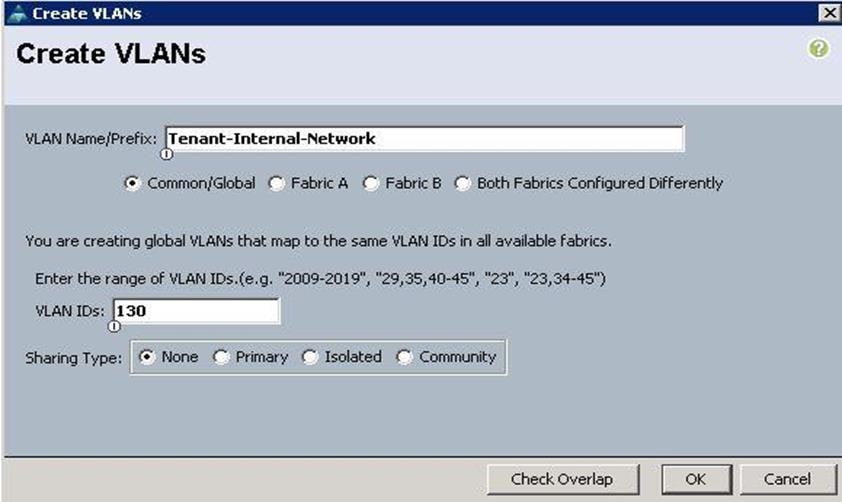

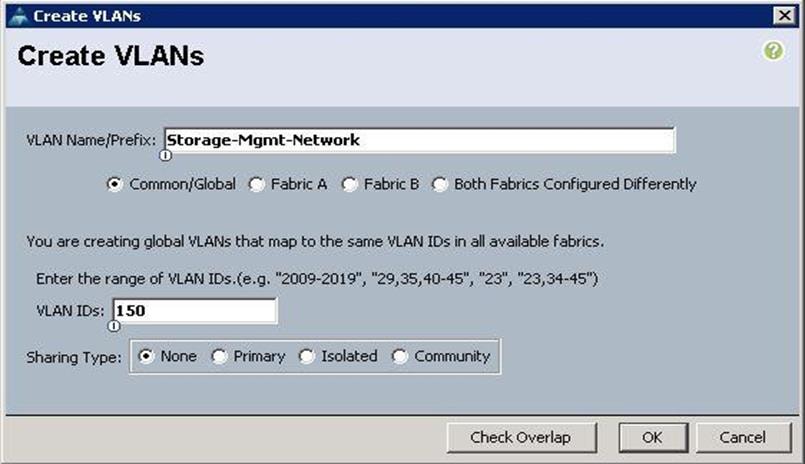

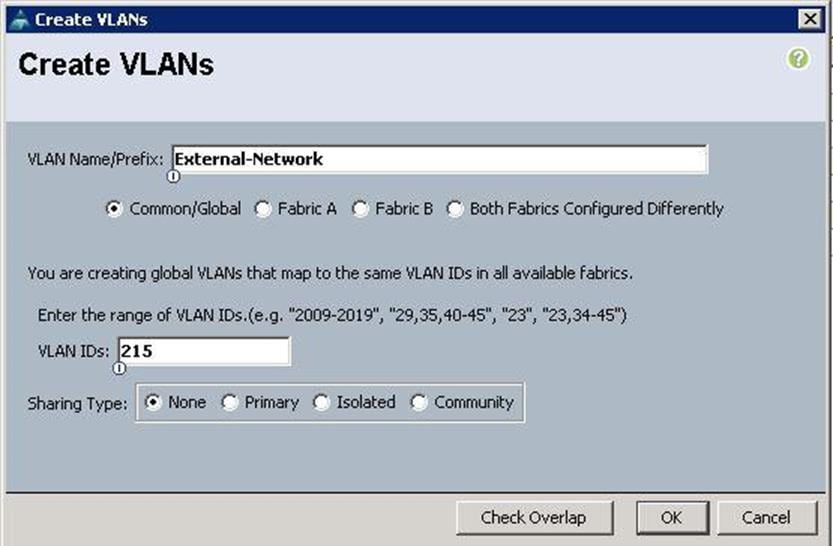

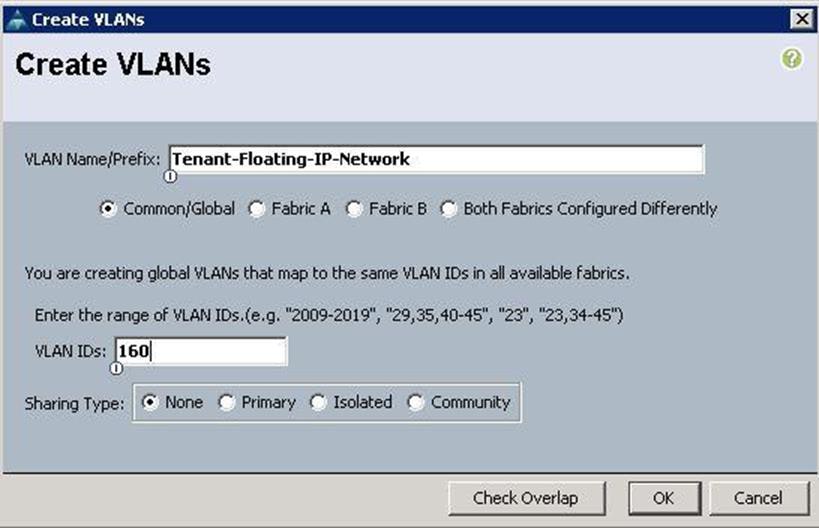

To create VLANs for all OpenStack networks for Controller, Compute and Ceph Storage Servers, from the UCS manager GUI, complete the following steps:

1. Under LAN à LAN Cloud à VLANs à right-click and select Create VLANs.

2. Specify the VLAN name as PXE-Network for Provisioning and specify the VLAN ID as 110 and click OK.

3. Specify the VLAN name as Storage-Public for accessing Ceph Storage Public Network and specify the VLAN ID as 120 and click OK.

4. Specify the VLAN name as Tenant-Internal-Network and specify the VLAN ID as 130 and click OK.

5. Specify the VLAN name as Storage-Mgmt-Network for Managing Ceph Storage Cluster and specify the VLAN ID as 130 and click OK.

6. Specify the VLAN name as External-Network and specify the VLAN ID as 215 and click OK.

7. Specify the VLAN name as Tenant-Floating-Network for accessing Tenant instances externally and specify the VLAN ID as 160 and click OK.

![]() This network is Optional. In this solution, we only used a 24 bit netmask for the External network that had a limitation of 250 IPs for tenant VMs. Due to this limitation, we used a 20 bit netmask for the Tenant Floating Network.

This network is Optional. In this solution, we only used a 24 bit netmask for the External network that had a limitation of 250 IPs for tenant VMs. Due to this limitation, we used a 20 bit netmask for the Tenant Floating Network.

The screenshot below shows the output of VLANs for all the OpenStack Networks created above.

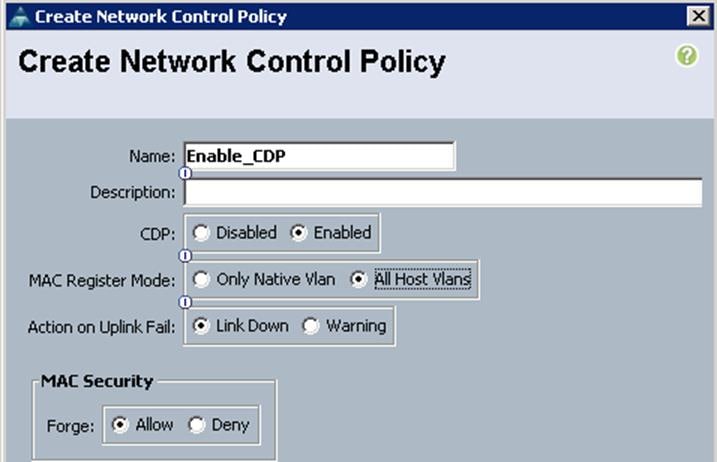

Create a Network Control Policy

To configure the Network Control policy from the UCS Manager, complete the following steps:

1. Under LAN à Policies à root à Network Control Policies à right-click and select Create Network Control Policy.

a. Specify the name and choose CDP as Enabled. Select the MAC register mode as "All hosts VLANs" and Action on Uplink fail as "Link Down" and click OK.

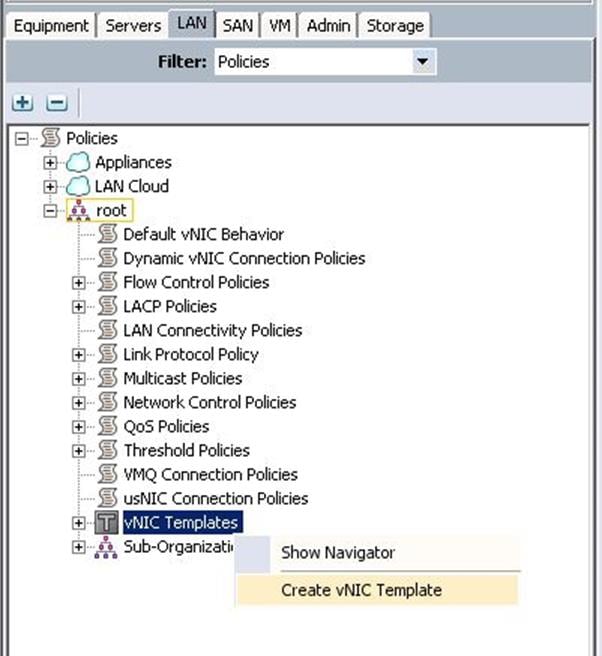

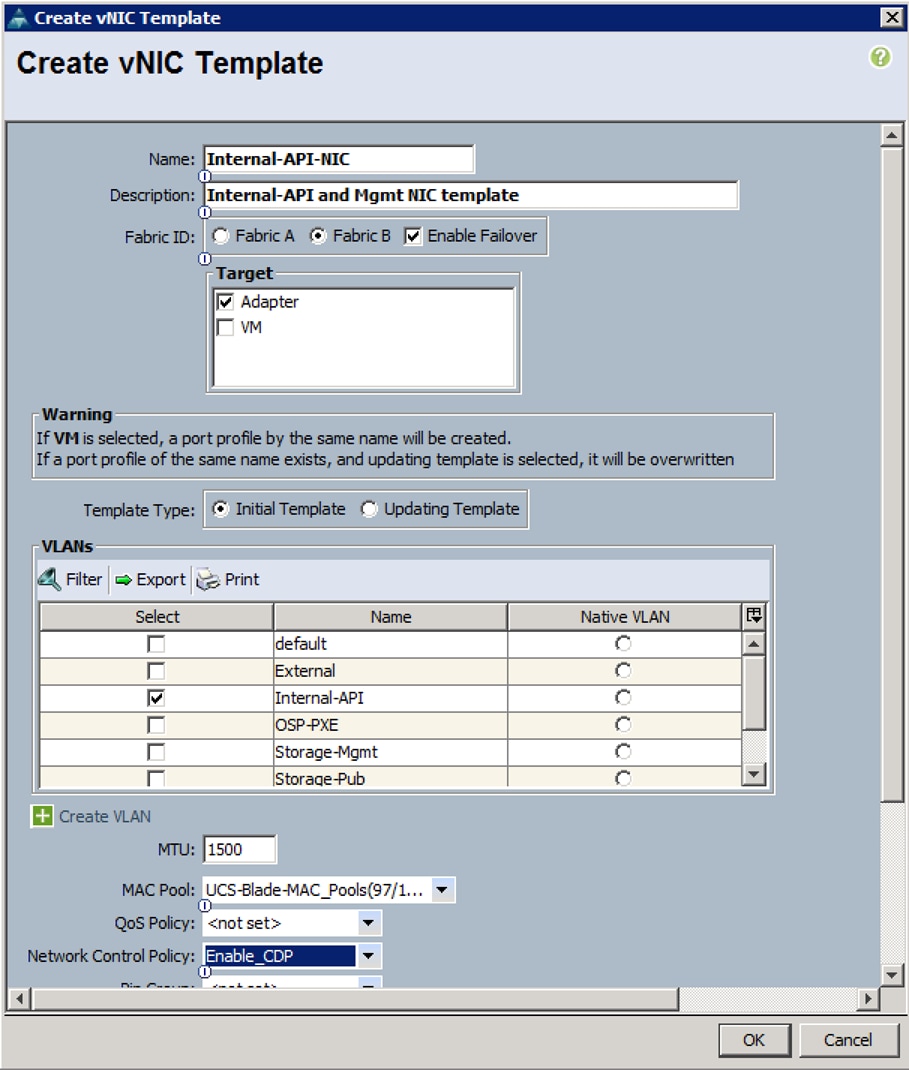

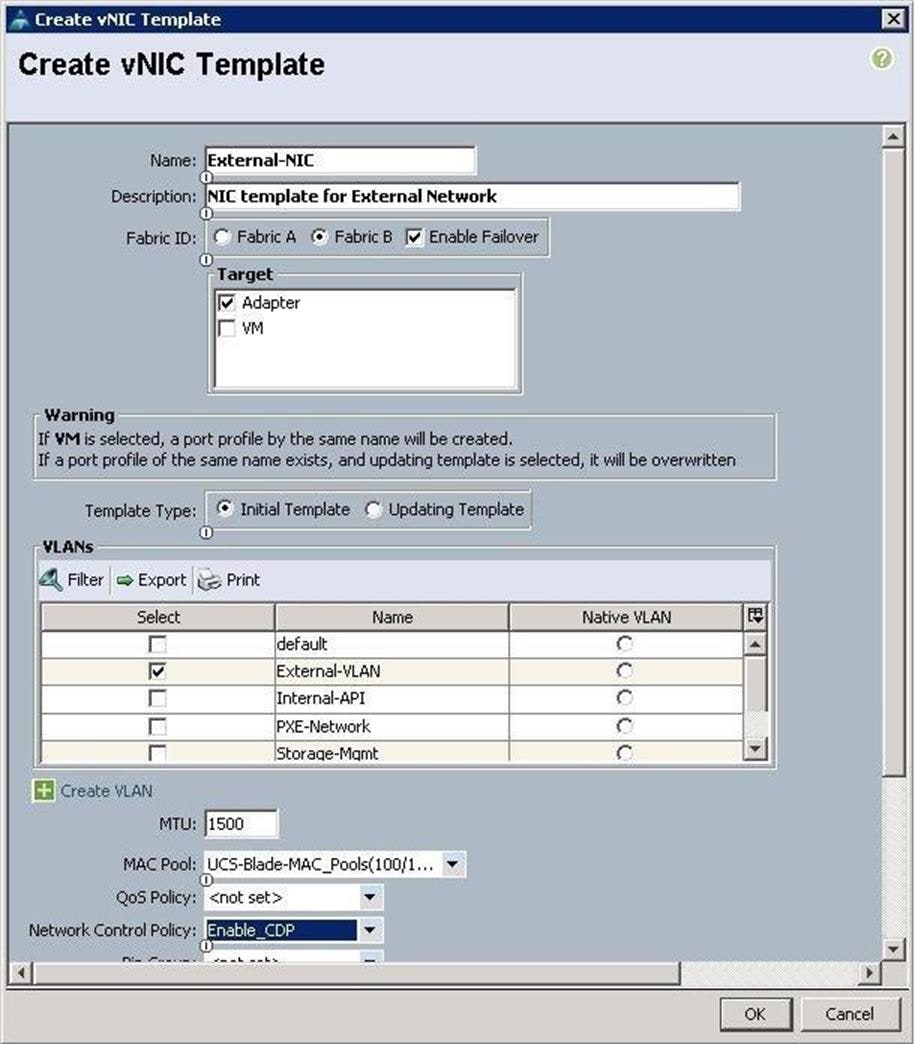

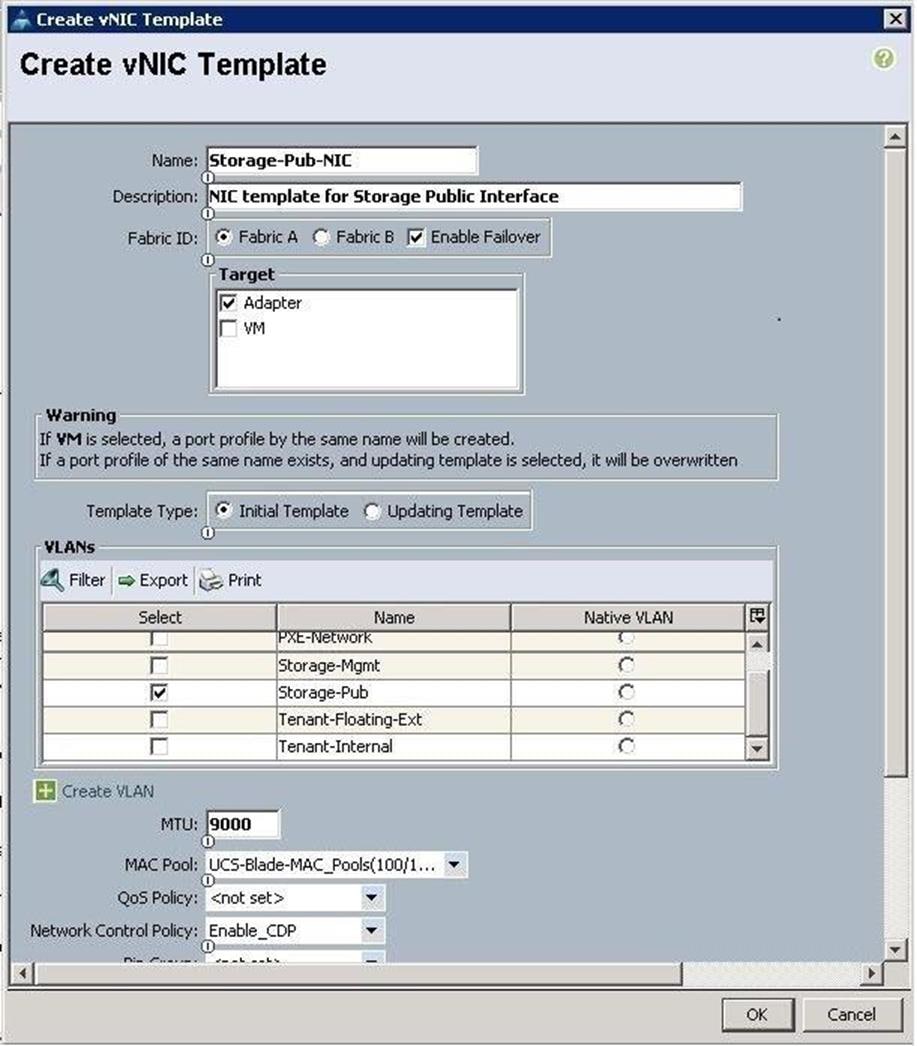

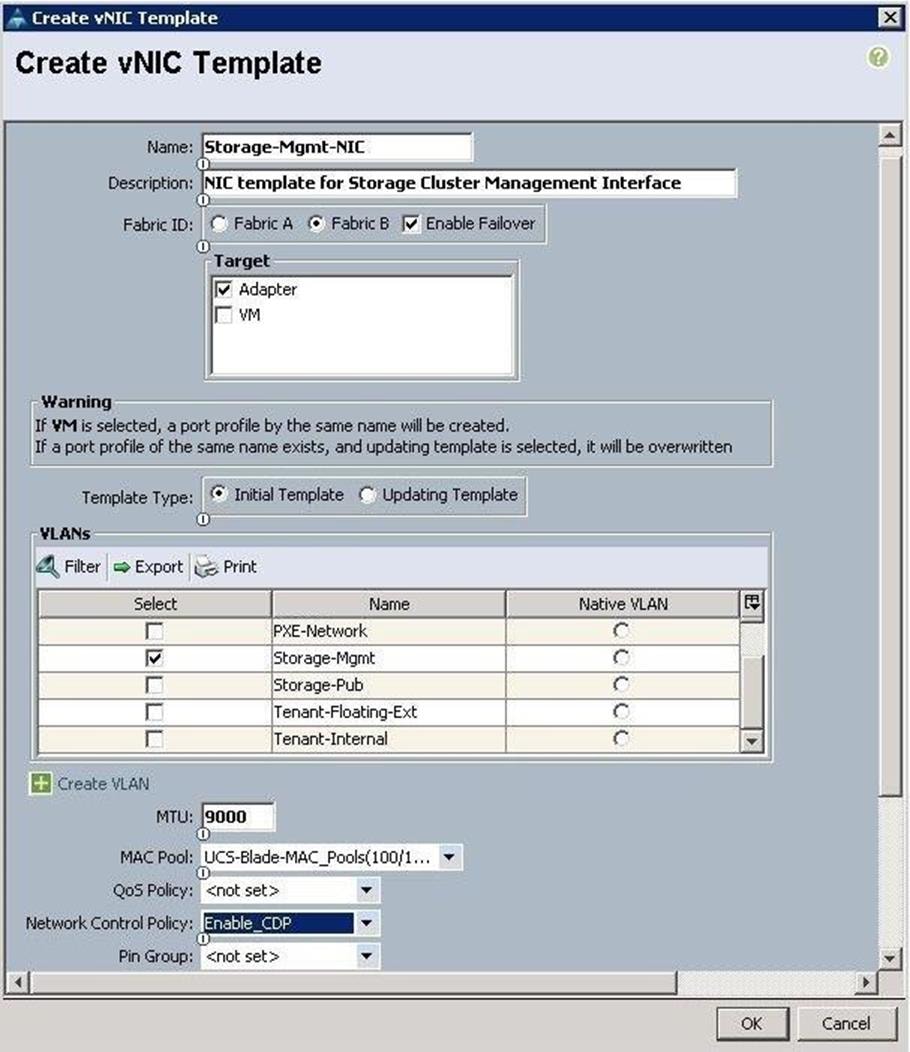

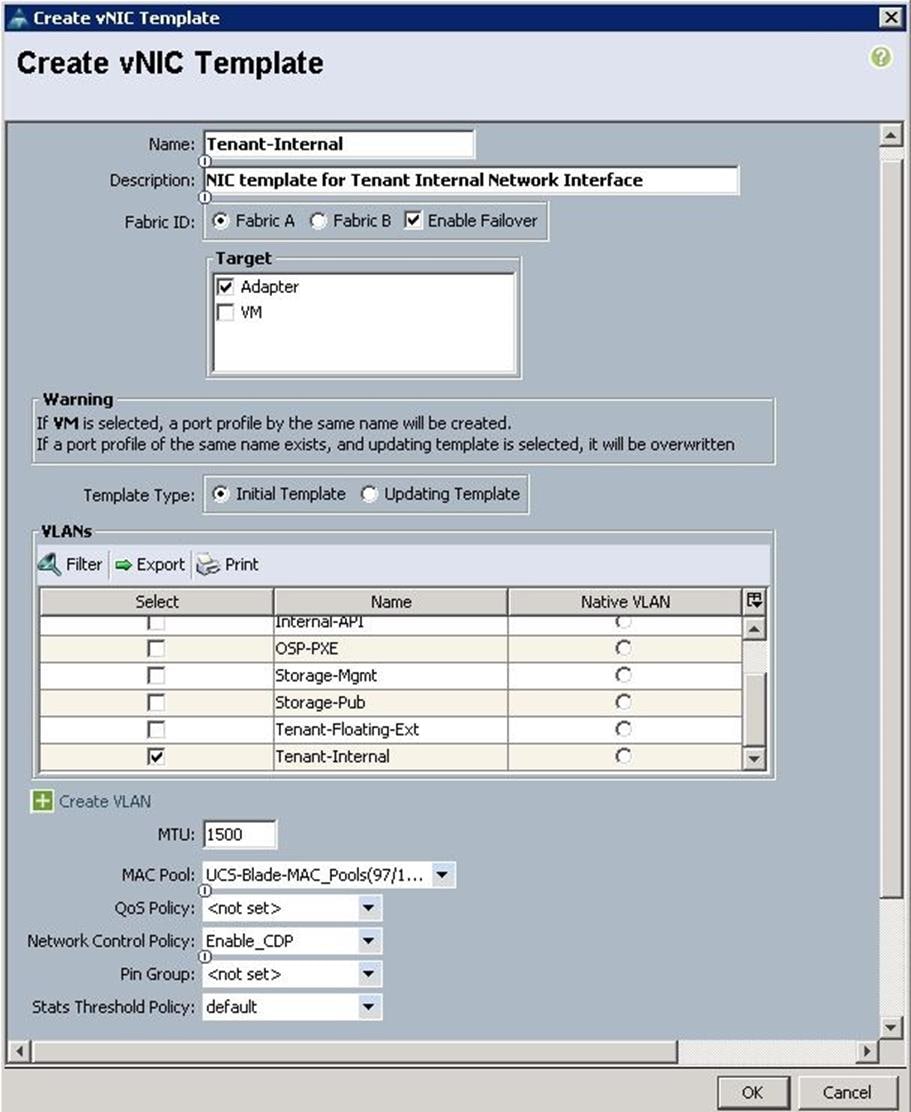

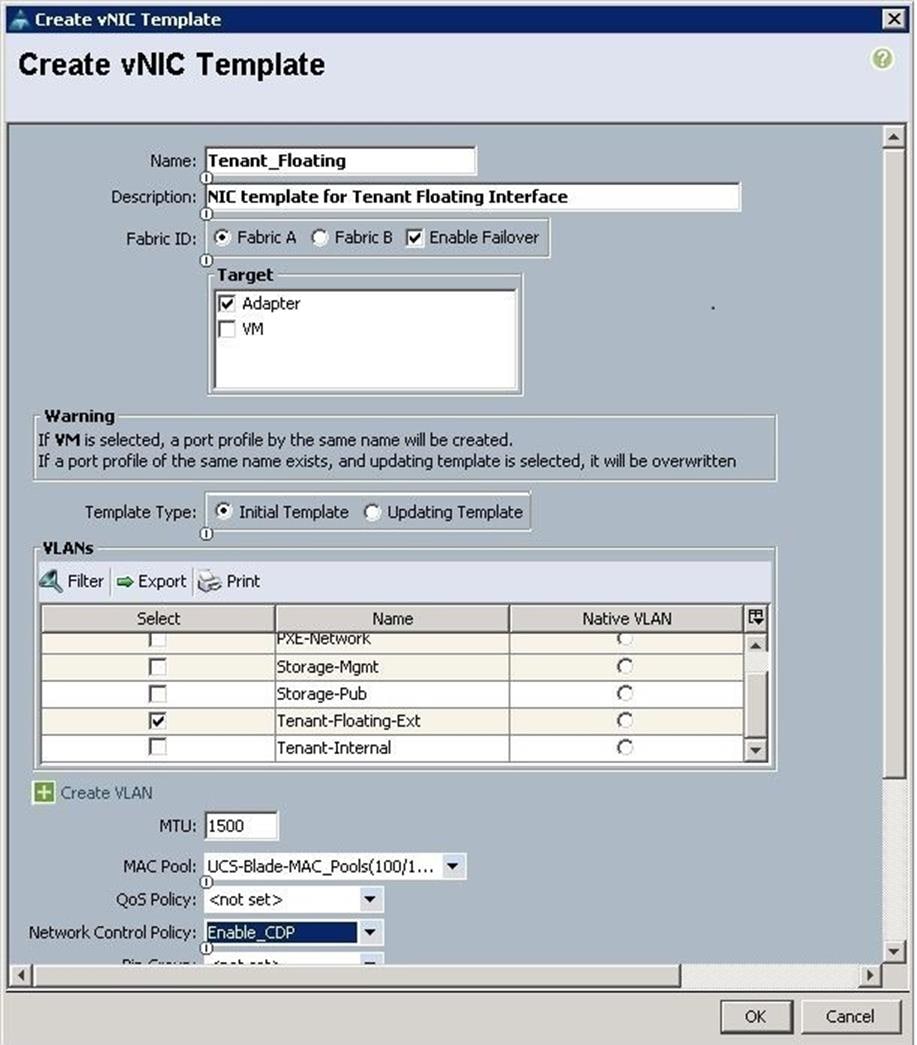

Create vNIC Templates

To Configure VNIC templates for each UCS Server VNIC interfaces, create VNIC templates from the UCS Manager GUI, complete the following steps:

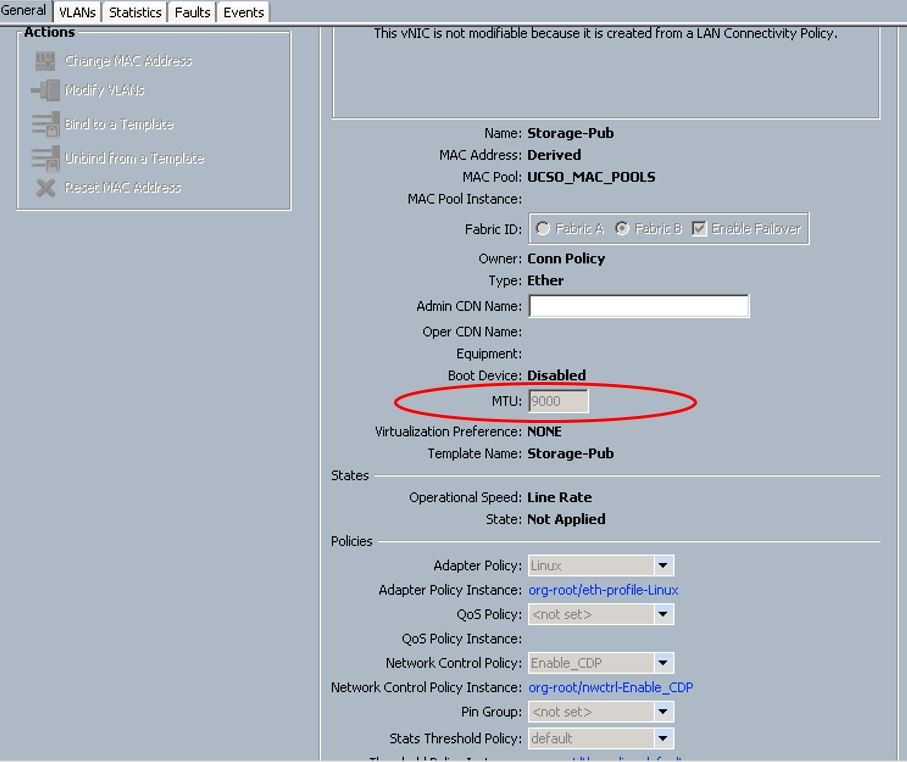

![]() The storage management network is configured with 9000 MTU.

The storage management network is configured with 9000 MTU.

1. Under LAN à Policies à root à VNIC Templates à right-click and select Create VNIC Template.

a. Create VNIC template for Internal-API network. Specify the name, description, Fabric ID, VLAN ID and choose MAC pools from the drop-down list.

b. Create VNIC template for External Network.

c. Create the VNIC template for Storage Public Network.

d. Create VNIC template for Storage Mgmt Cluster network.

e. Create VNIC template for Tenant Internal Network.

f. Create VNIC template for Tenant Floating Network.

After completion, you can see the VNIC templates for each traffic.

![]() For storage interfaces, a MTU value of 9000 has been added.

For storage interfaces, a MTU value of 9000 has been added.

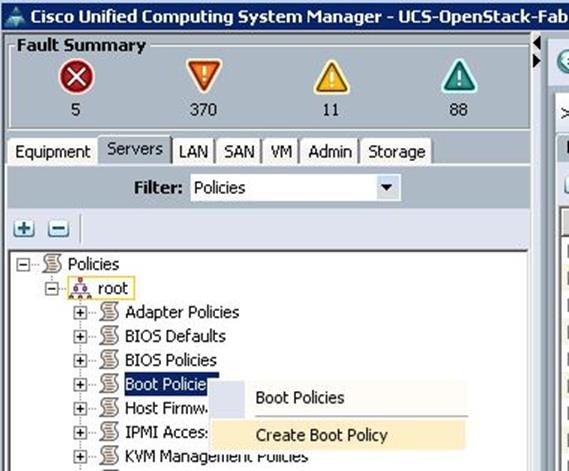

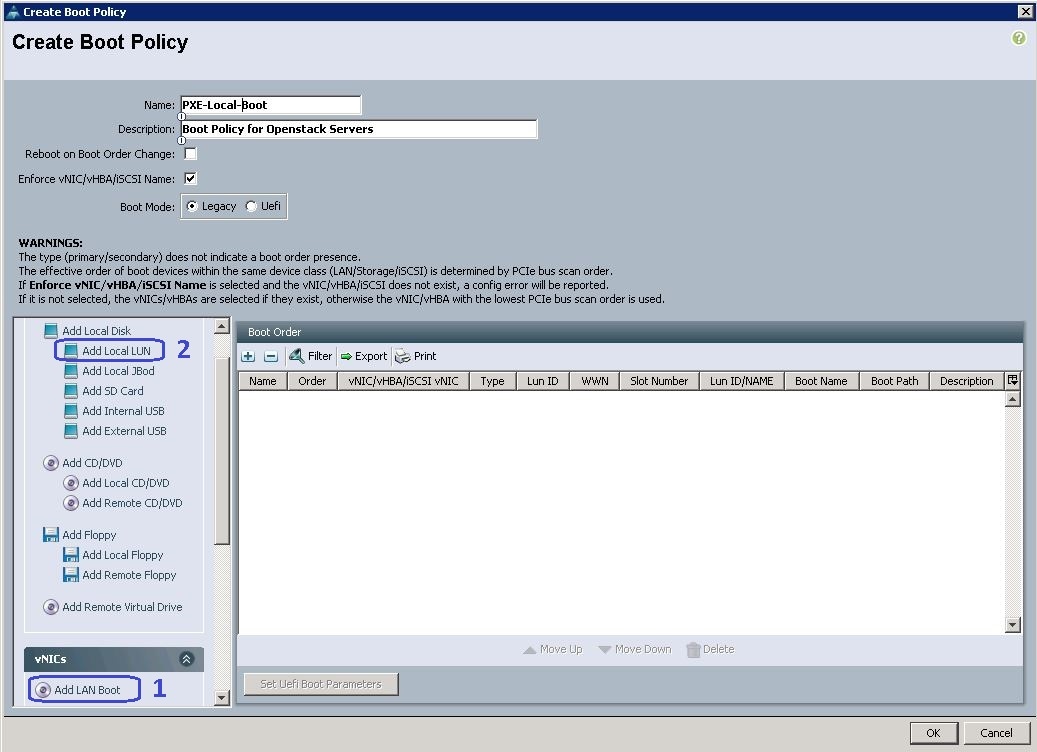

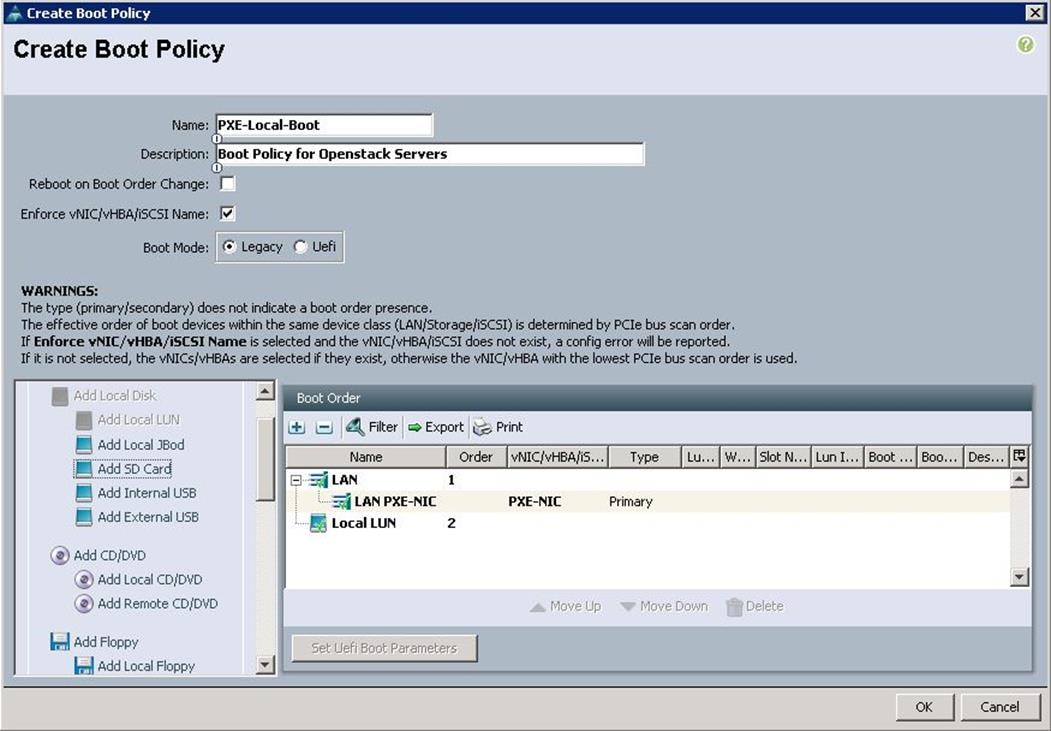

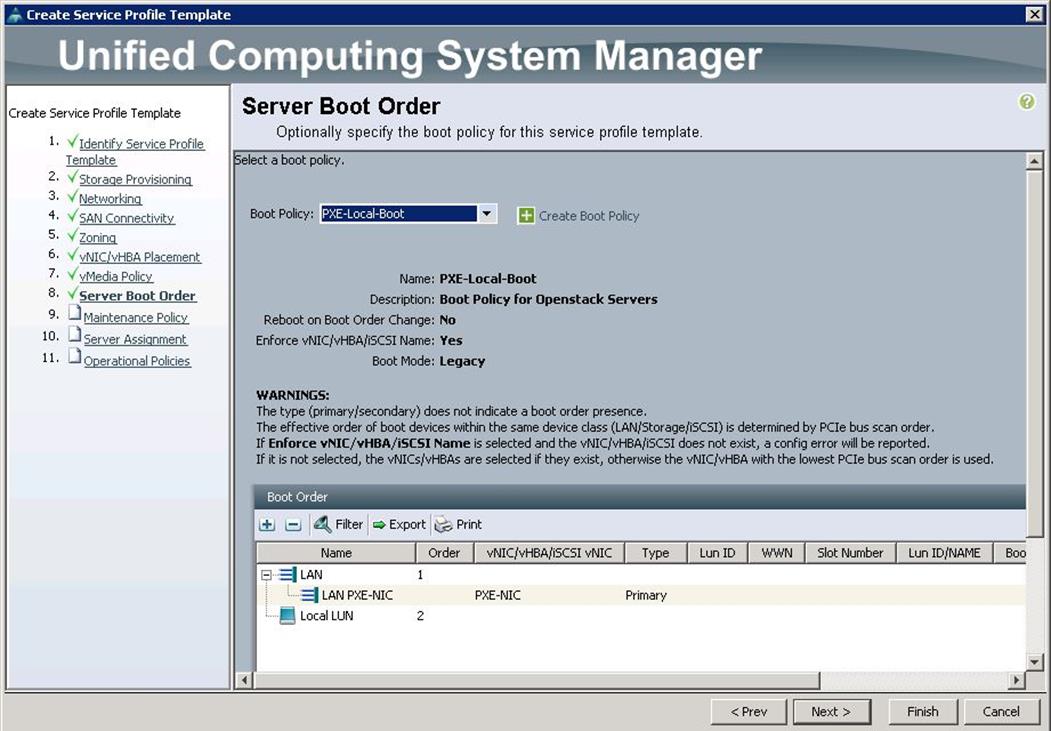

Create Boot Policy

To configure the Boot policy for the Cisco UCS Servers, create a Boot Policy from the Cisco UCS Manager GUI, and complete the following steps:

1. Under Server à Policies à root à Boot Policies à right-click and select Create Boot Policy.

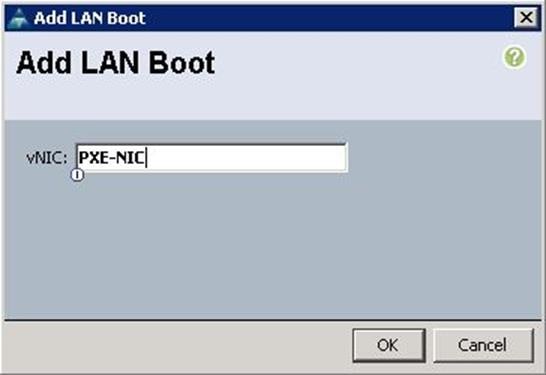

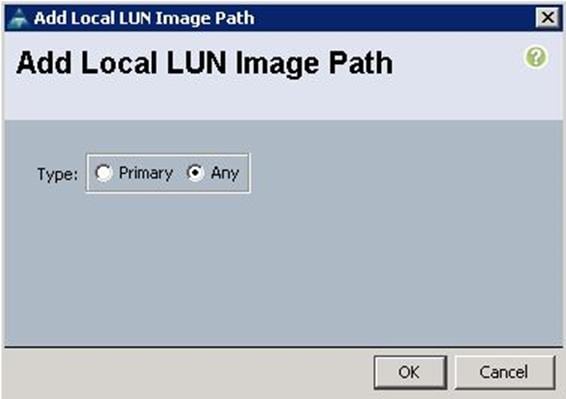

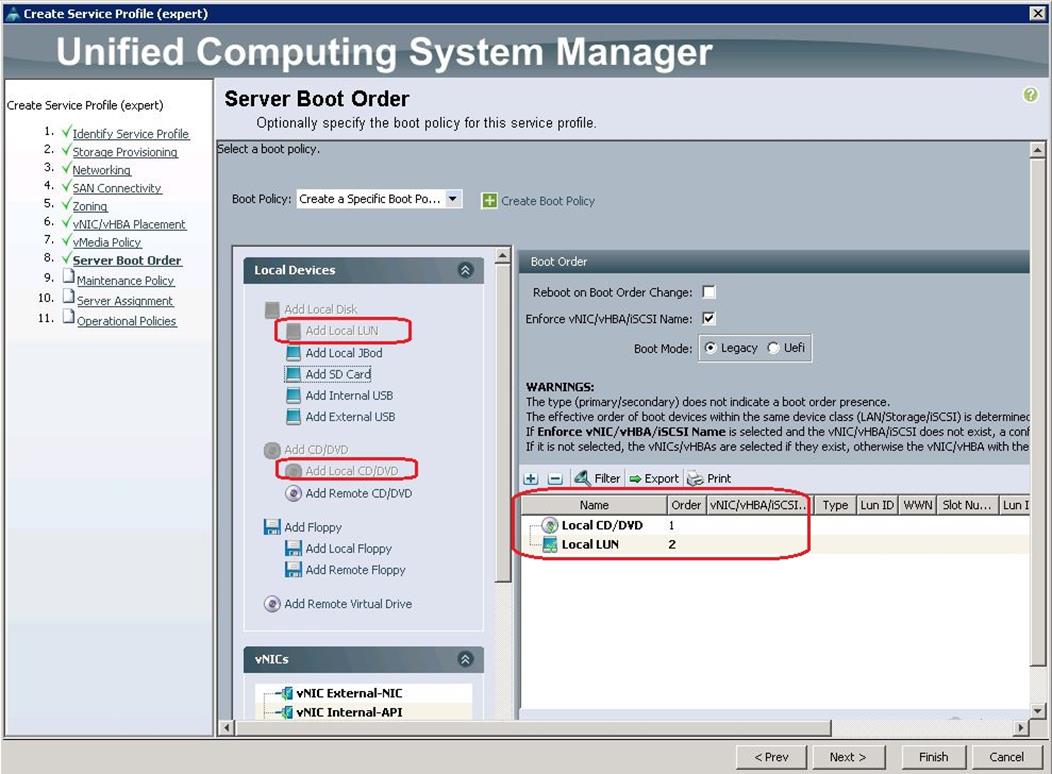

a. Specify the name and description. Select the First boot order as LAN boot and specify the actual VNIC name of the PXE network (PXE-NIC). Then select the second boot order and click Add Local LUN.

b. Specify the VNIC Name as PXE-NIC.

c. Make sure the First boot order is PXE NIC and second boot order is Local LUN and click OK.

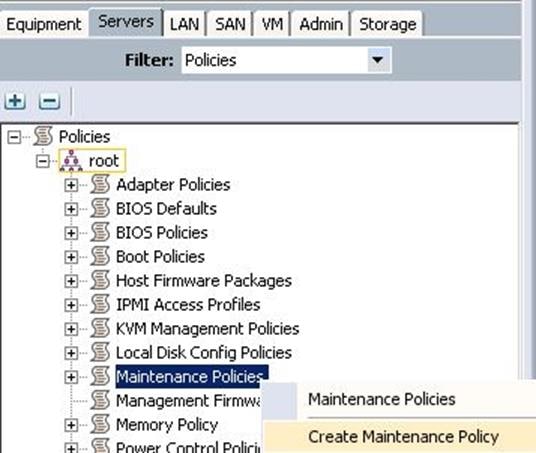

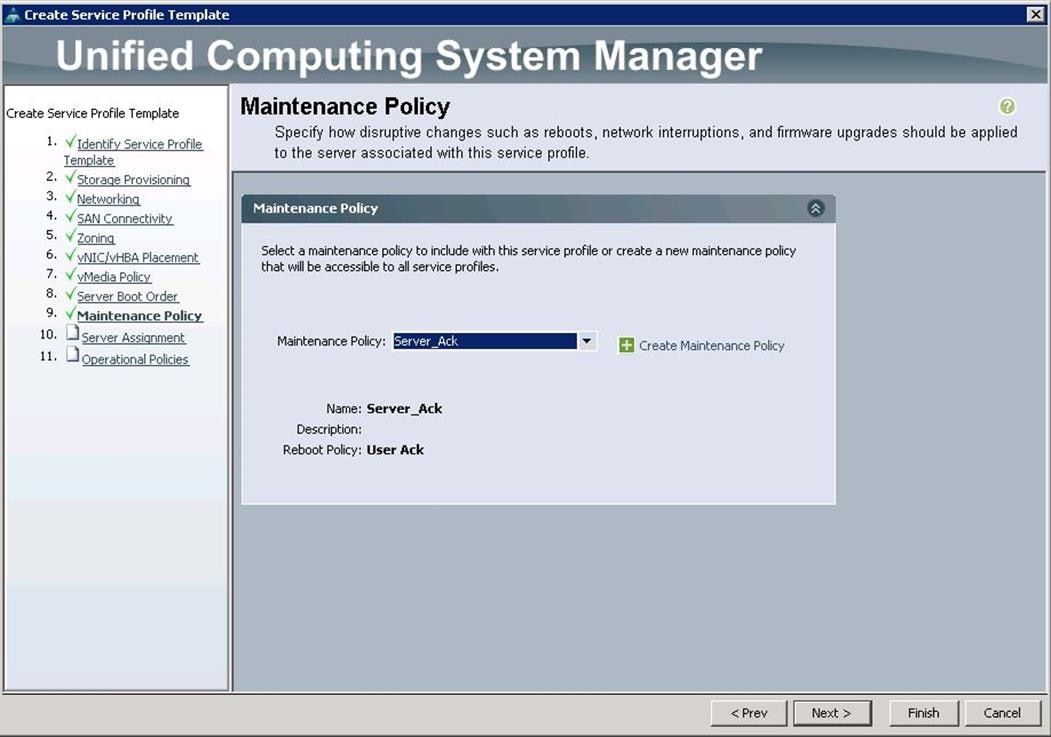

Create a Maintenance Policy

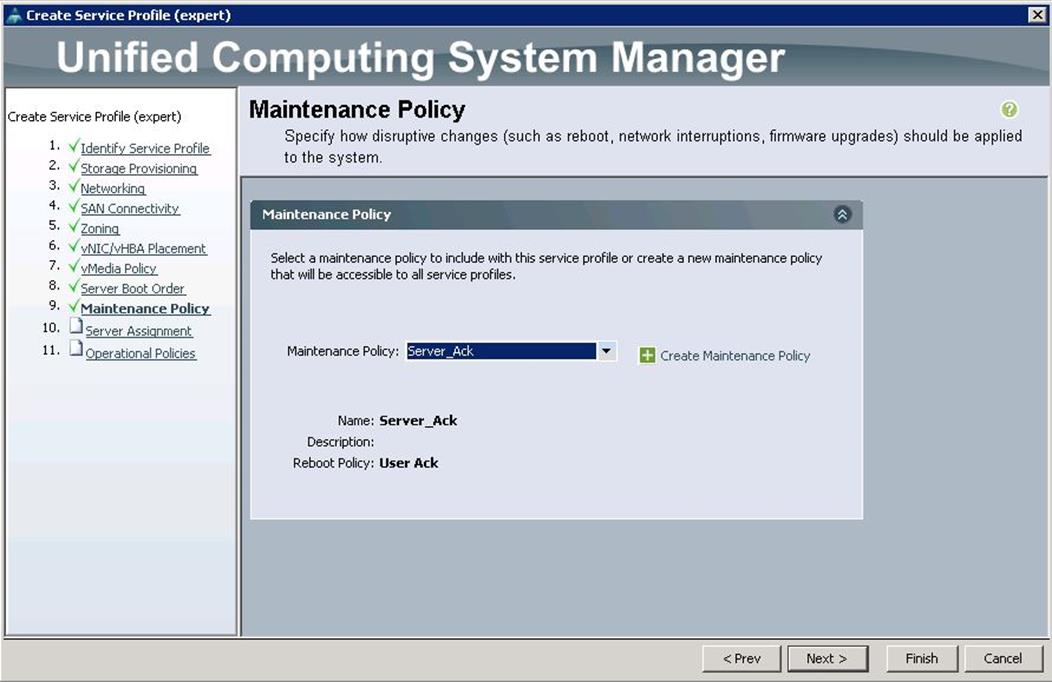

A maintenance policy determines a pre-defined action to take when there is a disruptive change made to the service profile associated with a server. When creating a maintenance policy you have to select a reboot policy which defines when the server can reboot once the changes are applied.

To configure the Maintenance policy from the Cisco UCS Manager, complete the following steps:

1. Under Server à Policies à root à Maintenance Policies à right-click and select Create Maintenance Policy.

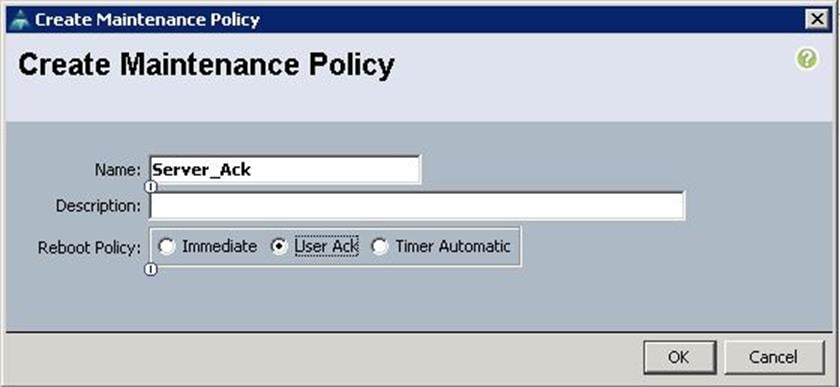

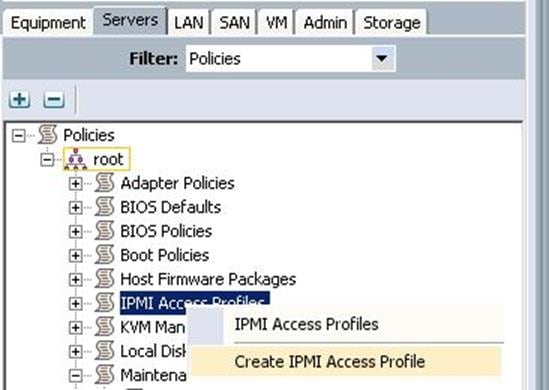

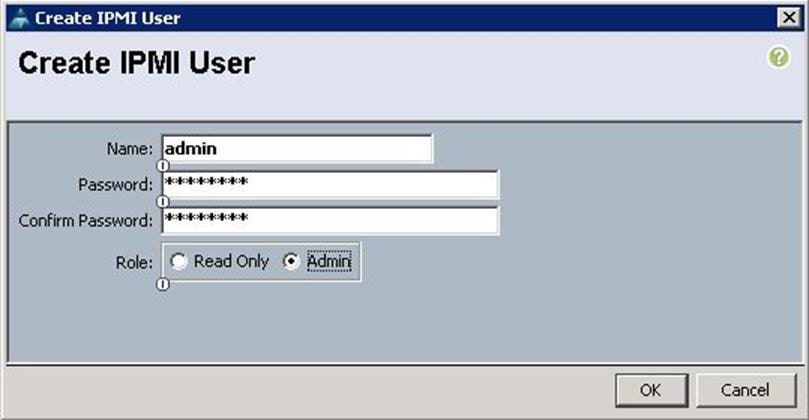

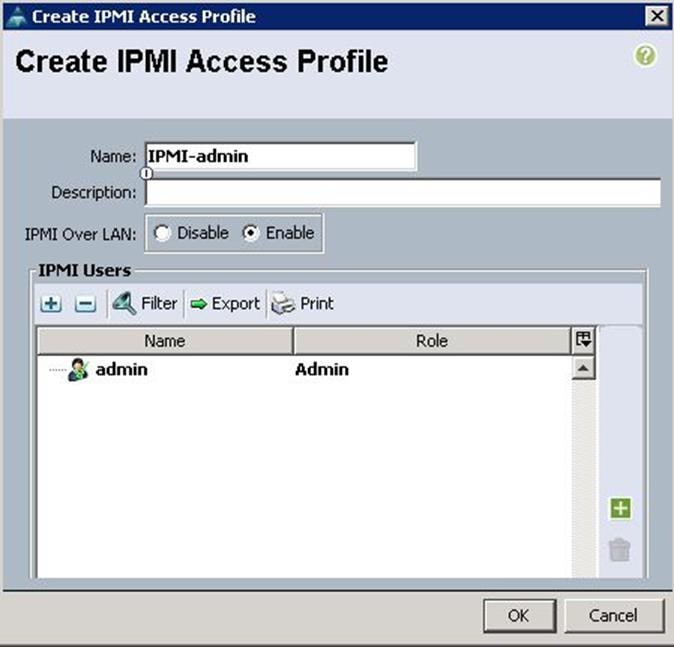

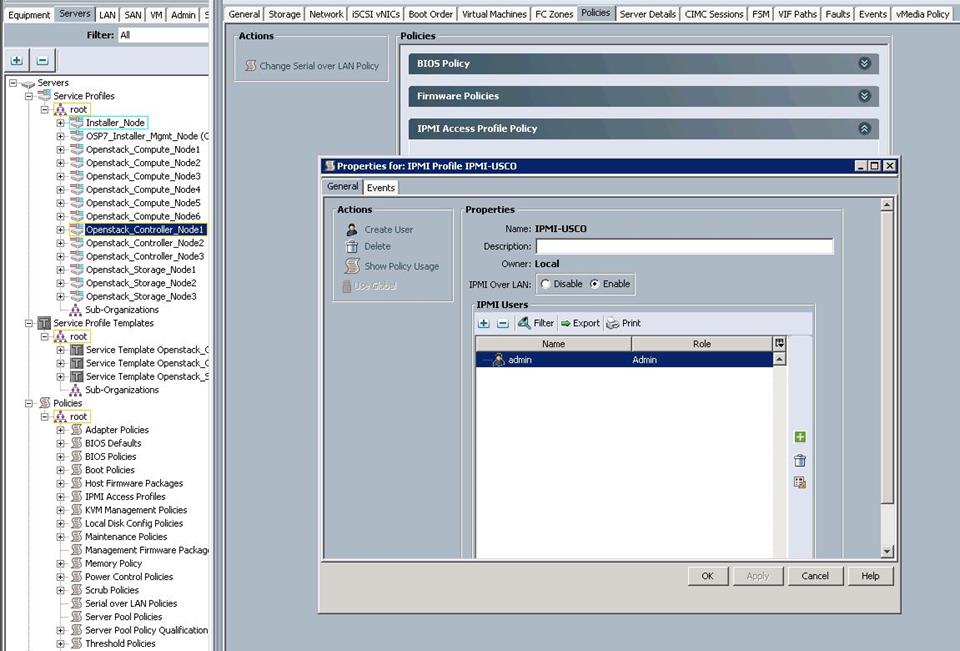

Create an IPMI Access Policy

This policy allows you to determine whether IPMI commands can be sent directly to the server, using the IP address (KVM IP address).

To configure the IPMI Access profiles from the Cisco UCS Manager, complete the following steps:

1. Under Server à Policies à root à IPMI Access profiles à right-click and select Create IPMI Access Profile.

a. Specify the name and click IPMI over LAN as Enabled and click “+”.

b. Specify the username and password. Choose Admin for the Role and click OK.

c. Click OK to create the IPMI access profile.

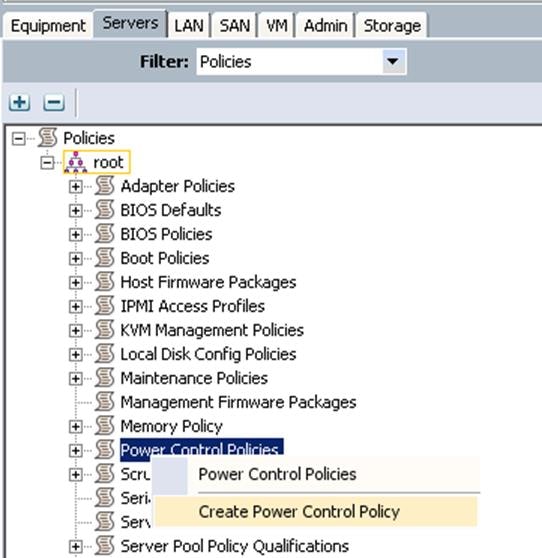

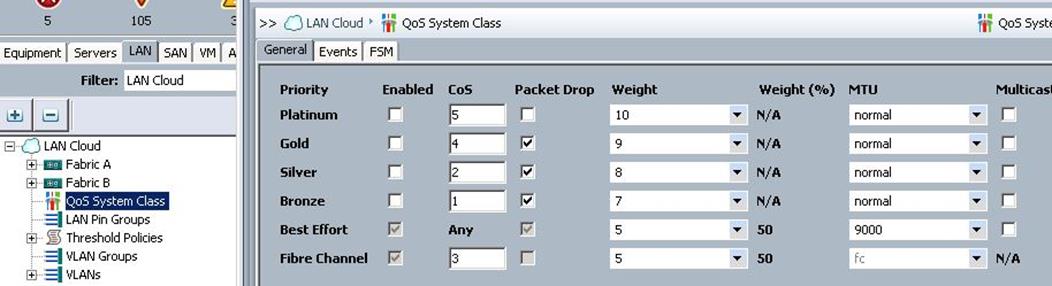

Create a Power Policy

Cisco UCS uses the priority set in the power control policy, along with the blade type and configuration, to calculate the initial power allocation for each blade within a chassis. During normal operation, the active blades within a chassis can borrow power from idle blades within the same chassis. If all blades are active and reach the power cap, service profiles with higher priority power control policies take precedence over service profiles with lower priority power control policies.

To configure the Power Control policy from the UCS Manager, complete the following steps:

1. Under Server à Policies à root à Power Control Policies à right-click and select Create Power Control Policy.

a. Specify the name and description. Choose Power Capping as No Cap.

![]() No Cap keeps the server runs at full capacity regardless of the power requirements of the other servers in its power group. Setting the priority to no-cap prevents Cisco UCS from leveraging unused power from that particular blade server. The server is allocated the maximum amount of power that that blade can reach.

No Cap keeps the server runs at full capacity regardless of the power requirements of the other servers in its power group. Setting the priority to no-cap prevents Cisco UCS from leveraging unused power from that particular blade server. The server is allocated the maximum amount of power that that blade can reach.

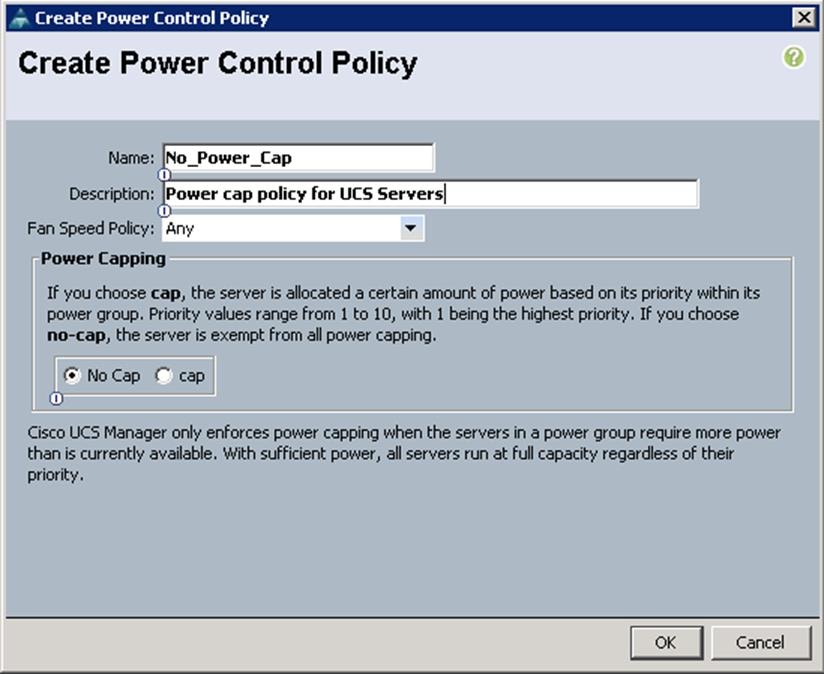

Create a QOS system class

Create a QOS system class as shown below:

Select the Best Effort class as MTU 9000, which will be leveraged in vNIC templates for storage public and storage management vNIC’s.

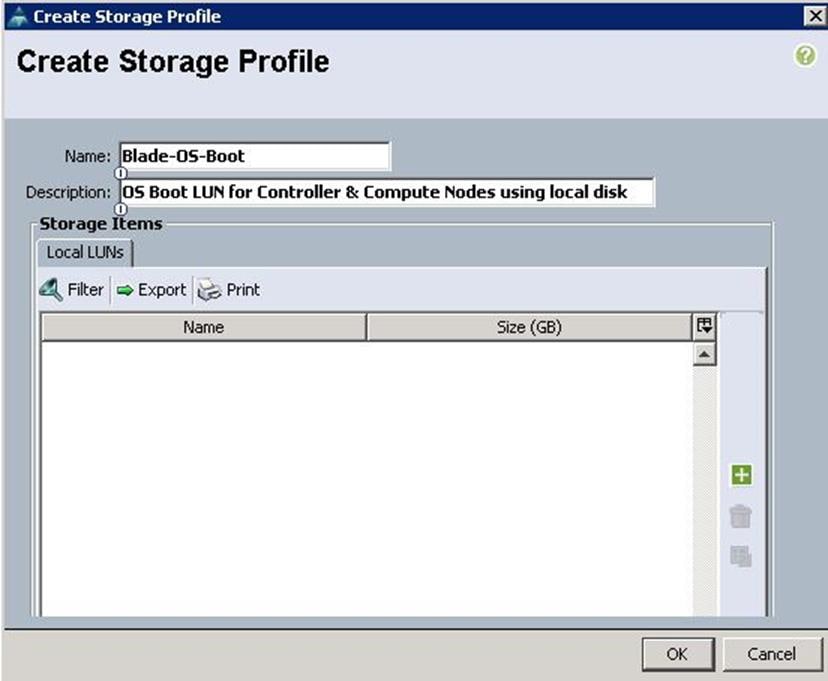

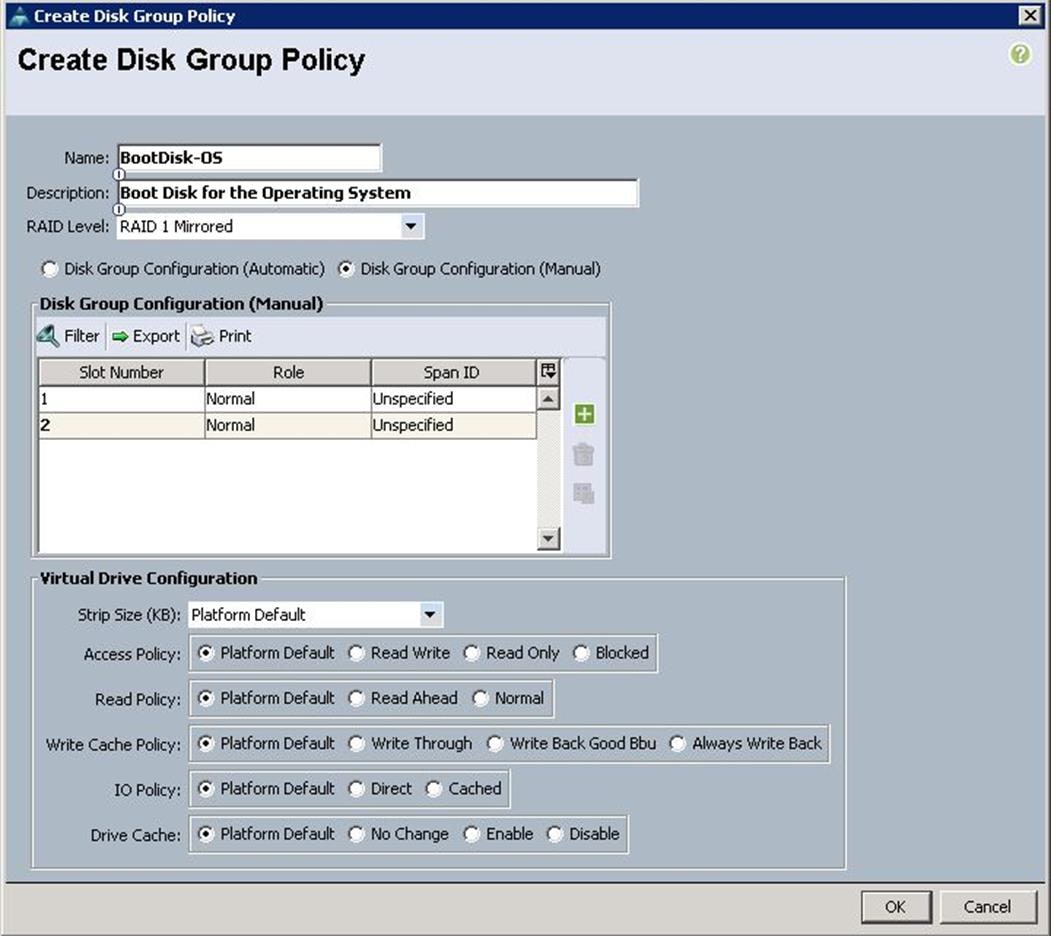

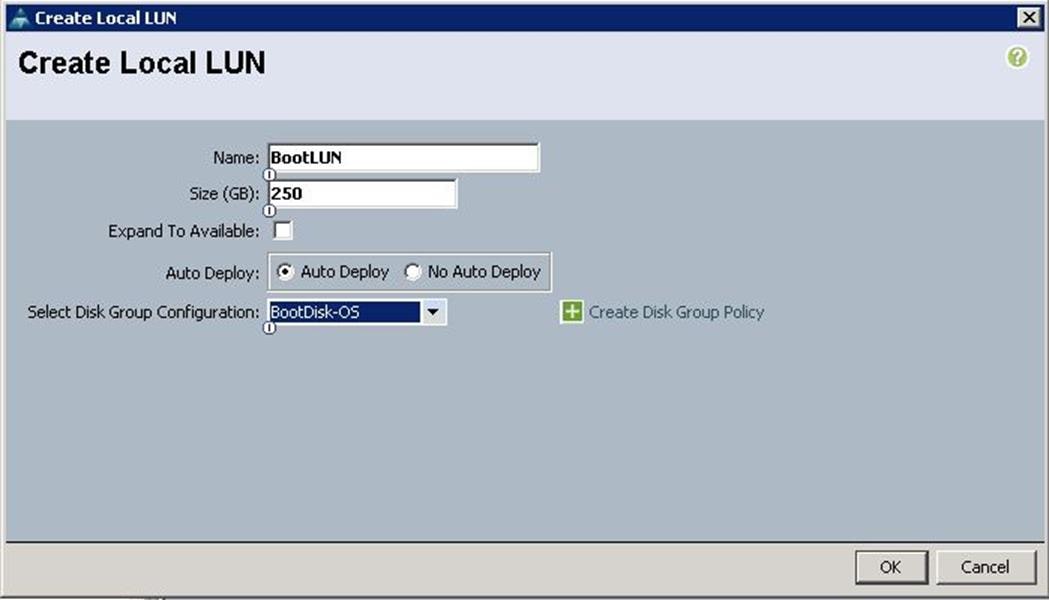

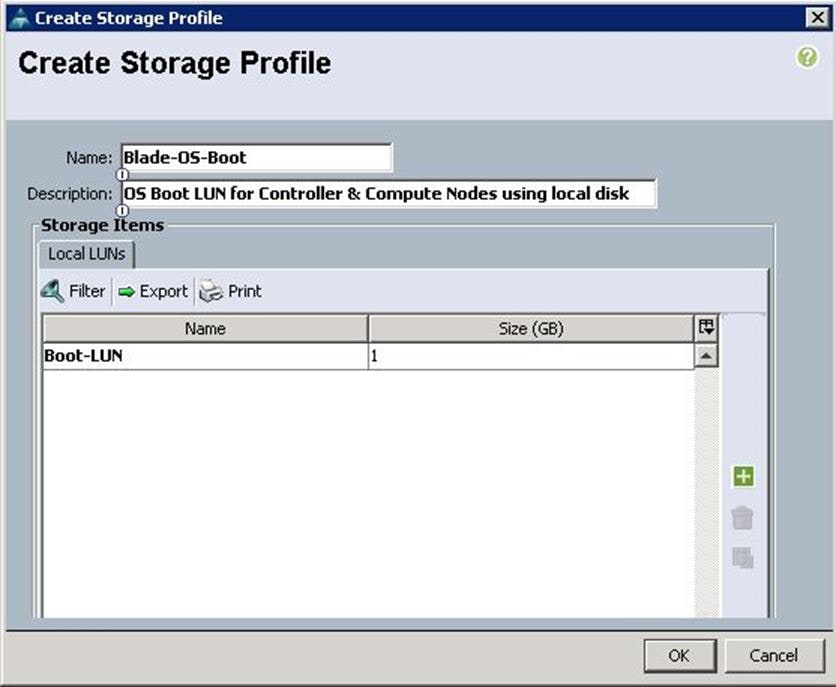

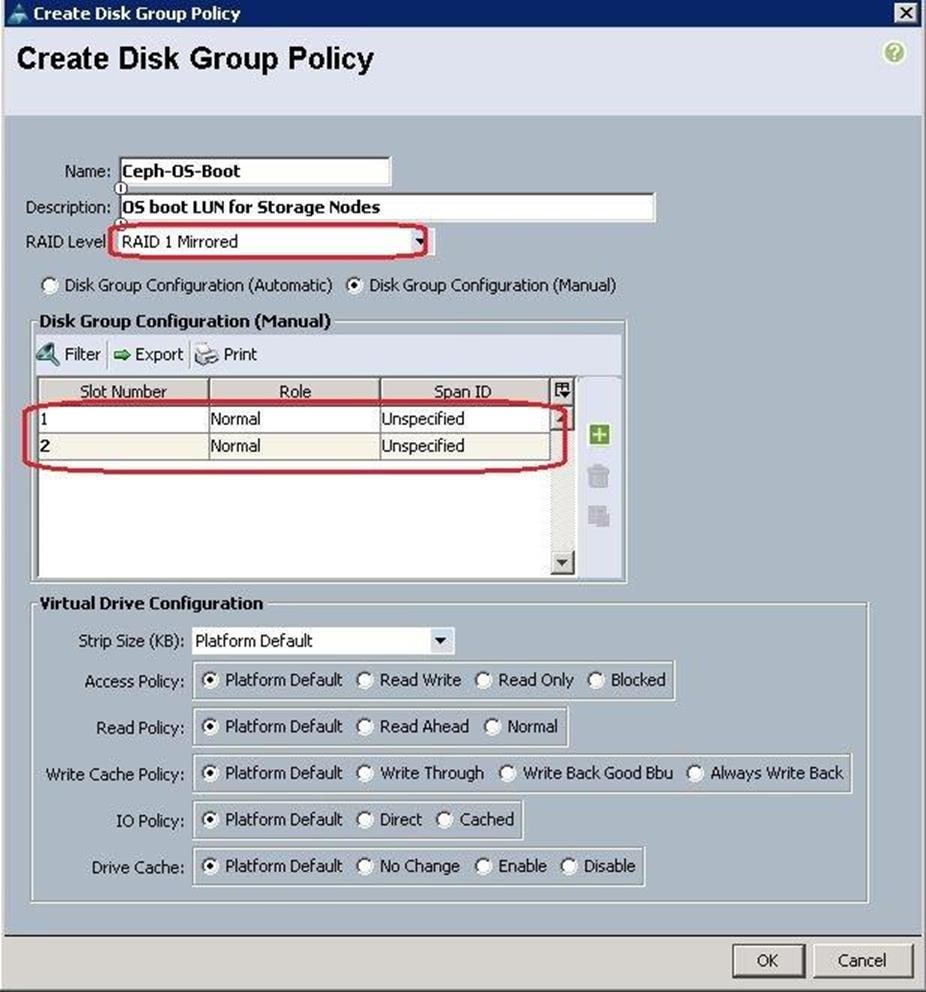

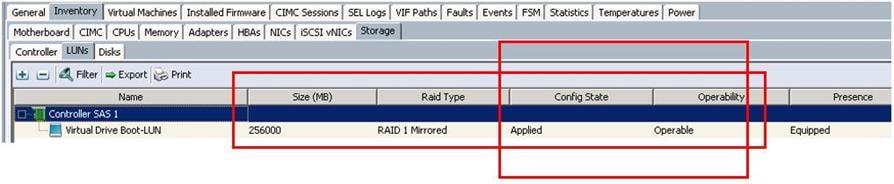

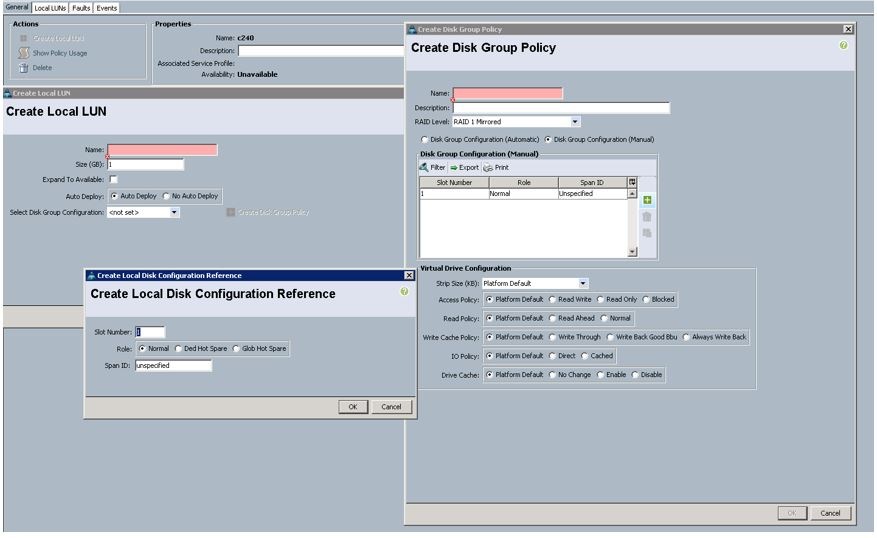

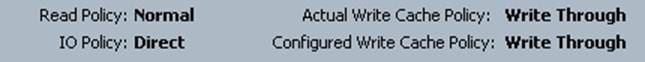

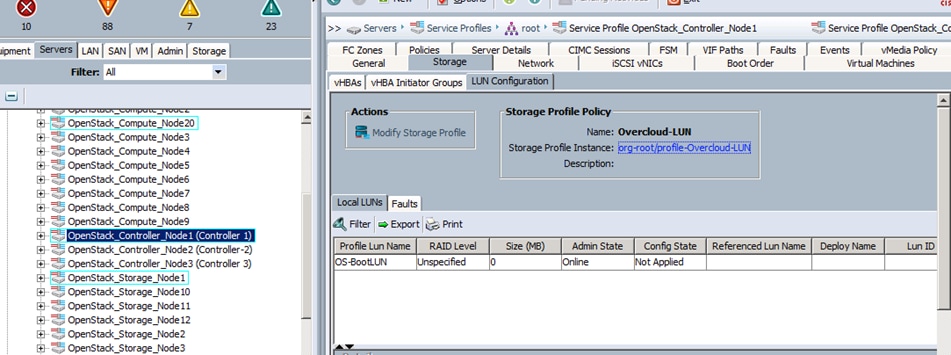

Create Storage Profiles for the Controller and Compute Blades

To allow flexibility in defining the number of storage disks, roles and usage of these disks, and other storage parameters, you can create and use storage profiles. LUNs configured in a storage profile can be used as boot LUNs or data LUNs, and can be dedicated to a specific server. You can also specify a local LUN as a boot device. However, LUN resizing is not supported.

To configure Storage profiles from the Cisco UCS Manager, complete the following steps:

1. Under Storage à Storage Provisioning à Storage Profiles à root à right-click and select Create Storage Profile.

a. Specify the name and click “+”.

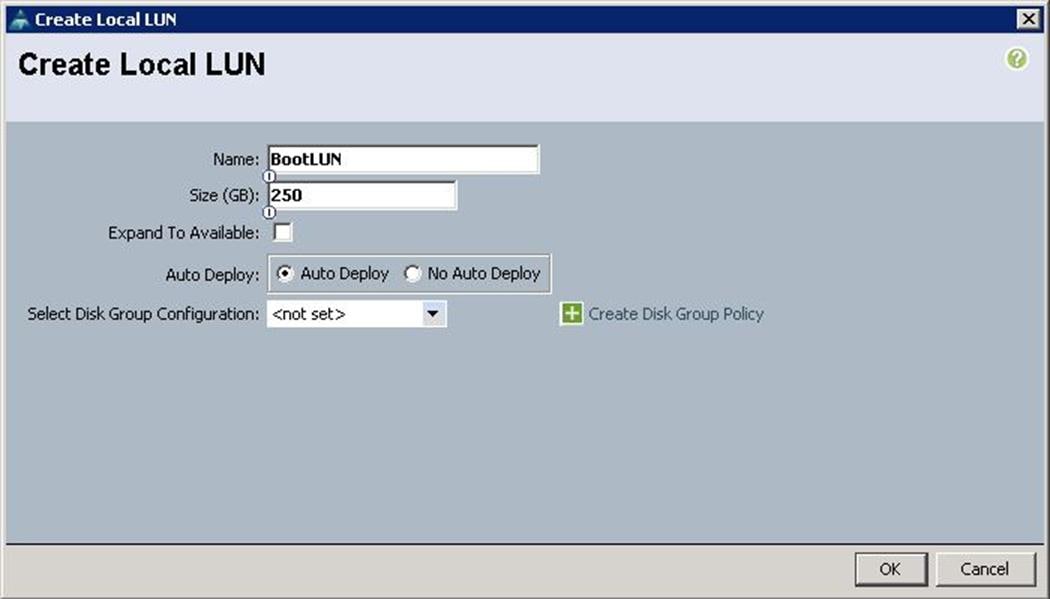

b. Specify the Local LUN name and size as 250 in GBand click Auto Deploy.

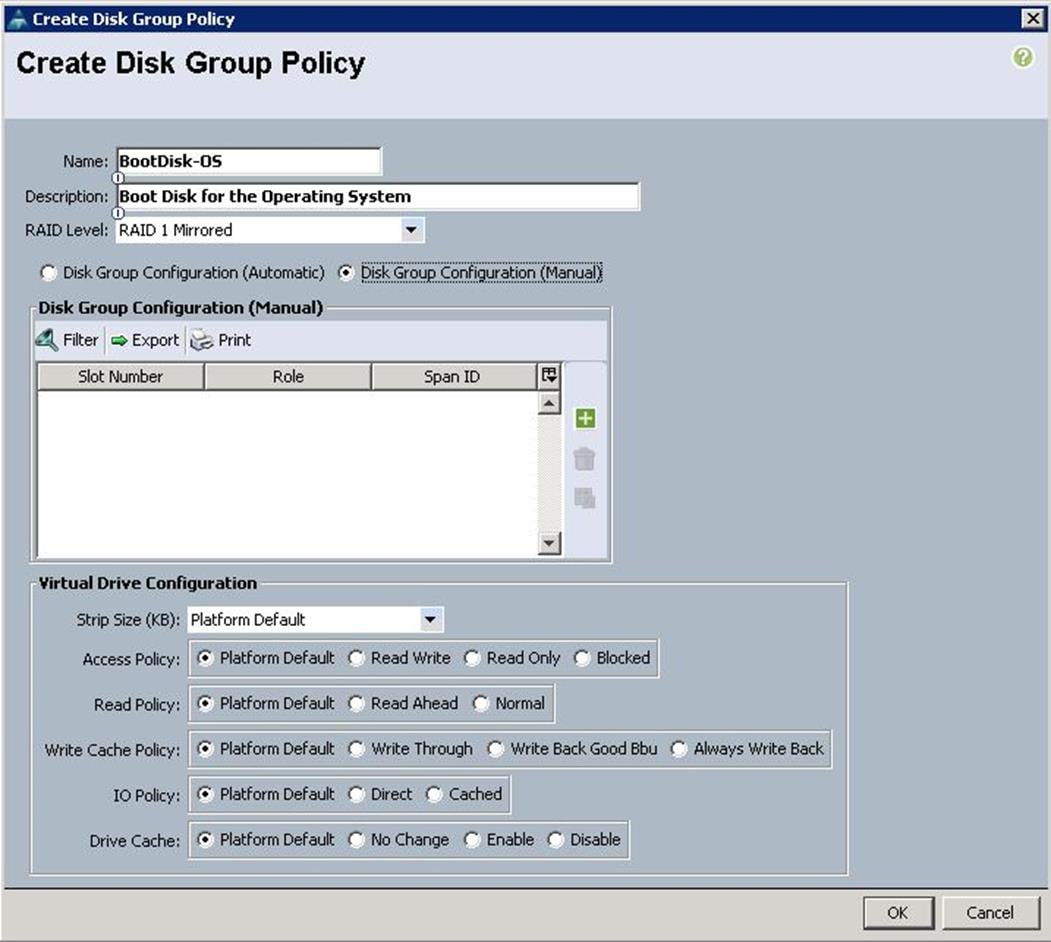

c. To configure RAID levels and configure the number of disks for the disk group, select Create Disk Group Policy.

d. Specify the name and choose RAID level as RAID 1 Mirrored. RAID1 is recommended for the Local boot LUNs.

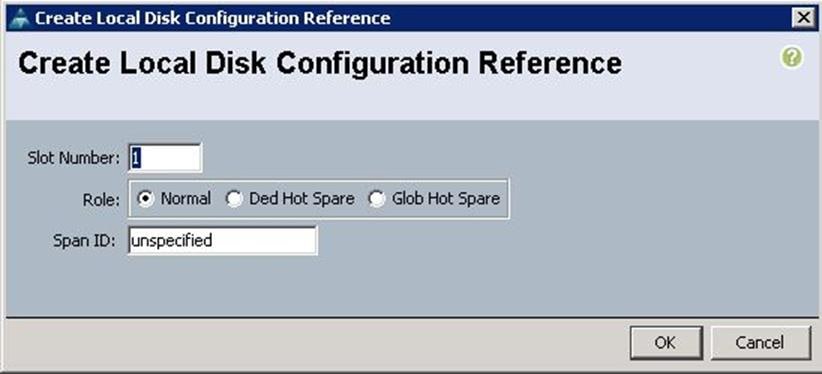

e. Select Disk group Configuration (Manual) and click “+”. Keep the Virtual Drive configuration with the default values.

f. Specify Disk Slot Number as 1 and Role as Normal.

g. Create another Local Disk configuration with the Slot number as 2 and click OK.

![]() In this solution, we used Local Disk 1 and Disk 2 as the boot LUNs with RAID 1 mirror configuration.

In this solution, we used Local Disk 1 and Disk 2 as the boot LUNs with RAID 1 mirror configuration.

h. Choose the Disk group policy Boot Disk-OS for the Local Boot LUN.

i. Click OK to confirm the Storage profile creation.

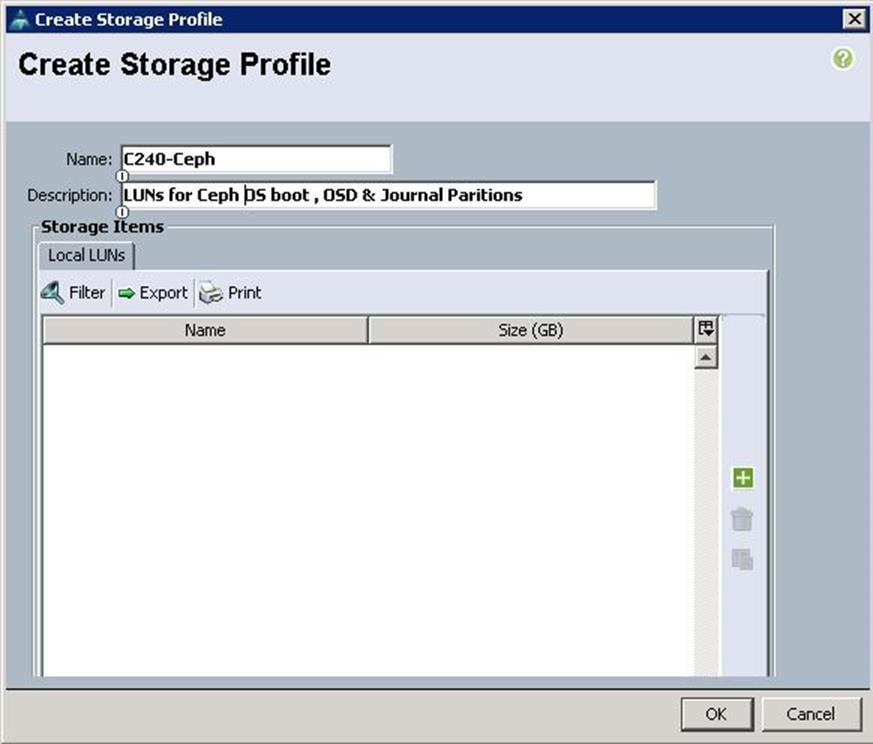

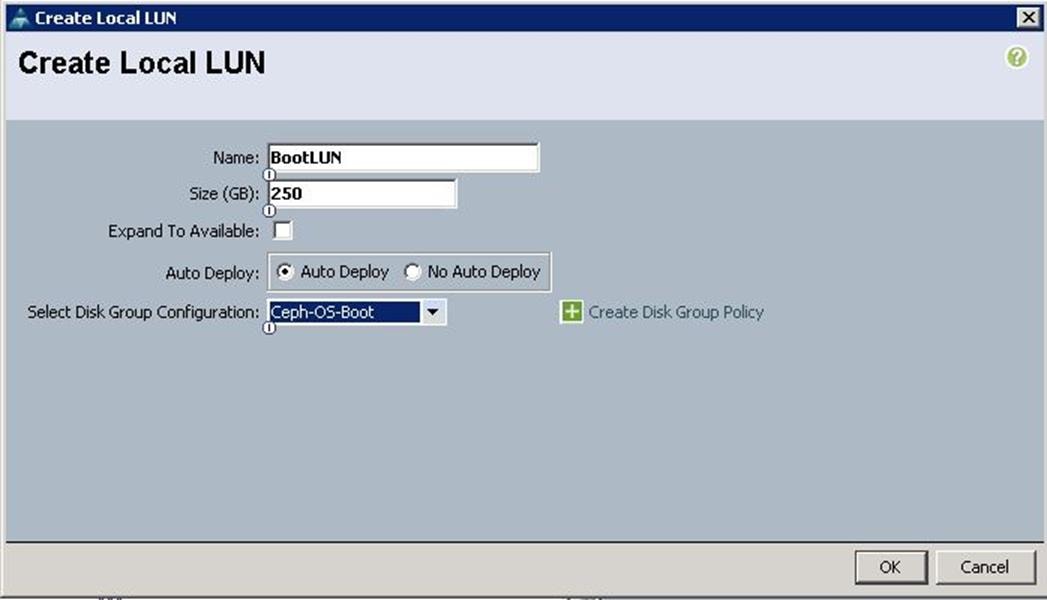

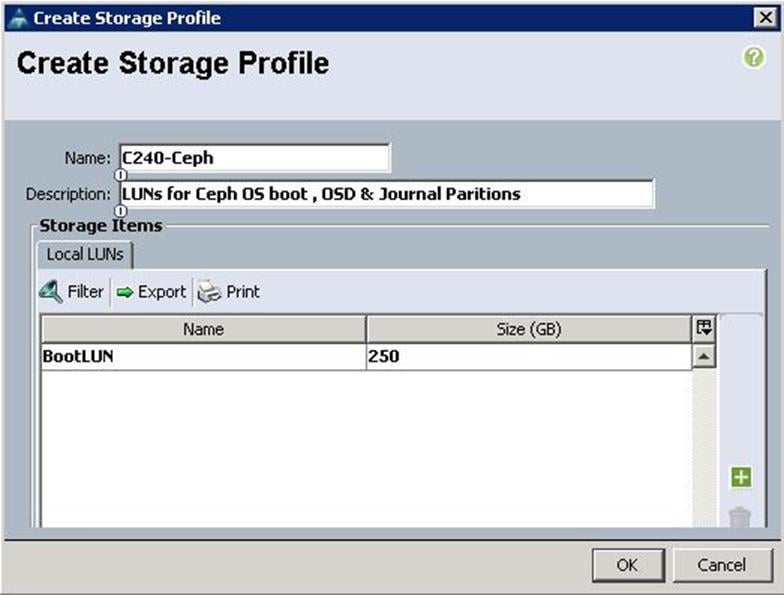

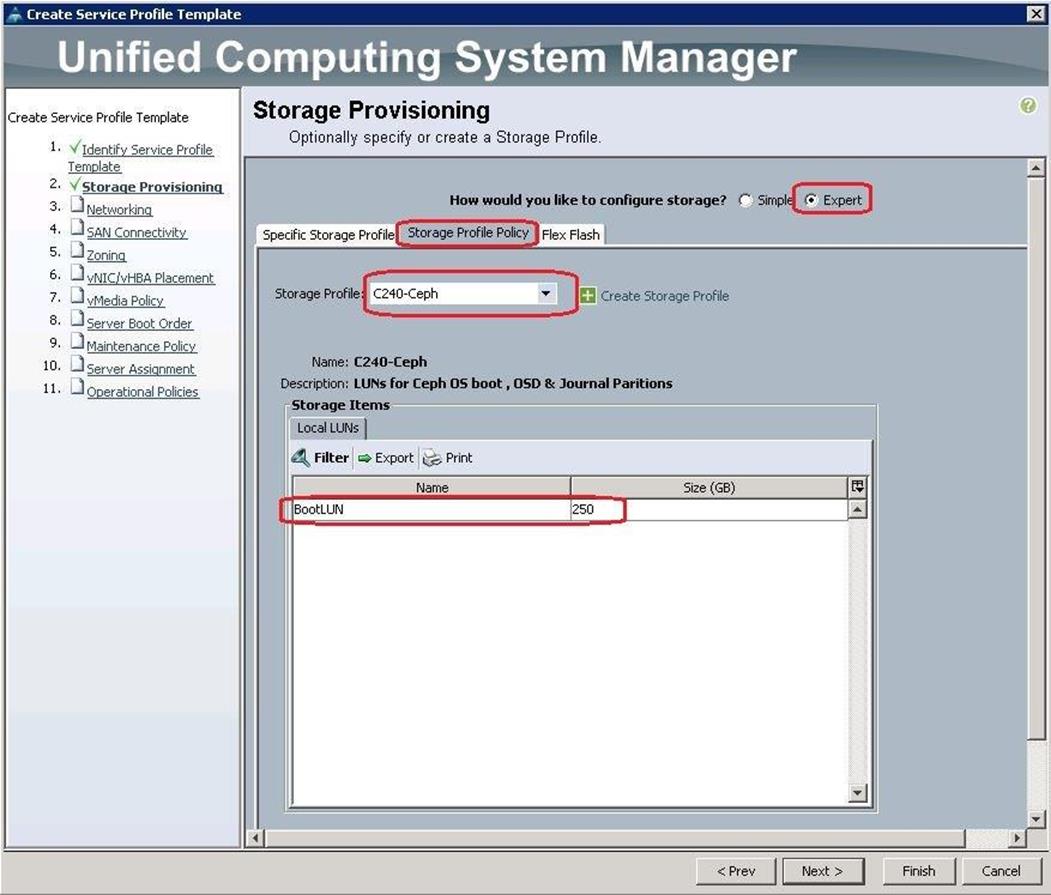

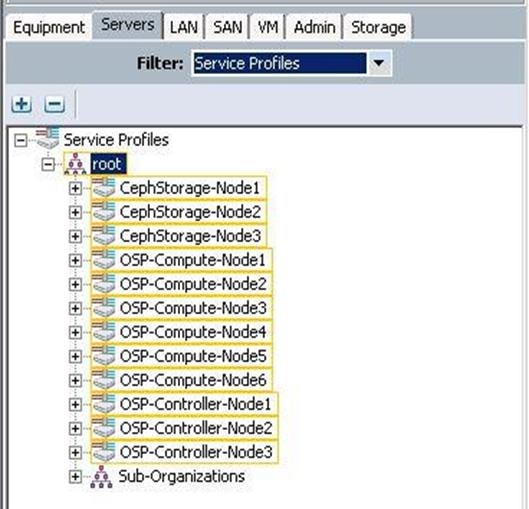

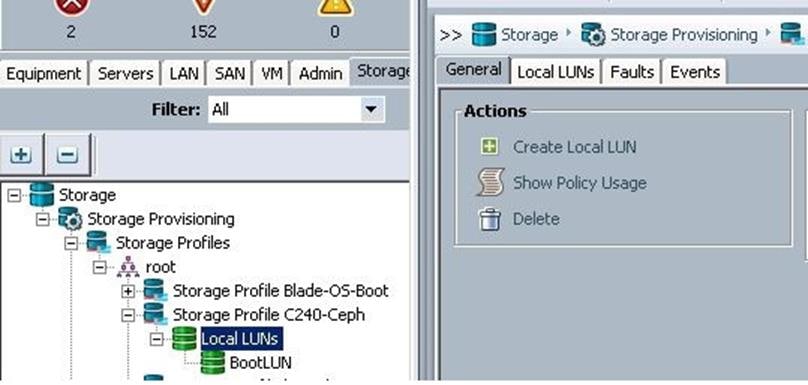

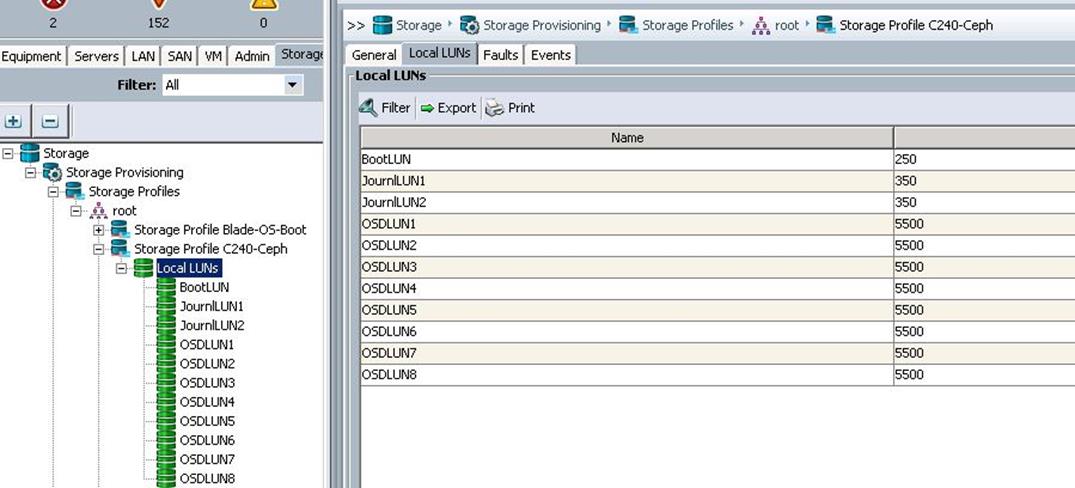

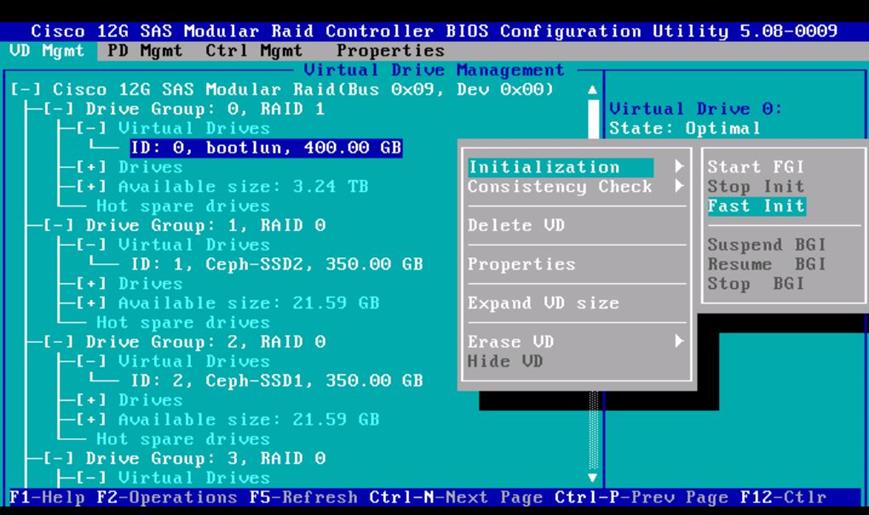

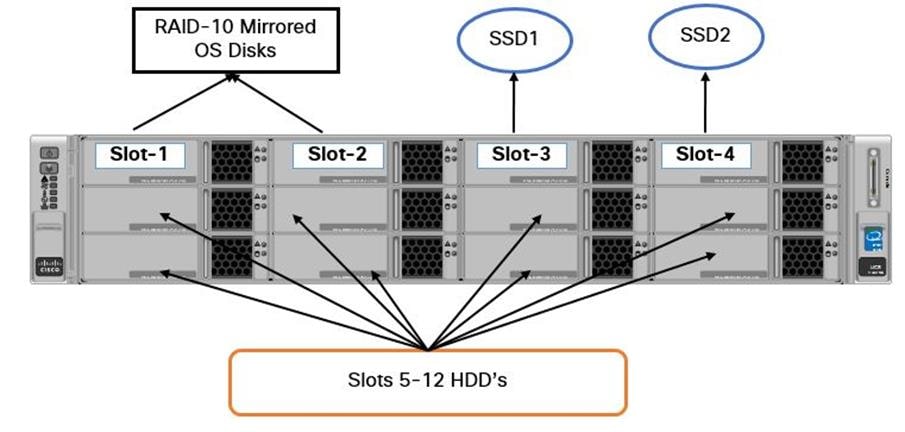

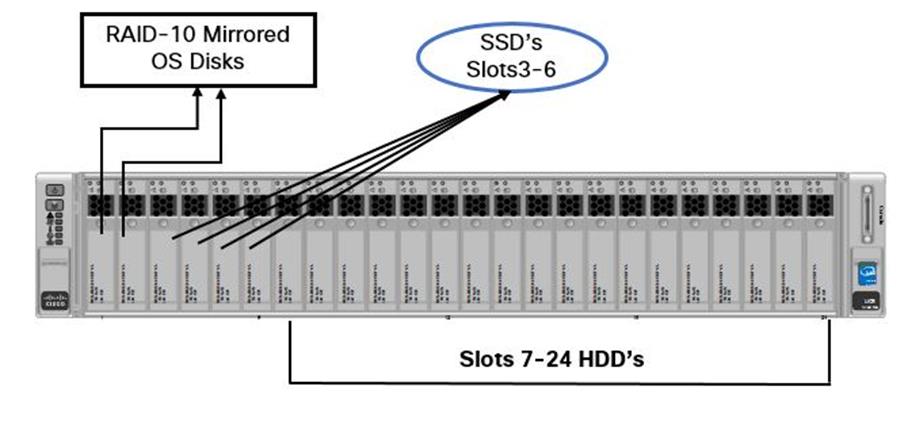

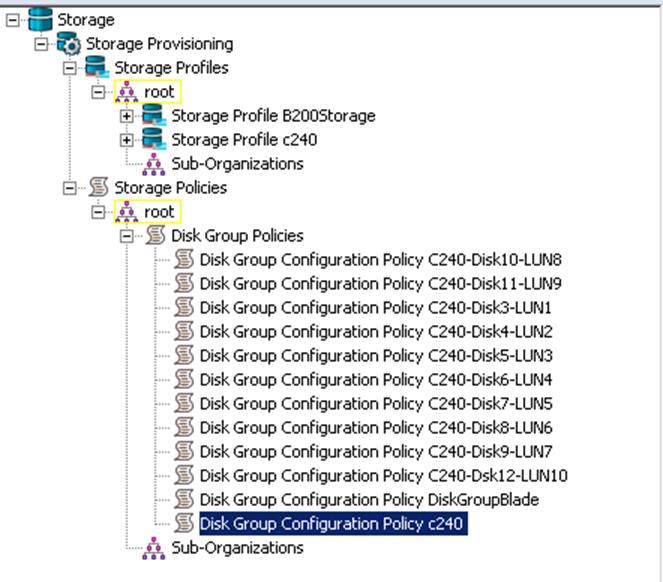

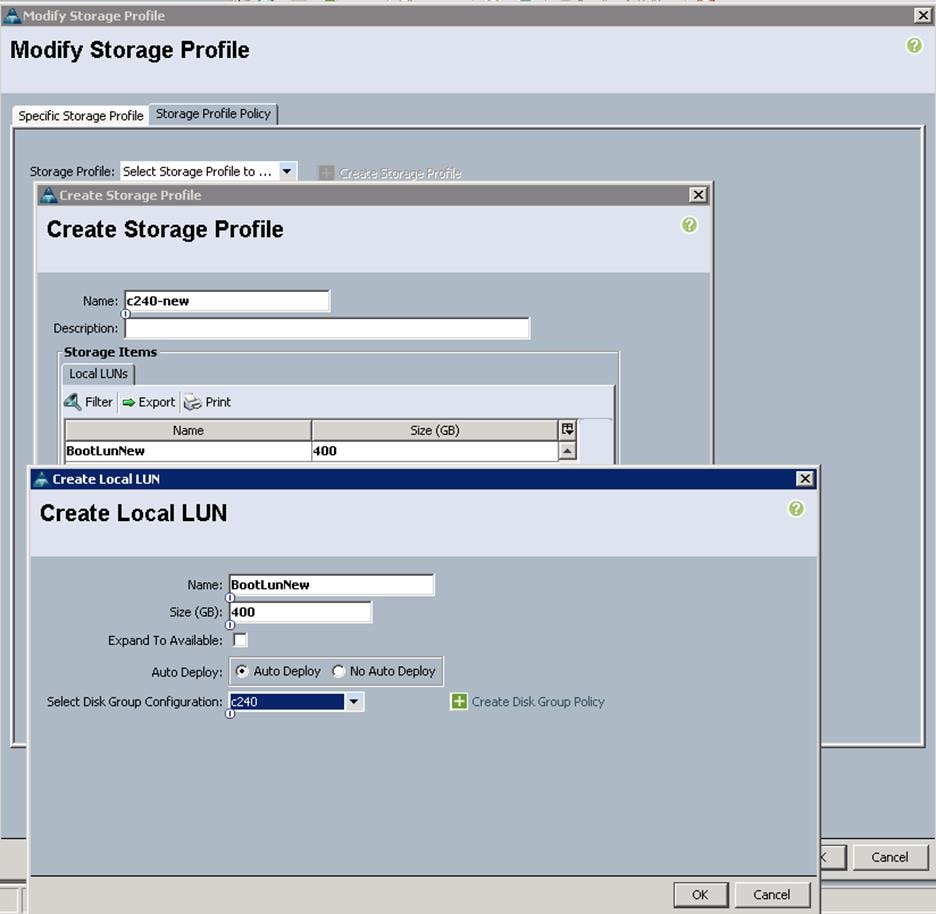

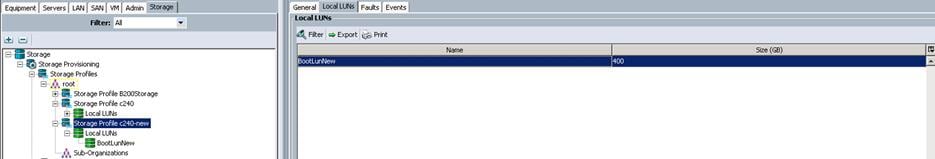

Create Storage Profiles for Cisco UCS C240 M4 Server Blades

To configure the Storage profiles from the UCS Manager, complete the following steps:

1. Under Storage à Storage Provisioning à Storage Profiles à root à right-click and select Create Storage Profile.

a. Specify the Storage profile name as C240-Ceph for the Ceph Storage Servers. Click “+”.

b. Specify the LUN name and size in GB. For the Disk group policy creation, select Disk Group Configuration for Ceph nodes as Ceph-OS-Boot similar to “BootDisk-OS” disk group policy as above.

c. After successful creation of Disk Group Policy, choose Disk Group Configuration as Ceph-OS-Boot and click OK.

d. Click OK to complete the Storage Profile creation for the Ceph Nodes.

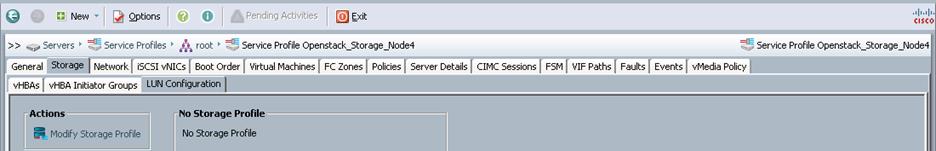

![]() For the Cisco UCS C240 M4 servers, the LUN creation for Ceph OSD disks (6TB SAS) and Ceph Journal disks (400GB SSDs) still remains on the Ceph Storage profile. Due to the Cisco UCS Manager limitations, we have to create OSD LUNs and Journal LUNs after the Cisco UCS C240 M4 server has been successfully associated with the Ceph Storage Service profiles.

For the Cisco UCS C240 M4 servers, the LUN creation for Ceph OSD disks (6TB SAS) and Ceph Journal disks (400GB SSDs) still remains on the Ceph Storage profile. Due to the Cisco UCS Manager limitations, we have to create OSD LUNs and Journal LUNs after the Cisco UCS C240 M4 server has been successfully associated with the Ceph Storage Service profiles.

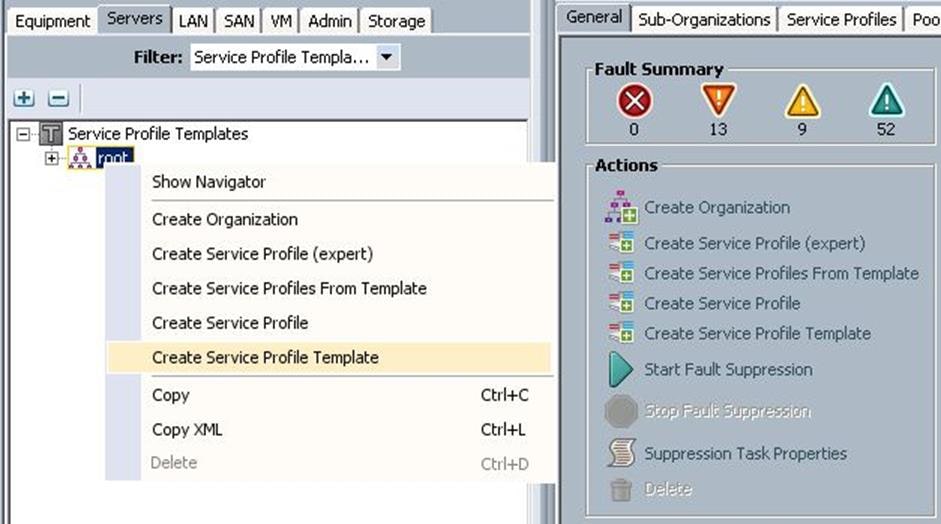

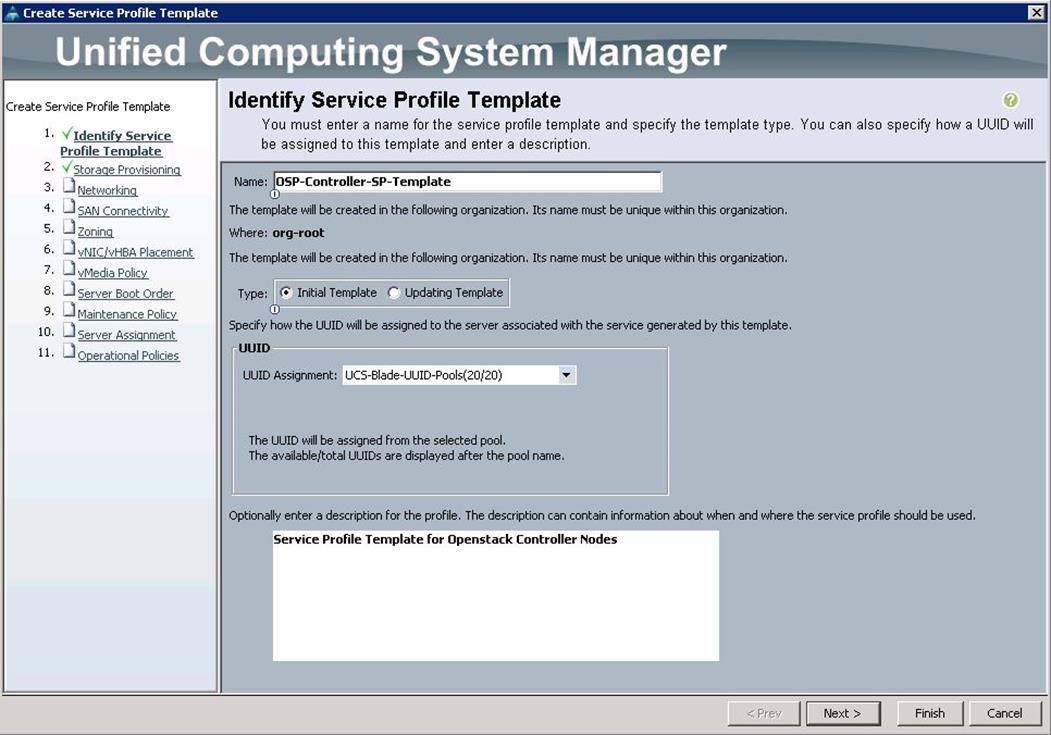

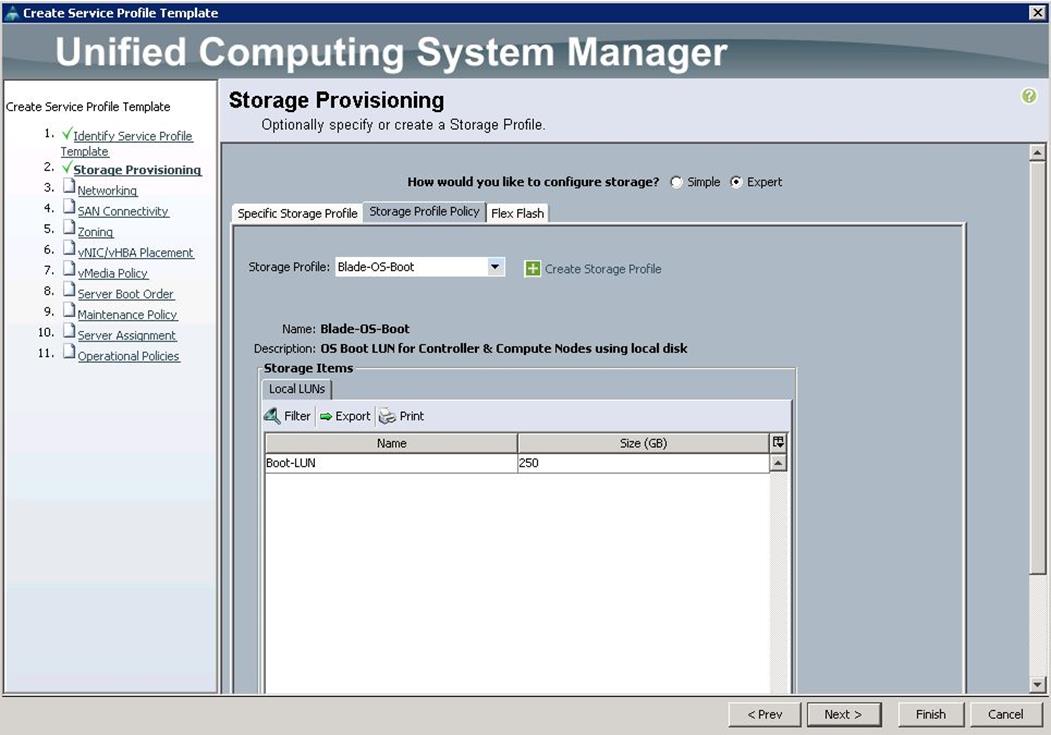

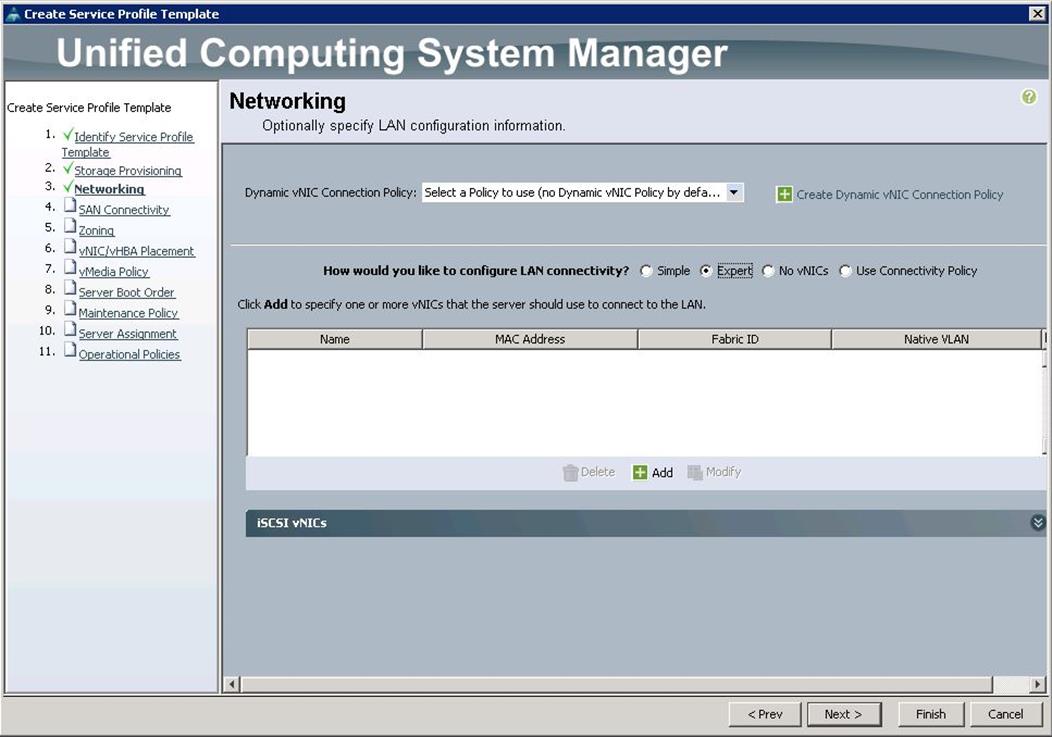

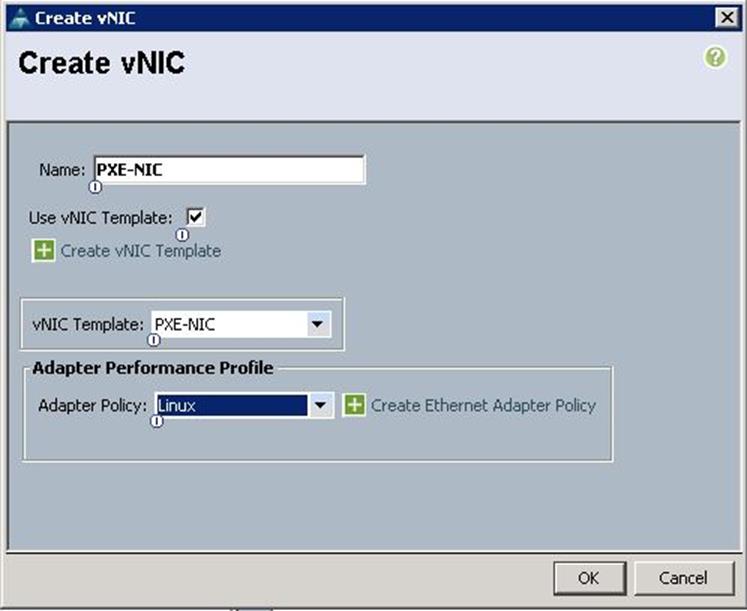

Create Service Profile Templates for Controller Nodes

To configure the Service Profile Templates for the Controller Nodes, complete the following steps:

1. Under Servers à Service Profile Templates à root à right-click and select Create Service Profile Template.

a. Specify the Service profile template name for the Controller node as OSP-Controller-SP-Template. Choose the UUID pools previously created from the drop-down list and click Next.

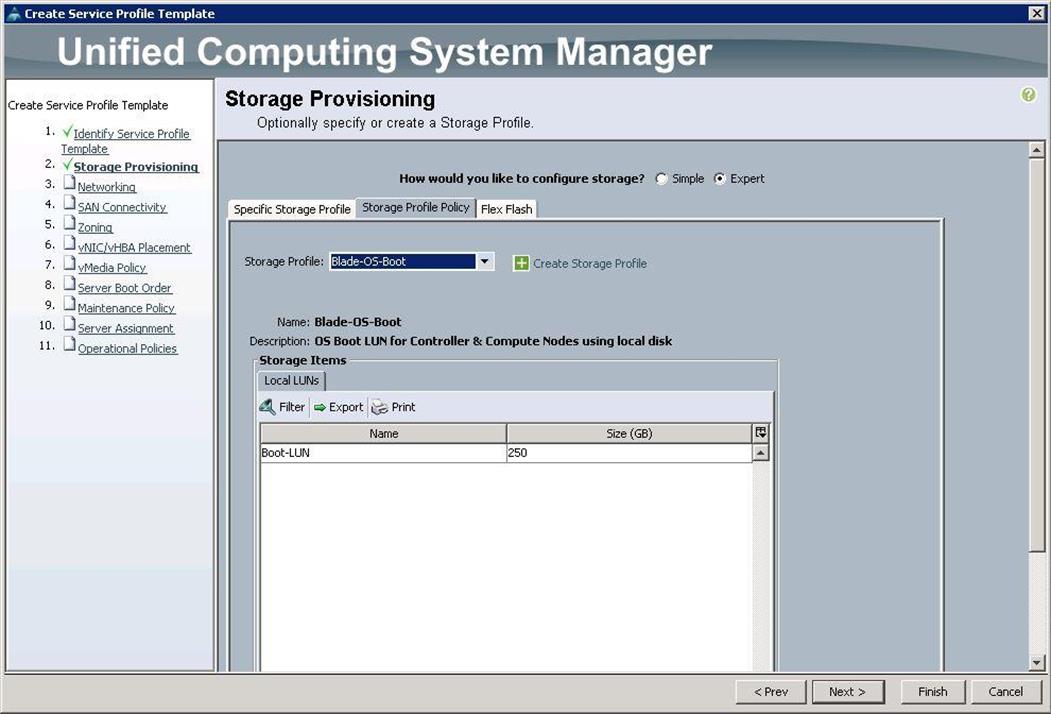

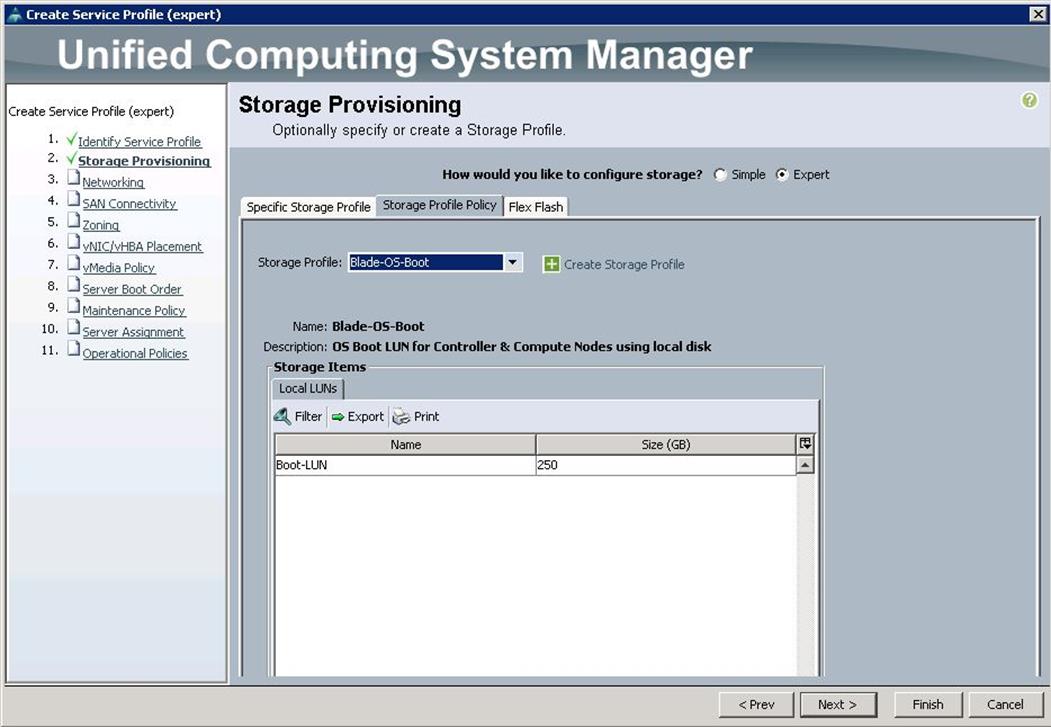

b. For Storage Provisioning, choose Expert and click Storage profile Policy and choose the Storage profile Blade-OS-boot previously created from the drop-down list and click Next.

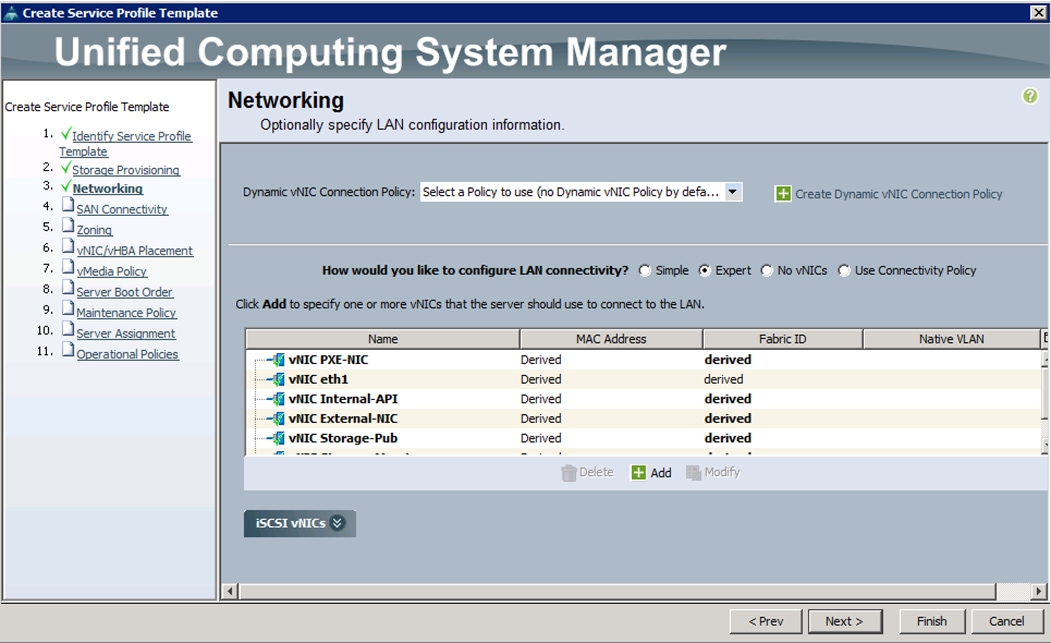

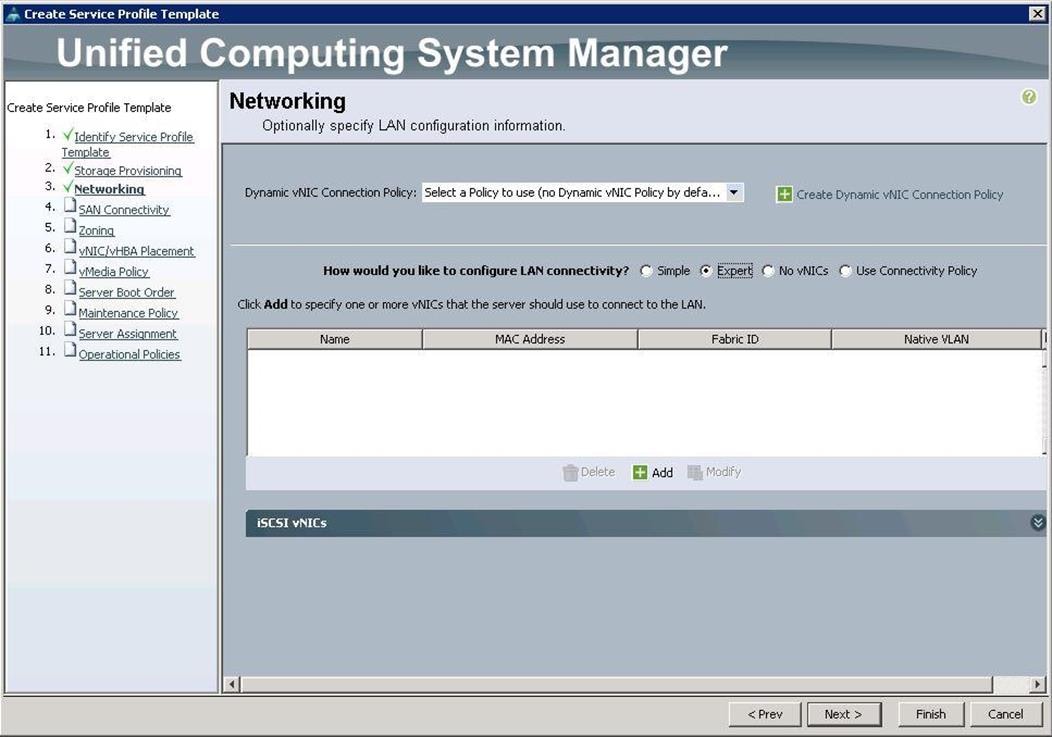

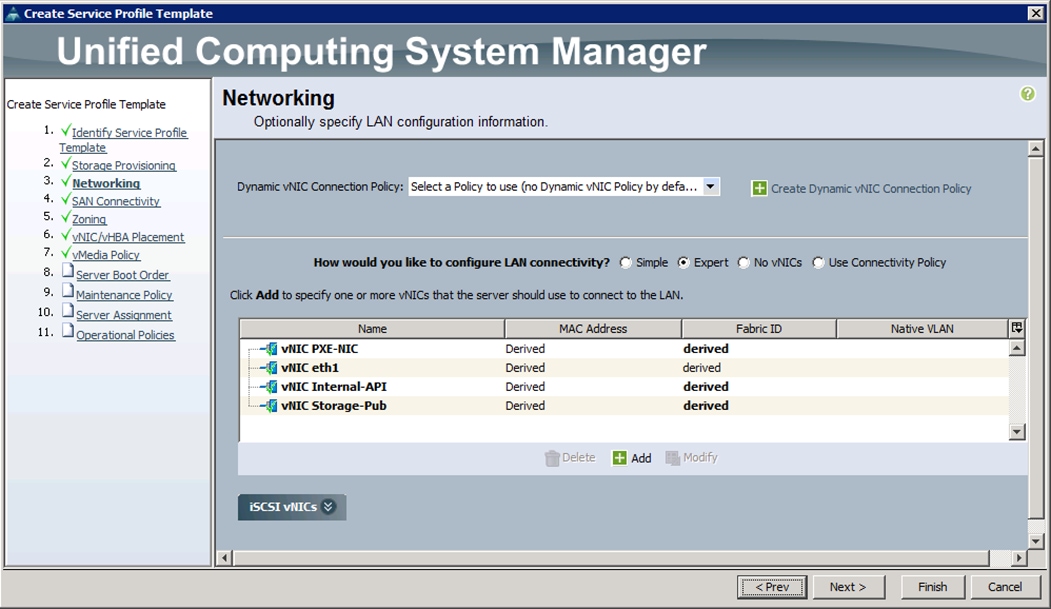

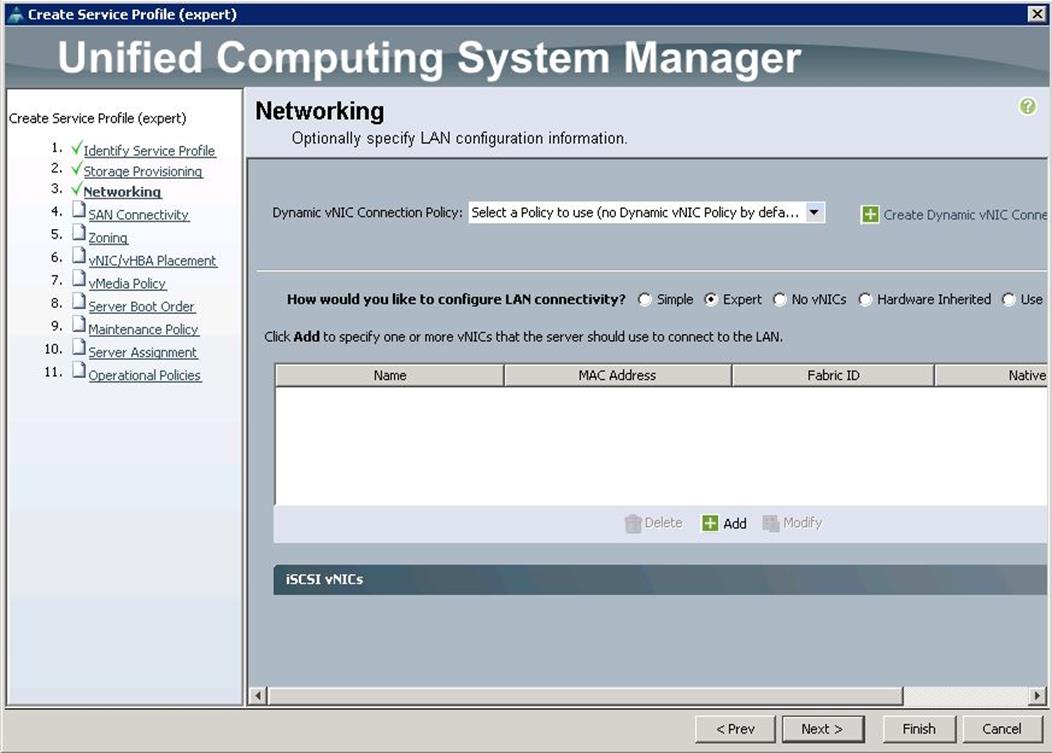

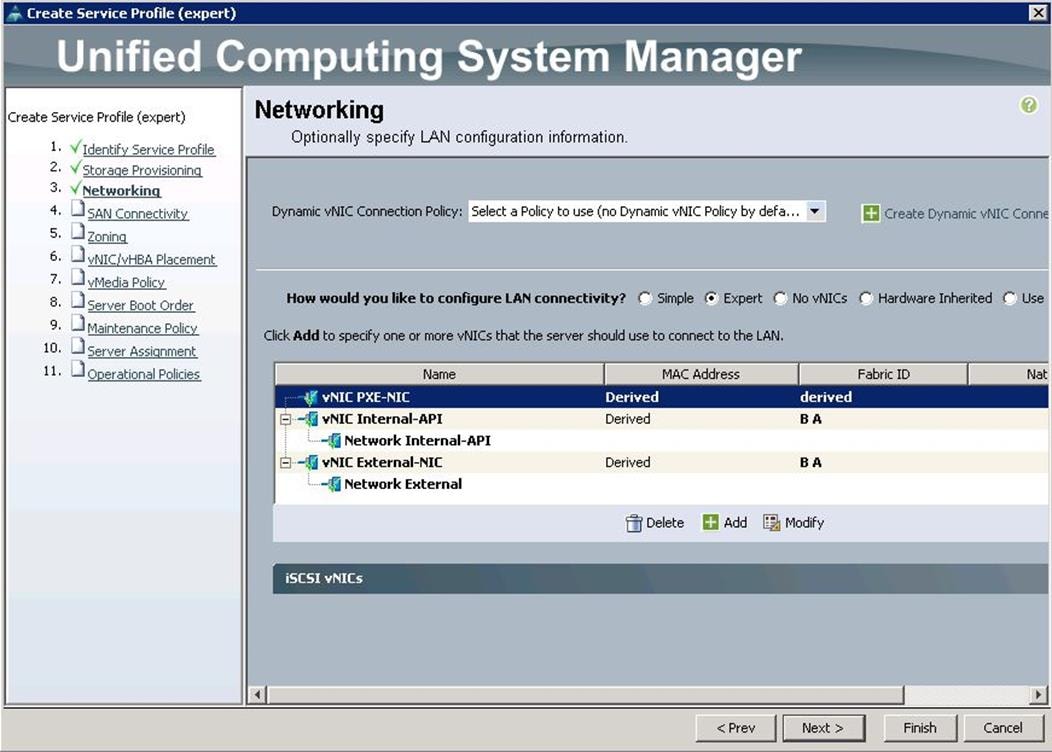

c. For Networking, choose Expert and click “+”.

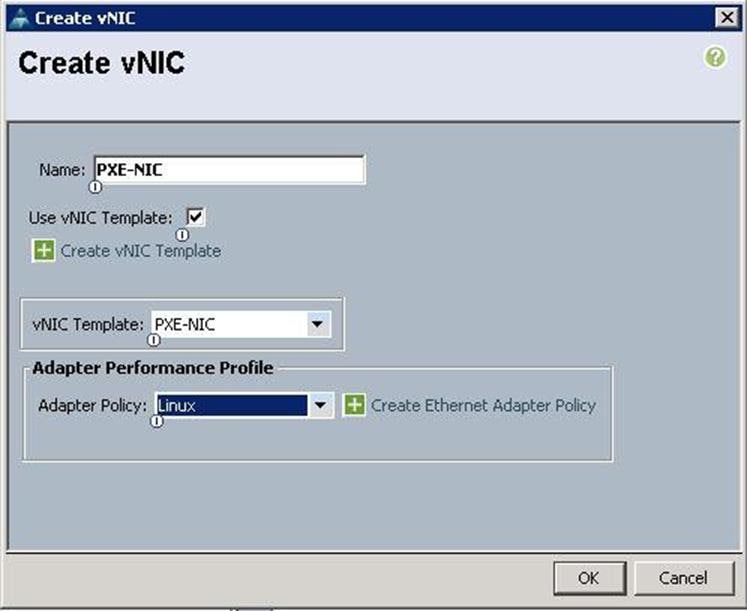

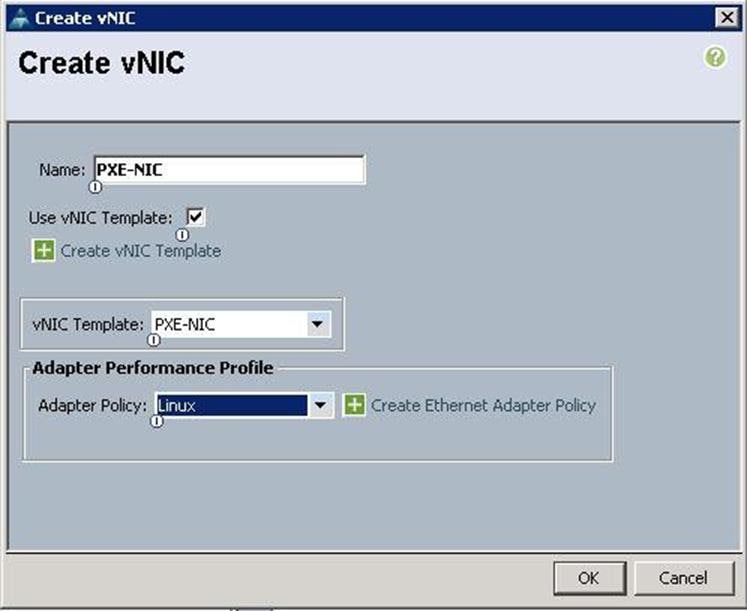

d. Create the VNIC interface for PXE or Provisioning network as PXE-NIC and click the check box Use VNIC template.

e. Under vNIC template, choose the PXE-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

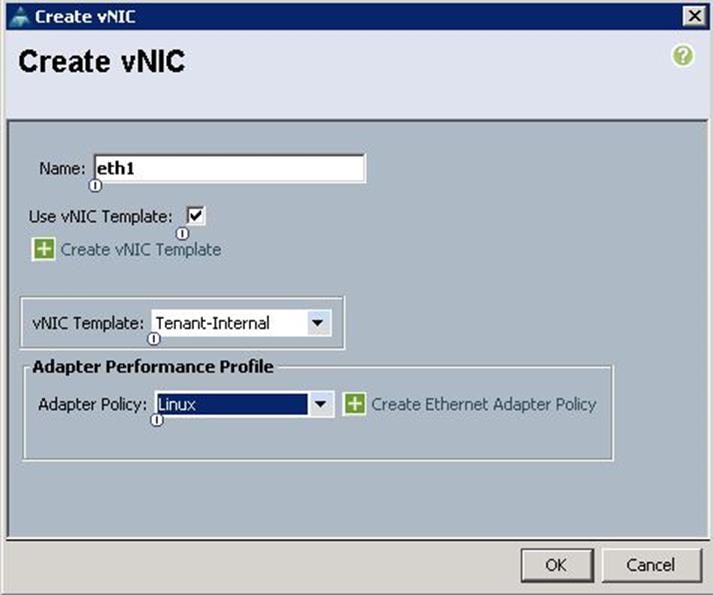

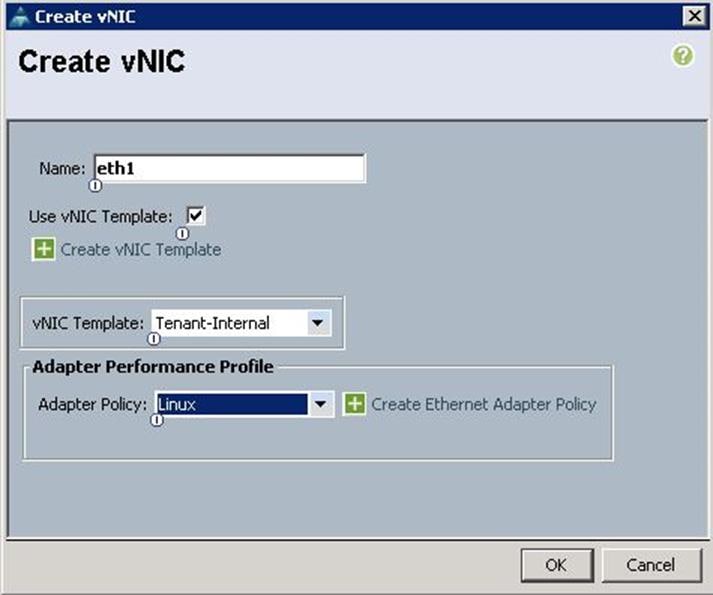

f. Create the VNIC interface for Tenant Internal Network as eth1 and then under vNIC template, choose the “Tenant-Internal” template we created before from the drop-down list and choose Adapter Policy as “Linux”.

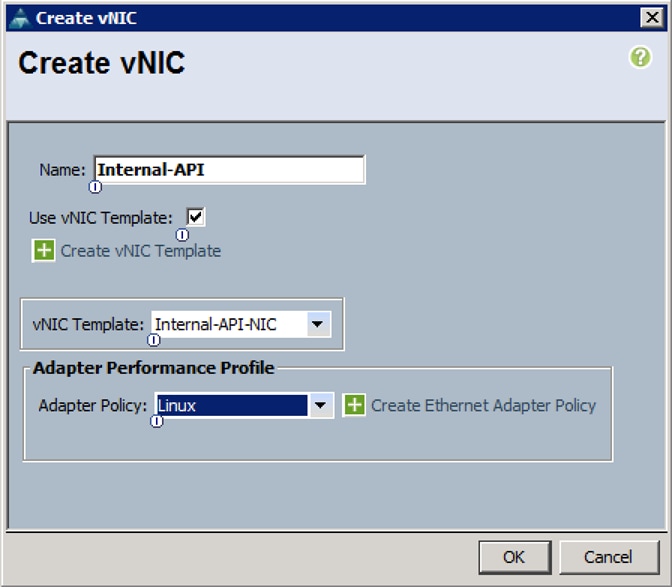

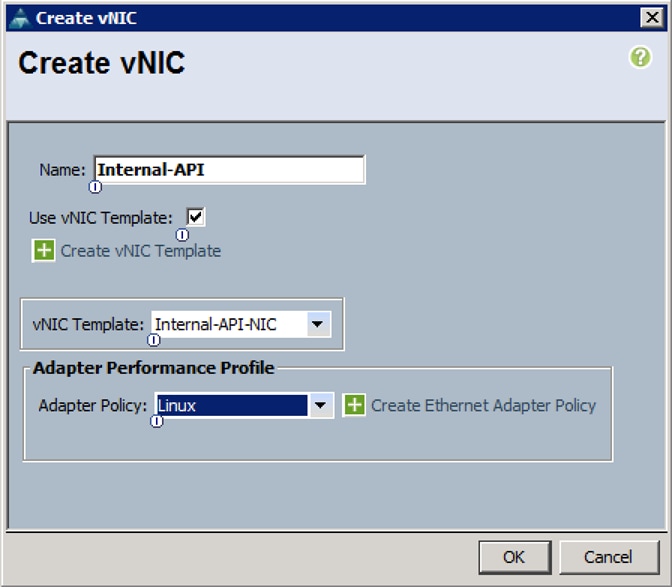

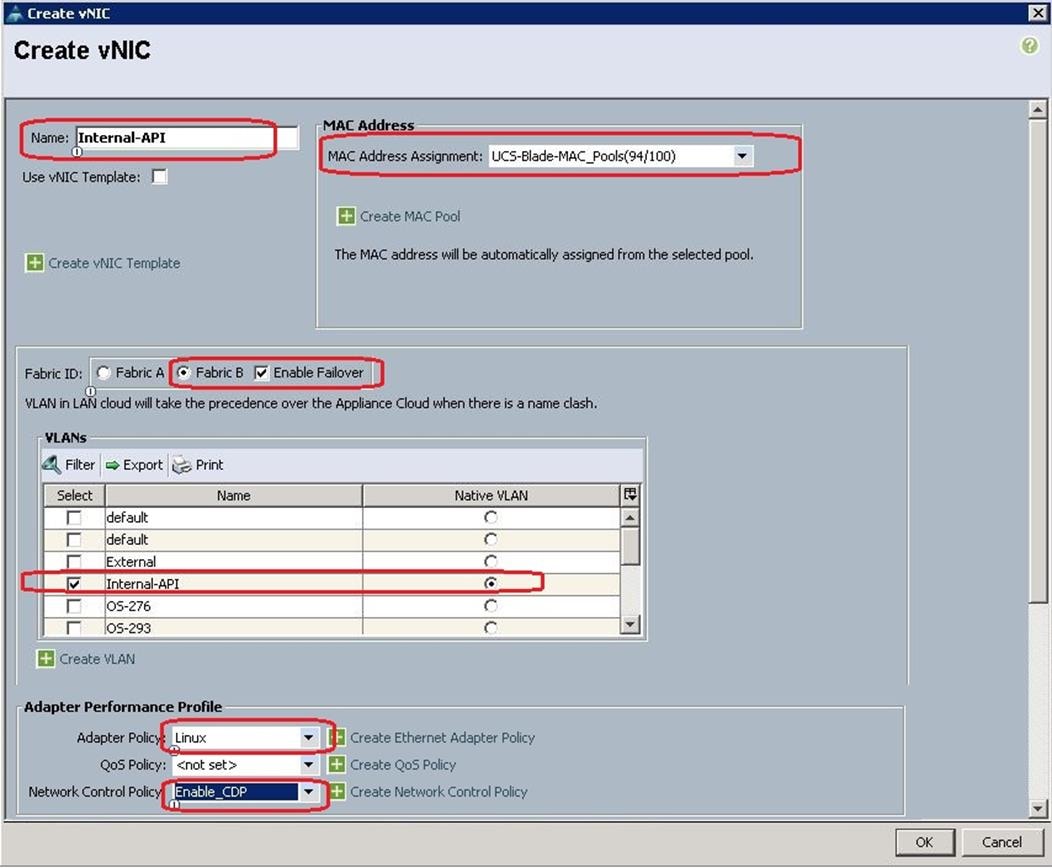

g. Create the VNIC interface for Internal API network as Internal-API and click the check box for Use VNIC template.

h. Under vNIC template, choose the Internal-API-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

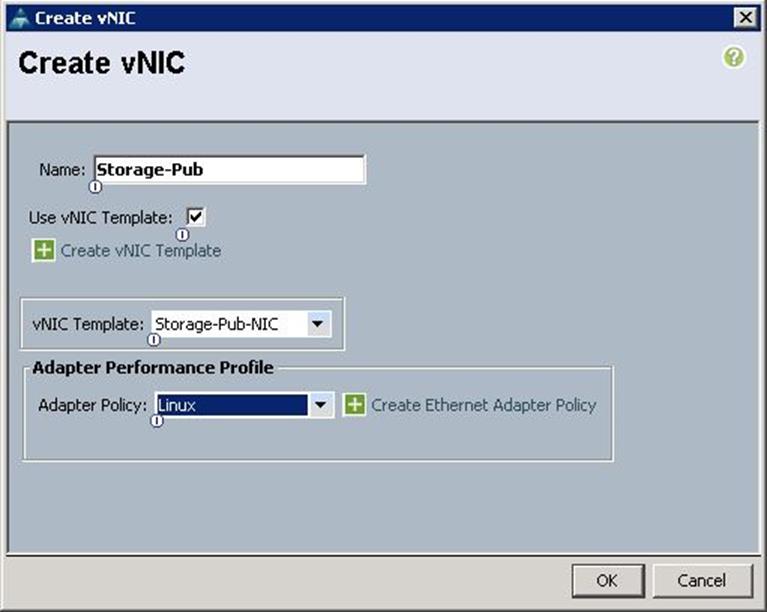

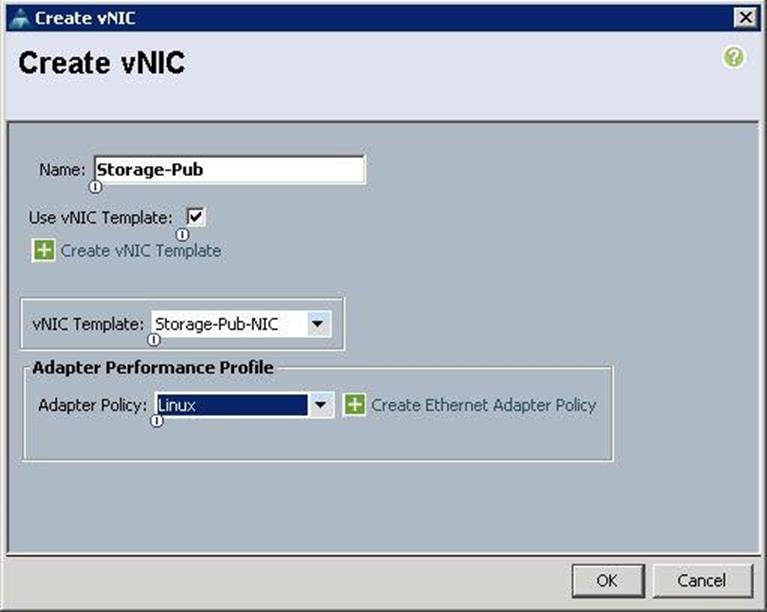

i. Create the VNIC interface for Storage Public Network as Storage-Pub and click the check box for Use VNIC template.

j. Under vNIC template, choose the Storage-Pub-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

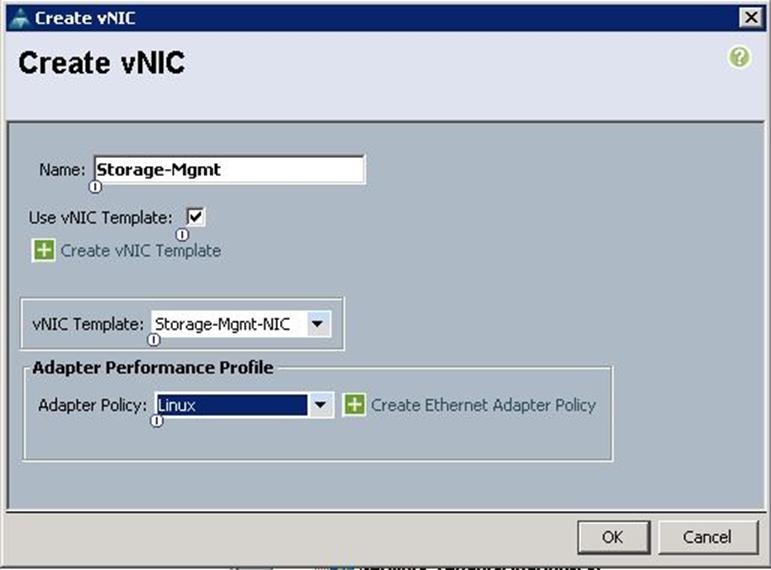

k. Create the VNIC interface for Storage Mgmt Cluster Network as Storage-Mgmt and click the check box for Use VNIC template.

l. Under vNIC template, choose the Storage-Mgmt-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

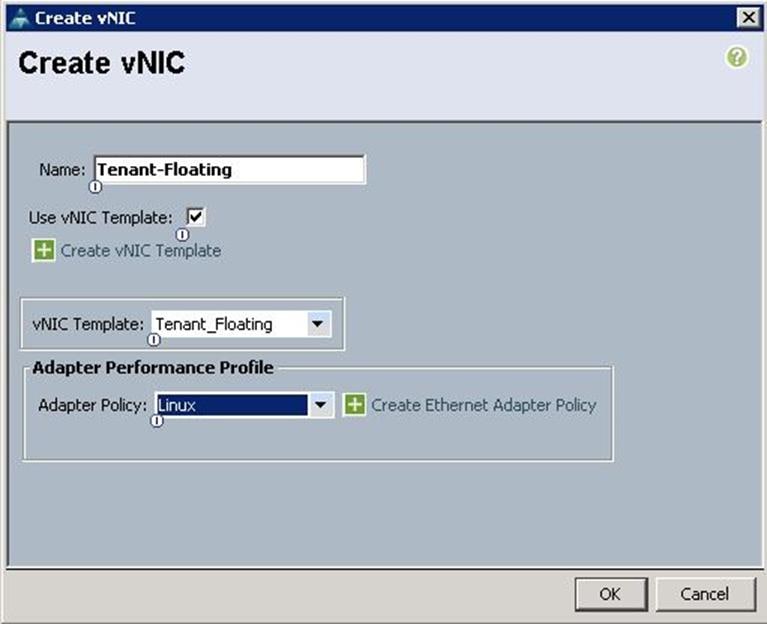

m. Create the VNIC interface for Floating Network as Tenant-Floating and click the check box the Use VNIC template.

n. Under the vNIC template, choose the Tenant-Floating template previously created from the drop-down list and choose Linux for the Adapter Policy.

o. Create the VNIC interface for External Network as External-NIC and click the check box the Use VNIC template.

p. Under the vNIC template, choose the External-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

q. After a successful VNIC creation, click Next.

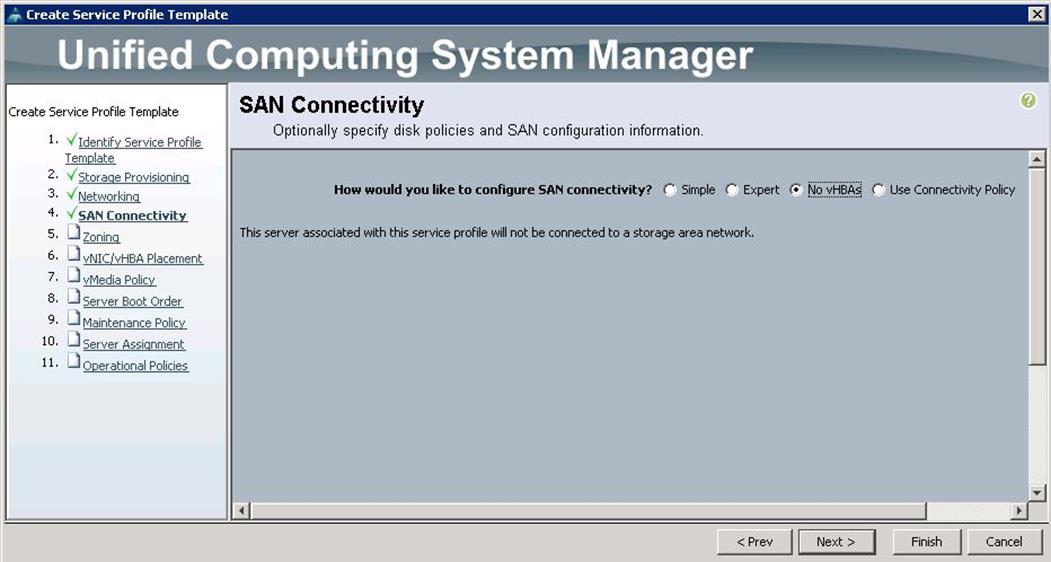

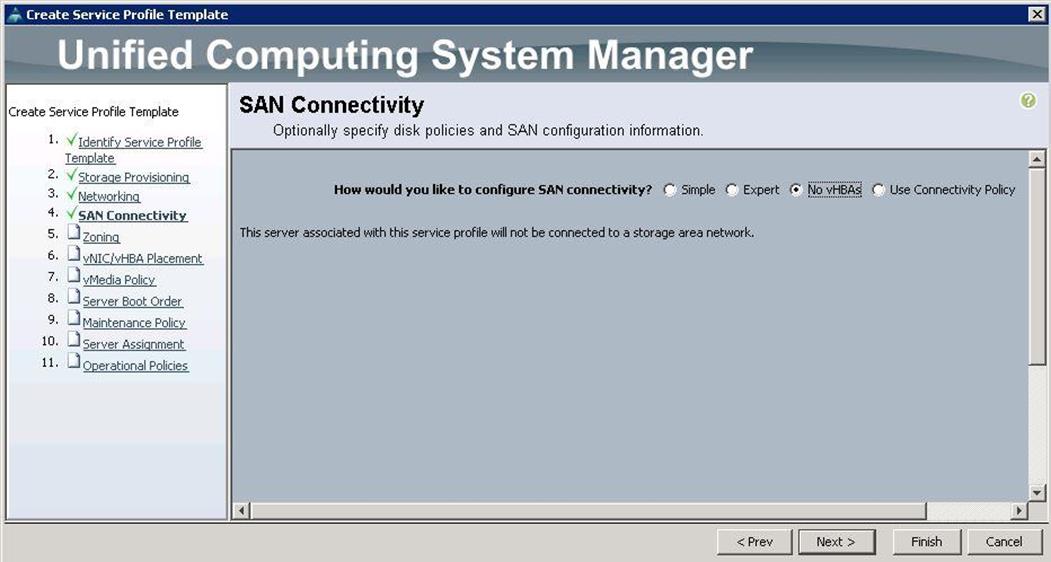

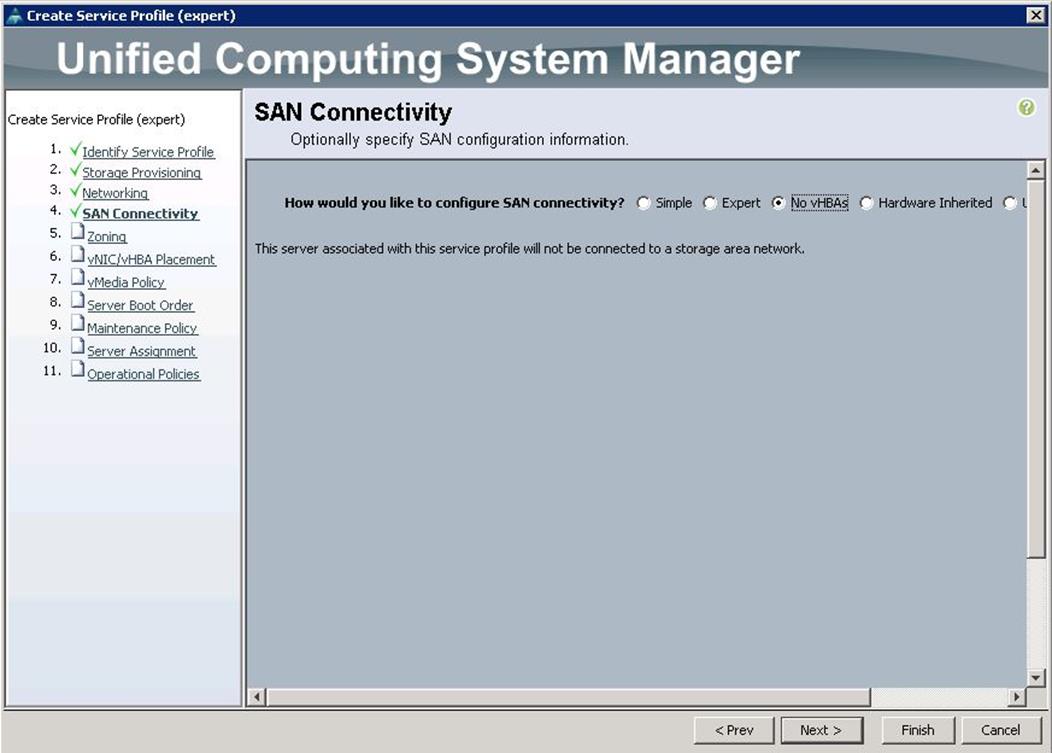

r. Under the SAN connectivity, choose No VHBAs and click Next.

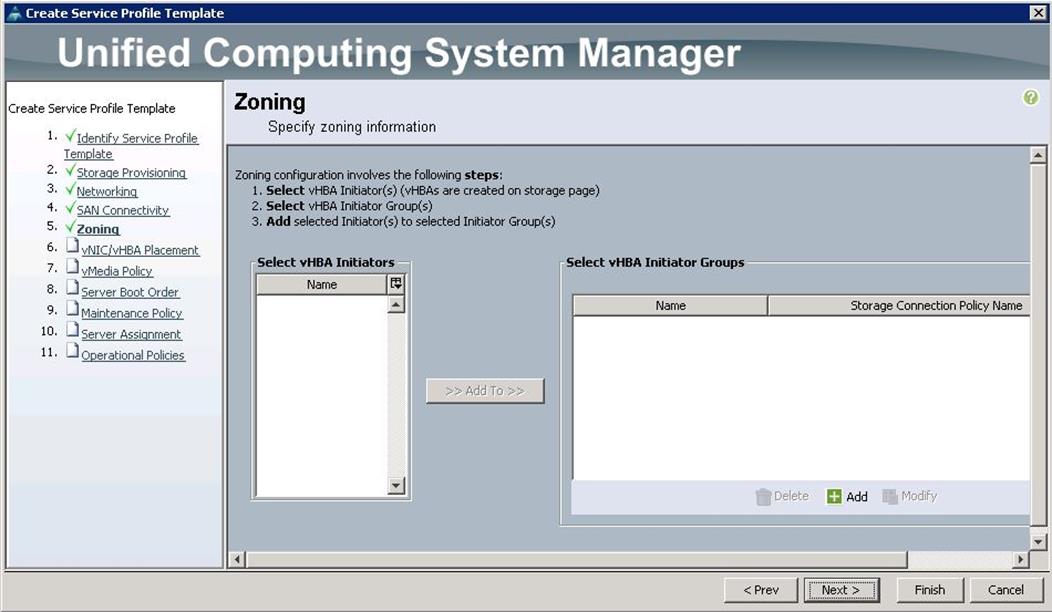

s. Under Zoning, click Next.

t. Under VNIC/VHBA Placement, choose the vNICs PCI order as shown below and click Next.

u. Under vMedia Policy, click Next.

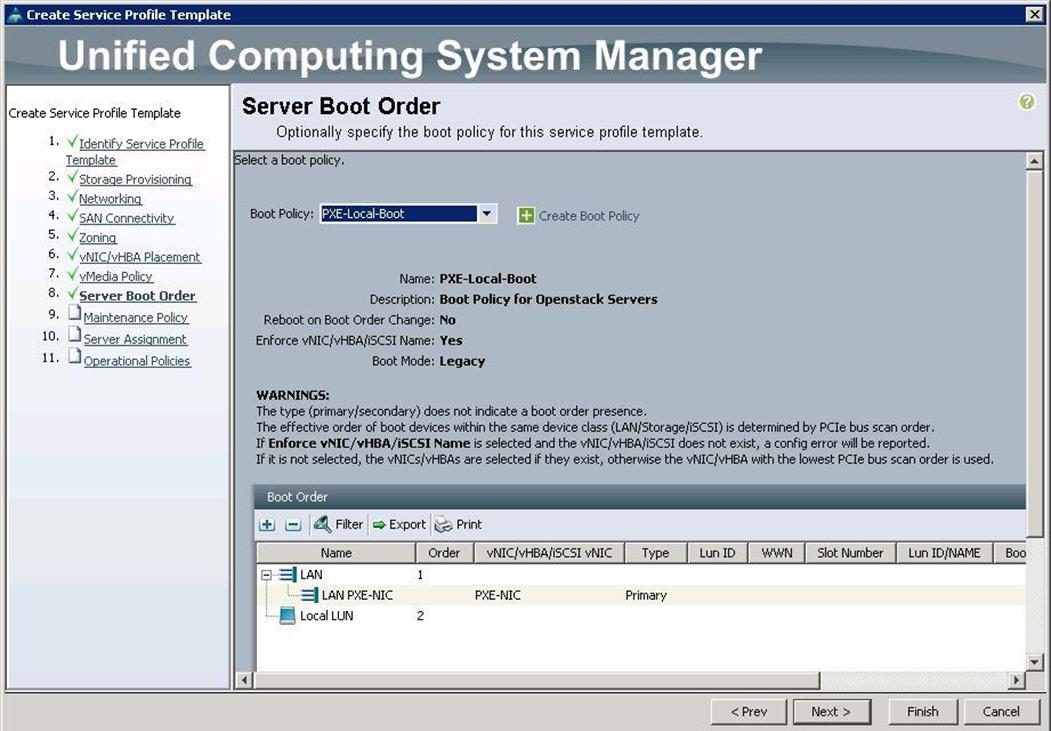

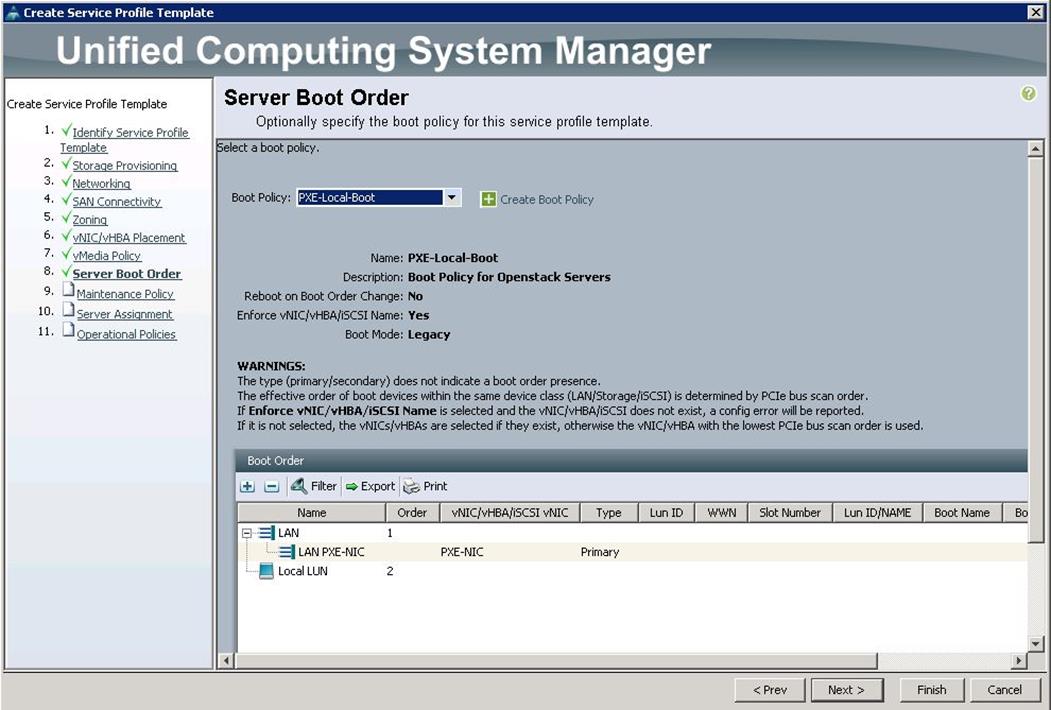

v. Under Server Boot Order, choose the boot policy as PXE-LocalBoot previously created, from the drop-down list and click Next.

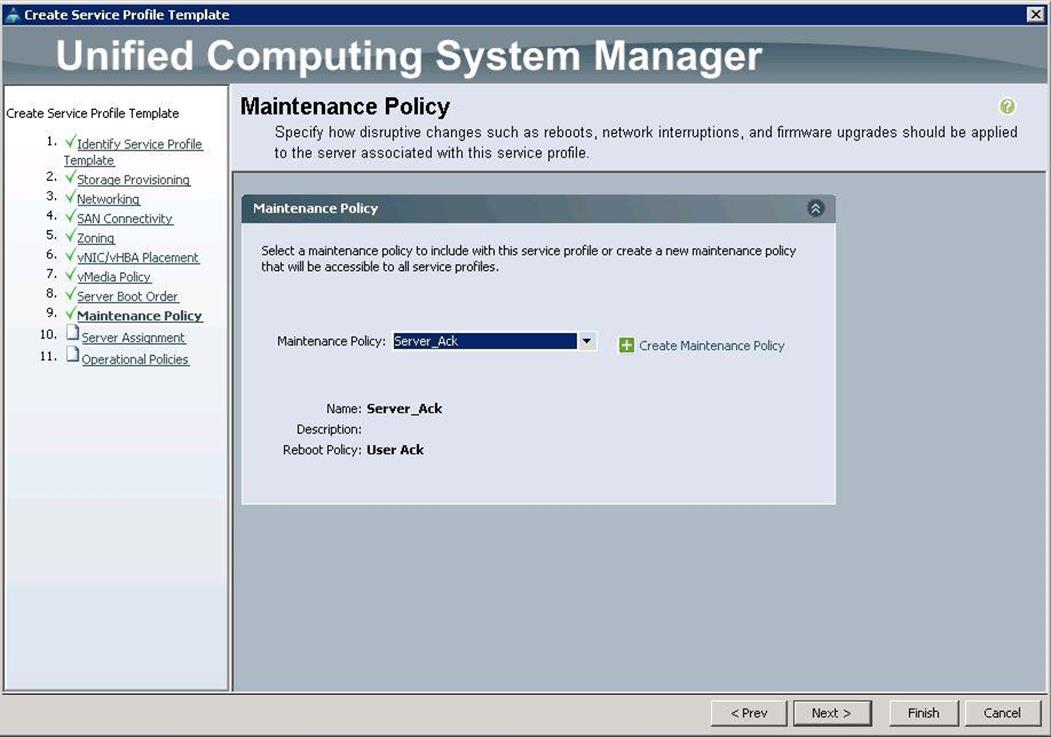

w. Under Maintenance Policy, choose Server_Ack previously created, from the drop-down list and click Next.

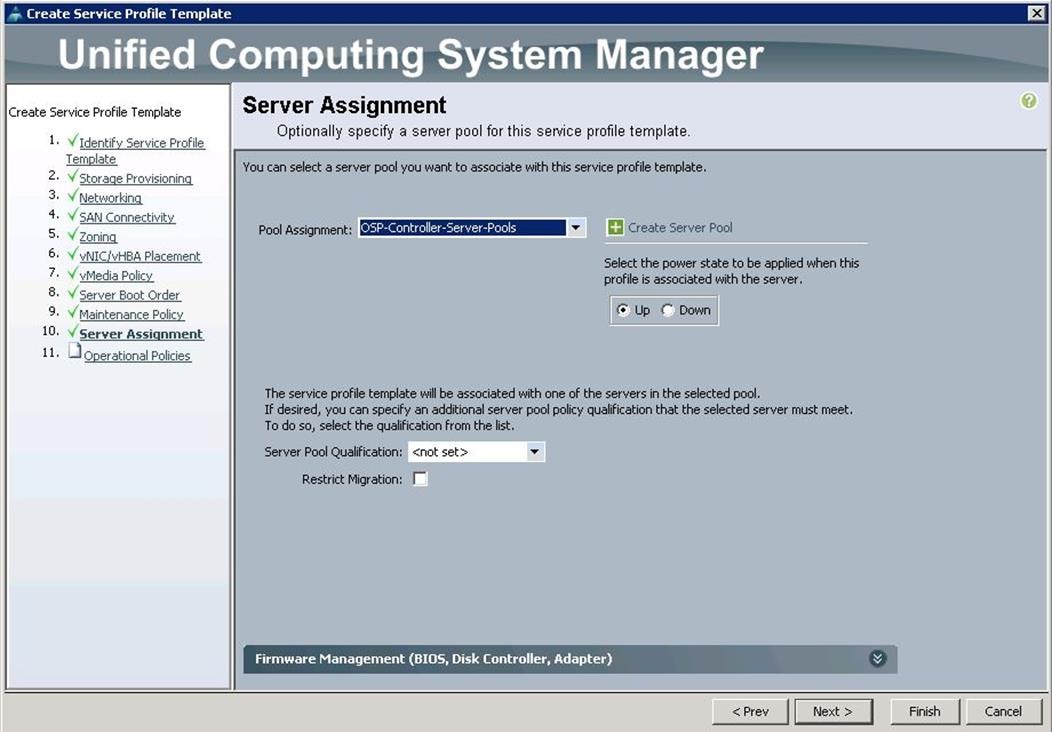

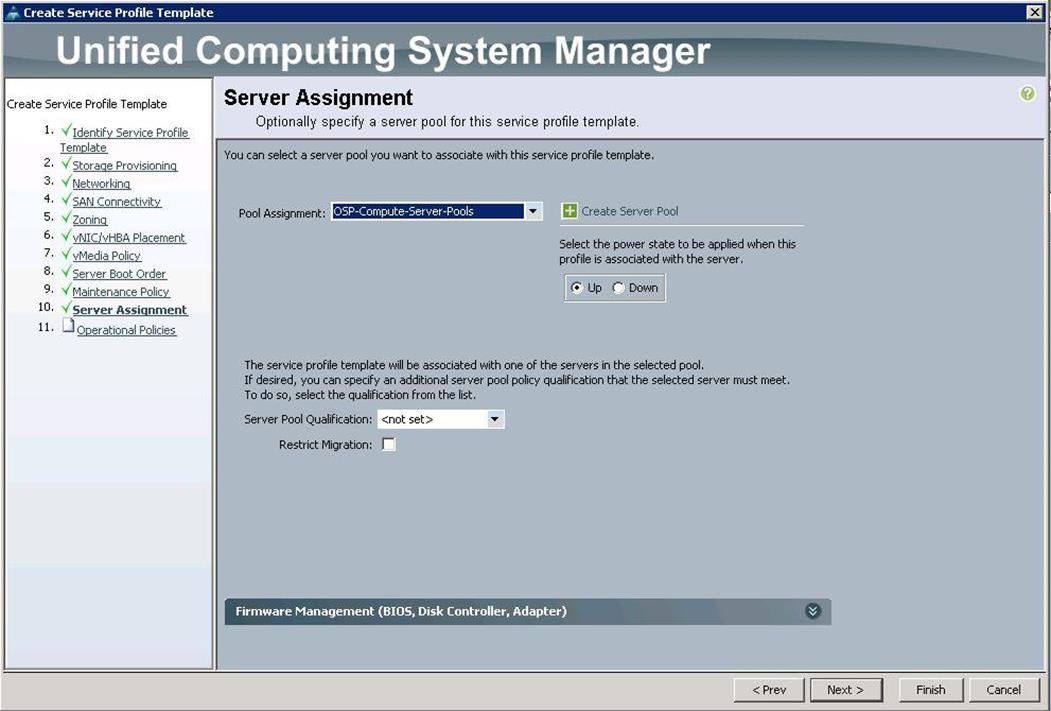

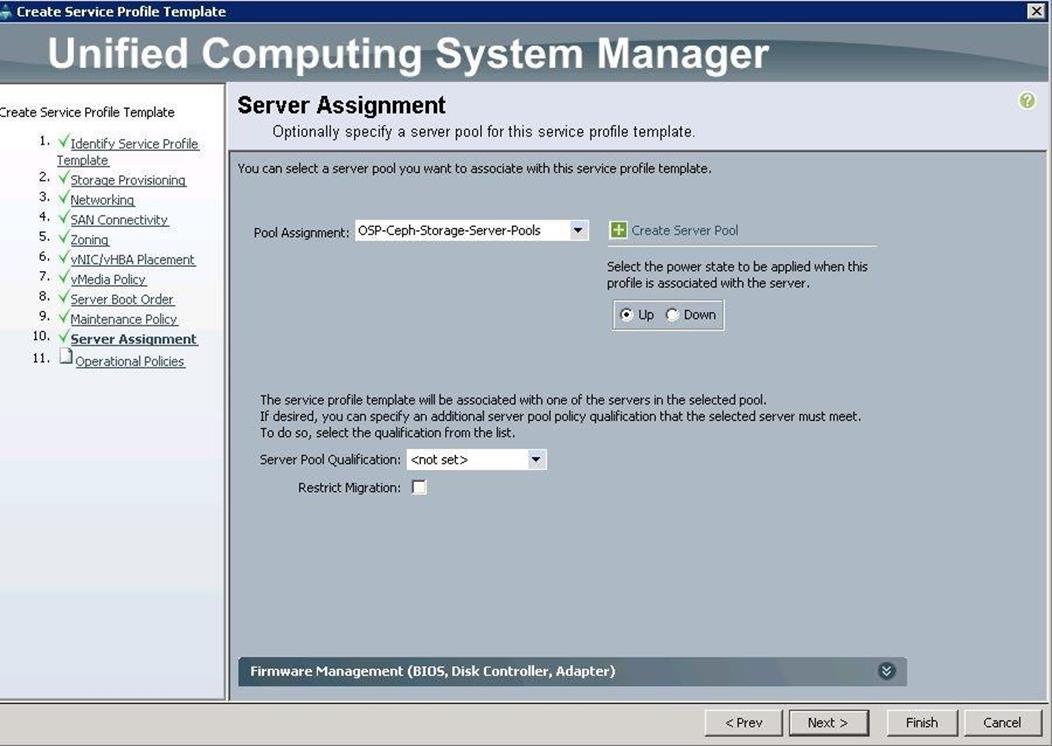

x. Under Server Assignment, choose the Pool Assignment as OSP-Controller-Server-Pools previously created, from the drop-down list and click Next.

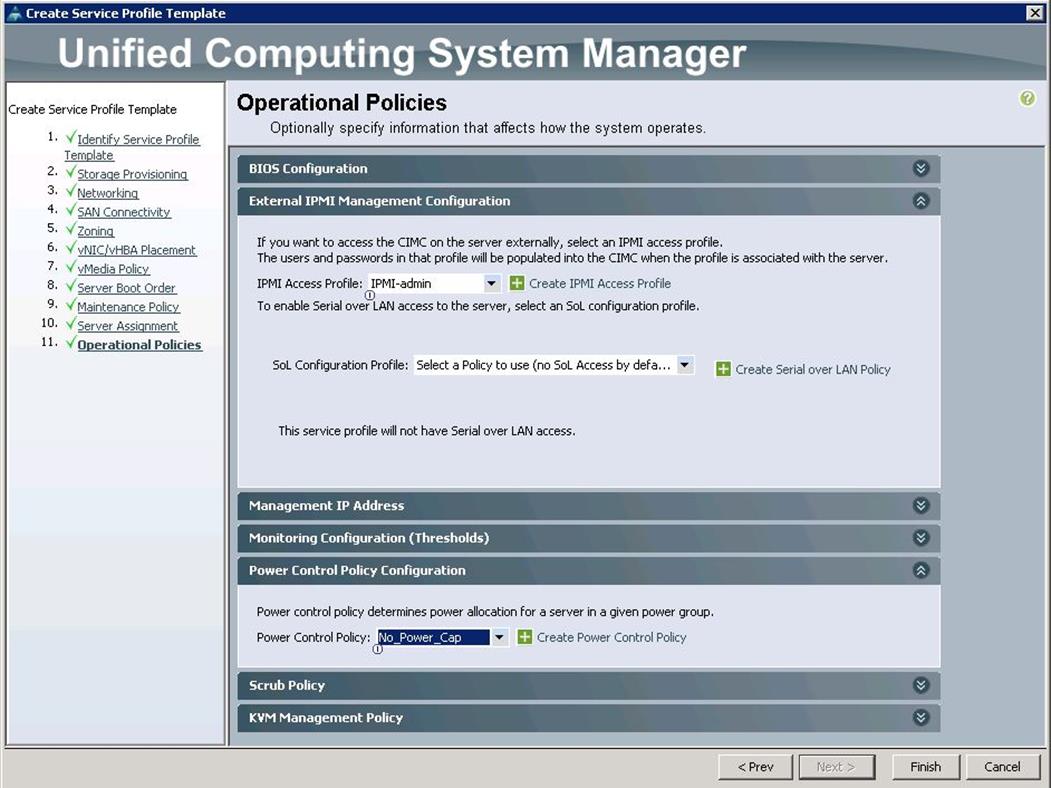

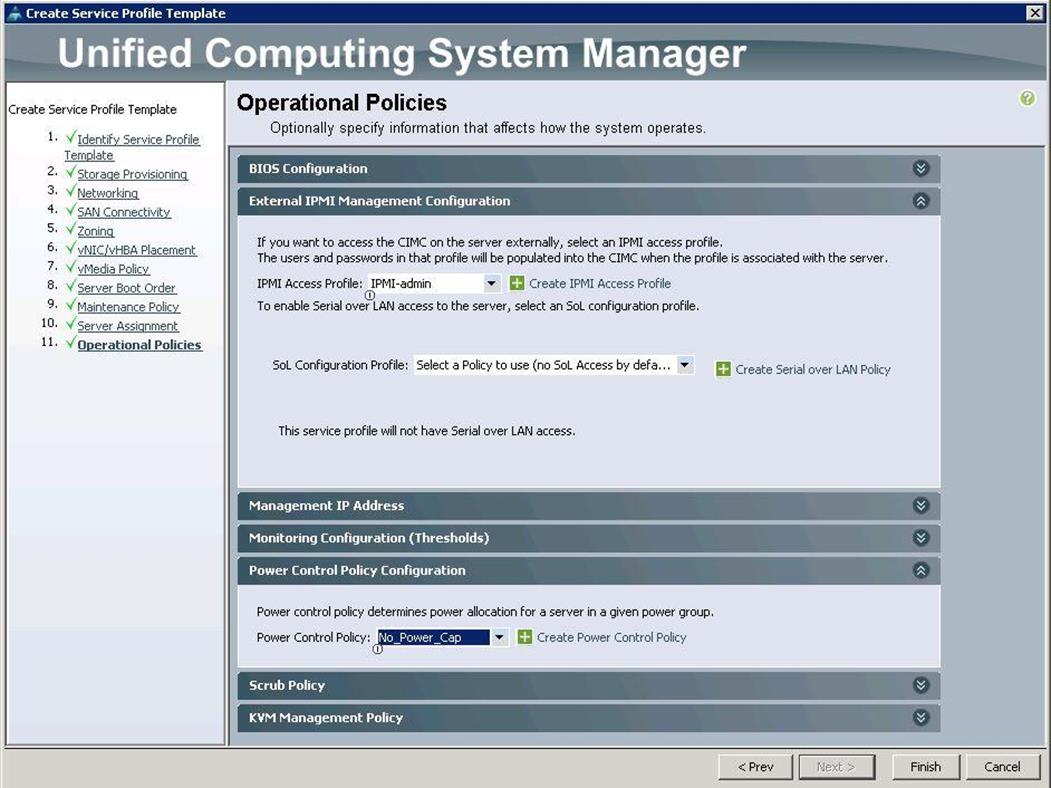

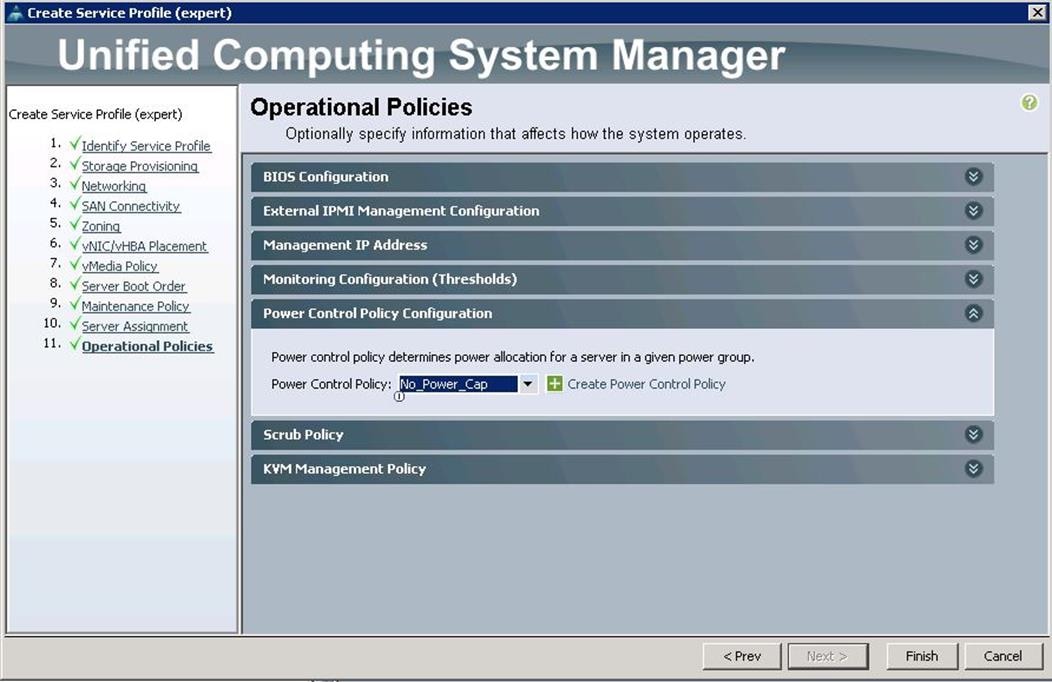

y. Under Operational Policies, choose the IPMI Access Profile as IPMI_admin previously created, from the drop-down list and choose the Power Control Policy as No_Power_Cap and click Finish.

Create Service Profile Templates for Compute Nodes

To create the Service Profile templates for the Compute nodes, complete the following steps:

1. Specify the Service profile template name for the Controller node as OSP-Compute-SP-Template.

2. Choose the UUID pools previously created from the drop-down list and click Next.

3. For Storage Provisioning, choose Expert and click Storage Profile Policy and choose the Storage profile Blade-OS-boot previously created, from the drop-down list and click Next.

4. For Networking, choose Expert and click “+".

5. Create the VNIC interface for PXE or Provisioning network as PXE-NIC and click the check box for Use VNIC template.

6. Under the vNIC template, choose the PXE-NIC template previously created, from the drop-down list and choose Linux for the Adapter Policy.

7. Create the VNIC interface for Tenant Internal Network as eth1 and then under vNIC template, choose the “Tenant-Internal” template we created before from the drop-down list and choose Adapter Policy as “Linux”.

![]() Due to the Cisco UCS Manager Plugin limitations, we have created eth1 as VNIC for Tenant Internal Network..

Due to the Cisco UCS Manager Plugin limitations, we have created eth1 as VNIC for Tenant Internal Network..

8. Create the VNIC interface for Internal API network as Internal-API and click the check box for VNIC template.

9. Under the vNIC template, choose the Internal-API template previously created, from the drop-down list and choose Linux for the Adapter Policy.

10. Create the VNIC interface for Storage Public Network as Storage-Pub and click the check box for Use VNIC template.

11. Under the vNIC template, choose the Storage-Pub-NIC template previously created, from the drop-down list and choose Linux for the Adapter Policy.

12. After a successful VNIC creation, click Next.

13. Under SAN connectivity, choose No VHBAs and click Next.

14. Under Zoning, click Next.

15. Under VNIC/VHBA Placement, choose the vNICs PCI order as shown below and click Next.

16. Under vMedia Policy, click Next.

17. Under Server Boot Order, choose boot policy as PXE-LocalBoot we created from the drop-down list and click Next.

18. Under Maintenance Policy, choose Server_Ack previously created, from the drop-down list and click Next.

19. Under Server assignment, choose Pool Assignment as OSP-Compute-Server-Pools previously, created from the drop-down list and click Next.

20. Under Operational Policies, choose the IPMI Access Profile as IPMI_admin previously created, from the drop-down list and choose the Power Control Policy as No_Power_Cap and click Finish.

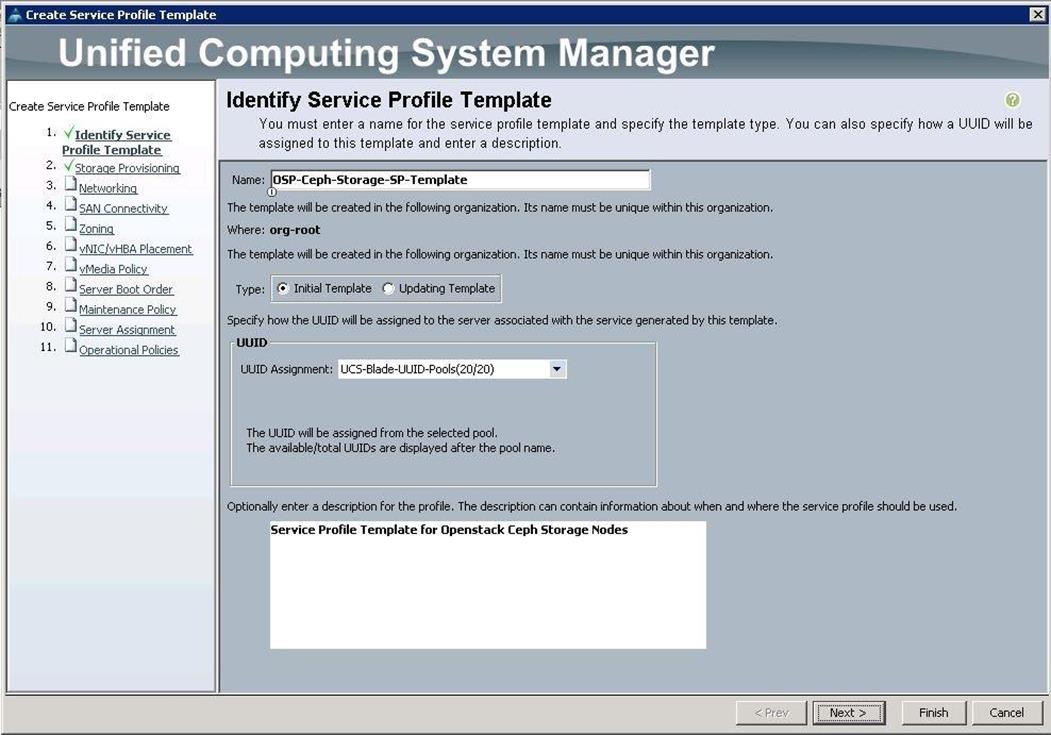

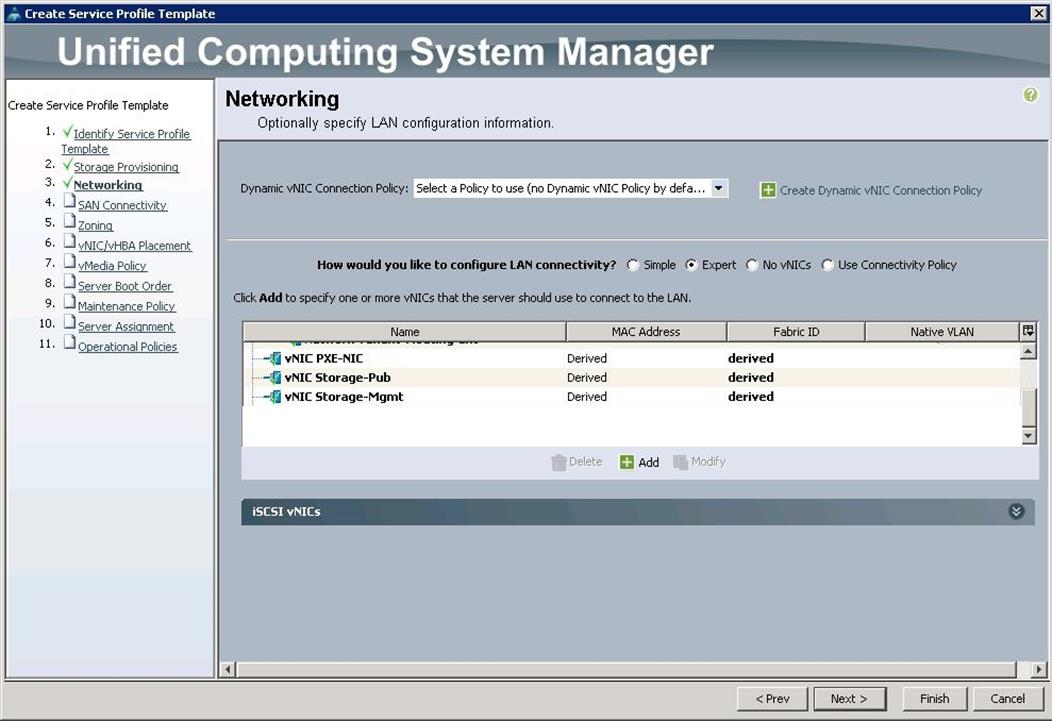

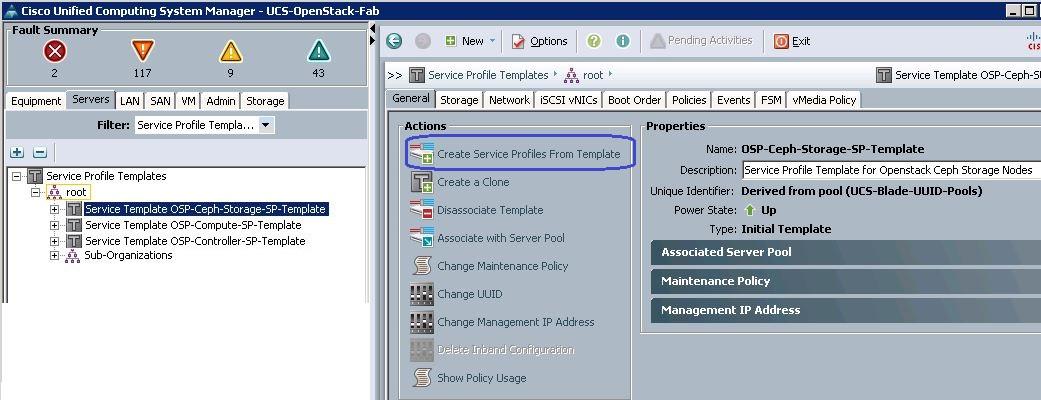

Create Service Profile Templates for Ceph Storage Nodes

To create the Service Profile templates for the Ceph Storage nodes, complete the following steps:

1. Specify the Service profile template name for the Ceph storage node as OSP-Ceph-Storage-SP-Template. Choose the UUID pools previously created, from the drop-down list and click Next.

2. Create vNIC’s for PXE, Stroage-Pub and Storage-Mgmt following steps similar to controller as mentioned here.

3. Click Next and then Choose “Server_Ack” under Maintenance Policy and then choose the “OSP-CephStorage-Server-Pools” under Pool assignment . Then select “No-power-cap” under power control policy. Click on Finish to complete the Service profile template creation for Ceph nodes.

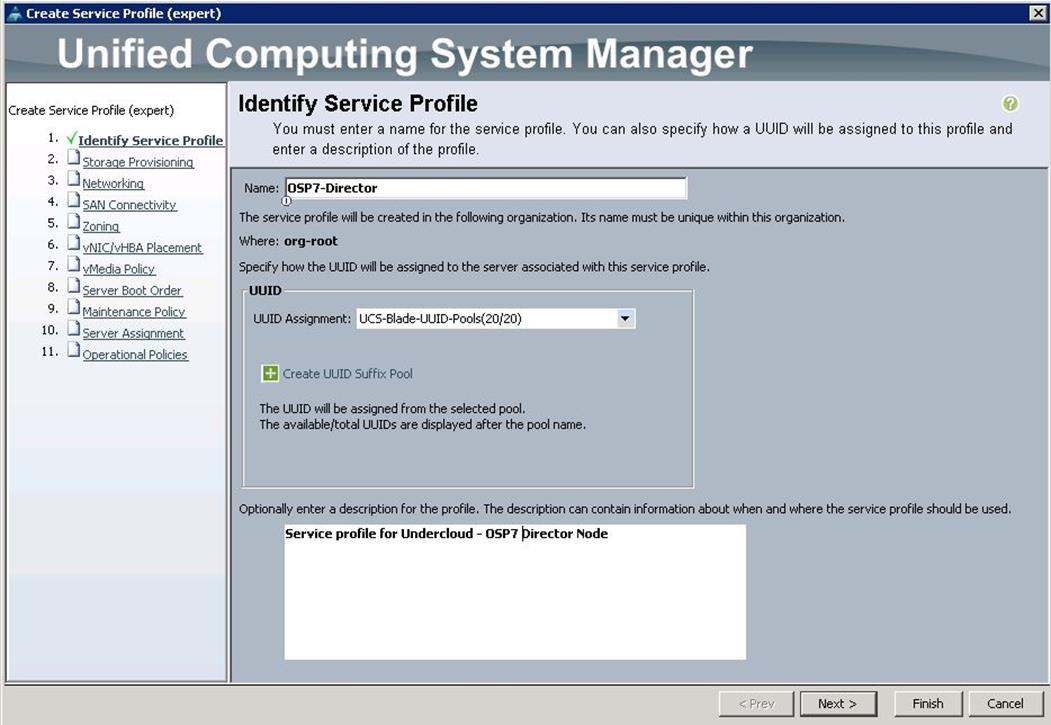

Create Service Profile for Undercloud ( OSP7 Director ) Node

To configure the Service Profile for Undercloud (OSP7 Director) Node, complete the following steps:

![]() As there is only one node for Undercloud, a single Service Profile is created. There are no Service Profile Templates for the undercloud node.

As there is only one node for Undercloud, a single Service Profile is created. There are no Service Profile Templates for the undercloud node.

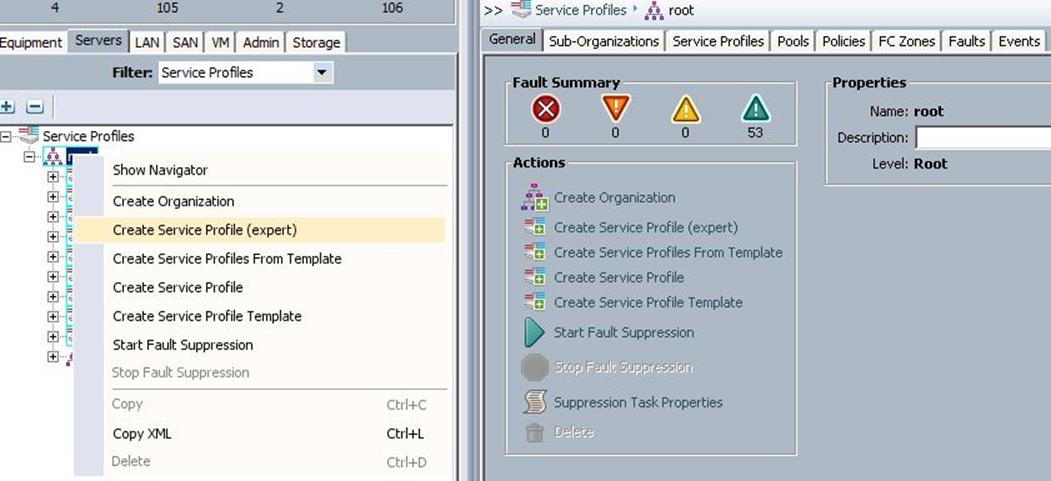

1. Under Servers > Service Profiles > root > right-click and select “Create Service Profile (expert)”

2. Specify the Service profile name for Undercloud node as OSP7-Director. Choose the UUID pools previously created from the drop-down list and click Next.

3. For Storage Provisioning, choose Expert and click Storage profile Policy and choose the Storage profile Blade-OS-boot previously created from the drop-down list and click Next.

4. For Networking, choose Expert and click “+”.

5. Create the VNIC interface for PXE or Provisioning network as PXE-NIC and click the check box Use VNIC template.

6. Under vNIC template, choose the PXE-NIC template previously created from the drop-down list and choose Linux for the Adapter Policy.

7. Create the VNIC interface for Internal API network as Internal-API and from the drop-down list choose MAC pools created before. Then click on “Fabric B” and check the “Enable Failover”.

8. Under VLANs , Select “Internal-API network” as Native VLAN, then choose Adapter Policy as “Linux” and Network Controller Policy as “Enable_CDP”

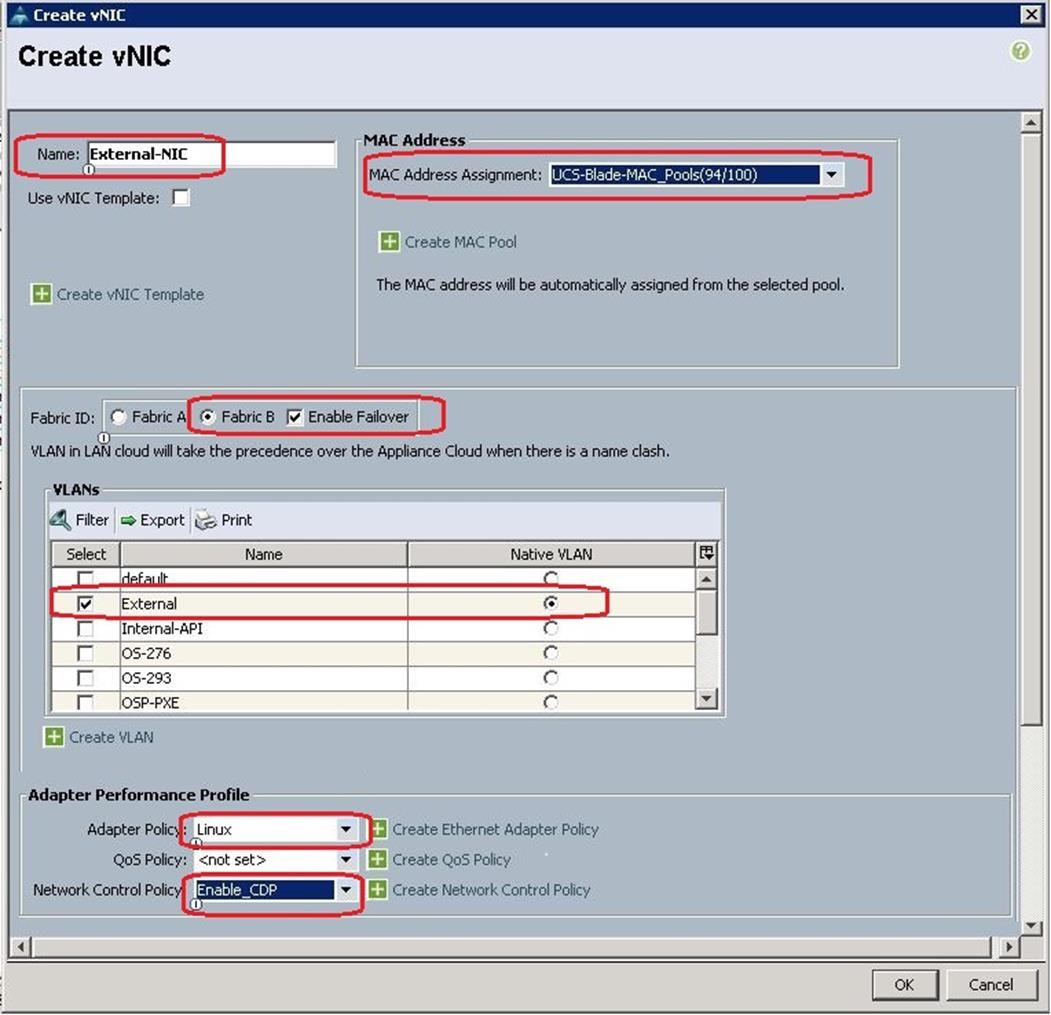

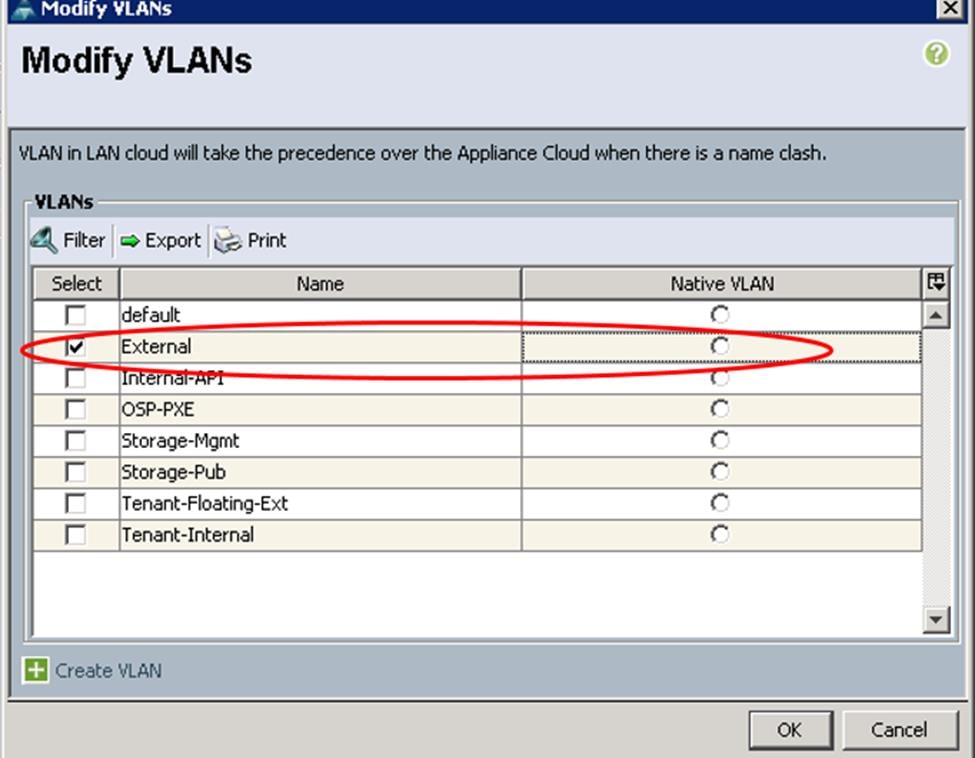

9. Create the the VNIC interface for External network as External-NIC and from the drop-down list choose MAC pools created before. Then click on “Fabric B” and check the “Enable Failover”.

10. Under VLANs , Select “External” as Native VLAN, then choose Adapter Policy as “Linux” and Network Controller Policy as “Enable_CDP”

11. After a successful VNIC creation, click Next.

12. Under the SAN connectivity, choose No VHBAs and click Next.

13. Under Zoning, click Next.

14. Under VNIC/VHBA Placement, choose the vNICs PCI order as shown below and click Next.

15. Under vMedia Policy, click Next.

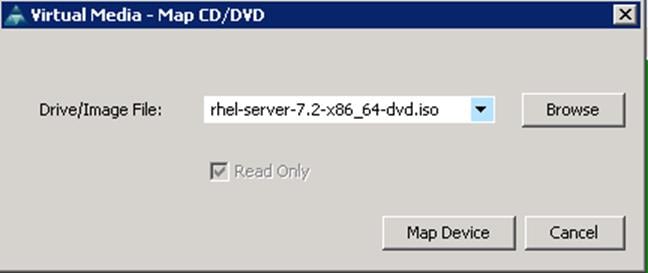

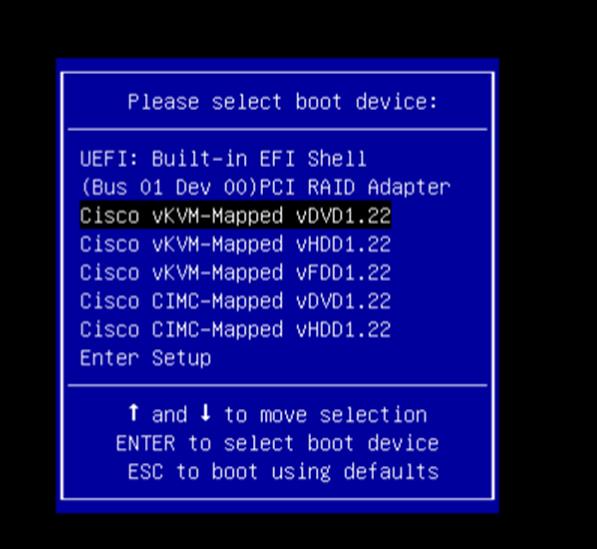

16. Under Server Boot Order, choose the boot policy as “Create a Specific Boot Policy”, from the drop-down list and click Next. Make sure you select “ local CD/DVD” as first boot order and “ local LUN” as second boot order and click Next.

17. Under Maintenance Policy, choose Server_Ack previously created, from the drop-down list and click Next.

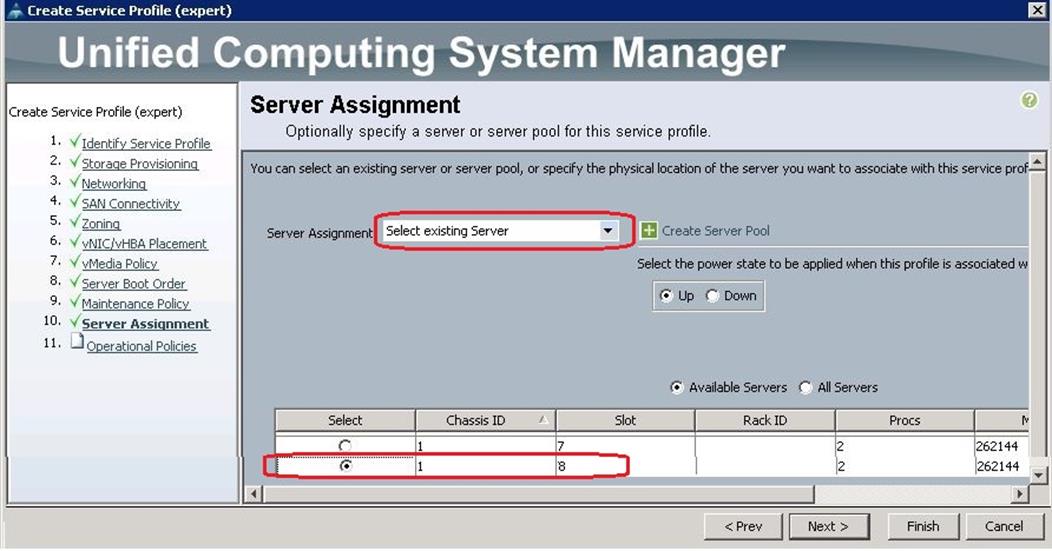

18. Under Server Assignment, choose “Select existing server” and select the respective blade assigned for Director node and click Next.

19. Under Operational Policies, choose the Power Control Policy as “No_Power_Cap” and click Finish.

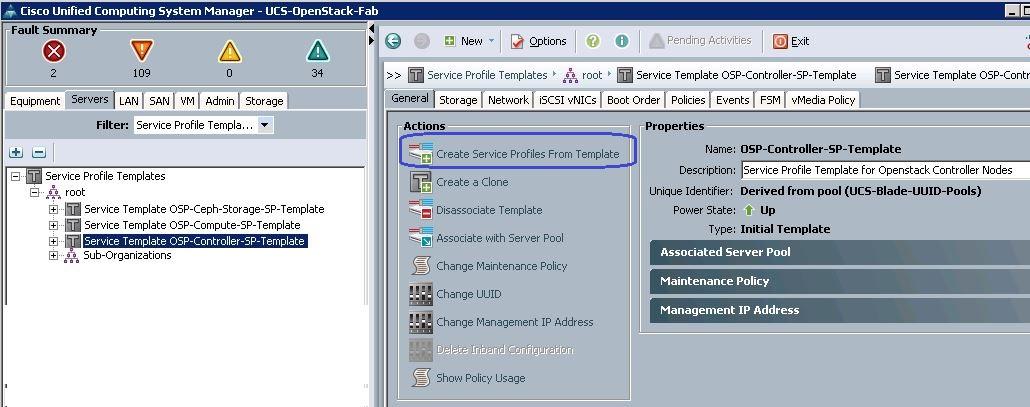

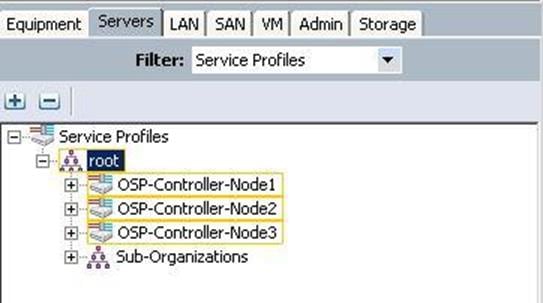

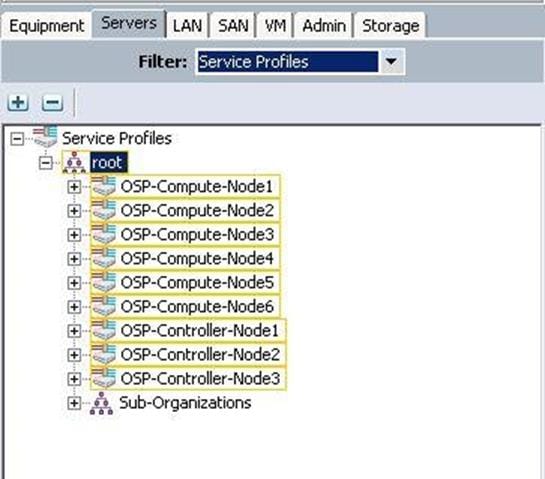

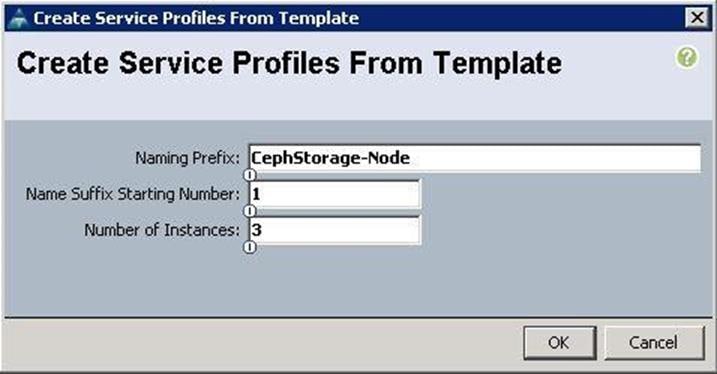

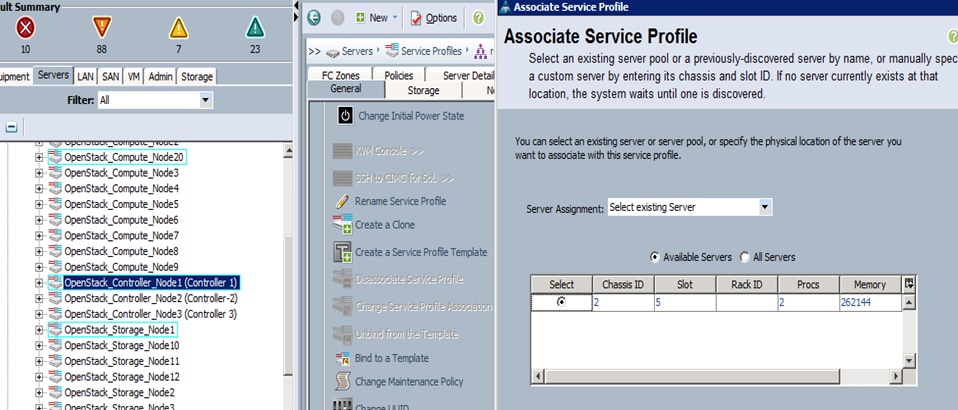

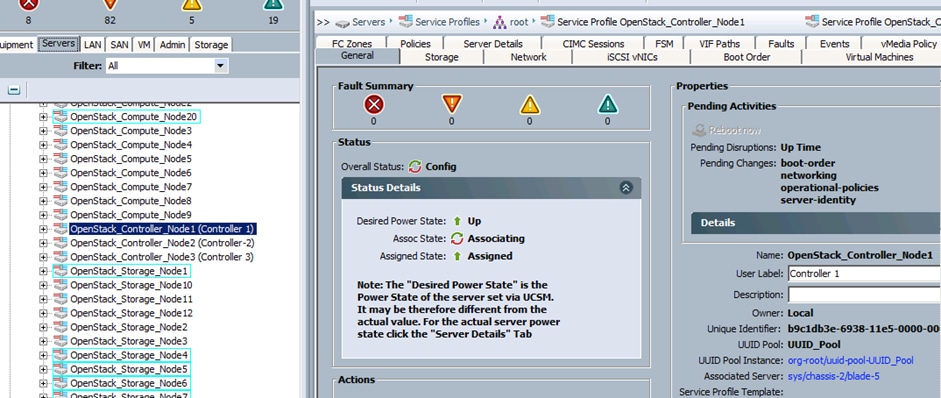

Create Service Profiles for Controller Nodes

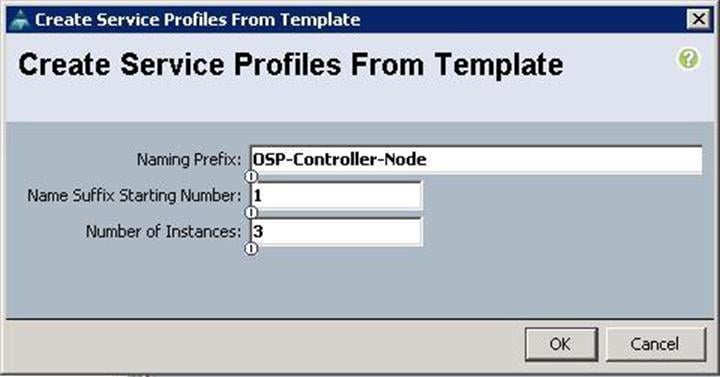

To create Service profiles for Controller nodes, complete the following steps:

1. Under Servers à Service Profile Templates à root à select the Controller Service profile template and click Create Service Profiles from Templates.

a. Specify the Service profile name and the number of instances as 3 for the Controller nodes.

b. Make sure the Service profiles for the Controller nodes have been created.

c. Under Servers à Service profiles à root .

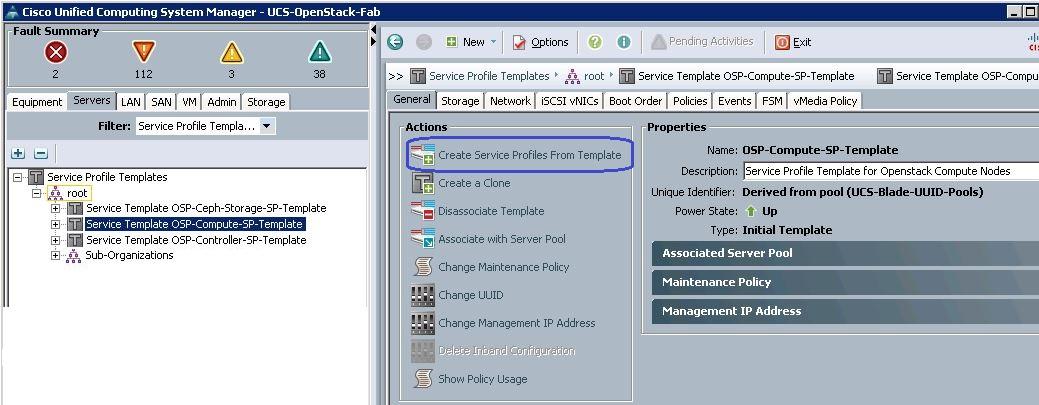

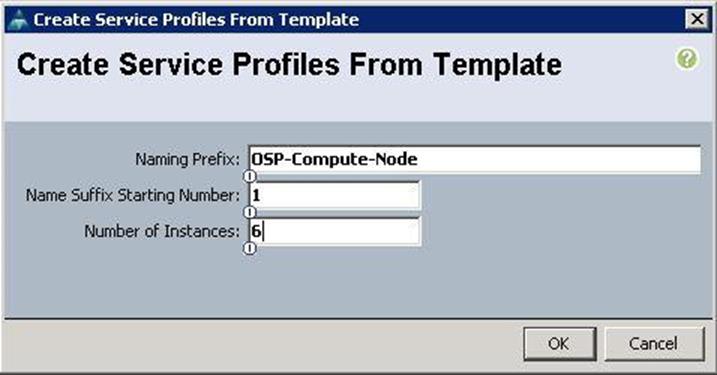

Create Service Profiles for Compute Nodes

To create Service profiles for Compute nodes, complete the following steps:

1. Under Servers à Service Profile Templates à root à select the Compute Service profile template and click Create Service Profiles from Templates.

a. Specify the profile name and set the number of instances to 6 for compute nodes.

b. Make sure the Service profiles for the Compute nodes have been created.

Create Service Profiles for Ceph Storage Nodes

To create Service profiles for Ceph Storage nodes, complete the following steps:

1. Under Servers à Service Profile Templates à root à select the Ceph Storage Service profile template and click Create Service Profiles from Templates.

a. Specify the Service profile name and set the number of instances to 3 for the Ceph Storage nodes.

b. Make sure the Service profiles for the Ceph Storage nodes have been created.

c. Verify the Service profile association with the respective UCS Servers.

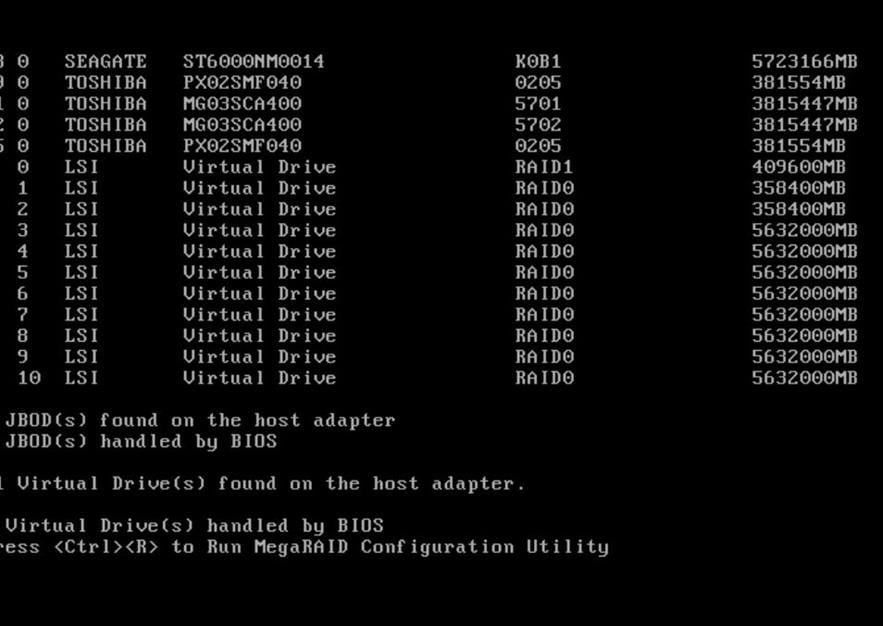

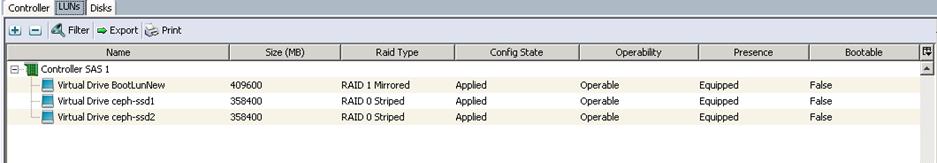

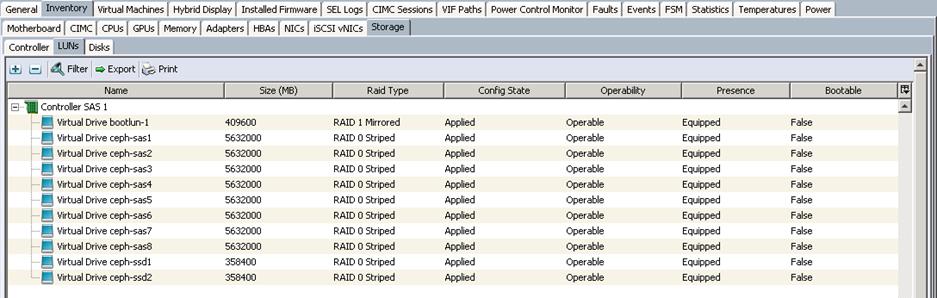

Create LUNs for the Ceph OSD and Journal Disks

After a successful CephStorage Server association, create the remaining LUNs for the Ceph OSD disks and Journal disks.

Create the Ceph Journal LUN

To create the Ceph Journal LUNs, complete the following steps:

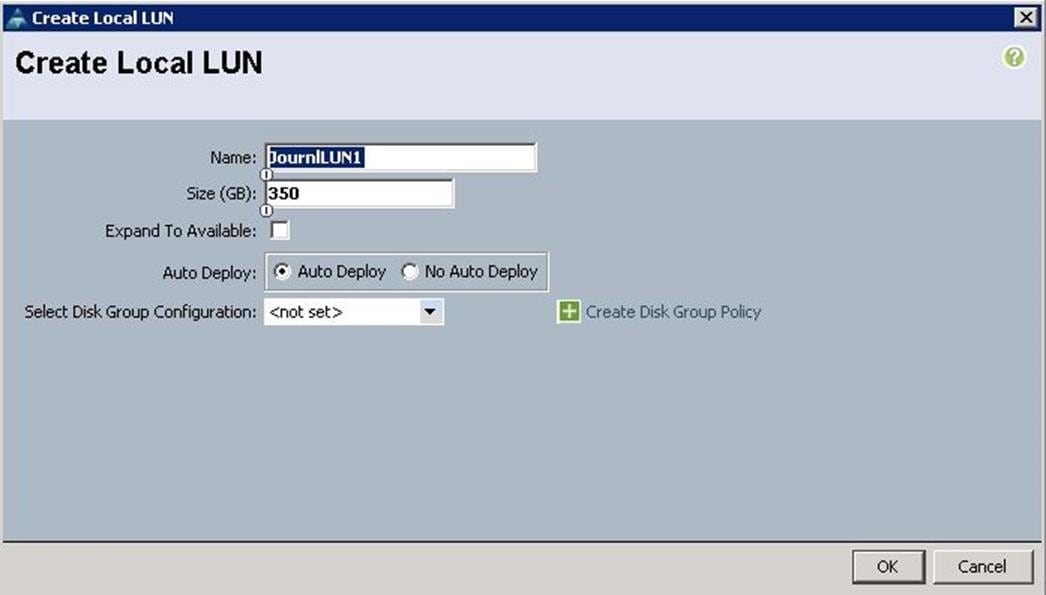

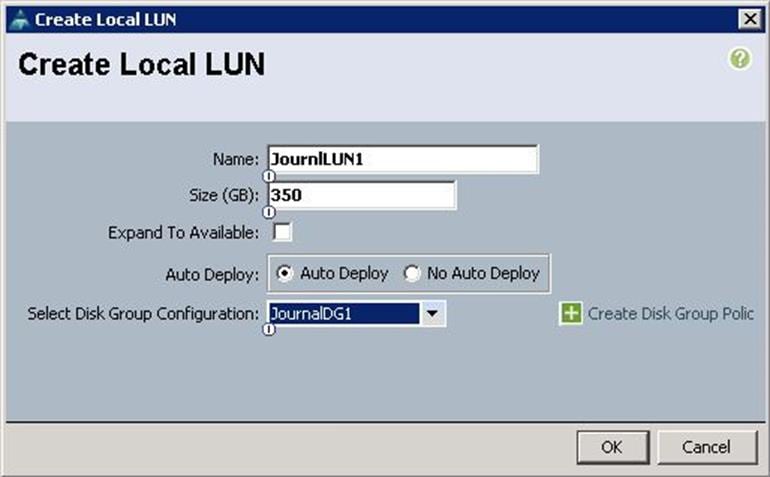

1. Under Storage àStorage Provisioning à root à select the previously created Ceph Sotrage profile C240-Ceph à click Local LUNs à click Create Local LUN.

a. Specify the name as JournaLUN1 and set the size in GB to 350 for the 400GB SSD disks and click Create Disk Group Policy.

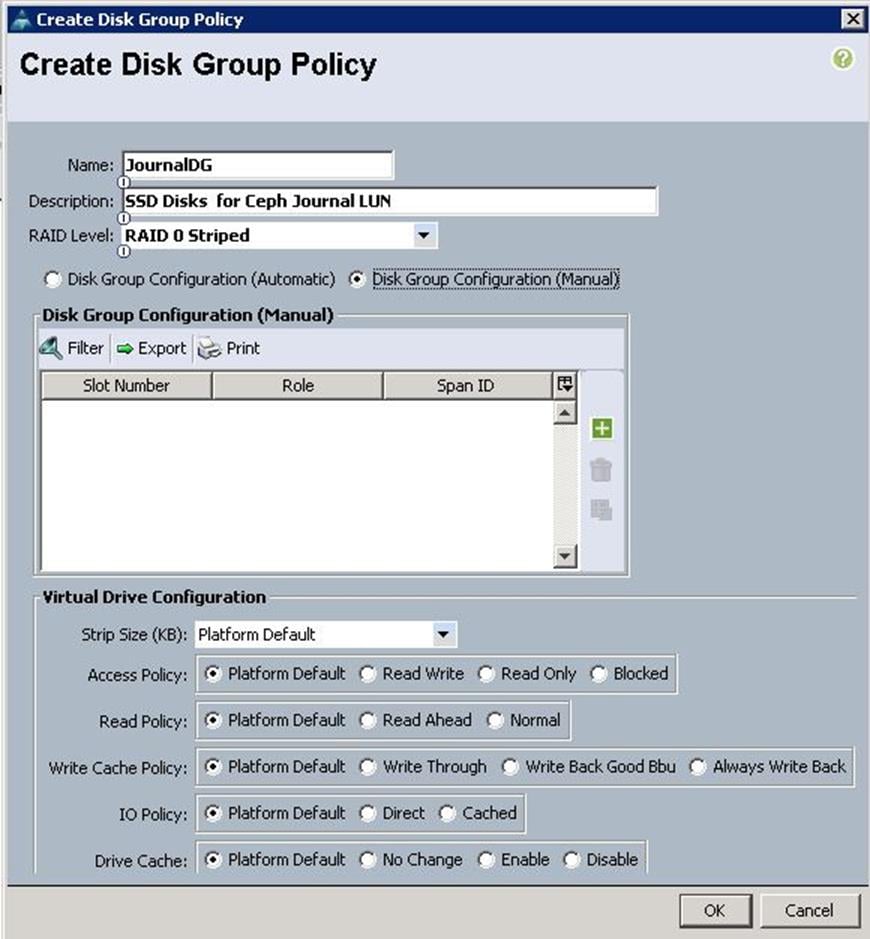

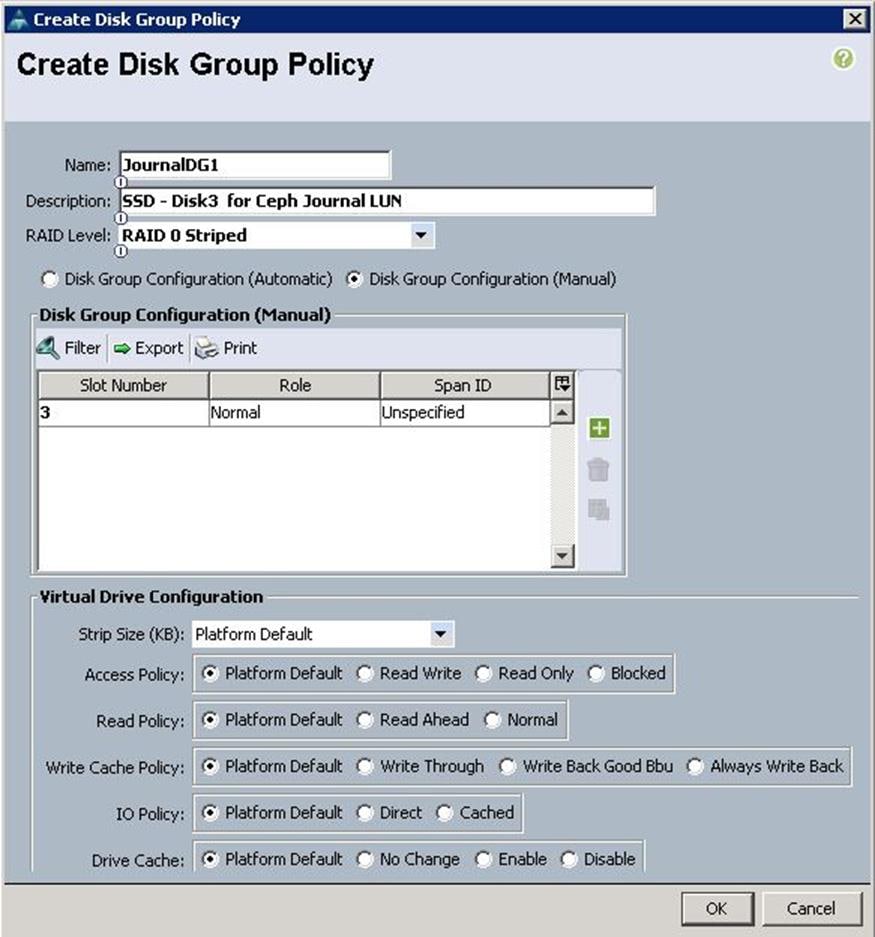

b. Specify the Disk group policy name and choose the RAID level as RAID 0 and select Disk Group Configuration (Manual).

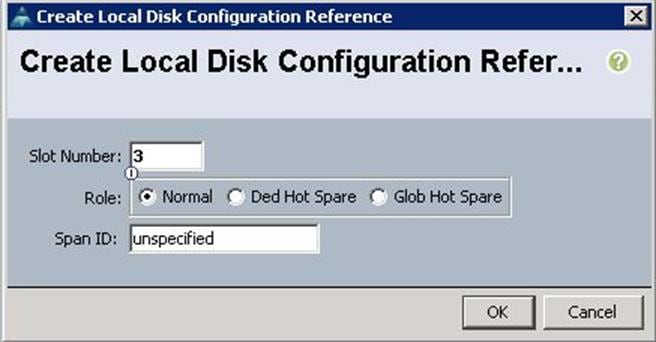

c. Specify the Slot ID as 3, which is the physical disk slot number for 400GB SSDs for the Journal LUN1 and click OK.

d. Click OK to confirm the Disk group policy creation.

e. From the drop-down list, choose the Disk group policy for the Journal LUN as JournalDG1.

f. Create the Local LUN as JournlLUN2 with Disk group policy as JournalDG2 using 400GB SSD on Disk Slot4.

Create the Ceph OSD LUN

To create the Ceph OSD LUN, complete the following steps:

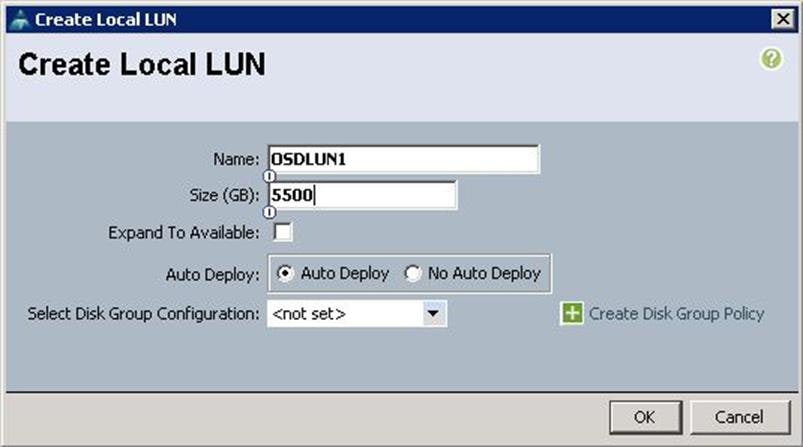

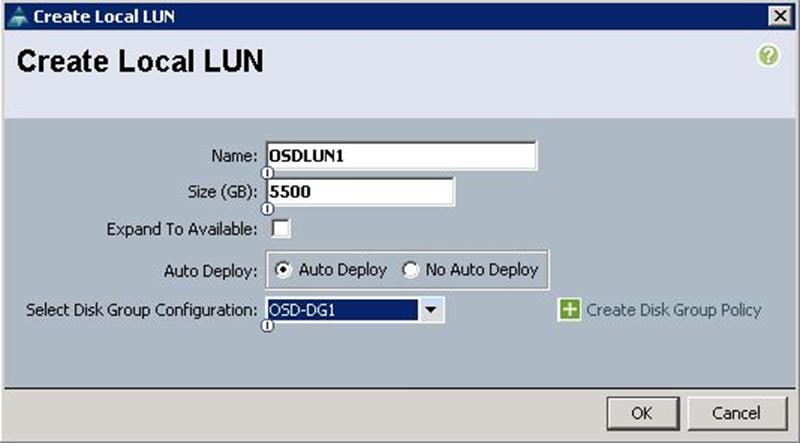

1. Under Storage àStorage Provisioning à root à select the previously created Ceph Storage profile C240-Ceph à click Local LUNs à click Create Local LUN.

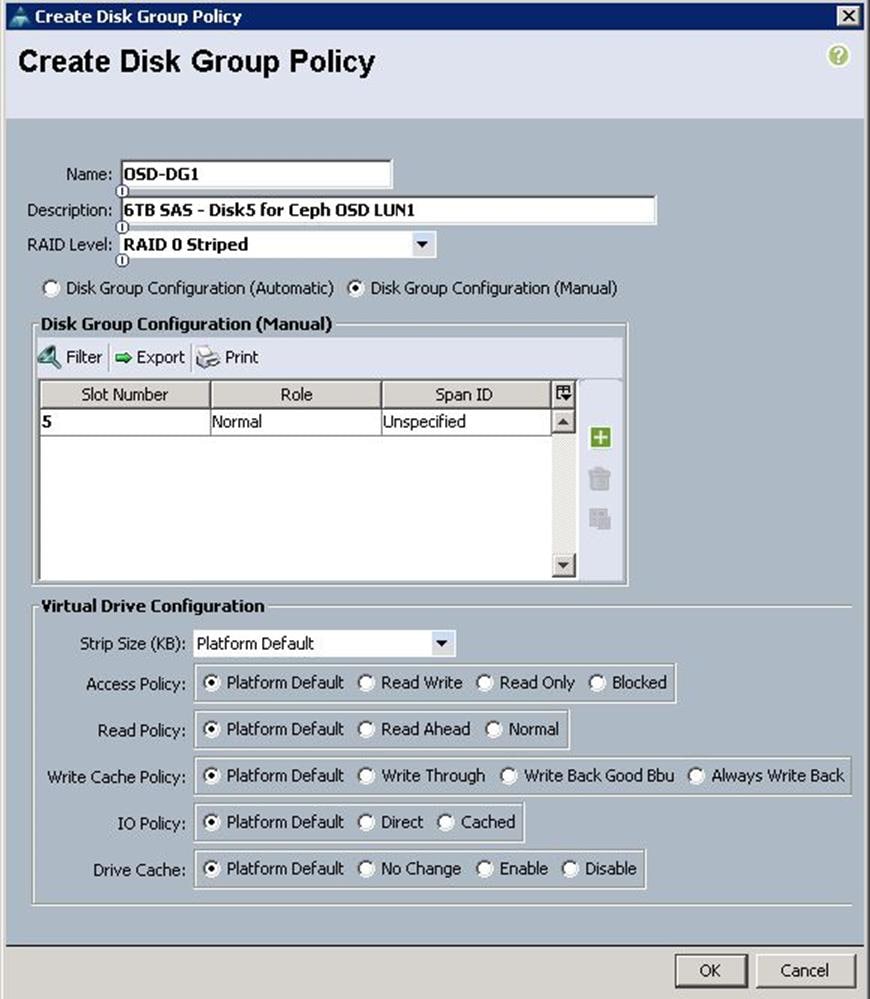

a. Specify the name as OSDLUN1 and the size in GB as 5500 for the 6TB SAS disks and click Create Disk Group Policy.

b. Specify Disk group policy name and Choose RAID level as RAID 0 and select Disk Group Configuration(Manual)

c. Click “+” and Specify Slot ID as 5, which is physical disk slot number for 6TB SAS disks for Ceph OSD LUN1 and click OK.

d. From the drop-down list, choose the Disk group policy for OSDLUN1 as OSD-DG1.

e. Create the remaining OSDLUN3, 4, 5, 6, 7, 8 with the Disk group policy using 6TB SAS disks 6, 7, 8, 9, 10, 11, and 12.

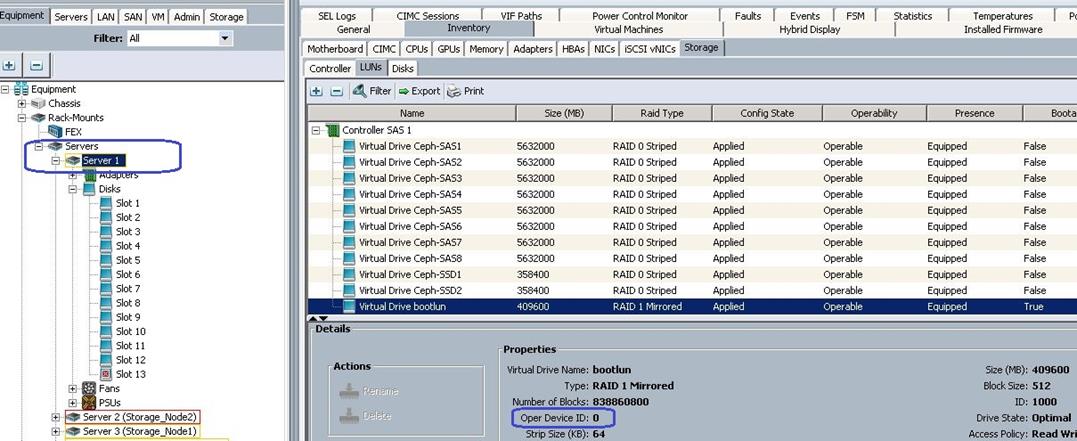

f. Make sure the LUNs for Journals and OSDs are created as shown below.

g. Make sure all the Ceph Storage Servers have the identical LUN ID and Device ID for all the LUNs (OS-boot, Journal and OSD) as shown in the table below:

| Physical Disk Slot |

Disk Type |

Disk Size |

RAID Level |

LUN Size |

LUN ID |

Device ID |

| Disk 1 |

SAS |

300 GB |

RAID 1 |

250 GB |

1000 |

0 |

| Disk 2 |

SAS |

300 GB |

||||

| Disk 3 |

SSD |

400 GB |

RAID 0 |

350 GB |

1001 |

1 |

| Disk 4 |

SSD |

400 GB |

RAID 0 |

350 GB |

1002 |

2 |

| Disk 5 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1003 |

3 |

| Disk 6 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1004 |

4 |

| Disk 7 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1005 |

5 |

| Disk 8 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1006 |

6 |

| Disk 9 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1007 |

7 |

| Disk 10 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1008 |

8 |

| Disk 11 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1009 |

9 |

| Disk 12 |

SAS |

6 TB |

RAID 0 |

5500 GB |

1010 |

10 |

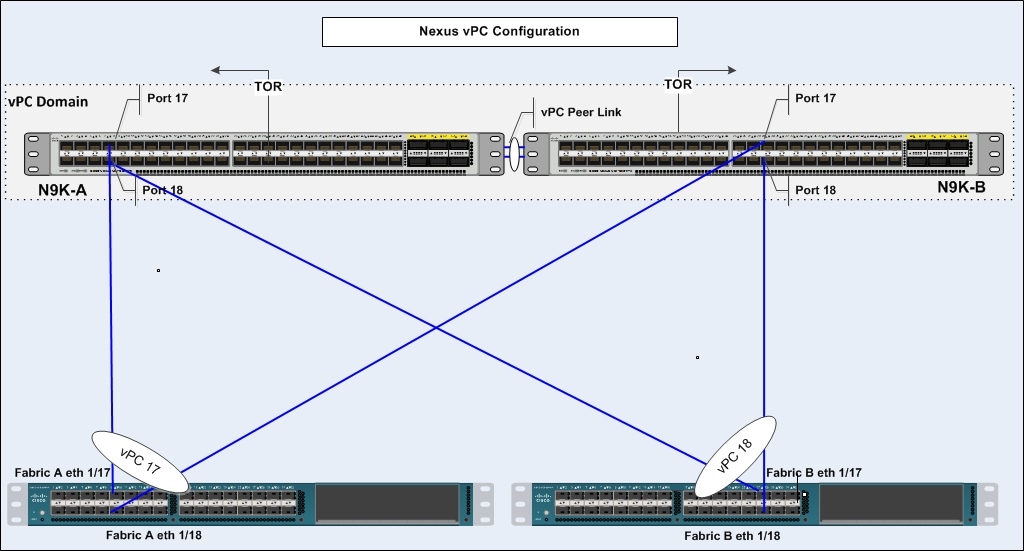

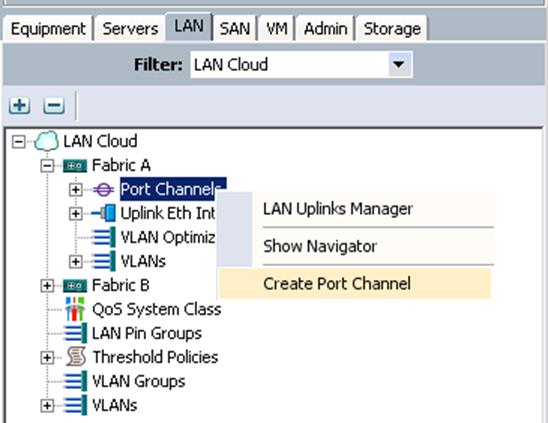

Create Port Channels for Cisco UCS Fabrics

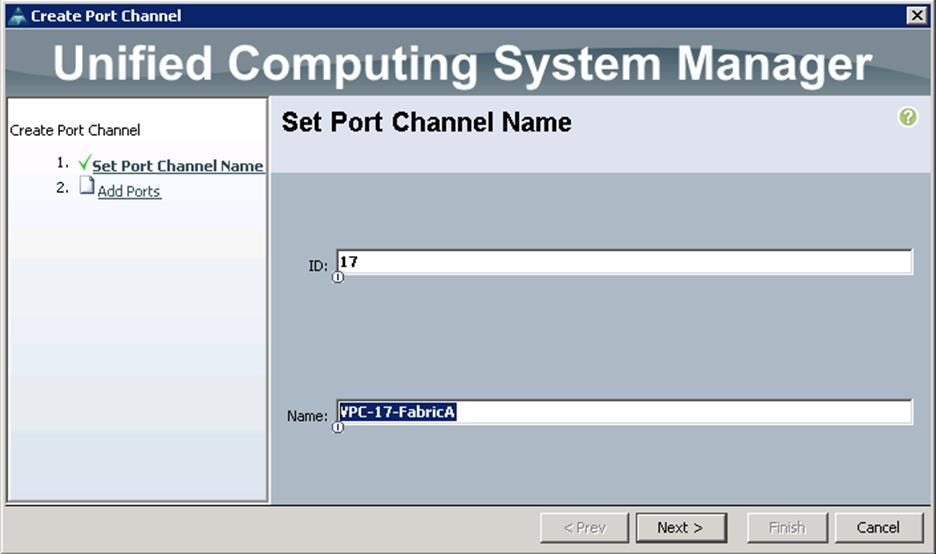

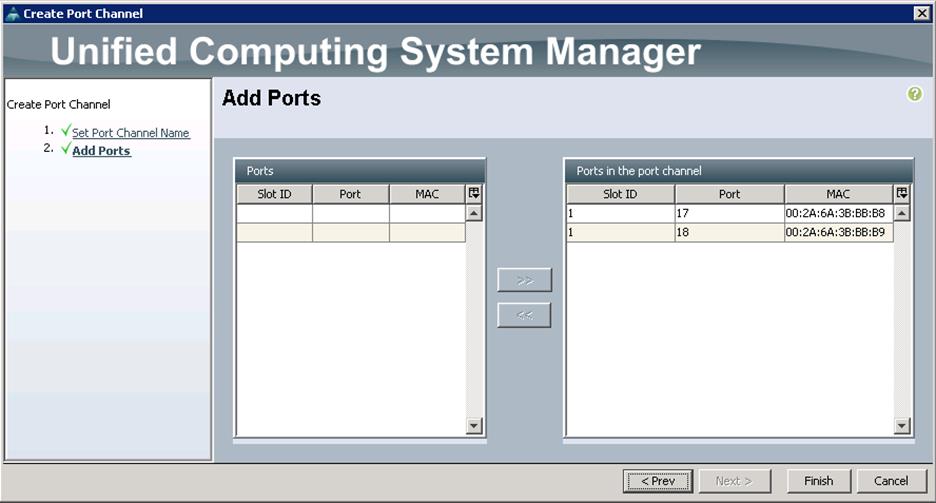

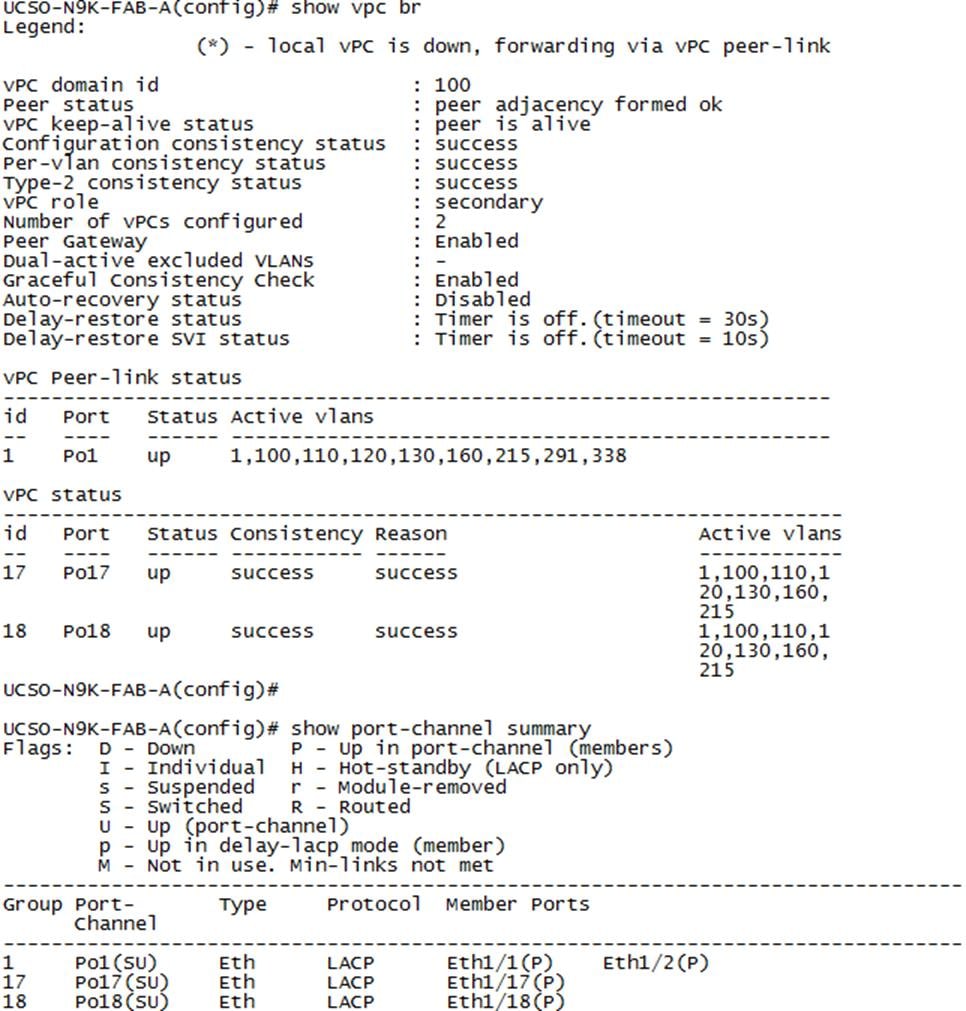

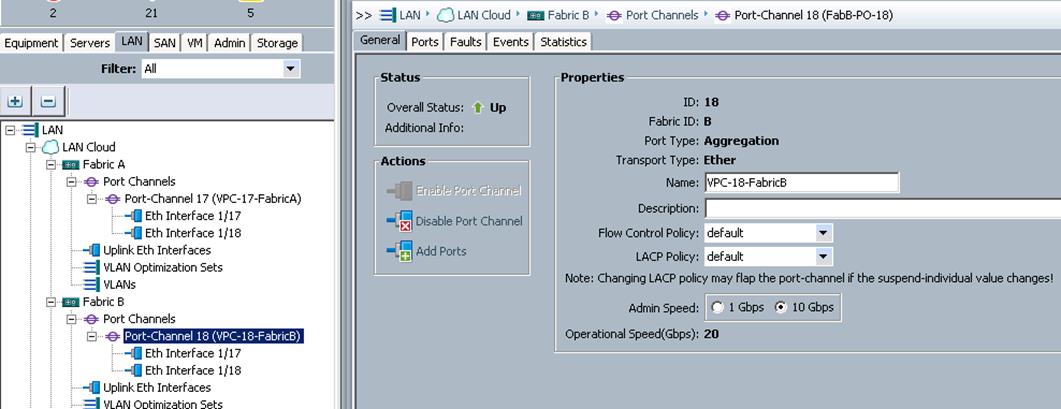

To create the Port Channels, complete the following steps as shown in the screenshots below. Figure 12 illustrates the configuration.

To create Port Channels from the UCS Manager GUI, complete the following steps:

1. Under LAN à LAN Cloud à Fabric A à Port Channels à right-click and select Create Port Channel.

a. Specify the ID and name for the port channel and click Next.

b. Select the ports 17 and 18 from left pane and move to the right pane into Ports in the Port Channel and click Finish.

![]() Repeat the steps shown above on Fabric B with Port-Channel as18.

Repeat the steps shown above on Fabric B with Port-Channel as18.

Cisco Nexus Configuration

Configure the Cisco Nexus 9372 PX Switch A

To configure the Cisco Nexus 9372 PX Switch A, complete the following step:

1. Connect the console port to the Nexus 9372 PX switch designated for Fabric A:

---- Basic System Configuration Dialog VDC: 1 ----

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.

*Note: setup is mainly used for configuring the system initially, when no configuration is present. So setup always assumes system defaults and not the current system configuration values.

Press Enter at anytime to skip a dialog. Use ctrl-c at anytime to skip the remaining dialogs.

Would you like to enter the basic configuration dialog (yes/no): yes

Do you want to enforce secure password standard (yes/no) [y]:

Create another login account (yes/no) [n]:

Configure read-only SNMP community string (yes/no) [n]:

Configure read-write SNMP community string (yes/no) [n]:

Enter the switch name : N9k-FAB-A

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]:

Mgmt0 IPv4 address : 10.22.100.3

Mgmt0 IPv4 netmask : 255.255.255.0

Configure the default gateway? (yes/no) [y]:

IPv4 address of the default gateway : 10.22.100.1

Configure advanced IP options? (yes/no) [n]:

Enable the telnet service? (yes/no) [n]:

Enable the ssh service? (yes/no) [y]:

Type of ssh key you would like to generate (dsa/rsa) [rsa]:

Number of rsa key bits <1024-2048> [2048]:

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address : <<ntp_server_ip>>

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]:

The following configuration will be applied:

password strength-check

switchname N9k-FAB-A

vrf context management

ip route 0.0.0.0/0 10.22.100.1

exit

no feature telnet

ssh key rsa 2048 force

feature ssh

ntp server <<var_global_ntp_server_ip>>

copp profile strict

interface mgmt0

ip address 10.22.100.3 255.255.255.0

no shutdown

Would you like to edit the configuration? (yes/no) [n]: Enter

Use this configuration and save it? (yes/no) [y]: Enter

[########################################] 100%

Copy complete.

Configure the Cisco Nexus 9372 PX Switch B

To configure the Cisco Nexus 9372 PX Switch B, complete the following step:

1. Connect the console port to the Nexus 9372 PX switch designated for Fabric B:

---- Basic System Configuration Dialog VDC: 1 ----

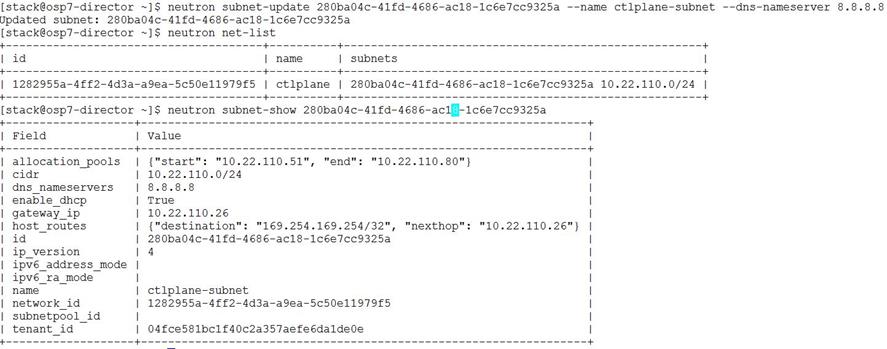

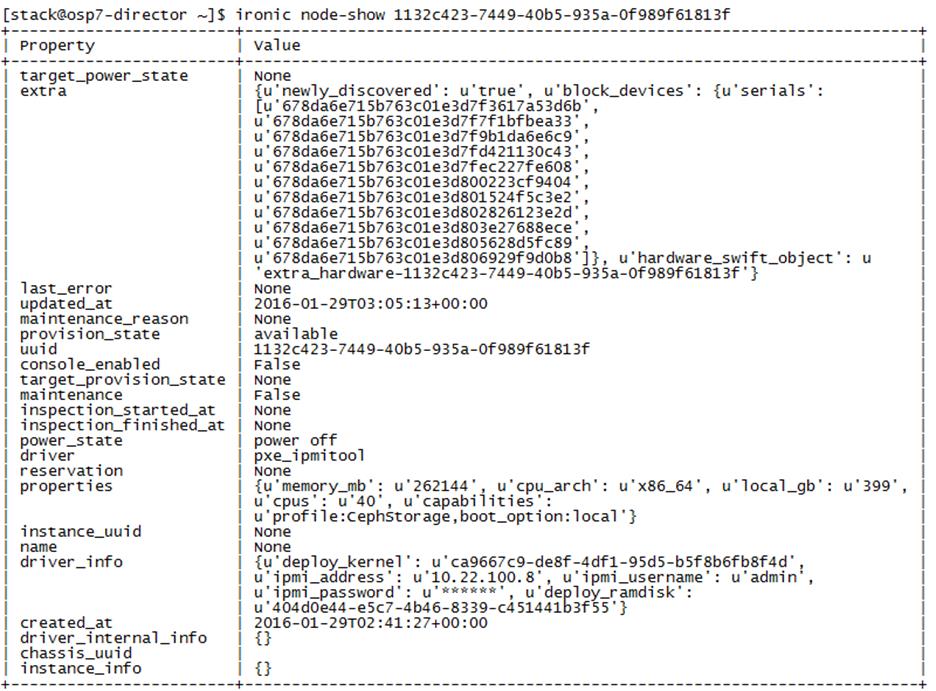

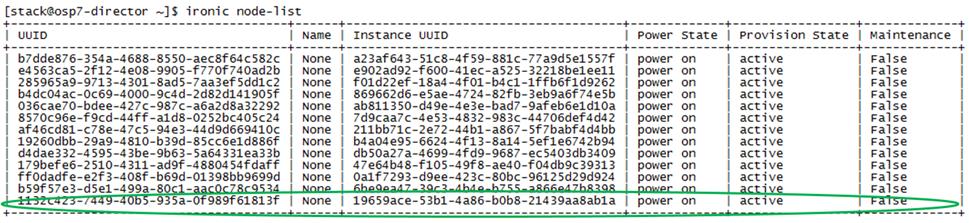

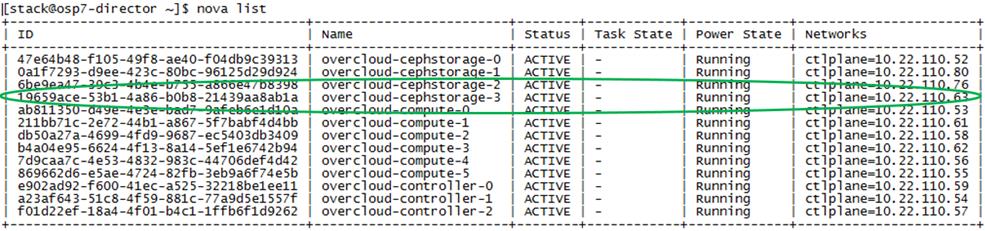

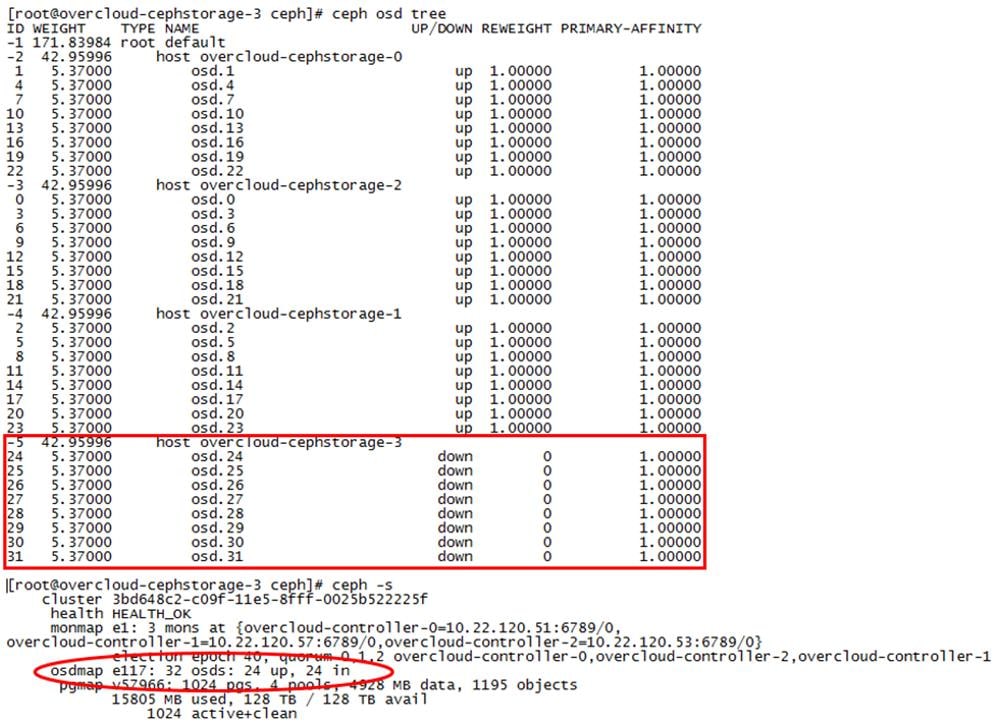

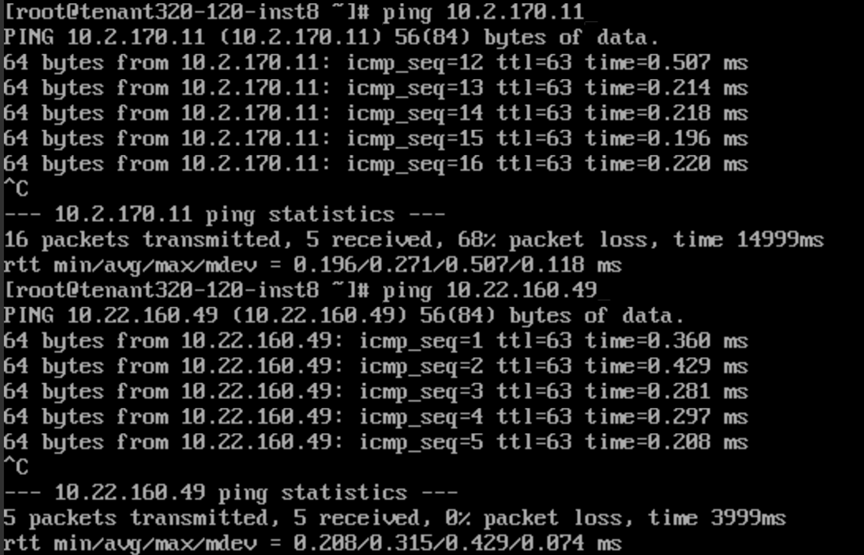

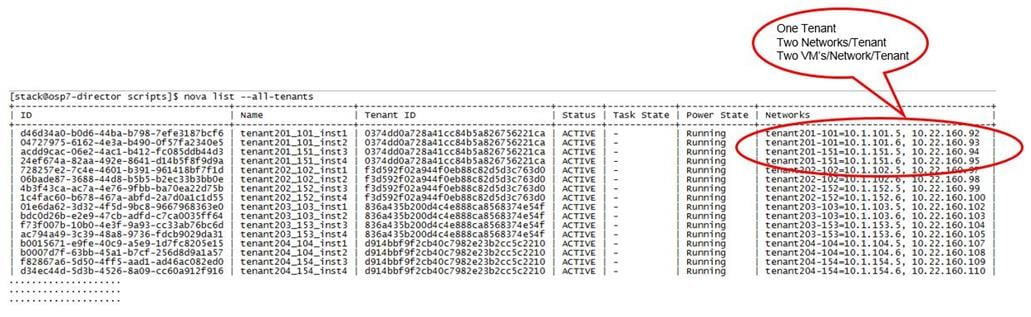

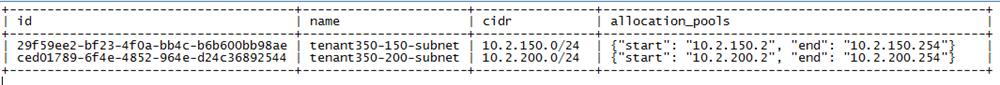

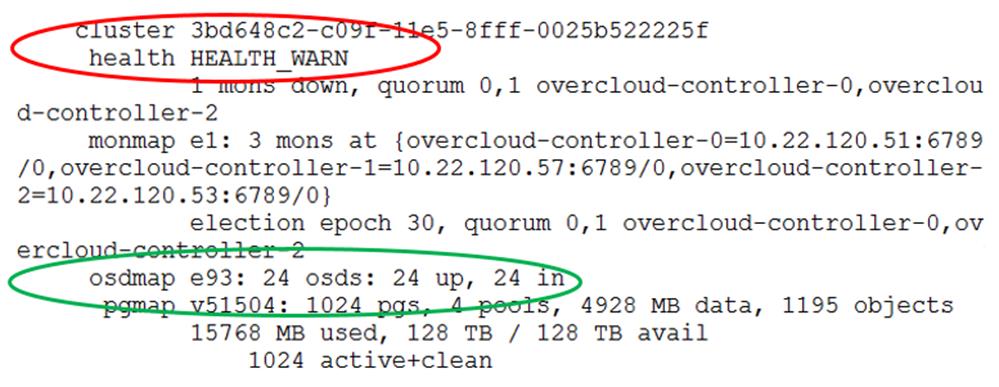

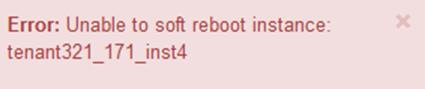

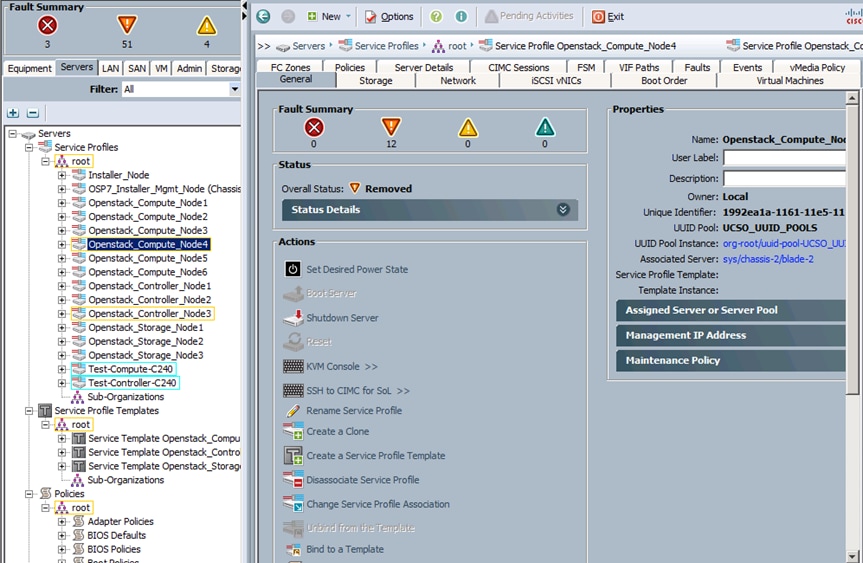

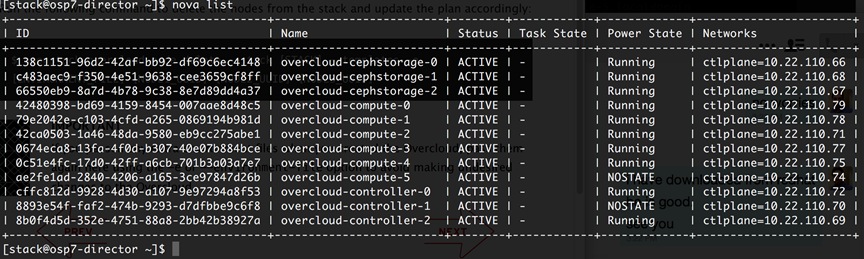

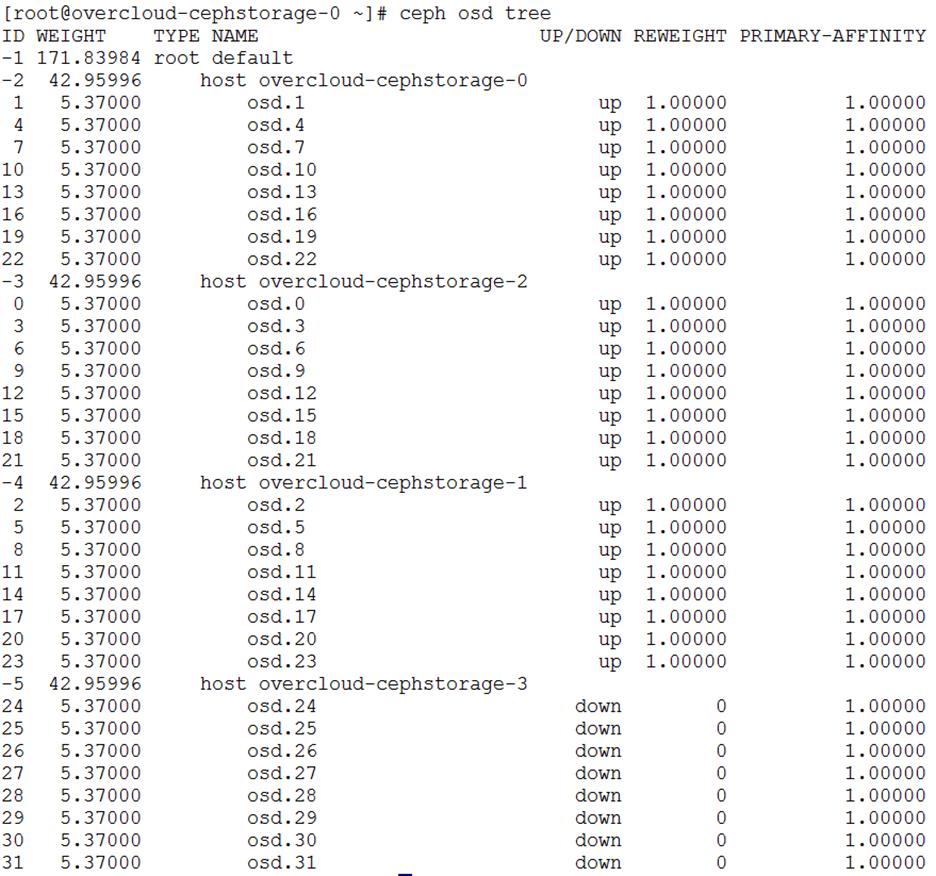

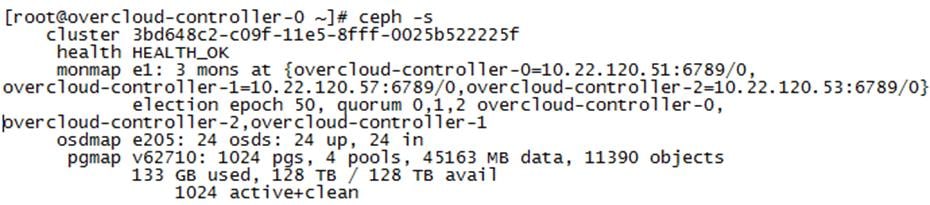

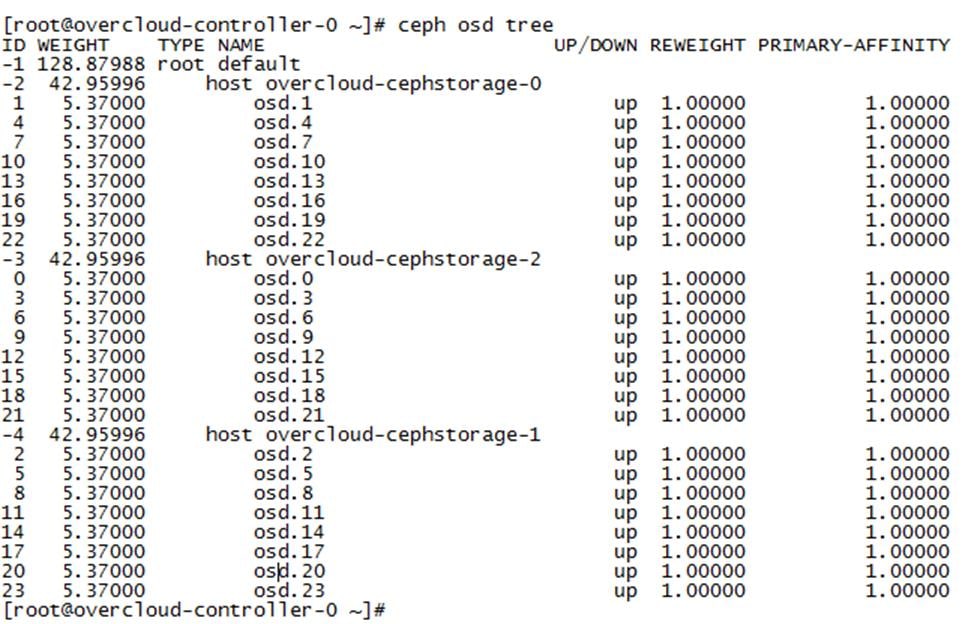

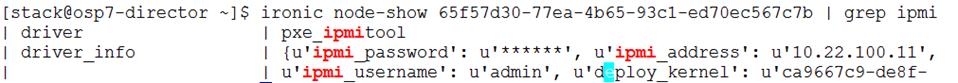

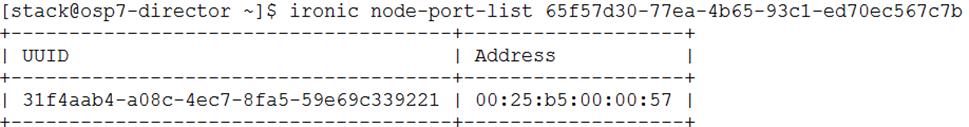

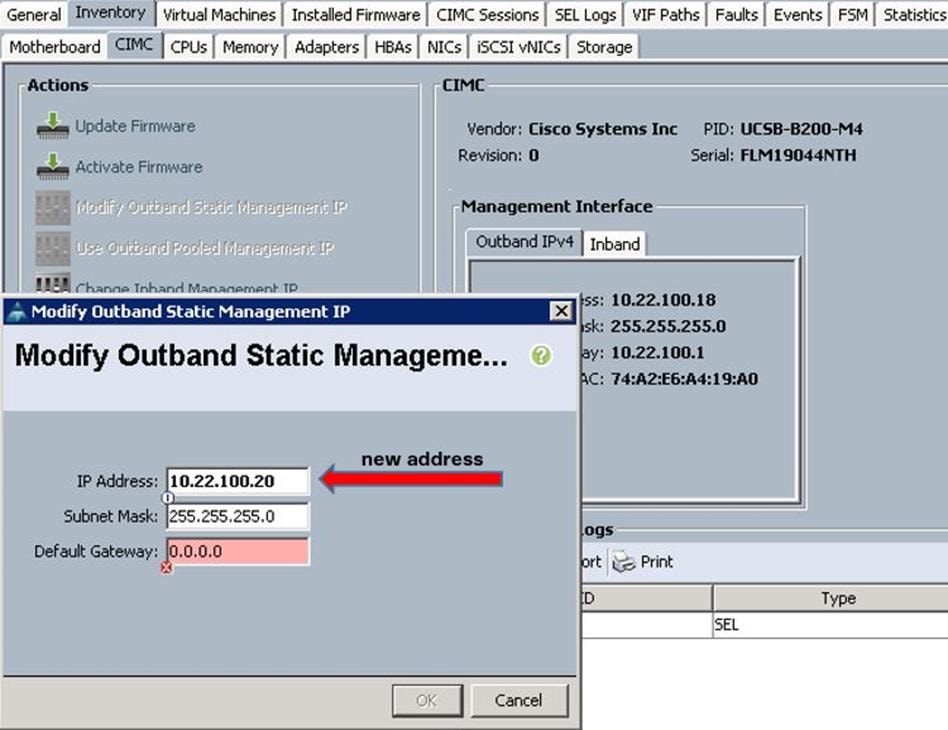

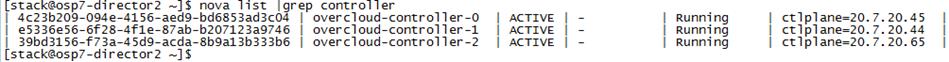

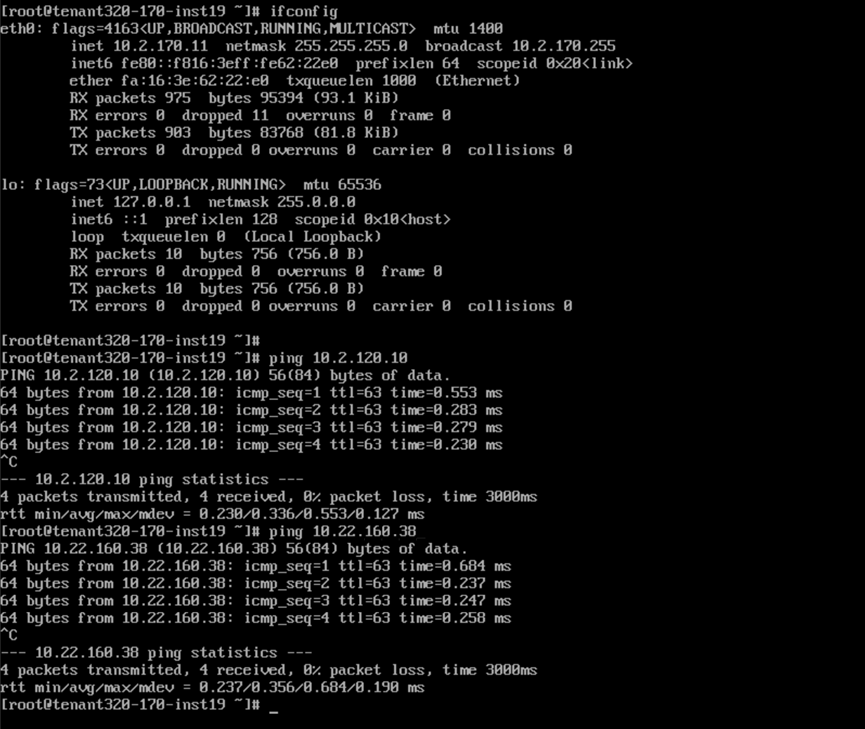

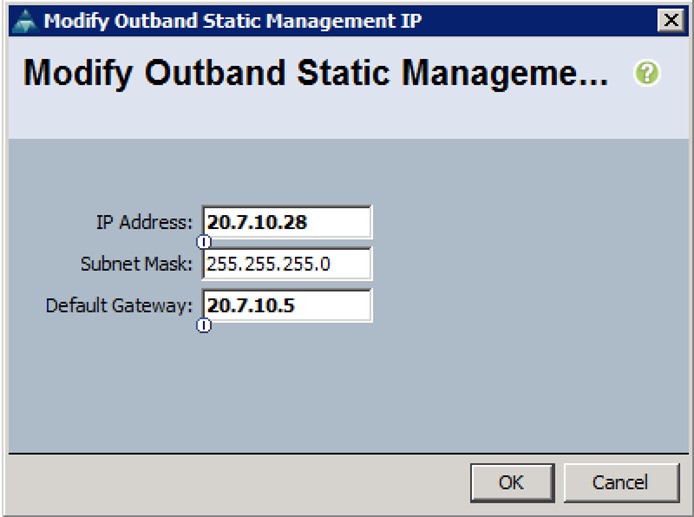

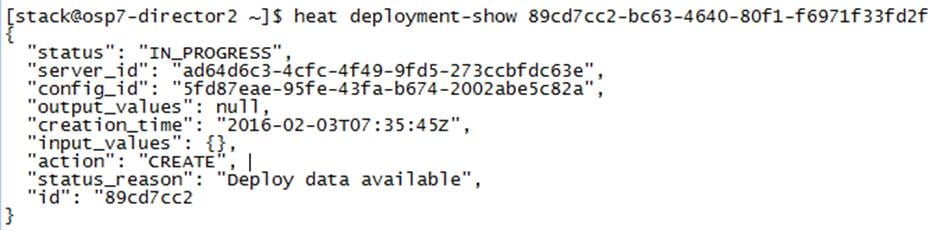

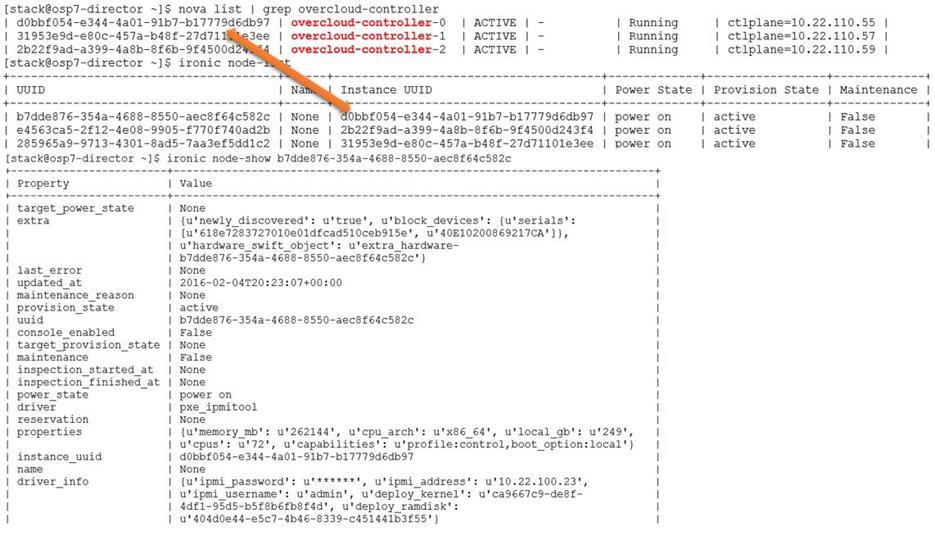

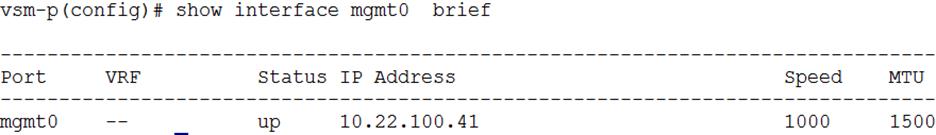

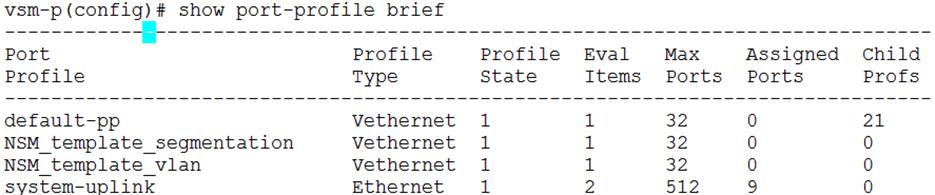

This setup utility will guide you through the basic configuration of the system. Setup configures only enough connectivity for management of the system.