FlashStack Datacenter for Oracle RAC 19c Databases on VMware vSphere

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

![]()

Document Organization

This document is organized into the following chapters:

| Chapter |

Description |

| High-level overview of the solution, benefits, and conclusion. |

|

| Provides the solution overview, intended audience, and new features |

|

| Provides the requirements, considerations, and performance details for the solution design |

|

| Provides the installation and configuration steps |

|

| Provides the OS and database deployment considerations and steps |

|

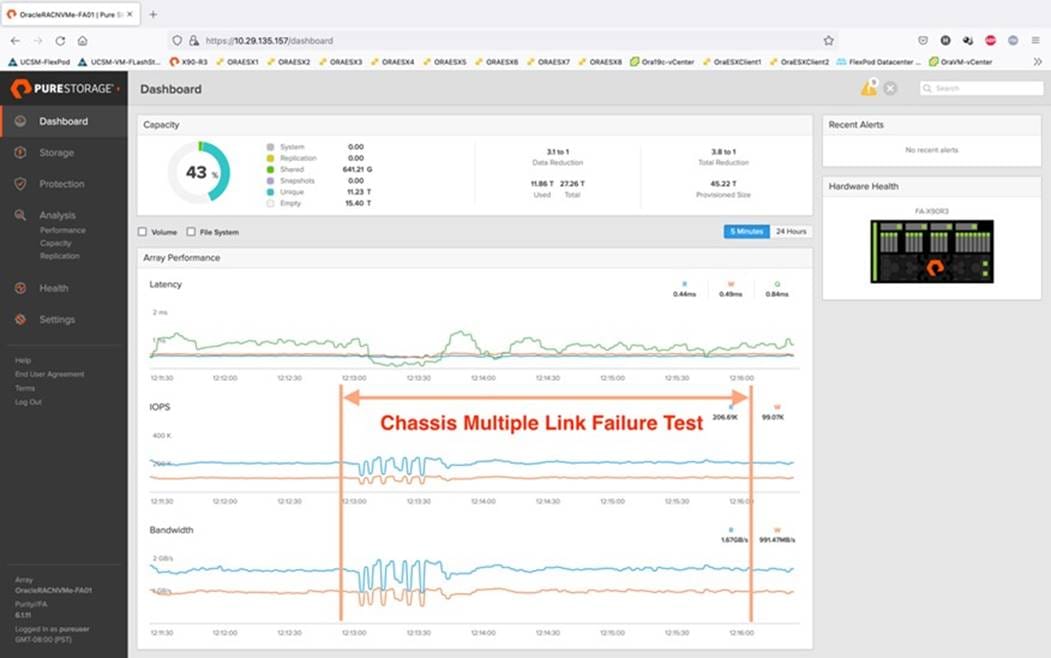

| Provides the tests implemented and results |

|

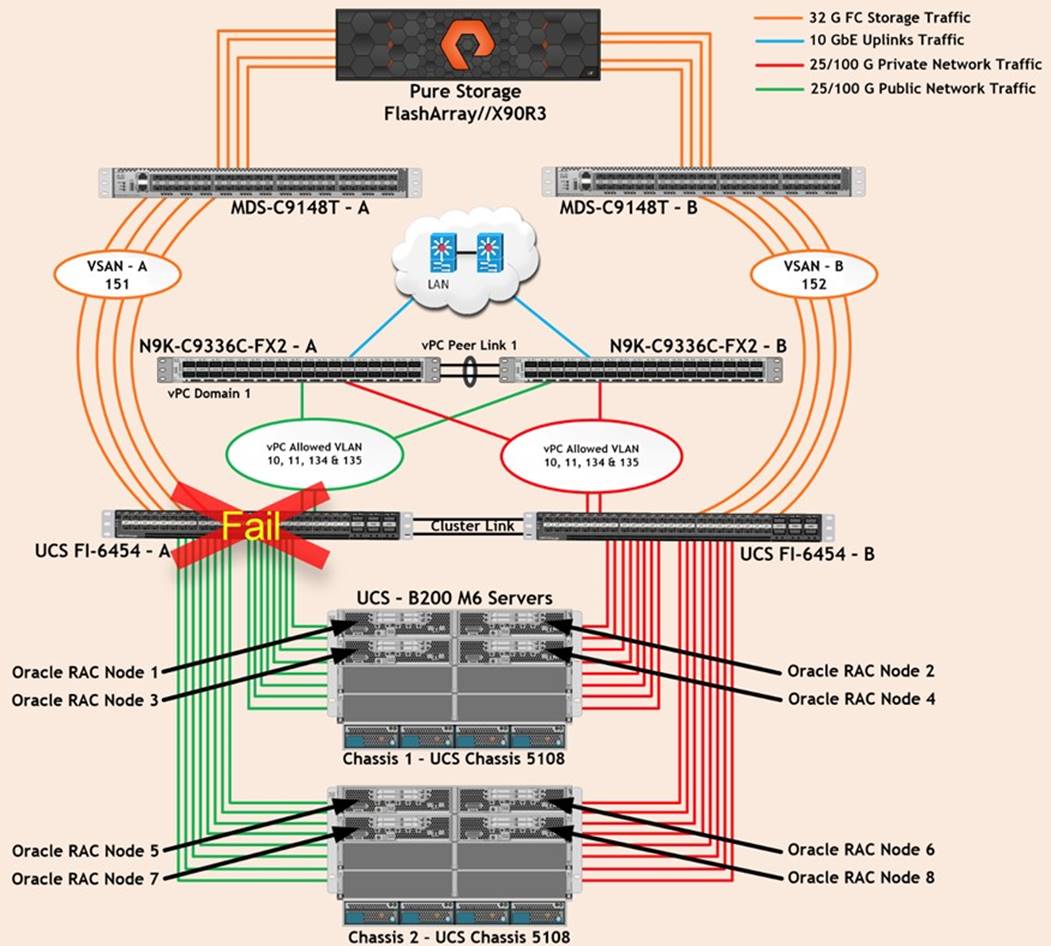

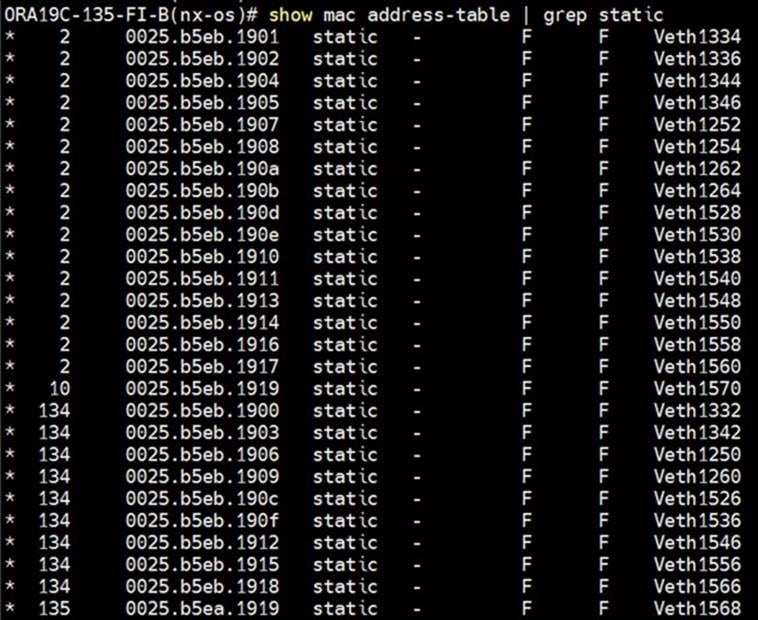

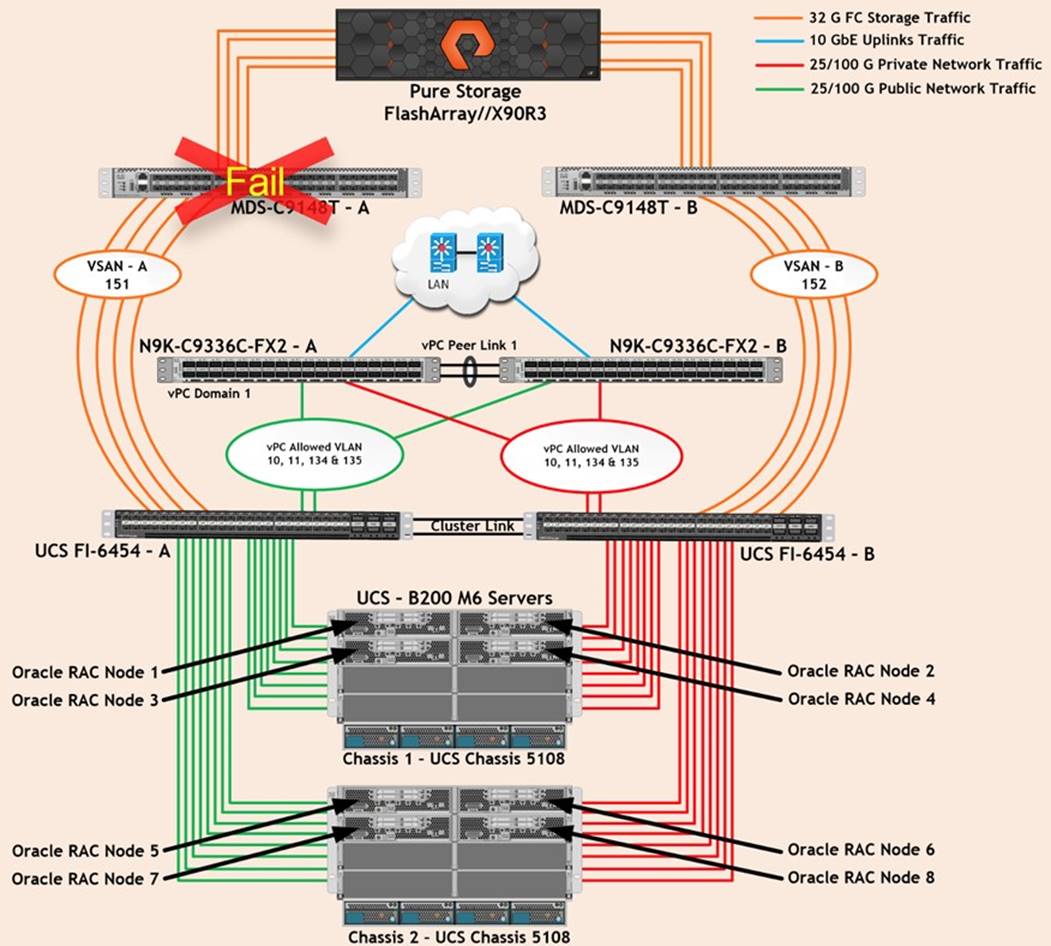

| Details the resiliency of the solution and the failure tests implemented |

|

| Summarizes the solution and its benefits |

|

| Provides information about known issues, enhancements, fixes, and recommendations |

|

| Provides a list of references used in this document |

|

| Provides details about the authors of this CVD |

|

| Additional configuration information and resources |

|

| Provide links for feedback and CVD Program information |

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

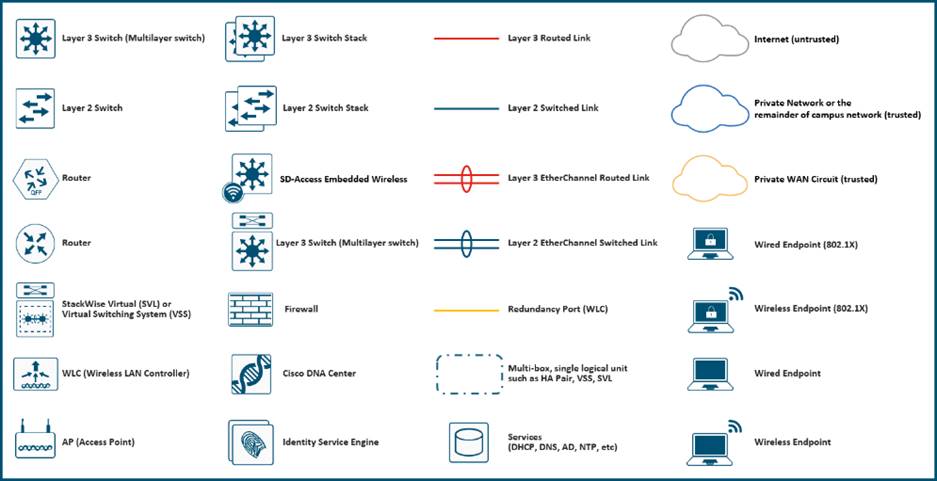

Icons Used in this Document

The IT industry has been transforming rapidly to converged infrastructure, which enables faster provisioning, scalability, lower data center costs, simpler management infrastructure with technology advancement. There is a current industry trend for pre-engineered solutions which standardize the data center infrastructure and offers operational efficiencies and agility to address enterprise applications and IT services. This standardized data center needs to be seamless instead of siloed when spanning multiple sites, delivering a uniform network and storage experience to the compute systems and end users accessing these data centers. Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that unites computing, network, storage access, and virtualization into a single cohesive system.

The FlashStack solution provides best of breed technology from Cisco Unified Computing System and Pure Storage to gain the benefits that converged infrastructure brings to the table. FlashStack solution provides the advantage of having the compute, storage, and network stack integrated with the programmability of Cisco Unified Computing System (Cisco UCS). Cisco Validated Designs (CVDs) consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of customers and to guide them from design to deployment. The combination of Cisco UCS, Pure Storage, Oracle Real Application Cluster Database and VMware vSphere architecture can accelerate your IT transformation by enabling faster deployments, greater flexibility of choice, efficiency, high availability, and lower risk.

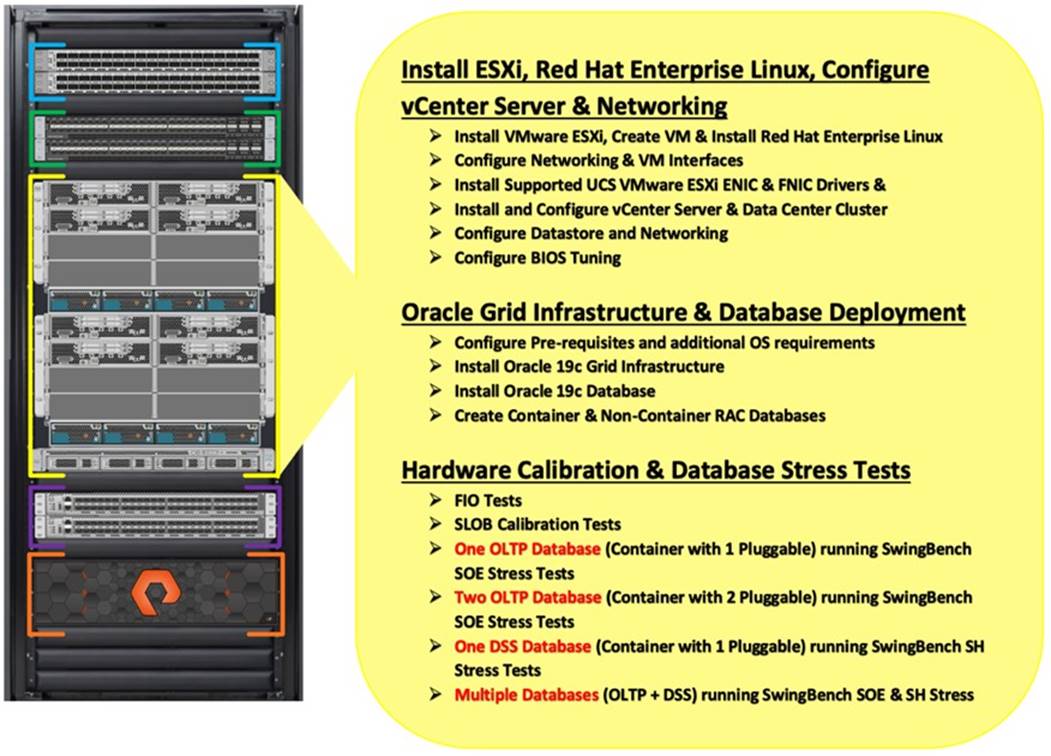

This Cisco Validated Design (CVD) describes a FlashStack reference architecture for deploying a highly available Oracle Multitenant RAC 19c Databases environment on Pure Storage FlashArray//X90 R3 using Cisco UCS Compute Servers, Cisco Fabric Interconnect Switches, Cisco Nexus Switches, Cisco MDS Switches with VMware vSphere and Red Hat Enterprise Linux. Cisco and Pure Storage have validated the reference architecture with various Database workloads like OLTP (Online Transactional Processing) and Data Warehouse in Cisco’s UCS Datacenter lab. This document presents the hardware and software configuration of the components involved, results of various tests performed and offers a framework for implementing highly available Oracle RAC Databases and best practices guidance.

This chapter is organized into the following subjects:

| Chapter |

Subject |

| Solution Overview |

This Cisco Validated Design (CVD) describes how Cisco Unified Computing System (Cisco UCS) with the new Cisco UCS B200 M6 blade servers, can be used in conjunction with Pure Storage FlashArray//X90 R3 System to implement a mission-critical application such as an Oracle Multitenant Real Application Cluster (RAC) 19c Database solution using VMware vSphere 7.0 on Fibre-Channel based storage access.

The Oracle Multitenant architecture helps customers reduce IT costs by simplifying consolidation, provisioning, upgrades and more. The Oracle Multitenant architecture allows Container database to hold many pluggable databases and it fully complements other options, including Oracle Real Application Clusters and Oracle Active Data Guard. Organizations of all kinds rely on their relational databases for both transaction processing (OLTP) and analytics (OLAP), but many still have challenges in meeting their goals of high availability, security, and performance.

FlashStack embraces the latest technology and efficiently simplifies data center workloads that redefine the way IT delivers value:

● A cohesive, integrated system that is managed, serviced, and tested as a whole.

● Guarantee customer success with prebuilt, pre-tested drivers and Oracle database software.

● Faster Time to Deployment – Leverage a pre-validated platform to minimize business disruption, improve IT agility, and reduce deployment time from months to weeks.

● Reduces Operational Risk – Highly available architecture with no single point of failure, non-disruptive operations, and no downtime.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, database administrators, IT managers, Oracle database architects, and customers who want to deploy an Oracle RAC 19c database solution on the FlashStack Converged Infrastructure with Pure Storage FlashArray, Cisco UCS platform and VMWare vSphere. A working knowledge of Oracle RAC Database, Linux, VMware, Storage technology, and Network is assumed but is not a prerequisite to read this document.

This document provides a step-by-step configuration and implementation guide for the FlashStack Datacenter with Cisco UCS Compute Servers, Cisco Fabric Interconnect Switches, Cisco MDS Switches, Cisco Nexus Switches and Pure Storage FlashArray Storage to deploy an Oracle RAC Database solution on VMWare vSphere environment.

The following are the objectives of this reference document:

● Provide reference architecture design guidelines for deploying Oracle RAC Databases on Virtual Server Infrastructures.

● Highlight the performance, manageability, and high availability for OLTP and OLAP type of Oracle Databases on the FlashStack CI Solution.

● Demonstrate the seamless scalability to meet growth needs of Oracle Databases.

● Confirm high availability of Database instances, without performance compromise through software and hardware failures.

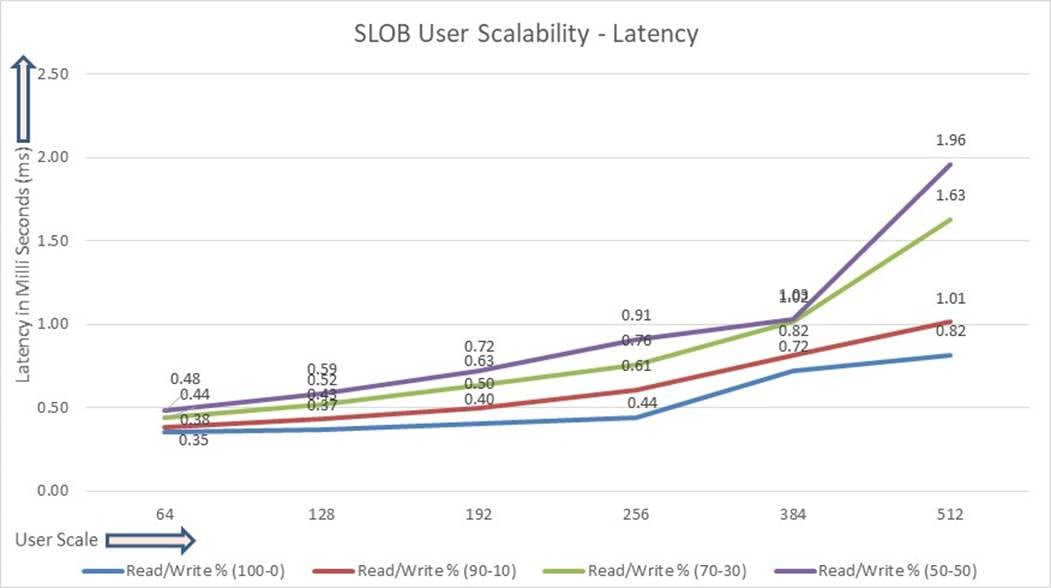

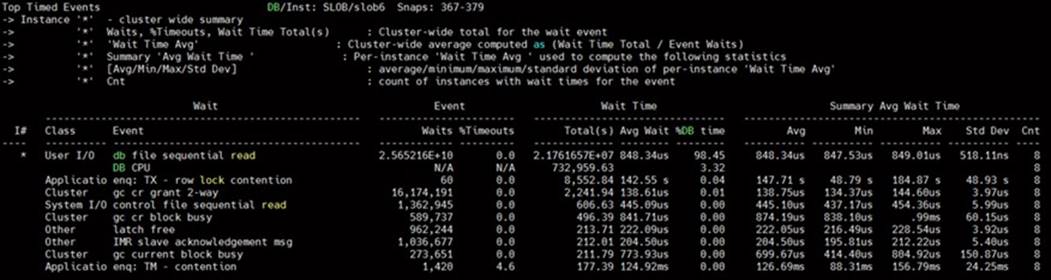

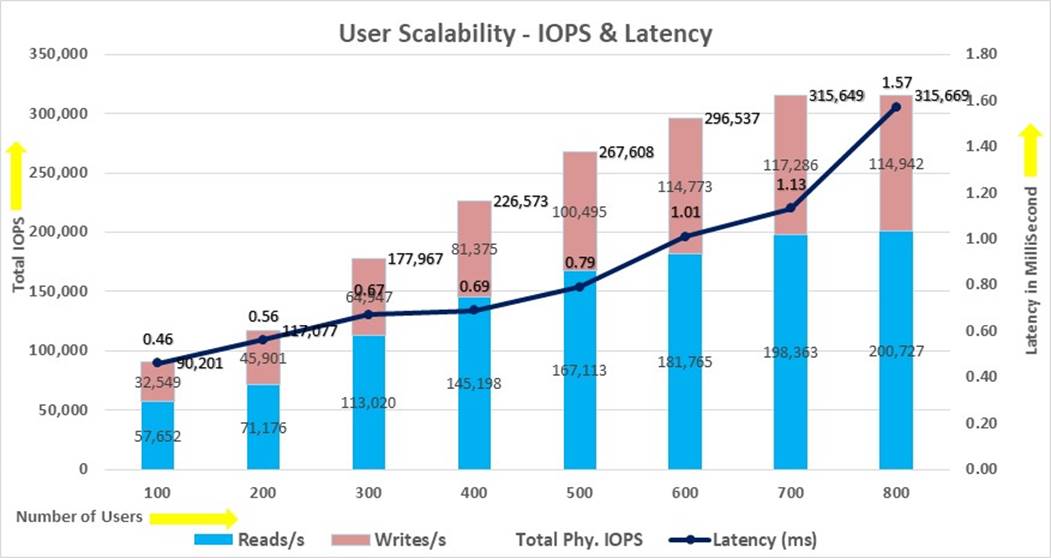

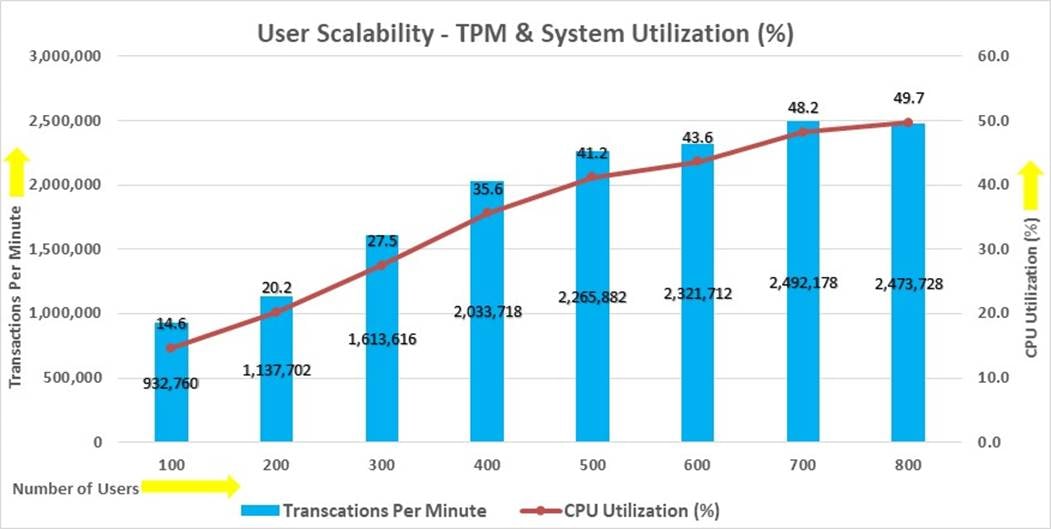

In this solution, we will deploy both types of databases (Non-Container Database and Container Database) and perform testing on various types of workloads to check how performance on both aspects of it. We will demonstrate the scalability and performance of this solution by running database stress tests such as SwingBench and SLOB (Silly Little Oracle Benchmark) on OLTP (Online Transaction Processing) and DSS (Decision Support System) databases with varying users, nodes, and read/write workload characteristics.

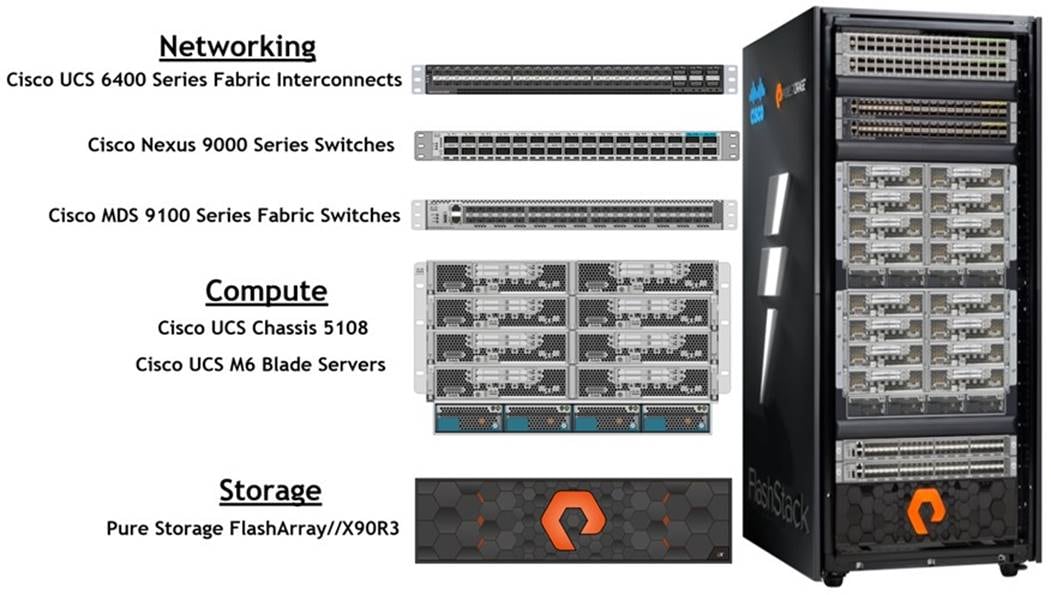

This release introduces the Pure Storage FlashArray//X90 R3 that brings the low latency and high performance of NVMe technology to the storage network along with Cisco UCS B200 M6 Blade Servers (6th Generation) to deploy Oracle RAC Database Release 19c, using traditional Fibre Channel on VMware and Red Hat Enterprise Linux.

It incorporates the following features:

● Cisco UCS B200 M6 Blade Servers with 3rd Gen Intel Xeon Scalable Processors

● Validation of Oracle RAC 19c Container and Non-Container Database deployments

● Support for the Cisco UCS Infrastructure and UCS Manager Software Release 4.2(1i)

● Support for Purity 6.1.11

● Validation of VMware vSphere 7.0.2

The FlashStack platform, developed by Cisco and Pure Storage, is a flexible, integrated infrastructure solution that delivers pre-validated storage, networking, and server technologies. Composed of defined set of hardware and software, this FlashStack solution is designed to increase IT responsiveness to organizational needs and reduce the cost of computing with maximum uptime and minimal risk. Cisco and Pure Storage have carefully validated and verified the FlashStack solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model.

This portfolio includes, but is not limited to, the following items:

● Best practice architectural design

● Implementation and deployment instructions and provides application sizing based on results

As shown in Figure 1, these components are connected and configured according to best practices of both Cisco and Pure Storage and provides the ideal platform for running a variety of enterprise database workloads with confidence. FlashStack can scale up for greater performance and capacity (adding compute, network, or storage resources individually as needed), or it can scale out for environments that require multiple consistent deployments.

The reference architecture explained in this document leverages the Pure Storage FlashArray//X90 R3 Controller with NVMe based DirectFlash Fabric for Storage, Cisco UCS B200 M6 Blade Server for Compute, Cisco Nexus 9000 Series Switches for the networking element, Cisco MDS 9000 Series Switches for the SAN Storage Networking element, and Cisco Fabric Interconnects 6400 series for System Management. As shown in Figure 1, FlashStack Architecture can maintain consistency at scale. Each of the component families shown in (Cisco UCS, Cisco Nexus, Cisco MDS, Cisco FI, and Pure Storage) offers platform and resource options to scale the infrastructure up or down, while supporting the same features and functionality that are required under the configuration and connectivity best practices of FlashStack.

FlashStack provides a jointly supported solution by Cisco and Pure Storage. Bringing a carefully validated architecture built on superior compute, world-class networking, and the leading innovations in all flash storage. The portfolio of validated offerings from FlashStack includes but is not limited to the following:

● Consistent Performance and Scalability

● Operational Simplicity

● Mission Critical and Enterprise Grade Resiliency

Cisco and Pure Storage have also built a robust and experienced support team focused on FlashStack solutions, from customer account and technical sales representatives to professional services and technical support engineers. The support alliance between Pure Storage and Cisco gives customers and channel services partners direct access to technical experts who collaborate with cross vendors and have access to shared lab resources to resolve potential issues.

This chapter is organized into the following subjects:

| Chapter |

Subject |

| Solution Design |

The reference FlashStack architecture covered in this document is built on the Pure Storage FlashArray//X90 R3 Series for Storage, Cisco B200 M6 Blade Servers for Compute, Cisco Nexus 9336C-FX2 Switches, Cisco MDS 9148T Fibre Channel Switches and Cisco Fabric Interconnects 6454 Fabric Interconnects for System Management in a single package. The design is flexible enough that the networking, computing, and storage can fit in one data center rack or be deployed according to a customer's data center design. The reference architecture reinforces the "wire-once" strategy, because as additional storage is added to the architecture, no re-cabling is required from the hosts to the Cisco UCS fabric interconnect.

The processing capabilities of CPUs have increased much faster than the processing demands of most database workloads. Sometimes databases are limited by CPU work, but it is generally a result of the processing limits of a single core and is not a limitation of the CPU. The result is an increasing number of idle cores on database servers that still must be licensed for the Oracle Database software. This underutilization of CPU resources is a waste of capital expenditure, not only in terms of licensing costs, but also in terms of the cost of the server itself, heat output, and so on. The Cisco UCS servers comes with different CPU options in terms of higher clock-speed which would help the database workloads and customer will have the option of using Higher clock-speed CPU with lower cores to keep the Oracle licensing costs down.

Requirements

This subject is organized into the following sections:

| Subject |

Section |

| Requirements |

This section describes the hardware and software components used to deploy an eight node Oracle RAC 19c Database solution on this architecture.

The inventory of the components used in this solution architecture is listed in Table 1.

Table 1. Hardware Inventory and Bill of Material

| Name |

Model/Product ID |

Description |

Quantity |

| Cisco UCS Blade Server Chassis |

UCSB-5108-AC2 |

Cisco UCS AC Blade Server Chassis, 6U with Eight Blade Server Slots |

2 |

| Cisco UCS Fabric Extender |

UCS-IOM-2408 |

Cisco UCS 2408 8x25 Gb Port IO Module |

4 |

| Cisco UCS B200 M6 Blade Server |

UCSB-B200-M6 |

Cisco UCS B200 M6 2 Socket Blade Server |

8 |

| Cisco UCS VIC 1440 |

UCSB-MLOM-40G-04 |

Cisco UCS VIC 1440 Blade MLOM |

8 |

| Cisco UCS Port Expander Card |

UCSB-MLOM-PT-01 |

Port Expander Card for Cisco UCS MLOM |

8 |

| Cisco UCS 6454 Fabric Interconnect |

UCS-FI-6454 |

Cisco UCS 6454 Fabric Interconnect |

2 |

| Cisco Nexus Switch |

N9K-9336C-FX2 |

Cisco Nexus 9336C-FX2 Switch |

2 |

| Cisco MDS Switch |

DS-C9148T-24PETK9 |

Cisco MDS 9148T 32-Gbps 48-Port Fibre Channel Switch |

2 |

| Pure Storage FlashArray |

FA-X90 R3 |

Pure Storage FlashArray//X90 R3 |

1 |

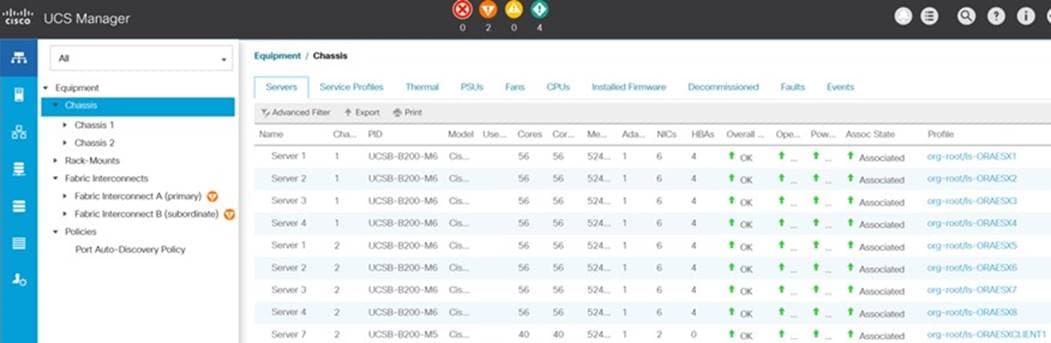

In this solution design, eight identical Cisco UCS B200 M6 Blade Servers were used for hosting an eight node Oracle RAC Database. The Cisco UCS B200 M6 two socket blade server is half-width blade server with two sockets, up to 32 DIMMs, two drive bays, two NVMe slots, Intel Xeon Processor Scalable Family, one mLOM, and two Mezz slots. The Cisco UCS B200 M6 Server configuration is listed in Table 2.

Table 2. Cisco UCS B200 M6 Blade Server

| Cisco UCS B200 M6 2 Socket Blade Server Configuration |

||

| Processor |

2 x Intel(R) Xeon(R) Gold 6348 2.60 GHz 235W 28C 42.00MB Cache DDR4 3200MHz 6TB |

UCS-CPU-I6348 |

| Memory |

16 x Samsung 32GB RDIMM DRx4 3200 |

UCS-MR-X32G2RW |

| Cisco UCS VIC 1440 |

Cisco UCS VIC 1440 Blade MLOM |

UCSB-MLOM-40G-04 |

| Cisco UCS Port Expander Card |

Port Expander Card for Cisco UCS MLOM |

UCSB-MLOM-PT-01 |

| Storage Controller |

Cisco FlexStorage 12G SAS RAID Controller. Supports RAID levels 0, 1, 10 and JBOD. Supports SSD (solid state drives) |

UCSB-RAID12G-M6 |

| SSD Disk |

480GB 2.5-inch Enterprise Value 6G SATA SSD |

UCS-SD480GBKS4-EV |

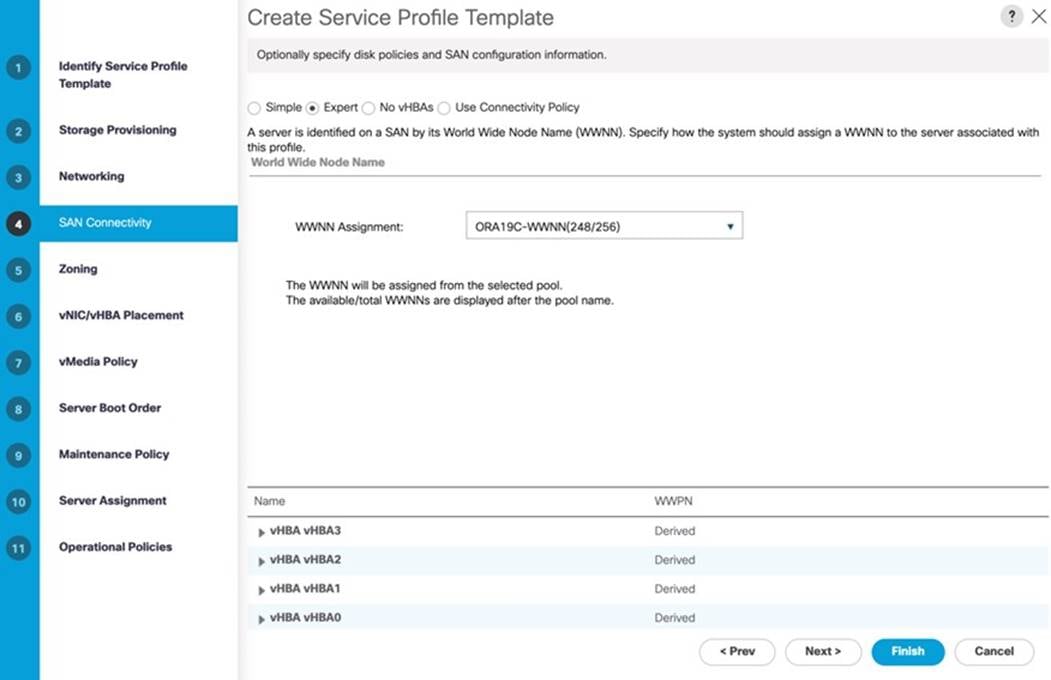

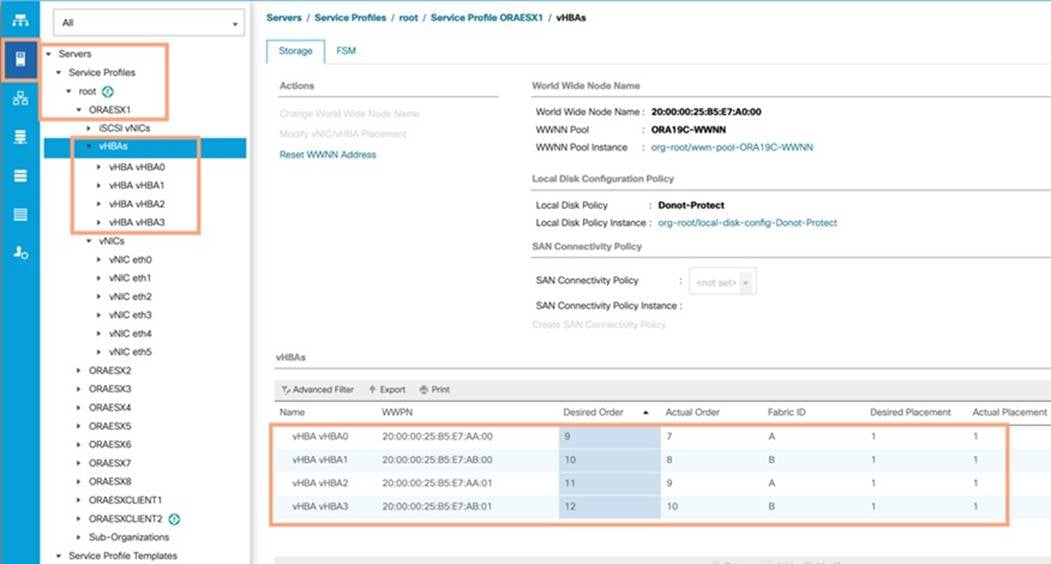

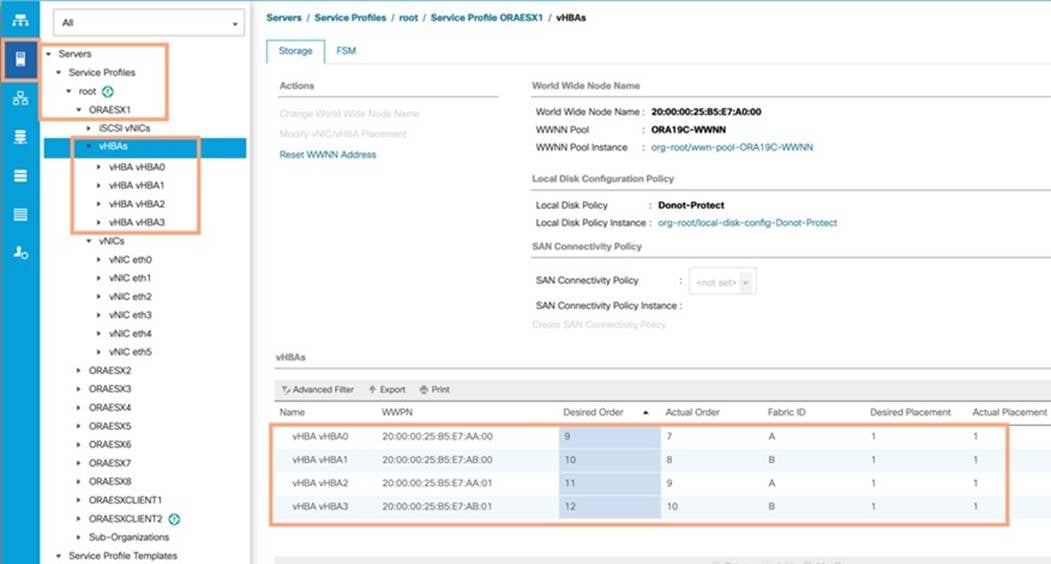

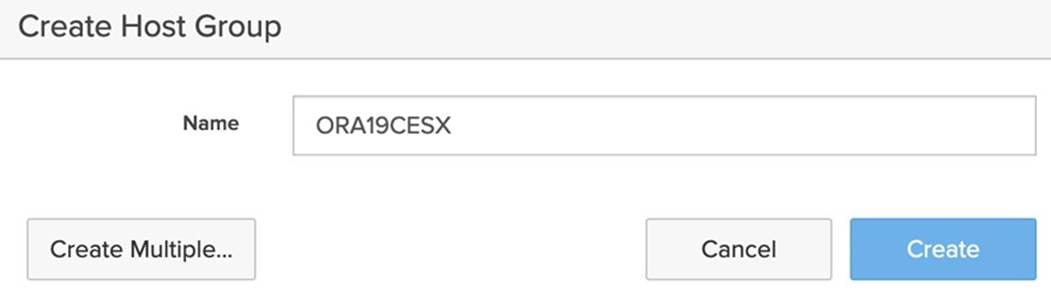

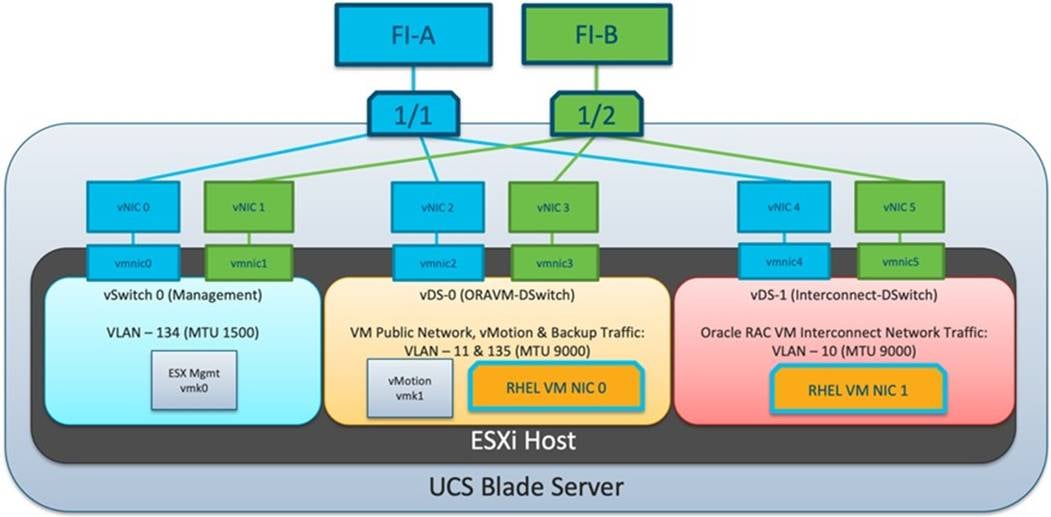

In this solution, six vNICs and four vHBAs were configured on each host to carry all the network and storage traffic as listed in Table 3.

Table 3. vNIC and vHBA Configured on each Linux Host

| vNIC Details |

|

| vNIC 0 (eth0) |

ESXi Management Network Traffic Interface on Fabric Interconnect – A (Allowed VLAN 134) MTU = 1500 |

| vNIC 1 (eth1) |

ESXi Management Network Traffic Interface on Fabric Interconnect – B (Allowed VLAN 134) MTU = 1500 |

| vNIC 2 (eth2) |

VM Management and vMotion Network Traffic Interface on Fabric Interconnect – A (Allowed VLAN 2, 11 & 135) MTU = 9000 |

| vNIC 3 (eth3) |

VM Management and vMotion Network Traffic Interface on Fabric Interconnect – B (Allowed VLAN 2, 11 & 135) MTU = 9000 |

| vNIC 4 (eth4) |

Private Server-to-Server Network (Cache Fusion) Traffic Interface for Oracle RAC on Fabric Interconnect – A (Allowed VLAN 2 & 10) MTU = 9000 |

| vNIC 5 (eth5) |

Private Server-to-Server Network (Cache Fusion) Traffic Interface for Oracle RAC on Fabric Interconnect – B (Allowed VLAN 2 & 10) MTU = 9000 |

| vHBA0 |

FC Network (Oracle RAC Storage) Traffic on Fabric Interconnect – A to MDS-A Switch |

| vHBA1 |

FC Network (Oracle RAC Storage) Traffic on Fabric Interconnect – B to MDS-B Switch |

| vHBA2 |

FC Network (Oracle RAC Storage) Traffic on Fabric Interconnect – A to MDS-A Switch |

| vHBA3 |

FC Network (Oracle RAC Storage) Traffic on Fabric Interconnect – B to MDS-B Switch |

The vNICs are configured for redundancy and failover on both the Fabric Interconnects and ESXi as listed in Table 4.

Table 4. vNIC and vHBA Configured on Each Linux Host

| Server vNICs |

FI |

Redundancy |

MTU |

Switch Type |

ESXi Failover |

| vNIC 0 |

FI – A |

Primary |

1500 |

vSwitch-0 |

ACTIVE |

| vNIC 1 |

FI – B |

Secondary |

ACTIVE |

||

| vNIC 2 |

FI – A |

Primary |

9000 |

vDS-0 |

ACTIVE |

| vNIC 3 |

FI – B |

Secondary |

ACTIVE |

||

| vNIC 4 |

FI – A |

Primary |

9000 |

vDS-1 |

ACTIVE |

| vNIC 5 |

FI – B |

Secondary |

ACTIVE |

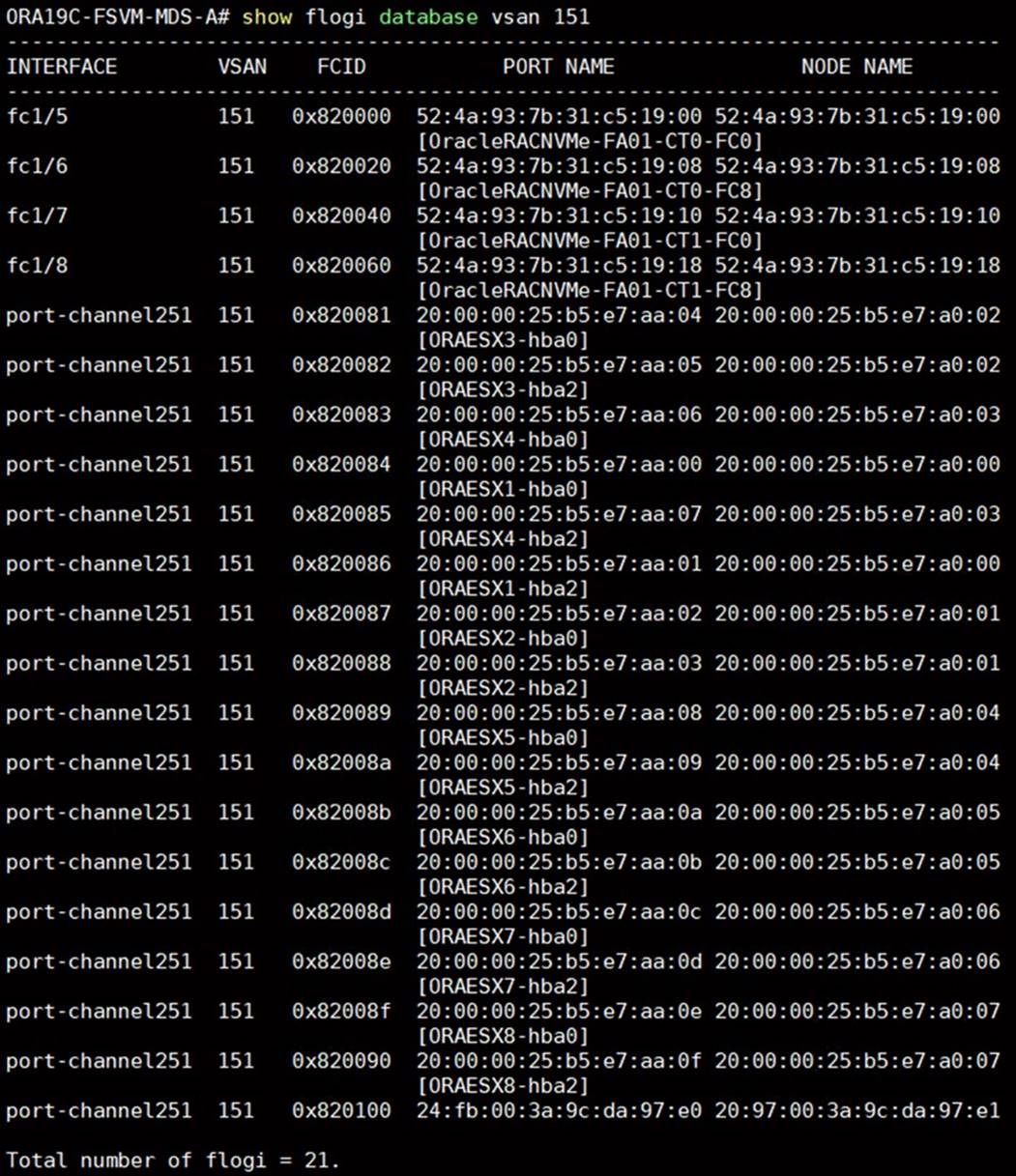

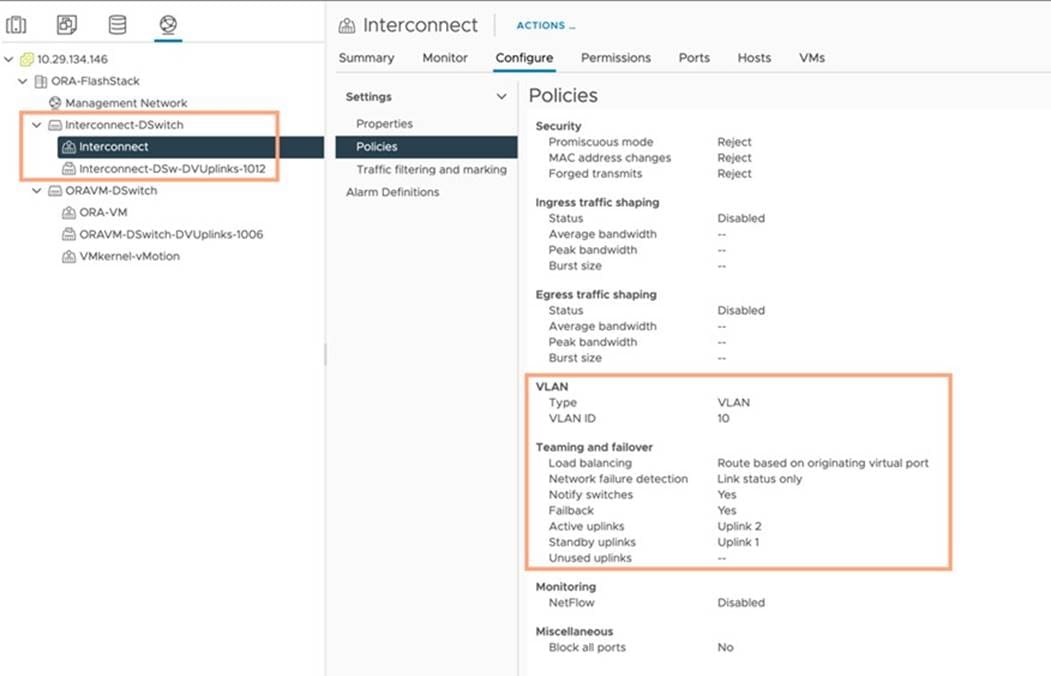

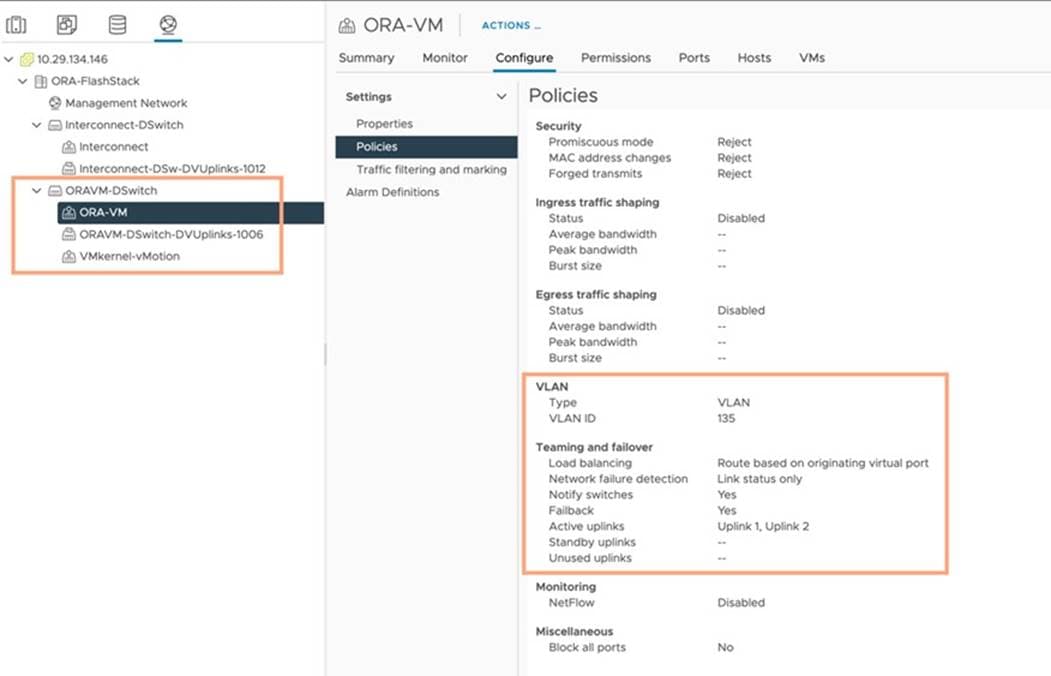

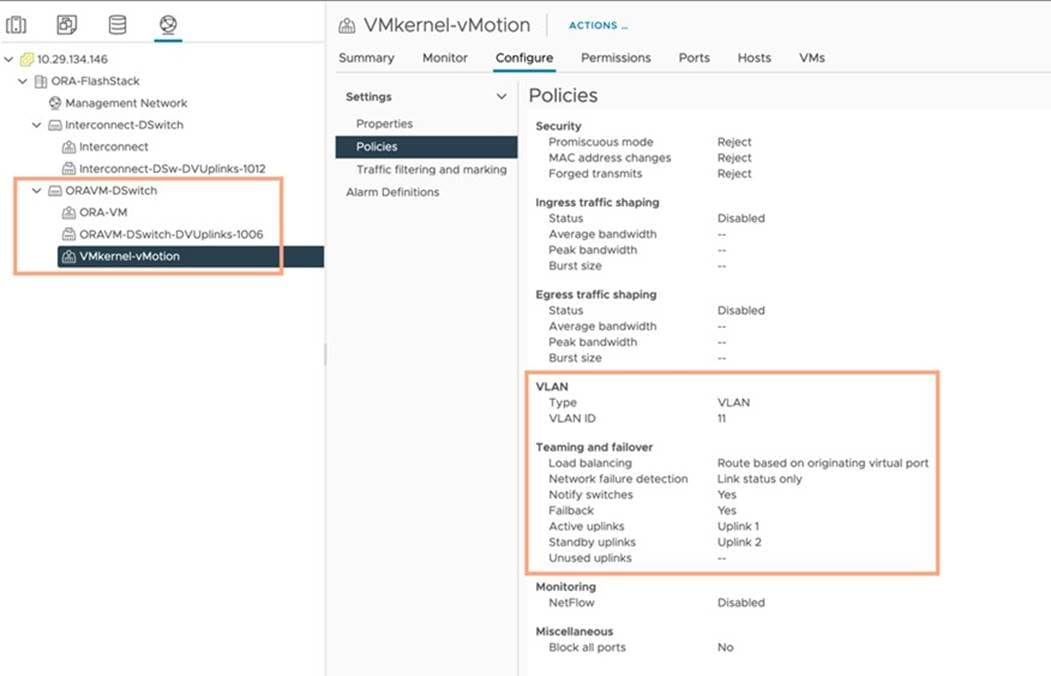

For this solution, five VLANs were configured to carry ESXi Management, VM Management, vMotion, and Oracle RAC private network traffic, as well as two VSANs to carry FC storage traffic, as listed in Table 5.

Table 5. VLAN and VSAN Configuration

| VLAN and VSAN Configuration |

||

| VLAN |

||

| Name |

ID |

Description |

| Native VLAN |

2 |

Native VLAN |

| ESXi Management Network |

134 |

VLAN for ESXi Management Network Traffic |

| VM Management |

135 |

VLAN for VM Management Network Traffic |

| Interconnect |

10 |

VLAN for Private Server-to-Server Network (Cache Fusion) Traffic for Oracle RAC |

| vMotion & Backup |

11 |

VLAN for vMotion and Database Backup Network Traffic |

| VSAN |

||

| Name |

ID |

Description |

| VSAN-A |

151 |

FC Network (Oracle RAC Storage) Traffic through Fabric Interconnect A |

| VSAN-B |

152 |

FC Network (Oracle RAC Storage) Traffic through Fabric Interconnect B |

This FlashStack solution consist of FlashArray //X90R3 Storage as listed in Table 6.

Table 6. Pure Storage FlashArray //X90 R3 Storage Configuration

| Storage Components |

Description |

| FlashArray//X90 R3 |

Pure Storage FlashArray//X90 R3 |

| Capacity |

27.26 TB |

| Connectivity |

8 x 32 Gb/s redundant FC, 1 Gb/s redundant Ethernet (Management port) |

| Physical |

3 Rack Units |

Table 7 lists the versions of the software and firmware releases used in this FlashStack solution.

Table 7. Software and Firmware Revisions

| Software and Firmware |

Version |

| Cisco Nexus 9336C-FX2 NXOS |

NXOS 9.3(2) |

| Cisco MDS 9148T System |

System Version 8.4(2c) |

| Cisco UCS Manager System |

4.2(1i) |

| Cisco UCS Adapter VIC 1440 |

Package Version – 4.2 (1i)B Running Version – 5.2 (1b) |

| Pure Storage FlashArray //X90R3 |

Purity//FA 6.1.11 |

| VMware vSphere ESXi Cisco Custom ISO |

VMware ESXi, 7.0.2, 17630552 |

| Virtual Machine OS |

Red Hat Enterprise Linux 7.9 (64-bit) |

| Cisco UCS VIC Storage Driver for VMWare Driver for ESXi_7.0U2 esxcli software vib list |grep nfnic |

Cisco_bootbank_nfnic_4.0.0.71-1OEM.670.0.0.8169922.vib 4.0.0.71-1OEM.670.0.0.8169922 |

| Cisco UCS VIC Network Driver for VMWare Driver for ESXi_7.0U2 esxcli software vib list |grep nenic |

Cisco_bootbank_nenic_1.0.35.0-1OEM.670.0.0.8169922.vib 1.0.35.0-1OEM.670.0.0.8169922 |

| VMware vCenter |

7.0 |

| Oracle Database 19c Grid Infrastructure for Linux x86-64 |

19.12.0.0.0 |

| Oracle Database 19c Enterprise Edition for Linux x86-64 |

19.12.0.0.0 |

| FIO |

fio-3.7-3.el8.x86_64 |

| Oracle SwingBench |

2.5.971 |

| SLOB |

2.5.2.4 |

Physical Topology

This solution consists of the following set of hardware combined into a single stack:

● Compute: Cisco UCS B200 M6 Blade Servers with Cisco Virtual Interface Cards (VICs) 1440

● Network: Cisco Nexus 9336C-FX2, Cisco MDS 9148T Fibre Channel and Cisco UCS Fabric Interconnect 6454 for network and management connectivity

● Storage: Pure Storage FlashArray//X90 R3

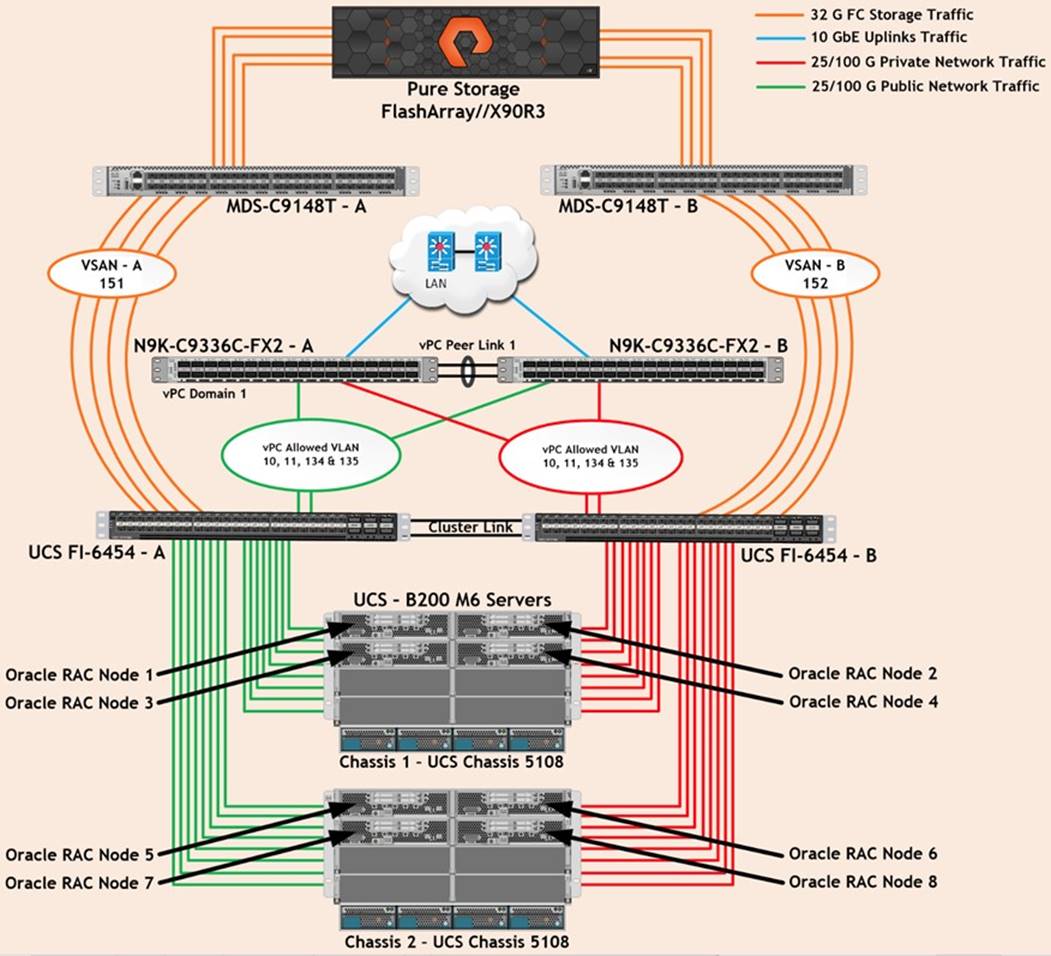

In this solution design, two Cisco UCS 5108 Blade Server Chassis with eight identical Intel Xeon CPU-based Cisco UCS B200 M6 Blade Servers for hosting the 8-Node Oracle RAC Databases were deployed. The Cisco UCS B200 M6 Server has a Virtual Interface Card (VIC) 1440 with a port expander and they were connected to eight ports from each Cisco Fabric extender 2408 from the Cisco UCS Chassis to the Cisco Fabric Interconnects. These were connected to the Cisco MDS Switches for upstream SAN connectivity to access the Pure FlashArray storage.

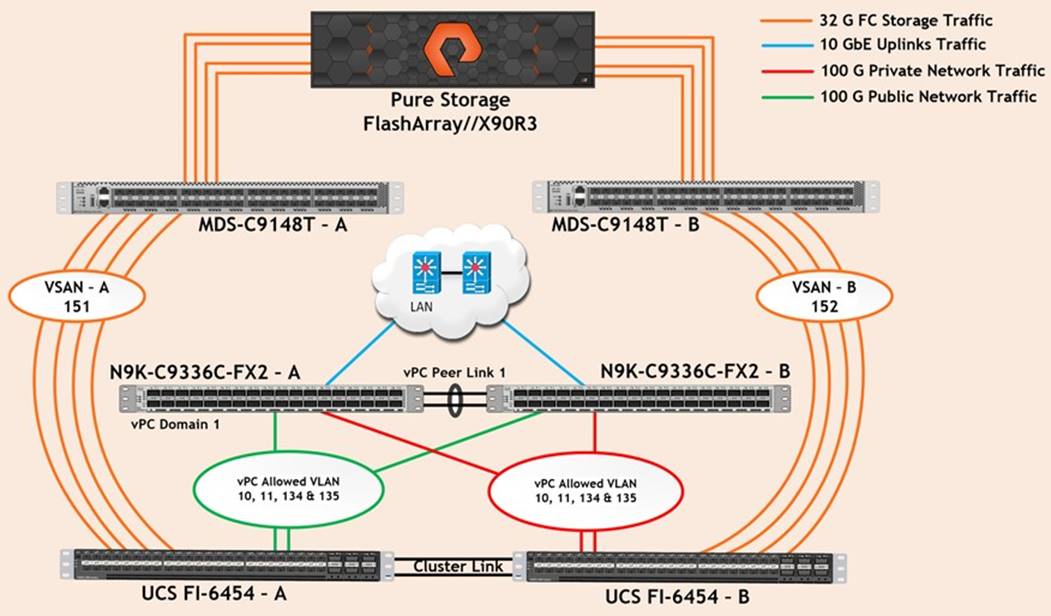

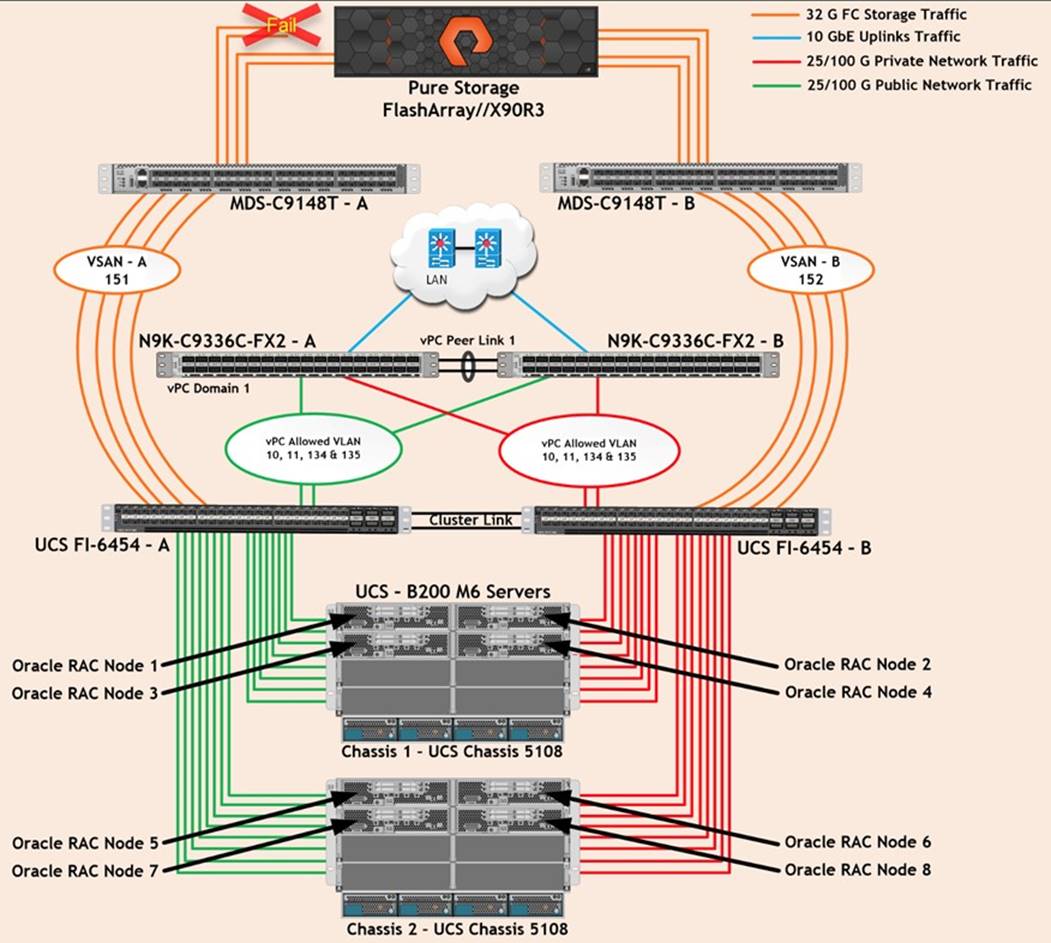

Figure 2 shows the architecture diagram of the FlashStack components deploying an eight node Oracle RAC 19c Database solution. This reference design is a typical network configuration that can be deployed in a customer's environments.

As shown in Figure 2, a pair of Cisco UCS 6454 Fabric Interconnects (FI) carries both storage and network traffic from the Cisco UCS B200 M6 server with the help of Cisco Nexus 9336C-FX2 and Cisco MDS 9132T switches. Both the Fabric Interconnects and the Cisco Nexus switches are clustered with the peer link between them to provide high availability.

Figure 2 shows 16 (8 x 25G link per chassis) links from the blade server chassis go to Fabric Interconnect – A. Similarly, 16 (8 x 25G link per chassis) links from the blade server chassis go to Fabric Interconnect – B. Fabric Interconnect – A links are used for Oracle Public Network Traffic (VLAN-134) shown as green lines while Fabric Interconnect – B links are used for Oracle Private Interconnect Traffic (VLAN- 10) shown as red lines. Two virtual Port-Channels (vPCs) are configured to provide public network and private network traffic paths for the server blades to northbound nexus switches.

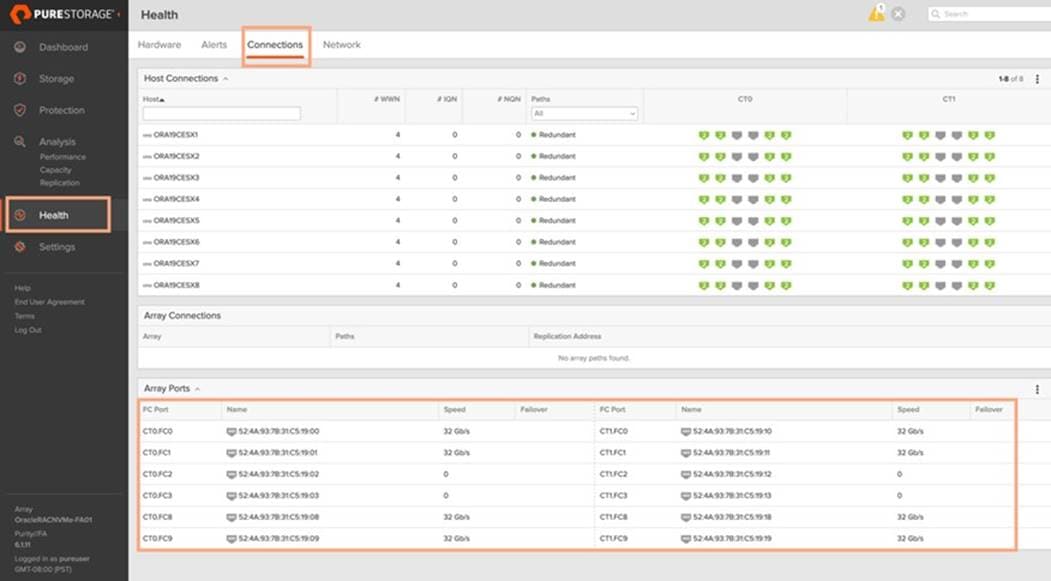

FC Storage access from both Fabric Interconnects to MDS Switches and Pure Storage FlashArray are shown as orange lines. Four 32Gb links are connected from FI – A to MDS – A Switch. Similarly, four 32Gb links are connected from FI – B to MDS – B Switch. The Pure Storage FlashArray //X90R3 has eight active FC connection goes to the Cisco MDS Switches. Four FC ports are connected to MDS-A, and other four FC ports are connected to MDS-B Switch. The Pure Storage Controller CT0 and Controller CT1 SAN ports FC0 and FC8 are connected to MDS – A Switch while the Controller CT0 and Controller CT1 SAN ports FC1 and FC9 are connected to MDS – B Switch. Also, two FC Port-Channels (PC) are configured to provide storage network paths from the server blades to storage array. Each PC has VSANs created for application and storage network data access.

| Tech tip |

| For Oracle RAC configuration on Cisco Unified Computing System, we recommend keeping all private interconnects network traffic local on a single Fabric interconnect. In such a case, the private traffic will stay local to that fabric interconnect and will not be routed via northbound network switch. In that way, all the inter server blade (or RAC node private) communications will be resolved locally at the fabric interconnects and this significantly reduces latency for Oracle Cache Fusion traffic. |

Additional 1Gb management connections are needed for an out-of-band network switch that sits apart from this FlashStack infrastructure. Each UCS FI, MDS and Nexus switch is connected to the out-of-band network switch, and each Pure Storage controller also has two connections to the out-of-band network switch.

Although this is the base design, each of the components can be scaled easily to support specific business requirements. For example, more servers or even blade chassis can be deployed to increase compute capacity, additional disk shelves can be deployed to improve I/O capability and throughput, and special hardware or software features can be added to introduce new features. This document guides you through the detailed steps for deploying the base architecture, as shown in the above figure. These procedures cover everything from physical cabling to network, compute, and storage device configurations.

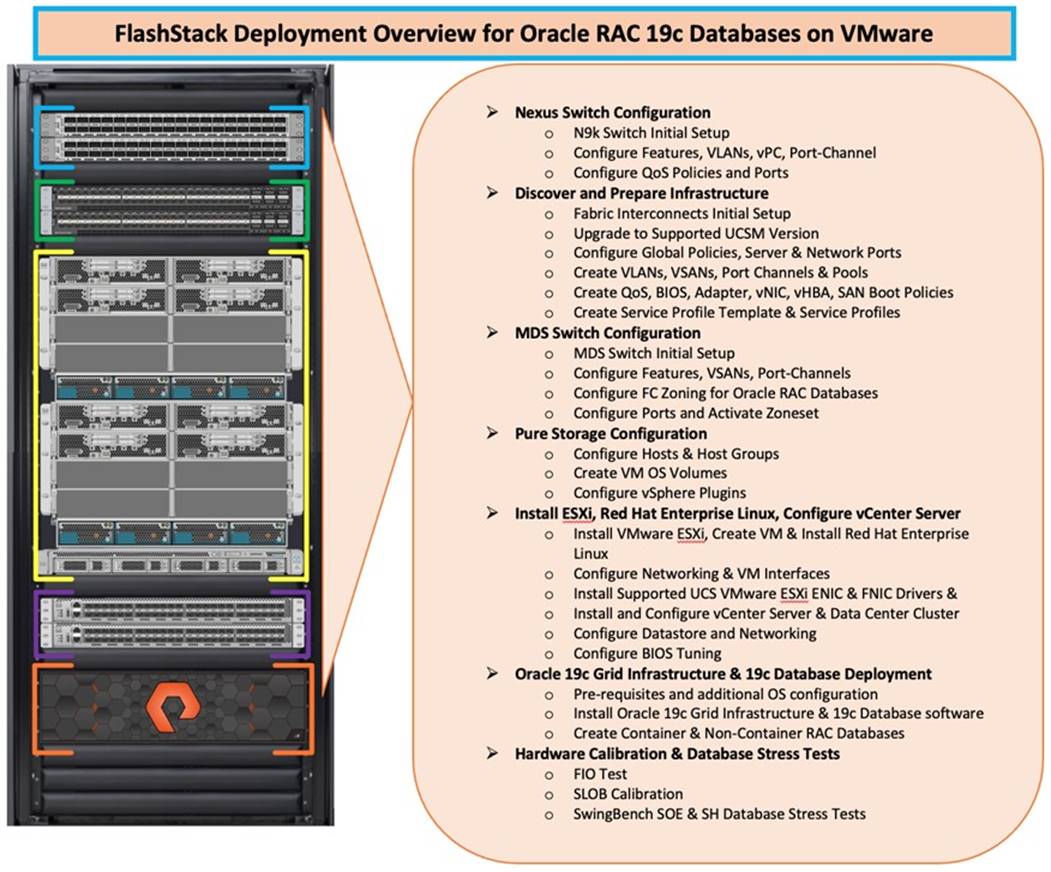

This chapter is organized into the following subjects:

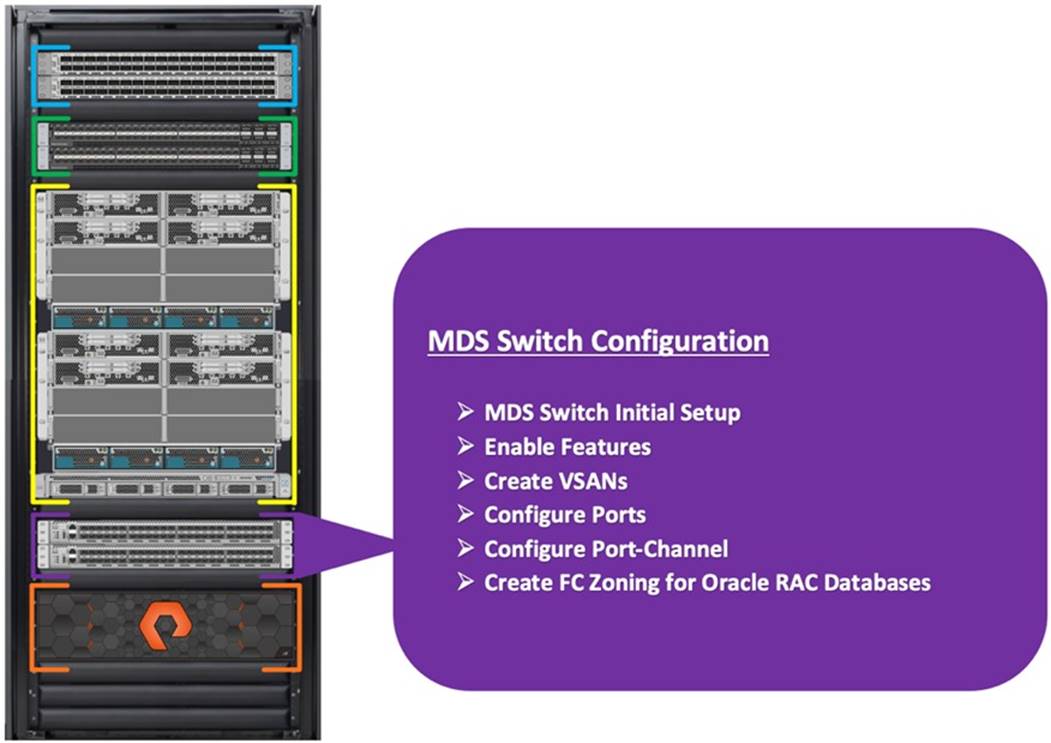

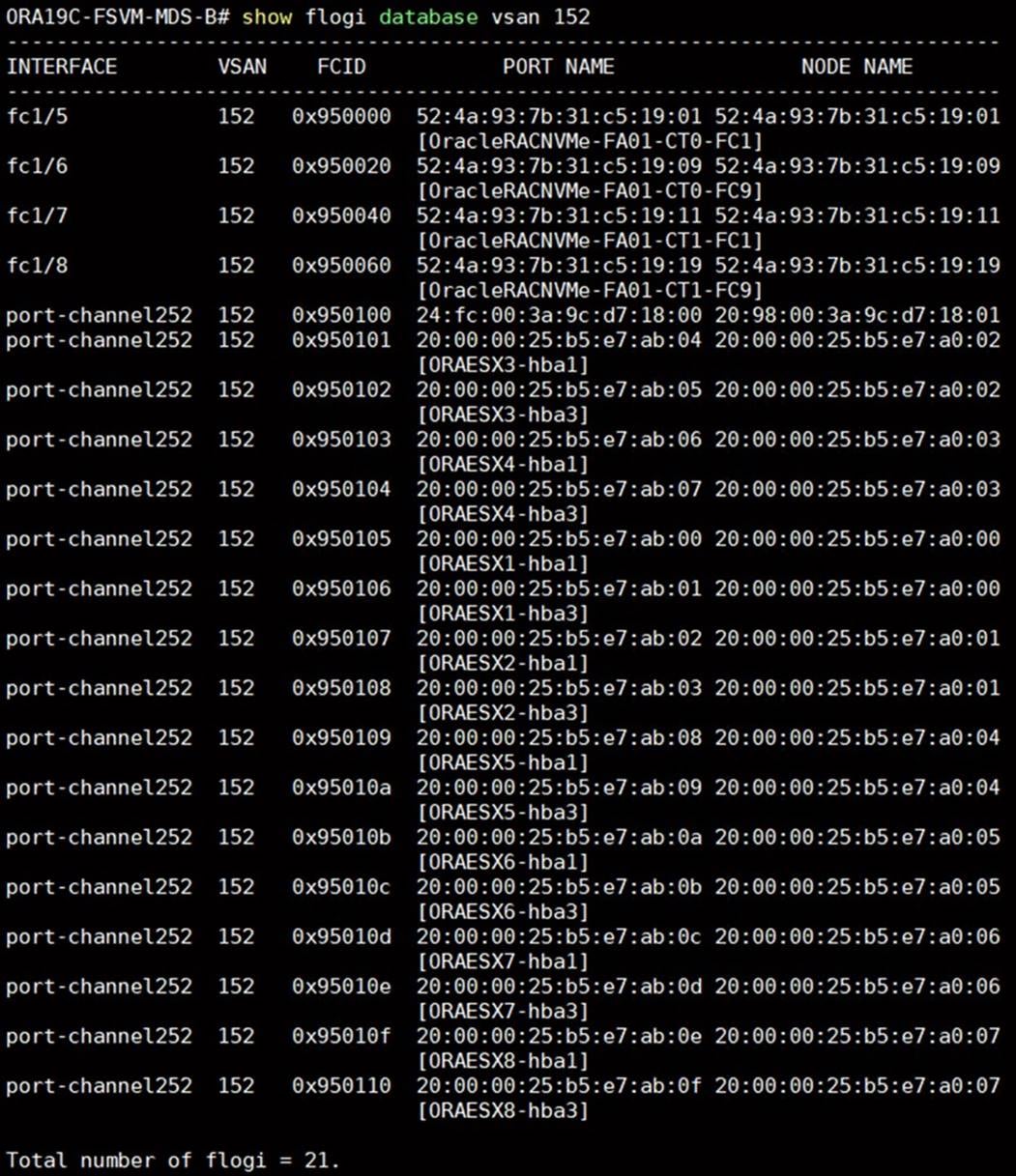

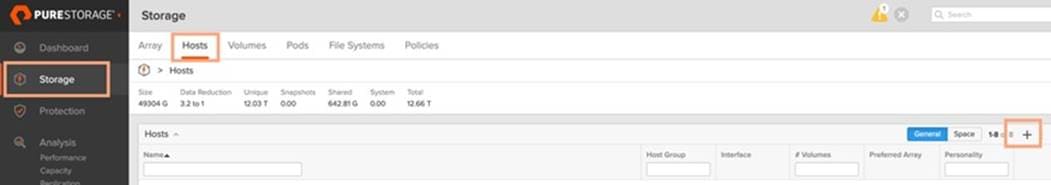

Figure 3 illustrates the high-level overview and steps for configuring various components to deploy and test the Oracle RAC Database 19c on FlashStack reference architecture.

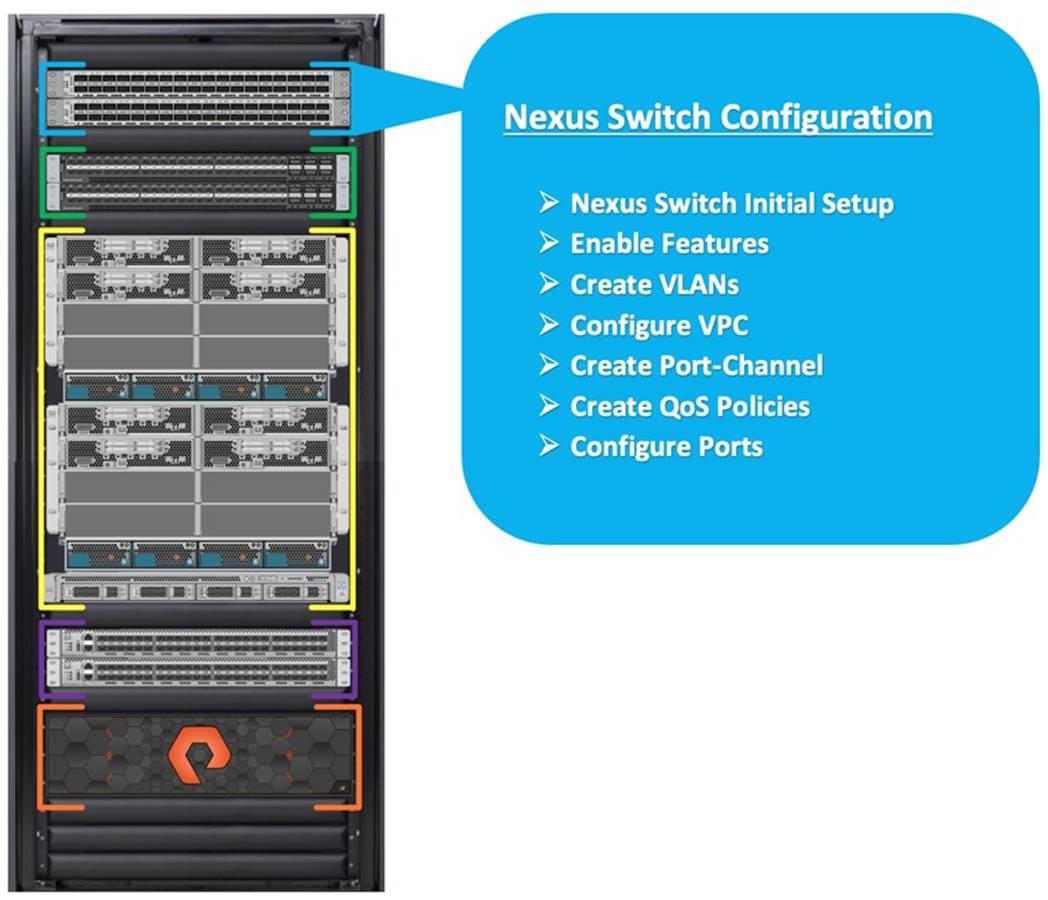

Cisco Nexus Switch Configuration

This section details the high-level steps to configure Cisco Nexus Switches as shown in Figure 4.

The following procedures describe how to configure the Cisco Nexus switches for use in a base FlashStack environment. This procedure assumes you’re using Cisco Nexus 9336C-FX2 switches deployed with the 100Gb end-to-end topology.

Procedure 1. Initial Setup – Cisco Nexus A and B Switch

Note: On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Step 1. Set up the initial configuration for the Cisco Nexus A switch on <nexus-A-hostname>, by running the following:

Abort Power on Auto Provisioning and continue with normal setup? (yes/no) [n]: yes

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-A-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-A-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-A-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-A-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <global-ntp-server-ip>

Configure default interface layer (L3/L2) [L3]: L2

Configure default switchport interface state (shut/noshut) [noshut]: Enter

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

Step 2. Repeat Step 1 to setup the initial configuration for the Cisco Nexus B Switch and change the relevant switch hostname and management IP address.

Procedure 2. Configure Global Settings

Step 1. Login as admin user on the Cisco Nexus Switch A and run the following commands to set the global configurations on Switch A:

configure terminal

feature interface-vlan

feature hsrp

feature lacp

feature vpc

feature udld

feature lldp

spanning-tree port type network default

spanning-tree port type edge bpduguard default

port-channel load-balance src-dst l4port

policy-map type network-qos jumbo

class type network-qos class-default

mtu 9216

system qos

service-policy type network-qos jumbo

vrf context management

ip route 0.0.0.0/0 10.29.135.1

copy run start

Step 2. Repeat Step 1 for the Cisco Nexus Switch B and run the commands to set global configurations on Cisco Nexus Switch B.

Note: Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

Procedure 3. VLAN Configuration

Note: Follow these steps on Cisco Nexus A and B Switches.

Step 1. Login as admin user on Cisco Nexus Switch A.

Step 2. Create VLAN 10 for Oracle RAC Private Network Traffic, VLAN 11 for vMotion, VLAN 134 for ESXi Management and VLAN 135 for VM Management and Oracle RAC Public Network Traffic:

configure terminal

vlan 2

name Native_VLAN

no shutdown

vlan 10

name Oracle_RAC_Private_Network

no shutdown

vlan 11

name vMotion

no shutdown

vlan 134

name ESX_Public_Network

no shutdown

vlan 135

name Oracle_RAC_Public_Network

no shutdown

interface Ethernet1/29

description connect to uplink switch

switchport access vlan 134

speed 1000

interface Ethernet1/31

description connect to uplink switch

switchport access vlan 135

speed 1000

copy run start

Step 3. Repeat Step 1 for the Cisco Nexus Switch B and create VLAN 10 for Oracle RAC Private Network Traffic, VLAN 11 for vMotion, VLAN 134 for ESXi Management and VLAN 135 for VM Management & Oracle RAC Public Network Traffic.

Note: Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

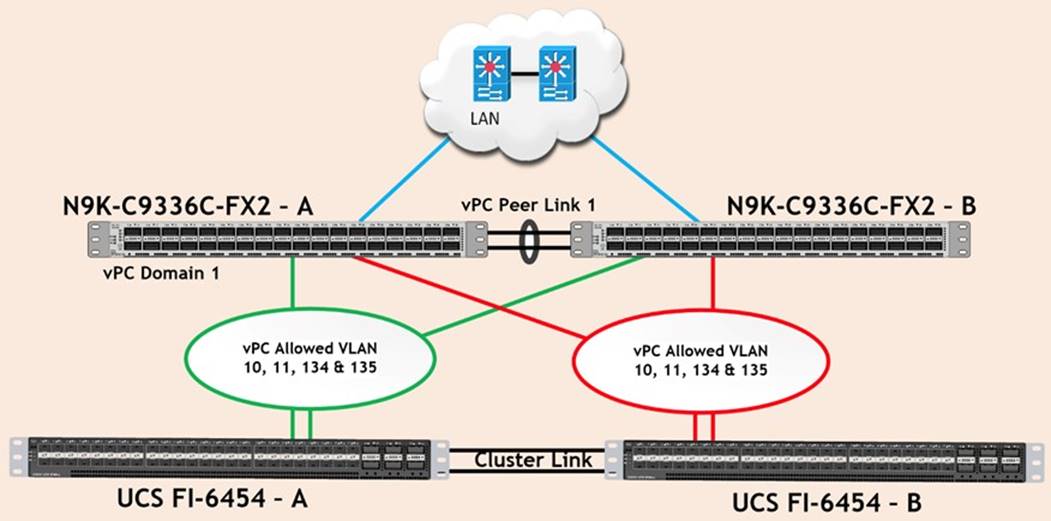

Virtual Port Channel (vPC) for Network Traffic

A port channel bundles individual links into a channel group to create a single logical link that provides the aggregate bandwidth of up to eight physical links. If a member port within a port channel fails, traffic previously carried over the failed link switches to the remaining member ports within the port channel. Port channeling also load balances traffic across these physical interfaces. The port channel stays operational as long as at least one physical interface within the port channel is operational. Using port channels, Cisco NX-OS provides wider bandwidth, redundancy, and load balancing across the channels

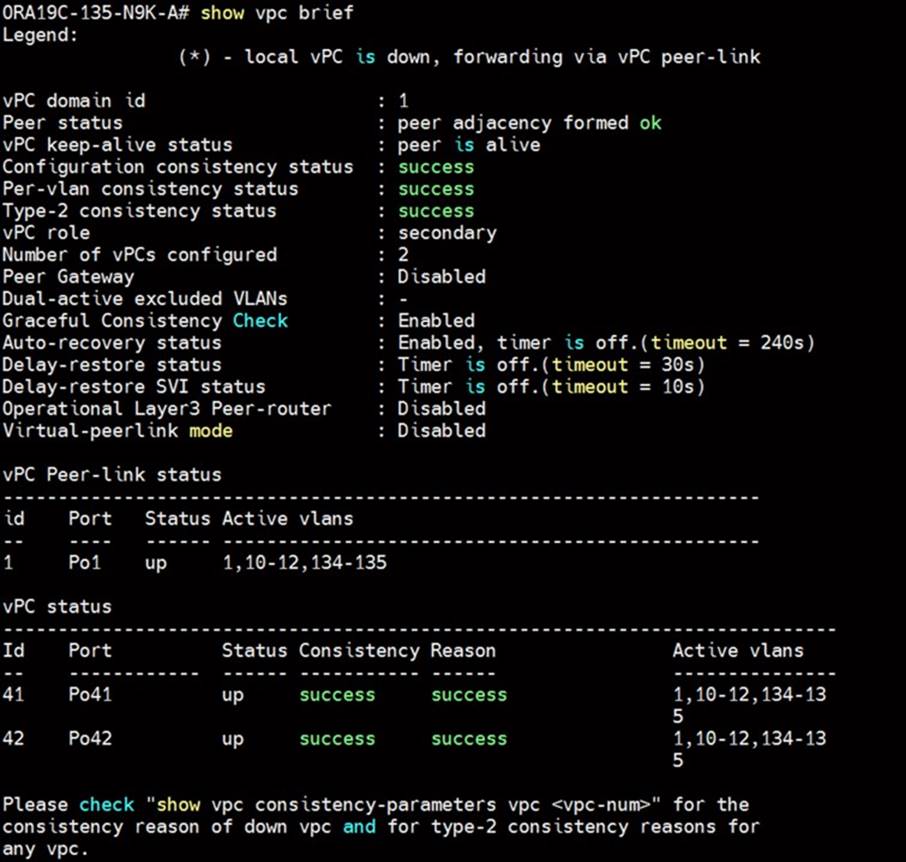

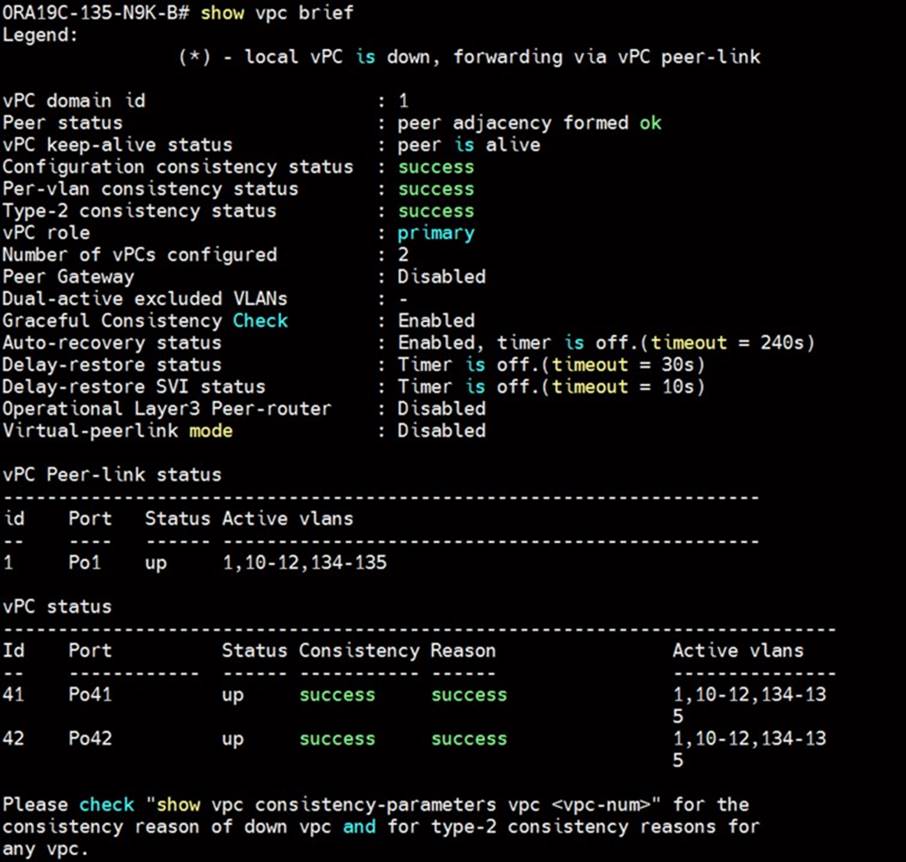

In the Cisco Nexus Switch topology, a single vPC feature is enabled to provide HA, faster convergence in the event of a failure, and greater throughput. Cisco Nexus vPC configurations with the vPC domains and corresponding vPC names and IDs for Oracle Database Servers is listed in Table 8.

| vPC Domain |

vPC Name |

vPC ID |

| 1 |

Peer-Link |

1 |

| 1 |

vPC FI-A |

41 |

| 1 |

vPC FI-B |

42 |

As listed in Table 7, a single vPC domain with Domain ID 1 is created across two Cisco Nexus switches to define vPC members to carry specific VLAN network traffic. In this topology, we defined a total number of 3 vPCs.

vPC ID 1 is defined as Peer link communication between the two Cisco Nexus switches. vPC IDs 41 and 42 are configured for both Cisco UCS fabric interconnects. Please follow these steps to create this configuration.

| Tech tip |

| A port channel bundles up to eight individual interfaces into a group to provide increased bandwidth and redundancy. |

Procedure 1. Create vPC Peer-Link

Note: For vPC 1 as Peer-link, we used interfaces 1 and 2 for Peer-Link. You may choose an appropriate number of ports based on your needs.

Step 1. Login as admin user into the Cisco Nexus Switch A:

configure terminal

vpc domain 1

peer-keepalive destination 10.29.135.154 source 10.29.135.153

auto-recovery

interface port-channel1

description vPC peer-link

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type network

vpc peer-link

interface Ethernet1/1

description Peer link 100g connected to N9K-B-Eth1/1

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

channel-group 1 mode active

interface Ethernet1/2

description Peer link 100g connected to N9K-B-Eth1/2

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

channel-group 1 mode active

copy run start

Step 2. Login as admin user into the Cisco Nexus Switch B and configure the second Cisco Nexus switch as follows:

| Tech tip |

| Make sure to change the description of interfaces and peer-keepalive destination and source IP addresses. |

configure terminal

vpc domain 1

peer-keepalive destination 10.29.135.153 source 10.29.135.154

auto-recovery

interface port-channel1

description vPC peer-link

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type network

vpc peer-link

interface Ethernet1/1

description Peer link 100g connected to N9K-A-Eth1/1

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

channel-group 1 mode active

interface Ethernet1/2

description Peer link 100g connected to N9K-A-Eth1/2

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

channel-group 1 mode active

copy run start

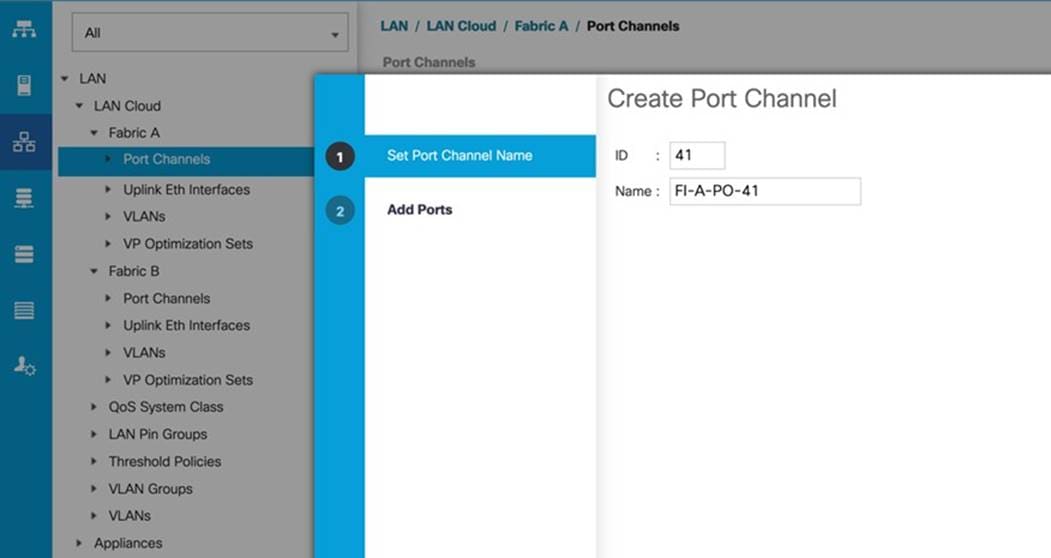

Create vPC Configuration between the Cisco Nexus Switches and Fabric Interconnects

This section describes how to create and configure port channel 41 and 42 for network traffic between the Cisco Nexus and Fabric Interconnect Switches.

Table 9 lists the vPC IDs, allowed VLAN IDs, and Ethernet uplink ports.

| vPC Description |

vPC ID |

Fabric Interconnects Ports |

Cisco Nexus Switch Ports |

Allowed VLANs |

| Port Channel FI-A |

41 |

FI-A Port 1/49 |

N9K-A Port 1/25 |

10,11,134,135 |

| FI-A Port 1/50 |

N9K-B Port 1/25 |

|||

| Port Channel FI-B |

42 |

FI-B Port 1/49 |

N9K-A Port 1/26 |

10,11,134,135 |

| FI-B Port 1/50 |

N9K-B Port 1/26 |

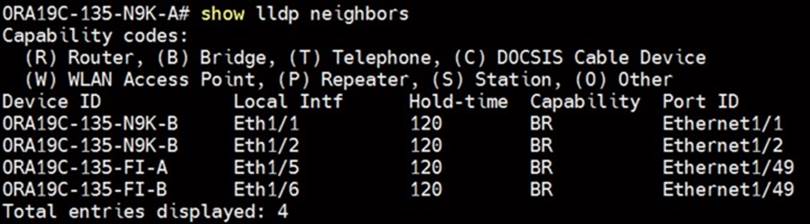

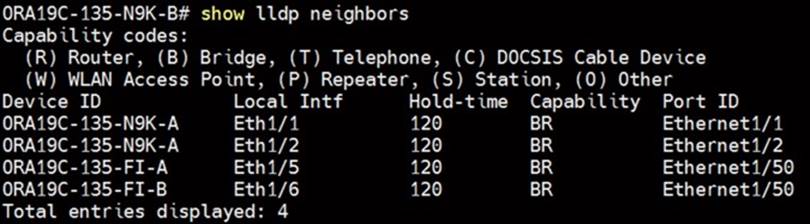

Verify the ports connectivity on both Cisco Nexus switches as shown below:

Procedure 1. Configure Port Channels on Cisco Nexus Switches

Step 1. Login as admin user into the Cisco Nexus Switch A:

configure terminal

interface port-channel41

description Port-Channel FI-A

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

vpc 41

no shutdown

interface port-channel42

description Port-Channel FI-B

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

vpc 42

no shutdown

interface Ethernet1/5

description 100g link to FI-A Port 49

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

channel-group 41 mode active

no shutdown

interface Ethernet1/6

description 100g link to FI-B Port 49

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

channel-group 42 mode active

no shutdown

copy run start

Step 2. Login as admin user into the Cisco Nexus Switch B and run the following commands to configure the second Cisco Nexus switch:

configure terminal

interface port-channel41

description Port-Channel FI-A

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

vpc 41

no shutdown

interface port-channel42

description Port-Channel FI-B

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

vpc 42

no shutdown

interface Ethernet1/5

description 100g link to FI-A Port 50

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

channel-group 41 mode active

no shutdown

interface Ethernet1/6

description 100g link to FI-B Port 50

switchport mode trunk

switchport trunk allowed vlan 1-2,10-11,134-135

spanning-tree port type edge trunk

mtu 9216

channel-group 42 mode active

no shutdown

copy run start

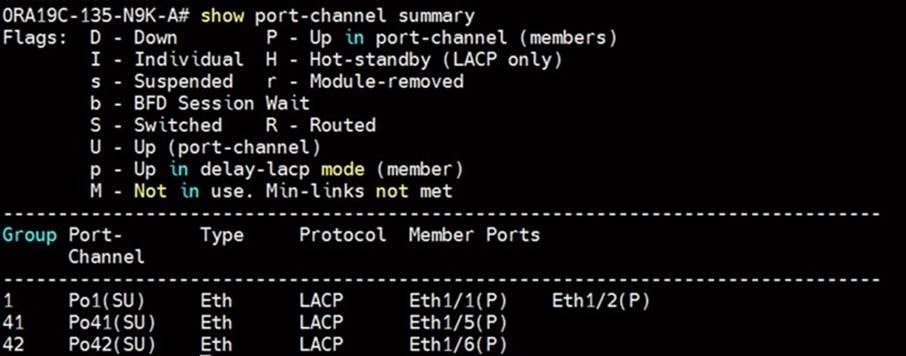

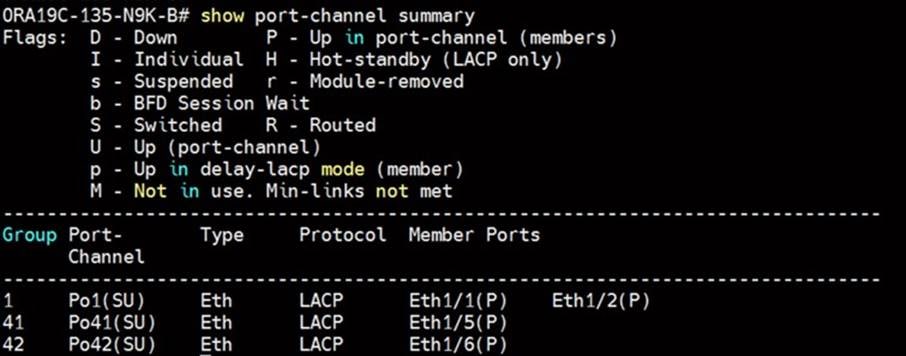

Procedure 2. Verify all vPC status

Step 1. Run this command for the Cisco Nexus Switch A Port-Channel Summary:

Step 2. Run this command for the Cisco Nexus Switch B Port-Channel Summary:

Step 3. Run this command for the Cisco Nexus Switch A vPC Status:

Step 4. Run this command for the Cisco Nexus Switch B vPC Status:

This section details the Cisco UCS configuration that was completed as part of the infrastructure buildout. The racking, power, and installation of the chassis are described in the installation guide, see: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

Note: It is beyond the scope of this document to explain the Cisco UCS infrastructure setup and connectivity. The documentation guides and examples are available here: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-and-configuration-guides-list.html.

Note: This document details all the tasks to configure Cisco UCS but only some screenshots are included.

Using logical servers that are disassociated from the physical hardware removes many limiting constraints around how servers are provisioned. Cisco UCS Service Profiles contain values for a server's property settings, including virtual network interface cards (vNICs), MAC addresses, boot policies, firmware policies, fabric connectivity, external management, and HA information. The service profiles represent all the attributes of a logical server in Cisco UCS model. By abstracting these settings from the physical server into a Cisco Service Profile, the Service Profile can then be deployed to any physical compute hardware within the Cisco UCS domain. Furthermore, Service Profiles can, at any time, be migrated from one physical server to another. Furthermore, Cisco is the only hardware provider to offer a truly unified management platform, with Cisco UCS Service Profiles and hardware abstraction capabilities extending to both blade and rack servers.

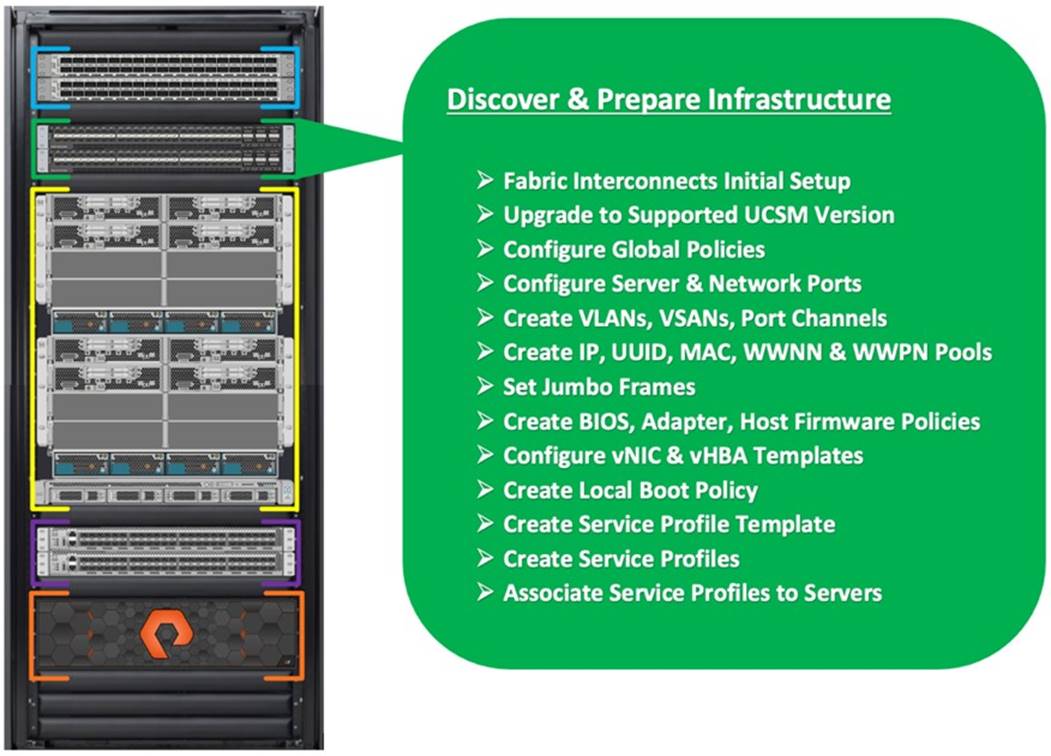

High-Level Steps to Configure Base Cisco UCS

The following are the high-level steps involved for a Cisco UCS configuration:

1. Perform Initial Setup of Fabric Interconnects for a Cluster Setup

2. Upgrade UCS Manager Software to Version 4.2(1i)

3. Synchronize Cisco UCS to NTP

4. Configure Fabric Interconnects for Chassis and Blade Discovery

5. Configure Global Policies

6. Configure Server Ports

7. Configure LAN and SAN

8. Configure Ethernet LAN Uplink Ports

9. Create Uplink Port Channels to Nexus Switches

10. Configure FC SAN Uplink Ports

11. Configure VLANs

12. Configure VSANs

13. Create FC Uplink Port Channels to MDS Switches

14. Enable FC Uplink VSAN Trunking (FCP)

15. Configure IP, UUID, Server, MAC, WWNN and WWPN Pools

16. IP Pool Creation

17. UUID Suffix Pool Creation

18. Server Pool Creation

19. MAC Pool Creation

20. WWNN and WWPN Pool

21. Set Jumbo Frames in both the Fabric Interconnect

22. Configure Server BIOS Policy

23. Create Adapter Policy

24. Create Adapter Policy for Public and Private Network Interfaces

25. Create Adapter Policy for NVMe FC Storage Network Interfaces

26. Configure Update Default Maintenance Policy

27. Configure Host Firmware Policy

28. Configure vNIC and vHBA Template

29. Create Public vNIC Template

30. Create Private vNIC Template

31. Create Storage FC Storage vHBA Template

32. Create Server Boot Policy for SAN Boot

The details for each of these steps are documented in the following sections.

Perform Initial Setup of Cisco UCS 6454 Fabric Interconnects for a Cluster Setup

This section provides detailed procedures for configuring the Cisco Unified Computing System (Cisco UCS) for use in a FlashStack environment.

| Tech tip |

| The steps are necessary to provision the Cisco UCS B-Series and C-Series servers and should be followed precisely to avoid improper configuration. |

Procedure 1. Configure FI-A and FI-B

Step 1. Verify the following physical connections on the fabric interconnect:

The management Ethernet port (mgmt0) is connected to an external hub, switch, or router

The L1 ports on both fabric interconnects are directly connected to each other

The L2 ports on both fabric interconnects are directly connected to each other

Step 2. Connect to the console port on the first Fabric Interconnect and run the following:

Enter the configuration method. (console/gui) ? console

Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup

You have chosen to setup a new Fabric interconnect. Continue? (y/n): y

Enforce strong password? (y/n) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Is this Fabric interconnect part of a cluster(select 'no' for standalone)? (yes/no) [n]: y

Enter the switch fabric (A/B) []: A

Enter the system name: <ucs-cluster-name>

Physical Switch Mgmt0 IP address : <ucsa-mgmt-ip>

Physical Switch Mgmt0 IPv4 netmask : <ucsa-mgmt-mask>

IPv4 address of the default gateway : <ucsa-mgmt-gateway>

Cluster IPv4 address : <ucs-cluster-ip>

Configure the DNS Server IP address? (yes/no) [n]: y

DNS IP address : <dns-server-1-ip>

Configure the default domain name? (yes/no) [n]: y

Default domain name : <ad-dns-domain-name>

Join centralized management environment (UCS Central)? (yes/no) [n]: Enter

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Step 3. Review the settings printed to the console. Answer yes to apply and save the configuration.

Step 4. Wait for the login prompt to make the configuration has been saved to Fabric Interconnect A.

Step 5. Connect console port on the second Fabric Interconnect B and run the following:

Enter the configuration method. (console/gui) ? console

Installer has detected the presence of a peer Fabric interconnect. This Fabric interconnect will be added to the cluster. Continue (y/n) ? y

Enter the admin password of the peer Fabric interconnect: <password>

Connecting to peer Fabric interconnect... done

Retrieving config from peer Fabric interconnect... done

Peer Fabric interconnect Mgmt0 IPv4 Address: <ucsa-mgmt-ip>

Peer Fabric interconnect Mgmt0 IPv4 Netmask: <ucsa-mgmt-mask>

Cluster IPv4 address : <ucs-cluster-ip>

Peer FI is IPv4 Cluster enabled. Please Provide Local Fabric Interconnect Mgmt0 IPv4 Address

Physical Switch Mgmt0 IP address : <ucsb-mgmt-ip>

Local fabric interconnect model(UCS-FI-6454)

Peer fabric interconnect is compatible with the local fabric interconnect. Continuing with the installer...

Apply and save the configuration (select 'no' if you want to re-enter)? (yes/no): yes

Step 6. Review the settings printed to the console. Answer yes to apply and save the configuration.

Step 7. Wait for the login prompt to make the configuration has been saved to Fabric Interconnect B.

Procedure 2. Log into Cisco UCS Manager

Step 1. Log into Cisco Unified Computing System (Cisco UCS) environment.

Step 2. Open a web browser and navigate to the Cisco UCS fabric interconnect cluster address.

Step 3. Click the Launch UCS Manager link under HTML to launch Cisco UCS Manager.

Step 4. If prompted to accept security certificates, accept as necessary.

Step 5. When prompted, enter admin as the username and enter the administrative password.

Step 6. Click Login to log into Cisco UCS Manager.

Procedure 3. Configure Cisco UCS Call Home

| Tech tip |

| It is highly recommended by Cisco to configure Call Home in Cisco UCS Manager. Configuring Call Home will accelerate resolution of support cases. |

Step 1. In Cisco UCS Manager, click Admin.

Step 2. Select All > Communication Management > Call Home.

Step 3. Change the State to On.

Step 4. Fill in all the fields according to your Management preferences and click Save Changes and OK to complete configuring Call Home.

Upgrade Cisco UCS Manager Software to Version 4.2 (1i)

This solution was configured on Cisco UCS 4.2(1i) software release. To upgrade the Cisco UCS Manager software and the Cisco UCS Fabric Interconnect software to version 4.2, go to: https://software.cisco.com/download/home/283612660/type/283655658/release/4.2(1i)

For more information about Install and Upgrade Guides, go to: https://www.cisco.com/c/en/us/support/servers-unified-computing/ucs-manager/products-installation-guides-list.html

Synchronize Cisco UCS to NTP Server

It’s important to synchronize Cisco UCS to the NTP server, because you want to make sure that logging information and timestamps have the accurate time and date.

Procedure 1. Synchronize Cisco UCS to NTP

Step 1. In Cisco UCS Manager, in the navigation pane, click the Admin tab.

Step 2. Select All > Time zone Management.

Step 3. In the Properties pane, select the appropriate time zone in the Time zone menu.

Step 4. Click Save Changes and then click OK.

Step 5. Click Add NTP Server.

Step 6. Enter the NTP server IP address and click OK.

Step 7. Click OK to finish.

Configure Fabric Interconnect for Chassis and Server Discovery

Cisco UCS 6454 Fabric Interconnects are configured for redundancy. It provides resiliency in case of failures. The first step to establish connectivity between blades and Fabric Interconnects.

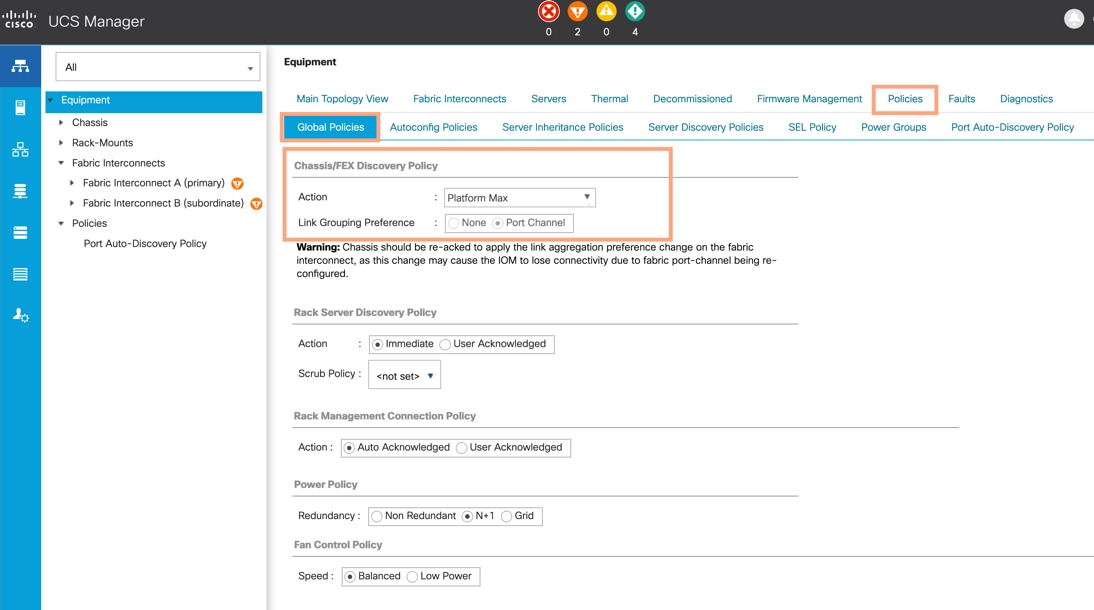

Procedure 1. Configure Global Policies

| Tech tip |

| The chassis discovery policy determines how the system reacts when you add a new chassis. We recommend using the platform max value as shown. Using platform max helps ensure that Cisco UCS Manager uses the maximum number of IOM uplinks available. |

Step 1. Go to Equipment > Policies > Global Policies > Chassis/FEX Discovery Policies. As shown in the screenshot below, select Action as Platform Max from the drop-down list and set Link Grouping to Port Channel.

Step 2. Click Save Changes.

Step 3. Click OK.

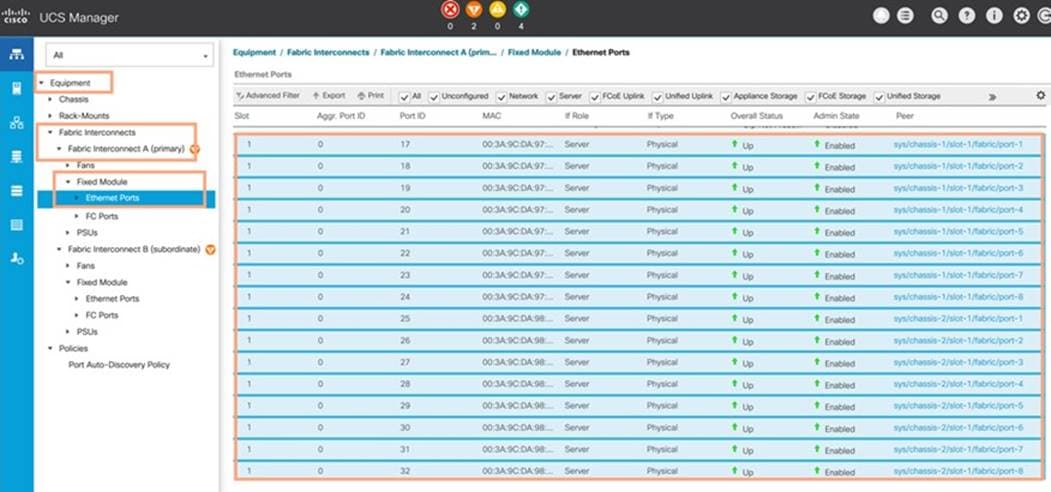

Procedure 2. Configure Server Ports

You need to configure Server Ports to initiate the chassis and blade discovery.

Step 1. Go to Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

Step 2. Select the ports (for this solution ports are 17-32) which are connected to the Cisco IO Modules of the two Cisco UCS B-Series 5108 Chassis.

Step 3. Right-click and select Configure as Server Port.

Step 4. Click Yes to confirm and click OK.

Step 5. Repeat steps 1-4 for Fabric Interconnect B.

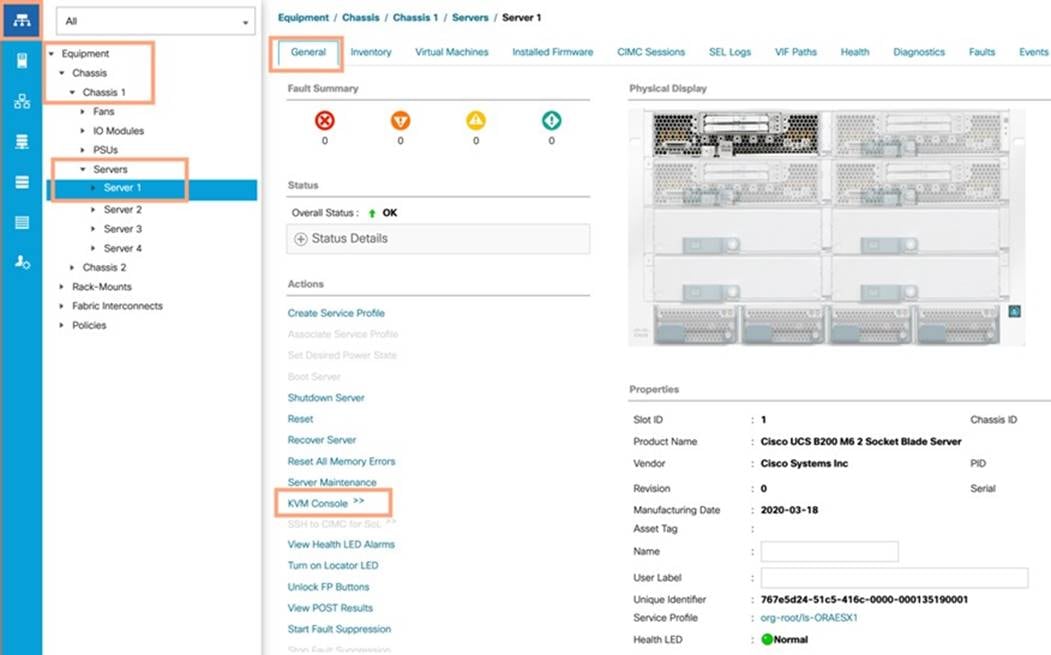

Step 6. After configuring Server Ports, acknowledge both Chassis. Go to Equipment > Chassis > Chassis 1 > General > Actions > select Acknowledge Chassis. Repeat this step to acknowledge the Chassis 2.

Step 7. After acknowledging both chassis, re-acknowledge all servers placed in the chassis. Go to Equipment > Chassis 1 > Servers > Server 1 > General > Actions > select Server Maintenance > select option Re-acknowledge and click OK. Repeat this step to re-acknowledge all eight Servers.

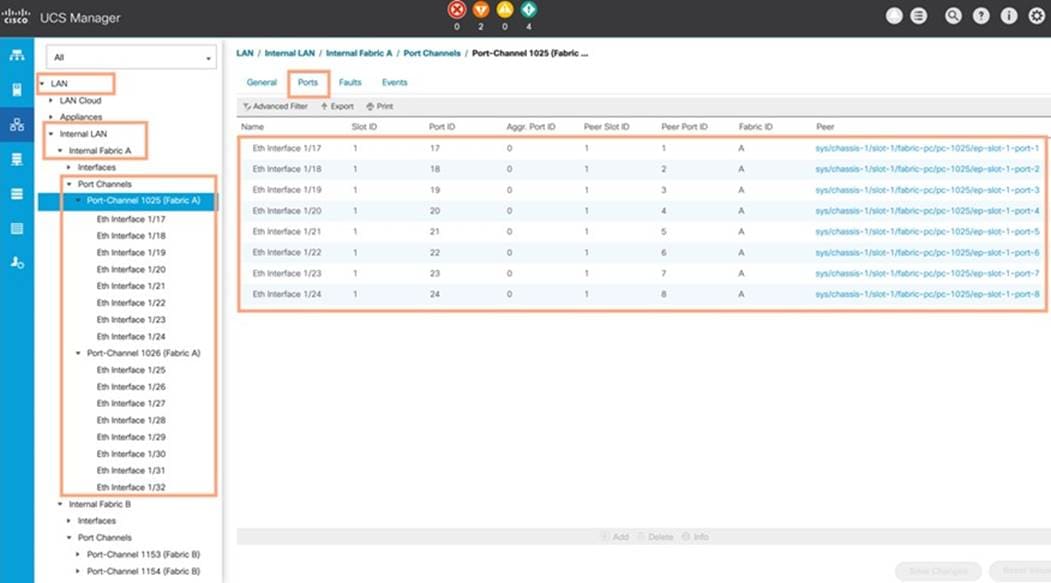

Step 8. When the acknowledgement of the Servers is completed, verify the Port-channel of Internal LAN on both chassis as shown below. Go to tab LAN > Internal LAN > Internal Fabric A > Port Channels on both chassis as shown below.

Step 9. Verify the same for Internal Fabric B.

Configure LAN and SAN on Cisco UCS Manager

Configure Ethernet Uplink Ports and Fibre Channel (FC) Storage ports on Cisco UCS Manager as explained below.

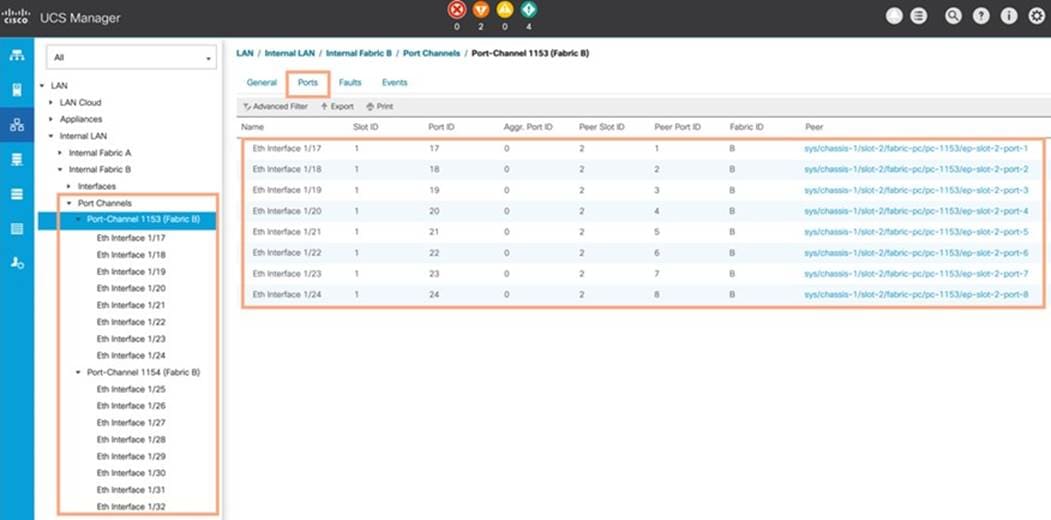

Procedure 1. Configure Ethernet LAN Uplink Ports

Step 1. In Cisco UCS Manager, in the navigation pane, click the Equipment tab.

Step 2. Select Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module.

Step 3. Expand Ethernet Ports.

Step 4. Select ports (for this solution ports are 49-50) that are connected to the Cisco Nexus switches, right-click them, and select Configure as Network Port.

Step 5. Click Yes to confirm ports and click OK.

Step 6. Verify the Ports connected to Nexus upstream switches are now configured as network ports.

Step 7. Repeat steps 1-6 for Fabric Interconnect B. The screenshot shows the network uplink ports for Fabric A.

Now two uplink ports have been created on each Fabric Interconnect as shown above. These ports will be used to create Virtual Port Channel in the next section.

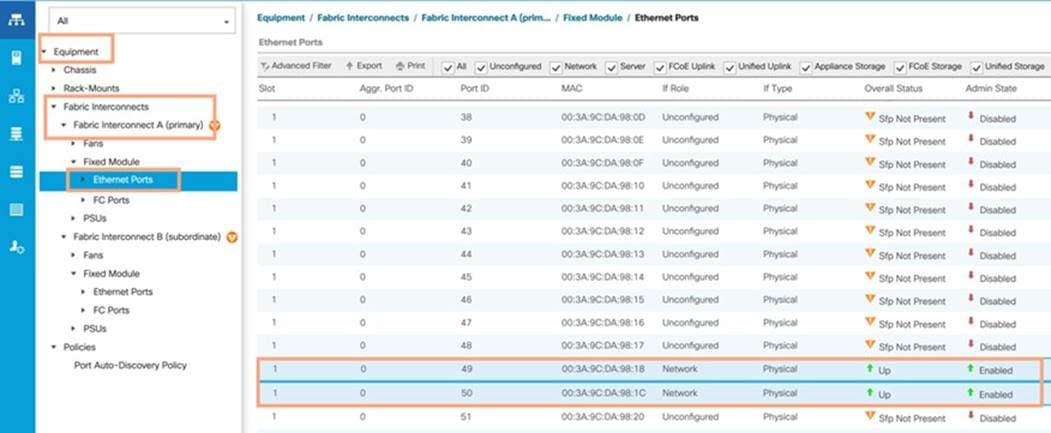

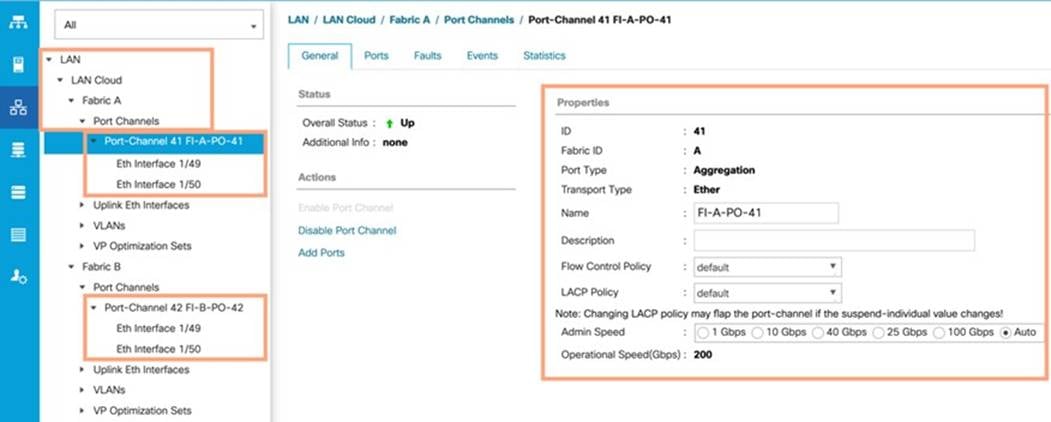

Procedure 2. Create Uplink Port Channels to Cisco Nexus Switches

| Tech tip |

| In this procedure, two port channels are created: one from Fabric A to both Cisco Nexus switches and one from Fabric B to both Cisco Nexus switches. |

Step 1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

Step 2. Under LAN > LAN Cloud, expand node Fabric A tree.

Step 3. Right-click Port Channels.

Step 4. Select Create Port Channel.

Step 5. Enter 41 as the unique ID of the port channel.

Step 6. Enter FI-A-PO-41 as the name of the port channel.

Step 7. Click Next.

Step 8. Select Ethernet ports 49-50 for the port channel.

Step 9. Click >> to add the ports to the port channel

Step 10. Click Finish to create the port channel and then click OK.

Step 11. Repeat steps 1-10 for Fabric Interconnect B, substituting 52 for the port channel number and FI-B for the name.

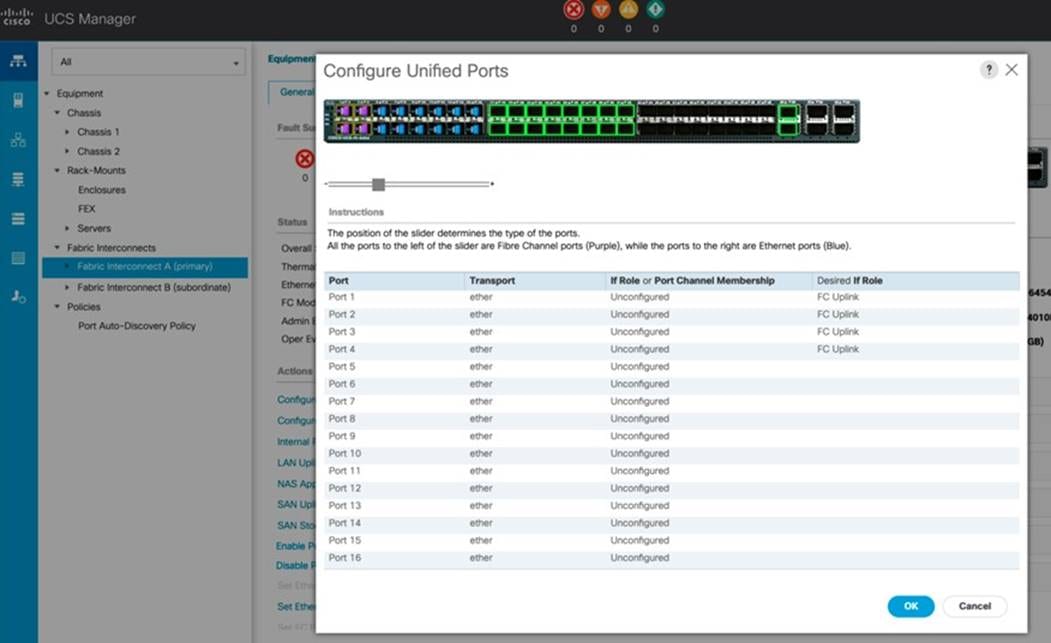

Procedure 3. Configure FC SAN Uplink Ports for Fabric Interconnect 6454

Step 1. In Cisco UCS Manager, click Equipment.

Step 2. Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary).

Step 3. Select Configure Unified Ports.

Step 4. Click Yes on the pop-up window warning that changes to the fixed module will require a reboot of the fabric interconnect and changes to the expansion module will require a reboot of that module.

Step 5. Within the Configured Fixed Ports pop-up window move the gray slider bar from the left to the right to select either 4, 8, or 12 ports to be set as FC Uplinks.

Note: For this solution, we configured the first four ports on the FI as FC Uplink ports.

Step 6. Click OK, then click Yes, then click OK to continue.

Note: Applying this configuration will cause the immediate reboot of Fabric Interconnect and/or Expansion Module(s).

Step 7. Click Equipment > Fabric Interconnects > Fabric Interconnect B (primary).

Step 8. Click Configure Unified Ports.

Step 9. Click Yes on the pop-up window warning that changes to the fixed module will require a reboot of the fabric interconnect and changes to the expansion module will require a reboot of that module.

Step 10. Within the Configured Fixed Ports pop-up window move the gray slider bar from the left to the right to select either 4, 8, or 12 ports to be set as FC Uplinks.

Step 11. Click OK then click Yes then click OK to continue.

Step 12. Wait for both Fabric Interconnects to reboot.

Step 13. Log back into Cisco UCS Manager.

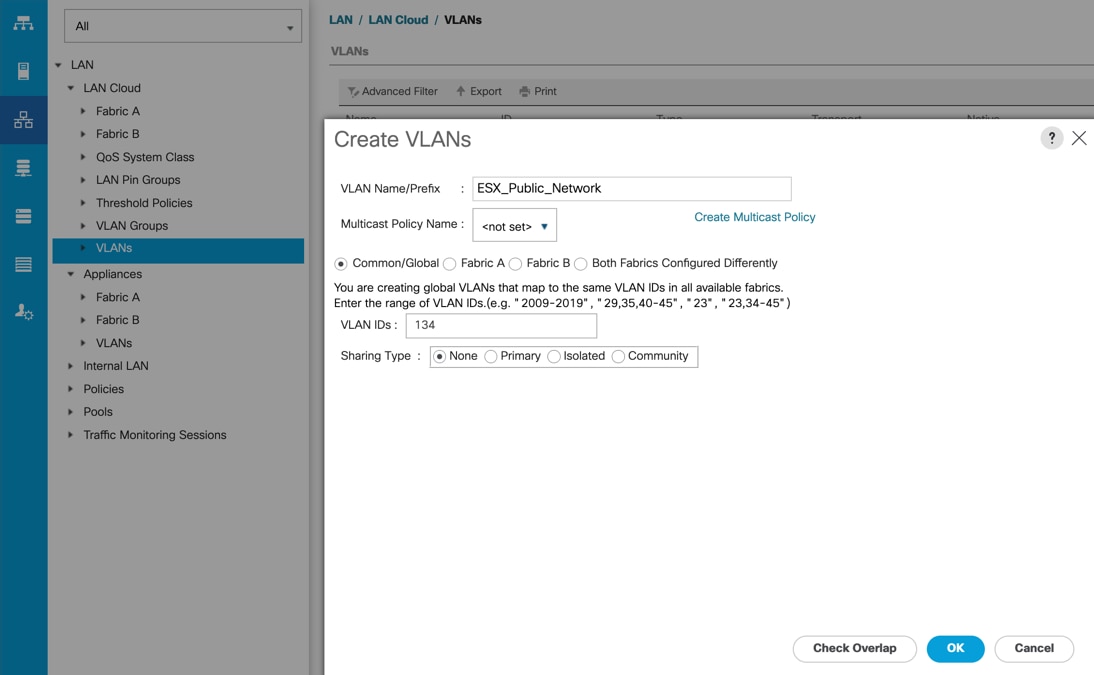

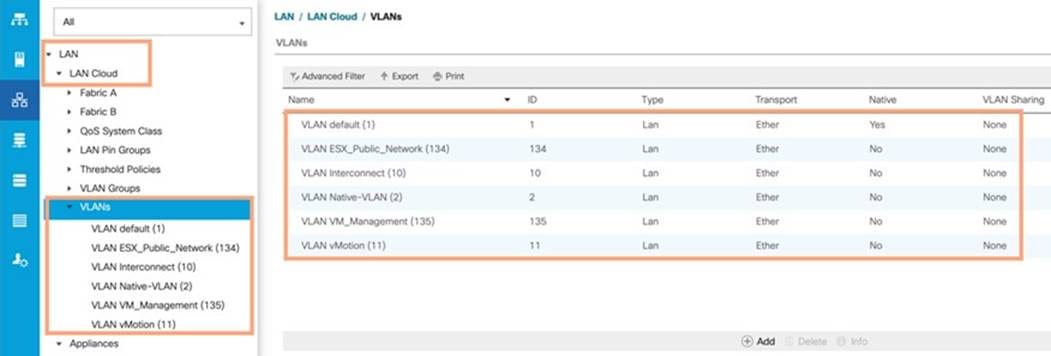

Procedure 4. Configure VLAN

Note: In this solution, five VLANs were created as listed in Table 5: VLAN 2 for Native VLAN, VLAN 134 for ESXi Management Network, VLAN 135 for VM Management Network, VLAN 10 for Private Server-to-Server Network (Cache Fusion) Traffic for Oracle RAC and VLAN 11 for vMotion and Database Backup Network Traffic. These VLANs will be used in the vNIC templates that are discussed later.

| Tech tip |

| It is very important to create both VLANs as global across both fabric interconnects. This way, the VLAN identity is maintained across the fabric interconnects in case of a NIC failover. |

Step 1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

Step 2. Click LAN > LAN Cloud.

Step 3. Right-click VLANs.

Step 4. Click Create VLANs.

Step 5. Enter ESX_Public_Network as the name of the VLAN to be used for ESXi Management Network Traffic.

Step 6. Keep the Common/Global option selected for the scope of the VLAN.

Step 7. Enter 134 as the ID of the VLAN ID.

Step 8. Keep the Sharing Type as None.

Step 9. Click OK and then click OK again.

Step 10. Create the remaining VLANs for VM Management Network, Private Server-to-Server Network (Interconnect), vMotion and Native VLAN as shown below:

Note: These VLANs will be used in the vNIC templates that are described in this CVD.

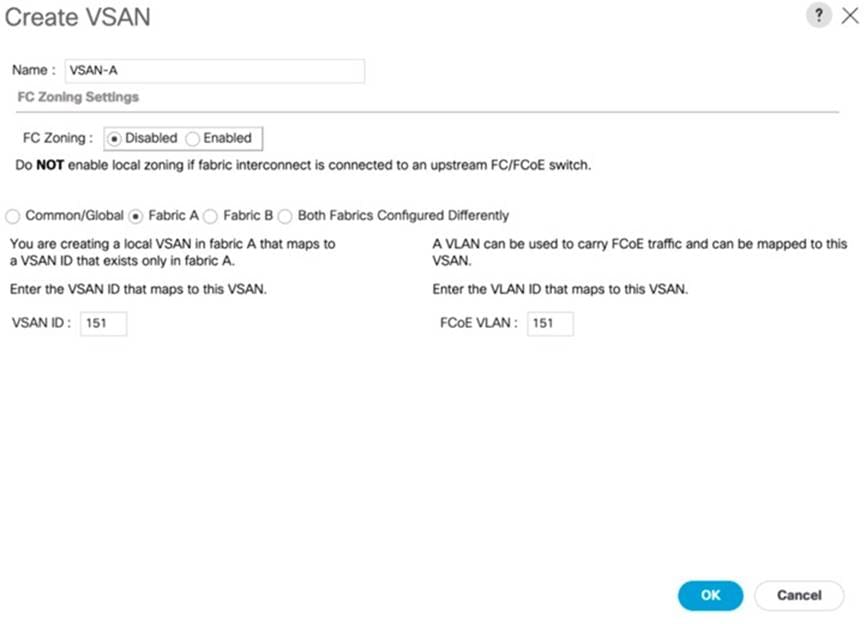

Procedure 5. Configure VSAN

Note: In this solution, we created two VSANs. VSAN-A 151 and VSAN-B 152 for FC SAN Storage Access.

Step 1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

Step 2. Select SAN > SAN Cloud > Fabric A > VSANs

Step 3. Under VSANs, right-click on VSANs.

Step 4. Select Create VSAN.

Step 5. Enter VSAN-A as the name of the VSAN.

Step 6. Leave FC Zoning set at Disabled.

Step 7. Select Fabric A for the scope of the VSAN.

Step 8. Enter VSAN ID as 151.

Step 9. Click OK and then click OK again

Step 10. Repeat steps 1-9 to create the VSAN 152 on FI-B.

| Tech tip |

| Enter a unique VSAN ID and a corresponding FCoE VLAN ID that matches the configuration in the MDS switch for Fabric A. It is recommended to use the same ID for both parameters and to use something other than 1. |

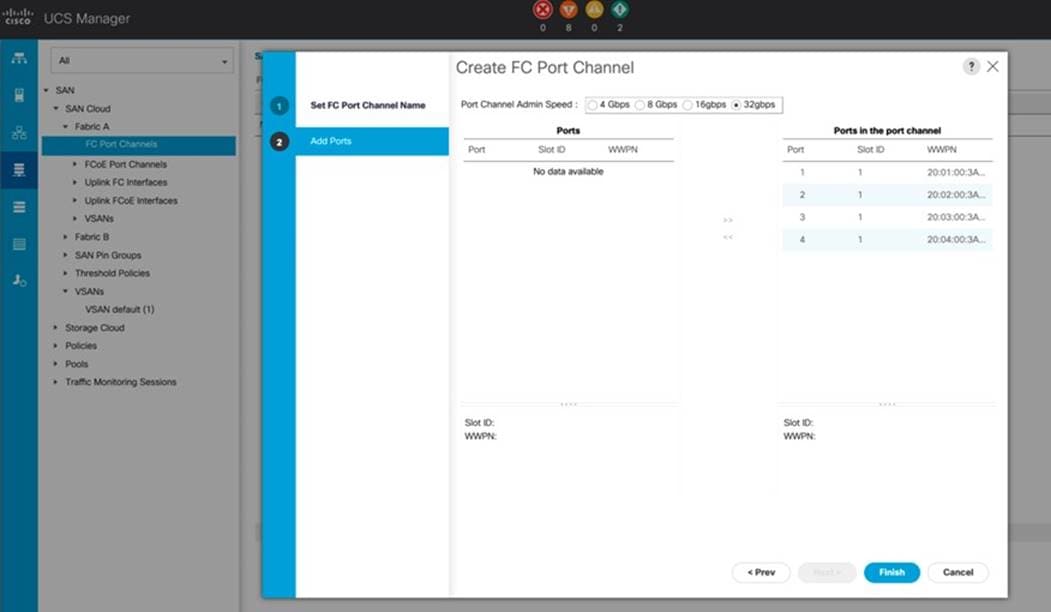

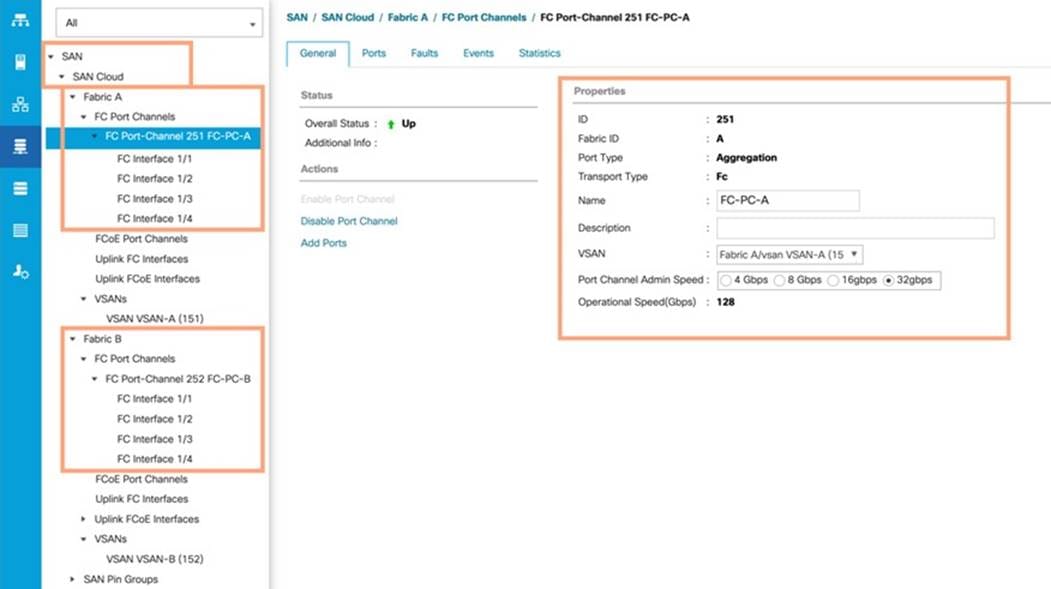

Procedure 6. Create FC Uplink Port Channels to MDS Switches

Note: In this solution, we created two FC Port Chanel. The first FC Port Channel is between FI-A to MDS-A and the second FC Port Channel is between FI-B to MDS-B.

Step 1. In Cisco UCS Manager, click SAN tab on the left.

Step 2. Click SAN > SAN Cloud > Fabric A > FC Port Channels > and then right-click on the FC Port Channel.

Step 3. Enter the name of Port Channel as FC-PC-A and unique ID as 251and click Next

Step 4. Select the appropriate ports of FI-A which are going to MDS-A and click the button >> to select those ports as a member of the Port Channel.

Note: For this solution, we configured all four ports as Port Channel ports as shown in the screenshot below:

Step 5. Click Finish to create this FC Port Channel for FI-A.

Step 6. Repeat steps 1-5 to create the FC Port Channel on FI-B with related FC Ports going to MDS-B.

Note: We configured the FI-B Port Channel as FC-PC-B with unique ID 252 as shown below:

Step 7. Click VSAN-A 151 for FC-PC-A and click Save Changes.

Step 8. Click VSAN-B 152 for FC-PC-B and click Save Changes.

Note: The MDS Switch is configured in the following section and after the appropriate VSAN and FC ports configuration, the FC Ports and Port-Channel will become ACTIVE.

Procedure 7. Enable FC Uplink VSAN Trunking (FCP)

Step 1. In Cisco UCS Manager, click SAN.

Step 2. Expand SAN > SAN Cloud.

Step 3. Choose Fabric A and in the Actions pane choose Enable FC Uplink Trunking.

Step 4. Click Yes on the Confirmation and Warning and then click OK.

Step 5. Choose Fabric B and in the Actions pane choose Enable FC Uplink Trunking.

Step 6. Click Yes on the Confirmation and Warning. Click OK to finish.

| Tech tip |

| Enabling VSAN trunking is optional. It is important that the Cisco Nexus VSAN trunking configuration match the configuration set in Cisco UCS Manager. |

Configure IP, UUID, Server, MAC, WWNN and WWPN Pools

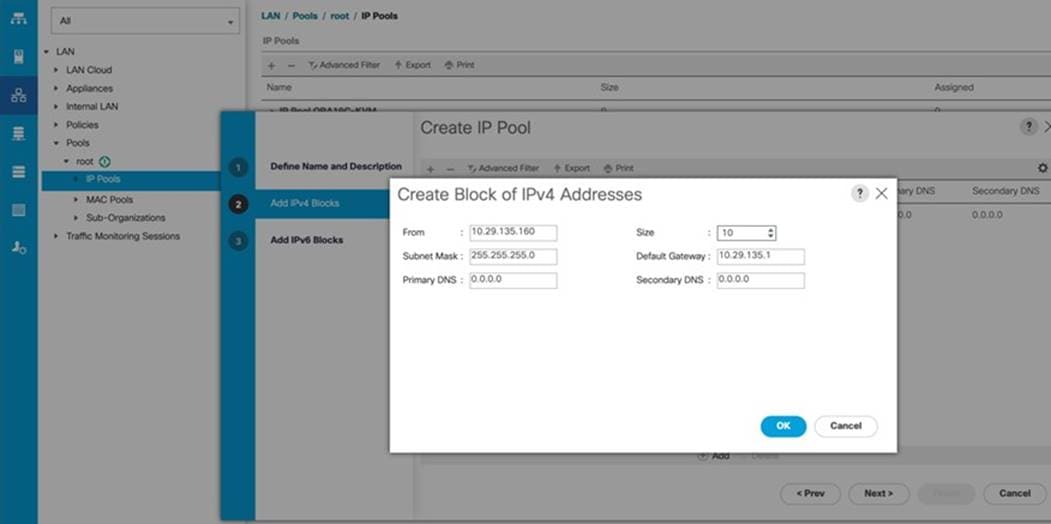

Procedure 1. IP Pool Creation

| Tech tip |

| An IP address pool on the out-of-band management network must be created to facilitate KVM access to each compute node in the UCS domain. |

Step 1. In Cisco UCS Manager, in the navigation pane, click the LAN tab.

Step 2. Click Pools > root > IP Pools >click Create IP Pool.

Note: For this solution, the IP Pool is named ORA19C-KVM Pool.

Step 3. Select the option Sequential to assign IP in sequential order then click Next.

Step 4. Click Add IPv4 Block.

Step 5. Enter the starting IP address of the block and the number of IP addresses required and the subnet and gateway information according to your environment, as shown below:

Step 6. Click Next and then click Finish to create the IP block.

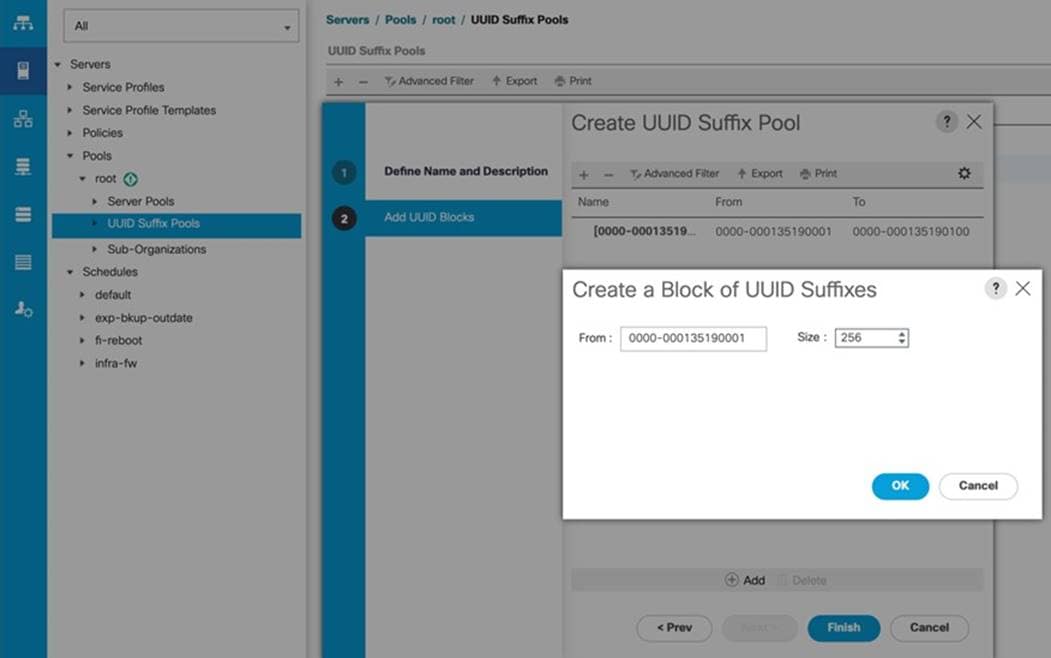

Procedure 2. UUID Suffix Pool Creation

Step 1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

Step 2. Click Pools > root.

Step 3. Right-click UUID Suffix Pools and then select Create UUID Suffix Pool.

Step 4. Enter ORA19C-UUID as the name of the UUID Pool name.

Step 5. Optional: Enter a description for the UUID pool.

Step 6. Keep the prefix at the derived option and select Sequential in as Assignment Order then click Next.

Step 7. Click Add to add a block of UUIDs.

Step 8. Create a starting point UUID as per your environment.

Step 9. Specify a size for the UUID block that is sufficient to support the available blade or server resources.

Step 10. Clink OK then click Finish to complete the UUID Pool configuration.

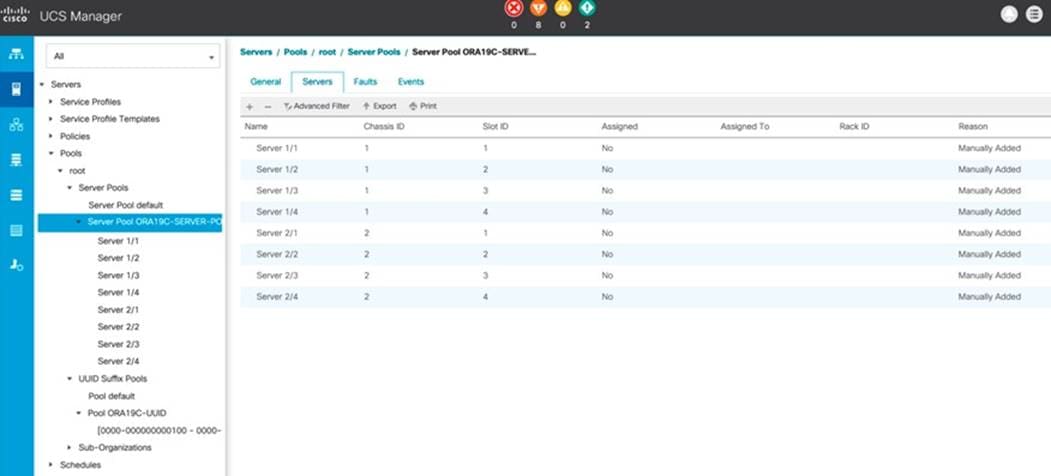

Procedure 3. Server Pool Creation

| Tech tip |

| Consider creating unique server pools to achieve the granularity that is required in your environment. |

Step 1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

Step 2. Select Pools > root > Right-click Server Pools > Select Create Server Pool.

Step 3. Enter ORA19C-SERVER-POOL as the name of the server pool.

Step 4. Optional: Enter a description for the server pool then click Next.

Step 5. Select all the eight servers to be used for the Oracle RAC management and click >> to add them to the server pool.

Step 6. Click Finish and then click OK.

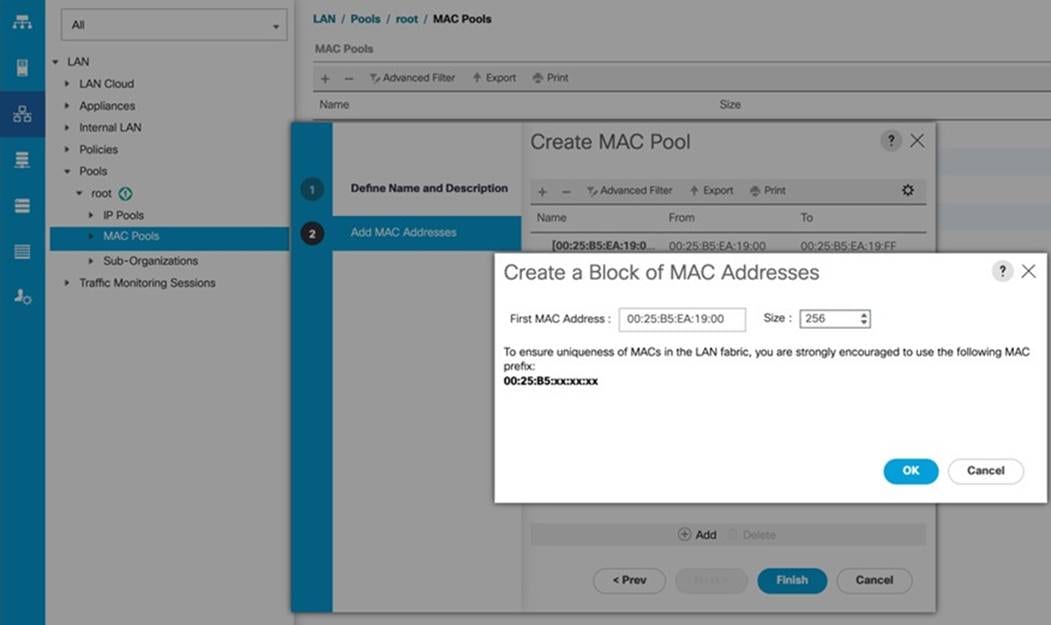

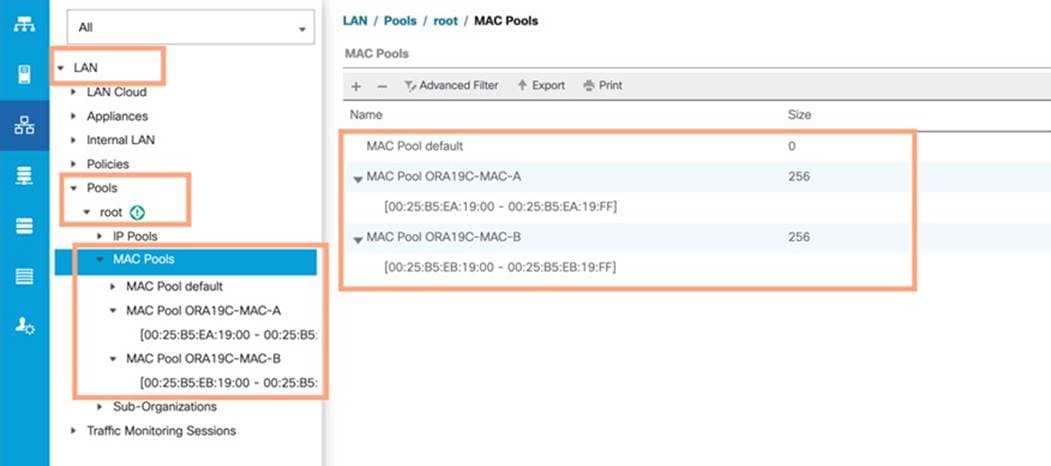

Procedure 4. MAC Pool Creation

Note: In this solution, we created two MAC Pool as ORA19C-MAC-A and ORA19C-MAC-B to provide MAC addresses for all of the Network Interfaces.

Step 1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

Step 2. Select Pools > root > right-click MAC Pools under the root organization.

Step 3. Select Create MAC Pool to create the MAC address pool.

Step 4. Enter ORA19C-MAC-A as the name for MAC pool.

Step 5. Enter the seed MAC address and provide the number of MAC addresses to be provisioned.

Step 6. Click OK and then click Finish.

Step 7. In the confirmation message, click OK.

Step 8. Create MAC Pool B as “ORA19C-MAC-B” and assign unique MAC Addresses as shown below:

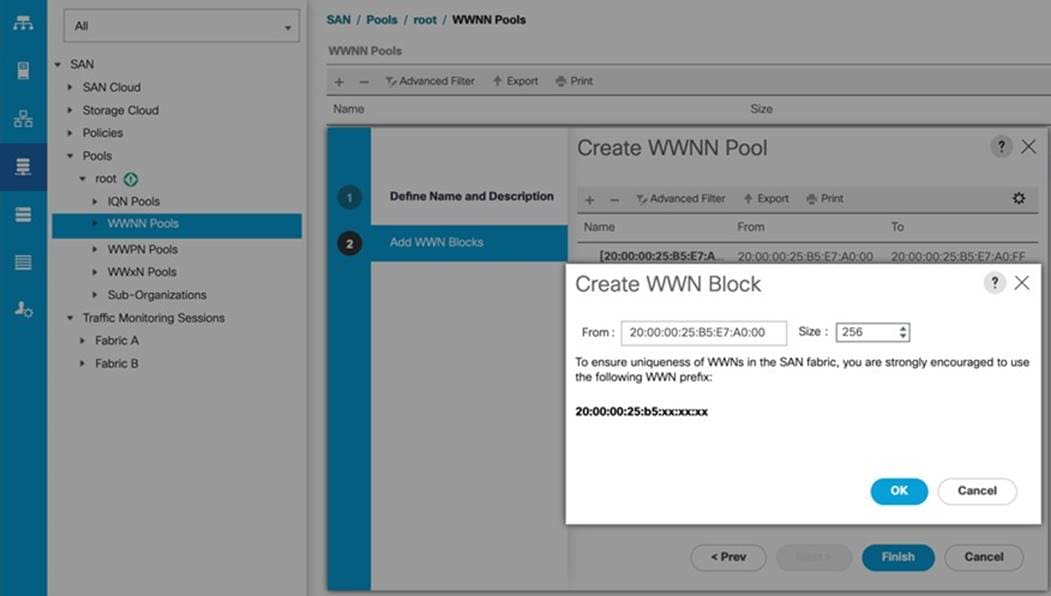

Procedure 5. Create a WWNN Pool

Note: In this solution, we configured one WWNN Pool to provide SAN access point for ESX and Linux VM hosts.

| Tech tip |

| These WWNN and WWPN entries will be used to access storage through SAN configuration. |

Step 1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

Step 2. Click Pools > Root > WWNN Pools > right-click WWNN Pools > click Create WWNN Pool.

Step 3. Assign the name ORA19C-WWNN and Assignment Order as sequential and click Next.

Step 4. Click Add and create a WWN Block as shown below:

Step 5. Click OK and then click Finish.

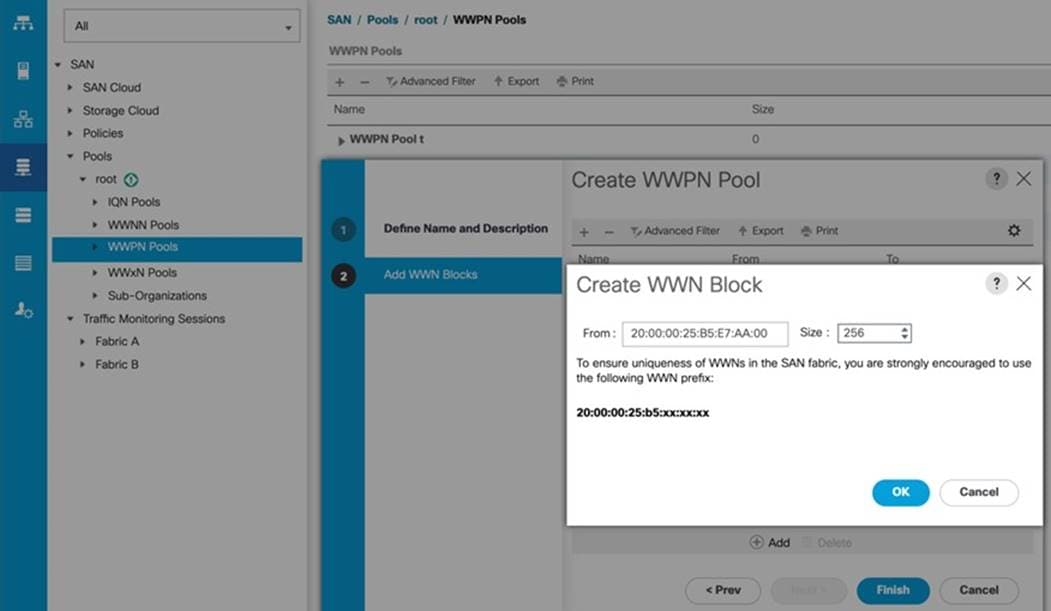

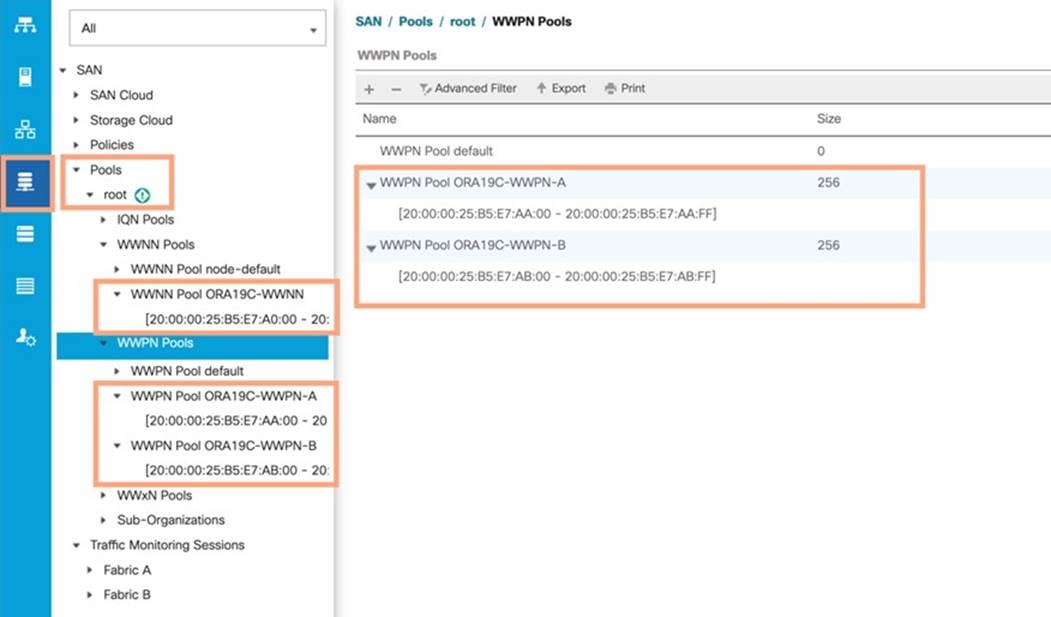

Procedure 6. Create WWPN Pools

Note: In this solution, we created two WWPNs; ORA19C-WWPN-A and ORA19C-WWPN-B Pool, for the World Wide Port Name.

Step 1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

Step 2. Click Pools > Root > WWPN Pools > right-click WWPN Pools > click Create WWPN Pool.

Step 3. Assign the name ORA19C-WWPN-A and Assignment Order as sequential.

Step 4. Click Next and then click Add to add block of Ports.

Step 5. Enter Block for WWN and size.

Step 6. Click OK and then click Finish.

Step 7. Configure the ORA19C-WWPN-B Pool as well and assign the unique block IDs as shown below:

| Tech tip |

| When there are multiple UCS domains sitting in adjacency, it is important that these blocks; the WWNN, WWPN, and MAC, hold differing values between each set. |

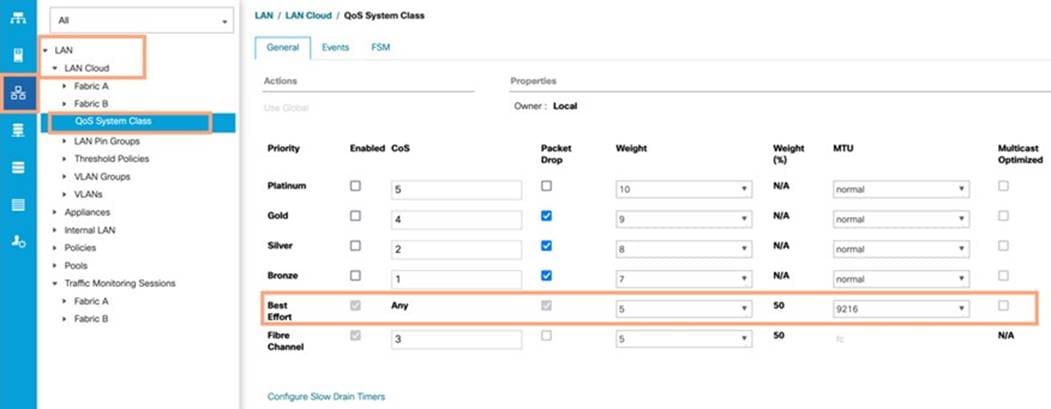

Set Jumbo Frames in both Cisco Fabric Interconnects

This section describe how to configure jumbo frames and enable quality of service in the Cisco UCS fabric.

Procedure 1. Set Jumbo Frames

Step 1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

Step 2. Select LAN > LAN Cloud > QoS System Class.

Step 3. In the right pane, click the General tab.

Step 4. On the Best Effort row, enter 9216 in the box under the MTU column.

Step 5. Click Save Changes in the bottom of the window.

Step 6. Click OK.

Note: The only the Fibre Channel and Best Effort QoS System Classes are enabled in this FlashStack implementation. The Cisco UCS and Cisco Nexus switches are intentionally configured this way so that all IP traffic within the FlashStack will be treated as Best Effort.

| Tech tip |

| Enabling the other QoS System Classes without having a comprehensive, end-to-end QoS setup in place can cause difficult to troubleshoot issues. |

All the Server BIOS policies may be required for your setup. Please follow the steps according to your environment and requirements. The following changes were made on the test bed where Oracle RAC installed. Please validate and change as needed.

For more detailed information on BIOS Settings, go to https://www.cisco.com/c/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/performance-tuning-guide-ucs-m6-servers.html

Procedure 1. Create a Server BIOS Policy for the Cisco UCS environment

Step 1. In Cisco UCS Manager, click Servers.

Step 2. Click Policies > root.

Step 3. Right-click BIOS Policies.

Step 4. Click Create BIOS Policy.

Step 5. Enter ORA-VM as the BIOS policy name

Step 6. Select and click the newly created BIOS Policy.

Step 7. Click the Main tab and select CDN Control value as Enabled.

Step 8. Click the Advanced tab, leaving the Processor tab selected within the Advanced tab.

Step 9. Set the following within the Processor tab:

● Enhanced CPU Performance: Disabled

● Intel HyperThreading Tech: Enabled

● Energy Efficient Turbo: Disabled

● IMC Inteleave: 1-way Interleave

● Sub NUMA Clustering: Enabled

● Processor C1E: Disabled

● LLC Prefetch: Disabled

● XPT Prefetch: Enabled

● Patrol Scrub: Disabled

● UPI Power Management: Enabled

Step 10. Set the following within the RAS Memory tab:

● LLC Dead Line: Disabled

● Memory Refresh Rate: 1x Refresh

Step 11. Click Save Changes and then click OK.

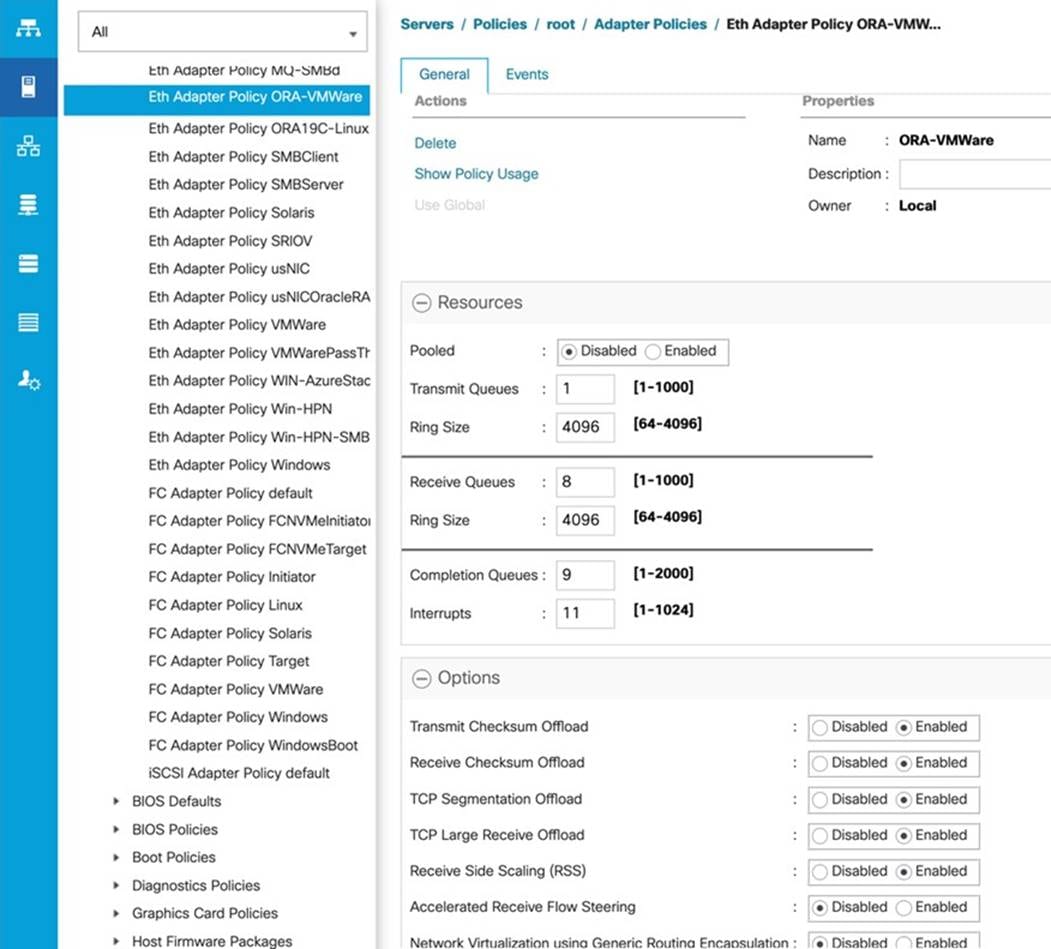

Note: In this solution, we used the default UCS “vmware” adapter policy for the ethernet NICs and fibre channel HBA. However, for the Oracle RAC interconnect NICs, we customized the ethernet adapter policy as explained below.

Procedure 1. Create Adapter Policy for Ethernet Traffic (only for Oracle RAC Private Network Interfaces)

Step 1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

Step 2. Select Policies > root > right-click Adapter Policies.

Step 3. Select Create Ethernet Adapter Policy.

Step 4. Provide a name for the Ethernet adapter policy as “ORA-VMWare”. Change the following fields and click Save Changes:

● Resources:

◦ Transmit Queues: 1

◦ Ring Size: 4096

◦ Receive Queues: 8

◦ Ring Size: 4096

◦ Completion Queues: 9

◦ Interrupts: 11

● Options:

◦ Receive Side Scaling (RSS): Enabled

Step 5. Configure the adapter policy as shown below:

RSS distributes network receive processing across multiple CPUs in multiprocessor systems, as follows:

● Disabled—Network receive processing is always handled by a single processor even if additional processors are available.

● Enabled—Network receive processing is shared across processors whenever possible.

Configure Default Maintenance Policy

You’ll need to configure your default maintenance policy for your specific environment requirements.

Procedure 1. Configure Default Maintenance Policy

Step 1. In Cisco UCS Manager, click the Servers tab in the navigation pane.

Step 2. Click Policies > root > Maintenance Policies > Default.

Step 3. Change the Reboot Policy to User Ack.

Step 4. Click Save Changes.

Step 5. Click OK to accept the changes.

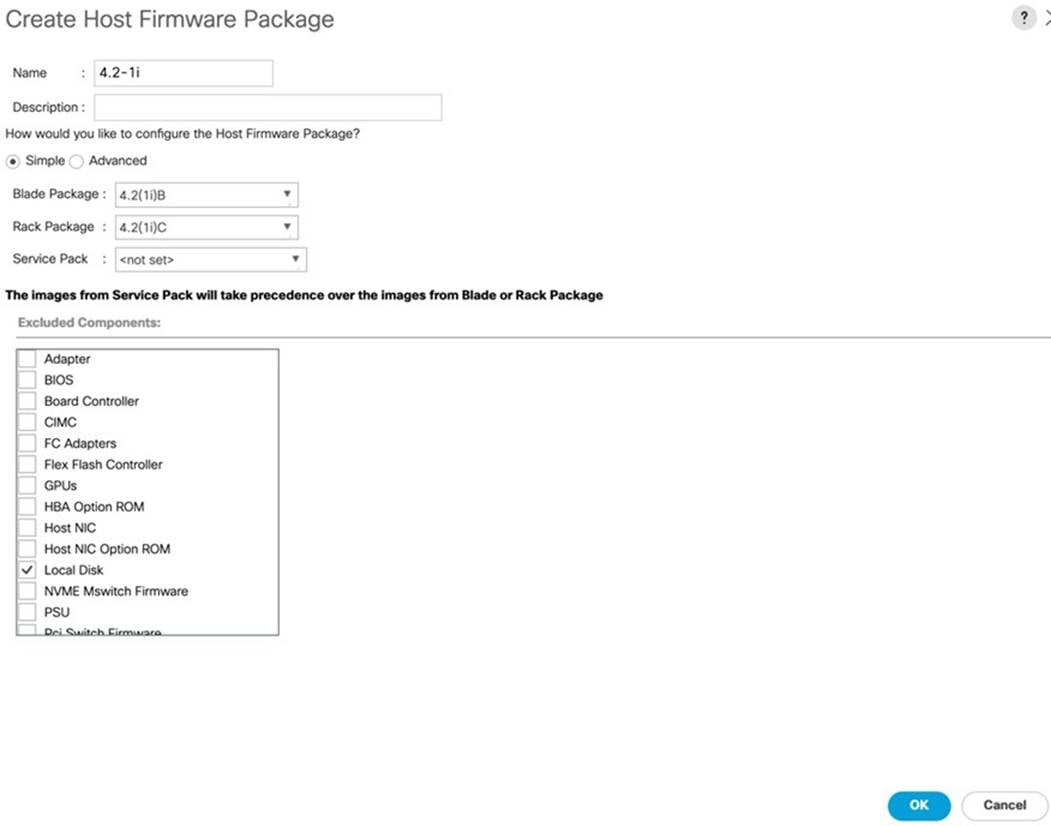

Configure Host Firmware Policy

Firmware management policies allow the administrator to choose the corresponding packages for a given server configuration. These policies often include packages for adapter, BIOS, board controller, FC adapters, host bus adapter (HBA) option ROM, and storage controller properties.

Procedure 1. Create Default Firmware Management Policy

Step 1. In Cisco UCS Manager, click Servers.

Step 2. Expand Policies > root.

Step 3. Expand Host Firmware Packages and right-click to “Create Host Firmware Policy.”

Step 4. Give the policy the name 4.2-1i and select the Blade and Rack Packages as shown below:

Step 5. Click OK, to create the host firmware package for this UCSM version.

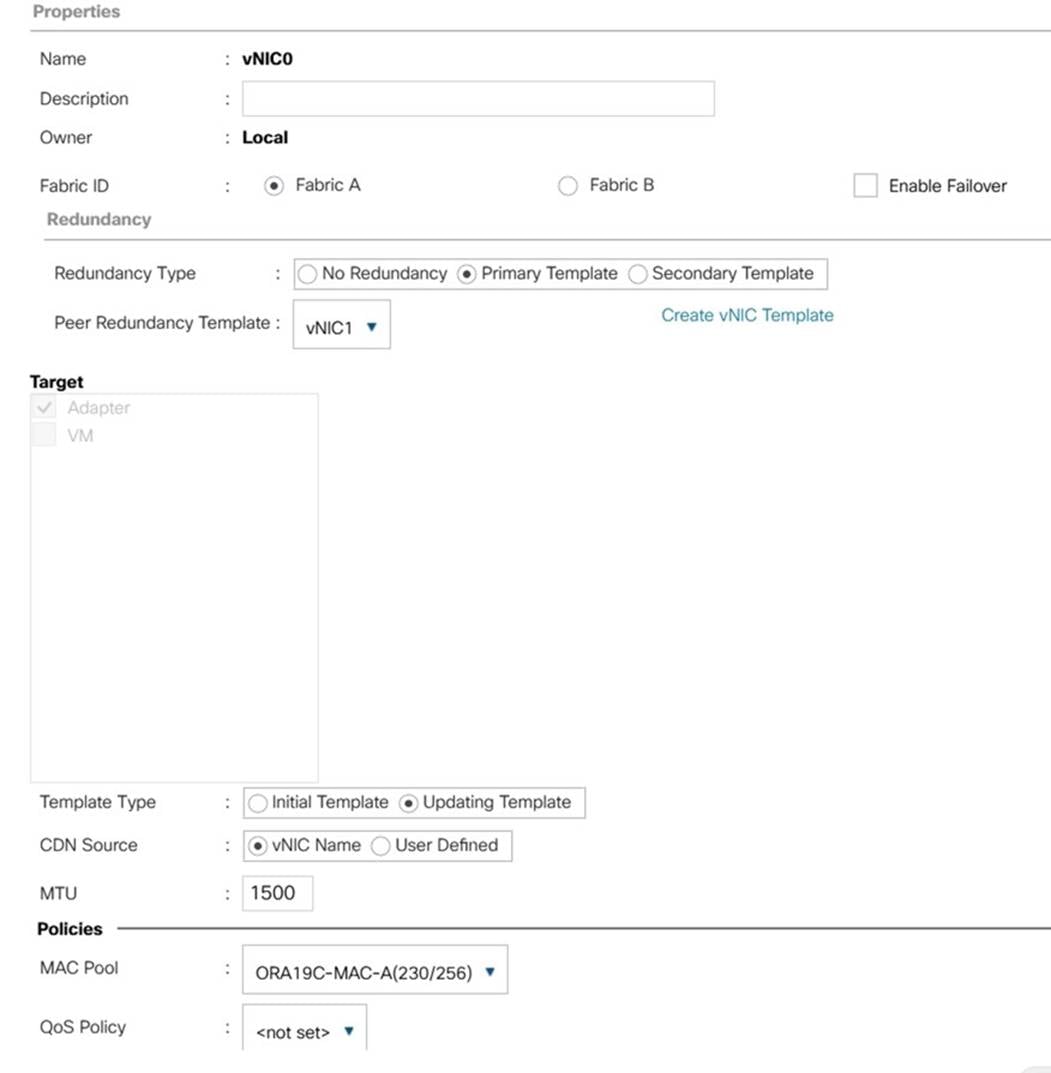

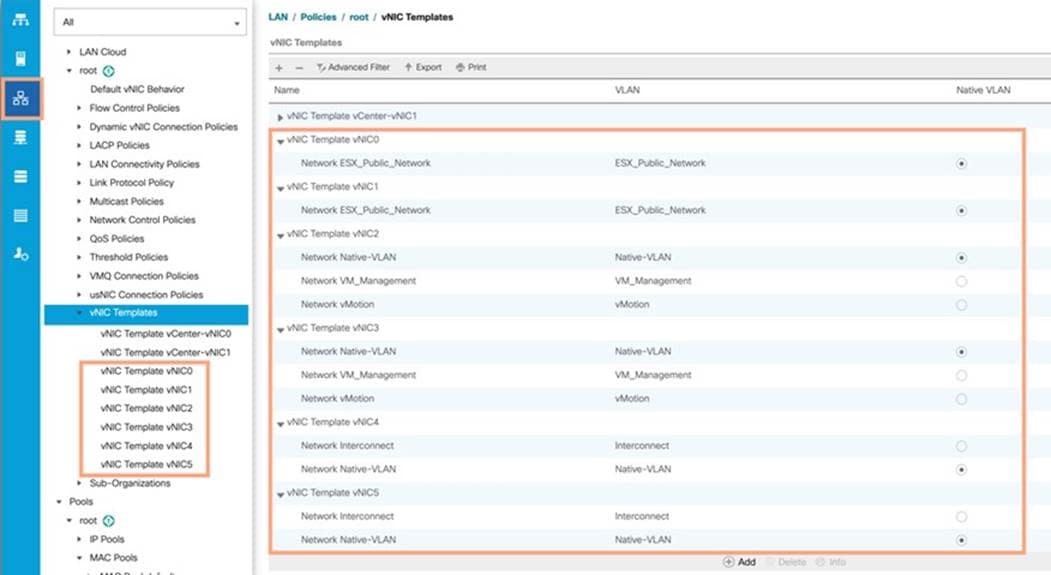

Configure vNIC and vHBA Template

Note: For this solution, we created two vNIC template for Public Network and Private Network Traffic. We will use these vNIC templates when creating the Service Profile later in this section.

Procedure 1. Create vNIC Template

Step 1. In Cisco UCS Manager, click the LAN tab in the navigation pane.

Step 2. Click Policies > root > vNIC Templates > Right-click to vNIC Template and click Create vNIC Template.

Step 3. Enter “vNIC0” for the vNIC template name and keep Fabric A selected.

Step 4. Select the Redundancy Type “Primary Template” since you are going to configure Primary and Secondary Template for all the NICs. Leave the Peer Redundancy Template blank since you will configure this when you create vNIC1 as peer template of vNIC0.

Step 5. For Template Type, select Updating Template.

Step 6. Under VLANs, check the boxes ESX_Public_Network and select Native VLAN and VLAN ID as 134.

Step 7. Keep MTU value 1500.

Step 8. In the MAC Pool list, click ORA19C-MAC-A.

Step 9. Click OK to create the vNIC0.

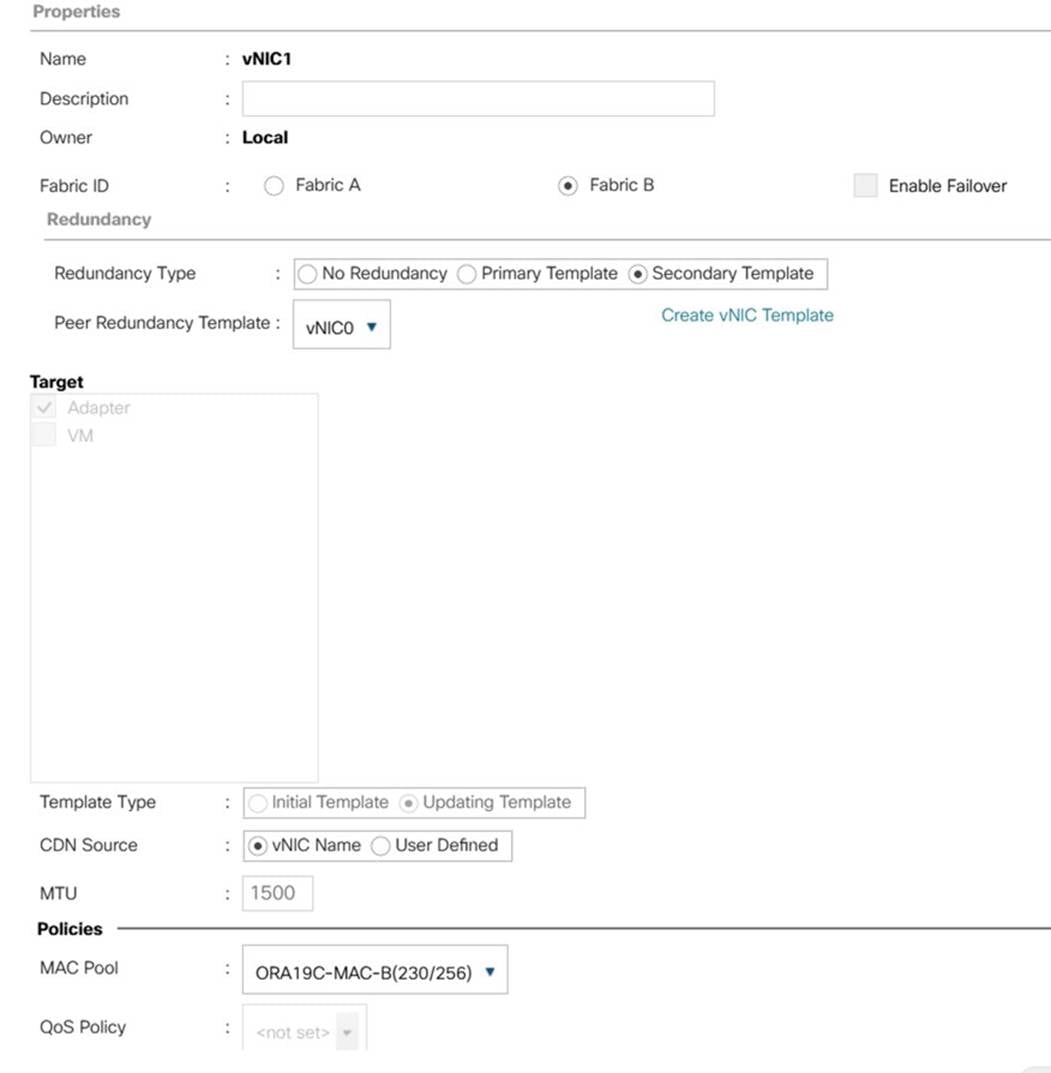

Step 10. Right-click the vNIC Template and select Create vNIC Template.

Step 11. Enter vNIC1 for the vNIC template name and keep Fabric B selected.

Step 12. For Redundancy Type select Secondary Template and from the Peer Redundancy Template drop-down list, select vNIC0 to configure Primary & Secondary Redundancy on FI-A and FI-B.

Step 13. For Template Type, select Updating Template and under VLANs, check the boxes ESX_Public_Network and for Native VLAN and VLAN ID select 134 and keep MTU value 1500.

Step 14. In the MAC Pool list, select ORA19C-MAC-B and click OK to create the vNIC1 as shown below:

Step 15. Click OK to finish.

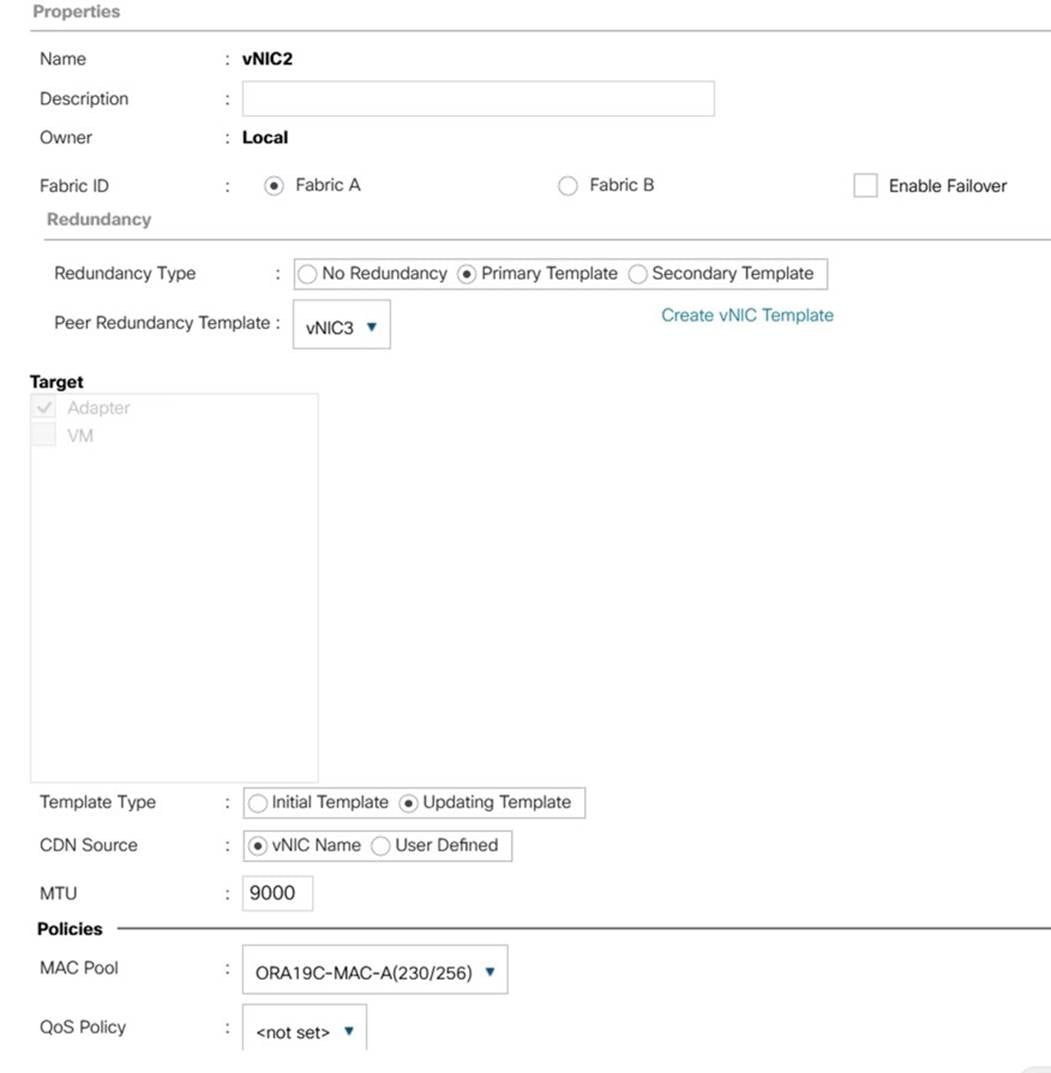

Note: We created vNIC2 and vNIC3 for carrying VM Management and vMotion Traffic in redundancy pairs across both FI.

Step 16. Right-click the vNIC Template and select Create vNIC Template.

Step 17. Enter vNIC2 for the vNIC template name and keep Fabric A selected.

Step 18. For Redundancy Type, select Primary Template since you are going to configure Primary Template as vNIC2 and Secondary Template as vNIC3.

Step 19. Leave the Peer Redundancy Template blank since you will configure this when you create vNIC3 as the peer template of vNIC2.

Step 20. For Template Type, select Updating Template.

Step 21. Under VLANs, check the boxes Native-VLAN, VM_Management, and vMotion. Select VLAN 2 for the Native VLAN.

Step 22. Change MTU value 9000.

Step 23. In the MAC Pool list, select ORA19C-MAC-A.

Step 24. Click OK to create the vNIC2.

Step 25. Right-click the vNIC Template and click Create vNIC Template.

Step 26. Enter vNIC3 for the vNIC template name and keep Fabric B selected

Step 27. For Redundancy Type, select Secondary Template and from the Peer Redundancy Template drop-down list, select vNIC2 to configure Primary & Secondary Redundancy on FI-A and FI-B.

Step 28. For Template Type, select Updating Template and under VLANs, check the boxes Native-VLAN, VM_Management, and vMotion. Select VLAN 2 as Native VLAN.

Step 29. Change MTU value 9000.

Step 30. In the MAC Pool list, select ORA19C-MAC-B and click OK to create the vNIC3 as shown below:

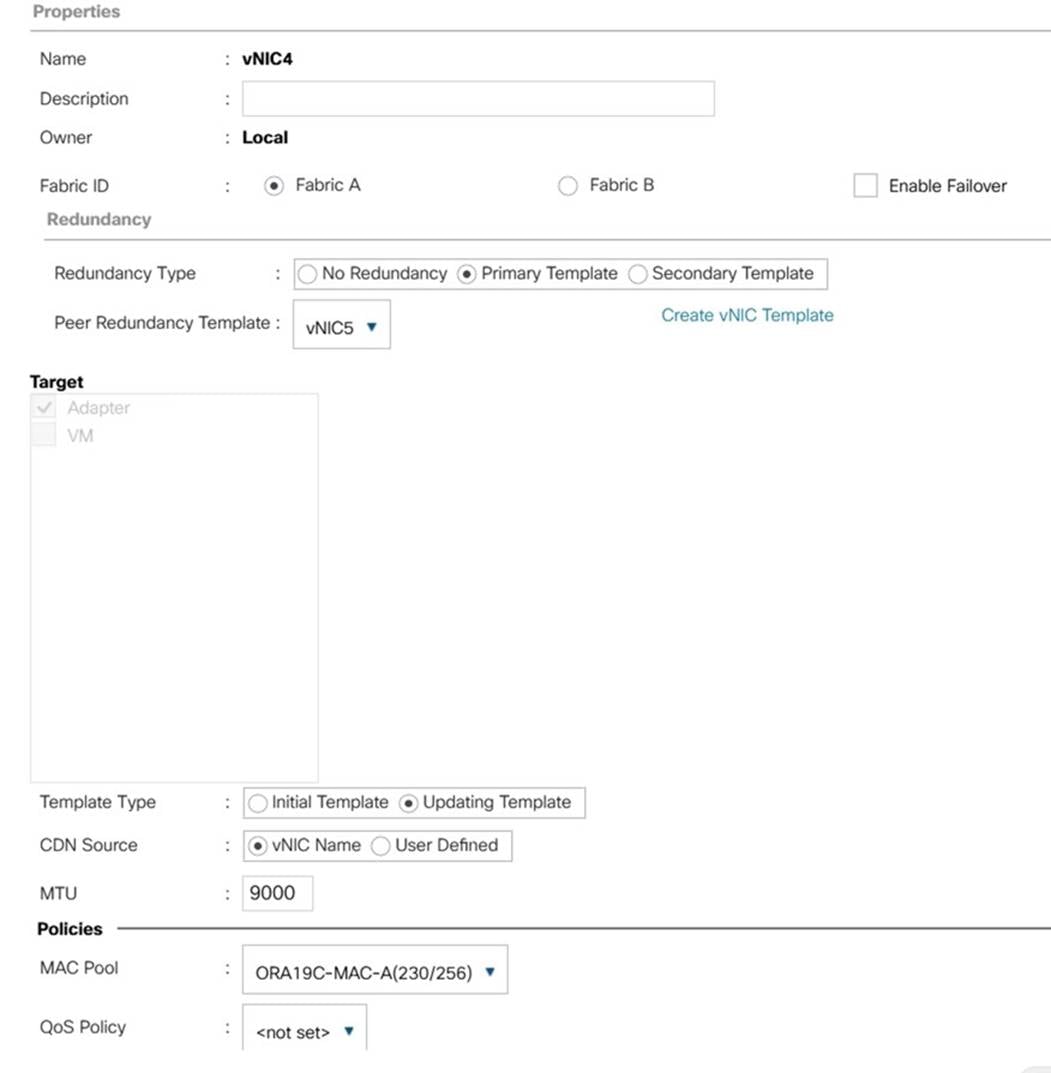

Step 31. Right-click the vNIC Template and select Create vNIC Template.

Step 32. Enter vNIC4 for the vNIC template name and keep Fabric A selected.

Step 33. For Redundancy Type, select Primary Template since you are going to configure the Primary Template as vNIC4 and Secondary Template as vNIC5.

Step 34. Leave the Peer Redundancy Template blank since you will configure this when you create vNIC5 as the peer template of vNIC4.

Step 35. For Template Type, select Updating Template.

Step 36. Under VLANs, check the boxes Native-VLAN and Interconnect. Select VLAN 2 as Native VLAN.

Step 37. Change MTU value 9000.

Step 38. In the MAC Pool list, select ORA19C-MAC-A.

Step 39. Click OK to create the vNIC4.

Step 40. Right-click the vNIC Template and select Create vNIC Template.

Step 41. Enter vNIC5 for the vNIC template name and keep Fabric B selected

Step 42. For the Redundancy Type, select Secondary Template and from the Peer Redundancy Template drop-down list, select vNIC4 to configure Primary & Secondary Redundancy on FI-A and FI-B.

Step 43. For Template Type, select Updating Template and under VLANs, check the boxes Native-VLAN and Interconnect. Select VLAN 2 as Native VLAN.

Step 44. Change MTU value 9000.

Step 45. In the MAC Pool list, select ORA19C-MAC-B and click OK to create the vNIC5 as shown below:

Below is the screenshot of all the vNIC configured for this environment:

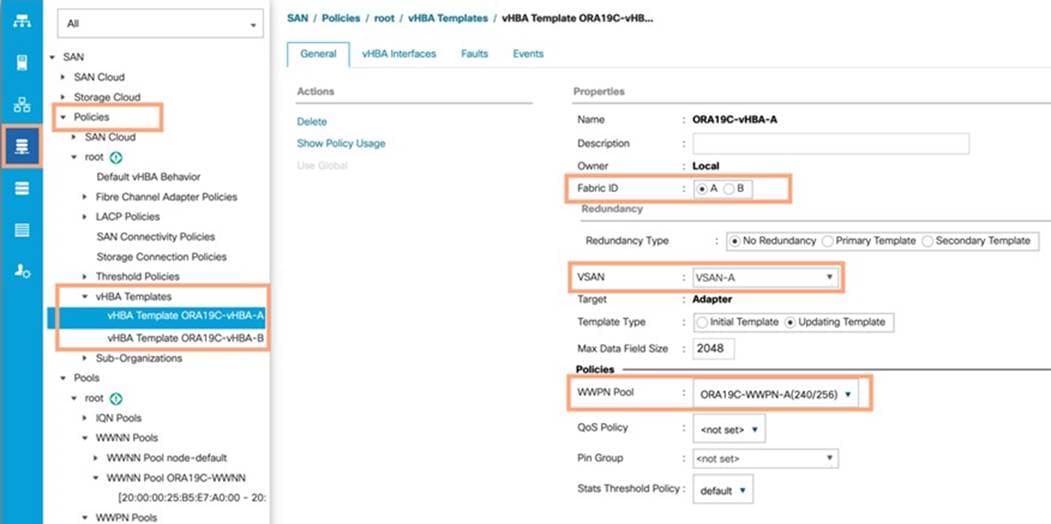

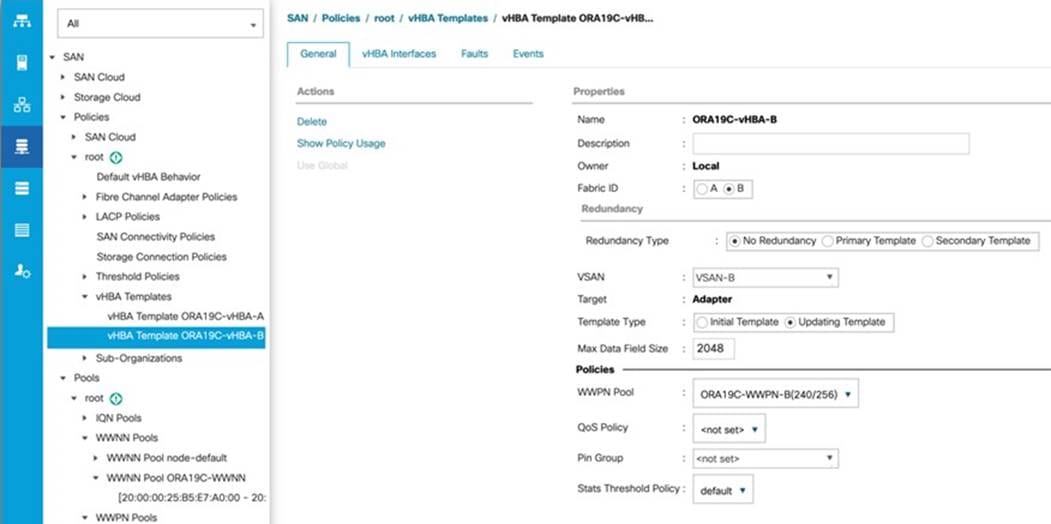

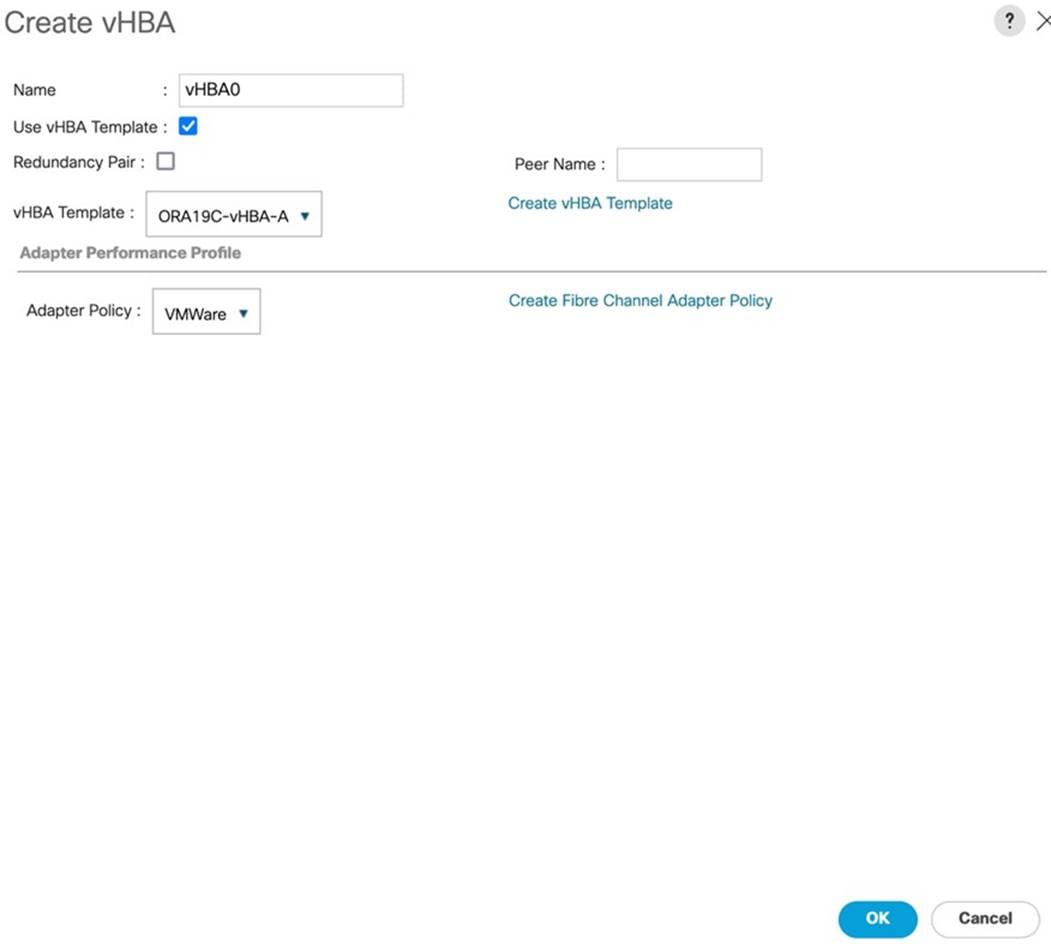

For this solution, we created two vHBA; ORA19C-vHBA-A and ORA19C-vHBA-B.

Procedure 1. Create virtual Host Bus Adapter (vHBA) templates

Step 1. In Cisco UCS Manager, click the SAN tab in the navigation pane.

Step 2. Click Policies > root > right-click vHBA Templates > click Create vHBA Template.

Step 3. Enter the name ORA19C-vHBA-A and keep Fabric A selected.

Step 4. For VSAN, select VSAN-A and template type as Updating Template.

Step 5. For WWPN Pool, select ORA19C-WWPN-A from the drop-down list and click OK to create the first vHBA.

Step 6. Create the second vHBA and change the name to ORA19C-vHBA-B.

Step 7. For the Fabric ID, select B, template type as Updating Template, and WWPN as ORA19C-WWPN-B.

Step 8. Click OK to create second vHBA.

Two vHBA templates have been created as shown below:

Create Local Disk Configuration Policy for Local Disk Boot

Note: For this Cisco Validated Design, we used local disks to install the VMware hypervisor ESXi on all eight blade servers. A Local disk configuration for Cisco UCS is necessary if the servers in the environments have a local disk.

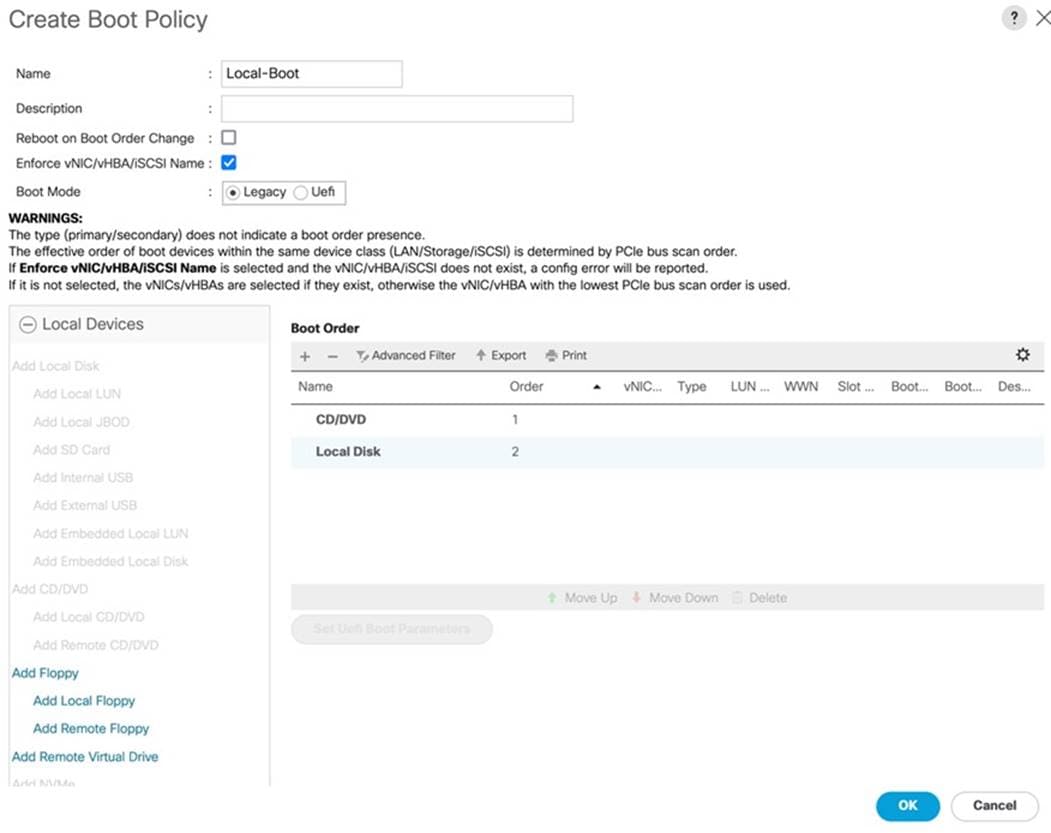

Procedure 1. Create Boot Policies for the Cisco UCS Environments

Step 1. Go to Cisco UCS Manager and then go to Servers > Policies > root > Boot Policies.

Step 2. Right-click and select Create Boot Policy. Enter Local-Boot for the name of the boot policy as shown below:

Note: We used two front disks on each blade server and created a RAID-1 Storage Profile and RAID-1 Storage Policy for high availability in case any disk failure occurs.

| Tech tip |

| You will create the Disk Group Policy first then you will create Storage Profile. |

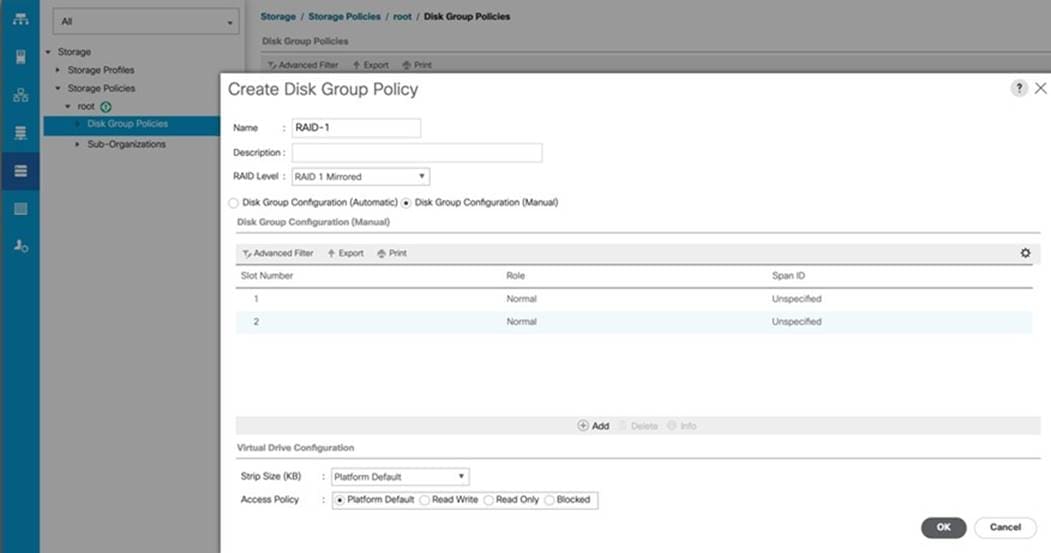

Procedure 1. Create the Disk Group Policy

Step 1. Go to Cisco UCS Manager and click the Storage Tab in the navigation pane.

Step 2. Go to Storage Policies > root > Disk Group Policies.

Step 3. Right-click and select Create Disk Group Policy.

Step 4. Name the Policy “RAID-1” and from the RAID Level drop-down list select RAID 1 Mirrored.

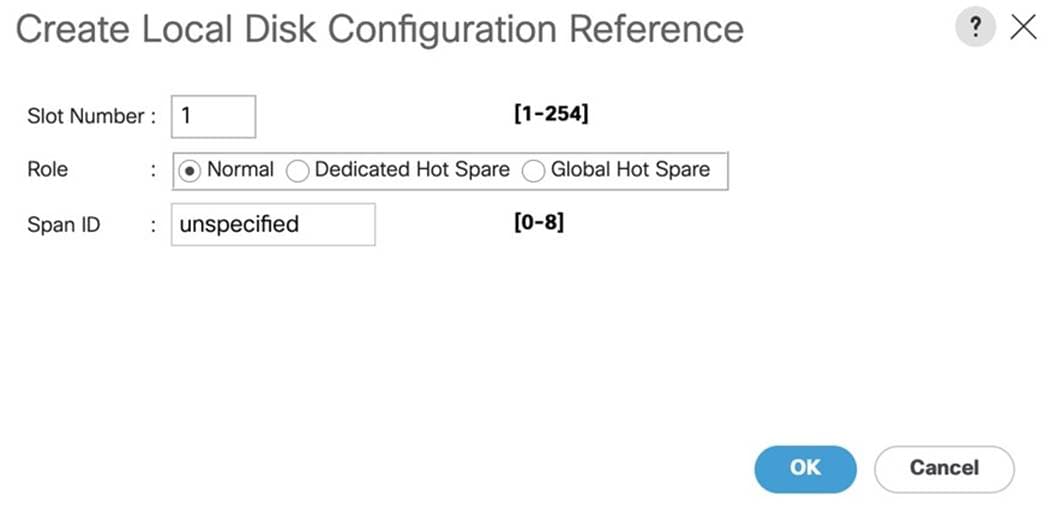

Step 5. Select Disk Group Configuration (Manual) and then click +Add to manually add the disk on slot 1 from the blade server as shown below:

Step 6. Click +Add to manually add the disk on slot 2 from the blade server.

Step 7. Keep the Virtual Drive Configuration options as default and clink OK to create Disk Group Policy as show below:

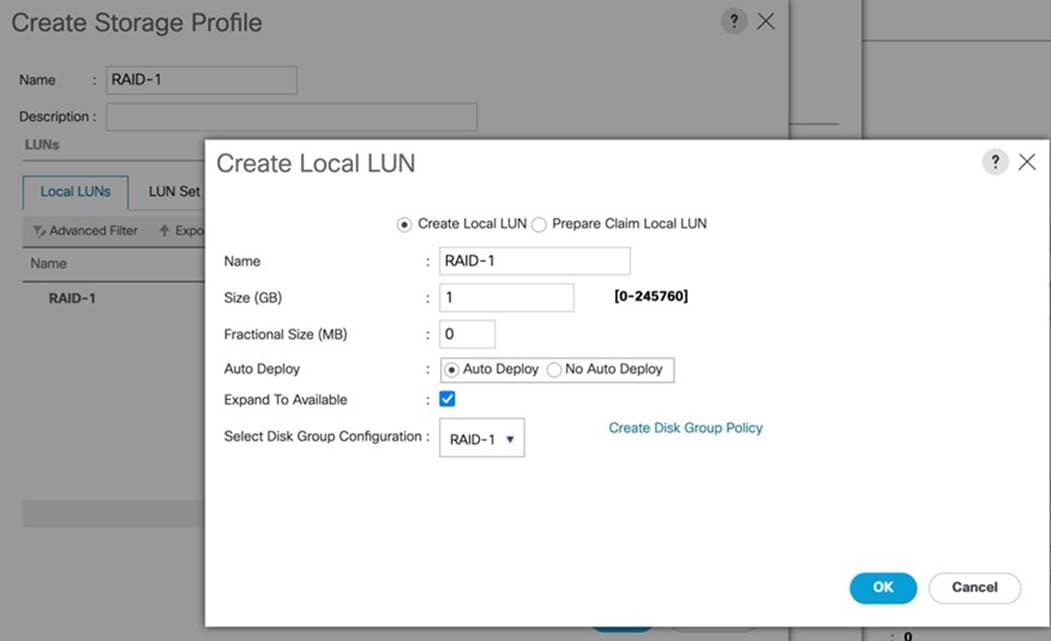

Procedure 2. Create a Storage Profile for RAID-1

Step 1. Go to Cisco UCS Manager and click the Storage Tab in the navigation pane.

Step 2. Go to Storage Profiles > root > and then right-click Create Storage Profile.

Step 3. Name the Storage Profile “RAID-1” and then click +Add to add Local LUNs into Storage Profile.

Step 4. From the Create Local LUN menu, enter the name “RAID-1” and check the box Expand To Available.

Step 5. For the Select Disk Group Configuration options, select RAID-1 for the Disk Group Policy which you previously created as shown below:

Step 6. Click OK to Create Local LUN and then click OK to finish creating the Storage Profile.

Note: For this solution, we used both slot 1 and slot 2 front disk to create RAID-1 mirrored Local LUN and presented this LUN as the Boot Option for the blade server so that the blade server can boot the OS from this local RAID-1 Volume. We ave installed VMware Hypervisor ESXi on this local RAID-1 Volume for each of the blade servers. We will assign this boot profile and local boot policy to the Service Profiles as explained in the following section.

Create and Configure Service Profile Template

Service profile templates enable policy-based server management that helps ensure consistent server resource provisioning suitable to meet predefined workload needs.

The Cisco UCS service profiles with SAN boot policy provides the following benefits:

● Scalability—Rapid deployment of new servers to the environment in a very few steps.

● Manageability—Enables seamless hardware maintenance and upgrades without any restrictions.

● Flexibility—Easy to repurpose physical servers for different applications and services as needed.

● Availability—Hardware failures are not impactful and critical. In rare case of a server failure, it is easier to associate the logical service profile to another healthy physical server to reduce the impact.

| Tech tip |

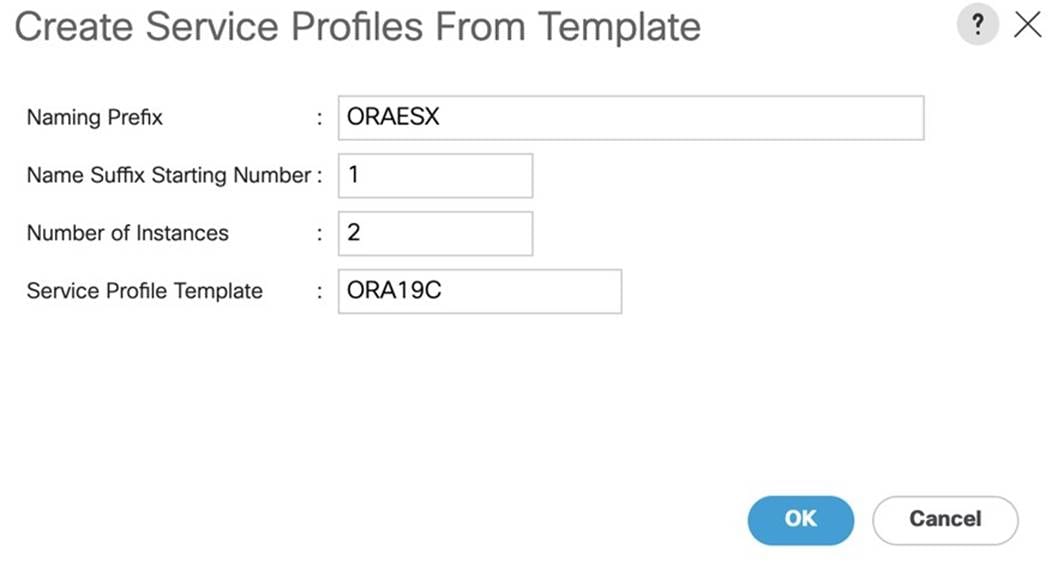

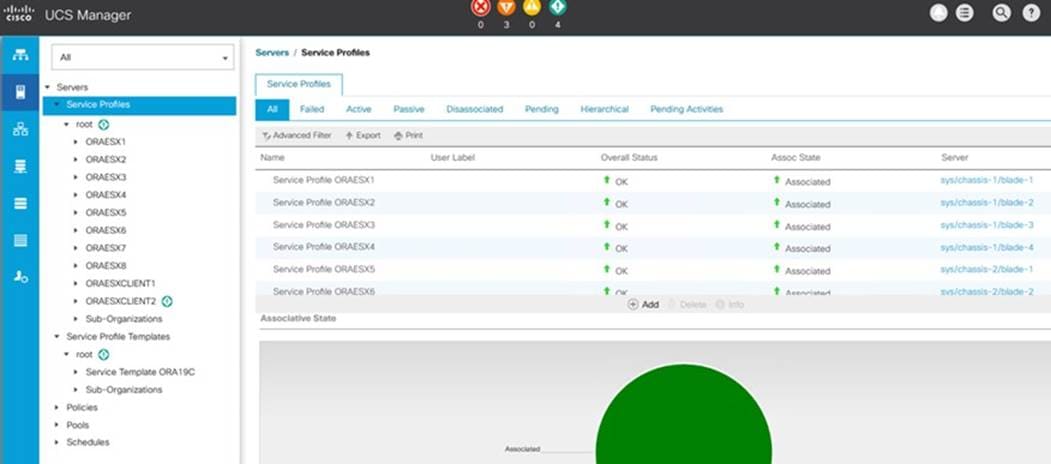

| For this solution, you will create one Service Profile Template “ORA19C.” |

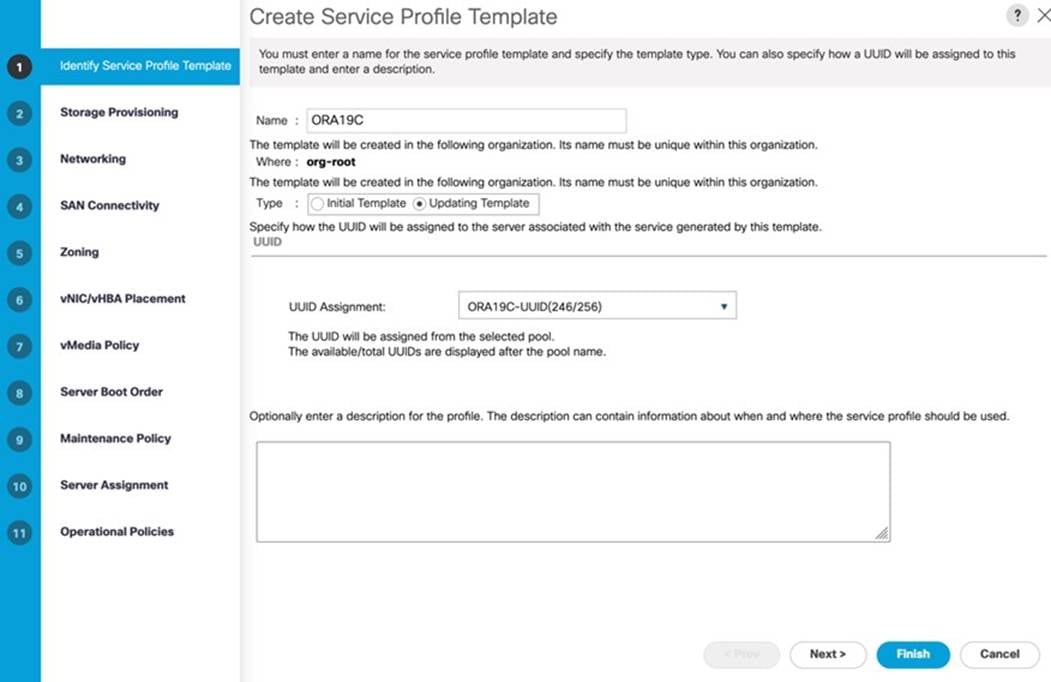

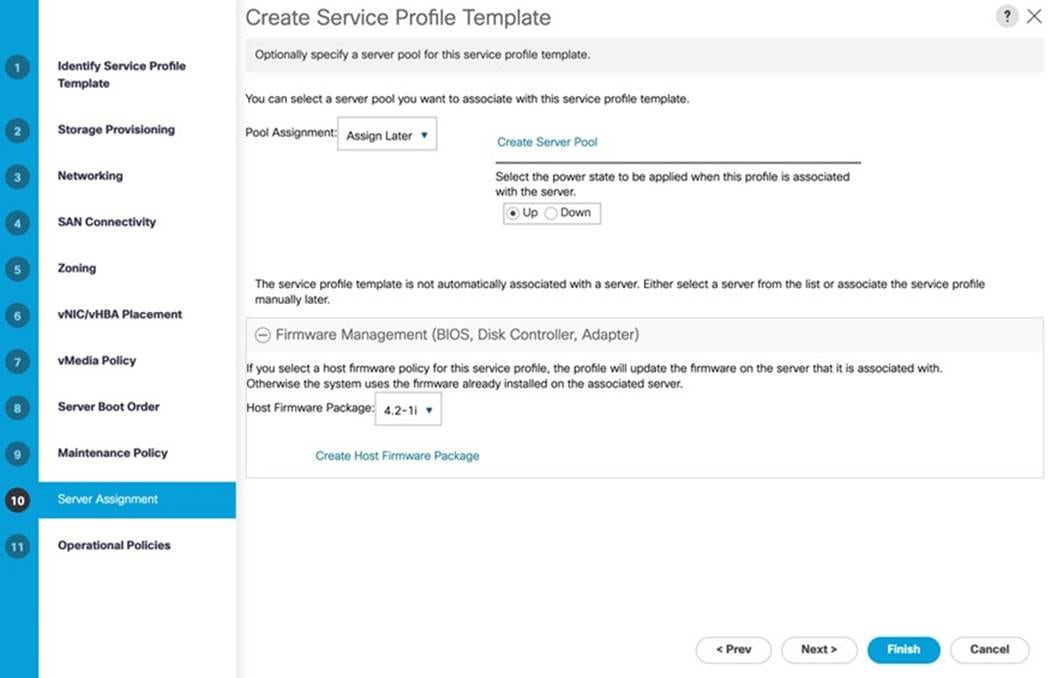

Procedure 1. Create Service Profile Template

Step 1. In Cisco UCS go to Servers > Service Profile Templates > root and right-click Create Service Profile Template as shown below:

Step 2. For the Service Profile Template name “ORA19C” and select the UUID Pool that was created earlier and click Next.

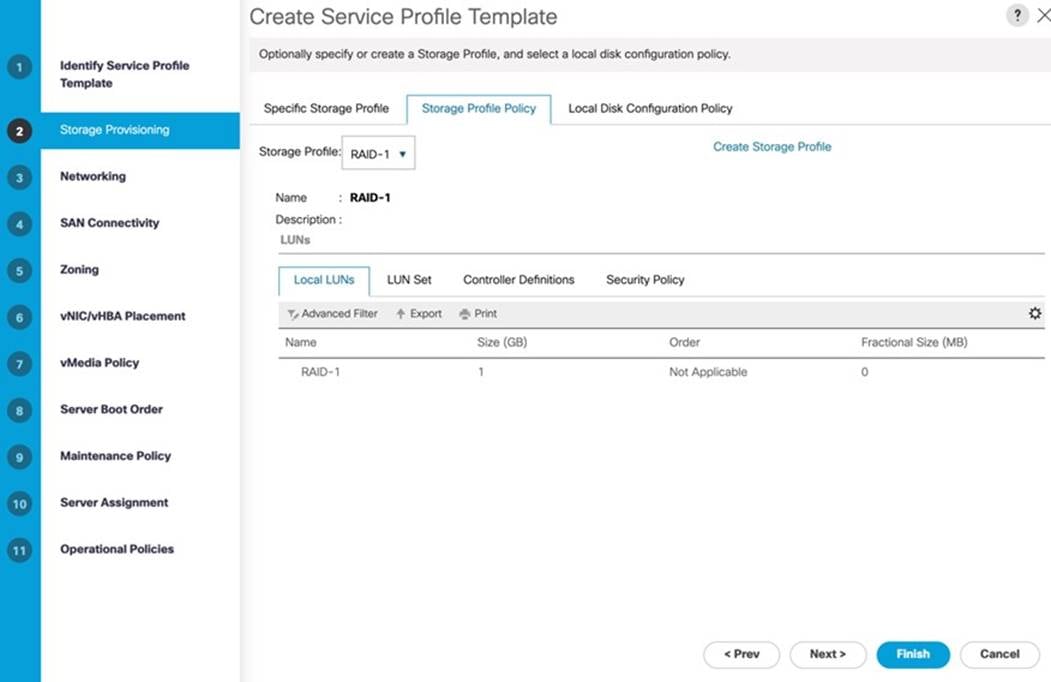

Step 3. In the Storage Provisioning menu, go to the Storage Profile Policy tab and for the Storage Profile select “RAID-1” as shown below:

Step 4. Go to the Local Disk Configuration Policy as select the default option for the Local Storage and click Next.

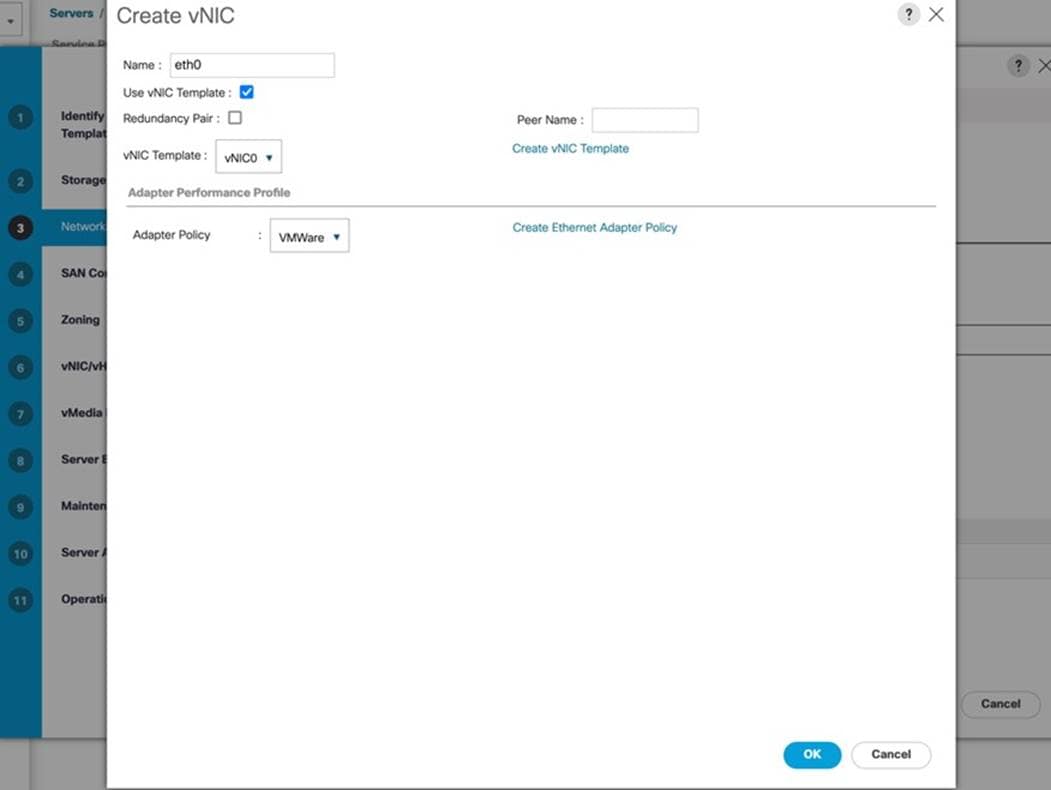

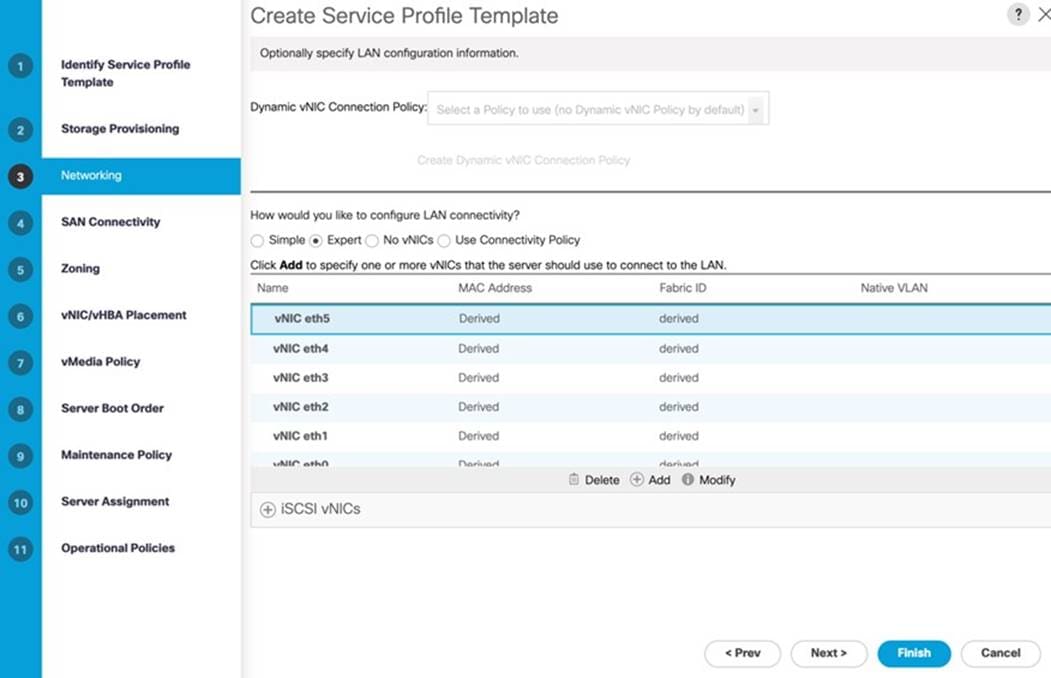

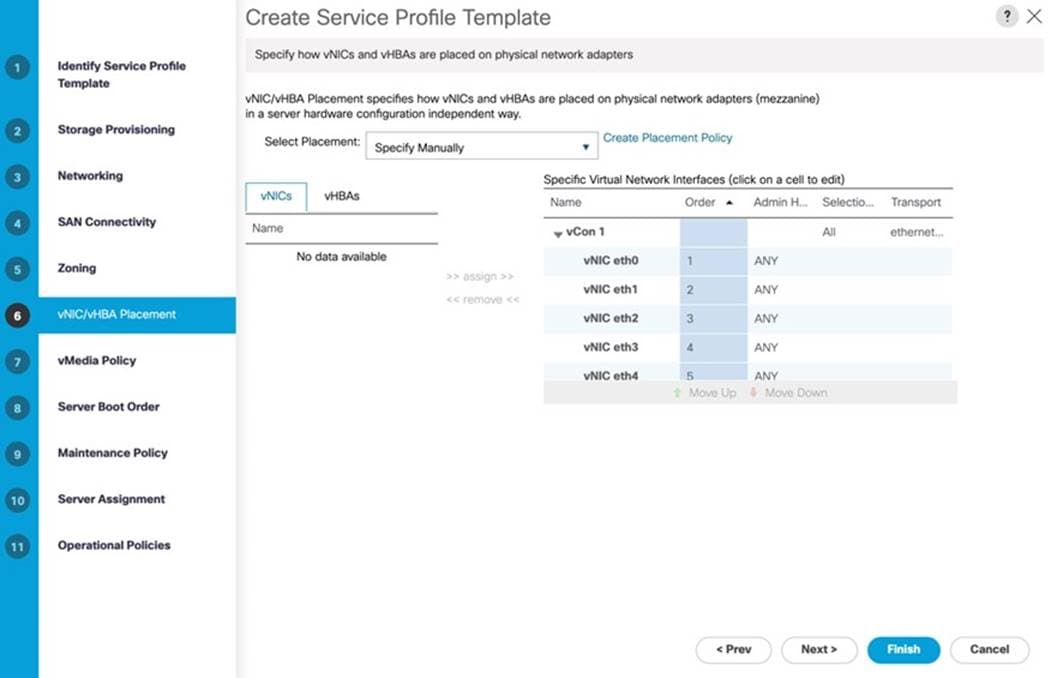

Step 5. In the Networking window, select Expert and click Add to create vNICs that the server should use to connect to the LAN.

Note: In this solution, we created six vNICs as previously explained. We named the first vNIC “eth0” and the second vNIC “eth1”. Similarly, we named the third vNIC as “eth2” and the forth vNIC “eth3”. The fifth vNIC “eth4” and sixth vNIC “eth5” as explained below.

The following six vNIC were created as follows:

● vNIC0 using vNIC Template vNIC0

● vNIC1 using vNIC Template vNIC1

● vNIC2 using vNIC Template vNIC2

● vNIC3 using vNIC Template vNIC3

● vNIC4 and vNIC5 were created using vNIC Template vNIC4 and vNIC Template vNIC5 while these two vNICs used the Adapter Policy “ORA-VMWare” previously created.

● All vNICs use the Adapter Policy “VMWare”

Step 6. In the Create vNIC menu, enter the name “eth0” and check the box “Use vNIC Template.” Select vNIC Template “vNIC0” with the Adapter Policy “VMWare.”

Step 7. Add the second vNIC “eth1” and check the box for “Use vNIC Template.” Select the vNIC Template vNIC1 and Adapter Policy as VMWare.

Step 8. Add the third vNIC “eth2” and check the box for “Use vNIC Template.” Select the vNIC Template vNIC2 and Adapter Policy as VMWare.

Step 9. Add the fourth vNIC “eth3” and check the box for “Use vNIC Template.” Select the vNIC Template vNIC3 and Adapter Policy as VMWare.

Step 10. Add the fifth vNIC “eth4” and check the box for “Use vNIC Template.” Select the vNIC Template vNIC4 and Adapter Policy “ORA-VMWare” previously create for Oracle RAC Private Interconnect Traffic.

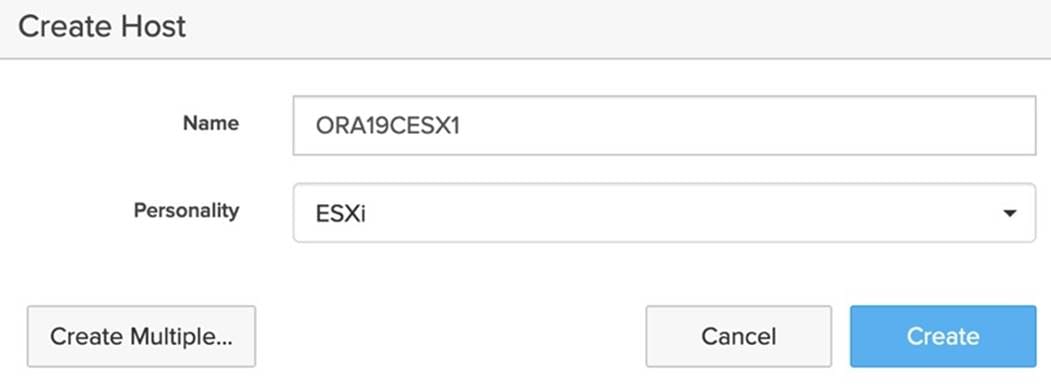

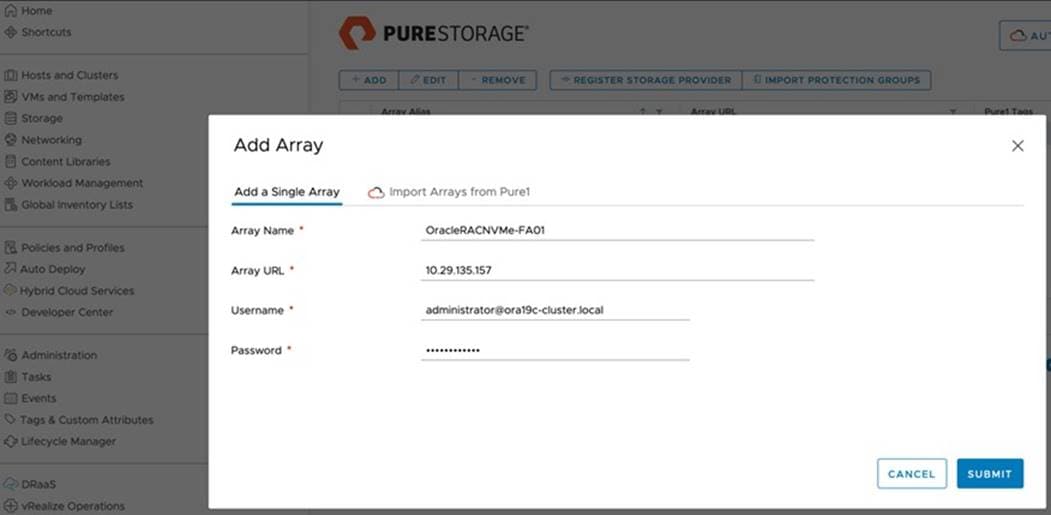

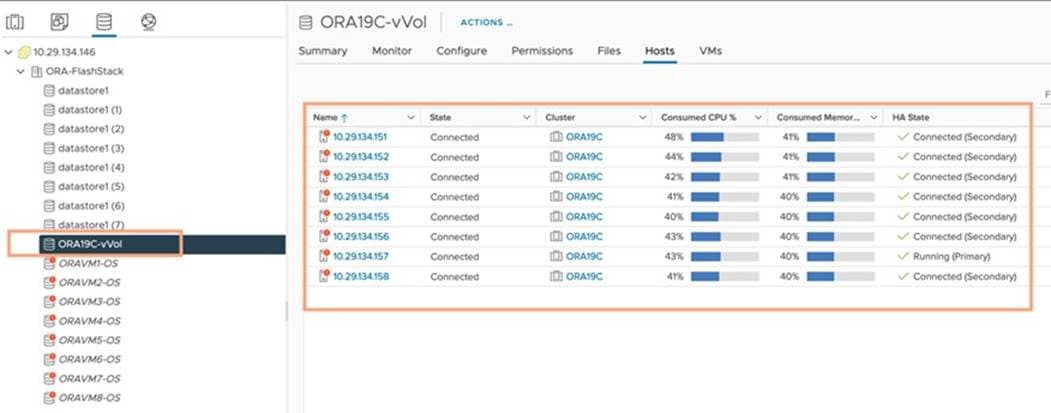

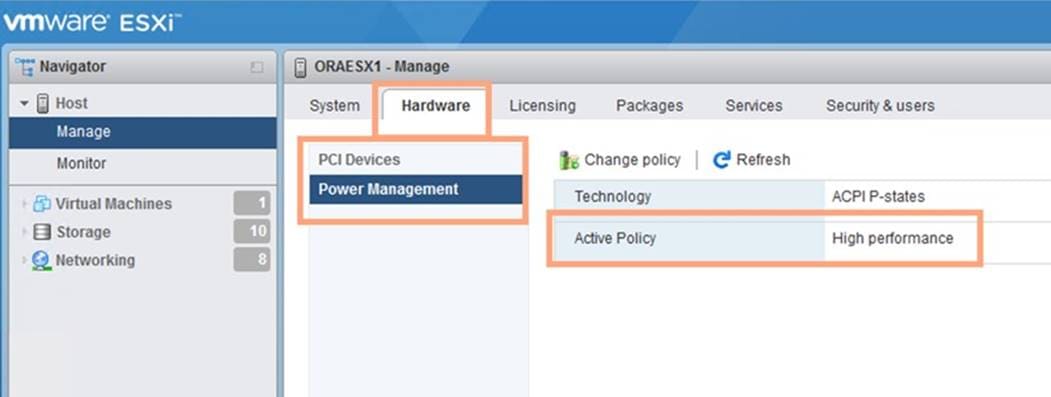

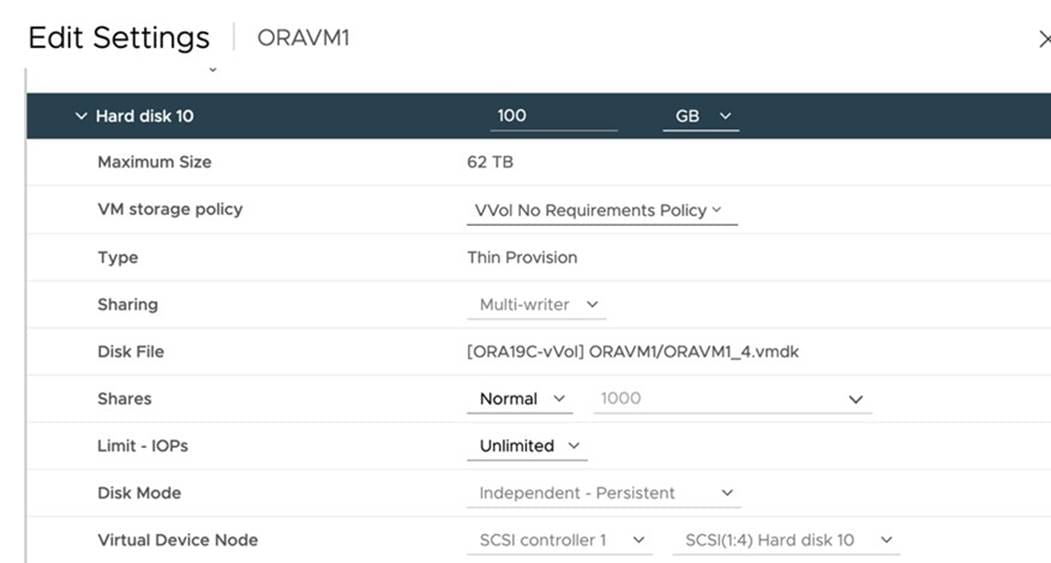

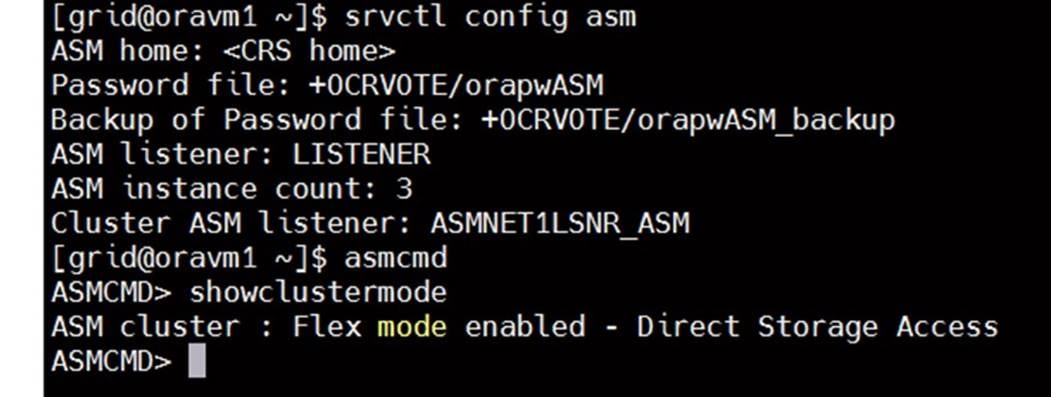

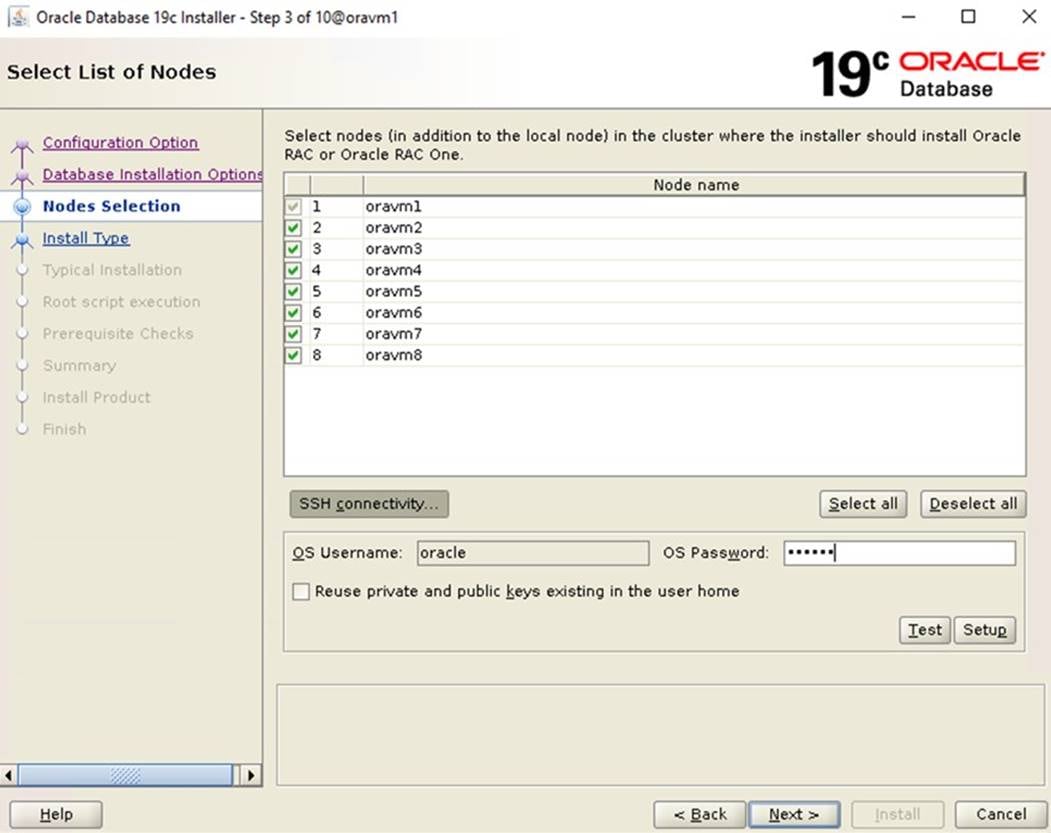

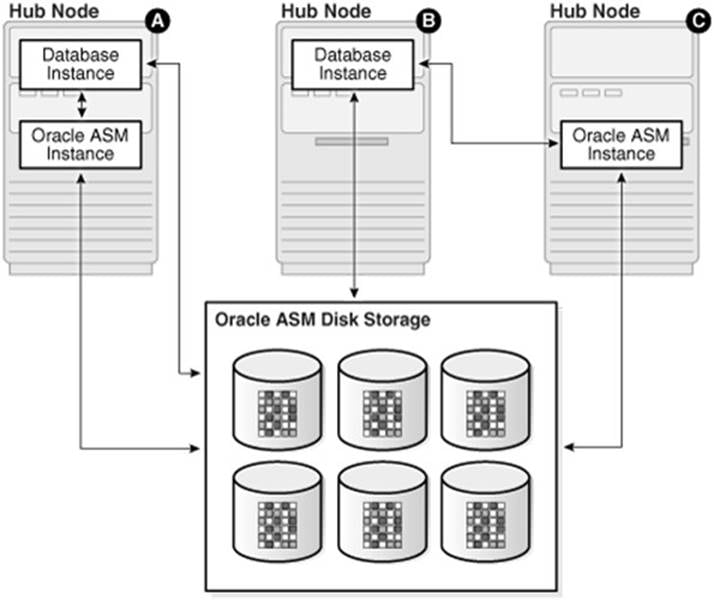

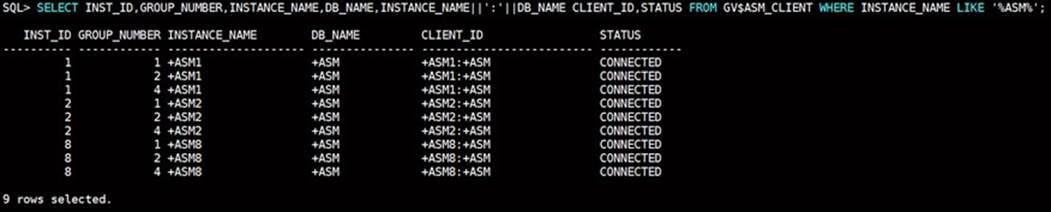

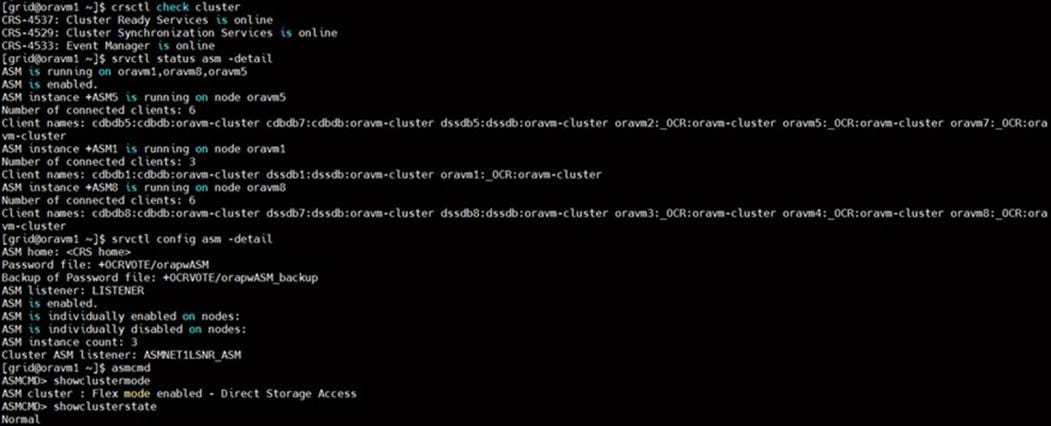

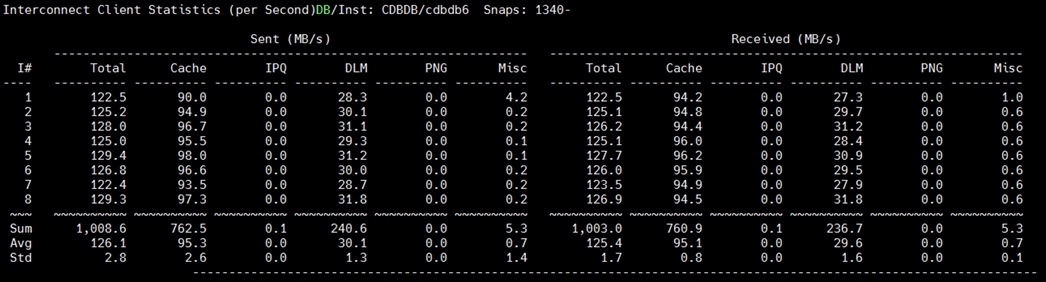

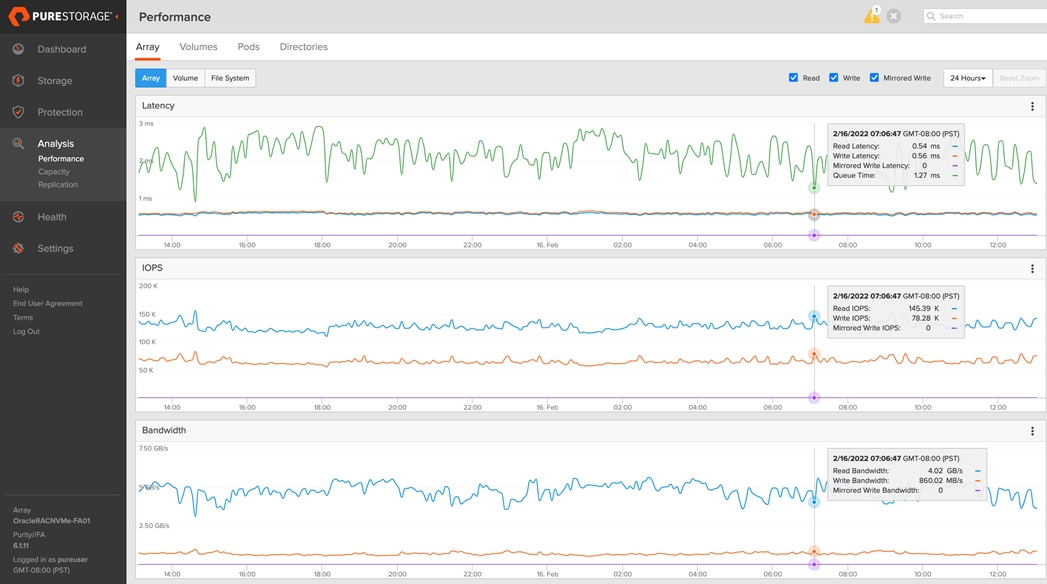

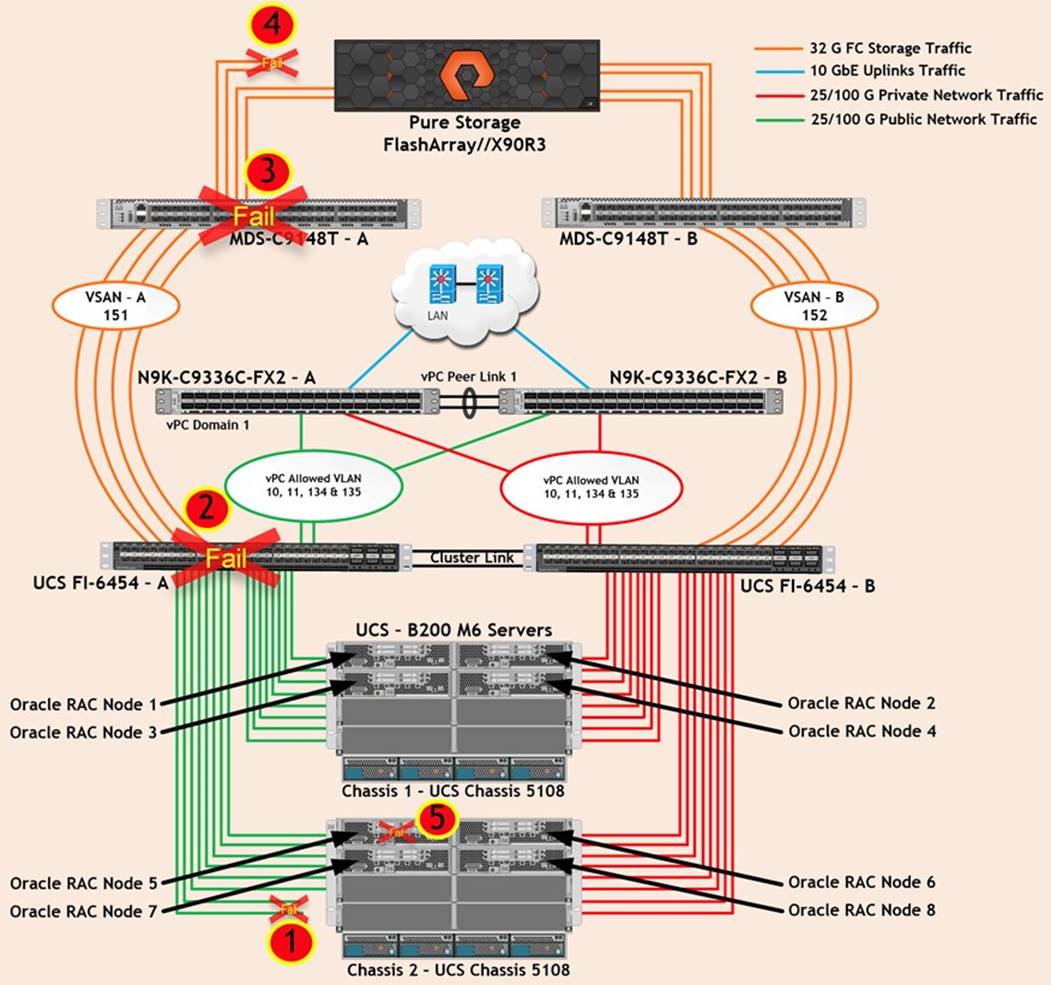

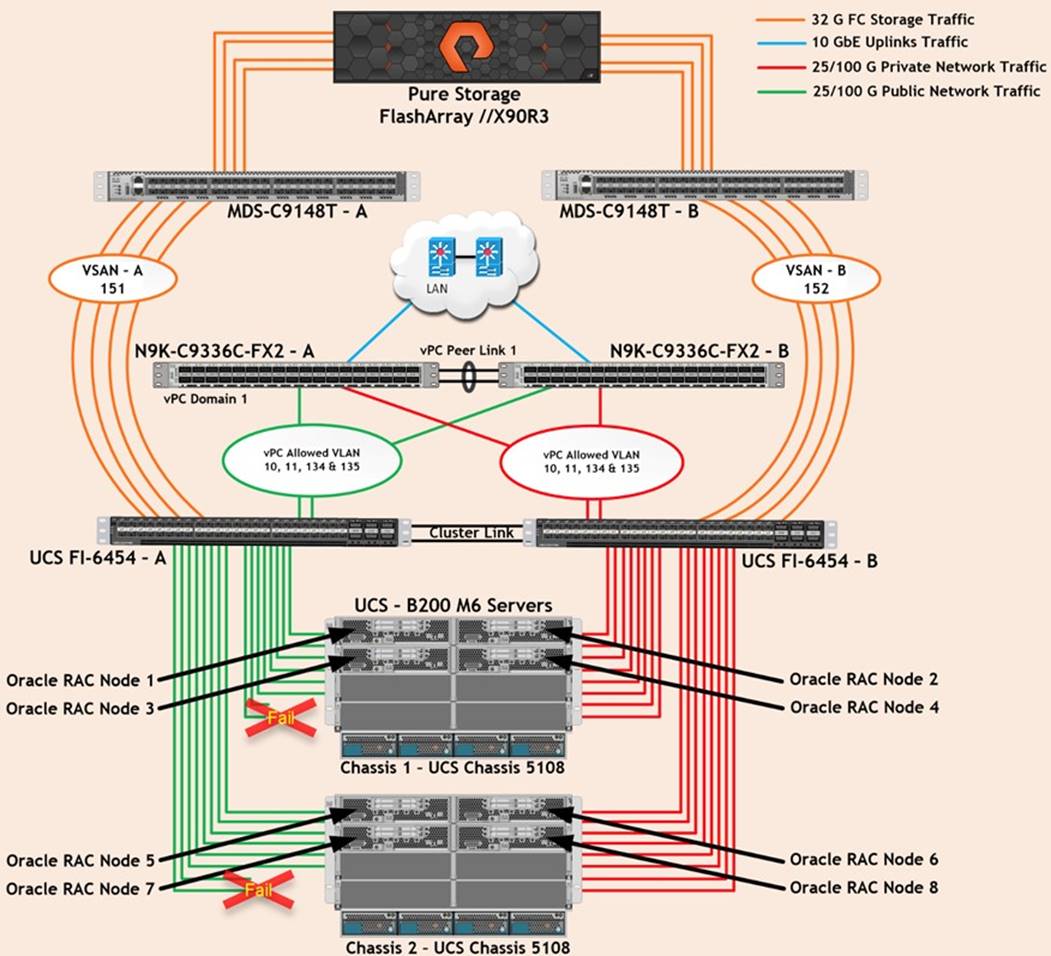

Step 11. Add the sixth vNIC “eth5” and check the box for “Use vNIC Template.” Select the vNIC Template vNIC5 and Adapter Policy as “ORA-VMWare”. As shown below, we configured six vNICs as eth0 to eth5 so the servers could connect to the LAN.