Cisco ACI VM Networking Support for Virtual Machine Managers

Benefits of ACI VM Networking

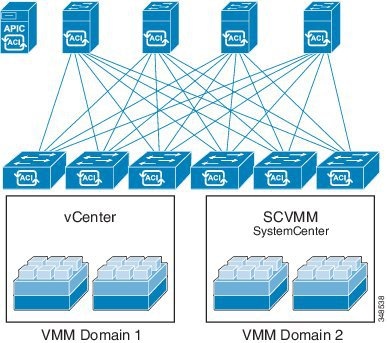

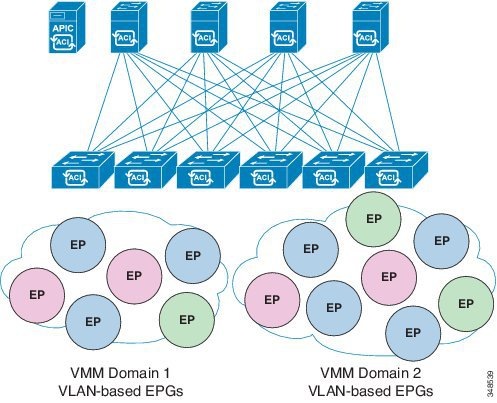

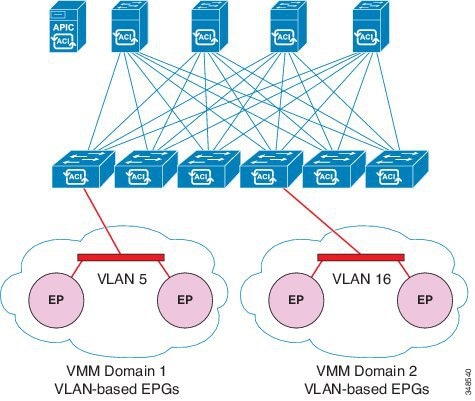

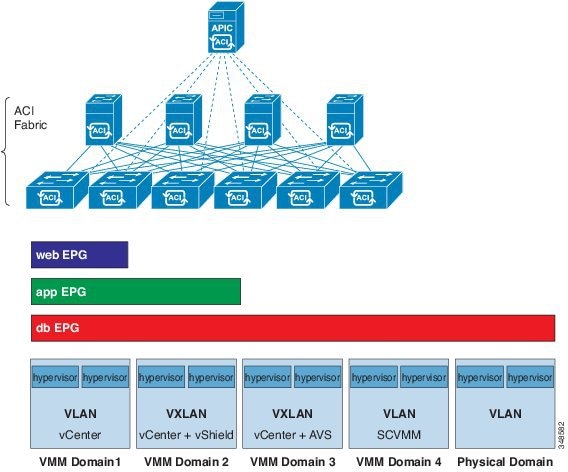

Cisco ACI virtual machine (VM) networking supports hypervisors from multiple vendors. It provides the hypervisors programmable and automated access to high-performance scalable virtualized data center infrastructure.

Programmability and automation are critical features of scalable data center virtualization infrastructure. The Cisco ACI open REST API enables virtual machine integration with and orchestration of the policy model-based Cisco ACI fabric. Cisco ACI VM networking enables consistent enforcement of policies across both virtual and physical workloads managed by hypervisors from multiple vendors.

Attachable entity profiles easily enable VM mobility and placement of workloads anywhere in the Cisco ACI fabric. The Cisco Application Policy Infrastructure Controller (APIC) provides centralized troubleshooting, application health score, and virtualization monitoring. Cisco ACI multi-hypervisor VM automation reduces or eliminates manual configuration and manual errors. This enables virtualized data centers to support large numbers of VMs reliably and cost effectively.

Supported Vendors

Cisco ACI supports virtual machine managers (VMMs) from the following products and vendors:

-

Cisco Application Centric Infrastructure Virtual Edge

For information, see the Cisco ACI Virtual Edge documentation on Cisco.com.

-

Cisco Application Virtual Switch (AVS)

For information, see the chapter "Cisco ACI with Cisco AVS" in the Cisco ACI Virtualization Guide and Cisco AVS documentation on Cisco.com.

-

Cloud Foundry

Cloud Foundry integration with Cisco ACI is supported beginning with Cisco APIC Release 3.1(2).

-

Kubernetes

For information, see the knowledge base article, Cisco ACI and Kubernetes Integration on Cisco.com.

-

Microsoft System Center Virtual Machine Manager (SCVMM)

For information, see the chapters "Cisco ACI with Microsoft SCVMM" and "Cisco ACI with Microsoft Windows Azure Pack in the Cisco ACI Virtualization Guide.

-

OpenShift

For information, see the OpenShift documentation on Cisco.com.

-

OpenStack

For information, see the OpenStack documentation on Cisco.com.

-

Red Hat Virtualization (RHV)

For information, see the knowledge base article, Cisco ACI and Red Hat Integration on Cisco.com.

-

VMware Virtual Distributed Switch (VDS)

For information, see the chapter "Cisco "ACI with VMware VDS Integration" in the Cisco ACI Virtualization Guide.

See the Cisco ACI Virtualization Compatibility Matrix for the most current list of verified interoperable products.

Feedback

Feedback