Introduction

Introduction

This tech note describes general support guidelines for HyperFlex systems and the following networking topologies:

New and Changed Information for this Release

The following table provides an overview of the significant changes to this guide for this current release. The table does not provide an exhaustive list of all changes made to this guide or of all new features in this release.

| Feature | Description |

Date Added |

Where Documented |

|---|---|---|---|

|

6400 Series Fabric Interconnects Support |

Added table describing VIC 1400 Series Support for UCS Fabric Interconnects in Cisco HX Release 5.0(x) and later. Updated title in UCS Fabric Interconnects Matrix for all 6400 Series. |

May 5, 2022 |

Cisco UCS VIC 1400 Series and 6400 Series Fabric Interconnects and Cisco 6400 Series Fabric Interconnects |

|

6400 Series Fabric Interconnects Support |

Updated the 6400 Series FIs support connectivity with Cisco UCS VIC 1400 Series, Cisco UCS VIC 1300 Series, and Cisco UCS VIC 1200 Series Adapters. |

HX Release 3.5(2a) |

Cisco UCS VIC 1400 Series and 6400 Series Fabric Interconnects and Cisco 6400 Series Fabric Interconnects |

|

6400 Series Fabric Interconnects Support |

The 6400 Series FIs now support connectivity with Cisco UCS VIC 1400 Series, Cisco UCS VIC 1300 Series, and Cisco UCS VIC 1200 Series Adapters. |

HX Release 3.5(1a) |

Cisco UCS VIC 1400 Series and 6400 Series Fabric Interconnects and Cisco 6400 Series Fabric Interconnects |

Communications, Services, Bias-free Language, and Additional Information

-

To receive timely, relevant information from Cisco, sign up at Cisco Profile Manager.

-

To get the business impact you’re looking for with the technologies that matter, visit Cisco Services.

-

To submit a service request, visit Cisco Support.

-

To discover and browse secure, validated enterprise-class apps, products, solutions and services, visit Cisco Marketplace.

-

To obtain general networking, training, and certification titles, visit Cisco Press.

-

To find warranty information for a specific product or product family, access Cisco Warranty Finder.

Documentation Feedback

To provide feedback about Cisco technical documentation, use the feedback form available in the right pane of every online document.

Cisco Bug Search Tool

Cisco Bug Search Tool (BST) is a web-based tool that acts as a gateway to the Cisco bug tracking system that maintains a comprehensive list of defects and vulnerabilities in Cisco products and software. BST provides you with detailed defect information about your products and software.

Bias-Free Language

The documentation set for this product strives to use bias-free language. For purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on standards documentation, or language that is used by a referenced third-party product.

Cisco VIC Series Adapters and UCS 6400 Series Fabric Interconnects

Cisco UCS VIC 1400 Series and 6400 Series Fabric Interconnects

The Cisco UCS VIC 1400 series is based on 4th generation Cisco ASIC technology, and is well-suited for next-generation networks requiring 10/25 Gigabit Ethernet for C-Series and S-Series servers and 10/40 Gigabit Ethernet connectivity for B-Series servers. Additional features support low latency kernel bypass for performance optimization such as usNIC, RDMA/RoCEv2, DPDK, Netqueue, and VMQ/VMMQ.

The following list summarizes a set of general support guidelines for the VIC 1400 series and the 6400 Series FIs.

-

For Cisco HX Data Platform releases 3.5(1a) and later, Cisco UCS VIC 1400 series is:

-

Supported for both compute and converged nodes.

-

Not supported in Hyper-V clusters.

-

-

For Cisco HX Data Platform releases 3.5(2a) and later, Cisco UCS VIC 1400 series is:

-

Supported for both compute and converged nodes.

-

-

All converged nodes must have the same connectivity speed. For example:

-

Mixed M4/M5/M6 clusters are supported with: VIC1227 on M4 nodes; VIC 1457 in 1x10G mode only; and VIC 1467 in 1x10G mode only

-

A uniform M6 cluster should have either all VIC 1467s or all VIC 1477 (for example, you may not mix VIC 1477 with VIC 1467 in the same cluster).

-

A uniform M6 cluster should have all VIC 1467s ports running the same speed and count (for example, do not combine 10G and 25G and use the same number of uplinks for all nodes).

-

A uniform M5 cluster should have all VIC 1457s and may not mix VIC 1387 with VIC 1457.

-

A uniform M5 cluster should have all VIC 1457s ports running the same speed and count (for example, do not combine 10G and 25G and use the same number of uplinks for all nodes).

-

The following tables describe the topologies supported with Cisco UCS VIC 1400 Series and UCS Fabric Interconnects 6300 and 6400 Series:

|

M6 Cisco UCS VIC 1400 Series Connectivity |

Cisco UCS VIC 1400 Series Adapters for both B-Series and C-Series |

|

|---|---|---|

|

6400 Series |

6300 Series |

|

|

10G |

HX Release 5.0(x) and later |

HX Release 5.0(x) and later |

|

25G |

HX Release 5.0(x) and later |

HX Release 5.0(x) and later |

|

40G |

Not Supported |

HX Release 5.0(x) and later |

Note |

FI 6200 series is not supported with M6 servers. |

| M5 Cisco UCS VIC 1400 Series Connectivity | Cisco UCS VIC 1400 Series Adapters for both B-Series and C-Series | ||

|---|---|---|---|

|

6400 Series |

6300 Series |

6200 Series |

|

| 10G | HX Release 3.5(2a) and later | Not Supported | HX Release 3.5(1a) and later |

| 25G | HX Release 3.5(2a) and later | Not Supported | Not Supported |

| M5 Cisco UCS VIC 1400 Series Connectivity | Cisco UCS VIC 1400 Series Adapters for both B-Series and C-Series | ||

|---|---|---|---|

|

6400 Series |

6300 Series |

6200 Series |

|

| 10G | Not Supported | Not Supported | Support starting Release 3.5(1a) |

| 25G | Not Supported | Not Supported | Not Supported |

Cisco 6400 Series Fabric Interconnects

The Cisco UCS 6400 Series Fabric Interconnect is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6400 Series offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions.

Note |

Cisco 6400 series fabric interconnects is not supported in the Cisco HX Release 3.5(1a) release. |

The following table presents an HX modular LAN-on-Motherboard (mLOM) and UCS Fabric Interconnects matrix:

|

MLOM VIC |

Interfaces |

6400 Series |

||

|---|---|---|---|---|

HX-MLOM-25Q-04 (VIC 1457)

|

2 or 4 ports, 10-Gbps Ethernet | 10Gbps supported in HX Release 3.5(2a) and later. | ||

HX-MLOM-25Q-04 (VIC 1457)

|

2 or 4 ports, 25-Gbps Ethernet | 25Gbps supported in HX Release 3.5(2a) and later. | ||

| HX-MLOM-C40Q-03 (VIC 1387) | 2 ports, 10- Gbps Ethernet (with QSA Adapter) | 10Gbps supported in HX Release 3.5(2a) and later. | ||

| HX-MLOM-C40Q-03 (VIC 1387) | 2 ports, 40-Gbps Ethernet | Not Supported | ||

| HX-MLOM-CSC-02 (VIC 1227) | 2 ports, 10-Gbps Ethernet | 10Gbps supported in HX Release 3.5(2a) and later. |

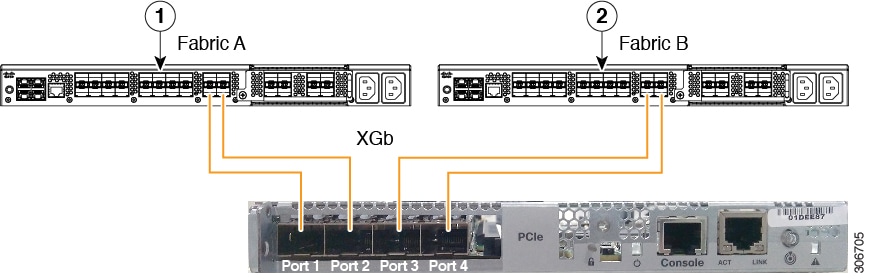

Physical Connectivity Illustrations for Direct Connect Mode Cluster Setup

The following images show a sample of direct connect mode physical connectivity for C-Series Rack-Mount Server with Cisco UCS VIC 1455. The port connections remain the same for Cisco UCS VIC 1457.

Warning |

If your configuration is a Fabric Interconnect 6400 connected to VIC 1455/1457 using SFP-H25G-CU3M or SFP-H25G-CU5M cables, do not use UCS Server Firmware versions 4.0(4), or 4.1(1d) and earlier. For more information on the supported upgrade sequence see Release Notes for Cisco HX Data Platform, Release 4.0. For more information on the issue, see CSCvu25233 |

Note |

The following restrictions apply:

This is due to the internal port-channeling architecture inside the card. Ports 1 and 3 are used because the connections between ports 1 and 2 (also 3 and 4) form an internal port-channel. |

Caution |

Do not connect port 1 to Fabric Interconnect A, and port 2 to Fabric Interconnect B. Use ports 1 and 3 only. Using ports 1 and 2 results in discovery and configuration failures. |

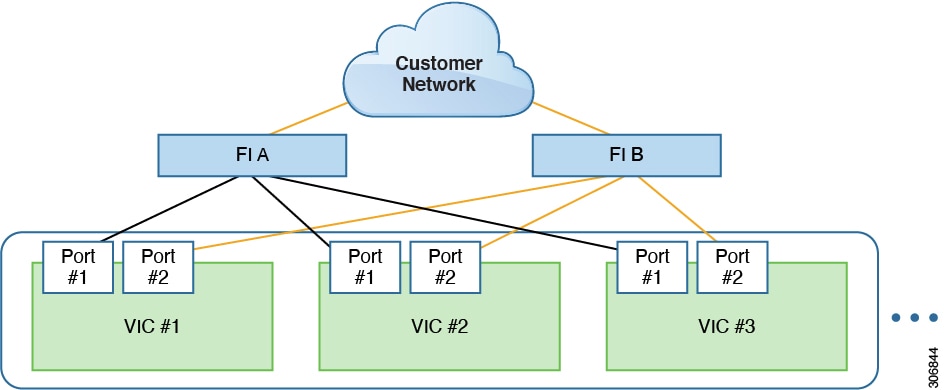

Multi-VIC Support

Multi-VIC Support in HyperFlex Clusters

About Multi-VIC Support

Multiple VIC adapters may be added in HyperFlex clusters as depicted in the following illustration, and offer the following benefits:

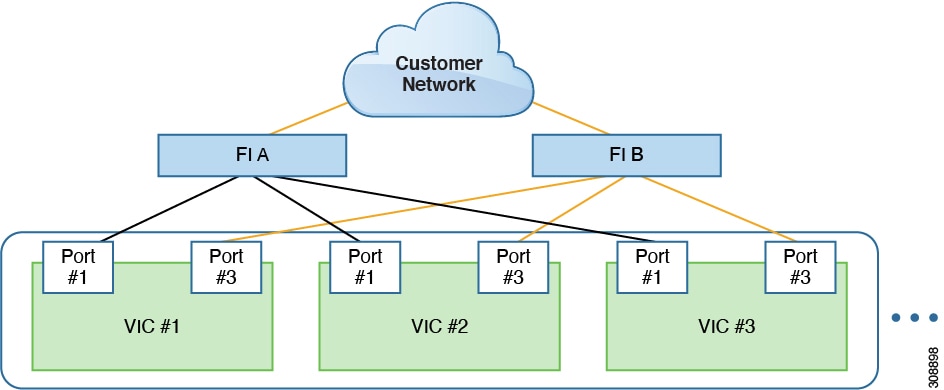

For each VIC 1400 series adapter, port 1 connects to FI A, and Port 3 connects to FI B as depicted in the following illustration:

-

Adaptive network infrastructure.

-

Maximum network design flexibility.

-

Physical VIC redundancy auto-failover (See the following section on Multi-VIC support with Auto-failover.

Guidelines and Special Requirements

Use the following set of guidelines and special requirements to add more than one VIC adapter in your HyperFlex clusters.

-

Important—Only supported on new deployments with Cisco HX Data Platform, Release 3.5(1a) and later. In other words, existing clusters deployed prior to release 3.5(1a) CANNOT install multiple VICs.

-

Supported for VMware ESXi clusters only. Multi-VIC is not supported on Hyper-V clusters.

-

Supported for HyperFlex M5 Converged nodes or Compute-only nodes.

-

Use with FI-attached systems only (not supported for HX Edge Systems).

-

Mandatory VIC 1387 (MLOM) or VIC 1457 (MLOM) is required.

-

(Optional) You can either add QTY 1 or QTY 2 VIC 1385 or VIC 1455 PCIe VIC.

-

You may not combine VIC 1300 series and VIC 1400 series in the same node or within the same cluster.

-

Interface speeds must be the same. Either all 10GbE (with enough QSAs if using VIC 1300), or all 40GbE. All VIC ports MUST be connected and discovered before starting installation. Installation will check to ensure all VIC ports are properly connected to the FIs.

-

All nodes should use the same number of uplinks. This is important for VIC 1400 that can utilize either 1 or 2 uplinks per fabric interconnect.

-

PCIe VIC links will be down, as the discovery process only occurs with the mLOM slot. Once the discovery process is complete, it is expected behavior for the PCIe links to the FI pair to remain down. This is due to only the mLOM slot getting standby power while the server is powered off. Once association process begins, and the server powers on, the PCIe VIC links will come online as well.

Multi-VIC Support with Auto Failover

Important |

You must place only new customer defined vNICS on these unused ports to avoid contention with production HX traffic. Do not place them on the ports used for HX services.  |

Third Party NIC Support

Introduction

Third-party NIC support in HyperFlex is designed to give customers maximum flexibility in configuring their servers as needed for an expanding set of applications and use cases. Refer to the following section for important considerations when adding additional networking hardware to HyperFlex servers.

Prerequisites

-

Installing third-party NIC Cards - Before cluster installation, install third party NIC cards; uncabled or cabled with links shut down. After deployment is complete, you may enable the links and create additional vSwitches and port groups for any application or VM requirements.

General guidelines for support

-

Supported for VMware ESXi clusters only. Third party NICs are not supported on Hyper-V clusters.

-

Additional vSwitches may be created that use leftover vmnics of third party network adapters. Care should be taken to ensure no changes are made to the vSwitches defined by HyperFlex. Third party vmnics should never be connected to the pre-defined vSwitches created by the HyperFlex installer. Additional user created vSwitches are the sole responsibility of the administrator, and are not managed by HyperFlex.

-

Third party NICs are supported on all HX converged and compute-only systems, including HX running under FIs and HX Edge.

-

Refer to the UCS HCL Tool for the complete list of network adapters supported. HX servers follow the same certification process as C-series servers and will support the same adapters.

-

The most popular network adapters will be available to order preinstalled from Cisco when configuring HX converged or compute-only nodes. However, any supported adapter may be ordered as a spare, and installed in the server before beginning HyperFlex installation.

-

Support for third party adapters begins with HX Data Platform release 3.5(1a) and later.

-

Adding new networking hardware after a cluster is deployed, regardless of HXDP version, is not recommended. Physical hardware changes can disrupt existing virtual networking configurations and require manual intervention to restore HyperFlex and other applications running on that host.

-

The maximum quantity of NICs supported is based on physical PCIe space in the server. Mixing of various port speeds, adapter vendors, and models is allowed, as these interfaces are not used to run the HyperFlex infrastructure.

-

Third party NICs may be directly connected to any external switch. Connectivity through the Fabric Interconnects is not required for third party NICs.

-

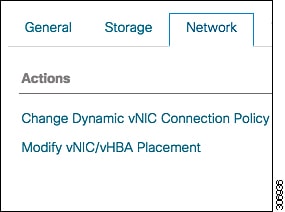

Important—Special consideration must be taken when using third party adapters on HX systems running under FIs. Manual policy modification is required during installation. Follow these steps:

-

Launch Cisco UCS Manager and login as an administrator.

-

In the Cisco UCS Manager UI, go to the Servers tab.

-

Navigate to the HX Cluster org that you wish to change and fix the issue when you get a configuration failure message on each Service Profile, such as There is not enough resources all for connection-placement.

-

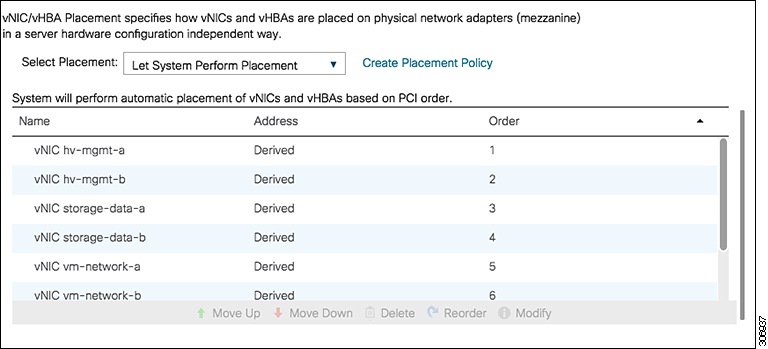

On each Service Profile template listed (hx-nodes, hx-nodes-m5, compute-nodes, compute-nodes-m5), change vNIC / vHBA policy to Let System Placement

-

Click Modify vNIC / vHBA Placement.

-

Change the Placement back to Let System Perform Placement as shown in the following illustration.

-

This action will trigger all the Service Profiles associated to any of the 4 Service Profile Templates to go into a pending acknowledgement state. You must reboot each affected system to fix this issue. This should ONLY be done on a fresh cluster and not an existing cluster during upgrade or expansion.

-

-

If the config failure fault on the HX service profiles does not clear in UCS Manager, perform the following additional steps:

-

Click Modify vNIC / vHBA Placement.

-

Change the Placement back to HyperFlex and click OK.

-

Click Modify vNIC / vHBA Placement.

-

Change the Placement back to Let System Perform Placement and click OK.

-

Confirm the faults are cleared from the service profiles on all HX servers.

-

-

Communications, Services, Bias-free Language, and Additional Information

-

To receive timely, relevant information from Cisco, sign up at Cisco Profile Manager.

-

To get the business impact you’re looking for with the technologies that matter, visit Cisco Services.

-

To submit a service request, visit Cisco Support.

-

To discover and browse secure, validated enterprise-class apps, products, solutions and services, visit Cisco Marketplace.

-

To obtain general networking, training, and certification titles, visit Cisco Press.

-

To find warranty information for a specific product or product family, access Cisco Warranty Finder.

Documentation Feedback

To provide feedback about Cisco technical documentation, use the feedback form available in the right pane of every online document.

Cisco Bug Search Tool

Cisco Bug Search Tool (BST) is a web-based tool that acts as a gateway to the Cisco bug tracking system that maintains a comprehensive list of defects and vulnerabilities in Cisco products and software. BST provides you with detailed defect information about your products and software.

Bias-Free Language

The documentation set for this product strives to use bias-free language. For purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on standards documentation, or language that is used by a referenced third-party product.

Feedback

Feedback