DC-No-FI Overview

Cisco HyperFlex Datacenter without Fabric Interconnect (DC-No-FI) brings the simplicity of hyperconvergence to data center deployments without the requirement of connecting the converged nodes to Cisco Fabric Interconnect.

Starting with HyperFlex Data Platform Release 4.5(2b) and later:

-

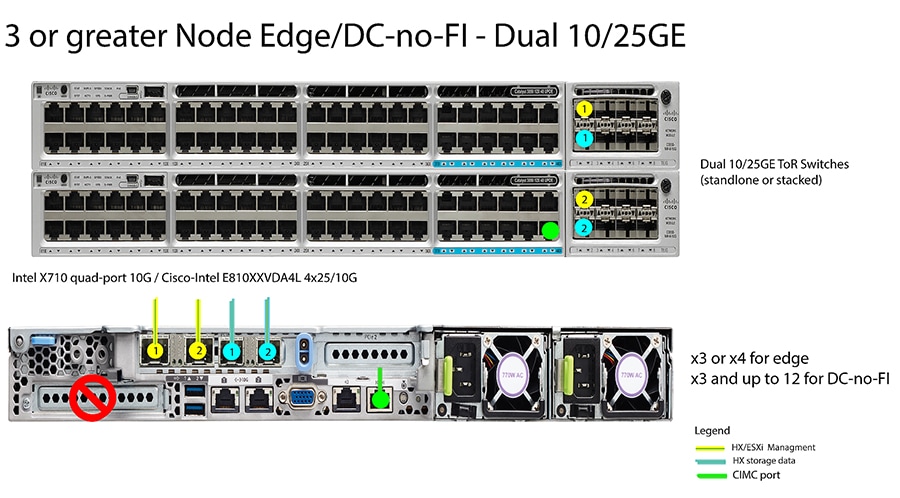

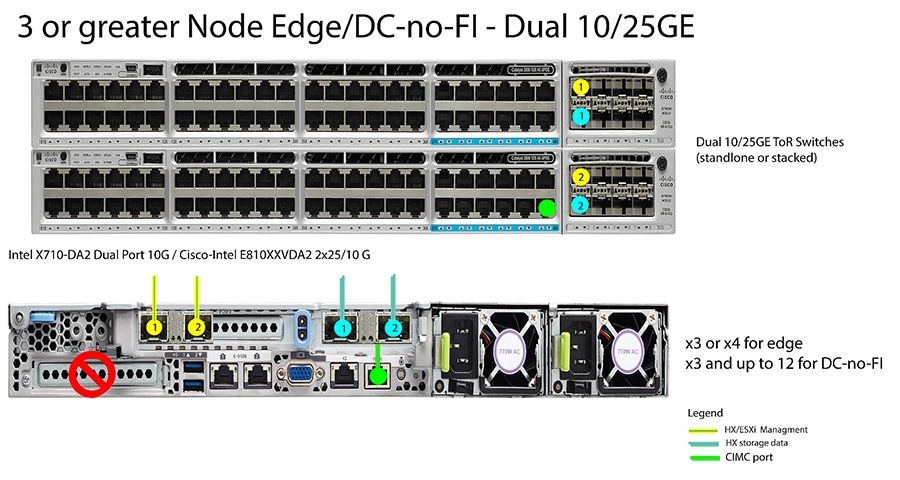

Support for DC-No-FI deployment from 3 to 12 converged nodes.

-

For clusters larger than 8 nodes, it is recommended to enable Logical Availibility Zones (LAZ) as part of your cluster deployment.

-

Support for cluster expansion with converged and compute nodes on HyperFlex DC-No-FI clusters. For more information see Expand Cisco HyperFlex Clusters in Cisco Intersight.

-

The expansion of HyperFlex Edge clusters beyond 4 nodes changes the deployment type from Edge type to DC-No-FI type.

-

Support for DC-No-FI as a target cluster for N:1 Replication.

-

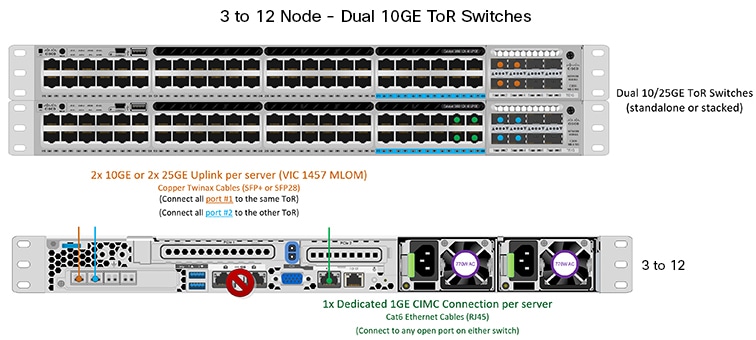

VIC-based and NIC-based clusters are supported. See the Preinstall Checklist for more details in the following section.

Note |

Starting with HXDP release 5.0(2a), DC-no-FI clusters support the HX nodes connected to different pairs of leaf switches for better redundancy and rack distribution, allowing you to scale the cluster as needed. This is supported with spine-leaf network architecture where all the HX nodes in a cluster belong to single network fabric in same datacenter. |

HyperFlex Data Platform Datacenter Advantage license or higher is required. For more information on HyperFlex licensing, see Cisco HyperFlex Software Licensing in the Cisco HyperFlex Systems Ordering and Licensing Guide.

Note |

1:1 converged:compute ratio requires HXDP DC Advantage license or higher and 1:2 converged:compute ratio requires HXDP DC Premier license. |

The Cisco Intersight HX installer rapidly deploys HyperFlex clusters. The installer constructs a pre-configuration definition of your cluster, called an HX Cluster Profile. This definition is a logical representation of the HX nodes in your HyperFlex DC-No-FI cluster. Each HX node provisioned in Cisco Intersight is specified in a HX Cluster profile.

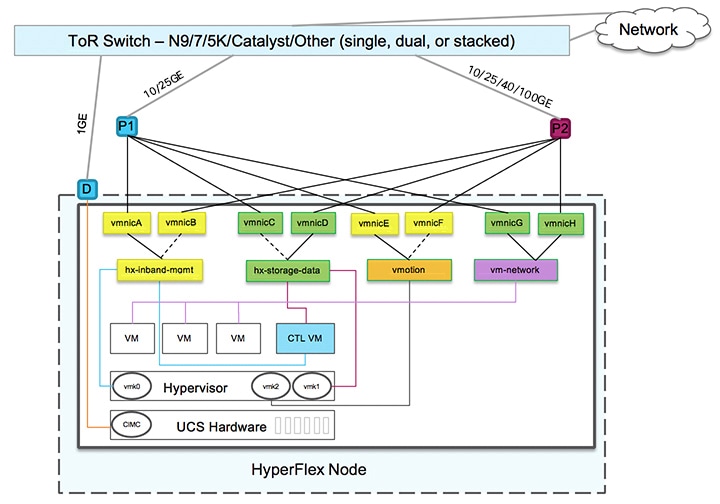

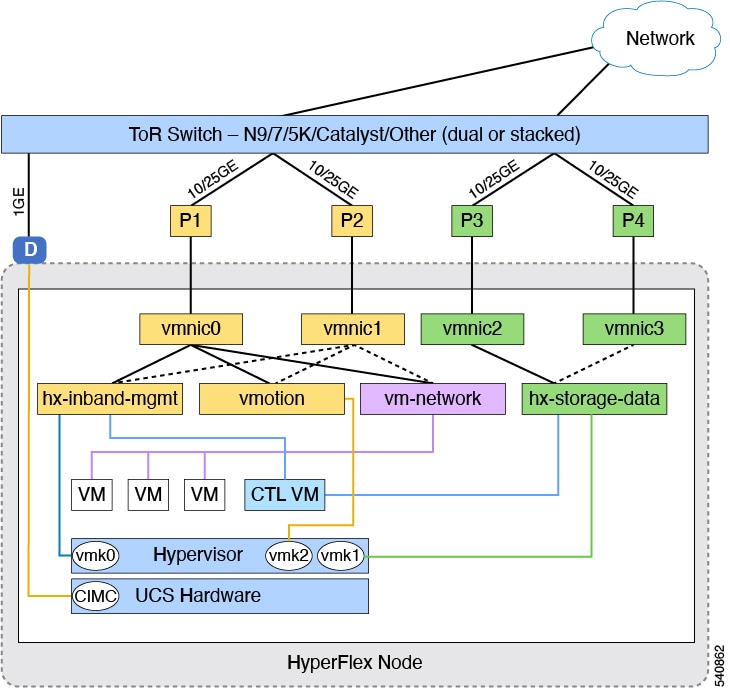

Additional guest VM VLANs are optional. You may use the same management VLAN above for guest VM traffic in environments that wish to keep a simplified flat network design.

Note |

Each cluster should use a unique storage data VLAN to keep all storage traffic isolated. Reuse of this VLAN across multiple clusters is highly discouraged. |

Note |

Due to the nature of the Cisco VIC carving up multiple vNICs from the same physical port, it is not possible for guest VM traffic configured on vswitch-hx-vm-network to communicate L2 to interfaces or services running on the same host. It is recommended to either a) use a separate VLAN and perform L3 routing or b) ensure any guest VMs that need access to management interfaces be placed on the vswitch-hx-inband-mgmt vSwitch. In general, guest VMs should not be put on any of the HyperFlex configured vSwitches except for the vm-network vSwitch. An example use case would be if you need to run vCenter on one of the nodes and it requires connectivity to manage the ESXi host it is running on. In this case, use one of the recommendations above to ensure uninterrupted connectivity. |

The following table summarizes the installation workflow for DC-No-FI clusters:

|

Step |

Description |

Reference |

||

|---|---|---|---|---|

|

1. |

Complete the preinstallation checklist. |

Preinstallation Checklist for Datacenter without Fabric Interconnect |

||

|

2. |

Ensure that the network is set up. |

|||

|

3. |

Log in to Cisco Intersight. |

|||

|

4. |

Claim Targets.

|

Claim Targets for DC-no-FI Clusters |

||

|

5. |

Verify Cisco UCS Firmware versions. |

Verify Firmware Version for HyperFlex DC-No-FI Clusters |

||

|

6. |

Run the HyperFlex Cluster Profile Wizard. |

Configure and Deploy HyperFlex Datacenter without Fabric Interconnect Clusters |

||

|

7. |

Run the post installation script through the controller VM. |

Post Installation Tasks for DC-no-FI Clusters |

Feedback

Feedback