FlashStack for Hybrid Multicloud GitOps White Paper

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

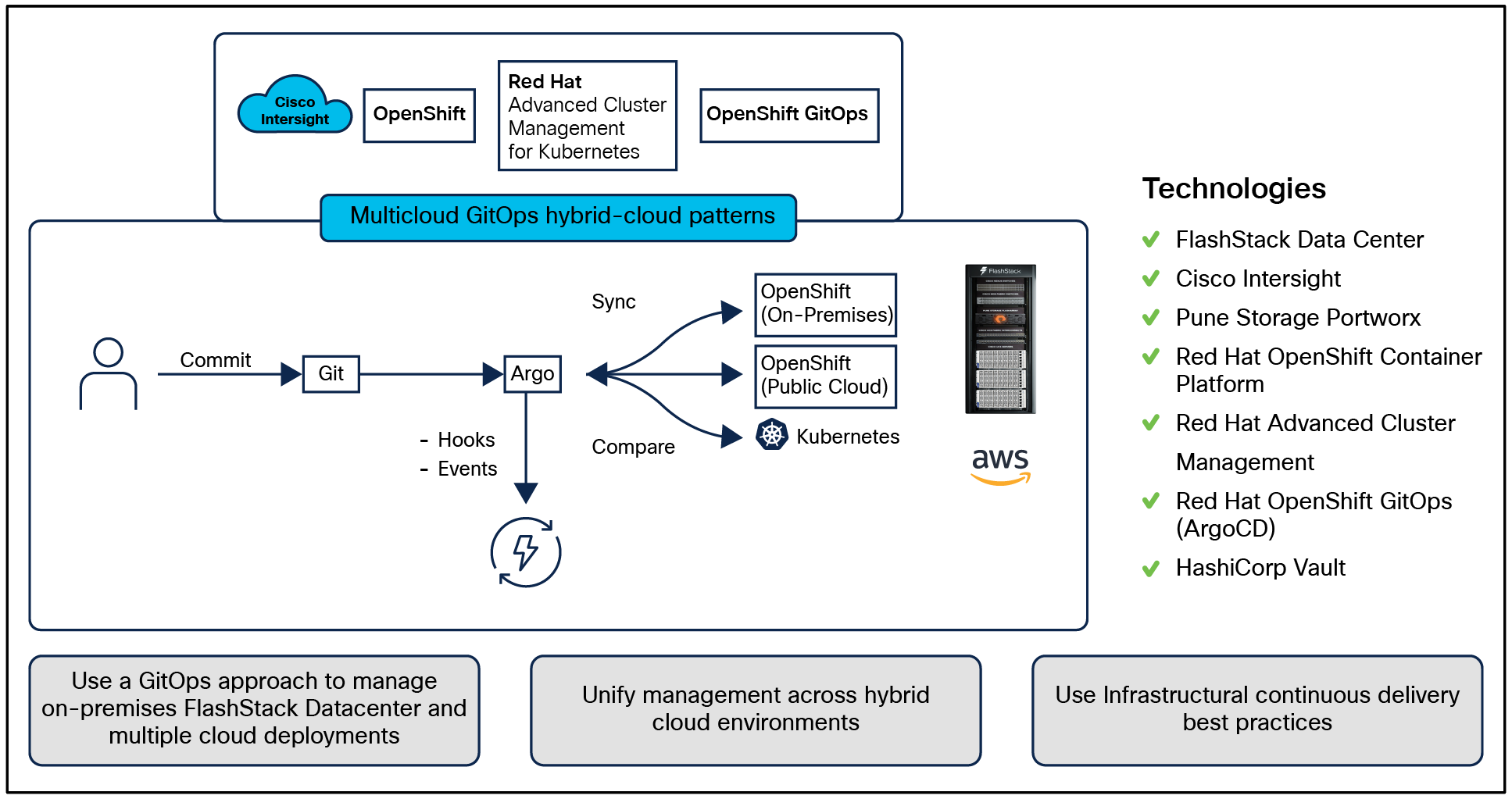

The solution presented in this document uses a GitOps approach to manage hybrid-cloud and multicloud deployments across on-premises and public cloud environments to provide cross-cluster governance and application lifecycle management on the Cisco® validated hybrid-cloud infrastructure solution for containerized workloads. This solution enables on-premises infrastructure provisioning at cloud scale with Cisco Intersight® powered by automation.

Validated patterns are living code architectures for different hybrid-cloud use cases. Validated patterns are used by architects and advanced developers to bring together products across the Red Hat® portfolio in a specific use case that are tested and maintained across the product lifecycle.

This paper explains the methodology to deploy the Red Hat validated pattern for Multicloud GitOps on a Cisco® validated hybrid-cloud infrastructure solution with Cisco UCS® X-Series based FlashStack Data Center, Red Hat OpenShift® Container Platform, Red Hat OpenShift GitOps, Red Hat Advanced Cluster Management for Kubernetes, and Portworx Enterprise Kubernetes Storage Platform.

On-premises infrastructure is built with FlashStack Virtual Server Infrastructure (VSI) with the Cisco UCS X-Series Modular System and managed using Cisco Intersight. The FlashStack solution is a validated, converged infrastructure developed jointly by Cisco and Pure Storage. The solution offers a predesigned data center architecture that incorporates computing, storage, and network design best practices to reduce IT risk by validating the architecture and helping ensure compatibility among the components. The FlashStack solution is successful because of its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking.

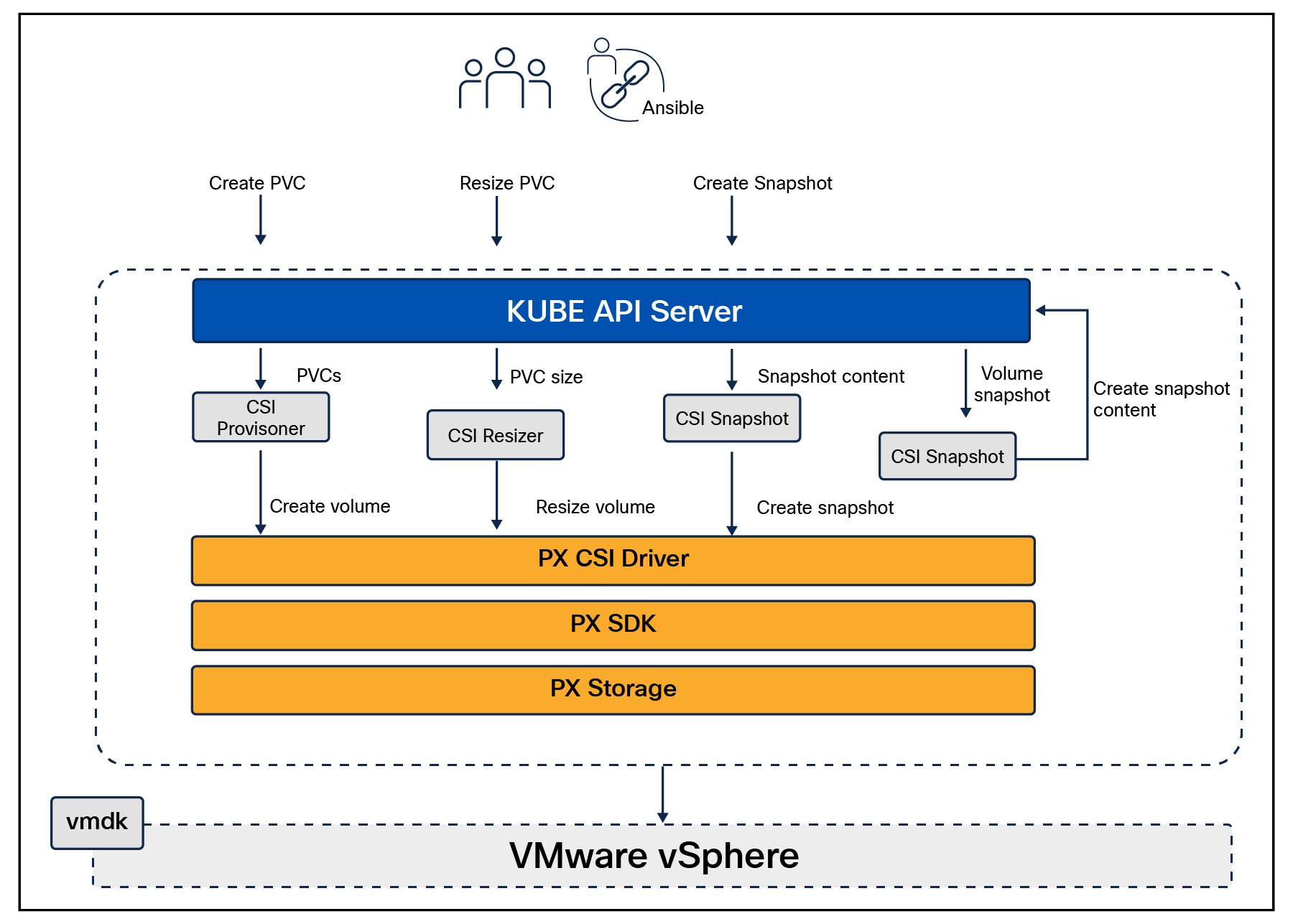

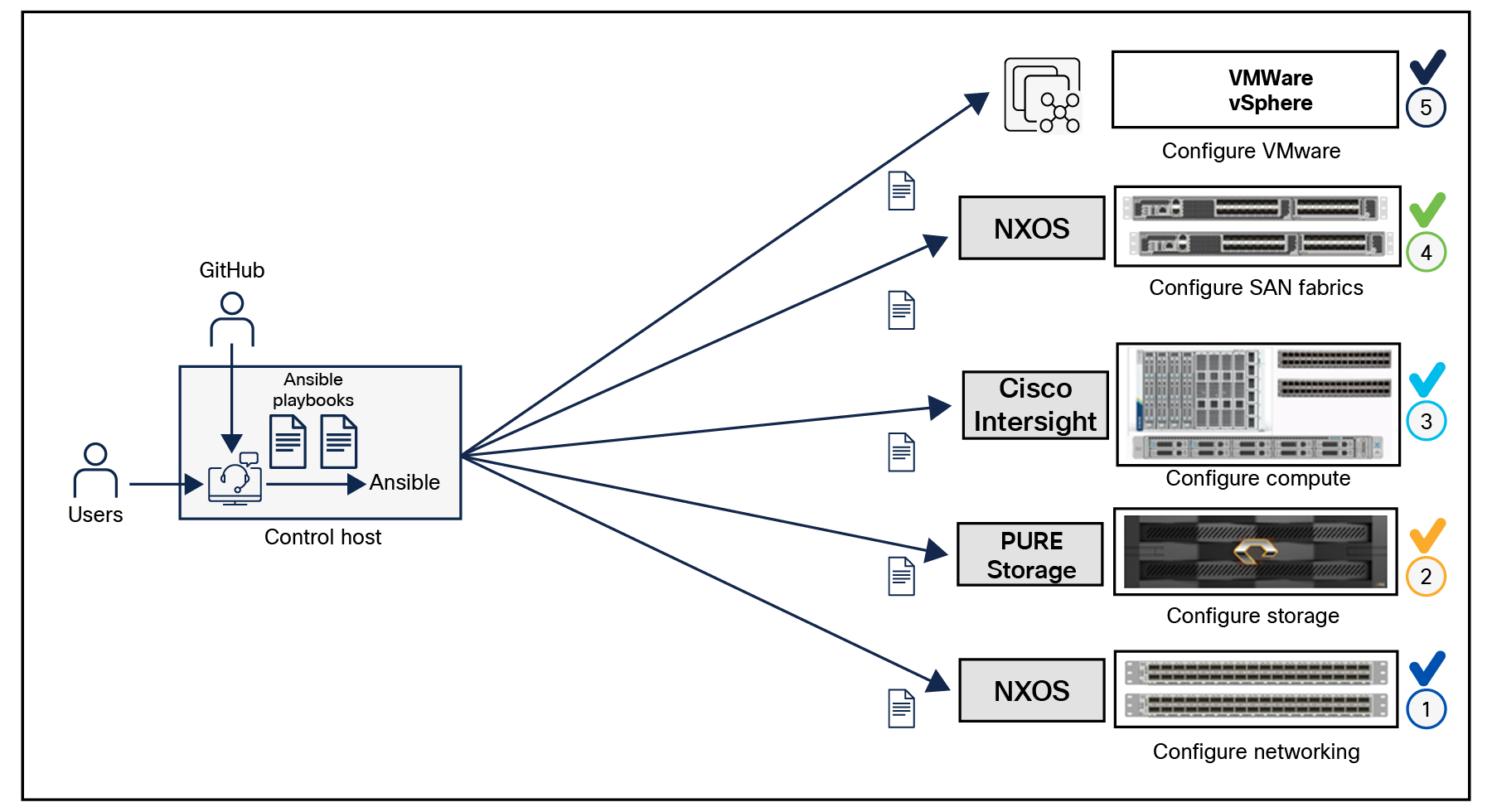

The on-premises infrastructure deployment is automated using Red Hat Ansible® to provide infrastructure as code (IaC) that can be integrated into existing CI/CD pipelines or other automation to accelerate deployments.

Hybrid-cloud has become the de facto deployment and operating model in most enterprises. In a study conducted by 451 Research across 2500 organizations from around the globe, 82 percent of the IT decision makers responded that they are already using a hybrid-cloud model. Cloud computing from hyper scalers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud offer limitless scale and flexibility, but it also comes with increasingly high costs, and sometimes higher risk, leaving enterprises with less control over their business critical applications and data. As a result, enterprises are adopting a hybrid strategy that allows them to optimally use both on-premises and public cloud infrastructure to meet their computing needs.

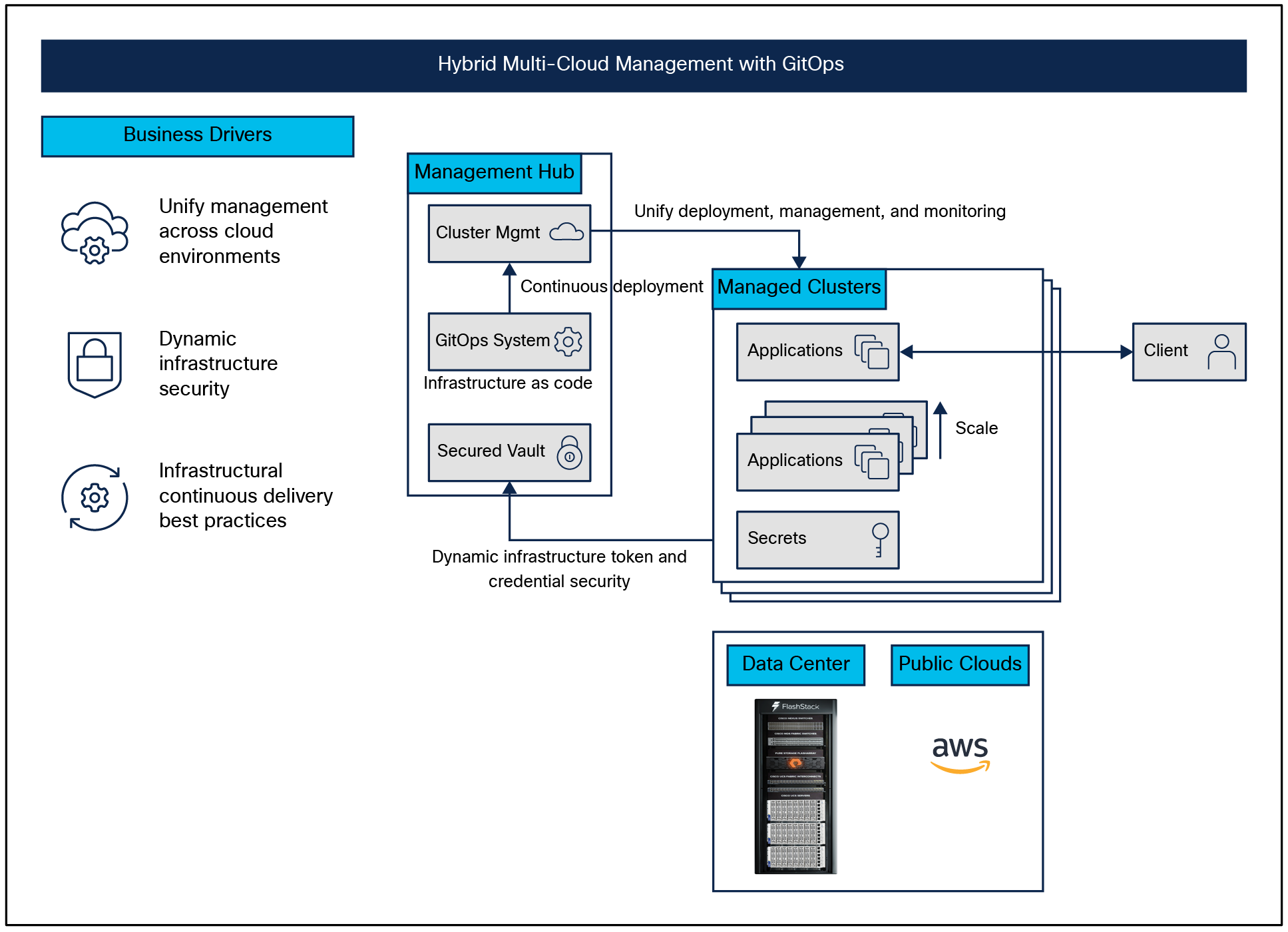

This solution covers hybrid and multicloud management with GitOps on the Cisco Validated Hybrid-cloud FlashStack‒based infrastructure solution for containerized workloads. At a high level, this requires a management hub, for DevOps and GitOps, and infrastructure that extends to one or more managed clusters running on-premises and/or public clouds. The automated infrastructure-as-code approach can manage the versioning of components and deploy according to the infrastructure-as-code configuration.

Solution overview

Benefits of hybrid multicloud management with GitOps on FlashStack‒based Cisco validated hybrid-cloud infrastructure solution include:

● Deployment of a Cisco validated hybrid-cloud infrastructure solution

● Infrastructure provisioning at cloud scale with Cisco Intersight powered by automation

● Unified management across cloud environments

● Dynamic infrastructure security

● Infrastructural continuous delivery best practices

● Multiple Cisco validated solutions on FlashStack Virtual Server Infrastructure

The reference architecture defined in this solution is built using Cisco X-Series modular‒based FlashStack, Cisco Intersight, Amazon Web Services (AWS), Red Hat OpenShift Container Platform (OCP), Red Hat OpenShift GitOps, Red Hat Advanced Cluster Management for Kubernetes, and Portworx Enterprise Storage Platform.

● Use a GitOps approach to manage hybrid and multicloud deployments across both public and private clouds

● Enable cross-cluster governance and application lifecycle management

● Securely manage secrets across the deployment

The intended audience of this document includes but is not limited to IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who are working on or interested in designing and deploying Cisco’s hybrid-cloud solutions.

This document provides architecture guidance to deploy and manage Multicloud GitOps validated patterns with Cisco FlashStack Data center on-premises. Hardware and software components used to validate the solution in Cisco’s internal labs are also provided. The document addresses various considerations and best practices for a successful deployment that enables enterprises to deploy applications using hybrid and Multicloud GitOps.

The OpenShift Container Platform deployment is done on Cisco UCS M6 platform servers (Cisco UCS X-Series compute nodes). But Cisco UCS M7 platform servers can also be leveraged.

Validated patterns are living code architectures for different edge computing and hybrid-cloud use cases deployed using a declarative GitOps framework. They are created by using GitOps to continuously deliver resources based on a defined distributed architecture. Validated patterns use operators to deploy applications and Helm Charts which are collections of files that describe sets of related Kubernetes resources for configuration that play a critical role in deploying distributed architectures, including Red Hat portfolio products with other technology ecosystem products, to help build a repeatable, reproducible solution architecture that can be extended across different platforms and delivery models, such as data centers, cloud platforms, and the edge. Validated patterns also incorporate a Continuous Integration (CI) pipeline to ensure that the use case continues to work across product updates to the ecosystem code base, providing consistency and trust as the lifecycle of the underlying products change over time. Using a validated pattern gives the confidence of using a best practice, reduces the risk of falling behind a crucial release point, and makes your deployment operable at scale.

These predefined computing configurations contain all the code necessary to build a comprehensive edge or hybrid-cloud stack. You can even create a pattern that goes beyond this documentation by using automated processes in GitOps that simplify deployment and ensure consistency across multiple sites and clusters. Each use case’s Git repository is open, and Red Hat regularly collaborates with customers to update use cases or to add partner technologies to configurations.

Validated patterns achieve the following, based on GitOps principles:

● Reproducibility: The declarative GitOps framework ensures that your applications do not fall out of sync with your desired state.

● Repeatability: Once the desired state has been created, it can be deployed at multiple locations and modified to support different infrastructure deployments.

● Lifecycle management: Validated patterns have their own lifecycle and are maintained over time, which allows for pattern versions to be tested based on new ecosystem product versions.

● Applicability: Each validated pattern includes a use case demo that shows how the pattern is being used in a real world scenario.

● Customizability: Validated patterns are meant to provide users with an accelerated time to value, delivering 80 percent or more of a desired deployment, As such, they incorporate a modular design so that architects can modify the pattern for their own use case and individual functions can be replaced to apply to other solutions.

Kubernetes provides a scalable orchestration platform for microservices and containers. Red Hat OpenShift, built on Kubernetes, enables organizations to build and deploy cloud native applications and use cases, extending workflows from the core to the edge. Incorporating applications, services and tools can greatly augment organizations’ ability to build workflows that significantly impact results; however, developing a distributed architecture with all of these collaborative technologies is not easy. Solution architects need to discover dependencies within products, how they interact with one another, and how they might change from version to version. Once an architecture is created, tested, and promoted to production, it is typically not repeatable, there is no consistency from one architecture to the next, and the architectures cannot be extended to other platforms or locations in short, the process is not scalable.

This is where validated patterns can help. Best practices and a deep understanding of how organizations are running patterns in their environments are the basis for a validated pattern. They help to operationalize a distributed architecture by using declarative GitOps principles and continuous integration pipelines. The result is an architecture that is built into code, so parts of the solution are built, deployed, and maintained together. The pattern framework can then be used to reproduce the solution and scale the solution across platforms; it can be repeated, modified, and extended for different workloads and use cases.

One benefit of using a validated pattern is that Red Hat maintains the configuration across product lifecycles, including testing against prerelease bits, assessing the interoperability of the distributed technologies as they evolve, so you can trust that the use case is going to work as intended.

Validated patterns also contain the code necessary to build your stack, and by using GitOps principles, developers can rely on automated processes to pinpoint more easily potential issues. A validated pattern makes the division of labor between various management pieces clear and easy to monitor and maintain.

Finally, patterns are developed using opensource principles, so they remain open and customizable. With validated patterns, known workloads can be replicated anywhere, with easy ways to modify the pattern for different use cases. As the technologies you use continue to improve and your use cases change, validated patterns can be automated and updated to suit your needs.

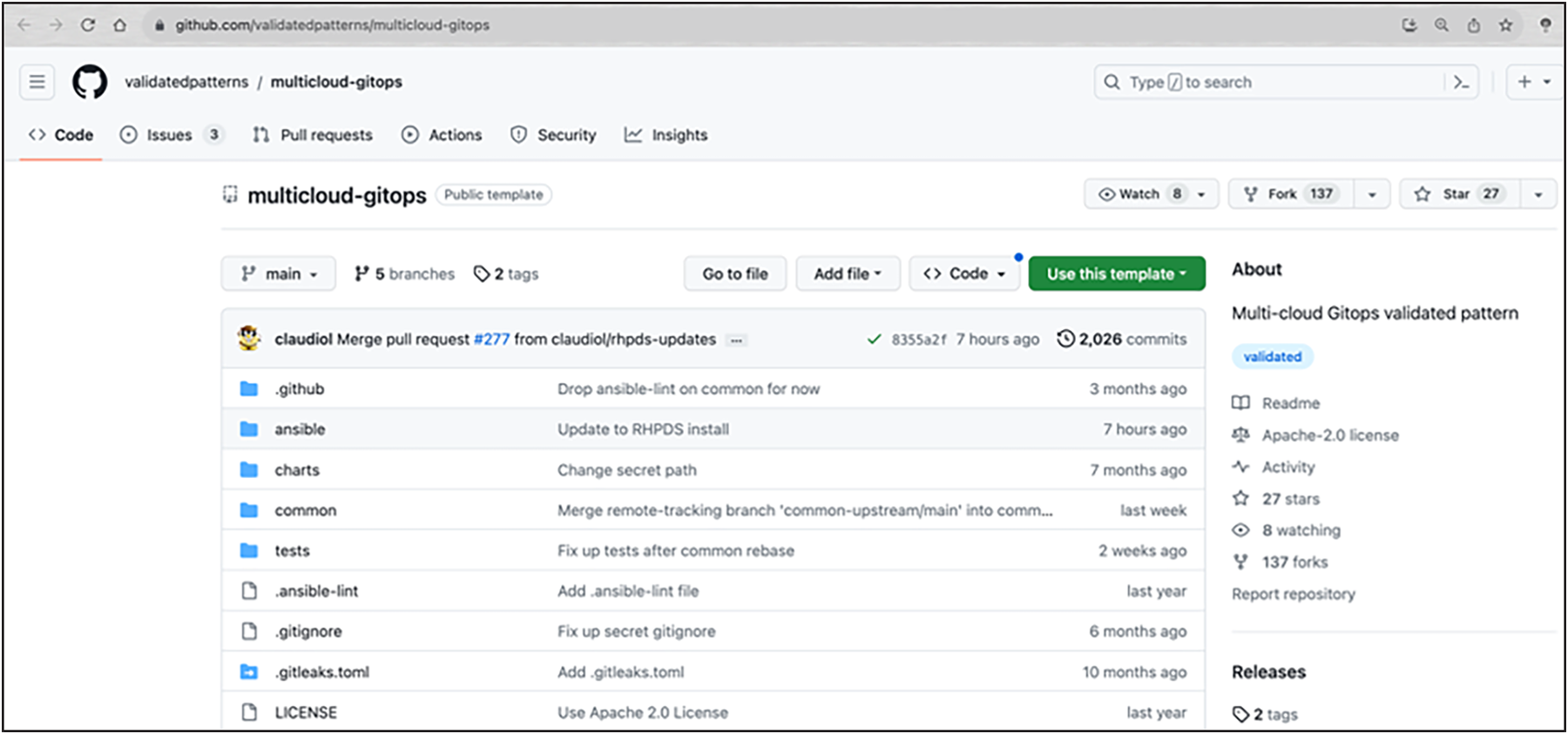

Validated pattern: Multicloud GitOps

Organizations are aiming to develop, deploy, and operate applications on an open hybrid-cloud in a stable, simple, and secure way. This hybrid strategy includes multicloud deployments where workloads might be running on multiple clusters and on multiple clouds, private or public. This strategy requires an infrastructure-as-code approach: GitOps. GitOps uses Git repositories as a single source of truth to deliver infrastructure as code. Submitted code checks the Continuous Integration (CI) process, while the Continuous Delivery (CD) process checks and applies requirements for such things as security, infrastructure as code, or any other boundaries set for the application framework. All changes to code are tracked, making updates easy while also providing version control should a rollback be needed.

The following technologies are used in this solution:

Red Hat OpenShift Platform

Red Hat OpenShift Platform is an enterprise ready Kubernetes container platform built for an open hybrid-cloud strategy. It provides a consistent application platform to manage hybrid-cloud, public cloud, and edge deployments. It delivers a complete application platform for both traditional and cloud native applications, allowing them to run anywhere. Red Hat OpenShift has a preconfigured, preinstalled, and self updating monitoring stack that provides monitoring for core platform components. It also enables the use of external secret management systems (for example, HashiCorp Vault in this case) to securely add secrets into the Red Hat OpenShift platform.

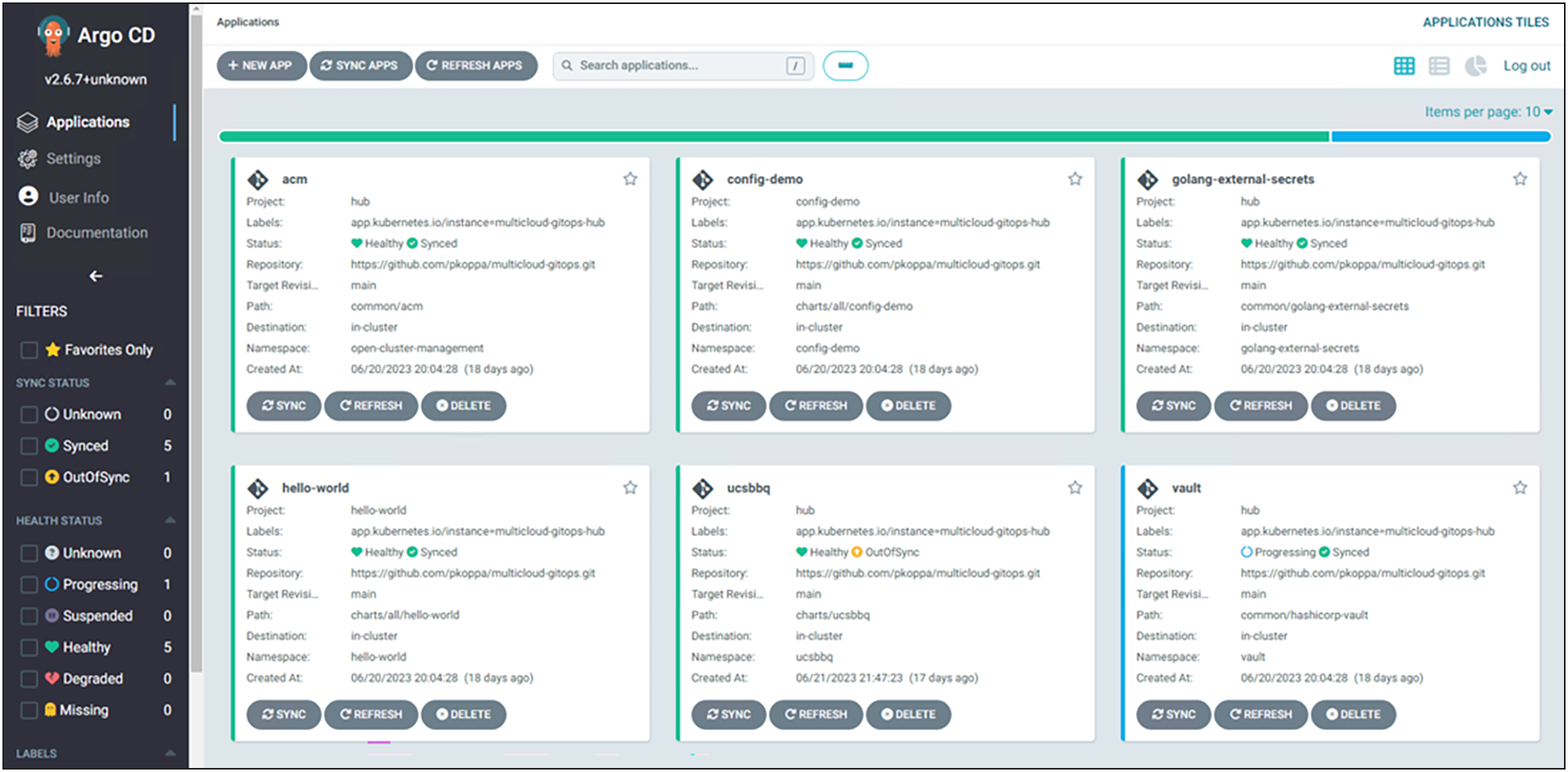

Red Hat OpenShift GitOps

Red Had OpenShift GitOps is a declarative application continuous delivery tool for Kubernetes based on the ArgoCD project. Application definitions, configurations, and environments are declarative, and version controlled in Git. It can automatically push the desired application state into a cluster, quickly find out if the application state is in sync with the desired state and manage applications in multicluster environments.

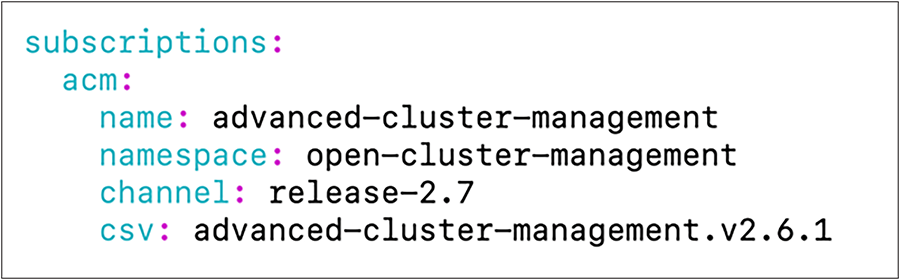

Red Hat Advanced Cluster Management for Kubernetes

Red Hat Advanced Cluster Management for Kubernetes controls clusters and applications from a single console, with built in security policies. It extends the value of Red Hat OpenShift by deploying applications, managing multiple clusters, and enforcing policies across multiple clusters at scale.

Red Hat Ansible Automation Platform

Red Hat Ansible Automation Platform provides an enterprise framework for building and operating IT automation at scale across hybrid-clouds, including edge deployments. It enables users across an organization to create, share, and manage automation, from development and operations to security and network teams.

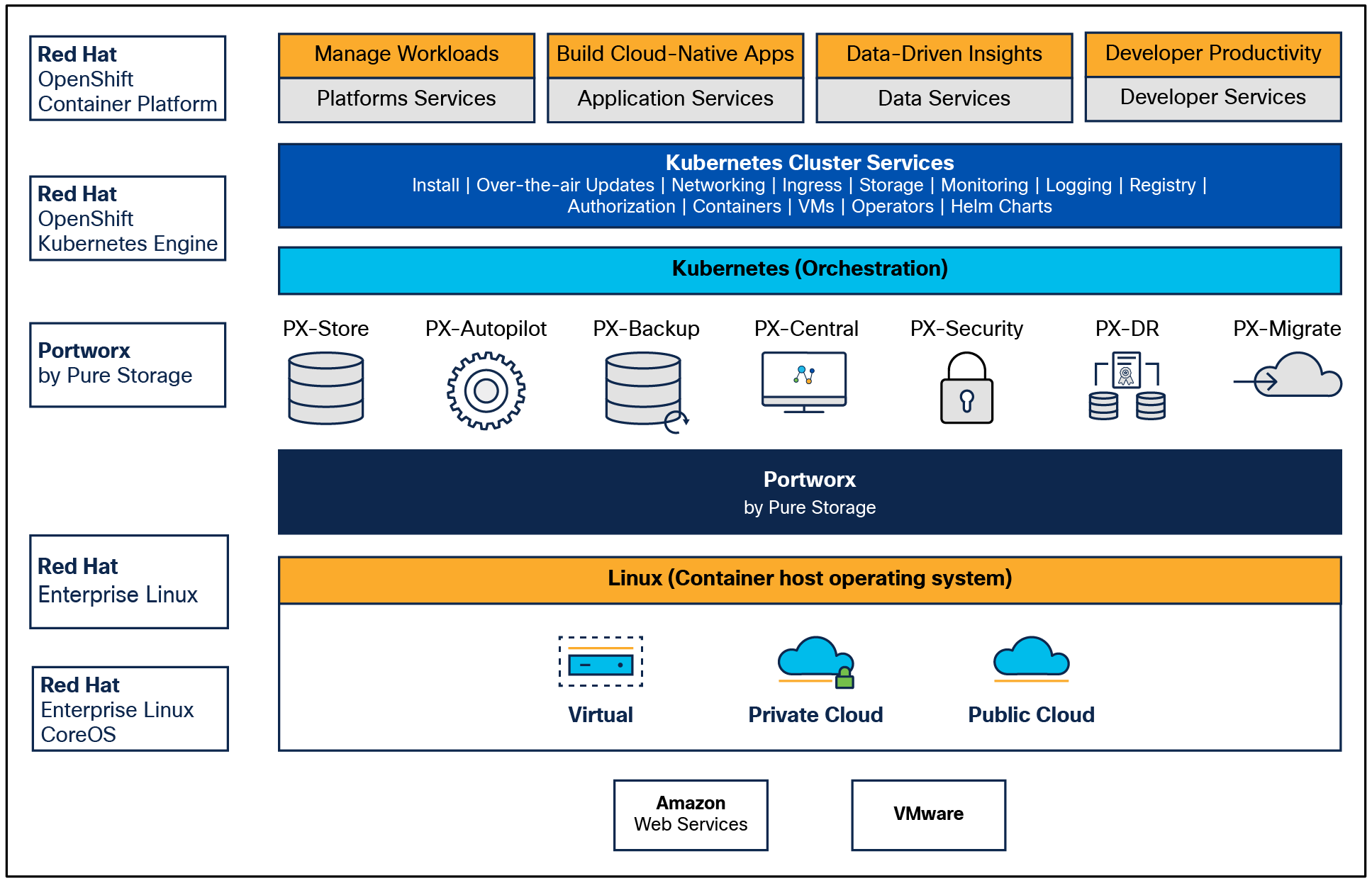

Portworx Enterprise

Portworx Enterprise provides persistent storage and Kubernetes data services to Red Hat OpenShift. Persistence is necessary for stateful applications in Kubernetes environments. Portworx also provides business continuity with Portworx Backup and Portworx Disaster Recovery products that will be incorporated in a future GitOps pattern.

HashiCorp Vault

HashiCorp Vault prov ides a secure centralized store for dynamic infrastructure and applications across-clusters, including over low trust networks between clouds and data centers.

This solution also uses a variety of observability tools, including the Prometheus monitoring system and a Grafana dashboard, that are integrated with OpenShift, as well as components of the Observatorium metaproject, which includes Thanos and the Loki API.

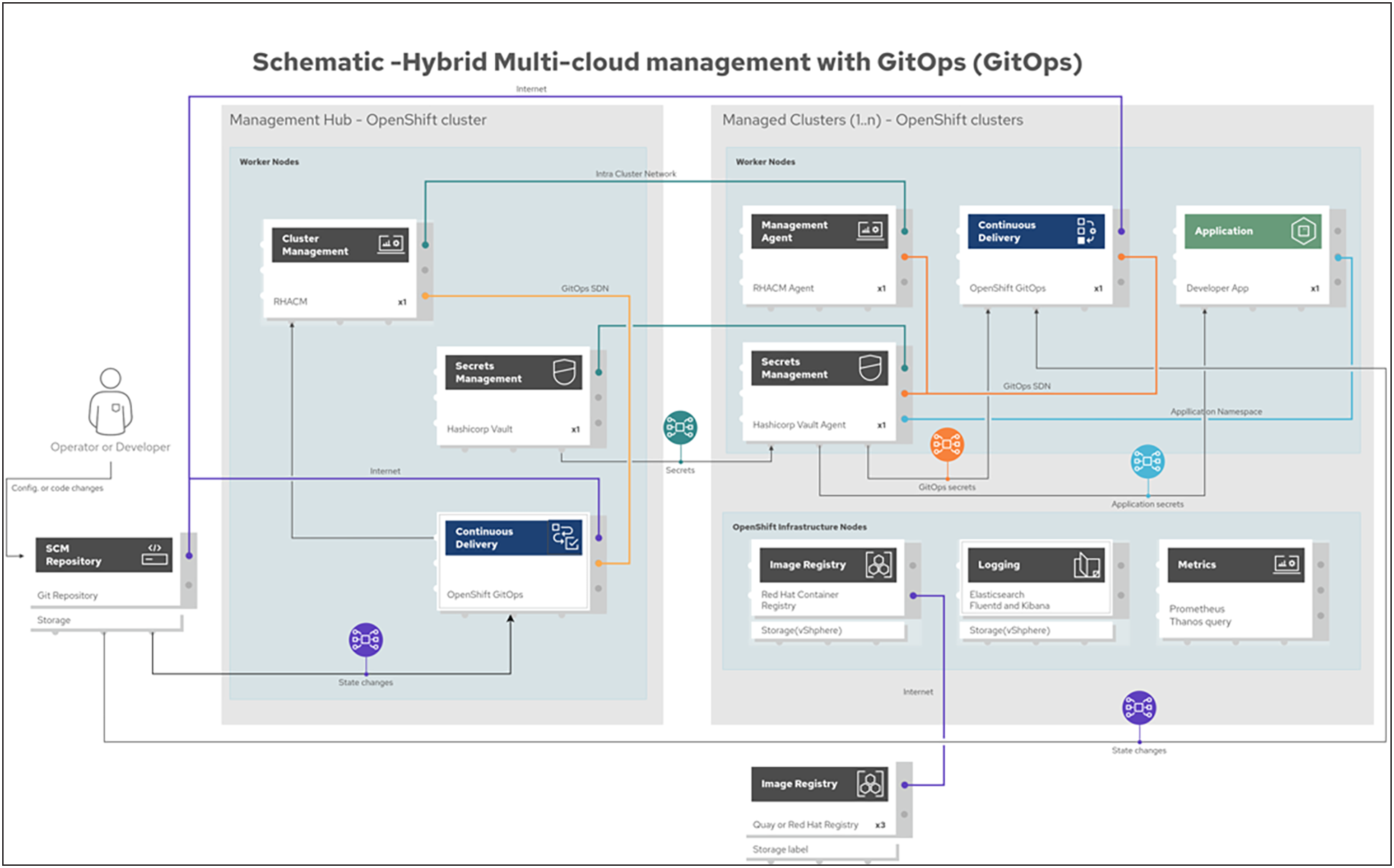

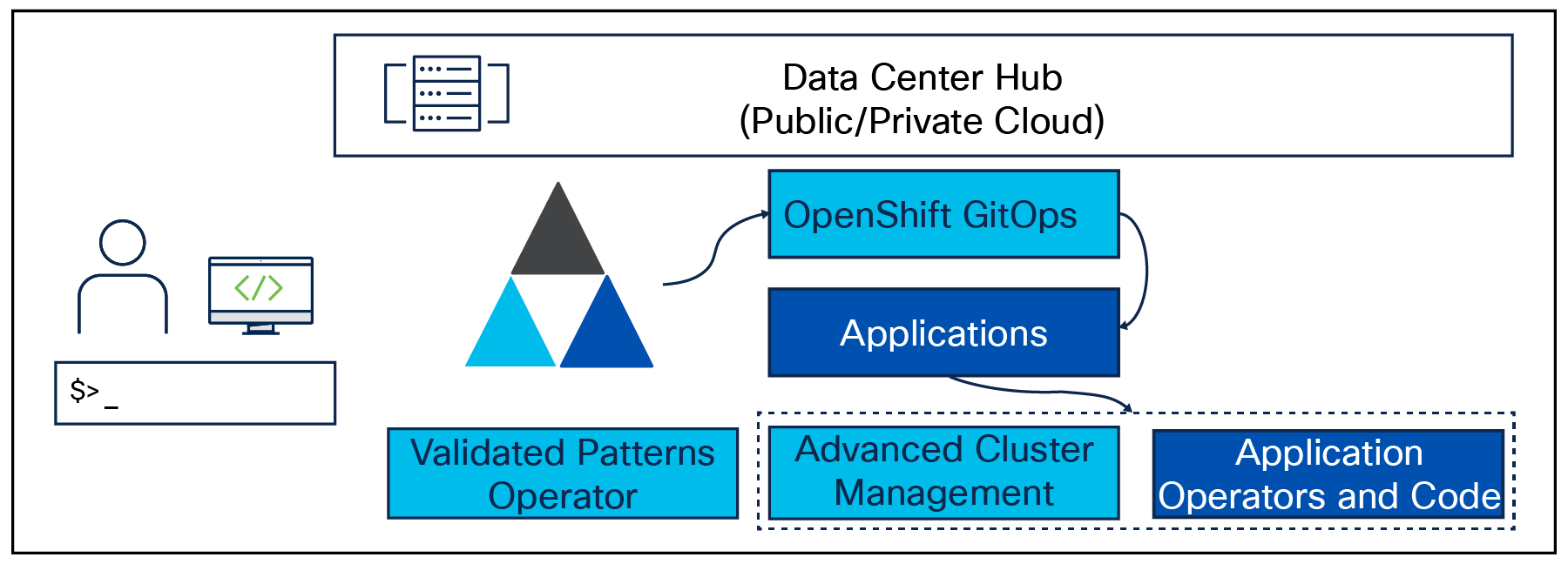

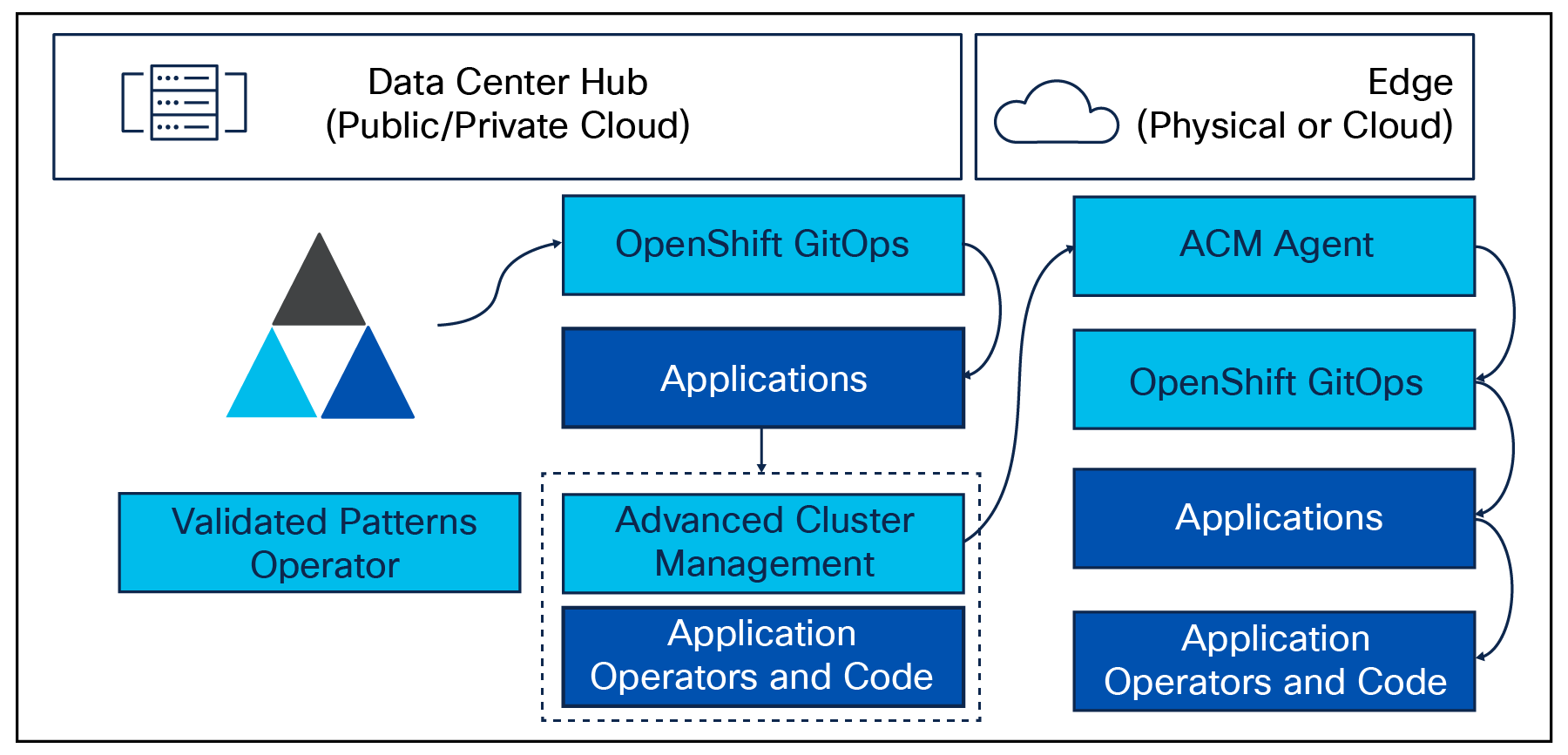

The following figure provides a schematic diagram overview of the complete solution, including both components and data flows.

Schematic diagram

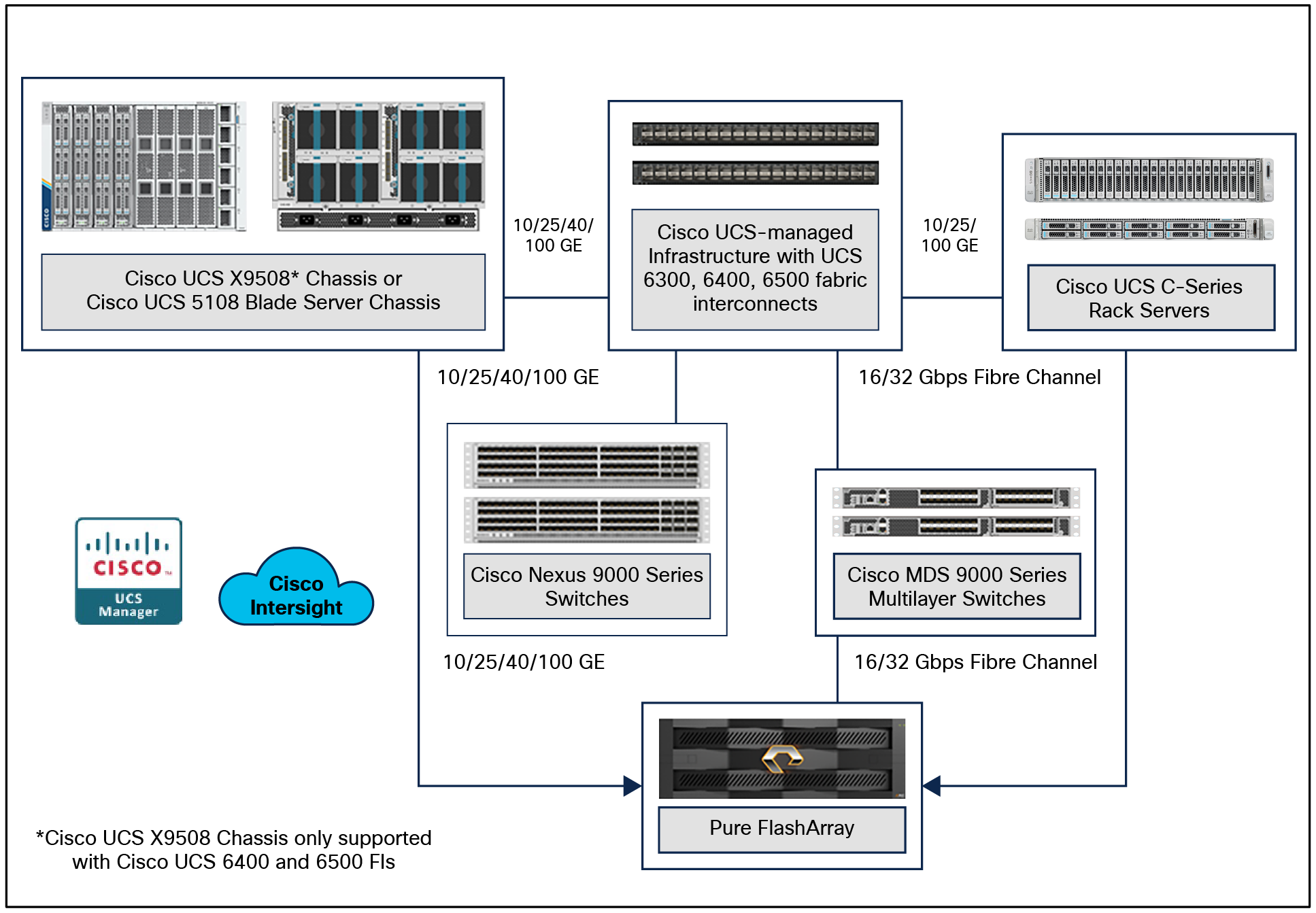

Cisco and Pure Storage have partnered to deliver many Cisco Validated Designs, which use best in class storage, server, and network components to serve as the foundation for virtualized workloads, enabling efficient architectural designs that you can deploy quickly and confidently.

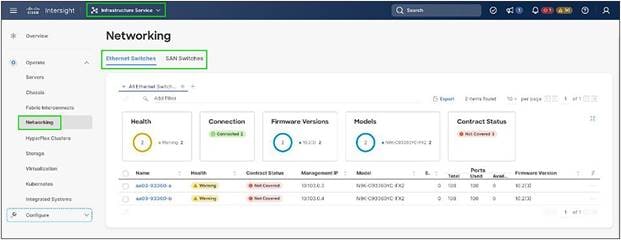

FlashStack architecture is built using the following infrastructure components for compute, network, and storage (Figure 3):

● Cisco Unified Computing System (Cisco UCS)

● Cisco Nexus® switches

● Cisco MDS 9000 Series Multilayer Switches

● Pure Storage FlashArray

All FlashStack components are integrated, so customers can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlashStack is its ability to maintain consistency at scale. Each of the component families shown in Figure 4. (Cisco UCS, Cisco Nexus, Cisco MDS, and Pure Storage FlashArray systems) offers platform and resource options to scale up or scale out the infrastructure while supporting the same features and functions.

The FlashStack solution with Cisco UCS X-Series uses the following hardware components:

● Cisco UCS X9508 Chassis with any number of Cisco UCS X210c M6 Compute Nodes.

● Cisco UCS fourth generation 6454 fabric interconnects to support 25-and 100-GE connectivity from various components.

● High-speed Cisco NX-OS‒based Nexus 93180YC-FX3 switching designed to support up to 100-GE connectivity.

● Pure Storage FlashArray//XL170 with high-speed Ethernet or Fibre Channel connectivity.

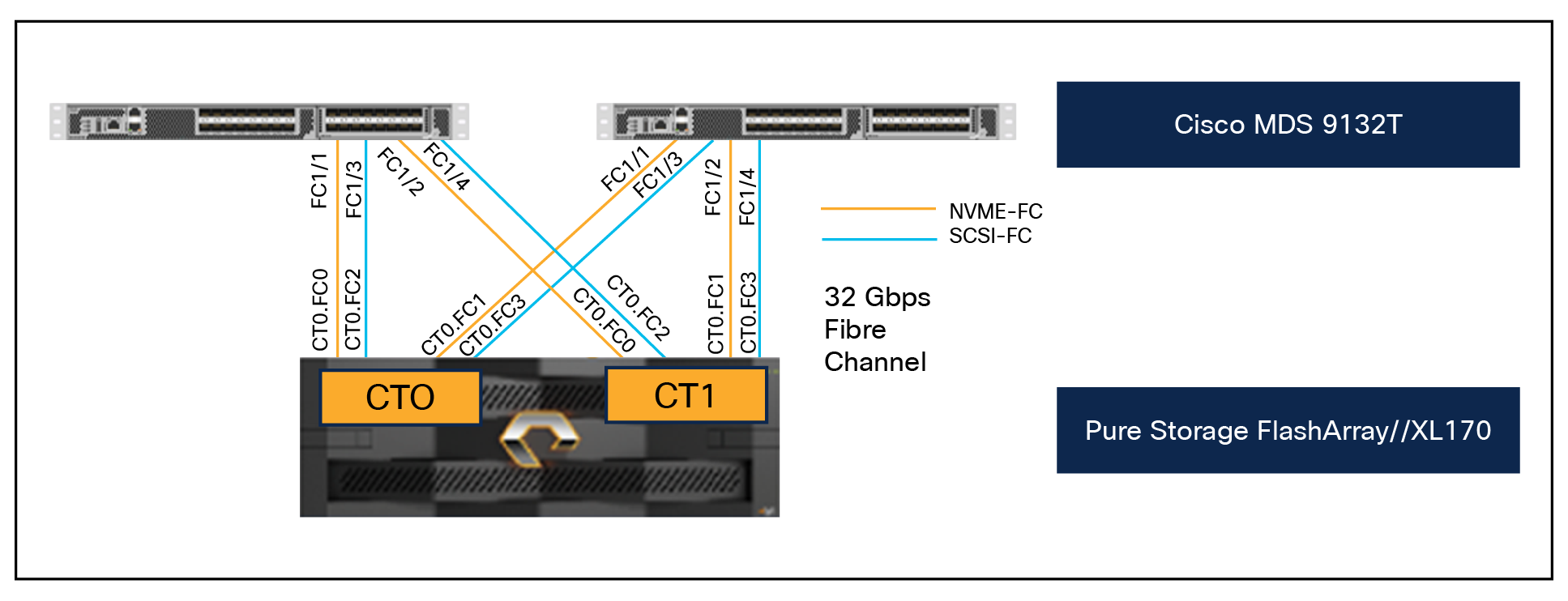

● Pure FlashArray//XL170 storage with 25GbE connectivity to a Cisco Nexus switching fabric, and 32Gb FC connectivity to a Cisco MDS switching fabric.

The software components consist of:

● Cisco Intersight platform to deploy, maintain, and support the FlashStack components.

● Cisco Intersight Assist virtual appliance to help connect the Pure Storage FlashArray and VMware vCenter with the Cisco Intersight platform.

● For virtualized clusters, VMware vCenter 8.0 to set up and manage the virtual infrastructure as well as integration of the virtual environment with Cisco Intersight software.

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise class, x86-architecture servers. The system is an integrated, scalable, multichassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System consists of the following subsystems:

● Compute – The compute piece of the system incorporates servers based on 2nd Generation Intel® Xeon® Scalable processors. Servers are available in blade and rack form factors, managed by Cisco UCS Manager.

● Network – The integrated network fabric in the system provides a low latency, lossless, 10/25/40/100 Gbps Ethernet fabric. Networks for LAN, SAN, and management access are consolidated within the fabric. The unified fabric uses the innovative single connect technology to lower costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

● Virtualization – The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtual environments to support evolving business needs.

Cisco Unified Computing System is revolutionizing the way servers are managed in the data center. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded management – In Cisco UCS, the servers are managed by the embedded firmware in the fabric interconnects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified fabric – In Cisco UCS, from blade server chassis or rack servers to fabric interconnects, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

● Auto discovery – By simply inserting the blade server into the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto discovery enables the wire once architecture of Cisco UCS, where the compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

Cisco UCS Manager (UCSM) provides unified, integrated management for all software and hardware components of Cisco UCS. Using Cisco® single connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive Graphical User Interface (GUI), a Command Line Interface (CLI), or through a robust Application Programming Interface (API).

Cisco UCS X-Series Modular System

The Cisco UCS X-Series Modular System is designed to take the current generation of the Cisco UCS platform to the next level with its design that will support future innovations and management in the cloud. Decoupling and moving platform management to the cloud allows the Cisco UCS platform to respond to features and scalability requirements much faster and more efficiently. Cisco UCS X-Series state of the art hardware simplifies the data center design by providing flexible server options. A single server type that supports a broader range of workloads results in fewer data center products to manage and maintain. The Cisco Intersight cloud management platform manages the Cisco UCS X-Series as well as integrating with third party devices. These devices include VMware vCenter and Pure Storage to provide visibility, optimization, and orchestration from a single platform, thereby enhancing agility and deployment consistency.

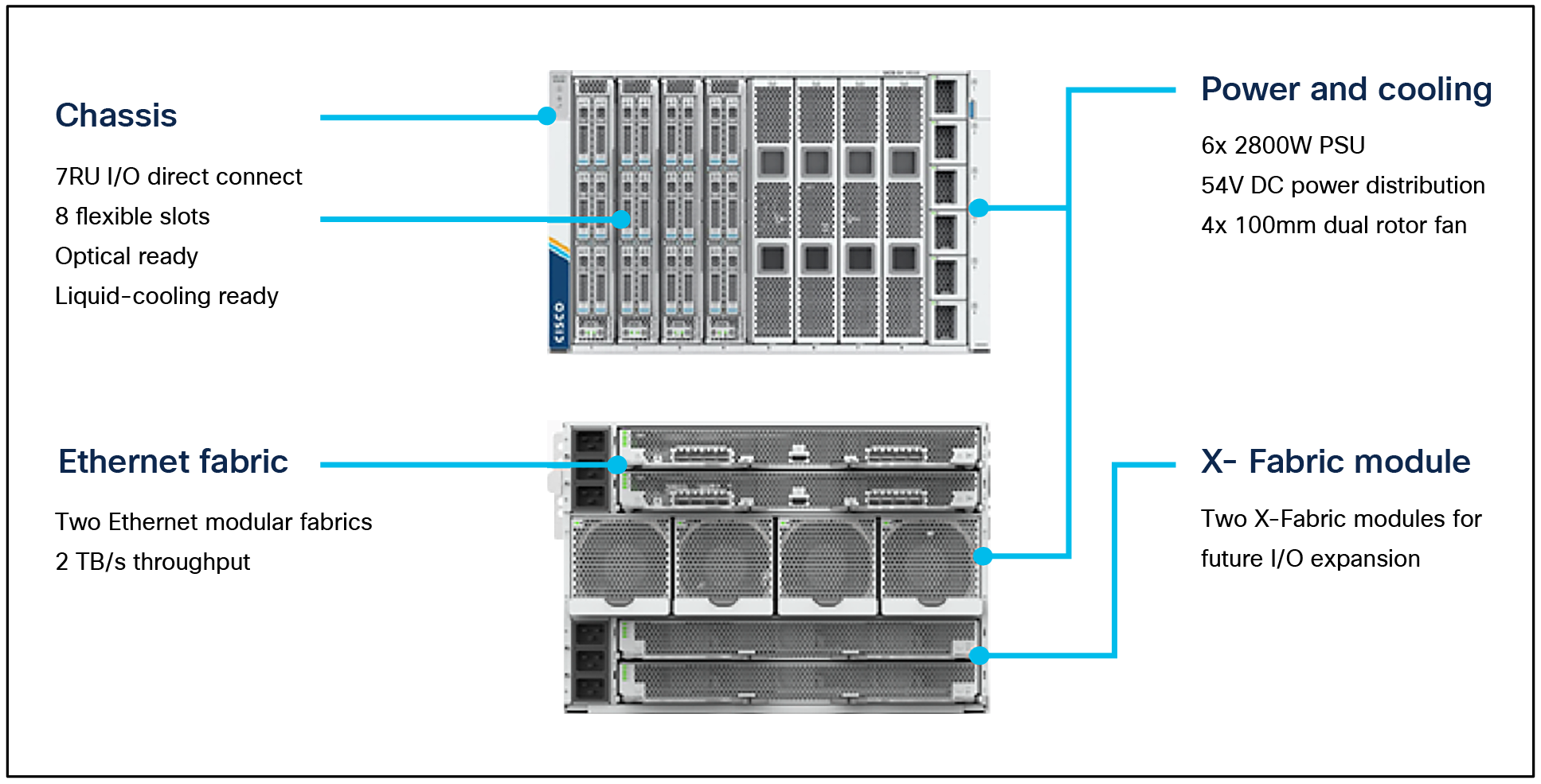

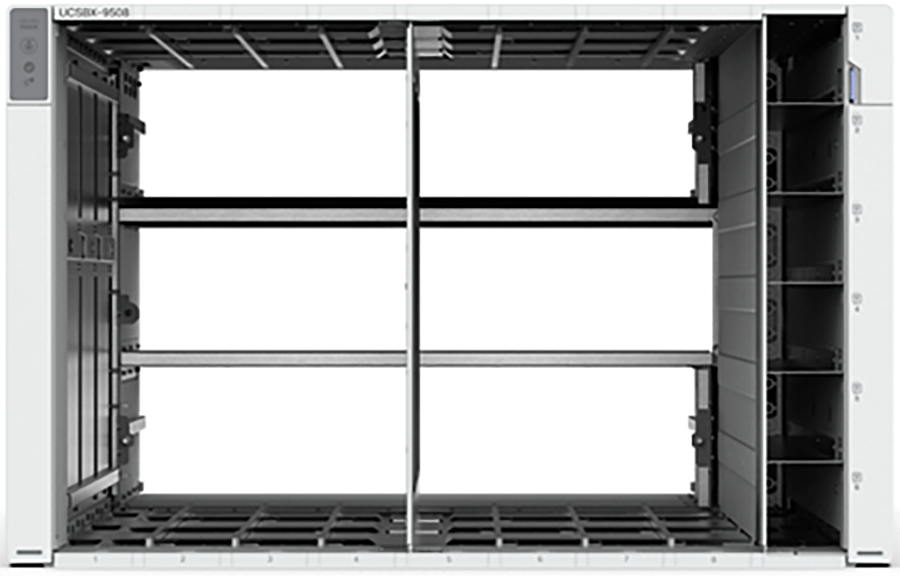

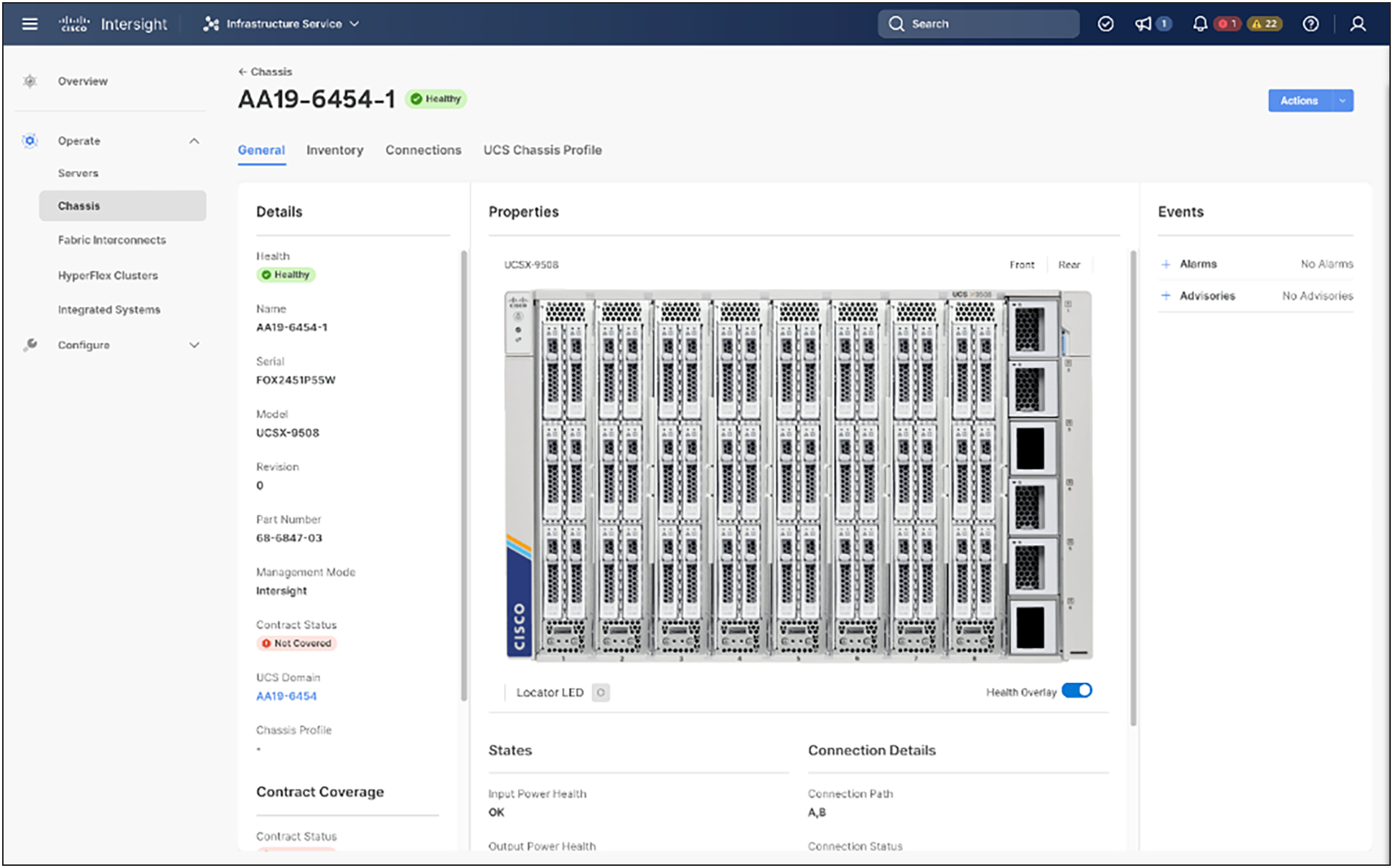

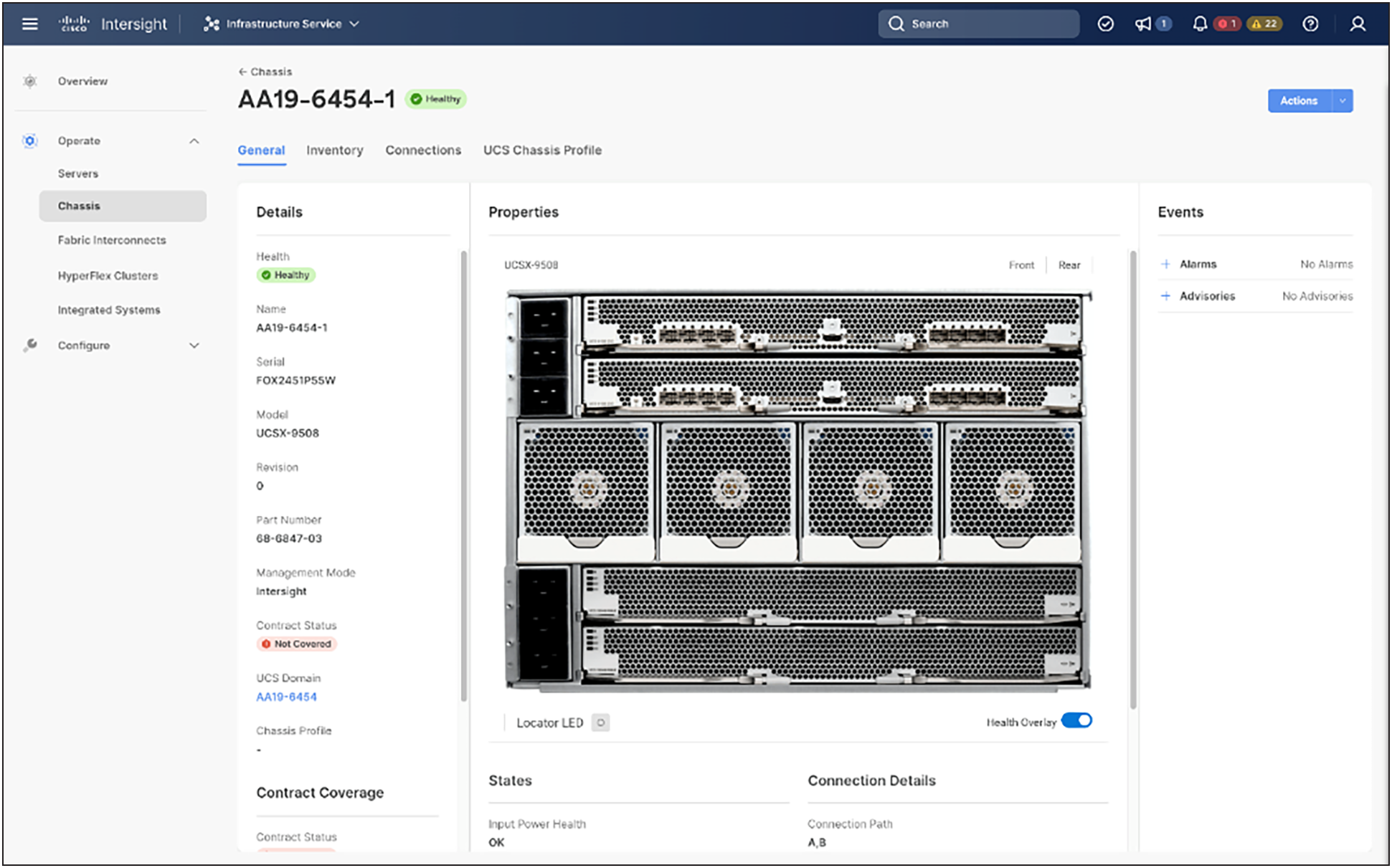

The Cisco UCS X9508 Chassis is engineered to be adaptable and flexible. As seen in Figure 5. the Cisco UCS X9508 has only a power distribution midplane. This innovative design provides fewer obstructions for better airflow. For I/O connectivity, vertically oriented compute nodes intersect with horizontally oriented fabric modules, allowing the chassis to support future fabric innovations. Cisco UCS X9508 Chassis’ superior packaging enables larger compute nodes, thereby providing more space for actual compute components, such as memory, GPUs, drives, and accelerators. Improved airflow through the chassis enables support for higher power components, and more space allows for future thermal solutions (such as liquid cooling) without limitations.

Cisco UCS X9508 Chassis – innovative design

The Cisco UCS X9508 7 rack unit (7RU) chassis has eight flexible slots. These slots can house a combination of compute nodes and a pool of future I/O resources that may include GPU accelerators, disk storage, and nonvolatile memory. At the top rear of the chassis are two Intelligent Fabric Modules (IFMs) that connect the chassis to upstream Cisco UCS 6400 Series Fabric Interconnects. At the bottom rear of the chassis are slots ready to house future UCS X-Fabric modules that can flexibly connect the compute nodes with I/O devices. Six 2800W Power Supply Units (PSUs) provide 54V power to the chassis with N, N+1, and N+N redundancy. A higher voltage allows efficient power delivery with less copper and reduced power loss. Efficient, 100mm, dual counter rotating fans deliver industry leading airflow and power efficiency, and optimized thermal algorithms enable different cooling modes to best support the customer’s environment.

Cisco UCSX 9108-25G Intelligent Fabric Modules

For the Cisco UCS X9508 Chassis, network connectivity is provided by a pair of Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6400 Series Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508’s midplane free design enables easy upgrades to new networking technologies as they emerge, making it easier to accommodate new network speeds or technologies in the future.

![]()

Cisco UCSX 9108-25G Intelligent Fabric Module

Each IFM supports eight 25Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 32 25Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the Cisco UCS FIs, providing up to 400Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to the native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches), and data Ethernet traffic is forwarded upstream to the data center network (through Cisco Nexus switches).

Cisco UCSX 9108-100G Intelligent Fabric Modules

The Cisco UCS 9108-100G and 9108-25G Intelligent Fabric Module (IFM) brings the unified fabric into the blade server enclosure, providing connectivity between the blade servers and the fabric interconnect, simplifying diagnostics, cabling, and management.

This FlashStack solution with Cisco UCS X-Series and Cisco UCS 5th Generation Unified Fabric technology uses Cisco UCS 9108 100G IFM.

Cisco UCS X9108-100G Intelligent Fabric Module

The Cisco UCS 9108 100G IFM connects the I/O fabric between the Cisco UCS 6536 Fabric Interconnect and the Cisco UCS X9508 Chassis, enabling a lossless and deterministic converged fabric to connect all blades and chassis together. Because the fabric module is similar to a distributed line card, it does not perform any switching and is managed as an extension of the fabric interconnects. This approach removes switching from the chassis, reducing overall infrastructure complexity, and enabling Cisco UCS to scale to many chassis without multiplying the number of switches needed, reducing TCO and allowing all chassis to be managed as a single, highly available management domain. The Cisco UCS 9108 100G IFM also manages the chassis environment (power supply, fans, and blades) in conjunction with the fabric interconnect. Therefore, separate chassis management modules are not required.

The IFM plugs into the rear side of the Cisco UCS X9508 Chassis. The IFM provides a data path from the chassis compute nodes to the Cisco UCS 6536 Fabric Interconnect. Up to two Intelligent Fabric Modules (IFMs) plug into the back of the Cisco UCS X9508 Chassis.

The IFMs serve as line cards in the chassis and multiplex data from the compute nodes to the Fabric Interconnect (FI). They also monitor and manage chassis components such as fan units, power supplies, environmental data, the LED status panel, and other chassis resources. The server compute node Keyboard, Video, and Mouse (KVM) data, Serial over LAN (SoL) data, and intelligent Platform Management Interface (IPMI) data also travel to the IFMs for monitoring and management purposes. In order to provide redundancy and failover, the IFMs are always used in pairs.

There are 8 x QSFP28 external connectors on an IFM to interface with a Cisco UCS 6536 Fabric Interconnect. The IFM internally provides 1 x 100G or 4 x 25G connections toward each Cisco UCS X210c Compute Node in the Cisco UCS X9508 Chassis.

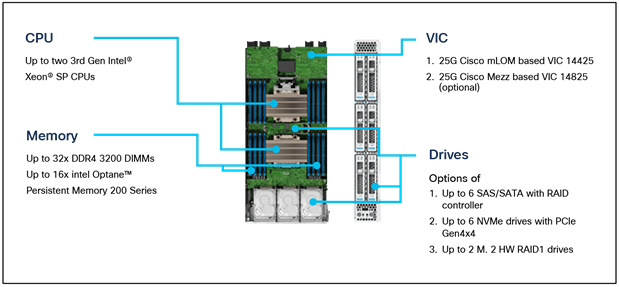

Cisco UCS X210c M6 Compute Node

The Cisco UCS X9508 Chassis is designed to host up to 8 Cisco UCS X210c M6 Compute Nodes. The hardware details of the Cisco UCS X210c M6 Compute Nodes are shown in Figure 8.

Cisco UCS X210c M6 Compute Node

The Cisco UCS X210c M6 Compute Node features:

● CPU: Up to 2x 3rd Gen Intel Xeon Scalable Processors with up to 40 cores per processor and 1.5 MB Level 3 cache per core

● Memory: Up to 32 x 256 GB DDR4-3200 DIMMs for a maximum of 8 TB of main memory. The UCS X210c M6 can also be configured for up to 16 x 512-GB Intel Optane persistent memory DIMMs for a maximum of 12 TB of memory.

● Disk storage: Up to 6 SAS or SATA drives can be configured with an internal RAID controller, or customers can configure up to 6 NVMe drives. 2 M.2 memory cards can be added to the UCS X210c M6 with RAID 1 mirroring.

● Virtual interface card (VIC): Up to 2 VICs, including an mLOM Cisco UCS VIC 14425 and a mezzanine Cisco UCS VIC 14825, can be installed in a UCS X210c M6.

● Security: The server supports an optional Trusted Platform Module (TPM). Additional security features include a secure boot FPGA and ACT2 anticounterfeit provisions.

Cisco UCS Virtual Interface Cards (VICs)

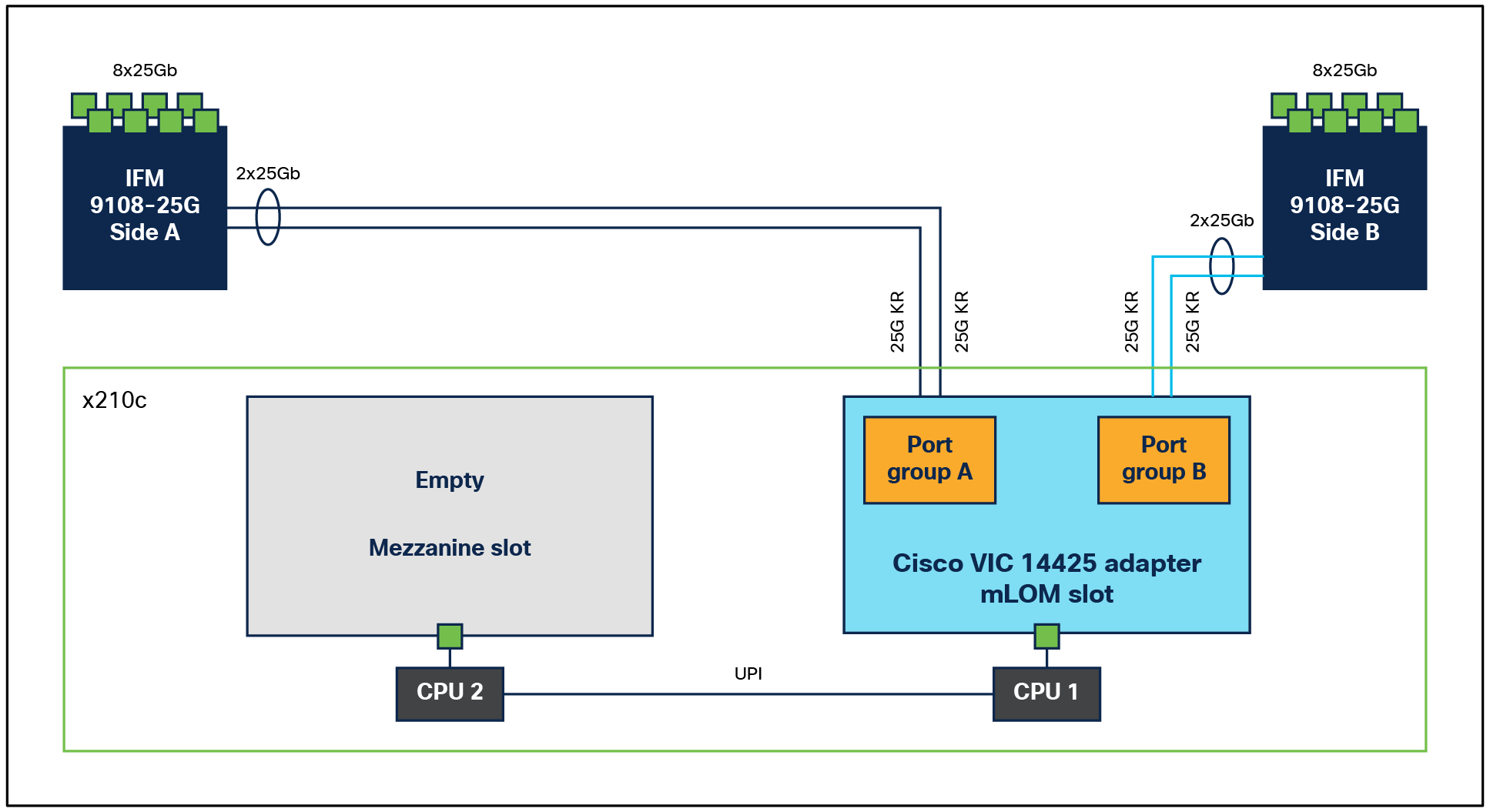

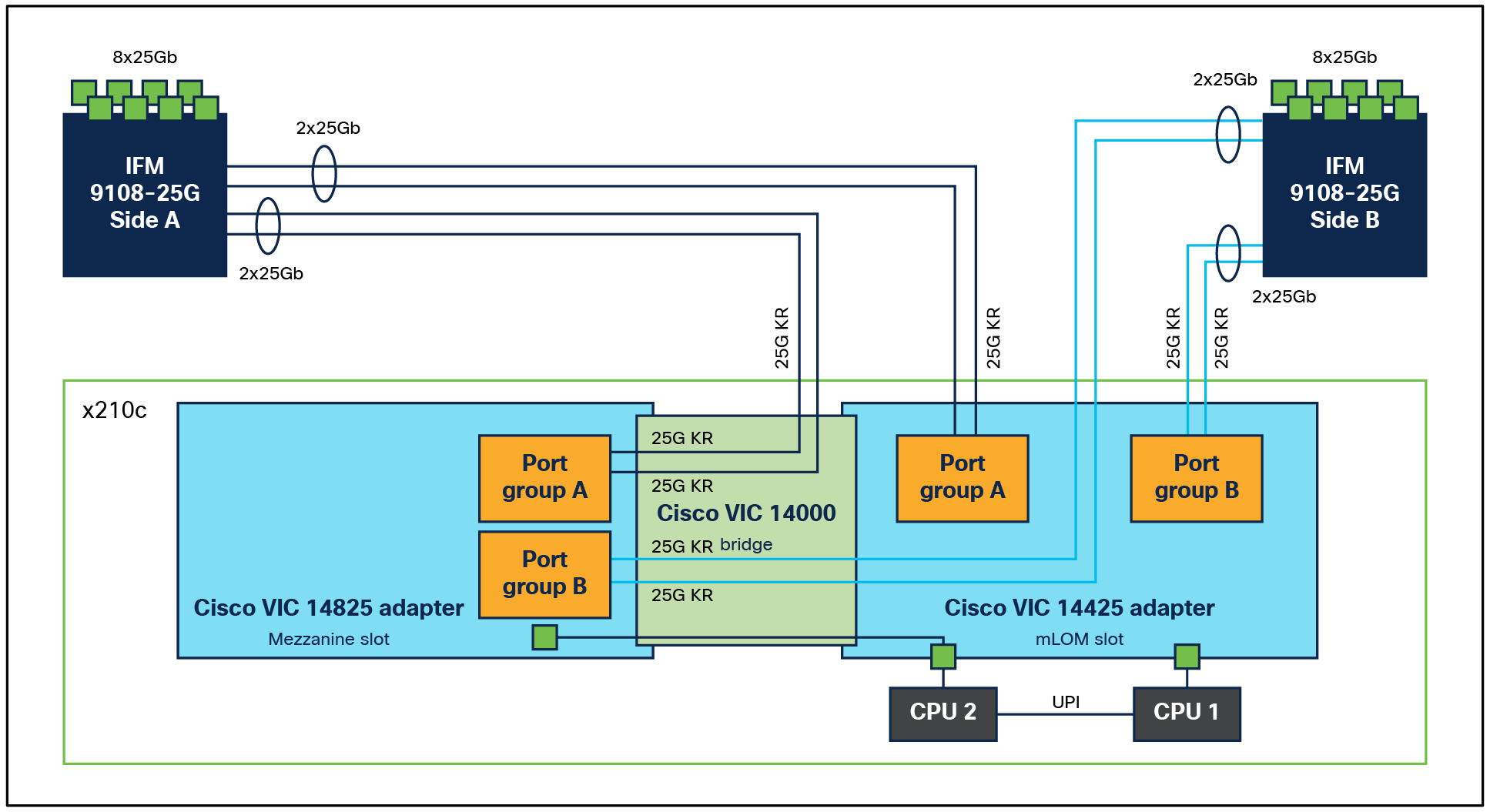

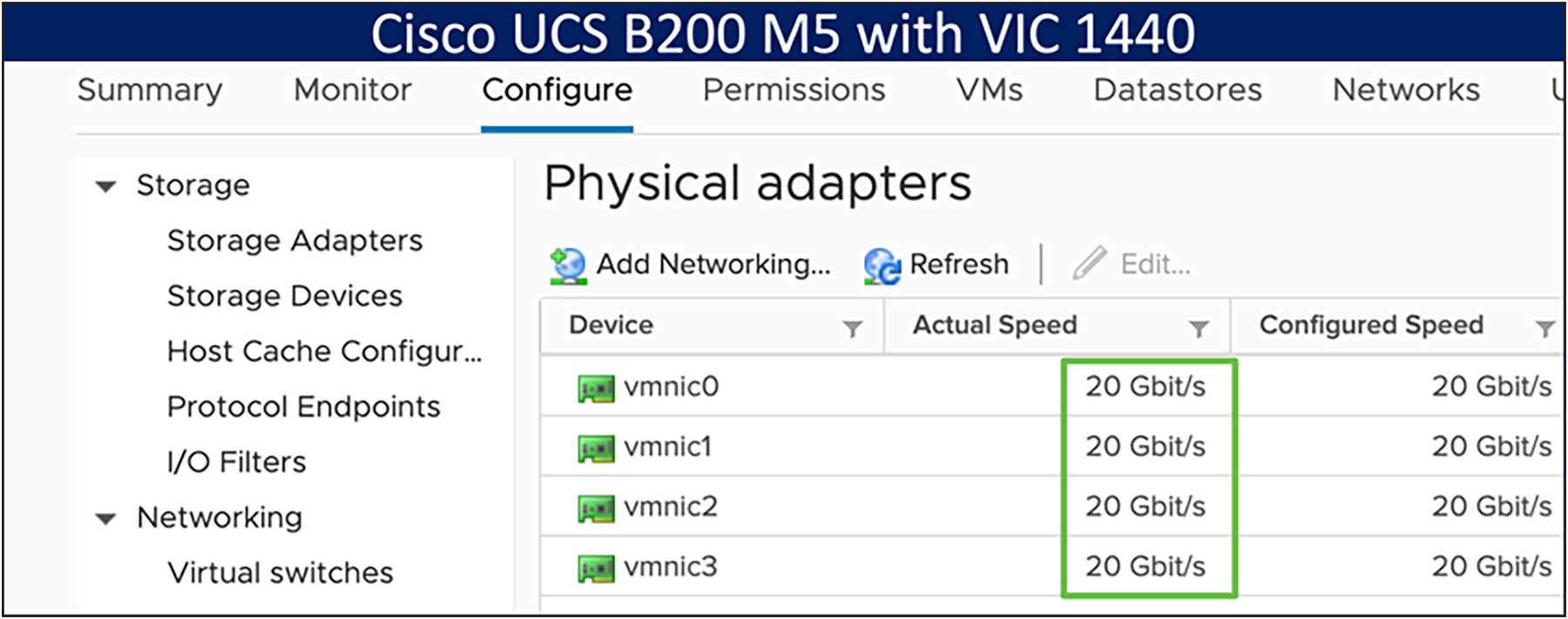

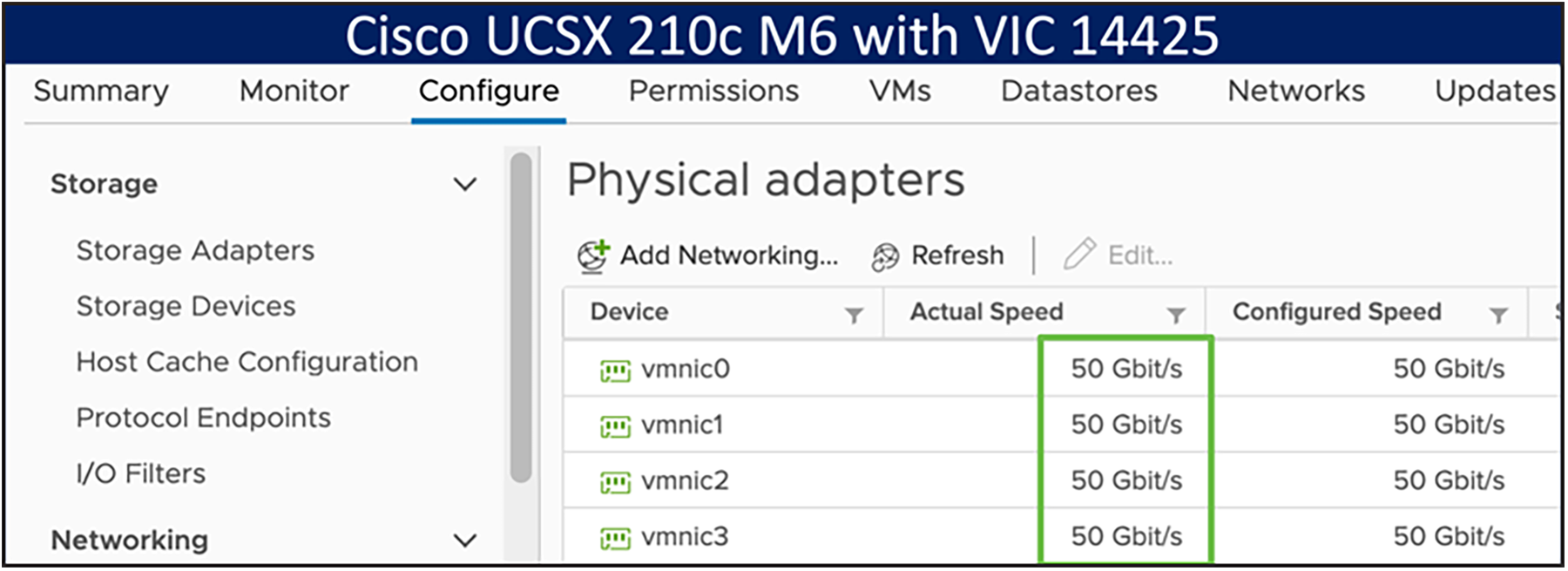

Cisco UCS X210c M6 Compute Nodes support the following Cisco fourth generation VICs:

Cisco UCS VIC 14425 fits the mLOM slot in the Cisco UCS X210c M6 Compute Node and enables up to 50 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 100 Gbps of connectivity per server. Cisco UCS VIC 14425 connectivity to the IFM and fabric interconnects is delivered through 4x 25-Gbps connections, which are configured automatically as 2x 50-Gbps port channels. The Cisco UCS VIC 14425 supports 256 virtual interfaces (both Fibre Channel and Ethernet) along with the latest networking innovations, such as NVMe-oF over RDMA (ROCEv2), VxLAN/NVGRE offload, and so on.

Single Cisco UCS VIC 14425 in Cisco UCS X210c M6 Compute Node

The connections between the 4th generation Cisco UCS VIC 1440 in the Cisco UCS B200 Blade Servers and the I/O modules in the Cisco UCS 5108 Blade Server Chassis comprise multiple 10Gbps KR lanes. The same connections between Cisco UCS VIC 14425 and IFMs in the Cisco UCS X-Series comprise multiple 25Gbps KR lanes, resulting in 2.5x better connectivity in Cisco UCS X210c M6 Compute Nodes.

The optional Cisco UCS VIC 14825 fits the mezzanine slot on the server. A bridge card (UCSX-V4-BRIDGE) extends this VIC’s 2x 50 Gbps of network connections up to the mLOM slot and out through the mLOM’s IFM connectors, bringing the total bandwidth to 100 Gbps per fabric for a total bandwidth of 200 Gbps per server.

Cisco UCS VIC 14425 and 14825 in Cisco UCS X210c M6 Compute Node

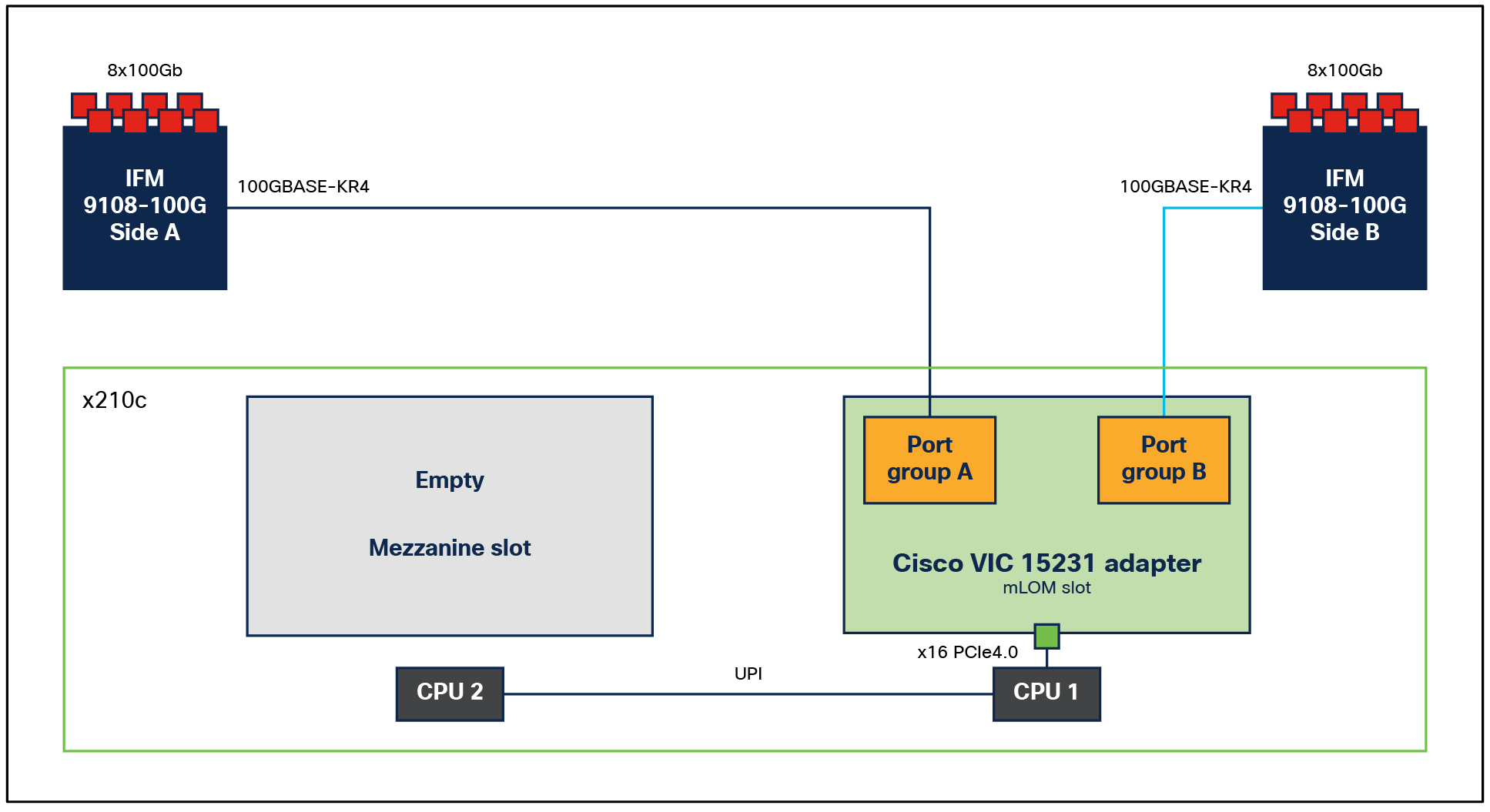

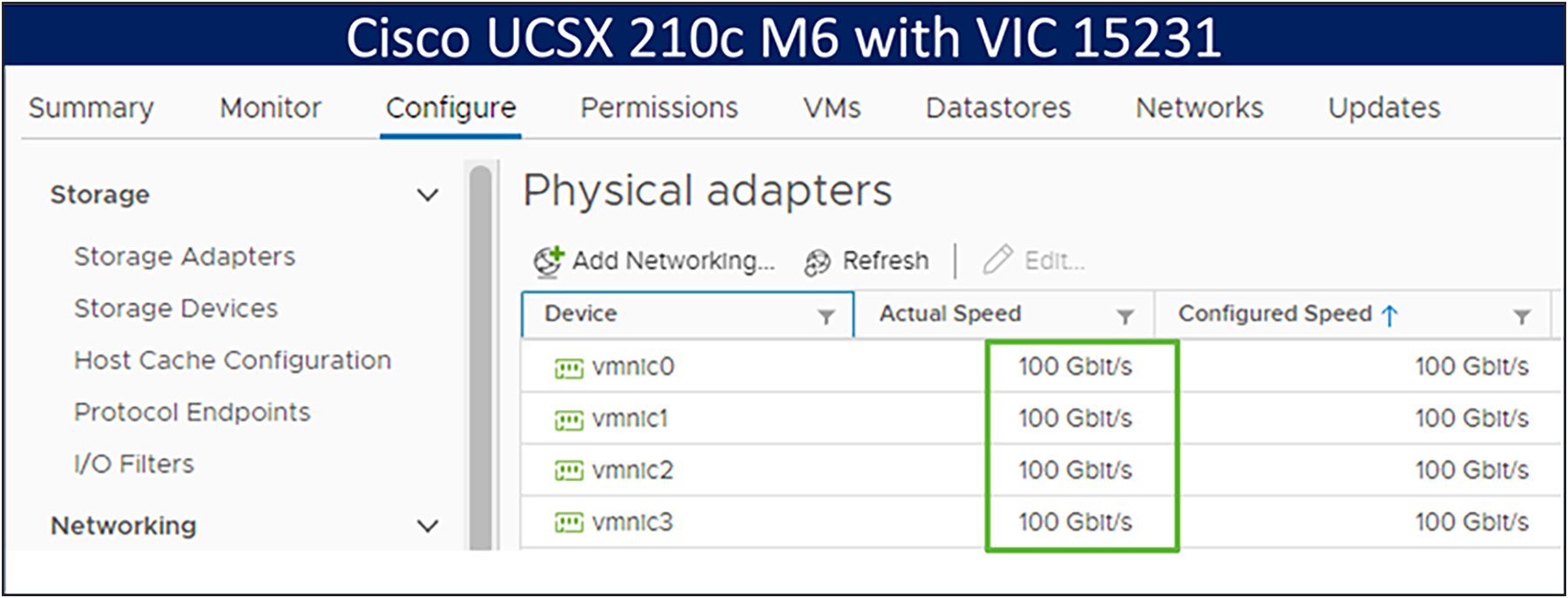

Cisco UCS VIC 15231 fits the mLOM slot in the Cisco X210c M6 Compute Node and enables up to 100 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 200 Gbps of connectivity per server.

Cisco UCS VIC 15231 mLOM

Cisco UCS VIC 15231 connectivity to the IFM and up to the fabric interconnects is delivered through 2x 100-Gbps connections. Cisco UCS VIC 15231 supports 256 virtual interfaces (both Fibre Channel and Ethernet) along with the latest networking innovations such as NVMeoF over RDMA (ROCEv2), VxLAN/NVGRE/GENEVE offload, and so on.

Single Cisco VIC 15231 in Cisco UCS X210c M6 Compute Node

The connections between Cisco UCS VIC 15231 and IFMs in the Cisco UCS X-Series results in 2x better connectivity in Cisco UCS X210c M6 Compute Nodes compared to the 4th generation Cisco UCS VIC 14425 in the Cisco UCS x210 M6 Compute Nodes.

The network interface speed comparison between VMware ESXi installed on a Cisco UCS B200 M5 Blade Server with a Cisco UCS VIC 1440, a Cisco UCS X210c M6 Compute Node with a Cisco UCS VIC 14425, and a Cisco UCS X210c M6 Compute Node with a Cisco UCS VIC 15231 are shown in Figure 13.

Network interface speed comparison

Cisco UCS 6400 Series Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point of connectivity and management for the entire Cisco UCS system. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager or Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low latency, lossless, cut through switching that supports LAN, SAN, and management traffic using a single set of cables.

![]()

Cisco UCS 6454 Fabric Interconnect

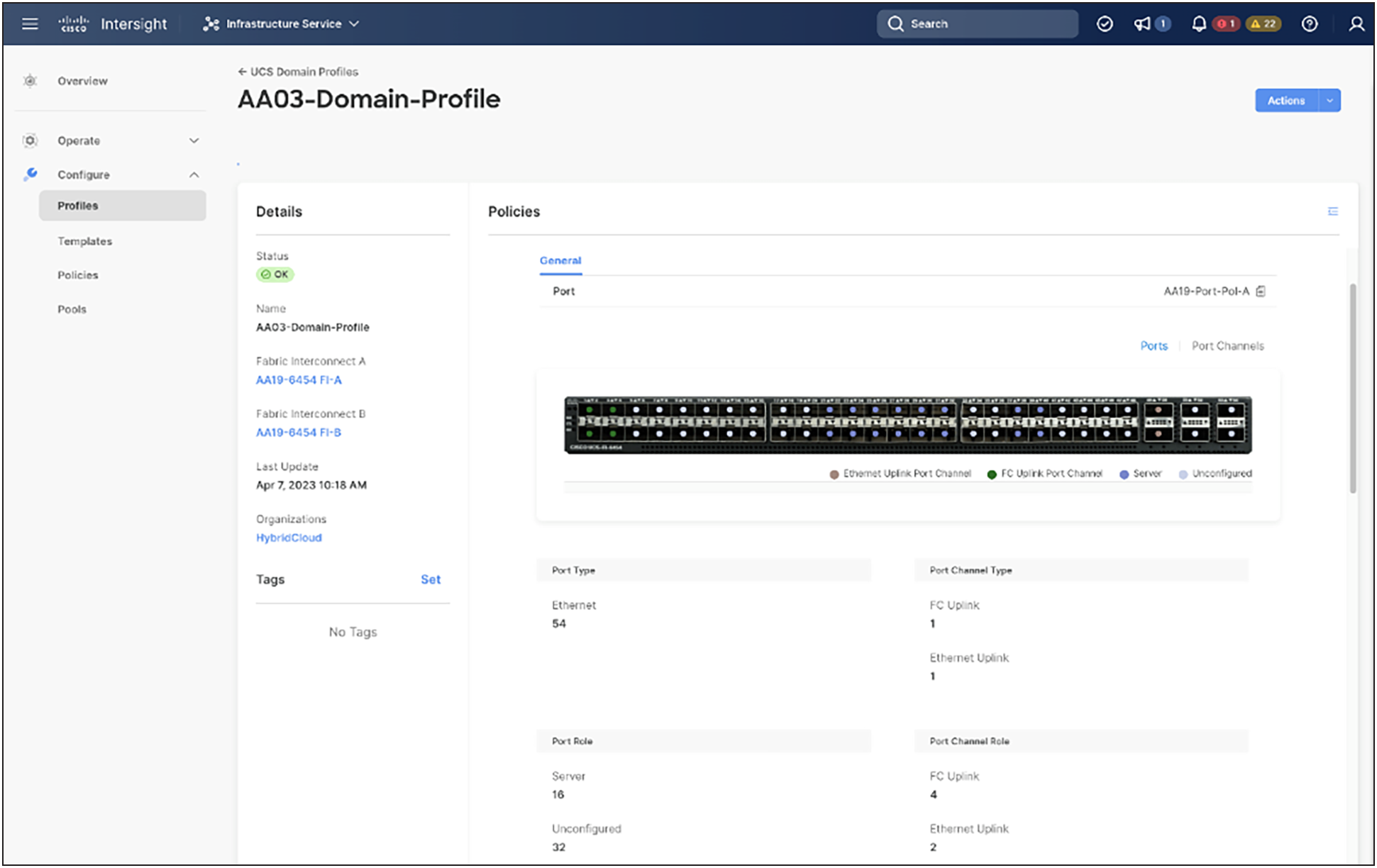

The Cisco UCS 6454 utilized in the current design is a 54-port fabric interconnect. This single-RU device includes 28 10/25 Gbps Ethernet ports, 4 1/10/25-Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports, and 16 unified ports that can support 10/25 Gigabit Ethernet or 8/16/32-Gbps Fibre Channel, depending on the SFP.

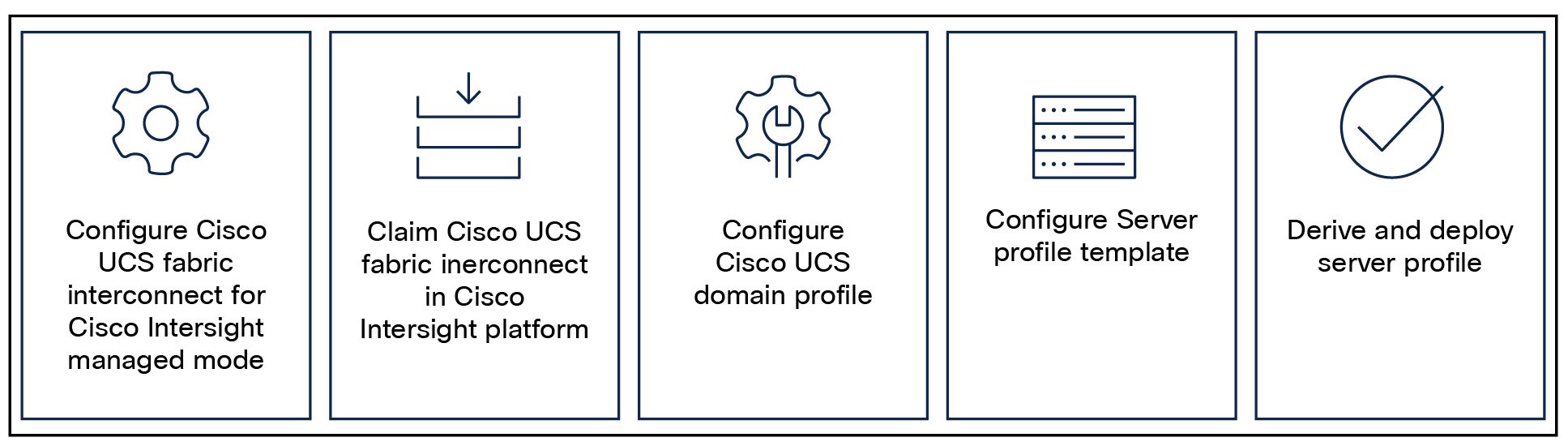

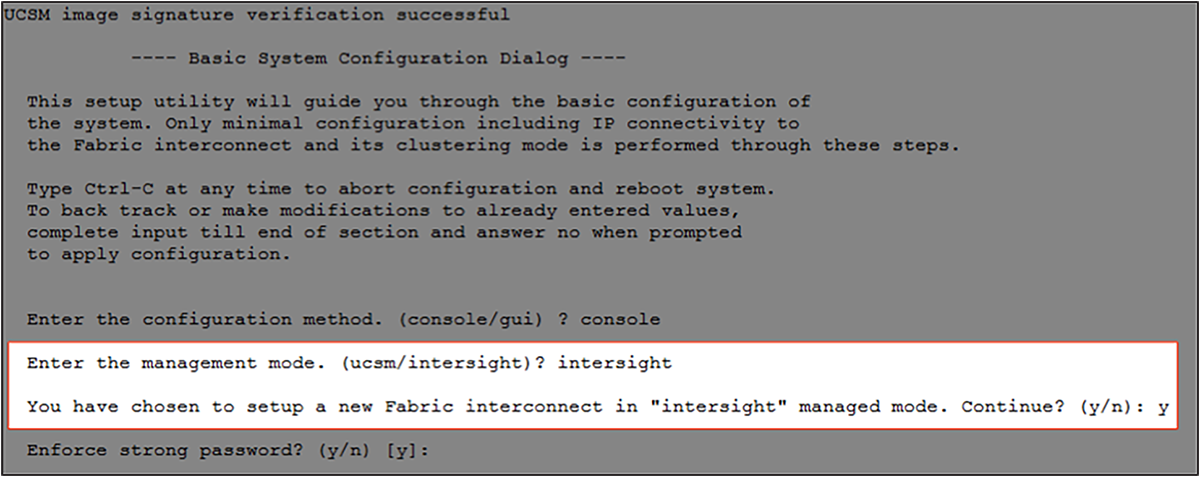

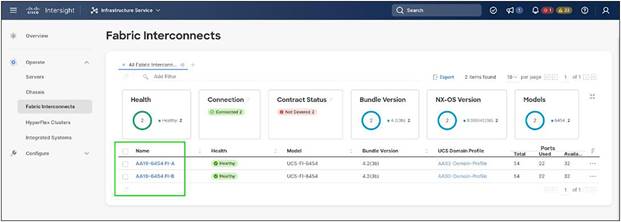

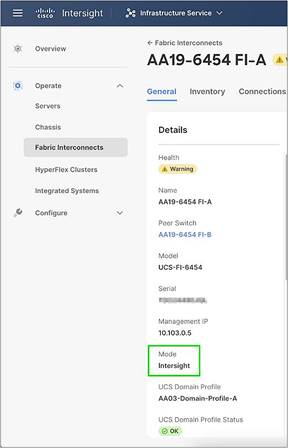

Note: For supporting the Cisco UCS X-Series, the fabric interconnects must be configured in Cisco Intersight Managed Mode (IMM). This option replaces the local management with Cisco Intersight cloud or appliance based management.

5th Generation Cisco UCS Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point of connectivity and management for the entire Cisco UCS system. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager or Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low latency, lossless, cut through switching that supports LAN, SAN, and management traffic using a single set of cables.

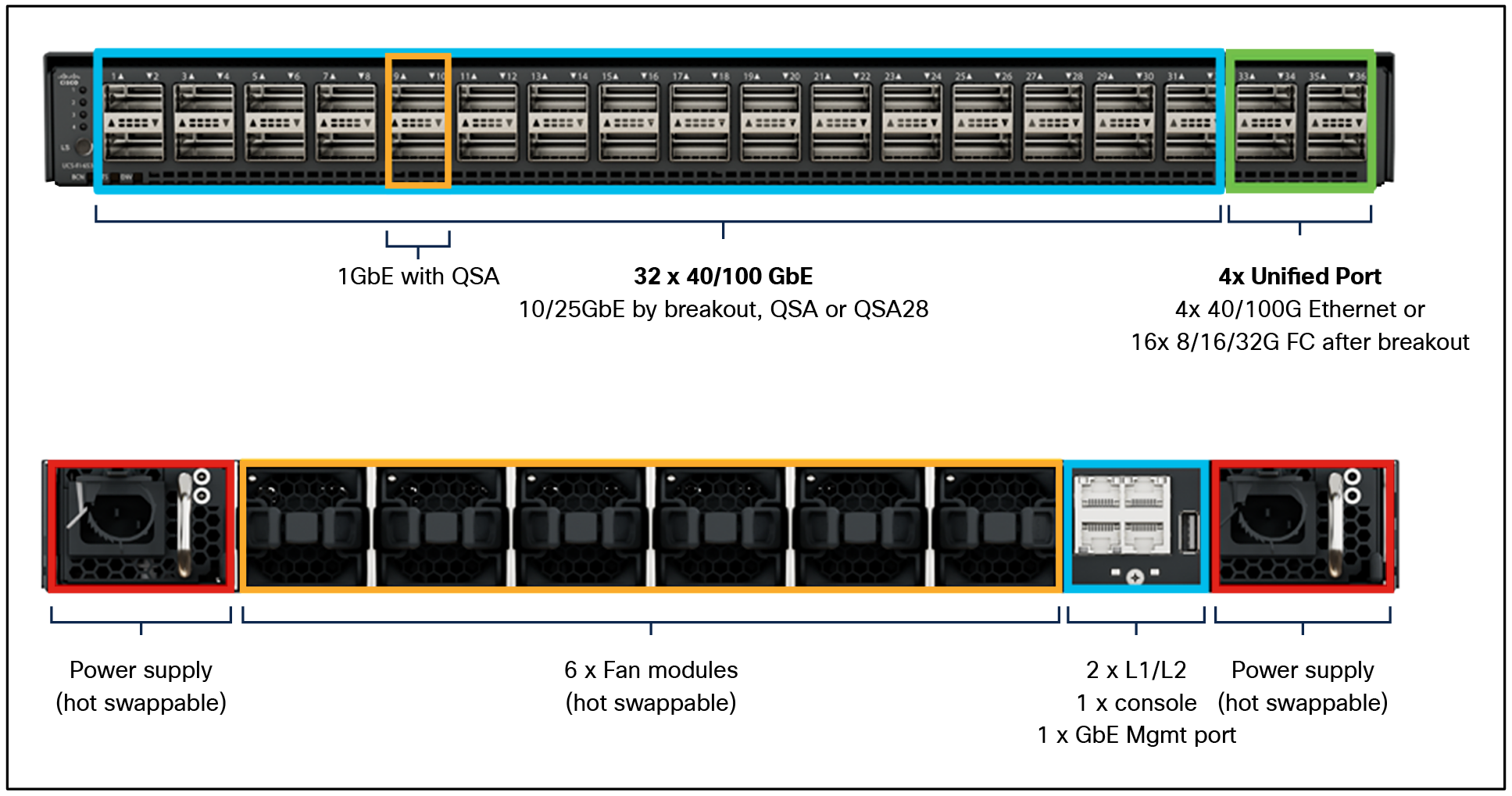

Cisco UCS 6536 Fabric Interconnect – front and rear view

The Cisco UCS 6536 Fabric Interconnect utilized in the current design is a one Rack Unit (1RU) 1/10/25/40/100 Gigabit Ethernet, FCoE, and Fibre Channel (FC) switch offering up to 7.42 Tbps throughput and up to 36 ports. The switch has 32 40/100-Gbps Ethernet ports and 4 unified ports that can support 40/100-Gbps Ethernet ports or 16 Fiber Channel ports after breakout at 8/16/32-Gbps FC speeds. The 16 FC ports after breakout can operate as FC uplinks or FC storage ports. The switch also supports two ports at 1-Gbps speed using QSA, and all 36 ports can break out for 10-or 25-Gbps Ethernet connectivity. All Ethernet ports can support FCoE.

The Cisco UCS 6536 Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6536 Fabric Interconnect offers line rate, low latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel, NVMe over Fabric, and Fibre Channel over Ethernet (FCoE) functions.

The Cisco UCS 6536 Fabric Interconnect provides the communication backbone and management connectivity for the Cisco UCS X-Series compute nodes, Cisco UCS X9508 Chassis, Cisco UCS B-series blade servers, Cisco UCS 5108 B-series server chassis, and Cisco UCS C-series rack servers. All servers attached to a Cisco UCS 6536 Fabric Interconnect become part of a single, highly available management domain. In addition, by supporting a unified fabric, Cisco UCS 6536 Fabric Interconnect provides both LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6536 uses a cut through architecture, supporting deterministic, low latency, line rate 10/25/40/100 Gigabit Ethernet ports, a switching capacity of 7.42 Tbps per FI and 14.84 Tbps per unified fabric domain, independent of packet size and enabled services. It enables 1600Gbps bandwidth per UCS X9508 chassis with X9108-IFM-100G in addition to enabling end-to-end 100G ethernet and 200G aggregate bandwidth per UCS X210c compute node. With the X9108-IFM-25G and the IOM 2408, it enables 400Gbps bandwidth per chassis per FI domain. The product family supports Cisco low latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increases the reliability, efficiency, and scalability of Ethernet networks. The UCS 6536 Fabric Interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings come from the unified fabric‒optimized server design in which Network Interface Cards (NICs), Host Bus Adapters (HBAs), cables, and switches can be consolidated.

Cisco UCS unified fabric: I/O consolidation

The Cisco UCS 6536 Fabric Interconnect is built to consolidate LAN and SAN traffic onto a single unified fabric, saving on Capital Expenditures (CapEx) and Operating Expenses (OpEx) associated with multiple parallel networks, different types of adapter cards, switching infrastructure, and cabling within racks. The unified ports allow ports in the fabric interconnect to support direct connections from Cisco UCS to existing native Fibre Channel SANs. The capability to connect to a native Fibre Channel protects existing storage system investments while dramatically simplifying in rack cabling.

The Cisco UCS 6536 Fabric Interconnect supports I/O consolidation with end-to-end network virtualization, visibility, and Quality-of-Service (QoS) enables the following LAN and SAN traffic:

● FC SAN, IP storage (iSCSI, NFS), NVMEoF (NVMe/FC, NVMe/TCP, NVMe over ROCEv2)

● Server management and LAN traffic

Cisco UCS unified fabric

The I/O consolidation under the Cisco UCS 6536 Fabric Interconnect, along with the stateless policy driven architecture of Cisco UCS and the hardware acceleration of the Cisco UCS virtual interface card, provides great simplicity, flexibility, resiliency, performance, and TCO savings for the customer’s compute infrastructure.

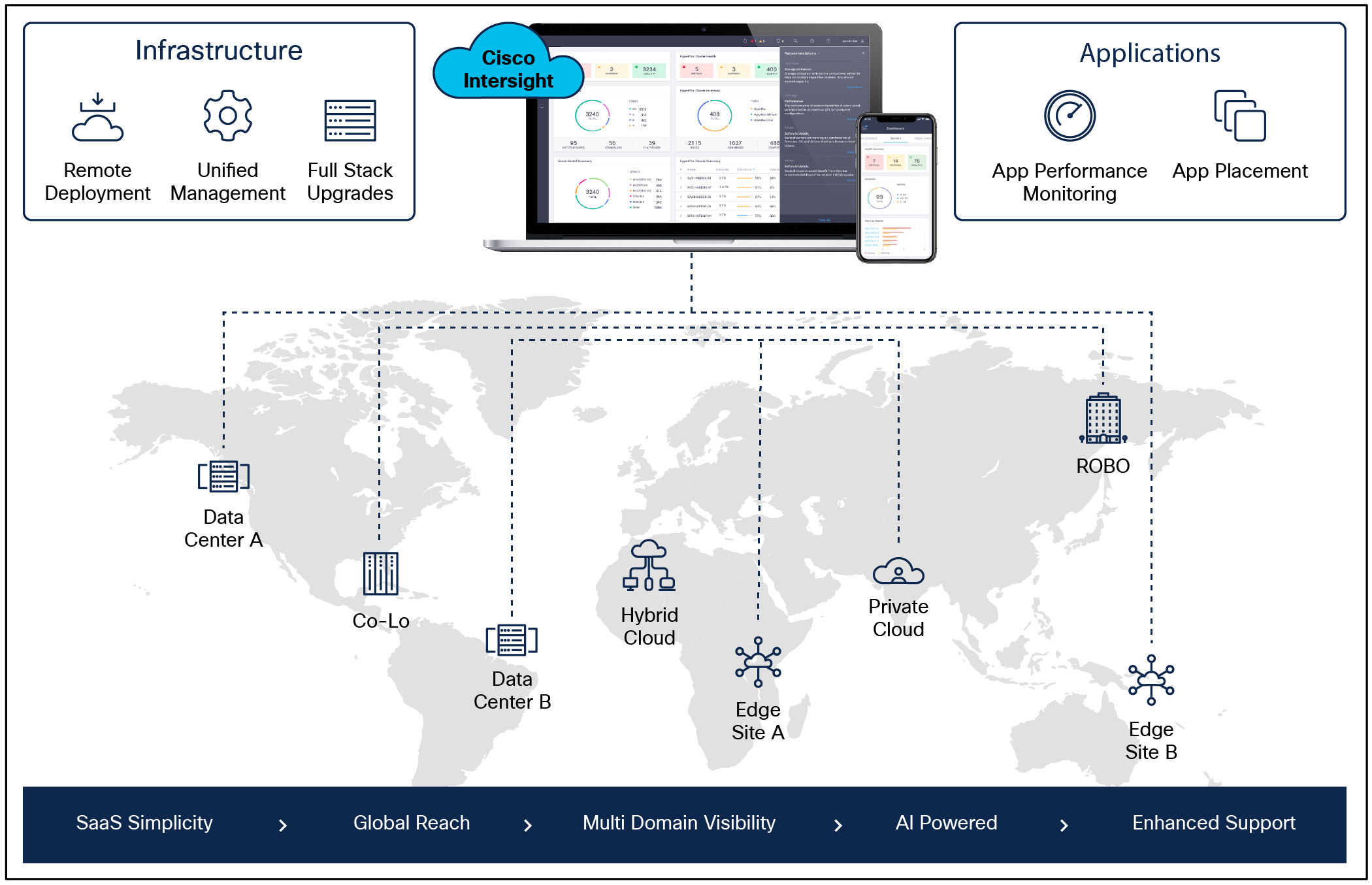

As applications and data become more distributed from the core data center and edge locations to public clouds, a centralized management platform is essential. IT agility will be a struggle without a consolidated view of infrastructure resources and centralized operations. Cisco Intersight provides a cloud hosted management and analytics platform for all Cisco UCS and other supported third party infrastructure across the globe. It provides an efficient way of deploying, managing, and upgrading infrastructure in the data center and remote or branch offices, at the edge, and in colocation environments.

Cisco Intersight

Cisco Intersight provides:

● No impact transition: An embedded connector within Cisco UCS will allow customers to start consuming benefits without a forklift upgrade.

● SaaS/subscription model: SaaS model provides for centralized, cloud scale management and operations across hundreds of sites around the globe without the administrative overhead of managing the platform.

● Enhanced support experience: A hosted platform allows Cisco to address issues platform wide, and this experience extends into platform supported by Cisco Technical Assistance Center (Cisco TAC).

● Unified management: A single pane of glass and a consistent operations model provide experience for managing all systems and solutions in one place.

● Programmability: End-to-end programmability with native APIs, SDKs, and popular DevOps toolsets will enable customers to consume natively.

● Single point of automation: Automation using Red Hat Ansible, HashiCorp Terraform, and other tools can be done through Intersight for all systems it manages.

● Recommendation engine: Our approach, which includes visibility, insight and action powered by machine intelligence and analytics, provides real time recommendations with agility and scale. An embedded recommendation engine offers insights sourced from across the network and tailored to each customer.

The main benefits of Cisco Intersight infrastructure services are as follows:

● Simplify daily operations by automating many daily manual tasks.

● Combine the convenience of a SaaS platform with the capability to connect from anywhere and manage infrastructure through a browser or mobile app.

● Stay ahead of problems and accelerate trouble resolution through advanced support capabilities.

● Gain global visibility of infrastructure health and status along with advanced management and support capabilities.

● Upgrade to add workload optimization when needed.

In this solution, Cisco Intersight unifies and simplifies the hybrid-cloud operations of FlashStack Data center components wherever they are deployed.

Cisco Intersight Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, on-premises options can be purchased separately. The Cisco Intersight Virtual Appliance and Cisco Intersight Private Virtual Appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight Virtual Appliance delivers the management features of the Cisco Intersight platform in an easy to deploy VMware Open Virtual Appliance (OVA) or Microsoft Hyper-V Server virtual machine that allows you to control the system details that leave your premises. The Cisco Intersight Private Virtual Appliance is provided in a form factor specifically designed for users who operate in disconnected (air gap) environments. The Private Virtual Appliance requires no connection to public networks or back to Cisco to operate.

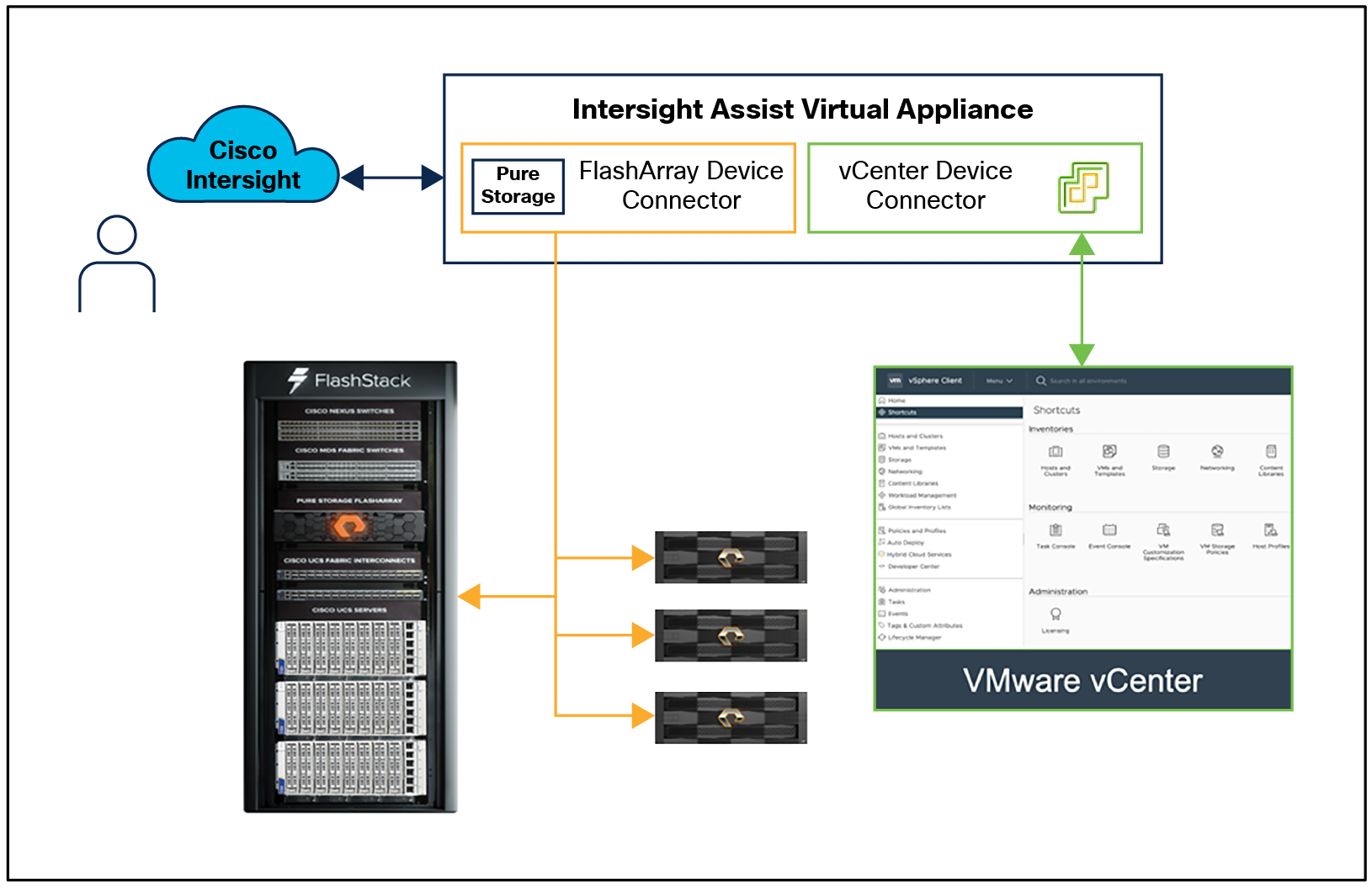

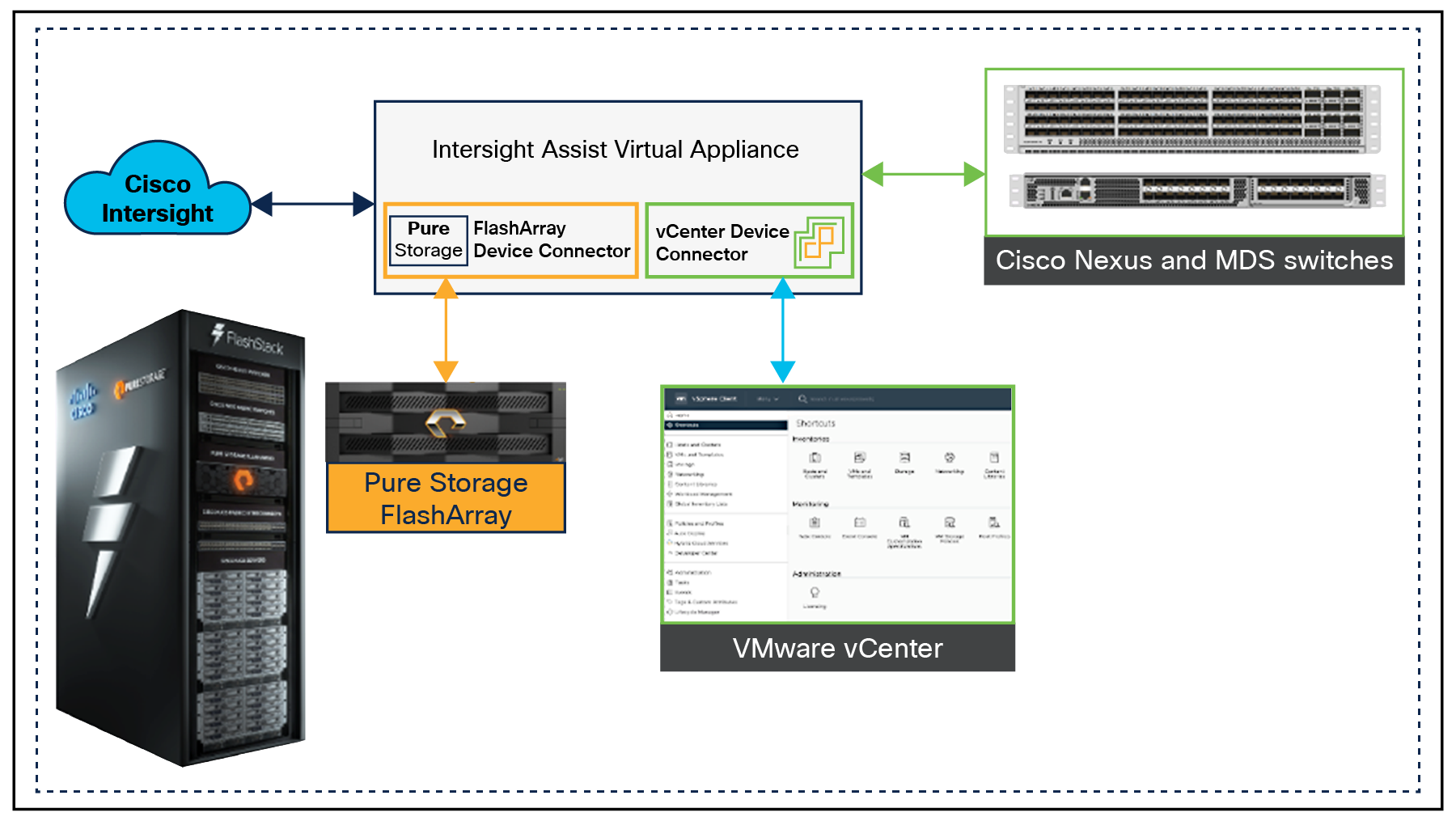

Cisco Intersight Assist and Device Connectors

Cisco Intersight Assist helps customers add endpoint devices to Cisco Intersight. A data center could have multiple devices that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight but does not connect to Intersight directly needs Cisco Intersight Assist to provide the necessary connectivity. FlashStack, VMware vCenter, and Pure Storage FlashArray connect to Intersight with the help of the Intersight Assist appliance.

Cisco Intersight Assist is available within the Cisco Intersight Virtual Appliance, which is distributed as a deployable virtual machine contained within an Open Virtual Appliance (OVA) file format. More details about the Cisco Intersight Assist VM deployment configuration is covered in later sections.

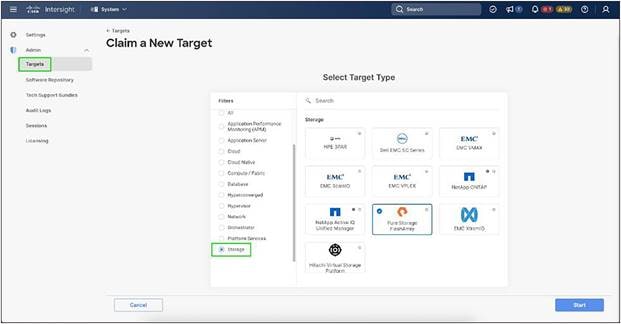

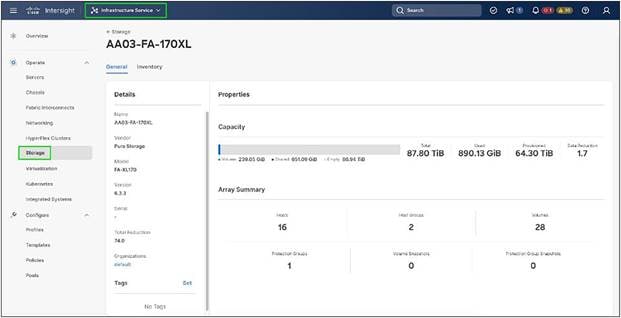

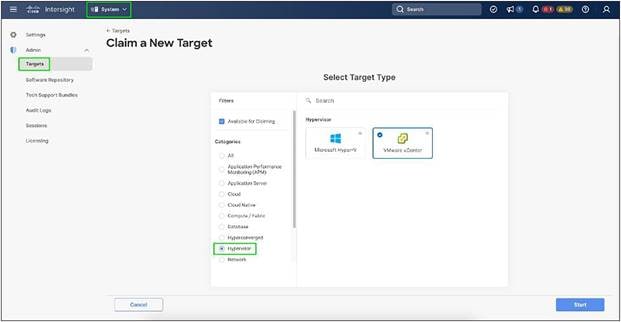

Cisco Intersight integrates with VMware vCenter and Pure Storage FlashArray as follows:

● Cisco Intersight uses the device connector running within the Cisco Intersight Assist virtual appliance to communicate with the VMware vCenter.

● Cisco Intersight uses the device connector running within a Cisco Intersight Assist virtual appliance to integrate with Pure Storage FlashArray//XL170.

Cisco Intersight and vCenter and Pure Storage integration

The device connector provides a safe way for connected targets to send information and receive control instructions from the Cisco Intersight portal using a secure Internet connection. The integration brings the full value and simplicity of Cisco Intersight infrastructure management service to VMware hypervisor and FlashArray storage environments. The integration architecture enables FlashStack customers to use new management capabilities with no compromise in their existing VMware or FlashArray operations. IT users will be able to manage heterogeneous infrastructure from a centralized Cisco Intersight portal. At the same time, the IT staff can continue to use VMware vCenter and the Pure Storage dashboard for comprehensive analysis, diagnostics, and reporting of virtual and storage environments. The next section addresses the functions that this integration provides.

Cisco Nexus 9000 Series Switches

The Cisco Nexus 9000 Series Switches offer both modular and fixed 1/10/25/40/100 Gigabit Ethernet switch configurations with scalability up to 60 Tbps of nonblocking performance with less than five microsecond latency, wire speed VXLAN gateway, bridging, and routing support.

![]()

Cisco Nexus 93180YC-FX3 Switch

The Cisco Nexus 9000 Series Switch featured in this design is the Cisco Nexus 93180YC-FX3 configured in Cisco NX-OS standalone mode. Cisco NX-OS is a purpose built data center operating system designed for performance, resiliency, scalability, manageability, and programmability at its foundation. It provides a robust and comprehensive feature set that meets the demanding requirements of virtualization and automation.

The Cisco Nexus 93180YC-FX3 switch is a 1RU switch that supports 3.6 Tbps of bandwidth and 1.2 bpps. The 48 downlink ports on the 93180YC-FX3 can support 1-, 10-, or 25-Gbps Ethernet, offering deployment flexibility and investment protection. The six uplink ports can be configured as 40- or 100-Gbps Ethernet, offering flexible migration options.

Cisco MDS 9132T 32G Multilayer Fabric Switch

The Cisco MDS 9132T 32G Multilayer Fabric Switch is the next generation of the highly reliable, flexible, and low cost Cisco MDS 9100 Series switches. It combines high performance with exceptional flexibility and cost effectiveness. This powerful, compact one Rack Unit (1RU) switch scales from 8 to 32 line rate 32 Gbps Fibre Channel ports.

![]()

Cisco MDS 9132T 32G Multilayer Fabric Switch

The Cisco MDS 9132T delivers advanced storage networking features and functions with ease of management and compatibility with the entire Cisco MDS 9000 family portfolio for reliable end-to-end connectivity. This switch also offers state of the art SAN analytics and telemetry capabilities that have been built into this next generation hardware platform. This new state of the art technology couples the next generation port Cisco ASIC with a fully dedicated network processing unit designed to complete analytics calculations in real time. The telemetry data extracted from the inspection of the frame headers are calculated on board (within the switch) and, using an industry leading open format, can be streamed to any analytics visualization platform. This switch also includes a dedicated 10/100/1000BASE-T telemetry port to maximize data delivery to any telemetry receiver, including Cisco Data center Network Manager.

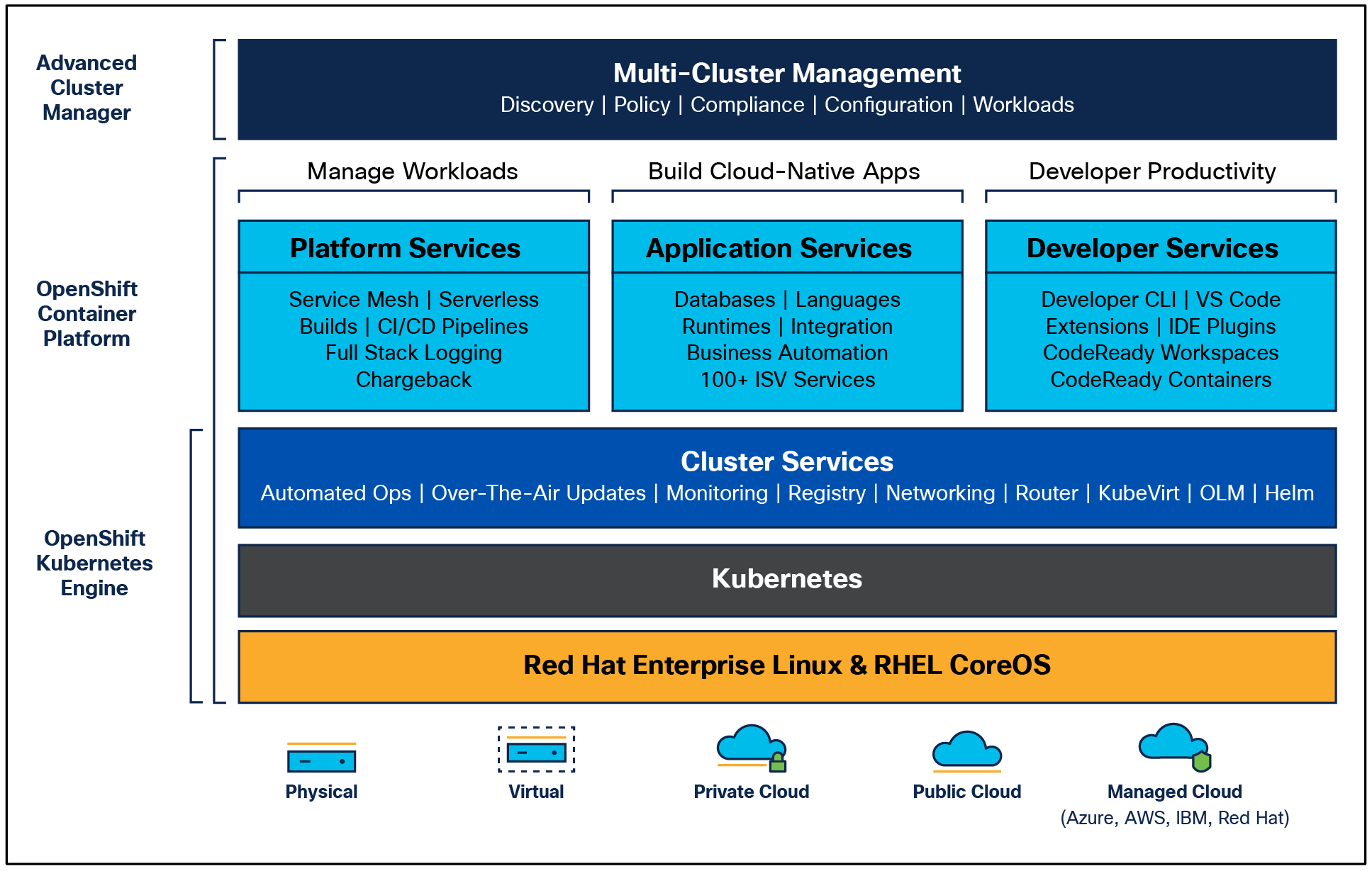

Red Hat OpenShift Container Platform

The Red Hat OpenShift Container Platform (OCP) is a container application platform that brings together CRI-0 and Kubernetes and provides an API and web interface to manage these services. CRI-O is a light weight implementation of the Kubernetes CRI (container runtime interface) to enable using Open Container Initiative (OCI)‒compatible runtimes including runC, crun, and Kata containers.

OCP allows customers to create and manage containers. Containers are standalone processes that run within their own environment, independent of the operating system and the underlying infrastructure. OCP helps develop, deploy, and manage container based applications. It provides a self service platform to create, modify, and deploy applications on demand, thus enabling faster development and release life cycles. OCP has a microservices based architecture of smaller, decoupled units that work together. It is powered by Kubernetes with data about the objects stored in etcd, a reliable clustered key value store.

OpenShift Container Platform overview

Some of the capabilities in Red Hat OCP include:

● Automated deployment of OCP clusters on-premises (bare metal, VMware vSphere, Red Hat Open Stack Platform, and Red Hat Virtualization) and in public clouds.

● Automated upgrades of OCP clusters with seamless over the air upgrades initiated from the web console or OpenShift CLI (oc).

● Add services with push button ease: Once a cluster is deployed, Red Hat OpenShift uses Kubernetes operators to deploy additional capabilities and services on the cluster. Red Hat Certified and community supported operators are available in the embedded operator hub and can be deployed with the click of a button.

● Multicluster management using Red Hat’s cloud based Hybrid-cloud Console or enterprise managed Advance Cluster Management (ACM) provides a consolidated view of all clusters, with the ability to easily access and use other Kubernetes technologies and services. OCP clusters can also be individually managed using a web based cluster console or APIs.

● Persistent storage support: OCP provides support for a broad range of ecosystem storage partners including the Portworx Enterprise Storage Platform used in this solution.

● Scalability: OCP can scale to meet the largest and smallest compute use cases as needed.

● Automate container and application builds, deployments, scaling, cluster management, and more with ease.

● Self-service provisioning: Developers can quickly and easily create applications on demand from the tools they use most, while operations retain full control over the entire environment.

● Source-to-image deployment: OCP provides a toolkit and workflow for producing ready to run images by injecting source code into a container and letting the container prepare that source code for execution.

For more information, see: Red Hat OpenShift Container Platform product page on redhat.com.

Within OpenShift Container Platform, Kubernetes manages containerized applications across a set of CRI-O runtime hosts and provides mechanisms for deployment, maintenance, and application scaling. The CRI-O service packages, instantiates, and runs containerized applications.

A Kubernetes cluster consists of one or more control plane nodes and a set of worker nodes. This solution design includes HA functionality at the hardware as well as the software stack. An OCP cluster is designed to run in HA mode with three control plane nodes and a minimum of two worker nodes to help ensure that the cluster has no single point of failure.

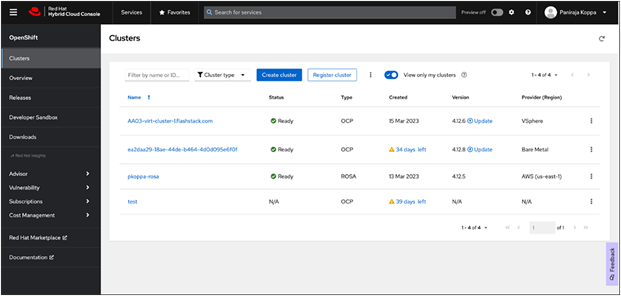

Red Hat Hybrid-cloud Console is a centralized SaaS based management console for deploying and managing multiple OCP clusters. It is used in this solution to provide consistent container management across a hybrid environment. The SaaS model enables enterprises to develop, deploy, and innovate faster across multiple infrastructures and quickly take advantage of new capabilities without the overhead of managing the tool. The console gives enterprises more control and visibility as environments grow and scale. The Hybrid-cloud Console also provides tools to proactively address issues, open and manage support cases, manage cloud costs, subscriptions, and more.

Red Hat Hybrid-cloud Console Dashboard

For more information, see: Red Hat Hybrid-cloud Console product page on redhat.com.

Red Hat OpenShift is available as a managed service by Red Hat and major cloud providers or as a self managed service where the enterprise manages and maintains the OCP cluster. Red Hat OCP as a managed service is hosted on major public clouds with Red Hat’s expert SRE teams providing a fully managed application platform, enabling the enterprise to focus on its applications and core business. Red Hat OpenShift is a complete, production ready application platform with additional services such as CI/CD pipelines, monitoring, security, container registry, service mesh, and more included on top of Kubernetes. OpenShift services include Red Hat OpenShift Service on AWS, Microsoft Azure Red Hat OpenShift, Red Hat OpenShift Dedicated on Google Cloud or AWS, and Red Hat OpenShift on IBM Cloud.

Red Hat Enterprise Linux CoreOS (RHCOS) is deployed automatically using configurations in the ignition files. The OCP installer creates the ignition configuration files necessary to deploy the OCP cluster with RHCOS. The configuration is based on the user provided responses to the installer. These files and images are downloaded and installed on the underlying infrastructure by the installer.

● Openshift install is a command line utility for installing OCP in cloud environments and on-premises. It collects information from the user, generates manifests, and uses Terraform to provision and configure infrastructure that will compose a cluster.

● Assisted installer is a cloud hosted installer available at https://console.redhat.com as both an API and a guided web UI. After defining a cluster, the user downloads a custom “discovery ISO” and boots it on the systems that will be provisioned into a cluster, at which point each system connects to console.redhat.com for coordination. Assisted installer offers great flexibility and customization while ensuring success by running an extensive set of validations prior to installation.

● Agent based installer is a command line utility that delivers the functionality of Assisted Installer in a standalone format that can be run in disconnected and air gapped environments, creating a cluster without requiring any other running systems besides a container registry.

● Red Hat Advanced Cluster Management for Kubernetes (see the section below) includes Assisted Installer running on-premises behind a Kubernetes API in addition to a web UI. OpenShift’s bare metal platform features, especially the bare metal operator, can be combined with Assisted installer to create an integrated end-to-end provisioning flow that uses Redfish Virtual Media to automatically boot the discovery ISO on managed systems.

Red Hat Enterprise Linux CoreOS

Red Hat Enterprise Linux CoreOS (RHCOS) is a lightweight operating system specifically designed for running containerized workloads. It is based on the secure, enterprise grade Red Hat Enterprise Linux (RHEL). RHCOS is the default operating system on all Red Hat OCP cluster nodes. RHCOS is tightly controlled, allowing only a few system settings to be modified using the ignition configuration files. RHCOS is designed to be installed as part of an OCP cluster installation process with minimal user configuration. Once the cluster is deployed, the cluster will fully manage the RHCOS subsystem configuration and upgrades.

RHCOS includes:

● Ignition: for initial bootup configuration and disk related tasks on OCP cluster nodes

Ignition serves as a first boot system configuration utility for initially bringing up and configuring the nodes in the OCP cluster. Starting from a tightly controlled OS image, the complete configuration of each system is expressed and applied using ignition. It also creates and formats disk partitions, writes files, creates file systems and directories, configures users, etc. During a cluster install, the control plane nodes get their configuration files from the temporary bootstrap machine used during install, and the worker nodes get theirs from the control plane nodes. After an OCP cluster is installed, subsequent configurations of nodes are done using the Machine Config Operator to manage and apply ignition.

● CRI-O: container engine running on OCP cluster nodes

CRI-O is a stable, standards based, lightweight container engine for Kubernetes that runs and manages the containers on each node. CRI-O implements the Kubernetes Container Runtime Interface (CRI) for running Open Container Initiative (OCI) compliant runtimes. OCP’s default container runtime is runC. CRI-O has a small footprint and a small attack surface, with an emphasis on security and simplicity. CRI-O is a Cloud Native Computing Foundation (CNCF) incubating project.

● Kubelet: Kubernetes service running on OCP cluster nodes

Kubelet is a Kubernetes service running on every node in the cluster. It communicates with the control plane components and processes requests for running, stopping, and managing container workloads.

● Container tools

RHCOS includes a set of container tools (including Podman, Skopeo, and crictl) for managing containers and container image actions such as start, stop, run, list, remove, build, sign, push, and pull.

● rpm-ostree combines RPM package management with libostree’s immutable content addressable operating system image management. RHCOS is installed and updated using libostree, guaranteeing that the installed OS is in a known state, with transactional upgrades and support for rollback.

Note: RHCOS was used on all control planes and worker nodes to support the automated Red Hat OpenShift 4 deployment.

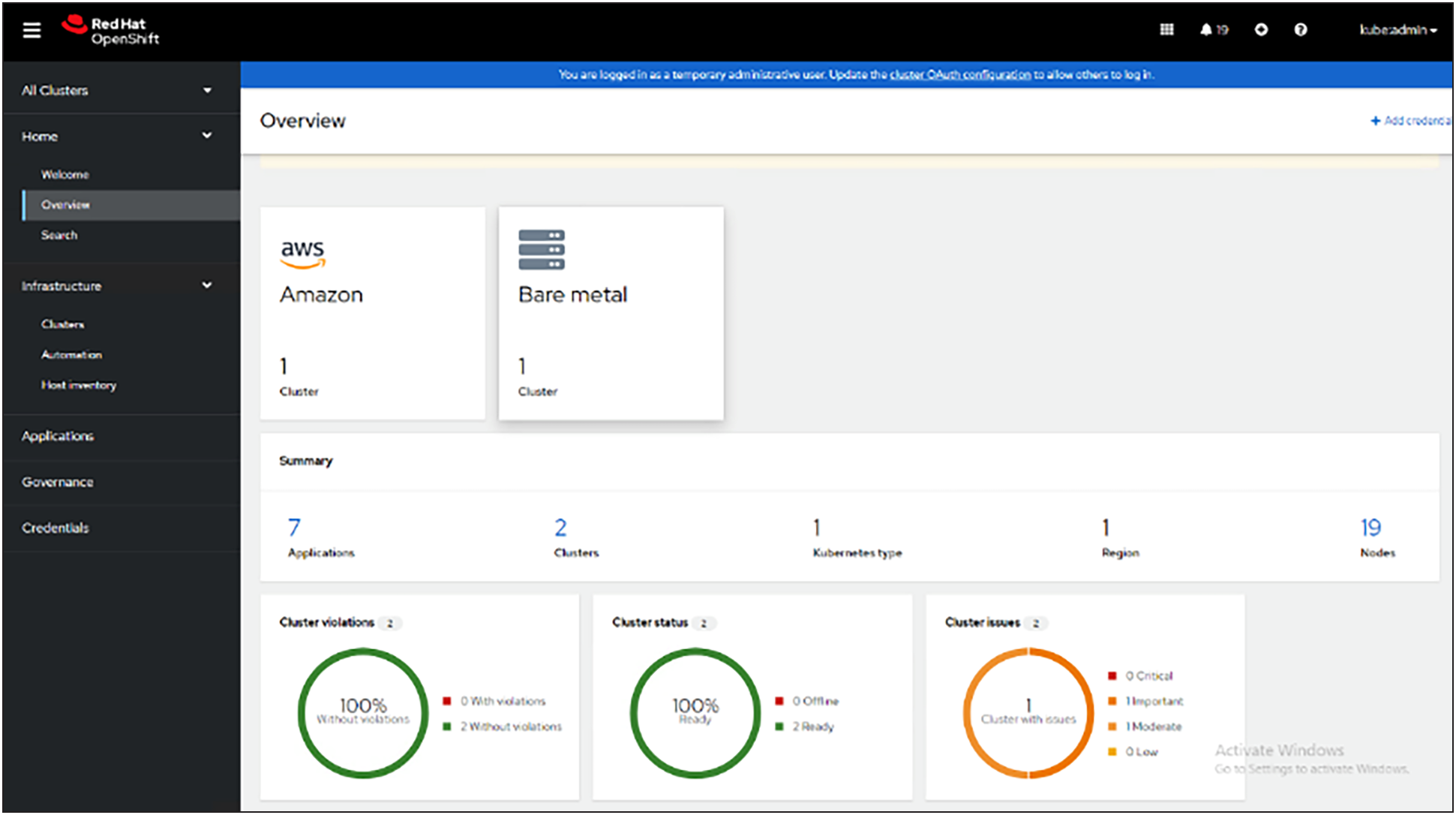

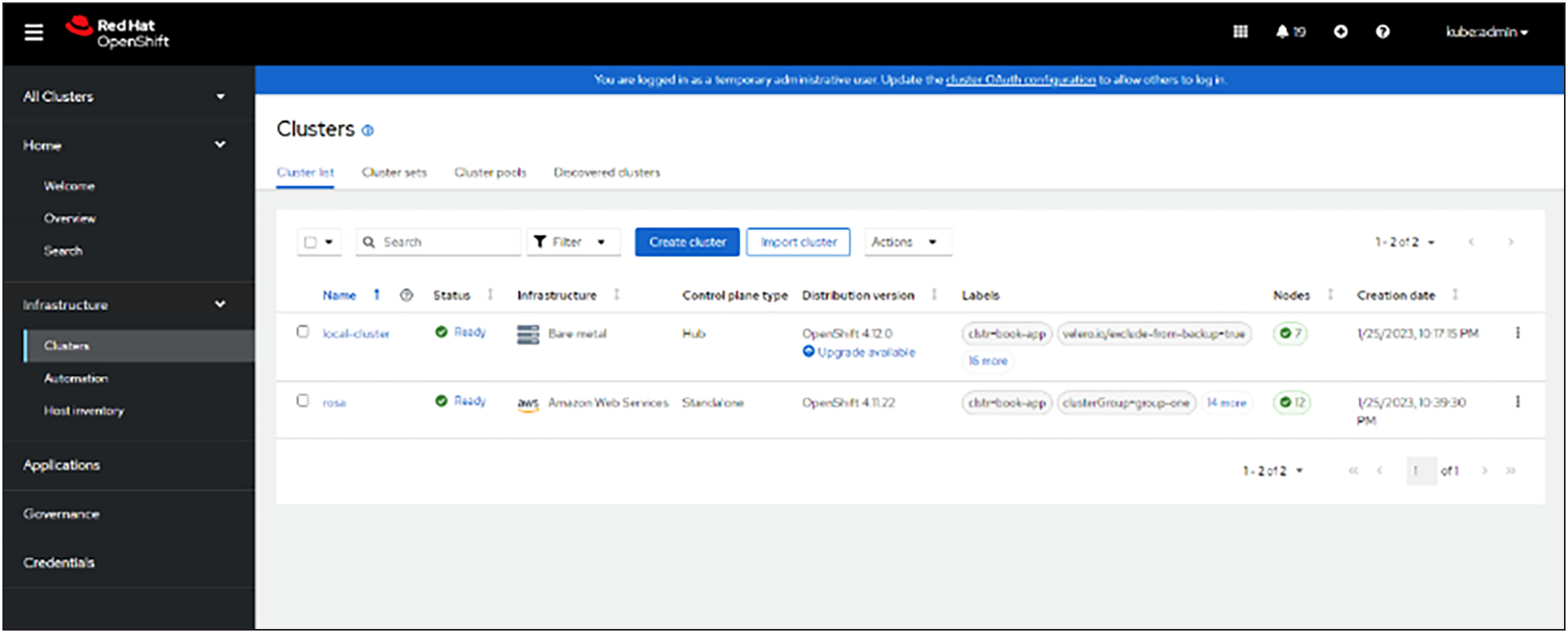

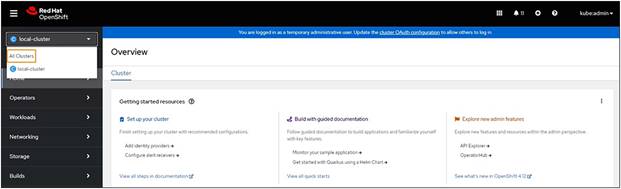

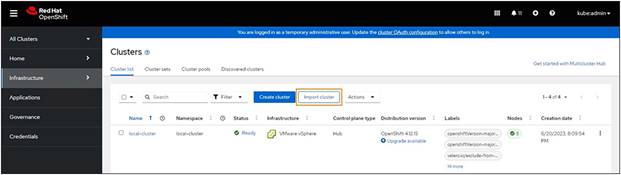

Red Hat Advanced Cluster Management for Kubernetes

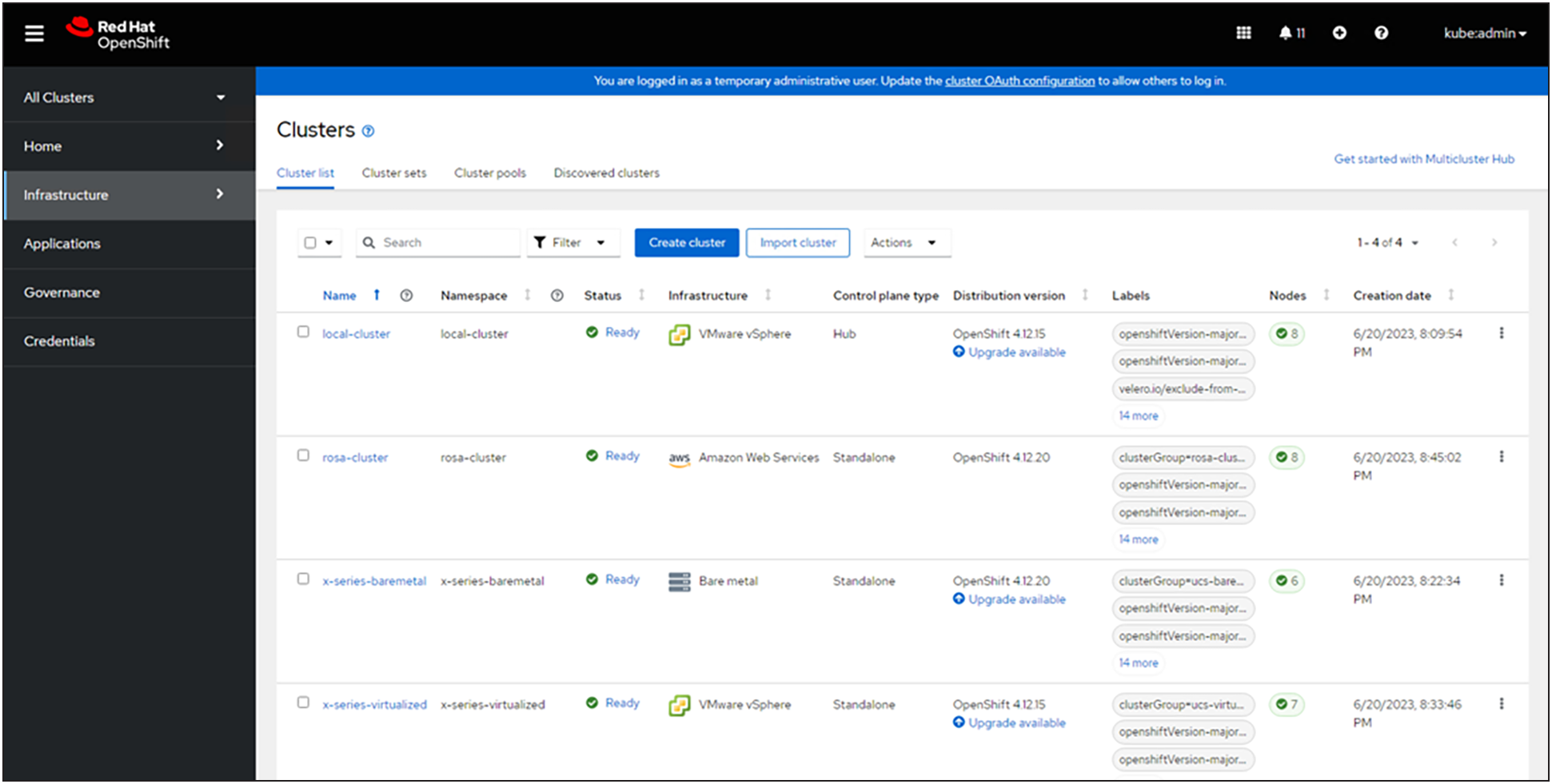

Red Hat Advanced Cluster Management for Kubernetes (ACM) controls clusters and applications from a single console, with built in security policies. It extends the value of OpenShift by deploying applications, managing multiple clusters, and enforcing policies across multiple clusters at scale. Red Hat’s solution ensures compliance, monitors usage, and maintains consistency.

Red Hat Advanced Cluster Management for Kubernetes dashboard

Red Hat Advanced Cluster Management for Kubernetes cluster overview

Running on Red Hat OpenShift, Red Hat Advanced Cluster Management for Kubernetes includes capabilities that unify multicluster management, provide Policy-based governance, and extend application lifecycle management.

Unified multicluster management

● Centrally create, update, and delete Kubernetes clusters across multiple private and public clouds.

● Search, find, and modify any Kubernetes resource across the entire domain.

● Quickly troubleshoot and resolve issues across your federated domain.

● When creating or updating clusters, automate tasks such as configuring cloud defined storage, static IP addresses, updating network components (such as firewalls or load balancers), and more with the integration of Red Hat Ansible Automation Platform.

Policy-based governance, risk, and compliance

● Centrally set and enforce policies for security, applications, and infrastructure.

● Quickly visualize detailed auditing on configuration of applications and clusters.

● Get immediate visibility into your compliance posture based on your defined standards.

● Automate remediation of policy violations and gather audit information about the clusters for analysis with the integration of Red Hat Ansible Automation Platform.

Advanced application lifecycle management

● Define and deploy applications across-clusters based on policy.

● Quickly view service endpoints and pods associated with your application topology – with all the dependencies.

● Automatically deploy applications to specific clusters based on channel and subscription definitions.

● When deploying or updating applications, automate configurations such as networking, databases, and more with the integration of Red Hat Ansible Automation Platform.

Multicluster observability for health and optimization

● Get an overview of multicluster health and optimization using out of the box multicluster dashboards with the capability to store long term data.

● Easily sort, filter, and do a deep scan of individual clusters or of aggregated multiclusters.

● Get an aggregated view of cluster metrics.

● Troubleshoot faster using the Dynamic Search and Visual Web Terminal capabilities.

Multicluster networking with Red Hat Submariner

● Provide cross-cluster network infrastructure with Red Hat Submariner (Submariner) for direct and encrypted communication.

● Use DNS service discovery for Kubernetes clusters connected by Submariner in multicluster environments.

● Uniformly manage and observe microservices based applications’ network flow for behavioral insight, control, and troubleshooting.

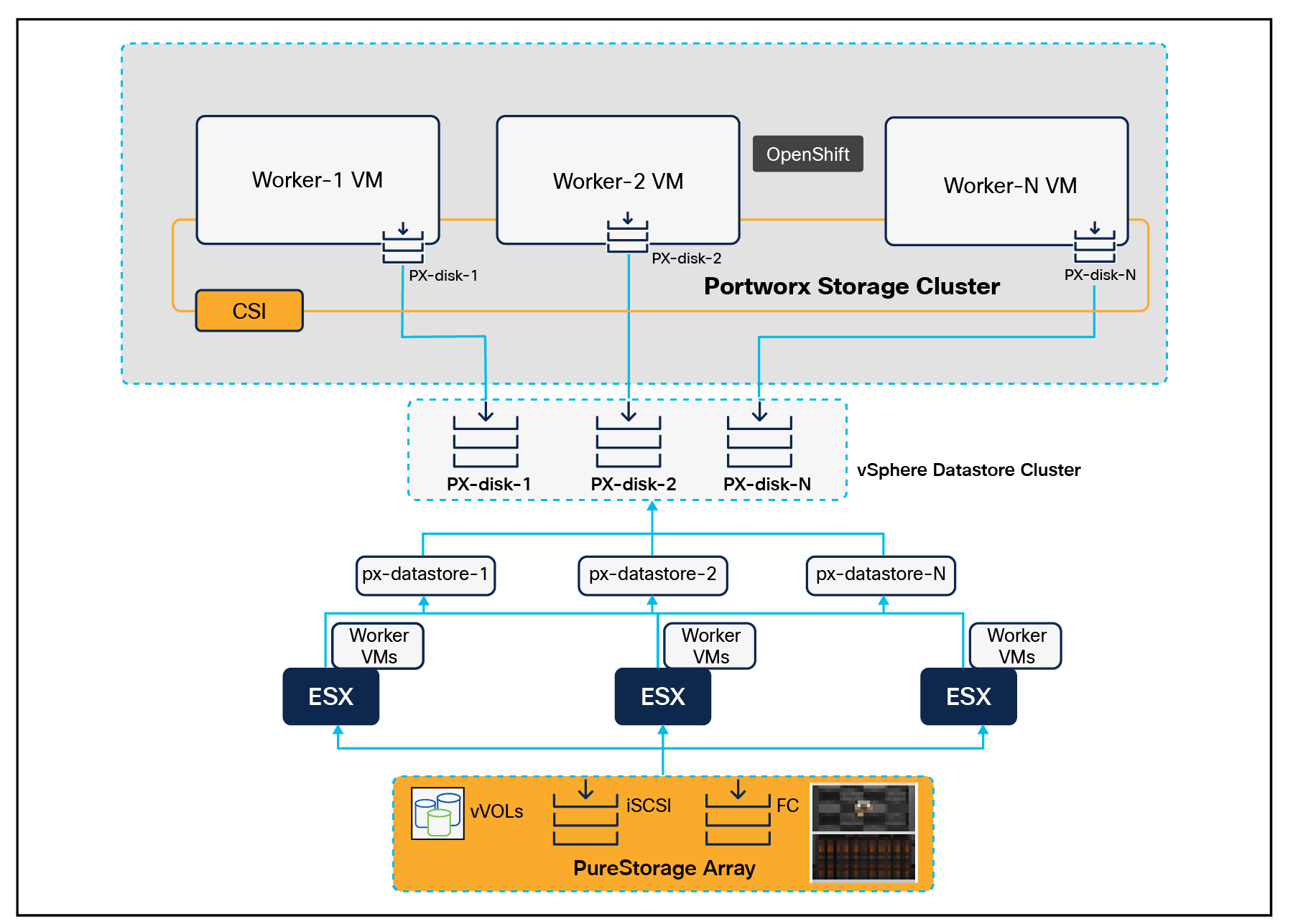

Portworx Enterprise Kubernetes storage platform

Portworx Enterprise is a multicloud ready software defined storage platform for running mission critical applications. Portworx (PX) is a fully integrated solution for persistent storage, disaster recovery, data security, cross cloud data migrations, and automated capacity management for applications.

Portworx provides container optimized storage for applications with no downtime, using such features as elastic scaling and a high availability solution across nodes/racks/availability zones. Portworx is designed to have consistent application performances by storage aware Class of Service (COS) and application aware I/O tuning.

Portworx Enterprise storage

Portworx secures the environment with encryption and access controls, provides cluster wide encryption with container or storage class‒based BYOK encryption. Portworx supports Role Based Access Control (RBAC) over both cluster operations and volume operations and integration with active directory and LDAP through OIDC.

For cloud native applications, Portworx allows local, application consistent/aware snapshots for multicontainer applications. Portworx Autopilot (PX-Autopilot) for Capacity Management has the ability to automatically resize individual container volumes or your entire collection of storage pools. Portworx rules based engine with customization capabilities can optimize applications based on performance requirements. PX-Autopilot can easily integrate with multiclouds such as Amazon Elastic Block Store, Google Persistent Disk, and Azure Blob Storage.

Portworx Backup (PX-Backup) can capture entire applications, including data, application configurations, and Kubernetes objects/metadata, and move them to any backup location at the click of a button, and its point and click recovery for any Kubernetes application makes it easy for developers. Portworx Disaster Recovery (PX-DR) has the ability to set DR policies at the container granular level and set multisite synchronous and asynchronous replication for a near zero RPO DR across a metro area.

This solution is for use cases and features that help administrators deploy and operate a robust Kubernetes stack for their developers.

Portworx solution overview

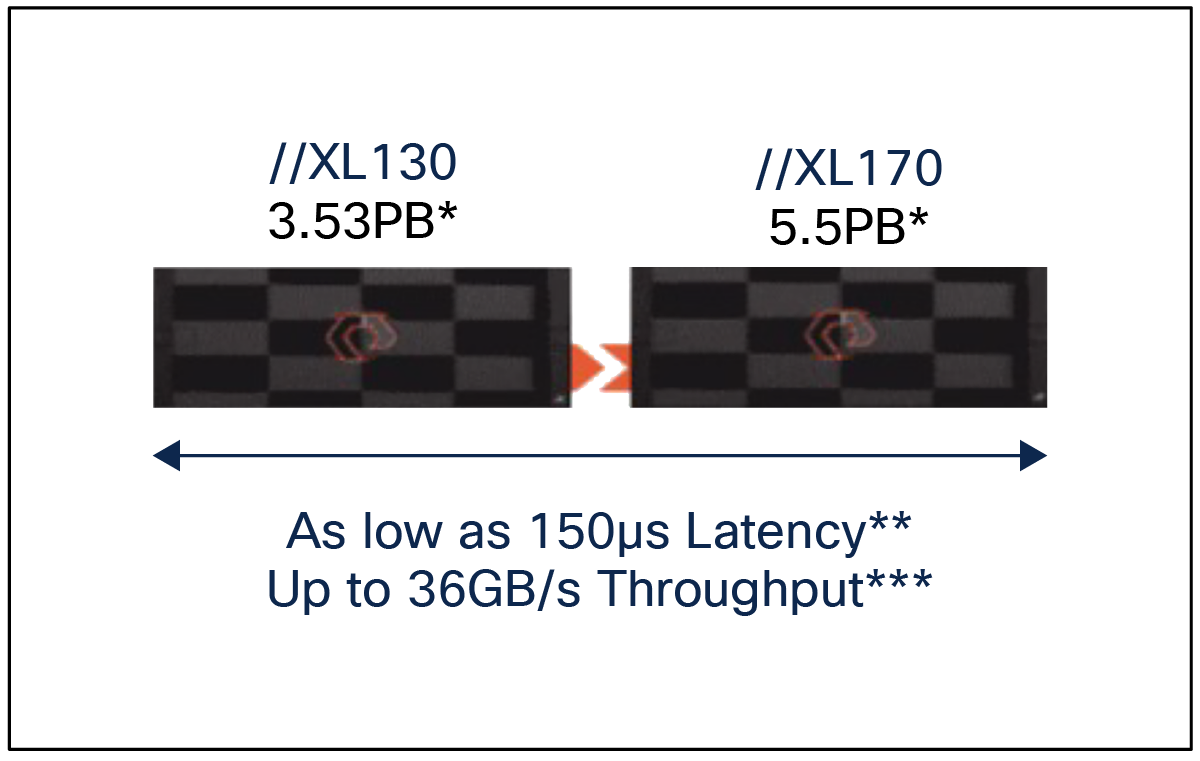

Key highlights of Pure Storage FlashArray//XL series:

● Increased capacity and performance: FlashArray//XL is designed for today’s higher powered multicore CPUs, allowing FlashArray//XL to increase performance over our FlashArray//X models. It provides more space for fans and airflow, which improves cooling efficiency, and for wider controllers, which enable performance to scale today and well into future generations of FlashArray//XL. With greater storage density, FlashArray//XL supports up to 40 DirectFlash modules in the main chassis.

● Increased connectivity, greater reliability, and improved redundancy: FlashArray//XL doubles the host I/O ports compared to FlashArray//X, for up to 36 ports per controller, and the //XL model provides more expansion slots for configuration flexibility. It doubles the bandwidth for each slot, including full bandwidth for mixed protocols. FlashArray//XL offers multiple 100GbE RDMA over Converged Ethernet (RoCE) links that are very robust to hot plug and provide faster controller failover speed.

● DirectFlash modules with distributed NVRAM: DirectFlash modules include onboard distributed non volatile random access memory (DFMD). With DFMD, NVRAM capacity, NVRAM write bandwidth, and array capacity, you can scale with the number of DFMDs, lifting the limit on write throughput.

● DirectCompress Accelerator: Included with every FlashArray//XL shipment, the DirectCompress Accelerator (DCA) increases compression efficiency by offloading inline compression to a dedicated PCIe card. It ensures maximum compression rates, even when the system is under a heavy load, and stretches capacity to reduce overall storage costs and to extend the value of your FlashArray//XL.

Pure Storage FlashArray//XL series

Table 1. FlashArray technical specifications

|

|

Capacity |

Physical |

| //XL170 |

Up to 5.5PB / 5.13PiB effective capacity* |

5-11U; 1850-2355W(nominal-peak) |

| Up to 1.4PB / 1.31PiB raw capacity** |

167lbs (75.7kg) fully loaded;8.72” x 18.94” x 29.72”** |

|

| //XL130 |

Up to 3.53PB / 3.3PiB effective capacity |

5-11U; 1550-2000 watts(nominal-peak) |

| Up to 968TB / 880TiB raw capacity |

167lbs (75.7kg) fully loaded; 8.72” x 18.94” x 29.72 |

|

| DirectFlash Shelf |

Up to 1.9PB effective capacity |

Up to 512TB / 448.2TiB raw capacity |

| 3U; 460-500 watts (nominal–peak) |

87.7lbs (39.8kg) fully loaded; 5.12” x 18.94” x 29.72” |

Table 2. FlashArray Connectivity

| Connectivity |

|

| Onboard Ports

● 2 x 1Gb (RJ45)

|

I/O Expansion Cards (6slots/controller)

●

2-port 10/25 Gb Ethernet, NVMe/TCP, NVMe/RoCE

●

2-port 40/100Gb Ethernet, NVMe/TCP, NVMe/RoCE

● 2-port 16/32/64†Gb FCP, NVMe/FC

● 4-port 16/32/64 Gb FCP, NVMe/FC

|

| Management Ports

● 1 x RJ45 Serial

● 1 x VGA

● 4 x USB 3.0

|

Advantages of using FlashArray as backend storage for Portworx Enterprise Storage Platform

Pure Storage FlashArray provides all flash storage backed by an enterprise class array with six nines reliability, data at rest encryption, and industry leading data reduction technology. Although Portworx supports any storage type, including Direct Attached Storage (DAS) and array based storage, using Portworx replicas to ensure data availability for application pods across nodes, then having all replicas provisioned from the same underlying FlashArray, will multiply your standard data reduction rate, for the application data, by the number of replicas for the persistent volume.

Portworx combined with Pure Storage FlashArray can be used as a cloud storage provider. This allows administrators to store data on-premises with FlashArray while benefiting from Portworx cloud drive features, automatically provisioning block volumes, expanding a cluster by adding new drives or expanding existing ones and support for PX-Backup and Autopilot. Pure Storage FlashArray with Portworx on Kubernetes can attach FlashArray as a direct access volume. Used in this way, Portworx directly provisions FlashArray volumes, maps them to a user PVC, and mounts them to pods. FlashArray Direct Access volumes support CSI operations such as filesystem operations. Snapshots, and QoS.

Container ready infrastructure: Portworx on top of Pure Storage FlashArray benefits from Kubernetes native storage and data management. Operate, scale, and secure modern applications and databases on FlashArray and FlashBlade with just a few clicks.

Amazon Web Services (AWS) and Red Hat OpenShift Service on AWS

AWS provides a flexible application computing environment for deploying cloud native infrastructure and applications. Red Hat OpenShift can accelerate application development and delivery by providing a consistent experience for developers and operators across both on-premises and public clouds. One set of Kubernetes APIs and management tooling, updated on the same schedule, and supported by the same industry leading vendor, can be deployed across all of an enterprise’s cloud and on-premises environments.

AWS is globally available, enabling enterprises to extend their enterprise deployments to a variety of AWS regions as needed. Red Hat OCP cluster nodes can also be distributed across multiple AWS Availability Zones (AZ) to ensure cluster and application availability.

OCP is available as a managed service on AWS, Red Hat OpenShift Service on AWS (ROSA), and as a self managed application platform. This solution uses the self managed service and the openshift install command line installation method. The automated installation uses several AWS services such as Route 53, DHCP, load balancers, Virtual Private Cloud (VPC), and EC2 instances that are deployed or used as a part of the installation process. Transit Gateways (TGWs) attached to the VPC provide connectivity to on-premises resources and services, including Kubernetes clusters and application workloads.

A VPC in AWS provides an isolated virtual networking environment on a shared infrastructure where users can deploy resources to support application workloads. Enterprises can deploy VPCs in AWS and connect them directly to the on-premises data center to enable connectivity between applications, services, and resources in each environment. One mechanism for enabling this connectivity is to use a site to site VPN to establish an IPsec VPN tunnel between the two locations.

Red Hat OpenShift Service on AWS

Red Hat OpenShift Services on AWS (ROSA) is a fully managed application platform that is integrated with AWS and managed by a global team of expert SREs. ROSA enables enterprises to focus on delivering value through their applications and workloads. It is easy to extend an on-premises OpenShift environment into the public cloud with ROSA’s self service deployment and robust SLAs.

Infrastructure as Code with Red Hat Ansible

Red Hat Ansible is an open source tool for Infrastructure as Code (IaC). Ansible is also used for configuration management and application software deployment. Ansible is designed to be agentless, secure, and simple. Ansible, available in Red Hat’s Ansible Automation Platform, is part of a suite of tools supported by Red Hat. Ansible manages endpoints and infrastructure components in an inventory file, formatted in YAML or INI. The inventory file can be a static file populated by an administrator or dynamically updated. Passwords and other sensitive data can be encrypted using Ansible Vault. Ansible uses playbooks to orchestrate provisioning and configuration management. Playbooks are written in human readable YAML format that is easy to understand. Ansible playbooks are executed against a subset of components in the inventory file. From a control machine, Ansible uses Secure Shell (SSH) or Windows Remote Management to remotely configure and provision target devices in the inventory based on the playbook tasks.

Ansible is simple and powerful, allowing users to easily manage various physical devices within FlashStack including the configuration of Cisco UCS bare metal servers, Cisco Nexus switches, Pure FlashArray storage, and VMware vSphere. Using Ansible’s playbook based automation is easy and integrates into your current provisioning infrastructure. This solution offers Ansible Playbooks that are made available from a GitHub repository that customers can access to automate the FlashStack deployment.

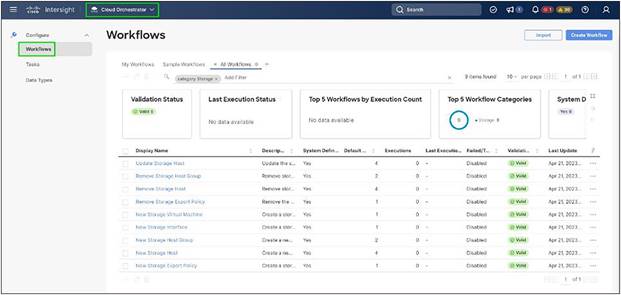

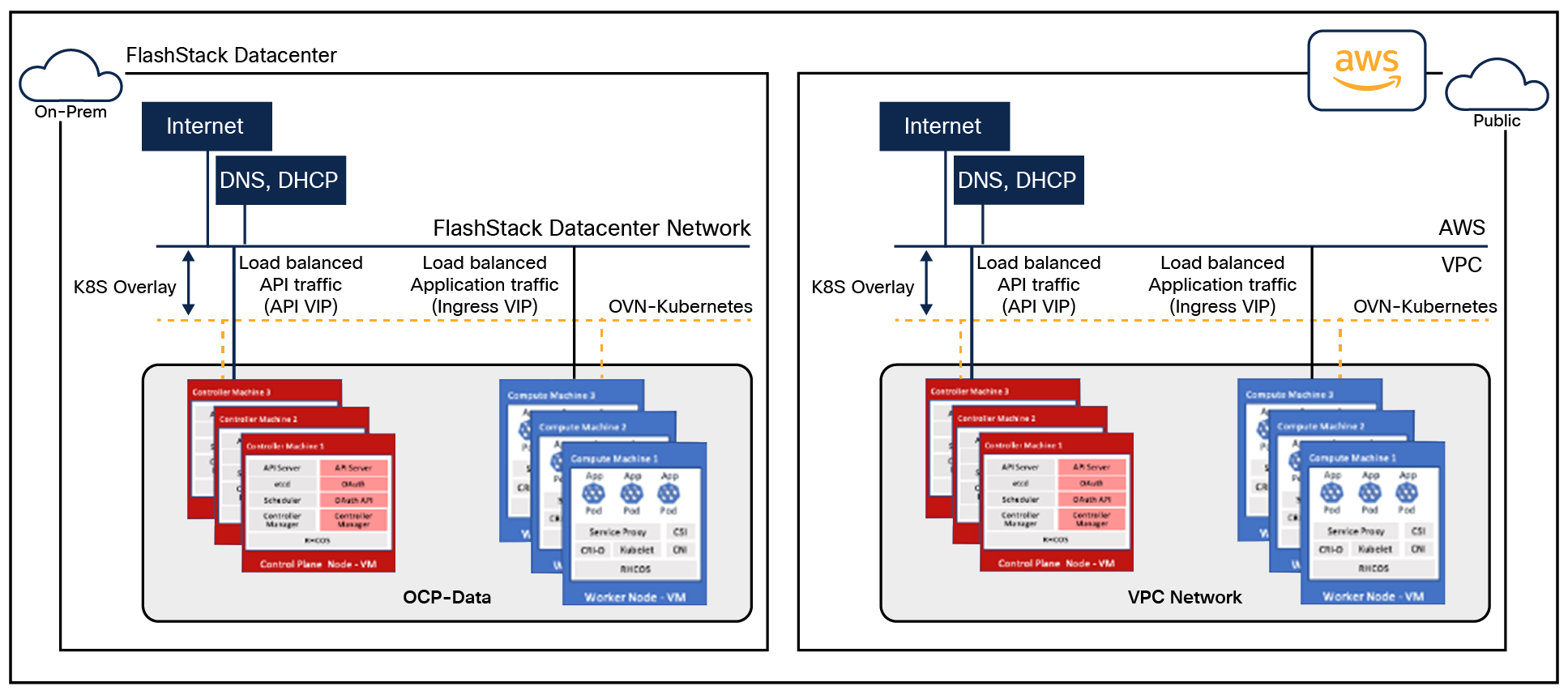

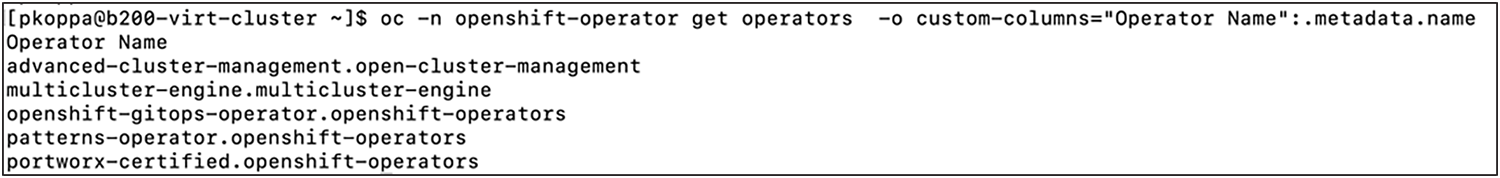

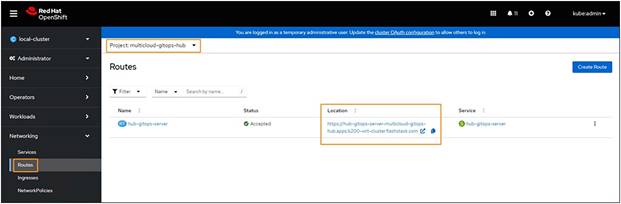

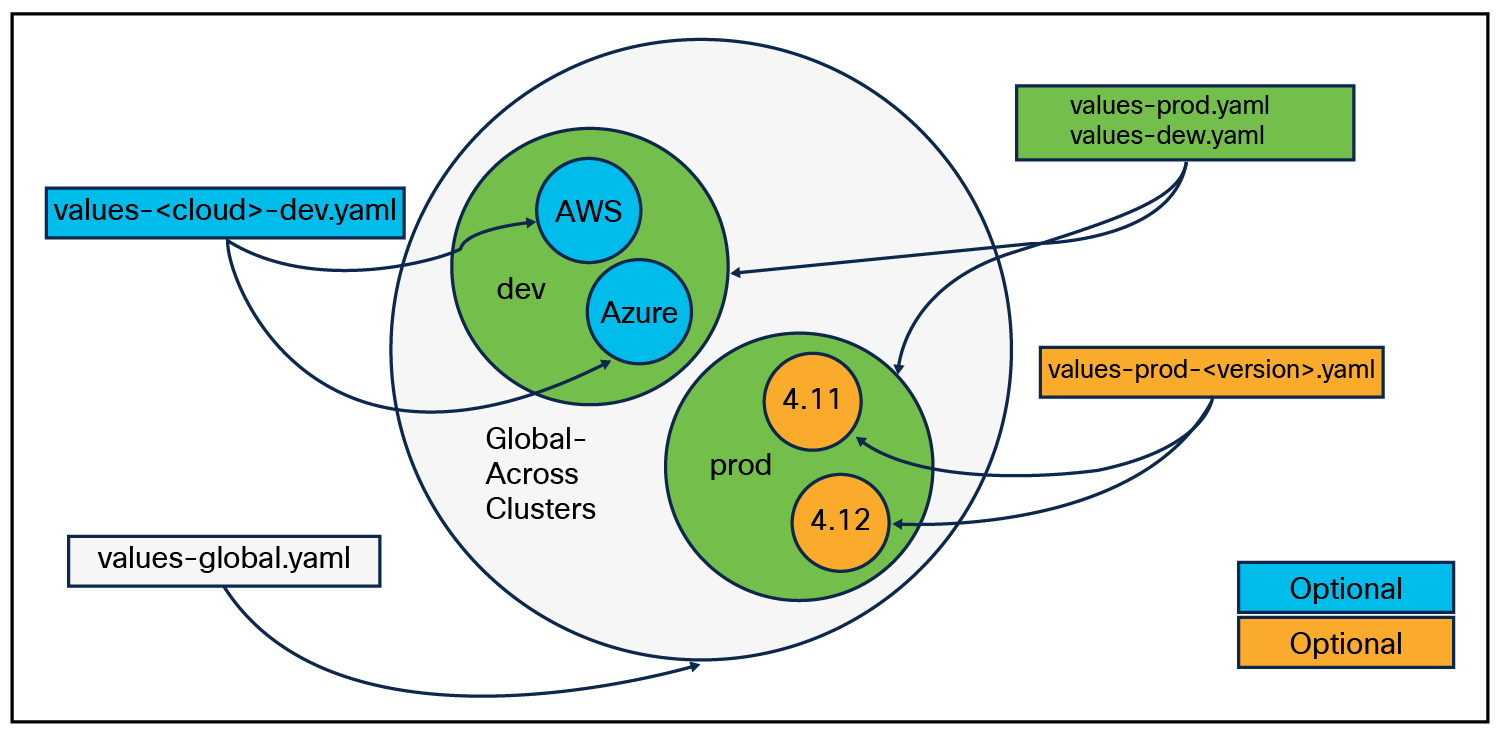

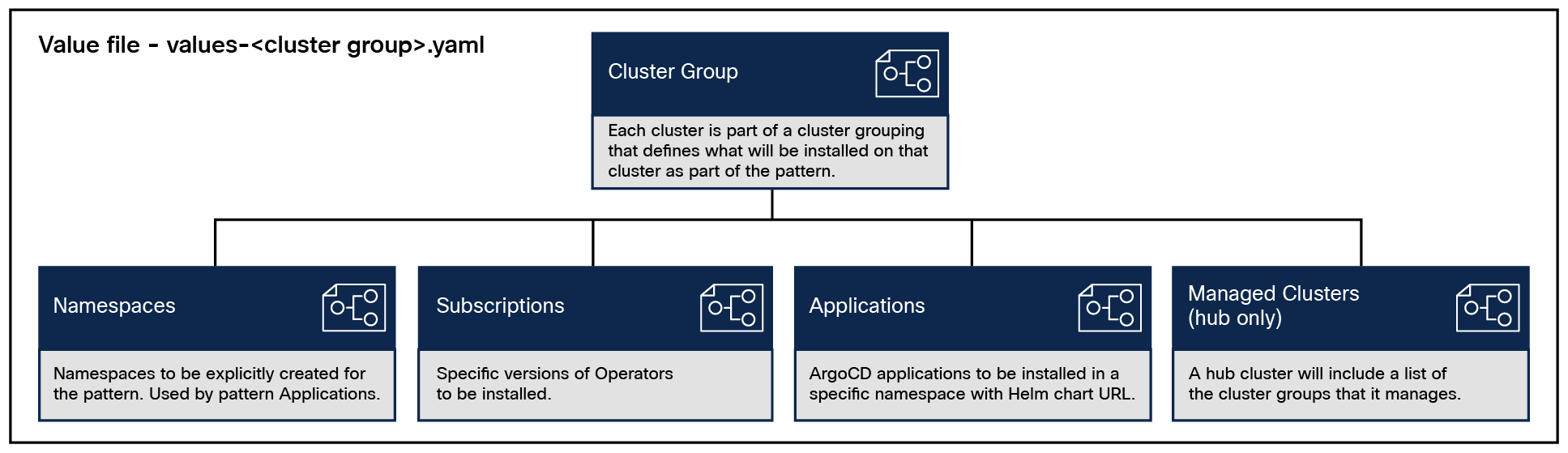

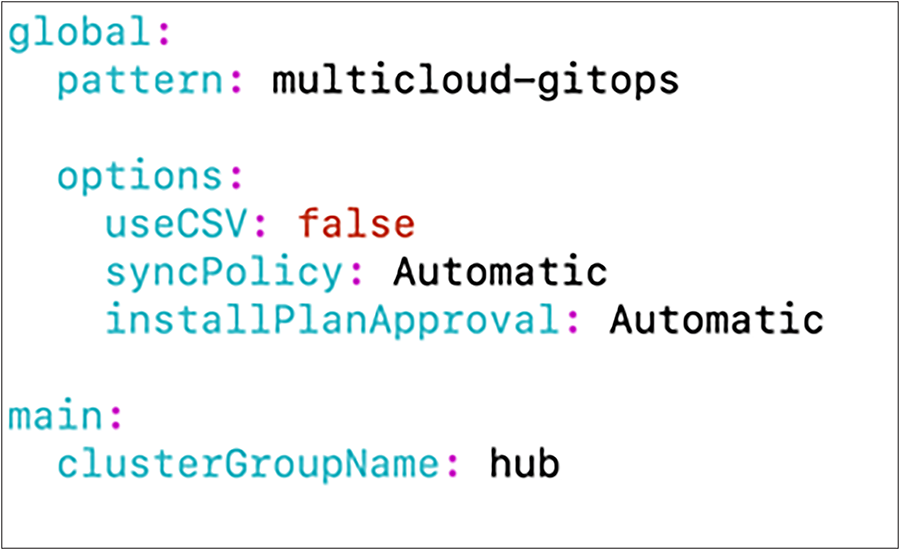

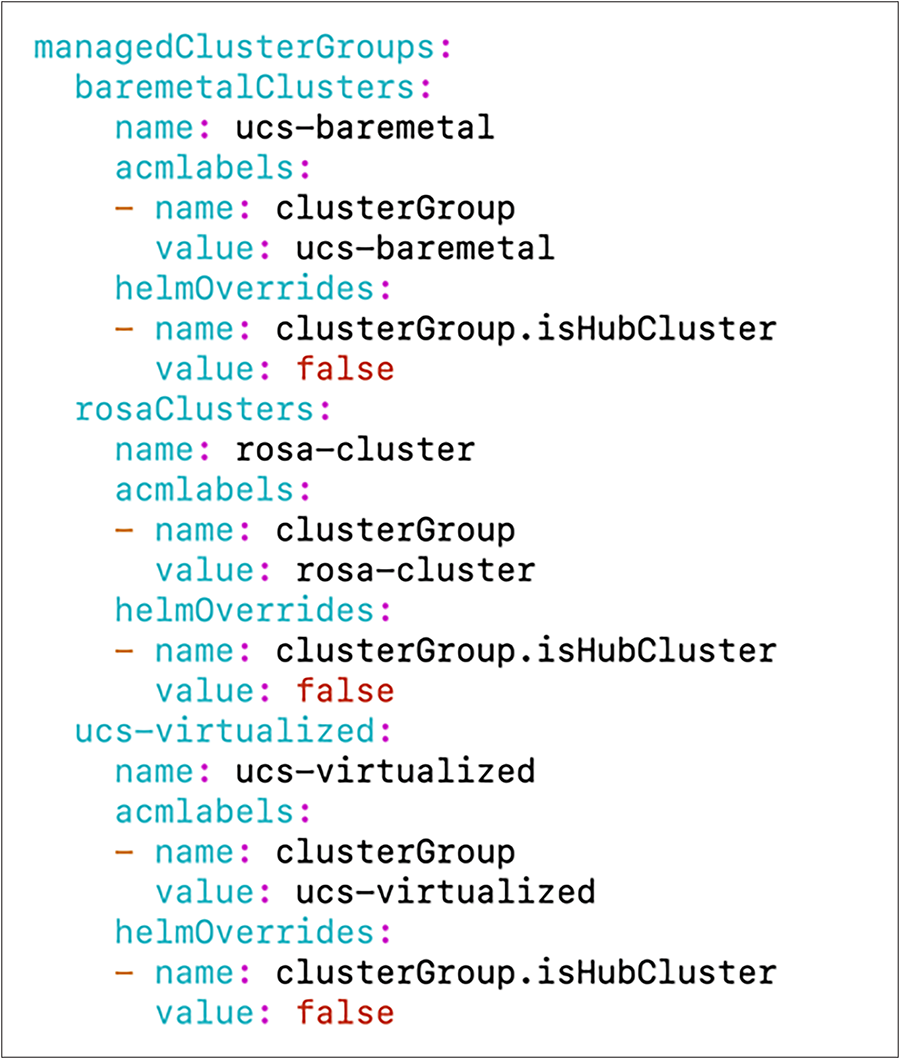

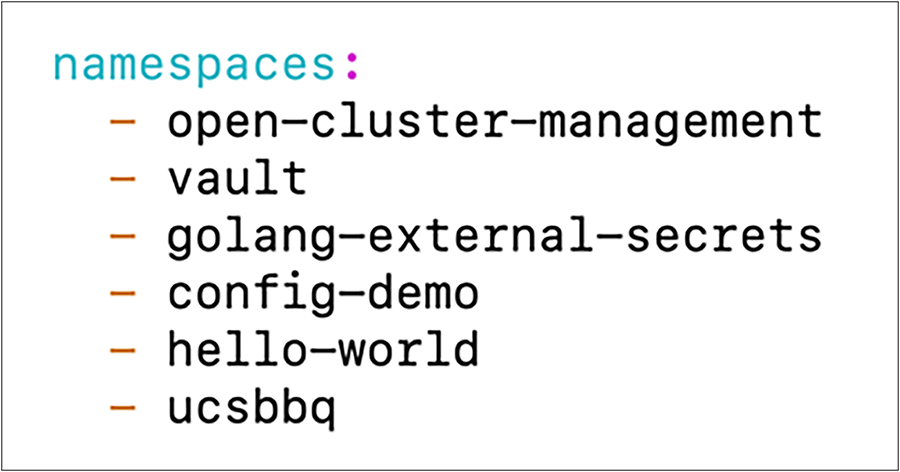

This architecture covers hybrid and multicloud management with GitOps as shown in the following figure. At a high level this requires a management hub for GitOps and infrastructure that extends to one or more managed clusters running on-premises FlashStack Data center and/or public clouds. The automated infrastructure-as-code approach can manage the versioning of components and deploy according to the infrastructure-as-code configuration.

Overview of Multicloud validated patterns

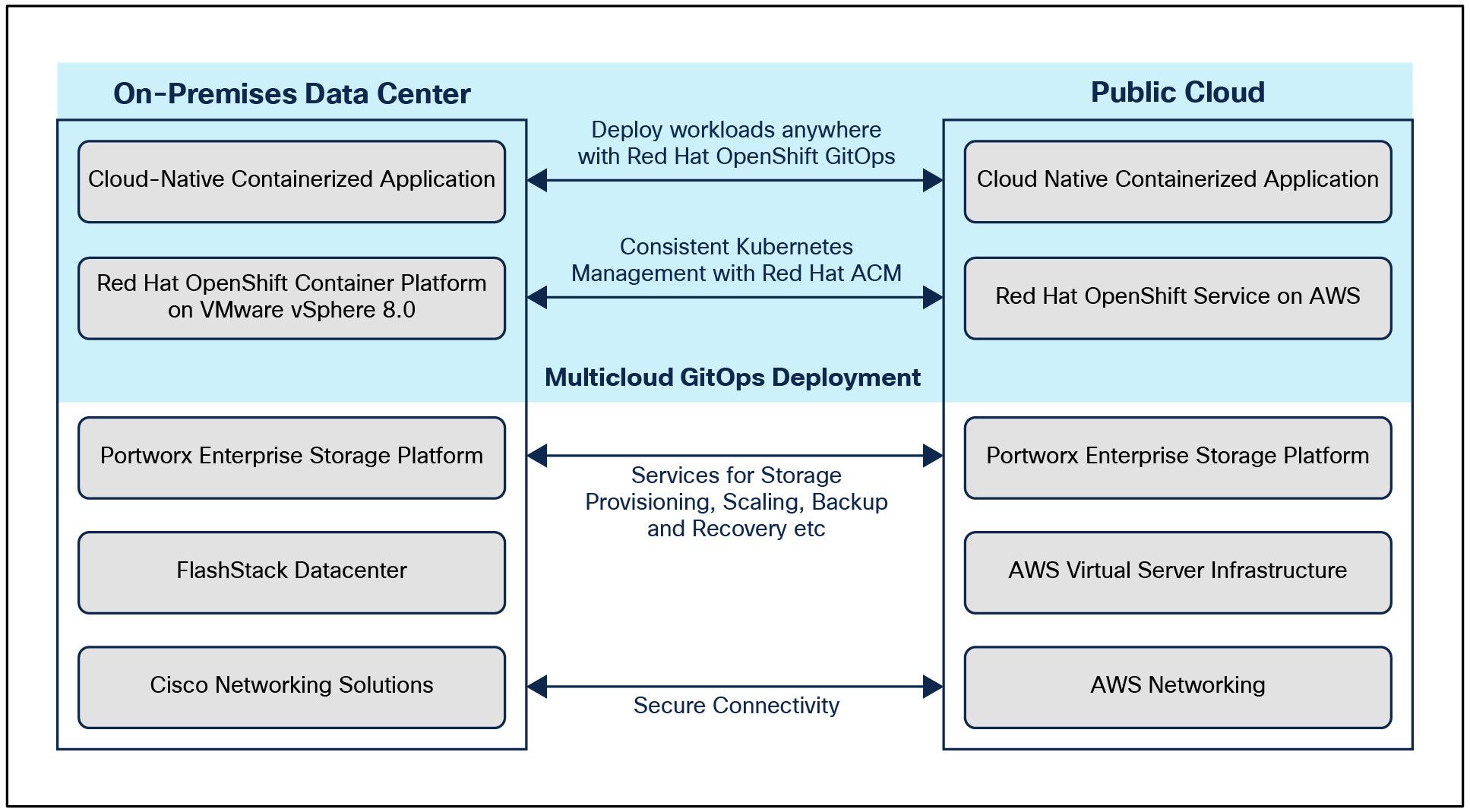

The hybrid-cloud infrastructure design in this solution consists of an on-premises data center, a public cloud infrastructure, and a secure network interconnecting the two environments. The Red Hat Validated Multicloud GitOps pattern is configured on the Red Hat OpenShift installed on the provisioned infrastructure.

Solution overview

FlashStack Virtual Server Infrastructure

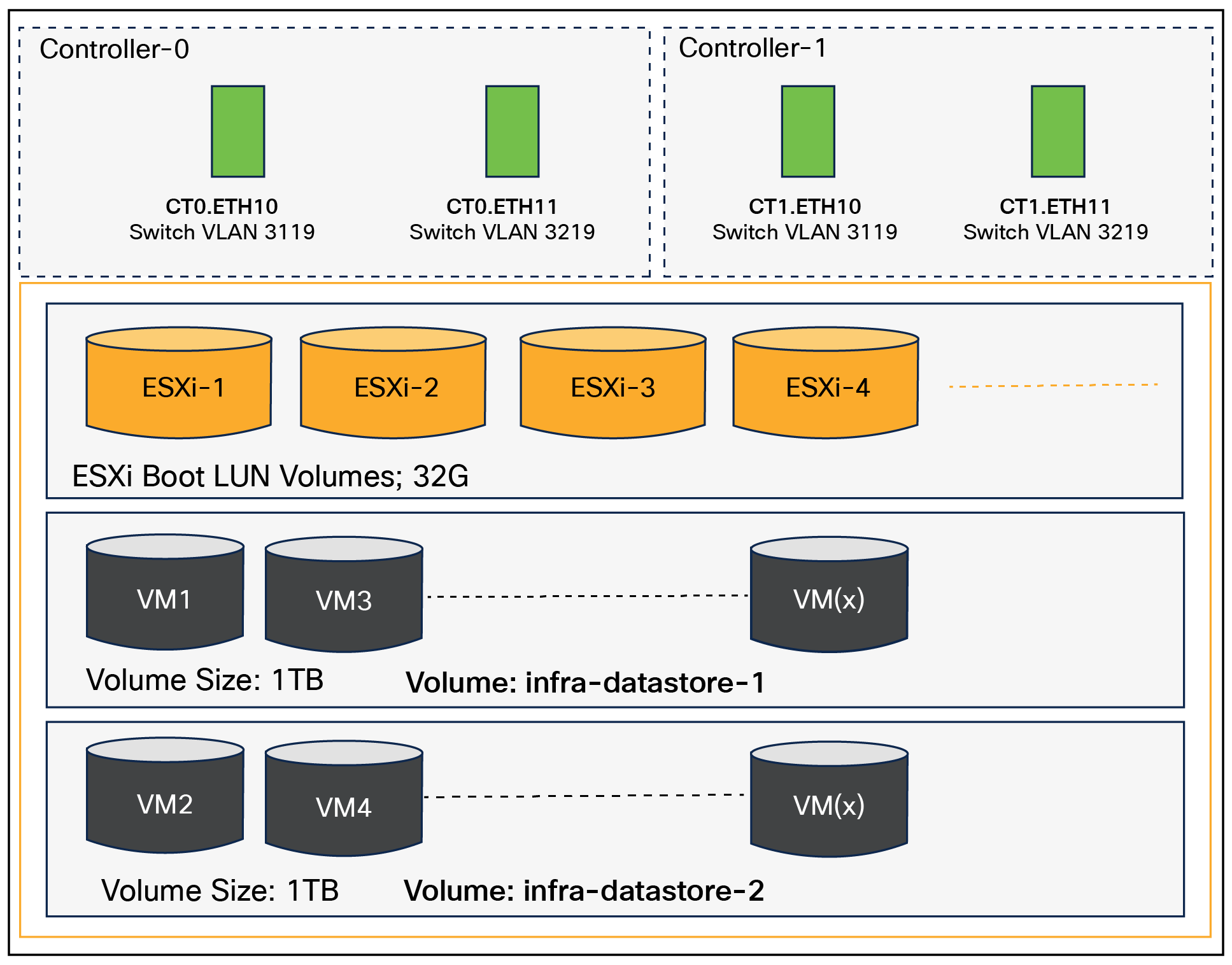

The on-premises FlashStack Virtual Server Infrastructure (VSI) in the solution consists of:

● 6 x Cisco UCS X210c M6 Compute Nodes form a VMware vSphere 8.0 cluster. Control plane and worker nodes are running as virtual machines on the VMware vSphere 8.0 cluster. There can be more than one OCP clusters in a VMware vSphere 8.0 cluster.

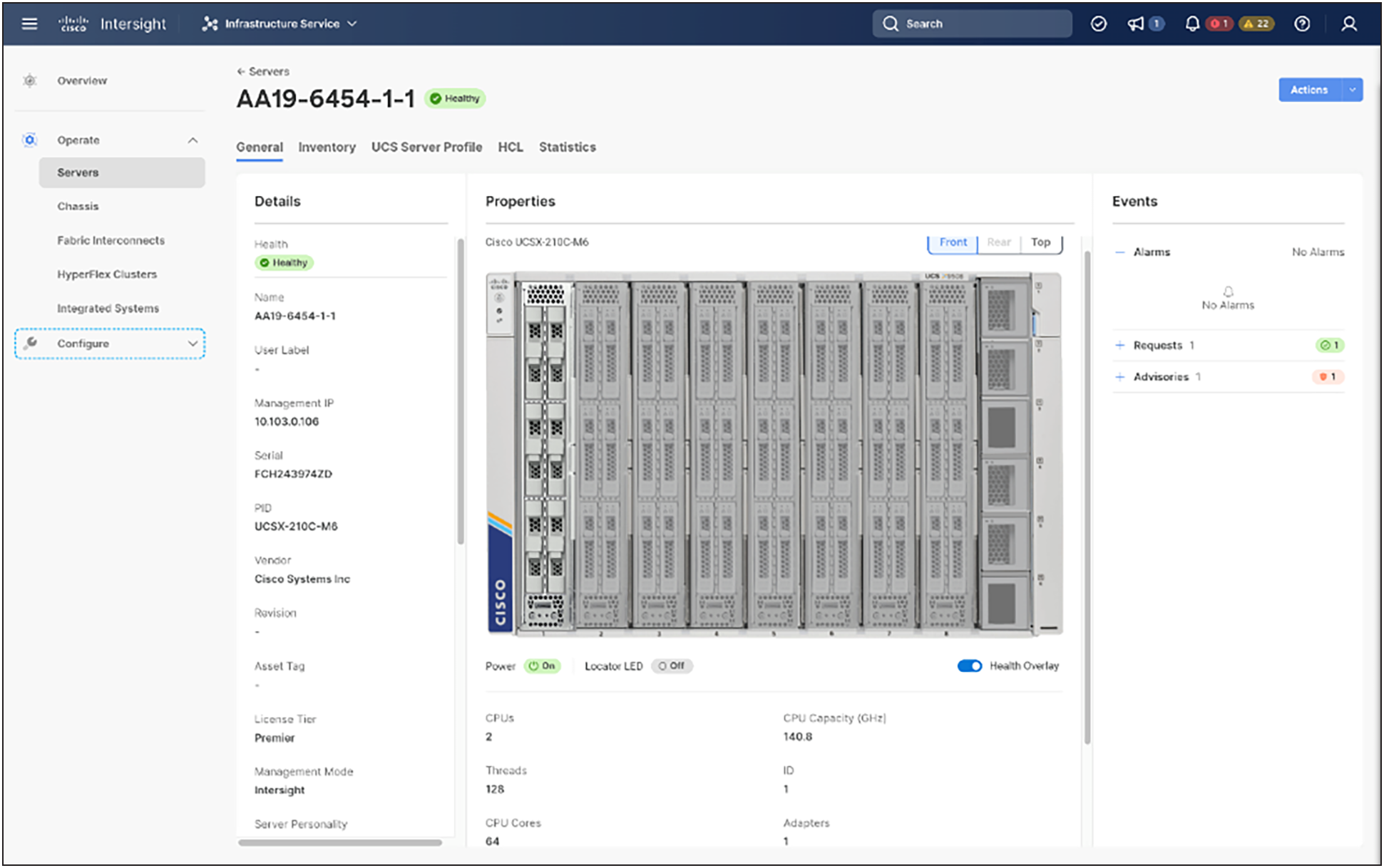

● The cluster is deployed and managed from the cloud using Cisco Intersight.

● 2 x Cisco UCS X210c M6 Compute Nodes form a management cluster with a VMware vSphere 8.0 cluster hosting services and management components to support the application cluster. The cluster is deployed and managed from the cloud using Cisco Intersight. The services deployed include a VMware vCenter cluster managing applications and a DNS, DHCP, and OCP installer workstation. The management cluster can also host a management OCP cluster to run services and other components. For example, Red Hat’s Advanced Cluster Manager requires a seed OCP cluster to run on before it can be used for multicluster management.

Public virtual server infrastructure

The public virtual server Infrastructure in the solution consists of Red Hat OpenShift Service on AWS (ROSA), a fully managed, turnkey application platform.

Two redundant IPsec VPN connections provide secure connectivity between the cloud native environments. The VPN connections are between two Cisco Cloud Services 1000v series on-premises and transit gateway routers in the public cloud.

Red Hat OCP clusters provide a Kubernetes environment for cloud native applications and use cases. The clusters are deployed on FlashStack Data center and on AWS EC2 instances using Red Hat Hybrid-cloud and managed using Red Hat Advanced Cluster Management for Kubernetes.

Portworx Enterprise Kubernetes Storage Platform

Portworx Enterprise provides cloud native storage for applications running in the FlashStack Data center and on AWS. Portworx also provides various data services such as:

● PX-DR, which enables asynchronous disaster recovery for the solution.

● PX-Backup, which delivers enterprise grade application and data protection with fast recovery.

● PX Central, which provides a monitoring, metrics, and data management interface for Portworx Enterprise.

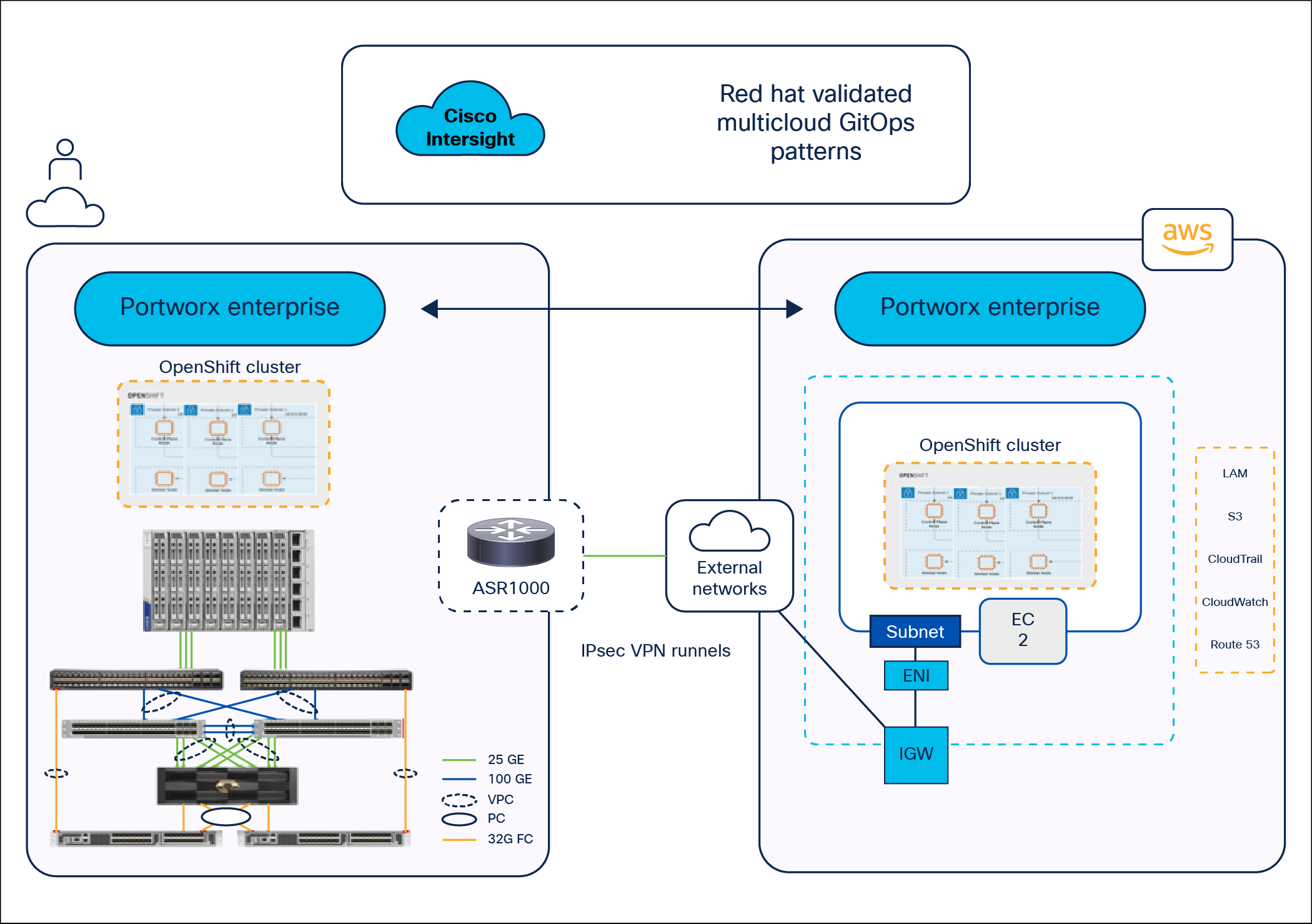

The figure below illustrates the end-to-end solution that was designed, built, and validated in Cisco internal labs.

Solution topology

● Cisco UCS X-Series‒based FlashStack provides customers compute density, expandability, and all flash infrastructure in a single system. The Cisco UCS X210c M6 Compute Node allows customers to utilize the latest hardware innovations for running compute intensive workloads.

● Portworx provides data services across a hybrid-cloud. Portworx Container Storage Interface provides dynamic provisioning of persistent storage from FlashStack. Portworx is Red Hat‒certified and available for deployment on Red Hat’s operator hub.

● FlashStack and Cisco Intersight can quickly deliver a production ready continuous integration stack for virtualized and containerized workloads.

● Cisco Intersight simplifies operations by providing a comprehensive set of day 2 capabilities and tools.

● The Red Hat Validated Multicloud GitOps pattern is configured on the Red Hat OpenShift installed on the provisioned infrastructure.

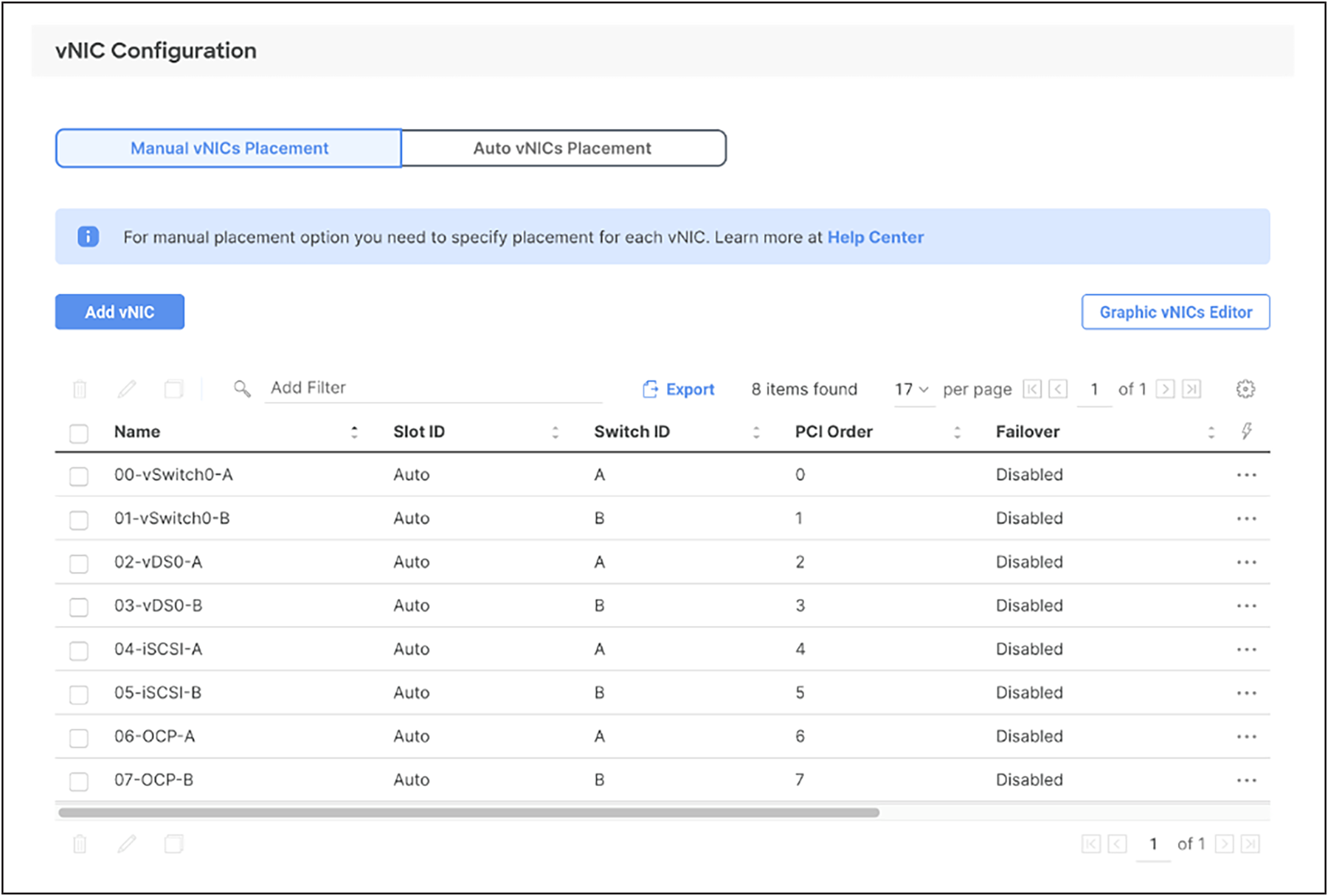

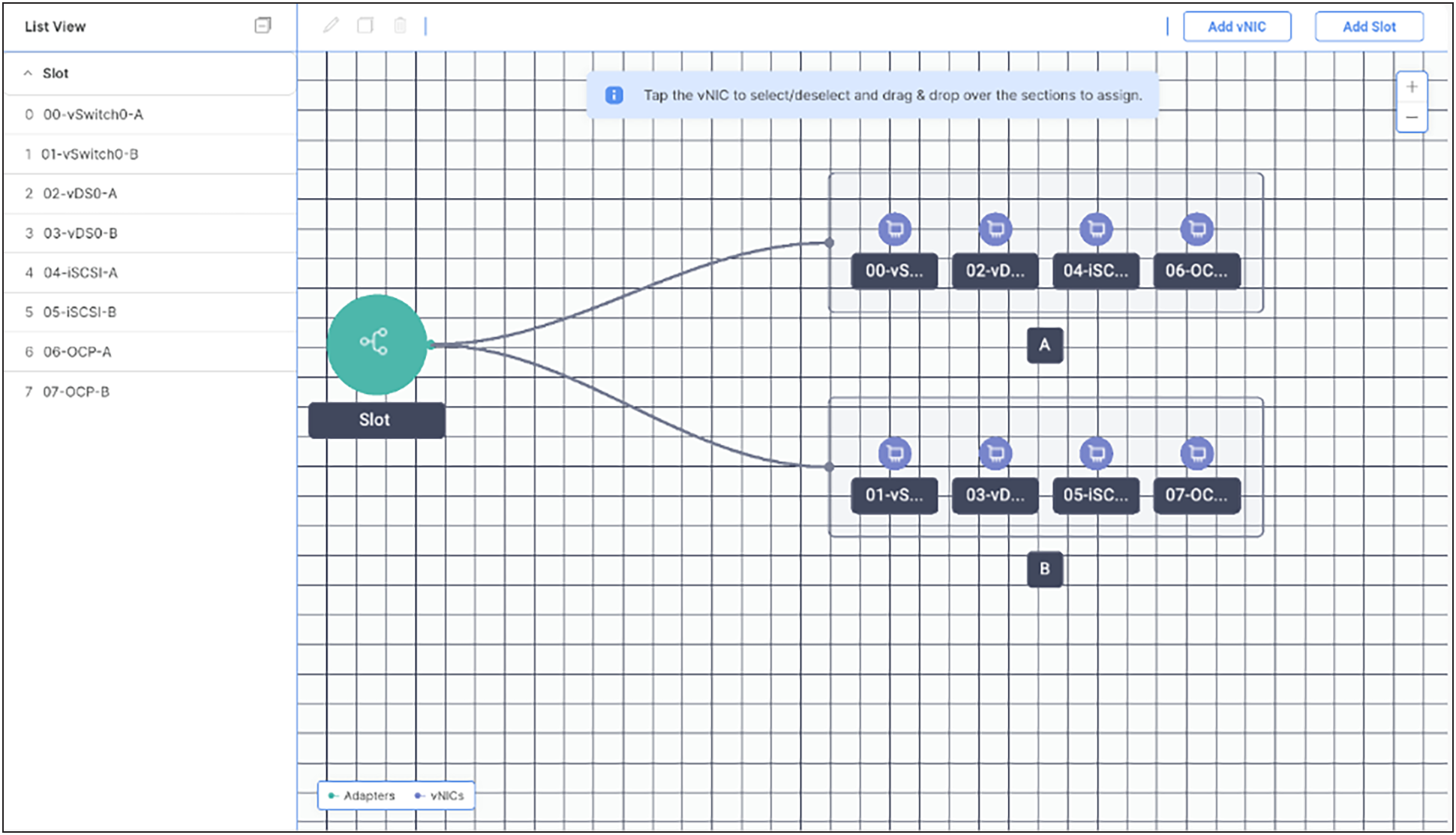

On-premises infrastructure includes the FlashStack Data Center. This FlashStack design utilizes Cisco UCS X-Series servers connected through Cisco UCS fabric interconnects and managed with Cisco Intersight Infrastructure Manager (IMM).

FlashStack with Cisco UCS X-Series supports both IP based and Fibre Channel (FC)‒based storage access designs. For the IP based solution, an iSCSI configuration on Cisco UCS and Pure Storage FlashArray is utilized to set up storage access, including boot from SAN configurations for the compute nodes. For the Fibre Channel‒ based designs, Pure Storage FlashArray and Cisco UCS X-Series are connected using Cisco MDS 9132T switches, and storage access, including boot from SAN, is provided over the Fibre Channel network. The physical connectivity details for both IP and Fibre Channel designs are explained in the following sections.

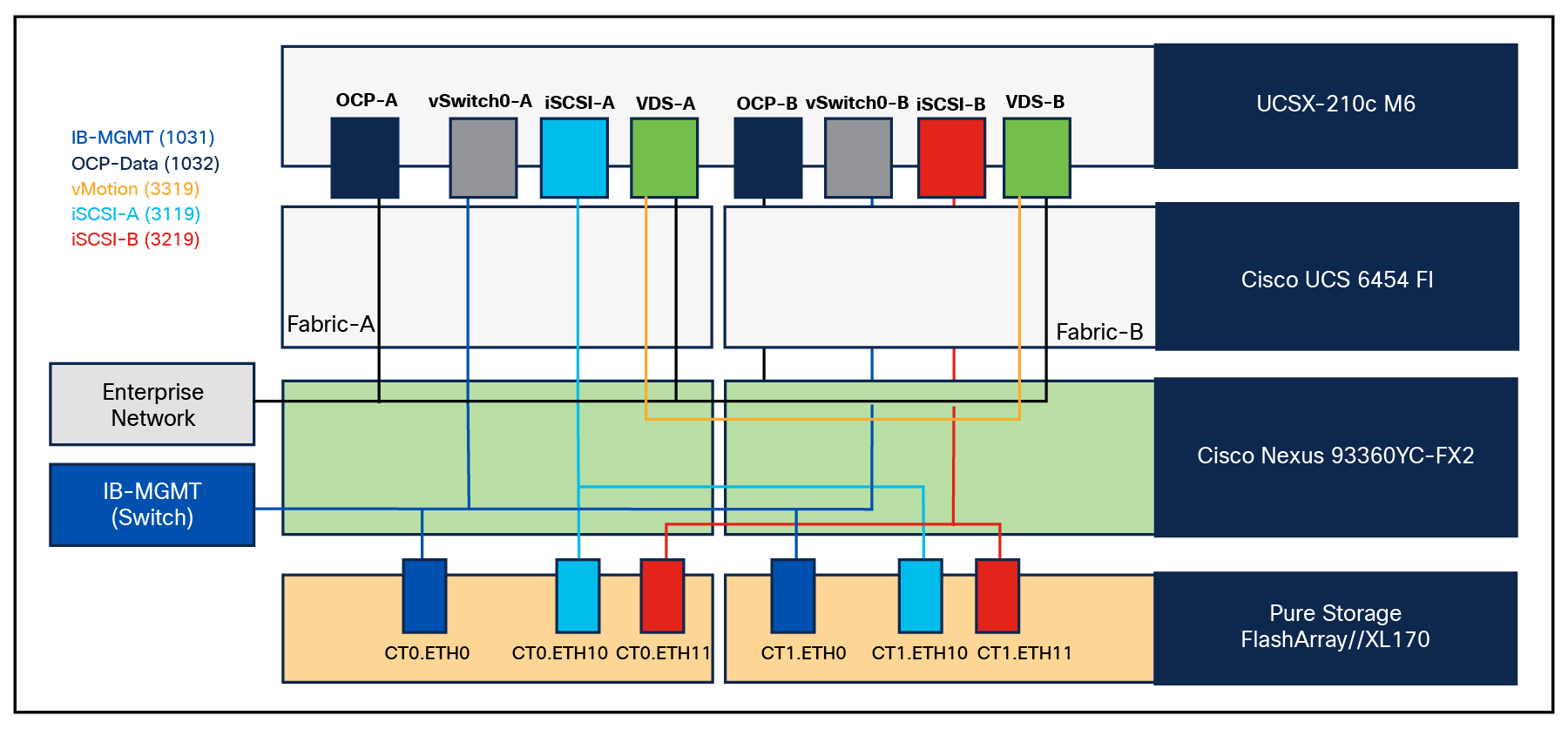

IP-based storage access: iSCSI

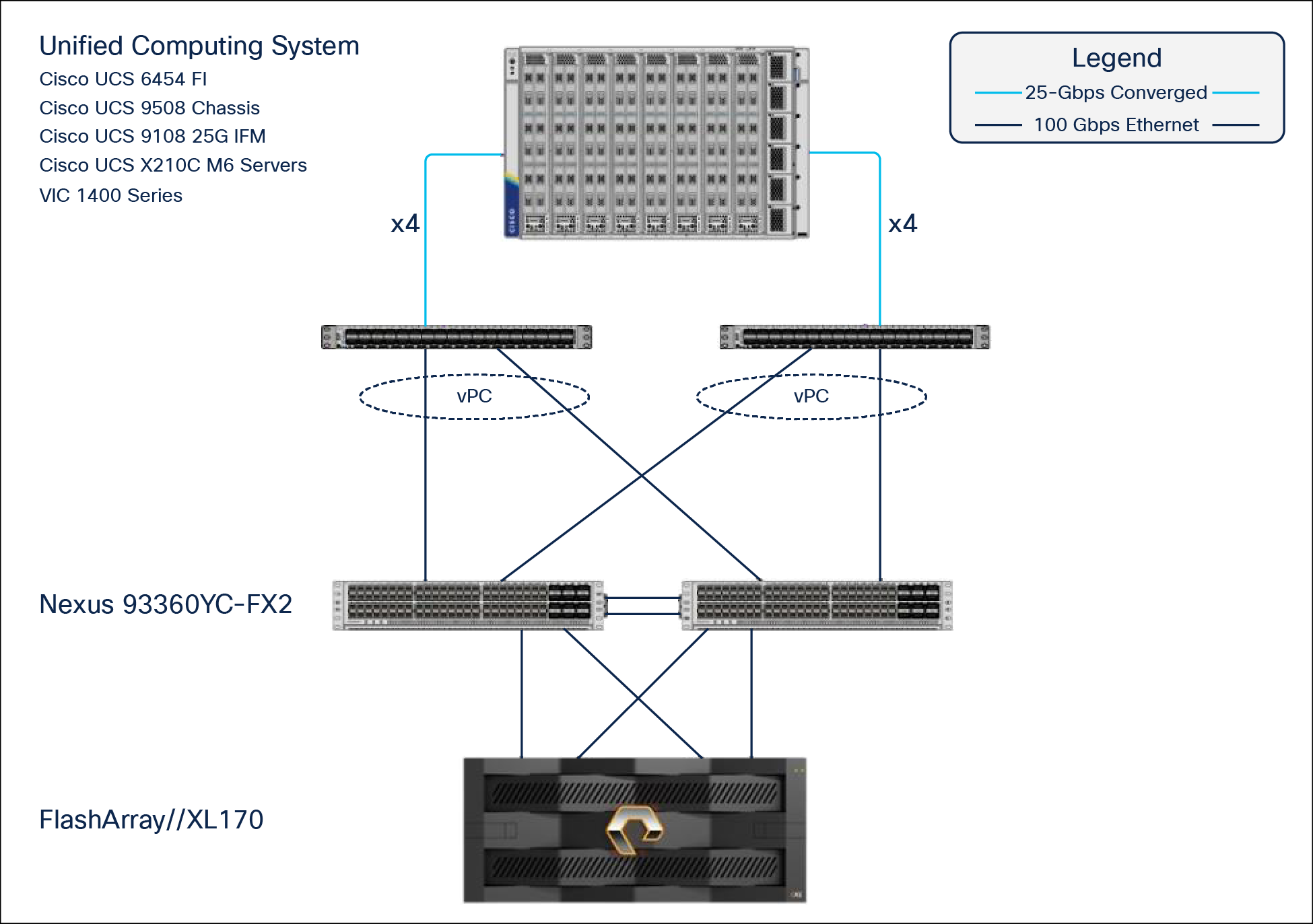

The physical topology for the IP based FlashStack is shown in Figure 31.

FlashStack ‒ physical topology for IP connectivity

To validate the IP based storage access in a FlashStack configuration, the components are set up as follows:

● Cisco UCS 6454 Fabric Interconnects provide the chassis and network connectivity.

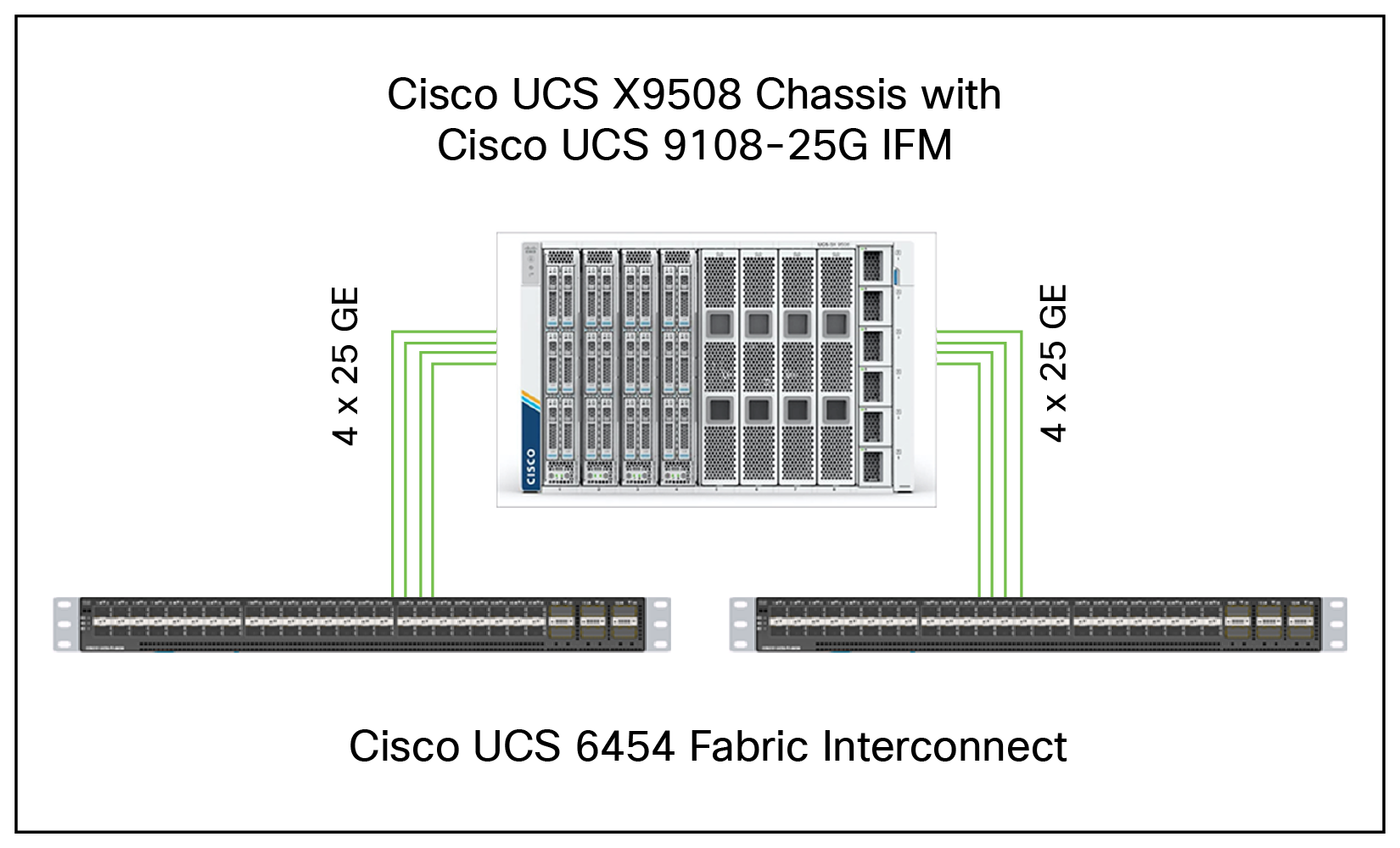

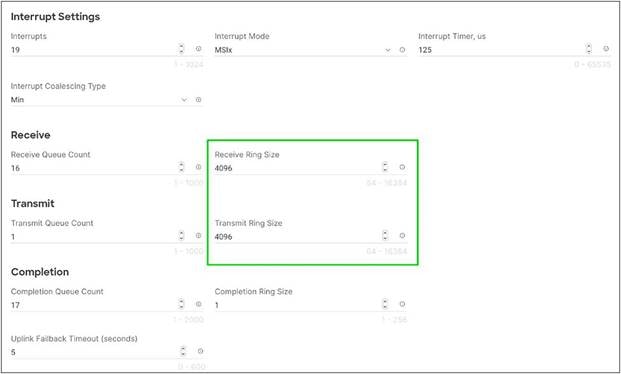

● The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI. If additional bandwidth is required, all eight 25G ports can be utilized.

● Cisco UCSX-210c M6 Compute Nodes contain fourth generation Cisco 14425 virtual interface cards.

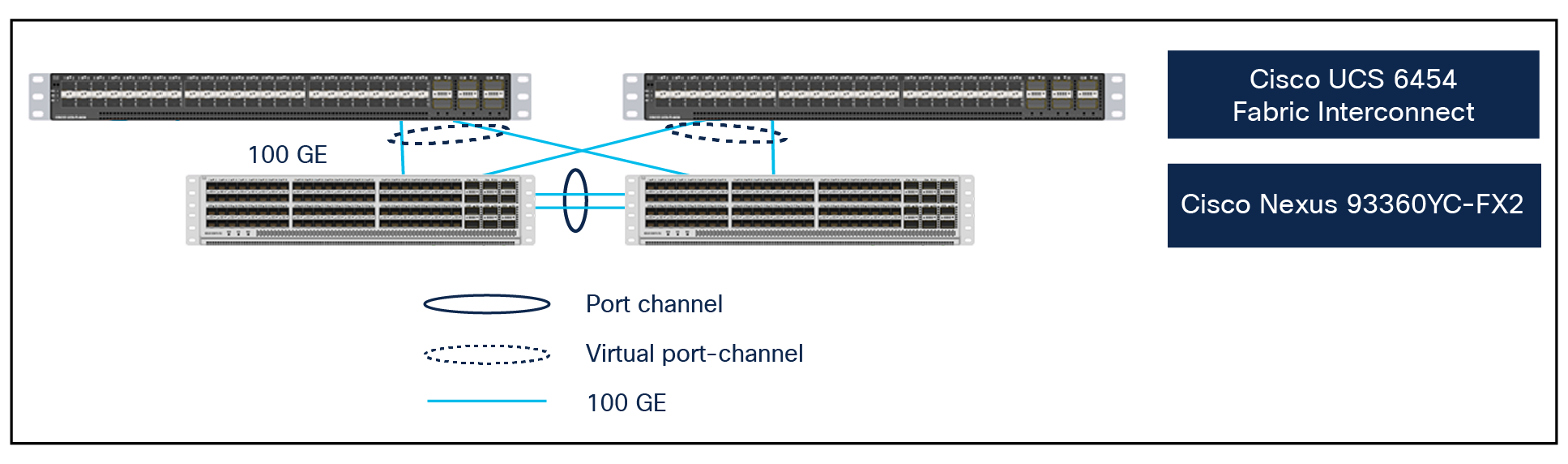

● Cisco Nexus 93360YC-FX2 Switches in Cisco NX-OS mode provide the switching fabric.

● Cisco UCS 6454 Fabric Interconnect 100-Gigabit Ethernet uplink ports connect to Cisco Nexus 93360YC-FX2 Switches in a virtual Port Channel (vPC) configuration.

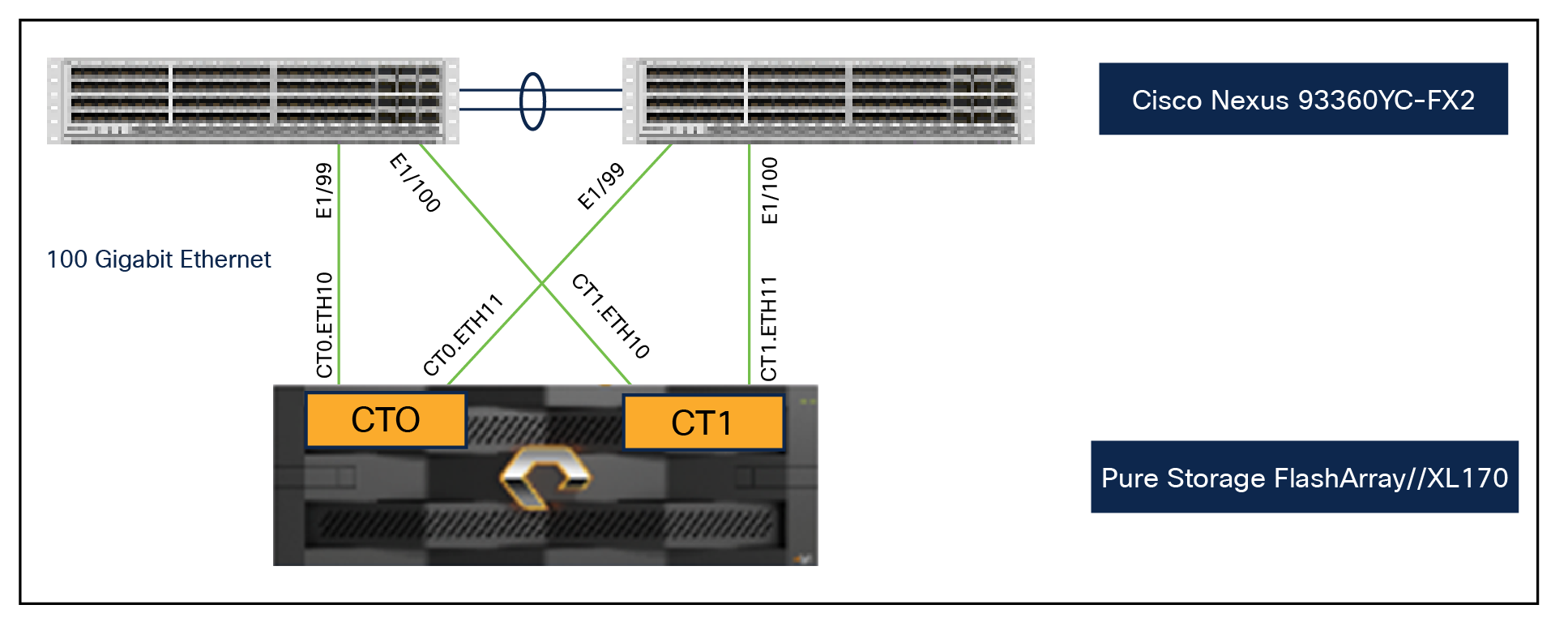

● The Pure Storage FlashArray//XL170 connects to the Cisco Nexus 93360YC-FX2 Switches using four 25-GE ports.

● VMware ESXi 8.0 is installed on Cisco UCSX-210c M6 Compute Nodes to validate the infrastructure.

● Red Hat OpenShift software is installed on a VMware vSphere 8.0 cluster.

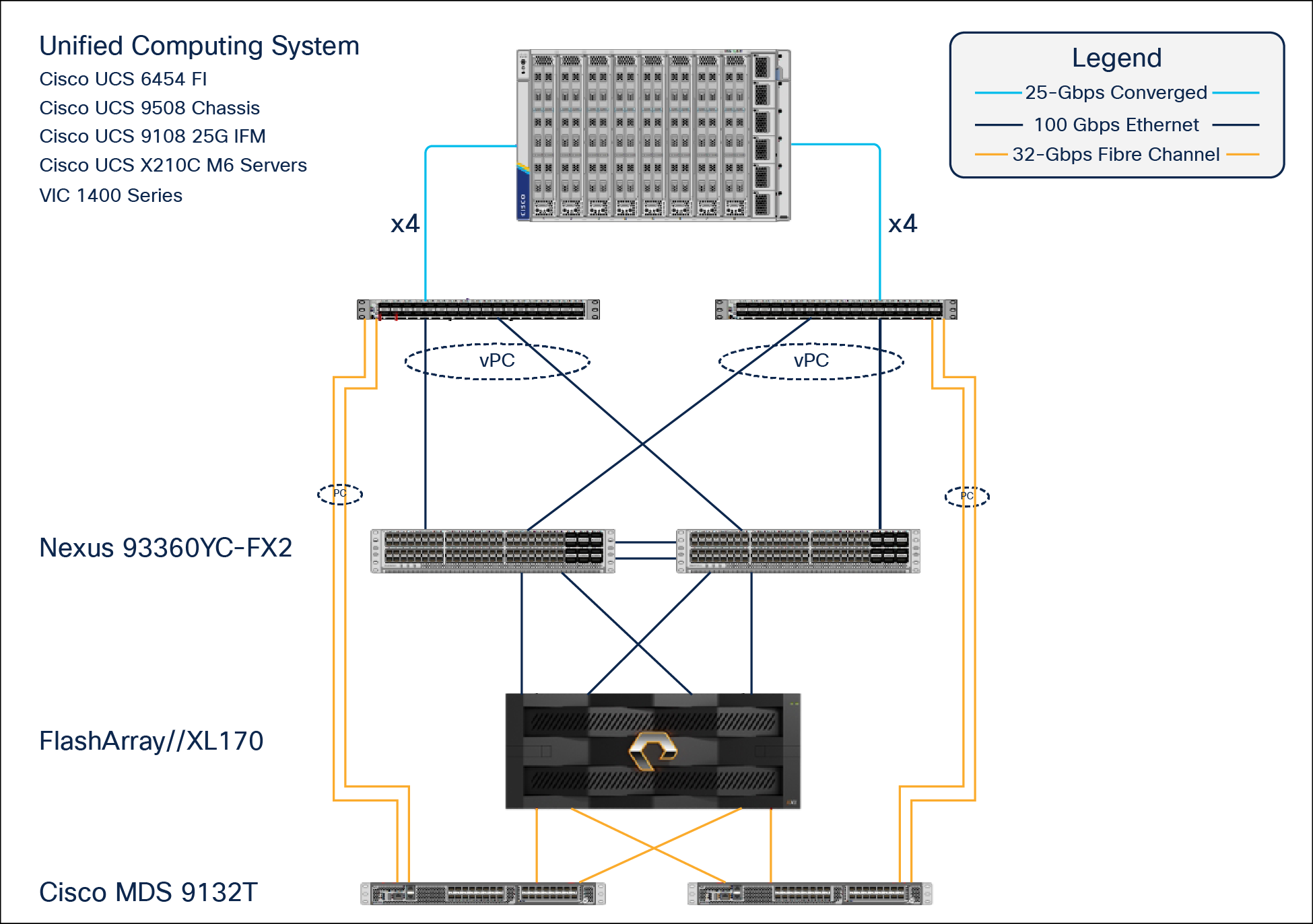

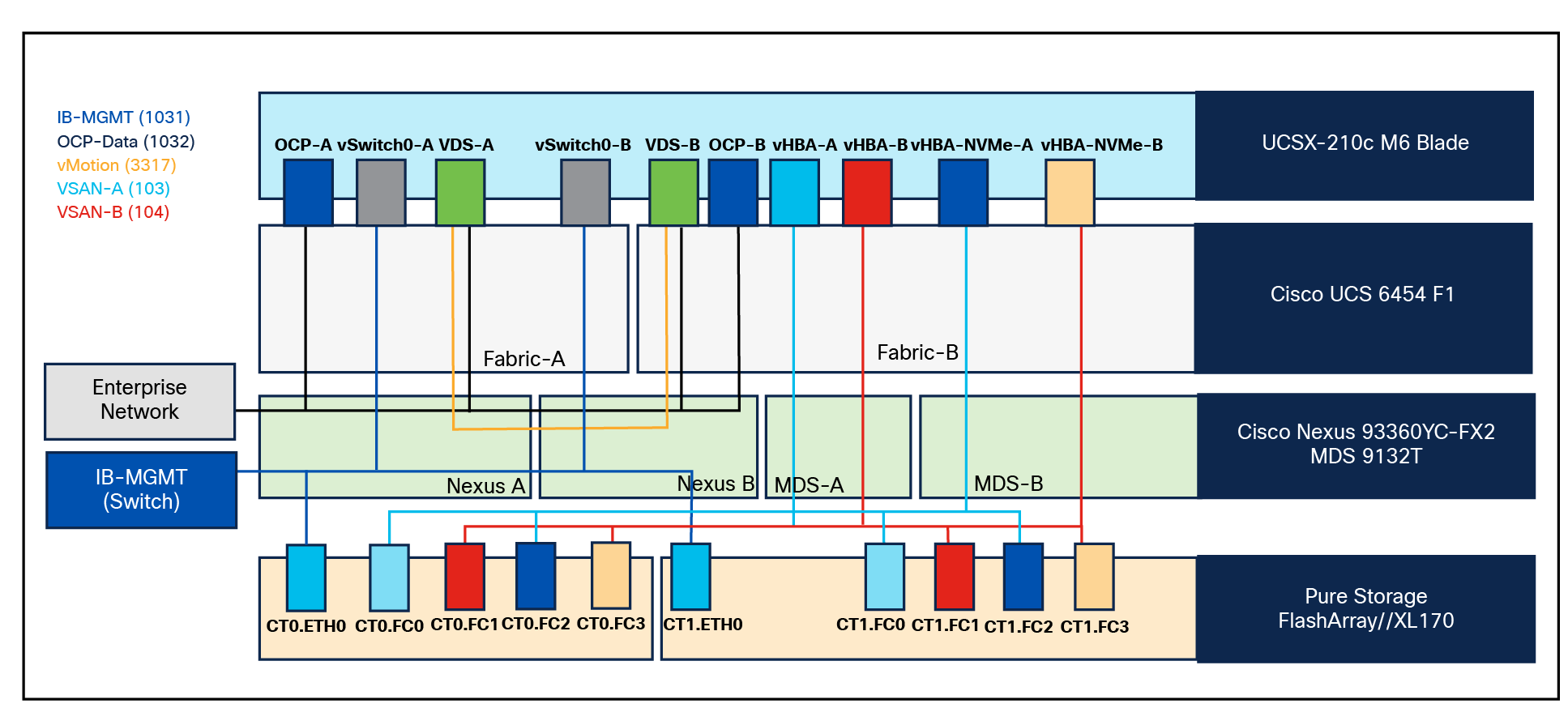

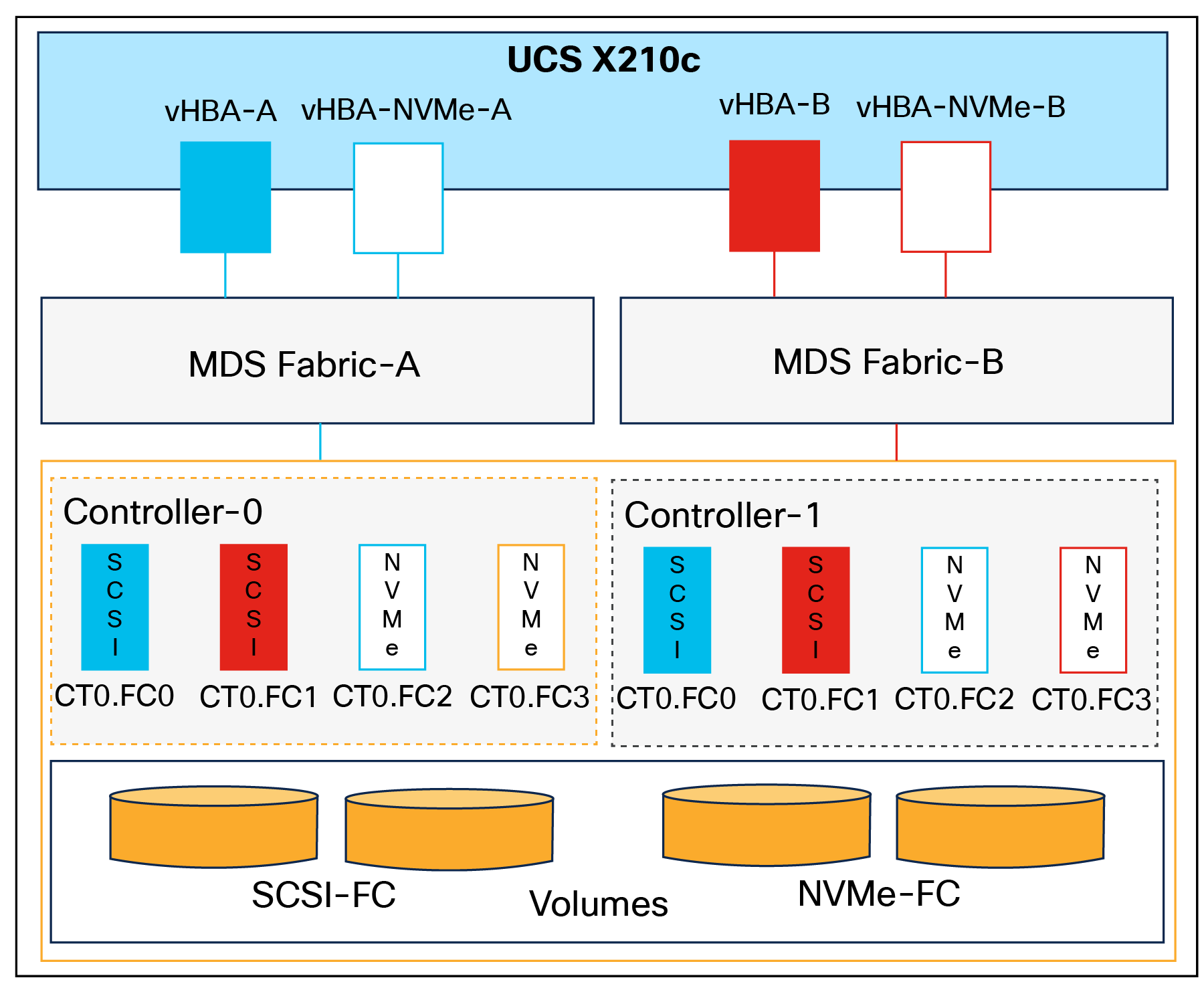

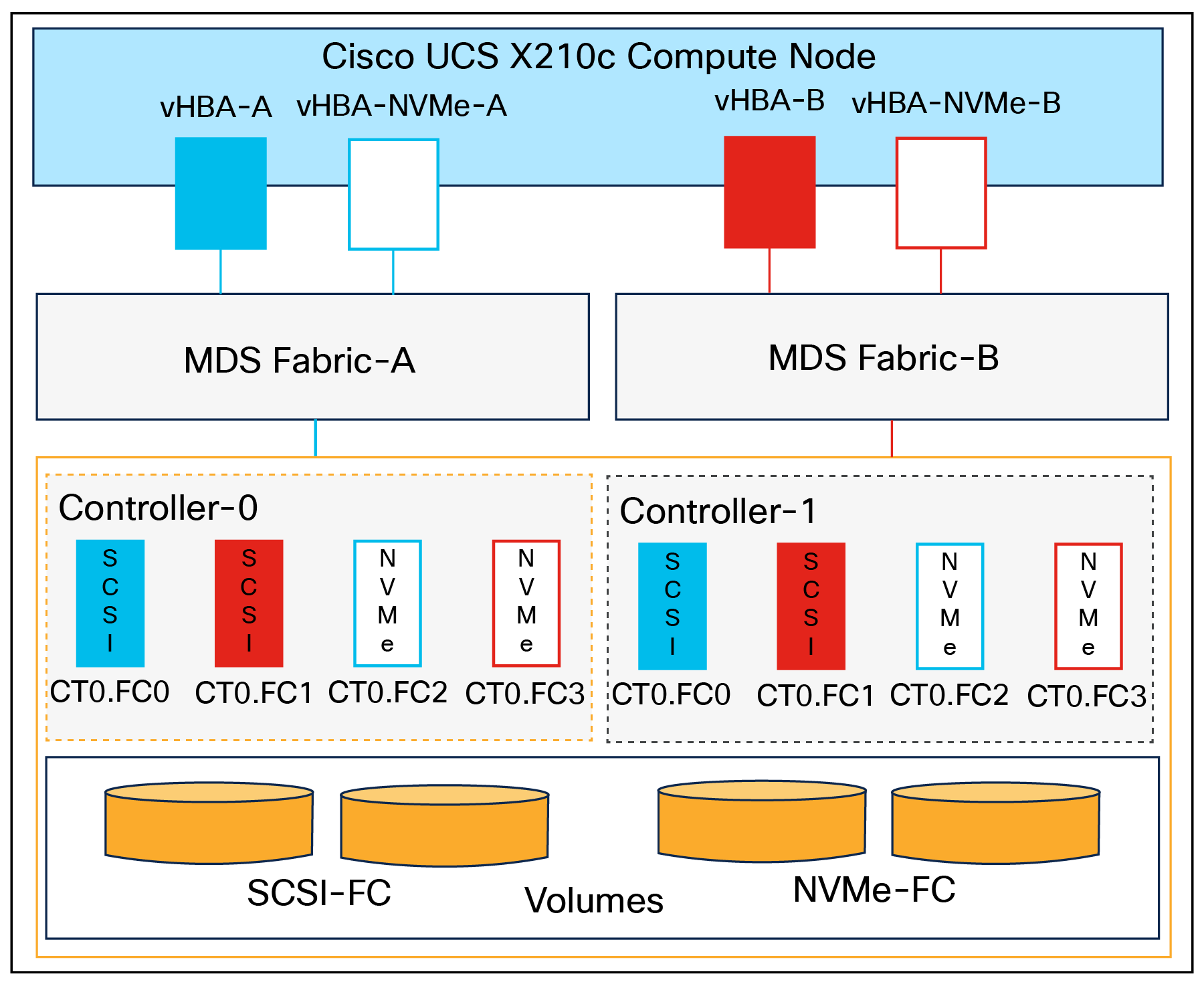

Fibre Channel‒based storage access: Fibre Channel and FC-NVMe

The physical topology of the FlashStack for Fibre Channel connectivity is shown in Figure 32.

FlashStack ‒ physical topology for Fibre Channel connectivity

To validate the Fibre Channel‒based storage access in a FlashStack configuration, the components are set up as follows:

● Cisco UCS 6454 Fabric Interconnects provide the chassis and network connectivity.

● The Cisco UCS X9508 Chassis connects to fabric interconnects using Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs), where four 25 Gigabit Ethernet ports are used on each IFM to connect to the appropriate FI.

● Cisco UCS X210c M6 Compute Nodes contain fourth generation Cisco 14425 virtual interface cards.

● Cisco Nexus 93360YC-FX2 Switches in Cisco NX-OS mode provide the switching fabric.

● Cisco UCS 6454 Fabric Interconnect 100 Gigabit Ethernet uplink ports connect to Cisco Nexus 93360YC-FX2 Switches in a vPC configuration.

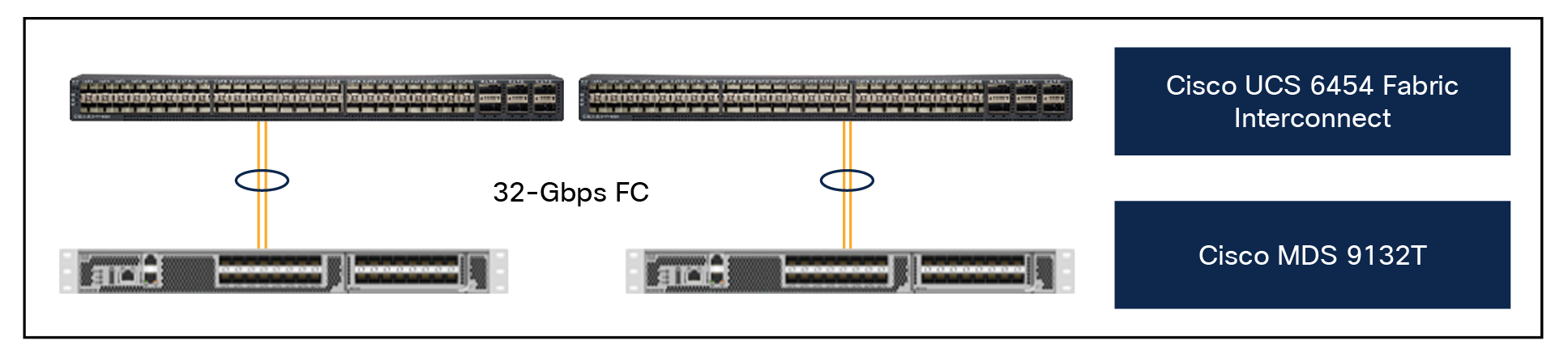

● Cisco UCS 6454 Fabric Interconnects are connected to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections configured as a single port channel for SAN connectivity.

● The Pure Storage FlashArray//XL170 connects to the Cisco MDS 9132T switches using 32-Gbps Fibre Channel connections for SAN connectivity.

● VMware ESXi 8.0 is installed on Cisco UCS X210c M6 Compute Nodes to validate the infrastructure.

● Red Hat OpenShift software is installed on a VMware vSphere 8.0 cluster.

Table 3 lists the VLANs configured for setting up the FlashStack environment.

| VLAN ID |

Name |

Usage |

| 3 |

Native-VLAN |

Use VLAN 3 as native VLAN instead of default VLAN (1). |

| 1030 |

OOB-MGMT-VLAN |

Out-of-Band Management VLAN to connect the management ports for various devices. |

| 1031 |

IB-MGMT-VLAN |

In-Band Management VLAN utilized for all in-band management connectivity for example, ESXi hosts, VM management, and so on. |

| 1032 |

OCP-Data |

Data traffic VLAN from/to RH OCP Virtual Machines. |

| 3119 |

iSCSI-A* |

iSCSI-A path for supporting boot from san for both Cisco UCS B-Series and Cisco UCS C-Series servers. |

| 3219 |

iSCSI-B* |

iSCSI-B path for supporting boot from san for both Cisco UCS B-Series and Cisco UCS C-Series servers. |

| 3319 |

vMotion |

VMware vMotion traffic. |

| 3419 |

VM-Traffic |

VM data traffic VLAN. |

Some of the key highlights of VLAN usage are as follows:

● VLAN 1030 allows customers to manage and access out of band management interfaces of various devices and is brought into the infrastructure to allow CIMC access to the Cisco UCS servers; it is also available to infrastructure Virtual Machines (VMs). Interfaces in this VLAN are configured with MTU 1500.

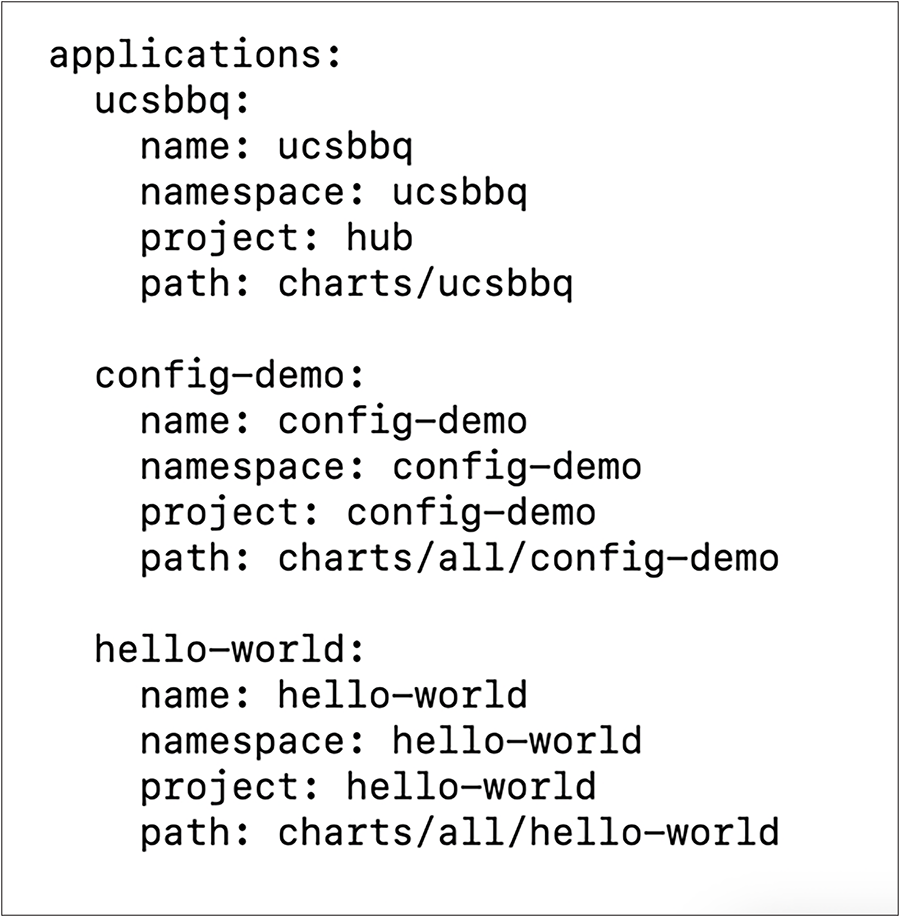

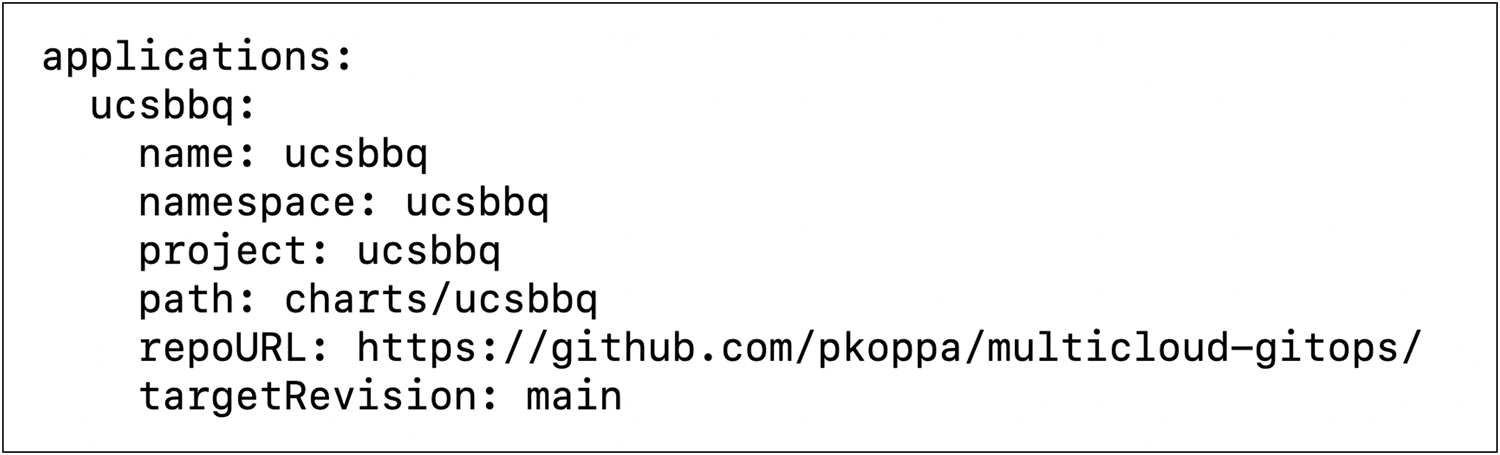

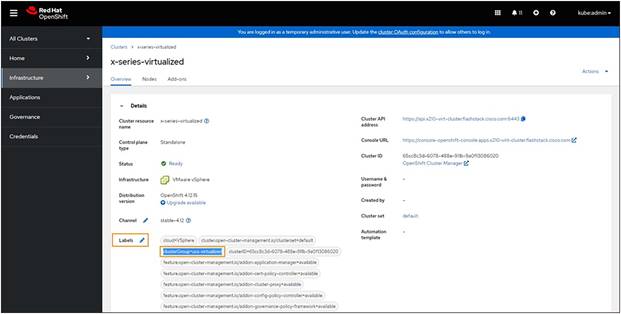

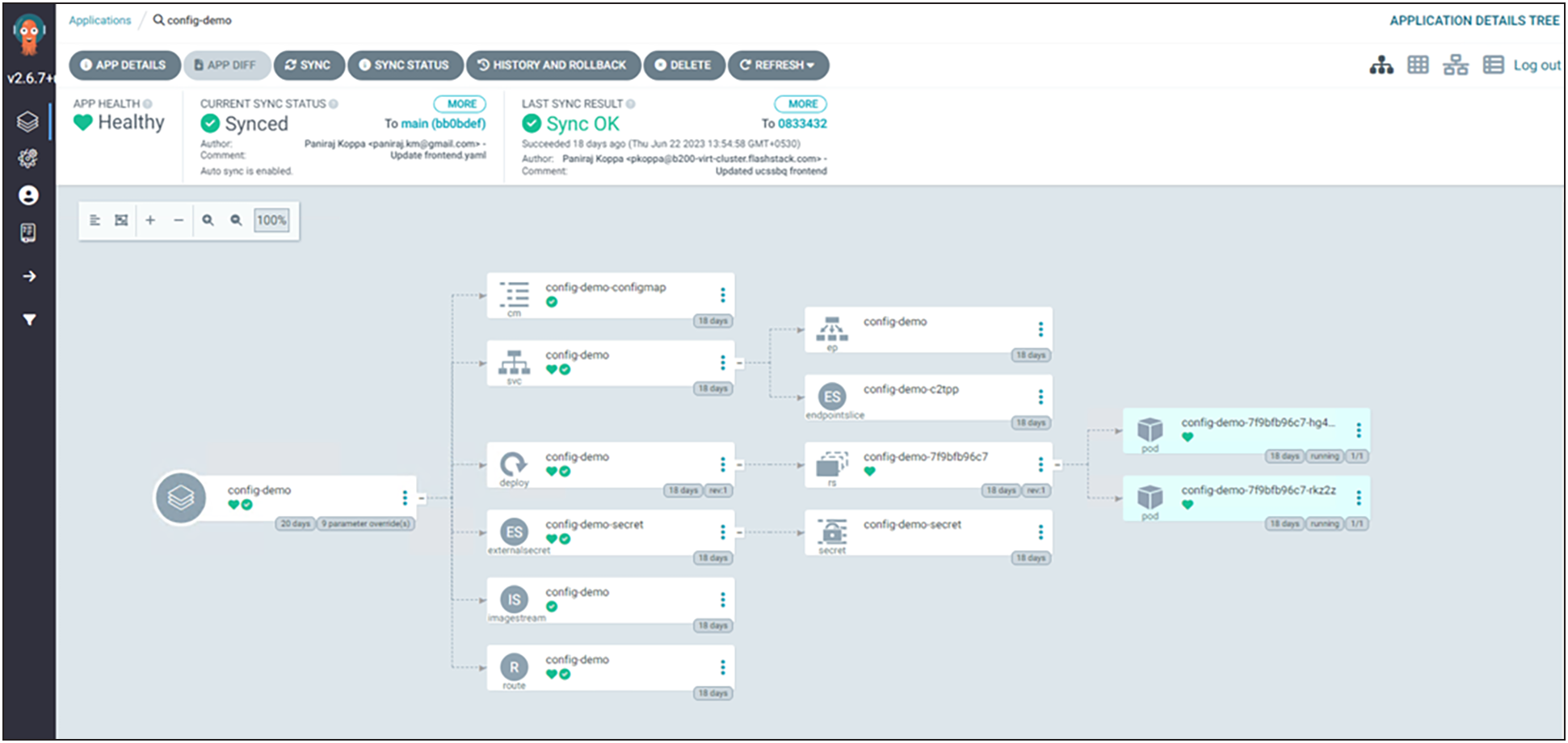

● VLAN 1031 is used for in band management of VMs, hosts, and other infrastructure services. Interfaces in this VLAN are configured with MTU 1500.