Table Of Contents

Initial Troubleshooting Checklist

Troubleshooting Connectivity Issues

Troubleshooting Job Creation Issues

Failures during Sessions Creation

Troubleshooting Job Execution Issues

Troubleshooting General Issues

Troubleshooting Cisco MDS DMM

This chapter describes procedures used to troubleshoot the data migration feature in the Cisco MDS 9000 Family multilayer directors and fabric switches. This chapter contains the following sections:

•

Initial Troubleshooting Checklist

•

Troubleshooting Connectivity Issues

•

Troubleshooting Job Creation Issues

•

Troubleshooting Job Execution Issues

•

Troubleshooting General Issues

DMM Overview

Cisco MDS DMM is an intelligent software application that runs on the Storage Services Module (SSM) of an MDS switch. With Cisco MDS DMM, no rewiring or reconfiguration is required for the server, the existing storage, or the SAN fabric. The SSM can be located anywhere in the fabric, as Cisco MDS DMM operates across the SAN. Data migrations are enabled and disabled by software control from the Cisco Fabric Manager.

Cisco MDS DMM provides a graphical user interface (GUI) (integrated into Fabric Manager) for configuring and executing data migrations. Cisco MDS DMM also provides CLI commands for configuring data migrations and displaying information about data migration jobs.

Best Practices

You can avoid problems when using DMM if you observe the following best practices:

•

Use the SLD tool.

The DMM feature includes the Array-Specific Library (ASL), which is a database of information about specific storage array products. DMM uses ASL to automatically correlate LUN maps between multipath port pairs.

Use the SLD CLI or GUI output to ensure that your storage devices are ASL classified.

For migration jobs involving active-passive arrays, use the SLD output to verify the mapping of active and passive LUNs to ports. Only ports with active LUNs should be included in migration jobs.

For more information about the SLD tool, see Checking Storage ASL Status, page 3-2.

•

Create a migration plan.

Cisco MDS DMM is designed to minimize the dependency on multiple organizations, and is designed to minimize service disruption. However, even with Cisco MDS DMM, data migration is a fairly complex activity. We recommend that you create a plan to ensure a smooth data migration.

•

Configure enclosures.

Before creating a migration job with the DMM GUI, you need to ensure that server and storage ports are included in enclosures. You need to create enclosures for server ports. If the server has multiple single-port HBAs, all of these ports need to be included in one enclosure. Enclosures for existing and new storage ports are typically created automatically.

•

Follow the topology guidelines.

Restrictions and recommendations for DMM topology are described in the "DMM Topology Guidelines" section on page 6-3.

•

Ensure all required ports are included in the migration job

When creating a data migration job, you must include all possible server HBA ports that access the LUNs being migrated. This is because all writes to a migrated LUN need to be mirrored to the new storage until the cut over occurs, so that no data writes are lost.

For additional information about selecting ports for server-based jobs, refer to the "Ports to Include in a Server-Based Job" section on page 6-4.

License Requirements

Each SSM with Cisco MDS DMM enabled requires a DMM license. DMM operates without a license for a grace period of 180 days.

DMM licenses are described in the "Using DMM Software Licenses" section on page 2-1.

Initial Troubleshooting Checklist

Begin troubleshooting DMM issues by checking the troubleshooting checklist in Table 5-1.

Common Troubleshooting Tools

The following navigation paths may be useful in troubleshooting DMM issues using Fabric Manager:

•

Select End Devices > SSM Features to access the SSM configuration.

•

Select End Devices > Data Mobility Manager to access the DMM status and configuration.

The following CLI commands on the SSM module may be useful in troubleshooting DMM issues:

•

show dmm job

•

show dmm job job-id job-id details

•

show dmm job job-id job-id session

Note

You need to connect to the SSM module using the attach module command prior to using the show dmm commands.

Troubleshooting Connectivity Issues

This section covers the following topics:

•

No Peer-to-Peer Communication

Cannot Connect to the SSM

Problems connecting the SSM can be caused by SSH, zoning, or routing configuration issues. Table 5-2 shows possible solutions.

Table 5-2 Cannot Connect to the SSM

Cannot connect to the SSM.

SSH not enabled on the supervisor module.

Enable SSH on the switch that hosts the SSM. See Configuring SSH on the Switch, page 2-2.

Zoning configuration error.

If VSAN 1 default zoning is denied, ensure that the VSAN 1 interface (supervisor module) and the CPP IP/FC interface have the same zoning. See Configuring IP Connectivity, page 2-3

IP routing not enabled.

Ensure that IPv4 routing is enabled. Use the ip routing command in configuration mode.

IP default gateway.

Configure the default gateway for the CPP IPFC interface to be the VSAN 1 IP address. See Configuring IP Connectivity, page 2-3

No Peer-to-Peer Communication

Table 5-3 shows possible solutions to problems connecting to the peer SSM.

Table 5-3 No Peer-to-Peer Communication

Cannot ping the peer SSM.

No route to the peer SSM.

Configure a static route to the peer SSM. See Configuring IP Connectivity, page 2-3

Connection Timeouts

If the DMM SSH connection is generating too many timeout errors, you can change the SSL and SSH timeout values. These properties are stored in the Fabric Manager Server properties file (Cisco Systems/MDS 9000/conf/server.properties). You can edit this file with a text editor, or you can set the properties through the Fabric Manager Web Services GUI, under the Admin tab.

The following server properties are related to DMM:

•

dmm.read.timeout—Read timeout for job creation. The default value is 60 seconds. The value is displayed in milliseconds.

•

dmm.read.ini.timeout—Read timeout for a job or session query. The default value is 5 seconds. The value is displayed in milliseconds.

•

dmm.connect.timeout—SSH connection attempt timeout. The default value is 6 seconds. The value is displayed in milliseconds.

•

dmm.connection.retry—If set to true, DMM will retry if the first connection attempt fails. By default, set to true.

Troubleshooting Job Creation Issues

The DMM GUI displays error messages to help you troubleshoot basic configuration mistakes when using the job creation wizards. See Creating a Server-Based Migration Job, page 4-4. A list of potential configuration errors is included after the last step in the task.

The following sections describe other issues that may occur during job creation:

•

Failures during Sessions Creation

Failures During Job Creation

If you make a configuration mistake while creating a job, the job creation wizard displays an error message to help you troubleshoot the problem. You need to correct your input before the wizard will let you proceed.

Table 5-4 shows other types of failures that may occur during job creation.

Table 5-4 Failures During Job Creation

Create Job failures.

No SSM available.

Ensure that the fabric has an SSM with DMM enabled and a valid DMM license.

Job infrastructure setup error. Possible causes are incorrect selection of server/storage port pairs, the server and existing storage ports are not zoned, or IP connectivity between SSMs is not configured correctly.

The exact error is displayed in the job activity log. See the "Opening the Job Error Log" section.

LUN discovery failures.

Use the SLD command in the CLI to check that the LUNs are being discovered properly.

Opening the Job Error Log

To open the job activity log, follow these steps:

Step 1

(Optional) Drag the wizard window to expose the Data Migration Status command bar.

Step 2

Click the refresh button.

Step 3

Select the job that you are troubleshooting from the list of jobs.

Step 4

Click the Log command to retrieve the job error log.

Step 5

The job information and error strings (if any) for each SSM are displayed.

Step 6

Click Cancel in the Wizard to delete the job.

Note

You must retrieve the job activity log before deleting the job.

DMM License Expires

If a time-bound license expires (or the default grace period expires), note the following behavior:

•

All jobs currently in progress will continue to execute until they are finished.

•

Jobs which are configured but not scheduled will run when the schedule kicks in

•

Jobs which are stopped or in a failure state can also be started and executed.

•

If the switch or SSM module performs a restart, the existing jobs cannot be restarted until the switch has a valid DMM license.

Scheduled Job is Reset

If the SSM or the switch performs a restart, all scheduled DMM jobs are placed in Reset state. Use the Modify command to restore jobs to the Scheduled state.

For each job, perform the following task:

Step 1

Select the job to be verified from the job list in the Data Migration Status pane.

Step 2

Click the Modify button in the Data Migration Status tool bar.

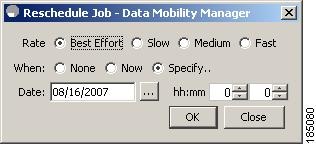

You see the Reschedule Job pop-up window, as shown in Figure 5-1.

Figure 5-1 Modify Job Schedule

Step 3

The originally configured values for migration rate and schedule are displayed. Modify the values if required.

Step 4

Click OK.

The job is automatically validated. If validation is successful, the job transitions into scheduled state. If you selected the Now radio button, the job starts immediately.

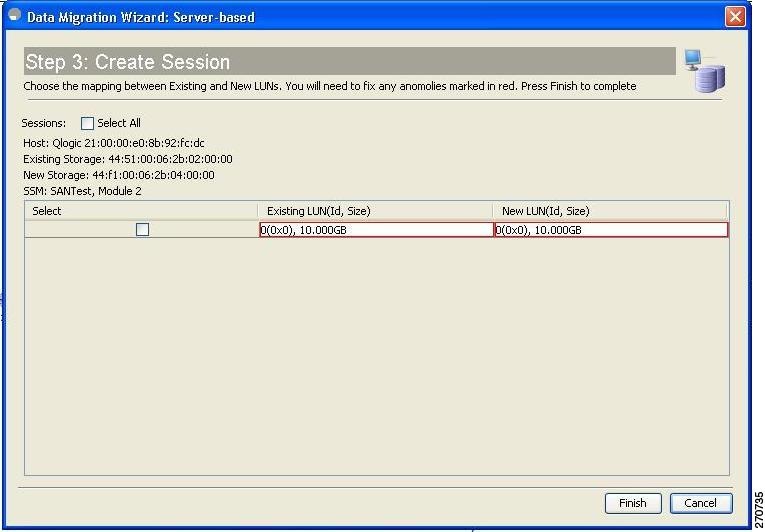

Failures during Sessions Creation

Figure 5-2 Failures during sessions creation

This section helps you troubleshoot an error when the new storage is smaller in size than the existing storage.The above figure in the DMM configuration wizard allows the user to configure sessions for the data migration job. The wizard displays a default session configuration. If any session is marked in red (as in the above figure) it implies that the session LUN in the new storage is smaller in size than the session LUN in the existing storage.

Although the LUN values displayed on the wizard are identical, the displayed LUN value in Gigabytes (GB) is rounded off to the third decimal.

The actual size of the LUNs can be verified using the show commands on the SSM CLI by completing the following steps.

•

Note down the host pWWN, existing storage pWWN and the new storage pWWN as displayed on the wizard screen. In the above figure (example) the values are:

–

Host: 21:00:00:e0:8b:92:fc:dc

–

Existing storage: 44:51:00:06:2b:02:00:00

–

New storage: 44:f1:00:06:2b:04:00:00

•

Note down the SSM information displayed on the wizard screen. In the above example the SSM chosen for the session is "SSM:SANTest, Module 2", where SANTest is the switch and the SSM is Module 2 on that switch.

•

From the switch console "attach" to the SSM console using the command attach module.

–

Example: SANTest# attach module 2

•

On the SSM CLI, display the Job Information.

module-2# show dmm job=============================================================================================================Data Mobility Manager Job Information=============================================================================================================Num Job Identifier Name Type Mode Method DMM GUI IP Peer SSM DPP Session Status=============================================================================================================1 1205521523 admin_2008/03/14-12:05 SRVR ONL METHOD-1 10.1.1.5 NOT_APPL 5 CREATEDNumber of Jobs :1•

Using the Job Identifier from the CLI output, display the job details.

module-2# show dmm job job-id 1205521523 detailLook for server information in the output and note down the VI pWWN corresponding to the host port selected:

-------------------------------------------------------------------------Server Port List (Num Ports :1)-------------------------------------------------------------------------Num VSAN Server pWWN Virtual Initiator pWWN-------------------------------------------------------------------------1 4 21:00:00:e0:8b:92:fc:dc 26:72:00:0d:ec:4a:63:82•

Using the storage pWWN and the VI pWWN, run the following command to get the LUN information for the existing and new storage:

Output for existing storage:

module-2# show dmm job job-id 1205521523 storage tgt-pwwn 44:51:00:06:2b:02:00:00 vi-pwwn 26:72:00:0d:ec:4a:63:82show dmm job job-id 1205521523 storage tgt-pwwn 0x445100062b020000 vi-pwwn 0x2672000dec4a6382Data Mobility Manager LUN InformationStoragePort: 00:00:02:2b:06:00:51:44 VI : 82:63:4a:ec:0d:00:72:26-------------------------------------------------------------------------------LUN Number: 0x0VendorID : SANBlazeProductID : VLUN FC RAMDiskSerialNum : 2fff00062b0e445100000000ID Len : 32ID : 600062b0000e44510000000000000000Block Len : 512Max LBA : 20973567Size : 10.000977 GBOutput for New Storage:

module-2# show dmm job job-id 1205521523 storage tgt-pwwn 44:f1:00:06:2b:04:00:00 vi-pwwn 26:72:00:0d:ec:4a:63:82show dmm job job-id 1205521523 storage tgt-pwwn 0x44f100062b040000 vi-pwwn 0x2672000dec4a6382Data Mobility Manager LUN InformationStoragePort: 00:00:04:2b:06:00:f1:44 VI : 82:63:4a:ec:0d:00:72:26-------------------------------------------------------------------------------LUN Number: 0x0VendorID : SANBlazeProductID : VLUN FC RAMDiskSerialNum : 2fff00062b0e44f100000000ID Len : 32ID : 600062b0000e44f10000000000000000Block Len : 512Max LBA : 20971519Size : 10.000000 GBAs you can see from the above example

Existing Storage : Max LBA : 20973567Size : 10.000977 GBNew Storage : Max LBA : 20971519Size : 10.000000 GB•

Fix the LUN Size on the New Storage and reconfigure the Job.

Troubleshooting Job Execution Issues

If a failure occurs during the execution of a data migration job, DMM halts the migration job and the job is placed in Failed or Reset state.

The data migration job needs to be validated before restarting it. If the DMM job is in Reset state, FC-Redirect entries are removed. In the DMM GUI, validation is done automatically when you restart the job. In the CLI, you must be in Reset state to validate. You cannot validate in a failed state.

Note

If a new port becomes active in the same zone as a migration job in progress, DMM generates a warning message in the system logs.

Troubleshooting job execution failures is described in the following sections:

DMM Jobs in Fail State

If DMM encounters SSM I/O error to the storage, the job is placed in Failed state. Table 5-5 shows possible solutions for jobs in Failed state.

DMM Jobs in Reset State

Table 5-6 shows possible causes and solutions for jobs in Reset state.

Troubleshooting General Issues

If you need assistance with troubleshooting an issue, save the output from the relevant show commands.

You must connect to the SSM to execute DMM show commands. Use the attach module slot command to connect to the SSM.

The show dmm job command provides useful information for troubleshooting DMM issues. For detailed information about using this command, see the DMM CLI Command Reference appendix.

Save the output of command show dmm tech-support into a file when reporting a DMM problem to the technical support organization.

Also run the show tech-support fc-redirect command on all switches with FC-Redirect entries. Save the output into a file.

DMM Error Reason Codes

If DMM encounters an error while running the job creation wizard, a popup window displays the error reason code. Error reason codes are are also captured in the Job Activity Log. Table 5-7 provides a description of the error codes.

Feedback

Feedback