Cisco UCS S3260 Storage Servers with Cohesity SmartFiles

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Cisco UCS S3260 Storage Servers with Cohesity SmartFiles

Deployment and Configuration Guide for Cohesity Helios Platform and Cohesity SmartFiles on Cisco S3260 M5 Storage Servers

Published: January 2022

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_P1)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2022 Cisco Systems, Inc. All rights reserved.

Configuration and Installation

SmartFiles Use Cases and Validation

Cohesity Certified Cisco UCS Nodes

According to Gartner, data will grow by 800% over the next five years, of which 80% will be unstructured in the form of file shares, backups, archives, logs, media files, dev/test and analytics. Traditional network attached storage (NAS) was designed over a decade ago for traditional use cases, and without serious architectural consideration for cloud and hybrid IT environments. The reality today is that there are multiple data silos resulting in mass data fragmentation across the data center and cloud. Challenges are not limited to the cost and management of multiple silos or delivering hybrid cloud seamlessly. Storage costs often destroy storage budgets. Addressing compliance, regulatory, and governance requirements adds to complexity and cost.

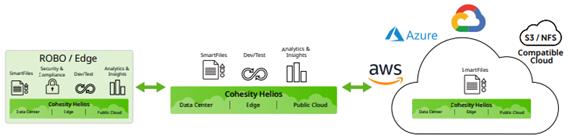

Cohesity SmartFiles is the industry’s first software-defined, data-centric, multiprotocol file and object solution for the enterprise. SmartFiles is a discrete offering that is provided on top of the Cohesity Helios platform and goes beyond traditional scale-out NAS in terms of global data manageability, multicloud scale, storage efficiency, policy-based automation, integrated applications, AI-powered cybersecurity, and multi-tiered data management. Unlike traditional NAS, the Cohesity Helios data platform with SmartFiles is data-centric, not storage or infrastructure centric. This means there is no need to move data to apps because the apps and data are integrated in the same platform. This removes the complexity and cost of running disparate file ecosystem apps and associated infrastructure for file environments. Cohesity Marketplace apps and machine-learning assisted management provide intelligence and ease-of management that separates SmartFiles from traditional scale-out NAS appliances. It’s smart and provides virtually effortless management, regardless of scale.

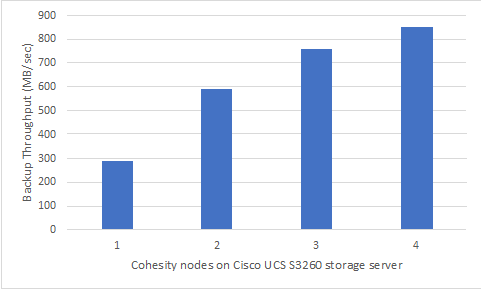

The Cisco UCS S3260 Storage Server is a modular storage server with dual M5 server nodes and is optimized for large datasets used in environments such as big data, cloud, object storage, video surveillance, and content delivery. The present deployment is optimized for best-in-class performance to capacity ratio, providing dual compute nodes and dense storage across a 4RU chassis. With UCS Management and stateless Service Profile capabilities, customers can easily expand to additional Cohesity nodes with minimal hardware and software configurations. Moreover, with UCS Managed S3260 storage chassis and Cohesity Helios platform, customers can achieve rolling upgrades of both software and hardware firmware with no cluster downtime.

This Cisco Validated Design and Deployment Guide provides prescriptive guidance for the design, setup, configuration, and ongoing use of the Cohesity Helios Platform with Cohesity SmartFiles, providing multi-protocol (NFS/CIFS/S3) file services and object storage capabilities on Cisco UCS S3260 dense storage server. This unique integrated solution is designed to solve siloed infrastructure, operational, and data management challenges faced by enterprises and service providers worldwide. The best-of-breed solution combines the web-scale simplicity and efficiency of Cohesity software with the power and flexibility of Cisco UCS servers. As a result, customers can more efficiently and effectively manage backup and unstructured data growth, acquire new insights, and reduce costs and complexity with a single, integrated solution. For more information on joint Cisco-Cohesity solutions, please see cohesity.com/cisco.

The Cisco UCS® S3260 Storage Server is a modular dual node x86 server designed for investment protection. Its architectural flexibility provides high performance or high capacity for your data intensive workloads. Combined with UCS Manager, customers users can easily deploy storage capacity from Terabytes to Petabytes within minutes.

The Cohesity Helios platform redefines data management with a web-scale solution that radically simplifies the way companies protect, control and extract value from their data. This software-defined platform spans across core, cloud, and edge, can be managed from a single GUI, and enables independent apps to run in the same environment. It is the only solution built on a hyperconverged, scale-out design that converges backup, files & objects, dev/test and analytics, and uniquely allows applications to run on the same platform to extract insights from data. Designed with Google-like principles, it delivers true global deduplication and impressive storage efficiency that spans edge to core to the public cloud.

Cohesity SmartFiles runs on top of the Helios platform. SmartFiles is an enterprise-class, software-defined, data-centric, multiprotocol file and object solution for the enterprise that transcends traditional offerings in terms of manageability, scale, security, efficiency, and multi-tiered data management. SmartFiles modernizes and simplifies data and application management by providing one platform for multiple workloads. It is a modern converged target for consolidating data silos and securely managing unstructured content and application data, including digital libraries, archives, rich media, video surveillance, big data, and backup data sets.

Cohesity SmartFiles on Cisco UCS S3260 storage server provides a dense storage, enterprise-class, software-defined, data-centric, multiprotocol file and object solution for the enterprise that go beyond the traditional offerings in terms of global manageability, multicloud scale, AI-powered security, efficiency, and multi-tiered data management. With Cohesity Helios management platform, customers can easily manage clusters deployed across data centers. The present architecture enables a high-density File Services solution providing modernized converged target for consolidating data silos and securely managing unstructured content and application data, including digital libraries, archives, rich media, video surveillance, big data, and backup data sets.

The 4RU Cisco UCS S3260 storage server is equipped with dual node compute server with each compute node managing 4 x 3.2 TB as the Flash Tier and 24 x 16 TB HDD for the capacity Tier. Cohesity SmartFiles on Cisco UCS solution - at minimum - is comprised of a four-node Cohesity Helios SmartFiles Cluster across two Cisco UCS S3260 storage chassis. With Cohesity web scale technology, the cluster can scale without limits, providing optimized efficiency and broad multiprotocol interoperability supporting NFS, SMB, S3, OpenStack Swift, and multiple leading public clouds. The solution ensures resiliency, consistency and linear performance and scale to seamlessly grow at your own pace by incrementally adding additional nodes, all while eliminating disruptive upgrades.

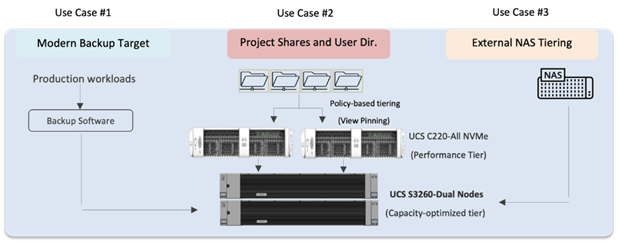

The solution extends across the following:

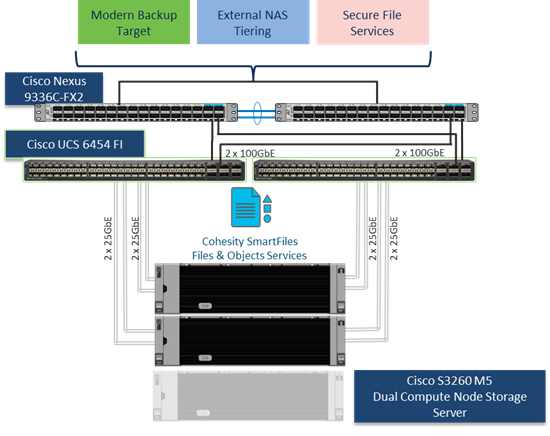

● Cohesity Helios Platform deployed as a four-node cluster across 2 x Cisco UCS S3260 Storage servers, each equipped with dual compute nodes, managed through a pair of Cisco Fabric Interconnect 6454.

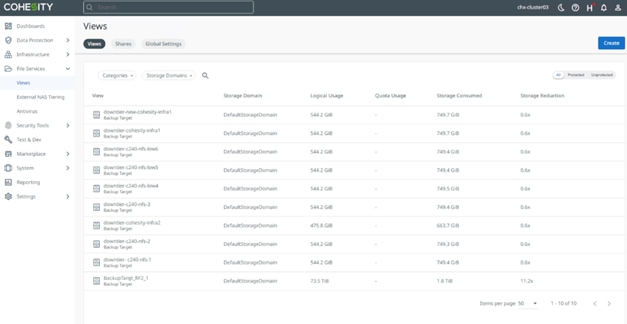

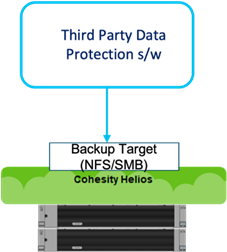

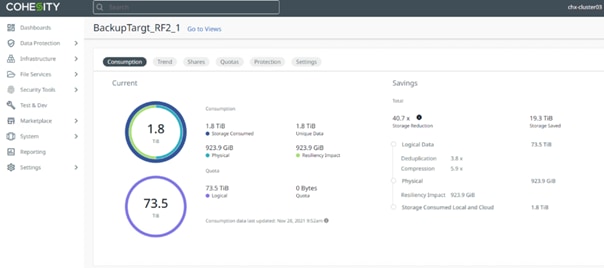

● Cohesity SmartFiles cluster as a Backup target for external Backup software.

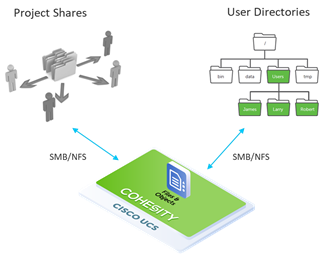

● Cohesity SmartFiles as a host for Secure File Services, thus eliminating inefficient and costly silos and allowing for provisioning of faster SSD storage or slower, less expensive HDD storage, depending on the use case.

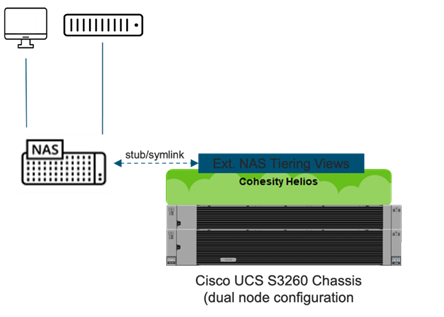

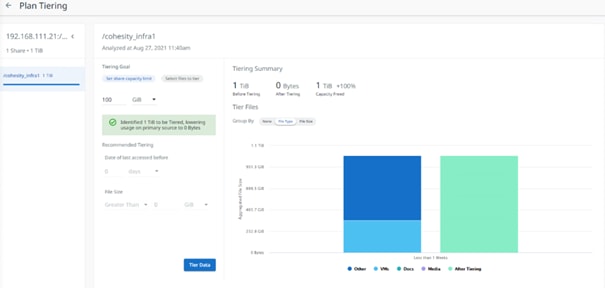

● Cohesity SmartFiles for External NAS Tiering to allow tiering of unused or infrequently used data from NAS primary storage to the Cohesity cluster.

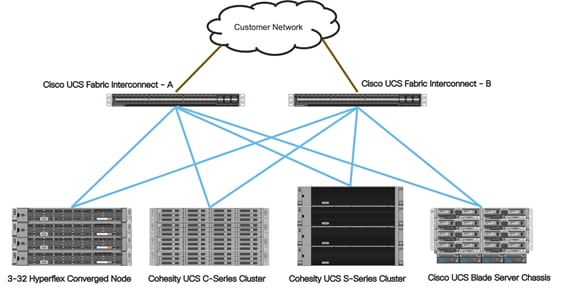

Figure 1 provides a high-level view of various use cases configured and validated in this solution.

Figure 1. Cohesity SmartFiles on Cisco UCS S3260 Storage Server

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, IT engineers, partners, and customers who are interested in learning about and deploying the Cohesity SmartFiles solution for non-latency-sensitive use cases, such as target storage for backups, replication, archiving, file services with NFS, SMB/CIFS and S3 backed object storage.

This document describes the installation, configuration, and validated use cases for the Cohesity Helios Platform with Cohesity SmartFiles on dual node Cisco UCS S3260 Storage chassis. A reference architecture is provided to configure the Cohesity Helios Platform on Cisco UCS S-Series Storage servers. This document does not specifically explain the configuration of the Cohesity SmartFiles features; this is described here: Cohesity SmartFiles

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation data center platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low-latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System consists of the following subsystems:

Compute - The compute piece of the system incorporates servers based on the Second-Generation Intel® Xeon® Scalable processors. Servers are available in blade and rack form factor, managed by Cisco UCS Manager.

Network - The integrated network fabric in the system provides a low-latency, lossless, 10/25/40/100 Gbps Ethernet fabric. Networks for LAN, SAN and management access are consolidated within the fabric. The unified fabric uses the innovative Single Connect technology to lowers costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

Virtualization - The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtual environments to support evolving business needs.

Storage access – Cisco UCS system provides consolidated access to both SAN storage and Network Attached Storage over the unified fabric. This provides customers with storage choices and investment protection. Also, the server administrators can pre-assign storage-access policies to storage resources, for simplified storage connectivity and management leading to increased productivity.

Management: The system uniquely integrates compute, network, and storage access subsystems, enabling it to be managed as a single entity through Cisco UCS Manager software. Cisco UCS Manager increases IT staff productivity by enabling storage, network, and server administrators to collaborate on Service Profiles that define the desired physical configurations and infrastructure policies for applications. Service Profiles increase business agility by enabling IT to automate and provision resources in minutes instead of days.

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

Embedded Management — In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Interconnects, eliminating the need for any external physical or virtual devices to manage the servers.

Unified Fabric — In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

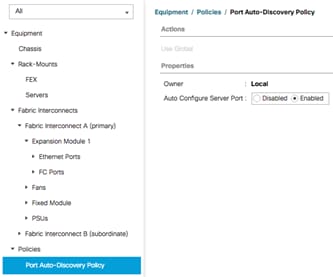

Auto Discovery — By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

Policy Based Resource Classification — Once a compute resource is discovered by Cisco UCS Manager, it can be automatically classified to a given resource pool based on policies defined. This capability is useful in multi-tenant cloud computing. This CVD showcases the policy-based resource classification of Cisco UCS Manager.

Combined Rack and Blade Server Management — Cisco UCS Manager can manage Cisco UCS B-series blade servers and Cisco UCS C-series rack servers under the same Cisco UCS domain. This feature, along with stateless computing makes compute resources truly hardware form factor agnostic.

Model based Management Architecture — The Cisco UCS Manager architecture and management database is model based, and data driven. An open XML API is provided to operate on the management model. This enables easy and scalable integration of Cisco UCS Manager with other management systems.

Policies, Pools, Templates — The management approach in Cisco UCS Manager is based on defining policies, pools, and templates, instead of cluttered configuration, which enables a simple, loosely coupled, data driven approach in managing compute, network, and storage resources.

Loose Referential Integrity — In Cisco UCS Manager, a service profile, port profile or policies can refer to other policies or logical resources with loose referential integrity. A referred policy cannot exist at the time of authoring the referring policy or a referred policy can be deleted even though other policies are referring to it. This provides different subject matter experts to work independently from each other. This provides great flexibility where different experts from different domains, such as network, storage, security, server, and virtualization work together to accomplish a complex task.

Policy Resolution — In Cisco UCS Manager, a tree structure of organizational unit hierarchy can be created that mimics the real-life tenants and/or organization relationships. Various policies, pools and templates can be defined at different levels of organization hierarchy. A policy referring to another policy by name is resolved in the organizational hierarchy with closest policy match. If no policy with specific name is found in the hierarchy of the root organization, then the special policy named “default” is searched. This policy resolution practice enables automation friendly management APIs and provides great flexibility to owners of different organizations.

Service Profiles and Stateless Computing — A service profile is a logical representation of a server, carrying its various identities and policies. This logical server can be assigned to any physical compute resource as far as it meets the resource requirements. Stateless computing enables procurement of a server within minutes, which used to take days in legacy server management systems.

Built-in Multi-Tenancy Support — The combination of policies, pools and templates, loose referential integrity, policy resolution in the organizational hierarchy and a service profiles-based approach to compute resources makes Cisco UCS Manager inherently friendly to multi-tenant environments typically observed in private and public clouds.

Extended Memory — The enterprise-class Cisco UCS Blade server extends the capabilities of the Cisco Unified Computing System portfolio in a half-width blade form factor. It harnesses the power of the latest Intel® Xeon® Scalable Series processor family CPUs and Intel® Optane DC Persistent Memory (DCPMM) with up to 18TB of RAM (using 256GB DDR4 DIMMs and 512GB DCPMM).

Simplified QoS — Even though Fibre Channel and Ethernet are converged in the Cisco UCS fabric, built-in support for QoS and lossless Ethernet makes it seamless. Network Quality of Service (QoS) is simplified in Cisco UCS Manager by representing all system classes in one GUI panel.

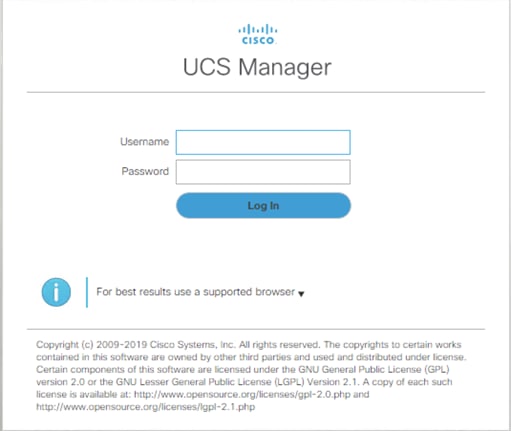

Cisco UCS Manager (UCSM) provides unified, integrated management for all software and hardware components in Cisco UCS. Using Cisco Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive graphical user interface (GUI), a command-line interface (CLI), or a through a robust application programming interface (API).

Cisco UCS Manager is embedded into the Cisco UCS Fabric Interconnect and provides a unified management interface that integrates server, network, and storage. Cisco UCS Manager performs auto-discovery to detect inventory, manage, and provision system components that are added or changed. It offers a comprehensive set of XML API for third party integration, exposes thousands of integration points, and facilitates custom development for automation, orchestration, and to achieve new levels of system visibility and control.

Cisco UCS™ Manager 4.0 provides unified, embedded management of all software and hardware components of the Cisco Unified Computing System™ (Cisco UCS) across multiple chassis and Cisco UCS servers. Cisco UCS Manager4.0 is a unified software release for all supported Cisco UCS hardware platforms. Release 4.0 enables support for UCS 6454 Fabric Interconnects, VIC 1400 series adapter cards on Cisco UCS M5 servers and Second-Generation Intel® Xeon® Scalable processor refresh and Intel® Optane™ Data Center persistent memory modules on UCS Intel-based M5 servers.

For more information on Cisco UCS Manager Release 4.0 refer to the Release Notes page.

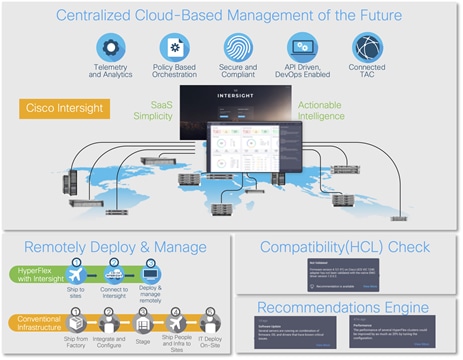

Cisco Intersight™ is a lifecycle management platform for your infrastructure, regardless of where it resides. In your enterprise data center, at the edge, in remote and branch offices, at retail and industrial sites—all these locations present unique management challenges and have typically required separate tools. Cisco Intersight Software as a Service (SaaS) unifies and simplifies your experience of the Cisco Unified Computing System™ (Cisco UCS®) and Cisco HyperFlex™ systems.

Intersight software delivers a new level of cloud-powered intelligence that supports lifecycle management with continuous improvement. It is tightly integrated with the Cisco® Technical Assistance Center (TAC). Expertise and information flow seamlessly between Cisco Intersight and IT teams, providing global management of Cisco infrastructure, anywhere. Remediation and problem resolution are supported with automated upload of error logs for rapid root-cause analysis.

Figure 2. Cisco Intersight

Automate your infrastructure

Cisco has a strong track record for management solutions that deliver policy-based automation to daily operations. Intersight SaaS is a natural evolution of our strategies. Cisco designed Cisco UCS and HyperFlex to be 100 percent programmable. Cisco Intersight simply moves the control plane from the network into the cloud. Now you can manage your Cisco UCS and HyperFlex infrastructure wherever it resides through a single interface.

Deploy your way

If you need to control how your management data is handled, comply with data locality regulations, or consolidate the number of outbound connections from servers, you can use the Cisco Intersight Virtual Appliance for an on-premises experience. Cisco Intersight Virtual Appliance is continuously updated just like the SaaS version, so regardless of which approach you implement, you never have to worry about whether your management software is up to date.

DevOps ready

If you are implementing DevOps practices, you can use the Cisco Intersight API with either the cloud-based or virtual appliance offering. Through the API you can configure and manage infrastructure as code—you are not merely configuring an abstraction layer; you are managing the real thing. Through the API and support of cloud-based RESTful API, Terraform providers, Microsoft PowerShell scripts, or Python software, you can automate the deployment of settings and software for both physical and virtual layers. Using the API, you can simplify infrastructure lifecycle operations and increase the speed of continuous application delivery.

Pervasive simplicity

Simplify the user experience by managing your infrastructure regardless of where it is installed.

Automate updates to Cisco HyperFlex™ Data Platform software, reducing complexity and manual efforts.

Actionable intelligence

Use best practices to enable faster, proactive IT operations.

Gain actionable insight for ongoing improvement and problem avoidance.

Manage anywhere

Deploy in the data center and at the edge with massive scale.

Get visibility into the health and inventory detail for your Intersight Managed environment on-the-go with the Cisco Intersight Mobile App.

For more information about Cisco Intersight and the different deployment options, go to: Cisco Intersight – Manage your systems anywhere.

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10 Gigabit, 25 Gigabit, 40 Gigabit, or 100 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE) and Fibre Channel connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for the Cisco UCS C-Series, S-Series and HX-Series Rack-Mount Servers, Cisco UCS B-Series Blade Servers, and Cisco UCS 5100 Series Blade Server Chassis. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain. In addition, by supporting unified fabrics, the Cisco UCS Fabric Interconnects provide both the LAN and SAN connectivity for all servers within its domain.

For networking performance, the Cisco UCS 6454 Series uses a cut-through architecture, supporting deterministic, low latency, line rate 10/25/40/100 Gigabit Ethernet ports, 3.82 Tbps of switching capacity, and 320 Gbps bandwidth per Cisco 5108 blade chassis when connected through the IOM 2208 model. The product family supports Cisco low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over the Ethernet fabric from the servers to the uplinks. Significant TCO savings come from an FCoE-optimized server design in which network interface cards (NICs), host bus adapters (HBAs), cables, and switches can be consolidated.

Cisco UCS 6454 Fabric Interconnect

The Cisco UCS 6454 Fabric Interconnect is a one-rack-unit (1RU) 10/25/40/100 Gigabit Ethernet, FCoE and Fiber Channel switch offering up to 3.82 Tbps throughput and up to 54 ports. The switch has eight (8) 10/25-Gbps fixed Ethernet ports, which can optionally be configured as 8/16/32-Gbps FC ports (ports 1 to 8), thirty-six (36) 10/25-Gbps fixed Ethernet ports (ports 9 to 44), four (4) 1/10/25-Gbps Ethernet ports (ports 45 to 48), and finally six (6) 40/100-Gbps Ethernet uplink ports (ports 49 to 54). For more information , refer to the Cisco UCS 6454 Fabric Interconnect spec sheet: (https://www.cisco.com/c/dam/en/us/products/collateral/servers-unified-computing/ucs-b-series-blade-servers/6400-specsheet.pdf

Figure 3. Cisco UCS 6454 Fabric Interconnect

Cisco UCS S-Series Cohesity-Certified Nodes

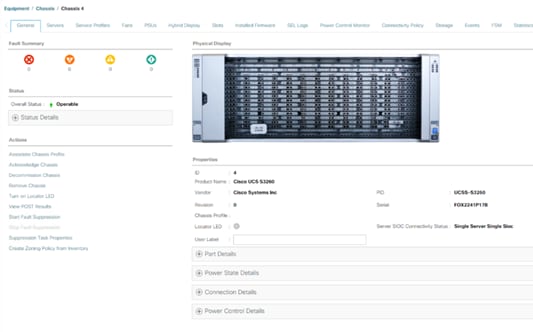

The Cisco UCS S3260 Storage Server is a modular, high-density, high-availability dual-node rack server well suited for service providers, enterprises, and industry-specific environments. It addresses the need for dense, cost-effective storage for the ever-growing amounts of data. Designed for a new class of cloud-scale applications and data-intensive workloads, it is simple to deploy and excellent for big data, software-defined storage, and data-protection environments.

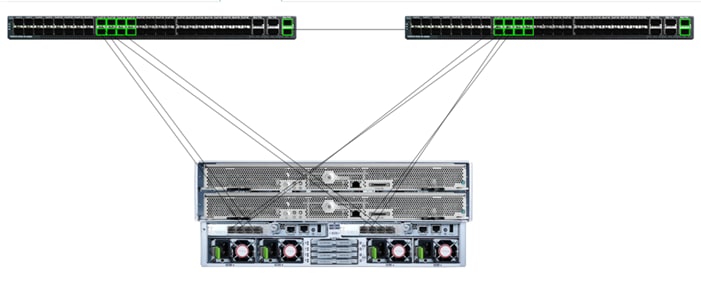

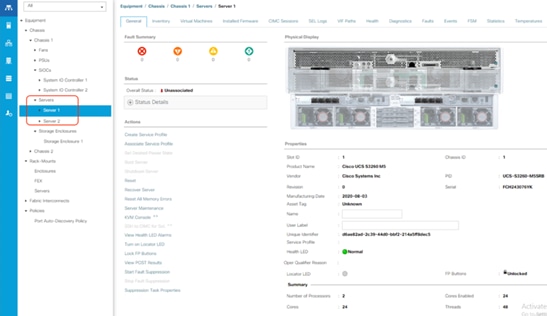

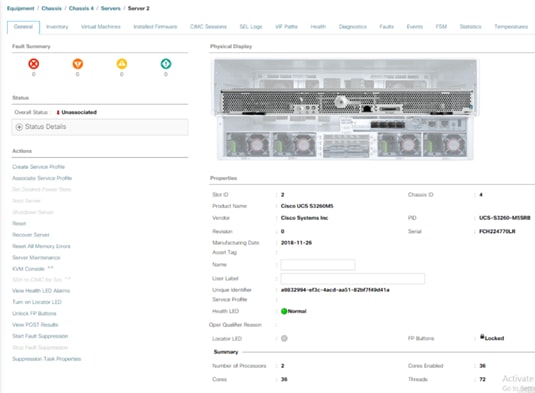

Figure 4. Cisco UCS S3260 Storage Server

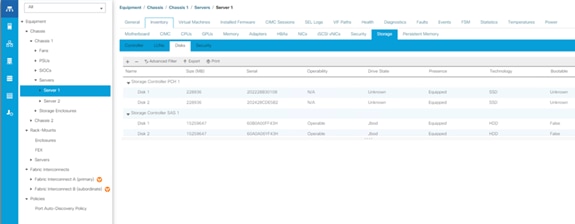

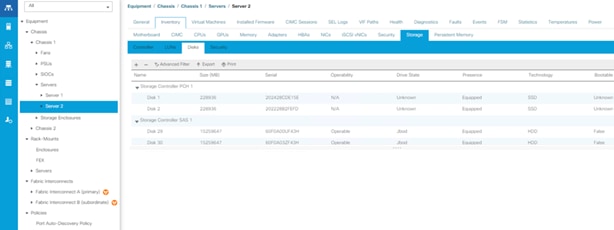

Cisco UCS S3260 is a four-rack-unit (4RU) Storage Server, providing a dense storage platform for Cohesity clusters. Each node in the S3260 chassis can be configured with a PCIe-based system I/O controller for Quad Port 10/25G Cisco VIC 1455 or Dual Port 100G Cisco VIC 1495. The Cisco S3260 storage server for Cohesity SmartFiles is a dual compute nodes system equipped with eight 3.2 TB high-performance SSD drives for data caching and 48 NL-SAS drives, each with 16 TB capacity. For more information, please refer to the Cisco S3260 storage server spec sheet.

Cisco UCS C-Series Cohesity-Certified Nodes

A Cohesity cluster requires a minimum of three Cisco UCS C-Series nodes (with disk storage). Data is replicated across at least two of these nodes, and a third node is required for continuous operation in the event of a single-node failure. Each node is equipped with two high-performance SSD drives for data caching and rapid acknowledgment of write requests. Each node also is equipped with additional hard disks for long term storage and overall capacity.

This two-rack-unit (2RU) Cisco C240 M5 Large Form Factor (LFF) model server contains a pair of 240 GB M.2 form factor solid-state disk (SSD) that acts as the boot drives, a pair of 1.6 TB or 3.2 TB NVMe SSD drives installed in the rear drive slots, and twelve 4 TB or 10 TB SATA HDD drives for storage capacity.

Figure 5. Cisco C240 M5 LFF Server

Cisco UCS VIC 1457 MLOM Interface Card

The Cisco UCS VIC 1457 Card is a quad-port Enhanced Small Form-Factor Pluggable (SFP+) 10/25-Gbps Ethernet, and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter installed in the Cisco UCS C-Series Rack Servers. The VIC 1457 is used in conjunction with the Cisco UCS 6454 model Fabric Interconnects. The mLOM slot can be used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and Worldwide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

Figure 6. Cisco UCS VIC 1457 mLOM Card

Cohesity has built a unique solution based on the same architectural principles employed by cloud hyperscalers managing consumer data but optimized for the enterprise world. The secret to the hyperscalers’ success lies in their architectural approach, which has three major components: a distributed file system—a single platform—to store data across locations, a single logical control plane through which to manage it, and the ability to run and expose services atop this platform to provide new functionality through a collection of applications. The Cohesity platform takes this same three-tier hyperscaler architectural approach and adapts it to the specific needs of enterprise data management.

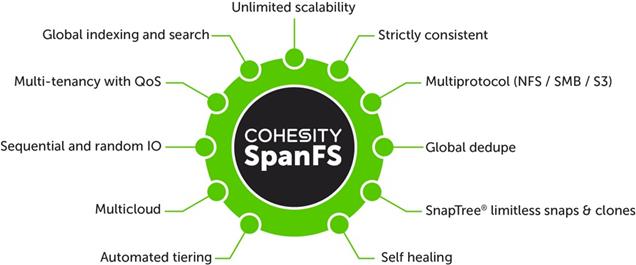

SpanFS: A Unique File System that Powers the Cohesity Helios Platform

The foundation of the Cohesity Helios Platform is Cohesity SpanFS®, a 3rd generation web-scale distributed file system. SpanFS enables the consolidation of all data management services, data, and apps onto a single software-defined platform, eliminating the need for the complex jumble of siloed infrastructure required by the traditional approach.

Predicated on SpanFS, Cohesity Helios Platform’s patented design allows all data management infrastructure functions— including backup and recovery, disaster recovery, long-term archival, file services and object storage, test data management, and analytics—to be run and managed in the same software environment at scale, whether in the public cloud, on-premises, or at the edge. Data is shared rather than siloed, stored efficiently rather than wastefully, and visible rather than kept in the dark—simultaneously addressing the problem of mass data fragmentation while allowing both IT and business teams to holistically leverage its value for the first time. In order to meet modern data management requirements, Cohesity SpanFS provides the following:

Key SpanFS attributes and implications include the following:

Unlimited Scalability: Start with as little as three nodes and grow limitlessly on-premises or in the cloud with a pay-as-you-grow model.

Strictly Consistent: Ensure data resiliency with strict consistency across nodes within a cluster.

Multi-Protocol: Support traditional NFS and SMB based applications as well as modern S3-based applications. Read and write to the same data volume with simultaneous multiprotocol access.

Global Dedupe: Significantly reduce data footprint by deduplicating across data sources and workloads with global variable-length deduplication.

Unlimited Snapshots and Clones: Create and store an unlimited number of snapshots and clones with significant space savings and no performance impact.

Self-Healing: Auto-balance and auto-distribute workloads across a distributed architecture.

Automated Tiering: Automatic data tiering across SSD, HDD, and cloud storage for achieving the right balance between cost optimization and performance.

Multi Cloud: Native integrations with leading public cloud providers for archival, tiering, replication, and protect cloud-native applications.

Sequential and Random IO: High I/O performance by auto-detecting the IO profile and placing data on the most appropriate media Multitenancy with QoS Native ability to support multiple tenants with QoS support, data isolation, separate encryption keys, and role-based access control.

Global Indexing and Search: Rapid global search due to indexing of file and object metadata.

Cohesity SmartFiles is an enterprise-class, software-defined, datacentric, multiprotocol file and object solution for the enterprise that transcends traditional offerings in terms of manageability, scale, security, efficiency and multi-tiered data management. It is based on the Cohesity Helios multicloud data platform, which delivers a patented distributed architecture for scale-out storage in a pay-as-you-grow model that never requires disruptive upgrades.

SmartFiles modernizes and simplifies data and application management by providing one platform for multiple workloads. It is a modern converged target for consolidating data silos and securely managing unstructured content and application data, including digital libraries, archives, rich media, video surveillance, big data and backup data sets.

Simplified Multicloud Data Management

SmartFiles software works efficiently on-premises as well as in the public cloud to make data visible and manageable. Its multicloud capabilities ensure seamless and rapid data mobility and application portability. Wherever data resides, it is always protected and preserved with integrated layers of security features and file integrity functions. Intelligent policy-based management and automation affirm efficient placement, governance and protection of volumes of data.

Do More With Data

SmartFiles enables you to extract value from data through an integrated Cohesity and third-party application ecosystem that runs directly on data in-place to mitigate risk from cyber threats and ransomware, accelerate content and metadata search, streamline compliance and eDiscovery, and detect anomalies and develop actionable insights with powerful analytics. SmartFiles also increases operational efficiency with multiple protocols, including native S3 compatibility, to integrate with leading business applications and clouds.

Flexible, Efficient And Cost Optimized

Available as a software defined solution, SmartFiles delivers drastically lower cost of ownership by providing the flexibility to match workload, capacity and cost requirements with a range of hardware choices, including flash-optimized options. Innovative data deduplication, compression and small file optimization extend storage capacity and enhance performance. Simplified tiering of cold content off valuable Tier 1 storage also assures investment protection while reducing the frequency of capacity upgrades on existing 3rd-party systems.

SmartFiles key capabilities include:

Multicloud Simplicity

● Enterprise Class: Designed to radically simplify enterprise data management and unlock unlimited value from enterprise data.

● Deploy Anywhere: As a software defined SmartFiles offers broad flexibility. Deploy on your choice of hardware, on virtual machines, or in one or more public clouds. It’s easy to migrate workloads across these deployments to optimize capacity, performance and costs.

● Unlimited Scale: Grow compute and storage independently. Support for heterogeneous disk and all-flash platforms allow for seamless deployment of new platforms with older platforms. You can also retire end-of-life platforms without expensive data migrations or forklift upgrades.

● Global Space Efficiency: SmartFiles is highly efficient and can deliver as much as 2x or 3x capacity savings compared to traditional alternatives. This translates to less physical storage costs, a smaller footprint, lower energy consumption and easier management.

● Multiprotocol Flexibility: An API-first design for maximum flexibility and ease of automation. SmartFiles provides native multiprotocol file and object services with unified permissions via NFS, SMB, S3 and OpenStack Swift.

Global Data Management

● One Global Control Plane: The singular Helios UI allows data management functions—including backup and recovery, disaster recovery, long-term archival, file and object services, test data management, security and compliance, and analytics — to be run and managed at scale, whether in the public cloud, on-premises, or at the edge. It offers real-time multi-cluster monitoring, pre-built and custom global reporting, global policy configuration and management, orchestrated multi-cluster upgrades, and global search.

● Automated Data Movement: Scale anywhere – on-premises or in the cloud – wherever it makes the most sense for your business. Policy-based automated tiering, archiving and replication to the cloud are also all supported.

● Burst for Compute: Replicate and instantly access data for processing in the cloud(s).

● Data Lifecycle Management: SmartFiles provides the ability to simply, reliably and cost effectively administer data creation, utilization, sharing, storage, and deletion.

● Deep Data Insights: Cohesity Helios leverages machine-learning-based algorithms to provide data driven insights to proactively assess your environment and automate infrastructure resources across multiple locations. From the Cohesity App Marketplace, Cohesity-developed apps and third-party apps are also available that can run directly on the platform and operate on data in-place for search, audit, antivirus.

● Simple Data Migration: Use built-in external NAS tiering capability to transparently move cold data off of your NAS device to SmartFiles.

AI-Powered Cyber Security

● Anti-Ransomware: Helios looks at a large cross-section an organization's data footprint to enable accurate and proactive actions. Anti-ransomware alerts can scan backups across the footprint to detect encryption-based ransomware attacks.

● WORM/Legal Hold: SmartFiles prevents data tampering and helps meet compliance requirements with an immutable file system, software encryption, over the wire encryption, multi-factor authentication, DataLock (WORM), legal hold settings, and adherence to FIPS 140-1 and 140-2 standards.

● Classification: Define classification policies and make discovering data across multiple clusters and data sources simple and automatic. SmartFiles incorporates the ability to choose a pre-built compliance template or search and define custom classification policies directly from the user interface.

● User Behavior: Avoid paying for expensive third-party tools to help answer questions about who moved data, who deleted data and what happened to the data. We've built those capabilities directly into the free Spotlight app on the Marketplace.

● Risk Exposure: SmartFiles includes a number of features for preventing unwanted data exposure, including secure views, in-flight and at-rest encryption, role-based access control and support for secure SSH.

The following sections detail the physical hardware, software revisions, and firmware versions required to install Cohesity SmartFiles Clusters running on Unified Computing System. A Cohesity SmartFiles cluster requires a minimum of two Cisco UCS S3260 Storage chassis, with each chassis equipped with dual UCS-S3260 M5 server nodes.

Table 1. Cohesity Helios System Components

| Component |

Hardware Required |

| Fabric Interconnects |

Two (2) Cisco UCS 6454 Fabric Interconnects |

| Servers |

Minimum of (2) two Cisco UCS S3260 storage server chassis each with dual server node |

Table 2 lists the required hardware components and disk options for the Cisco UCS S3260 Storage Server Base Chassis, which are required for installing Cohesity SmartFiles.

Table 2. Cisco UCS S3260 M5 Chassis Options

| Cisco UCS S3260 M5 options |

Hardware Required |

|

| Chassis |

Cisco UCS S3260 Storage Server Base Chassis |

|

| Server Node |

2x Cisco UCS S3260 M5 Server Node for Intel Scalable CPUs |

|

| Processors |

Each server node equipped with two Intel 4214R 2.4GHz/100W 12C/16.50MB CPUs |

|

| Memory |

Each server node equipped with 256 GB of total memory using eight (8) RDIMM/2Rx4 (8Gb) 1.2v modules |

|

| Disk Controller |

Cisco UCS S3260 Dual Pass Through based on Broadcom IT Firmware |

|

| System I/O Controller (SIOC) |

PCIe slot based with Cisco UCS VIC 1455 Quad Port 10/25G adapter |

|

| Storage |

SSDs |

8 x Cisco UCS C3000 Top Load 3X 3.2TB SSD |

| HDDs |

48 x 16TB 4Kn NL-SAS 7200 RPM 12Gb HDD |

|

| Network |

Cisco UCS VIC 1455 Quad Port 10/25G SFP28 CNA PCIE |

|

| Rear Drive / Boot Device |

4 x UCS S3260 240G Boot SSD |

|

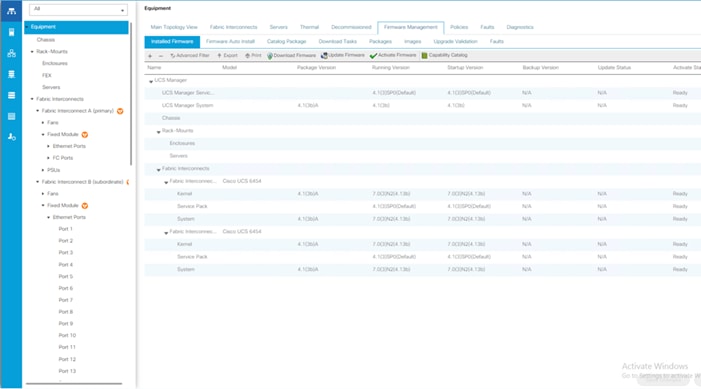

Table 3 lists the software components and the versions required for a single cluster of the Cohesity Helios Platform running in Cisco UCS, as tested, and validated in this document.

| Component |

Software Required |

| Cohesity Helios, Cohesity SmartFiles |

6.6.0d_release-20211028_8aa8fd67 or later |

| Cisco UCS Firmware |

Cisco UCS Infrastructure software, B-Series and C-Series bundles, revision 4.1(3b) or later |

Cisco UCS systems and the Cohesity software must be properly licensed for all software features in use, and for all ports in use on the Cisco UCS Fabric Interconnects. Please contact your sales partner or your direct Cisco and Cohesity sales teams to ensure you order all the necessary and appropriate licenses for your solution.

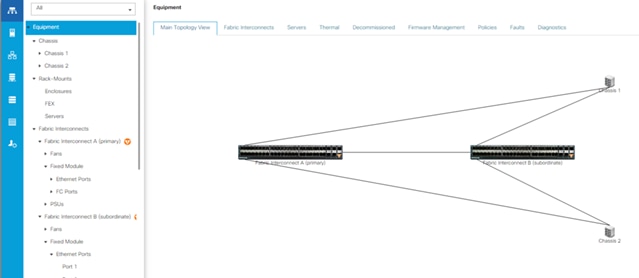

Cisco Unified Computing System is composed of a pair of Cisco UCS Fabric Interconnects along with up to 160 Cisco UCS B-Series blade servers, Cisco UCS C-Series rack-mount servers, HX-Series hyper-converged servers, or S-Series storage servers per UCS domain. Inside of a Cisco UCS domain, multiple environments can be deployed for differing workloads. For example, a Cisco HyperFlex cluster can be built using Cisco HX-Series rack-mount servers, a Cohesity cluster can be built using high density Cisco S-Series Storage server chassis or Cisco UCS C-Series Rack-mount servers and Cisco UCS B-Series blade servers inside of Cisco 5108 blade chassis can be deployed for various bare-metal or virtualized environments. The two Fabric Interconnects both connect to every Cisco UCS C-Series, HX-Series, or Cisco UCS S-Series storage server, and also connect to every Cisco UCS 5108 blade chassis. Upstream network connections, also referred to as “northbound” network connections are made from the Fabric Interconnects to the customer datacenter network at the time of installation.

Figure 7. Cisco UCS Example Physical Topology

Cisco UCS Fabric Interconnects

Cisco UCS Fabric Interconnects (FI) are deployed in pairs, wherein the two units operate as a management cluster, while forming two separate network fabrics, referred to as the A side and B side fabrics. Therefore, many design elements will refer to FI A or FI B, alternatively called fabric A or fabric B. Both Fabric Interconnects are active at all times, passing data on both network fabrics for a redundant and highly available configuration. Management services, including Cisco UCS Manager, are also provided by the two FIs but in a clustered manner, where one FI is the primary, and one is secondary, with a roaming clustered IP address. This primary/secondary relationship is only for the management cluster and has no effect on data transmission.

Fabric Interconnects have the following ports, which must be connected for proper management of the Cisco UCS domain:

● Mgmt: A 10/100/1000 Mbps port for managing the Fabric Interconnect and the Cisco UCS domain through GUI and CLI tools. This port is also used by remote KVM, IPMI and SoL sessions to the managed servers within the domain. This is typically connected to the customer management network.

● L1: A cross connect port for forming the Cisco UCS management cluster. This port is connected directly to the L1 port of the paired Fabric Interconnect using a standard CAT5 or CAT6 Ethernet cable with RJ45 plugs. It is not necessary to connect this to a switch or hub.

● L2: A cross connect port for forming the Cisco UCS management cluster. This port is connected directly to the L2 port of the paired Fabric Interconnect using a standard CAT5 or CAT6 Ethernet cable with RJ45 plugs. It is not necessary to connect this to a switch or hub.

● Console: An RJ45 serial port for direct console access to the Fabric Interconnect. This port is typically used during the initial FI setup process with the included serial to RJ45 adapter cable. This can also be plugged into a terminal aggregator or remote console server device.

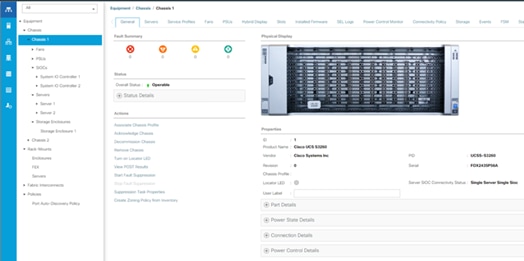

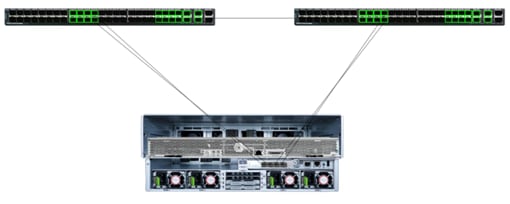

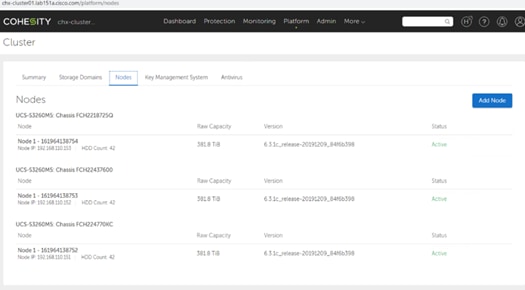

Cisco UCS S-Series Storage Server Chassis

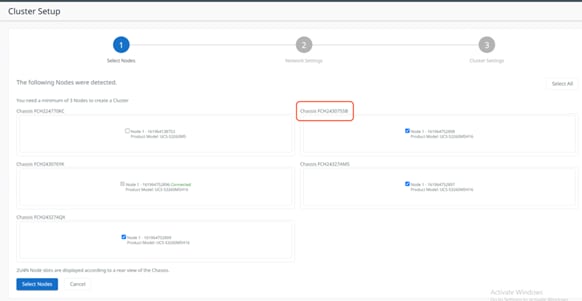

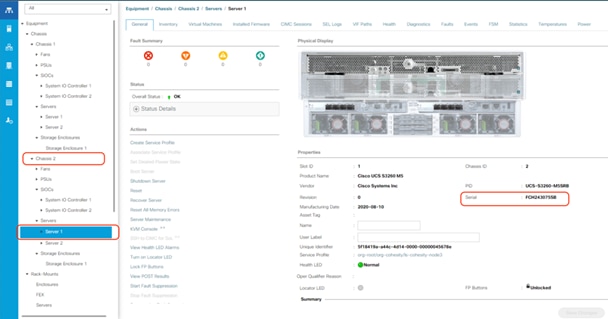

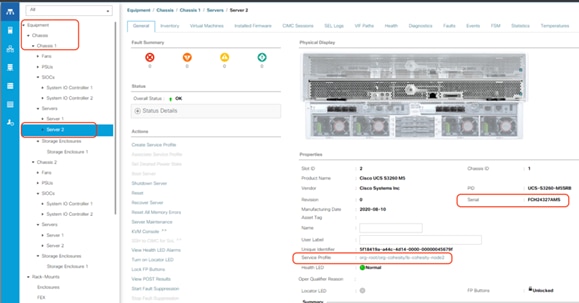

Cohesity UCS clusters for SmartFiles require a minimum of three (3) compute nodes. Each Cisco UCS S Series Storage Server for Cohesity SmartFiles, is equipped with a dual Server node. The Cisco UCS S-Series Storage Servers are connected directly to the Cisco UCS Fabric Interconnects in Direct Connect mode. Internally the Cisco UCS S-Series servers are configured with the PCIe-based system I/O controller for Quad Port 10/25G Cisco VIC 1455. The standard and redundant connection practice is to connect port 1 and port 2 of each server’s VIC card to a numbered port on FI A, and port 3 and port 4 of each server’s VIC card to the same numbered port on FI B. The design also supports connecting just port 1 to FI A and port 3 to FI B. The use of ports 1 and 3 are because ports 1 and 2 form an internal port-channel, as does ports 3 and 4. This allows an optional 2 cable connection method, which is not used in this design.

| Failure to follow this cabling practice can lead to errors, discovery failures, and loss of redundant connectivity. |

| WARNING! |

| Do not connect port 1 of the VIC 1455 to Fabric Interconnect A, and then connect port 2 of the VIC 1455 to Fabric Interconnect B. Using ports 1 and 2, each connected to FI A and FI B will lead to discovery and configuration failures. |

Figure 8. Cisco UCS S-Series Storage Server Connectivity

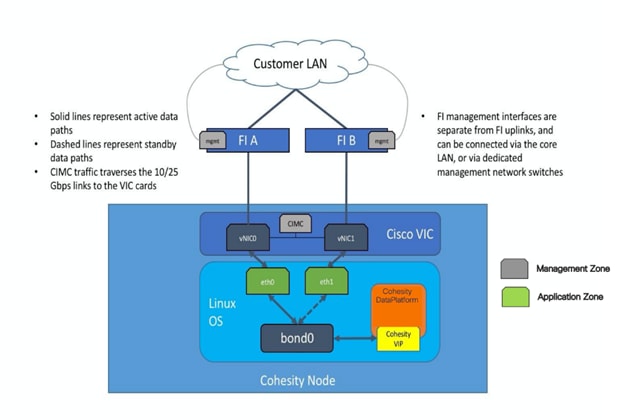

The Cohesity Helios Platform for Cohesity SmartFiles running on Cisco UCS has communication pathways that fall into two defined zones:

● Management Zone: This zone comprises the connections needed to manage the physical hardware, and the configuration of the Cisco UCS domain. These interfaces and IP addresses need to be available to all staff who will administer the UCS system, throughout the LAN/WAN. All IP addresses in this zone must be allocated from the same layer 2 (L2) subnet. This zone must provide access to Domain Name System (DNS), Network Time Protocol (NTP) services, and allow communication through HTTP/S and Secure Shell (SSH). In this zone are multiple physical and virtual components:

◦ Fabric Interconnect management ports.

◦ Cisco Intelligent Management Controller (CIMC) management interfaces used by each the rack-mount servers and blades, which answer through the FI management ports.

◦ IPMI access over LAN, allowing Cohesity Operation System to obtain information about system hardware health to proactively raise alerts and warnings

● Application Zone: This zone comprises the connections used by the Cohesity Helios software and the underlying operating system on the nodes. These interfaces and IP addresses need to be able to always communicate with each other for proper operation, and they must be allocated from the same L2 subnet. The VLAN used for Cohesity application traffic must be accessible to/from all environments utilizing Cohesity File Services, such as the external NAS or external backup software utilizing Cohesity Views as Backup Target. This zone must provide access to Domain Name System (DNS), Network Time Protocol (NTP) services, and allow communication through HTTP/S and Secure Shell (SSH. Finally, the VLAN must be able to traverse the network uplinks from the Cisco UCS domain, reaching FI A from FI B directly through the northbound switches, and vice-versa. In this zone are multiple components:

◦ A static IP address configured for the underlying Linux operating system of each Cohesity node. Two UCS vNICs are configured per node, one on the A side fabric, and one on the B side fabric. The two interfaces are configured as slave interfaces in a bond within the Linux operating system, using bond mode 1 (active/passive).

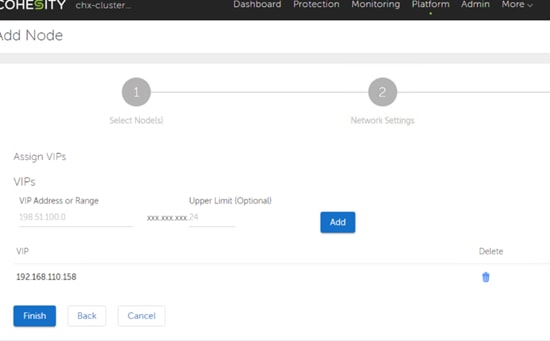

◦ A floating virtual IP address (VIP), one per node, that is used by Cohesity for all management, backup, and file services access. The assignment of the addresses is handled by the Cohesity software and will be re-assigned to an available node if any node should fall offline. These floating addresses are all assigned in DNS to a single A record, and the DNS server must respond to queries for that A record using DNS round-robin.

Figure 9. Logical Network Design

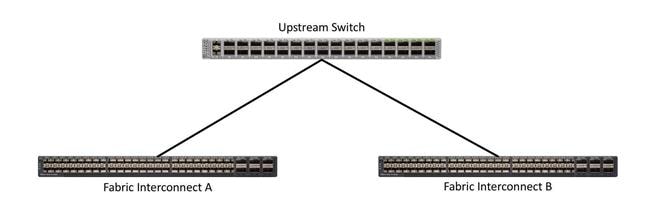

Cisco UCS network uplinks connect “northbound” from the pair of Cisco UCS Fabric Interconnects to the LAN in the customer datacenter. All Cisco UCS uplinks operate as trunks, carrying multiple 802.1Q VLAN IDs across the uplinks. The default Cisco UCS behavior is to assume that all VLAN IDs defined in the Cisco UCS configuration are eligible to be trunked across all available uplinks.

Cisco UCS Fabric Interconnects appear on the network as a collection of endpoints versus another network switch. Internally, the Fabric Interconnects do not participate in spanning-tree protocol (STP) domains, and the Fabric Interconnects cannot form a network loop, as they are not connected to each other with a layer 2 Ethernet link. All link up/down decisions through STP will be made by the upstream root bridges.

Uplinks need to be connected and active from both Fabric Interconnects. For redundancy, multiple uplinks can be used on each FI, either as 802.3ad Link Aggregation Control Protocol (LACP) port-channels or using individual links. For the best level of performance and redundancy, uplinks can be made as LACP port-channels to multiple upstream Cisco switches using the virtual port channel (vPC) feature. Using vPC uplinks allows all uplinks to be active passing data, plus protects against any individual link failure, and the failure of an upstream switch. Other uplink configurations can be redundant, however spanning-tree protocol loop avoidance may disable links if vPC is not available.

All uplink connectivity methods must allow for traffic to pass from one Fabric Interconnect to the other, or from fabric A to fabric B. There are scenarios where cable, port or link failures would require traffic that normally does not leave the Cisco UCS domain, to now be forced over the Cisco UCS uplinks. Additionally, this traffic flow pattern can be seen briefly during maintenance procedures, such as updating firmware on the Fabric Interconnects, which requires them to be rebooted. The following sections and figures detail several uplink connectivity options.

Single uplinks to single switch

This connection design is susceptible to failures at several points; single uplink failures on either Fabric Interconnect can lead to connectivity losses or functional failures, and the failure of the single uplink switch will cause a complete connectivity outage.

Figure 10. Connectivity with Single Uplink to Single Switch

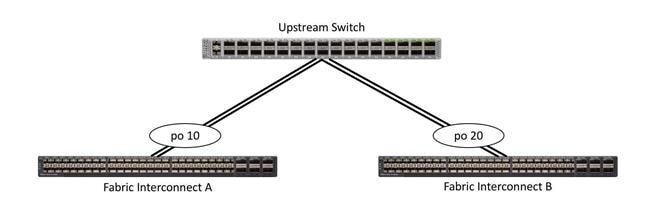

Port channels to single switch

This connection design is now redundant against the loss of a single link but remains susceptible to the failure of the single switch.

Figure 11. Connectivity with Port-Channels to Single Switch

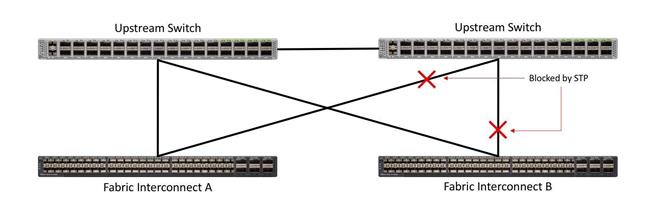

Single uplinks or port channels to multiple switches

This connection design is redundant against the failure of an upstream switch, and redundant against a single link failure. In normal operation, STP is likely to block half of the links to avoid a loop across the two upstream switches. The side effect of this is to reduce bandwidth between the Cisco UCS domain and the LAN. If any of the active links were to fail, STP would bring the previously blocked link online to provide access to that Fabric Interconnect through the other switch. It is not recommended to connect both links from a single FI to a single switch, as that configuration is susceptible to a single switch failure breaking connectivity from fabric A to fabric B. For enhanced redundancy, the single links in the figure below could also be port-channels.

Figure 12. Connectivity with Multiple Uplink Switches

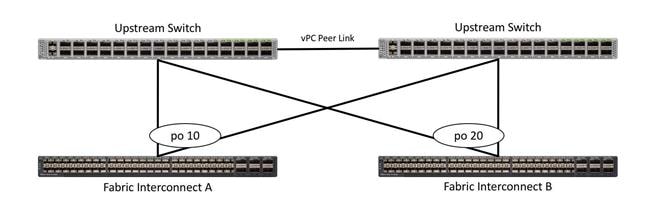

vPC to multiple switches

This recommended connection design relies on using Cisco switches that have the virtual port channel feature, such as Catalyst 6000 series switches running VSS, Cisco Nexus 5000 series, and Cisco Nexus 9000 series switches. Logically the two vPC enabled switches appear as one, and therefore spanning-tree protocol will not block any links. This configuration allows for all links to be active, achieving maximum bandwidth potential, and multiple redundancy at each level.

Figure 13. Connectivity with vPC

For the Cohesity system configuration, one only one VLAN is needed to be carried to the Cisco UCS domain from the upstream LAN, and this VLAN is also defined in the Cisco UCS configuration. Table 4 lists the VLANs required by Cohesity in Cisco UCS, and their functions:

| VLAN Name |

VLAN ID |

Purpose |

| <<cohesity_vlan>> |

Customer supplied |

Cohesity node Linux OS interfaces Cohesity node software virtual IP addresses |

All Cohesity traffic traversing the <<cohesity_vlan>> VLAN and subnet is configured by default to use standard ethernet frames.

Prior to the installation of the cluster, proper consideration must be given to the number of nodes required for the Cohesity cluster, and the usable capacity that will result.

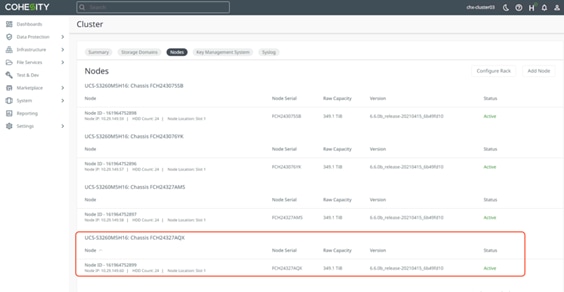

Cohesity clusters require a minimum of three (3) compute nodes, each Cisco UCS S3260 storage chassis can be equipped with two compute nods. From that point, the cluster can grow to any size of cluster that is required by the end user which meets their overall storage space requirements. This limitless scaling is a key feature present in Cohesity which allows future growth without the fears of reaching an overall capacity restriction. Cohesity Data Platform allows addition of multiple nodes simultaneously.

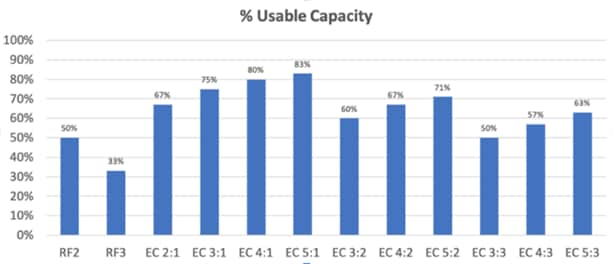

Cohesity provides a configurable resiliency on HDDs or node failures. Both Replication Factor RF2 and RF3 along with the Erasure Coding (EC) scheme is supported with the Cohesity SpanFS filesystem. RF refers to the number of replicas of a unit of data. The unit of replication is a chunk file, and a chunk file is mirrored into either one or two other nodes depending on the RF number chosen. An RF2 mechanism provides resilience against a single data unit failure, and a RF3 provides resilience against two data unit failures.

EC refers to a scheme where a number of usable data stripe units can be protected from failures using code stripe units, which are in turn derived from the usable data stripe units. A single code stripe unit can protect against one data (or code) stripe failure, and two code stripe units can protect against two data (or code) stripe unit failures.

Based on the resiliency and fault tolerance chosen, the raw to usable capacity varies. The figure below provides a high-level understanding of the usable capacity when different types of RF or EC schemes are chosen.

Figure 14. Usable Capacity

For more information, please see the Cohesity resilience white paper: (https://info.cohesity.com/Cohesity-Fault-Tolerance-White-Paper.html)

Disk drive manufacturers have adopted a size reporting methodology using calculation by powers of 10, also known as decimal prefix. As an example, a 120 GB disk is listed with a minimum of 120 x 10^9 bytes of usable addressable capacity, or 120 billion bytes. However, many operating systems and file systems report their space based on standard computer binary exponentiation, or calculation by powers of 2, also called binary prefix. In this example, 2^10 or 1024 bytes make up a kilobyte, 2^10 kilobytes make up a megabyte, 2^10 megabytes make up a gigabyte, and 2^10 gigabytes make up a terabyte. As the values increase, the disparity between the two systems of measurement and notation get worse, at the terabyte level, the deviation between a decimal prefix value and a binary prefix value is nearly 10 percent.

The International System of Units (SI) defines values and decimal prefix by powers of 10 as listed in Table 5.

Table 5. SI Unit Values (Decimal Prefix)

| Value |

Symbol |

Name |

| 1000 bytes |

kB |

Kilobyte |

| 1000 kB |

MB |

Megabyte |

| 1000 MB |

GB |

Gigabyte |

| 1000 GB |

TB |

Terabyte |

The International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) defines values and binary prefix by powers of 2 in ISO/IEC 80000-13:2008 Clause 4 as listed in Table 6.

Table 6. IEC Unit Values (binary prefix)

| Value |

Symbol |

Name |

| 1024 bytes |

KiB |

Kibibyte |

| 1024 KiB |

MiB |

Mebibyte |

| 1024 MiB |

GiB |

Gibibyte |

| 1024 GiB |

TiB |

Tebibyte |

For the purpose of this document, the decimal prefix numbers are used only for raw disk capacity as listed by the respective manufacturers. For all calculations where raw or usable capacities are shown from the perspective of the Cohesity software, filesystems or operating systems, the binary prefix numbers are used. This is done primarily to show a consistent set of values as seen by the end user from within the Cohesity HTML management dashboard when viewing cluster capacity, allocation, and consumption, and also within most operating systems.

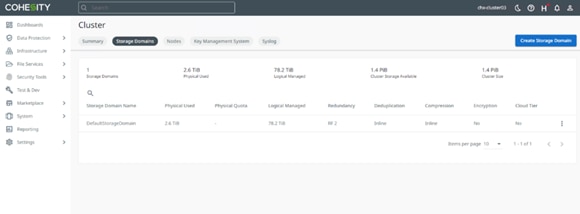

Table 7 lists a set of Cohesity Helios cluster usable capacity values, using binary prefixes, for an array of cluster configurations. These values provide an example of the capacity calculations, for determining the appropriate size of Cohesity cluster to initially purchase. Additional savings from deduplication and compression will raise the effective logical capacity far beyond the physical capacity of the nodes. Additionally, the choice of replication factor 2, or erasure coding, will determine the overall efficiency of the real data being stored on the nodes.

Table 7. Cohesity Cluster Usable Physical Capacities

| Cisco UCS C-Series Server Model |

Capacity Disk Size (each) |

Capacity Disk Quantity (per node) |

Usable Capacity (per node) |

Capacity per node @ RF2 |

Capacity per node with EC 2:1 |

| C240-M5L |

4 TB |

12 |

43.7 TiB |

21.8 TiB |

29.1 TiB |

| 10 TB |

12 |

109.2 TiB |

54.6 TiB |

72.8 TiB |

|

| S3260 M5 |

10 TB |

42 |

382.2 TiB |

191.1 TiB |

254.8 TiB |

| 10 TB |

21 |

191.1 TiB |

95.6 TiB |

127.4 TiB |

Configuration and Installation

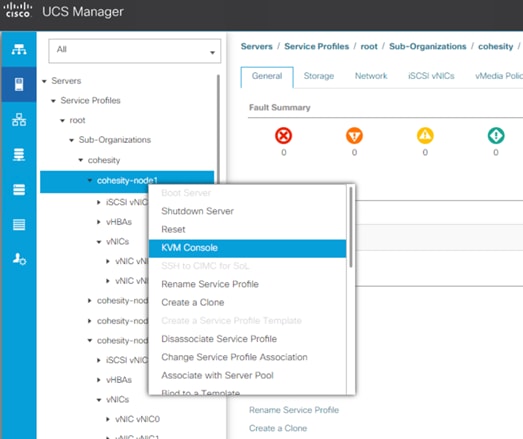

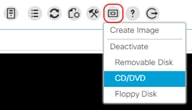

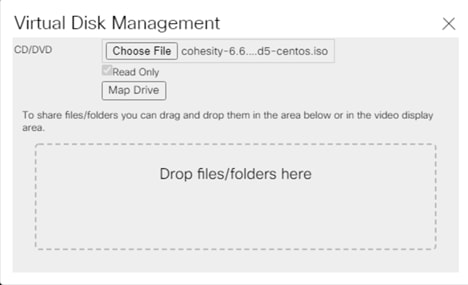

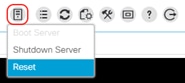

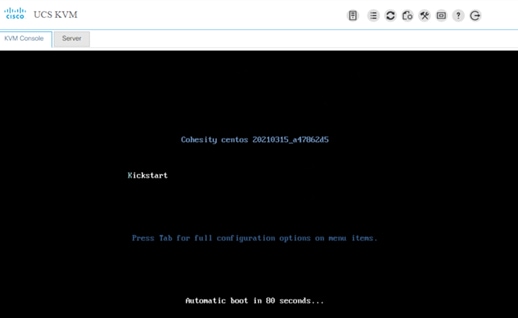

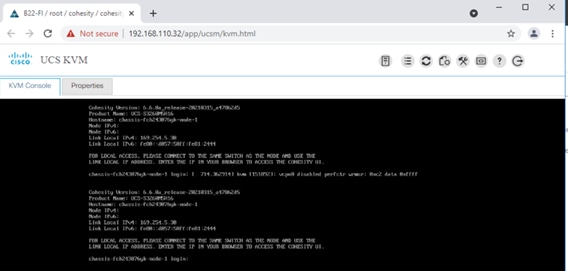

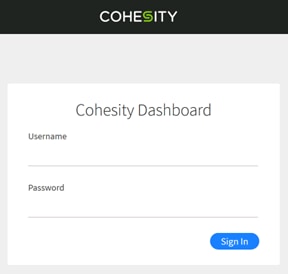

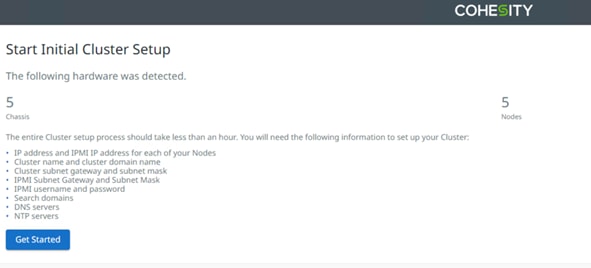

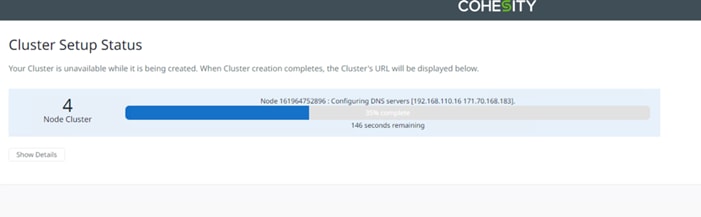

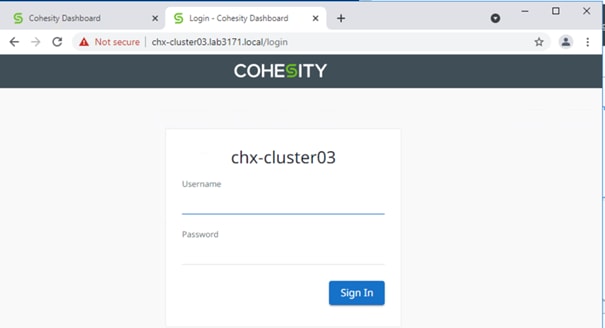

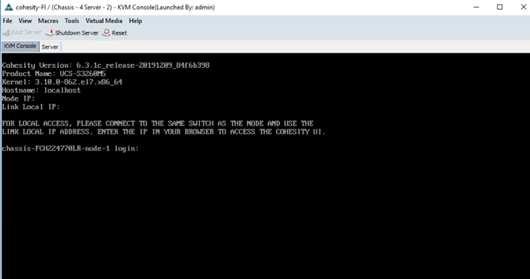

Installing the Cohesity Helios system is done through mounting a virtual DVD image to each Cisco UCS S-Series Storage Server node, which is available for download from Cohesity as an ISO file. The installation DVD validates the hardware configuration, installs the Linux operating system, copies the Cohesity software packages, and completes with the nodes ready for their final configuration to form a cluster. Prior to using the installation DVD, the configuration of the Cisco UCS domain, its policies, templates, and service profiles to be associated to the servers must be completed. The following sections will guide you through the prerequisites and manual steps needed to configure Cisco UCS Manager prior to booting the Cohesity installation DVD, the steps to install Cohesity to each node, and how to perform the remaining post-installation tasks to configure the Cohesity cluster. Finally, a basic configuration example is given for configuring Cohesity Storage Domains, Sources, Policies, Protection Jobs, file services Views, and Test/Dev virtual machine services.

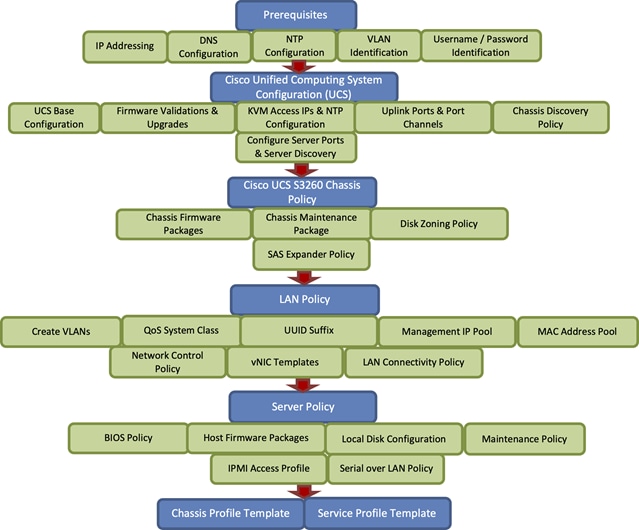

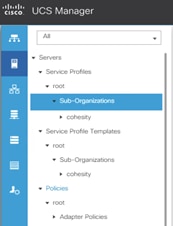

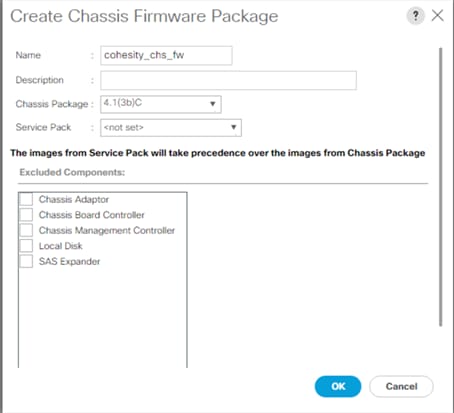

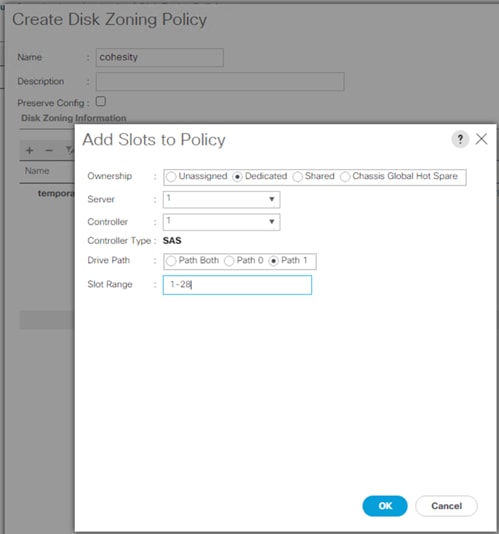

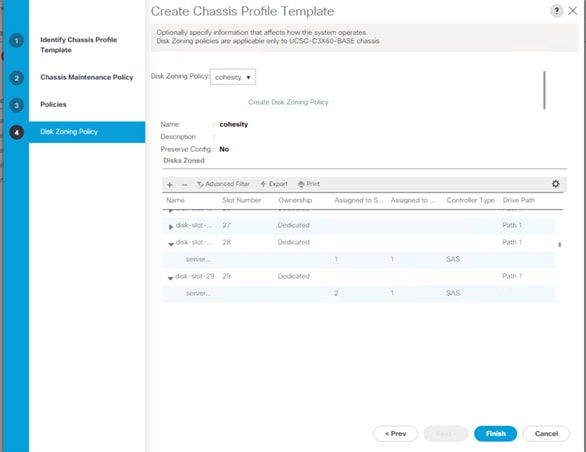

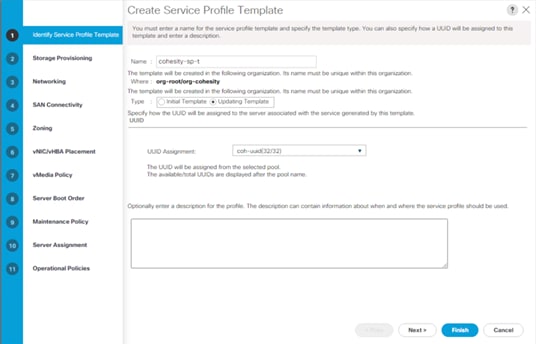

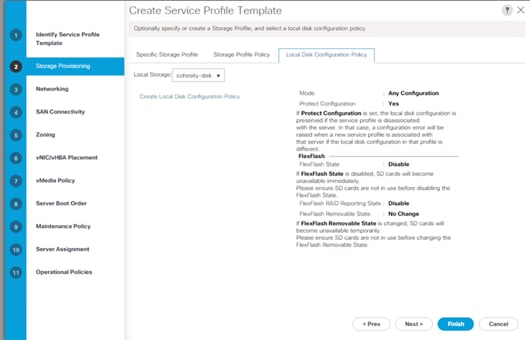

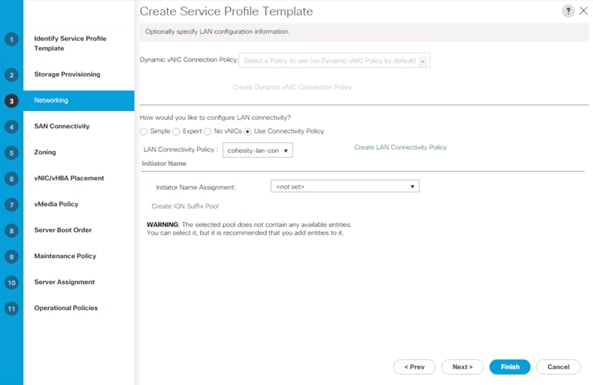

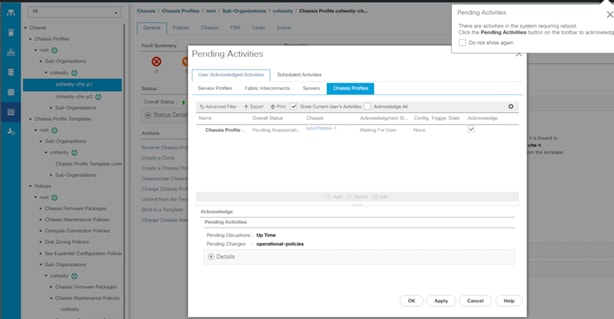

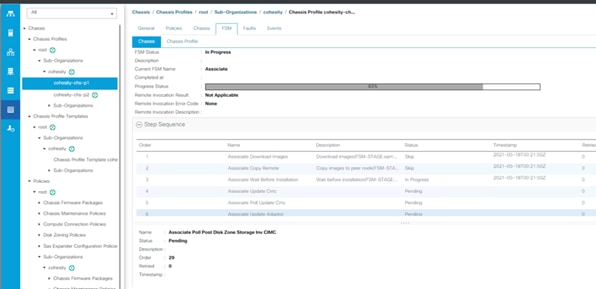

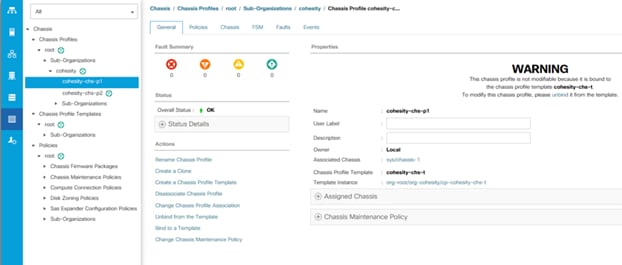

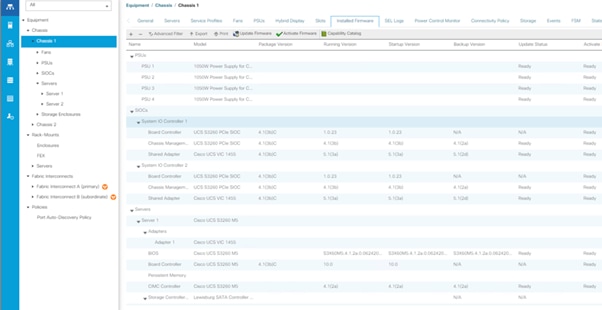

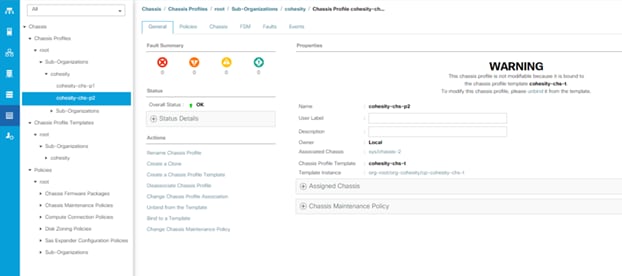

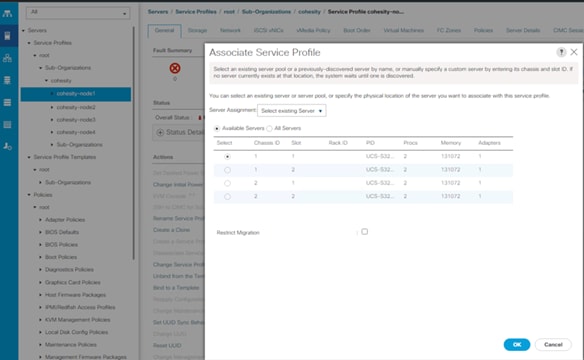

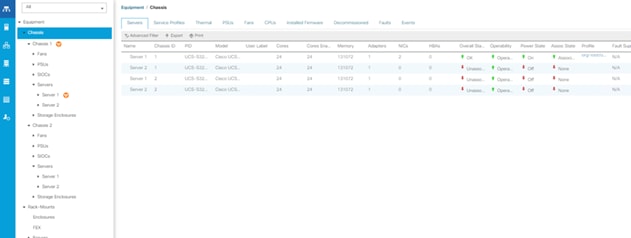

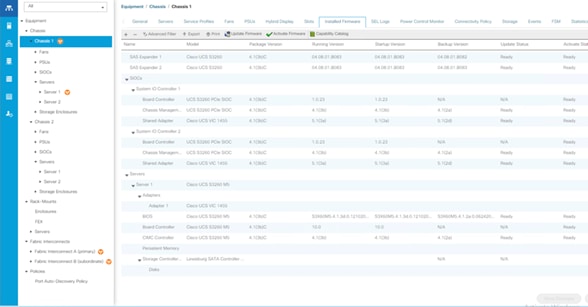

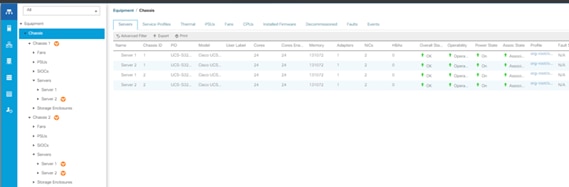

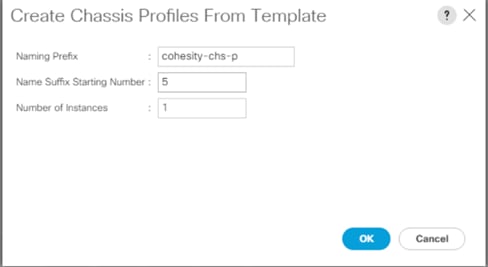

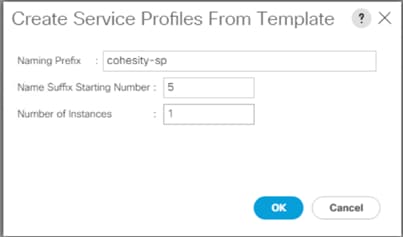

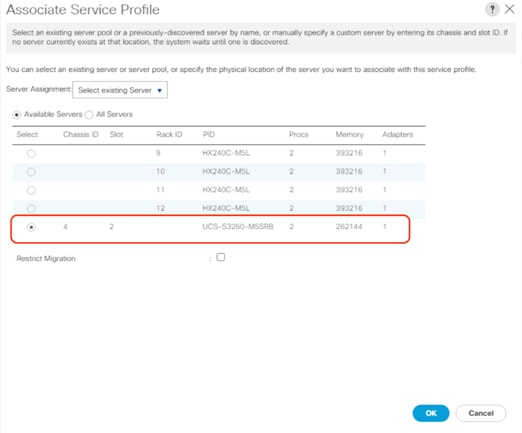

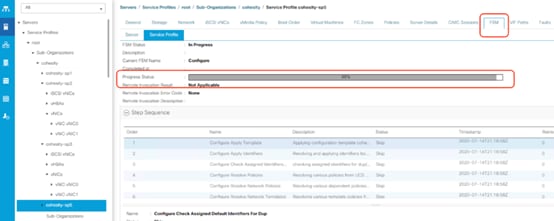

The workflow to configure Cisco UCS for Cohesity cluster with the Cisco UCS S3260 Storage server is detailed in the workflow below. This is a one-time process to configure the Cisco UCS Chassis and Server Profiles Templates for Cohesity Cluster, Chassis Profiles and Service Profiles can be instantiated from templates and attached to multiple Cisco UCS S3260 Chassis and server nodes within the same UCS Domain. A Cisco UCS domain allows you to quickly align computing resources in the data center with rapidly changing business requirements. This built-in flexibility is determined by whether you choose to fully implement the stateless computing feature.

Figure 15. Cisco UCS Configuration Workflow

Note: The workflow (Figure 15) to configure the Cisco UCS chassis and server profiles templates for Cohesity Cluster is a one-time process. The chassis profiles and service profiles can be instantiated from templates and attached to multiple Cisco UCS S3260 chassis and server nodes within the same Cisco UCS domain.

Prior to beginning the installation activities, complete the following necessary tasks and gather the required information.

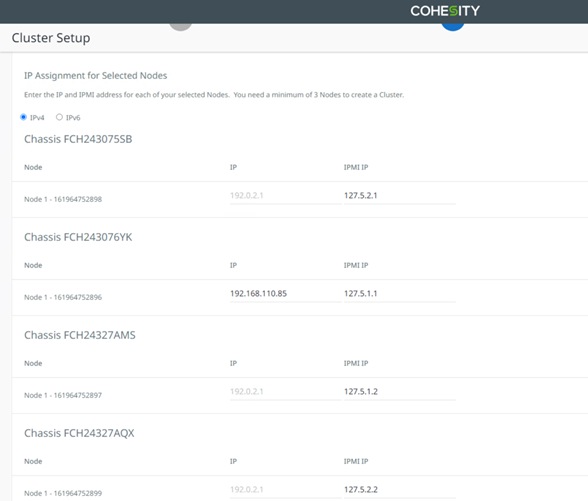

IP addresses for the Cohesity system on Cisco UCS need to be allocated from the appropriate subnets and VLANs to be used. IP addresses that are used by the system fall into the following groups:

● Cisco UCS Management: These addresses are used and assigned by Cisco UCS Manager. Three IP addresses are used by Cisco UCS Manager; one address is assigned to each Cisco UCS Fabric Interconnect, and the third IP address is a roaming address for management of the Cisco UCS cluster. In addition, at least one IP address per Cisco UCS S-series compute node is required for the Cohesity external management IP address pool, which is assigned to the CIMC interface of the physical servers. Since these management addresses are assigned from a pool, they need to be provided in a contiguous block of addresses. These addresses must all be in the same subnet.

● Cohesity Application: These addresses are used by the Linux OS on each Cohesity node, and the Cohesity software. Two IP addresses per node in the Cohesity cluster are required from the same subnet. These addresses can be assigned from the same subnet at the Cisco UCS Management addresses, or they may be separate.

Use the following tables to list the required IP addresses for the installation of a 4-node standard Cohesity cluster and review an example IP configuration.

Note: Table cells shaded in black do not require an IP address

Table 8. Cohesity Cluster IP Addressing

| Address Group: |

UCS Management |

Cohesity Application |

|

| VLAN ID: |

|

|

|

| Subnet: |

|

|

|

| Subnet Mask: |

|

|

|

| Gateway: |

|

|

|

| Device |

UCS Management Addresses |

Node IP |

Cohesity VIP |

| Fabric Interconnect A |

|

|

|

| Fabric Interconnect B |

|

|

|

| UCS Manager |

|

|

|

| Cohesity Node #1 |

|

|

|

| Cohesity Node #2 |

|

|

|

| Cohesity Node #3 |

|

|

|

| Cohesity Node #4 |

|

|

|

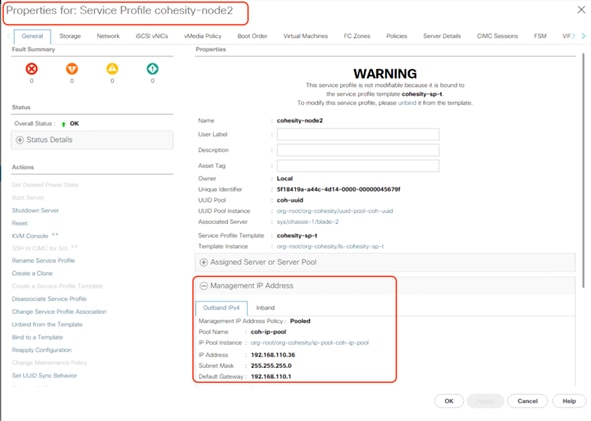

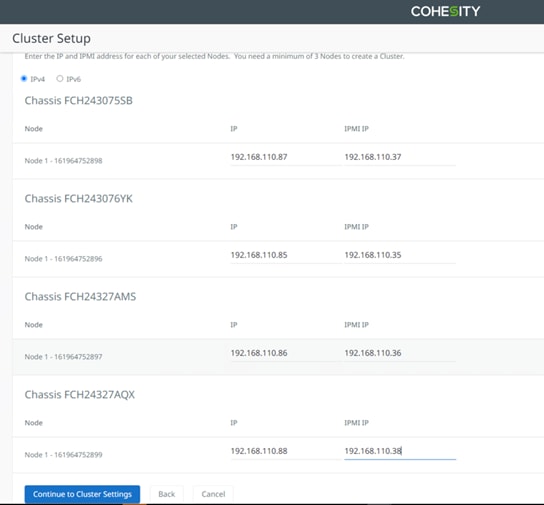

Table 9. Example Cohesity Cluster IP Addressing

| Address Group: |

UCS Management |

Cohesity Application |

|

| VLAN ID: |

3171 |

3171 |

|

| Subnet: |

192.168.110.0 |

192.168.110.0 |

|

| Subnet Mask: |

255.255.255.0 |

255.255.255.0 |

|

| Gateway: |

192.168.110.1 |

192.168.110.1 |

|

| Device |

UCS Management Addresses |

Node IP |

Cohesity VIP |

| Fabric Interconnect A |

192.168.110.33 |

|

|

| Fabric Interconnect B |

192.168.110.34 |

|

|

| UCS Manager |

192.168.110.32 |

|

|

| Cohesity Node #1 |

192.168.110.146 |

192.168.110.151 |

192.168.110.155 |

| Cohesity Node #2 |

192.168.110.147 |

192.168.110.152 |

192.168.110.156 |

| Cohesity Node #3 |

192.168.110.148 |

192.168.110.153 |

192.168.110.157 |

| Cohesity Node #4 |

192.168.110.149 |

192.168.110.154 |

192.168.110.158 |

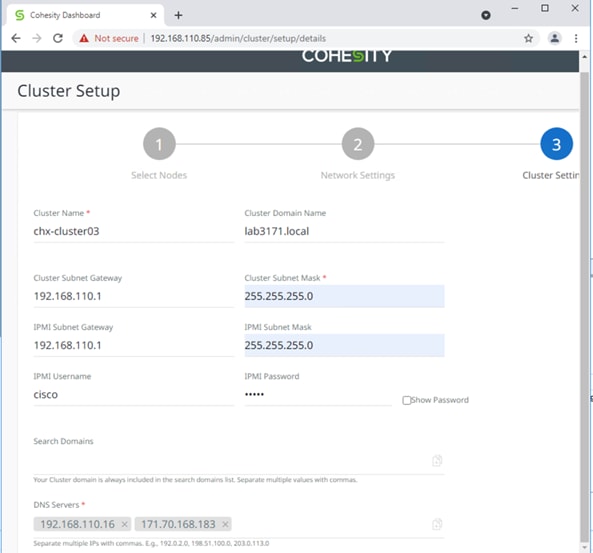

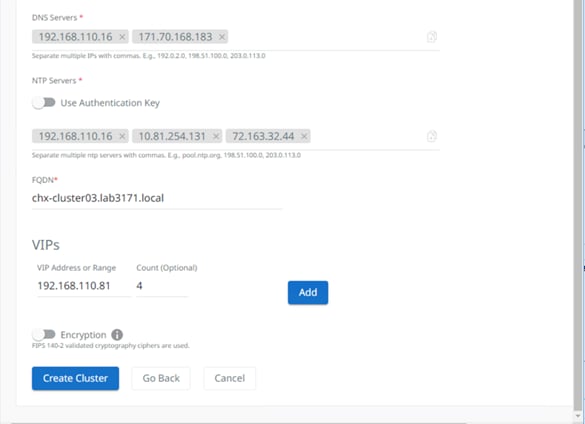

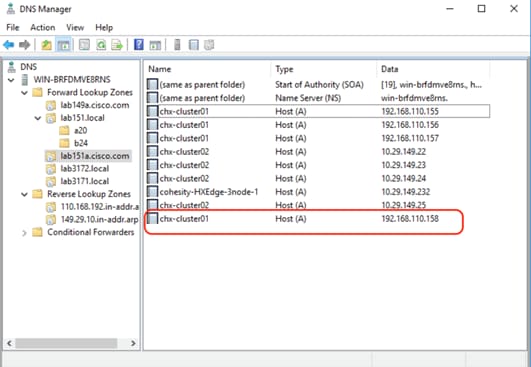

DNS servers are required to be configured for querying Fully Qualified Domain Names (FQDN) in the Cohesity application group. DNS records need to be created prior to beginning the installation. At a minimum, it is required to create a single A record for the name of the Cohesity cluster, which answers with each of the virtual IP addresses used by the Cohesity nodes in round-robin fashion. Some DNS servers are not configured by default to return multiple addresses in round-robin fashion in response to a request for a single A record, please ensure your DNS server is properly configured for round-robin before continuing. The configuration can be tested by querying the DNS name of the Cohesity cluster from multiple clients and verifying that all of the different IP addresses are given as answers in turn.

Use the following tables to list the required DNS information for the installation and review an example configuration.

Table 10. DNS Server Information

| Item |

Value |

A Records |

| DNS Server #1 |

|

|

| DNS Server #2 |

|

|

| DNS Domain |

|

|

| UCS Domain Name |

|

|

| Cohesity Cluster Name |

|

|

|

|

|

|

|

|

||

|

|

||

Table 11. DNS Server Example Information

| Item |

Value |

A Records |

| DNS Server #1 |

192.168.110.16 |

|

| DNS Server #2 |

|

|

| DNS Domain |

|

|

| UCS Domain Name |

B22-FI |

|

| Cohesity Cluster Name |

192.168.110.155 |

192.168.110.155 |

|

|

192.168.110.156 |

|

| 192.168.110.157 |

||

| 192.168.110.158 |

||

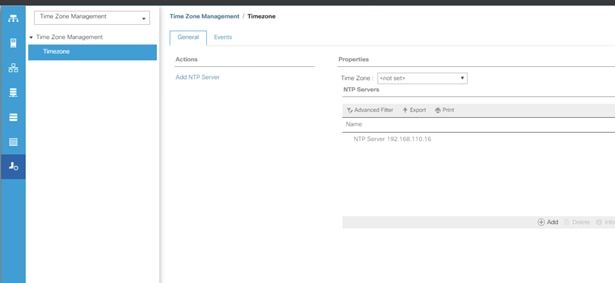

Consistent time clock synchronization is required across the components of the Cohesity cluster, provided by reliable NTP servers, accessible for querying in the Cisco UCS Management network group, and the Cohesity Application group.

Use the following tables to list the required NTP information for the installation and review an example configuration.

Table 12. NTP Server Information

| Item |

Value |

| NTP Server #1 |

|

| NTP Server #2 |

|

| Timezone |

|

Table 13. NTP Server Example Information

| Item |

Value |

| NTP Server #1 |

192.168.110.16 |

| NTP Server #2 |

|

| Timezone |

(UTC-8:00) Pacific Time |

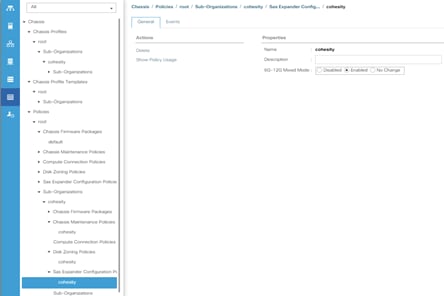

Prior to the installation, the required VLAN IDs need to be documented, and created in the upstream network if necessary. There is one VLAN that needs to be trunked to the two Cisco UCS Fabric Interconnects which manage the Cohesity cluster; the VLAN for the Cohesity Application group. The VLAN IDs must be supplied during the Cisco UCS configuration steps, and the VLAN names should be customized to make them easily identifiable.

Use the following tables to list the required VLAN information for the installation and review an example configuration:

| Name |

ID |

| <<cohesity_vlan>> |

|

Table 15. VLAN Example Information

| Name |

ID |

| VLAN3171 |

3171 |

The Cisco UCS uplink connectivity design needs to be finalized prior to beginning the installation. Refer to the network uplink design possibilities in the Network Design section.

Use the following tables to list the required network uplink information for the installation and review an example configuration.

Table 16. Network Uplink Configuration

| Fabric Interconnect Port |

Port Channel |

Port Channel Type |

Port Channel ID |

Port Channel Name |

|

| A |

|

☐ Yes ☐ No |

☐ LACP ☐ vPC |

|

|

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

| B |

|

☐ Yes ☐ No |

☐ LACP ☐ vPC |

|

|

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

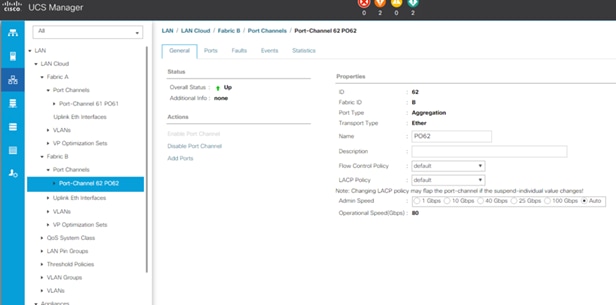

Table 17. Network Uplink Example Configuration

| Fabric Interconnect Port |

Port Channel |

Port Channel Type |

Port Channel ID |

Port Channel Name |

|

| A |

1/53 |

☒ Yes ☐ No |

☐ LACP ☒ vPC |

31 |

vpc31 |

| 1/54 |

☒ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

| B |

1/53 |

☒ Yes ☐ No |

☐ LACP ☒ vPC |

32 |

vpc32 |

| 1/54 |

☒ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

|

|

☐ Yes ☐ No |

||||

Several usernames and passwords need to be defined or known as part of the Cohesity installation and configuration process. Use the following tables to list the required username and password information and review an example configuration.

Table 18. Usernames and Passwords

| Account |

Username |

Password |

| UCS Administrator |

admin |

<<ucs_admin_pw>> |

| Cohesity Administrator |

admin |

<<cohesity_admin_pw>> |

Table 19. Example Usernames and Passwords

| Account |

Username |

Password |

| UCS Administrator |

admin |

xxxx |

| Cohesity Administrator |

admin |

xxxx |

Install the Fabric Interconnects and the Cisco UCS C-Series rack-mount servers according to their corresponding hardware installation guides listed below:

Cisco UCS 6454 Fabric Interconnect:

https://www.cisco.com/c/en/us/td/docs/unified_computing/ucs/hw/6454-install-guide/6454.pdf

Cisco UCS S Series Storage Server:

https://www.cisco.com/c/dam/en/us/td/docs/unified_computing/ucs/s/hw/S3260/installb/S3260.pdf

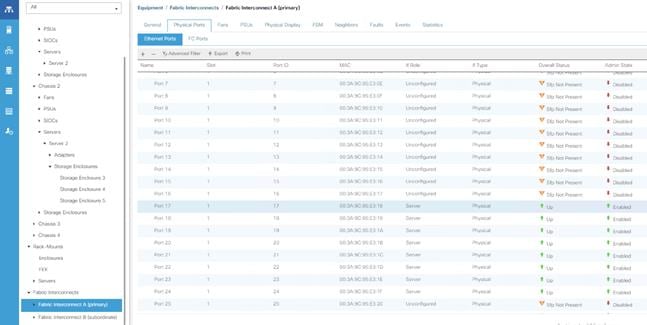

The physical layout of the Cohesity system was previously described in section Physical Topology. The Fabric Interconnects and S-series chassis need to be cabled properly before beginning the installation activities.

Table 20 lists an example cabling map for installation of a Cohesity system, using four compute nodes, each Cisco UCS S3260 Chassis is equipped with two compute nodes.

| Device |

Port |

Connected To |

Port |

Type |

Length |

Note |

| UCS6454-A |

L1 |

UCS6454-B |

L1 |

CAT5 |

1FT |

|

| UCS6454-A |

L2 |

UCS6454-B |

L2 |

CAT5 |

1FT |

|

| UCS6454-A |

mgmt0 |

Customer LAN |

|

CAT5 |

|

Management interface |

| UCS6454-A |

1/17 |

Cohesity Chassis #1 server node#1 |

mLOM port 1 |

Twinax |

3M |

Chassis1/Server1 |

| UCS6454-A |

1/18 |

Cohesity Chassis #1 server node#1 |

mLOM port 2 |

Twinax |

3M |

Chassis1/Server1 |

| UCS6454-A |

1/19 |

Cohesity Chassis #1 server node#2 |

mLOM port 1 |

Twinax |

3M |

Chassis1/Server2 |

| UCS6454-A |

1/20 |

Cohesity Chassis #1 server node#2 |

mLOM port 2 |

Twinax |

3M |

Chassis1/Server2 |

| UCS6454-A |

1/21 |

Cohesity Chassis #2 server node#1 |

mLOM port 1 |

Twinax |

3M |

Chassis2/Server1 |

| UCS6454-A |

1/22 |

Cohesity Chassis #2 server node#1 |

mLOM port 2 |

Twinax |

3M |

Chassis2/Server1 |

| UCS6454-A |

1/23 |

Cohesity Chassis #2 server node#2 |

mLOM port 1 |

Twinax |

3M |

Chassis2/Server2 |

| UCS6454-A |

1/24 |

Cohesity Chassis #2 server node#2 |

mLOM port 2 |

Twinax |

3M |

Chassis2/Server2 |

| UCS6454-A |

1/53 |

Customer LAN |

|

|

|

uplink |

| UCS6454-A |

1/54 |

Customer LAN |

|

|

|

uplink |

| UCS6454-B |

L1 |

UCS6454-A |

L1 |

CAT5 |

1FT |

|

| UCS6454-B |

L2 |

UCS6454-A |

L2 |

CAT5 |

1FT |

|

| UCS6454-B |

mgmt0 |

Customer LAN |

|

CAT5 |

|

Management interface |

| UCS6454-A |

1/17 |

Cohesity Chassis #1 server node#1 |

mLOM port 3 |

Twinax |

3M |

Chassis1/Server1 |

| UCS6454-A |

1/18 |

Cohesity Chassis #1 server node#1 |

mLOM port 4 |

Twinax |

3M |

Chassis1/Server1 |

| UCS6454-A |

1/19 |

Cohesity Chassis #1 server node#2 |

mLOM port 3 |

Twinax |

3M |

Chassis1/Server2 |

| UCS6454-A |

1/20 |

Cohesity Chassis #1 server node#2 |

mLOM port 4 |

Twinax |

3M |

Chassis1/Server2 |

| UCS6454-A |

1/21 |

Cohesity Chassis #2 server node#1 |

mLOM port 3 |

Twinax |

3M |

Chassis2/Server1 |

| UCS6454-A |

1/22 |

Cohesity Chassis #2 server node#1 |

mLOM port 4 |

Twinax |

3M |

Chassis2/Server1 |

| UCS6454-A |

1/23 |

Cohesity Chassis #2 server node#2 |

mLOM port 3 |

Twinax |

3M |

Chassis2/Server2 |

| UCS6454-A |

1/24 |

Cohesity Chassis #2 server node#2 |

mLOM port 4 |

Twinax |

3M |

Chassis2/Server2 |

| UCS6454-B |

1/53 |

Customer LAN |

|

|

|

uplink |

| UCS6454-B |

1/54 |

Customer LAN |

|

|

|

uplink |

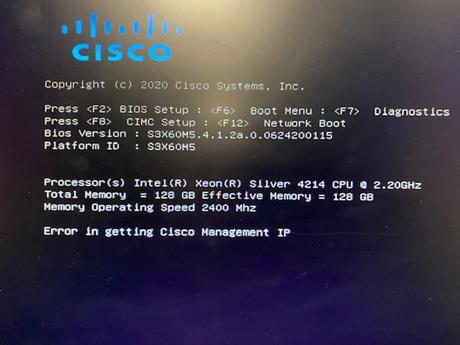

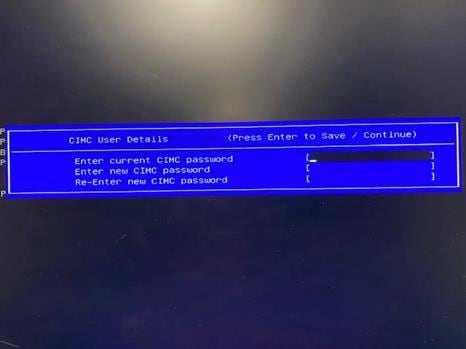

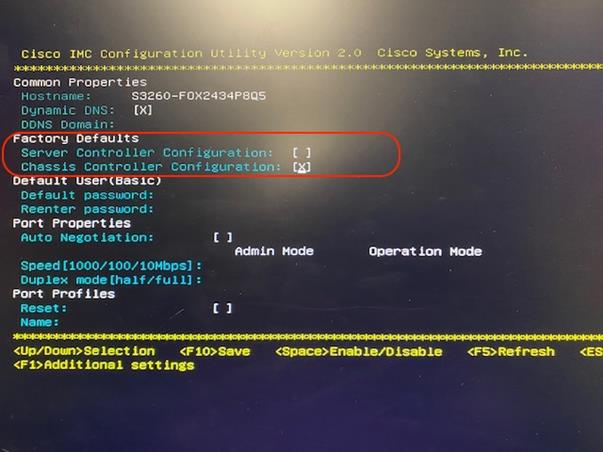

This section describes the steps to initialize and configure the Cisco UCS Fabric Interconnects, to prepare them for the Cohesity installation. For installations of Cohesity being integrated into an existing Cisco UCS domain, the following steps outlining the initial setup of the Fabric Interconnects, and their uplink port configuration can be skipped. In this situation, the steps beginning with the configuration of the server ports and server discovery onwards, including sub-organizations, policies, pools, templates, and service profiles, must still be performed.

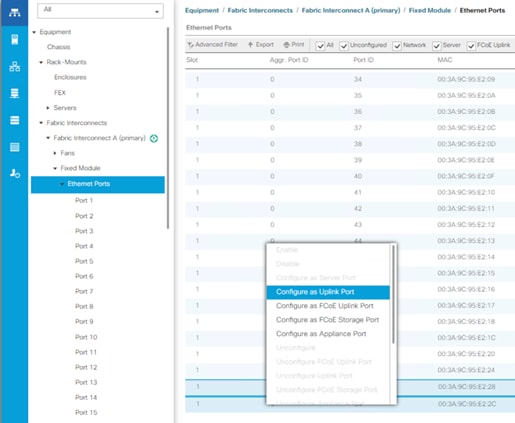

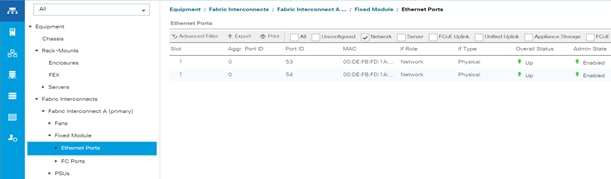

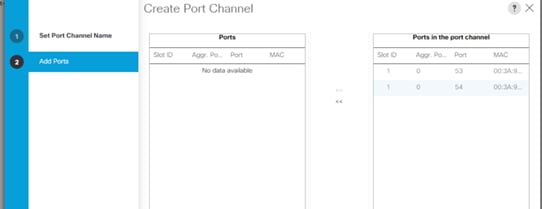

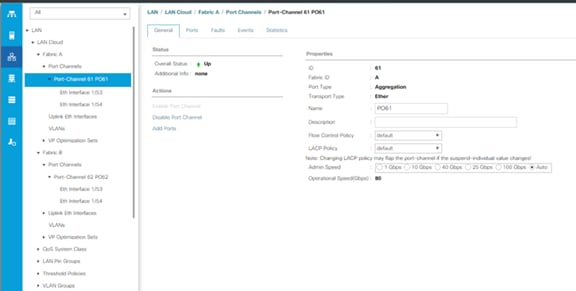

Procedure 1. Configure Cisco UCS Fabric Interconnect A

Step 1. Make sure the Fabric Interconnect cabling is properly connected, including the L1 and L2 cluster links, and the management ports, then power the Fabric Interconnects on by inserting the power cords.

Step 2. Connect to the console port on the first Fabric Interconnect, which will be designated as the A fabric device. Use the supplied Cisco console cable (CAB-CONSOLE-RJ45=), and connect it to a built-in DB9 serial port, or use a USB to DB9 serial port adapter.

Step 3. Start your terminal emulator software.

Step 4. Create a connection to the COM port of the computer’s DB9 port, or the USB to serial adapter. Set the terminal emulation to VT100, and the settings to 9600 baud, 8 data bits, no parity, and 1 stop bit.

Step 5. Open the connection which was just created. You may have to press ENTER to see the first prompt.

Step 6. Configure the first Fabric Interconnect, using the following example as a guideline:

---- Basic System Configuration Dialog ----

This setup utility will guide you through the basic configuration of

the system. Only minimal configuration including IP connectivity to

the Fabric interconnect and its clustering mode is performed through these steps.

Type Ctrl-C at any time to abort configuration and reboot system.

To back track or make modifications to already entered values,

complete input till end of section and answer no when prompted

to apply configuration.

Enter the configuration method. (console/gui) ? console

Enter the setup mode; setup newly or restore from backup. (setup/restore) ? setup

You have chosen to setup a new Fabric interconnect. Continue? (y/n): y

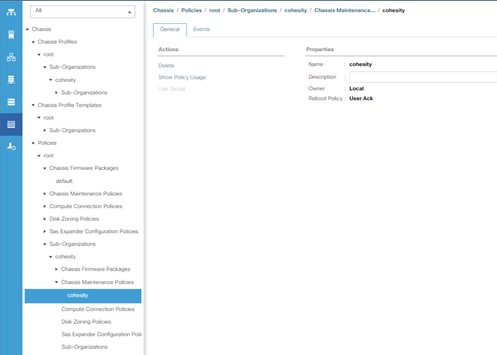

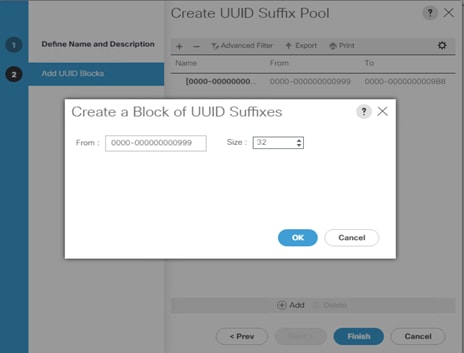

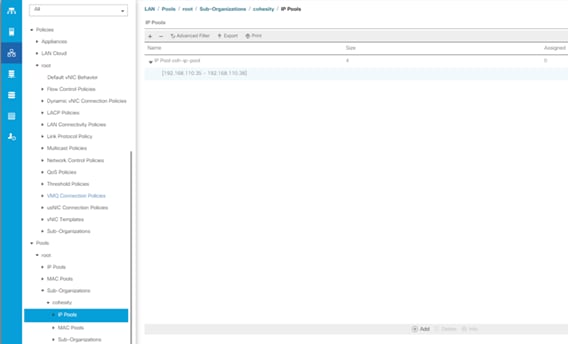

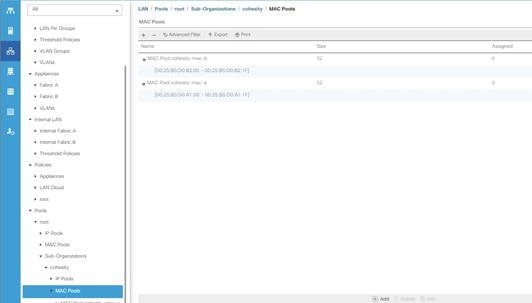

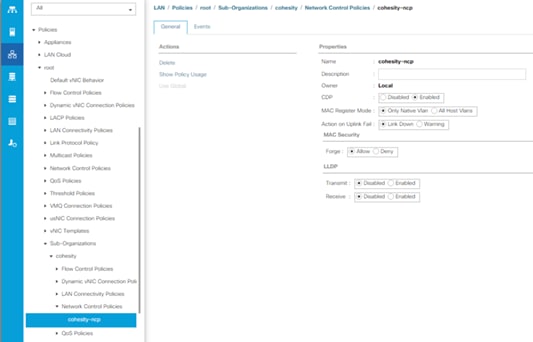

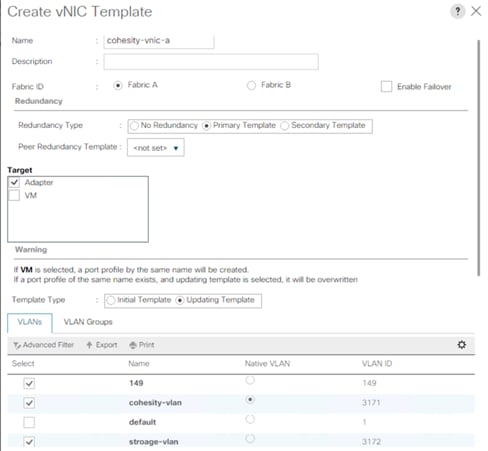

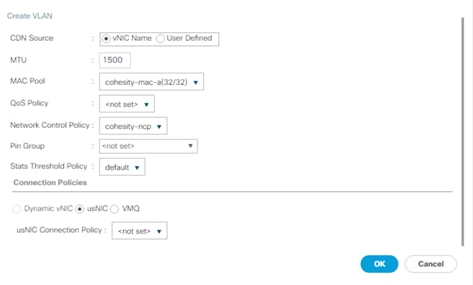

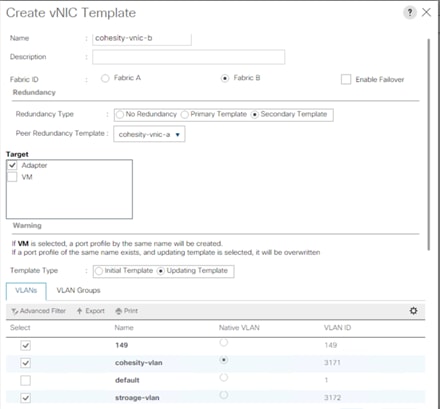

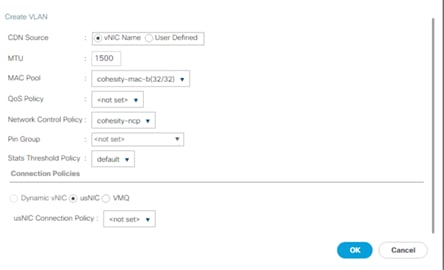

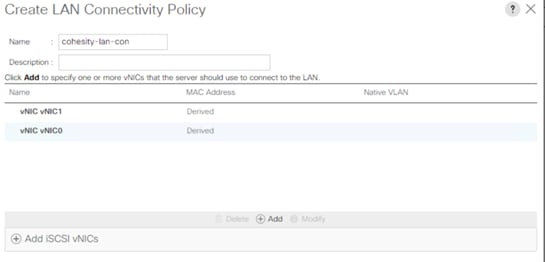

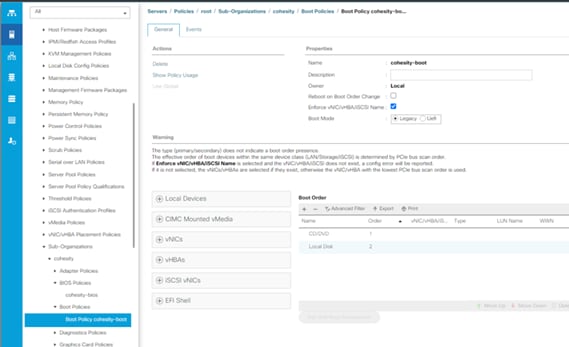

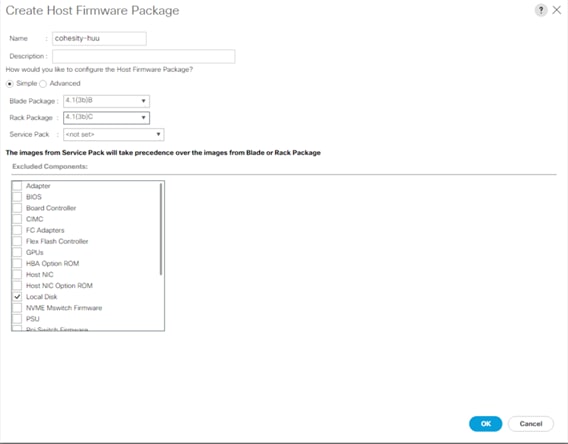

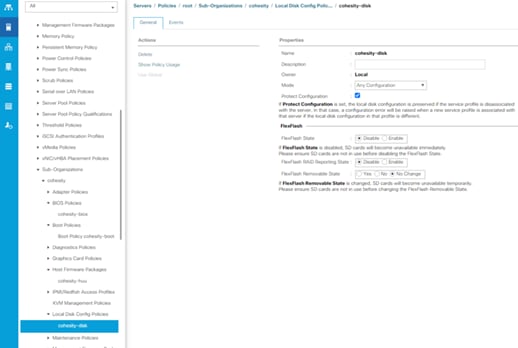

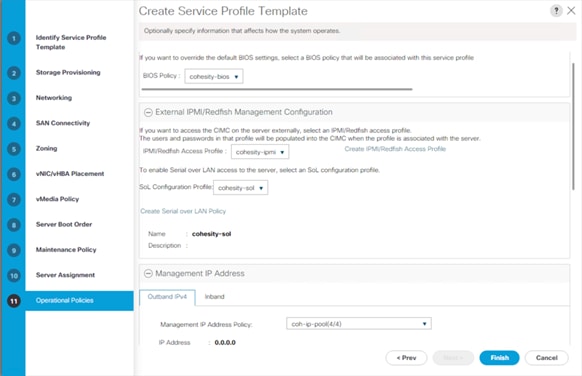

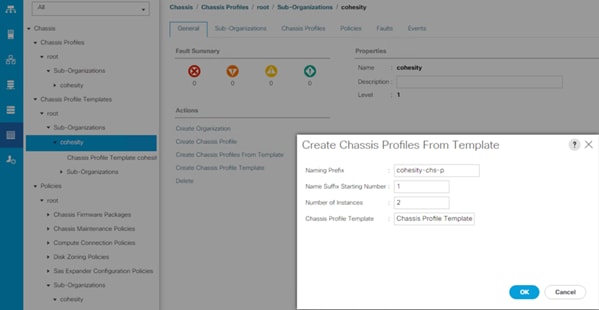

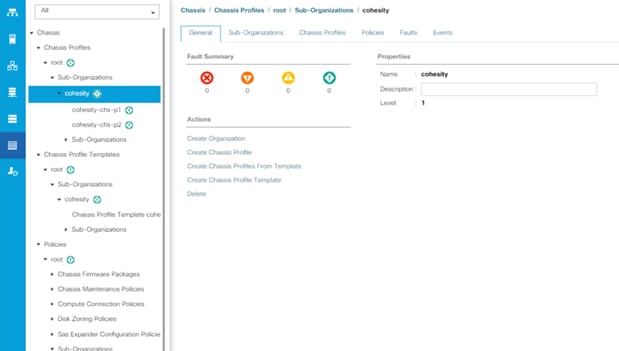

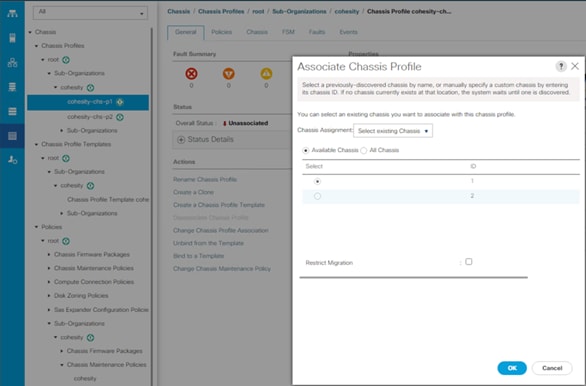

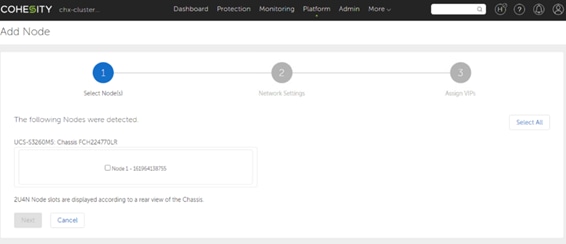

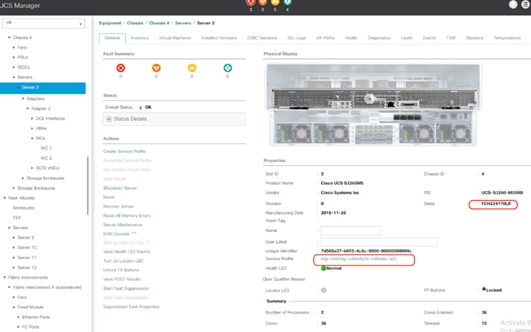

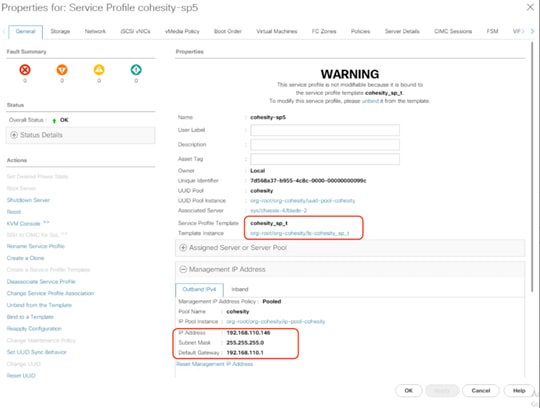

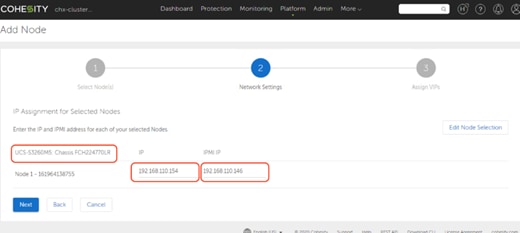

Enforce strong password? (y/n) [y]: y