Cisco HyperFlex and Red Hat OCP for Hybrid Cloud

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Hybrid cloud has become the de facto deployment and operating model in most Enterprises. In a study conducted by 451 Research across 2500 organizations from around the globe, 82% of the IT decision makers responded that they are already using a hybrid cloud model. Cloud computing from hyper-scalers such as Amazon Web Services (AWS), Microsoft Azure and Google Cloud offer limitless scale and flexibility, but it also comes with increasingly high costs, at times higher risk, leaving Enterprises with less control over their business-critical applications and data. As a result, Enterprises are adopting a hybrid strategy that allows them to optimally use both on-prem and public cloud infrastructure to meet their computing needs.

Hybrid cloud model enables Enterprises to:

● Leverage public cloud for specific use cases, for example, to meet short-term spikes in demand or for disaster recovery (DR). An Enterprise can minimize their CAPEX investment by not having to maintain under-utilized on-prem resources for these scenarios. However, a hybrid cloud DR strategy that requires the movement of data back to the Enterprise could get very costly as cloud providers charge considerably more for moving the data out of the cloud than for moving data into the cloud.

● Benefit from higher availability inherent in the hybrid cloud model. The Enterprise’s data center is now distributed across different infrastructures in different geographical locations, one managed by the Enterprise and the other by the cloud provider. As such, in most cases and if designed properly, a failure in one location should only impact that location.

● Accelerate innovation through increased agility as Enterprises can quickly spin up environments in the public cloud to start their development efforts and still have the option to deploy the application on-prem for testing or production where it might be easier to integrate into existing tools and processes. It also allows them to retain control of their data.

● Flexibility to select an optimal infrastructure and location that best meets their business needs. Each organization will have unique costs, compliance, security, performance, and other requirements and it helps to have more options.

Some of the common Enterprise use cases for hybrid cloud are:

● Enabling cloud-native environments anywhere, either on-prem or public cloud, with consistent life cycle management across a hybrid infrastructure environment. Enterprises need this to accelerate their application modernization efforts and for developing new applications. In production, the hybrid model enables them to deploy some applications in the cloud while keeping others on-prem, or host applications in both environments for redundancy, load-balancing etc.

● Development and Test (Dev/Test) where multiple teams in an application’s build/release cycle need multiple infrastructure environments for development, testing, production etc. For example, organizations may start their initial development in the public cloud where they can quickly spin up an environment, but then will deploy that application into production on-prem where they can easily access backend data, tooling, and other resources .

● Backup and recovery where the application resides either on-prem or distributed across both on-prem and cloud, but the data is backed up in the cloud. Recovery in this case can be to on-prem and/or cloud depending on the application.

● Cloud bursting or data center extension where an application scales into the cloud to meet peak demands or to enhance the on-prem application using Machine Learning or other data-intensive computations running in the cloud.

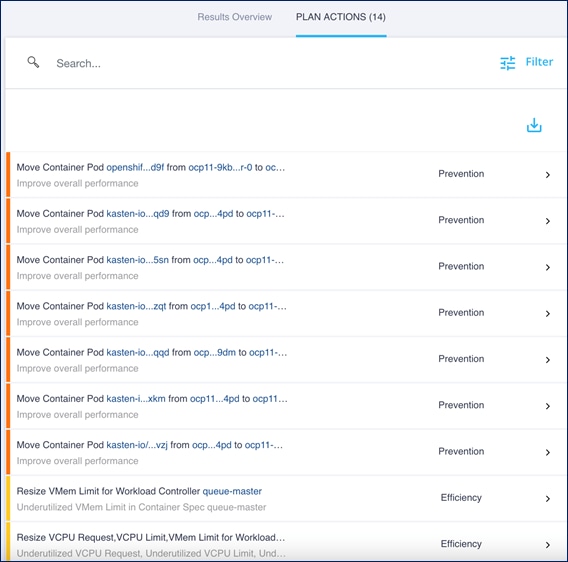

The solution presented in this document will address the first two use cases and deliver a foundational cloud-native hybrid-cloud infrastructure with operational simplicity and ease. It will enable developers and operators to quickly deploy cloud-native workloads anywhere with consistent operational experience across both environments. The solution is built using Cisco HyperFlex, Cisco Intersight, Amazon Web Services (AWS), and Red Hat OpenShift Container Platform (OCP). The solution also uses Cisco HyperFlex Container Storage Interface (CSI) to provide persistent storage for stateful Kubernetes (K8S) workloads running on Cisco HyperFlex, and Cisco Intersight Workload Optimizer (IWO) to ensure application performance, optimize resource usage, and manage cloud costs in a hybrid cloud deployment. The on-prem infrastructure deployment is automated using Red Hat Ansible to provide Infrastructure as Code (IaC) that can be integrated into existing CI/CD pipelines or other automation to accelerate deployments.

This chapter contains the following:

● Audience

Hybrid architectures are complex and challenging, and ad-hoc deployments that often occur organically in many Enterprises, only add to that challenge, making it more risky and costly for Enterprises. To address this, Enterprises need a hybrid cloud strategy and architecture that can deliver an agile, responsive infrastructure to its users to power new applications and innovations. The solution presented here can serve as a foundational hybrid-cloud reference architecture for Enterprises, with the simplicity, agility, and operational consistency that IT and Dev-Ops need.

The intended audience for this document includes, but is not limited to, sales engineers, field consultants, professional services, IT teams, partners, and customers who are working on or interested in designing and deploying Cisco’s Hybrid Cloud solutions.

This document is a Cisco Validated Design (CVD) for a Cisco hybrid cloud infrastructure solution for cloud-native workloads. The document provides the end-to-end design with detailed procedures for implementing the solution across a Cisco data center and public cloud. Hardware and software components used to validate the solution in Cisco’s internal labs are also provided.

This is the initial release of Cisco’s hybrid cloud solution with Cisco HyperFlex and Red Hat OCP. At a high level, the solution delivers a simple, flexible, and scalable infrastructure for an Enterprise’s cloud-native efforts, enabling workloads to be deployed anywhere from on-prem to a public cloud. The solution supports the following hybrid cloud use cases:

● Enable cloud-native environments anywhere with consistent management

● Development and Test

Other capabilities in this release of the solution are:

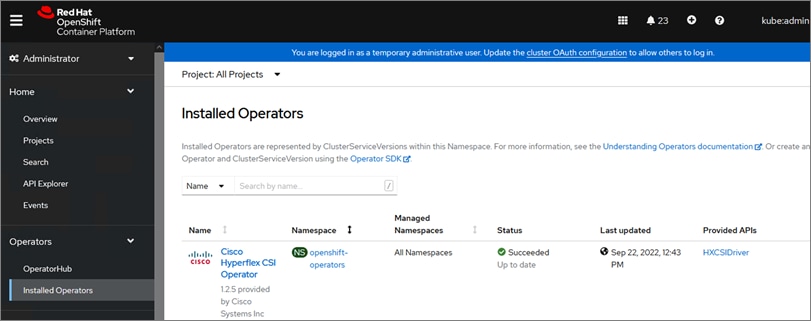

● Support for Cisco HyperFlex CSI 1.2.5

● Support for Cisco HyperFlex release 5.0(2a)

● Support for Red Hat OpenShift Container Platform 4.10

● Cisco Intersight for consistent, centralized operations across a hybrid environment

● Red Hat Hybrid Cloud Console and OpenShift cluster console for consistent, centralized K8S management across a hybrid environment

● Application performance monitoring with cloud cost management and resource optimization using Cisco Intersight Workload Optimizer (IWO) to ensure the performance of containerized applications in a hybrid deployment.

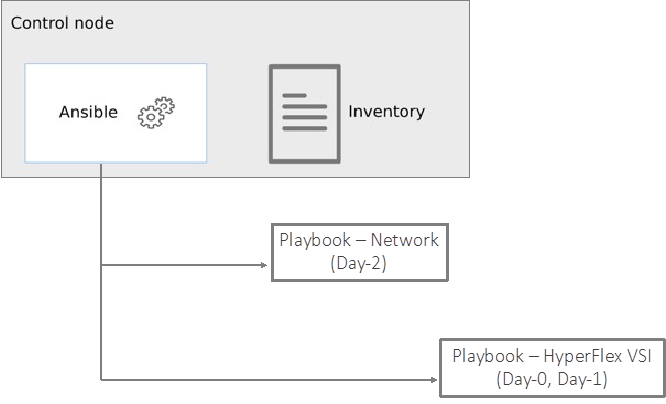

● IaC using Red Hat Ansible for the automated deployment of on-prem compute, storage, and networking

● Automated Install of Red Hat OCP on HyperFlex Virtual Server Infrastructure (VSI) and on AWS EC2 instances using Installer Provisioned Infrastructure (IPI) method

This solution provides a foundational reference architecture for a hybrid cloud infrastructure solution. The solution enables Enterprises to deploy and develop cloud-native applications anywhere, with consistent management and operational experience for both developers and IT Operations/Dev-Ops teams. The solution also supports two common hybrid cloud use cases as outlined earlier.

A hybrid cloud, by definition, is a cloud-computing architecture consisting of at least one on-prem location, a public cloud, and a secure network that interconnects the two locations. This solution delivers a hybrid cloud using a combination of Cisco, Red Hat, and AWS products and technologies as outlined below.

● Cisco HyperFlex standard clusters provide Enterprise-class software-defined compute, storage, and server networking for the on-prem Enterprise data center. HyperFlex delivers operational simplicity and agility without compromising scale, performance, or flexibility.

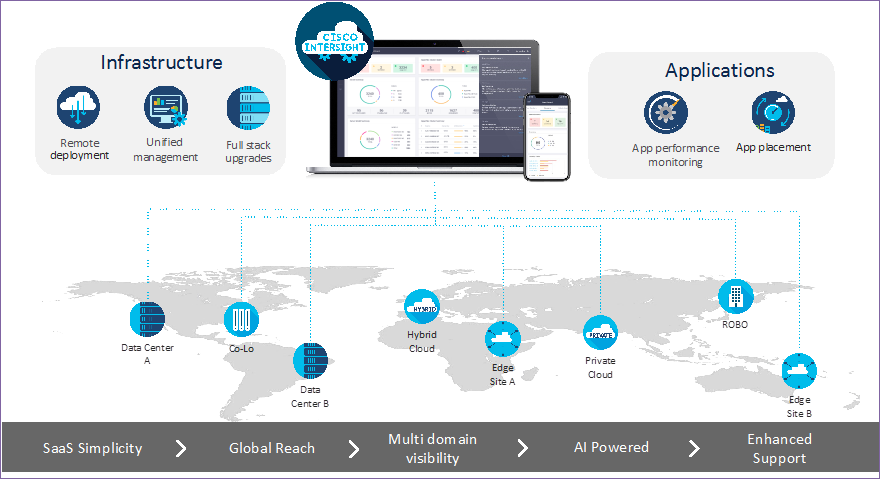

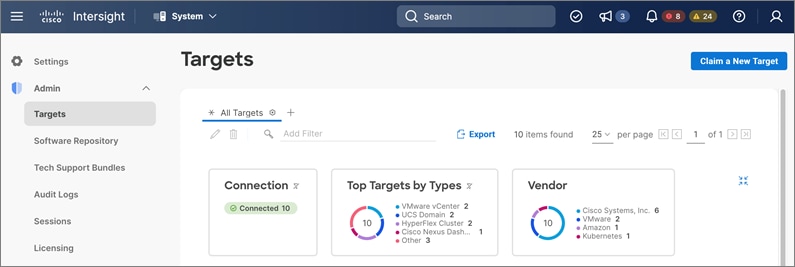

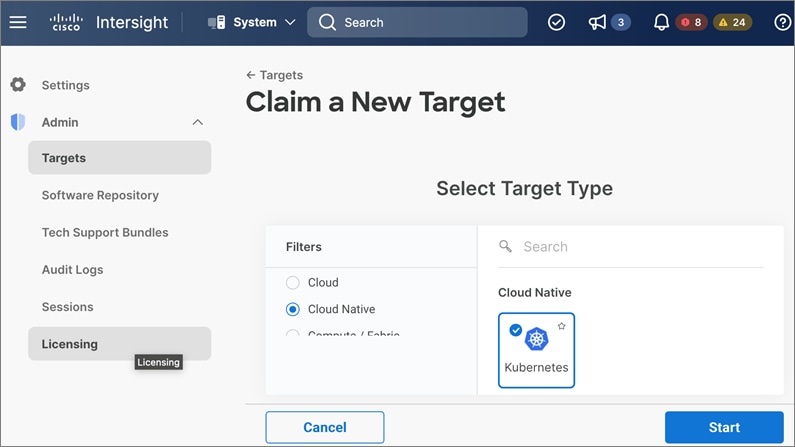

● Cisco Intersight provides cloud-based infrastructure management with centralized visibility and operations for all HyperFlex, Cisco UCS, and supported third-party infra that an Enterprise has located anywhere across the globe, from edge locations to Enterprise data centers. The SaaS delivery model enables IT teams to benefit from the continuous delivery of innovations and features without having to life cycle manage the management platform. Integration of vCenter, AWS, and OCP nodes in Intersight enables full-stack visibility, monitoring, and resource optimization.

● Cisco Networking using on-prem CSR 1000v and AWS Transit Gateways provides secure hybrid cloud connectivity.

● Red Hat OpenShift Container Platform provides a highly secure, Enterprise-class container orchestration platform with development and operational tools that simplify cloud-native efforts. OCP also delivers a consistent operational experience across both on-prem and public cloud.

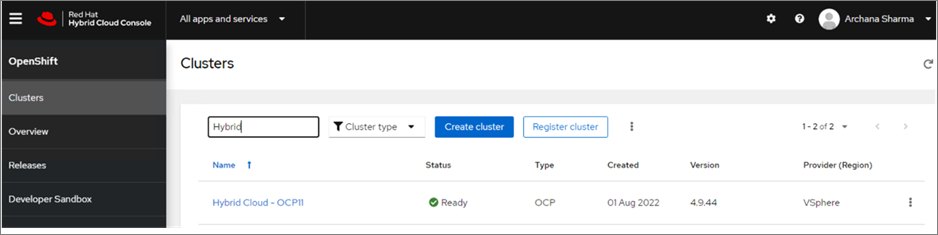

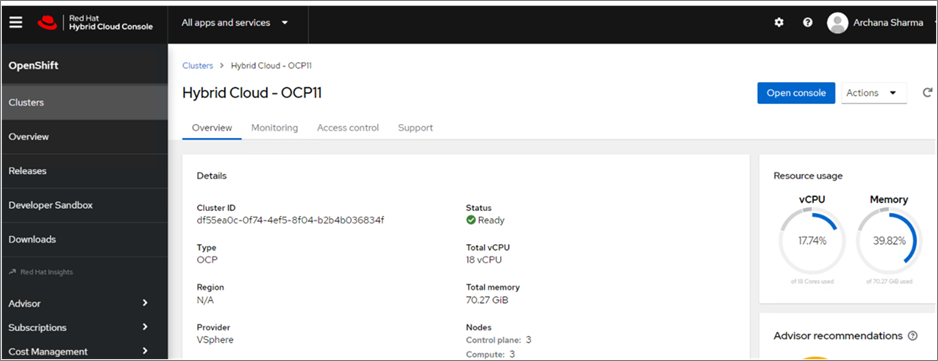

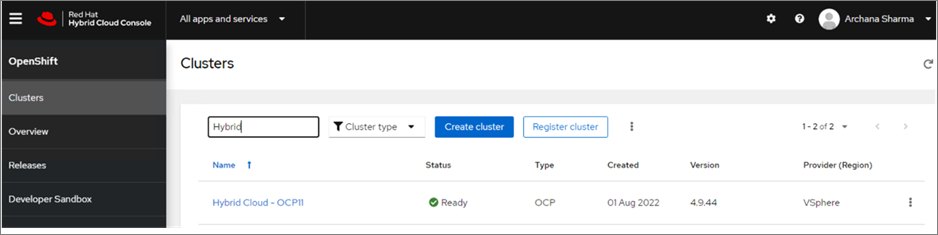

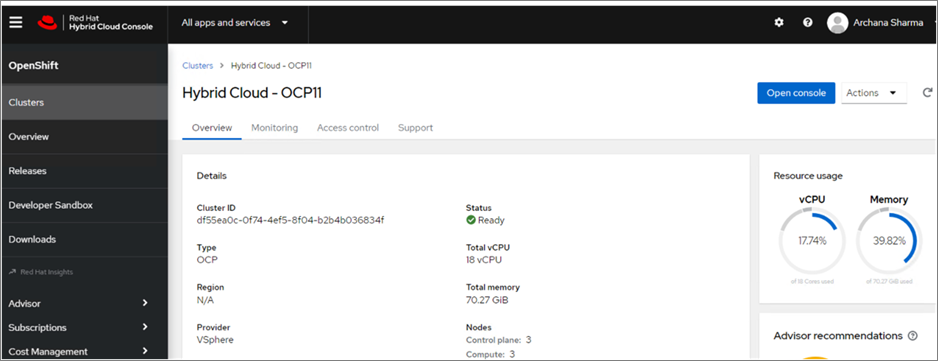

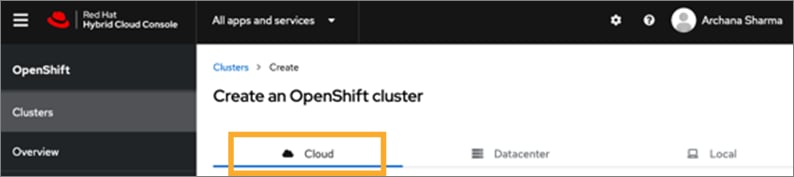

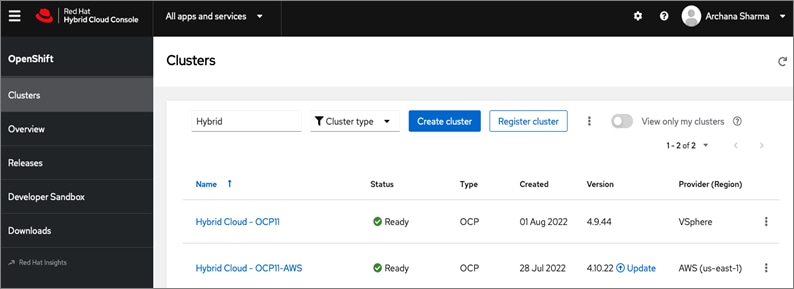

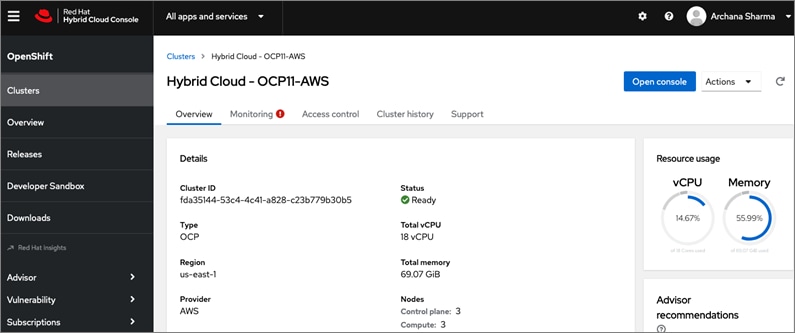

● Red Hat Hybrid Cloud Console provides cloud-based centralized management of OCP clusters distributed across on-prem and public clouds in a hybrid deployment. The application OCP clusters in the solution, hosted on HyperFlex VSI and AWS, are also deployed from the Red Hat Hybrid Cloud Console.

● Cisco HyperFlex CSI provides persistent container storage for cloud-native workloads hosted on Cisco HyperFlex using the underlying HyperFlex storage. It is Red Hat certified and can be quickly deployed on a cluster using a simple click-button install.

● VMware vSphere provides the virtualization on HyperFlex infrastructure. OCP clusters are deployed on VMs on HyperFlex and EC2 instances on AWS.

● Cisco Intersight Workload Optimizer ensures application performance with real-time analytics and automated decision-making that can adapt to changes real-time through actions that move workloads, add compute and memory resources etc. IWO continuously monitors components and resources to optimize resource usage and manage cloud-costs with full-stack visibility across a hybrid deployment.

● Infrastructure as Code using Red Hat Ansible automates the deployment of on-prem hyperconverged infrastructure, and networking to speed up deployment and for integration into existing Enterprise automation and/or CI/CD pipelines.

The end-to-end solution was validated in Cisco’s internal labs with Cisco and partner recommended best practices in place.

This chapter contains the following:

● Red Hat OpenShift Container Platform (OCP)

● Cisco Intersight Workload Optimizer (IWO)

Cisco HyperFlex

HyperFlex is a highly flexible, resilient, and scalable hyper-converged virtual server infrastructure (VSI) platform capable of supporting an organization’s most mission critical and performance-intensive workloads, both traditional and cloud-native. HyperFlex systems with Intel® Xeon® Scalable processors is certified as an Intel® Select Solution for Hybrid Cloud deployments. HyperFlex provides software-defined infrastructure with software-defined computing and storage enabled by Cisco Unified Computing System (Cisco UCS) servers and Cisco HyperFlex Data Platform (HXDP) software. HyperFlex systems can be deployed and managed from the cloud using Cisco Intersight to quickly deliver software-defined compute and storage to any location within the Enterprise. Cisco Intersight offers centralized management of all HyperFlex systems (and Cisco UCS) with a comprehensive set of features and capabilities to simplify and ease operations.

HyperFlex is engineered data integrity, reliability, and availability to ensure business continuity and data protection. HyperFlex provides Enterprise-class shared storage with data services such as:

● Replication based on the replication factor configured, stripes and replicates data across nodes in the cluster to protect against single or multi-component failures.

● Native replication replicates data to other clusters for backup or disaster-recovery purposes. Each node participates in the data transfer, minimizing the performance impact.

● Synchronous replication in HyperFlex stretch clusters maintains data in two locations at the same time to enable failover from one location to the other with zero Recovery Point Objective (RPO) and very short Recovery Time Objective (RTO).

● Data deduplication is always on to reduce storage capacity which on virtualized clusters with virtual machines running multiple instances of an operating system, often the same, results in large amounts of replicated data.

● Data compression further reduces storage requirements, lowering costs. HyperFlex uses a log-structured file system that is designed to store variable-sized blocks which internal fragmentation.

● Thin provisioning allows large data volumes to be created without requiring actual storage until it is needed so that storage capacity isn’t being reserved without being used.

● Fast, space-efficient clones rapidly replicate storage volumes so that virtual machines can be replicated simply through metadata operations, with actual data copied only for write operations.

● Data protection API enables enterprise backup tools to access data volumes for consistent per-VM backup operations.

Cisco HyperFlex Data Platform (HXDP)

The foundation for Cisco HyperFlex systems is the Cisco HyperFlex Data Platform software that runs on each node in a HyperFlex cluster. HyperFlex Data Platform is a purpose-built, high-performance, log-structured, scale-out file system with enterprise-class storage features. The HXDP software runs on Cisco HyperFlex (HX-series) nodes to create a highly available cluster. Each HyperFlex server/node includes an HyperFlex Data Platform controller or Storage Controller Virtual Machine (SCVM) that implements the scale-out and distributed file system using internal flash-based SSDs, NVMe storage, or a combination of NVMe, flash-based SSDs and high-capacity HDDs to store data. The distributed controller design prevents the controller from becoming a bottleneck, unlike many Enterprise storage systems. Each controller assumes direct control of all drives on a node via PCI passthrough and communicates with other controllers over 10 or 40 GbE to aggregate all available capacity across all nodes into a single pool of storage. As nodes are added, the cluster scales linearly to deliver computing, storage capacity, and I/O performance.

Each HyperFlex node also run IOvisor as a software VIB (SCVM client) inside the hypervisor to enable access to the datastores presented by the HyperFlex storage cluster. HyperFlex provides both high-performance and resiliency as the stored data is distributed across all nodes and all nodes participate in serving I/O.

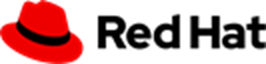

HyperFlex provides several hardware configuration options. Enterprises can customize the HyperFlex server configuration as needed to meet business and workload requirements.

For more details on HyperFlex Data Platform software architecture and design, see: https://www.cisco.com/c/en/us/products/collateral/hyperconverged-infrastructure/hyperflex-hx-series/white-paper-c11-736814.html

HyperFlex Design Options

HyperFlex offers enterprise-class features and performance with distributed, scale-out storage and operational simplicity for an Enterprise’s cloud-native efforts in a hybrid environment. Some additional factors that make HyperFlex particularly well-suited as a hybrid cloud platform are:

● Flexibility

HyperFlex supports modern and traditional business-critical Enterprise applications with GPU acceleration, flexible choice of processors (Intel, AMD), disk capacity and type, network bandwidth and interface type, form factor, and storage. Virtual Machines and containers running on HyperFlex can leverage HyperFlex storage using NFS and HX CSI, respectively. HyperFlex can also provide iSCSI storage to external iSCSI clients such as Windows servers, Linux servers, and so on. HyperFlex can integrate into existing environments; VMs running on HyperFlex can mount existing Enterprise storage using NFS, iSCSI, or FC. HyperFlex systems can also connect to existing Cisco UCS FIs with other Cisco UCS server platforms.

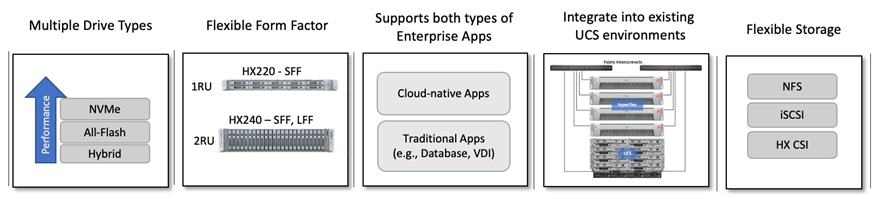

HyperFlex is also a very flexible, adaptable, and scalable platform that can be deployed anywhere from Enterprise data centers to edge locations with centralized deployment and management from Cisco Intersight. It is available in multiple configuration options such as HX edge and stretched cluster to support different Enterprise use cases.

HyperFlex with support for multiple drive (Hybrid, All-Flash, NVMe) and node types (HX220, H240) can independently scale compute and storage. The cluster can also be expanded incrementally, one node at a time, with linear performance.

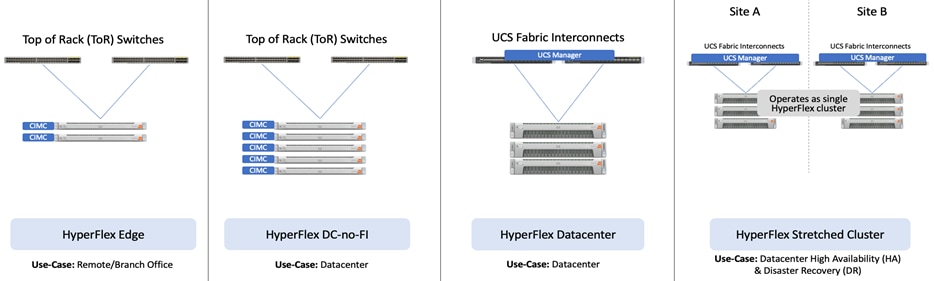

● Scalability

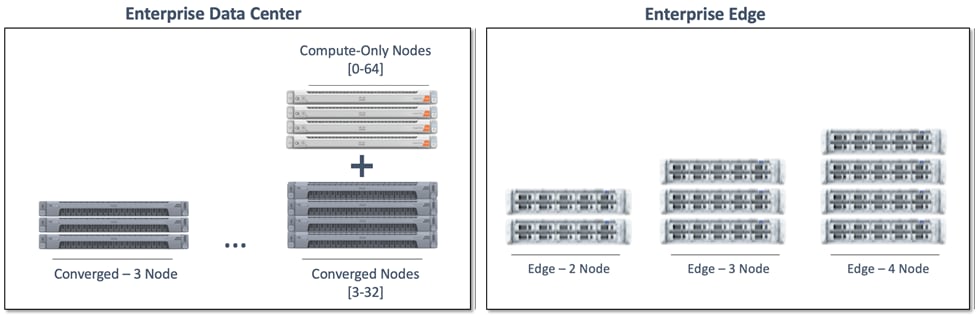

At the time of writing this document, s HyperFlex cluster can start with as few as 2-nodes in a HyperFlex edge cluster and expand up to 96 nodes in a single cluster using a combination of converged and compute-only nodes. The flexibility to independently scale compute and storage allows Enterprises to tune the cluster performance to meet workload requirements using a combination of CPU, memory, GPU acceleration, and storage. A 4-node, 2RU HyperFlex system with 15TB NVMe drives and 16 x 256GB DIMMs can provide over a petabyte of storage capacity and 4TB of memory, respectively.

The HyperFlex platforms also offer multiple network interface options with speeds of 1-, 10-, and 25-Gbps on the edge platforms and 10-, 25-, 40-, and 100-Gbps on core data center platforms.

● Security

Security is an integral part of HyperFlex with several security features as outlined below:

◦ Data-at-Rest-Encryption with self-encrypting drives (SED) with Enterprise key management software using local passphrase or remote key management.

◦ Native Software Encryption of filesystem built into HyperFlex with key management via Intersight

◦ Security Baseline System Checks is part of HyperFlex Health Check capabilities in Intersight

◦ Secure CLI/Shell reduces attack surface by limiting commands and access via SSH to ‘admin’ user only.

◦ Secure Boot verifies the trustworthiness of ESXi hypervisor by chaining each stage of boot with a hardware root of trust provided by the Cisco Trust Anchor Module

◦ Security Hardened APIs with support for Secure Technical Implementation Guides (STIGs) for all vSphere components

For more information, see: Cisco HyperFlex - Hyperconverged Infrastructure (HCI) on cisco.com

A standard HyperFlex cluster requires a minimum of three HX-Series converged nodes with compute and storage. Data is replicated across at least two of these nodes, and a third node is required for continuous operation in the event of a single-node failure. Each node that has disk storage is equipped with at least one high-performance SSD drive for data caching and rapid acknowledgment of write requests. Each node also is equipped with additional disks, up to the platform’s physical limit, for long term storage and capacity.

HyperFlex servers come in multiple form-factors (1RU, 2RU) with a range of Intel and AMD processor, memory, and drive options. For a complete list of server options and specifications, see: https://www.cisco.com/c/en/us/products/hyperconverged-infrastructure/hyperflex-hx-series/index.html#~models

The servers in the solution are equipped Cisco VIC 1387 MLOM Interface cards.

The mLOM slot is used to install a Cisco VIC without consuming a PCIe slot, which provides greater I/O expandability. It incorporates next-generation converged network adapter (CNA) technology from Cisco, providing investment protection for future feature releases. The card enables a policy-based, stateless, agile server infrastructure that can present up to 256 PCIe standards-compliant interfaces to the host, each dynamically configured as either a network interface card (NICs) or host bus adapter (HBA). The personality of the interfaces is set programmatically using the service profile associated with the server. The number, type (NIC or HBA), identity (MAC address and World Wide Name [WWN]), failover policy, adapter settings, bandwidth, and quality-of-service (QoS) policies of the PCIe interfaces are all specified using the service profile.

The Cisco UCS VIC 1387 Card is a dual-port Enhanced Quad Small Form-Factor Pluggable (QSFP+) 40-Gbps Ethernet, and Fibre Channel over Ethernet (FCoE)-capable PCI Express (PCIe) modular LAN-on-motherboard (mLOM) adapter installed in the Cisco UCS HX-Series Rack Servers. The Cisco UCS VIC 1387 is used in conjunction with the Cisco UCS 6332 or 6332-16UP model Fabric Interconnects.

Cisco UCS Fabric Interconnect

The Cisco UCS Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. Depending on the model chosen, the Cisco UCS Fabric Interconnect offers line-rate, low-latency, lossless 10 Gigabit, 25 Gigabit, 40 Gigabit, and 100 Gigabit Ethernet connectivity. Cisco UCS Fabric Interconnects provide the management and communication backbone for Cisco UCS and HyperFlex series Servers. All servers and chassis, and therefore all blades, attached to the Cisco UCS Fabric Interconnects become part of a single, highly available management domain, and provides LAN connectivity to all servers within a Cisco UCS domain. The product family supports Cisco low-latency, lossless Ethernet unified network fabric capabilities, which increase the reliability, efficiency, and scalability of Ethernet networks. The Fabric Interconnect supports multiple traffic classes over the Ethernet fabric from the servers to the uplinks.

There are several models of Cisco UCS Fabric Interconnects. A pair of Cisco UCS 6332 Fabric Interconnects are used in this design.

Cisco UCS 6332 Fabric Interconnect

The Cisco UCS 6332 Fabric Interconnect is a one-rack-unit (1RU) 40 Gigabit Ethernet and FCoE switch offering up to 2560 Gbps of throughput. The switch has 32 40-Gbps fixed Ethernet and FCoE ports. Up to 24 of the ports can be reconfigured as 4x10Gbps breakout ports, providing up to 96 10-Gbps ports, although Cisco HyperFlex nodes must use a 40GbE VIC adapter in order to connect to a Cisco UCS 6300 Series Fabric Interconnect.

Other models of Cisco UCS Fabric Interconnects are the Cisco UCS 6500 and 6400 series in addition to the Cisco UCS 6300 series. Table 1 lists some key differences between these three Cisco UCS FI series.

Table 1. Cisco UCS Fabric Interconnect Models

| Cisco UCS Fabric Interconnects |

UCS 6500 Series |

UCS 6400 Series |

UCS 6300 Series |

| Port Density |

36 |

54 or 108 |

32-40GbE -or- 24 x 40GbE and 16 x 1- and 10GbE |

| Port Speeds |

1/10/25/40/100-Gbps Ethernet or FCoE |

1/10/25/40/100-Gbps Ethernet or FCoE |

1/10/40-Gbps |

| Throughput |

Up to 7.42 Tbps |

- 6454: Up to 3.82 Tbps - 64108: Up to 7.42 Tbps |

Up to 2.56-Tbps |

Cisco HyperFlex Container Storage Interface (CSI)

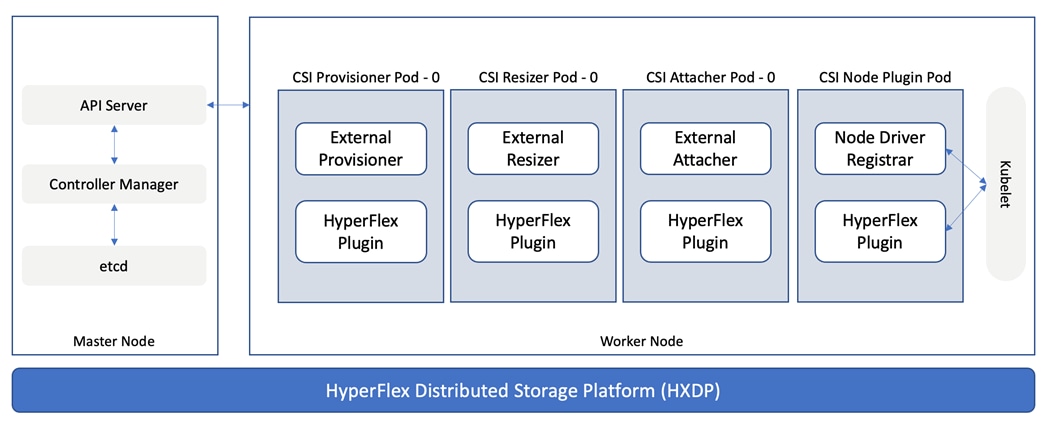

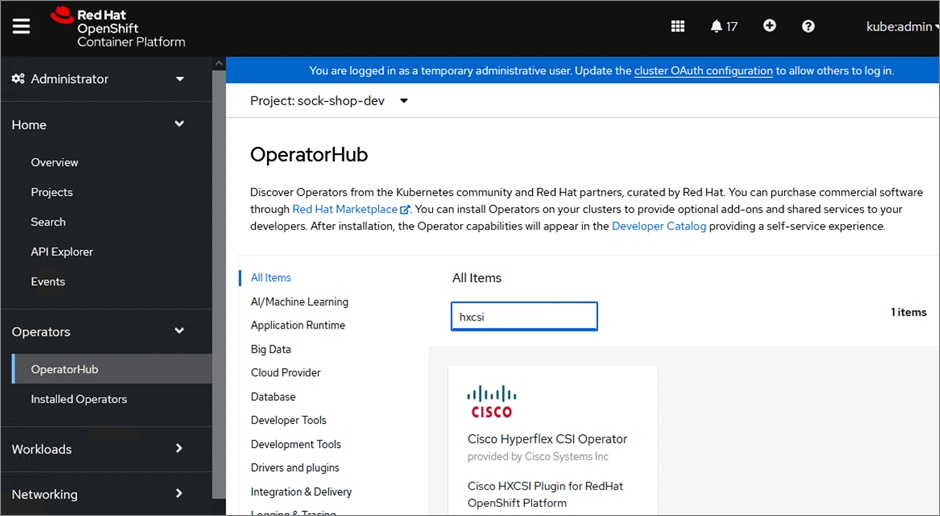

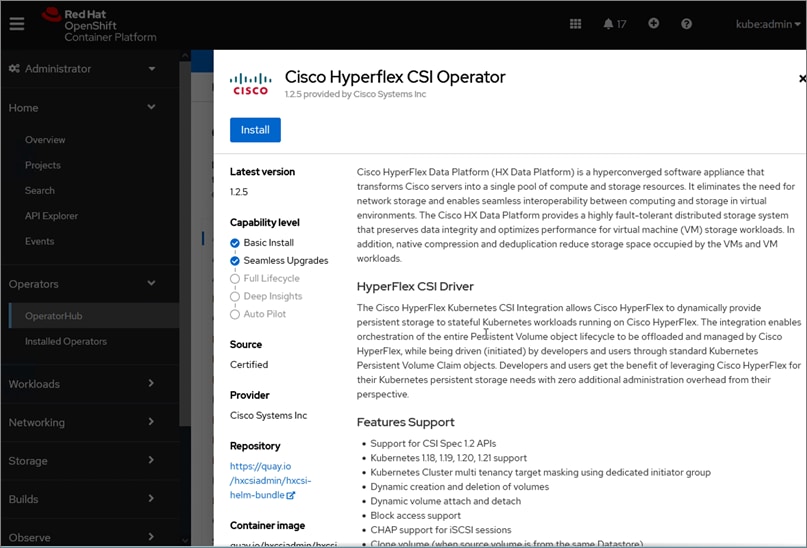

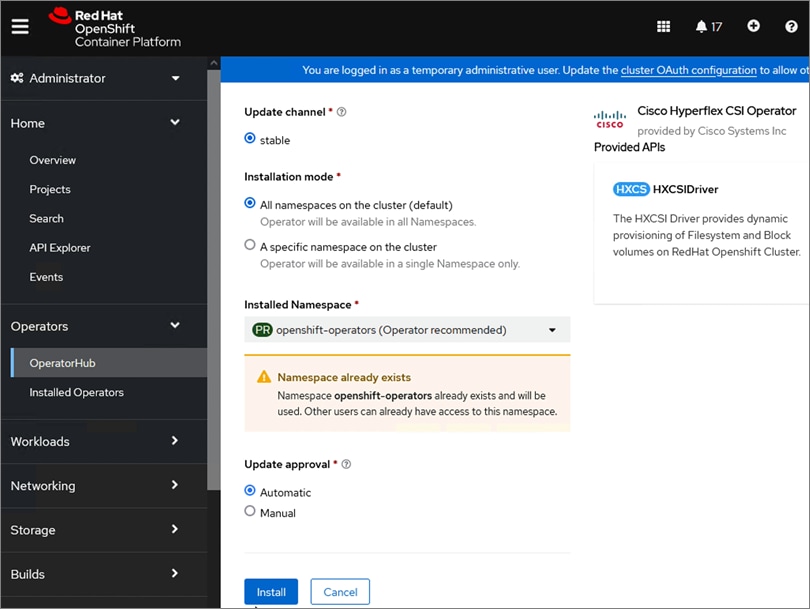

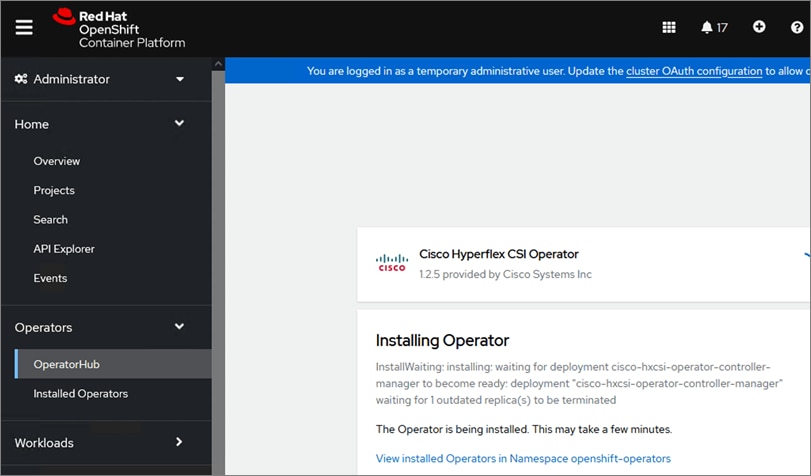

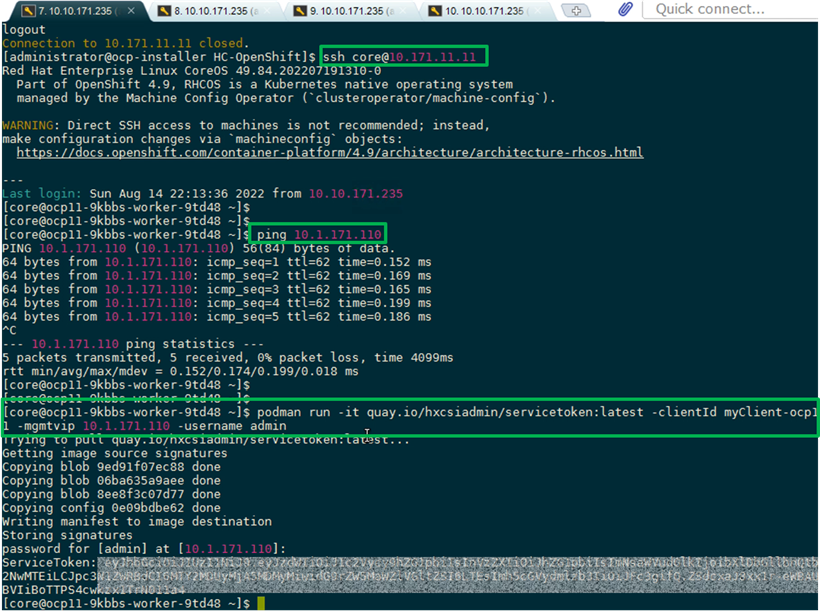

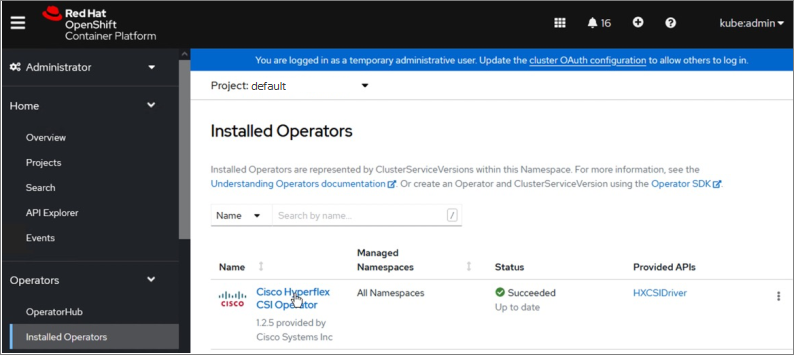

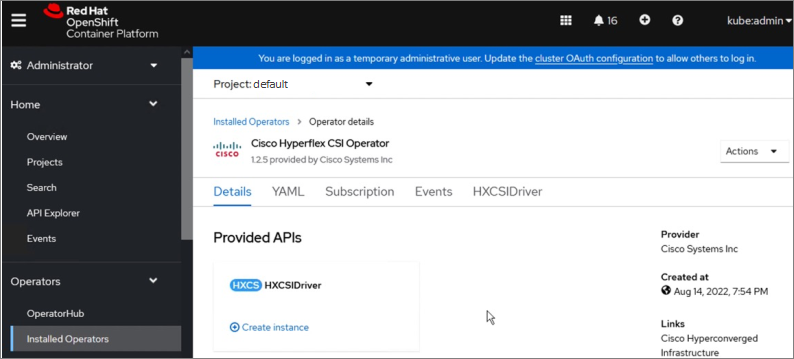

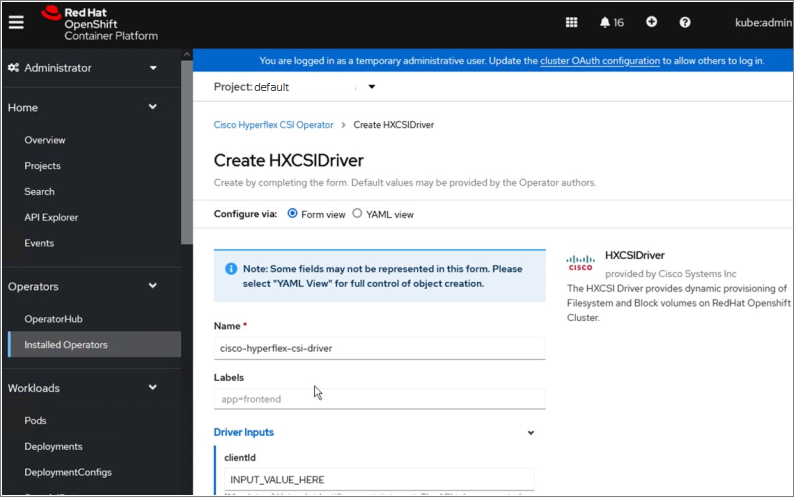

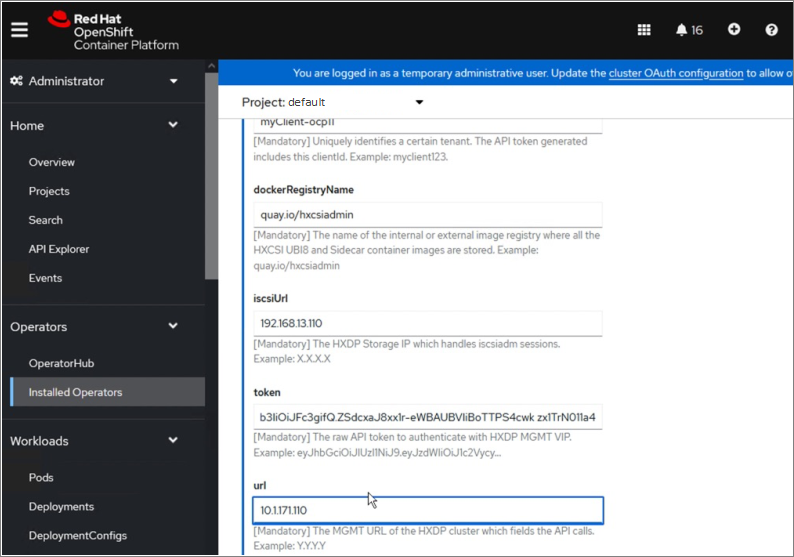

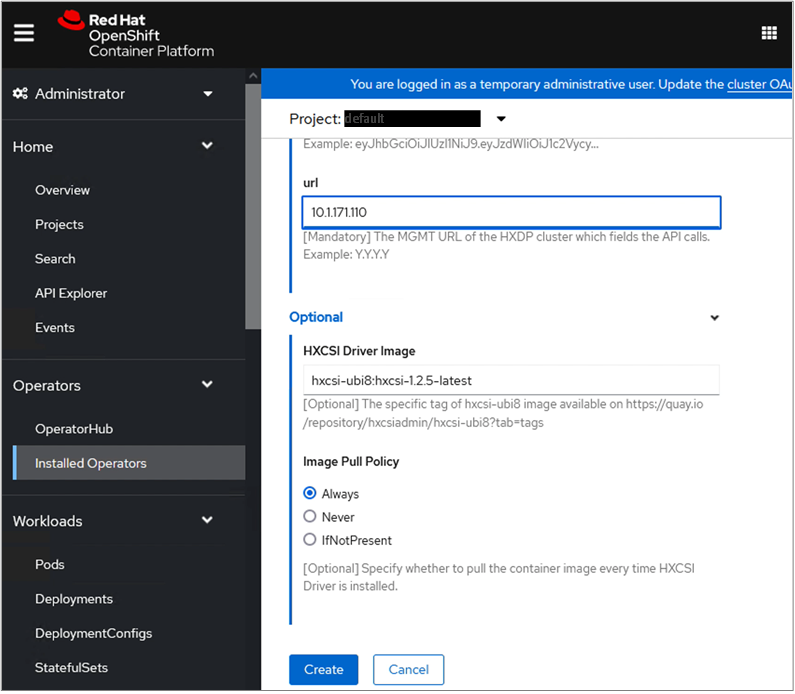

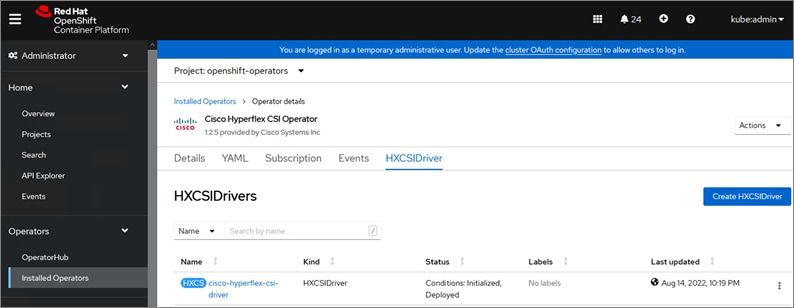

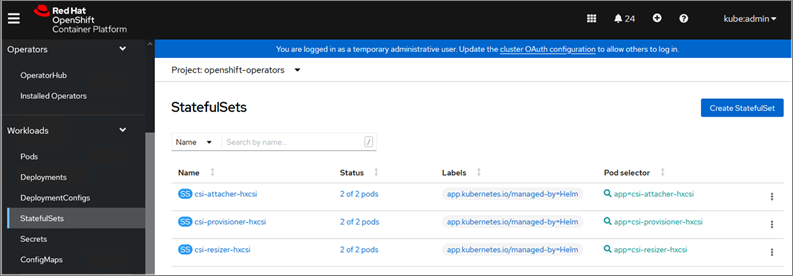

Cisco HyperFlex CSI is an out-of-tree container-based Kubernetes storage plugin that enables stateful container workloads to dynamically request persistent storage from the underlying Hyperflex storage. Developers and operators leverage their Cisco HyperFlex to meet both Kubernetes compute and storage needs with minimal additional administrative overhead. With Cisco HyperFlex CSI, the performance, efficiency, and resiliency of Cisco HyperFlex can now be extended to stateful Kubernetes applications. HyperFlex CSI is Red Hat certified and available as an operator from Red Hat’s Operator Hub. The Cisco HyperFlex CSI operator is developed and published using a family of tools and capabilities ( Operator SDK, Operator Lifecycle manager, Operator registry) – see Operator Framework for more information. The Cisco HyperFlex CSI Operator, along with the driver is published on Red Hat’s registry (Quay.io) and made available to Kubernetes developers and operators via the embedded Operator Hub on the OCP cluster console.

To deploy and consume HyperFlex storage, the HyperFlex CSI uses native Kubernetes objects and primitives (for example, Storage Classes) that Kubernetes users are already familiar with, making it easier to adopt and use. CSI enables orchestration of the entire Persistent Volume object lifecycle to be offloaded and managed by Cisco HyperFlex by initiating a storage request using standard Kubernetes Persistent Volume Claims (PVC). The CSI plugin can be quickly deployed by simply searching for it in Red Hat’s Operator Hub and then clicking 'Install' to deploy it. Once the plugin and driver are deployed and setup, any stateful application running on HyperFlex can request and use storage as needed.

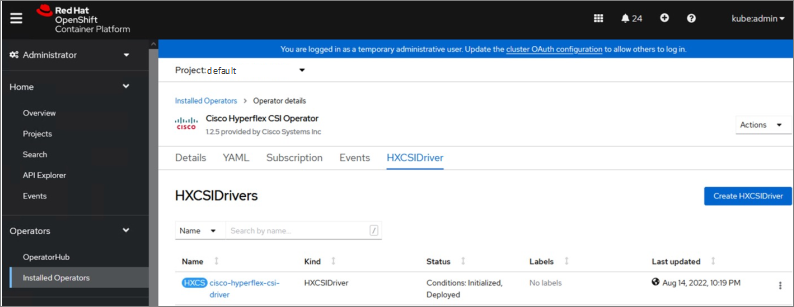

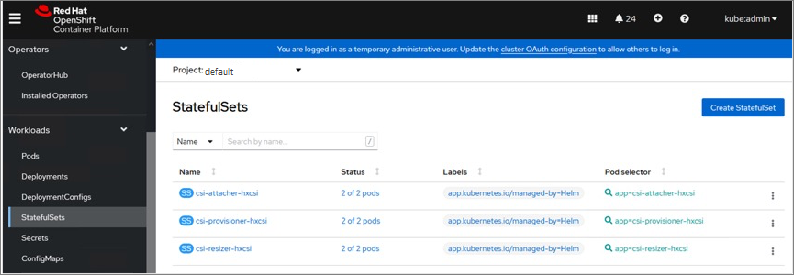

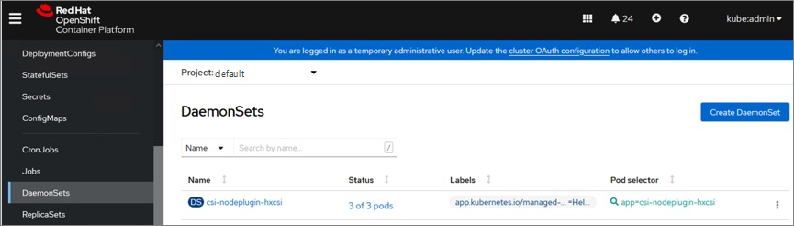

The Cisco HyperFlex CSI is deployed in Pods and containers on the Red Hat OCP cluster where it is deployed. The different HyperFlex CSI components deployed on a cluster are shown in Figure 3.

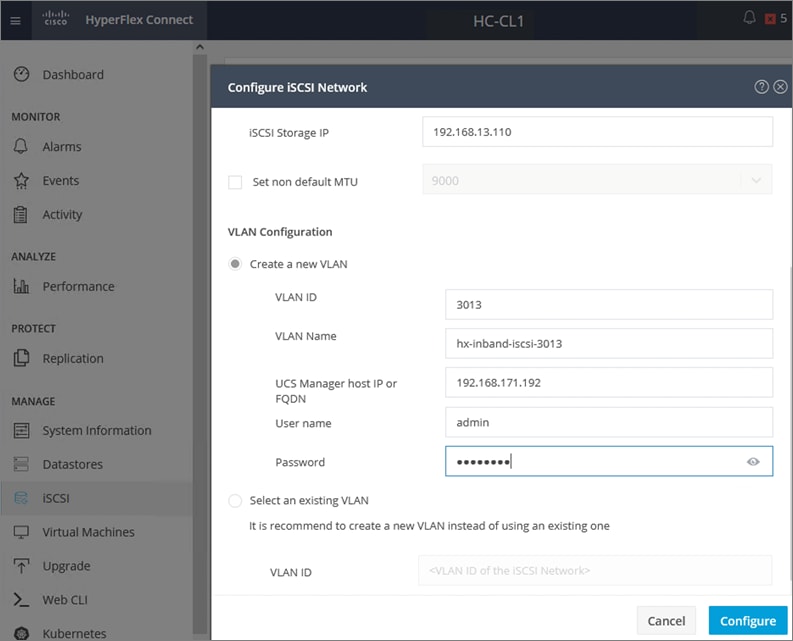

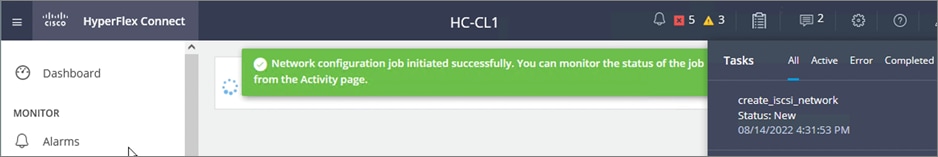

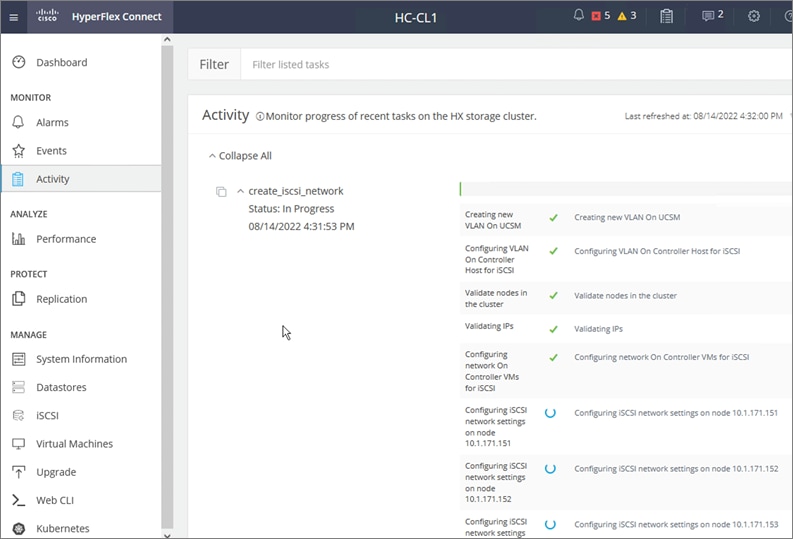

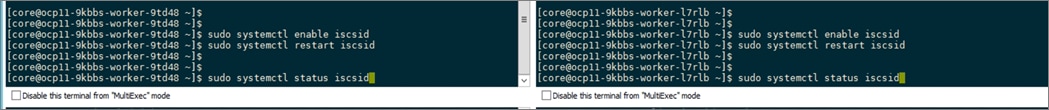

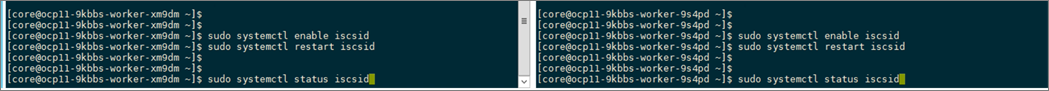

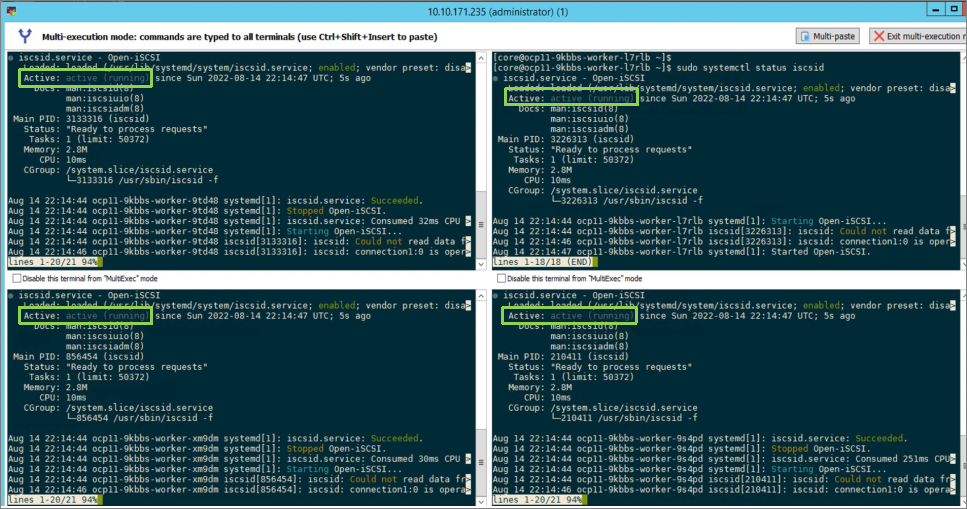

On the HyperFlex side, the internal distributed storage file system is presented using Internet Small Computer Systems Interface (iSCSI) protocol to applications and other external entities. The CSI Plugin leverages the HyperFlex iSCSI capability to dynamically create iSCSI volumes and present them to the Red Hat OCP cluster to be utilized by Kubernetes Pods for persistent storage. To enable OCP pods to mount HyperFlex storage as iSCSI volumes, a dedicated iSCSI storage network is utilized that routes traffic from OCP cluster in the HyperFlex VM network to the iSCSI storage data network.

At the time of this document, Cisco HyperFlex CSI supports the following features:

● Support for CSI Spec 1.2 APIs

● Kubernetes 1.18, 1.19, 1.20, 1.21 support

● Kubernetes Cluster multi tenancy target masking using dedicated initiator group

● Dynamic creation and deletion of volumes

● Dynamic volume attach and detach

● Block access support

● CHAP support for iSCSI sessions

● Clone volume (when source volume is from the same Datastore)

● PV support with different filesystems (Ext4, Ext3, XFS)

● Volume space statistics reporting per CSI specs

● Multi-writer support (ReadWriteMany) for Block Mode only

● Volume resize support for block mode volumes and ext3, ext4 filesystem volumes (expansion)

● Software encrypted volumes (requires HXDP 5.0(2a) or higher)

● Volume snapshots (requires HXDP 5.0(2a) or higher)

● CHAP protection for volumes (requires HXDP 5.0(2a) or higher)

For more information, see Cisco Hyperflex CSI Operator page on catalog.redhat.com.

As applications and data become more distributed from core data center and edge locations to public clouds, a centralized management platform is essential. IT agility will be struggle without a consolidated view of the infrastructure resources and centralized operations. Cisco Intersight provides a cloud-hosted, management and analytics platform for all HyperFlex, Cisco UCS and other supported third-party infrastructure across the globe. It provides an efficient way of deploying, managing, and upgrading infrastructure in the data center, ROBO, edge, and co-location environments.

Cisco Intersight provides:

● No Impact Transition: Embedded connector (Cisco HyperFlex, Cisco UCS) will allow customers to start consuming benefits without forklift upgrade

● SaaS/Subscription Model: SaaS model provides for centralized, cloud-scale management and operations across hundreds of sites around the globe without the administrative overhead of managing the platform.

● Enhanced Support Experience: Hosted platform allows Cisco to address issues platform-wide and experience extends into TAC supported platforms

● Unified Management: Single pane of glass, consistent operations model, and experience for managing all systems and solutions

● Programmability: End to end programmability with native API, SDK’s and popular DevOps toolsets will enable customers to consume natively.

● Single point of automation: Automation using Ansible, Terraform and other tools can be done through Intersight for all systems it manages.

● Recommendation Engine: Our approach of visibility, insight and action powered by machine intelligence and analytics provide real-time recommendations with agility and scale. Embedded recommendation platform with insights sourced from across Cisco install base and tailored to each customer

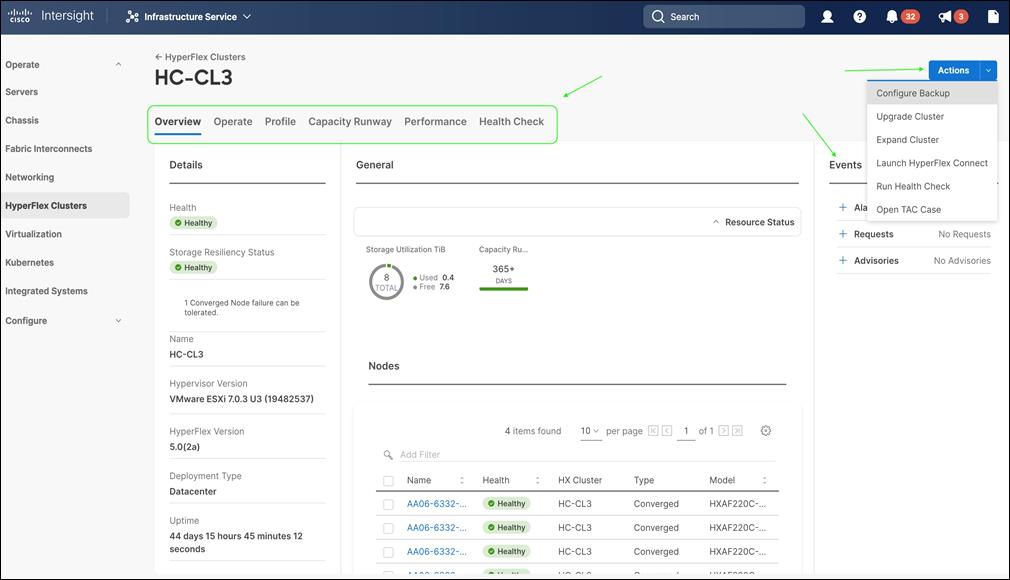

In this solution, Cisco Intersight unifies and simplifies the hybrid cloud operations of Cisco HyperFlex clusters wherever they are deployed. The life cycle management capabilities that Intersight offers specifically for HyperFlex systems are shown below.

● Deploy HyperFlex standard/data center cluster including DC-no-FI configurations, and edge clusters

● Full-stack upgrade of a HyperFlex cluster from HyperFlex/Cisco UCS server firmware, HXDP software to VMware vSphere

● Monitor the status of all actions initiated via Intersight (for example, HyperFlex install)

● Get security alerts, software advisories with recommendations, alarms, and other notifications

● Detailed hardware and software component inventory for each system, including hosts, virtual machines, data stores, disks, and encryptions key management.

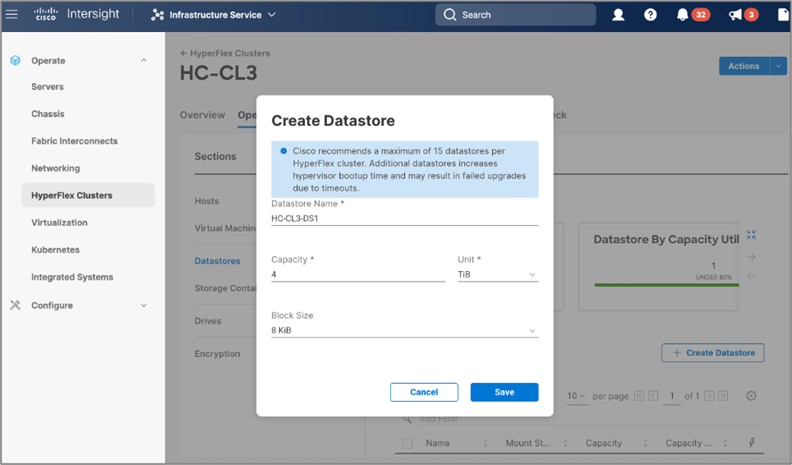

● Storage management through features such as create, delete, mount, and unmount data stores

● Tools for Hardware Compatible Checks (HCL), capacity planning, monitoring storage performance (IOPs, throughput latency), and health checks.

● Launch vKVM, Cisco UCS Manager, HyperFlex Connect to access individual cluster management tools.

● Provision backup, manage encryptions key, open support cases that will be populated with serial numbers of servers in the clusters, and expand clusters

● Customizable dashboard with pre-configured widgets to view the information that is of interest to the administrator

For more information, go to the Cisco Intersight product page on cisco.com.

Red Hat OpenShift Container Platform (OCP)

Enterprises are modernizing their applications using scalable, cloud-native architectures and technologies such as containers and micro-services that can run anywhere, from private to public to hybrid clouds. Kubernetes (K8s) is an open-source orchestration system that automates the deployment, scaling, and management of containerized applications. Kubernetes is a project within Cloud Native Computing Foundation (CNCF); it is the de facto industry standard for container orchestration. Kubernetes projects have over 3 million contributions from a large open-source community that includes companies such as Red Hat and Cisco. Red Hat is also one of the top contributors to the Kubernetes project.

Red Hat OpenShift

Red Hat OpenShift is a commercially packaged product derived from the upstream open-source Kubernetes. OpenShift goes beyond standard Kubernetes to provide a to provide a broader cloud-native ecosystem of tools and extensions that make it easier to design, develop, test, and operate a cloud-native environment. OpenShift provides extensions such as built-in security, a user-friendly dashboard, integrated image registries, an embedded operator hub, and integrated CI/CD pipelines. It is a secure Enterprise platform with certified third-party integrations, testing, and support from Red Hat.

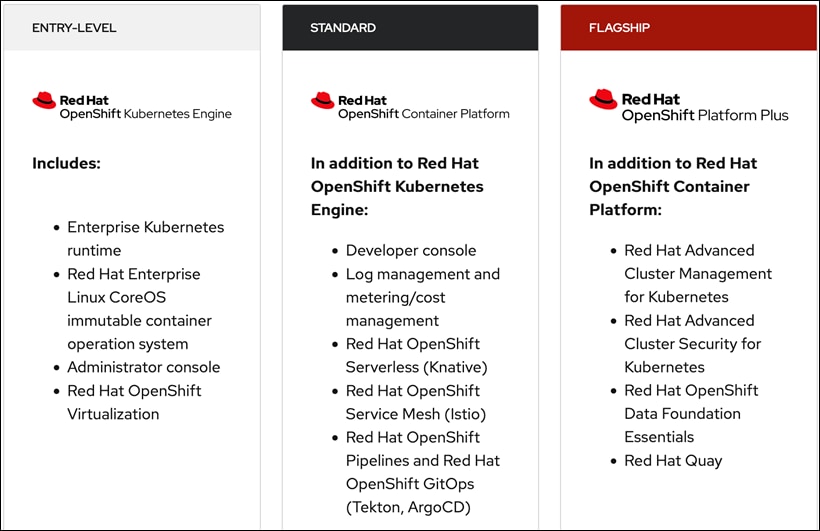

Red Hat OpenShift is a suite of three products:

● OpenShift Kubernetes Engine (OKE)

● OpenShift Container Platform (OCP)

● OpenShift Container Platform Plus

Each product includes an Enterprise-class container orchestration system bundled with different value-added components as shown in Figure 4.

Note: This solution uses Red Hat OpenShift Container Platform, but Enterprises can also Red Hat OpenShift Container Platform Plus to leverage advanced capabilities.

Red Hat OpenShift Container Platform

Red Hat OCP is a secure, enterprise-class, Kubernetes platform for building, deploying, and managing cloud-native environments and applications across a hybrid cloud. OCP provides enterprise-grade Kubernetes but with developer-focused (for example, a developer console, service mesh) and operational (for example, log and cost management, CI/CD pipelines, GitOps) capabilities and tools to support an Enterprise’s cloud-native efforts. Red Hat OCP uses Red Hat Enterprise Linux CoreOS (RHCOS) as a foundation for running and managing containers using container technologies such as CRI-O and runc. OCP is designed to provide consistent developer and operator experience across a hybrid environment.

Some of the capabilities in Red Hat OCP include:

● Automated deployment of OCP clusters on-prem (bare metal, VMware vSphere, Red Hat OpenStack® Platform, Red Hat Virtualization) and in public clouds (Amazon Web Services, Google Cloud Platform, IBM Cloud, Microsoft Azure).

● Automated upgrades of OCP clusters with over-the-air upgrades using web console or OpenShift CLI (oc)

● Add services with push-button ease – Once a cluster is deployed, Red Hat OpenShift uses Kubernetes Operators to deploy additional capabilities and services on the cluster. Red Hat and community supported operators are available in the embedded Operator Hub and can be deployed with the click of a button.

● Multi-cluster management using Red Hat’s cloud-based Hybrid Cloud Console or enterprise-managed Advance Cluster Management (ACM) provides a consolidated view of all clusters, with the ability to easily access and use other K8s technologies and services. OCP clusters can also be individually managed using a web-based cluster console or APIs.

● Persistent storage support – OCP provides support for a broad range of eco-system storage partners including the HyperFlex storage used in this solution.

● Scalability – OCP can scale to thousands of instances across hundreds of nodes in seconds as needed.

● Automate container and application builds, deployments, scaling, cluster management, and more with ease.

● Self-service provisioning – Developers can quickly and easily create applications on demand from the tools they use most, while operations retain full control over the entire environment.

● Source-to-image deployment – OCP provides a toolkit and workflow for producing ready-to-run images by injecting source code into a container and letting the container prepare that source code for execution.

For more information, see: Red Hat OpenShift Container Platform product page on redhat.com.

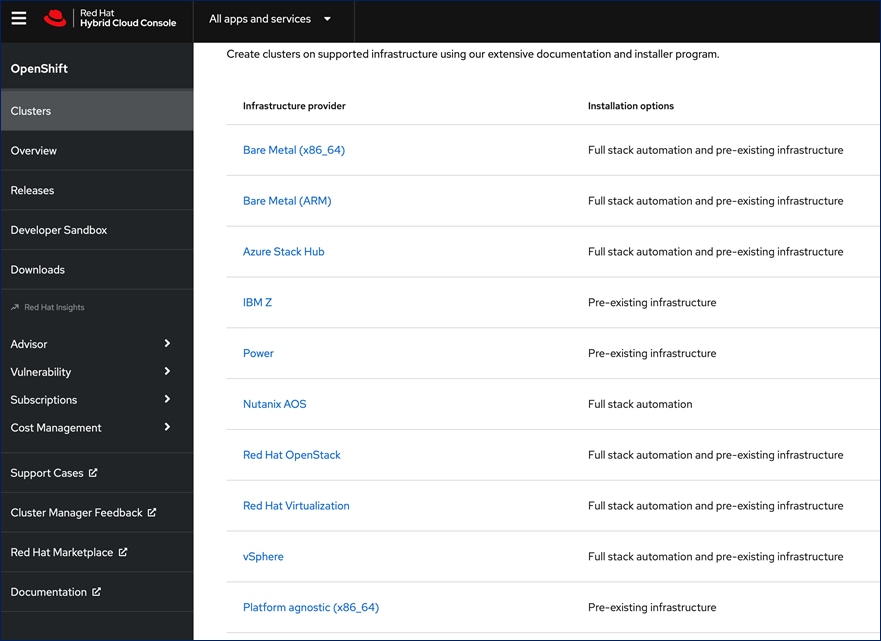

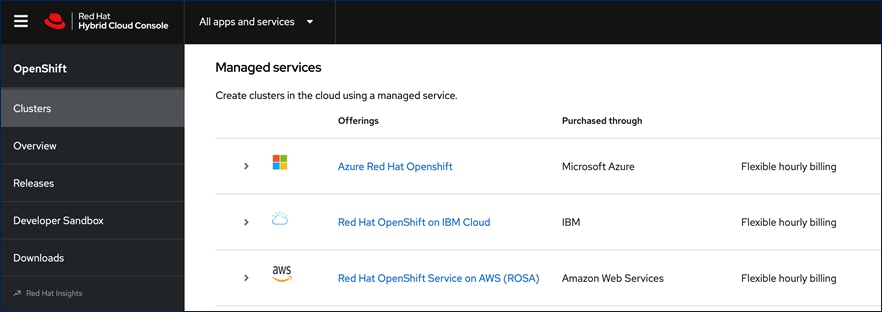

Red Hat Hybrid Cloud Console

Red Hat Hybrid Cloud Console is a centralized, SaaS-based management console for managing multiple OCP clusters. It is used in this solution to provide consistent container management across a hybrid environment. The SaaS model enables Enterprises to develop, deploy, and innovate faster across multiple infrastructures and quickly take advantage of new capabilities without the overhead of managing the tool. The console gives Enterprises more control and visibility as environments grow and scale. The Hybrid Cloud Console also provides tools to proactively address issues, open and manage support cases, manage cloud costs, subscriptions, etc.

For more information, see: Red Hat Hybrid Cloud Console product page on redhat.com

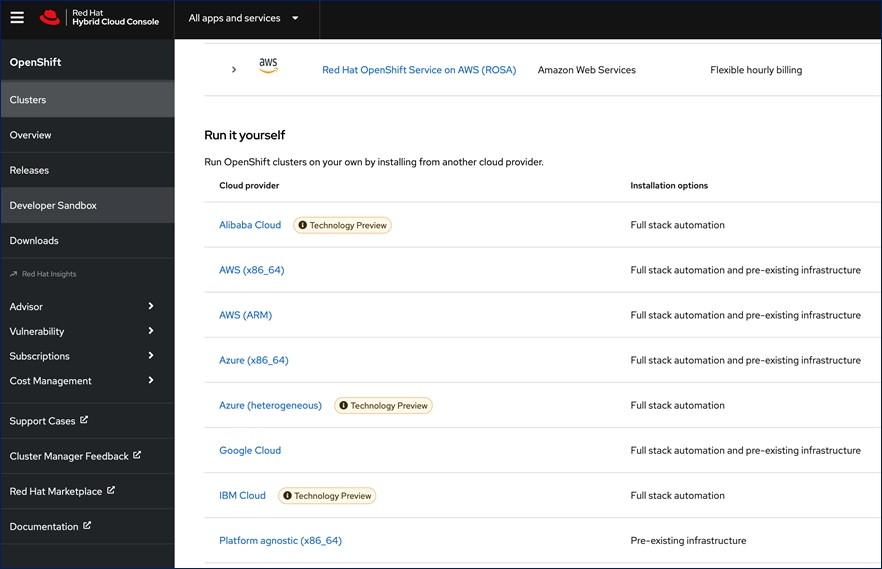

Consumption Models

Red Hat OpenShift is available as a managed service by Red Hat and the cloud provider or as self-managed service where the Enterprise manages and maintains the OCP cluster. Unlike other managed Kubernetes services, including ones offered by the public cloud providers, Red Hat OCP managed and hosted on the same public provider environments provides support for the full-stack rather than just Kubernetes, with consistent experience across the hybrid environment. Red Hat OpenShift is a complete, production-ready application platform with additional services such as CI/CD pipelines, monitoring, security, registry, service mesh etc. included on top of upstream Kubernetes. The managed cloud-hosted services include Red Hat OpenShift Service on AWS, Microsoft Azure Red Hat OpenShift, Red Hat OpenShift Dedicated on Google Cloud or AWS, and Red Hat OpenShift on IBM Cloud.

Installation Options

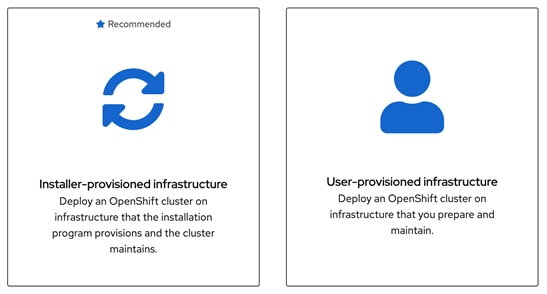

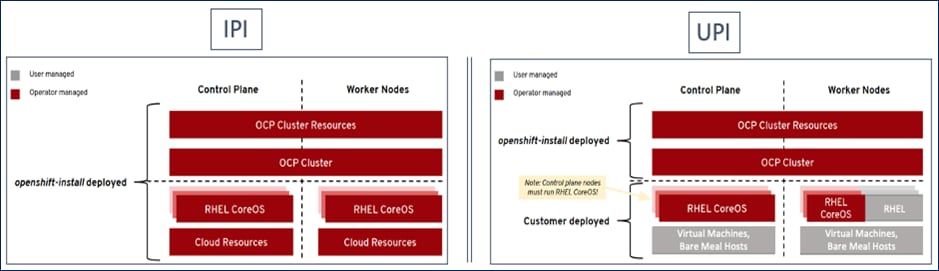

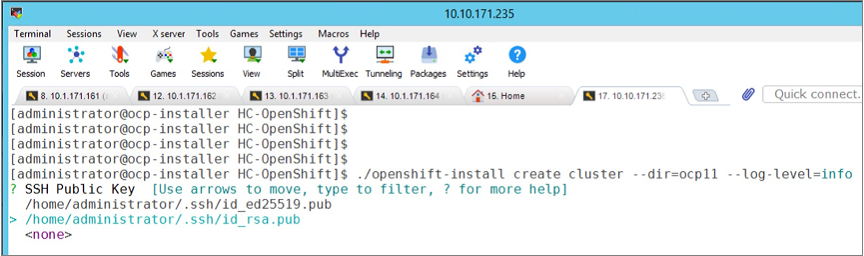

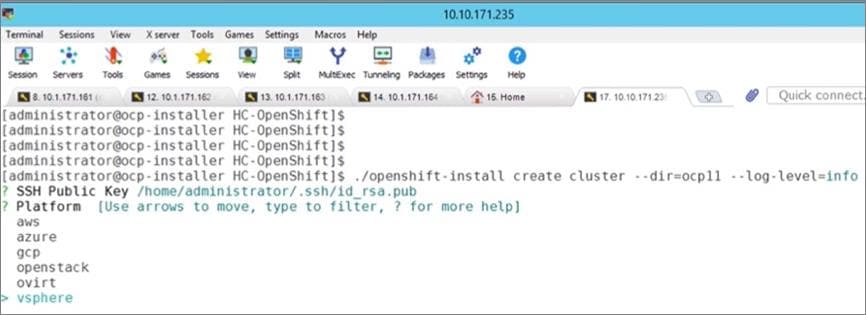

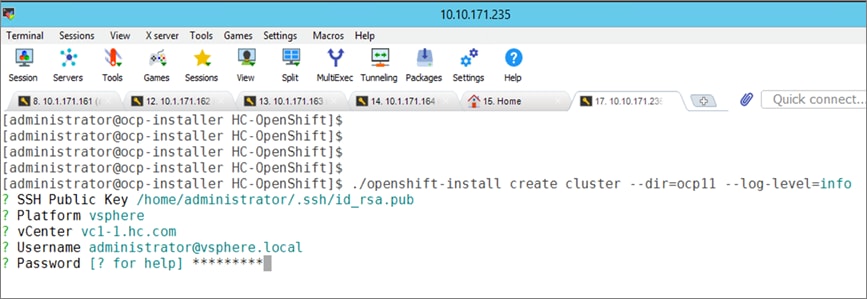

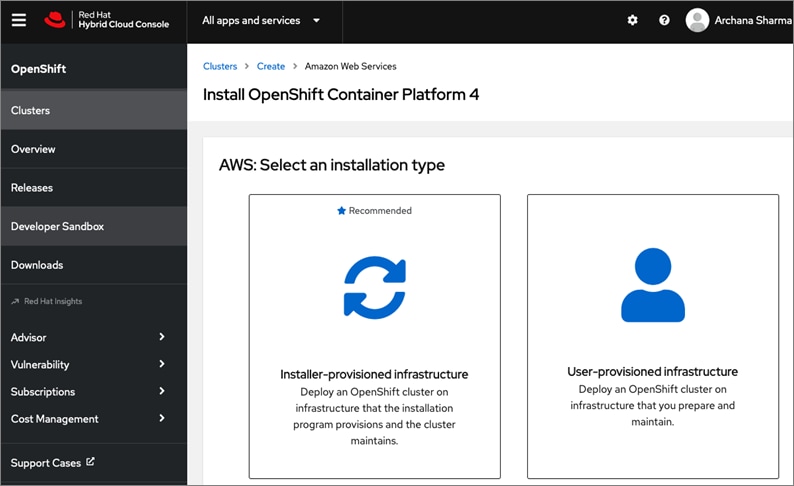

The Red Hat OpenShift Container Platform installer can install and deploy a cluster using either Installer-Provisioned infrastructure (IPI) or the User-Provisioned infrastructure (UPI) methods.

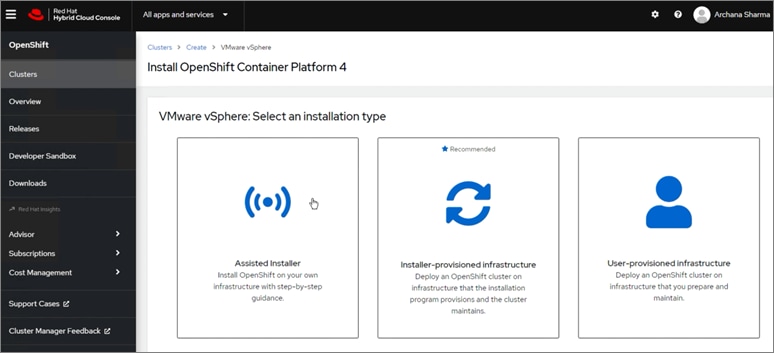

The IPI installation is an opinionated, prescriptive approach but it is the quickest way to deploy a cluster. The install implements best practices and is fully automated. The install takes approximately 45minutes and can be deployed with minimal understanding of the underlying infrastructure. In the UPI method, the administrator is responsible for loading the operating system (RHCOS, RHEL) and bringing up the different nodes (for example, bootstrap, master, worker) in an OCP cluster.

In on-prem environments, a third option, Assisted Installer, is also supported where the user provisions and maintains the infrastructure but with step-by-step guidance from the installer.

Both installation mechanisms offer either the default install or customizations. For example, IPI Install on AWS can deploy a cluster in a restricted or private network, existing virtual private cloud (VPC), government, or top-secret region, with network customizations or a fully custom install where the installer still provisions the infrastructure.

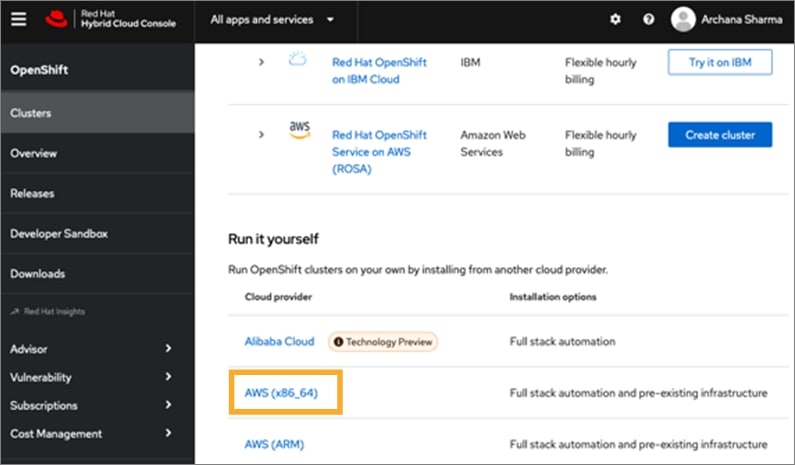

The Red Hat OCP installer is flexible and provide several options for deploying a cluster in a variety of infrastructure environments. The Hybrid Cloud Console supported environments at the time of writing of this document are:

● On-prem (Bare Metal, Virtual, Private cloud)

● Public Cloud – Managed service

● Public Cloud – Self-managed

Note: For a complete list of all environments supported using the IPI and UPI installation methods, please refer to the Red Hat OCP documentation for a given release.

Red Hat Enterprise Linux CoreOS (RHCOS)

RHCOS is a light-weight, container-specific operating system, specifically designed for running container workloads. It is based on the secure, enterprise-grade Red Hat Enterprise Linux (RHEL). RHCOS is the default operating system on all Red Hat OCP cluster nodes, and the only operating system supported on control/master nodes. The recommended IPI installation method deploys RHCOS on all cluster machines, including compute/worker nodes though RHCOS and RHEL are supported. RHCOS is tightly controlled, allowing only a few system settings to be modified using the Ignition configuration files. RHCOS is designed to deploy an OCP cluster with minimal user configuration and once the cluster is deployed, the cluster will fully manage the RHCOS subsystem.

RHCOS includes:

● Ignition – for initial bootup configuration and disk related tasks on OCP cluster nodes

● CRI-O – Container Engine running on OCP cluster nodes

● Kubelet – Kubernetes service running on OCP cluster nodes

● Set of container tools

Ignition serves as a first boot system configuration utility for initially bringing up and configuring the nodes in the OCP cluster. It also creates and formats disk partitions, writes files, creates file systems and directories, configures users etc. During a cluster install, the control/master nodes get their configuration file from the temporary bootstrap machine used during install, and the worker nodes get theirs from the control/master nodes. Ignition is designed to initialize systems and all subsequent configuration done using the Machine Config Operator in OCP.

CRI-O is a stable, standards-based, lightweight container engine for Kubernetes that runs and manages the containers. CRI-O implements the Kubernetes Container Runtime Interface (CRI) for running Open Container Initiative (OCI) compliant runtimes. OCP uses the default OCI runtime, runc as the container runtime. CRI-O has a small footprint and a smaller attack surface, making it more secure. OCP uses the default OCI runtime - runc. CRI-O, along with RHCOS provides capabilities such as starting, stopping, and restarting containers. CRI-O is an independent project in Cloud Native Computing Foundation (CNCF) that also supports and maintains Kubernetes.

Kubelet is a Kubernetes service running on every worker node in the cluster. It communicates with the control plane components and processes requests for running, stopping, and managing container workloads.

Container Tools: RHCOS also includes a set of container tools (for example, podman, skopeo, crictl) for managing containers and container images such as start, stop, run list, remove containers and copy, authentication, sign images. RHCOS uses the rpm-ostree system to pull, extract, and write container images to disk for cluster updates.

RHCOS is deployed using configurations in ignition files. The OCP installer creates the Ignition configuration files necessary to deploy the OCP cluster with RHCOS. The configuration is based on the user provided responses to the installer. These files and images are downloaded and installed on the underlying infrastructure by the installer when using the IPI method. However, when using the UPI method, the user must download the RHCOS images, generate the Ignition configuration files and use them to provision the cluster machines.

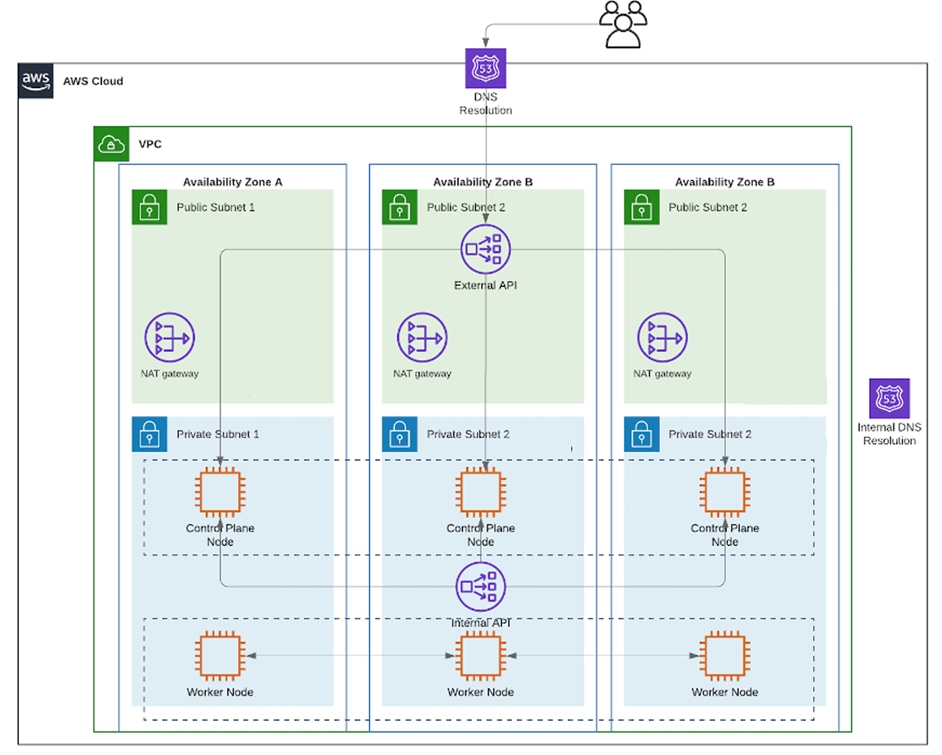

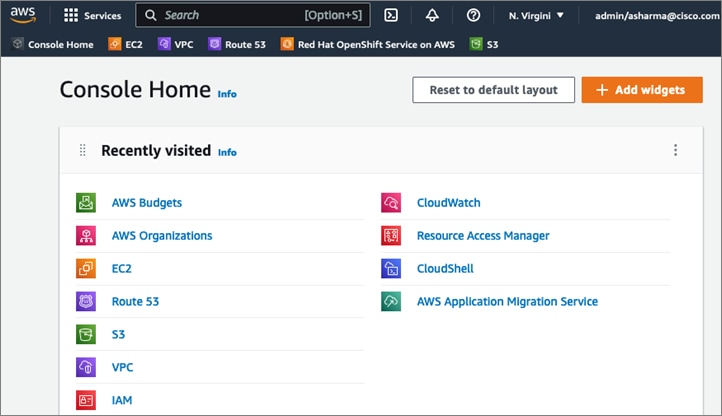

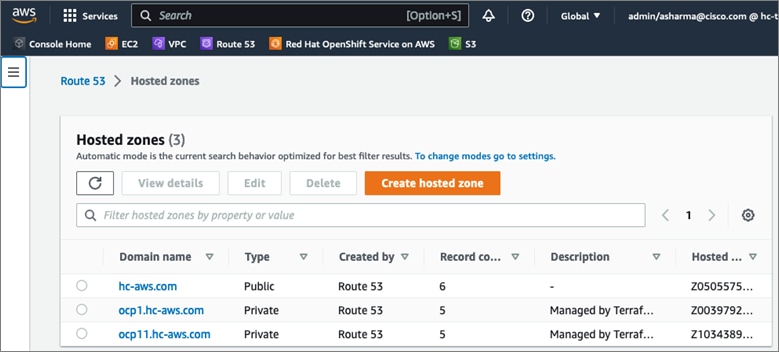

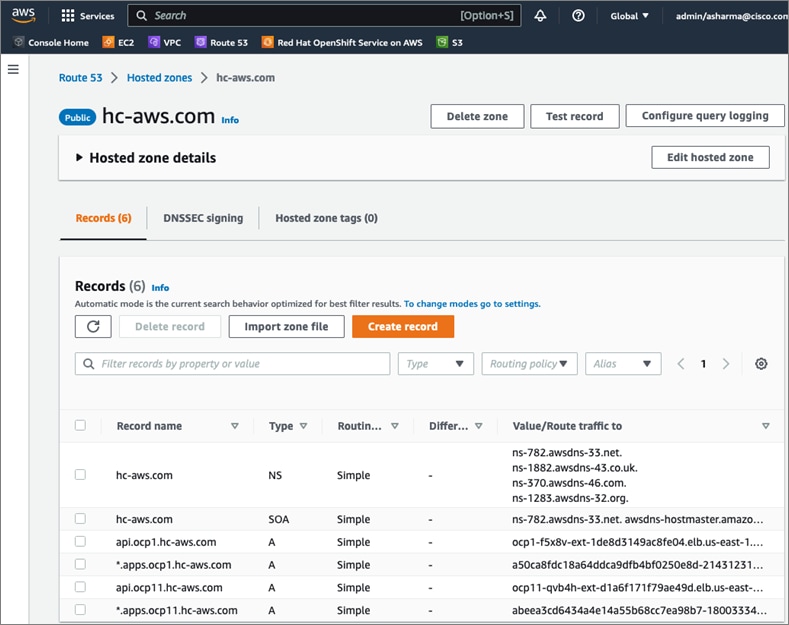

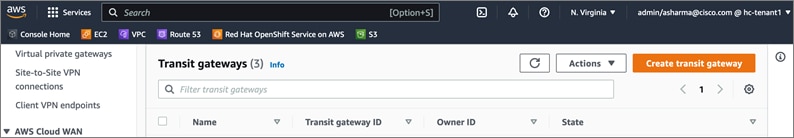

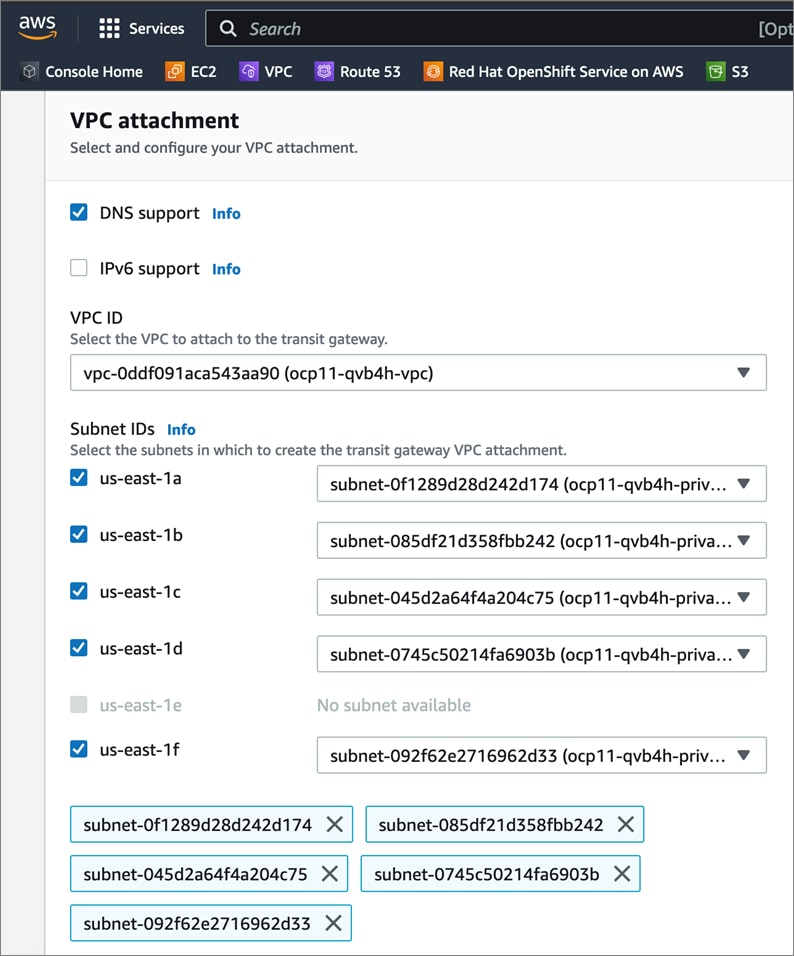

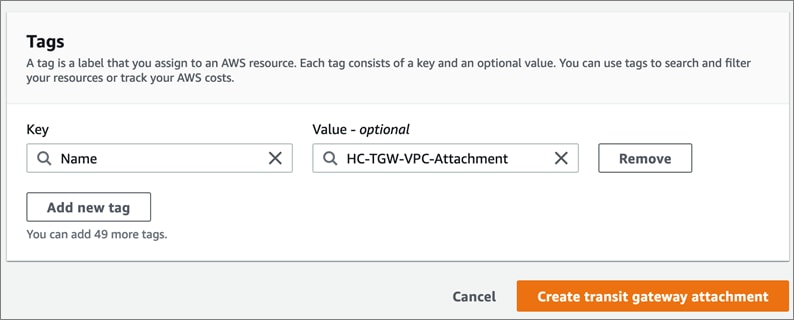

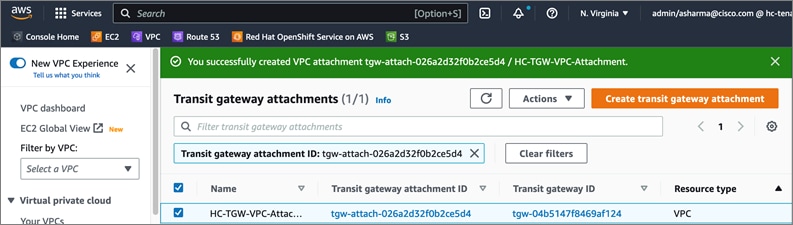

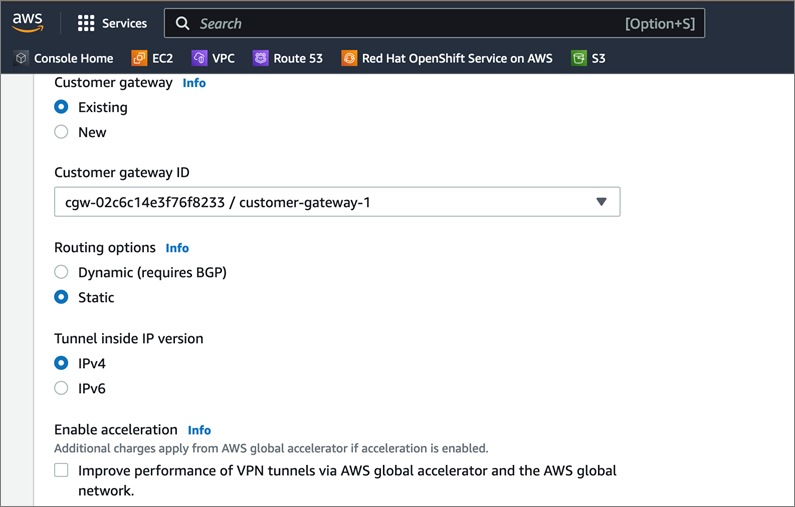

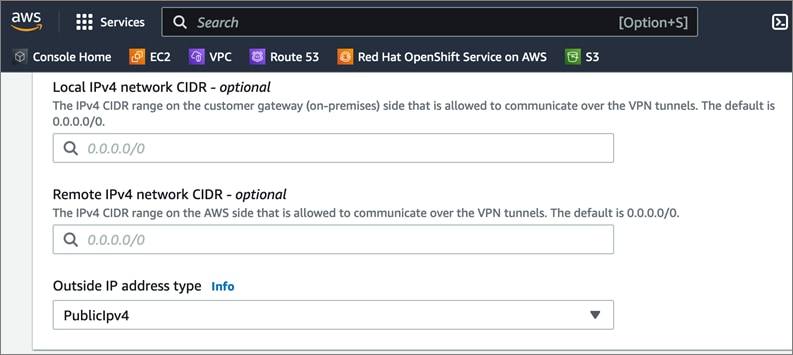

AWS provides a flexible application computing environment for deploying cloud-native applications. Red Hat OCP cluster on AWS can accelerate application development and delivery by providing a consistent experience for developers and operators across both on-prem and public cloud. AWS is globally available, enabling Enterprises to extend their enterprise deployments to a variety of AWS regions as needed. Red Hat OCP cluster nodes can also be distributed across multiple AWS Availability Zones (AZ) to ensure availability. OCP is also supported on other cloud providers such as Google Cloud Platform, Microsoft Azure, and IBM Cloud. OCP is available as a managed service on AWS, Red Hat OCP Service on AWS (ROSA) and as self-managed. This solution uses the self-managed service and the IPI installation method that was used on-prem. The automated IPI installation method uses several AWS services such as Route 53, DHCP, load balancers, Virtual Private Cloud (VPC) and EC2 instances that are deployed or used as a part of the installation process. Transit Gateways (TGW) attached to the VPC provide connectivity to on-prem resources and services, including K8s clusters and application workloads.

A VPC in AWS provides an isolated virtual networking environment on a shared infrastructure where users can deploy resources to support applications workloads. Enterprises can deploy VPCs in AWS cloud and connect them directly to the on-prem datacenter to enable connectivity between applications, services, and resources in each environment. One mechanism for enabling this connectivity is to use a Site-to-Site VPN to establish an IPsec VPN tunnel between the two locations.

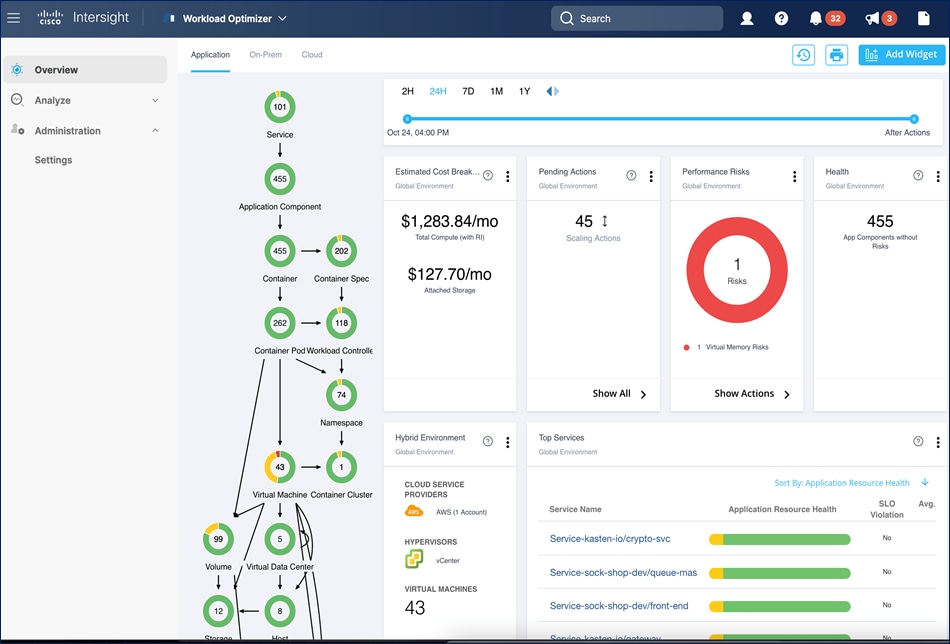

Cisco Intersight Workload Optimizer (IWO)

Cisco IWO provides resource management for cloud-native workloads in a hybrid cloud deployment. It takes a top-down approach to monitoring all resources in a hybrid cloud deployment to ensure that applications are healthy, and the environment is operating in an optimal manner. IWO uses real-time AI-powered analytics to continuously monitor and analyze application workloads and their resource consumption. IWO uses the historical and real-time data to make actionable recommendations (for example, provision additional resources) that can be automatically implemented to ensure application performance before users and services are impacted. IWO provides a cost analysis with each recommendation so that administrators can make better decisions, one that minimizes cloud costs, prevents sprawl, and generally uses resources more efficiently. IWO recommendations can be implemented to consolidate workloads to minimize infrastructure needs. Cisco IWO for planning purposes, to model future workload growth scenarios and to estimate the additional infrastructure that your organization will need and when.

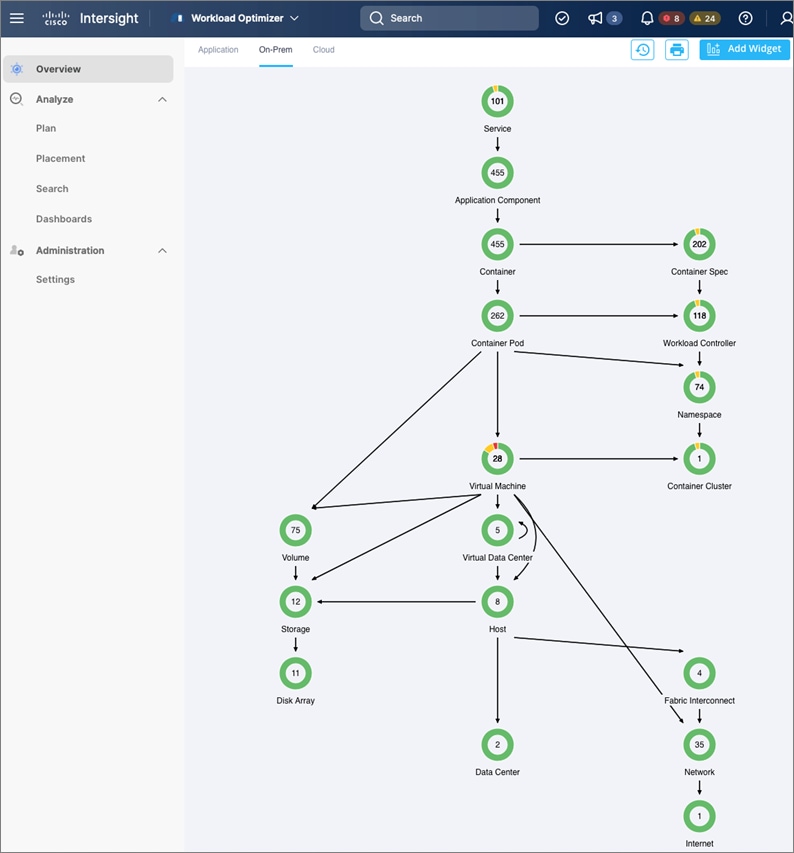

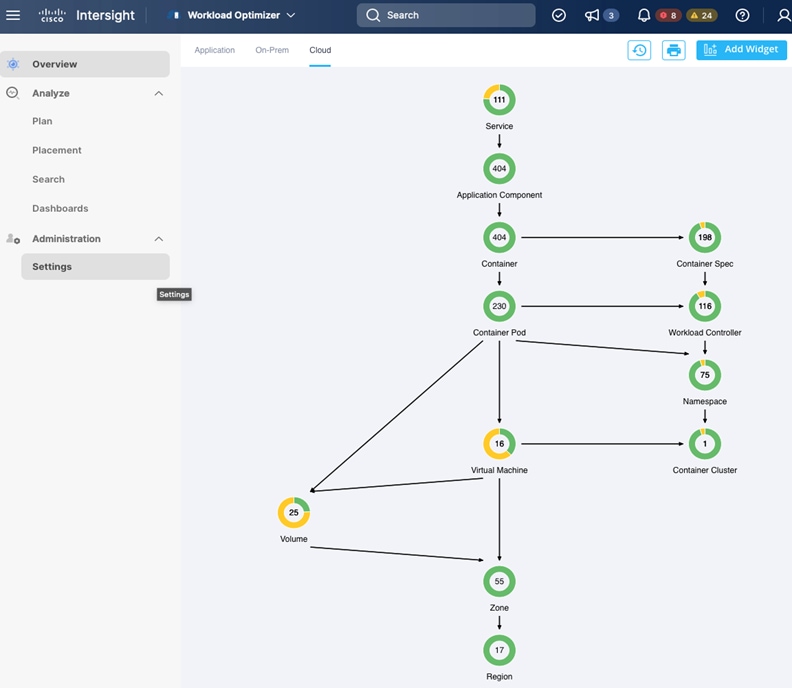

To perform application resource management, Cisco IWO discovers software and hardware components running in your environment and creates an inter-dependency map of all components from an application perspective. It then monitors and analyzes the resources to optimize resource usage while assuring application performance. The inter-dependency map includes all layers of the infrastructure, from the containers and VMs down to the underlying compute, storage, and network infrastructure.

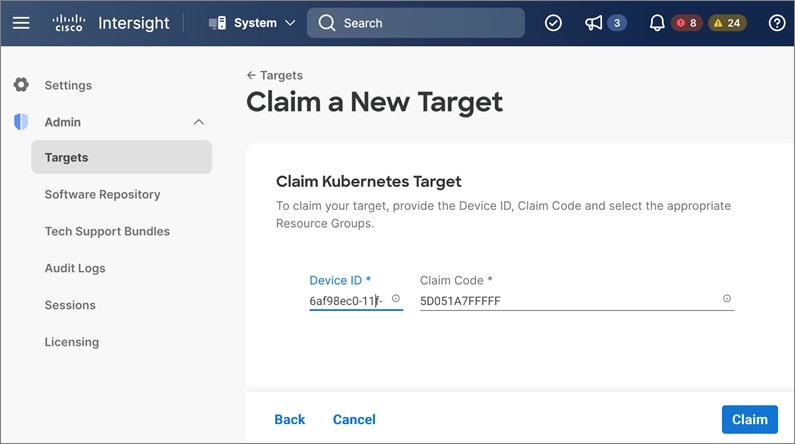

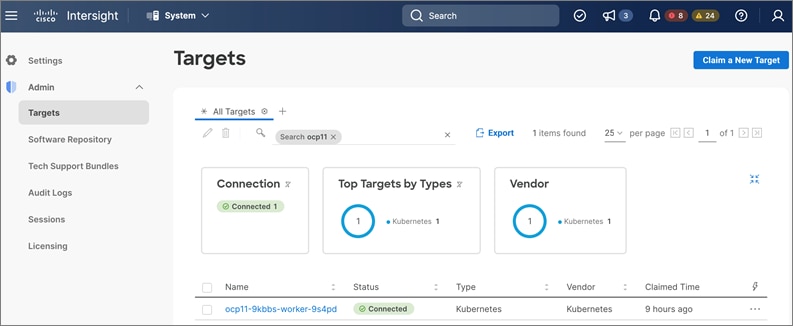

To evaluate and monitor the resource usage of applications, Cisco IWO collects telemetry data from both on-prem and public cloud components. The starting point for this data collection are the targets claimed in Intersight. Intersight supports a broad-range of Cisco and third-party products to provide a holistic top-down view of all infrastructure entities or components for a given application or in each location (on-prem, public). For cloud-native environments, Cisco IWO discovers and monitors the following container specific entities: Service, Application Components, Container, Container Pod, Container Spec, Workload Controller, Container Cluster, Namespace, Virtual Machine, and Volume. Figure 5 shows the inter-dependency map based on the discovered entities from an application perspective, widgets that show the estimated cost breakdown, pending IWO recommendations and actions, performance risks, overall health of the application and the top application services.

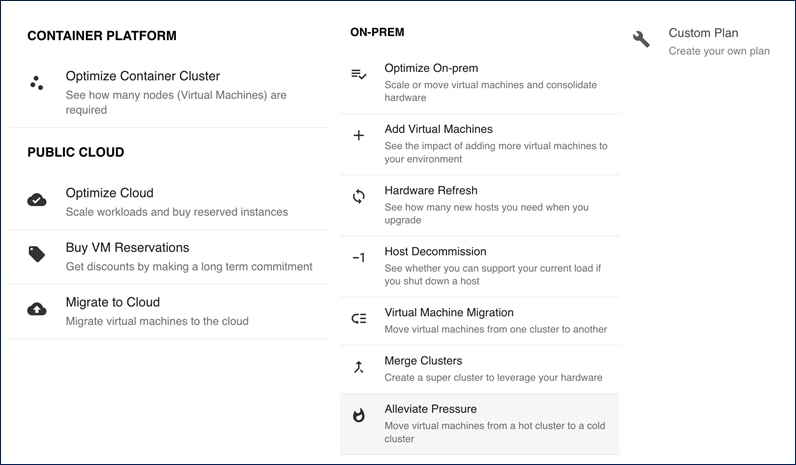

You can also use IWO to run simulations for planning purposes and placement recommendations. You can then schedule and implement the changes at your convenience. Intersight Workload Optimizer supports the following action modes:

● Recommend — Recommend the action so a user can execute it from the domain manager rather than from IWO

● Manual — Recommend the action, and provide an option to execute that action through the IWO Web GUI interface

● Automatic — Execute the action automatically without user involvement. IWO waits five minutes between actions.

Action modes specify the degree of automation that should be used for implementing the actions. The action modes in a policy are used to set the business rule. Some of the action types and actions supported by IWO are:

● Types: Placement, Scaling, RI optimization, configuration, start/buy stop, delete

● Actions: Provision, Start, Resize, Buy YRI, reconfigure, move/suspend/delete

IWO also provides several pre-defined plans to analyze and optimize various aspects of the deployment. Some of the plans available are:

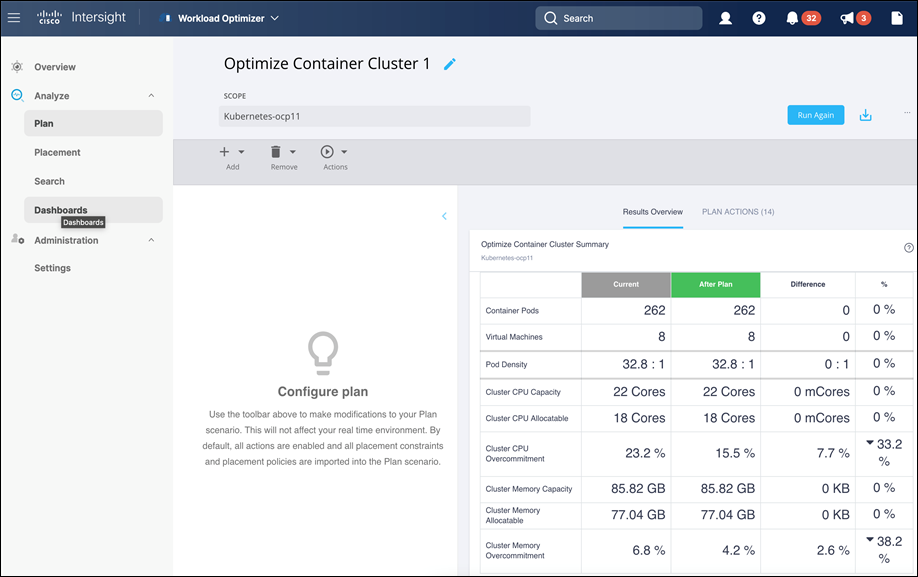

The figure below shows the results of a full container optimization plan that was executed and the actions that IWO recommended as a result.

Some of the actions recommended by the execution of this plan are provided below as an example.

Note: The plan was executed on an under-utilized OCP cluster in Cisco’s internal labs so many of the recommendations are to reduce the vCPU or vMem that the containers can use.

Cisco IWO also provides these recommendations without running plan based on its ongoing monitoring and analysis. These can be viewed selecting and browsing to the entity directly from the inter-dependency map.

For more information, see: Cisco Intersight Workload Optimizer product page on cisco.com

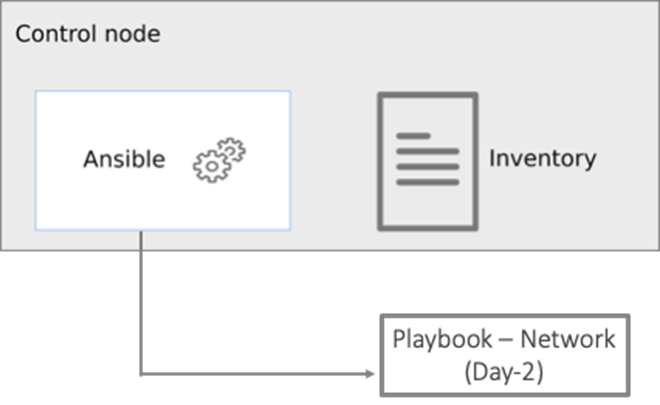

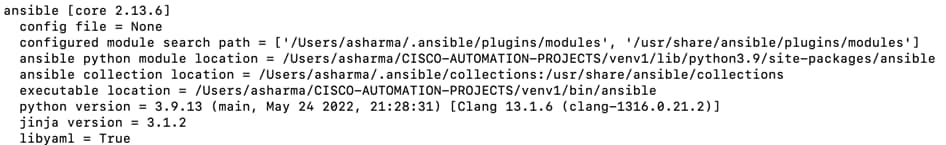

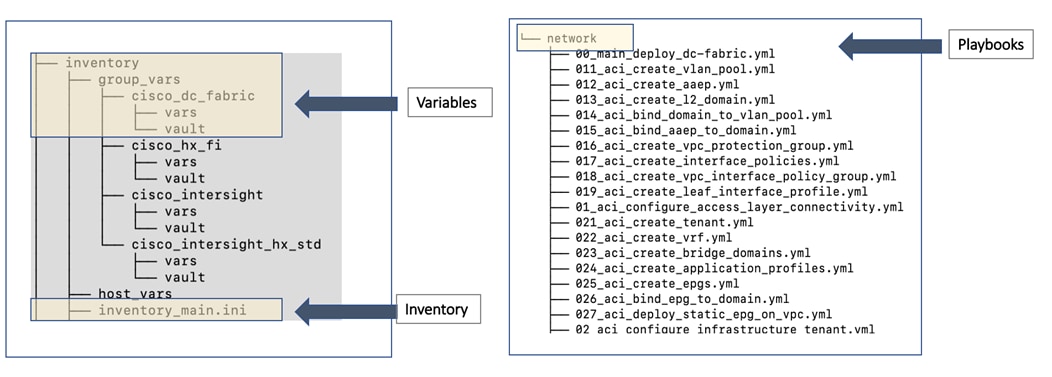

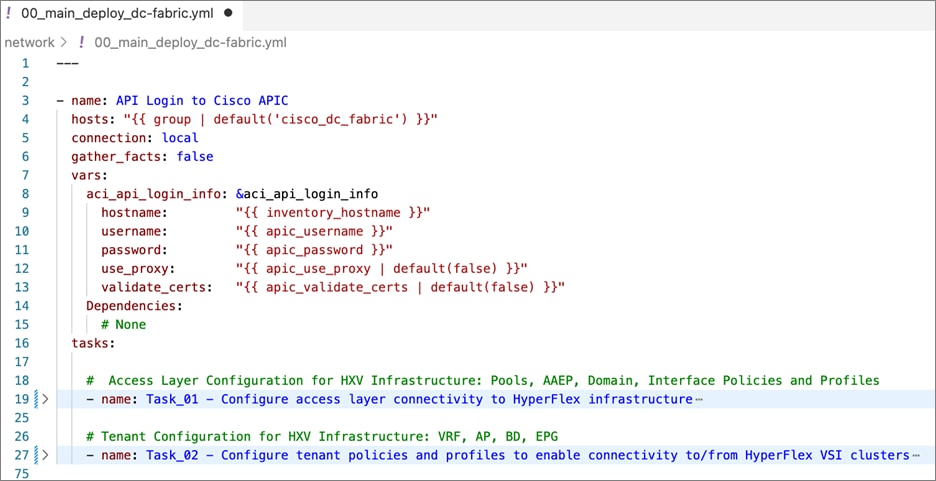

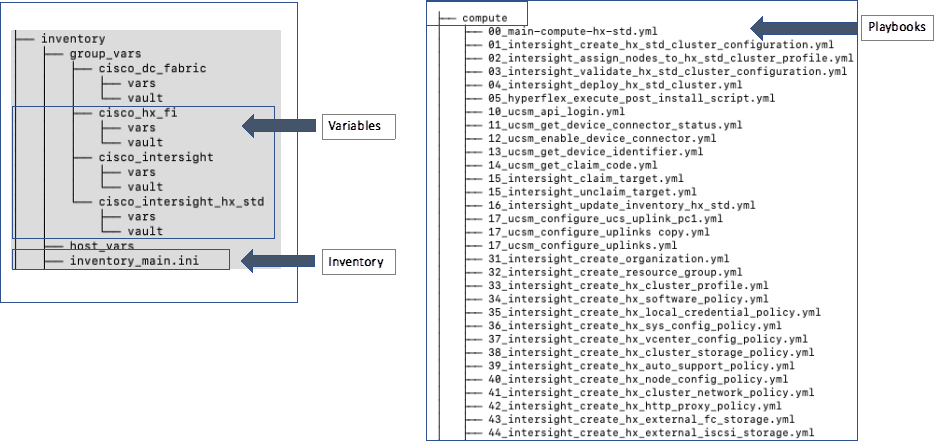

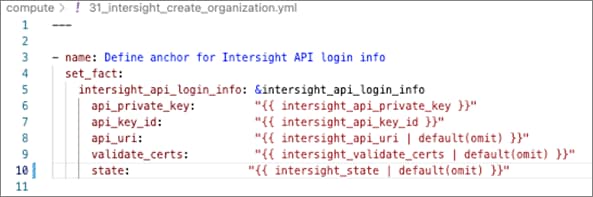

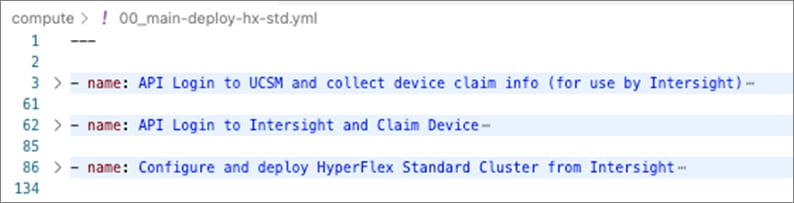

Ansible is an open-source tool for Infrastructure as Code (IaC). Ansible is also used for configuration management and application software deployment. Ansible is designed to be agentless, secure, and simple. Ansible available in Red Hat’s Ansible Automation Platform is part of a suite of tools supported by Red Hat. Ansible manages endpoints and infrastructure components in an inventory file, formatted in YAML or INI. The inventory file can be a static file populated by an administrator or dynamically updated. Passwords and other sensitive data can be encrypted using Ansible Vault. Ansible uses playbooks to orchestrate the provisioning. Playbooks are written in human readable YAML format that is easy to understand. Ansible playbooks are executed against a subset of components in the inventory file. From a control machine, Ansible uses SSH or Windows Remote Management to remotely configure and provision target devices in the inventory based on the playbook tasks.

Ansible is used to provision Cisco HyperFlex VSI and Cisco ACI fabric infrastructure in the solution. Ansible also provides a robust container and Kubernetes management, including Red Hat OpenShift Container Platform that Enterprises can leverage for their automation efforts.

This chapter contains the following:

● Overview

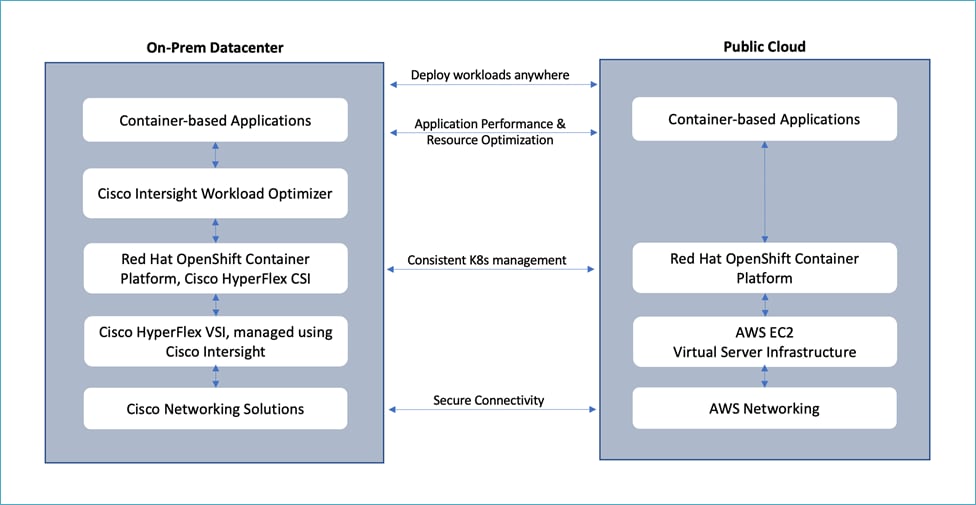

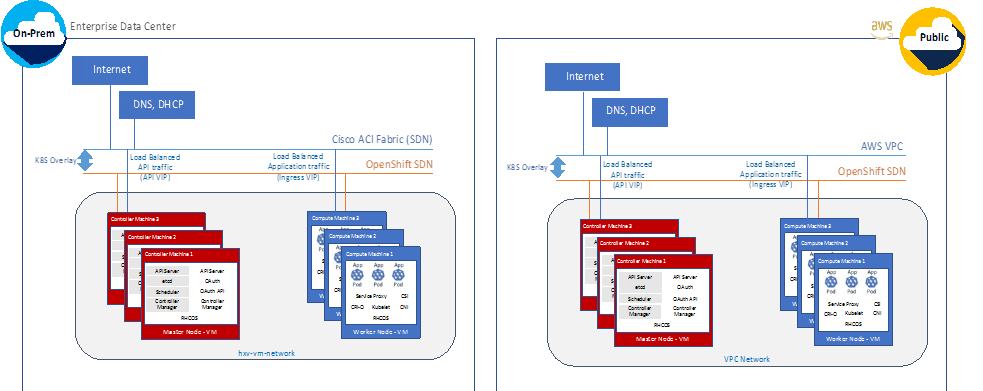

At a high level, the hybrid cloud infrastructure design in this solution consists of an on-prem datacenter, public cloud infrastructure, and a secure network interconnecting the two environments, as shown below:

Virtual Server Infrastructure (VSI)

The on-prem Virtual Server Infrastructure in the solution consists of:

● Four (4) node HyperFlex standard (or HyperFlex Datacenter) cluster as an application cluster for running cloud-native workloads on a Red Hat OCP cluster. The cluster is deployed and managed from the cloud using Cisco Intersight.

● Four (4) node HyperFlex standard cluster as a management cluster for hosting services and management components to support the application cluster. The cluster is deployed and managed from the cloud using Cisco Intersight. The services deployed include VMware vCenter managing the application cluster, DNS, DHCP and OCP Installer workstation. The management cluster can also host a management OCP cluster to run services and other components. For example, Red Hat’s Advanced Cluster Manager requires a seed OCP cluster to run on before it can be used for multi-cluster management (see Red Hat OCP in Technology Review section)

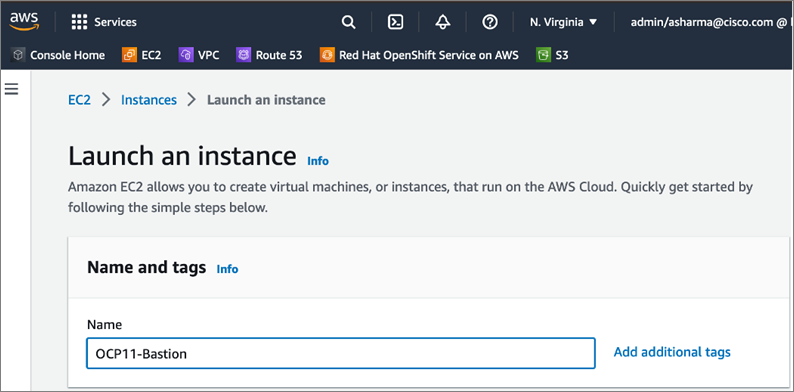

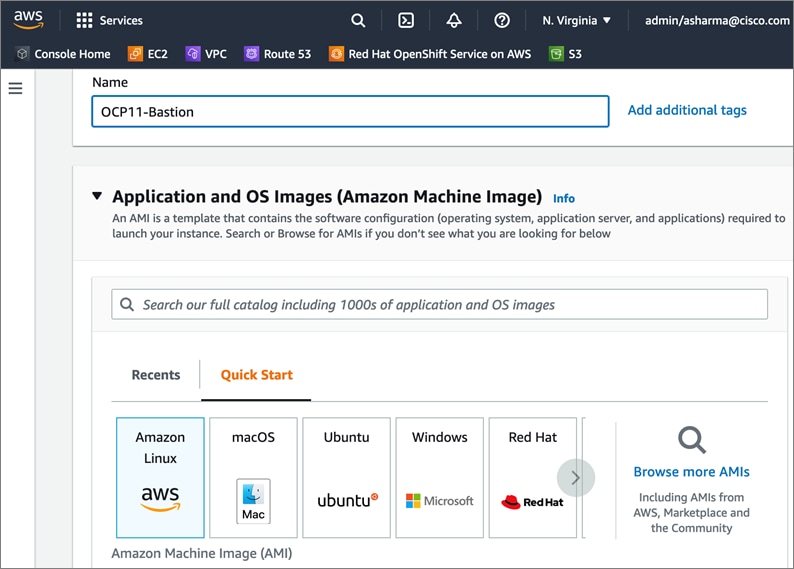

The public Virtual Server Infrastructure in the solution consists of:

● Amazon Elastic Compute Cloud (Amazon EC2) instances in the Amazon Web Services (AWS) Cloud provide the virtual server infrastructure in the public cloud. Red Hat OCP clusters are deployed on EC2 instances in a Virtual Private Cloud (VPC). The OCP cluster in the VPC are distributed across 3 x Availability Zones (AZ) in the region for resiliency.

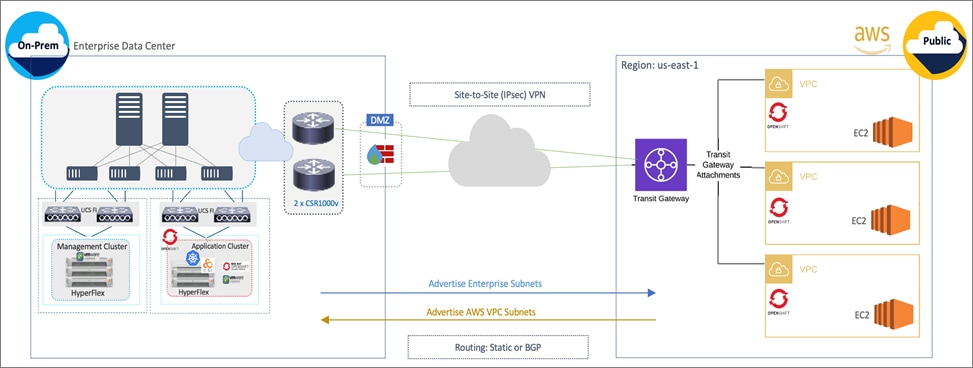

Network Connectivity

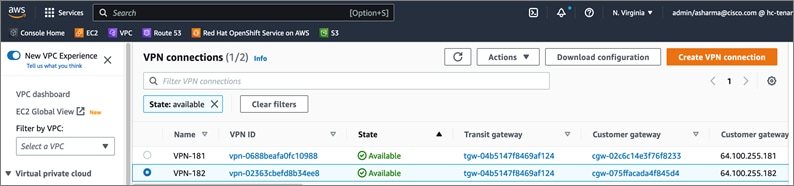

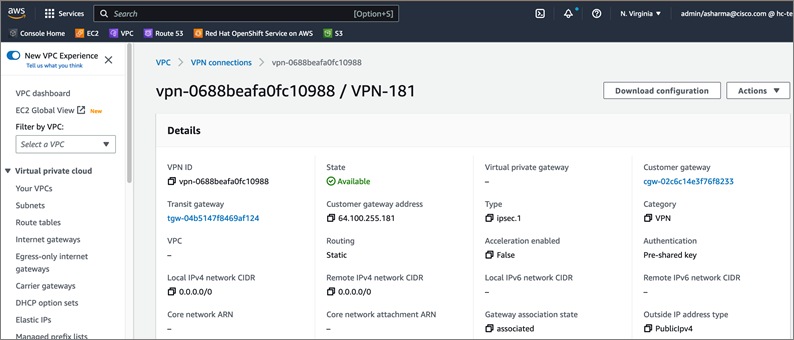

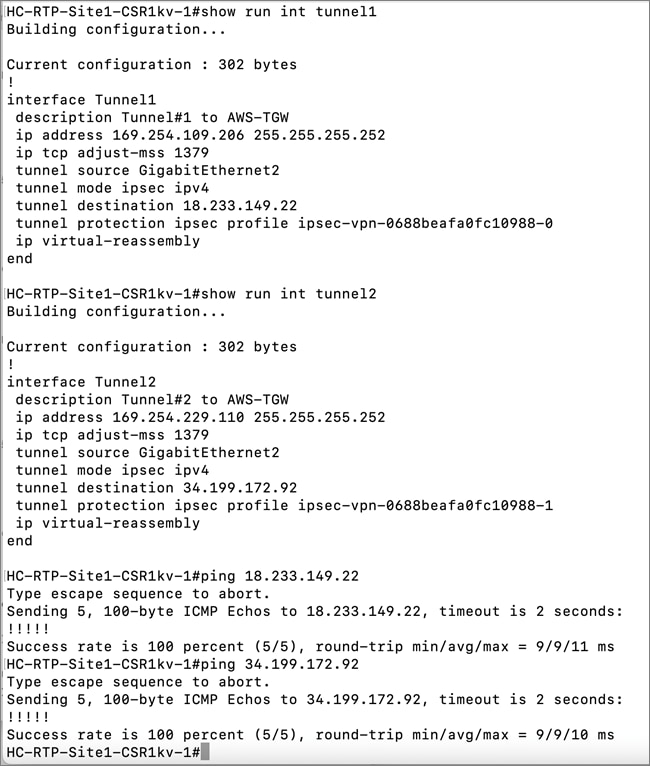

● Two redundant IPsec VPN connections provide secure connectivity between the cloud-native environments. The VPN connections are between 2 x CSR1000v routers on-prem and transit gateway routers in the public cloud.

Kubernetes Infrastructure

● Red Hat OCP cluster(s) provide a Kubernetes environment for cloud-native applications and use cases. The clusters are deployed from the cloud on HyperFlex VSI and on AWS EC2 instances using Red Hat Hybrid Cloud

● HyperFlex CSI provides persistent storage for stateful workloads hosted on HyperFlex VSI

Application Performance

● Cisco Intersight Workload Optimizer ensure application performance. IWO also provides resource monitoring, optimization, and cost management across this environment.

Figure 6 illustrates the end-to-end solution that was designed, built, and validated in Cisco internal labs.

Hybrid Cloud deployments give Enterprises complete flexibility in selecting an optimal location for their workloads based on performance, cost, compliance, and other factors. Some of the design requirements that this hybrid cloud solution addresses are outlined below.

● Operational simplicity and agility with the flexibility to deploy and manage workloads anywhere. The on-prem infrastructure that the Enterprise manages, must be easy to deploy and manage without compromising functionality, scale, or performance.

● The infrastructure must also be available as code for integration into existing Enterprise automation or CI/CD pipelines.

● The solution must continuously monitor and optimize the hybrid environment to ensure application performance and manage cloud costs.

The overall solution also addresses the following high-level design goals:

● Resilient design across all layers of the infrastructure with no single point of failure

● Scalable design with the ability to independently scale compute, storage, and networking as needed

● Modular design with the ability to upgrade or replace components and sub-systems as needed

● Flexible design across all layers of the solution that includes sub-system design, individual components used, and storage configuration and connectivity options

● Operational agility and simplicity through best-of-breed products, SaaS operations, automation, and orchestration tools

● Incorporates technology and product best practices for the different components in the solution

The design also addresses two commonly seen use cases in hybrid cloud use deployments:

● Enable cloud-native deployments anywhere, from on-prem to public cloud, while maintaining a consistent management experience

● Dev/Test for cloud-native workloads where organizations have multiple teams in a CI/CD pipeline that need separate environments, both on-prem and in the public cloud. Development teams may start the work in the cloud, but the application is staged and deployed into production on-prem.

The Cisco Validated Design (CVD) in this document provides a foundational infrastructure architecture for a secure, scalable, enterprise-class hybrid cloud solution to run cloud-native workloads. The main aspects of the design are:

● On-prem infrastructure (compute, storage, virtualization, and network) design

● Infrastructure as Code (IaC)

● Operational ease and consistency as the hybrid model expand to other Enterprise locations

● Secure hybrid cloud connectivity

● Cloud-native Infrastructure design (on-prem, public cloud)

● Persistent Storage for stateful, cloud-native workloads

● Operational ease with consistent cloud-native development and operational experience regardless of the environment

● Application resource monitoring, visibility, and cost management across on-prem and public cloud

● Ensuring application performance in any environment

Infrastructure Design

For cloud-native efforts, Enterprise IT teams need the ability to quickly deploy and manage the infrastructure in the Enterprise data center. The infra in the on-prem location, unlike the public cloud, is typically deployed and managed by the Enterprise. Because of this, IT and DevOps teams need infrastructure that is simple and easy to deploy and operate without compromising performance, scale, or functionality. The data center infrastructure design outlined in this section offers just that.

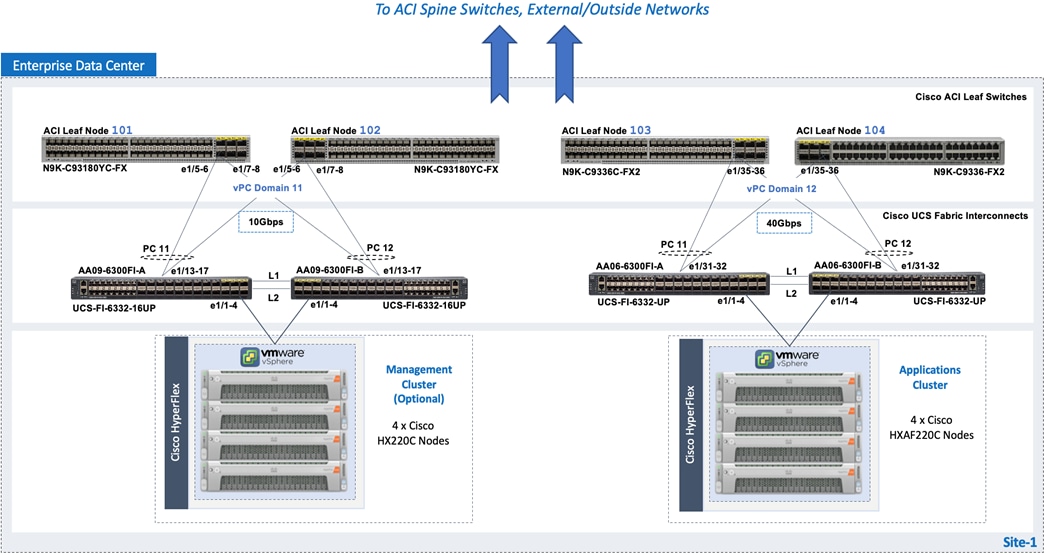

Figure 7 shows the on-prem infrastructure design in this solution.

Cisco HyperFlex provides the on-prem virtual server infrastructure in the solution. HyperFlex delivers software-define compute, storage, and virtualization as a fully-integrated product, enabling IT teams to spend less time on infrastructure management and more time on other business initiatives. The design uses HyperFlex clusters, an application cluster for running application workloads, and a management cluster for hosting infrastructure management and other services. The management HyperFlex cluster is optional in the design as the services running on the management cluster can also be deployed on an Enterprise's existing infrastructure. Both are HyperFlex standard (data center) cluster with 4 nodes in each cluster.

The nodes in the cluster connect to a Cisco UCS domain consisting of a pair of Cisco UCS 6300 Series Fabric Interconnects. The management and application clusters attach to different Cisco UCS domains in this solution, but they could also connect to the same UCS domain if needed. A single UCS domain consisting of a pair of Fabric Interconnects can support multiple HyperFlex clusters (and Cisco UCS systems). The exact number depends on the number of servers in a given cluster and the port density of the Fabric Interconnect model chosen.

The data center fabric in the solution is a Cisco ACI fabric. The HyperFlex clusters connect to the ACI fabric through the Cisco UCS 6300 Fabric Interconnects in their UCS domain. Some HyperFlex systems (for example, DC-no-FI, Edge) can also connect directly to the leaf switches in the ACI fabric. However, connecting to Cisco UCS Fabric Interconnects provides some benefits. It serves as an aggregation point for a group of servers with unified management. The two Cisco UCS Fabric Interconnects in a UCS domain form a highly available, unified, low-latency switching fabric capable of handling I/O traffic from hundreds of servers. It provides connectivity between the servers and systems that connect to it and connectivity to the upstream data center fabric to connect to other UCS domains and networks. Cisco UCS Manager (UCSM) provides unified management for all HyperFlex and Cisco UCS systems in that Cisco UCS domain. It runs as embedded software on the Fabric Interconnects. The design can use centralized (via Intersight) and local management (using Cisco UCSM), which can be helpful in troubleshooting situations. The unified fabric can also provide Quality-of-Service (QoS) for the different types of traffic traversing the fabric, including HyperFlex infrastructure (management, storage data, vMotion, replication) and application traffic.

The HyperFlex UCS domains connect to Nexus 9000 series leaf switches in the ACI fabric for northbound connectivity to other networks within and outside the Enterprise and intra-cluster connectivity when traffic needs to go from one Fabric Interconnect (FI-A) to the other (FI-B) within the same domain. In a HyperFlex cluster, this typically happens in failure situations. Each Fabric Interconnect connects to a pair of leaf switches for redundancy. For the application cluster, the uplink connections from the Cisco UCS 6300 Fabric Interconnects are:

● 2 x 40GbE links from FI-A, one to each switch in the leaf switch pair

● 2 x 40GbE links from FI-B, one to each switch in the leaf switch pair

The multiple uplinks provide higher bandwidth and resiliency. Cisco UCS FI and Nexus switches support 802.3ad standards for aggregating links into a port channel (PC) using Link Aggregation Protocol (LACP). The 40Gbps links on the FI side are bundled using a port channel, while the links on the Nexus 9000 leaf switch pair are bundled using a virtual port channel (vPC). The Fabric Interconnects use two port channels to connect to the ACI leaf switch pair - one from each FI. The 2 x 40GbE links on each FI connect to different Nexus switches in the leaf switch pair. In the reverse direction, the ACI leaf switches pair uses two vPCs to connect to the Fabric Interconnects - one to each FI. vPC enables links from two switches to be bundled such that it appears as a “single logical" port channel to a third device (in this case, FI). The vPC design provides higher aggregate bandwidth with both link and node-level redundancy. In this design, the total uplink bandwidth for the application UCS domain, is 160Gbps (40Gbps per link x 2 uplinks per FI x 2FI). You can also add more links to the PC/vPC bundle to increase the uplink bandwidth. The Fabric Interconnects can also use higher speed links to connect to the upstream Nexus switches. The Cisco UCS 6300 series Fabric Interconnects used in this solution support 10G and 40G, but other hardware models are available that support 25G and 100G connectivity. The HyperFlex management cluster is also connected similarly, using a PC/vPC design but uses 4 x 10GbE links for uplink connectivity. The FI uplinks to the ACI fabric operate as trunks, carrying traffic from multiple 802.1Q VLAN IDs to the ACI fabric. The uplinks trunk VLAN traffic for HyperFlex infrastructure (in-band management, vMotion, storage data) and application networks. The VLANs are also configured on the individual virtual NIC (vNIC) templates going to each server in the HX cluster.

The HyperFlex nodes in a cluster are dual-homed to the Fabric Interconnects in the UCS domain for high availability. Each server in the application cluster uses a VIC 1387 adapter with two 40Gbps uplink ports to connect to each FI, resulting in two redundant paths, one through each fabric (FI-A, FI-B). The two uplinks provide each server with 2x40Gbps of uplink bandwidth and redundancy in the event of a failure.

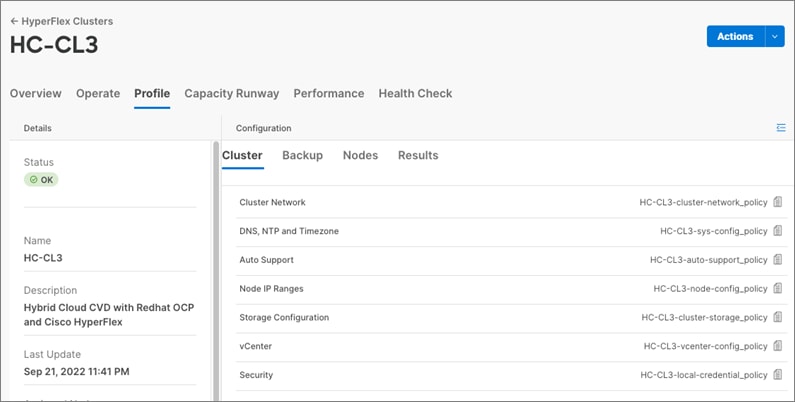

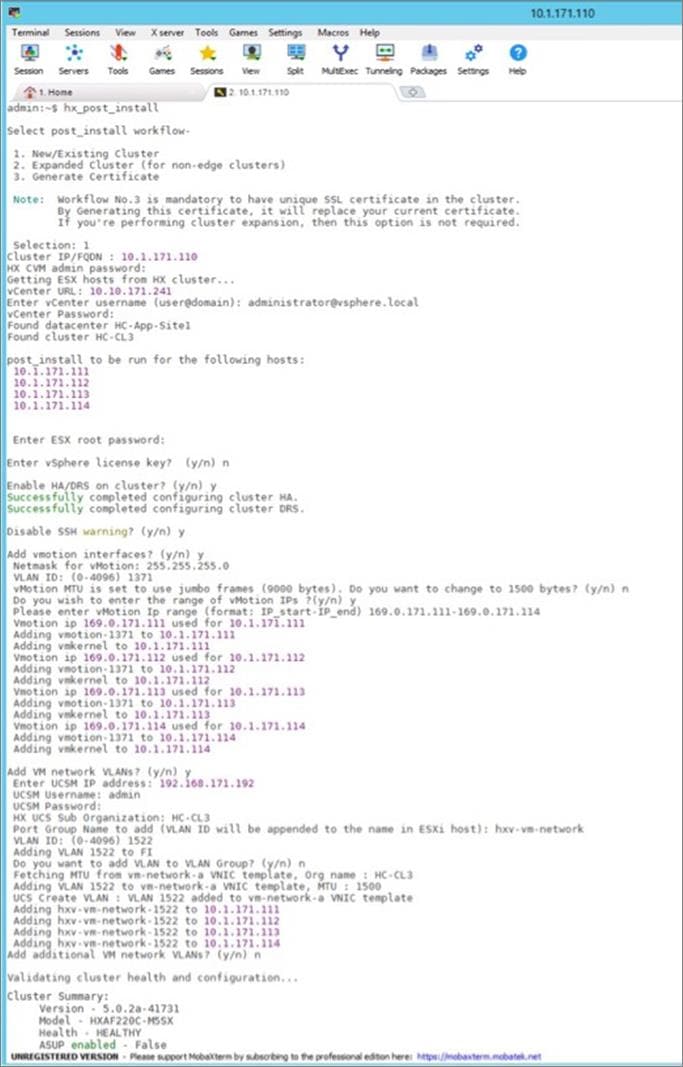

HyperFlex uses an automated installation process to deliver a production-ready HyperFlex VSI cluster in less than an hour. Either an Installer VM (OVF) or Cisco Intersight can be used to initiate the deployment. Intersight provides a simple workflow/wizard to gather input from the user, validate and begin the installation process. HyperFlex systems use a best-practice configuration combined with user-provided inputs to generate a cluster profile. The installer uses this profile to deploy and configure the cluster. The profile can also be cloned and re-used (with minimal changes) to deploy additional clusters as needed. HyperFlex systems typically undergo a factory install process that pre-installs some of the firmware and software required (for example, server firmware, VMware ESXi software), which makes the customer site installation and setup much quicker and easier.

The Cisco UCS Fabric Interconnects (when used) must be claimed as a target for Intersight to discover the servers in the cluster. Intersight and other components will also require connectivity to multiple networks to deploy the cluster. The Cisco ACI fabric provides this connectivity – see the Data Center Fabric – Cisco ACI section for additional details.

The two HyperFlex clusters in the solution are deployed and managed using Cisco Intersight. The installation results in a complete virtual server infrastructure that is ready for deploying a Red Hat OCP cluster and cloud-native workloads. The virtual server infrastructure is managed using VMware vCenter running on the management HyperFlex cluster.

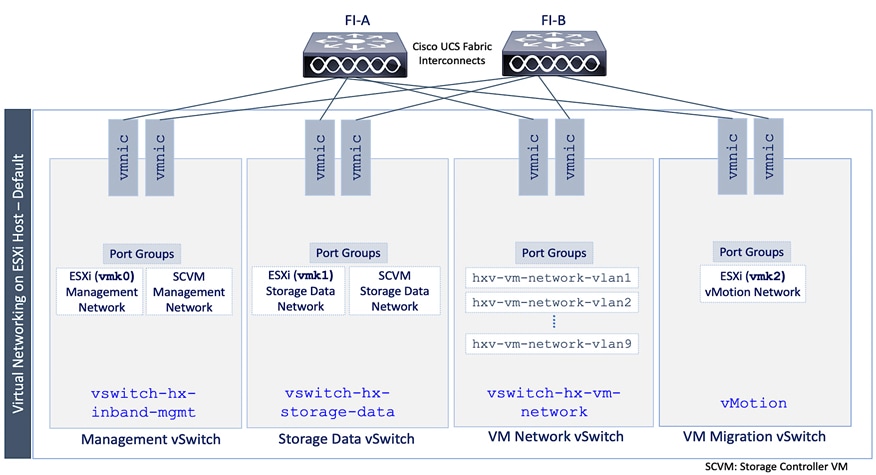

Virtual Networking Design

The automated HyperFlex installation process deploys a VMware vSphere cluster with a pre-defined virtual networking design. The design is identical on all ESXi hosts in the cluster. The design uses four VMware virtual switches (vSwitch) for different types of traffic. The virtual switches deployed by the automated installation process are:

● vswitch-hx-inband-mgmt: This is the default ESXi vSwitch0 that is renamed by the ESXi kickstart file as part of the automated installation process. The switch has two uplinks, active on fabric A and standby on fabric B – by default, jumbo frames are not enabled on these uplinks. The installer deploys multiple port groups on this vSwitch for ESXi management, storage controller VM management and replication (if enabled). The management interfaces include ESXi management interface, SCVM management and replication interface, and a roaming management cluster IP (one per cluster).

● vswitch-hx-storage-data: This vSwitch has two uplinks, active on fabric B and standby on fabric A – by default, jumbo frames are enabled on these uplinks. The installer deploys multiple port groups on this vSwitch for ESXi and storage controller VM storage-data networks. The storage-data interfaces include ESXi host, SCVM storage interface, and a roaming storage cluster IP (one per cluster).

● vswitch-hx-vm-network: This vSwitch has two uplinks, active on both fabrics A and B – by default, jumbo frames are not enabled on these uplinks. However, in this design, it has been reconfigured for jumbo frames through Cisco UCS Manager. The VLANs associated with the above port-groups are all tagged VLANs (not native VLANs) in Cisco UCS vNIC templates. Therefore, these VLANs are also explicitly configured in ESXi/vSphere.

● vmotion: This vSwitch has two uplinks, active on fabric A and standby on fabric B – by default, jumbo frames are enabled on these uplinks. The IP addresses of the VMkernel ports (vmk2) are configured using a post-install script. The VLANs associated with the above port-groups are all tagged VLANs (not native VLANs) in Cisco UCS vNIC templates. Therefore, these VLANs are also explicitly configured in ESXi/vSphere.

Enterprises can migrate the VM network virtual switch to a VMware distributed virtual switch (vDS), but the management and storage-data should stay on the installer-deployed VMware vSwitches. The VMware vDS can be optionally deployed and managed by Cisco ACI using the Virtual Machine Manager (VMM) integration feature. With VMM integration, ACI can dynamically provision port-groups on the vDS when corresponding endpoint groups (or VLANs) are provisioned in the fabric.

The installer deploys four virtual switches on each ESXi host in the cluster, with two uplinks or VMware virtual NICs (vmnics) per vSwitch. The vmnics at the hypervisor level map to virtual NICs (vNICs) on the Cisco UCS VIC adapter deployed in each server. The HyperFlex installation process configures the service-profiles in Cisco UCS Manager to create the different vNICs and vmnics that are used by the virtual switches running on the ESXi hosts in the HyperFlex VSI cluster.

Figure 8 shows the default virtual networking deployed on the ESXi hosts in a cluster.

The port groups on each vSwitch correspond to tagged VLANs in the server’s vNIC template. The automated installation provisions the vNIC templates and VLANs on the server side and maps them to port groups on the VMware vSwitch. The vMotion vSwitch port groups and VLAN configuration is done post-deployment using a post-install script.

The virtual machine port groups and VLANs can be provisioned during the initial install or post-deployment using the post-install script. You can also ACI VMM integration to dynamically provision the ESXI networking as needed to roll out new applications and services. However, the ACI VMM integration will not provision the VLANs in the Cisco UCS Fabric Interconnect so this will have to be provisioned separately.

The Red Hat OCP clusters deployed in this solution uses the default VMware vSwitch for VM networks, on a VM network VLAN deployed by the installer.

Data Center Fabric – Cisco ACI

The data center fabric in the solution is a Cisco ACI fabric. ACI provides connectivity to the different components in the solution (HyperFlex server, Cisco UCS Fabric Interconnects, VMware vCenter, DNS, DHCP, NTP) and other networks, including external connectivity to Cisco Intersight and Red Hat Hybrid Cloud Console. The design separates all infrastructure connectivity necessary for deploying and maintaining the HyperFlex VSI cluster to a separate ACI tenant (HXV-Foundation). This tenant is responsible for providing the following HyperFlex infrastructure connectivity:

● Management: A HyperFlex cluster requires in-band management connectivity to ESXi hosts and storage controller virtual machines (SCVM) in the cluster. The roaming management cluster IP and other endpoints must be accessible to administrators, tools, and other entities outside the fabric. VMware vCenter managing the VSI cluster also needs access to the ESXi management interfaces.

● Storage-data: A HyperFlex cluster requires storage-data connectivity to ESXi hosts and storage controller virtual machines in the cluster. The roaming storage-data cluster IP and other endpoints must be accessible from the data center fabric.

● VMware vMotion: To enable vMotion of guest VMs running on the application HyperFlex cluster, the vMotion network must be reachable from VMware vCenter running on the management cluster.

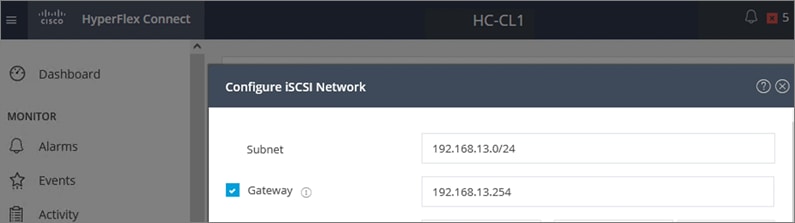

● iSCSI Storage-data: The HyperFlex CSI used in this design uses iSCSI. A dedicated network is created for the iSCSI storage data traffic.

Multiple HyperFlex clusters connected to the same ACI fabric can use the same management and vMotion infrastructure networks, but the storage-data traffic should be on a dedicated network, one for each cluster. The ACI fabric also provides the following infrastructure connectivity:

● Internet Access for reachability to Cisco Intersight. The UCS domain must be claimed as a target in Intersight before it can be used to deploy the HyperFlex VSI cluster.

● Reachability to networks and services within the Enterprise, both within and outside the ACI fabric.

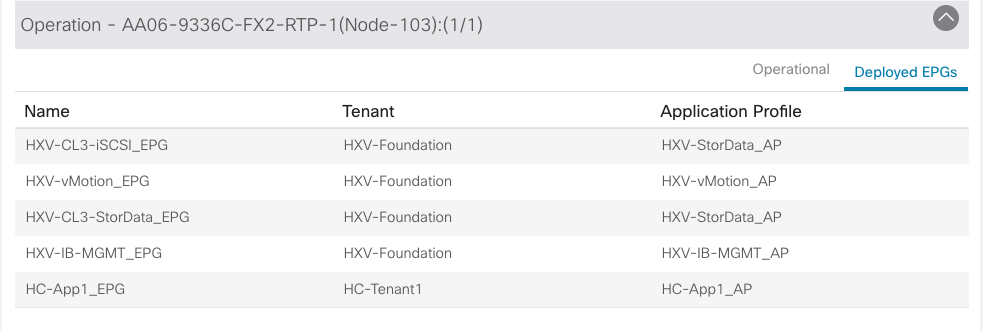

For the on-prem Red Hat OCP cluster and application workloads running on the HyperFlex VSI, the design uses a dedicated Application Tenant (for example, HC-Tenant1) to provide connectivity for the following HyperFlex network:

● VM network(s): Applications networks deployed on the HyperFlex application cluster will need connectivity to various networks and services depending on the application requirements. In this solution, the Red Hat OCP is deployed on Guest VM networks and will require reachability to various internal and external entities required to install and operate the OCP cluster.

● Persistent Storage: For Red Hat OCP clusters to access persistent storage on HyperFlex using the HyperFlex CSI plugin, the ACI fabric will need to route traffic from the OCP cluster network (VM network) to the HyperFlex iSCSI storage-data network.

The ACI tenancy design will separate the infrastructure and application traffic. Multiple application tenants can be deployed as needed. For Red Hat OCP clusters and cloud native workloads, the ACI fabric also provides the following connectivity:

● Internet Access for deploying the Red Hat OCP cluster on HyperFlex VSI. Cloud-native applications running on the cluster will most likely need Internet access as well.

● Reachability to internal Enterprise networks and services, both within and outside the ACI fabric.

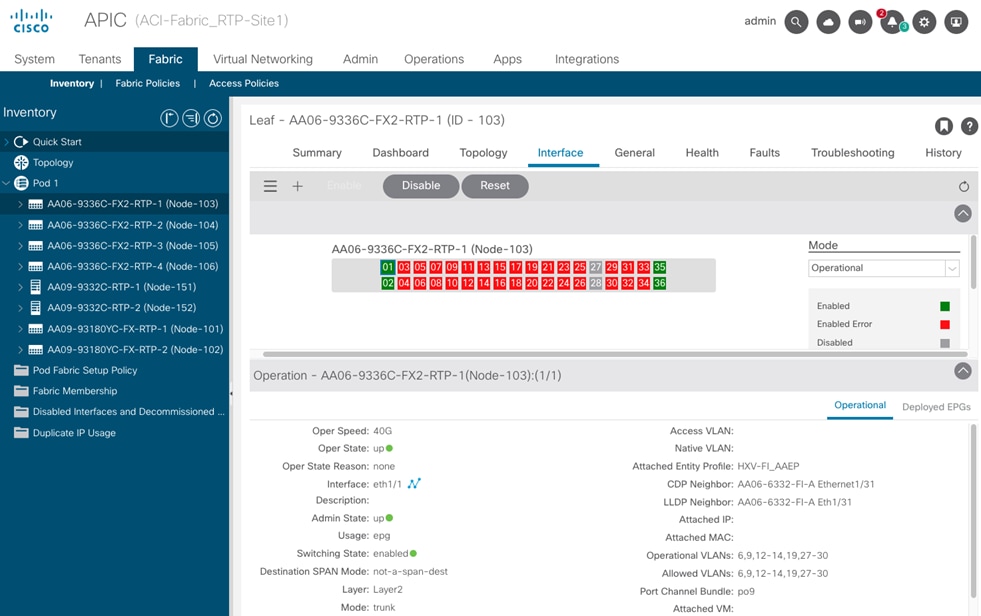

In the ACI architecture, ACI constructs (Tenants, Application profiles, Bridge domains, EPGs etc.) define and enable the connectivity through the fabric. To meet the infrastructure connectivity requirements outlined above, EPGs and other ACI constructs are defined in the HXV-Foundation tenant to enable this connectivity. The infrastructure VLAN networks provisioned by the HyperFlex installer are then mapped to end-point groups in ACI to enable forwarding between endpoints in the same network and to other networks. The same is done for application connectivity, but within the application tenant (HC-Tenant1). The ACI constructs to enable this forwarding are provided in the Solution Deployment section (Table 5).

To enable connectivity through the fabric, the ACI fabric must also provide access layer connectivity to the UCS domains and HyperFlex servers. The access layer connectivity includes:

● Physical connectivity to the UCS domains that HyperFlex clusters connect to. The PC/vPC design and connectivity are described in the Detailed Design - Cisco HyperFlex section.

● Access Layer configuration to enable connectivity to/from the Fabric Interconnects and HyperFlex servers in the UCS domain. In ACI, fabric access policies represent the configuration for connecting to access layer devices. To enable this connectivity, policies are first created and then applied to the leaf switch interfaces that connect to the access layer devices.

The specific policies and configuration used to enable the access layer connectivity to the Cisco UCS Fabric Interconnects and HyperFlex servers for the application cluster in this solution are provided in the Solution Deployment section (Table 4). The policies and profiles will create virtual port channels on the ACI leaf switch pair and enable access layer connectivity to the Cisco UCS Fabric Interconnects in the UCS domain.

The Cisco ACI fabric, together with Cisco UCS FIs provide the connectivity necessary to bring a HyperFlex cluster online and maintain the cluster post-deployment for hosting application workloads. The on-prem virtual server infrastructure is now ready for deploying a cloud-native environment on-prem using Red Hat OpenShift Container platform.

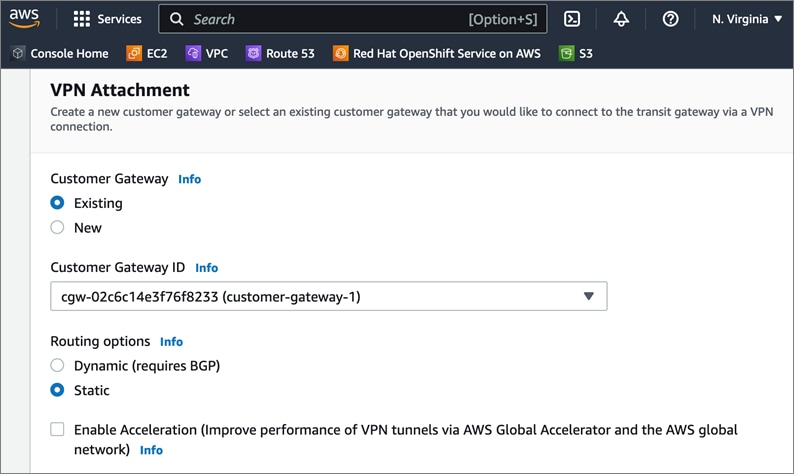

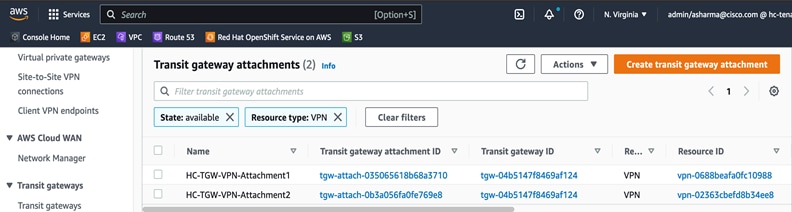

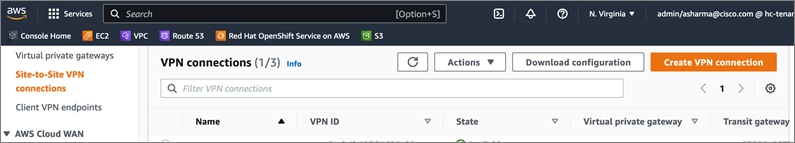

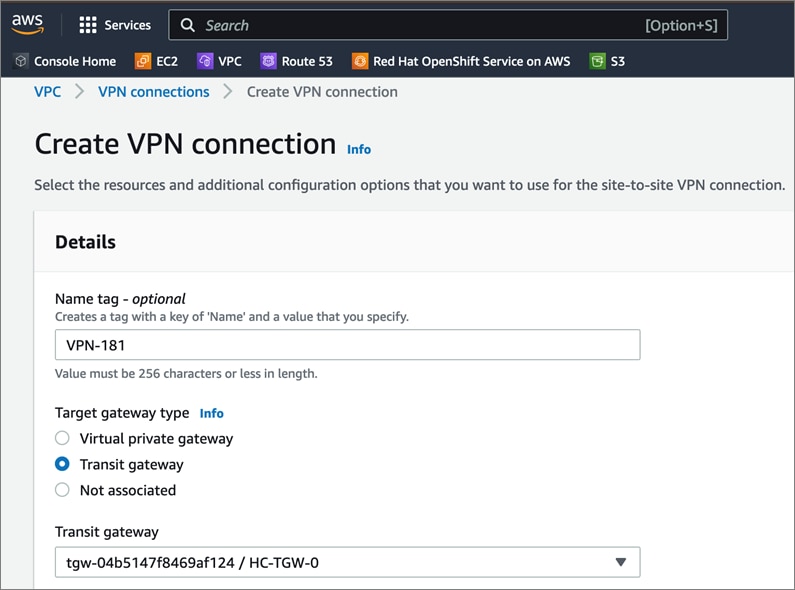

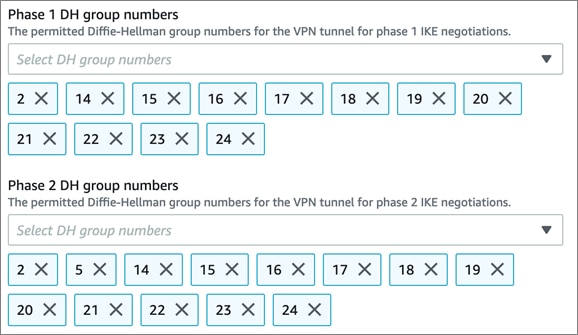

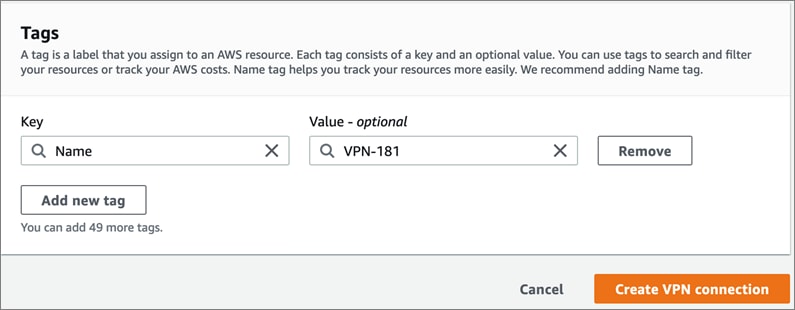

Hybrid Cloud Connectivity

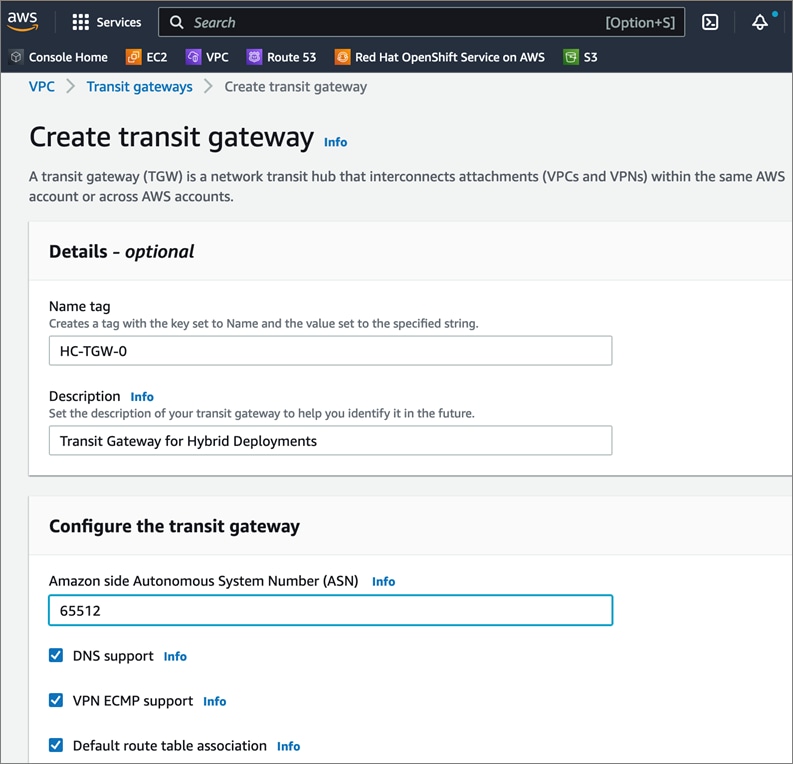

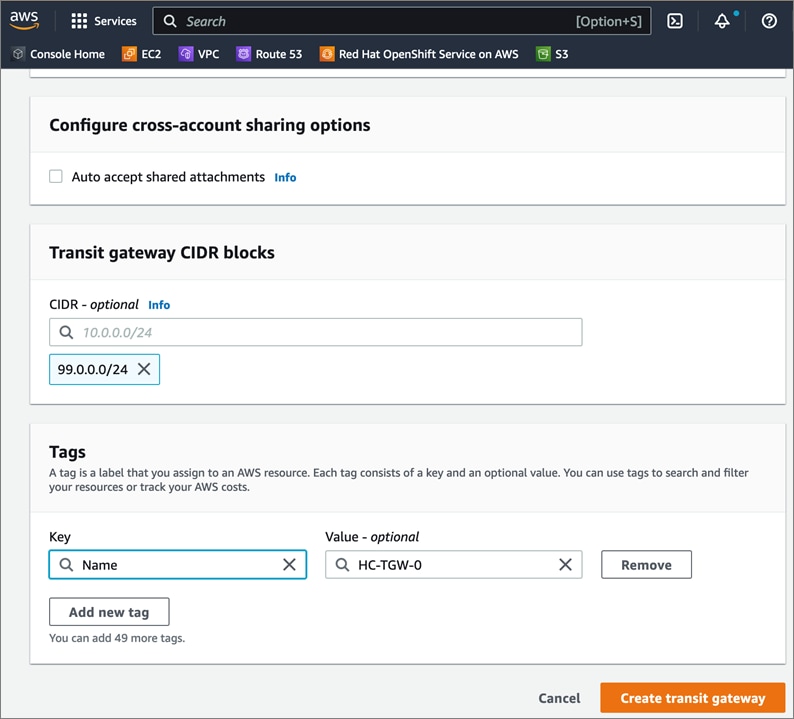

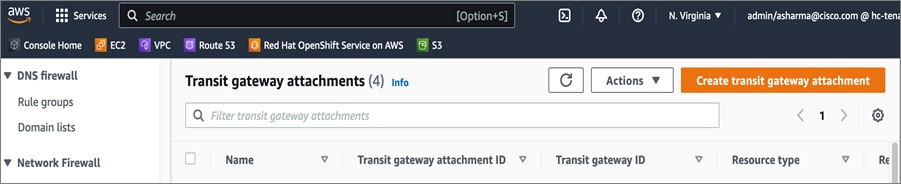

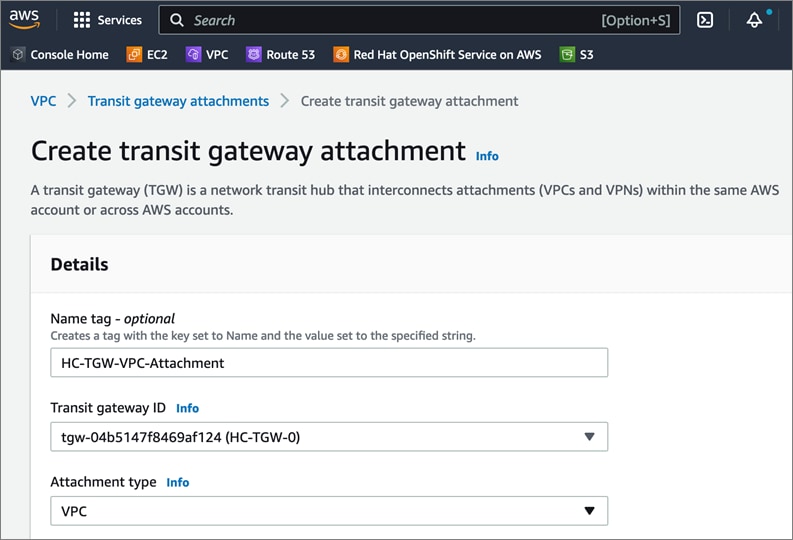

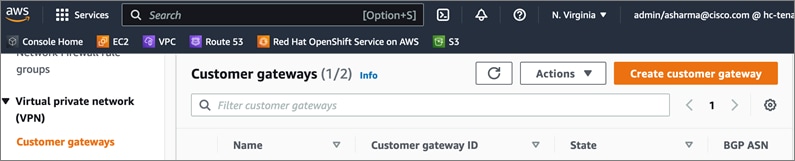

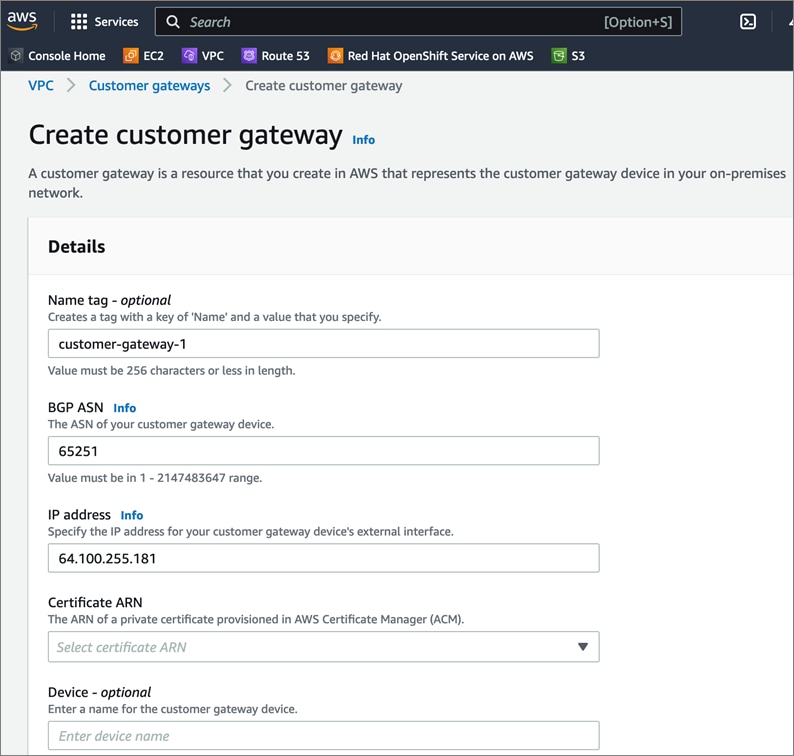

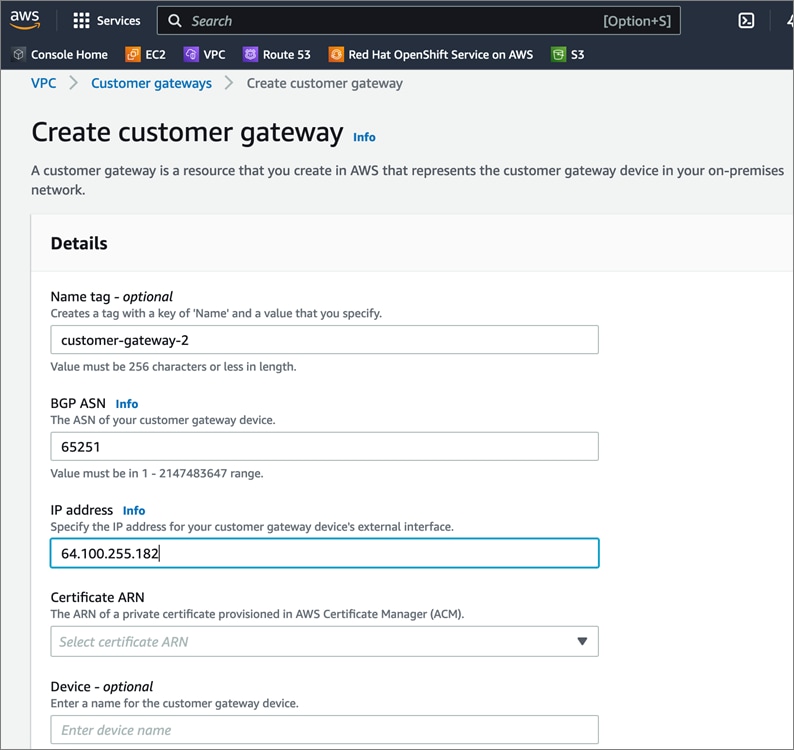

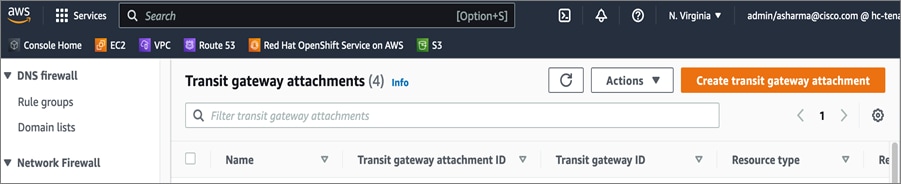

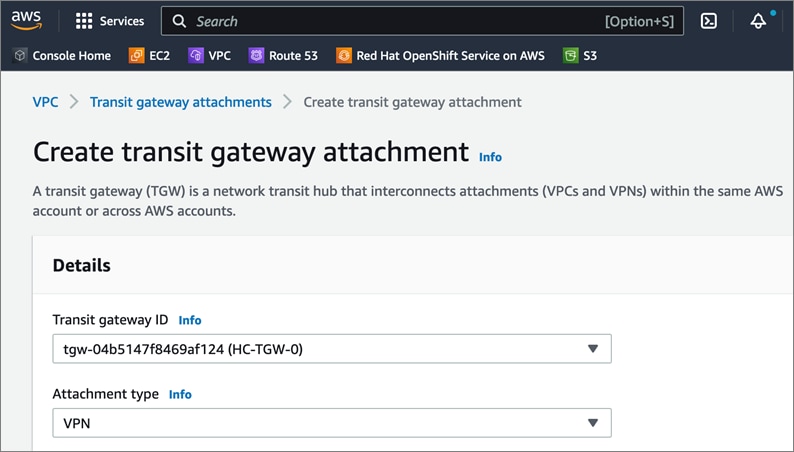

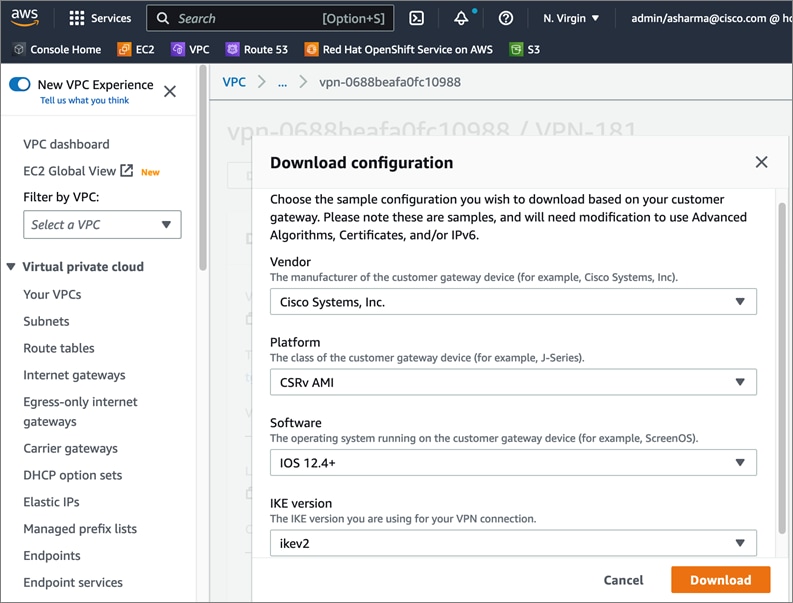

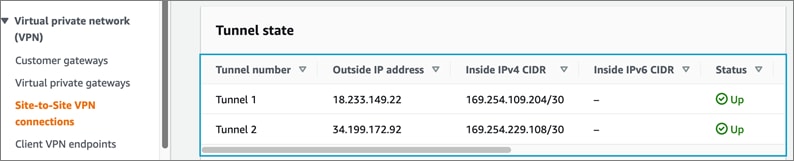

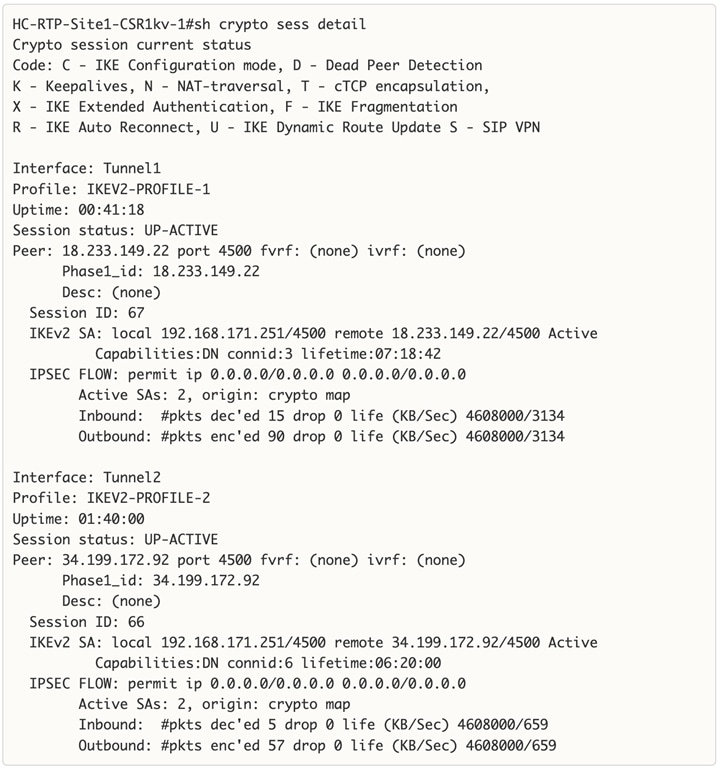

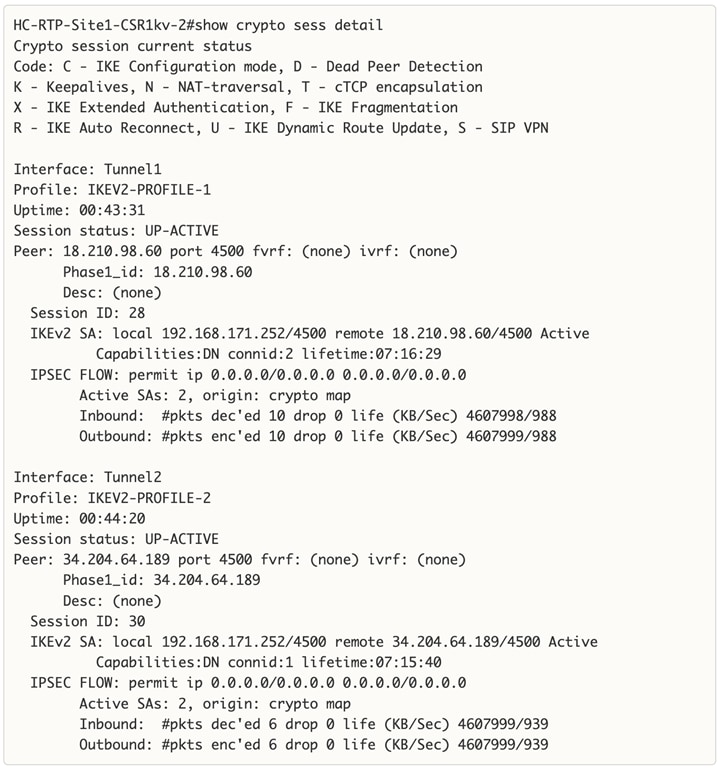

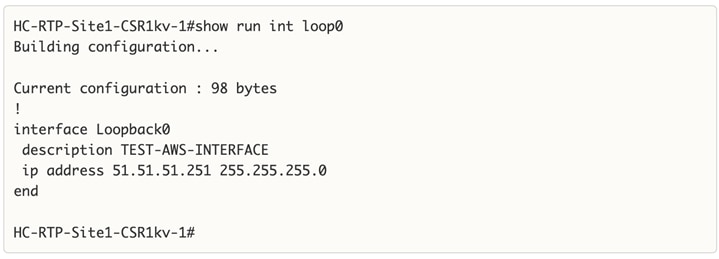

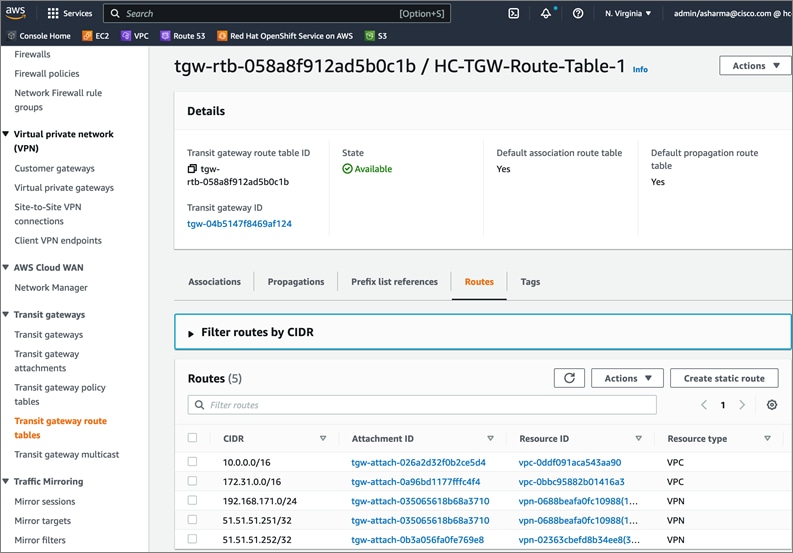

Cisco offers multiple options for hybrid cloud connectivity between an on-prem location and public cloud. Options include Cisco ACI or VXLAN EVPN fabrics with Nexus Dashboard Orchestrator and Cloud Controller to orchestrate and extend the on-prem networking into the public cloud, SD-WAN, IPsec VPN, and dedicated connections. The hybrid cloud connectivity in this solution is established over the Internet using IPsec VPN tunnels. The IPsec VPN tunnel enables applications, services, and other components to securely access networks and entities in the other location. IPsec VPN tunnels are also referred to as Site-to-Site VPNs. IPsec VPN establishes encrypted tunnels across the Internet to provide secure connectivity for Enterprise traffic in a hybrid deployment. The IPsec VPN tunnels are established between Transit Gateways (TGW) in AWS and a pair of Cisco Cloud Services 1000 series router (CSR1kv). TGWs in AWS serve as transit hubs that can be used to consolidate the hybrid cloud connectivity from multiple VPCs by adding an attachment in each. Alternatively, you can also Virtual Private Gateways (VGW) from each VPC to establish IPsec VPN tunnels to the Enterprise data center, but you can only attach one VPC to this connection. When interconnecting locations in this manner, there should not be any overlapping addressing otherwise you could have routing issues. Figure 9 shows the hybrid cloud connectivity used in this solution. The AWS transit gateway establishes two tunnels to each customer/enterprise gateway (CSR1kv) for redundancy. The design uses two CSRs for higher availability to provide a total for 4 IPsec VPN tunnels between locations. The 4 tunnels will require 4 public IPs to establish the tunnels across the Internet. Enterprise firewalls must be provisioned to allow IPsec protocols and traffic from the AWS side tunnel addresses. By default, AWS expect the enterprise gateway to initiate the Internet Key Exchange (IKE) negotiation process to bring up the tunnel by the by generating traffic. It is recommended that AWS is configured to initiate the IKE negotiation to prevent the tunnel from going down when there is a lull in the traffic flow.

When using Transit Gateways, there are some service limits in terms of MTU, routes, routing protocols, bandwidth, etc. that you should be aware of. Please review the AWS documentation for details on the VPN limits: https://docs.aws.amazon.com/vpn/latest/s2svpn/vpn-limits.html.

Kubernetes Infrastructure Design

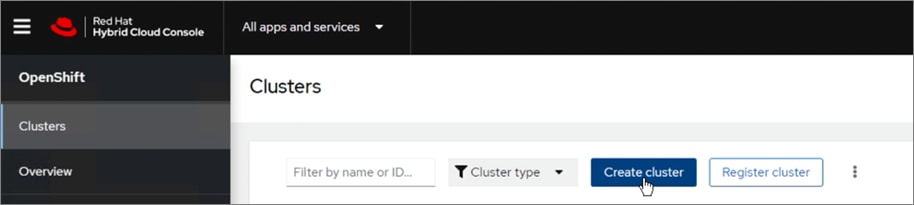

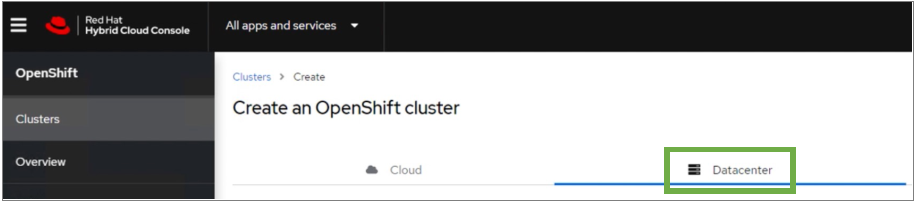

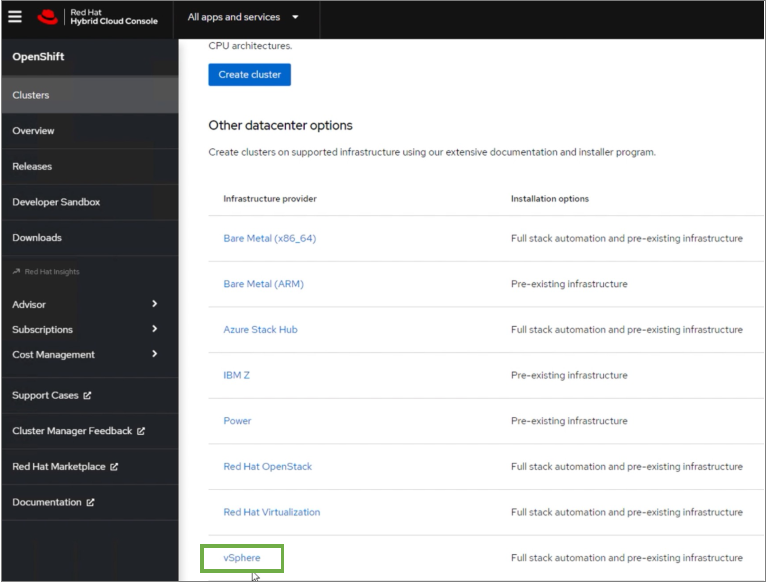

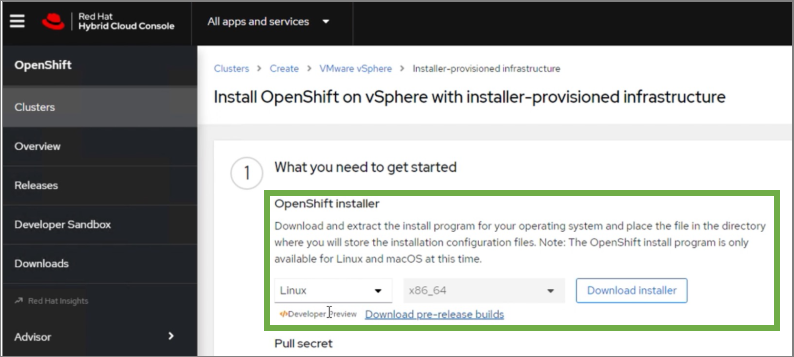

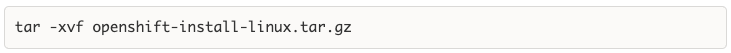

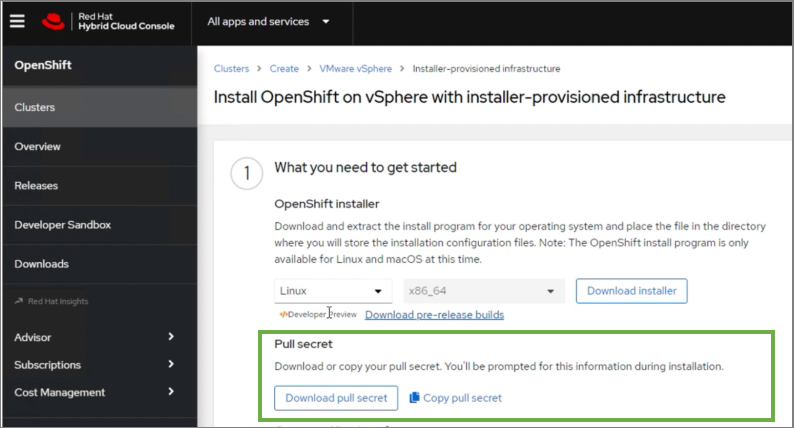

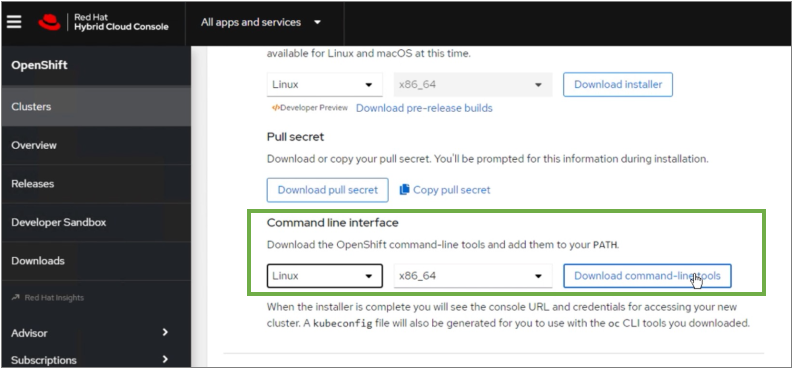

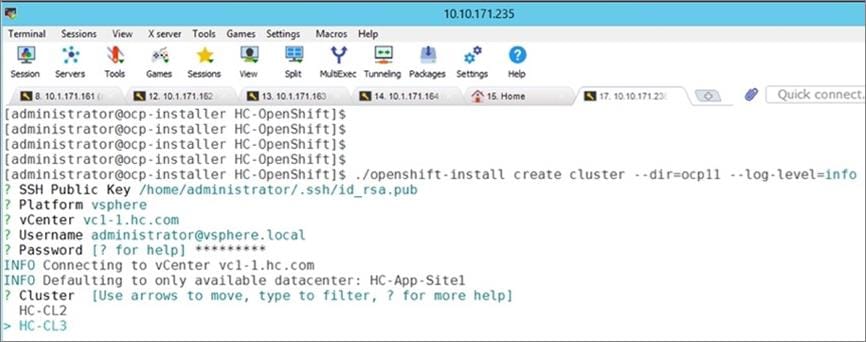

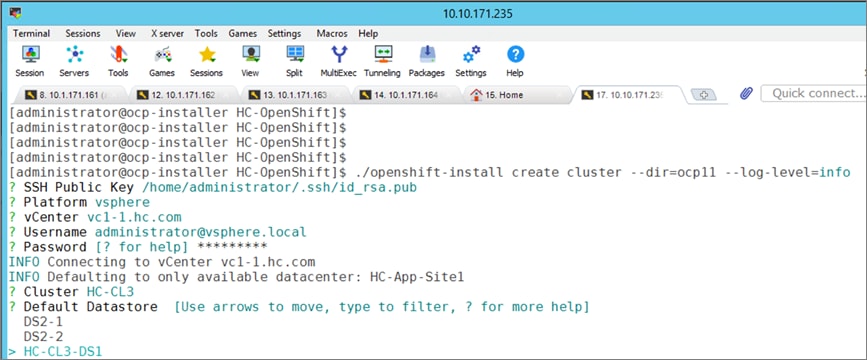

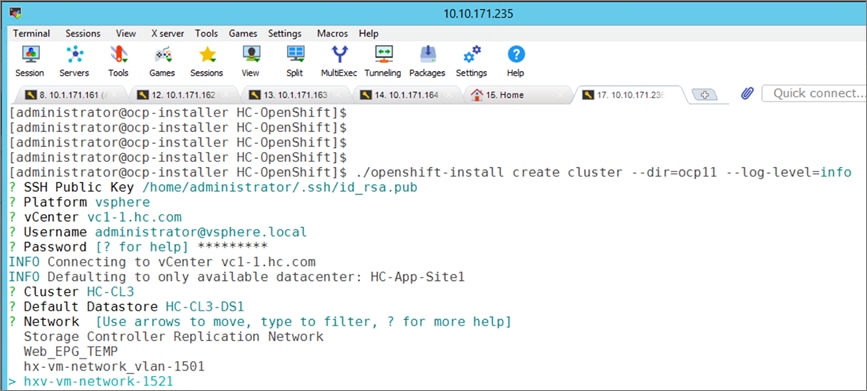

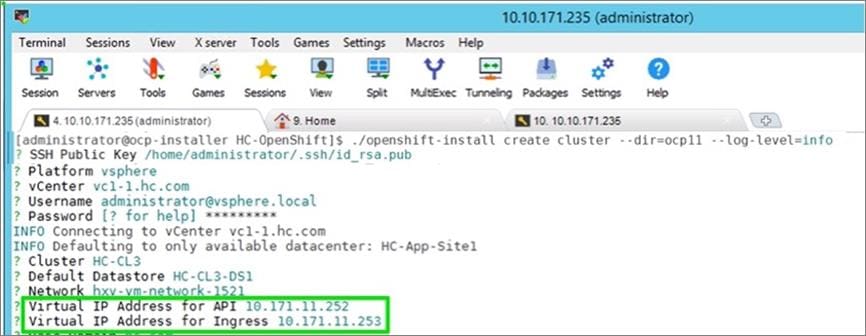

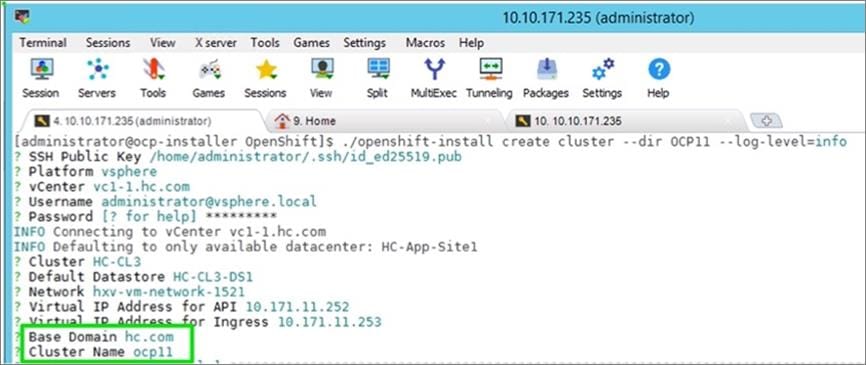

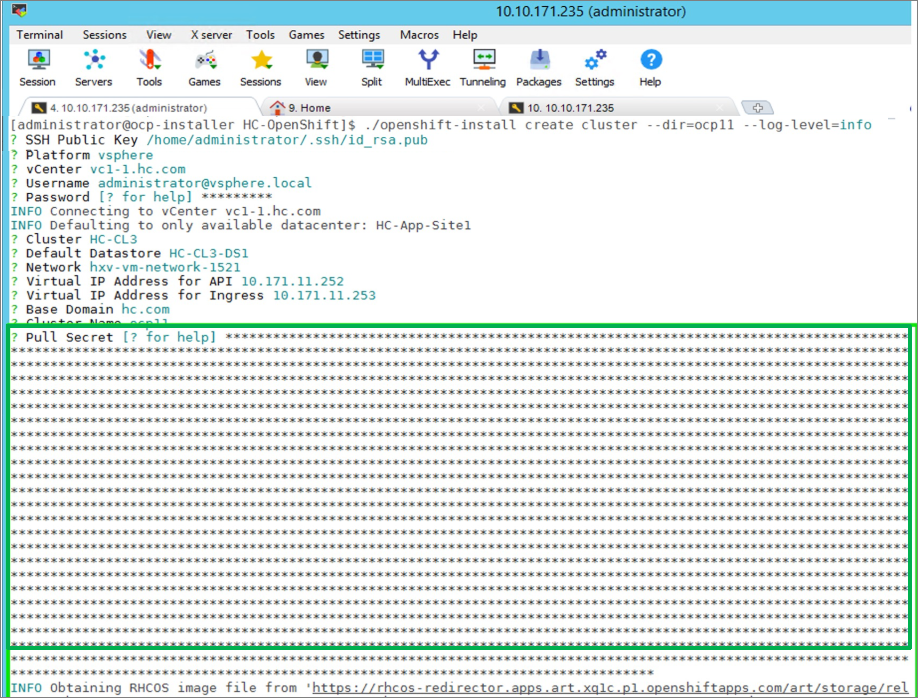

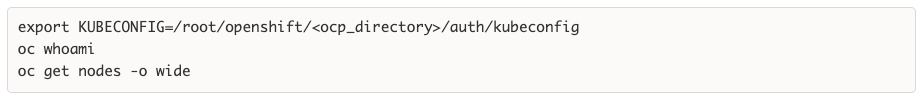

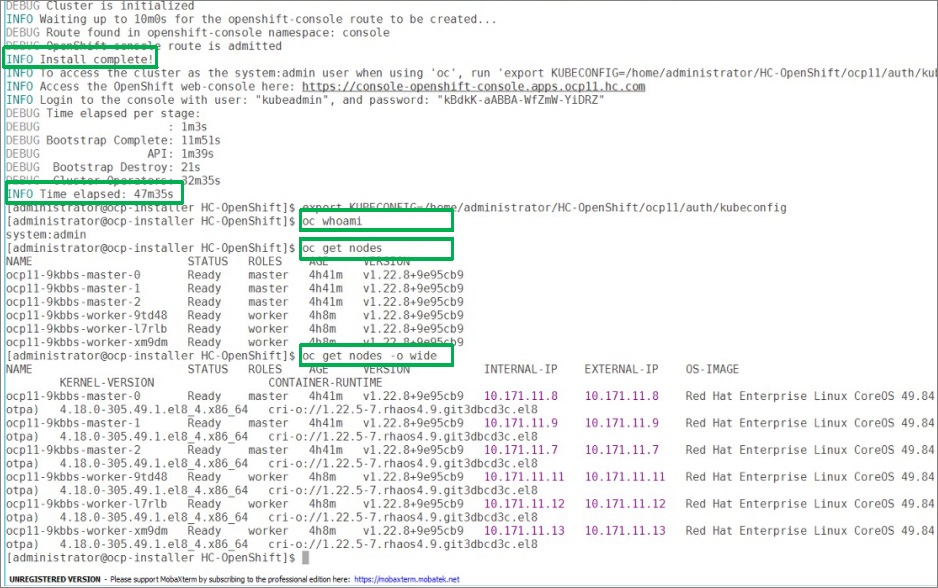

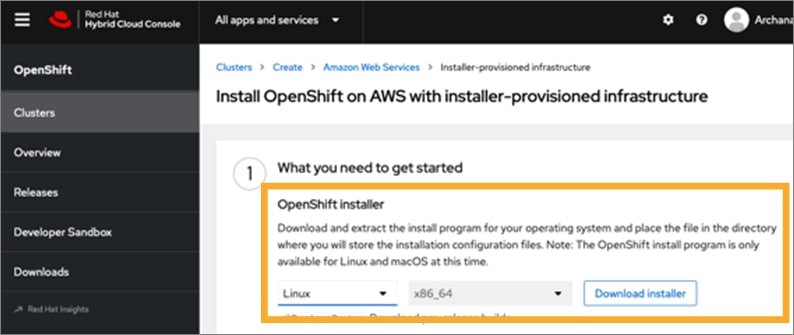

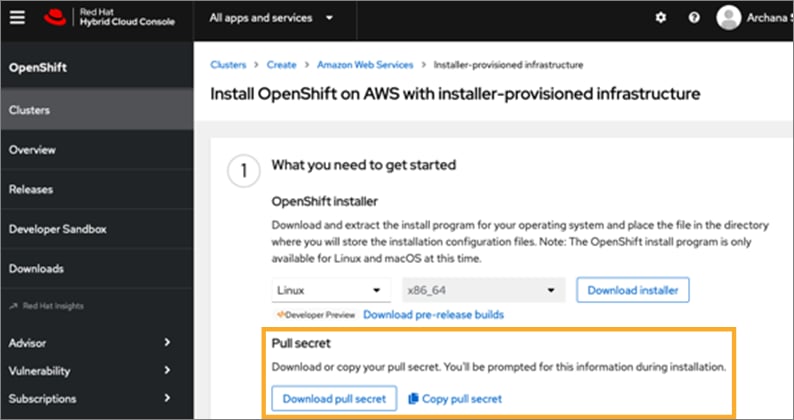

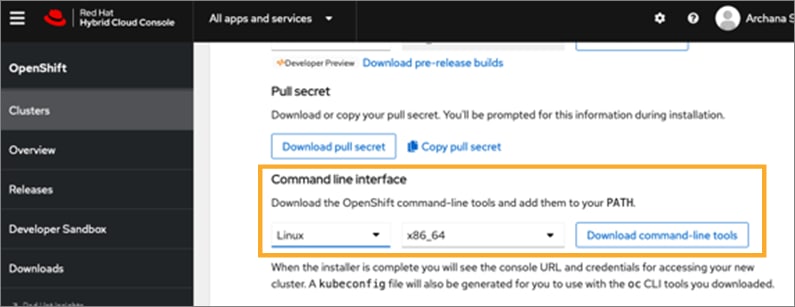

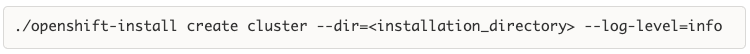

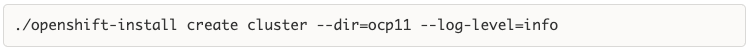

Red Hat OCP provides secure, enterprise-class Kubernetes environment for developing, deploying, and managing cloud-native applications. The Red Hat Hybrid Cloud Console provides centralized, SaaS-based management of OCP clusters deployed anywhere, from on-prem to public cloud. Hybrid Cloud Console provides access to the latest installer package and other tools necessary for securely accessing and managing the cluster for each environment (for example, bare metal, VMware vSphere, AWS, Azure, GCP) and consumption (managed, unmanaged) model that OCP supports. In this solution, Hybrid Cloud Console is used to deploy OCP clusters in both on-prem and public using the Red Hat recommended Installer Provisioned Installation (IPI) method. This method deploys the full infrastructure using an opinionated, prescriptive approach with best-practices implemented. This is the fastest way to deploy an OCP cluster and OCP continues to manage the infrastructure components post-deployment. The automated OCP installer for each infrastructure environment collects minimal information from the user (for example, on-prem datastore). It does offer some customizations (for example, on AWS - deploy in an existing VPC on AWS) but for more extensive infrastructure customizations, the User Provisioned Installation (UPI) method is available. However, UPI does increase the operational burden on the IT/DevOps teams unlike IPI where OCP manages the infrastructure components.

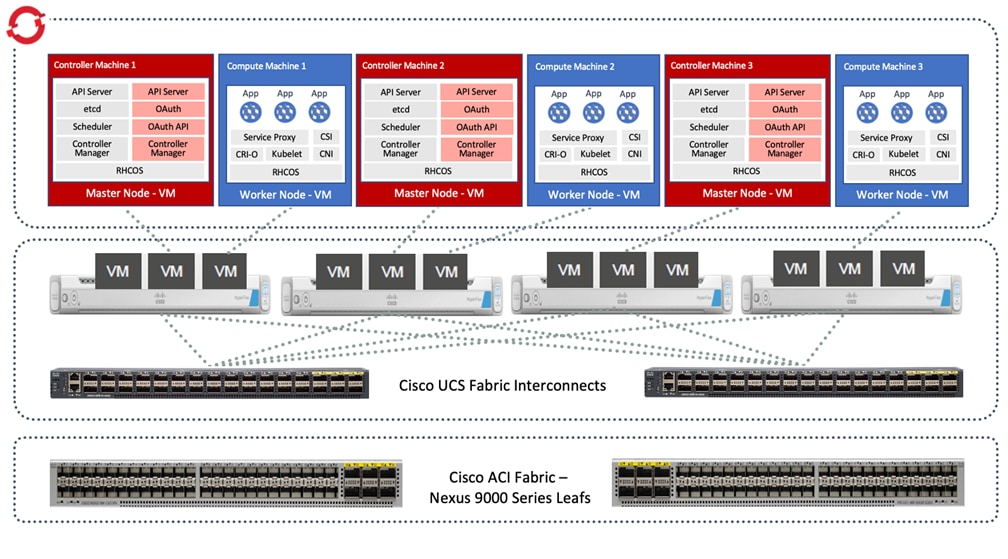

On-Prem – Red Hat OCP on HyperFlex VSI

The Red Hat OCP cluster in the solution is deployed on HyperFlex VSI in the Enterprise data center as shown in Figure 10.

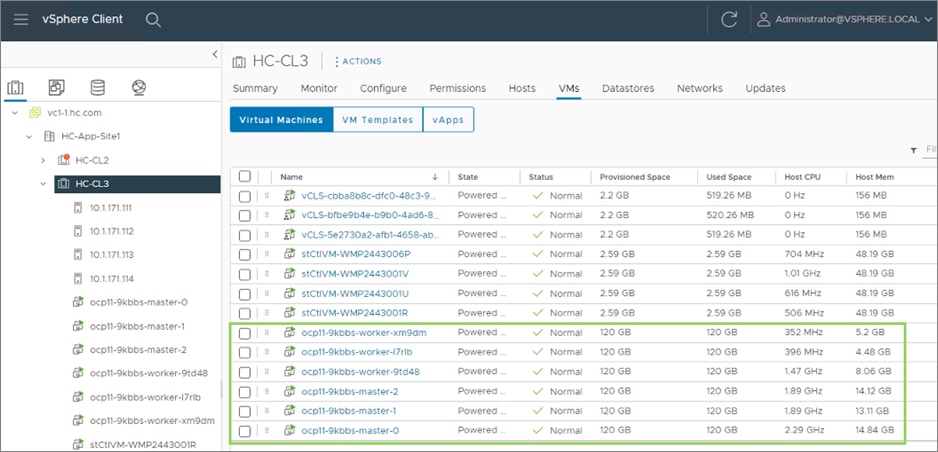

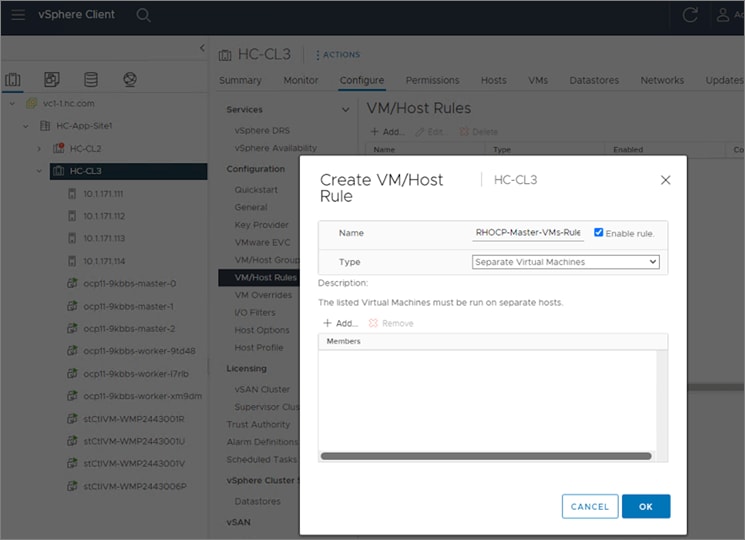

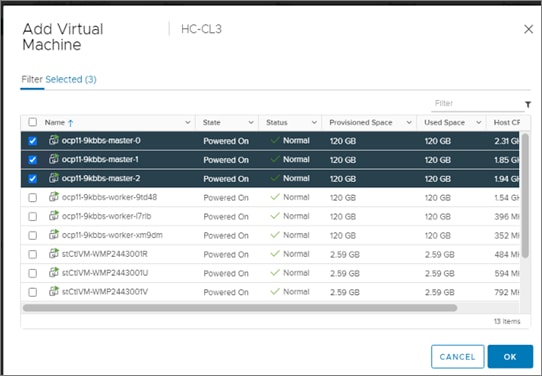

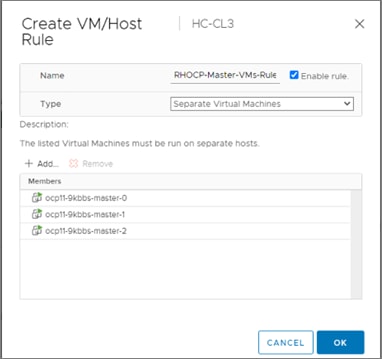

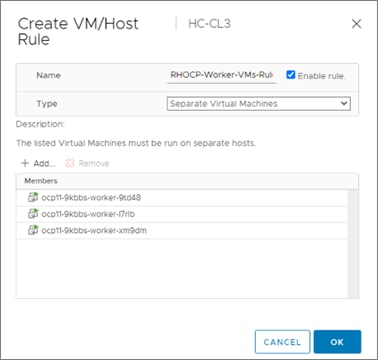

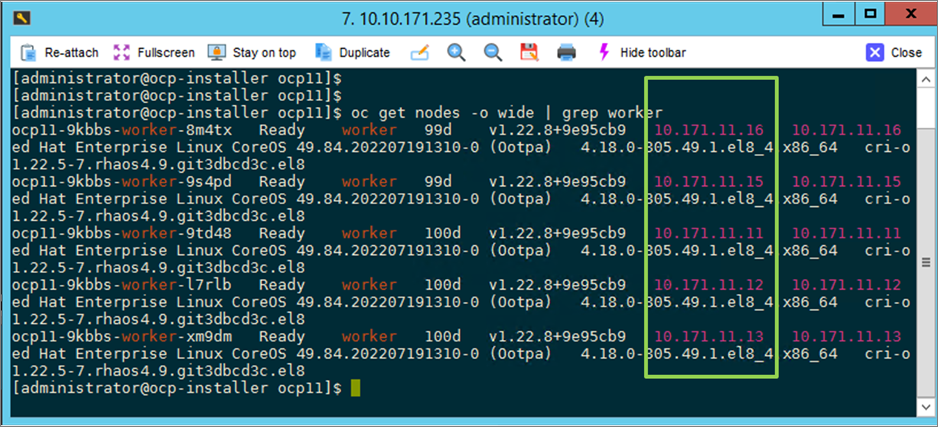

The nodes in the K8s cluster are VMs running on the Application HyperFlex VSI cluster, managed by VMware vCenter running on the management HyperFlex VSI cluster. The default IPI install deploys 3 x control/master and 3 x compute/worker nodes (VMs) – additional worker nodes can be added as needed. To isolate applications from infrastructure and management, OCP cluster and HyperFlex VSI are deployed on separate tenants in the Cisco ACI fabric. The same tenant can be used for additional OCP clusters or on new tenants can be added as needed. The OCP cluster is deployed on a VMware vSwitch VM network that was provisioned as a part of the HyperFlex VSI install. Post-install, the control/master and compute/worker nodes in the cluster are distributed across the ESXi hosts in the HyperFlex VSI cluster using VMware VM affinity rules. Each controller node runs on a separate physical ESXi host in the 4-node HyperFlex VSI cluster. Red Hat recommends three control nodes in all production deployments and therefore, a minimum of 3-node, but ideally a 4-node HyperFlex VSI cluster should be used for OCP deployments. VM affinity rules are also used to distribute the compute/worker nodes across the available ESXi hosts.

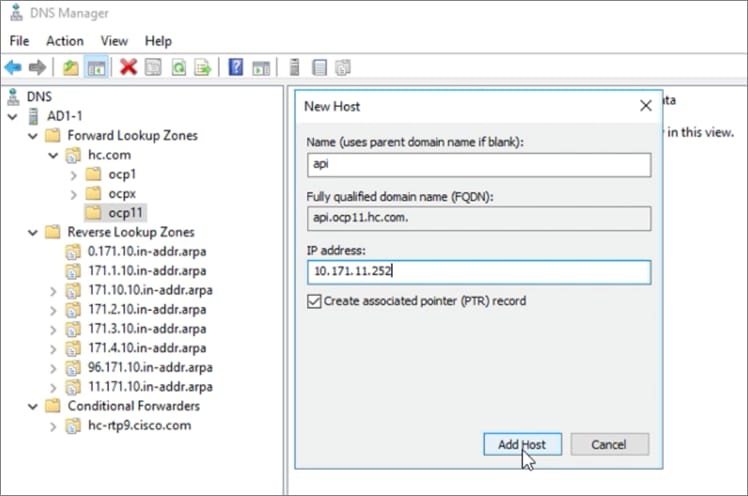

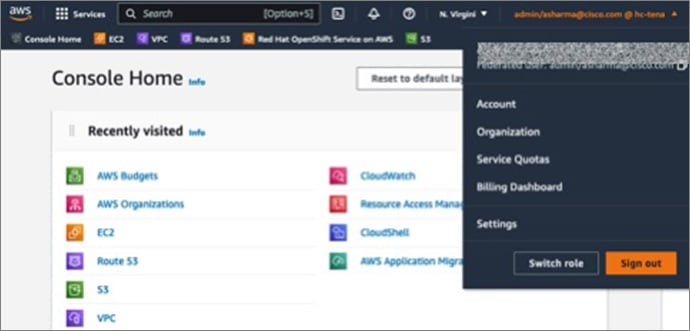

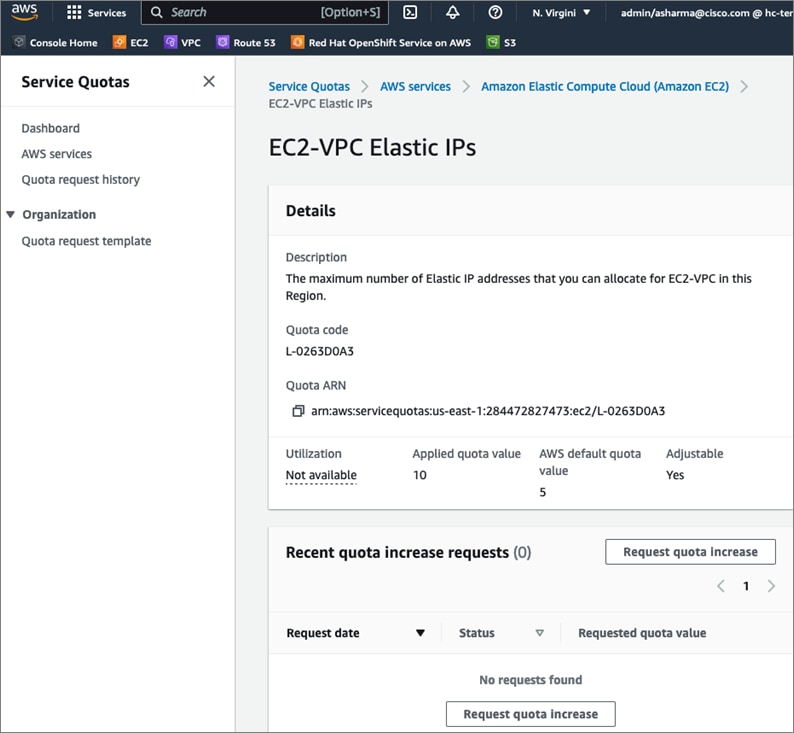

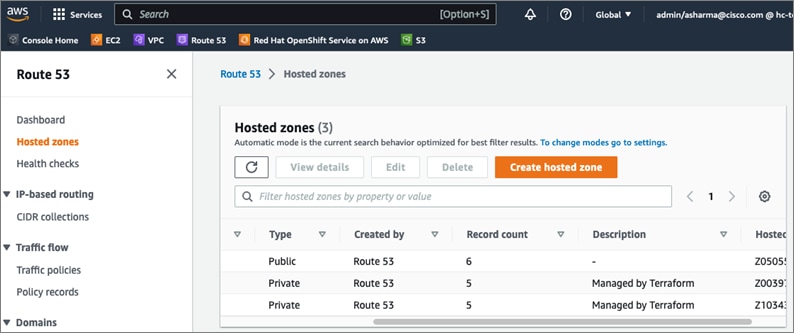

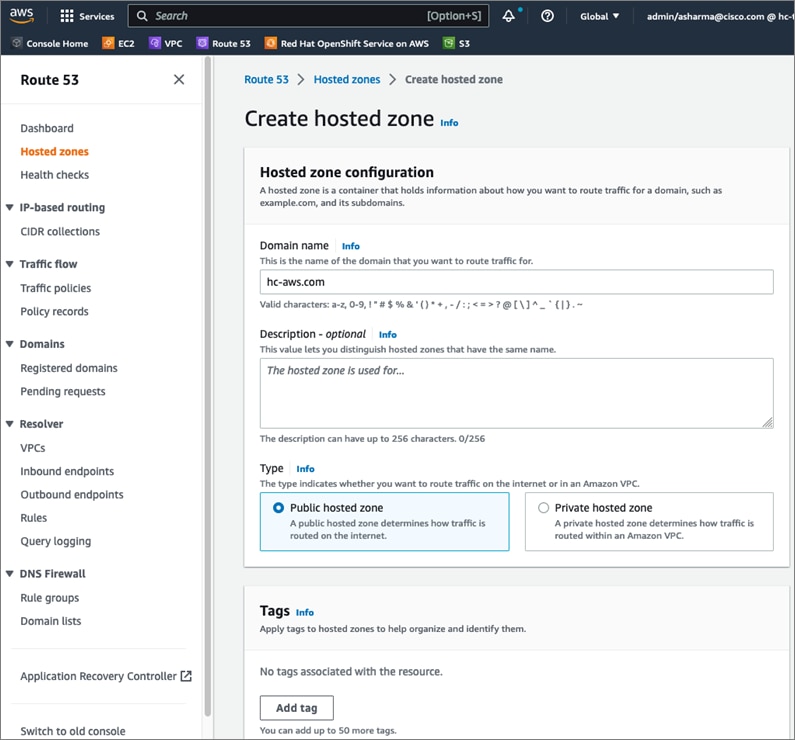

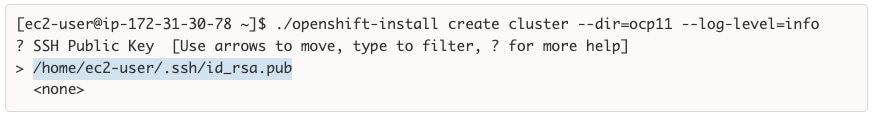

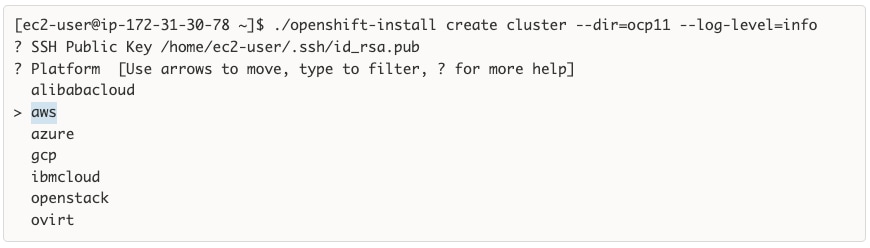

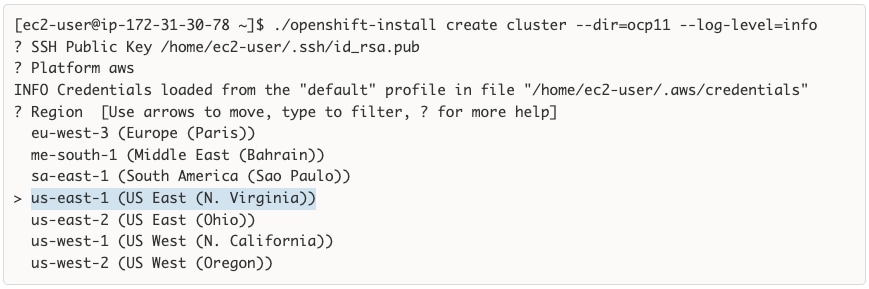

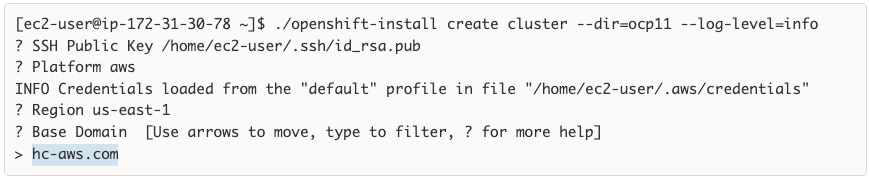

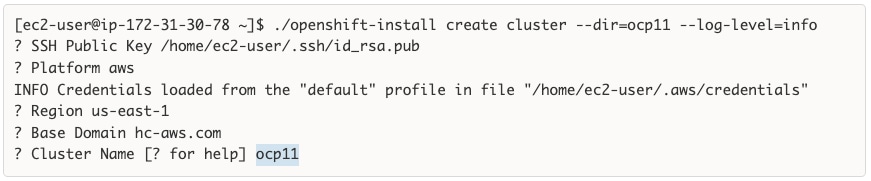

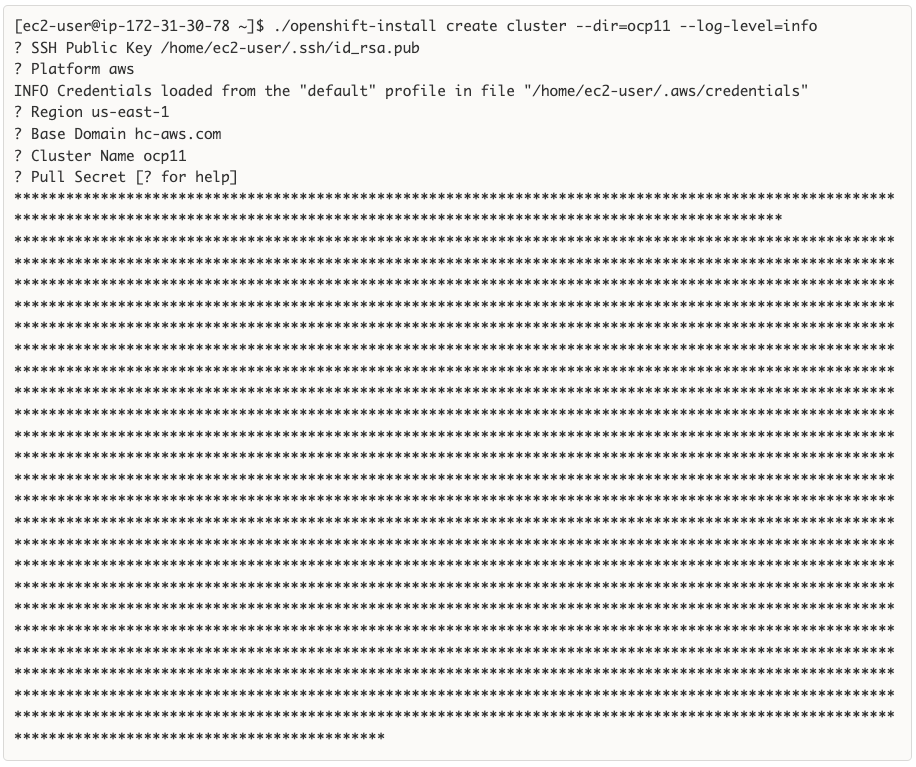

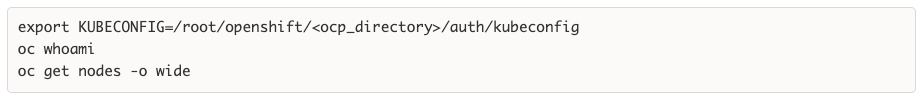

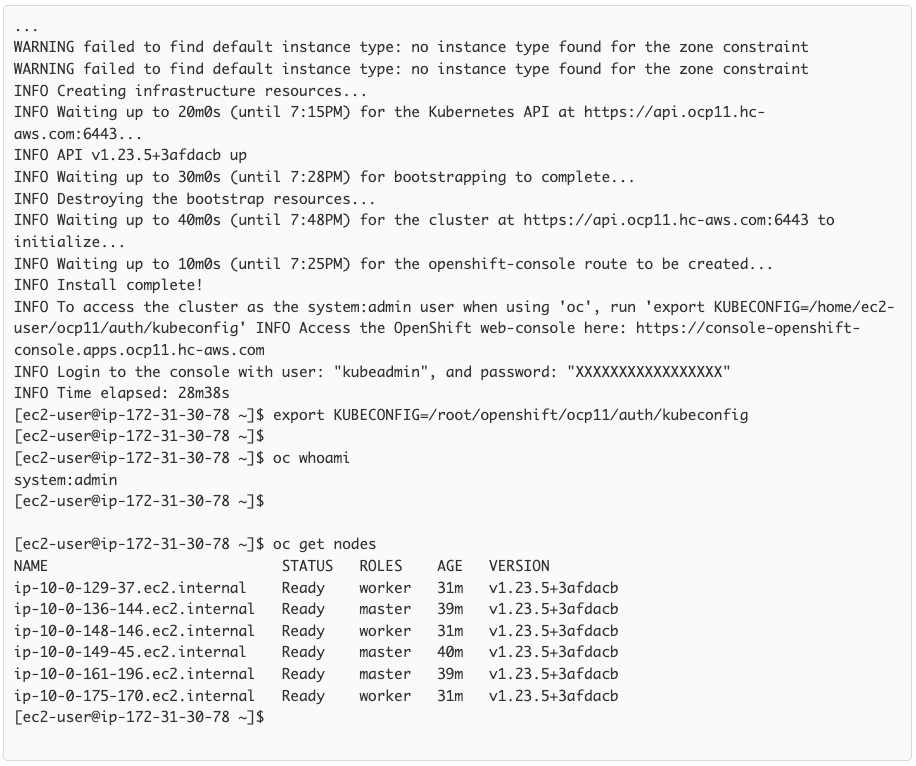

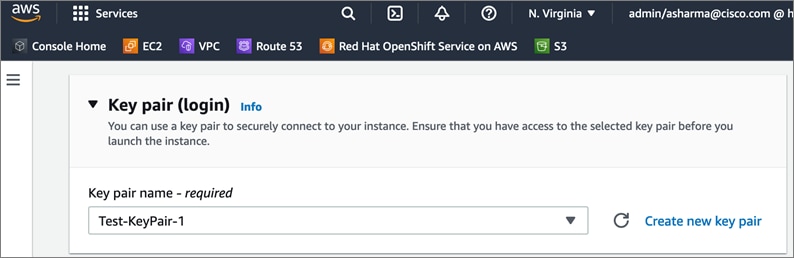

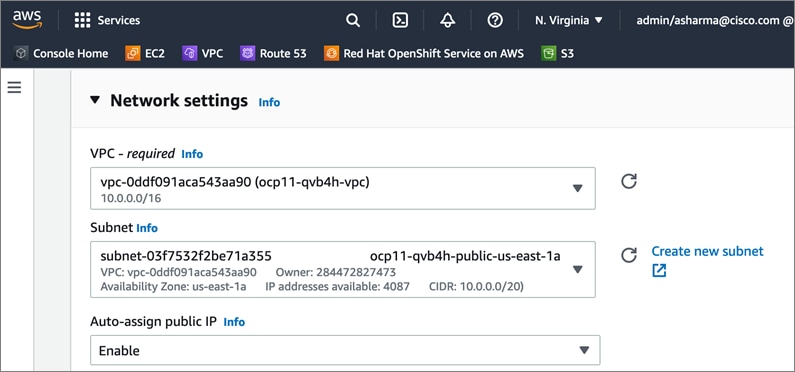

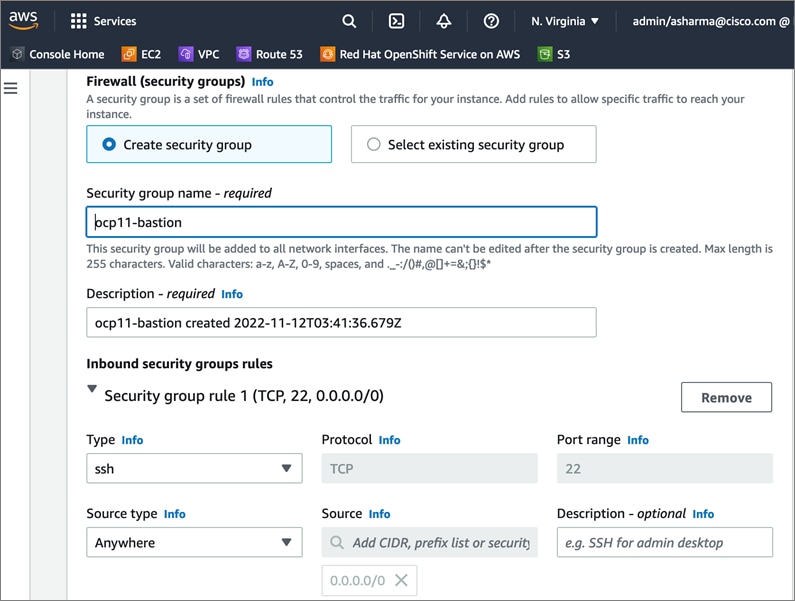

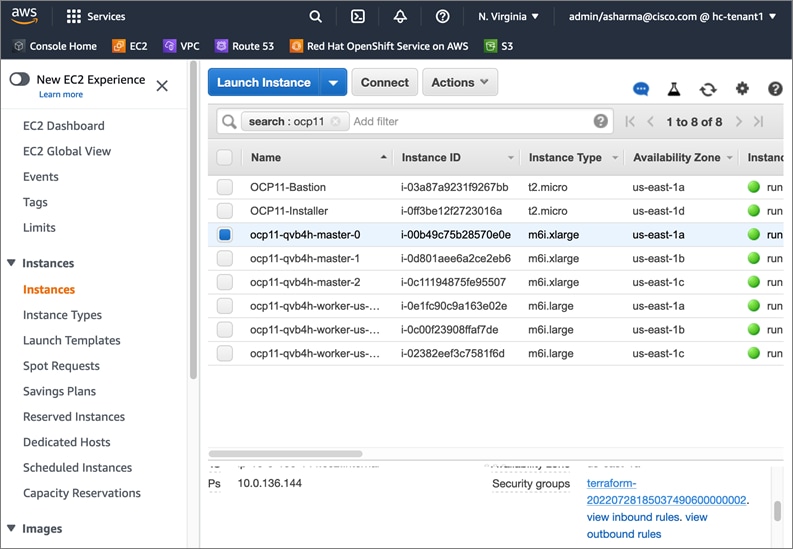

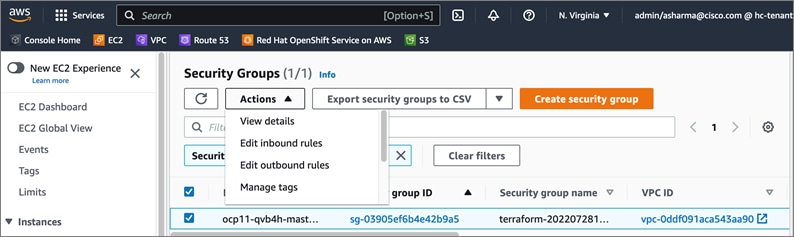

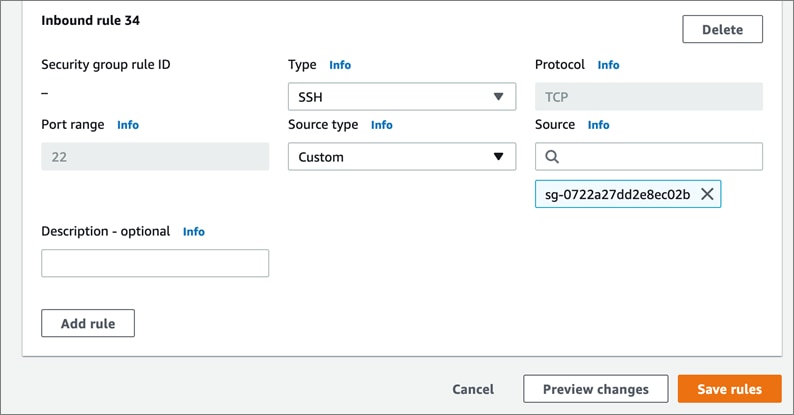

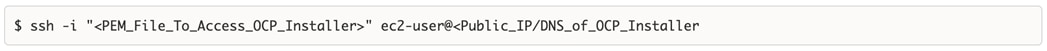

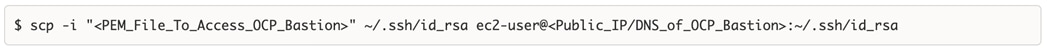

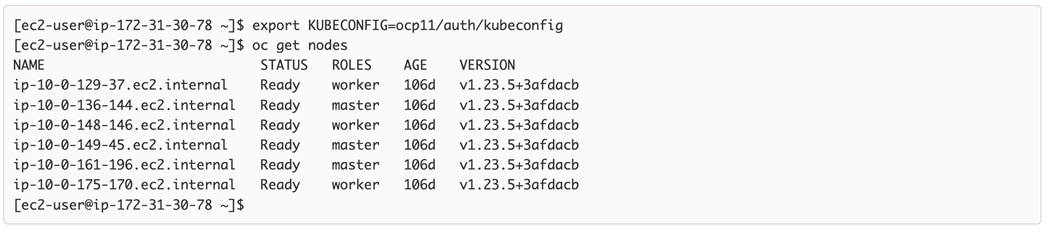

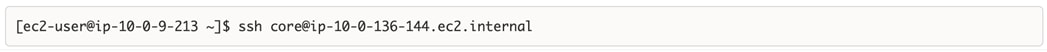

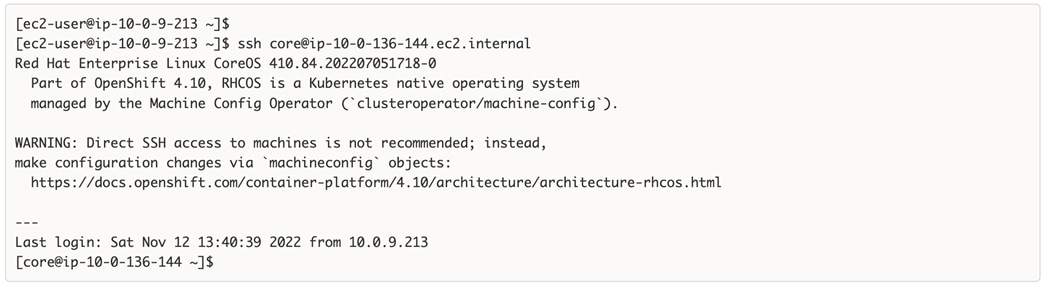

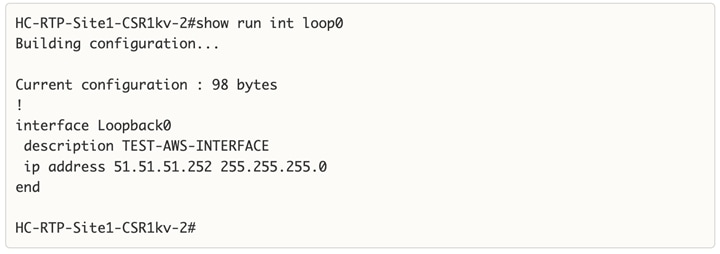

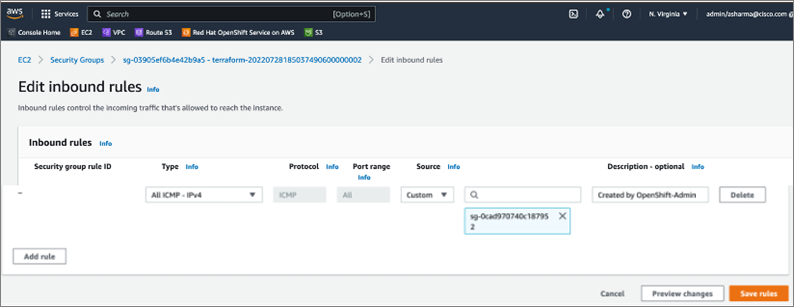

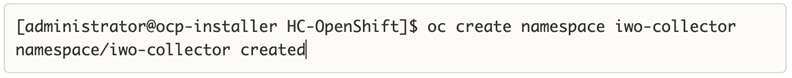

Public Cloud – Red Hat OCP on AWS