FlexPod Datacenter with Oracle 21c RAC on Cisco UCS X-Series M7 and NetApp AFF900 with NVMe/FC

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: December 2023

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Cisco Validated Designs include systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. The success of the FlexPod solution is driven through its ability to evolve and incorporate both technology and product innovations in the areas of management, compute, storage, and networking. This document explains the design details of incorporating the Cisco Unified Computing System (Cisco UCS) X-Series Modular Systems platform with end-to-end 100Gbps networking into a FlexPod Datacenter and the ability to monitor and manage FlexPod components from the cloud using Cisco Intersight.

The FlexPod Datacenter with NetApp All Flash AFF system is a converged infrastructure platform that combines best-of breed technologies from Cisco and NetApp into a powerful converged platform for enterprise applications. Cisco and NetApp work closely with Oracle to support the most demanding transactional and response-time-sensitive databases required by today’s businesses.

This Cisco Validated Design (CVD) describes the reference FlexPod Datacenter architecture using Cisco UCS X-Series and NetApp All Flash AFF Storage for deploying a highly available Oracle 21c Multitenant Real Application Clusters (RAC) Database environment. This document shows the hardware and software configuration of the components involved, results of various tests and offers implementation and best practices guidance using Cisco UCS X-Series Compute Servers, Cisco Fabric Interconnect Switches, Cisco Nexus Switches, Cisco MDS Switches and NetApp AFF Storage for implementing Oracle RAC Databases on NVMe/FC.

FlexPod Datacenter with end-to-end 100Gbps ethernet is configurable according to demand and usage. You can purchase the exact infrastructure you need for you current application requirements and can scale-up by adding more resources to the FlexPod system or scale-out by adding more FlexPod instances. By moving the management from the fabric interconnects into the cloud, the solution can respond to the speed and scale of your deployments with a constant stream of new capabilities delivered from Cisco Intersight software-as-a-service model at cloud-scale. For those that require management within a secure datacenter, Cisco Intersight is also offered as an on-site appliance with both connected and internet disconnected options.

This chapter contains the following:

· Audience

· Key Elements of a Datacenter FlexPod Solution

The Cisco Unified Computing System X-Series (Cisco UCSX) with Intersight Managed Mode (IMM) is a modular compute system, configured and managed from the cloud. It is designed to meet the needs of modern applications and to improve operational efficiency, agility, and scale through an adaptable, future-ready, modular design. The Cisco Intersight platform is a Software-as-a-Service (SaaS) infrastructure lifecycle management platform that delivers simplified configuration, deployment, maintenance, and support.

Powered by the Cisco Intersight cloud-operations platform, the Cisco UCS X-Series enables the next-generation cloud-operated FlexPod infrastructure that not only simplifies data-center management but also allows the infra-structure to adapt to the unpredictable needs of modern applications as well as traditional workloads.

This CVD describes how the Cisco UCS X-Series can be used in conjunction with NetApp AFF A900 All Flash storage systems to implement a mission-critical application such as an Oracle 21c RAC databases solution using modern SANs on NVMe over Fabrics (NVMe over Fibre-Channel or NVMe/FC).

The intended audience for this document includes, but is not limited to customers, field consultants, database administrators, IT architects, Oracle database architects, and sales engineers who want to deploy Oracle RAC 21c database solution on FlexPod Converged Infrastructure with NetApp clustered Data ONTAP and the Cisco UCS X-Series platform using Intersight Managed Mode (IMM) to deliver IT efficiency and enable IT innovation. A working knowledge of Oracle RAC Database, Linux, Storage technology, and Network is assumed but is not a prerequisite to read this document.

This document provides a step-by-step configuration and implementation guide for the FlexPod Datacenter with Cisco UCS X-Series Compute Servers, Cisco Fabric Interconnect Switches, Cisco MDS Switches, Cisco Nexus Switches and NetApp AFF Storage to deploy an Oracle RAC Database solution. Furthermore, it provides references for incorporating Cisco Intersight—managed Cisco UCS X-Series platform with end-to-end 100Gbps within a FlexPod Datacenter infrastructure. This document introduces various design elements and explains various considerations and best practices for a successful deployment.

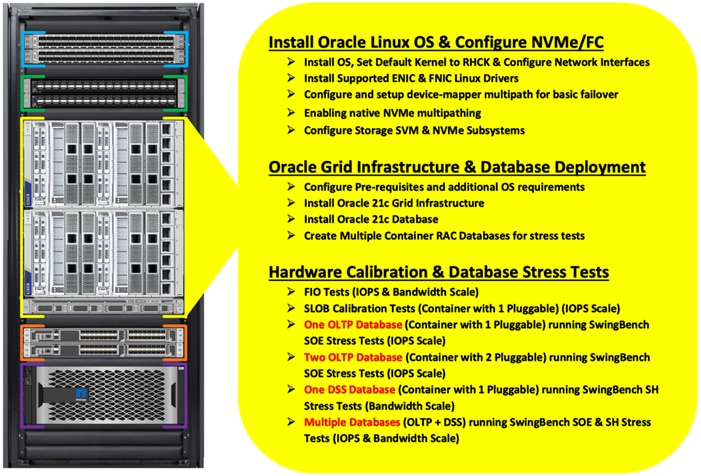

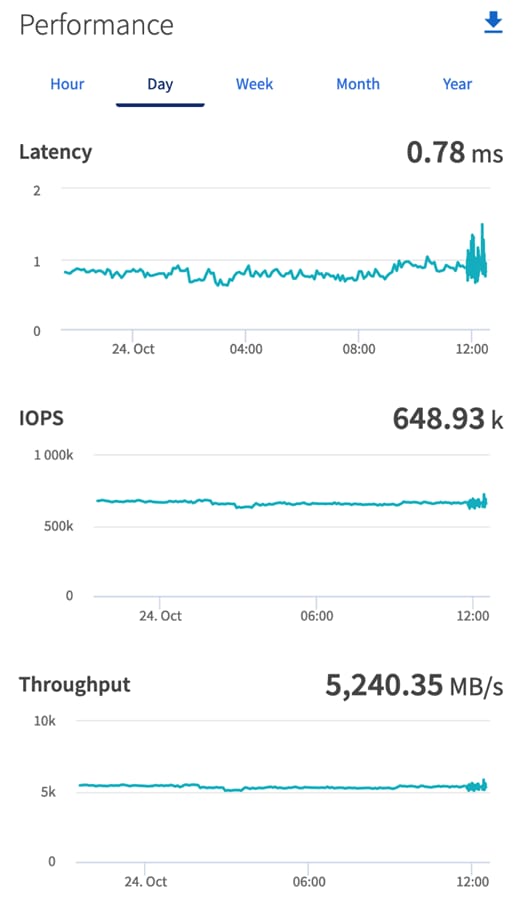

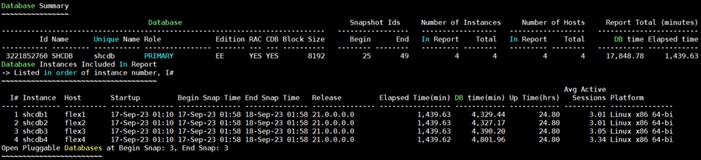

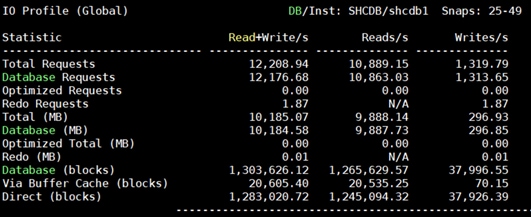

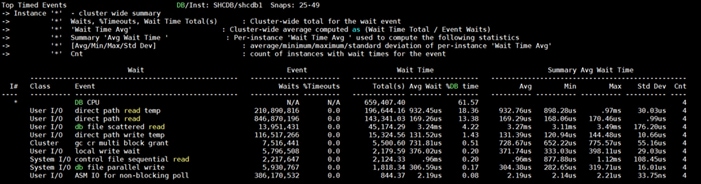

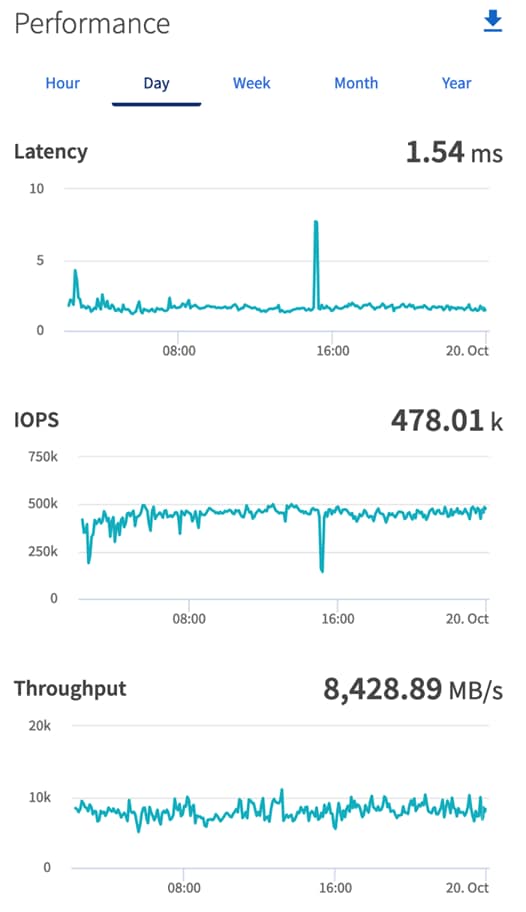

The document also highlights the design and product requirements for integrating compute, network, and storage systems to Cisco Intersight to deliver a true cloud-based integrated approach to infrastructure management. The goal of this document is to build, validate and evaluate the performance of this FlexPod reference architecture while running various types of Oracle OLTP and OLAP workloads using various benchmarking exercises and showcase Oracle database server read latency, peak sustained throughput and IOPS under various stress tests.

The following design elements distinguish this version of FlexPod from previous models:

· Deploying and managing Cisco UCS X9508 chassis equipped with Cisco UCS X410c M7 compute nodes from the cloud using Cisco Intersight

· Support for the NVMe/FC on Cisco UCS and NetApp Storage

· Implementation of FC and NVMe/FC on the same architecture

· Integration of the 5th Generation Cisco UCS 6536 Fabric Interconnect into FlexPod Datacenter

· Integration of the 5th Generation Cisco UCS 15000 Series VICs into FlexPod Datacenter

· Integration of the Cisco UCSX-I-9108-100G Intelligent Fabric Module into the Cisco X-Series 9508 Chassis

· Implementation of end-to-end 100G network to optimize the I/O path between Oracle databases and the RAC Servers

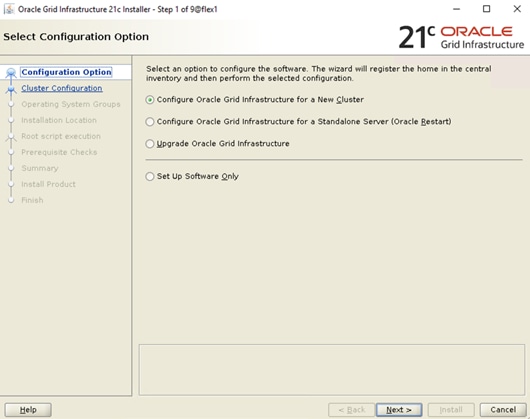

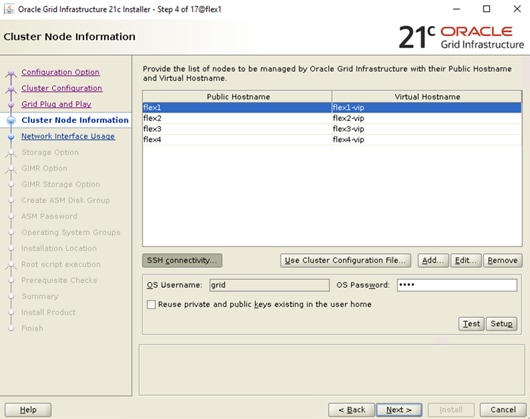

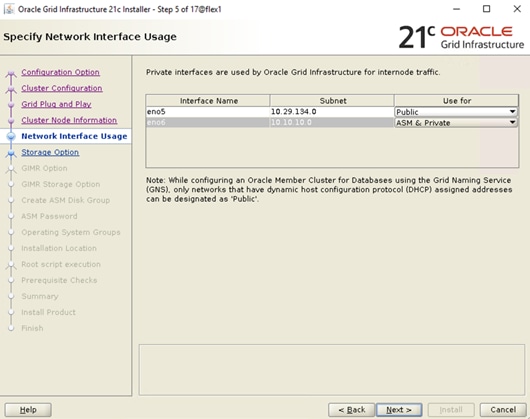

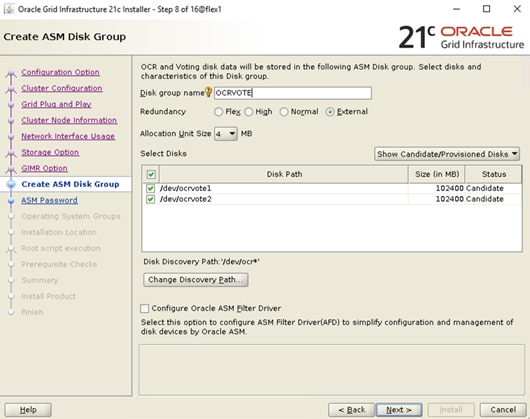

· Validation of Oracle 21c Grid Infrastructure and 21c Databases

· Support for the release of NetApp ONTAP 9.12.1

Built on groundbreaking technology from NetApp and Cisco, the FlexPod converged infrastructure platform meets and exceeds the challenges of simplifying deployments for best-in-class data center infrastructure. FlexPod is a defined set of hardware and software that serves as an integrated foundation for both virtualized and non-virtualized solutions. Composed of pre-validated storage, networking, and server technologies, FlexPod is designed to increase IT responsiveness to organizational needs and reduce the cost of computing with maximum uptime and minimal risk. Simplifying the delivery of data center platforms gives enterprises an advantage in delivering new services and applications.

FlexPod provides the following differentiators:

· Flexible design with a broad range of reference architectures and validated designs.

· Elimination of costly, disruptive downtime through Cisco UCS and NetApp ONTAP.

· Leverage a pre-validated platform to minimize business disruption and improve IT agility and reduce deployment time from months to weeks.

· Cisco Validated Designs (CVDs) and NetApp Validated Architectures (NVAs) covering a variety of use cases.

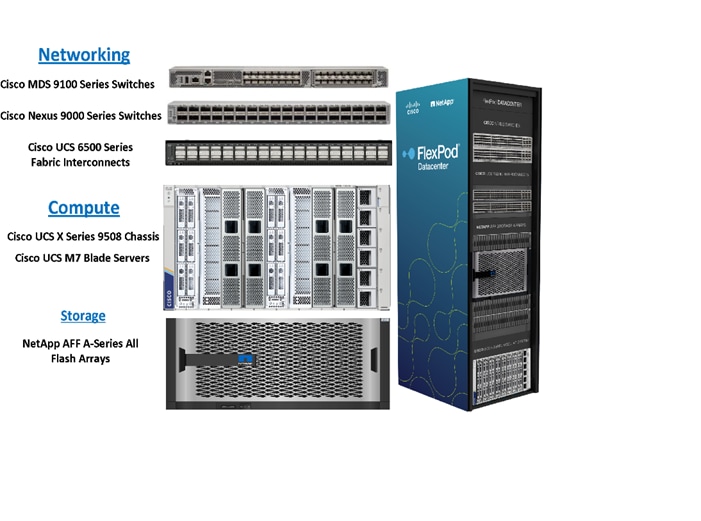

Key Elements of a Datacenter FlexPod Solution

Cisco and NetApp have carefully validated and verified the FlexPod solution architecture and its many use cases while creating a portfolio of detailed documentation, information, and references to assist customers in transforming their data centers to this shared infrastructure model.

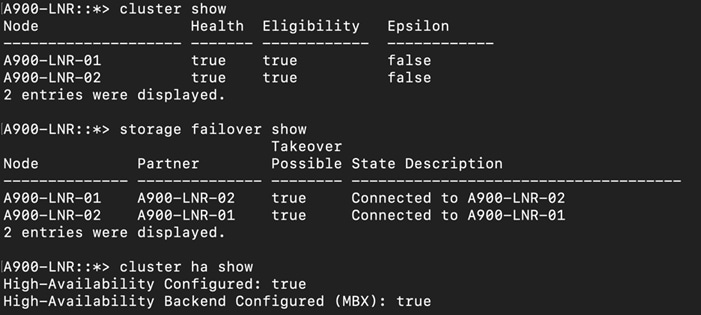

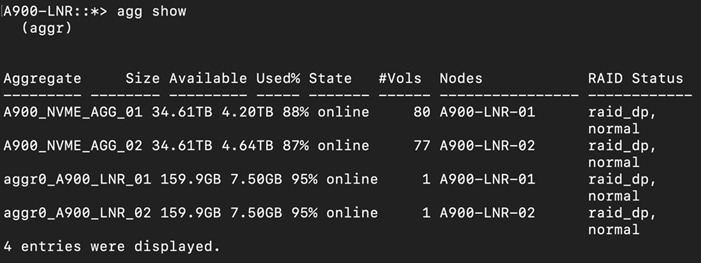

This reference FlexPod Datacenter architecture is built using the following infrastructure components for compute, network, and storage:

· Compute – Cisco UCS X-Series Chassis with Cisco UCS X410c M7 Blade Servers

· Network – Cisco UCS Fabric Interconnects, Cisco Nexus switches and Cisco MDS switches

· Storage – NetApp AFF All Flash Storage systems

All FlexPod components have been integrated so you can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlexPod is its ability to maintain consistency at scale. Each of the component families (Cisco UCS, Cisco FI, Cisco Nexus, Cisco MDS and NetApp controllers) shown in the figure above offers platform and resource options to scale up or scale out the infrastructure while supporting the same features. The design is flexible enough that the networking, computing, and storage can fit in one data center rack or be deployed according to a customer's data center design. The reference architecture reinforces the "wire-once" strategy, because as additional storage is added to the architecture, no re-cabling is required from the hosts to the Cisco UCS fabric interconnect.

This FlexPod Datacenter solution for deploying Oracle RAC 21c Databases is built using the following hardware components:

· Fifth-generation Cisco UCS 6536 Fabric Interconnects to support 10/25/40/100GbE and Cisco Intersight platform to deploy, maintain and support UCS and FlexPod components.

· Two Cisco UCS X9508 Chassis with each chassis having two Cisco UCSX-I-9108-100G Intelligent Fabric Modules to deploy end to end 100GE connectivity.

· Total of four Cisco UCS X410c M7 Compute Nodes (2 Nodes per Chassis) with each node having one Cisco Virtual Interface Cards (VICs) 15231.

· High-speed Cisco NX-OS-based Cisco Nexus C9336C-FX2 switching design to support up to 100GE connectivity and Cisco MDS 9132T Fibre Channel Switches for Storage Networking

· NetApp AFF A900 end-to-end NVMe storage with 100GE/32GFC connectivity.

There are two modes to configure Cisco UCS, one is UCSM (UCS Managed) and the other is IMM (Intersight Managed Mode). This reference solution was deployed using Intersight Managed Mode (IMM). The best practices and setup recommendations are described later in this document.

Note: In this validated and deployed solution, the Cisco UCS X-Series is only supported in IMM mode.

This solution provides an end-to-end architecture with Cisco UCS and NetApp technologies to demonstrate the benefits for running Oracle RAC Database 21c environment with superior performance, scalability and high availability using NVMe over Fibre Channel (NVMe/FC).

Nonvolatile Memory Express (NVMe) is an optimized, high-performance, scalable interface designed to work with current and the next-generation NVM technologies. The NVMe interface is defined to enable host software to communicate with nonvolatile memory over PCI Express (PCIe). It was designed from the ground up for low-latency solid state media, eliminating many of the bottlenecks seen in the legacy protocols for running enterprise applications. NVMe devices are connected to the PCIe bus inside a server. NVMe-oF extends the high-performance and low-latency benefits of NVMe across network fabrics that connect servers and storage. NVMe-oF takes the lightweight and streamlined NVMe command set, and the more efficient queueing model, and replaces the PCIe transport with alternate transports, like Fibre Channel, RDMA over Converged Ethernet (RoCE v2), TCP.

NVMe over Fibre Channel (NVMe/FC) is implemented through the Fibre Channel NVMe (FC-NVMe) standard which is designed to enable NVMe based message commands to transfer data and status information between a host computer and a target storage subsystem over a Fibre Channel network fabric. FC-NVMe simplifies the NVMe command sets into basic FCP instructions. Since the Fibre Channel is designed for storage traffic, functionality such as discovery, management and end-to-end qualification of equipment is built into the system.

Most high-performance latency sensitive applications and workloads are running on FCP today. Since the NVMe/FC and Fibre Channel networks use the same underlying transport protocol (FCP), they can use common hardware components. It’s even possible to use the same switches, cables, and ONTAP target port to communicate with both protocols at the same time. The ability to use either protocol by itself or both at the same time on the same hardware makes transitioning from FCP to NVMe/FC both simple and seamless.

Large-scale block flash-based storage environments that use Fibre Channel are the most likely to adopt NVMe over FC. FC-NVMe offers the same structure, predictability, and reliability characteristics for NVMe-oF that Fibre Channel does for SCSI. Plus, NVMe-oF traffic and traditional SCSI-based traffic can run simultaneously on the same FC fabric.

This FlexPod solution showcases the Cisco UCS System with NetApp AFF Storage Array running on NVMe over FibreChannel (NVMe/FC) which can provide efficiency and performance of NVMe, and the benefits of all-flash robust scale out storage system that combines low-latency performance with comprehensive data management, built-in efficiencies, integrated data protection, multiprotocol support, and nondisruptive operations.

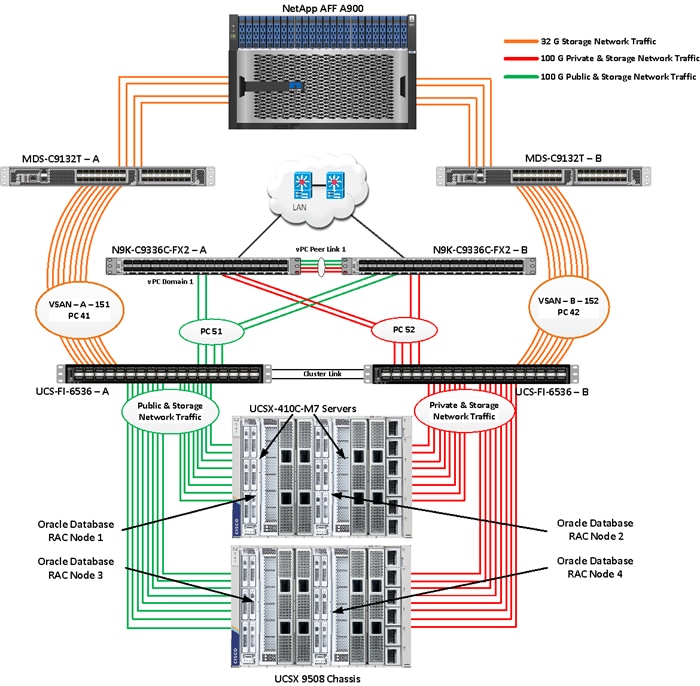

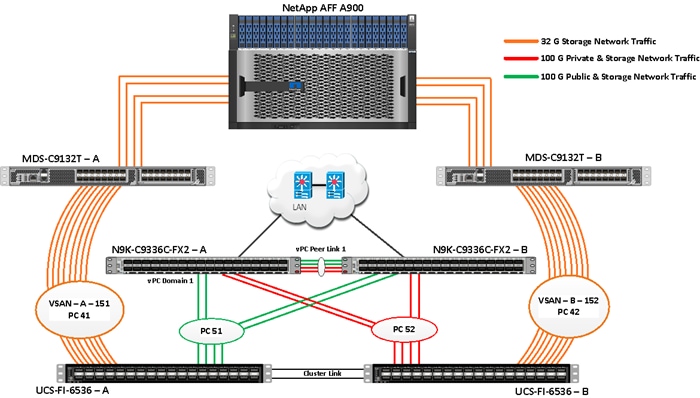

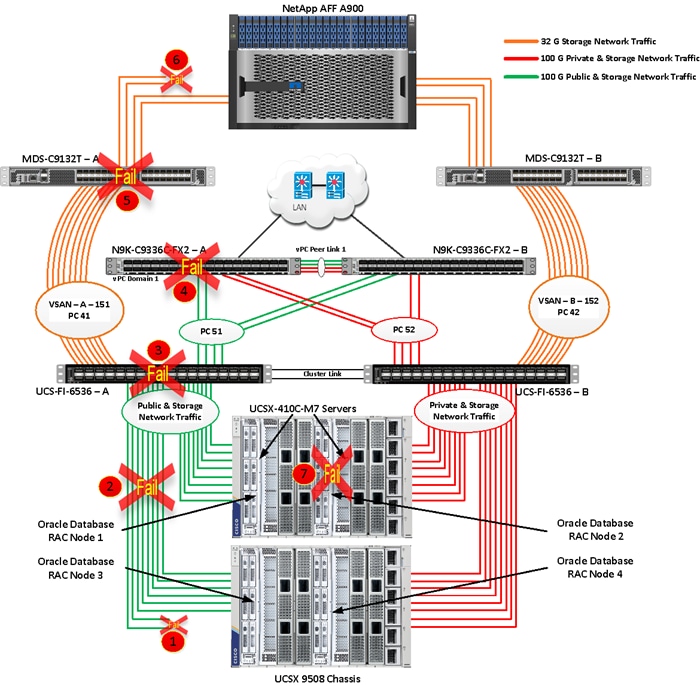

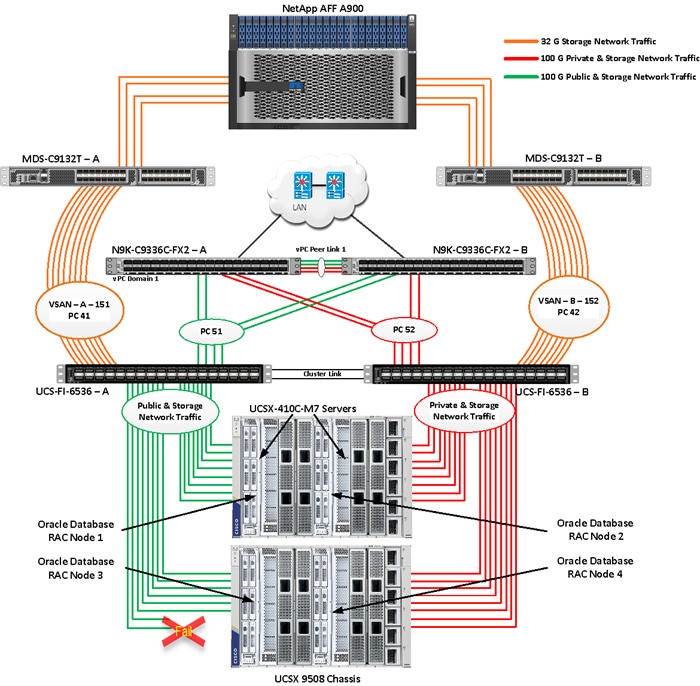

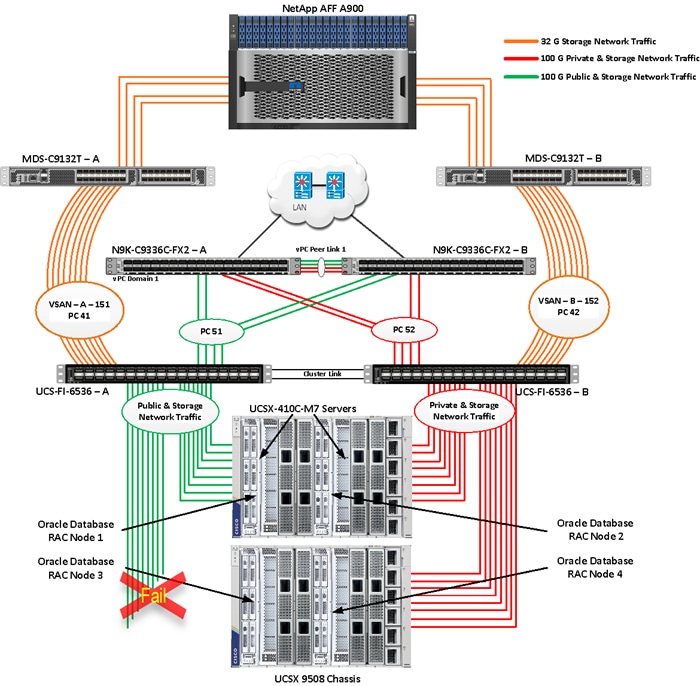

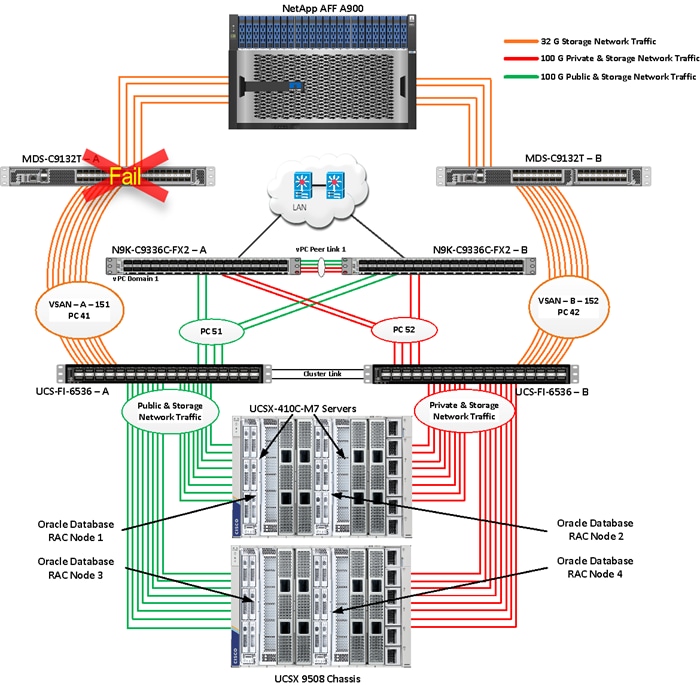

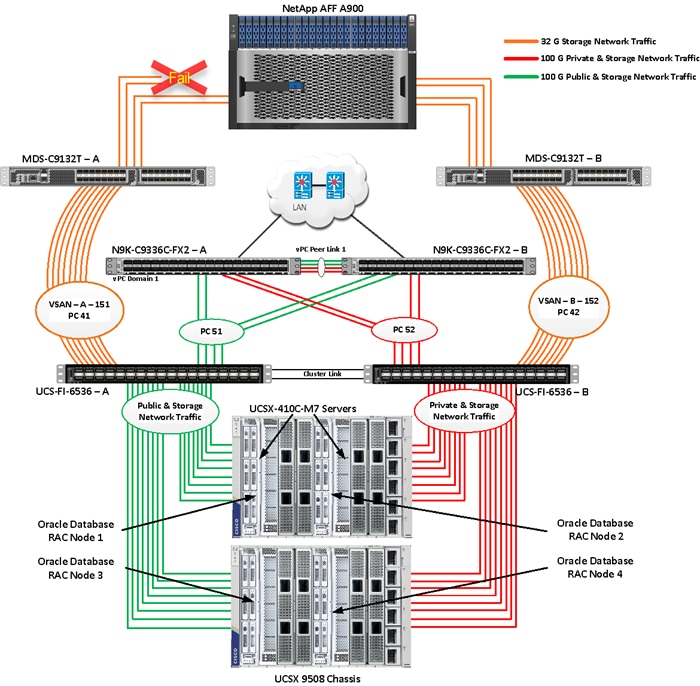

Figure 1 shows the architecture diagram of the FlexPod components to deploy a four node Oracle RAC 21c Database solution on NVMe/FC. This reference design is a typical network configuration that can be deployed in a customer's environment.

Figure 1. FlexPod components architecture

As shown in Figure 1, a pair of Cisco UCS 6536 Fabric Interconnects (FI) carries both storage and network traffic from the Cisco UCS X410c M7 server with the help of Cisco Nexus 9336C-FX2 switches and Cisco MDS 9132T switches. The Fabric Interconnects and the Cisco Nexus Switches are clustered with the peer link between them to provide high availability.

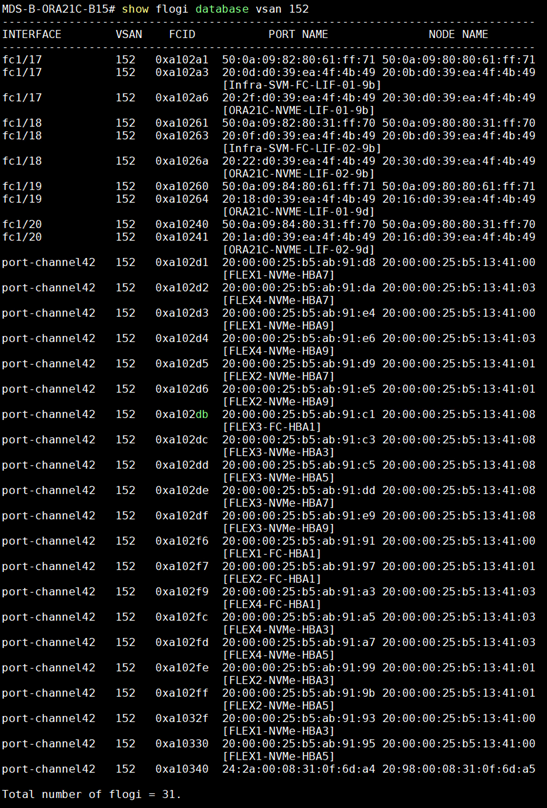

As illustrated in Figure 1, 16 (8 x 100G link per chassis) links from the blade server chassis go to Fabric Interconnect – A. Similarly, 16 (8 x 100G link per chassis) links from the blade server chassis go to Fabric Interconnect – B. Fabric Interconnect – A links are used for Oracle Public Network Traffic (VLAN-134) and Storage Network Traffic (VSAN 151) shown as green lines while Fabric Interconnect – B links are used for Oracle Private Interconnect Traffic (VLAN 10) and Storage Network Traffic (VSAN 152) shown as red lines. Two virtual Port-Channels (vPCs) are configured to provide public network and private network traffic paths for the server blades to northbound Nexus switches.

FC and NVMe/FC Storage access from both Fabric Interconnects to MDS Switches and NetApp Storage Array are shown as orange lines. Eight 32Gb links are connected from FI – A to MDS – A Switch. Similarly, eight 32Gb links are connected from FI – B to MDS – B Switch. The NetApp Storage AFF A900 has eight active FC connections that go to the Cisco MDS Switches. Four FC ports are connected to MDS-A, and the other four FC ports are connected to MDS-B Switch.

The NetApp Controller CT1 and Controller CT2 SAN ports 9a and 9c are connected to MDS – A Switch while the Controller CT1 and Controller CT2 SAN ports 9b and 9d are connected to MDS – B Switch. Also, two FC Port-Channels (PC) are configured (vPC 41 & vPC 42) to provide storage network paths from the server blades to storage array. Each port-channel has VSANs (VSAN 151 & VSAN 152) created for application and storage network data access.

Note: For the Oracle RAC configuration on Cisco Unified Computing System, we recommend keeping all private interconnect network traffic local on a single Fabric interconnect. In this case, the private traffic will stay local to that fabric interconnect and will not be routed through the northbound network switch. This way all the inter server blade (or RAC node private) communications will be resolved locally at the fabric interconnects and this significantly reduces latency for Oracle Cache Fusion traffic.

Additional 1Gb management connections are needed for an out-of-band network switch that is apart from this FlexPod infrastructure. Each Cisco UCS FI, Cisco MDS and Cisco Nexus switch is connected to the out-of-band network switch, and each NetApp AFF controller also has two connections to the out-of-band network switch.

Although this is the base design, each of the components can be scaled easily to support specific business requirements. For example, more servers or even blade chassis can be deployed to increase compute capacity, additional disk shelves can be deployed to improve I/O capability and throughput, and special hardware or software features can be added to introduce new features. This document guides you through the detailed steps for deploying the base architecture, as shown in Figure 1. These procedures cover everything from physical cabling to network, compute, and storage device configurations.

This section describes the hardware and software components used to deploy a four node Oracle RAC 21c Database Solution on this architecture.

The inventory of the components used in this solution architecture is listed in Table 1.

Table 1. Table for Hardware Inventory and Bill of Material

| Name |

Model/Product ID |

Description |

Quantity |

| Cisco UCS X Blade Server Chassis |

UCSX-9508 |

Cisco UCS X Series Blade Server Chassis, 7RU which can house a combination of compute nodes and a pool of future I/O resources that may include GPU accelerators, disk storage, and nonvolatile memory. |

2 |

| Cisco UCS 9108 100G IFM (Intelligent Fabric Module) |

UCSX-I-9108-100G |

Cisco UCS 9108 100G IFM connects the I/O fabric between the Cisco UCS X9508 Chassis and 6536 Fabric Interconnects 800 Gb/s (8x100Gb/s) Port IO Module for compute nodes |

4 |

| Cisco UCS X410c M7 Compute Server |

UCSX-410c-M7 |

Cisco UCS X410c M7 4 Socket Blade Server (4x 4th Gen Intel Xeon Scalable Processors) |

4 |

| Cisco UCS VIC 15231 |

UCSX-ML-V5D200G |

Cisco UCS VIC 15231 2x100/200G mLOM for X Compute Node |

4 |

| Cisco UCS 6536 Fabric Interconnect |

UCS-FI-6536 |

Cisco UCS 6536 Fabric Interconnect providing both network connectivity and management capabilities for the system |

2 |

| Cisco MDS Switch |

DS-C9132T-8PMESK9 |

Cisco MDS 9132T 32-Gbps 32-Port Fibre Channel Switch |

2 |

| Cisco Nexus Switch |

N9K-9336C-FX2 |

Cisco Nexus 9336C-FX2 Switch |

2 |

| NetApp AFF Storage |

AFF A900 |

NetApp AFF A-Series All Flash Arrays with NS224 NSM Disk Shelf Module |

1 |

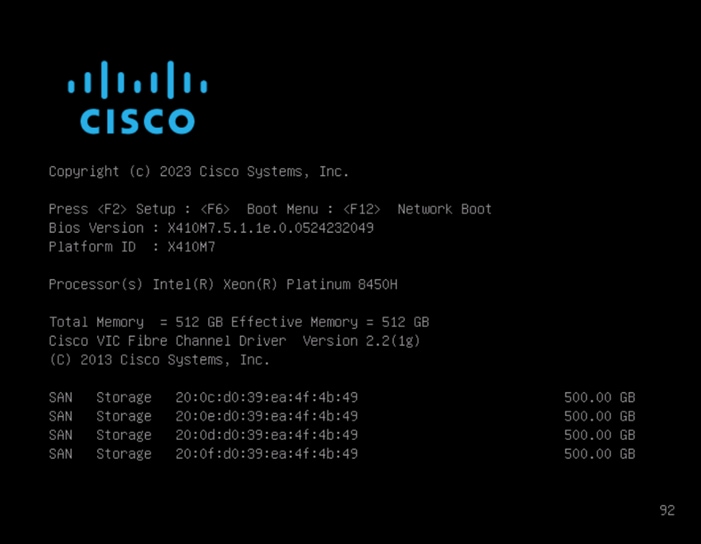

Note: In this solution design, we used 4 identical Cisco UCS X410c M7 Blade Servers to configure the Red Hat Linux 8.7 Operating system and then deploy a 4 node Oracle RAC Database. The Cisco UCS X410c M7 Server configuration is listed in Table 2.

Table 2. Cisco UCS X410c M7 Compute Server Configuration

| Cisco UCS X410c M7 Server Configuration |

|

|

| Processor |

4 x Intel(R) Xeon(R) Platinum 8450H CPU @ 2GHz 250W 28C 75MB Cache (4 x 28 CPU Cores = 112 Core Total) |

PID - UCS-CPU-I8450H |

| Memory |

16 x Samsung 32GB DDR5-4800-MHz (512 GB) |

PID - UCS-MRX32G1RE1 |

| VIC 15231 |

Cisco UCS VIC 15231 Blade Server MLOM (200G for compute node) (2x100G through each fabric) |

PID - UCSX-ML-V5D200G |

Table 3. vNIC and vHBA Configured on each Linux Host

| vNIC Details |

|

| vNIC 0 (eth0) |

Management and Public Network Traffic Interface for Oracle RAC. MTU = 1500 |

| vNIC 1 (eth1) |

Private Server-to-Server Network (Cache Fusion) Traffic Interface for Oracle RAC. MTU = 9000 |

| vHBA0 |

FC Network Traffic & Boot from SAN through MDS-A Switch |

| vHBA1 |

FC Network Traffic & Boot from SAN through MDS-B Switch |

| vHBA2 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-A Switch |

| vHBA3 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-B Switch |

| vHBA4 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-A Switch |

| vHBA5 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-B Switch |

| vHBA6 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-A Switch |

| vHBA7 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-B Switch |

| vHBA8 |

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-A Switch |

| vHBA9 |

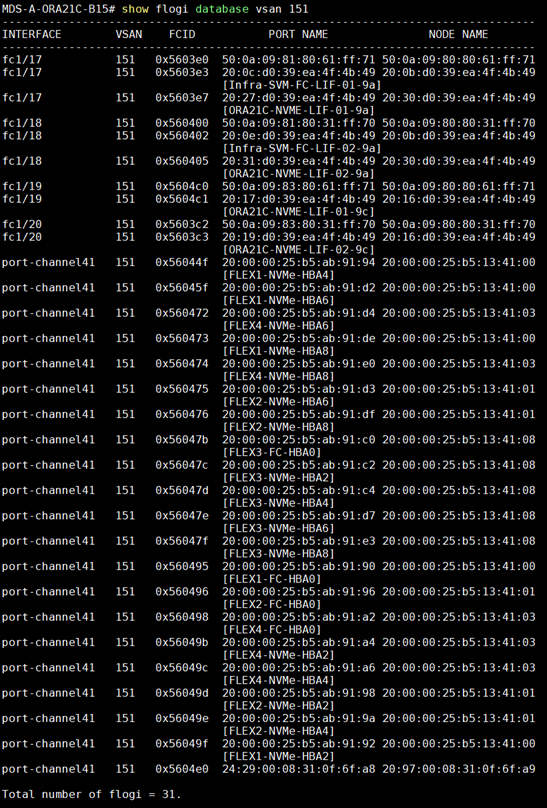

NVMe/FC Network Traffic (Oracle RAC Storage Traffic) through MDS-B Switch |

Note: For this solution, we configured 2 VLANs to carry public and private network traffic as well as two VSANs to carry FC and NVMe/FC storage traffic as listed in Table 4.

Table 4. VLAN and VSAN Configuration

| VLAN Configuration |

||

| VLAN |

||

| Name |

ID |

Description |

| Default VLAN |

1 |

Native VLAN |

| Public VLAN |

134 |

VLAN for Public Network Traffic |

| Private VLAN |

10 |

VLAN for Private Network Traffic |

| VSAN |

||

| Name |

ID |

Description |

| VSAN-A |

151 |

FC and NVMe/FC Network Traffic through for Fabric Interconnect A |

| VSAN-B |

152 |

FC and NVMe/FC Network Traffic through for Fabric Interconnect B |

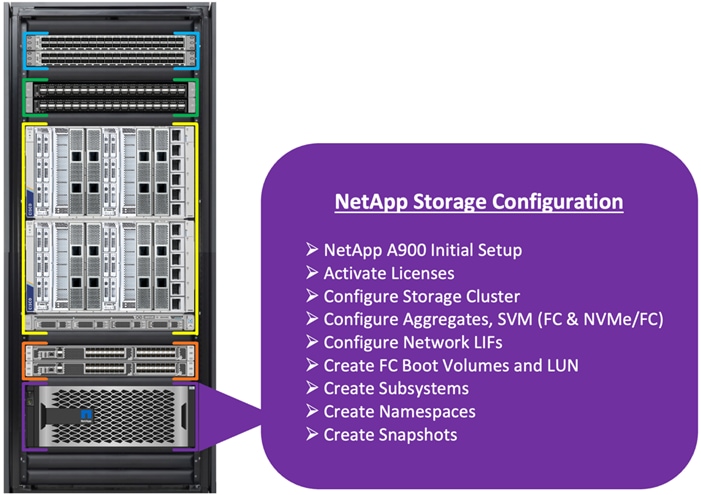

This FlexPod solution consists of NetApp All Flash AFF Series Storage as listed in Table 5.

Table 5. NetApp AFF A900 Storage Configuration

| Storage Components |

Description |

| AFF A900 Flash Array |

NetApp All Flash AFF A900 Storage Array (24 x 3.49 TB NVMe SSD Drives) |

| NSM 100 Disk Shelf |

NetApp Disk Shelf NS224NSM100 Expansion Storage Shelf 24 x 3.84 TB NVMe SSD Drives (X4011WBORA3T8NTF)

|

| Capacity |

72.9 TB |

| Connectivity |

8 x 32 Gb/s redundant FC, NVMe/FC 1 Gb/s redundant Ethernet (Management port) |

| Physical |

10 Rack Units |

Table 6. Software and Firmware Revisions

| Software and Firmware |

Version |

| Cisco UCS FI 6536 |

Bundle Version 4.2(3e) or NX-OS Version – 9.3(5)I42(3d) Image Name - intersight-ucs-infra-5gfi.4.2.3e.bin |

| Cisco UCS X410c M7 Server |

5.2(0.230041) Image Name - intersight-ucs-server-410c-m7.5.2.0.230041.bin |

| Cisco UCS Adapter VIC 15231 |

5.3(2.32) |

| Cisco eNIC (Cisco VIC Ethernet NIC Driver) (modinfo enic) |

4.5.0.7-939.23 (kmod-enic-4.5.0.7-939.23.rhel8u7_4.18.0_425.3.1.x86_64) |

| Cisco fNIC (Cisco VIC FC HBA Driver) (modinfo fnic) |

2.0.0.90-252.0 (kmod-fnic-2.0.0.90-252.0.rhel8u7.x86_64) |

| Red Hat Enterprise Linux Server |

Red Hat Enterprise Linux release 8.7 (Kerel - 4.18.0-425.3.1.el8.x86_64) |

| Oracle Database 21c Grid Infrastructure for Linux x86-64 |

21.3.0.0.0 |

| Oracle Database 21c Enterprise Edition for Linux x86-64 |

21.3.0.0.0 |

| Cisco Nexus 9336C-FX2 NXOS |

NXOS System Version - 9.3(3) & BIOS Version – 05.40 |

| Cisco MDS 9132T Software |

System Version - 9.3(2) & BIOS Version - 1.43.0 |

| NetApp Storage AFF A900 |

ONTAP 9.12.1P4 |

| NetApp NS224NSM100 Disk Shelf |

NSM100:0210 |

| FIO |

fio-3.19-3.el8.x86_64 |

| Oracle Swingbench |

2.7 |

| SLOB |

2.5.4.0 |

Solution Configuration

This chapter contains the following:

· Cisco Nexus Switch Configuration

· Cisco UCS X-Series Configuration – Intersight Managed Mode (IMM)

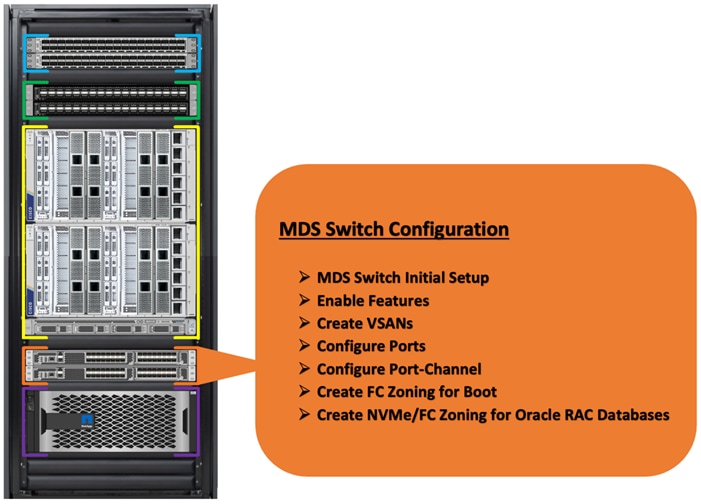

· Cisco MDS Switch Configuration

· NetApp AFF A900 Storage Configuration

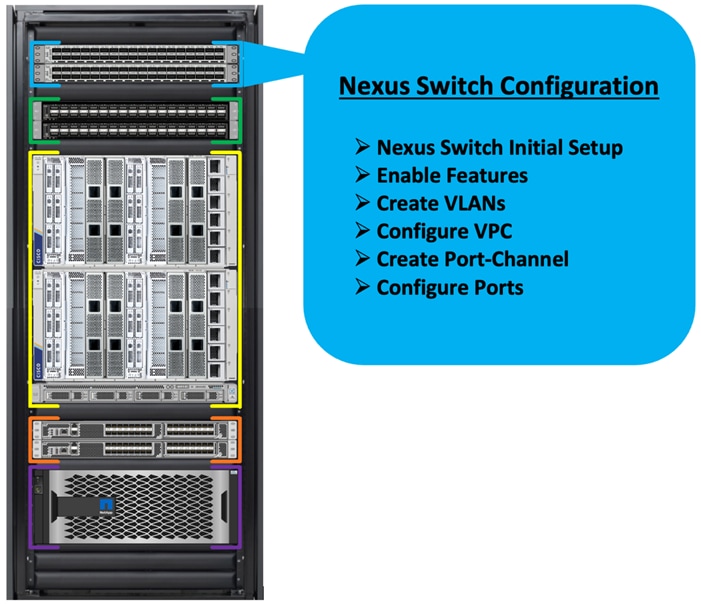

Cisco Nexus Switch Configuration

This section details the high-level steps to configure Cisco Nexus Switches.

Figure 2 illustrates the high-level overview and steps to configure various components to deploy and test the Oracle RAC Database 21c for this FlexPod reference architecture.

Figure 2. Cisco Nexus Switch configuration architecture

The following procedures describe how to configure the Cisco Nexus switches to use in a base FlexPod environment. This procedure assumes you’re using Cisco Nexus 9336C-FX2 switches deployed with the 100Gb end-to-end topology.

Note: On initial boot and connection to the serial or console port of the switch, the NX-OS setup should automatically start and attempt to enter Power on Auto Provisioning.

Procedure 1. Initial Setup for the Cisco Nexus A Switch

Step 1. To set up the initial configuration for the Cisco Nexus A Switch on <nexus-A-hostname>, run the following:

Abort Power on Auto Provisioning and continue with normal setup? (yes/no) [n]: yes

Do you want to enforce secure password standard (yes/no) [y]: Enter

Enter the password for "admin": <password>

Confirm the password for "admin": <password>

Would you like to enter the basic configuration dialog (yes/no): yes

Create another login account (yes/no) [n]: Enter

Configure read-only SNMP community string (yes/no) [n]: Enter

Configure read-write SNMP community string (yes/no) [n]: Enter

Enter the switch name: <nexus-A-hostname>

Continue with Out-of-band (mgmt0) management configuration? (yes/no) [y]: Enter

Mgmt0 IPv4 address: <nexus-A-mgmt0-ip>

Mgmt0 IPv4 netmask: <nexus-A-mgmt0-netmask>

Configure the default gateway? (yes/no) [y]: Enter

IPv4 address of the default gateway: <nexus-A-mgmt0-gw>

Configure advanced IP options? (yes/no) [n]: Enter

Enable the telnet service? (yes/no) [n]: Enter

Enable the ssh service? (yes/no) [y]: Enter

Type of ssh key you would like to generate (dsa/rsa) [rsa]: Enter

Number of rsa key bits <1024-2048> [1024]: Enter

Configure the ntp server? (yes/no) [n]: y

NTP server IPv4 address: <global-ntp-server-ip>

Configure default interface layer (L3/L2) [L3]: L2

Configure default switchport interface state (shut/noshut) [noshut]: Enter

Configure CoPP system profile (strict/moderate/lenient/dense/skip) [strict]: Enter

Would you like to edit the configuration? (yes/no) [n]: Enter

Cisco Nexus B Switch

Similarly, follow the steps in the procedure Initial Setup for the Cisco Nexus A Switch to setup the initial configuration for the Cisco Nexus B Switch and change the relevant switch hostname and management IP address according to your environment.

Procedure 1. Configure Global Settings

Configure the global setting on both Cisco Nexus Switches.

Step 1. Login as admin user into the Cisco Nexus Switch A and run the following commands to set the global configurations on switch A:

configure terminal

feature interface-vlan

feature hsrp

feature lacp

feature vpc

feature lldp

spanning-tree port type network default

spanning-tree port type edge bpduguard default

port-channel load-balance src-dst l4port

policy-map type network-qos jumbo

class type network-qos class-default

mtu 9216

system qos

service-policy type network-qos jumbo

vrf context management

ip route 0.0.0.0/0 10.29.134.1

copy run start

Step 2. Login as admin user into the Nexus Switch B and run the same above commands to set global configurations on Nexus Switch B.

Note: Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

Procedure 2. VLANs Configuration

Create the necessary virtual local area networks (VLANs) on both Cisco Nexus switches.

Step 1. Login as admin user into the Cisco Nexus Switch A.

Step 2. Create VLAN 134 for Public Network Traffic, VLAN 10 for Private Network Traffic, and VLAN 21,22,23,24 for Storage Network Traffic.

configure terminal

vlan 134

name Oracle_RAC_Public_Traffic

no shutdown

vlan 10

name Oracle_RAC_Private_Traffic

no shutdown

interface Ethernet 1/29

description To-Management-Uplink-Switch

switchport access vlan 134

speed 1000

copy run start

Step 3. Login as admin user into the Nexus Switch B and similar way, create all the VLANs 134 for Oracle RAC Public Network Traffic and VLAN 10 for Oracle RAC Private Network Traffic.

Note: Make sure to run copy run start to save the configuration on each switch after the configuration is completed.

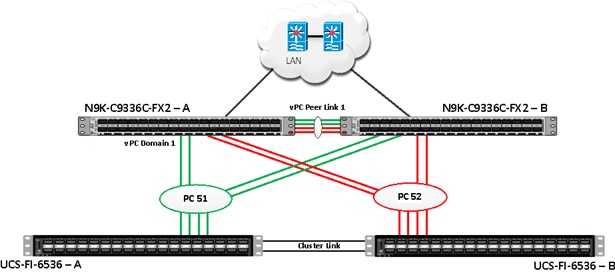

Virtual Port Channel (vPC) Summary for Network Traffic

A port channel bundles individual links into a channel group to create a single logical link that provides the aggregate bandwidth of up to eight physical links. If a member port within a port channel fails, traffic previously carried over the failed link switches to the remaining member ports within the port channel. Port channeling also load balances traffic across these physical interfaces. The port channel stays operational as long as at least one physical interface within the port channel is operational. Using port channels, Cisco NX-OS provides wider bandwidth, redundancy, and load balancing across the channels.

In the Cisco Nexus Switch topology, a single vPC feature is enabled to provide HA, faster convergence in the event of a failure, and greater throughput. The Cisco Nexus vPC configurations with the vPC domains and corresponding vPC names and IDs for Oracle Database Servers are listed in Table 7.

| vPC Domain |

vPC Name |

vPC ID |

| 1 |

Peer-Link |

1 |

| 51 |

vPC FI-A |

51 |

| 52 |

vPC FI-B |

52 |

As listed in Table 7, a single vPC domain with Domain ID 1 is created across two Nexus switches to define vPC members to carry specific VLAN network traffic. In this topology, we defined a total number of 3 vPCs.

vPC ID 1 is defined as Peer link communication between the two Cisco Nexus switches. vPC IDs 51 and 52 are configured for both Cisco UCS Fabric Interconnects.

Note: A port channel bundles up to eight individual interfaces into a group to provide increased bandwidth and redundancy.

Procedure 3. Create vPC Peer-Link

Note: For vPC 1 as Peer-link, we used interfaces 1 to 4 for Peer-Link. You may choose an appropriate number of ports based on your needs.

Create the necessary port channels between devices on both Cisco Nexus Switches.

Step 1. Login as admin user into the Cisco Nexus Switch A:

configure terminal

vpc domain 1

peer-keepalive destination 10.29.134.44 source 10.29.134.43

auto-recovery

interface port-channel 1

description vPC peer-link

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type network

vpc peer-link

no shut

interface Ethernet 1/1

description Peer link connected to ORA21C-N9K-B-Eth1/1

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/2

description Peer link connected to ORA21C-N9K-B-Eth1/2

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/3

description Peer link connected to ORA21C-N9K-B-Eth1/3

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/4

description Peer link connected to ORA21C-N9K-B-Eth1/4

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

exit

copy run start

Step 2. Login as admin user into the Cisco Nexus Switch B and repeat step 1 to configure the second Cisco Nexus Switch.

Note: Make sure to change the description of the interfaces and peer-keepalive destination and source IP addresses.

Step 3. Configure the vPC on the other Cisco Nexus switch. Login as admin for the Cisco Nexus Switch B:

configure terminal

vpc domain 1

peer-keepalive destination 10.29.134.43 source 10.29.134.44

auto-recovery

interface port-channel 1

description vPC peer-link

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type network

vpc peer-link

no shut

interface Ethernet 1/1

description Peer link connected to ORA21C-N9K-A-Eth1/1

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/2

description Peer link connected to ORA21C-N9K-A-Eth1/2

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/3

description Peer link connected to ORA21C-N9K-A-Eth1/3

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

interface Ethernet 1/4

description Peer link connected to ORA21C-N9K-A-Eth1/4

switchport mode trunk

switchport trunk allowed vlan 1,10,134

channel-group 1 mode active

no shut

exit

copy run start

Create vPC Configuration between Cisco Nexus and Fabric Interconnect Switches

This section describes how to create and configure port channel 51 and 52 for network traffic between the Cisco Nexus and Fabric Interconnect Switches.

Table 8 lists the vPC IDs, allowed VLAN IDs, and ethernet uplink ports.

| vPC Description |

vPC ID |

Fabric Interconnects Ports |

Cisco Nexus Switch Ports |

Allowed VLANs |

| Port Channel FI-A |

51 |

FI-A Port 1/27 |

N9K-A Port 1/9 |

10,134 Note: VLAN 10 is needed for failover. |

| FI-A Port 1/28 |

N9K-A Port 1/10 |

|||

| FI-A Port 1/29 |

N9K-B Port 1/9 |

|||

| FI-A Port 1/30 |

N9K-B Port 1/10 |

|||

| Port Channel FI-B |

52 |

FI-B Port 1/27 |

N9K-A Port 1/11 |

10,134 Note: VLAN 134 is needed for failover. |

| FI-B Port 1/28 |

N9K-A Port 1/12 |

|||

| FI-B Port 1/29 |

N9K-B Port 1/11 |

|||

| FI-B Port 1/30 |

N9K-B Port 1/12 |

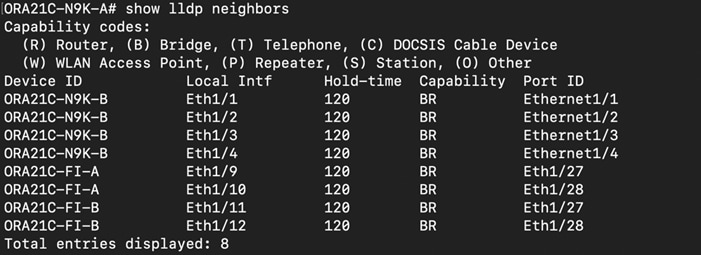

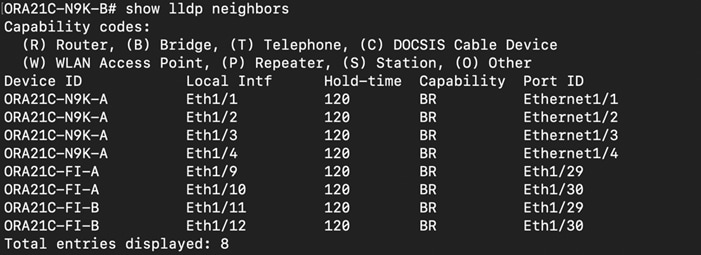

Verify the port connectivity on both Cisco Nexus Switches

Figure 3. Cisco Nexus A Connectivity

Figure 4. Cisco Nexus B Connectivity

Procedure 1. Configure the port channels on the Cisco Nexus Switches

Step 1. Login as admin user into Cisco Nexus Switch A and run the following commands:

configure terminal

interface port-channel 51

description connect to ORA21C-FI-A

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

vpc 51

no shutdown

interface port-channel 52

description connect to ORA21C-FI-B

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

vpc 52

no shutdown

interface Ethernet 1/9

description Fabric-Interconnect-A-27

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 51 mode active

no shutdown

interface Ethernet 1/10

description Fabric-Interconnect-A-28

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 51 mode active

no shutdown

interface Ethernet1/11

description Fabric-Interconnect-B-27

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 52 mode active

no shutdown

interface Ethernet 1/12

description Fabric-Interconnect-B-28

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 52 mode active

no shutdown

copy run start

Step 2. Login as admin user into Cisco Nexus Switch B and run the following commands to configure the second Cisco Nexus Switch:

configure terminal

interface port-channel 51

description connect to ORA21C-FI-A

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

vpc 51

no shutdown

interface port-channel 52

description connect to ORA21C-FI-B

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

vpc 52

no shutdown

interface Ethernet 1/9

description Fabric-Interconnect-A-29

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 51 mode active

no shutdown

interface Ethernet 1/10

description Fabric-Interconnect-A-30

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 51 mode active

no shutdown

interface Ethernet 1/11

description Fabric-Interconnect-B-29

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 52 mode active

no shutdown

interface Ethernet 1/12

description Fabric-Interconnect-B-30

switchport mode trunk

switchport trunk allowed vlan 1,10,134

spanning-tree port type edge trunk

mtu 9216

channel-group 52 mode active

no shutdown

copy run start

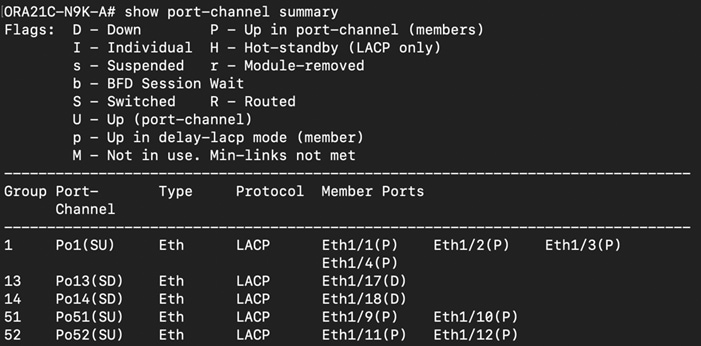

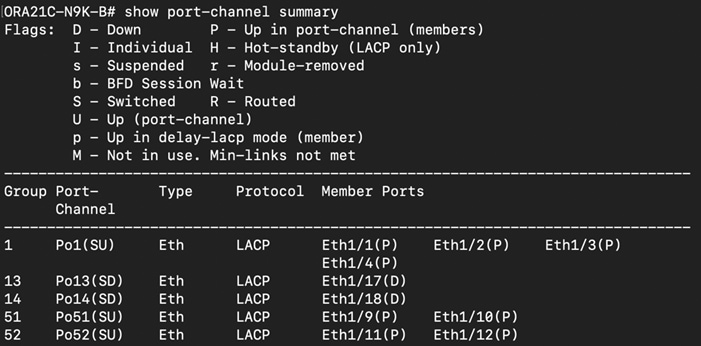

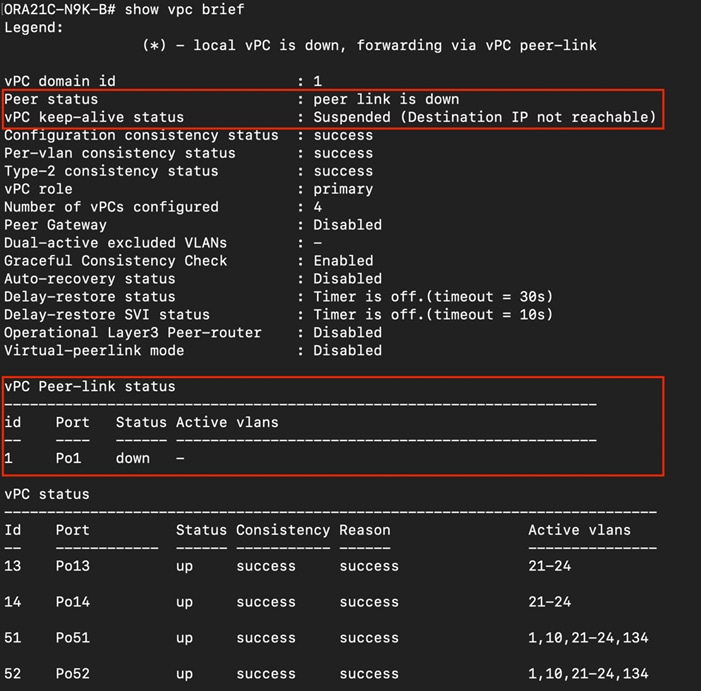

Verify All vPC Status

Procedure 1. Verify the status of all port-channels using Cisco Nexus Switches

Step 1. Cisco Nexus Switch A Port-Channel Summary:

Step 2. Cisco Nexus Switch B Port-Channel Summary:

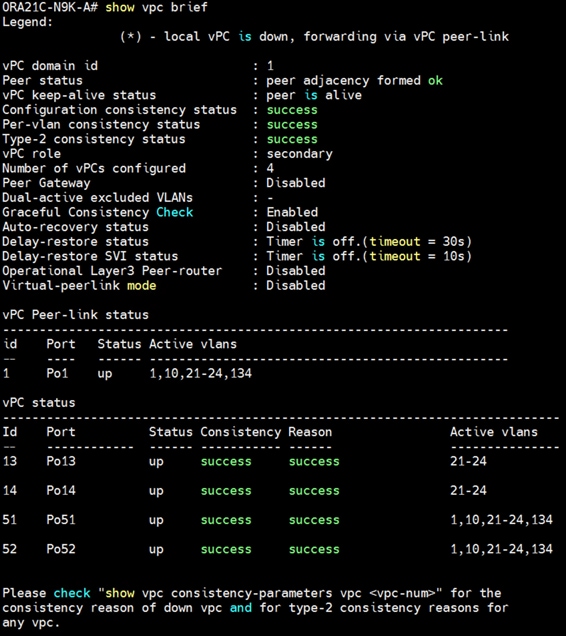

Step 3. Cisco Nexus Switch A vPC Status:

Step 4. Cisco Nexus Switch B vPC Status:

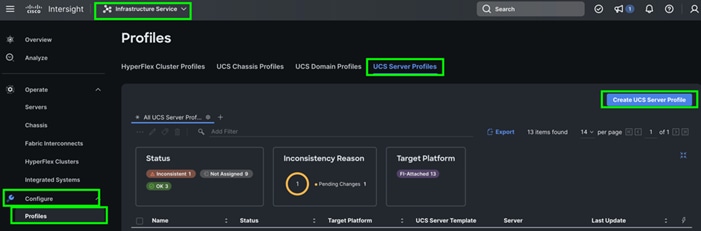

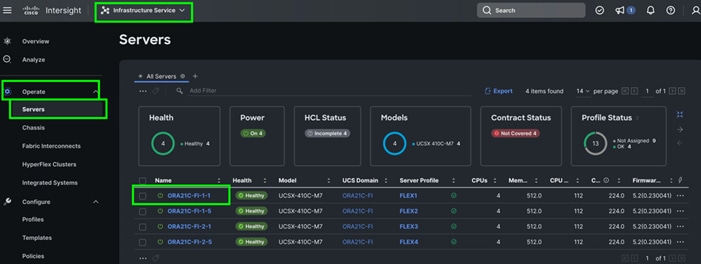

Cisco UCS X-Series Configuration – Intersight Managed Mode (IMM)

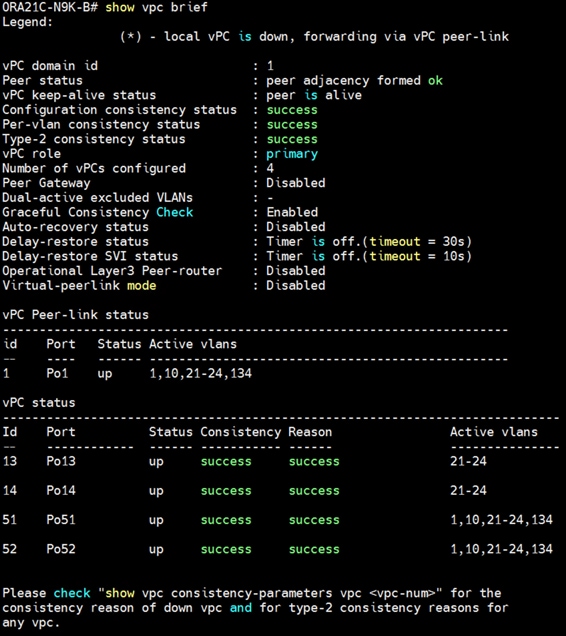

This section details the high-level steps for the Cisco UCS X-Series Configuration in Intersight Managed Mode.

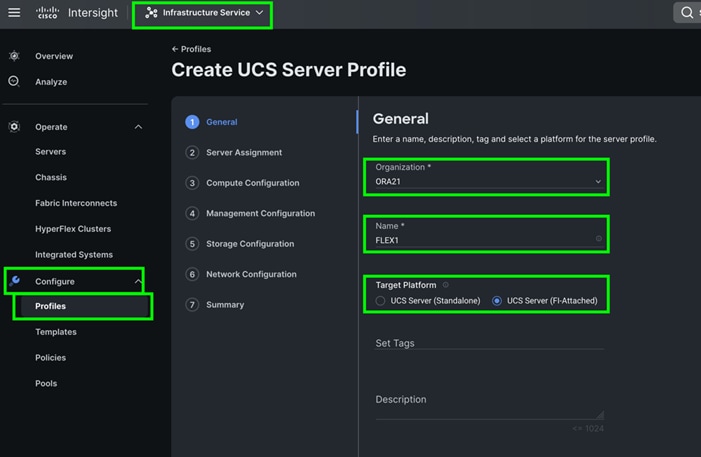

Cisco Intersight Managed Mode standardizes policy and operation management for Cisco UCS X-Series. The compute nodes in Cisco UCS X-Series are configured using server profiles defined in Cisco Intersight. These server profiles derive all the server characteristics from various policies and templates. At a high level, configuring Cisco UCS using Intersight Managed Mode consists of the steps shown in Figure 5.

Figure 5. Configuration Steps for Cisco Intersight Managed Mode

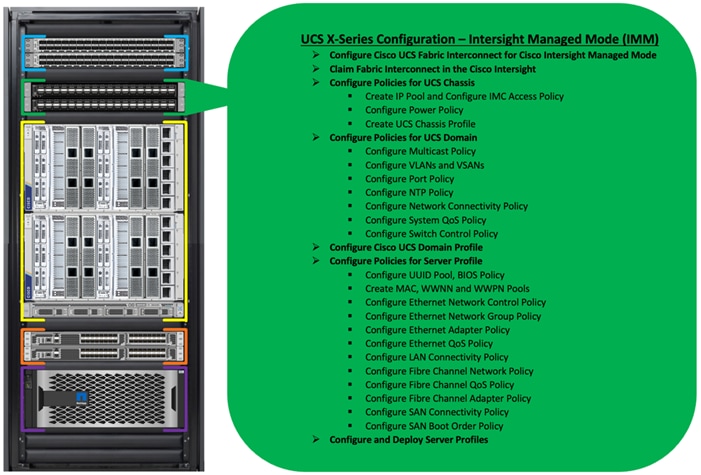

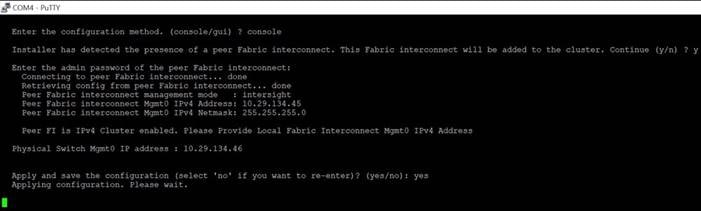

Procedure 1. Configure Cisco UCS Fabric Interconnect for Cisco Intersight Managed Mode

During the initial configuration, for the management mode, the configuration wizard enables you to choose whether to manage the fabric interconnect through Cisco UCS Manager or the Cisco Intersight platform. You can switch the management mode for the fabric interconnects between Cisco Intersight and Cisco UCS Manager at any time; however, Cisco UCS FIs must be set up in Intersight Managed Mode (IMM) for configuring the Cisco UCS X-Series system.

Step 1. Verify the following physical connections on the fabric interconnect:

· The management Ethernet port (mgmt0) is connected to an external hub, switch, or router.

· The L1 ports on both fabric interconnects are directly connected to each other.

· The L2 ports on both fabric interconnects are directly connected to each other.

Step 2. Connect to the console port on the first fabric interconnect and configure the first FI as shown below:

Step 3. Connect the console port on the second fabric interconnect B and configure it as shown below:

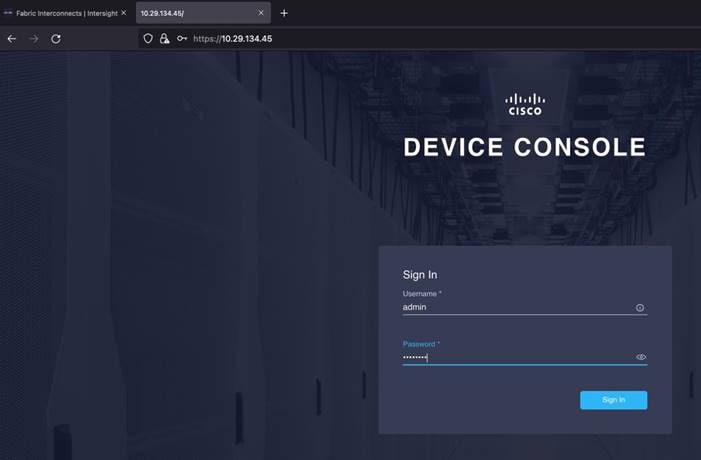

Step 4. After configuring both the FI management address, open a web browser and navigate to the Cisco UCS fabric interconnect management address as configured. If prompted to accept security certificates, accept, as necessary.

Step 5. Log into the device console for FI-A by entering your username and password.

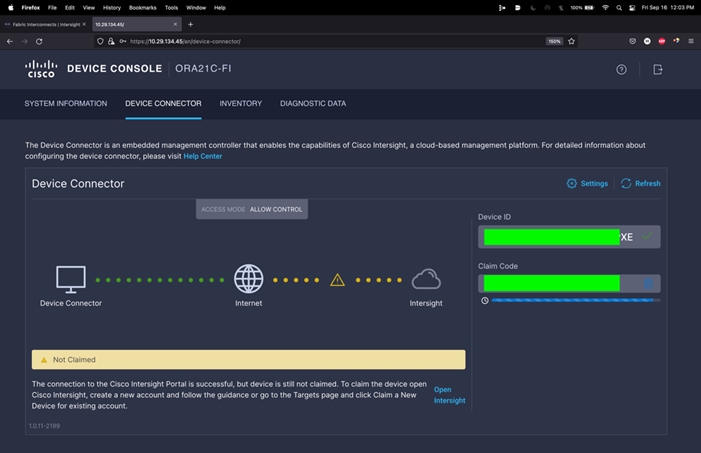

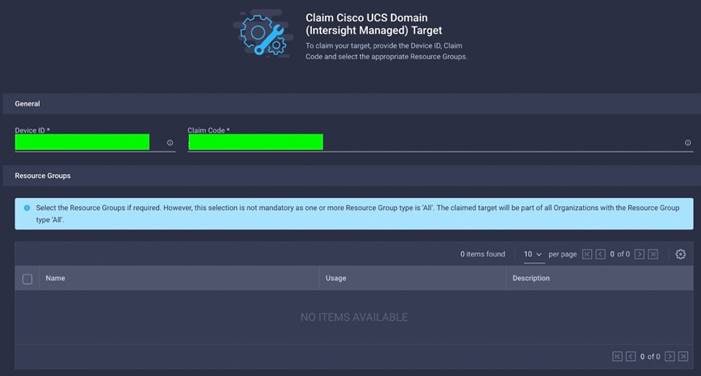

Step 6. Go to the Device Connector tab and get the DEVICE ID and CLAIM Code as shown below:

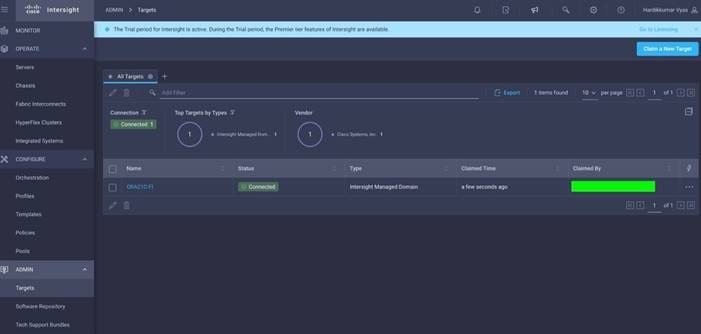

Procedure 2. Claim Fabric Interconnect in Cisco Intersight Platform

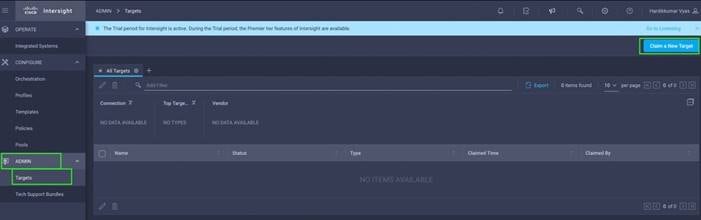

After setting up the Cisco UCS fabric interconnect for Cisco Intersight Managed Mode, FIs can be claimed to a new or an existing Cisco Intersight account. When a Cisco UCS Fabric Interconnect is successfully added to the Cisco Intersight platform, all future configuration steps are completed in the Cisco Intersight portal. After getting the device id and claim code of FI, go to https://intersight.com/.

Step 7. Sign in with your Cisco ID or if you don’t have one, click Sing Up and setup your account.

Note: We created the “FlexPod-ORA21C” account for this solution.

Step 8. After logging into your Cisco Intersight account, go to > ADMIN > Targets > Claim a New Target.

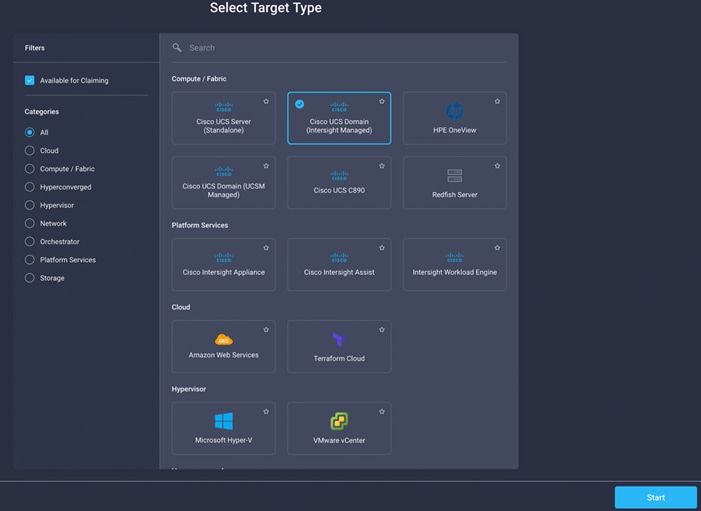

Step 9. For the Select Target Type, select “Cisco UCS Domain (Intersight Managed)” and click Start.

Step 10. Enter the Device ID and Claim Code which was previously captured. Click Claim to claim this domain in Cisco Intersight.

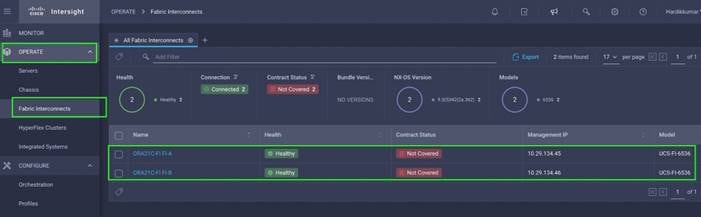

When you claim this domain, you can see both FIs under this domain and verify it’s under Intersight Managed Mode:

Procedure 3. Configure Policies for Cisco UCS Chassis

Note: For this solution, we configured Organization as “ORA21.” You will configure all the profile, pools, and policies under this common organization to better consolidate resources.

Step 1. To create Organization, go to Cisco Intersight > Settings > Organization and create depending upon your environment.

Note: We configured the IP Pool, IMC Access Policy, and Power Policy for the Cisco UCS Chassis profile as explained below.

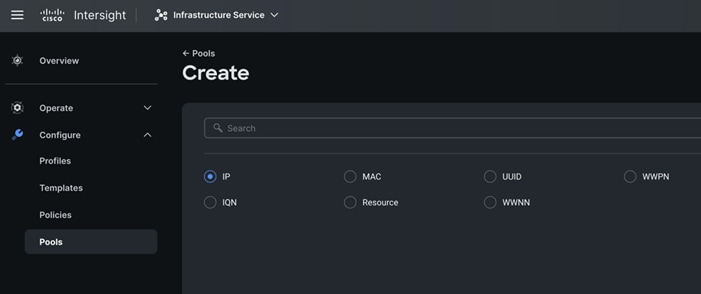

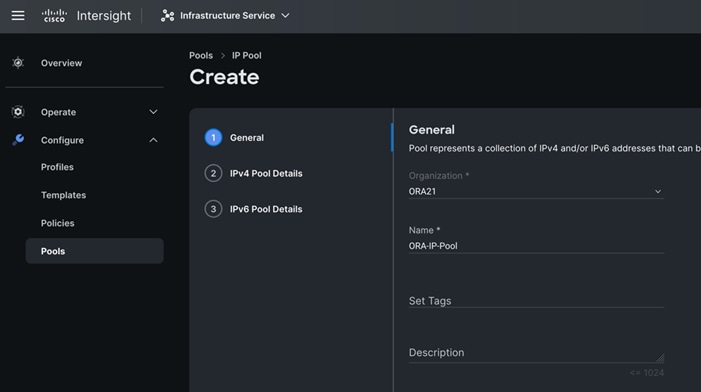

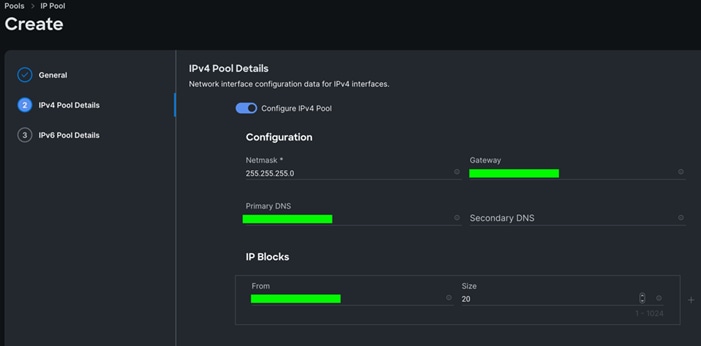

Procedure 4. Create IP Pool

Step 1. To configure the IP Pool for the Cisco UCS Chassis profile, go to > Infrastructure Service > Configure > Pools > and then select “Create Pool” on the top right corner.

Step 2. Select option “IP” as shown below to create the IP Pool.

Step 3. In the IP Pool Create section, for Organization select “ORA21” and enter the Policy name “ORA-IP-Pool” and click Next.

Step 4. Enter Netmask, Gateway, Primary DNS, IP Blocks and Size according to your environment and click Next.

Note: For this solution, we did not configure the IPv6 Pool. Keep the Configure IPv6 Pool option disabled and click Create to create the IP Pool.

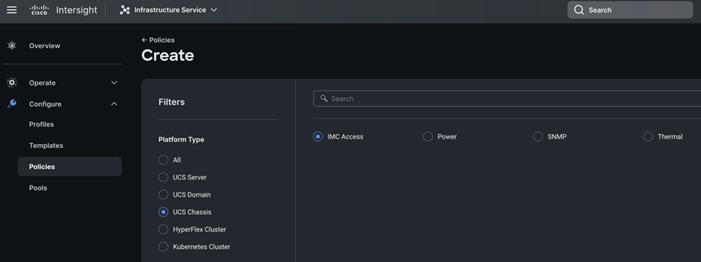

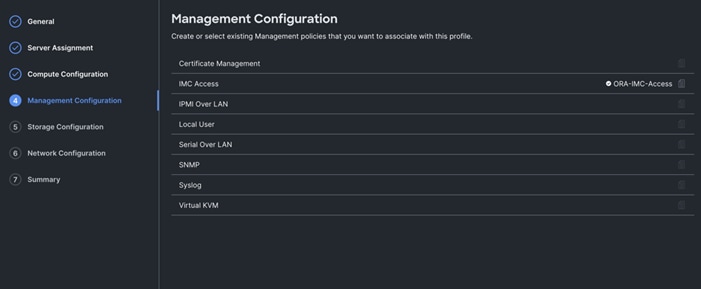

Procedure 5. Configure IMC Access Policy

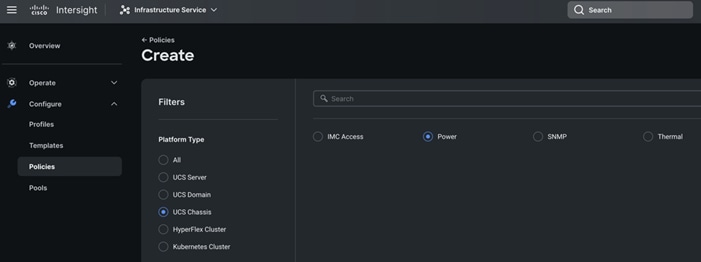

Step 1. To configure the IMC Access Policy for the Cisco UCS Chassis profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. Select the platform type “UCS Chassis” and select “IMC Access” policy.

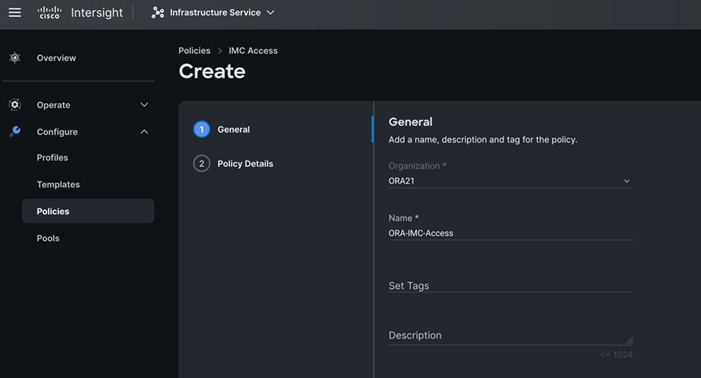

Step 3. In the IMC Access Create section, for Organization select “ORA21” and enter the Policy name “ORA-IMC-Access” and click Next.

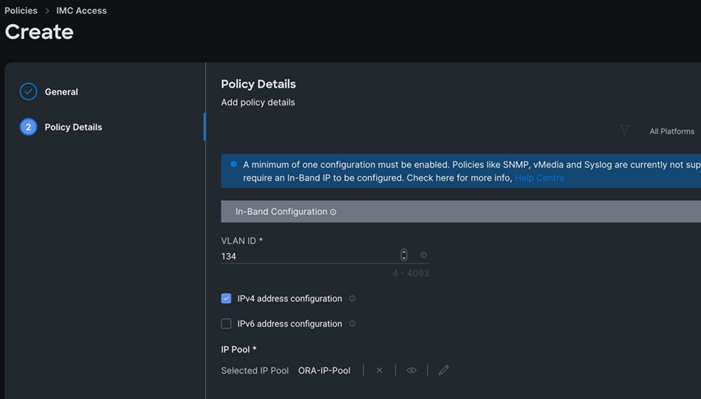

Step 4. In the Policy Details section, enter the VLAN ID as 134 and select the IP Pool “ORA-IP-Pool.”

Step 5. Click Create to create this policy.

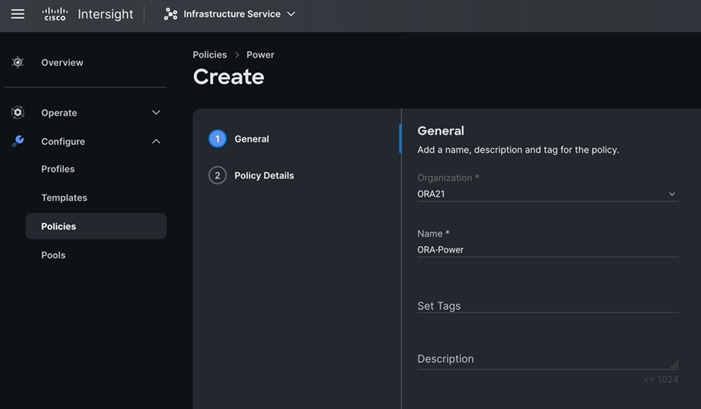

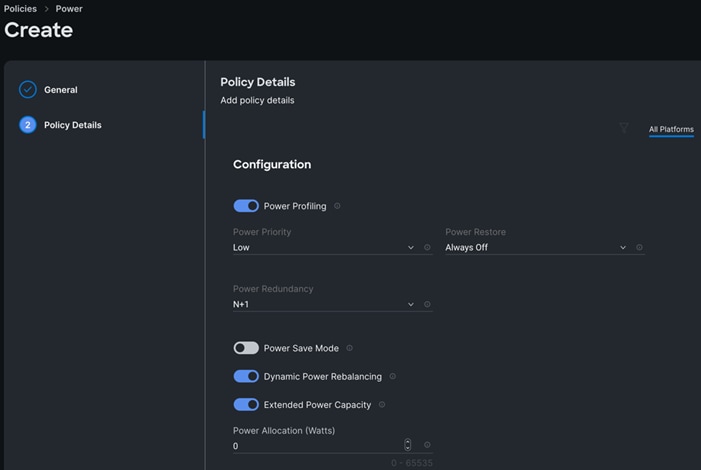

Procedure 6. Configure Power Policy

Step 1. To configure the Power Policy for the Cisco UCS Chassis profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. Select the platform type “UCS Chassis” and select “Power.”

Step 3. In the Power Policy Create section, for Organization select “ORA21” and enter the Policy name “ORA-Power” and click Next.

Step 4. In the Policy Details section, for Power Redundancy select N+1 and turn off Power Save Mode.

Step 5. Click Create to create this policy.

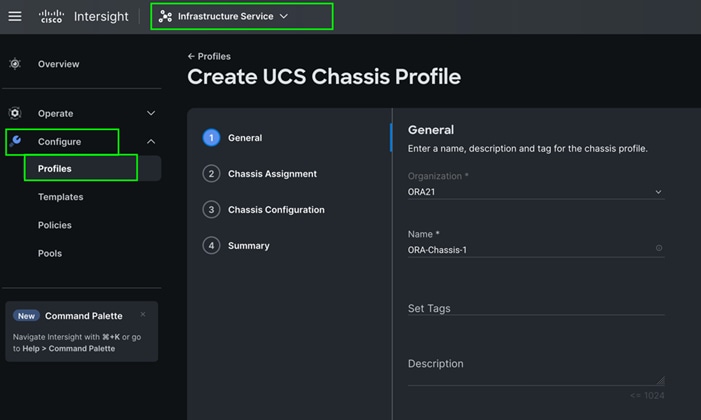

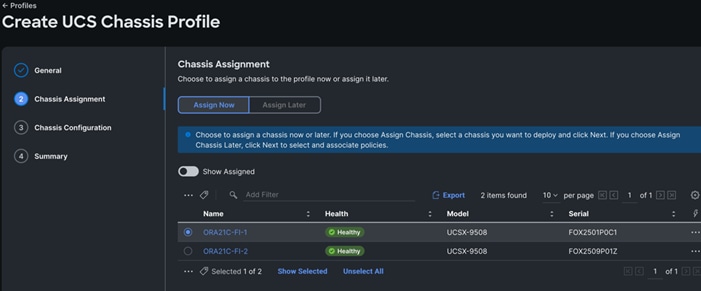

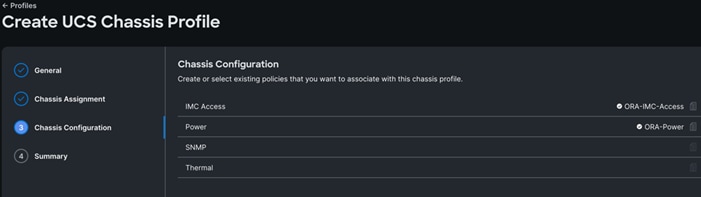

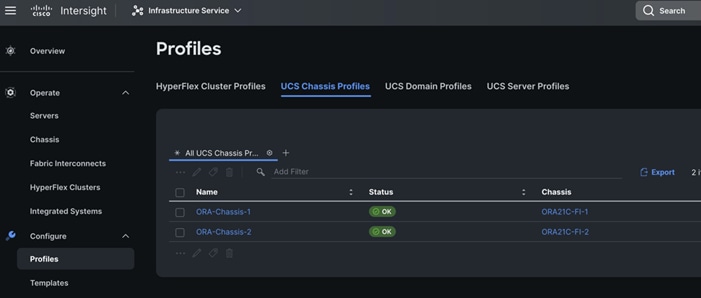

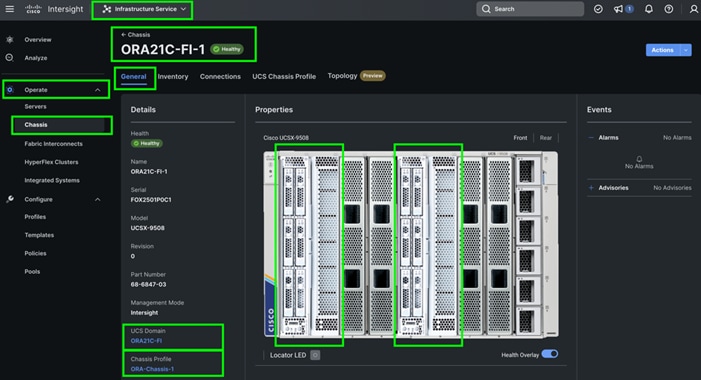

Procedure 7. Create Cisco UCS Chassis Profile

A Cisco UCS Chassis profile enables you to create and associate chassis policies to an Intersight Managed Mode (IMM) claimed chassis. When a chassis profile is associated with a chassis, Cisco Intersight automatically configures the chassis to match the configurations specified in the policies of the chassis profile. The chassis-related policies can be attached to the profile either at the time of creation or later. For more information, go to: https://intersight.com/help/saas/features/chassis/configure#chassis_profiles.

The chassis profile in a FlexPod is used to set the power policy for the chassis. By default, Cisco UCSX power supplies are configured in GRID mode, but the power policy can be utilized to set the power supplies in non-redundant or N+1/N+2 redundant modes

Step 1. To create a Cisco UCS Chassis Profile, go to Infrastructure Service > Configure > Profiles > UCS Chassis Domain Profiles tab > and click Create UCS Chassis Profile.

Step 2. In the Chassis Assignment menu, for the first chassis, click “ORA21C-FI-1” and click Next.

Step 3. In the Chassis configuration section, for the policy for IMC Access select “ORA-IMC-Access” and for the Power policy select “ORA-Power.”

Step 4. Review the configuration settings summary for the Chassis Profile and click Deploy to create the Cisco UCS Chassis Profile for the first chassis.

Note: For this solution, we created two Chassis Profile (ORA-Chassis-1 and ORA-Chassis-2) and assigned to both the chassis as shown below:

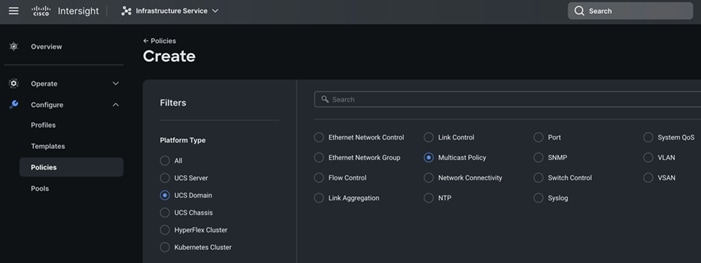

Configure Policies for Cisco UCS Domain

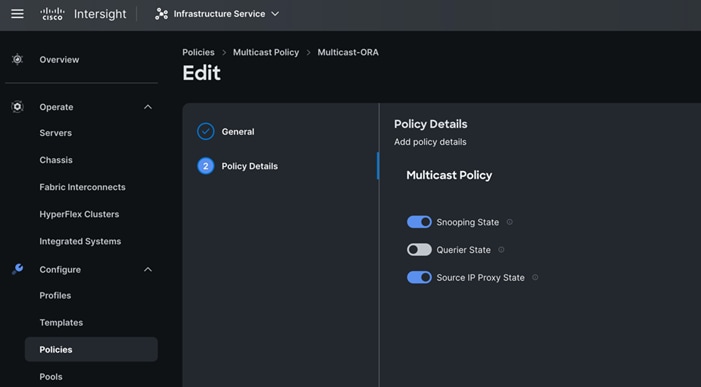

Procedure 1. Configure Multicast Policy

Step 1. To configure Multicast Policy for a Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for Policy, select “Multicast Policy.”

Step 2. In the Multicast Policy Create section, for the Organization select “ORA21” and for the Policy name “Multicast-ORA.” Click Next.

Step 3. In the Policy Details section, select Snooping State and Source IP Proxy State.

Step 4. Click Create to create this policy.

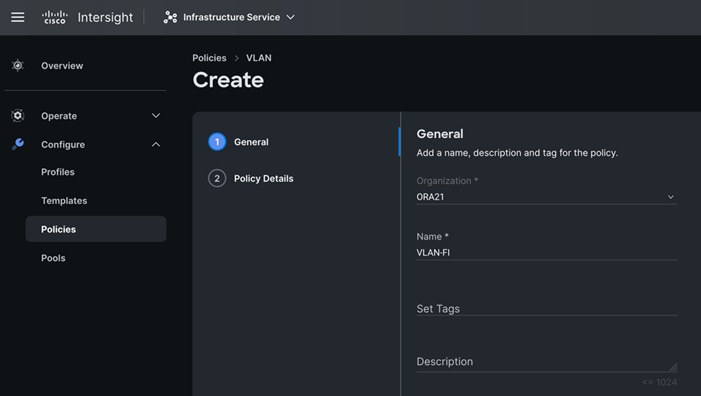

Procedure 2. Configure VLANs

Step 1. To configure the VLAN Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the Policy select “VLAN.”

Step 2. In the VLAN Policy Create section, for the Organization select “ORA21” and for the Policy name select “VLAN-FI.” Click Next.

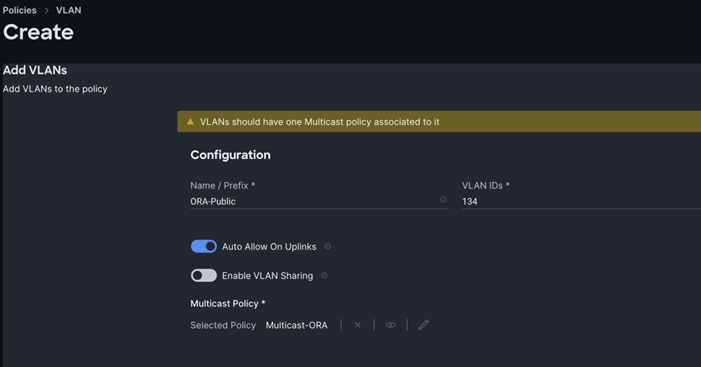

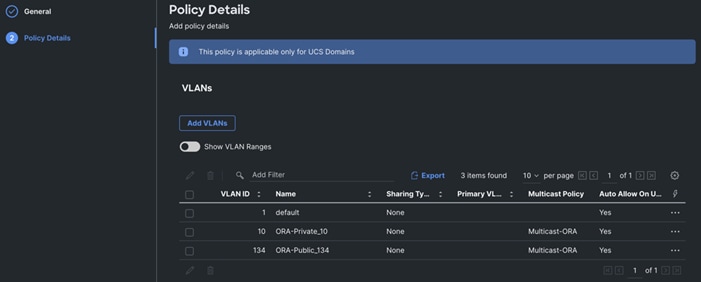

Step 3. In the Policy Details section, to configure the individual VLANs, select "Add VLANs." Provide a name, VLAN ID for the VLAN and select the Multicast Policy as shown below:

Step 4. Click Add to add this VLAN to the policy. Add another VLAN 10 and provide the names to various network traffic of this solution.

Step 5. Click Create to create this policy.

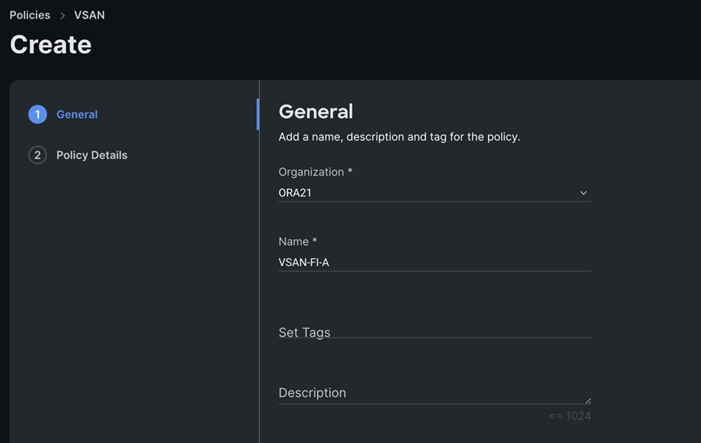

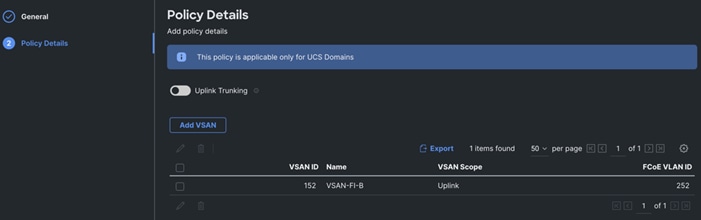

Procedure 3. Configure VSANs

Step 1. To configure the VSAN Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the Policy select “VSAN.”

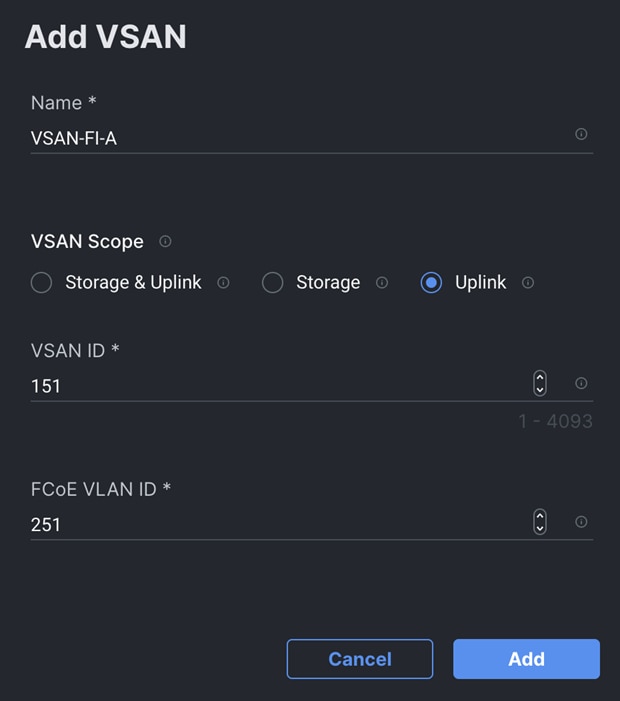

Step 2. In the VSAN Policy Create section, for the Organization select “ORA21” and for the Policy name select “VLAN-FI-A.” Click Next.

Step 3. In the Policy Details section, to configure the individual VSAN, select "Add VSAN." Provide a name, VSAN ID, FCoE VLAN ID and VSAN Scope for the VSAN on FI-A side as shown below:

Note: Storage & Uplink VSAN scope allows you to provision SAN and Direct Attached Storage, using the fabric interconnect running in FC Switching mode. You have to externally provision the zones for the VSAN on upstream FC/FCoE switches. Storage VSAN scope allows you to connect and configure Direct Attached Storage, using the fabric interconnect running in FC Switching mode. You can configure local zones on this VSAN using FC Zone policies. All unmanaged zones in the fabric interconnect are cleared when this VSAN is configured for the first time. Do NOT configure this VSAN on upstream FC/FCoE switches.

Note: Uplink scope VSAN allows you to provision SAN connectivity using the Fabric Interconnect.

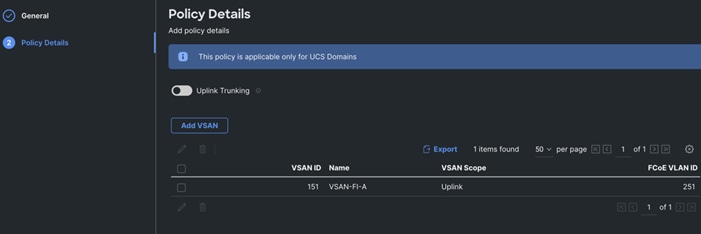

Step 4. Click Add to add this VSAN to the policy.

Step 5. Click Create to create this VSAN policy for FI-A.

Step 6. Configure VSAN policy for FI-B:

a. To configure the VSAN Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the Policy select “VSAN.”

b. In the VSAN Policy Create section, for the Organization select “ORA21” and for the Policy name select “VLAN-FI-B.” Click Next.

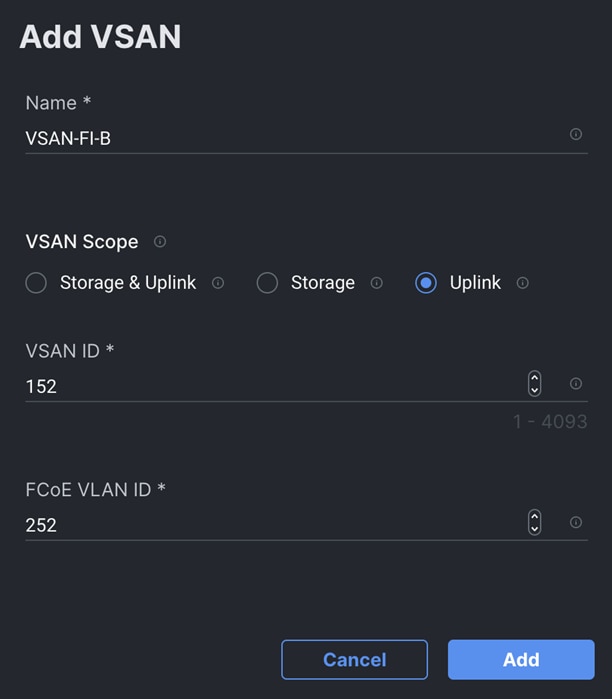

c. In the Policy Details section, to configure the individual VSAN, select "Add VSAN." Provide a name, VSAN ID, FCoE VLAN ID and VSAN Scope for the VSAN on FI-B side as shown below:

Step 7. Click Add to add this VSAN to the policy.

Step 8. Click Create to create this VSAN policy for FI-B.

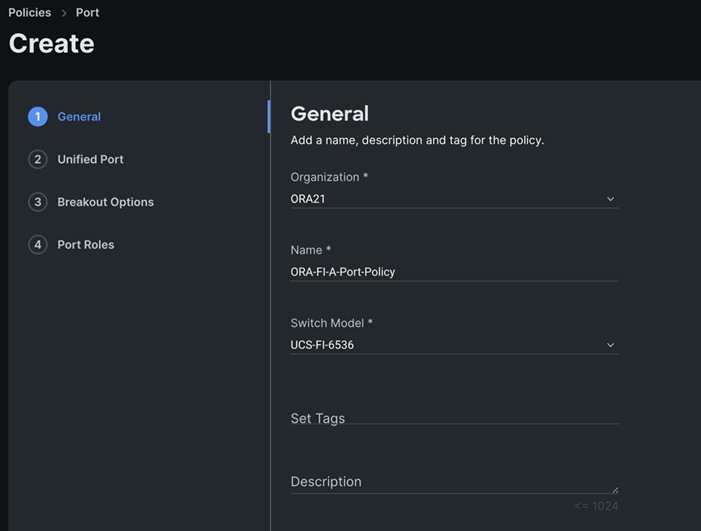

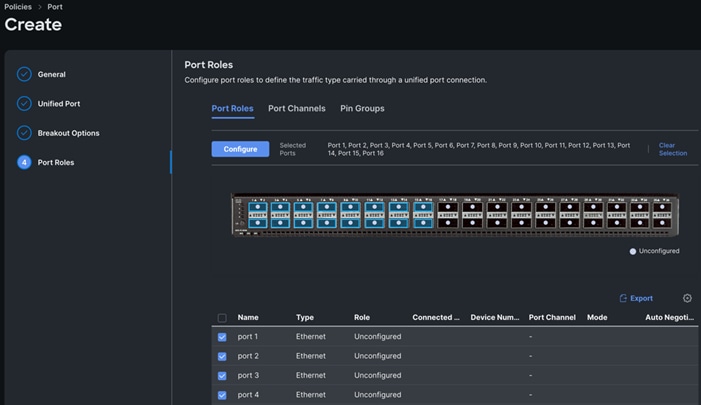

Procedure 4. Configure Port Policy

Step 1. To configure the Port Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy, select “Port.”

Step 2. In the Port Policy Create section, for the Organization, select “ORA21”, for the policy name select “ORA-FI-A-Port-Policy” and for the Switch Model select "UCS-FI-6536.” Click Next.

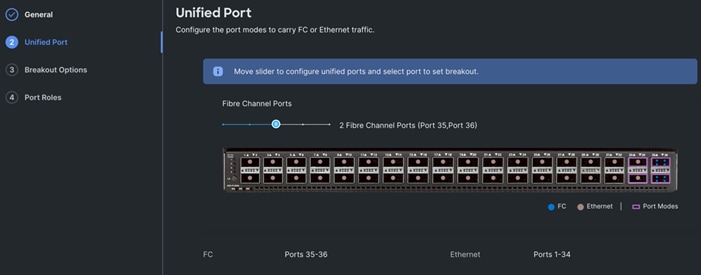

Note: We did not configure the Fibre Channel Ports for this solution. In the Unified Port section, leave it as default and click Next.

Note: We did not configure the Breakout options for this solution. Leave it as default and click Next.

Step 3. In the Unified Port section, move the slider to right side as shown below. This changes Port 35 and Port 36 to FC port.

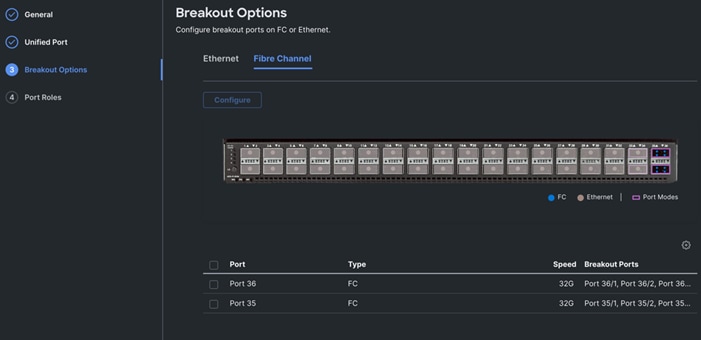

Step 4. In the Breakout Options section, go to Fibre Channel tab and select Port 35 and 36 and click Configure. Set Port 35 and 36 to “4x32G” and click Next.

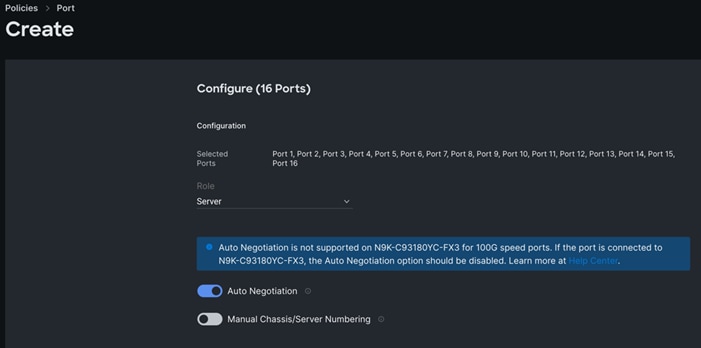

Step 5. In the Port Role section, select port 1 to 16 and click Configure.

Step 6. In the Configure section, for Role select Server and keep the Auto Negotiation ON.

Step 7. Click SAVE to add this configuration for port roles.

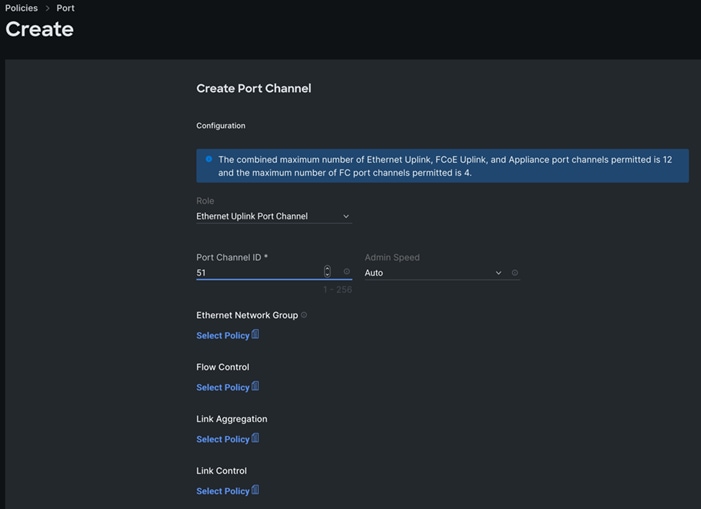

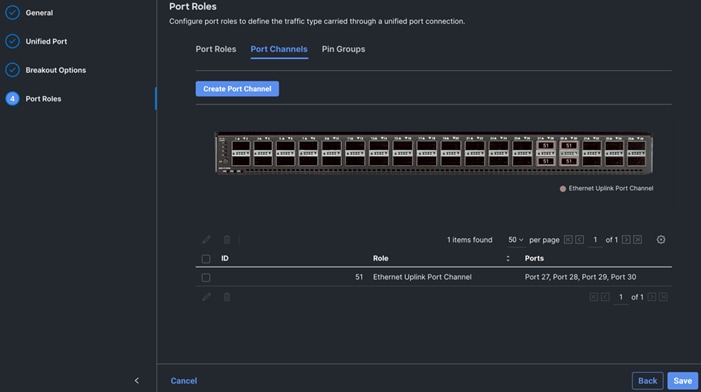

Step 8. Go to the Port Channels tab and select Port 27 to 30 and click Create Port Channel between FI-A and both Cisco Nexus Switches. In the Create Port Channel section, for Role select Ethernet Uplinks Port Channel, and for the Port Channel ID select 51 and select Auto for the Admin Speed.

Step 9. Click SAVE to add this configuration for uplink port roles.

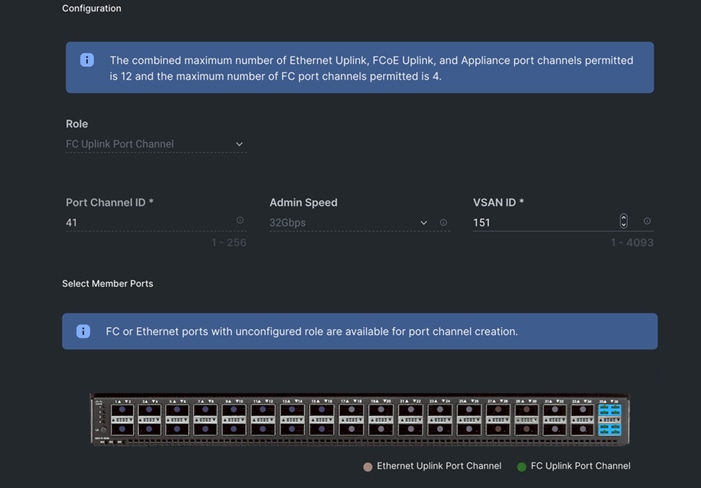

Step 10. Go to the Port Channels tab and now select Port 35/1 to 35/4 and 36/1 to 36/4. Click Create Port Channel between FI-A and Cisco MDS A Switch. In the Create Port Channel section, for Role select FC Uplink Port Channel, and for the Port Channel ID select 41 and enter 151 as VSAN ID.

Step 11. Click SAVE to add this configuration for storage uplink port roles.

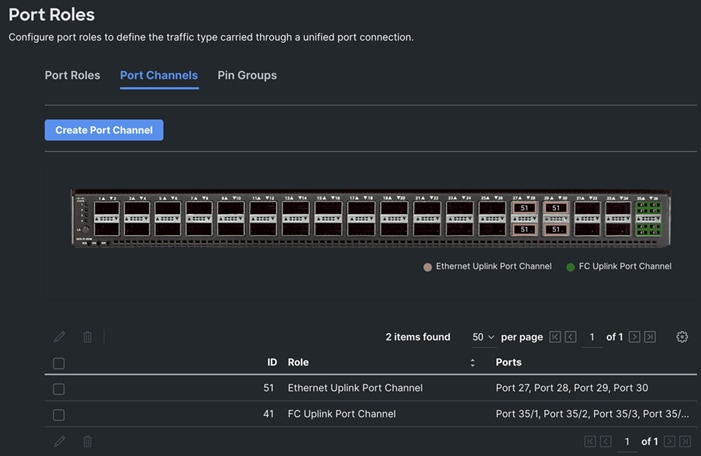

Step 12. Verify both the port channel as shown below:

Step 13. Click SAVE to complete this configuration for all the server ports and uplink port roles.

Note: We configured the FI-B ports and created a Port Policy for FI-B, “ORA-FI-B-Port-Policy.”

Note: In the FI-B port policy, we also configured unified ports as well as breakout options for 4x32G on port 35 and 36 for FC Traffic.

Note: As configured for FI-A, we configured the port policy for FI-B. For FI-B, configured port 1 to 16 for server ports, port 27 to 30 as the ethernet uplink port-channel ports and 35/1-35/4 to 36/1-36/4 ports as FC uplink Port channel ports.

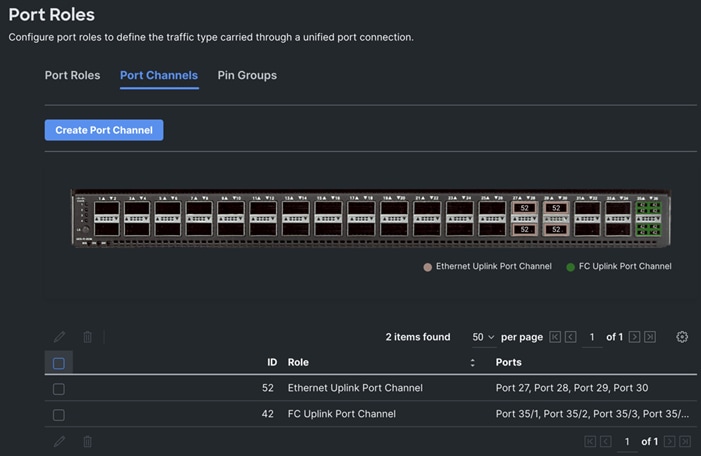

Note: For FI-B, we configured Port-Channel ID as 52 for Ethernet Uplink Port Channel and Port-Channel ID as 42 for FC Uplink Port Channel as shown below:

This completes the Port Policy for FI-A and FI-B for Cisco UCS Domain profile.

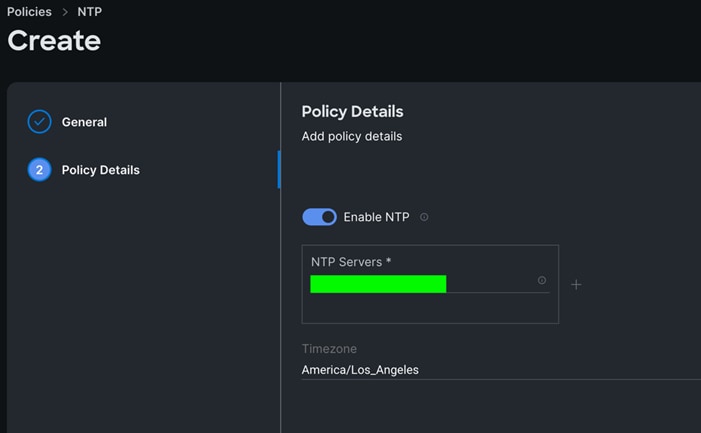

Procedure 5. Configure NTP Policy

Step 1. To configure the NTP Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “NTP.”

Step 2. In the NTP Policy Create section, for the Organization select “ORA21” and for the policy name select “NTP-Policy.” Click Next.

Step 3. In the Policy Details section, select the option to enable the NTP Server and enter your NTP Server details as shown below.

Step 4. Click Create.

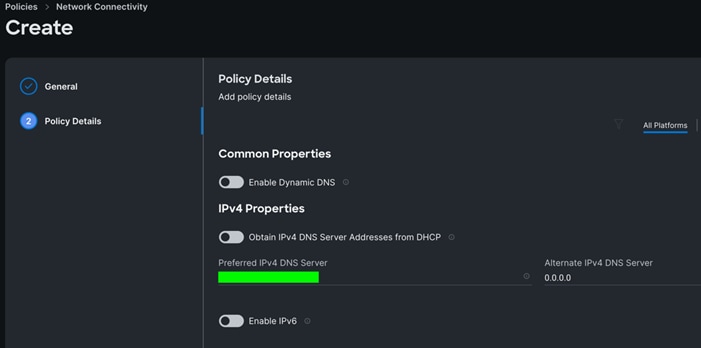

Procedure 6. Configure Network Connectivity Policy

Step 1. To configure to Network Connectivity Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “Network Connectivity.”

Step 2. In the Network Connectivity Policy Create section, for the Organization select “ORA21” and for the policy name select “Network-Connectivity-Policy.” Click Next.

Step 3. In the Policy Details section, enter the IPv4 DNS Server information according to your environment details as shown below:

Step 4. Click Create.

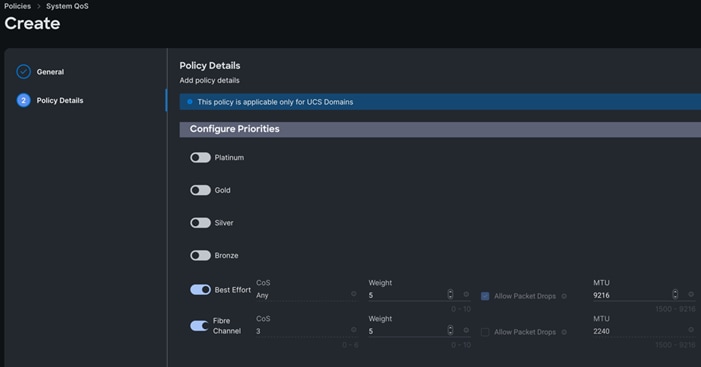

Procedure 7. Configure System QoS Policy

Step 1. To configure the System QoS Policy for the Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “System QoS.”

Step 2. In the System QoS Policy Create section, for the Organization select “ORA21” and for the policy name select “ORA-QoS.” Click Next.

Step 3. In the Policy Details section under Configure Priorities, select Best Effort and set the MTU size to 9216.

Step 4. Click Create.

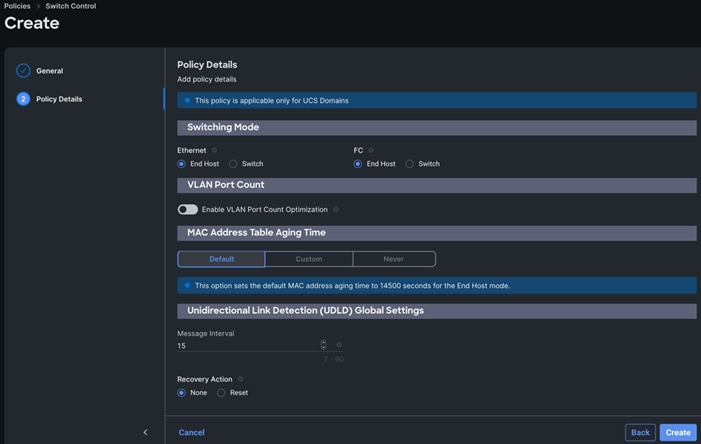

Procedure 8. Configure Switch Control Policy

Step 1. To configure the Switch Control Policy for the UCS Domain profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Domain” and for the policy select “Switch Control.”

Step 2. In the Switch Control Policy Create section, for the Organization select “ORA21” and for the policy name select “ORA-Switch-Control.” Click Next.

Step 3. In the Policy Details section, for the Switching Mode for Ethernet as well as FC, select and keep "End Host" Mode.

Step 4. Click Create to create this policy.

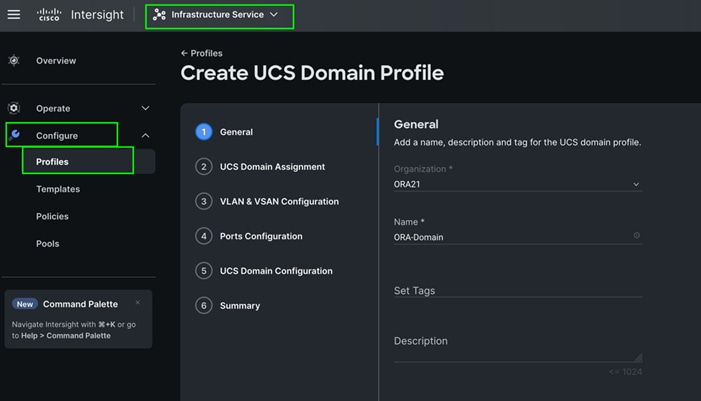

Configure Cisco UCS Domain Profile

With Cisco Intersight, a domain profile configures a fabric interconnect pair through reusable policies, allows for configuration of the ports and port channels, and configures the VLANs and VSANs in the network. It defines the characteristics of and configures ports on fabric interconnects. You can create a domain profile and associate it with a fabric interconnect domain. The domain-related policies can be attached to the profile either at the time of creation or later. One UCS Domain profile can be assigned to one fabric interconnect domain. For more information, go to: https://intersight.com/help/saas/features/fabric_interconnects/configure#domain_profile

Some of the characteristics of the Cisco UCS domain profile in the FlexPod environment are:

· A single domain profile (ORA-Domain) is created for the pair of Cisco UCS fabric interconnects.

· Unique port policies are defined for the two fabric interconnects.

· The VLAN configuration policy is common to the fabric interconnect pair because both fabric interconnects are configured for the same set of VLANs.

· The VSAN configuration policy is different to each of the fabric interconnects because both fabric interconnects are configured to carry separate storage traffic through separate VSANs.

· The Network Time Protocol (NTP), network connectivity, and system Quality-of-Service (QoS) policies are common to the fabric interconnect pair.

Procedure 1. Create a domain profile

Step 1. To create a domain profile, go to Infrastructure Service > Configure > Profiles > then go to the UCS Domain Profiles tab and click Create UCS Domain Profile.

Step 2. For the domain profile name, enter “ORA-Domain” and for the Organization select what was previously configured. Click Next.

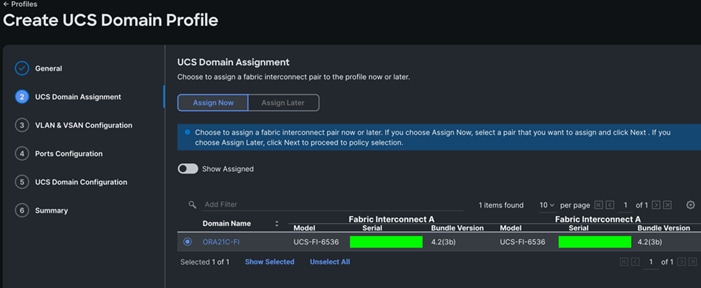

Step 3. In the UCS Domain Assignment menu, for the Domain Name select “ORA21C-FI” which was added previously into this domain and click Next.

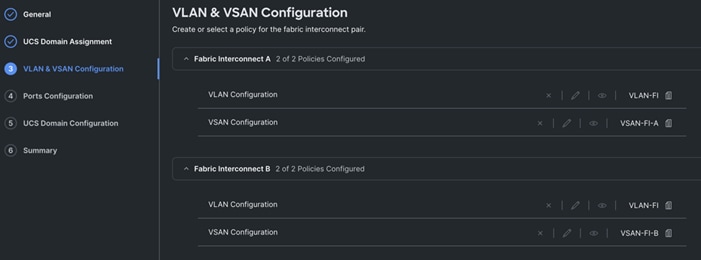

Step 4. In the VLAN & VSAN Configuration screen, for the VLAN Configuration for both FIs, select VLAN-FI. For the VSAN configuration for FI-A, select VSAN-FI-A and for FI-B select VSAN-FI-B that were configured in the previous section. Click Next.

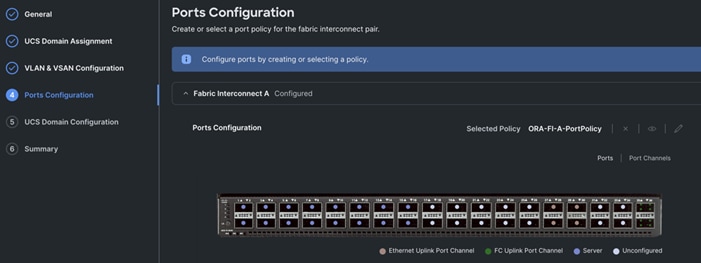

Step 5. In the Port Configuration section, for the Port Configuration Policy for FI-A select ORA-FI-A-PortPolicy. For the port configuration policy for FI-B select ORA-FI-B-PortPolicy.

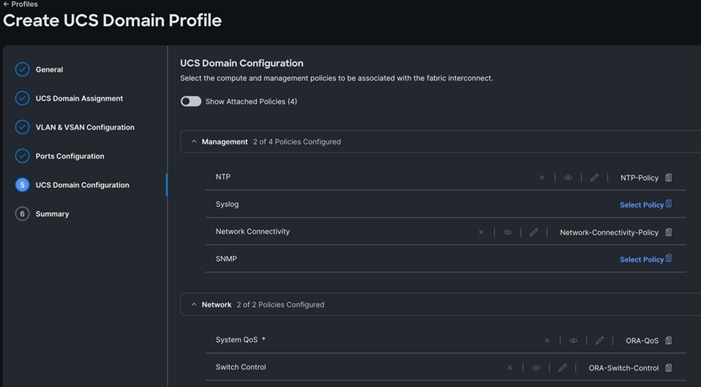

Step 6. In the UCS Domain Configuration section, select the policy for NTP, Network Connectivity, System QoS and Switch Control as shown below:

Step 7. In the Summary window, review the policies and click Deploy to create Domain Profile.

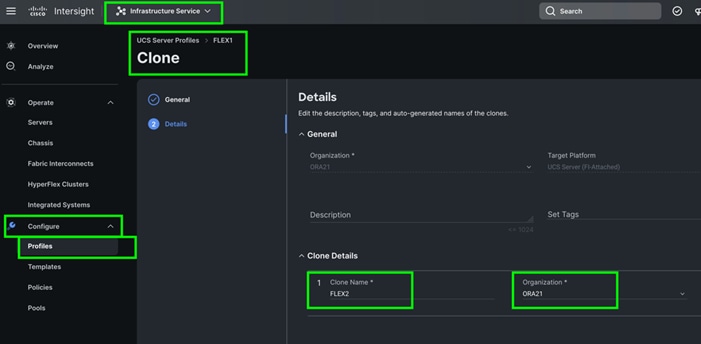

After the Cisco UCS domain profile has been successfully created and deployed, the policies including the port policies are pushed to the Cisco UCS fabric interconnects. The Cisco UCS domain profile can easily be cloned to install additional Cisco UCS systems. When cloning the Cisco UCS domain profile, the new Cisco UCS domains utilize the existing policies for the consistent deployment of additional Cisco UCS systems at scale.

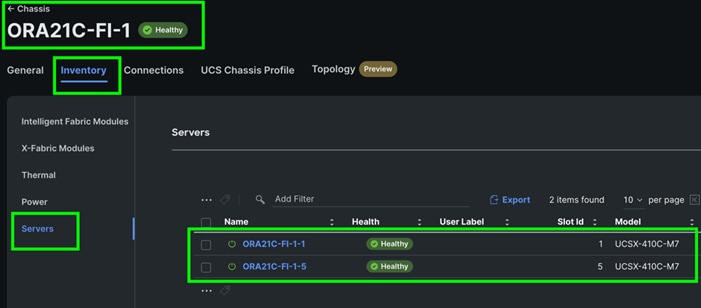

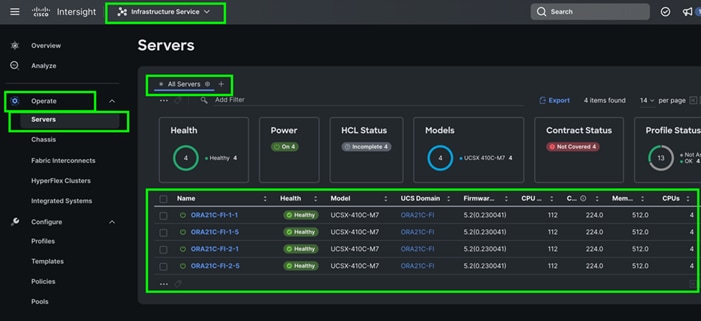

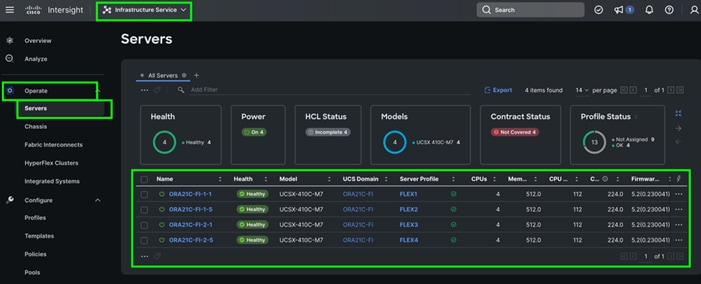

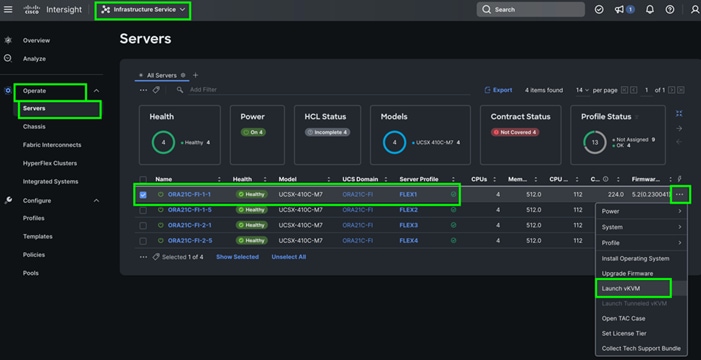

The Cisco UCS X9508 Chassis and Cisco UCS X410c M7 Compute Nodes are automatically discovered when the ports are successfully configured using the domain profile as shown below:

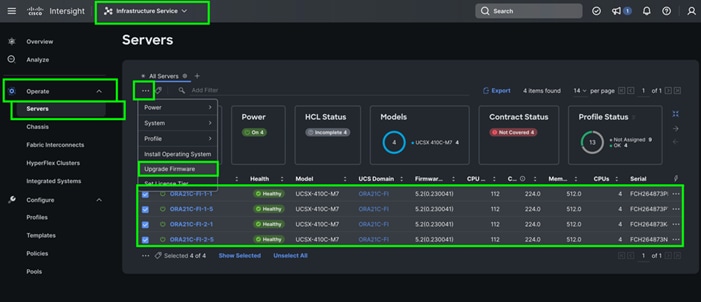

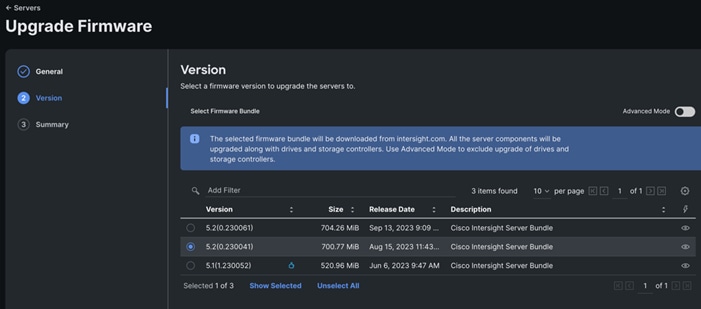

Step 8. After discovering the servers successfully, upgrade all server firmware through IMM to the supported release. To do this, check the box for All Servers and then click the ellipses and from the drop-down list, select Upgrade Firmware.

Step 9. In the Upgrade Firmware section, select all servers and click Next. In the Version section, for the supported firmware version release select “5.2(0.230041)” and click Next, then click Upgrade to upgrade the firmware on all servers simultaneously.

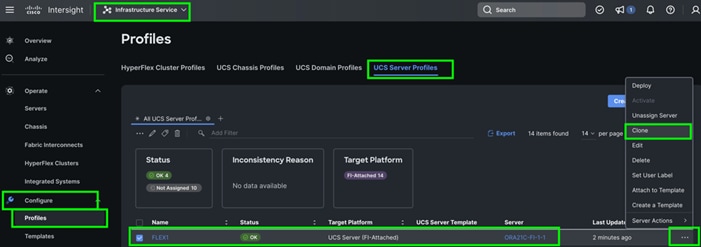

After the successful firmware upgrade, you can create a server profile template and a server profile for IMM configuration.

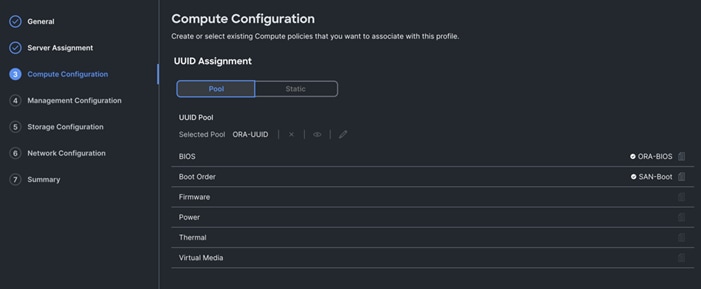

Configure Policies for Server Profile

A server profile enables resource management by simplifying policy alignment and server configuration. The server profile wizard groups the server policies into the following categories to provide a quick summary view of the policies that are attached to a profile:

· Compute Configuration: BIOS, Boot Order, and Virtual Media policies.

· Management Configuration: Certificate Management, IMC Access, IPMI (Intelligent Platform Management Interface) Over LAN, Local User, Serial Over LAN, SNMP (Simple Network Management Protocol), Syslog and Virtual KVM (Keyboard, Video, and Mouse).

· Storage Configuration: SD Card, Storage.

· Network Configuration: LAN connectivity and SAN connectivity policies.

Some of the characteristics of the server profile template for FlexPod are as follows:

· BIOS policy is created to specify various server parameters in accordance with FlexPod best practices.

· Boot order policy defines virtual media (KVM mapper DVD) and SAN boot through NetApp storage.

· IMC access policy defines the management IP address pool for KVM access.

· LAN connectivity policy is used to create two virtual network interface cards (vNICs) – One vNIC for Server Node Management and Public Network Traffic, second vNIC for Private Server-to-Server Network (Cache Fusion) Traffic Interface for Oracle RAC.

· SAN connectivity policy is used to create total 10 vHBA (2 vHBA for FC SAN Boot and 8 vHBA for NVMe FC Database traffic) per server to boot through FC SAN as well as run NVMe FC traffics on the same server node.

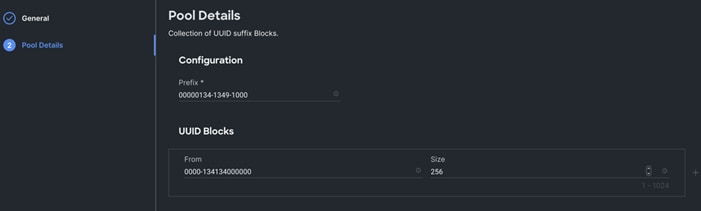

Procedure 1. Configure UUID Pool

Step 1. To create UUID Pool for a Cisco UCS, go to > Infrastructure Service > Configure > Pools > and click Create Pool. Select option UUID.

Step 2. In the UUID Pool Create section, for the Organization, select ORA21 and for the Policy name ORA-UUID. Click Next

Step 3. Select Prefix, UUID block and size according to your environment. and click Create.

Procedure 2. Configure BIOS Policy

Note: For more information, see “Performance Tuning Best Practices Guide for Cisco UCS M7 Platforms”

Note: For this specific database solution, we created a BIOS policy and used all “Platform Default” values.

Step 1. To create BIOS Policy, go to > Infrastructure Service > Configure > Policies > and select Platform type as UCS Server and select on BIOS and click on start.

Step 2. In the BIOS create general menu, for the Organization, select ORA21 and for the Policy name ORA-BIOS. Click Next

Step 3. Click Create to create the platform default BIOS policy.

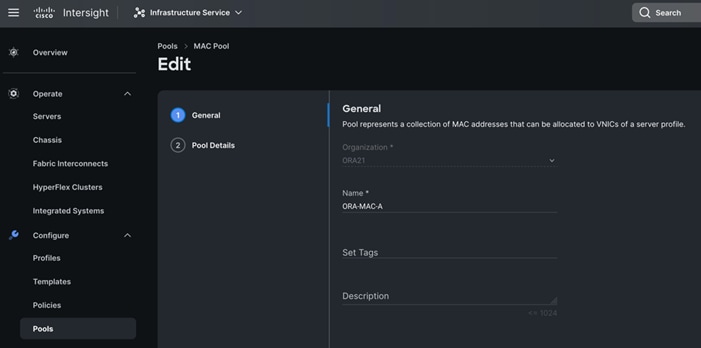

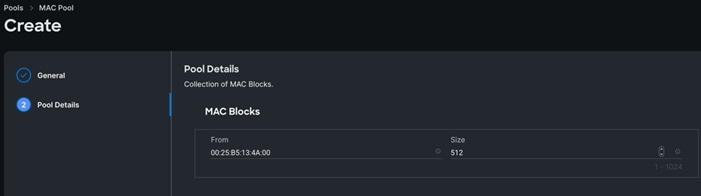

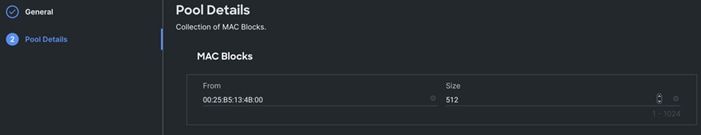

Procedure 3. Create MAC Pool

Step 1. To configure a MAC Pool for a Cisco UCS Domain profile, go to > Infrastructure Service > Configure > Pools > and click Create Pool. Select option MAC to create MAC Pool.

Step 2. In the MAC Pool Create section, for the Organization, select ORA21 and for the Policy name ORA-MAC-A. Click Next.

Step 3. Enter the MAC Blocks from and Size of the pool according to your environment and click Create.

Note: For this solution, we configured two MAC Pools. ORA-MAC-A for vNICs MAC Address VLAN 134 (public network traffic) on all servers through FI-A Side. ORA-MAC-B for vNICs MAC Address of VLAN 10 (private network traffic) on all servers through FI-B Side.

Step 4. Create a second MAC Pool to provide MAC addresses to all vNICs running on VLAN 10.

Step 5. Go to > Infrastructure Service > Configure > Pools > and click Create Pool. Select option MAC to create MAC Pool.

Step 6. In the MAC Pool Create section, for the Organization, select ORA21 and for the Policy name “ORA-MAC-B.” Click Next.

Step 7. Enter the MAC Blocks from and Size of the pool according to your environment and click Create.

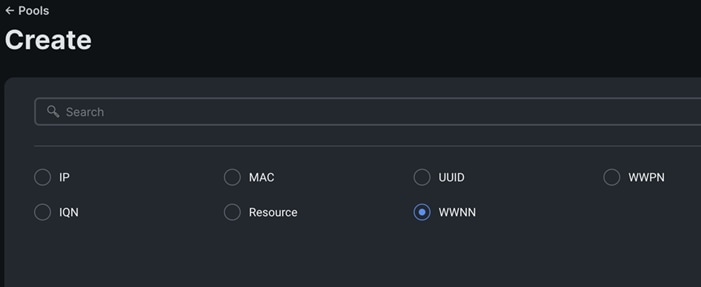

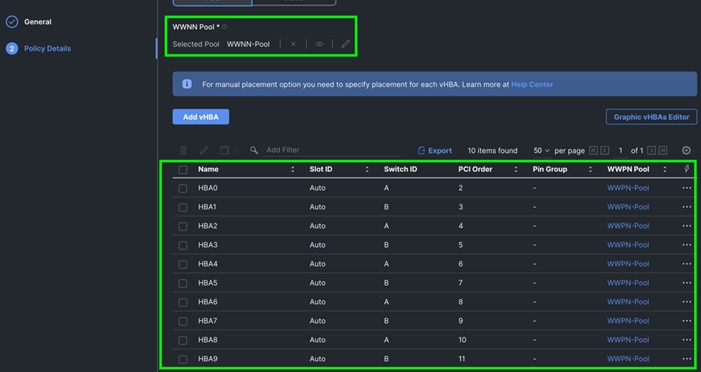

Procedure 4. Create WWNN and WWPN Pools

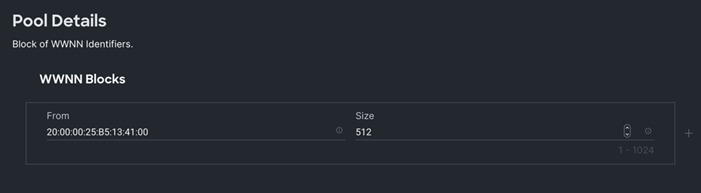

Step 1. To create WWNN Pool, go to > Infrastructure Service > Configure > Pools > and click Create Pool. Select option WWNN.

Step 2. In the WWNN Pool Create section, for the Organization select ORA21 and name it “WWNN-Pool.” Click Next.

Step 3. Add WWNN Block and Size of the pool according to your environment and click Create.

Step 4. Click Create to create this policy.

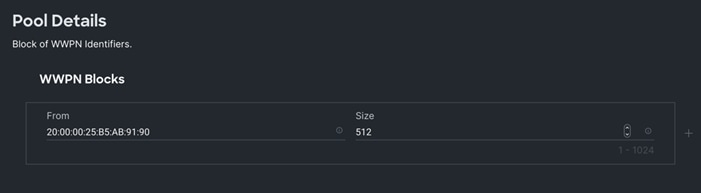

Step 5. Create WWPN Pool, go to > Infrastructure Service > Configure > Pools > and click Create Pool. Select option WWPN.

Step 6. In the WWPN Pool Create section, for the Organization select ORA21 and name it “WWPN-Pool.” Click Next.

Step 7. Add WWPN Block and Size of the pool according to your environment and click Create.

Step 8. Click Create to create this policy.

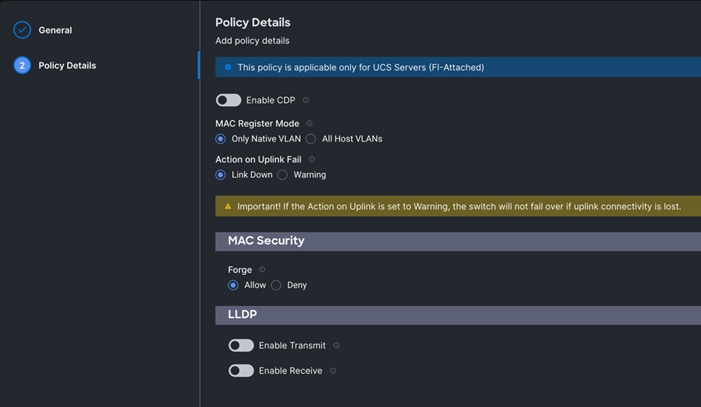

Procedure 5. Configure Ethernet Network Control Policy

Step 1. To configure the Ethernet Network Control Policy for the UCS server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Network Control.

Step 3. In the Switch Control Policy Create section, for the Organization select ORA21 and for the policy name enter “ORA-Eth-Network-Control.” Click Next.

Step 4. In the Policy Details section, keep the parameter as shown below:

Step 5. Click Create to create this policy.

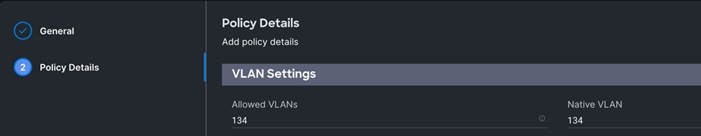

Procedure 6. Configure Ethernet Network Group Policy

Note: We configured two Ethernet Network Groups to allow two different VLAN traffic for this solution.

Step 1. To configure the Ethernet Network Group Policy for the UCS server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Network Group.

Step 3. In the Switch Control Policy Create section, for the Organization select ORA21 and for the policy name enter “Eth-Network-134.” Click Next.

Step 4. In the Policy Details section, for the Allowed VLANs and Native VLAN enter 134 as shown below:

Step 5. Click Create to create this policy for VLAN 134.

Step 6. Create “Eth-Network-10” and add VLAN 10 for the Allowed VLANs and Native VLAN.

Note: For this solution, we used these Ethernet Network Group policies and applied them on different vNICs to carry individual VLAN traffic.

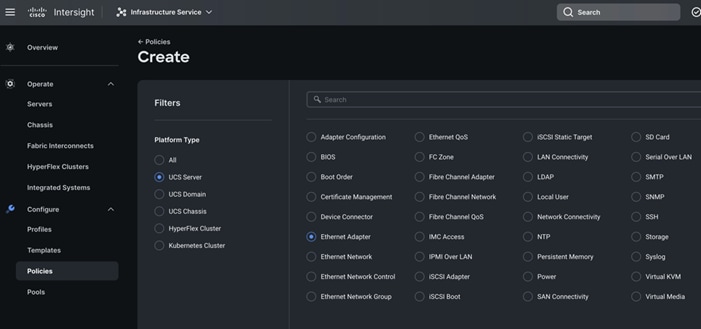

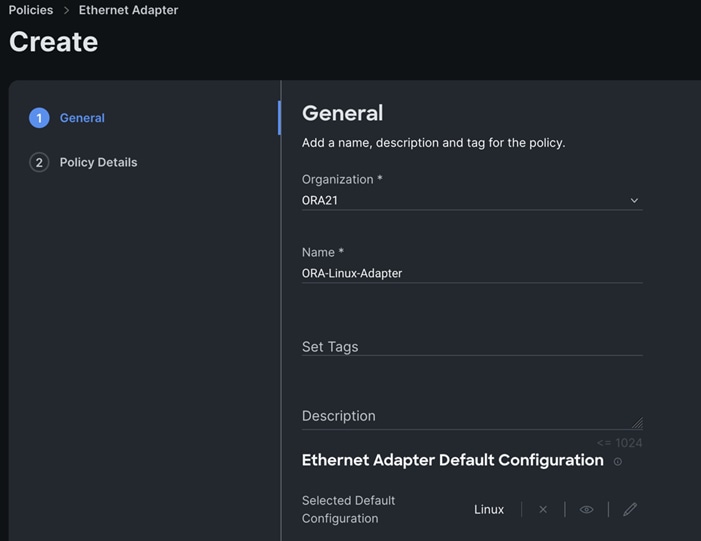

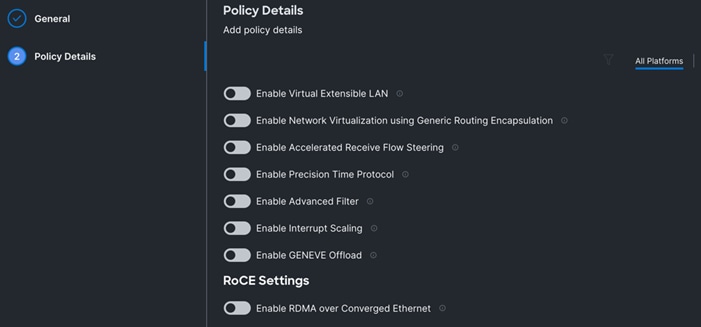

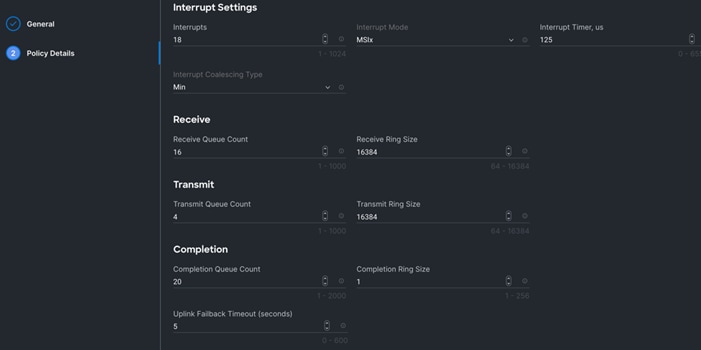

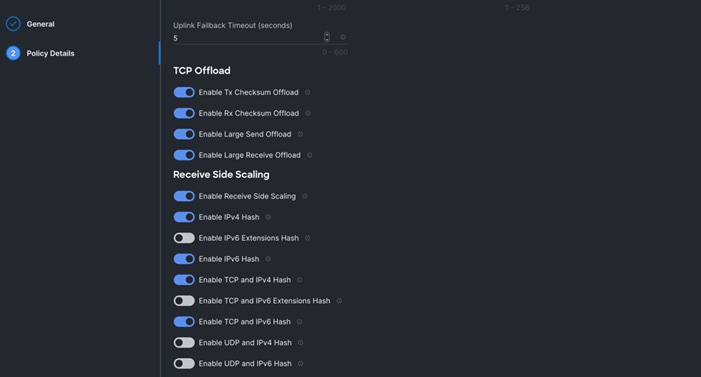

Procedure 7. Configure Ethernet Adapter Policy

Step 1. To configure the Ethernet Adapter Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet Adapter.

Step 3. In the Ethernet Adapter Configuration section, for the Organization select ORA21 and for the policy name enter ORA-Linux-Adapter.

Step 4. Select the Default Ethernet Adapter Configuration option and select Linux from the popup menu. Click Next.

Step 5. In the Ethernet Adapter Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-Linux-Adapter.” Select the Default Ethernet Adapter Configuration option and select Linux from the popup menu. Click Next.

Step 6. In the Policy Details section, for the recommended performance on the ethernet adapter, keep the “Interrupt Settings” parameter.

Step 7. Click Create to create this policy.

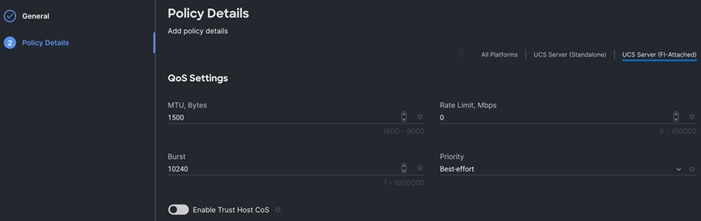

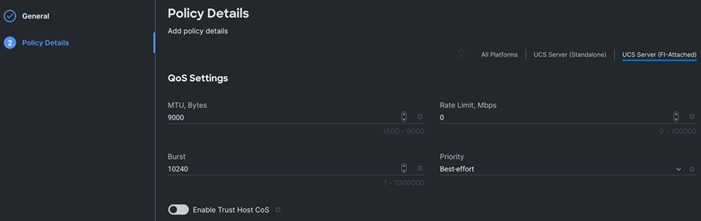

Procedure 8. Create Ethernet QoS Policy

Step 1. To configure the Ethernet QoS Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Ethernet QoS.

Step 3. In the Create Ethernet QoS Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-Eth-QoS-1500” click Next.

Step 4. Enter QoS Settings as shown below to configure 1500 MTU for management vNIC.

Step 5. Click Create to create this policy for vNIC0.

Step 6. Create another QoS policy for second vNIC running oracle private network and interconnect traffic.

Step 7. In the Create Ethernet QoS Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-Eth-QoS-9000.” Click Next.

Step 8. Enter QoS Settings as shown below to configure 9000 MTU for oracle database private interconnect vNIC traffic.

Step 9. Click Create to create this policy for vNIC1.

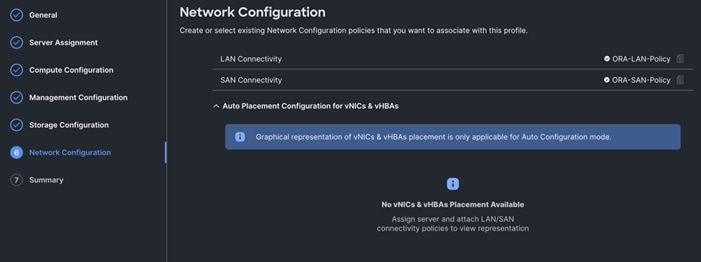

Procedure 9. Configure LAN Connectivity Policy

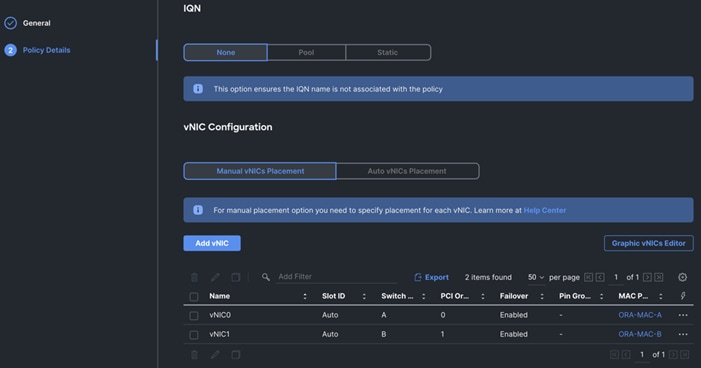

Two vNICs were configured per server as shown in Table 10.

| Name |

Switch ID |

PCI-Order |

MAC Pool |

Fail-Over |

| vNIC0 |

FI – A |

0 |

ORA-MAC-A |

Enabled |

| vNIC1 |

FI – B |

1 |

ORA-MAC-B |

Enabled |

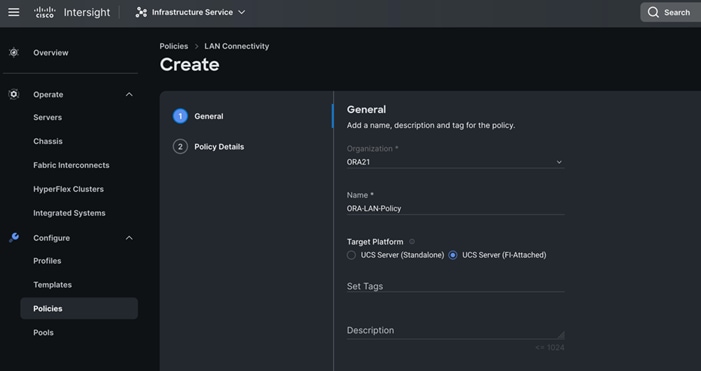

Step 1. Go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select “UCS Server” and for the policy select “LAN Connectivity.”

Step 2. In the LAN Connectivity Policy Create section, for the Organization select ORA21,for the policy name enter “ORA-LAN-Policy” and for the Target Platform select UCS Server (FI-Attached). Click Next.

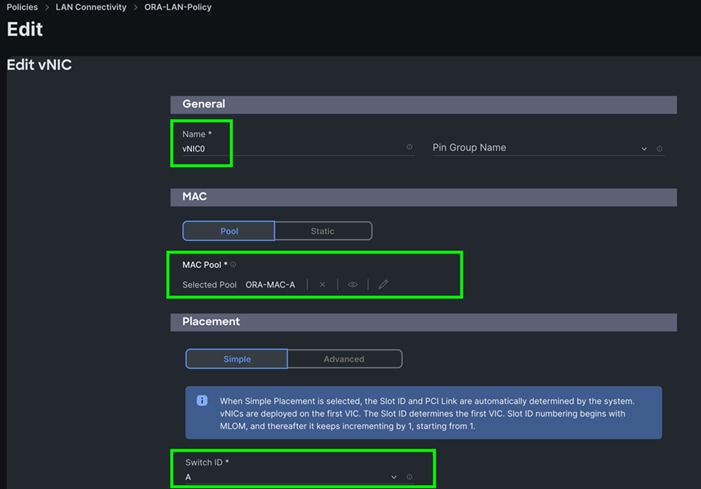

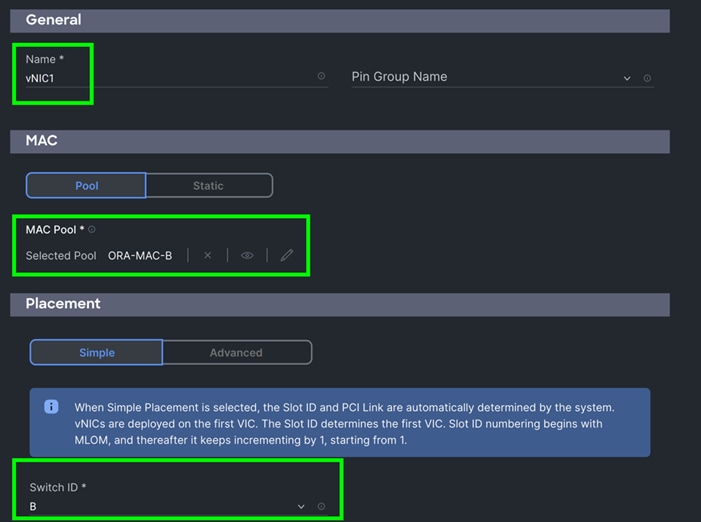

Step 3. In the Policy Details section, click Add vNIC. In the Add vNIC section, for the first vNIC enter vNIC0. In the Edit vNIC section, for the vNIC name enter "vNIC0" and for the MAC Pool select ORA-MAC-A.

Step 4. In the Placement option, select Simple and for the Switch ID select A as shown below:

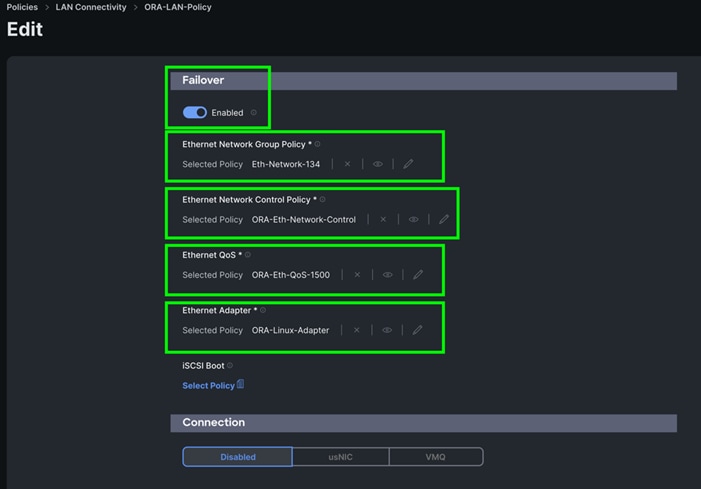

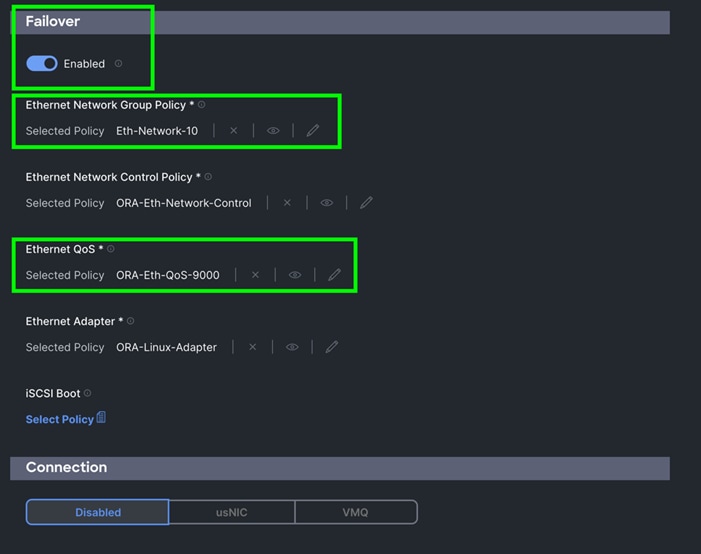

Step 5. For Failover select Enable for this vNIC configuration. This enables the vNIC to failover to another FI.

Step 6. For the Ethernet Network Group Policy, select Eth-Network-134. For the Ethernet Network Control Policy select ORA-Eth-Network-Control. For Ethernet QoS, select ORA-Eth-QoS-1500, and for the Ethernet Adapter, select ORA-Linux-Adapter. Click Add to add vNIC0 to this policy.

Step 7. Add a second vNIC. For the name enter "vNIC1" and for the MAC Pool select ORA-MAC-B.

Step 8. In the Placement option, select Simple and for the Switch ID select B as shown below:

Step 9. For Failover select Enable for this vNIC configuration. This enables the vNIC to failover to another FI.

Step 10. For the Ethernet Network Group Policy, select Eth-Network-10. For the Ethernet Network Control Policy, select ORA-Eth-Network-Control. For the Ethernet QoS, select ORA-Eth-QoS-9000, and for the Ethernet Adapter, select ORA-Linux-Adapter. Click Add to add vNIC0 to this policy.

Step 11. Click Add to add vNIC1 into this policy.

Step 12. After adding these two vNICs, review and make sure the Switch ID, PCI Order, Failover Enabled, and MAC Pool are as shown below:

Step 13. Click Create to create this policy.

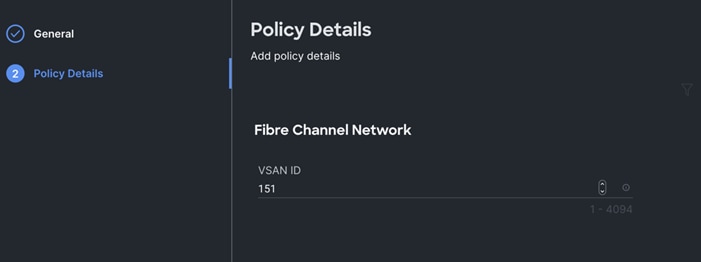

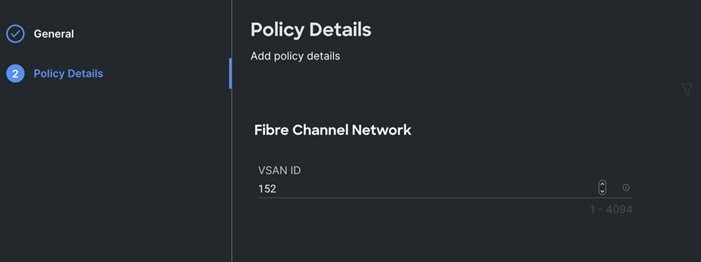

Procedure 10. Create Fibre Channel Network Policy

Step 1. To configure the Fibre Channel Network Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Fibre Channel Network.

Note: For this solution, we configured two Fibre Channel network policy as “ORA-FC-Network-151” and “ORA-FC-Network-152” to carry two VSAN traffic 151 and 152 on each of the Fabric Interconnect.

Step 3. In the Create Fibre Channel Network Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-FC-Network-151.” Click Next.

Step 4. For the VSAN ID enter 151 as shown below:

Step 5. Click Create to create this policy for VSAN 151.

Step 6. Create another Fibre Channel Network Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 7. For the platform type select UCS Server and for the policy select Fibre Channel Network.

Step 8. In the Create Fibre Channel Network Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-FC-Network-152.” Click Next.

Step 9. For the VSAN ID enter 152 as shown below:

Step 10. Click Create to create this policy for VSAN 152.

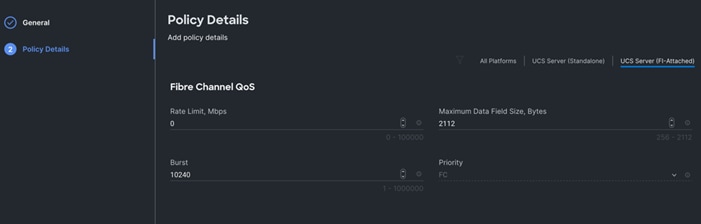

Procedure 11. Create Fibre Chanel QoS Policy

Step 1. To configure the Fibre Channel QoS Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server and for the policy select Fibre Channel QoS.

Step 3. In the Create Fibre Channel QoS Configuration section, for the Organization select ORA21 and for the policy name enter ORA-FC-QoS click Next.

Step 4. Enter QoS Settings as shown below to configure QoS for Fibre Channel for vHBA0:

Step 5. Click Create to create this policy for Fibre Channel QoS.

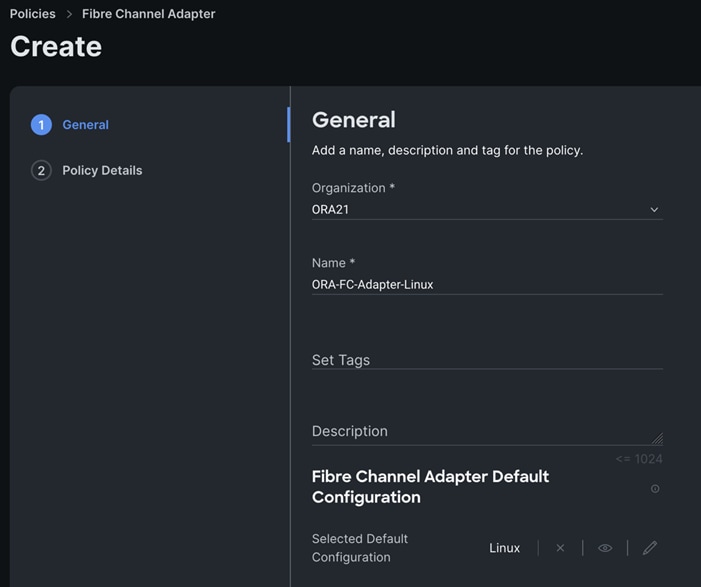

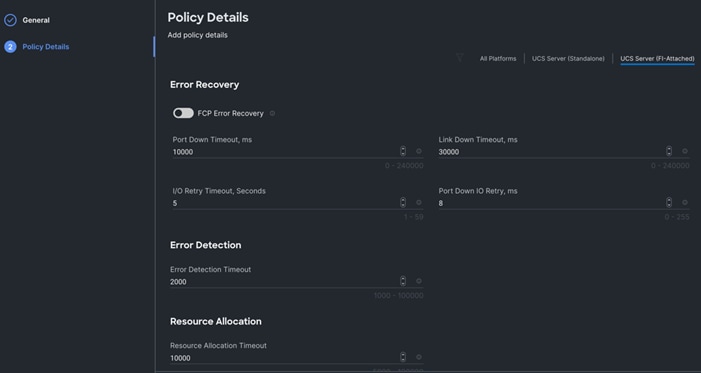

Procedure 12. Create Fibre Channel Adapter Policy

Step 1. To configure the Fibre Channel Adapter Policy for the UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.

Step 2. For the platform type select UCS Server” and for the policy select Fibre Channel Adapter.

Step 3. In the Create Fibre Channel Adapter Configuration section, for the Organization select ORA21 and for the policy name enter “ORA-FC-Adapter-Linux”. For the Fibre Channel Adapter Default Configuration, select Linux and click Next.

Note: For this solution, we used the default linux adapter settings to configure the FC and NVMe FC HBA’s.

Step 4. Click Create to create this policy for vHBA.

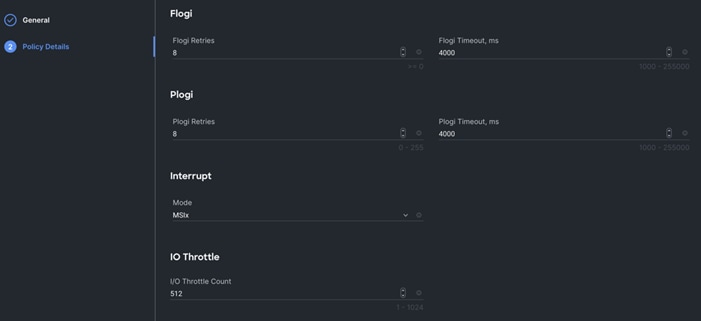

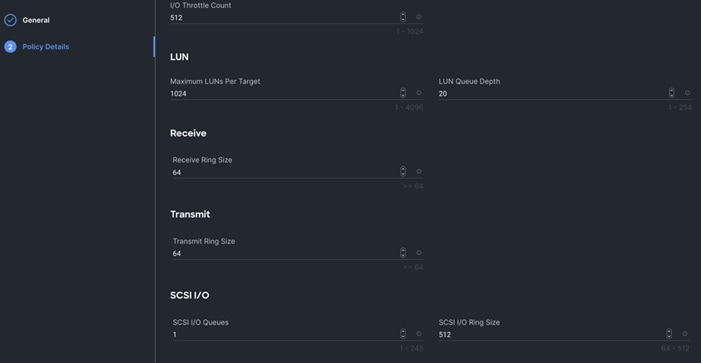

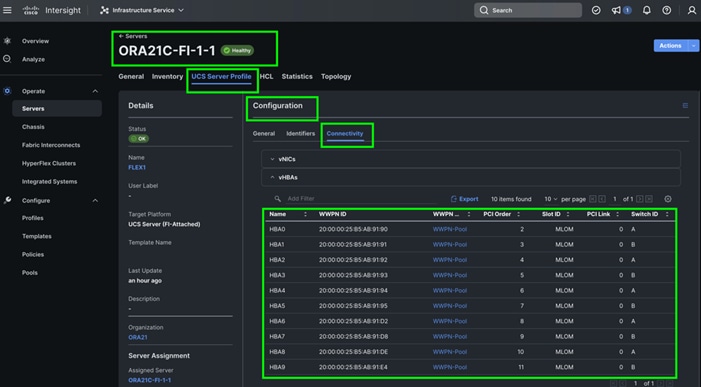

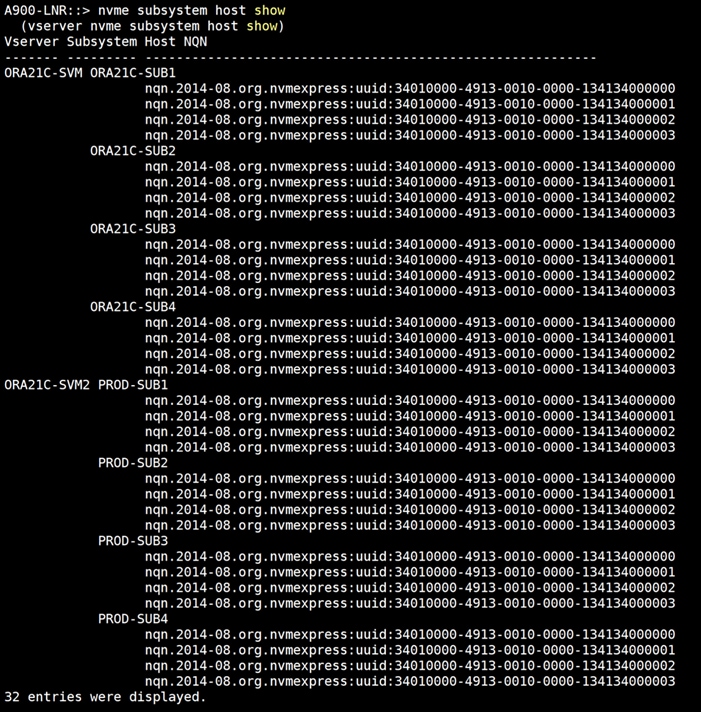

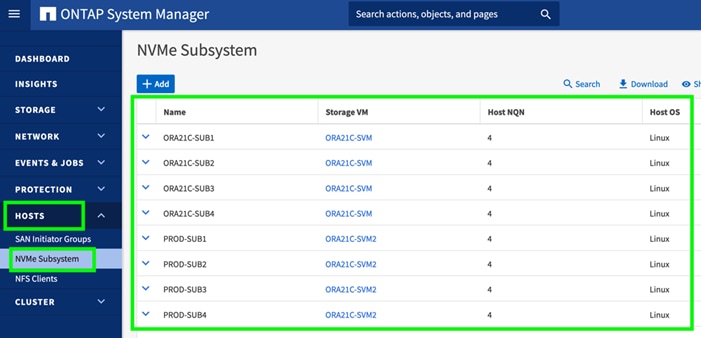

Procedure 13. Configure SAN Connectivity Policy

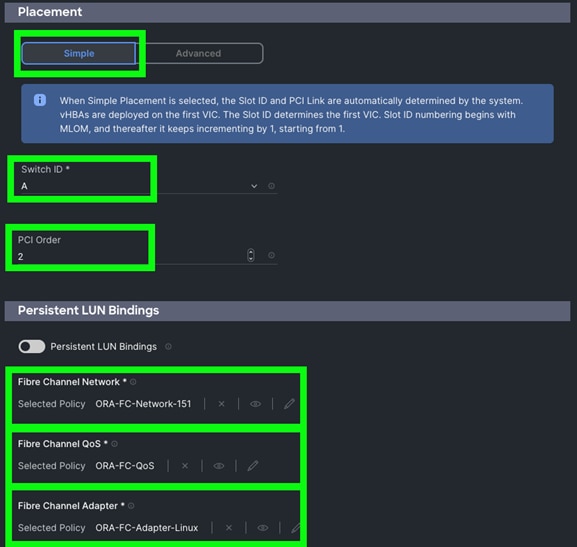

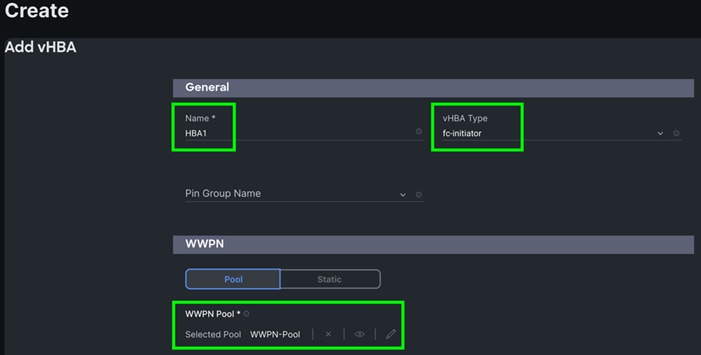

As mentioned previously, two vHBA (HBA0 and HBA1) were configured for Boot from SAN on two VSANs. HBA0 was configured to carry the FC Network Traffic on VSAN 151 and boot from SAN through the MDS-A Switch while HBA1 was configured to carry the FC Network Traffic on VSAN 152 and boot from SAN through the MDS-B Switch.

Note: For the best performance, we recommend creating at least 8 vHBAs to run NVMe/FC traffic. Also, for this solution, we configured 8 vHBAs to run workload for NVMe/FC traffic.

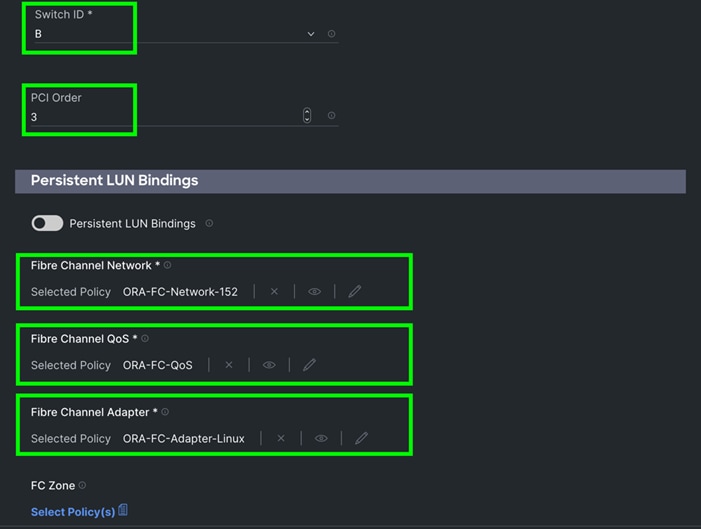

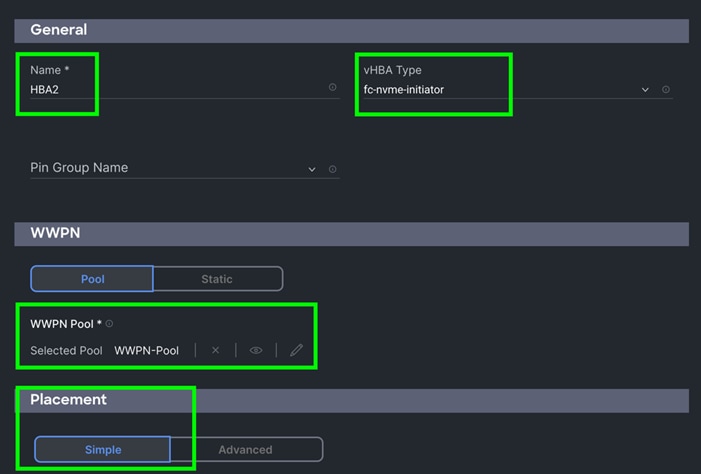

A total of eight vHBAs were configured to carry the NVMe/FC network traffic for the database on two VSANs. Four vHBAs (HBA2, HBA4, HBA6 and HBA8) were configured to carry the NVMe/FC network traffic on VSAN 151 for Oracle RAC database storage traffic through MDS-A Switch. Four vHBA (HBA3, HBA5, HBA7 and HBA9) were configured to carry the NVMe/FC network traffic on VSAN 152 for Oracle RAC database storage traffic through the MDS-B Switch.

For each Server node, a total of 10 vHBAs were configured as listed in Table 10.

| Name |

vHBA Type |

Switch ID |

PCI-Order |

Fibre Channel Network |

Fibre Channel Adapter |

Fibre Channel QoS |

| HBA0 |

fc-initiator |

FI – A |

2 |

ORA-FC-Network-151 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA1 |

fc-initiator |

FI – B |

3 |

ORA-FC-Network-152 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA2 |

fc-nvme-initiator |

FI – A |

4 |

ORA-FC-Network-151 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA3 |

fc-nvme-initiator |

FI – B |

5 |

ORA-FC-Network-152 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA4 |

fc-nvme-initiator |

FI – A |

6 |

ORA-FC-Network-151 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA5 |

fc-nvme-initiator |

FI – B |

7 |

ORA-FC-Network-152 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA6 |

fc-nvme-initiator |

FI – A |

8 |

ORA-FC-Network-151 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA7 |

fc-nvme-initiator |

FI – B |

9 |

ORA-FC-Network-152 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA8 |

fc-nvme-initiator |

FI – A |

10 |

ORA-FC-Network-151 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

| HBA9 |

fc-nvme-initiator |

FI – B |

11 |

ORA-FC-Network-152 |

ORA-FC-Adapter-Linux |

ORA-FC-QoS |

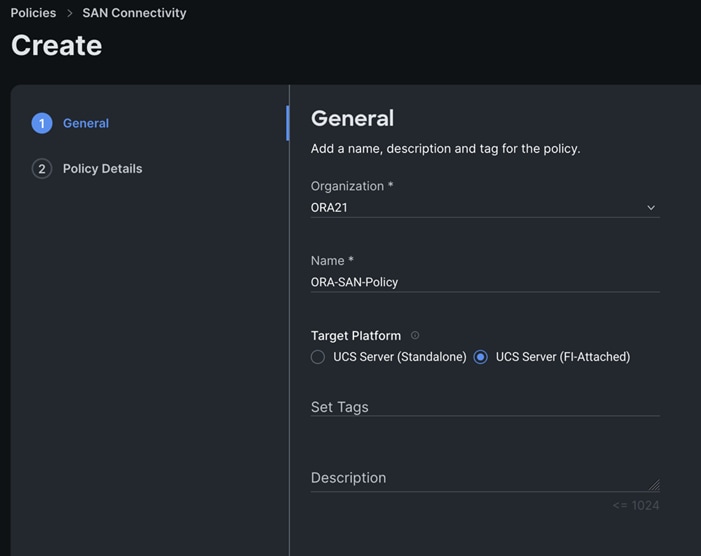

Step 1. Go to > Infrastructure Service > Configure > Polices > and click Create Policy. For the platform type select UCS Server and for the policy select SAN Connectivity.

Step 2. In the SAN Connectivity Policy Create section, for the Organization select ORA21,for the policy name enter ORA-SAN-Policy and for the Target Platform select UCS Server (FI-Attached). Click Next.

Step 3. In the Policy Details section, select WWNN Pool and then select WWNN-Pool that you previously created. Click Add vHBA.

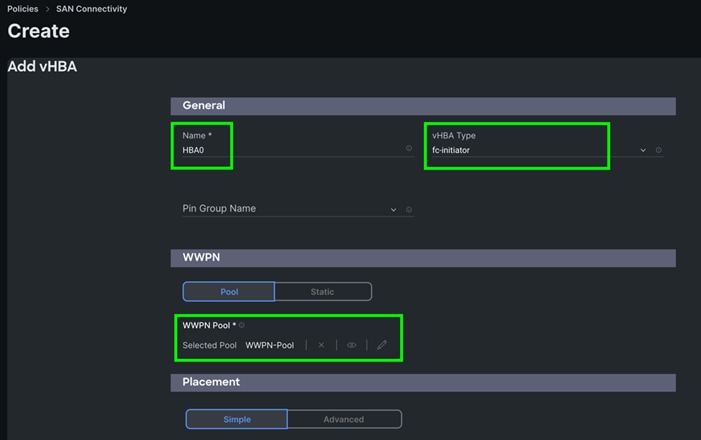

Step 4. In the Add vHBA section, for the Name enter “HBA0” and for the vHBA Type enter “fc-initiator.”

Step 5. For the WWPN Pool, select the WWPN-Pool that you previously created, as shown below:

Step 6. For the Placement, keep the option Simple and for the Switch ID select A and for the PCI Order select 2.

Step 7. For the Fibre Channel Network select ORA-FC-Network-151.

Step 8. For the Fibre Channel QoS select ORA-FC-QoS.

Step 9. For the Fibre Channel Adapter select ORA-FC-Adapter-Linux.

Step 10. Click Add to add this first HBA0.

Step 11. Click Add vHBA to add a second HBA.

Step 12. In the Add vHBA section, for the Name enter “HBA1” and for the vHBA Type select fc-initiator.

Step 13. For the WWPN Pool select WWPN-Pool that was previously create, as shown below:

Step 14. For the Placement, keep the option Simple and for Switch ID select B and for the PCI Order select 3.

Step 15. For the Fibre Channel Network select ORA-FC-Network-152.

Step 16. For the Fibre Channel QoS select ORA-FC-QoS.

Step 17. For the Fibre Channel Adapter select ORA-FC-Adapter-Linux.

Step 18. Click Add to add this second HBA1.

Note: For this solution, we added another eight HBA for NVME/FC.

Step 19. Click Add vHBA.

Step 20. In the Add vHBA section, for the Name enter “HBA2” and for the vHBA Type select fc-nvme-initiator.

Step 21. For the WWPN Pool select WWPN-Pool, which was previously created, as shown below:

Step 22. For the Placement, keep the option Simple and for the Switch ID select A and for the PCI Order select 4.

Step 23. For the Fibre Channel Network select ORA-FC-Network-151.

Step 24. For the Fibre Channel QoS select ORA-FC-QoS.

Step 25. For the Fibre Channel Adapter select ORA-FC-Adapter-Linux.

Step 26. Click Add to add this HBA2.

Note: For this solution, we added another seven HBA for NVME/FC.

Step 27. Click Add vHBA and select the appropriate vHBA Type, WWPN Pool, Simple Placement, Switch ID, PCI Order, Fibre Channel Network, Fibre Channel QoS, and Fibre Channel Adapter for all rest of the HBAs listed in Table 10.

Step 28. After adding the ten vHBAs, review and make sure the Switch ID, PCI Order, and HBA Type are as shown below:

Step 29. Click Create to create this policy.

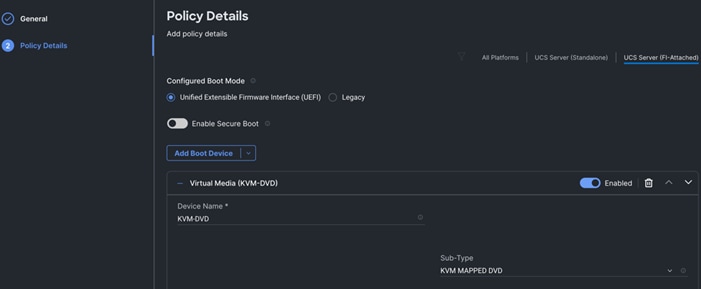

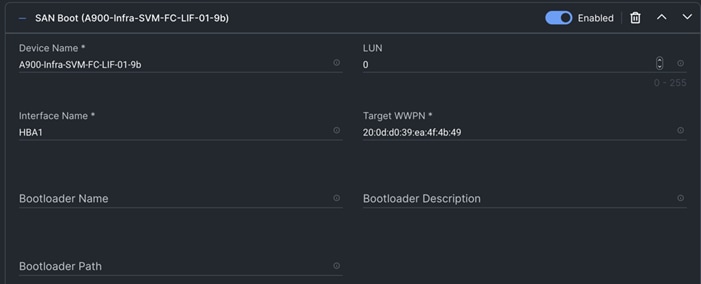

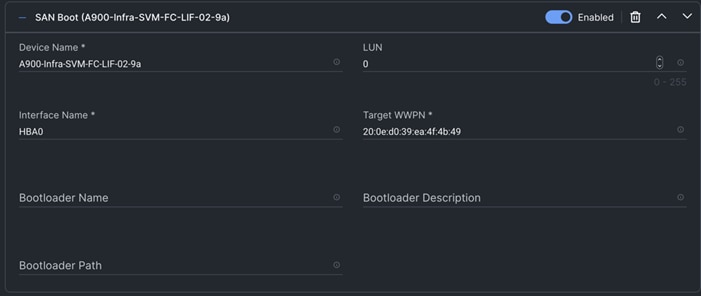

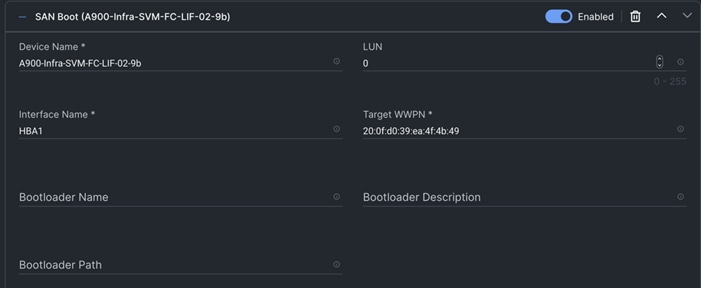

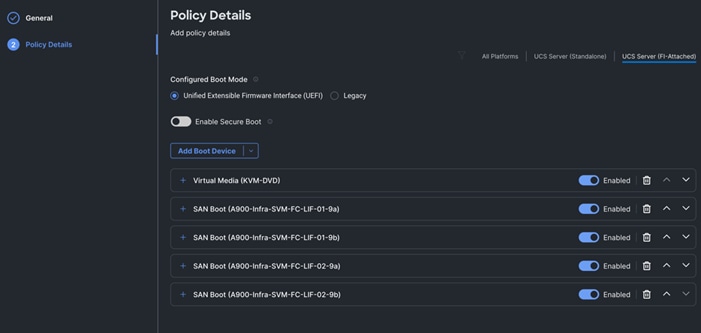

Procedure 14. Configure Boot Order Policy

All Oracle server nodes are set to boot from SAN for this Cisco Validated Design, as part of the Service Profile. The benefits of booting from SAN are numerous; disaster recovery, lower cooling, and power requirements for each server since a local drive is not required, and better performance, and so on. We strongly recommend using “Boot from SAN” to realize the full benefits of Cisco UCS stateless computing features, such as service profile mobility.

Note: For this solution, we used SAN Boot and configured the SAN Boot order policy as detailed in this procedure.

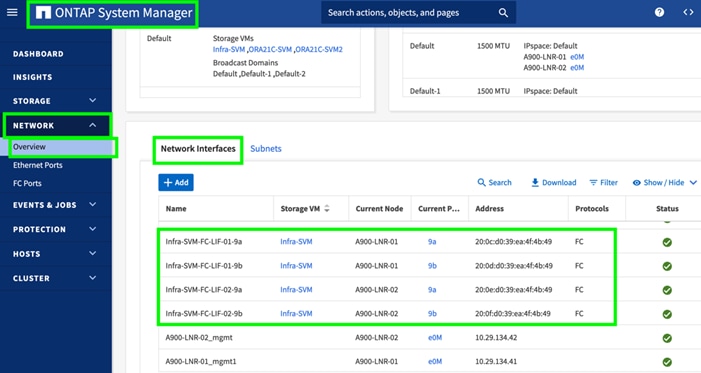

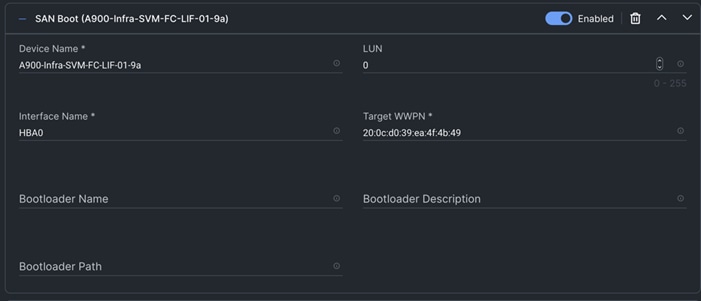

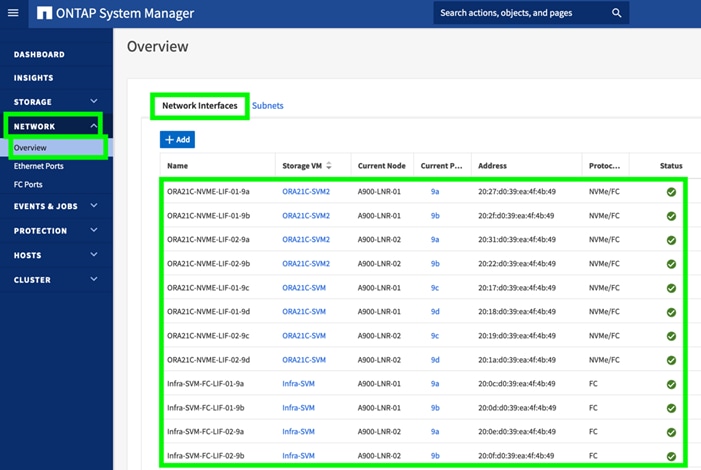

To create SAN Boot Order Policy, you need to enter the WWPN of NetApp Storage. The screenshot below shows both the NetApp AFF A900 Controller FC Ports and related WWPN:

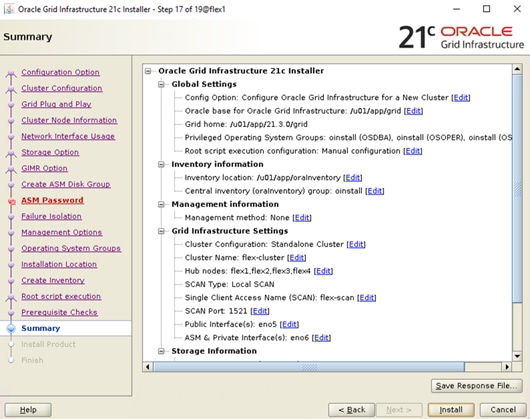

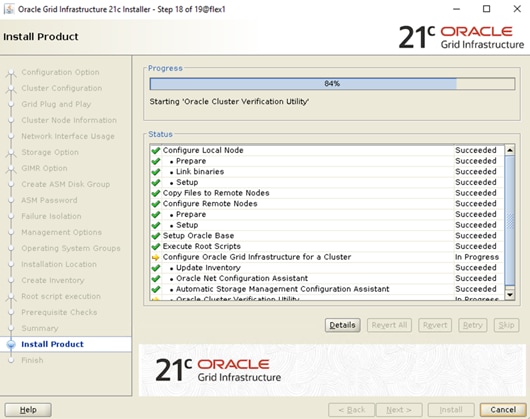

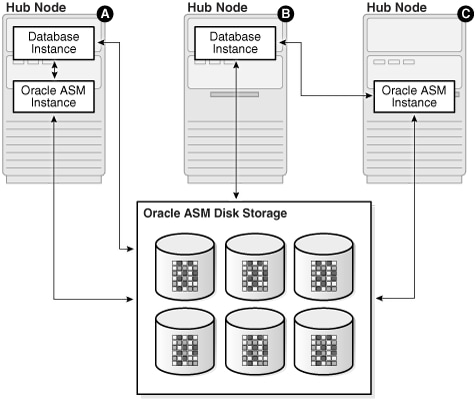

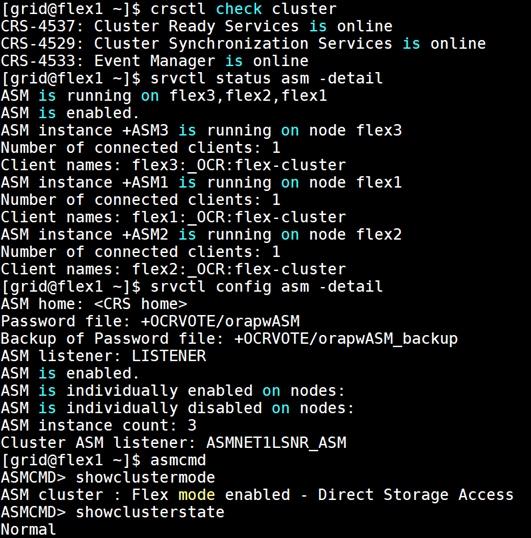

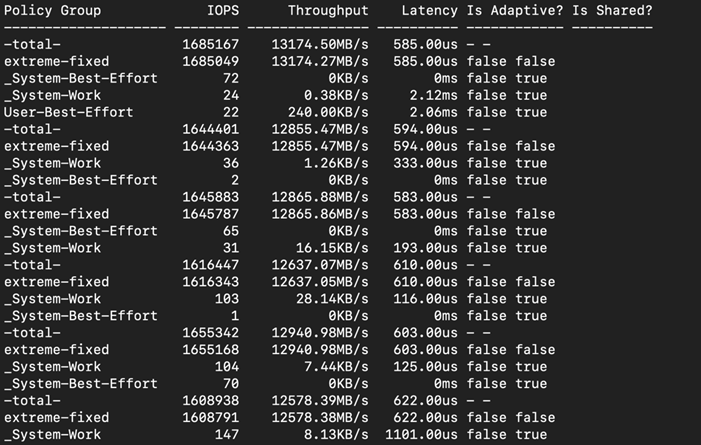

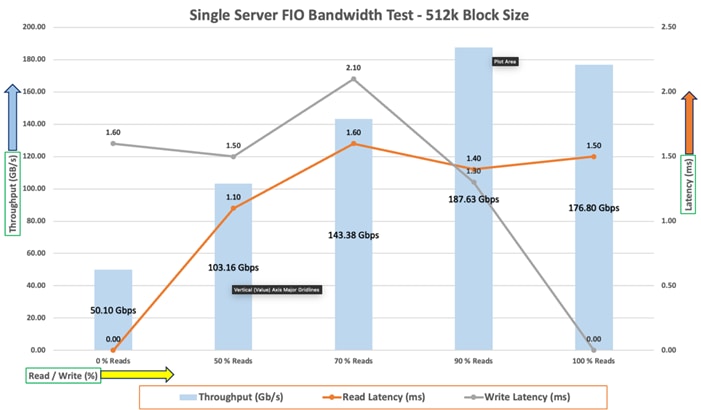

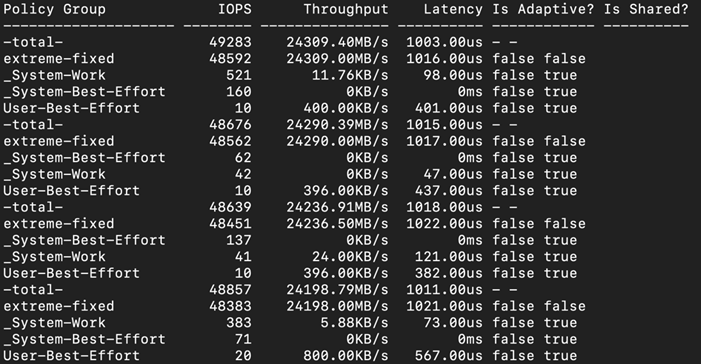

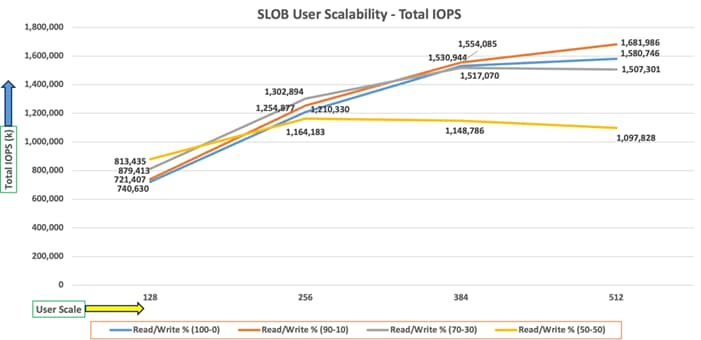

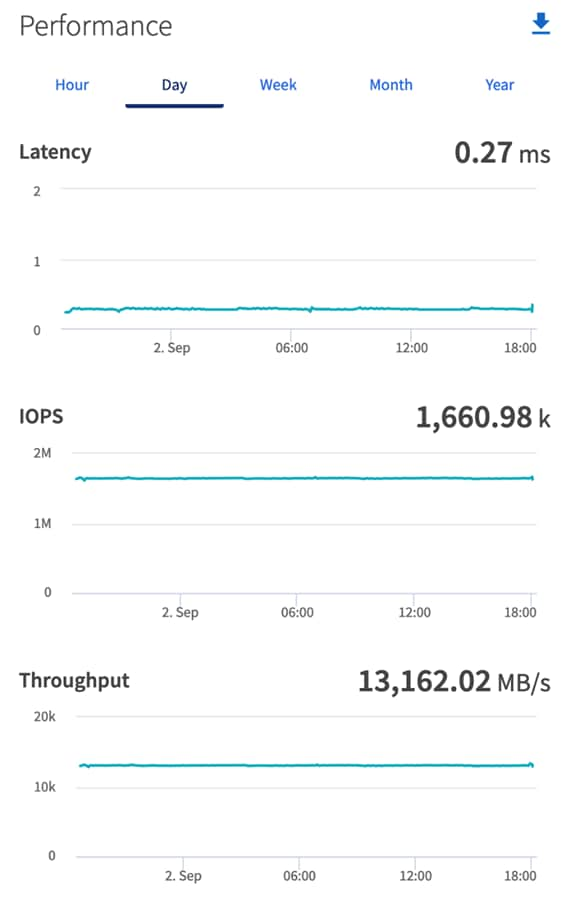

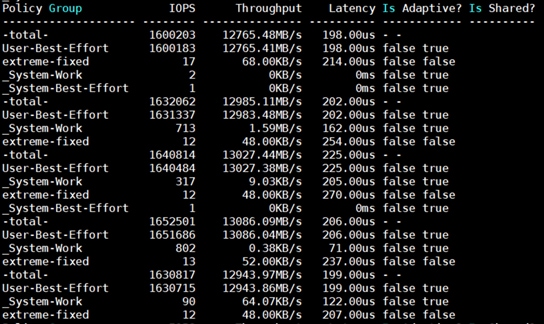

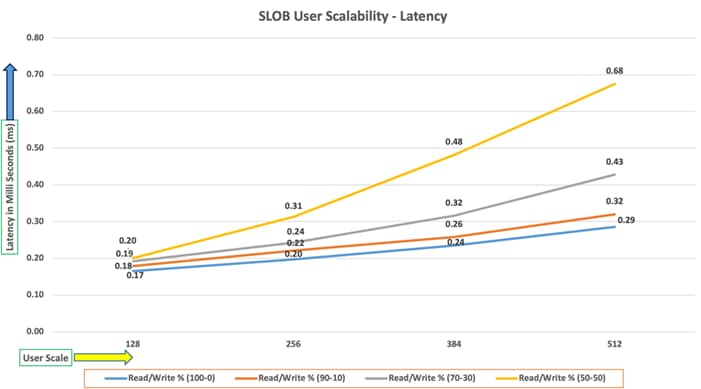

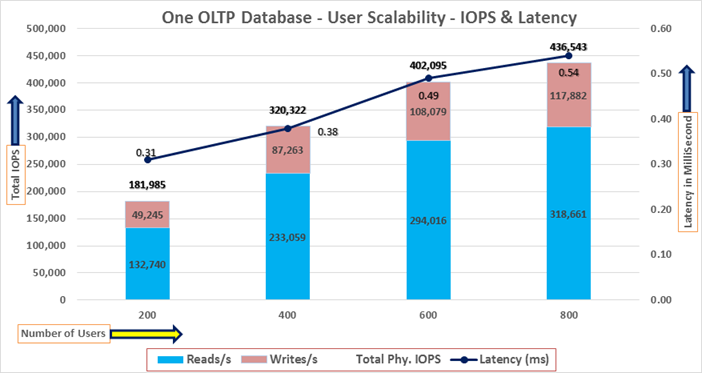

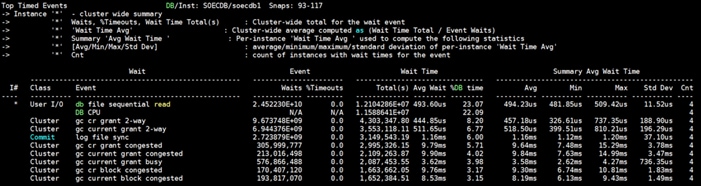

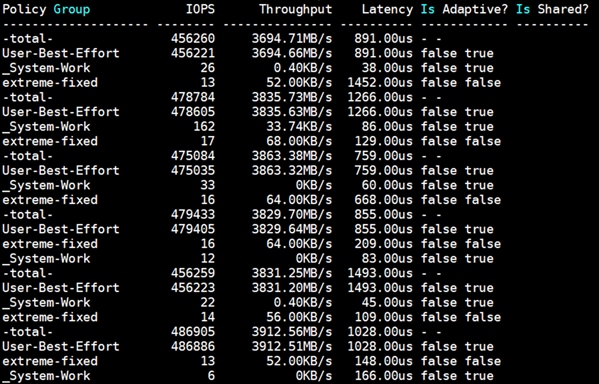

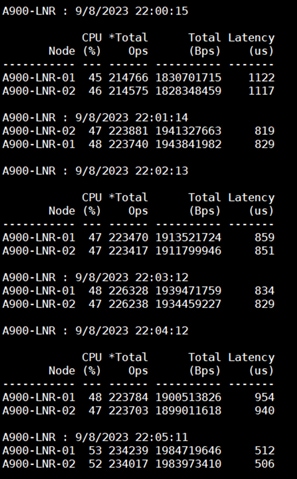

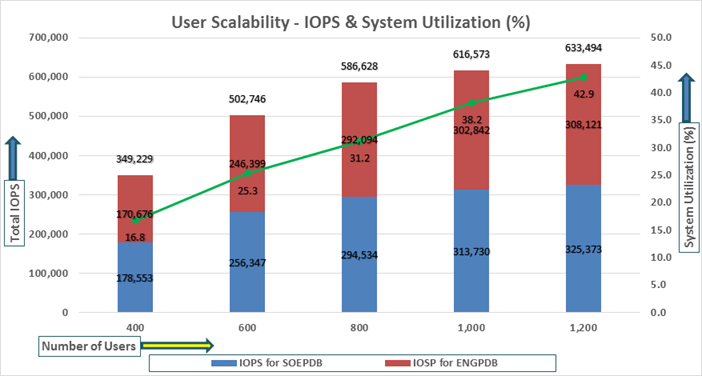

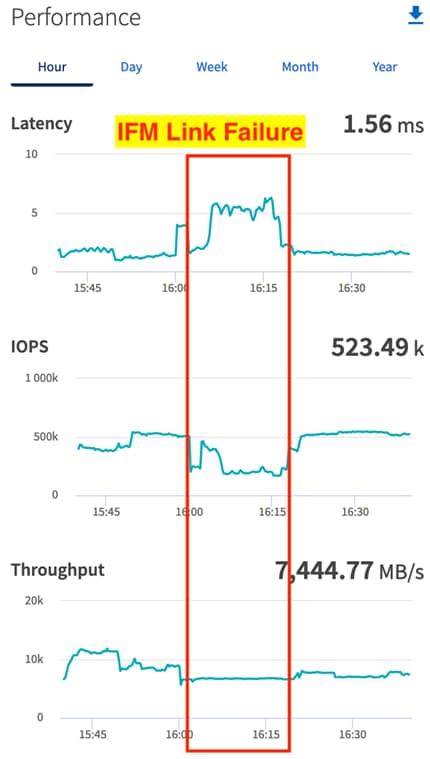

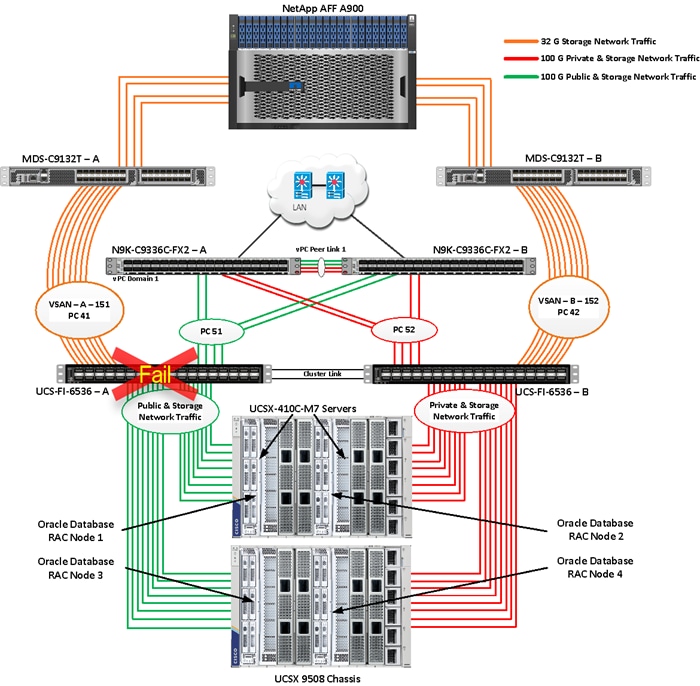

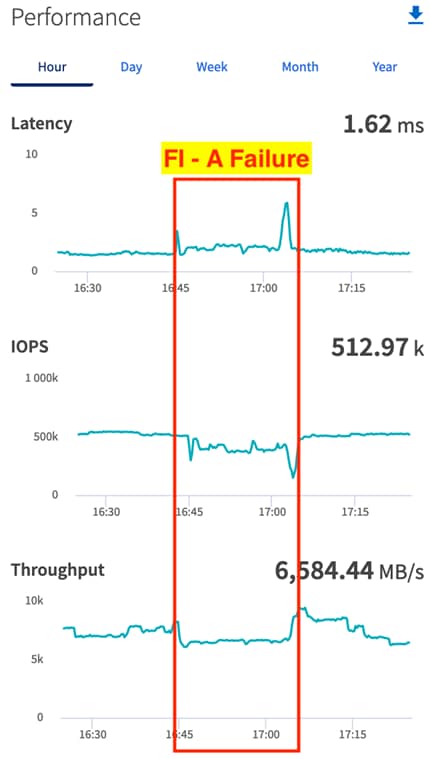

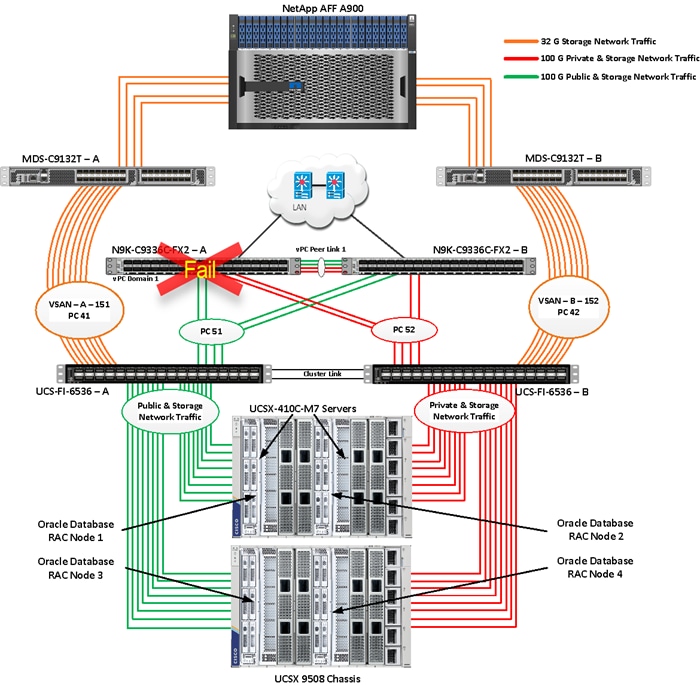

Step 1. To configure Boot Order Policy for UCS Server profile, go to > Infrastructure Service > Configure > Polices > and click Create Policy.