Fibre Channel Connectivity Overview

Cisco ACI supports Fibre Channel (FC) connectivity on a leaf switch using N-Port Virtualization (NPV) mode. NPV allows the switch to aggregate FC traffic from locally connected host ports (N ports) into a node proxy (NP port) uplink to a core switch.

A switch is in NPV mode after enabling NPV. NPV mode applies to an entire switch. Each end device connected to an NPV mode switch must log in as an N port to use this feature (loop-attached devices are not supported). All links from the edge switches (in NPV mode) to the NPV core switches are established as NP ports (not E ports), which are used for typical inter-switch links.

Note |

In the FC NPV application, the role of the ACI leaf switch is to provide a path for FC traffic between the locally connected SAN hosts and a locally connected core switch. The leaf switch does not perform local switching between SAN hosts, and the FC traffic is not forwarded to a spine switch. |

FC NPV Benefits

FC NPV provides the following:

-

Increases the number of hosts that connect to the fabric without adding domain IDs in the fabric. The domain ID of the NPV core switch is shared among multiple NPV switches.

-

FC and FCoE hosts connect to SAN fabrics using native FC interfaces.

-

Automatic traffic mapping for load balancing. For newly added servers connected to NPV, traffic is automatically distributed among the external uplinks based on current traffic loads.

-

Static traffic mapping. A server connected to NPV can be statically mapped to an external uplink.

FC NPV Mode

Feature-set fcoe-npv in ACI will be enabled automatically by default when the first FCoE/FC configuration is pushed.

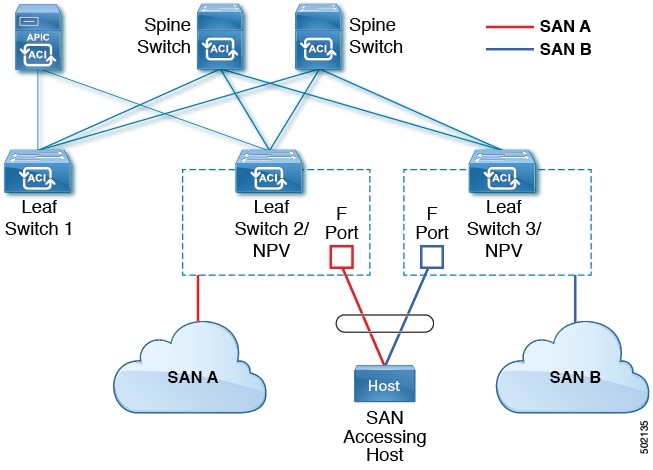

FC Topology

The topology of various configurations supporting FC traffic over the ACI fabric is shown in the following figure:

-

Server/storage host interfaces on the ACI leaf switch can be configured to function as either native FC ports or as virtual FC (FCoE) ports.

-

An uplink interface to a FC core switch can be configured as any of the following port types:

-

native FC NP port

-

SAN-PO NP port

-

-

An uplink interface to a FCF switch can be configured as any of the following port types:

-

virtual (vFC) NP port

-

vFC-PO NP port

-

-

N-Port ID Virtualization (NPIV) is supported and enabled by default, allowing an N port to be assigned multiple N port IDs or Fibre Channel IDs (FCID) over a single link.

-

Trunking can be enabled on an NP port to the core switch. Trunking allows a port to support more than one VSAN. When trunk mode is enabled on an NP port, it is referred to as a TNP port.

-

Multiple FC NP ports can be combined as a SAN port channel (SAN-PO) to the core switch. Trunking is supported on a SAN port channel.

-

FC F ports support 4/16/32 Gbps and auto speed configuration, but 8Gbps is not supported for host interfaces. The default speed is "auto."

-

FC NP ports support 4/8/16/32 Gbps and auto speed configuration. The default speed is "auto."

-

Multiple FDISC followed by Flogi (nested NPIV) is supported with FC/FCoE host and FC/FCoE NP links.

-

An FCoE host behind a FEX is supported over an FCoE NP/uplink.

-

Starting in the APIC 4.1(1) release, an FCoE host behind a FEX is supported over the Fibre Channel NP/uplink.

-

All FCoE hosts behind one FEX can either be load balanced across multiple vFC and vFC-PO uplinks, or through a single Fibre Channel/SAN port channel uplink.

-

SAN boot is supported on a FEX through an FCoE NP/uplink.

-

Starting in the APIC 4.1(1) release, SAN boot is also supported over a FC/SAN-PO uplink.

-

SAN boot is supported over vPC for FCoE hosts that are connected through FEX.

Feedback

Feedback