|

Collaboration Virtualization Hardware

|

|

| Introduction |

(top) |

|

Note: Unless specifically indicated otherwise, references to UCS B-Series includes both UCS Mini and regular UCS 5100 Series Blade Server Chassis. UCS Mini is allowed as long as the UCS B-Series and C-Series servers in UCS Mini conform to Collaboration support policies.

Note: Not all UC apps support all hardware options. For supported options, check the application's page in At a Glance.

This web page describes supported compute, storage and network hardware for Virtualization of Cisco Unified Communications, including UC on UCS (Cisco Unified Communications on Cisco Unified Computing System). See the "How to..." links for guidelines on how to design, quote and procure a virtualized UC solution that follows Cisco's support policy.

Cisco uses three different support models:

- UC on UCS Tested Reference Configuration (TRC), some of which are available as packaged collaboration solutions like Cisco Business Edition 6000 or Cisco Business Edition 7000.

- "TRC" used by itself means "UC on UCS Tested Reference Configuration (TRC)".

- UC on UCS Specs-based

- "UC on UCS" used by itself refers to both UC on UCS TRC and UC on UCS Specs-based.

- Third-party Server Specs-based

- "Specs-based" used by itself refers to the common rules of UC on UCS Specs-based and Third-party Server Specs-based.

Below is a comparison of the hardware support options. Note that the following are identical regardless of the support model chosen:

- Virtual machine (OVA) definitions

- VMware product, version and feature support

- VMware configuration requirements for UC

- Application/VM Co-residency policy (specifically regarding application mix, 3rd-party support, no reservations / oversubscription, virtual/physical sizing rules and max VM count per server).

|

UC on UCS TRC |

UC on UCS Specs-based |

Third-Party Server Specs-based |

Other hardware |

| Basic Approach |

Configuration-based |

Rules-based |

Rules-based |

Not supported- does not satisfy this page's policy |

| Allowed for which UC apps? |

See At a Glance |

See At a Glance |

See At a Glance |

Not Supported |

| UC-required Virtualization Software |

Click here for general requirements.

VMware vCenter is optional.

VMware vSphere is mandatory.

- Click here for supported versions, editions, features, capacities and purchase options.

|

Click here for general requirements.

VMware vCenter is mandatory. Also mandatory to capture Statistics Level 4 for maximum duration at each level.

One of the following is mandatory:

- Cisco UC Virtualization Foundation

- VMware vSphere

- Click here for supported versions, editions, features, capacities and purchase options.

|

Click here for general requirements.

VMware vCenter is mandatory. Also mandatory to capture Statistics Level 4 for maximum duration at each level.

VMware vSphere is mandatory:

- Click here for supported versions, editions, features, capacities and purchase options.

|

N/A - not supported |

| Allowed Servers |

Select Cisco UCS listed in Table 1. Must follow all TRC rules in this policy. |

Any Cisco UCS that satisfies this page's policy |

Any 3rd-party server model that satisfies this page's policy |

None |

| Required Level of Virtualization/Server Experience |

Low / Medium |

High |

High |

N/A |

| Cisco-tested? |

Joint validation of apps and server hardware by UC and UCS teams |

Generic server hardware validation by UCS team. Not jointly validated with UC apps by Cisco. |

No server hardware validation by Cisco. Not jointly validated with UC apps by Cisco. |

No Cisco testing (unspported hardware) |

| Server Model, CPU and Component Choices |

Less (customer accepts tradeoff of less hardware flexibility for more UC predictability). |

More (customer assumes more test/design ownership to get more hardware flexibility) |

More (customer assumes more test/design ownership to get more hardware flexibility) |

None (unsupported hardware) |

| Does Cisco TAC support UC apps?

|

Yes, when all TRC rules in this policy are followed.

UC apps on C-Series DAS-only TRC: Supported with Guaranteed performance

UC apps on C-Series FC SAN TRC or B-Series FC SAN TRC: Supported with Guaranteed performance provided all shared storage requirements in this policy are met. |

Yes, when all Specs-based rules in this policy are followed. Supported with performance Guidance only |

Yes, when all Specs-based rules in this policy are followed. Supported with performance Guidance only |

UC apps not supported when deployed on unsupported hardware. |

| Does Cisco TAC suppport the server? |

Yes. If used with UC apps, then all TRC rules in this policy must be followed. |

Yes. If used with UC apps, then all UC on UCS Specs-based rules in this policy must be followed. |

No. Cisco TAC supports products purchased from Cisco with a valid, paid-up maintenance contract. |

No. Cisco TAC supports products purchased from Cisco with a valid, paid-up maintenance contract. Also note UC apps also not supported when deployed on unsupported hardware. |

| Who designs/determines the server's BOM? |

Customer wants Cisco to own |

Customer wants to own, with assistance from Cisco |

Customer wants to own |

N/A |

For more details on Cisco UCS servers in general, see the following:

Cisco UCS B-Series Servers Documentation Roadmap

Cisco UCS C-Series Servers Documentation Roadmap

Cisco UCS C-Series Integrated Management Controller Documentation

Cisco UCS Manager Documentation

Cisco UCS home page

Cisco UC on UCS solution home page

|

|

| Cisco Business Edition Appliance and UC on UCS Tested Reference Configurations (TRCs) Servers |

(top) |

Note: Definition of a Tested Reference Configuration(TRC):

- Includes specification of server model and loal components (CPU, RAM, adapters, local storage) at the orderable part number level.

- UCS B-Series servers, do NOT include specification of blade server chassis or switching.

- UCS E-Series servers, do NOT include specification of Cisco Integrated Server Router (ISR) chassis unless purchased as part of a Cisco Business Edition 6000S appliance.

- For TRCs using DAS storage, includes specification of required RAID configuration (e.g. RAID5, RAID10, etc) - including battery backup cache or SuperCap.

- Includes guidance on hardware installation and basic setup (full Cisco UCS installation documentation is on http://www.cisco.com/go/ucs and is the authoritative source).

- Does not include specification of virtual-to physical network interface mapping as this is design-dependent.

- Does not include specification of adapter settings (such as Cisco VIC, 3rd-party CNA / NIC / HBA) as this is design-dependent.

- Does not include configuration settings or step by step procedures for hardware BIOS, firmware, drivers, RAID setup outside the guidance in the Cisco Collaboration on Virtual Servers document. See the Cisco UCS documentation for details.

- Does not include design, installation and configuration of external hardware such as:

- Network routing and switching (e.g. routers, gateways, MCUs, ethernet/FC/FCoE switches, Cisco Catalyst/Nexus/MDS, etc.)

- QoS configuration of route/switch network devices.

- Cisco UCS B-Series chassis and switching components (e.g. Cisco UCS 6100/6200, Cisco UCS 2100/220, Cisco UCS 5100)

- Storage arrays (such as those from EMC, NetApp or other vendors)

- Does not include configuration settings, patch recommendations or step by step procedures for required VMware virtualization software.

Click here for basic guidance on TRC hardware setup.

If you have a UCS C-Series server and need assistance identifying its hardware specs for comparison with the configurations below, see the Cisco UCS C-Series Servers Integrated Management Controller GUI Configuration Guide.

|

|

UConUCS Tested Reference Configurations

|

End of Sale Hardware

|

Click here to download End of Sale Servers

|

| |

|

BE6000S and Extra-Small UConUCS TRCs

|

Hardware Configuration Name and

Part Numbers / SKUs / BOM |

Form Factor and Physical Specs |

Capacity Available to VMs (using required Sizing Rules) |

Extra-Small

UConUCS TRC

(XS TRC) |

|

TRC =

Cisco ISR Single-wide Blade Server

Single D-1528 (6-core / 1.9 GHz)

32 GB RAM

VMware + UC apps boot from DAS (2x 900GB SAS, RAID1)

Onboard internal and external ethernet ports |

6 total physical cores (note: Intel Xeon Processor-D only supported on this TRC and only for select deployment models)

28 GB physical RAM

~900 GB

External port for CIMC, internal and external 1Gb ports used for LAN access. |

Click here to download specifications and BOM for End of Sale Servers.

|

BE6000M and Small UConUCS TRCs

|

Hardware Configuration Name and

Part Numbers / SKUs / BOM |

Form Factor and Physical Specs |

Capacity Available to VMs (using required Sizing Rules) |

| Cisco Business Edition 6000M or Small

UConUCS TRC

(S TRC) |

BE6000M (M5)

UCS C220 M5SX TRC#1

Click here for BOM |

1RU Rack-mount Server

Single Xeon 4114

48 GB RAM

VMware + UC apps boot from DAS (single 6-disks-RAID5 of 300GB 12G SAS 10K SFF)

Ethernet ports on motherboard |

10 total physical cores ("Restricted UC Performance" CPU type)

40 GB physical RAM

~1TB

1x 1GbE (CIMC), 2x 10GbE (LOM) |

Click here to download specifications and BOM for End of Sale Servers.

|

BE6000H and Small Plus UConUCS TRCs

|

Hardware Configuration Name and

Part Numbers / SKUs / BOM |

Form Factor and Physical Specs |

Capacity Available to VMs (using required Sizing Rules) |

| Cisco Business Edition 6000H or Small Plus

UConUCS TRC

(S+ TRC) |

BE6000H (M5)

UCS C220 M5SX TRC#2

Click here for BOM |

1RU Rack-mount Server

Dual Xeon 4114

64 GB RAM

VMware + UC apps boot from DAS (single 8-disks-RAID5 of 300GB 12G SAS 10K SFF)

Ethernet ports on motherboard and single NIC |

20 total physical cores ("Restricted UC Performance" CPU type)

56 GB physical RAM

~1TB

1x 1GbE (CIMC), 2x 10GbE and 4x 1GbE (LOM) |

Click here to download specifications and BOM for End of Sale Servers.

|

BE7000M and Medium UConUCS TRCs

|

Hardware Configuration Name and

Part Numbers / SKUs / BOM |

Form Factor and Physical Specs |

Capacity Available to VMs (using required Sizing Rules) |

| Cisco Business Edition 7000M or Medium

UConUCS TRC

(M TRC) |

BE7000M (M5)

UCS C240 M5SX TRC#1

Click here for BOM |

2RU Rack-mount Server

Single Xeon 6132

96 GB RAM

VMware + UC apps boot from DAS (dual 7-disks-RAID5 of 300GB 12G SAS 10K SFF)

Ethernet ports on motherboard and dual NIC |

14 total physical cores ("Full UC Performance" CPU type)

88 GB physical RAM

Two volumes, each with ~1TB usable disk space

1x 1GbE (CIMC), 2x 10GbE and 8x 1GbE (LOM) |

Click here to download specifications and BOM for End of Sale Servers.

|

BE7000H and Large UConUCS TRCs

|

Hardware Configuration Name and

Part Numbers / SKUs / BOM |

Form Factor and Physical Specs |

Capacity Available to VMs (using required Sizing Rules) |

| Cisco Business Edition 7000H or Large

UConUCS TRC

(L TRC) |

HyperFlex HX220c M5SX TRC#1

Click here for BOM |

2RU Rack-mount HyperFlex node

Dual Xeon 6142 (16-core, 2.6 GHz)

256 GB RAM

VMware + UC apps boot from HX Data Platform

Ethernet ports on motherboard + Cisco VIC |

24 total physical cores ("Full UC Performance" CPU type) after Storage Controller overhead

200 GB RAM (i.e. 256GB less 48GB for HX Data Platform and 8GB for ESXi)

Usable storage space ~5.76 TB (3-node HX cluster with RF=2)

1x 1GbE (CIMC), 2x 10GbE (LOM), 2x 40GbE (VIC) |

BE7000H (M5)

UCS C240 M5SX TRC#2

Click here for BOM |

2RU Rack-mount Server

Dual Xeon 6132

192 GB RAM

VMware + UC apps boot from DAS (quad 6-disks-RAID5 of 300GB 12G SAS 10K SFF)

Ethernet ports on motherboard and dual NIC |

28 total physical cores ("Full UC Performance" CPU type)

184 GB physical RAM

Four volumes, each with ~1TB usable disk space

1x 1GbE (CIMC), 2x 10GbE and 8x 1GbE (NIC) |

Click here to download specifications and BOM for End of Sale Servers.

|

|

|

|

| Virtualization Software Requirements |

(top) |

|

VMware vCenter is required for UC on UCS Specs-based, Specs-based 3rd-party infrastructure and HX TRC (because HX requires vCenter).

VMware vCenter is optional for other UC on UCS TRC deployments.

Just like some of the UC applications, vCenter can be configured to save more performance data. The more historical data saved, the bigger disk space needed by the database used by vCenter. Note, this is one of the main areas where you need vCenter rather than going directly to the ESXi host for performance data. vCenter can save historical data that the ESXi host does not keep.

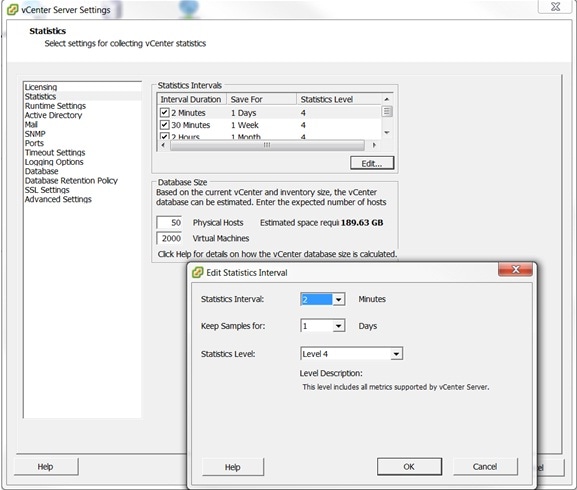

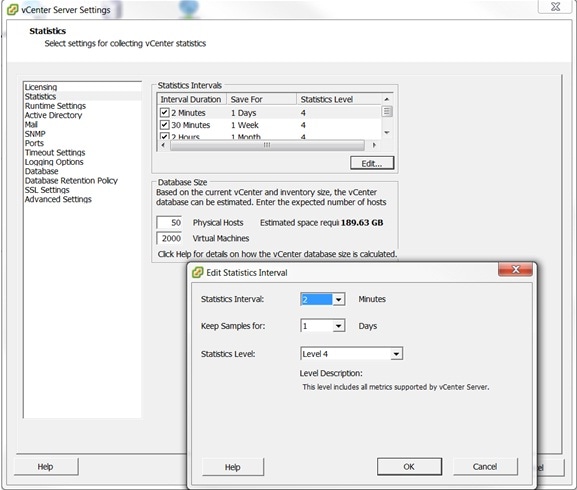

The configurations to change the amount historical data saved by vCenter is located in the vSphere client under Administration > Server Settings. For each interval duration and save time the statistic level can be set. The statistics levels range from 1 to 4 with level 4 containing the most data. View the data size estimates to ensure there is enough space to keep all statistics.

For a UC on UCS Specs-based or Specs-based 3rd-party infrastructure, Statistics Level 4 is required on all statistics. Configuring VMware vCenter to capture detailed logs, as shown in Figure 1 below, is strongly recommended. If not configured by default, Cisco TAC may request enabling these settings in order to troubleshoot problems.

Figure 1

VMware virtualization software is required for Cisco TAC support.

See the Introduction for basic virtualization software requirements, including what is optional and what is mandatory.

For Cisco UCS, no UC applications run or install directly on the server hardware; all applications run only as virtual machines. Cisco UC does not support a physical, bare-metal, or nonvirtualized installation on Cisco UCS server hardware.

All UC virtualization deployments must align with the VMware Hardware Compatibility List (HCL).

All UC virtualization deployments must follow UC rules for supported VMware products, versions, editions and features as described here.

Note: For UC on UCS Specs-based and Third-party Server Specs-based, use of VMware vCenter is mandatory, and Statistics Level 4 logging is mandatory. See above for how to configure VMware vCenter to capture these logs. If not configured by default, Cisco TAC may request enabling these settings in order to troubleshoot problems.

|

|

|

| "Can I use this server?" |

(top) |

|

UC virtualization hardware support is most dependent on the Intel CPU model and the VMware Hardware Compatibility List (HCL).

The server model only matters in the context of:

whether or not it is on the VMware HCL

what Intel CPU models does it carry (and are those CPU models allowed for UC virtualization)

can its hardware component options satisfy all other requirements of this policy

For additional considerations, see TAC TechNote 115955.

Note:

- UC does not support every CPU model

- A given server model may not carry every (or any) CPU model that UC supports.

- Therefore your server model choices may be artificially limited by which CPUs the server models carry.

|

UC on UCS TRC |

UC on UCS Specs-based |

Third-Party Server Specs-based |

Not Supported |

| Allowed Servers:

Vendors

Models / Generations

Form Factors |

Only Cisco Unified Computing System B-Series Blade Servers and C-Series Rack-mount Servers listed in Table 1 are supported.

HyperFlex M5 TRC permits chassis substitution of 1RU, 2RU, hybrid storage and all-flash models. TRC may also be used with HyperFlex Edge with HXDP 4.0+ and 10/25/40 GE network connections (1 GE network connections not supported).

|

Any Cisco Unified Computing System server is supported as long as:

it is on the VMware HCL for the version of VMware vSphere ESXi required by UC.

it carries a CPU model supported by UC (described later in this policy).

it satisfies all other requirements of this policy

Otherwise, any Cisco UCS model, generation, form factor (rack, blade) may be used. |

Any 3rd-party server model is supported as long as:

it is on the VMware HCL for the version of VMware vSphere ESXi required by UC.

it carries a CPU model supported by UC (described later in this policy).

it satisfies all other requirements of this policy

Otherwise, any 3rd-party vendor, model, generation, form factor (rack, blade) may be used. |

The following are NOT supported:

Cisco or 3rd-party server models that do not satisfy the rules of this policy.

Cisco 7800 Series Media Convergence Servers (MCS 7800) regardless of CPU model

Cisco UCS Express (SRE-V 9xx on ISR router hardware)

Cisco UCS E-Series Blade Servers (E14x/16x on ISR router hardware) with CPUs that do not meet Processor policy requirements.

For additional considerations, please see TAC TechNote 115955. |

| Server or Component "Embedded Software"

BIOS

Firmware

Drivers |

Always enable "Intel Virtualization Technology" in the hardware BIOS if it is present to enable.

Always enable hyperthreading in the hardware BIOS if it is present to enable, but always deploy 1 vCPU per physical CPU core (regardless of whether hyperthreading is enabled or not). For more details, see Collaboration Virtualization Sizing.

Cisco apps will specify supported versions of VMware vSphere ESXi. Follow server vendor guidelines for versions, levels and settings of BIOS, firmware and drivers required for the version of VMware vSphere ESXi in use.

|

| Mechanical and Environmental |

Note: Energy-saving features that cause reduction in CPU performance or real-time relocation/powering-down of virtual machines (such as CPU throttling or VMware Dynamic Power Management) are not supported.

Otherwise, there are no UC-specific requirements for form factor, rack mounting hardware, cable management hardware, power supplies, fans or cooling systems. Follow server vendor guidelines for these components.

If you use a Cisco UCS bundle SKU, note that the rail kit, cable management and power supply options may not match what is available with non-bundled Cisco UCS.

Redundant power supplies are highly recommended, particularly for UC on UCS.

For Cisco UCS, it is strongly recommended to use the Cisco default rail kit, unless you have different rack types such as telco racks or racks proprietary to another server vendor. Cisco does not sell any other types of rack-mounting hardware; you must purchase such hardware from a third party. |

|

|

| Processors / CPUs |

(top) |

|

Allowed vs. Supported CPU policies are different for UC on UCS Tested Reference Configuration, UC on UCS Specs-based and 3rd-party Server Specs-based. Required physical core speeds (base frequencies) are different for applications using 1vcpu:1pcore sizing approach (see Collaboration Virtualization Sizing) vs. applications that support CPU Reservations, such as UCM/IMP (see UCM and IMP Caveated Support for VMware CPU Reservations and Distributed Resource Scheduler). The rest of this section covers physical CPU requirements for 1vcpu:1pcore sizing approach.

Read the notes and BOTH CPU tables below to understand these support policy differences before locking in CPU parts choices.

Note:

- If an application has application-specific rules on its page on www.cisco.com/go/uc-virtualized, that rule takes precedence over this policy. Check EVERY app that will share a physical server before finalizing CPU choice.

- CPU architectures are NOT allowed for Specs-based until explicitly listed below. Until listed below, they are not allowed, even if believed to be "better" since "newer".

- Some CPU architectures, or CPU models within an architecture/family, are not a good fit for Collaboration requirements and will NEVER be allowed or listed below. Do not assume that all CPU architectures, or all models with an architecture, will be allowed.

- Collaboration application support for new CPU architectures/models may lag the release date from Intel and/or server vendors.

- Collaboration applications require minimum physical core speeds on allowed CPU architectures (normal not turbo). Higher capacity VM configurations will usually require higher minimum physical core speeds. Expect these speeds to be higher than what is required for many traditional business applications. Speeds need to be what is shown at ark.intel.com in the CPU model's description, or for Cisco UCS in the description of the CPU SKU. This is normal speed not "turbo". This speed may not be what is actually returned by running software such as OS CLIs.

- Slower-speed CPUs categorized as "Restricted UC Performance" are only allowed for certain VM configurations of certain Collaboration apps, and they are usually lower capacity points of those applications. Do not assume large capacity VMs are allowed on slow-speed CPUs.

- Physical CPU choices for UC on UCS Tested Reference Configurations are stricter. They are a subset of what is allowed for Specs-based. See CPU Table 2.

- Physical CPUs shipping as part of a Packaged Collaboration Solution like Cisco Business Edition may not be changed.

CPU Table 1 - Allowed Shipping Specs-based CPUs

Click here for Allowed Older (end of sale) CPUs

|

CPU Table 2 - Additional TRC and Specs-based rules for CPU

|

UC on UCS TRC |

UC on UCS Specs-based |

Third-party Server Specs-based |

Not supported |

| Physical Sockets / CPU Quantity |

Must exactly match what is listed in TRC Table 1. |

Customer choice (subject to what server model allows). |

The following CPUs are NOT supported for UC:

Intel CPUs that are IN one of the supported architectures/families, but do NOT meet minimum physical core speeds, are not supported for UC.

Unlisted Intel CPU architectures/families (such as Intel Xeon 6500, E5-16xx, Core-i7, etc.) are NOT supported for UC. An Intel CPU architecture is not supported for UC unless listed in CPU Table 1 or in the Allowed Older (end of sale) CPUs. Note that end of sale CPU hardware may be carried by a server that is no longer supported by the server vendor.

Other CPU vendors such as AMD are not supported for UC.

Cisco TAC is not obligated to troubleshoot UC app issues when deployed on unsupported hardware. |

| Physical CPU Vendor and CPU model |

Must either exactly match the TRC BOM's CPU model in TRC Table 1 or use a CPU model that satisfies the following requirements:

- Same physical CPU core count as the TRC BOM's CPU model in Table 1.

- Same CPU architecture as the TRC BOM's CPU model in Table 1.

- Physical CPU core speed same or higher than that of the TRC BOM's CPU model in Table 1.

E.g. if the TRC BOM was tested with 2-socket Intel Xeon E5-2680 v1 (SandyBridge, 8-core, 2.7 GHz), then substition of a pair of any other E5-2600 v1 with 8 cores and speed 2.7 Ghz or higher would be allowed and the server is still a TRC. Subsitition with E5-2600 v2 or v3 would NOT be a TRC due to different architecture, and would make the server UC on UCS Specs-based. |

See app links in table on http://www.cisco.com/go/virtualized-collaboration or Supported Applications for which CPU Types are allowed for a given VM configuration of a UC app. E.g. the Unified Communications Manager 7500 user VM configuration is only allowed on a Full UC Performance CPU. |

| Total Physical CPU Cores |

Total available is fixed based on the CPU models in TRC Table 1. |

Total available depends on which CPU model customer chooses vs. the physical server's socket count and the CPU model selected. |

Total required is based on Collaboration Virtualization Sizing.

You can use https://www.cisco.com/go/vmpt or http://www.cisco.com/go/quotecollab for help with VM placement.

Cisco TAC is not obligated to troubleshoot UC app issues in deployments with insufficient physical processor cores or speed. |

|

|

| Memory / RAM |

(top) |

Note: Virtualization software licenses such as Cisco UC Virtualization Foundation or VMware vSphere limit the amount of total vRAM that can be used (and therefore the amount of physical RAM that can be used for UC VMs, due to UC sizing rules). See Unified Communications VMware Requirements for these limits. In general larger deployments, or deployments with high VM counts, will require very high vRAM totals and will therefore need to use VMware vSphere instead of Cisco UC Virtualization Foundation. If using high-memory-capacity servers, use VMware vSphere instead to ensure use of all physical memory.

|

UC on UCS TRC |

Specs-based (UCS or 3rd-party Server) |

| Physical RAM |

Total available is listed in Table 1. Additional memory may be added. |

Total available depends on the server chosen. |

| Total required is dependent on the virtual machine quantity/size mix deployed on the hardware:

2-4 GB required for virtualization software, depending on version. See Memory/RAM section of General sizing rules.

plus the sum of UC virtual machines' vRAM.

while following co-residency support policy rules. Per these rules, recall that UC does not support physical memory oversubscription (1 GB of vRAM must equal 1 GB of physical RAM). Cisco TAC is not obligated to troubleshoot UC app issues if the deployment has insufficient physical RAM. |

Memory Module/DIMM

Speed and Population |

For what was tested in a TRC, see Table 1.

Follow server vendor guidelines for optimum memory population for the memory capacity required by UC.

For Cisco UCS, use the Specs Sheets at UCS Quick Catalog. E.g. for a UCS B200 M3 with 96GB total RAM, optimal is 4x8GB DIMM + 4x4GB DIMM. Using 6x16GB DIMM is not optimal.

Otherwise, there are no UC-specific requirements (primarily because UC does not support memory oversubscription).

UC allows any DIMM speed (e.g. 1333 MHz, 1600 MHz, etc.).

UC allows any memory hardware module size, density and quantity (including changing the DIMM population on a Tested Reference Configuration) as long as UC-required RAM capacity is met, and the server vendor supports the intended memory configuration. |

|

|

| Storage |

(top) |

|

To be supported for UC, all storage systems - whether TRC or specs-based - must meet the following requirements:

- Compatible with the VMware HCL and compatible with the supported server model used

- Host-level kernel disk command latency < 4ms (no spikes above) and physical device command latency < 20 ms (no spikes above). For NFS NAS, guest latency < 24 ms (no spikes above)

- Published vDisk capacity requirements of UC VMs.

- See VM specs of application pages in At a Glance table on http://www.cisco.com/go/virtualized-collaboration

- For DAS-only TRCs (including Cisco Business Edition 6000, Thin Provisioning (either from VMware or from storage array) is not supported. Thick provisioning must be used.

- For diskless TRCs and any Specs-based server, thin provisioning (either from VMware or from storage array) is allowed with the caveat that disk space must be available to the VM as needed. Running out of disk space due to thin provisioning will crash the application and corrupt the virtual disk (which may also prevent restore from backup on the virtual disk).

- Published IOPS capacity requirements of UC VMs (including excess capacity provisioned to handle IOPS spikes such as during Cisco Unified Communications Manager upgrades).

- Other storage system design requirements (click here).

Note: UC on UCS TRCs using only DAS storage (such as C220 M3S TRC#1) have been pre-designed and tested to meet the above requirements for any UC with UC co-residency scenario that will fit on the TRC. Detailed capacity planning is not required unless deploying

- non-UC/3rd-party apps

- VM OVA templates created later than the TRC

- VM OVA templates with very large vDisks (300GB+).

Note: All of the above requirements must be met for Cisco UC to function properly. Except for UC on UCS TRCs using DAS only, it is the customer's responsibility to design a storage system that meets the above requirements. Cisco TAC is not obligated to troubleshoot UC app issues when customer-provided storage is insufficient, overloaded or otherwise not meeting the above requirements.

See below for supported storage hardware options.

|

UC on UCS TRC |

Specs-based (UCS or 3rd-party Server) |

| Supported Storage Options |

TRCs are only defined for:

DAS-only with UC-specified configuration (C260 M2, C240 M3S, C220 M3S, C210 M1/M2, C200 M2)

FC SAN with VMware local boot from DAS (B200 M1/M2, C210 M1/M2)

Diskless / boot from FC SAN (B440 M2, B230 M2, B200 M3, C210 M2)

|

DAS with customer-defined configuration (including local disks, external SAS, etc.)

FC, iSCSI, FCoE or Infiniband SAN

Diskless / boot from SAN via above transport options (only supported with VMware vSphere ESXi 4.1+ and compatible UC app versions).

NFS NAS |

DAS Support Details

|

UC on UCS TRC |

Specs-based (UCS or 3rd-party Server) |

| Disk Size and Speed |

B-Series TRC

may use the disk size/speed listed in Table 1 BOMs, or any other orderable size/speed for the blade server (since local disks are only used to boot VMware).

C-Series TRC

Both must be same or higher than specs listed in Table 1.

E.g. for a TRC tested with 300 GB 10K rpm disks, then:

300GB 15K rpm is supported (faster)

146GB 10K rpm not supported (too small)

7.2K rpm disk of any size not supported (too slow)

HyperFlex M5 TRC

For Capacity Disks, may substitute other disk technologies (SFF/LFF SAS, SED, SSD) on HyperFlex HCL that provide same or higher space and speed. For Boot / System / Cache Disks, may substitute other disks on HyperFlex HCL. |

DAS is supported with customer-determined disk size, speed, quantity, technology, form factor and RAID configuration as long as:

compatible with the VMware HCL and compatible with the server model used

all UC latency, performance and capacity requirements are met. To ensure optimum UC app performance, be sure to use Battery Backup cache or SuperCap on RAID controllers for DAS.

|

| TRC BOMs are updated as orderable disk drive options change. E.g. UCS C210 M2 TRC#1 was tested with 146GB 15K rpm disks, but due to 146GB disk EOL, the BOM now specifies 300GB 15K rpm disks (still supported as TRC since both size and speed are "same or higher" than what was tested). |

| Disk Quantity, Technology, Form Factor |

C-Series TRCs Must exactly match what is listed in Table 1. E.g. if the TRC was tested with ten 2.5" SAS drives, then that must be used regardless of disk size or speed.

HyperFlex M5 TRC

See previous row. |

| RAID Configuration |

C-Series TRCs RAID configuration, including physical-to-logical volume mapping, must exactly match Table 1 and the RAID instructions in the document Cisco Collaboration on Virtual Servers here.

N/A for HyperFlex M5 TRC (does not use RAID). |

SAN / NAS Support Details

- Applies to any TRC or Specs-based configuration connecting to FC, iSCSI, FCoE or NFS storage.

- No UC requirement to dedicate arrays or storage groups to UC (vs. non-UC), or to one UC app vs. other UC apps.

- The storage solution must be compatible with the server model used. E.g. for Cisco Unified Computing System: Cisco UCS Interoperability

- The storage solution must be compatible with the VMware HCL. For example, refer to the "SAN/Storage" tab at http://www.vmware.com/resources/compatibility/search.php?sourceid=ie7&rls=com.microsoft:en-us:IE-SearchBox&ie=&oe=

- No UC requirements on disk size, speed, technology (SAS, SATA, FC disk), form factor or RAID configuration as long as requirements for compatibility, latency, performance and capacity are met. "Tier 1 Storage" is generally recommended for UC deployments. See the UC Virtualization Storage System Design Requirements for an illustration of a best practices storage array configuration for UC.

- There is no UC-specific requirement for NFS version. Use what VMware and the server vendor recommend for the vSphere ESXi version required by UC.

- Use of storage network and array "features" (such as thin provisioning or EMC Powerpath) is allowed.

- Otherwise any shared storage configuration is allowed as long as UC requirements for VMware HCL, server compatibility, latency, capacity and performance are met.

Removable Media

|

UC on UCS TRC |

Specs-based (UCS or 3rd-party Server) |

| Boot from SD cards, USB flash or other |

Not allowed or supported by Cisco for apps or VMware vSphere ESXi. TRCs are only validated as either boot from DAS or diskless boot from FC SAN depending on Table 1.

Note all current TRCs are either diskless blades or C-Series DAS/HDD. SD cards in C-Series TRCs are used for convenience to get the UCS utilities (like SCU and HUU), in lieu of a DVD drive.

HyperFlex TRCs must boot from Cisco FlexFlash and are not user-changeable. |

Not allowed or supported by Cisco for apps. Must boot from DAS, SAN or NAS per Specs-based Storage requirements.

Allowed for VMware vSphere ESXi as long as supported by server vendor. Cisco Collaboration apps do not test, validate or troubleshoot these boot methods - work with server vendor if boot issues. |

Otherwise, there are no UC-specific requirements or restrictions. The different methods of installing UC apps into VMs can leverage the following distribution types of Cisco UC software:

- Physical delivery of UC apps via ISO image file on DVD.

- Cisco eDelivery of UC apps via email with link to ISO image file download.

|

|

| IO Adapters, Controllers and Devices for LAN Access and Storage Access |

(top) |

All adapters used (NIC, HBA, CNA, VIC, etc.) must be on the VMware Hardware Compatibility List for the version of vSphere ESXi required by UC.

|

UC on UCS TRC |

Specs-based (UCS or 3rd-party Server) |

| Physical Adapter Hardware (NIC, HBA, VIC, CNA) |

For UCS B-Series TRC

- Adapter vendor/model/technology - either match the adapters listed in Table 1 BOMs or substitute with any other supported adapter for the blade server model (which adapter "should" be used is dependent on deployment, design and UC apps).

- Adapter quantity may be one or two.

For UCS C-Series TRC:

- must exactly match adapter vendor/model/technology (e.g. Intel i350 for 1GbE or QLogic QLE2462 for FC) listed in Table 1 BOMs.

- Allowed NIC quantity must be same or higher than what is listed in Table 1 BOMs.

- Allowed HBA/VIC/CNA quantity must exactly match Table 1 BOMs.

- Any other changes are not allowed for a UC on UCS TRC, but are allowed for UC on UCS Specs-based.

For HyperFlex M5 TRC:

- For VIC, may substitute quantity 1+ of any 10/25/40GE or faster VIC on HyperFlex HCL. If TRC being used with HyperFlex Edge, must use 10/25/40GE interfaces (1GE interface options not supported).

- For NIC, may substitute quantity 1+ of any NIC 1GE or faster on HyperFlex HCL.

|

Only the following I/O Devices are supported:

- HBA for storage access

- Fibre Channel - 2Gbps or faster

- InfiniBand

- NIC for LAN and/or shared storage access

- Ethernet - 1Gbps or faste. Includes NFS and iSCSI for storage access.

- Cisco VIC or 3rd-party Converged Network Adapter for LAN and/or storage access

- RAID Controllers for DAS storage access

- SAS

- SAS SATA Combo

- SAS-RAID

- SAS/SATA-RAID

- SATA

The customer is also responsible for configuring redundant devices on the server (e.g. redundant NIC, HBA, VIA or CNA adapters).

There are no UC restrictions on hardware vendors for I/O Devices other than that VMware HCL and the server vendor/model must be compatible with them and support them. |

| IO Capacity and Performance |

In most cases detailed capacity planning is not required for LAN IO or storage access IO. TRC adapter choices have been made to accommodate the IO of all UC on UCS app co-residency scenarios that will fit on the TRC. For guidance on active vs. standby network ports, see the Cisco UC Design Guide and QoS Design Considerations for Virtual UC with UCS

It is the customer's responsibility to ensure the external LAN and storage access meet UC app design requirements.

|

LAN access adapters must be able to accommodate the LAN usage of UC VMs (described in UC app design guides).

Storage access adapters must be able to accommodate the storage IOPS (described in the Storage section of this policy).

Cisco TAC is not obligated troubleshoot UC apps issues in a deployment with insufficient or overloaded I/O devices.

|

|

|

| |

| Hardware Bills of Material (BOMs) |

(top) |

Note:

Do not assume that other UCS bundle SKUs on Cisco Commerce Build and Price can be used with UC on UCS. Before quoting one of these bundles, identify the BOM that it ships and see below:

- If the bundle meets TRC requirements on this page, it may be quoted for UC on UCS TRC.

- If the bundle does NOT meet TRC requirements but DOES meet Specs-based requirements, then it may be quoted for UC on UCS Specs-based only.

- If the bundle does NOT meet TRC requirements and also does NOT meet Specs-based requirements, then it may NOT be quoted for UC on UCS at all without modification.

|

End of Sale Hardware |

Click here to download EOS Bills of Material

|

|

HX220c M5SX TRC#1 |

|

Large HyperFlex node TRC. See details

Below is hardware-only configuration for a single HyperFlex TRC node and does not include required virtualization software licensing or required HyperFlex HX Data Platform (HXDP) subscription (click here for more details).

A minimum supported solution requires 3 HyperFlex TRC nodes and a pair of 6x00 Fabric Interconnection Switches. Click here for a sample deployment.

This configuration is also quotable as part of bundle HX-UC-C2X0M5-TRC1.

| Quantity |

Cisco Part Number |

Description |

| 1 |

HX220C-M5SX |

Cisco HyperFlex HX220c M5 Node |

| 2 |

Either:

HX-CPU-6142

HX-CPU-I6242 |

2.6 GHz 6142/150W 16C/22MB Cache/DDR4 2666MHzIntel 6242 2.8GHz/150W 16C/24.75MB 3DX DDR4 2933 MHz |

84 |

Either:

HX-MR-X32G2RS-HHX-ML-X64G4RT-H |

32GB DDR4-2666-MHz RDIMM/PC4-21300/dual rank/x4/1.2v64GB DDR4-2933-MHz LRDIMM/4Rx4/1.2v |

| 6 |

HX-HD12TB10K12N |

1.2 TB 12G SAS 10K RPM SFF HDD |

| 1 |

HX-SD480G63X-EP |

480GB 2.5in Enterprise Performance 6GSATA SSD(3X endurance) |

| 1 |

Either:

HX-SD240G61X-EVHX-SD240GM1X-EV |

240GB 2.5 inch Enterprise Value 6G SATA SSD240GB 2.5 inch Enterprise Value 6G SATA SSD |

| 1 |

HX-M2-240GB |

240GB SATA M.2 |

| 1 |

HX-SAS-M5 |

Cisco 12G Modular SAS HBA (max 16 drives) |

| 1 |

HX-MLOM-C40Q-03 |

Cisco VIC 1387 Dual Port 40Gb QSFP CNA MLOM |

| 2 |

CVR-QSFP-SFP10G |

QSFP to SFP10G adapter |

1

(optional) |

HX-PCIE-IRJ45 |

Intel i350 Quad Port 1Gb Adapter |

| 1 |

HX-RAILF-M4 |

Friction Rail Kit for C220 M4 rack servers |

| 2 |

HX-PSU1-1050W |

Cisco UCS 1050W AC Power Supply for Rack Server |

| 1 |

HX-MSD-32G |

32GB Micro SD Card for UCS M5 servers |

2

(auto-include) |

UCSC-BBLKD-S2 |

UCS C-Series M5 SFF drive blanking panel |

1

(auto-include) |

UCSC-BZL-C220M5 |

C220 M5 Security Bezel |

2

(auto-include) |

UCSC-HS-C220M5 |

Heat sink for UCS C220 M5 rack servers 150W CPUs & below |

1

(auto-include) |

UCS-MSTOR-M2 |

Mini Storage carrier for M.2 SATA/NVME (holds up to 2) |

| Note |

HXDP Software Subscription required - sold separately.

License required for VMware vSphere ESXi - sold separately. |

|

|

C240 M5SX TRC#2 |

|

Large TRC. See details

This configuration available as Business Edition 7000H appliance BE7H-M5-K9 or BE7H-M5-XU.

Note: The C240 M5L is only permitted under UC on UCS Specs-based.

Note: BE7000 appliances with ESXi 6.5 require vmfs5 (vmfs6 is not supported). For non-appliance TRCs, if you want to use vmfs6, some Collaboration applications do not support (see their virtualization page on Collaboration Virtualization) and a VMware patch is required to ensure storage performance.

Note: For Intel Spectre/Meltdown concerns, see cisco-sa-20180104-cpusidechannel.

| Quantity |

Cisco Part Number |

Description |

| 1 |

UCSC-C240-M5SX |

UCS C240 M5 24 SFF + 2 rear drives w/o CPU,mem,HD,PCIe,PS |

| 2 |

UCS-CPU-6132 |

2.6 GHz 6132/140W 14C/19.25MB Cache/DDR4 2666MHz |

| 12 |

UCS-MR-X16G1RS-H |

16GB DDR4-2666-MHz RDIMM/PC4-21300/single rank/x4/1.2v |

| 24 |

UCS-HD300G10K12N |

300GB 12G SAS 10K RPM SFF HDD |

| 1 |

UCSC-RAID-M5HD |

Cisco 12G Modular RAID controller with 4GB cache |

| 1 |

R2XX-RAID5 |

Enable RAID 5 Setting |

| 2 |

UCSC-PCIE-IRJ45 |

Intel i350 Quad Port 1Gb Adapter |

| 1 |

UCSC-PCI-1B-240M5 |

Riser 1B incl 3 PCIe slots (x8, x8, x8); all slots from CPU1

|

| 1 |

UCSC-RAILB-M4 |

Ball Bearing Rail Kit for C220 & C240 M4 & M5 rack servers

|

| 2 |

UCSC-PSU1-1050W |

Cisco UCS 1050W AC Power Supply for Rack Server |

| 2(auto-included) |

UCSC-BBLKD-S2 |

UCS C-Series M5 SFF drive blanking panel |

| 1(auto-included) |

UCSC-PCIF-240M5 |

C240 M5 PCIe Riser Blanking Panel |

| 1(auto-included) |

CBL-SC-MR12GM5P |

Super Cap cable for UCSC-RAID-M5HD |

| 1(auto-included) |

UCSC-SCAP-M5 |

Super Cap for UCSC-RAID-M5, UCSC-MRAID1GB-KIT |

| 2(auto-included) |

UCSC-HS-C240M5 |

Heat sink for UCS C240 M5 rack servers 150W CPUs & below |

| 1 |

CIMC-LATEST |

IMC SW (Recommended) latest release for C-Series Servers. |

| 1 |

C1UCS-OPT-OUT |

Cisco ONE Data Center Compute Opt Out Option |

|

|

C240 M5SX TRC#1 |

|

Medium TRC. See details

This configuration available as Business Edition 7000M appliance BE7M-M5-K9 or BE7M-M5-XU.

Note: The C240 M5L is only permitted under UC on UCS Specs-based.

Note: BE7000 appliances with ESXi 6.5 require vmfs5 (vmfs6 is not supported). For non-appliance TRCs, if you want to use vmfs6, some Collaboration applications do not support (see their virtualization page on Collaboration Virtualization) and a VMware patch is required to ensure storage performance.

Note: For Intel Spectre/Meltdown concerns, see cisco-sa-20180104-cpusidechannel.

| Quantity |

Cisco Part Number |

Description |

| 1 |

UCSC-C240-M5SX |

UCS C240 M5 24 SFF + 2 rear drives w/o CPU,mem,HD,PCIe,PS |

| 1 |

UCS-CPU-6132 |

2.6 GHz 6132/140W 14C/19.25MB Cache/DDR4 2666MHz |

| 6 |

UCS-MR-X16G1RS-H |

16GB DDR4-2666-MHz RDIMM/PC4-21300/single rank/x4/1.2v |

| 14 |

UCS-HD300G10K12N |

300GB 12G SAS 10K RPM SFF HDD |

| 1 |

UCSC-RAID-M5HD |

Cisco 12G Modular RAID controller with 4GB cache |

| 1 |

R2XX-RAID5 |

Enable RAID 5 Setting |

| 2 |

UCSC-PCIE-IRJ45 |

Intel i350 Quad Port 1Gb Adapter |

| 1 |

UCSC-PCI-1B-240M5 |

Riser 1B incl 3 PCIe slots (x8, x8, x8); all slots from CPU1 |

| 1 |

UCSC-RAILB-M4 |

Ball Bearing Rail Kit for C220 & C240 M4 & M5 rack servers |

| 2 |

UCSC-PSU1-1050W |

Cisco UCS 1050W AC Power Supply for Rack Server |

| 12(auto-included) |

UCSC-BBLKD-S2 |

UCS C-Series M5 SFF drive blanking panel |

| 1(auto-included) |

UCSC-PCIF-240M5 |

C240 M5 PCIe Riser Blanking Panel |

| 1(auto-included) |

CBL-SC-MR12GM5P |

Super Cap cable for UCSC-RAID-M5HD |

| 1(auto-included) |

UCSC-SCAP-M5 |

Super Cap for UCSC-RAID-M5, UCSC-MRAID1GB-KIT |

| 1(auto-included) |

UCSC-HS-C240M5 |

Heat sink for UCS C240 M5 rack servers 150W CPUs & below |

| 1 |

CIMC-LATEST |

IMC SW (Recommended) latest release for C-Series Servers. |

| 1 |

C1UCS-OPT-OUT |

Cisco ONE Data Center Compute Opt Out Option |

|

|

C220 M5SX TRC#2 |

|

Small Plus TRC. See details

This configuration available as Business Edition 6000H appliance BE6H-M5-K9 or BE6H-M5-XU.

Note: The C220 M5L is only permitted under UC on UCS Specs-based.

Note: BE6000 appliances with ESXi 6.5 require vmfs5 (vmfs6 is not supported). For non-appliance TRCs, if you want to use vmfs6, some Collaboration applications do not support (see their virtualization page on Collaboration Virtualization) and a VMware patch is required to ensure storage performance.

Note: For Intel Spectre/Meltdown concerns, see cisco-sa-20180104-cpusidechannel.

| Quantity |

Cisco Part Number |

Description |

| 1 |

UCSC-C220-M5SX |

UCS C220 M5 SFF 10 HD w/o CPU, mem, HD, PCIe, PSU |

| 2 |

UCS-CPU-4114 |

2.2 GHz 4114/85W 10C/13.75MB Cache/DDR4 2400MHz |

| 4 |

UCS-MR-X16G1RS-H |

16GB DDR4-2666-MHz RDIMM/PC4-21300/single rank/x4/1.2v |

| 8 |

UCS-HD300G10K12N |

300GB 12G SAS 10K RPM SFF HDD |

| 1 |

UCSC-RAID-M5 |

Cisco 12G Modular RAID controller with 2GB cache |

| 1 |

R2XX-RAID5 |

Enable RAID 5 Setting |

| 1 |

UCSC-PCIE-IRJ45 |

Intel i350 Quad Port 1Gb Adapter |

| 1 |

UCSC-RAILB-M4 |

Ball Bearing Rail Kit for C220 & C240 M4 & M5 rack servers |

| 2 |

UCSC-PSU1-770W |

Cisco UCS 770W AC Power Supply for Rack Server |

| 2(auto-included) |

UCSC-BBLKD-S2 |

UCS C-Series M5 SFF drive blanking panel |

| 1(auto-included) |

CBL-SC-MR12GM52 |

Super Cap cable for UCSC-RAID-M5 on C240 M5 Servers |

| 1(auto-included) |

UCSC-SCAP-M5 |

Super Cap for UCSC-RAID-M5, UCSC-MRAID1GB-KIT |

| 2(auto-included) |

UCSC-HS-C220M5 |

Heat sink for UCS C220 M5 rack servers 150W CPUs & below

|

| 1 |

CIMC-LATEST |

IMC SW (Recommended) latest release for C-Series Servers. |

| 1 |

C1UCS-OPT-OUT |

Cisco ONE Data Center Compute Opt Out Option |

|

|

C220 M5SX TRC#1 |

|

Small TRC. See details

This configuration available as Business Edition 6000M appliance BE6M-M5-K9 or BE6M-M5-XU.

Note: The C220 M5L is only permitted under UC on UCS Specs-based.

Note: BE6000 appliances with ESXi 6.5 require vmfs5 (vmfs6 is not supported). For non-appliance TRCs, if you want to use vmfs6, some Collaboration applications do not support (see their virtualization page on Collaboration Virtualization) and a VMware patch is required to ensure storage performance.

Note: For Intel Spectre/Meltdown concerns, see cisco-sa-20180104-cpusidechannel.

| Quantity |

Cisco Part Number |

Description |

| 1 |

UCSC-C220-M5SX |

UCS C220 M5 SFF 10 HD w/o CPU, mem, HD, PCIe, PSU |

| 1 |

UCS-CPU-4114 |

2.2 GHz 4114/85W 10C/13.75MB Cache/DDR4 2400MHz |

| 3 |

UCS-MR-X16G1RS-H |

16GB DDR4-2666-MHz RDIMM/PC4-21300/single rank/x4/1.2v |

| 6 |

UCS-HD300G10K12N |

300GB 12G SAS 10K RPM SFF HDD |

| 1 |

UCSC-RAID-M5 |

Cisco 12G Modular RAID controller with 2GB cache |

| 1 |

R2XX-RAID5 |

Enable RAID 5 Setting |

| 1 |

UCSC-RAILB-M4 |

Ball Bearing Rail Kit for C220 & C240 M4 & M5 rack servers

|

| 1 |

UCSC-PSU1-770W |

Cisco UCS 770W AC Power Supply for Rack Server |

| 4(auto-included) |

UCSC-BBLKD-S2 |

UCS C-Series M5 SFF drive blanking panel |

| 1(auto-included) |

UCSC-PSU-BLKP1U |

Power Supply Blanking Panel for C220 M4 servers |

| 1(auto-included) |

CBL-SC-MR12GM52 |

Super Cap cable for UCSC-RAID-M5 on C240 M5 Servers |

| 1(auto-included) |

UCSC-SCAP-M5 |

Super Cap for UCSC-RAID-M5, UCSC-MRAID1GB-KIT |

| 1(auto-included) |

UCSC-HS-C220M5 |

Heat sink for UCS C220 M5 rack servers 150W CPUs & below

|

| 1 |

CIMC-LATEST |

IMC SW (Recommended) latest release for C-Series Servers. |

| 1 |

C1UCS-OPT-OUT |

Cisco ONE Data Center Compute Opt Out Option |

|

|

E160S M3 TRC#1 |

|

Extra-Small TRC. See details

For a-la-carte ordering of this blade server as an option of an ISR router toplevel SKU, note the blade server SKU will vary by ISR model. Non-exhaustive examples are provided below.

Note this is a special server configuration with deployment model restrictions. See www.cisco.com/go/vmpt for allowed application virtual machine mixes.

| Quantity |

Cisco Part Number |

Description |

| 1 |

Either:

Blade-server-only

UCS-E160S-M3/K9=

Blade server as option of ISR

(example only, SKU varies by ISR model)

UCS-E160S-M3/K9 |

UCS-E, SingleWide, 6 Core CPU, 8 GB Flash, 8 GB RAM, 1-2 HDD

UCS-E, SingleWide, 6 Core CPU, 8 GB Flash, 8 GB RAM, 1-2 HDD |

| 1 |

EM3-MEM-8U16G |

8 to 16 GB upgrade, 1200MHz VLP RDIMM/PC4-2400 1R for UCSEM3 |

| 1 |

EM3-MEM-16G |

16 GB 1200MHz VLP RDIMM/PC4-2400 1R for UCS-E M3 |

| 2 |

E100S-HDD-SAS900G |

900 GB, SAS hard disk drive for SingleWide UCS-E |

| 1 |

DISK-MODE-RAID-1 |

Configure hard drives as RAID 1 (Mirror) |

|

|

|

|