|

Collaboration Virtualization Sizing

|

|

| Introduction |

(top) |

| This article provides specifics and examples to aid in sizing Unified Communications applications for the UCS B-series and C-series servers.

|

|

| OVAs, VMs, Users and Servers |

(top) |

| What is an OVA? A virtual machine template defines the configuration of the virtual machine's virtual hardware, or the "VM configuration". Open Virtualization Format (OVF) is an open standard for describing a VM configuration, and Open Virtualization Archive (OVA) is an open standard to package and distribute these templates. Files in OVA format have an extension of ".ova". Cisco Collaboration applications produce a file in OVA format containing all the required/supported VM configurations for that application.

Does Cisco require use of OVA files provided by the Collaboration apps? To be TAC-supported, Virtual Machines for Cisco Unified Communications applications must use a VM configuration from the OVA file provided by that application.

- They represent what the UC apps have been validated with.

- It is the only way to ensure UC apps are deployed on "aligned" disks for SAN deployments (i.e. pre-aligned filesystem disk partitions for the VM's vDisks).

- Use the readme of the OVA download file as authoritative source of information on supported VM configurations. When in doubt, or if there is a conflict between readme text and online web pages, use the readme.

- Changes to the VM configuration are NOT allowed unless specifically stated as permitted in technical documentation such as OVA Readme files or application-specific documents like the Replace a Single Server or Cluster for Cisco Unified Communications Manager. This includes virtual hardware specs, vnic adapter types, virtual SCSI adapter types, file system alignment and all other configuration aspects. Attempting to resize or change quantities of vDisks outside of what is specifically allowed for a given application can break the filesystem alignment of a VM, which can manifest later as a performance problem or inability to upgrade to a new application release.

Where do I download these OVA files from? Each Collaboration application posts its OVA file on Download Software on www.cisco.com. Most product pages on Cisco Unified Communications in a Virtualized Environment provide a link to the folder containing these files. The configuration of a UC Application virtual machine must match a supported virtual machine template.

Perform the following procedure to obtain the virtual machine template for a UC Application on Virtualized Servers:

- Go to the virtualization page for the product from Cisco Unified Communications in a Virtualized Environment.

- Click on the "click to download OVA file for this version" from the location selected above.

- You may need to navigate to the Virtual Machine Templates from the Download Software.

- To download a single OVA file, click the Download File button next to that file. To download multiple OVA files, click the Add to Cart button next to each file that you want to download, then click on the Download Cart link. A Download Cart page appears.

- Click the Proceed with Download button on the Download Cart page. A Software License Agreement page appears.

- Read the Software License Agreement, then click the Agree button

- On the next page, click on either the Download Manager link (requires Java) or the Non Java Download Option link. A new browser window appears.

- If you selected Download Manager, a Select Location dialog box appears. Specify the location where you want to save the file, and click Open to save the file to your local machine.

- If you selected Non Java Download Option, click the Download link on the new browser window. Specify the location and save the file to your local machine.

What does the term "users" mean in a sizing context? Many of the Collaboration apps use "users" in the label of a particular VM configuration. "Users" means a specific user count at a particular BHCA, with a particular number of devices per user, feature mix per VM, device mix per VM, etc. Use the sizing tools and design guides at www.cisco.com/go/uc-virtualized to establish how many devices are able to be supported by a given VM configuration. Actual user capacity and total supported devices will be design-dependent; please follow all rules in the design guides and sizing tools for what your application can actually support.

|

|

| Application Co-residency Support Policy |

(top) |

| Cisco UC virtualization only supports application co-residency under the specific conditions described below and as clarified in TAC Technote Document ID: 113520.

This policy only covers the rules for physical/virtual hardware sizing, co-resident application mix and maximum VM count per physical server. All other UC virtualization rules still apply (e.g supported VMware vSphere ESXi versions or hardware options). Co-residency rules apply equally to all hardware options:

Note: UC app VM performance is only guaranteed when installed on a UC on UCS Tested Reference Configuration, and only if all other conditions in this policy are followed.

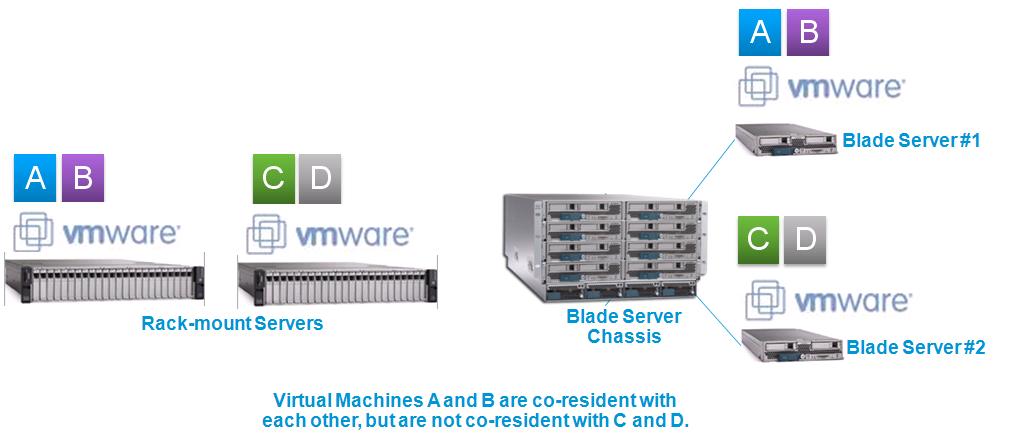

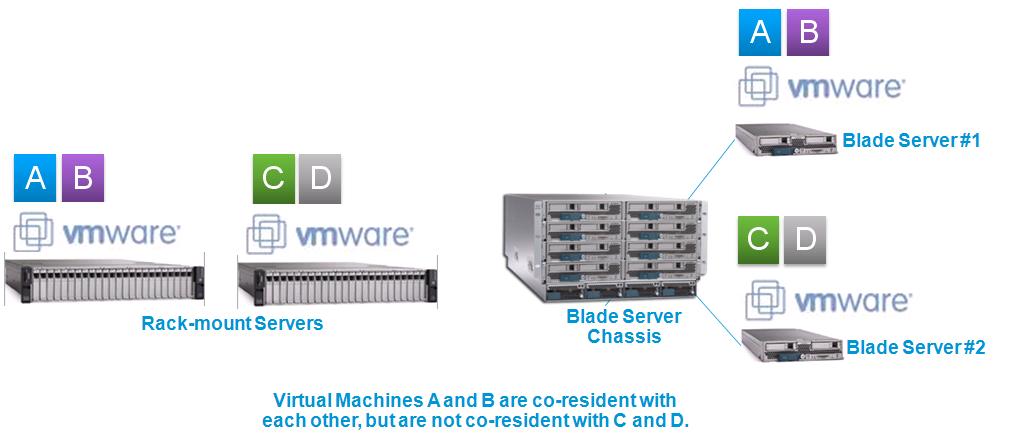

"Application co-residency" in this UC support policy is defined as VMs sharing the same physical server and the same virtualization software host:

- E.g. VMs running on the same VMware vSphere ESXi host on the same physical rack-mount server, such as Cisco UCS C-Series.

- E.g. VMs running on the same VMware vSphere ESXi host on the same physical blade server in the same blade server chassis, such as Cisco UCS B-Series.

- "Co-resident application mix" in this UC support policy refers to the set of VMs sharing a physical server and a virtualization software host.

- VMs running on different virtualization hosts and different physical servers are not co-resident.

- E.g. VMs running on two different Cisco UCS C-Series rack-mount servers are not co-resident.

- E.g. VMs running on two different Cisco UCS B-Series blade servers in the same UCS 5100 blade server chassis are not co-resident.

Virtual Machines (VMs) are categorized as follows for purposes of this UC support policy:

- Cisco UC app VMs (or simply UC app VMs): a VM for one of the Cisco UC apps at Unified Communications in a Virtualized Environment.

- Cisco non-UC app VMs (or simply non-UC VMs): a VM for a Cisco application not listed at Unified Communications in a Virtualized Environment, such as the VM for Cisco Nexus 1000V's VSM.

- 3rd-party application VMs (or simply 3rd-party app VMs): a VM for a non-Cisco, application, such as VMware vCenter, 3rd-party Cisco Technology Developer Program applications, non-Cisco-provided TFTP/SFTP/DNS/DHCP servers, Directories, Groupware, File/print, CRM, customer home-grown applications, etc.

Note: If you are using the virtualization software called "Cisco UC Virtualization Hypervisor" or "Cisco UC Virtualization Foundation" (as described in Unified Communications VMware Requirements), there are restrictions on allowed non-UC and 3rd-party application VMs. You may be requried to instead deploy on VMware vSphere Standard, Enterprise or Enterprise Plus Edition.

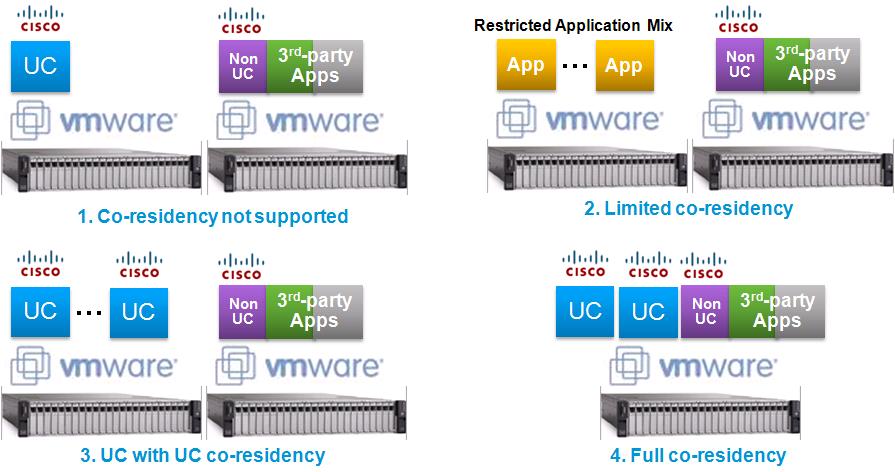

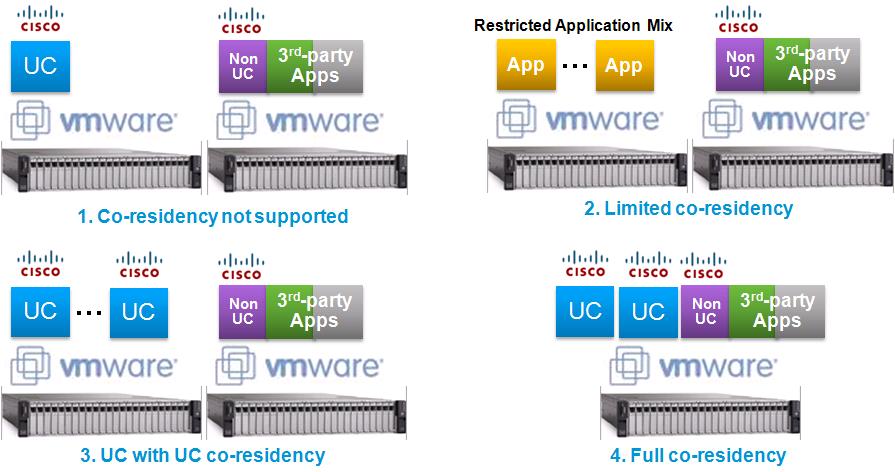

Each Cisco UC app supports one of the following four types of co-residency:

Note: Troubleshooting UC VMs co-resident with non-UC/3rd-party app VMs may require the changes described at TAC TechNote Document ID #113520. To be supported by Cisco TAC, customers must agree to these changes if required by Cisco TAC.

- None: Co-residency is not supported. The UC app only supports a single instance of itself in a single VM on the virtualization host / physical server. No co-residency with ANY other VM is allowed, whether Cisco UC app VM, Cisco non-UC VM, or 3rd-party application VM.

- Limited: The co-resident application mix is restricted to specified VM combinations only. Click on the "Limited" entry in the tables below to see which VM combinations are allowed. Co-residency with any VMs outside these combinations - including other Cisco VMs - is not supported (these applications must be placed on a separate physical server). The deployment must also follow the General Rules for Co-residency and Physical/Virtual Hardware Sizing listed below.

- UC with UC only: The co-resident application mix is restricted to VMs for UC apps listed at Unified Communications in a Virtualized Environment. Co-residency with Cisco non-UC VMs and/or 3rd-party application VMs is not supported; those VMs must be placed on a separate physical server. The deployment must also follow the General Rules for Co-residency and Physical/Virtual Hardware Sizing rules below.

- Full: The co-resident application mix may contain UC app VMs with Cisco non-UC VMs with 3rd-party application VMs. The deployment must follow the General Rules for Co-residency and Physical/Virtual Hardware Sizing rules below. The deployment must also follow the Special Rules for non-UC and 3rd-party Co-residency below.

|

General Rules for Co-residency and Physical/Virtual Hardware Sizing |

|

Note: For Unified Contact Center Enterprise or Packaged Contact Center Enterprise, first refer to the Solution Design Guide for solution-specific rules that may differ from below.

See the tables after the rules for the co-residency policy of each UC app.

Note: Remember that virtualization and co-residency support varies by UC app version, so don't forget to double-check inter-UC-app version compatibility, see Cisco Unified Communications System Documentation.

"Matching" Support Policies

All co-resident applications must "match" in the following areas:

- Same "server" support for compute/network/storage hardware (see Unified Communications in a Virtualized Environment).

- E.g. if you want to host co-resident apps on UCS C260 M2 TRC#1, all co-resident apps must have a hardware support policy that permits this.

- E.g. if you want to deploy instead as UC on UCS Specs-based with a diskless UCS C260 M2 and a SAN/NAS storage array, all co-resident apps must support this.

- You must pick a hardware option that all the co-resident apps can support. For example, some UC apps do not support Specs-based for UC on UCS or 3rd-party Servers, some UC apps do not support certain Tested Reference Configurations such as UC on UCS C200 M2 TRC#1 (as opposed to UC on UCS C200 M2 specs-based).

- Same support for virtualization software product and version.

- E.g. one app supports vSphere 5.0, the other app only supports vSphere 4.1. vSphere 5.0 may not be used for this co-resident application mix.

- All apps must support a co-residency policy that permits the desired co-resident application mix.

- E.g. one app has a "Full" policy, another app has "UC with UC" policy. Co-resident non-UC or 3rd-party app VMs are not allowed.

- E.g. one app has a "UC with UC" policy, another app has "Limited" policy. Even though all apps will be UC, the desired combination may not be allowed by the "Limited" app.

- E.g. one app has "None" policy. No other apps can be co-resident with this app regardless of their policies.

- If support policies of a given co-resident app mix do not match, then the "least common denominator" is required.

Virtual Machine Configurations

All UC applications must use a supported virtual machine OVA template from Unified Communications Virtualization Downloads (including OVA/OVF Templates).

No Hardware Oversubscription

All VMs require a one to one mapping between virtual hardware and physical hardware. See specifics below.

CPU

- Make sure your Tested Reference Configuration or Specs-based CPU is the right CPU Type for the particular UC VMs. E.g. for Cisco Unified Communications Manager using 1vcpu:1pcore sizing approach, the 2.5K user, 7.5K user and 10K user VMs may only run on a server with a "Full UC Performance" CPU Type vs. if using CPU Reservations approach (see UCM and IMP Caveated Support for VMware CPU Reservations and Distributed Resource Scheduler) it may be possible to run a "Restricted UC Performance" CPU Type. The rest of this section covers 1vcpu:1pcore sizing approach.

- Reminder you must use a VM configuration from the Cisco-provided OVA file. Not supported to edit the "Number of Virtual Sockets" and "Number of Cores per Socket" in the Cisco-required VM configurations.

- You can use https://www.cisco.com/go/vmpt or http://www.cisco.com/go/quotecollab for help with VM placement.

- Always enable hyperthreading in the hardware BIOS if it is present to enable.

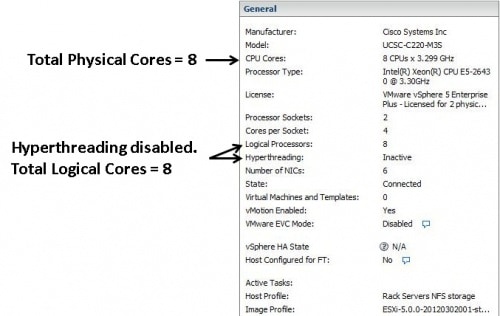

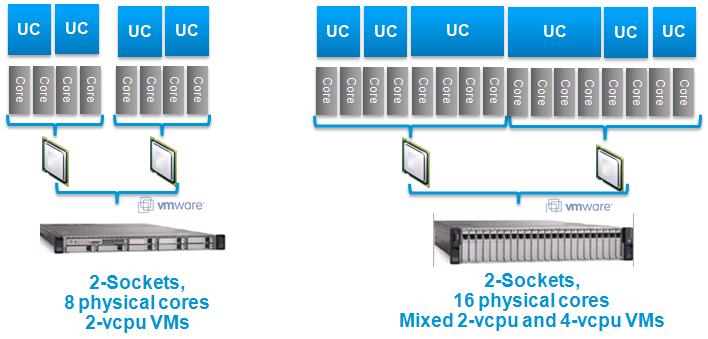

Always deploy 1 vCPU per physical CPU core (regardless of whether hyperthreading is enabled or not).

- This is a Cisco deployment model rule, not a configuration setting in ESXi.

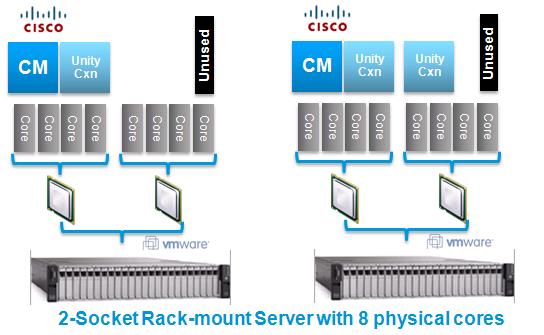

- The sum of total vCPU for all VMs must NOT exceed the sum of physical cores. For example, if you have a host with 12 total physical cores, then you can deploy any combination of virtual machines where the total vCPU sums to 12.

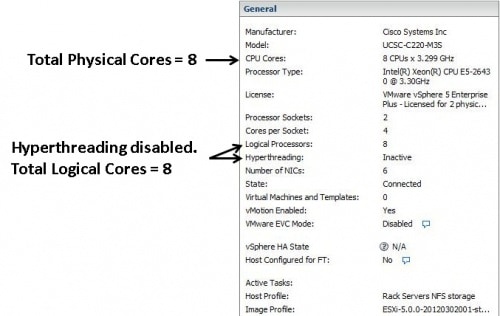

- Do NOT deploy 1vCPU per Logical Core or Logical Processor.

- Here is a sample of an ESXi management screen that distinguishes Logical Processors/Cores from physical cores.

- The requirement is based on physical cores on CPU architectures that Cisco has verified have equivalent performance (click here for details). E.g. for UC sizing purposes, one core on E5-2600 at 2.5+ GHz is equivalent to one core on E7-2800 at 2.4+ GHz, which are both equivalent to one core on 5600 at 2.53+ GHz.

- Cisco Unity VMs also require VMware CPU Affinity (Cisco Unity Connection VMs do not).

- If there is at least one live Unity Connection VM on the physical server, you must do one of the following:

- Unity Connection 10.5(2) or higher with ESXi 5.5 and higher, using "Latency Sensitivity"

- Each Unity Connection VM must have the ESXi "Latency Sensitivity" setting set to "High".

- At least one VM (that is not Unity Connection) on the same host is required to have "Latency Sensitivity" set to "Normal". Recommended that all other VMs on the same host are set to "Normal" except for Unity Connection VMs.

- Note VMs should be powered off when enabling "Latency Sensitivity". To enable "Latency Sensitivity" do the following:

- Login to VMware vSphere Web Client

- Navigate to DataCenter -> Virtual Machines and select the VM for which Latency Sensitivity will be enabled.

- Select VM Options, Advanced settings, Edit

- Set Latency Sensitivity value to "High" and OK to apply.

- Any Unity Connection version that supports virtualization with ESXi 4.0 to 5.1 (can't use "Latency Sensitivity") or 5.5 or later (but choosing not to use "Latency Sensitivity")

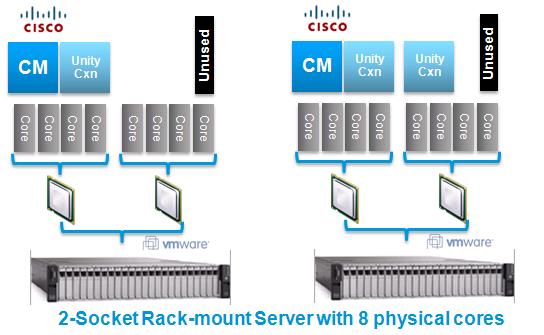

- one physical CPU core per physical server must be left unused (it is actually being used by the ESXi scheduler)

- For example, if you have a host with 12 total physical cores and one or more of the VMs on that host will be Unity Connection, then you can deploy any combination of virtual machines where the total number of vCPU on those virtual machines adds up to 11, with the 12th core left unused. This is regardless of how many Unity Connection VMs are on that host.

|

|

| Special Rules for non-UC and 3rd-party Co-residency |

(top) |

| See the tables after these rules for the co-residency policy of each Cisco UC app.

Choice of virtualization software license may restrict choice of non-UC and/or 3rd-party application VMs, and how many of those VMs may reside on a particular host. See Virtualization Software Requirements.

Non-UC VMs and 3rd-party app VMs that will be co-resident with Cisco UC app VMs are also required to align with all of the following:

"Matching" Support Policies

All co-resident VMs must follow the "Matching" Support Polices rule in General Rules for Co-residency and Physical/Virtual Hardware Sizing. Cisco Collaboration Virtualization does not describe policies for Cisco non-UC apps or 3rd-party apps.

Virtual Machine Configuration

Cisco non-UC VMs and 3rd-party app VMs own definition of their supported VM OVA templates (or specs for one to be created), similar to what Cisco UC app VMs require in General Rules for Co-residency and Physical/Virtual Hardware Sizing. Cisco Unified Communications in a Virtualized Environment does not describe VM templates for Cisco non-UC apps or 3rd-party apps.

CPU

All co-resident VMs - including non-UC VMs and 3rd-party app VMs - must follow the No Hardware Oversubscription rules for CPU in General Rules for Co-residency and Physical/Virtual Hardware Sizing.

Memory/RAM

- To enforce "no memory oversubscription", each co-resident VM - whether UC, non-UC or 3rd-party - must have a reservation for vRAM that includes all the vRAM of the virtual machine. For example, if you have a virtual machine that is configured with 4GB of vRAM, then that virtual machine must also have a reservation of 4 GB of vRAM.

- Otherwise all co-resident VMs - including non-UC VMs and 3rd-party app VMs - must follow the No Hardware Oversubscription rules for Memory/RAM in General Rules for Co-residency and Physical/Virtual Hardware Sizing. The 2 GB for VMware vSphere is in addition to the sum of the vRAM reservations for the VMs.

Storage

- Non-UC VMs and 3rd-party app VMs must define their storage capacity requirements (ideally in an OVA template) and storage performance requirements. These requirements are not captured at Cisco Unified Communications in a Virtualized Environment.

- All co-resident VMs - including non-UC VMs and 3rd-party app VMs - must follow the No Hardware Oversubscription rules for Storage in General Rules for Co-residency and Physical/Virtual Hardware Sizing, including provisioning sufficient disk space, IOPS and low latency to handle the total VM load.

- If DAS storage is to be used with non-UC / 3rd-party app VMs, it is highly recommended that pre-deployment testing be conducted, where all VMs are pushed to their highest level of IOPS generation. This is due to DAS environments being more capacity/performance-constrained in general, more dependent on adapter caches in RAID controllers, and Cisco DAS testing only done for UC apps on UCS Tested Reference Configurations.

Network/LAN

|

|

| Network, QoS and Shared Storage Design Considerations |

(top) |

| See QoS Design Considerations for Virtual UC with UCS.

See Storage System Design Requirements.

|

|