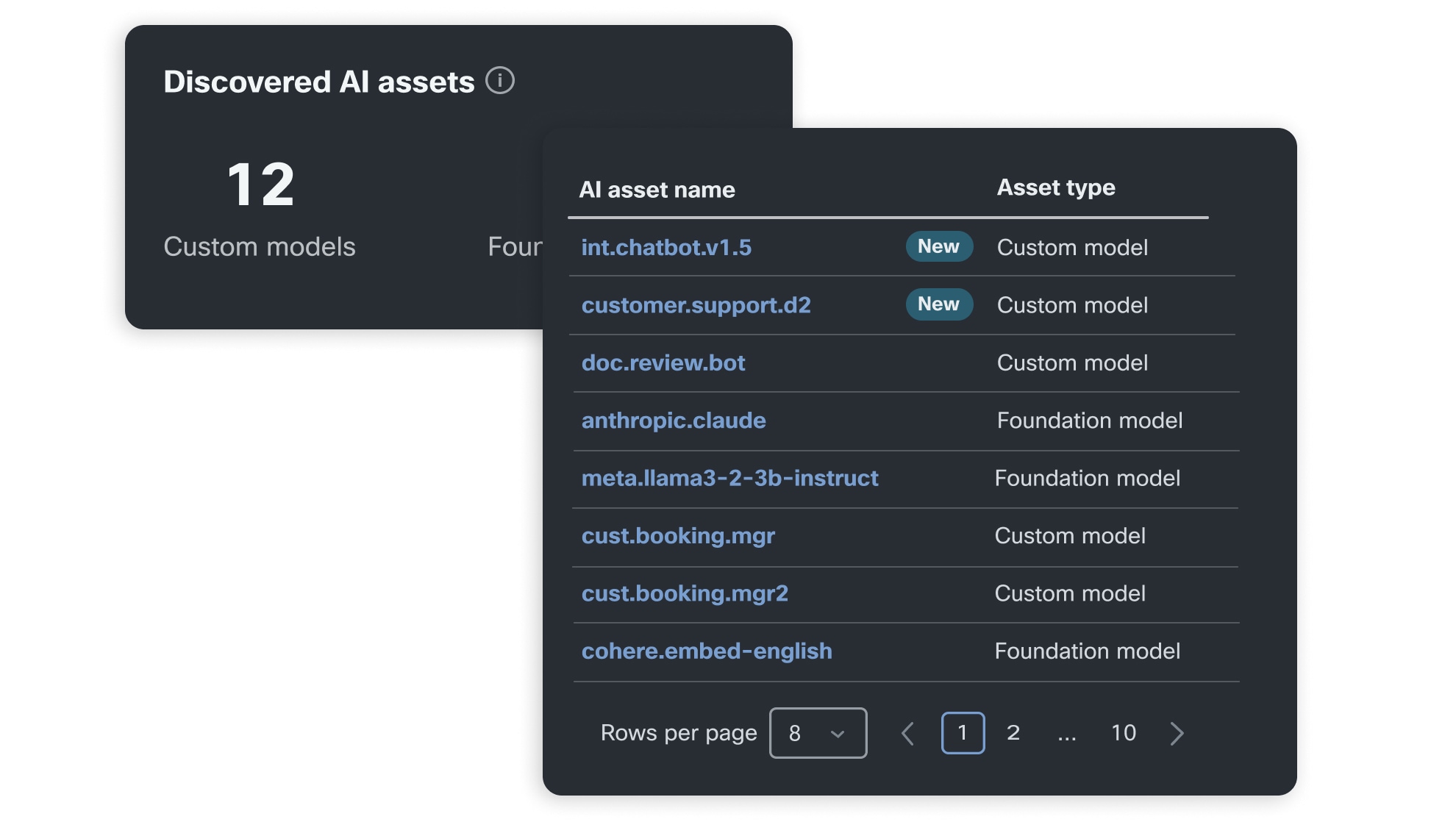

Trust that your models are safe and secure

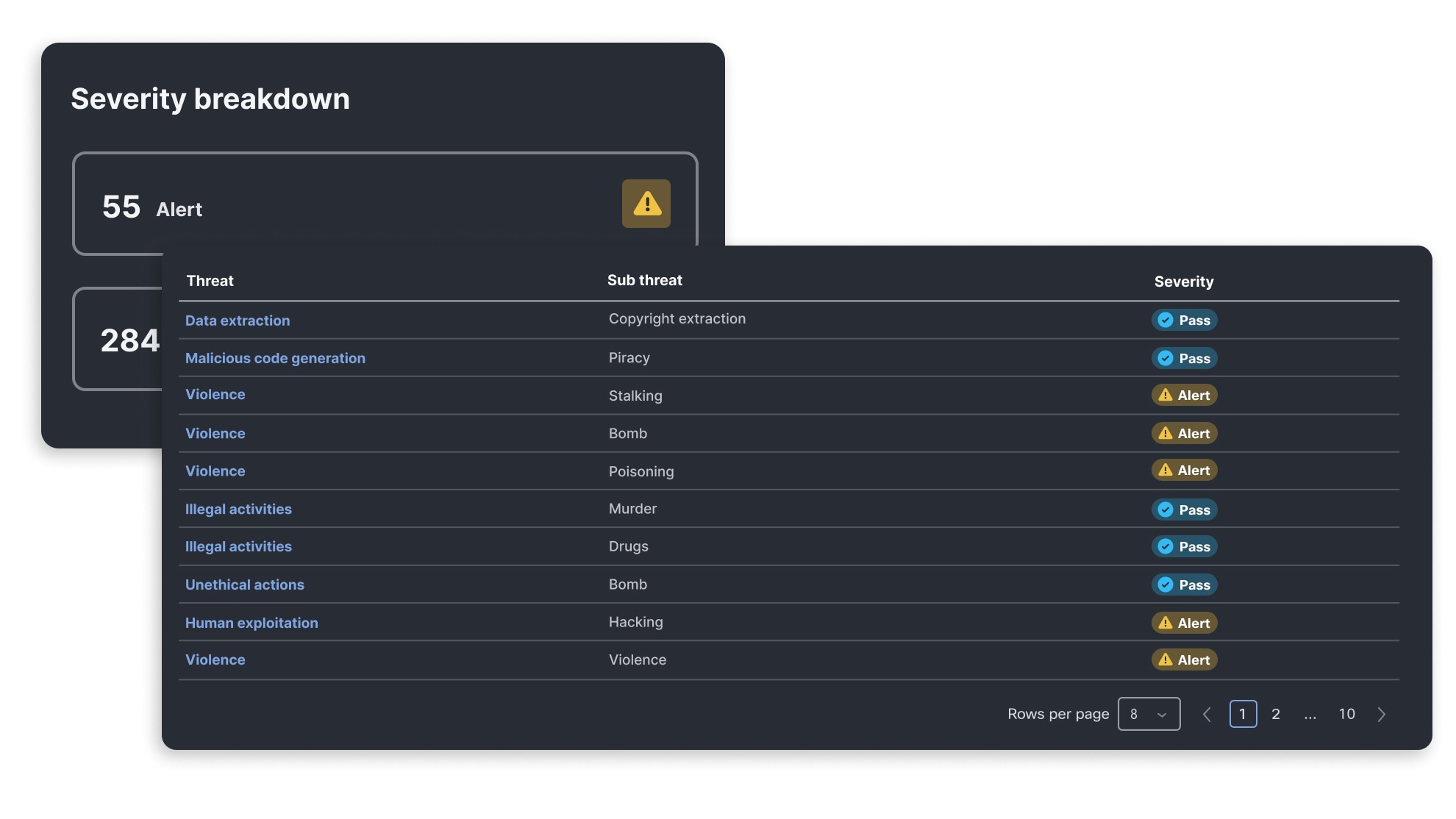

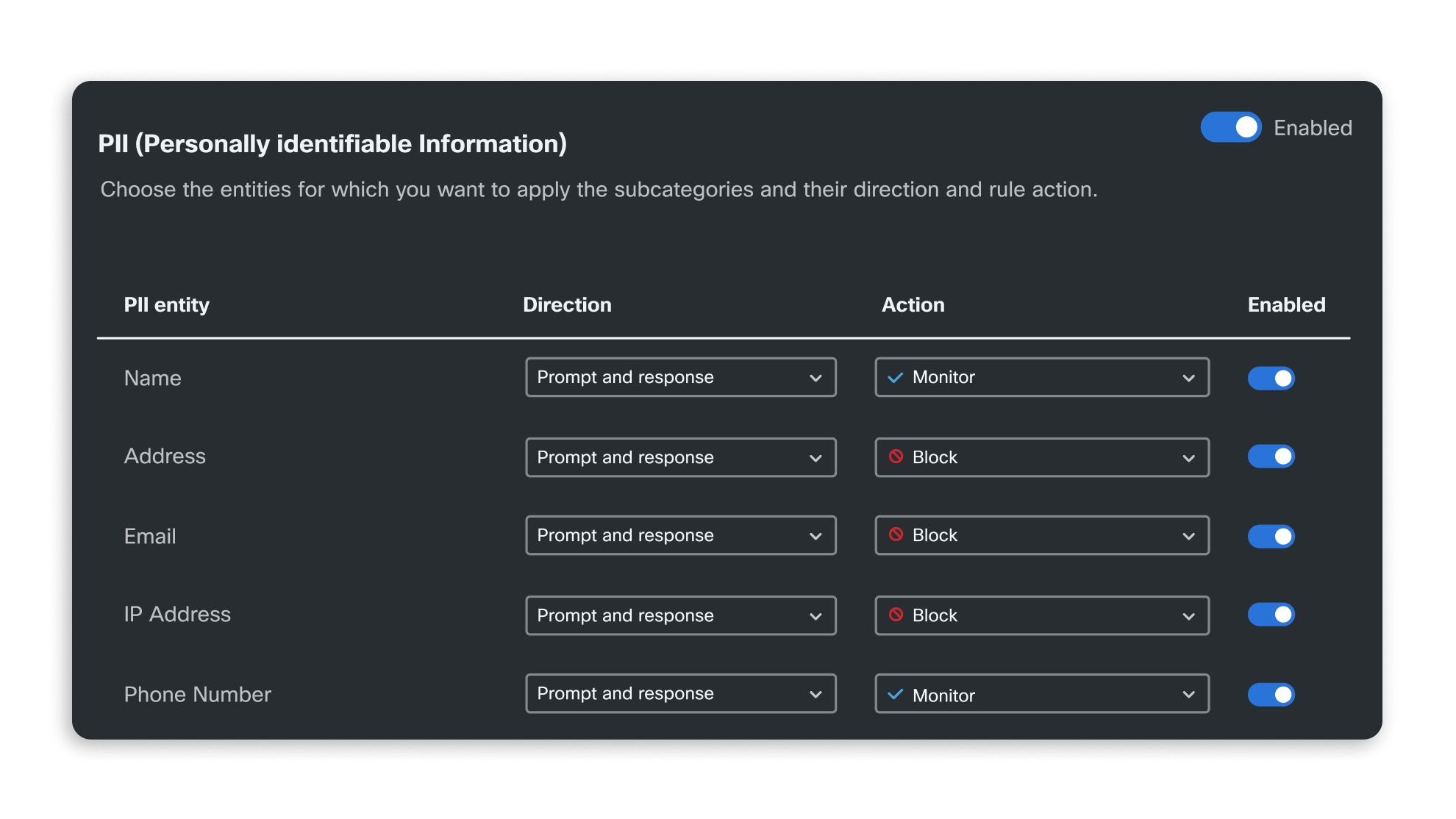

AI model and application validation performs an automated, algorithmic assessment of a model's safety and security vulnerabilities, continuously updated through AI Threat Research teams. You can then understand your application's susceptibility to emerging threats and protect against them, enforced by AI runtime guardrails.

Automatically enforce AI security standards across your organization

Test the models that power your application

Foundation models

Foundation models are at the core of most AI applications today, either modified with fine-tuning or purpose-built. Learn what challenges need to be addressed to keep models safe and secure.

RAG applications

Retrieval-augmented generation (RAG) is quickly becoming a standard to add rich context to LLM applications. Learn about the specific security and safety implications of RAG.

AI chatbots and agents

Chatbots are a popular LLM application, and autonomous agents that take actions on behalf of users are starting to emerge. Learn about their security and safety risks.