New and Changed Information

The following table provides an overview of the significant changes up to this current release. The table does not provide an exhaustive list of all changes or of the new features up to this release.

|

Cisco ACI CNI plug-in Release Version |

Feature |

|---|---|

|

5.2(3) |

Cisco Application Centric Infrastructure (ACI) supports Red Hat OpenShift 4.10 on a bare metal server. |

Openshift 4.10 on Bare Metal

This document pertains to installing OCP with the ACI CNI. However, to identify and resolve issues in your infrastructure not related to the ACI CNI, see the relevant installation guide to first install OCP on your bare metal nodes using the default OpenShift SDN CNI. You can check the OpenShift 4.10 container platform documentation.

Note |

This document can not be used standalone. This document should be used along with the Red Hat OpenShift 4.10 Installing a Cluster on Bare Metal with Network Customizations document to perform the OpenShift cluster installation. |

Requirements for supporting OpenShift 4.10 on a Bare Metal Server

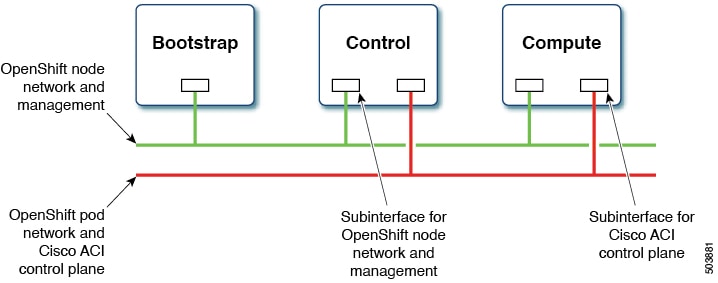

At least two network interfaces are required for bare metal nodes, one for the node network, and the second for the pod network. The design separates OpenShift node traffic from the pod traffic. The separation results in the control and compute machines having two network interfaces, as shown in the following illustration:

The first interface is used for the node network and the second one is used for the pod network. The second interface also carries Cisco ACI control plane traffic. A VLAN tagged subinterface is configured on the second interface to carry the cluster's pod traffic and also the Cisco ACI control plane traffic.

Installation Process

The following sections detail the steps required to install the OpenShift cluster using the ACI CNI.

Configuring the OpenShift Installer

Use this procedure to configure the OpenShift installer.

Before you begin

Download the OpenShift installer and OC client.

For details of the location from where you can download the installer , see the OpenShift 4.10 document titled, Installing a Cluster on Bare Metal with Network Customizations.

Procedure

|

Create the |

Configuring ACI Infra and CNI

Use this procedure for configuring ACI infra and CNI using acc-provision.

Procedure

|

Sample ACI configuration:

Customize the sample This generates a new |

Configuring Ignition Files

Use this procedure for configuring ignition files for the bare metal nodes.

Before you begin

Prepare the Ignition Files

From Github download the config.yaml and update_ign.py files. This script updates the CoreOS ignition file, with additional NIC configuration, required to extend the Cisco ACI

internal network (Infra VLAN) up to the server level. This interface is used to carry VxLAN traffic from OVS towards the ACI

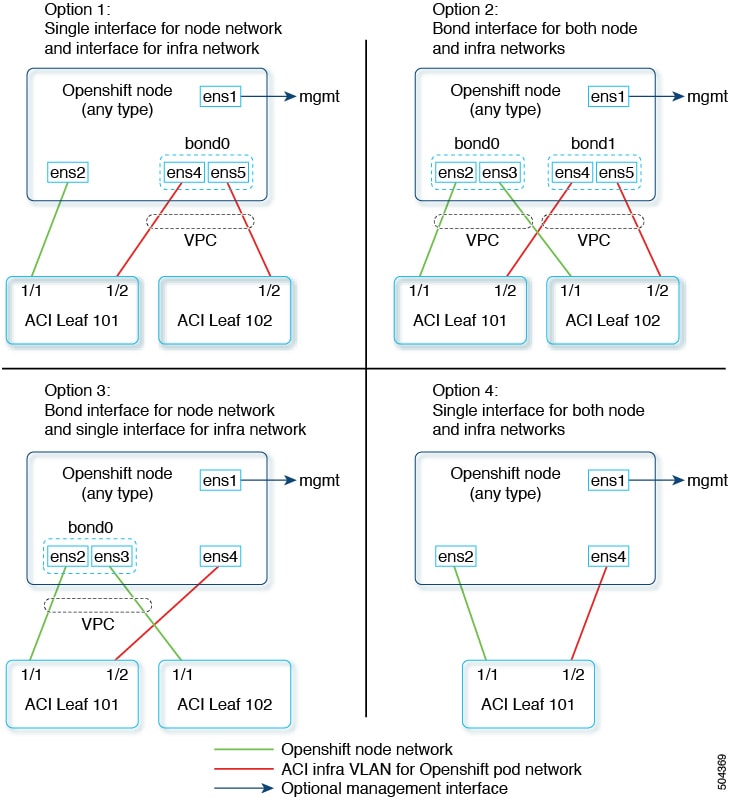

leaf switch with an appropriate tag for the pod network. The script offers four options for node and pod network configuration:

-

Single interface for node network and bond interface for infra network.

-

Bond interface for both node and infra networks.

-

Bond interface for node network and single interface for infra network.

-

Single interface for both node and infra networks.

Each of the above options have been illustrated as shown below:

Note |

Node network is configured as VLAN subinterface of either bond0 or physical NIC. You can configure the server with additional VLAN(s) for management purpose or use the node network for management network. The design might be dependent on the server provisioning method (PXE or manual ISO boot). |

Read the instructions provided at the top of the update_ign.py script and accordingly update the config.yaml file to match your environment.

config.yaml file to match your environment.

all:

infra_vlan: 4093

kubeapi_vlan: 3

service_vlan: 202

network_interfaces:

node:

mtu: 1500

opflex:

mtu: 1700

os_compute_nodes_number: 2

os_cp_nodes_number: 3

node_network_interface:

- ens2

- ens3

aci_infra_network_interface:

- ens4

- ens5Procedure

|

Step 1 |

Create a root folder for your cluster. |

|

Step 2 |

Copy the |

|

Step 3 |

Create the manifests. |

|

Step 4 |

Extract all the ACI manifest files in |

|

Step 5 |

Create the ignition configurations. |

|

Step 6 |

Update the ignition files with the ACI CNI specific configuration(s). The ignition files are now ready and can be copied to your HTTP server, so they can be served to your nodes. The |

What to do next

Updating the Default Ingress Controller

For updating the default Ingress Controller publish strategy to use the ACI Loadbalancer, log in as a user with cluster-admin privileges and run the following:

oc replace --force --wait --filename - <<EOF

apiVersion: operator.openshift.io/v1

kind: IngressController

metadata:

namespace: openshift-ingress-operator

name: default

spec:

endpointPublishingStrategy:

type: LoadBalancerService

loadBalancer:

scope: External

EOFFor more details, see the Configuring the Default Ingress Controller for your Cluster to be Internal section in the Ingress Operator in OpenShift Container Platform Red Hat guide.

Feedback

Feedback