FlexPod Datacenter for OpenShift Container Platform 4

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

FlexPod Datacenter for OpenShift Container Platform 4

Deployment Guide for FlexPod Datacenter for OpenShift Container Platform 4

Published: August 2020

In partnership with:

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to:

http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unified Computing System (Cisco UCS), Cisco UCS B-Series Blade Servers, Cisco UCS C-Series Rack Servers, 1Cisco UCS S-Series Storage Servers, Cisco UCS Manager, Cisco UCS Management Software, Cisco Unified Fabric, Cisco Application Centric Infrastructure, Cisco Nexus 9000 Series, Cisco Nexus 7000 Series. Cisco Prime Data Center Network Manager, Cisco NX-OS Software, Cisco MDS Series, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries. (LDW_PU1)

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2020 Cisco Systems, Inc. All rights reserved.

Table of Contents

Hardware and Software Revisions

OpenShift Container Platform Design

OpenShift Container Platform Deployment

OpenShift Container Platform - Networking Configuration

OpenShift Container Platform Management Connectivity

OpenShift Container Platform Storage Connectivity

OpenShift Container Platform – Installation Requirements

Infrastructure Virtual Machines

OpenShift Container Platform Virtual Machines

Virtual Machine Hostnames and IP addresses

OpenShift Container Platform – Infrastructure Setup

Deploy Infrastructure Virtual Machines in VMware

Install and Configure the DNS Server

Install and Configure the DHCP Server

Install and Configure Web Server

Install and Configure Load Balancer

Verify Infrastructure Services

OpenShift Container Platform – Installation

Obtain the Installation Programs

Create the Installation Configuration File

Create the Ignition Configuration Files

Create Red Hat Enterprise Linux CoreOS (RHCOS) VM Template

Install the OpenShift CLI by Downloading the Binary

Prepare the Terraform Installer

Approve the Certificate Signing Requests

Log into OpenShift Container Platform

Setup NFS Volume for Image Registry on NetApp

VMware Configuration for OCP Virtual Machines

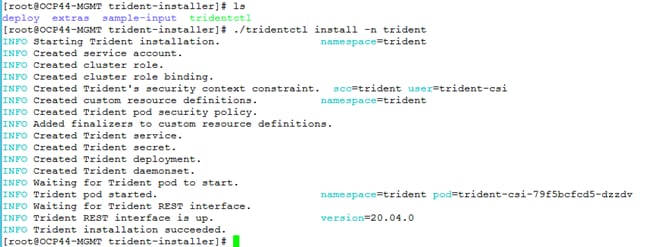

Storage Configuration for NetApp Trident

Create Load-Sharing Mirrors of SVM Root Volume

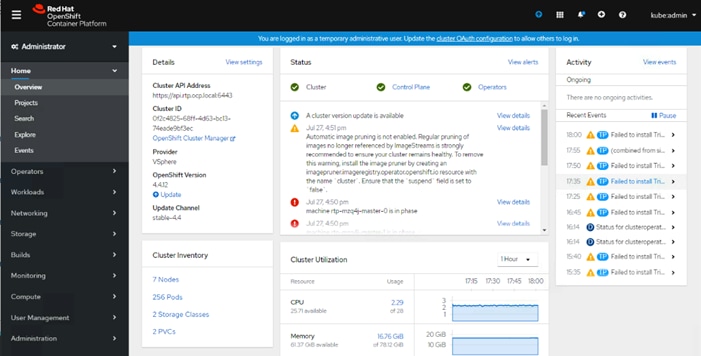

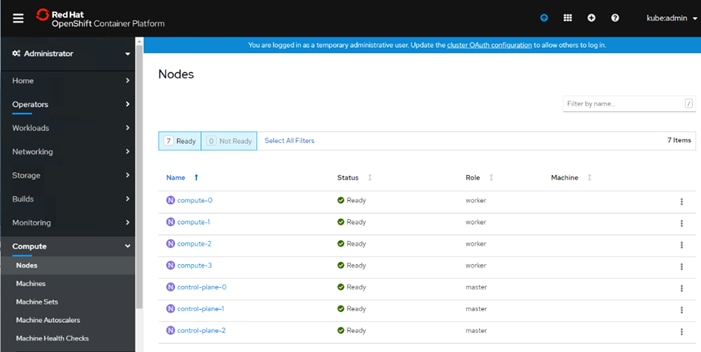

OCP Web Console - Compute Nodes

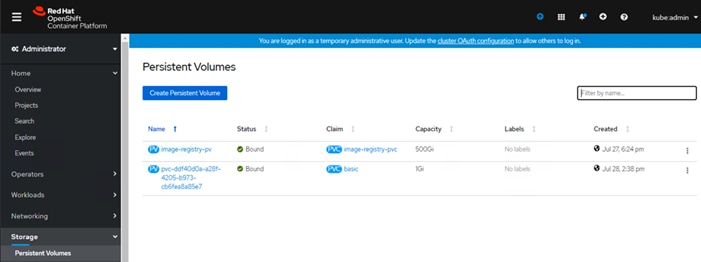

OCP Web Console – Persistent Volumes

Load Balancer Configuration File

Red Hat OpenShift Container Platform

Cisco Validated Designs (CVDs) deliver systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of the customers and to guide them from design to deployment.

Customers looking to deploy applications using a shared datacenter infrastructure face several challenges. A recurring infrastructure challenge is to achieve the required levels of IT agility and efficiency that can effectively meet the company’s business objectives. Addressing these challenges requires having an optimal solution with the following key characteristics:

· Availability: Help ensure applications and services availability at all times with no single point of failure

· Flexibility: Ability to support new services without requiring underlying infrastructure modifications

· Efficiency: Facilitate efficient operation of the infrastructure through re-usable policies

· Manageability: Ease of deployment and ongoing management to minimize operating costs

· Scalability: Ability to expand and grow with significant investment protection

· Compatibility: Minimize risk by ensuring compatibility of integrated components

Cisco and NetApp have partnered to deliver a series of FlexPod solutions that enable strategic datacenter platforms with the above characteristics. FlexPod solution delivers an integrated architecture that incorporates compute, storage, and network design best practices thereby minimizing IT risks by validating the integrated architecture to ensure compatibility between various components. The solution also addresses IT pain points by providing documented design guidance, deployment guidance and support that can be used in various stages (planning, designing and implementation) of a deployment.

Red Hat® OpenShift® is an enterprise ready Kubernetes container platform to manage hybrid cloud and multi-cloud deployments. Red Hat OpenShift Container Platform includes everything needed for hybrid cloud, enterprise container and Kubernetes development and deployments. It includes an enterprise-grade Linux operating system, container runtime, networking, monitoring, container registry, authentication, and authorization solutions.

Combining Red Hat OpenShift with FlexPod Datacenter solution can simplify the deployment and the management of the container infrastructure. Customers can benefit from improved efficiency, better data protection, lower risk, and the flexibility to scale this highly available enterprise-grade infrastructure stack to accommodate new business requirements. The pre-validated converged solution approach helps organizations achieve the speed, flexibility, and scale required for all of their application modernization and digital transformation initiatives.

Introduction

The FlexPod Datacenter solution is a pre-designed, integrated, and validated architecture for data center that combines Cisco UCS servers, Cisco Nexus family of switches, Cisco MDS fabric switches and NetApp ONTAP storage arrays into a single, flexible architecture. FlexPod solutions are designed for high availability, with no single points of failure, while maintaining cost-effectiveness and flexibility in the design to support a wide variety of workloads. FlexPod design can support different hypervisor options, bare metal servers, and can also be sized and optimized based on customer workload requirements.

To help customers, business partners, and other deployment teams with their digital transformation and to enhance their cloud-native and application modernization practices, this document provides a reference architecture that includes design guidance, best practices, and other recommendations for deploying Red Hat OpenShift Container Platform (OCP) 4 on FlexPod DC architecture.

This document is a detailed walk through of the solution build out for deploying OCP 4 on FlexPod DC and provides step by step deployment instructions for various components.

Audience

The intended audience of this document includes but is not limited to data scientists, IT architects, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to take advantage of an infrastructure built to deliver IT efficiency and enable IT innovation.

What’s New in this Release?

The following design elements distinguish this version of FlexPod from previous models:

· Deploying Red Hat OpenShift Container Platform (OCP) 4.4 on FlexPod Datacenter infrastructure running vSphere 6.7 Update 3.

· Showcase NetApp storage integration with the underlying container orchestration using NetApp Trident to provision and manage persistent volumes for the containerized applications.

Architecture

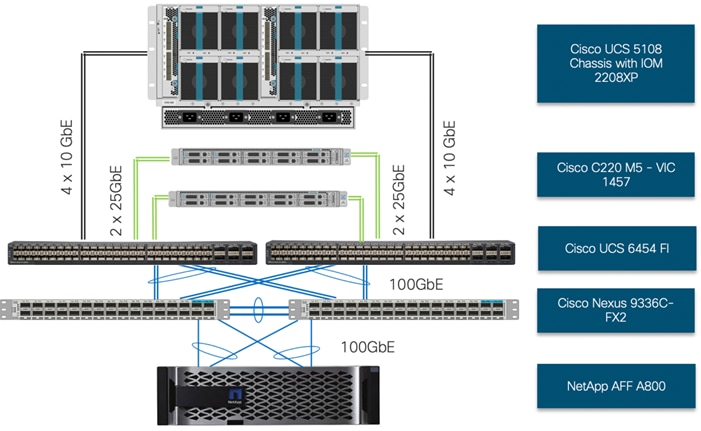

FlexPod Datacenter for OCP comprises of the following core hardware components:

· Cisco UCS Manager on Cisco 4th generation 6454 Fabric Interconnects to support 10GbE, 25GbE and 100GbE connectivity from various components.

· Cisco UCS 5108 Chassis with Cisco UCS B200 M5 blade servers and Cisco UCS C220 M5 rack servers to support VMware vSphere environment where Red Hat OCP is deployed.

· High-Speed Cisco NxOS based Nexus 9336C-FX2 switching design to support up to 100GbE connectivity.

· NetApp AFF A800 NVMe storage with 100GbE connectivity to Cisco Nexus switching fabric.

The FlexPod Datacenter solution for OCP closely aligns with latest NxOS based FlexPod CVD: FlexPod Datacenter with NetApp ONTAP 9.7, Cisco Intersight, and VMware vSphere 6.7 U3 Design Guide and meets the following general design requirements:

1. Resilient design across all layers of the infrastructure with no single point of failure.

2. Scalable design with the flexibility to add compute capacity, storage, or network bandwidth as needed.

3. Modular design that can be replicated to expand and grow as the needs of the business grow.

4. Flexible design that can support components beyond what is validated and documented in this guide.

5. Simplified design with ability to automate and integrate with external automation and orchestration tools.

For Red Hat OCP 4 integration into a traditional FlexPod Datacenter solution, the following specific design considerations are also observed:

1. High Availability of master nodes with a minimum of 3 master node VMs deployed.

2. A minimum of 4 worker node VMs with ability to increase the nodes as the load requirements increase.

3. Automating the OCP installation by utilizing Terraform scripts provided by Red Hat to simplify the installation and reduce the deployment time.

4. Present persistent storage (volumes) to the containerized applications by utilizing the NetApp Trident storage orchestrator.

Physical Topology

The physical topology for FlexPod Datacenter for OCP 4 deployment is shown in Figure 1.

To validate the design, an environment with the following components was setup:

· Cisco UCS 6454 Fabric Interconnects (FI) to support Cisco UCS 5108 chassis and Cisco UCS C220 M5 servers.

· Cisco UCS 5108 chassis connected to FIs using 2208XP IOMs.

· Cisco Nexus 9336C running in NxOS mode provides the switching fabric.

· Cisco UCS 6454 FI’s 100GbE uplink ports were connected to Nexus 9336C as port-channels.

· NetApp AFF A800 controllers connected to Nexus 9336C switch using 100GbE port-channels.

· VMware 6.7 Update 3 ESXi software installed on Cisco UCS B200 M5 and C220 M5* servers.

![]() * The solution was validated using both Cisco UCS B200 M5 and Cisco C220 M5 servers to show the versatility of the Cisco UCS platform. Customers can choose to deploy OCP on just the Cisco UCS B-Series or Cisco UCS C-Series servers depending on their requirements.

* The solution was validated using both Cisco UCS B200 M5 and Cisco C220 M5 servers to show the versatility of the Cisco UCS platform. Customers can choose to deploy OCP on just the Cisco UCS B-Series or Cisco UCS C-Series servers depending on their requirements.

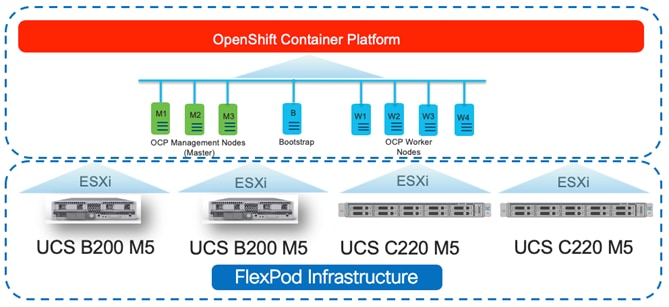

Base Infrastructure

The reference architecture described in this document leverages the components explained in FlexPod Datacenter with NetApp ONTAP 9.7, Cisco Intersight, and VMware vSphere 6.7 U3 Design Guide. The FlexPod Datacenter for OCP extends the virtual infrastructure architecture by deploying RedHat OpenShift Container Platform Virtual Machines (VM) over the VMware vSphere infrastructure as shown in Figure 2:

This deployment guide explains the OCP setup and relevant storage configuration.

![]() This deployment guide explains the OCP setup including the infrastructure prerequisites and appropriate storage configuration. However, the base virtual machine infrastructure configuration and setup is beyond the scope of this document. Customers are encouraged to refer to the FlexPod Datacenter with NetApp ONTAP 9.7, Cisco Intersight, and VMware vSphere 6.7 U3 the for step-by-step configuration procedures.

This deployment guide explains the OCP setup including the infrastructure prerequisites and appropriate storage configuration. However, the base virtual machine infrastructure configuration and setup is beyond the scope of this document. Customers are encouraged to refer to the FlexPod Datacenter with NetApp ONTAP 9.7, Cisco Intersight, and VMware vSphere 6.7 U3 the for step-by-step configuration procedures.

Hardware and Software Revisions

Table 1 lists the software versions for hardware and software components used in this solution. Each version used has been certified within interoperability matrixes supported by Cisco, NetApp, and VMware. For more information about supported versions, consult the following sources:

· Cisco UCS Hardware and Software Interoperability Tool

· NetApp Interoperability Matrix

Table 1 Hardware and Software Revisions

| Component |

Software |

|

| Network |

Cisco Nexus 9336C-FX2 |

7.0(3)I7(6) |

| Compute |

Cisco UCS Fabric Interconnect 6454 |

4.0(4g) |

|

|

Cisco UCS B-Series and C-Series M5 Servers |

4.0(4g) |

|

|

VMware ESXi |

6.7 U3 |

|

|

ESXi ENIC Driver |

1.0.29.0 |

|

|

VMware vCenter Appliance |

6.7 U3 |

| Storage |

NetApp ONTAP |

9.7 |

|

|

NetApp NFS Plugin for VMware VAAI |

1.1.2-3 |

|

|

NetApp Virtual Storage Console |

9.7 |

|

|

NetApp Trident |

20.04 |

| Software |

OpenShift Container Platform |

4.4.12 |

|

|

Red Hat Enterprise Linux CoreOS |

4.4.3 |

Required VLANs

Table 2 list various VLANs configured for setting up the FlexPod environment including their specific usage.

| VLAN ID |

Name |

Usage |

| 2 |

Native-VLAN |

Use VLAN 2 as Native VLAN instead of default VLAN (1) |

| 20 |

OOB-MGMT-VLAN |

Out of Band Management VLAN to connect the management ports for various devices |

| 120 |

IB-MGMT-VLAN |

In Band Management VLAN utilized for all the OCP components. This VLAN is also used for accessing NetApp SVMs for NFS volumes |

| 3100 |

vMotion |

VMware vMotion traffic |

| 3111 (Fabric A only) |

iSCSI-A |

iSCSI-A path for supporting boot-from-san for both Cisco UCS B-Series and Cisco UCS C-Series servers |

| 3121 (Fabric B only) |

iSCSI-B |

iSCSI-B path for supporting boot-from-san for both Cisco UCS B-Series and Cisco UCS C-Series servers |

| 3151 |

NFS-VLAN |

NFS VLAN for mounting ESXi datastores to host VMs |

Some of the key highlights of VLAN usage are as follows:

· VLAN 20 allows customers to manage and access out of band management interfaces of various devices.

· VLAN 120 is used for all the OCP infrastructure (DNS, DHCP, and so on) as well as OCP cluster VMs. This VLAN is also utilized for providing access to the dedicated NetApp Storage Virtual Machine (OCP-SVM) used by NetApp Trident for configuring persistent volumes.

· VLAN 3151 provides ESXi hosts access to the NFS datastores hosted on the NetApp Controllers for deploying VMs.

· A pair of iSCSI VLANs (3111 and 3121) are configured to provide access to boot LUNs for ESXi hosts. These VLANs are defined on individual Fabric Interconnects.

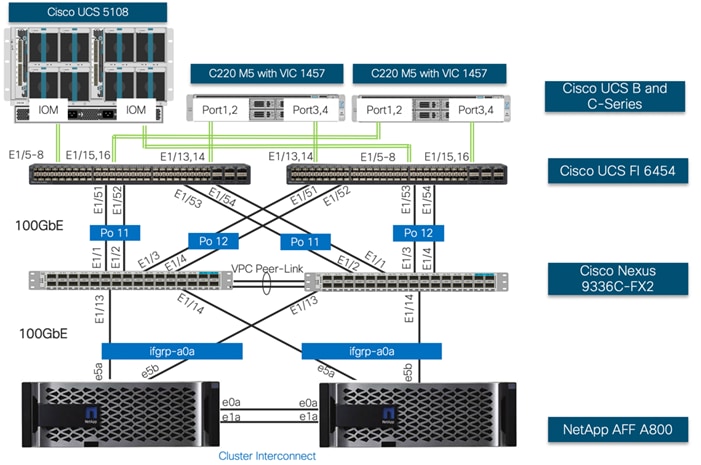

Physical Infrastructure

FlexPod Cabling

The information in this section is provided as a reference for cabling the physical equipment in a FlexPod environment. Customers can adjust the ports according to their individual setup. This document assumes that out-of-band management ports are plugged into an existing management infrastructure at the deployment sites. The interfaces shown in Figure 3 will be used in various configuration steps. Additional 1Gb management connections will be needed for an out-of-band network switch that sits apart from the FlexPod infrastructure. Each Cisco UCS fabric interconnect, Cisco Nexus switch and NetApp AFF controller is connected to the out-of-band network switch. Layer 3 network connectivity is required between the Out-of-Band (OOB) and In-Band (IB) Management Subnets.

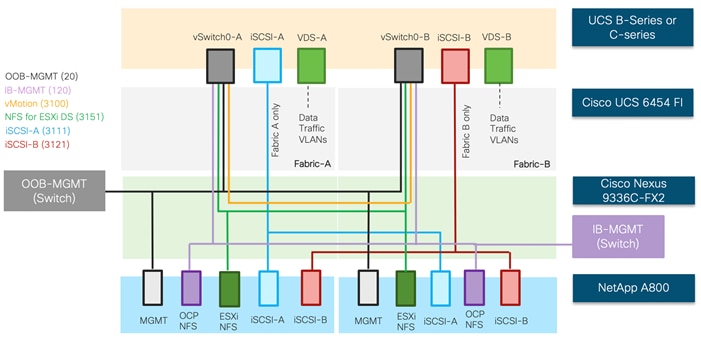

VMware Infrastructure Design

In FlexPod Datacenter deployments, each Cisco UCS server (B-Series or C-Series), equipped with a Cisco Virtual Interface Card (VIC), is configured for multiple virtual interfaces (vNICs) which appear as standards-compliant PCIe endpoints to the OS. The service profile configuration for an ESXi host is shown in Figure 4.

Each ESXi service profile supports:

· Managing the ESXi hosts using a common management segment

· Diskless SAN boot using iSCSI with persistent operating system installation for true stateless computing

· Six vNICs where:

- 2 redundant vNICs (vSwitch0-A and vSwitch0-B) carry out-of-band management, in-band management, and ESXi host NFS datastore VLANs. The MTU value for this interface is set as a Jumbo MTU (9000).

- 2 redundant vNICs (VDS-A and VDS-B) are used by the vSphere Distributed switch and carry VMware vMotion traffic and customer application data traffic. The MTU for these interfaces is set to Jumbo MTU (9000).

- 1 iSCSI-A vNIC utilizes iSCSI-A VLAN (defined only on Fabric A) to provide access to iSCSI-A path. The MTU value for this interface is set as a Jumbo MTU (9000).

- 1 iSCSI-B vNIC utilizes iSCSI-B VLAN (defined only on Fabric B) to provide access to iSCSI-B path. The MTU value for this interface is set as a Jumbo MTU (9000).

· Each ESXi host (blade) accesses NFS datastores hosted on NetApp A800 controllers to be used for deploying virtual machines.

· Each ESXi host provides access to the OCP image registry NFS volume using the in-band management network.

· Each ESXi host also provides access to a dedicated NetApp SVM utilized by NetApp Trident using in-band management VLAN.

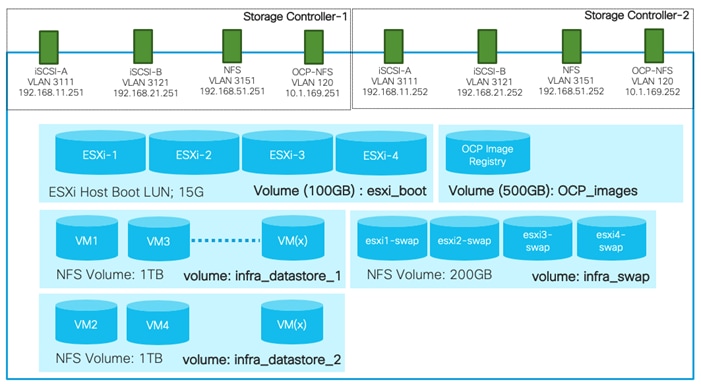

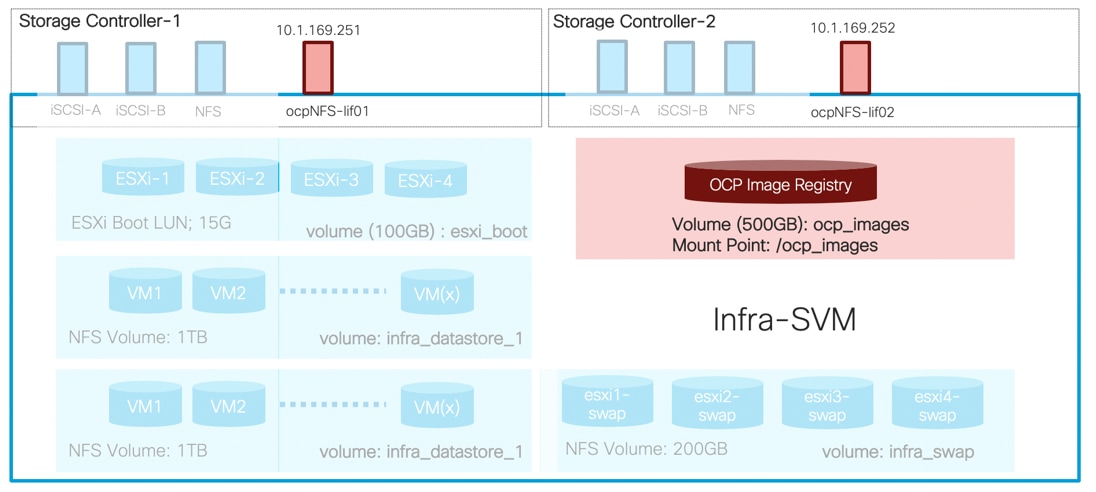

Storage Design

To provide the necessary data segregation and management, two separate SVMs are configured for this design. These SVMs are as follows:

· Infra-SVM

- This SVM hosts:

§ ESXi boot LUNs

§ NFS datastores for vSphere environment

§ NFS volume for OCP image registry

The volumes, VLANs, and Interface (LIFs) details are shown in Figure 5. The Infra-SVM setup is captured in the FlexPod Datacenter with NetApp ONTAP 9.7, Cisco Intersight, and VMware vSphere 6.7 U3. The setup for OCP Image Registry volume is covered in this deployment guide.

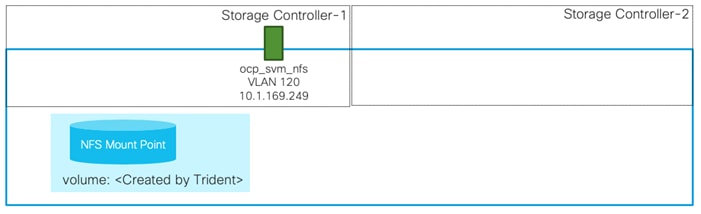

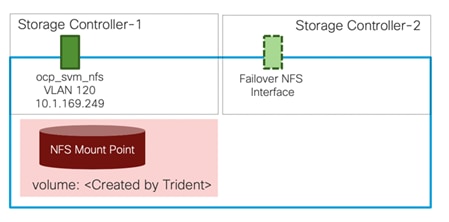

· OCP-SVM

This SVM is used by NetApp Trident to deploy persistent volumes for the container applications.

The persistent volumes are dynamically created by NetApp Trident as needed for the containers. The VLAN and Interface (LIF) details are shown in Figure 6. A single LIF is created in this instance to access the persistent storage volumes. Failover is enabled for this LIF to protect against controller failures.

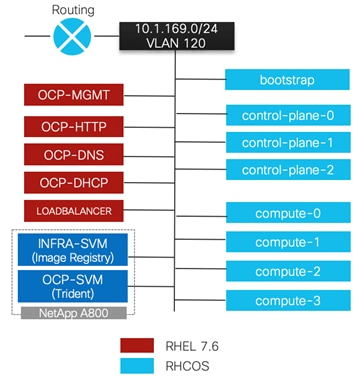

OpenShift Container Platform Design

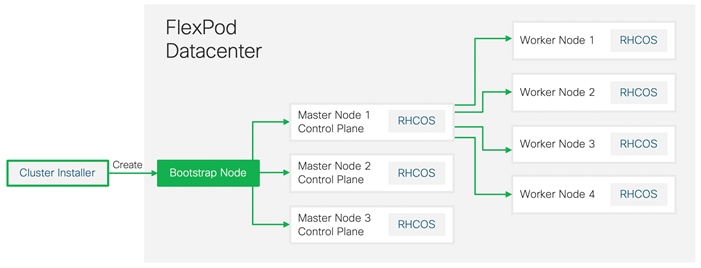

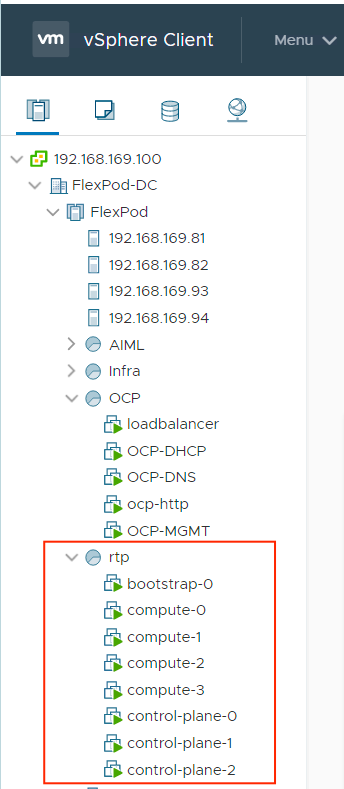

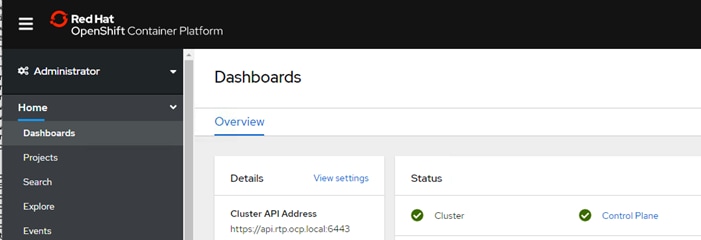

OCP 4.4 is deployed on the VMware infrastructure as a set of VMs. Three master nodes and four worker nodes are deployed in the validation environment and additional worker nodes can easily be added to increase the scalability of the solution. Figure 7 shows various VMs used for deploying and managing a Red Hat OCP cluster: The specific role and sizing guidelines for these VMs is explained in the next section.

The FlexPod Datacenter for OCP was built on a 4-node ESXi Cluster using two Cisco UCS B200 M5 and two Cisco UCS C220 M5 servers. A high-level overview of the VMs and their connectivity is covered in Figure 7:

For detailed installation instruction, refer to:: https://docs.openshift.com/container-platform/4.4/welcome/index.html. Choose the correct version of the documentation by selecting the appropriate version of OCP being deployed from the drop-down list:

OpenShift Container Platform - Networking Configuration

OpenShift Container Platform Management Connectivity

Master nodes and Worker nodes have management connectivity using the in-band management VLAN (120) as shown in Figure 7. This VLAN is defined on vSwitch0 of all the ESXi hosts and customer routing and switching network provides network access to the Internet for downloading various packages during OCP installation. In the lab validation, the in-band management network was utilized to deploy all the VMs and associated services including access to storage. Customers can also choose to deploy the OCP VMs using an application data VLAN on the ESXi Virtual Distributed Switch (VDS).

All the Red Hat Enterprise Linux CoreOS (RHCOS) machines require network access during initial boot to fetch Ignition config files from the Machine Config Server. During the initial boot, the machines require either a DHCP server or that static IP addresses be set in order to establish a network connection to download their Ignition config files.

While installing all the prerequisites needed to deploy the OpenShift Container Platform configuration, all the nodes in this deployment require Internet access to complete the following actions:

· Download the installation program

· Obtain the packages required to install and update the cluster

· Perform subscription management

The in-band management subnet is configured for Internet access and a DHCP server is deployed to provide IP addresses to the OCP VMs. A DNS server with appropriate DNS records is also deployed in the same subnet. Details of DHCP and DNS servers is provided in the upcoming sections.

OpenShift Container Platform Storage Connectivity

The OpenShift Container Platform virtual machines require access to the NetApp storage system for the following two types of storage configurations:

· An NFS volume to host Image Registry in the Infra-SVM.

· Management and data (NFS) access to a dedicated SVM (OCP-SVM) for NetApp Trident. NetApp Trident accesses the NetApp A800 controller and provisions the persistent volumes in the OCP-SVM as needed for various applications.

As shown in Figure 7, this connectivity is achieved by defining data and management LIFs in the in-band management subnet over VLAN 120. All the VMs and Trident then have direct access to NFS mount points and NetApp Trident can access the A800 Management interface for persistent volume configurations.

OpenShift Container Platform – Installation Requirements

The FlexPod Datacenter for OCP utilizes user-provisioned infrastructure (UPI) cluster configuration for OCP installation therefore when provisioning and managing the FlexPod DC infrastructure, you must provide all of the supporting cluster infrastructure and resources, including the bootstrap machine, networking, load balancing, storage, and individual cluster machines.

The following supporting cluster resources are required for the UPI installation:

· The control plane and compute machines that make up the cluster

· Load balancer(s)

· Cluster networking, including the DNS records and required subnets

· Storage for the cluster infrastructure and applications

![]() For the user-provisioned infrastructure, RHEL based worker virtual machines can also be deployed however for this validation, RHCOS was used for both the control and worker VMs.

For the user-provisioned infrastructure, RHEL based worker virtual machines can also be deployed however for this validation, RHCOS was used for both the control and worker VMs.

Infrastructure Virtual Machines

The following infrastructure service VMs were deployed to support the OCP cluster:

· 1 DNS server (RHEL 7.6)

· 1 HA Proxy Load Balancer (RHEL 7.6)

· 1 Web/HTTP Server (RHEL 7.6)

· 1 DHCP Server (RHEL 7.6)

· 1 Management VM (RHEL 7.6) to setup and manage OCP environment.

The deployment details for these VMs are listed in Table 3.

Table 3 Infrastructure services VM Details

| Machine |

OS |

vCPU |

RAM (GB) |

Storage (GB) |

Comment |

| DNS |

RHEL 7.6* |

2 |

8 |

100 |

DNS servers for the lab validation |

| DHCP |

RHEL 7.6* |

2 |

4 |

100 |

DHCP server for the lab validation |

| HTTP |

RHEL 7.6* |

2 |

4 |

100 |

Apache Server on RHEL |

| MGMT |

RHEL 7.6* |

2 |

8 |

100 |

This node is used to deploy and manage OCP. Various packages including terraform are installed |

| HA Proxy |

RHEL 7.6* |

2 |

16 |

100 |

Single Load Balancer instance for the lab validation |

![]() The virtual machines sizes listed above are for lab deployment only. Customers should size their VMs according to their individual requirements.

The virtual machines sizes listed above are for lab deployment only. Customers should size their VMs according to their individual requirements.

![]() * While RHEL 7.6 was utilized during this validation, customers can use the latest RHEL versions for their specific deployments. Most of these services should already be deployed in the customer environments.

* While RHEL 7.6 was utilized during this validation, customers can use the latest RHEL versions for their specific deployments. Most of these services should already be deployed in the customer environments.

OpenShift Container Platform Virtual Machines

The following OCP Virtual Machines were set up by the terraform scripts for the cluster deployment:

· 1 Bootstrap VM (Red Hat Enterprise Linux CoreOS - RHCOS)

· 3 Control Plane VMs (RHCOS)

· 4 Compute Node VMs (RHCOS)

The bootstrap virtual machines can be safely deleted once the OCP cluster is installed. These virtual machines are deployed using a VMware OVA therefore the resources required are automatically set by the OVA and the OCP installer. Table 4 lists the number of virtual machines and their specifications as deployed in this validation.

| Machine |

Number of Nodes |

OS |

vCPU |

RAM (GB) |

Storage (GB) |

Comment |

| Bootstrap |

1 |

RHCOS |

4 |

16 |

60 |

Bootstrap node |

| Control plane |

3 |

RHCOS |

4 |

16 |

60 |

Control plane/Master nodes |

| Compute |

4 |

RHCOS |

4 |

8 |

60 |

Compute/Worker nodes |

Virtual Machine Hostnames and IP addresses

Table 5 shows the hostnames and IP addresses of all the virtual machines used in this deployment guide. These hostnames and IP addresses will be used throughout this document. Customers should plan all the hostnames and IP addresses before initiating the installation process.

Table 5 Virtual Machine Information

| Hostname |

IP address |

Description |

| ocp-mgmt |

10.1.169.5 |

Management host used for setting up OCP |

| ocp-dns |

10.1.162.10 |

DNS server |

| ocp-dhcp |

10.1.162.253 |

DHCP Server |

| ocp-http |

10.1.162.9 |

Web server to host config file(s) |

| loadbalancer |

10.1.162.100 |

Load-Balancer (HA-Proxy) |

| control-plane-0 |

10.1.169.11 |

Master Node |

| control-plane-1 |

10.1.169.12 |

Master Node |

| control-plane-2 |

10.1.169.13 |

Master Node |

| compute-0 |

10.1.169.21 |

Compute Node |

| compute-1 |

10.1.169.22 |

Compute Node |

| compute-2 |

10.1.169.23 |

Compute Node |

| compute-3 |

10.1.169.24 |

Compute Node |

OpenShift Container Platform – Infrastructure Setup

This section describes the setting up the user provisioned infrastructure services for deploying RedHat OCP. The following steps need to be completed for setting up the infrastructure:

1. Configure DNS

2. Configure DHCP

3. Configure Web Server

4. Configure Load Balancer

5. Prepare the management host by installing Terraform and other software dependencies

All the infrastructure nodes are configured as virtual machines on the FlexPod Infrastructure. The ocp-mgmt virtual machine is the management host used to run the openshift-installer program and to host the terraform platform for automating the installation.

OpenShift Container Platform requires a fully functional DNS server in the environment. A set of records must be configured in the DNS to provide name resolution for hosts and containers running on the platform.

The required bootstrap Ignition configuration files and the raw installation images were hosted on a locally configured web server (ocp-http) used as the Machine Config Server.

The following domain and OCP cluster names are used in this deployment guide:

· Base Domain: ocp.local

· OCP Cluster Name: rtp

Deploy Infrastructure Virtual Machines in VMware

Log into the VMware vCenter and deploy 5 infrastructure virtual machines as shown in Table 3. Make sure all the virtual machines are added to the in-band network. Install the RHEL 7.6 operating system on all the VMs and assign them an IP address and hostname. The host names and IP addresses for these VMs can be obtained from Table 5.

Install and Configure the DNS Server

In this deployment, a RHEL 7.6 based virtual machine was used to setup and configure a DNS server. To install and configure the DNS server, follow these steps:

1. Log into the DNS Linux VM as root and make sure subscription manager is up to date:

[root@OCP-DNS ~]# subscription-manager attach –auto

2. Install bind and bind-utils:

[root@OCP-DNS ~]# yum install bind bind-utils

3. Update the /etc/named.conf as well as the forward and reverse zone configuration files in /var/named. The DNS needs to be updated with the following values:

| Name |

Record |

Destination |

Notes |

| Kubernetes API |

api.<cluster_name>.<base_name>. |

OpenShift Admin Load Balancer |

Must be resolvable by all external clients and cluster nodes |

| Kubernetes Internal |

api-int.<cluster_name>.<base_name>. |

OpenShift Admin Load Balancer |

Must be resolvable by all cluster nodes |

| Application Routes |

*.apps. <cluster_name>.<base_name>. |

OpenShift Application Ingress |

Must be resolvable by all external clients and cluster nodes |

| Master nodes |

etcd-<index>.<cluster_name>.<base_name>. |

Master nodes |

Must be resolvable by all cluster nodes |

| SSL Server |

_etcd-server-ssl._tcp. <cluster_name>.<base_name>. |

Master nodes |

Refer below. |

In Table 6, the following variables are used:

· <index> - the number of the master node. Starting at 0. For example, the first master node would be etcd-0.

· <cluster_name> - the domain name used for the cluster (rtp).

· <base_name> - the base domain name for the intranet (ocp.local).

![]() The complete files used in the lab configuration are included in the Appendix-DNS Configuration Files for your reference. When creating the forward and reverse zone files, verify the file permissions are set correctly.

The complete files used in the lab configuration are included in the Appendix-DNS Configuration Files for your reference. When creating the forward and reverse zone files, verify the file permissions are set correctly.

4. Make sure the DNS requests are allowed through the firewall:

[root@OCP-DNS ~]# firewall-cmd --permanent --add-port=53/udp

[root@OCP-DNS ~]# firewall-cmd reload

5. Alternately, the firewall services can be stopped and disabled:

[root@OCP-DNS ~]# systemctl stop firewalld

[root@OCP-DNS ~]# systemctl disable firewalld

6. Start the named services:

[root@OCP-DNS ~]# systemctl enable named

Created symlink from /etc/systemd/system/multi-user.target.wants/named.service to /usr/lib/systemd/system/named.service.

[root@OCP-DNS ~]# systemctl start named

[root@OCP-DNS ~]#

7. You may need to restart the VM for these changes to take effect.

For detailed configuration steps, please refer to: https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/sec-bind

Install and Configure the DHCP Server

Dynamic IP address allocation is required during the creation of cluster nodes by Terraform to access the ignition files. In this deployment, a RHEL 7.6 based VM was used to setup and configure a DHCP server. To install and configure the DHCP server, follow these steps:

1. Log into the DHCP Linux VM as root and install dhcp:

[root@OCP-DHCP ~]# yum install dhcp

2. Configure the DHCP server by updating the IP subnet and various parameters in the configuration file /etc/dhcp/dhcpd.conf.

![]() The complete file used in the lab configuration are included in the Appendix-DHCP Configuration File for your reference.

The complete file used in the lab configuration are included in the Appendix-DHCP Configuration File for your reference.

3. Make sure the DHCP requests are allowed through the firewall:

[root@OCP-DHCP ~]# firewall-cmd --permanent --add-service=dhcp

[root@OCP-DHCP ~]# firewall-cmd reload

Alternately, the firewall services can be stopped and disabled:

[root@OCP-DHCP ~]# systemctl stop firewalld

[root@OCP-DHCP ~]# systemctl disable firewalld

4. Enable and start or restart the dhcpd service:

[root@OCP-DHCP ~]# systemctl enable dhcpd

[root@OCP-DHCP ~]# systemctl start dhcpd

Install and Configure Web Server

In this deployment, a RHEL 7.6 based virtual machine was used to setup and configure a web server. No specific configuration is required for the OCP installation other than creating a directory to hold the ignition files. To install and configure the Apache web server, follow these steps:

1. Log into the DHCP Linux VM as root and install httpd:

[root@ocp-http ~]# yum install httpd

2. Update the configuration file /etc/httpd/conf/httpd.conf as listed in “Appendix: Web Server config File”.

3. Add the following firewall rules to allow clients connection to HTTP server:

[root@ocp-http ~]# firewall-cmd --zone=public --permanent --add-service=http

[root@ocp-http ~]# firewall-cmd –reload

![]() The default web server port has been changed to 8080 during this validation. Refer to the httpd.conf file in Appendix for implementation details.

The default web server port has been changed to 8080 during this validation. Refer to the httpd.conf file in Appendix for implementation details.

[root@ocp-http ~]# firewall-cmd --zone=public --permanent --add-port 8080/tcp

4. Alternately, the firewall services can be stopped and disabled:

[root@ocp-http ~]# systemctl stop firewalld

[root@ocp-http ~]# systemctl disable firewalld

5. Start or restart the web server and enable the httpd service:

[root@ocp-http ~]# systemctl restart httpd

[root@ocp-http ~]# systemctl enable httpd

Install and Configure Load Balancer

In this lab deployment, a RHEL 7.6 based VM was used to setup and configure as an external load balancer. Customers can configure an existing load-balancer if the service already exists. To install and configure the haproxy server, follow these steps:

1. Log into the loadbalancer Linux VM as root and install haproxy:

[root@loadbalancer ~]# yum install haproxy

2. Update the configuration files /etc/haproxy/haproxy.cfg with the following information:

| Description |

Incoming Port |

Mode |

Destination |

Dest. Port |

Balance |

| OpenShift Admin |

6443 |

TCP |

Master Nodes |

6443 |

Source |

| OpenShift Installation (Removed once built) |

22623 |

TCP |

Bootstrap and Master Nodes |

22623 |

Source |

| OpenShift Application Ingress |

80 |

TCP |

Worker Nodes |

80 |

Source |

| 443 |

TCP |

Worker Nodes |

443 |

Source |

![]() The complete file used in the lab configuration is included in the Appendix – Load Balancer Configuration File for your reference.

The complete file used in the lab configuration is included in the Appendix – Load Balancer Configuration File for your reference.

3. Add the following firewall rules to allow client connections to HAProxy server:

[root@loadbalancer ~] firewall-cmd --permanent --add-service=haproxy

[root@loadbalancer ~] firewall-cmd --reload

4. Alternately, the firewall services can be stopped and disabled:

[root@loadbalancer ~]# systemctl stop firewalld

[root@loadbalancer ~]# systemctl disable firewalld

5. Start or restart the haproxy service:

[root@loadbalancer ~]# systemctl restart haproxy

[root@loadbalancer ~]# systemctl enable haproxy

![]() If the haproxy service does not start and SELinux is enabled, run the following command to allow haproxy to bind to non-standard ports: setsebool -P haproxy_connect_any on

If the haproxy service does not start and SELinux is enabled, run the following command to allow haproxy to bind to non-standard ports: setsebool -P haproxy_connect_any on

Verify Infrastructure Services

The status of all the infrastructure services can be verified using the commands below. Verify that all the services are up and running before proceeding with the OpenShift Container Platform installation.

[root@OCP-DNS named]# systemctl status named

● named.service - Berkeley Internet Name Domain (DNS)

Loaded: loaded (/usr/lib/systemd/system/named.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-05-08 15:29:30 EDT; 2 months 1 days ago

Process: 21991 ExecReload=/bin/sh -c /usr/sbin/rndc reload > /dev/null 2>&1 || /bin/kill -HUP $MAINPID (code=exited, status=0/SUCCESS)

Process: 6079 ExecStart=/usr/sbin/named -u named -c ${NAMEDCONF} $OPTIONS (code=exited, status=0/SUCCESS)

Process: 6059 ExecStartPre=/bin/bash -c if [ ! "$DISABLE_ZONE_CHECKING" == "yes" ]; then /usr/sbin/named-checkconf -z "$NAMEDCONF"; else echo "Checking of zone files is disabled"; fi (code=exited, status=0/SUCCESS)

Main PID: 6088 (named)

CGroup: /system.slice/named.service

└─6088 /usr/sbin/named -u named -c /etc/named.conf

Jul 09 15:33:37 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:34:35 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:35:19 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:36:00 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:36:29 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:37:58 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:38:17 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:38:38 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:39:13 OCP-DNS.ocp.local named[6088]: resolver priming query complete

Jul 09 15:40:09 OCP-DNS.ocp.local named[6088]: resolver priming query complete

---------------------------------------------------------------------------------------------------------

[root@OCP-DHCP ~]# systemctl status dhcpd

● dhcpd.service - DHCPv4 Server Daemon

Loaded: loaded (/usr/lib/systemd/system/dhcpd.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-05-13 17:38:00 EDT; 1 months 26 days ago

Docs: man:dhcpd(8)

man:dhcpd.conf(5)

Main PID: 10311 (dhcpd)

Status: "Dispatching packets..."

CGroup: /system.slice/dhcpd.service

└─10311 /usr/sbin/dhcpd -f -cf /etc/dhcp/dhcpd.conf -user dhcpd -group dhcpd --no-pid

Jul 09 07:05:49 OCP-DHCP dhcpd[10311]: DHCPACK on 10.1.169.108 to 00:50:56:a0:c3:7f (OCP-Jump) via ens192

Jul 09 08:55:31 OCP-DHCP dhcpd[10311]: Wrote 8 leases to leases file.

Jul 09 08:55:31 OCP-DHCP dhcpd[10311]: DHCPREQUEST for 10.1.169.109 from 00:50:56:a0:f2:bd (Al-Jump) via ens192

Jul 09 08:55:31 OCP-DHCP dhcpd[10311]: DHCPACK on 10.1.169.109 to 00:50:56:a0:f2:bd (Al-Jump) via ens192

Jul 09 13:05:49 OCP-DHCP dhcpd[10311]: Wrote 8 leases to leases file.

Jul 09 13:05:49 OCP-DHCP dhcpd[10311]: DHCPREQUEST for 10.1.169.108 from 00:50:56:a0:c3:7f (OCP-Jump) via ens192

Jul 09 13:05:49 OCP-DHCP dhcpd[10311]: DHCPACK on 10.1.169.108 to 00:50:56:a0:c3:7f (OCP-Jump) via ens192

Jul 09 14:55:33 OCP-DHCP dhcpd[10311]: Wrote 8 leases to leases file.

Jul 09 14:55:33 OCP-DHCP dhcpd[10311]: DHCPREQUEST for 10.1.169.109 from 00:50:56:a0:f2:bd (Al-Jump) via ens192

Jul 09 14:55:33 OCP-DHCP dhcpd[10311]: DHCPACK on 10.1.169.109 to 00:50:56:a0:f2:bd (Al-Jump) via ens192

---------------------------------------------------------------------------------------------------------

[root@ocp-http ~]# systemctl status httpd

● httpd.service - The Apache HTTP Server

Loaded: loaded (/usr/lib/systemd/system/httpd.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2020-05-08 16:17:12 EDT; 2 months 1 days ago

Docs: man:httpd(8)

man:apachectl(8)

Process: 4181 ExecReload=/usr/sbin/httpd $OPTIONS -k graceful (code=exited, status=0/SUCCESS)

Main PID: 5930 (httpd)

Status: "Total requests: 0; Current requests/sec: 0; Current traffic: 0 B/sec"

CGroup: /system.slice/httpd.service

├─4182 /usr/sbin/httpd -DFOREGROUND

├─4183 /usr/sbin/httpd -DFOREGROUND

├─4184 /usr/sbin/httpd -DFOREGROUND

├─4185 /usr/sbin/httpd -DFOREGROUND

├─4186 /usr/sbin/httpd -DFOREGROUND

└─5930 /usr/sbin/httpd -DFOREGROUND

Jun 07 03:31:01 ocp-http systemd[1]: Reloading The Apache HTTP Server.

Jun 07 03:31:01 ocp-http systemd[1]: Reloaded The Apache HTTP Server.

Jun 14 03:47:01 ocp-http systemd[1]: Reloading The Apache HTTP Server.

Jun 14 03:47:01 ocp-http systemd[1]: Reloaded The Apache HTTP Server.

Jun 21 03:20:01 ocp-http systemd[1]: Reloading The Apache HTTP Server.

Jun 21 03:20:01 ocp-http systemd[1]: Reloaded The Apache HTTP Server.

Jun 28 03:31:01 ocp-http systemd[1]: Reloading The Apache HTTP Server.

Jun 28 03:31:01 ocp-http systemd[1]: Reloaded The Apache HTTP Server.

Jul 05 03:32:01 ocp-http systemd[1]: Reloading The Apache HTTP Server.

Jul 05 03:32:01 ocp-http systemd[1]: Reloaded The Apache HTTP Server.

---------------------------------------------------------------------------------------------------------

[root@loadbalancer ~]# systemctl status haproxy

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2020-05-13 11:13:48 EDT; 1 months 26 days ago

Main PID: 9733 (haproxy-systemd)

CGroup: /system.slice/haproxy.service

├─9733 /usr/sbin/haproxy-systemd-wrapper -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid

├─9734 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

└─9735 /usr/sbin/haproxy -f /etc/haproxy/haproxy.cfg -p /run/haproxy.pid -Ds

May 13 11:13:48 loadbalancer systemd[1]: Stopped HAProxy Load Balancer.

May 13 11:13:48 loadbalancer systemd[1]: Started HAProxy Load Balancer.

May 13 11:13:48 loadbalancer haproxy-systemd-wrapper[9733]: haproxy-systemd-wrapper: executing /usr/sbin/hapr...-Ds

Hint: Some lines were ellipsized, use -l to show in full.

[root@loadbalancer ~]# netstat -ltnp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 6991/haproxy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 6991/haproxy

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 6311/sshd

tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 6991/haproxy

tcp 0 0 0.0.0.0:22623 0.0.0.0:* LISTEN 6991/haproxy

tcp6 0 0 :::22 :::* LISTEN 6311/sshd

Set Up the Management Host

In the following section, the installation steps are performed on the management VM (ocp-mgmt) to prepare the VM for OCP installation.

Install Terraform

Terraform is an Infrastructure as Code tool developed by HashiCorp for building, changing, and versioning infrastructure safely and efficiently. In this deployment, Terraform is used to fully automate the VM provisioning of the OCP cluster nodes.

![]() The installer github template was developed with Terraform version 11 therefore the appropriate version of Terraform was installed on the management host.

The installer github template was developed with Terraform version 11 therefore the appropriate version of Terraform was installed on the management host.

Terraform consists of following components:

· Configuration files(.tf). Terraform uses its own configuration language, designed to allow concise descriptions of infrastructure. The Terraform language is declarative, describing an intended goal rather than the steps to reach that goal.

· The Terraform binary (executable) file, which is written and compiled in the GO language. To install Terraform, find the appropriate package for your system and download it from: https://releases.hashicorp.com/terraform/0.11.14/

· Terraform state file (.tfstate), a JSON file with running configuration.

To install Terraform, run the following commands on the management host:

[root@OCP-MGMT ~]# mkdir terraform

[root@OCP-MGMT ~]# export TERRAFORM_VERSION=0.11.14

[root@OCP-MGMT ~]# cd terraform/

[root@OCP-MGMT terraform]# wget https://releases.hashicorp.com/terraform/${TERRAFORM_VERSION}/terraform_${TERRAFORM_VERSION}_linux_amd64.zip

https://releases.hashicorp.com/terraform/0.11.14/terraform_0.11.14_linux_amd64.zip

Resolving releases.hashicorp.com (releases.hashicorp.com)... 151.101.1.183, 151.101.65.183, 151.101.129.183, ...

Connecting to releases.hashicorp.com (releases.hashicorp.com)|151.101.1.183|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 12569267 (12M) [application/zip]

Saving to: ‘terraform_0.11.14_linux_amd64.zip’

100%[=====================================================>] 12,569,267 40.5MB/s in 0.3s

2020-05-08 18:04:05 (40.5 MB/s) - ‘terraform_0.11.14_linux_amd64.zip’ saved [12569267/12569267]

[root@OCP-MGMT terraform]# unzip terraform_${TERRAFORM_VERSION}_linux_amd64.zip -d ~/bin/

Archive: terraform_0.11.14_linux_amd64.zip

inflating: /root/bin/terraform

[root@OCP-MGMT terraform]# terraform -v

Terraform v0.11.14

Your version of Terraform is out of date! The latest version

is 0.12.24. You can update by downloading from www.terraform.io/downloads.html

Generate an SSH Private Key and Add it to the Agent

In order to perform installation debugging, or disaster recovery on the OpenShift cluster, an SSH key must be added to both ssh-agent and to the installation program. This key can be used to SSH into the cluster nodes as the user core since during cluster deployment, the key is added to the core user’s ~/.ssh/authorized_keys list and enables password-less access between the cluster nodes.

To generate the SSH key for password-less authentication, on the management host, follow these steps:

1. Run the following command and specify the path and file name:

[root@OCP-MGMT ~]# ssh-keygen -t rsa -b 4096 -N '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:W60YYTG1EQD/6yDOZ8lfKF0cJQqXFq0ZYQlntIxs3pE root@OCP-MGMT.rtp.ocp.local

The key's randomart image is:

+---[RSA 4096]----+

| ..*=%B. . |

| o @=*.o |

| B.E+. |

| + +o+ . |

| S + + |

| * = |

| ..=.= . |

| o .=+ . |

| oo .o |

+----[SHA256]-----+

2. Running the above command generates an SSH key that does not require a password when connecting to the cluster VMs from the management host ocp-mgmt.

3. Start the ssh-agent process as a background task:

[root@OCP-MGMT ~]# eval "$(ssh-agent -s)"

Agent pid 7758

4. Specify the path and file name for your SSH private key, such as ~/.ssh/id_rsa

[root@OCP-MGMT ~]# ssh-add ~/.ssh/id_rsa

Identity added: /root/.ssh/id_rsa (/root/.ssh/id_rsa)

![]() During OpenShift Container Platform installation, the SSH public key needs to be provided to the installation program. Since we are installing the cluster on user provided infrastructure, the key we just generated will be provided to the cluster machines.

During OpenShift Container Platform installation, the SSH public key needs to be provided to the installation program. Since we are installing the cluster on user provided infrastructure, the key we just generated will be provided to the cluster machines.

OpenShift Container Platform – Installation

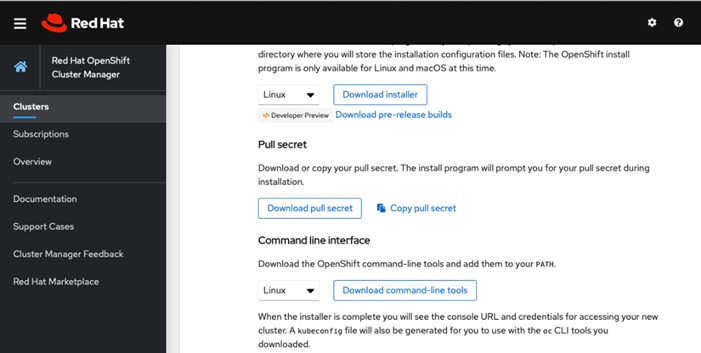

Obtain the Installation Programs

To download the OCP 4.4 installation files on the management host, access the URLs listed below and download the appropriate packages.

![]() All the files listed below can be downloaded from the portal page: https://cloud.redhat.com/openshift/install/vsphere/user-provisioned, however these files install the latest version of OCP (4.5 currently). To download an older OCP (4.4) release, you need to access the URLs listed below. The stable release for 4.4 keeps getting updated and its recommended to use the latest stable release. At the time of validation, the latest stable release was 4.4.12.

All the files listed below can be downloaded from the portal page: https://cloud.redhat.com/openshift/install/vsphere/user-provisioned, however these files install the latest version of OCP (4.5 currently). To download an older OCP (4.4) release, you need to access the URLs listed below. The stable release for 4.4 keeps getting updated and its recommended to use the latest stable release. At the time of validation, the latest stable release was 4.4.12.

1. On the management host (OCP-MGMT), create a directory to host all the install files (/root/install_files)

2. Access the following URL: https://cloud.redhat.com/openshift/install/vsphere/user-provisioned, log in with RedHat user ID and download the Pull Secret file by saving it in the txt format.

pull-secret.txt

3. Access the following URL to download the RHCOS for VMware: https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/4.4/latest/. Download the OVA on the management host which can access the vCenter and upload the OVA for virtual machine template creation.

rhcos-4.4.3-x86_64-vmware.x86_64.ova

4. Access the following URL: https://mirror.openshift.com/pub/openshift-v4/clients/ocp/stable-4.4/ to download the OpenShift install and client programs:

openshift-install-linux-4.4.12.tar.gz

openshift-client-linux-4.4.12.tar.gz

![]() The current most stable version of OCP is 4.4.12. This version is frequently updated by RedHat.

The current most stable version of OCP is 4.4.12. This version is frequently updated by RedHat.

5. Extract the installation program at the location of your choice (/root/install_files):

[root@OCP-MGMT install_files]# tar xvf openshift-install-linux-4.4.12.tar.gz

README.md

openshift-install

[root@OCP-MGMT install_files]#

[root@OCP-MGMT install_files]# ls -l

total 1408884

-rw-r--r-- 1 root root 25285926 Jul 6 01:52 openshift-client-linux-4.4.12.tar.gz

-rwxr-xr-x 1 root root 354095104 Jul 6 01:56 openshift-install

-rw-r--r-- 1 root root 83895994 Jul 6 01:56 openshift-install-linux-4.4.12.tar.gz

-rw-r--r-- 1 root root 2723 Jul 23 08:24 pull-secret.txt

-rw-r--r-- 1 root root 706 Jul 6 01:56 README.md

[root@OCP-MGMT install_files]#

![]() The installation program creates several files on the management host that are required to install the cluster. You need to keep both the installation program and the files that the installation program creates after we finish installing the cluster.

The installation program creates several files on the management host that are required to install the cluster. You need to keep both the installation program and the files that the installation program creates after we finish installing the cluster.

Create the Installation Configuration File

For installations of OpenShift Container Platform that uses user-provisioned infrastructure, an installation configuration file must be added. Follow these steps to create an installation configuration file:

1. Create an installation directory on the management host to store the installation assets:

root@OCP-MGMT install_files]# mkdir ocp44

![]() Some installation assets, like bootstrap X.509 certificates have short expiration intervals therefore the installation directory (ocp44 in this example) cannot be reused. If you want to reuse the files you are creating, copy them into a different (new) directory. Since the file names for the installation assets might change between releases, use caution when copying installation files from an earlier OpenShift Container Platform version.

Some installation assets, like bootstrap X.509 certificates have short expiration intervals therefore the installation directory (ocp44 in this example) cannot be reused. If you want to reuse the files you are creating, copy them into a different (new) directory. Since the file names for the installation assets might change between releases, use caution when copying installation files from an earlier OpenShift Container Platform version.

2. Manually create an installation configuration file and name this file install-config.yaml:

[root@OCP-MGMT ocp44]# touch install-config.yaml

3. For the details of the install-config.yaml file, go to: https://docs.openshift.com/container-platform/4.4/installing/installing_vsphere/installing-vsphere.html#installation-vsphere-config-yaml_installing-vsphere. The following input is required to create the file:

· base domain

· OCP cluster id

· OCP pull secret

· ssh public key (~/.ssh/id_rsa.pub)

· vCenter host

· vCenter user

· vCenter password

· vCenter datacenter

· vCenter datastore

A sample install-config.yaml file is provided below.

Sample install-config.yaml file for VMware vSphere

Customize the install-config.yaml file below to match your installation requirements:

[root@OCP-MGMT ocp44]# more install-config.yaml

apiVersion: v1

baseDomain: ocp.local

compute:

- hyperthreading: Enabled

name: worker

replicas: 0

controlPlane:

hyperthreading: Enabled

name: master

replicas: 3

metadata:

name: rtp

platform:

vsphere:

vcenter: 192.168.169.100

username: administrator@vsphere.local

password: <password>

datacenter: FlexPod-DC

defaultDatastore: infra_datastore_1

fips: <false>

pullSecret:'{"auths":{"cloud.openshift.com <SNIP> }'

sshKey: 'ssh-rsa <SNIP> == root@OCP-MGMT.rtp.ocp.local'

The sample file and the explanation of all the parameters are covered here: https://docs.openshift.com/container-platform/4.4/installing/installing_vsphere/installing-vsphere.html#installation-vsphere-config-yaml_installing-vsphere.

![]() Use extreme caution when adding the pull secret and rsa keys to the file to make sure there are no unnecessary line breaks. The installation will fail if the installer cannot read these strings correctly.

Use extreme caution when adding the pull secret and rsa keys to the file to make sure there are no unnecessary line breaks. The installation will fail if the installer cannot read these strings correctly.

Back up the install-config.yaml file so that it can be used to install multiple clusters (if required). The install-config.yaml file gets deleted at the end of installation. The file can be backed up using the following command.

[root@OCP-MGMT ocp44]# cp install-config.yaml install-config.`date '+%s'`.bak

[root@OCP-MGMT ocp44]# ls

install-config.1589318105.bak install-config.yaml

Create the Ignition Configuration Files

The previously downloaded OpenShift installer is used to create the Ignition configuration files. The installer expects the YAML formatted configuration file created in the last step to generate the cluster configuration information. To modify cluster definition files and to manually start the cluster machines, Kubernetes manifest and ignition config files are generated.

To create the ignition configuration files, follow these steps:

1. Generate the Kubernetes manifests for the cluster which defines the objects bootstrap nodes will have to create initially:

[root@OCP-MGMT install_files]# ./openshift-install create manifests --dir=./ocp44

INFO Consuming Install Config from target directory

WARNING Making control-plane schedulable by setting MastersSchedulable to true for Scheduler cluster settings

The installation directory in the command above (ocp44) contains the install-config.yaml. The warning can safely be ignored because compute machines are created later in the installation process.

![]() The Ignition config files that the installation program generates contain certificates that expire after 24 hours. You must complete your cluster installation and keep the cluster running for 24 hours in a non-degraded state to ensure that the first certificate rotation has finished.

The Ignition config files that the installation program generates contain certificates that expire after 24 hours. You must complete your cluster installation and keep the cluster running for 24 hours in a non-degraded state to ensure that the first certificate rotation has finished.

2. Modify the manifests/cluster-scheduler-02-config.yml Kubernetes manifest file to prevent Pods from being scheduled on the control plane machines:

a. Open the manifests/cluster-scheduler-02-config.yml file.

b. Locate the “masters Schedulable” parameter and set its value to “False”.

c. Save and exit the file.

[root@OCP-MGMT ocp44]# more manifests/cluster-scheduler-02-config.yml

apiVersion: config.openshift.io/v1

kind: Scheduler

metadata:

creationTimestamp: null

name: cluster

spec:

mastersSchedulable: False

policy:

name: ""

status: {}

3. Create the Ignition config files. Ignition is the utility that is used by RHCOS to manipulate disks during initial configuration. It completes common disk tasks, including partitioning disks, formatting partitions, writing files, and configuring users. On first boot, Ignition reads its configuration from the installation media or the location specified and applies the configuration to the machines.

[root@OCP-MGMT install_files]# ./openshift-install create ignition-configs --dir=./ocp44

INFO Consuming Worker Machines from target directory

INFO Consuming Master Machines from target directory

INFO Consuming Openshift Manifests from target directory

INFO Consuming Common Manifests from target directory

INFO Consuming OpenShift Install (Manifests) from target directory

[root@OCP-MGMT install_files]#

The installation directory in the command above (ocp44) contains the install_config.yml.

4. Verify the following files were generated in the installation directory (ocp44). You can install the tree command using “yum install tree” or use “ls” command to verify the contents of the directory.

[root@OCP-MGMT install_files]# tree ocp44

ocp44

├── auth

│ ├── kubeadmin-password

│ └── kubeconfig

├── bootstrap.ign

├── install-config.1589318657.bak

├── master.ign

├── metadata.json

└── worker.ign

1 directory, 7 files

![]() The ignition files are valid for 24 hours - so if the installation takes longer than 24 hours due to any reason, new ignition files need to be generated.

The ignition files are valid for 24 hours - so if the installation takes longer than 24 hours due to any reason, new ignition files need to be generated.

5. Change permissions and copy the generated bootstrap.ign file to web server to make sure the file can be downloaded using http:

[root@OCP-MGMT ocp44]# chmod 777 bootstrap.ign

[root@OCP-MGMT ocp44]# scp bootstrap.ign root@ocp-http:/var/www/html

root@ocp-http's password: *****

bootstrap.ign 100% 291KB 18.4MB/s 00:00

[root@OCP-MGMT ocp44]#

6. Verify, downloading file from http server works:

[root@OCP-MGMT ocp44]# cd ..

[root@OCP-MGMT install_files]# wget ocp-http:8080/bootstrap.ign

--2020-05-12 17:35:58-- http://ocp-http:8080/bootstrap.ign

Resolving ocp-http (ocp-http)... 10.1.169.9

Connecting to ocp-http (ocp-http)|10.1.169.9|:8080... connected.

HTTP request sent, awaiting response... 200 OK

Length: 298087 (291K)

Saving to: ‘bootstrap.ign’

100%[==================================>] 298,087 --.-K/s in 0.001s

2020-05-12 17:35:58 (212 MB/s) - ‘bootstrap.ign’ saved [298087/298087]

Create Red Hat Enterprise Linux CoreOS (RHCOS) VM Template

For installing the OCP cluster on VMware vSphere, RHCOS machines will be deployed using a VM template on vSphere. To create the virtual machine template using the previously downloaded RHCOS OVA, follow these steps:

![]() The RHCOS images might not change with every release of OpenShift Container Platform. You must download an image with the highest version that is less than or equal to the OpenShift Container Platform version that you install. Use the image version that matches your OpenShift Container Platform version if it is available.

The RHCOS images might not change with every release of OpenShift Container Platform. You must download an image with the highest version that is less than or equal to the OpenShift Container Platform version that you install. Use the image version that matches your OpenShift Container Platform version if it is available.

1. Log into the VMware vCenter using a web browser.

2. From the Hosts and Clusters tab, right-click the cluster’s name and click Deploy OVF Template.

3. On the Select an OVF tab, select the RHCOS OVA file that was locally downloaded.

4. On the Select a name and folder tab, set a Virtual machine name, such as RHCOS, click the name of your vSphere cluster.

5. On the Select a compute resource tab, select the name of the vSphere cluster.

6. On the Select storage tab, configure the storage options for your virtual machine.

7. Select Thin Provision.

8. Select the datastore (infra_datastore_1) that was specified in install-config.yaml file.

9. On the Select network tab, specify the in-band management network (or a network of your choice).

10. Do not specify values for the Customize template tab.

11. Verify the selected parameters and Click Finish.

![]() In a typical FlexPod Datacenter deployment, all ESXi hosts synchronize their time with an NTP server. To avoid any certificate validation issues due to mismatched time, this virtual machine is set to synchronize its time with the ESXi host.

In a typical FlexPod Datacenter deployment, all ESXi hosts synchronize their time with an NTP server. To avoid any certificate validation issues due to mismatched time, this virtual machine is set to synchronize its time with the ESXi host.

12. Right Click the VM name and select Edit Settings.

13. Click on VM Options and expand VMware Tools.

14. Check the box: Synchronize guest time with host.

15. Click OK.

16. Right-click the newly created VM, and in the resulting context menu, select Template > Convert to Template.

17. Click Yes to proceed with the template creation by confirming at the displayed message.

Install the OpenShift CLI by Downloading the Binary

To install the OpenShift CLI (oc) on the management host in order to interact with OpenShift Container Platform using a command-line interface, follow these steps:

1. The client software (openshift-client-linux-4.4.12.tar.gz) was previously downloaded on the management host in the directory: install_files.

2. Extract the compressed file:

[root@OCP-MGMT install_files]# tar xvf openshift-client-linux-4.4.12.tar.gz

README.md

oc

kubectl

[root@OCP-MGMT install_files]#

3. Copy oc and kubectl executable files to a directory in your PATH (for example, ~/bin/):

[root@OCP-MGMT install_files]# cp oc ~/bin

[root@OCP-MGMT install_files]# cp kubectl ~/bin

4. After the CLI is installed, it is available using the oc command:

[root@OCP-MGMT install_files]# oc

OpenShift Client

This client helps you develop, build, deploy, and run your applications on any

OpenShift or Kubernetes cluster. It also includes the administrative

commands for managing a cluster under the 'adm' subcommand

<SNIP>

Prepare the Terraform Installer

Download the Terraform installer used in this validation from the following location:

https://github.com/ucs-compute-solutions/openshift43-installer

![]() The code reference to the installer can be found at Cisco DevNet Exchange: https://developer.cisco.com/codeexchange/github/repo/ucs-compute-solutions/openshift43-installer. This installer automates the OCP node virtual machine deployment and has been tested with OCP 4.3 and 4.4. If the installer does not work in your environment or you do not want to use terraform to automate the VM deployment, refer to the manual VM creation procedure covered in RedHat documentation: https://docs.openshift.com/container-platform/4.4/installing/installing_vsphere/installing-vsphere.html#installation-vsphere-machines_installing-vsphere .

The code reference to the installer can be found at Cisco DevNet Exchange: https://developer.cisco.com/codeexchange/github/repo/ucs-compute-solutions/openshift43-installer. This installer automates the OCP node virtual machine deployment and has been tested with OCP 4.3 and 4.4. If the installer does not work in your environment or you do not want to use terraform to automate the VM deployment, refer to the manual VM creation procedure covered in RedHat documentation: https://docs.openshift.com/container-platform/4.4/installing/installing_vsphere/installing-vsphere.html#installation-vsphere-machines_installing-vsphere .

To prepare the Terraform installer, follow these steps:

1. Create a directory on the management host to store required terraform repo:

[root@OCP-MGMT ~]# mkdir TFscripts

[root@OCP-MGMT ~]# cd TFscripts

[root@OCP-MGMT TFscripts]#

2. Clone the repo and change to the install directory:

[root@OCP-MGMT TFscripts ]# git clone https://github.com/ucs-compute-solutions/openshift43-installer https://github.com/ucs-compute-solutions/openshift43-installer

Cloning into 'installer'...

remote: Enumerating objects: 109463, done.

remote: Total 109463 (delta 0), reused 0 (delta 0), pack-reused 109463 Receiving objects: 100% (109463/109463), 95.26 MiB | 32.13 MiB/s, done. Resolving deltas: 100% (67555/67555), done.

3. There is an example terraform.tfvars file in this directory named terraform.tfvars.example. Copy the file and adjust the variables according to your environment.

[root@OCP-MGMT TFscripts]# cd openshift43-installer

[root@OCP-MGMT openshift43-installer]# cp terraform.tfvars.example terraform.tfvars

4. The sample config file used in this installation is shown below. Update the variables according to your environment:

[root@OCP-MGMT openshift43-installer]# more terraform.tfvars

// ID identifying the cluster to create. Use your username so that resources created can be tracked back to you.

cluster_id = "rtp"

// Domain of the cluster. This should be "${cluster_id}.${base_domain}".

cluster_domain = "rtp.ocp.local"

// Base domain from which the cluster domain is a subdomain.

base_domain = "ocp.local"

// Name of the vSphere server. The dev cluster is on "vcsa.vmware.devcluster.openshift.com".

vsphere_server = "192.168.169.100"

// User on the vSphere server.

vsphere_user = "administrator@vsphere.local"

// Password of the user on the vSphere server.

vsphere_password = “<PASSWORD>”

// Name of the vSphere cluster. The dev cluster is "devel".

vsphere_cluster = "FlexPod"

// Name of the vSphere data center. The dev cluster is "dc1".

vsphere_datacenter = "FlexPod-DC"

// Name of the vSphere data store to use for the VMs. The dev cluster uses "nvme-ds1".

vsphere_datastore = "infra_datastore_1"

// Name of the network to use for the VMs. The dev cluster uses "ocp-network".

vm_network = "10-1-169-NET"

// Name of the VM template to clone to create VMs for the cluster. The dev cluster has a template named "rhcos-latest".

vm_template = "RHCOS44"

// The machine_cidr where IP addresses will be assigned for cluster nodes.

// Additionally, IPAM will assign IPs based on the network ID.

machine_cidr = "10.1.169.0/24"

// The number of control plane VMs to create. Default is 3.

control_plane_count = 3

// The number of compute VMs to create. Default is 3.

compute_count = 4

// URL of the bootstrap ignition. This needs to be publicly accessible so that the bootstrap machine can pull the ignition.

bootstrap_ignition_url = "http://10.1.169.9:8080/bootstrap.ign"

// Ignition config for the control plane machines. You should copy the contents of the master.ign generated by the installer.

control_plane_ignition = <<END_OF_MASTER_IGNITION

{"ignition":{"config":{"append":[{"source":"https://apiint.rtp.ocp.local:22623/config/master","verification":{}}]},"security":{"tls":{"certificateAuthorities":[{"source":"data:text/plain;charset=utf-8;base64,LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0t

<SNIP>

VJUSUZJQ0FURS0tLS0tCg==","verification":{}}]}},"timeouts":{},"version":"2.2.0"},"networkd":{},"passwd":{},"storage":{},"systemd":{}}

END_OF_MASTER_IGNITION

// Ignition config for the compute machines. You should copy the contents of the worker.ign generated by the installer.

compute_ignition = <<END_OF_WORKER_IGNITION

{"ignition":{"config":{"append":[{"source":"https://apiint.rtp.ocp.local:22623/config/worker","verification":{}}]},"security":{"tls":{"certificateAuthorities":[{"source":"data:text/plain;charset=utf-8;base64,LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tC

<SNIP>

N2tUUWI0PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==","verification":{}}]}},"timeouts":{},"version":"2.2.0"},"networkd":{},"passwd":{},"storage":{},"systemd":{}}

END_OF_WORKER_IGNITION

// Set bootstrap_ip, control_plane_ip, and compute_ip if you want to use static

// IPs reserved someone else, rather than the IPAM server.

// Update the IP address to assign to the bootstrap VM.

bootstrap_ip = "10.1.169.20"

// The IP addresses to assign to the control plane VMs. The length of this list

// must match the value of control_plane_count and update the IP Addresses.

control_plane_ips = ["10.1.169.11", "10.1.169.12", "10.1.169.13"]

// The IP addresses to assign to the compute VMs. The length of this list must

// match the value of compute_count and update the IP Addresses.

compute_ips = ["10.1.169.21", "10.1.169.22", "10.1.169.23", "10.1.169.24"]

5. Configure the Gateway and DNS in the TFscripts/openshift43-installer/machine/ignition.tf file:

GATEWAY= 10.1.169.254

DNS1=10.1.169.10

At this time, all the necessary prerequisites are completed and the OpenShift installation can start.

OCP Installation

Because each machine in the cluster requires information about the cluster when it is provisioned, OCP uses a temporary bootstrap VM during initial configuration to provide the required information to the permanent control plane. This VM boots by using the Ignition config file that describes how to create the cluster. The bootstrap machine creates the master machines that make up the control plane; create the control plane machines then create the compute machines. Figure 8 illustrates this process:

Bootstrapping a cluster involves the following high-level steps:

· The bootstrap machine boots and starts hosting the remote resources required for the master machines to boot.

· The master machines fetch the remote resources from the bootstrap machine and finish booting.

· The master machines use the bootstrap machine to form an etcd cluster.

· The bootstrap machine starts a temporary Kubernetes control plane using the new etcd cluster.

· The temporary control plane schedules the production control plane to the master machines.

· The temporary control plane shuts down and passes control to the production control plane.

· The bootstrap machine injects OpenShift Container Platform components into the production control plane.

· The control plane sets up the worker nodes.

· The control plane installs additional services in the form of a set of Operators.

· Customers can manually delete the bootstrap machine when the installation completes.

To start the OCP installation process, from the management host follow these steps:

1. Make sure you are still in the OpenShift installer directory, where the terraform files (*.tf) exists:

[root@OCP-MGMT openshift43-installer]# pwd

/root/TFscripts/openshift43-installer

2. Initialize terraform directory to download all the required providers:

![]() For more info on terraform init and terraform providers refer to the terraform documentation: https://www.terraform.io/docs/.

For more info on terraform init and terraform providers refer to the terraform documentation: https://www.terraform.io/docs/.

[root@OCP-MGMT openshift43-installer]# terraform init

Initializing modules...

- module.folder

Getting source "./folder"

- module.resource_pool

Getting source "./resource_pool"

- module.bootstrap

Getting source "./machine"

- module.control_plane

Getting source "./machine"

- module.compute

Getting source "./machine"

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

- Downloading plugin for provider "vsphere" (1.18.1)...

- Downloading plugin for provider "ignition" (1.1.0)...

- Downloading plugin for provider "external" (1.2.0)...

- Downloading plugin for provider "template" (2.1.2)...

- Downloading plugin for provider "null" (2.1.2)...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.external: version = "~> 1.2"

* provider.null: version = "~> 2.1"

* provider.template: version = "~> 2.1"

* provider.vsphere: version = "~> 1.18"

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

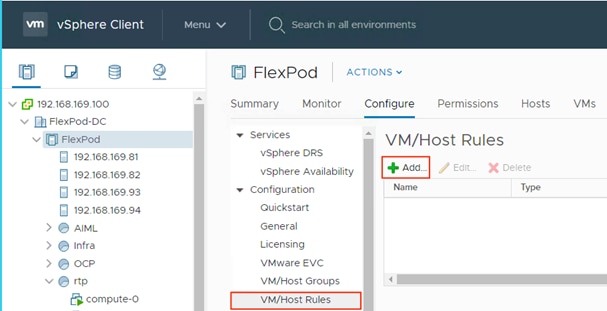

should now work.