FlashStack for Generative AI Inferencing Design Guide

Available Languages

Bias-Free Language

The documentation set for this product strives to use bias-free language. For the purposes of this documentation set, bias-free is defined as language that does not imply discrimination based on age, disability, gender, racial identity, ethnic identity, sexual orientation, socioeconomic status, and intersectionality. Exceptions may be present in the documentation due to language that is hardcoded in the user interfaces of the product software, language used based on RFP documentation, or language that is used by a referenced third-party product. Learn more about how Cisco is using Inclusive Language.

- US/Canada 800-553-2447

- Worldwide Support Phone Numbers

- All Tools

Feedback

Feedback

Published: January 2024

In partnership with:

![]()

About the Cisco Validated Design Program

The Cisco Validated Design (CVD) program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information, go to: http://www.cisco.com/go/designzone.

Cisco Validated Designs (CVDs) consist of systems and solutions that are designed, tested, and documented to facilitate and improve customer deployments. These designs incorporate a wide range of technologies and products into a portfolio of solutions that have been developed to address the business needs of our customers.

Generative AI stands as a transformative force across every industry, driving innovation in content generation, creative image and video creation, virtual assistants, chatbots, and beyond. Despite these opportunities, integrating generative AI into enterprise settings poses unique challenges. Building the right infrastructure with appropriate computational resources is critical. Inferencing model efficiency and optimized model deployment and serving are crucial for performance. Visibility and monitoring of the entire stack is important from the operations point of view.

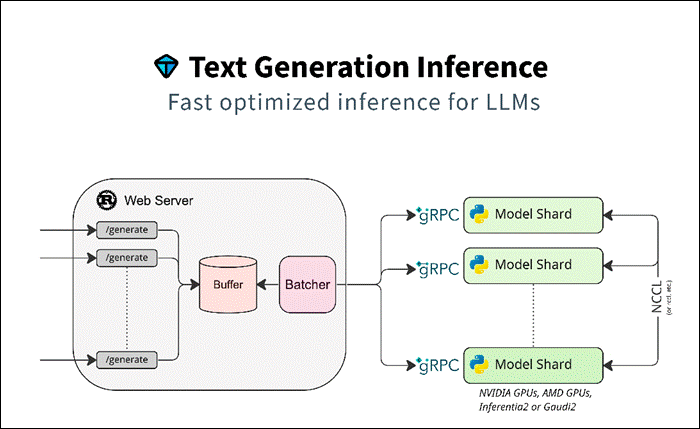

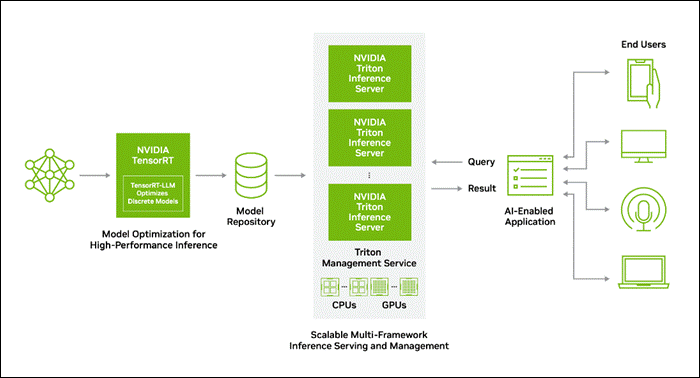

This document explains the Cisco Validated Design for Generative AI Inferencing. This solution outlines the design and reference architecture for a scalable, high-performance solution aimed at deploying Large Language Models (LLM) and other generative AI models in enterprises, ensuring operational simplicity and ease. The comprehensive exploration covers a spectrum of generative AI models, along with inferencing servers and backends.

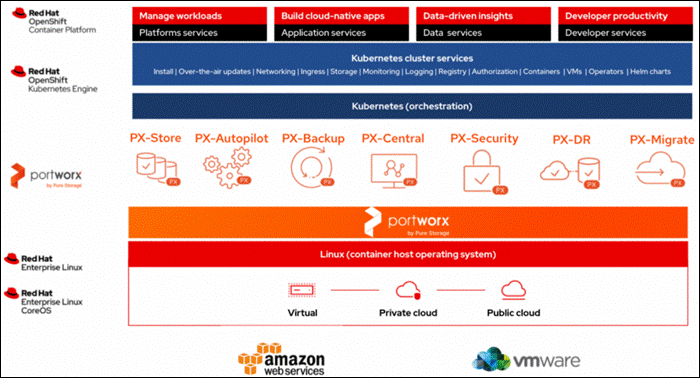

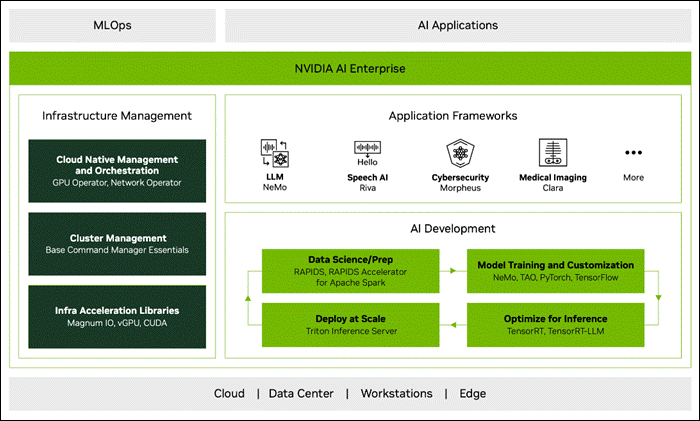

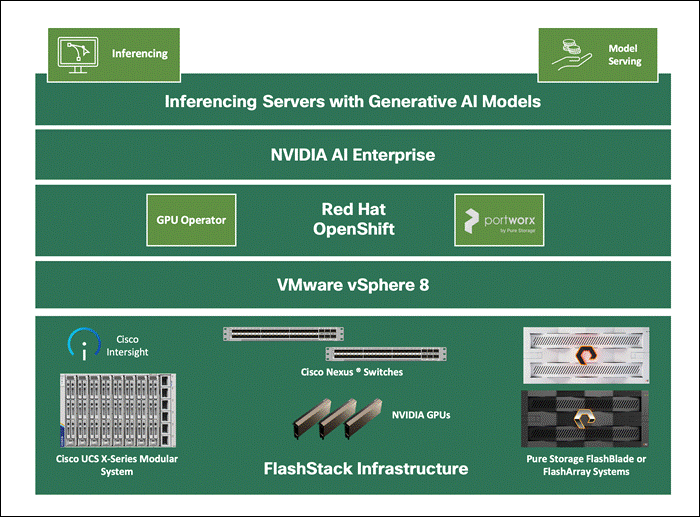

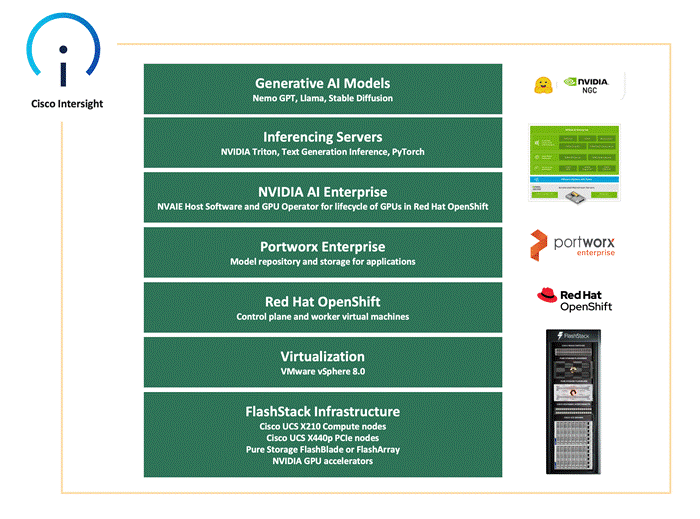

FlashStack Datacenter, powered by NVIDIA, incorporates accelerated computing, essential AI software, and pre-trained models. This holistic stack simplifies the deployment of AI models across diverse applications, offering a comprehensive solution for a wide range of use cases.

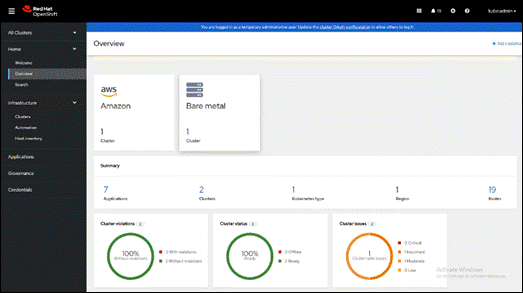

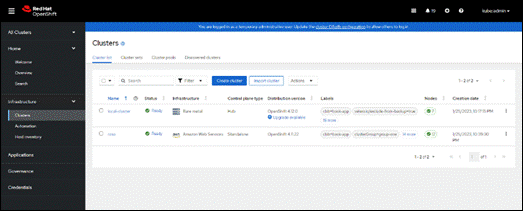

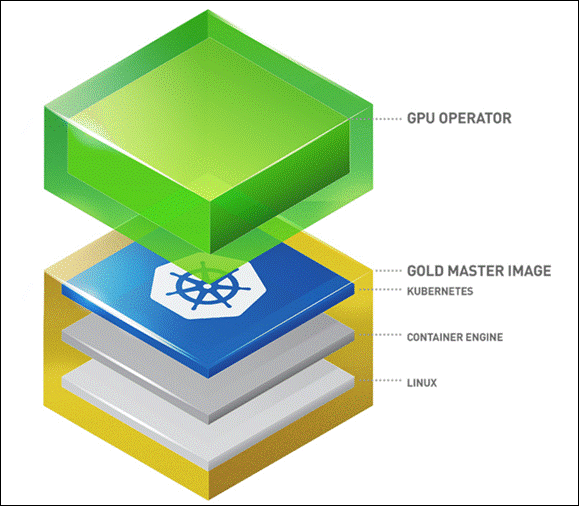

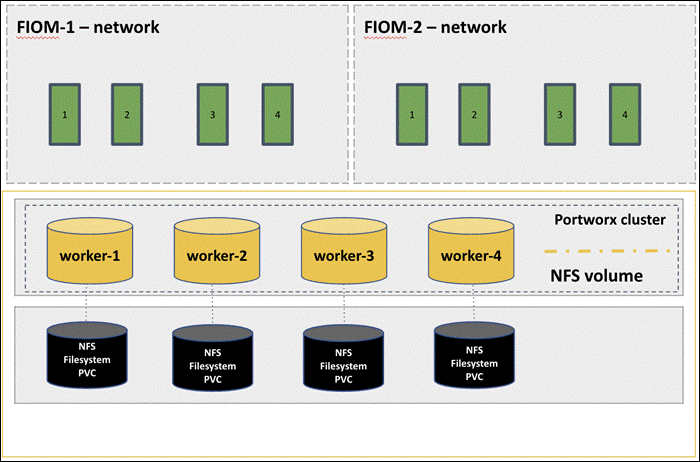

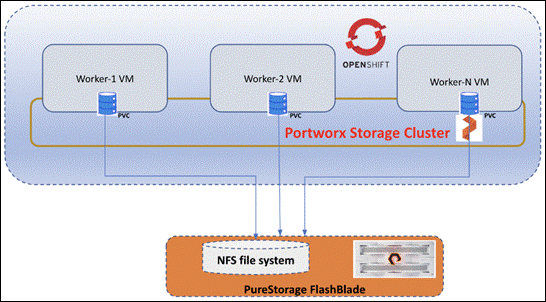

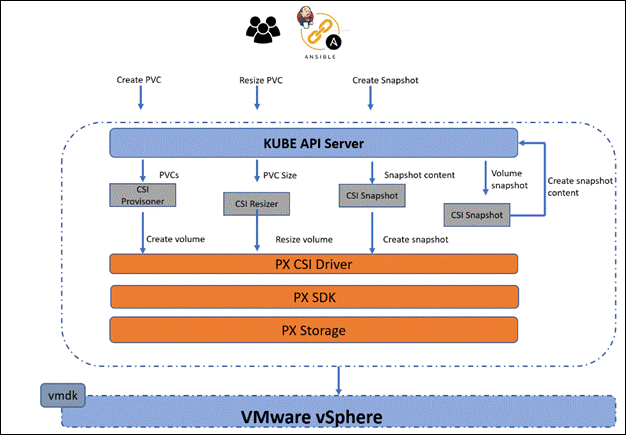

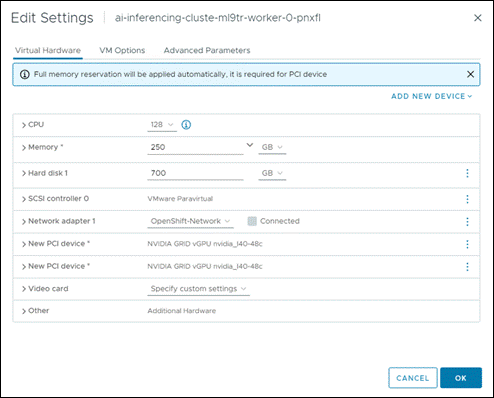

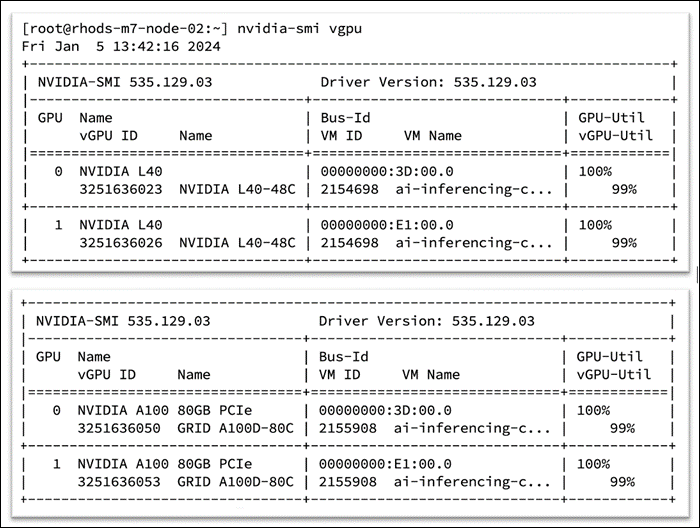

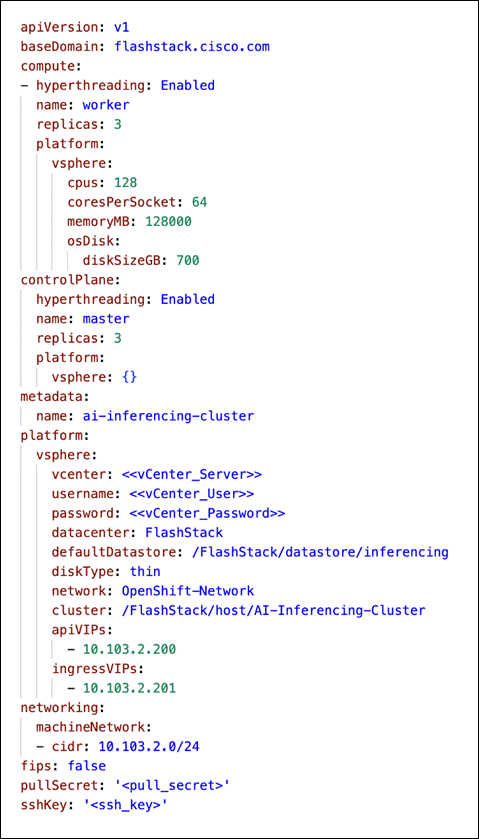

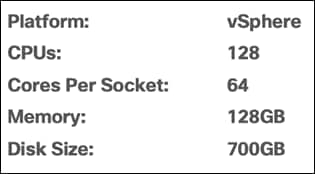

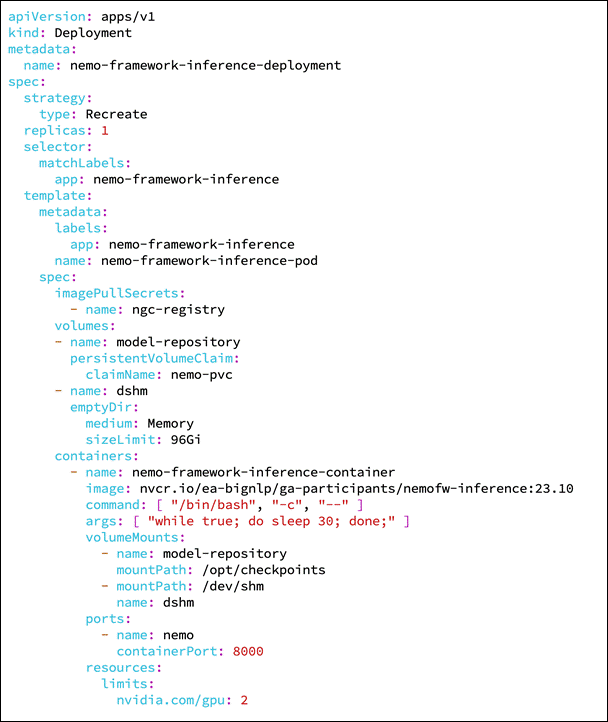

The infrastructure is built with the Cisco UCS X-Series modular platform based FlashStack VSI managed using Cisco Intersight. Deployment consists of Red Hat OpenShift Container Platform clusters deployed on VMware vSphere installed on Cisco UCS X210c M7 compute nodes with NVIDIA GPUs. The software layer of the NVIDIA AI platform, NVIDIA AI Enterprise powers inferencing workflow. Portworx Enterprise backed by Pure Storage FlashArray and FlashBlade provide all-flash enterprise storage as well as cloud native storage for model repository and other storage and data services.

The deployment is automated using Red Hat Ansible to provide Infrastructure as Code (IaC) that can be integrated into existing CI/CD pipelines or ML Ops platform to accelerate deployments.

This chapter contains the following:

● Audience

Generative AI is reshaping industries, from dynamic marketing content to interactive virtual assistants and chatbots. However, unlocking its potential within enterprises poses challenges. A robust infrastructure, observability across the stack, optimized model deployment and serving, high availability, and scaling are few.

The solution highlights how enterprises and AI practitioners can deploy Large Language Models and other Generative AI models quickly and efficiently for intelligent enterprise applications.

The hardware and software components are integrated so that customers can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the ground up.

The intended audience of this document includes IT decision makers like CTOs and CIOs, IT architects and customers who are working on or interested in design, deployment, and life cycle management of generative AI systems.

This document explains the Cisco Validated Design for Generative AI Inferencing. The solution presented in this document will address design and reference architecture for a scalable, high performing and cloud native solution to deploy Generative AI models for inferencing in the enterprise with operational simplicity and ease.

The document addresses various considerations for a successful deployment of generative AI models along with the inferencing servers and backends in customer environment.

This solution provides a foundational reference architecture for Generative AI inferencing in the enterprises. The solution enables Enterprises to deploy Large Language Models and other Generative AI models. It also outlines consistent management and operational experience, and provides visibility across stack, data, and storage service.

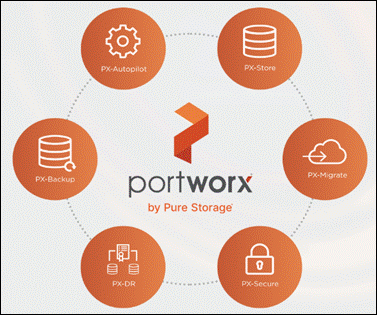

The solution is built using Cisco X-Series modular based FlashStack with NVIDIA GPUs, Cisco Intersight, NVIDIA AI Enterprise, Red Hat OpenShift Container Platform (OCP) and Portworx Enterprise Storage Platform. Portworx storage provider will use FlashArray and FlashBlade for backend storage.

The end-to-end solution was validated in Cisco’s internal labs with Cisco and partner-recommended best practices in place.

The FlashStack Converged Infrastructure in this solution is a Cisco Validated Design that eliminates the need for Enterprise IT teams to handle the entire process of designing, building, integrating, validating, and automating solutions in-house. Instead, teams can rely on a comprehensive design and implementation guide based on industry best practices, which saves time, accelerates infrastructure deployments, and reduces risks.

The FlashStack VSI solution outlined in this document offers the following benefits:

● Provides a highly available and scalable platform with a flexible architecture that supports various deployment models.

● Simplifies global solution management through a cloud-based approach.

● Delivers a hybrid-cloud-ready, policy-driven modular design.

● Incorporates a cooperative support model and Cisco Solution Support.

● Offers an easily deployable, consumable, and manageable architecture, saving time and resources typically spent on researching, procuring, and integrating off-the-shelf components.

● Supports component monitoring, solution automation and orchestration, as well as workload optimization.

Generative AI Inferencing: Concepts, Components, and Market Context

This chapter contains the following:

● What is Generative AI Inferencing?

● Generative AI Industry Use Cases

● Generative AI Inferencing Challenges

This chapter explains various concepts of Generative AI, including model development workflow, inferencing challenges and use cases.

Generative AI is a powerful branch of artificial intelligence that holds immense potential for addressing various challenges faced by enterprises. With generative AI, users and applications can quickly generate new content based on a variety of inputs; inputs and outputs to these models can include text, images, sounds, animation, 3D models, or other types of data. Due to the versatility of generative AI models, applications leveraging them can perform multiple tasks based on available data and inputs, increasing functionality beyond just text and image generation or chat-based Q&A.

How Does Generative AI Compare to Traditional AI?

Generative AI can create new content, chat responses, designs, synthetic data, and more. Traditional AI, on the other hand, is focused on detecting patterns, making decisions, honing analytics, classifying data, and detecting fraud.

As more organizations recognize the value of using AI to create new content, they’re now exploring large language models (LLMs) and other generator models. Since there are pretrained LLMs available, known as foundation models, adopting generative AI requires less upfront training compared with traditional AI models. This results in significant cost and time savings when developing, running, and maintaining AI applications in production.

While 2023 has been the year of Generative AI with the introduction of ChatGPT and models like Stable Diffusion, the technology has been in development for some time. NVIDIA and other companies have been researching and innovating in this space for years, which has helped lead us to where we are today. Examples include StyleGAN (2018), which creates realistic images of people, and GauGAN (2019), which allows you to create fingerpaint-style images that instantly become realistic landscapes. NVIDIA has released an app based on this research called Canvas, and these technologies have been used broadly by ecosystem partners.

What is Generative AI Inferencing?

Generative AI inferencing refers to the process of using a trained generative AI model (large language models and non-large language models) to generate new data or content based on input or contextual cues. During inferencing, the model applies its learned knowledge to produce outputs that are not direct repetitions of the training data but are rather novel creations generated by the model.

The inferencing process is crucial for leveraging the generative capabilities of the models in practical applications. It allows users to obtain novel outputs by providing inputs or guiding the model's behavior based on specific requirements or constraints. The generated content can be used for various creative purposes, prototyping, or as a tool for exploration in different domains.

The term "inferencing" in the context of generative AI is associated with generating content like:

● Text Generation:

◦ Storytelling: Generative models can create fictional stories, narratives, or even entire chapters of books.

◦ Poetry and Prose: AI models can generate poetic verses, prose, or creative writing.

◦ Dialogues: Conversational agents powered by generative models can produce human-like dialogues.

● Image Generation:

◦ Artistic Creations: Generative Adversarial Networks (GANs) can generate visually appealing and artistic images.

◦ Style Transfer: Models can transform images into different artistic styles.

◦ Face Synthesis: GANs can create realistic faces of non-existent individuals.

● Music Composition:

◦ Melody Generation: AI models can compose original melodies and music.

◦ Genre-specific Music: Generative models can create music in specific genres, mimicking different styles.

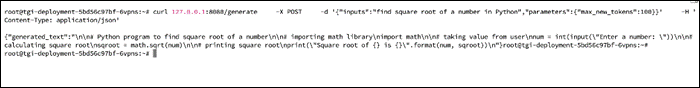

● Code Generation:

◦ Source Code: AI models can generate code snippets or even entire programs based on a given task or description.

● Language Translation:

◦ Multilingual Text: Models like OpenAI's GPT can generate text in multiple languages.

◦ Translation: AI models can translate text from one language to another while preserving context.

● Content Summarization:

◦ Text Summaries: Generative models can summarize large blocks of text into concise and coherent summaries.

● Content Completion:

◦ Sentence Completion: AI models can complete sentences or paragraphs in a way that fits the context.

◦ Text Expansion: Generative models can expand on given ideas or concepts.

● Product Descriptions:

◦ E-commerce Descriptions: AI models can generate product descriptions for e-commerce websites.

● Scientific Writing:

◦ Research Abstracts: Models can generate abstracts or summaries of scientific research papers.

● Conversational Agents:

◦ Chatbot Responses: AI-powered chatbots can generate responses in natural language during conversations.

Generative AI is a broad category that includes models designed to generate new and original content. This content can be in various forms, such as images, text, audio, or even video. Large language models are a specific subset of generative AI designed to understand and generate human language. They are primarily focused on natural language processing tasks.

Large language models (LLMs) are a class of natural language processing models which uses deep learning methodologies to comprehend and generate human language. These models are trained in vast amounts of textual data to learn the patterns, structures, and nuances of language.

One of the notable examples of LLMs is the GPT (Generative Pre-trained Transformer) series developed by OpenAI.

Key features of large language models include:

● Scale: LLMs are characterized by their large number of parameters, often ranging from tens of millions to billions. The scale of these models allows them to capture complex linguistic patterns and generate diverse and contextually relevant text.

● Pre-training: LLMs are typically pre-trained on a massive corpus of text data before being fine-tuned for specific tasks. During pre-training, the model learns to predict the next word in a sentence or fill in missing words, which helps it acquire a broad understanding of language.

● Transformer Architecture: LLMs, including GPT, are built on the Transformer architecture, which enables efficient processing of sequential data. Transformers use self-attention mechanisms to capture relationships between words in a sentence, facilitating better context understanding.

● Transfer Learning: LLMs leverage transfer learning, where the knowledge gained during pre-training on a general language understanding task is transferred to specific tasks with minimal additional training. This approach allows these models to excel in a variety of natural language processing (NLP) applications.

● Fine-tuning: After pre-training, LLMs can be fine-tuned for specific tasks, such as text classification, language translation, summarization, and more. This fine-tuning process adapts the model to the nuances of the target application.

● Diverse Applications: Large Language Models find applications in a wide range of tasks, including but not limited to natural language understanding, text generation, sentiment analysis, machine translation, question answering, and chatbot development.

The development of Large Language Models has significantly advanced the field of natural language processing, enabling the creation of sophisticated AI systems capable of understanding, and generating human-like text across various domains. However, ethical considerations, biases in training data, and potential misuse are important considerations associated with the deployment of these models.

Model Parameters

Model parameters are the internal variables or weights that the model learns during the training process. Weights are the coefficients that scale the input features in a neural network. In the context of LLMs, these weights determine the strength of connections between neurons in different layers. For example, in a transformer model, weights are associated with the attention mechanisms and transformations applied to input sequences.

LLMs often consist of multiple layers, each with its set of weights and biases. In transformer architectures, these layers may include self-attention mechanisms and feedforward neural networks. The parameters of each layer capture different aspects of the input data.

The total number of parameters in an LLM is a critical factor in its capacity to capture complex language patterns and nuances.

Generative AI is capturing the attention of industry leaders and organizations worldwide, prompting developers and executives alike to seek a deeper understanding of the technology and how they can use it to differentiate themselves in the market.

The momentum surrounding generative AI presents a significant opportunity for organizations looking to unlock its transformative potential.

By embracing generative AI, both startups and large organizations can immediately extract knowledge from their proprietary datasets, tap into additional creativity to create new content, understand underlying data patterns, augment training data, and simulate complex scenarios. This enables them to unlock new opportunities, drive innovation, improve decision-making, boost efficiency, and gain a competitive advantage in today's fast-paced and evolving market.

Some key benefits are:

● Extract Knowledge From Proprietary Data

◦ Pretrained foundation models are trained on the knowledge of the internet.

◦ Models can be augmented with proprietary data, so they have knowledge specific to the business and domain they operate within.

◦ Using retrieval augmented generation (RAG), models can generate responses using external and current data.

● Increase Creativity and Create New Content

◦ Generative AI models excel at generating original outputs, such as music, images, text, and 3D models, mimicking human creativity.

◦ Enterprises can harness generative AI to explore new avenues for content creation, expanding their creative possibilities.

● Understand Underlying Data Patterns

◦ Generative models have the unique ability to understand and learn intricate patterns and structures present in input data.

◦ This understanding enables them to generate outputs that resemble the input data while adding unique variations.

◦ By using generative AI, enterprises can gain valuable insights into underlying data patterns to aid decision-making and optimize processes.

● Simulate Complex Scenarios

◦ Generative models can help create synthetic data to simulate complex scenarios. This capability is useful for creating simulated environments for reinforcement learning or generating synthetic datasets for training other machine learning models.

◦ Enterprises can use generative AI to create realistic 3D scenes for simulations and generate diverse synthetic data, enhancing their understanding and decision-making in complex areas such as visual inspection, robotics, and autonomous vehicles.

● Augment Training Data

◦ Unsupervised Learning

Many generative AI models, including transformer-based models, diffusion-based models, generative adversarial networks (GANs), and variational autoencoders (VAEs), leverage unsupervised learning techniques.

These models learn to represent the underlying structure of data without explicit labels, providing valuable insights into data distribution and generating novel outputs.

● Probabilistic Outputs

◦ Unlike deterministic AI systems, generative models produce outputs with a probabilistic nature.

◦ This characteristic allows them to generate different outputs each time the same input is provided, adding variability and creativity to the results.

◦ Enterprises can benefit from generative AI's probabilistic outputs by introducing diversity and adaptability in their processes and experiences.

● Data Augmentation

◦ Generative models can generate new examples that resemble the original training data, enabling data augmentation.

◦ This capability is especially valuable when training data is limited or expensive to acquire, improving the performance and robustness of machine learning models.

Generative AI Industry Use Cases

Generative AI for Healthcare and Life Sciences

Healthcare

Generative AI is transforming healthcare by unlocking high-quality data and insights for medical device companies, pharmaceutical organizations, and academic medical centers, leading to faster discoveries, and improved clinical outcomes. Generative AI for medical imaging analysis can help identify complex disease mechanisms, predict clinical outcomes, and prescribe tailored treatments for patients. Generative AI can generate synthetic medical images of the human anatomy, including high-resolution images of the most complex structures like the human brain. These synthetic medical images can be used to ensure that good-quality data is used to train deep learning models and reach downstream decision-making algorithms.

Life Sciences (Drug Discovery)

Improving the speed and quality of early preclinical drug discovery pipelines is directly related to unlocking new therapies that can improve patient outcomes and save lives. Generative AI models have the potential to revolutionize numerous areas of drug discovery, from transforming the screening of large databases for potential drugs to testing their binding properties to specific proteins in the body. It’s a powerful tool, helping to predict 3D protein structures, generate small molecules and proteins, predict protein and molecule properties, and predict the binding structure of a small molecule to a protein.

● Molecule generation: De novo molecule generation—the process of generating new small molecules from scratch—can aid in discovering molecule-protein binding affinities, patterns and relationships between molecular structures and activities, and a host of other downstream tasks. Nowhere is the potential of generative AI greater than in molecule generation, where transformer-based models are using SMILES, a string notation for representing the chemical structure of small molecules, to understand latent chemical space.

● Protein generation: Just like molecules, protein sequences can be generated from scratch to explore unique structures, properties, and functions of cells—even with limited training data. Generative AI models for protein generation only need a small set of input protein sequences to generate new sequences with specific mutations or modifications.

● Docked pose predictions: Diffusion generative models are unlocking new possibilities in molecular docking, which is critical in identifying small molecules that bind well with protein targets. While these identifications were nearly impossible and computationally expensive before, generative AI can now help scientists predict and manage molecular conformations, ultimately leading to more accurate predictions of interactions with protein targets.

Generative AI for Financial Services

Top AI use cases in the financial services industry (FSI) are in customer service and document automation in banking and finding signals from unstructured data in capital markets areas where generative natural language processing models and LLMs are used to better respond to customer inquiries and uncover investment insights. Generative recommender systems power personalized banking experiences, marketing optimization, and investment guidance.

FSIs can train LLMs on domain-specific and proprietary data, which is more attuned to finance applications. Financial transformers, or “FinFormers,” can learn context and understand the meaning of unstructured financial data. They can power Q&A chatbots, summarize and translate financial texts, provide early warning signs of counterparty risk, quickly retrieve data, and identify data-quality issues.

These generative AI tools rely on frameworks that can integrate proprietary data into model training and fine-tuning, integrate data curation to prevent bias, and add guardrails to keep conversations finance-specific.

Fintech startups and large international banks are expanding their use of LLMs and generative AI to develop sophisticated virtual assistants that serve internal and external stakeholders, create hyperpersonalized customer content, automate document summarization to reduce manual work, and analyze terabytes of public and private data to generate investment insights.

Generative AI for Telecommunications

Generative AI has the power to deliver cost-savings, efficiency benefits, and new revenue opportunities to the telecommunications industry. Current efforts from telcos are focused on realizing cost savings in two main domains:

● Customer Service

Generative AI is transforming telco customer service. LLMs trained on ticket logs, call transcriptions, and other domain specific data improve self-service channels and chatbots with human-like interactions. Call center agents can be assisted with suggested responses and relevant resolutions presented in near real time, with no need to search through documents and resources.

● Network Operations

Generative AI is improving the way telcos design, build, and operate their networks. For example, LLMs trained on data sources such as technical manuals, network performance data, and ticket issues can support network engineers and architects, putting the information they need only a text or voice query away. Fast identification and resolution of network issues and security threats and accurate prediction of equipment failures result in real business value.

Challenges to Adoption

Telecom operators don't always have the resources and expertise needed to develop, scale, and maintain LLMs in house. Partners, including independent software vendors (ISVs) and global system integrators (GSIs), provide the opportunity to overcome these challenges and achieve their AI goals.

Beyond a ready solution that simplifies getting started, the ability to bring models to the data, provide control content with guardrails, and support multi-cloud, multi-model, and multilingual deployments are key features telcos are looking for.

New Revenue Opportunities for Telcos

Generative AI is also opening new revenue opportunities for telcos. With large edge infrastructure and access to vast datasets, telcos around the world are now offering generative AI as a service to enterprise and government customers.

Generative AI for Retail

From operations to customer relations and retention, generative AI is positioned to transform the retail industry.

Generative AI can be used to enhance the shopping experience with features such as interior design assistants, voice enabled search, user review summaries, and smart recommenders that understand the context of requests.

New state-of-the-art generative AI models for text, images, high-fidelity videos, and 3D assets can be trained and fine tuned with a retailer’s proprietary data, representing their specific brand and tone and with appropriate guardrails to complete domain-specific tasks. For example, they can generate robust product descriptions that improve search engine optimization (SEO) rankings and help shoppers find the exact product they’re looking for. AI models can use metatags containing product attributes to generate more comprehensive product descriptions that include terms like “low sugar” or “gluten free.”

Generative AI assistants can be used for back-office tasks, including dynamic pricing, customer segmentation, and customer experience management. Virtual assistants can check resource-planning systems and generate customer service messages to inform shoppers about which items are available and when orders will ship and even assist customers with order-change requests.

Retailers will continue to deploy generative AI to capture and retain customer attention, deliver superior shopping experiences, and drive revenue by matching shoppers with the right products at the right time.

Generative AI for Public Sector

One opportunity for generative AI in the public sector is helping public servants perform their jobs more efficiently. The vast majority of federal employees work in administrative roles and carry out time-consuming tasks such as drafting, editing, and summarizing documents, updating databases, recording expenditures for auditing and compliance, and responding to citizen inquiries.

Generative AI’s ability to summarize documents has great potential to boost the productivity of policymakers and staffers, civil servants, procurement officers, and contractors. With reports and legislation often spanning hundreds of pages of dense academic or legal text, AI-powered summaries generated in seconds can quickly break down complex content into plain language, relieving employees of the tedious, time-consuming task.

AI virtual assistants and question-and-answer chatbots, powered by LLMs, can instantly deliver relevant information to people online, taking the burden off of overstretched staff who work phone banks at agencies like the Treasury Department, IRS, and DMV.

With simple question-and-answer text inputs, AI content generation can help public servants create and distribute publications, email correspondence, reports, press releases, and public service announcements.

Another important element of generative AI to highlight is retrieval-augmented generation, also known as RAG. RAG is an AI framework that retrieves facts from external, or proprietary, sources. This allows the language model to have the most current and accurate information possible so that it can give users the insights they need in near-real time.

Incorporating RAG in an LLM-based question-answering system has benefits for the public sector. RAG ensures that the model has access to the most reliable data and that its users have access to the model’s sources. This means that its content generation can be checked for accuracy, reducing the likelihood of the model populating inaccurate information. Ultimately, implementing guardrails like RAG helps maintain the trustworthiness of LLM-based models.

Generative AI for Media and Entertainment

Generative AI in the media and entertainment industry serves a growing ecosystem of over 100 million creators, enabling new creative possibilities and business opportunities.

Generative AI can help artists, developers, and business decision-makers accelerate, personalize, and monetize their content in ways that weren't possible before. To achieve this, studios and broadcasters are training, customizing, and deploying GPU-accelerated generative AI models on premises and in the cloud.

● Content creation: Generative AI models generate new text, images, videos, sound effects, music and voice, 3D objects, and animations based on user prompts. This newly created content can be used for various purposes, including artistic exploration, data augmentation, style transfer, video synthesis and enhancement, and animations. Using RAG, responses from LLMs can leverage external databases and current data to improve accuracy and safety of content.

● Data analytics and personalization: Generative AI platforms help achieve hyper-personalization by analyzing vast amounts of data and tailoring content to individual preferences and behaviors in real time. This includes generating personalized content and narratives based on viewer interactions or as part of a recommender engine. Highly targeted advertising means greater revenue from enhanced audience engagement and more subscription renewals. Specifically tailored downloadable content opens up new opportunities for revenue streams.

Organizations that have developed strategies around deploying generative AI in the production pipeline have reduced content costs and increased revenues through advertisements and subscriptions by attracting and engaging viewers. This is necessary in a market that's saturated with more shows, films, and live streams, all vying for audience attention. This makes generative AI critical to the survival of an organization in this industry.

Generative AI for Architecture, Engineering, Construction, and Operations (AECO)

Generative AI is used in the architecture, engineering, construction, and operations (AECO) industry to enhance creativity, efficiency, and innovation.

Design Optimization

Generative AI can help architects translate between different modes such as text, 2D, 3D, video, and sketches. It can be used to build designs that meet specific parameters for energy efficiency, structural integrity, daylighting, natural ventilation, and budget. AI-generated design optimizations can also be use-case specific. For example, urban planners can optimize for factors like transportation, infrastructure, and green space. Interior designers can optimize for aesthetics. And structural engineers can optimize for materials and construction costs.

Predictive AI Physics

For simulation and analysis, generative AI can predict the structural integrity of buildings, bridges, dams, and other infrastructure under varying environmental conditions like earthquakes, floods, and winds. AI can also be applied to energy-efficiency modeling, acoustic simulations, and thermal-comfort analysis. Generative AI can conduct ongoing evaluations and offer real-time insights that can be directly integrated into projects under design.

Construction Management

Generative AI can help estimate construction costs for design options and aid in budgeting and decision-making. By regularly evaluating images from the construction site, generative AI can predict scheduling delays and cost overruns and make recommendations to keep projects on track. Vision AI lets construction site managers identify construction defects, errors, and safety risks, and generative AI can give them recommendations to resolve the issues.

Generative AI tools empower AECO professionals to explore innovative design solutions, reduce design time, and make data-informed decisions, ultimately leading to more efficient, sustainable, and cost-effective projects.

Generative AI for Higher Education and Research

Generative AI can be used in multiple areas of education, including research, teaching, and administration. Researchers can use generative AI to support scientific writing and coding and to create synthetic data for model training. Faculty can use generative AI as teaching assistants and to support course development. Universities are beginning to build their own generative AI tools to support operational efficiency and student communications and support.

To prepare the next generation of workers for the future, universities across the globe should build AI into their curriculums, invest in computing infrastructure, and support research initiatives not only in STEM fields but also in the arts, social sciences, and every other domain on campus.

Universities that provide generative AI tools—including the necessary infrastructure to support researchers, faculty, and students—will have an advantage in attracting top talent and funding.

Student Support

Universities can train interactive generative AI chatbots to support students with general information, course registration, financial aid, and more.

Research

With generative AI, researchers can automate experiments, collect data, create synthetic data for model training, and conduct data analysis. AI software, hardware, and funding to support research can help universities compete for top researchers and other talent.

Faculty

Professors will soon have the ability to augment their course preparation and administration with generative AI tools that can generate syllabi and course materials, automate scoring, and dynamically update course content.

Generative AI for Smart Cities and Spaces

In smart cities and spaces, generative AI can be used to enhance citizen support services, improve infrastructure design, manage traffic, enrich tourism experiences, and more.

Urban Planning and Design

Generative AI algorithms can analyze vast amounts of data, including traffic patterns, energy consumption, and environmental factors to generate optimized urban plans and designs. This can help city planners and architects create more sustainable, eco-friendly urban environments.

Traffic Management

AI-driven traffic management systems can generate predictive models and algorithms to optimize traffic flow, reduce congestion, and improve transportation efficiency. This can include dynamically adapting traffic signal timings, suggesting optimal routes, and providing traffic pattern predictions to enhance overall mobility.

Public Safety and Security

Urban planners can use generative AI to simulate natural disasters such as wildfires, earthquakes, hurricanes, and floods to identify existing inefficiencies and plan for a more resilient urban infrastructure. This creates the possibility of proactive monitoring, early detection, and efficient allocation of resources for public safety.

Tourism and Citizen Support Services

With vast amounts of historical and real-time data, generative AI can enhance tourism experiences with personalized recommendations based on individual preferences. AI can optimize itineraries, taking into consideration weather conditions, transportation options, crowd density, and more. Multilingual speech-enabled avatars can interact with visitors and serve as guides, offering immersive experiences on local landmarks, historical sites, and culture and providing real-time language translation and navigation assistance. AI-driven citizen support services can also enable seamless communication and interaction with local businesses, police, and other public servants.

Generative AI for Automotive

Automakers are harnessing the power of generative AI to enhance various aspects of their operations, including vehicle design, engineering, manufacturing, autonomous vehicle software development, marketing, and sales.

Transforming Design Processes

Generative AI has the potential to revolutionize the automotive industry’s traditional design processes. It can transform 2D sketches into 3D non-uniform rational B-splines (NURBS) models. This innovative approach empowers automakers to leverage visual datasets for generative design, ultimately expediting design iterations and reducing design timelines.

Smart Factories

Manufacturers are embracing generative AI and tools to optimize factory layouts before production. With the ability to include video and 3D data in design plans, manufacturers can run simulations and optimize for efficiency in advance, minimizing costly change orders and eliminating waste.

Autonomous Vehicle Development

Generative AI is driving innovation in the development of autonomous vehicles. Innovative technologies such as neural radiance fields (NeRF) reconstruct interactive 3D simulations from sensor data. Using large language models (LLM), developers can use text prompts to add diversity and detail to a simulated scene. This unlocks more diverse ways to use simulation for AV development, accelerating a new era of safe and efficient self-driving cars.

Marketing, Sales, and Support

Generative AI can power digital vehicle configurators that enhance customer shopping experiences. With configurators hosted on a unified cloud platform, automakers can connect design and marketing pipelines, allowing marketers to launch campaigns earlier in the design process. These same configurations can be used online or in dealership showrooms, letting shoppers interact with vehicles, customize features, and create 3D scenes for test drives, even if their preferred model isn’t on the lot.

At dealerships, AI chatbots can answer pricing questions and inform shoppers which models are in stock. In vehicles, onboard AI assistants can execute natural language voice commands for navigation and infotainment, generate vehicle diagnostics, and query user manuals, ensuring drivers can keep both hands on the wheel. Data insights from driver interactions can also help manufacturers improve vehicle design and operating software to enhance the driving experience.

Generative AI for Manufacturing

Generative AI is transforming the manufacturing industry through more streamlined and efficient processes for product development, smart manufacturing, and supply chain management.

Digital Engineering

Generative AI can be trained on legacy product designs and manufacturing information alongside contemporary scientific literature, providing quick and valuable R&D insights to engineers. By analyzing text data, image data, and computer-aided design (CAD) data, generative AI can make suggestions to help designers and engineers broaden the design space and arrive at an innovative solution more quickly. It can also identify patterns and trends to inform design optimizations. At the end of the product development cycle, AI can be used to generate patents and internal documents, saving valuable time and resources.

Smart Manufacturing and Industrial Field Service

Generative AI can be used to generate code and scripts for computer numerical control (CNC) and programmable logic controller (PLC) systems, saving developers valuable time and manual effort. Generative AI can be used for technician training and assistance with interactive equipment manuals and repair guides. Technicians in the field can use handheld devices equipped with large language models to query equipment documentation and instantly access installation and repair instructions, images, video guides, and even digital twin platforms. This helps to increase safety, minimize field hours, and maximize equipment uptime.

Supply Chain Management

Generative AI can improve supply chain management with highly accurate demand forecasting models that account for historical sales, market trends, and macroeconomic conditions, helping businesses optimize inventory levels and avoid stockouts. Generative AI can help with supplier selection by analyzing performance, pricing, and distance to important markets. Generative AI can also support procurement departments by generating requests for proposal (RFP) documents and negotiation scripts to optimize pricing and terms. Interactive chatbots for shippers and receivers can surface documents and information on shipping, customs, tax responsibilities, general Q&A, and more.

Typical Generative AI workflow starts with aligning to the business objectives while maintaining a concise and accurate technical focus in every stage.

Business Strategy and Use Case Definition: Define generative AI objectives aligning with business goals.

● Key Tasks:

◦ Identify use cases.

◦ Clearly define the generative task, whether it's image generation, text generation, style transfer, etc.

◦ Establish goals and success metrics.

Data Preparation and Curation: Ensure high-quality, well-managed dataset availability.

● Key Tasks:

◦ Gather a diverse and representative dataset for training the generative model.

◦ Data cleansing and labeling.

◦ Data aggregation and preprocessing.

◦ Increase the diversity of the training data through techniques like rotation, scaling, or flipping.

◦ Anonymization or synthetic data generation if required.

◦ Leveraging MLOps platforms for efficient data management.

Model Training: Utilize accelerated infrastructure for efficient training.

● Key Tasks:

◦ Training from scratch or selecting pretrained models.

◦ Allocating heavy computational resources.

◦ Optimizing performance with validated, high-performance infrastructure.

Model Customization: Fine-tuning, prompt learning (including prompt tuning and P-tuning), transfer learning, reinforcement learning.

● Key Tasks:

◦ Adapt pretrained models to specific business needs.

◦ Implement customization methods based on requirements.

Inferencing: Deploy and operate trained models for ongoing generation.

● Key Tasks:

◦ Scale computing resources (scaling up or out) based on demand.

◦ Iterate on inferencing based on new data and customization opportunities.

◦ Continuous monitoring of inferencing performance.

◦ Identification and optimization of opportunities for further customization and fine-tuning.

This workflow emphasizes technical aspects, highlighting the importance of infrastructure efficiency, model customization techniques, and ongoing optimization in the inferencing phase.

Generative AI Inferencing Challenges

Deploying and managing generative AI inferencing systems has few challenges. Below are some common challenges:

● High Computational Demands

Generative models, especially ones with large model parameters, demand significant computational resources for inferencing. The required computing resources are less compared to model training or fine-tuning, however inferencing in production might require significant compute and memory resources.

● Latency and Real-time Requirements

For applications requiring real-time responses, minimizing inference latency is crucial. This may require optimized hardware or distributed computing architectures.

● Model Size and Complexity

The size and complexity of generative models, especially in the case of transformers with billions of parameters, can pose challenges in terms of storage requirements and data access times.

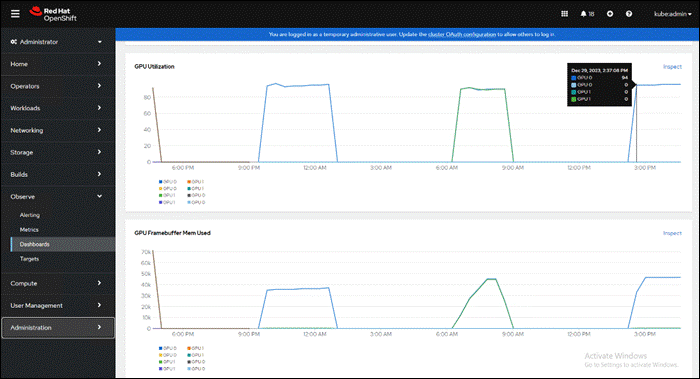

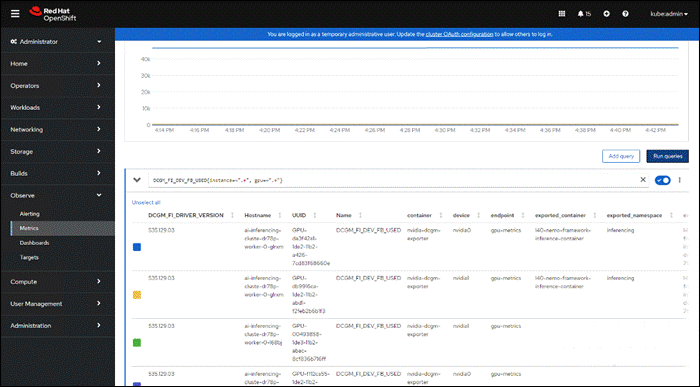

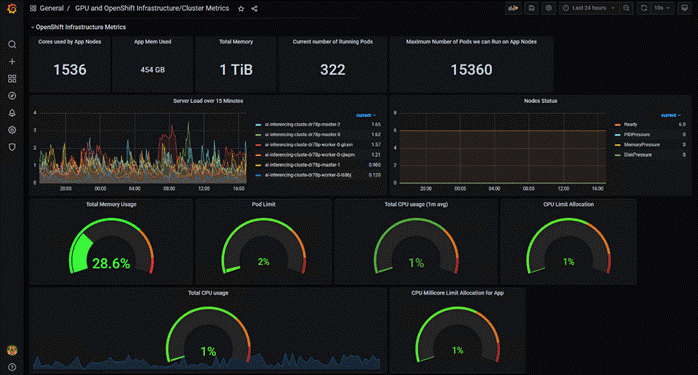

● Visibility and Monitoring:

Ensuring the health and performance of the deployed models through continuous monitoring and maintenance is essential for reliability and uptime.

● Memory Management

Efficient memory usage is critical, especially when dealing with large batches of data or when deploying on edge devices with limited memory resources.

● Data Transfer Overhead

Generative models may require large amounts of data to be transferred between storage and computing resources, putting strain on network bandwidth.

● Scaling for Demand

Handling variable workloads and scaling infrastructure to meet increased demand, especially during peak times, can be challenging.

● Updating Trained Models

Introducing updates to generative models, whether for improvements or security patches, may necessitate a robust deployment strategy to minimize downtime and ensure a smooth transition.

● Model optimization

It is crucial to optimize and reduce the footprint of the models. This helps cut down on the memory and computational resources needed, making it easier to deploy the models on different devices or platforms efficiently. However, finding the right balance between the size of the model, how fast it makes predictions, and how accurate it is can be a challenging task.

● Model Security

Protecting generative models from adversarial attacks or unauthorized access is crucial. Security measures must be implemented to ensure the integrity and confidentiality of the models.

● Optimizing Costs

Managing the costs associated with computational resources, especially in cloud environments, requires careful optimization to avoid unnecessary expenses.

Addressing these infrastructure challenges involves a combination of selecting appropriate infrastructure, optimizing algorithms, implementing effective deployment strategies, and leveraging scalable and flexible infrastructure solution which is already validated. Continuous monitoring and adaptation to evolving technology can help maintain a robust and efficient generative AI inferencing infrastructure.

FlashStack Datacenter powered by NVIDIA is designed with accelerated computing, essential AI software and pretrained models. The end-to-end stack enables to deploy AI models for any application.

Technology Overview

This chapter contains the following:

● Cisco Unified Computing System

● Cisco Intersight Assist and Device Connectors

● Cisco Nexus Switching Fabric

● Cisco MDS 9132T 32G Multilayer Fabric Switch

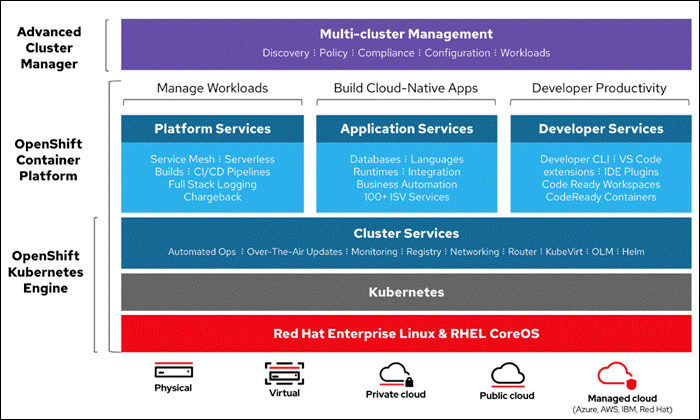

● Red Hat OpenShift Container Platform

● Red Hat Advanced Cluster Management for Kubernetes

● Portworx Enterprise Kubernetes Storage and Data Management Platform

● Infrastructure as Code with Red Hat Ansible

● NVIDIA Triton Inference Server

Cisco and Pure Storage have partnered to deliver many Cisco Validated Designs, which use best-in-class storage, server, and network components to serve as the foundation for virtualized workloads, enabling efficient architectural designs that you can deploy quickly and confidently.

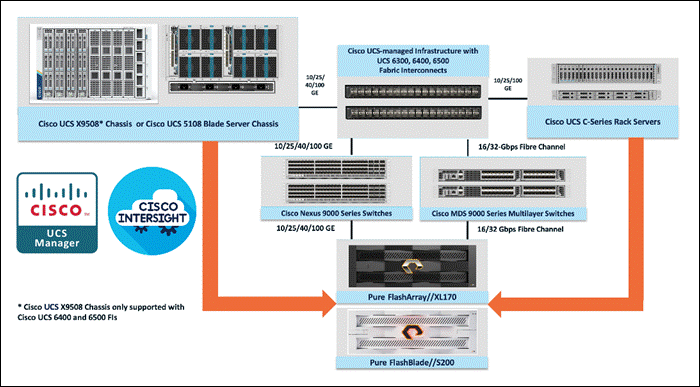

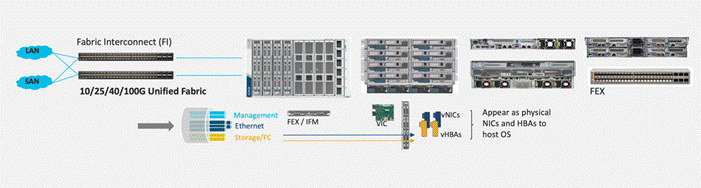

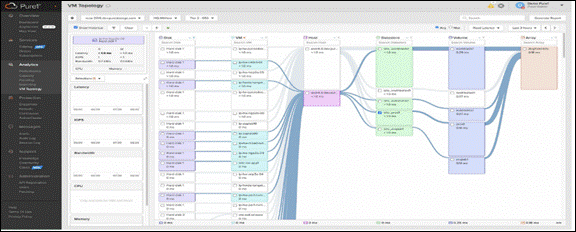

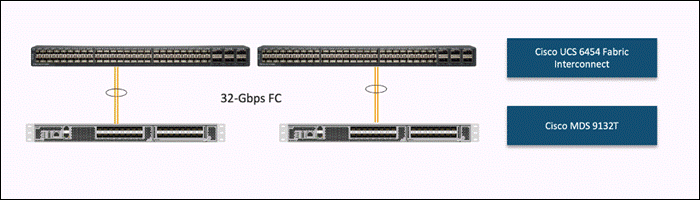

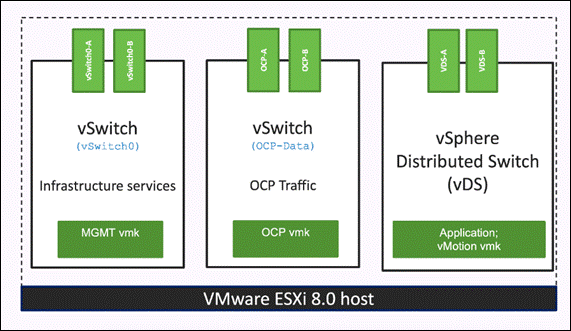

FlashStack architecture is built using the following infrastructure components for compute, network, and storage (Figure 1):

● Cisco Unified Computing System (Cisco UCS)

● Cisco Nexus switches

● Cisco MDS 9000 switches

● Pure Storage FlashArray

All FlashStack components are integrated, so you can deploy the solution quickly and economically while eliminating many of the risks associated with researching, designing, building, and deploying similar solutions from the foundation. One of the main benefits of FlashStack is its ability to maintain consistency at scale. Each of the component families shown in Figure above (Cisco UCS, Cisco Nexus, Cisco MDS, Pure Storage FlashArray and FlashBlade systems) offers platform and resource options to scale up or scale out the infrastructure while supporting the same features and functions.

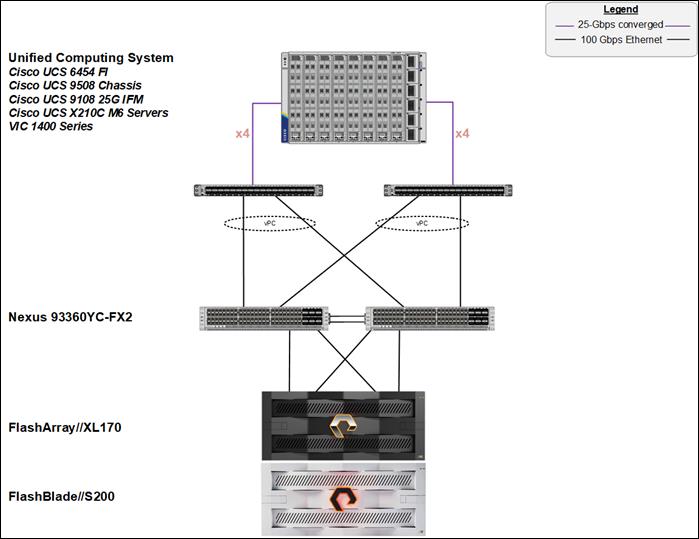

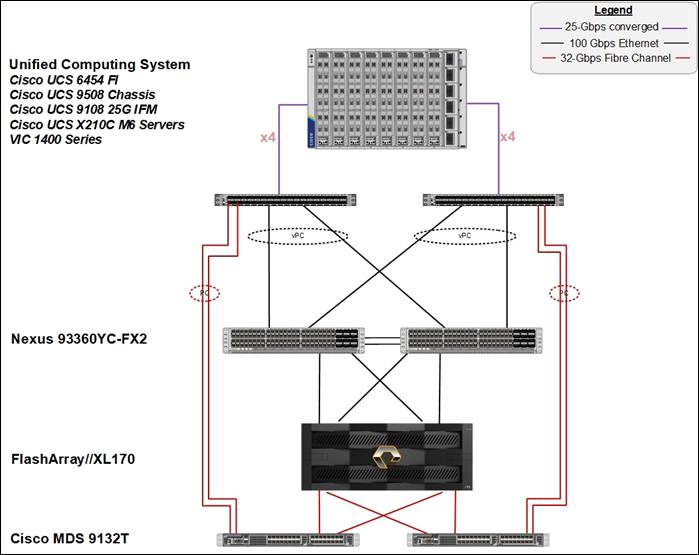

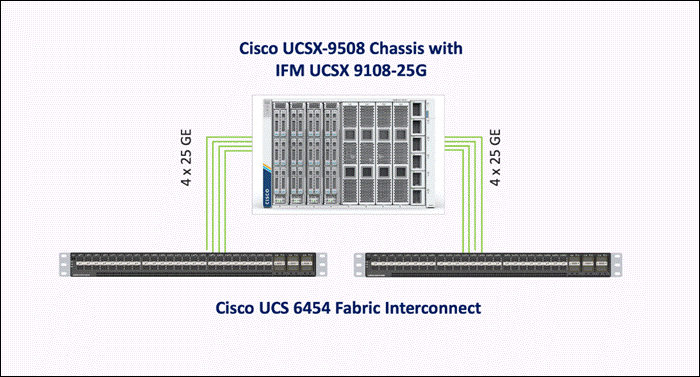

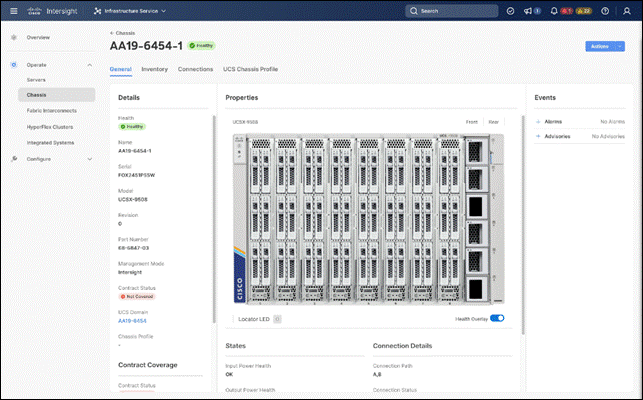

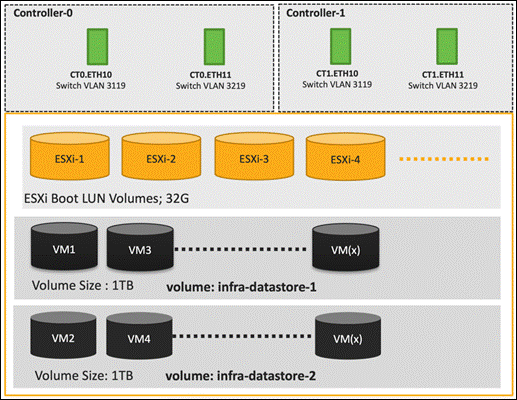

The FlashStack solution with Cisco UCS X-Series uses the following hardware components:

● Cisco UCS X9508 chassis with any number of Cisco UCS X210c M7 compute nodes.

● Cisco UCS fourth-generation 6454 fabric interconnects to support 25- and 100-GE connectivity from various components.

● High-speed Cisco NXOS-based Nexus 93180YC-FX3 switching design to support up to 100-GE connectivity.

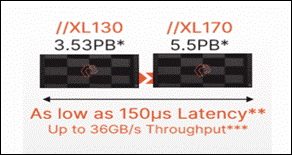

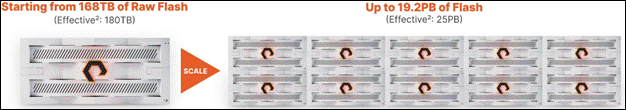

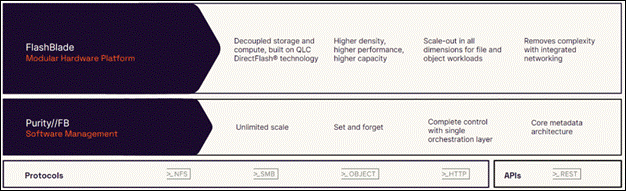

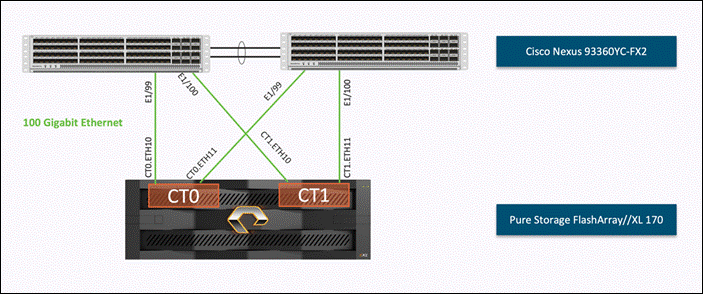

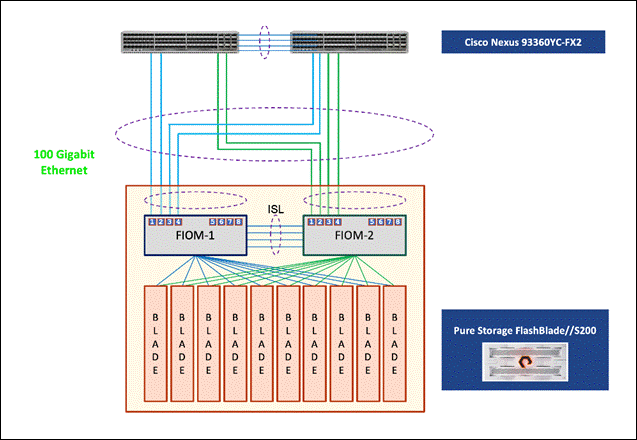

● Pure Storage FlashBlade//S500 scale-out file and object storage with 100GE connectivity to Cisco Nexus switching fabric.

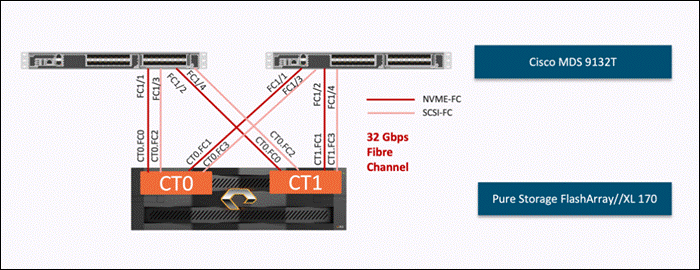

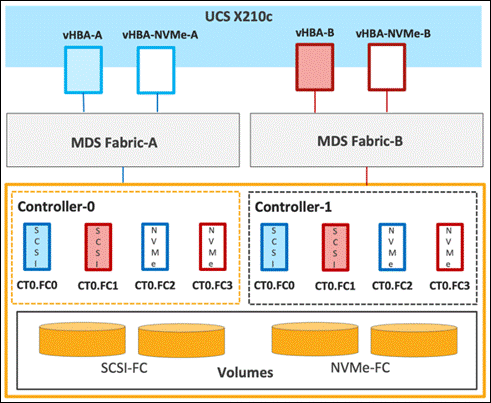

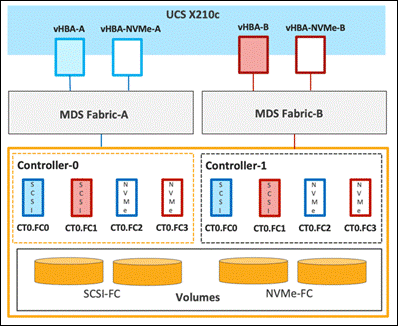

● Pure FlashArray//XL170 storage with 25GbE connectivity to Cisco Nexus switching fabric and 32Gb FC connectivity to Cisco MDS switching fabric.

The software components consist of:

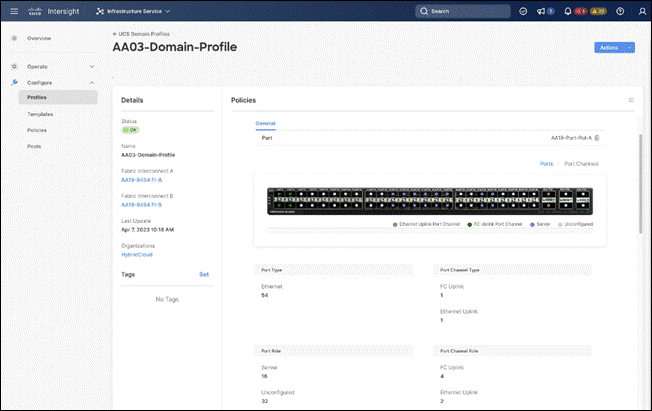

● Cisco Intersight platform to deploy, maintain, and support the FlashStack components.

● Cisco Intersight Assist virtual appliance to help connect the Pure Storage FlashArray and VMware vCenter with the Cisco Intersight platform.

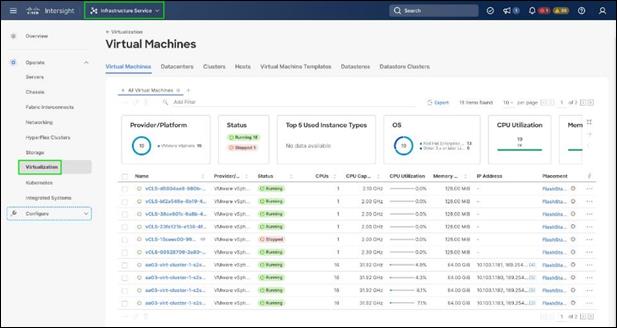

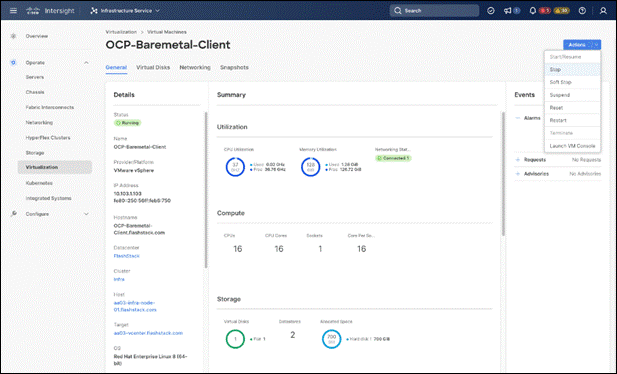

● For virtualized clusters, VMware vCenter 8.0 to set up and manage the virtual infrastructure as well as integration of the virtual environment with Cisco Intersight software.

Cisco Unified Computing System

Cisco Unified Computing System (Cisco UCS) is a next-generation datacenter platform that integrates computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce total cost of ownership and increase business agility. The system integrates a low-latency, lossless 10-100 Gigabit Ethernet unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform with a unified management domain for managing all resources.

Cisco Unified Computing System consists of the following subsystems:

● Compute—The compute piece of the system incorporates servers based on the Fourth Generation Intel Xeon Scalable processors. Servers are available in blade and rack form factor, managed by Cisco UCS Manager.

● Network—The integrated network fabric in the system provides a low-latency, lossless, 10/25/40/100 Gbps Ethernet fabric. Networks for LAN, SAN and management access are consolidated within the fabric. The unified fabric uses the innovative Single Connect technology to lower costs by reducing the number of network adapters, switches, and cables. This in turn lowers the power and cooling needs of the system.

● Virtualization—The system unleashes the full potential of virtualization by enhancing the scalability, performance, and operational control of virtual environments. Cisco security, policy enforcement, and diagnostic features are now extended into virtual environments to support evolving business needs.

Cisco Unified Computing System is revolutionizing the way servers are managed in the datacenter. The following are the unique differentiators of Cisco Unified Computing System and Cisco UCS Manager:

● Embedded Management—In Cisco UCS, the servers are managed by the embedded firmware in the Fabric Inter-connects, eliminating the need for any external physical or virtual devices to manage the servers.

● Unified Fabric—In Cisco UCS, from blade server chassis or rack servers to FI, there is a single Ethernet cable used for LAN, SAN, and management traffic. This converged I/O results in reduced cables, SFPs and adapters – reducing capital and operational expenses of the overall solution.

● Auto Discovery—By simply inserting the blade server in the chassis or connecting the rack server to the fabric interconnect, discovery and inventory of compute resources occurs automatically without any management intervention. The combination of unified fabric and auto-discovery enables the wire-once architecture of Cisco UCS, where compute capability of Cisco UCS can be extended easily while keeping the existing external connectivity to LAN, SAN, and management networks.

Cisco UCS Manager (UCSM) provides unified, integrated management for all software and hardware components in Cisco UCS. Using Cisco Single Connect technology, it manages, controls, and administers multiple chassis for thousands of virtual machines. Administrators use the software to manage the entire Cisco Unified Computing System as a single logical entity through an intuitive graphical user interface (GUI), a command-line interface (CLI), or through a robust application programming interface (API).

Cisco Unified Computing System X-Series

The Cisco Unified Computing System X-Series (Cisco UCSX) is a modular, next-generation data center platform that builds upon the unique architecture and advantages of the previous Cisco UCS 5108 system but with the following key enhancements that simplify IT operations:

● Cloud-managed infrastructure: With Cisco UCS X-Series, the management of the network infrastructure is moved to the cloud, making it easier and simpler for IT teams to respond quickly and at scale to meet the needs of your business. The Cisco Intersight cloud-operations platform allows you to adapt the resources of the Cisco UCS X-Series Modular System to meet the specific requirements of a workload. Additionally, you can seamlessly integrate third-party devices such as Pure Storage and VMware vCenter. This integration also enables global visibility, monitoring, optimization, and orchestration for all your applications and infrastructure.

● Adaptable system designed for modern applications: Today's cloud-native and hybrid applications are dynamic and unpredictable. Application and DevOps teams frequently deploy and redeploy resources to meet evolving requirements. To address this, the Cisco UCS X-Series provides an adaptable system that doesn't lock you into a fixed set of resources. It combines the density, manageability, and efficiency of blade servers with the expandability of rack servers, allowing you to consolidate multiple workloads onto a single platform. This consolidation results in improved performance, automation, and efficiency for both hybrid and traditional data center applications.

● Platform engineered for the future: The Cisco UCS X-Series is designed to adapt to emerging technologies with minimal risk. It is a modular system that can support future generations of processors, storage, nonvolatile memory, accelerators, and interconnects. This eliminates the need to purchase, configure, maintain, power, and cool separate management modules and servers. Cloud-based management through Intersight ensures automatic updates and access to new capabilities delivered through a software-as-a-service model.

● Broad support for diverse workloads: The Cisco UCS X-Series supports a broad range of workloads, reducing the need for different products which lowers support costs, training costs, and gives you more flexibility in your data center environment.

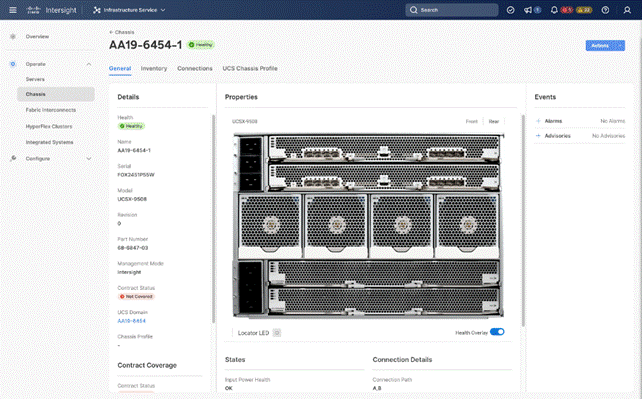

Cisco UCS X9508 Chassis

The Cisco UCS X-Series chassis is engineered to be adaptable and flexible. As shown in Figure 2, Cisco UCS X9508 chassis has only a power-distribution midplane. This innovative design provides fewer obstructions for better airflow. For I/O connectivity, vertically oriented compute nodes intersect with horizontally oriented fabric modules, allowing the chassis to support future fabric innovations. Cisco UCS X9508 Chassis’ superior packaging enables larger compute nodes, thereby providing more space for actual compute components, such as memory, GPU, drives, and accelerators. Improved airflow through the chassis enables support for higher power components, and more space allows for future thermal solutions (such as liquid cooling) without limitations.

The Cisco UCS X9508 7-Rack-Unit (7RU) chassis has eight flexible slots (Figure 3). These slots can house a combination of compute nodes and a pool of future I/O resources that may include GPU accelerators, disk storage, and nonvolatile memory.

At the top rear of the chassis are two Intelligent Fabric Modules (IFMs) that connect the chassis to upstream Cisco UCS 6500 Series Fabric Interconnects. At the bottom rear of the chassis are slots ready to house future Cisco UCS X-Series fabric modules that can flexibly connect the compute nodes with I/O devices. Six 2800W Power Supply Units (PSUs) provide 54V power to the chassis with N, N+1, and N+N redundancy. A higher voltage allows efficient power delivery with less copper and reduced power loss. Efficient, 100mm, dual counter-rotating fans deliver industry-leading airflow and power efficiency, and optimized thermal algorithms enable different cooling modes to best support your environment.

Cisco UCSX 9108-25G Intelligent Fabric Modules

For the Cisco UCS X9508 Chassis, the network connectivity is provided by a pair of Cisco UCSX 9108-25G Intelligent Fabric Modules (IFMs). Like the fabric extenders used in the Cisco UCS 5108 Blade Server Chassis, these modules carry all network traffic to a pair of Cisco UCS 6400 Series Fabric Interconnects (FIs). IFMs also host the Chassis Management Controller (CMC) for chassis management. In contrast to systems with fixed networking components, Cisco UCS X9508s midplane-free design enables easy upgrades to new networking technologies as they emerge making it straightforward to accommodate new network speeds or technologies in the future.

![]()

Each IFM supports eight 25Gb uplink ports for connecting the Cisco UCS X9508 Chassis to the FIs and 32 25Gb server ports for the eight compute nodes. IFM server ports can provide up to 200 Gbps of unified fabric connectivity per compute node across the two IFMs. The uplink ports connect the chassis to the Cisco UCS FIs, providing up to 400Gbps connectivity across the two IFMs. The unified fabric carries management, VM, and Fibre Channel over Ethernet (FCoE) traffic to the FIs, where management traffic is routed to the Cisco Intersight cloud operations platform, FCoE traffic is forwarded to the native Fibre Channel interfaces through unified ports on the FI (to Cisco MDS switches), and data Ethernet traffic is forwarded upstream to the datacenter network (via Cisco Nexus switches).

Cisco UCSX 9108-100G Intelligent Fabric Modules

The Cisco UCS 9108-100G and 9108-25G Intelligent Fabric Module (IFM) brings the unified fabric into the blade server enclosure, providing connectivity between the blade servers and the fabric interconnect, simplifying diagnostics, cabling, and management.

This FlashStack solution with Cisco UCS X-Series and 5th Generation Fabric technology uses Cisco UCS 9108 100G IFM.

The Cisco UCS 9108 100G IFM connects the I/O fabric between the 6536 Fabric Interconnect and the Cisco UCS X9508 Chassis, enabling a lossless and deterministic converged fabric to connect all blades and chassis together. Because the fabric module is similar to a distributed line card, it does not perform any switching and is managed as an extension of the fabric interconnects. This approach removes switching from the chassis, reducing overall infrastructure complexity, and enabling Cisco UCS to scale to many chassis without multiplying the number of switches needed, reducing TCO, and allowing all chassis to be managed as a single, highly available management domain. The Cisco UCS 9108 100G IFM also manages the chassis environment (power supply, fans, and blades) in conjunction with the fabric interconnect. Therefore, separate chassis-management modules are not required.

The IFM plugs into the rear side of the Cisco UCS X9508 chassis. The IFM provides a data path from the chassis compute nodes to the Cisco UCS 6536 Fabric Interconnect. Up to two Intelligent Fabric Modules (IFMs) plug into the back of the Cisco UCS X9508 chassis.

The IFMs serve as line cards in the chassis and multiplex data from the compute nodes to the Fabric Interconnect (FI). They also monitor and manage chassis components such as fan units, power supplies, environmental data, LED status panel, and other chassis resources. The server compute node Keyboard-Video-Mouse (KVM) data, Serial over LAN (SoL) data, and Intelligent Platform Management Interface (IPMI) data also travel to the IFMs for monitoring and management purposes. In order to provide redundancy and failover, the IFMs are always used in pairs.

There are 8 x QSFP28 external connectors on an IFM to interface with a Cisco UCS 6536 Fabric Interconnect. The IFM internally provides 1 x 100G or 4 x 25G connections towards each Cisco UCS X210c Compute Node in Cisco X9508 chassis.

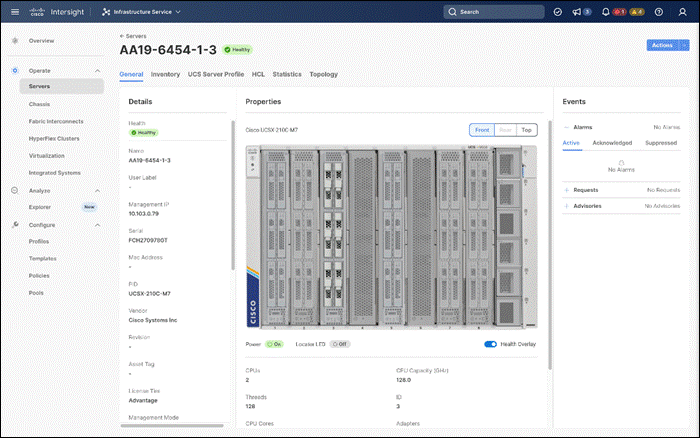

Cisco UCS X210 M7 Server

The Cisco UCS X210 M7 server is a high-performance and highly scalable server designed for data centers and enterprise environments. Some of the key benefits of this server are:

● Performance: The Cisco UCS X210 M7 server is built to deliver exceptional performance. It features the latest Intel Xeon Scalable processors, providing high processing power for demanding workloads such as virtualization, database management, and analytics. The server's architecture is designed to optimize performance across a wide range of applications.

● Scalability: The Cisco UCS X210 M7 server offers excellent scalability options, allowing organizations to easily scale their computing resources as their needs grow. With support for up to eight CPUs and up to 112 DIMM slots, the server can accommodate large memory configurations and high core counts, enabling it to handle resource-intensive applications and virtualization environments.

● Memory Capacity: The server supports a large memory footprint, making it suitable for memory-intensive workloads. It can accommodate a vast amount of DDR4 DIMMs, providing a high memory capacity for applications that require significant data processing and analysis.

● Enhanced Virtualization Capabilities: The Cisco UCS X210 M7 server is designed to optimize virtualization performance. It includes features such as Intel Virtualization Technology (VT-x) and Virtual Machine Device Queues (VMDq), which improve virtual machine density and network performance in virtualized environments. These capabilities enable organizations to consolidate their workloads and achieve efficient resource utilization.

● Simplified Management: The Cisco Unified Computing System (Cisco UCS) management software provides a unified and streamlined approach to server management. The Cisco UCS Manager software allows administrators to manage multiple servers from a single interface, simplifying operations and reducing management complexity. Additionally, the server integrates with Cisco's ecosystem of management tools, providing enhanced visibility, automation, and control.

● High Availability and Reliability: The Cisco UCS X210 M7 server is built with redundancy and fault tolerance in mind. It includes features such as hot-swappable components, redundant power supplies, and redundant fans, ensuring high availability and minimizing downtime. The server's architecture is designed to support mission-critical applications that require continuous operation.

● Energy Efficiency: Cisco UCS servers are designed to be energy-efficient. The Cisco UCS X210 M7 server incorporates power management features that optimize power usage and reduce energy consumption. This not only helps organizations reduce their carbon footprint but also lowers operating costs over time.

Note: It's important to understand that the specific benefits and features may vary depending on the configuration and usage scenario. Organizations should evaluate their specific requirements and consult with Cisco or their authorized resellers to determine how the Cisco UCS X210 M7 server can best meet their needs.

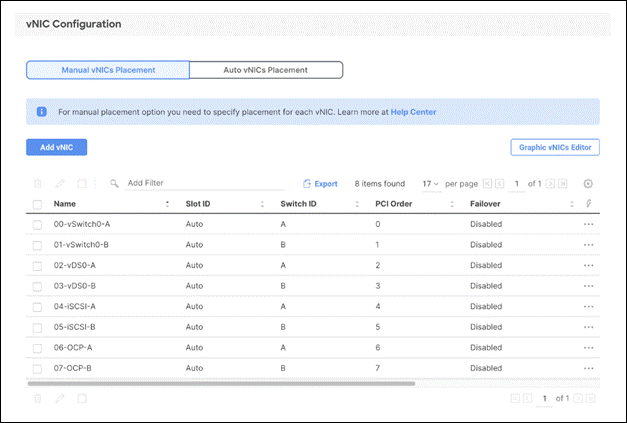

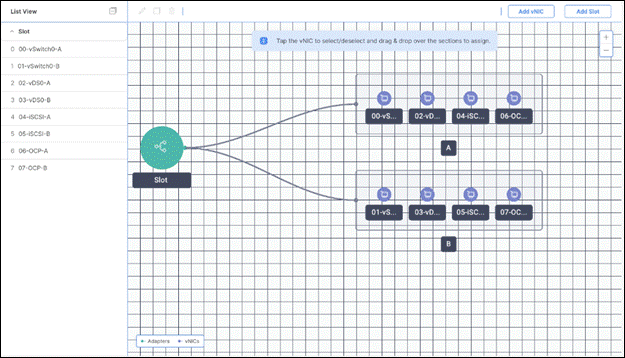

Cisco UCS Virtual Interface Cards (VICs)

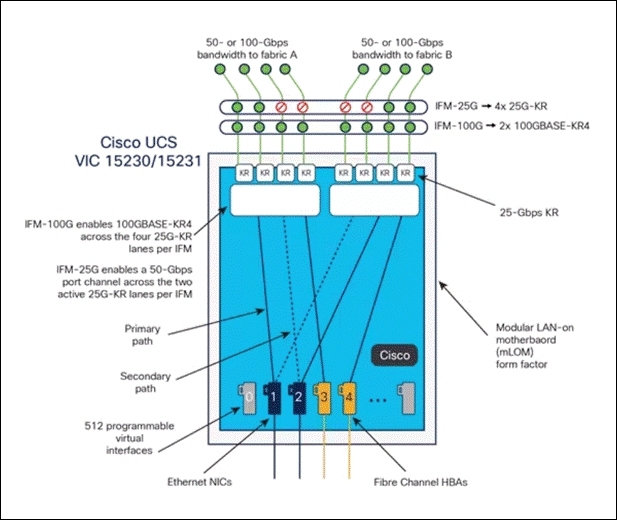

Cisco UCS X210c M7 Compute Nodes support multiple Cisco UCS VIC cards. This design uses the Cisco UCS VIC 15231 adapter.

Cisco UCS VIC 15231

Cisco UCS VIC 15231 fits the mLOM slot in the Cisco X210c M7 Compute Node and enables up to 100 Gbps of unified fabric connectivity to each of the chassis IFMs for a total of 200 Gbps of connectivity per server.

Cisco UCS VIC 15231 connectivity to the IFM-100G and up to the 5th Gen 6536 fabric interconnects is delivered through 2x 100-Gbps connections. The connections between Cisco UCS VIC 15231 and IFM-25Gs in Cisco UCS X-Series enabled 2X50Gbps connection per IFM.

Cisco UCS VIC 15231 supports 256 virtual interfaces (both Fibre Channel and Ethernet) along with the latest networking innovations such as NVMeoF over RDMA (ROCEv2), VxLAN/NVGRE/GENEVE offload, and so on.

Cisco UCS VIC 15428

The Cisco UCS VIC 15428 is a quad-port small-form-factor pluggable (SFP+/SFP28/SFP56) mLOM card designed for Cisco UCS C-Series M6/M7 rack servers. The card supports 10/25/50-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

When a Cisco UCS rack server with a Cisco UCS VIC 15428 is connected to a fabric interconnect (FI-6536/6400/6300), the Cisco UCS VIC 15428 is provisioned via Cisco Intersight or Cisco UCS Manager (UCSM) policies. And when the Cisco UCS rack server with Cisco UCS VIC 15428 is connected to a ToR switch such as Cisco Nexus 9000 Series, the Cisco UCS VIC 15428 is provisioned through the Cisco IMC or Cisco Intersight policies for a standalone server.

Cisco UCS VIC 15238

The Cisco UCS VIC 15238 is a dual-port quad small-form-factor pluggable (QSFP/QSFP28/QSFP56) mLOM card designed for Cisco UCS C-Series M6 and M7 rack servers. The card supports 40/100/200-Gbps Ethernet or FCoE. The card can present PCIe standards-compliant interfaces to the host, and these can be dynamically configured as either NICs or HBAs.

When a Cisco UCS rack server with Cisco UCS VIC 15238 is connected to a Cisco UCS fabric interconnect (FI-6536/6300), the Cisco UCS VIC 15238 is provisioned through Cisco Intersight (IMM) or Cisco UCS Manager (UCSM) policies. And when the Cisco UCS rack server with Cisco UCS VIC 15238 is connected to a ToR switch such as Cisco Nexus 9000 Series, the Cisco UCS VIC 15238 is provisioned through the Cisco IMC or Intersight policies for a Cisco UCS standalone server.

Cisco UCS Fabric

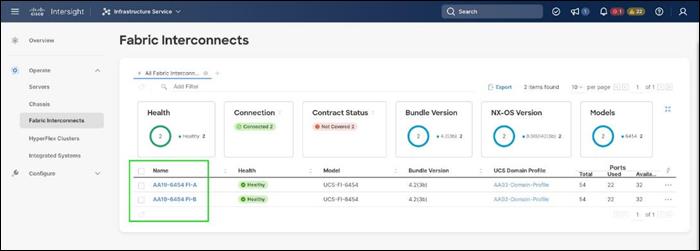

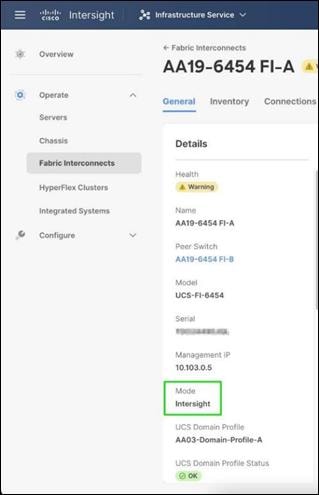

Cisco UCS 6400 Series Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point of connectivity and management for the entire Cisco UCS system. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager or Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

![]()

Cisco UCS 6454 utilized in the current design is a 54-port Fabric Interconnect. This single RU device includes 28 10/25 Gbps Ethernet ports, 4 1/10/25-Gbps Ethernet ports, 6 40/100-Gbps Ethernet uplink ports, and 16 unified ports that can support 10/25 Gigabit Ethernet or 8/16/32-Gbps Fibre Channel, depending on the SFP.

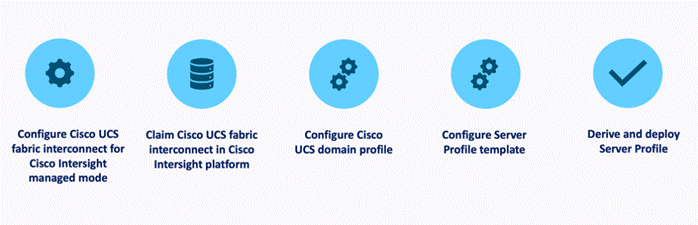

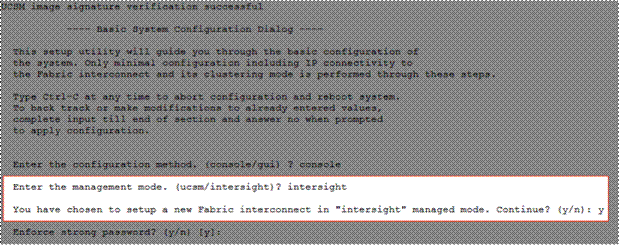

Note: To support the Cisco UCS X-Series, the fabric interconnects must be configured in Intersight Managed Mode (IMM). This option replaces the local management with Cisco Intersight cloud or appliance-based management.

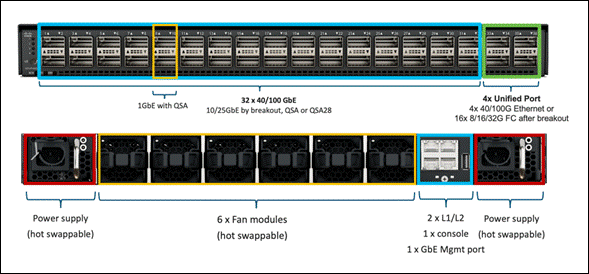

5th Generation Cisco UCS Fabric Interconnects

The Cisco UCS Fabric Interconnects (FIs) provide a single point of connectivity and management for the entire Cisco UCS system. Typically deployed as an active/active pair, the system’s FIs integrate all components into a single, highly available management domain controlled by the Cisco UCS Manager or Cisco Intersight. Cisco UCS FIs provide a single unified fabric for the system, with low-latency, lossless, cut-through switching that supports LAN, SAN, and management traffic using a single set of cables.

The Cisco UCS 6536 Fabric Interconnect utilized in the current design is a One-Rack-Unit (1RU) 1/10/25/40/100 Gigabit Ethernet, FCoE, and Fibre Channel switch offering up to 7.42 Tbps throughput and up to 36 ports. The switch has 32 40/100-Gbps Ethernet ports and 4 unified ports that can support 40/100-Gbps Ethernet ports or 16 Fiber Channel ports after breakout at 8/16/32-Gbps FC speeds. The 16 FC ports after breakout can operate as an FC uplink or FC storage port. The switch also supports two ports at 1-Gbps speed using QSA, and all 36 ports can breakout for 10- or 25-Gbps Ethernet connectivity. All Ethernet ports can support FCoE.

The Cisco UCS 6536 Fabric Interconnect (FI) is a core part of the Cisco Unified Computing System, providing both network connectivity and management capabilities for the system. The Cisco UCS 6536 Fabric Interconnect offers line-rate, low-latency, lossless 10/25/40/100 Gigabit Ethernet, Fibre Channel, NVMe over Fabric, and Fibre Channel over Ethernet (FCoE) functions.

The Cisco UCS 6536 Fabric Interconnect provides the communication backbone and management connectivity for the Cisco UCS X-Series compute nodes, Cisco UCS X9508 X-series chassis, Cisco UCS B-Series blade servers, Cisco UCS 5108 B-Series server chassis, and Cisco UCS C-Series rack servers. All servers attached to a Cisco UCS 6536 Fabric Interconnect become part of a single, highly available management domain. In addition, by supporting a unified fabric, Cisco UCS 6536 Fabric Interconnect provides both LAN and SAN connectivity for all servers within its domain.

From a networking perspective, the Cisco UCS 6536 uses a cut-through architecture, supporting deterministic, low-latency, line-rate 10/25/40/100 Gigabit Ethernet ports, a switching capacity of 7.42 Tbps per FI and 14.84 Tbps per unified fabric domain, independent of packet size and enabled services. It enables 1600Gbps bandwidth per X9508 chassis with X9108-IFM-100G in addition to enabling end-to-end 100G ethernet and 200G aggregate bandwidth per X210c compute node. With the X9108-IFM-25G and the IOM 2408, it enables 400Gbps bandwidth per chassis per FI domain. The product family supports Cisco low-latency, lossless 10/25/40/100 Gigabit Ethernet unified network fabric capabilities, which increases the reliability, efficiency, and scalability of Ethernet networks. The 6536 Fabric Interconnect supports multiple traffic classes over a lossless Ethernet fabric from the server through the fabric interconnect. Significant TCO savings come from the Unified Fabric optimized server design in which network interface cards (NICs), Host Bus Adapters (HBAs), cables, and switches can be consolidated.

Cisco UCS Unified Fabric: I/O Consolidation

The Cisco UCS 6536 Fabric Interconnect is built to consolidate LAN and SAN traffic onto a single unified fabric, saving on Capital Expenditures (CapEx) and Operating Expenses (OpEx) associated with multiple parallel networks, different types of adapter cards, switching infrastructure, and cabling within racks. The unified ports allow ports in the fabric interconnect to support direct connections from Cisco UCS to existing native Fibre Channel SANs. The capability to connect to a native Fibre Channel protects existing storage-system investments while dramatically simplifying in-rack cabling.

The Cisco UCS 6536 Fabric Interconnect supports I/O consolidation with end-to-end network virtualization, visibility, and QoS guarantees the following LAN and SAN traffic:

● FC SAN, IP Storage (iSCSI, NFS), NVMEoF (NVMe/FC, NVMe/TCP, NVMe over ROCEv2)

● Server management and LAN traffic

The I/O consolidation under the Cisco UCS 6536 fabric interconnect along with the stateless policy-driven architecture of Cisco UCS and the hardware acceleration of the Cisco UCS Virtual Interface card provides great simplicity, flexibility, resiliency, performance, and TCO savings for the customer’s compute infrastructure.

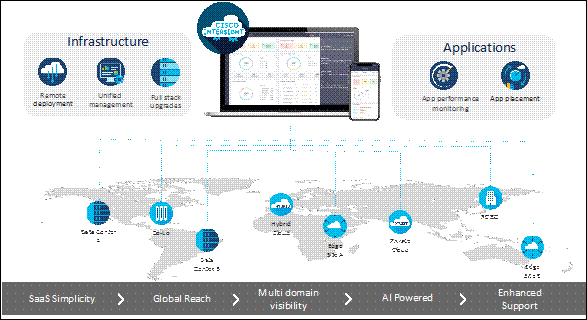

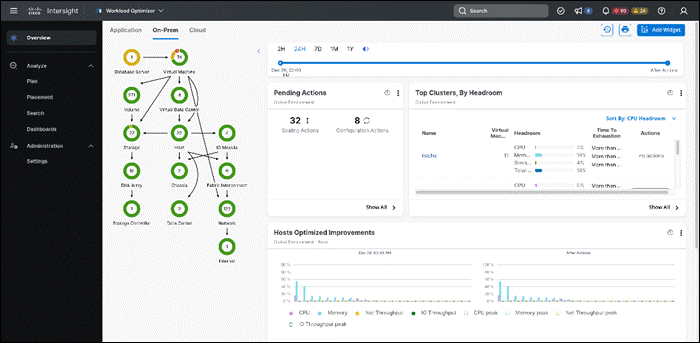

As applications and data become more distributed from core datacenter and edge locations to public clouds, a centralized management platform is essential. IT agility will be struggle without a consolidated view of the infrastructure resources and centralized operations. Cisco Intersight provides a cloud-hosted, management and analytics platform for all Cisco UCS and other supported third-party infrastructure across the globe. It provides an efficient way of deploying, managing, and upgrading infrastructure in the datacenter, ROBO, edge, and co-location environments.

Cisco Intersight provides:

● No Impact Transition: The embedded connector within Cisco UCS allows you to start consuming benefits without a forklift upgrade.

● SaaS/Subscription Model: The SaaS model provides a centralized, cloud-scale management and operations across hundreds of sites around the globe without the administrative overhead of managing the platform.

● Enhanced Support Experience: The hosted platform enables Cisco to address issues platform-wide and experience extends into TAC supported platforms.

● Unified Management: A single pane of glass, consistent operations model, and experience for managing all systems and solutions.

● Programmability: End-to-end programmability with native API, SDK’s and popular DevOps toolsets will enable customers to consume natively.

● Single point of automation: Automation using Ansible, Terraform and other tools can be done through Cisco Intersight for all systems it manages.

● Recommendation Engine: Our approach with visibility, insight, and action powered by machine intelligence and analytics provide real-time recommendations with agility and scale. The embedded recommendation platform with insights sourced from across Cisco install base and tailored to each customer.

The main benefits of Cisco Intersight infrastructure services are as follows:

● Simplify daily operations by automating many daily manual tasks.

● Combine the convenience of a SaaS platform with the capability to connect from anywhere and manage infrastructure through a browser or mobile app.

● Stay ahead of problems and accelerate trouble resolution through advanced support capabilities.

● Gain global visibility of infrastructure health and status along with advanced management and support capabilities.

● Upgrade to add workload optimization when needed.

In this solution, Cisco Intersight unifies and simplifies the hybrid cloud operations of FlashStack datacenter components wherever they are deployed.

Cisco Intersight Virtual Appliance and Private Virtual Appliance

In addition to the SaaS deployment model running on Intersight.com, on-premises options can be purchased separately. The Cisco Intersight Virtual Appliance and Cisco Intersight Private Virtual Appliance are available for organizations that have additional data locality or security requirements for managing systems. The Cisco Intersight Virtual Appliance delivers the management features of the Cisco Intersight platform in an easy-to-deploy VMware Open Virtualization Appliance (OVA) or Microsoft Hyper-V Server virtual machine that allows you to control the system details that leave your premises. The Cisco Intersight Private Virtual Appliance is provided in a form factor specifically designed for users who operate in disconnected (air gap) environments. The Private Virtual Appliance requires no connection to public networks or back to Cisco to operate.

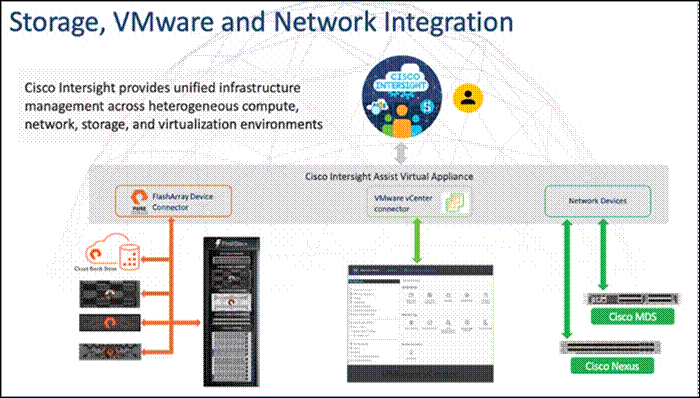

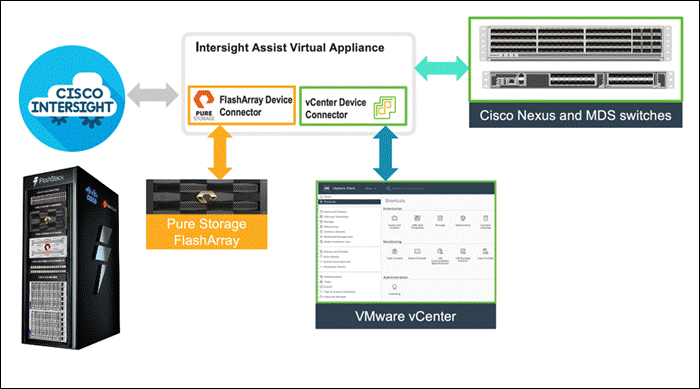

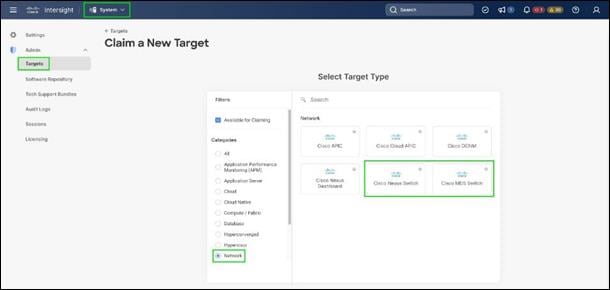

Cisco Intersight Assist and Device Connectors

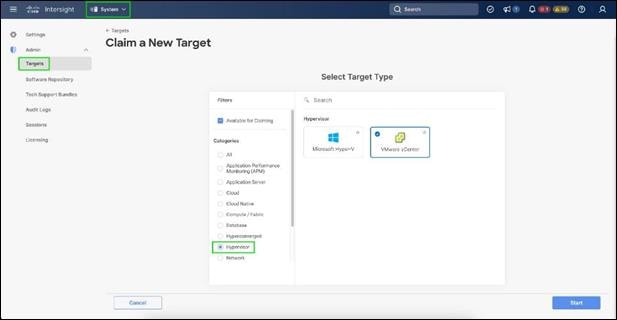

Cisco Intersight Assist helps customers add endpoint devices to Cisco Intersight. A datacenter could have multiple devices that do not connect directly with Cisco Intersight. Any device that is supported by Cisco Intersight but does not connect to Intersight directly needs Cisco Intersight Assist to provide the necessary connectivity. In FlashStack, VMware vCenter and Pure Storage FlashArray connect to Intersight with the help of Intersight Assist appliance.

Cisco Intersight Assist is available within the Cisco Intersight Virtual Appliance, which is distributed as a deployable virtual machine contained within an Open Virtual Appliance (OVA) file format.

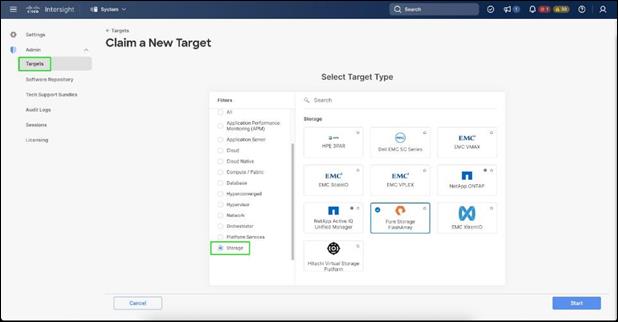

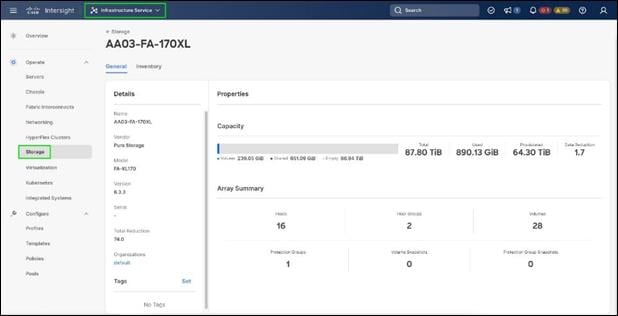

Cisco Intersight integrates with VMware vCenter and Pure Storage FlashArray as follows:

● Cisco Intersight uses the device connector running within Cisco Intersight Assist virtual appliance to communicate with the VMware vCenter.

● Cisco Intersight uses the device connector running within a Cisco Intersight Assist virtual appliance to integrate with Pure Storage FlashArray//XL170.

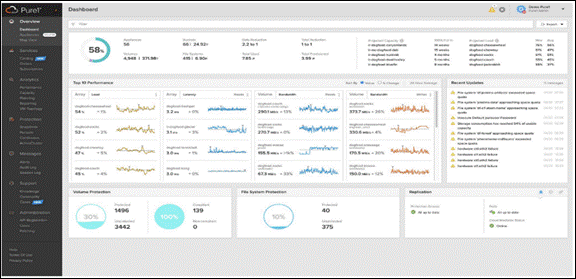

The device connector provides a safe way for connected targets to send information and receive control instructions from the Cisco Intersight portal using a secure Internet connection. The integration brings the full value and simplicity of Cisco Intersight infrastructure management service to VMware hypervisor and FlashArray storage environments. The integration architecture enables FlashStack customers to use new management capabilities with no compromise in their existing VMware or FlashArray operations. IT users will be able to manage heterogeneous infrastructure from a centralized Cisco Intersight portal. At the same time, the IT staff can continue to use VMware vCenter and the Pure Storage dashboard for comprehensive analysis, diagnostics, and reporting of virtual and storage environments. The next section addresses the functions that this integration provides.

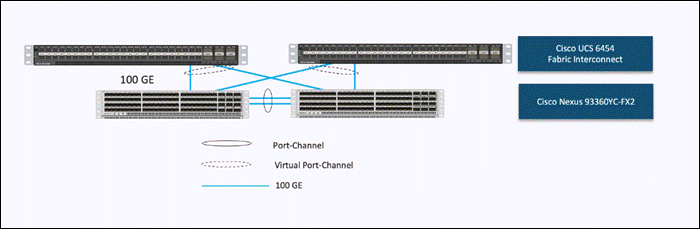

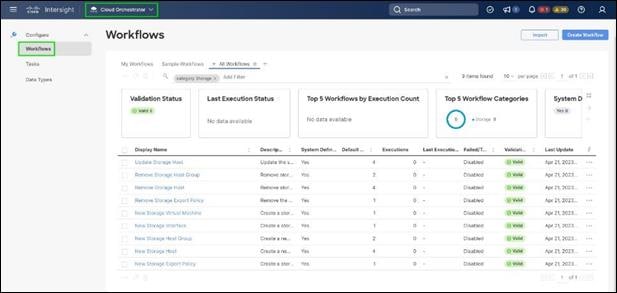

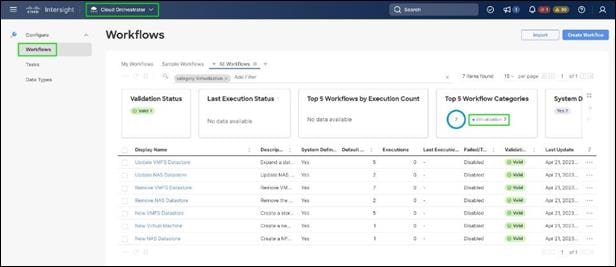

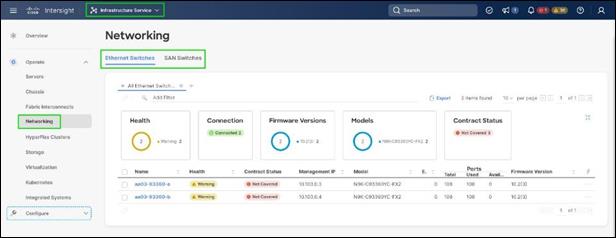

The Cisco Nexus 9000 Series Switches offer both modular and fixed 1/10/25/40/100 Gigabit Ethernet switch configurations with scalability up to 60 Tbps of nonblocking performance with less than five-microsecond latency, wire speed VXLAN gateway, bridging, and routing support.

![]()