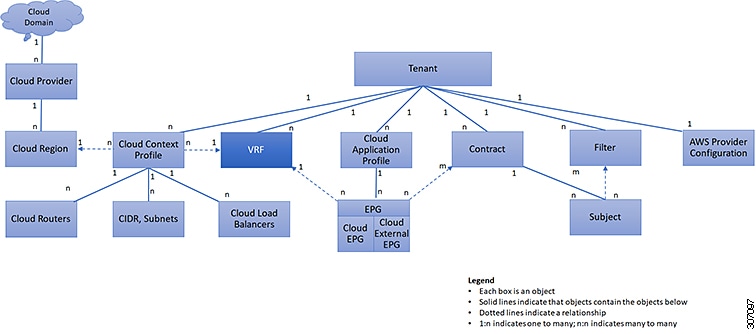

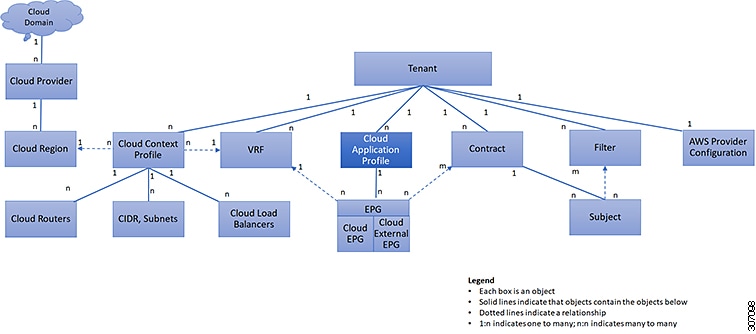

In Cisco APIC, a pre-defined tenant (the tenant common) is available to provide common services to all tenants, such as shared L3Out, private networks, DNS, DHCP, and Active directory.

Prior to release 26.0(3), endpoints on an on-premises ACI tenant and endpoints in a user tenant using networking resources

from the on-premises tenant common cannot communicate with endpoints on the cloud user tenant. Beginning with release 26.0(3), support is now available for

inter-tenant shared services between the on-premises tenant common and cloud user tenants.

Cisco Cloud Network Controller, used in conjunction with Nexus Dashboard Orchestrator, supports inter-tenant shared services

in a hybrid cloud environment, allowing you to deploy resources in on-premises tenants and cloud tenants, where contracts

are deployed in tenant common. The tenant common still exists on the Cloud Network Controller; however, it is not associated with any cloud account. It is just used for storing

filters and contracts that later can be used for a shared service policy. Beginning with release 26.0(3), support is available

for having resources in the on-premises Cisco APIC tenant common for both Application EPGs and external EPGs, as well as having inter-tenant shared services in a hybrid cloud environment.

For example, assume that you already have an on-premises Cisco APIC tenant common deployed with a VRF. You can have bridge domain or EPG in the tenant common as you normally would, or you can now create a new user tenant to leverage the VRF and bridge domain in the tenant common.

Prior to release 26.0(3), the following variants of standard tenant are supported:

With this update in release 26.0(3), the following variants of the on-premises ACI tenant common are also supported:

-

Regular EPG in the tenant common to a cloud tenant

-

External EPG in the tenant common to a cloud tenant

-

Regular EPG in a user tenant with a bridge domain and VRF in the tenant common to a cloud tenant

-

External EPG in a user tenant with a VRF in the tenant common to a cloud tenant

Use Cases

This section describes several use case examples related to the support for inter-tenant shared services in hybrid cloud environments

in release 26.0(3).

On-Premises Cisco APIC Tenant Common Use Case

In this use case, an on-premises Cisco APIC tenant common is deployed with either or both of these configurations:

There is also a contract configured with a user tenant in a cloud site.

The user tenant in the cloud site can be stretched to all the sites, including the on-premises and other cloud sites, and

traffic will still flow between the on-premises tenant common and the user tenant across all sites.

|

Site1: On-Premises Site

|

Site2: Cloud Site

|

|

VRF in tenant common in Site1: VRF1

|

VRF in tenant in Site2: VRF2

|

|

EPG in Site1: EPG1

|

EPG in Site2: EPG2

|

|

Tenant in Site2 stretched to Site1

|

Tenant common in Site1 available in Site2

|

|

External EPG available in VRF1 in tenant common

|

External EPG can be created on Site1

|

Site User Tenants Use Case

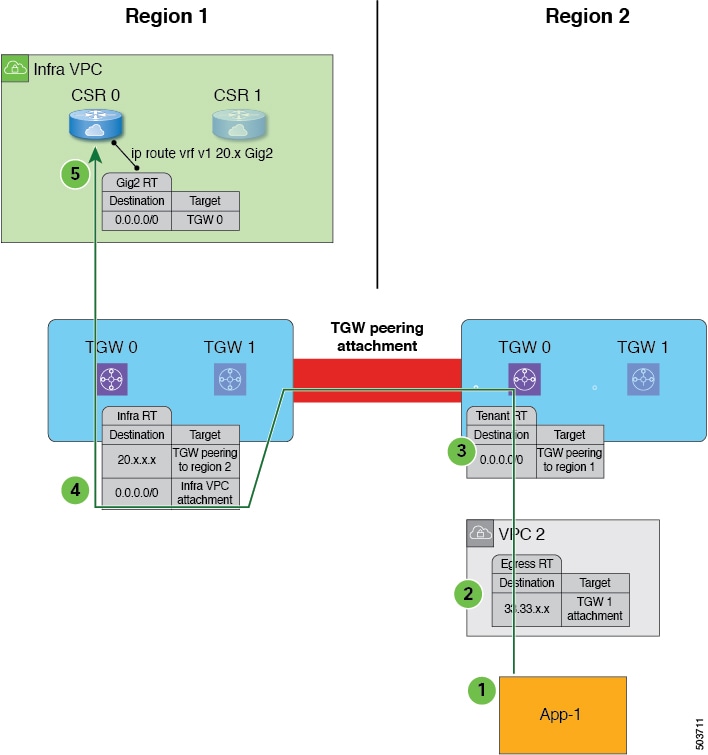

In this use case, a tenant (Tenant1) is deployed only in Site1, which is either an on-premises site or a cloud site, and another

tenant (Tenant2) is deployed only in Site2, which is a cloud site, and a contract is shared across tenants.

|

Site1: On-Premises or Cloud Site

|

Site2: Cloud Site

|

|

VRF in tenant (Tenant1) in Site1: VRF1

|

VRF in tenant (Tenant2) in Site2: VRF2

|

|

EPG in Site1: EPG1

|

EPG in Site2: EPG2

|

|

Tenant2 in Site2 stretched to Site1

|

Tenant1 in Site1 stretched to Site2

|

|

External EPG available in VRF1 in Tenant1

|

External EPG can be created on Site1

|

Example Configuration Process

The following general steps provide an example for configuring inter-tenant shared services in hybrid cloud environments.

See the Nexus Dashboard Orchestrator documentation for more details.

-

Define the tenants, if necessary.

In this example scenario, two tenants need to be defined:

-

Cloud only tenant that is associated with a cloud account

-

On-premises common tenant, which is already defined through APIC and exists in both the on-premises ACI and the cloud by default

-

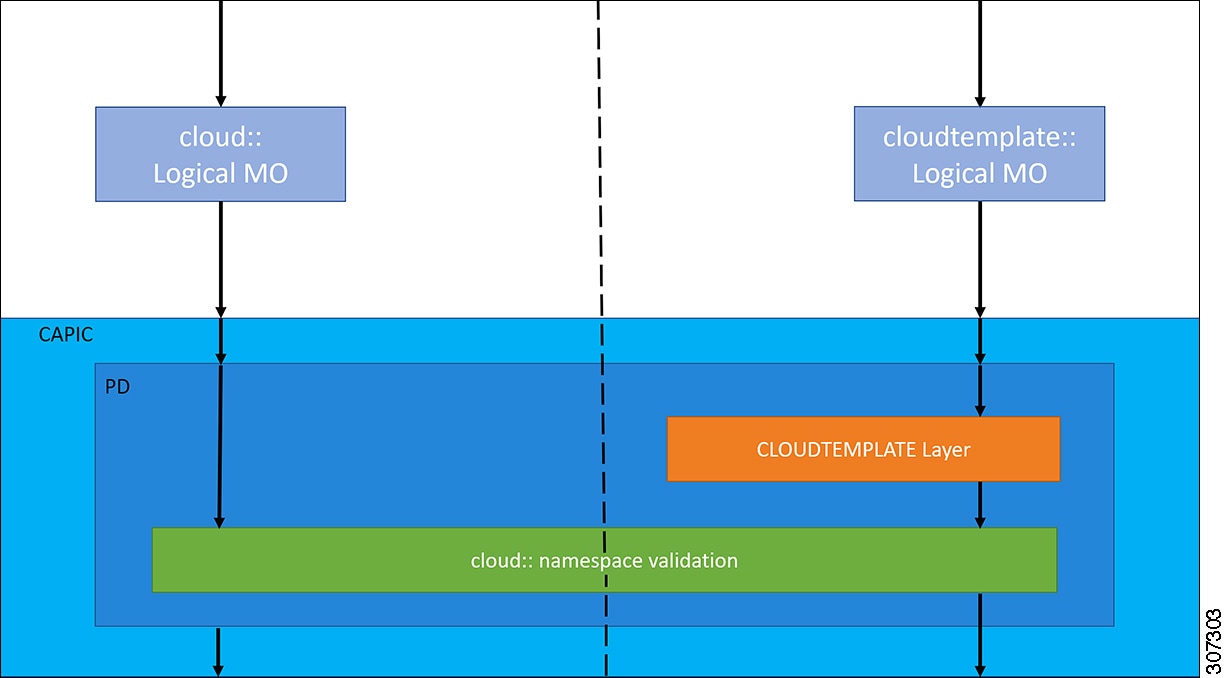

Define the tenant templates in Nexus Dashboard Orchestrator (NDO) that are associated with the two tenants.

In this example scenario, you will define two tenant templates in NDO:

-

Create a schema (for example, common-schema) with the necessary templates.

You can have multiple templates within a schema. For example, you could create two templates within this schema:

-

common-policy: In this example scenario, we will make the following configurations in this template:

-

We will associate this template with the common tenant in the cloud site. This template is to deploy the contracts and filter to the common tenant on the cloud (though the common tenant is not associated with any cloud account) and the common tenant on the on-premises ACI site.

-

We will also create two contracts in this template:

-

We will also configure the necessary policy contract and filters within this template.

-

common-app: In this example scenario, we will associate this template only with the tenant common in the on-premises site, and we will make the necessary configurations with this on-premises site, such as configurations

related to an application profile, VRF, bridge domain, L3Out, external EPG, and so on.

-

Create a second schema (for example, cloud-schema) with a single template (cloud-only), where we will associate this template only with the cloud only tenant, and we will make the necessary configurations with

this cloud site, such as configuruations related to an application profile, VNet/vPC, and so on.

-

Configure contracts using the contracts that you defined when you created the schemas.

-

Deploy the configurations in NDO.

Feedback

Feedback